problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

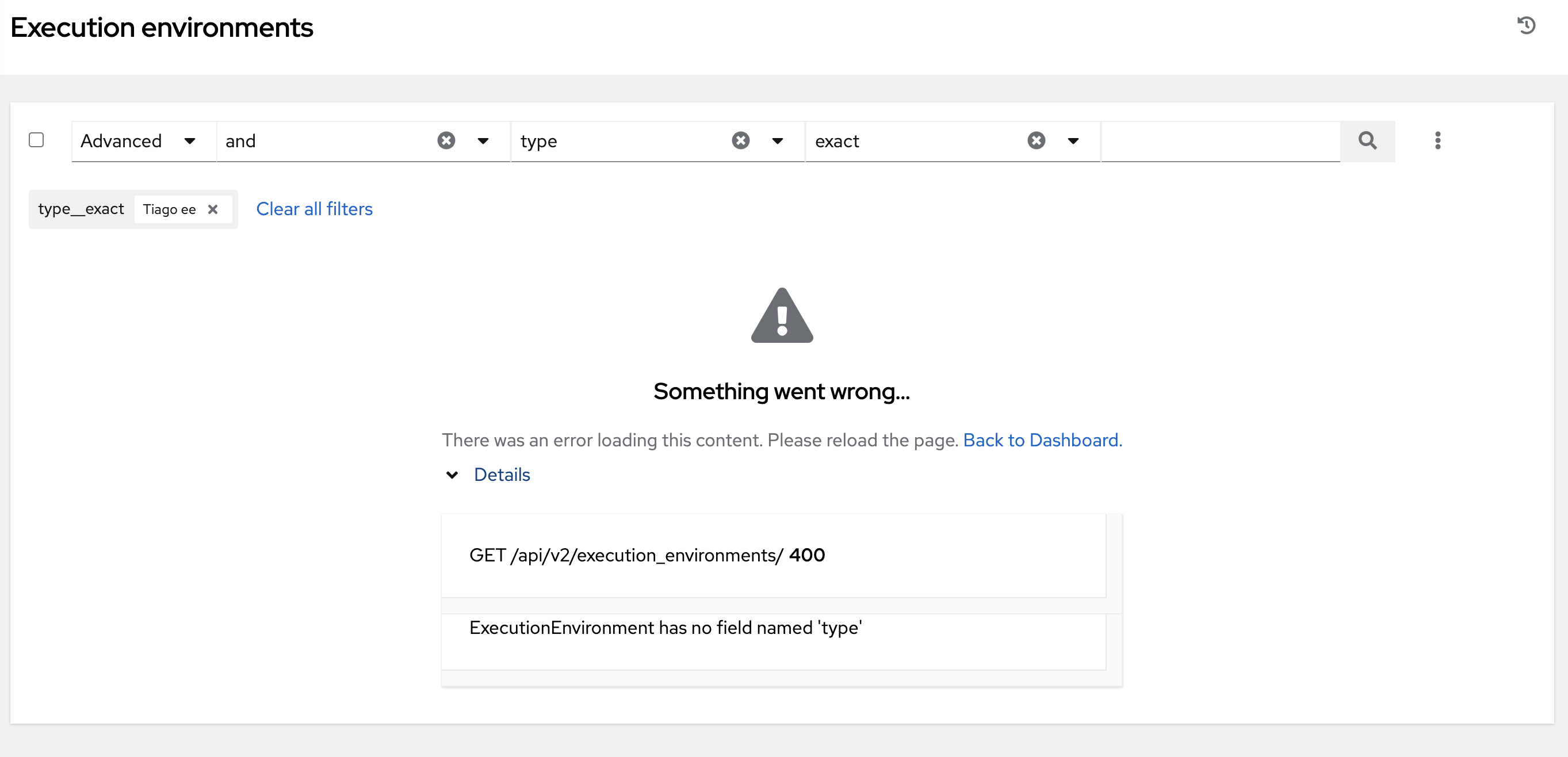

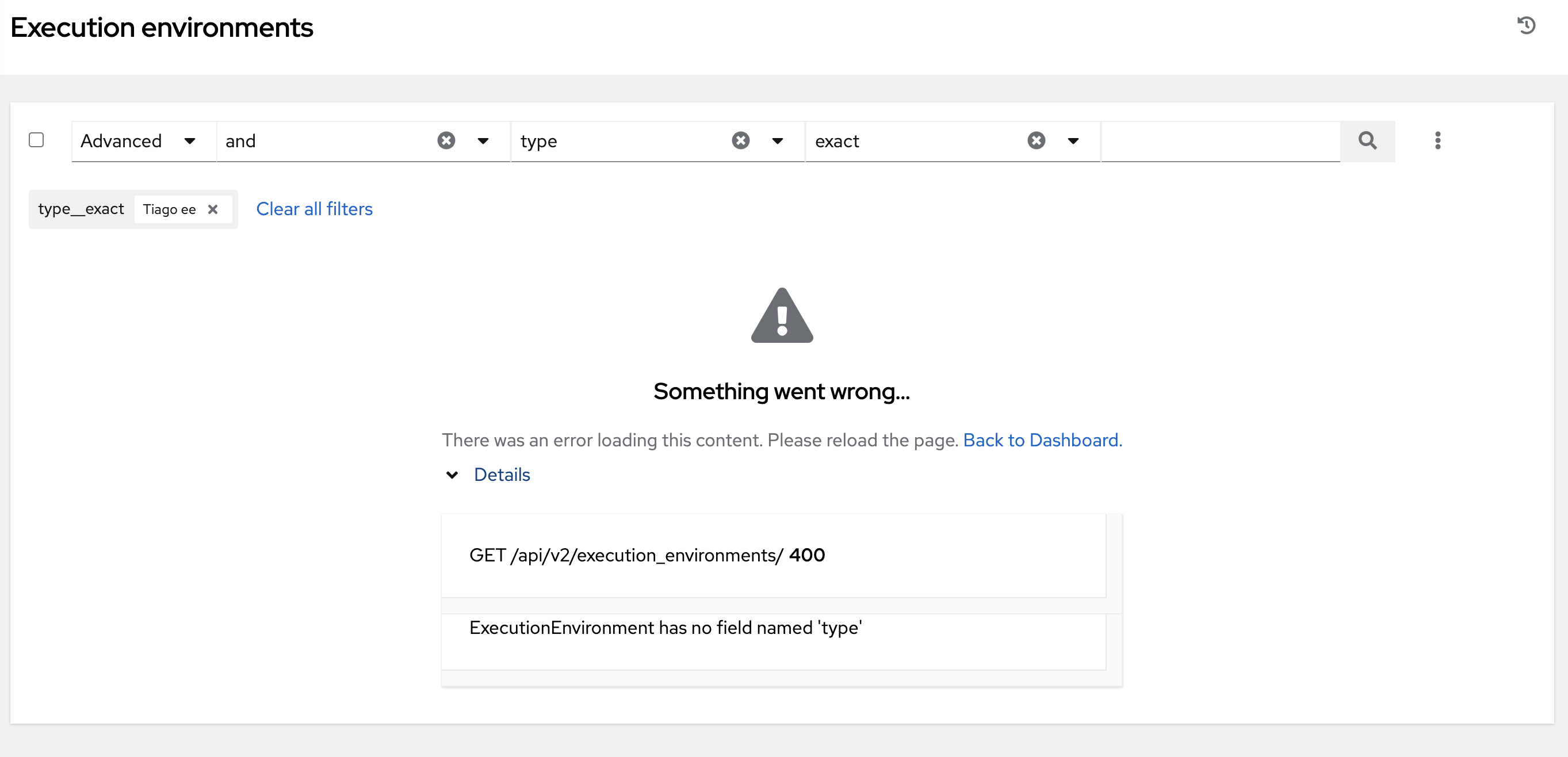

gh_patches_debug_14981 | rasdani/github-patches | git_diff | python-pillow__Pillow-2530 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

later commits to PR 2509 broken the compress_level=1 setting

This is a follow-up to #2509 .

Using the same test file from #2508 with current head ( 1.7.6-5795-g82c51e4 / 82c51e4 is )

https://cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png

```

from PIL import Image

Image.open("cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png").show()

Image.open("cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png").save("m.png", compress_level=1)

```

The file size are as follows:

```

-rw-------. 1 Hin-Tak Hin-Tak 28907 May 14 00:59 tmpEYXgFV.PNG

-rw-------. 1 Hin-Tak Hin-Tak 28907 May 14 00:42 tmpud2qIG.PNG

-rw-rw-r--. 1 Hin-Tak Hin-Tak 36127 May 14 00:44 m.png

-rw-rw-r--. 1 Hin-Tak Hin-Tak 36127 May 14 01:00 m.png

```

The original is 29069 bytes. I did test in #2509 that I got the 36127 larger file size for minimum compression, instead of what 1.7.6-5795-g82c51e4 / 82c51e4 is now giving, the 28907 smaller file size.

So some of @wiredfool 's "improvements" broke the minumum compression setting, which I last tested worked in my #2509 series.

Anyway, you should aim at

```

from PIL import Image

Image.open("cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png").show()

```

giving a /tmp/tmpPWRSTU.PNG of around 36k, not 28k.

Considered my experience in #2509, I'd prefer to switch off notification for this.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `PIL/ImageShow.py`

Content:

```

1 #

2 # The Python Imaging Library.

3 # $Id$

4 #

5 # im.show() drivers

6 #

7 # History:

8 # 2008-04-06 fl Created

9 #

10 # Copyright (c) Secret Labs AB 2008.

11 #

12 # See the README file for information on usage and redistribution.

13 #

14

15 from __future__ import print_function

16

17 from PIL import Image

18 import os

19 import sys

20

21 if sys.version_info >= (3, 3):

22 from shlex import quote

23 else:

24 from pipes import quote

25

26 _viewers = []

27

28

29 def register(viewer, order=1):

30 try:

31 if issubclass(viewer, Viewer):

32 viewer = viewer()

33 except TypeError:

34 pass # raised if viewer wasn't a class

35 if order > 0:

36 _viewers.append(viewer)

37 elif order < 0:

38 _viewers.insert(0, viewer)

39

40

41 def show(image, title=None, **options):

42 r"""

43 Display a given image.

44

45 :param image: An image object.

46 :param title: Optional title. Not all viewers can display the title.

47 :param \**options: Additional viewer options.

48 :returns: True if a suitable viewer was found, false otherwise.

49 """

50 for viewer in _viewers:

51 if viewer.show(image, title=title, **options):

52 return 1

53 return 0

54

55

56 class Viewer(object):

57 """Base class for viewers."""

58

59 # main api

60

61 def show(self, image, **options):

62

63 # save temporary image to disk

64 if image.mode[:4] == "I;16":

65 # @PIL88 @PIL101

66 # "I;16" isn't an 'official' mode, but we still want to

67 # provide a simple way to show 16-bit images.

68 base = "L"

69 # FIXME: auto-contrast if max() > 255?

70 else:

71 base = Image.getmodebase(image.mode)

72 if base != image.mode and image.mode != "1" and image.mode != "RGBA":

73 image = image.convert(base)

74

75 return self.show_image(image, **options)

76

77 # hook methods

78

79 format = None

80 options = {}

81

82 def get_format(self, image):

83 """Return format name, or None to save as PGM/PPM"""

84 return self.format

85

86 def get_command(self, file, **options):

87 raise NotImplementedError

88

89 def save_image(self, image):

90 """Save to temporary file, and return filename"""

91 return image._dump(format=self.get_format(image), **self.options)

92

93 def show_image(self, image, **options):

94 """Display given image"""

95 return self.show_file(self.save_image(image), **options)

96

97 def show_file(self, file, **options):

98 """Display given file"""

99 os.system(self.get_command(file, **options))

100 return 1

101

102 # --------------------------------------------------------------------

103

104 if sys.platform == "win32":

105

106 class WindowsViewer(Viewer):

107 format = "BMP"

108

109 def get_command(self, file, **options):

110 return ('start "Pillow" /WAIT "%s" '

111 '&& ping -n 2 127.0.0.1 >NUL '

112 '&& del /f "%s"' % (file, file))

113

114 register(WindowsViewer)

115

116 elif sys.platform == "darwin":

117

118 class MacViewer(Viewer):

119 format = "PNG"

120 options = {'compress-level': 1}

121

122 def get_command(self, file, **options):

123 # on darwin open returns immediately resulting in the temp

124 # file removal while app is opening

125 command = "open -a /Applications/Preview.app"

126 command = "(%s %s; sleep 20; rm -f %s)&" % (command, quote(file),

127 quote(file))

128 return command

129

130 register(MacViewer)

131

132 else:

133

134 # unixoids

135

136 def which(executable):

137 path = os.environ.get("PATH")

138 if not path:

139 return None

140 for dirname in path.split(os.pathsep):

141 filename = os.path.join(dirname, executable)

142 if os.path.isfile(filename) and os.access(filename, os.X_OK):

143 return filename

144 return None

145

146 class UnixViewer(Viewer):

147 format = "PNG"

148 options = {'compress-level': 1}

149

150 def show_file(self, file, **options):

151 command, executable = self.get_command_ex(file, **options)

152 command = "(%s %s; rm -f %s)&" % (command, quote(file),

153 quote(file))

154 os.system(command)

155 return 1

156

157 # implementations

158

159 class DisplayViewer(UnixViewer):

160 def get_command_ex(self, file, **options):

161 command = executable = "display"

162 return command, executable

163

164 if which("display"):

165 register(DisplayViewer)

166

167 class XVViewer(UnixViewer):

168 def get_command_ex(self, file, title=None, **options):

169 # note: xv is pretty outdated. most modern systems have

170 # imagemagick's display command instead.

171 command = executable = "xv"

172 if title:

173 command += " -name %s" % quote(title)

174 return command, executable

175

176 if which("xv"):

177 register(XVViewer)

178

179 if __name__ == "__main__":

180 # usage: python ImageShow.py imagefile [title]

181 print(show(Image.open(sys.argv[1]), *sys.argv[2:]))

182

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/PIL/ImageShow.py b/PIL/ImageShow.py

--- a/PIL/ImageShow.py

+++ b/PIL/ImageShow.py

@@ -117,7 +117,7 @@

class MacViewer(Viewer):

format = "PNG"

- options = {'compress-level': 1}

+ options = {'compress_level': 1}

def get_command(self, file, **options):

# on darwin open returns immediately resulting in the temp

@@ -145,7 +145,7 @@

class UnixViewer(Viewer):

format = "PNG"

- options = {'compress-level': 1}

+ options = {'compress_level': 1}

def show_file(self, file, **options):

command, executable = self.get_command_ex(file, **options)

| {"golden_diff": "diff --git a/PIL/ImageShow.py b/PIL/ImageShow.py\n--- a/PIL/ImageShow.py\n+++ b/PIL/ImageShow.py\n@@ -117,7 +117,7 @@\n \n class MacViewer(Viewer):\n format = \"PNG\"\n- options = {'compress-level': 1}\n+ options = {'compress_level': 1}\n \n def get_command(self, file, **options):\n # on darwin open returns immediately resulting in the temp\n@@ -145,7 +145,7 @@\n \n class UnixViewer(Viewer):\n format = \"PNG\"\n- options = {'compress-level': 1}\n+ options = {'compress_level': 1}\n \n def show_file(self, file, **options):\n command, executable = self.get_command_ex(file, **options)\n", "issue": "later commits to PR 2509 broken the compress_level=1 setting\nThis is a follow-up to #2509 .\r\n\r\nUsing the same test file from #2508 with current head ( 1.7.6-5795-g82c51e4 / 82c51e4 is )\r\nhttps://cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png\r\n\r\n```\r\nfrom PIL import Image\r\nImage.open(\"cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png\").show()\r\nImage.open(\"cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png\").save(\"m.png\", compress_level=1)\r\n```\r\n\r\nThe file size are as follows:\r\n```\r\n-rw-------. 1 Hin-Tak Hin-Tak 28907 May 14 00:59 tmpEYXgFV.PNG\r\n-rw-------. 1 Hin-Tak Hin-Tak 28907 May 14 00:42 tmpud2qIG.PNG\r\n-rw-rw-r--. 1 Hin-Tak Hin-Tak 36127 May 14 00:44 m.png\r\n-rw-rw-r--. 1 Hin-Tak Hin-Tak 36127 May 14 01:00 m.png\r\n```\r\n\r\nThe original is 29069 bytes. I did test in #2509 that I got the 36127 larger file size for minimum compression, instead of what 1.7.6-5795-g82c51e4 / 82c51e4 is now giving, the 28907 smaller file size.\r\nSo some of @wiredfool 's \"improvements\" broke the minumum compression setting, which I last tested worked in my #2509 series.\r\n\r\nAnyway, you should aim at\r\n\r\n```\r\nfrom PIL import Image\r\nImage.open(\"cloud.githubusercontent.com/assets/327203/25754502/be994452-31bf-11e7-85da-9cc7fb2811bd.png\").show()\r\n```\r\n\r\ngiving a /tmp/tmpPWRSTU.PNG of around 36k, not 28k.\r\n\r\nConsidered my experience in #2509, I'd prefer to switch off notification for this.\r\n\n", "before_files": [{"content": "#\n# The Python Imaging Library.\n# $Id$\n#\n# im.show() drivers\n#\n# History:\n# 2008-04-06 fl Created\n#\n# Copyright (c) Secret Labs AB 2008.\n#\n# See the README file for information on usage and redistribution.\n#\n\nfrom __future__ import print_function\n\nfrom PIL import Image\nimport os\nimport sys\n\nif sys.version_info >= (3, 3):\n from shlex import quote\nelse:\n from pipes import quote\n\n_viewers = []\n\n\ndef register(viewer, order=1):\n try:\n if issubclass(viewer, Viewer):\n viewer = viewer()\n except TypeError:\n pass # raised if viewer wasn't a class\n if order > 0:\n _viewers.append(viewer)\n elif order < 0:\n _viewers.insert(0, viewer)\n\n\ndef show(image, title=None, **options):\n r\"\"\"\n Display a given image.\n\n :param image: An image object.\n :param title: Optional title. Not all viewers can display the title.\n :param \\**options: Additional viewer options.\n :returns: True if a suitable viewer was found, false otherwise.\n \"\"\"\n for viewer in _viewers:\n if viewer.show(image, title=title, **options):\n return 1\n return 0\n\n\nclass Viewer(object):\n \"\"\"Base class for viewers.\"\"\"\n\n # main api\n\n def show(self, image, **options):\n\n # save temporary image to disk\n if image.mode[:4] == \"I;16\":\n # @PIL88 @PIL101\n # \"I;16\" isn't an 'official' mode, but we still want to\n # provide a simple way to show 16-bit images.\n base = \"L\"\n # FIXME: auto-contrast if max() > 255?\n else:\n base = Image.getmodebase(image.mode)\n if base != image.mode and image.mode != \"1\" and image.mode != \"RGBA\":\n image = image.convert(base)\n\n return self.show_image(image, **options)\n\n # hook methods\n\n format = None\n options = {}\n\n def get_format(self, image):\n \"\"\"Return format name, or None to save as PGM/PPM\"\"\"\n return self.format\n\n def get_command(self, file, **options):\n raise NotImplementedError\n\n def save_image(self, image):\n \"\"\"Save to temporary file, and return filename\"\"\"\n return image._dump(format=self.get_format(image), **self.options)\n\n def show_image(self, image, **options):\n \"\"\"Display given image\"\"\"\n return self.show_file(self.save_image(image), **options)\n\n def show_file(self, file, **options):\n \"\"\"Display given file\"\"\"\n os.system(self.get_command(file, **options))\n return 1\n\n# --------------------------------------------------------------------\n\nif sys.platform == \"win32\":\n\n class WindowsViewer(Viewer):\n format = \"BMP\"\n\n def get_command(self, file, **options):\n return ('start \"Pillow\" /WAIT \"%s\" '\n '&& ping -n 2 127.0.0.1 >NUL '\n '&& del /f \"%s\"' % (file, file))\n\n register(WindowsViewer)\n\nelif sys.platform == \"darwin\":\n\n class MacViewer(Viewer):\n format = \"PNG\"\n options = {'compress-level': 1}\n\n def get_command(self, file, **options):\n # on darwin open returns immediately resulting in the temp\n # file removal while app is opening\n command = \"open -a /Applications/Preview.app\"\n command = \"(%s %s; sleep 20; rm -f %s)&\" % (command, quote(file),\n quote(file))\n return command\n\n register(MacViewer)\n\nelse:\n\n # unixoids\n\n def which(executable):\n path = os.environ.get(\"PATH\")\n if not path:\n return None\n for dirname in path.split(os.pathsep):\n filename = os.path.join(dirname, executable)\n if os.path.isfile(filename) and os.access(filename, os.X_OK):\n return filename\n return None\n\n class UnixViewer(Viewer):\n format = \"PNG\"\n options = {'compress-level': 1}\n\n def show_file(self, file, **options):\n command, executable = self.get_command_ex(file, **options)\n command = \"(%s %s; rm -f %s)&\" % (command, quote(file),\n quote(file))\n os.system(command)\n return 1\n\n # implementations\n\n class DisplayViewer(UnixViewer):\n def get_command_ex(self, file, **options):\n command = executable = \"display\"\n return command, executable\n\n if which(\"display\"):\n register(DisplayViewer)\n\n class XVViewer(UnixViewer):\n def get_command_ex(self, file, title=None, **options):\n # note: xv is pretty outdated. most modern systems have\n # imagemagick's display command instead.\n command = executable = \"xv\"\n if title:\n command += \" -name %s\" % quote(title)\n return command, executable\n\n if which(\"xv\"):\n register(XVViewer)\n\nif __name__ == \"__main__\":\n # usage: python ImageShow.py imagefile [title]\n print(show(Image.open(sys.argv[1]), *sys.argv[2:]))\n", "path": "PIL/ImageShow.py"}], "after_files": [{"content": "#\n# The Python Imaging Library.\n# $Id$\n#\n# im.show() drivers\n#\n# History:\n# 2008-04-06 fl Created\n#\n# Copyright (c) Secret Labs AB 2008.\n#\n# See the README file for information on usage and redistribution.\n#\n\nfrom __future__ import print_function\n\nfrom PIL import Image\nimport os\nimport sys\n\nif sys.version_info >= (3, 3):\n from shlex import quote\nelse:\n from pipes import quote\n\n_viewers = []\n\n\ndef register(viewer, order=1):\n try:\n if issubclass(viewer, Viewer):\n viewer = viewer()\n except TypeError:\n pass # raised if viewer wasn't a class\n if order > 0:\n _viewers.append(viewer)\n elif order < 0:\n _viewers.insert(0, viewer)\n\n\ndef show(image, title=None, **options):\n r\"\"\"\n Display a given image.\n\n :param image: An image object.\n :param title: Optional title. Not all viewers can display the title.\n :param \\**options: Additional viewer options.\n :returns: True if a suitable viewer was found, false otherwise.\n \"\"\"\n for viewer in _viewers:\n if viewer.show(image, title=title, **options):\n return 1\n return 0\n\n\nclass Viewer(object):\n \"\"\"Base class for viewers.\"\"\"\n\n # main api\n\n def show(self, image, **options):\n\n # save temporary image to disk\n if image.mode[:4] == \"I;16\":\n # @PIL88 @PIL101\n # \"I;16\" isn't an 'official' mode, but we still want to\n # provide a simple way to show 16-bit images.\n base = \"L\"\n # FIXME: auto-contrast if max() > 255?\n else:\n base = Image.getmodebase(image.mode)\n if base != image.mode and image.mode != \"1\" and image.mode != \"RGBA\":\n image = image.convert(base)\n\n return self.show_image(image, **options)\n\n # hook methods\n\n format = None\n options = {}\n\n def get_format(self, image):\n \"\"\"Return format name, or None to save as PGM/PPM\"\"\"\n return self.format\n\n def get_command(self, file, **options):\n raise NotImplementedError\n\n def save_image(self, image):\n \"\"\"Save to temporary file, and return filename\"\"\"\n return image._dump(format=self.get_format(image), **self.options)\n\n def show_image(self, image, **options):\n \"\"\"Display given image\"\"\"\n return self.show_file(self.save_image(image), **options)\n\n def show_file(self, file, **options):\n \"\"\"Display given file\"\"\"\n os.system(self.get_command(file, **options))\n return 1\n\n# --------------------------------------------------------------------\n\nif sys.platform == \"win32\":\n\n class WindowsViewer(Viewer):\n format = \"BMP\"\n\n def get_command(self, file, **options):\n return ('start \"Pillow\" /WAIT \"%s\" '\n '&& ping -n 2 127.0.0.1 >NUL '\n '&& del /f \"%s\"' % (file, file))\n\n register(WindowsViewer)\n\nelif sys.platform == \"darwin\":\n\n class MacViewer(Viewer):\n format = \"PNG\"\n options = {'compress_level': 1}\n\n def get_command(self, file, **options):\n # on darwin open returns immediately resulting in the temp\n # file removal while app is opening\n command = \"open -a /Applications/Preview.app\"\n command = \"(%s %s; sleep 20; rm -f %s)&\" % (command, quote(file),\n quote(file))\n return command\n\n register(MacViewer)\n\nelse:\n\n # unixoids\n\n def which(executable):\n path = os.environ.get(\"PATH\")\n if not path:\n return None\n for dirname in path.split(os.pathsep):\n filename = os.path.join(dirname, executable)\n if os.path.isfile(filename) and os.access(filename, os.X_OK):\n return filename\n return None\n\n class UnixViewer(Viewer):\n format = \"PNG\"\n options = {'compress_level': 1}\n\n def show_file(self, file, **options):\n command, executable = self.get_command_ex(file, **options)\n command = \"(%s %s; rm -f %s)&\" % (command, quote(file),\n quote(file))\n os.system(command)\n return 1\n\n # implementations\n\n class DisplayViewer(UnixViewer):\n def get_command_ex(self, file, **options):\n command = executable = \"display\"\n return command, executable\n\n if which(\"display\"):\n register(DisplayViewer)\n\n class XVViewer(UnixViewer):\n def get_command_ex(self, file, title=None, **options):\n # note: xv is pretty outdated. most modern systems have\n # imagemagick's display command instead.\n command = executable = \"xv\"\n if title:\n command += \" -name %s\" % quote(title)\n return command, executable\n\n if which(\"xv\"):\n register(XVViewer)\n\nif __name__ == \"__main__\":\n # usage: python ImageShow.py imagefile [title]\n print(show(Image.open(sys.argv[1]), *sys.argv[2:]))\n", "path": "PIL/ImageShow.py"}]} | 2,565 | 183 |

gh_patches_debug_3770 | rasdani/github-patches | git_diff | joke2k__faker-1046 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fake ISBN10 causes "Registrant/Publication not found"

In rare cases the `fake.isbn10` method throws an exception with the following message: `Exception: Registrant/Publication not found in registrant rule list. `

A full exception message:

```

/usr/local/lib/python3.6/site-packages/faker/providers/isbn/__init__.py:70: in isbn10

ean, group, registrant, publication = self._body()

/usr/local/lib/python3.6/site-packages/faker/providers/isbn/__init__.py:41: in _body

registrant, publication = self._registrant_publication(reg_pub, rules)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

reg_pub = '64799998'

rules = [RegistrantRule(min='0000000', max='1999999', registrant_length=2), RegistrantRule(min='2000000', max='2279999', regis...'6480000', max='6489999', registrant_length=7), RegistrantRule(min='6490000', max='6999999', registrant_length=3), ...]

@staticmethod

def _registrant_publication(reg_pub, rules):

""" Separate the registration from the publication in a given

string.

:param reg_pub: A string of digits representing a registration

and publication.

:param rules: A list of RegistrantRules which designate where

to separate the values in the string.

:returns: A (registrant, publication) tuple of strings.

"""

for rule in rules:

if rule.min <= reg_pub <= rule.max:

reg_len = rule.registrant_length

break

else:

> raise Exception('Registrant/Publication not found in registrant '

'rule list.')

E Exception: Registrant/Publication not found in registrant rule list.

/usr/local/lib/python3.6/site-packages/faker/providers/isbn/__init__.py:59: Exception

```

### Steps to reproduce

Call `faker.providers.isbn.Provider._registrant_publication` with any of the following values for the `reg_pub` param: `64799998`, `39999999`. These values are valid randomly generated strings from [L34](https://github.com/joke2k/faker/blob/master/faker/providers/isbn/__init__.py#L37).

Code:

```python

from faker.providers.isbn import Provider

from faker.providers.isbn.rules import RULES

# Fails; throws an exception

Provider._registrant_publication('64799998', RULES['978']['0'])

Provider._registrant_publication('39999999', RULES['978']['1'])

# Works; but may be invalid

Provider._registrant_publication('64799998', RULES['978']['1'])

Provider._registrant_publication('39999999', RULES['978']['0'])

```

### Expected behavior

The `faker.providers.isbn.Provider._body` should generate valid `reg_pub` values.

### Actual behavior

It generates values for `reg_pub` that are not accepted by the rules defined in `faker.providers.isbn.rules`.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `faker/providers/isbn/__init__.py`

Content:

```

1 # coding=utf-8

2

3 from __future__ import unicode_literals

4 from .. import BaseProvider

5 from .isbn import ISBN, ISBN10, ISBN13

6 from .rules import RULES

7

8

9 class Provider(BaseProvider):

10 """ Generates fake ISBNs. ISBN rules vary across languages/regions

11 so this class makes no attempt at replicating all of the rules. It

12 only replicates the 978 EAN prefix for the English registration

13 groups, meaning the first 4 digits of the ISBN-13 will either be

14 978-0 or 978-1. Since we are only replicating 978 prefixes, every

15 ISBN-13 will have a direct mapping to an ISBN-10.

16

17 See https://www.isbn-international.org/content/what-isbn for the

18 format of ISBNs.

19 See https://www.isbn-international.org/range_file_generation for the

20 list of rules pertaining to each prefix/registration group.

21 """

22

23 def _body(self):

24 """ Generate the information required to create an ISBN-10 or

25 ISBN-13.

26 """

27 ean = self.random_element(RULES.keys())

28 reg_group = self.random_element(RULES[ean].keys())

29

30 # Given the chosen ean/group, decide how long the

31 # registrant/publication string may be.

32 # We must allocate for the calculated check digit, so

33 # subtract 1

34 reg_pub_len = ISBN.MAX_LENGTH - len(ean) - len(reg_group) - 1

35

36 # Generate a registrant/publication combination

37 reg_pub = self.numerify('#' * reg_pub_len)

38

39 # Use rules to separate the registrant from the publication

40 rules = RULES[ean][reg_group]

41 registrant, publication = self._registrant_publication(reg_pub, rules)

42 return [ean, reg_group, registrant, publication]

43

44 @staticmethod

45 def _registrant_publication(reg_pub, rules):

46 """ Separate the registration from the publication in a given

47 string.

48 :param reg_pub: A string of digits representing a registration

49 and publication.

50 :param rules: A list of RegistrantRules which designate where

51 to separate the values in the string.

52 :returns: A (registrant, publication) tuple of strings.

53 """

54 for rule in rules:

55 if rule.min <= reg_pub <= rule.max:

56 reg_len = rule.registrant_length

57 break

58 else:

59 raise Exception('Registrant/Publication not found in registrant '

60 'rule list.')

61 registrant, publication = reg_pub[:reg_len], reg_pub[reg_len:]

62 return registrant, publication

63

64 def isbn13(self, separator='-'):

65 ean, group, registrant, publication = self._body()

66 isbn = ISBN13(ean, group, registrant, publication)

67 return isbn.format(separator)

68

69 def isbn10(self, separator='-'):

70 ean, group, registrant, publication = self._body()

71 isbn = ISBN10(ean, group, registrant, publication)

72 return isbn.format(separator)

73

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/faker/providers/isbn/__init__.py b/faker/providers/isbn/__init__.py

--- a/faker/providers/isbn/__init__.py

+++ b/faker/providers/isbn/__init__.py

@@ -52,7 +52,7 @@

:returns: A (registrant, publication) tuple of strings.

"""

for rule in rules:

- if rule.min <= reg_pub <= rule.max:

+ if rule.min <= reg_pub[:-1] <= rule.max:

reg_len = rule.registrant_length

break

else:

| {"golden_diff": "diff --git a/faker/providers/isbn/__init__.py b/faker/providers/isbn/__init__.py\n--- a/faker/providers/isbn/__init__.py\n+++ b/faker/providers/isbn/__init__.py\n@@ -52,7 +52,7 @@\n :returns: A (registrant, publication) tuple of strings.\n \"\"\"\n for rule in rules:\n- if rule.min <= reg_pub <= rule.max:\n+ if rule.min <= reg_pub[:-1] <= rule.max:\n reg_len = rule.registrant_length\n break\n else:\n", "issue": "Fake ISBN10 causes \"Registrant/Publication not found\"\nIn rare cases the `fake.isbn10` method throws an exception with the following message: `Exception: Registrant/Publication not found in registrant rule list. `\r\n\r\nA full exception message:\r\n```\r\n/usr/local/lib/python3.6/site-packages/faker/providers/isbn/__init__.py:70: in isbn10\r\n ean, group, registrant, publication = self._body()\r\n/usr/local/lib/python3.6/site-packages/faker/providers/isbn/__init__.py:41: in _body\r\n registrant, publication = self._registrant_publication(reg_pub, rules)\r\n_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ \r\nreg_pub = '64799998'\r\nrules = [RegistrantRule(min='0000000', max='1999999', registrant_length=2), RegistrantRule(min='2000000', max='2279999', regis...'6480000', max='6489999', registrant_length=7), RegistrantRule(min='6490000', max='6999999', registrant_length=3), ...]\r\n\r\n @staticmethod\r\n def _registrant_publication(reg_pub, rules):\r\n \"\"\" Separate the registration from the publication in a given\r\n string.\r\n :param reg_pub: A string of digits representing a registration\r\n and publication.\r\n :param rules: A list of RegistrantRules which designate where\r\n to separate the values in the string.\r\n :returns: A (registrant, publication) tuple of strings.\r\n \"\"\"\r\n for rule in rules:\r\n if rule.min <= reg_pub <= rule.max:\r\n reg_len = rule.registrant_length\r\n break\r\n else:\r\n> raise Exception('Registrant/Publication not found in registrant '\r\n 'rule list.')\r\nE Exception: Registrant/Publication not found in registrant rule list.\r\n\r\n/usr/local/lib/python3.6/site-packages/faker/providers/isbn/__init__.py:59: Exception\r\n```\r\n### Steps to reproduce\r\n\r\nCall `faker.providers.isbn.Provider._registrant_publication` with any of the following values for the `reg_pub` param: `64799998`, `39999999`. These values are valid randomly generated strings from [L34](https://github.com/joke2k/faker/blob/master/faker/providers/isbn/__init__.py#L37).\r\n\r\nCode:\r\n```python\r\nfrom faker.providers.isbn import Provider\r\nfrom faker.providers.isbn.rules import RULES\r\n\r\n# Fails; throws an exception\r\nProvider._registrant_publication('64799998', RULES['978']['0'])\r\nProvider._registrant_publication('39999999', RULES['978']['1'])\r\n\r\n# Works; but may be invalid\r\nProvider._registrant_publication('64799998', RULES['978']['1'])\r\nProvider._registrant_publication('39999999', RULES['978']['0'])\r\n```\r\n\r\n### Expected behavior\r\n\r\nThe `faker.providers.isbn.Provider._body` should generate valid `reg_pub` values.\r\n\r\n### Actual behavior\r\n\r\nIt generates values for `reg_pub` that are not accepted by the rules defined in `faker.providers.isbn.rules`.\r\n\n", "before_files": [{"content": "# coding=utf-8\n\nfrom __future__ import unicode_literals\nfrom .. import BaseProvider\nfrom .isbn import ISBN, ISBN10, ISBN13\nfrom .rules import RULES\n\n\nclass Provider(BaseProvider):\n \"\"\" Generates fake ISBNs. ISBN rules vary across languages/regions\n so this class makes no attempt at replicating all of the rules. It\n only replicates the 978 EAN prefix for the English registration\n groups, meaning the first 4 digits of the ISBN-13 will either be\n 978-0 or 978-1. Since we are only replicating 978 prefixes, every\n ISBN-13 will have a direct mapping to an ISBN-10.\n\n See https://www.isbn-international.org/content/what-isbn for the\n format of ISBNs.\n See https://www.isbn-international.org/range_file_generation for the\n list of rules pertaining to each prefix/registration group.\n \"\"\"\n\n def _body(self):\n \"\"\" Generate the information required to create an ISBN-10 or\n ISBN-13.\n \"\"\"\n ean = self.random_element(RULES.keys())\n reg_group = self.random_element(RULES[ean].keys())\n\n # Given the chosen ean/group, decide how long the\n # registrant/publication string may be.\n # We must allocate for the calculated check digit, so\n # subtract 1\n reg_pub_len = ISBN.MAX_LENGTH - len(ean) - len(reg_group) - 1\n\n # Generate a registrant/publication combination\n reg_pub = self.numerify('#' * reg_pub_len)\n\n # Use rules to separate the registrant from the publication\n rules = RULES[ean][reg_group]\n registrant, publication = self._registrant_publication(reg_pub, rules)\n return [ean, reg_group, registrant, publication]\n\n @staticmethod\n def _registrant_publication(reg_pub, rules):\n \"\"\" Separate the registration from the publication in a given\n string.\n :param reg_pub: A string of digits representing a registration\n and publication.\n :param rules: A list of RegistrantRules which designate where\n to separate the values in the string.\n :returns: A (registrant, publication) tuple of strings.\n \"\"\"\n for rule in rules:\n if rule.min <= reg_pub <= rule.max:\n reg_len = rule.registrant_length\n break\n else:\n raise Exception('Registrant/Publication not found in registrant '\n 'rule list.')\n registrant, publication = reg_pub[:reg_len], reg_pub[reg_len:]\n return registrant, publication\n\n def isbn13(self, separator='-'):\n ean, group, registrant, publication = self._body()\n isbn = ISBN13(ean, group, registrant, publication)\n return isbn.format(separator)\n\n def isbn10(self, separator='-'):\n ean, group, registrant, publication = self._body()\n isbn = ISBN10(ean, group, registrant, publication)\n return isbn.format(separator)\n", "path": "faker/providers/isbn/__init__.py"}], "after_files": [{"content": "# coding=utf-8\n\nfrom __future__ import unicode_literals\nfrom .. import BaseProvider\nfrom .isbn import ISBN, ISBN10, ISBN13\nfrom .rules import RULES\n\n\nclass Provider(BaseProvider):\n \"\"\" Generates fake ISBNs. ISBN rules vary across languages/regions\n so this class makes no attempt at replicating all of the rules. It\n only replicates the 978 EAN prefix for the English registration\n groups, meaning the first 4 digits of the ISBN-13 will either be\n 978-0 or 978-1. Since we are only replicating 978 prefixes, every\n ISBN-13 will have a direct mapping to an ISBN-10.\n\n See https://www.isbn-international.org/content/what-isbn for the\n format of ISBNs.\n See https://www.isbn-international.org/range_file_generation for the\n list of rules pertaining to each prefix/registration group.\n \"\"\"\n\n def _body(self):\n \"\"\" Generate the information required to create an ISBN-10 or\n ISBN-13.\n \"\"\"\n ean = self.random_element(RULES.keys())\n reg_group = self.random_element(RULES[ean].keys())\n\n # Given the chosen ean/group, decide how long the\n # registrant/publication string may be.\n # We must allocate for the calculated check digit, so\n # subtract 1\n reg_pub_len = ISBN.MAX_LENGTH - len(ean) - len(reg_group) - 1\n\n # Generate a registrant/publication combination\n reg_pub = self.numerify('#' * reg_pub_len)\n\n # Use rules to separate the registrant from the publication\n rules = RULES[ean][reg_group]\n registrant, publication = self._registrant_publication(reg_pub, rules)\n return [ean, reg_group, registrant, publication]\n\n @staticmethod\n def _registrant_publication(reg_pub, rules):\n \"\"\" Separate the registration from the publication in a given\n string.\n :param reg_pub: A string of digits representing a registration\n and publication.\n :param rules: A list of RegistrantRules which designate where\n to separate the values in the string.\n :returns: A (registrant, publication) tuple of strings.\n \"\"\"\n for rule in rules:\n if rule.min <= reg_pub[:-1] <= rule.max:\n reg_len = rule.registrant_length\n break\n else:\n raise Exception('Registrant/Publication not found in registrant '\n 'rule list.')\n registrant, publication = reg_pub[:reg_len], reg_pub[reg_len:]\n return registrant, publication\n\n def isbn13(self, separator='-'):\n ean, group, registrant, publication = self._body()\n isbn = ISBN13(ean, group, registrant, publication)\n return isbn.format(separator)\n\n def isbn10(self, separator='-'):\n ean, group, registrant, publication = self._body()\n isbn = ISBN10(ean, group, registrant, publication)\n return isbn.format(separator)\n", "path": "faker/providers/isbn/__init__.py"}]} | 1,870 | 126 |

gh_patches_debug_35799 | rasdani/github-patches | git_diff | Parsl__parsl-1804 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Add account option to slurm provider

See Parsl/libsubmit#68

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `parsl/providers/slurm/slurm.py`

Content:

```

1 import os

2 import math

3 import time

4 import logging

5 import typeguard

6

7 from typing import Optional

8

9 from parsl.channels import LocalChannel

10 from parsl.channels.base import Channel

11 from parsl.launchers import SingleNodeLauncher

12 from parsl.launchers.launchers import Launcher

13 from parsl.providers.cluster_provider import ClusterProvider

14 from parsl.providers.provider_base import JobState, JobStatus

15 from parsl.providers.slurm.template import template_string

16 from parsl.utils import RepresentationMixin, wtime_to_minutes

17

18 logger = logging.getLogger(__name__)

19

20 translate_table = {

21 'PD': JobState.PENDING,

22 'R': JobState.RUNNING,

23 'CA': JobState.CANCELLED,

24 'CF': JobState.PENDING, # (configuring),

25 'CG': JobState.RUNNING, # (completing),

26 'CD': JobState.COMPLETED,

27 'F': JobState.FAILED, # (failed),

28 'TO': JobState.TIMEOUT, # (timeout),

29 'NF': JobState.FAILED, # (node failure),

30 'RV': JobState.FAILED, # (revoked) and

31 'SE': JobState.FAILED

32 } # (special exit state

33

34

35 class SlurmProvider(ClusterProvider, RepresentationMixin):

36 """Slurm Execution Provider

37

38 This provider uses sbatch to submit, squeue for status and scancel to cancel

39 jobs. The sbatch script to be used is created from a template file in this

40 same module.

41

42 Parameters

43 ----------

44 partition : str

45 Slurm partition to request blocks from. If none, no partition slurm directive will be specified.

46 channel : Channel

47 Channel for accessing this provider. Possible channels include

48 :class:`~parsl.channels.LocalChannel` (the default),

49 :class:`~parsl.channels.SSHChannel`, or

50 :class:`~parsl.channels.SSHInteractiveLoginChannel`.

51 nodes_per_block : int

52 Nodes to provision per block.

53 cores_per_node : int

54 Specify the number of cores to provision per node. If set to None, executors

55 will assume all cores on the node are available for computation. Default is None.

56 mem_per_node : int

57 Specify the real memory to provision per node in GB. If set to None, no

58 explicit request to the scheduler will be made. Default is None.

59 min_blocks : int

60 Minimum number of blocks to maintain.

61 max_blocks : int

62 Maximum number of blocks to maintain.

63 parallelism : float

64 Ratio of provisioned task slots to active tasks. A parallelism value of 1 represents aggressive

65 scaling where as many resources as possible are used; parallelism close to 0 represents

66 the opposite situation in which as few resources as possible (i.e., min_blocks) are used.

67 walltime : str

68 Walltime requested per block in HH:MM:SS.

69 scheduler_options : str

70 String to prepend to the #SBATCH blocks in the submit script to the scheduler.

71 worker_init : str

72 Command to be run before starting a worker, such as 'module load Anaconda; source activate env'.

73 exclusive : bool (Default = True)

74 Requests nodes which are not shared with other running jobs.

75 launcher : Launcher

76 Launcher for this provider. Possible launchers include

77 :class:`~parsl.launchers.SingleNodeLauncher` (the default),

78 :class:`~parsl.launchers.SrunLauncher`, or

79 :class:`~parsl.launchers.AprunLauncher`

80 move_files : Optional[Bool]: should files be moved? by default, Parsl will try to move files.

81 """

82

83 @typeguard.typechecked

84 def __init__(self,

85 partition: Optional[str],

86 channel: Channel = LocalChannel(),

87 nodes_per_block: int = 1,

88 cores_per_node: Optional[int] = None,

89 mem_per_node: Optional[int] = None,

90 init_blocks: int = 1,

91 min_blocks: int = 0,

92 max_blocks: int = 1,

93 parallelism: float = 1,

94 walltime: str = "00:10:00",

95 scheduler_options: str = '',

96 worker_init: str = '',

97 cmd_timeout: int = 10,

98 exclusive: bool = True,

99 move_files: bool = True,

100 launcher: Launcher = SingleNodeLauncher()):

101 label = 'slurm'

102 super().__init__(label,

103 channel,

104 nodes_per_block,

105 init_blocks,

106 min_blocks,

107 max_blocks,

108 parallelism,

109 walltime,

110 cmd_timeout=cmd_timeout,

111 launcher=launcher)

112

113 self.partition = partition

114 self.cores_per_node = cores_per_node

115 self.mem_per_node = mem_per_node

116 self.exclusive = exclusive

117 self.move_files = move_files

118 self.scheduler_options = scheduler_options + '\n'

119 if exclusive:

120 self.scheduler_options += "#SBATCH --exclusive\n"

121 if partition:

122 self.scheduler_options += "#SBATCH --partition={}\n".format(partition)

123 self.worker_init = worker_init + '\n'

124

125 def _status(self):

126 ''' Internal: Do not call. Returns the status list for a list of job_ids

127

128 Args:

129 self

130

131 Returns:

132 [status...] : Status list of all jobs

133 '''

134 job_id_list = ','.join(self.resources.keys())

135 cmd = "squeue --job {0}".format(job_id_list)

136 logger.debug("Executing sqeueue")

137 retcode, stdout, stderr = self.execute_wait(cmd)

138 logger.debug("sqeueue returned")

139

140 # Execute_wait failed. Do no update

141 if retcode != 0:

142 logger.warning("squeue failed with non-zero exit code {} - see https://github.com/Parsl/parsl/issues/1588".format(retcode))

143 return

144

145 jobs_missing = list(self.resources.keys())

146 for line in stdout.split('\n'):

147 parts = line.split()

148 if parts and parts[0] != 'JOBID':

149 job_id = parts[0]

150 status = translate_table.get(parts[4], JobState.UNKNOWN)

151 logger.debug("Updating job {} with slurm status {} to parsl status {}".format(job_id, parts[4], status))

152 self.resources[job_id]['status'] = JobStatus(status)

153 jobs_missing.remove(job_id)

154

155 # squeue does not report on jobs that are not running. So we are filling in the

156 # blanks for missing jobs, we might lose some information about why the jobs failed.

157 for missing_job in jobs_missing:

158 logger.debug("Updating missing job {} to completed status".format(missing_job))

159 self.resources[missing_job]['status'] = JobStatus(JobState.COMPLETED)

160

161 def submit(self, command, tasks_per_node, job_name="parsl.slurm"):

162 """Submit the command as a slurm job.

163

164 Parameters

165 ----------

166 command : str

167 Command to be made on the remote side.

168 tasks_per_node : int

169 Command invocations to be launched per node

170 job_name : str

171 Name for the job (must be unique).

172 Returns

173 -------

174 None or str

175 If at capacity, returns None; otherwise, a string identifier for the job

176 """

177

178 scheduler_options = self.scheduler_options

179 worker_init = self.worker_init

180 if self.mem_per_node is not None:

181 scheduler_options += '#SBATCH --mem={}g\n'.format(self.mem_per_node)

182 worker_init += 'export PARSL_MEMORY_GB={}\n'.format(self.mem_per_node)

183 if self.cores_per_node is not None:

184 cpus_per_task = math.floor(self.cores_per_node / tasks_per_node)

185 scheduler_options += '#SBATCH --cpus-per-task={}'.format(cpus_per_task)

186 worker_init += 'export PARSL_CORES={}\n'.format(cpus_per_task)

187

188 job_name = "{0}.{1}".format(job_name, time.time())

189

190 script_path = "{0}/{1}.submit".format(self.script_dir, job_name)

191 script_path = os.path.abspath(script_path)

192

193 logger.debug("Requesting one block with {} nodes".format(self.nodes_per_block))

194

195 job_config = {}

196 job_config["submit_script_dir"] = self.channel.script_dir

197 job_config["nodes"] = self.nodes_per_block

198 job_config["tasks_per_node"] = tasks_per_node

199 job_config["walltime"] = wtime_to_minutes(self.walltime)

200 job_config["scheduler_options"] = scheduler_options

201 job_config["worker_init"] = worker_init

202 job_config["user_script"] = command

203

204 # Wrap the command

205 job_config["user_script"] = self.launcher(command,

206 tasks_per_node,

207 self.nodes_per_block)

208

209 logger.debug("Writing submit script")

210 self._write_submit_script(template_string, script_path, job_name, job_config)

211

212 if self.move_files:

213 logger.debug("moving files")

214 channel_script_path = self.channel.push_file(script_path, self.channel.script_dir)

215 else:

216 logger.debug("not moving files")

217 channel_script_path = script_path

218

219 retcode, stdout, stderr = self.execute_wait("sbatch {0}".format(channel_script_path))

220

221 job_id = None

222 if retcode == 0:

223 for line in stdout.split('\n'):

224 if line.startswith("Submitted batch job"):

225 job_id = line.split("Submitted batch job")[1].strip()

226 self.resources[job_id] = {'job_id': job_id, 'status': JobStatus(JobState.PENDING)}

227 else:

228 print("Submission of command to scale_out failed")

229 logger.error("Retcode:%s STDOUT:%s STDERR:%s", retcode, stdout.strip(), stderr.strip())

230 return job_id

231

232 def cancel(self, job_ids):

233 ''' Cancels the jobs specified by a list of job ids

234

235 Args:

236 job_ids : [<job_id> ...]

237

238 Returns :

239 [True/False...] : If the cancel operation fails the entire list will be False.

240 '''

241

242 job_id_list = ' '.join(job_ids)

243 retcode, stdout, stderr = self.execute_wait("scancel {0}".format(job_id_list))

244 rets = None

245 if retcode == 0:

246 for jid in job_ids:

247 self.resources[jid]['status'] = JobStatus(JobState.CANCELLED) # Setting state to cancelled

248 rets = [True for i in job_ids]

249 else:

250 rets = [False for i in job_ids]

251

252 return rets

253

254 @property

255 def status_polling_interval(self):

256 return 60

257

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/parsl/providers/slurm/slurm.py b/parsl/providers/slurm/slurm.py

--- a/parsl/providers/slurm/slurm.py

+++ b/parsl/providers/slurm/slurm.py

@@ -43,6 +43,9 @@

----------

partition : str

Slurm partition to request blocks from. If none, no partition slurm directive will be specified.

+ account : str

+ Slurm account to which to charge resources used by the job. If none, the job will use the

+ user's default account.

channel : Channel

Channel for accessing this provider. Possible channels include

:class:`~parsl.channels.LocalChannel` (the default),

@@ -77,12 +80,13 @@

:class:`~parsl.launchers.SingleNodeLauncher` (the default),

:class:`~parsl.launchers.SrunLauncher`, or

:class:`~parsl.launchers.AprunLauncher`

- move_files : Optional[Bool]: should files be moved? by default, Parsl will try to move files.

+ move_files : Optional[Bool]: should files be moved? by default, Parsl will try to move files.

"""

@typeguard.typechecked

def __init__(self,

partition: Optional[str],

+ account: Optional[str] = None,

channel: Channel = LocalChannel(),

nodes_per_block: int = 1,

cores_per_node: Optional[int] = None,

@@ -115,11 +119,14 @@

self.mem_per_node = mem_per_node

self.exclusive = exclusive

self.move_files = move_files

+ self.account = account

self.scheduler_options = scheduler_options + '\n'

if exclusive:

self.scheduler_options += "#SBATCH --exclusive\n"

if partition:

self.scheduler_options += "#SBATCH --partition={}\n".format(partition)

+ if account:

+ self.scheduler_options += "#SBATCH --account={}\n".format(account)

self.worker_init = worker_init + '\n'

def _status(self):

| {"golden_diff": "diff --git a/parsl/providers/slurm/slurm.py b/parsl/providers/slurm/slurm.py\n--- a/parsl/providers/slurm/slurm.py\n+++ b/parsl/providers/slurm/slurm.py\n@@ -43,6 +43,9 @@\n ----------\n partition : str\n Slurm partition to request blocks from. If none, no partition slurm directive will be specified.\n+ account : str\n+ Slurm account to which to charge resources used by the job. If none, the job will use the\n+ user's default account.\n channel : Channel\n Channel for accessing this provider. Possible channels include\n :class:`~parsl.channels.LocalChannel` (the default),\n@@ -77,12 +80,13 @@\n :class:`~parsl.launchers.SingleNodeLauncher` (the default),\n :class:`~parsl.launchers.SrunLauncher`, or\n :class:`~parsl.launchers.AprunLauncher`\n- move_files : Optional[Bool]: should files be moved? by default, Parsl will try to move files.\n+ move_files : Optional[Bool]: should files be moved? by default, Parsl will try to move files.\n \"\"\"\n \n @typeguard.typechecked\n def __init__(self,\n partition: Optional[str],\n+ account: Optional[str] = None,\n channel: Channel = LocalChannel(),\n nodes_per_block: int = 1,\n cores_per_node: Optional[int] = None,\n@@ -115,11 +119,14 @@\n self.mem_per_node = mem_per_node\n self.exclusive = exclusive\n self.move_files = move_files\n+ self.account = account\n self.scheduler_options = scheduler_options + '\\n'\n if exclusive:\n self.scheduler_options += \"#SBATCH --exclusive\\n\"\n if partition:\n self.scheduler_options += \"#SBATCH --partition={}\\n\".format(partition)\n+ if account:\n+ self.scheduler_options += \"#SBATCH --account={}\\n\".format(account)\n self.worker_init = worker_init + '\\n'\n \n def _status(self):\n", "issue": "Add account option to slurm provider\nSee Parsl/libsubmit#68\n", "before_files": [{"content": "import os\nimport math\nimport time\nimport logging\nimport typeguard\n\nfrom typing import Optional\n\nfrom parsl.channels import LocalChannel\nfrom parsl.channels.base import Channel\nfrom parsl.launchers import SingleNodeLauncher\nfrom parsl.launchers.launchers import Launcher\nfrom parsl.providers.cluster_provider import ClusterProvider\nfrom parsl.providers.provider_base import JobState, JobStatus\nfrom parsl.providers.slurm.template import template_string\nfrom parsl.utils import RepresentationMixin, wtime_to_minutes\n\nlogger = logging.getLogger(__name__)\n\ntranslate_table = {\n 'PD': JobState.PENDING,\n 'R': JobState.RUNNING,\n 'CA': JobState.CANCELLED,\n 'CF': JobState.PENDING, # (configuring),\n 'CG': JobState.RUNNING, # (completing),\n 'CD': JobState.COMPLETED,\n 'F': JobState.FAILED, # (failed),\n 'TO': JobState.TIMEOUT, # (timeout),\n 'NF': JobState.FAILED, # (node failure),\n 'RV': JobState.FAILED, # (revoked) and\n 'SE': JobState.FAILED\n} # (special exit state\n\n\nclass SlurmProvider(ClusterProvider, RepresentationMixin):\n \"\"\"Slurm Execution Provider\n\n This provider uses sbatch to submit, squeue for status and scancel to cancel\n jobs. The sbatch script to be used is created from a template file in this\n same module.\n\n Parameters\n ----------\n partition : str\n Slurm partition to request blocks from. If none, no partition slurm directive will be specified.\n channel : Channel\n Channel for accessing this provider. Possible channels include\n :class:`~parsl.channels.LocalChannel` (the default),\n :class:`~parsl.channels.SSHChannel`, or\n :class:`~parsl.channels.SSHInteractiveLoginChannel`.\n nodes_per_block : int\n Nodes to provision per block.\n cores_per_node : int\n Specify the number of cores to provision per node. If set to None, executors\n will assume all cores on the node are available for computation. Default is None.\n mem_per_node : int\n Specify the real memory to provision per node in GB. If set to None, no\n explicit request to the scheduler will be made. Default is None.\n min_blocks : int\n Minimum number of blocks to maintain.\n max_blocks : int\n Maximum number of blocks to maintain.\n parallelism : float\n Ratio of provisioned task slots to active tasks. A parallelism value of 1 represents aggressive\n scaling where as many resources as possible are used; parallelism close to 0 represents\n the opposite situation in which as few resources as possible (i.e., min_blocks) are used.\n walltime : str\n Walltime requested per block in HH:MM:SS.\n scheduler_options : str\n String to prepend to the #SBATCH blocks in the submit script to the scheduler.\n worker_init : str\n Command to be run before starting a worker, such as 'module load Anaconda; source activate env'.\n exclusive : bool (Default = True)\n Requests nodes which are not shared with other running jobs.\n launcher : Launcher\n Launcher for this provider. Possible launchers include\n :class:`~parsl.launchers.SingleNodeLauncher` (the default),\n :class:`~parsl.launchers.SrunLauncher`, or\n :class:`~parsl.launchers.AprunLauncher`\n move_files : Optional[Bool]: should files be moved? by default, Parsl will try to move files.\n \"\"\"\n\n @typeguard.typechecked\n def __init__(self,\n partition: Optional[str],\n channel: Channel = LocalChannel(),\n nodes_per_block: int = 1,\n cores_per_node: Optional[int] = None,\n mem_per_node: Optional[int] = None,\n init_blocks: int = 1,\n min_blocks: int = 0,\n max_blocks: int = 1,\n parallelism: float = 1,\n walltime: str = \"00:10:00\",\n scheduler_options: str = '',\n worker_init: str = '',\n cmd_timeout: int = 10,\n exclusive: bool = True,\n move_files: bool = True,\n launcher: Launcher = SingleNodeLauncher()):\n label = 'slurm'\n super().__init__(label,\n channel,\n nodes_per_block,\n init_blocks,\n min_blocks,\n max_blocks,\n parallelism,\n walltime,\n cmd_timeout=cmd_timeout,\n launcher=launcher)\n\n self.partition = partition\n self.cores_per_node = cores_per_node\n self.mem_per_node = mem_per_node\n self.exclusive = exclusive\n self.move_files = move_files\n self.scheduler_options = scheduler_options + '\\n'\n if exclusive:\n self.scheduler_options += \"#SBATCH --exclusive\\n\"\n if partition:\n self.scheduler_options += \"#SBATCH --partition={}\\n\".format(partition)\n self.worker_init = worker_init + '\\n'\n\n def _status(self):\n ''' Internal: Do not call. Returns the status list for a list of job_ids\n\n Args:\n self\n\n Returns:\n [status...] : Status list of all jobs\n '''\n job_id_list = ','.join(self.resources.keys())\n cmd = \"squeue --job {0}\".format(job_id_list)\n logger.debug(\"Executing sqeueue\")\n retcode, stdout, stderr = self.execute_wait(cmd)\n logger.debug(\"sqeueue returned\")\n\n # Execute_wait failed. Do no update\n if retcode != 0:\n logger.warning(\"squeue failed with non-zero exit code {} - see https://github.com/Parsl/parsl/issues/1588\".format(retcode))\n return\n\n jobs_missing = list(self.resources.keys())\n for line in stdout.split('\\n'):\n parts = line.split()\n if parts and parts[0] != 'JOBID':\n job_id = parts[0]\n status = translate_table.get(parts[4], JobState.UNKNOWN)\n logger.debug(\"Updating job {} with slurm status {} to parsl status {}\".format(job_id, parts[4], status))\n self.resources[job_id]['status'] = JobStatus(status)\n jobs_missing.remove(job_id)\n\n # squeue does not report on jobs that are not running. So we are filling in the\n # blanks for missing jobs, we might lose some information about why the jobs failed.\n for missing_job in jobs_missing:\n logger.debug(\"Updating missing job {} to completed status\".format(missing_job))\n self.resources[missing_job]['status'] = JobStatus(JobState.COMPLETED)\n\n def submit(self, command, tasks_per_node, job_name=\"parsl.slurm\"):\n \"\"\"Submit the command as a slurm job.\n\n Parameters\n ----------\n command : str\n Command to be made on the remote side.\n tasks_per_node : int\n Command invocations to be launched per node\n job_name : str\n Name for the job (must be unique).\n Returns\n -------\n None or str\n If at capacity, returns None; otherwise, a string identifier for the job\n \"\"\"\n\n scheduler_options = self.scheduler_options\n worker_init = self.worker_init\n if self.mem_per_node is not None:\n scheduler_options += '#SBATCH --mem={}g\\n'.format(self.mem_per_node)\n worker_init += 'export PARSL_MEMORY_GB={}\\n'.format(self.mem_per_node)\n if self.cores_per_node is not None:\n cpus_per_task = math.floor(self.cores_per_node / tasks_per_node)\n scheduler_options += '#SBATCH --cpus-per-task={}'.format(cpus_per_task)\n worker_init += 'export PARSL_CORES={}\\n'.format(cpus_per_task)\n\n job_name = \"{0}.{1}\".format(job_name, time.time())\n\n script_path = \"{0}/{1}.submit\".format(self.script_dir, job_name)\n script_path = os.path.abspath(script_path)\n\n logger.debug(\"Requesting one block with {} nodes\".format(self.nodes_per_block))\n\n job_config = {}\n job_config[\"submit_script_dir\"] = self.channel.script_dir\n job_config[\"nodes\"] = self.nodes_per_block\n job_config[\"tasks_per_node\"] = tasks_per_node\n job_config[\"walltime\"] = wtime_to_minutes(self.walltime)\n job_config[\"scheduler_options\"] = scheduler_options\n job_config[\"worker_init\"] = worker_init\n job_config[\"user_script\"] = command\n\n # Wrap the command\n job_config[\"user_script\"] = self.launcher(command,\n tasks_per_node,\n self.nodes_per_block)\n\n logger.debug(\"Writing submit script\")\n self._write_submit_script(template_string, script_path, job_name, job_config)\n\n if self.move_files:\n logger.debug(\"moving files\")\n channel_script_path = self.channel.push_file(script_path, self.channel.script_dir)\n else:\n logger.debug(\"not moving files\")\n channel_script_path = script_path\n\n retcode, stdout, stderr = self.execute_wait(\"sbatch {0}\".format(channel_script_path))\n\n job_id = None\n if retcode == 0:\n for line in stdout.split('\\n'):\n if line.startswith(\"Submitted batch job\"):\n job_id = line.split(\"Submitted batch job\")[1].strip()\n self.resources[job_id] = {'job_id': job_id, 'status': JobStatus(JobState.PENDING)}\n else:\n print(\"Submission of command to scale_out failed\")\n logger.error(\"Retcode:%s STDOUT:%s STDERR:%s\", retcode, stdout.strip(), stderr.strip())\n return job_id\n\n def cancel(self, job_ids):\n ''' Cancels the jobs specified by a list of job ids\n\n Args:\n job_ids : [<job_id> ...]\n\n Returns :\n [True/False...] : If the cancel operation fails the entire list will be False.\n '''\n\n job_id_list = ' '.join(job_ids)\n retcode, stdout, stderr = self.execute_wait(\"scancel {0}\".format(job_id_list))\n rets = None\n if retcode == 0:\n for jid in job_ids:\n self.resources[jid]['status'] = JobStatus(JobState.CANCELLED) # Setting state to cancelled\n rets = [True for i in job_ids]\n else:\n rets = [False for i in job_ids]\n\n return rets\n\n @property\n def status_polling_interval(self):\n return 60\n", "path": "parsl/providers/slurm/slurm.py"}], "after_files": [{"content": "import os\nimport math\nimport time\nimport logging\nimport typeguard\n\nfrom typing import Optional\n\nfrom parsl.channels import LocalChannel\nfrom parsl.channels.base import Channel\nfrom parsl.launchers import SingleNodeLauncher\nfrom parsl.launchers.launchers import Launcher\nfrom parsl.providers.cluster_provider import ClusterProvider\nfrom parsl.providers.provider_base import JobState, JobStatus\nfrom parsl.providers.slurm.template import template_string\nfrom parsl.utils import RepresentationMixin, wtime_to_minutes\n\nlogger = logging.getLogger(__name__)\n\ntranslate_table = {\n 'PD': JobState.PENDING,\n 'R': JobState.RUNNING,\n 'CA': JobState.CANCELLED,\n 'CF': JobState.PENDING, # (configuring),\n 'CG': JobState.RUNNING, # (completing),\n 'CD': JobState.COMPLETED,\n 'F': JobState.FAILED, # (failed),\n 'TO': JobState.TIMEOUT, # (timeout),\n 'NF': JobState.FAILED, # (node failure),\n 'RV': JobState.FAILED, # (revoked) and\n 'SE': JobState.FAILED\n} # (special exit state\n\n\nclass SlurmProvider(ClusterProvider, RepresentationMixin):\n \"\"\"Slurm Execution Provider\n\n This provider uses sbatch to submit, squeue for status and scancel to cancel\n jobs. The sbatch script to be used is created from a template file in this\n same module.\n\n Parameters\n ----------\n partition : str\n Slurm partition to request blocks from. If none, no partition slurm directive will be specified.\n account : str\n Slurm account to which to charge resources used by the job. If none, the job will use the\n user's default account.\n channel : Channel\n Channel for accessing this provider. Possible channels include\n :class:`~parsl.channels.LocalChannel` (the default),\n :class:`~parsl.channels.SSHChannel`, or\n :class:`~parsl.channels.SSHInteractiveLoginChannel`.\n nodes_per_block : int\n Nodes to provision per block.\n cores_per_node : int\n Specify the number of cores to provision per node. If set to None, executors\n will assume all cores on the node are available for computation. Default is None.\n mem_per_node : int\n Specify the real memory to provision per node in GB. If set to None, no\n explicit request to the scheduler will be made. Default is None.\n min_blocks : int\n Minimum number of blocks to maintain.\n max_blocks : int\n Maximum number of blocks to maintain.\n parallelism : float\n Ratio of provisioned task slots to active tasks. A parallelism value of 1 represents aggressive\n scaling where as many resources as possible are used; parallelism close to 0 represents\n the opposite situation in which as few resources as possible (i.e., min_blocks) are used.\n walltime : str\n Walltime requested per block in HH:MM:SS.\n scheduler_options : str\n String to prepend to the #SBATCH blocks in the submit script to the scheduler.\n worker_init : str\n Command to be run before starting a worker, such as 'module load Anaconda; source activate env'.\n exclusive : bool (Default = True)\n Requests nodes which are not shared with other running jobs.\n launcher : Launcher\n Launcher for this provider. Possible launchers include\n :class:`~parsl.launchers.SingleNodeLauncher` (the default),\n :class:`~parsl.launchers.SrunLauncher`, or\n :class:`~parsl.launchers.AprunLauncher`\n move_files : Optional[Bool]: should files be moved? by default, Parsl will try to move files.\n \"\"\"\n\n @typeguard.typechecked\n def __init__(self,\n partition: Optional[str],\n account: Optional[str] = None,\n channel: Channel = LocalChannel(),\n nodes_per_block: int = 1,\n cores_per_node: Optional[int] = None,\n mem_per_node: Optional[int] = None,\n init_blocks: int = 1,\n min_blocks: int = 0,\n max_blocks: int = 1,\n parallelism: float = 1,\n walltime: str = \"00:10:00\",\n scheduler_options: str = '',\n worker_init: str = '',\n cmd_timeout: int = 10,\n exclusive: bool = True,\n move_files: bool = True,\n launcher: Launcher = SingleNodeLauncher()):\n label = 'slurm'\n super().__init__(label,\n channel,\n nodes_per_block,\n init_blocks,\n min_blocks,\n max_blocks,\n parallelism,\n walltime,\n cmd_timeout=cmd_timeout,\n launcher=launcher)\n\n self.partition = partition\n self.cores_per_node = cores_per_node\n self.mem_per_node = mem_per_node\n self.exclusive = exclusive\n self.move_files = move_files\n self.account = account\n self.scheduler_options = scheduler_options + '\\n'\n if exclusive:\n self.scheduler_options += \"#SBATCH --exclusive\\n\"\n if partition:\n self.scheduler_options += \"#SBATCH --partition={}\\n\".format(partition)\n if account:\n self.scheduler_options += \"#SBATCH --account={}\\n\".format(account)\n self.worker_init = worker_init + '\\n'\n\n def _status(self):\n ''' Internal: Do not call. Returns the status list for a list of job_ids\n\n Args:\n self\n\n Returns:\n [status...] : Status list of all jobs\n '''\n job_id_list = ','.join(self.resources.keys())\n cmd = \"squeue --job {0}\".format(job_id_list)\n logger.debug(\"Executing sqeueue\")\n retcode, stdout, stderr = self.execute_wait(cmd)\n logger.debug(\"sqeueue returned\")\n\n # Execute_wait failed. Do no update\n if retcode != 0:\n logger.warning(\"squeue failed with non-zero exit code {} - see https://github.com/Parsl/parsl/issues/1588\".format(retcode))\n return\n\n jobs_missing = list(self.resources.keys())\n for line in stdout.split('\\n'):\n parts = line.split()\n if parts and parts[0] != 'JOBID':\n job_id = parts[0]\n status = translate_table.get(parts[4], JobState.UNKNOWN)\n logger.debug(\"Updating job {} with slurm status {} to parsl status {}\".format(job_id, parts[4], status))\n self.resources[job_id]['status'] = JobStatus(status)\n jobs_missing.remove(job_id)\n\n # squeue does not report on jobs that are not running. So we are filling in the\n # blanks for missing jobs, we might lose some information about why the jobs failed.\n for missing_job in jobs_missing:\n logger.debug(\"Updating missing job {} to completed status\".format(missing_job))\n self.resources[missing_job]['status'] = JobStatus(JobState.COMPLETED)\n\n def submit(self, command, tasks_per_node, job_name=\"parsl.slurm\"):\n \"\"\"Submit the command as a slurm job.\n\n Parameters\n ----------\n command : str\n Command to be made on the remote side.\n tasks_per_node : int\n Command invocations to be launched per node\n job_name : str\n Name for the job (must be unique).\n Returns\n -------\n None or str\n If at capacity, returns None; otherwise, a string identifier for the job\n \"\"\"\n\n scheduler_options = self.scheduler_options\n worker_init = self.worker_init\n if self.mem_per_node is not None:\n scheduler_options += '#SBATCH --mem={}g\\n'.format(self.mem_per_node)\n worker_init += 'export PARSL_MEMORY_GB={}\\n'.format(self.mem_per_node)\n if self.cores_per_node is not None:\n cpus_per_task = math.floor(self.cores_per_node / tasks_per_node)\n scheduler_options += '#SBATCH --cpus-per-task={}'.format(cpus_per_task)\n worker_init += 'export PARSL_CORES={}\\n'.format(cpus_per_task)\n\n job_name = \"{0}.{1}\".format(job_name, time.time())\n\n script_path = \"{0}/{1}.submit\".format(self.script_dir, job_name)\n script_path = os.path.abspath(script_path)\n\n logger.debug(\"Requesting one block with {} nodes\".format(self.nodes_per_block))\n\n job_config = {}\n job_config[\"submit_script_dir\"] = self.channel.script_dir\n job_config[\"nodes\"] = self.nodes_per_block\n job_config[\"tasks_per_node\"] = tasks_per_node\n job_config[\"walltime\"] = wtime_to_minutes(self.walltime)\n job_config[\"scheduler_options\"] = scheduler_options\n job_config[\"worker_init\"] = worker_init\n job_config[\"user_script\"] = command\n\n # Wrap the command\n job_config[\"user_script\"] = self.launcher(command,\n tasks_per_node,\n self.nodes_per_block)\n\n logger.debug(\"Writing submit script\")\n self._write_submit_script(template_string, script_path, job_name, job_config)\n\n if self.move_files:\n logger.debug(\"moving files\")\n channel_script_path = self.channel.push_file(script_path, self.channel.script_dir)\n else:\n logger.debug(\"not moving files\")\n channel_script_path = script_path\n\n retcode, stdout, stderr = self.execute_wait(\"sbatch {0}\".format(channel_script_path))\n\n job_id = None\n if retcode == 0:\n for line in stdout.split('\\n'):\n if line.startswith(\"Submitted batch job\"):\n job_id = line.split(\"Submitted batch job\")[1].strip()\n self.resources[job_id] = {'job_id': job_id, 'status': JobStatus(JobState.PENDING)}\n else:\n print(\"Submission of command to scale_out failed\")\n logger.error(\"Retcode:%s STDOUT:%s STDERR:%s\", retcode, stdout.strip(), stderr.strip())\n return job_id\n\n def cancel(self, job_ids):\n ''' Cancels the jobs specified by a list of job ids\n\n Args:\n job_ids : [<job_id> ...]\n\n Returns :\n [True/False...] : If the cancel operation fails the entire list will be False.\n '''\n\n job_id_list = ' '.join(job_ids)\n retcode, stdout, stderr = self.execute_wait(\"scancel {0}\".format(job_id_list))\n rets = None\n if retcode == 0:\n for jid in job_ids:\n self.resources[jid]['status'] = JobStatus(JobState.CANCELLED) # Setting state to cancelled\n rets = [True for i in job_ids]\n else:\n rets = [False for i in job_ids]\n\n return rets\n\n @property\n def status_polling_interval(self):\n return 60\n", "path": "parsl/providers/slurm/slurm.py"}]} | 3,248 | 459 |

gh_patches_debug_6354 | rasdani/github-patches | git_diff | iterative__dvc-2627 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Issue with dvc push to AWS s3 remote

**Please provide information about your setup**

DVC version(i.e. `dvc --version`), Platform and method of installation (pip, homebrew, pkg Mac, exe (Windows), DEB(Linux), RPM(Linux))

DVC: 0.62.1

Mac: Mojave 10.13

Install with pip

issue with `dvc push`

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `dvc/progress.py`

Content:

```

1 """Manages progress bars for dvc repo."""

2 from __future__ import print_function

3 import logging

4 from tqdm import tqdm

5 from concurrent.futures import ThreadPoolExecutor

6 from funcy import merge

7

8 logger = logging.getLogger(__name__)

9

10

11 class TqdmThreadPoolExecutor(ThreadPoolExecutor):

12 """

13 Ensure worker progressbars are cleared away properly.

14 """

15

16 def __enter__(self):

17 """

18 Creates a blank initial dummy progress bar if needed so that workers

19 are forced to create "nested" bars.

20 """

21 blank_bar = Tqdm(bar_format="Multi-Threaded:", leave=False)

22 if blank_bar.pos > 0:

23 # already nested - don't need a placeholder bar

24 blank_bar.close()

25 self.bar = blank_bar

26 return super(TqdmThreadPoolExecutor, self).__enter__()

27

28 def __exit__(self, *a, **k):

29 super(TqdmThreadPoolExecutor, self).__exit__(*a, **k)

30 self.bar.close()

31

32

33 class Tqdm(tqdm):

34 """

35 maximum-compatibility tqdm-based progressbars

36 """

37

38 BAR_FMT_DEFAULT = (

39 "{percentage:3.0f}%|{bar:10}|"

40 "{desc:{ncols_desc}.{ncols_desc}}{n}/{total}"

41 " [{elapsed}<{remaining}, {rate_fmt:>11}{postfix}]"

42 )

43 BAR_FMT_NOTOTAL = (

44 "{desc:{ncols_desc}.{ncols_desc}}{n}"

45 " [{elapsed}<??:??, {rate_fmt:>11}{postfix}]"

46 )

47

48 def __init__(

49 self,

50 iterable=None,

51 disable=None,

52 level=logging.ERROR,

53 desc=None,

54 leave=False,

55 bar_format=None,

56 bytes=False, # pylint: disable=W0622

57 **kwargs

58 ):

59 """

60 bytes : shortcut for

61 `unit='B', unit_scale=True, unit_divisor=1024, miniters=1`

62 desc : persists after `close()`

63 level : effective logging level for determining `disable`;

64 used only if `disable` is unspecified

65 kwargs : anything accepted by `tqdm.tqdm()`

66 """

67 kwargs = kwargs.copy()

68 kwargs.setdefault("unit_scale", True)

69 if bytes:

70 bytes_defaults = dict(

71 unit="B", unit_scale=True, unit_divisor=1024, miniters=1

72 )

73 kwargs = merge(bytes_defaults, kwargs)

74 self.desc_persist = desc

75 if disable is None:

76 disable = logger.getEffectiveLevel() > level

77 super(Tqdm, self).__init__(

78 iterable=iterable,

79 disable=disable,

80 leave=leave,

81 desc=desc,

82 bar_format="!",

83 **kwargs

84 )

85 if bar_format is None:

86 if self.__len__():

87 self.bar_format = self.BAR_FMT_DEFAULT

88 else:

89 self.bar_format = self.BAR_FMT_NOTOTAL

90 else:

91 self.bar_format = bar_format

92 self.refresh()

93

94 def update_desc(self, desc, n=1):

95 """

96 Calls `set_description_str(desc)` and `update(n)`

97 """

98 self.set_description_str(desc, refresh=False)

99 self.update(n)

100

101 def update_to(self, current, total=None):

102 if total:

103 self.total = total # pylint: disable=W0613,W0201

104 self.update(current - self.n)

105

106 def close(self):

107 if self.desc_persist is not None:

108 self.set_description_str(self.desc_persist, refresh=False)

109 super(Tqdm, self).close()

110

111 @property

112 def format_dict(self):

113 """inject `ncols_desc` to fill the display width (`ncols`)"""

114 d = super(Tqdm, self).format_dict

115 ncols = d["ncols"] or 80

116 ncols_desc = ncols - len(self.format_meter(ncols_desc=1, **d)) + 1

117 d["ncols_desc"] = max(ncols_desc, 0)

118 return d

119

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/dvc/progress.py b/dvc/progress.py

--- a/dvc/progress.py

+++ b/dvc/progress.py

@@ -114,5 +114,11 @@

d = super(Tqdm, self).format_dict

ncols = d["ncols"] or 80

ncols_desc = ncols - len(self.format_meter(ncols_desc=1, **d)) + 1

- d["ncols_desc"] = max(ncols_desc, 0)

+ ncols_desc = max(ncols_desc, 0)

+ if ncols_desc:

+ d["ncols_desc"] = ncols_desc

+ else:

+ # work-around for zero-width desc

+ d["ncols_desc"] = 1

+ d["desc"] = 0

return d