problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

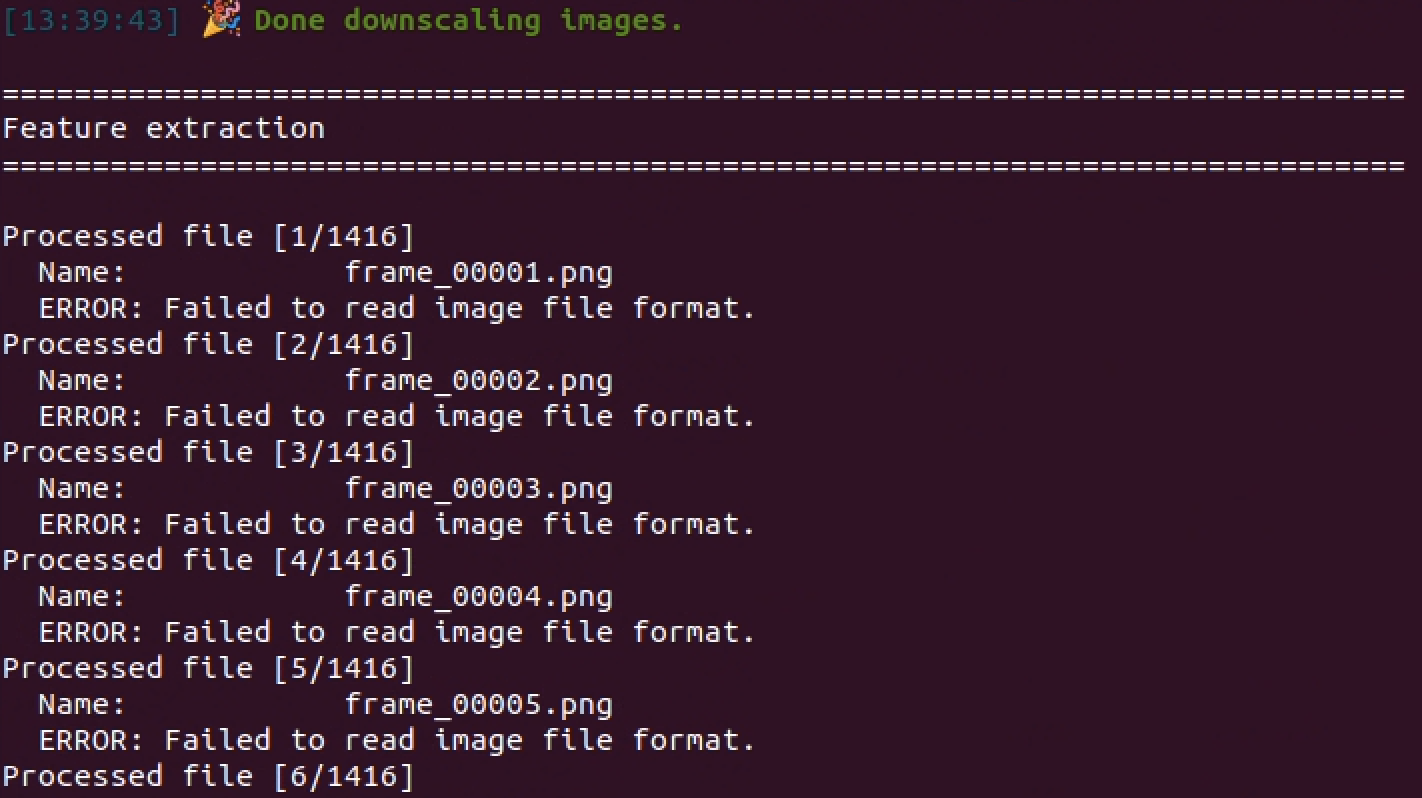

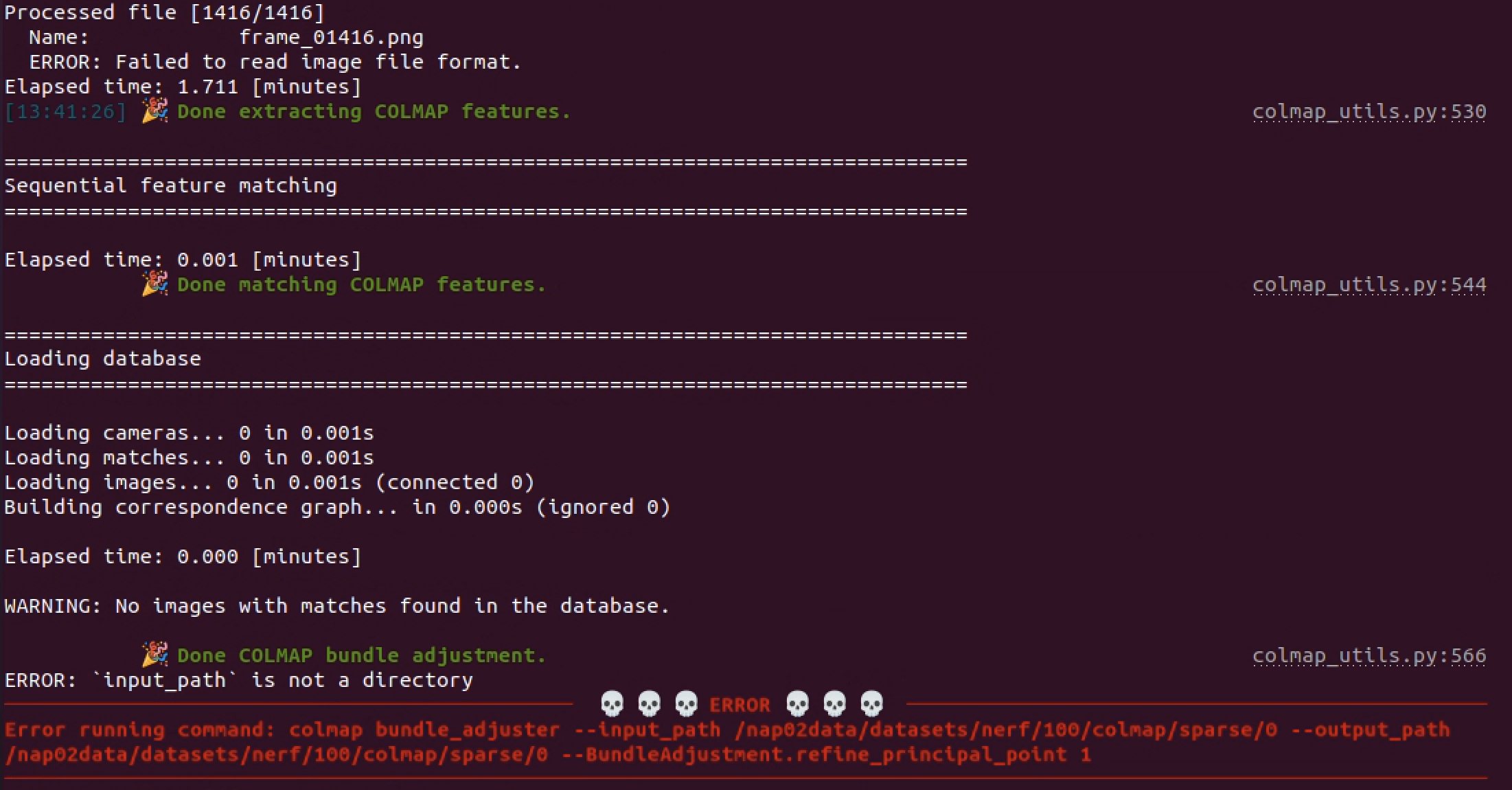

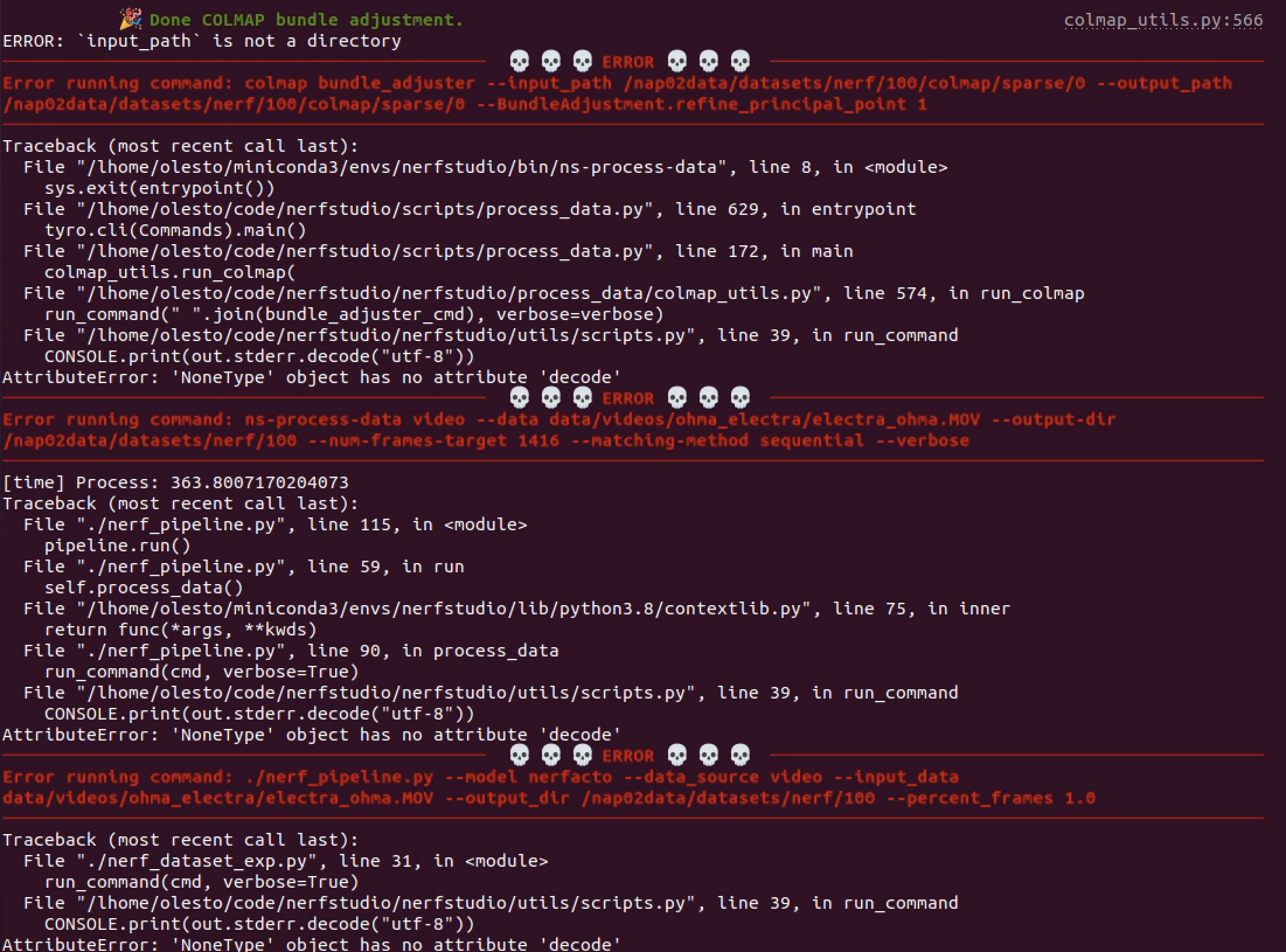

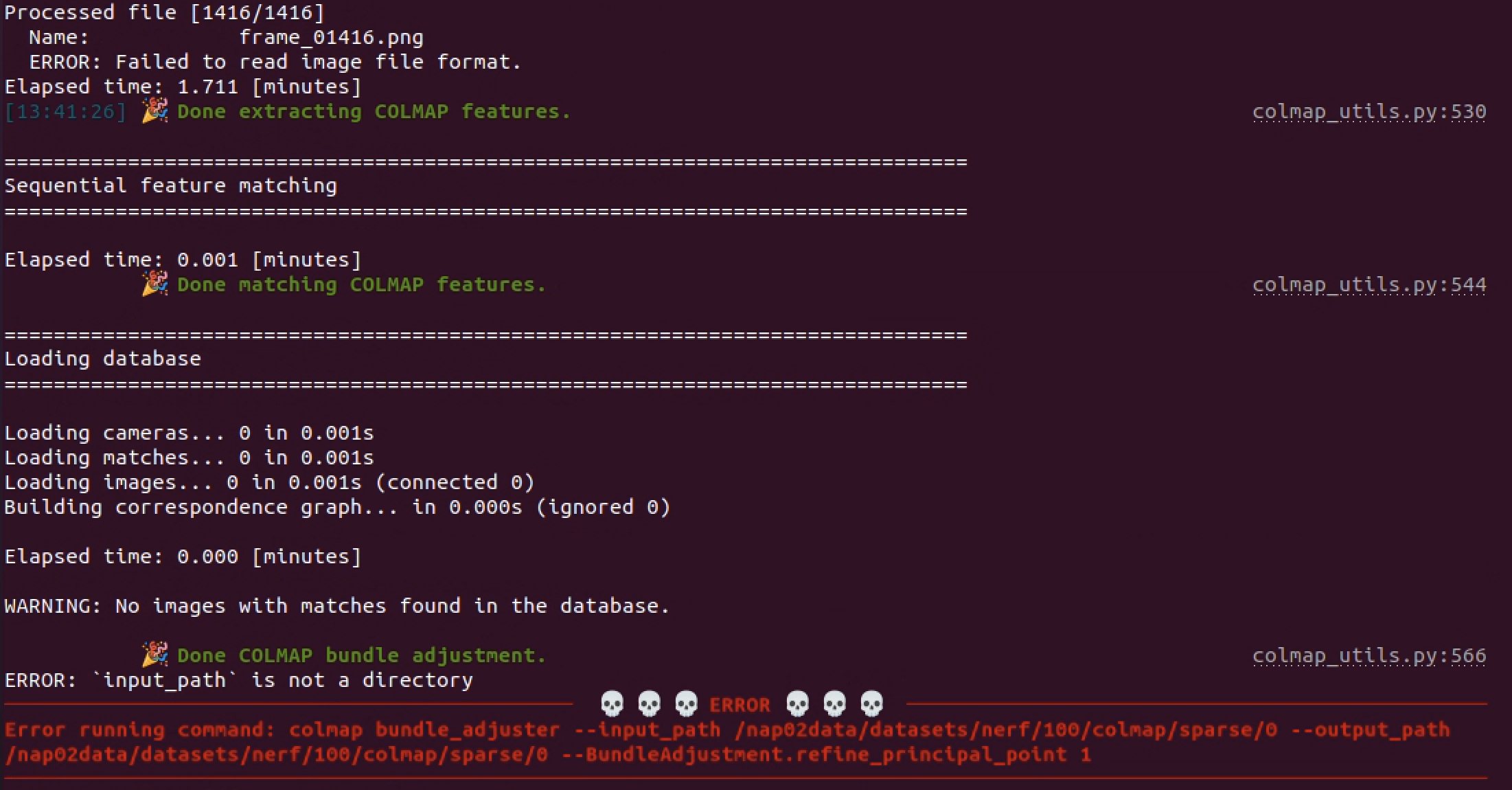

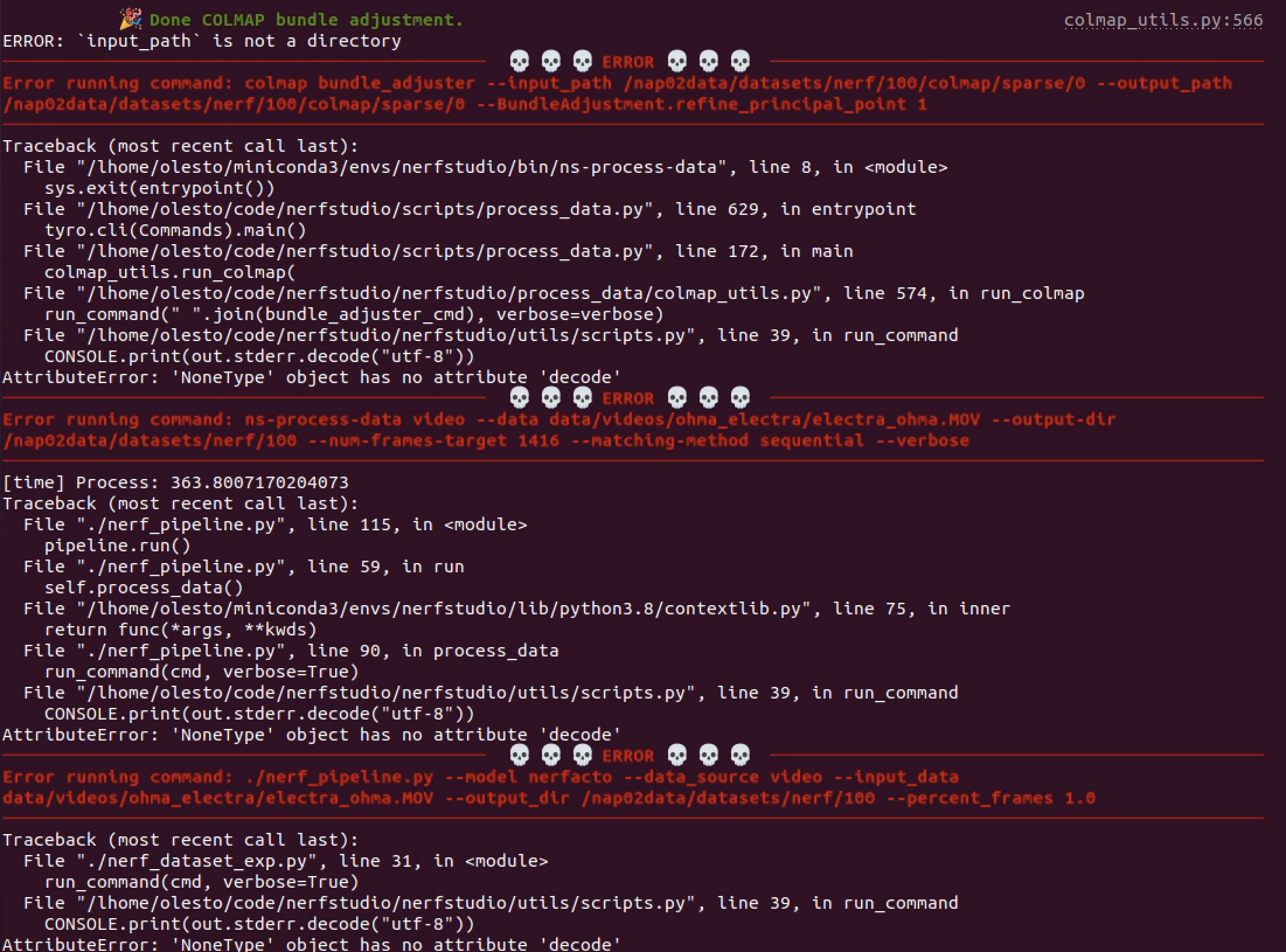

gh_patches_debug_35965 | rasdani/github-patches | git_diff | ethereum__web3.py-914 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Erorr in websockets.py: '<=' not supported between instances of 'int' and 'NoneType'

* web3 (4.3.0)

* websockets (4.0.1)

* Python: 3.6

* OS: osx HighSierra

### What was wrong?

`web3 = Web3(Web3.WebsocketProvider("ws://10.224.12.6:8546"))`

`web3.eth.syncing //returns data`

The websocket is clearly open but when I run a filter which is supposed to have many entries, I get the following error trace:

Upon running: `data = web3.eth.getFilterLogs(new_block_filter.filter_id)`, I get:

```

~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/web3/providers/websocket.py in make_request(self, method, params)

81 WebsocketProvider._loop

82 )

---> 83 return future.result()

/anaconda3/lib/python3.6/concurrent/futures/_base.py in result(self, timeout)

430 raise CancelledError()

431 elif self._state == FINISHED:

--> 432 return self.__get_result()

433 else:

434 raise TimeoutError()

/anaconda3/lib/python3.6/concurrent/futures/_base.py in __get_result(self)

382 def __get_result(self):

383 if self._exception:

--> 384 raise self._exception

385 else:

386 return self._result

~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/web3/providers/websocket.py in coro_make_request(self, request_data)

71 async with self.conn as conn:

72 await conn.send(request_data)

---> 73 return json.loads(await conn.recv())

74

75 def make_request(self, method, params):

~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/websockets/protocol.py in recv(self)

321 next_message.cancel()

322 if not self.legacy_recv:

--> 323 raise ConnectionClosed(self.close_code, self.close_reason)

324

325 @asyncio.coroutine

~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/websockets/exceptions.py in __init__(self, code, reason)

145 self.reason = reason

146 message = "WebSocket connection is closed: "

--> 147 if 3000 <= code < 4000:

148 explanation = "registered"

149 elif 4000 <= code < 5000:

TypeError: '<=' not supported between instances of 'int' and 'NoneType'

```

The same filter runs fine (albeit a bit slow) using `Web3.HTTPProvider()`

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `web3/providers/websocket.py`

Content:

```

1 import asyncio

2 import json

3 import logging

4 import os

5 from threading import (

6 Thread,

7 )

8

9 import websockets

10

11 from web3.providers.base import (

12 JSONBaseProvider,

13 )

14

15

16 def _start_event_loop(loop):

17 asyncio.set_event_loop(loop)

18 loop.run_forever()

19 loop.close()

20

21

22 def _get_threaded_loop():

23 new_loop = asyncio.new_event_loop()

24 thread_loop = Thread(target=_start_event_loop, args=(new_loop,), daemon=True)

25 thread_loop.start()

26 return new_loop

27

28

29 def get_default_endpoint():

30 return os.environ.get('WEB3_WS_PROVIDER_URI', 'ws://127.0.0.1:8546')

31

32

33 class PersistentWebSocket:

34

35 def __init__(self, endpoint_uri, loop):

36 self.ws = None

37 self.endpoint_uri = endpoint_uri

38 self.loop = loop

39

40 async def __aenter__(self):

41 if self.ws is None:

42 self.ws = await websockets.connect(uri=self.endpoint_uri, loop=self.loop)

43 return self.ws

44

45 async def __aexit__(self, exc_type, exc_val, exc_tb):

46 if exc_val is not None:

47 try:

48 await self.ws.close()

49 except Exception:

50 pass

51 self.ws = None

52

53

54 class WebsocketProvider(JSONBaseProvider):

55 logger = logging.getLogger("web3.providers.WebsocketProvider")

56 _loop = None

57

58 def __init__(self, endpoint_uri=None):

59 self.endpoint_uri = endpoint_uri

60 if self.endpoint_uri is None:

61 self.endpoint_uri = get_default_endpoint()

62 if WebsocketProvider._loop is None:

63 WebsocketProvider._loop = _get_threaded_loop()

64 self.conn = PersistentWebSocket(self.endpoint_uri, WebsocketProvider._loop)

65 super().__init__()

66

67 def __str__(self):

68 return "WS connection {0}".format(self.endpoint_uri)

69

70 async def coro_make_request(self, request_data):

71 async with self.conn as conn:

72 await conn.send(request_data)

73 return json.loads(await conn.recv())

74

75 def make_request(self, method, params):

76 self.logger.debug("Making request WebSocket. URI: %s, "

77 "Method: %s", self.endpoint_uri, method)

78 request_data = self.encode_rpc_request(method, params)

79 future = asyncio.run_coroutine_threadsafe(

80 self.coro_make_request(request_data),

81 WebsocketProvider._loop

82 )

83 return future.result()

84

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/web3/providers/websocket.py b/web3/providers/websocket.py

--- a/web3/providers/websocket.py

+++ b/web3/providers/websocket.py

@@ -8,10 +8,15 @@

import websockets

+from web3.exceptions import (

+ ValidationError,

+)

from web3.providers.base import (

JSONBaseProvider,

)

+RESTRICTED_WEBSOCKET_KWARGS = {'uri', 'loop'}

+

def _start_event_loop(loop):

asyncio.set_event_loop(loop)

@@ -32,14 +37,17 @@

class PersistentWebSocket:

- def __init__(self, endpoint_uri, loop):

+ def __init__(self, endpoint_uri, loop, websocket_kwargs):

self.ws = None

self.endpoint_uri = endpoint_uri

self.loop = loop

+ self.websocket_kwargs = websocket_kwargs

async def __aenter__(self):

if self.ws is None:

- self.ws = await websockets.connect(uri=self.endpoint_uri, loop=self.loop)

+ self.ws = await websockets.connect(

+ uri=self.endpoint_uri, loop=self.loop, **self.websocket_kwargs

+ )

return self.ws

async def __aexit__(self, exc_type, exc_val, exc_tb):

@@ -55,13 +63,26 @@

logger = logging.getLogger("web3.providers.WebsocketProvider")

_loop = None

- def __init__(self, endpoint_uri=None):

+ def __init__(self, endpoint_uri=None, websocket_kwargs=None):

self.endpoint_uri = endpoint_uri

if self.endpoint_uri is None:

self.endpoint_uri = get_default_endpoint()

if WebsocketProvider._loop is None:

WebsocketProvider._loop = _get_threaded_loop()

- self.conn = PersistentWebSocket(self.endpoint_uri, WebsocketProvider._loop)

+ if websocket_kwargs is None:

+ websocket_kwargs = {}

+ else:

+ found_restricted_keys = set(websocket_kwargs.keys()).intersection(

+ RESTRICTED_WEBSOCKET_KWARGS

+ )

+ if found_restricted_keys:

+ raise ValidationError(

+ '{0} are not allowed in websocket_kwargs, '

+ 'found: {1}'.format(RESTRICTED_WEBSOCKET_KWARGS, found_restricted_keys)

+ )

+ self.conn = PersistentWebSocket(

+ self.endpoint_uri, WebsocketProvider._loop, websocket_kwargs

+ )

super().__init__()

def __str__(self):

| {"golden_diff": "diff --git a/web3/providers/websocket.py b/web3/providers/websocket.py\n--- a/web3/providers/websocket.py\n+++ b/web3/providers/websocket.py\n@@ -8,10 +8,15 @@\n \n import websockets\n \n+from web3.exceptions import (\n+ ValidationError,\n+)\n from web3.providers.base import (\n JSONBaseProvider,\n )\n \n+RESTRICTED_WEBSOCKET_KWARGS = {'uri', 'loop'}\n+\n \n def _start_event_loop(loop):\n asyncio.set_event_loop(loop)\n@@ -32,14 +37,17 @@\n \n class PersistentWebSocket:\n \n- def __init__(self, endpoint_uri, loop):\n+ def __init__(self, endpoint_uri, loop, websocket_kwargs):\n self.ws = None\n self.endpoint_uri = endpoint_uri\n self.loop = loop\n+ self.websocket_kwargs = websocket_kwargs\n \n async def __aenter__(self):\n if self.ws is None:\n- self.ws = await websockets.connect(uri=self.endpoint_uri, loop=self.loop)\n+ self.ws = await websockets.connect(\n+ uri=self.endpoint_uri, loop=self.loop, **self.websocket_kwargs\n+ )\n return self.ws\n \n async def __aexit__(self, exc_type, exc_val, exc_tb):\n@@ -55,13 +63,26 @@\n logger = logging.getLogger(\"web3.providers.WebsocketProvider\")\n _loop = None\n \n- def __init__(self, endpoint_uri=None):\n+ def __init__(self, endpoint_uri=None, websocket_kwargs=None):\n self.endpoint_uri = endpoint_uri\n if self.endpoint_uri is None:\n self.endpoint_uri = get_default_endpoint()\n if WebsocketProvider._loop is None:\n WebsocketProvider._loop = _get_threaded_loop()\n- self.conn = PersistentWebSocket(self.endpoint_uri, WebsocketProvider._loop)\n+ if websocket_kwargs is None:\n+ websocket_kwargs = {}\n+ else:\n+ found_restricted_keys = set(websocket_kwargs.keys()).intersection(\n+ RESTRICTED_WEBSOCKET_KWARGS\n+ )\n+ if found_restricted_keys:\n+ raise ValidationError(\n+ '{0} are not allowed in websocket_kwargs, '\n+ 'found: {1}'.format(RESTRICTED_WEBSOCKET_KWARGS, found_restricted_keys)\n+ )\n+ self.conn = PersistentWebSocket(\n+ self.endpoint_uri, WebsocketProvider._loop, websocket_kwargs\n+ )\n super().__init__()\n \n def __str__(self):\n", "issue": "Erorr in websockets.py: '<=' not supported between instances of 'int' and 'NoneType'\n* web3 (4.3.0)\r\n* websockets (4.0.1)\r\n* Python: 3.6\r\n* OS: osx HighSierra\r\n\r\n\r\n### What was wrong?\r\n\r\n`web3 = Web3(Web3.WebsocketProvider(\"ws://10.224.12.6:8546\"))`\r\n`web3.eth.syncing //returns data`\r\n\r\nThe websocket is clearly open but when I run a filter which is supposed to have many entries, I get the following error trace:\r\n\r\nUpon running: `data = web3.eth.getFilterLogs(new_block_filter.filter_id)`, I get:\r\n\r\n```\r\n~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/web3/providers/websocket.py in make_request(self, method, params)\r\n 81 WebsocketProvider._loop\r\n 82 )\r\n---> 83 return future.result()\r\n\r\n/anaconda3/lib/python3.6/concurrent/futures/_base.py in result(self, timeout)\r\n 430 raise CancelledError()\r\n 431 elif self._state == FINISHED:\r\n--> 432 return self.__get_result()\r\n 433 else:\r\n 434 raise TimeoutError()\r\n\r\n/anaconda3/lib/python3.6/concurrent/futures/_base.py in __get_result(self)\r\n 382 def __get_result(self):\r\n 383 if self._exception:\r\n--> 384 raise self._exception\r\n 385 else:\r\n 386 return self._result\r\n\r\n~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/web3/providers/websocket.py in coro_make_request(self, request_data)\r\n 71 async with self.conn as conn:\r\n 72 await conn.send(request_data)\r\n---> 73 return json.loads(await conn.recv())\r\n 74 \r\n 75 def make_request(self, method, params):\r\n\r\n~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/websockets/protocol.py in recv(self)\r\n 321 next_message.cancel()\r\n 322 if not self.legacy_recv:\r\n--> 323 raise ConnectionClosed(self.close_code, self.close_reason)\r\n 324 \r\n 325 @asyncio.coroutine\r\n\r\n~/Desktop/contracts-py/contracts/lib/python3.6/site-packages/websockets/exceptions.py in __init__(self, code, reason)\r\n 145 self.reason = reason\r\n 146 message = \"WebSocket connection is closed: \"\r\n--> 147 if 3000 <= code < 4000:\r\n 148 explanation = \"registered\"\r\n 149 elif 4000 <= code < 5000:\r\n\r\nTypeError: '<=' not supported between instances of 'int' and 'NoneType'\r\n```\r\n\r\nThe same filter runs fine (albeit a bit slow) using `Web3.HTTPProvider()`\r\n\r\n\n", "before_files": [{"content": "import asyncio\nimport json\nimport logging\nimport os\nfrom threading import (\n Thread,\n)\n\nimport websockets\n\nfrom web3.providers.base import (\n JSONBaseProvider,\n)\n\n\ndef _start_event_loop(loop):\n asyncio.set_event_loop(loop)\n loop.run_forever()\n loop.close()\n\n\ndef _get_threaded_loop():\n new_loop = asyncio.new_event_loop()\n thread_loop = Thread(target=_start_event_loop, args=(new_loop,), daemon=True)\n thread_loop.start()\n return new_loop\n\n\ndef get_default_endpoint():\n return os.environ.get('WEB3_WS_PROVIDER_URI', 'ws://127.0.0.1:8546')\n\n\nclass PersistentWebSocket:\n\n def __init__(self, endpoint_uri, loop):\n self.ws = None\n self.endpoint_uri = endpoint_uri\n self.loop = loop\n\n async def __aenter__(self):\n if self.ws is None:\n self.ws = await websockets.connect(uri=self.endpoint_uri, loop=self.loop)\n return self.ws\n\n async def __aexit__(self, exc_type, exc_val, exc_tb):\n if exc_val is not None:\n try:\n await self.ws.close()\n except Exception:\n pass\n self.ws = None\n\n\nclass WebsocketProvider(JSONBaseProvider):\n logger = logging.getLogger(\"web3.providers.WebsocketProvider\")\n _loop = None\n\n def __init__(self, endpoint_uri=None):\n self.endpoint_uri = endpoint_uri\n if self.endpoint_uri is None:\n self.endpoint_uri = get_default_endpoint()\n if WebsocketProvider._loop is None:\n WebsocketProvider._loop = _get_threaded_loop()\n self.conn = PersistentWebSocket(self.endpoint_uri, WebsocketProvider._loop)\n super().__init__()\n\n def __str__(self):\n return \"WS connection {0}\".format(self.endpoint_uri)\n\n async def coro_make_request(self, request_data):\n async with self.conn as conn:\n await conn.send(request_data)\n return json.loads(await conn.recv())\n\n def make_request(self, method, params):\n self.logger.debug(\"Making request WebSocket. URI: %s, \"\n \"Method: %s\", self.endpoint_uri, method)\n request_data = self.encode_rpc_request(method, params)\n future = asyncio.run_coroutine_threadsafe(\n self.coro_make_request(request_data),\n WebsocketProvider._loop\n )\n return future.result()\n", "path": "web3/providers/websocket.py"}], "after_files": [{"content": "import asyncio\nimport json\nimport logging\nimport os\nfrom threading import (\n Thread,\n)\n\nimport websockets\n\nfrom web3.exceptions import (\n ValidationError,\n)\nfrom web3.providers.base import (\n JSONBaseProvider,\n)\n\nRESTRICTED_WEBSOCKET_KWARGS = {'uri', 'loop'}\n\n\ndef _start_event_loop(loop):\n asyncio.set_event_loop(loop)\n loop.run_forever()\n loop.close()\n\n\ndef _get_threaded_loop():\n new_loop = asyncio.new_event_loop()\n thread_loop = Thread(target=_start_event_loop, args=(new_loop,), daemon=True)\n thread_loop.start()\n return new_loop\n\n\ndef get_default_endpoint():\n return os.environ.get('WEB3_WS_PROVIDER_URI', 'ws://127.0.0.1:8546')\n\n\nclass PersistentWebSocket:\n\n def __init__(self, endpoint_uri, loop, websocket_kwargs):\n self.ws = None\n self.endpoint_uri = endpoint_uri\n self.loop = loop\n self.websocket_kwargs = websocket_kwargs\n\n async def __aenter__(self):\n if self.ws is None:\n self.ws = await websockets.connect(\n uri=self.endpoint_uri, loop=self.loop, **self.websocket_kwargs\n )\n return self.ws\n\n async def __aexit__(self, exc_type, exc_val, exc_tb):\n if exc_val is not None:\n try:\n await self.ws.close()\n except Exception:\n pass\n self.ws = None\n\n\nclass WebsocketProvider(JSONBaseProvider):\n logger = logging.getLogger(\"web3.providers.WebsocketProvider\")\n _loop = None\n\n def __init__(self, endpoint_uri=None, websocket_kwargs=None):\n self.endpoint_uri = endpoint_uri\n if self.endpoint_uri is None:\n self.endpoint_uri = get_default_endpoint()\n if WebsocketProvider._loop is None:\n WebsocketProvider._loop = _get_threaded_loop()\n if websocket_kwargs is None:\n websocket_kwargs = {}\n else:\n found_restricted_keys = set(websocket_kwargs.keys()).intersection(\n RESTRICTED_WEBSOCKET_KWARGS\n )\n if found_restricted_keys:\n raise ValidationError(\n '{0} are not allowed in websocket_kwargs, '\n 'found: {1}'.format(RESTRICTED_WEBSOCKET_KWARGS, found_restricted_keys)\n )\n self.conn = PersistentWebSocket(\n self.endpoint_uri, WebsocketProvider._loop, websocket_kwargs\n )\n super().__init__()\n\n def __str__(self):\n return \"WS connection {0}\".format(self.endpoint_uri)\n\n async def coro_make_request(self, request_data):\n async with self.conn as conn:\n await conn.send(request_data)\n return json.loads(await conn.recv())\n\n def make_request(self, method, params):\n self.logger.debug(\"Making request WebSocket. URI: %s, \"\n \"Method: %s\", self.endpoint_uri, method)\n request_data = self.encode_rpc_request(method, params)\n future = asyncio.run_coroutine_threadsafe(\n self.coro_make_request(request_data),\n WebsocketProvider._loop\n )\n return future.result()\n", "path": "web3/providers/websocket.py"}]} | 1,632 | 544 |

gh_patches_debug_34363 | rasdani/github-patches | git_diff | localstack__localstack-1082 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Localstack Elasticsearch plugin Ingest User Agent Processor not available

Plugin `Ingest User Agent Processor` is installed by default for Elasticsearch (ELK) on AWS. It is not the case in Localstack and think we basically expect it.

In addition, I was not able to install it manually through command `bin/elasticsearch-plugin install ingest-user-agent` as bin/elasticsearch-plugin is missing.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `localstack/constants.py`

Content:

```

1 import os

2 import localstack_client.config

3

4 # LocalStack version

5 VERSION = '0.8.10'

6

7 # default AWS region

8 if 'DEFAULT_REGION' not in os.environ:

9 os.environ['DEFAULT_REGION'] = 'us-east-1'

10 DEFAULT_REGION = os.environ['DEFAULT_REGION']

11

12 # constant to represent the "local" region, i.e., local machine

13 REGION_LOCAL = 'local'

14

15 # dev environment

16 ENV_DEV = 'dev'

17

18 # backend service ports, for services that are behind a proxy (counting down from 4566)

19 DEFAULT_PORT_APIGATEWAY_BACKEND = 4566

20 DEFAULT_PORT_KINESIS_BACKEND = 4565

21 DEFAULT_PORT_DYNAMODB_BACKEND = 4564

22 DEFAULT_PORT_S3_BACKEND = 4563

23 DEFAULT_PORT_SNS_BACKEND = 4562

24 DEFAULT_PORT_SQS_BACKEND = 4561

25 DEFAULT_PORT_ELASTICSEARCH_BACKEND = 4560

26 DEFAULT_PORT_CLOUDFORMATION_BACKEND = 4559

27

28 DEFAULT_PORT_WEB_UI = 8080

29

30 LOCALHOST = 'localhost'

31

32 # version of the Maven dependency with Java utility code

33 LOCALSTACK_MAVEN_VERSION = '0.1.15'

34

35 # map of default service APIs and ports to be spun up (fetch map from localstack_client)

36 DEFAULT_SERVICE_PORTS = localstack_client.config.get_service_ports()

37

38 # host to bind to when starting the services

39 BIND_HOST = '0.0.0.0'

40

41 # AWS user account ID used for tests

42 TEST_AWS_ACCOUNT_ID = '000000000000'

43 os.environ['TEST_AWS_ACCOUNT_ID'] = TEST_AWS_ACCOUNT_ID

44

45 # root code folder

46 LOCALSTACK_ROOT_FOLDER = os.path.realpath(os.path.join(os.path.dirname(os.path.realpath(__file__)), '..'))

47

48 # virtualenv folder

49 LOCALSTACK_VENV_FOLDER = os.path.join(LOCALSTACK_ROOT_FOLDER, '.venv')

50 if not os.path.isdir(LOCALSTACK_VENV_FOLDER):

51 # assuming this package lives here: <python>/lib/pythonX.X/site-packages/localstack/

52 LOCALSTACK_VENV_FOLDER = os.path.realpath(os.path.join(LOCALSTACK_ROOT_FOLDER, '..', '..', '..'))

53

54 # API Gateway path to indicate a user request sent to the gateway

55 PATH_USER_REQUEST = '_user_request_'

56

57 # name of LocalStack Docker image

58 DOCKER_IMAGE_NAME = 'localstack/localstack'

59

60 # environment variable name to tag local test runs

61 ENV_INTERNAL_TEST_RUN = 'LOCALSTACK_INTERNAL_TEST_RUN'

62

63 # content types

64 APPLICATION_AMZ_JSON_1_0 = 'application/x-amz-json-1.0'

65 APPLICATION_AMZ_JSON_1_1 = 'application/x-amz-json-1.1'

66 APPLICATION_JSON = 'application/json'

67

68 # Lambda defaults

69 LAMBDA_TEST_ROLE = 'arn:aws:iam::%s:role/lambda-test-role' % TEST_AWS_ACCOUNT_ID

70

71 # installation constants

72 ELASTICSEARCH_JAR_URL = 'https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.0.zip'

73 DYNAMODB_JAR_URL = 'https://s3-us-west-2.amazonaws.com/dynamodb-local/dynamodb_local_latest.zip'

74 ELASTICMQ_JAR_URL = 'https://s3-eu-west-1.amazonaws.com/softwaremill-public/elasticmq-server-0.14.2.jar'

75 STS_JAR_URL = 'http://central.maven.org/maven2/com/amazonaws/aws-java-sdk-sts/1.11.14/aws-java-sdk-sts-1.11.14.jar'

76

77 # API endpoint for analytics events

78 API_ENDPOINT = 'https://api.localstack.cloud/v1'

79

```

Path: `localstack/services/install.py`

Content:

```

1 #!/usr/bin/env python

2

3 import os

4 import sys

5 import glob

6 import shutil

7 import logging

8 import tempfile

9 from localstack.constants import (DEFAULT_SERVICE_PORTS, ELASTICMQ_JAR_URL, STS_JAR_URL,

10 ELASTICSEARCH_JAR_URL, DYNAMODB_JAR_URL, LOCALSTACK_MAVEN_VERSION)

11 from localstack.utils.common import download, parallelize, run, mkdir, save_file, unzip, rm_rf, chmod_r

12

13 THIS_PATH = os.path.dirname(os.path.realpath(__file__))

14 ROOT_PATH = os.path.realpath(os.path.join(THIS_PATH, '..'))

15

16 INSTALL_DIR_INFRA = '%s/infra' % ROOT_PATH

17 INSTALL_DIR_NPM = '%s/node_modules' % ROOT_PATH

18 INSTALL_DIR_ES = '%s/elasticsearch' % INSTALL_DIR_INFRA

19 INSTALL_DIR_DDB = '%s/dynamodb' % INSTALL_DIR_INFRA

20 INSTALL_DIR_KCL = '%s/amazon-kinesis-client' % INSTALL_DIR_INFRA

21 INSTALL_DIR_ELASTICMQ = '%s/elasticmq' % INSTALL_DIR_INFRA

22 INSTALL_PATH_LOCALSTACK_FAT_JAR = '%s/localstack-utils-fat.jar' % INSTALL_DIR_INFRA

23 TMP_ARCHIVE_ES = os.path.join(tempfile.gettempdir(), 'localstack.es.zip')

24 TMP_ARCHIVE_DDB = os.path.join(tempfile.gettempdir(), 'localstack.ddb.zip')

25 TMP_ARCHIVE_STS = os.path.join(tempfile.gettempdir(), 'aws-java-sdk-sts.jar')

26 TMP_ARCHIVE_ELASTICMQ = os.path.join(tempfile.gettempdir(), 'elasticmq-server.jar')

27 URL_LOCALSTACK_FAT_JAR = ('http://central.maven.org/maven2/' +

28 'cloud/localstack/localstack-utils/{v}/localstack-utils-{v}-fat.jar').format(v=LOCALSTACK_MAVEN_VERSION)

29

30 # set up logger

31 LOGGER = logging.getLogger(__name__)

32

33

34 def install_elasticsearch():

35 if not os.path.exists(INSTALL_DIR_ES):

36 LOGGER.info('Downloading and installing local Elasticsearch server. This may take some time.')

37 mkdir(INSTALL_DIR_INFRA)

38 # download and extract archive

39 download_and_extract_with_retry(ELASTICSEARCH_JAR_URL, TMP_ARCHIVE_ES, INSTALL_DIR_INFRA)

40 elasticsearch_dir = glob.glob(os.path.join(INSTALL_DIR_INFRA, 'elasticsearch*'))

41 if not elasticsearch_dir:

42 raise Exception('Unable to find Elasticsearch folder in %s' % INSTALL_DIR_INFRA)

43 shutil.move(elasticsearch_dir[0], INSTALL_DIR_ES)

44

45 for dir_name in ('data', 'logs', 'modules', 'plugins', 'config/scripts'):

46 dir_path = '%s/%s' % (INSTALL_DIR_ES, dir_name)

47 mkdir(dir_path)

48 chmod_r(dir_path, 0o777)

49

50

51 def install_elasticmq():

52 if not os.path.exists(INSTALL_DIR_ELASTICMQ):

53 LOGGER.info('Downloading and installing local ElasticMQ server. This may take some time.')

54 mkdir(INSTALL_DIR_ELASTICMQ)

55 # download archive

56 if not os.path.exists(TMP_ARCHIVE_ELASTICMQ):

57 download(ELASTICMQ_JAR_URL, TMP_ARCHIVE_ELASTICMQ)

58 shutil.copy(TMP_ARCHIVE_ELASTICMQ, INSTALL_DIR_ELASTICMQ)

59

60

61 def install_kinesalite():

62 target_dir = '%s/kinesalite' % INSTALL_DIR_NPM

63 if not os.path.exists(target_dir):

64 LOGGER.info('Downloading and installing local Kinesis server. This may take some time.')

65 run('cd "%s" && npm install' % ROOT_PATH)

66

67

68 def install_dynamodb_local():

69 if not os.path.exists(INSTALL_DIR_DDB):

70 LOGGER.info('Downloading and installing local DynamoDB server. This may take some time.')

71 mkdir(INSTALL_DIR_DDB)

72 # download and extract archive

73 download_and_extract_with_retry(DYNAMODB_JAR_URL, TMP_ARCHIVE_DDB, INSTALL_DIR_DDB)

74

75 # fix for Alpine, otherwise DynamoDBLocal fails with:

76 # DynamoDBLocal_lib/libsqlite4java-linux-amd64.so: __memcpy_chk: symbol not found

77 if is_alpine():

78 ddb_libs_dir = '%s/DynamoDBLocal_lib' % INSTALL_DIR_DDB

79 patched_marker = '%s/alpine_fix_applied' % ddb_libs_dir

80 if not os.path.exists(patched_marker):

81 patched_lib = ('https://rawgit.com/bhuisgen/docker-alpine/master/alpine-dynamodb/' +

82 'rootfs/usr/local/dynamodb/DynamoDBLocal_lib/libsqlite4java-linux-amd64.so')

83 patched_jar = ('https://rawgit.com/bhuisgen/docker-alpine/master/alpine-dynamodb/' +

84 'rootfs/usr/local/dynamodb/DynamoDBLocal_lib/sqlite4java.jar')

85 run("curl -L -o %s/libsqlite4java-linux-amd64.so '%s'" % (ddb_libs_dir, patched_lib))

86 run("curl -L -o %s/sqlite4java.jar '%s'" % (ddb_libs_dir, patched_jar))

87 save_file(patched_marker, '')

88

89 # fix logging configuration for DynamoDBLocal

90 log4j2_config = """<Configuration status="WARN">

91 <Appenders>

92 <Console name="Console" target="SYSTEM_OUT">

93 <PatternLayout pattern="%d{HH:mm:ss.SSS} [%t] %-5level %logger{36} - %msg%n"/>

94 </Console>

95 </Appenders>

96 <Loggers>

97 <Root level="WARN"><AppenderRef ref="Console"/></Root>

98 </Loggers>

99 </Configuration>"""

100 log4j2_file = os.path.join(INSTALL_DIR_DDB, 'log4j2.xml')

101 save_file(log4j2_file, log4j2_config)

102 run('cd "%s" && zip -u DynamoDBLocal.jar log4j2.xml || true' % INSTALL_DIR_DDB)

103

104

105 def install_amazon_kinesis_client_libs():

106 # install KCL/STS JAR files

107 if not os.path.exists(INSTALL_DIR_KCL):

108 mkdir(INSTALL_DIR_KCL)

109 if not os.path.exists(TMP_ARCHIVE_STS):

110 download(STS_JAR_URL, TMP_ARCHIVE_STS)

111 shutil.copy(TMP_ARCHIVE_STS, INSTALL_DIR_KCL)

112 # Compile Java files

113 from localstack.utils.kinesis import kclipy_helper

114 classpath = kclipy_helper.get_kcl_classpath()

115 java_files = '%s/utils/kinesis/java/com/atlassian/*.java' % ROOT_PATH

116 class_files = '%s/utils/kinesis/java/com/atlassian/*.class' % ROOT_PATH

117 if not glob.glob(class_files):

118 run('javac -cp "%s" %s' % (classpath, java_files))

119

120

121 def install_lambda_java_libs():

122 # install LocalStack "fat" JAR file (contains all dependencies)

123 if not os.path.exists(INSTALL_PATH_LOCALSTACK_FAT_JAR):

124 LOGGER.info('Downloading and installing LocalStack Java libraries. This may take some time.')

125 download(URL_LOCALSTACK_FAT_JAR, INSTALL_PATH_LOCALSTACK_FAT_JAR)

126

127

128 def install_component(name):

129 if name == 'kinesis':

130 install_kinesalite()

131 elif name == 'dynamodb':

132 install_dynamodb_local()

133 elif name == 'es':

134 install_elasticsearch()

135 elif name == 'sqs':

136 install_elasticmq()

137

138

139 def install_components(names):

140 parallelize(install_component, names)

141 install_lambda_java_libs()

142

143

144 def install_all_components():

145 install_components(DEFAULT_SERVICE_PORTS.keys())

146

147

148 # -----------------

149 # HELPER FUNCTIONS

150 # -----------------

151

152

153 def is_alpine():

154 try:

155 run('cat /etc/issue | grep Alpine', print_error=False)

156 return True

157 except Exception:

158 return False

159

160

161 def download_and_extract_with_retry(archive_url, tmp_archive, target_dir):

162

163 def download_and_extract():

164 if not os.path.exists(tmp_archive):

165 download(archive_url, tmp_archive)

166 unzip(tmp_archive, target_dir)

167

168 try:

169 download_and_extract()

170 except Exception:

171 # try deleting and re-downloading the zip file

172 LOGGER.info('Unable to extract file, re-downloading ZIP archive: %s' % tmp_archive)

173 rm_rf(tmp_archive)

174 download_and_extract()

175

176

177 if __name__ == '__main__':

178

179 if len(sys.argv) > 1:

180 if sys.argv[1] == 'libs':

181 print('Initializing installation.')

182 logging.basicConfig(level=logging.INFO)

183 logging.getLogger('requests').setLevel(logging.WARNING)

184 install_all_components()

185 print('Done.')

186 elif sys.argv[1] == 'testlibs':

187 # Install additional libraries for testing

188 install_amazon_kinesis_client_libs()

189

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/localstack/constants.py b/localstack/constants.py

--- a/localstack/constants.py

+++ b/localstack/constants.py

@@ -70,6 +70,9 @@

# installation constants

ELASTICSEARCH_JAR_URL = 'https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.0.zip'

+# https://docs.aws.amazon.com/ja_jp/elasticsearch-service/latest/developerguide/aes-supported-plugins.html

+ELASTICSEARCH_PLUGIN_LIST = ['analysis-icu', 'ingest-attachment', 'ingest-user-agent', 'analysis-kuromoji',

+ 'mapper-murmur3', 'mapper-size', 'analysis-phonetic', 'analysis-smartcn', 'analysis-stempel', 'analysis-ukrainian']

DYNAMODB_JAR_URL = 'https://s3-us-west-2.amazonaws.com/dynamodb-local/dynamodb_local_latest.zip'

ELASTICMQ_JAR_URL = 'https://s3-eu-west-1.amazonaws.com/softwaremill-public/elasticmq-server-0.14.2.jar'

STS_JAR_URL = 'http://central.maven.org/maven2/com/amazonaws/aws-java-sdk-sts/1.11.14/aws-java-sdk-sts-1.11.14.jar'

diff --git a/localstack/services/install.py b/localstack/services/install.py

--- a/localstack/services/install.py

+++ b/localstack/services/install.py

@@ -7,7 +7,7 @@

import logging

import tempfile

from localstack.constants import (DEFAULT_SERVICE_PORTS, ELASTICMQ_JAR_URL, STS_JAR_URL,

- ELASTICSEARCH_JAR_URL, DYNAMODB_JAR_URL, LOCALSTACK_MAVEN_VERSION)

+ ELASTICSEARCH_JAR_URL, ELASTICSEARCH_PLUGIN_LIST, DYNAMODB_JAR_URL, LOCALSTACK_MAVEN_VERSION)

from localstack.utils.common import download, parallelize, run, mkdir, save_file, unzip, rm_rf, chmod_r

THIS_PATH = os.path.dirname(os.path.realpath(__file__))

@@ -47,6 +47,14 @@

mkdir(dir_path)

chmod_r(dir_path, 0o777)

+ # install default plugins

+ for plugin in ELASTICSEARCH_PLUGIN_LIST:

+ if is_alpine():

+ # https://github.com/pires/docker-elasticsearch/issues/56

+ os.environ['ES_TMPDIR'] = '/tmp'

+ plugin_binary = os.path.join(INSTALL_DIR_ES, 'bin', 'elasticsearch-plugin')

+ run('%s install %s' % (plugin_binary, plugin))

+

def install_elasticmq():

if not os.path.exists(INSTALL_DIR_ELASTICMQ):

| {"golden_diff": "diff --git a/localstack/constants.py b/localstack/constants.py\n--- a/localstack/constants.py\n+++ b/localstack/constants.py\n@@ -70,6 +70,9 @@\n \n # installation constants\n ELASTICSEARCH_JAR_URL = 'https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.0.zip'\n+# https://docs.aws.amazon.com/ja_jp/elasticsearch-service/latest/developerguide/aes-supported-plugins.html\n+ELASTICSEARCH_PLUGIN_LIST = ['analysis-icu', 'ingest-attachment', 'ingest-user-agent', 'analysis-kuromoji',\n+ 'mapper-murmur3', 'mapper-size', 'analysis-phonetic', 'analysis-smartcn', 'analysis-stempel', 'analysis-ukrainian']\n DYNAMODB_JAR_URL = 'https://s3-us-west-2.amazonaws.com/dynamodb-local/dynamodb_local_latest.zip'\n ELASTICMQ_JAR_URL = 'https://s3-eu-west-1.amazonaws.com/softwaremill-public/elasticmq-server-0.14.2.jar'\n STS_JAR_URL = 'http://central.maven.org/maven2/com/amazonaws/aws-java-sdk-sts/1.11.14/aws-java-sdk-sts-1.11.14.jar'\ndiff --git a/localstack/services/install.py b/localstack/services/install.py\n--- a/localstack/services/install.py\n+++ b/localstack/services/install.py\n@@ -7,7 +7,7 @@\n import logging\n import tempfile\n from localstack.constants import (DEFAULT_SERVICE_PORTS, ELASTICMQ_JAR_URL, STS_JAR_URL,\n- ELASTICSEARCH_JAR_URL, DYNAMODB_JAR_URL, LOCALSTACK_MAVEN_VERSION)\n+ ELASTICSEARCH_JAR_URL, ELASTICSEARCH_PLUGIN_LIST, DYNAMODB_JAR_URL, LOCALSTACK_MAVEN_VERSION)\n from localstack.utils.common import download, parallelize, run, mkdir, save_file, unzip, rm_rf, chmod_r\n \n THIS_PATH = os.path.dirname(os.path.realpath(__file__))\n@@ -47,6 +47,14 @@\n mkdir(dir_path)\n chmod_r(dir_path, 0o777)\n \n+ # install default plugins\n+ for plugin in ELASTICSEARCH_PLUGIN_LIST:\n+ if is_alpine():\n+ # https://github.com/pires/docker-elasticsearch/issues/56\n+ os.environ['ES_TMPDIR'] = '/tmp'\n+ plugin_binary = os.path.join(INSTALL_DIR_ES, 'bin', 'elasticsearch-plugin')\n+ run('%s install %s' % (plugin_binary, plugin))\n+\n \n def install_elasticmq():\n if not os.path.exists(INSTALL_DIR_ELASTICMQ):\n", "issue": "Localstack Elasticsearch plugin Ingest User Agent Processor not available\nPlugin `Ingest User Agent Processor` is installed by default for Elasticsearch (ELK) on AWS. It is not the case in Localstack and think we basically expect it.\r\n\r\nIn addition, I was not able to install it manually through command `bin/elasticsearch-plugin install ingest-user-agent` as bin/elasticsearch-plugin is missing.\n", "before_files": [{"content": "import os\nimport localstack_client.config\n\n# LocalStack version\nVERSION = '0.8.10'\n\n# default AWS region\nif 'DEFAULT_REGION' not in os.environ:\n os.environ['DEFAULT_REGION'] = 'us-east-1'\nDEFAULT_REGION = os.environ['DEFAULT_REGION']\n\n# constant to represent the \"local\" region, i.e., local machine\nREGION_LOCAL = 'local'\n\n# dev environment\nENV_DEV = 'dev'\n\n# backend service ports, for services that are behind a proxy (counting down from 4566)\nDEFAULT_PORT_APIGATEWAY_BACKEND = 4566\nDEFAULT_PORT_KINESIS_BACKEND = 4565\nDEFAULT_PORT_DYNAMODB_BACKEND = 4564\nDEFAULT_PORT_S3_BACKEND = 4563\nDEFAULT_PORT_SNS_BACKEND = 4562\nDEFAULT_PORT_SQS_BACKEND = 4561\nDEFAULT_PORT_ELASTICSEARCH_BACKEND = 4560\nDEFAULT_PORT_CLOUDFORMATION_BACKEND = 4559\n\nDEFAULT_PORT_WEB_UI = 8080\n\nLOCALHOST = 'localhost'\n\n# version of the Maven dependency with Java utility code\nLOCALSTACK_MAVEN_VERSION = '0.1.15'\n\n# map of default service APIs and ports to be spun up (fetch map from localstack_client)\nDEFAULT_SERVICE_PORTS = localstack_client.config.get_service_ports()\n\n# host to bind to when starting the services\nBIND_HOST = '0.0.0.0'\n\n# AWS user account ID used for tests\nTEST_AWS_ACCOUNT_ID = '000000000000'\nos.environ['TEST_AWS_ACCOUNT_ID'] = TEST_AWS_ACCOUNT_ID\n\n# root code folder\nLOCALSTACK_ROOT_FOLDER = os.path.realpath(os.path.join(os.path.dirname(os.path.realpath(__file__)), '..'))\n\n# virtualenv folder\nLOCALSTACK_VENV_FOLDER = os.path.join(LOCALSTACK_ROOT_FOLDER, '.venv')\nif not os.path.isdir(LOCALSTACK_VENV_FOLDER):\n # assuming this package lives here: <python>/lib/pythonX.X/site-packages/localstack/\n LOCALSTACK_VENV_FOLDER = os.path.realpath(os.path.join(LOCALSTACK_ROOT_FOLDER, '..', '..', '..'))\n\n# API Gateway path to indicate a user request sent to the gateway\nPATH_USER_REQUEST = '_user_request_'\n\n# name of LocalStack Docker image\nDOCKER_IMAGE_NAME = 'localstack/localstack'\n\n# environment variable name to tag local test runs\nENV_INTERNAL_TEST_RUN = 'LOCALSTACK_INTERNAL_TEST_RUN'\n\n# content types\nAPPLICATION_AMZ_JSON_1_0 = 'application/x-amz-json-1.0'\nAPPLICATION_AMZ_JSON_1_1 = 'application/x-amz-json-1.1'\nAPPLICATION_JSON = 'application/json'\n\n# Lambda defaults\nLAMBDA_TEST_ROLE = 'arn:aws:iam::%s:role/lambda-test-role' % TEST_AWS_ACCOUNT_ID\n\n# installation constants\nELASTICSEARCH_JAR_URL = 'https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.0.zip'\nDYNAMODB_JAR_URL = 'https://s3-us-west-2.amazonaws.com/dynamodb-local/dynamodb_local_latest.zip'\nELASTICMQ_JAR_URL = 'https://s3-eu-west-1.amazonaws.com/softwaremill-public/elasticmq-server-0.14.2.jar'\nSTS_JAR_URL = 'http://central.maven.org/maven2/com/amazonaws/aws-java-sdk-sts/1.11.14/aws-java-sdk-sts-1.11.14.jar'\n\n# API endpoint for analytics events\nAPI_ENDPOINT = 'https://api.localstack.cloud/v1'\n", "path": "localstack/constants.py"}, {"content": "#!/usr/bin/env python\n\nimport os\nimport sys\nimport glob\nimport shutil\nimport logging\nimport tempfile\nfrom localstack.constants import (DEFAULT_SERVICE_PORTS, ELASTICMQ_JAR_URL, STS_JAR_URL,\n ELASTICSEARCH_JAR_URL, DYNAMODB_JAR_URL, LOCALSTACK_MAVEN_VERSION)\nfrom localstack.utils.common import download, parallelize, run, mkdir, save_file, unzip, rm_rf, chmod_r\n\nTHIS_PATH = os.path.dirname(os.path.realpath(__file__))\nROOT_PATH = os.path.realpath(os.path.join(THIS_PATH, '..'))\n\nINSTALL_DIR_INFRA = '%s/infra' % ROOT_PATH\nINSTALL_DIR_NPM = '%s/node_modules' % ROOT_PATH\nINSTALL_DIR_ES = '%s/elasticsearch' % INSTALL_DIR_INFRA\nINSTALL_DIR_DDB = '%s/dynamodb' % INSTALL_DIR_INFRA\nINSTALL_DIR_KCL = '%s/amazon-kinesis-client' % INSTALL_DIR_INFRA\nINSTALL_DIR_ELASTICMQ = '%s/elasticmq' % INSTALL_DIR_INFRA\nINSTALL_PATH_LOCALSTACK_FAT_JAR = '%s/localstack-utils-fat.jar' % INSTALL_DIR_INFRA\nTMP_ARCHIVE_ES = os.path.join(tempfile.gettempdir(), 'localstack.es.zip')\nTMP_ARCHIVE_DDB = os.path.join(tempfile.gettempdir(), 'localstack.ddb.zip')\nTMP_ARCHIVE_STS = os.path.join(tempfile.gettempdir(), 'aws-java-sdk-sts.jar')\nTMP_ARCHIVE_ELASTICMQ = os.path.join(tempfile.gettempdir(), 'elasticmq-server.jar')\nURL_LOCALSTACK_FAT_JAR = ('http://central.maven.org/maven2/' +\n 'cloud/localstack/localstack-utils/{v}/localstack-utils-{v}-fat.jar').format(v=LOCALSTACK_MAVEN_VERSION)\n\n# set up logger\nLOGGER = logging.getLogger(__name__)\n\n\ndef install_elasticsearch():\n if not os.path.exists(INSTALL_DIR_ES):\n LOGGER.info('Downloading and installing local Elasticsearch server. This may take some time.')\n mkdir(INSTALL_DIR_INFRA)\n # download and extract archive\n download_and_extract_with_retry(ELASTICSEARCH_JAR_URL, TMP_ARCHIVE_ES, INSTALL_DIR_INFRA)\n elasticsearch_dir = glob.glob(os.path.join(INSTALL_DIR_INFRA, 'elasticsearch*'))\n if not elasticsearch_dir:\n raise Exception('Unable to find Elasticsearch folder in %s' % INSTALL_DIR_INFRA)\n shutil.move(elasticsearch_dir[0], INSTALL_DIR_ES)\n\n for dir_name in ('data', 'logs', 'modules', 'plugins', 'config/scripts'):\n dir_path = '%s/%s' % (INSTALL_DIR_ES, dir_name)\n mkdir(dir_path)\n chmod_r(dir_path, 0o777)\n\n\ndef install_elasticmq():\n if not os.path.exists(INSTALL_DIR_ELASTICMQ):\n LOGGER.info('Downloading and installing local ElasticMQ server. This may take some time.')\n mkdir(INSTALL_DIR_ELASTICMQ)\n # download archive\n if not os.path.exists(TMP_ARCHIVE_ELASTICMQ):\n download(ELASTICMQ_JAR_URL, TMP_ARCHIVE_ELASTICMQ)\n shutil.copy(TMP_ARCHIVE_ELASTICMQ, INSTALL_DIR_ELASTICMQ)\n\n\ndef install_kinesalite():\n target_dir = '%s/kinesalite' % INSTALL_DIR_NPM\n if not os.path.exists(target_dir):\n LOGGER.info('Downloading and installing local Kinesis server. This may take some time.')\n run('cd \"%s\" && npm install' % ROOT_PATH)\n\n\ndef install_dynamodb_local():\n if not os.path.exists(INSTALL_DIR_DDB):\n LOGGER.info('Downloading and installing local DynamoDB server. This may take some time.')\n mkdir(INSTALL_DIR_DDB)\n # download and extract archive\n download_and_extract_with_retry(DYNAMODB_JAR_URL, TMP_ARCHIVE_DDB, INSTALL_DIR_DDB)\n\n # fix for Alpine, otherwise DynamoDBLocal fails with:\n # DynamoDBLocal_lib/libsqlite4java-linux-amd64.so: __memcpy_chk: symbol not found\n if is_alpine():\n ddb_libs_dir = '%s/DynamoDBLocal_lib' % INSTALL_DIR_DDB\n patched_marker = '%s/alpine_fix_applied' % ddb_libs_dir\n if not os.path.exists(patched_marker):\n patched_lib = ('https://rawgit.com/bhuisgen/docker-alpine/master/alpine-dynamodb/' +\n 'rootfs/usr/local/dynamodb/DynamoDBLocal_lib/libsqlite4java-linux-amd64.so')\n patched_jar = ('https://rawgit.com/bhuisgen/docker-alpine/master/alpine-dynamodb/' +\n 'rootfs/usr/local/dynamodb/DynamoDBLocal_lib/sqlite4java.jar')\n run(\"curl -L -o %s/libsqlite4java-linux-amd64.so '%s'\" % (ddb_libs_dir, patched_lib))\n run(\"curl -L -o %s/sqlite4java.jar '%s'\" % (ddb_libs_dir, patched_jar))\n save_file(patched_marker, '')\n\n # fix logging configuration for DynamoDBLocal\n log4j2_config = \"\"\"<Configuration status=\"WARN\">\n <Appenders>\n <Console name=\"Console\" target=\"SYSTEM_OUT\">\n <PatternLayout pattern=\"%d{HH:mm:ss.SSS} [%t] %-5level %logger{36} - %msg%n\"/>\n </Console>\n </Appenders>\n <Loggers>\n <Root level=\"WARN\"><AppenderRef ref=\"Console\"/></Root>\n </Loggers>\n </Configuration>\"\"\"\n log4j2_file = os.path.join(INSTALL_DIR_DDB, 'log4j2.xml')\n save_file(log4j2_file, log4j2_config)\n run('cd \"%s\" && zip -u DynamoDBLocal.jar log4j2.xml || true' % INSTALL_DIR_DDB)\n\n\ndef install_amazon_kinesis_client_libs():\n # install KCL/STS JAR files\n if not os.path.exists(INSTALL_DIR_KCL):\n mkdir(INSTALL_DIR_KCL)\n if not os.path.exists(TMP_ARCHIVE_STS):\n download(STS_JAR_URL, TMP_ARCHIVE_STS)\n shutil.copy(TMP_ARCHIVE_STS, INSTALL_DIR_KCL)\n # Compile Java files\n from localstack.utils.kinesis import kclipy_helper\n classpath = kclipy_helper.get_kcl_classpath()\n java_files = '%s/utils/kinesis/java/com/atlassian/*.java' % ROOT_PATH\n class_files = '%s/utils/kinesis/java/com/atlassian/*.class' % ROOT_PATH\n if not glob.glob(class_files):\n run('javac -cp \"%s\" %s' % (classpath, java_files))\n\n\ndef install_lambda_java_libs():\n # install LocalStack \"fat\" JAR file (contains all dependencies)\n if not os.path.exists(INSTALL_PATH_LOCALSTACK_FAT_JAR):\n LOGGER.info('Downloading and installing LocalStack Java libraries. This may take some time.')\n download(URL_LOCALSTACK_FAT_JAR, INSTALL_PATH_LOCALSTACK_FAT_JAR)\n\n\ndef install_component(name):\n if name == 'kinesis':\n install_kinesalite()\n elif name == 'dynamodb':\n install_dynamodb_local()\n elif name == 'es':\n install_elasticsearch()\n elif name == 'sqs':\n install_elasticmq()\n\n\ndef install_components(names):\n parallelize(install_component, names)\n install_lambda_java_libs()\n\n\ndef install_all_components():\n install_components(DEFAULT_SERVICE_PORTS.keys())\n\n\n# -----------------\n# HELPER FUNCTIONS\n# -----------------\n\n\ndef is_alpine():\n try:\n run('cat /etc/issue | grep Alpine', print_error=False)\n return True\n except Exception:\n return False\n\n\ndef download_and_extract_with_retry(archive_url, tmp_archive, target_dir):\n\n def download_and_extract():\n if not os.path.exists(tmp_archive):\n download(archive_url, tmp_archive)\n unzip(tmp_archive, target_dir)\n\n try:\n download_and_extract()\n except Exception:\n # try deleting and re-downloading the zip file\n LOGGER.info('Unable to extract file, re-downloading ZIP archive: %s' % tmp_archive)\n rm_rf(tmp_archive)\n download_and_extract()\n\n\nif __name__ == '__main__':\n\n if len(sys.argv) > 1:\n if sys.argv[1] == 'libs':\n print('Initializing installation.')\n logging.basicConfig(level=logging.INFO)\n logging.getLogger('requests').setLevel(logging.WARNING)\n install_all_components()\n print('Done.')\n elif sys.argv[1] == 'testlibs':\n # Install additional libraries for testing\n install_amazon_kinesis_client_libs()\n", "path": "localstack/services/install.py"}], "after_files": [{"content": "import os\nimport localstack_client.config\n\n# LocalStack version\nVERSION = '0.8.10'\n\n# default AWS region\nif 'DEFAULT_REGION' not in os.environ:\n os.environ['DEFAULT_REGION'] = 'us-east-1'\nDEFAULT_REGION = os.environ['DEFAULT_REGION']\n\n# constant to represent the \"local\" region, i.e., local machine\nREGION_LOCAL = 'local'\n\n# dev environment\nENV_DEV = 'dev'\n\n# backend service ports, for services that are behind a proxy (counting down from 4566)\nDEFAULT_PORT_APIGATEWAY_BACKEND = 4566\nDEFAULT_PORT_KINESIS_BACKEND = 4565\nDEFAULT_PORT_DYNAMODB_BACKEND = 4564\nDEFAULT_PORT_S3_BACKEND = 4563\nDEFAULT_PORT_SNS_BACKEND = 4562\nDEFAULT_PORT_SQS_BACKEND = 4561\nDEFAULT_PORT_ELASTICSEARCH_BACKEND = 4560\nDEFAULT_PORT_CLOUDFORMATION_BACKEND = 4559\n\nDEFAULT_PORT_WEB_UI = 8080\n\nLOCALHOST = 'localhost'\n\n# version of the Maven dependency with Java utility code\nLOCALSTACK_MAVEN_VERSION = '0.1.15'\n\n# map of default service APIs and ports to be spun up (fetch map from localstack_client)\nDEFAULT_SERVICE_PORTS = localstack_client.config.get_service_ports()\n\n# host to bind to when starting the services\nBIND_HOST = '0.0.0.0'\n\n# AWS user account ID used for tests\nTEST_AWS_ACCOUNT_ID = '000000000000'\nos.environ['TEST_AWS_ACCOUNT_ID'] = TEST_AWS_ACCOUNT_ID\n\n# root code folder\nLOCALSTACK_ROOT_FOLDER = os.path.realpath(os.path.join(os.path.dirname(os.path.realpath(__file__)), '..'))\n\n# virtualenv folder\nLOCALSTACK_VENV_FOLDER = os.path.join(LOCALSTACK_ROOT_FOLDER, '.venv')\nif not os.path.isdir(LOCALSTACK_VENV_FOLDER):\n # assuming this package lives here: <python>/lib/pythonX.X/site-packages/localstack/\n LOCALSTACK_VENV_FOLDER = os.path.realpath(os.path.join(LOCALSTACK_ROOT_FOLDER, '..', '..', '..'))\n\n# API Gateway path to indicate a user request sent to the gateway\nPATH_USER_REQUEST = '_user_request_'\n\n# name of LocalStack Docker image\nDOCKER_IMAGE_NAME = 'localstack/localstack'\n\n# environment variable name to tag local test runs\nENV_INTERNAL_TEST_RUN = 'LOCALSTACK_INTERNAL_TEST_RUN'\n\n# content types\nAPPLICATION_AMZ_JSON_1_0 = 'application/x-amz-json-1.0'\nAPPLICATION_AMZ_JSON_1_1 = 'application/x-amz-json-1.1'\nAPPLICATION_JSON = 'application/json'\n\n# Lambda defaults\nLAMBDA_TEST_ROLE = 'arn:aws:iam::%s:role/lambda-test-role' % TEST_AWS_ACCOUNT_ID\n\n# installation constants\nELASTICSEARCH_JAR_URL = 'https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.0.zip'\n# https://docs.aws.amazon.com/ja_jp/elasticsearch-service/latest/developerguide/aes-supported-plugins.html\nELASTICSEARCH_PLUGIN_LIST = ['analysis-icu', 'ingest-attachment', 'ingest-user-agent', 'analysis-kuromoji',\n 'mapper-murmur3', 'mapper-size', 'analysis-phonetic', 'analysis-smartcn', 'analysis-stempel', 'analysis-ukrainian']\nDYNAMODB_JAR_URL = 'https://s3-us-west-2.amazonaws.com/dynamodb-local/dynamodb_local_latest.zip'\nELASTICMQ_JAR_URL = 'https://s3-eu-west-1.amazonaws.com/softwaremill-public/elasticmq-server-0.14.2.jar'\nSTS_JAR_URL = 'http://central.maven.org/maven2/com/amazonaws/aws-java-sdk-sts/1.11.14/aws-java-sdk-sts-1.11.14.jar'\n\n# API endpoint for analytics events\nAPI_ENDPOINT = 'https://api.localstack.cloud/v1'\n", "path": "localstack/constants.py"}, {"content": "#!/usr/bin/env python\n\nimport os\nimport sys\nimport glob\nimport shutil\nimport logging\nimport tempfile\nfrom localstack.constants import (DEFAULT_SERVICE_PORTS, ELASTICMQ_JAR_URL, STS_JAR_URL,\n ELASTICSEARCH_JAR_URL, ELASTICSEARCH_PLUGIN_LIST, DYNAMODB_JAR_URL, LOCALSTACK_MAVEN_VERSION)\nfrom localstack.utils.common import download, parallelize, run, mkdir, save_file, unzip, rm_rf, chmod_r\n\nTHIS_PATH = os.path.dirname(os.path.realpath(__file__))\nROOT_PATH = os.path.realpath(os.path.join(THIS_PATH, '..'))\n\nINSTALL_DIR_INFRA = '%s/infra' % ROOT_PATH\nINSTALL_DIR_NPM = '%s/node_modules' % ROOT_PATH\nINSTALL_DIR_ES = '%s/elasticsearch' % INSTALL_DIR_INFRA\nINSTALL_DIR_DDB = '%s/dynamodb' % INSTALL_DIR_INFRA\nINSTALL_DIR_KCL = '%s/amazon-kinesis-client' % INSTALL_DIR_INFRA\nINSTALL_DIR_ELASTICMQ = '%s/elasticmq' % INSTALL_DIR_INFRA\nINSTALL_PATH_LOCALSTACK_FAT_JAR = '%s/localstack-utils-fat.jar' % INSTALL_DIR_INFRA\nTMP_ARCHIVE_ES = os.path.join(tempfile.gettempdir(), 'localstack.es.zip')\nTMP_ARCHIVE_DDB = os.path.join(tempfile.gettempdir(), 'localstack.ddb.zip')\nTMP_ARCHIVE_STS = os.path.join(tempfile.gettempdir(), 'aws-java-sdk-sts.jar')\nTMP_ARCHIVE_ELASTICMQ = os.path.join(tempfile.gettempdir(), 'elasticmq-server.jar')\nURL_LOCALSTACK_FAT_JAR = ('http://central.maven.org/maven2/' +\n 'cloud/localstack/localstack-utils/{v}/localstack-utils-{v}-fat.jar').format(v=LOCALSTACK_MAVEN_VERSION)\n\n# set up logger\nLOGGER = logging.getLogger(__name__)\n\n\ndef install_elasticsearch():\n if not os.path.exists(INSTALL_DIR_ES):\n LOGGER.info('Downloading and installing local Elasticsearch server. This may take some time.')\n mkdir(INSTALL_DIR_INFRA)\n # download and extract archive\n download_and_extract_with_retry(ELASTICSEARCH_JAR_URL, TMP_ARCHIVE_ES, INSTALL_DIR_INFRA)\n elasticsearch_dir = glob.glob(os.path.join(INSTALL_DIR_INFRA, 'elasticsearch*'))\n if not elasticsearch_dir:\n raise Exception('Unable to find Elasticsearch folder in %s' % INSTALL_DIR_INFRA)\n shutil.move(elasticsearch_dir[0], INSTALL_DIR_ES)\n\n for dir_name in ('data', 'logs', 'modules', 'plugins', 'config/scripts'):\n dir_path = '%s/%s' % (INSTALL_DIR_ES, dir_name)\n mkdir(dir_path)\n chmod_r(dir_path, 0o777)\n\n # install default plugins\n for plugin in ELASTICSEARCH_PLUGIN_LIST:\n if is_alpine():\n # https://github.com/pires/docker-elasticsearch/issues/56\n os.environ['ES_TMPDIR'] = '/tmp'\n plugin_binary = os.path.join(INSTALL_DIR_ES, 'bin', 'elasticsearch-plugin')\n run('%s install %s' % (plugin_binary, plugin))\n\n\ndef install_elasticmq():\n if not os.path.exists(INSTALL_DIR_ELASTICMQ):\n LOGGER.info('Downloading and installing local ElasticMQ server. This may take some time.')\n mkdir(INSTALL_DIR_ELASTICMQ)\n # download archive\n if not os.path.exists(TMP_ARCHIVE_ELASTICMQ):\n download(ELASTICMQ_JAR_URL, TMP_ARCHIVE_ELASTICMQ)\n shutil.copy(TMP_ARCHIVE_ELASTICMQ, INSTALL_DIR_ELASTICMQ)\n\n\ndef install_kinesalite():\n target_dir = '%s/kinesalite' % INSTALL_DIR_NPM\n if not os.path.exists(target_dir):\n LOGGER.info('Downloading and installing local Kinesis server. This may take some time.')\n run('cd \"%s\" && npm install' % ROOT_PATH)\n\n\ndef install_dynamodb_local():\n if not os.path.exists(INSTALL_DIR_DDB):\n LOGGER.info('Downloading and installing local DynamoDB server. This may take some time.')\n mkdir(INSTALL_DIR_DDB)\n # download and extract archive\n download_and_extract_with_retry(DYNAMODB_JAR_URL, TMP_ARCHIVE_DDB, INSTALL_DIR_DDB)\n\n # fix for Alpine, otherwise DynamoDBLocal fails with:\n # DynamoDBLocal_lib/libsqlite4java-linux-amd64.so: __memcpy_chk: symbol not found\n if is_alpine():\n ddb_libs_dir = '%s/DynamoDBLocal_lib' % INSTALL_DIR_DDB\n patched_marker = '%s/alpine_fix_applied' % ddb_libs_dir\n if not os.path.exists(patched_marker):\n patched_lib = ('https://rawgit.com/bhuisgen/docker-alpine/master/alpine-dynamodb/' +\n 'rootfs/usr/local/dynamodb/DynamoDBLocal_lib/libsqlite4java-linux-amd64.so')\n patched_jar = ('https://rawgit.com/bhuisgen/docker-alpine/master/alpine-dynamodb/' +\n 'rootfs/usr/local/dynamodb/DynamoDBLocal_lib/sqlite4java.jar')\n run(\"curl -L -o %s/libsqlite4java-linux-amd64.so '%s'\" % (ddb_libs_dir, patched_lib))\n run(\"curl -L -o %s/sqlite4java.jar '%s'\" % (ddb_libs_dir, patched_jar))\n save_file(patched_marker, '')\n\n # fix logging configuration for DynamoDBLocal\n log4j2_config = \"\"\"<Configuration status=\"WARN\">\n <Appenders>\n <Console name=\"Console\" target=\"SYSTEM_OUT\">\n <PatternLayout pattern=\"%d{HH:mm:ss.SSS} [%t] %-5level %logger{36} - %msg%n\"/>\n </Console>\n </Appenders>\n <Loggers>\n <Root level=\"WARN\"><AppenderRef ref=\"Console\"/></Root>\n </Loggers>\n </Configuration>\"\"\"\n log4j2_file = os.path.join(INSTALL_DIR_DDB, 'log4j2.xml')\n save_file(log4j2_file, log4j2_config)\n run('cd \"%s\" && zip -u DynamoDBLocal.jar log4j2.xml || true' % INSTALL_DIR_DDB)\n\n\ndef install_amazon_kinesis_client_libs():\n # install KCL/STS JAR files\n if not os.path.exists(INSTALL_DIR_KCL):\n mkdir(INSTALL_DIR_KCL)\n if not os.path.exists(TMP_ARCHIVE_STS):\n download(STS_JAR_URL, TMP_ARCHIVE_STS)\n shutil.copy(TMP_ARCHIVE_STS, INSTALL_DIR_KCL)\n # Compile Java files\n from localstack.utils.kinesis import kclipy_helper\n classpath = kclipy_helper.get_kcl_classpath()\n java_files = '%s/utils/kinesis/java/com/atlassian/*.java' % ROOT_PATH\n class_files = '%s/utils/kinesis/java/com/atlassian/*.class' % ROOT_PATH\n if not glob.glob(class_files):\n run('javac -cp \"%s\" %s' % (classpath, java_files))\n\n\ndef install_lambda_java_libs():\n # install LocalStack \"fat\" JAR file (contains all dependencies)\n if not os.path.exists(INSTALL_PATH_LOCALSTACK_FAT_JAR):\n LOGGER.info('Downloading and installing LocalStack Java libraries. This may take some time.')\n download(URL_LOCALSTACK_FAT_JAR, INSTALL_PATH_LOCALSTACK_FAT_JAR)\n\n\ndef install_component(name):\n if name == 'kinesis':\n install_kinesalite()\n elif name == 'dynamodb':\n install_dynamodb_local()\n elif name == 'es':\n install_elasticsearch()\n elif name == 'sqs':\n install_elasticmq()\n\n\ndef install_components(names):\n parallelize(install_component, names)\n install_lambda_java_libs()\n\n\ndef install_all_components():\n install_components(DEFAULT_SERVICE_PORTS.keys())\n\n\n# -----------------\n# HELPER FUNCTIONS\n# -----------------\n\n\ndef is_alpine():\n try:\n run('cat /etc/issue | grep Alpine', print_error=False)\n return True\n except Exception:\n return False\n\n\ndef download_and_extract_with_retry(archive_url, tmp_archive, target_dir):\n\n def download_and_extract():\n if not os.path.exists(tmp_archive):\n download(archive_url, tmp_archive)\n unzip(tmp_archive, target_dir)\n\n try:\n download_and_extract()\n except Exception:\n # try deleting and re-downloading the zip file\n LOGGER.info('Unable to extract file, re-downloading ZIP archive: %s' % tmp_archive)\n rm_rf(tmp_archive)\n download_and_extract()\n\n\nif __name__ == '__main__':\n\n if len(sys.argv) > 1:\n if sys.argv[1] == 'libs':\n print('Initializing installation.')\n logging.basicConfig(level=logging.INFO)\n logging.getLogger('requests').setLevel(logging.WARNING)\n install_all_components()\n print('Done.')\n elif sys.argv[1] == 'testlibs':\n # Install additional libraries for testing\n install_amazon_kinesis_client_libs()\n", "path": "localstack/services/install.py"}]} | 3,646 | 591 |

gh_patches_debug_19160 | rasdani/github-patches | git_diff | marshmallow-code__webargs-368 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

AttributeError: module 'typing' has no attribute 'NoReturn' with Python 3.5.3

I get this error when running the tests with Python 3.5.3.

```

tests/test_py3/test_aiohttpparser_async_functions.py:6: in <module>

from webargs.aiohttpparser import parser, use_args, use_kwargs

webargs/aiohttpparser.py:72: in <module>

class AIOHTTPParser(AsyncParser):

webargs/aiohttpparser.py:148: in AIOHTTPParser

) -> typing.NoReturn:

E AttributeError: module 'typing' has no attribute 'NoReturn'

```

The docs say [`typing.NoReturn`](https://docs.python.org/3/library/typing.html#typing.NoReturn) was added in 3.6.5. However, [the tests pass on Travis](https://travis-ci.org/marshmallow-code/webargs/jobs/486701760) with Python 3.5.6.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `webargs/aiohttpparser.py`

Content:

```

1 """aiohttp request argument parsing module.

2

3 Example: ::

4

5 import asyncio

6 from aiohttp import web

7

8 from webargs import fields

9 from webargs.aiohttpparser import use_args

10

11

12 hello_args = {

13 'name': fields.Str(required=True)

14 }

15 @asyncio.coroutine

16 @use_args(hello_args)

17 def index(request, args):

18 return web.Response(

19 body='Hello {}'.format(args['name']).encode('utf-8')

20 )

21

22 app = web.Application()

23 app.router.add_route('GET', '/', index)

24 """

25 import typing

26

27 from aiohttp import web

28 from aiohttp.web import Request

29 from aiohttp import web_exceptions

30 from marshmallow import Schema, ValidationError

31 from marshmallow.fields import Field

32

33 from webargs import core

34 from webargs.core import json

35 from webargs.asyncparser import AsyncParser

36

37

38 def is_json_request(req: Request) -> bool:

39 content_type = req.content_type

40 return core.is_json(content_type)

41

42

43 class HTTPUnprocessableEntity(web.HTTPClientError):

44 status_code = 422

45

46

47 # Mapping of status codes to exception classes

48 # Adapted from werkzeug

49 exception_map = {422: HTTPUnprocessableEntity}

50

51

52 def _find_exceptions() -> None:

53 for name in web_exceptions.__all__:

54 obj = getattr(web_exceptions, name)

55 try:

56 is_http_exception = issubclass(obj, web_exceptions.HTTPException)

57 except TypeError:

58 is_http_exception = False

59 if not is_http_exception or obj.status_code is None:

60 continue

61 old_obj = exception_map.get(obj.status_code, None)

62 if old_obj is not None and issubclass(obj, old_obj):

63 continue

64 exception_map[obj.status_code] = obj

65

66

67 # Collect all exceptions from aiohttp.web_exceptions

68 _find_exceptions()

69 del _find_exceptions

70

71

72 class AIOHTTPParser(AsyncParser):

73 """aiohttp request argument parser."""

74

75 __location_map__ = dict(

76 match_info="parse_match_info", **core.Parser.__location_map__

77 )

78

79 def parse_querystring(self, req: Request, name: str, field: Field) -> typing.Any:

80 """Pull a querystring value from the request."""

81 return core.get_value(req.query, name, field)

82

83 async def parse_form(self, req: Request, name: str, field: Field) -> typing.Any:

84 """Pull a form value from the request."""

85 post_data = self._cache.get("post")

86 if post_data is None:

87 self._cache["post"] = await req.post()

88 return core.get_value(self._cache["post"], name, field)

89

90 async def parse_json(self, req: Request, name: str, field: Field) -> typing.Any:

91 """Pull a json value from the request."""

92 json_data = self._cache.get("json")

93 if json_data is None:

94 if not (req.body_exists and is_json_request(req)):

95 return core.missing

96 try:

97 json_data = await req.json(loads=json.loads)

98 except json.JSONDecodeError as e:

99 if e.doc == "":

100 return core.missing

101 else:

102 return self.handle_invalid_json_error(e, req)

103 self._cache["json"] = json_data

104 return core.get_value(json_data, name, field, allow_many_nested=True)

105

106 def parse_headers(self, req: Request, name: str, field: Field) -> typing.Any:

107 """Pull a value from the header data."""

108 return core.get_value(req.headers, name, field)

109

110 def parse_cookies(self, req: Request, name: str, field: Field) -> typing.Any:

111 """Pull a value from the cookiejar."""

112 return core.get_value(req.cookies, name, field)

113

114 def parse_files(self, req: Request, name: str, field: Field) -> None:

115 raise NotImplementedError(

116 "parse_files is not implemented. You may be able to use parse_form for "

117 "parsing upload data."

118 )

119

120 def parse_match_info(self, req: Request, name: str, field: Field) -> typing.Any:

121 """Pull a value from the request's ``match_info``."""

122 return core.get_value(req.match_info, name, field)

123

124 def get_request_from_view_args(

125 self, view: typing.Callable, args: typing.Iterable, kwargs: typing.Mapping

126 ) -> Request:

127 """Get request object from a handler function or method. Used internally by

128 ``use_args`` and ``use_kwargs``.

129 """

130 req = None

131 for arg in args:

132 if isinstance(arg, web.Request):

133 req = arg

134 break

135 elif isinstance(arg, web.View):

136 req = arg.request

137 break

138 assert isinstance(req, web.Request), "Request argument not found for handler"

139 return req

140

141 def handle_error(

142 self,

143 error: ValidationError,

144 req: Request,

145 schema: Schema,

146 error_status_code: typing.Union[int, None] = None,

147 error_headers: typing.Union[typing.Mapping[str, str], None] = None,

148 ) -> typing.NoReturn:

149 """Handle ValidationErrors and return a JSON response of error messages

150 to the client.

151 """

152 error_class = exception_map.get(

153 error_status_code or self.DEFAULT_VALIDATION_STATUS

154 )

155 if not error_class:

156 raise LookupError("No exception for {0}".format(error_status_code))

157 headers = error_headers

158 raise error_class(

159 body=json.dumps(error.messages).encode("utf-8"),

160 headers=headers,

161 content_type="application/json",

162 )

163

164 def handle_invalid_json_error(

165 self, error: json.JSONDecodeError, req: Request, *args, **kwargs

166 ) -> typing.NoReturn:

167 error_class = exception_map[400]

168 messages = {"json": ["Invalid JSON body."]}

169 raise error_class(

170 body=json.dumps(messages).encode("utf-8"), content_type="application/json"

171 )

172

173

174 parser = AIOHTTPParser()

175 use_args = parser.use_args # type: typing.Callable

176 use_kwargs = parser.use_kwargs # type: typing.Callable

177

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/webargs/aiohttpparser.py b/webargs/aiohttpparser.py

--- a/webargs/aiohttpparser.py

+++ b/webargs/aiohttpparser.py

@@ -145,7 +145,7 @@

schema: Schema,

error_status_code: typing.Union[int, None] = None,

error_headers: typing.Union[typing.Mapping[str, str], None] = None,

- ) -> typing.NoReturn:

+ ) -> "typing.NoReturn":

"""Handle ValidationErrors and return a JSON response of error messages

to the client.

"""

@@ -163,7 +163,7 @@

def handle_invalid_json_error(

self, error: json.JSONDecodeError, req: Request, *args, **kwargs

- ) -> typing.NoReturn:

+ ) -> "typing.NoReturn":

error_class = exception_map[400]

messages = {"json": ["Invalid JSON body."]}

raise error_class(

| {"golden_diff": "diff --git a/webargs/aiohttpparser.py b/webargs/aiohttpparser.py\n--- a/webargs/aiohttpparser.py\n+++ b/webargs/aiohttpparser.py\n@@ -145,7 +145,7 @@\n schema: Schema,\n error_status_code: typing.Union[int, None] = None,\n error_headers: typing.Union[typing.Mapping[str, str], None] = None,\n- ) -> typing.NoReturn:\n+ ) -> \"typing.NoReturn\":\n \"\"\"Handle ValidationErrors and return a JSON response of error messages\n to the client.\n \"\"\"\n@@ -163,7 +163,7 @@\n \n def handle_invalid_json_error(\n self, error: json.JSONDecodeError, req: Request, *args, **kwargs\n- ) -> typing.NoReturn:\n+ ) -> \"typing.NoReturn\":\n error_class = exception_map[400]\n messages = {\"json\": [\"Invalid JSON body.\"]}\n raise error_class(\n", "issue": "AttributeError: module 'typing' has no attribute 'NoReturn' with Python 3.5.3\nI get this error when running the tests with Python 3.5.3.\r\n\r\n```\r\ntests/test_py3/test_aiohttpparser_async_functions.py:6: in <module>\r\n from webargs.aiohttpparser import parser, use_args, use_kwargs\r\nwebargs/aiohttpparser.py:72: in <module>\r\n class AIOHTTPParser(AsyncParser):\r\nwebargs/aiohttpparser.py:148: in AIOHTTPParser\r\n ) -> typing.NoReturn:\r\nE AttributeError: module 'typing' has no attribute 'NoReturn'\r\n```\r\n\r\nThe docs say [`typing.NoReturn`](https://docs.python.org/3/library/typing.html#typing.NoReturn) was added in 3.6.5. However, [the tests pass on Travis](https://travis-ci.org/marshmallow-code/webargs/jobs/486701760) with Python 3.5.6.\n", "before_files": [{"content": "\"\"\"aiohttp request argument parsing module.\n\nExample: ::\n\n import asyncio\n from aiohttp import web\n\n from webargs import fields\n from webargs.aiohttpparser import use_args\n\n\n hello_args = {\n 'name': fields.Str(required=True)\n }\n @asyncio.coroutine\n @use_args(hello_args)\n def index(request, args):\n return web.Response(\n body='Hello {}'.format(args['name']).encode('utf-8')\n )\n\n app = web.Application()\n app.router.add_route('GET', '/', index)\n\"\"\"\nimport typing\n\nfrom aiohttp import web\nfrom aiohttp.web import Request\nfrom aiohttp import web_exceptions\nfrom marshmallow import Schema, ValidationError\nfrom marshmallow.fields import Field\n\nfrom webargs import core\nfrom webargs.core import json\nfrom webargs.asyncparser import AsyncParser\n\n\ndef is_json_request(req: Request) -> bool:\n content_type = req.content_type\n return core.is_json(content_type)\n\n\nclass HTTPUnprocessableEntity(web.HTTPClientError):\n status_code = 422\n\n\n# Mapping of status codes to exception classes\n# Adapted from werkzeug\nexception_map = {422: HTTPUnprocessableEntity}\n\n\ndef _find_exceptions() -> None:\n for name in web_exceptions.__all__:\n obj = getattr(web_exceptions, name)\n try:\n is_http_exception = issubclass(obj, web_exceptions.HTTPException)\n except TypeError:\n is_http_exception = False\n if not is_http_exception or obj.status_code is None:\n continue\n old_obj = exception_map.get(obj.status_code, None)\n if old_obj is not None and issubclass(obj, old_obj):\n continue\n exception_map[obj.status_code] = obj\n\n\n# Collect all exceptions from aiohttp.web_exceptions\n_find_exceptions()\ndel _find_exceptions\n\n\nclass AIOHTTPParser(AsyncParser):\n \"\"\"aiohttp request argument parser.\"\"\"\n\n __location_map__ = dict(\n match_info=\"parse_match_info\", **core.Parser.__location_map__\n )\n\n def parse_querystring(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a querystring value from the request.\"\"\"\n return core.get_value(req.query, name, field)\n\n async def parse_form(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a form value from the request.\"\"\"\n post_data = self._cache.get(\"post\")\n if post_data is None:\n self._cache[\"post\"] = await req.post()\n return core.get_value(self._cache[\"post\"], name, field)\n\n async def parse_json(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a json value from the request.\"\"\"\n json_data = self._cache.get(\"json\")\n if json_data is None:\n if not (req.body_exists and is_json_request(req)):\n return core.missing\n try:\n json_data = await req.json(loads=json.loads)\n except json.JSONDecodeError as e:\n if e.doc == \"\":\n return core.missing\n else:\n return self.handle_invalid_json_error(e, req)\n self._cache[\"json\"] = json_data\n return core.get_value(json_data, name, field, allow_many_nested=True)\n\n def parse_headers(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a value from the header data.\"\"\"\n return core.get_value(req.headers, name, field)\n\n def parse_cookies(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a value from the cookiejar.\"\"\"\n return core.get_value(req.cookies, name, field)\n\n def parse_files(self, req: Request, name: str, field: Field) -> None:\n raise NotImplementedError(\n \"parse_files is not implemented. You may be able to use parse_form for \"\n \"parsing upload data.\"\n )\n\n def parse_match_info(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a value from the request's ``match_info``.\"\"\"\n return core.get_value(req.match_info, name, field)\n\n def get_request_from_view_args(\n self, view: typing.Callable, args: typing.Iterable, kwargs: typing.Mapping\n ) -> Request:\n \"\"\"Get request object from a handler function or method. Used internally by\n ``use_args`` and ``use_kwargs``.\n \"\"\"\n req = None\n for arg in args:\n if isinstance(arg, web.Request):\n req = arg\n break\n elif isinstance(arg, web.View):\n req = arg.request\n break\n assert isinstance(req, web.Request), \"Request argument not found for handler\"\n return req\n\n def handle_error(\n self,\n error: ValidationError,\n req: Request,\n schema: Schema,\n error_status_code: typing.Union[int, None] = None,\n error_headers: typing.Union[typing.Mapping[str, str], None] = None,\n ) -> typing.NoReturn:\n \"\"\"Handle ValidationErrors and return a JSON response of error messages\n to the client.\n \"\"\"\n error_class = exception_map.get(\n error_status_code or self.DEFAULT_VALIDATION_STATUS\n )\n if not error_class:\n raise LookupError(\"No exception for {0}\".format(error_status_code))\n headers = error_headers\n raise error_class(\n body=json.dumps(error.messages).encode(\"utf-8\"),\n headers=headers,\n content_type=\"application/json\",\n )\n\n def handle_invalid_json_error(\n self, error: json.JSONDecodeError, req: Request, *args, **kwargs\n ) -> typing.NoReturn:\n error_class = exception_map[400]\n messages = {\"json\": [\"Invalid JSON body.\"]}\n raise error_class(\n body=json.dumps(messages).encode(\"utf-8\"), content_type=\"application/json\"\n )\n\n\nparser = AIOHTTPParser()\nuse_args = parser.use_args # type: typing.Callable\nuse_kwargs = parser.use_kwargs # type: typing.Callable\n", "path": "webargs/aiohttpparser.py"}], "after_files": [{"content": "\"\"\"aiohttp request argument parsing module.\n\nExample: ::\n\n import asyncio\n from aiohttp import web\n\n from webargs import fields\n from webargs.aiohttpparser import use_args\n\n\n hello_args = {\n 'name': fields.Str(required=True)\n }\n @asyncio.coroutine\n @use_args(hello_args)\n def index(request, args):\n return web.Response(\n body='Hello {}'.format(args['name']).encode('utf-8')\n )\n\n app = web.Application()\n app.router.add_route('GET', '/', index)\n\"\"\"\nimport typing\n\nfrom aiohttp import web\nfrom aiohttp.web import Request\nfrom aiohttp import web_exceptions\nfrom marshmallow import Schema, ValidationError\nfrom marshmallow.fields import Field\n\nfrom webargs import core\nfrom webargs.core import json\nfrom webargs.asyncparser import AsyncParser\n\n\ndef is_json_request(req: Request) -> bool:\n content_type = req.content_type\n return core.is_json(content_type)\n\n\nclass HTTPUnprocessableEntity(web.HTTPClientError):\n status_code = 422\n\n\n# Mapping of status codes to exception classes\n# Adapted from werkzeug\nexception_map = {422: HTTPUnprocessableEntity}\n\n\ndef _find_exceptions() -> None:\n for name in web_exceptions.__all__:\n obj = getattr(web_exceptions, name)\n try:\n is_http_exception = issubclass(obj, web_exceptions.HTTPException)\n except TypeError:\n is_http_exception = False\n if not is_http_exception or obj.status_code is None:\n continue\n old_obj = exception_map.get(obj.status_code, None)\n if old_obj is not None and issubclass(obj, old_obj):\n continue\n exception_map[obj.status_code] = obj\n\n\n# Collect all exceptions from aiohttp.web_exceptions\n_find_exceptions()\ndel _find_exceptions\n\n\nclass AIOHTTPParser(AsyncParser):\n \"\"\"aiohttp request argument parser.\"\"\"\n\n __location_map__ = dict(\n match_info=\"parse_match_info\", **core.Parser.__location_map__\n )\n\n def parse_querystring(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a querystring value from the request.\"\"\"\n return core.get_value(req.query, name, field)\n\n async def parse_form(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a form value from the request.\"\"\"\n post_data = self._cache.get(\"post\")\n if post_data is None:\n self._cache[\"post\"] = await req.post()\n return core.get_value(self._cache[\"post\"], name, field)\n\n async def parse_json(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a json value from the request.\"\"\"\n json_data = self._cache.get(\"json\")\n if json_data is None:\n if not (req.body_exists and is_json_request(req)):\n return core.missing\n try:\n json_data = await req.json(loads=json.loads)\n except json.JSONDecodeError as e:\n if e.doc == \"\":\n return core.missing\n else:\n return self.handle_invalid_json_error(e, req)\n self._cache[\"json\"] = json_data\n return core.get_value(json_data, name, field, allow_many_nested=True)\n\n def parse_headers(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a value from the header data.\"\"\"\n return core.get_value(req.headers, name, field)\n\n def parse_cookies(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a value from the cookiejar.\"\"\"\n return core.get_value(req.cookies, name, field)\n\n def parse_files(self, req: Request, name: str, field: Field) -> None:\n raise NotImplementedError(\n \"parse_files is not implemented. You may be able to use parse_form for \"\n \"parsing upload data.\"\n )\n\n def parse_match_info(self, req: Request, name: str, field: Field) -> typing.Any:\n \"\"\"Pull a value from the request's ``match_info``.\"\"\"\n return core.get_value(req.match_info, name, field)\n\n def get_request_from_view_args(\n self, view: typing.Callable, args: typing.Iterable, kwargs: typing.Mapping\n ) -> Request:\n \"\"\"Get request object from a handler function or method. Used internally by\n ``use_args`` and ``use_kwargs``.\n \"\"\"\n req = None\n for arg in args:\n if isinstance(arg, web.Request):\n req = arg\n break\n elif isinstance(arg, web.View):\n req = arg.request\n break\n assert isinstance(req, web.Request), \"Request argument not found for handler\"\n return req\n\n def handle_error(\n self,\n error: ValidationError,\n req: Request,\n schema: Schema,\n error_status_code: typing.Union[int, None] = None,\n error_headers: typing.Union[typing.Mapping[str, str], None] = None,\n ) -> \"typing.NoReturn\":\n \"\"\"Handle ValidationErrors and return a JSON response of error messages\n to the client.\n \"\"\"\n error_class = exception_map.get(\n error_status_code or self.DEFAULT_VALIDATION_STATUS\n )\n if not error_class:\n raise LookupError(\"No exception for {0}\".format(error_status_code))\n headers = error_headers\n raise error_class(\n body=json.dumps(error.messages).encode(\"utf-8\"),\n headers=headers,\n content_type=\"application/json\",\n )\n\n def handle_invalid_json_error(\n self, error: json.JSONDecodeError, req: Request, *args, **kwargs\n ) -> \"typing.NoReturn\":\n error_class = exception_map[400]\n messages = {\"json\": [\"Invalid JSON body.\"]}\n raise error_class(\n body=json.dumps(messages).encode(\"utf-8\"), content_type=\"application/json\"\n )\n\n\nparser = AIOHTTPParser()\nuse_args = parser.use_args # type: typing.Callable\nuse_kwargs = parser.use_kwargs # type: typing.Callable\n", "path": "webargs/aiohttpparser.py"}]} | 2,246 | 222 |

gh_patches_debug_3699 | rasdani/github-patches | git_diff | plone__Products.CMFPlone-2982 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

PasswordResetView::getErrors function never called.

## BUG

<!--

Read https://plone.org/support/bugs first!

Please use the labels at Github, at least one of the types: bug, regression, question, enhancement.

Please include tracebacks, screenshots, code of debugging sessions or code that reproduces the issue if possible.

The best reproductions are in plain Plone installations without addons or at least with minimal needed addons installed.

-->

### What I did:

I am trying to reset the password, using a normal Plone user and I made a PDB inside https://github.com/plone/Products.CMFPlone/blob/master/Products/CMFPlone/browser/login/password_reset.py#L159

URL: {site url}/passwordreset/e3127df738bc41e1976cc36cc9832132?userid=local_manager

### What I expect to happen:

I expected a call `RegistrationTool.testPasswordValidity(password, password2)