problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_7890 | rasdani/github-patches | git_diff | googleapis__google-auth-library-python-846 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

JWT decoding depends upon deprecated function

#### Environment details

- OS: Debian 11 (bullseye)

- Python version: 3.9.2

- pip version: 20.3.4

- `google-auth` version: 1.30

#### Steps to reproduce

Decode a JWT token from Google Cloud Identity-Aware Proxy

#### Error

The following deprecation warnings are issues from the `cryptography` library:

```

/usr/local/lib/python3.9/dist-packages/google/auth/crypt/es256.py:56: CryptographyDeprecationWarning: int_from_bytes is deprecated, use int.from_bytes instead

/usr/local/lib/python3.9/dist-packages/google/auth/crypt/es256.py:57: CryptographyDeprecationWarning: int_from_bytes is deprecated, use int.from_bytes instead

```

The changes necessary seem self evident. The [function in question][frombytes] exists in Python 3.2+ and therefore falls within the currently stated supported versions (3.5+).

[frombytes]: https://docs.python.org/3/library/stdtypes.html#int.from_bytes

--- END ISSUE ---

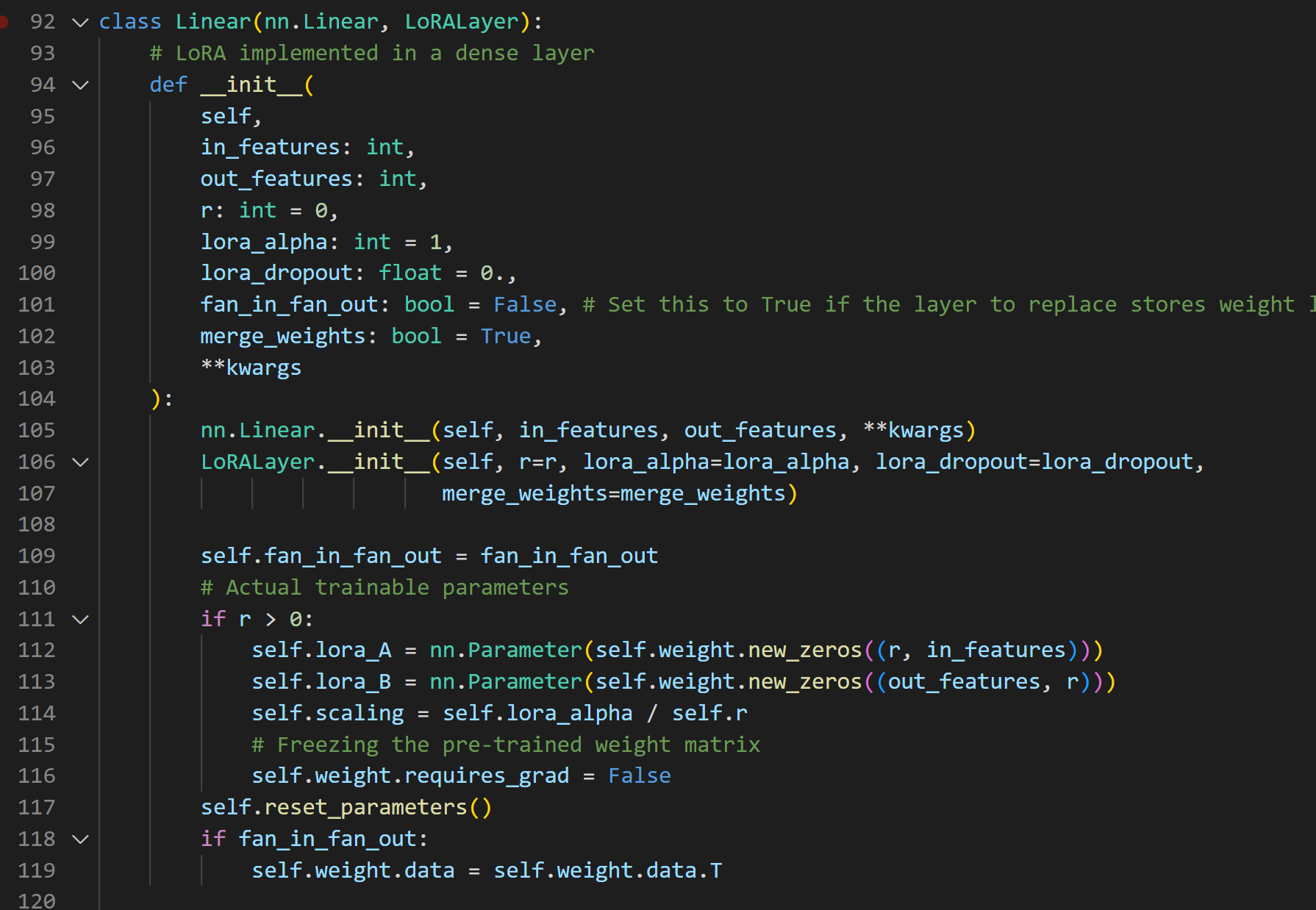

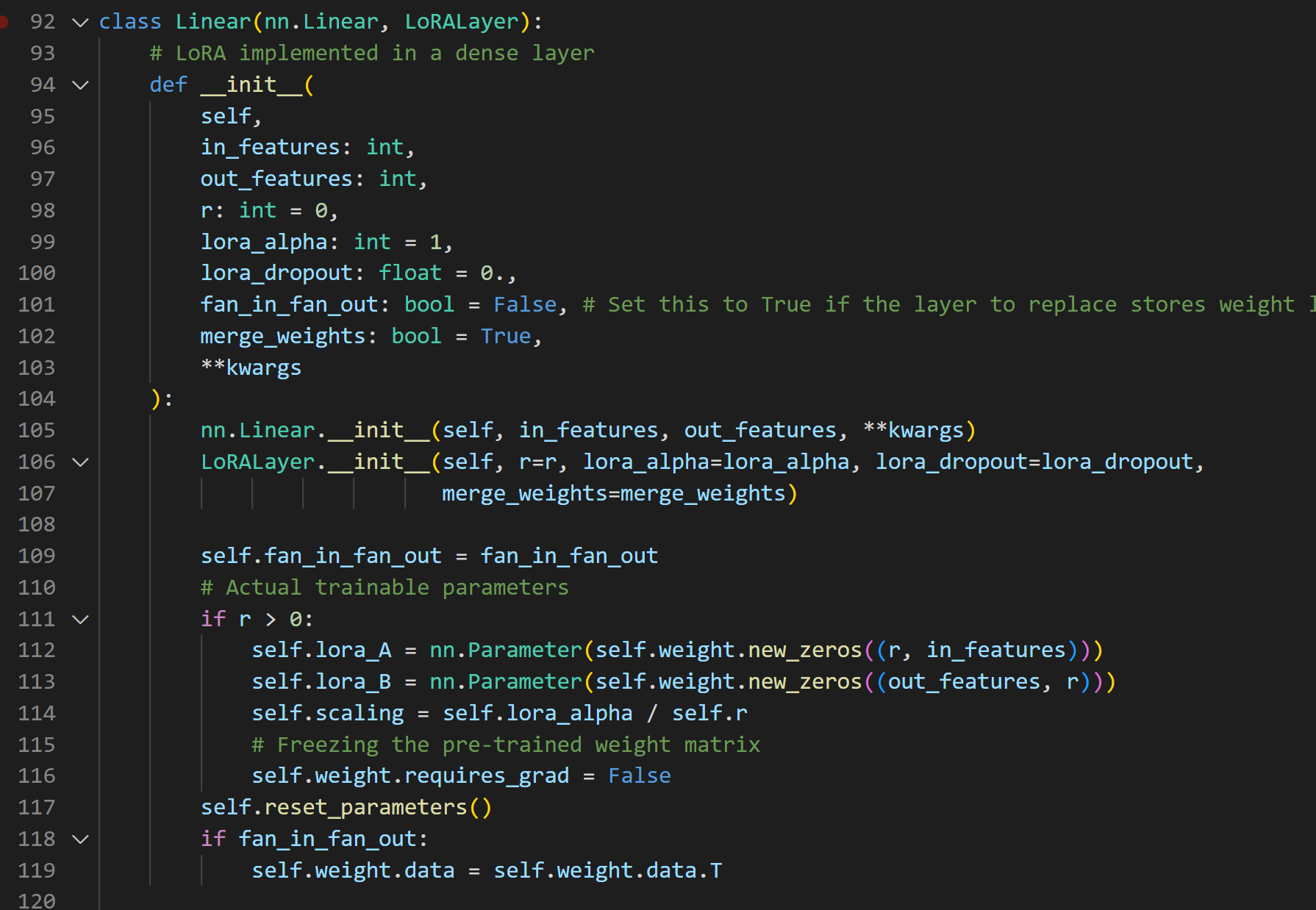

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `google/auth/crypt/es256.py`

Content:

```

1 # Copyright 2017 Google Inc.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """ECDSA (ES256) verifier and signer that use the ``cryptography`` library.

16 """

17

18 from cryptography import utils

19 import cryptography.exceptions

20 from cryptography.hazmat import backends

21 from cryptography.hazmat.primitives import hashes

22 from cryptography.hazmat.primitives import serialization

23 from cryptography.hazmat.primitives.asymmetric import ec

24 from cryptography.hazmat.primitives.asymmetric import padding

25 from cryptography.hazmat.primitives.asymmetric.utils import decode_dss_signature

26 from cryptography.hazmat.primitives.asymmetric.utils import encode_dss_signature

27 import cryptography.x509

28

29 from google.auth import _helpers

30 from google.auth.crypt import base

31

32

33 _CERTIFICATE_MARKER = b"-----BEGIN CERTIFICATE-----"

34 _BACKEND = backends.default_backend()

35 _PADDING = padding.PKCS1v15()

36

37

38 class ES256Verifier(base.Verifier):

39 """Verifies ECDSA cryptographic signatures using public keys.

40

41 Args:

42 public_key (

43 cryptography.hazmat.primitives.asymmetric.ec.ECDSAPublicKey):

44 The public key used to verify signatures.

45 """

46

47 def __init__(self, public_key):

48 self._pubkey = public_key

49

50 @_helpers.copy_docstring(base.Verifier)

51 def verify(self, message, signature):

52 # First convert (r||s) raw signature to ASN1 encoded signature.

53 sig_bytes = _helpers.to_bytes(signature)

54 if len(sig_bytes) != 64:

55 return False

56 r = utils.int_from_bytes(sig_bytes[:32], byteorder="big")

57 s = utils.int_from_bytes(sig_bytes[32:], byteorder="big")

58 asn1_sig = encode_dss_signature(r, s)

59

60 message = _helpers.to_bytes(message)

61 try:

62 self._pubkey.verify(asn1_sig, message, ec.ECDSA(hashes.SHA256()))

63 return True

64 except (ValueError, cryptography.exceptions.InvalidSignature):

65 return False

66

67 @classmethod

68 def from_string(cls, public_key):

69 """Construct an Verifier instance from a public key or public

70 certificate string.

71

72 Args:

73 public_key (Union[str, bytes]): The public key in PEM format or the

74 x509 public key certificate.

75

76 Returns:

77 Verifier: The constructed verifier.

78

79 Raises:

80 ValueError: If the public key can't be parsed.

81 """

82 public_key_data = _helpers.to_bytes(public_key)

83

84 if _CERTIFICATE_MARKER in public_key_data:

85 cert = cryptography.x509.load_pem_x509_certificate(

86 public_key_data, _BACKEND

87 )

88 pubkey = cert.public_key()

89

90 else:

91 pubkey = serialization.load_pem_public_key(public_key_data, _BACKEND)

92

93 return cls(pubkey)

94

95

96 class ES256Signer(base.Signer, base.FromServiceAccountMixin):

97 """Signs messages with an ECDSA private key.

98

99 Args:

100 private_key (

101 cryptography.hazmat.primitives.asymmetric.ec.ECDSAPrivateKey):

102 The private key to sign with.

103 key_id (str): Optional key ID used to identify this private key. This

104 can be useful to associate the private key with its associated

105 public key or certificate.

106 """

107

108 def __init__(self, private_key, key_id=None):

109 self._key = private_key

110 self._key_id = key_id

111

112 @property

113 @_helpers.copy_docstring(base.Signer)

114 def key_id(self):

115 return self._key_id

116

117 @_helpers.copy_docstring(base.Signer)

118 def sign(self, message):

119 message = _helpers.to_bytes(message)

120 asn1_signature = self._key.sign(message, ec.ECDSA(hashes.SHA256()))

121

122 # Convert ASN1 encoded signature to (r||s) raw signature.

123 (r, s) = decode_dss_signature(asn1_signature)

124 return utils.int_to_bytes(r, 32) + utils.int_to_bytes(s, 32)

125

126 @classmethod

127 def from_string(cls, key, key_id=None):

128 """Construct a RSASigner from a private key in PEM format.

129

130 Args:

131 key (Union[bytes, str]): Private key in PEM format.

132 key_id (str): An optional key id used to identify the private key.

133

134 Returns:

135 google.auth.crypt._cryptography_rsa.RSASigner: The

136 constructed signer.

137

138 Raises:

139 ValueError: If ``key`` is not ``bytes`` or ``str`` (unicode).

140 UnicodeDecodeError: If ``key`` is ``bytes`` but cannot be decoded

141 into a UTF-8 ``str``.

142 ValueError: If ``cryptography`` "Could not deserialize key data."

143 """

144 key = _helpers.to_bytes(key)

145 private_key = serialization.load_pem_private_key(

146 key, password=None, backend=_BACKEND

147 )

148 return cls(private_key, key_id=key_id)

149

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/google/auth/crypt/es256.py b/google/auth/crypt/es256.py

--- a/google/auth/crypt/es256.py

+++ b/google/auth/crypt/es256.py

@@ -53,8 +53,8 @@

sig_bytes = _helpers.to_bytes(signature)

if len(sig_bytes) != 64:

return False

- r = utils.int_from_bytes(sig_bytes[:32], byteorder="big")

- s = utils.int_from_bytes(sig_bytes[32:], byteorder="big")

+ r = int.from_bytes(sig_bytes[:32], byteorder="big")

+ s = int.from_bytes(sig_bytes[32:], byteorder="big")

asn1_sig = encode_dss_signature(r, s)

message = _helpers.to_bytes(message)

| {"golden_diff": "diff --git a/google/auth/crypt/es256.py b/google/auth/crypt/es256.py\n--- a/google/auth/crypt/es256.py\n+++ b/google/auth/crypt/es256.py\n@@ -53,8 +53,8 @@\n sig_bytes = _helpers.to_bytes(signature)\n if len(sig_bytes) != 64:\n return False\n- r = utils.int_from_bytes(sig_bytes[:32], byteorder=\"big\")\n- s = utils.int_from_bytes(sig_bytes[32:], byteorder=\"big\")\n+ r = int.from_bytes(sig_bytes[:32], byteorder=\"big\")\n+ s = int.from_bytes(sig_bytes[32:], byteorder=\"big\")\n asn1_sig = encode_dss_signature(r, s)\n \n message = _helpers.to_bytes(message)\n", "issue": "JWT decoding depends upon deprecated function\n#### Environment details\r\n\r\n - OS: Debian 11 (bullseye)\r\n - Python version: 3.9.2\r\n - pip version: 20.3.4\r\n - `google-auth` version: 1.30\r\n\r\n#### Steps to reproduce\r\n\r\nDecode a JWT token from Google Cloud Identity-Aware Proxy\r\n\r\n#### Error\r\n\r\nThe following deprecation warnings are issues from the `cryptography` library:\r\n\r\n```\r\n/usr/local/lib/python3.9/dist-packages/google/auth/crypt/es256.py:56: CryptographyDeprecationWarning: int_from_bytes is deprecated, use int.from_bytes instead\r\n/usr/local/lib/python3.9/dist-packages/google/auth/crypt/es256.py:57: CryptographyDeprecationWarning: int_from_bytes is deprecated, use int.from_bytes instead\r\n```\r\n\r\nThe changes necessary seem self evident. The [function in question][frombytes] exists in Python 3.2+ and therefore falls within the currently stated supported versions (3.5+).\r\n\r\n[frombytes]: https://docs.python.org/3/library/stdtypes.html#int.from_bytes\n", "before_files": [{"content": "# Copyright 2017 Google Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"ECDSA (ES256) verifier and signer that use the ``cryptography`` library.\n\"\"\"\n\nfrom cryptography import utils\nimport cryptography.exceptions\nfrom cryptography.hazmat import backends\nfrom cryptography.hazmat.primitives import hashes\nfrom cryptography.hazmat.primitives import serialization\nfrom cryptography.hazmat.primitives.asymmetric import ec\nfrom cryptography.hazmat.primitives.asymmetric import padding\nfrom cryptography.hazmat.primitives.asymmetric.utils import decode_dss_signature\nfrom cryptography.hazmat.primitives.asymmetric.utils import encode_dss_signature\nimport cryptography.x509\n\nfrom google.auth import _helpers\nfrom google.auth.crypt import base\n\n\n_CERTIFICATE_MARKER = b\"-----BEGIN CERTIFICATE-----\"\n_BACKEND = backends.default_backend()\n_PADDING = padding.PKCS1v15()\n\n\nclass ES256Verifier(base.Verifier):\n \"\"\"Verifies ECDSA cryptographic signatures using public keys.\n\n Args:\n public_key (\n cryptography.hazmat.primitives.asymmetric.ec.ECDSAPublicKey):\n The public key used to verify signatures.\n \"\"\"\n\n def __init__(self, public_key):\n self._pubkey = public_key\n\n @_helpers.copy_docstring(base.Verifier)\n def verify(self, message, signature):\n # First convert (r||s) raw signature to ASN1 encoded signature.\n sig_bytes = _helpers.to_bytes(signature)\n if len(sig_bytes) != 64:\n return False\n r = utils.int_from_bytes(sig_bytes[:32], byteorder=\"big\")\n s = utils.int_from_bytes(sig_bytes[32:], byteorder=\"big\")\n asn1_sig = encode_dss_signature(r, s)\n\n message = _helpers.to_bytes(message)\n try:\n self._pubkey.verify(asn1_sig, message, ec.ECDSA(hashes.SHA256()))\n return True\n except (ValueError, cryptography.exceptions.InvalidSignature):\n return False\n\n @classmethod\n def from_string(cls, public_key):\n \"\"\"Construct an Verifier instance from a public key or public\n certificate string.\n\n Args:\n public_key (Union[str, bytes]): The public key in PEM format or the\n x509 public key certificate.\n\n Returns:\n Verifier: The constructed verifier.\n\n Raises:\n ValueError: If the public key can't be parsed.\n \"\"\"\n public_key_data = _helpers.to_bytes(public_key)\n\n if _CERTIFICATE_MARKER in public_key_data:\n cert = cryptography.x509.load_pem_x509_certificate(\n public_key_data, _BACKEND\n )\n pubkey = cert.public_key()\n\n else:\n pubkey = serialization.load_pem_public_key(public_key_data, _BACKEND)\n\n return cls(pubkey)\n\n\nclass ES256Signer(base.Signer, base.FromServiceAccountMixin):\n \"\"\"Signs messages with an ECDSA private key.\n\n Args:\n private_key (\n cryptography.hazmat.primitives.asymmetric.ec.ECDSAPrivateKey):\n The private key to sign with.\n key_id (str): Optional key ID used to identify this private key. This\n can be useful to associate the private key with its associated\n public key or certificate.\n \"\"\"\n\n def __init__(self, private_key, key_id=None):\n self._key = private_key\n self._key_id = key_id\n\n @property\n @_helpers.copy_docstring(base.Signer)\n def key_id(self):\n return self._key_id\n\n @_helpers.copy_docstring(base.Signer)\n def sign(self, message):\n message = _helpers.to_bytes(message)\n asn1_signature = self._key.sign(message, ec.ECDSA(hashes.SHA256()))\n\n # Convert ASN1 encoded signature to (r||s) raw signature.\n (r, s) = decode_dss_signature(asn1_signature)\n return utils.int_to_bytes(r, 32) + utils.int_to_bytes(s, 32)\n\n @classmethod\n def from_string(cls, key, key_id=None):\n \"\"\"Construct a RSASigner from a private key in PEM format.\n\n Args:\n key (Union[bytes, str]): Private key in PEM format.\n key_id (str): An optional key id used to identify the private key.\n\n Returns:\n google.auth.crypt._cryptography_rsa.RSASigner: The\n constructed signer.\n\n Raises:\n ValueError: If ``key`` is not ``bytes`` or ``str`` (unicode).\n UnicodeDecodeError: If ``key`` is ``bytes`` but cannot be decoded\n into a UTF-8 ``str``.\n ValueError: If ``cryptography`` \"Could not deserialize key data.\"\n \"\"\"\n key = _helpers.to_bytes(key)\n private_key = serialization.load_pem_private_key(\n key, password=None, backend=_BACKEND\n )\n return cls(private_key, key_id=key_id)\n", "path": "google/auth/crypt/es256.py"}], "after_files": [{"content": "# Copyright 2017 Google Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"ECDSA (ES256) verifier and signer that use the ``cryptography`` library.\n\"\"\"\n\nfrom cryptography import utils\nimport cryptography.exceptions\nfrom cryptography.hazmat import backends\nfrom cryptography.hazmat.primitives import hashes\nfrom cryptography.hazmat.primitives import serialization\nfrom cryptography.hazmat.primitives.asymmetric import ec\nfrom cryptography.hazmat.primitives.asymmetric import padding\nfrom cryptography.hazmat.primitives.asymmetric.utils import decode_dss_signature\nfrom cryptography.hazmat.primitives.asymmetric.utils import encode_dss_signature\nimport cryptography.x509\n\nfrom google.auth import _helpers\nfrom google.auth.crypt import base\n\n\n_CERTIFICATE_MARKER = b\"-----BEGIN CERTIFICATE-----\"\n_BACKEND = backends.default_backend()\n_PADDING = padding.PKCS1v15()\n\n\nclass ES256Verifier(base.Verifier):\n \"\"\"Verifies ECDSA cryptographic signatures using public keys.\n\n Args:\n public_key (\n cryptography.hazmat.primitives.asymmetric.ec.ECDSAPublicKey):\n The public key used to verify signatures.\n \"\"\"\n\n def __init__(self, public_key):\n self._pubkey = public_key\n\n @_helpers.copy_docstring(base.Verifier)\n def verify(self, message, signature):\n # First convert (r||s) raw signature to ASN1 encoded signature.\n sig_bytes = _helpers.to_bytes(signature)\n if len(sig_bytes) != 64:\n return False\n r = int.from_bytes(sig_bytes[:32], byteorder=\"big\")\n s = int.from_bytes(sig_bytes[32:], byteorder=\"big\")\n asn1_sig = encode_dss_signature(r, s)\n\n message = _helpers.to_bytes(message)\n try:\n self._pubkey.verify(asn1_sig, message, ec.ECDSA(hashes.SHA256()))\n return True\n except (ValueError, cryptography.exceptions.InvalidSignature):\n return False\n\n @classmethod\n def from_string(cls, public_key):\n \"\"\"Construct an Verifier instance from a public key or public\n certificate string.\n\n Args:\n public_key (Union[str, bytes]): The public key in PEM format or the\n x509 public key certificate.\n\n Returns:\n Verifier: The constructed verifier.\n\n Raises:\n ValueError: If the public key can't be parsed.\n \"\"\"\n public_key_data = _helpers.to_bytes(public_key)\n\n if _CERTIFICATE_MARKER in public_key_data:\n cert = cryptography.x509.load_pem_x509_certificate(\n public_key_data, _BACKEND\n )\n pubkey = cert.public_key()\n\n else:\n pubkey = serialization.load_pem_public_key(public_key_data, _BACKEND)\n\n return cls(pubkey)\n\n\nclass ES256Signer(base.Signer, base.FromServiceAccountMixin):\n \"\"\"Signs messages with an ECDSA private key.\n\n Args:\n private_key (\n cryptography.hazmat.primitives.asymmetric.ec.ECDSAPrivateKey):\n The private key to sign with.\n key_id (str): Optional key ID used to identify this private key. This\n can be useful to associate the private key with its associated\n public key or certificate.\n \"\"\"\n\n def __init__(self, private_key, key_id=None):\n self._key = private_key\n self._key_id = key_id\n\n @property\n @_helpers.copy_docstring(base.Signer)\n def key_id(self):\n return self._key_id\n\n @_helpers.copy_docstring(base.Signer)\n def sign(self, message):\n message = _helpers.to_bytes(message)\n asn1_signature = self._key.sign(message, ec.ECDSA(hashes.SHA256()))\n\n # Convert ASN1 encoded signature to (r||s) raw signature.\n (r, s) = decode_dss_signature(asn1_signature)\n return utils.int_to_bytes(r, 32) + utils.int_to_bytes(s, 32)\n\n @classmethod\n def from_string(cls, key, key_id=None):\n \"\"\"Construct a RSASigner from a private key in PEM format.\n\n Args:\n key (Union[bytes, str]): Private key in PEM format.\n key_id (str): An optional key id used to identify the private key.\n\n Returns:\n google.auth.crypt._cryptography_rsa.RSASigner: The\n constructed signer.\n\n Raises:\n ValueError: If ``key`` is not ``bytes`` or ``str`` (unicode).\n UnicodeDecodeError: If ``key`` is ``bytes`` but cannot be decoded\n into a UTF-8 ``str``.\n ValueError: If ``cryptography`` \"Could not deserialize key data.\"\n \"\"\"\n key = _helpers.to_bytes(key)\n private_key = serialization.load_pem_private_key(\n key, password=None, backend=_BACKEND\n )\n return cls(private_key, key_id=key_id)\n", "path": "google/auth/crypt/es256.py"}]} | 2,055 | 182 |

gh_patches_debug_12156 | rasdani/github-patches | git_diff | nltk__nltk-3022 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

nltk.chat.chatbot() endless loop

When I type `import nltk` followed by `nltk.chat.chatbots()`, it lists/asks which one I want to talk to, and then endlessly scrolls the following: ` Enter a number in the range 1-5: Error: bad chatbot number`, in both Jupyter and Spyder.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `nltk/chat/__init__.py`

Content:

```

1 # Natural Language Toolkit: Chatbots

2 #

3 # Copyright (C) 2001-2022 NLTK Project

4 # Authors: Steven Bird <[email protected]>

5 # URL: <https://www.nltk.org/>

6 # For license information, see LICENSE.TXT

7

8 # Based on an Eliza implementation by Joe Strout <[email protected]>,

9 # Jeff Epler <[email protected]> and Jez Higgins <[email protected]>.

10

11 """

12 A class for simple chatbots. These perform simple pattern matching on sentences

13 typed by users, and respond with automatically generated sentences.

14

15 These chatbots may not work using the windows command line or the

16 windows IDLE GUI.

17 """

18

19 from nltk.chat.eliza import eliza_chat

20 from nltk.chat.iesha import iesha_chat

21 from nltk.chat.rude import rude_chat

22 from nltk.chat.suntsu import suntsu_chat

23 from nltk.chat.util import Chat

24 from nltk.chat.zen import zen_chat

25

26 bots = [

27 (eliza_chat, "Eliza (psycho-babble)"),

28 (iesha_chat, "Iesha (teen anime junky)"),

29 (rude_chat, "Rude (abusive bot)"),

30 (suntsu_chat, "Suntsu (Chinese sayings)"),

31 (zen_chat, "Zen (gems of wisdom)"),

32 ]

33

34

35 def chatbots():

36 import sys

37

38 print("Which chatbot would you like to talk to?")

39 botcount = len(bots)

40 for i in range(botcount):

41 print(" %d: %s" % (i + 1, bots[i][1]))

42 while True:

43 print("\nEnter a number in the range 1-%d: " % botcount, end=" ")

44 choice = sys.stdin.readline().strip()

45 if choice.isdigit() and (int(choice) - 1) in range(botcount):

46 break

47 else:

48 print(" Error: bad chatbot number")

49

50 chatbot = bots[int(choice) - 1][0]

51 chatbot()

52

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/nltk/chat/__init__.py b/nltk/chat/__init__.py

--- a/nltk/chat/__init__.py

+++ b/nltk/chat/__init__.py

@@ -33,15 +33,12 @@

def chatbots():

- import sys

-

print("Which chatbot would you like to talk to?")

botcount = len(bots)

for i in range(botcount):

print(" %d: %s" % (i + 1, bots[i][1]))

while True:

- print("\nEnter a number in the range 1-%d: " % botcount, end=" ")

- choice = sys.stdin.readline().strip()

+ choice = input(f"\nEnter a number in the range 1-{botcount}: ").strip()

if choice.isdigit() and (int(choice) - 1) in range(botcount):

break

else:

| {"golden_diff": "diff --git a/nltk/chat/__init__.py b/nltk/chat/__init__.py\n--- a/nltk/chat/__init__.py\n+++ b/nltk/chat/__init__.py\n@@ -33,15 +33,12 @@\n \n \n def chatbots():\n- import sys\n-\n print(\"Which chatbot would you like to talk to?\")\n botcount = len(bots)\n for i in range(botcount):\n print(\" %d: %s\" % (i + 1, bots[i][1]))\n while True:\n- print(\"\\nEnter a number in the range 1-%d: \" % botcount, end=\" \")\n- choice = sys.stdin.readline().strip()\n+ choice = input(f\"\\nEnter a number in the range 1-{botcount}: \").strip()\n if choice.isdigit() and (int(choice) - 1) in range(botcount):\n break\n else:\n", "issue": "nltk.chat.chatbot() endless loop\nWhen I type `import nltk` followed by `nltk.chat.chatbots()`, it lists/asks which one I want to talk to, and then endlessly scrolls the following: ` Enter a number in the range 1-5: Error: bad chatbot number`, in both Jupyter and Spyder.\n", "before_files": [{"content": "# Natural Language Toolkit: Chatbots\n#\n# Copyright (C) 2001-2022 NLTK Project\n# Authors: Steven Bird <[email protected]>\n# URL: <https://www.nltk.org/>\n# For license information, see LICENSE.TXT\n\n# Based on an Eliza implementation by Joe Strout <[email protected]>,\n# Jeff Epler <[email protected]> and Jez Higgins <[email protected]>.\n\n\"\"\"\nA class for simple chatbots. These perform simple pattern matching on sentences\ntyped by users, and respond with automatically generated sentences.\n\nThese chatbots may not work using the windows command line or the\nwindows IDLE GUI.\n\"\"\"\n\nfrom nltk.chat.eliza import eliza_chat\nfrom nltk.chat.iesha import iesha_chat\nfrom nltk.chat.rude import rude_chat\nfrom nltk.chat.suntsu import suntsu_chat\nfrom nltk.chat.util import Chat\nfrom nltk.chat.zen import zen_chat\n\nbots = [\n (eliza_chat, \"Eliza (psycho-babble)\"),\n (iesha_chat, \"Iesha (teen anime junky)\"),\n (rude_chat, \"Rude (abusive bot)\"),\n (suntsu_chat, \"Suntsu (Chinese sayings)\"),\n (zen_chat, \"Zen (gems of wisdom)\"),\n]\n\n\ndef chatbots():\n import sys\n\n print(\"Which chatbot would you like to talk to?\")\n botcount = len(bots)\n for i in range(botcount):\n print(\" %d: %s\" % (i + 1, bots[i][1]))\n while True:\n print(\"\\nEnter a number in the range 1-%d: \" % botcount, end=\" \")\n choice = sys.stdin.readline().strip()\n if choice.isdigit() and (int(choice) - 1) in range(botcount):\n break\n else:\n print(\" Error: bad chatbot number\")\n\n chatbot = bots[int(choice) - 1][0]\n chatbot()\n", "path": "nltk/chat/__init__.py"}], "after_files": [{"content": "# Natural Language Toolkit: Chatbots\n#\n# Copyright (C) 2001-2022 NLTK Project\n# Authors: Steven Bird <[email protected]>\n# URL: <https://www.nltk.org/>\n# For license information, see LICENSE.TXT\n\n# Based on an Eliza implementation by Joe Strout <[email protected]>,\n# Jeff Epler <[email protected]> and Jez Higgins <[email protected]>.\n\n\"\"\"\nA class for simple chatbots. These perform simple pattern matching on sentences\ntyped by users, and respond with automatically generated sentences.\n\nThese chatbots may not work using the windows command line or the\nwindows IDLE GUI.\n\"\"\"\n\nfrom nltk.chat.eliza import eliza_chat\nfrom nltk.chat.iesha import iesha_chat\nfrom nltk.chat.rude import rude_chat\nfrom nltk.chat.suntsu import suntsu_chat\nfrom nltk.chat.util import Chat\nfrom nltk.chat.zen import zen_chat\n\nbots = [\n (eliza_chat, \"Eliza (psycho-babble)\"),\n (iesha_chat, \"Iesha (teen anime junky)\"),\n (rude_chat, \"Rude (abusive bot)\"),\n (suntsu_chat, \"Suntsu (Chinese sayings)\"),\n (zen_chat, \"Zen (gems of wisdom)\"),\n]\n\n\ndef chatbots():\n print(\"Which chatbot would you like to talk to?\")\n botcount = len(bots)\n for i in range(botcount):\n print(\" %d: %s\" % (i + 1, bots[i][1]))\n while True:\n choice = input(f\"\\nEnter a number in the range 1-{botcount}: \").strip()\n if choice.isdigit() and (int(choice) - 1) in range(botcount):\n break\n else:\n print(\" Error: bad chatbot number\")\n\n chatbot = bots[int(choice) - 1][0]\n chatbot()\n", "path": "nltk/chat/__init__.py"}]} | 882 | 200 |

gh_patches_debug_11612 | rasdani/github-patches | git_diff | DataDog__dd-trace-py-542 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Leaking sockets and connections

It looks like ddtrace writer is not closing the http connections it opens.

```

File "../lib/python3.7/threading.py", line 885, in _bootstrap

self._bootstrap_inner()

File "../lib/python3.7/threading.py", line 917, in _bootstrap_inner

self.run()

File "../lib/python3.7/threading.py", line 865, in run

self._target(*self._args, **self._kwargs)

File "../lib/python3.7/site-packages/ddtrace/writer.py", line 168, in _target

result_services = None

File "../lib/python3.7/http/client.py", line 408, in close

self._close_conn()

File "../lib/python3.7/http/client.py", line 401, in _close_conn

fp.close()

File "../lib/python3.7/socket.py", line 660, in close

self._sock = None

File "../lib/python3.7/warnings.py", line 99, in _showwarnmsg

msg.file, msg.line)

File "/app/core.py", line 30, in warn_with_traceback

traceback.print_stack(file=log)

../lib/python3.7/socket.py:660: ResourceWarning: unclosed <socket.socket fd=14, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=6, laddr=('0.0.0.0', 54954), raddr=('0.0.0.0', 8126)>

self._sock = None

```

Looking at the code, the issue is in the `_put` method of the `API` object. It creates an `HTTPConnection` but doesn't close it.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `ddtrace/api.py`

Content:

```

1 # stdlib

2 import logging

3 import time

4 import ddtrace

5 from json import loads

6

7 # project

8 from .encoding import get_encoder, JSONEncoder

9 from .compat import httplib, PYTHON_VERSION, PYTHON_INTERPRETER, get_connection_response

10

11

12 log = logging.getLogger(__name__)

13

14 TRACE_COUNT_HEADER = 'X-Datadog-Trace-Count'

15

16 _VERSIONS = {'v0.4': {'traces': '/v0.4/traces',

17 'services': '/v0.4/services',

18 'compatibility_mode': False,

19 'fallback': 'v0.3'},

20 'v0.3': {'traces': '/v0.3/traces',

21 'services': '/v0.3/services',

22 'compatibility_mode': False,

23 'fallback': 'v0.2'},

24 'v0.2': {'traces': '/v0.2/traces',

25 'services': '/v0.2/services',

26 'compatibility_mode': True,

27 'fallback': None}}

28

29 def _parse_response_json(response):

30 """

31 Parse the content of a response object, and return the right type,

32 can be a string if the output was plain text, or a dictionnary if

33 the output was a JSON.

34 """

35 if hasattr(response, 'read'):

36 body = response.read()

37 try:

38 if not isinstance(body, str) and hasattr(body, 'decode'):

39 body = body.decode('utf-8')

40 if hasattr(body, 'startswith') and body.startswith('OK'):

41 # This typically happens when using a priority-sampling enabled

42 # library with an outdated agent. It still works, but priority sampling

43 # will probably send too many traces, so the next step is to upgrade agent.

44 log.debug("'OK' is not a valid JSON, please make sure trace-agent is up to date")

45 return

46 content = loads(body)

47 return content

48 except (ValueError, TypeError) as err:

49 log.debug("unable to load JSON '%s': %s" % (body, err))

50

51 class API(object):

52 """

53 Send data to the trace agent using the HTTP protocol and JSON format

54 """

55 def __init__(self, hostname, port, headers=None, encoder=None, priority_sampling=False):

56 self.hostname = hostname

57 self.port = port

58

59 self._headers = headers or {}

60 self._version = None

61

62 if priority_sampling:

63 self._set_version('v0.4', encoder=encoder)

64 else:

65 self._set_version('v0.3', encoder=encoder)

66

67 self._headers.update({

68 'Datadog-Meta-Lang': 'python',

69 'Datadog-Meta-Lang-Version': PYTHON_VERSION,

70 'Datadog-Meta-Lang-Interpreter': PYTHON_INTERPRETER,

71 'Datadog-Meta-Tracer-Version': ddtrace.__version__,

72 })

73

74 def _set_version(self, version, encoder=None):

75 if version not in _VERSIONS:

76 version = 'v0.2'

77 if version == self._version:

78 return

79 self._version = version

80 self._traces = _VERSIONS[version]['traces']

81 self._services = _VERSIONS[version]['services']

82 self._fallback = _VERSIONS[version]['fallback']

83 self._compatibility_mode = _VERSIONS[version]['compatibility_mode']

84 if self._compatibility_mode:

85 self._encoder = JSONEncoder()

86 else:

87 self._encoder = encoder or get_encoder()

88 # overwrite the Content-type with the one chosen in the Encoder

89 self._headers.update({'Content-Type': self._encoder.content_type})

90

91 def _downgrade(self):

92 """

93 Downgrades the used encoder and API level. This method must fallback to a safe

94 encoder and API, so that it will success despite users' configurations. This action

95 ensures that the compatibility mode is activated so that the downgrade will be

96 executed only once.

97 """

98 self._set_version(self._fallback)

99

100 def send_traces(self, traces):

101 if not traces:

102 return

103 start = time.time()

104 data = self._encoder.encode_traces(traces)

105 response = self._put(self._traces, data, len(traces))

106

107 # the API endpoint is not available so we should downgrade the connection and re-try the call

108 if response.status in [404, 415] and self._fallback:

109 log.debug('calling endpoint "%s" but received %s; downgrading API', self._traces, response.status)

110 self._downgrade()

111 return self.send_traces(traces)

112

113 log.debug("reported %d traces in %.5fs", len(traces), time.time() - start)

114 return response

115

116 def send_services(self, services):

117 if not services:

118 return

119 s = {}

120 for service in services:

121 s.update(service)

122 data = self._encoder.encode_services(s)

123 response = self._put(self._services, data)

124

125 # the API endpoint is not available so we should downgrade the connection and re-try the call

126 if response.status in [404, 415] and self._fallback:

127 log.debug('calling endpoint "%s" but received %s; downgrading API', self._services, response.status)

128 self._downgrade()

129 return self.send_services(services)

130

131 log.debug("reported %d services", len(services))

132 return response

133

134 def _put(self, endpoint, data, count=0):

135 conn = httplib.HTTPConnection(self.hostname, self.port)

136

137 headers = self._headers

138 if count:

139 headers = dict(self._headers)

140 headers[TRACE_COUNT_HEADER] = str(count)

141

142 conn.request("PUT", endpoint, data, headers)

143 return get_connection_response(conn)

144

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/ddtrace/api.py b/ddtrace/api.py

--- a/ddtrace/api.py

+++ b/ddtrace/api.py

@@ -133,11 +133,13 @@

def _put(self, endpoint, data, count=0):

conn = httplib.HTTPConnection(self.hostname, self.port)

-

- headers = self._headers

- if count:

- headers = dict(self._headers)

- headers[TRACE_COUNT_HEADER] = str(count)

-

- conn.request("PUT", endpoint, data, headers)

- return get_connection_response(conn)

+ try:

+ headers = self._headers

+ if count:

+ headers = dict(self._headers)

+ headers[TRACE_COUNT_HEADER] = str(count)

+

+ conn.request("PUT", endpoint, data, headers)

+ return get_connection_response(conn)

+ finally:

+ conn.close()

| {"golden_diff": "diff --git a/ddtrace/api.py b/ddtrace/api.py\n--- a/ddtrace/api.py\n+++ b/ddtrace/api.py\n@@ -133,11 +133,13 @@\n \n def _put(self, endpoint, data, count=0):\n conn = httplib.HTTPConnection(self.hostname, self.port)\n-\n- headers = self._headers\n- if count:\n- headers = dict(self._headers)\n- headers[TRACE_COUNT_HEADER] = str(count)\n-\n- conn.request(\"PUT\", endpoint, data, headers)\n- return get_connection_response(conn)\n+ try:\n+ headers = self._headers\n+ if count:\n+ headers = dict(self._headers)\n+ headers[TRACE_COUNT_HEADER] = str(count)\n+\n+ conn.request(\"PUT\", endpoint, data, headers)\n+ return get_connection_response(conn)\n+ finally:\n+ conn.close()\n", "issue": "Leaking sockets and connections\nIt looks like ddtrace writer is not closing the http connections it opens. \r\n\r\n```\r\n File \"../lib/python3.7/threading.py\", line 885, in _bootstrap\r\n self._bootstrap_inner()\r\n File \"../lib/python3.7/threading.py\", line 917, in _bootstrap_inner\r\n self.run()\r\n File \"../lib/python3.7/threading.py\", line 865, in run\r\n self._target(*self._args, **self._kwargs)\r\n File \"../lib/python3.7/site-packages/ddtrace/writer.py\", line 168, in _target\r\n result_services = None\r\n File \"../lib/python3.7/http/client.py\", line 408, in close\r\n self._close_conn()\r\n File \"../lib/python3.7/http/client.py\", line 401, in _close_conn\r\n fp.close()\r\n File \"../lib/python3.7/socket.py\", line 660, in close\r\n self._sock = None\r\n File \"../lib/python3.7/warnings.py\", line 99, in _showwarnmsg\r\n msg.file, msg.line)\r\n File \"/app/core.py\", line 30, in warn_with_traceback\r\n traceback.print_stack(file=log)\r\n../lib/python3.7/socket.py:660: ResourceWarning: unclosed <socket.socket fd=14, family=AddressFamily.AF_INET, type=SocketKind.SOCK_STREAM, proto=6, laddr=('0.0.0.0', 54954), raddr=('0.0.0.0', 8126)>\r\n self._sock = None\r\n```\r\n\r\n\r\nLooking at the code, the issue is in the `_put` method of the `API` object. It creates an `HTTPConnection` but doesn't close it. \n", "before_files": [{"content": "# stdlib\nimport logging\nimport time\nimport ddtrace\nfrom json import loads\n\n# project\nfrom .encoding import get_encoder, JSONEncoder\nfrom .compat import httplib, PYTHON_VERSION, PYTHON_INTERPRETER, get_connection_response\n\n\nlog = logging.getLogger(__name__)\n\nTRACE_COUNT_HEADER = 'X-Datadog-Trace-Count'\n\n_VERSIONS = {'v0.4': {'traces': '/v0.4/traces',\n 'services': '/v0.4/services',\n 'compatibility_mode': False,\n 'fallback': 'v0.3'},\n 'v0.3': {'traces': '/v0.3/traces',\n 'services': '/v0.3/services',\n 'compatibility_mode': False,\n 'fallback': 'v0.2'},\n 'v0.2': {'traces': '/v0.2/traces',\n 'services': '/v0.2/services',\n 'compatibility_mode': True,\n 'fallback': None}}\n\ndef _parse_response_json(response):\n \"\"\"\n Parse the content of a response object, and return the right type,\n can be a string if the output was plain text, or a dictionnary if\n the output was a JSON.\n \"\"\"\n if hasattr(response, 'read'):\n body = response.read()\n try:\n if not isinstance(body, str) and hasattr(body, 'decode'):\n body = body.decode('utf-8')\n if hasattr(body, 'startswith') and body.startswith('OK'):\n # This typically happens when using a priority-sampling enabled\n # library with an outdated agent. It still works, but priority sampling\n # will probably send too many traces, so the next step is to upgrade agent.\n log.debug(\"'OK' is not a valid JSON, please make sure trace-agent is up to date\")\n return\n content = loads(body)\n return content\n except (ValueError, TypeError) as err:\n log.debug(\"unable to load JSON '%s': %s\" % (body, err))\n\nclass API(object):\n \"\"\"\n Send data to the trace agent using the HTTP protocol and JSON format\n \"\"\"\n def __init__(self, hostname, port, headers=None, encoder=None, priority_sampling=False):\n self.hostname = hostname\n self.port = port\n\n self._headers = headers or {}\n self._version = None\n\n if priority_sampling:\n self._set_version('v0.4', encoder=encoder)\n else:\n self._set_version('v0.3', encoder=encoder)\n\n self._headers.update({\n 'Datadog-Meta-Lang': 'python',\n 'Datadog-Meta-Lang-Version': PYTHON_VERSION,\n 'Datadog-Meta-Lang-Interpreter': PYTHON_INTERPRETER,\n 'Datadog-Meta-Tracer-Version': ddtrace.__version__,\n })\n\n def _set_version(self, version, encoder=None):\n if version not in _VERSIONS:\n version = 'v0.2'\n if version == self._version:\n return\n self._version = version\n self._traces = _VERSIONS[version]['traces']\n self._services = _VERSIONS[version]['services']\n self._fallback = _VERSIONS[version]['fallback']\n self._compatibility_mode = _VERSIONS[version]['compatibility_mode']\n if self._compatibility_mode:\n self._encoder = JSONEncoder()\n else:\n self._encoder = encoder or get_encoder()\n # overwrite the Content-type with the one chosen in the Encoder\n self._headers.update({'Content-Type': self._encoder.content_type})\n\n def _downgrade(self):\n \"\"\"\n Downgrades the used encoder and API level. This method must fallback to a safe\n encoder and API, so that it will success despite users' configurations. This action\n ensures that the compatibility mode is activated so that the downgrade will be\n executed only once.\n \"\"\"\n self._set_version(self._fallback)\n\n def send_traces(self, traces):\n if not traces:\n return\n start = time.time()\n data = self._encoder.encode_traces(traces)\n response = self._put(self._traces, data, len(traces))\n\n # the API endpoint is not available so we should downgrade the connection and re-try the call\n if response.status in [404, 415] and self._fallback:\n log.debug('calling endpoint \"%s\" but received %s; downgrading API', self._traces, response.status)\n self._downgrade()\n return self.send_traces(traces)\n\n log.debug(\"reported %d traces in %.5fs\", len(traces), time.time() - start)\n return response\n\n def send_services(self, services):\n if not services:\n return\n s = {}\n for service in services:\n s.update(service)\n data = self._encoder.encode_services(s)\n response = self._put(self._services, data)\n\n # the API endpoint is not available so we should downgrade the connection and re-try the call\n if response.status in [404, 415] and self._fallback:\n log.debug('calling endpoint \"%s\" but received %s; downgrading API', self._services, response.status)\n self._downgrade()\n return self.send_services(services)\n\n log.debug(\"reported %d services\", len(services))\n return response\n\n def _put(self, endpoint, data, count=0):\n conn = httplib.HTTPConnection(self.hostname, self.port)\n\n headers = self._headers\n if count:\n headers = dict(self._headers)\n headers[TRACE_COUNT_HEADER] = str(count)\n\n conn.request(\"PUT\", endpoint, data, headers)\n return get_connection_response(conn)\n", "path": "ddtrace/api.py"}], "after_files": [{"content": "# stdlib\nimport logging\nimport time\nimport ddtrace\nfrom json import loads\n\n# project\nfrom .encoding import get_encoder, JSONEncoder\nfrom .compat import httplib, PYTHON_VERSION, PYTHON_INTERPRETER, get_connection_response\n\n\nlog = logging.getLogger(__name__)\n\nTRACE_COUNT_HEADER = 'X-Datadog-Trace-Count'\n\n_VERSIONS = {'v0.4': {'traces': '/v0.4/traces',\n 'services': '/v0.4/services',\n 'compatibility_mode': False,\n 'fallback': 'v0.3'},\n 'v0.3': {'traces': '/v0.3/traces',\n 'services': '/v0.3/services',\n 'compatibility_mode': False,\n 'fallback': 'v0.2'},\n 'v0.2': {'traces': '/v0.2/traces',\n 'services': '/v0.2/services',\n 'compatibility_mode': True,\n 'fallback': None}}\n\ndef _parse_response_json(response):\n \"\"\"\n Parse the content of a response object, and return the right type,\n can be a string if the output was plain text, or a dictionnary if\n the output was a JSON.\n \"\"\"\n if hasattr(response, 'read'):\n body = response.read()\n try:\n if not isinstance(body, str) and hasattr(body, 'decode'):\n body = body.decode('utf-8')\n if hasattr(body, 'startswith') and body.startswith('OK'):\n # This typically happens when using a priority-sampling enabled\n # library with an outdated agent. It still works, but priority sampling\n # will probably send too many traces, so the next step is to upgrade agent.\n log.debug(\"'OK' is not a valid JSON, please make sure trace-agent is up to date\")\n return\n content = loads(body)\n return content\n except (ValueError, TypeError) as err:\n log.debug(\"unable to load JSON '%s': %s\" % (body, err))\n\nclass API(object):\n \"\"\"\n Send data to the trace agent using the HTTP protocol and JSON format\n \"\"\"\n def __init__(self, hostname, port, headers=None, encoder=None, priority_sampling=False):\n self.hostname = hostname\n self.port = port\n\n self._headers = headers or {}\n self._version = None\n\n if priority_sampling:\n self._set_version('v0.4', encoder=encoder)\n else:\n self._set_version('v0.3', encoder=encoder)\n\n self._headers.update({\n 'Datadog-Meta-Lang': 'python',\n 'Datadog-Meta-Lang-Version': PYTHON_VERSION,\n 'Datadog-Meta-Lang-Interpreter': PYTHON_INTERPRETER,\n 'Datadog-Meta-Tracer-Version': ddtrace.__version__,\n })\n\n def _set_version(self, version, encoder=None):\n if version not in _VERSIONS:\n version = 'v0.2'\n if version == self._version:\n return\n self._version = version\n self._traces = _VERSIONS[version]['traces']\n self._services = _VERSIONS[version]['services']\n self._fallback = _VERSIONS[version]['fallback']\n self._compatibility_mode = _VERSIONS[version]['compatibility_mode']\n if self._compatibility_mode:\n self._encoder = JSONEncoder()\n else:\n self._encoder = encoder or get_encoder()\n # overwrite the Content-type with the one chosen in the Encoder\n self._headers.update({'Content-Type': self._encoder.content_type})\n\n def _downgrade(self):\n \"\"\"\n Downgrades the used encoder and API level. This method must fallback to a safe\n encoder and API, so that it will success despite users' configurations. This action\n ensures that the compatibility mode is activated so that the downgrade will be\n executed only once.\n \"\"\"\n self._set_version(self._fallback)\n\n def send_traces(self, traces):\n if not traces:\n return\n start = time.time()\n data = self._encoder.encode_traces(traces)\n response = self._put(self._traces, data, len(traces))\n\n # the API endpoint is not available so we should downgrade the connection and re-try the call\n if response.status in [404, 415] and self._fallback:\n log.debug('calling endpoint \"%s\" but received %s; downgrading API', self._traces, response.status)\n self._downgrade()\n return self.send_traces(traces)\n\n log.debug(\"reported %d traces in %.5fs\", len(traces), time.time() - start)\n return response\n\n def send_services(self, services):\n if not services:\n return\n s = {}\n for service in services:\n s.update(service)\n data = self._encoder.encode_services(s)\n response = self._put(self._services, data)\n\n # the API endpoint is not available so we should downgrade the connection and re-try the call\n if response.status in [404, 415] and self._fallback:\n log.debug('calling endpoint \"%s\" but received %s; downgrading API', self._services, response.status)\n self._downgrade()\n return self.send_services(services)\n\n log.debug(\"reported %d services\", len(services))\n return response\n\n def _put(self, endpoint, data, count=0):\n conn = httplib.HTTPConnection(self.hostname, self.port)\n try:\n headers = self._headers\n if count:\n headers = dict(self._headers)\n headers[TRACE_COUNT_HEADER] = str(count)\n\n conn.request(\"PUT\", endpoint, data, headers)\n return get_connection_response(conn)\n finally:\n conn.close()\n", "path": "ddtrace/api.py"}]} | 2,251 | 198 |

gh_patches_debug_2358 | rasdani/github-patches | git_diff | mars-project__mars-3146 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[BUG] There maybe duplicate `WorkerMetaAPI` creation in `TaskStageProcessor`

**Describe the bug**

We can see there is a lot of duplicate `WorkerMetaAPI` creation in the method `_update_result_meta` of `mars/services/task/execution/mars/stage.py` if there is too many chunk result and their bands are same:

```python

for tileable in tile_context.values():

chunks = [c.data for c in tileable.chunks]

for c, params_fields in zip(chunks, self._get_params_fields(tileable)):

address = chunk_to_result[c].meta["bands"][0][0]

meta_api = await WorkerMetaAPI.create(session_id, address)

call = meta_api.get_chunk_meta.delay(c.key, fields=params_fields)

worker_meta_api_to_chunk_delays[meta_api][c] = calll

```

There are 3639 chunk results and 200 workers in my job and it costs 1414s to create worker meta apis.

Maybe we can cache the worker meta apis to avoid duplicate creation.

**To Reproduce**

1. Your Python version: python-3.7.9

2. The version of Mars you use: master

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mars/services/meta/api/oscar.py`

Content:

```

1 # Copyright 1999-2021 Alibaba Group Holding Ltd.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15

16 from typing import Dict, List, Any

17

18 from .... import oscar as mo

19 from ....core import ChunkType

20 from ....core.operand import Fuse

21 from ....lib.aio import alru_cache

22 from ....typing import BandType

23 from ....utils import get_chunk_params

24 from ..core import get_meta_type

25 from ..store import AbstractMetaStore

26 from ..supervisor.core import MetaStoreManagerActor, MetaStoreActor

27 from ..worker.core import WorkerMetaStoreManagerActor

28 from .core import AbstractMetaAPI

29

30

31 class BaseMetaAPI(AbstractMetaAPI):

32 def __init__(self, session_id: str, meta_store: mo.ActorRefType[AbstractMetaStore]):

33 # make sure all meta types registered

34 from .. import metas

35

36 del metas

37

38 self._session_id = session_id

39 self._meta_store = meta_store

40

41 @mo.extensible

42 async def set_tileable_meta(

43 self, tileable, memory_size: int = None, store_size: int = None, **extra

44 ):

45 from ....dataframe.core import (

46 DATAFRAME_TYPE,

47 DATAFRAME_GROUPBY_TYPE,

48 SERIES_GROUPBY_TYPE,

49 )

50

51 params = tileable.params.copy()

52 if isinstance(

53 tileable, (DATAFRAME_TYPE, DATAFRAME_GROUPBY_TYPE, SERIES_GROUPBY_TYPE)

54 ):

55 # dataframe needs some special process for now

56 del params["columns_value"]

57 del params["dtypes"]

58 params.pop("key_dtypes", None)

59 params["dtypes_value"] = tileable.dtypes_value

60 params["nsplits"] = tileable.nsplits

61 params.update(extra)

62 meta = get_meta_type(type(tileable))(

63 object_id=tileable.key,

64 **params,

65 memory_size=memory_size,

66 store_size=store_size

67 )

68 return await self._meta_store.set_meta(tileable.key, meta)

69

70 @mo.extensible

71 async def get_tileable_meta(

72 self, object_id: str, fields: List[str] = None

73 ) -> Dict[str, Any]:

74 return await self._meta_store.get_meta(object_id, fields=fields)

75

76 @mo.extensible

77 async def del_tileable_meta(self, object_id: str):

78 return await self._meta_store.del_meta(object_id)

79

80 @classmethod

81 def _extract_chunk_meta(

82 cls,

83 chunk: ChunkType,

84 memory_size: int = None,

85 store_size: int = None,

86 bands: List[BandType] = None,

87 fields: List[str] = None,

88 exclude_fields: List[str] = None,

89 **extra

90 ):

91 if isinstance(chunk.op, Fuse):

92 # fuse op

93 chunk = chunk.chunk

94 params = get_chunk_params(chunk)

95 chunk_key = extra.pop("chunk_key", chunk.key)

96 object_ref = extra.pop("object_ref", None)

97 params.update(extra)

98

99 if object_ref:

100 object_refs = (

101 [object_ref] if not isinstance(object_ref, list) else object_ref

102 )

103 else:

104 object_refs = []

105

106 if fields is not None:

107 fields = set(fields)

108 params = {k: v for k, v in params.items() if k in fields}

109 elif exclude_fields is not None:

110 exclude_fields = set(exclude_fields)

111 params = {k: v for k, v in params.items() if k not in exclude_fields}

112

113 return get_meta_type(type(chunk))(

114 object_id=chunk_key,

115 **params,

116 bands=bands,

117 memory_size=memory_size,

118 store_size=store_size,

119 object_refs=object_refs

120 )

121

122 @mo.extensible

123 async def set_chunk_meta(

124 self,

125 chunk: ChunkType,

126 memory_size: int = None,

127 store_size: int = None,

128 bands: List[BandType] = None,

129 fields: List[str] = None,

130 exclude_fields: List[str] = None,

131 **extra

132 ):

133 """

134 Parameters

135 ----------

136 chunk: ChunkType

137 chunk to set meta

138 memory_size: int

139 memory size for chunk data

140 store_size: int

141 serialized size for chunk data

142 bands:

143 chunk data bands

144 fields: list

145 fields to include in meta

146 exclude_fields: list

147 fields to exclude in meta

148 extra

149

150 Returns

151 -------

152

153 """

154 meta = self._extract_chunk_meta(

155 chunk,

156 memory_size=memory_size,

157 store_size=store_size,

158 bands=bands,

159 fields=fields,

160 exclude_fields=exclude_fields,

161 **extra

162 )

163 return await self._meta_store.set_meta(meta.object_id, meta)

164

165 @set_chunk_meta.batch

166 async def batch_set_chunk_meta(self, args_list, kwargs_list):

167 set_chunk_metas = []

168 for args, kwargs in zip(args_list, kwargs_list):

169 meta = self._extract_chunk_meta(*args, **kwargs)

170 set_chunk_metas.append(

171 self._meta_store.set_meta.delay(meta.object_id, meta)

172 )

173 return await self._meta_store.set_meta.batch(*set_chunk_metas)

174

175 @mo.extensible

176 async def get_chunk_meta(

177 self, object_id: str, fields: List[str] = None, error="raise"

178 ):

179 return await self._meta_store.get_meta(object_id, fields=fields, error=error)

180

181 @get_chunk_meta.batch

182 async def batch_get_chunk_meta(self, args_list, kwargs_list):

183 get_chunk_metas = []

184 for args, kwargs in zip(args_list, kwargs_list):

185 get_chunk_metas.append(self._meta_store.get_meta.delay(*args, **kwargs))

186 return await self._meta_store.get_meta.batch(*get_chunk_metas)

187

188 @mo.extensible

189 async def del_chunk_meta(self, object_id: str):

190 """

191 Parameters

192 ----------

193 object_id: str

194 chunk id

195 """

196 return await self._meta_store.del_meta(object_id)

197

198 @del_chunk_meta.batch

199 async def batch_del_chunk_meta(self, args_list, kwargs_list):

200 del_chunk_metas = []

201 for args, kwargs in zip(args_list, kwargs_list):

202 del_chunk_metas.append(self._meta_store.del_meta.delay(*args, **kwargs))

203 return await self._meta_store.del_meta.batch(*del_chunk_metas)

204

205 @mo.extensible

206 async def add_chunk_bands(self, object_id: str, bands: List[BandType]):

207 return await self._meta_store.add_chunk_bands(object_id, bands)

208

209 @add_chunk_bands.batch

210 async def batch_add_chunk_bands(self, args_list, kwargs_list):

211 add_chunk_bands_tasks = []

212 for args, kwargs in zip(args_list, kwargs_list):

213 add_chunk_bands_tasks.append(

214 self._meta_store.add_chunk_bands.delay(*args, **kwargs)

215 )

216 return await self._meta_store.add_chunk_bands.batch(*add_chunk_bands_tasks)

217

218 @mo.extensible

219 async def remove_chunk_bands(self, object_id: str, bands: List[BandType]):

220 return await self._meta_store.remove_chunk_bands(object_id, bands)

221

222 @remove_chunk_bands.batch

223 async def batch_remove_chunk_bands(self, args_list, kwargs_list):

224 remove_chunk_bands_tasks = []

225 for args, kwargs in zip(args_list, kwargs_list):

226 remove_chunk_bands_tasks.append(

227 self._meta_store.remove_chunk_bands.delay(*args, **kwargs)

228 )

229 return await self._meta_store.remove_chunk_bands.batch(

230 *remove_chunk_bands_tasks

231 )

232

233 @mo.extensible

234 async def get_band_chunks(self, band: BandType) -> List[str]:

235 return await self._meta_store.get_band_chunks(band)

236

237

238 class MetaAPI(BaseMetaAPI):

239 @classmethod

240 @alru_cache(cache_exceptions=False)

241 async def create(cls, session_id: str, address: str) -> "MetaAPI":

242 """

243 Create Meta API.

244

245 Parameters

246 ----------

247 session_id : str

248 Session ID.

249 address : str

250 Supervisor address.

251

252 Returns

253 -------

254 meta_api

255 Meta api.

256 """

257 meta_store_ref = await mo.actor_ref(address, MetaStoreActor.gen_uid(session_id))

258

259 return MetaAPI(session_id, meta_store_ref)

260

261

262 class MockMetaAPI(MetaAPI):

263 @classmethod

264 async def create(cls, session_id: str, address: str) -> "MetaAPI":

265 # create an Actor for mock

266 try:

267 meta_store_manager_ref = await mo.create_actor(

268 MetaStoreManagerActor,

269 "dict",

270 dict(),

271 address=address,

272 uid=MetaStoreManagerActor.default_uid(),

273 )

274 except mo.ActorAlreadyExist:

275 # ignore if actor exists

276 meta_store_manager_ref = await mo.actor_ref(

277 MetaStoreManagerActor,

278 address=address,

279 uid=MetaStoreManagerActor.default_uid(),

280 )

281 try:

282 await meta_store_manager_ref.new_session_meta_store(session_id)

283 except mo.ActorAlreadyExist:

284 pass

285 return await super().create(session_id=session_id, address=address)

286

287

288 class WorkerMetaAPI(BaseMetaAPI):

289 @classmethod

290 @alru_cache(cache_exceptions=False)

291 async def create(cls, session_id: str, address: str) -> "WorkerMetaAPI":

292 """

293 Create worker meta API.

294

295 Parameters

296 ----------

297 session_id : str

298 Session ID.

299 address : str

300 Worker address.

301

302 Returns

303 -------

304 meta_api

305 Worker meta api.

306 """

307 worker_meta_store_manager_ref = await mo.actor_ref(

308 uid=WorkerMetaStoreManagerActor.default_uid(), address=address

309 )

310 worker_meta_store_ref = (

311 await worker_meta_store_manager_ref.new_session_meta_store(session_id)

312 )

313 return WorkerMetaAPI(session_id, worker_meta_store_ref)

314

315

316 class MockWorkerMetaAPI(WorkerMetaAPI):

317 @classmethod

318 async def create(cls, session_id: str, address: str) -> "WorkerMetaAPI":

319 # create an Actor for mock

320 try:

321 await mo.create_actor(

322 WorkerMetaStoreManagerActor,

323 "dict",

324 dict(),

325 address=address,

326 uid=WorkerMetaStoreManagerActor.default_uid(),

327 )

328 except mo.ActorAlreadyExist:

329 # ignore if actor exists

330 await mo.actor_ref(

331 WorkerMetaStoreManagerActor,

332 address=address,

333 uid=WorkerMetaStoreManagerActor.default_uid(),

334 )

335 return await super().create(session_id, address)

336

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/mars/services/meta/api/oscar.py b/mars/services/meta/api/oscar.py

--- a/mars/services/meta/api/oscar.py

+++ b/mars/services/meta/api/oscar.py

@@ -237,7 +237,7 @@

class MetaAPI(BaseMetaAPI):

@classmethod

- @alru_cache(cache_exceptions=False)

+ @alru_cache(maxsize=1024, cache_exceptions=False)

async def create(cls, session_id: str, address: str) -> "MetaAPI":

"""

Create Meta API.