modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-14 00:44:55

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 519

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-14 00:44:41

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

DrishtiSharma/LayoutLMv3-Finetuned-CORD_100 | DrishtiSharma | 2022-09-18T19:38:50Z | 83 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"layoutlmv3",

"token-classification",

"generated_from_trainer",

"dataset:cord-layoutlmv3",

"license:cc-by-nc-sa-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-09-18T18:35:30Z | ---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

datasets:

- cord-layoutlmv3

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: LayoutLMv3-Finetuned-CORD_100

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: cord-layoutlmv3

type: cord-layoutlmv3

config: cord

split: train

args: cord

metrics:

- name: Precision

type: precision

value: 0.9524870081662955

- name: Recall

type: recall

value: 0.9603293413173652

- name: F1

type: f1

value: 0.9563920983973164

- name: Accuracy

type: accuracy

value: 0.9647707979626485

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# LayoutLMv3-Finetuned-CORD_100

This model is a fine-tuned version of [microsoft/layoutlmv3-base](https://huggingface.co/microsoft/layoutlmv3-base) on the cord-layoutlmv3 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1948

- Precision: 0.9525

- Recall: 0.9603

- F1: 0.9564

- Accuracy: 0.9648

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1.1e-05

- train_batch_size: 5

- eval_batch_size: 5

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 3000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.56 | 250 | 0.9568 | 0.7298 | 0.7844 | 0.7561 | 0.7992 |

| 1.3271 | 3.12 | 500 | 0.5239 | 0.8398 | 0.8713 | 0.8553 | 0.8858 |

| 1.3271 | 4.69 | 750 | 0.3586 | 0.8945 | 0.9207 | 0.9074 | 0.9300 |

| 0.3495 | 6.25 | 1000 | 0.2716 | 0.9298 | 0.9416 | 0.9357 | 0.9410 |

| 0.3495 | 7.81 | 1250 | 0.2331 | 0.9198 | 0.9356 | 0.9276 | 0.9474 |

| 0.1725 | 9.38 | 1500 | 0.2134 | 0.9379 | 0.9499 | 0.9438 | 0.9529 |

| 0.1725 | 10.94 | 1750 | 0.2079 | 0.9401 | 0.9513 | 0.9457 | 0.9605 |

| 0.1116 | 12.5 | 2000 | 0.1992 | 0.9554 | 0.9618 | 0.9586 | 0.9656 |

| 0.1116 | 14.06 | 2250 | 0.1941 | 0.9517 | 0.9588 | 0.9553 | 0.9631 |

| 0.0762 | 15.62 | 2500 | 0.1966 | 0.9503 | 0.9588 | 0.9545 | 0.9639 |

| 0.0762 | 17.19 | 2750 | 0.1951 | 0.9510 | 0.9588 | 0.9549 | 0.9626 |

| 0.0636 | 18.75 | 3000 | 0.1948 | 0.9525 | 0.9603 | 0.9564 | 0.9648 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

lizaboiarchuk/bert-tiny-oa-finetuned | lizaboiarchuk | 2022-09-18T19:05:02Z | 83 | 0 | transformers | [

"transformers",

"tf",

"bert",

"fill-mask",

"generated_from_keras_callback",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-09-18T07:27:29Z | ---

license: mit

tags:

- generated_from_keras_callback

model-index:

- name: lizaboiarchuk/bert-tiny-oa-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# lizaboiarchuk/bert-tiny-oa-finetuned

This model is a fine-tuned version of [prajjwal1/bert-tiny](https://huggingface.co/prajjwal1/bert-tiny) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 4.0626

- Validation Loss: 3.7514

- Epoch: 4

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -525, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 4.6311 | 4.1088 | 0 |

| 4.2579 | 3.7859 | 1 |

| 4.0635 | 3.7253 | 2 |

| 4.0658 | 3.6842 | 3 |

| 4.0626 | 3.7514 | 4 |

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.8.2

- Tokenizers 0.12.1

|

ssharm87/t5-small-finetuned-xsum-ss | ssharm87 | 2022-09-18T17:13:52Z | 110 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-09-18T07:18:08Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- xsum

metrics:

- rouge

model-index:

- name: t5-small-finetuned-xsum-ss

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: xsum

type: xsum

config: default

split: train

args: default

metrics:

- name: Rouge1

type: rouge

value: 26.3663

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-xsum-ss

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the xsum dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5823

- Rouge1: 26.3663

- Rouge2: 6.4727

- Rougel: 20.538

- Rougelsum: 20.5411

- Gen Len: 18.8006

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 0.25

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:------:|:---------:|:-------:|

| 2.8125 | 0.25 | 3189 | 2.5823 | 26.3663 | 6.4727 | 20.538 | 20.5411 | 18.8006 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/lula-13 | sd-concepts-library | 2022-09-18T16:57:51Z | 0 | 6 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T16:57:44Z | ---

license: mit

---

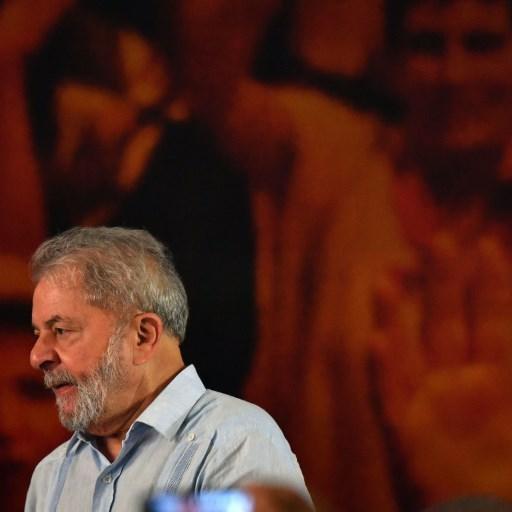

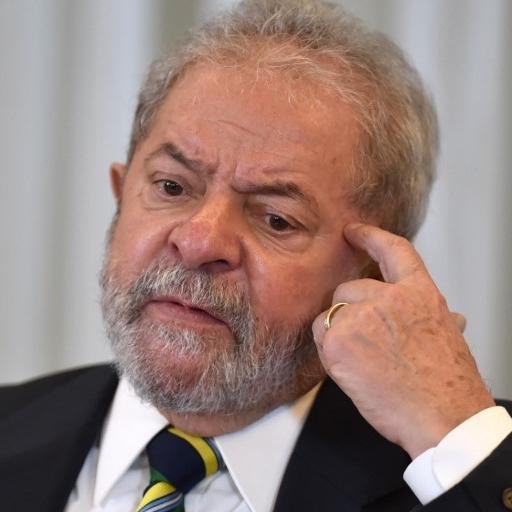

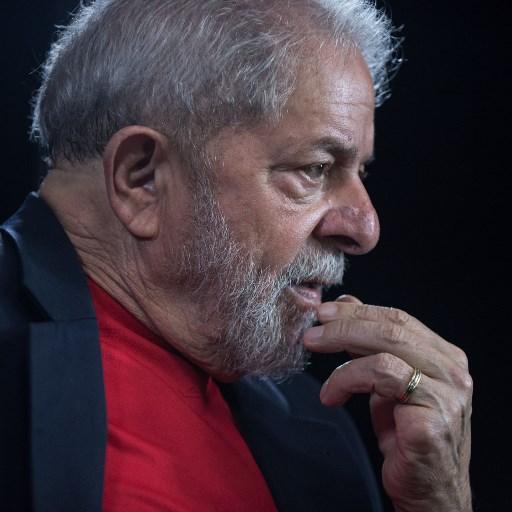

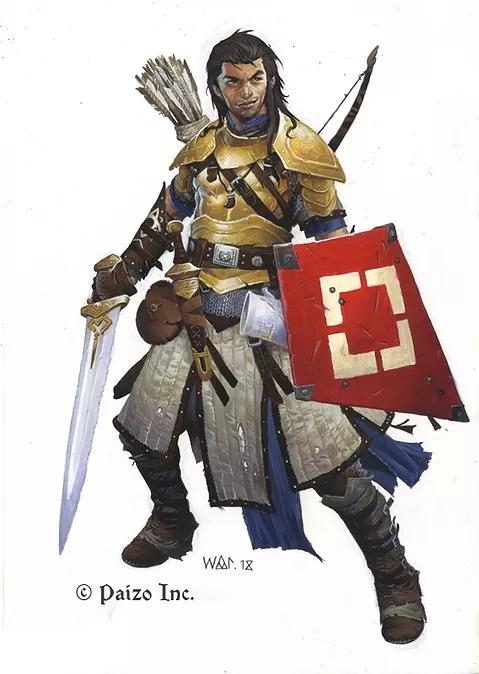

### Lula 13 on Stable Diffusion

This is the `<lula-13>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/rail-scene | sd-concepts-library | 2022-09-18T14:28:03Z | 0 | 1 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T14:27:48Z | ---

license: mit

---

### Rail Scene on Stable Diffusion

This is the `<rail-pov>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

jayanta/aaraki-vit-base-patch16-224-in21k-finetuned-cifar10 | jayanta | 2022-09-18T14:16:57Z | 220 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2022-09-17T11:53:40Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: mit-b2-finetuned-memes

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8523956723338485

- task:

type: image-classification

name: Image Classification

dataset:

type: custom

name: custom

split: test

metrics:

- type: f1

value: 0.8580847578266328

name: F1

- type: precision

value: 0.8587893412503379

name: Precision

- type: recall

value: 0.8593508500772797

name: Recall

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mit-b2-finetuned-memes

This model is a fine-tuned version of [aaraki/vit-base-patch16-224-in21k-finetuned-cifar10](https://huggingface.co/aaraki/vit-base-patch16-224-in21k-finetuned-cifar10) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4137

- Accuracy: 0.8524

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.9727 | 0.99 | 40 | 0.8400 | 0.7334 |

| 0.5305 | 1.99 | 80 | 0.5147 | 0.8284 |

| 0.3124 | 2.99 | 120 | 0.4698 | 0.8145 |

| 0.2263 | 3.99 | 160 | 0.3892 | 0.8563 |

| 0.1453 | 4.99 | 200 | 0.3874 | 0.8570 |

| 0.1255 | 5.99 | 240 | 0.4097 | 0.8470 |

| 0.0989 | 6.99 | 280 | 0.3860 | 0.8570 |

| 0.0755 | 7.99 | 320 | 0.4141 | 0.8539 |

| 0.08 | 8.99 | 360 | 0.4049 | 0.8594 |

| 0.0639 | 9.99 | 400 | 0.4137 | 0.8524 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

huynguyen208/bert-finetuned-ner | huynguyen208 | 2022-09-18T13:36:26Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-09-18T13:09:28Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: train

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9307387862796834

- name: Recall

type: recall

value: 0.9498485358465163

- name: F1

type: f1

value: 0.9401965683824755

- name: Accuracy

type: accuracy

value: 0.9860187201977983

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0620

- Precision: 0.9307

- Recall: 0.9498

- F1: 0.9402

- Accuracy: 0.9860

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0868 | 1.0 | 1756 | 0.0699 | 0.9197 | 0.9352 | 0.9274 | 0.9821 |

| 0.0324 | 2.0 | 3512 | 0.0659 | 0.9202 | 0.9455 | 0.9327 | 0.9849 |

| 0.0162 | 3.0 | 5268 | 0.0620 | 0.9307 | 0.9498 | 0.9402 | 0.9860 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Shaz/augh | Shaz | 2022-09-18T12:49:50Z | 0 | 0 | null | [

"region:us"

]

| null | 2022-09-17T19:10:50Z | import requests

API_URL = "https://api-inference.huggingface.co/models/gpt2"

headers = {"Authorization": f"Bearer {API_TOKEN}"}

def query(payload):

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()

output = query({

"inputs": "Can you please let us know more details about your ",

}) |

sd-concepts-library/lizardman | sd-concepts-library | 2022-09-18T11:42:28Z | 0 | 3 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T11:42:22Z | ---

license: mit

---

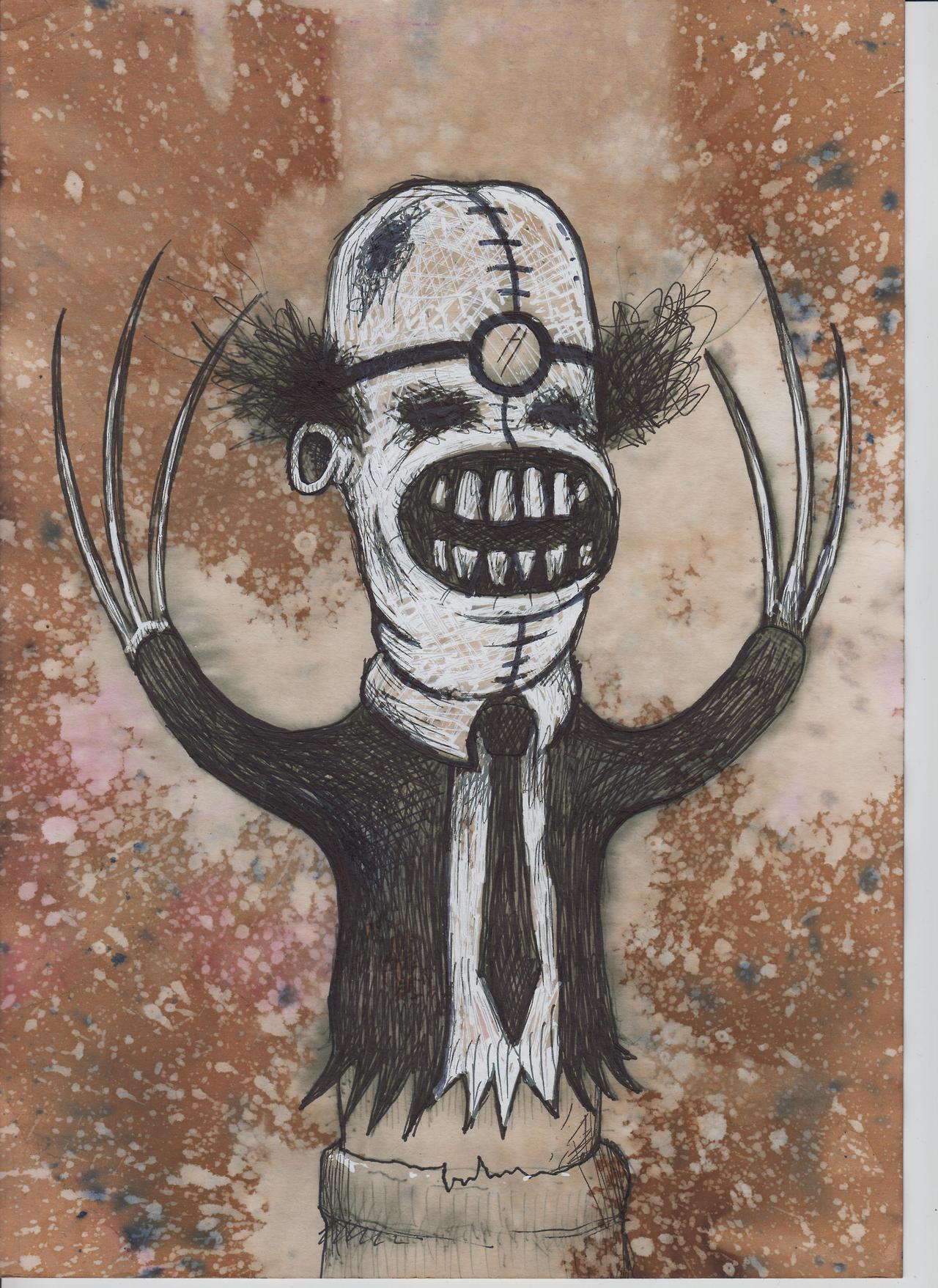

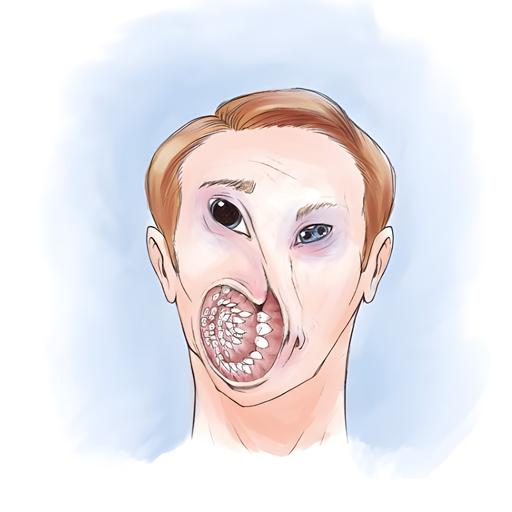

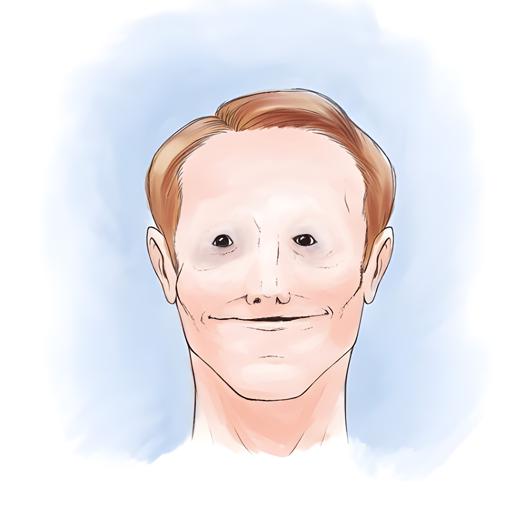

### Lizardman on Stable Diffusion

This is the `PlaceholderTokenLizardman` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

ydmeira/beit-finetuned-pokemon | ydmeira | 2022-09-18T11:35:48Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"beit",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2022-09-03T10:34:50Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: beit-finetuned-pokemon

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# beit-finetuned-pokemon

This model is a fine-tuned version of [ydmeira/beit-finetuned-pokemon](https://huggingface.co/ydmeira/beit-finetuned-pokemon) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0219

- Mean Iou: 0.4955

- Mean Accuracy: 0.9910

- Overall Accuracy: 0.9910

- Per Category Iou: [0.0, 0.9909617791470107]

- Per Category Accuracy: [nan, 0.9909617791470107]

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mean Iou | Mean Accuracy | Overall Accuracy | Per Category Iou | Per Category Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|:-------------:|:----------------:|:-------------------------:|:-------------------------:|

| 0.0354 | 0.21 | 1000 | 0.0347 | 0.4978 | 0.9955 | 0.9955 | [0.0, 0.9955007125868244] | [nan, 0.9955007125868244] |

| 0.0273 | 0.43 | 2000 | 0.0277 | 0.4951 | 0.9903 | 0.9903 | [0.0, 0.9902709092544748] | [nan, 0.9902709092544748] |

| 0.0307 | 0.64 | 3000 | 0.0788 | 0.4875 | 0.9751 | 0.9751 | [0.0, 0.9750850921785902] | [nan, 0.9750850921785902] |

| 0.0295 | 0.85 | 4000 | 0.0412 | 0.4939 | 0.9877 | 0.9877 | [0.0, 0.9877162657609527] | [nan, 0.9877162657609527] |

| 0.0255 | 1.07 | 5000 | 0.0842 | 0.4862 | 0.9723 | 0.9723 | [0.0, 0.972304346385062] | [nan, 0.972304346385062] |

| 0.0253 | 1.28 | 6000 | 0.0325 | 0.4950 | 0.9901 | 0.9901 | [0.0, 0.9900621363084688] | [nan, 0.9900621363084688] |

| 0.0239 | 1.49 | 7000 | 0.0440 | 0.4917 | 0.9835 | 0.9835 | [0.0, 0.9834701005512881] | [nan, 0.9834701005512881] |

| 0.0238 | 1.71 | 8000 | 0.0338 | 0.4950 | 0.9900 | 0.9900 | [0.0, 0.9899977115151821] | [nan, 0.9899977115151821] |

| 0.0223 | 1.92 | 9000 | 0.0319 | 0.4950 | 0.9900 | 0.9900 | [0.0, 0.989994712810938] | [nan, 0.989994712810938] |

| 0.0231 | 2.13 | 10000 | 0.0382 | 0.4921 | 0.9841 | 0.9841 | [0.0, 0.984106425591889] | [nan, 0.984106425591889] |

| 0.0205 | 2.35 | 11000 | 0.0450 | 0.4926 | 0.9851 | 0.9851 | [0.0, 0.9851146530893756] | [nan, 0.9851146530893756] |

| 0.0201 | 2.56 | 12000 | 0.0265 | 0.4954 | 0.9908 | 0.9908 | [0.0, 0.9908277212846449] | [nan, 0.9908277212846449] |

| 0.0188 | 2.77 | 13000 | 0.0377 | 0.4933 | 0.9866 | 0.9866 | [0.0, 0.9865726862234793] | [nan, 0.9865726862234793] |

| 0.0181 | 2.99 | 14000 | 0.0219 | 0.4955 | 0.9910 | 0.9910 | [0.0, 0.9909617791470107] | [nan, 0.9909617791470107] |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

debbiesoon/prot_bert_bfd-disopro | debbiesoon | 2022-09-18T11:33:41Z | 106 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-09-18T09:58:56Z | ---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

model-index:

- name: prot_bert_bfd-disopro

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# prot_bert_bfd-disopro

This model is a fine-tuned version of [Rostlab/prot_bert_bfd](https://huggingface.co/Rostlab/prot_bert_bfd) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3062

- Precision: 0.8640

- Recall: 0.8772

- F1: 0.8202

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|

| 0.0734 | 1.0 | 60 | 0.3415 | 0.7691 | 0.8770 | 0.8195 |

| 0.5288 | 2.0 | 120 | 0.2993 | 0.7691 | 0.8770 | 0.8195 |

| 0.3888 | 3.0 | 180 | 0.3062 | 0.8640 | 0.8772 | 0.8202 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

olympictafira/cAT | olympictafira | 2022-09-18T11:13:25Z | 0 | 1 | null | [

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"arxiv:2207.12598",

"arxiv:2112.10752",

"arxiv:2103.00020",

"arxiv:2205.11487",

"arxiv:1910.09700",

"license:other",

"region:us"

]

| text-to-image | 2022-09-18T11:12:18Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

inference: false

extra_gated_prompt: |-

One more step before getting this model.

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. CompVis claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

Please read the full license here: https://huggingface.co/spaces/CompVis/stable-diffusion-license

By clicking on "Access repository" below, you accept that your *contact information* (email address and username) can be shared with the model authors as well.

extra_gated_fields:

I have read the License and agree with its terms: checkbox

---

# Stable Diffusion v1-4 Model Card

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input.

For more information about how Stable Diffusion functions, please have a look at [🤗's Stable Diffusion with 🧨Diffusers blog](https://huggingface.co/blog/stable_diffusion).

The **Stable-Diffusion-v1-4** checkpoint was initialized with the weights of the [Stable-Diffusion-v1-2](https:/steps/huggingface.co/CompVis/stable-diffusion-v1-2)

checkpoint and subsequently fine-tuned on 225k steps at resolution 512x512 on "laion-aesthetics v2 5+" and 10% dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

This weights here are intended to be used with the 🧨 Diffusers library. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, [come here](https://huggingface.co/CompVis/stable-diffusion-v-1-4-original)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable-diffusion-license) is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming-convention-of-responsible-ai-licenses), adapted from the work that [BigScience](https://bigscience.huggingface.co/) and [the RAIL Initiative](https://www.licenses.ai/) are jointly carrying in the area of responsible AI licensing. See also [the article about the BLOOM Open RAIL license](https://bigscience.huggingface.co/blog/the-bigscience-rail-license) on which our license is based.

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([CLIP ViT-L/14](https://arxiv.org/abs/2103.00020)) as suggested in the [Imagen paper](https://arxiv.org/abs/2205.11487).

- **Resources for more information:** [GitHub Repository](https://github.com/CompVis/stable-diffusion), [Paper](https://arxiv.org/abs/2112.10752).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

We recommend using [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion.

```bash

pip install --upgrade diffusers transformers scipy

```

Run this command to log in with your HF Hub token if you haven't before:

```bash

huggingface-cli login

```

Running the pipeline with the default PNDM scheduler:

```python

import torch

from torch import autocast

from diffusers import StableDiffusionPipeline

model_id = "CompVis/stable-diffusion-v1-4"

device = "cuda"

pipe = StableDiffusionPipeline.from_pretrained(model_id, use_auth_token=True)

pipe = pipe.to(device)

prompt = "a photo of an astronaut riding a horse on mars"

with autocast("cuda"):

image = pipe(prompt, guidance_scale=7.5).images[0]

image.save("astronaut_rides_horse.png")

```

**Note**:

If you are limited by GPU memory and have less than 10GB of GPU RAM available, please make sure to load the StableDiffusionPipeline in float16 precision instead of the default float32 precision as done above. You can do so by telling diffusers to expect the weights to be in float16 precision:

```py

import torch

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16, revision="fp16", use_auth_token=True)

pipe = pipe.to(device)

prompt = "a photo of an astronaut riding a horse on mars"

with autocast("cuda"):

image = pipe(prompt, guidance_scale=7.5).images[0]

image.save("astronaut_rides_horse.png")

```

To swap out the noise scheduler, pass it to `from_pretrained`:

```python

from diffusers import StableDiffusionPipeline, LMSDiscreteScheduler

model_id = "CompVis/stable-diffusion-v1-4"

# Use the K-LMS scheduler here instead

scheduler = LMSDiscreteScheduler(beta_start=0.00085, beta_end=0.012, beta_schedule="scaled_linear", num_train_timesteps=1000)

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, use_auth_token=True)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

with autocast("cuda"):

image = pipe(prompt, guidance_scale=7.5).images[0]

image.save("astronaut_rides_horse.png")

```

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and

tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), but applies in the same way to Stable Diffusion v1_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/) which contains adult material

and is not fit for product use without additional safety mechanisms and

considerations.

- No additional measures were used to deduplicate the dataset. As a result, we observe some degree of memorization for images that are duplicated in the training data.

The training data can be searched at [https://rom1504.github.io/clip-retrieval/](https://rom1504.github.io/clip-retrieval/) to possibly assist in the detection of memorized images.

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion v1 was trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are primarily limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

### Safety Module

The intended use of this model is with the [Safety Checker](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/safety_checker.py) in Diffusers.

This checker works by checking model outputs against known hard-coded NSFW concepts.

The concepts are intentionally hidden to reduce the likelihood of reverse-engineering this filter.

Specifically, the checker compares the class probability of harmful concepts in the embedding space of the `CLIPTextModel` *after generation* of the images.

The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-2B (en) and subsets thereof (see next section)

**Training Procedure**

Stable Diffusion v1-4 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through a ViT-L/14 text-encoder.

- The non-pooled output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet.

We currently provide four checkpoints, which were trained as follows.

- [`stable-diffusion-v1-1`](https://huggingface.co/CompVis/stable-diffusion-v1-1): 237,000 steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en).

194,000 steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`).

- [`stable-diffusion-v1-2`](https://huggingface.co/CompVis/stable-diffusion-v1-2): Resumed from `stable-diffusion-v1-1`.

515,000 steps at resolution `512x512` on "laion-improved-aesthetics" (a subset of laion2B-en,

filtered to images with an original size `>= 512x512`, estimated aesthetics score `> 5.0`, and an estimated watermark probability `< 0.5`. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an [improved aesthetics estimator](https://github.com/christophschuhmann/improved-aesthetic-predictor)).

- [`stable-diffusion-v1-3`](https://huggingface.co/CompVis/stable-diffusion-v1-3): Resumed from `stable-diffusion-v1-2`. 195,000 steps at resolution `512x512` on "laion-improved-aesthetics" and 10 % dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

- [`stable-diffusion-v1-4`](https://huggingface.co/CompVis/stable-diffusion-v1-4) Resumed from `stable-diffusion-v1-2`.225,000 steps at resolution `512x512` on "laion-aesthetics v2 5+" and 10 % dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 2

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 PLMS sampling

steps show the relative improvements of the checkpoints:

Evaluated using 50 PLMS steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 150000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 11250 kg CO2 eq.

## Citation

```bibtex

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

```

*This model card was written by: Robin Rombach and Patrick Esser and is based on the [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).*://huggingface.co/CompVis/stable-diffusion-v1-4 |

huggingtweets/perpetualg00se | huggingtweets | 2022-09-18T10:25:36Z | 109 | 1 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-09-18T10:20:59Z | ---

language: en

thumbnail: http://www.huggingtweets.com/perpetualg00se/1663496719106/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1245588692573409281/mGWMt1q7_400x400.png')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">PerpetualG00se</div>

<div style="text-align: center; font-size: 14px;">@perpetualg00se</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from PerpetualG00se.

| Data | PerpetualG00se |

| --- | --- |

| Tweets downloaded | 3166 |

| Retweets | 514 |

| Short tweets | 628 |

| Tweets kept | 2024 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/32gxsmj0/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @perpetualg00se's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/17rf9oo3) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/17rf9oo3/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/perpetualg00se')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

venkateshdas/roberta-base-squad2-ta-qna-roberta3e | venkateshdas | 2022-09-18T10:22:29Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"question-answering",

"generated_from_trainer",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

]

| question-answering | 2022-09-18T10:13:04Z | ---

license: cc-by-4.0

tags:

- generated_from_trainer

model-index:

- name: roberta-base-squad2-ta-qna-roberta3e

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-squad2-ta-qna-roberta3e

This model is a fine-tuned version of [deepset/roberta-base-squad2](https://huggingface.co/deepset/roberta-base-squad2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4671

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 87 | 0.5221 |

| No log | 2.0 | 174 | 0.4408 |

| No log | 3.0 | 261 | 0.4671 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/glass-prism-cube | sd-concepts-library | 2022-09-18T07:38:27Z | 0 | 0 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T07:38:16Z | ---

license: mit

---

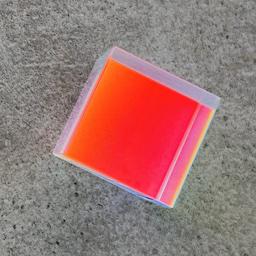

### glass prism cube on Stable Diffusion

This is the `<glass-prism-cube>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

debbiesoon/prot_bert_bfd-disoDNA | debbiesoon | 2022-09-18T06:50:23Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-09-18T04:33:19Z | ---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

model-index:

- name: prot_bert_bfd-disoDNA

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# prot_bert_bfd-disoDNA

This model was trained from scratch on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1323

- Precision: 0.9442

- Recall: 0.9717

- F1: 0.9578

## Model description

This is a token classification model designed to predict the intrinsically disordered regions of amino acid sequences on the level of DNA disorder annotation.

## Intended uses & limitations

This model works on amino acid sequences that are spaced between characters.

'0': No disorder

'1': Disordered

Example Inputs :

D E A Q F K E C Y D T C H K E C S D K G N G F T F C E M K C D T D C S V K D V K E K L E N Y K P K N

M A S E E L Q K D L E E V K V L L E K A T R K R V R D A L T A E K S K I E T E I K N K M Q Q K S Q K K A E L L D N E K P A A V V A P I T T G Y T D G I S Q I S L

M D V F M K G L S K A K E G V V A A A E K T K Q G V A E A A G K T K E G V L Y V G S K T K E G V V H G V A T V A E K T K E Q V T N V G G A V V T G V T A V A Q K T V E G A G S I A A A T G F V K K D Q L G K N E E G A P Q E G I L E D M P V D P D N E A Y E M P S E E G Y Q D Y E P E A

M E L V L K D A Q S A L T V S E T T F G R D F N E A L V H Q V V V A Y A A G A R Q G T R A Q K T R A E V T G S G K K P W R Q K G T G R A R S G S I K S P I W R S G G V T F A

A R P Q D H S Q K V N K K M Y R G A L K S I L S E L V R Q D R L I V V E K F S V E A P K T K L L A Q K L K D M A L E D V L I I T G E L D E N L F L A A R N L H K V D V R D A T G I D P V S L I A F D K V V M T A D A V K Q V E E M L A

M S D K P D M A E I E K F D K S K L K K T E T Q E K N P L P S K E T I E Q E K Q A G E S

## Training and evaluation data

Training and evaluation data were retrieved from https://www.csuligroup.com/DeepDISOBind/#Materials (Accessed March 2022).

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|

| 0.0213 | 1.0 | 61 | 0.1322 | 0.9442 | 0.9717 | 0.9578 |

| 0.0212 | 2.0 | 122 | 0.1322 | 0.9442 | 0.9717 | 0.9578 |

| 0.1295 | 3.0 | 183 | 0.1323 | 0.9442 | 0.9717 | 0.9578 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/dsmuses | sd-concepts-library | 2022-09-18T06:37:28Z | 0 | 0 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T06:37:17Z | ---

license: mit

---

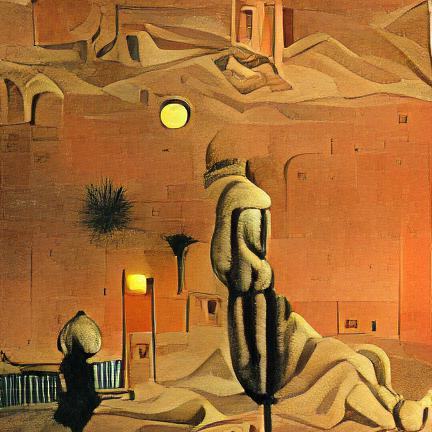

### DSmuses on Stable Diffusion

This is the `<DSmuses>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/threestooges | sd-concepts-library | 2022-09-18T05:40:11Z | 0 | 1 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T05:40:07Z | ---

license: mit

---

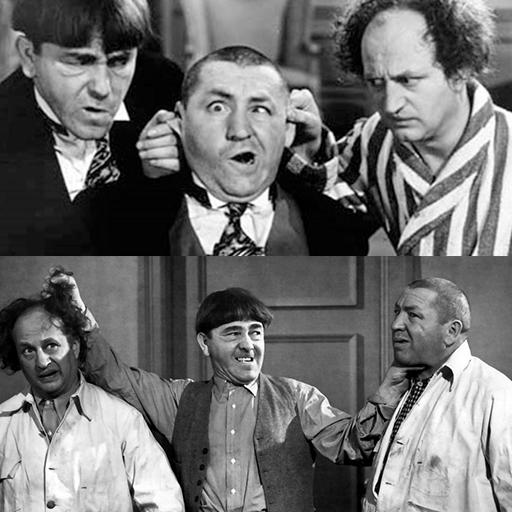

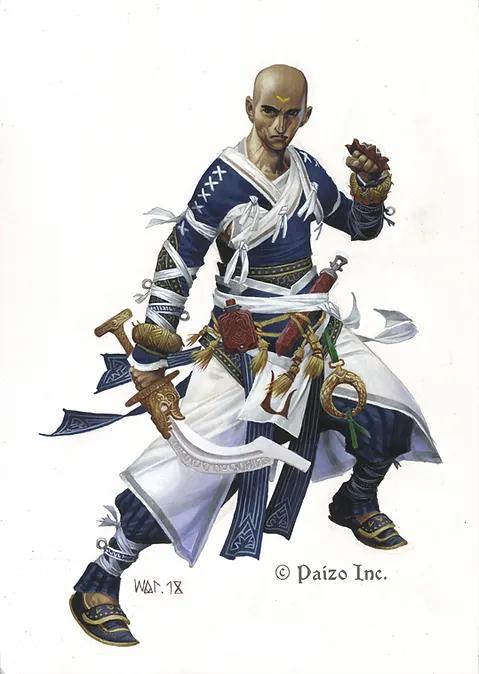

### threestooges on Stable Diffusion

This is the `<threestooges>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

rosskrasner/testcatdog | rosskrasner | 2022-09-18T03:56:03Z | 0 | 0 | fastai | [

"fastai",

"region:us"

]

| null | 2022-09-14T03:29:28Z | ---

tags:

- fastai

---

# Amazing!

🥳 Congratulations on hosting your fastai model on the Hugging Face Hub!

# Some next steps

1. Fill out this model card with more information (see the template below and the [documentation here](https://huggingface.co/docs/hub/model-repos))!

2. Create a demo in Gradio or Streamlit using 🤗 Spaces ([documentation here](https://huggingface.co/docs/hub/spaces)).

3. Join the fastai community on the [Fastai Discord](https://discord.com/invite/YKrxeNn)!

Greetings fellow fastlearner 🤝! Don't forget to delete this content from your model card.

---

# Model card

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

|

sd-concepts-library/loab-character | sd-concepts-library | 2022-09-18T00:46:01Z | 0 | 4 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T00:45:48Z | ---

license: mit

---

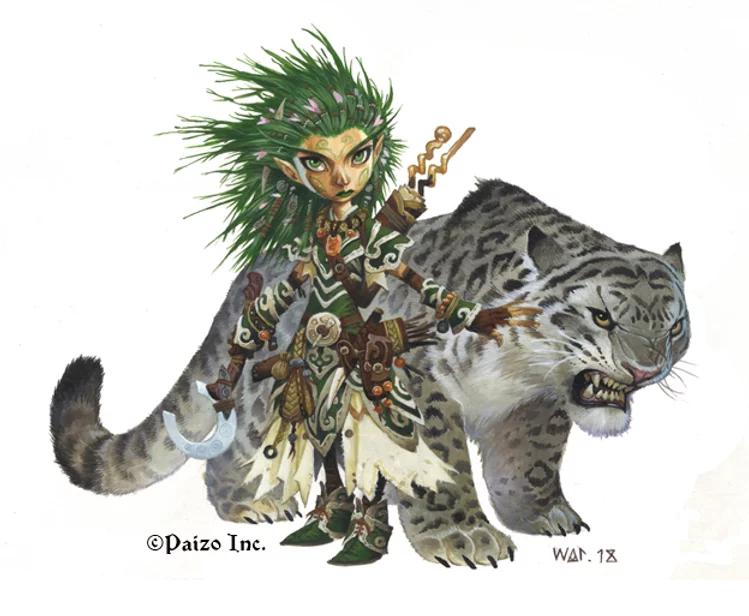

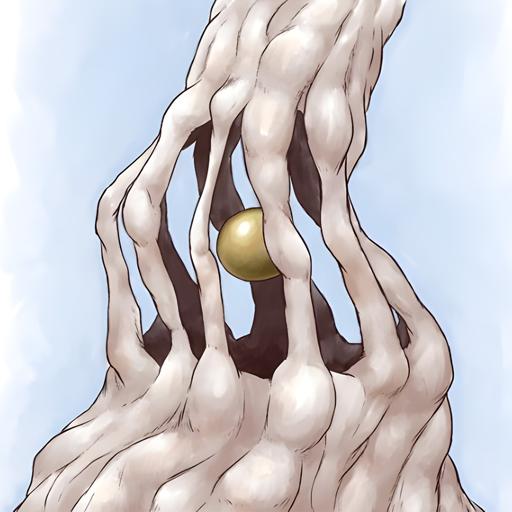

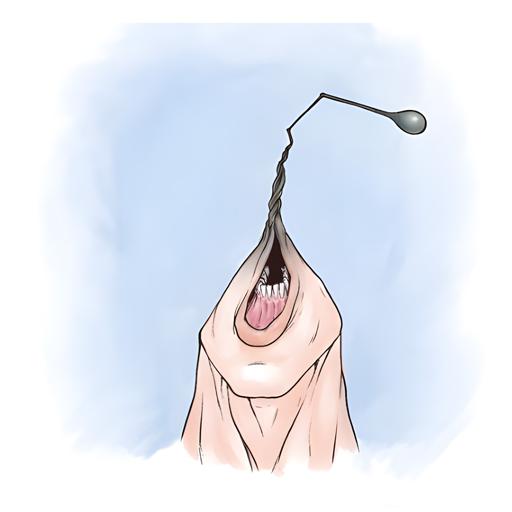

### Loab Character on Stable Diffusion

This is the `<loab-character>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

pikodemo/ppo-LunarLander-v2 | pikodemo | 2022-09-18T00:11:48Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T14:59:15Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: -553.66 +/- 175.78

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

sd-concepts-library/valorantstyle | sd-concepts-library | 2022-09-17T23:55:16Z | 0 | 20 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T23:55:05Z | ---

license: mit

---

### valorantstyle on Stable Diffusion

This is the `<valorant>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

reinoudbosch/pegasus-samsum | reinoudbosch | 2022-09-17T23:03:24Z | 99 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"dataset:samsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-09-17T22:26:31Z | ---

tags:

- generated_from_trainer

datasets:

- samsum

model-index:

- name: pegasus-samsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-samsum

This model is a fine-tuned version of [google/pegasus-cnn_dailymail](https://huggingface.co/google/pegasus-cnn_dailymail) on the samsum dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4814

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.7052 | 0.54 | 500 | 1.4814 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.11.0

- Datasets 2.0.0

- Tokenizers 0.11.0

|

sd-concepts-library/paul-noir | sd-concepts-library | 2022-09-17T21:40:41Z | 0 | 0 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T21:40:35Z | ---

license: mit

---

### Paul Noir on Stable Diffusion

This is the `<paul-noir>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

Bistolero/1ep_seq_25_6b | Bistolero | 2022-09-17T21:23:44Z | 111 | 0 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:gem",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-09-17T21:07:40Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- gem

model-index:

- name: kapakapa

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# kapakapa

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the gem dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 15

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 14

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/r-crumb-style | sd-concepts-library | 2022-09-17T21:15:16Z | 0 | 5 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T21:15:11Z | ---

license: mit

---

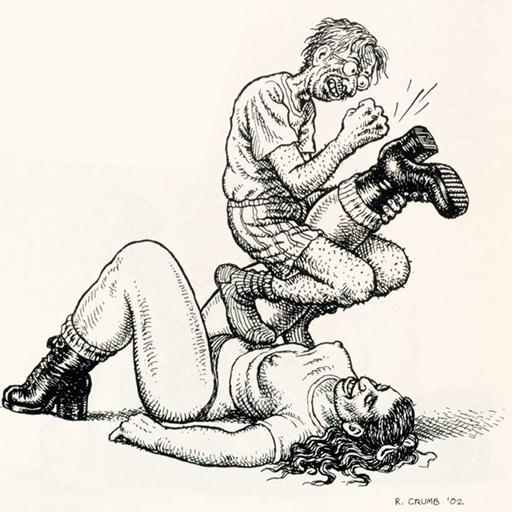

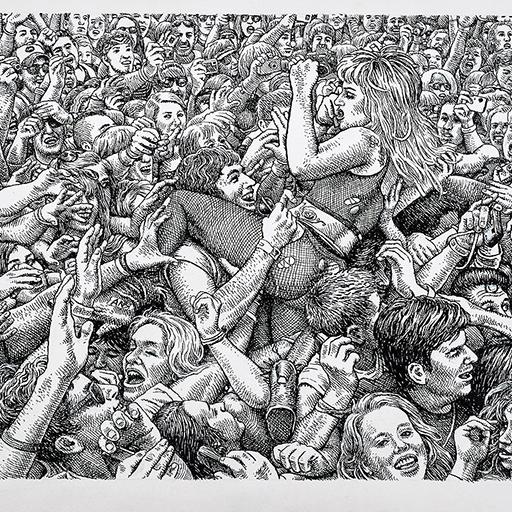

### r crumb style on Stable Diffusion

This is the `<rcrumb>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

anechaev/Reinforce-U5CartPole | anechaev | 2022-09-17T20:43:09Z | 0 | 0 | null | [

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T20:41:20Z | ---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-U5CartPole

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 46.40 +/- 7.76

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

vangenugtenr/autobiographical_interview_scoring | vangenugtenr | 2022-09-17T20:39:50Z | 162 | 0 | transformers | [

"transformers",

"tf",

"distilbert",

"text-classification",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-09-17T20:31:46Z | ---

license: cc-by-nc-sa-4.0

---

|

sd-concepts-library/3d-female-cyborgs | sd-concepts-library | 2022-09-17T20:15:59Z | 0 | 39 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T20:15:45Z | ---

license: mit

---

### 3d Female Cyborgs on Stable Diffusion

This is the `<A female cyborg>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

tavakolih/all-MiniLM-L6-v2-pubmed-full | tavakolih | 2022-09-17T19:59:09Z | 1,201 | 9 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"dataset:pubmed",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

]

| sentence-similarity | 2022-09-17T19:59:01Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

datasets:

- pubmed

---

# tavakolih/all-MiniLM-L6-v2-pubmed-full

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('tavakolih/all-MiniLM-L6-v2-pubmed-full')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=tavakolih/all-MiniLM-L6-v2-pubmed-full)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 221 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

Parameters of the fit()-Method:

```

{

"epochs": 10,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Normalize()

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

Tritkoman/Kvenfinnishtranslator | Tritkoman | 2022-09-17T18:38:22Z | 103 | 0 | transformers | [

"transformers",

"pytorch",

"autotrain",

"translation",

"en",

"fi",

"dataset:Tritkoman/autotrain-data-wnkeknrr",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

]

| translation | 2022-09-17T18:36:53Z | ---

tags:

- autotrain

- translation

language:

- en

- fi

datasets:

- Tritkoman/autotrain-data-wnkeknrr

co2_eq_emissions:

emissions: 0.007023045912239053

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1495654541

- CO2 Emissions (in grams): 0.0070

## Validation Metrics

- Loss: 2.873

- SacreBLEU: 22.653

- Gen len: 7.114 |

dumitrescustefan/gpt-neo-romanian-780m | dumitrescustefan | 2022-09-17T18:24:19Z | 260 | 12 | transformers | [

"transformers",

"pytorch",

"gpt_neo",

"text-generation",

"romanian",

"text generation",

"causal lm",

"gpt-neo",

"ro",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-08-29T15:31:26Z | ---

language:

- ro

license: mit # Example: apache-2.0 or any license from https://hf.co/docs/hub/repositories-licenses

tags:

- romanian

- text generation

- causal lm

- gpt-neo

---

# GPT-Neo Romanian 780M

This model is a GPT-Neo transformer decoder model designed using EleutherAI's replication of the GPT-3 architecture.

It was trained on a thoroughly cleaned corpus of Romanian text of about 40GB composed of Oscar, Opus, Wikipedia, literature and various other bits and pieces of text, joined together and deduplicated. It was trained for about a month, totaling 1.5M steps on a v3-32 TPU machine.

### Authors:

* Dumitrescu Stefan

* Mihai Ilie

### Evaluation

Evaluation to be added soon, also on [https://github.com/dumitrescustefan/Romanian-Transformers](https://github.com/dumitrescustefan/Romanian-Transformers)

### Acknowledgements

Thanks [TPU Research Cloud](https://sites.research.google/trc/about/) for the TPUv3 machine needed to train this model!

|

RICHPOOL/RICHPOOL_MINER | RICHPOOL | 2022-09-17T17:42:59Z | 0 | 0 | null | [

"region:us"

]

| null | 2022-09-17T17:39:16Z | ### 开源矿工-瑞池专业版

开源-绿色-无抽水

huggingface 下载分流

#### 原软件源代码

https://github.com/ntminer/NtMiner

#### 授权协议

The LGPL license。

|

sd-concepts-library/durer-style | sd-concepts-library | 2022-09-17T16:36:56Z | 0 | 7 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T16:36:49Z | ---

license: mit

---

### durer style on Stable Diffusion

This is the `<drr-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

theojolliffe/pegasus-model-3-x25 | theojolliffe | 2022-09-17T15:48:03Z | 108 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-09-17T14:27:08Z | ---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: pegasus-model-3-x25

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-model-3-x25

This model is a fine-tuned version of [theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback](https://huggingface.co/theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5668

- Rouge1: 61.9972

- Rouge2: 48.1531

- Rougel: 48.845

- Rougelsum: 59.5019

- Gen Len: 123.0814

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:------:|:---------:|:--------:|

| 1.144 | 1.0 | 883 | 0.5668 | 61.9972 | 48.1531 | 48.845 | 59.5019 | 123.0814 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Eksperymenty/Pong-PLE-v0 | Eksperymenty | 2022-09-17T14:44:18Z | 0 | 0 | null | [

"Pong-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T14:44:08Z | ---

tags:

- Pong-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Pong-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pong-PLE-v0

type: Pong-PLE-v0

metrics:

- type: mean_reward

value: -16.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pong-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pong-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

jayanta/swin-base-patch4-window7-224-20epochs-finetuned-memes | jayanta | 2022-09-17T13:02:25Z | 216 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2022-09-17T12:07:58Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-base-patch4-window7-224-20epochs-finetuned-memes

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.847758887171561

- task:

type: image-classification

name: Image Classification

dataset:

type: custom

name: custom

split: test

metrics:

- type: f1

value: 0.8504084378729573

name: F1

- type: precision

value: 0.8519647060733512

name: Precision

- type: recall

value: 0.8523956723338485

name: Recall

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-base-patch4-window7-224-20epochs-finetuned-memes

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7090

- Accuracy: 0.8478

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.0238 | 0.99 | 40 | 0.9636 | 0.6445 |

| 0.777 | 1.99 | 80 | 0.6591 | 0.7666 |

| 0.4763 | 2.99 | 120 | 0.5381 | 0.8130 |

| 0.3215 | 3.99 | 160 | 0.5244 | 0.8253 |

| 0.2179 | 4.99 | 200 | 0.5123 | 0.8238 |

| 0.1868 | 5.99 | 240 | 0.5052 | 0.8308 |

| 0.154 | 6.99 | 280 | 0.5444 | 0.8338 |

| 0.1166 | 7.99 | 320 | 0.6318 | 0.8238 |

| 0.1099 | 8.99 | 360 | 0.5656 | 0.8338 |

| 0.0925 | 9.99 | 400 | 0.6057 | 0.8338 |

| 0.0779 | 10.99 | 440 | 0.5942 | 0.8393 |

| 0.0629 | 11.99 | 480 | 0.6112 | 0.8400 |

| 0.0742 | 12.99 | 520 | 0.6588 | 0.8331 |

| 0.0752 | 13.99 | 560 | 0.6143 | 0.8408 |

| 0.0577 | 14.99 | 600 | 0.6450 | 0.8516 |

| 0.0589 | 15.99 | 640 | 0.6787 | 0.8400 |

| 0.0555 | 16.99 | 680 | 0.6641 | 0.8454 |

| 0.052 | 17.99 | 720 | 0.7213 | 0.8524 |

| 0.0589 | 18.99 | 760 | 0.6917 | 0.8470 |

| 0.0506 | 19.99 | 800 | 0.7090 | 0.8478 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

test1234678/distilbert-base-uncased-distilled-clinc | test1234678 | 2022-09-17T12:34:43Z | 108 | 0 | transformers | [

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:clinc_oos",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-09-17T07:24:42Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- clinc_oos

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-distilled-clinc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: clinc_oos

type: clinc_oos

config: plus

split: train

args: plus

metrics:

- name: Accuracy

type: accuracy

value: 0.9461290322580646

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-distilled-clinc