modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-14 00:44:55

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 519

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-14 00:44:41

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

jimmy880219/bert-base-chinese-finetuned-squad | jimmy880219 | 2022-10-30T13:25:52Z | 10 | 1 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

]

| question-answering | 2022-10-30T12:22:01Z | ---

tags:

- generated_from_trainer

model-index:

- name: bert-base-chinese-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-chinese-finetuned-squad

This model is a fine-tuned version of [bert-base-chinese](https://huggingface.co/bert-base-chinese) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 11.3796

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.7051 | 1.0 | 6911 | 11.3796 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

shrdlu9/bert-base-cased-ud-NER | shrdlu9 | 2022-10-30T12:02:07Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"ner",

"en",

"endpoints_compatible",

"region:us"

]

| null | 2022-10-30T11:01:00Z | ---

language:

- en

tags:

- ner

metrics:

- seqeval

---

## Overview

This model consists of a bert-base-cased model fine-tuned for Named Entity Recognition (NER) with 18 NE tags on the Universal Dependencies English dataset.

\

https://universaldependencies.org/en/index.html

\

The recognized NE tags are:

| CARDINAL | cardinal value |

|-----------------------|------------------------|

| DATE | date value |

| EVENT | event name |

| FAC | building name |

| GPE | geo-political entity |

| LANGUAGE | language name |

| LAW | law name |

| LOC | location name |

| MONEY | money name |

| NORP | affiliation |

| ORDINAL | ordinal value |

| ORG | organization name |

| PERCENT | percent value |

| PERSON | person name |

| PRODUCT | product name |

| QUANTITY | quantity value |

| TIME | time value |

| WORK_OF_ART | name of work of art |

A fine-tuned bert-base-uncased model is also available. |

tlttl/tluo_xml_roberta_base_amazon_review_sentiment_v3 | tlttl | 2022-10-30T11:23:42Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-10-30T07:54:25Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: tluo_xml_roberta_base_amazon_review_sentiment_v3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tluo_xml_roberta_base_amazon_review_sentiment_v3

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9456

- Accuracy: 0.6023

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 123

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 1.056 | 0.33 | 5000 | 0.9885 | 0.5642 |

| 0.944 | 0.67 | 10000 | 0.9574 | 0.5913 |

| 0.9505 | 1.0 | 15000 | 0.9674 | 0.579 |

| 0.8902 | 1.33 | 20000 | 0.9660 | 0.5945 |

| 0.8851 | 1.67 | 25000 | 0.9470 | 0.5888 |

| 0.8714 | 2.0 | 30000 | 0.9456 | 0.6023 |

| 0.7967 | 2.33 | 35000 | 0.9662 | 0.5978 |

| 0.767 | 2.67 | 40000 | 0.9738 | 0.5987 |

| 0.7595 | 3.0 | 45000 | 0.9740 | 0.5988 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Tokenizers 0.13.1

|

NlpHUST/vi-word-segmentation | NlpHUST | 2022-10-30T09:45:24Z | 140 | 4 | transformers | [

"transformers",

"pytorch",

"electra",

"token-classification",

"word segmentation",

"vi",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-10-30T04:48:30Z | ---

widget:

- text: "Phát biểu tại phiên thảo luận về tình hình kinh tế xã hội của Quốc hội sáng 28/10 , Bộ trưởng Bộ LĐ-TB&XH Đào Ngọc Dung khái quát , tại phiên khai mạc kỳ họp , lãnh đạo chính phủ đã báo cáo , đề cập tương đối rõ ràng về việc thực hiện các chính sách an sinh xã hội"

tags:

- word segmentation

language:

- vi

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: vi-word-segmentation

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vi-word-segmentation

This model is a fine-tuned version of [NlpHUST/electra-base-vn](https://huggingface.co/NlpHUST/electra-base-vn) on an vlsp 2013 vietnamese word segmentation dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0501

- Precision: 0.9833

- Recall: 0.9838

- F1: 0.9835

- Accuracy: 0.9911

## Model description

More information needed

## Intended uses & limitations

You can use this model with Transformers *pipeline* for NER.

```python

from transformers import AutoTokenizer, AutoModelForTokenClassification

from transformers import pipeline

tokenizer = AutoTokenizer.from_pretrained("NlpHUST/vi-word-segmentation")

model = AutoModelForTokenClassification.from_pretrained("NlpHUST/vi-word-segmentation")

nlp = pipeline("token-classification", model=model, tokenizer=tokenizer)

example = "Phát biểu tại phiên thảo luận về tình hình kinh tế xã hội của Quốc hội sáng 28/10 , Bộ trưởng Bộ LĐ-TB&XH Đào Ngọc Dung khái quát , tại phiên khai mạc kỳ họp , lãnh đạo chính phủ đã báo cáo , đề cập tương đối rõ ràng về việc thực hiện các chính sách an sinh xã hội"

ner_results = nlp(example)

example_tok = ""

for e in ner_results:

if "##" in e["word"]:

example_tok = example_tok + e["word"].replace("##","")

elif e["entity"] =="I":

example_tok = example_tok + "_" + e["word"]

else:

example_tok = example_tok + " " + e["word"]

print(example_tok)

Phát_biểu tại phiên thảo_luận về tình_hình kinh_tế xã_hội của Quốc_hội sáng 28 / 10 , Bộ_trưởng Bộ LĐ - TB [UNK] XH Đào_Ngọc_Dung khái_quát , tại phiên khai_mạc kỳ họp , lãnh_đạo chính_phủ đã báo_cáo , đề_cập tương_đối rõ_ràng về việc thực_hiện các chính_sách an_sinh xã_hội

```

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 4

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0168 | 1.0 | 4712 | 0.0284 | 0.9813 | 0.9825 | 0.9819 | 0.9904 |

| 0.0107 | 2.0 | 9424 | 0.0350 | 0.9789 | 0.9814 | 0.9802 | 0.9895 |

| 0.005 | 3.0 | 14136 | 0.0364 | 0.9826 | 0.9843 | 0.9835 | 0.9909 |

| 0.0033 | 4.0 | 18848 | 0.0434 | 0.9830 | 0.9831 | 0.9830 | 0.9908 |

| 0.0017 | 5.0 | 23560 | 0.0501 | 0.9833 | 0.9838 | 0.9835 | 0.9911 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

fumi13/q-Taxi-v3 | fumi13 | 2022-10-30T09:40:15Z | 0 | 0 | null | [

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-10-30T09:40:07Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="fumi13/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

fumi13/q-FrozenLake-v1-4x4-noSlippery | fumi13 | 2022-10-30T09:27:39Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-10-30T09:27:30Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="fumi13/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Tritkoman/English2Sardinian | Tritkoman | 2022-10-30T07:41:31Z | 102 | 0 | transformers | [

"transformers",

"pytorch",

"autotrain",

"translation",

"en",

"it",

"dataset:Tritkoman/autotrain-data-gatvotva",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

]

| translation | 2022-10-30T07:31:37Z | ---

tags:

- autotrain

- translation

language:

- en

- it

datasets:

- Tritkoman/autotrain-data-gatvotva

co2_eq_emissions:

emissions: 14.908336657166226

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1931765297

- CO2 Emissions (in grams): 14.9083

## Validation Metrics

- Loss: 2.666

- SacreBLEU: 17.990

- Gen len: 64.922 |

g30rv17ys/ddpm-hkuoct-dr-256-200ep | g30rv17ys | 2022-10-30T06:16:58Z | 0 | 0 | diffusers | [

"diffusers",

"tensorboard",

"en",

"dataset:imagefolder",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

]

| null | 2022-10-29T19:28:18Z | ---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: imagefolder

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-hkuoct-dr-256-200ep

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `imagefolder` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/geevegeorge/ddpm-hkuoct-dr-256-200ep/tensorboard?#scalars)

|

hsc748NLP/TfhBERT | hsc748NLP | 2022-10-30T05:37:15Z | 6 | 2 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-10-21T14:52:14Z | ---

license: apache-2.0

---

https://github.com/hsc748NLP/SikuBERT-for-digital-humanities-and-classical-Chinese-information-processing |

hsc748NLP/BtfhBERT | hsc748NLP | 2022-10-30T05:36:55Z | 162 | 3 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-10-21T14:52:37Z | ---

license: apache-2.0

---

https://github.com/hsc748NLP/SikuBERT-for-digital-humanities-and-classical-Chinese-information-processing |

bharadwajkg/sample-beauty-cardiffnlp-twitter-roberta-base-sentiment | bharadwajkg | 2022-10-30T05:01:44Z | 103 | 1 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-10-29T07:45:57Z | ---

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- recall

model-index:

- name: sample-beauty-cardiffnlp-twitter-roberta-base-sentiment

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sample-beauty-cardiffnlp-twitter-roberta-base-sentiment

This model is a fine-tuned version of [cardiffnlp/twitter-roberta-base-sentiment](https://huggingface.co/cardiffnlp/twitter-roberta-base-sentiment) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3954

- Accuracy: 0.9

- F1: 0.6805

- Recall: 0.6647

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

Ankit15nov/xlm-roberta-base-finetuned-panx-it | Ankit15nov | 2022-10-30T03:24:38Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-10-30T03:22:50Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-it

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.it

metrics:

- name: F1

type: f1

value: 0.8199834847233691

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-it

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2484

- F1: 0.8200

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.7739 | 1.0 | 70 | 0.3264 | 0.7482 |

| 0.3054 | 2.0 | 140 | 0.2655 | 0.7881 |

| 0.1919 | 3.0 | 210 | 0.2484 | 0.8200 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.5.1

- Datasets 1.16.1

- Tokenizers 0.10.3

|

sd-concepts-library/leif-jones | sd-concepts-library | 2022-10-30T01:21:56Z | 0 | 2 | null | [

"license:mit",

"region:us"

]

| null | 2022-10-30T01:21:52Z | ---

license: mit

---

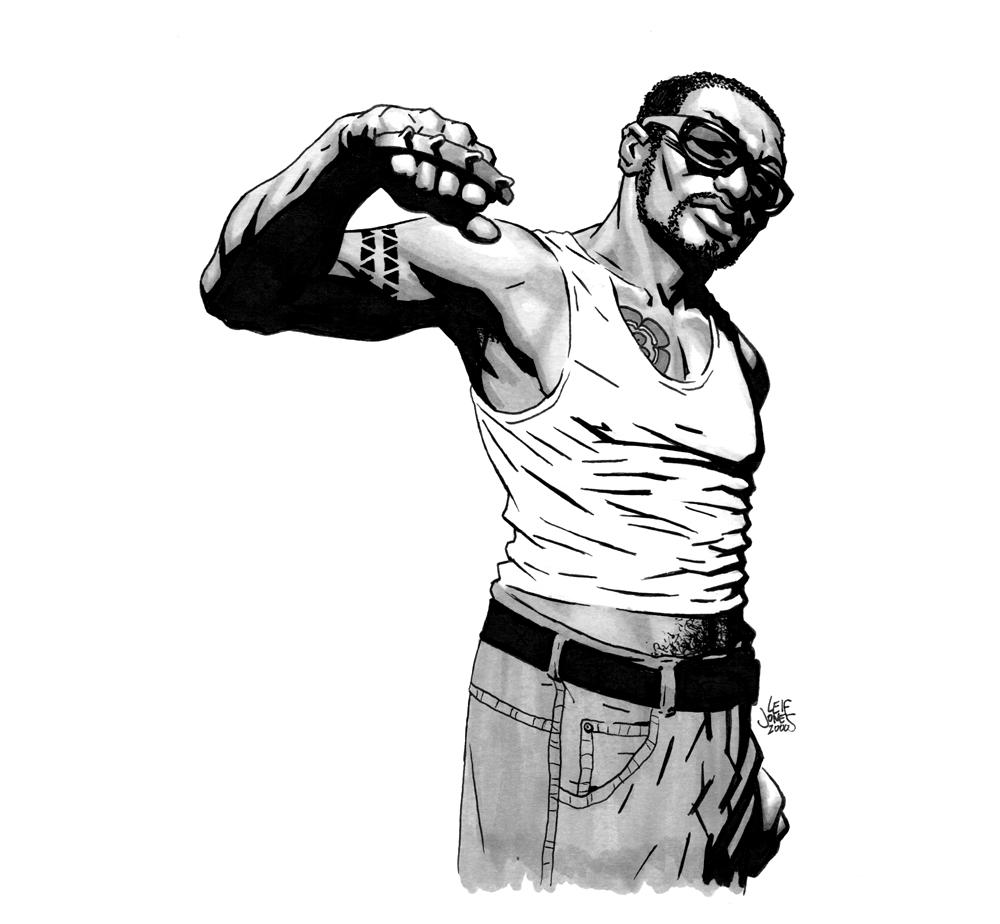

### leif jones on Stable Diffusion

This is the `<leif-jones>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

tlttl/tluo_xml_roberta_base_amazon_review_sentiment_v2 | tlttl | 2022-10-30T00:51:07Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-10-29T15:21:12Z | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: tluo_xml_roberta_base_amazon_review_sentiment_v2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tluo_xml_roberta_base_amazon_review_sentiment_v2

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9630

- Accuracy: 0.6057

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 123

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 1.0561 | 0.33 | 5000 | 0.9954 | 0.567 |

| 0.948 | 0.67 | 10000 | 0.9641 | 0.5862 |

| 0.9557 | 1.0 | 15000 | 0.9605 | 0.589 |

| 0.8891 | 1.33 | 20000 | 0.9420 | 0.5875 |

| 0.8889 | 1.67 | 25000 | 0.9397 | 0.592 |

| 0.8777 | 2.0 | 30000 | 0.9236 | 0.6042 |

| 0.778 | 2.33 | 35000 | 0.9612 | 0.5972 |

| 0.7589 | 2.67 | 40000 | 0.9728 | 0.5995 |

| 0.7593 | 3.0 | 45000 | 0.9630 | 0.6057 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

nqhuy/ASR_Phimtailieu_WithLM | nqhuy | 2022-10-30T00:09:00Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-10-26T01:38:17Z | ---

tags:

- generated_from_trainer

model-index:

- name: ASR_Phimtailieu_WithLM

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ASR_Phimtailieu_WithLM

This model was trained from scratch on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.5235

- eval_wer: 0.2531

- eval_runtime: 570.9035

- eval_samples_per_second: 15.467

- eval_steps_per_second: 1.934

- epoch: 2.58

- step: 39000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4.42184e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 25

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

ifrz/wav2vec2-large-xlsr-galician | ifrz | 2022-10-29T23:47:47Z | 4,518 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-04-29T08:55:46Z | # wav2vec2-large-xlsr-galician

---

language: gl

datasets:

- OpenSLR 77

- mozilla-foundation common_voice_8_0

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

license: apache-2.0

model-index:

- name: Galician wav2vec2-large-xlsr-galician

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset_1:

name: OpenSLR

type: openslr

args: gl

dataset_2:

name: mozilla-foundation

type: common voice

args: gl

metrics:

- name: Test WER

type: wer

value: 7.12

---

# Model

Fine-tuned model for Galician language

Based on the [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) self-supervised model

Fine-tune with audio labelled from [OpenSLR](https://openslr.org/77/) and Mozilla [Common_Voice](https://commonvoice.mozilla.org/gl) (both datasets previously refined)

Check training metrics to see results

# Testing

Make sure that the audio speech input is sampled at 16kHz (mono).

```python

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

model = Wav2Vec2ForCTC.from_pretrained("ifrz/wav2vec2-large-xlsr-galician")

processor = Wav2Vec2Processor.from_pretrained("ifrz/wav2vec2-large-xlsr-galician")

# Reading taken audio clip

import librosa, torch

audio, rate = librosa.load("./gl_test_1.wav", sr = 16000)

# Taking an input value

input_values = processor(audio, sampling_rate=16_000, return_tensors = "pt", padding="longest").input_values

# Storing logits (non-normalized prediction values)

logits = model(input_values).logits

# Storing predicted ids

prediction = torch.argmax(logits, dim = -1)

# Passing the prediction to the tokenzer decode to get the transcription

transcription = processor.batch_decode(prediction)[0]

print(transcription)

``` |

prakharz/DIAL-T0 | prakharz | 2022-10-29T23:39:24Z | 4 | 3 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"arxiv:2205.12673",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-10-29T23:35:26Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: DIAL_T0

results: []

widget:

- text: "Instruction: Edit the provided response into a response that is fluent and coherent to the dialogue context. \n\nInput: [CONTEXT] How may I help you? [ENDOFTURN] I left a suitcase on the train to London the other day. [RESPONSE] Can describe itit , sir ? It will help us find [ENDOFDIALOGUE] [QUESTION] Given this context and response provided, the edited response is"

- text: "Instruction: Generate a response that starts with the provided initial phrase. \n\nInput: [INITIAL_PHRASE] Please describe [CONTEXT] How may I help you? [ENDOFTURN] I left a suitcase on the train to London the other day. [ENDOFDIALOGUE] [QUESTION] A response with the provided initial phrase is"

- text: "Instruction: Generate a response that starts with the provided initial phrase and contains the provided keywords. \n\nInput: [INITIAL PHRASE] Please describe [KEYWORDS] color, any documents [CONTEXT] How may I help you? [ENDOFTURN] I left a suitcase on the train to London the other day. [ENDOFDIALOGUE] [QUESTION] A response with the provided initial phrase and keywords is"

- text: "Instruction: What is the intent of the response \n\nInput: [CONTEXT] How may I help you? [RESPONSE] I left a suitcase on the train to London the other day. [ENDOFDIALOGUE] [OPTIONS] booking, reservation change, checkout, lost&found, time information, security, schedules [QUESTION] The intent of the response is"

- text: "Instruction: Generate a summary for the following dialog context. \n\nInput: [CONTEXT] Ann: Wanna go out? [ENDOFTURN] Kate: Not really, I feel sick. [ENDOFTURN] Ann: Drink mint tea, they say it helps. Ok, so we'll meet up another time. Take care! [ENDOFTURN] Kate: Thanks! [ENDOFDIALOGUE] [QUESTION] For this dialogue, the summary is: "

- text: "Instruction: Consider the context of the conversation and a document and generate an answer accordingly \n\nInput: [CONTEXT] How may I help you? [ENDOFTURN] I left a suitcase on the train to London the other day. [ENDOFDIALOGUE] [QUESTION] What is the response of the following question: Where was the person going to?"

- text: "Instruction: Generate a response using the provided background knowledge. \n\nInput: [KNOWLEDGE] Emailid for cases related to lost and found is [email protected] [CONTEXT] How may I help you? [ENDOFTURN] I left a suitcase on the train to London the other day. [ENDOFDIALOGUE] [QUESTION] Generate a response using the information from the background knowledge."

---

# InstructDial

Instruction tuning is an emergent paradigm in NLP wherein natural language instructions are leveraged with language models to induce zero-shot performance on unseen tasks. Instructions have been shown to enable good performance on unseen tasks and datasets in both large and small language models. Dialogue is an especially interesting area to explore instruction tuning because dialogue systems perform multiple kinds of tasks related to language (e.g., natural language understanding and generation, domain-specific interaction), yet instruction tuning has not been systematically explored for dialogue-related tasks. We introduce InstructDial, an instruction tuning framework for dialogue, which consists of a repository of 48 diverse dialogue tasks in a unified text-to-text format created from 59 openly available dialogue datasets. Next, we explore cross-task generalization ability on models tuned on InstructDial across diverse dialogue tasks. Our analysis reveals that InstructDial enables good zero-shot performance on unseen datasets and tasks such as dialogue evaluation and intent detection, and even better performance in a few-shot setting. To ensure that models adhere to instructions, we introduce novel meta-tasks. We establish benchmark zero-shot and few-shot performance of models trained using the proposed framework on multiple dialogue tasks.

[Paper](https://arxiv.org/abs/2205.12673)

# Dial_T0

T5-xl 3B type model trained on InstructDial tasks. This model is a fine-tuned version of bigscience/T0_3B model

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

All tasks in InstructDial framework (including all dialogue eval tasks)

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 9

- eval_batch_size: 9

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 72

- total_eval_batch_size: 72

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.3.2

- Tokenizers 0.12.1

|

psdwizzard/Boredape-Diffusion | psdwizzard | 2022-10-29T23:14:07Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

]

| null | 2022-10-29T22:41:39Z | ---

license: creativeml-openrail-m

---

Boredape Diffusion

This is the fine-tuned Stable Diffusion model trained on images Bored Ape Yacht Club. Make your own sometimes busted looking Bored Apes.

Use keyword boredape |

sd-concepts-library/edgerunners-style-v2 | sd-concepts-library | 2022-10-29T23:01:46Z | 0 | 6 | null | [

"license:mit",

"region:us"

]

| null | 2022-10-29T23:01:35Z | ---

license: mit

---

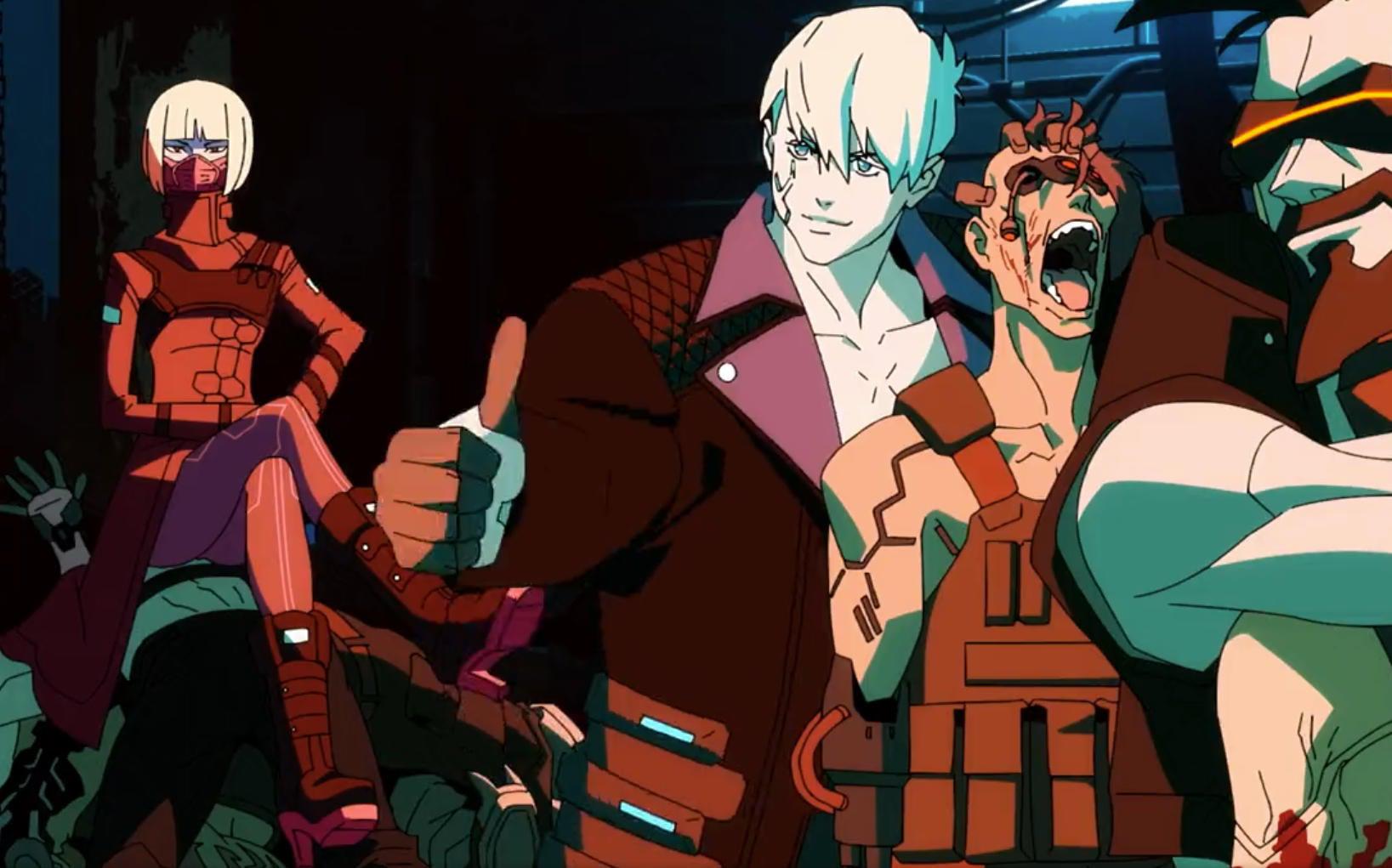

### Edgerunners Style v2 on Stable Diffusion

This is the `<edgerunners-style-av-v2>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

beautifulpichai/all-MiniLM-L12-v2-ledgar-contrastive | beautifulpichai | 2022-10-29T22:45:34Z | 6 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

]

| sentence-similarity | 2022-10-29T22:45:25Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 2451 with parameters:

```

{'batch_size': 8, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 2451,

"warmup_steps": 246,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Normalize()

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

Alt41r/gpt-simpson | Alt41r | 2022-10-29T22:44:18Z | 8 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"Text Generation",

"conversational",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-10-29T20:35:04Z | ---

language:

- en

tags:

- Text Generation

- conversational

widget:

- text: "Do you like beer?"

example_title: "Example 1"

- text: "Who are you?"

example_title: "Example 2"

--- |

sergiocannata/convnext-tiny-224-finetuned-brs | sergiocannata | 2022-10-29T22:41:21Z | 31 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"convnext",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2022-10-29T22:16:22Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

- f1

model-index:

- name: convnext-tiny-224-finetuned-brs

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8235294117647058

- name: F1

type: f1

value: 0.7272727272727272

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# convnext-tiny-224-finetuned-brs

This model is a fine-tuned version of [facebook/convnext-tiny-224](https://huggingface.co/facebook/convnext-tiny-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8667

- Accuracy: 0.8235

- F1: 0.7273

- Precision (ppv): 0.8

- Recall (sensitivity): 0.6667

- Specificity: 0.9091

- Npv: 0.8333

- Auc: 0.7879

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 100

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision (ppv) | Recall (sensitivity) | Specificity | Npv | Auc |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------------:|:--------------------:|:-----------:|:------:|:------:|

| 0.6766 | 6.25 | 100 | 0.7002 | 0.4706 | 0.5263 | 0.3846 | 0.8333 | 0.2727 | 0.75 | 0.5530 |

| 0.6408 | 12.49 | 200 | 0.6770 | 0.6471 | 0.5714 | 0.5 | 0.6667 | 0.6364 | 0.7778 | 0.6515 |

| 0.464 | 18.74 | 300 | 0.6624 | 0.5882 | 0.5882 | 0.4545 | 0.8333 | 0.4545 | 0.8333 | 0.6439 |

| 0.4295 | 24.98 | 400 | 0.6938 | 0.5294 | 0.5 | 0.4 | 0.6667 | 0.4545 | 0.7143 | 0.5606 |

| 0.3952 | 31.25 | 500 | 0.5974 | 0.7059 | 0.6154 | 0.5714 | 0.6667 | 0.7273 | 0.8 | 0.6970 |

| 0.1082 | 37.49 | 600 | 0.6163 | 0.6471 | 0.5 | 0.5 | 0.5 | 0.7273 | 0.7273 | 0.6136 |

| 0.1997 | 43.74 | 700 | 0.6155 | 0.7059 | 0.6154 | 0.5714 | 0.6667 | 0.7273 | 0.8 | 0.6970 |

| 0.1267 | 49.98 | 800 | 0.9063 | 0.6471 | 0.5714 | 0.5 | 0.6667 | 0.6364 | 0.7778 | 0.6515 |

| 0.1178 | 56.25 | 900 | 0.8672 | 0.7059 | 0.6667 | 0.5556 | 0.8333 | 0.6364 | 0.875 | 0.7348 |

| 0.2008 | 62.49 | 1000 | 0.7049 | 0.8235 | 0.7692 | 0.7143 | 0.8333 | 0.8182 | 0.9 | 0.8258 |

| 0.0996 | 68.74 | 1100 | 0.4510 | 0.8235 | 0.7692 | 0.7143 | 0.8333 | 0.8182 | 0.9 | 0.8258 |

| 0.0115 | 74.98 | 1200 | 0.7561 | 0.8235 | 0.7692 | 0.7143 | 0.8333 | 0.8182 | 0.9 | 0.8258 |

| 0.0177 | 81.25 | 1300 | 1.0400 | 0.7059 | 0.6667 | 0.5556 | 0.8333 | 0.6364 | 0.875 | 0.7348 |

| 0.0261 | 87.49 | 1400 | 0.9139 | 0.8235 | 0.7692 | 0.7143 | 0.8333 | 0.8182 | 0.9 | 0.8258 |

| 0.028 | 93.74 | 1500 | 0.7367 | 0.7647 | 0.7143 | 0.625 | 0.8333 | 0.7273 | 0.8889 | 0.7803 |

| 0.0056 | 99.98 | 1600 | 0.8667 | 0.8235 | 0.7273 | 0.8 | 0.6667 | 0.9091 | 0.8333 | 0.7879 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

RafboOrg/ppo-LunarLander-v2 | RafboOrg | 2022-10-29T22:04:13Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-10-29T21:32:52Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 216.33 +/- 18.78

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

SirVeggie/Aeolian | SirVeggie | 2022-10-29T21:50:20Z | 0 | 4 | null | [

"license:creativeml-openrail-m",

"region:us"

]

| null | 2022-10-17T17:36:12Z | ---

license: creativeml-openrail-m

---

# Aeolian stable diffusion model

Original artist: WLOP\

Patreon: https://www.patreon.com/wlop/posts

An original character created and drawn by WLOP for his webcomic Ghostblade.

## Basic explanation

Token and Class words are what guide the AI to produce images similar to the trained style/object/character.

Include any mix of these words in the prompt to produce verying results, or exclude them to have a less pronounced effect.

There is usually at least a slight stylistic effect even without the words, but it is recommended to include at least one.

Adding token word/phrase class word/phrase at the start of the prompt in that order produces results most similar to the trained concept, but they can be included elsewhere as well. Some models produce better results when not including all token/class words.

3k models are are more flexible, while 5k models produce images closer to the trained concept.

I recommend 2k/3k models for normal use, and 5k/6k models for model merging and use without token/class words.

However it can be also very prompt specific. I highly recommend self-experimentation.

## Comparison

Aeolian and aeolian_3000 are quite similar with slight differences.

Epoch 5 and 6 versions were earlier in the waifu diffusion 1.3 training process, so it is easier to produce more varied, non anime, results.

## aeolian

```

token: m_aeolian

class: §¶•

base: waifu diffusion 1.2-e5

notes: 2020 step training

```

## aeolian_3000

```

token: m_aeolian

class: §¶•

base: waifu diffusion 1.2-e6

notes: 3000 step training

```

## aeolian_v2

```

token: m_concept

class: §

base: waifu diffusion 1.3

notes: 1.3 model, which may give some benefits over 1.2-e5

```

## License

This embedding is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

beautifulpichai/all-MiniLM-L6-v2-ledgar-contrastive | beautifulpichai | 2022-10-29T21:15:08Z | 6 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

]

| sentence-similarity | 2022-10-29T21:14:59Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 2451 with parameters:

```

{'batch_size': 8, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 2451,

"warmup_steps": 246,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Normalize()

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

NikitaBaramiia/dqn-SpaceInvadersNoFrameskip-v4 | NikitaBaramiia | 2022-10-29T21:11:12Z | 3 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-10-29T21:10:39Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 451.00 +/- 99.62

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga NikitaBaramiia -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga NikitaBaramiia -f logs/

rl_zoo3 enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga NikitaBaramiia

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 500000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

huggingtweets/mcpeachpies | huggingtweets | 2022-10-29T20:45:06Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-10-29T20:33:46Z | ---

language: en

thumbnail: http://www.huggingtweets.com/mcpeachpies/1667076223314/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1396209493415845888/vye-v8UP_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">mcpeachpies 🍑</div>

<div style="text-align: center; font-size: 14px;">@mcpeachpies</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from mcpeachpies 🍑.

| Data | mcpeachpies 🍑 |

| --- | --- |

| Tweets downloaded | 3239 |

| Retweets | 208 |

| Short tweets | 1076 |

| Tweets kept | 1955 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/2ys0xeox/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @mcpeachpies's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/d1x4t5yn) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/d1x4t5yn/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/mcpeachpies')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Athithya/finetuning-sentiment-model-3000-samples | Athithya | 2022-10-29T19:52:08Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-10-29T19:31:37Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: finetuning-sentiment-model-3000-samples

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

ankur-gupta/dummy | ankur-gupta | 2022-10-29T18:36:52Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"t5",

"feature-extraction",

"generated_from_keras_callback",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| feature-extraction | 2022-10-27T21:35:24Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: dummy

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# dummy

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.23.1

- TensorFlow 2.10.0

- Datasets 2.6.1

- Tokenizers 0.13.1

|

Stancld/long-t5-local-large | Stancld | 2022-10-29T18:18:34Z | 13 | 0 | transformers | [

"transformers",

"tf",

"longt5",

"text2text-generation",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-10-29T18:13:19Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: long-t5-local-large

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# long-t5-local-large

This model is a fine-tuned version of [google/long-t5-local-large](https://huggingface.co/google/long-t5-local-large) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.24.0.dev0

- TensorFlow 2.9.0

- Datasets 2.2.2

- Tokenizers 0.11.6

|

Stancld/long-t5-local-base | Stancld | 2022-10-29T18:13:08Z | 7 | 0 | transformers | [

"transformers",

"tf",

"longt5",

"text2text-generation",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-10-29T18:11:08Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: long-t5-local-base

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# long-t5-local-base

This model is a fine-tuned version of [google/long-t5-local-base](https://huggingface.co/google/long-t5-local-base) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.24.0.dev0

- TensorFlow 2.9.0

- Datasets 2.2.2

- Tokenizers 0.11.6

|

Stancld/long-t5-tglobal-large | Stancld | 2022-10-29T18:11:04Z | 12 | 0 | transformers | [

"transformers",

"tf",

"longt5",

"text2text-generation",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-10-29T18:04:59Z | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: long-t5-tglobal-large

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# long-t5-tglobal-large

This model is a fine-tuned version of [google/long-t5-tglobal-large](https://huggingface.co/google/long-t5-tglobal-large) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.24.0.dev0

- TensorFlow 2.9.0

- Datasets 2.2.2

- Tokenizers 0.11.6

|

ViktorDo/SciBERT-WIKI_Epiphyte_Finetuned | ViktorDo | 2022-10-29T17:39:03Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-10-29T16:21:50Z | ---

tags:

- generated_from_trainer

model-index:

- name: SciBERT-WIKI_Epiphyte_Finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# SciBERT-WIKI_Epiphyte_Finetuned

This model is a fine-tuned version of [allenai/scibert_scivocab_uncased](https://huggingface.co/allenai/scibert_scivocab_uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0530

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.0782 | 1.0 | 2094 | 0.0624 |

| 0.0591 | 2.0 | 4188 | 0.0481 |

| 0.0278 | 3.0 | 6282 | 0.0530 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

huggingtweets/wayneradiotv | huggingtweets | 2022-10-29T17:30:09Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-10-29T17:30:00Z | ---

language: en

thumbnail: https://github.com/borisdayma/huggingtweets/blob/master/img/logo.png?raw=true

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1511060623072927747/xvz5xYEj_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">wayneradiotv</div>

<div style="text-align: center; font-size: 14px;">@wayneradiotv</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from wayneradiotv.

| Data | wayneradiotv |

| --- | --- |

| Tweets downloaded | 3227 |

| Retweets | 1142 |

| Short tweets | 365 |

| Tweets kept | 1720 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3nfxw79q/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @wayneradiotv's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2dhlzg3t) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2dhlzg3t/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/wayneradiotv')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

huggingtweets/socpens | huggingtweets | 2022-10-29T17:04:28Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-10-29T17:03:12Z | ---

language: en

thumbnail: http://www.huggingtweets.com/socpens/1667063063525/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1404907635934216205/unH2FvUy_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">scorpy</div>

<div style="text-align: center; font-size: 14px;">@socpens</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from scorpy.

| Data | scorpy |

| --- | --- |

| Tweets downloaded | 3236 |

| Retweets | 758 |

| Short tweets | 423 |

| Tweets kept | 2055 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1xewzfqo/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @socpens's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/1u64kl11) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/1u64kl11/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/socpens')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

ViktorDo/SciBERT-WIKI_Growth_Form_Finetuned | ViktorDo | 2022-10-29T16:06:48Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-10-29T14:41:58Z | ---

tags:

- generated_from_trainer

model-index:

- name: SciBERT-WIKI_Growth_Form_Finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# SciBERT-WIKI_Growth_Form_Finetuned

This model is a fine-tuned version of [allenai/scibert_scivocab_uncased](https://huggingface.co/allenai/scibert_scivocab_uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2853

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.259 | 1.0 | 2320 | 0.2713 |

| 0.195 | 2.0 | 4640 | 0.2513 |

| 0.149 | 3.0 | 6960 | 0.2853 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

wskhanh/bert-finetuned-squad | wskhanh | 2022-10-29T15:05:55Z | 7 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| question-answering | 2022-10-28T13:24:57Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: bert-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-squad

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1

- Datasets 2.6.1

- Tokenizers 0.13.1

|

huggingtweets/donvesh | huggingtweets | 2022-10-29T11:48:30Z | 103 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-10-29T11:47:07Z | ---

language: en

thumbnail: http://www.huggingtweets.com/donvesh/1667044106194/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div