modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-16 00:42:46

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 522

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-16 00:42:16

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

LoneStriker/Yi-34B-Spicyboros-3.1-2-4.65bpw-h6-exl2 | LoneStriker | 2023-11-17T12:28:36Z | 6 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"dataset:unalignment/spicy-3.1",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2023-11-17T12:26:23Z | ---

license: other

license_name: yi-license

license_link: LICENSE

datasets:

- unalignment/spicy-3.1

---

# Fine-tune of Y-34B with Spicyboros-3.1

Three epochs of fine tuning with @jondurbin's SpicyBoros-3.1 dataset. 4.65bpw should fit on a single 3090/4090, 5.0bpw, 6.0bpw, and 8.0bpw will require more than one GPU 24 GB VRAM GPU.

**Please note:** you may have to turn down repetition penalty to 1.0. The model seems to get into "thesaurus" mode sometimes without this change.

# Original Yi-34B Model Card Below

<div align="center">

<h1>

Yi

</h1>

</div>

## Introduction

The **Yi** series models are large language models trained from scratch by developers at [01.AI](https://01.ai/). The first public release contains two base models with the parameter size of 6B and 34B.

## News

- 🎯 **2023/11/02**: The base model of `Yi-6B` and `Yi-34B`

## Model Performance

| Model | MMLU | CMMLU | C-Eval | GAOKAO | BBH | Commonsense Reasoning | Reading Comprehension | Math & Code |

| :------------ | :------: | :------: | :------: | :------: | :------: | :-------------------: | :-------------------: | :---------: |

| | 5-shot | 5-shot | 5-shot | 0-shot | 3-shot@1 | - | - | - |

| LLaMA2-34B | 62.6 | - | - | - | 44.1 | 69.9 | 68.0 | 26.0 |

| LLaMA2-70B | 68.9 | 53.3 | - | 49.8 | 51.2 | 71.9 | 69.4 | 36.8 |

| Baichuan2-13B | 59.2 | 62.0 | 58.1 | 54.3 | 48.8 | 64.3 | 62.4 | 23.0 |

| Qwen-14B | 66.3 | 71.0 | 72.1 | 62.5 | 53.4 | 73.3 | 72.5 | 39.8 |

| Skywork-13B | 62.1 | 61.8 | 60.6 | 68.1 | 41.7 | 72.4 | 61.4 | 24.9 |

| InternLM-20B | 62.1 | 59.0 | 58.8 | 45.5 | 52.5 | 78.3 | - | 26.0 |

| Aquila-34B | 67.8 | 71.4 | 63.1 | - | - | - | - | - |

| Falcon-180B | 70.4 | 58.0 | 57.8 | 59.0 | 54.0 | 77.3 | 68.8 | 34.0 |

| Yi-6B | 63.2 | 75.5 | 72.0 | 72.2 | 42.8 | 72.3 | 68.7 | 19.8 |

| **Yi-34B** | **76.3** | **83.7** | **81.4** | **82.8** | **54.3** | **80.1** | **76.4** | **37.1** |

While benchmarking open-source models, we have observed a disparity between the results generated by our pipeline and those reported in public sources (e.g. OpenCampus). Upon conducting a more in-depth investigation of this difference, we have discovered that various models may employ different prompts, post-processing strategies, and sampling techniques, potentially resulting in significant variations in the outcomes. Our prompt and post-processing strategy remains consistent with the original benchmark, and greedy decoding is employed during evaluation without any post-processing for the generated content. For scores that did not report by original author (including score reported with different setting), we try to get results with our pipeline.

To extensively evaluate model's capability, we adopted the methodology outlined in Llama2. Specifically, we included PIQA, SIQA, HellaSwag, WinoGrande, ARC, OBQA, and CSQA to assess common sense reasoning. SquAD, QuAC, and BoolQ were incorporated to evaluate reading comprehension. CSQA was exclusively tested using a 7-shot setup, while all other tests were conducted in a 0-shot configuration. Additionally, we introduced GSM8K (8-shot@1), MATH (4-shot@1), HumanEval (0-shot@1), and MBPP (3-shot@1) under the category "Math & Code". Due to technical constraints, we did not test Falcon-180 on QuAC and OBQA; the score is derived by averaging the scores on the remaining tasks. Since the scores for these two tasks are generally lower than the average, we believe that Falcon-180B's performance was not underestimated.

## Disclaimer

Although we use data compliance checking algorithms during the training process to ensure the compliance of the trained model to the best of our ability, due to the complexity of the data and the diversity of language model usage scenarios, we cannot guarantee that the model will generate correct and reasonable output in all scenarios. Please be aware that there is still a risk of the model producing problematic outputs. We will not be responsible for any risks and issues resulting from misuse, misguidance, illegal usage, and related misinformation, as well as any associated data security concerns.

## License

The Yi series model must be adhere to the [Model License Agreement](https://huggingface.co/01-ai/Yi-34B/blob/main/LICENSE).

For any questions related to licensing and copyright, please contact us ([[email protected]](mailto:[email protected])).

|

onangeko/Pixelcopter-PLE-v0 | onangeko | 2023-11-17T12:27:57Z | 0 | 0 | null | [

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-11-16T12:29:08Z | ---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Pixelcopter-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 35.20 +/- 42.21

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

pragnakalpdev32/lora-trained-xl-person-new_25 | pragnakalpdev32 | 2023-11-17T12:17:06Z | 3 | 1 | diffusers | [

"diffusers",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail++",

"region:us"

]

| text-to-image | 2023-11-17T12:13:45Z |

---

license: openrail++

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: A photo of sks person

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- lora

inference: true

---

# LoRA DreamBooth - pragnakalpdev32/lora-trained-xl-person-new_25

These are LoRA adaption weights for stabilityai/stable-diffusion-xl-base-1.0. The weights were trained on A photo of sks person using [DreamBooth](https://dreambooth.github.io/). You can find some example images in the following.

LoRA for the text encoder was enabled: False.

Special VAE used for training: None.

|

bradmin/reward-gpt-duplicate-answer-2 | bradmin | 2023-11-17T12:16:14Z | 6 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"gpt_neox",

"text-classification",

"generated_from_trainer",

"base_model:EleutherAI/polyglot-ko-1.3b",

"base_model:finetune:EleutherAI/polyglot-ko-1.3b",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-11-17T11:45:29Z | ---

license: apache-2.0

base_model: EleutherAI/polyglot-ko-1.3b

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: reward-gpt-duplicate-answer-2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# reward-gpt-duplicate-answer-2

This model is a fine-tuned version of [EleutherAI/polyglot-ko-1.3b](https://huggingface.co/EleutherAI/polyglot-ko-1.3b) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0162

- Accuracy: 0.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 9e-06

- train_batch_size: 6

- eval_batch_size: 6

- seed: 2023

- gradient_accumulation_steps: 10

- total_train_batch_size: 60

- optimizer: Adam with betas=(0.9,0.95) and epsilon=1e-08

- lr_scheduler_type: cosine

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.1389 | 0.24 | 100 | 0.0358 | 0.0 |

| 0.104 | 0.47 | 200 | 0.0283 | 0.0 |

| 0.0881 | 0.71 | 300 | 0.0163 | 0.0 |

| 0.0764 | 0.94 | 400 | 0.0162 | 0.0 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.1+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

TheBloke/sqlcoder-34b-alpha-AWQ | TheBloke | 2023-11-17T12:07:59Z | 26 | 5 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"en",

"base_model:defog/sqlcoder-34b-alpha",

"base_model:quantized:defog/sqlcoder-34b-alpha",

"license:cc-by-4.0",

"autotrain_compatible",

"text-generation-inference",

"4-bit",

"awq",

"region:us"

]

| text-generation | 2023-11-17T10:32:56Z | ---

base_model: defog/sqlcoder-34b-alpha

inference: false

language:

- en

license: cc-by-4.0

model_creator: Defog.ai

model_name: SQLCoder 34B Alpha

model_type: llama

pipeline_tag: text-generation

prompt_template: "## Task\nGenerate a SQL query to answer the following question:\n\

`{prompt}`\n\n### Database Schema\nThis query will run on a database whose schema\

\ is represented in this string:\nCREATE TABLE products (\n product_id INTEGER\

\ PRIMARY KEY, -- Unique ID for each product\n name VARCHAR(50), -- Name of the\

\ product\n price DECIMAL(10,2), -- Price of each unit of the product\n quantity\

\ INTEGER -- Current quantity in stock\n);\n\nCREATE TABLE sales (\n sale_id INTEGER\

\ PRIMARY KEY, -- Unique ID for each sale\n product_id INTEGER, -- ID of product\

\ sold\n customer_id INTEGER, -- ID of customer who made purchase\n salesperson_id\

\ INTEGER, -- ID of salesperson who made the sale\n sale_date DATE, -- Date the\

\ sale occurred\n quantity INTEGER -- Quantity of product sold\n);\n\n-- sales.product_id\

\ can be joined with products.product_id\n\n### SQL\nGiven the database schema,\

\ here is the SQL query that answers `{prompt}`:\n```sql\n"

quantized_by: TheBloke

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# SQLCoder 34B Alpha - AWQ

- Model creator: [Defog.ai](https://huggingface.co/defog)

- Original model: [SQLCoder 34B Alpha](https://huggingface.co/defog/sqlcoder-34b-alpha)

<!-- description start -->

## Description

This repo contains AWQ model files for [Defog.ai's SQLCoder 34B Alpha](https://huggingface.co/defog/sqlcoder-34b-alpha).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

### About AWQ

AWQ is an efficient, accurate and blazing-fast low-bit weight quantization method, currently supporting 4-bit quantization. Compared to GPTQ, it offers faster Transformers-based inference with equivalent or better quality compared to the most commonly used GPTQ settings.

It is supported by:

- [Text Generation Webui](https://github.com/oobabooga/text-generation-webui) - using Loader: AutoAWQ

- [vLLM](https://github.com/vllm-project/vllm) - Llama and Mistral models only

- [Hugging Face Text Generation Inference (TGI)](https://github.com/huggingface/text-generation-inference)

- [Transformers](https://huggingface.co/docs/transformers) version 4.35.0 and later, from any code or client that supports Transformers

- [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) - for use from Python code

<!-- description end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/sqlcoder-34b-alpha-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/sqlcoder-34b-alpha-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/sqlcoder-34b-alpha-GGUF)

* [Defog.ai's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/defog/sqlcoder-34b-alpha)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Sqlcoder

```

## Task

Generate a SQL query to answer the following question:

`{prompt}`

### Database Schema

This query will run on a database whose schema is represented in this string:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

CREATE TABLE sales (

sale_id INTEGER PRIMARY KEY, -- Unique ID for each sale

product_id INTEGER, -- ID of product sold

customer_id INTEGER, -- ID of customer who made purchase

salesperson_id INTEGER, -- ID of salesperson who made the sale

sale_date DATE, -- Date the sale occurred

quantity INTEGER -- Quantity of product sold

);

-- sales.product_id can be joined with products.product_id

### SQL

Given the database schema, here is the SQL query that answers `{prompt}`:

```sql

```

<!-- prompt-template end -->

<!-- licensing start -->

## Licensing

The creator of the source model has listed its license as `cc-by-4.0`, and this quantization has therefore used that same license.

As this model is based on Llama 2, it is also subject to the Meta Llama 2 license terms, and the license files for that are additionally included. It should therefore be considered as being claimed to be licensed under both licenses. I contacted Hugging Face for clarification on dual licensing but they do not yet have an official position. Should this change, or should Meta provide any feedback on this situation, I will update this section accordingly.

In the meantime, any questions regarding licensing, and in particular how these two licenses might interact, should be directed to the original model repository: [Defog.ai's SQLCoder 34B Alpha](https://huggingface.co/defog/sqlcoder-34b-alpha).

<!-- licensing end -->

<!-- README_AWQ.md-provided-files start -->

## Provided files, and AWQ parameters

I currently release 128g GEMM models only. The addition of group_size 32 models, and GEMV kernel models, is being actively considered.

Models are released as sharded safetensors files.

| Branch | Bits | GS | AWQ Dataset | Seq Len | Size |

| ------ | ---- | -- | ----------- | ------- | ---- |

| [main](https://huggingface.co/TheBloke/sqlcoder-34b-alpha-AWQ/tree/main) | 4 | 128 | [code](https://huggingface.co/datasets/nickrosh/Evol-Instruct-Code-80k-v1/viewer/) | 4096 | 18.31 GB

<!-- README_AWQ.md-provided-files end -->

<!-- README_AWQ.md-text-generation-webui start -->

## How to easily download and use this model in [text-generation-webui](https://github.com/oobabooga/text-generation-webui)

Please make sure you're using the latest version of [text-generation-webui](https://github.com/oobabooga/text-generation-webui).

It is strongly recommended to use the text-generation-webui one-click-installers unless you're sure you know how to make a manual install.

1. Click the **Model tab**.

2. Under **Download custom model or LoRA**, enter `TheBloke/sqlcoder-34b-alpha-AWQ`.

3. Click **Download**.

4. The model will start downloading. Once it's finished it will say "Done".

5. In the top left, click the refresh icon next to **Model**.

6. In the **Model** dropdown, choose the model you just downloaded: `sqlcoder-34b-alpha-AWQ`

7. Select **Loader: AutoAWQ**.

8. Click Load, and the model will load and is now ready for use.

9. If you want any custom settings, set them and then click **Save settings for this model** followed by **Reload the Model** in the top right.

10. Once you're ready, click the **Text Generation** tab and enter a prompt to get started!

<!-- README_AWQ.md-text-generation-webui end -->

<!-- README_AWQ.md-use-from-vllm start -->

## Multi-user inference server: vLLM

Documentation on installing and using vLLM [can be found here](https://vllm.readthedocs.io/en/latest/).

- Please ensure you are using vLLM version 0.2 or later.

- When using vLLM as a server, pass the `--quantization awq` parameter.

For example:

```shell

python3 -m vllm.entrypoints.api_server --model TheBloke/sqlcoder-34b-alpha-AWQ --quantization awq --dtype auto

```

- When using vLLM from Python code, again set `quantization=awq`.

For example:

```python

from vllm import LLM, SamplingParams

prompts = [

"Tell me about AI",

"Write a story about llamas",

"What is 291 - 150?",

"How much wood would a woodchuck chuck if a woodchuck could chuck wood?",

]

prompt_template=f'''## Task

Generate a SQL query to answer the following question:

`{prompt}`

### Database Schema

This query will run on a database whose schema is represented in this string:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

CREATE TABLE sales (

sale_id INTEGER PRIMARY KEY, -- Unique ID for each sale

product_id INTEGER, -- ID of product sold

customer_id INTEGER, -- ID of customer who made purchase

salesperson_id INTEGER, -- ID of salesperson who made the sale

sale_date DATE, -- Date the sale occurred

quantity INTEGER -- Quantity of product sold

);

-- sales.product_id can be joined with products.product_id

### SQL

Given the database schema, here is the SQL query that answers `{prompt}`:

```sql

'''

prompts = [prompt_template.format(prompt=prompt) for prompt in prompts]

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="TheBloke/sqlcoder-34b-alpha-AWQ", quantization="awq", dtype="auto")

outputs = llm.generate(prompts, sampling_params)

# Print the outputs.

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

```

<!-- README_AWQ.md-use-from-vllm start -->

<!-- README_AWQ.md-use-from-tgi start -->

## Multi-user inference server: Hugging Face Text Generation Inference (TGI)

Use TGI version 1.1.0 or later. The official Docker container is: `ghcr.io/huggingface/text-generation-inference:1.1.0`

Example Docker parameters:

```shell

--model-id TheBloke/sqlcoder-34b-alpha-AWQ --port 3000 --quantize awq --max-input-length 3696 --max-total-tokens 4096 --max-batch-prefill-tokens 4096

```

Example Python code for interfacing with TGI (requires [huggingface-hub](https://github.com/huggingface/huggingface_hub) 0.17.0 or later):

```shell

pip3 install huggingface-hub

```

```python

from huggingface_hub import InferenceClient

endpoint_url = "https://your-endpoint-url-here"

prompt = "Tell me about AI"

prompt_template=f'''## Task

Generate a SQL query to answer the following question:

`{prompt}`

### Database Schema

This query will run on a database whose schema is represented in this string:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

CREATE TABLE sales (

sale_id INTEGER PRIMARY KEY, -- Unique ID for each sale

product_id INTEGER, -- ID of product sold

customer_id INTEGER, -- ID of customer who made purchase

salesperson_id INTEGER, -- ID of salesperson who made the sale

sale_date DATE, -- Date the sale occurred

quantity INTEGER -- Quantity of product sold

);

-- sales.product_id can be joined with products.product_id

### SQL

Given the database schema, here is the SQL query that answers `{prompt}`:

```sql

'''

client = InferenceClient(endpoint_url)

response = client.text_generation(prompt,

max_new_tokens=128,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

repetition_penalty=1.1)

print(f"Model output: ", response)

```

<!-- README_AWQ.md-use-from-tgi end -->

<!-- README_AWQ.md-use-from-python start -->

## Inference from Python code using Transformers

### Install the necessary packages

- Requires: [Transformers](https://huggingface.co/docs/transformers) 4.35.0 or later.

- Requires: [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) 0.1.6 or later.

```shell

pip3 install --upgrade "autoawq>=0.1.6" "transformers>=4.35.0"

```

Note that if you are using PyTorch 2.0.1, the above AutoAWQ command will automatically upgrade you to PyTorch 2.1.0.

If you are using CUDA 11.8 and wish to continue using PyTorch 2.0.1, instead run this command:

```shell

pip3 install https://github.com/casper-hansen/AutoAWQ/releases/download/v0.1.6/autoawq-0.1.6+cu118-cp310-cp310-linux_x86_64.whl

```

If you have problems installing [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) using the pre-built wheels, install it from source instead:

```shell

pip3 uninstall -y autoawq

git clone https://github.com/casper-hansen/AutoAWQ

cd AutoAWQ

pip3 install .

```

### Transformers example code (requires Transformers 4.35.0 and later)

```python

from transformers import AutoModelForCausalLM, AutoTokenizer, TextStreamer

model_name_or_path = "TheBloke/sqlcoder-34b-alpha-AWQ"

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path)

model = AutoModelForCausalLM.from_pretrained(

model_name_or_path,

low_cpu_mem_usage=True,

device_map="cuda:0"

)

# Using the text streamer to stream output one token at a time

streamer = TextStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True)

prompt = "Tell me about AI"

prompt_template=f'''## Task

Generate a SQL query to answer the following question:

`{prompt}`

### Database Schema

This query will run on a database whose schema is represented in this string:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

CREATE TABLE sales (

sale_id INTEGER PRIMARY KEY, -- Unique ID for each sale

product_id INTEGER, -- ID of product sold

customer_id INTEGER, -- ID of customer who made purchase

salesperson_id INTEGER, -- ID of salesperson who made the sale

sale_date DATE, -- Date the sale occurred

quantity INTEGER -- Quantity of product sold

);

-- sales.product_id can be joined with products.product_id

### SQL

Given the database schema, here is the SQL query that answers `{prompt}`:

```sql

'''

# Convert prompt to tokens

tokens = tokenizer(

prompt_template,

return_tensors='pt'

).input_ids.cuda()

generation_params = {

"do_sample": True,

"temperature": 0.7,

"top_p": 0.95,

"top_k": 40,

"max_new_tokens": 512,

"repetition_penalty": 1.1

}

# Generate streamed output, visible one token at a time

generation_output = model.generate(

tokens,

streamer=streamer,

**generation_params

)

# Generation without a streamer, which will include the prompt in the output

generation_output = model.generate(

tokens,

**generation_params

)

# Get the tokens from the output, decode them, print them

token_output = generation_output[0]

text_output = tokenizer.decode(token_output)

print("model.generate output: ", text_output)

# Inference is also possible via Transformers' pipeline

from transformers import pipeline

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

**generation_params

)

pipe_output = pipe(prompt_template)[0]['generated_text']

print("pipeline output: ", pipe_output)

```

<!-- README_AWQ.md-use-from-python end -->

<!-- README_AWQ.md-compatibility start -->

## Compatibility

The files provided are tested to work with:

- [text-generation-webui](https://github.com/oobabooga/text-generation-webui) using `Loader: AutoAWQ`.

- [vLLM](https://github.com/vllm-project/vllm) version 0.2.0 and later.

- [Hugging Face Text Generation Inference (TGI)](https://github.com/huggingface/text-generation-inference) version 1.1.0 and later.

- [Transformers](https://huggingface.co/docs/transformers) version 4.35.0 and later.

- [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) version 0.1.1 and later.

<!-- README_AWQ.md-compatibility end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Brandon Frisco, LangChain4j, Spiking Neurons AB, transmissions 11, Joseph William Delisle, Nitin Borwankar, Willem Michiel, Michael Dempsey, vamX, Jeffrey Morgan, zynix, jjj, Omer Bin Jawed, Sean Connelly, jinyuan sun, Jeromy Smith, Shadi, Pawan Osman, Chadd, Elijah Stavena, Illia Dulskyi, Sebastain Graf, Stephen Murray, terasurfer, Edmond Seymore, Celu Ramasamy, Mandus, Alex, biorpg, Ajan Kanaga, Clay Pascal, Raven Klaugh, 阿明, K, ya boyyy, usrbinkat, Alicia Loh, John Villwock, ReadyPlayerEmma, Chris Smitley, Cap'n Zoog, fincy, GodLy, S_X, sidney chen, Cory Kujawski, OG, Mano Prime, AzureBlack, Pieter, Kalila, Spencer Kim, Tom X Nguyen, Stanislav Ovsiannikov, Michael Levine, Andrey, Trailburnt, Vadim, Enrico Ros, Talal Aujan, Brandon Phillips, Jack West, Eugene Pentland, Michael Davis, Will Dee, webtim, Jonathan Leane, Alps Aficionado, Rooh Singh, Tiffany J. Kim, theTransient, Luke @flexchar, Elle, Caitlyn Gatomon, Ari Malik, subjectnull, Johann-Peter Hartmann, Trenton Dambrowitz, Imad Khwaja, Asp the Wyvern, Emad Mostaque, Rainer Wilmers, Alexandros Triantafyllidis, Nicholas, Pedro Madruga, SuperWojo, Harry Royden McLaughlin, James Bentley, Olakabola, David Ziegler, Ai Maven, Jeff Scroggin, Nikolai Manek, Deo Leter, Matthew Berman, Fen Risland, Ken Nordquist, Manuel Alberto Morcote, Luke Pendergrass, TL, Fred von Graf, Randy H, Dan Guido, NimbleBox.ai, Vitor Caleffi, Gabriel Tamborski, knownsqashed, Lone Striker, Erik Bjäreholt, John Detwiler, Leonard Tan, Iucharbius

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

# Original model card: Defog.ai's SQLCoder 34B Alpha

# Defog SQLCoder

**Updated on Nov 14 to reflect benchmarks for SQLCoder-34B**

Defog's SQLCoder is a state-of-the-art LLM for converting natural language questions to SQL queries.

[Interactive Demo](https://defog.ai/sqlcoder-demo/) | [🤗 HF Repo](https://huggingface.co/defog/sqlcoder-34b-alpha) | [♾️ Colab](https://colab.research.google.com/drive/1z4rmOEiFkxkMiecAWeTUlPl0OmKgfEu7?usp=sharing) | [🐦 Twitter](https://twitter.com/defogdata)

## TL;DR

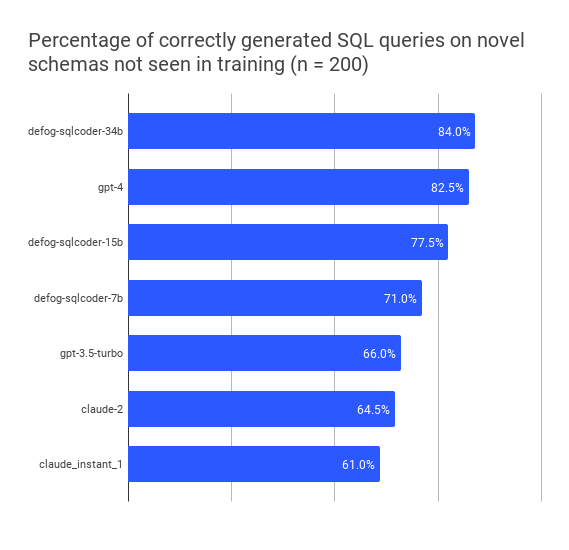

SQLCoder-34B is a 34B parameter model that outperforms `gpt-4` and `gpt-4-turbo` for natural language to SQL generation tasks on our [sql-eval](https://github.com/defog-ai/sql-eval) framework, and significantly outperforms all popular open-source models.

SQLCoder-34B is fine-tuned on a base CodeLlama model.

## Results on novel datasets not seen in training

| model | perc_correct |

|-|-|

| defog-sqlcoder-34b | 84.0 |

| gpt4-turbo-2023-11-09 | 82.5 |

| gpt4-2023-11-09 | 82.5 |

| defog-sqlcoder2 | 77.5 |

| gpt4-2023-08-28 | 74.0 |

| defog-sqlcoder-7b | 71.0 |

| gpt-3.5-2023-10-04 | 66.0 |

| claude-2 | 64.5 |

| gpt-3.5-2023-08-28 | 61.0 |

| claude_instant_1 | 61.0 |

| text-davinci-003 | 52.5 |

## License

The code in this repo (what little there is of it) is Apache-2 licensed. The model weights have a `CC BY-SA 4.0` license. The TL;DR is that you can use and modify the model for any purpose – including commercial use. However, if you modify the weights (for example, by fine-tuning), you must open-source your modified weights under the same license terms.

## Training

Defog was trained on more than 20,000 human-curated questions. These questions were based on 10 different schemas. None of the schemas in the training data were included in our evaluation framework.

You can read more about our [training approach](https://defog.ai/blog/open-sourcing-sqlcoder2-7b/) and [evaluation framework](https://defog.ai/blog/open-sourcing-sqleval/).

## Results by question category

We classified each generated question into one of 5 categories. The table displays the percentage of questions answered correctly by each model, broken down by category.

| | date | group_by | order_by | ratio | join | where |

| -------------- | ---- | -------- | -------- | ----- | ---- | ----- |

| sqlcoder-34b | 80 | 94.3 | 88.6 | 74.3 | 82.9 | 82.9 |

| gpt-4 | 68 | 94.3 | 85.7 | 77.1 | 85.7 | 80 |

| sqlcoder2-15b | 76 | 80 | 77.1 | 60 | 77.1 | 77.1 |

| sqlcoder-7b | 64 | 82.9 | 74.3 | 54.3 | 74.3 | 74.3 |

| gpt-3.5 | 68 | 77.1 | 68.6 | 37.1 | 71.4 | 74.3 |

| claude-2 | 52 | 71.4 | 74.3 | 57.1 | 65.7 | 62.9 |

| claude-instant | 48 | 71.4 | 74.3 | 45.7 | 62.9 | 60 |

| gpt-3 | 32 | 71.4 | 68.6 | 25.7 | 57.1 | 54.3 |

<img width="831" alt="image" src="https://github.com/defog-ai/sqlcoder/assets/5008293/79c5bdc8-373c-4abd-822e-e2c2569ed353">

## Using SQLCoder

You can use SQLCoder via the `transformers` library by downloading our model weights from the Hugging Face repo. We have added sample code for [inference](./inference.py) on a [sample database schema](./metadata.sql).

```bash

python inference.py -q "Question about the sample database goes here"

# Sample question:

# Do we get more revenue from customers in New York compared to customers in San Francisco? Give me the total revenue for each city, and the difference between the two.

```

You can also use a demo on our website [here](https://defog.ai/sqlcoder-demo)

## Hardware Requirements

SQLCoder-34B has been tested on a 4xA10 GPU with `float16` weights. You can also load an 8-bit and 4-bit quantized version of the model on consumer GPUs with 20GB or more of memory – like RTX 4090, RTX 3090, and Apple M2 Pro, M2 Max, or M2 Ultra Chips with 20GB or more of memory.

## Todo

- [x] Open-source the v1 model weights

- [x] Train the model on more data, with higher data variance

- [ ] Tune the model further with Reward Modelling and RLHF

- [ ] Pretrain a model from scratch that specializes in SQL analysis

|

DamarJati/face-hand-YOLOv5 | DamarJati | 2023-11-17T12:06:28Z | 0 | 4 | null | [

"tensorboard",

"yolov5",

"anime",

"Face detection",

"object-detection",

"en",

"dataset:DamarJati/face-hands-YOLOv5",

"region:us"

]

| object-detection | 2023-11-16T22:46:05Z | ---

datasets:

- DamarJati/face-hands-YOLOv5

language:

- en

tags:

- yolov5

- anime

- Face detection

pipeline_tag: object-detection

---

# YOLOv5 Model for Face and Hands Detection

## Overview

This repository contains a YOLOv5 model trained for detecting faces and hands. The model is based on the YOLOv5 architecture and has been fine-tuned on a custom dataset.

## Model Information

- **Model Name:** yolov5-face-hands

- **Framework:** PyTorch

- **Version:** 1.0.0

- **Model List** ["face", "null1", "null2", "hands"]

- **The list model used is 0 and 3** ["0", "1", "2", "3"]

|

:-------------------------------------:|:-------------------------------------:

## Usage

### Installation

```bash

pip install torch torchvision

pip install yolov5

```

### Load Model

```bash

import torch

# Load the YOLOv5 model

model = torch.hub.load('ultralytics/yolov5', 'custom', path='path/to/your/model.pt', force_reload=True)

# Set device (GPU or CPU)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

# Set model to evaluation mode

model.eval()

```

### Inference

```bash

import cv2

# Load and preprocess an image

image_path = 'path/to/your/image.jpg'

image = cv2.imread(image_path)

results = model(image)

# Display results (customize based on your needs)

results.show()

# Extract bounding box information

bboxes = results.xyxy[0].cpu().numpy()

for bbox in bboxes:

label_index = int(bbox[5])

label_mapping = ["face", "null1", "null2", "hands"]

label = label_mapping[label_index]

confidence = bbox[4]

print(f"Detected {label} with confidence {confidence:.2f}")

```

## License

This model is released under the MIT License. See LICENSE for more details.

## Citation

If you find this model useful, please consider citing the YOLOv5 repository:

```bibtex

@misc{jati2023customyolov5,

author = {Damar Jati},

title = {Custom YOLOv5 Model for Face and Hands Detection},

year = {2023},

orcid: {\url{https://orcid.org/0009-0002-0758-2712}}

publisher = {Hugging Face Model Hub},

howpublished = {\url{https://huggingface.co/DamarJati/face-hand-YOLOv5}}

}

``` |

LoneStriker/Yi-34B-Spicyboros-3.1-2-4.0bpw-h6-exl2 | LoneStriker | 2023-11-17T12:00:37Z | 10 | 1 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"dataset:unalignment/spicy-3.1",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2023-11-17T11:58:46Z | ---

license: other

license_name: yi-license

license_link: LICENSE

datasets:

- unalignment/spicy-3.1

---

# Fine-tune of Y-34B with Spicyboros-3.1

Three epochs of fine tuning with @jondurbin's SpicyBoros-3.1 dataset. 4.65bpw should fit on a single 3090/4090, 5.0bpw, 6.0bpw, and 8.0bpw will require more than one GPU 24 GB VRAM GPU.

**Please note:** you may have to turn down repetition penalty to 1.0. The model seems to get into "thesaurus" mode sometimes without this change.

# Original Yi-34B Model Card Below

<div align="center">

<h1>

Yi

</h1>

</div>

## Introduction

The **Yi** series models are large language models trained from scratch by developers at [01.AI](https://01.ai/). The first public release contains two base models with the parameter size of 6B and 34B.

## News

- 🎯 **2023/11/02**: The base model of `Yi-6B` and `Yi-34B`

## Model Performance

| Model | MMLU | CMMLU | C-Eval | GAOKAO | BBH | Commonsense Reasoning | Reading Comprehension | Math & Code |

| :------------ | :------: | :------: | :------: | :------: | :------: | :-------------------: | :-------------------: | :---------: |

| | 5-shot | 5-shot | 5-shot | 0-shot | 3-shot@1 | - | - | - |

| LLaMA2-34B | 62.6 | - | - | - | 44.1 | 69.9 | 68.0 | 26.0 |

| LLaMA2-70B | 68.9 | 53.3 | - | 49.8 | 51.2 | 71.9 | 69.4 | 36.8 |

| Baichuan2-13B | 59.2 | 62.0 | 58.1 | 54.3 | 48.8 | 64.3 | 62.4 | 23.0 |

| Qwen-14B | 66.3 | 71.0 | 72.1 | 62.5 | 53.4 | 73.3 | 72.5 | 39.8 |

| Skywork-13B | 62.1 | 61.8 | 60.6 | 68.1 | 41.7 | 72.4 | 61.4 | 24.9 |

| InternLM-20B | 62.1 | 59.0 | 58.8 | 45.5 | 52.5 | 78.3 | - | 26.0 |

| Aquila-34B | 67.8 | 71.4 | 63.1 | - | - | - | - | - |

| Falcon-180B | 70.4 | 58.0 | 57.8 | 59.0 | 54.0 | 77.3 | 68.8 | 34.0 |

| Yi-6B | 63.2 | 75.5 | 72.0 | 72.2 | 42.8 | 72.3 | 68.7 | 19.8 |

| **Yi-34B** | **76.3** | **83.7** | **81.4** | **82.8** | **54.3** | **80.1** | **76.4** | **37.1** |

While benchmarking open-source models, we have observed a disparity between the results generated by our pipeline and those reported in public sources (e.g. OpenCampus). Upon conducting a more in-depth investigation of this difference, we have discovered that various models may employ different prompts, post-processing strategies, and sampling techniques, potentially resulting in significant variations in the outcomes. Our prompt and post-processing strategy remains consistent with the original benchmark, and greedy decoding is employed during evaluation without any post-processing for the generated content. For scores that did not report by original author (including score reported with different setting), we try to get results with our pipeline.

To extensively evaluate model's capability, we adopted the methodology outlined in Llama2. Specifically, we included PIQA, SIQA, HellaSwag, WinoGrande, ARC, OBQA, and CSQA to assess common sense reasoning. SquAD, QuAC, and BoolQ were incorporated to evaluate reading comprehension. CSQA was exclusively tested using a 7-shot setup, while all other tests were conducted in a 0-shot configuration. Additionally, we introduced GSM8K (8-shot@1), MATH (4-shot@1), HumanEval (0-shot@1), and MBPP (3-shot@1) under the category "Math & Code". Due to technical constraints, we did not test Falcon-180 on QuAC and OBQA; the score is derived by averaging the scores on the remaining tasks. Since the scores for these two tasks are generally lower than the average, we believe that Falcon-180B's performance was not underestimated.

## Disclaimer

Although we use data compliance checking algorithms during the training process to ensure the compliance of the trained model to the best of our ability, due to the complexity of the data and the diversity of language model usage scenarios, we cannot guarantee that the model will generate correct and reasonable output in all scenarios. Please be aware that there is still a risk of the model producing problematic outputs. We will not be responsible for any risks and issues resulting from misuse, misguidance, illegal usage, and related misinformation, as well as any associated data security concerns.

## License

The Yi series model must be adhere to the [Model License Agreement](https://huggingface.co/01-ai/Yi-34B/blob/main/LICENSE).

For any questions related to licensing and copyright, please contact us ([[email protected]](mailto:[email protected])).

|

xiaol/RWKV-v5.2-7B-novel-completion-control-0.4-16k | xiaol | 2023-11-17T11:47:47Z | 0 | 0 | null | [

"license:apache-2.0",

"region:us"

]

| null | 2023-11-17T09:32:48Z | ---

license: apache-2.0

---

### RWKV novel style tuned with specific instructions

We hear you, we need control the direction of completion from the summary, so you can do what you want with this experimental model, welcome to let us know what you concern mostly. |

SenY/LECO | SenY | 2023-11-17T11:46:49Z | 0 | 30 | null | [

"license:other",

"region:us"

]

| null | 2023-07-22T00:26:30Z | ---

license: other

---

It is a repository for storing as many LECOs as I can think of, emphasizing quantity over quality.

Files will continue to be added as needed.

Because the guidance_scale parameter is somewhat excessive, these LECOs tend to be very sensitive and too effective; using a weight of -0.1 to -1 is appropriate in most cases.

All LECOs are trained with target eq positive, erase settings.

The target is a one of among danbooru's GENERAL tags what most frequently used in order from the top to the bottom, and sometimes I also add phrases that I have personally come up with.

``` prompts.yaml

- target: "$query"

positive: "$query"

unconditional: ""

neutral: ""

action: "erase"

guidance_scale: 1.0

resolution: 512

batch_size: 4

```

```config.yaml

prompts_file: prompts.yaml

pretrained_model:

name_or_path: "/storage/model-1892-0000-0000.safetensors"

v2: false

v_pred: false

network:

type: "lierla"

rank: 4

alpha: 1.0

training_method: "full"

train:

precision: "bfloat16"

noise_scheduler: "ddim"

iterations: 50

lr: 1

optimizer: "Prodigy"

lr_scheduler: "cosine"

max_denoising_steps: 50

save:

name: "$query"

path: "/stable-diffusion-webui/models/Lora/LECO/"

per_steps: 50

precision: "float16"

logging:

use_wandb: false

verbose: false

other:

use_xformers: true

```

|

abduldattijo/videomae-base-finetuned-ucf101-subset-V3KILLER | abduldattijo | 2023-11-17T11:44:28Z | 12 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"videomae",

"video-classification",

"generated_from_trainer",

"base_model:abduldattijo/videomae-base-finetuned-ucf101-subset",

"base_model:finetune:abduldattijo/videomae-base-finetuned-ucf101-subset",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

]

| video-classification | 2023-11-16T08:31:43Z | ---

license: cc-by-nc-4.0

base_model: abduldattijo/videomae-base-finetuned-ucf101-subset

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: videomae-base-finetuned-ucf101-subset-V3KILLER

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# videomae-base-finetuned-ucf101-subset-V3KILLER

This model is a fine-tuned version of [abduldattijo/videomae-base-finetuned-ucf101-subset](https://huggingface.co/abduldattijo/videomae-base-finetuned-ucf101-subset) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2181

- Accuracy: 0.9615

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- training_steps: 5960

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.3447 | 0.03 | 150 | 0.1339 | 0.9579 |

| 0.3161 | 1.03 | 300 | 0.1538 | 0.9465 |

| 0.3386 | 2.03 | 450 | 0.3260 | 0.9019 |

| 0.3572 | 3.03 | 600 | 0.1967 | 0.9311 |

| 0.3699 | 4.03 | 750 | 0.1661 | 0.9505 |

| 0.3125 | 5.03 | 900 | 0.3292 | 0.9205 |

| 0.4785 | 6.03 | 1050 | 0.2029 | 0.9324 |

| 0.3477 | 7.03 | 1200 | 0.1534 | 0.9385 |

| 0.2909 | 8.03 | 1350 | 0.1265 | 0.9571 |

| 0.2646 | 9.03 | 1500 | 0.1239 | 0.9586 |

| 0.3339 | 10.03 | 1650 | 0.1341 | 0.9628 |

| 0.0954 | 11.03 | 1800 | 0.1835 | 0.9423 |

| 0.3861 | 12.03 | 1950 | 0.2241 | 0.9467 |

| 0.248 | 13.03 | 2100 | 0.1258 | 0.9620 |

| 0.2513 | 14.03 | 2250 | 0.2217 | 0.9357 |

| 0.1133 | 15.03 | 2400 | 0.2129 | 0.9406 |

| 0.1421 | 16.03 | 2550 | 0.3006 | 0.9264 |

| 0.0248 | 17.03 | 2700 | 0.3868 | 0.9142 |

| 0.0166 | 18.03 | 2850 | 0.2594 | 0.9518 |

| 0.0874 | 19.03 | 3000 | 0.3652 | 0.9252 |

| 0.0889 | 20.03 | 3150 | 0.2249 | 0.9533 |

| 0.0804 | 21.03 | 3300 | 0.2027 | 0.9628 |

| 0.0019 | 22.03 | 3450 | 0.4682 | 0.9212 |

| 0.0405 | 23.03 | 3600 | 0.2425 | 0.9493 |

| 0.0847 | 24.03 | 3750 | 0.2456 | 0.9558 |

| 0.1656 | 25.03 | 3900 | 0.2623 | 0.9505 |

| 0.1007 | 26.03 | 4050 | 0.2389 | 0.9484 |

| 0.0616 | 27.03 | 4200 | 0.2529 | 0.9543 |

| 0.0005 | 28.03 | 4350 | 0.1521 | 0.9732 |

| 0.0006 | 29.03 | 4500 | 0.4115 | 0.9165 |

| 0.0007 | 30.03 | 4650 | 0.4279 | 0.9220 |

| 0.0004 | 31.03 | 4800 | 0.3572 | 0.9372 |

| 0.0003 | 32.03 | 4950 | 0.3314 | 0.9419 |

| 0.0002 | 33.03 | 5100 | 0.4008 | 0.9347 |

| 0.0611 | 34.03 | 5250 | 0.4632 | 0.9239 |

| 0.0003 | 35.03 | 5400 | 0.3756 | 0.9368 |

| 0.0003 | 36.03 | 5550 | 0.3745 | 0.9429 |

| 0.163 | 37.03 | 5700 | 0.3967 | 0.9383 |

| 0.0059 | 38.03 | 5850 | 0.3808 | 0.9389 |

| 0.0003 | 39.02 | 5960 | 0.3824 | 0.9395 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.1+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

kalypso42/q-FrozenLake-v1-4x4-noSlippery | kalypso42 | 2023-11-17T11:43:13Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-11-17T11:43:11Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="kalypso42/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

jrad98/ppo-Pyramids | jrad98 | 2023-11-17T11:30:28Z | 0 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"Pyramids",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

]

| reinforcement-learning | 2023-11-17T11:30:25Z | ---

library_name: ml-agents

tags:

- Pyramids

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: jrad98/ppo-Pyramids

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

shandung/fine_tuned_modelsFinal_2 | shandung | 2023-11-17T11:19:13Z | 8 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"m2m_100",

"text2text-generation",

"generated_from_trainer",

"base_model:facebook/m2m100_418M",

"base_model:finetune:facebook/m2m100_418M",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2023-11-17T11:14:49Z | ---

license: mit

base_model: facebook/m2m100_418M

tags:

- generated_from_trainer

model-index:

- name: fine_tuned_modelsFinal_2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# fine_tuned_modelsFinal_2

This model is a fine-tuned version of [facebook/m2m100_418M](https://huggingface.co/facebook/m2m100_418M) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1230

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 2376

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.5433 | 18.35 | 500 | 0.1457 |

| 0.0408 | 36.7 | 1000 | 0.1265 |

| 0.0181 | 55.05 | 1500 | 0.1300 |

| 0.0103 | 73.39 | 2000 | 0.1226 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Tokenizers 0.15.0

|

crom87/sd_base-db-selfies30-1e-06-priorp | crom87 | 2023-11-17T11:15:06Z | 7 | 0 | diffusers | [

"diffusers",

"tensorboard",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"dreambooth",

"base_model:runwayml/stable-diffusion-v1-5",

"base_model:finetune:runwayml/stable-diffusion-v1-5",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-11-16T09:19:47Z |

---

license: creativeml-openrail-m

base_model: runwayml/stable-diffusion-v1-5

instance_prompt: TOKstyle person

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- dreambooth

inference: true

---

# DreamBooth - crom87/sd_base-db-selfies30-1e-06-priorp

This is a dreambooth model derived from runwayml/stable-diffusion-v1-5. The weights were trained on TOKstyle person using [DreamBooth](https://dreambooth.github.io/).

You can find some example images in the following.

DreamBooth for the text encoder was enabled: True.

|

vetertann/fb7-test | vetertann | 2023-11-17T11:04:38Z | 0 | 0 | null | [

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:ybelkada/falcon-7b-sharded-bf16",

"base_model:finetune:ybelkada/falcon-7b-sharded-bf16",

"region:us"

]

| null | 2023-11-17T10:17:20Z | ---

base_model: ybelkada/falcon-7b-sharded-bf16

tags:

- generated_from_trainer

model-index:

- name: fb7-test

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# fb7-test

This model is a fine-tuned version of [ybelkada/falcon-7b-sharded-bf16](https://huggingface.co/ybelkada/falcon-7b-sharded-bf16) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.03

- training_steps: 180

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

amazon/FalconLite | amazon | 2023-11-17T11:00:22Z | 327 | 170 | transformers | [

"transformers",

"RefinedWeb",

"text-generation",

"custom_code",

"license:apache-2.0",

"autotrain_compatible",

"region:us"

]

| text-generation | 2023-08-01T14:18:59Z | ---

license: apache-2.0

inference: false

---

# FalconLite Model

FalconLite is a quantized version of the [Falcon 40B SFT OASST-TOP1 model](https://huggingface.co/OpenAssistant/falcon-40b-sft-top1-560), capable of processing long (i.e. 11K tokens) input sequences while consuming 4x less GPU memory. By utilizing 4-bit [GPTQ quantization](https://github.com/PanQiWei/AutoGPTQ) and adapted [dynamic NTK](https://www.reddit.com/r/LocalLLaMA/comments/14mrgpr/dynamically_scaled_rope_further_increases/) RotaryEmbedding, FalconLite achieves a balance between latency, accuracy, and memory efficiency. With the ability to process 5x longer contexts than the original model, FalconLite is useful for applications such as topic retrieval, summarization, and question-answering. FalconLite can be deployed on a single AWS `g5.12x` instance with [TGI 0.9.2](https://github.com/huggingface/text-generation-inference/tree/v0.9.2), making it suitable for applications that require high performance in resource-constrained environments.

## *New!* FalconLite2 Model ##

To keep up with the updated model FalconLite2, please refer to [FalconLite2](https://huggingface.co/amazon/FalconLite2).

## Model Details

- **Developed by:** [AWS Contributors](https://github.com/orgs/aws-samples/teams/aws-prototype-ml-apac)

- **Model type:** [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

- **Language:** English

- **Quantized from weights:** [Falcon 40B SFT OASST-TOP1 model](https://huggingface.co/OpenAssistant/falcon-40b-sft-top1-560)

- **Modified from layers:** [Text-Generation-Inference 0.9.2](https://github.com/huggingface/text-generation-inference/tree/v0.9.2)

- **License:** Apache 2.0

- **Contact:** [GitHub issues](https://github.com/awslabs/extending-the-context-length-of-open-source-llms/issues)

- **Blogpost:** [Extend the context length of Falcon40B to 10k](https://medium.com/@chenwuperth/extend-the-context-length-of-falcon40b-to-10k-85d81d32146f)

## Deploy FalconLite ##

SSH login to an AWS `g5.12x` instance with the [Deep Learning AMI](https://aws.amazon.com/releasenotes/aws-deep-learning-ami-gpu-pytorch-2-0-ubuntu-20-04/).

### Start LLM server

```bash

git clone https://github.com/awslabs/extending-the-context-length-of-open-source-llms.git falconlite-dev

cd falconlite-dev/script

./docker_build.sh

./start_falconlite.sh

```

### Perform inference

```bash

# after FalconLite has been completely started

pip install -r requirements-client.txt

python falconlite_client.py

```

### *New!* Amazon SageMaker Deployment ###

To deploy FalconLite on SageMaker endpoint, please follow [this notebook](https://github.com/awslabs/extending-the-context-length-of-open-source-llms/blob/main/custom-tgi-ecr/deploy.ipynb).

**Important** - When using FalconLite for inference for the first time, it may require a brief 'warm-up' period that can take 10s of seconds. However, subsequent inferences should be faster and return results in a more timely manner. This warm-up period is normal and should not affect the overall performance of the system once the initialisation period has been completed.

## Evalution Result ##

We evaluated FalconLite against benchmarks that are specifically designed to assess the capabilities of LLMs in handling longer contexts. All evaluations were conducted without fine-tuning the model.

### Accuracy ###

|Eval task|Input length| Input length | Input length| Input length|

|----------|-------------|-------------|------------|-----------|

| | 2800 ~ 3800| 5500 ~ 5600 |7500 ~ 8300 | 9300 ~ 11000 |

| [Topic Retrieval](https://lmsys.org/blog/2023-06-29-longchat/) | 100% | 100% | 92% | 92% |

| [Line Retrieval](https://lmsys.org/blog/2023-06-29-longchat/#longeval-results) | 38% | 12% | 8% | 4% |

| [Pass key Retrieval](https://github.com/epfml/landmark-attention/blob/main/llama/run_test.py#L101) | 100% | 100% | 100% | 100% |

|Eval task| Test set Accuracy | Hard subset Accuracy|

|----------|-------------|-------------|

| [Question Answering with Long Input Texts](https://nyu-mll.github.io/quality/) | 46.9% | 40.8% |

### Performance ###

**metrics** = the average number of generated tokens per second (TPS) =

`nb-generated-tokens` / `end-to-end-response-time`

The `end-to-end-response-time` = when the last token is generated - when the inference request is received

|Instance| Input length | Input length| Input length|Input length|

|----------|-------------|-------------|------------|------------|

| | 20 | 3300 | 5500 |10000 |

| g5.48x | 22 tps | 12 tps | 12 tps | 12 tps |

| g5.12x | 18 tps | 11 tps | 11 tps | 10 tps |

## Limitations ##

* Our evaluation shows that FalconLite's capability in `Line Retrieval` is limited, and requires further effort.

* While `g5.12x` is sufficient for FalconLite to handle 10K long contexts, a larger instance with more memory capcacity such as `g5.48x` is recommended for sustained, heavy workloads.

* Before using the FalconLite model, it is important to perform your own independent assessment, and take measures to ensure that your use would comply with your own specific quality control practices and standards, and that your use would comply with the local rules, laws, regulations, licenses and terms that apply to you, and your content. |

CADM97/Reinforce2 | CADM97 | 2023-11-17T10:59:17Z | 0 | 0 | null | [

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-11-17T10:59:11Z | ---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce2

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 43.00 +/- 31.52

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

HamdanXI/bert-paradetox-1Token-split-masked | HamdanXI | 2023-11-17T10:58:07Z | 6 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"bert",

"fill-mask",

"generated_from_trainer",

"base_model:google-bert/bert-base-uncased",

"base_model:finetune:google-bert/bert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2023-11-17T10:01:23Z | ---

license: apache-2.0

base_model: bert-base-uncased

tags:

- generated_from_trainer

model-index:

- name: bert-paradetox-1Token-split-masked

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-paradetox-1Token-split-masked

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0005

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 237 | 0.0014 |

| No log | 2.0 | 474 | 0.0006 |

| 0.3349 | 3.0 | 711 | 0.0005 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

Gracoy/swin-tiny-patch4-window7-224-Kaggle_test_20231117 | Gracoy | 2023-11-17T10:47:44Z | 7 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"swin",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:microsoft/swin-tiny-patch4-window7-224",

"base_model:finetune:microsoft/swin-tiny-patch4-window7-224",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2023-11-17T09:53:36Z | ---

license: apache-2.0

base_model: microsoft/swin-tiny-patch4-window7-224

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-tiny-patch4-window7-224-Kaggle_test_20231117

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: validation

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9336188436830836

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-Kaggle_test_20231117

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2057

- Accuracy: 0.9336

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.2786 | 0.99 | 22 | 0.2184 | 0.9368 |

| 0.1598 | 1.98 | 44 | 0.1826 | 0.9347 |

| 0.1352 | 2.97 | 66 | 0.2057 | 0.9336 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

alfredowh/poca-SoccerTwos | alfredowh | 2023-11-17T10:38:05Z | 33 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"SoccerTwos",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-SoccerTwos",

"region:us"

]

| reinforcement-learning | 2023-11-17T10:34:24Z | ---

library_name: ml-agents

tags:

- SoccerTwos

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-SoccerTwos

---

# **poca** Agent playing **SoccerTwos**

This is a trained model of a **poca** agent playing **SoccerTwos**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: alfredo-wh/poca-SoccerTwos

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

under-tree/transformer-en-ru | under-tree | 2023-11-17T10:26:12Z | 12 | 0 | transformers | [

"transformers",

"pytorch",

"marian",

"text2text-generation",

"generated_from_trainer",

"base_model:Helsinki-NLP/opus-mt-en-ru",

"base_model:finetune:Helsinki-NLP/opus-mt-en-ru",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2023-11-13T15:09:42Z | ---

license: apache-2.0

base_model: Helsinki-NLP/opus-mt-en-ru

tags:

- generated_from_trainer

model-index:

- name: transformer-en-ru

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# transformer-en-ru

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-en-ru](https://huggingface.co/Helsinki-NLP/opus-mt-en-ru) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Framework versions

- Transformers 4.35.0.dev0

- Pytorch 2.1.0a0+32f93b1

- Datasets 2.14.5

- Tokenizers 0.14.1

|

Jukaboo/Llama2_7B_chat_meetingBank_ft_adapters_EOS_3 | Jukaboo | 2023-11-17T10:23:02Z | 0 | 0 | null | [

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:meta-llama/Llama-2-7b-chat-hf",

"base_model:finetune:meta-llama/Llama-2-7b-chat-hf",

"region:us"

]

| null | 2023-11-17T09:09:18Z | ---

base_model: meta-llama/Llama-2-7b-chat-hf

tags:

- generated_from_trainer

model-index:

- name: Llama2_7B_chat_meetingBank_ft_adapters_EOS_3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Llama2_7B_chat_meetingBank_ft_adapters_EOS_3

This model is a fine-tuned version of [meta-llama/Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8142

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.05

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.2757 | 0.2 | 65 | 1.9499 |

| 1.8931 | 0.4 | 130 | 1.8631 |

| 1.6246 | 0.6 | 195 | 1.8294 |

| 2.2049 | 0.8 | 260 | 1.8142 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

logichacker/my_awesome_swag_model | logichacker | 2023-11-17T10:13:26Z | 7 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"bert",

"multiple-choice",

"generated_from_trainer",

"dataset:swag",

"base_model:google-bert/bert-base-uncased",

"base_model:finetune:google-bert/bert-base-uncased",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| multiple-choice | 2023-11-17T09:25:28Z | ---

license: apache-2.0

base_model: bert-base-uncased

tags:

- generated_from_trainer

datasets:

- swag

metrics:

- accuracy

model-index:

- name: my_awesome_swag_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_swag_model

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the swag dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0449

- Accuracy: 0.7878

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.7784 | 1.0 | 4597 | 0.5916 | 0.7655 |

| 0.3817 | 2.0 | 9194 | 0.6262 | 0.7813 |

| 0.1508 | 3.0 | 13791 | 1.0449 | 0.7878 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

Weyaxi/Nebula-7B-checkpoints | Weyaxi | 2023-11-17T09:52:02Z | 0 | 0 | peft | [

"peft",

"tensorboard",

"en",

"dataset:garage-bAInd/Open-Platypus",

"license:apache-2.0",

"region:us"

]

| null | 2023-10-03T19:12:34Z | ---

license: apache-2.0

datasets:

- garage-bAInd/Open-Platypus

language:

- en

tags:

- peft

---

<a href="https://www.buymeacoffee.com/PulsarAI" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/v2/default-yellow.png" alt="Buy Me A Coffee" style="height: 60px !important;width: 217px !important;" ></a>

# Nebula-7b-Checkpoints

Checkpoints of Nebula-7B. Finetuned from [mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1).

## Lora Weights

You can access lora weights from here:

[PulsarAI/Nebula-7B-Lora](https://huggingface.co/PulsarAI/Nebula-7B-Lora)

## Original Weights

You can access original weights from here:

[PulsarAI/Nebula-7B](https://huggingface.co/PulsarAI/Nebula-7B) |