modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-15 00:43:56

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 521

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-15 00:40:56

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

lmqg/mt5-small-zhquad-qag | lmqg | 2023-11-10T16:21:15Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"mt5",

"text2text-generation",

"questions and answers generation",

"zh",

"dataset:lmqg/qag_zhquad",

"arxiv:2210.03992",

"license:cc-by-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2023-11-10T15:53:12Z |

---

license: cc-by-4.0

metrics:

- bleu4

- meteor

- rouge-l

- bertscore

- moverscore

language: zh

datasets:

- lmqg/qag_zhquad

pipeline_tag: text2text-generation

tags:

- questions and answers generation

widget:

- text: "南安普敦的警察服务由汉普郡警察提供。南安普敦行动的主要基地是一座新的八层专用建筑,造价3000万英镑。该建筑位于南路,2011年启用,靠近 南安普敦中央 火车站。此前,南安普顿市中心的行动位于市民中心西翼,但由于设施老化,加上计划在旧警察局和地方法院建造一座新博物馆,因此必须搬迁。在Portswood、Banister Park、Hille和Shirley还有其他警察局,在南安普顿中央火车站还有一个英国交通警察局。"

example_title: "Questions & Answers Generation Example 1"

model-index:

- name: lmqg/mt5-small-zhquad-qag

results:

- task:

name: Text2text Generation

type: text2text-generation

dataset:

name: lmqg/qag_zhquad

type: default

args: default

metrics:

- name: QAAlignedF1Score-BERTScore (Question & Answer Generation)

type: qa_aligned_f1_score_bertscore_question_answer_generation

value: 75.47

- name: QAAlignedRecall-BERTScore (Question & Answer Generation)

type: qa_aligned_recall_bertscore_question_answer_generation

value: 75.41

- name: QAAlignedPrecision-BERTScore (Question & Answer Generation)

type: qa_aligned_precision_bertscore_question_answer_generation

value: 75.56

- name: QAAlignedF1Score-MoverScore (Question & Answer Generation)

type: qa_aligned_f1_score_moverscore_question_answer_generation

value: 52.42

- name: QAAlignedRecall-MoverScore (Question & Answer Generation)

type: qa_aligned_recall_moverscore_question_answer_generation

value: 52.33

- name: QAAlignedPrecision-MoverScore (Question & Answer Generation)

type: qa_aligned_precision_moverscore_question_answer_generation

value: 52.53

---

# Model Card of `lmqg/mt5-small-zhquad-qag`

This model is fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) for question & answer pair generation task on the [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [google/mt5-small](https://huggingface.co/google/mt5-small)

- **Language:** zh

- **Training data:** [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="zh", model="lmqg/mt5-small-zhquad-qag")

# model prediction

question_answer_pairs = model.generate_qa("南安普敦的警察服务由汉普郡警察提供。南安普敦行动的主要基地是一座新的八层专用建筑,造价3000万英镑。该建筑位于南路,2011年启用,靠近南安普敦中央火车站。此前,南安普顿市中心的行动位于市民中心西翼,但由于设施老化,加上计划在旧警察局和地方法院建造一座新博物馆,因此必须搬迁。在Portswood、Banister Park、Hille和Shirley还有其他警察局,在南安普顿中央火车站还有一个英国交通警察局。")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "lmqg/mt5-small-zhquad-qag")

output = pipe("南安普敦的警察服务由汉普郡警察提供。南安普敦行动的主要基地是一座新的八层专用建筑,造价3000万英镑。该建筑位于南路,2011年启用,靠近 南安普敦中央 火车站。此前,南安普顿市中心的行动位于市民中心西翼,但由于设施老化,加上计划在旧警察局和地方法院建造一座新博物馆,因此必须搬迁。在Portswood、Banister Park、Hille和Shirley还有其他警察局,在南安普顿中央火车站还有一个英国交通警察局。")

```

## Evaluation

- ***Metric (Question & Answer Generation)***: [raw metric file](https://huggingface.co/lmqg/mt5-small-zhquad-qag/raw/main/eval/metric.first.answer.paragraph.questions_answers.lmqg_qag_zhquad.default.json)

| | Score | Type | Dataset |

|:--------------------------------|--------:|:--------|:-------------------------------------------------------------------|

| QAAlignedF1Score (BERTScore) | 75.47 | default | [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) |

| QAAlignedF1Score (MoverScore) | 52.42 | default | [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) |

| QAAlignedPrecision (BERTScore) | 75.56 | default | [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) |

| QAAlignedPrecision (MoverScore) | 52.53 | default | [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) |

| QAAlignedRecall (BERTScore) | 75.41 | default | [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) |

| QAAlignedRecall (MoverScore) | 52.33 | default | [lmqg/qag_zhquad](https://huggingface.co/datasets/lmqg/qag_zhquad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qag_zhquad

- dataset_name: default

- input_types: ['paragraph']

- output_types: ['questions_answers']

- prefix_types: None

- model: google/mt5-small

- max_length: 512

- max_length_output: 256

- epoch: 12

- batch: 8

- lr: 0.001

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 8

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/lmqg/mt5-small-zhquad-qag/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

panverz/translate | panverz | 2023-11-10T16:12:45Z | 0 | 0 | null | [

"region:us"

]

| null | 2023-11-10T16:11:42Z | from googletrans import LANGUAGES, Translator

import deepl

# Initialize Google Translate

translator = Translator()

# Initialize DeepL

deepl_api_key = 'YOUR_DEEPL_API_KEY'

deepl_client = deepl.Client(deepl_api_key)

# Text to translate

text = 'Hello, how are you?'

# Translate using Google Translate

google_translations = {}

for lang_code, lang_name in LANGUAGES.items():

translation = translator.translate(text, dest=lang_code).text

google_translations[lang_name] = translation

print('Google Translate:', google_translations)

# Translate using DeepL

deepl_translations = {}

for lang_code, lang_name in LANGUAGES.items():

translation = deepl_client.translate(text, target_lang=lang_code)['translations'][0]['text']

deepl_translations[lang_name] = translation

print('DeepL:', deepl_translations) |

matekadlicsko/opus-mt-tc-big-hu-en-finetuned-telex-news | matekadlicsko | 2023-11-10T16:12:36Z | 5 | 1 | transformers | [

"transformers",

"safetensors",

"marian",

"text2text-generation",

"translation",

"hu",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| translation | 2023-11-10T15:26:58Z | ---

license: apache-2.0

language:

- hu

- en

pipeline_tag: translation

---

## Hungarian to English Translation Model (Fine-Tuned)

**Language Pair:**

- Source Language: Hungarian

- Target Language: English

**Model Description:**

This model is a fine-tuned version based on [opus-mt-tc-big-hu-en](https://huggingface.co/Helsinki-NLP/opus-mt-tc-big-hu-en) for Hungarian to English translation. The fine-tuning process utilized articles from [telex](https://telex.hu/), enhancing the model's performance.

**License:**

The model is released under the [Apache License 2.0](https://www.apache.org/licenses/LICENSE-2.0), which is a permissive open-source license. It allows users to use, modify, and distribute the software for any purpose, with additional provisions related to patents.

**Note:**

Users are encouraged to review and comply with the terms of the Apache License 2.0 when using or contributing to this model. Additionally, citation and attribution to the original models and data sources are appreciated.

---

|

Nunofofo/rr | Nunofofo | 2023-11-10T16:08:50Z | 0 | 0 | null | [

"license:openrail",

"region:us"

]

| null | 2023-11-10T16:07:57Z | ---

license: openrail

license_name: open

license_link: LICENSE

---

|

Danielbrdz/CodeBarcenas-1b | Danielbrdz | 2023-11-10T16:07:18Z | 1,500 | 0 | transformers | [

"transformers",

"pytorch",

"gpt_bigcode",

"text-generation",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2023-11-10T15:42:39Z | ---

license: llama2

---

CodeBarcenas

Model specialized in the Python language

Based on the model: WizardLM/WizardCoder-1B-V1.0

And trained with the dataset: mlabonne/Evol-Instruct-Python-1k

Made with ❤️ in Guadalupe, Nuevo Leon, Mexico 🇲🇽 |

skroed/musicgen-medium | skroed | 2023-11-10T15:57:22Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"musicgen",

"text-to-audio",

"arxiv:2306.05284",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

]

| text-to-audio | 2023-10-28T18:48:45Z | ---

inference: true

tags:

- musicgen

license: cc-by-nc-4.0

pipeline_tag: text-to-audio

widget:

- text: a funky house with 80s hip hop vibes

example_title: Prompt 1

- text: a chill song with influences from lofi, chillstep and downtempo

example_title: Prompt 2

- text: a catchy beat for a podcast intro

example_title: Prompt 3

---

# MusicGen - Medium - 1.5B

MusicGen is a text-to-music model capable of genreating high-quality music samples conditioned on text descriptions or audio prompts.

It is a single stage auto-regressive Transformer model trained over a 32kHz EnCodec tokenizer with 4 codebooks sampled at 50 Hz.

Unlike existing methods, like MusicLM, MusicGen doesn't require a self-supervised semantic representation, and it generates all 4 codebooks in one pass.

By introducing a small delay between the codebooks, we show we can predict them in parallel, thus having only 50 auto-regressive steps per second of audio.

MusicGen was published in [Simple and Controllable Music Generation](https://arxiv.org/abs/2306.05284) by *Jade Copet, Felix Kreuk, Itai Gat, Tal Remez, David Kant, Gabriel Synnaeve, Yossi Adi, Alexandre Défossez*.

Four checkpoints are released:

- [small](https://huggingface.co/facebook/musicgen-small)

- [**medium** (this checkpoint)](https://huggingface.co/facebook/musicgen-medium)

- [large](https://huggingface.co/facebook/musicgen-large)

- [melody](https://huggingface.co/facebook/musicgen-melody)

## Example

Try out MusicGen yourself!

* Audiocraft Colab:

<a target="_blank" href="https://colab.research.google.com/drive/1fxGqfg96RBUvGxZ1XXN07s3DthrKUl4-?usp=sharing">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

* Hugging Face Colab:

<a target="_blank" href="https://colab.research.google.com/github/sanchit-gandhi/notebooks/blob/main/MusicGen.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

* Hugging Face Demo:

<a target="_blank" href="https://huggingface.co/spaces/facebook/MusicGen">

<img src="https://huggingface.co/datasets/huggingface/badges/raw/main/open-in-hf-spaces-sm.svg" alt="Open in HuggingFace"/>

</a>

## 🤗 Transformers Usage

You can run MusicGen locally with the 🤗 Transformers library from version 4.31.0 onwards.

1. First install the 🤗 [Transformers library](https://github.com/huggingface/transformers) and scipy:

```

pip install --upgrade pip

pip install --upgrade transformers scipy

```

2. Run inference via the `Text-to-Audio` (TTA) pipeline. You can infer the MusicGen model via the TTA pipeline in just a few lines of code!

```python

from transformers import pipeline

import scipy

synthesiser = pipeline("text-to-audio", "facebook/musicgen-medium")

music = synthesiser("lo-fi music with a soothing melody", forward_params={"do_sample": True})

scipy.io.wavfile.write("musicgen_out.wav", rate=music["sampling_rate"], music=audio["audio"])

```

3. Run inference via the Transformers modelling code. You can use the processor + generate code to convert text into a mono 32 kHz audio waveform for more fine-grained control.

```python

from transformers import AutoProcessor, MusicgenForConditionalGeneration

processor = AutoProcessor.from_pretrained("facebook/musicgen-medium")

model = MusicgenForConditionalGeneration.from_pretrained("facebook/musicgen-medium")

inputs = processor(

text=["80s pop track with bassy drums and synth", "90s rock song with loud guitars and heavy drums"],

padding=True,

return_tensors="pt",

)

audio_values = model.generate(**inputs, max_new_tokens=256)

```

3. Listen to the audio samples either in an ipynb notebook:

```python

from IPython.display import Audio

sampling_rate = model.config.audio_encoder.sampling_rate

Audio(audio_values[0].numpy(), rate=sampling_rate)

```

Or save them as a `.wav` file using a third-party library, e.g. `scipy`:

```python

import scipy

sampling_rate = model.config.audio_encoder.sampling_rate

scipy.io.wavfile.write("musicgen_out.wav", rate=sampling_rate, data=audio_values[0, 0].numpy())

```

For more details on using the MusicGen model for inference using the 🤗 Transformers library, refer to the [MusicGen docs](https://huggingface.co/docs/transformers/model_doc/musicgen).

## Audiocraft Usage

You can also run MusicGen locally through the original [Audiocraft library]((https://github.com/facebookresearch/audiocraft):

1. First install the [`audiocraft` library](https://github.com/facebookresearch/audiocraft)

```

pip install git+https://github.com/facebookresearch/audiocraft.git

```

2. Make sure to have [`ffmpeg`](https://ffmpeg.org/download.html) installed:

```

apt get install ffmpeg

```

3. Run the following Python code:

```py

from audiocraft.models import MusicGen

from audiocraft.data.audio import audio_write

model = MusicGen.get_pretrained("medium")

model.set_generation_params(duration=8) # generate 8 seconds.

descriptions = ["happy rock", "energetic EDM"]

wav = model.generate(descriptions) # generates 2 samples.

for idx, one_wav in enumerate(wav):

# Will save under {idx}.wav, with loudness normalization at -14 db LUFS.

audio_write(f'{idx}', one_wav.cpu(), model.sample_rate, strategy="loudness")

```

## Model details

**Organization developing the model:** The FAIR team of Meta AI.

**Model date:** MusicGen was trained between April 2023 and May 2023.

**Model version:** This is the version 1 of the model.

**Model type:** MusicGen consists of an EnCodec model for audio tokenization, an auto-regressive language model based on the transformer architecture for music modeling. The model comes in different sizes: 300M, 1.5B and 3.3B parameters ; and two variants: a model trained for text-to-music generation task and a model trained for melody-guided music generation.

**Paper or resources for more information:** More information can be found in the paper [Simple and Controllable Music Generation](https://arxiv.org/abs/2306.05284).

**Citation details:**

```

@misc{copet2023simple,

title={Simple and Controllable Music Generation},

author={Jade Copet and Felix Kreuk and Itai Gat and Tal Remez and David Kant and Gabriel Synnaeve and Yossi Adi and Alexandre Défossez},

year={2023},

eprint={2306.05284},

archivePrefix={arXiv},

primaryClass={cs.SD}

}

```

**License:** Code is released under MIT, model weights are released under CC-BY-NC 4.0.

**Where to send questions or comments about the model:** Questions and comments about MusicGen can be sent via the [Github repository](https://github.com/facebookresearch/audiocraft) of the project, or by opening an issue.

## Intended use

**Primary intended use:** The primary use of MusicGen is research on AI-based music generation, including:

- Research efforts, such as probing and better understanding the limitations of generative models to further improve the state of science

- Generation of music guided by text or melody to understand current abilities of generative AI models by machine learning amateurs

**Primary intended users:** The primary intended users of the model are researchers in audio, machine learning and artificial intelligence, as well as amateur seeking to better understand those models.

**Out-of-scope use cases:** The model should not be used on downstream applications without further risk evaluation and mitigation. The model should not be used to intentionally create or disseminate music pieces that create hostile or alienating environments for people. This includes generating music that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

## Metrics

**Models performance measures:** We used the following objective measure to evaluate the model on a standard music benchmark:

- Frechet Audio Distance computed on features extracted from a pre-trained audio classifier (VGGish)

- Kullback-Leibler Divergence on label distributions extracted from a pre-trained audio classifier (PaSST)

- CLAP Score between audio embedding and text embedding extracted from a pre-trained CLAP model

Additionally, we run qualitative studies with human participants, evaluating the performance of the model with the following axes:

- Overall quality of the music samples;

- Text relevance to the provided text input;

- Adherence to the melody for melody-guided music generation.

More details on performance measures and human studies can be found in the paper.

**Decision thresholds:** Not applicable.

## Evaluation datasets

The model was evaluated on the [MusicCaps benchmark](https://www.kaggle.com/datasets/googleai/musiccaps) and on an in-domain held-out evaluation set, with no artist overlap with the training set.

## Training datasets

The model was trained on licensed data using the following sources: the [Meta Music Initiative Sound Collection](https://www.fb.com/sound), [Shutterstock music collection](https://www.shutterstock.com/music) and the [Pond5 music collection](https://www.pond5.com/). See the paper for more details about the training set and corresponding preprocessing.

## Evaluation results

Below are the objective metrics obtained on MusicCaps with the released model. Note that for the publicly released models, we had all the datasets go through a state-of-the-art music source separation method, namely using the open source [Hybrid Transformer for Music Source Separation](https://github.com/facebookresearch/demucs) (HT-Demucs), in order to keep only the instrumental part. This explains the difference in objective metrics with the models used in the paper.

| Model | Frechet Audio Distance | KLD | Text Consistency | Chroma Cosine Similarity |

|---|---|---|---|---|

| facebook/musicgen-small | 4.88 | 1.42 | 0.27 | - |

| **facebook/musicgen-medium** | 5.14 | 1.38 | 0.28 | - |

| facebook/musicgen-large | 5.48 | 1.37 | 0.28 | - |

| facebook/musicgen-melody | 4.93 | 1.41 | 0.27 | 0.44 |

More information can be found in the paper [Simple and Controllable Music Generation](https://arxiv.org/abs/2306.05284), in the Results section.

## Limitations and biases

**Data:** The data sources used to train the model are created by music professionals and covered by legal agreements with the right holders. The model is trained on 20K hours of data, we believe that scaling the model on larger datasets can further improve the performance of the model.

**Mitigations:** Vocals have been removed from the data source using corresponding tags, and then using a state-of-the-art music source separation method, namely using the open source [Hybrid Transformer for Music Source Separation](https://github.com/facebookresearch/demucs) (HT-Demucs).

**Limitations:**

- The model is not able to generate realistic vocals.

- The model has been trained with English descriptions and will not perform as well in other languages.

- The model does not perform equally well for all music styles and cultures.

- The model sometimes generates end of songs, collapsing to silence.

- It is sometimes difficult to assess what types of text descriptions provide the best generations. Prompt engineering may be required to obtain satisfying results.

**Biases:** The source of data is potentially lacking diversity and all music cultures are not equally represented in the dataset. The model may not perform equally well on the wide variety of music genres that exists. The generated samples from the model will reflect the biases from the training data. Further work on this model should include methods for balanced and just representations of cultures, for example, by scaling the training data to be both diverse and inclusive.

**Risks and harms:** Biases and limitations of the model may lead to generation of samples that may be considered as biased, inappropriate or offensive. We believe that providing the code to reproduce the research and train new models will allow to broaden the application to new and more representative data.

**Use cases:** Users must be aware of the biases, limitations and risks of the model. MusicGen is a model developed for artificial intelligence research on controllable music generation. As such, it should not be used for downstream applications without further investigation and mitigation of risks. |

Nostradamused/Taxi-v3 | Nostradamused | 2023-11-10T15:55:08Z | 0 | 0 | null | [

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-11-10T15:55:06Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.52 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="Nostradamused/Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

GraydientPlatformAPI/gasm-z | GraydientPlatformAPI | 2023-11-10T15:51:21Z | 3 | 1 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"license:openrail",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-11-10T15:46:07Z | ---

license: openrail

library_name: diffusers

pipeline_tag: text-to-image

--- |

PsiPi/lmsys_vicuna-13b-v1.5-16k-exl2-3.0bpw | PsiPi | 2023-11-10T15:39:56Z | 6 | 1 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"arxiv:2307.09288",

"arxiv:2306.05685",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-11-10T14:43:26Z | ---

inference: false

license: llama2

---

# Vicuna Model Card

## Model Details

Vicuna is a chat assistant trained by fine-tuning Llama 2 on user-shared conversations collected from ShareGPT.

- **Developed by:** [LMSYS](https://lmsys.org/)

- **Model type:** An auto-regressive language model based on the transformer architecture

- **License:** Llama 2 Community License Agreement

- **Finetuned from model:** [Llama 2](https://arxiv.org/abs/2307.09288)

### Model Sources

- **Repository:** https://github.com/lm-sys/FastChat

- **Blog:** https://lmsys.org/blog/2023-03-30-vicuna/

- **Paper:** https://arxiv.org/abs/2306.05685

- **Demo:** https://chat.lmsys.org/

## Uses

The primary use of Vicuna is research on large language models and chatbots.

The primary intended users of the model are researchers and hobbyists in natural language processing, machine learning, and artificial intelligence.

## How to Get Started with the Model

- Command line interface: https://github.com/lm-sys/FastChat#vicuna-weights

- APIs (OpenAI API, Huggingface API): https://github.com/lm-sys/FastChat/tree/main#api

## Training Details

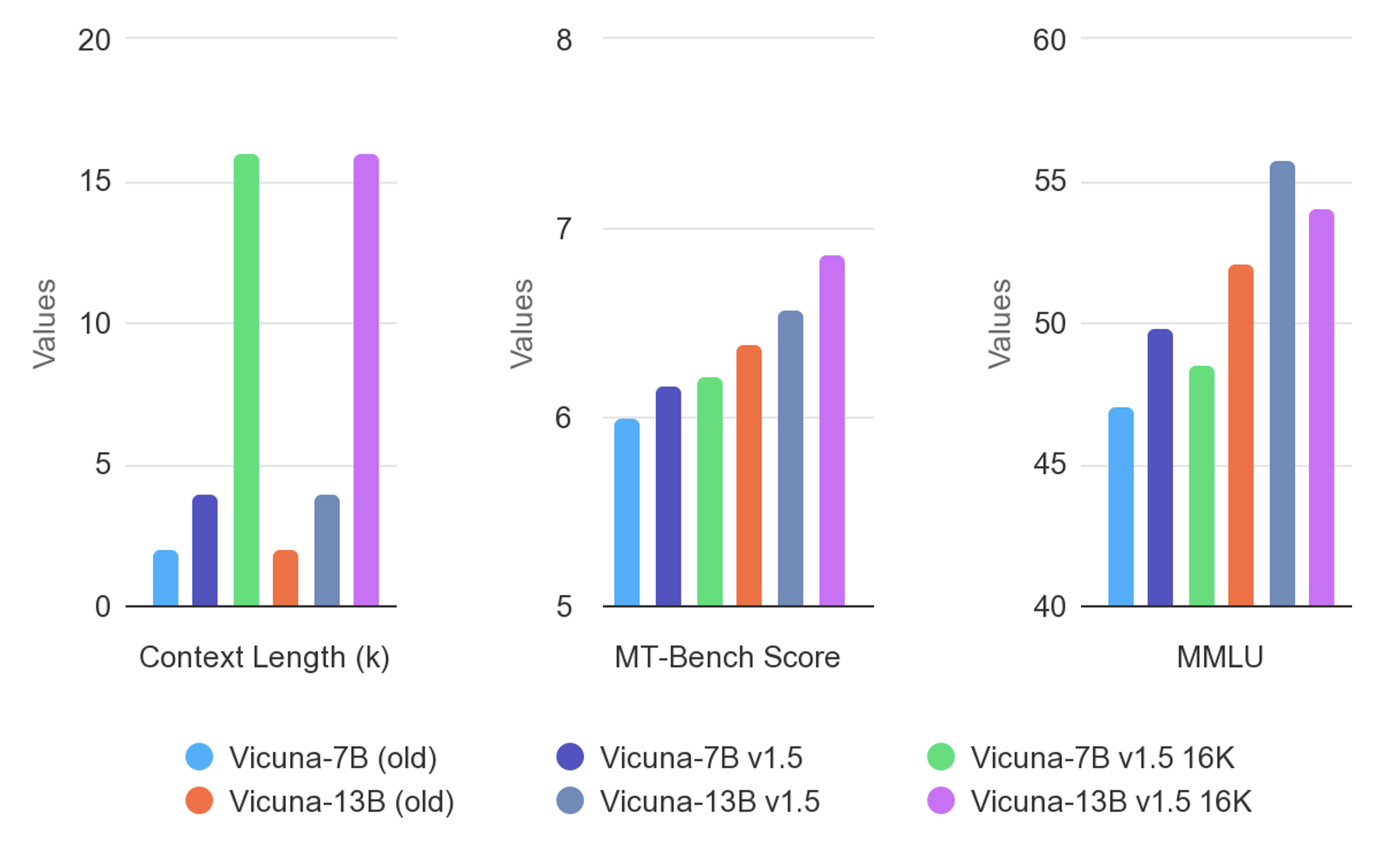

Vicuna v1.5 (16k) is fine-tuned from Llama 2 with supervised instruction fine-tuning and linear RoPE scaling.

The training data is around 125K conversations collected from ShareGPT.com. These conversations are packed into sequences that contain 16K tokens each.

See more details in the "Training Details of Vicuna Models" section in the appendix of this [paper](https://arxiv.org/pdf/2306.05685.pdf).

## Evaluation

Vicuna is evaluated with standard benchmarks, human preference, and LLM-as-a-judge. See more details in this [paper](https://arxiv.org/pdf/2306.05685.pdf) and [leaderboard](https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard).

## Difference between different versions of Vicuna

See [vicuna_weights_version.md](https://github.com/lm-sys/FastChat/blob/main/docs/vicuna_weights_version.md) |

Vargol/lcm_sdxl_full_model | Vargol | 2023-11-10T15:37:05Z | 2 | 0 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

]

| text-to-image | 2023-11-10T11:40:02Z | ---

license: openrail++

tags:

- text-to-image

- stable-diffusion

---

This is a copy of the sdxl base (stabilityai/stable-diffusion-xl-base-1.0) with the unet replaced

with the LCM distilled unet (latent-consistency/lcm-sdxl) and scheduler config set to default to the LCM Scheduler.

This makes LCM SDXL run as a standard Diffusion Pipeline

```py

from diffusers import DiffusionPipeline

import torch

pipe = DiffusionPipeline.from_pretrained(

"Vargol/lcm_sdxl_full_model", variant='fp16', torch_dtype=torch.float16

).to("mps")

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

generator = torch.manual_seed(0)

image = pipe(

prompt=prompt, num_inference_steps=4, generator=generator, guidance_scale=8.0

).images[0]

image.save('distilled.png')

``` |

nickrobinson/distilbert-base-uncased-finetuned-emotion | nickrobinson | 2023-11-10T15:29:30Z | 4 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-11-10T15:21:15Z | ---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

config: split

split: validation

args: split

metrics:

- name: Accuracy

type: accuracy

value: 0.9235

- name: F1

type: f1

value: 0.9232572625951749

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2213

- Accuracy: 0.9235

- F1: 0.9233

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8544 | 1.0 | 250 | 0.3350 | 0.902 | 0.9008 |

| 0.2605 | 2.0 | 500 | 0.2213 | 0.9235 | 0.9233 |

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

|

Spleonard1/my_awesome_qa_model | Spleonard1 | 2023-11-10T15:17:09Z | 5 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| question-answering | 2023-11-10T15:14:27Z | ---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: my_awesome_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_qa_model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6857

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 250 | 2.4027 |

| 2.7785 | 2.0 | 500 | 1.7883 |

| 2.7785 | 3.0 | 750 | 1.6857 |

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

|

darshsingh1/sqlcoder2-fasttrain_7k | darshsingh1 | 2023-11-10T15:14:03Z | 0 | 0 | null | [

"safetensors",

"generated_from_trainer",

"dataset:mpachauri/DatasetTrimmed",

"base_model:meta-llama/Llama-2-7b-hf",

"base_model:finetune:meta-llama/Llama-2-7b-hf",

"region:us"

]

| null | 2023-11-08T13:15:13Z | ---

base_model: meta-llama/Llama-2-7b-hf

tags:

- generated_from_trainer

model-index:

- name: sqlcoder2-fasttrain_7k

results: []

datasets:

- mpachauri/DatasetTrimmed

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sqlcoder2-fasttrain_7k

This model is a fine-tuned version of [meta-llama/Llama-2-7b-hf](https://huggingface.co/meta-llama/Llama-2-7b-hf) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: nan

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.5

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 500

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 7.4687 | 0.65 | 500 | nan |

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1 |

piotrklima/ppo-LunarLander-v2 | piotrklima | 2023-11-10T15:04:44Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-11-10T15:04:24Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 260.77 +/- 13.88

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

AntoineD/MiniLM_uncased_classification_tools_qlora_fr | AntoineD | 2023-11-10T15:01:45Z | 0 | 0 | null | [

"generated_from_trainer",

"base_model:microsoft/MiniLM-L12-H384-uncased",

"base_model:finetune:microsoft/MiniLM-L12-H384-uncased",

"license:mit",

"region:us"

]

| null | 2023-11-10T14:59:12Z | ---

license: mit

base_model: microsoft/MiniLM-L12-H384-uncased

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: MiniLM_uncased_classification_tools_qlora_fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# MiniLM_uncased_classification_tools_qlora_fr

This model is a fine-tuned version of [microsoft/MiniLM-L12-H384-uncased](https://huggingface.co/microsoft/MiniLM-L12-H384-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7495

- Accuracy: 0.5

- Learning Rate: 0.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 24

- eval_batch_size: 192

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 60

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Rate |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 7 | 2.0845 | 0.075 | 0.0001 |

| No log | 2.0 | 14 | 2.0852 | 0.075 | 0.0001 |

| No log | 3.0 | 21 | 2.0851 | 0.075 | 0.0001 |

| No log | 4.0 | 28 | 2.0855 | 0.075 | 0.0001 |

| No log | 5.0 | 35 | 2.0858 | 0.075 | 0.0001 |

| No log | 6.0 | 42 | 2.0861 | 0.075 | 9e-05 |

| No log | 7.0 | 49 | 2.0863 | 0.075 | 0.0001 |

| No log | 8.0 | 56 | 2.0859 | 0.075 | 0.0001 |

| No log | 9.0 | 63 | 2.0856 | 0.075 | 0.0001 |

| No log | 10.0 | 70 | 2.0855 | 0.075 | 0.0001 |

| No log | 11.0 | 77 | 2.0848 | 0.075 | 0.0001 |

| No log | 12.0 | 84 | 2.0844 | 0.075 | 8e-05 |

| No log | 13.0 | 91 | 2.0829 | 0.075 | 0.0001 |

| No log | 14.0 | 98 | 2.0825 | 0.075 | 0.0001 |

| No log | 15.0 | 105 | 2.0811 | 0.1 | 0.0001 |

| No log | 16.0 | 112 | 2.0793 | 0.175 | 0.0001 |

| No log | 17.0 | 119 | 2.0771 | 0.125 | 0.0001 |

| No log | 18.0 | 126 | 2.0750 | 0.175 | 7e-05 |

| No log | 19.0 | 133 | 2.0723 | 0.175 | 0.0001 |

| No log | 20.0 | 140 | 2.0683 | 0.175 | 0.0001 |

| No log | 21.0 | 147 | 2.0637 | 0.175 | 0.0001 |

| No log | 22.0 | 154 | 2.0574 | 0.175 | 0.0001 |

| No log | 23.0 | 161 | 2.0507 | 0.2 | 0.0001 |

| No log | 24.0 | 168 | 2.0419 | 0.325 | 6e-05 |

| No log | 25.0 | 175 | 2.0318 | 0.35 | 0.0001 |

| No log | 26.0 | 182 | 2.0214 | 0.4 | 0.0001 |

| No log | 27.0 | 189 | 2.0083 | 0.4 | 0.0001 |

| No log | 28.0 | 196 | 1.9949 | 0.4 | 0.0001 |

| No log | 29.0 | 203 | 1.9781 | 0.4 | 0.0001 |

| No log | 30.0 | 210 | 1.9609 | 0.4 | 5e-05 |

| No log | 31.0 | 217 | 1.9475 | 0.425 | 0.0000 |

| No log | 32.0 | 224 | 1.9317 | 0.45 | 0.0000 |

| No log | 33.0 | 231 | 1.9131 | 0.45 | 0.0000 |

| No log | 34.0 | 238 | 1.9015 | 0.475 | 0.0000 |

| No log | 35.0 | 245 | 1.8906 | 0.5 | 0.0000 |

| No log | 36.0 | 252 | 1.8740 | 0.475 | 4e-05 |

| No log | 37.0 | 259 | 1.8613 | 0.5 | 0.0000 |

| No log | 38.0 | 266 | 1.8552 | 0.525 | 0.0000 |

| No log | 39.0 | 273 | 1.8389 | 0.5 | 0.0000 |

| No log | 40.0 | 280 | 1.8302 | 0.5 | 0.0000 |

| No log | 41.0 | 287 | 1.8228 | 0.5 | 0.0000 |

| No log | 42.0 | 294 | 1.8244 | 0.525 | 3e-05 |

| No log | 43.0 | 301 | 1.8048 | 0.5 | 0.0000 |

| No log | 44.0 | 308 | 1.7944 | 0.525 | 0.0000 |

| No log | 45.0 | 315 | 1.7929 | 0.5 | 0.0000 |

| No log | 46.0 | 322 | 1.7904 | 0.5 | 0.0000 |

| No log | 47.0 | 329 | 1.7810 | 0.5 | 0.0000 |

| No log | 48.0 | 336 | 1.7790 | 0.5 | 2e-05 |

| No log | 49.0 | 343 | 1.7758 | 0.5 | 0.0000 |

| No log | 50.0 | 350 | 1.7677 | 0.525 | 0.0000 |

| No log | 51.0 | 357 | 1.7626 | 0.525 | 0.0000 |

| No log | 52.0 | 364 | 1.7579 | 0.525 | 0.0000 |

| No log | 53.0 | 371 | 1.7552 | 0.525 | 0.0000 |

| No log | 54.0 | 378 | 1.7544 | 0.525 | 1e-05 |

| No log | 55.0 | 385 | 1.7523 | 0.525 | 0.0000 |

| No log | 56.0 | 392 | 1.7510 | 0.525 | 0.0000 |

| No log | 57.0 | 399 | 1.7501 | 0.525 | 5e-06 |

| No log | 58.0 | 406 | 1.7498 | 0.525 | 0.0000 |

| No log | 59.0 | 413 | 1.7496 | 0.525 | 0.0000 |

| No log | 60.0 | 420 | 1.7495 | 0.5 | 0.0 |

### Framework versions

- Transformers 4.34.0

- Pytorch 2.0.1+cu117

- Datasets 2.14.5

- Tokenizers 0.14.1

|

AntoineD/MiniLM_uncased_classification_tools_fr | AntoineD | 2023-11-10T14:56:00Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:microsoft/MiniLM-L12-H384-uncased",

"base_model:finetune:microsoft/MiniLM-L12-H384-uncased",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-11-10T14:50:18Z | ---

license: mit

base_model: microsoft/MiniLM-L12-H384-uncased

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: MiniLM_uncased_classification_tools_fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# MiniLM_uncased_classification_tools_fr

This model is a fine-tuned version of [microsoft/MiniLM-L12-H384-uncased](https://huggingface.co/microsoft/MiniLM-L12-H384-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3165

- Accuracy: 0.925

- Learning Rate: 0.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 24

- eval_batch_size: 192

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 60

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Rate |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 7 | 2.0607 | 0.35 | 0.0001 |

| No log | 2.0 | 14 | 1.9111 | 0.425 | 0.0001 |

| No log | 3.0 | 21 | 1.6543 | 0.45 | 0.0001 |

| No log | 4.0 | 28 | 1.4578 | 0.525 | 0.0001 |

| No log | 5.0 | 35 | 1.3136 | 0.65 | 0.0001 |

| No log | 6.0 | 42 | 1.2160 | 0.7 | 9e-05 |

| No log | 7.0 | 49 | 1.0786 | 0.725 | 0.0001 |

| No log | 8.0 | 56 | 1.0171 | 0.675 | 0.0001 |

| No log | 9.0 | 63 | 0.9491 | 0.7 | 0.0001 |

| No log | 10.0 | 70 | 0.8773 | 0.75 | 0.0001 |

| No log | 11.0 | 77 | 0.8019 | 0.75 | 0.0001 |

| No log | 12.0 | 84 | 0.7436 | 0.775 | 8e-05 |

| No log | 13.0 | 91 | 0.6747 | 0.825 | 0.0001 |

| No log | 14.0 | 98 | 0.7357 | 0.775 | 0.0001 |

| No log | 15.0 | 105 | 0.5386 | 0.85 | 0.0001 |

| No log | 16.0 | 112 | 0.6222 | 0.85 | 0.0001 |

| No log | 17.0 | 119 | 0.6284 | 0.85 | 0.0001 |

| No log | 18.0 | 126 | 0.4489 | 0.9 | 7e-05 |

| No log | 19.0 | 133 | 0.6431 | 0.85 | 0.0001 |

| No log | 20.0 | 140 | 0.6064 | 0.85 | 0.0001 |

| No log | 21.0 | 147 | 0.6948 | 0.825 | 0.0001 |

| No log | 22.0 | 154 | 0.5535 | 0.85 | 0.0001 |

| No log | 23.0 | 161 | 0.4672 | 0.875 | 0.0001 |

| No log | 24.0 | 168 | 0.4797 | 0.875 | 6e-05 |

| No log | 25.0 | 175 | 0.4908 | 0.9 | 0.0001 |

| No log | 26.0 | 182 | 0.5879 | 0.85 | 0.0001 |

| No log | 27.0 | 189 | 0.6601 | 0.85 | 0.0001 |

| No log | 28.0 | 196 | 0.6036 | 0.85 | 0.0001 |

| No log | 29.0 | 203 | 0.5495 | 0.85 | 0.0001 |

| No log | 30.0 | 210 | 0.5135 | 0.85 | 5e-05 |

| No log | 31.0 | 217 | 0.4767 | 0.875 | 0.0000 |

| No log | 32.0 | 224 | 0.4431 | 0.9 | 0.0000 |

| No log | 33.0 | 231 | 0.4681 | 0.875 | 0.0000 |

| No log | 34.0 | 238 | 0.5612 | 0.85 | 0.0000 |

| No log | 35.0 | 245 | 0.4495 | 0.9 | 0.0000 |

| No log | 36.0 | 252 | 0.4384 | 0.9 | 4e-05 |

| No log | 37.0 | 259 | 0.4378 | 0.875 | 0.0000 |

| No log | 38.0 | 266 | 0.4104 | 0.875 | 0.0000 |

| No log | 39.0 | 273 | 0.5060 | 0.875 | 0.0000 |

| No log | 40.0 | 280 | 0.4756 | 0.875 | 0.0000 |

| No log | 41.0 | 287 | 0.4558 | 0.875 | 0.0000 |

| No log | 42.0 | 294 | 0.4458 | 0.9 | 3e-05 |

| No log | 43.0 | 301 | 0.3969 | 0.875 | 0.0000 |

| No log | 44.0 | 308 | 0.4762 | 0.875 | 0.0000 |

| No log | 45.0 | 315 | 0.4891 | 0.875 | 0.0000 |

| No log | 46.0 | 322 | 0.4460 | 0.9 | 0.0000 |

| No log | 47.0 | 329 | 0.3892 | 0.925 | 0.0000 |

| No log | 48.0 | 336 | 0.4267 | 0.9 | 2e-05 |

| No log | 49.0 | 343 | 0.3327 | 0.9 | 0.0000 |

| No log | 50.0 | 350 | 0.3225 | 0.925 | 0.0000 |

| No log | 51.0 | 357 | 0.3223 | 0.925 | 0.0000 |

| No log | 52.0 | 364 | 0.3136 | 0.95 | 0.0000 |

| No log | 53.0 | 371 | 0.3109 | 0.925 | 0.0000 |

| No log | 54.0 | 378 | 0.3142 | 0.9 | 1e-05 |

| No log | 55.0 | 385 | 0.3168 | 0.925 | 0.0000 |

| No log | 56.0 | 392 | 0.3163 | 0.925 | 0.0000 |

| No log | 57.0 | 399 | 0.3174 | 0.925 | 5e-06 |

| No log | 58.0 | 406 | 0.3185 | 0.925 | 0.0000 |

| No log | 59.0 | 413 | 0.3168 | 0.925 | 0.0000 |

| No log | 60.0 | 420 | 0.3165 | 0.925 | 0.0 |

### Framework versions

- Transformers 4.34.0

- Pytorch 2.0.1+cu117

- Datasets 2.14.5

- Tokenizers 0.14.1

|

linoyts/huggy_v28 | linoyts | 2023-11-10T14:49:55Z | 9 | 0 | diffusers | [

"diffusers",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail++",

"region:us"

]

| text-to-image | 2023-11-10T14:09:52Z |

---

license: openrail++

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: A webpage in the style of <s0><s1><s2>

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- lora

inference: true

---

# LoRA DreamBooth - LinoyTsaban/huggy_v28

These are LoRA adaption weights for stabilityai/stable-diffusion-xl-base-1.0. The weights were trained on A webpage in the style of <s0><s1><s2> using [DreamBooth](https://dreambooth.github.io/). You can find some example images in the following.

LoRA for the text encoder was enabled: False.

Special VAE used for training: madebyollin/sdxl-vae-fp16-fix.

|

quentino/ppo-LunarLander-v2 | quentino | 2023-11-10T14:45:07Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-11-10T14:44:47Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 257.12 +/- 19.75

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

blabla1233/ppo-LunarLander-v2 | blabla1233 | 2023-11-10T14:44:31Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-11-10T14:43:50Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 250.88 +/- 14.95

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

ChrisEsworthy/Covid_Misinformation_Model | ChrisEsworthy | 2023-11-10T14:35:54Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:spencer-gable-cook/COVID-19_Misinformation_Detector",

"base_model:finetune:spencer-gable-cook/COVID-19_Misinformation_Detector",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-04-12T11:55:41Z | ---

license: mit

tags:

- generated_from_trainer

base_model: spencer-gable-cook/COVID-19_Misinformation_Detector

model-index:

- name: Covid_Misinformation_Model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Covid_Misinformation_Model

This model is a fine-tuned version of [spencer-gable-cook/COVID-19_Misinformation_Detector](https://huggingface.co/spencer-gable-cook/COVID-19_Misinformation_Detector) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1213

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.27.4

- Pytorch 1.9.0+cu111

- Datasets 2.11.0

- Tokenizers 0.12.1

|

NesrineBannour/CAS-privacy-preserving-model | NesrineBannour | 2023-11-10T14:29:32Z | 0 | 0 | transformers | [

"transformers",

"biomedical",

"clinical",

"pytorch",

"camembert",

"token-classification",

"fr",

"dataset:bigbio/cas",

"license:cc-by-sa-4.0",

"region:us"

]

| token-classification | 2023-10-10T11:34:38Z | ---

license: cc-by-sa-4.0

datasets:

- bigbio/cas

language:

- fr

metrics:

- f1

- precision

- recall

library_name: transformers

tags:

- biomedical

- clinical

- pytorch

- camembert

pipeline_tag: token-classification

inference: false

---

# Privacy-preserving mimic models for clinical named entity recognition in French

<!-- ## Paper abstract -->

In this [paper](https://doi.org/10.1016/j.jbi.2022.104073), we propose a

Privacy-Preserving Mimic Models architecture that enables the generation of shareable models using the *mimic learning* approach.

The idea of mimic learning is to annotate unlabeled public data through a *private teacher model* trained on the original sensitive data.

The newly labeled public dataset is then used to train the *student models*. These generated *student models* could be shared

without sharing the data itself or exposing the *private teacher model* that was directly built on this data.

# CAS Privacy-Preserving Named Entity Recognition (NER) Mimic Model

<!-- Provide a quick summary of what the model is/does. -->

To generate the CAS Privacy-Preserving Mimic Model, we used a *private teacher model* to annotate the unlabeled

[CAS clinical French corpus](https://aclanthology.org/W18-5614/). The *private teacher model* is an NER model trained on the

[MERLOT clinical corpus](https://link.springer.com/article/10.1007/s10579-017-9382-y) and could not be shared. Using the produced

[silver annotations](https://zenodo.org/records/6451361), we train the CAS *student model*, namely the CAS Privacy-Preserving NER Mimic Model.

This model might be viewed as a knowledge transfer process between the *teacher* and the *student model* in a privacy-preserving manner.

We share only the weights of the CAS *student model*, which is trained on silver-labeled publicly released data.

We argue that no potential attack could reveal information about sensitive private data using the silver annotations

generated by the *private teacher model* on publicly available non-sensitive data.

Our model is constructed based on [CamemBERT](https://huggingface.co/camembert) model using the Natural language structuring ([NLstruct](https://github.com/percevalw/nlstruct)) library that

implements NER models that handle nested entities.

- **Paper:** [Privacy-preserving mimic models for clinical named entity recognition in French](https://doi.org/10.1016/j.jbi.2022.104073)

- **Produced gold and silver annotations for the [DEFT](https://deft.lisn.upsaclay.fr/2020/) and [CAS](https://aclanthology.org/W18-5614/) French clinical corpora:** https://zenodo.org/records/6451361

- **Developed by:** [Nesrine Bannour](https://github.com/NesrineBannour), [Perceval Wajsbürt](https://github.com/percevalw), [Bastien Rance](https://team.inria.fr/heka/fr/team-members/rance/), [Xavier Tannier](http://xavier.tannier.free.fr/) and [Aurélie Névéol](https://perso.limsi.fr/neveol/)

- **Language:** French

- **License:** cc-by-sa-4.0

<!-- ## Model Sources -->

<!-- Provide the basic links for the model. -->

<!-- ## Training Details

<!-- ### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

<!-- ### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

<!-- #### Training Hyperparameters -->

# Download the CAS Privacy-Preserving NER Mimic Model

```python

fasttext_url = hf_hub_url(repo_id="NesrineBannour/CAS-privacy-preserving-model", filename="CAS-privacy-preserving-model_fasttext.txt")

urllib.request.urlretrieve(fasttext_url, fasttext_url.split('/')[-1])

model_url = hf_hub_url(repo_id="NesrineBannour/CAS-privacy-preserving-model", filename="CAS-privacy-preserving-model.ckpt")

urllib.request.urlretrieve(model_url, "path/to/your/folder/"+ model_url.split('/')[-1])

path_checkpoint = "path/to/your/folder/"+ model_url.split('/')[-1]

```

## 1. Load and use the model using only NLstruct

[NLstruct](https://github.com/percevalw/nlstruct) is the Python library we used to generate our

CAS privacy-preserving NER mimic model and that handles nested entities.

### Install the NLstruct library

```

pip install nlstruct==0.1.0

```

### Use the model

```python

from nlstruct import load_pretrained

from nlstruct.datasets import load_from_brat, export_to_brat

ner_model = load_pretrained(path_checkpoint)

test_data = load_from_brat("path/to/brat/test")

test_predictions = ner_model.predict(test_data)

# Export the predictions into the BRAT standoff format

export_to_brat(test_predictions, filename_prefix="path/to/exported_brat")

```

## 2. Load the model using NLstruct and use it with the Medkit library

[Medkit](https://github.com/TeamHeka/medkit) is a Python library for facilitating the extraction of features from various modalities of patient data,

including textual data.

### Install the Medkit library

```

python -m pip install 'medkit-lib'

```

### Use the model

Our model could be implemented as a Medkit operation module as follows:

```python

import os

from nlstruct import load_pretrained

import urllib.request

from huggingface_hub import hf_hub_url

from medkit.io.brat import BratInputConverter, BratOutputConverter

from medkit.core import Attribute

from medkit.core.text import NEROperation,Entity,Span,Segment, span_utils

class CAS_matcher(NEROperation):

def __init__(self):

# Load the fasttext file

fasttext_url = hf_hub_url(repo_id="NesrineBannour/CAS-privacy-preserving-model", filename="CAS-privacy-preserving-model_fasttext.txt")

if not os.path.exists("CAS-privacy-preserving-model_fasttext.txt"):

urllib.request.urlretrieve(fasttext_url, fasttext_url.split('/')[-1])

# Load the model

model_url = hf_hub_url(repo_id="NesrineBannour/CAS-privacy-preserving-model", filename="CAS-privacy-preserving-model.ckpt")

if not os.path.exists("ner_model/CAS-privacy-preserving-model.ckpt"):

urllib.request.urlretrieve(model_url, "ner_model/"+ model_url.split('/')[-1])

path_checkpoint = "ner_model/"+ model_url.split('/')[-1]

self.model = load_pretrained(path_checkpoint)

self.model.eval()

def run(self, segments):

"""Return entities for each match in `segments`.

Parameters

----------

segments:

List of segments into which to look for matches.

Returns

-------

List[Entity]

Entities found in `segments`.

"""

# get an iterator to all matches, grouped by segment

entities = []

for segment in segments:

matches = self.model.predict({"doc_id":segment.uid,"text":segment.text})

entities.extend([entity

for entity in self._matches_to_entities(matches, segment)

])

return entities

def _matches_to_entities(self, matches, segment: Segment):

for match in matches["entities"]:

text_all,spans_all = [],[]

for fragment in match["fragments"]:

text, spans = span_utils.extract(

segment.text, segment.spans, [(fragment["begin"], fragment["end"])]

)

text_all.append(text)

spans_all.extend(spans)

text_all = "".join(text_all)

entity = Entity(

label=match["label"],

text=text_all,

spans=spans_all,

)

score_attr = Attribute(

label="confidence",

value=float(match["confidence"]),

#metadata=dict(model=self.model.path_checkpoint),

)

entity.attrs.add(score_attr)

yield entity

brat_converter = BratInputConverter()

docs = brat_converter.load("path/to/brat/test")

matcher = CAS_matcher()

for doc in docs:

entities = matcher.run([doc.raw_segment])

for ent in entities:

doc.anns.add(ent)

brat_output_converter = BratOutputConverter(attrs=[])

# To keep the same document names in the output folder

doc_names = [os.path.splitext(os.path.basename(doc.metadata["path_to_text"]))[0] for doc in docs]

brat_output_converter.save(docs, dir_path="path/to/exported_brat, doc_names=doc_names)

```

<!-- ## Evaluation of test data

<!-- This section describes the evaluation protocols and provides the results. -->

<!-- #### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

<!-- [More Information Needed]

### Results

[More Information Needed]

#### Summary -->

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions are estimated using the [Carbontracker](https://github.com/lfwa/carbontracker) tool.

The used version at the time of our experiments computes its estimates by using the average carbon intensity in

European Union in 2017 instead of the France value (294.21 gCO<sub>2</sub>eq/kWh vs. 85 gCO<sub>2</sub>eq/kWh).

Therefore, our reported carbon footprint of training both the private model that generated the silver annotations

and the CAS student model is overestimated.

- **Hardware Type:** GPU NVIDIA GTX 1080 Ti

- **Compute Region:** Gif-sur-Yvette, Île-de-France, France

- **Carbon Emitted:** 292 gCO<sub>2</sub>eq

## Acknowledgements

We thank the institutions and colleagues who made it possible to use the datasets described in this study:

the Biomedical Informatics Department at the Rouen University Hospital provided access to the LERUDI corpus,

and Dr. Grabar (Université de Lille, CNRS, STL) granted permission to use the DEFT/CAS corpus. We would also like to thank

the ITMO Cancer Aviesan for funding our research, and the [HeKA research team](https://team.inria.fr/heka/) for integrating our model

into their library [Medkit]((https://github.com/TeamHeka/medkit)).

## Citation

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

If you use this model in your research, please make sure to cite our paper:

```bibtex

@article{BANNOUR2022104073,

title = {Privacy-preserving mimic models for clinical named entity recognition in French},

journal = {Journal of Biomedical Informatics},

volume = {130},

pages = {104073},

year = {2022},

issn = {1532-0464},

doi = {https://doi.org/10.1016/j.jbi.2022.104073},

url = {https://www.sciencedirect.com/science/article/pii/S1532046422000892}}

}

```

<!-- ## Bias, Risks, and Limitations -->

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

<!-- [More Information Needed] -->

|

voxxer/ppo-SnowballTarget | voxxer | 2023-11-10T14:26:21Z | 2 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"SnowballTarget",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-SnowballTarget",

"region:us"

]

| reinforcement-learning | 2023-11-10T14:26:18Z | ---

library_name: ml-agents

tags:

- SnowballTarget

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-SnowballTarget

---

# **ppo** Agent playing **SnowballTarget**

This is a trained model of a **ppo** agent playing **SnowballTarget**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: voxxer/ppo-SnowballTarget

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

alicelouis/Swin2e-4Lion | alicelouis | 2023-11-10T14:26:03Z | 7 | 0 | transformers | [

"transformers",

"safetensors",

"swin",

"image-classification",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2023-11-10T14:12:49Z | ---

license: mit

metrics:

- accuracy

---

from transformers import AutoImageProcessor, SwinForImageClassification

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("alicelouis/Swin2e-4Lion")

model = SwinForImageClassification.from_pretrained("alicelouis/Swin2e-4Lion")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

|

hkivancoral/hushem_1x_deit_tiny_sgd_lr0001_fold5 | hkivancoral | 2023-11-10T14:23:39Z | 8 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:facebook/deit-tiny-patch16-224",

"base_model:finetune:facebook/deit-tiny-patch16-224",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2023-11-10T14:21:48Z | ---

license: apache-2.0

base_model: facebook/deit-tiny-patch16-224

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: hushem_1x_deit_tiny_sgd_lr0001_fold5

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: test

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.14634146341463414

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hushem_1x_deit_tiny_sgd_lr0001_fold5

This model is a fine-tuned version of [facebook/deit-tiny-patch16-224](https://huggingface.co/facebook/deit-tiny-patch16-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5789

- Accuracy: 0.1463

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 6 | 1.6455 | 0.1220 |

| 1.6035 | 2.0 | 12 | 1.6420 | 0.1220 |

| 1.6035 | 3.0 | 18 | 1.6386 | 0.1463 |

| 1.6142 | 4.0 | 24 | 1.6353 | 0.1463 |

| 1.5857 | 5.0 | 30 | 1.6321 | 0.1463 |

| 1.5857 | 6.0 | 36 | 1.6289 | 0.1463 |

| 1.5718 | 7.0 | 42 | 1.6259 | 0.1463 |

| 1.5718 | 8.0 | 48 | 1.6232 | 0.1463 |

| 1.5833 | 9.0 | 54 | 1.6206 | 0.1463 |

| 1.5737 | 10.0 | 60 | 1.6178 | 0.1463 |

| 1.5737 | 11.0 | 66 | 1.6153 | 0.1463 |

| 1.5614 | 12.0 | 72 | 1.6128 | 0.1220 |

| 1.5614 | 13.0 | 78 | 1.6104 | 0.1220 |

| 1.5648 | 14.0 | 84 | 1.6081 | 0.1220 |

| 1.5575 | 15.0 | 90 | 1.6060 | 0.1220 |

| 1.5575 | 16.0 | 96 | 1.6040 | 0.1220 |

| 1.5452 | 17.0 | 102 | 1.6020 | 0.1220 |

| 1.5452 | 18.0 | 108 | 1.6002 | 0.1220 |

| 1.5768 | 19.0 | 114 | 1.5984 | 0.1220 |

| 1.5464 | 20.0 | 120 | 1.5966 | 0.1220 |

| 1.5464 | 21.0 | 126 | 1.5950 | 0.1220 |

| 1.5149 | 22.0 | 132 | 1.5934 | 0.1220 |

| 1.5149 | 23.0 | 138 | 1.5920 | 0.1220 |

| 1.6056 | 24.0 | 144 | 1.5905 | 0.1220 |

| 1.5161 | 25.0 | 150 | 1.5892 | 0.1220 |

| 1.5161 | 26.0 | 156 | 1.5879 | 0.1220 |

| 1.519 | 27.0 | 162 | 1.5868 | 0.1220 |

| 1.519 | 28.0 | 168 | 1.5857 | 0.1220 |

| 1.5531 | 29.0 | 174 | 1.5848 | 0.1220 |

| 1.5347 | 30.0 | 180 | 1.5839 | 0.1220 |

| 1.5347 | 31.0 | 186 | 1.5831 | 0.1220 |

| 1.5238 | 32.0 | 192 | 1.5824 | 0.1220 |

| 1.5238 | 33.0 | 198 | 1.5817 | 0.1463 |

| 1.5463 | 34.0 | 204 | 1.5811 | 0.1463 |

| 1.5219 | 35.0 | 210 | 1.5805 | 0.1463 |

| 1.5219 | 36.0 | 216 | 1.5800 | 0.1463 |

| 1.5056 | 37.0 | 222 | 1.5797 | 0.1463 |

| 1.5056 | 38.0 | 228 | 1.5794 | 0.1463 |

| 1.5505 | 39.0 | 234 | 1.5791 | 0.1463 |

| 1.5261 | 40.0 | 240 | 1.5790 | 0.1463 |

| 1.5261 | 41.0 | 246 | 1.5789 | 0.1463 |

| 1.5175 | 42.0 | 252 | 1.5789 | 0.1463 |

| 1.5175 | 43.0 | 258 | 1.5789 | 0.1463 |

| 1.5317 | 44.0 | 264 | 1.5789 | 0.1463 |

| 1.5241 | 45.0 | 270 | 1.5789 | 0.1463 |

| 1.5241 | 46.0 | 276 | 1.5789 | 0.1463 |

| 1.5533 | 47.0 | 282 | 1.5789 | 0.1463 |

| 1.5533 | 48.0 | 288 | 1.5789 | 0.1463 |

| 1.4945 | 49.0 | 294 | 1.5789 | 0.1463 |

| 1.5379 | 50.0 | 300 | 1.5789 | 0.1463 |

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

|

VitaliiVrublevskyi/v10_bert-base-uncased-finetuned-mrpc | VitaliiVrublevskyi | 2023-11-10T14:21:33Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-11-10T14:09:00Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

- f1

model-index:

- name: v10_bert-base-uncased-finetuned-mrpc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

config: mrpc

split: validation

args: mrpc

metrics:

- name: Accuracy

type: accuracy

value: 0.8455882352941176

- name: F1

type: f1

value: 0.8923076923076922

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# v10_bert-base-uncased-finetuned-mrpc

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5079

- Accuracy: 0.8456

- F1: 0.8923

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 79

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 115 | 0.5043 | 0.7574 | 0.8319 |

| No log | 2.0 | 230 | 0.4095 | 0.8456 | 0.8919 |

| No log | 3.0 | 345 | 0.4298 | 0.8407 | 0.8889 |

| No log | 4.0 | 460 | 0.4580 | 0.8529 | 0.8962 |

| 0.3409 | 5.0 | 575 | 0.5079 | 0.8456 | 0.8923 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.13.3

|

nero1342/vcn-7b-v3-500it-crawl | nero1342 | 2023-11-10T14:21:30Z | 0 | 0 | peft | [

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:vilm/vietcuna-7b-v3",

"base_model:adapter:vilm/vietcuna-7b-v3",

"region:us"

]

| null | 2023-11-10T09:08:29Z | ---

library_name: peft

base_model: vilm/vietcuna-7b-v3

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters