modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-23 18:27:52

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 492

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-23 18:25:26

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

lenartlola/rick-bot | lenartlola | 2022-12-15T14:02:44Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-12-15T14:00:20Z | ---

tags:

- conversational

--- |

J3/ppo-Huggy | J3 | 2022-12-15T13:57:38Z | 0 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2022-12-15T13:57:27Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: J3/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

Tereveni-AI/gpt2-124M-uk-fiction | Tereveni-AI | 2022-12-15T13:49:41Z | 30 | 3 | transformers | [

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"uk",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-03-02T23:29:05Z | ---

language: uk

widget:

- text: "Но зла Юнона, суча дочка, "

tags:

- text-generation

---

Note: **default code snippet above won't work** because we are using `AlbertTokenizer` with `GPT2LMHeadModel`, see [issue](https://github.com/huggingface/transformers/issues/4285).

## GPT2 124M Trained on Ukranian Fiction

### Training details

Model was trained on corpus of 4040 fiction books, 2.77 GiB in total.

Evaluation on [brown-uk](https://github.com/brown-uk/corpus) gives perplexity of 50.16.

### Example usage:

```python

from transformers import AlbertTokenizer, GPT2LMHeadModel

tokenizer = AlbertTokenizer.from_pretrained("Tereveni-AI/gpt2-124M-uk-fiction")

model = GPT2LMHeadModel.from_pretrained("Tereveni-AI/gpt2-124M-uk-fiction")

input_ids = tokenizer.encode("Но зла Юнона, суча дочка,", add_special_tokens=False, return_tensors='pt')

outputs = model.generate(

input_ids,

do_sample=True,

num_return_sequences=3,

max_length=50

)

for i, out in enumerate(outputs):

print("{}: {}".format(i, tokenizer.decode(out)))

```

Prints something like this:

```bash

0: Но зла Юнона, суча дочка, яка затьмарила всі її таємниці: І хто з'їсть її душу, той помре». І, не дочекавшись гніву богів, посунула в пітьму, щоб не бачити перед собою. Але, за

1: Но зла Юнона, суча дочка, і довела мене до божевілля. Але він не знав нічого. Після того як я його побачив, мені стало зле. Я втратив рівновагу. Але в мене не було часу на роздуми. Я вже втратив надію

2: Но зла Юнона, суча дочка, не нарікала нам! — раптом вигукнула Юнона. — Це ти, старий йолопе! — мовила вона, не перестаючи сміятись. — Хіба ти не знаєш, що мені подобається ходити з тобою?

``` |

andresgtn/ppo-LunarLander-v2 | andresgtn | 2022-12-15T13:47:07Z | 0 | 1 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2022-12-15T13:46:32Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 222.99 +/- 15.34

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

ntinosmg/q-FrozenLake-v1-4x4-noSlippery | ntinosmg | 2022-12-15T13:35:08Z | 0 | 0 | null | [

"FrozenLake-v1-4x4",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2022-08-13T18:33:02Z | ---

tags:

- FrozenLake-v1-4x4

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4

type: FrozenLake-v1-4x4

metrics:

- type: mean_reward

value: 0.61 +/- 0.49

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="ntinosmg/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

Ari/whisper-small-af-za | Ari | 2022-12-15T13:33:47Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"whisper-event",

"generated_from_trainer",

"hf-asr-leaderboard",

"af",

"dataset:google/fleurs",

"dataset:openslr/SLR32",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-12-13T13:37:08Z | ---

language:

- af

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

- hf-asr-leaderboard

datasets:

- google/fleurs

- openslr/SLR32

model-index:

- name: whisper-small-af-za - Ari

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

metrics:

- name: Wer

type: wer

value: 0.0

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whisper-small-af-za - Ari

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.0002

- eval_wer: 0.0

- eval_runtime: 77.0592

- eval_samples_per_second: 2.569

- eval_steps_per_second: 0.324

- epoch: 14.6

- step: 2000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

tomekkorbak/eloquent_stallman | tomekkorbak | 2022-12-15T13:27:52Z | 0 | 0 | null | [

"generated_from_trainer",

"en",

"dataset:tomekkorbak/detoxify-pile-chunk3-0-50000",

"dataset:tomekkorbak/detoxify-pile-chunk3-50000-100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-100000-150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-150000-200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-200000-250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-250000-300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-300000-350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-350000-400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-400000-450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-450000-500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-500000-550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-550000-600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-600000-650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-650000-700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-700000-750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-750000-800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-800000-850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-850000-900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-900000-950000",

"dataset:tomekkorbak/detoxify-pile-chunk3-950000-1000000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1000000-1050000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1050000-1100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1100000-1150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1150000-1200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1200000-1250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1250000-1300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1300000-1350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1350000-1400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1400000-1450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1450000-1500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1500000-1550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1550000-1600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1600000-1650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1650000-1700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1700000-1750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1750000-1800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1800000-1850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1850000-1900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1900000-1950000",

"license:mit",

"region:us"

] | null | 2022-12-15T13:27:41Z | ---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- tomekkorbak/detoxify-pile-chunk3-0-50000

- tomekkorbak/detoxify-pile-chunk3-50000-100000

- tomekkorbak/detoxify-pile-chunk3-100000-150000

- tomekkorbak/detoxify-pile-chunk3-150000-200000

- tomekkorbak/detoxify-pile-chunk3-200000-250000

- tomekkorbak/detoxify-pile-chunk3-250000-300000

- tomekkorbak/detoxify-pile-chunk3-300000-350000

- tomekkorbak/detoxify-pile-chunk3-350000-400000

- tomekkorbak/detoxify-pile-chunk3-400000-450000

- tomekkorbak/detoxify-pile-chunk3-450000-500000

- tomekkorbak/detoxify-pile-chunk3-500000-550000

- tomekkorbak/detoxify-pile-chunk3-550000-600000

- tomekkorbak/detoxify-pile-chunk3-600000-650000

- tomekkorbak/detoxify-pile-chunk3-650000-700000

- tomekkorbak/detoxify-pile-chunk3-700000-750000

- tomekkorbak/detoxify-pile-chunk3-750000-800000

- tomekkorbak/detoxify-pile-chunk3-800000-850000

- tomekkorbak/detoxify-pile-chunk3-850000-900000

- tomekkorbak/detoxify-pile-chunk3-900000-950000

- tomekkorbak/detoxify-pile-chunk3-950000-1000000

- tomekkorbak/detoxify-pile-chunk3-1000000-1050000

- tomekkorbak/detoxify-pile-chunk3-1050000-1100000

- tomekkorbak/detoxify-pile-chunk3-1100000-1150000

- tomekkorbak/detoxify-pile-chunk3-1150000-1200000

- tomekkorbak/detoxify-pile-chunk3-1200000-1250000

- tomekkorbak/detoxify-pile-chunk3-1250000-1300000

- tomekkorbak/detoxify-pile-chunk3-1300000-1350000

- tomekkorbak/detoxify-pile-chunk3-1350000-1400000

- tomekkorbak/detoxify-pile-chunk3-1400000-1450000

- tomekkorbak/detoxify-pile-chunk3-1450000-1500000

- tomekkorbak/detoxify-pile-chunk3-1500000-1550000

- tomekkorbak/detoxify-pile-chunk3-1550000-1600000

- tomekkorbak/detoxify-pile-chunk3-1600000-1650000

- tomekkorbak/detoxify-pile-chunk3-1650000-1700000

- tomekkorbak/detoxify-pile-chunk3-1700000-1750000

- tomekkorbak/detoxify-pile-chunk3-1750000-1800000

- tomekkorbak/detoxify-pile-chunk3-1800000-1850000

- tomekkorbak/detoxify-pile-chunk3-1850000-1900000

- tomekkorbak/detoxify-pile-chunk3-1900000-1950000

model-index:

- name: eloquent_stallman

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# eloquent_stallman

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 25000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.24.0

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'filter_threshold': 0.00078,

'is_split_by_sentences': True,

'skip_tokens': 1661599744},

'generation': {'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048},

{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'max_tokens': 64, 'num_samples': 4096},

'model': {'from_scratch': False,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'model_kwargs': {'revision': '81a1701e025d2c65ae6e8c2103df559071523ee0'},

'path_or_name': 'tomekkorbak/goofy_pasteur'},

'objective': {'name': 'MLE'},

'tokenizer': {'path_or_name': 'gpt2'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'eloquent_stallman',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0005,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'tokens_already_seen': 1661599744,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/3o0evgmf |

Dreamoon/ppo-LunarLander-v2 | Dreamoon | 2022-12-15T13:21:13Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2022-12-09T20:34:55Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -64.07 +/- 67.43

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

LucianoDeben/ppo-Huggy | LucianoDeben | 2022-12-15T13:10:35Z | 1 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2022-12-15T13:10:21Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: LucianoDeben/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

gabrieleai/gamindocar-2000-700 | gabrieleai | 2022-12-15T13:06:17Z | 1 | 0 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2022-12-15T13:04:11Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### Gamindocar-2000-700 Dreambooth model trained by gabrieleai with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Sample pictures of this concept:

|

ibadrehman/ppo-Huggy-01 | ibadrehman | 2022-12-15T12:40:16Z | 10 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2022-12-15T12:40:09Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: ibadrehman/ppo-Huggy-01

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

bvk1ng/bipedal_walker_ppo | bvk1ng | 2022-12-15T12:39:15Z | 1 | 0 | stable-baselines3 | [

"stable-baselines3",

"BipedalWalker-v3",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2022-12-15T12:34:54Z | ---

library_name: stable-baselines3

tags:

- BipedalWalker-v3

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: Proximal Policy Optimisation (PPO)

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: BipedalWalker-v3

type: BipedalWalker-v3

metrics:

- type: mean_reward

value: 209.63 +/- 82.30

name: mean_reward

verified: false

---

# **Proximal Policy Optimisation (PPO)** Agent playing **BipedalWalker-v3**

This is a trained model of a **Proximal Policy Optimisation (PPO)** agent playing **BipedalWalker-v3**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

SRM47/gpt2-large-paraphraser | SRM47 | 2022-12-15T12:33:15Z | 9 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-12-15T10:50:36Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: gpt2-large-paraphraser

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-large-paraphraser

This model is a fine-tuned version of [gpt2-large](https://huggingface.co/gpt2-large) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

scikit-learn/blog-example | scikit-learn | 2022-12-15T12:31:24Z | 0 | 0 | sklearn | [

"sklearn",

"skops",

"tabular-classification",

"region:us"

] | tabular-classification | 2022-12-15T12:21:52Z | ---

library_name: sklearn

tags:

- sklearn

- skops

- tabular-classification

model_file: model.pkl

widget:

structuredData:

area_mean:

- 407.4

- 1335.0

- 428.0

area_se:

- 26.99

- 77.02

- 17.12

area_worst:

- 508.9

- 1946.0

- 546.3

compactness_mean:

- 0.05991

- 0.1076

- 0.069

compactness_se:

- 0.01065

- 0.01895

- 0.01727

compactness_worst:

- 0.1049

- 0.3055

- 0.188

concave points_mean:

- 0.02069

- 0.08941

- 0.01393

concave points_se:

- 0.009175

- 0.01232

- 0.006747

concave points_worst:

- 0.06544

- 0.2112

- 0.06913

concavity_mean:

- 0.02638

- 0.1527

- 0.02669

concavity_se:

- 0.01245

- 0.02681

- 0.02045

concavity_worst:

- 0.08105

- 0.4159

- 0.1471

fractal_dimension_mean:

- 0.05934

- 0.05478

- 0.06057

fractal_dimension_se:

- 0.001461

- 0.001711

- 0.002922

fractal_dimension_worst:

- 0.06487

- 0.07055

- 0.07993

perimeter_mean:

- 73.28

- 134.8

- 75.51

perimeter_se:

- 2.684

- 4.119

- 1.444

perimeter_worst:

- 83.12

- 166.8

- 85.22

radius_mean:

- 11.5

- 20.64

- 11.84

radius_se:

- 0.3927

- 0.6137

- 0.2222

radius_worst:

- 12.97

- 25.37

- 13.3

smoothness_mean:

- 0.09345

- 0.09446

- 0.08871

smoothness_se:

- 0.00638

- 0.006211

- 0.005517

smoothness_worst:

- 0.1183

- 0.1562

- 0.128

symmetry_mean:

- 0.1834

- 0.1571

- 0.1533

symmetry_se:

- 0.02292

- 0.01276

- 0.01616

symmetry_worst:

- 0.274

- 0.2689

- 0.2535

texture_mean:

- 18.45

- 17.35

- 18.94

texture_se:

- 0.8429

- 0.6575

- 0.8652

texture_worst:

- 22.46

- 23.17

- 24.99

---

# Model description

This is a Logistic Regression trained on breast cancer dataset.

## Intended uses & limitations

This model is trained for educational purposes.

## Training Procedure

### Hyperparameters

The model is trained with below hyperparameters.

<details>

<summary> Click to expand </summary>

| Hyperparameter | Value |

|--------------------------|-----------------------------------------------------------------|

| memory | |

| steps | [('scaler', StandardScaler()), ('model', LogisticRegression())] |

| verbose | False |

| scaler | StandardScaler() |

| model | LogisticRegression() |

| scaler__copy | True |

| scaler__with_mean | True |

| scaler__with_std | True |

| model__C | 1.0 |

| model__class_weight | |

| model__dual | False |

| model__fit_intercept | True |

| model__intercept_scaling | 1 |

| model__l1_ratio | |

| model__max_iter | 100 |

| model__multi_class | auto |

| model__n_jobs | |

| model__penalty | l2 |

| model__random_state | |

| model__solver | lbfgs |

| model__tol | 0.0001 |

| model__verbose | 0 |

| model__warm_start | False |

</details>

### Model Plot

The model plot is below.

<style>#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 {color: black;background-color: white;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 pre{padding: 0;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-toggleable {background-color: white;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 label.sk-toggleable__label {cursor: pointer;display: block;width: 100%;margin-bottom: 0;padding: 0.3em;box-sizing: border-box;text-align: center;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 label.sk-toggleable__label-arrow:before {content: "▸";float: left;margin-right: 0.25em;color: #696969;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 label.sk-toggleable__label-arrow:hover:before {color: black;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-estimator:hover label.sk-toggleable__label-arrow:before {color: black;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-toggleable__content {max-height: 0;max-width: 0;overflow: hidden;text-align: left;background-color: #f0f8ff;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-toggleable__content pre {margin: 0.2em;color: black;border-radius: 0.25em;background-color: #f0f8ff;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 input.sk-toggleable__control:checked~div.sk-toggleable__content {max-height: 200px;max-width: 100%;overflow: auto;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 input.sk-toggleable__control:checked~label.sk-toggleable__label-arrow:before {content: "▾";}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-estimator input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-label input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 input.sk-hidden--visually {border: 0;clip: rect(1px 1px 1px 1px);clip: rect(1px, 1px, 1px, 1px);height: 1px;margin: -1px;overflow: hidden;padding: 0;position: absolute;width: 1px;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-estimator {font-family: monospace;background-color: #f0f8ff;border: 1px dotted black;border-radius: 0.25em;box-sizing: border-box;margin-bottom: 0.5em;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-estimator:hover {background-color: #d4ebff;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-parallel-item::after {content: "";width: 100%;border-bottom: 1px solid gray;flex-grow: 1;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-label:hover label.sk-toggleable__label {background-color: #d4ebff;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-serial::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 2em;bottom: 0;left: 50%;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-serial {display: flex;flex-direction: column;align-items: center;background-color: white;padding-right: 0.2em;padding-left: 0.2em;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-item {z-index: 1;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-parallel {display: flex;align-items: stretch;justify-content: center;background-color: white;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-parallel::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 2em;bottom: 0;left: 50%;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-parallel-item {display: flex;flex-direction: column;position: relative;background-color: white;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-parallel-item:first-child::after {align-self: flex-end;width: 50%;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-parallel-item:last-child::after {align-self: flex-start;width: 50%;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-parallel-item:only-child::after {width: 0;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-dashed-wrapped {border: 1px dashed gray;margin: 0 0.4em 0.5em 0.4em;box-sizing: border-box;padding-bottom: 0.4em;background-color: white;position: relative;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-label label {font-family: monospace;font-weight: bold;background-color: white;display: inline-block;line-height: 1.2em;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-label-container {position: relative;z-index: 2;text-align: center;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-container {/* jupyter's `normalize.less` sets `[hidden] { display: none; }` but bootstrap.min.css set `[hidden] { display: none !important; }` so we also need the `!important` here to be able to override the default hidden behavior on the sphinx rendered scikit-learn.org. See: https://github.com/scikit-learn/scikit-learn/issues/21755 */display: inline-block !important;position: relative;}#sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152 div.sk-text-repr-fallback {display: none;}</style><div id="sk-5b6643ea-0cef-4d0c-8389-2cf071bf6152" class="sk-top-container" style="overflow: auto;"><div class="sk-text-repr-fallback"><pre>Pipeline(steps=[('scaler', StandardScaler()), ('model', LogisticRegression())])</pre><b>Please rerun this cell to show the HTML repr or trust the notebook.</b></div><div class="sk-container" hidden><div class="sk-item sk-dashed-wrapped"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="76a688ab-e260-4cf7-a9f2-bf77900be27c" type="checkbox" ><label for="76a688ab-e260-4cf7-a9f2-bf77900be27c" class="sk-toggleable__label sk-toggleable__label-arrow">Pipeline</label><div class="sk-toggleable__content"><pre>Pipeline(steps=[('scaler', StandardScaler()), ('model', LogisticRegression())])</pre></div></div></div><div class="sk-serial"><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="6a4fcd10-6b63-40a6-a848-13717b9f7c82" type="checkbox" ><label for="6a4fcd10-6b63-40a6-a848-13717b9f7c82" class="sk-toggleable__label sk-toggleable__label-arrow">StandardScaler</label><div class="sk-toggleable__content"><pre>StandardScaler()</pre></div></div></div><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="974bd93d-19db-4a61-b7ff-66d07e5bbadb" type="checkbox" ><label for="974bd93d-19db-4a61-b7ff-66d07e5bbadb" class="sk-toggleable__label sk-toggleable__label-arrow">LogisticRegression</label><div class="sk-toggleable__content"><pre>LogisticRegression()</pre></div></div></div></div></div></div></div>

## Evaluation Results

You can find the details about evaluation process and the evaluation results.

| Metric | Value |

|----------|----------|

| accuracy | 0.965035 |

| f1 score | 0.965035 |

# How to Get Started with the Model

Use the code below to get started with the model.

```python

import joblib

import json

import pandas as pd

clf = joblib.load(model.pkl)

with open("config.json") as f:

config = json.load(f)

clf.predict(pd.DataFrame.from_dict(config["sklearn"]["example_input"]))

```

# Additional Content

## Confusion Matrix

|

MarcusAGray/q-FrozenLake-v1-8x8-noSlippery | MarcusAGray | 2022-12-15T12:24:56Z | 0 | 0 | null | [

"FrozenLake-v1-8x8-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2022-12-15T12:19:13Z | ---

tags:

- FrozenLake-v1-8x8-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-8x8-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-8x8-no_slippery

type: FrozenLake-v1-8x8-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="MarcusAGray/q-FrozenLake-v1-8x8-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

emmashe15/ppo-LunarLander-v2-TEST | emmashe15 | 2022-12-15T12:10:45Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2022-12-15T11:54:29Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 262.61 +/- 21.13

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

supermy/c2m-mt5 | supermy | 2022-12-15T12:07:27Z | 4 | 3 | transformers | [

"transformers",

"pytorch",

"mt5",

"feature-extraction",

"zh",

"dataset:c2m",

"endpoints_compatible",

"region:us"

] | feature-extraction | 2022-12-15T11:31:14Z | ---

language: zh

datasets: c2m

inference:

parameters:

max_length: 108

num_return_sequences: 1

do_sample: True

widget:

- text: "晋太元中,武陵人捕鱼为业。缘溪行,忘路之远近。忽逢桃花林,夹岸数百步,中无杂树,芳草鲜美,落英缤纷。渔人甚异之,复前行,欲穷其林。林尽水源,便得一山,山有小口,仿佛若有光。便舍船,从口入。初极狭,才通人。复行数十步,豁然开朗。土地平旷,屋舍俨然,有良田、美池、桑竹之属。阡陌交通,鸡犬相闻。其中往来种作,男女衣着,悉如外人。黄发垂髫,并怡然自乐。"

example_title: "桃花源记"

- text: "往者不可谏,来者犹可追。"

example_title: "来者犹可追"

- text: "逝者如斯夫!不舍昼夜。"

example_title: "逝者如斯夫"

---

# 文言文 to 现代文

## Model description

## How to use

使用 pipeline 调用模型:

```python

>>> from transformers import pipeline

>>> model_checkpoint = "supermy/c2m-mt5"

>>> translator = pipeline("translation",

model=model_checkpoint,

num_return_sequences=1,

max_length=52,

truncation=True,)

>>> translator("往者不可谏,来者犹可追。")

[{'translation_text': '过 去 的 事 情 不能 劝 谏 , 未来 的 事 情 还 可以 追 回 来 。 如 果 过 去 的 事 情 不能 劝 谏 , 那 么 , 未来 的 事 情 还 可以 追 回 来 。 如 果 过 去 的 事 情'}]

>>> translator("福兮祸所伏,祸兮福所倚。",do_sample=True)

[{'translation_text': '幸 福 是 祸 患 所 隐 藏 的 , 灾 祸 是 福 祸 所 依 托 的 。 这 些 都 是 幸 福 所 依 托 的 。 这 些 都 是 幸 福 所 带 来 的 。 幸 福 啊 , 也 是 幸 福'}]

>>> translator("成事不说,遂事不谏,既往不咎。", num_return_sequences=1,do_sample=True)

[{'translation_text': '事 情 不 高 兴 , 事 情 不 劝 谏 , 过 去 的 事 就 不 会 责 怪 。 事 情 没 有 多 久 了 , 事 情 没 有 多 久 , 事 情 没 有 多 久 了 , 事 情 没 有 多'}]

>>> translator("逝者如斯夫!不舍昼夜。",num_return_sequences=1,max_length=30)

[{'translation_text': '逝 去 的 人 就 像 这 样 啊 , 不分 昼夜 地 去 追 赶 它 们 。 这 样 的 人 就 不 会 忘 记'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("supermy/c2m-mt5")

model = AutoModelForSeq2SeqLM.from_pretrained("supermy/c2m-mt5")

text = "用你喜欢的任何文本替换我。"

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

## Training data

非常全的文言文(古文)-现代文平行语料,基本涵盖了大部分经典古籍著作。

原始爬取的数据是篇章级对齐,经过脚本分句(按照句号分号感叹号问号划分)以及人工校对,形成共计约96万句对。目录bitext下是文言文-现代文对齐的平行数据。此外,目录source下是文言文单语数据,target下是现代文单语数据,这两个目录下的文件内容按行对齐。

以下为数据统计信息。其中,短篇章中包括了《论语》、《孟子》、《左传》等篇幅较短的古籍,已和《资治通鉴》合并。

|书名|句数

|:--|:--|

短篇章和资治通鉴|348727

元史|21182

北史|25823

北书|10947

南史|13838

南齐书|13137

史记|17701

后汉书|17753

周书|14930

太平广记|59358

宋书|23794

宋史|77853

徐霞客游记|22750

新五代史|10147

新唐书|12359

旧五代史|11377

旧唐书|29185

明史|85179

晋书|21133

梁书|14318

水经注全|11630

汉书|37622

辽史|9278

金史|13758

陈书|7096

隋书|8204

魏书|28178

**总计**|**967257**

《短篇章和资治通鉴》中各书籍统计如下(此部分数据量不完全准确):

|书名|句数

|:--|:--|

资治通鉴|7.95w

左传|1.09w

大学章句集注| 86

反经| 4211

公孙龙子| 73

管子| 6266

鬼谷子| 385

韩非子| 4325

淮南子| 2669

黄帝内经| 6162

皇帝四经| 243

将苑| 100

金刚经| 193

孔子家语| 138

老子| 398

了凡四训| 31

礼记| 4917

列子| 1735

六韬| 693

六祖坛经| 949

论语| 988

吕氏春秋| 2473

孟子| 1654

梦溪笔谈| 1280

墨子| 2921

千字文| 82

清史稿| 1604

三字经| 234

山海经| 919

伤寒论| 712

商君书| 916

尚书| 1048

世说新语| 3044

司马法| 132

搜神记| 1963

搜神后记| 540

素书| 61

孙膑兵法| 230

孙子兵法| 338

天工开物| 807

尉缭子| 226

文昌孝经| 194

文心雕龙| 1388

吴子| 136

孝经| 102

笑林广记| 1496

荀子| 3131

颜氏家训| 510

仪礼| 2495

易传| 711

逸周书| 1505

战国策| 3318

贞观政要| 1291

中庸| 206

周礼| 2026

周易| 460

庄子| 1698

百战奇略| 800

论衡| 1.19w

智囊|2165

罗织经|188

朱子家训|31

抱朴子|217

地藏经|547

国语|3841

容斋随笔|2921

幼学琼林|1372

三略|268

围炉夜话|387

冰鉴|120

如果您使用该语料库,请注明出处:https://github.com/NiuTrans/Classical-Modern

感谢为该语料库做出贡献的成员:丁佳鹏、杨文权、刘晓晴、曹润柘、罗应峰。

```

```

## Training procedure

在英伟达16G显卡训练了 4 天整,共计68 次。

[文言文数据集](https://huggingface.co/datasets/supermy/Classical-Modern) 训练数据. 模型 [MT5](google/mt5-small)

```

[INFO|trainer.py:1628] 2022-12-15 16:08:36,696 >> ***** Running training *****

[INFO|trainer.py:1629] 2022-12-15 16:08:36,696 >> Num examples = 967255

[INFO|trainer.py:1630] 2022-12-15 16:08:36,697 >> Num Epochs = 6

[INFO|trainer.py:1631] 2022-12-15 16:08:36,697 >> Instantaneous batch size per device = 12

[INFO|trainer.py:1632] 2022-12-15 16:08:36,697 >> Total train batch size (w. parallel, distributed & accumulation) = 12

[INFO|trainer.py:1633] 2022-12-15 16:08:36,697 >> Gradient Accumulation steps = 1

[INFO|trainer.py:1634] 2022-12-15 16:08:36,697 >> Total optimization steps = 483630

[INFO|trainer.py:1654] 2022-12-15 16:08:36,698 >> Continuing training from checkpoint, will skip to saved global_step

[INFO|trainer.py:1655] 2022-12-15 16:08:36,698 >> Continuing training from epoch 5

[INFO|trainer.py:1656] 2022-12-15 16:08:36,698 >> Continuing training from global step 465000

{'loss': 5.2906, 'learning_rate': 1.8743667679837894e-06, 'epoch': 5.78}

{'loss': 5.3196, 'learning_rate': 1.8226743584971985e-06, 'epoch': 5.78}

{'loss': 5.3467, 'learning_rate': 6.513243595310464e-08, 'epoch': 5.99}

{'loss': 5.3363, 'learning_rate': 1.344002646651366e-08, 'epoch': 6.0}

{'train_runtime': 6277.5234, 'train_samples_per_second': 924.494, 'train_steps_per_second': 77.042, 'train_loss': 0.2044413571775476, 'epoch': 6.0}

***** train metrics *****

epoch = 6.0

train_loss = 0.2044

train_runtime = 1:44:37.52

train_samples = 967255

train_samples_per_second = 924.494

train_steps_per_second = 77.042

12/15/2022 17:53:23 - INFO - __main__ - *** Evaluate ***

[INFO|trainer.py:2920] 2022-12-15 17:53:23,729 >> ***** Running Evaluation *****

[INFO|trainer.py:2922] 2022-12-15 17:53:23,729 >> Num examples = 200

[INFO|trainer.py:2925] 2022-12-15 17:53:23,729 >> Batch size = 12

100%|██████████| 17/17 [00:07<00:00, 2.29it/s]

[INFO|modelcard.py:443] 2022-12-15 17:53:32,737 >> Dropping the following result as it does not have all the necessary fields:

{'task': {'name': 'Translation', 'type': 'translation'}, 'metrics': [{'name': 'Bleu', 'type': 'bleu', 'value': 0.7225}]}

***** eval metrics *****

epoch = 6.0

eval_bleu = 0.7225

eval_gen_len = 12.285

eval_loss = 6.6782

eval_runtime = 0:00:07.77

eval_samples = 200

eval_samples_per_second = 25.721

eval_steps_per_second = 2.186

``` |

Nenma/finetuning-sentiment-model-25k-samples | Nenma | 2022-12-15T11:55:52Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-12-15T10:52:45Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-25k-samples

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: train

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.93208

- name: F1

type: f1

value: 0.932183081715792

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-25k-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2198

- Accuracy: 0.9321

- F1: 0.9322

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

odahl/q-FrozenLake-v1-4x4-noSlippery | odahl | 2022-12-15T11:44:12Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2022-12-15T11:41:11Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="odahl/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

zhiyil/roberta-base-finetuned-cola | zhiyil | 2022-12-15T11:40:48Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"optimum_graphcore",

"roberta",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-12-15T11:11:45Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- matthews_correlation

model-index:

- name: roberta-base-finetuned-cola

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-cola

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6074

- Matthews Correlation: 0.6221

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: IPU

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- total_eval_batch_size: 5

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- training precision: Mixed Precision

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.4536 | 1.0 | 534 | 0.4104 | 0.5738 |

| 0.4876 | 2.0 | 1068 | 0.5156 | 0.5729 |

| 0.1281 | 3.0 | 1602 | 0.5083 | 0.6145 |

| 0.0441 | 4.0 | 2136 | 0.5483 | 0.6119 |

| 0.2985 | 5.0 | 2670 | 0.6074 | 0.6221 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.0+cpu

- Datasets 2.7.1

- Tokenizers 0.12.0

|

tomekkorbak/kind_poitras | tomekkorbak | 2022-12-15T11:27:37Z | 0 | 0 | null | [

"generated_from_trainer",

"en",

"dataset:tomekkorbak/detoxify-pile-chunk3-0-50000",

"dataset:tomekkorbak/detoxify-pile-chunk3-50000-100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-100000-150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-150000-200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-200000-250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-250000-300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-300000-350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-350000-400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-400000-450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-450000-500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-500000-550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-550000-600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-600000-650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-650000-700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-700000-750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-750000-800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-800000-850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-850000-900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-900000-950000",

"dataset:tomekkorbak/detoxify-pile-chunk3-950000-1000000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1000000-1050000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1050000-1100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1100000-1150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1150000-1200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1200000-1250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1250000-1300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1300000-1350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1350000-1400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1400000-1450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1450000-1500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1500000-1550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1550000-1600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1600000-1650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1650000-1700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1700000-1750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1750000-1800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1800000-1850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1850000-1900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1900000-1950000",

"license:mit",

"region:us"

] | null | 2022-12-15T11:27:21Z | ---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- tomekkorbak/detoxify-pile-chunk3-0-50000

- tomekkorbak/detoxify-pile-chunk3-50000-100000

- tomekkorbak/detoxify-pile-chunk3-100000-150000

- tomekkorbak/detoxify-pile-chunk3-150000-200000

- tomekkorbak/detoxify-pile-chunk3-200000-250000

- tomekkorbak/detoxify-pile-chunk3-250000-300000

- tomekkorbak/detoxify-pile-chunk3-300000-350000

- tomekkorbak/detoxify-pile-chunk3-350000-400000

- tomekkorbak/detoxify-pile-chunk3-400000-450000

- tomekkorbak/detoxify-pile-chunk3-450000-500000

- tomekkorbak/detoxify-pile-chunk3-500000-550000

- tomekkorbak/detoxify-pile-chunk3-550000-600000

- tomekkorbak/detoxify-pile-chunk3-600000-650000

- tomekkorbak/detoxify-pile-chunk3-650000-700000

- tomekkorbak/detoxify-pile-chunk3-700000-750000

- tomekkorbak/detoxify-pile-chunk3-750000-800000

- tomekkorbak/detoxify-pile-chunk3-800000-850000

- tomekkorbak/detoxify-pile-chunk3-850000-900000

- tomekkorbak/detoxify-pile-chunk3-900000-950000

- tomekkorbak/detoxify-pile-chunk3-950000-1000000

- tomekkorbak/detoxify-pile-chunk3-1000000-1050000

- tomekkorbak/detoxify-pile-chunk3-1050000-1100000

- tomekkorbak/detoxify-pile-chunk3-1100000-1150000

- tomekkorbak/detoxify-pile-chunk3-1150000-1200000

- tomekkorbak/detoxify-pile-chunk3-1200000-1250000

- tomekkorbak/detoxify-pile-chunk3-1250000-1300000

- tomekkorbak/detoxify-pile-chunk3-1300000-1350000

- tomekkorbak/detoxify-pile-chunk3-1350000-1400000

- tomekkorbak/detoxify-pile-chunk3-1400000-1450000

- tomekkorbak/detoxify-pile-chunk3-1450000-1500000

- tomekkorbak/detoxify-pile-chunk3-1500000-1550000

- tomekkorbak/detoxify-pile-chunk3-1550000-1600000

- tomekkorbak/detoxify-pile-chunk3-1600000-1650000

- tomekkorbak/detoxify-pile-chunk3-1650000-1700000

- tomekkorbak/detoxify-pile-chunk3-1700000-1750000

- tomekkorbak/detoxify-pile-chunk3-1750000-1800000

- tomekkorbak/detoxify-pile-chunk3-1800000-1850000

- tomekkorbak/detoxify-pile-chunk3-1850000-1900000

- tomekkorbak/detoxify-pile-chunk3-1900000-1950000

model-index:

- name: kind_poitras

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# kind_poitras

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 25000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.24.0

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'filter_threshold': 0.00078,

'is_split_by_sentences': True,

'skip_tokens': 1661599744},

'generation': {'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048},

{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'max_tokens': 64, 'num_samples': 4096},

'model': {'from_scratch': False,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'model_kwargs': {'revision': '81a1701e025d2c65ae6e8c2103df559071523ee0'},

'path_or_name': 'tomekkorbak/goofy_pasteur'},

'objective': {'name': 'MLE'},

'tokenizer': {'path_or_name': 'gpt2'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'kind_poitras',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0005,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'tokens_already_seen': 1661599744,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/1u3qemu1 |

Kwaku/social_media_sa_finetuned_2 | Kwaku | 2022-12-15T11:25:09Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"distilbert",

"text-classification",

"eng",

"dataset:banking77",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-12-11T17:12:39Z | ---

language: eng

datasets:

- banking77

---

# Social Media Sentiment Analysis Model (Finetuned 2)

This is an updated fine-tuned version of the [Social Media Sentiment Analysis Model](https://huggingface.co/Kwaku/social_media_sa) which is a finetuned version of [Distilbert](https://huggingface.co/models?other=distilbert). It's best suited for sentiment-analysis.

## Model Description

Social Media Sentiment Analysis Model was trained on the [dataset consisting of tweets](https://www.kaggle.com/code/mohamednabill7/sentiment-analysis-of-twitter-data/data) obtained from Kaggle."

## Intended Uses and Limitations

This model is meant for sentiment-analysis. Because it was trained on a corpus of tweets, it is familiar with social media jargons.

### How to use

You can use this model directly with a pipeline for text generation:

```python

>>>from transformers import pipeline

>>> model_name = "Kwaku/social_media_sa_finetuned_2"

>>> generator = pipeline("sentiment-analysis", model=model_name)

>>> result = generator("I like this model")

>>> print(result)

Generated output: [{'label': 'positive', 'score': 0.9992923736572266}]

```

### Limitations and bias

This model inherits the bias of its parent, [Distilbert](https://huggingface.co/models?other=distilbert).

Besides that, it was trained on only 1000 randomly selected sequences, and thus does not achieve a high probability rate.

It does fairly well nonetheless. |

GeneralAwareness/Dth | GeneralAwareness | 2022-12-15T11:05:02Z | 0 | 6 | null | [

"stable-diffusion",

"v2",

"text-to-image",

"image-to-image",

"Embedding",

"en",

"license:cc-by-nc-sa-4.0",

"region:us"

] | text-to-image | 2022-12-15T11:01:53Z | ---

license: cc-by-nc-sa-4.0

language:

- en

thumbnail: "https://huggingface.co/GeneralAwareness/Dth/resolve/main/an_ancient_bone_legion_dth.png"

tags:

- stable-diffusion

- v2

- text-to-image

- image-to-image

- Embedding

---

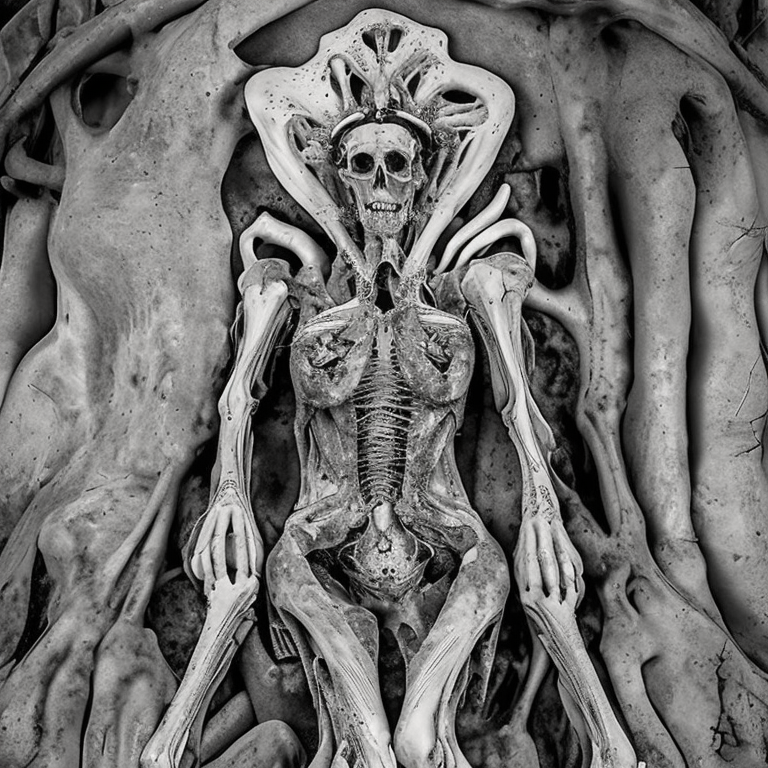

Textual Inversion Embedding by General Awareness For SD 2.x trained on 768x768 images from various sources.

Install by downloading the .pt embedding, and put it in the \embeddings folder

A bones/death/pencil drawing themed embedding that was created with 16 vectors. Using this with my previous Unddep embedding does add colour to water, etc... A good combo to try.

Use keyword: dth

Without this embedding and with this embedding.

Without this embedding and with this embedding.

Without this embedding and with this embedding.

A bone house.

The Bone Queen

The Bone Queen, queen of bones.

An ancient bone legion.

An abandoned house.

A stairway to Heaven

A tall proud tree.

A beautiful rose

|

ArthurinRUC/ppo-Huggy | ArthurinRUC | 2022-12-15T10:28:31Z | 11 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2022-12-15T10:28:24Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: ArthurinRUC/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

mikmascari/evalita-dm-BERT | mikmascari | 2022-12-15T10:21:53Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-12-14T16:14:08Z | Model for prerequisite relation inference between wikipedia pages, ITALIAN only, Domain specific for Data mining and coding.

Based on the work of NLP-CIC @ PRELEARN

eval_accuracy: 0.9176470588235294,

eval_f1: 0.8510638297872339,

eval_precision: 0.7692307692307693,

eval_recall: 0.9523809523809523,

Evaluated on EVALITA Prelearn task dataset in-domain DataMining |

metga97/ppo-Huggy | metga97 | 2022-12-15T10:12:42Z | 5 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] | reinforcement-learning | 2022-12-15T10:12:31Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: metga97/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

LucianoDeben/ppo-LunarLander-v2 | LucianoDeben | 2022-12-15T09:57:30Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2022-12-15T09:56:57Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 258.91 +/- 19.36

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

tolerantpancake/NegativeLowResolution | tolerantpancake | 2022-12-15T09:37:43Z | 0 | 30 | null | [

"region:us"

] | null | 2022-12-15T09:36:59Z |

Here is a **negative prompt** embedding I created in the hopes of using embeddings to eliminate low detailed and low fidelity images.**All 400-2400 step versions work very well to increase detail without losing coherency of the subject when used with other embeddings or large prompts. treat the different training step versions as a detail slider, 100-2400** This is an experiment, but the results are already impressive. Toy around with it, it can make some really cool images! An X/Y matrix is also provided here showing the different versions combined with my other negative embedding, "Negative Mutation". |

tolerantpancake/NegativeMutation | tolerantpancake | 2022-12-15T09:36:43Z | 0 | 21 | null | [

"region:us"

] | null | 2022-12-15T09:11:41Z | Here is a **negative prompt** embedding I created in the hopes of using embeddings to eliminate anatomical mutations and disfigurements.** The 400-500 step versions seem to work the best with no positive prompt embeddings**, however you can seemingly use all of them up to 2400 steps with very good results if you are using other embeddings in your positive prompt. This is an experiment, but the results are already impressive. Toy around with it, it can make some really cool images.! |

SRM47/gpt2-paraphraser | SRM47 | 2022-12-15T09:32:21Z | 8 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-12-15T08:15:12Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: gpt2-paraphraser

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-paraphraser

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed