modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-05 12:28:32

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 468

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-05 12:27:45

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

addy88/wav2vec2-assamese-stt | addy88 | 2021-12-19T16:55:56Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/addy88/wav2vec2-assamese-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/addy88/wav2vec2-assamese-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-bengali-stt | addy88 | 2021-12-19T16:52:02Z | 4 | 3 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-bengali-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-bengali-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-malayalam-stt | addy88 | 2021-12-19T16:36:31Z | 26 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-malayalam-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-malayalam-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-marathi-stt | addy88 | 2021-12-19T16:31:22Z | 21 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-marathi-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-marathi-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec-odia-stt | addy88 | 2021-12-19T15:56:01Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-odia-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-odia-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-urdu-stt | addy88 | 2021-12-19T15:47:47Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-urdu-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-urdu-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-nepali-stt | addy88 | 2021-12-19T15:36:06Z | 4 | 1 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-nepali-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-nepali-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

kco4776/soongsil-bert-wellness | kco4776 | 2021-12-19T15:23:09Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ## References

- [Soongsil-BERT](https://github.com/jason9693/Soongsil-BERT) |

addy88/wav2vec2-english-stt | addy88 | 2021-12-19T15:08:42Z | 17 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-english-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-english-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-kannada-stt | addy88 | 2021-12-19T13:35:26Z | 248 | 1 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-kannada-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-kannada-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

microsoft/unispeech-1350-en-90-it-ft-1h | microsoft | 2021-12-19T13:19:29Z | 28 | 0 | transformers | [

"transformers",

"pytorch",

"unispeech",

"automatic-speech-recognition",

"audio",

"it",

"dataset:common_voice",

"arxiv:2101.07597",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- it

datasets:

- common_voice

tags:

- audio

- automatic-speech-recognition

---

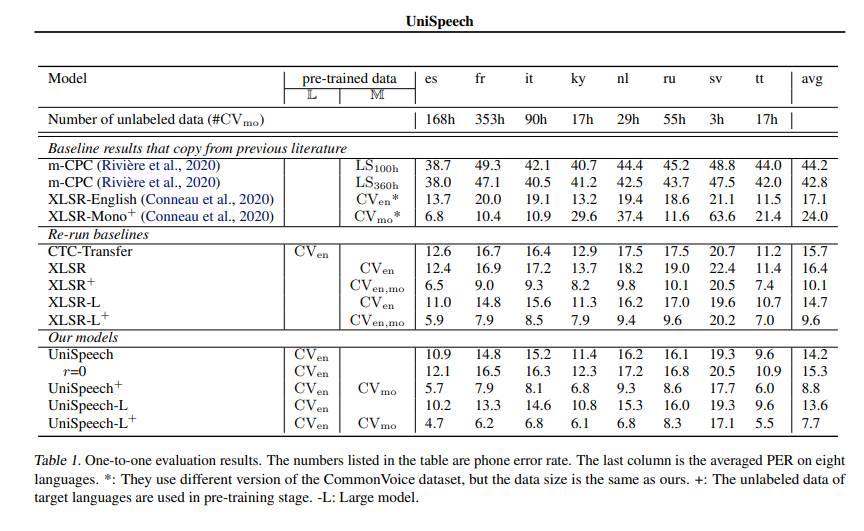

# UniSpeech-Large-plus ITALIAN

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The large model pretrained on 16kHz sampled speech audio and phonetic labels and consequently fine-tuned on 1h of Italian phonemes.

When using the model make sure that your speech input is also sampled at 16kHz and your text in converted into a sequence of phonemes.

[Paper: UniSpeech: Unified Speech Representation Learning

with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597)

Authors: Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang

**Abstract**

*In this paper, we propose a unified pre-training approach called UniSpeech to learn speech representations with both unlabeled and labeled data, in which supervised phonetic CTC learning and phonetically-aware contrastive self-supervised learning are conducted in a multi-task learning manner. The resultant representations can capture information more correlated with phonetic structures and improve the generalization across languages and domains. We evaluate the effectiveness of UniSpeech for cross-lingual representation learning on public CommonVoice corpus. The results show that UniSpeech outperforms self-supervised pretraining and supervised transfer learning for speech recognition by a maximum of 13.4% and 17.8% relative phone error rate reductions respectively (averaged over all testing languages). The transferability of UniSpeech is also demonstrated on a domain-shift speech recognition task, i.e., a relative word error rate reduction of 6% against the previous approach.*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech.

# Usage

This is an speech model that has been fine-tuned on phoneme classification.

## Inference

```python

import torch

from datasets import load_dataset

from transformers import AutoModelForCTC, AutoProcessor

import torchaudio.functional as F

model_id = "microsoft/unispeech-1350-en-90-it-ft-1h"

sample = next(iter(load_dataset("common_voice", "it", split="test", streaming=True)))

resampled_audio = F.resample(torch.tensor(sample["audio"]["array"]), 48_000, 16_000).numpy()

model = AutoModelForCTC.from_pretrained(model_id)

processor = AutoProcessor.from_pretrained(model_id)

input_values = processor(resampled_audio, return_tensors="pt").input_values

with torch.no_grad():

logits = model(input_values).logits

prediction_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(prediction_ids)

# => 'm ɪ a n n o f a tː o ʊ n o f f ɛ r t a k e n o n p o t e v o p r ɔ p r i o r i f j ʊ t a r e'

# for "Mi hanno fatto un\'offerta che non potevo proprio rifiutare."

```

## Evaluation

```python

from datasets import load_dataset, load_metric

import datasets

import torch

from transformers import AutoModelForCTC, AutoProcessor

model_id = "microsoft/unispeech-1350-en-90-it-ft-1h"

ds = load_dataset("mozilla-foundation/common_voice_3_0", "it", split="train+validation+test+other")

wer = load_metric("wer")

model = AutoModelForCTC.from_pretrained(model_id)

processor = AutoProcessor.from_pretrained(model_id)

# taken from

# https://github.com/microsoft/UniSpeech/blob/main/UniSpeech/examples/unispeech/data/it/phonesMatches_reduced.json

with open("./testSeqs_uniform_new_version.text", "r") as f:

lines = f.readlines()

# retrieve ids model is evaluated on

ids = [x.split("\t")[0] for x in lines]

ds = ds.filter(lambda p: p.split("/")[-1].split(".")[0] in ids, input_columns=["path"])

ds = ds.cast_column("audio", datasets.Audio(sampling_rate=16_000))

def decode(batch):

input_values = processor(batch["audio"]["array"], return_tensors="pt", sampling_rate=16_000)

logits = model(input_values).logits

pred_ids = torch.argmax(logits, axis=-1)

batch["prediction"] = processor.batch_decode(pred_ids)

batch["target"] = processor.tokenizer.phonemize(batch["sentence"])

return batch

out = ds.map(decode, remove_columns=ds.column_names)

per = wer.compute(predictions=out["prediction"], references=out["target"])

print("per", per)

# -> should give per 0.06685252146070828 - compare to results below

```

# Contribution

The model was contributed by [cywang](https://huggingface.co/cywang) and [patrickvonplaten](https://huggingface.co/patrickvonplaten).

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

# Official Results

See *UniSpeeech-L^{+}* - *it*:

|

new5558/simcse-model-wangchanberta-base-att-spm-uncased | new5558 | 2021-12-19T13:01:31Z | 80 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"camembert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2022-03-02T23:29:05Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# new5558/simcse-model-wangchanberta-base-att-spm-uncased

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('new5558/simcse-model-wangchanberta-base-att-spm-uncased')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

def cls_pooling(model_output, attention_mask):

return model_output[0][:,0]

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('new5558/simcse-model-wangchanberta-base-att-spm-uncased')

model = AutoModel.from_pretrained('new5558/simcse-model-wangchanberta-base-att-spm-uncased')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, cls pooling.

sentence_embeddings = cls_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=new5558/simcse-model-wangchanberta-base-att-spm-uncased)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 5125 with parameters:

```

{'batch_size': 256, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'transformers.optimization.AdamW'>",

"optimizer_params": {

"lr": 1e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 32, 'do_lower_case': False}) with Transformer model: CamembertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

rlagusrlagus123/XTC4096 | rlagusrlagus123 | 2021-12-19T11:19:34Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-03-02T23:29:05Z | ---

tags:

- conversational

---

---

#12 epochs, each batch size 4, gradient accumulation steps 1, tail 4096.

#THIS SEEMS TO BE THE OPTIMAL SETUP. |

rlagusrlagus123/XTC20000 | rlagusrlagus123 | 2021-12-19T11:00:28Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-03-02T23:29:05Z | ---

tags:

- conversational

---

---

#12 epochs, each batch size 2, gradient accumulation steps 2, tail 20000 |

NbAiLabArchive/test_w5_long_roberta_tokenizer | NbAiLabArchive | 2021-12-19T10:36:40Z | 41 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"tensorboard",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:04Z | Just for performing some experiments. Do not use. |

Langame/gpt2-waiting | Langame | 2021-12-19T09:02:26Z | 11 | 1 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"en",

"dataset:waiting-messages",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-03-02T23:29:04Z | ---

language:

- en # Example: en

license: mit # Example: apache-2.0 or any license from https://hf.co/docs/hub/model-repos#list-of-license-identifiers

tags:

- text-generation

datasets:

- waiting-messages # Example: common_voice. Use dataset id from https://hf.co/datasets

widget:

- text: 'List of funny waiting messages:'

example_title: 'Funny waiting messages'

---

# Langame/gpt2-waiting

This fine-tuned model can generate funny waiting messages.

[Langame](https://langa.me) uses these within its platform 😛.

|

Ayham/distilbert_gpt2_summarization_cnndm | Ayham | 2021-12-19T06:43:15Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"generated_from_trainer",

"dataset:cnn_dailymail",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- cnn_dailymail

model-index:

- name: distilbert_gpt2_summarization_cnndm

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert_gpt2_summarization_cnndm

This model is a fine-tuned version of [](https://huggingface.co/) on the cnn_dailymail dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Ayham/roberta_gpt2_summarization_xsum | Ayham | 2021-12-19T06:35:43Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- xsum

model-index:

- name: roberta_gpt2_summarization_xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta_gpt2_summarization_xsum

This model is a fine-tuned version of [](https://huggingface.co/) on the xsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Ayham/bert_gpt2_summarization_cnndm | Ayham | 2021-12-19T06:32:54Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"generated_from_trainer",

"dataset:cnn_dailymail",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- cnn_dailymail

model-index:

- name: bert_gpt2_summarization_cnndm

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert_gpt2_summarization_cnndm

This model is a fine-tuned version of [](https://huggingface.co/) on the cnn_dailymail dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Ayham/xlnet_gpt2_summarization_xsum | Ayham | 2021-12-19T04:50:11Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- xsum

model-index:

- name: xlnet_gpt2_summarization_xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlnet_gpt2_summarization_xsum

This model is a fine-tuned version of [](https://huggingface.co/) on the xsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

zaccharieramzi/UNet-OASIS | zaccharieramzi | 2021-12-19T02:07:02Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # UNet-OASIS

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- OASIS

---

This model can be used to reconstruct single coil OASIS data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil brain retrospective data from the OASIS database at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.unet import unet

model = unet(n_layers=4, layers_n_channels=[16, 32, 64, 128], layers_n_non_lins=2,)

model.load_weights('UNet-fastmri/model_weights.h5')

```

Using the model is then as simple as:

```python

model(zero_filled_recon)

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [OASIS dataset](https://www.oasis-brains.org/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [OASIS dataset](https://www.oasis-brains.org/).

- PSNR: 29.8

- SSIM: 0.847

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/UNet-fastmri | zaccharieramzi | 2021-12-19T02:05:48Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # UNet-fastmri

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- fastMRI

---

This model can be used to reconstruct single coil fastMRI data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil knee data from Siemens scanner at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.unet import unet

model = unet(n_layers=4, layers_n_channels=[16, 32, 64, 128], layers_n_non_lins=2,)

model.load_weights('UNet-fastmri/model_weights.h5')

```

Using the model is then as simple as:

```python

model(zero_filled_recon)

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [fastMRI dataset](https://fastmri.org/dataset/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [fastMRI dataset](https://fastmri.org/dataset/).

| Contrast | PD | PDFS |

|----------|-------|--------|

| PSNR | 33.64 | 29.89 |

| SSIM | 0.807 | 0.6334 |

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/KIKI-net-OASIS | zaccharieramzi | 2021-12-19T01:59:51Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # KIKI-net-OASIS

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- OASIS

---

This model can be used to reconstruct single coil OASIS data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil brain retrospective data from the OASIS database at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.kiki_sep import full_kiki_net

from fastmri_recon.models.utils.non_linearities import lrelu

model = full_kiki_net(n_convs=16, n_filters=48, activation=lrelu)

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_rows, n_cols]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [OASIS dataset](https://www.oasis-brains.org/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [OASIS dataset](https://www.oasis-brains.org/).

- PSNR: 30.08

- SSIM: 0.853

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/CascadeNet-fastmri | zaccharieramzi | 2021-12-19T01:43:27Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # CascadeNet-fastmri

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- fastMRI

---

This model can be used to reconstruct single coil fastMRI data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil knee data from Siemens scanner at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.cascading import cascade_net

model = cascade_net()

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_rows, n_cols]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [fastMRI dataset](https://fastmri.org/dataset/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [fastMRI dataset](https://fastmri.org/dataset/).

| Contrast | PD | PDFS |

|----------|-------|--------|

| PSNR | 33.98 | 29.88 |

| SSIM | 0.811 | 0.6251 |

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/PDNet-fastmri | zaccharieramzi | 2021-12-19T01:32:10Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # PDNet-fastmri

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- fastMRI

---

This model can be used to reconstruct single coil fastMRI data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil knee data from Siemens scanner at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.pdnet import pdnet

model = pdnet()

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_rows, n_cols]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [fastMRI dataset](https://fastmri.org/dataset/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [fastMRI dataset](https://fastmri.org/dataset/).

| Contrast | PD | PDFS |

|----------|-------|--------|

| PSNR | 34.2 | 30.06 |

| SSIM | 0.818 | 0.9554 |

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

SoLID/sgd-output-plan-constructor | SoLID | 2021-12-18T21:00:54Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:05Z | ## Schema Guided Dialogue Output Plan Constructor

|

s3h/mt5-small-finetuned-src-to-trg | s3h | 2021-12-18T20:34:32Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: mt5-small-finetuned-src-to-trg

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-src-to-trg

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:-------:|

| No log | 1.0 | 40 | nan | 0.1737 | 3.1818 |

### Framework versions

- Transformers 4.14.1

- Pytorch 1.6.0

- Datasets 1.16.1

- Tokenizers 0.10.3

|

zaccharieramzi/XPDNet-brain-af8 | zaccharieramzi | 2021-12-18T17:09:08Z | 0 | 0 | null | [

"arxiv:2010.07290",

"arxiv:2106.00753",

"region:us"

] | null | 2022-03-02T23:29:05Z | # XPDNet-brain-af8

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- fastMRI

---

This model was used to achieve the 2nd highest submission in terms of PSNR on the fastMRI dataset (see https://fastmri.org/leaderboards/).

It is a base model for acceleration factor 8.

The model uses 25 iterations and a medium MWCNN, and a big sensitivity maps refiner.

## Model description

For more details, see https://arxiv.org/abs/2010.07290.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct brain data from Siemens scanner at acceleration factor 8.

It was shown [here](https://arxiv.org/abs/2106.00753), that it can generalize well, although further tests are required.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

import tensorflow as tf

from fastmri_recon.models.subclassed_models.denoisers.proposed_params import get_model_specs

from fastmri_recon.models.subclassed_models.xpdnet import XPDNet

n_primal = 5

model_fun, model_kwargs, n_scales, res = [

(model_fun, kwargs, n_scales, res)

for m_name, m_size, model_fun, kwargs, _, n_scales, res in get_model_specs(n_primal=n_primal, force_res=False)

if m_name == 'MWCNN' and m_size == 'medium'

][0]

model_kwargs['use_bias'] = False

run_params = dict(

n_primal=n_primal,

multicoil=True,

n_scales=n_scales,

refine_smaps=True,

refine_big=True,

res=res,

output_shape_spec=True,

n_iter=25,

)

model = XPDNet(model_fun, model_kwargs, **run_params)

kspace_size = [1, 1, 320, 320]

inputs = [

tf.zeros(kspace_size + [1], dtype=tf.complex64), # kspace

tf.zeros(kspace_size, dtype=tf.complex64), # mask

tf.zeros(kspace_size, dtype=tf.complex64), # smaps

tf.constant([[320, 320]]), # shape

]

model(inputs)

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_coils, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_coils, n_rows, n_cols]

smaps, # shape: [n_slices, n_coils, n_rows, n_cols]

shape, # shape: [n_slices, 2]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [fastMRI dataset](https://fastmri.org/dataset/).

## Training procedure

The training procedure is described in https://arxiv.org/abs/2010.07290.

This section is WIP.

## Evaluation results

On the fastMRI validation dataset, the same model with a smaller sensitivity maps refiner gives the following results for 30 validation volumes per contrast:

| Contrast | T1 | T2 | FLAIR | T1-POST |

|----------|--------|--------|--------|---------|

| PSNR | 38.57 | 37.41 | 36.81 | 38.90 |

| SSIM | 0.9348 | 0.9404 | 0.9086 | 0.9517 |

Further results can be seen on the fastMRI leaderboards for the test and challenge dataset: https://fastmri.org/leaderboards/

## Bibtex entry

```

@inproceedings{Ramzi2020d,

archivePrefix = {arXiv},

arxivId = {2010.07290},

author = {Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

booktitle = {ISMRM},

eprint = {2010.07290},

pages = {1--4},

title = {{XPDNet for MRI Reconstruction: an application to the 2020 fastMRI challenge}},

url = {http://arxiv.org/abs/2010.07290},

year = {2021}

}

```

|

FarisHijazi/wav2vec2-large-xls-r-300m-turkish-colab | FarisHijazi | 2021-12-18T15:19:16Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:04Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xls-r-300m-turkish-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-turkish-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 256

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 512

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.13.3

- Tokenizers 0.10.3

|

jcsilva/wav2vec2-base-timit-demo-colab | jcsilva | 2021-12-18T13:45:19Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-base-timit-demo-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7665

- Wer: 0.6956

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.052 | 0.8 | 100 | 3.0167 | 1.0 |

| 2.7436 | 1.6 | 200 | 1.9369 | 1.0006 |

| 1.4182 | 2.4 | 300 | 0.7665 | 0.6956 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

s-nlp/rubert-base-corruption-detector | s-nlp | 2021-12-18T09:28:50Z | 22 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"fluency",

"ru",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

language:

- ru

tags:

- fluency

---

This is a model for evaluation of naturalness of short Russian texts. It has been trained to distinguish human-written texts from their corrupted versions.

Corruption sources: random replacement, deletion, addition, shuffling, and re-inflection of words and characters, random changes of capitalization, round-trip translation, filling random gaps with T5 and RoBERTA models. For each original text, we sampled three corrupted texts, so the model is uniformly biased towards the `unnatural` label.

Data sources: web-corpora from [the Leipzig collection](https://wortschatz.uni-leipzig.de/en/download) (`rus_news_2020_100K`, `rus_newscrawl-public_2018_100K`, `rus-ru_web-public_2019_100K`, `rus_wikipedia_2021_100K`), comments from [OK](https://www.kaggle.com/alexandersemiletov/toxic-russian-comments) and [Pikabu](https://www.kaggle.com/blackmoon/russian-language-toxic-comments).

On our private test dataset, the model has achieved 40% rank correlation with human judgements of naturalness, which is higher than GPT perplexity, another popular fluency metric. |

nateraw/rare-puppers-demo | nateraw | 2021-12-17T22:48:47Z | 101 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"huggingpics",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2022-03-02T23:29:05Z | ---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: rare-puppers-demo

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.9101123809814453

---

# rare-puppers-demo

Autogenerated by HuggingPics🤗🖼️

Create your own image classifier for **anything** by running [the demo on Google Colab](https://colab.research.google.com/github/nateraw/huggingpics/blob/main/HuggingPics.ipynb).

Report any issues with the demo at the [github repo](https://github.com/nateraw/huggingpics).

## Example Images

#### corgi

#### husky

#### samoyed

#### shiba inu

|

Harveenchadha/vakyansh-wav2vec2-punjabi-pam-10 | Harveenchadha | 2021-12-17T20:14:16Z | 1,400 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"audio",

"speech",

"pa",

"arxiv:2107.07402",

"license:mit",

"model-index",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:04Z | ---

language: pa

#datasets:

#- Interspeech 2021

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- speech

license: mit

model-index:

- name: Wav2Vec2 Vakyansh Punjabi Model by Harveen Chadha

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice hi

type: common_voice

args: pa

metrics:

- name: Test WER

type: wer

value: 33.17

---

Fine-tuned on Multilingual Pretrained Model [CLSRIL-23](https://arxiv.org/abs/2107.07402). The original fairseq checkpoint is present [here](https://github.com/Open-Speech-EkStep/vakyansh-models). When using this model, make sure that your speech input is sampled at 16kHz.

**Note: The result from this model is without a language model so you may witness a higher WER in some cases.**

|

microsoft/unispeech-sat-base-plus-sd | microsoft | 2021-12-17T18:40:56Z | 407 | 0 | transformers | [

"transformers",

"pytorch",

"unispeech-sat",

"audio-frame-classification",

"speech",

"en",

"arxiv:1912.07875",

"arxiv:2106.06909",

"arxiv:2101.00390",

"arxiv:2110.05752",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language:

- en

tags:

- speech

---

# UniSpeech-SAT-Base for Speaker Diarization

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The model was pretrained on 16kHz sampled speech audio with utterance and speaker contrastive loss. When using the model, make sure that your speech input is also sampled at 16kHz.

The model was pre-trained on:

- 60,000 hours of [Libri-Light](https://arxiv.org/abs/1912.07875)

- 10,000 hours of [GigaSpeech](https://arxiv.org/abs/2106.06909)

- 24,000 hours of [VoxPopuli](https://arxiv.org/abs/2101.00390)

[Paper: UNISPEECH-SAT: UNIVERSAL SPEECH REPRESENTATION LEARNING WITH SPEAKER

AWARE PRE-TRAINING](https://arxiv.org/abs/2110.05752)

Authors: Sanyuan Chen, Yu Wu, Chengyi Wang, Zhengyang Chen, Zhuo Chen, Shujie Liu, Jian Wu, Yao Qian, Furu Wei, Jinyu Li, Xiangzhan Yu

**Abstract**

*Self-supervised learning (SSL) is a long-standing goal for speech processing, since it utilizes large-scale unlabeled data and avoids extensive human labeling. Recent years witness great successes in applying self-supervised learning in speech recognition, while limited exploration was attempted in applying SSL for modeling speaker characteristics. In this paper, we aim to improve the existing SSL framework for speaker representation learning. Two methods are introduced for enhancing the unsupervised speaker information extraction. First, we apply the multi-task learning to the current SSL framework, where we integrate the utterance-wise contrastive loss with the SSL objective function. Second, for better speaker discrimination, we propose an utterance mixing strategy for data augmentation, where additional overlapped utterances are created unsupervisely and incorporate during training. We integrate the proposed methods into the HuBERT framework. Experiment results on SUPERB benchmark show that the proposed system achieves state-of-the-art performance in universal representation learning, especially for speaker identification oriented tasks. An ablation study is performed verifying the efficacy of each proposed method. Finally, we scale up training dataset to 94 thousand hours public audio data and achieve further performance improvement in all SUPERB tasks..*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech-SAT.

# Fine-tuning details

The model is fine-tuned on the [LibriMix dataset](https://github.com/JorisCos/LibriMix) using just a linear layer for mapping the network outputs.

# Usage

## Speaker Diarization

```python

from transformers import Wav2Vec2FeatureExtractor, UniSpeechSatForAudioFrameClassification

from datasets import load_dataset

import torch

dataset = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

feature_extractor = Wav2Vec2FeatureExtractor.from_pretrained('microsoft/unispeech-sat-base-plus-sd')

model = UniSpeechSatForAudioFrameClassification.from_pretrained('microsoft/unispeech-sat-base-plus-sd')

# audio file is decoded on the fly

inputs = feature_extractor(dataset[0]["audio"]["array"], return_tensors="pt")

logits = model(**inputs).logits

probabilities = torch.sigmoid(logits[0])

# labels is a one-hot array of shape (num_frames, num_speakers)

labels = (probabilities > 0.5).long()

```

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

|

Eyvaz/wav2vec2-base-russian-modified-kaggle | Eyvaz | 2021-12-17T18:39:50Z | 5 | 1 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:04Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

name: wav2vec2-base-russian-modified-kaggle

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-russian-modified-kaggle

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 12

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 24

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.1

- Datasets 1.13.3

- Tokenizers 0.10.3

|

cjrowe/afriberta_base-finetuned-tydiqa | cjrowe | 2021-12-17T18:21:22Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"question-answering",

"generated_from_trainer",

"sw",

"dataset:tydiqa",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:05Z | ---

language:

- sw

tags:

- generated_from_trainer

datasets:

- tydiqa

model-index:

- name: afriberta_base-finetuned-tydiqa

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# afriberta_base-finetuned-tydiqa

This model is a fine-tuned version of [castorini/afriberta_base](https://huggingface.co/castorini/afriberta_base) on the tydiqa dataset.

It achieves the following results on the evaluation set:

- Loss: 2.3728

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 192 | 2.1359 |

| No log | 2.0 | 384 | 2.3409 |

| 0.8353 | 3.0 | 576 | 2.3728 |

### Framework versions

- Transformers 4.14.1

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

microsoft/unispeech-sat-large-sv | microsoft | 2021-12-17T18:13:15Z | 240 | 4 | transformers | [

"transformers",

"pytorch",

"unispeech-sat",

"audio-xvector",

"speech",

"en",

"arxiv:1912.07875",

"arxiv:2106.06909",

"arxiv:2101.00390",

"arxiv:2110.05752",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language:

- en

datasets:

tags:

- speech

---

# UniSpeech-SAT-Large for Speaker Verification

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The model was pretrained on 16kHz sampled speech audio with utterance and speaker contrastive loss. When using the model, make sure that your speech input is also sampled at 16kHz.

The model was pre-trained on:

- 60,000 hours of [Libri-Light](https://arxiv.org/abs/1912.07875)

- 10,000 hours of [GigaSpeech](https://arxiv.org/abs/2106.06909)

- 24,000 hours of [VoxPopuli](https://arxiv.org/abs/2101.00390)

[Paper: UNISPEECH-SAT: UNIVERSAL SPEECH REPRESENTATION LEARNING WITH SPEAKER

AWARE PRE-TRAINING](https://arxiv.org/abs/2110.05752)

Authors: Sanyuan Chen, Yu Wu, Chengyi Wang, Zhengyang Chen, Zhuo Chen, Shujie Liu, Jian Wu, Yao Qian, Furu Wei, Jinyu Li, Xiangzhan Yu

**Abstract**

*Self-supervised learning (SSL) is a long-standing goal for speech processing, since it utilizes large-scale unlabeled data and avoids extensive human labeling. Recent years witness great successes in applying self-supervised learning in speech recognition, while limited exploration was attempted in applying SSL for modeling speaker characteristics. In this paper, we aim to improve the existing SSL framework for speaker representation learning. Two methods are introduced for enhancing the unsupervised speaker information extraction. First, we apply the multi-task learning to the current SSL framework, where we integrate the utterance-wise contrastive loss with the SSL objective function. Second, for better speaker discrimination, we propose an utterance mixing strategy for data augmentation, where additional overlapped utterances are created unsupervisely and incorporate during training. We integrate the proposed methods into the HuBERT framework. Experiment results on SUPERB benchmark show that the proposed system achieves state-of-the-art performance in universal representation learning, especially for speaker identification oriented tasks. An ablation study is performed verifying the efficacy of each proposed method. Finally, we scale up training dataset to 94 thousand hours public audio data and achieve further performance improvement in all SUPERB tasks..*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech-SAT.

# Fine-tuning details

The model is fine-tuned on the [VoxCeleb1 dataset](https://www.robots.ox.ac.uk/~vgg/data/voxceleb/vox1.html) using an X-Vector head with an Additive Margin Softmax loss

[X-Vectors: Robust DNN Embeddings for Speaker Recognition](https://www.danielpovey.com/files/2018_icassp_xvectors.pdf)

# Usage

## Speaker Verification

```python

from transformers import Wav2Vec2FeatureExtractor, UniSpeechSatForXVector

from datasets import load_dataset

import torch

dataset = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

feature_extractor = Wav2Vec2FeatureExtractor.from_pretrained('microsoft/unispeech-sat-large-sv')

model = UniSpeechSatForXVector.from_pretrained('microsoft/unispeech-sat-large-sv')

# audio files are decoded on the fly

inputs = feature_extractor(dataset[:2]["audio"]["array"], return_tensors="pt")

embeddings = model(**inputs).embeddings

embeddings = torch.nn.functional.normalize(embeddings, dim=-1).cpu()

# the resulting embeddings can be used for cosine similarity-based retrieval

cosine_sim = torch.nn.CosineSimilarity(dim=-1)

similarity = cosine_sim(embeddings[0], embeddings[1])

threshold = 0.89 # the optimal threshold is dataset-dependent

if similarity < threshold:

print("Speakers are not the same!")

```

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

|

microsoft/unispeech-sat-base-sv | microsoft | 2021-12-17T18:11:05Z | 200 | 0 | transformers | [

"transformers",

"pytorch",

"unispeech-sat",

"audio-xvector",

"speech",

"en",

"dataset:librispeech_asr",

"arxiv:2110.05752",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language:

- en

datasets:

- librispeech_asr

tags:

- speech

---

# UniSpeech-SAT-Base for Speaker Verification

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The model was pretrained on 16kHz sampled speech audio with utterance and speaker contrastive loss. When using the model, make sure that your speech input is also sampled at 16kHz.

The model was pre-trained on:

- 960 hours of [LibriSpeech](https://huggingface.co/datasets/librispeech_asr)

[Paper: UNISPEECH-SAT: UNIVERSAL SPEECH REPRESENTATION LEARNING WITH SPEAKER

AWARE PRE-TRAINING](https://arxiv.org/abs/2110.05752)

Authors: Sanyuan Chen, Yu Wu, Chengyi Wang, Zhengyang Chen, Zhuo Chen, Shujie Liu, Jian Wu, Yao Qian, Furu Wei, Jinyu Li, Xiangzhan Yu

**Abstract**

*Self-supervised learning (SSL) is a long-standing goal for speech processing, since it utilizes large-scale unlabeled data and avoids extensive human labeling. Recent years witness great successes in applying self-supervised learning in speech recognition, while limited exploration was attempted in applying SSL for modeling speaker characteristics. In this paper, we aim to improve the existing SSL framework for speaker representation learning. Two methods are introduced for enhancing the unsupervised speaker information extraction. First, we apply the multi-task learning to the current SSL framework, where we integrate the utterance-wise contrastive loss with the SSL objective function. Second, for better speaker discrimination, we propose an utterance mixing strategy for data augmentation, where additional overlapped utterances are created unsupervisely and incorporate during training. We integrate the proposed methods into the HuBERT framework. Experiment results on SUPERB benchmark show that the proposed system achieves state-of-the-art performance in universal representation learning, especially for speaker identification oriented tasks. An ablation study is performed verifying the efficacy of each proposed method. Finally, we scale up training dataset to 94 thousand hours public audio data and achieve further performance improvement in all SUPERB tasks..*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech-SAT.

# Fine-tuning details

The model is fine-tuned on the [VoxCeleb1 dataset](https://www.robots.ox.ac.uk/~vgg/data/voxceleb/vox1.html) using an X-Vector head with an Additive Margin Softmax loss

[X-Vectors: Robust DNN Embeddings for Speaker Recognition](https://www.danielpovey.com/files/2018_icassp_xvectors.pdf)

# Usage

## Speaker Verification

```python

from transformers import Wav2Vec2FeatureExtractor, UniSpeechSatForXVector

from datasets import load_dataset

import torch

dataset = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

feature_extractor = Wav2Vec2FeatureExtractor.from_pretrained('microsoft/unispeech-sat-base-sv')

model = UniSpeechSatForXVector.from_pretrained('microsoft/unispeech-sat-base-sv')

# audio files are decoded on the fly

inputs = feature_extractor(dataset[:2]["audio"]["array"], return_tensors="pt")

embeddings = model(**inputs).embeddings

embeddings = torch.nn.functional.normalize(embeddings, dim=-1).cpu()

# the resulting embeddings can be used for cosine similarity-based retrieval

cosine_sim = torch.nn.CosineSimilarity(dim=-1)

similarity = cosine_sim(embeddings[0], embeddings[1])

threshold = 0.86 # the optimal threshold is dataset-dependent

if similarity < threshold:

print("Speakers are not the same!")

```

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

|

osanseviero/fastai_cat_vs_dog | osanseviero | 2021-12-17T14:27:39Z | 32 | 4 | generic | [

"generic",

"image-classification",

"region:us"

] | image-classification | 2022-03-02T23:29:05Z | ---

tags:

- image-classification

library_name: generic

---

# Dog vs Cat Image Classification with FastAI CNN

Training is based in FastAI [Quick Start](https://docs.fast.ai/quick_start.html). Example training

## Training

The model was trained as follows

```python

path = untar_data(URLs.PETS)/'images'

def is_cat(x): return x[0].isupper()

dls = ImageDataLoaders.from_name_func(

path, get_image_files(path), valid_pct=0.2, seed=42,

label_func=is_cat, item_tfms=Resize(224))

learn = cnn_learner(dls, resnet34, metrics=error_rate)

learn.fine_tune(1)

``` |

Rocketknight1/gbert-base-germaner | Rocketknight1 | 2021-12-17T14:04:59Z | 5 | 1 | transformers | [

"transformers",

"tf",

"tensorboard",

"bert",

"token-classification",

"generated_from_keras_callback",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2022-03-02T23:29:04Z | ---

license: mit

tags:

- generated_from_keras_callback

model-index:

- name: Rocketknight1/gbert-base-germaner

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Rocketknight1/gbert-base-germaner

This model is a fine-tuned version of [deepset/gbert-base](https://huggingface.co/deepset/gbert-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0340

- Validation Loss: 0.0881

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 4176, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.1345 | 0.0865 | 0 |

| 0.0550 | 0.0878 | 1 |

| 0.0340 | 0.0881 | 2 |

### Framework versions

- Transformers 4.15.0.dev0

- TensorFlow 2.6.0

- Datasets 1.16.2.dev0

- Tokenizers 0.10.3

|

patrickvonplaten/wavlm-libri-clean-100h-large | patrickvonplaten | 2021-12-17T13:40:58Z | 10,035 | 2 | transformers | [

"transformers",