modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-04 18:27:18

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 468

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-04 18:26:45

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

kwang1993/wav2vec2-base-timit-demo | kwang1993 | 2021-12-21T04:54:44Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | https://huggingface.co/blog/fine-tune-wav2vec2-english

Use the processor from https://huggingface.co/facebook/wav2vec2-base |

vuiseng9/pegasus-arxiv | vuiseng9 | 2021-12-21T02:23:21Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"pegasus",

"text2text-generation",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:05Z | This model is developed with transformers v4.13 with minor patch in this [fork](https://github.com/vuiseng9/transformers/tree/pegasus-v4p13).

# Setup

```bash

git clone https://github.com/vuiseng9/transformers

cd transformers

git checkout pegasus-v4p13 && git reset --hard 41eeb07

# installation, set summarization dependency

# . . .

```

# Train

```bash

#!/usr/bin/env bash

export CUDA_VISIBLE_DEVICES=0,1,2,3

NEPOCH=10

RUNID=pegasus-arxiv-${NEPOCH}eph-run1

OUTDIR=/data1/vchua/pegasus-hf4p13/pegasus-ft/${RUNID}

mkdir -p $OUTDIR

python run_summarization.py \

--model_name_or_path google/pegasus-large \

--dataset_name ccdv/arxiv-summarization \

--do_train \

--adafactor \

--learning_rate 8e-4 \

--label_smoothing_factor 0.1 \

--num_train_epochs $NEPOCH \

--per_device_train_batch_size 2 \

--do_eval \

--per_device_eval_batch_size 2 \

--num_beams 8 \

--max_source_length 1024 \

--max_target_length 256 \

--evaluation_strategy steps \

--eval_steps 10000 \

--save_strategy steps \

--save_steps 5000 \

--logging_steps 1 \

--overwrite_output_dir \

--run_name $RUNID \

--output_dir $OUTDIR > $OUTDIR/run.log 2>&1 &

```

# Eval

```bash

#!/usr/bin/env bash

export CUDA_VISIBLE_DEVICES=3

DT=$(date +%F_%H-%M)

RUNID=pegasus-arxiv-${DT}

OUTDIR=/data1/vchua/pegasus-hf4p13/pegasus-eval/${RUNID}

mkdir -p $OUTDIR

python run_summarization.py \

--model_name_or_path vuiseng9/pegasus-arxiv \

--dataset_name ccdv/arxiv-summarization \

--max_source_length 1024 \

--max_target_length 256 \

--do_predict \

--per_device_eval_batch_size 8 \

--predict_with_generate \

--num_beams 8 \

--overwrite_output_dir \

--run_name $RUNID \

--output_dir $OUTDIR > $OUTDIR/run.log 2>&1 &

```

Although fine-tuning is carried out for 5 epochs, this model is the checkpoint @150000 steps, 5.91 epoch, 34hrs) with lowest eval loss during training. Test/predict with this checkpoint should give results below. Note that we observe model at 80000 steps is closed to published result from HF.

```

***** predict metrics *****

predict_gen_len = 210.0925

predict_loss = 1.7192

predict_rouge1 = 46.1383

predict_rouge2 = 19.1393

predict_rougeL = 27.7573

predict_rougeLsum = 41.583

predict_runtime = 2:40:25.86

predict_samples = 6440

predict_samples_per_second = 0.669

predict_steps_per_second = 0.084

``` |

Ayham/distilbert_gpt2_summarization_xsum | Ayham | 2021-12-20T20:31:56Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- xsum

model-index:

- name: distilbert_gpt2_summarization_xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert_gpt2_summarization_xsum

This model is a fine-tuned version of [](https://huggingface.co/) on the xsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

quarter100/ko-boolq-model | quarter100 | 2021-12-20T13:23:04Z | 5 | 1 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | labeled by "YES" : 1, "NO" : 0, "No Answer" : 2

fine tuned by klue/roberta-large |

patrickvonplaten/wavlm-libri-clean-100h-base-plus | patrickvonplaten | 2021-12-20T12:59:01Z | 14,635 | 3 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wavlm",

"automatic-speech-recognition",

"librispeech_asr",

"generated_from_trainer",

"wavlm_libri_finetune",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

tags:

- automatic-speech-recognition

- librispeech_asr

- generated_from_trainer

- wavlm_libri_finetune

model-index:

- name: wavlm-libri-clean-100h-base-plus

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wavlm-libri-clean-100h-base-plus

This model is a fine-tuned version of [microsoft/wavlm-base-plus](https://huggingface.co/microsoft/wavlm-base-plus) on the LIBRISPEECH_ASR - CLEAN dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0819

- Wer: 0.0683

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 32

- total_eval_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 2.8877 | 0.34 | 300 | 2.8649 | 1.0 |

| 0.2852 | 0.67 | 600 | 0.2196 | 0.1830 |

| 0.1198 | 1.01 | 900 | 0.1438 | 0.1273 |

| 0.0906 | 1.35 | 1200 | 0.1145 | 0.1035 |

| 0.0729 | 1.68 | 1500 | 0.1055 | 0.0955 |

| 0.0605 | 2.02 | 1800 | 0.0936 | 0.0859 |

| 0.0402 | 2.35 | 2100 | 0.0885 | 0.0746 |

| 0.0421 | 2.69 | 2400 | 0.0848 | 0.0700 |

### Framework versions

- Transformers 4.15.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.16.2.dev0

- Tokenizers 0.10.3

|

patrickvonplaten/wav2vec2-common_voice-tr-demo | patrickvonplaten | 2021-12-20T12:54:39Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"speech-recognition",

"common_voice",

"generated_from_trainer",

"tr",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- tr

license: apache-2.0

tags:

- speech-recognition

- common_voice

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-common_voice-tr-demo

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-common_voice-tr-demo

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the COMMON_VOICE - TR dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3856

- Wer: 0.3556

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 15.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.7391 | 0.92 | 100 | 3.5760 | 1.0 |

| 2.927 | 1.83 | 200 | 3.0796 | 0.9999 |

| 0.9009 | 2.75 | 300 | 0.9278 | 0.8226 |

| 0.6529 | 3.67 | 400 | 0.5926 | 0.6367 |

| 0.3623 | 4.59 | 500 | 0.5372 | 0.5692 |

| 0.2888 | 5.5 | 600 | 0.4407 | 0.4838 |

| 0.285 | 6.42 | 700 | 0.4341 | 0.4694 |

| 0.0842 | 7.34 | 800 | 0.4153 | 0.4302 |

| 0.1415 | 8.26 | 900 | 0.4317 | 0.4136 |

| 0.1552 | 9.17 | 1000 | 0.4145 | 0.4013 |

| 0.1184 | 10.09 | 1100 | 0.4115 | 0.3844 |

| 0.0556 | 11.01 | 1200 | 0.4182 | 0.3862 |

| 0.0851 | 11.93 | 1300 | 0.3985 | 0.3688 |

| 0.0961 | 12.84 | 1400 | 0.4030 | 0.3665 |

| 0.0596 | 13.76 | 1500 | 0.3880 | 0.3631 |

| 0.0917 | 14.68 | 1600 | 0.3878 | 0.3582 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.12.1

- Tokenizers 0.10.3

|

patrickvonplaten/wav2vec2-librispeech-clean-100h-demo-dist | patrickvonplaten | 2021-12-20T12:53:43Z | 87 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"speech-recognition",

"librispeech_asr",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- speech-recognition

- librispeech_asr

- generated_from_trainer

model-index:

- name: wav2vec2-librispeech-clean-100h-demo-dist

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-librispeech-clean-100h-demo-dist

This model is a fine-tuned version of [facebook/wav2vec2-large-lv60](https://huggingface.co/facebook/wav2vec2-large-lv60) on the LIBRISPEECH_ASR - CLEAN dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0572

- Wer: 0.0417

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 32

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.399 | 0.11 | 100 | 3.6153 | 1.0 |

| 2.8892 | 0.22 | 200 | 2.8963 | 1.0 |

| 2.8284 | 0.34 | 300 | 2.8574 | 1.0 |

| 0.7347 | 0.45 | 400 | 0.6158 | 0.4850 |

| 0.1138 | 0.56 | 500 | 0.2038 | 0.1560 |

| 0.248 | 0.67 | 600 | 0.1274 | 0.1024 |

| 0.2586 | 0.78 | 700 | 0.1108 | 0.0876 |

| 0.0733 | 0.9 | 800 | 0.0936 | 0.0762 |

| 0.044 | 1.01 | 900 | 0.0834 | 0.0662 |

| 0.0393 | 1.12 | 1000 | 0.0792 | 0.0622 |

| 0.0941 | 1.23 | 1100 | 0.0769 | 0.0627 |

| 0.036 | 1.35 | 1200 | 0.0731 | 0.0603 |

| 0.0768 | 1.46 | 1300 | 0.0713 | 0.0559 |

| 0.0518 | 1.57 | 1400 | 0.0686 | 0.0537 |

| 0.0815 | 1.68 | 1500 | 0.0639 | 0.0515 |

| 0.0603 | 1.79 | 1600 | 0.0636 | 0.0500 |

| 0.056 | 1.91 | 1700 | 0.0609 | 0.0480 |

| 0.0265 | 2.02 | 1800 | 0.0621 | 0.0465 |

| 0.0496 | 2.13 | 1900 | 0.0607 | 0.0449 |

| 0.0436 | 2.24 | 2000 | 0.0591 | 0.0446 |

| 0.0421 | 2.35 | 2100 | 0.0590 | 0.0428 |

| 0.0641 | 2.47 | 2200 | 0.0603 | 0.0443 |

| 0.0466 | 2.58 | 2300 | 0.0580 | 0.0429 |

| 0.0132 | 2.69 | 2400 | 0.0574 | 0.0423 |

| 0.0073 | 2.8 | 2500 | 0.0586 | 0.0417 |

| 0.0021 | 2.91 | 2600 | 0.0574 | 0.0412 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.12.1

- Tokenizers 0.10.3

|

patrickvonplaten/hubert-librispeech-clean-100h-demo-dist | patrickvonplaten | 2021-12-20T12:53:35Z | 10 | 1 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"hubert",

"automatic-speech-recognition",

"speech-recognition",

"librispeech_asr",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- speech-recognition

- librispeech_asr

- generated_from_trainer

model-index:

- name: hubert-librispeech-clean-100h-demo-dist

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hubert-librispeech-clean-100h-demo-dist

This model is a fine-tuned version of [facebook/hubert-large-ll60k](https://huggingface.co/facebook/hubert-large-ll60k) on the LIBRISPEECH_ASR - CLEAN dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0984

- Wer: 0.0883

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 32

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 2.9031 | 0.11 | 100 | 2.9220 | 1.0 |

| 2.6437 | 0.22 | 200 | 2.6268 | 1.0 |

| 0.3934 | 0.34 | 300 | 0.4860 | 0.4182 |

| 0.3531 | 0.45 | 400 | 0.3088 | 0.2894 |

| 0.2255 | 0.56 | 500 | 0.2568 | 0.2426 |

| 0.3379 | 0.67 | 600 | 0.2073 | 0.2011 |

| 0.2419 | 0.78 | 700 | 0.1849 | 0.1838 |

| 0.2128 | 0.9 | 800 | 0.1662 | 0.1690 |

| 0.1341 | 1.01 | 900 | 0.1600 | 0.1541 |

| 0.0946 | 1.12 | 1000 | 0.1431 | 0.1404 |

| 0.1643 | 1.23 | 1100 | 0.1373 | 0.1304 |

| 0.0663 | 1.35 | 1200 | 0.1293 | 0.1307 |

| 0.162 | 1.46 | 1300 | 0.1247 | 0.1266 |

| 0.1433 | 1.57 | 1400 | 0.1246 | 0.1262 |

| 0.1581 | 1.68 | 1500 | 0.1219 | 0.1154 |

| 0.1036 | 1.79 | 1600 | 0.1127 | 0.1081 |

| 0.1352 | 1.91 | 1700 | 0.1087 | 0.1040 |

| 0.0471 | 2.02 | 1800 | 0.1085 | 0.1005 |

| 0.0945 | 2.13 | 1900 | 0.1066 | 0.0973 |

| 0.0843 | 2.24 | 2000 | 0.1102 | 0.0964 |

| 0.0774 | 2.35 | 2100 | 0.1079 | 0.0940 |

| 0.0952 | 2.47 | 2200 | 0.1056 | 0.0927 |

| 0.0635 | 2.58 | 2300 | 0.1026 | 0.0920 |

| 0.0665 | 2.69 | 2400 | 0.1012 | 0.0905 |

| 0.034 | 2.8 | 2500 | 0.1009 | 0.0900 |

| 0.0251 | 2.91 | 2600 | 0.0993 | 0.0883 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.12.1

- Tokenizers 0.10.3

|

patrickvonplaten/sew-mid-100k-librispeech-clean-100h-ft | patrickvonplaten | 2021-12-20T12:53:26Z | 11 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"sew",

"automatic-speech-recognition",

"librispeech_asr",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- automatic-speech-recognition

- librispeech_asr

- generated_from_trainer

model-index:

- name: sew-mid-100k-librispeech-clean-100h-ft

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sew-mid-100k-librispeech-clean-100h-ft

This model is a fine-tuned version of [asapp/sew-mid-100k](https://huggingface.co/asapp/sew-mid-100k) on the LIBRISPEECH_ASR - CLEAN dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1976

- Wer: 0.1665

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 32

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.4274 | 0.11 | 100 | 4.1419 | 1.0 |

| 2.9657 | 0.22 | 200 | 3.1203 | 1.0 |

| 2.9069 | 0.34 | 300 | 3.0107 | 1.0 |

| 2.8666 | 0.45 | 400 | 2.8960 | 1.0 |

| 1.4535 | 0.56 | 500 | 1.4062 | 0.8664 |

| 0.6821 | 0.67 | 600 | 0.5530 | 0.4930 |

| 0.4827 | 0.78 | 700 | 0.4122 | 0.3630 |

| 0.4485 | 0.9 | 800 | 0.3597 | 0.3243 |

| 0.2666 | 1.01 | 900 | 0.3104 | 0.2790 |

| 0.2378 | 1.12 | 1000 | 0.2913 | 0.2613 |

| 0.2516 | 1.23 | 1100 | 0.2702 | 0.2452 |

| 0.2456 | 1.35 | 1200 | 0.2619 | 0.2338 |

| 0.2392 | 1.46 | 1300 | 0.2466 | 0.2195 |

| 0.2117 | 1.57 | 1400 | 0.2379 | 0.2092 |

| 0.1837 | 1.68 | 1500 | 0.2295 | 0.2029 |

| 0.1757 | 1.79 | 1600 | 0.2240 | 0.1949 |

| 0.1626 | 1.91 | 1700 | 0.2195 | 0.1927 |

| 0.168 | 2.02 | 1800 | 0.2137 | 0.1853 |

| 0.168 | 2.13 | 1900 | 0.2123 | 0.1839 |

| 0.1576 | 2.24 | 2000 | 0.2095 | 0.1803 |

| 0.1756 | 2.35 | 2100 | 0.2075 | 0.1776 |

| 0.1467 | 2.47 | 2200 | 0.2049 | 0.1754 |

| 0.1702 | 2.58 | 2300 | 0.2013 | 0.1722 |

| 0.177 | 2.69 | 2400 | 0.1993 | 0.1701 |

| 0.1417 | 2.8 | 2500 | 0.1983 | 0.1688 |

| 0.1302 | 2.91 | 2600 | 0.1977 | 0.1678 |

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.13.4.dev0

- Tokenizers 0.10.3

|

MMG/bert-base-spanish-wwm-cased-finetuned-sqac-finetuned-squad2-es | MMG | 2021-12-20T08:10:24Z | 23 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"es",

"dataset:squad_es",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- squad_es

model-index:

- name: bert-base-spanish-wwm-cased-finetuned-sqac-finetuned-squad2-es

results: []

language:

- es

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-spanish-wwm-cased-finetuned-sqac-finetuned-squad2-es

This model is a fine-tuned version of [MMG/bert-base-spanish-wwm-cased-finetuned-sqac](https://huggingface.co/MMG/bert-base-spanish-wwm-cased-finetuned-sqac) on the squad_es dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2584

- {'exact': 63.358070500927646, 'f1': 70.22498384623977}

### Framework versions

- Transformers 4.14.1

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Amalq/roberta-base-finetuned-schizophreniaReddit2 | Amalq | 2021-12-20T05:41:28Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"fill-mask",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:04Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: roberta-base-finetuned-schizophreniaReddit2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-schizophreniaReddit2

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7785

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 490 | 1.8093 |

| 1.9343 | 2.0 | 980 | 1.7996 |

| 1.8856 | 3.0 | 1470 | 1.7966 |

| 1.8552 | 4.0 | 1960 | 1.7844 |

| 1.8267 | 5.0 | 2450 | 1.7839 |

### Framework versions

- Transformers 4.14.1

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

microsoft/unispeech-1350-en-168-es-ft-1h | microsoft | 2021-12-19T23:01:13Z | 33 | 0 | transformers | [

"transformers",

"pytorch",

"unispeech",

"automatic-speech-recognition",

"audio",

"es",

"dataset:common_voice",

"arxiv:2101.07597",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- es

datasets:

- common_voice

tags:

- audio

- automatic-speech-recognition

---

# UniSpeech-Large-plus Spanish

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The large model pretrained on 16kHz sampled speech audio and phonetic labels and consequently fine-tuned on 1h of Spanish phonemes.

When using the model make sure that your speech input is also sampled at 16kHz and your text in converted into a sequence of phonemes.

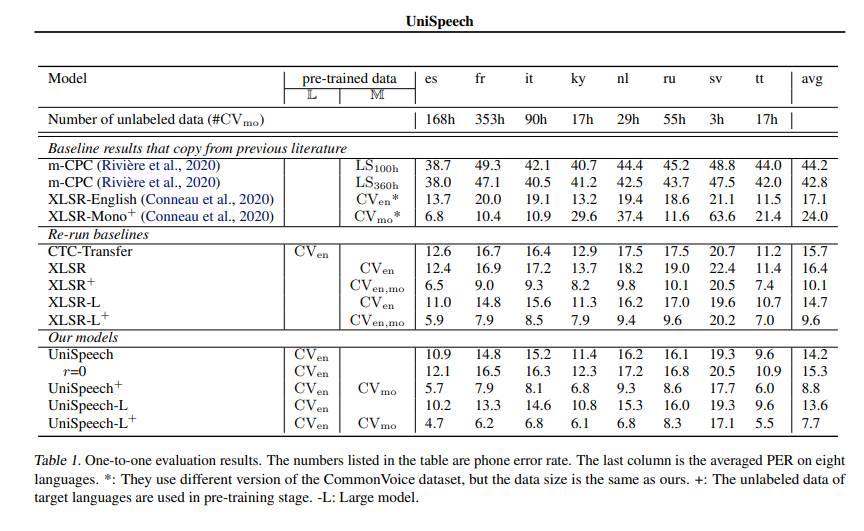

[Paper: UniSpeech: Unified Speech Representation Learning

with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597)

Authors: Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang

**Abstract**

*In this paper, we propose a unified pre-training approach called UniSpeech to learn speech representations with both unlabeled and labeled data, in which supervised phonetic CTC learning and phonetically-aware contrastive self-supervised learning are conducted in a multi-task learning manner. The resultant representations can capture information more correlated with phonetic structures and improve the generalization across languages and domains. We evaluate the effectiveness of UniSpeech for cross-lingual representation learning on public CommonVoice corpus. The results show that UniSpeech outperforms self-supervised pretraining and supervised transfer learning for speech recognition by a maximum of 13.4% and 17.8% relative phone error rate reductions respectively (averaged over all testing languages). The transferability of UniSpeech is also demonstrated on a domain-shift speech recognition task, i.e., a relative word error rate reduction of 6% against the previous approach.*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech.

# Usage

This is an speech model that has been fine-tuned on phoneme classification.

## Inference

```python

import torch

from datasets import load_dataset

from transformers import AutoModelForCTC, AutoProcessor

import torchaudio.functional as F

model_id = "microsoft/unispeech-1350-en-168-es-ft-1h"

sample = next(iter(load_dataset("common_voice", "es", split="test", streaming=True)))

resampled_audio = F.resample(torch.tensor(sample["audio"]["array"]), 48_000, 16_000).numpy()

model = AutoModelForCTC.from_pretrained(model_id)

processor = AutoProcessor.from_pretrained(model_id)

input_values = processor(resampled_audio, return_tensors="pt").input_values

with torch.no_grad():

logits = model(input_values).logits

prediction_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(prediction_ids)

# -> gives:

# b j e n i k e ɾ ɾ e ɣ a l o a s a β ɾ i ɾ p ɾ i m e ɾ o'

# for: Bien . ¿ y qué regalo vas a abrir primero ?

```

# Contribution

The model was contributed by [cywang](https://huggingface.co/cywang) and [patrickvonplaten](https://huggingface.co/patrickvonplaten).

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

# Official Results

See *UniSpeeech-L^{+}* - *es*:

|

oseibrefo/distilbert-base-uncased-finetuned-cola | oseibrefo | 2021-12-19T19:40:54Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- matthews_correlation

model-index:

- name: distilbert-base-uncased-finetuned-cola

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

args: cola

metrics:

- name: Matthews Correlation

type: matthews_correlation

value: 0.5497693861041112

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7595

- Matthews Correlation: 0.5498

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5275 | 1.0 | 535 | 0.5411 | 0.4254 |

| 0.3498 | 2.0 | 1070 | 0.4973 | 0.5183 |

| 0.2377 | 3.0 | 1605 | 0.6180 | 0.5079 |

| 0.175 | 4.0 | 2140 | 0.7595 | 0.5498 |

| 0.1322 | 5.0 | 2675 | 0.8412 | 0.5370 |

### Framework versions

- Transformers 4.14.1

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

addy88/wav2vec2-assamese-stt | addy88 | 2021-12-19T16:55:56Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/addy88/wav2vec2-assamese-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/addy88/wav2vec2-assamese-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-bhojpuri-stt | addy88 | 2021-12-19T16:48:06Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-bhojpuri-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-bhojpuri-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-marathi-stt | addy88 | 2021-12-19T16:31:22Z | 21 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-marathi-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-marathi-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec-odia-stt | addy88 | 2021-12-19T15:56:01Z | 6 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-odia-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-odia-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-rajsthani-stt | addy88 | 2021-12-19T15:52:16Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-rajsthani-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-rajsthani-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-urdu-stt | addy88 | 2021-12-19T15:47:47Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-urdu-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-urdu-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-tamil-stt | addy88 | 2021-12-19T15:43:45Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-tamil-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-tamil-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-telugu-stt | addy88 | 2021-12-19T15:39:58Z | 1,020 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-telugu-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-telugu-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-nepali-stt | addy88 | 2021-12-19T15:36:06Z | 4 | 1 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-nepali-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-nepali-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

nguyenvulebinh/envibert | nguyenvulebinh | 2021-12-19T14:20:51Z | 26 | 5 | transformers | [

"transformers",

"pytorch",

"roberta",

"fill-mask",

"exbert",

"vi",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: vi

tags:

- exbert

license: cc-by-nc-4.0

---

# RoBERTa for Vietnamese and English (envibert)

This RoBERTa version is trained by using 100GB of text (50GB of Vietnamese and 50GB of English) so it is named ***envibert***. The model architecture is custom for production so it only contains 70M parameters.

## Usages

```python

from transformers import RobertaModel

from transformers.file_utils import cached_path, hf_bucket_url

from importlib.machinery import SourceFileLoader

import os

cache_dir='./cache'

model_name='nguyenvulebinh/envibert'

def download_tokenizer_files():

resources = ['envibert_tokenizer.py', 'dict.txt', 'sentencepiece.bpe.model']

for item in resources:

if not os.path.exists(os.path.join(cache_dir, item)):

tmp_file = hf_bucket_url(model_name, filename=item)

tmp_file = cached_path(tmp_file,cache_dir=cache_dir)

os.rename(tmp_file, os.path.join(cache_dir, item))

download_tokenizer_files()

tokenizer = SourceFileLoader("envibert.tokenizer", os.path.join(cache_dir,'envibert_tokenizer.py')).load_module().RobertaTokenizer(cache_dir)

model = RobertaModel.from_pretrained(model_name,cache_dir=cache_dir)

# Encode text

text_input = 'Đại học Bách Khoa Hà Nội .'

text_ids = tokenizer(text_input, return_tensors='pt').input_ids

# tensor([[ 0, 705, 131, 8751, 2878, 347, 477, 5, 2]])

# Extract features

text_features = model(text_ids)

text_features['last_hidden_state'].shape

# torch.Size([1, 9, 768])

len(text_features['hidden_states'])

# 7

```

### Citation

```text

@inproceedings{nguyen20d_interspeech,

author={Thai Binh Nguyen and Quang Minh Nguyen and Thi Thu Hien Nguyen and Quoc Truong Do and Chi Mai Luong},

title={{Improving Vietnamese Named Entity Recognition from Speech Using Word Capitalization and Punctuation Recovery Models}},

year=2020,

booktitle={Proc. Interspeech 2020},

pages={4263--4267},

doi={10.21437/Interspeech.2020-1896}

}

```

**Please CITE** our repo when it is used to help produce published results or is incorporated into other software.

# Contact

[email protected]

[](https://twitter.com/intent/follow?screen_name=nguyenvulebinh) |

addy88/wav2vec2-sanskrit-stt | addy88 | 2021-12-19T13:38:52Z | 264 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-sanskrit-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-sanskrit-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

addy88/wav2vec2-kannada-stt | addy88 | 2021-12-19T13:35:26Z | 248 | 1 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ## Usage

The model can be used directly (without a language model) as follows:

```python

import soundfile as sf

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import argparse

def parse_transcription(wav_file):

# load pretrained model

processor = Wav2Vec2Processor.from_pretrained("addy88/wav2vec2-kannada-stt")

model = Wav2Vec2ForCTC.from_pretrained("addy88/wav2vec2-kannada-stt")

# load audio

audio_input, sample_rate = sf.read(wav_file)

# pad input values and return pt tensor

input_values = processor(audio_input, sampling_rate=sample_rate, return_tensors="pt").input_values

# INFERENCE

# retrieve logits & take argmax

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

# transcribe

transcription = processor.decode(predicted_ids[0], skip_special_tokens=True)

print(transcription)

``` |

new5558/simcse-model-wangchanberta-base-att-spm-uncased | new5558 | 2021-12-19T13:01:31Z | 80 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"camembert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2022-03-02T23:29:05Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# new5558/simcse-model-wangchanberta-base-att-spm-uncased

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('new5558/simcse-model-wangchanberta-base-att-spm-uncased')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

def cls_pooling(model_output, attention_mask):

return model_output[0][:,0]

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('new5558/simcse-model-wangchanberta-base-att-spm-uncased')

model = AutoModel.from_pretrained('new5558/simcse-model-wangchanberta-base-att-spm-uncased')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, cls pooling.

sentence_embeddings = cls_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=new5558/simcse-model-wangchanberta-base-att-spm-uncased)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 5125 with parameters:

```

{'batch_size': 256, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'transformers.optimization.AdamW'>",

"optimizer_params": {

"lr": 1e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 32, 'do_lower_case': False}) with Transformer model: CamembertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

rlagusrlagus123/XTC4096 | rlagusrlagus123 | 2021-12-19T11:19:34Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2022-03-02T23:29:05Z | ---

tags:

- conversational

---

---

#12 epochs, each batch size 4, gradient accumulation steps 1, tail 4096.

#THIS SEEMS TO BE THE OPTIMAL SETUP. |

NbAiLabArchive/test_w5_long_roberta_tokenizer | NbAiLabArchive | 2021-12-19T10:36:40Z | 41 | 0 | transformers | [

"transformers",

"pytorch",

"jax",

"tensorboard",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:04Z | Just for performing some experiments. Do not use. |

shreyasgite/wav2vec2-large-xls-r-300m-dementianet | shreyasgite | 2021-12-19T09:11:16Z | 78 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: wav2vec2-large-xls-r-300m-dementianet

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-dementianet

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3430

- Accuracy: 0.4062

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 22

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.3845 | 3.33 | 40 | 1.3556 | 0.3125 |

| 1.3659 | 6.67 | 80 | 1.3602 | 0.3125 |

| 1.3619 | 10.0 | 120 | 1.3569 | 0.3125 |

| 1.3575 | 13.33 | 160 | 1.3509 | 0.3125 |

| 1.3356 | 16.67 | 200 | 1.3599 | 0.3125 |

| 1.3166 | 20.0 | 240 | 1.3430 | 0.4062 |

### Framework versions

- Transformers 4.14.1

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Ayham/roberta_gpt2_summarization_xsum | Ayham | 2021-12-19T06:35:43Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- xsum

model-index:

- name: roberta_gpt2_summarization_xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta_gpt2_summarization_xsum

This model is a fine-tuned version of [](https://huggingface.co/) on the xsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Ayham/xlnet_gpt2_summarization_xsum | Ayham | 2021-12-19T04:50:11Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"generated_from_trainer",

"dataset:xsum",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:04Z | ---

tags:

- generated_from_trainer

datasets:

- xsum

model-index:

- name: xlnet_gpt2_summarization_xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlnet_gpt2_summarization_xsum

This model is a fine-tuned version of [](https://huggingface.co/) on the xsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

yerevann/x-r-hy | yerevann | 2021-12-19T03:19:04Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2-large-xls-r-2b-armenian-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-2b-armenian-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-2b](https://huggingface.co/facebook/wav2vec2-xls-r-2b) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5166

- Wer: 0.7397

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 120

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:------:|:-----:|:---------------:|:------:|

| 3.7057 | 2.38 | 200 | 0.7731 | 0.8091 |

| 0.5797 | 4.76 | 400 | 0.8279 | 0.7804 |

| 0.4341 | 7.14 | 600 | 1.0343 | 0.8285 |

| 0.3135 | 9.52 | 800 | 1.0551 | 0.8066 |

| 0.2409 | 11.9 | 1000 | 1.0686 | 0.7897 |

| 0.1998 | 14.29 | 1200 | 1.1329 | 0.7766 |

| 0.1729 | 16.67 | 1400 | 1.3234 | 0.8567 |

| 0.1533 | 19.05 | 1600 | 1.2432 | 0.8160 |

| 0.1354 | 21.43 | 1800 | 1.2780 | 0.7954 |

| 0.12 | 23.81 | 2000 | 1.2228 | 0.8054 |

| 0.1175 | 26.19 | 2200 | 1.3484 | 0.8129 |

| 0.1141 | 28.57 | 2400 | 1.2881 | 0.9130 |

| 0.1053 | 30.95 | 2600 | 1.1972 | 0.7910 |

| 0.0954 | 33.33 | 2800 | 1.3702 | 0.8048 |

| 0.0842 | 35.71 | 3000 | 1.3963 | 0.7960 |

| 0.0793 | 38.1 | 3200 | 1.4690 | 0.7991 |

| 0.0707 | 40.48 | 3400 | 1.5045 | 0.8085 |

| 0.0745 | 42.86 | 3600 | 1.4749 | 0.8004 |

| 0.0693 | 45.24 | 3800 | 1.5047 | 0.7960 |

| 0.0646 | 47.62 | 4000 | 1.4216 | 0.7997 |

| 0.0555 | 50.0 | 4200 | 1.4676 | 0.8029 |

| 0.056 | 52.38 | 4400 | 1.4273 | 0.8104 |

| 0.0465 | 54.76 | 4600 | 1.3999 | 0.7841 |

| 0.046 | 57.14 | 4800 | 1.6130 | 0.8473 |

| 0.0404 | 59.52 | 5000 | 1.5586 | 0.7841 |

| 0.0403 | 61.9 | 5200 | 1.3959 | 0.7653 |

| 0.0404 | 64.29 | 5400 | 1.5318 | 0.8041 |

| 0.0365 | 66.67 | 5600 | 1.5300 | 0.7854 |

| 0.0338 | 69.05 | 5800 | 1.5051 | 0.7885 |

| 0.0307 | 71.43 | 6000 | 1.5647 | 0.7935 |

| 0.0235 | 73.81 | 6200 | 1.4919 | 0.8154 |

| 0.0268 | 76.19 | 6400 | 1.5259 | 0.8060 |

| 0.0275 | 78.57 | 6600 | 1.3985 | 0.7897 |

| 0.022 | 80.95 | 6800 | 1.5515 | 0.8154 |

| 0.017 | 83.33 | 7000 | 1.5737 | 0.7647 |

| 0.0205 | 85.71 | 7200 | 1.4876 | 0.7572 |

| 0.0174 | 88.1 | 7400 | 1.6331 | 0.7829 |

| 0.0188 | 90.48 | 7600 | 1.5108 | 0.7685 |

| 0.0134 | 92.86 | 7800 | 1.7125 | 0.7866 |

| 0.0125 | 95.24 | 8000 | 1.6042 | 0.7635 |

| 0.0133 | 97.62 | 8200 | 1.4608 | 0.7478 |

| 0.0272 | 100.0 | 8400 | 1.4784 | 0.7309 |

| 0.0133 | 102.38 | 8600 | 1.4471 | 0.7459 |

| 0.0094 | 104.76 | 8800 | 1.4852 | 0.7272 |

| 0.0103 | 107.14 | 9000 | 1.5679 | 0.7409 |

| 0.0088 | 109.52 | 9200 | 1.5090 | 0.7309 |

| 0.0077 | 111.9 | 9400 | 1.4994 | 0.7290 |

| 0.0068 | 114.29 | 9600 | 1.5008 | 0.7340 |

| 0.0054 | 116.67 | 9800 | 1.5166 | 0.7390 |

| 0.0052 | 119.05 | 10000 | 1.5166 | 0.7397 |

### Framework versions

- Transformers 4.14.1

- Pytorch 1.10.0

- Datasets 1.16.1

- Tokenizers 0.10.3

|

zaccharieramzi/UNet-OASIS | zaccharieramzi | 2021-12-19T02:07:02Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # UNet-OASIS

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- OASIS

---

This model can be used to reconstruct single coil OASIS data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil brain retrospective data from the OASIS database at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.unet import unet

model = unet(n_layers=4, layers_n_channels=[16, 32, 64, 128], layers_n_non_lins=2,)

model.load_weights('UNet-fastmri/model_weights.h5')

```

Using the model is then as simple as:

```python

model(zero_filled_recon)

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [OASIS dataset](https://www.oasis-brains.org/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [OASIS dataset](https://www.oasis-brains.org/).

- PSNR: 29.8

- SSIM: 0.847

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/KIKI-net-OASIS | zaccharieramzi | 2021-12-19T01:59:51Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # KIKI-net-OASIS

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- OASIS

---

This model can be used to reconstruct single coil OASIS data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil brain retrospective data from the OASIS database at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.kiki_sep import full_kiki_net

from fastmri_recon.models.utils.non_linearities import lrelu

model = full_kiki_net(n_convs=16, n_filters=48, activation=lrelu)

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_rows, n_cols]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [OASIS dataset](https://www.oasis-brains.org/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [OASIS dataset](https://www.oasis-brains.org/).

- PSNR: 30.08

- SSIM: 0.853

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/CascadeNet-OASIS | zaccharieramzi | 2021-12-19T01:47:21Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # CascadeNet-OASIS

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- OASIS

---

This model can be used to reconstruct single coil OASIS data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil brain retrospective data from the OASIS database at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.cascading import cascade_net

model = cascade_net()

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_rows, n_cols]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [OASIS dataset](https://www.oasis-brains.org/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [OASIS dataset](https://www.oasis-brains.org/).

- PSNR: 32.0

- SSIM: 0.887

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/CascadeNet-fastmri | zaccharieramzi | 2021-12-19T01:43:27Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # CascadeNet-fastmri

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- fastMRI

---

This model can be used to reconstruct single coil fastMRI data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil knee data from Siemens scanner at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.cascading import cascade_net

model = cascade_net()

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_rows, n_cols]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [fastMRI dataset](https://fastmri.org/dataset/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [fastMRI dataset](https://fastmri.org/dataset/).

| Contrast | PD | PDFS |

|----------|-------|--------|

| PSNR | 33.98 | 29.88 |

| SSIM | 0.811 | 0.6251 |

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/PDNet-OASIS | zaccharieramzi | 2021-12-19T01:37:49Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # PDNet-OASIS

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- OASIS

---

This model can be used to reconstruct single coil OASIS data with an acceleration factor of 4.

## Model description

For more details, see https://www.mdpi.com/2076-3417/10/5/1816.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct single coil brain retrospective data from the OASIS database at acceleration factor 4.

It cannot be used on multi-coil data.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

from fastmri_recon.models.functional_models.pdnet import pdnet

model = pdnet()

model.load_weights('model_weights.h5')

```

Using the model is then as simple as:

```python

model([

kspace, # shape: [n_slices, n_rows, n_cols, 1]

mask, # shape: [n_slices, n_rows, n_cols]

])

```

## Limitations and bias

The limitations and bias of this model have not been properly investigated.

## Training data

This model was trained using the [OASIS dataset](https://www.oasis-brains.org/).

## Training procedure

The training procedure is described in https://www.mdpi.com/2076-3417/10/5/1816 for brain data.

This section is WIP.

## Evaluation results

This model was evaluated using the [OASIS dataset](https://www.oasis-brains.org/).

- PSNR: 33.22

- SSIM: 0.910

## Bibtex entry

```

@article{ramzi2020benchmarking,

title={Benchmarking MRI reconstruction neural networks on large public datasets},

author={Ramzi, Zaccharie and Ciuciu, Philippe and Starck, Jean-Luc},

journal={Applied Sciences},

volume={10},

number={5},

pages={1816},

year={2020},

publisher={Multidisciplinary Digital Publishing Institute}

}

```

|

zaccharieramzi/NCPDNet-multicoil-spiral | zaccharieramzi | 2021-12-19T01:01:43Z | 0 | 0 | null | [

"region:us"

] | null | 2022-03-02T23:29:05Z | # NCPDNet-multicoil-spiral

---

tags:

- TensorFlow

- MRI reconstruction

- MRI

datasets:

- fastMRI

---

This is a non-Cartesian multicoil MRI reconstruction model for spiral trajectories at acceleration factor 4.

The model uses 10 iterations and a small vanilla CNN.

## Model description

For more details, see https://hal.inria.fr/hal-03188997.

This section is WIP.

## Intended uses and limitations

This model can be used to reconstruct multicoil knee data from Siemens scanner at acceleration factor 4 in a spiral acquisition setting.

## How to use

This model can be loaded using the following repo: https://github.com/zaccharieramzi/fastmri-reproducible-benchmark.

After cloning the repo, `git clone https://github.com/zaccharieramzi/fastmri-reproducible-benchmark`, you can install the package via `pip install fastmri-reproducible-benchmark`.

The framework is TensorFlow.

You can initialize and load the model weights as follows:

```python

import tensorflow as tf

from fastmri_recon.models.subclassed_models.ncpdnet import NCPDNet

model = NCPDNet(

multicoil=True,

im_size=(640, 400),

dcomp=True,

refine_smaps=True,

)

kspace_shape = 1

inputs = [

tf.zeros([1, 1, kspace_shape, 1], dtype=tf.complex64),

tf.zeros([1, 2, kspace_shape], dtype=tf.float32),

tf.zeros([1, 1, 640, 320], dtype=tf.complex64),