modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-06-02 12:28:20

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 462

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 54

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-06-02 12:26:48

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

vineetsharma/ppo-LunarLander-v2 | vineetsharma | 2023-07-11T11:35:07Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-11T11:34:45Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 281.79 +/- 14.76

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Penbloom/Penbloom_semirealmix | Penbloom | 2023-07-11T11:34:24Z | 0 | 0 | null | [

"musclar",

"korean",

"license:openrail",

"region:us"

] | null | 2023-04-08T14:59:05Z | ---

license: openrail

tags:

- musclar

- korean

---

## Model Detail & Merge Recipes

Penbloom_semirealmix aims to create musclar girls with nice skin texture and detailed clothes.This is a ``merge`` model.

## Source model

[Civitai:Beenyou|Stable Diffusion Checkpoint](https://civitai.com/models/27688/beenyou)

[⚠NSFW][Civitai:饭特稀|Stable Diffusion Checkpoint](https://civitai.com/models/18427/v08))

### Penbloom_semirealmix_v1.0 |

vvasanth/falcon7b-finetune-test-220623_1 | vvasanth | 2023-07-11T11:31:41Z | 0 | 0 | null | [

"text-generation",

"license:apache-2.0",

"region:us"

] | text-generation | 2023-07-04T11:51:13Z | ---

license: apache-2.0

pipeline_tag: text-generation

--- |

gsaivinay/wizard-vicuna-13B-SuperHOT-8K-fp16 | gsaivinay | 2023-07-11T11:24:54Z | 11 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"custom_code",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-07-11T11:22:25Z | ---

inference: false

license: other

duplicated_from: TheBloke/wizard-vicuna-13B-SuperHOT-8K-fp16

---

<!-- header start -->

<div style="width: 100%;">

Cloned from TheBloke repo

</div>

<!-- header end -->

# June Lee's Wizard Vicuna 13B fp16

This is fp16 pytorch format model files for [June Lee's Wizard Vicuna 13B](https://huggingface.co/TheBloke/wizard-vicuna-13B-HF) merged with [Kaio Ken's SuperHOT 8K](https://huggingface.co/kaiokendev/superhot-13b-8k-no-rlhf-test).

[Kaio Ken's SuperHOT 13b LoRA](https://huggingface.co/kaiokendev/superhot-13b-8k-no-rlhf-test) is merged on to the base model, and then 8K context can be achieved during inference by using `trust_remote_code=True`.

Note that `config.json` has been set to a sequence length of 8192. This can be modified to 4096 if you want to try with a smaller sequence length.

## Repositories available

* [4-bit GPTQ models for GPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-SuperHOT-8K-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGML models for CPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-SuperHOT-8K-GGML)

* [Unquantised SuperHOT fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/TheBloke/wizard-vicuna-13B-SuperHOT-8K-fp16)

* [Unquantised base fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/junelee/wizard-vicuna-13b)

## How to use this model from Python code

First make sure you have Einops installed:

```

pip3 install auto-gptq

```

Then run the following code. `config.json` has been default to a sequence length of 8192, but you can also configure this in your Python code.

The provided modelling code, activated with `trust_remote_code=True` will automatically set the `scale` parameter from the configured `max_position_embeddings`. Eg for 8192, `scale` is set to `4`.

```python

from transformers import AutoConfig, AutoTokenizer, AutoModelForCausalLM, pipeline

import argparse

model_name_or_path = "gsaivinay/wizard-vicuna-13B-SuperHOT-8K-fp16"

use_triton = False

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, use_fast=True)

config = AutoConfig.from_pretrained(model_name_or_path, trust_remote_code=True)

# Change this to the sequence length you want

config.max_position_embeddings = 8192

model = AutoModelForCausalLM.from_pretrained(model_name_or_path,

config=config,

trust_remote_code=True,

device_map='auto')

# Note: check to confirm if this is correct prompt template is correct for this model!

prompt = "Tell me about AI"

prompt_template=f'''USER: {prompt}

ASSISTANT:'''

print("\n\n*** Generate:")

input_ids = tokenizer(prompt_template, return_tensors='pt').input_ids.cuda()

output = model.generate(inputs=input_ids, temperature=0.7, max_new_tokens=512)

print(tokenizer.decode(output[0]))

# Inference can also be done using transformers' pipeline

print("*** Pipeline:")

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=512,

temperature=0.7,

top_p=0.95,

repetition_penalty=1.15

)

print(pipe(prompt_template)[0]['generated_text'])

```

## Using other UIs: monkey patch

Provided in the repo is `llama_rope_scaled_monkey_patch.py`, written by @kaiokendev.

It can be theoretically be added to any Python UI or custom code to enable the same result as `trust_remote_code=True`. I have not tested this, and it should be superseded by using `trust_remote_code=True`, but I include it for completeness and for interest.

# Original model card: Kaio Ken's SuperHOT 8K

### SuperHOT Prototype 2 w/ 8K Context

This is a second prototype of SuperHOT, this time 30B with 8K context and no RLHF, using the same technique described in [the github blog](https://kaiokendev.github.io/til#extending-context-to-8k).

Tests have shown that the model does indeed leverage the extended context at 8K.

You will need to **use either the monkeypatch** or, if you are already using the monkeypatch, **change the scaling factor to 0.25 and the maximum sequence length to 8192**

#### Looking for Merged & Quantized Models?

- 30B 4-bit CUDA: [tmpupload/superhot-30b-8k-4bit-safetensors](https://huggingface.co/tmpupload/superhot-30b-8k-4bit-safetensors)

- 30B 4-bit CUDA 128g: [tmpupload/superhot-30b-8k-4bit-128g-safetensors](https://huggingface.co/tmpupload/superhot-30b-8k-4bit-128g-safetensors)

#### Training Details

I trained the LoRA with the following configuration:

- 1200 samples (~400 samples over 2048 sequence length)

- learning rate of 3e-4

- 3 epochs

- The exported modules are:

- q_proj

- k_proj

- v_proj

- o_proj

- no bias

- Rank = 4

- Alpha = 8

- no dropout

- weight decay of 0.1

- AdamW beta1 of 0.9 and beta2 0.99, epsilon of 1e-5

- Trained on 4-bit base model

# Original model card: June Lee's Wizard Vicuna 13B

# Wizard-Vicuna-13B-HF

This is a float16 HF format repo for [junelee's wizard-vicuna 13B](https://huggingface.co/junelee/wizard-vicuna-13b).

June Lee's repo was also HF format. The reason I've made this is that the original repo was in float32, meaning it required 52GB disk space, VRAM and RAM.

This model was converted to float16 to make it easier to load and manage.

## Repositories available

* [4bit GPTQ models for GPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-GPTQ).

* [4bit and 5bit GGML models for CPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-GGML).

* [float16 HF format model for GPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-HF).

# Original WizardVicuna-13B model card

Github page: https://github.com/melodysdreamj/WizardVicunaLM

# WizardVicunaLM

### Wizard's dataset + ChatGPT's conversation extension + Vicuna's tuning method

I am a big fan of the ideas behind WizardLM and VicunaLM. I particularly like the idea of WizardLM handling the dataset itself more deeply and broadly, as well as VicunaLM overcoming the limitations of single-turn conversations by introducing multi-round conversations. As a result, I combined these two ideas to create WizardVicunaLM. This project is highly experimental and designed for proof of concept, not for actual usage.

## Benchmark

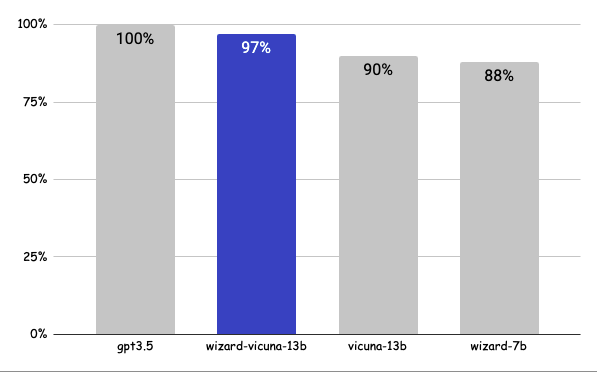

### Approximately 7% performance improvement over VicunaLM

### Detail

The questions presented here are not from rigorous tests, but rather, I asked a few questions and requested GPT-4 to score them. The models compared were ChatGPT 3.5, WizardVicunaLM, VicunaLM, and WizardLM, in that order.

| | gpt3.5 | wizard-vicuna-13b | vicuna-13b | wizard-7b | link |

|-----|--------|-------------------|------------|-----------|----------|

| Q1 | 95 | 90 | 85 | 88 | [link](https://sharegpt.com/c/YdhIlby) |

| Q2 | 95 | 97 | 90 | 89 | [link](https://sharegpt.com/c/YOqOV4g) |

| Q3 | 85 | 90 | 80 | 65 | [link](https://sharegpt.com/c/uDmrcL9) |

| Q4 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/XBbK5MZ) |

| Q5 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/AQ5tgQX) |

| Q6 | 92 | 85 | 87 | 88 | [link](https://sharegpt.com/c/eVYwfIr) |

| Q7 | 95 | 90 | 85 | 92 | [link](https://sharegpt.com/c/Kqyeub4) |

| Q8 | 90 | 85 | 75 | 70 | [link](https://sharegpt.com/c/M0gIjMF) |

| Q9 | 92 | 85 | 70 | 60 | [link](https://sharegpt.com/c/fOvMtQt) |

| Q10 | 90 | 80 | 75 | 85 | [link](https://sharegpt.com/c/YYiCaUz) |

| Q11 | 90 | 85 | 75 | 65 | [link](https://sharegpt.com/c/HMkKKGU) |

| Q12 | 85 | 90 | 80 | 88 | [link](https://sharegpt.com/c/XbW6jgB) |

| Q13 | 90 | 95 | 88 | 85 | [link](https://sharegpt.com/c/JXZb7y6) |

| Q14 | 94 | 89 | 90 | 91 | [link](https://sharegpt.com/c/cTXH4IS) |

| Q15 | 90 | 85 | 88 | 87 | [link](https://sharegpt.com/c/GZiM0Yt) |

| | 91 | 88 | 82 | 80 | |

## Principle

We adopted the approach of WizardLM, which is to extend a single problem more in-depth. However, instead of using individual instructions, we expanded it using Vicuna's conversation format and applied Vicuna's fine-tuning techniques.

Turning a single command into a rich conversation is what we've done [here](https://sharegpt.com/c/6cmxqq0).

After creating the training data, I later trained it according to the Vicuna v1.1 [training method](https://github.com/lm-sys/FastChat/blob/main/scripts/train_vicuna_13b.sh).

## Detailed Method

First, we explore and expand various areas in the same topic using the 7K conversations created by WizardLM. However, we made it in a continuous conversation format instead of the instruction format. That is, it starts with WizardLM's instruction, and then expands into various areas in one conversation using ChatGPT 3.5.

After that, we applied the following model using Vicuna's fine-tuning format.

## Training Process

Trained with 8 A100 GPUs for 35 hours.

## Weights

You can see the [dataset](https://huggingface.co/datasets/junelee/wizard_vicuna_70k) we used for training and the [13b model](https://huggingface.co/junelee/wizard-vicuna-13b) in the huggingface.

## Conclusion

If we extend the conversation to gpt4 32K, we can expect a dramatic improvement, as we can generate 8x more, more accurate and richer conversations.

## License

The model is licensed under the LLaMA model, and the dataset is licensed under the terms of OpenAI because it uses ChatGPT. Everything else is free.

## Author

[JUNE LEE](https://github.com/melodysdreamj) - He is active in Songdo Artificial Intelligence Study and GDG Songdo.

|

openwaifu/SoVits-VC-Chtholly-Nota-Seniorious-0.1 | openwaifu | 2023-07-11T11:19:42Z | 1 | 0 | transformers | [

"transformers",

"anime",

"audio",

"tts",

"voice conversion",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | 2023-04-17T12:07:10Z | ---

license: mit

tags:

- anime

- audio

- tts

- voice conversion

---

Origin (Generated From TTS):

<audio controls src="https://s3.amazonaws.com/moonup/production/uploads/62d3a59dc72c791b23918293/neVwV9PEc0gGylrEup2Kn.wav"></audio>

Converted (Using SoVits Chtholly-VC)

<audio controls src="https://s3.amazonaws.com/moonup/production/uploads/62d3a59dc72c791b23918293/oKNg3kVgAb7utyGCZa8f9.wav"></audio> |

1aurent/CartPole-v1 | 1aurent | 2023-07-11T11:15:03Z | 0 | 0 | null | [

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-11T10:42:02Z | ---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 498.08 +/- 19.05

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

jwu323/origin-llama-7b | jwu323 | 2023-07-11T11:06:24Z | 8 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-11T09:17:15Z | This contains the original weights for the LLaMA-7b model.

This model is under a non-commercial license (see the LICENSE file).

You should only use this repository if you have been granted access to the model by filling out [this form](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform) but either lost your copy of the weights or got some trouble converting them to the Transformers format.

[According to this comment](https://github.com/huggingface/transformers/issues/21681#issuecomment-1436552397), dtype of a model in PyTorch is always float32, regardless of the dtype of the checkpoint you saved. If you load a float16 checkpoint in a model you create (which is in float32 by default), the dtype that is kept at the end is the dtype of the model, not the dtype of the checkpoint. |

digiplay/BasilKorea_v2 | digiplay | 2023-07-11T11:00:49Z | 315 | 2 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-11T10:27:11Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

|

ashnrk/textual_inversion_pasture | ashnrk | 2023-07-11T10:54:44Z | 5 | 0 | diffusers | [

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"textual_inversion",

"base_model:stabilityai/stable-diffusion-2-1",

"base_model:adapter:stabilityai/stable-diffusion-2-1",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-11T09:52:17Z |

---

license: creativeml-openrail-m

base_model: stabilityai/stable-diffusion-2-1

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- textual_inversion

inference: true

---

# Textual inversion text2image fine-tuning - ashnrk/textual_inversion_pasture

These are textual inversion adaption weights for stabilityai/stable-diffusion-2-1. You can find some example images in the following.

|

nickw9/ppo-LunarLander-v2 | nickw9 | 2023-07-11T10:48:56Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-11T10:48:37Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: ppo

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 256.15 +/- 10.89

name: mean_reward

verified: false

---

# **ppo** Agent playing **LunarLander-v2**

This is a trained model of a **ppo** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

digiplay/RealEpicMajicRevolution_v1 | digiplay | 2023-07-11T10:42:18Z | 393 | 1 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-11T09:48:27Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

Model info:

https://civitai.com/models/107185/real-epic-majic-revolution

Original Author's DEMO images :

|

Winmodel/ML-Agents-Pyramids | Winmodel | 2023-07-11T10:36:07Z | 0 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"Pyramids",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] | reinforcement-learning | 2023-07-11T10:36:05Z | ---

library_name: ml-agents

tags:

- Pyramids

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: Winmodel/ML-Agents-Pyramids

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

mort1k/q-FrozenLake-v1-4x4-noSlippery | mort1k | 2023-07-11T10:35:42Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-11T10:35:41Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="mort1k/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

F-Haru/paraphrase-mpnet-base-v2_09-04-MarginMSELoss-finetuning-7-5 | F-Haru | 2023-07-11T10:29:25Z | 1 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2023-07-11T09:35:14Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

ファインチューニングする時のNegative ja-en, en-jaのコサイン類似度が0.9以上0.4以下のみで

ファインチューニングをした後に、

教師モデルをparaphrase-mpnet-base-v2で知識蒸留をしたモデル

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 1686 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MSELoss.MSELoss`

Parameters of the fit()-Method:

```

{

"epochs": 3,

"evaluation_steps": 1000,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"eps": 1e-06,

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 514, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

miki-kawa/huggingdatavit-base-beans | miki-kawa | 2023-07-11T10:22:59Z | 193 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:beans",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2023-07-11T09:55:51Z | ---

license: apache-2.0

tags:

- image-classification

- generated_from_trainer

datasets:

- beans

metrics:

- accuracy

model-index:

- name: huggingdatavit-base-beans

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: beans

type: beans

config: default

split: validation

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9924812030075187

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# huggingdatavit-base-beans

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the beans dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0356

- Accuracy: 0.9925

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.1059 | 1.54 | 100 | 0.0356 | 0.9925 |

| 0.0256 | 3.08 | 200 | 0.0663 | 0.9774 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.1+cu117

- Datasets 2.13.0

- Tokenizers 0.11.0

|

Krish23/Tujgc | Krish23 | 2023-07-11T10:22:51Z | 0 | 0 | null | [

"license:cc-by-nc-sa-2.0",

"region:us"

] | null | 2023-07-11T10:22:51Z | ---

license: cc-by-nc-sa-2.0

---

|

bofenghuang/vigogne-7b-instruct | bofenghuang | 2023-07-11T10:18:13Z | 1,493 | 23 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"LLM",

"fr",

"license:openrail",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-03-22T21:36:45Z | ---

license: openrail

language:

- fr

pipeline_tag: text-generation

library_name: transformers

tags:

- llama

- LLM

inference: false

---

<p align="center" width="100%">

<img src="https://huggingface.co/bofenghuang/vigogne-7b-instruct/resolve/main/vigogne_logo.png" alt="Vigogne" style="width: 40%; min-width: 300px; display: block; margin: auto;">

</p>

# Vigogne-7B-Instruct: A French Instruction-following LLaMA Model

Vigogne-7B-Instruct is a LLaMA-7B model fine-tuned to follow the French instructions.

For more information, please visit the Github repo: https://github.com/bofenghuang/vigogne

**Usage and License Notices**: Same as [Stanford Alpaca](https://github.com/tatsu-lab/stanford_alpaca), Vigogne is intended and licensed for research use only. The dataset is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes.

## Changelog

All versions are available in branches.

- **V1.0**: Initial release, trained on the translated Stanford Alpaca dataset.

- **V1.1**: Improved translation quality of the Stanford Alpaca dataset.

- **V2.0**: Expanded training dataset to 224k for better performance.

- **V3.0**: Further expanded training dataset to 262k for improved results.

## Usage

```python

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

from vigogne.preprocess import generate_instruct_prompt

model_name_or_path = "bofenghuang/vigogne-7b-instruct"

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, padding_side="right", use_fast=False)

model = AutoModelForCausalLM.from_pretrained(model_name_or_path, torch_dtype=torch.float16, device_map="auto")

user_query = "Expliquez la différence entre DoS et phishing."

prompt = generate_instruct_prompt(user_query)

input_ids = tokenizer(prompt, return_tensors="pt")["input_ids"].to(model.device)

input_length = input_ids.shape[1]

generated_outputs = model.generate(

input_ids=input_ids,

generation_config=GenerationConfig(

temperature=0.1,

do_sample=True,

repetition_penalty=1.0,

max_new_tokens=512,

),

return_dict_in_generate=True,

)

generated_tokens = generated_outputs.sequences[0, input_length:]

generated_text = tokenizer.decode(generated_tokens, skip_special_tokens=True)

print(generated_text)

```

You can also infer this model by using the following Google Colab Notebook.

<a href="https://colab.research.google.com/github/bofenghuang/vigogne/blob/main/notebooks/infer_instruct.ipynb" target="_blank"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

## Limitations

Vigogne is still under development, and there are many limitations that have to be addressed. Please note that it is possible that the model generates harmful or biased content, incorrect information or generally unhelpful answers.

|

ivivnov/ppo-LunarLander-v2 | ivivnov | 2023-07-11T09:56:04Z | 4 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-11T09:55:46Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 270.61 +/- 15.26

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

pierre-loic/climate-news-articles | pierre-loic | 2023-07-11T09:53:58Z | 111 | 1 | transformers | [

"transformers",

"pytorch",

"safetensors",

"flaubert",

"text-classification",

"license:cc",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-06-27T08:18:17Z | ---

license: cc

widget:

- text: "Nouveaux records d’émissions de CO₂ du secteur énergétique en 2022, selon une étude"

- text: "Climat et énergie : les objectifs de l’Union européenne pour 2030 ont du « plomb dans l’aile »"

- text: "Municipales à Paris : Emmanuel Grégoire « se prépare méthodiquement » pour l’après Hidalgo"

---

# 🌍 Détection des articles de presse française traitant des sujets liés au climat

*🇬🇧 / 🇺🇸 : as this model is trained only on French data, all explanations are written in French in this repository. The goal of the model is to classify titles of French newspapers in two categories : if it's about climate or not.*

## 🗺️ Le contexte

Ce modèle de classification de **titres d'article de presse française** a été réalisé pour l'association [Data for good](https://dataforgood.fr/) à Grenoble et plus particulièrement pour l'association [Quota climat](https://www.quotaclimat.org/).

L'objectif de ce modèle est de savoir si un **article de presse** traite du **sujet du climat** à partir de son **titre**. Cette tache est complexe car l'algorithme **n'a pas accès au contenu** des articles de presse. Néanmoins, à l'aide des modèles de langage basés sur les [tranformeurs](https://fr.wikipedia.org/wiki/Transformeur) et plus particulièrement les modèles basés sur une architecture [BERT](https://fr.wikipedia.org/wiki/BERT_(mod%C3%A8le_de_langage)), on peut obtenir des résultats intéressants. Nous avons étudié les **deux principaux modèles** basés sur cette architecture et entrainés sur des **corpus en français** : [FlauBERT](https://hal.science/hal-02784776v3/document) et [CamemBERT](https://camembert-model.fr/)

## 📋 L'utilisation du modèle final

Le modèle final présenté n'est évidemment **pas parfait** et possède des **biais**. En effet, certains choix du modèles peuvent être discutables : ceci provient du **périmètre de définition** de la notion de **climat**.

Pour tester le modèle avec le langage Python, il y a **deux solutions** :

- Soit en **téléchargeant le modèle** avec la bibliothèque Python [transformers](https://pypi.org/project/transformers/)

Pour tester le modèle, il suffit d'installer la bibliothèque Python [transformers](https://pypi.org/project/transformers/) dans un environnement virtuel et exécuter le code suivant :

```python

from transformers import pipeline

pipe = pipeline("text-classification", model="pierre-loic/climate-news-articles")

sentence = "Guerre en Ukraine, en direct : le président allemand appelle à ne pas « bloquer » Washington pour la livraison d’armes à sous-munitions"

print(pipe(sentence))

```

```

[{'label': 'NE TRAITE PAS DU CLIMAT', 'score': 0.6566330194473267}]

```

- Soit en appelant l'**API** d'Hugging Face avec la bibliothèque Python [requests](https://pypi.org/project/requests/)

Pour appeler l'**API** d'Hugging Face, il vous faut un **token** que vous pouvez récupérer dans votre espace personnel. Il ne vous plus qu'à exécuter le code suivant :

```python

import requests

API_URL = "https://api-inference.huggingface.co/models/pierre-loic/climate-news-articles"

headers = {"Authorization": "Bearer xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"}

def query(payload):

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()

output = query({

"inputs": "Canicule : deux nouveaux départements du Sud-Est placés en vigilance orange lundi",

})

print(output)

```

```

[[{'label': 'TRAITE DU CLIMAT', 'score': 0.511335015296936}, {'label': 'NE TRAITE PAS DU CLIMAT', 'score': 0.48866504430770874}]]

```

## 🔎 Le détail du travail d'entrainement

### La méthodologie utilisée

Différentes pistes d'étude ont été explorées pour aboutir au modèle final :

- La **première piste** que nous avons étudiée est de faire prédire la classification des titres d'articles de presse entre "climat" et "pas climat" par [ChatGPT](https://openai.com/blog/chatgpt) grâce à du [prompt engineering](https://en.wikipedia.org/wiki/Prompt_engineering). Les résultats étaient assez intéressants mais le modèle se trompait parfois sur des cas très simples.

- La **deuxième piste** que nous avons étudiée est de vectoriser les mots des titres de presse par une méthode Tf-Idf et d'utiliser un modèle de classification ([régression logistique](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) et [random forest](https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html)). Les résultats étaient légérement meilleurs qu'avec un dummy classifier (qui prédit toujours la classe majoritaire "Climat").

- La **troisième piste** que nous avons étudiée est de vectoriser les titres des articles de presse avec un modèle de type [BERT](https://fr.wikipedia.org/wiki/BERT_(mod%C3%A8le_de_langage)) ([camemBERT](https://camembert-model.fr/) uniquement entrainé sur un corpus francophone) et ensuite d'utiliser un modèle de classification ([régression logistique](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html) et [random forest](https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html)) sur les plongements. Les résultats étaient intéressants.

- La **quatrième piste** (celle qui a été retenue pour ce modèle) est de faire un fine-tuning d'un modèle de BERT (FlauBERT ou CamemBERT) pour la tache de classification.

### Les données

Les données proviennent d'une collecte de **titres d'articles de presse française** collectés durant plusieurs mois. Nous avons labellisé environ **2000 de ces titres** pour entrainer le modèle.

### Le modèle final

Le modèle retenu est un modèle de type FlauBERT avec un **fine-tuning** pour la **classification des articles de presse**. Les **données d'entrainement** ont été **sous-échantilonnées (undersampling)** pour équilibrer les classes.

### Les améliorations envisageables

Pour **améliorer le modèle**, il pourrait être intéressant d'**intégrer plus de données** sur les domaines où le modèle **se trompe le plus**. |

ashnrk/textual_inversion_industrial | ashnrk | 2023-07-11T09:52:03Z | 4 | 0 | diffusers | [

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"textual_inversion",

"base_model:stabilityai/stable-diffusion-2-1",

"base_model:adapter:stabilityai/stable-diffusion-2-1",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-07-11T08:49:45Z |

---

license: creativeml-openrail-m

base_model: stabilityai/stable-diffusion-2-1

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- textual_inversion

inference: true

---

# Textual inversion text2image fine-tuning - ashnrk/textual_inversion_industrial

These are textual inversion adaption weights for stabilityai/stable-diffusion-2-1. You can find some example images in the following.

|

Winmodel/ML-Agents-SnowballTarget | Winmodel | 2023-07-11T09:47:03Z | 0 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"SnowballTarget",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-SnowballTarget",

"region:us"

] | reinforcement-learning | 2023-07-11T09:47:02Z | ---

library_name: ml-agents

tags:

- SnowballTarget

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-SnowballTarget

---

# **ppo** Agent playing **SnowballTarget**

This is a trained model of a **ppo** agent playing **SnowballTarget**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: Winmodel/ML-Agents-SnowballTarget

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

jalaluddin94/xlmr-nli-indoindo | jalaluddin94 | 2023-07-11T09:44:18Z | 161 | 0 | transformers | [

"transformers",

"pytorch",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-07-11T05:51:03Z | ---

license: mit

base_model: xlm-roberta-base

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: xlmr-nli-indoindo

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlmr-nli-indoindo

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6699

- Accuracy: 0.7701

- Precision: 0.7701

- Recall: 0.7701

- F1: 0.7693

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-06

- train_batch_size: 6

- eval_batch_size: 6

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 1.0444 | 1.0 | 1722 | 0.8481 | 0.6463 | 0.6463 | 0.6463 | 0.6483 |

| 0.7958 | 2.0 | 3444 | 0.7483 | 0.7369 | 0.7369 | 0.7369 | 0.7353 |

| 0.7175 | 3.0 | 5166 | 0.6812 | 0.7579 | 0.7579 | 0.7579 | 0.7576 |

| 0.66 | 4.0 | 6888 | 0.6293 | 0.7679 | 0.7679 | 0.7679 | 0.7674 |

| 0.6056 | 5.0 | 8610 | 0.6459 | 0.7651 | 0.7651 | 0.7651 | 0.7640 |

| 0.5769 | 6.0 | 10332 | 0.6699 | 0.7701 | 0.7701 | 0.7701 | 0.7693 |

### Framework versions

- Transformers 4.31.0.dev0

- Pytorch 2.0.0

- Datasets 2.1.0

- Tokenizers 0.13.3

|

F-Haru/09-04-MarginMSELoss-finetuning-7-5 | F-Haru | 2023-07-11T09:43:47Z | 2 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2023-07-11T08:30:56Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

ファインチューニングする時のNegative ja-en, en-jaのコサイン類似度が0.9以上0.4以下のみで

ファインチューニングをしたモデル

この後に知識蒸留したモデルはもう一つのモデルの方にある

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 11912 with parameters:

```

{'batch_size': 8, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MarginMSELoss.MarginMSELoss`

Parameters of the fit()-Method:

```

{

"epochs": 3,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 1000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 510, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

jordyvl/vit-small_rvl_cdip_100_examples_per_class_kd_CEKD_t5.0_a0.9 | jordyvl | 2023-07-11T09:39:46Z | 161 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2023-07-11T08:25:41Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: vit-small_rvl_cdip_100_examples_per_class_kd_CEKD_t5.0_a0.9

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-small_rvl_cdip_100_examples_per_class_kd_CEKD_t5.0_a0.9

This model is a fine-tuned version of [WinKawaks/vit-small-patch16-224](https://huggingface.co/WinKawaks/vit-small-patch16-224) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2897

- Accuracy: 0.635

- Brier Loss: 0.5186

- Nll: 2.9908

- F1 Micro: 0.635

- F1 Macro: 0.6391

- Ece: 0.1984

- Aurc: 0.1511

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 100

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Brier Loss | Nll | F1 Micro | F1 Macro | Ece | Aurc |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:----------:|:-------:|:--------:|:--------:|:------:|:------:|

| No log | 1.0 | 25 | 2.8799 | 0.12 | 0.9317 | 15.6566 | 0.12 | 0.1217 | 0.1503 | 0.8678 |

| No log | 2.0 | 50 | 2.2166 | 0.395 | 0.7576 | 9.4150 | 0.395 | 0.3645 | 0.2155 | 0.3726 |

| No log | 3.0 | 75 | 1.7821 | 0.505 | 0.6346 | 5.5305 | 0.505 | 0.4975 | 0.1755 | 0.2454 |

| No log | 4.0 | 100 | 1.6660 | 0.5275 | 0.6038 | 4.9669 | 0.5275 | 0.5333 | 0.1684 | 0.2324 |

| No log | 5.0 | 125 | 1.6118 | 0.54 | 0.5943 | 4.8266 | 0.54 | 0.5233 | 0.1947 | 0.2249 |

| No log | 6.0 | 150 | 1.7108 | 0.5275 | 0.6168 | 4.4308 | 0.5275 | 0.5247 | 0.2018 | 0.2418 |

| No log | 7.0 | 175 | 1.6465 | 0.5825 | 0.5721 | 4.8918 | 0.5825 | 0.5614 | 0.1887 | 0.1995 |

| No log | 8.0 | 200 | 1.6441 | 0.565 | 0.6040 | 4.2349 | 0.565 | 0.5591 | 0.1933 | 0.2216 |

| No log | 9.0 | 225 | 1.7054 | 0.565 | 0.6054 | 4.6348 | 0.565 | 0.5649 | 0.1845 | 0.2033 |

| No log | 10.0 | 250 | 1.6724 | 0.5375 | 0.6191 | 4.3502 | 0.5375 | 0.5257 | 0.1991 | 0.2223 |

| No log | 11.0 | 275 | 1.5397 | 0.57 | 0.5757 | 4.1311 | 0.57 | 0.5715 | 0.2079 | 0.1936 |

| No log | 12.0 | 300 | 1.7636 | 0.55 | 0.6394 | 5.0515 | 0.55 | 0.5376 | 0.2252 | 0.2268 |

| No log | 13.0 | 325 | 1.6080 | 0.575 | 0.5997 | 4.2707 | 0.575 | 0.5515 | 0.2048 | 0.1887 |

| No log | 14.0 | 350 | 1.7572 | 0.575 | 0.6205 | 4.6140 | 0.575 | 0.5705 | 0.2203 | 0.2342 |

| No log | 15.0 | 375 | 1.5604 | 0.58 | 0.5872 | 3.8633 | 0.58 | 0.5762 | 0.2089 | 0.1866 |

| No log | 16.0 | 400 | 1.6440 | 0.585 | 0.6042 | 4.2508 | 0.585 | 0.5940 | 0.2253 | 0.2182 |

| No log | 17.0 | 425 | 1.6117 | 0.5825 | 0.6057 | 4.2511 | 0.5825 | 0.5732 | 0.2299 | 0.1947 |

| No log | 18.0 | 450 | 1.5597 | 0.605 | 0.5732 | 4.4755 | 0.605 | 0.6028 | 0.2101 | 0.1721 |

| No log | 19.0 | 475 | 1.4177 | 0.6325 | 0.5429 | 3.4771 | 0.6325 | 0.6319 | 0.1930 | 0.1786 |

| 0.5354 | 20.0 | 500 | 1.5745 | 0.56 | 0.6076 | 3.6058 | 0.56 | 0.5643 | 0.2265 | 0.1898 |

| 0.5354 | 21.0 | 525 | 1.4907 | 0.6125 | 0.5682 | 3.9837 | 0.6125 | 0.6184 | 0.1981 | 0.1810 |

| 0.5354 | 22.0 | 550 | 1.4494 | 0.5925 | 0.5677 | 3.2864 | 0.5925 | 0.5906 | 0.2187 | 0.1670 |

| 0.5354 | 23.0 | 575 | 1.5608 | 0.62 | 0.5830 | 4.0132 | 0.62 | 0.6029 | 0.2286 | 0.1808 |

| 0.5354 | 24.0 | 600 | 1.5038 | 0.58 | 0.5957 | 3.6519 | 0.58 | 0.5956 | 0.2321 | 0.1879 |

| 0.5354 | 25.0 | 625 | 1.4094 | 0.615 | 0.5554 | 3.0313 | 0.615 | 0.6102 | 0.2180 | 0.1689 |

| 0.5354 | 26.0 | 650 | 1.4485 | 0.62 | 0.5712 | 3.3326 | 0.62 | 0.6181 | 0.2138 | 0.1729 |

| 0.5354 | 27.0 | 675 | 1.4156 | 0.6225 | 0.5621 | 3.2257 | 0.6225 | 0.6239 | 0.2158 | 0.1718 |

| 0.5354 | 28.0 | 700 | 1.3729 | 0.6275 | 0.5476 | 3.1300 | 0.6275 | 0.6285 | 0.2078 | 0.1620 |

| 0.5354 | 29.0 | 725 | 1.3671 | 0.6275 | 0.5337 | 3.4625 | 0.6275 | 0.6285 | 0.2177 | 0.1586 |

| 0.5354 | 30.0 | 750 | 1.3263 | 0.63 | 0.5380 | 3.2177 | 0.63 | 0.6338 | 0.2063 | 0.1577 |

| 0.5354 | 31.0 | 775 | 1.2991 | 0.6225 | 0.5223 | 3.0482 | 0.6225 | 0.6238 | 0.1940 | 0.1525 |

| 0.5354 | 32.0 | 800 | 1.3227 | 0.6325 | 0.5333 | 2.9622 | 0.6325 | 0.6351 | 0.1906 | 0.1554 |

| 0.5354 | 33.0 | 825 | 1.3077 | 0.63 | 0.5298 | 3.2060 | 0.63 | 0.6338 | 0.1933 | 0.1555 |

| 0.5354 | 34.0 | 850 | 1.3036 | 0.6225 | 0.5269 | 3.0431 | 0.6225 | 0.6242 | 0.1996 | 0.1535 |

| 0.5354 | 35.0 | 875 | 1.3057 | 0.6275 | 0.5263 | 2.9651 | 0.6275 | 0.6291 | 0.2023 | 0.1538 |

| 0.5354 | 36.0 | 900 | 1.2992 | 0.6275 | 0.5247 | 2.9748 | 0.6275 | 0.6289 | 0.1961 | 0.1518 |

| 0.5354 | 37.0 | 925 | 1.3001 | 0.6325 | 0.5252 | 2.9784 | 0.6325 | 0.6347 | 0.1978 | 0.1531 |

| 0.5354 | 38.0 | 950 | 1.2990 | 0.63 | 0.5229 | 2.9014 | 0.63 | 0.6327 | 0.1981 | 0.1524 |

| 0.5354 | 39.0 | 975 | 1.2995 | 0.6325 | 0.5246 | 2.9776 | 0.6325 | 0.6354 | 0.1946 | 0.1533 |

| 0.0336 | 40.0 | 1000 | 1.2945 | 0.6275 | 0.5226 | 2.9029 | 0.6275 | 0.6302 | 0.1965 | 0.1523 |

| 0.0336 | 41.0 | 1025 | 1.3023 | 0.63 | 0.5247 | 3.0515 | 0.63 | 0.6341 | 0.2044 | 0.1534 |

| 0.0336 | 42.0 | 1050 | 1.2990 | 0.635 | 0.5239 | 3.0673 | 0.635 | 0.6381 | 0.1952 | 0.1516 |

| 0.0336 | 43.0 | 1075 | 1.2962 | 0.635 | 0.5213 | 3.0585 | 0.635 | 0.6378 | 0.2055 | 0.1523 |

| 0.0336 | 44.0 | 1100 | 1.2991 | 0.625 | 0.5229 | 2.9801 | 0.625 | 0.6278 | 0.1954 | 0.1532 |

| 0.0336 | 45.0 | 1125 | 1.2949 | 0.6375 | 0.5222 | 3.0564 | 0.6375 | 0.6419 | 0.2027 | 0.1519 |

| 0.0336 | 46.0 | 1150 | 1.2989 | 0.6275 | 0.5228 | 3.0737 | 0.6275 | 0.6308 | 0.2075 | 0.1529 |

| 0.0336 | 47.0 | 1175 | 1.2902 | 0.6325 | 0.5201 | 3.0606 | 0.6325 | 0.6360 | 0.2099 | 0.1516 |

| 0.0336 | 48.0 | 1200 | 1.2971 | 0.6275 | 0.5217 | 3.0829 | 0.6275 | 0.6305 | 0.1882 | 0.1518 |

| 0.0336 | 49.0 | 1225 | 1.2913 | 0.63 | 0.5212 | 2.9853 | 0.63 | 0.6332 | 0.1928 | 0.1524 |

| 0.0336 | 50.0 | 1250 | 1.2917 | 0.63 | 0.5205 | 2.9850 | 0.63 | 0.6336 | 0.1910 | 0.1518 |

| 0.0336 | 51.0 | 1275 | 1.2928 | 0.63 | 0.5208 | 3.0579 | 0.63 | 0.6330 | 0.2020 | 0.1528 |

| 0.0336 | 52.0 | 1300 | 1.2941 | 0.635 | 0.5205 | 3.0647 | 0.635 | 0.6383 | 0.1919 | 0.1515 |

| 0.0336 | 53.0 | 1325 | 1.2930 | 0.635 | 0.5207 | 3.0637 | 0.635 | 0.6384 | 0.1868 | 0.1518 |

| 0.0336 | 54.0 | 1350 | 1.2918 | 0.63 | 0.5203 | 3.0628 | 0.63 | 0.6335 | 0.1986 | 0.1519 |

| 0.0336 | 55.0 | 1375 | 1.2894 | 0.635 | 0.5198 | 2.9874 | 0.635 | 0.6383 | 0.2026 | 0.1514 |

| 0.0336 | 56.0 | 1400 | 1.2913 | 0.63 | 0.5203 | 3.0691 | 0.63 | 0.6337 | 0.2045 | 0.1519 |

| 0.0336 | 57.0 | 1425 | 1.2923 | 0.6325 | 0.5205 | 2.9869 | 0.6325 | 0.6358 | 0.1962 | 0.1522 |

| 0.0336 | 58.0 | 1450 | 1.2927 | 0.6375 | 0.5199 | 3.0734 | 0.6375 | 0.6408 | 0.1905 | 0.1514 |

| 0.0336 | 59.0 | 1475 | 1.2931 | 0.6325 | 0.5204 | 3.0607 | 0.6325 | 0.6353 | 0.1980 | 0.1520 |

| 0.0236 | 60.0 | 1500 | 1.2911 | 0.6325 | 0.5199 | 3.0664 | 0.6325 | 0.6359 | 0.1875 | 0.1517 |

| 0.0236 | 61.0 | 1525 | 1.2901 | 0.635 | 0.5195 | 2.9877 | 0.635 | 0.6386 | 0.1907 | 0.1516 |

| 0.0236 | 62.0 | 1550 | 1.2913 | 0.635 | 0.5192 | 3.0655 | 0.635 | 0.6383 | 0.1971 | 0.1515 |

| 0.0236 | 63.0 | 1575 | 1.2920 | 0.635 | 0.5201 | 3.0044 | 0.635 | 0.6379 | 0.1991 | 0.1514 |

| 0.0236 | 64.0 | 1600 | 1.2911 | 0.635 | 0.5192 | 3.0654 | 0.635 | 0.6380 | 0.1848 | 0.1509 |

| 0.0236 | 65.0 | 1625 | 1.2924 | 0.635 | 0.5196 | 3.1438 | 0.635 | 0.6379 | 0.1969 | 0.1515 |

| 0.0236 | 66.0 | 1650 | 1.2901 | 0.635 | 0.5191 | 2.9928 | 0.635 | 0.6392 | 0.1978 | 0.1507 |

| 0.0236 | 67.0 | 1675 | 1.2911 | 0.6325 | 0.5189 | 3.0662 | 0.6325 | 0.6359 | 0.1896 | 0.1517 |

| 0.0236 | 68.0 | 1700 | 1.2911 | 0.6375 | 0.5193 | 2.9932 | 0.6375 | 0.6404 | 0.2017 | 0.1507 |

| 0.0236 | 69.0 | 1725 | 1.2893 | 0.635 | 0.5189 | 2.9907 | 0.635 | 0.6391 | 0.1951 | 0.1511 |

| 0.0236 | 70.0 | 1750 | 1.2913 | 0.6325 | 0.5195 | 2.9919 | 0.6325 | 0.6362 | 0.1955 | 0.1513 |

| 0.0236 | 71.0 | 1775 | 1.2899 | 0.635 | 0.5188 | 2.9899 | 0.635 | 0.6386 | 0.2049 | 0.1511 |

| 0.0236 | 72.0 | 1800 | 1.2912 | 0.635 | 0.5192 | 2.9914 | 0.635 | 0.6379 | 0.1924 | 0.1513 |

| 0.0236 | 73.0 | 1825 | 1.2898 | 0.6325 | 0.5188 | 2.9901 | 0.6325 | 0.6367 | 0.2059 | 0.1511 |

| 0.0236 | 74.0 | 1850 | 1.2902 | 0.635 | 0.5190 | 2.9918 | 0.635 | 0.6391 | 0.2069 | 0.1511 |

| 0.0236 | 75.0 | 1875 | 1.2904 | 0.635 | 0.5191 | 2.9916 | 0.635 | 0.6391 | 0.1969 | 0.1511 |

| 0.0236 | 76.0 | 1900 | 1.2905 | 0.635 | 0.5191 | 2.9899 | 0.635 | 0.6391 | 0.1969 | 0.1512 |

| 0.0236 | 77.0 | 1925 | 1.2904 | 0.635 | 0.5191 | 2.9917 | 0.635 | 0.6391 | 0.1926 | 0.1511 |

| 0.0236 | 78.0 | 1950 | 1.2899 | 0.635 | 0.5188 | 2.9909 | 0.635 | 0.6391 | 0.2010 | 0.1510 |

| 0.0236 | 79.0 | 1975 | 1.2900 | 0.635 | 0.5188 | 2.9908 | 0.635 | 0.6391 | 0.2034 | 0.1511 |

| 0.0233 | 80.0 | 2000 | 1.2900 | 0.635 | 0.5188 | 2.9910 | 0.635 | 0.6391 | 0.1967 | 0.1511 |

| 0.0233 | 81.0 | 2025 | 1.2900 | 0.635 | 0.5188 | 2.9911 | 0.635 | 0.6391 | 0.2002 | 0.1511 |

| 0.0233 | 82.0 | 2050 | 1.2901 | 0.635 | 0.5189 | 2.9909 | 0.635 | 0.6391 | 0.1993 | 0.1511 |

| 0.0233 | 83.0 | 2075 | 1.2900 | 0.635 | 0.5188 | 2.9906 | 0.635 | 0.6391 | 0.1937 | 0.1511 |

| 0.0233 | 84.0 | 2100 | 1.2901 | 0.635 | 0.5189 | 2.9917 | 0.635 | 0.6391 | 0.2026 | 0.1511 |

| 0.0233 | 85.0 | 2125 | 1.2899 | 0.635 | 0.5188 | 2.9905 | 0.635 | 0.6391 | 0.1993 | 0.1512 |

| 0.0233 | 86.0 | 2150 | 1.2897 | 0.635 | 0.5187 | 2.9906 | 0.635 | 0.6391 | 0.1976 | 0.1511 |

| 0.0233 | 87.0 | 2175 | 1.2899 | 0.635 | 0.5188 | 2.9905 | 0.635 | 0.6391 | 0.1980 | 0.1511 |

| 0.0233 | 88.0 | 2200 | 1.2897 | 0.635 | 0.5187 | 2.9911 | 0.635 | 0.6391 | 0.1957 | 0.1511 |

| 0.0233 | 89.0 | 2225 | 1.2899 | 0.635 | 0.5187 | 2.9910 | 0.635 | 0.6391 | 0.1970 | 0.1511 |

| 0.0233 | 90.0 | 2250 | 1.2898 | 0.635 | 0.5187 | 2.9905 | 0.635 | 0.6391 | 0.1988 | 0.1512 |

| 0.0233 | 91.0 | 2275 | 1.2897 | 0.635 | 0.5187 | 2.9908 | 0.635 | 0.6391 | 0.1961 | 0.1511 |

| 0.0233 | 92.0 | 2300 | 1.2898 | 0.635 | 0.5187 | 2.9908 | 0.635 | 0.6391 | 0.1966 | 0.1511 |

| 0.0233 | 93.0 | 2325 | 1.2897 | 0.635 | 0.5186 | 2.9908 | 0.635 | 0.6391 | 0.1984 | 0.1511 |

| 0.0233 | 94.0 | 2350 | 1.2898 | 0.635 | 0.5187 | 2.9907 | 0.635 | 0.6391 | 0.2009 | 0.1511 |

| 0.0233 | 95.0 | 2375 | 1.2897 | 0.635 | 0.5186 | 2.9908 | 0.635 | 0.6391 | 0.2023 | 0.1511 |

| 0.0233 | 96.0 | 2400 | 1.2897 | 0.635 | 0.5186 | 2.9908 | 0.635 | 0.6391 | 0.1985 | 0.1511 |

| 0.0233 | 97.0 | 2425 | 1.2897 | 0.635 | 0.5186 | 2.9908 | 0.635 | 0.6391 | 0.1984 | 0.1511 |

| 0.0233 | 98.0 | 2450 | 1.2897 | 0.635 | 0.5186 | 2.9908 | 0.635 | 0.6391 | 0.1985 | 0.1511 |

| 0.0233 | 99.0 | 2475 | 1.2897 | 0.635 | 0.5186 | 2.9909 | 0.635 | 0.6391 | 0.1984 | 0.1511 |

| 0.0232 | 100.0 | 2500 | 1.2897 | 0.635 | 0.5186 | 2.9908 | 0.635 | 0.6391 | 0.1984 | 0.1511 |

### Framework versions

- Transformers 4.28.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.12.0

- Tokenizers 0.12.1

|

dsfsi/ss-en-m2m100-gov | dsfsi | 2023-07-11T09:39:30Z | 112 | 1 | transformers | [

"transformers",

"pytorch",

"safetensors",

"m2m_100",

"text2text-generation",

"m2m100",

"translation",

"africanlp",

"african",

"siswati",

"ss",

"en",

"arxiv:2303.03750",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2023-05-22T08:46:05Z | ---

license: cc-by-4.0

language:

- ss

- en

pipeline_tag: text2text-generation

tags:

- m2m100

- translation

- africanlp

- african

- siswati

---

# [ss-en] Siswati to English Translation Model based on M2M100 and The South African Gov-ZA multilingual corpus

Model created from Siswati to English aligned sentences from [The South African Gov-ZA multilingual corpus](https://github.com/dsfsi/gov-za-multilingual)

The data set contains cabinet statements from the South African government, maintained by the Government Communication and Information System (GCIS). Data was scraped from the governments website: https://www.gov.za/cabinet-statements

## Authors

- Vukosi Marivate - [@vukosi](https://twitter.com/vukosi)

- Matimba Shingange

- Richard Lastrucci

- Isheanesu Joseph Dzingirai

- Jenalea Rajab

## BibTeX entry and citation info

```

@inproceedings{lastrucci-etal-2023-preparing,

title = "Preparing the Vuk{'}uzenzele and {ZA}-gov-multilingual {S}outh {A}frican multilingual corpora",

author = "Richard Lastrucci and Isheanesu Dzingirai and Jenalea Rajab and Andani Madodonga and Matimba Shingange and Daniel Njini and Vukosi Marivate",

booktitle = "Proceedings of the Fourth workshop on Resources for African Indigenous Languages (RAIL 2023)",

month = may,

year = "2023",

address = "Dubrovnik, Croatia",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.rail-1.3",

pages = "18--25"

}

```

[Paper - Preparing the Vuk'uzenzele and ZA-gov-multilingual South African multilingual corpora](https://arxiv.org/abs/2303.03750) |

subandwho/trial3 | subandwho | 2023-07-11T09:27:02Z | 3 | 0 | peft | [

"peft",

"region:us"

] | null | 2023-07-11T09:26:58Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.4.0.dev0

|

RogerB/KinyaBERT-small-finetuned-kintweetsA | RogerB | 2023-07-11T09:18:46Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2023-07-11T09:18:09Z | ---

tags:

- generated_from_trainer

model-index:

- name: KinyaBERT-small-finetuned-kintweetsA

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# KinyaBERT-small-finetuned-kintweetsA

This model is a fine-tuned version of [jean-paul/KinyaBERT-small](https://huggingface.co/jean-paul/KinyaBERT-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 4.8590

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 10

- eval_batch_size: 10

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 5.2458 | 1.0 | 60 | 5.0038 |

| 5.0197 | 2.0 | 120 | 5.1308 |

| 4.8906 | 3.0 | 180 | 4.8419 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

ntranphong/my_setfit_model_redweasel | ntranphong | 2023-07-11T09:12:07Z | 4 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"mpnet",

"setfit",

"text-classification",

"arxiv:2209.11055",

"license:apache-2.0",

"region:us"

] | text-classification | 2023-07-11T09:11:23Z | ---

license: apache-2.0

tags:

- setfit

- sentence-transformers

- text-classification

pipeline_tag: text-classification

---

# ntranphong/my_setfit_model_redweasel

This is a [SetFit model](https://github.com/huggingface/setfit) that can be used for text classification. The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Usage

To use this model for inference, first install the SetFit library:

```bash

python -m pip install setfit

```

You can then run inference as follows:

```python

from setfit import SetFitModel

# Download from Hub and run inference

model = SetFitModel.from_pretrained("ntranphong/my_setfit_model_redweasel")

# Run inference

preds = model(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

```

## BibTeX entry and citation info

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

|

Bluishoul/grimoire-model | Bluishoul | 2023-07-11T08:55:00Z | 0 | 0 | transformers | [

"transformers",

"text-classification",

"dataset:Open-Orca/OpenOrca",

"doi:10.57967/hf/0873",

"license:openrail",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-06-30T02:45:26Z | ---

license: openrail

pipeline_tag: text-classification

library_name: transformers

datasets:

- Open-Orca/OpenOrca

--- |

zhundred/SpaceInvadersNoFrameskip-v4 | zhundred | 2023-07-11T08:52:32Z | 9 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | reinforcement-learning | 2023-07-11T08:52:02Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 415.00 +/- 187.28

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga zhundred -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga zhundred -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga zhundred

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

NasimB/gpt2-concat-all-mod-datasets1-rarity-all-iorder-c13k | NasimB | 2023-07-11T08:45:58Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-07-11T07:01:32Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-concat-all-mod-datasets1-rarity-all-iorder-c13k

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-concat-all-mod-datasets1-rarity-all-iorder-c13k

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.3983

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 6.7811 | 0.32 | 500 | 5.6598 |

| 5.4368 | 0.63 | 1000 | 5.2297 |

| 5.0819 | 0.95 | 1500 | 4.9819 |

| 4.8064 | 1.27 | 2000 | 4.8391 |

| 4.6653 | 1.58 | 2500 | 4.7273 |

| 4.5682 | 1.9 | 3000 | 4.6197 |

| 4.3541 | 2.22 | 3500 | 4.5701 |

| 4.2704 | 2.53 | 4000 | 4.5079 |

| 4.2264 | 2.85 | 4500 | 4.4351 |

| 4.051 | 3.17 | 5000 | 4.4290 |

| 3.9415 | 3.49 | 5500 | 4.3896 |

| 3.9311 | 3.8 | 6000 | 4.3596 |

| 3.8035 | 4.12 | 6500 | 4.3598 |

| 3.6487 | 4.44 | 7000 | 4.3523 |

| 3.6387 | 4.75 | 7500 | 4.3363 |

| 3.5857 | 5.07 | 8000 | 4.3408 |

| 3.4463 | 5.39 | 8500 | 4.3415 |

| 3.4459 | 5.7 | 9000 | 4.3420 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

KennethTM/gpt2-small-danish | KennethTM | 2023-07-11T08:37:00Z | 193 | 3 | transformers | [

"transformers",

"pytorch",

"safetensors",

"gpt2",

"text-generation",

"da",

"dataset:oscar",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-06-17T17:51:54Z | ---

datasets:

- oscar

language:

- da

widget:

- text: Der var engang

---

# What is this?

A GPT-2 model (small version, 124 M parameters) for Danish text generation. The model was not pre-trained from scratch but adapted from the English version.

# How to use

Test the model using the pipeline from the [🤗 Transformers](https://github.com/huggingface/transformers) library:

```python

from transformers import pipeline

generator = pipeline("text-generation", model = "KennethTM/gpt2-small-danish")

text = generator("Manden arbejdede som")

print(text[0]["generated_text"])

```

Or load it using the Auto* classes:

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("KennethTM/gpt2-small-danish")

model = AutoModelForCausalLM.from_pretrained("KennethTM/gpt2-small-danish")

```

# Model training