modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-15 18:28:48

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 522

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-15 18:28:34

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

google/byt5-small | google | 2023-01-24T16:36:59Z | 1,451,674 | 64 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"multilingual",

"af",

"am",

"ar",

"az",

"be",

"bg",

"bn",

"ca",

"ceb",

"co",

"cs",

"cy",

"da",

"de",

"el",

"en",

"eo",

"es",

"et",

"eu",

"fa",

"fi",

"fil",

"fr",

"fy",

"ga",

"gd",

"gl",

"gu",

"ha",

"haw",

"hi",

"hmn",

"ht",

"hu",

"hy",

"ig",

"is",

"it",

"iw",

"ja",

"jv",

"ka",

"kk",

"km",

"kn",

"ko",

"ku",

"ky",

"la",

"lb",

"lo",

"lt",

"lv",

"mg",

"mi",

"mk",

"ml",

"mn",

"mr",

"ms",

"mt",

"my",

"ne",

"nl",

"no",

"ny",

"pa",

"pl",

"ps",

"pt",

"ro",

"ru",

"sd",

"si",

"sk",

"sl",

"sm",

"sn",

"so",

"sq",

"sr",

"st",

"su",

"sv",

"sw",

"ta",

"te",

"tg",

"th",

"tr",

"uk",

"und",

"ur",

"uz",

"vi",

"xh",

"yi",

"yo",

"zh",

"zu",

"dataset:mc4",

"arxiv:1907.06292",

"arxiv:2105.13626",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- af

- am

- ar

- az

- be

- bg

- bn

- ca

- ceb

- co

- cs

- cy

- da

- de

- el

- en

- eo

- es

- et

- eu

- fa

- fi

- fil

- fr

- fy

- ga

- gd

- gl

- gu

- ha

- haw

- hi

- hmn

- ht

- hu

- hy

- ig

- is

- it

- iw

- ja

- jv

- ka

- kk

- km

- kn

- ko

- ku

- ky

- la

- lb

- lo

- lt

- lv

- mg

- mi

- mk

- ml

- mn

- mr

- ms

- mt

- my

- ne

- nl

- no

- ny

- pa

- pl

- ps

- pt

- ro

- ru

- sd

- si

- sk

- sl

- sm

- sn

- so

- sq

- sr

- st

- su

- sv

- sw

- ta

- te

- tg

- th

- tr

- uk

- und

- ur

- uz

- vi

- xh

- yi

- yo

- zh

- zu

datasets:

- mc4

license: apache-2.0

---

# ByT5 - Small

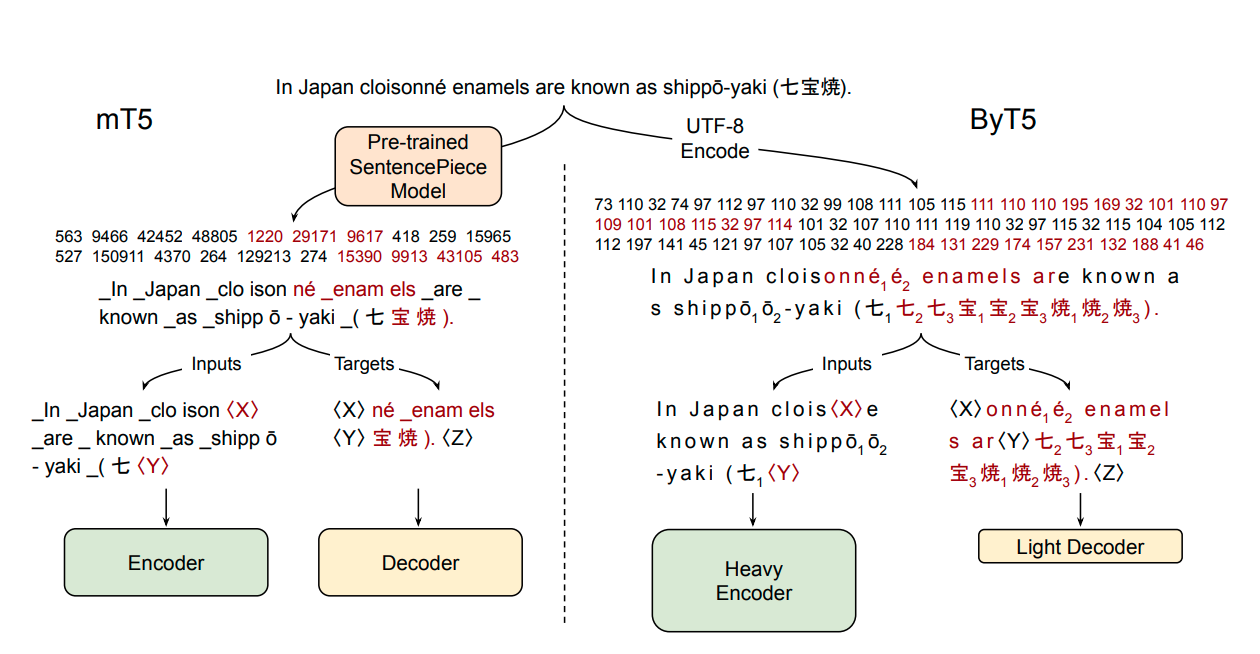

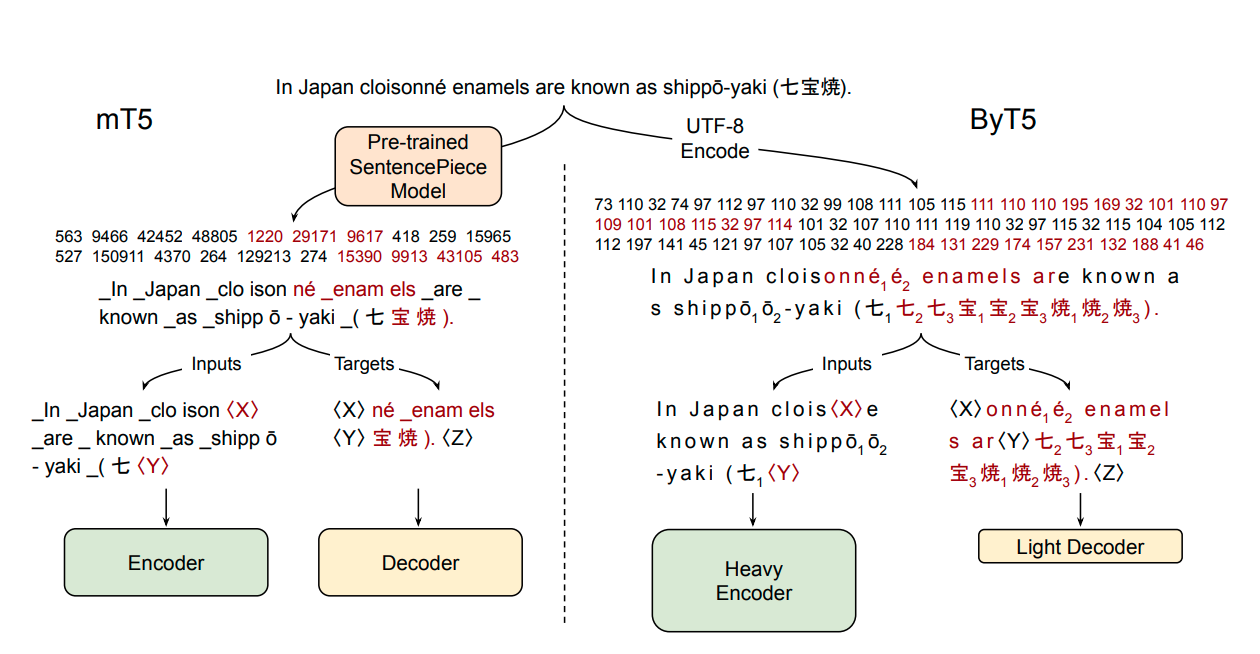

ByT5 is a tokenizer-free version of [Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) and generally follows the architecture of [MT5](https://huggingface.co/google/mt5-small).

ByT5 was only pre-trained on [mC4](https://www.tensorflow.org/datasets/catalog/c4#c4multilingual) excluding any supervised training with an average span-mask of 20 UTF-8 characters. Therefore, this model has to be fine-tuned before it is useable on a downstream task.

ByT5 works especially well on noisy text data,*e.g.*, `google/byt5-small` significantly outperforms [mt5-small](https://huggingface.co/google/mt5-small) on [TweetQA](https://arxiv.org/abs/1907.06292).

Paper: [ByT5: Towards a token-free future with pre-trained byte-to-byte models](https://arxiv.org/abs/2105.13626)

Authors: *Linting Xue, Aditya Barua, Noah Constant, Rami Al-Rfou, Sharan Narang, Mihir Kale, Adam Roberts, Colin Raffel*

## Example Inference

ByT5 works on raw UTF-8 bytes and can be used without a tokenizer:

```python

from transformers import T5ForConditionalGeneration

import torch

model = T5ForConditionalGeneration.from_pretrained('google/byt5-small')

input_ids = torch.tensor([list("Life is like a box of chocolates.".encode("utf-8"))]) + 3 # add 3 for special tokens

labels = torch.tensor([list("La vie est comme une boîte de chocolat.".encode("utf-8"))]) + 3 # add 3 for special tokens

loss = model(input_ids, labels=labels).loss # forward pass

```

For batched inference & training it is however recommended using a tokenizer class for padding:

```python

from transformers import T5ForConditionalGeneration, AutoTokenizer

model = T5ForConditionalGeneration.from_pretrained('google/byt5-small')

tokenizer = AutoTokenizer.from_pretrained('google/byt5-small')

model_inputs = tokenizer(["Life is like a box of chocolates.", "Today is Monday."], padding="longest", return_tensors="pt")

labels = tokenizer(["La vie est comme une boîte de chocolat.", "Aujourd'hui c'est lundi."], padding="longest", return_tensors="pt").input_ids

loss = model(**model_inputs, labels=labels).loss # forward pass

```

## Abstract

Most widely-used pre-trained language models operate on sequences of tokens corresponding to word or subword units. Encoding text as a sequence of tokens requires a tokenizer, which is typically created as an independent artifact from the model. Token-free models that instead operate directly on raw text (bytes or characters) have many benefits: they can process text in any language out of the box, they are more robust to noise, and they minimize technical debt by removing complex and error-prone text preprocessing pipelines. Since byte or character sequences are longer than token sequences, past work on token-free models has often introduced new model architectures designed to amortize the cost of operating directly on raw text. In this paper, we show that a standard Transformer architecture can be used with minimal modifications to process byte sequences. We carefully characterize the trade-offs in terms of parameter count, training FLOPs, and inference speed, and show that byte-level models are competitive with their token-level counterparts. We also demonstrate that byte-level models are significantly more robust to noise and perform better on tasks that are sensitive to spelling and pronunciation. As part of our contribution, we release a new set of pre-trained byte-level Transformer models based on the T5 architecture, as well as all code and data used in our experiments.

|

google/byt5-base | google | 2023-01-24T16:36:53Z | 32,951 | 21 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"multilingual",

"af",

"am",

"ar",

"az",

"be",

"bg",

"bn",

"ca",

"ceb",

"co",

"cs",

"cy",

"da",

"de",

"el",

"en",

"eo",

"es",

"et",

"eu",

"fa",

"fi",

"fil",

"fr",

"fy",

"ga",

"gd",

"gl",

"gu",

"ha",

"haw",

"hi",

"hmn",

"ht",

"hu",

"hy",

"ig",

"is",

"it",

"iw",

"ja",

"jv",

"ka",

"kk",

"km",

"kn",

"ko",

"ku",

"ky",

"la",

"lb",

"lo",

"lt",

"lv",

"mg",

"mi",

"mk",

"ml",

"mn",

"mr",

"ms",

"mt",

"my",

"ne",

"nl",

"no",

"ny",

"pa",

"pl",

"ps",

"pt",

"ro",

"ru",

"sd",

"si",

"sk",

"sl",

"sm",

"sn",

"so",

"sq",

"sr",

"st",

"su",

"sv",

"sw",

"ta",

"te",

"tg",

"th",

"tr",

"uk",

"und",

"ur",

"uz",

"vi",

"xh",

"yi",

"yo",

"zh",

"zu",

"dataset:mc4",

"arxiv:1907.06292",

"arxiv:2105.13626",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- af

- am

- ar

- az

- be

- bg

- bn

- ca

- ceb

- co

- cs

- cy

- da

- de

- el

- en

- eo

- es

- et

- eu

- fa

- fi

- fil

- fr

- fy

- ga

- gd

- gl

- gu

- ha

- haw

- hi

- hmn

- ht

- hu

- hy

- ig

- is

- it

- iw

- ja

- jv

- ka

- kk

- km

- kn

- ko

- ku

- ky

- la

- lb

- lo

- lt

- lv

- mg

- mi

- mk

- ml

- mn

- mr

- ms

- mt

- my

- ne

- nl

- no

- ny

- pa

- pl

- ps

- pt

- ro

- ru

- sd

- si

- sk

- sl

- sm

- sn

- so

- sq

- sr

- st

- su

- sv

- sw

- ta

- te

- tg

- th

- tr

- uk

- und

- ur

- uz

- vi

- xh

- yi

- yo

- zh

- zu

datasets:

- mc4

license: apache-2.0

---

# ByT5 - Base

ByT5 is a tokenizer-free version of [Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) and generally follows the architecture of [MT5](https://huggingface.co/google/mt5-base).

ByT5 was only pre-trained on [mC4](https://www.tensorflow.org/datasets/catalog/c4#c4multilingual) excluding any supervised training with an average span-mask of 20 UTF-8 characters. Therefore, this model has to be fine-tuned before it is useable on a downstream task.

ByT5 works especially well on noisy text data,*e.g.*, `google/byt5-base` significantly outperforms [mt5-base](https://huggingface.co/google/mt5-base) on [TweetQA](https://arxiv.org/abs/1907.06292).

Paper: [ByT5: Towards a token-free future with pre-trained byte-to-byte models](https://arxiv.org/abs/2105.13626)

Authors: *Linting Xue, Aditya Barua, Noah Constant, Rami Al-Rfou, Sharan Narang, Mihir Kale, Adam Roberts, Colin Raffel*

## Example Inference

ByT5 works on raw UTF-8 bytes and can be used without a tokenizer:

```python

from transformers import T5ForConditionalGeneration

import torch

model = T5ForConditionalGeneration.from_pretrained('google/byt5-base')

input_ids = torch.tensor([list("Life is like a box of chocolates.".encode("utf-8"))]) + 3 # add 3 for special tokens

labels = torch.tensor([list("La vie est comme une boîte de chocolat.".encode("utf-8"))]) + 3 # add 3 for special tokens

loss = model(input_ids, labels=labels).loss # forward pass

```

For batched inference & training it is however recommended using a tokenizer class for padding:

```python

from transformers import T5ForConditionalGeneration, AutoTokenizer

model = T5ForConditionalGeneration.from_pretrained('google/byt5-base')

tokenizer = AutoTokenizer.from_pretrained('google/byt5-base')

model_inputs = tokenizer(["Life is like a box of chocolates.", "Today is Monday."], padding="longest", return_tensors="pt")

labels = tokenizer(["La vie est comme une boîte de chocolat.", "Aujourd'hui c'est lundi."], padding="longest", return_tensors="pt").input_ids

loss = model(**model_inputs, labels=labels).loss # forward pass

```

## Abstract

Most widely-used pre-trained language models operate on sequences of tokens corresponding to word or subword units. Encoding text as a sequence of tokens requires a tokenizer, which is typically created as an independent artifact from the model. Token-free models that instead operate directly on raw text (bytes or characters) have many benefits: they can process text in any language out of the box, they are more robust to noise, and they minimize technical debt by removing complex and error-prone text preprocessing pipelines. Since byte or character sequences are longer than token sequences, past work on token-free models has often introduced new model architectures designed to amortize the cost of operating directly on raw text. In this paper, we show that a standard Transformer architecture can be used with minimal modifications to process byte sequences. We carefully characterize the trade-offs in terms of parameter count, training FLOPs, and inference speed, and show that byte-level models are competitive with their token-level counterparts. We also demonstrate that byte-level models are significantly more robust to noise and perform better on tasks that are sensitive to spelling and pronunciation. As part of our contribution, we release a new set of pre-trained byte-level Transformer models based on the T5 architecture, as well as all code and data used in our experiments.

|

google/bigbird-pegasus-large-bigpatent | google | 2023-01-24T16:36:44Z | 785 | 39 | transformers | [

"transformers",

"pytorch",

"bigbird_pegasus",

"text2text-generation",

"summarization",

"en",

"dataset:big_patent",

"arxiv:2007.14062",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| summarization | 2022-03-02T23:29:05Z | ---

language: en

license: apache-2.0

datasets:

- big_patent

tags:

- summarization

---

# BigBirdPegasus model (large)

BigBird, is a sparse-attention based transformer which extends Transformer based models, such as BERT to much longer sequences. Moreover, BigBird comes along with a theoretical understanding of the capabilities of a complete transformer that the sparse model can handle.

BigBird was introduced in this [paper](https://arxiv.org/abs/2007.14062) and first released in this [repository](https://github.com/google-research/bigbird).

Disclaimer: The team releasing BigBird did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

BigBird relies on **block sparse attention** instead of normal attention (i.e. BERT's attention) and can handle sequences up to a length of 4096 at a much lower compute cost compared to BERT. It has achieved SOTA on various tasks involving very long sequences such as long documents summarization, question-answering with long contexts.

## How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BigBirdPegasusForConditionalGeneration, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("google/bigbird-pegasus-large-bigpatent")

# by default encoder-attention is `block_sparse` with num_random_blocks=3, block_size=64

model = BigBirdPegasusForConditionalGeneration.from_pretrained("google/bigbird-pegasus-large-bigpatent")

# decoder attention type can't be changed & will be "original_full"

# you can change `attention_type` (encoder only) to full attention like this:

model = BigBirdPegasusForConditionalGeneration.from_pretrained("google/bigbird-pegasus-large-bigpatent", attention_type="original_full")

# you can change `block_size` & `num_random_blocks` like this:

model = BigBirdPegasusForConditionalGeneration.from_pretrained("google/bigbird-pegasus-large-bigpatent", block_size=16, num_random_blocks=2)

text = "Replace me by any text you'd like."

inputs = tokenizer(text, return_tensors='pt')

prediction = model.generate(**inputs)

prediction = tokenizer.batch_decode(prediction)

```

## Training Procedure

This checkpoint is obtained after fine-tuning `BigBirdPegasusForConditionalGeneration` for **summarization** on [big_patent](https://huggingface.co/datasets/big_patent) dataset.

## BibTeX entry and citation info

```tex

@misc{zaheer2021big,

title={Big Bird: Transformers for Longer Sequences},

author={Manzil Zaheer and Guru Guruganesh and Avinava Dubey and Joshua Ainslie and Chris Alberti and Santiago Ontanon and Philip Pham and Anirudh Ravula and Qifan Wang and Li Yang and Amr Ahmed},

year={2021},

eprint={2007.14062},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

google/bert2bert_L-24_wmt_de_en | google | 2023-01-24T16:35:54Z | 843 | 8 | transformers | [

"transformers",

"pytorch",

"encoder-decoder",

"text2text-generation",

"translation",

"en",

"de",

"dataset:wmt14",

"arxiv:1907.12461",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| translation | 2022-03-02T23:29:05Z | ---

language:

- en

- de

license: apache-2.0

datasets:

- wmt14

tags:

- translation

---

# bert2bert_L-24_wmt_de_en EncoderDecoder model

The model was introduced in

[this paper](https://arxiv.org/abs/1907.12461) by Sascha Rothe, Shashi Narayan, Aliaksei Severyn and first released in [this repository](https://tfhub.dev/google/bertseq2seq/bert24_de_en/1).

The model is an encoder-decoder model that was initialized on the `bert-large` checkpoints for both the encoder

and decoder and fine-tuned on German to English translation on the WMT dataset, which is linked above.

Disclaimer: The model card has been written by the Hugging Face team.

## How to use

You can use this model for translation, *e.g.*

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("google/bert2bert_L-24_wmt_de_en", pad_token="<pad>", eos_token="</s>", bos_token="<s>")

model = AutoModelForSeq2SeqLM.from_pretrained("google/bert2bert_L-24_wmt_de_en")

sentence = "Willst du einen Kaffee trinken gehen mit mir?"

input_ids = tokenizer(sentence, return_tensors="pt", add_special_tokens=False).input_ids

output_ids = model.generate(input_ids)[0]

print(tokenizer.decode(output_ids, skip_special_tokens=True))

# should output

# Want to drink a kaffee go with me? .

```

|

facebook/xglm-7.5B | facebook | 2023-01-24T16:35:48Z | 4,749 | 57 | transformers | [

"transformers",

"pytorch",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"region:us"

]

| text-generation | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- en

- ru

- zh

- de

- es

- fr

- ja

- it

- pt

- el

- ko

- fi

- id

- tr

- ar

- vi

- th

- bg

- ca

- hi

- et

- bn

- ta

- ur

- sw

- te

- eu

- my

- ht

- qu

license: mit

thumbnail: https://huggingface.co/front/thumbnails/facebook.png

inference: false

---

# XGLM-7.5B

XGLM-7.5B is a multilingual autoregressive language model (with 7.5 billion parameters) trained on a balanced corpus of a diverse set of languages totaling 500 billion sub-tokens. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Training Data Statistics

The training data statistics of XGLM-7.5B is shown in the table below.

| ISO-639-1| family | name | # tokens | ratio | ratio w/ lowRes upsampling |

|:--------|:-----------------|:------------------------|-------------:|------------:|-------------:|

| en | Indo-European | English | 803526736124 | 0.489906 | 0.3259 |

| ru | Indo-European | Russian | 147791898098 | 0.0901079 | 0.0602 |

| zh | Sino-Tibetan | Chinese | 132770494630 | 0.0809494 | 0.0483 |

| de | Indo-European | German | 89223707856 | 0.0543992 | 0.0363 |

| es | Indo-European | Spanish | 87303083105 | 0.0532282 | 0.0353 |

| fr | Indo-European | French | 77419639775 | 0.0472023 | 0.0313 |

| ja | Japonic | Japanese | 66054364513 | 0.040273 | 0.0269 |

| it | Indo-European | Italian | 41930465338 | 0.0255648 | 0.0171 |

| pt | Indo-European | Portuguese | 36586032444 | 0.0223063 | 0.0297 |

| el | Indo-European | Greek (modern) | 28762166159 | 0.0175361 | 0.0233 |

| ko | Koreanic | Korean | 20002244535 | 0.0121953 | 0.0811 |

| fi | Uralic | Finnish | 16804309722 | 0.0102455 | 0.0681 |

| id | Austronesian | Indonesian | 15423541953 | 0.00940365 | 0.0125 |

| tr | Turkic | Turkish | 12413166065 | 0.00756824 | 0.0101 |

| ar | Afro-Asiatic | Arabic | 12248607345 | 0.00746791 | 0.0099 |

| vi | Austroasiatic | Vietnamese | 11199121869 | 0.00682804 | 0.0091 |

| th | Tai–Kadai | Thai | 10842172807 | 0.00661041 | 0.044 |

| bg | Indo-European | Bulgarian | 9703797869 | 0.00591635 | 0.0393 |

| ca | Indo-European | Catalan | 7075834775 | 0.0043141 | 0.0287 |

| hi | Indo-European | Hindi | 3448390110 | 0.00210246 | 0.014 |

| et | Uralic | Estonian | 3286873851 | 0.00200399 | 0.0133 |

| bn | Indo-European | Bengali, Bangla | 1627447450 | 0.000992245 | 0.0066 |

| ta | Dravidian | Tamil | 1476973397 | 0.000900502 | 0.006 |

| ur | Indo-European | Urdu | 1351891969 | 0.000824241 | 0.0055 |

| sw | Niger–Congo | Swahili | 907516139 | 0.000553307 | 0.0037 |

| te | Dravidian | Telugu | 689316485 | 0.000420272 | 0.0028 |

| eu | Language isolate | Basque | 105304423 | 6.42035e-05 | 0.0043 |

| my | Sino-Tibetan | Burmese | 101358331 | 6.17976e-05 | 0.003 |

| ht | Creole | Haitian, Haitian Creole | 86584697 | 5.27902e-05 | 0.0035 |

| qu | Quechuan | Quechua | 3236108 | 1.97304e-06 | 0.0001 |

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-7.5B development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-7.5B")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-7.5B")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

``` |

facebook/xglm-564M | facebook | 2023-01-24T16:35:45Z | 15,028 | 51 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"region:us"

]

| text-generation | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- en

- ru

- zh

- de

- es

- fr

- ja

- it

- pt

- el

- ko

- fi

- id

- tr

- ar

- vi

- th

- bg

- ca

- hi

- et

- bn

- ta

- ur

- sw

- te

- eu

- my

- ht

- qu

license: mit

thumbnail: https://huggingface.co/front/thumbnails/facebook.png

inference: false

---

# XGLM-564M

XGLM-564M is a multilingual autoregressive language model (with 564 million parameters) trained on a balanced corpus of a diverse set of 30 languages totaling 500 billion sub-tokens. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Training Data Statistics

The training data statistics of XGLM-564M is shown in the table below.

| ISO-639-1| family | name | # tokens | ratio | ratio w/ lowRes upsampling |

|:--------|:-----------------|:------------------------|-------------:|------------:|-------------:|

| en | Indo-European | English | 803526736124 | 0.489906 | 0.3259 |

| ru | Indo-European | Russian | 147791898098 | 0.0901079 | 0.0602 |

| zh | Sino-Tibetan | Chinese | 132770494630 | 0.0809494 | 0.0483 |

| de | Indo-European | German | 89223707856 | 0.0543992 | 0.0363 |

| es | Indo-European | Spanish | 87303083105 | 0.0532282 | 0.0353 |

| fr | Indo-European | French | 77419639775 | 0.0472023 | 0.0313 |

| ja | Japonic | Japanese | 66054364513 | 0.040273 | 0.0269 |

| it | Indo-European | Italian | 41930465338 | 0.0255648 | 0.0171 |

| pt | Indo-European | Portuguese | 36586032444 | 0.0223063 | 0.0297 |

| el | Indo-European | Greek (modern) | 28762166159 | 0.0175361 | 0.0233 |

| ko | Koreanic | Korean | 20002244535 | 0.0121953 | 0.0811 |

| fi | Uralic | Finnish | 16804309722 | 0.0102455 | 0.0681 |

| id | Austronesian | Indonesian | 15423541953 | 0.00940365 | 0.0125 |

| tr | Turkic | Turkish | 12413166065 | 0.00756824 | 0.0101 |

| ar | Afro-Asiatic | Arabic | 12248607345 | 0.00746791 | 0.0099 |

| vi | Austroasiatic | Vietnamese | 11199121869 | 0.00682804 | 0.0091 |

| th | Tai–Kadai | Thai | 10842172807 | 0.00661041 | 0.044 |

| bg | Indo-European | Bulgarian | 9703797869 | 0.00591635 | 0.0393 |

| ca | Indo-European | Catalan | 7075834775 | 0.0043141 | 0.0287 |

| hi | Indo-European | Hindi | 3448390110 | 0.00210246 | 0.014 |

| et | Uralic | Estonian | 3286873851 | 0.00200399 | 0.0133 |

| bn | Indo-European | Bengali, Bangla | 1627447450 | 0.000992245 | 0.0066 |

| ta | Dravidian | Tamil | 1476973397 | 0.000900502 | 0.006 |

| ur | Indo-European | Urdu | 1351891969 | 0.000824241 | 0.0055 |

| sw | Niger–Congo | Swahili | 907516139 | 0.000553307 | 0.0037 |

| te | Dravidian | Telugu | 689316485 | 0.000420272 | 0.0028 |

| eu | Language isolate | Basque | 105304423 | 6.42035e-05 | 0.0043 |

| my | Sino-Tibetan | Burmese | 101358331 | 6.17976e-05 | 0.003 |

| ht | Creole | Haitian, Haitian Creole | 86584697 | 5.27902e-05 | 0.0035 |

| qu | Quechuan | Quechua | 3236108 | 1.97304e-06 | 0.0001 |

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-564M development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-564M")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-564M")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

``` |

facebook/xglm-2.9B | facebook | 2023-01-24T16:35:40Z | 557 | 8 | transformers | [

"transformers",

"pytorch",

"xglm",

"text-generation",

"multilingual",

"en",

"ru",

"zh",

"de",

"es",

"fr",

"ja",

"it",

"pt",

"el",

"ko",

"fi",

"id",

"tr",

"ar",

"vi",

"th",

"bg",

"ca",

"hi",

"et",

"bn",

"ta",

"ur",

"sw",

"te",

"eu",

"my",

"ht",

"qu",

"arxiv:2112.10668",

"license:mit",

"autotrain_compatible",

"region:us"

]

| text-generation | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- en

- ru

- zh

- de

- es

- fr

- ja

- it

- pt

- el

- ko

- fi

- id

- tr

- ar

- vi

- th

- bg

- ca

- hi

- et

- bn

- ta

- ur

- sw

- te

- eu

- my

- ht

- qu

license: mit

thumbnail: https://huggingface.co/front/thumbnails/facebook.png

inference: false

---

# XGLM-2.9B

XGLM-2.9B is a multilingual autoregressive language model (with 2.9 billion parameters) trained on a balanced corpus of a diverse set of languages totaling 500 billion sub-tokens. It was introduced in the paper [Few-shot Learning with Multilingual Language Models](https://arxiv.org/abs/2112.10668) by Xi Victoria Lin\*, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li\* (\*Equal Contribution). The original implementation was released in [this repository](https://github.com/pytorch/fairseq/tree/main/examples/xglm).

## Training Data Statistics

The training data statistics of XGLM-2.9B is shown in the table below.

| ISO-639-1| family | name | # tokens | ratio | ratio w/ lowRes upsampling |

|:--------|:-----------------|:------------------------|-------------:|------------:|-------------:|

| en | Indo-European | English | 803526736124 | 0.489906 | 0.3259 |

| ru | Indo-European | Russian | 147791898098 | 0.0901079 | 0.0602 |

| zh | Sino-Tibetan | Chinese | 132770494630 | 0.0809494 | 0.0483 |

| de | Indo-European | German | 89223707856 | 0.0543992 | 0.0363 |

| es | Indo-European | Spanish | 87303083105 | 0.0532282 | 0.0353 |

| fr | Indo-European | French | 77419639775 | 0.0472023 | 0.0313 |

| ja | Japonic | Japanese | 66054364513 | 0.040273 | 0.0269 |

| it | Indo-European | Italian | 41930465338 | 0.0255648 | 0.0171 |

| pt | Indo-European | Portuguese | 36586032444 | 0.0223063 | 0.0297 |

| el | Indo-European | Greek (modern) | 28762166159 | 0.0175361 | 0.0233 |

| ko | Koreanic | Korean | 20002244535 | 0.0121953 | 0.0811 |

| fi | Uralic | Finnish | 16804309722 | 0.0102455 | 0.0681 |

| id | Austronesian | Indonesian | 15423541953 | 0.00940365 | 0.0125 |

| tr | Turkic | Turkish | 12413166065 | 0.00756824 | 0.0101 |

| ar | Afro-Asiatic | Arabic | 12248607345 | 0.00746791 | 0.0099 |

| vi | Austroasiatic | Vietnamese | 11199121869 | 0.00682804 | 0.0091 |

| th | Tai–Kadai | Thai | 10842172807 | 0.00661041 | 0.044 |

| bg | Indo-European | Bulgarian | 9703797869 | 0.00591635 | 0.0393 |

| ca | Indo-European | Catalan | 7075834775 | 0.0043141 | 0.0287 |

| hi | Indo-European | Hindi | 3448390110 | 0.00210246 | 0.014 |

| et | Uralic | Estonian | 3286873851 | 0.00200399 | 0.0133 |

| bn | Indo-European | Bengali, Bangla | 1627447450 | 0.000992245 | 0.0066 |

| ta | Dravidian | Tamil | 1476973397 | 0.000900502 | 0.006 |

| ur | Indo-European | Urdu | 1351891969 | 0.000824241 | 0.0055 |

| sw | Niger–Congo | Swahili | 907516139 | 0.000553307 | 0.0037 |

| te | Dravidian | Telugu | 689316485 | 0.000420272 | 0.0028 |

| eu | Language isolate | Basque | 105304423 | 6.42035e-05 | 0.0043 |

| my | Sino-Tibetan | Burmese | 101358331 | 6.17976e-05 | 0.003 |

| ht | Creole | Haitian, Haitian Creole | 86584697 | 5.27902e-05 | 0.0035 |

| qu | Quechuan | Quechua | 3236108 | 1.97304e-06 | 0.0001 |

## Model card

For intended usage of the model, please refer to the [model card](https://github.com/pytorch/fairseq/blob/main/examples/xglm/model_card.md) released by the XGLM-2.9B development team.

## Example (COPA)

The following snippet shows how to evaluate our models (GPT-3 style, zero-shot) on the Choice of Plausible Alternatives (COPA) task, using examples in English, Chinese and Hindi.

```python

import torch

import torch.nn.functional as F

from transformers import XGLMTokenizer, XGLMForCausalLM

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-2.9B")

model = XGLMForCausalLM.from_pretrained("facebook/xglm-2.9B")

data_samples = {

'en': [

{

"premise": "I wanted to conserve energy.",

"choice1": "I swept the floor in the unoccupied room.",

"choice2": "I shut off the light in the unoccupied room.",

"question": "effect",

"label": "1"

},

{

"premise": "The flame on the candle went out.",

"choice1": "I blew on the wick.",

"choice2": "I put a match to the wick.",

"question": "cause",

"label": "0"

}

],

'zh': [

{

"premise": "我想节约能源。",

"choice1": "我在空着的房间里扫了地板。",

"choice2": "我把空房间里的灯关了。",

"question": "effect",

"label": "1"

},

{

"premise": "蜡烛上的火焰熄灭了。",

"choice1": "我吹灭了灯芯。",

"choice2": "我把一根火柴放在灯芯上。",

"question": "cause",

"label": "0"

}

],

'hi': [

{

"premise": "M te vle konsève enèji.",

"choice1": "Mwen te fin baleye chanm lib la.",

"choice2": "Mwen te femen limyè nan chanm lib la.",

"question": "effect",

"label": "1"

},

{

"premise": "Flam bouji a te etenn.",

"choice1": "Mwen te soufle bouji a.",

"choice2": "Mwen te limen mèch bouji a.",

"question": "cause",

"label": "0"

}

]

}

def get_logprobs(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

input_ids, output_ids = inputs["input_ids"], inputs["input_ids"][:, 1:]

outputs = model(**inputs, labels=input_ids)

logits = outputs.logits

logprobs = torch.gather(F.log_softmax(logits, dim=2), 2, output_ids.unsqueeze(2))

return logprobs

# Zero-shot evaluation for the Choice of Plausible Alternatives (COPA) task.

# A return value of 0 indicates that the first alternative is more plausible,

# while 1 indicates that the second alternative is more plausible.

def COPA_eval(prompt, alternative1, alternative2):

lprob1 = get_logprobs(prompt + "\n" + alternative1).sum()

lprob2 = get_logprobs(prompt + "\n" + alternative2).sum()

return 0 if lprob1 > lprob2 else 1

for lang in data_samples_long:

for idx, example in enumerate(data_samples_long[lang]):

predict = COPA_eval(example["premise"], example["choice1"], example["choice2"])

print(f'{lang}-{idx}', predict, example['label'])

# en-0 1 1

# en-1 0 0

# zh-0 1 1

# zh-1 0 0

# hi-0 1 1

# hi-1 0 0

``` |

facebook/wmt21-dense-24-wide-x-en | facebook | 2023-01-24T16:35:35Z | 26 | 13 | transformers | [

"transformers",

"pytorch",

"m2m_100",

"text2text-generation",

"translation",

"wmt21",

"multilingual",

"ha",

"is",

"ja",

"cs",

"ru",

"zh",

"de",

"en",

"arxiv:2108.03265",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| translation | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- ha

- is

- ja

- cs

- ru

- zh

- de

- en

license: mit

tags:

- translation

- wmt21

---

# WMT 21 X-En

WMT 21 X-En is a 4.7B multilingual encoder-decoder (seq-to-seq) model trained for one-to-many multilingual translation.

It was introduced in this [paper](https://arxiv.org/abs/2108.03265) and first released in [this](https://github.com/pytorch/fairseq/tree/main/examples/wmt21) repository.

The model can directly translate text from 7 languages: Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de) to English.

To translate into a target language, the target language id is forced as the first generated token.

To force the target language id as the first generated token, pass the `forced_bos_token_id` parameter to the `generate` method.

*Note: `M2M100Tokenizer` depends on `sentencepiece`, so make sure to install it before running the example.*

To install `sentencepiece` run `pip install sentencepiece`

Since the model was trained with domain tags, you should prepend them to the input as well.

* "wmtdata newsdomain": Use for sentences in the news domain

* "wmtdata otherdomain": Use for sentences in all other domain

```python

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

model = AutoModelForSeq2SeqLM.from_pretrained("facebook/wmt21-dense-24-wide-x-en")

tokenizer = AutoTokenizer.from_pretrained("facebook/wmt21-dense-24-wide-x-en")

# translate German to English

tokenizer.src_lang = "de"

inputs = tokenizer("wmtdata newsdomain Ein Modell für viele Sprachen", return_tensors="pt")

generated_tokens = model.generate(**inputs)

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "A model for many languages"

# translate Icelandic to English

tokenizer.src_lang = "is"

inputs = tokenizer("wmtdata newsdomain Ein fyrirmynd fyrir mörg tungumál", return_tensors="pt")

generated_tokens = model.generate(**inputs)

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "One model for many languages"

```

See the [model hub](https://huggingface.co/models?filter=wmt21) to look for more fine-tuned versions.

## Languages covered

English (en), Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de)

## BibTeX entry and citation info

```

@inproceedings{tran2021facebook

title={Facebook AI’s WMT21 News Translation Task Submission},

author={Chau Tran and Shruti Bhosale and James Cross and Philipp Koehn and Sergey Edunov and Angela Fan},

booktitle={Proc. of WMT},

year={2021},

}

``` |

facebook/wmt21-dense-24-wide-en-x | facebook | 2023-01-24T16:35:31Z | 41 | 37 | transformers | [

"transformers",

"pytorch",

"m2m_100",

"text2text-generation",

"translation",

"wmt21",

"multilingual",

"ha",

"is",

"ja",

"cs",

"ru",

"zh",

"de",

"en",

"arxiv:2108.03265",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| translation | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- ha

- is

- ja

- cs

- ru

- zh

- de

- en

license: mit

tags:

- translation

- wmt21

---

# WMT 21 En-X

WMT 21 En-X is a 4.7B multilingual encoder-decoder (seq-to-seq) model trained for one-to-many multilingual translation.

It was introduced in this [paper](https://arxiv.org/abs/2108.03265) and first released in [this](https://github.com/pytorch/fairseq/tree/main/examples/wmt21) repository.

The model can directly translate English text into 7 other languages: Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de).

To translate into a target language, the target language id is forced as the first generated token.

To force the target language id as the first generated token, pass the `forced_bos_token_id` parameter to the `generate` method.

*Note: `M2M100Tokenizer` depends on `sentencepiece`, so make sure to install it before running the example.*

To install `sentencepiece` run `pip install sentencepiece`

Since the model was trained with domain tags, you should prepend them to the input as well.

* "wmtdata newsdomain": Use for sentences in the news domain

* "wmtdata otherdomain": Use for sentences in all other domain

```python

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

model = AutoModelForSeq2SeqLM.from_pretrained("facebook/wmt21-dense-24-wide-en-x")

tokenizer = AutoTokenizer.from_pretrained("facebook/wmt21-dense-24-wide-en-x")

inputs = tokenizer("wmtdata newsdomain One model for many languages.", return_tensors="pt")

# translate English to German

generated_tokens = model.generate(**inputs, forced_bos_token_id=tokenizer.get_lang_id("de"))

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "Ein Modell für viele Sprachen."

# translate English to Icelandic

generated_tokens = model.generate(**inputs, forced_bos_token_id=tokenizer.get_lang_id("is"))

tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

# => "Ein fyrirmynd fyrir mörg tungumál."

```

See the [model hub](https://huggingface.co/models?filter=wmt21) to look for more fine-tuned versions.

## Languages covered

English (en), Hausa (ha), Icelandic (is), Japanese (ja), Czech (cs), Russian (ru), Chinese (zh), German (de)

## BibTeX entry and citation info

```

@inproceedings{tran2021facebook

title={Facebook AI’s WMT21 News Translation Task Submission},

author={Chau Tran and Shruti Bhosale and James Cross and Philipp Koehn and Sergey Edunov and Angela Fan},

booktitle={Proc. of WMT},

year={2021},

}

``` |

facebook/wav2vec2-xls-r-2b-22-to-16 | facebook | 2023-01-24T16:35:01Z | 32 | 14 | transformers | [

"transformers",

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"speech",

"xls_r",

"xls_r_translation",

"multilingual",

"fr",

"de",

"es",

"ca",

"it",

"ru",

"zh",

"pt",

"fa",

"et",

"mn",

"nl",

"tr",

"ar",

"sv",

"lv",

"sl",

"ta",

"ja",

"id",

"cy",

"en",

"dataset:common_voice",

"dataset:multilingual_librispeech",

"dataset:covost2",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- fr

- de

- es

- ca

- it

- ru

- zh

- pt

- fa

- et

- mn

- nl

- tr

- ar

- sv

- lv

- sl

- ta

- ja

- id

- cy

- en

datasets:

- common_voice

- multilingual_librispeech

- covost2

tags:

- speech

- xls_r

- automatic-speech-recognition

- xls_r_translation

pipeline_tag: automatic-speech-recognition

license: apache-2.0

widget:

- example_title: Swedish

src: https://cdn-media.huggingface.co/speech_samples/cv_swedish_1.mp3

- example_title: Arabic

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ar_19058308.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: German

src: https://cdn-media.huggingface.co/speech_samples/common_voice_de_17284683.mp3

- example_title: French

src: https://cdn-media.huggingface.co/speech_samples/common_voice_fr_17299386.mp3

- example_title: Indonesian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_id_19051309.mp3

- example_title: Italian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_it_17415776.mp3

- example_title: Japanese

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ja_19482488.mp3

- example_title: Mongolian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_mn_18565396.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: Turkish

src: https://cdn-media.huggingface.co/speech_samples/common_voice_tr_17341280.mp3

- example_title: Catalan

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ca_17367522.mp3

- example_title: English

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_18301577.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

---

# Wav2Vec2-XLS-R-2B-22-16 (XLS-R-Any-to-Any)

Facebook's Wav2Vec2 XLS-R fine-tuned for **Speech Translation.**

This is a [SpeechEncoderDecoderModel](https://huggingface.co/transformers/model_doc/speechencoderdecoder.html) model.

The encoder was warm-started from the [**`facebook/wav2vec2-xls-r-2b`**](https://huggingface.co/facebook/wav2vec2-xls-r-2b) checkpoint and

the decoder from the [**`facebook/mbart-large-50`**](https://huggingface.co/facebook/mbart-large-50) checkpoint.

Consequently, the encoder-decoder model was fine-tuned on `{input_lang}` -> `{output_lang}` translation pairs

of the [Covost2 dataset](https://huggingface.co/datasets/covost2).

The model can translate from the following spoken languages `{input_lang}` to the following written languages `{output_lang}`:

`{input_lang}` -> `{output_lang}`

with `{input_lang}` one of:

{`en`, `fr`, `de`, `es`, `ca`, `it`, `ru`, `zh-CN`, `pt`, `fa`, `et`, `mn`, `nl`, `tr`, `ar`, `sv-SE`, `lv`, `sl`, `ta`, `ja`, `id`, `cy`}

and `{output_lang}`:

{`en`, `de`, `tr`, `fa`, `sv-SE`, `mn`, `zh-CN`, `cy`, `ca`, `sl`, `et`, `id`, `ar`, `ta`, `lv`, `ja`}

## Usage

### Demo

The model can be tested on [**this space**](https://huggingface.co/spaces/facebook/XLS-R-2B-22-16).

You can select the target language, record some audio in any of the above mentioned input languages,

and then sit back and see how well the checkpoint can translate the input.

### Example

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline. By default, the checkpoint will

translate spoken English to written German. To change the written target language,

you need to pass the correct `forced_bos_token_id` to `generate(...)` to condition

the decoder on the correct target language.

To select the correct `forced_bos_token_id` given your choosen language id, please make use

of the following mapping:

```python

MAPPING = {

"en": 250004,

"de": 250003,

"tr": 250023,

"fa": 250029,

"sv": 250042,

"mn": 250037,

"zh": 250025,

"cy": 250007,

"ca": 250005,

"sl": 250052,

"et": 250006,

"id": 250032,

"ar": 250001,

"ta": 250044,

"lv": 250017,

"ja": 250012,

}

```

As an example, if you would like to translate to Swedish, you can do the following:

```python

from datasets import load_dataset

from transformers import pipeline

# select correct `forced_bos_token_id`

forced_bos_token_id = MAPPING["sv"]

# replace following lines to load an audio file of your choice

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

audio_file = librispeech_en[0]["file"]

asr = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-xls-r-2b-22-to-16", feature_extractor="facebook/wav2vec2-xls-r-2b-22-to-16")

translation = asr(audio_file, forced_bos_token_id=forced_bos_token_id)

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoderModel

from datasets import load_dataset

model = SpeechEncoderDecoderModel.from_pretrained("facebook/wav2vec2-xls-r-2b-22-to-16")

processor = Speech2Text2Processor.from_pretrained("facebook/wav2vec2-xls-r-2b-22-to-16")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# select correct `forced_bos_token_id`

forced_bos_token_id = MAPPING["sv"]

inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["array"]["sampling_rate"], return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"], forced_bos_token_id=forced_bos_token)

transcription = processor.batch_decode(generated_ids)

```

## More XLS-R models for `{lang}` -> `en` Speech Translation

- [Wav2Vec2-XLS-R-300M-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-300m-en-to-15)

- [Wav2Vec2-XLS-R-1B-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-1b-en-to-15)

- [Wav2Vec2-XLS-R-2B-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-2b-en-to-15)

- [Wav2Vec2-XLS-R-2B-22-16](https://huggingface.co/facebook/wav2vec2-xls-r-2b-22-to-16)

|

facebook/wav2vec2-xls-r-2b-21-to-en | facebook | 2023-01-24T16:34:58Z | 24 | 5 | transformers | [

"transformers",

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"speech",

"xls_r",

"xls_r_translation",

"multilingual",

"fr",

"de",

"es",

"ca",

"it",

"ru",

"zh",

"pt",

"fa",

"et",

"mn",

"nl",

"tr",

"ar",

"sv",

"lv",

"sl",

"ta",

"ja",

"id",

"cy",

"en",

"dataset:common_voice",

"dataset:multilingual_librispeech",

"dataset:covost2",

"arxiv:2111.09296",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- fr

- de

- es

- ca

- it

- ru

- zh

- pt

- fa

- et

- mn

- nl

- tr

- ar

- sv

- lv

- sl

- ta

- ja

- id

- cy

- en

datasets:

- common_voice

- multilingual_librispeech

- covost2

tags:

- speech

- xls_r

- automatic-speech-recognition

- xls_r_translation

pipeline_tag: automatic-speech-recognition

license: apache-2.0

widget:

- example_title: Swedish

src: https://cdn-media.huggingface.co/speech_samples/cv_swedish_1.mp3

- example_title: Arabic

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ar_19058308.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: German

src: https://cdn-media.huggingface.co/speech_samples/common_voice_de_17284683.mp3

- example_title: French

src: https://cdn-media.huggingface.co/speech_samples/common_voice_fr_17299386.mp3

- example_title: Indonesian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_id_19051309.mp3

- example_title: Italian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_it_17415776.mp3

- example_title: Japanese

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ja_19482488.mp3

- example_title: Mongolian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_mn_18565396.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: Turkish

src: https://cdn-media.huggingface.co/speech_samples/common_voice_tr_17341280.mp3

- example_title: Catalan

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ca_17367522.mp3

- example_title: English

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_18301577.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

---

# Wav2Vec2-XLS-R-2b-21-EN

Facebook's Wav2Vec2 XLS-R fine-tuned for **Speech Translation.**

This is a [SpeechEncoderDecoderModel](https://huggingface.co/transformers/model_doc/speechencoderdecoder.html) model.

The encoder was warm-started from the [**`facebook/wav2vec2-xls-r-2b`**](https://huggingface.co/facebook/wav2vec2-xls-r-2b) checkpoint and

the decoder from the [**`facebook/mbart-large-50`**](https://huggingface.co/facebook/mbart-large-50) checkpoint.

Consequently, the encoder-decoder model was fine-tuned on 21 `{lang}` -> `en` translation pairs of the [Covost2 dataset](https://huggingface.co/datasets/covost2).

The model can translate from the following spoken languages `{lang}` -> `en` (English):

{`fr`, `de`, `es`, `ca`, `it`, `ru`, `zh-CN`, `pt`, `fa`, `et`, `mn`, `nl`, `tr`, `ar`, `sv-SE`, `lv`, `sl`, `ta`, `ja`, `id`, `cy`} -> `en`

For more information, please refer to Section *5.1.2* of the [official XLS-R paper](https://arxiv.org/abs/2111.09296).

## Usage

### Demo

The model can be tested directly on the speech recognition widget on this model card!

Simple record some audio in one of the possible spoken languages or pick an example audio file to see how well the checkpoint can translate the input.

### Example

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline

```python

from datasets import load_dataset

from transformers import pipeline

# replace following lines to load an audio file of your choice

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

audio_file = librispeech_en[0]["file"]

asr = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-xls-r-2b-21-to-en", feature_extractor="facebook/wav2vec2-xls-r-2b-21-to-en")

translation = asr(audio_file)

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoderModel

from datasets import load_dataset

model = SpeechEncoderDecoderModel.from_pretrained("facebook/wav2vec2-xls-r-2b-21-to-en")

processor = Speech2Text2Processor.from_pretrained("facebook/wav2vec2-xls-r-2b-21-to-en")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["array"]["sampling_rate"], return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

transcription = processor.batch_decode(generated_ids)

```

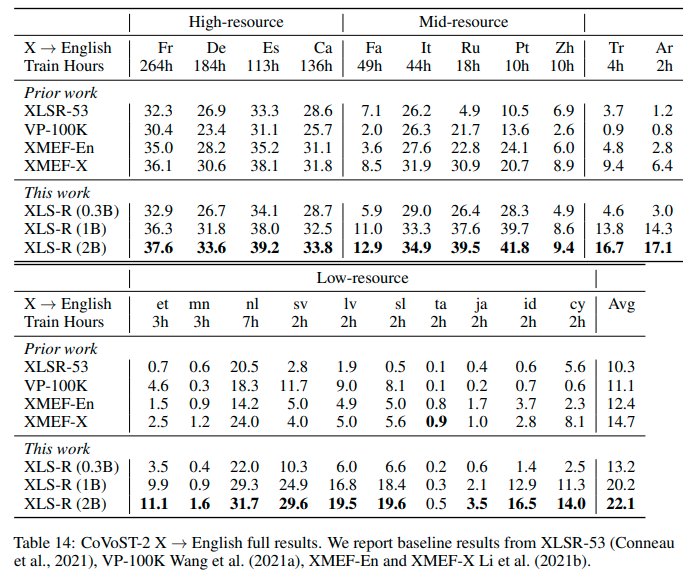

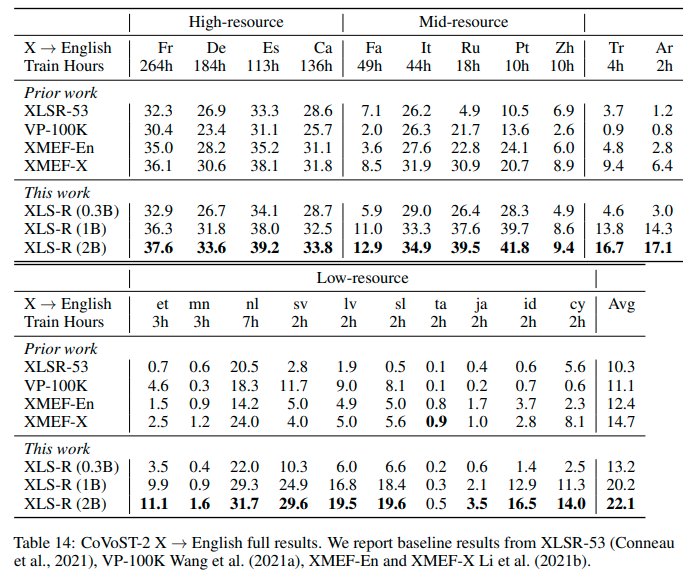

## Results `{lang}` -> `en`

See the row of **XLS-R (2B)** for the performance on [Covost2](https://huggingface.co/datasets/covost2) for this model.

## More XLS-R models for `{lang}` -> `en` Speech Translation

- [Wav2Vec2-XLS-R-300M-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-300m-21-to-en)

- [Wav2Vec2-XLS-R-1B-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-1b-21-to-en)

- [Wav2Vec2-XLS-R-2B-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-2b-21-to-en)

- [Wav2Vec2-XLS-R-2B-22-16](https://huggingface.co/facebook/wav2vec2-xls-r-2b-22-to-16)

|

facebook/wav2vec2-xls-r-1b-21-to-en | facebook | 2023-01-24T16:34:50Z | 381 | 3 | transformers | [

"transformers",

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"speech",

"xls_r",

"xls_r_translation",

"multilingual",

"fr",

"de",

"es",

"ca",

"it",

"ru",

"zh",

"pt",

"fa",

"et",

"mn",

"nl",

"tr",

"ar",

"sv",

"lv",

"sl",

"ta",

"ja",

"id",

"cy",

"en",

"dataset:common_voice",

"dataset:multilingual_librispeech",

"dataset:covost2",

"arxiv:2111.09296",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- multilingual

- fr

- de

- es

- ca

- it

- ru

- zh

- pt

- fa

- et

- mn

- nl

- tr

- ar

- sv

- lv

- sl

- ta

- ja

- id

- cy

- en

datasets:

- common_voice

- multilingual_librispeech

- covost2

tags:

- speech

- xls_r

- automatic-speech-recognition

- xls_r_translation

pipeline_tag: automatic-speech-recognition

license: apache-2.0

widget:

- example_title: Swedish

src: https://cdn-media.huggingface.co/speech_samples/cv_swedish_1.mp3

- example_title: Arabic

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ar_19058308.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: German

src: https://cdn-media.huggingface.co/speech_samples/common_voice_de_17284683.mp3

- example_title: French

src: https://cdn-media.huggingface.co/speech_samples/common_voice_fr_17299386.mp3

- example_title: Indonesian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_id_19051309.mp3

- example_title: Italian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_it_17415776.mp3

- example_title: Japanese

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ja_19482488.mp3

- example_title: Mongolian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_mn_18565396.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

- example_title: Russian

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ru_18849022.mp3

- example_title: Turkish

src: https://cdn-media.huggingface.co/speech_samples/common_voice_tr_17341280.mp3

- example_title: Catalan

src: https://cdn-media.huggingface.co/speech_samples/common_voice_ca_17367522.mp3

- example_title: English

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_18301577.mp3

- example_title: Dutch

src: https://cdn-media.huggingface.co/speech_samples/common_voice_nl_17691471.mp3

---

# Wav2Vec2-XLS-R-2b-21-EN

Facebook's Wav2Vec2 XLS-R fine-tuned for **Speech Translation.**

This is a [SpeechEncoderDecoderModel](https://huggingface.co/transformers/model_doc/speechencoderdecoder.html) model.

The encoder was warm-started from the [**`facebook/wav2vec2-xls-r-1b`**](https://huggingface.co/facebook/wav2vec2-xls-r-1b) checkpoint and

the decoder from the [**`facebook/mbart-large-50`**](https://huggingface.co/facebook/mbart-large-50) checkpoint.

Consequently, the encoder-decoder model was fine-tuned on 21 `{lang}` -> `en` translation pairs of the [Covost2 dataset](https://huggingface.co/datasets/covost2).

The model can translate from the following spoken languages `{lang}` -> `en` (English):

{`fr`, `de`, `es`, `ca`, `it`, `ru`, `zh-CN`, `pt`, `fa`, `et`, `mn`, `nl`, `tr`, `ar`, `sv-SE`, `lv`, `sl`, `ta`, `ja`, `id`, `cy`} -> `en`

For more information, please refer to Section *5.1.2* of the [official XLS-R paper](https://arxiv.org/abs/2111.09296).

## Usage

### Demo

The model can be tested directly on the speech recognition widget on this model card!

Simple record some audio in one of the possible spoken languages or pick an example audio file to see how well the checkpoint can translate the input.

### Example

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline

```python

from datasets import load_dataset

from transformers import pipeline

# replace following lines to load an audio file of your choice

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

audio_file = librispeech_en[0]["file"]

asr = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-xls-r-1b-21-to-en", feature_extractor="facebook/wav2vec2-xls-r-1b-21-to-en")

translation = asr(audio_file)

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoderModel

from datasets import load_dataset

model = SpeechEncoderDecoderModel.from_pretrained("facebook/wav2vec2-xls-r-1b-21-to-en")

processor = Speech2Text2Processor.from_pretrained("facebook/wav2vec2-xls-r-1b-21-to-en")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["array"]["sampling_rate"], return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

transcription = processor.batch_decode(generated_ids)

```

## Results `{lang}` -> `en`

See the row of **XLS-R (1B)** for the performance on [Covost2](https://huggingface.co/datasets/covost2) for this model.

## More XLS-R models for `{lang}` -> `en` Speech Translation

- [Wav2Vec2-XLS-R-300M-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-300m-21-to-en)

- [Wav2Vec2-XLS-R-1B-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-1b-21-to-en)

- [Wav2Vec2-XLS-R-2B-21-EN](https://huggingface.co/facebook/wav2vec2-xls-r-2b-21-to-en)

- [Wav2Vec2-XLS-R-2B-22-16](https://huggingface.co/facebook/wav2vec2-xls-r-2b-22-to-16)

|

facebook/s2t-wav2vec2-large-en-tr | facebook | 2023-01-24T16:32:38Z | 46 | 3 | transformers | [

"transformers",

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"audio",

"speech-translation",

"speech2text2",

"en",

"tr",

"dataset:covost2",

"dataset:librispeech_asr",

"arxiv:2104.06678",

"license:mit",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- en

- tr

datasets:

- covost2

- librispeech_asr

tags:

- audio

- speech-translation

- automatic-speech-recognition

- speech2text2

license: mit

pipeline_tag: automatic-speech-recognition

widget:

- example_title: Common Voice 1

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_99989.mp3

- example_title: Common Voice 2

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_99986.mp3

- example_title: Common Voice 3

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_99987.mp3

---

# S2T2-Wav2Vec2-CoVoST2-EN-TR-ST

`s2t-wav2vec2-large-en-tr` is a Speech to Text Transformer model trained for end-to-end Speech Translation (ST).

The S2T2 model was proposed in [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/pdf/2104.06678.pdf) and officially released in

[Fairseq](https://github.com/pytorch/fairseq/blob/6f847c8654d56b4d1b1fbacec027f47419426ddb/fairseq/models/wav2vec/wav2vec2_asr.py#L266).

## Model description

S2T2 is a transformer-based seq2seq (speech encoder-decoder) model designed for end-to-end Automatic Speech Recognition (ASR) and Speech

Translation (ST). It uses a pretrained [Wav2Vec2](https://huggingface.co/transformers/model_doc/wav2vec2.html) as the encoder and a transformer-based decoder. The model is trained with standard autoregressive cross-entropy loss and generates the translations autoregressively.

## Intended uses & limitations

This model can be used for end-to-end English speech to Turkish text translation.

See the [model hub](https://huggingface.co/models?filter=speech2text2) to look for other S2T2 checkpoints.

### How to use

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline

```python

from datasets import load_dataset

from transformers import pipeline

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

asr = pipeline("automatic-speech-recognition", model="facebook/s2t-wav2vec2-large-en-tr", feature_extractor="facebook/s2t-wav2vec2-large-en-tr")

translation = asr(librispeech_en[0]["file"])

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoder

from datasets import load_dataset

import soundfile as sf

model = SpeechEncoderDecoder.from_pretrained("facebook/s2t-wav2vec2-large-en-tr")

processor = Speech2Text2Processor.from_pretrained("facebook/s2t-wav2vec2-large-en-tr")

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

ds = ds.map(map_to_array)

inputs = processor(ds["speech"][0], sampling_rate=16_000, return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

transcription = processor.batch_decode(generated_ids)

```

## Evaluation results

CoVoST-V2 test results for en-tr (BLEU score): **17.5**

For more information, please have a look at the [official paper](https://arxiv.org/pdf/2104.06678.pdf) - especially row 10 of Table 2.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2104-06678,

author = {Changhan Wang and

Anne Wu and

Juan Miguel Pino and

Alexei Baevski and

Michael Auli and

Alexis Conneau},

title = {Large-Scale Self- and Semi-Supervised Learning for Speech Translation},

journal = {CoRR},

volume = {abs/2104.06678},

year = {2021},

url = {https://arxiv.org/abs/2104.06678},

archivePrefix = {arXiv},

eprint = {2104.06678},

timestamp = {Thu, 12 Aug 2021 15:37:06 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2104-06678.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

facebook/s2t-wav2vec2-large-en-ca | facebook | 2023-01-24T16:32:32Z | 6 | 2 | transformers | [

"transformers",

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"audio",

"speech-translation",

"speech2text2",

"en",

"ca",

"dataset:covost2",

"dataset:librispeech_asr",

"arxiv:2104.06678",

"license:mit",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- en

- ca

datasets:

- covost2

- librispeech_asr

tags:

- audio

- speech-translation

- automatic-speech-recognition

- speech2text2

license: mit

pipeline_tag: automatic-speech-recognition

widget:

- example_title: Common Voice 1

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_18301577.mp3

- example_title: Common Voice 2

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_99989.mp3

- example_title: Common Voice 3

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_9999.mp3

---

# S2T2-Wav2Vec2-CoVoST2-EN-CA-ST

`s2t-wav2vec2-large-en-ca` is a Speech to Text Transformer model trained for end-to-end Speech Translation (ST).

The S2T2 model was proposed in [Large-Scale Self- and Semi-Supervised Learning for Speech Translation](https://arxiv.org/pdf/2104.06678.pdf) and officially released in

[Fairseq](https://github.com/pytorch/fairseq/blob/6f847c8654d56b4d1b1fbacec027f47419426ddb/fairseq/models/wav2vec/wav2vec2_asr.py#L266).

## Model description

S2T2 is a transformer-based seq2seq (speech encoder-decoder) model designed for end-to-end Automatic Speech Recognition (ASR) and Speech

Translation (ST). It uses a pretrained [Wav2Vec2](https://huggingface.co/transformers/model_doc/wav2vec2.html) as the encoder and a transformer-based decoder. The model is trained with standard autoregressive cross-entropy loss and generates the translations autoregressively.

## Intended uses & limitations

This model can be used for end-to-end English speech to Catalan text translation.

See the [model hub](https://huggingface.co/models?filter=speech2text2) to look for other S2T2 checkpoints.

### How to use

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline

```python

from datasets import load_dataset

from transformers import pipeline

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

asr = pipeline("automatic-speech-recognition", model="facebook/s2t-wav2vec2-large-en-ca", feature_extractor="facebook/s2t-wav2vec2-large-en-ca")

translation = asr(librispeech_en[0]["file"])

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoder

from datasets import load_dataset

import soundfile as sf

model = SpeechEncoderDecoder.from_pretrained("facebook/s2t-wav2vec2-large-en-ca")

processor = Speech2Text2Processor.from_pretrained("facebook/s2t-wav2vec2-large-en-ca")

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

ds = ds.map(map_to_array)

inputs = processor(ds["speech"][0], sampling_rate=16_000, return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

transcription = processor.batch_decode(generated_ids)

```

## Evaluation results

CoVoST-V2 test results for en-ca (BLEU score): **34.1**

For more information, please have a look at the [official paper](https://arxiv.org/pdf/2104.06678.pdf) - especially row 10 of Table 2.