modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-16 06:27:54

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 522

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-16 06:27:41

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

dhmeltzer/ppo-pyramid | dhmeltzer | 2023-02-01T04:47:43Z | 0 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

]

| reinforcement-learning | 2023-02-01T04:43:06Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

library_name: ml-agents

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Write your model_id: dhmeltzer/ppo-pyramid

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

nlp04/sentiment_roberta_large_with_diary | nlp04 | 2023-02-01T04:20:53Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-02-01T02:39:43Z | ---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: sentiment_roberta_large_with_diary

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sentiment_roberta_large_with_diary

This model is a fine-tuned version of [klue/roberta-large](https://huggingface.co/klue/roberta-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5671

- Micro f1 score: 80.0000

- Auprc: 77.0282

- Accuracy: 0.8

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 1.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Micro f1 score | Auprc | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------------:|:-------:|:--------:|

| 1.6198 | 0.13 | 100 | 1.3872 | 48.9362 | 55.5743 | 0.4894 |

| 0.6603 | 0.26 | 200 | 0.9249 | 65.9574 | 62.8759 | 0.6596 |

| 0.5387 | 0.4 | 300 | 0.7262 | 73.1915 | 71.1936 | 0.7319 |

| 0.4801 | 0.53 | 400 | 0.6623 | 74.0426 | 68.8606 | 0.7404 |

| 0.4597 | 0.66 | 500 | 0.6092 | 76.1702 | 75.7346 | 0.7617 |

| 0.4217 | 0.79 | 600 | 0.5929 | 78.7234 | 76.8709 | 0.7872 |

| 0.4148 | 0.93 | 700 | 0.5671 | 80.0000 | 77.0282 | 0.8 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu117

- Datasets 2.7.1

- Tokenizers 0.13.2

|

BachNgoH/q-FrozenLake-v1-4x4-noSlippery | BachNgoH | 2023-02-01T04:04:45Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-02-01T04:04:43Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="BachNgoH/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

gaarsmu/atari_invaders_1M | gaarsmu | 2023-02-01T04:00:07Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-02-01T03:59:34Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 390.00 +/- 139.71

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga gaarsmu -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga gaarsmu -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga gaarsmu

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

irow/dual-bert-IR | irow | 2023-02-01T03:58:59Z | 0 | 0 | null | [

"en",

"dataset:BeIR/msmarco",

"region:us"

]

| null | 2023-01-26T23:55:54Z | ---

datasets:

- BeIR/msmarco

language:

- en

---

This model uses two BERT models fine-tuned on a contrastive learning objective.

One is responsable for short queries, and the other for longer documents that contain the answer to the query.

After encoding many documents, one may perform a nearest neighbor search with the query encoding, to fetch

the most relevant document.

Take a look at inference.py to see how to perform inference. |

sschet/ner-disease-ncbi-bionlp-bc5cdr-pubmed | sschet | 2023-02-01T03:44:41Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"token-classification",

"ner",

"ncbi",

"disease",

"pubmed",

"bioinfomatics",

"en",

"dataset:ncbi-disease",

"dataset:bc5cdr",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-02-01T02:52:04Z | ---

language:

- en

tags:

- ner

- ncbi

- disease

- pubmed

- bioinfomatics

license: apache-2.0

datasets:

- ncbi-disease

- bc5cdr

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

widget:

- text: "Hepatocyte nuclear factor 4 alpha (HNF4α) is regulated by different promoters to generate two isoforms, one of which functions as a tumor suppressor. Here, the authors reveal that induction of the alternative isoform in hepatocellular carcinoma inhibits the circadian clock by repressing BMAL1, and the reintroduction of BMAL1 prevents HCC tumor growth."

---

# NER to find Gene & Gene products

> The model was trained on ncbi-disease, BC5CDR dataset, pretrained on this [pubmed-pretrained roberta model](/raynardj/roberta-pubmed)

All the labels, the possible token classes.

```json

{"label2id": {

"O": 0,

"Disease":1,

}

}

```

Notice, we removed the 'B-','I-' etc from data label.🗡

## This is the template we suggest for using the model

```python

from transformers import pipeline

PRETRAINED = "raynardj/ner-disease-ncbi-bionlp-bc5cdr-pubmed"

ner = pipeline(task="ner",model=PRETRAINED, tokenizer=PRETRAINED)

ner("Your text", aggregation_strategy="first")

```

And here is to make your output more consecutive ⭐️

```python

import pandas as pd

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(PRETRAINED)

def clean_output(outputs):

results = []

current = []

last_idx = 0

# make to sub group by position

for output in outputs:

if output["index"]-1==last_idx:

current.append(output)

else:

results.append(current)

current = [output, ]

last_idx = output["index"]

if len(current)>0:

results.append(current)

# from tokens to string

strings = []

for c in results:

tokens = []

starts = []

ends = []

for o in c:

tokens.append(o['word'])

starts.append(o['start'])

ends.append(o['end'])

new_str = tokenizer.convert_tokens_to_string(tokens)

if new_str!='':

strings.append(dict(

word=new_str,

start = min(starts),

end = max(ends),

entity = c[0]['entity']

))

return strings

def entity_table(pipeline, **pipeline_kw):

if "aggregation_strategy" not in pipeline_kw:

pipeline_kw["aggregation_strategy"] = "first"

def create_table(text):

return pd.DataFrame(

clean_output(

pipeline(text, **pipeline_kw)

)

)

return create_table

# will return a dataframe

entity_table(ner)(YOUR_VERY_CONTENTFUL_TEXT)

```

> check our NER model on

* [gene and gene products](/raynardj/ner-gene-dna-rna-jnlpba-pubmed)

* [chemical substance](/raynardj/ner-chemical-bionlp-bc5cdr-pubmed).

* [disease](/raynardj/ner-disease-ncbi-bionlp-bc5cdr-pubmed) |

sschet/scibert_scivocab_uncased-finetuned-ner | sschet | 2023-02-01T03:44:18Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"token-classification",

"Named Entity Recognition",

"SciBERT",

"Adverse Effect",

"Drug",

"Medical",

"en",

"dataset:ade_corpus_v2",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-02-01T02:41:03Z | ---

language:

- en

tags:

- Named Entity Recognition

- SciBERT

- Adverse Effect

- Drug

- Medical

datasets:

- ade_corpus_v2

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

widget:

- text: "Abortion, miscarriage or uterine hemorrhage associated with misoprostol (Cytotec), a labor-inducing drug."

example_title: "Abortion, miscarriage, ..."

- text: "Addiction to many sedatives and analgesics, such as diazepam, morphine, etc."

example_title: "Addiction to many..."

- text: "Birth defects associated with thalidomide"

example_title: "Birth defects associated..."

- text: "Bleeding of the intestine associated with aspirin therapy"

example_title: "Bleeding of the intestine..."

- text: "Cardiovascular disease associated with COX-2 inhibitors (i.e. Vioxx)"

example_title: "Cardiovascular disease..."

---

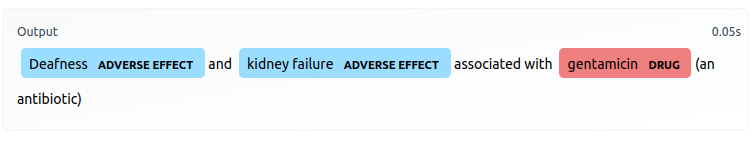

This is a SciBERT-based model fine-tuned to perform Named Entity Recognition for drug names and adverse drug effects.

This model classifies input tokens into one of five classes:

- `B-DRUG`: beginning of a drug entity

- `I-DRUG`: within a drug entity

- `B-EFFECT`: beginning of an AE entity

- `I-EFFECT`: within an AE entity

- `O`: outside either of the above entities

To get started using this model for inference, simply set up an NER `pipeline` like below:

```python

from transformers import (AutoModelForTokenClassification,

AutoTokenizer,

pipeline,

)

model_checkpoint = "jsylee/scibert_scivocab_uncased-finetuned-ner"

model = AutoModelForTokenClassification.from_pretrained(model_checkpoint, num_labels=5,

id2label={0: 'O', 1: 'B-DRUG', 2: 'I-DRUG', 3: 'B-EFFECT', 4: 'I-EFFECT'}

)

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

model_pipeline = pipeline(task="ner", model=model, tokenizer=tokenizer)

print( model_pipeline ("Abortion, miscarriage or uterine hemorrhage associated with misoprostol (Cytotec), a labor-inducing drug."))

```

SciBERT: https://huggingface.co/allenai/scibert_scivocab_uncased

Dataset: https://huggingface.co/datasets/ade_corpus_v2

|

sschet/bert-large-uncased_med-ner | sschet | 2023-02-01T03:42:17Z | 30 | 3 | transformers | [

"transformers",

"pytorch",

"jax",

"bert",

"token-classification",

"en",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-02-01T02:02:56Z | ---

language:

- en

datasets:

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

---

A Named Entity Recognition model for medication entities (`medication name`, `dosage`, `duration`, `frequency`, `reason`).

The model has been trained on the i2b2 (now n2c2) dataset for the 2009 - Medication task. Please visit the n2c2 site to request access to the dataset. |

sschet/bern2-ner | sschet | 2023-02-01T03:41:57Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"roberta",

"en",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"endpoints_compatible",

"region:us"

]

| null | 2023-02-01T01:41:08Z | ---

language:

- en

datasets:

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

---

|

sschet/ner-gene-dna-rna-jnlpba-pubmed | sschet | 2023-02-01T03:41:37Z | 9 | 4 | transformers | [

"transformers",

"pytorch",

"roberta",

"token-classification",

"ner",

"gene",

"protein",

"rna",

"bioinfomatics",

"en",

"dataset:jnlpba",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-02-01T01:31:59Z | ---

language:

- en

tags:

- ner

- gene

- protein

- rna

- bioinfomatics

license: apache-2.0

datasets:

- jnlpba

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

widget:

- text: "It consists of 25 exons encoding a 1,278-amino acid glycoprotein that is composed of 13 transmembrane domains"

---

# NER to find Gene & Gene products

> The model was trained on jnlpba dataset, pretrained on this [pubmed-pretrained roberta model](/raynardj/roberta-pubmed)

All the labels, the possible token classes.

```json

{"label2id": {

"DNA": 2,

"O": 0,

"RNA": 5,

"cell_line": 4,

"cell_type": 3,

"protein": 1

}

}

```

Notice, we removed the 'B-','I-' etc from data label.🗡

## This is the template we suggest for using the model

```python

from transformers import pipeline

PRETRAINED = "raynardj/ner-gene-dna-rna-jnlpba-pubmed"

ner = pipeline(task="ner",model=PRETRAINED, tokenizer=PRETRAINED)

ner("Your text", aggregation_strategy="first")

```

And here is to make your output more consecutive ⭐️

```python

import pandas as pd

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(PRETRAINED)

def clean_output(outputs):

results = []

current = []

last_idx = 0

# make to sub group by position

for output in outputs:

if output["index"]-1==last_idx:

current.append(output)

else:

results.append(current)

current = [output, ]

last_idx = output["index"]

if len(current)>0:

results.append(current)

# from tokens to string

strings = []

for c in results:

tokens = []

starts = []

ends = []

for o in c:

tokens.append(o['word'])

starts.append(o['start'])

ends.append(o['end'])

new_str = tokenizer.convert_tokens_to_string(tokens)

if new_str!='':

strings.append(dict(

word=new_str,

start = min(starts),

end = max(ends),

entity = c[0]['entity']

))

return strings

def entity_table(pipeline, **pipeline_kw):

if "aggregation_strategy" not in pipeline_kw:

pipeline_kw["aggregation_strategy"] = "first"

def create_table(text):

return pd.DataFrame(

clean_output(

pipeline(text, **pipeline_kw)

)

)

return create_table

# will return a dataframe

entity_table(ner)(YOUR_VERY_CONTENTFUL_TEXT)

```

> check our NER model on

* [gene and gene products](/raynardj/ner-gene-dna-rna-jnlpba-pubmed)

* [chemical substance](/raynardj/ner-chemical-bionlp-bc5cdr-pubmed).

* [disease](/raynardj/ner-disease-ncbi-bionlp-bc5cdr-pubmed) |

sschet/biobert_genetic_ner | sschet | 2023-02-01T03:40:52Z | 8 | 2 | transformers | [

"transformers",

"pytorch",

"bert",

"token-classification",

"NER",

"Biomedical",

"Genetics",

"en",

"dataset:JNLPBA",

"dataset:BC2GM",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-02-01T01:09:47Z | ---

language: "en"

license: apache-2.0

tags:

- token-classification

- NER

- Biomedical

- Genetics

datasets:

- JNLPBA

- BC2GM

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

---

BioBERT model fine-tuned in NER task with JNLPBA and BC2GM corpus for genetic class entities.

This was fine-tuned in order to use it in a BioNER/BioNEN system which is available at: https://github.com/librairy/bio-ner |

sschet/biobert_diseases_ner | sschet | 2023-02-01T03:40:32Z | 4 | 1 | transformers | [

"transformers",

"pytorch",

"bert",

"token-classification",

"NER",

"Biomedical",

"Diseases",

"en",

"dataset:BC5CDR-diseases",

"dataset:ncbi_disease",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-02-01T00:59:17Z | ---

language: "en"

license: apache-2.0

tags:

- token-classification

- NER

- Biomedical

- Diseases

datasets:

- BC5CDR-diseases

- ncbi_disease

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

---

BioBERT model fine-tuned in NER task with BC5CDR-diseases and NCBI-diseases corpus

This was fine-tuned in order to use it in a BioNER/BioNEN system which is available at: https://github.com/librairy/bio-ner |

sschet/biobert_chemical_ner | sschet | 2023-02-01T03:40:10Z | 10 | 5 | transformers | [

"transformers",

"pytorch",

"tf",

"bert",

"token-classification",

"NER",

"Biomedical",

"Chemicals",

"en",

"dataset:BC5CDR-chemicals",

"dataset:BC4CHEMD",

"dataset:tner/bc5cdr",

"dataset:commanderstrife/jnlpba",

"dataset:bc2gm_corpus",

"dataset:drAbreu/bc4chemd_ner",

"dataset:linnaeus",

"dataset:chintagunta85/ncbi_disease",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-02-01T00:37:59Z | ---

language: en

tags:

- token-classification

- NER

- Biomedical

- Chemicals

datasets:

- BC5CDR-chemicals

- BC4CHEMD

- tner/bc5cdr

- commanderstrife/jnlpba

- bc2gm_corpus

- drAbreu/bc4chemd_ner

- linnaeus

- chintagunta85/ncbi_disease

license: apache-2.0

---

BioBERT model fine-tuned in NER task with BC5CDR-chemicals and BC4CHEMD corpus.

This was fine-tuned in order to use it in a BioNER/BioNEN system which is available at: https://github.com/librairy/bio-ner |

RinTrin/climate-tech-0.8605 | RinTrin | 2023-02-01T03:26:24Z | 1 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

]

| sentence-similarity | 2023-02-01T03:26:16Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 250 with parameters:

```

{'batch_size': 32, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 2,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 500,

"warmup_steps": 50,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Normalize()

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

tehqikness/manda2 | tehqikness | 2023-02-01T02:59:42Z | 2 | 1 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-02-01T02:56:23Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### manda2 Dreambooth model trained by tehqikness with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

swl-models/lora-pad-colossus-omega | swl-models | 2023-02-01T02:44:47Z | 0 | 1 | null | [

"license:creativeml-openrail-m",

"region:us"

]

| null | 2023-02-01T02:39:02Z | ---

license: creativeml-openrail-m

---

|

Someman/bird-danphe | Someman | 2023-02-01T02:38:23Z | 0 | 0 | diffusers | [

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"wildcard",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-01-04T16:50:46Z | ---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- wildcard

widget:

- text: a big dog with danphe face

---

# DreamBooth model for the bird concept trained by Someman on the Someman/danphe dataset.

This is a Stable Diffusion model fine-tuned on the bird concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of bird danphe**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `danphe` images for the wildcard theme.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('Someman/bird-danphe')

image = pipeline().images[0]

image

```

|

Someman/sitar-psych | Someman | 2023-02-01T02:38:06Z | 1 | 0 | diffusers | [

"diffusers",

"pytorch",

"stable-diffusion",

"text-to-image",

"diffusion-models-class",

"dreambooth-hackathon",

"wildcard",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-01-05T06:04:10Z | ---

license: creativeml-openrail-m

tags:

- pytorch

- diffusers

- stable-diffusion

- text-to-image

- diffusion-models-class

- dreambooth-hackathon

- wildcard

widget:

- text: Paint a sitar surrounded by objects and symbols that reflect the cultural

and artistic influences of Salvador Dali

---

# DreamBooth model for the instrument concept trained by Someman on the Someman/Sitar dataset.

This is a Stable Diffusion model fine-tuned on the instrument concept with DreamBooth. It can be used by modifying the `instance_prompt`: **a photo of sitar**

This model was created as part of the DreamBooth Hackathon 🔥. Visit the [organisation page](https://huggingface.co/dreambooth-hackathon) for instructions on how to take part!

## Description

This is a Stable Diffusion model fine-tuned on `sitar` images for the wildcard theme.

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('Someman/sitar-psych')

image = pipeline().images[0]

image

```

|

muhtasham/small-mlm-glue-rte-custom-tokenizer-expand-vocab | muhtasham | 2023-02-01T02:07:34Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2023-02-01T01:42:44Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: small-mlm-glue-rte-custom-tokenizer-expand-vocab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# small-mlm-glue-rte-custom-tokenizer-expand-vocab

This model is a fine-tuned version of [google/bert_uncased_L-4_H-512_A-8](https://huggingface.co/google/bert_uncased_L-4_H-512_A-8) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.9781

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 5.366 | 1.6 | 500 | 4.5434 |

| 4.5071 | 3.21 | 1000 | 4.1161 |

| 4.0413 | 4.81 | 1500 | 3.7831 |

| 3.6922 | 6.41 | 2000 | 3.4965 |

| 3.4951 | 8.01 | 2500 | 3.3765 |

| 3.2834 | 9.62 | 3000 | 3.1673 |

| 3.1036 | 11.22 | 3500 | 3.1155 |

| 2.9958 | 12.82 | 4000 | 3.0351 |

| 2.8456 | 14.42 | 4500 | 2.9650 |

| 2.6968 | 16.03 | 5000 | 2.9781 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

muhtasham/small-mlm-glue-qqp-from-scratch-custom-tokenizer-expand-vocab | muhtasham | 2023-02-01T01:52:33Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2023-02-01T01:19:03Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: small-mlm-glue-qqp-from-scratch-custom-tokenizer-expand-vocab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# small-mlm-glue-qqp-from-scratch-custom-tokenizer-expand-vocab

This model is a fine-tuned version of [google/bert_uncased_L-4_H-512_A-8](https://huggingface.co/google/bert_uncased_L-4_H-512_A-8) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 5.2614

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 7.3866 | 0.4 | 500 | 6.2514 |

| 6.1051 | 0.8 | 1000 | 5.9205 |

| 5.7552 | 1.2 | 1500 | 5.7346 |

| 5.5838 | 1.6 | 2000 | 5.6074 |

| 5.5191 | 2.0 | 2500 | 5.5317 |

| 5.4095 | 2.4 | 3000 | 5.4497 |

| 5.3784 | 2.8 | 3500 | 5.4039 |

| 5.2816 | 3.2 | 4000 | 5.3645 |

| 5.2523 | 3.6 | 4500 | 5.3406 |

| 5.2021 | 4.0 | 5000 | 5.2614 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

swl-models/toooajk-yagurumagiku-v3-dreambooth | swl-models | 2023-02-01T01:47:41Z | 2,445 | 0 | diffusers | [

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-02-01T01:26:25Z | ---

license: creativeml-openrail-m

language:

- en

library_name: diffusers

pipeline_tag: text-to-image

tags:

- stable-diffusion

- stable-diffusion-diffusers

---

|

ongkn/smtn_ppo-Huggy | ongkn | 2023-02-01T01:45:38Z | 4 | 0 | ml-agents | [

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

]

| reinforcement-learning | 2023-02-01T01:45:31Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: ongkn/smtn_ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

swl-models/toooajk-yagurumagiku-v3-hypernet | swl-models | 2023-02-01T01:34:44Z | 0 | 0 | null | [

"license:creativeml-openrail-m",

"region:us"

]

| null | 2023-02-01T01:27:00Z | ---

license: creativeml-openrail-m

---

|

swl-models/xiaolxl-guofeng-v3 | swl-models | 2023-02-01T01:27:23Z | 5,596 | 14 | diffusers | [

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-01-31T15:46:10Z | ---

license: creativeml-openrail-m

language:

- en

library_name: diffusers

pipeline_tag: text-to-image

tags:

- stable-diffusion

- stable-diffusion-diffusers

duplicated_from: xiaolxl/GuoFeng3

---

# 介绍 - GuoFeng3

欢迎使用GuoFeng3模型 - (TIP:这个版本的名字进行了微调),这是一个中国华丽古风风格模型,也可以说是一个古风游戏角色模型,具有2.5D的质感。第三代大幅度减少上手难度,增加了场景元素与男性古风人物,除此之外为了模型能更好的适应其它TAG,还增加了其它风格的元素。这一代对脸和手的崩坏有一定的修复,同时素材大小也提高到了最长边1024。

--

Welcome to the GuoFeng3 model - (TIP: the name of this version has been fine-tuned). This is a Chinese gorgeous antique style model, which can also be said to be an antique game character model with a 2.5D texture. The third generation greatly reduces the difficulty of getting started, and adds scene elements and male antique characters. In addition, in order to better adapt the model to other TAGs, other style elements are also added. This generation has repaired the broken face and hands to a certain extent, and the size of the material has also increased to the longest side of 1024.

# 安装教程 - install

1. 将GuoFeng3.ckpt模型放入SD目录 - Put GuoFeng3.ckpt model into SD directory

2. 此模型自带VAE,如果你的程序不支持,请记得选择任意一个VAE文件,否则图形将为灰色 - This model comes with VAE. If your program does not support it, please remember to select any VAE file, otherwise the graphics will be gray

# 如何使用 - How to use

**TIP:经过一天的测试,发现很多人物可能出现红眼问题,可以尝试在负面词添加red eyes。如果色彩艳丽可以尝试降低CFG - After a day of testing, we found that many characters may have red-eye problems. We can try to add red eyes to negative words。Try to reduce CFG if the color is bright**

简单:第三代大幅度减少上手难度 - Simple: the third generation greatly reduces the difficulty of getting started

- **关键词 - key word:**

```

best quality, masterpiece, highres, 1girl,china dress,Beautiful face

```

- **负面词 - Negative words:**

```

NSFW, lowres,bad anatomy,bad hands, text, error, missing fingers,extra digit, fewer digits, cropped, worstquality, low quality, normal quality,jpegartifacts,signature, watermark, username,blurry,bad feet

```

---

高级:如果您还想使图片尽可能更好,请尝试以下配置 - senior:If you also want to make the picture as better as possible, please try the following configuration

- Sampling steps:**50**

- Sampler:**DPM++ SDE Karras or DDIM**

- The size of the picture should be at least **1024** - 图片大小至少1024

- CFG:**4-6**

- **更好的负面词 Better negative words - 感谢群友提供的负面词:**

```

(((simple background))),monochrome ,lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, lowres, bad anatomy, bad hands, text, error, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, ugly,pregnant,vore,duplicate,morbid,mut ilated,tran nsexual, hermaphrodite,long neck,mutated hands,poorly drawn hands,poorly drawn face,mutation,deformed,blurry,bad anatomy,bad proportions,malformed limbs,extra limbs,cloned face,disfigured,gross proportions, (((missing arms))),(((missing legs))), (((extra arms))),(((extra legs))),pubic hair, plump,bad legs,error legs,username,blurry,bad feet

```

- **如果想元素更丰富,可以添加下方关键词 - If you want to enrich the elements, you can add the following keywords**

```

Beautiful face,

hair ornament, solo,looking at viewer,smile,closed mouth,lips

china dress,dress,hair ornament, necklace, jewelry, long hair, earrings, chinese clothes,

architecture,east asian architecture,building,outdoors,rooftop,city,cityscape

```

# 例图 - Examples

(可在文件列表中找到原图,并放入WebUi查看关键词等信息) - (You can find the original image in the file list, and put WebUi to view keywords and other information)

<img src=https://huggingface.co/xiaolxl/GuoFeng3/resolve/main/examples/e1.png>

<img src=https://huggingface.co/xiaolxl/GuoFeng3/resolve/main/examples/e2.png>

<img src=https://huggingface.co/xiaolxl/GuoFeng3/resolve/main/examples/e3.png>

<img src=https://huggingface.co/xiaolxl/GuoFeng3/resolve/main/examples/e4.png> |

muhtasham/small-mlm-glue-qnli-from-scratch-custom-tokenizer-expand-vocab | muhtasham | 2023-02-01T01:16:52Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2023-02-01T00:38:03Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: small-mlm-glue-qnli-from-scratch-custom-tokenizer-expand-vocab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# small-mlm-glue-qnli-from-scratch-custom-tokenizer-expand-vocab

This model is a fine-tuned version of [google/bert_uncased_L-4_H-512_A-8](https://huggingface.co/google/bert_uncased_L-4_H-512_A-8) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 6.0244

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 7.6626 | 0.4 | 500 | 6.7278 |

| 6.5829 | 0.8 | 1000 | 6.5388 |

| 6.4637 | 1.2 | 1500 | 6.3776 |

| 6.3446 | 1.6 | 2000 | 6.2966 |

| 6.2664 | 2.0 | 2500 | 6.2288 |

| 6.2043 | 2.4 | 3000 | 6.1839 |

| 6.1615 | 2.8 | 3500 | 6.1349 |

| 6.1085 | 3.2 | 4000 | 6.0839 |

| 6.0625 | 3.6 | 4500 | 6.0543 |

| 6.0432 | 4.0 | 5000 | 6.0244 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

PeterDerLustige/dqn-SpaceInvadersNoFrameskip-v4 | PeterDerLustige | 2023-02-01T01:09:05Z | 3 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-02-01T01:08:26Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 640.50 +/- 283.52

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga PeterDerLustige -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga PeterDerLustige -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga PeterDerLustige

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 10000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

muhtasham/small-mlm-glue-qnli-custom-tokenizer-expand-vocab | muhtasham | 2023-02-01T01:05:35Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2023-02-01T00:23:19Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: small-mlm-glue-qnli-custom-tokenizer-expand-vocab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# small-mlm-glue-qnli-custom-tokenizer-expand-vocab

This model is a fine-tuned version of [google/bert_uncased_L-4_H-512_A-8](https://huggingface.co/google/bert_uncased_L-4_H-512_A-8) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.0716

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 5.5339 | 0.4 | 500 | 4.7224 |

| 4.6477 | 0.8 | 1000 | 4.3242 |

| 4.3146 | 1.2 | 1500 | 3.9988 |

| 4.0046 | 1.6 | 2000 | 3.7777 |

| 3.7942 | 2.0 | 2500 | 3.5976 |

| 3.5684 | 2.4 | 3000 | 3.4426 |

| 3.4406 | 2.8 | 3500 | 3.3275 |

| 3.332 | 3.2 | 4000 | 3.2361 |

| 3.1941 | 3.6 | 4500 | 3.1616 |

| 3.0981 | 4.0 | 5000 | 3.0716 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

Botnoi/wav2vec2-xls-r-300m-th-v7_0 | Botnoi | 2023-02-01T00:57:16Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2023-01-31T10:01:09Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: wav2vec2-xls-r-300m-th-v7_0

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-xls-r-300m-th-v7_0

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.4099

- Wer: 0.9988

- Cer: 0.7861

- Clean Cer: 0.7617

- Learning Rate: 0.0000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer | Clean Cer | Rate |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:---------:|:------:|

| 8.5484 | 0.4 | 500 | 3.6234 | 1.0 | 1.0 | 1.0 | 0.0000 |

| 3.2275 | 0.8 | 1000 | 2.2960 | 0.9998 | 0.7081 | 0.6540 | 0.0000 |

| 0.9955 | 1.2 | 1500 | 1.2224 | 0.9549 | 0.4327 | 0.3756 | 0.0000 |

| 0.66 | 1.61 | 2000 | 0.9559 | 0.9232 | 0.3651 | 0.3040 | 0.0000 |

| 0.546 | 2.01 | 2500 | 0.9207 | 0.9481 | 0.3585 | 0.2826 | 0.0000 |

| 0.4459 | 2.41 | 3000 | 0.7701 | 0.8693 | 0.2940 | 0.2383 | 0.0000 |

| 0.4041 | 2.81 | 3500 | 0.7756 | 0.8224 | 0.2949 | 0.2634 | 0.0000 |

| 0.3637 | 3.21 | 4000 | 0.6015 | 0.7015 | 0.2064 | 0.1807 | 0.0000 |

| 0.334 | 3.61 | 4500 | 0.5615 | 0.6675 | 0.1907 | 0.1638 | 0.0000 |

| 0.3283 | 4.02 | 5000 | 0.6205 | 0.7073 | 0.2092 | 0.1803 | 0.0000 |

| 0.3762 | 4.42 | 5500 | 0.7517 | 0.6366 | 0.1778 | 0.1600 | 0.0000 |

| 0.4954 | 4.82 | 6000 | 0.9374 | 0.7073 | 0.2023 | 0.1735 | 0.0000 |

| 0.5568 | 5.22 | 6500 | 0.8859 | 0.7027 | 0.1982 | 0.1666 | 0.0000 |

| 0.6756 | 5.62 | 7000 | 1.0252 | 0.6802 | 0.1920 | 0.1628 | 0.0000 |

| 0.7752 | 6.02 | 7500 | 1.1259 | 0.7657 | 0.2309 | 0.1908 | 0.0000 |

| 0.8305 | 6.43 | 8000 | 1.3857 | 0.9029 | 0.3252 | 0.2668 | 0.0000 |

| 1.7385 | 6.83 | 8500 | 3.2320 | 0.9998 | 0.9234 | 0.9114 | 0.0000 |

| 2.7839 | 7.23 | 9000 | 3.3238 | 0.9999 | 0.9400 | 0.9306 | 0.0000 |

| 2.8307 | 7.63 | 9500 | 3.2678 | 0.9998 | 0.9167 | 0.9053 | 0.0000 |

| 2.7672 | 8.03 | 10000 | 3.2435 | 0.9995 | 0.8992 | 0.8867 | 0.0000 |

| 2.7426 | 8.43 | 10500 | 3.2396 | 0.9995 | 0.8720 | 0.8561 | 0.0000 |

| 2.7608 | 8.84 | 11000 | 3.2689 | 0.9993 | 0.8399 | 0.8202 | 0.0000 |

| 2.8195 | 9.24 | 11500 | 3.3283 | 0.9989 | 0.8084 | 0.7865 | 0.0000 |

| 2.9044 | 9.64 | 12000 | 3.4099 | 0.9988 | 0.7861 | 0.7617 | 0.0000 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

|

ongkn/smtn_test-deeprl-course | ongkn | 2023-02-01T00:46:01Z | 2 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-02-01T00:45:36Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 242.84 +/- 19.19

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Jorgeleon/jleonart | Jorgeleon | 2023-02-01T00:45:48Z | 5 | 0 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-02-01T00:34:15Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### jleonart Dreambooth model trained by Jorgeleon with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

swl-models/wind-v2 | swl-models | 2023-02-01T00:43:50Z | 31 | 0 | diffusers | [

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"en",

"arxiv:1910.09700",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-01-31T14:31:44Z | ---

license: creativeml-openrail-m

language:

- en

library_name: diffusers

pipeline_tag: text-to-image

tags:

- stable-diffusion

- stable-diffusion-diffusers

---

# Model Card for Wind v2

<!-- Provide a quick summary of what the model is/does. -->

This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1).

# Model Details

## Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** 9

- **Shared by:** SWL Models

- **Model type:** Stable Diffusion Checkpoint

- **Language(s) (NLP):** English

- **License:** CreativeML Open RAIL-M

## Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

# Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

## Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

## Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

## Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

# Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

## Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

# Training Details

## Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

## Training Procedure [optional]

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

### Preprocessing

[More Information Needed]

### Speeds, Sizes, Times

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

# Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

## Testing Data, Factors & Metrics

### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

## Results

[More Information Needed]

### Summary

# Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

# Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

# Technical Specifications [optional]

## Model Architecture and Objective

[More Information Needed]

## Compute Infrastructure

[More Information Needed]

### Hardware

[More Information Needed]

### Software

[More Information Needed]

# Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

# Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

# More Information [optional]

[More Information Needed]

# Model Card Authors [optional]

[More Information Needed]

# Model Card Contact

[More Information Needed]

|

muhtasham/tiny-mlm-glue-wnli-custom-tokenizer-expand-vocab | muhtasham | 2023-02-01T00:41:23Z | 3 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2023-02-01T00:27:48Z | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: tiny-mlm-glue-wnli-custom-tokenizer-expand-vocab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tiny-mlm-glue-wnli-custom-tokenizer-expand-vocab

This model is a fine-tuned version of [google/bert_uncased_L-2_H-128_A-2](https://huggingface.co/google/bert_uncased_L-2_H-128_A-2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6887

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 4.719 | 6.25 | 500 | 3.7847 |

| 4.0062 | 12.5 | 1000 | 3.4790 |

| 3.5607 | 18.75 | 1500 | 3.3417 |

| 3.3 | 25.0 | 2000 | 2.9815 |

| 3.0174 | 31.25 | 2500 | 2.7710 |

| 2.8668 | 37.5 | 3000 | 2.5395 |

| 2.6312 | 43.75 | 3500 | 2.4257 |

| 2.4138 | 50.0 | 4000 | 1.9615 |

| 2.2602 | 56.25 | 4500 | 2.1444 |

| 2.1614 | 62.5 | 5000 | 1.6887 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

RinTrin/climate-tech-test | RinTrin | 2023-02-01T00:40:37Z | 2 | 0 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"en",

"dataset:s2orc",

"dataset:flax-sentence-embeddings/stackexchange_xml",

"dataset:ms_marco",

"dataset:gooaq",

"dataset:yahoo_answers_topics",

"dataset:code_search_net",

"dataset:search_qa",

"dataset:eli5",

"dataset:snli",

"dataset:multi_nli",

"dataset:wikihow",

"dataset:natural_questions",

"dataset:trivia_qa",

"dataset:embedding-data/sentence-compression",

"dataset:embedding-data/flickr30k-captions",

"dataset:embedding-data/altlex",

"dataset:embedding-data/simple-wiki",

"dataset:embedding-data/QQP",

"dataset:embedding-data/SPECTER",

"dataset:embedding-data/PAQ_pairs",

"dataset:embedding-data/WikiAnswers",

"arxiv:1904.06472",

"arxiv:2102.07033",

"arxiv:2104.08727",

"arxiv:1704.05179",

"arxiv:1810.09305",

"license:apache-2.0",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

]

| sentence-similarity | 2023-02-01T00:40:29Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

language: en

license: apache-2.0

datasets:

- s2orc

- flax-sentence-embeddings/stackexchange_xml

- ms_marco

- gooaq

- yahoo_answers_topics

- code_search_net

- search_qa

- eli5

- snli

- multi_nli

- wikihow

- natural_questions

- trivia_qa

- embedding-data/sentence-compression

- embedding-data/flickr30k-captions

- embedding-data/altlex

- embedding-data/simple-wiki

- embedding-data/QQP

- embedding-data/SPECTER

- embedding-data/PAQ_pairs

- embedding-data/WikiAnswers

---

# all-MiniLM-L6-v2

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

import torch.nn.functional as F

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/all-MiniLM-L6-v2')

model = AutoModel.from_pretrained('sentence-transformers/all-MiniLM-L6-v2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

# Normalize embeddings

sentence_embeddings = F.normalize(sentence_embeddings, p=2, dim=1)

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/all-MiniLM-L6-v2)

------

## Background

The project aims to train sentence embedding models on very large sentence level datasets using a self-supervised

contrastive learning objective. We used the pretrained [`nreimers/MiniLM-L6-H384-uncased`](https://huggingface.co/nreimers/MiniLM-L6-H384-uncased) model and fine-tuned in on a

1B sentence pairs dataset. We use a contrastive learning objective: given a sentence from the pair, the model should predict which out of a set of randomly sampled other sentences, was actually paired with it in our dataset.

We developped this model during the

[Community week using JAX/Flax for NLP & CV](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104),

organized by Hugging Face. We developped this model as part of the project:

[Train the Best Sentence Embedding Model Ever with 1B Training Pairs](https://discuss.huggingface.co/t/train-the-best-sentence-embedding-model-ever-with-1b-training-pairs/7354). We benefited from efficient hardware infrastructure to run the project: 7 TPUs v3-8, as well as intervention from Googles Flax, JAX, and Cloud team member about efficient deep learning frameworks.

## Intended uses

Our model is intented to be used as a sentence and short paragraph encoder. Given an input text, it ouptuts a vector which captures

the semantic information. The sentence vector may be used for information retrieval, clustering or sentence similarity tasks.

By default, input text longer than 256 word pieces is truncated.

## Training procedure

### Pre-training

We use the pretrained [`nreimers/MiniLM-L6-H384-uncased`](https://huggingface.co/nreimers/MiniLM-L6-H384-uncased) model. Please refer to the model card for more detailed information about the pre-training procedure.

### Fine-tuning

We fine-tune the model using a contrastive objective. Formally, we compute the cosine similarity from each possible sentence pairs from the batch.

We then apply the cross entropy loss by comparing with true pairs.

#### Hyper parameters

We trained ou model on a TPU v3-8. We train the model during 100k steps using a batch size of 1024 (128 per TPU core).

We use a learning rate warm up of 500. The sequence length was limited to 128 tokens. We used the AdamW optimizer with

a 2e-5 learning rate. The full training script is accessible in this current repository: `train_script.py`.

#### Training data

We use the concatenation from multiple datasets to fine-tune our model. The total number of sentence pairs is above 1 billion sentences.

We sampled each dataset given a weighted probability which configuration is detailed in the `data_config.json` file.

| Dataset | Paper | Number of training tuples |

|--------------------------------------------------------|:----------------------------------------:|:--------------------------:|

| [Reddit comments (2015-2018)](https://github.com/PolyAI-LDN/conversational-datasets/tree/master/reddit) | [paper](https://arxiv.org/abs/1904.06472) | 726,484,430 |

| [S2ORC](https://github.com/allenai/s2orc) Citation pairs (Abstracts) | [paper](https://aclanthology.org/2020.acl-main.447/) | 116,288,806 |

| [WikiAnswers](https://github.com/afader/oqa#wikianswers-corpus) Duplicate question pairs | [paper](https://doi.org/10.1145/2623330.2623677) | 77,427,422 |

| [PAQ](https://github.com/facebookresearch/PAQ) (Question, Answer) pairs | [paper](https://arxiv.org/abs/2102.07033) | 64,371,441 |

| [S2ORC](https://github.com/allenai/s2orc) Citation pairs (Titles) | [paper](https://aclanthology.org/2020.acl-main.447/) | 52,603,982 |

| [S2ORC](https://github.com/allenai/s2orc) (Title, Abstract) | [paper](https://aclanthology.org/2020.acl-main.447/) | 41,769,185 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title, Body) pairs | - | 25,316,456 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title+Body, Answer) pairs | - | 21,396,559 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title, Answer) pairs | - | 21,396,559 |

| [MS MARCO](https://microsoft.github.io/msmarco/) triplets | [paper](https://doi.org/10.1145/3404835.3462804) | 9,144,553 |

| [GOOAQ: Open Question Answering with Diverse Answer Types](https://github.com/allenai/gooaq) | [paper](https://arxiv.org/pdf/2104.08727.pdf) | 3,012,496 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Title, Answer) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 1,198,260 |

| [Code Search](https://huggingface.co/datasets/code_search_net) | - | 1,151,414 |

| [COCO](https://cocodataset.org/#home) Image captions | [paper](https://link.springer.com/chapter/10.1007%2F978-3-319-10602-1_48) | 828,395|

| [SPECTER](https://github.com/allenai/specter) citation triplets | [paper](https://doi.org/10.18653/v1/2020.acl-main.207) | 684,100 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Question, Answer) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 681,164 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Title, Question) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 659,896 |

| [SearchQA](https://huggingface.co/datasets/search_qa) | [paper](https://arxiv.org/abs/1704.05179) | 582,261 |

| [Eli5](https://huggingface.co/datasets/eli5) | [paper](https://doi.org/10.18653/v1/p19-1346) | 325,475 |

| [Flickr 30k](https://shannon.cs.illinois.edu/DenotationGraph/) | [paper](https://transacl.org/ojs/index.php/tacl/article/view/229/33) | 317,695 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (titles) | | 304,525 |

| AllNLI ([SNLI](https://nlp.stanford.edu/projects/snli/) and [MultiNLI](https://cims.nyu.edu/~sbowman/multinli/) | [paper SNLI](https://doi.org/10.18653/v1/d15-1075), [paper MultiNLI](https://doi.org/10.18653/v1/n18-1101) | 277,230 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (bodies) | | 250,519 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (titles+bodies) | | 250,460 |

| [Sentence Compression](https://github.com/google-research-datasets/sentence-compression) | [paper](https://www.aclweb.org/anthology/D13-1155/) | 180,000 |

| [Wikihow](https://github.com/pvl/wikihow_pairs_dataset) | [paper](https://arxiv.org/abs/1810.09305) | 128,542 |

| [Altlex](https://github.com/chridey/altlex/) | [paper](https://aclanthology.org/P16-1135.pdf) | 112,696 |

| [Quora Question Triplets](https://quoradata.quora.com/First-Quora-Dataset-Release-Question-Pairs) | - | 103,663 |