modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

mang3dd/blockassist-bc-tangled_slithering_alligator_1755603918

|

mang3dd

| 2025-08-19T12:12:14Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tangled slithering alligator",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T12:12:10Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tangled slithering alligator

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

lilTAT/blockassist-bc-gentle_rugged_hare_1755605480

|

lilTAT

| 2025-08-19T12:11:51Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"gentle rugged hare",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T12:11:47Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- gentle rugged hare

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

sefcee/VIDEO.18.Orginal-Uppal-Farm-Girl-Viral-Video-Link.New.full.videos.Uppal.Farm.Girl

|

sefcee

| 2025-08-19T12:11:29Z

| 0

| 0

| null |

[

"region:us"

] | null | 2025-08-19T12:10:07Z

|

<a href="https://allyoutubers.com/Orginal-Uppal-Farm-Girl-Viral-Video"> 🌐 VIDEO.18.Orginal-Uppal-Farm-Girl-Viral-Video-Link.New.full.videos.Uppal.Farm.Girl

🔴 ➤►DOWNLOAD👉👉🟢 ➤ <a href="https://allyoutubers.com/Orginal-Uppal-Farm-Girl-Viral-Video"> 🌐 VIDEO.18.Orginal-Uppal-Farm-Girl-Viral-Video-Link.New.full.videos.Uppal.Farm.Girl

<a href="https://allyoutubers.com/Orginal-Uppal-Farm-Girl-Viral-Video"> 🌐 VIDEO.18.Orginal-Uppal-Farm-Girl-Viral-Video-Link.New.full.videos.Uppal.Farm.Girl

🔴 ➤►DOWNLOAD👉👉🟢 ➤ <a href="https://allyoutubers.com/Orginal-Uppal-Farm-Girl-Viral-Video"> 🌐 VIDEO.18.Orginal-Uppal-Farm-Girl-Viral-Video-Link.New.full.videos.Uppal.Farm.Girl

|

LBST/t10_pick_and_place_smolvla_013000

|

LBST

| 2025-08-19T12:11:26Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-013000",

"region:us"

] |

robotics

| 2025-08-19T12:11:21Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-013000

---

# T08 Pick and Place Policy - Checkpoint 013000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 013000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 013000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_013000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 013000*

|

LBST/t10_pick_and_place_smolvla_012000

|

LBST

| 2025-08-19T12:11:01Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-012000",

"region:us"

] |

robotics

| 2025-08-19T12:10:54Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-012000

---

# T08 Pick and Place Policy - Checkpoint 012000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 012000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 012000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_012000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 012000*

|

pempekmangedd/blockassist-bc-patterned_sturdy_dolphin_1755603799

|

pempekmangedd

| 2025-08-19T12:11:00Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"patterned sturdy dolphin",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T12:10:57Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- patterned sturdy dolphin

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

SirAB/Dolphin-gemma2-2b-finetuned-v2

|

SirAB

| 2025-08-19T12:11:00Z

| 29

| 1

|

transformers

|

[

"transformers",

"safetensors",

"gemma2",

"text-generation",

"text-generation-inference",

"unsloth",

"conversational",

"en",

"base_model:SirAB/Dolphin-gemma2-2b-finetuned-v2",

"base_model:finetune:SirAB/Dolphin-gemma2-2b-finetuned-v2",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-06-10T09:34:21Z

|

---

base_model: SirAB/Dolphin-gemma2-2b-finetuned-v2

tags:

- text-generation-inference

- transformers

- unsloth

- gemma2

license: apache-2.0

language:

- en

---

# Uploaded finetuned model

- **Developed by:** SirAB

- **License:** apache-2.0

- **Finetuned from model :** SirAB/Dolphin-gemma2-2b-finetuned-v2

This gemma2 model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

Prerna43/distilbert-base-uncased-lora-text-classification

|

Prerna43

| 2025-08-19T12:10:33Z

| 0

| 0

|

peft

|

[

"peft",

"tensorboard",

"safetensors",

"base_model:adapter:distilbert-base-uncased",

"lora",

"transformers",

"base_model:distilbert/distilbert-base-uncased",

"base_model:adapter:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"region:us"

] | null | 2025-08-19T12:03:59Z

|

---

library_name: peft

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- base_model:adapter:distilbert-base-uncased

- lora

- transformers

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-lora-text-classification

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-lora-text-classification

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6438

- Accuracy: 0.887

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 250 | 0.5865 | 0.891 |

| 0.051 | 2.0 | 500 | 0.6101 | 0.888 |

| 0.051 | 3.0 | 750 | 0.6309 | 0.889 |

| 0.1059 | 4.0 | 1000 | 0.6438 | 0.887 |

### Framework versions

- PEFT 0.17.0

- Transformers 4.55.2

- Pytorch 2.6.0+cu124

- Datasets 4.0.0

- Tokenizers 0.21.4

|

katanyasekolah/blockassist-bc-silky_sprightly_cassowary_1755603621

|

katanyasekolah

| 2025-08-19T12:10:15Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"silky sprightly cassowary",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T12:10:11Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- silky sprightly cassowary

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

LBST/t10_pick_and_place_smolvla_010000

|

LBST

| 2025-08-19T12:10:09Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-010000",

"region:us"

] |

robotics

| 2025-08-19T12:10:02Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-010000

---

# T08 Pick and Place Policy - Checkpoint 010000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 010000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 010000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_010000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 010000*

|

lisaozill03/blockassist-bc-rugged_prickly_alpaca_1755603876

|

lisaozill03

| 2025-08-19T12:10:02Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"rugged prickly alpaca",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T12:09:58Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- rugged prickly alpaca

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

LBST/t10_pick_and_place_smolvla_009000

|

LBST

| 2025-08-19T12:09:43Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-009000",

"region:us"

] |

robotics

| 2025-08-19T12:09:36Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-009000

---

# T08 Pick and Place Policy - Checkpoint 009000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 009000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 009000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_009000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 009000*

|

LBST/t10_pick_and_place_smolvla_008000

|

LBST

| 2025-08-19T12:09:16Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-008000",

"region:us"

] |

robotics

| 2025-08-19T12:09:09Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-008000

---

# T08 Pick and Place Policy - Checkpoint 008000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 008000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 008000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_008000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 008000*

|

p1m2/falcon-7b-sharded-bf16-finetuned-mental-health-conversational

|

p1m2

| 2025-08-19T12:08:49Z

| 0

| 0

|

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"generated_from_trainer",

"trl",

"sft",

"base_model:ybelkada/falcon-7b-sharded-bf16",

"base_model:finetune:ybelkada/falcon-7b-sharded-bf16",

"endpoints_compatible",

"region:us"

] | null | 2025-08-19T10:13:06Z

|

---

base_model: ybelkada/falcon-7b-sharded-bf16

library_name: transformers

model_name: falcon-7b-sharded-bf16-finetuned-mental-health-conversational

tags:

- generated_from_trainer

- trl

- sft

licence: license

---

# Model Card for falcon-7b-sharded-bf16-finetuned-mental-health-conversational

This model is a fine-tuned version of [ybelkada/falcon-7b-sharded-bf16](https://huggingface.co/ybelkada/falcon-7b-sharded-bf16).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="p1m2/falcon-7b-sharded-bf16-finetuned-mental-health-conversational", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/parammehta123/huggingface/runs/w637538i)

This model was trained with SFT.

### Framework versions

- TRL: 0.21.0

- Transformers: 4.55.2

- Pytorch: 2.6.0+cu124

- Datasets: 4.0.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

Dejiat/blockassist-bc-savage_unseen_bobcat_1755605258

|

Dejiat

| 2025-08-19T12:08:27Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"savage unseen bobcat",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T12:08:20Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- savage unseen bobcat

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Unlearning/early-unlearning-weak-filter-ga-1-in-209-ga-lr-scale-0_001-gclip-0_5

|

Unlearning

| 2025-08-19T12:07:52Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"gpt_neox",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-11T15:07:18Z

|

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

LBST/t10_pick_and_place_smolvla_004000

|

LBST

| 2025-08-19T12:07:36Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-004000",

"region:us"

] |

robotics

| 2025-08-19T12:07:29Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-004000

---

# T08 Pick and Place Policy - Checkpoint 004000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 004000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 004000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_004000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 004000*

|

LBST/t10_pick_and_place_smolvla_003000

|

LBST

| 2025-08-19T12:07:09Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-003000",

"region:us"

] |

robotics

| 2025-08-19T12:07:04Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-003000

---

# T08 Pick and Place Policy - Checkpoint 003000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 003000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 003000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_003000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 003000*

|

VoilaRaj/80_cGooIB

|

VoilaRaj

| 2025-08-19T12:06:45Z

| 0

| 0

| null |

[

"safetensors",

"any-to-any",

"omega",

"omegalabs",

"bittensor",

"agi",

"license:mit",

"region:us"

] |

any-to-any

| 2025-08-19T12:02:53Z

|

---

license: mit

tags:

- any-to-any

- omega

- omegalabs

- bittensor

- agi

---

This is an Any-to-Any model checkpoint for the OMEGA Labs x Bittensor Any-to-Any subnet.

Check out the [git repo](https://github.com/omegalabsinc/omegalabs-anytoany-bittensor) and find OMEGA on X: [@omegalabsai](https://x.com/omegalabsai).

|

LBST/t10_pick_and_place_smolvla_002000

|

LBST

| 2025-08-19T12:06:44Z

| 0

| 0

|

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"pick-and-place",

"smolvla",

"checkpoint-002000",

"region:us"

] |

robotics

| 2025-08-19T12:06:20Z

|

---

library_name: lerobot

tags:

- robotics

- pick-and-place

- smolvla

- checkpoint-002000

---

# T08 Pick and Place Policy - Checkpoint 002000

This model is a checkpoint from the training of a pick-and-place policy using SmolVLA architecture.

## Model Details

- **Checkpoint**: 002000

- **Architecture**: SmolVLA

- **Task**: Pick and Place (T08)

- **Training Step**: 002000

## Usage

You can evaluate this model using LeRobot:

```bash

python -m lerobot.scripts.eval \

--policy.path=LBST/t10_pick_and_place_smolvla_002000 \

--env.type=<your_environment> \

--eval.n_episodes=10 \

--policy.device=cuda

```

## Files

- `config.json`: Policy configuration

- `model.safetensors`: Model weights in SafeTensors format

- `train_config.json`: Complete training configuration for reproducibility

## Parent Repository

This checkpoint was extracted from: [LBST/t10_pick_and_place_files](https://huggingface.co/LBST/t10_pick_and_place_files)

---

*Generated automatically from checkpoint 002000*

|

quantumxnode/blockassist-bc-dormant_peckish_seahorse_1755603595

|

quantumxnode

| 2025-08-19T12:06:13Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"dormant peckish seahorse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T12:06:10Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- dormant peckish seahorse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Kumo2023/nupurbro

|

Kumo2023

| 2025-08-19T12:05:27Z

| 0

| 0

|

diffusers

|

[

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] |

text-to-image

| 2025-08-19T10:59:59Z

|

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: TOK

---

# Nupurbro

<Gallery />

## About this LoRA

This is a [LoRA](https://replicate.com/docs/guides/working-with-loras) for the FLUX.1-dev text-to-image model. It can be used with diffusers or ComfyUI.

It was trained on [Replicate](https://replicate.com/) using AI toolkit: https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `TOK` to trigger the image generation.

## Run this LoRA with an API using Replicate

```py

import replicate

input = {

"prompt": "TOK",

"lora_weights": "https://huggingface.co/Kumo2023/nupurbro/resolve/main/lora.safetensors"

}

output = replicate.run(

"black-forest-labs/flux-dev-lora",

input=input

)

for index, item in enumerate(output):

with open(f"output_{index}.webp", "wb") as file:

file.write(item.read())

```

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('Kumo2023/nupurbro', weight_name='lora.safetensors')

image = pipeline('TOK').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

## Training details

- Steps: 6000

- Learning rate: 0.0004

- LoRA rank: 16

## Contribute your own examples

You can use the [community tab](https://huggingface.co/Kumo2023/nupurbro/discussions) to add images that show off what you’ve made with this LoRA.

|

haihp02/pdfreeee-biggerb

|

haihp02

| 2025-08-19T12:05:13Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"trl",

"dpo",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T12:04:12Z

|

---

library_name: transformers

tags:

- trl

- dpo

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

m-muraki/Qwen3-Coder-30B-A3B-Instruct-FP8

|

m-muraki

| 2025-08-19T12:03:40Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"qwen3_moe",

"text-generation",

"conversational",

"arxiv:2505.09388",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"fp8",

"region:us"

] |

text-generation

| 2025-08-19T12:02:47Z

|

---

library_name: transformers

license: apache-2.0

license_link: https://huggingface.co/Qwen/Qwen3-Coder-30B-A3B-Instruct-FP8/blob/main/LICENSE

pipeline_tag: text-generation

---

# Qwen3-Coder-30B-A3B-Instruct-FP8

<a href="https://chat.qwen.ai/" target="_blank" style="margin: 2px;">

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

</a>

## Highlights

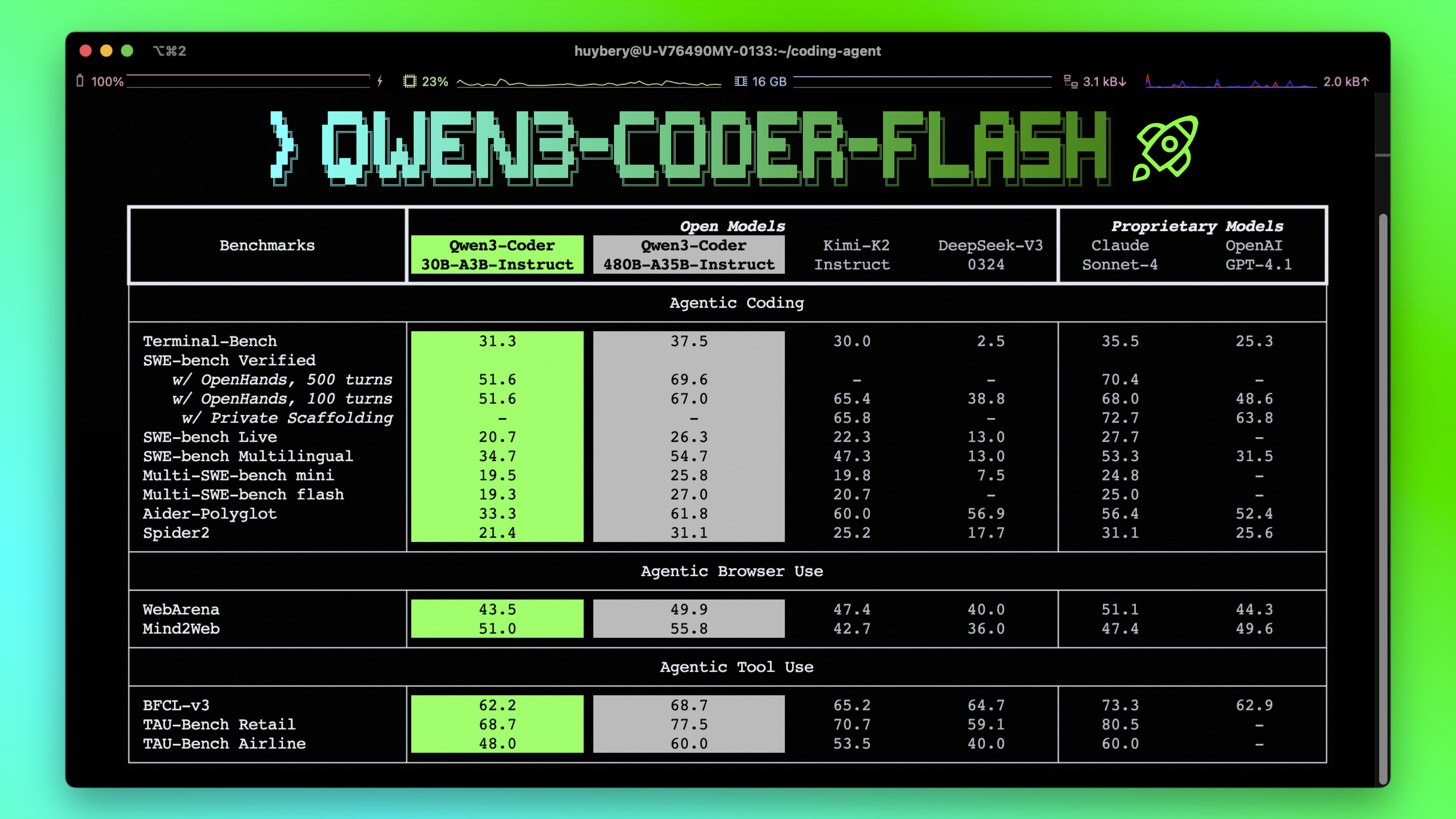

**Qwen3-Coder** is available in multiple sizes. Today, we're excited to introduce **Qwen3-Coder-30B-A3B-Instruct-FP8**. This streamlined model maintains impressive performance and efficiency, featuring the following key enhancements:

- **Significant Performance** among open models on **Agentic Coding**, **Agentic Browser-Use**, and other foundational coding tasks.

- **Long-context Capabilities** with native support for **256K** tokens, extendable up to **1M** tokens using Yarn, optimized for repository-scale understanding.

- **Agentic Coding** supporting for most platform such as **Qwen Code**, **CLINE**, featuring a specially designed function call format.

## Model Overview

**Qwen3-Coder-30B-A3B-Instruct-FP8** has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining & Post-training

- Number of Parameters: 30.5B in total and 3.3B activated

- Number of Layers: 48

- Number of Attention Heads (GQA): 32 for Q and 4 for KV

- Number of Experts: 128

- Number of Activated Experts: 8

- Context Length: **262,144 natively**.

**NOTE: This model supports only non-thinking mode and does not generate ``<think></think>`` blocks in its output. Meanwhile, specifying `enable_thinking=False` is no longer required.**

For more details, including benchmark evaluation, hardware requirements, and inference performance, please refer to our [blog](https://qwenlm.github.io/blog/qwen3-coder/), [GitHub](https://github.com/QwenLM/Qwen3-Coder), and [Documentation](https://qwen.readthedocs.io/en/latest/).

## Quickstart

We advise you to use the latest version of `transformers`.

With `transformers<4.51.0`, you will encounter the following error:

```

KeyError: 'qwen3_moe'

```

The following contains a code snippet illustrating how to use the model generate content based on given inputs.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-Coder-30B-A3B-Instruct-FP8"

# load the tokenizer and the model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# prepare the model input

prompt = "Write a quick sort algorithm."

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# conduct text completion

generated_ids = model.generate(

**model_inputs,

max_new_tokens=65536

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

content = tokenizer.decode(output_ids, skip_special_tokens=True)

print("content:", content)

```

**Note: If you encounter out-of-memory (OOM) issues, consider reducing the context length to a shorter value, such as `32,768`.**

## Note on FP8

For convenience and performance, we have provided `fp8`-quantized model checkpoint for Qwen3, whose name ends with `-FP8`. The quantization method is fine-grained `fp8` quantization with block size of 128. You can find more details in the `quantization_config` field in `config.json`.

You can use the Qwen3-30B-A3B-Instruct-FP8 model with serveral inference frameworks, including `transformers`, `sglang`, and `vllm`, as the original bfloat16 model.

However, please pay attention to the following known issues:

- `transformers`:

- there are currently issues with the "fine-grained fp8" method in `transformers` for distributed inference. You may need to set the environment variable `CUDA_LAUNCH_BLOCKING=1` if multiple devices are used in inference.

## Agentic Coding

Qwen3-Coder excels in tool calling capabilities.

You can simply define or use any tools as following example.

```python

# Your tool implementation

def square_the_number(num: float) -> dict:

return num ** 2

# Define Tools

tools=[

{

"type":"function",

"function":{

"name": "square_the_number",

"description": "output the square of the number.",

"parameters": {

"type": "object",

"required": ["input_num"],

"properties": {

'input_num': {

'type': 'number',

'description': 'input_num is a number that will be squared'

}

},

}

}

}

]

import OpenAI

# Define LLM

client = OpenAI(

# Use a custom endpoint compatible with OpenAI API

base_url='http://localhost:8000/v1', # api_base

api_key="EMPTY"

)

messages = [{'role': 'user', 'content': 'square the number 1024'}]

completion = client.chat.completions.create(

messages=messages,

model="Qwen3-Coder-30B-A3B-Instruct-FP8",

max_tokens=65536,

tools=tools,

)

print(completion.choice[0])

```

## Best Practices

To achieve optimal performance, we recommend the following settings:

1. **Sampling Parameters**:

- We suggest using `temperature=0.7`, `top_p=0.8`, `top_k=20`, `repetition_penalty=1.05`.

2. **Adequate Output Length**: We recommend using an output length of 65,536 tokens for most queries, which is adequate for instruct models.

### Citation

If you find our work helpful, feel free to give us a cite.

```

@misc{qwen3technicalreport,

title={Qwen3 Technical Report},

author={Qwen Team},

year={2025},

eprint={2505.09388},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.09388},

}

```

|

llm-slice/blm-gpt2s-90M-s42_901M-s42_submission

|

llm-slice

| 2025-08-19T12:03:13Z

| 993

| 0

| null |

[

"safetensors",

"gpt2",

"interaction",

"babylm-submission",

"babylm-2025",

"en",

"arxiv:2405.09605",

"arxiv:2411.07990",

"region:us"

] | null | 2025-08-15T08:47:53Z

|

---

language:

- en

tags:

- interaction

- babylm-submission

- babylm-2025

---

# Model Card for BabyLM submission to the Interaction Track

<!-- Provide a quick summary of what the model is/does. [Optional] -->

A 124M model with the GPT-2 architecture trained with the next token prediction loss for 10 epochs (~900 M words) **on 90% of the BabyLM corpus** and an additional **1 M words of PPO RL** training as submission for the Interaction track of the 2025 BabyLM challenge.

This model card is based on the model card of the BabyLM [100M GPT-2 baseline](https://huggingface.co/BabyLM-community/babylm-baseline-100m-gpt2/edit/main/README.md).

# Table of Contents

- [Model Card for Storytelling Submission Model](#model-card-for--model_id-)

- [Table of Contents](#table-of-contents)

- [Model Details](#model-details)

- [Model Description](#model-description)

- [Uses](#uses)

- [Training Details](#training-details)

- [Training Data](#training-data)

- [Hyperparameters](#hyperparameters)

- [Training Procedure](#training-procedure)

- [Size and Checkpoints](#size-and-checkpoints)

- [Evaluation](#evaluation)

- [Testing Data & Metrics](#testing-data-factors--metrics)

- [Testing Data](#testing-data)

- [Metrics](#metrics)

- [Results](#results)

- [Technical Specifications](#technical-specifications-optional)

- [Model Architecture and Objective](#model-architecture-and-objective)

- [Compute Infrastructure](#compute-infrastructure)

- [Hardware](#hardware)

- [Software](#software)

- [Training Time](#training-time)

- [Citation](#citation)

- [Model Card Authors](#model-card-authors-optional)

- [Bibliography](#bibliography)

# Model Details

## Model Description

<!-- Provide a longer summary of what this model is/does. -->

This is the RL storytelling model, based on a [pretrained GPT-2 model](https://huggingface.co/llm-slice/blm-gpt2s-90M-s42), for the Interaction Track of the 2025 BabyLM challenge.

- **Developed by:** Jonas Mayer Martins, Ali Hamza Bashir, Muhammad Rehan Khalid

- **Model type:** Causal language model

- **Language(s) (NLP):** eng

- **Resources for more information:**

- [GitHub Repo](https://github.com/malihamza/babylm-interactive-learning)

# Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

This is a pre-trained language model.

It can be used to evaluate tasks in a zero-shot manner and also can be fine-tuned for downstream tasks.

It can be used for language generation but given its small size and low number of words trained on, do not expect LLM-level performance.

# Training Details

## Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

We used the BabyLM 100M (Strict) dataset for training. **We trained the tokenizer and model on randomly selected 90% of the corpus**, which is composed of the following:

| Source | Weight | Domain | Citation | Website | License |

| --- | --- | --- | --- | --- | --- |

| BNC | 8% | Dialogue | BNC Consortium (2007) | [link](http://www.natcorp.ox.ac.uk/) | [link](http://www.natcorp.ox.ac.uk/docs/licence.html) <sup>1</sup> |

| CHILDES | 29% | Dialogue, Child-Directed | MacWhinney (2000) | | [link](https://talkbank.org/share/rules.html) |

| Project Gutenberg | 26% | Fiction, Nonfiction | Gerlach & Font-Clos (2020) | [link](https://github.com/pgcorpus/gutenberg) | [link](https://www.gutenberg.org/policy/license.html) |

| OpenSubtitles | 20% | Dialogue, Scripted | Lison & Tiedermann (2016) | [link](https://opus.nlpl.eu/OpenSubtitles-v2018.php) | Open source |

| Simple English Wikipedia | 15% | Nonfiction | -- | [link](https://dumps.wikimedia.org/simplewiki/20221201/) | [link](https://dumps.wikimedia.org/legal.html) |

| Switchboard | 1% | Dialogue | Godfrey et al. (1992), Stolcke et al., (2000) | [link](http://compprag.christopherpotts.net/swda.html) | [link](http://compprag.christopherpotts.net/swda.html) |

<sup>1</sup> Our distribution of part of the BNC Texts is permitted under the fair dealings provision of copyright law (see term (2g) in the BNC license).

## Hyperparameters PPO RL training

| **Parameter** | **Value** |

|----------------------------------|---------------------|

| Student context length | 512 |

| seed | 42 |

| batch size | 360 |

| Student sampling temperature | 1 |

| top_k | 0 |

| top_p | 1 |

| max_new_tokens (student) | 90 |

| Teacher model | Llama 3.1 8B Instr. |

| Teacher context length | 1024 |

| max_new_tokens (teacher) | 6 |

| gradient_accumulation_steps | 1 |

| adap_kl_ctrl | True |

| init_kl_coef | 0.2 |

| learning_rate | 1×10⁻⁶ |

| Student input limit | 1 M words |

## Hyperparameters Pretraining

| Hyperparameter | Value |

| --- | --- |

| Number of epochs | 10 |

| Datapoint length | 512 |

| Batch size | 16 |

| Gradient accumulation steps | 4 |

| Learning rate | 0.0005 |

| Number of steps | 211650 |

| Warmup steps | 2116 |

| Gradient clipping | 1 |

| Optimizer | AdamW |

| Optimizer Beta_1 | 0.9 |

| Optimizer Beta_2 | 0.999 |

| Optimizer Epsilon | 10<sup>-8</sup>|

| Tokenizer | BytePairBPE |

| Vocab Size | 16000 |

## Training Procedure

The model is trained with next token prediction loss for 10 epochs.

### Size and checkpoints

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

The model has 124M parameters.

In total we train on around 901 M words and provide multiple checkpoints from the training.

Specifically we provode:

- Checkpoints every 1 M words for the first 10 M words

- Checkpoints every 10 M words first 100 M words

- Checkpoints every 100 M words until 900 M words

- Checkpoints every 100 K words until 901 M words

# Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

This model is evaluated in two ways:

1. We do zero-shot evaluation on 7 tasks.

2. We do fine-tuning on a subset of the (Super)GLUE tasks (Wang et al., ICLR 2019; Wang et al., NeurIPS 2019) .

## Testing Data & Metrics

### Testing Data

<!-- This should link to a Data Card if possible. -->

For the BLiMP, BLiMP supplement, and EWoK tasks, we use a filtered version of the dataset to only include examples with words found in the BabyLM dataset.

For the Finetuning task, we both filter and sample down to a maximum 10 000 train examples.

*Validation Data*

*Zero-shot Tasks*

- **BLiMP**: The Benchmark of Linguistic Minimal Pairs evaluates the model's linguistic ability by seeing if it can recognize the grammatically correct sentence from a pair of minimally different sentences. It tests various grammatical phenomena.(Warstadt et al., TACL 2020)

- **BLiMP Supplement**: A supplement to BLiMP introduced in the first edition of the BabyLM challenge. More focused on dialogue and questions. (Warstadt et al., CoNLL-BabyLM 2023)

- **EWoK**: Works similarly to BLiMP but looks the model's internal world knowledge. Looking at both whter a model has physical and social knowledge. (Ivanova et al., 2024)

- **Eye Tracking and Self-paced Reading**: Looks at whether the model can mimick the eye tracking and reading time of a human but using surprisal of a word as a proxy for time spent reading a word. (de Varda et al., BRM 2024)

- **Entity Tracking**: Checks whether a model can keep track of the changes to the states of entities as text/dialogue unfolds. (Kim & Schuster, ACL 2023)

- **WUGs**: Tests morphological generalization in LMs through an adjective nominalization and past tense task. (Hofmann et al., 2024) (Weissweiler et al., 2023)

- **COMPS**: Property knowledge. (Misra et al., 2023)

*Finetuning Tasks*

- **BoolQ**: A yes/no QA dataset with unprompted and unconstrained questions. (Clark et al., NAACL 2019)

- **MNLI**: The Multi-Genre Natural Language Inference corpus tests the language understanding of a model by seeing wehther it can recognize textual entailment. (Williams et al., NAACL 2018)

- **MRPC**: The Microsoft Research Paraphrase Corpus contains pairs of sentences that are either paraphrases/semntically equivalent to each other or unrelated.(Dolan & Brockett, IJCNLP 2005)

- **QQP**<sup>2</sup>: Similarly to MRPC, the Quora Question Pairs corpus tests the models ability to determine whether a pair of questions are sematically similar to each other. These questions are sourced from Quora.

- **MultiRC**: The Multi-Sentence Reading Comprehension corpus is a QA task that evaluates the model's ability to the correct answer from a list of answers given a question and context paragraph. In this version the data is changed to a binary classification judging whether the answer to a question, context pair is correct. (Khashabi et al., NAACL 2018)

- **RTE**: Similar the Recognizing Text Entailement tests the model's ability to recognize text entailement. (Dagan et al., Springer 2006; Bar et al., 2006; Giampiccolo et al., 2007; Bentivogli et al., TAC 2009)

- **WSC**: The Winograd Schema Challenge tests the models ability to do coreference resolution on sentences with a pronoun and a list of noun phrases found in the sentence. This version edits it to be a binary classification on examples consisting of a pronoun and noun phrase.(Levesque et al., PKRR 2012)

<sup>2</sup> https://www.quora.com/profile/Ricky-Riche-2/First-Quora-Dataset-Release-Question-Pairs

### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

The metrics used to evaluate the model are the following:

- Zero-shot

- Accuracy on predicting the correct completion/sentence for BLiMP, BLiMP Supplement, EWoK, Entity Tracking, and WUGs

- Change in R^2 prediction from baseline for Eye Tracking (with no spillover) and Self-paced Reading (1-word spillover)

- Finetuning

- 3 class Accuracy for MNLI

- Binary Accuracy for BoolQ, MultiRC, and WSC

- F1-score for MRPC and QQP

The metrics were chosen based on the advice of the papers the tasks come from.

### Hyperparameters

| Hyperparameter | MNLI, RTE, QQP, MRPC, BoolQ, MultiRC | WSC |

| --- | --- | --- |

| Learning Rate | 3\*10<sup>-5</sup> | 3\*10<sup>-5</sup> |

| Batch Size | 16 | 16 |

| Epochs | 10 | 30 |

| Weight decay | 0.01 | 0.01 |

| Optimizer | AdamW | AdamW |

| Scheduler | cosine | cosine |

| Warmup percentage | 6% | 6% |

| Dropout | 0.1 | 0.1 |

## Results

We compare our student model against two official baselines from the 2025 BabyLM Challenge<sup>1</sup>:

- **1000M-pre:** The standard *pretraining* baseline, using a GPT-2-small model trained on 100M unique words from the BabyLM dataset (10 epochs, next-word prediction).

- **SimPO:** A baseline first trained for 7 epochs with next-word prediction, then 2 epochs *interleaving* prediction and reinforcement learning. Here, the RL reward encourages the student to generate completions similar to the teacher’s output.

- **900M-pre:** Our model, using the same GPT-2-small architecture, pretrained on 90% of the BabyLM dataset (yielding approximately 91M unique words, 10 epochs).

- **900M-RL:** Our model after additional PPO-based reinforcement learning with the teacher, using about 1M words as input for the interactive (RL) phase.

---

### Evaluation Results

| **Task** | **1000M-pre** | **SimPO** | **900M-pre** | **900M-RL** |

|:------------- | ------------: | ---------:| ------------:| -----------:|

| BLiMP | 74.88 | 72.16 | 77.52 | **77.53** |

| Suppl. | **63.32** | 61.22 | 56.62 | 56.72 |

| EWOK | 51.67 | **51.92** | 51.36 | 51.41 |

| COMPS | **56.17** | 55.05 | 55.20 | 55.18 |

| ET | 31.51 | 28.06 | 30.34 | **33.11** |

| GLUE | 52.18 | 50.35 | **53.14** | 52.46 |

#### Model descriptions:

- **1000M-pre:** Baseline pretrained on 100M words (BabyLM challenge baseline).

- **SimPO:** Baseline using a hybrid of pretraining and RL with a similarity-based reward.

- **900M-pre:** Our GPT-2-small model, pretrained on 90M words (similar settings as baseline, but less data).

- **900M-RL:** The same model as 900M-pre, further trained with PPO using teacher feedback on 1M words of input.

-

See: [BabyLM Challenge](https://huggingface.co/BabyLM-community) for the baselines.

# Technical Specifications

### Hardware

- 4 A100 GPUs were used to train this model.

### Software

PyTorch

### Training Time

The model took 20 hours to train and consumed 53560 core hours (with 4 GPUs and 32 CPUs).

# Citation

```latex

@misc{MayerMartinsBKB2025,

title={ToDo},

author={Jonas Mayer Martins, Ali Hamza Bashir, Muhammad Rehan Khalid, Lisa Beinborn},

year={2025},

eprint={2502.TODO},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={ToDo},

}

```

# Model Card Authors

Jonas Mayer Martins

# Bibliography

[GLUE: A multi-task benchmark and analysis platform for natural language understanding](https://openreview.net/pdf?id=rJ4km2R5t7) (Wang et al., ICLR 2019)

[SuperGLUE: A Stickier Benchmark for General-Purpose Language Understanding Systems](https://proceedings.neurips.cc/paper_files/paper/2019/file/4496bf24afe7fab6f046bf4923da8de6-Paper.pdf) (Wang et al., NeurIPS 2019)

[BLiMP: The Benchmark of Linguistic Minimal Pairs for English](https://aclanthology.org/2020.tacl-1.25/) (Warstadt et al., TACL 2020)

[Findings of the BabyLM Challenge: Sample-Efficient Pretraining on Developmentally Plausible Corpora](https://aclanthology.org/2023.conll-babylm.1/) (Warstadt et al., CoNLL-BabyLM 2023)

[🌏 Elements of World Knowledge (EWoK): A cognition-inspired framework for evaluating basic world knowledge in language models](https://arxiv.org/pdf/2405.09605v1) (Ivanova et al., 2024)

[Cloze probability, predictability ratings, and computational estimates for 205 English sentences, aligned with existing EEG and reading time data](https://link.springer.com/article/10.3758/s13428-023-02261-8) (de Varda et al., BRM 2024)

[Entity Tracking in Language Models](https://aclanthology.org/2023.acl-long.213/) (Kim & Schuster, ACL 2023)

[Derivational Morphology Reveals Analogical Generalization in Large Language Models](https://arxiv.org/pdf/2411.07990) (Hofmann et al., 2024)

[Automatically Constructing a Corpus of Sentential Paraphrases](https://aclanthology.org/I05-5002/) (Dolan & Brockett, IJCNLP 2005)

[A Broad-Coverage Challenge Corpus for Sentence Understanding through Inference](https://aclanthology.org/N18-1101/) (Williams et al., NAACL 2018)

[The Winograd Schema Challenge]( http://dl.acm.org/citation.cfm?id=3031843.3031909) (Levesque et al., PKRR 2012)

[The PASCAL Recognising Textual Entailment Challenge](https://link.springer.com/chapter/10.1007/11736790_9) (Dagan et al., Springer 2006)

[The Second PASCAL Recognising Textual Entailment Challenge]() (Bar et al., 2006)

[The Third PASCAL Recognizing Textual Entailment Challenge](https://aclanthology.org/W07-1401/) (Giampiccolo et al., 2007)

[The Fifth PASCAL Recognizing Textual Entailment Challenge](https://tac.nist.gov/publications/2009/additional.papers/RTE5_overview.proceedings.pdf) (Bentivogli et al., TAC 2009)

[BoolQ: Exploring the Surprising Difficulty of Natural Yes/No Questions](https://aclanthology.org/N19-1300/) (Clark et al., NAACL 2019)

[Looking Beyond the Surface: A Challenge Set for Reading Comprehension over Multiple Sentences](https://aclanthology.org/N18-1023/) (Khashabi et al., NAACL 2018)

|

premrajreddy/Home-TinyLlama-1.1B-HomeAssist-GGUF

|

premrajreddy

| 2025-08-19T12:02:08Z

| 0

| 0

| null |

[

"safetensors",

"gguf",

"llama",

"home-assistant",

"voice-assistant",

"automation",

"assistant",

"home",

"text-generation",

"conversational",

"en",

"dataset:acon96/Home-Assistant-Requests",

"base_model:TinyLlama/TinyLlama-1.1B-Chat-v1.0",

"base_model:finetune:TinyLlama/TinyLlama-1.1B-Chat-v1.0",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T02:13:44Z

|

---

language: en

license: apache-2.0

tags:

- home-assistant

- voice-assistant

- automation

- assistant

- home

pipeline_tag: text-generation

datasets:

- acon96/Home-Assistant-Requests

base_model:

- TinyLlama/TinyLlama-1.1B-Chat-v1.0

base_model_relation: finetune

---

# 🏠 TinyLLaMA-1.1B Home Assistant Voice Model

This model is a **fine-tuned version** of [TinyLlama/TinyLlama-1.1B-Chat-v1.0](https://huggingface.co/TinyLlama/TinyLlama-1.1B-Chat-v1.0), trained with [acon96/Home-Assistant-Requests](https://huggingface.co/datasets/acon96/Home-Assistant-Requests).

It is designed to act as a **voice-controlled smart home assistant** that takes natural language instructions and outputs **Home Assistant commands**.

---

## ✨ Features

- Converts **natural language voice commands** into Home Assistant automation calls.

- Produces **friendly confirmations** and **structured JSON service commands**.

- Lightweight (1.1B parameters) – runs efficiently on CPUs, GPUs, and via **Ollama** with quantization.

---

## 🔧 Example Usage (Transformers)

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("premrajreddy/tinyllama-1.1b-home-llm")

model = AutoModelForCausalLM.from_pretrained("premrajreddy/tinyllama-1.1b-home-llm")

query = "turn on the kitchen lights"

inputs = tokenizer(query, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=80)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

|

musdbi/bpce_model

|

musdbi

| 2025-08-19T12:00:37Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"llama",

"trl",

"en",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-08-19T12:00:27Z

|

---

base_model: unsloth/meta-llama-3.1-8b-instruct-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** musdbi

- **License:** apache-2.0

- **Finetuned from model :** unsloth/meta-llama-3.1-8b-instruct-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

swiptit/blockassist-bc-polished_armored_mandrill_1755604721

|

swiptit

| 2025-08-19T11:59:23Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"polished armored mandrill",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T11:59:19Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- polished armored mandrill

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

vg-sentry/Qwen-Qwen2.5-Coder-7B-Instruct-sentry-v1

|

vg-sentry

| 2025-08-19T11:59:11Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"generated_from_trainer",

"sft",

"trl",

"unsloth",

"endpoints_compatible",

"region:us"

] | null | 2025-08-19T11:07:28Z

|

---

base_model: unsloth/qwen2.5-coder-7b-instruct-bnb-4bit

library_name: transformers

model_name: Qwen-Qwen2.5-Coder-7B-Instruct-sentry-v1

tags:

- generated_from_trainer

- sft

- trl

- unsloth

licence: license

---

# Model Card for Qwen-Qwen2.5-Coder-7B-Instruct-sentry-v1

This model is a fine-tuned version of [unsloth/qwen2.5-coder-7b-instruct-bnb-4bit](https://huggingface.co/unsloth/qwen2.5-coder-7b-instruct-bnb-4bit).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="vg-sentry/Qwen-Qwen2.5-Coder-7B-Instruct-sentry-v1", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with SFT.

### Framework versions

- TRL: 0.21.0

- Transformers: 4.55.2

- Pytorch: 2.6.0+cu124

- Datasets: 4.0.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

indoempatnol/blockassist-bc-fishy_wary_swan_1755602935

|

indoempatnol

| 2025-08-19T11:56:18Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"fishy wary swan",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T11:56:14Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- fishy wary swan

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Sayemahsjn/blockassist-bc-playful_feline_octopus_1755603521

|

Sayemahsjn

| 2025-08-19T11:55:30Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"playful feline octopus",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T11:55:26Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- playful feline octopus

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

kojeklollipop/blockassist-bc-spotted_amphibious_stork_1755602793

|

kojeklollipop

| 2025-08-19T11:54:00Z

| 0

| 0

| null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"spotted amphibious stork",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T11:53:57Z

|

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- spotted amphibious stork

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

lakelee/RLB_MLP_BC_v4.20250819.18.1

|

lakelee

| 2025-08-19T11:53:49Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"mlp_swiglu",

"generated_from_trainer",

"base_model:lakelee/RLB_MLP_BC_v4.20250819.18",

"base_model:finetune:lakelee/RLB_MLP_BC_v4.20250819.18",

"endpoints_compatible",

"region:us"

] | null | 2025-08-19T11:09:01Z

|

---

library_name: transformers

base_model: lakelee/RLB_MLP_BC_v4.20250819.18

tags:

- generated_from_trainer

model-index:

- name: RLB_MLP_BC_v4.20250819.18.1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# RLB_MLP_BC_v4.20250819.18.1

This model is a fine-tuned version of [lakelee/RLB_MLP_BC_v4.20250819.18](https://huggingface.co/lakelee/RLB_MLP_BC_v4.20250819.18) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Use adamw_torch_fused with betas=(0.9,0.99) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 100

- num_epochs: 1.0

### Training results

### Framework versions

- Transformers 4.55.2

- Pytorch 2.8.0+cu128

- Tokenizers 0.21.4

|

burmeai/burme-v1

|

burmeai

| 2025-08-19T11:51:20Z

| 0

| 0

| null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-19T11:51:20Z

|

---

license: apache-2.0

---

|

AXERA-TECH/Qwen2.5-0.5B-Instruct-CTX-Int8

|

AXERA-TECH

| 2025-08-19T11:51:10Z

| 10

| 0

|

transformers

|

[

"transformers",

"Qwen",

"Qwen2.5-0.5B-Instruct",

"Qwen2.5-0.5B-Instruct-GPTQ-Int8",

"GPTQ",

"en",

"base_model:Qwen/Qwen2.5-0.5B-Instruct-GPTQ-Int8",

"base_model:finetune:Qwen/Qwen2.5-0.5B-Instruct-GPTQ-Int8",

"license:bsd-3-clause",

"endpoints_compatible",

"region:us"

] | null | 2025-06-03T07:41:28Z

|

---

library_name: transformers

license: bsd-3-clause

base_model:

- Qwen/Qwen2.5-0.5B-Instruct-GPTQ-Int8

tags:

- Qwen

- Qwen2.5-0.5B-Instruct

- Qwen2.5-0.5B-Instruct-GPTQ-Int8

- GPTQ

language:

- en

---

# Qwen2.5-0.5B-Instruct-GPTQ-Int8

This version of Qwen2.5-0.5B-Instruct-GPTQ-Int8 has been converted to run on the Axera NPU using **w8a16** quantization.

This model has been optimized with the following LoRA:

Compatible with Pulsar2 version: 4.2(Not released yet)

## Convert tools links:

For those who are interested in model conversion, you can try to export axmodel through the original repo : https://huggingface.co/Qwen/Qwen2.5-0.5B-Instruct-GPTQ-Int8

[Pulsar2 Link, How to Convert LLM from Huggingface to axmodel](https://pulsar2-docs.readthedocs.io/en/latest/appendix/build_llm.html)

[AXera NPU LLM Runtime](https://github.com/AXERA-TECH/ax-llm)

## Support Platform

- AX650

- AX650N DEMO Board

- [M4N-Dock(爱芯派Pro)](https://wiki.sipeed.com/hardware/zh/maixIV/m4ndock/m4ndock.html)

- [M.2 Accelerator card](https://axcl-docs.readthedocs.io/zh-cn/latest/doc_guide_hardware.html)

- AX630C

- *developing*

|Chips|w8a16|w4a16|

|--|--|--|

|AX650| 30 tokens/sec| TBD |

## How to use

Download all files from this repository to the device

```

root@ax650:/mnt/qtang/llm-test/qwen2.5-0.5b-ctx# tree -L 1

.

|-- main_ax650

|-- main_axcl_aarch64

|-- main_axcl_x86

|-- post_config.json

|-- qwen2.5-0.5b-gptq-int8-ctx-ax630c

|-- qwen2.5-0.5b-gptq-int8-ctx-ax650

|-- qwen2.5_tokenizer

|-- qwen2.5_tokenizer_uid.py

|-- run_qwen2.5_0.5b_gptq_int8_ctx_ax630c.sh

`-- run_qwen2.5_0.5b_gptq_int8_ctx_ax650.sh

3 directories, 7 files

```

#### Start the Tokenizer service

```

root@ax650:/mnt/qtang/llm-test/qwen2.5-0.5b-ctx# python3 qwen2.5_tokenizer_uid.py

Server running at http://0.0.0.0:12345

```

#### Inference with AX650 Host, such as M4N-Dock(爱芯派Pro) or AX650N DEMO Board

Open another terminal and run `run_qwen2.5_0.5b_gptq_int8_ax650.sh`

```

root@ax650:/mnt/qtang/llm-test/qwen2.5-0.5b-ctx# ./run_qwen2.5_0.5b_gptq_int8_ctx_ax650.sh

[I][ Init][ 110]: LLM init start

[I][ Init][ 34]: connect http://127.0.0.1:12345 ok

[I][ Init][ 57]: uid: cdeaf62e-0243-4dc9-b557-23a7c1ba7da1

bos_id: -1, eos_id: 151645

100% | ████████████████████████████████ | 27 / 27 [12.35s<12.35s, 2.19 count/s] init post axmodel ok,remain_cmm(3960 MB)

[I][ Init][ 188]: max_token_len : 2560

[I][ Init][ 193]: kv_cache_size : 128, kv_cache_num: 2560

[I][ Init][ 201]: prefill_token_num : 128

[I][ Init][ 205]: grp: 1, prefill_max_token_num : 1

[I][ Init][ 205]: grp: 2, prefill_max_token_num : 128

[I][ Init][ 205]: grp: 3, prefill_max_token_num : 512

[I][ Init][ 205]: grp: 4, prefill_max_token_num : 1024

[I][ Init][ 205]: grp: 5, prefill_max_token_num : 1536

[I][ Init][ 205]: grp: 6, prefill_max_token_num : 2048

[I][ Init][ 209]: prefill_max_token_num : 2048