modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

facebook/convnext-large-384

|

55a95313fa52934fa7b4d8e646aa0ac574eda4ef

|

2022-02-26T12:16:55.000Z

|

[

"pytorch",

"tf",

"convnext",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2201.03545",

"transformers",

"vision",

"license:apache-2.0"

] |

image-classification

| false |

facebook

| null |

facebook/convnext-large-384

| 85 | null |

transformers

| 4,900 |

---

license: apache-2.0

tags:

- vision

- image-classification

datasets:

- imagenet-1k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# ConvNeXT (large-sized model)

ConvNeXT model trained on ImageNet-1k at resolution 384x384. It was introduced in the paper [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545) by Liu et al. and first released in [this repository](https://github.com/facebookresearch/ConvNeXt).

Disclaimer: The team releasing ConvNeXT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

ConvNeXT is a pure convolutional model (ConvNet), inspired by the design of Vision Transformers, that claims to outperform them. The authors started from a ResNet and "modernized" its design by taking the Swin Transformer as inspiration.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=convnext) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import ConvNextFeatureExtractor, ConvNextForImageClassification

import torch

from datasets import load_dataset

dataset = load_dataset("huggingface/cats-image")

image = dataset["test"]["image"][0]

feature_extractor = ConvNextFeatureExtractor.from_pretrained("facebook/convnext-large-384")

model = ConvNextForImageClassification.from_pretrained("facebook/convnext-large-384")

inputs = feature_extractor(image, return_tensors="pt")

with torch.no_grad():

logits = model(**inputs).logits

# model predicts one of the 1000 ImageNet classes

predicted_label = logits.argmax(-1).item()

print(model.config.id2label[predicted_label]),

```

For more code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/convnext).

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2201-03545,

author = {Zhuang Liu and

Hanzi Mao and

Chao{-}Yuan Wu and

Christoph Feichtenhofer and

Trevor Darrell and

Saining Xie},

title = {A ConvNet for the 2020s},

journal = {CoRR},

volume = {abs/2201.03545},

year = {2022},

url = {https://arxiv.org/abs/2201.03545},

eprinttype = {arXiv},

eprint = {2201.03545},

timestamp = {Thu, 20 Jan 2022 14:21:35 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-2201-03545.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

facebook/wav2vec2-xls-r-300m-en-to-15

|

eca5ea600a8570ea1744fe5bd13f8b1ce505a656

|

2022-05-26T22:27:20.000Z

|

[

"pytorch",

"speech-encoder-decoder",

"automatic-speech-recognition",

"multilingual",

"en",

"de",

"tr",

"fa",

"sv",

"mn",

"zh",

"cy",

"ca",

"sl",

"et",

"id",

"ar",

"ta",

"lv",

"ja",

"dataset:common_voice",

"dataset:multilingual_librispeech",

"dataset:covost2",

"arxiv:2111.09296",

"transformers",

"speech",

"xls_r",

"xls_r_translation",

"license:apache-2.0"

] |

automatic-speech-recognition

| false |

facebook

| null |

facebook/wav2vec2-xls-r-300m-en-to-15

| 85 | null |

transformers

| 4,901 |

---

language:

- multilingual

- en

- de

- tr

- fa

- sv

- mn

- zh

- cy

- ca

- sl

- et

- id

- ar

- ta

- lv

- ja

datasets:

- common_voice

- multilingual_librispeech

- covost2

tags:

- speech

- xls_r

- xls_r_translation

- automatic-speech-recognition

pipeline_tag: automatic-speech-recognition

license: apache-2.0

widget:

- example_title: English

src: https://cdn-media.huggingface.co/speech_samples/common_voice_en_18301577.mp3

---

# Wav2Vec2-XLS-R-300M-EN-15

Facebook's Wav2Vec2 XLS-R fine-tuned for **Speech Translation.**

This is a [SpeechEncoderDecoderModel](https://huggingface.co/transformers/model_doc/speechencoderdecoder.html) model.

The encoder was warm-started from the [**`facebook/wav2vec2-xls-r-300m`**](https://huggingface.co/facebook/wav2vec2-xls-r-300m) checkpoint and

the decoder from the [**`facebook/mbart-large-50`**](https://huggingface.co/facebook/mbart-large-50) checkpoint.

Consequently, the encoder-decoder model was fine-tuned on 15 `en` -> `{lang}` translation pairs of the [Covost2 dataset](https://huggingface.co/datasets/covost2).

The model can translate from spoken `en` (Engish) to the following written languages `{lang}`:

`en` -> {`de`, `tr`, `fa`, `sv-SE`, `mn`, `zh-CN`, `cy`, `ca`, `sl`, `et`, `id`, `ar`, `ta`, `lv`, `ja`}

For more information, please refer to Section *5.1.1* of the [official XLS-R paper](https://arxiv.org/abs/2111.09296).

## Usage

### Demo

The model can be tested on [**this space**](https://huggingface.co/spaces/facebook/XLS-R-300m-EN-15).

You can select the target language, record some audio in English,

and then sit back and see how well the checkpoint can translate the input.

### Example

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

You can use the model directly via the ASR pipeline. By default, the checkpoint will

translate spoken English to written German. To change the written target language,

you need to pass the correct `forced_bos_token_id` to `generate(...)` to condition

the decoder on the correct target language.

To select the correct `forced_bos_token_id` given your choosen language id, please make use

of the following mapping:

```python

MAPPING = {

"de": 250003,

"tr": 250023,

"fa": 250029,

"sv": 250042,

"mn": 250037,

"zh": 250025,

"cy": 250007,

"ca": 250005,

"sl": 250052,

"et": 250006,

"id": 250032,

"ar": 250001,

"ta": 250044,

"lv": 250017,

"ja": 250012,

}

```

As an example, if you would like to translate to Swedish, you can do the following:

```python

from datasets import load_dataset

from transformers import pipeline

# select correct `forced_bos_token_id`

forced_bos_token_id = MAPPING["sv"]

# replace following lines to load an audio file of your choice

librispeech_en = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

audio_file = librispeech_en[0]["file"]

asr = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-xls-r-300m-en-to-15", feature_extractor="facebook/wav2vec2-xls-r-300m-en-to-15")

translation = asr(audio_file, forced_bos_token_id=forced_bos_token_id)

```

or step-by-step as follows:

```python

import torch

from transformers import Speech2Text2Processor, SpeechEncoderDecoderModel

from datasets import load_dataset

model = SpeechEncoderDecoderModel.from_pretrained("facebook/wav2vec2-xls-r-300m-en-to-15")

processor = Speech2Text2Processor.from_pretrained("facebook/wav2vec2-xls-r-300m-en-to-15")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# select correct `forced_bos_token_id`

forced_bos_token_id = MAPPING["sv"]

inputs = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["array"]["sampling_rate"], return_tensors="pt")

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"], forced_bos_token_id=forced_bos_token)

transcription = processor.batch_decode(generated_ids)

```

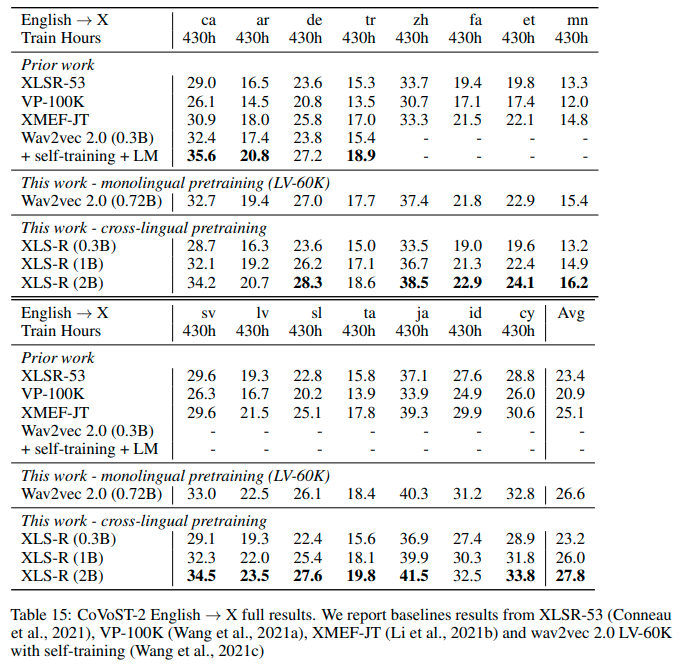

## Results `en` -> `{lang}`

See the row of **XLS-R (0.3B)** for the performance on [Covost2](https://huggingface.co/datasets/covost2) for this model.

## More XLS-R models for `{lang}` -> `en` Speech Translation

- [Wav2Vec2-XLS-R-300M-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-300m-en-to-15)

- [Wav2Vec2-XLS-R-1B-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-1b-en-to-15)

- [Wav2Vec2-XLS-R-2B-EN-15](https://huggingface.co/facebook/wav2vec2-xls-r-2b-en-to-15)

- [Wav2Vec2-XLS-R-2B-22-16](https://huggingface.co/facebook/wav2vec2-xls-r-2b-22-to-16)

|

hfl/chinese-electra-small-discriminator

|

f59e9653eb2382d76ca1a45b782897158d828f26

|

2021-03-03T01:39:00.000Z

|

[

"pytorch",

"tf",

"electra",

"zh",

"arxiv:2004.13922",

"transformers",

"license:apache-2.0"

] | null | false |

hfl

| null |

hfl/chinese-electra-small-discriminator

| 85 | 1 |

transformers

| 4,902 |

---

language:

- zh

license: "apache-2.0"

---

**Please use `ElectraForPreTraining` for `discriminator` and `ElectraForMaskedLM` for `generator` if you are re-training these models.**

## Chinese ELECTRA

Google and Stanford University released a new pre-trained model called ELECTRA, which has a much compact model size and relatively competitive performance compared to BERT and its variants.

For further accelerating the research of the Chinese pre-trained model, the Joint Laboratory of HIT and iFLYTEK Research (HFL) has released the Chinese ELECTRA models based on the official code of ELECTRA.

ELECTRA-small could reach similar or even higher scores on several NLP tasks with only 1/10 parameters compared to BERT and its variants.

This project is based on the official code of ELECTRA: [https://github.com/google-research/electra](https://github.com/google-research/electra)

You may also interested in,

- Chinese BERT series: https://github.com/ymcui/Chinese-BERT-wwm

- Chinese ELECTRA: https://github.com/ymcui/Chinese-ELECTRA

- Chinese XLNet: https://github.com/ymcui/Chinese-XLNet

- Knowledge Distillation Toolkit - TextBrewer: https://github.com/airaria/TextBrewer

More resources by HFL: https://github.com/ymcui/HFL-Anthology

## Citation

If you find our resource or paper is useful, please consider including the following citation in your paper.

- https://arxiv.org/abs/2004.13922

```

@inproceedings{cui-etal-2020-revisiting,

title = "Revisiting Pre-Trained Models for {C}hinese Natural Language Processing",

author = "Cui, Yiming and

Che, Wanxiang and

Liu, Ting and

Qin, Bing and

Wang, Shijin and

Hu, Guoping",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: Findings",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.findings-emnlp.58",

pages = "657--668",

}

```

|

michaelbenayoun/vit-base-beans

|

b724a7366b1467f6f5e53b978b44c4c08d6c23cc

|

2021-12-17T09:17:23.000Z

|

[

"pytorch",

"tensorboard",

"vit",

"image-classification",

"dataset:cifar10",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] |

image-classification

| false |

michaelbenayoun

| null |

michaelbenayoun/vit-base-beans

| 85 | null |

transformers

| 4,903 |

---

license: apache-2.0

tags:

- image-classification

- generated_from_trainer

datasets:

- cifar10

metrics:

- accuracy

model-index:

- name: vit-base-beans

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: cifar10

type: cifar10

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.6224

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-base-beans

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the cifar10 dataset.

It achieves the following results on the evaluation set:

- Loss: 2.1333

- Accuracy: 0.6224

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 1337

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 100

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.1678 | 0.02 | 100 | 2.1333 | 0.6224 |

### Framework versions

- Transformers 4.15.0.dev0

- Pytorch 1.10.0

- Datasets 1.16.2.dev0

- Tokenizers 0.10.2

|

mrm8488/bert2bert_shared-spanish-finetuned-muchocine-review-summarization

|

266f7ece730f283e5f979c18056f53fc4896a31f

|

2021-05-07T09:26:36.000Z

|

[

"pytorch",

"encoder-decoder",

"text2text-generation",

"es",

"transformers",

"summarization",

"films",

"cinema",

"autotrain_compatible"

] |

summarization

| false |

mrm8488

| null |

mrm8488/bert2bert_shared-spanish-finetuned-muchocine-review-summarization

| 85 | null |

transformers

| 4,904 |

---

tags:

- summarization

- films

- cinema

language: es

widget:

- text: "Es la película que con más ansia he esperado, dado el precedente de las dos anteriores entregas, esta debía ser la joya de la corona, el mejor film jamás realizado… Pero cuando salí del cine estaba decepcionado, me leí el libro antes de ver la película (cosa que no hice con las otras dos) y sentí que Peter me falló. Le faltaba algo, habían obviado demasiadas cosas y no salía Saruman, algo incomprensible dada la importancia que se le dio en las anteriores películas. La película parecía incompleta y realmente lo estaba. Me pareció la peor de la trilogía. Volví a ver el film y esta vez en su versión extendida y mentalizado ya de que no podía ser igual que el libro y mi opinión cambio. Es la mejor de la trilogía en todos los aspectos y para mi gusto el mejor film que jamás se ha hecho. A pesar de sus casi 240 minutos el ritmo no decae sino que aumenta en algo que solo lo he visto hacer a Peter Jackson, las palabras de Tolkien cobra vida y con gran lirismo el film avanza hacía su clímax final. La impecable banda sonora te transporta los sentimientos que el maestro Jackson te quiere transmitir. Aquella noche de 2003 en el teatro Kodak de L.A los oscars dieron justicia a una trilogía que injustamente fue tratada hasta esa noche. En conjunto gano 17 oscars, pero en mi opinión se quedaron bastante cortos. El tiempo pondrá a esta trilogía como clásico imperecedero, una lección de cómo realizar una superproducción, los mensajes que transmiten, los bellos escenarios que presentan, un cuento al fin y al cabo pero convertido en obra de arte. Genialidad en todos los sentidos, no os dejéis engañar por los que duramente critican a esta trilogía y sino mirar lo que ellos llaman buen cine…"

---

|

nates-test-org/convit_base

|

ec61081b76678a0c3c53d4d6bd64305598a6da09

|

2021-10-29T04:40:37.000Z

|

[

"pytorch",

"timm",

"image-classification"

] |

image-classification

| false |

nates-test-org

| null |

nates-test-org/convit_base

| 85 | null |

timm

| 4,905 |

---

tags:

- image-classification

- timm

library_tag: timm

---

# Model card for convit_base

|

speechbrain/urbansound8k_ecapa

|

ac8b73ec8c5fd158447654a33101fe236e048bd9

|

2022-05-30T14:34:29.000Z

|

[

"en",

"dataset:Urbansound8k",

"arxiv:2106.04624",

"speechbrain",

"embeddings",

"Sound",

"Keywords",

"Keyword Spotting",

"pytorch",

"ECAPA-TDNN",

"TDNN",

"Command Recognition",

"audio-classification",

"license:apache-2.0"

] |

audio-classification

| false |

speechbrain

| null |

speechbrain/urbansound8k_ecapa

| 85 | 3 |

speechbrain

| 4,906 |

---

language: "en"

thumbnail:

tags:

- speechbrain

- embeddings

- Sound

- Keywords

- Keyword Spotting

- pytorch

- ECAPA-TDNN

- TDNN

- Command Recognition

- audio-classification

license: "apache-2.0"

datasets:

- Urbansound8k

metrics:

- Accuracy

---

<iframe src="https://ghbtns.com/github-btn.html?user=speechbrain&repo=speechbrain&type=star&count=true&size=large&v=2" frameborder="0" scrolling="0" width="170" height="30" title="GitHub"></iframe>

<br/><br/>

# Sound Recognition with ECAPA embeddings on UrbanSoudnd8k

This repository provides all the necessary tools to perform sound recognition with SpeechBrain using a model pretrained on UrbanSound8k.

You can download the dataset [here](https://urbansounddataset.weebly.com/urbansound8k.html)

The provided system can recognize the following 10 keywords:

```

dog_bark, children_playing, air_conditioner, street_music, gun_shot, siren, engine_idling, jackhammer, drilling, car_horn

```

For a better experience, we encourage you to learn more about

[SpeechBrain](https://speechbrain.github.io). The given model performance on the test set is:

| Release | Accuracy 1-fold (%)

|:-------------:|:--------------:|

| 04-06-21 | 75.5 |

## Pipeline description

This system is composed of a ECAPA model coupled with statistical pooling. A classifier, trained with Categorical Cross-Entropy Loss, is applied on top of that.

## Install SpeechBrain

First of all, please install SpeechBrain with the following command:

```

pip install speechbrain

```

Please notice that we encourage you to read our tutorials and learn more about

[SpeechBrain](https://speechbrain.github.io).

### Perform Sound Recognition

```python

import torchaudio

from speechbrain.pretrained import EncoderClassifier

classifier = EncoderClassifier.from_hparams(source="speechbrain/urbansound8k_ecapa", savedir="pretrained_models/gurbansound8k_ecapa")

out_prob, score, index, text_lab = classifier.classify_file('speechbrain/urbansound8k_ecapa/dog_bark.wav')

print(text_lab)

```

The system is trained with recordings sampled at 16kHz (single channel).

The code will automatically normalize your audio (i.e., resampling + mono channel selection) when calling *classify_file* if needed. Make sure your input tensor is compliant with the expected sampling rate if you use *encode_batch* and *classify_batch*.

### Inference on GPU

To perform inference on the GPU, add `run_opts={"device":"cuda"}` when calling the `from_hparams` method.

### Training

The model was trained with SpeechBrain (8cab8b0c).

To train it from scratch follows these steps:

1. Clone SpeechBrain:

```bash

git clone https://github.com/speechbrain/speechbrain/

```

2. Install it:

```

cd speechbrain

pip install -r requirements.txt

pip install -e .

```

3. Run Training:

```

cd recipes/UrbanSound8k/SoundClassification

python train.py hparams/train_ecapa_tdnn.yaml --data_folder=your_data_folder

```

You can find our training results (models, logs, etc) [here](https://drive.google.com/drive/folders/1sItfg_WNuGX6h2dCs8JTGq2v2QoNTaUg?usp=sharing).

### Limitations

The SpeechBrain team does not provide any warranty on the performance achieved by this model when used on other datasets.

#### Referencing ECAPA

```@inproceedings{DBLP:conf/interspeech/DesplanquesTD20,

author = {Brecht Desplanques and

Jenthe Thienpondt and

Kris Demuynck},

editor = {Helen Meng and

Bo Xu and

Thomas Fang Zheng},

title = {{ECAPA-TDNN:} Emphasized Channel Attention, Propagation and Aggregation

in {TDNN} Based Speaker Verification},

booktitle = {Interspeech 2020},

pages = {3830--3834},

publisher = {{ISCA}},

year = {2020},

}

```

#### Referencing UrbanSound

```@inproceedings{Salamon:UrbanSound:ACMMM:14,

Author = {Salamon, J. and Jacoby, C. and Bello, J. P.},

Booktitle = {22nd {ACM} International Conference on Multimedia (ACM-MM'14)},

Month = {Nov.},

Pages = {1041--1044},

Title = {A Dataset and Taxonomy for Urban Sound Research},

Year = {2014}}

```

# **Citing SpeechBrain**

Please, cite SpeechBrain if you use it for your research or business.

```bibtex

@misc{speechbrain,

title={{SpeechBrain}: A General-Purpose Speech Toolkit},

author={Mirco Ravanelli and Titouan Parcollet and Peter Plantinga and Aku Rouhe and Samuele Cornell and Loren Lugosch and Cem Subakan and Nauman Dawalatabad and Abdelwahab Heba and Jianyuan Zhong and Ju-Chieh Chou and Sung-Lin Yeh and Szu-Wei Fu and Chien-Feng Liao and Elena Rastorgueva and François Grondin and William Aris and Hwidong Na and Yan Gao and Renato De Mori and Yoshua Bengio},

year={2021},

eprint={2106.04624},

archivePrefix={arXiv},

primaryClass={eess.AS},

note={arXiv:2106.04624}

}

```

|

uer/t5-v1_1-small-chinese-cluecorpussmall

|

4695f4326f388432eed807b081822a18795ec17d

|

2022-07-15T08:22:12.000Z

|

[

"pytorch",

"tf",

"jax",

"mt5",

"text2text-generation",

"zh",

"dataset:CLUECorpusSmall",

"arxiv:1909.05658",

"transformers",

"autotrain_compatible"

] |

text2text-generation

| false |

uer

| null |

uer/t5-v1_1-small-chinese-cluecorpussmall

| 85 | null |

transformers

| 4,907 |

---

language: zh

datasets: CLUECorpusSmall

widget:

- text: "作为电子extra0的平台,京东绝对是领先者。如今的刘强extra1已经是身价过extra2的老板。"

---

# Chinese T5 Version 1.1

## Model description

This is the set of Chinese T5 Version 1.1 models pre-trained by [UER-py](https://github.com/dbiir/UER-py/), which is introduced in [this paper](https://arxiv.org/abs/1909.05658).

**Version 1.1**

Chinese T5 Version 1.1 includes the following improvements compared to our Chinese T5 model:

- GEGLU activation in feed-forward hidden layer, rather than ReLU

- Dropout was turned off in pre-training

- no parameter sharing between embedding and classifier layer

You can download the set of Chinese T5 Version 1.1 models either from the [UER-py Modelzoo page](https://github.com/dbiir/UER-py/wiki/Modelzoo), or via HuggingFace from the links below:

| | Link |

| ----------------- | :----------------------------: |

| **T5-v1_1-Small** | [**L=8/H=512 (Small)**][small] |

| **T5-v1_1-Base** | [**L=12/H=768 (Base)**][base] |

In T5 Version 1.1, spans of the input sequence are masked by so-called sentinel token. Each sentinel token represents a unique mask token for the input sequence and should start with `<extra_id_0>`, `<extra_id_1>`, … up to `<extra_id_99>`. However, `<extra_id_xxx>` is separated into multiple parts in Huggingface's Hosted inference API. Therefore, we replace `<extra_id_xxx>` with `extraxxx` in vocabulary and BertTokenizer regards `extraxxx` as one sentinel token.

## How to use

You can use this model directly with a pipeline for text2text generation (take the case of T5-v1_1-Small):

```python

>>> from transformers import BertTokenizer, MT5ForConditionalGeneration, Text2TextGenerationPipeline

>>> tokenizer = BertTokenizer.from_pretrained("uer/t5-v1_1-small-chinese-cluecorpussmall")

>>> model = MT5ForConditionalGeneration.from_pretrained("uer/t5-v1_1-small-chinese-cluecorpussmall")

>>> text2text_generator = Text2TextGenerationPipeline(model, tokenizer)

>>> text2text_generator("中国的首都是extra0京", max_length=50, do_sample=False)

[{'generated_text': 'extra0 北 extra1 extra2 extra3 extra4 extra5'}]

```

## Training data

[CLUECorpusSmall](https://github.com/CLUEbenchmark/CLUECorpus2020/) is used as training data.

## Training procedure

The model is pre-trained by [UER-py](https://github.com/dbiir/UER-py/) on [Tencent Cloud](https://cloud.tencent.com/). We pre-train 1,000,000 steps with a sequence length of 128 and then pre-train 250,000 additional steps with a sequence length of 512. We use the same hyper-parameters on different model sizes.

Taking the case of T5-v1_1-Small

Stage1:

```

python3 preprocess.py --corpus_path corpora/cluecorpussmall.txt \

--vocab_path models/google_zh_with_sentinel_vocab.txt \

--dataset_path cluecorpussmall_t5-v1_1_seq128_dataset.pt \

--processes_num 32 --seq_length 128 \

--dynamic_masking --data_processor t5

```

```

python3 pretrain.py --dataset_path cluecorpussmall_t5-v1_1_seq128_dataset.pt \

--vocab_path models/google_zh_with_sentinel_vocab.txt \

--config_path models/t5-v1_1/small_config.json \

--output_model_path models/cluecorpussmall_t5-v1_1_small_seq128_model.bin \

--world_size 8 --gpu_ranks 0 1 2 3 4 5 6 7 \

--total_steps 1000000 --save_checkpoint_steps 100000 --report_steps 50000 \

--learning_rate 1e-3 --batch_size 64 \

--span_masking --span_geo_prob 0.3 --span_max_length 5

```

Stage2:

```

python3 preprocess.py --corpus_path corpora/cluecorpussmall.txt \

--vocab_path models/google_zh_with_sentinel_vocab.txt \

--dataset_path cluecorpussmall_t5-v1_1_seq512_dataset.pt \

--processes_num 32 --seq_length 512 \

--dynamic_masking --data_processor t5

```

```

python3 pretrain.py --dataset_path cluecorpussmall_t5-v1_1_seq512_dataset.pt \

--pretrained_model_path models/cluecorpussmall_t5-v1_1_small_seq128_model.bin-1000000 \

--vocab_path models/google_zh_with_sentinel_vocab.txt \

--config_path models/t5-v1_1/small_config.json \

--output_model_path models/cluecorpussmall_t5-v1_1_small_seq512_model.bin \

--world_size 8 --gpu_ranks 0 1 2 3 4 5 6 7 \

--total_steps 250000 --save_checkpoint_steps 50000 --report_steps 10000 \

--learning_rate 5e-4 --batch_size 16 \

--span_masking --span_geo_prob 0.3 --span_max_length 5

```

Finally, we convert the pre-trained model into Huggingface's format:

```

python3 scripts/convert_t5_from_uer_to_huggingface.py --input_model_path cluecorpussmall_t5_small_seq512_model.bin-250000 \

--output_model_path pytorch_model.bin \

--layers_num 8 \

--type t5-v1_1

```

### BibTeX entry and citation info

```

@article{2020t5,

title = {Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer},

author = {Colin Raffel and Noam Shazeer and Adam Roberts and Katherine Lee and Sharan Narang and Michael Matena and Yanqi Zhou and Wei Li and Peter J. Liu},

journal = {Journal of Machine Learning Research},

pages = {1-67},

year = {2020}

}

@article{zhao2019uer,

title={UER: An Open-Source Toolkit for Pre-training Models},

author={Zhao, Zhe and Chen, Hui and Zhang, Jinbin and Zhao, Xin and Liu, Tao and Lu, Wei and Chen, Xi and Deng, Haotang and Ju, Qi and Du, Xiaoyong},

journal={EMNLP-IJCNLP 2019},

pages={241},

year={2019}

}

```

[small]:https://huggingface.co/uer/t5-v1_1-small-chinese-cluecorpussmall

[base]:https://huggingface.co/uer/t5-v1_1-base-chinese-cluecorpussmall

|

wietsedv/wav2vec2-large-xlsr-53-frisian

|

8c02c12454880a55cb031de9744245649fd0e70f

|

2021-03-28T20:09:35.000Z

|

[

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"fy-NL",

"dataset:common_voice",

"transformers",

"audio",

"speech",

"xlsr-fine-tuning-week",

"license:apache-2.0",

"model-index"

] |

automatic-speech-recognition

| false |

wietsedv

| null |

wietsedv/wav2vec2-large-xlsr-53-frisian

| 85 | null |

transformers

| 4,908 |

---

language: fy-NL

datasets:

- common_voice

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

license: apache-2.0

model-index:

- name: Frisian XLSR Wav2Vec2 Large 53 by Wietse de Vries

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice fy-NL

type: common_voice

args: fy-NL

metrics:

- name: Test WER

type: wer

value: 16.25

---

# Wav2Vec2-Large-XLSR-53-Frisian

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on Frisian using the [Common Voice](https://huggingface.co/datasets/common_voice) dataset.

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "fy-NL", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("wietsedv/wav2vec2-large-xlsr-53-frisian")

model = Wav2Vec2ForCTC.from_pretrained("wietsedv/wav2vec2-large-xlsr-53-frisian")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Frisian test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "fy-NL", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("wietsedv/wav2vec2-large-xlsr-53-frisian")

model = Wav2Vec2ForCTC.from_pretrained("wietsedv/wav2vec2-large-xlsr-53-frisian")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\'\“\%\‘\”]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:.2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 16.25 %

## Training

The Common Voice `train` and `validation` datasets were used for training.

|

yuvraj/summarizer-cnndm

|

4dfc2c9a7a656985c0b7415509cfdd26550e8306

|

2020-12-11T22:04:58.000Z

|

[

"pytorch",

"bart",

"text2text-generation",

"en",

"transformers",

"summarization",

"autotrain_compatible"

] |

summarization

| false |

yuvraj

| null |

yuvraj/summarizer-cnndm

| 85 | null |

transformers

| 4,909 |

---

language: "en"

tags:

- summarization

---

# Summarization

## Model description

BartForConditionalGeneration model fine tuned for summarization on 10000 samples from the cnn-dailymail dataset

## How to use

PyTorch model available

```python

from transformers import AutoTokenizer, AutoModelWithLMHead, pipeline

tokenizer = AutoTokenizer.from_pretrained("yuvraj/summarizer-cnndm")

AutoModelWithLMHead.from_pretrained("yuvraj/summarizer-cnndm")

summarizer = pipeline('summarization', model=model, tokenizer=tokenizer)

summarizer("<Text to be summarized>")

## Limitations and bias

Trained on a small dataset

|

zhiheng-huang/bert-base-uncased-embedding-relative-key-query

|

a3126b4d74e3edf3eea4280d186ac7ad4dbc4753

|

2021-05-20T09:45:59.000Z

|

[

"pytorch",

"jax",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

] |

fill-mask

| false |

zhiheng-huang

| null |

zhiheng-huang/bert-base-uncased-embedding-relative-key-query

| 85 | null |

transformers

| 4,910 |

Entry not found

|

kevinjesse/graphcodebert-MT4TS

|

ee7bbf8909df6ab4b82e3229dfff0b5b4c9cc8c0

|

2022-03-09T11:39:30.000Z

|

[

"pytorch",

"roberta",

"token-classification",

"transformers",

"autotrain_compatible"

] |

token-classification

| false |

kevinjesse

| null |

kevinjesse/graphcodebert-MT4TS

| 85 | null |

transformers

| 4,911 |

Entry not found

|

hafidber/rare-puppers

|

aba4ab1fac86f7f7bb5958dd7e8c12ce14ec051b

|

2022-04-06T17:53:06.000Z

|

[

"pytorch",

"tensorboard",

"vit",

"image-classification",

"transformers",

"huggingpics",

"model-index"

] |

image-classification

| false |

hafidber

| null |

hafidber/rare-puppers

| 85 | null |

transformers

| 4,912 |

---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: rare-puppers

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.9552238583564758

---

# rare-puppers

Autogenerated by HuggingPics🤗🖼️

Create your own image classifier for **anything** by running [the demo on Google Colab](https://colab.research.google.com/github/nateraw/huggingpics/blob/main/HuggingPics.ipynb).

Report any issues with the demo at the [github repo](https://github.com/nateraw/huggingpics).

## Example Images

#### corgi

#### samoyed

#### shiba inu

|

malteos/gpt2-wechsel-german-ds-meg

|

6961d2febd4a803e925a3acc7034548f32e6bc5c

|

2022-05-05T19:41:22.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"de",

"transformers",

"license:mit"

] |

text-generation

| false |

malteos

| null |

malteos/gpt2-wechsel-german-ds-meg

| 85 | null |

transformers

| 4,913 |

---

license: mit

language: de

widget:

- text: "In einer schockierenden Entdeckung fanden Wissenschaftler eine Herde Einhörner, die in einem abgelegenen, zuvor unerforschten Tal in den Anden lebten."

---

# Replication of [gpt2-wechsel-german](https://huggingface.co/benjamin/gpt2-wechsel-german)

- trained with [BigScience's DeepSpeed-Megatron-LM code base](https://github.com/bigscience-workshop/Megatron-DeepSpeed)

- 22hrs on 4xA100 GPUs (~ 80 TFLOPs / GPU)

- stopped after 100k steps

- less than a single epoch on `oscar_unshuffled_deduplicated_de` (excluding validation set; original model was trained for 75 epochs on less data)

- bf16

- zero stage 1

- tp/pp = 1

## Evaluation

| Model | PPL |

|---|---|

| `gpt2-wechsel-german-ds-meg` | **26.4** |

| `gpt2-wechsel-german` | 26.8 |

| `gpt2` (retrained from scratch) | 27.63 |

## License

MIT

|

ajtamayoh/NER_EHR_Spanish_model_Mulitlingual_BERT

|

8ff6b1072af538faea974122ed66e9870d9c1ec2

|

2022-06-14T16:29:31.000Z

|

[

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] |

token-classification

| false |

ajtamayoh

| null |

ajtamayoh/NER_EHR_Spanish_model_Mulitlingual_BERT

| 85 | null |

transformers

| 4,914 |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: NER_EHR_Spanish_model_Mulitlingual_BERT

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# NER_EHR_Spanish_model_Mulitlingual_BERT

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the DisTEMIST shared task 2022 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2603

- Precision: 0.5637

- Recall: 0.5801

- F1: 0.5718

- Accuracy: 0.9534

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 7

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 71 | 0.2060 | 0.5017 | 0.5540 | 0.5266 | 0.9496 |

| No log | 2.0 | 142 | 0.2163 | 0.5363 | 0.5433 | 0.5398 | 0.9495 |

| No log | 3.0 | 213 | 0.2245 | 0.5521 | 0.5356 | 0.5438 | 0.9514 |

| No log | 4.0 | 284 | 0.2453 | 0.5668 | 0.5985 | 0.5822 | 0.9522 |

| No log | 5.0 | 355 | 0.2433 | 0.5657 | 0.5579 | 0.5617 | 0.9530 |

| No log | 6.0 | 426 | 0.2553 | 0.5762 | 0.5762 | 0.5762 | 0.9536 |

| No log | 7.0 | 497 | 0.2603 | 0.5637 | 0.5801 | 0.5718 | 0.9534 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.2.0

- Tokenizers 0.12.1

|

malmarjeh/mbert2mbert-arabic-text-summarization

|

d575c9342bd733da408d31286672ec2fbe568b63

|

2022-06-29T12:54:31.000Z

|

[

"pytorch",

"encoder-decoder",

"text2text-generation",

"ar",

"transformers",

"Multilingual BERT",

"BERT2BERT",

"MSA",

"Arabic Text Summarization",

"Arabic News Title Generation",

"Arabic Paraphrasing",

"autotrain_compatible"

] |

text2text-generation

| false |

malmarjeh

| null |

malmarjeh/mbert2mbert-arabic-text-summarization

| 85 | null |

transformers

| 4,915 |

---

language:

- ar

tags:

- Multilingual BERT

- BERT2BERT

- MSA

- Arabic Text Summarization

- Arabic News Title Generation

- Arabic Paraphrasing

---

# An Arabic abstractive text summarization model

A BERT2BERT-based model whose parameters are initialized with mBERT weights and which has been fine-tuned on a dataset of 84,764 paragraph-summary pairs.

More details on the fine-tuning of this model will be released later.

The model can be used as follows:

```python

from transformers import BertTokenizer, AutoModelForSeq2SeqLM, pipeline

from arabert.preprocess import ArabertPreprocessor

model_name="malmarjeh/mbert2mbert-arabic-text-summarization"

preprocessor = ArabertPreprocessor(model_name="")

tokenizer = BertTokenizer.from_pretrained(model_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

pipeline = pipeline("text2text-generation",model=model,tokenizer=tokenizer)

text = "شهدت مدينة طرابلس، مساء أمس الأربعاء، احتجاجات شعبية وأعمال شغب لليوم الثالث على التوالي، وذلك بسبب تردي الوضع المعيشي والاقتصادي. واندلعت مواجهات عنيفة وعمليات كر وفر ما بين الجيش اللبناني والمحتجين استمرت لساعات، إثر محاولة فتح الطرقات المقطوعة، ما أدى إلى إصابة العشرات من الطرفين."

text = preprocessor.preprocess(text)

result = pipeline(text,

pad_token_id=tokenizer.eos_token_id,

num_beams=3,

repetition_penalty=3.0,

max_length=200,

length_penalty=1.0,

no_repeat_ngram_size = 3)[0]['generated_text']

result

>>> 'احتجاجات في طرابلس على خلفية مواجهات عنيفة بين الجيش اللبناني والمحتجين'

```

## Contact:

**Mohammad Bani Almarjeh**: [Linkedin](https://www.linkedin.com/in/mohammad-bani-almarjeh/) | <[email protected]>

|

gustavhartz/roberta-base-cuad-finetuned

|

b801aecb08c8e28c07b03e162226d93e804b6661

|

2022-06-27T11:58:31.000Z

|

[

"pytorch",

"roberta",

"question-answering",

"transformers",

"autotrain_compatible"

] |

question-answering

| false |

gustavhartz

| null |

gustavhartz/roberta-base-cuad-finetuned

| 85 | null |

transformers

| 4,916 |

# Finetuned legal contract review QA model based 👩⚖️ 📑

Best model presented in the master thesis [*Exploring CUAD using RoBERTa span-selection QA models for legal contract review*](https://github.com/gustavhartz/transformers-legal-tasks) for QA on the Contract Understanding Atticus Dataset. Full training logic and associated thesis available through link.

Outperform the most popular HF cuad model [Rakib/roberta-base-on-cuad](hello.com) and is the best model for CUAD on Hugging Face 26/06/2022

| **Model name** | **Top 1 Has Ans F1** | **Top 3 Has Ans F1** |

|-----------------------------------------|----------------------|----------------------|

| gustavhartz/roberta-base-cuad-finetuned | 85.68 | 94.06 |

| Rakib/roberta-base-on-cuad | 81.26 | 92.48 |

For questions etc. go through the Github repo :)

### Citation

If you found the code of thesis helpful you can please cite it :)

```

@thesis{ha2022,

author = {Hartz, Gustav Selfort},

title = {Exploring CUAD using RoBERTa span-selection QA models for legal contract review},

language = {English},

format = {thesis},

year = {2022},

publisher = {DTU Department of Applied Mathematics and Computer Science}

}

```

|

alistairmcleay/UBAR-distilgpt2

|

f5d4fa573db863e37138ea73cb37e61a294850a9

|

2022-06-26T14:10:29.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"license:wtfpl"

] |

text-generation

| false |

alistairmcleay

| null |

alistairmcleay/UBAR-distilgpt2

| 85 | null |

transformers

| 4,917 |

---

license: wtfpl

---

|

ebelenwaf/canbert

|

f1bcf409d524839b6ab581dcc93f9635e5311e8e

|

2022-07-17T03:39:02.000Z

|

[

"pytorch",

"tensorboard",

"roberta",

"fill-mask",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

] |

fill-mask

| false |

ebelenwaf

| null |

ebelenwaf/canbert

| 85 | null |

transformers

| 4,918 |

---

tags:

- generated_from_trainer

model-index:

- name: canbert

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# canbert

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.21.0.dev0

- Pytorch 1.12.0+cu113

- Tokenizers 0.12.1

|

tinkoff-ai/ruDialoGPT-small

|

aaf0936bedf44fcc834ee1ae91372af02f69f280

|

2022-07-19T20:27:35.000Z

|

[

"pytorch",

"gpt2",

"ru",

"arxiv:2001.09977",

"transformers",

"conversational",

"license:mit",

"text-generation"

] |

text-generation

| false |

tinkoff-ai

| null |

tinkoff-ai/ruDialoGPT-small

| 85 | null |

transformers

| 4,919 |

---

license: mit

pipeline_tag: text-generation

widget:

- text: "@@ПЕРВЫЙ@@ привет @@ВТОРОЙ@@ привет @@ПЕРВЫЙ@@ как дела? @@ВТОРОЙ@@"

example_title: "how r u"

- text: "@@ПЕРВЫЙ@@ что ты делал на выходных? @@ВТОРОЙ@@"

example_title: "wyd"

language:

- ru

tags:

- conversational

---

This generation model is based on [sberbank-ai/rugpt3small_based_on_gpt2](https://huggingface.co/sberbank-ai/rugpt3small_based_on_gpt2). It's trained on large corpus of dialog data and can be used for buildning generative conversational agents

The model was trained with context size 3

On a private validation set we calculated metrics introduced in [this paper](https://arxiv.org/pdf/2001.09977.pdf):

- Sensibleness: Crowdsourcers were asked whether model's response makes sense given the context

- Specificity: Crowdsourcers were asked whether model's response is specific for given context, in other words we don't want our model to give general and boring responses

- SSA which is the average of two metrics above (Sensibleness Specificity Average)

| | sensibleness | specificity | SSA |

|:----------------------------------------------------|---------------:|--------------:|------:|

| [tinkoff-ai/ruDialoGPT-small](https://huggingface.co/tinkoff-ai/ruDialoGPT-small) | 0.64 | 0.5 | 0.57 |

| [tinkoff-ai/ruDialoGPT-medium](https://huggingface.co/tinkoff-ai/ruDialoGPT-medium) | 0.78 | 0.69 | 0.735 |

How to use:

```python

import torch

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained('tinkoff-ai/ruDialoGPT-small')

model = AutoModelWithLMHead.from_pretrained('tinkoff-ai/ruDialoGPT-small')

inputs = tokenizer('@@ПЕРВЫЙ@@ привет @@ВТОРОЙ@@ привет @@ПЕРВЫЙ@@ как дела? @@ВТОРОЙ@@', return_tensors='pt')

generated_token_ids = model.generate(

**inputs,

top_k=10,

top_p=0.95,

num_beams=3,

num_return_sequences=3,

do_sample=True,

no_repeat_ngram_size=2,

temperature=1.2,

repetition_penalty=1.2,

length_penalty=1.0,

eos_token_id=50257,

max_new_tokens=40

)

context_with_response = [tokenizer.decode(sample_token_ids) for sample_token_ids in generated_token_ids]

context_with_response

```

|

nvidia/stt_ca_conformer_transducer_large

|

b07d20c46f0610ba8051db55674fcc36d438b7d7

|

2022-07-22T18:34:11.000Z

|

[

"nemo",

"ca",

"dataset:mozilla-foundation/common_voice_9_0",

"arxiv:2005.08100",

"automatic-speech-recognition",

"speech",

"audio",

"Transducer",

"Conformer",

"Transformer",

"pytorch",

"NeMo",

"hf-asr-leaderboard",

"license:cc-by-4.0",

"model-index"

] |

automatic-speech-recognition

| false |

nvidia

| null |

nvidia/stt_ca_conformer_transducer_large

| 85 | 1 |

nemo

| 4,920 |

---

language:

- ca

library_name: nemo

datasets:

- mozilla-foundation/common_voice_9_0

thumbnail: null

tags:

- automatic-speech-recognition

- speech

- audio

- Transducer

- Conformer

- Transformer

- pytorch

- NeMo

- hf-asr-leaderboard

license: cc-by-4.0

model-index:

- name: stt_ca_conformer_transducer_large

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Mozilla Common Voice 9.0

type: mozilla-foundation/common_voice_9_0

config: ca

split: test

args:

language: ca

metrics:

- name: Test WER

type: wer

value: 3.85

---

# NVIDIA Conformer-Transducer Large (Catalan)

<style>

img {

display: inline;

}

</style>

| [](#model-architecture)

| [](#model-architecture)

| [](#datasets)

This model transcribes speech into lowercase Catalan alphabet including spaces, dashes and apostrophes, and is trained on around 1023 hours of Catalan speech data.

It is a non-autoregressive "large" variant of Conformer, with around 120 million parameters.

See the [model architecture](#model-architecture) section and [NeMo documentation](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/main/asr/models.html#conformer-transducer) for complete architecture details.

## Usage

The model is available for use in the NeMo toolkit [3], and can be used as a pre-trained checkpoint for inference or for fine-tuning on another dataset.

To train, fine-tune or play with the model you will need to install [NVIDIA NeMo](https://github.com/NVIDIA/NeMo). We recommend you install it after you've installed latest PyTorch version.

```

pip install nemo_toolkit['all']

```

### Automatically instantiate the model

```python

import nemo.collections.asr as nemo_asr

asr_model = nemo_asr.models.EncDecRNNTBPEModel.from_pretrained("nvidia/stt_ca_conformer_transducer_large")

```

### Transcribing using Python

First, let's get a sample

```

wget https://dldata-public.s3.us-east-2.amazonaws.com/2086-149220-0033.wav

```

Then simply do:

```

asr_model.transcribe(['2086-149220-0033.wav'])

```

### Transcribing many audio files

```shell

python [NEMO_GIT_FOLDER]/examples/asr/transcribe_speech.py

pretrained_name="nvidia/stt_ca_conformer_transducer_large"

audio_dir="<DIRECTORY CONTAINING AUDIO FILES>"

```

### Input

This model accepts 16 kHz mono-channel Audio (wav files) as input.

### Output

This model provides transcribed speech as a string for a given audio sample.

## Model Architecture

Conformer-Transducer model is an autoregressive variant of Conformer model [1] for Automatic Speech Recognition which uses Transducer loss/decoding. You may find more info on the detail of this model here: [Conformer-Transducer Model](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/main/asr/models.html).

## Training

The NeMo toolkit [3] was used for training the models for over several hundred epochs. These model are trained with this [example script](https://github.com/NVIDIA/NeMo/blob/main/examples/asr/asr_transducer/speech_to_text_rnnt_bpe.py) and this [base config](https://github.com/NVIDIA/NeMo/blob/main/examples/asr/conf/conformer/conformer_transducer_bpe.yaml).

The tokenizers for these models were built using the text transcripts of the train set with this [script](https://github.com/NVIDIA/NeMo/blob/main/scripts/tokenizers/process_asr_text_tokenizer.py).

The vocabulary we use contains 44 characters:

```python

[' ', "'", '-', 'a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm', 'n', 'o', 'p', 'q', 'r', 's', 't', 'u', 'v', 'w', 'x', 'y', 'z', '·', 'à', 'á', 'ç', 'è', 'é', 'í', 'ï', 'ñ', 'ò', 'ó', 'ú', 'ü', 'ı', '–', '—']

```

Full config can be found inside the .nemo files.

### Datasets

All the models in this collection are trained on MCV-9.0 Catalan dataset, which contains around 1203 hours training, 28 hours of development and 27 hours of testing speech audios.

## Performance

The list of the available models in this collection is shown in the following table. Performances of the ASR models are reported in terms of Word Error Rate (WER%) with greedy decoding.

| Version | Tokenizer | Vocabulary Size | Dev WER| Test WER| Train Dataset |

|---------|-----------------------|-----------------|--------|---------|-----------------|

| 1.11.0 | SentencePiece Unigram | 128 |4.43 | 3.85 | MCV-9.0 Train set|

## Limitations

Since this model was trained on publicly available speech datasets, the performance of this model might degrade for speech which includes technical terms, or vernacular that the model has not been trained on. The model might also perform worse for accented speech.

## Deployment with NVIDIA Riva

[NVIDIA Riva](https://developer.nvidia.com/riva), is an accelerated speech AI SDK deployable on-prem, in all clouds, multi-cloud, hybrid, on edge, and embedded.

Additionally, Riva provides:

* World-class out-of-the-box accuracy for the most common languages with model checkpoints trained on proprietary data with hundreds of thousands of GPU-compute hours

* Best in class accuracy with run-time word boosting (e.g., brand and product names) and customization of acoustic model, language model, and inverse text normalization

* Streaming speech recognition, Kubernetes compatible scaling, and enterprise-grade support

Although this model isn’t supported yet by Riva, the [list of supported models is here](https://huggingface.co/models?other=Riva).

Check out [Riva live demo](https://developer.nvidia.com/riva#demos).

## References

- [1] [Conformer: Convolution-augmented Transformer for Speech Recognition](https://arxiv.org/abs/2005.08100)

- [2] [Google Sentencepiece Tokenizer](https://github.com/google/sentencepiece)

- [3] [NVIDIA NeMo Toolkit](https://github.com/NVIDIA/NeMo)

|

Akashpb13/Swahili_xlsr

|

8aa8e010d6a3f1f049fa8fefd8ecc4de57ef00fe

|

2022-03-23T18:28:25.000Z

|

[

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"sw",

"dataset:mozilla-foundation/common_voice_8_0",

"transformers",

"generated_from_trainer",

"hf-asr-leaderboard",

"model_for_talk",

"mozilla-foundation/common_voice_8_0",

"robust-speech-event",

"license:apache-2.0",

"model-index"

] |

automatic-speech-recognition

| false |

Akashpb13

| null |

Akashpb13/Swahili_xlsr

| 84 | null |

transformers

| 4,921 |

---

language:

- sw

license: apache-2.0

tags:

- automatic-speech-recognition

- generated_from_trainer

- hf-asr-leaderboard

- model_for_talk

- mozilla-foundation/common_voice_8_0

- robust-speech-event

- sw

datasets:

- mozilla-foundation/common_voice_8_0

model-index:

- name: Akashpb13/Swahili_xlsr

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 8

type: mozilla-foundation/common_voice_8_0

args: sw

metrics:

- name: Test WER

type: wer

value: 0.11763625454589981

- name: Test CER

type: cer

value: 0.02884228669922436

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Robust Speech Event - Dev Data

type: speech-recognition-community-v2/dev_data

args: kmr

metrics:

- name: Test WER

type: wer

value: 0.11763625454589981

- name: Test CER

type: cer

value: 0.02884228669922436

---

# Akashpb13/Swahili_xlsr

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the MOZILLA-FOUNDATION/COMMON_VOICE_7_0 - hu dataset.

It achieves the following results on the evaluation set (which is 10 percent of train data set merged with dev datasets):

- Loss: 0.159032

- Wer: 0.187934

## Model description

"facebook/wav2vec2-xls-r-300m" was finetuned.

## Intended uses & limitations

More information needed

## Training and evaluation data

Training data -

Common voice Hausa train.tsv and dev.tsv

Only those points were considered where upvotes were greater than downvotes and duplicates were removed after concatenation of all the datasets given in common voice 7.0

## Training procedure

For creating the training dataset, all possible datasets were appended and 90-10 split was used.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.000096

- train_batch_size: 16

- eval_batch_size: 16

- seed: 13

- gradient_accumulation_steps: 2

- lr_scheduler_type: cosine_with_restarts

- lr_scheduler_warmup_steps: 500

- num_epochs: 80

- mixed_precision_training: Native AMP

### Training results

| Step | Training Loss | Validation Loss | Wer |

|------|---------------|-----------------|----------|

| 500 | 4.810000 | 2.168847 | 0.995747 |

| 1000 | 0.564200 | 0.209411 | 0.303485 |

| 1500 | 0.217700 | 0.153959 | 0.239534 |

| 2000 | 0.150700 | 0.139901 | 0.216327 |

| 2500 | 0.119400 | 0.137543 | 0.208828 |

| 3000 | 0.099500 | 0.140921 | 0.203045 |

| 3500 | 0.087100 | 0.138835 | 0.199649 |

| 4000 | 0.074600 | 0.141297 | 0.195844 |

| 4500 | 0.066600 | 0.148560 | 0.194127 |

| 5000 | 0.060400 | 0.151214 | 0.194388 |

| 5500 | 0.054400 | 0.156072 | 0.192187 |

| 6000 | 0.051100 | 0.154726 | 0.190322 |

| 6500 | 0.048200 | 0.159847 | 0.189538 |

| 7000 | 0.046400 | 0.158727 | 0.188307 |

| 7500 | 0.046500 | 0.159032 | 0.187934 |

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.0+cu102

- Datasets 1.18.3

- Tokenizers 0.10.3

#### Evaluation Commands

1. To evaluate on `mozilla-foundation/common_voice_8_0` with split `test`

```bash

python eval.py --model_id Akashpb13/Swahili_xlsr --dataset mozilla-foundation/common_voice_8_0 --config sw --split test

```

|

Frodnar/bee-likes

|

58dd1beead6c58c97840cae6cbd6c1ee298056f0

|

2021-07-02T14:47:21.000Z

|

[

"pytorch",

"tensorboard",

"vit",

"image-classification",

"transformers",

"huggingpics",

"model-index"

] |

image-classification

| false |

Frodnar

| null |

Frodnar/bee-likes

| 84 | null |

transformers

| 4,922 |

---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: bee-likes

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.8333333134651184

---

# bee-likes

Autogenerated by HuggingPics🤗🖼️

Create your own image classifier for **anything** by running [the demo on Google Colab](https://colab.research.google.com/github/nateraw/huggingpics/blob/main/HuggingPics.ipynb).

Report any issues with the demo at the [github repo](https://github.com/nateraw/huggingpics).

## Example Images

#### bee

#### hoverfly

#### wasp

|

HScomcom/gpt2-MyLittlePony

|

30475d509fbf395c2a120673a3886f47bc3e4731

|

2021-05-21T10:09:36.000Z

|

[

"pytorch",

"jax",

"gpt2",

"text-generation",

"transformers"

] |

text-generation

| false |

HScomcom

| null |

HScomcom/gpt2-MyLittlePony

| 84 | 1 |

transformers

| 4,923 |

The model that generates the My little pony script

Fine tuning data: [Kaggle](https://www.kaggle.com/liury123/my-little-pony-transcript?select=clean_dialog.csv)

API page: [Ainize](https://ainize.ai/fpem123/GPT2-MyLittlePony)

Demo page: [End point](https://master-gpt2-my-little-pony-fpem123.endpoint.ainize.ai/)

### Model information

Base model: gpt-2 large

Epoch: 30

Train runtime: 4943.9641 secs

Loss: 0.0291

###===Teachable NLP===

To train a GPT-2 model, write code and require GPU resources, but can easily fine-tune and get an API to use the model here for free.

Teachable NLP: [Teachable NLP](https://ainize.ai/teachable-nlp)

Tutorial: [Tutorial](https://forum.ainetwork.ai/t/teachable-nlp-how-to-use-teachable-nlp/65?utm_source=community&utm_medium=huggingface&utm_campaign=model&utm_content=teachable%20nlp)

|

Helsinki-NLP/opus-mt-ja-nl

|

c2caa48f4d8dc2123fbab467998cd32fe4d79a17

|

2020-08-21T14:42:47.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"ja",

"nl",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-ja-nl

| 84 | null |

transformers

| 4,924 |

---

language:

- ja

- nl

tags:

- translation

license: apache-2.0

---

### jpn-nld

* source group: Japanese

* target group: Dutch

* OPUS readme: [jpn-nld](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/jpn-nld/README.md)

* model: transformer-align

* source language(s): jpn jpn_Hani jpn_Hira jpn_Kana jpn_Latn

* target language(s): nld

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/jpn-nld/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/jpn-nld/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/jpn-nld/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.jpn.nld | 34.7 | 0.534 |

### System Info:

- hf_name: jpn-nld

- source_languages: jpn

- target_languages: nld

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/jpn-nld/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['ja', 'nl']

- src_constituents: {'jpn_Hang', 'jpn', 'jpn_Yiii', 'jpn_Kana', 'jpn_Hani', 'jpn_Bopo', 'jpn_Latn', 'jpn_Hira'}

- tgt_constituents: {'nld'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/jpn-nld/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/jpn-nld/opus-2020-06-17.test.txt

- src_alpha3: jpn

- tgt_alpha3: nld

- short_pair: ja-nl

- chrF2_score: 0.534

- bleu: 34.7

- brevity_penalty: 0.938

- ref_len: 25849.0

- src_name: Japanese

- tgt_name: Dutch

- train_date: 2020-06-17

- src_alpha2: ja

- tgt_alpha2: nl

- prefer_old: False

- long_pair: jpn-nld

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41

|

Helsinki-NLP/opus-mt-ko-ru

|

2050b5c2ba3981c9b135ff58febaca447efc75ad

|

2020-08-21T14:42:47.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"ko",

"ru",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-ko-ru

| 84 | null |

transformers

| 4,925 |

---

language:

- ko

- ru

tags:

- translation

license: apache-2.0

---

### kor-rus

* source group: Korean

* target group: Russian

* OPUS readme: [kor-rus](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/kor-rus/README.md)

* model: transformer-align

* source language(s): kor_Hang kor_Latn

* target language(s): rus

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/kor-rus/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/kor-rus/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/kor-rus/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.kor.rus | 30.3 | 0.514 |

### System Info:

- hf_name: kor-rus

- source_languages: kor

- target_languages: rus

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/kor-rus/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['ko', 'ru']

- src_constituents: {'kor_Hani', 'kor_Hang', 'kor_Latn', 'kor'}

- tgt_constituents: {'rus'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/kor-rus/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/kor-rus/opus-2020-06-17.test.txt

- src_alpha3: kor

- tgt_alpha3: rus

- short_pair: ko-ru

- chrF2_score: 0.514

- bleu: 30.3

- brevity_penalty: 0.961

- ref_len: 1382.0

- src_name: Korean

- tgt_name: Russian

- train_date: 2020-06-17

- src_alpha2: ko

- tgt_alpha2: ru

- prefer_old: False

- long_pair: kor-rus

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41

|

Helsinki-NLP/opus-mt-swc-fr

|

831ee59763b34c0bf2ecf27bcf966e74f16b2363

|

2021-09-11T10:47:54.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"swc",

"fr",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-swc-fr

| 84 | null |

transformers

| 4,926 |

---

tags:

- translation

license: apache-2.0

---

### opus-mt-swc-fr

* source languages: swc

* target languages: fr

* OPUS readme: [swc-fr](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/swc-fr/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/swc-fr/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/swc-fr/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/swc-fr/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.swc.fr | 28.6 | 0.470 |

|

JuliusAlphonso/distilbert-plutchik

|

034821c7b7614e228473d28b35ea937d5e1341e6

|

2021-06-19T22:06:23.000Z

|

[

"pytorch",

"distilbert",

"text-classification",

"transformers"

] |

text-classification

| false |

JuliusAlphonso

| null |

JuliusAlphonso/distilbert-plutchik

| 84 | 1 |

transformers

| 4,927 |

Labels are based on Plutchik's model of emotions and may be combined:

|

KoichiYasuoka/bert-base-japanese-upos

|

e9077f5b327b42da14e3c5d60f529331a68f9eed

|

2022-05-23T21:50:57.000Z

|

[

"pytorch",

"bert",

"token-classification",

"ja",

"dataset:universal_dependencies",

"transformers",

"japanese",

"pos",

"wikipedia",

"dependency-parsing",

"license:cc-by-sa-4.0",

"autotrain_compatible"

] |

token-classification

| false |

KoichiYasuoka

| null |

KoichiYasuoka/bert-base-japanese-upos

| 84 | 1 |

transformers

| 4,928 |

---

language:

- "ja"

tags:

- "japanese"

- "token-classification"

- "pos"

- "wikipedia"

- "dependency-parsing"

datasets:

- "universal_dependencies"

license: "cc-by-sa-4.0"

pipeline_tag: "token-classification"

widget:

- text: "国境の長いトンネルを抜けると雪国であった。"

---

# bert-base-japanese-upos

## Model Description

This is a BERT model pre-trained on Japanese Wikipedia texts for POS-tagging and dependency-parsing, derived from [bert-base-japanese-char-extended](https://huggingface.co/KoichiYasuoka/bert-base-japanese-char-extended). Every short-unit-word is tagged by [UPOS](https://universaldependencies.org/u/pos/) (Universal Part-Of-Speech).

## How to Use

```py

import torch

from transformers import AutoTokenizer,AutoModelForTokenClassification

tokenizer=AutoTokenizer.from_pretrained("KoichiYasuoka/bert-base-japanese-upos")

model=AutoModelForTokenClassification.from_pretrained("KoichiYasuoka/bert-base-japanese-upos")

s="国境の長いトンネルを抜けると雪国であった。"

p=[model.config.id2label[q] for q in torch.argmax(model(tokenizer.encode(s,return_tensors="pt"))["logits"],dim=2)[0].tolist()[1:-1]]

print(list(zip(s,p)))

```

or

```py

import esupar

nlp=esupar.load("KoichiYasuoka/bert-base-japanese-upos")

print(nlp("国境の長いトンネルを抜けると雪国であった。"))

```

## See Also

[esupar](https://github.com/KoichiYasuoka/esupar): Tokenizer POS-tagger and Dependency-parser with BERT/RoBERTa models

|

arbml/wav2vec2-large-xlsr-dialect-classification

|

7d2493de4078502088aebc858be597c98d2cb31d

|

2021-07-05T18:15:15.000Z

|

[

"pytorch",

"jax",

"wav2vec2",

"transformers"

] | null | false |

arbml

| null |

arbml/wav2vec2-large-xlsr-dialect-classification

| 84 | null |

transformers

| 4,929 |

Entry not found

|

andrejmiscic/simcls-scorer-cnndm

|

87f46dbe5b7337432287c0460b614ee0c8ec21c3

|

2021-10-16T20:39:39.000Z

|

[

"pytorch",

"roberta",

"feature-extraction",

"en",

"dataset:cnn_dailymail",

"arxiv:2106.01890",

"arxiv:1602.06023",

"transformers",

"simcls"

] |

feature-extraction

| false |

andrejmiscic

| null |

andrejmiscic/simcls-scorer-cnndm

| 84 | null |

transformers

| 4,930 |

---

language:

- en

tags:

- simcls

datasets:

- cnn_dailymail

---

# SimCLS

SimCLS is a framework for abstractive summarization presented in [SimCLS: A Simple Framework for Contrastive Learning of Abstractive Summarization](https://arxiv.org/abs/2106.01890).

It is a two-stage approach consisting of a *generator* and a *scorer*. In the first stage, a large pre-trained model for abstractive summarization (the *generator*) is used to generate candidate summaries, whereas, in the second stage, the *scorer* assigns a score to each candidate given the source document. The final summary is the highest-scoring candidate.

This model is the *scorer* trained for summarization of CNN/DailyMail ([paper](https://arxiv.org/abs/1602.06023), [datasets](https://huggingface.co/datasets/cnn_dailymail)). It should be used in conjunction with [facebook/bart-large-cnn](https://huggingface.co/facebook/bart-large-cnn). See [our Github repository](https://github.com/andrejmiscic/simcls-pytorch) for details on training, evaluation, and usage.

## Usage

```bash

git clone https://github.com/andrejmiscic/simcls-pytorch.git

cd simcls-pytorch

pip3 install torch torchvision torchaudio transformers sentencepiece

```

```python

from src.model import SimCLS, GeneratorType

summarizer = SimCLS(generator_type=GeneratorType.Bart,

generator_path="facebook/bart-large-cnn",

scorer_path="andrejmiscic/simcls-scorer-cnndm")

article = "This is a news article."

summary = summarizer(article)

print(summary)

```

### Results