modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

sequence | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

mboth/distil-eng-quora-sentence | 0432d0fa4c983b9ecd6b95613882406eb273216d | 2021-07-09T06:00:21.000Z | [

"pytorch",

"distilbert",

"feature-extraction",

"sentence-transformers",

"sentence-similarity",

"transformers"

] | sentence-similarity | false | mboth | null | mboth/distil-eng-quora-sentence | 4 | 1 | sentence-transformers | 18,800 | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# mboth/distil-eng-quora-sentence

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('mboth/distil-eng-quora-sentence')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('mboth/distil-eng-quora-sentence')

model = AutoModel.from_pretrained('mboth/distil-eng-quora-sentence')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=mboth/distil-eng-quora-sentence)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

meghanabhange/Hinglish-Bert-Class | 30fe74ce635c67976b7fee2b9c8e4f03dac60c65 | 2021-05-19T23:12:59.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | false | meghanabhange | null | meghanabhange/Hinglish-Bert-Class | 4 | null | transformers | 18,801 | Entry not found |

mhu-coder/ConvTasNet_Libri1Mix_enhsingle | a7275d944176c8969495fe611c32520004aa070b | 2021-09-23T16:10:04.000Z | [

"pytorch",

"dataset:libri1mix",

"dataset:enh_single",

"asteroid",

"audio",

"ConvTasNet",

"audio-to-audio",

"license:cc-by-sa-4.0"

] | audio-to-audio | false | mhu-coder | null | mhu-coder/ConvTasNet_Libri1Mix_enhsingle | 4 | 1 | asteroid | 18,802 | ---

tags:

- asteroid

- audio

- ConvTasNet

- audio-to-audio

datasets:

- libri1mix

- enh_single

license: cc-by-sa-4.0

---

## Asteroid model `mhu-coder/ConvTasNet_Libri1Mix_enhsingle`

Imported from [Zenodo](https://zenodo.org/record/4301955#.X9cj98Jw0bY)

### Description:

This model was trained by Mathieu Hu using the librimix/ConvTasNet recipe in

[Asteroid](https://github.com/asteroid-team/asteroid).

It was trained on the `enh_single` task of the Libri1Mix dataset.

### Training config:

```yaml

data:

n_src: 1

sample_rate: 16000

segment: 3

task: enh_single

train_dir: data/wav16k/min/train-100

valid_dir: data/wav16k/min/dev

filterbank:

kernel_size: 16

n_filters: 512

stride: 8

main_args:

exp_dir: exp/train_convtasnet_f34664b9

help: None

masknet:

bn_chan: 128

hid_chan: 512

mask_act: relu

n_blocks: 8

n_repeats: 3

n_src: 1

skip_chan: 128

optim:

lr: 0.001

optimizer: adam

weight_decay: 0.0

positional arguments:

training:

batch_size: 2

early_stop: True

epochs: 200

half_lr: True

num_workers: 4

```

### Results:

```yaml

si_sdr: 13.938355526049932

si_sdr_imp: 10.488574220190232

sdr: 14.567380104207393

sdr_imp: 11.064717304994337

sir: inf

sir_imp: nan

sar: 14.567380104207393

sar_imp: 11.064717304994337

stoi: 0.9201010933251715

stoi_imp: 0.1241812697846321

```

### License notice:

This work "ConvTasNet_Libri1Mx_enhsingle" is a derivative of [CSR-I (WSJ0) Complete](https://catalog.ldc.upenn.edu/LDC93S6A)

by [LDC](https://www.ldc.upenn.edu/), used under [LDC User Agreement for

Non-Members](https://catalog.ldc.upenn.edu/license/ldc-non-members-agreement.pdf) (Research only).

"ConvTasNet_Libri1Mix_enhsingle" is licensed under [Attribution-ShareAlike 3.0 Unported](https://creativecommons.org/licenses/by-sa/3.0/)

by Mathieu Hu.

|

michaelrglass/dpr-ctx_encoder-multiset-base-kgi0-zsre | ab012f489c29f6c4edd8317b548a6845f222700f | 2021-04-20T18:21:38.000Z | [

"pytorch",

"dpr",

"transformers"

] | null | false | michaelrglass | null | michaelrglass/dpr-ctx_encoder-multiset-base-kgi0-zsre | 4 | null | transformers | 18,803 | Entry not found |

microsoft/deberta-xxlarge-v2-mnli | 095b3cb5ae735180e64199fe9b0f7b7015553a32 | 2021-02-11T02:05:00.000Z | [

"pytorch",

"deberta-v2",

"en",

"transformers",

"deberta",

"license:mit"

] | null | false | microsoft | null | microsoft/deberta-xxlarge-v2-mnli | 4 | null | transformers | 18,804 | ---

language: en

tags: deberta

thumbnail: https://huggingface.co/front/thumbnails/microsoft.png

license: mit

---

## DeBERTa: Decoding-enhanced BERT with Disentangled Attention

## This model is DEPRECATED, please use [DeBERTa-V2-XXLarge-MNLI](https://huggingface.co/microsoft/deberta-v2-xxlarge-mnli)

|

microsoft/unispeech-1350-en-168-es-ft-1h | e64faf70af36fe556a1916981a06c5dbccba2a09 | 2021-12-19T23:01:13.000Z | [

"pytorch",

"unispeech",

"automatic-speech-recognition",

"es",

"dataset:common_voice",

"arxiv:2101.07597",

"transformers",

"audio"

] | automatic-speech-recognition | false | microsoft | null | microsoft/unispeech-1350-en-168-es-ft-1h | 4 | null | transformers | 18,805 | ---

language:

- es

datasets:

- common_voice

tags:

- audio

- automatic-speech-recognition

---

# UniSpeech-Large-plus Spanish

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The large model pretrained on 16kHz sampled speech audio and phonetic labels and consequently fine-tuned on 1h of Spanish phonemes.

When using the model make sure that your speech input is also sampled at 16kHz and your text in converted into a sequence of phonemes.

[Paper: UniSpeech: Unified Speech Representation Learning

with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597)

Authors: Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang

**Abstract**

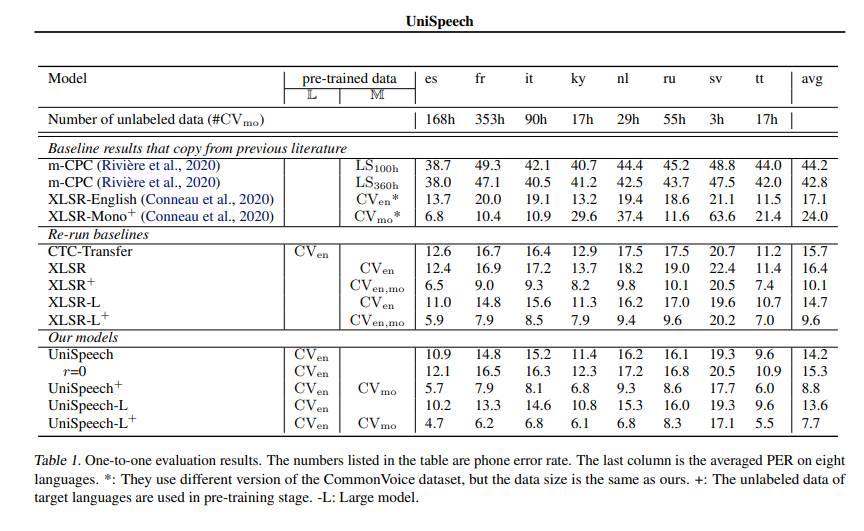

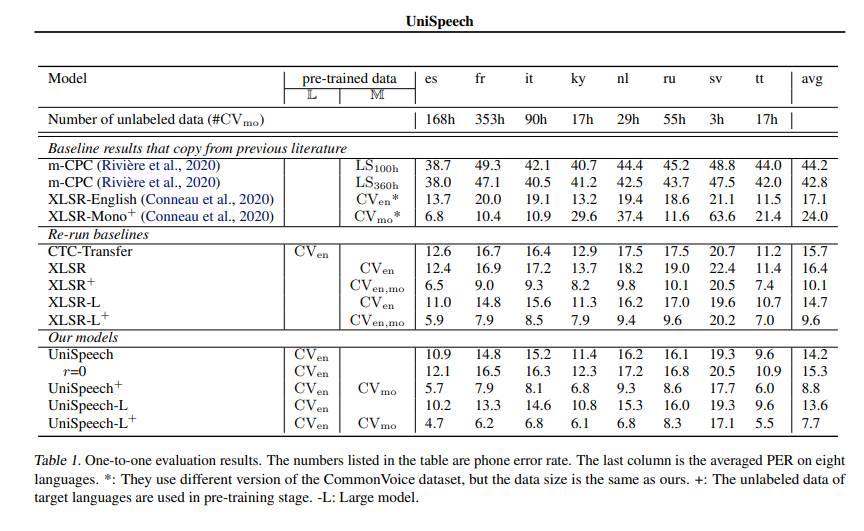

*In this paper, we propose a unified pre-training approach called UniSpeech to learn speech representations with both unlabeled and labeled data, in which supervised phonetic CTC learning and phonetically-aware contrastive self-supervised learning are conducted in a multi-task learning manner. The resultant representations can capture information more correlated with phonetic structures and improve the generalization across languages and domains. We evaluate the effectiveness of UniSpeech for cross-lingual representation learning on public CommonVoice corpus. The results show that UniSpeech outperforms self-supervised pretraining and supervised transfer learning for speech recognition by a maximum of 13.4% and 17.8% relative phone error rate reductions respectively (averaged over all testing languages). The transferability of UniSpeech is also demonstrated on a domain-shift speech recognition task, i.e., a relative word error rate reduction of 6% against the previous approach.*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech.

# Usage

This is an speech model that has been fine-tuned on phoneme classification.

## Inference

```python

import torch

from datasets import load_dataset

from transformers import AutoModelForCTC, AutoProcessor

import torchaudio.functional as F

model_id = "microsoft/unispeech-1350-en-168-es-ft-1h"

sample = next(iter(load_dataset("common_voice", "es", split="test", streaming=True)))

resampled_audio = F.resample(torch.tensor(sample["audio"]["array"]), 48_000, 16_000).numpy()

model = AutoModelForCTC.from_pretrained(model_id)

processor = AutoProcessor.from_pretrained(model_id)

input_values = processor(resampled_audio, return_tensors="pt").input_values

with torch.no_grad():

logits = model(input_values).logits

prediction_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(prediction_ids)

# -> gives:

# b j e n i k e ɾ ɾ e ɣ a l o a s a β ɾ i ɾ p ɾ i m e ɾ o'

# for: Bien . ¿ y qué regalo vas a abrir primero ?

```

# Contribution

The model was contributed by [cywang](https://huggingface.co/cywang) and [patrickvonplaten](https://huggingface.co/patrickvonplaten).

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

# Official Results

See *UniSpeeech-L^{+}* - *es*:

|

microsoft/unispeech-1350-en-17h-ky-ft-1h | 47f2558e5235c1ca19376d3fee08564055f29626 | 2021-12-19T23:00:00.000Z | [

"pytorch",

"unispeech",

"automatic-speech-recognition",

"ky",

"dataset:common_voice",

"arxiv:2101.07597",

"transformers",

"audio"

] | automatic-speech-recognition | false | microsoft | null | microsoft/unispeech-1350-en-17h-ky-ft-1h | 4 | null | transformers | 18,806 | ---

language:

- ky

datasets:

- common_voice

tags:

- audio

- automatic-speech-recognition

---

# UniSpeech-Large-plus Kyrgyz

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The large model pretrained on 16kHz sampled speech audio and phonetic labels and consequently fine-tuned on 1h of Kyrgyz phonemes.

When using the model make sure that your speech input is also sampled at 16kHz and your text in converted into a sequence of phonemes.

[Paper: UniSpeech: Unified Speech Representation Learning

with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597)

Authors: Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang

**Abstract**

*In this paper, we propose a unified pre-training approach called UniSpeech to learn speech representations with both unlabeled and labeled data, in which supervised phonetic CTC learning and phonetically-aware contrastive self-supervised learning are conducted in a multi-task learning manner. The resultant representations can capture information more correlated with phonetic structures and improve the generalization across languages and domains. We evaluate the effectiveness of UniSpeech for cross-lingual representation learning on public CommonVoice corpus. The results show that UniSpeech outperforms self-supervised pretraining and supervised transfer learning for speech recognition by a maximum of 13.4% and 17.8% relative phone error rate reductions respectively (averaged over all testing languages). The transferability of UniSpeech is also demonstrated on a domain-shift speech recognition task, i.e., a relative word error rate reduction of 6% against the previous approach.*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech.

# Usage

This is an speech model that has been fine-tuned on phoneme classification.

## Inference

```python

import torch

from datasets import load_dataset

from transformers import AutoModelForCTC, AutoProcessor

import torchaudio.functional as F

model_id = "microsoft/unispeech-1350-en-17h-ky-ft-1h"

sample = next(iter(load_dataset("common_voice", "ky", split="test", streaming=True)))

resampled_audio = F.resample(torch.tensor(sample["audio"]["array"]), 48_000, 16_000).numpy()

model = AutoModelForCTC.from_pretrained(model_id)

processor = AutoProcessor.from_pretrained(model_id)

input_values = processor(resampled_audio, return_tensors="pt").input_values

with torch.no_grad():

logits = model(input_values).logits

prediction_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(prediction_ids)

```

# Contribution

The model was contributed by [cywang](https://huggingface.co/cywang) and [patrickvonplaten](https://huggingface.co/patrickvonplaten).

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

# Official Results

See *UniSpeeech-L^{+}* - *ky*:

|

microsoft/unispeech-sat-large-sd | 1e451cf8fdaa17c25d2a08d70511a06b22488e40 | 2021-12-17T18:42:36.000Z | [

"pytorch",

"unispeech-sat",

"audio-frame-classification",

"en",

"arxiv:1912.07875",

"arxiv:2106.06909",

"arxiv:2101.00390",

"arxiv:2110.05752",

"transformers",

"speech"

] | null | false | microsoft | null | microsoft/unispeech-sat-large-sd | 4 | null | transformers | 18,807 | ---

language:

- en

datasets:

tags:

- speech

---

# UniSpeech-SAT-Large for Speaker Diarization

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

The model was pretrained on 16kHz sampled speech audio with utterance and speaker contrastive loss. When using the model, make sure that your speech input is also sampled at 16kHz.

The model was pre-trained on:

- 60,000 hours of [Libri-Light](https://arxiv.org/abs/1912.07875)

- 10,000 hours of [GigaSpeech](https://arxiv.org/abs/2106.06909)

- 24,000 hours of [VoxPopuli](https://arxiv.org/abs/2101.00390)

[Paper: UNISPEECH-SAT: UNIVERSAL SPEECH REPRESENTATION LEARNING WITH SPEAKER

AWARE PRE-TRAINING](https://arxiv.org/abs/2110.05752)

Authors: Sanyuan Chen, Yu Wu, Chengyi Wang, Zhengyang Chen, Zhuo Chen, Shujie Liu, Jian Wu, Yao Qian, Furu Wei, Jinyu Li, Xiangzhan Yu

**Abstract**

*Self-supervised learning (SSL) is a long-standing goal for speech processing, since it utilizes large-scale unlabeled data and avoids extensive human labeling. Recent years witness great successes in applying self-supervised learning in speech recognition, while limited exploration was attempted in applying SSL for modeling speaker characteristics. In this paper, we aim to improve the existing SSL framework for speaker representation learning. Two methods are introduced for enhancing the unsupervised speaker information extraction. First, we apply the multi-task learning to the current SSL framework, where we integrate the utterance-wise contrastive loss with the SSL objective function. Second, for better speaker discrimination, we propose an utterance mixing strategy for data augmentation, where additional overlapped utterances are created unsupervisely and incorporate during training. We integrate the proposed methods into the HuBERT framework. Experiment results on SUPERB benchmark show that the proposed system achieves state-of-the-art performance in universal representation learning, especially for speaker identification oriented tasks. An ablation study is performed verifying the efficacy of each proposed method. Finally, we scale up training dataset to 94 thousand hours public audio data and achieve further performance improvement in all SUPERB tasks..*

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech-SAT.

# Fine-tuning details

The model is fine-tuned on the [LibriMix dataset](https://github.com/JorisCos/LibriMix) using just a linear layer for mapping the network outputs.

# Usage

## Speaker Diarization

```python

from transformers import Wav2Vec2FeatureExtractor, UniSpeechSatForAudioFrameClassification

from datasets import load_dataset

import torch

dataset = load_dataset("hf-internal-testing/librispeech_asr_demo", "clean", split="validation")

feature_extractor = Wav2Vec2FeatureExtractor.from_pretrained('microsoft/unispeech-sat-large-sd')

model = UniSpeechSatForAudioFrameClassification.from_pretrained('microsoft/unispeech-sat-large-sd')

# audio file is decoded on the fly

inputs = feature_extractor(dataset[0]["audio"]["array"], return_tensors="pt")

logits = model(**inputs).logits

probabilities = torch.sigmoid(logits[0])

# labels is a one-hot array of shape (num_frames, num_speakers)

labels = (probabilities > 0.5).long()

```

# License

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

|

midas/gupshup_h2e_mbart | b0e78f67817f90377719b4f12cba4186b816ec69 | 2021-11-14T02:08:45.000Z | [

"pytorch",

"mbart",

"text2text-generation",

"arxiv:1910.04073",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | midas | null | midas/gupshup_h2e_mbart | 4 | null | transformers | 18,808 | # Gupshup

GupShup: Summarizing Open-Domain Code-Switched Conversations EMNLP 2021

Paper: [https://aclanthology.org/2021.emnlp-main.499.pdf](https://aclanthology.org/2021.emnlp-main.499.pdf)

Github: [https://github.com/midas-research/gupshup](https://github.com/midas-research/gupshup)

### Dataset

Please request for the Gupshup data using [this Google form](https://docs.google.com/forms/d/1zvUk7WcldVF3RCoHdWzQPzPprtSJClrnHoIOYbzaJEI/edit?ts=61381ec0).

Dataset is available for `Hinglish Dilaogues to English Summarization`(h2e) and `English Dialogues to English Summarization`(e2e). For each task, Dialogues/conversastion have `.source`(train.source) as file extension whereas Summary has `.target`(train.target) file extension. ".source" file need to be provided to `input_path` and ".target" file to `reference_path` argument in the scripts.

## Models

All model weights are available on the Huggingface model hub. Users can either directly download these weights in their local and provide this path to `model_name` argument in the scripts or use the provided alias (to `model_name` argument) in scripts directly; this will lead to download weights automatically by scripts.

Model names were aliased in "gupshup_TASK_MODEL" sense, where "TASK" can be h2e,e2e and MODEL can be mbart, pegasus, etc., as listed below.

**1. Hinglish Dialogues to English Summary (h2e)**

| Model | Huggingface Alias |

|---------|-------------------------------------------------------------------------------|

| mBART | [midas/gupshup_h2e_mbart](https://huggingface.co/midas/gupshup_h2e_mbart) |

| PEGASUS | [midas/gupshup_h2e_pegasus](https://huggingface.co/midas/gupshup_h2e_pegasus) |

| T5 MTL | [midas/gupshup_h2e_t5_mtl](https://huggingface.co/midas/gupshup_h2e_t5_mtl) |

| T5 | [midas/gupshup_h2e_t5](https://huggingface.co/midas/gupshup_h2e_t5) |

| BART | [midas/gupshup_h2e_bart](https://huggingface.co/midas/gupshup_h2e_bart) |

| GPT-2 | [midas/gupshup_h2e_gpt](https://huggingface.co/midas/gupshup_h2e_gpt) |

**2. English Dialogues to English Summary (e2e)**

| Model | Huggingface Alias |

|---------|-------------------------------------------------------------------------------|

| mBART | [midas/gupshup_e2e_mbart](https://huggingface.co/midas/gupshup_e2e_mbart) |

| PEGASUS | [midas/gupshup_e2e_pegasus](https://huggingface.co/midas/gupshup_e2e_pegasus) |

| T5 MTL | [midas/gupshup_e2e_t5_mtl](https://huggingface.co/midas/gupshup_e2e_t5_mtl) |

| T5 | [midas/gupshup_e2e_t5](https://huggingface.co/midas/gupshup_e2e_t5) |

| BART | [midas/gupshup_e2e_bart](https://huggingface.co/midas/gupshup_e2e_bart) |

| GPT-2 | [midas/gupshup_e2e_gpt](https://huggingface.co/midas/gupshup_e2e_gpt) |

## Inference

### Using command line

1. Clone this repo and create a python virtual environment (https://docs.python.org/3/library/venv.html). Install the required packages using

```

git clone https://github.com/midas-research/gupshup.git

pip install -r requirements.txt

```

2. run_eval script has the following arguments.

* **model_name** : Path or alias to one of our models available on Huggingface as listed above.

* **input_path** : Source file or path to file containing conversations, which will be summarized.

* **save_path** : File path where to save summaries generated by the model.

* **reference_path** : Target file or path to file containing summaries, used to calculate matrices.

* **score_path** : File path where to save scores.

* **bs** : Batch size

* **device**: Cuda devices to use.

Please make sure you have downloaded the Gupshup dataset using the above google form and provide the correct path to these files in the argument's `input_path` and `refrence_path.` Or you can simply put `test.source` and `test.target` in `data/h2e/`(hinglish to english) or `data/e2e/`(english to english) folder. For example, to generate English summaries from Hinglish dialogues using the mbart model, run the following command

```

python run_eval.py \

--model_name midas/gupshup_h2e_mbart \

--input_path data/h2e/test.source \

--save_path generated_summary.txt \

--reference_path data/h2e/test.target \

--score_path scores.txt \

--bs 8

```

Another example, to generate English summaries from English dialogues using the Pegasus model

```

python run_eval.py \

--model_name midas/gupshup_e2e_pegasus \

--input_path data/e2e/test.source \

--save_path generated_summary.txt \

--reference_path data/e2e/test.target \

--score_path scores.txt \

--bs 8

```

Please create an issue if you are facing any difficulties in replicating the results.

### References

Please cite [[1]](https://arxiv.org/abs/1910.04073) if you found the resources in this repository useful.

[1] Mehnaz, Laiba, Debanjan Mahata, Rakesh Gosangi, Uma Sushmitha Gunturi, Riya Jain, Gauri Gupta, Amardeep Kumar, Isabelle G. Lee, Anish Acharya, and Rajiv Shah. [*GupShup: Summarizing Open-Domain Code-Switched Conversations*](https://aclanthology.org/2021.emnlp-main.499.pdf)

```

@inproceedings{mehnaz2021gupshup,

title={GupShup: Summarizing Open-Domain Code-Switched Conversations},

author={Mehnaz, Laiba and Mahata, Debanjan and Gosangi, Rakesh and Gunturi, Uma Sushmitha and Jain, Riya and Gupta, Gauri and Kumar, Amardeep and Lee, Isabelle G and Acharya, Anish and Shah, Rajiv},

booktitle={Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing},

pages={6177--6192},

year={2021}

}

```

|

midas/gupshup_h2e_t5 | ab85f7ba5e9f4c67cd55bd64257d789f37d23b01 | 2021-11-14T02:09:33.000Z | [

"pytorch",

"t5",

"text2text-generation",

"arxiv:1910.04073",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | midas | null | midas/gupshup_h2e_t5 | 4 | null | transformers | 18,809 | # Gupshup

GupShup: Summarizing Open-Domain Code-Switched Conversations EMNLP 2021

Paper: [https://aclanthology.org/2021.emnlp-main.499.pdf](https://aclanthology.org/2021.emnlp-main.499.pdf)

Github: [https://github.com/midas-research/gupshup](https://github.com/midas-research/gupshup)

### Dataset

Please request for the Gupshup data using [this Google form](https://docs.google.com/forms/d/1zvUk7WcldVF3RCoHdWzQPzPprtSJClrnHoIOYbzaJEI/edit?ts=61381ec0).

Dataset is available for `Hinglish Dilaogues to English Summarization`(h2e) and `English Dialogues to English Summarization`(e2e). For each task, Dialogues/conversastion have `.source`(train.source) as file extension whereas Summary has `.target`(train.target) file extension. ".source" file need to be provided to `input_path` and ".target" file to `reference_path` argument in the scripts.

## Models

All model weights are available on the Huggingface model hub. Users can either directly download these weights in their local and provide this path to `model_name` argument in the scripts or use the provided alias (to `model_name` argument) in scripts directly; this will lead to download weights automatically by scripts.

Model names were aliased in "gupshup_TASK_MODEL" sense, where "TASK" can be h2e,e2e and MODEL can be mbart, pegasus, etc., as listed below.

**1. Hinglish Dialogues to English Summary (h2e)**

| Model | Huggingface Alias |

|---------|-------------------------------------------------------------------------------|

| mBART | [midas/gupshup_h2e_mbart](https://huggingface.co/midas/gupshup_h2e_mbart) |

| PEGASUS | [midas/gupshup_h2e_pegasus](https://huggingface.co/midas/gupshup_h2e_pegasus) |

| T5 MTL | [midas/gupshup_h2e_t5_mtl](https://huggingface.co/midas/gupshup_h2e_t5_mtl) |

| T5 | [midas/gupshup_h2e_t5](https://huggingface.co/midas/gupshup_h2e_t5) |

| BART | [midas/gupshup_h2e_bart](https://huggingface.co/midas/gupshup_h2e_bart) |

| GPT-2 | [midas/gupshup_h2e_gpt](https://huggingface.co/midas/gupshup_h2e_gpt) |

**2. English Dialogues to English Summary (e2e)**

| Model | Huggingface Alias |

|---------|-------------------------------------------------------------------------------|

| mBART | [midas/gupshup_e2e_mbart](https://huggingface.co/midas/gupshup_e2e_mbart) |

| PEGASUS | [midas/gupshup_e2e_pegasus](https://huggingface.co/midas/gupshup_e2e_pegasus) |

| T5 MTL | [midas/gupshup_e2e_t5_mtl](https://huggingface.co/midas/gupshup_e2e_t5_mtl) |

| T5 | [midas/gupshup_e2e_t5](https://huggingface.co/midas/gupshup_e2e_t5) |

| BART | [midas/gupshup_e2e_bart](https://huggingface.co/midas/gupshup_e2e_bart) |

| GPT-2 | [midas/gupshup_e2e_gpt](https://huggingface.co/midas/gupshup_e2e_gpt) |

## Inference

### Using command line

1. Clone this repo and create a python virtual environment (https://docs.python.org/3/library/venv.html). Install the required packages using

```

git clone https://github.com/midas-research/gupshup.git

pip install -r requirements.txt

```

2. run_eval script has the following arguments.

* **model_name** : Path or alias to one of our models available on Huggingface as listed above.

* **input_path** : Source file or path to file containing conversations, which will be summarized.

* **save_path** : File path where to save summaries generated by the model.

* **reference_path** : Target file or path to file containing summaries, used to calculate matrices.

* **score_path** : File path where to save scores.

* **bs** : Batch size

* **device**: Cuda devices to use.

Please make sure you have downloaded the Gupshup dataset using the above google form and provide the correct path to these files in the argument's `input_path` and `refrence_path.` Or you can simply put `test.source` and `test.target` in `data/h2e/`(hinglish to english) or `data/e2e/`(english to english) folder. For example, to generate English summaries from Hinglish dialogues using the mbart model, run the following command

```

python run_eval.py \

--model_name midas/gupshup_h2e_mbart \

--input_path data/h2e/test.source \

--save_path generated_summary.txt \

--reference_path data/h2e/test.target \

--score_path scores.txt \

--bs 8

```

Another example, to generate English summaries from English dialogues using the Pegasus model

```

python run_eval.py \

--model_name midas/gupshup_e2e_pegasus \

--input_path data/e2e/test.source \

--save_path generated_summary.txt \

--reference_path data/e2e/test.target \

--score_path scores.txt \

--bs 8

```

Please create an issue if you are facing any difficulties in replicating the results.

### References

Please cite [[1]](https://arxiv.org/abs/1910.04073) if you found the resources in this repository useful.

[1] Mehnaz, Laiba, Debanjan Mahata, Rakesh Gosangi, Uma Sushmitha Gunturi, Riya Jain, Gauri Gupta, Amardeep Kumar, Isabelle G. Lee, Anish Acharya, and Rajiv Shah. [*GupShup: Summarizing Open-Domain Code-Switched Conversations*](https://aclanthology.org/2021.emnlp-main.499.pdf)

```

@inproceedings{mehnaz2021gupshup,

title={GupShup: Summarizing Open-Domain Code-Switched Conversations},

author={Mehnaz, Laiba and Mahata, Debanjan and Gosangi, Rakesh and Gunturi, Uma Sushmitha and Jain, Riya and Gupta, Gauri and Kumar, Amardeep and Lee, Isabelle G and Acharya, Anish and Shah, Rajiv},

booktitle={Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing},

pages={6177--6192},

year={2021}

}

```

|

milyiyo/minilm-finetuned-emotion | fb6005acdd6f3ab7088dec77ef0298616a41ba16 | 2022-01-16T00:37:00.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"dataset:emotion",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index"

] | text-classification | false | milyiyo | null | milyiyo/minilm-finetuned-emotion | 4 | 1 | transformers | 18,810 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- f1

model-index:

- name: minilm-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: F1

type: f1

value: 0.931192

---

Based model: [microsoft/MiniLM-L12-H384-uncased](https://huggingface.co/microsoft/MiniLM-L12-H384-uncased)

Dataset: [emotion](https://huggingface.co/datasets/emotion)

These are the results on the evaluation set:

| Attribute | Value |

| ------------------ | -------- |

| Training Loss | 0.163100 |

| Validation Loss | 0.192153 |

| F1 | 0.931192 |

|

mimi/Waynehills-NLP-doogie | 4e1ec489b96e2feb40a3a1586bb6d03cd3f7a6b2 | 2022-01-06T08:02:38.000Z | [

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

] | text2text-generation | false | mimi | null | mimi/Waynehills-NLP-doogie | 4 | null | transformers | 18,811 | ---

tags:

- generated_from_trainer

model-index:

- name: Waynehills-NLP-doogie

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Waynehills-NLP-doogie

This model is a fine-tuned version of [KETI-AIR/ke-t5-base-ko](https://huggingface.co/KETI-AIR/ke-t5-base-ko) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.9188

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 10

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 28.2167 | 0.06 | 1000 | 9.7030 |

| 10.4479 | 0.12 | 2000 | 7.5450 |

| 8.0306 | 0.19 | 3000 | 6.1969 |

| 6.503 | 0.25 | 4000 | 5.3015 |

| 5.5406 | 0.31 | 5000 | 4.6363 |

| 4.7299 | 0.38 | 6000 | 4.0431 |

| 3.9263 | 0.44 | 7000 | 3.6313 |

| 3.4111 | 0.5 | 8000 | 3.4830 |

| 3.0517 | 0.56 | 9000 | 3.3294 |

| 2.7524 | 0.62 | 10000 | 3.2077 |

| 2.5402 | 0.69 | 11000 | 3.1094 |

| 2.3228 | 0.75 | 12000 | 3.1099 |

| 2.1513 | 0.81 | 13000 | 3.0284 |

| 2.0418 | 0.88 | 14000 | 3.0155 |

| 1.8875 | 0.94 | 15000 | 3.0241 |

| 1.756 | 1.0 | 16000 | 3.0165 |

| 1.6489 | 1.06 | 17000 | 2.9849 |

| 1.5788 | 1.12 | 18000 | 2.9496 |

| 1.5368 | 1.19 | 19000 | 2.9500 |

| 1.4467 | 1.25 | 20000 | 3.0133 |

| 1.381 | 1.31 | 21000 | 2.9631 |

| 1.3451 | 1.38 | 22000 | 3.0159 |

| 1.2917 | 1.44 | 23000 | 2.9906 |

| 1.2605 | 1.5 | 24000 | 3.0006 |

| 1.2003 | 1.56 | 25000 | 2.9797 |

| 1.1987 | 1.62 | 26000 | 2.9253 |

| 1.1703 | 1.69 | 27000 | 3.0044 |

| 1.1474 | 1.75 | 28000 | 2.9216 |

| 1.0816 | 1.81 | 29000 | 2.9645 |

| 1.0709 | 1.88 | 30000 | 3.0439 |

| 1.0476 | 1.94 | 31000 | 3.0844 |

| 1.0645 | 2.0 | 32000 | 2.9434 |

| 1.0204 | 2.06 | 33000 | 2.9386 |

| 0.9901 | 2.12 | 34000 | 3.0452 |

| 0.9911 | 2.19 | 35000 | 2.9798 |

| 0.9706 | 2.25 | 36000 | 2.9919 |

| 0.9461 | 2.31 | 37000 | 3.0279 |

| 0.9577 | 2.38 | 38000 | 2.9615 |

| 0.9466 | 2.44 | 39000 | 2.9988 |

| 0.9486 | 2.5 | 40000 | 2.9133 |

| 0.9201 | 2.56 | 41000 | 3.0004 |

| 0.896 | 2.62 | 42000 | 2.9626 |

| 0.8893 | 2.69 | 43000 | 2.9667 |

| 0.9028 | 2.75 | 44000 | 2.9543 |

| 0.897 | 2.81 | 45000 | 2.8760 |

| 0.8664 | 2.88 | 46000 | 2.9894 |

| 0.8719 | 2.94 | 47000 | 2.8456 |

| 0.8491 | 3.0 | 48000 | 2.9713 |

| 0.8402 | 3.06 | 49000 | 2.9738 |

| 0.8484 | 3.12 | 50000 | 2.9361 |

| 0.8304 | 3.19 | 51000 | 2.8945 |

| 0.8208 | 3.25 | 52000 | 2.9625 |

| 0.8074 | 3.31 | 53000 | 3.0054 |

| 0.8226 | 3.38 | 54000 | 2.9405 |

| 0.8185 | 3.44 | 55000 | 2.9047 |

| 0.8352 | 3.5 | 56000 | 2.9016 |

| 0.8289 | 3.56 | 57000 | 2.9490 |

| 0.7918 | 3.62 | 58000 | 2.9621 |

| 0.8212 | 3.69 | 59000 | 2.9341 |

| 0.7955 | 3.75 | 60000 | 2.9167 |

| 0.7724 | 3.81 | 61000 | 2.9409 |

| 0.8169 | 3.88 | 62000 | 2.8925 |

| 0.7862 | 3.94 | 63000 | 2.9314 |

| 0.803 | 4.0 | 64000 | 2.9271 |

| 0.7595 | 4.06 | 65000 | 2.9263 |

| 0.7931 | 4.12 | 66000 | 2.9400 |

| 0.7759 | 4.19 | 67000 | 2.9501 |

| 0.7859 | 4.25 | 68000 | 2.9133 |

| 0.805 | 4.31 | 69000 | 2.8785 |

| 0.7649 | 4.38 | 70000 | 2.9060 |

| 0.7692 | 4.44 | 71000 | 2.8868 |

| 0.7692 | 4.5 | 72000 | 2.9045 |

| 0.7798 | 4.56 | 73000 | 2.8951 |

| 0.7812 | 4.62 | 74000 | 2.9068 |

| 0.7533 | 4.69 | 75000 | 2.9129 |

| 0.7527 | 4.75 | 76000 | 2.9157 |

| 0.7652 | 4.81 | 77000 | 2.9053 |

| 0.7633 | 4.88 | 78000 | 2.9190 |

| 0.7437 | 4.94 | 79000 | 2.9251 |

| 0.7653 | 5.0 | 80000 | 2.9188 |

### Framework versions

- Transformers 4.12.5

- Pytorch 1.10.0+cu111

- Datasets 1.5.0

- Tokenizers 0.10.3

|

mklucifer/DialoGPT-medium-DEADPOOL | 0550da6f5f3e109d635bbe8da5e87b2df05d7d38 | 2021-10-27T15:10:16.000Z | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | false | mklucifer | null | mklucifer/DialoGPT-medium-DEADPOOL | 4 | null | transformers | 18,812 | ---

tags:

- conversational

---

# DEADPOOL DialoGPT Model |

ml6team/distilbert-base-dutch-cased-toxic-comments | edf505d15afb6de3dbe994f13337c723495a4057 | 2022-01-20T08:21:12.000Z | [

"pytorch",

"distilbert",

"text-classification",

"nl",

"transformers",

"license:apache-2.0"

] | text-classification | false | ml6team | null | ml6team/distilbert-base-dutch-cased-toxic-comments | 4 | 5 | transformers | 18,813 | ---

language:

- nl

tags:

- text-classification

- pytorch

widget:

- text: "Ik heb je lief met heel mijn hart"

example_title: "Non toxic comment 1"

- text: "Dat is een goed punt, zo had ik het nog niet bekeken."

example_title: "Non toxic comment 2"

- text: "Wat de fuck zei je net tegen me, klootzak?"

example_title: "Toxic comment 1"

- text: "Rot op, vuile hoerenzoon."

example_title: "Toxic comment 2"

license: apache-2.0

metrics:

- Accuracy, F1 Score, Recall, Precision

---

# distilbert-base-dutch-toxic-comments

## Model description:

This model was created with the purpose to detect toxic or potentially harmful comments.

For this model, we finetuned a multilingual distilbert model [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased) on the translated [Jigsaw Toxicity dataset](https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge).

The original dataset was translated using the appropriate [MariantMT model](https://huggingface.co/Helsinki-NLP/opus-mt-en-nl).

The model was trained for 2 epochs, on 90% of the dataset, with the following arguments:

```

training_args = TrainingArguments(

learning_rate=3e-5,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

gradient_accumulation_steps=4,

load_best_model_at_end=True,

metric_for_best_model="recall",

epochs=2,

evaluation_strategy="steps",

save_strategy="steps",

save_total_limit=10,

logging_steps=100,

eval_steps=250,

save_steps=250,

weight_decay=0.001,

report_to="wandb")

```

## Model Performance:

Model evaluation was done on 1/10th of the dataset, which served as the test dataset.

| Accuracy | F1 Score | Recall | Precision |

| --- | --- | --- | --- |

| 95.75 | 78.88 | 77.23 | 80.61 |

## Dataset:

Unfortunately we cannot open-source the dataset, since we are bound by the underlying Jigsaw license.

|

mmcquade11/autonlp-imdb-test-21134453 | 357f7eb72db04932822cc51da75b19199ebd1ca4 | 2021-10-18T17:47:59.000Z | [

"pytorch",

"roberta",

"text-classification",

"en",

"dataset:mmcquade11/autonlp-data-imdb-test",

"transformers",

"autonlp",

"co2_eq_emissions"

] | text-classification | false | mmcquade11 | null | mmcquade11/autonlp-imdb-test-21134453 | 4 | null | transformers | 18,814 | ---

tags: autonlp

language: en

widget:

- text: "I love AutoNLP 🤗"

datasets:

- mmcquade11/autonlp-data-imdb-test

co2_eq_emissions: 38.102565360610484

---

# Model Trained Using AutoNLP

- Problem type: Binary Classification

- Model ID: 21134453

- CO2 Emissions (in grams): 38.102565360610484

## Validation Metrics

- Loss: 0.172550767660141

- Accuracy: 0.9355

- Precision: 0.9362853135644159

- Recall: 0.9346

- AUC: 0.98267064

- F1: 0.9354418977079372

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/mmcquade11/autonlp-imdb-test-21134453

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("mmcquade11/autonlp-imdb-test-21134453", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("mmcquade11/autonlp-imdb-test-21134453", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

``` |

mofawzy/gpt-2-goodreads-ar | e00809551e44401ba29eb27d647d504bfbe78404 | 2021-05-23T09:53:17.000Z | [

"pytorch",

"jax",

"gpt2",

"text-generation",

"transformers"

] | text-generation | false | mofawzy | null | mofawzy/gpt-2-goodreads-ar | 4 | null | transformers | 18,815 | ### Generate Arabic reviews sentences with model GPT-2 Medium.

#### Load model

```

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("mofawzy/gpt-2-medium-ar")

model = AutoModelWithLMHead.from_pretrained("mofawzy/gpt-2-medium-ar")

```

### Eval:

```

***** eval metrics *****

epoch = 20.0

eval_loss = 1.7798

eval_mem_cpu_alloc_delta = 3MB

eval_mem_cpu_peaked_delta = 0MB

eval_mem_gpu_alloc_delta = 0MB

eval_mem_gpu_peaked_delta = 7044MB

eval_runtime = 0:03:03.37

eval_samples = 527

eval_samples_per_second = 2.874

perplexity = 5.9285

```

#### Notebook:

https://colab.research.google.com/drive/1P0Raqrq0iBLNH87DyN9j0SwWg4C2HubV?usp=sharing

|

mohammed/wav2vec2-large-xlsr-arabic | acbe8be2e88637e63fc31d199de81b989e982600 | 2021-07-06T12:52:15.000Z | [

"pytorch",

"jax",

"wav2vec2",

"automatic-speech-recognition",

"ar",

"dataset:common_voice",

"dataset:arabic_speech_corpus",

"transformers",

"audio",

"speech",

"xlsr-fine-tuning-week",

"license:apache-2.0",

"model-index"

] | automatic-speech-recognition | false | mohammed | null | mohammed/wav2vec2-large-xlsr-arabic | 4 | 2 | transformers | 18,816 | ---

language: ar

datasets:

- common_voice

- arabic_speech_corpus

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

license: apache-2.0

model-index:

- name: Mohammed XLSR Wav2Vec2 Large 53

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice ar

type: common_voice

args: ar

metrics:

- name: Test WER

type: wer

value: 36.699

- name: Validation WER

type: wer

value: 36.699

---

# Wav2Vec2-Large-XLSR-53-Arabic

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

on Arabic using the `train` splits of [Common Voice](https://huggingface.co/datasets/common_voice)

and [Arabic Speech Corpus](https://huggingface.co/datasets/arabic_speech_corpus).

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

%%capture

!pip install datasets

!pip install transformers==4.4.0

!pip install torchaudio

!pip install jiwer

!pip install tnkeeh

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "ar", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("mohammed/wav2vec2-large-xlsr-arabic")

model = Wav2Vec2ForCTC.from_pretrained("mohammed/wav2vec2-large-xlsr-arabic")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("The predicted sentence is: ", processor.batch_decode(predicted_ids))

print("The original sentence is:", test_dataset["sentence"][:2])

```

The output is:

```

The predicted sentence is : ['ألديك قلم', 'ليست نارك مكسافة على هذه الأرض أبعد من يوم أمس']

The original sentence is: ['ألديك قلم ؟', 'ليست هناك مسافة على هذه الأرض أبعد من يوم أمس.']

```

## Evaluation

The model can be evaluated as follows on the Arabic test data of Common Voice:

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

# creating a dictionary with all diacritics

dict = {

'ِ': '',

'ُ': '',

'ٓ': '',

'ٰ': '',

'ْ': '',

'ٌ': '',

'ٍ': '',

'ً': '',

'ّ': '',

'َ': '',

'~': '',

',': '',

'ـ': '',

'—': '',

'.': '',

'!': '',

'-': '',

';': '',

':': '',

'\'': '',

'"': '',

'☭': '',

'«': '',

'»': '',

'؛': '',

'ـ': '',

'_': '',

'،': '',

'“': '',

'%': '',

'‘': '',

'”': '',

'�': '',

'_': '',

',': '',

'?': '',

'#': '',

'‘': '',

'.': '',

'؛': '',

'get': '',

'؟': '',

' ': ' ',

'\'ۖ ': '',

'\'': '',

'\'ۚ' : '',

' \'': '',

'31': '',

'24': '',

'39': ''

}

# replacing multiple diacritics using dictionary (stackoverflow is amazing)

def remove_special_characters(batch):

# Create a regular expression from the dictionary keys

regex = re.compile("(%s)" % "|".join(map(re.escape, dict.keys())))

# For each match, look-up corresponding value in dictionary

batch["sentence"] = regex.sub(lambda mo: dict[mo.string[mo.start():mo.end()]], batch["sentence"])

return batch

test_dataset = load_dataset("common_voice", "ar", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("mohammed/wav2vec2-large-xlsr-arabic")

model = Wav2Vec2ForCTC.from_pretrained("mohammed/wav2vec2-large-xlsr-arabic")

model.to("cuda")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

test_dataset = test_dataset.map(remove_special_characters)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 36.699%

## Future Work

One can use *data augmentation*, *transliteration*, or *attention_mask* to increase the accuracy.

|

mohsenfayyaz/bert-base-cased-toxicity | 20449e48fc48c477568c72f12b6159d86290ca43 | 2021-05-19T23:39:41.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | false | mohsenfayyaz | null | mohsenfayyaz/bert-base-cased-toxicity | 4 | null | transformers | 18,817 | Entry not found |

mohsenfayyaz/bert-base-uncased-offenseval2019-unbalanced | e2c36709fcd98f5761b8d3396354d0e515467ccf | 2021-05-19T23:41:35.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | false | mohsenfayyaz | null | mohsenfayyaz/bert-base-uncased-offenseval2019-unbalanced | 4 | null | transformers | 18,818 | Entry not found |

mohsenfayyaz/bert-base-uncased-toxicity-a | bc046871daaaba8d8c2ce7a10b2b4d7eb0ea46e2 | 2021-05-19T23:44:37.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | false | mohsenfayyaz | null | mohsenfayyaz/bert-base-uncased-toxicity-a | 4 | null | transformers | 18,819 | Entry not found |

mohsenfayyaz/xlnet-base-cased-offenseval2019-downsample | bd015b15113ade3ce9ebfbd7617a7d6ac898f973 | 2021-05-04T13:58:20.000Z | [

"pytorch",

"xlnet",

"text-classification",

"transformers"

] | text-classification | false | mohsenfayyaz | null | mohsenfayyaz/xlnet-base-cased-offenseval2019-downsample | 4 | null | transformers | 18,820 | Entry not found |

mollypak/bert-model-baby | 479f6cf68275cc5d09c3bf31fab1d57ab2e1407f | 2021-11-26T13:30:51.000Z | [

"pytorch",

"bert",

"text-classification",

"transformers"

] | text-classification | false | mollypak | null | mollypak/bert-model-baby | 4 | null | transformers | 18,821 | Entry not found |

momo/distilbert-base-uncased-finetuned-ner | 9a242b287e56e5a2a963b662f5aabb2a0f37cf11 | 2021-11-28T17:15:36.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"token-classification",

"dataset:conll2003",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | token-classification | false | momo | null | momo/distilbert-base-uncased-finetuned-ner | 4 | null | transformers | 18,822 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9262123053131559

- name: Recall

type: recall

value: 0.9380243875153821

- name: F1

type: f1

value: 0.9320809248554913

- name: Accuracy

type: accuracy

value: 0.9839547555880344

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0617

- Precision: 0.9262

- Recall: 0.9380

- F1: 0.9321

- Accuracy: 0.9840

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2465 | 1.0 | 878 | 0.0727 | 0.9175 | 0.9199 | 0.9187 | 0.9808 |

| 0.0527 | 2.0 | 1756 | 0.0610 | 0.9245 | 0.9361 | 0.9303 | 0.9834 |

| 0.0313 | 3.0 | 2634 | 0.0617 | 0.9262 | 0.9380 | 0.9321 | 0.9840 |

### Framework versions

- Transformers 4.12.5

- Pytorch 1.8.0

- Datasets 1.16.1

- Tokenizers 0.10.3

|

monologg/kocharelectra-base-finetuned-goemotions | 6aad036b0a14d69fc4dce14d18eca945e4456925 | 2020-05-29T12:52:27.000Z | [

"pytorch",

"electra",

"transformers"

] | null | false | monologg | null | monologg/kocharelectra-base-finetuned-goemotions | 4 | null | transformers | 18,823 | Entry not found |

monologg/kocharelectra-base-generator | 019d26cdd8791eb28e2b67ff956b0fb441db4ffd | 2020-05-27T17:35:59.000Z | [

"pytorch",

"electra",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | monologg | null | monologg/kocharelectra-base-generator | 4 | null | transformers | 18,824 | Entry not found |

monologg/kocharelectra-small-finetuned-goemotions | e77adc04b5143e5989f09adff8fce8ac47d090b1 | 2020-05-29T12:56:37.000Z | [

"pytorch",

"electra",

"transformers"

] | null | false | monologg | null | monologg/kocharelectra-small-finetuned-goemotions | 4 | null | transformers | 18,825 | Entry not found |

monologg/koelectra-base-finetuned-goemotions | c505c12811b558c574420afdaa74aac8b3c31421 | 2020-05-18T20:19:16.000Z | [

"pytorch",

"electra",

"transformers"

] | null | false | monologg | null | monologg/koelectra-base-finetuned-goemotions | 4 | null | transformers | 18,826 | Entry not found |

monsoon-nlp/dv-muril | b67f178d9b0545a7652aad32f08d4ac2b5df7dca | 2021-05-20T00:01:51.000Z | [

"pytorch",

"jax",

"bert",

"fill-mask",

"dv",

"transformers",

"autotrain_compatible"

] | fill-mask | false | monsoon-nlp | null | monsoon-nlp/dv-muril | 4 | null | transformers | 18,827 | ---

language: dv

---

# dv-muril

This is an experiment in transfer learning, to insert Dhivehi word and

word-piece tokens into Google's MuRIL model.

This BERT-based model currently performs better than dv-wave ELECTRA on

the Maldivian News Classification task https://github.com/Sofwath/DhivehiDatasets

## Training

- Start with MuRIL (similar to mBERT) with no Thaana vocabulary

- Based on PanLex dictionaries, attach 1,100 Dhivehi words to Malayalam or English embeddings

- Add remaining words and word-pieces from BertWordPieceTokenizer / vocab.txt

- Continue BERT pretraining

## Performance

- mBERT: 52%

- dv-wave (ELECTRA, 30k vocab): 89%

- dv-muril (10k vocab) before BERT pretraining step: 89.8%

- previous dv-muril (30k vocab): 90.7%

- dv-muril (10k vocab): 91.6%

CoLab notebook:

https://colab.research.google.com/drive/113o6vkLZRkm6OwhTHrvE0x6QPpavj0fn

|

monsoon-nlp/dv-wave | 2ba732ad89d9ead004e5fdc9941ddc829c2c6524 | 2020-12-11T21:51:38.000Z | [

"pytorch",

"tf",

"electra",

"dv",

"transformers"

] | null | false | monsoon-nlp | null | monsoon-nlp/dv-wave | 4 | null | transformers | 18,828 | ---

language: dv

---

# dv-wave

This is a second attempt at a Dhivehi language model trained with

Google Research's [ELECTRA](https://github.com/google-research/electra).

Tokenization and pre-training CoLab: https://colab.research.google.com/drive/1ZJ3tU9MwyWj6UtQ-8G7QJKTn-hG1uQ9v?usp=sharing

Using SimpleTransformers to classify news https://colab.research.google.com/drive/1KnyQxRNWG_yVwms_x9MUAqFQVeMecTV7?usp=sharing

V1: similar performance to mBERT on news classification task after finetuning for 3 epochs (52%)

V2: fixed tokenizers ```do_lower_case=False``` and ```strip_accents=False``` to preserve vowel signs of Dhivehi

dv-wave: 89% to mBERT: 52%

## Corpus

Trained on @Sofwath's 307MB corpus of Dhivehi text: https://github.com/Sofwath/DhivehiDatasets - this repo also contains the news classification task CSV

[OSCAR](https://oscar-corpus.com/) was considered but has not been added to pretraining; as of

this writing their web crawl has 126MB of Dhivehi text (79MB deduped).

## Vocabulary

Included as vocab.txt in the upload - vocab_size is 29874

|

moshew/bert-small-aug-sst2-distilled | 499849730805e6d6dba5eab7ba5c5bac42b18be7 | 2022-02-23T11:12:18.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers"

] | text-classification | false | moshew | null | moshew/bert-small-aug-sst2-distilled | 4 | null | transformers | 18,829 | Accuracy = 92 |

moussaKam/frugalscore_medium_roberta_bert-score | b73f9b7a9466c99166673f4ec146085f23bb4973 | 2022-02-01T10:51:17.000Z | [

"pytorch",

"bert",

"text-classification",

"arxiv:2110.08559",

"transformers"

] | text-classification | false | moussaKam | null | moussaKam/frugalscore_medium_roberta_bert-score | 4 | null | transformers | 18,830 | # FrugalScore

FrugalScore is an approach to learn a fixed, low cost version of any expensive NLG metric, while retaining most of its original performance

Paper: https://arxiv.org/abs/2110.08559?context=cs

Project github: https://github.com/moussaKam/FrugalScore

The pretrained checkpoints presented in the paper :

| FrugalScore | Student | Teacher | Method |

|----------------------------------------------------|-------------|----------------|------------|

| [moussaKam/frugalscore_tiny_bert-base_bert-score](https://huggingface.co/moussaKam/frugalscore_tiny_bert-base_bert-score) | BERT-tiny | BERT-Base | BERTScore |

| [moussaKam/frugalscore_small_bert-base_bert-score](https://huggingface.co/moussaKam/frugalscore_small_bert-base_bert-score) | BERT-small | BERT-Base | BERTScore |

| [moussaKam/frugalscore_medium_bert-base_bert-score](https://huggingface.co/moussaKam/frugalscore_medium_bert-base_bert-score) | BERT-medium | BERT-Base | BERTScore |

| [moussaKam/frugalscore_tiny_roberta_bert-score](https://huggingface.co/moussaKam/frugalscore_tiny_roberta_bert-score) | BERT-tiny | RoBERTa-Large | BERTScore |

| [moussaKam/frugalscore_small_roberta_bert-score](https://huggingface.co/moussaKam/frugalscore_small_roberta_bert-score) | BERT-small | RoBERTa-Large | BERTScore |

| [moussaKam/frugalscore_medium_roberta_bert-score](https://huggingface.co/moussaKam/frugalscore_medium_roberta_bert-score) | BERT-medium | RoBERTa-Large | BERTScore |

| [moussaKam/frugalscore_tiny_deberta_bert-score](https://huggingface.co/moussaKam/frugalscore_tiny_deberta_bert-score) | BERT-tiny | DeBERTa-XLarge | BERTScore |

| [moussaKam/frugalscore_small_deberta_bert-score](https://huggingface.co/moussaKam/frugalscore_small_deberta_bert-score) | BERT-small | DeBERTa-XLarge | BERTScore |

| [moussaKam/frugalscore_medium_deberta_bert-score](https://huggingface.co/moussaKam/frugalscore_medium_deberta_bert-score) | BERT-medium | DeBERTa-XLarge | BERTScore |

| [moussaKam/frugalscore_tiny_bert-base_mover-score](https://huggingface.co/moussaKam/frugalscore_tiny_bert-base_mover-score) | BERT-tiny | BERT-Base | MoverScore |

| [moussaKam/frugalscore_small_bert-base_mover-score](https://huggingface.co/moussaKam/frugalscore_small_bert-base_mover-score) | BERT-small | BERT-Base | MoverScore |

| [moussaKam/frugalscore_medium_bert-base_mover-score](https://huggingface.co/moussaKam/frugalscore_medium_bert-base_mover-score) | BERT-medium | BERT-Base | MoverScore | |

moussaKam/frugalscore_small_bert-base_mover-score | 3437e3adb217131a1af4c38127649b9f1f00cf14 | 2022-05-11T11:05:28.000Z | [

"pytorch",

"bert",

"text-classification",

"arxiv:2110.08559",

"transformers"

] | text-classification | false | moussaKam | null | moussaKam/frugalscore_small_bert-base_mover-score | 4 | null | transformers | 18,831 | # FrugalScore

FrugalScore is an approach to learn a fixed, low cost version of any expensive NLG metric, while retaining most of its original performance

Paper: https://arxiv.org/abs/2110.08559?context=cs

Project github: https://github.com/moussaKam/FrugalScore

The pretrained checkpoints presented in the paper :

| FrugalScore | Student | Teacher | Method |

|----------------------------------------------------|-------------|----------------|------------|

| [moussaKam/frugalscore_tiny_bert-base_bert-score](https://huggingface.co/moussaKam/frugalscore_tiny_bert-base_bert-score) | BERT-tiny | BERT-Base | BERTScore |

| [moussaKam/frugalscore_small_bert-base_bert-score](https://huggingface.co/moussaKam/frugalscore_small_bert-base_bert-score) | BERT-small | BERT-Base | BERTScore |

| [moussaKam/frugalscore_medium_bert-base_bert-score](https://huggingface.co/moussaKam/frugalscore_medium_bert-base_bert-score) | BERT-medium | BERT-Base | BERTScore |

| [moussaKam/frugalscore_tiny_roberta_bert-score](https://huggingface.co/moussaKam/frugalscore_tiny_roberta_bert-score) | BERT-tiny | RoBERTa-Large | BERTScore |

| [moussaKam/frugalscore_small_roberta_bert-score](https://huggingface.co/moussaKam/frugalscore_small_roberta_bert-score) | BERT-small | RoBERTa-Large | BERTScore |

| [moussaKam/frugalscore_medium_roberta_bert-score](https://huggingface.co/moussaKam/frugalscore_medium_roberta_bert-score) | BERT-medium | RoBERTa-Large | BERTScore |

| [moussaKam/frugalscore_tiny_deberta_bert-score](https://huggingface.co/moussaKam/frugalscore_tiny_deberta_bert-score) | BERT-tiny | DeBERTa-XLarge | BERTScore |

| [moussaKam/frugalscore_small_deberta_bert-score](https://huggingface.co/moussaKam/frugalscore_small_deberta_bert-score) | BERT-small | DeBERTa-XLarge | BERTScore |

| [moussaKam/frugalscore_medium_deberta_bert-score](https://huggingface.co/moussaKam/frugalscore_medium_deberta_bert-score) | BERT-medium | DeBERTa-XLarge | BERTScore |

| [moussaKam/frugalscore_tiny_bert-base_mover-score](https://huggingface.co/moussaKam/frugalscore_tiny_bert-base_mover-score) | BERT-tiny | BERT-Base | MoverScore |

| [moussaKam/frugalscore_small_bert-base_mover-score](https://huggingface.co/moussaKam/frugalscore_small_bert-base_mover-score) | BERT-small | BERT-Base | MoverScore |

| [moussaKam/frugalscore_medium_bert-base_mover-score](https://huggingface.co/moussaKam/frugalscore_medium_bert-base_mover-score) | BERT-medium | BERT-Base | MoverScore | |

mrm8488/RuPERTa-base-finetuned-spa-constitution | 2c2175a87fbdeef84c54e432495c32b74d4f79f0 | 2021-05-20T18:12:03.000Z | [

"pytorch",

"jax",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | mrm8488 | null | mrm8488/RuPERTa-base-finetuned-spa-constitution | 4 | null | transformers | 18,832 | Entry not found |

mrm8488/bert-mini-wrslb-finetuned-squadv1 | a68eaa0039c5d956aa5740c5d5afe3afd5d1e227 | 2021-05-20T00:26:56.000Z | [

"pytorch",

"jax",

"bert",

"question-answering",

"transformers",

"autotrain_compatible"

] | question-answering | false | mrm8488 | null | mrm8488/bert-mini-wrslb-finetuned-squadv1 | 4 | null | transformers | 18,833 | Entry not found |

mrm8488/bert-small-wrslb-finetuned-squadv1 | e7aeb86ed58911ba91ce824e24207fe2a1b6d091 | 2021-05-20T00:34:10.000Z | [

"pytorch",

"jax",

"bert",

"question-answering",

"transformers",

"autotrain_compatible"

] | question-answering | false | mrm8488 | null | mrm8488/bert-small-wrslb-finetuned-squadv1 | 4 | null | transformers | 18,834 | Entry not found |

mrm8488/electricidad-small-discriminator | 2c4c04c7cd445ea978938e9618229fad7de2cbea | 2022-03-30T20:44:50.000Z | [

"pytorch",

"electra",

"pretraining",

"es",

"dataset:large_spanish_corpus",

"transformers",

"Spanish",

"Electra"

] | null | false | mrm8488 | null | mrm8488/electricidad-small-discriminator | 4 | 3 | transformers | 18,835 | ---

language: es

thumbnail: https://i.imgur.com/uxAvBfh.png

tags:

- Spanish

- Electra

datasets:

- large_spanish_corpus

---

## ELECTRICIDAD: The Spanish Electra [Imgur](https://imgur.com/uxAvBfh)

**ELECTRICIDAD** is a small Electra like model (discriminator in this case) trained on a [Large Spanish Corpus](https://github.com/josecannete/spanish-corpora) (aka BETO's corpus).

As mentioned in the original [paper](https://openreview.net/pdf?id=r1xMH1BtvB):

**ELECTRA** is a new method for self-supervised language representation learning. It can be used to pre-train transformer networks using relatively little compute. ELECTRA models are trained to distinguish "real" input tokens vs "fake" input tokens generated by another neural network, similar to the discriminator of a [GAN](https://arxiv.org/pdf/1406.2661.pdf). At small scale, ELECTRA achieves strong results even when trained on a single GPU. At large scale, ELECTRA achieves state-of-the-art results on the [SQuAD 2.0](https://rajpurkar.github.io/SQuAD-explorer/) dataset.

For a detailed description and experimental results, please refer the paper [ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators](https://openreview.net/pdf?id=r1xMH1BtvB).

## Model details ⚙

|Param| # Value|

|-----|--------|

|Layers|\t12 |

|Hidden |256 \t|

|Params| 14M|

## Evaluation metrics (for discriminator) 🧾

|Metric | # Score |

|-------|---------|

|Accuracy| 0.94|

|Precision| 0.76|

|AUC | 0.92|

## Benchmarks 🔨

WIP 🚧

## How to use the discriminator in `transformers`

```python

from transformers import ElectraForPreTraining, ElectraTokenizerFast

import torch

discriminator = ElectraForPreTraining.from_pretrained("mrm8488/electricidad-small-discriminator")

tokenizer = ElectraTokenizerFast.from_pretrained("mrm8488/electricidad-small-discriminator")

sentence = "el zorro rojo es muy rápido"

fake_sentence = "el zorro rojo es muy ser"

fake_tokens = tokenizer.tokenize(sentence)

fake_inputs = tokenizer.encode(sentence, return_tensors="pt")

discriminator_outputs = discriminator(fake_inputs)

predictions = torch.round((torch.sign(discriminator_outputs[0]) + 1) / 2)

[print("%7s" % token, end="") for token in fake_tokens]

[print("%7s" % int(prediction), end="") for prediction in predictions.tolist()[1:-1]]

# Output:

'''

el zorro rojo es muy ser 0 0 0 0 0 1[None, None, None, None, None, None]

'''

```

As you can see there is a **1** in the place where the model detected the fake token (**ser**). So, it works! 🎉

[Electricidad-small fine-tuned models](https://huggingface.co/models?search=electricidad-small)

## Acknowledgments

I thank [🤗/transformers team](https://github.com/huggingface/transformers) for answering my doubts and Google for helping me with the [TensorFlow Research Cloud](https://www.tensorflow.org/tfrc) program.

## Citation

If you want to cite this model you can use this:

```bibtex

@misc{mromero2020electricidad-small-discriminator,

title={Spanish Electra (small) by Manuel Romero},

author={Romero, Manuel},

publisher={Hugging Face},

journal={Hugging Face Hub},

howpublished={\url{https://huggingface.co/mrm8488/electricidad-small-discriminator}},

year={2020}

}

```

> Created by [Manuel Romero/@mrm8488](https://twitter.com/mrm8488)

> Made with <span style="color: #e25555;">♥</span> in Spain

|

mrm8488/scibert_scivocab-finetuned-CORD19 | 32d24a040bfbfe2558723fc76b7c18f61b9cc3a2 | 2021-05-20T00:48:35.000Z | [

"pytorch",

"jax",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | mrm8488 | null | mrm8488/scibert_scivocab-finetuned-CORD19 | 4 | null | transformers | 18,836 | Entry not found |

mrm8488/t5-base-finetuned-math-linear-algebra-2d | 461e682b5f0577f892ed1373cdb3d0e626466585 | 2020-08-19T16:39:10.000Z | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | mrm8488 | null | mrm8488/t5-base-finetuned-math-linear-algebra-2d | 4 | null | transformers | 18,837 | Entry not found |

mrshu/wav2vec2-large-xlsr-slovene | f935524ed90c6bd485855aadaa150f028fbc47b6 | 2021-07-06T13:25:51.000Z | [

"pytorch",

"jax",

"wav2vec2",

"automatic-speech-recognition",

"sl",

"dataset:common_voice",

"transformers",

"audio",

"speech",

"xlsr-fine-tuning-week",

"license:apache-2.0",

"model-index"

] | automatic-speech-recognition | false | mrshu | null | mrshu/wav2vec2-large-xlsr-slovene | 4 | null | transformers | 18,838 | ---

language: sl

datasets:

- common_voice

tags:

- audio

- automatic-speech-recognition

- speech

- xlsr-fine-tuning-week

license: apache-2.0

model-index:

- name: XLSR Wav2Vec2 Slovene

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice sl

type: common_voice

args: sl

metrics:

- name: Test WER

type: wer

value: 36.97

---

# Wav2Vec2-Large-XLSR-53-Slovene

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) in Slovene using the [Common Voice](https://huggingface.co/datasets/common_voice)

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "sl", split="test[:2%]").

processor = Wav2Vec2Processor.from_pretrained("mrshu/wav2vec2-large-xlsr-slovene")

model = Wav2Vec2ForCTC.from_pretrained("mrshu/wav2vec2-large-xlsr-slovene")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Slovene test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "sl", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("mrshu/wav2vec2-large-xlsr-slovene")

model = Wav2Vec2ForCTC.from_pretrained("mrshu/wav2vec2-large-xlsr-slovene")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\%\‘\”\�\«\»\)\(\„\'\–\’\—]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 36.97 %

## Training

The Common Voice `train`, `validation` datasets were used for training.

The script used for training can be found [here](https://colab.research.google.com/drive/14uahdilysnFsiYniHxY9fyKjFGuYQe7p)

|

mse30/bart-base-finetuned-multinews | 3562810841c50072d210440e5624230687b5b9ee | 2021-10-09T03:19:09.000Z | [

"pytorch",

"bart",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | mse30 | null | mse30/bart-base-finetuned-multinews | 4 | null | transformers | 18,839 | Entry not found |

muhtasham/autonlp-Doctor_DE-24595545 | a46f143e53582e6376263fdb77d627bebbd70188 | 2021-10-22T11:59:58.000Z | [

"pytorch",

"bert",

"text-classification",

"de",

"dataset:muhtasham/autonlp-data-Doctor_DE",

"transformers",

"autonlp",

"co2_eq_emissions"

] | text-classification | false | muhtasham | null | muhtasham/autonlp-Doctor_DE-24595545 | 4 | null | transformers | 18,840 | ---

tags: autonlp

language: de

widget:

- text: "I love AutoNLP 🤗"

datasets:

- muhtasham/autonlp-data-Doctor_DE

co2_eq_emissions: 203.30658367993382

---

# Model Trained Using AutoNLP

- Problem type: Single Column Regression

- Model ID: 24595545

- CO2 Emissions (in grams): 203.30658367993382

## Validation Metrics

- Loss: 0.30214861035346985

- MSE: 0.30214861035346985

- MAE: 0.25911855697631836

- R2: 0.8455587614373526

- RMSE: 0.5496804714202881

- Explained Variance: 0.8476610779762268

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/muhtasham/autonlp-Doctor_DE-24595545

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("muhtasham/autonlp-Doctor_DE-24595545", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("muhtasham/autonlp-Doctor_DE-24595545", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

``` |