modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Team-PIXEL/pixel-base-finetuned-qnli | 066279a64f5529a4527e63b40bbcee9fa3e8f221 | 2022-07-15T02:52:20.000Z | [

"pytorch",

"pixel",

"text-classification",

"en",

"dataset:glue",

"transformers",

"generated_from_trainer",

"model-index"

] | text-classification | false | Team-PIXEL | null | Team-PIXEL/pixel-base-finetuned-qnli | 5 | null | transformers | 17,600 | ---

language:

- en

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

model-index:

- name: pixel-base-finetuned-qnli

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: GLUE QNLI

type: glue

args: qnli

metrics:

- name: Accuracy

type: accuracy

value: 0.8859600951857953

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pixel-base-finetuned-qnli

This model is a fine-tuned version of [Team-PIXEL/pixel-base](https://huggingface.co/Team-PIXEL/pixel-base) on the GLUE QNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9503

- Accuracy: 0.8860

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- training_steps: 15000

- mixed_precision_training: Apex, opt level O1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.5451 | 0.31 | 500 | 0.5379 | 0.7282 |

| 0.4451 | 0.61 | 1000 | 0.3846 | 0.8318 |

| 0.4567 | 0.92 | 1500 | 0.3543 | 0.8525 |

| 0.3558 | 1.22 | 2000 | 0.3294 | 0.8638 |

| 0.3324 | 1.53 | 2500 | 0.3221 | 0.8666 |

| 0.3434 | 1.83 | 3000 | 0.2976 | 0.8774 |

| 0.2573 | 2.14 | 3500 | 0.3193 | 0.8750 |

| 0.2411 | 2.44 | 4000 | 0.3044 | 0.8794 |

| 0.253 | 2.75 | 4500 | 0.2932 | 0.8834 |

| 0.1653 | 3.05 | 5000 | 0.3364 | 0.8841 |

| 0.1662 | 3.36 | 5500 | 0.3348 | 0.8797 |

| 0.1816 | 3.67 | 6000 | 0.3440 | 0.8869 |

| 0.1699 | 3.97 | 6500 | 0.3453 | 0.8845 |

| 0.1027 | 4.28 | 7000 | 0.4277 | 0.8810 |

| 0.0987 | 4.58 | 7500 | 0.4590 | 0.8832 |

| 0.0974 | 4.89 | 8000 | 0.4311 | 0.8783 |

| 0.0669 | 5.19 | 8500 | 0.5214 | 0.8819 |

| 0.0583 | 5.5 | 9000 | 0.5776 | 0.8850 |

| 0.065 | 5.8 | 9500 | 0.5646 | 0.8821 |

| 0.0381 | 6.11 | 10000 | 0.6252 | 0.8796 |

| 0.0314 | 6.41 | 10500 | 0.7222 | 0.8801 |

| 0.0453 | 6.72 | 11000 | 0.6951 | 0.8823 |

| 0.0264 | 7.03 | 11500 | 0.7620 | 0.8828 |

| 0.0215 | 7.33 | 12000 | 0.8160 | 0.8834 |

| 0.0176 | 7.64 | 12500 | 0.8583 | 0.8828 |

| 0.0245 | 7.94 | 13000 | 0.8484 | 0.8867 |

| 0.0124 | 8.25 | 13500 | 0.8927 | 0.8836 |

| 0.0112 | 8.55 | 14000 | 0.9368 | 0.8827 |

| 0.0154 | 8.86 | 14500 | 0.9405 | 0.8860 |

| 0.0046 | 9.16 | 15000 | 0.9503 | 0.8860 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cu113

- Datasets 2.0.0

- Tokenizers 0.11.6

|

huggingtweets/thomastrainrek | d8e268125fe164d667b22ccef939c34cf0c1d604 | 2022-07-17T02:03:59.000Z | [

"pytorch",

"gpt2",

"text-generation",

"en",

"transformers",

"huggingtweets"

] | text-generation | false | huggingtweets | null | huggingtweets/thomastrainrek | 5 | null | transformers | 17,601 | ---

language: en

thumbnail: http://www.huggingtweets.com/thomastrainrek/1658023434881/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1321337599332593664/tqNLm-HD_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">thomas the trainwreck</div>

<div style="text-align: center; font-size: 14px;">@thomastrainrek</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from thomas the trainwreck.

| Data | thomas the trainwreck |

| --- | --- |

| Tweets downloaded | 1454 |

| Retweets | 34 |

| Short tweets | 40 |

| Tweets kept | 1380 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/15e6z8cg/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @thomastrainrek's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2967djo2) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2967djo2/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/thomastrainrek')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

JoonJoon/koelectra-base-v3-discriminator-finetuned-ner | 29e2af0578469824a76ee16a4f590ff7df003ccc | 2022-07-15T06:43:05.000Z | [

"pytorch",

"electra",

"token-classification",

"dataset:klue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | token-classification | false | JoonJoon | null | JoonJoon/koelectra-base-v3-discriminator-finetuned-ner | 5 | null | transformers | 17,602 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- klue

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: koelectra-base-v3-discriminator-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: klue

type: klue

args: ner

metrics:

- name: Precision

type: precision

value: 0.6665182546749777

- name: Recall

type: recall

value: 0.7350073648032546

- name: F1

type: f1

value: 0.6990893625537877

- name: Accuracy

type: accuracy

value: 0.9395764497172635

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# koelectra-base-v3-discriminator-finetuned-ner

This model is a fine-tuned version of [monologg/koelectra-base-v3-discriminator](https://huggingface.co/monologg/koelectra-base-v3-discriminator) on the klue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1957

- Precision: 0.6665

- Recall: 0.7350

- F1: 0.6991

- Accuracy: 0.9396

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 438 | 0.2588 | 0.5701 | 0.6655 | 0.6141 | 0.9212 |

| 0.4333 | 2.0 | 876 | 0.2060 | 0.6671 | 0.7134 | 0.6895 | 0.9373 |

| 0.1944 | 3.0 | 1314 | 0.1957 | 0.6665 | 0.7350 | 0.6991 | 0.9396 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.0+cu102

- Datasets 1.14.0

- Tokenizers 0.10.3

|

jinwooChoi/hjw_small_25_32_0.0001 | 1a129cd10e36943e4395b70e1e439e74419667d6 | 2022-07-15T07:32:27.000Z | [

"pytorch",

"electra",

"text-classification",

"transformers"

] | text-classification | false | jinwooChoi | null | jinwooChoi/hjw_small_25_32_0.0001 | 5 | null | transformers | 17,603 | Entry not found |

darragh/swinunetr-btcv-base | 2a60a8f819994b0210038531994703c7c7bd8e21 | 2022-07-15T21:01:42.000Z | [

"pytorch",

"en",

"dataset:BTCV",

"transformers",

"btcv",

"medical",

"swin",

"license:apache-2.0"

] | null | false | darragh | null | darragh/swinunetr-btcv-base | 5 | null | transformers | 17,604 | ---

language: en

tags:

- btcv

- medical

- swin

license: apache-2.0

datasets:

- BTCV

---

# Model Overview

This repository contains the code for Swin UNETR [1,2]. Swin UNETR is the state-of-the-art on Medical Segmentation

Decathlon (MSD) and Beyond the Cranial Vault (BTCV) Segmentation Challenge dataset. In [1], a novel methodology is devised for pre-training Swin UNETR backbone in a self-supervised

manner. We provide the option for training Swin UNETR by fine-tuning from pre-trained self-supervised weights or from scratch.

The source repository for the training of these models can be found [here](https://github.com/Project-MONAI/research-contributions/tree/main/SwinUNETR/BTCV).

# Installing Dependencies

Dependencies for training and inference can be installed using the model requirements :

``` bash

pip install -r requirements.txt

```

# Intended uses & limitations

You can use the raw model for dicom segmentation, but it's mostly intended to be fine-tuned on a downstream task.

Note that this model is primarily aimed at being fine-tuned on tasks which segment CAT scans or MRIs on images in dicom format. Dicom meta data mostly differs across medical facilities, so if applying to a new dataset, the model should be finetuned.

# How to use

To install necessary dependencies, run the below in bash.

```

git clone https://github.com/darraghdog/Project-MONAI-research-contributions pmrc

pip install -r pmrc/requirements.txt

cd pmrc/SwinUNETR/BTCV

```

To load the model from the hub.

```

>>> from swinunetr import SwinUnetrModelForInference

>>> model = SwinUnetrModelForInference.from_pretrained('darragh/swinunetr-btcv-tiny')

```

# Limitations and bias

The training data used for this model is specific to CAT scans from certain health facilities and machines. Data from other facilities may difffer in image distributions, and may require finetuning of the models for best performance.

# Evaluation results

We provide several pre-trained models on BTCV dataset in the following.

<table>

<tr>

<th>Name</th>

<th>Dice (overlap=0.7)</th>

<th>Dice (overlap=0.5)</th>

<th>Feature Size</th>

<th># params (M)</th>

<th>Self-Supervised Pre-trained </th>

</tr>

<tr>

<td>Swin UNETR/Base</td>

<td>82.25</td>

<td>81.86</td>

<td>48</td>

<td>62.1</td>

<td>Yes</td>

</tr>

<tr>

<td>Swin UNETR/Small</td>

<td>79.79</td>

<td>79.34</td>

<td>24</td>

<td>15.7</td>

<td>No</td>

</tr>

<tr>

<td>Swin UNETR/Tiny</td>

<td>72.05</td>

<td>70.35</td>

<td>12</td>

<td>4.0</td>

<td>No</td>

</tr>

</table>

# Data Preparation

The training data is from the [BTCV challenge dataset](https://www.synapse.org/#!Synapse:syn3193805/wiki/217752).

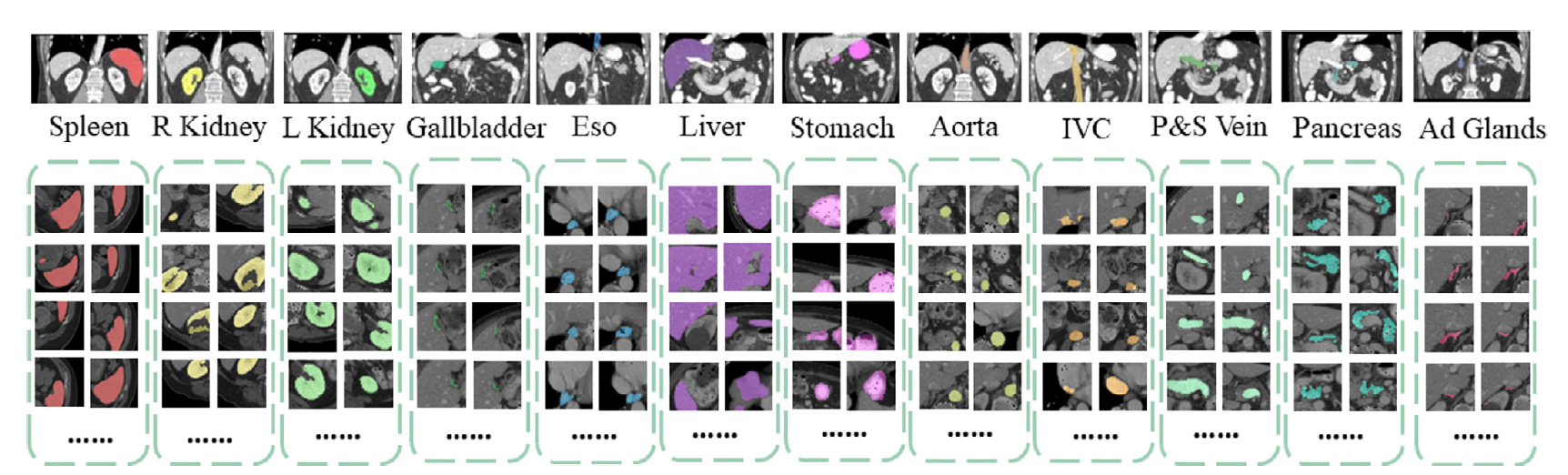

- Target: 13 abdominal organs including 1. Spleen 2. Right Kidney 3. Left Kideny 4.Gallbladder 5.Esophagus 6. Liver 7. Stomach 8.Aorta 9. IVC 10. Portal and Splenic Veins 11. Pancreas 12.Right adrenal gland 13.Left adrenal gland.

- Task: Segmentation

- Modality: CT

- Size: 30 3D volumes (24 Training + 6 Testing)

# Training

See the source repository [here](https://github.com/Project-MONAI/research-contributions/tree/main/SwinUNETR/BTCV) for information on training.

# BibTeX entry and citation info

If you find this repository useful, please consider citing the following papers:

```

@inproceedings{tang2022self,

title={Self-supervised pre-training of swin transformers for 3d medical image analysis},

author={Tang, Yucheng and Yang, Dong and Li, Wenqi and Roth, Holger R and Landman, Bennett and Xu, Daguang and Nath, Vishwesh and Hatamizadeh, Ali},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={20730--20740},

year={2022}

}

@article{hatamizadeh2022swin,

title={Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images},

author={Hatamizadeh, Ali and Nath, Vishwesh and Tang, Yucheng and Yang, Dong and Roth, Holger and Xu, Daguang},

journal={arXiv preprint arXiv:2201.01266},

year={2022}

}

```

# References

[1]: Tang, Y., Yang, D., Li, W., Roth, H.R., Landman, B., Xu, D., Nath, V. and Hatamizadeh, A., 2022. Self-supervised pre-training of swin transformers for 3d medical image analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 20730-20740).

[2]: Hatamizadeh, A., Nath, V., Tang, Y., Yang, D., Roth, H. and Xu, D., 2022. Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images. arXiv preprint arXiv:2201.01266.

|

Jinchen/roberta-base-finetuned-mrpc | e1e575856446d6d7f99499bcd8288732da817d87 | 2022-07-15T13:15:54.000Z | [

"pytorch",

"roberta",

"text-classification",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index"

] | text-classification | false | Jinchen | null | Jinchen/roberta-base-finetuned-mrpc | 5 | null | transformers | 17,605 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

- f1

model-index:

- name: roberta-base-finetuned-mrpc

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-mrpc

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2891

- Accuracy: 0.8925

- F1: 0.9228

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: IPU

- gradient_accumulation_steps: 16

- total_train_batch_size: 64

- total_eval_batch_size: 20

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- training precision: Mixed Precision

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.5998 | 1.0 | 57 | 0.5425 | 0.74 | 0.8349 |

| 0.5058 | 2.0 | 114 | 0.3020 | 0.875 | 0.9084 |

| 0.3316 | 3.0 | 171 | 0.2891 | 0.8925 | 0.9228 |

| 0.1617 | 4.0 | 228 | 0.2937 | 0.8825 | 0.9138 |

| 0.3161 | 5.0 | 285 | 0.3193 | 0.8875 | 0.9171 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.0+cpu

- Datasets 2.3.2

- Tokenizers 0.12.1

|

clevrly/roberta-large-mnli-fer-finetuned | 0c7894eae6933bbbf3858723b33d8092805b7093 | 2022-07-22T20:30:58.000Z | [

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index"

] | text-classification | false | clevrly | null | clevrly/roberta-large-mnli-fer-finetuned | 5 | null | transformers | 17,606 | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: roberta-large-mnli-fer-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-large-mnli-fer-finetuned

This model is a fine-tuned version of [roberta-large](https://huggingface.co/roberta-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6940

- Accuracy: 0.5005

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.7049 | 1.0 | 554 | 0.6895 | 0.5750 |

| 0.6981 | 2.0 | 1108 | 0.7054 | 0.5005 |

| 0.7039 | 3.0 | 1662 | 0.6936 | 0.5005 |

| 0.6976 | 4.0 | 2216 | 0.6935 | 0.4995 |

| 0.6991 | 5.0 | 2770 | 0.6940 | 0.5005 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Someman/xlm-roberta-base-finetuned-panx-de | cf83a59d0a275fc21ddbc23ecf7691346161c1c8 | 2022-07-16T05:50:27.000Z | [

"pytorch",

"tensorboard",

"xlm-roberta",

"token-classification",

"dataset:xtreme",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index",

"autotrain_compatible"

] | token-classification | false | Someman | null | Someman/xlm-roberta-base-finetuned-panx-de | 5 | null | transformers | 17,607 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.8640345886904085

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1426

- F1: 0.8640

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2525 | 1.0 | 787 | 0.1795 | 0.8184 |

| 0.1283 | 2.0 | 1574 | 0.1402 | 0.8468 |

| 0.08 | 3.0 | 2361 | 0.1426 | 0.8640 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Someman/xlm-roberta-base-finetuned-panx-de-fr | bfdd3666597b998a34362838d77d958238e22ffe | 2022-07-16T07:25:13.000Z | [

"pytorch",

"xlm-roberta",

"token-classification",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index",

"autotrain_compatible"

] | token-classification | false | Someman | null | Someman/xlm-roberta-base-finetuned-panx-de-fr | 5 | null | transformers | 17,608 | ---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1717

- F1: 0.8601

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2889 | 1.0 | 1073 | 0.1945 | 0.8293 |

| 0.1497 | 2.0 | 2146 | 0.1636 | 0.8476 |

| 0.093 | 3.0 | 3219 | 0.1717 | 0.8601 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Konstantine4096/bart-pizza-5K | 4fbd492c8cbed6ef5cfd65b124f9c7f5e125d210 | 2022-07-16T22:26:21.000Z | [

"pytorch",

"bart",

"text2text-generation",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | text2text-generation | false | Konstantine4096 | null | Konstantine4096/bart-pizza-5K | 5 | null | transformers | 17,609 | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bart-pizza-5K

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-pizza-5K

This model is a fine-tuned version of [facebook/bart-base](https://huggingface.co/facebook/bart-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1688

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.0171 | 1.6 | 500 | 0.1688 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

MMVos/distilbert-base-uncased-finetuned-squad | 7e229c745a8c6aea4b1ce74f972bd69a0b57ae18 | 2022-07-18T12:16:01.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"dataset:squad_v2",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | question-answering | false | MMVos | null | MMVos/distilbert-base-uncased-finetuned-squad | 5 | null | transformers | 17,610 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad_v2 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4214

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.1814 | 1.0 | 8235 | 1.2488 |

| 0.9078 | 2.0 | 16470 | 1.3127 |

| 0.7439 | 3.0 | 24705 | 1.4214 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

davanstrien/vit-base-patch16-224-in21k-fine-tuned | d71d5458c6c0f6986cd74848f1d8758cd69de070 | 2022-07-20T17:18:39.000Z | [

"pytorch",

"tensorboard",

"vit",

"transformers"

] | null | false | davanstrien | null | davanstrien/vit-base-patch16-224-in21k-fine-tuned | 5 | null | transformers | 17,611 | Entry not found |

Kayvane/distilbert-base-uncased-wandb-week-3-complaints-classifier-256 | 88ba91c7588f1f13846521731b7e3f6dd0083f70 | 2022-07-19T06:29:12.000Z | [

"pytorch",

"distilbert",

"text-classification",

"dataset:consumer-finance-complaints",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | Kayvane | null | Kayvane/distilbert-base-uncased-wandb-week-3-complaints-classifier-256 | 5 | null | transformers | 17,612 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- consumer-finance-complaints

metrics:

- accuracy

- f1

- recall

- precision

model-index:

- name: distilbert-base-uncased-wandb-week-3-complaints-classifier-256

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: consumer-finance-complaints

type: consumer-finance-complaints

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8234544620559604

- name: F1

type: f1

value: 0.8176243580045963

- name: Recall

type: recall

value: 0.8234544620559604

- name: Precision

type: precision

value: 0.8171438106054644

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-wandb-week-3-complaints-classifier-256

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the consumer-finance-complaints dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5453

- Accuracy: 0.8235

- F1: 0.8176

- Recall: 0.8235

- Precision: 0.8171

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4.097565552226687e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 256

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Recall | Precision |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:------:|:---------:|

| 0.6691 | 0.61 | 1500 | 0.6475 | 0.7962 | 0.7818 | 0.7962 | 0.7875 |

| 0.5361 | 1.22 | 3000 | 0.5794 | 0.8161 | 0.8080 | 0.8161 | 0.8112 |

| 0.4659 | 1.83 | 4500 | 0.5453 | 0.8235 | 0.8176 | 0.8235 | 0.8171 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Ghostwolf/wav2vec2-large-xlsr-hindi | 757c05bcb11267d09a942180aeb1dd77f35bbb69 | 2022-07-26T16:48:59.000Z | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"transformers"

] | automatic-speech-recognition | false | Ghostwolf | null | Ghostwolf/wav2vec2-large-xlsr-hindi | 5 | null | transformers | 17,613 | |

RJ3vans/DeBERTaCMV1spanTagger | 054c74e31ea15fb78b7745cdc13c5d70158081a4 | 2022-07-19T16:24:58.000Z | [

"pytorch",

"deberta-v2",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | RJ3vans | null | RJ3vans/DeBERTaCMV1spanTagger | 5 | null | transformers | 17,614 | Entry not found |

abecode/t5-base-finetuned-emo20q-classification | 1ae994837532f5c27c347d90524050346e34e59d | 2022-07-19T18:56:13.000Z | [

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | text2text-generation | false | abecode | null | abecode/t5-base-finetuned-emo20q-classification | 5 | null | transformers | 17,615 | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: t5-base-finetuned-emo20q-classification

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-finetuned-emo20q-classification

This model is a fine-tuned version of [t5-base](https://huggingface.co/t5-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3759

- Rouge1: 70.3125

- Rouge2: 0.0

- Rougel: 70.2083

- Rougelsum: 70.2083

- Gen Len: 2.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 280 | 0.3952 | 68.3333 | 0.0 | 68.2292 | 68.2812 | 2.0 |

| 0.7404 | 2.0 | 560 | 0.3774 | 70.1042 | 0.0 | 70.1042 | 70.1042 | 2.0 |

| 0.7404 | 3.0 | 840 | 0.3759 | 70.3125 | 0.0 | 70.2083 | 70.2083 | 2.0 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

Siyong/MT_RN | 554b6a3111d14b1d3df95c9e46b89fbbfdfea1e8 | 2022-07-20T01:36:09.000Z | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | automatic-speech-recognition | false | Siyong | null | Siyong/MT_RN | 5 | null | transformers | 17,616 | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: run1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# run1

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6666

- Wer: 0.6375

- Cer: 0.3170

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 2000

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|

| 1.0564 | 2.36 | 2000 | 2.3456 | 0.9628 | 0.5549 |

| 0.5071 | 4.73 | 4000 | 2.0652 | 0.9071 | 0.5115 |

| 0.3952 | 7.09 | 6000 | 2.3649 | 0.9108 | 0.4628 |

| 0.3367 | 9.46 | 8000 | 1.7615 | 0.8253 | 0.4348 |

| 0.2765 | 11.82 | 10000 | 1.6151 | 0.7937 | 0.4087 |

| 0.2493 | 14.18 | 12000 | 1.4976 | 0.7881 | 0.3905 |

| 0.2318 | 16.55 | 14000 | 1.6731 | 0.8160 | 0.3925 |

| 0.2074 | 18.91 | 16000 | 1.5822 | 0.7658 | 0.3913 |

| 0.1825 | 21.28 | 18000 | 1.5442 | 0.7361 | 0.3704 |

| 0.1824 | 23.64 | 20000 | 1.5988 | 0.7621 | 0.3711 |

| 0.1699 | 26.0 | 22000 | 1.4261 | 0.7119 | 0.3490 |

| 0.158 | 28.37 | 24000 | 1.7482 | 0.7658 | 0.3648 |

| 0.1385 | 30.73 | 26000 | 1.4103 | 0.6784 | 0.3348 |

| 0.1199 | 33.1 | 28000 | 1.5214 | 0.6636 | 0.3273 |

| 0.116 | 35.46 | 30000 | 1.4288 | 0.7212 | 0.3486 |

| 0.1071 | 37.83 | 32000 | 1.5344 | 0.7138 | 0.3411 |

| 0.1007 | 40.19 | 34000 | 1.4501 | 0.6691 | 0.3237 |

| 0.0943 | 42.55 | 36000 | 1.5367 | 0.6859 | 0.3265 |

| 0.0844 | 44.92 | 38000 | 1.5321 | 0.6599 | 0.3273 |

| 0.0762 | 47.28 | 40000 | 1.6721 | 0.6264 | 0.3142 |

| 0.0778 | 49.65 | 42000 | 1.6666 | 0.6375 | 0.3170 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.0+cu113

- Datasets 2.0.0

- Tokenizers 0.12.1

|

commanderstrife/bc2gm_corpus-Bio_ClinicalBERT-finetuned-ner | a4367803bb4a087c0b8e0eac15862b08e0ad2697 | 2022-07-20T02:51:04.000Z | [

"pytorch",

"bert",

"token-classification",

"dataset:bc2gm_corpus",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index",

"autotrain_compatible"

] | token-classification | false | commanderstrife | null | commanderstrife/bc2gm_corpus-Bio_ClinicalBERT-finetuned-ner | 5 | null | transformers | 17,617 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- bc2gm_corpus

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bc2gm_corpus-Bio_ClinicalBERT-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: bc2gm_corpus

type: bc2gm_corpus

args: bc2gm_corpus

metrics:

- name: Precision

type: precision

value: 0.7853881278538812

- name: Recall

type: recall

value: 0.8158102766798419

- name: F1

type: f1

value: 0.8003101977510663

- name: Accuracy

type: accuracy

value: 0.9758965601366187

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bc2gm_corpus-Bio_ClinicalBERT-finetuned-ner

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the bc2gm_corpus dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1505

- Precision: 0.7854

- Recall: 0.8158

- F1: 0.8003

- Accuracy: 0.9759

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0981 | 1.0 | 782 | 0.0712 | 0.7228 | 0.7948 | 0.7571 | 0.9724 |

| 0.0509 | 2.0 | 1564 | 0.0687 | 0.7472 | 0.8199 | 0.7818 | 0.9746 |

| 0.0121 | 3.0 | 2346 | 0.0740 | 0.7725 | 0.8011 | 0.7866 | 0.9747 |

| 0.0001 | 4.0 | 3128 | 0.1009 | 0.7618 | 0.8251 | 0.7922 | 0.9741 |

| 0.0042 | 5.0 | 3910 | 0.1106 | 0.7757 | 0.8185 | 0.7965 | 0.9754 |

| 0.0015 | 6.0 | 4692 | 0.1182 | 0.7812 | 0.8111 | 0.7958 | 0.9758 |

| 0.0001 | 7.0 | 5474 | 0.1283 | 0.7693 | 0.8275 | 0.7973 | 0.9753 |

| 0.0072 | 8.0 | 6256 | 0.1376 | 0.7863 | 0.8158 | 0.8008 | 0.9762 |

| 0.0045 | 9.0 | 7038 | 0.1468 | 0.7856 | 0.8180 | 0.8015 | 0.9761 |

| 0.0 | 10.0 | 7820 | 0.1505 | 0.7854 | 0.8158 | 0.8003 | 0.9759 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

huggingartists/rage-against-the-machine | 091efabcc80a94165cd155146cbc77a31804b783 | 2022-07-20T04:23:50.000Z | [

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"dataset:huggingartists/rage-against-the-machine",

"transformers",

"huggingartists",

"lyrics",

"lm-head",

"causal-lm"

] | text-generation | false | huggingartists | null | huggingartists/rage-against-the-machine | 5 | null | transformers | 17,618 | ---

language: en

datasets:

- huggingartists/rage-against-the-machine

tags:

- huggingartists

- lyrics

- lm-head

- causal-lm

widget:

- text: "I am"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:DISPLAY_1; margin-left: auto; margin-right: auto; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://images.genius.com/2158957823960c84c7890b8fa5e6d479.1000x1000x1.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 HuggingArtists Model 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Rage Against the Machine</div>

<a href="https://genius.com/artists/rage-against-the-machine">

<div style="text-align: center; font-size: 14px;">@rage-against-the-machine</div>

</a>

</div>

I was made with [huggingartists](https://github.com/AlekseyKorshuk/huggingartists).

Create your own bot based on your favorite artist with [the demo](https://colab.research.google.com/github/AlekseyKorshuk/huggingartists/blob/master/huggingartists-demo.ipynb)!

## How does it work?

To understand how the model was developed, check the [W&B report](https://wandb.ai/huggingartists/huggingartists/reportlist).

## Training data

The model was trained on lyrics from Rage Against the Machine.

Dataset is available [here](https://huggingface.co/datasets/huggingartists/rage-against-the-machine).

And can be used with:

```python

from datasets import load_dataset

dataset = load_dataset("huggingartists/rage-against-the-machine")

```

[Explore the data](https://wandb.ai/huggingartists/huggingartists/runs/2lbi7kzi/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on Rage Against the Machine's lyrics.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/huggingartists/huggingartists/runs/10r0sf3w) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/huggingartists/huggingartists/runs/10r0sf3w/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingartists/rage-against-the-machine')

generator("I am", num_return_sequences=5)

```

Or with Transformers library:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("huggingartists/rage-against-the-machine")

model = AutoModelWithLMHead.from_pretrained("huggingartists/rage-against-the-machine")

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Aleksey Korshuk*

[](https://github.com/AlekseyKorshuk)

[](https://twitter.com/intent/follow?screen_name=alekseykorshuk)

[](https://t.me/joinchat/_CQ04KjcJ-4yZTky)

For more details, visit the project repository.

[](https://github.com/AlekseyKorshuk/huggingartists)

|

lqdisme/test_squad | 9f2d7e3235da7299bdbce33e9e5deb8dac823bc6 | 2022-07-20T04:27:06.000Z | [

"pytorch",

"distilbert",

"feature-extraction",

"transformers"

] | feature-extraction | false | lqdisme | null | lqdisme/test_squad | 5 | null | transformers | 17,619 | Entry not found |

ryo0634/luke-base-comp-umls | 04b2e898e2f6f0bec9f74048f87b90a9c7221d0f | 2022-07-20T05:38:59.000Z | [

"pytorch",

"luke",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | ryo0634 | null | ryo0634/luke-base-comp-umls | 5 | null | transformers | 17,620 | Entry not found |

jordyvl/biobert-base-cased-v1.2_ncbi_disease-lowC-sm-first-ner | 2e2a35f2d4bd5f914ca4666d038fa4cb4c5e7087 | 2022-07-20T08:49:02.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | jordyvl | null | jordyvl/biobert-base-cased-v1.2_ncbi_disease-lowC-sm-first-ner | 5 | null | transformers | 17,621 | Entry not found |

tianying/bert-finetuned-ner | 4ecd1ad44a9b45d96f860c7a073323fdae4b5b02 | 2022-07-20T13:58:10.000Z | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | tianying | null | tianying/bert-finetuned-ner | 5 | null | transformers | 17,622 | Entry not found |

liton10/mt5-small-finetuned-amazon-en-es | bfb3714d65045c5f051eefe8e916d0b87c78c107 | 2022-07-20T10:03:33.000Z | [

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"transformers",

"summarization",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | summarization | false | liton10 | null | liton10/mt5-small-finetuned-amazon-en-es | 5 | null | transformers | 17,623 | ---

license: apache-2.0

tags:

- summarization

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mt5-small-finetuned-amazon-en-es

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-amazon-en-es

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 9.2585

- Rouge1: 6.1835

- Rouge2: 0.0

- Rougel: 5.8333

- Rougelsum: 6.1835

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|

| 24.1065 | 1.0 | 11 | 32.7123 | 7.342 | 1.5385 | 7.1515 | 7.342 |

| 22.6474 | 2.0 | 22 | 19.7137 | 6.1039 | 0.0 | 5.7143 | 6.1039 |

| 16.319 | 3.0 | 33 | 12.8543 | 6.1039 | 0.0 | 5.7143 | 6.1039 |

| 16.3224 | 4.0 | 44 | 10.1929 | 5.9524 | 0.0 | 5.7143 | 5.9524 |

| 15.0599 | 5.0 | 55 | 9.9186 | 5.9524 | 0.0 | 5.7143 | 5.9524 |

| 14.6053 | 6.0 | 66 | 9.3235 | 6.1835 | 0.0 | 5.8333 | 6.1835 |

| 14.4345 | 7.0 | 77 | 9.1621 | 6.1835 | 0.0 | 5.8333 | 6.1835 |

| 13.7973 | 8.0 | 88 | 9.2585 | 6.1835 | 0.0 | 5.8333 | 6.1835 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

jordyvl/biobert-base-cased-v1.2_ncbi_disease-CRF-first-ner | 2743f53bcf91adb11b6498e15d6be157218180f4 | 2022-07-20T14:09:50.000Z | [

"pytorch",

"tensorboard",

"bert",

"transformers"

] | null | false | jordyvl | null | jordyvl/biobert-base-cased-v1.2_ncbi_disease-CRF-first-ner | 5 | null | transformers | 17,624 | Entry not found |

Lvxue/distilled_test_0.99_formal | 76de46cb5e250490cd0f1dd585e4da81623d6e37 | 2022-07-22T20:00:29.000Z | [

"pytorch",

"mt5",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | Lvxue | null | Lvxue/distilled_test_0.99_formal | 5 | null | transformers | 17,625 | Entry not found |

ckadam15/distilbert-base-uncased-finetuned-squad | 389d2d4549011487e254c605f3390fc893bced15 | 2022-07-25T16:04:57.000Z | [

"pytorch",

"distilbert",

"question-answering",

"transformers",

"autotrain_compatible"

] | question-answering | false | ckadam15 | null | ckadam15/distilbert-base-uncased-finetuned-squad | 5 | null | transformers | 17,626 | Entry not found |

furrutiav/beto_coherence_v2 | 639f8320999464f2b729f4d37c462e873980acde | 2022-07-21T20:23:27.000Z | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | false | furrutiav | null | furrutiav/beto_coherence_v2 | 5 | null | transformers | 17,627 | Entry not found |

gary109/ai-light-dance_singing3_ft_pretrain2_wav2vec2-large-xlsr-53 | 3468f5bec770d7eadb63aa5f6928ad27afa47433 | 2022-07-27T03:22:01.000Z | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"transformers",

"gary109/AI_Light_Dance",

"generated_from_trainer",

"model-index"

] | automatic-speech-recognition | false | gary109 | null | gary109/ai-light-dance_singing3_ft_pretrain2_wav2vec2-large-xlsr-53 | 5 | null | transformers | 17,628 | ---

tags:

- automatic-speech-recognition

- gary109/AI_Light_Dance

- generated_from_trainer

model-index:

- name: ai-light-dance_singing3_ft_pretrain2_wav2vec2-large-xlsr-53

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ai-light-dance_singing3_ft_pretrain2_wav2vec2-large-xlsr-53

This model is a fine-tuned version of [gary109/ai-light-dance_singing3_ft_pretrain2_wav2vec2-large-xlsr-53](https://huggingface.co/gary109/ai-light-dance_singing3_ft_pretrain2_wav2vec2-large-xlsr-53) on the GARY109/AI_LIGHT_DANCE - ONSET-SINGING3 dataset.

It achieves the following results on the evaluation set:

- Loss: 2.4279

- Wer: 1.0087

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 100.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 1.209 | 1.0 | 72 | 2.5599 | 0.9889 |

| 1.3395 | 2.0 | 144 | 2.7188 | 0.9877 |

| 1.2695 | 3.0 | 216 | 2.9989 | 0.9709 |

| 1.2818 | 4.0 | 288 | 3.2352 | 0.9757 |

| 1.2389 | 5.0 | 360 | 3.6867 | 0.9783 |

| 1.2368 | 6.0 | 432 | 3.3189 | 0.9811 |

| 1.2307 | 7.0 | 504 | 3.0786 | 0.9657 |

| 1.2607 | 8.0 | 576 | 2.9720 | 0.9677 |

| 1.2584 | 9.0 | 648 | 2.5613 | 0.9702 |

| 1.2266 | 10.0 | 720 | 2.6937 | 0.9610 |

| 1.262 | 11.0 | 792 | 3.9060 | 0.9745 |

| 1.2361 | 12.0 | 864 | 3.6138 | 0.9718 |

| 1.2348 | 13.0 | 936 | 3.4838 | 0.9745 |

| 1.2715 | 14.0 | 1008 | 3.3128 | 0.9751 |

| 1.2505 | 15.0 | 1080 | 3.2015 | 0.9710 |

| 1.211 | 16.0 | 1152 | 3.4709 | 0.9709 |

| 1.2067 | 17.0 | 1224 | 3.0566 | 0.9673 |

| 1.2536 | 18.0 | 1296 | 2.5479 | 0.9789 |

| 1.2297 | 19.0 | 1368 | 2.8307 | 0.9710 |

| 1.1949 | 20.0 | 1440 | 3.4112 | 0.9777 |

| 1.2181 | 21.0 | 1512 | 2.6784 | 0.9682 |

| 1.195 | 22.0 | 1584 | 3.0395 | 0.9639 |

| 1.2047 | 23.0 | 1656 | 3.1935 | 0.9726 |

| 1.2306 | 24.0 | 1728 | 3.2649 | 0.9723 |

| 1.199 | 25.0 | 1800 | 3.1378 | 0.9645 |

| 1.1945 | 26.0 | 1872 | 2.8143 | 0.9596 |

| 1.19 | 27.0 | 1944 | 3.5174 | 0.9787 |

| 1.1976 | 28.0 | 2016 | 2.9666 | 0.9594 |

| 1.2229 | 29.0 | 2088 | 2.8672 | 0.9589 |

| 1.1548 | 30.0 | 2160 | 2.6568 | 0.9627 |

| 1.169 | 31.0 | 2232 | 2.8799 | 0.9654 |

| 1.1857 | 32.0 | 2304 | 2.8691 | 0.9625 |

| 1.1862 | 33.0 | 2376 | 2.8251 | 0.9555 |

| 1.1721 | 34.0 | 2448 | 3.5968 | 0.9726 |

| 1.1293 | 35.0 | 2520 | 3.4130 | 0.9651 |

| 1.1513 | 36.0 | 2592 | 2.8804 | 0.9630 |

| 1.1537 | 37.0 | 2664 | 2.5824 | 0.9575 |

| 1.1818 | 38.0 | 2736 | 2.8443 | 0.9613 |

| 1.1835 | 39.0 | 2808 | 2.6431 | 0.9619 |

| 1.1457 | 40.0 | 2880 | 2.9254 | 0.9639 |

| 1.1591 | 41.0 | 2952 | 2.8194 | 0.9561 |

| 1.1284 | 42.0 | 3024 | 2.6432 | 0.9806 |

| 1.1602 | 43.0 | 3096 | 2.4279 | 1.0087 |

| 1.1556 | 44.0 | 3168 | 2.5040 | 1.0030 |

| 1.1256 | 45.0 | 3240 | 3.1641 | 0.9608 |

| 1.1256 | 46.0 | 3312 | 2.9522 | 0.9677 |

| 1.1211 | 47.0 | 3384 | 2.6318 | 0.9580 |

| 1.1142 | 48.0 | 3456 | 2.7298 | 0.9533 |

| 1.1237 | 49.0 | 3528 | 2.5442 | 0.9673 |

| 1.0976 | 50.0 | 3600 | 2.7767 | 0.9610 |

| 1.1154 | 51.0 | 3672 | 2.6849 | 0.9646 |

| 1.1012 | 52.0 | 3744 | 2.5384 | 0.9621 |

| 1.1077 | 53.0 | 3816 | 2.4505 | 1.0067 |

| 1.0936 | 54.0 | 3888 | 2.5847 | 0.9687 |

| 1.0772 | 55.0 | 3960 | 2.4575 | 0.9761 |

| 1.092 | 56.0 | 4032 | 2.4889 | 0.9802 |

| 1.0868 | 57.0 | 4104 | 2.5885 | 0.9664 |

| 1.0979 | 58.0 | 4176 | 2.6370 | 0.9607 |

| 1.094 | 59.0 | 4248 | 2.6195 | 0.9605 |

| 1.0745 | 60.0 | 4320 | 2.5346 | 0.9834 |

| 1.1057 | 61.0 | 4392 | 2.6879 | 0.9603 |

| 1.0722 | 62.0 | 4464 | 2.5426 | 0.9735 |

| 1.0731 | 63.0 | 4536 | 2.8259 | 0.9535 |

| 1.0862 | 64.0 | 4608 | 2.7632 | 0.9559 |

| 1.0396 | 65.0 | 4680 | 2.5401 | 0.9807 |

| 1.0581 | 66.0 | 4752 | 2.6977 | 0.9687 |

| 1.0647 | 67.0 | 4824 | 2.6968 | 0.9694 |

| 1.0549 | 68.0 | 4896 | 2.6439 | 0.9807 |

| 1.0607 | 69.0 | 4968 | 2.6822 | 0.9771 |

| 1.05 | 70.0 | 5040 | 2.7011 | 0.9607 |

| 1.042 | 71.0 | 5112 | 2.5766 | 0.9713 |

| 1.042 | 72.0 | 5184 | 2.5720 | 0.9747 |

| 1.0594 | 73.0 | 5256 | 2.7176 | 0.9704 |

| 1.0425 | 74.0 | 5328 | 2.7458 | 0.9614 |

| 1.0199 | 75.0 | 5400 | 2.5906 | 0.9987 |

| 1.0198 | 76.0 | 5472 | 2.5534 | 1.0087 |

| 1.0193 | 77.0 | 5544 | 2.5421 | 0.9933 |

| 1.0379 | 78.0 | 5616 | 2.5139 | 0.9994 |

| 1.025 | 79.0 | 5688 | 2.4850 | 1.0313 |

| 1.0054 | 80.0 | 5760 | 2.5803 | 0.9814 |

| 1.0218 | 81.0 | 5832 | 2.5696 | 0.9867 |

| 1.0177 | 82.0 | 5904 | 2.6011 | 1.0065 |

| 1.0094 | 83.0 | 5976 | 2.6166 | 0.9855 |

| 1.0202 | 84.0 | 6048 | 2.5557 | 1.0204 |

| 1.0148 | 85.0 | 6120 | 2.6118 | 1.0033 |

| 1.0117 | 86.0 | 6192 | 2.5671 | 1.0120 |

| 1.0195 | 87.0 | 6264 | 2.5443 | 1.0041 |

| 1.0114 | 88.0 | 6336 | 2.5627 | 1.0049 |

| 1.0074 | 89.0 | 6408 | 2.5670 | 1.0255 |

| 0.9883 | 90.0 | 6480 | 2.5338 | 1.0306 |

| 1.0112 | 91.0 | 6552 | 2.5615 | 1.0142 |

| 0.9986 | 92.0 | 6624 | 2.5566 | 1.0415 |

| 0.9939 | 93.0 | 6696 | 2.5728 | 1.0287 |

| 0.9954 | 94.0 | 6768 | 2.5617 | 1.0138 |

| 0.9643 | 95.0 | 6840 | 2.5890 | 1.0145 |

| 0.9892 | 96.0 | 6912 | 2.5918 | 1.0119 |

| 0.983 | 97.0 | 6984 | 2.5862 | 1.0175 |

| 0.988 | 98.0 | 7056 | 2.5873 | 1.0147 |

| 0.9908 | 99.0 | 7128 | 2.5973 | 1.0073 |

| 0.9696 | 100.0 | 7200 | 2.5938 | 1.0156 |

### Framework versions

- Transformers 4.21.0.dev0

- Pytorch 1.9.1+cu102

- Datasets 2.3.3.dev0

- Tokenizers 0.12.1

|

huggingtweets/hotwingsuk | b3b6666d4a33270169525ff28f135fcfcc34e3cf | 2022-07-22T03:26:48.000Z | [

"pytorch",

"gpt2",

"text-generation",

"en",

"transformers",

"huggingtweets"

] | text-generation | false | huggingtweets | null | huggingtweets/hotwingsuk | 5 | null | transformers | 17,629 | ---

language: en

thumbnail: http://www.huggingtweets.com/hotwingsuk/1658460403599/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1280474754214957056/GKqk3gAm_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">HotWings</div>

<div style="text-align: center; font-size: 14px;">@hotwingsuk</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from HotWings.

| Data | HotWings |

| --- | --- |

| Tweets downloaded | 2057 |

| Retweets | 69 |

| Short tweets | 258 |

| Tweets kept | 1730 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3opu8h6o/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @hotwingsuk's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/bzf76pmf) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/bzf76pmf/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/hotwingsuk')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

sudo-s/exper5_mesum5 | 97e53fb419c9c283bba70e7520acb1e0ad4387c3 | 2022-07-22T15:29:30.000Z | [

"pytorch",

"tensorboard",

"vit",

"image-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | image-classification | false | sudo-s | null | sudo-s/exper5_mesum5 | 5 | null | transformers | 17,630 | ---

license: apache-2.0

tags:

- image-classification

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: exper5_mesum5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# exper5_mesum5

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the sudo-s/herbier_mesuem5 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0181

- Accuracy: 0.8142

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 16

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 4.7331 | 0.23 | 100 | 4.7080 | 0.1130 |

| 4.4246 | 0.47 | 200 | 4.4573 | 0.1598 |

| 4.2524 | 0.7 | 300 | 4.2474 | 0.2 |

| 4.0881 | 0.93 | 400 | 4.0703 | 0.2290 |

| 3.8605 | 1.16 | 500 | 3.9115 | 0.2763 |

| 3.7434 | 1.4 | 600 | 3.7716 | 0.3349 |

| 3.5978 | 1.63 | 700 | 3.6375 | 0.3544 |

| 3.5081 | 1.86 | 800 | 3.5081 | 0.3840 |

| 3.2616 | 2.09 | 900 | 3.3952 | 0.4308 |

| 3.2131 | 2.33 | 1000 | 3.2817 | 0.4509 |

| 3.1369 | 2.56 | 1100 | 3.1756 | 0.4710 |

| 3.0726 | 2.79 | 1200 | 3.0692 | 0.5107 |

| 2.8159 | 3.02 | 1300 | 2.9734 | 0.5308 |

| 2.651 | 3.26 | 1400 | 2.8813 | 0.5728 |

| 2.6879 | 3.49 | 1500 | 2.7972 | 0.5781 |

| 2.5625 | 3.72 | 1600 | 2.7107 | 0.6012 |

| 2.4156 | 3.95 | 1700 | 2.6249 | 0.6237 |

| 2.3557 | 4.19 | 1800 | 2.5475 | 0.6302 |

| 2.2496 | 4.42 | 1900 | 2.4604 | 0.6556 |

| 2.1933 | 4.65 | 2000 | 2.3963 | 0.6456 |

| 2.0341 | 4.88 | 2100 | 2.3327 | 0.6858 |

| 1.793 | 5.12 | 2200 | 2.2500 | 0.6858 |

| 1.8131 | 5.35 | 2300 | 2.1950 | 0.6935 |

| 1.8358 | 5.58 | 2400 | 2.1214 | 0.7136 |

| 1.8304 | 5.81 | 2500 | 2.0544 | 0.7130 |

| 1.602 | 6.05 | 2600 | 1.9998 | 0.7325 |

| 1.5487 | 6.28 | 2700 | 1.9519 | 0.7308 |

| 1.4782 | 6.51 | 2800 | 1.8918 | 0.7361 |

| 1.4397 | 6.74 | 2900 | 1.8359 | 0.7544 |

| 1.3278 | 6.98 | 3000 | 1.7930 | 0.7485 |

| 1.4241 | 7.21 | 3100 | 1.7463 | 0.7574 |

| 1.3319 | 7.44 | 3200 | 1.7050 | 0.7663 |

| 1.2584 | 7.67 | 3300 | 1.6436 | 0.7686 |

| 1.088 | 7.91 | 3400 | 1.6128 | 0.7751 |

| 1.0303 | 8.14 | 3500 | 1.5756 | 0.7757 |

| 1.0075 | 8.37 | 3600 | 1.5306 | 0.7822 |

| 0.976 | 8.6 | 3700 | 1.4990 | 0.7858 |

| 0.9363 | 8.84 | 3800 | 1.4619 | 0.7781 |

| 0.8869 | 9.07 | 3900 | 1.4299 | 0.7899 |

| 0.8749 | 9.3 | 4000 | 1.3930 | 0.8018 |

| 0.7958 | 9.53 | 4100 | 1.3616 | 0.8065 |

| 0.7605 | 9.77 | 4200 | 1.3367 | 0.7982 |

| 0.7642 | 10.0 | 4300 | 1.3154 | 0.7911 |

| 0.6852 | 10.23 | 4400 | 1.2894 | 0.8 |

| 0.667 | 10.47 | 4500 | 1.2623 | 0.8148 |

| 0.6119 | 10.7 | 4600 | 1.2389 | 0.8095 |

| 0.6553 | 10.93 | 4700 | 1.2180 | 0.8053 |

| 0.5725 | 11.16 | 4800 | 1.2098 | 0.8036 |

| 0.567 | 11.4 | 4900 | 1.1803 | 0.8083 |

| 0.4941 | 11.63 | 5000 | 1.1591 | 0.8107 |

| 0.4562 | 11.86 | 5100 | 1.1471 | 0.8024 |

| 0.5155 | 12.09 | 5200 | 1.1272 | 0.8172 |

| 0.5062 | 12.33 | 5300 | 1.1206 | 0.8095 |

| 0.4552 | 12.56 | 5400 | 1.1030 | 0.8142 |

| 0.4553 | 12.79 | 5500 | 1.0918 | 0.8148 |

| 0.4055 | 13.02 | 5600 | 1.0837 | 0.8118 |

| 0.4484 | 13.26 | 5700 | 1.0712 | 0.8148 |

| 0.3635 | 13.49 | 5800 | 1.0657 | 0.8124 |

| 0.4054 | 13.72 | 5900 | 1.0543 | 0.8124 |

| 0.3201 | 13.95 | 6000 | 1.0508 | 0.8148 |

| 0.3448 | 14.19 | 6100 | 1.0409 | 0.8166 |

| 0.3591 | 14.42 | 6200 | 1.0371 | 0.8142 |

| 0.3606 | 14.65 | 6300 | 1.0345 | 0.8160 |

| 0.3633 | 14.88 | 6400 | 1.0281 | 0.8136 |

| 0.373 | 15.12 | 6500 | 1.0259 | 0.8124 |

| 0.3417 | 15.35 | 6600 | 1.0215 | 0.8112 |

| 0.3429 | 15.58 | 6700 | 1.0204 | 0.8148 |

| 0.3509 | 15.81 | 6800 | 1.0181 | 0.8142 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0

- Datasets 2.3.2

- Tokenizers 0.12.1

|

ai4bharat/IndicXLMv2-alpha-QA | e9b34813364166204fa9f2aa5a5b3b2f8b0da389 | 2022-07-22T14:22:58.000Z | [

"pytorch",

"roberta",

"question-answering",

"transformers",

"autotrain_compatible"

] | question-answering | false | ai4bharat | null | ai4bharat/IndicXLMv2-alpha-QA | 5 | null | transformers | 17,631 | Entry not found |

cyr19/distilbert-base-uncased_2-epochs-squad | fb53da82b07f65ca8150147a205431731d9c90db | 2022-07-22T16:17:30.000Z | [

"pytorch",

"distilbert",

"question-answering",

"transformers",

"autotrain_compatible"

] | question-answering | false | cyr19 | null | cyr19/distilbert-base-uncased_2-epochs-squad | 5 | null | transformers | 17,632 | learning_rate:

- 1e-5

train_batchsize:

- 16

epochs:

- 2

weight_decay

- 0.01

optimizer

- Adam

datasets:

- squad

metrics

- EM:10.307414104882

- F1:42.10389032370503

|

huggingtweets/aoc-kamalaharris | 05ad2c1d3bbbdbe0a17a284e54fcba435c4014bd | 2022-07-23T04:44:34.000Z | [

"pytorch",

"gpt2",

"text-generation",

"en",

"transformers",

"huggingtweets"

] | text-generation | false | huggingtweets | null | huggingtweets/aoc-kamalaharris | 5 | null | transformers | 17,633 | ---

language: en

thumbnail: http://www.huggingtweets.com/aoc-kamalaharris/1658551469874/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1377062766314467332/2hyqngJz_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/923274881197895680/AbHcStkl_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Kamala Harris & Alexandria Ocasio-Cortez</div>

<div style="text-align: center; font-size: 14px;">@aoc-kamalaharris</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Kamala Harris & Alexandria Ocasio-Cortez.

| Data | Kamala Harris | Alexandria Ocasio-Cortez |

| --- | --- | --- |

| Tweets downloaded | 3206 | 3245 |

| Retweets | 829 | 1264 |

| Short tweets | 8 | 126 |

| Tweets kept | 2369 | 1855 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1fpjb3ip/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @aoc-kamalaharris's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/wftrlnh5) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/wftrlnh5/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/aoc-kamalaharris')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

huggingtweets/kremlinrussia_e | e48343e61aa78d240c67d0b316622e91bac48fac | 2022-07-23T05:48:32.000Z | [

"pytorch",

"gpt2",

"text-generation",

"en",

"transformers",

"huggingtweets"

] | text-generation | false | huggingtweets | null | huggingtweets/kremlinrussia_e | 5 | null | transformers | 17,634 | ---

language: en

thumbnail: http://www.huggingtweets.com/kremlinrussia_e/1658555307462/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/501717583846842368/psd9aFLl_400x400.png')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">President of Russia</div>

<div style="text-align: center; font-size: 14px;">@kremlinrussia_e</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from President of Russia.

| Data | President of Russia |

| --- | --- |

| Tweets downloaded | 3197 |

| Retweets | 1 |

| Short tweets | 38 |

| Tweets kept | 3158 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1nplalk6/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @kremlinrussia_e's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/3jz3samc) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/3jz3samc/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/kremlinrussia_e')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

planhanasan/test-trainer | 6f8a5204d700486a3493645adcfb6506328d9dcd | 2022-07-27T00:09:44.000Z | [

"pytorch",

"tensorboard",

"camembert",

"fill-mask",

"ja",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | fill-mask | false | planhanasan | null | planhanasan/test-trainer | 5 | null | transformers | 17,635 | ---

license: apache-2.0

language:

- ja

tags:

- generated_from_trainer

datasets:

- glue

model-index:

- name: test-trainer