full_name

stringlengths 9

72

| url

stringlengths 28

91

| description

stringlengths 3

343

⌀ | readme

stringlengths 1

207k

|

|---|---|---|---|

overflowy/chat-key

|

https://github.com/overflowy/chat-key

|

Supercharge your productivity with ChatGPT and AutoHotkey 🚀

|

<p align="center"><img src="assets/logo.png" alt="ChatKey Logo"></p>

<p align="center">

<a href="https://opensource.org/licenses/MIT">

<img src="https://img.shields.io/badge/license-MIT-green.svg" alt="License: MIT">

</a>

<a href="https://github.com/overflowy/chat-key/releases/latest">

<img src="https://img.shields.io/github/v/release/overflowy/chat-key?logo=github" alt="Latest Release">

</a>

<a href="https://github.com/overflowy/chat-key/releases/latest">

<img src="https://img.shields.io/github/downloads/overflowy/chat-key/total.svg?logo=github" alt="Total Downloads">

</a>

<a href="https://www.autohotkey.com">

<img src="https://img.shields.io/badge/powered_by-AutoHotkey-orange?logo=AutoHotkey" alt="Powered By: AutoHokey">

</a>

</p>

## About

ChatKey is small tool that enables you to use your own ChatGPT/GPT-4 prompts in any application that supports text input.

<p align="center">

<a href="https://github.com/overflowy/chat-key/releases/latest">

<img src="assets/screenshot.png" alt="Screenshot">

</a>

</p>

## Usage

1. Please ensure that you have configured the OPENAI_TOKEN environment variable with your API key

2. Download the [latest release](https://github.com/overflowy/chat-key/releases/latest)

3. Extract all files from the zip

4. Run `ChatKey.exe`

5. Start typing in any application that supports text input

6. Select the text to use as input for the prompt

7. Press the hotkey to show the popup menu (default: `Alt + .`).

8. Select the prompt from the popup menu

9. Wait for the response to be generated

10. Review the generated response and press `Enter`

## Configuration

To configure ChatKey, you can edit the [`config.ini`](config.ini) file provided.

### General settings

| Key | Description | Default |

| ------------------- | --------------------------------------------------------- | --------- |

| `popup_menu_hotkey` | The hotkey to show the popup menu | `Alt + .` |

| `replace_text` | Whether to replace the selected text with the response | `0` |

| `show_notification` | Whether to show a notification when generating a response | `1` |

| `max_input_length` | The maximum length of input text (0 = unlimited) | `0` |

### Adding prompts

To add new prompts, you must include a new section in the [`config.ini`](config.ini) file. For instance, if you wish to include a prompt for translating text to French, you can achieve this by appending the following section to the configuration file:

```ini

[prompt_translate_to_french]

name = Translate to French

shortcut = t

system_prompt = "I want you to act as a French translator. I will say something in any language and you will translate it to French. The first thing I want you to translate is:"

temperature = 0.2

model = gpt-3.5-turbo

```

To ensure that the newly added prompt is available in the popup menu, it must be included in the `[popup_menu]` section. Additionally, if you have already configured multiple prompts, you can tidy up the popup menu by utilizing `---` as a separator.

```ini

[popup_menu]

---

prompt_translate_to_french

```

The changes will be applied automatically, there's no need to restart ChatKey (the only exception to this rule is the global `popup_menu_hotkey`).

### Prompt settings

You can individually configure the parameters of each prompt. If keys with default values are omitted, the default values will be used instead.

| Key | Description | Default |

| ------------------- | -------------------------------------------------------------------------------------------------------- | --------- |

| `name` | The name of the prompt that will be displayed in the popup menu | |

| `shortcut` | The shortcut key to select the prompt from the popup menu | |

| `system_content` | The prompt that will be used to generate the response (required) | |

| `model` | The model to use when generating the response, more info [here](https://platform.openai.com/docs/models) | `gpt-3.5` |

| `temperature` | The temperature to use when generating the response (0.0 - 2.0) | `0.7` |

| `top_p` | The top_p to use when generating the response (0.0 - 1.0) | |

| `presence_penalty` | Increase the model's likelihood to talk about new topics (-2.0 - 2.0) | |

| `frequency_penalty` | Decrease the model's likelihood to repeat the same line verbatim (-2.0 - 2.0) | |

## Acknowledgements

- [cocobelgica](https://github.com/cocobelgica) for the JSON lib

- [teadrinker](https://www.autohotkey.com/boards/viewtopic.php?t=113529) for the HBitmapFromResource lib

## License

The code in this repository is licensed under the MIT License. See [LICENSE](LICENSE) for more information.

|

MrTalentDev/go-bitcoin-parser

|

https://github.com/MrTalentDev/go-bitcoin-parser

| null |

Bitcoin Blockchain Parser

------

This repository contains the golang code of bitcoin blockchain parser, you can use cli interface

to interact with parser or use it as library.

Contents

--------

- [Feature](#feature)

- [Setup](#setup)

- [Example](#example)

- [Test](#test)

Feature

--------

- Deserialize block (provide raw data)

- Deserialize transaction (provide raw data)

- Get Block by hash (provide bitcoin blk folder and index folder)

- Get Block by height (provide bitcoin blk folder and index folder)

- Get unorder blocks (provide bitcoin blk folder)

- Get order blocks (provide bitcoin blk folder and index folder)

- Get last height (provide bitcoin blk folder and index folder)

Setup

------

## Setup config

We use config to set blockpath and indexpath, if you want to use deserialize block or transaction,

you don't need to set config. but if you want to use function involved blk file, you need to set

config in file or specify it in cli's variable.

### config path

Environmental variables: BITCOIN_PARSER_CONFIG_PATH

if you doesn't set env, then default path is parser.conf in current folder.

### parser.conf

#### Example

```

blockpath=./test-data/blocks

indexpath=./test-data/index

```

## Build

```shell script

go get github.com/syndtr/goleveldb/leveldb

go get golang.org/x/crypto/ripemd160

go bulid

```

## Run

```shell script

./bitcoin-parser

```

Example

-------

## Deserialize block

### code

```go

var BlockTestBytes = []byte{

0x01,0x00,0x00,0x00,0x6f,0xe2,0x8c,0x0a,

0xb6,0xf1,0xb3,0x72,0xc1,0xa6,0xa2,0x46,

0xae,0x63,0xf7,0x4f,0x93,0x1e,0x83,0x65,

0xe1,0x5a,0x08,0x9c,0x68,0xd6,0x19,0x00,

0x00,0x00,0x00,0x00,0x98,0x20,0x51,0xfd,

0x1e,0x4b,0xa7,0x44,0xbb,0xbe,0x68,0x0e,

0x1f,0xee,0x14,0x67,0x7b,0xa1,0xa3,0xc3,

0x54,0x0b,0xf7,0xb1,0xcd,0xb6,0x06,0xe8,

0x57,0x23,0x3e,0x0e,0x61,0xbc,0x66,0x49,

0xff,0xff,0x00,0x1d,0x01,0xe3,0x62,0x99,

0x01,0x01,0x00,0x00,0x00,0x01,0x00,0x00,

0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,

0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,

0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,

0x00,0x00,0x00,0x00,0x00,0x00,0xff,0xff,

0xff,0xff,0x07,0x04,0xff,0xff,0x00,0x1d,

0x01,0x04,0xff,0xff,0xff,0xff,0x01,0x00,

0xf2,0x05,0x2a,0x01,0x00,0x00,0x00,0x43,

0x41,0x04,0x96,0xb5,0x38,0xe8,0x53,0x51,

0x9c,0x72,0x6a,0x2c,0x91,0xe6,0x1e,0xc1,

0x16,0x00,0xae,0x13,0x90,0x81,0x3a,0x62,

0x7c,0x66,0xfb,0x8b,0xe7,0x94,0x7b,0xe6,

0x3c,0x52,0xda,0x75,0x89,0x37,0x95,0x15,

0xd4,0xe0,0xa6,0x04,0xf8,0x14,0x17,0x81,

0xe6,0x22,0x94,0x72,0x11,0x66,0xbf,0x62,

0x1e,0x73,0xa8,0x2c,0xbf,0x23,0x42,0xc8,

0x58,0xee,0xac,0x00,0x00,0x00,0x00,

}

block, err := DeserializeBlock(BlockTestBytes, &MainnetParams)

if err != nil {

t.Errorf("DeserializeBlock error:%s", err)

}

str, err := block.String()

if err != nil {

t.Errorf("block to string error:%s", err)

}

fmt.Println("%s", str)

```

### cli

```shell script

./bitcoin-parser deserializeblock -net mainnet -raw 010000006fe28c0ab6f1b372c1a6a246ae63f74f931e8365e15a089c68d6190000000000982051fd1e4ba744bbbe680e1fee14677ba1a3c3540bf7b1cdb606e857233e0e61bc6649ffff001d01e362990101000000010000000000000000000000000000000000000000000000000000000000000000ffffffff0704ffff001d0104ffffffff0100f2052a0100000043410496b538e853519c726a2c91e61ec11600ae1390813a627c66fb8be7947be63c52da7589379515d4e0a604f8141781e62294721166bf621e73a82cbf2342c858eeac00000000

```

### output

```json

{

"hash": "00000000839a8e6886ab5951d76f411475428afc90947ee320161bbf18eb6048",

"blockHeader": {

"version": 1,

"prevBlockHeaderHash": "000000000019d6689c085ae165831e934ff763ae46a2a6c172b3f1b60a8ce26f",

"merkleRootHash": "0e3e2357e806b6cdb1f70b54c3a3a17b6714ee1f0e68bebb44a74b1efd512098",

"time": 1231469665,

"nbits": 486604799,

"nonce": 2573394689

},

"txnCount": 1,

"txns": [

{

"hash": "0e3e2357e806b6cdb1f70b54c3a3a17b6714ee1f0e68bebb44a74b1efd512098",

"version": 1,

"txinCount": 1,

"txin": [

{

"hash": "0000000000000000000000000000000000000000000000000000000000000000",

"index": 4294967295,

"scriptBytes": 7,

"signatureScript": "04ffff001d0104",

"sequence": 4294967295

}

],

"txOutCount": 1,

"txOut": [

{

"value": 5000000000,

"pkScriptBytes": 67,

"pkScript": {

"pkscript": "410496b538e853519c726a2c91e61ec11600ae1390813a627c66fb8be7947be63c52da7589379515d4e0a604f8141781e62294721166bf621e73a82cbf2342c858eeac",

"pops": [

{

"opcode": {

"value": "41",

"name": "OP_DATA_65"

},

"data": "0496b538e853519c726a2c91e61ec11600ae1390813a627c66fb8be7947be63c52da7589379515d4e0a604f8141781e62294721166bf621e73a82cbf2342c858ee"

},

{

"opcode": {

"value": "ac",

"name": "OP_CHECKSIG"

},

"data": ""

}

],

"stype": "PubKey",

"addresses": [

"12c6DSiU4Rq3P4ZxziKxzrL5LmMBrzjrJX"

]

}

}

],

"witness": null,

"lockTime": 0

}

]

}

```

## Deserialize Transaction

#### code

```go

transactionTestBytes = []byte{

0x01,0x00,0x00,0x00,0x00,0x01,0x01,0x9b,

0x34,0xe2,0x9c,0x56,0x2d,0xe2,0x0e,0xf3,

0x26,0x68,0x2f,0xa5,0x82,0x67,0x98,0x47,

0xa4,0xdc,0x4a,0xc7,0xd5,0x26,0x4d,0x32,

0x28,0xa1,0x7c,0x8f,0xef,0x91,0x0c,0x01,

0x00,0x00,0x00,0x00,0xff,0xff,0xff,0xff,

0x01,0x16,0xaa,0x0f,0x00,0x00,0x00,0x00,

0x00,0x16,0x00,0x14,0x52,0x15,0x83,0x08,

0xca,0x2e,0x51,0x49,0xd9,0x39,0x73,0x10,

0xec,0x1f,0xe2,0xa4,0xf8,0x8a,0xfb,0x07,

0x02,0x48,0x30,0x45,0x02,0x21,0x00,0xce,

0x94,0x6d,0x85,0x63,0x0e,0x5d,0x4b,0xb2,

0x27,0xf6,0x96,0x78,0xfa,0xff,0x2d,0x39,

0x7a,0xdb,0x25,0x61,0x7c,0xc9,0xb4,0xa5,

0x32,0x3f,0x4b,0x76,0x01,0x21,0xe8,0x02,

0x20,0x4d,0x05,0x0a,0x9c,0x08,0x83,0x30,

0xf2,0x36,0xae,0xc8,0x80,0x75,0xde,0x5c,

0xa6,0x31,0xa5,0xb7,0xa3,0xae,0xa6,0x92,

0x37,0x1d,0x0f,0x99,0xc9,0x62,0xd7,0x8d,

0x85,0x01,0x21,0x03,0x2c,0xc6,0x3e,0xba,

0x4d,0x1f,0x35,0xb6,0x38,0xe7,0x36,0x86,

0xf7,0x68,0xc2,0xc9,0x63,0x99,0xe6,0x9d,

0xc7,0x3f,0xcc,0x39,0xfe,0x30,0xb5,0x43,

0xed,0x85,0x9c,0x19,0x00,0x00,0x00,0x00,

}

tx, _, err := DesserializeTransaction(transactionTestBytes, &MainnetParams)

if err != nil {

t.Errorf("TestDesserializeTransaction error: %s", err)

}

str, err := tx.String()

if err != nil {

t.Errorf("transaction to string error:%s", err)

}

fmt.Println("%s", str)

```

### cli

```shell script

./bitcoin-parser deserializetransaction -net mainnet -raw 010000000001019b34e29c562de20ef326682fa582679847a4dc4ac7d5264d3228a17c8fef910c0100000000ffffffff0116aa0f000000000016001452158308ca2e5149d9397310ec1fe2a4f88afb0702483045022100ce946d85630e5d4bb227f69678faff2d397adb25617cc9b4a5323f4b760121e802204d050a9c088330f236aec88075de5ca631a5b7a3aea692371d0f99c962d78d850121032cc63eba4d1f35b638e73686f768c2c96399e69dc73fcc39fe30b543ed859c1900000000

```

### output

```json

{

"hash": "ff857d64d03182391620a933fc8477372af4bac3a3f737883a57882d7c7d8f36",

"version": 1,

"txinCount": 1,

"txin": [

{

"hash": "9b34e29c562de20ef326682fa582679847a4dc4ac7d5264d3228a17c8fef910c",

"index": 1,

"scriptBytes": 0,

"signatureScript": "",

"sequence": 4294967295

}

],

"txOutCount": 1,

"txOut": [

{

"value": 1026582,

"pkScriptBytes": 22,

"pkScript": {

"pkscript": "001452158308ca2e5149d9397310ec1fe2a4f88afb07",

"pops": [

{

"opcode": {

"value": "00",

"name": "OP_0"

},

"data": ""

},

{

"opcode": {

"value": "14",

"name": "OP_DATA_20"

},

"data": "52158308ca2e5149d9397310ec1fe2a4f88afb07"

}

],

"stype": "WitnessPubKeyHash",

"addresses": [

"bc1q2g2cxzx29eg5nkfewvgwc8lz5nug47c8ta5ene"

]

}

}

],

"witness": [

{

"WitnessStackCount": 2,

"WitnessScript": [

{

"WitnessScriptBytes": 72,

"WitnessScript": "3045022100ce946d85630e5d4bb227f69678faff2d397adb25617cc9b4a5323f4b760121e802204d050a9c088330f236aec88075de5ca631a5b7a3aea692371d0f99c962d78d8501"

},

{

"WitnessScriptBytes": 33,

"WitnessScript": "032cc63eba4d1f35b638e73686f768c2c96399e69dc73fcc39fe30b543ed859c19"

}

]

}

],

"lockTime": 0

}

```

## Get Block by hash

### cli

```shell script

./bitcoin-parser -hash 00000000000000322cee8a774b3bc50bd304f92ee63a7d8c7c01bc9e71f182ae -indexpath ./test-data/index -blockpath ./test-data/blocks

```

## Get Block by height

### cli

```shell script

./bitcoin-parser getblockbyheight -height 131301 -indexpath ./test-data/index -blockpath ./test-data/blocks

```

## Get unorder blocks

blocks in blk files are not in order, this instruction will show original order blocks in blk files.

### cli

```shell script

./bitcoin-parser getunorderblocks -start 0 -end 999999 -name 2 -blockpath ./test-data/blocks

```

## Get order blocks

this instruction will show blocks in blk files in order by height.

### cli

```shell script

./bitcoin-parser getorderblocks -start 0 -end 999999 -name 2 -indexpath ./test-data/index -blockpath ./test-data/blocks

```

## Get last height

### cli

```shell script

./bitcoin-parser getlastheight -indexpath ./test-data/index -blockpath ./test-data/blocks

```

Test

-----

if you want find more use case, you can find in test case.

```shell script

go test

```

|

AI-Club-IIT-Madras/Summer_School_2023

|

https://github.com/AI-Club-IIT-Madras/Summer_School_2023

|

AI Club IIT Madras Summer School 2023

|

# AI Club Summer School 2023

This repository contains all the slides/notes used during the Summer School conducted at IIT Madras.

All the session recordings are present in this YouTube playlist - [Link](https://youtube.com/playlist?list=PLWkFppvOIj_RK8MBQEgipsDvahLiQJYQH)

## Tasks

| Task | Description | Link | Deadline |

| :--- | :---------- | :--- | :------- |

| 1 | Data Visualization Challenge | [Task 1](/Task_1/) | July 15th 2023, 11:59 PM |

| 2 | Kaggle Contest | [Task 2](/Task_2/), [Kaggle](https://www.kaggle.com/t/382449f886764755bb9259c9e75d374d) | July 27th 2023, 11:59 PM |

## Sessions

| Session | Date | Topic | Session Notes/Slides | Recording |

| :-----: | :------------ | :-------------------------------------------------- | :------------------- | :------------- |

| 1 | July 7th 2023 | Intro to Python, Numpy, Pandas and Matplotlib | [Session 1](/Session_1/) | [Recording](https://www.youtube.com/watch?v=A2U0c21IsfA) |

| 2 | July 10th 2023 | Linear, Logistic and Polynomial Regression | [Session 2](/Session_2/) | [Recording](https://www.youtube.com/live/eEGmz0Tm1Ck?feature=share) |

| 3 | July 12th 2023 | KNNs, SVMs, Naive Bayes Classifiers | [Session 3](/Session_3/) | [Recording](https://www.youtube.com/live/m7VHg2an9yg?feature=share) |

| 4 | July 14th 2023 | Decision Trees, Random Forest and Ensemble methods | [Session 4](/Session_4/) | [Recording](https://youtube.com/live/YczMERvNpPA) |

| 5 | July 17th 2023 | Intro to Deep Learning, Neural Networks, Pytorch basics | [Session 5](/Session_5/) | [Recording](https://www.youtube.com/watch?v=ObmItJBoImI&list=PLWkFppvOIj_RK8MBQEgipsDvahLiQJYQH&index=5) |

| 6 | July 19th 2023 | Add-ons to improve model performance: Data preprocessing, Feature Engineering, Regularization, Optimizers| [Session 6](/Session_6/) | [Recording](https://www.youtube.com/watch?v=Ia_v6VbdEDU&list=PLWkFppvOIj_RK8MBQEgipsDvahLiQJYQH&index=7) |

| 7 | July 21st 2023 | CNNs (Theory and Implementation) | [Session 7](/Session_7/) | [Recording](https://www.youtube.com/watch?v=h45fwxvresA&list=PLWkFppvOIj_RK8MBQEgipsDvahLiQJYQH&index=9) |

| 8 | July 24th 2023 | Object Detection - Haar Cascade, HOGs, RCNN, YOLO | [Session 8](/Session_8/) | [Recording](https://www.youtube.com/watch?v=aDmyoCaFQe0&list=PLWkFppvOIj_RK8MBQEgipsDvahLiQJYQH&index=10) |

| 9 | July 26th 2023 | Intro to Reinforcement Learning | [Session 9](/Session_9/) | [Recording](https://youtu.be/L57sTJfZfO0) |

|

ksyncpro/lol-bot

|

https://github.com/ksyncpro/lol-bot

|

An account leveling bot for League of Legends written in Python.

|

## Welcome to lol bot

- This is a python bot that uses the local League Client API to start games and plays them with a simple but effective game loop.

- This bot has leveled up [thousands of league accounts](https://www.playerauctions.com/lol-account/) and is still going strong.

- No pixel botting and/or image recognition makes it easy to run on any setup.

</br>

<p align="left">

<img src="https://user-images.githubusercontent.com/32341824/231916860-8cdaa0bb-c808-48f7-8afe-5cd151501a98.gif")

</p>

## Current Features

- Start League and Login

- Start a Beginner Bot Game

- Buy items and somewhat intelligently push mid until the game is over

- End game, earn experience, collect rewards

- Loop 🥡🧋

## Requirements

- [League of Legends](https://signup.leagueoflegends.com/en-us/signup/download)

- [League of Legends Account](https://signup.leagueoflegends.com/en-us/signup/index)

- [Python >= 3.10](https://www.python.org/downloads/)

## Setup

- Ensure Python is added to your PATH, check "Add Python to PATH" when installing

- Clone/Download the repo

- Download [extra RAM](https://downloadmoreram.com/) (only if needed)

- Run Installer.bat or ```pip install -r requirements.txt```

## Optional Configuration

- For non-standard league install paths, update the league_dir in constants.py

- To continuously level new league accounts:

- Implement account.py to connect to your database of league accounts

- Make sure that "stay signed in" is not checked when league of legends starts.

- This allows the bot to log out of league by closing it, get new account credentials, restart league, log in with the new credentials, and start another leveling loop, cycling indefinitely

- To level accounts past level 30 or play in different game types update game data variables in constants.py

## Disclaimer

Another LoL Bot isn’t endorsed by Riot Games and doesn’t reflect the views or opinions of Riot Games or anyone officially involved in producing or managing League of Legends. League of Legends and Riot Games are trademarks or registered trademarks of Riot Games, Inc. League of Legends © Riot Games, Inc.

This software works with other programs/services (League of Legends) so you take full responsibility for breaking their Terms of Service and full responsibility for the accounts that you’re using with this bot and agree to use it at your own risk.

|

nalgeon/sqlpkg

|

https://github.com/nalgeon/sqlpkg

|

SQLite package registry

|

# The (unofficial) SQLite package registry

Given the vast amount of SQLite extensions, it's only logical to have a package registry. So here it is.

The registry contains only package metadata (specs). Package binaries and sources are located elsewhere (usually in the package developer's repositories).

Here is what you can do from here:

- Search the registry at [sqlpkg.org](https://sqlpkg.org/).

- Install extensions using the [sqlpkg](https://github.com/nalgeon/sqlpkg-cli) package manager.

- Add your own package to the registry.

Note that you don't have to add your package to the registry for `sqlpkg` to work with it — it will work just fine with your own repo (see the `sqlpkg` documentation for details). Adding your package to the registry is completely optional.

[**Subscribe**](https://antonz.org/subscribe/) for updates 🚀

|

declare-lab/flacuna

|

https://github.com/declare-lab/flacuna

|

Flacuna was developed by fine-tuning Vicuna on Flan-mini, a comprehensive instruction collection encompassing various tasks. Vicuna is already an excellent writing assistant, and the intention behind Flacuna was to enhance Vicuna's problem-solving capabilities. To achieve this, we curated a dedicated instruction dataset called Flan-mini.

|

# Flacuna: A Vicuna made of Flan

[Paper](https://arxiv.org/abs//2307.02053) | [Model](https://huggingface.co/declare-lab/flacuna-13b-v1.0) | [Dataset](https://huggingface.co/datasets/declare-lab/flan-mini)

📣 We still have numerous experiments awaiting completion (details are [here](https://arxiv.org/abs//2307.02053)), requiring additional computing resources in our lab. If any industry professionals reading this are willing to provide assistance, please feel free to reach out to us at [email protected].

<p align="center">

<img src="https://declare-lab.net/assets/images/logos/flacuna5.png" alt="Image" width="200" height="335">

</p>

Flacuna was developed by fine-tuning Vicuna on Flan-mini, a comprehensive instruction collection encompassing various tasks. Vicuna is already an excellent writing assistant, and the intention behind Flacuna was to enhance Vicuna's problem-solving capabilities. To achieve this, we curated a dedicated instruction dataset called Flan-mini.

| Dataset Name | Source | Dataset Size |

|-----------------------------|------------------------|--------------|

| Flan2021 | Flan | 388K |

| Public Pool of Prompts | Flan | 320K |

| Natural instructions v2 | Flan | 200K |

| CoT | Flan | 100K |

| Code Search | HF/code_search_net | 100K |

| Code Contest | HF/deepmind/code_contests | 50K |

| Apps | HF/codeparrot/apps | 50K |

| GPT4-Alpaca | GPT-4 | 52K |

| Code-Alpaca | ChatGPT | 20K |

| ShareGPT | ChatGPT | 60K |

| Total | - | 1.34M |

## Quickstart Guide

```python

from flacuna import FLACUNA

model = FLACUNA("declare-lab/flacuna-13b-v1.0")

prompt = (

"A chat between a curious user and an artificial intelligence assistant. "

"The assistant gives helpful, detailed, and polite answers to the user's questions. "

"USER: You are tasked to demonstrate your writing skills in professional or work settings for the following question.\n"

"Can you help me write a speech for a graduation ceremony, inspiring and motivating the graduates to pursue their dreams and make a positive impact on the world?\n"

"Output: ASSISTANT: "

)

decoded = model.generate(prompt)

print (decoded)

```

The output is the following:

```

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: You are tasked to demonstrate your writing skills in professional or work settings for the following question.

Can you help me write a speech for a graduation ceremony, inspiring and motivating the graduates to pursue their dreams and make a positive impact on the world?

Output: ASSISTANT: Dear Graduates,

It is my honor to stand before you here today, to celebrate your achievements and to acknowledge your hard work and dedication that you have shown here at the University. I am proud of each one of you, for all that you have accomplished thus far, and for the potential that lies within each of you.

As you move on from this stage, I want to remind you that your journey has just begun. The real world awaits you, and with it, comes a myriad of opportunities and challenges. But I believe in each one of you, and I know that you are more than capable of facing whatever lies ahead.

You are the future of this world, and I have no doubt that you will make a positive impact on it, in whatever field you choose to pursue. Whether it is in science, technology, education, healthcare, or any other field, your contributions will be valuable and meaningful, and will make a difference in the lives of others.

So, as you move forward, I urge you to stay true to your passions, to pursue your dreams, and to never give up on what you believe in. Remember, it is not the obstacles that define us, but how we overcome them that truly matters.

You have the power to change the world, and I have no doubt that you will. So, go out there, and make the most of every opportunity that comes your way. Make a difference, and leave your mark on this world.

Congratulations once again, and best of luck in all that lies ahead.

Thank you.

```

## Problem Solving Ability

As a result of this fine-tuning process, Flacuna exhibited notable performance improvements in problem-solving across multiple benchmark datasets, both in few-shot and zero-shot settings.

| **Model** | **Size** | **MMLU (5-shot)** | **BBH (3-shot)** | **DROP (3-shot)** | **CRASS (3-shot)** | **HumanEval (0-shot)** | **Avg.** |

| --- | --- | --- | --- | --- | --- | --- | --- |

| StableVicuna | 13B | 49.2 (+3.0) | 37.5 (+0.4) | 34.3 (-1.0) | 67.5 (+8.7) | 15.9 (+2.5) | 40.9 (+2.7) |

| Vicuna | 13B | 50.6 (+4.5) | 37.6 (+0.5) | 32.6 (-3.0) | 60.9 (+2.1) | 11.6 (-1.8) | 38.7 (+0.6) |

| Flacuna | 13B | 51.1 (+5.0) | 39.3 (+2.2) | 43.6 (+8.0) | 74.1 (+15.3) | 11.0 (-2.4) | 43.8 (+5.6) |

| **Model** | **Size** | **MMLU (0-shot)** | **BBH (0-shot)** | **CRASS (0-shot)** |

| --- | --- | --- | --- | --- |

| StableVicuna | 13B | 47.5 | 18.5 | 64.2 |

| Vicuna | 13B | 48.3 | 28.3 | 65.7 |

| Flacuna | 13B | 49.4 | 32.5 | 67.9 |

During training, Flacuna is a 13B checkpoint of LLaMA and employed a maximum input sequence length of 1280. We utilized LoRA for parameter-efficient fine-tuning.

## Chatbot / Writing Assistant

While Flacuna primarily excels in problem-solving tasks, we made efforts to maintain the impressive writing and chatting ability of Vicuna. To achieve this, we incorporated conversational datasets generated by GPT-4, such as GPT-4-Alpaca and ShareGPT, into the Flan-mini collection.

To use Flacuna as a chatbot or writing assistant, we recommend you use the following template:

```

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: {definition of the task}.\n\n

{question}\n

Output: ASSISTANT:

```

**Please note that we still recommend using Vicuna as your preferred Chatbot or Writing Assistant, over Flacuna. Flacuna's primary strength lies in problem-solving tasks, making it ideal for such applications.**

The following table presents the writing performance of Flacuna on the IMPACT dataset, which is a component of the InstructEval evaluation suite. The generated responses have been evaluated by ChatGPT, and their relevance and coherence have been scored on a scale of 1 to 5.

| **Model** | **Size** | **Informative Rel.** | **Informative Coh.** | **Professional Rel.** | **Professional Coh.** | **Argumentative Rel.** | **Argumentative Coh.** | **Creative Rel.** | **Creative Coh.** | **Avg. Rel.** | **Avg. Coh.** |

| --- | --- | --- | --- | --- | --- | --- | --- | --- | --- | --- | --- |

| ChatGPT | - | 3.34 | 3.98 | 3.88 | 3.96 | 3.96 | 3.82 | 3.92 | 3.94 | 3.78 | 3.93 |

| Flan-Alpaca | 11B | 3.56 | 3.46 | 3.54 | 3.70 | 3.22 | 3.28 | 3.70 | 3.40 | 3.51 | 3.46 |

| Flan-T5 | 11B | 2.64 | 3.24 | 2.62 | 3.22 | 2.54 | 3.40 | 2.50 | 2.72 | 2.58 | 3.15 |

| Dolly-V2 | 12B | 3.54 | 3.64 | 2.96 | 3.74 | 3.66 | 3.20 | 3.02 | 3.18 | 3.30 | 3.44 |

| StableVicuna | 13B | 3.54 | 3.64 | 2.96 | 3.74 | 3.30 | 3.20 | 3.02 | 3.18 | 3.21 | 3.44 |

| Vicuna | 13B | 3.60 | 3.96 | 3.74 | 3.82 | 3.82 | 3.56 | 3.82 | 3.92 | 3.75 | 3.82 |

| Flacuna | 13B | 3.02 | 3.42 | 3.48 | 3.52 | 3.38 | 3.02 | 3.92 | 3.80 | 3.45 | 3.44 |

## Training Flacuna

Navigate to the `data` directory and download the Flan-Mini dataset:

```bash

cd data

wget https://huggingface.co/datasets/declare-lab/flan-mini/resolve/main/flan_mini.json.zip

unzip flan_mini.json.zip

cd ..

```

You can then use the `train.sh` script for fine-tuning Vicuna on the Flan-Mini dataset:

```bash

bash train.sh

```

## Citation

```bibtex

@misc{ghosal2023flacuna,

title={Flacuna: Unleashing the Problem Solving Power of Vicuna using FLAN Fine-Tuning},

author={Deepanway Ghosal and Yew Ken Chia and Navonil Majumder and Soujanya Poria},

year={2023},

eprint={2307.02053},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

fishzjp/FileTools

|

https://github.com/fishzjp/FileTools

|

用于生成任意大小的文件

|

# 背景

在软件开发和系统测试的过程中,经常需要测试在磁盘空间满的情况下系统的表现和处理能力。这样的测试场景可以帮助开发人员和测试人员评估系统在资源紧张的环境下的鲁棒性和性能。

然而,手动创建大文件并将磁盘填满是一项繁琐且耗时的任务。为了简化这个过程并提高效率,我开发了一个文件生成工具,该工具可以根据需求创建任意大小的文件,以模拟磁盘空间满的场景。

# 项目特点

1. 用户友好的界面:该工具使用了PyQt5库来创建一个图形化界面,使用户能够直观、方便地操作。界面提供了文件夹路径、文件名称和文件大小等输入框,以及浏览和生成按钮,用户可以轻松选择路径和设置文件参数。

2. 文件写入速度快:任意大小文件秒写入

# 程序打包

### 程序使用pyinstaller打包,打包时有使用upx压缩,打包命令如下

```

# 使用upx压缩打包命令 注意:--upx-dir= 需要替换为你本地upx的安装路径

pyinstaller --onefile --add-data "icon.png;." --add-data "SmileySans-Oblique.ttf;." --add-data "style.qss;." --noconsole --upx-dir=D:\code\file_tools\tools\upx-4.0.2-win64\upx-4.0.2-win64 file_tools.py

# 不使用upx压缩打包命令

pyinstaller --onefile --add-data "icon.png;." --add-data "SmileySans-Oblique.ttf;." --add-data "style.qss;." --noconsole file_tools.py

```

# 工具界面

# 工具下载

https://github.com/fishzjp/FileTools/releases

# 工具字体

字体采用得意黑 https://github.com/atelier-anchor/smiley-sans

# 公众号

<br> 希望这次优化对你有帮助,如果还有其他需要改进的地方,请随时告知。

|

wasdwasd0105/PicoW-usb2bt-audio

|

https://github.com/wasdwasd0105/PicoW-usb2bt-audio

|

Transform your Raspberry Pi Pico W into a USB to Bluetooth Audio Adapter.

|

# PicoW USB Audio to Bluetooth Adapter

The Pico W USB Audio to Bluetooth Adapter transforms your Raspberry Pi Pico W into a high-quality, hassle-free audio streaming device.

It allows any device with a USB input, including MacOS, Windows, Linux, Nintendo Switch, PS4/PS5, and more, to gain Bluetooth Audio capability.

With Pico W Adapter, you can easily transmit audio wirelessly from your USB audio source to your Bluetooth headphones or speaker, enhancing your listening experience.

To make sure we can keep working on free and open-source projects like this,

**please consider becoming a [:heart: Sponsor via PayPal](https://www.paypal.com/donate/?business=UZAK3WFV233ML&no_recurring=0&item_name=Help+me+build+more+project%21¤cy_code=USD) or support us via [:coffee: Ko-fi](https://ko-fi.com/wasdwasd0105).**

<p align="center">

<img alt="Logo" src="logo.png" width="200">

</p>

### Driver-Free Setup

Setting up PicoW requires no driver or software installation. Simply plug the Pico W into your device's USB port, set your Bluetooth headphones or speakers to pairing mode, and an automatic connection will be made.

### Bluetooth SBC Codec

Pico W Bluetooth Adapter utilizes the sbc codec to deliver high-quality audio. It can steam with stereo sound.

### Video demo

[](http://www.youtube.com/watch?v=Dilagi7l4xc "")

## Installation

Installing the Pico W USB Audio to Bluetooth Adapter firmware involves flashing a UF2 (USB Flashing Format) file onto your Raspberry Pi Pico. Follow these steps:

1. **Download the UF2 file:** You can find the latest firmware for the PicoW USB Audio to Bluetooth Adapter at the [releases page](https://github.com/wasdwasd0105/PicoW-usb2bt-audio/releases) of the GitHub repository. Download the `.uf2` file from the latest release.

2. **Connect the Pico to your computer:** First, ensure that your Pico is not connected to your computer. Then, hold down the 'BOOTSEL' button on the Pico while you plug it into your computer using a micro USB cable. It will appear on your computer as a mass storage device (like a USB flash drive).

3. **Copy the UF2 file:** Simply drag and drop (or copy and paste) the downloaded UF2 file onto the Pico.

4. **Reset the Pico:** Once the UF2 file has been copied onto the Pico, it will automatically reset and start running the new firmware.

## Usage

Using the PicoW USB Audio to Bluetooth Adapter is a straightforward process. Here are the steps to follow:

1. **Connect your Pico W to your audio source device:** Use a USB cable to connect your Raspberry Pi Pico W to the device that you want to stream audio from.

2. **Set the audio output on your source device:** On your audio source device, go to your sound settings and change the audio output device to `USB Bluetooth Audio`.

3. **Pairing a new device:** To pair a new device, long press the 'BOOTSEL' button on the Pico W and release it the led light will blink fast. Then, put the new Bluetooth device into pairing mode. The Pico W will automatically connect to it.

4. **Start playing audio:** Once everything is set up, you can start playing audio from your source device. The audio will be streamed to your Bluetooth device via the Pico W.

5. **Reconnecting a device:** You can reconnect the headphone by short pressing the 'BOOTSEL' button.

## Green LED light Status Indicator

1. **Blinking Slow (1s):** When the Green LED light is blinking, it indicates that audio is currently streaming.

2. **Blinking Fast (0.5s):** It means that the PicoW Adapter is in pairing mode.

3. **On (Steady Light):** It means that the PicoW Adapter is on standby. Short-press the key to reconnect the last saved device.

## Compile & Debug

In order to compile the PicoW USB Audio to Bluetooth Adapter firmware from source code, you need to follow these steps:

1. **Prepare your environment:** Make sure that you have a working development environment for Raspberry Pi Pico projects. This includes having the required compiler and tools installed. You may refer to the [Getting started with Raspberry Pi Pico](https://www.raspberrypi.org/documentation/rp2040/getting-started/) guide for detailed instructions.

2. **Set environment variables:** Before you can build the project, you need to set two environment variables: `PICO_SDK_PATH` and `PICO_EXTRAS_PATH`. For example:

```bash

export PICO_SDK_PATH=~/pico-sdk

export PICO_EXTRAS_PATH=~/pico-extras

```

3. **Build the project:** After setting up your environment, navigate to the project directory in a terminal and run the provided build script:

```bash

./build.sh

```

This script should compile the project and produce a UF2 firmware file that you can flash onto your Pico W.

4. **Debug Serial input/output:** You can use uart to see the debug info. Connect the GPIO 0 and 1 as TX and RX. To enable BTstack's serial input, you can uncomment `HAVE_BTSTACK_STDIN` under btstack_config.h

## Acknowledgments

This project wouldn't have been possible without the foundational work provided by the following projects:

1. [usb-sound-card](https://github.com/raspberrypi/pico-playground/tree/master/apps/usb_sound_card): It served as a valuable reference for handling USB audio data with the Raspberry Pi Pico.

2. [a2dp_source_demo](https://github.com/bluekitchen/btstack/blob/master/example/a2dp_source_demo.c): The Advanced Audio Distribution Profile (A2DP) source demo provided by the BTstack.

## License

This project is licensed under the terms of the Apache License 2.0.

|

tomquirk/threads-api

|

https://github.com/tomquirk/threads-api

|

Threads by Instagram API for Python

|

# threads-api

Threads by Instagram API for Python

Just chill. Coming soon

|

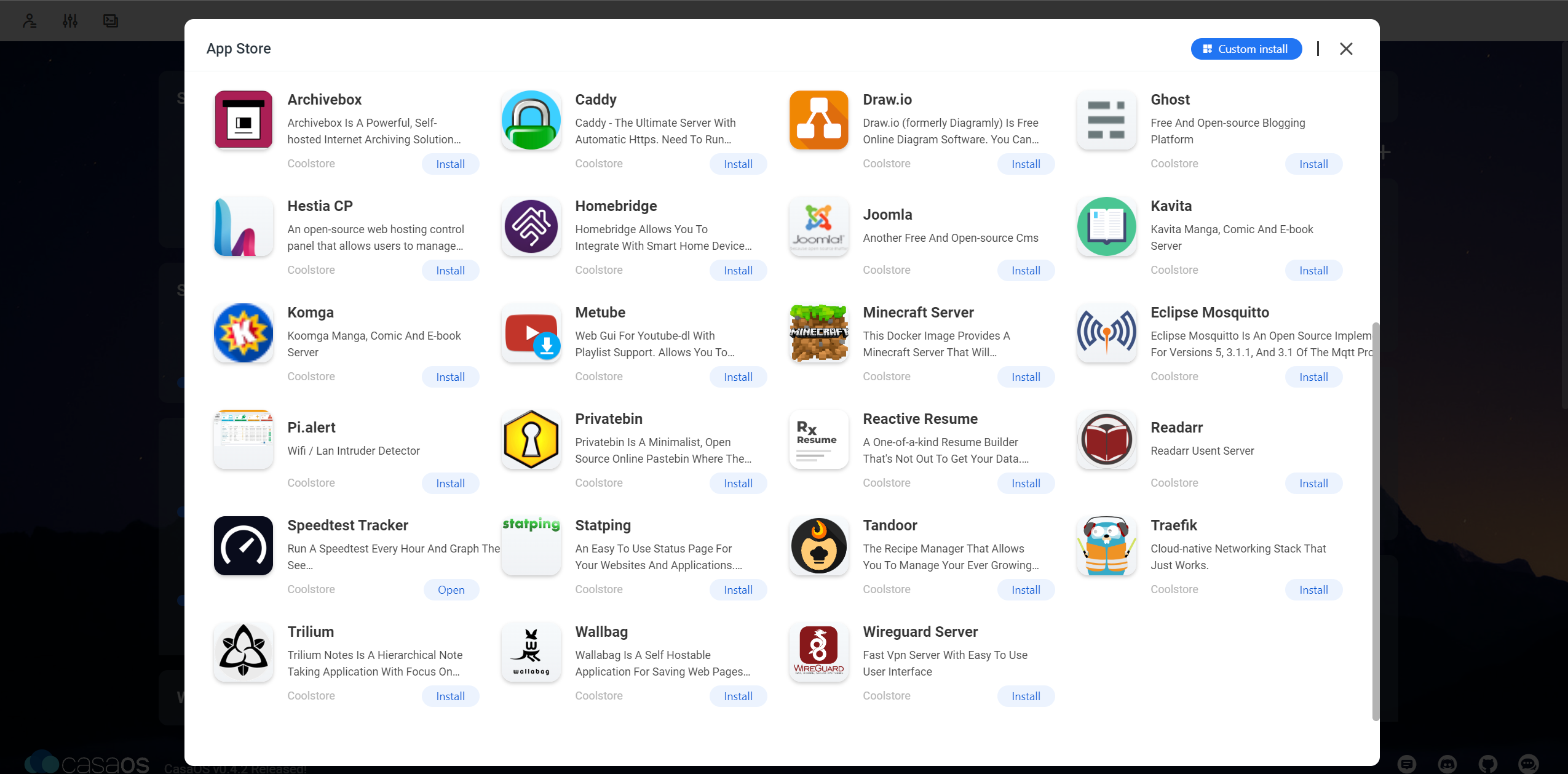

WisdomSky/CasaOS-Coolstore

|

https://github.com/WisdomSky/CasaOS-Coolstore

|

Just an another CasaOS third-party Appstore with ❄Cool❄ apps.

|

# CasaOS Coolstore

Just a cool appstore. ⛄

---

#### ☕ Treat WisdomSky a cup of coffee...

[](https://www.paypal.com/cgi-bin/webscr?cmd=_s-xclick&hosted_button_id=RYAFEWHVZCZ62)

---

## 📃 Table of Contents

- [Introduction](#-introduction)

- [Installation](#-installation)

- [List of Applications](#-list-of-applications)

- [Frequently Asked Questions / FAQs](#-frequently-asked-questions)

- [How to Upgrade CasaOS](#-how-to-upgrade-casaos)

- ["Error 404: Not Found" during install](#-error-404-not-found-during-install)

- [How to uninstall the CasaOS Coolstore](#-how-to-uninstall-the-casaos-coolstore)

- [Request an App](#-request-an-app)

- [Contributing](#-contributing)

---

## 🔥 Introduction

Just an another CasaOS third-party Appstore with ❄**Cool**❄ apps.

---

## ✅ Installation

Run the following command to install the appstore:

```bash

casaos-cli app-management register app-store https://casaos-appstore.paodayag.dev/coolstore.zip

```

> **NOTE: Custom Appstore is only supported on CasaOS version [0.4.4](https://blog.casaos.io/blog/23.html) and above. How to upgrade? [Click here](#-how-to-upgrade-casaos)**

---

## 🛠 List of Applications

| Application | Version | Description |

| --- | --- | --- |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/archivebox.png" width="15"/> [Archivebox](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/archivebox) | undefined | Archivebox Is A Powerful, Self-hosted Internet Archiving Solution To Collect, Save, And View Sites You Want To Preserve Offline. |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/caddy.png" width="15"/> [Caddy](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/caddy) | 2.7 | Caddy - The Ultimate Server With Automatic Https. Need To Run Tools/install_caddy.sh Before Installing The Template. |

| <img src="https://cdn.jsdelivr.net/gh/WisdomSky/CasaOS-Coolstore@main/Apps/casaos-cloudflared/icon.png" width="15"/> [Cloudflared](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/casaos-cloudflared) | 2023.7.3 | A tunneling daemon by Cloudflare that safely exposes your web servers into the internet. |

| <img src="https://raw.githubusercontent.com/jgraph/drawio/dev/src/main/webapp/images/logo.png" width="15"/> [Draw.io](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/draw.io) | undefined | Draw.io (formerly Diagramly) Is Free Online Diagram Software. You Can Use It As A Flowchart Maker, Network Diagram Software, To Create Uml Online, As An Er Diagram Tool, To Design Database Schema, To Build Bpmn Online, As A Circuit Diagram Maker, And More. Draw.io Can Import .vsdx, Gliffy™ And Lucidchart™ Files. |

| <img src="https://portainer-io-assets.sfo2.digitaloceanspaces.com/logos/ghost.png" width="15"/> [Ghost](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/ghost) | undefined | Free And Open-source Blogging Platform |

| <img src="https://raw.githubusercontent.com/hestiacp/hestiacp/main/web/images/logo.png" width="15"/> [Hestia CP](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/hestiacp) | undefined | An open-source web hosting control panel that allows users to manage their websites, domains, email accounts, databases, and more. It provides a user-friendly interface for managing web hosting environments and simplifies various administrative tasks. |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/homebridge.png" width="15"/> [Homebridge](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/homebridge) | undefined | Homebridge Allows You To Integrate With Smart Home Devices That Do Not Natively Support Homekit. There Are Over 2,000 Homebridge Plugins Supporting Thousands Of Different Smart Accessories. |

| <img src="https://immich.app/img/logo.png" width="15"/> [Immich](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/immich) | v1.68.0 | Self-hosted photos and videos backup tool |

| <img src="https://portainer-io-assets.sfo2.digitaloceanspaces.com/logos/joomla.png" width="15"/> [Joomla](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/joomla) | undefined | Another Free And Open-source Cms |

| <img src="https://raw.githubusercontent.com/Kareadita/Kavita/main/UI/Web/src/assets/images/logo.png" width="15"/> [Kavita](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/kavita) | undefined | Kavita Manga, Comic And E-book Server |

| <img src="https://raw.githubusercontent.com/gotson/komga/master/.github/readme-images/app-icon.png" width="15"/> [Komga](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/komga) | undefined | Koomga Manga, Comic And E-book Server |

| <img src="https://raw.githubusercontent.com/SelfhostedPro/selfhosted_templates/master/Images/ytdlm.png" width="15"/> [Metube](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/metube-youtubedl) | undefined | Web Gui For Youtube-dl With Playlist Support. Allows You To Download Videos From Youtube And Dozens Of Other Sites (https://ytdl-org.github.io/youtube-dl/supportedsites.html). |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/minecraft.png" width="15"/> [Minecraft Server](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/minecraft) | undefined | This Docker Image Provides A Minecraft Server That Will Automatically Download The Latest Stable Version At Startup. You Can Also Run/upgrade To Any Specific Version Or The Latest Snapshot. See The Versions Section Below For More Information. |

| <img src="https://raw.githubusercontent.com/docker-library/docs/757578e3a44e5460a8a11d32a81776f8b74231a9/eclipse-mosquitto/logo.png" width="15"/> [Eclipse Mosquitto](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/mosquitto) | undefined | Eclipse Mosquitto Is An Open Source Implementation Of A Server For Versions 5, 3.1.1, And 3.1 Of The Mqtt Protocol.you Will Need To Create /portainer/files/appdata/config/mosquitto/config/mosquitto.conf And If Needed Your Password File.have A Look On Https://mosquitto.org/man/mosquitto_passwd-1.html |

| <img src="https://raw.githubusercontent.com/pucherot/Pi.Alert/main/docs/img/1_devices.jpg" width="15"/> [Pi.alert](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/pi-alert) | undefined | Wifi / Lan Intruder Detector |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/privatebin.png" width="15"/> [Privatebin](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/privatebin) | undefined | Privatebin Is A Minimalist, Open Source Online Pastebin Where The Server Has Zero Knowledge Of Pasted Data! |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/reactiveresume.png" width="15"/> [Reactive Resume](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/reactive-resume) | undefined | A One-of-a-kind Resume Builder That's Not Out To Get Your Data. Completely Secure, Customizable, Portable, Open-source And Free Forever. |

| <img src="https://raw.githubusercontent.com/Readarr/Readarr/develop/Logo/128.png" width="15"/> [Readarr](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/readerr) | nightly-0.3.1.2095 | Readarr Usent Server |

| <img src="https://static.cdnlogo.com/logos/r/32/rocket-chat.svg" width="15"/> [Rocket. Chat](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/rocket-chat) | undefined | Rocket. Chat is a customizable open source communications platform for organizations with high standards of data protection. |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/speedtest-tracker.png" width="15"/> [Speedtest Tracker](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/speedtest-tracker) | undefined | Run A Speedtest Every Hour And Graph The Results. See Https://hub.docker.com/r/henrywhitaker3/speedtest-tracker/tags For Arch Options |

| <img src="https://raw.githubusercontent.com/xneo1/portainer_templates/master/Images/statping.png" width="15"/> [Statping](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/statping) | undefined | An Easy To Use Status Page For Your Websites And Applications. Statping Will Automatically Fetch The Application And Render A Beautiful Status Page With Tons Of Features For You To Build An Even Better Status Page. |

| <img src="https://docs.tandoor.dev/logo_color.svg" width="15"/> [Tandoor](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/tandoor) | undefined | The Recipe Manager That Allows You To Manage Your Ever Growing Collection Of Digital Recipes. |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/traefik.png" width="15"/> [Traefik](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/traefik) | undefined | Cloud-native Networking Stack That Just Works. |

| <img src="https://www.saashub.com/images/app/service_logos/55/2901389fab77/large.png?1561117248" width="15"/> [Trilium](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/trilium) | undefined | Trilium Notes Is A Hierarchical Note Taking Application With Focus On Building Large Personal Knowledge Bases |

| <img src="https://raw.githubusercontent.com/jake-walker/selfhosted_templates/wallabag/Images/wallabag.png" width="15"/> [Wallabag](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/wallabag) | undefined | Wallabag Is A Self Hostable Application For Saving Web Pages: Save And Classify Articles. Read Them Later. Freely. |

| <img src="https://raw.githubusercontent.com/pi-hosted/pi-hosted/master/images/wireguard.png" width="15"/> [Wireguard Server](https://github.com/WisdomSky/CasaOS-Coolstore/tree/main/Apps/wg-easy) | 7 | Fast Vpn Server With Easy To Use User Interface |

## 💡 Frequently Asked Questions

### 👉 How to Upgrade CasaOS

Run the following command:

curl -fsSL https://get.casaos.io/update/v0.4.4-alpha | sudo bash

### 👉 Error 404 Not Found during install

This could be caused by your CasaOS running on a port other than the default `port 80`. You need to add the `-u` flag at the end to tell command which port your CasaOS is running:

```bash

casaos-cli app-management register app-store https://casaos-appstore.paodayag.dev/coolstore.zip -u "localhost:<my-casa-os-port>"

```

Replace `<my-casa-os-port>` with the port where your CasaOS is running. For example if my CasaOS is running on port 99:

```bash

casaos-cli app-management register app-store https://casaos-appstore.paodayag.dev/coolstore.zip -u "localhost:99"

```

### 👉 How to uninstall the CasaOS Coolstore

Get the assigned ID of the Coolstore:

casaos-cli app-management list app-stores

Unregister the CasaOS Coolstore:

casaos-cli app-management unregister app-store <coolstore-id>

> NOTE: Replace `<coolstore-id>` with the corresponding ID of the CasaOS Coolstore.

---

### 🎈 Request an App

You can request an app to be added into the list by submitting a request [here](https://github.com/WisdomSky/CasaOS-Coolstore/issues/new?assignees=&labels=app-request&projects=&template=app-request.md&title=%5BApp+Request%5D+%3CApp+Name+Here%3E).

---

### 💙 Contributing

> ☠ WARNING: Please refrain from submitting PRs into this repository.

You can contribute changes or updates to this Appstore through [CasaOS-Cool-Appstore-Generator](https://github.com/WisdomSky/CasaOS-Cool-Appstore-Generator).

|

PABannier/bark.cpp

|

https://github.com/PABannier/bark.cpp

|

Port of Suno AI's Bark in C/C++ for fast inference

|

# bark.cpp (coming soon!)

Inference of SunoAI's bark model in pure C/C++ using [ggml](https://github.com/ggerganov/ggml).

## Description

The main goal of `bark.cpp` is to synthesize audio from a textual input with the [Bark](https://github.com/suno-ai/bark) model.

Bark has essentially 4 components:

- [x] Semantic model to encode the text input

- [x] Coarse model

- [x] Fine model

- [ ] Encoder (quantizer + decoder) to generate the waveform from the tokens

## Roadmap

- [ ] Quantization

- [ ] FP16

- [ ] Swift package for iOS devices

|

Overdrive141/vite-shadcnui-boilerplate

|

https://github.com/Overdrive141/vite-shadcnui-boilerplate

|

A boilerplate React Project with ShadcnUI, Tailwind CSS, powered by Vite that supports both JS & TS.

|

<h1 align="center">Vite React Project Boilerplate (TS/JS) (ShadcnUI & Tailwind)</h1>

<p>

<img alt="Version" src="https://img.shields.io/badge/version-0.0.0-blue.svg?cacheSeconds=2592000" />

<a href="#" target="_blank">

<img alt="License: MIT" src="https://img.shields.io/badge/License-MIT-yellow.svg" />

</a>

<a href="https://twitter.com/FarhanSoftware" target="_blank">

<img alt="Twitter: FarhanSoftware" src="https://img.shields.io/twitter/follow/FarhanSoftware.svg?style=social" />

</a>

</p>

## Main configurations

- React 18 that supports both TypeScript & JavaScript out of the box

- You can use absolute imports, using `@` as `src` directory

- Support for [ShadcnUI](https://ui.shadcn.com) & Tailwind CSS

## Usage

Install the dependencies:

```sh

yarn install or npm install

```

Run dev server:

```sh

yarn dev or npm run dev

```

## Production version

To generate the production version, you can run:

```sh

yarn build or npm run build

```

All files you have to deploy will be located at the `dist` directory.

## Author

👤 **Farhan H**

* Website: https://farhanh.dev

* Twitter: [@FarhanSoftware](https://twitter.com/FarhanSoftware)

## 🤝 Contributing

Contributions, issues and feature requests are welcome!<br />Feel free to report on them on the issues page.

|

ManeraKai/fediredirect

|

https://github.com/ManeraKai/fediredirect

| null |

# FediRedirect

A browser extension that redirects you from a fediverse instance to your favorite instance. Here's a [demo](https://youtu.be/96wBlCna8I4).

<a href="https://addons.mozilla.org/firefox/addon/fediredirect/">

<img src ="assets/badge-amo.png" height=60 >

</a>

It currently supports:

- [Mastodon](https://joinmastodon.org)

- [Soapbox](https://soapbox.pub)

- [Lemmy](https://join-lemmy.org)

- [Calckey](https://calckey.org)

- [Peertube](https://joinpeertube.org)

- [Pleroma](https://pleroma.social)

|

StepL7/interpark-ticket

|

https://github.com/StepL7/interpark-ticket

|

抢票python脚本

|

# interpark-ticket

抢票python脚本

代码目前比较定制化,就是抢seventeen的

|

keep-starknet-strange/madara-infra

|

https://github.com/keep-starknet-strange/madara-infra

|

Infrastructure related stuff for Madara.

|

<div align="center">

<img src="images/readme-header.png" height="256">

<h2>🏗 Madara: Infrastructure</h2>

<h6>Main repository for handling the infrastructure efforts for Madara</h6>

<a href="https://github.com/keep-starknet-strange/madara">

<img src="https://img.shields.io/badge/Official%20Repository-Madara-red"/>

</a>

</div>

## Table of Contents

- [Disclaimer](#disclaimer)

- [Getting Started](#getting-started)

- [Dependencies](#dependencies)

- [Local Testnet](#local-testnet)

- [Docker/Kubernetes](#docker-kubernetes)

- [Ansible](#ansible)

- [Terraform](#terraform)

- [Scripts](#scripts)

- [Starknet Stack](#starknet-stack)

- [Apibara](/starknet-stack#apibara)

- [Kakarot](/starknet-stack#kakarot)

- [Madara](/starknet-stack#madara)

- [Madara App](/starknet-stack#madara-app)

- [Madara Explorer](/starknet-stack#madara-explorer)

- [Madara Swap](/starknet-stack#madara-swap)

- [Madara Telemetry](/starknet-stack#telemetry)

- [Starkcet](/starknet-stack#starkcet)

- [Starken](/starknet-stack#starken)

- [Starksheet](/starknet-stack#starksheet)

## ⚠️ Disclaimer

Hey there! Just a heads-up: while we've put in the effort, we can't promise everything here's perfect. Using the tools or guides in this repo? Be aware they can deploy or undeploy things automatically, which might slap extra charges on your cloud bills. Always double-check your actions, and remember to keep backups. Dive in at your own risk!

## 🚀 Getting Started

Welcome to the Madara: Infrastructure repository! If you're keen to set up, manage, or fiddle with Madara's infrastructure, you're in the right place. This guide will walk you through the initial steps to get up and running. Before diving into the deep end, ensure you've got the necessary tools and permissions.

### Dependencies

1. Git: This one's a no-brainer. You'll need Git to clone and manage versions of this repository.

2. Docker: We use containerization a lot here, so ensure Docker's installed and ready.

3. Extra Tools: Some deployments might need specific CLI tools, like Ansible or Kubernetes. Keep them handy!

📌 Note: This is just a starter pack. As you dive into specific sections, there might be more tools or configurations needed. Always refer to the relevant documentation.

## Local Testnet

## Docker/Kubernetes

## Ansible

## Terraform

## Scripts

## Starknet Stack

Starknet, at its core, is a robust and dynamic ecosystem. The stack we've assembled here is a collection of services and tools designed to synergize and empower your Madara endeavors. You can check more information on the [Starknet Stack README](/starknet-stack).

|

msdirtbag/mcspattack

|

https://github.com/msdirtbag/mcspattack

|

ATT&CK Navigator Layer for Microsoft Cloud Security Platforms

|

# mcspattack

ATT&CK Navigator Layer for Microsoft Cloud Security Platforms

|

oskarek/swift-json-parsing

|

https://github.com/oskarek/swift-json-parsing

|

JSON decoding and encoding, using the swift-parsing library

|

# JSONParsing

[](https://github.com/oskarek/swift-json-parsing/actions/workflows/ci.yml)

[](https://swiftpackageindex.com/oskarek/swift-json-parsing)

[](https://swiftpackageindex.com/oskarek/swift-json-parsing)

A library for decoding and encoding json, built on top of @pointfreeco's [Parsing](https://github.com/pointfreeco/swift-parsing) library.

* [Introduction](#introduction)

* [Quick start](#quick-start)

* [Motivation - why not use Codable?](#motivation---why-not-use-codable)

* [The `JSONValue` type](#the-jsonvalue-type)

* [The JSON parsers](#the-json-parsers)

* [Null](#null)

* [JSONBoolean](#jsonboolean)

* [JSONNumber](#jsonnumber)

* [JSONString](#jsonstring)

* [JSONArray](#jsonarray)

* [JSONObject](#jsonobject)

* [Field](#field)

* [OptionalField](#optionalfield)

* [Integration with Codable](#integration-with-codable)

* [Benchmarks](#benchmarks)

* [Installation](#installation)

---

## Introduction

As mentioned above, this library is built using the [Parsing](https://github.com/pointfreeco/swift-parsing) library, which is a library that provides a consistent story for writing _parsing_ code in Swift, that is, code that turns some _unstructured data_ into more _structured data_. You do that by constructing _parsers_ that are generic over both the (unstructured) _input_ and the (structued) _output_. What's really great is the fact the these parsers can be made _invertible_ (or bidirectional), meaning they can also turn structured data _back_ into unstructured data, referred to as _printing_.

The *JSONParsing* library provides predefined parsers tuned specifically for when the _input is json_, giving you a convenient way of writing parsers capable of parsing (decoding) and printing (encoding) json. This style of dealing with json has a number of benefits compared to the *Codable* abstraction. More about that in the [Motivation](#motivation---why-not-use-codable) section.

## Quick start

Let's see what it looks like to decode and encode json data using this library. Imagine, for example, you have json describing a movie:

```swift

let json = """

{

"title": "Interstellar",

"release_year": 2014,

"director": "Christopher Nolan",

"stars": [

"Matthew McConaughey",

"Anne Hathaway",

"Jessica Chastain"

],

"poster_url": "https://www.themoviedb.org/t/p/w1280/gEU2QniE6E77NI6lCU6MxlNBvIx.jpg",

"added_to_favorites": true

}

""".data(using: .utf8)!

```

First, we define a corresponding `Movie` type:

```swift

struct Movie {

let title: String

let releaseYear: Int

let director: String

let stars: [String]

let posterUrl: URL?

let addedToFavorites: Bool

}

```

Then, we can create a _JSON parser_, to handle the decoding of the json into this new data type:

```swift

extension Movie {

static var jsonParser: some JSONParserPrinter<Self> {

ParsePrint(.memberwise(Self.init)) {

Field("title") { String.jsonParser() }

Field("release_year") { Int.jsonParser() }

Field("director") { String.jsonParser() }

Field("stars") {

JSONArray { String.jsonParser() }

}

OptionalField("poster_url") { URL.jsonParser() }

Field("added_to_favorites") { Bool.jsonParser() }

}

}

}

```

Now, the `Movie.jsonParser` can be used to decode json data into `Movie` instances:

```swift

let decodedMovie = try Movie.jsonParser.decode(json)

print(decodedMovie)

// Movie(title: "Interstellar", releaseYear: 2014, director: "Christopher Nolan", stars: ["Matthew McConaughey", "Anne Hathaway", "Jessica Chastain"], posterUrl: Optional(https://www.themoviedb.org/t/p/w1280/gEU2QniE6E77NI6lCU6MxlNBvIx.jpg), addedToFavorites: true)

```

But what's even cooler is that the very same parser, without any extra work, can also be used to _encode_ movie values into json:

```swift

let jokerMovie = Movie(

title: "Joker",

releaseYear: 2019,

director: "Todd Phillips",

stars: ["Joaquin Phoenix", "Robert De Niro"],

posterUrl: URL(string: "https://www.themoviedb.org/t/p/w1280/udDclJoHjfjb8Ekgsd4FDteOkCU.jpg")!,

addedToFavorites: true

)

let jokerJson = try Movie.jsonParser.encode(jokerMovie)

print(String(data: jokerJson, encoding: .utf8)!)

// {"added_to_favorites":true,"director":"Todd Phillips","poster_url":"https://www.themoviedb.org/t/p/w1280/udDclJoHjfjb8Ekgsd4FDteOkCU.jpg","release_year":2019,"stars":["Joaquin Phoenix","Robert De Niro"],"title":"Joker"}

```

More information about the building blocks for constructing the JSON parsers can be found under the [The JSON parsers](#the-json-parsers) section.

## Motivation - why not use Codable?

The default way to work with JSON in Swift is with Apple's own Codable framework. While it is a powerful abstraction, it does have some drawbacks and limitations. Let's explore some of them and see how the JSONParsing library addresses these issues.

### More than one JSON representation

One limitation of the Codable framework is that any given type can only have _one_ way of being represented as JSON. To work around this limitation, one common approach is to introduce wrapper types that wrap a value of the result type and have a custom Decodable implementation. Then, when decoding the type, you first decode to the wrapper type and then extract the underlying value. While this approach works, it's cumbersome to introduce a new type solely for handling JSON decoding. Moreover, the wrapper type needs to be explicitly used whenever you want to decode to the underlying type with that specific decoding strategy.

As an example, let's consider the following type representing an RGB color:

```swift

struct RGBColor {

let red: Int

let green: Int

let blue: Int

}

```

What would be the corresponding json representation for this type? Would it be something like:

```json

{

"red": 205,

"green": 99,

"blue": 138

}

```

Or perhaps:

```json

"205,99,138"

```

The truth is, both representations are reasonable (as well as many other possibilities), and it's possible that you might have one API endpoint returning RGB colors in the first format, and another in the second format. But when using Codable, you would have to choose one of the formats to be the one used for the `RGBColor` type. To handle both variants, you would have to define two separate types, something like `RGBColorWithObjectRepresentation` and `RGBColorWithStringRepresentation`, and conform both of them to `Codable`, with the different decoding/encoding strategies.

Using the *JSONParsing* library, you can easily just create two separate parsers, one for each alternative:

```swift

extension RGBColor {

static var jsonParserForObjectRepresentation: some JSONParserPrinter<Self> {

ParsePrint(.memberwise(Self.init)) {

Field("red") { Int.jsonParser() }

Field("green") { Int.jsonParser() }

Field("blue") { Int.jsonParser() }

}

}

static var jsonParserForStringRepresentation: some JSONParserPrinter<Self> {

ParsePrint(.memberwise(Self.init)) {

JSONString {

Int.parser()

","

Int.parser()

","

Int.parser()

}

}

}

}

```

And now you can use whichever suits best in the given situation:

```swift

// in one place in the app

let colorJson1 = """

{

"red": 205,

"green": 99,

"blue": 138

}

""".data(using: .utf8)!

// decode

let color1 = try RGBColor.jsonParserForObjectRepresentation.decode(colorJson1)

print(color1)

// RGBColor(red: 205, green: 99, blue: 138)

// encode

let newColorJson1 = try RGBColor.jsonParserForObjectRepresentation.encode(color1)

print(String(data: newColorJson1, encoding: .utf8)!)

// {"blue":138,"green":99,"red":205}

// in another place in the app

let colorJson2 = """

"55,190,25"

""".data(using: .utf8)!

// decode

let color2 = try RGBColor.jsonParserForStringRepresentation.decode(colorJson2)

print(color2)

// RGBColor(red: 205, green: 99, blue: 138)

// encode

let newColorJson2 = try RGBColor.jsonParserForStringRepresentation.encode(color2)

print(String(data: newColorJson2, encoding: .utf8)!)

// "55,190,25"

```

If you want, you could even define a configurable function, dealing with both variants in the same place:

```swift

extension RGBColor {

static func jsonParser(useStringRepresentation: Bool = false) -> some JSONParserPrinter<Self> {

ParsePrint(.memberwise(Self.init)) {

if useStringRepresentation {

JSONString {

Int.parser()

","

Int.parser()

","

Int.parser()

}

} else {

Field("red") { Int.jsonParser() }

Field("green") { Int.jsonParser() }

Field("blue") { Int.jsonParser() }

}

}

}

}

try RGBColor.jsonParser(useStringRepresentation: false).decode(colorJson1)

// RGBColor(red: 205, green: 99, blue: 138)

try RGBColor.jsonParser(useStringRepresentation: true).decode(colorJson2)

// RGBColor(red: 205, green: 99, blue: 138)

```

#### The `Date` type

Perhaps the most common way to run into the limitation of a type only being able to have one single `Codable` conformance, is when dealing with the `Date` type. In fact, it's so common, that the Codable framework even provides a special way of managing how `Date` types are decoded/encoded, through the `dateDecodingStrategy`/`dateEncodingStrategy` properties available on `JSONDecoder` and `JSONEncoder`, respectively. While this does work, it's a little weird to have special handling for _one_ specific type, that looks nothing like how you deal with all the other types. Also, having the configuration on the Encoder/Decoder types, means you can't have more than one date format in the same json object.

With *JSONParsing* on the other hand, the `Date` type doesn't have to be handled as an exception. We saw above with the `RGBColor` type, that we can just create a parser that matches the required representation that is used in the JSON API. The library also extends the `Date` type with a static `jsonParser(formatter:)` method, which allows constructing a json parser that decodes/encodes dates according to a given `DateFormatter`:

```swift

let json = """

{

"date1": "1998-11-20",

"date2": "2021-06-01T13:09:09Z"

}

""".data(using: .utf8)!

struct MyType {

let date1: Date

let date2: Date

}

let basicFormatter = DateFormatter()

basicFormatter.dateFormat = "yyyy-MM-dd"

let isoFormatter = DateFormatter()

isoFormatter.dateFormat = "yyyy-MM-dd'T'HH':'mm':'ss'Z'"

extension MyType {

static var jsonParser: some JSONParserPrinter<Self> {

ParsePrint(.memberwise(Self.init)) {

Field("date1") { Date.jsonParser(formatter: basicFormatter) }

Field("date2") { Date.jsonParser(formatter: isoFormatter) }

}

}

}

let parsedValue = try MyType.jsonParser.decode(json)

print(parsedValue)

// MyType(date1: 1998-11-20 00:00:00 +0000, date2: 2021-06-01 13:09:09 +0000)

let encodedJson = try MyType.jsonParser.encode(parsedValue)

print(String(data: encodedJson, encoding: .utf8)!)

// {"date1":"1998-11-20","date2":"2021-06-01T13:09:09Z"}

```

### Decoding and encoding logic out of sync

Codable has the really cool feature of being able to automatically synthesize the decoding and encoding implementations for Swift types, thanks to integration with the Swift compiler. Unfortunately, in practice, the automatically synthesized implementations will often not be correct for your use case, because it assumes that your json data and your Swift data types _exactly_ match each other in structure. This will often not be the case, for various reasons. First, you might be dealing with JSON APIs that you don't own yourself and therefore might deliver data in a format that isn't ideal to your use case. But even if you do own the API code, it might be used by multiple platforms, which means you can't tailor it specifically to work perfectly with your Swift code. Also, Swift has some features, such as enums, that simply _can't_ be expressed equivalently in json.

So in practice, when using Codable, you will often have to implement the decoding and encoding logic manually. And the problem in that situation, is that they have to be implemented _separately_. This means that, whenever the expected json format changes in any way, you have to remember to update both the `init(from:)` (decoding) and the `encode(to:)` (encoding) implementations accordingly.

With *JSONParsing* on the other hand, you can write a single json parser that can take care of both the decoding and the encoding (as was shown in the [Quick start](#quick-start) section). What this means is that you are guaranteed to always have the two transformations kept in sync as your json API evolves.

### Custom String parsing

Recall how we previously defined a json parser for the `RGBColor` type, where the json representation was a comma separated string. It looked like this:

```swift

extension RGBColor {

static var jsonParserForStringRepresentation: some JSONParserPrinter<Self> {

ParsePrint(.memberwise(Self.init)) {

JSONString {

Int.parser()

","

Int.parser()

","

Int.parser()

}

}

}

}

let colorJson = """

"55,190,25"

""".data(using: .utf8)!

let color = try RGBColor.jsonParserForStringRepresentation.decode(colorJson)

print(color)

// RGBColor(red: 55, green: 190, blue: 25)

let newColorJson2 = try RGBColor.jsonParserForStringRepresentation.encode(color2)

print(String(data: newColorJson2, encoding: .utf8)!)

// "55,190,25"

```

In that example, it was used to highlight the fact that we can handle different json representations for the same type. However, it actually also shows off _another_ great thing about the library, which is how its integration with the *Parsing* library makes it very convenient to deal with types whose json representation requires custom String transformations.

Let's try to accomplish the same thing using Codable:

```swift

extension RGBColor: Decodable {

init(from decoder: Decoder) throws {

let container = try decoder.singleValueContainer()

let stringValue = container.decode(String.self)

self.red = ???

self.green = ???

self.blue = ???

}

}

```

How do we get the rgb components from the decoded String? The Codable abstraction doesn't really provide a general answer to this. We could of course use the *Parsing* library here if we want:

```swift

extension RGBColor: Decodable {

init(from decoder: Decoder) throws {

let container = try decoder.singleValueContainer()

let stringValue = try container.decode(String.self)

self = try Parse(Self.init) {

Int.parser()

","

Int.parser()

","

Int.parser()

}

.parse(stringValue)

}

}

```

But it's not as seamlessly integrated into the rest of the code, as it was in the *JSONParsing* example, forcing us to manually call out to the `parse` method for instance. And also, again, this is only half of the equation, we still have to deal with the encoding, which has to be implemented on its own.

### JSON with alternative representations

Imagine that you are working with an api that delivers a list of ingredients in the following format:

```swift

let ingredientsJson = """

[

{

"name": "milk",

"amount": {

"value": 2,

"unit": "dl"

}

},

{

"name": "salt",

"amount": "a pinch"

}

]

""".data(using: .utf8)!

```

As you can see, the `amount` can _either_ be expressed as a combination of a value and a unit, _or_ a string. In Swift, this is most naturally represented using an enum:

```swift

struct Ingredient {

enum Amount {

case exact(value: Int, unit: String)

case freetext(String)

}

let name: String

let amount: Amount

}

```

In this situation, we cannot get a suitable synthesized conformance to `Codable` for the `Amount` type, so we have no choice but to implement the methods ourselves. Let's do the `Decodable` conformance:

```swift

extension Ingredient.Amount: Decodable {

enum CodingKeys: CodingKey {

case unit

case value

}

init(from decoder: Decoder) throws {

do {

let container = try decoder.singleValueContainer()

self = .freetext(try container.decode(String.self))

} catch {

let container = try decoder.container(keyedBy: CodingKeys.self)

let value = try container.decode(Int.self, forKey: .value)

let unit = try container.decode(String.self, forKey: .unit)

self = .exact(value: value, unit: unit)

}

}

}

```

For the `Ingredient` type we can just use the automatically synthesized conformance:

```swift

extension Ingredient: Decodable {}

```

Now we can use a `JSONDecoder` to decode the `ingredientsJson` into a list of `Ingredient`:

```swift

let ingredients = try JSONDecoder().decode([Ingredient].self, from: ingredientsJson)

print(ingredients)

// [Ingredient(name: "milk", amount: Ingredient.Amount.exact(value: 2, unit: "dl")), Ingredient(name: "salt", amount: Ingredient.Amount.freetext("a pinch"))]

```

So that works. We did have to create an explicit `CodingKeys` type as well as two separate `containers` for handling the two cases, which is a little bit of extra boilerplate, but it's not too bad. But there is actually a more fundamental problem here. To see that, let's modify the json input like this:

```diff

[

...

{

"name": "salt",

- "amount": "a pinch"

+ "amount": 3

}

]

""".data(using: .utf8)!

```

So the amount is now just a number, which is not allowed. When we try to decode the list, we get an error:

```swift

do {

let ingredients = try JSONDecoder().decode([Ingredient].self, from: ingredientsJson)

} catch {

print(error)

// typeMismatch(Swift.Dictionary<Swift.String, Any>, Swift.DecodingError.Context(codingPath: [_JSONKey(stringValue: "Index 1", intValue: 1), CodingKeys(stringValue: "amount", intValue: nil)], debugDescription: "Expected to decode Dictionary<String, Any> but found a number instead.", underlyingError: nil))

}

```

The error message isn't very easily readable, but hidden in there is the message: `"Expected to decode Dictionary<String, Any> but found a number instead."`. So judging by this error, it would seem like that the only valid type of value for the `amount` field is a nested json object. But we know that there is actually a second valid option, namely a string. But this information got lost when the error was created, because of our (arbitrary) choice in the `init(from:)` to _first_ try to decode it as a string, and then if that fails, try the other alternative. If we had written it in the other order, our error message would instead have said `"Expected to decode String but found a number instead."`. Either way, we are missing the fact that we have _multiple valid choices_.

So let's see how the JSONParsing library handles this kind of situation! Instead of conforming the types to `Decodable`, let's write _JSON parsers_ for them.

```swift

extension Ingredient.Amount {

static var jsonParser: some JSONParserPrinter<Self> {

OneOf {

ParsePrint(.case(Self.exact)) {

Field("value") { Int.jsonParser() }

Field("unit") { String.jsonParser() }

}

ParsePrint(.case(Self.freetext)) {

String.jsonParser()

}

}

}

}

extension Ingredient {

static var jsonParser: some JSONParserPrinter<Self> {

ParsePrint(.memberwise(Self.init)) {

Field("name") { String.jsonParser() }

Field("amount") { Amount.jsonParser }

}

}

}

```

We make use of the `OneOf` parser from the *Parsing* library, which will run a number of parsers until one succeeds, and if no one succeeds their errors are accumulated. Let's try decoding the same json as before, and see what is printed[^1]:

```swift

do {

let ingredients = try JSONArray { Ingredient.jsonParser }.decode(ingredientsJson)

} catch {

print(error)

// At [index 1]/"amount":

// error: multiple failures occurred

//

// error: Expected an object (containing the key "value"), but found:

// 3

//

// Expected a string, but found:

// 3

}

```

As you can see, _both_ possibilities are now mentioned in the printed error message. Also, as a bonus, the error message is _a lot_ easier to read.

This also serves as a glimpse at what printed errors look like when using this library. They always have basically the same layout as what you see above: a path describing where something went wrong, and then a more detailed description of _what_ went wrong. All in an easily readable format.