full_name

stringlengths 9

72

| url

stringlengths 28

91

| description

stringlengths 3

343

⌀ | readme

stringlengths 1

207k

|

|---|---|---|---|

kaixindelele/ChatSensitiveWords

|

https://github.com/kaixindelele/ChatSensitiveWords

|

利用LLM+敏感词库,来自动判别是否涉及敏感词。

|

# ChatSensitiveWords

利用LLM+敏感词库,来自动判别是否涉及敏感词。已经在[chatpaper](https://chatwithpaper.org)上线!

# Motivation:

在[chatpaper](https://chatwithpaper.org)中,我们免费提供了[学术版GPT](https://github.com/binary-husky/gpt_academic)完整功能,这里面有一个隐藏的对话功能,我们希望给大家提供一个学术对话接口,但防不住大家好奇,或者有极少数人故意攻击我们的输出。

因此我们需要紧急上线敏感词检测。尤其是针对政治敏感词和色情敏感词。

# 方案:

1. 传统敏感词库检测是否有敏感词

2. 如果有敏感词,让Chat来判断语义,是否有误杀。

3. 输出敏感词检测(待做)

# 使用步骤:

1. pip install -r requirements.txt

2. 替换api key

3. 确认敏感词库路径是否正确。

# 初步效果:

<details><summary><code><b>一些有意思的案例截图</b></code></summary>

</details>

# 时间消耗分析:

无敏感词的情况,消耗0.2s左右

有敏感词,消耗0.2+0.8s左右。

欢迎大家在本地测试和评估。

**千万别在我们的学术版上测试!**

# 欢迎大家一起玩耍

目前传统敏感词库的检测还没有做好,然后chat的prompt也没有调到最优。

欢迎大家star和pull新功能!

# 如果对搭网站的朋友有帮助的话,也欢迎打赏, 感谢认可!

## 请不要打包卖钱,如果卖钱的话,分我点...

# Starchart

[](https://star-history.com/#kaixindelele/ChatSensitiveWords&Date)

## Contributors

<a href="https://github.com/kaixindelele/ChatSensitiveWords/graphs/contributors">

<img src="https://contrib.rocks/image?repo=kaixindelele/ChatSensitiveWords" />

</a>

## 项目引用:

Please cite the repo if you use the data or code in this repo.

```

@misc{ChatPaper,

author={Yongle Luo},

title = {ChatSensitiveWords: Use LLM and sensitive word library to check sensitive input text.},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/kaixindelele/ChatSensitiveWords}},

}

```

|

Xposed-Modules-Repo/com.unexpected.th

|

https://github.com/Xposed-Modules-Repo/com.unexpected.th

|

电报助手

|

# Telegram Helper

- 电报助手

# 注意

- 此项目已经停止维护

---

|

verytinydever/react-copy-mailto

|

https://github.com/verytinydever/react-copy-mailto

| null |

## react-copy-mailto

<!-- ALL-CONTRIBUTORS-BADGE:START - Do not remove or modify this section -->

[](#contributors-)

<!-- ALL-CONTRIBUTORS-BADGE:END -->

[](https://badge.fury.io/js/react-copy-mailto)

A fully customizable React component for copying email from `mailto` links.

## Motivation

The one thing we all can agree on that we hate it when the default mail app pops up after clicking on the `mailto` links. Most of the time we just want to copy the email address and that's where this module comes into play. Big shout out to [Kuldar](https://twitter.com/kkuldar) whose tweet [thread](https://twitter.com/kkuldar/status/1270736717939716097) inspired us to build this.

## Demo

## Installation and Usage

The easiest way to use this library is to install it via yarn or npm

```

yarn add react-copy-mailto

```

or

```

npm install react-copy-mailto

```

Then just use it in your app:

```jsx

import React from "react";

import CopyMailTo from "react-copy-mailto";

const YourComponent = () => (

<div>

<CopyMailTo email="[email protected]" />

</div>

);

```

## Props

You can customize almost every aspect of this component using the below props, out of which **email is the only required prop**.

| Name | Type | Default | Description |

|:-: |--- |--- |--- |

| email | string | none | The email to be copied. |

| children | ReactNode | null | Use this if you want to use some custom component inside the anchor tag. |

| defaultTooltip | string | "Copy email address" | Text shown in the tooltip when the user hovers over the link. |

| copiedTooltip | string | "Copied to clipboard!" | Text shown in the tooltip when the user clicks on the link and the text is copied to clipboard. |

| containerStyles | style object | none | The styles to be applied to the container. |

| tooltipStyles | style object | none | The styles to be applied to the tooltip. |

| anchorStyles | style object | none | The styles to be applied to the anchor. |

## Development

- Install the dependencies

```

yarn

```

- Run the example on the development server

```

yarn demo:dev

```

## Contributing

[](https://github.com/devfolioco/react-copy-mailto/issues) [](https://github.com/devfolioco/react-copy-mailto/pulls)

Feel free to open [issues](https://github.com/devfolioco/react-copy-mailto/issues/new/choose) and [pull requests](https://github.com/devfolioco/react-copy-mailto/pulls)!

## License

[](https://github.com/devfolioco/react-copy-mailto/blob/master/LICENSE)

## Contributors ✨

Thanks goes to these wonderful people ([emoji key](https://allcontributors.org/docs/en/emoji-key)):

<!-- ALL-CONTRIBUTORS-LIST:START - Do not remove or modify this section -->

<!-- prettier-ignore-start -->

<!-- markdownlint-disable -->

<table>

<tr>

<td align="center"><a href="http://prateeksurana.me"><img src="https://avatars3.githubusercontent.com/u/21277179?v=4" width="100px;" alt=""/><br /><sub><b>Prateek Surana</b></sub></a><br /><a href="https://github.com/devfolioco/react-copy-mailto/commits?author=prateek3255" title="Code">💻</a> <a href="#design-prateek3255" title="Design">🎨</a> <a href="#content-prateek3255" title="Content">🖋</a> <a href="https://github.com/devfolioco/react-copy-mailto/commits?author=prateek3255" title="Documentation">📖</a></td>

<td align="center"><a href="http://ankiiitraj.github.io"><img src="https://avatars2.githubusercontent.com/u/48787278?v=4" width="100px;" alt=""/><br /><sub><b>Ankit Raj</b></sub></a><br /><a href="#tool-ankiiitraj" title="Tools">🔧</a></td>

</tr>

</table>

<!-- markdownlint-enable -->

<!-- prettier-ignore-end -->

<!-- ALL-CONTRIBUTORS-LIST:END -->

This project follows the [all-contributors](https://github.com/all-contributors/all-contributors) specification. Contributions of any kind welcome!

|

nicholaschiasson/fcidr

|

https://github.com/nicholaschiasson/fcidr

|

Fragmented Classless Inter-Domain Routing (FCIDR)

|

# fcidr

[](https://crates.io/crates/fcidr)

Fragmented Classless Inter-Domain Routing (FCIDR)

A library exposing a data structure to represent a set of CIDR ranges as well

as an interface to compute set operations over CIDRs.

This data structure can be applied, for example, in configuring firewalls that

*implicitly deny* (AWS Security Groups) using a rule set that explicitly

expresses rules for both allow and deny.

> **Note**

> Currently, only IPv4 is supported. IPv6 support is tracked by [#6](https://github.com/nicholaschiasson/fcidr/issues/6).

## CLI

This project also publishes a binary application for use on the command line to

support composing chains of set operations on CIDRs by reading from standard

input.

### Installation

For now, crates.io is the only place this is being distributed.

```

cargo install fcidr

```

### Usage

```

Fragmented Classless Inter-Domain Routing (FCIDR)

Usage: fcidr [CIDR] <COMMAND>

Commands:

complement Compute the complement of the input CIDR(s) [aliases: !, not]

difference Compute the set difference between the input CIDR(s) and another CIDR [aliases: -, exclude, minus]

union Compute the set union of the input CIDR(s) and another CIDR [aliases: +, include, plus]

help Print this message or the help of the given subcommand(s)

Arguments:

[CIDR] The input CIDR range and first operand to the computation. If omitted, input is taken from stdin. In this way, multiple computations can be chained together

Options:

-h, --help Print help

-V, --version Print version`

```

### Example

```

fcidr 10.0.0.0/8 difference 10.0.64.0/20 | fcidr difference 10.0.82.0/24 | fcidr union 10.0.82.74/31

10.0.0.0/18

10.0.80.0/23

10.0.82.74/31

10.0.83.0/24

10.0.84.0/22

10.0.88.0/21

10.0.96.0/19

10.0.128.0/17

10.1.0.0/16

10.2.0.0/15

10.4.0.0/14

10.8.0.0/13

10.16.0.0/12

10.32.0.0/11

10.64.0.0/10

10.128.0.0/9

```

```

fcidr 10.0.0.0/8 difference 10.0.64.0/20 | fcidr difference 10.0.82.0/24 | fcidr union 10.0.82.74/31 | fcidr complement

0.0.0.0/5

8.0.0.0/7

10.0.64.0/20

10.0.82.0/26

10.0.82.64/29

10.0.82.72/31

10.0.82.76/30

10.0.82.80/28

10.0.82.96/27

10.0.82.128/25

11.0.0.0/8

12.0.0.0/6

16.0.0.0/4

32.0.0.0/3

64.0.0.0/2

128.0.0.0/1

```

Alternative concise syntax:

```

fcidr 10.0.0.0/8 + 127.0.0.0/16 | fcidr - 10.64.0.0/16 | fcidr !

0.0.0.0/5

8.0.0.0/7

10.64.0.0/16

11.0.0.0/8

12.0.0.0/6

16.0.0.0/4

32.0.0.0/3

64.0.0.0/3

96.0.0.0/4

112.0.0.0/5

120.0.0.0/6

124.0.0.0/7

126.0.0.0/8

127.1.0.0/16

127.2.0.0/15

127.4.0.0/14

127.8.0.0/13

127.16.0.0/12

127.32.0.0/11

127.64.0.0/10

127.128.0.0/9

128.0.0.0/1

```

|

junhoyeo/react-threads

|

https://github.com/junhoyeo/react-threads

|

Embed Static Threads in your React/Next.js application. UI components for Meta's Threads.

|

# react-threads

[](https://www.npmjs.com/package/react-threads) [](https://github.com/junhoyeo/react-threads/blob/main/license) [](https://prettier.io)

> Embed Static Threads in your React/Next.js application. UI components for Meta's Threads. _Powered by **[junhoyeo/threads-api](https://github.com/junhoyeo/threads-api)**._

[](https://react-threads.vercel.app)

## Demo

> **Warning**<br/>

> Vercel Deployment is currently sometimes unstable. 🏴☠️

[](https://react-threads.vercel.app/CuUoEcbRFma)

## 📦 Setup

First, install `react-threads` with it's dependencies.

```bash

yarn add next react-threads threads-api

yarn add -D tailwindcss postcss autoprefixer

```

Add path to `react-threads` to [content sources](https://tailwindcss.com/docs/content-configuration) of your Tailwind Configuration file(`tailwind.config.js`).

```js

// tailwind.config.js

/** @type {import('tailwindcss').Config} */

module.exports = {

content: [

'./src/**/*.{ts,tsx}',

// path to `react-threads`

'node_modules/react-threads/**/*',

],

};

```

Set [`images.remotePatterns` to your app's `next.config.js`](https://nextjs.org/docs/pages/api-reference/components/image#remotepatterns). We use `next/image` to proxy images under the hood.

```js

// next.config.js

/** @type {import('next').NextConfig} */

const nextConfig = {

images: {

remotePatterns: [

{

protocol: 'https',

hostname: '**.cdninstagram.com',

},

{

protocol: 'https',

hostname: '**.fbcdn.net',

},

],

},

};

module.exports = nextConfig;

```

## 🚀 Usage

```ts

import { GetStaticProps, NextPage } from 'next';

import { BannerCTA, Thread } from 'react-threads';

import { Thread as ThreadPost, ThreadsAPI } from 'threads-api';

const threadsAPI = new ThreadsAPI();

type Props = {

threadID: string;

thread: ThreadPost;

};

export const getStaticProps: GetStaticProps<Props, { threadId: string }> = async (context) => {

try {

const threadID = context.params?.threadId;

if (!threadID) {

console.log('[!] Thread ID not provided');

return { notFound: true };

}

const postID = threadsAPI.getPostIDfromThreadID(threadID);

if (!postID) {

console.log(

'[!] Post ID not found with provided Thread ID (in threadsAPI.getPostIDfromThreadID):',

threadID,

);

return { notFound: true };

}

const thread = await threadsAPI.getThreads(postID);

const { containing_thread } = thread;

return {

props: {

threadID,

thread: containing_thread,

},

revalidate: 10,

};

} catch (err) {

console.error('[*] Error fetching Thread', err);

throw err;

}

};

export async function getStaticPaths() {

return {

paths: [],

fallback: true,

};

}

```

## 🏴☠️ Useful Building Blocks

- Looking for an API client?

- **[junhoyeo/threads-api ](https://github.com/junhoyeo/threads-api)**

- Using [_Private Git Submodules_](https://github.com/orgs/vercel/discussions/44) when deploying with Vercel?

- **[junhoyeo/vercel-submodules ](https://github.com/junhoyeo/vercel-submodules)**

## 🏴☠️ Inspired from 🤍

- [vercel-labs/react-tweet](https://github.com/vercel-labs/react-tweet)

- [transitive-bullshit/react-static-tweets](https://github.com/transitive-bullshit/react-static-tweets)

- [zernonia/tweetic](https://github.com/zernonia/tweetic)

## License

<p align="center">

<a href="https://github.com/junhoyeo">

<img src="https://github.com/junhoyeo/threads-api/raw/main/.github/labtocat.png" width="256" height="256">

</a>

</p>

<p align="center">

<strong>MIT © <a href="https://github.com/junhoyeo">Junho Yeo</a></strong>

</p>

If you find this project intriguing, **please consider starring it(⭐)** or following me on [GitHub](https://github.com/junhoyeo) (I wouldn't say [Threads](https://www.threads.net/@_junhoyeo)). I code 24/7 and ship mind-breaking things on a regular basis, so your support definitely won't be in vain.

|

surqing/-Autox.js-

|

https://github.com/surqing/-Autox.js-

|

通过安装安卓端的autox.js,执行本项目的脚本,实现自动监测大麦,自动演唱会门票

|

# -Autox.js-

通过安装安卓端的[autox.js](https://github.com/kkevsekk1/AutoX),执行本项目的脚本,实现自动监测大麦,自动抢演唱会门票

## 实际抢票过程中会遇到其他的错误或者异常信息没有做处理,感兴趣的朋友欢迎继续完善

### 抢票的难度很大,请不要抱有太大希望,该脚本仅用于学习autoxjs

# 食用方法

1. 安装适合你的安卓手机的autoxjs

2. 在autoxjs中新建一个.js脚本文件

3. 将最新版本的本脚本复制进.js文件中

4. 按照脚本中的注释修改脚本

5. 进入到等待抢购的页面

6. 返回脚本,点击执行即可

# 声明

该脚本仅为个人用于学习的项目,请勿用于其他用途!

|

VolkanSah/The-Code-Interpreter-in-OpenAI-ChatGPT

|

https://github.com/VolkanSah/The-Code-Interpreter-in-OpenAI-ChatGPT

|

The code interpreter is a tool developed by OpenAI to execute programming code in an interactive environment. It is capable of running Python code and displaying the results in real-time.

|

# Exploring the Code Interpreter in OpenAI ChatGPT 4

The code interpreter is an advanced feature of OpenAI's ChatGPT that brings a new level of interactivity to the AI model.

It is designed to execute Python code in a sandboxed environment and provide real-time results, making it a powerful

tool for a wide range of tasks from mathematical computations to data analysis, from code prototyping to teaching and

learning Python programming interactively. While there are certain limitations to its functionality due to security

reasons, it opens up a whole new set of possibilities for how users can interact with ChatGPT.

## Table of Contents

- [What is the Code Interpreter?](#what-is-the-code-interpreter)

- [What is the Code Interpreter used for?](#what-is-the-code-interpreter-used-for)

- [How can ChatGPT assist with programming?](#how-can-chatgpt-assist-with-programming)

- [What are the limitations?](#what-are-the-limitations)

- [What are the benefits?](#what-are-the-benefits)

- [Data Storage](#data-storage)

- [Detailed Explanation of the Data Storage](#detailed-explanation-of-the-data-storage)

- [Working with Images](#working-with-images)

- [How to enable Code Interpreter?](settings-ci.png)

## The Code Interpreter in OpenAI ChatGPT

### What is the Code Interpreter?

The code interpreter is a tool developed by OpenAI to execute programming code in an interactive environment. It is capable of running Python code and displaying the results in real-time.

### What is the Code Interpreter used for?

The code interpreter can be used for a variety of tasks, including:

- Performing complex mathematical calculations

- Analyzing and visualizing data

- Prototyping and debugging Python code

- Interactive learning and practicing Python programming

### How can ChatGPT assist with programming?

ChatGPT can generate, review, and debug code based on the provided requirements. It can also assist in structuring code and provide suggestions for improvements. Moreover, it can explain complex programming concepts and assist in solving coding problems.

### What are the limitations?

While the code interpreter is a powerful tool, it has certain limitations:

- It does not have access to the internet. This means it cannot make external requests.

- It runs in an isolated environment and does not have access to the operating system or its resources.

- Code execution that takes longer than 120 seconds is automatically stopped.

- It has access to a special location, '/mnt/data', where it can read and write files.

Despite these limitations, the code interpreter is a versatile tool that can greatly assist programmers of all skill levels.

### What are the benefits?

The code interpreter offers several benefits:

- It provides a safe environment to run code without the risk of affecting the operating system or data.

- It allows for real-time interaction with the code, providing immediate feedback.

- It can assist in learning Python programming and improving coding skills.

- It can handle a variety of tasks, from simple calculations to data analysis and visualization.

## Data Storage

The code interpreter has access to a special directory, '/mnt/data', where it can read and write files. This can be used for operations that need to save or load data, like writing logs, saving plots, or loading data for analysis. However, no other locations on the filesystem can be accessed.

## Detailed Explanation of the Data Storage

The '/mnt/data' directory is a special storage location that the code interpreter can access to read and write files. This is especially useful for operations that require persistent storage or the exchange of data between different code executions.

Here are some ways you can use the '/mnt/data' directory:

1. **Saving and Loading Data Files:** If you're working with data in formats like .csv, .json, .txt, etc., you can read from and write to these files directly in this directory. For instance, to write a list of numbers to a .txt file, you would do:

```python

with open('/mnt/data/numbers.txt', 'w') as file:

for num in range(10):

file.write(str(num) + '\n')

```

To read the file, you would do:

```python

with open('/mnt/data/numbers.txt', 'r') as file:

numbers = file.readlines()

```

2. **Storing Logs:** If you're running code that generates logs (like debugging information, progress of a task, etc.), you can write these logs to a file in '/mnt/data'.

```python

with open('/mnt/data/log.txt', 'w') as file:

file.write('This is a log message.')

```

3. **Saving Plots and Images:** If you're generating plots or other images with your code, you can save them to '/mnt/data' as .png, .jpg, or other image formats. For instance, if you're using matplotlib to create a plot, you can save it with:

```python

import matplotlib.pyplot as plt

plt.plot([0, 1, 2, 3, 4], [0, 1, 4, 9, 16])

plt.savefig('/mnt/data/plot.png')

```

You can then download the image file directly from the generated sandbox link.

Remember, any file operations need to be done using the '/mnt/data' path. The code interpreter does not have access to any other locations on the filesystem.

## Working with Images

With the help of various Python libraries such as PIL (Python Imaging Library), OpenCV, and matplotlib, a variety of operations can be performed on images. Here are some examples:

1. **Displaying Image:** Display an image.

```python

from PIL import Image

import matplotlib.pyplot as plt

# Open the image file

img = Image.open('/mnt/data/your_image.jpg')

# Display the image

plt.imshow(img)

plt.axis('off') # Turn off the axis

plt.show()

```

2. **Resizing Image:** Change the size of an image, enlarge or shrink it.

```python

# Resize the image

img_resized = img.resize((new_width, new_height))

```

3. **Rotating or Flipping Image:** Rotate an image or flip it horizontally or vertically.

```python

# Rotate the image

img_rotated = img.rotate(angle)

# Flip the image

img_flipped = img.transpose(Image.FLIP_LEFT_RIGHT)

```

4. **Color Conversions:** Convert an image to grayscale or change the color mode.

```python

# Convert the image to grayscale

img_gray = img.convert('L')

```

5. **Adjusting Brightness, Contrast, and Saturation:** Adjust the brightness, contrast, or saturation of an image.

```python

from PIL import ImageEnhance

# Increase the brightness

enhancer = ImageEnhance.Brightness(img)

img_brighter = enhancer.enhance(1.5)

```

6. **Applying Filters:** Apply different types of filters, like Gaussian blur, edge detection, etc.

```python

from PIL import ImageFilter

# Apply a filter

img_blurred = img.filter(ImageFilter.GaussianBlur(radius=5))

```

7. **Image Analysis:** Perform simple image analysis, like calculating the histogram.

```python

# Get the histogram

hist = img.histogram()

```

8. **Image Merging:** Merge multiple images into a single image.

```python

# Merge images

img_merged = Image.merge('RGB', [img1, img2, img3])

```

Please note that while these operations can be performed on a technical level, as an AI model, It cannot make aesthetic or creative decisions. Any changes It makes to an image are based on technical instructions, not creative or artistic considerations.

### Contributing

Contributions are welcome! Please feel free to submit a pull request.

## [❤️](https://jugendamt-deutschland.de) Thank you for your support!

If you appreciate my work, please consider supporting me:

- Become a Sponsor: [Link to my sponsorship page](https://github.com/sponsors/volkansah)

- :star: my projects: Starring projects on GitHub helps increase their visibility and can help others find my work.

- Follow me: Stay updated with my latest projects and releases.

### 👣 other GPT stuff

- [Link to ChatGPT Shellmaster](https://github.com/VolkanSah/ChatGPT-ShellMaster/)

- [GPT-Security-Best-Practices](https://github.com/VolkanSah/GPT-Security-Best-Practices)

- [OpenAi cost calculator](https://github.com/VolkanSah/OpenAI-Cost-Calculator)

- [GPT over CLI](https://github.com/VolkanSah/GPT-over-CLI)

- [Secure Implementation of Artificial Intelligence (AI)](https://github.com/VolkanSah/Implementing-AI-Systems-Whitepaper)

- [Comments Reply with GPT (davinci3)](https://github.com/VolkanSah/GPT-Comments-Reply-WordPress-Plugin)

- [Basic GPT Webinterface](https://github.com/VolkanSah/GPT-API-Integration-in-HTML-CSS-with-JS-PHP)

### Credits

- [Volkan Kücükbudak //NCF](https://gihub.com/volkansah)

- and OpenAI's ChatGPT4 with Code Interpreter for providing interactive coding assistance and insights.

|

weiwosuoai/WeBlog

|

https://github.com/weiwosuoai/WeBlog

|

📗 Spring Boot + Vue 3.2 + Vite 前后端分离博客~ 感谢点个 Star 呀~

|

# WeBlog

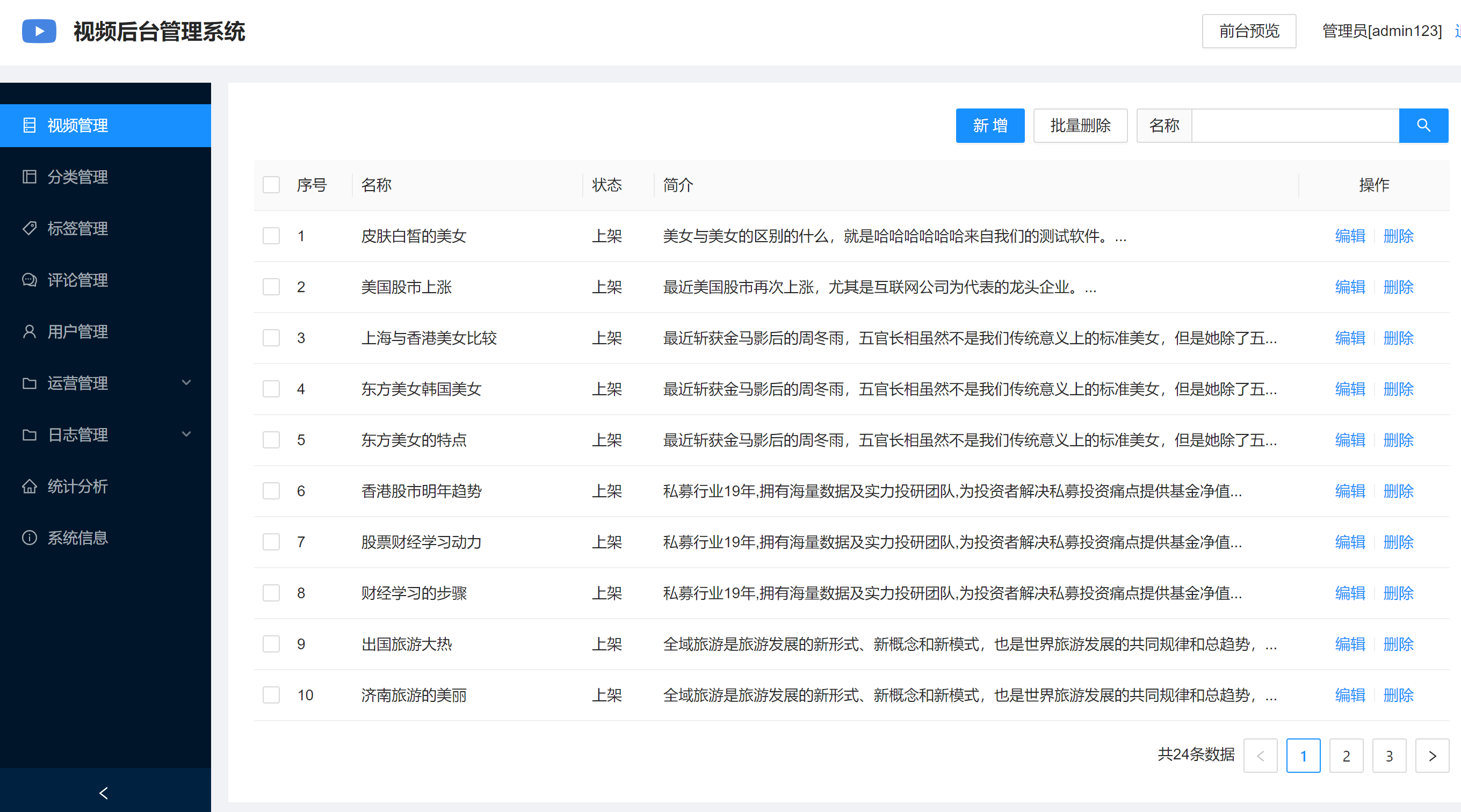

## 简介

一款由 Spring Boot + Vue 3.2 + Vite 4.3 开发的前后端分离博客。

- 后端采用 Spring Boot 、Mybatis Plus 、MySQL 、Spring Sericuty、JWT、Minio、Guava 等;

- 后台管理基于 Vue 3.2 + Vite + Element Plus 纯手动搭建的管理后台,未采用任何 Admin 框架;

- 支持博客 Markdown 格式发布与编辑、文章分类、文章标签的管理;

- 支持博客基本信息的设置,以及社交主页的跳转;

- 支持仪表盘数据统计,Echarts 文章发布热图统计、PV 访问量统计;

## 相关地址

- GitHub 地址:[https://github.com/weiwosuoai/WeBlog](https://github.com/weiwosuoai/WeBlog)

- Gitee 地址:[https://gitee.com/AllenJiang/WeBlog](https://gitee.com/AllenJiang/WeBlog)

- 演示地址:http://118.31.41.16/

- 游客账号:test

- 游客密码:test

> PS: 演示环境的服务器配置很低,带宽很小,若打开速度较慢,你可以部署到本地来访问哟~

## 演示截图

### 登录页

### 仪表盘

### 文章管理

### 写博客

### 前台首页

### 博客详情

### 归档页

## 功能

### 前台

| 功能 | 是否完成 |

| ----------- | -------- |

| 首页 | ✅ |

| 分类列表 | ✅ |

| 标签标签 | ✅ |

| 博客详情 | ✅ |

| 站内搜索 | TODO |

| 知识库 Wiki | TODO |

| 博客评论 | TODO |

### 后台

| 功能 | 是否完成 |

| ---------- | -------- |

| 后台登录页 | ✅ |

| 仪表盘 | ✅ |

| 文章管理 | ✅ |

| 分类管理 | ✅ |

| 标签管理 | ✅ |

| 博客设置 | ✅ |

| 评论管理 | TODO |

## 模块介绍

| 项目名 | 说明 |

| ----------------- | ------------------------------------------------ |

| weblog-springboot | 后端项目 |

| weblog-vue3 | 前端项目 |

| sql | 数据库初始化脚本(包括表结构以及相关初始化数据) |

### 后端项目模块介绍

| 模块名 | 说明 |

| -------------------- | -------------------- |

| weblog-module-admin | 博客后台管理模块 |

| weblog-module-common | 通用模块 |

| weblog-module-jwt | JWT 认证、授权模块 |

| weblog-web | 博客前台(启动入口) |

## 技术栈

### 后端

| 框架 | 说明 | 版本号 | 备注 |

| ------------------- | ------------------------ | ----------- | ------------------------------------------ |

| JDK | Java 开发工具包 | 1.8 | 它是目前企业项目比较主流的版本 |

| Spring Boot | Web 应用开发框架 | 2.6.3 | |

| Maven | 项目构建工具 | 3.6.3 | 企业主流的构建工具 |

| MySQL | 数据库 | 5.7 | |

| Mybatis Plus | Mybatis 增强版持久层框架 | 3.5.2 | |

| HikariCP | 数据库连接池 | 4.0.3 | Spring Boot 内置数据库连接池,号称性能最强 |

| Spring Security | 安全框架 | 2.6.3 | |

| JWT | Web 应用令牌 | 0.11.2 | |

| Lombok | 消除冗余的样板式代码 | 1.8.22 | |

| Jackson | JSON 工具库 | 2.13.1 | |

| Hibernate Validator | 参数校验组件 | 6.2.0.Final | |

| Logback | 日志组件 | 1.2.10 | |

| Guava | Google 开源的工具库 | 18.0 | |

| p6spy | 动态监测框架 | 3.9.1 | |

| Minio | 对象存储 | 8.2.1 | 用于存储博客中相关图片 |

| flexmark | Markdown 解析 | 0.62.2 | |

### 前端

| 框架 | 说明 | 版本号 |

| ------------ | ------------------------------- | ------- |

| Node | JavaScript 运行时环境 | 18.15.0 |

| Vue 3 | Javascript 渐进式框架 | 3.2.47 |

| Vite | 前端项目构建工具 | 4.3.9 |

| Element Plus | 饿了么基于 Vue 3 开源的组件框架 | 2.3.3 |

| vue-router | Vue 路由管理器 | 4.1.6 |

| vuex | 状态存储组件 | 4.0.2 |

| md-editor-v3 | Markdown 编辑器组件 | 3.0.1 |

| windicss | CSS 工具类框架 | 3.5.6 |

| axios | 基于 Promise 的网络请求库 | 1.3.5 |

| Echarts | 百度开源的数据可视化图表库 | 5.4.2 |

## 数据库初始化脚本

初始化脚本位于 `sql` 模块中,目录如下:

小伙伴们在部署时,请先新建一个名为 `weblog` 的库,然后依次执行 `schema.sql` 和 `data.sql` 即可:

- `schema.sql` : 表结构脚本;

- `data.sql` : 初始化数据脚本,如登录用户信息、博客基本设置信息等;

|

horsefacts/trading-days

|

https://github.com/horsefacts/trading-days

|

A Uniswap v4 hook that reverts when markets are closed in New York.

|

# 🗽 trading-days

A Uniswap v4 hook that reverts when markets are closed in New York.

## Views

- `isCoreTradingHours`: Return `true` between 9:30 AM and 4:00 PM ET.

- `isTradingDay`: Return `true` Mon-Fri, if it's not a NYSE holiday.

- `isHoliday`: Return `true` if it's a NYSE holiday.

- `getHoliday`: Get the current holiday (see the `Holiday` enum).

- `isDST`: Return `true` if it's Daylight Savings Time in New York.

- `time`: Adjust `block.timestamp` so that UTC date calculations are localized to US Eastern Time. Subtracts either 4 or 5 hours, depending on whether it's DST.

- `marketIsOpen`: Return `true` if the market is currently open.

- `state`: Get the current state of the market, one of:

- `HOLIDAY`

- `WEEKEND`

- `AFTER_HOURS`

- `OPEN`

## Errors

- `ClosedForHoliday(Holiday holiday)`: Markets are closed for a [NYSE holiday](https://www.nyse.com/markets/hours-calendars). Error data includes a Holiday enum, one of:

- `NEW_YEARS_DAY`

- `MARTIN_LUTHER_KING_JR_DAY`

- `WASHINGTONS_BIRTHDAY`

- `GOOD_FRIDAY`

- `MEMORIAL_DAY`

- `JUNETEENTH_NATIONAL_INDEPENDENCE_DAY`

- `INDEPENDENCE_DAY`

- `LABOR_DAY`

- `THANKSGIVING_DAY`

- `CHRISTMAS_DAY`

- `NEW_YEARS_DAY_OBSERVED`

- `ClosedForWeekend`: Markets are closed for the weekend.

- `AfterHours`: Markets are closed on weekdays before 9:30 AM and after 4:00 PM ET.

## Events

- `DingDingDing`: If you perform the first swap of the day, you get to ring the opening bell!

## Deployments

| Network | TradingView |

| ------- | ----------------------------------------------------------------------------------------------------------------------------------- |

| Goerli | [`0x000000006016c17169c289346015b3c9d42a1218`](https://goerli.etherscan.io/address/0x000000006016c17169c289346015b3c9d42a1218#code) |

| Mainnet | [`0x000000006016c17169c289346015b3c9d42a1218`](https://etherscan.io/address/0x000000006016c17169c289346015b3c9d42a1218#code) |

## Technical Details

The NYSE holiday calendar and Daylight Savings start/end timestamps are stored as [data contracts](https://github.com/dragonfly-xyz/useful-solidity-patterns/tree/main/patterns/big-data-storage).

NYSE holidays were precalculated through 2123 using the Python [holidays](https://pypi.org/project/holidays/) package. Each 13-byte sequence encodes one year, which includes up to 11 holidays. Each holiday is encoded as 9 bits, 4 for the month and 5 for the day. A year may have 9, 10, or 11 holidays, depending on whether New Year's Day of the next year falls on a Saturday.

The start and end timestamps for Daylight Savings were precalculated through 2123 using the `calculate_dst.py` script in this repo. The data contract stores each start/end pair as an 8-byte sequence, encoding two 32-bit values representing seconds since Jan 1, 2023. These represent the exact start and end timestamp of Daylight Savings Time in New York, according to current [DST rules](https://www.nist.gov/pml/time-and-frequency-division/popular-links/daylight-saving-time-dst). (That is, DST starts at 2am local time on the second Sunday of March and ends 2am local time on the first Sunday of November).

## Acknowledgments

Inspired by [Mariano's](https://github.com/nanexcool) legendary "office hours" modifier:

<a href="https://twitter.com/nanexcool/status/1259623747339849729" target="_blank">

<img src="./img/office_hours.png" width=480px />

</a>

Thanks to:

- [saucepoint](https://twitter.com/saucepoint) for their [v4 hooks template](https://github.com/saucepoint/v4-template)

- [BokkyPooBah](https://twitter.com/BokkyPooBah) for [BokkyPooBahsDateTimeLibrary](https://github.com/bokkypoobah/BokkyPooBahsDateTimeLibrary)

|

Ambershawl656/AM

|

https://github.com/Ambershawl656/AM

| null |

Real Time Operating System Lessons using Arduino and The FreeRTOS Kernel

learning

arduino

examples

atmel

freertos

learning-exercise

arduino-boards

arduino-platform

example-project

arduino-uno

rtos

arduino-mega

example-repo

example-codes# AM

|

mierenhoop/advent-of-beans

|

https://github.com/mierenhoop/advent-of-beans

| null |

# Advent of beans

Advent of code clone built with [Redbean](https://redbean.dev/).

## Status

*I will pause development on this project because it is currently in a working state. I did not plan to create actual puzzles and host an event, therefore adding new features might be a waste of time. If anyone is interested in using this project for hosting their event, create a github issue or contact me so I can help you get it set up.*

As of the time of writing it has the following features:

* Page listing all the puzzles

* Puzzle can have starting time

* Puzzle inputs are stored in buckets, some users will share a bucket

* Wrong answer submit timeout

* Leaderboard for everything & individual puzzles

* Silver and gold stars

* User profile page

* Github integration

* Cached remote resources to enable use in private networks

* All-in-one binary with control of the database via the command line

* No javascript

Todo list:

* Rate limiting/DDOS protection

* Support multiple events

|

Venusdev2113/javascript-serveral-animation-effect

|

https://github.com/Venusdev2113/javascript-serveral-animation-effect

|

I made the project including a lot of animation effect.

|

# javascript-serveral-animation-effect

I made the project including a lot of animation effect.

|

lablab-ai/Google-VertexAI-FastAPI

|

https://github.com/lablab-ai/Google-VertexAI-FastAPI

|

Simple boilerplate to get started with Generative AI models from Google Vertex AI based on FastAPI

|

# Getting started with Vertex AI Generative AI

## Before you begin

This is a simple starter boilerplate that gives you a basic FastAPI setup with a few endpoints. It is meant to be used as a starting point for your own projects.

### Clone and install dependencies

In your terminal, run the following commands:

```

git clone [email protected]:lablab-ai/Google-VertexAI-FastAPI.git

cd Google-VertexAI-FastAPI

cd app

pip install -r requirements.txt

```

### Update the project auth

In order to use the Vertex AI SDK, you will need to update the project auth using a serviceaccount

In `app`, folder create the file `service_account.json` and paste the content of your service account json file. Create the file if you don't have it by runnung the following command in your terminal:

`touch service_account.json`

In the file `service_account.json` paste the content of your service account json file. It should look like this:

```

{

"type": "service_account",

"project_id": "YOUR_PROJECT_ID",

"private_key_id": "YOUR_PRIVATE_KEY_ID",

"private_key": "YOUR_PRIVATE_KEY",

"client_email": "YOUR_CLIENT_EMAIL",

"client_id": "YOUR_CLIENT_ID",

"auth_uri": "YOUR_AUTH_URI",

"token_uri": "YOUR_TOKEN_URI",

"auth_provider_x509_cert_url": "YOUR_AUTH_PROVIDER_X509_CERT_URL",

"client_x509_cert_url": "YOUR_CLIENT_X509_CERT_URL",

"universe_domain": "YOUR_UNIVERSE_DOMAIN"

}

```

You can find your service account json file in the Vertex AI console under `Settings > Service account` or you got it provided by lablab.ai (If you are part of the Google Vertex AI hackathon )

### Start the server and test

Once you have installed the dependencies, you can start the server by running: `uvicorn main:app --reload --port 8080` in the `app` directory.

When the server is running, you can test it by going to `http://localhost:8080/docs` in your browser. You should see the Swagger UI where you can test the endpoints.

Good luck! and don't forget to star this repo if you like it!

**Thank you** for reading! If you enjoyed this tutorial you can find more and continue reading

[on our tutorial page](https://lablab.ai/t/)

---

[](https://lablab.ai)

## Join the LabLab Discord

On lablab discord, we discuss this repo and many other topics related to artificial intelligence! Checkout upcoming [Artificial Intelligence Hackathons](https://lablab.ai) Event

[](https://newnative.ai)

|

DeepGraphLearning/ProtST

|

https://github.com/DeepGraphLearning/ProtST

|

Camera-ready repo for ProtST

|

# ProtST: Multi-Modality Learning of Protein Sequences and Biomedical Texts

ProtST is an advanced pretraining framework for protein sequence understanding and prediction, as introduced in our [ICML2023 oral paper](https://arxiv.org/abs/2301.12040). It is designed to enhance protein sequence pre-training and understanding by integrating protein functions and other important properties through biomedical texts.

The effectiveness and superiority of ProtST-induced PLMs over previous ones are demonstrated on diverse representation learning downstream tasks and zero-shot predictions. It also enables functional protein retrieval from large-scale databases even without any function annotation, as illustrated below.

# Installation #

You may install the dependencies of TorchProtein and ProtST as below.

Generally, they work with Python 3.7/3.8 and PyTorch version >= 1.8.0.

```bash

conda create -n protein python=3.9

conda activate protein

conda install pytorch==2.0.0 pytorch-cuda=11.7 -c pytorch -c nvidia

conda install torchdrug pytorch-sparse pytorch-scatter pytorch-cluster -c pytorch -c pyg -c milagraph

conda install scikit-learn pandas decorator ipython networkx tqdm matplotlib -y

conda install fair-esm transformers easydict pyyaml lmdb -c conda-forge

```

# Pre-trained Model Zoo

| Model | Config | Ckpt |

|:---------------:|:------------------------------------------------:|:-------------------------------------------------------------------------------------------------------------------------------------------------------:|

| ProtST-ESM-1b | [config](config/pretrain/pretrain_esm.yaml) | [ckpt](https://protsl.s3.us-east-2.amazonaws.com/checkpoints/protst_esm1b.pth) |

| ProtST-ESM-2 | [config](config/pretrain/pretrain_esm.yaml) | [ckpt](https://protsl.s3.us-east-2.amazonaws.com/checkpoints/protst_esm2.pth) | |

| ProtST-ProtBert | [config](config/pretrain/pretrain_protbert.yaml) | [ckpt](https://protsl.s3.us-east-2.amazonaws.com/checkpoints/protst_protbert.pth) |

# Usage

To reproduce all the experiments in ProtST, we provide all the necessary configuration files at `config/.../*.yaml`, which are categorized by the dataset, model architecture, and hyperparameters. When running experiments, we specify the configuration file with an argument `--config` and all the required arguments marked by `{{ }}` in that configuration file.

Note that all the datasets will be automatically downloaded in the code. But if you are using clusters without Internet connection, please run `python ./script/prepare_all_datasets.py` to cache datasets in advance.

## Pre-training

By default, we pretrain 3 different PLM backbones (ESM-1b, ESM2 and ProtBert) using 4 V100 GPUs with the following command. Note that we have the choice of using two versions of text encoders: PebMedBert trained with only abstracts `PebMedBert-abs` and PebMedBert trained with full papers `PebMedBert-full`.

```

alias python4proc='python -m torch.distributed.launch --nproc_per_node=4'

# pretrain ESM-1b

python4proc script/run_pretrain.py --config ./config/pretrain/pretrain_esm.yaml --protein_model ESM-1b --text_model PubMedBERT-abs

# pretrain ESM-2

python4proc script/run_pretrain.py --config ./config/pretrain/pretrain_esm.yaml --protein_model ESM-2-650M --text_model PubMedBERT-abs

# pretrain ProtBert

python4proc script/run_pretrain.py --config ./config/pretrain/pretrain_protbert.yaml --text_model PubMedBERT-abs

```

## Downstream Tasks: Representation Learning

For representation learning, we verify our pre-trained multimodal PLMs on 11 standard benchmarks for protein localization prediction, fitness landscape prediction and protein function annotation, under both fix-encoder learning and full-model tuning settings.

We label the pretrained checkpoints as `PRETRAIN_CHECKPOINT`. For different PLM backbone, the corresponding configuration files are in `./config/downstream_task/.../*.yaml`. We give a demonstration for ProtST-enhanced ESM-1b.

### Protein Localization Prediction

For binary localization prediction, you can run as below to perform fix-encoder learning and full-model tuning, respectively:

```

# fix-encoder learning

python4proc ./script/run_downstream.py --config ./config/downstream_task/PretrainESM/localization_fix.yaml --checkpoint $PRETRAIN_CHECKPOINT --dataset BinaryLocalization --num_class 2

# full-model tuning

python4proc ./script/run_downstream.py --config ./config/downstream_task/PretrainESM/localization_tune.yaml --checkpoint $PRETRAIN_CHECKPOINT --dataset BinaryLocalization --num_class 2

```

**Note that**, subcellular localization can be performed in the similar way (please see `./config` for details).

### Fitness Landscape Prediction

For Beta-Lactamase fitness prediction, you can run as below to perform fix-encoder learning and full-model tuning, respectively:

```

# fix-encoder learning

python4proc ./script/run_downstream.py --config ./config/downstream_task/PretrainESM/fitness_fix.yaml --checkpoint $PRETRAIN_CHECKPOINT --dataset BetaLactamase --batch_size 32

# full-model tuning

python4proc ./script/run_downstream.py --config ./config/downstream_task/PretrainESM/fitness_tune.yaml --checkpoint $PRETRAIN_CHECKPOINT --dataset BetaLactamase --batch_size 6

```

**Note that**, Fluorescence, Stability, AAV and Thermostability prediction can be performed in the similar way (please see `./config` for details).

### Protein Function Annotation

For Enzyme Commission (EC) number prediction, you can run as below to perform full-model tuning:

```

python4proc ./script/run_downstream.py --config ./config/downstream_task/PretrainESM/annotation_tune.yaml --checkpoint $PRETRAIN_CHECKPOINT --dataset td_datasets.EnzymeCommission --branch null

```

**Note that**, the Gene Ontology (GO) term prediction at Molecular Function (MF), Biological Process (BP) and Cellular Component (CC) branches can be performed in the similar way (please see `./config` for details).

## Downstream Tasks: Zero-shot Protein Classification

### Zero-shot Predictors

ProtST supports zero-shot protein classification, where it does not require any labeled protein. This is achieved by comparing representation similarities between a query protein and all labels, thanks to the aligned representation space of protein sequences and label descriptions in ProtST.

We demonstrate on zero-shot subcellular localization prediction and zero-shot reaction classification with ProtST-enhanced ESM-1b. We have also explored different prompt templates and description fields as listed in `./data/zero_shot_classification/`.

```

# Subcellular Localization Prediction

python ./script/run_zero_shot.py --config ./config/zero_shot/PretrainESM/zero_shot.yaml --checkpoint $PRETRAIN_CHECKPOINT --prompt_label ./data/zero_shot_classification/subloc_name.tsv --dataset SubcellularLocalization --field "['name']"

# Reaction Classification

python ./script/run_zero_shot.py --config ./config/zero_shot/PretrainESM/zero_shot.yaml --checkpoint $PRETRAIN_CHECKPOINT --prompt_label ./data/zero_shot_classification/reaction_name.tsv --dataset Reaction --field "['name']"

```

### Few-shot and Non-parametric Baselines

ProtST-induced zero-shot classifiers have better data efficiency against various few-shot and non-parametric classifiers. You can run these baselines as below:

```

# few-shot classifiers

## Subcellular Localization Prediction

python ./script/run_few_shot.py --config ./config/few_shot/PretrainESM/few_shot.yaml --dataset SubcellularLocalization --num_class 10 --checkpoint $PRETRAIN_CHECKPOINT

## Reaction Classification

python ./script/run_few_shot.py --config ./config/few_shot/PretrainESM/few_shot.yaml --dataset Reaction --num_class 384 --checkpoint $PRETRAIN_CHECKPOINT

# non-parametric few-shot classifiers

## Subcellular Localization Prediction

python ./script/run_few_shot_nonparam.py --config ./config/few_shot/PretrainESM/few_shot.yaml --dataset SubcellularLocalization --num_class 10 --checkpoint $PRETRAIN_CHECKPOINT

## Reaction Classification

python ./script/run_few_shot_nonparam.py --config ./config/few_shot/PretrainESM/few_shot.yaml --dataset Reaction --num_class 384 --checkpoint $PRETRAIN_CHECKPOINT

```

### Predictor Ensemble

We also show that ProtST-based zero-shot predictor can enhance the performance of supervised learning models via ensemble. We use the following scripts to do ensembles, where `SUPERVISED_CHECKPOINT` refers to the checkpoints obtained by supervised learning on downstream tasks.

```

## Subcellular Localization Prediction

python ./script/run_supervised_with_zero.py -sc ./config/downstream_task/PretrainESM/localization_fix.yaml -zc ./config/zero_shot/zero_shot.yaml --dataset SubcellularLocalization --num_class 10 --prompt_label ./data/zero_shot_classification/subloc_name.tsv --field "['name']" --checkpoint $PRETRAIN_CHECKPOINT --supervised_checkpoint $SUPERVISED_CHECKPOINT

## Reaction Classification

python ./script/run_supervised_with_zero.py -sc ./config/downstream_task/PretrainESM/reaction_tune.yaml -zc ./config/zero_shot/zero_shot.yaml --dataset Reaction --num_class 384 --prompt_label ./data/zero_shot_classification/reaction_name.tsv --field "['name']" --checkpoint $PRETRAIN_CHECKPOINT --supervised_checkpoint $SUPERVISED_CHECKPOINT

```

## Downstream Tasks: Text to Protein Retrieval

We illustrate the capability of ProtST-ESM-1b on retrieving functional proteins as below, where no function annotation is required:

```

python ./script/run_t2p_retrieval.py --config ./config/t2p_retrieval/go_mf.yaml --checkpoint $PRETRAIN_CHECKPOINT

```

# License

This codebase is released under the Apache License 2.0 as in the [LICENSE](LICENSE) file.

# Citation

If you find this project helpful, please cite our paper:

```

@article{xu2023protst,

title={ProtST: Multi-Modality Learning of Protein Sequences and Biomedical Texts},

author={Xu, Minghao and Yuan, Xinyu and Miret, Santiago and Tang, Jian},

journal={arXiv preprint arXiv:2301.12040},

year={2023}

}

```

# Contact

For any questions or issues, open an issue or contact

Minghao Xu ([email protected]) and Xinyu Yuan ([email protected]).

|

CarlJamison/cosmic-air-host

|

https://github.com/CarlJamison/cosmic-air-host

|

Store entire html pages in a single url

|

Compresses and creates a link for simple, self-contained html sites.

Imagine a scenario where you have a quick proof of concept or cool visual that you want to show to someone, but don't feel like hosting it anywhere. For example, something like [this](https://carljamison.github.io/TimeKiller/). You can use this project to create a link, where all the scripts and styling are stored in the url. In the above case, [this](https://air-host.azurewebsites.net/h/sVW7W_iNhj_V0Kk0bgxNKHlJjkYdGq560n0qMqkXZWLthxxIRrElRPoKsz_vsd2Agm9Tvu2DwQ_b_bPz6vlYCC_WPKj7BNvOJBnlpzQD1eelSZ0_XodZ9s4t-SU-l7vaji4kGegkw1lmM_jFaN9vORiTsNI_-c0tG9sbM_gdwu_qR3hfMlYoRSe49XKrNab4ppvsoJ6-Dlnm4RTH9-Mr6d399PZ-GEG1Pjr-OHz4x-fP375Or6BowMZje_uf3uktmUHMlzFeTGr9pVnVCK7gmojPC_-pvKsK6XdS4AE7FLIR7n_PU2K5YXGDdxJyb1l6WJZlOxA2YH-9FzTWJGgODFkIF05kTt9ozCN1OEHJIYOpGOJTfZJxGvmoJ0MGZU40C5SH7lxGB22WDdh4AqWIKTd1pV_Kv4ufXJqMkWybrxgrqs82qKsq5TbbeCyjInFawdYa1bEP_gqzdcI2_c2PWhVSgO1KMSRYbarK8IZQ9ZdMLHWm2u4sBfChz2oJ33pG00wXKVPrEjXDMmtteVpYv2VrlbXgsXFRjBHuTEU1A8qc2ViMDTvIahgz4Inm7kyQlgp3NcUTrVh_5_qyc4d37I1HAHi_V5psCfB8qVJCcfgkbgDETy3b8GGWLarA_Li2t-L78XsmbFEMaXw2eW5EV0IhPYqoDWQO71TMNsIARmcpNniehkLB9ISnCX4C3znfIUCiF3Hb1GhDDhkmri47PgIF1T8AgucldgDudVBy-B-GYX7ItzzKK0KRHN1YYWQrDnSwHL5giKEfQ8UXbemeiyrY-TOabeP4yQ5BGcHIImG6nIMUIkG7BZYBYiYOOEqvuQYap2oJMPHfCP15MMqe4hJImyOJhUGDFlDvD3C3h7yxVcurSMCp-oieN7kSyBMwwg1xCjU6CLKlFEzybCAe-5qbaPdbolRlb3fbPKzfSTBhwKjGonJKVVr0Lmqong3zN_eDfOhuuhlUA85RNiqIXF5icV1dCYgjcnb79kqZ5ZCcVtDAbQkLeoUTSwqgx3ImwYWpIqxqAq20UBHzhFdpYEb3iywjxBxikNjadjjo3mDryJ6wD1teO-_IFbeU1cjCEr4rfZtUxv_i-r0RFU5rfh__FFleKMn6XRasOI-37CEP8RZwtfQlXxKxWgNapDNGj90BnMD0jsVGYHrI3J5KnIPVlcgarffmkGeaUxvEhqqCHOAt7UAnSPgfI4gjCOfaBpzOFDRfUO7lfzXSt4x8kvS8d-coGPz3gmmJoCOQh6dnFbKQOL60cnJpcxt2HVO7WBbIolCpDQAg5mmAzPGhjDR1UIYWgA9hWyxO7Yu3bJcI9hJVS8fcZ3WBJq82rF0b3ktYFJawkAj552mc0QGvcxEhgoTG8oh16A9GqzNDFFug5iaR9KoeisRs4A5bhZmRjUG3k5e6LcHUV0yEAM5DYTrIsXlwOADOQlgA1OpBhxX94XBVIwKc1394AqyFj2-cbSOGkowe-HPvOjCLFJePFLUFosfju32-h_OYWi5NrbeI5B5rkk6K17hNXnYAjOalZIHNgfnlu8xXv7Xvgif4APL_f7NyIP5ObnoIT3yYD1Vaz3yfO847vqeZ-acalW1SQczVM830C1nG0zfw1iDV2RaPfoglnL5D8p4-45SCwAA) would be result.

This is obviously only useful in specific limited scenarios. If the html is not self-contained, or references scripts, stylings, images, etc. that it can't access, than this won't work. If the html is too complex, than this will also not work. This is intended for small, quick projects where it might not make sense to host it anywhere.

|

luolin-ai/FastGPT-Enterprise-WeChatbot

|

https://github.com/luolin-ai/FastGPT-Enterprise-WeChatbot

|

基于企微gpt知识库的bot机器人,能够自动回复企业微信中收到的消息。这个机器人能够处理私聊和群聊,还可以记住与用户的聊天内容,从而做出更加贴合上下文的回应。此外,您还可以设置白名单来控制机器人与哪些用户或群组交互。如需对接自己的知识库网站WX:aiwis99

|

# FastGPT-Enterprise-WeChatbot

# Enterprise-WeChat-GPTbot

基于企业微信外部群的微信机器人,支持FASTgpt

# 企业微信机器人使用教程:

1.打开项目下载企业微信4.0.8.6027版本 下载:https://drive.weixin.qq.com/s?k=ANMA2gc2ACsWAjgBOT

如需对接自己的知识库网站WX:gojiberrys

2.配置,

打开https://fastgpt.run/网站,点击创建ai

进入网站点击新建应用,点击生成key

# 扫码加入交流群

大家好!我非常高兴地告诉大家,我已经成功完成了我的代码项目!通过不懈的努力和团队合作,我们成功实现了一个更智能的企业微信聊天机器人。

在这个过程中,我意识到为了进一步改进项目并提供更好的用户体验,我们需要一些资金支持。因此,我希望能够向大家征求赞助。这些资金将用于购买必要的资源、改进代码和增加功能,以确保我们能够提供最好的服务。

作为赞助者,您将享有以下特权和回报:

优先体验新功能和更新:您将成为第一个尝试和体验项目的最新功能和改进的人。

参与项目决策和功能讨论:您的声音和意见对项目的发展至关重要,您将被邀请参与项目的决策和功能讨论。

特别感谢和认可:作为项目的赞助者,您将得到我们的特别感谢和认可,您的支持将在项目中被特别提及。

如果您愿意支持我和我的项目,您可以通过以下方式进行赞助:

不管您是否能够提供赞助,我都非常感谢您对我项目的关注和支持。如果您有任何问题、建议或意见,也请随时与我交流。让我们一起为项目的成功努力!

谢谢大家!无论您是否能够赞助,您的支持对我来说都非常重要。让我们共同见证这个项目的成长和进步!

|

nickrosh/evol-teacher

|

https://github.com/nickrosh/evol-teacher

|

Open Source WizardCoder Dataset

|

# Evol-Teacher: Recreating WizardCoder

This repo implements the code generation instruction process described in the [WizardCoder Paper](https://arxiv.org/pdf/2306.08568.pdf). Currently, WizardCoder is one the most performant Code Generation models, being beaten only by ChatGPT. This takes the Code Alpaca 20k dataset and evolves each instruction through a randomly chosen evolution prompt to increase instruction complexity. These prompts range from increase time/space complexity, to increasing requirements, to adding erroneus code to improve robustness, etc. This is done three times with pruning and post processing to remove unwanted instructions and responses. The iterative addition of more complexity gives higher quality and more in-depth instructions than what is ususally generated in Alpaca methods. This, like in the case of WizardCoder and WizardLM, can lead to strong performance that gets very close to RLHF model performance.

`generate_evol.py` allows you to generate an Evolution-Instruct dataset from any instruction dataset in the format `Instruction`/`Response`. Alpaca style datasets that contain `input` fields can be converted to Evolution format with `convert_alpaca_to_evol()`. The high level overview of the evolution process is as follows:

1. A seed instruction is taken and evolved with a randomly chosen evolution prompt using GPT3.5.

2. Responses are generated to each of these new evolved prompts also with GPT3.5.

3. Poor quality instructions and responses are pruned and also prevented from further evolution.

4. This evolution process repeats M times. In the paper and the default value in this repo, M=3.

As described in the paper, I performed this process on the full 20k Code Alpaca dataset with three evolutions, resulting in a total of 80k instruction-response pairs. Over 120,000 API calls were made to OpenAI to create this dataset, and due to the rate limit, it took around three days to complete.

## Getting the full 80k Dataset

The full dataset can be found on [HuggingFace Hub](https://huggingface.co/datasets/nickrosh/Evol-Instruct-Code-80k-v1).

You can also easily recreate the full dataset by running `merge_evolutions(output_dir="./data/EvolInstruct-Code-80k/")` within `generate_evol.py`. This will merge the seed dataset and the three evolutions. The evolutions are saved here separately for later analysis on generational differences.

## Fine Tuning

We can instruct-tune a model using this dataset very similarly to Alpaca tuning. Simply run `train.py` with your desired parameters. If you set the model max length to 512, it will have a much smaller memory footprint and you will be able to train faster. I instruct-tuned [ReplitLM](https://github.com/replit/ReplitLM) on the full 80k dataset using the following parameters:

```bash

--model_name_or_path replit/replit-code-v1-3b \

--data_path ./data/EvolInstruct-Code-80k/EvolInstruct-Code-80k.json \

--output_dir ./checkpoints \

--num_train_epochs 3 \

--per_device_train_batch_size 1 \

--gradient_accumulation_steps 8 \

--evaluation_strategy "no" \

--save_strategy "steps" \

--save_steps 50 \

--save_total_limit 2 \

--learning_rate 2e-5 \

--weight_decay 0. \

--warmup_ratio 0.03 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--model_max_length 2000 \

--bf16 True \

--tf32 True

```

The instruction tuned version of the model can be found on [HuggingFace Hub](https://huggingface.co/nickrosh/Evol-Replit-v1)

## Evaluation

When this model is run with Greedy Decoding, it achieves a HumanEval Pass@1 Score of 31.1%, up from the initial value of 21% for ReplitLM. Note that this is not the same foundational model as the original WizardCoder, which was fine tuned on top of StarCoder, which has a score of 34% out of the box. To run HumanEval on this model, follow the package download instructions on the HumanEval repo and then run the following script `sh human_eval.sh`.

```bash

#!/bin/sh

model="./checkpoints" # Path to your model

temp=0.0

max_len=2048

pred_num=1

num_seqs_per_iter=1

output_path=preds/T${temp}_N${pred_num}

mkdir -p ${output_path}

echo 'Output path: '$output_path

echo 'Model to eval: '$model

# Default Sampling: temp=0.2, pred_num=200, num_seqs_per_iter=2

# CUDA_VISIBLE_DEVICES=0 python humaneval_gen.py --model ${model} \

# --temperature ${temp} --num_seqs_per_iter ${num_seqs_per_iter} --N ${pred_num} \

# --max_len ${max_len} --output_path ${output_path}

# Greedy Decoding: Also set temp=0.0, pred_num=1, and num_seqs_per_iter=1

CUDA_VISIBLE_DEVICES=0 python humaneval_gen.py --model ${model} \

--temperature ${temp} --num_seqs_per_iter ${num_seqs_per_iter} --N ${pred_num} \

--max_len ${max_len} --output_path ${output_path} --greedy_decode

output_path=preds/T${temp}_N${pred_num}

echo 'Output path: '$output_path

python process_humaneval.py --path ${output_path} --out_path ${output_path}.jsonl --add_prompt

evaluate_functional_correctness ${output_path}.jsonl

```

Please refer to the WizardCoder repo for an example of how to run HumanEval on multiple GPUs.

## Citation

Thanks to the original WizardCoder team

```

@misc{luo2023wizardcoder,

title={WizardCoder: Empowering Code Large Language Models with Evol-Instruct},

author={Ziyang Luo and Can Xu and Pu Zhao and Qingfeng Sun and Xiubo Geng and Wenxiang Hu and Chongyang Tao and Jing Ma and Qingwei Lin and Daxin Jiang},

year={2023},

eprint={2306.08568},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

And thanks to the original Alpaca team.

```

@misc{alpaca,

author = {Rohan Taori and Ishaan Gulrajani and Tianyi Zhang and Yann Dubois and Xuechen Li and Carlos Guestrin and Percy Liang and Tatsunori B. Hashimoto },

title = {Stanford Alpaca: An Instruction-following LLaMA model},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/tatsu-lab/stanford_alpaca}},

}

```

And thanks to sahil280114 for the CodeAlpaca project and seed dataset.

```

@misc{codealpaca,

author = {Sahil Chaudhary},

title = {Code Alpaca: An Instruction-following LLaMA model for code generation},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/sahil280114/codealpaca}},

}

```

Naturally, you should also cite the original LLaMA paper [1] and the Self-Instruct paper [2].

Also thanks to [Teknium1](https://github.com/teknium1/stanford_alpaca-replit) for the Replit Training Script. I made some changes for the Evolution Instruct format input.

|

juri/Parse3339

|

https://github.com/juri/Parse3339

|

A fast pure Swift RFC 3339 timestamp parser

|

[](https://github.com/juri/Parse3339/actions/workflows/build.yml)

[](https://swiftpackageindex.com/juri/Parse3339)

[](https://swiftpackageindex.com/juri/Parse3339)

# Parse3339

Parse3339 is a fast [RFC 3339] time stamp parser written in pure Swift.

RFC 3339 specifies the commonly used subset of ISO 8601 suitable for time stamps. This parser restricts the subset even further. The following are the formats supported by Parse3339:

- `2023-07-09T113:14:00+03:00`

- `2023-07-09T113:14:00.2+03:00`

- `2023-07-09T113:14:00Z`

- `2023-07-09T113:14:00.2Z`

There's nothing to configure, and it's all in just file in case you want to copy it over instead of using it as a package.

[RFC 3339]: https://www.rfc-editor.org/rfc/rfc3339

## Usage

```swift

import Parse3339

let s = "2023-07-09T13:14:00+03:00"

guard let parts = Parse3339.parse(s) else {

return

}

let date = parts.date

print(date.timeIntervalSinceReferenceDate)

// output: 710590440.0

```

There's a helper function you can use with Foundation's `JSONDecoder`:

```swift

import Parse3339

let decoder = JSONDecoder()

decoder.dateDecodingStrategy = .custom(Parse3339.parseFromDecoder(_:))

```

For `Package.swift` snippets and documentation, visit the [Swift Package Index page](https://swiftpackageindex.com/juri/Parse3339).

## Speed and memory usage

Parse3339 is pleasantly fast and stingy with memory usage. The package has benchmarks:

```sh

env PARSE3339_BENCHMARK=1 swift package benchmark --target ParserBenchmarks

```

It has benchmarks that parse the same string using Foundation's `DateFormatter`, Foundation's `ISO8601DateFormatter`, Parse3339 creating a `Date` with Foundation's `DateComponents` and `Calendar`, and Parse3339 creating a `Date` with standard Unix functions.

Output from one run:

```

Parse with DateFormatter

╒════════════════════════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╕

│ Metric │ p0 │ p25 │ p50 │ p75 │ p90 │ p99 │ p100 │ Samples │

╞════════════════════════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╡

│ Malloc (total) │ 275 │ 275 │ 275 │ 275 │ 275 │ 275 │ 279 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Memory (resident peak) (M) │ 11 │ 15 │ 19 │ 23 │ 25 │ 27 │ 27 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Throughput (# / s) (K) │ 17 │ 16 │ 16 │ 16 │ 15 │ 12 │ 1 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (total CPU) (ns) │ 59084 │ 59967 │ 60255 │ 61887 │ 64927 │ 82175 │ 236750 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (wall clock) (ns) │ 58500 │ 59391 │ 59647 │ 61279 │ 64255 │ 83327 │ 513167 │ 100000 │

╘════════════════════════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╛

Parse with ISO8601DateFormatter

╒════════════════════════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╕

│ Metric │ p0 │ p25 │ p50 │ p75 │ p90 │ p99 │ p100 │ Samples │

╞════════════════════════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╡

│ Malloc (total) │ 496 │ 496 │ 496 │ 496 │ 496 │ 496 │ 497 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Memory (resident peak) (K) │ 9764 │ 9781 │ 9781 │ 9781 │ 9781 │ 9781 │ 9781 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Throughput (# / s) (K) │ 11 │ 10 │ 10 │ 10 │ 10 │ 7 │ 0 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (total CPU) (ns) │ 91375 │ 92351 │ 92799 │ 95551 │ 99519 │ 123007 │ 542458 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (wall clock) (ns) │ 90792 │ 91711 │ 92159 │ 94911 │ 98879 │ 127487 │ 3553542 │ 100000 │

╘════════════════════════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╛

Parse with Parse3339 (DateComponents)

╒════════════════════════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╕

│ Metric │ p0 │ p25 │ p50 │ p75 │ p90 │ p99 │ p100 │ Samples │

╞════════════════════════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╡

│ Malloc (total) │ 64 │ 64 │ 64 │ 64 │ 64 │ 64 │ 67 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Memory (resident peak) (M) │ 9 │ 41 │ 73 │ 105 │ 124 │ 136 │ 137 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Throughput (# / s) (K) │ 43 │ 42 │ 42 │ 41 │ 38 │ 29 │ 4 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (total CPU) (ns) │ 23416 │ 23999 │ 24223 │ 25007 │ 26463 │ 34751 │ 206791 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (wall clock) (ns) │ 22833 │ 23423 │ 23631 │ 24383 │ 25807 │ 34335 │ 211458 │ 100000 │

╘════════════════════════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╛

Parse with Parse3339 (Unix time)

╒════════════════════════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╤═════════╕

│ Metric │ p0 │ p25 │ p50 │ p75 │ p90 │ p99 │ p100 │ Samples │

╞════════════════════════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╪═════════╡

│ Malloc (total) │ 0 │ 0 │ 0 │ 0 │ 0 │ 0 │ 0 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Memory (resident peak) (K) │ 7831 │ 7852 │ 7864 │ 7864 │ 7864 │ 7864 │ 7864 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Throughput (# / s) (K) │ 263 │ 252 │ 247 │ 242 │ 233 │ 183 │ 17 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (total CPU) (ns) │ 4333 │ 4503 │ 4543 │ 4667 │ 4875 │ 6543 │ 48584 │ 100000 │

├────────────────────────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼─────────┤

│ Time (wall clock) (ns) │ 3791 │ 3959 │ 4041 │ 4127 │ 4291 │ 5459 │ 56625 │ 100000 │

╘════════════════════════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╧═════════╛

```

|

jinhoyoo/summarize_youtube_video_lecture

|

https://github.com/jinhoyoo/summarize_youtube_video_lecture

|

Summarize Youtube's script by chapter creater configured

|

# Youtube Script Summarizer

## Overview

Youtube Script Summarizer is a tool that helps summarize YouTube scripts by chapter creators. It leverages the youtube-transcript-api, langchain, and OpenAI packages to achieve this.

## How it works

The youtube-transcript-api package is used to extract the script from a YouTube video. The script is then processed using the langchain package to identify the language and translate it if necessary. Finally, the OpenAI package is used to generate a summary of the script for each chapter created.

## Usage

0. Clone this project and setup Python over v3.11.x.

1. Install dependency

``` bash

$ pip install -r requirements.txt

```

2. Open `summary.ipynb`

- Create `.env` file and set `OPENAI_API_KEY` variable.

- Edit the variables in the "Input variables" cell according to your requirements.

- Provide the YouTube video ID (`youtube_video_id`) for which you want to generate a summary.

- Specify the language of the subscription (`language`).

- Copy and paste the time stamps and chapter names from the YouTube video description into the `chapter_part_in_description` variable.

``` Python

# Youtube video ID

youtube_video_id="MZQ6bc6mPAE"

# Language of subscription

language = "ko"

# Officially no way to get chapter automatically,

# so copy and paste the time stamp and chapter in description of Youtube video.

chapter_part_in_description = """

00:00 시작

05:24 해먹을 결심: 탄핵해야 하는 이유

10:55 댓글 읽어보기

24:27 인사조직론이란 무엇인가?

33:52 (게르만 모형) 왜 직무가 중요한가?

43:11 추미애의 직무인식

49:16 직무의 존재목적, 칸트의 인간관과 경영학적 응용

1:04:40 성과책임의 사회적 의미 (참고)직무의 3대 구성요소

1:06:05 직무개념의 부재

1:07:44 주진우와 양향자

1:11:52 추미애의 고백과 진심

1:35:09 왜 역량인가?

1:35:47 역량의 개념에 대한 이해

1:38:05 《성취예측모형》 프레임워크와 역량사전

1:41:48 진실한 리더십과 인재평가의 프레임워크

1:43:59 DANO 경영플랫폼 운용_리더십이란 무엇인가?

1:49:42 추미애에 대한 오해의 프레임과 추미애의 비전은 무엇인가?

1:53:21 이재명과 추미애 vs. 이낙연과 김진표

1:55:52 푸른 하늘을(김수영 시인, 1960.06.15.)

1:58:57 정리

"""

```

3. Run notebook and you can get the `markdown_note.md`.

- This file has the script and summary for each chapter.

## Trobleshooting

- Please define '00:00:00' as start in `chapter_part_in_description`.

## FAQ

- To-Do

|

Moexin/IPTV

|

https://github.com/Moexin/IPTV

|

MyIPTV

|

Auto Update IPTV in 2023-08-02 08:42:04 CST

|

renegadevi/nuxt-boilerplate

|

https://github.com/renegadevi/nuxt-boilerplate

|

A ready to use Nuxt 3 boilerplate. (w/ Tailwind, i18n, Pinia, GDPR, Dark mode, TypeScript etc.)

|

# Nuxt Boilerplate

A ready to use Nuxt 3 boilerplate

**Demo**: https://nuxt-boilerplate.onrender.com/

## Setup

### Prerequisites:

- [pnpm](https://pnpm.io/)

- [mkcert](https://github.com/FiloSottile/mkcert) (Optional localhost HTTPS)

### Install dependencies:

```bash

pnpm install

```

### Optional: `.env`

```ini

VITE_BASE_URL="https://localhost:3000/"

```

### Optional: Generate certificate for HTTPS for localhost

```bash

# mkdir certs

cd certs

mkcert localhost

```

## Start local server

### Start development server

```bash

# HTTP

pnpm run dev

# HTTPS

pnpm run dev-https

```

### Start production build server (HTTP)

```bash

# HTTP

pnpm run build;pnpm run preview

# HTTPS

pnpm run build;pnpm run preview-https

```

Check out the [deployment documentation](https://nuxt.com/docs/getting-started/deployment) for more information.

## Nuxt Modules included:

- [eslint](https://nuxt.com/modules/eslint)

- [pinia](https://nuxt.com/modules/pinia)

- [tailwindcss](https://nuxt.com/modules/tailwindcss)

- [i18n](https://nuxt.com/modules/i18n)

- [device](https://nuxt.com/modules/device)

- [devtools](https://nuxt.com/modules/devtools)

- [image](https://nuxt.com/modules/image)

- [google-fonts](https://nuxt.com/modules/google-fonts)

- [color-mode](https://nuxt.com/modules/color-mode)

- [icon](https://nuxt.com/modules/icon)

- [cookie-control](https://nuxt.com/modules/cookie-control)

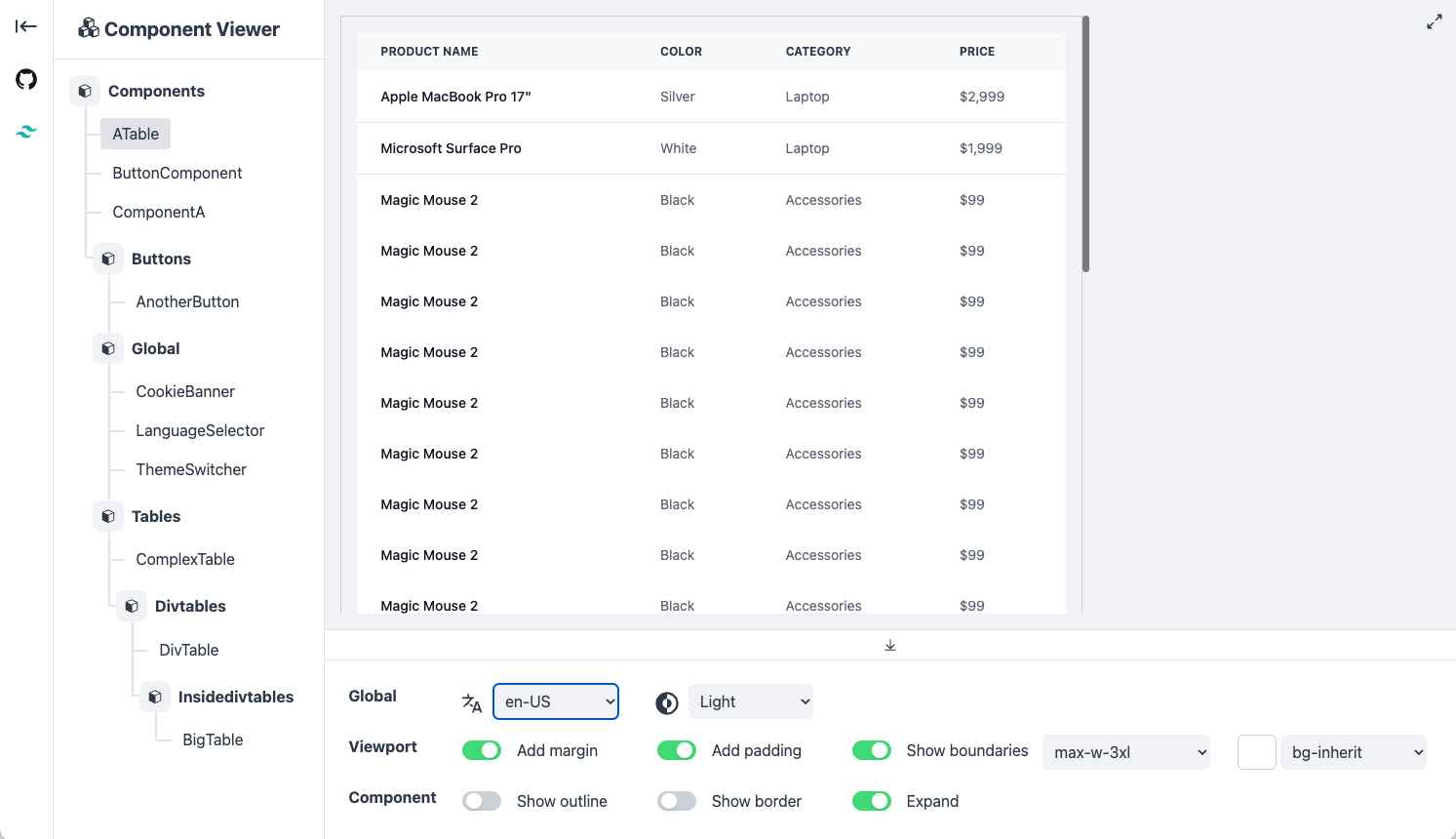

## Optional: Component Viewer

If you are looking for a component viewer while building and designing components, I made this extension for nuxt. Drop in the `_components.vue` into the `Pages` directory, make sure you have a `blank.vue` in Layouts that's just a `<Slot />`, and go to `/_components`

https://github.com/renegadevi/nuxt-component-viewer

|

NowScott/IndWebIndex

|

https://github.com/NowScott/IndWebIndex

|

Individual Web Index.

|

# Individual Web Index

[![data update][data-update-image]][data-update-url]

[![GitHub stars][stars-image]][stars-url]

[![GitHub forks][forks-image]][forks-url]

[![license GPL-3.0][license-image]][license-url]

[![commit-count-permonth][commit-image]][commit-url]

[![contributors-count][contributors-image]][contributors-url]

[![web][web-image]][web-url]

[![web-en][web-en-image]][web-en-url]

## Star History

[![Star History Chart][stars-history-image]][stars-url]

[data-update-url]:https://github.com/NowScott/web_database/actions/workflows/scraping.yml "数据更新"

[data-update-image]:https://img.shields.io/github/actions/workflow/status/NowScott/IndWebIndex/scraping.yml?label=data%20update

[stars-url]:https://github.com/NowScott/IndWebIndex/stargazers "星标"

[stars-image]: https://img.shields.io/github/stars/NowScott/IndWebIndex?label=Star

[forks-url]: https://github.com/NowScott/IndWebIndex/forks "复刻"

[forks-image]: https://img.shields.io/github/forks/NowScott/IndWebIndex?label=Fork

[license-url]: https://opensource.org/license/gpl-3-0/ "许可证"

[license-image]: https://img.shields.io/github/license/NowScott/IndWebIndex

[commit-url]:https://github.com/NowScott/IndWebIndex/commits/main "提交"

[commit-image]:https://img.shields.io/github/commit-activity/m/NowScott/IndWebIndex

[contributors-url]:https://github.com/NowScott/IndWebIndex/graphs/contributors "贡献者"

[contributors-image]:https://img.shields.io/github/contributors/NowScott/IndWebIndex

[web-url]:https://www.nowscott.top "中文网页"

[web-image]:https://img.shields.io/badge/%E7%BD%91%E9%A1%B5%E9%A2%84%E8%A7%88-%E4%B8%AD%E6%96%87-blue

[web-en-url]:https://www.nowscott.top/en "English Web"

[web-en-image]:https://img.shields.io/badge/Preview-EN-blue

[stars-history-image]:https://api.star-history.com/svg?repos=NowScott/IndWebIndex&type=Date

## 1.Purpose of creation

Long ago, there was a website in the early days of the internet called Yellow Pages, which contained an index of many other websites. Over time, it gradually evolved into search engines.

However, in search engines, the results often lack accuracy when it comes to finding specific websites. Therefore, I still believe that everyone needs their own Yellow Pages.

This personal Yellow Pages would include a collection of frequently used websites, along with the ability to add tags and brief descriptions for simple searching and browsing functionality.

In the end, I decided to name this personal Yellow Pages as "Individual Web Index."

## 2.Website iteration

From the time I had this idea until now, this website has gone through many iterations, and the URL has also changed several times. The current and final website is [nowscott.top](https://nowscott.top)。

In the beginning, the data file was manually entered directly, and every time I wanted to update the data content, I had to access the developer interface, make changes to the data file, and then upload it to GitHub. This made me feel very unfriendly.

Therefore, in the latest version, I utilized the Notion API to store the data in Notion's database. I will explain the specific implementation details in the next section.

## 3.How to use the Notion API

(The code for fetching data from Notion is not included in this project.)

This idea originated from a video by an uploader on Bilibili, and the link is provided below:

[【S1E3】用Notion当数据库写一个简单的API](https://www.bilibili.com/video/BV1gF411E7pV/?share_source=copy_web&vd_source=98c7014c35363c157a4fba4929dbda77)

In this video, I learned how to use the Notion API to fetch data from Notion and display it on a web page. If you have any questions, you can watch the video for more information.

To summarize, I utilized a website called Netlify to deploy a service. This service runs every time someone visits the domain I deployed, retrieves data from Notion, and returns it to the frontend. Once the frontend receives the data, it can be displayed on the webpage.

This sounds great, right? However, this simple process takes around 7 to 8 seconds at most, which does not align with the vision of a fast-loading personal website. Therefore, I made further improvements in this area.

## 4.Some optimizations

I cannot change the access speed of the Notion API, but what I can change is the way data is fetched. If we want to make modifications more convenient, we can store a copy of the data in a convenient location. When accessing the website, we can fetch this stored data, effectively resolving the issue of slow access speed.

When the data is modified, the aforementioned convenient data copy will not be updated immediately because it cannot communicate directly with Notion. To address this, I leverage GitHub Actions to regularly update the stored data. This ensures that the data remains up-to-date.

## 5.Final Notes

In summary, this project is a web-based personal website index that addresses the issue of slow access speed and ensures timely data updates. These optimizations make modifications easier and provide a more efficient and seamless user experience. Thank you for your interest in this project.

If you have any questions or inquiries regarding this project, you can contact me via email at: [<i class="fa fa-envelope-o"></i> [email protected]](mailto:[email protected])

|

flare-ai/customGPT-template

|

https://github.com/flare-ai/customGPT-template

|

Mercury - Train your own custom GPT. Chat with any file, or website.

|

<h1 align="center">Mercury</h4>

<h4 align="center">

<img width="800" src="https://github.com/jacobvo888/readme-assets/blob/main/merc-search.png" alt="">

</h4>

<h4 align="center">

<img width="200" src="https://github.com/jacobvo888/readme-assets/blob/main/logo-down-indigo.webp" alt="">

</h4>

## Chat with any Document or Website

> Train your own custom GPT

- Train on specific websites that you define

- Train on documents you upload

- Builds on dialog with Chat History

- Cites sources

- [Perplexity](https://www.perplexity.ai/) style UI

<h2 align="center">

<br>

<img width="1000" src="https://github.com/jacobvo888/readme-assets/blob/main/merc-read-1.png" alt="">

</h2>

#### Supported Files

- [x] .pdf

- [x] .docx

- [x] .md

- [x] .txt

- [x] .png

- [x] .jpg

- [x] .html

- [x] .json

#### Coming Soon

- [ ] .csv

- [ ] .pptx

- [ ] notion

- [ ] next 13 app dir

- [ ] vercel ai sdk

## Train

#### 1. Upload: `/api/embed-file`

- file is uploaded -> cleaned to plain text, and split into 1000-character documents.

- OpenAI's embedding API is used to generate embeddings for each document using the "text-embedding-ada-002" model.

- The embeddings are stored in a Pinecone namespace.

#### 2. Scrape: `/api/embed-webpage`

- Web pages are scraped using [cheerio](https://github.com/cheeriojs/cheerio), cleaned to plain text, and split into 1000-character documents.

- OpenAI's embedding API is used to generate embeddings for each document using the "text-embedding-ada-002" model.

- The embeddings are stored in a Pinecone namespace.

<h4 align="center">

<br>

<img width="1000" src="https://github.com/jacobvo888/readme-assets/blob/main/merc-dark-train.png" alt="">

</h4>

## Query

#### Responding to queries: `/api/query`

- A single embedding is generated from the user prompt.

- The embedding is used to perform a similarity search against the vector database.

- The results of the similarity search are used to construct a prompt for GPT-3.

- The GTP-3 response is then streamed back to the user.

<h4 align="center">

<br>

<img width="1000" src="https://github.com/jacobvo888/readme-assets/blob/main/merc-2.gif" alt="">

</h4>

## Getting Started

### 1. Clone Repo and Install Deps