id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

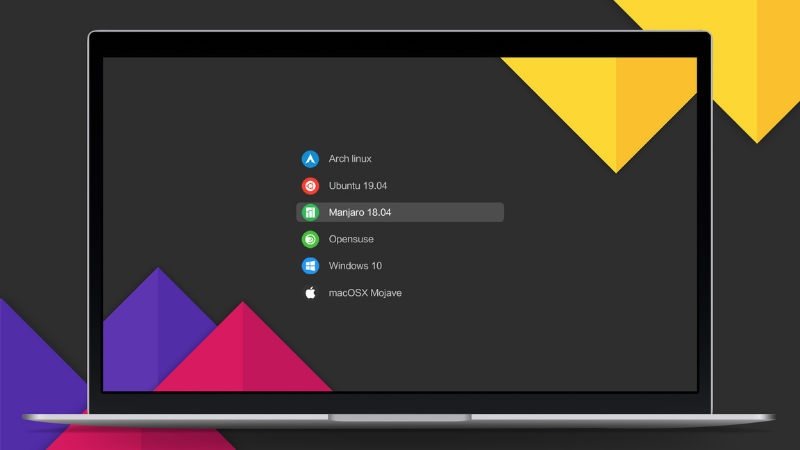

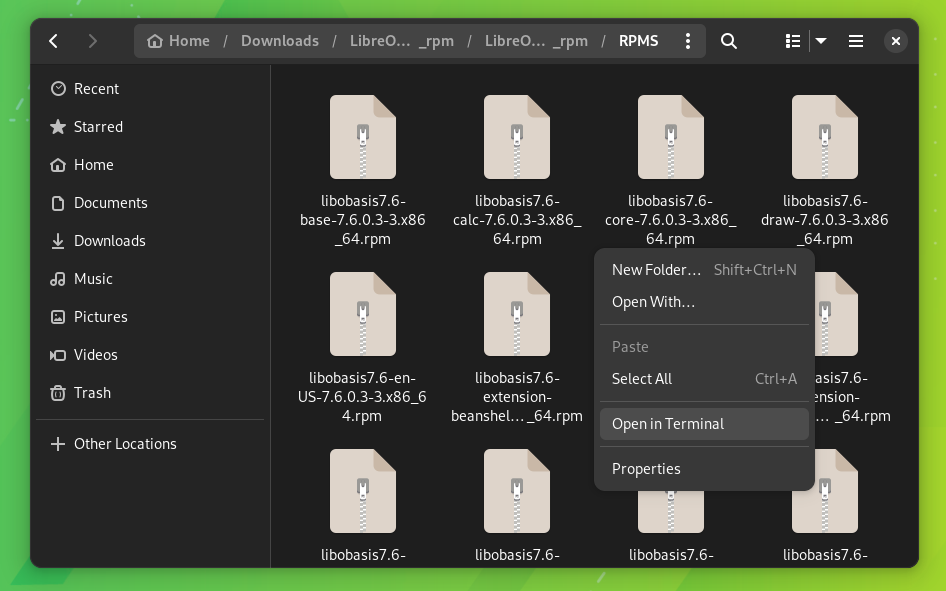

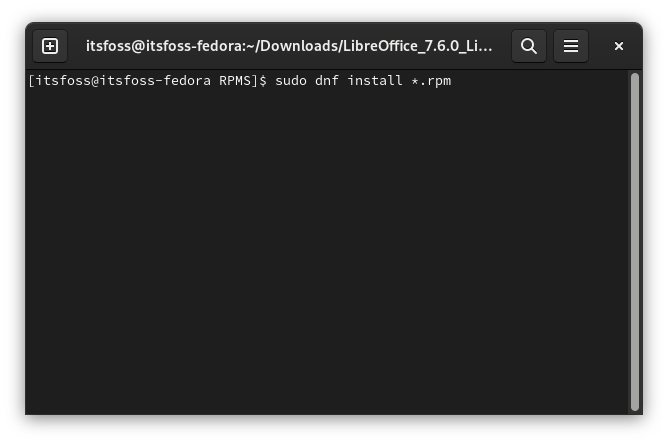

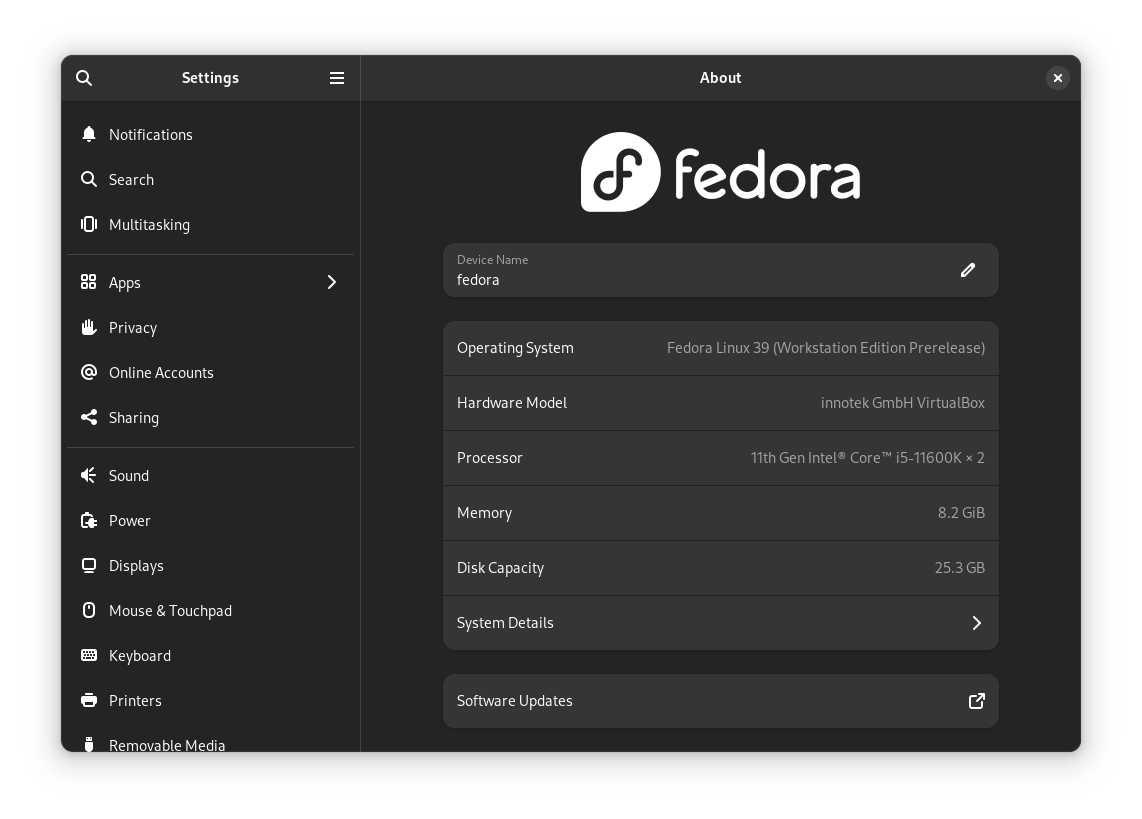

16,050 | Fedora Linux 的家族(一):官方版本 | https://fedoramagazine.org/fedora-linux-editions-part-1-official-editions/ | 2023-07-31T09:14:00 | [

"Fedora"

] | https://linux.cn/article-16050-1.html |

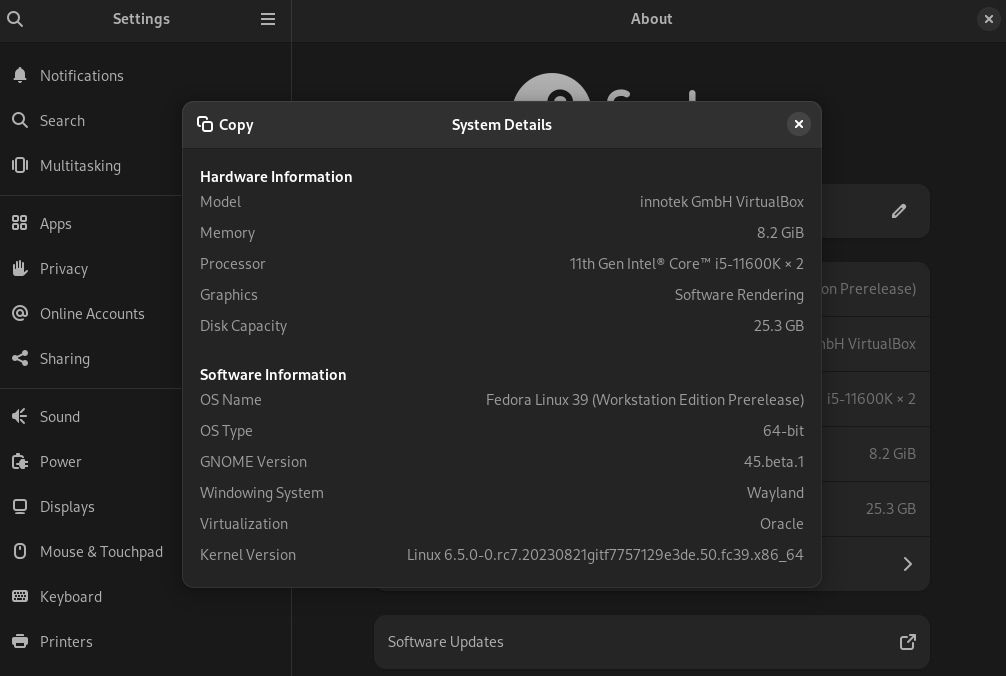

Fedora Linux 提供了多个变体以满足你的需求。你可以在我之前的文章《[Fedora Linux 的各种版本](/article-15003-1.html)》中找到所有 Fedora Linux 变体的概述。本文将对 Fedora Linux 官方版本进行更详细的介绍。共有五个 <ruby> 版本 <rt> Edition </rt></ruby>: Fedora Workstation、Fedora Server、Fedora IoT、Fedora CoreOS 和 Fedora Silverblue。Fedora Linux 下载页面目前显示其中三个为 *官方* 版本,另外两个为 *新兴* 版本。本文将涵盖所有五个版本。

### Fedora Workstation

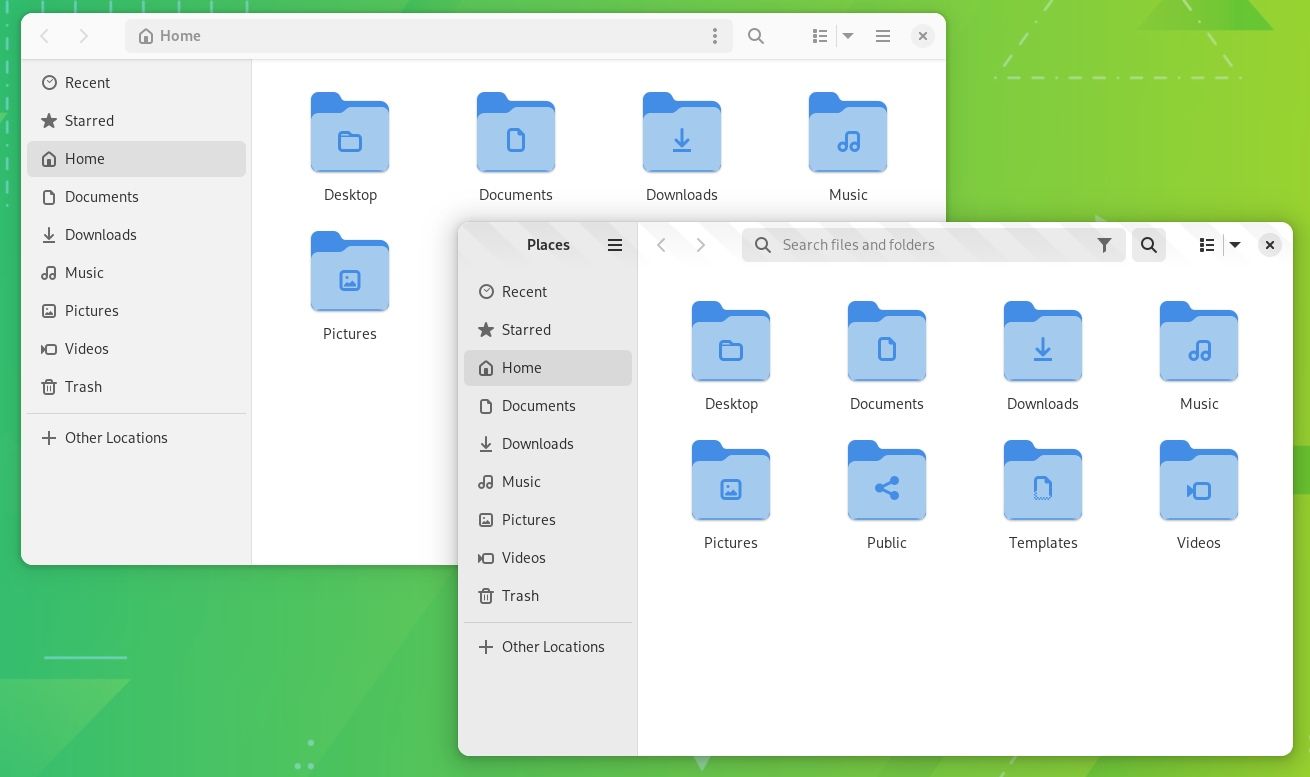

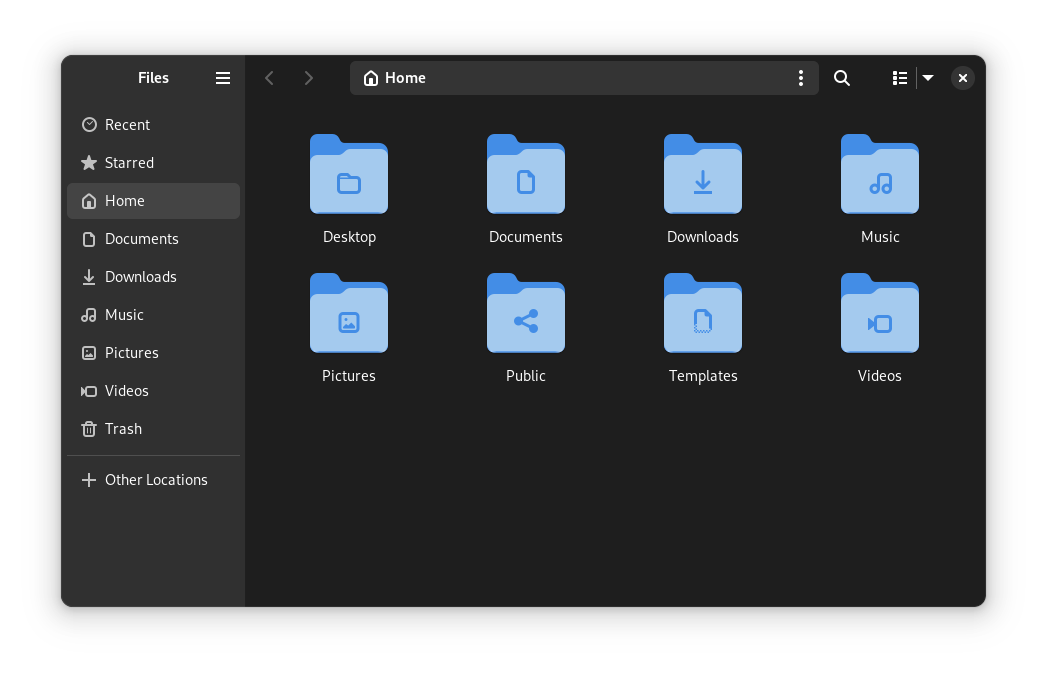

如果你是笔记本电脑或台式计算机用户,则 Fedora Workstation 是适合你的操作系统。Fedora Workstation 非常易于使用。你可以用它满足日常工作、教育、爱好等需求。例如,你可以使用它创建文档,制作演示文稿,上网冲浪,处理图像,编辑视频等等。

这个 Fedora Linux 版本默认使用 GNOME 桌面环境。你可以使用这种环境舒适地工作和进行各种活动。你还可以根据个人喜好自定义 Fedora Workstation 的外观,让你在使用过程中更加舒适。如果你是 Fedora Workstation 的新用户,你可以阅读我之前的文章 [在安装 Fedora Workstation 之后要做的事](https://fedoramagazine.org/things-to-do-after-installing-fedora-34-workstation/)。通过该文章,你将更容易上手 Fedora Workstation。

更多信息请参阅以下链接:

>

> **[Fedora Workstation](https://getfedora.org/en/workstation/)**

>

>

>

### Fedora Server

许多公司需要自己的服务器来支持基础设施。Fedora Server 版操作系统配备了一个强大的基于 Web 的管理界面称为 Cockpit,具有现代化的外观。Cockpit 可以让你轻松查看和监控系统性能和状态。

Fedora Server 包含一些开源世界中的最新技术,并得到一个活跃的社区的支持。它非常稳定可靠。然而,并不保证 Fedora 社区中的任何人都能够在你遇到问题时提供帮助。如果你运行的是关键任务的应用程序,并且可能需要技术支持,你可能要考虑使用 [Red Hat Enterprise Linux](https://www.redhat.com/en/technologies/linux-platforms/enterprise-linux)。

更多信息请访问以下链接:

>

> **[Fedora Server](https://getfedora.org/en/server/)**

>

>

>

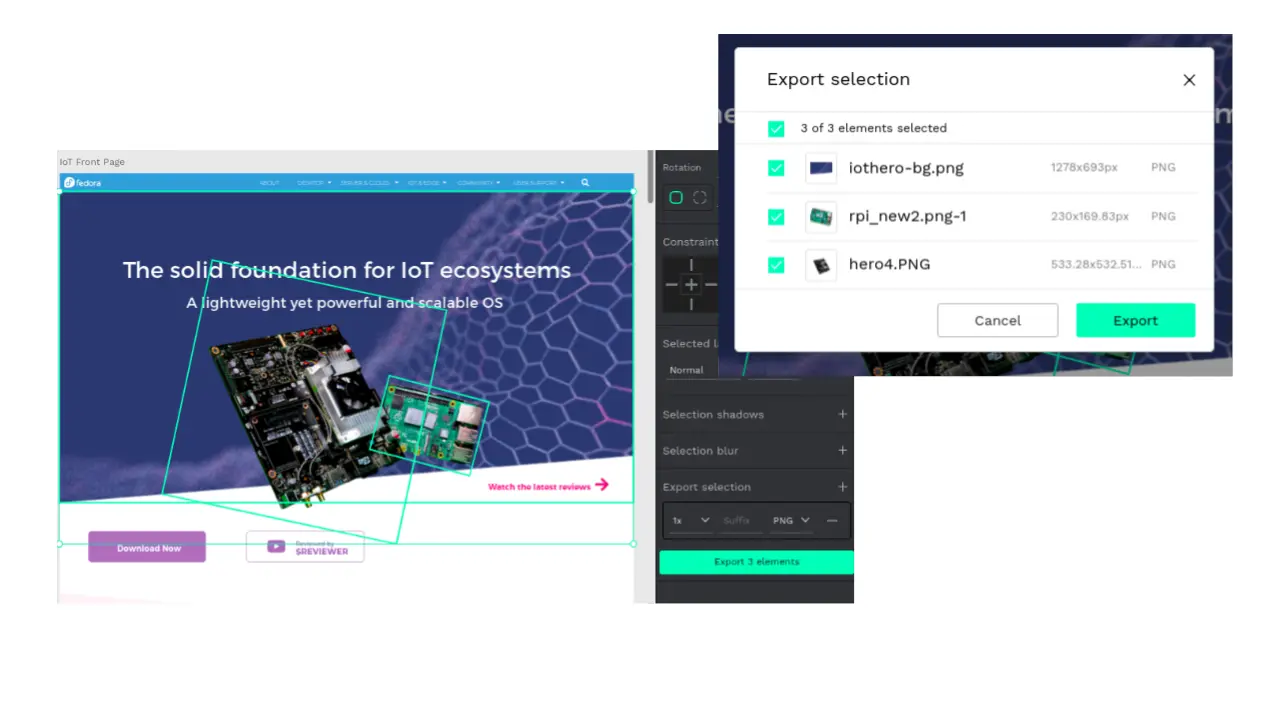

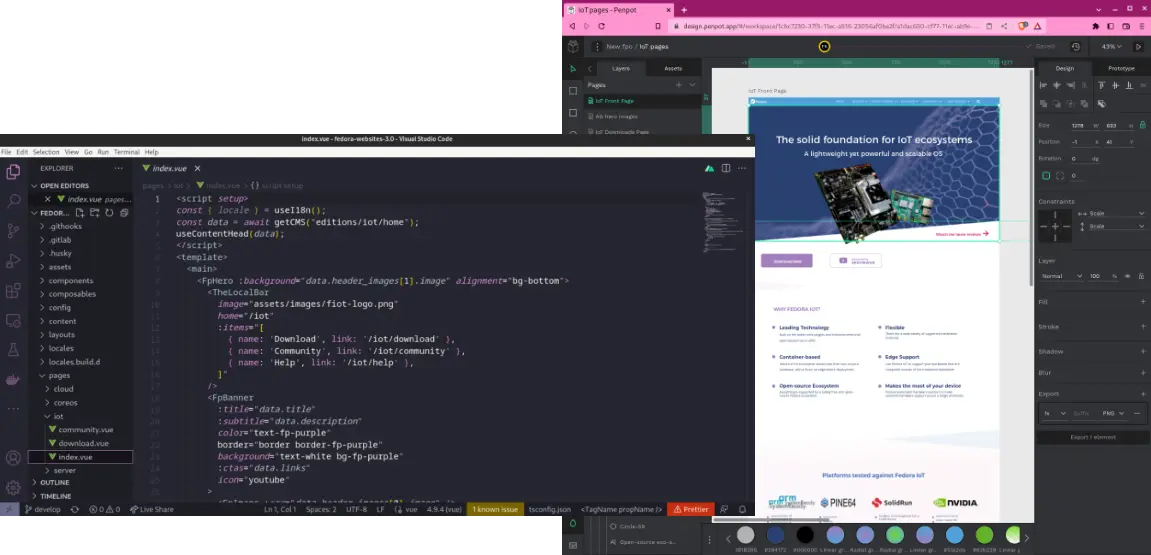

### Fedora IoT

为物联网设备专门设计的操作系统越来越受欢迎。Fedora IoT 就是为了应对这一需求而创建的操作系统。Fedora IoT 是一个不可变操作系统,使用 OSTree 技术来进行原子更新。该操作系统专注于物联网设备的安全性,这非常重要。Fedora IoT 支持多种架构。它还配备了一个基于 Web 的配置控制台,因此可以在不需要键盘、鼠标或监视器物理连接到设备的情况下进行远程配置。

更多信息请访问以下链接:

>

> **[Fedora IoT](https://getfedora.org/en/iot/)**

>

>

>

### Fedora CoreOS

Fedora CoreOS 是一个面向容器的操作系统。该操作系统用于在任何环境中安全可靠地运行应用程序。它设计用于集群,但也可以作为独立系统运行。该操作系统与 Linux 容器配置具有高度兼容性。

更多信息请访问以下链接:

>

> **[Fedora CoreOS](https://getfedora.org/en/coreos?stream=stable)**

>

>

>

### Fedora Silverblue

这个版本是 Fedora Workstation 的一个变体,界面并没有太大区别。但是,Fedora Silverblue 是一个不可变的操作系统,采用以容器为中心的工作流程。这意味着每个安装的副本与同一版本的其他副本完全相同。其目标是使其更加稳定,更少出现错误,并更容易进行测试和开发。

更多信息请访问以下链接:

>

> **[Fedora Silverblue](https://silverblue.fedoraproject.org/)**

>

>

>

### 结论

Fedora Linux 的每个版本都有不同的目的。多个版本的可用性可以帮助你获得适合你需求的操作系统。本文讨论的 Fedora Linux 版本是在 Fedora Linux 的主要下载页面上提供的操作系统。你可以在 <https://getfedora.org/> 找到下载链接和更完整的文档说明。

*(题图:MJ/90ffba71-aee2-4429-a846-41f06997792c)*

---

via: <https://fedoramagazine.org/fedora-linux-editions-part-1-official-editions/>

作者:[Arman Arisman](https://fedoramagazine.org/author/armanwu/) 选题:[lkxed](https://github.com/lkxed) 译者:ChatGPT 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Fedora Linux provides several variants to meet your needs. You can find an overview of all the Fedora Linux variants in my previous article [Introduce the different Fedora Linux editions](https://fedoramagazine.org/introduce-the-different-fedora-linux-editions/). This article will go into a little more detail about the Fedora Linux official editions. There are five *editions* — Fedora Workstation, Fedora Server, Fedora IoT, Fedora CoreOS, and Fedora Silverblue. The Fedora Linux download page currently shows that three of these are *official* editions and the remaining two are *emerging* editions. This article will cover all five editions.

## Fedora Workstation

If you are a laptop or desktop computer user, then Fedora Workstation is the right operating system for you. Fedora workstation is very easy to use. You can use this for daily needs such as work, education, hobbies, and more. For example, you can use it to create documents, make presentations, surf the internet, manipulate images, edit videos, and many other things.

This Fedora Linux edition comes with the GNOME Desktop Environment by default. You can work and do activities comfortably using this appearance concept. You can also customize the appearance of this Fedora Workstation according to your preferences, so you will be more comfortable using it. If you are a new Fedora Workstation user, you can read my previous article [Things to do after installing Fedora 34 Workstation](https://fedoramagazine.org/things-to-do-after-installing-fedora-34-workstation/). Through the article, you will find it easier to start with Fedora Workstation.

More information is available at this link: [https://getfedora.org/en/workstation/](https://getfedora.org/en/workstation/)

## Fedora Server

Many companies require their own servers to support their infrastructure. The Fedora Server edition operating system comes with a powerful web-based management interface called Cockpit that has a modern look. Cockpit enables you to easily view and monitor system performance and status.

Fedora Server includes some of the latest technology in the open source world and it is backed by an active community. It is very stable and reliable. However, there is no *guarantee* that anyone from the Fedora community will be available or able to help if you encounter problems. If you are running mission critical applications and you might require technical support, you might want to consider [Red Hat Enterprise Linux](https://www.redhat.com/en/technologies/linux-platforms/enterprise-linux) instead.

More information is available at this link: [https://getfedora.org/en](https://getfedora.org/en/server/)[/server/](https://getfedora.org/en/server/)

## Fedora IoT

Operating systems designed specifically for IoT devices have become popular. Fedora IoT is an operating system created in response to this. Fedora IoT is an immutable operating system that uses OSTree Technology with atomic updates. This operating system focuses on security which is very important for IoT devices. Fedora IoT has support for multiple architectures. It also comes with a web-based configuration console so that it can be configured remotely without requiring that a keyboard, mouse or monitor be physically connected to the device.

More information is available at this link: [https://getfedora.org/en/iot/](https://getfedora.org/en/iot/)

## Fedora CoreOS

Fedora CoreOS is a container-focused operating system. This operating system is used to run applications safely and reliably in any environment. It is designed for clusters but can also be run as a standalone system. This operating system has high compatibility with Linux Container configurations.

More information is available at this link: [https://getfedora.org/en/coreos/](https://getfedora.org/en/coreos?stream=stable)

## Fedora Silverblue

This edition is a variant of Fedora Workstation with an interface that is not much different. However, the difference is that Fedora Silverblue is an immutable operating system with a container-centric workflow. This means that each installation is exactly the same as another installation of the same version. The goal is to make it more stable, less prone to bugs, and easier to test and develop.

More information is available at this link: [https://silverblue.fedoraproject.org/](https://silverblue.fedoraproject.org/)

## Conclusion

Each edition of Fedora Linux has a different purpose. The availability of several editions can help you to get an operating system that suits your needs. The Fedora Linux editions discussed in this article are the operating systems available on the main download page for Fedora Linux. You can find download links and more complete documentation at [https://getfedora.org/](https://getfedora.org/).

## Alan W Shrock

Did you forget about Fedora Kinoite?

## Jesse

Isn’t Fedora Kinoite the Silverblue Edition but using KDE Plasma? KDE Plasma is one of the Fedora Spins, but not an official Fedora Release.

## Peter Boy

A good article that comes just at the right time for us. The docs team is currently planning a restructuring of the Fedora user documentation, away from a release related structure to a structuring according to the variants (see https://communityblog.fedoraproject.org/fedora-docs-is-about-to-change-significantly-check-it-out-still-in-statu-nascendi/) . The article helps to get a clearer view of this.

@Arman Arisman, maybe you could help us to describe in the docs the peculiarities of the variants briefly and accurately?

I am very aware of how difficult it is to describe the variants briefly and succinctly and, given the variety of characteristics, to hit on the most important features. Some things do come up short for me.

For example, the problem of a reliable, quasi enforceable support affects all variants, not only Server where this is explicitly stated. It is certainly especially important for professional server use, perhaps more important than for Workstations? But isn’t it just as important for e.g. CoreOS? Isn’t it equally important for all server variants? Or what makes it less important for one or the other?

Or the Workstation – Silverblue relationship. Is Silverblue really “more stable” than Workstation? Are there facts and figures that prove this? I would really love to know. And if it is, at what “cost”? Experience shows that “nothing is for free”. What can I perhaps no longer do or only with additional effort? What are possible criteria to decide between the two?

## Nelson

Regarding Workstation/Silverblue – its additional stability comes down to it being an immutable OS (much like IoT/CoreOS).

Not only it relies on atomic updates (OSTree acting as a “git for binaries”), everything in the /usr folder of the system is mounted as read-only; so it isn’t possible by default to simply edit a specific binary (example, SSH) unbeknownst to the system admin(s) – it requires either unlocking the current deployment (making it mutable) or overlaying an additional package to replace it. Those actions are, in turn, traced by OSTree and can be easily reverted, thus providing a safer, more reliable and reproducible environment to work with.

## Peter Boy

Yes, I know. That’s the theory. What about the empirics? Once in a time, Unix systems used to mount /usr read only for the same effect (regarding modification, not the other features). Over time, there was no additional security in addition to all the other security measurement introduced over the last decades.

## Gregory Bartholomew

Hi Peter. FYI, I (the editor) was the one who suggested the additional wording about RHEL being available if you wanted better support in the Fedora Server section. To my knowledge, Red Hat doesn’t offer paid-support for Fedora CoreOS. What made it worth mention for Fedora Server but not for the others was that there was another/better option. Just my 2¢. 🙂

## Peter Boy

Yeah, the other way around as I read it. There is a kind of update option. This is a distinguishing feature, indeed. And it is one of the practically most important features.

## Matthew Phillips

The comment recommending RHEL to server users made sense to me. People want fresher software for their personal computers, and often don’t mind doing a little troubleshooting if something breaks or is buggy. But for servers its often the other way around… you can’t even force some people to make updates, if it works they’d rather not touch it.

There is another option for CoreOS too, Red Hat Atomic. Uses rpm-ostree and everything.

## Jonatas Esteves

Just want to leave a heads-up to all the tinkerers out there that Silverblue is almost ready, it’s safe to make the jump! I’ve been on it since F35 and I’m very happy with it, and convinced this is the future. I wouldn’t install it on “grandpa’s PC” yet, but for a techie person, it’s time to be on the next-big-thing.

## Edd

Genial Buen contenido

## Kodden Tecnologia

Great post, Im in doubt about Fedora Silverblue, it’s worth install on workstation? Thanks

## SigmaSquadron

Absolutely. Silverblue is stable, and implements many technolgies considered the be the future of the Linux userland.

## Vijay

One of the good article to read about Open source development.

I would like to share it wilth my connection.

## Stephen

I have been running Fedora Silverblue since Fedora Linux 27 as my daily driver and wouldn’t turn back to traditional Workstation.

## Matthew Phillips

Just my opinion, but it’s getting to be the time where Silverblue and CoreOS should be official editions and not ’emerging’. I’ve been using Silverblue since F30 I believe… it was good enough to stay on back then and has only aged like a fine wine.

When I first read this article and was reminded of the emerging label, the thought “maybe I should be using the official edition until Silverblue is considered official” momentarily went through my head. The emerging label makes it sound a bit like a project which could be dropped Google-style, even if the Fedora Council wouldn’t do that.

## Abdul Hameed

I have been using Fedora since Fedora 2.

Today, I am using Fedora workstation 36 .

Your Post is very useful about other editions of Fedora. I like only Fedora Workstation. So I had no more knowledge about other editions, but today, I read your post and now I have useful knowledge.

Thanks.

## Ravi

I am using Fedora 36 KDE spin, does upgrading to future release work as reliably as official edition(Workstation) if proper upgrading procedure is followed?

Fedora 36 KDE is excellent so far.

## SigmaSquadron

Fedora KDE is a spin. It’s produced by the Fedora KDE Special Interest Group, but should be as reliable as official editions.

## eswgftwretgewr

We need

Fedora Hurd

Fedora Serenity

Fedora Minix

## Greg Zeng

Fedora was frightening in my tests. Wayland display server is default. However Wayland will not work with dinner applications.

Gnome display environment is too innovative, moving very far away from there usual Windows interface.

BTRFS is often recommended by Fedora, but it is not widely supported perhaps. Utilities for BTRFS seen lacking or hard to use.

## Peter Boy

You are not alone in this assessment. But, have you also tried Gnome Classic? That is now very mature, and you can effectively work with it again (not as flexible as Gnome 2, but it’s ok). You can select it in the login screen.

There was a discussion about it. It is the eternal belief in progress that whatever is new and advanced is thought to be always useful and practical. Nevertheless, the Anaconda installation program offers the option to configure another file system. Use the custom option in Installation target.

Shh! Some users install the Server Edition and a GUI of their choice on top of it (see the use case in their documentation). But that’s not really how it’s meant to be used. 🙂

## makosol

If you want a desktop that is way more Windows-like, I recommend you to use the Fedora KDE spin.

## SigmaSquadron

I’m not sure what a dinner application is, but XWayland does work with almost all X11 applications. It even works well for gaming, with only very legacy applications being left behind, but in those cases, you can just switch to an X11 session. The benefits Wayland brings are too great to simply ignore in favour of X, and are worth the occasional broken application.

GNOME is its own thing. You could just use a spin, and its not a bad thing that it moves away from Windows. Breaking convention in favour of new, good ideas is a good thing.

About BTRFS, it sounds like you just have little experience with it. The default btrfs-progs tools does everything the ext4 tools do, and so much more. On ext4, you’ll often need external tools, such as testdisk, to do simple recovery tasks, but on btrfs, it’s a simple

away.

Also, BTRFS is widely supported on enterprise environments. SUSE is a major contender for Enterprise Linux, and they use BTRFS by default.

## Arthur Bishop

Fedora is like a restaurant with 5 Michelin stars. I have a lot of fun with MATE DESKTOP anyway my computer is weak it’s hard for him to run something else Thank God there is MATE with Fedora

## Matt

MATE is a wonderful desktop, I use it on all the distros I test. Give yourself that 2010-era Fedora 14 look!

## Kevin "G"

This comment I don’t think has much to do with the topic, but that graphic at the top (official editions), should be incorporated in my view to the banner that shows up when you first boot Fedora. That powder blue and the Fedora logo, give it a much nice “entrance screen” imo. Again, sorry this doesn’t relate to the topic at hand, but I had to say something. I love Fedora, and I love how it’s starting to look. Thank you for your time and reading.

## Kevin "G"

Have the welcome banner be the same as above, with powder blue and white letters that read…

Fedora Linux-Workstation Edition-36 (*in white letters, would change )

Fedora logo right side, as you can tell I really like the banner someone made, and I think with the black or dark of Gnome, and the powder blue with white letters giving it a Fedora primary universal look would be very nice looking. But that’s just my opinion. I tend to think Fedora should have a universal look, that users can change if they want to.

## Gregory Bartholomew

FYI, in this case, the cover image was made by the author of the article.

Also, if you want to recommend enhancements to Fedora’s Workstation edition, you might have better luck reaching the right people by posting your comment here: https://discussion.fedoraproject.org/tag/workstation

## Kevin "G"

yeah I know it was created by the author, but it would look great in distro, IMO. Thanks for the link. |

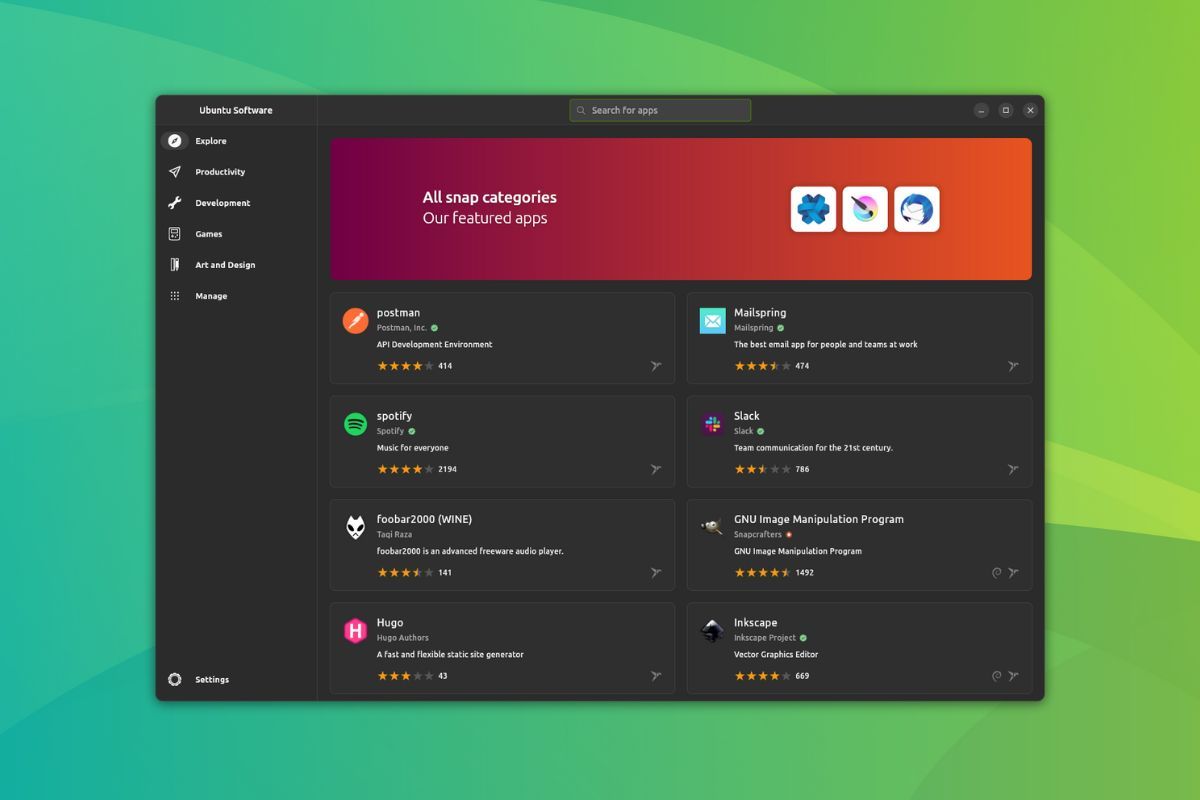

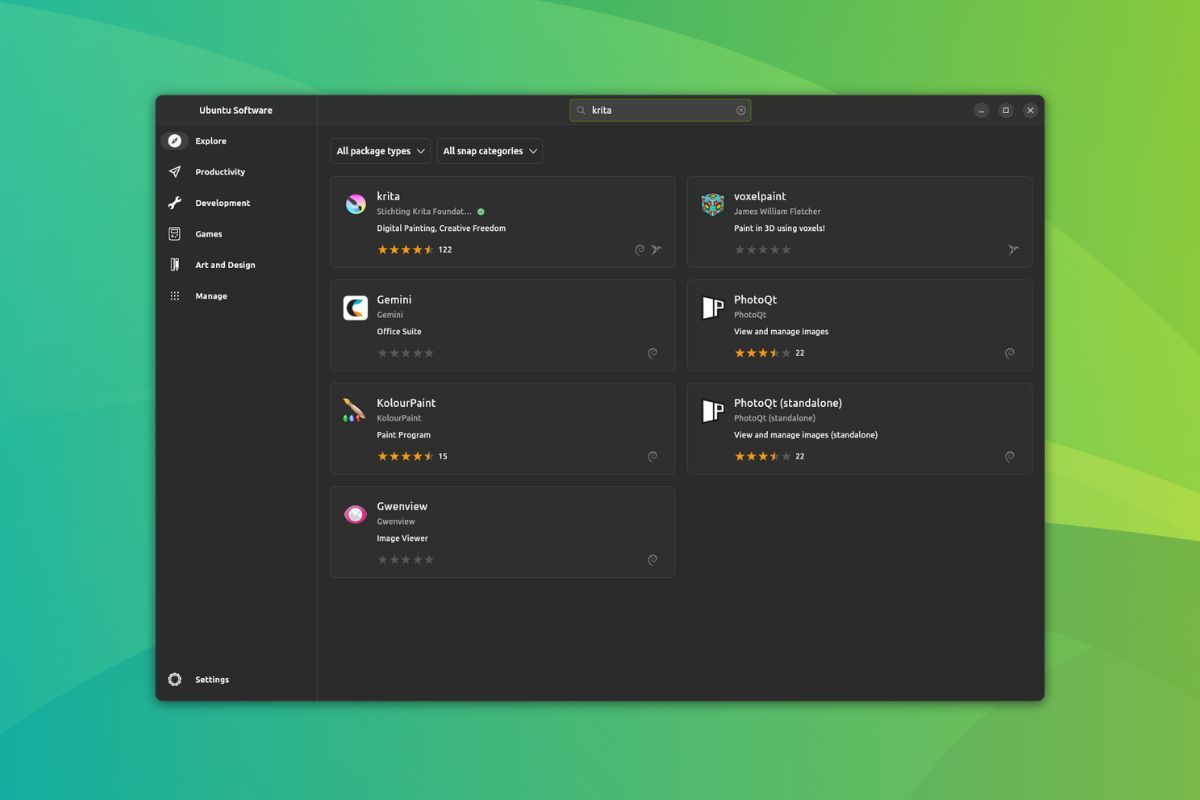

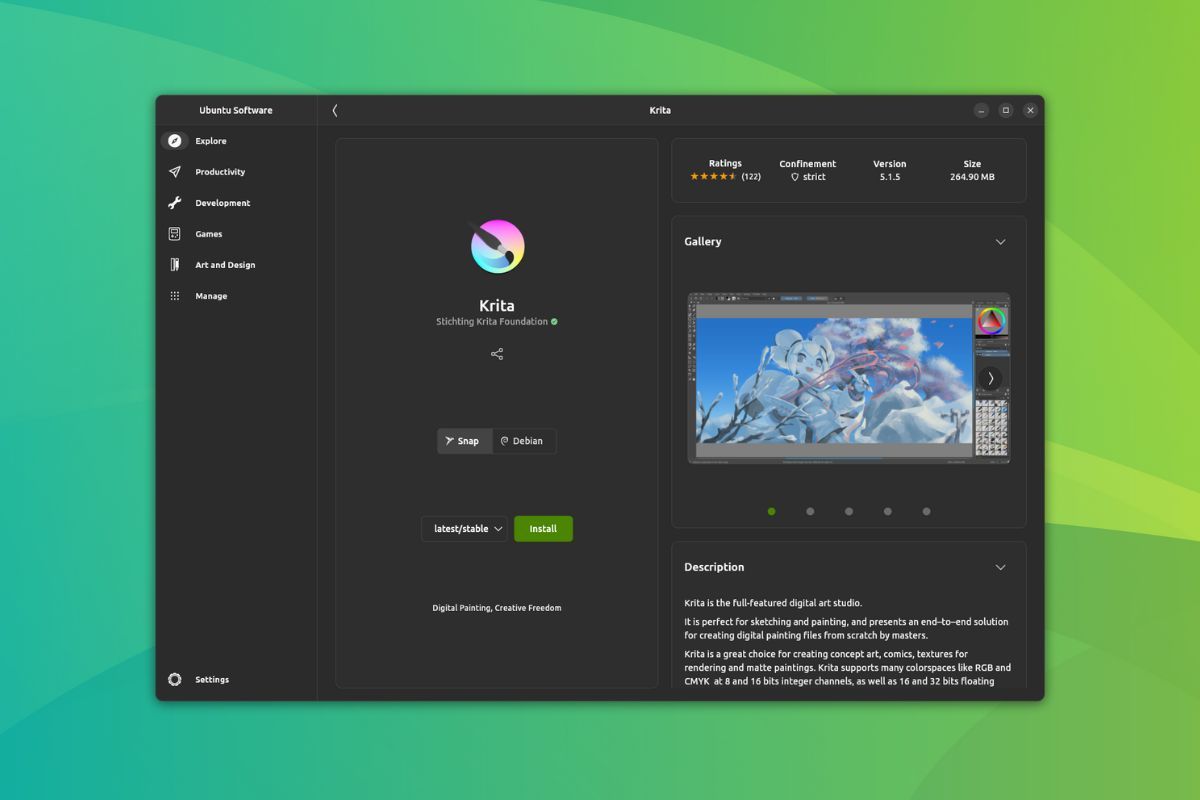

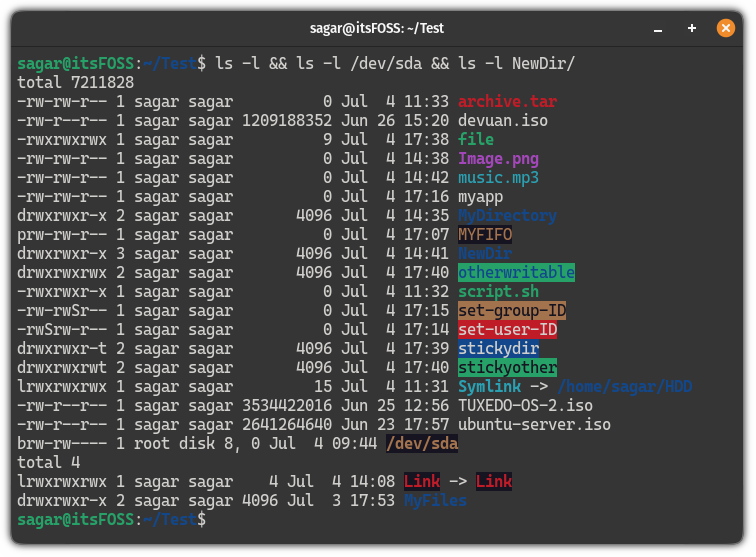

16,053 | 终端基础:在 Linux 中复制文件和目录 | https://itsfoss.com/copy-files-directory-linux/ | 2023-07-31T22:08:49 | [

"终端"

] | https://linux.cn/article-16053-1.html |

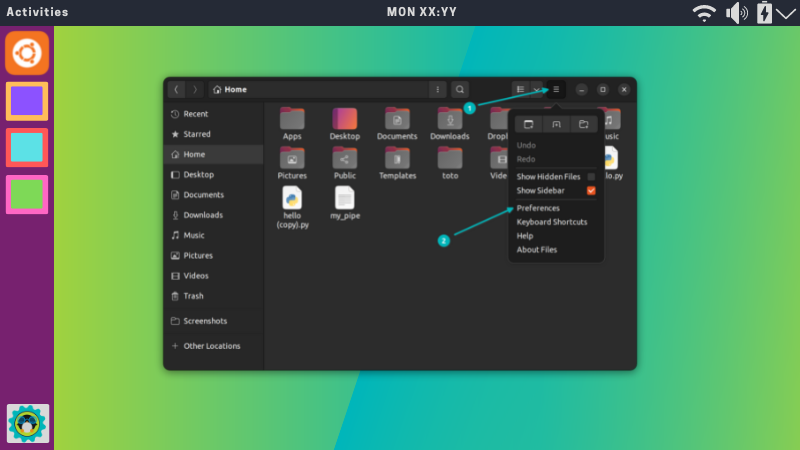

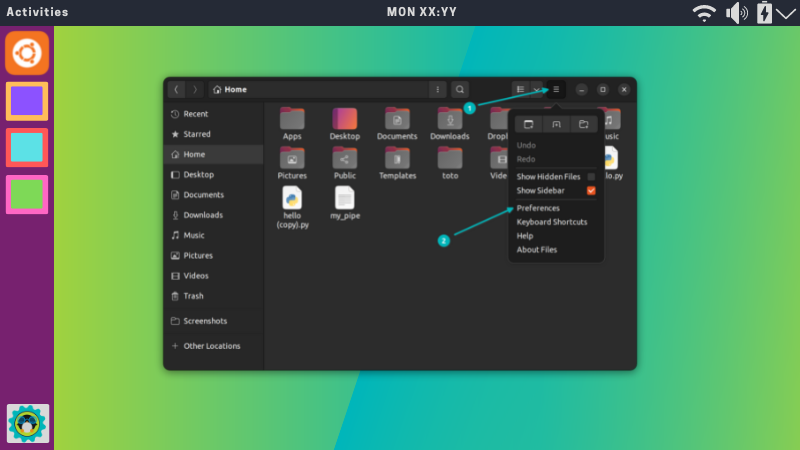

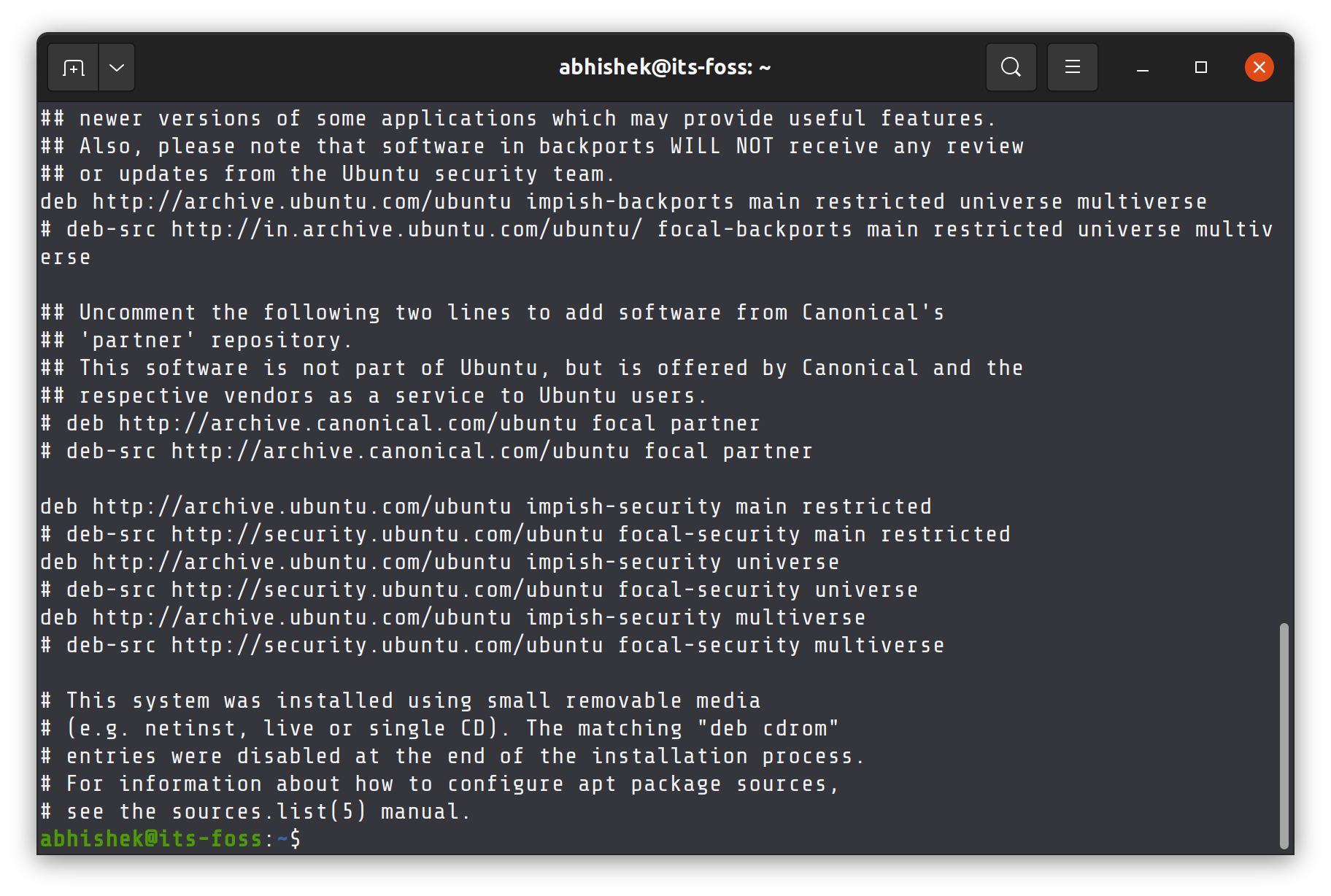

>

> 在终端基础知识系列的这一部分中,学习如何在 Linux 中使用命令行复制文件和目录。

>

>

>

复制文件是你经常执行的最基本但最重要的任务之一。

Linux 有一个专门的 `cp` 命令用于复制文件和目录(文件夹)。

在终端基础知识系列的这一部分中,你将学习在终端中复制文件和文件夹。

>

> ? 回想一下,以下是你迄今为止在本终端基础知识系列中所学到的内容:

>

>

> * [更改目录](https://itsfoss.com/change-directories/)

> * [创建新目录](/article-15595-1.html)

> * [列出目录内容](https://itsfoss.com/list-directory-content/)

> * [创建文件](/article-15643-1.html)

> * [读取文件](https://itsfoss.com/view-file-contents/)

> * [删除文件和目录](/article-15809-1.html)

>

>

>

让我们继续该系列的第七章。

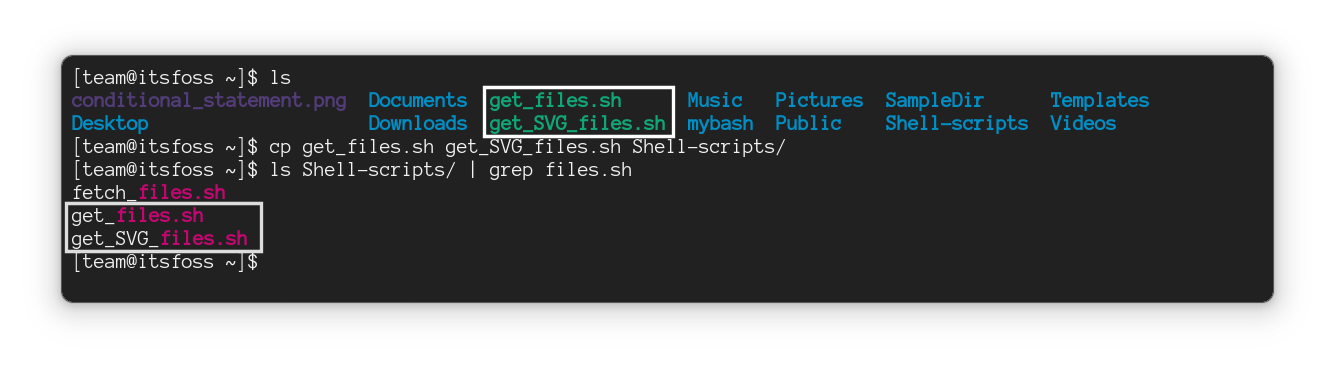

### 在 Linux 命令行中复制文件

让我向你展示一些复制文件的示例。

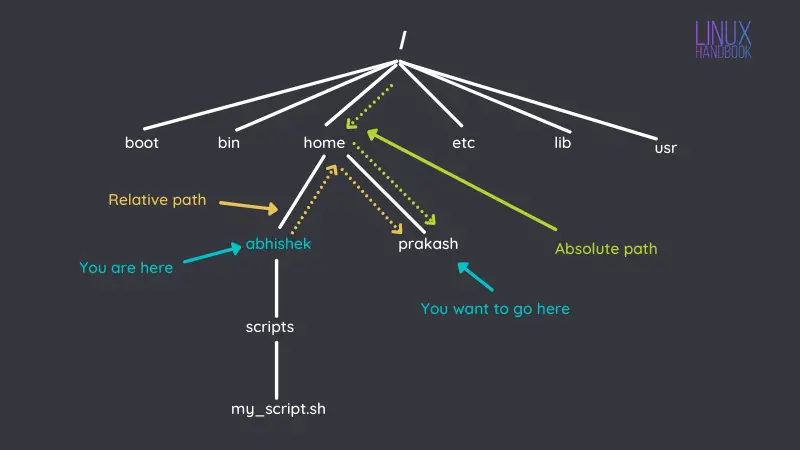

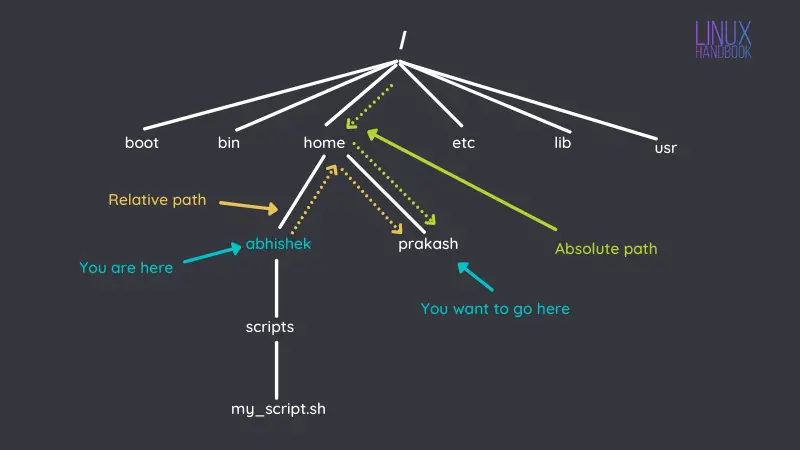

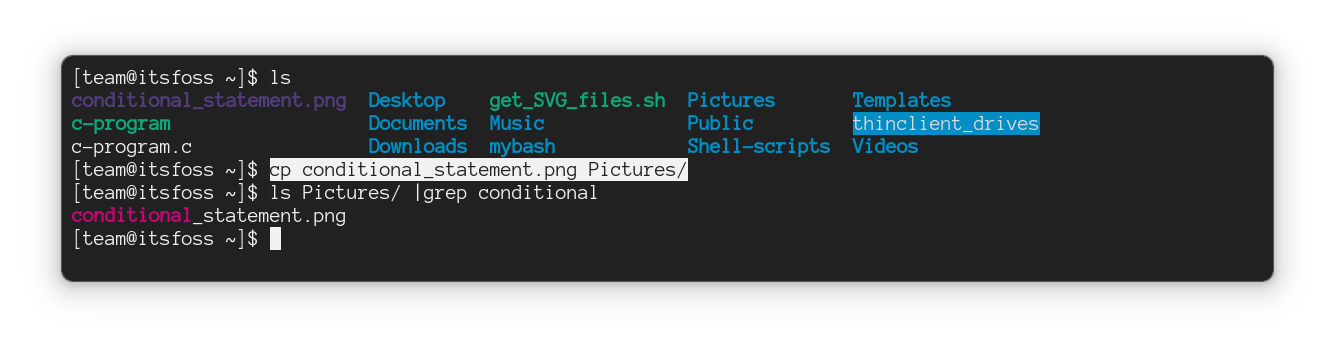

#### 将文件复制到另一个目录

要将一个文件复制到另一目录,你所要做的就是遵循给定的命令语法:

```

cp 源文件 目标目录

```

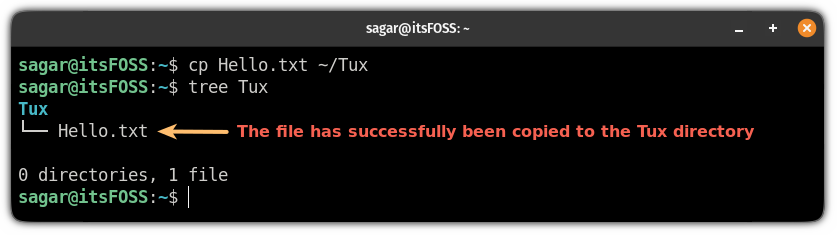

例如,在这里,我将名为 `Hello.txt` 的文件复制到名为 `Tux` 的目录中:

正如你所看到的,文件已成功复制到 `Tux` 目录中。

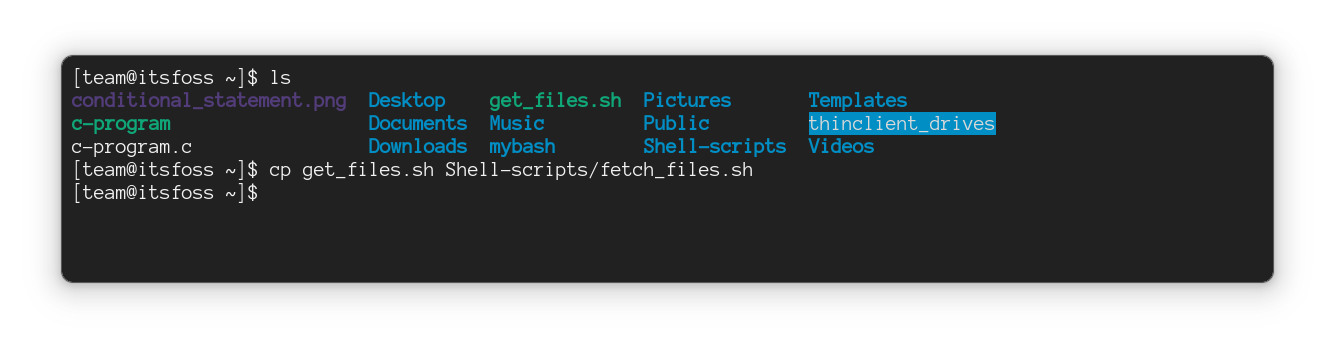

#### 复制文件但重命名

你可以选择在复制文件时重命名该文件。只需为“目标文件”指定一个不同的名称即可。

```

cp 源文件 改名的文件

```

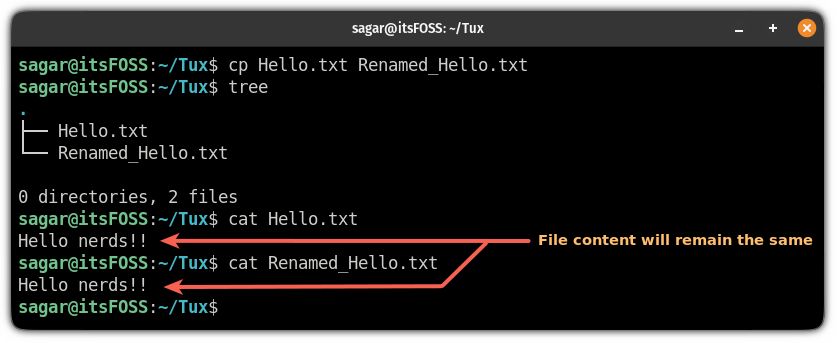

作为参考,在这里,我将名为 `Hello.txt` 的文件复制到同一目录,并将其重命名为 `Renamed_Hello.txt`:

为什么要这么做? 比如说,你必须编辑配置文件。一个好的做法是在编辑配置文件之前在同一位置对其进行备份。这样,如果事情没有按计划进行,你可以恢复到旧配置。

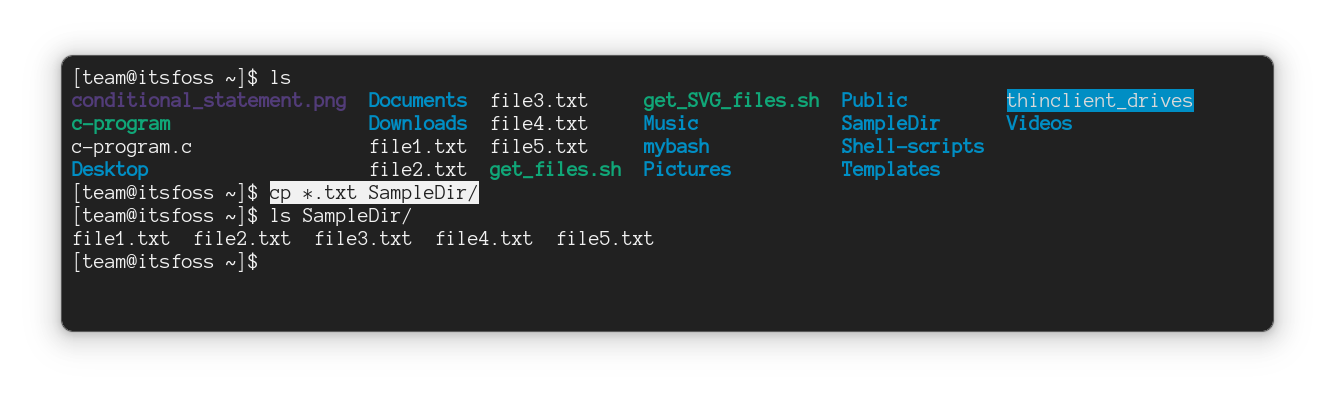

#### 将多个文件复制到另一个位置

要将多个文件复制到另一个目录,请按以下方式执行命令:

```

cp File1 File2 File3 FileN Target_directory

```

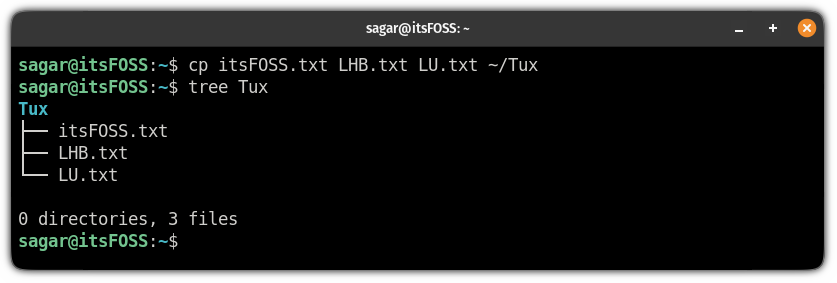

在这里,我将多个文件复制到新位置。

>

> ? 当你复制多个文件时,仅使用 `cp` 命令无法重命名它们。

>

>

>

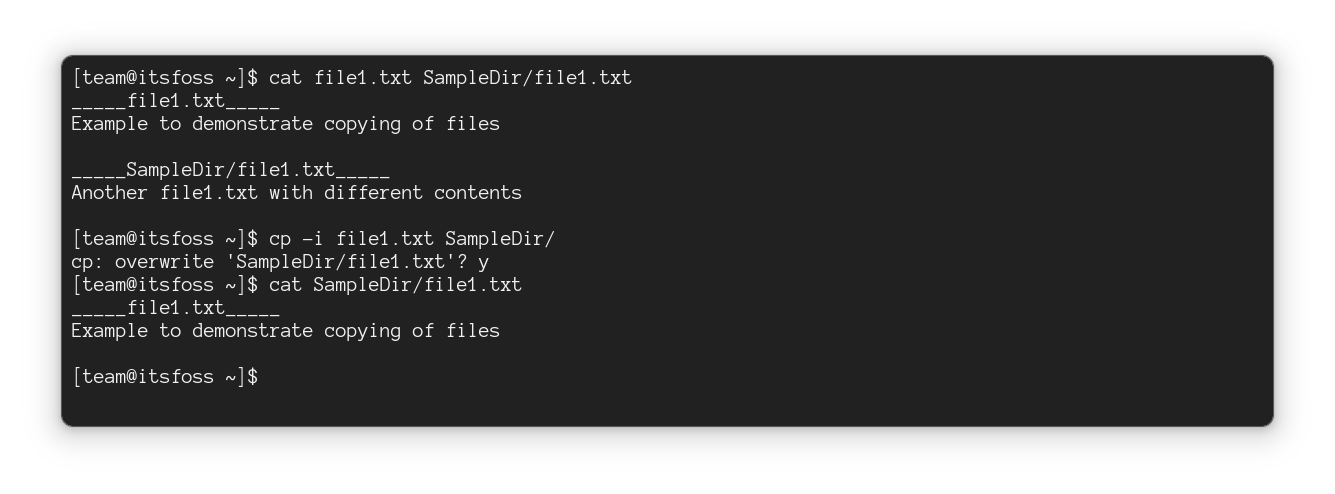

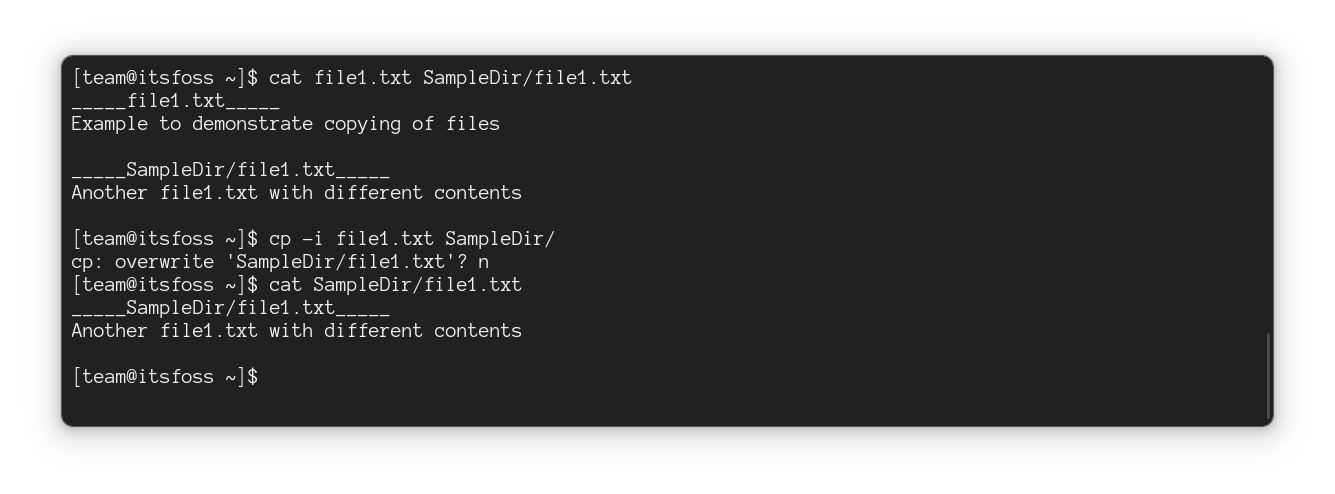

#### 复制时处理重复文件

默认情况下,如果目标目录中存在同名文件,`cp` 命令将覆盖该文件。

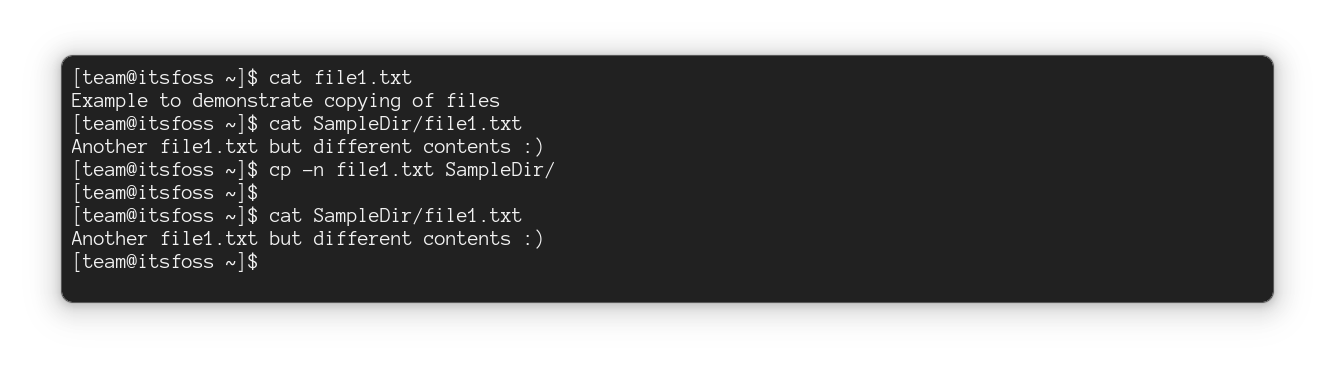

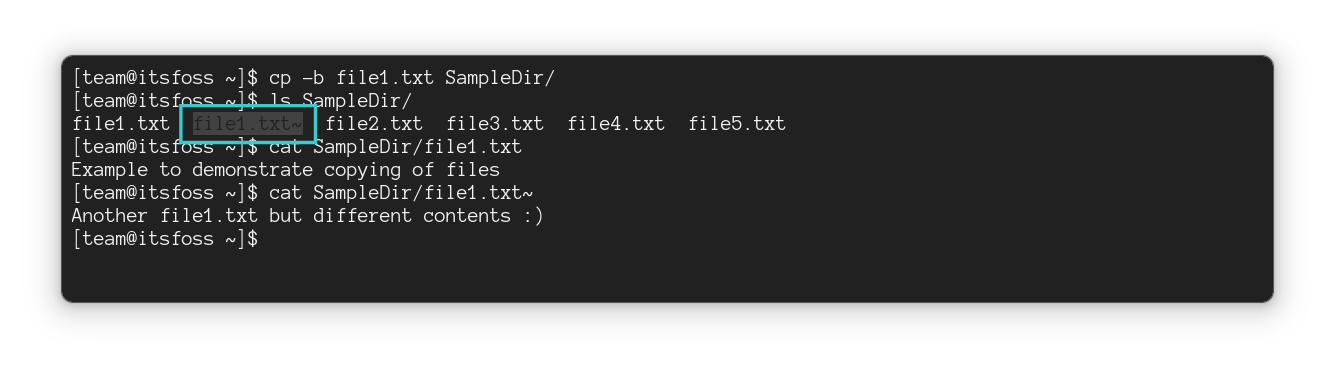

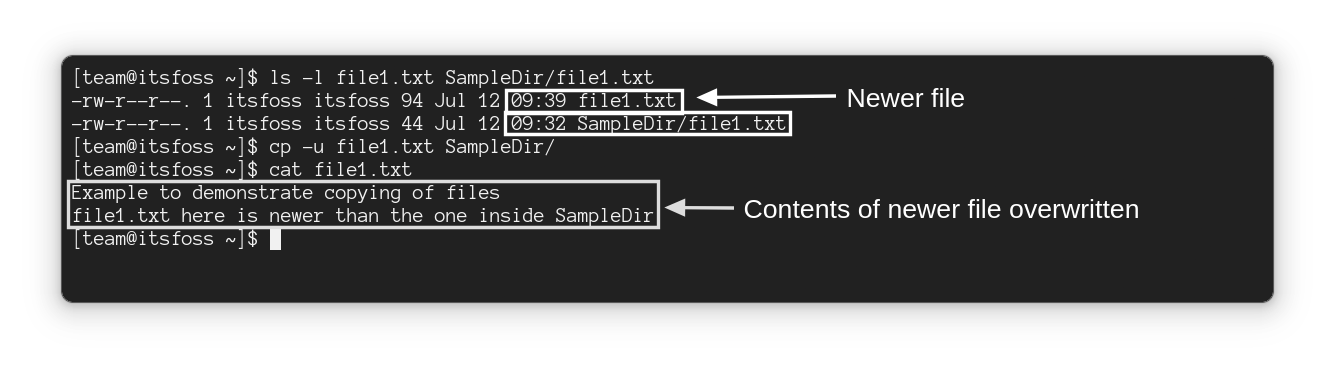

为了避免覆盖,你可以在 cp 命令中使用 `-n` 选项,它不会覆盖现有文件:

```

cp -n 源文件 目标目录

```

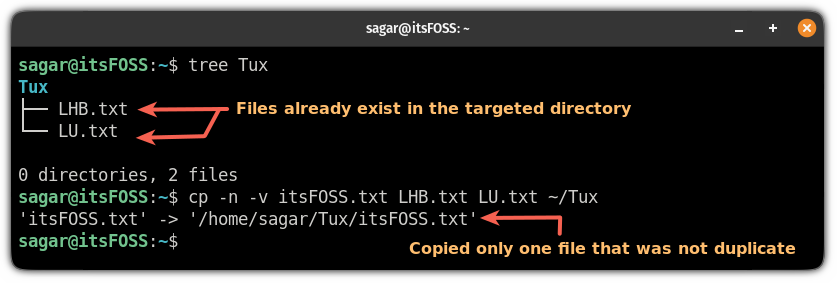

例如,在这里,我尝试复制目标目录中已有的两个文件,并使用 `-v` 选项来展示该命令正在执行的操作:

```

cp -n -v itsFOSS.txt LHB.txt LU.txt ~/Tux

```

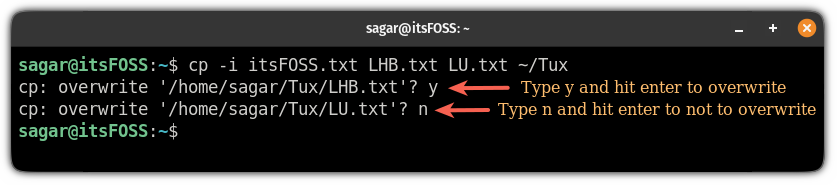

#### 交互式复制文件

但是,当你想要覆盖某些文件,而某些文件应该保持不变时该怎么办?

好吧,你可以使用 `-i` 选项在交互模式下使用 `cp` 命令,它每次都会询问你是否应该覆盖该文件:

```

cp -i 源文件 目标目录

```

>

> ?️ 自己练习上述所有示例。你已经了解如何创建文件和文件夹,因此请重新创建所有内容。

>

>

>

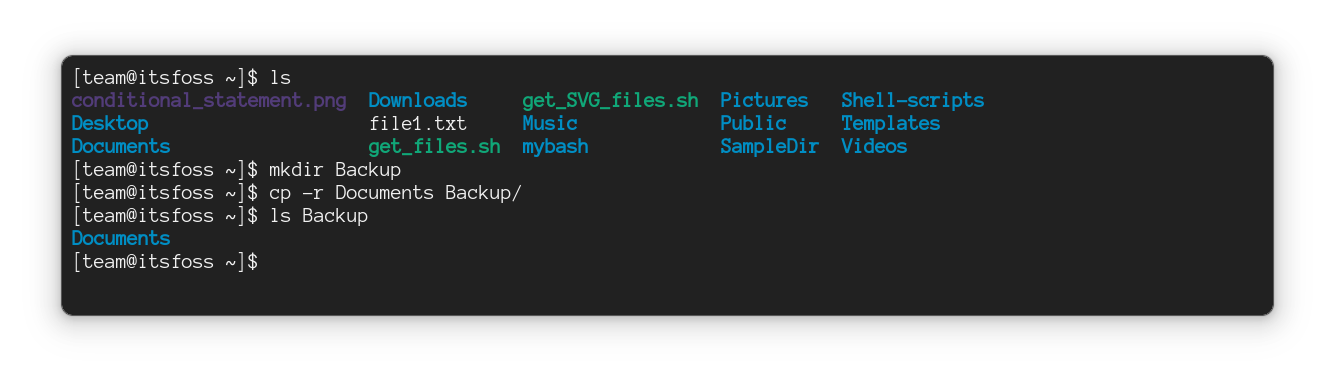

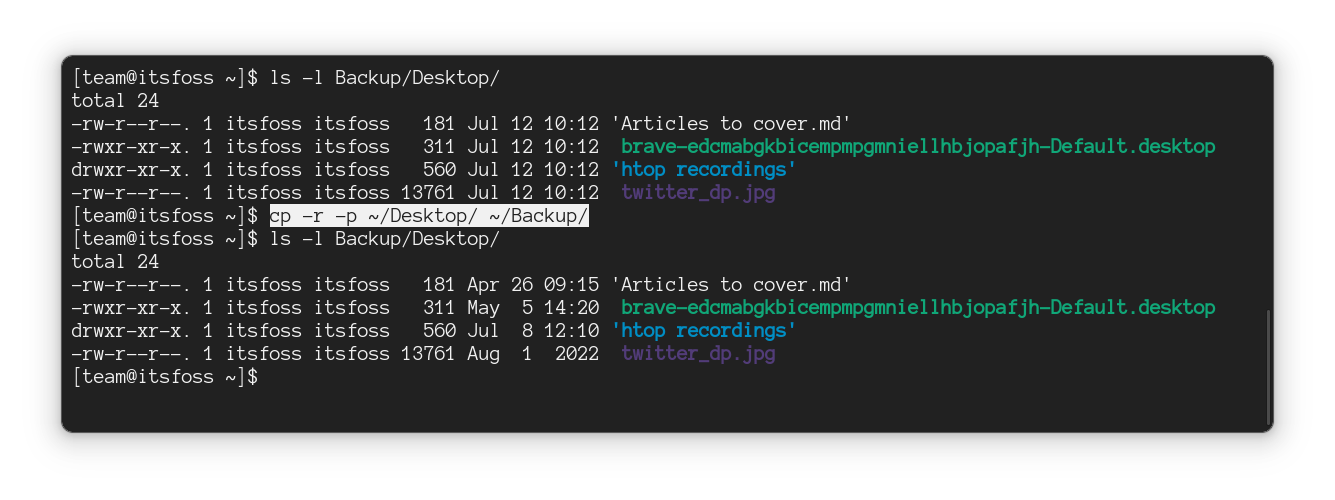

### 在 Linux 命令行中复制目录

`mkdir` 命令用于创建新目录,`rmdir` 命令用于删除(空)目录。但没有用于复制目录的 `cpdir` 命令。

你必须使用相同的 `cp` 命令,但使用递归选项 `-r` 将目录及其所有内容复制到另一个位置:

```

cp -r 源目录 目标目录

```

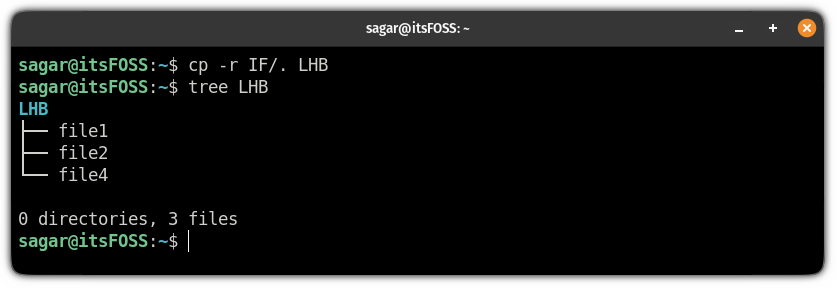

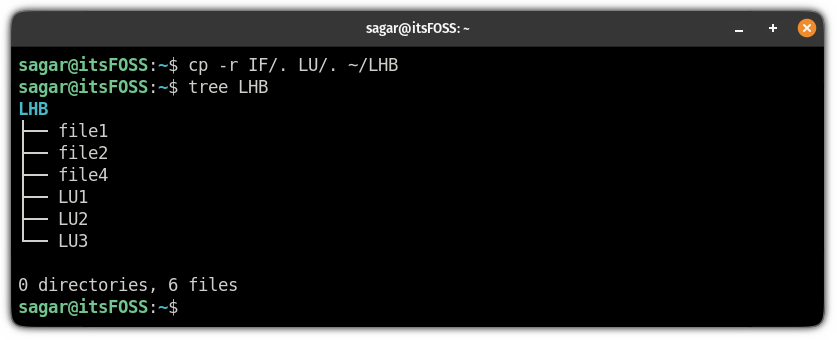

例如,在这里,我将名为 `IF` 的目录复制到 `LHB`:

但它复制了整个目录。?

那么,当你只想复制目录内容而不是目录本身时该怎么办?

你可以执行以下操作:

#### 仅复制目录的内容(不是目录)

要仅复制目录的内容,而不复制目录本身,请在源目录名称的末尾附加 `/.`:

```

cp -r 源目录/. 目标目录

```

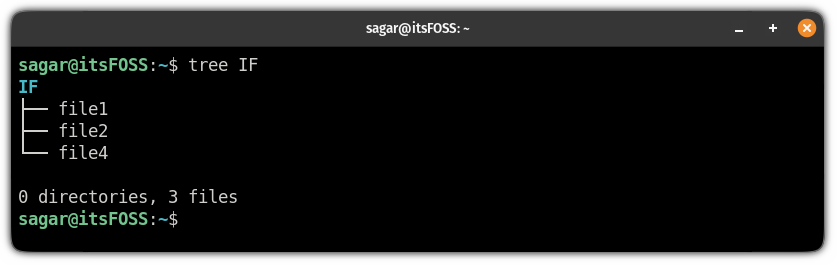

在这里,我想复制名为 `IF` 的目录的内容,其中包含以下三个文件:

我将执行以下命令将 `IF` 目录的文件内容复制到 `LHB`:

```

cp -r IF/. LHB

```

你还可以在此处使用 `源目录/*` 。

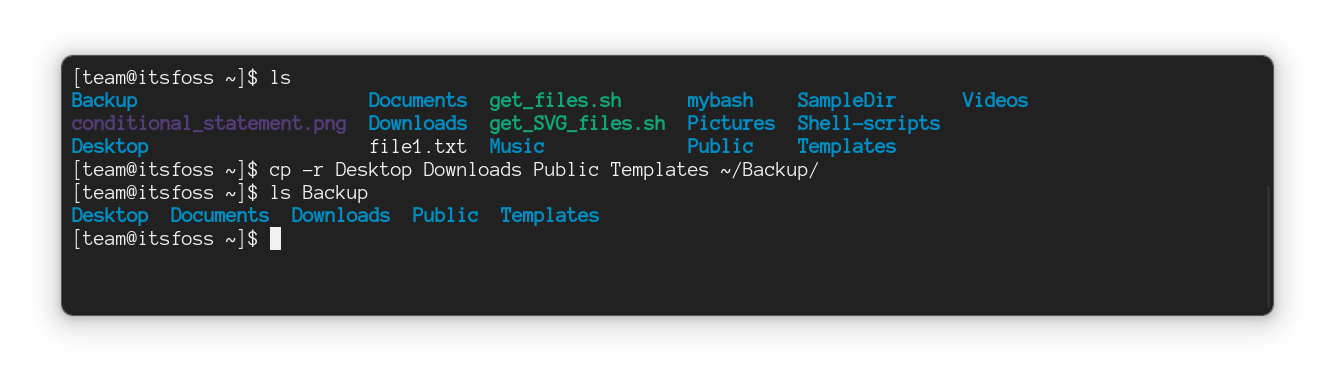

#### 复制多个目录

要复制多个目录,你必须按以下方式执行命令:

```

cp -r 目录1 目录2 目录3 目录N 目标目录

```

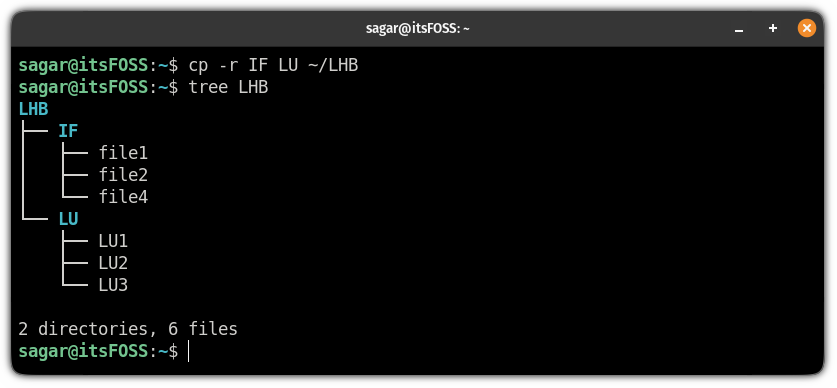

例如,在这里,我将两个名为 `IF` 和 `LU` 的目录复制到 `LHB`:

```

cp -r IF LU ~/LHB

```

当你想要从多个目录复制文件但不复制目录本身时,你可以执行相同的操作:

```

cp -r 目录1/. 目录2/. 目录3/. 目录N/. 目标目录

```

>

> ?️ 你还可以像重命名文件一样重命名目录。

>

>

>

### 测试你的知识

现在,让我们看看你对到目前为止所学到的知识还记得多少。

* 创建一个名为 `copy_practice` 的目录。

* 将文件 `/etc/services` 复制到这个新创建的文件夹。

* 在此目录下创建一个名为 `secrets` 的文件夹,并将文件 `/etc/passwd` 和 `/etc/services` 复制到其中。

* 将 `copy_practice` 中的 `services` 文件复制到 `secrets` 文件夹中,但不要覆盖它。

* 将 `secrets` 文件夹复制到你的主目录。

* 删除 `secrets` 和 `copy_practice` 目录。

这会给你一些练习。

到目前为止进展顺利。你已经学到了很多东西。在下一章中,你将了解如何使用 `mv` 命令移动文件和文件夹。

---

via: <https://itsfoss.com/copy-files-directory-linux/>

作者:[Sagar Sharma](https://itsfoss.com/author/sagar/) 选题:[lkxed](https://github.com/lkxed/) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | # Chapter 7: Copy Files and Directories in Linux

Learn how to copy files and directories in Linux using the command line in this part of the Terminal Basics series.

Copying files is one of the most basic yet crucial tasks you will be doing regularly.

Linux has a dedicated cp command for copying both files and directories (folders).

In this part of the Terminal Basics series, you'll learn to copy files and folders in the terminal.

-

[Change directories](https://itsfoss.com/change-directories/)

-

[Make new directories](https://itsfoss.com/make-directories/)

-

[List directory contents](https://itsfoss.com/list-directory-content/)

-

[Create files](https://itsfoss.com/create-files/)

-

[Reading files](https://itsfoss.com/view-file-contents/)

-

[Removing files and directories](https://itsfoss.com/delete-files-folders-linux/)

Let's go on with the seventh chapter in the series.

## Copying files in Linux command line

Let me show you a few examples of copying files.

### Copy a file to another directory

To copy one file to another directory, all you have to do is follow the given command syntax:

`cp Source_file Destination_directory`

For example, here, I have copied a file named `Hello.txt`

to the directory named `Tux`

:

And as you can see, the file has successfully been copied to the Tux directory.

### Copy the file but rename it

You can choose to rename the file while copying it. Just give a different name to the 'target file'.

`cp Source_file Renamed_file`

For reference, here, I have copied a file named `Hello.txt`

to the same directory by renaming it to `Renamed_Hello.txt`

:

Why would you do that? Say, you have to edit a config file. A good practice is to make a backup of the config file in the same location before editing it. This way, you can revert to the old configuration if things don't go as planned.

### Copy multiple files to another location

To copy multiple files to another directory, execute the command in the following fashion:

`cp File1 File2 File3 FileN Target_directory`

Here, I copy multiple files to a new location.

### Deal with duplicate files while copying

By default, the cp command will override the file if a file with the same name exists in the target directory.

To avoid overriding, you can use the `-n`

option with the cp command, and it won't override the existing files:

`cp -n Source_File Destination_directory`

For example, here, I have tried to copy two files that were already there in my targeted directory and used `-v`

option to showcase what is being done by the command:

`cp -n -v itsFOSS.txt LHB.txt LU.txt ~/Tux`

### Interactively copy files

But what about when you want to override some files, whereas some should be kept intact?

Well, you can use the cp command in the interactive mode using the `-i`

option, and it will ask you each time whether the file should be overridden or not:

`cp -i Source_file Destination_directory`

## Copy directories in Linux command line

There is mkdir command to make new directories, rmdir to remove (empty) directories. But there is no cpdir command for copying directories.

You'll have to use the same cp command but with the recursive option `-r`

to copy a directory with all its content to another location:

`cp -r Source_dir Target_dir`

For example, here, I have copied a directory named `IF`

to `LHB`

:

But it copied the entire directory 🤨

So, what do you do when you only want to copy the directory's contents, not the directory itself?

Here's what you can do:

### Copy only the contents of a directory (not the directory)

To copy only the contents of the directory, not the directory itself, you append `/.`

at the end of the source directory's name:

`cp -r Source_directory/. Destination_directory`

Here, I want to copy the contents of a directory named `IF`

which contains the following three files:

And I will execute the following command to copy the file contents of the `IF`

directory to `LHB`

:

`cp -r IF/. LHB`

You can also use Source_directory/* here.

### Copy multiple directories

To copy multiple directories, you will have to execute the command in the following way:

`cp -r Dir1 Dir2 Dir3 DirN Destiniation_directory`

For example, here, I have copied two directories named `IF`

and `LU`

to the `LHB`

:

`cp -r IF LU ~/LHB`

You can do the same when you want to copy files from multiple directories but not the directory itself:

`cp -r Dir1/. Dir2/. Dir3/. DirN/. Destination_directory`

## 📝 Test your knowledge

Now, let's see how much you remember the lessons learned so far.

- Create a directory called copy_practice

- Copy the file /etc/services to this newly created folder

- Create a folder named secrets under this directory and copy files /etc/passwd and /etc/services in it

- Copy the services file in copy_practice to the secrets folder but don't overwrite it

- Copy the secrets folder to your home directory

- Delete the secrets and copy_practice directories

That would give you some practice.

It's going well so far. You have learned quite a few things. In the next chapter, you'll see about [moving files and folders with mv command](https://itsfoss.com/move-files-linux/). |

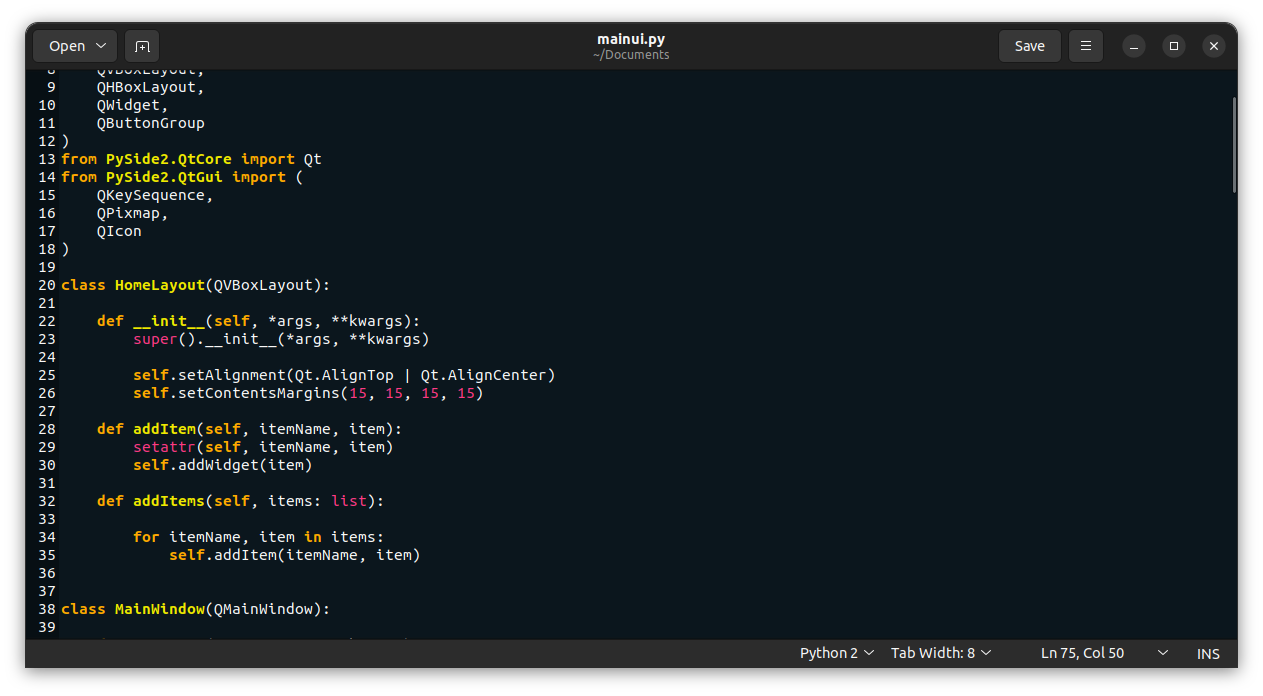

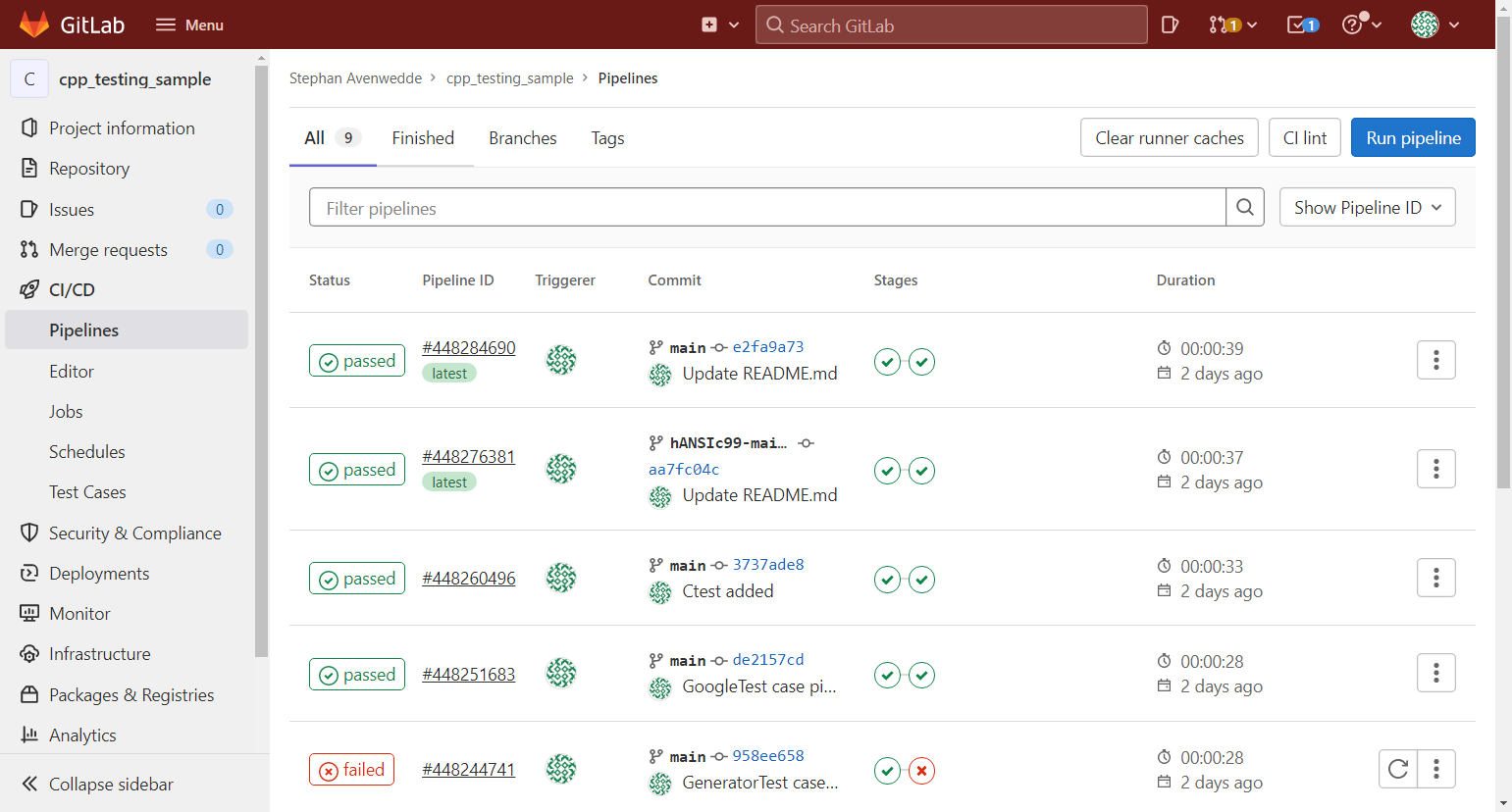

16,055 | 使用 GoogleTest 和 CTest 进行单元测试 | https://opensource.com/article/22/1/unit-testing-googletest-ctest | 2023-08-02T11:15:50 | [

"单元测试"

] | /article-16055-1.html |

>

> 进行单元测试可以提高代码质量,并且它不会打断你的工作流。

>

>

>

本文是 [使用 CMake 和 VSCodium 设置一个构建系统](/article-14249-1.html) 的后续文章。

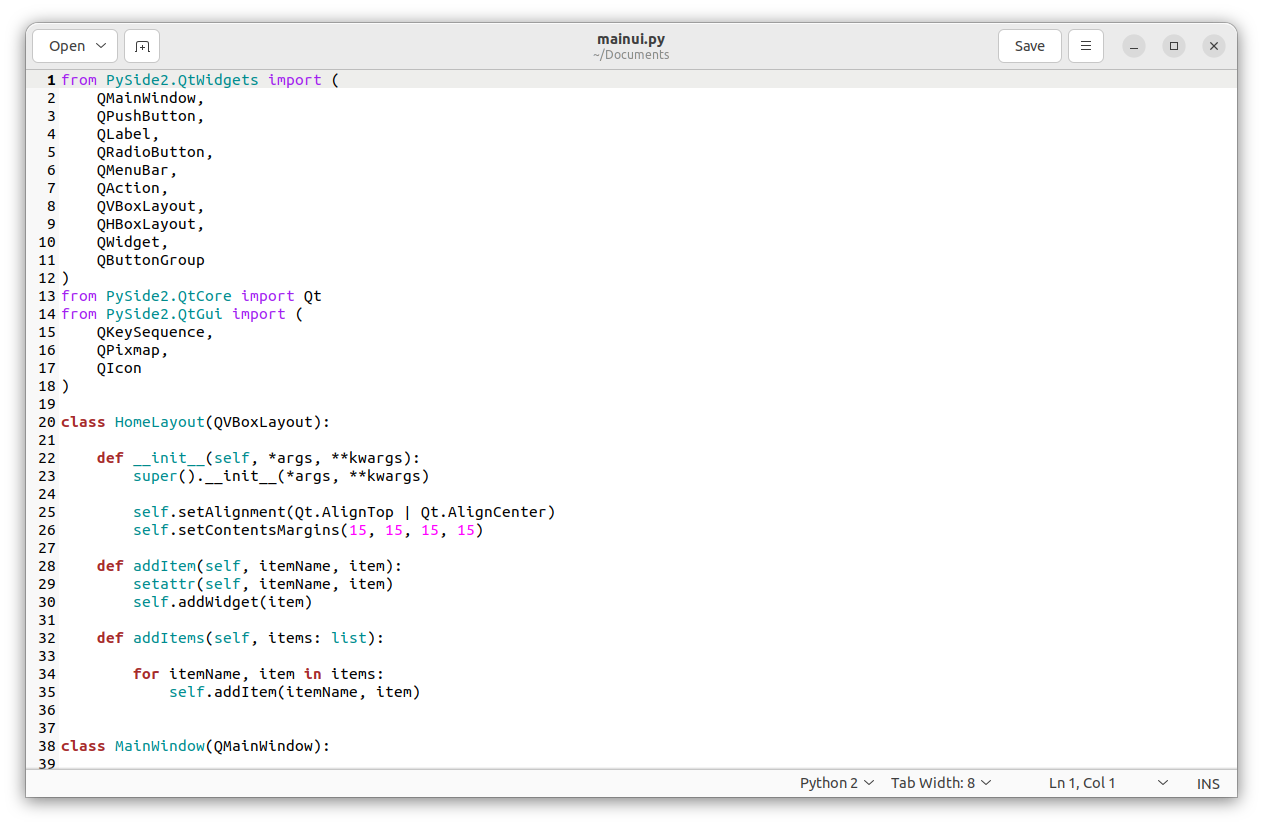

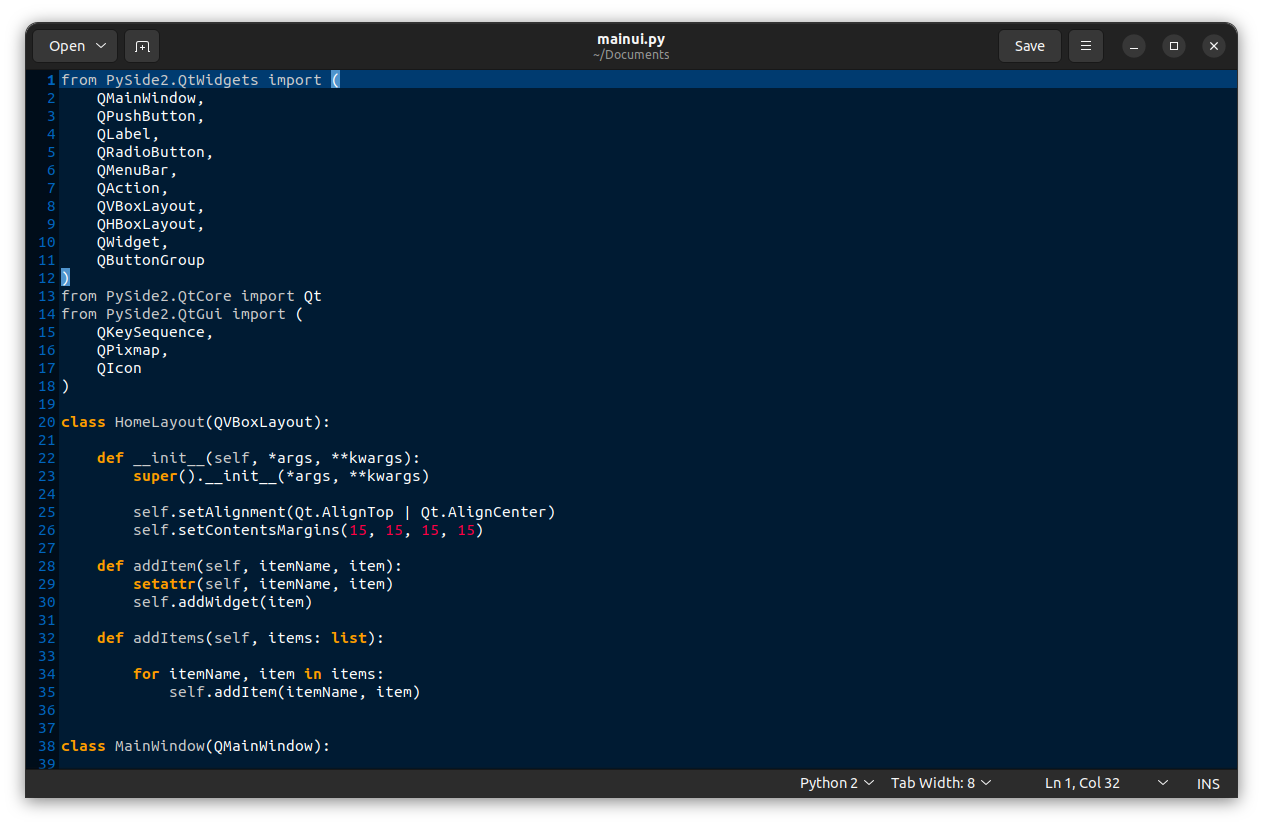

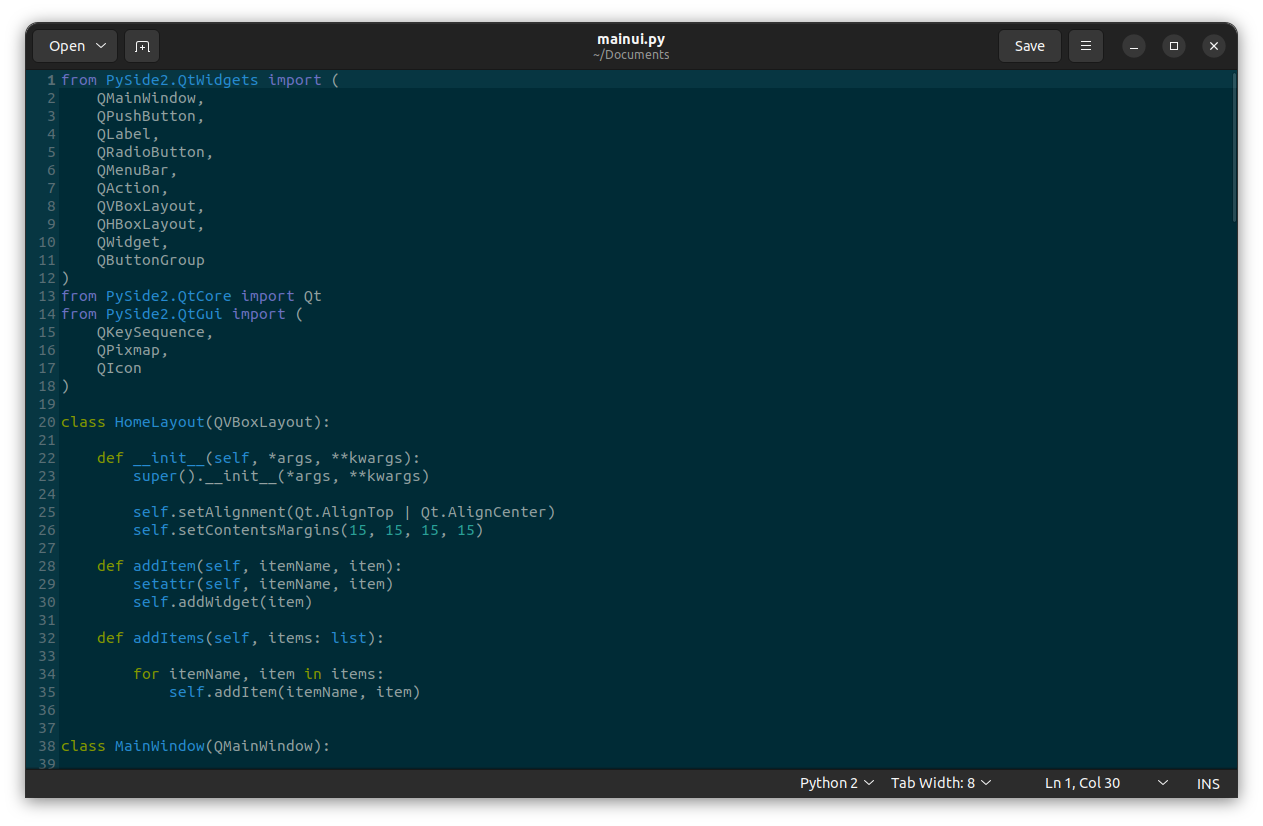

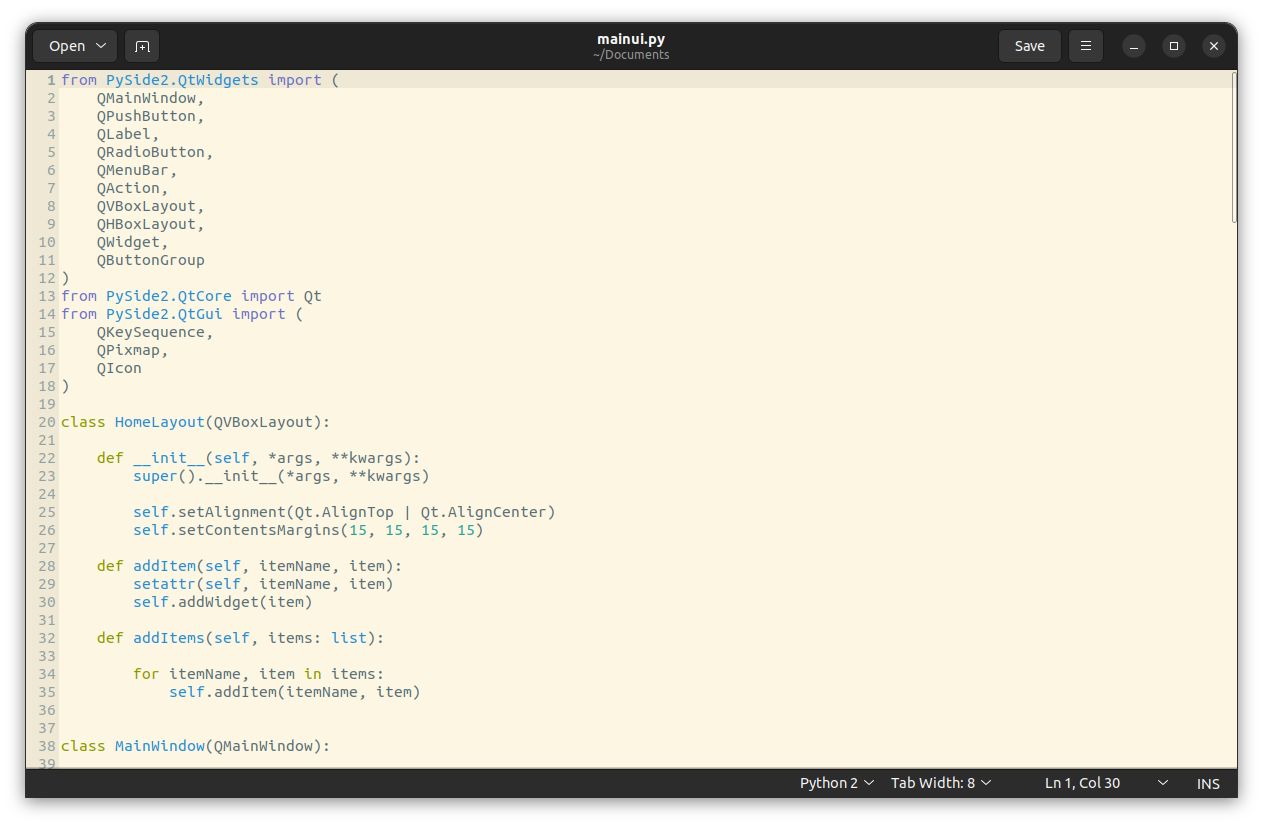

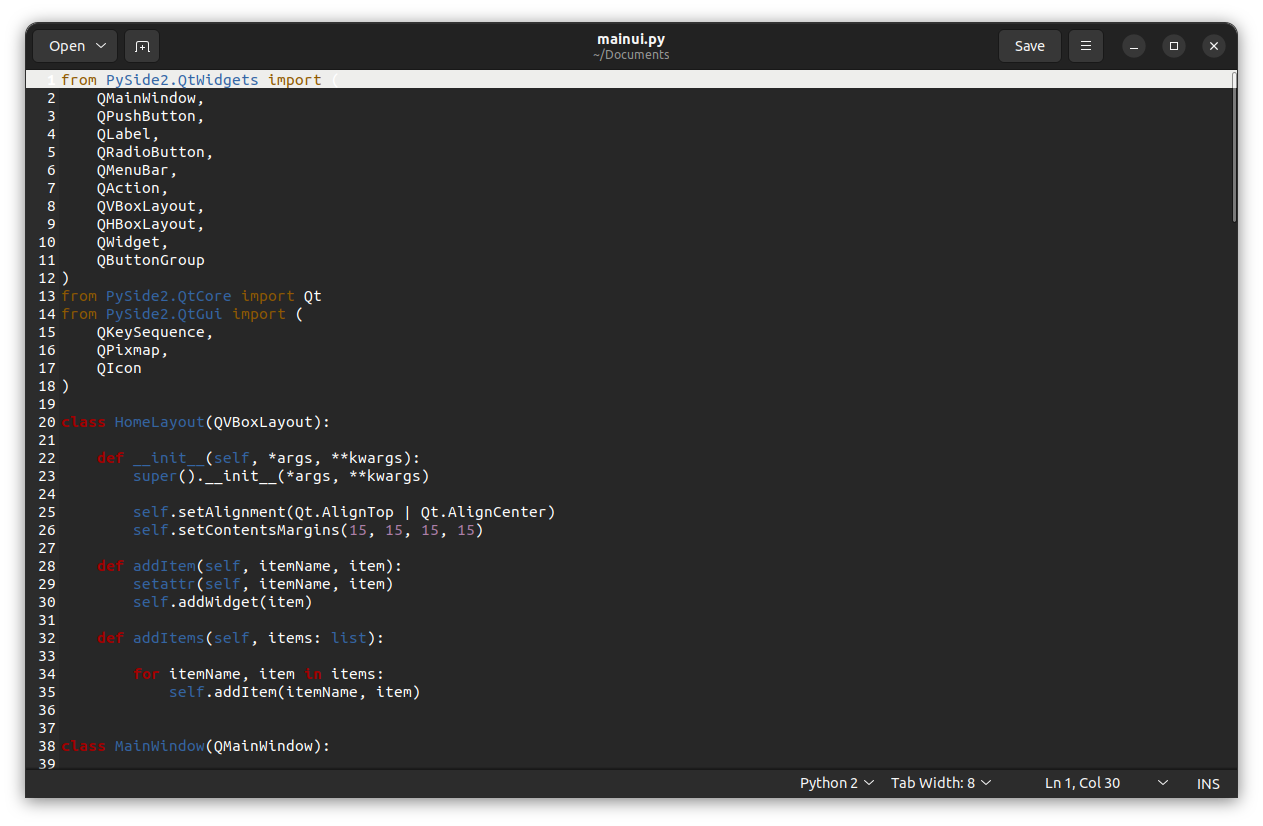

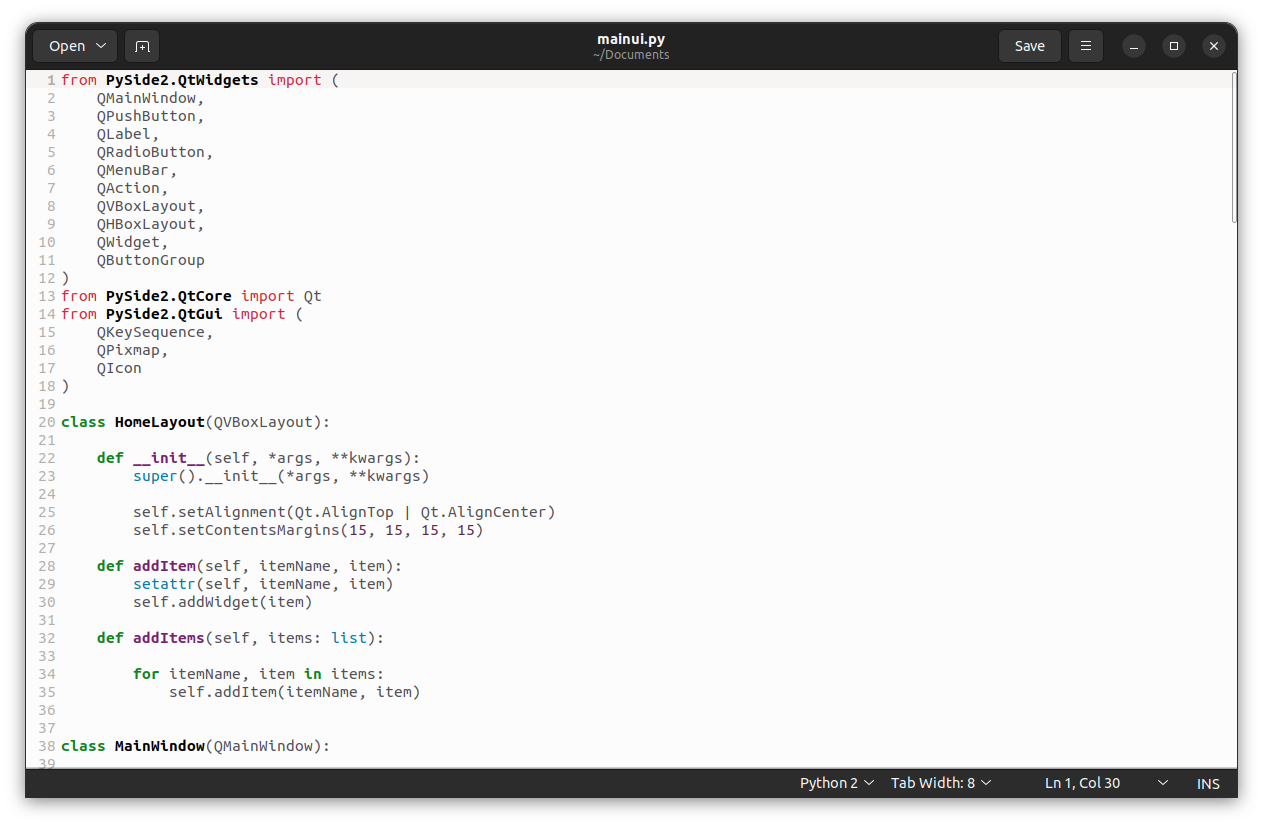

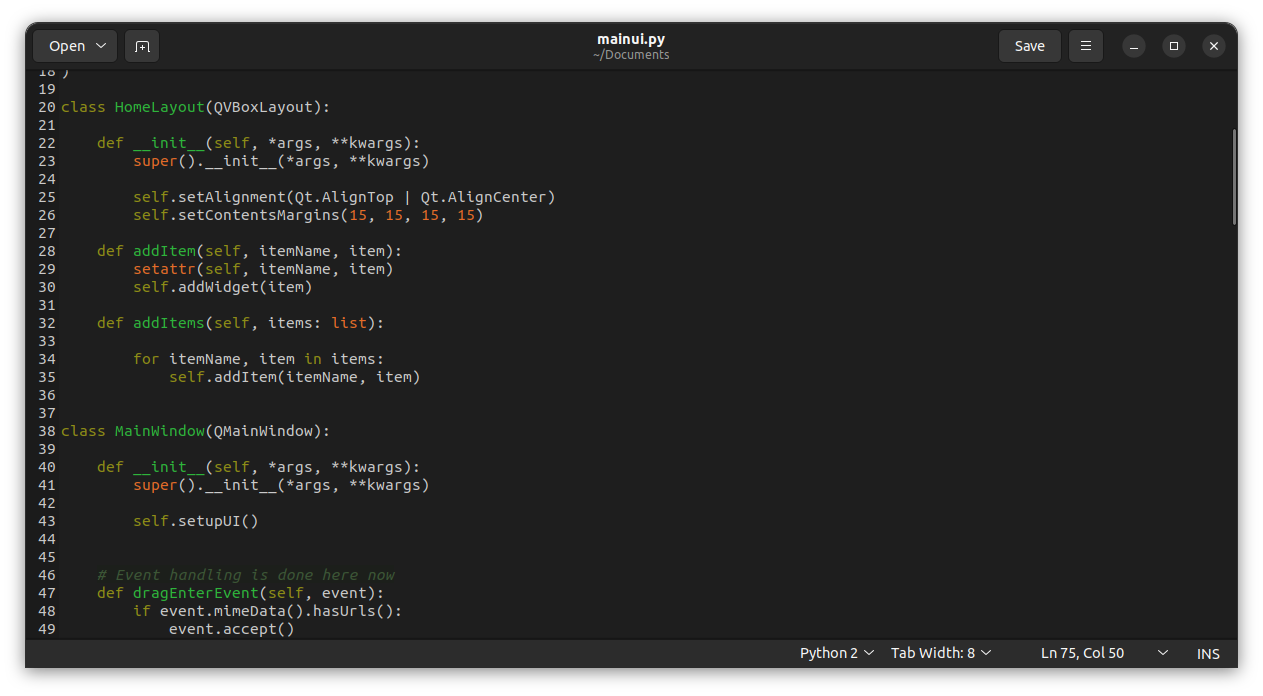

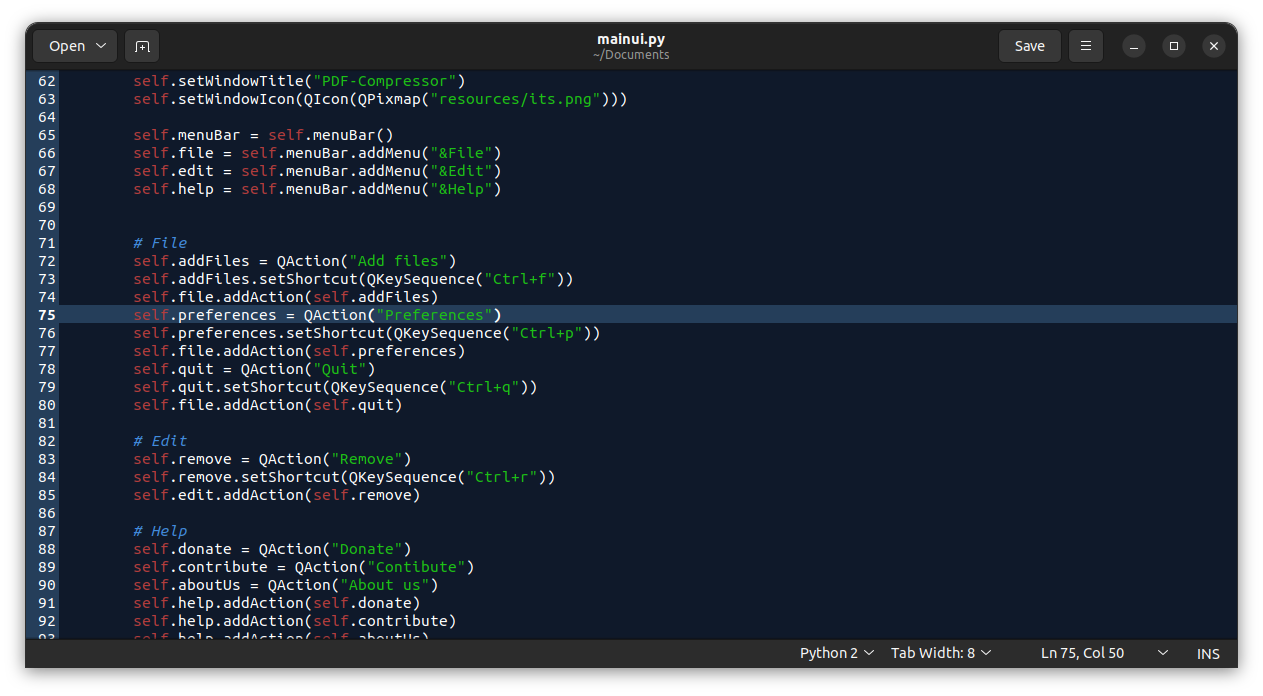

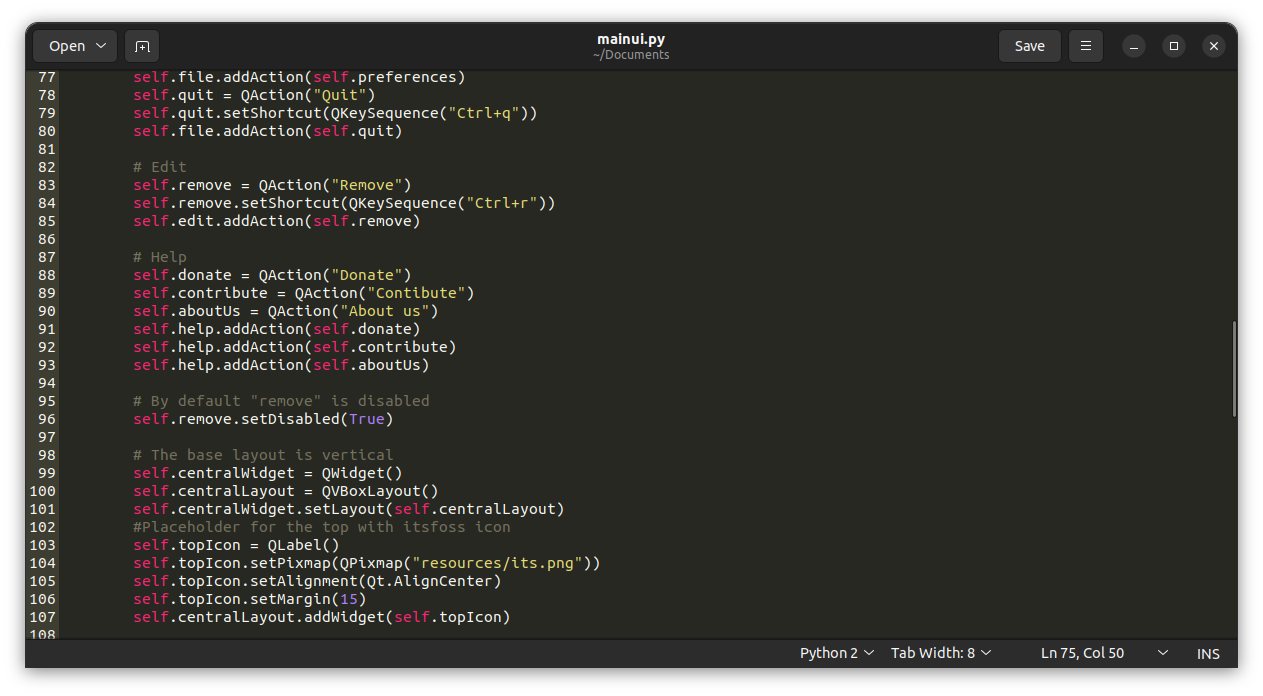

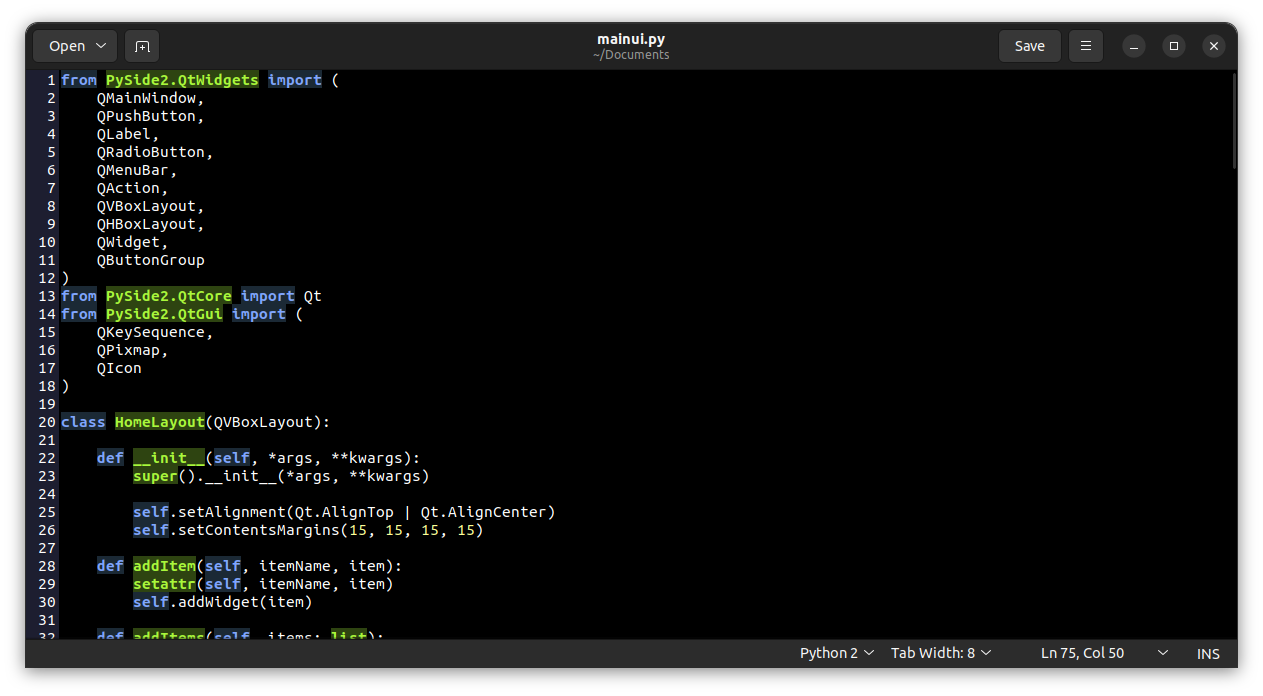

在上一篇文章中我介绍了基于 [VSCodium](https://vscodium.com/) 和 [CMake](https://cmake.org/) 配置构建系统。本文我将介绍如何通过 [GoogleTest](https://github.com/google/googletest) 和 [CTest](https://cmake.org/cmake/help/latest/manual/ctest.1.html) 将单元测试集成到这个构建系统中。

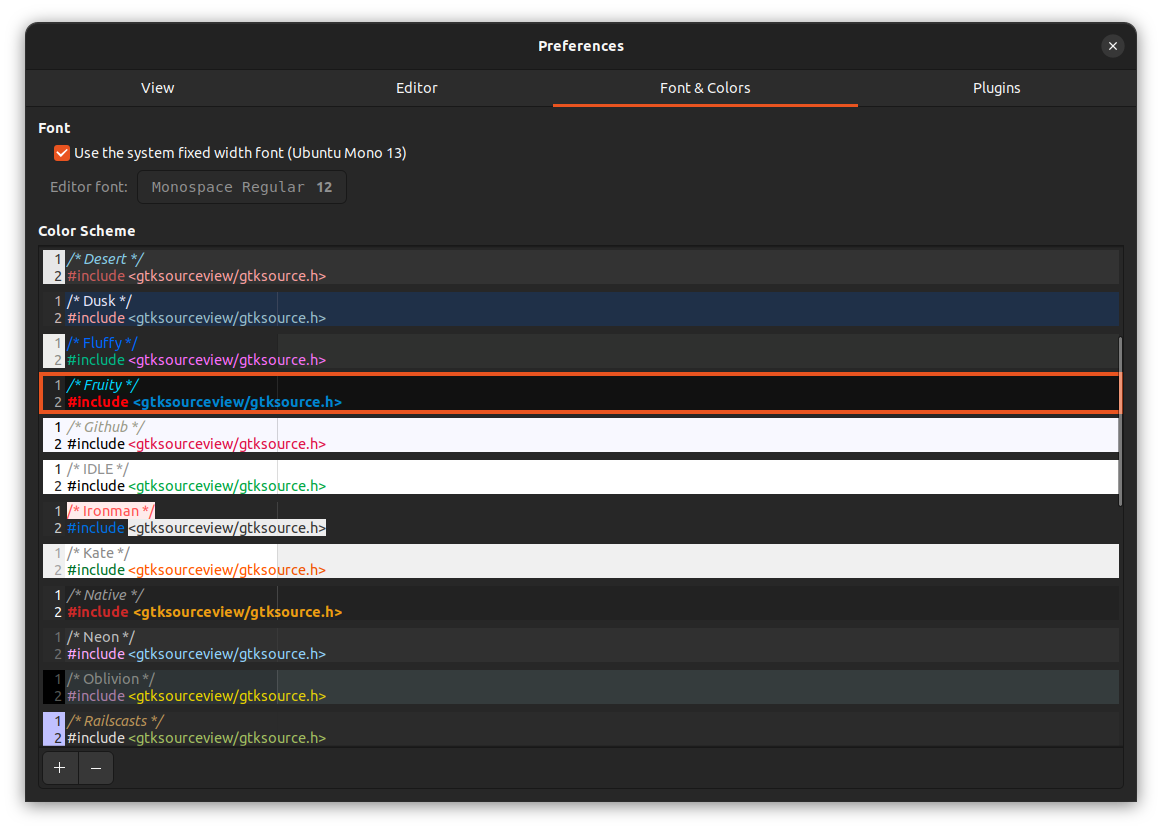

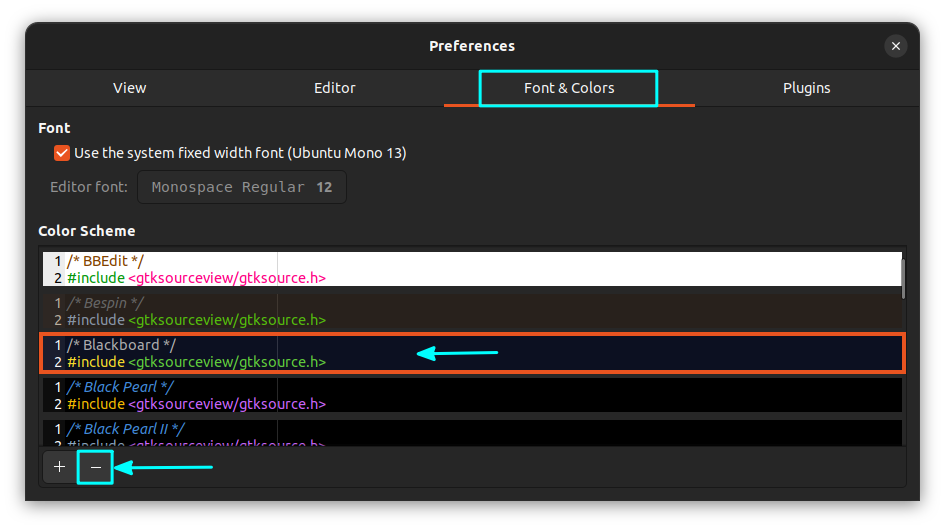

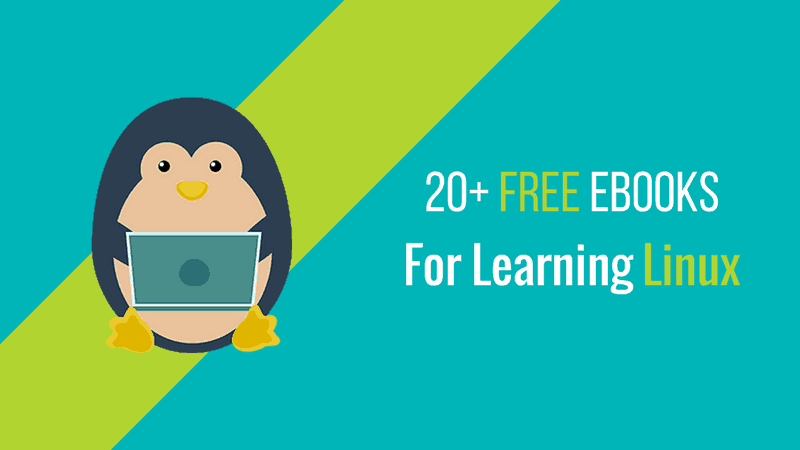

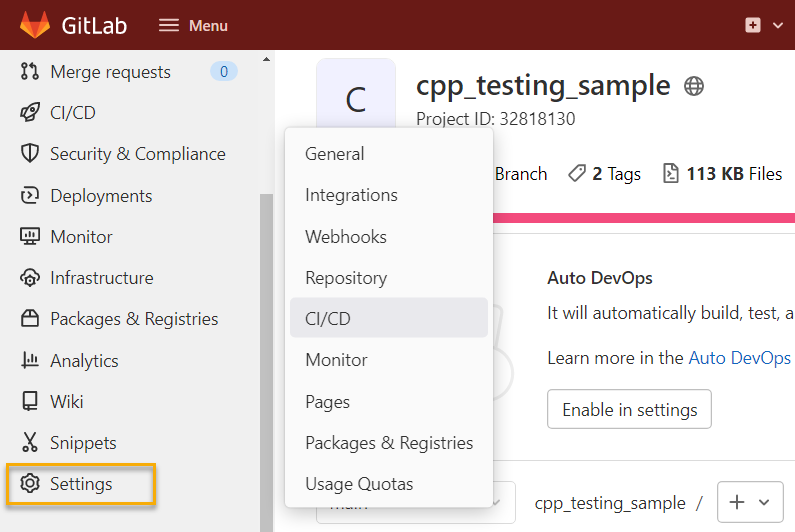

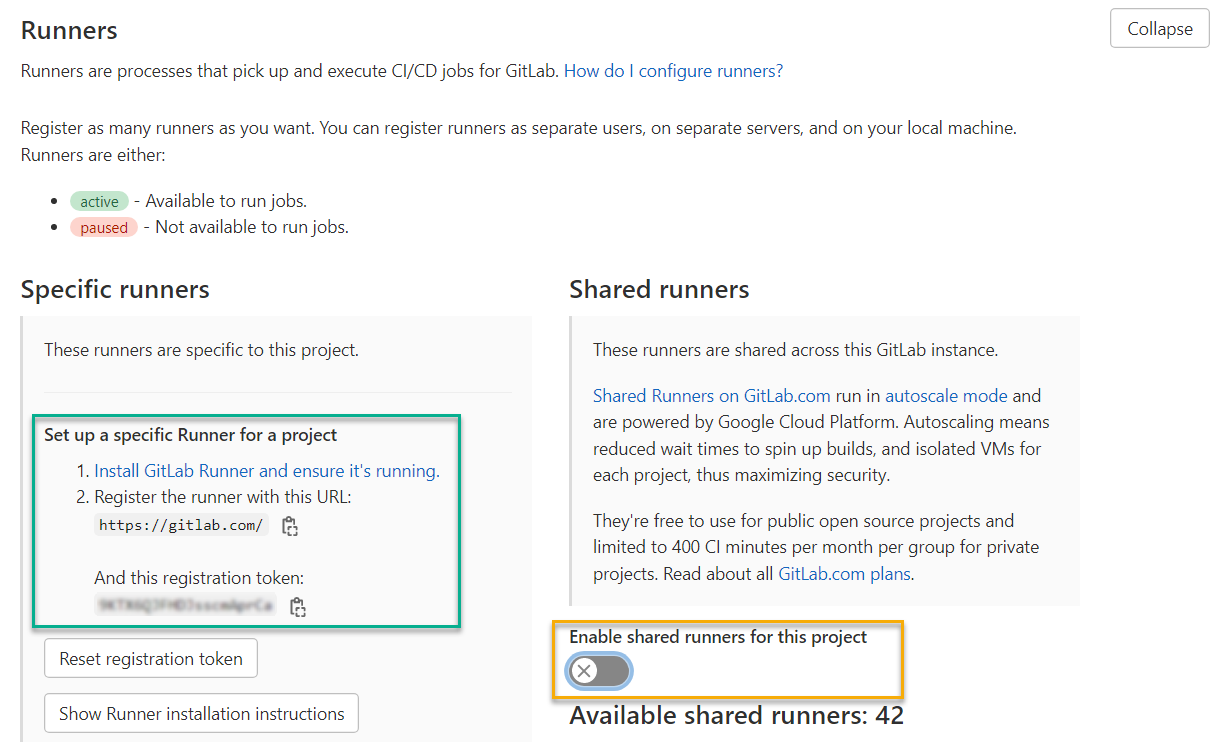

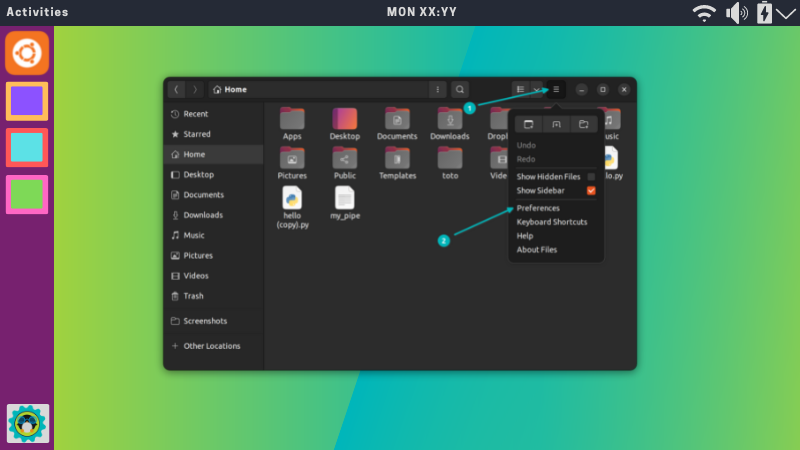

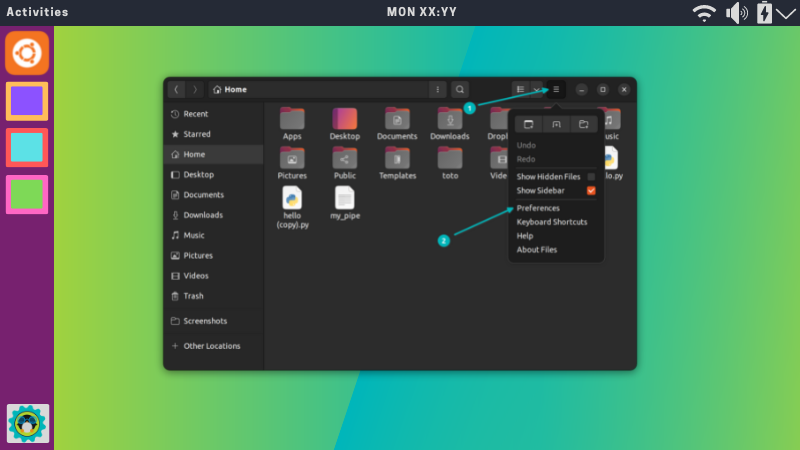

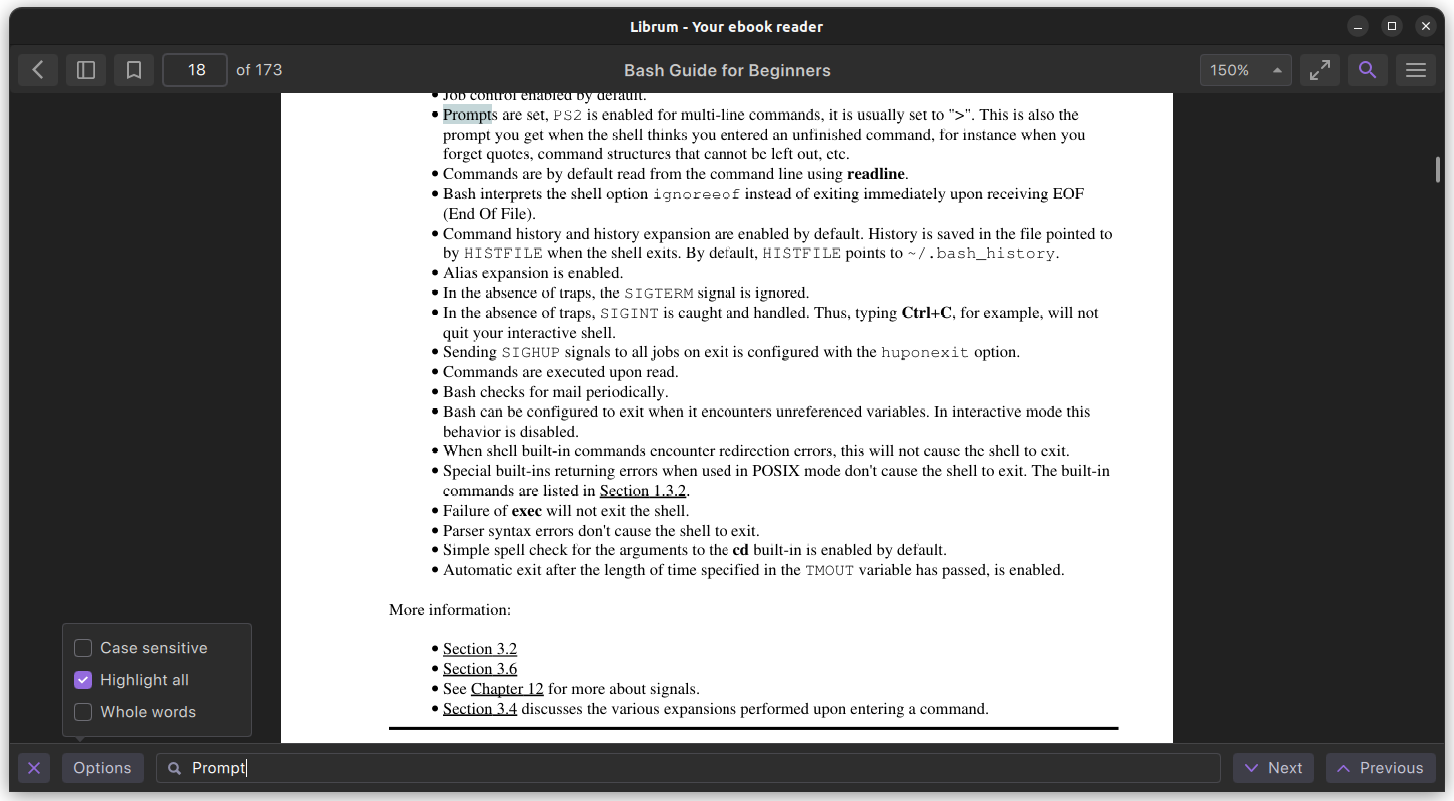

首先克隆 [这个仓库](https://github.com/hANSIc99/cpp_testing_sample),用 VSCodium 打开,切换到 `devops_2` 标签。你可以通过点击 `main` 分支符号(红框处),然后选择 `devops_2` 标签(黄框处)来进行切换:

或者你可以通过命令行来切换:

```

$ git checkout tags/devops_2

```

### GoogleTest

GoogleTest 是一个平台无关的开源 C++ 测试框架。单元测试是用来验证单个逻辑单元的行为的。尽管 GoogleTest 并不是专门用于单元测试的,我将用它对 `Generator` 库进行单元测试。

在 GoogleTest 中,测试用例是通过断言宏来定义的。断言可能产生以下结果:

* *成功*: 测试通过。

* *非致命失败*: 测试失败,但测试继续。

* *致命失败*: 测试失败,且测试终止。

致命断言和非致命断言通过不同的宏来区分:

* `ASSERT_*`: 致命断言,失败时终止。

* `EXPECT_*`: 非致命断言,失败时不终止。

谷歌推荐使用 `EXPECT_*` 宏,因为当测试中包含多个的断言时,它允许继续执行。断言有两个参数:第一个参数是测试分组的名称,第二个参数是测试自己的名称。`Generator` 只定义了 `generate(...)` 函数,所以本文中所有的测试都属于同一个测试组:`GeneratorTest`。

针对 `generate(...)` 函数的测试可以从 [GeneratorTest.cpp](https://github.com/hANSIc99/cpp_testing_sample/blob/main/Generator/GeneratorTest.cpp) 中找到。

#### 引用一致性检查

[generate(...)](https://github.com/hANSIc99/cpp_testing_sample/blob/main/Generator/Generator.cpp) 函数有一个 [std::stringstream](https://en.cppreference.com/w/cpp/io/basic_stringstream) 的引用作为输入参数,并且它也将这个引用作为返回值。第一个测试就是检查输入的引用和返回的引用是否一致。

```

TEST(GeneratorTest, ReferenceCheck){

const int NumberOfElements = 10;

std::stringstream buffer;

EXPECT_EQ(

std::addressof(buffer),

std::addressof(Generator::generate(buffer, NumberOfElements))

);

}

```

在这个测试中我使用 [std::addressof](https://en.cppreference.com/w/cpp/memory/addressof) 来获取对象的地址,并用 `EXPECT_EQ` 来比较输入对象和返回对象是否是同一个。

#### 检查元素个数

本测试检查作为输入的 `std::stringstream` 引用中的元素个数与输入参数中指定的个数是否相同。

```

TEST(GeneratorTest, NumberOfElements){

const int NumberOfElements = 50;

int nCalcNoElements = 0;

std::stringstream buffer;

Generator::generate(buffer, NumberOfElements);

std::string s_no;

while(std::getline(buffer, s_no, ' ')) {

nCalcNoElements++;

}

EXPECT_EQ(nCalcNoElements, NumberOfElements);

}

```

#### 乱序重排

本测试检查随机化引擎是否工作正常。如果连续调用两次 `generate` 函数,应该得到的是两个不同的结果。

```

TEST(GeneratorTest, Shuffle){

const int NumberOfElements = 50;

std::stringstream buffer_A;

std::stringstream buffer_B;

Generator::generate(buffer_A, NumberOfElements);

Generator::generate(buffer_B, NumberOfElements);

EXPECT_NE(buffer_A.str(), buffer_B.str());

}

```

#### 求和校验

与前面的测试相比,这是一个大体量的测试。它检查 1 到 n 的数值序列的和与乱序重排后的序列的和是否相等。 `generate(...)` 函数应该生成一个 1 到 n 的乱序的序列,这个序列的和应当是不变的。

```

TEST(GeneratorTest, CheckSum){

const int NumberOfElements = 50;

int nChecksum_in = 0;

int nChecksum_out = 0;

std::vector<int> vNumbersRef(NumberOfElements); // Input vector

std::iota(vNumbersRef.begin(), vNumbersRef.end(), 1); // Populate vector

// Calculate reference checksum

for(const int n : vNumbersRef){

nChecksum_in += n;

}

std::stringstream buffer;

Generator::generate(buffer, NumberOfElements);

std::vector<int> vNumbersGen; // Output vector

std::string s_no;

// Read the buffer back back to the output vector

while(std::getline(buffer, s_no, ' ')) {

vNumbersGen.push_back(std::stoi(s_no));

}

// Calculate output checksum

for(const int n : vNumbersGen){

nChecksum_out += n;

}

EXPECT_EQ(nChecksum_in, nChecksum_out);

}

```

你可以像对一般 C++ 程序一样调试这些测试。

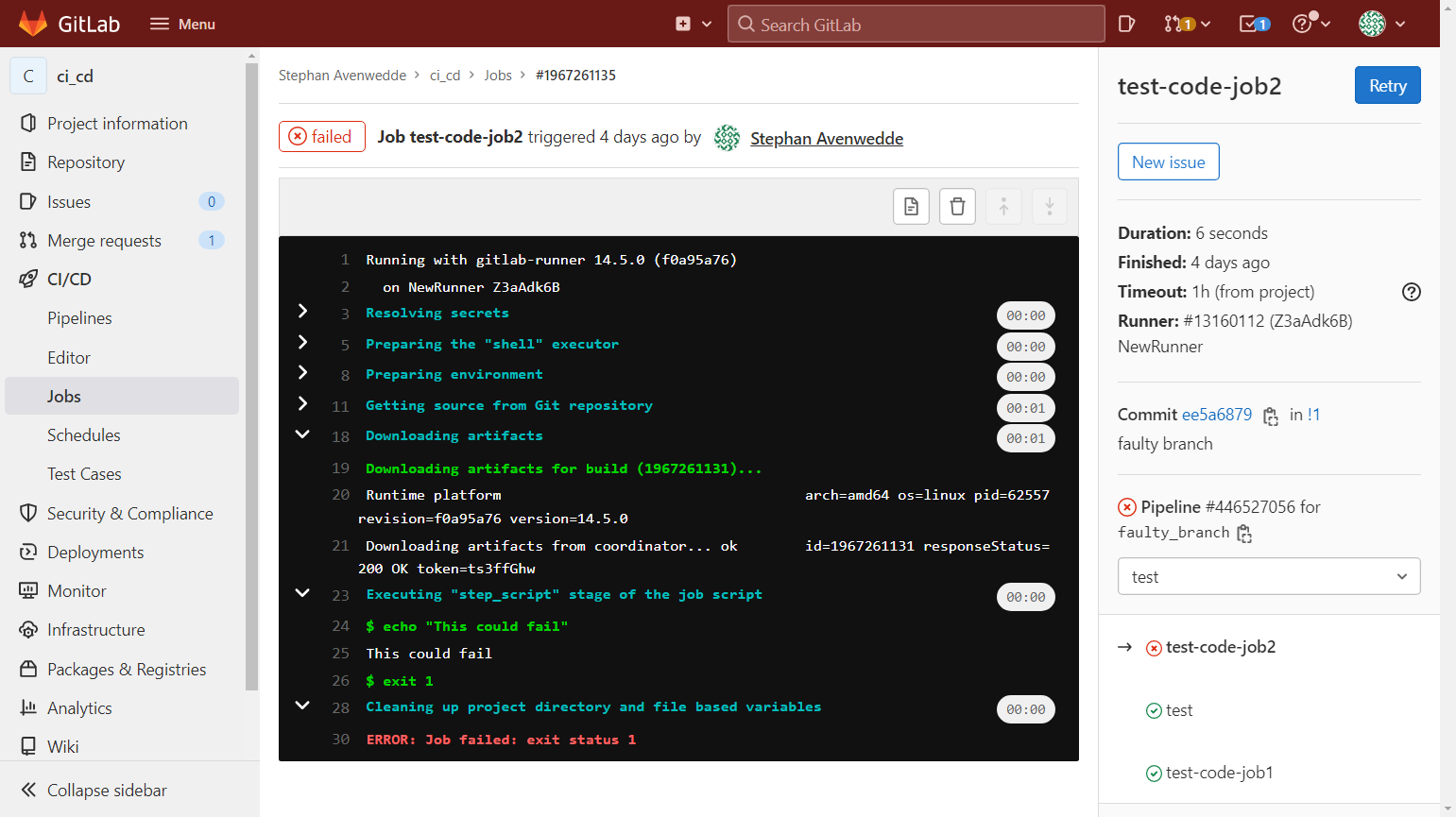

### CTest

除了嵌入到代码中的测试之外,[CTest](https://cmake.org/cmake/help/latest/manual/ctest.1.html) 提供了可执行程序的测试方式。简而言之就是通过给可执行程序传入特定的参数,然后用 [正则表达式](https://en.wikipedia.org/wiki/Regular_expression) 对它的输出进行匹配检查。通过这种方式可以很容易检查程序对于不正确的命令行参数的反应。这些测试定义在顶层的 [CMakeLists.txt](https://github.com/hANSIc99/cpp_testing_sample/blob/main/CMakeLists.txt) 文件中。下面我详细介绍 3 个测试用例:

#### 参数正常

如果输入参数是一个正整数,程序应该输出应该是一个数列:

```

add_test(NAME RegularUsage COMMAND Producer 10)

set_tests_properties(RegularUsage

PROPERTIES PASS_REGULAR_EXPRESSION "^[0-9 ]+"

)

```

#### 没有提供参数

如果没有传入参数,程序应该立即退出并提示错误原因:

```

add_test(NAME NoArg COMMAND Producer)

set_tests_properties(NoArg

PROPERTIES PASS_REGULAR_EXPRESSION "^Enter the number of elements as argument"

)

```

#### 参数错误

当传入的参数不是整数时,程序应该退出并报错。比如给 `Producer` 传入参数 `ABC`:

```

add_test(NAME WrongArg COMMAND Producer ABC)

set_tests_properties(WrongArg

PROPERTIES PASS_REGULAR_EXPRESSION "^Error: Cannot parse"

)

```

#### 执行测试

可以使用 `ctest -R Usage -VV` 命令来执行测试。这里给 `ctest` 的命令行参数:

* `-R <测试名称>` : 执行单个测试

* `-VV`:打印详细输出

测试执行结果如下:

```

$ ctest -R Usage -VV

UpdatecTest Configuration from :/home/stephan/Documents/cpp_testing sample/build/DartConfiguration.tcl

UpdateCTestConfiguration from :/home/stephan/Documents/cpp_testing sample/build/DartConfiguration.tcl

Test project /home/stephan/Documents/cpp_testing sample/build

Constructing a list of tests

Done constructing a list of tests

Updating test list for fixtures

Added 0 tests to meet fixture requirements

Checking test dependency graph...

Checking test dependency graph end

```

在这里我执行了名为 `Usage` 的测试。

它以无参数的方式调用 `Producer`:

```

test 3

Start 3: Usage

3: Test command: /home/stephan/Documents/cpp testing sample/build/Producer

```

输出不匹配 `[^[0-9]+]` 的正则模式,测试未通过。

```

3: Enter the number of elements as argument

1/1 test #3. Usage ................

Failed Required regular expression not found.

Regex=[^[0-9]+]

0.00 sec round.

0% tests passed, 1 tests failed out of 1

Total Test time (real) =

0.00 sec

The following tests FAILED:

3 - Usage (Failed)

Errors while running CTest

$

```

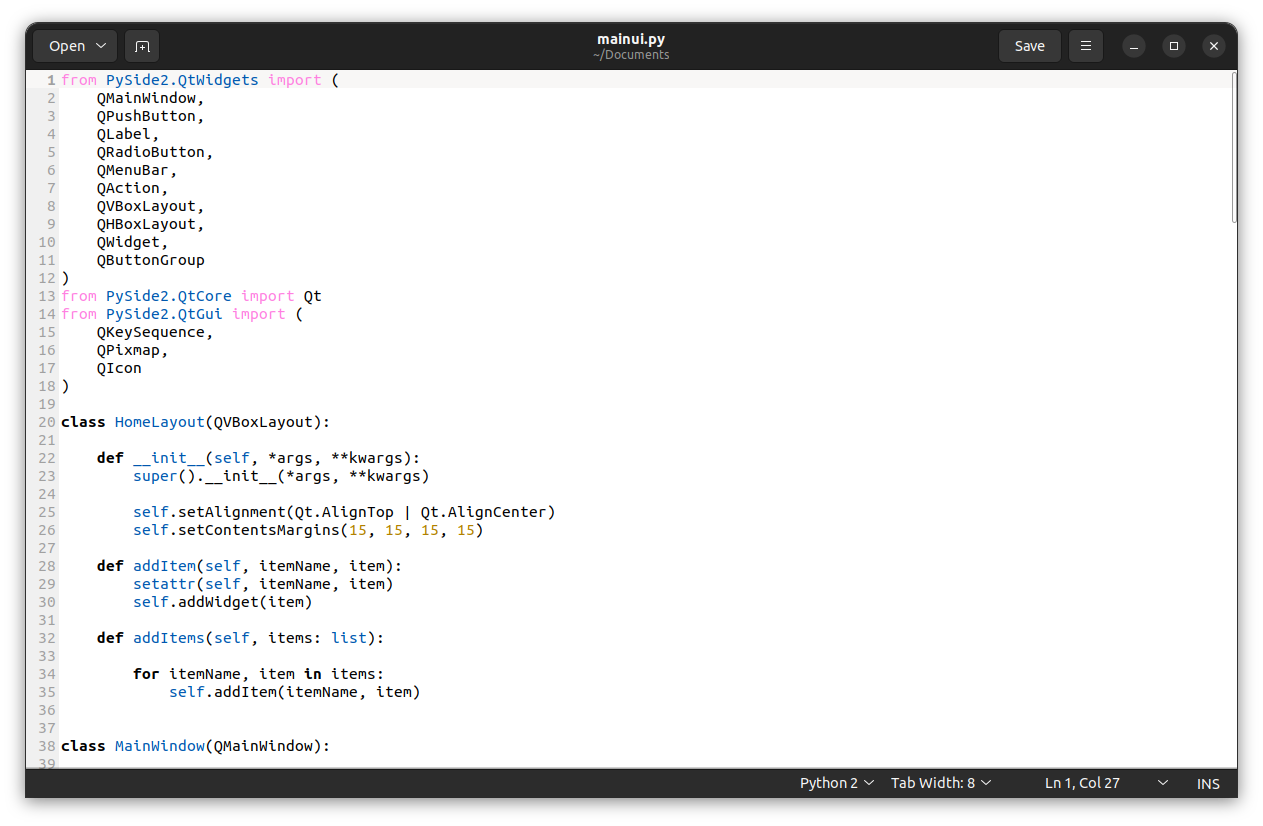

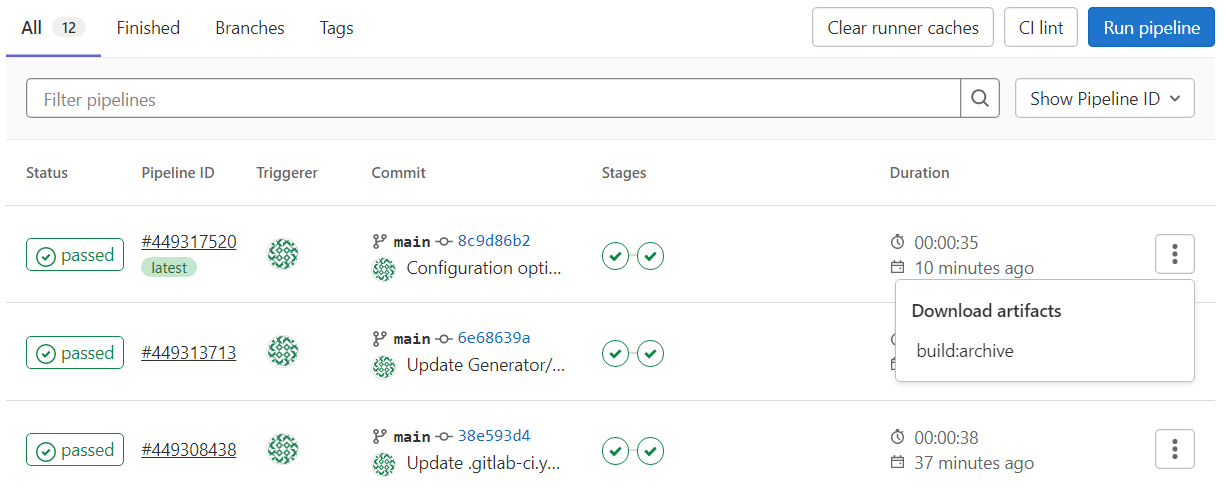

如果想要执行所有测试(包括那些用 GoogleTest 生成的),切换到 `build` 目录中,然后运行 `ctest` 即可:

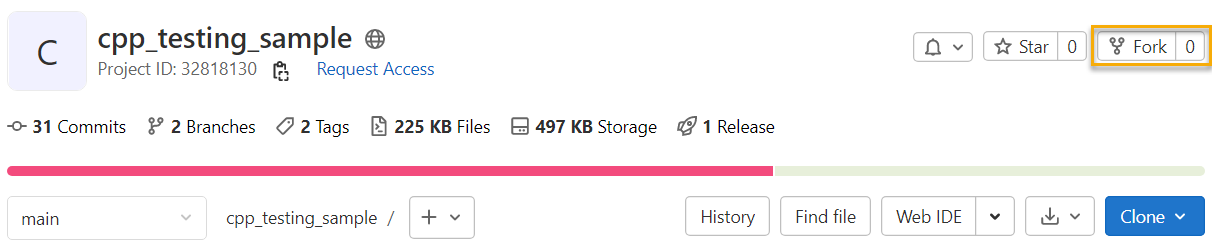

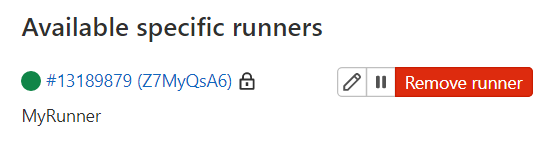

在 VSCodium 中可以通过点击信息栏的黄框处来调用 CTest。如果所有测试都通过了,你会看到如下输出:

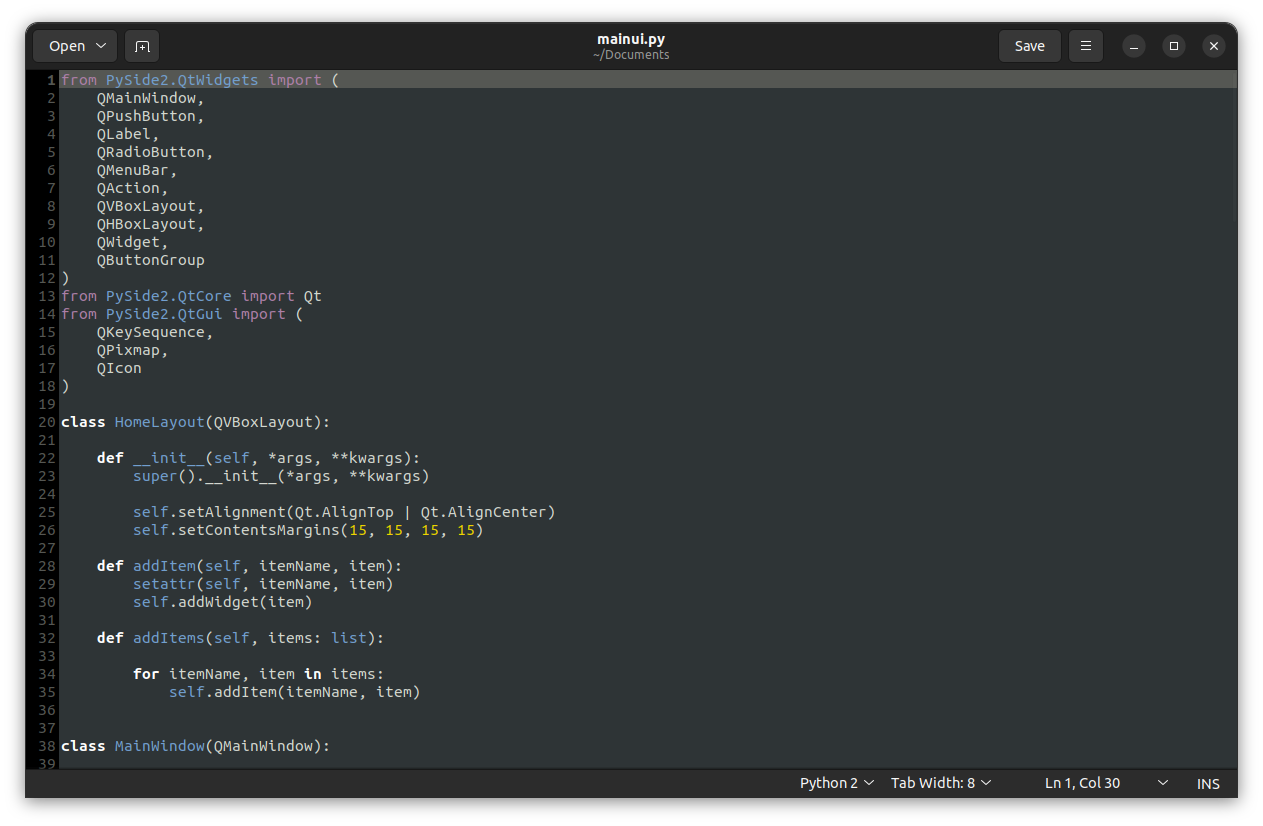

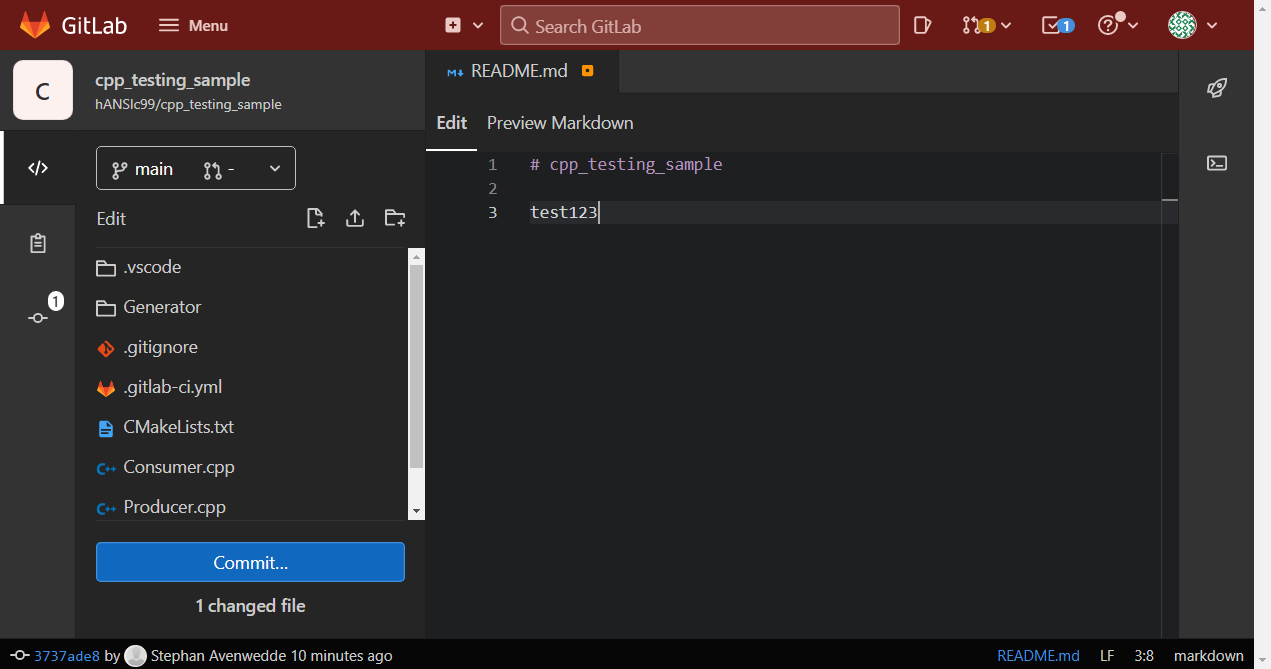

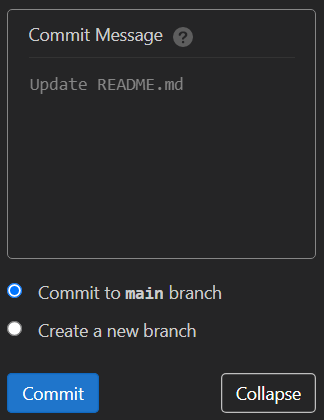

### 使用 Git 钩子进行自动化测试

目前为止,运行测试是开发者需要额外执行的步骤,那些不能通过测试的代码仍然可能被提交和推送到代码仓库中。利用 [Git 钩子](https://git-scm.com/book/en/v2/Customizing-Git-Git-Hooks) 可以自动执行测试,从而防止有瑕疵的代码被提交。

切换到 `.git/hooks` 目录,创建 `pre-commit` 文件,复制粘贴下面的代码:

```

#!/usr/bin/sh

(cd build; ctest --output-on-failure -j6)

```

然后,给文件增加可执行权限:

```

$ chmod +x pre-commit

```

这个脚本会在提交之前调用 CTest 进行测试。如果有测试未通过,提交过程就会被终止:

只有所有测试都通过了,提交过程才会完成:

这个机制也有一个漏洞:可以通过 `git commit --no-verify` 命令绕过测试。解决办法是配置构建服务器,这能保证只有正常工作的代码才能被提交,但这又是另一个话题了。

### 总结

本文提到的技术实施简单,并且能够帮你快速发现代码中的问题。做单元测试可以提高代码质量,同时也不会打断你的工作流。GoogleTest 框架提供了丰富的特性以应对各种测试场景,文中我所提到的只是一小部分而已。如果你想进一步了解 GoogleTest,我推荐你阅读 [GoogleTest Primer](https://google.github.io/googletest/primer.html)。

*(题图:MJ/f212ce43-b60b-4005-b70d-8384f2ba5860)*

---

via: <https://opensource.com/article/22/1/unit-testing-googletest-ctest>

作者:[Stephan Avenwedde](https://opensource.com/users/hansic99) 选题:[lujun9972](https://github.com/lujun9972) 译者:[toknow-gh](https://github.com/toknow-gh) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

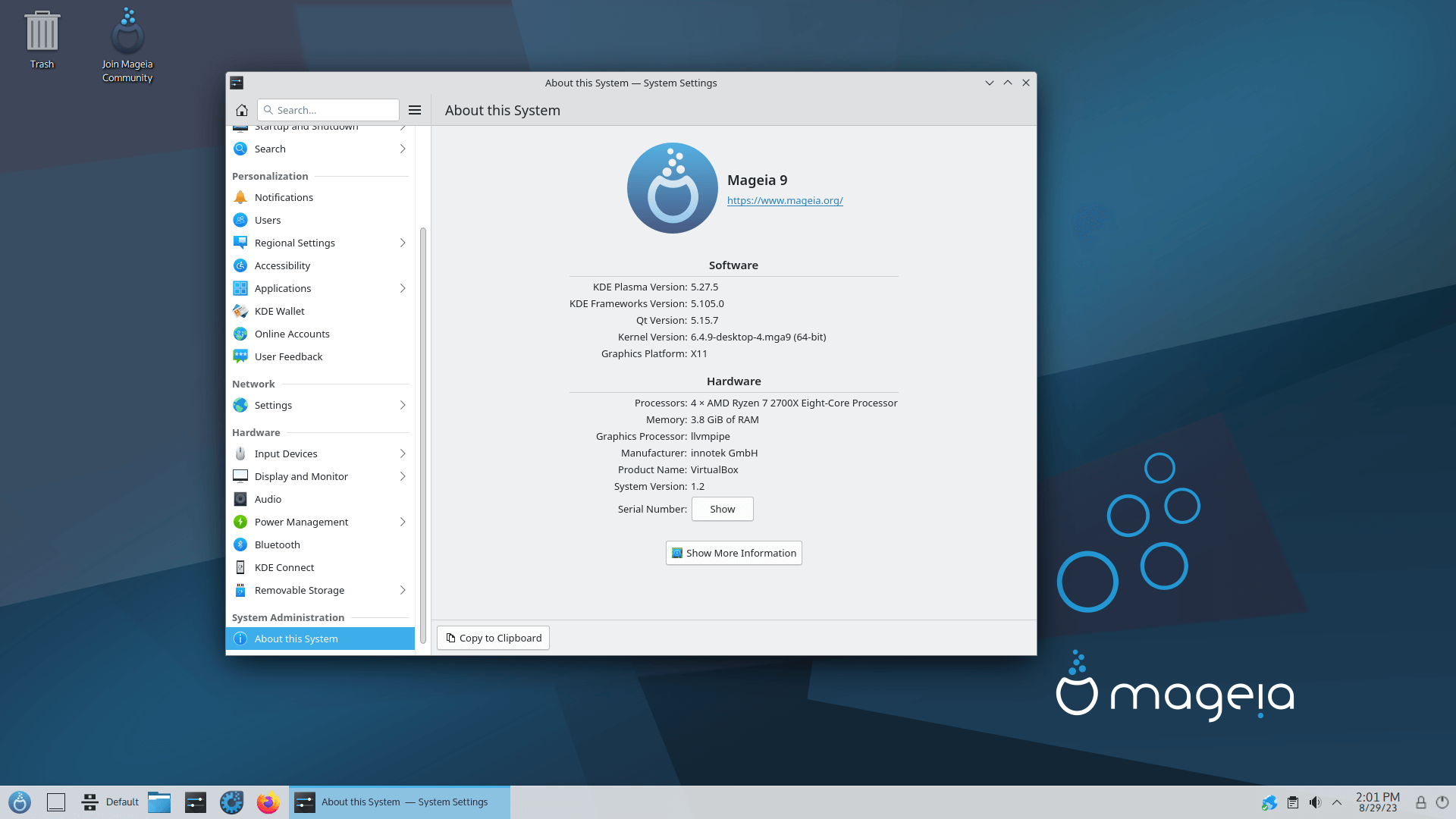

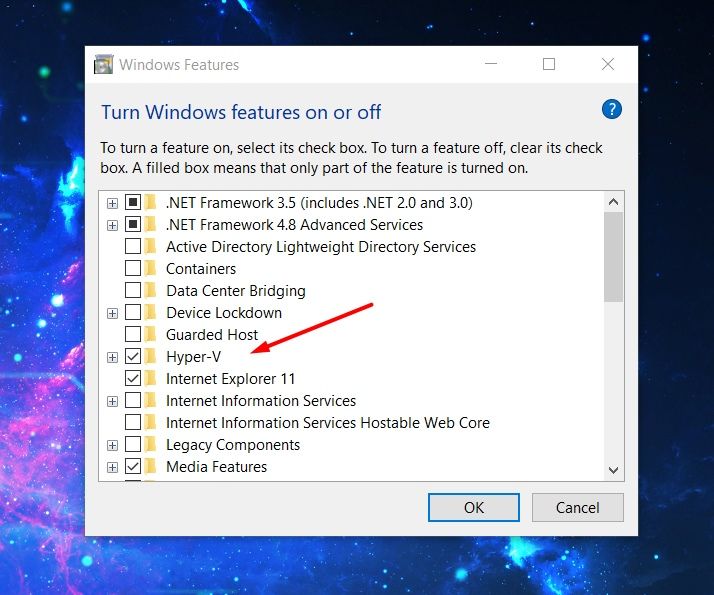

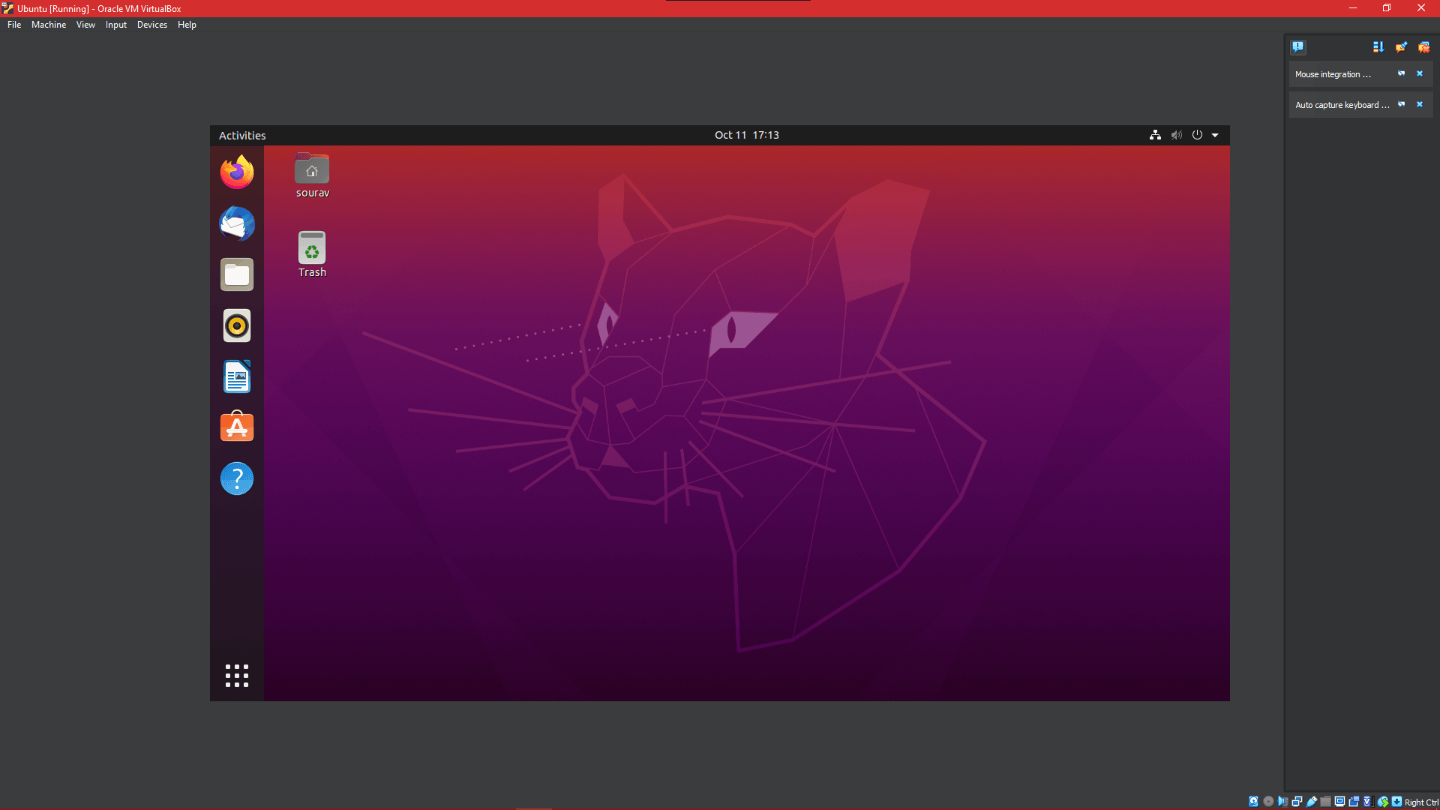

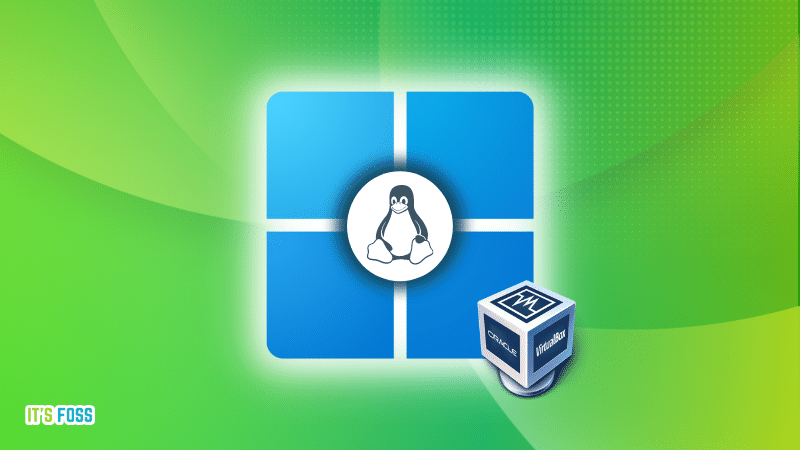

16,056 | 如何访问虚拟机中的 GRUB 菜单 | https://itsfoss.com/access-grub-virtual-machine/ | 2023-08-02T11:30:00 | [

"虚拟机",

"GRUB"

] | https://linux.cn/article-16056-1.html |

>

> 需要在虚拟机中访问 GRUB 菜单吗?以下是做法。

>

>

>

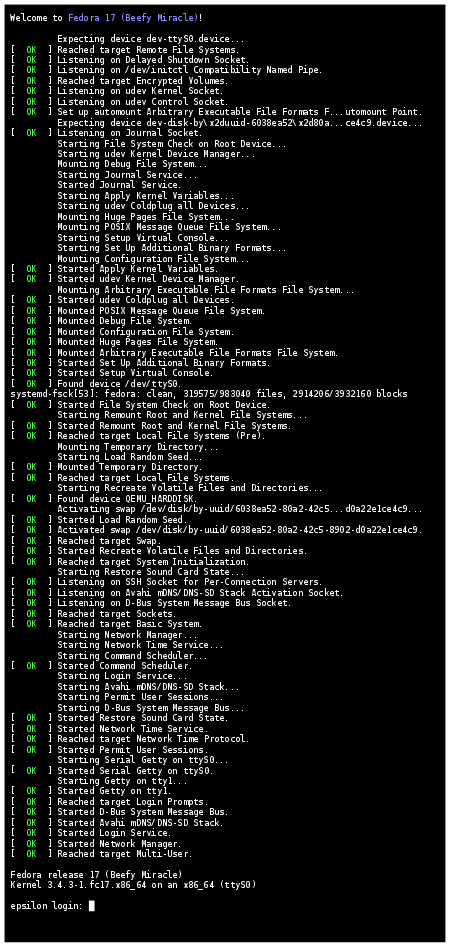

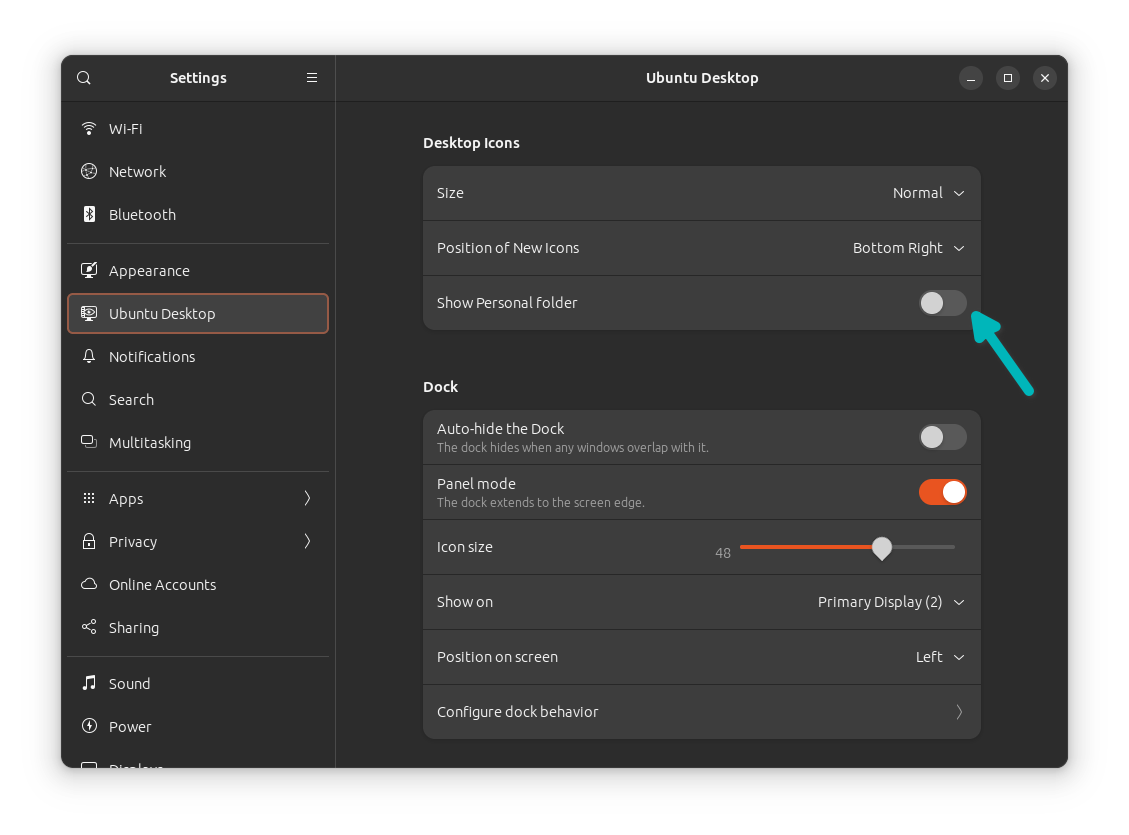

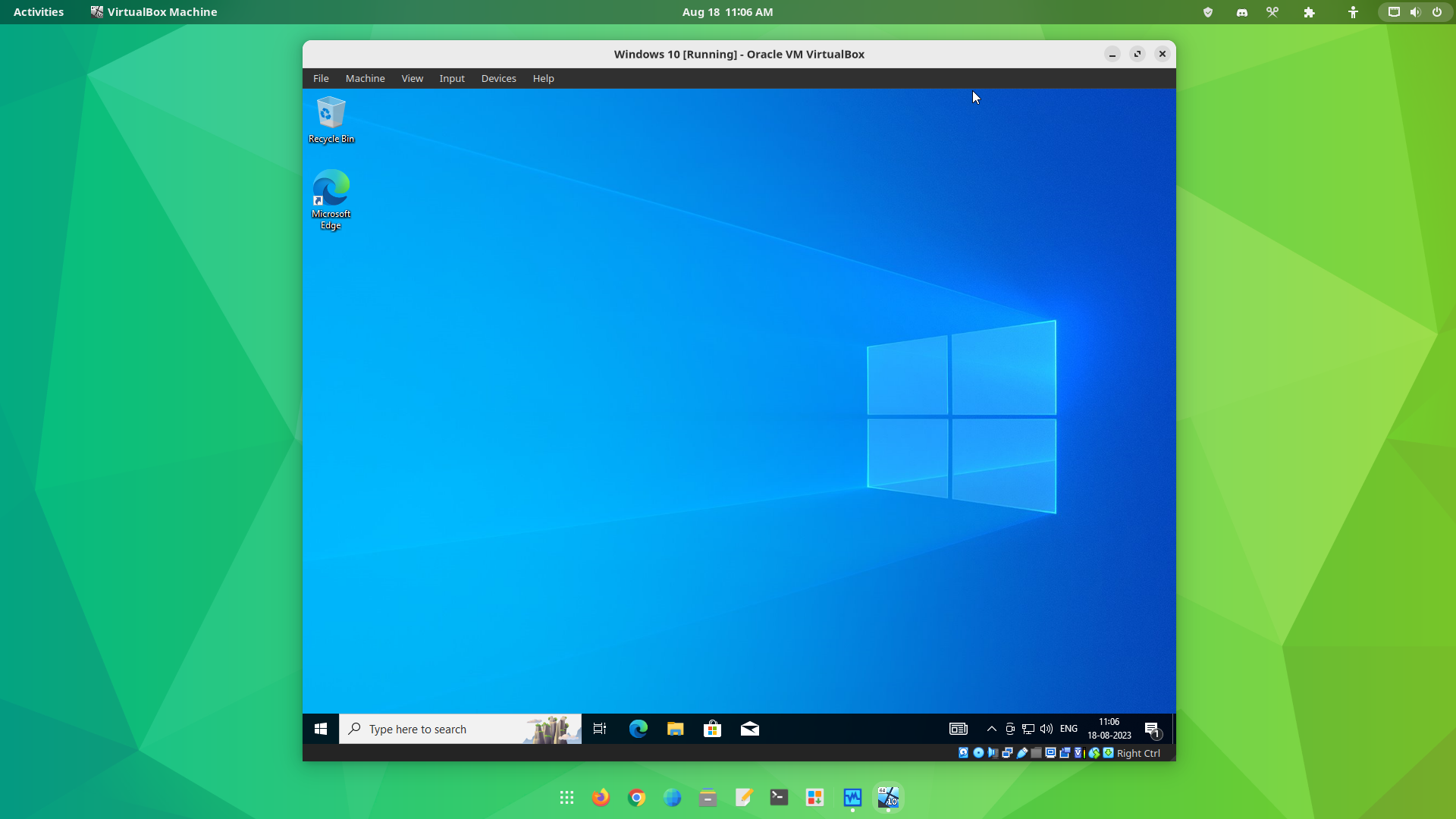

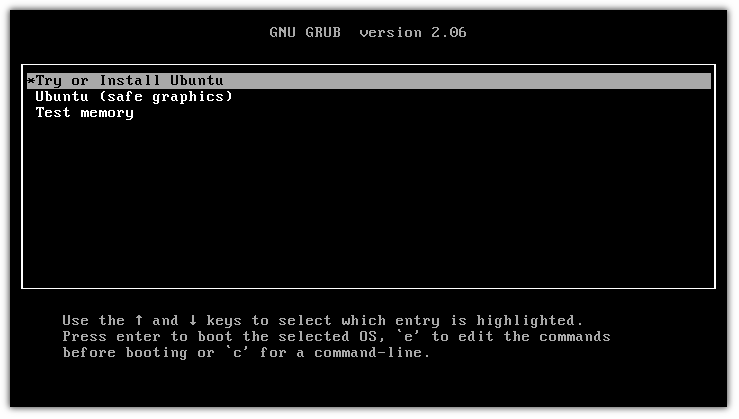

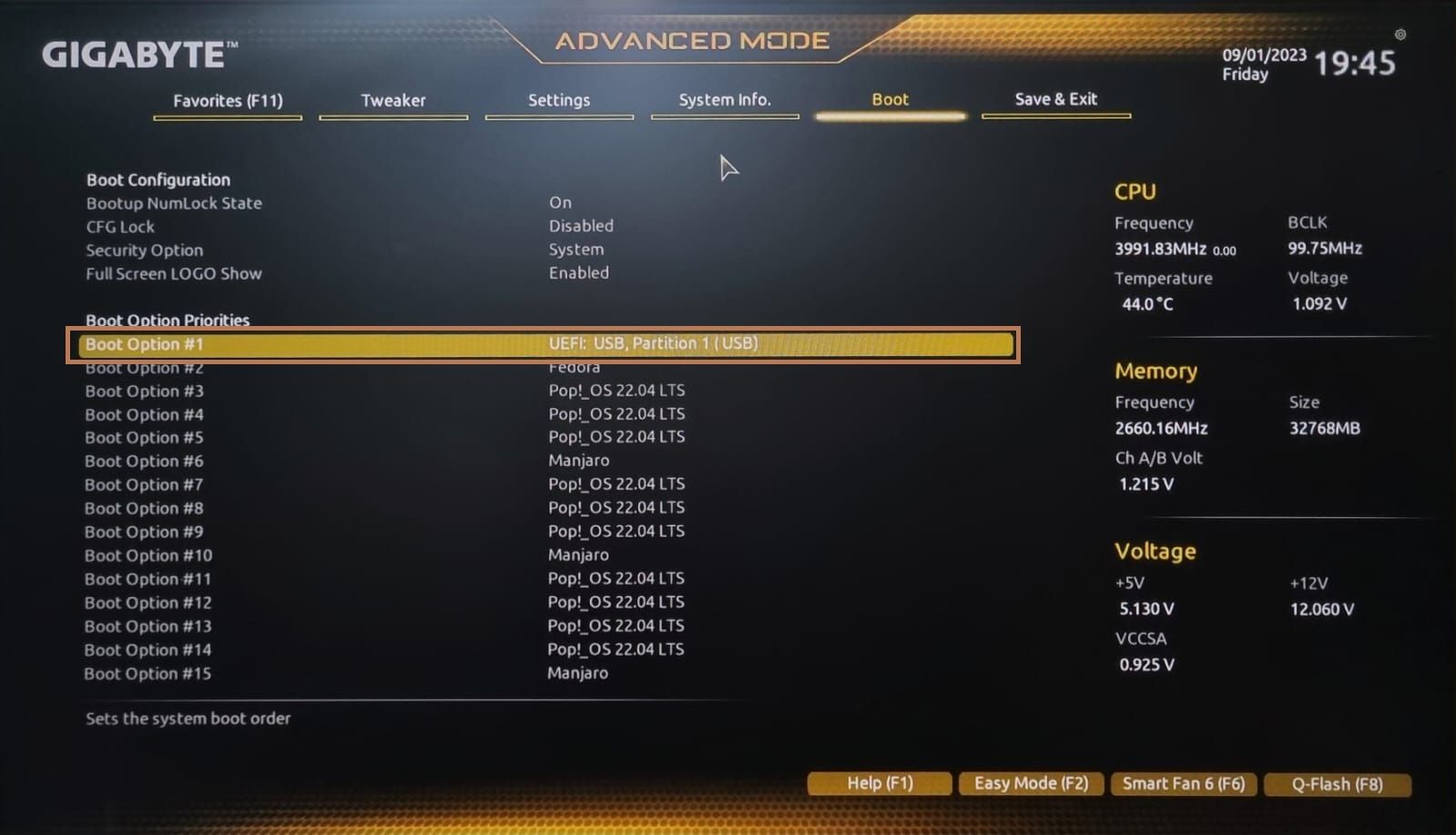

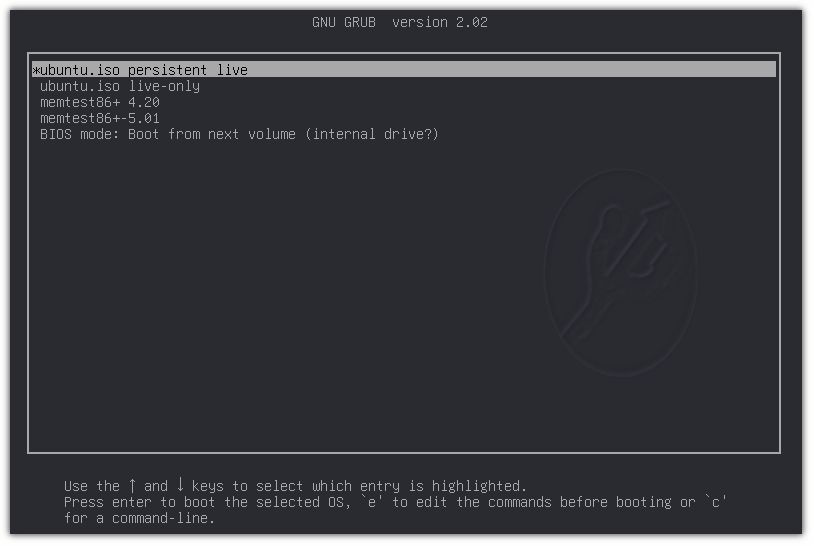

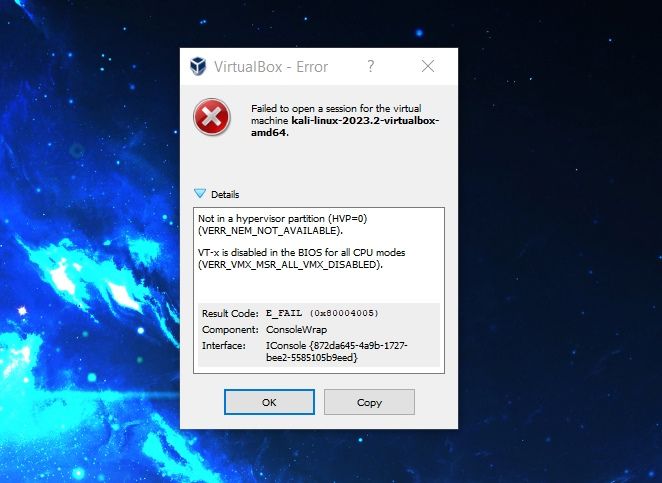

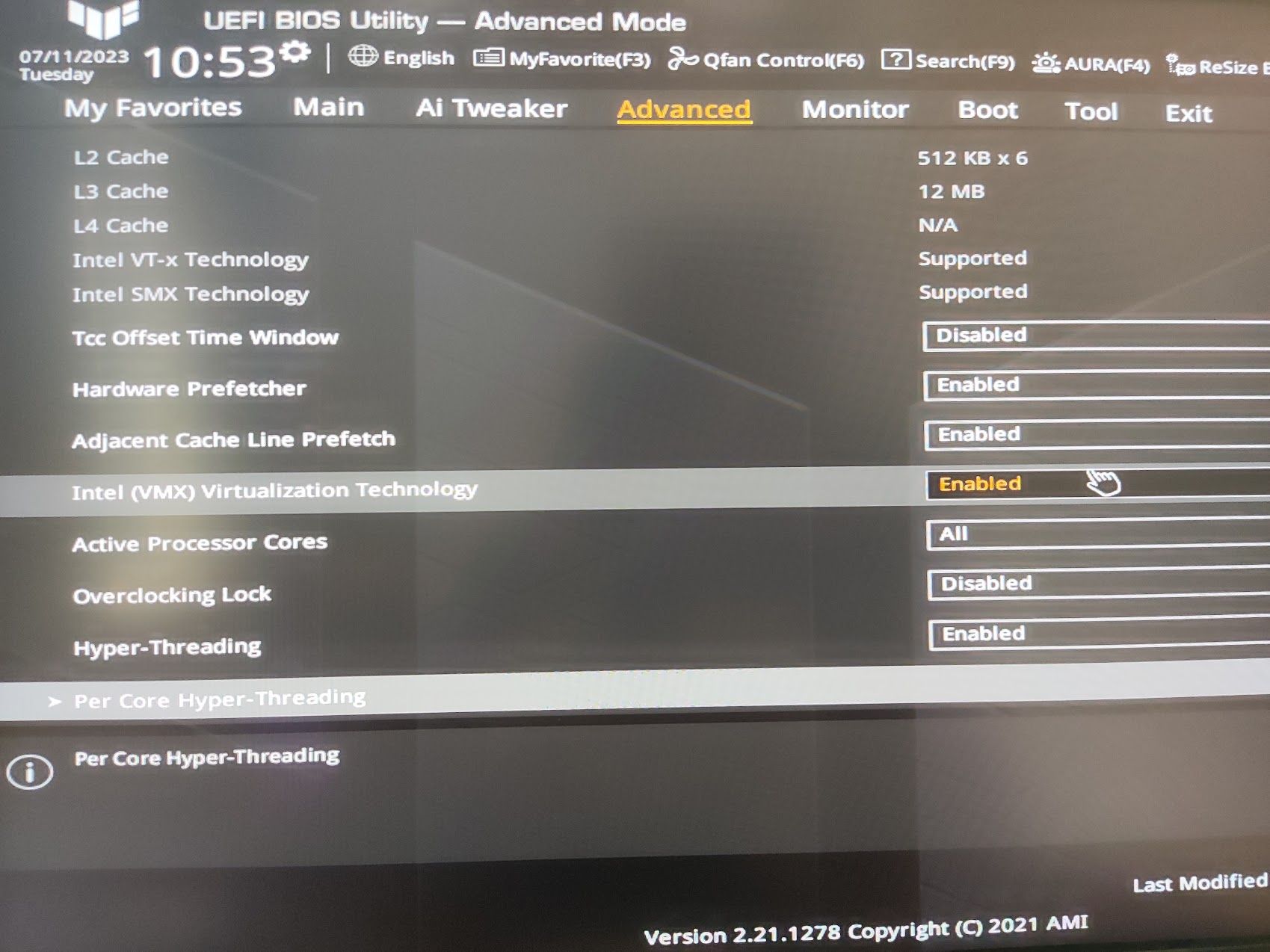

大多数现代虚拟机都配置为跳过 [GRUB 引导加载程序](https://itsfoss.com/what-is-grub/) 以获得无缝体验。

但是,你有时可能需要访问 GRUB 菜单。例如,如果你想切换回旧内核或进入恢复模式以 [重置密码](https://itsfoss.com/how-to-hack-ubuntu-password/)。

>

> ? TLDR:重启虚拟机并在再次启动时按住 `Shift` 键。这将为你提供 GRUB 菜单。

>

>

>

在这篇简短的文章中,我将向你展示两种访问虚拟机中运行的 Linux 中的 GRUB 菜单的方法:

* 临时方案(当你需要访问 GRUB 一两次时)

* 永久方案(每次启动时都会显示 GRUB)

由于大多数用户不会每天与 GRUB 交互,因此我将从一个临时解决方案开始,你可以无需任何调整即可访问 GRUB。

>

> ? 我在此处的教程中使用了 Ubuntu,但这些步骤也适用于其他 Linux 发行版。

>

>

>

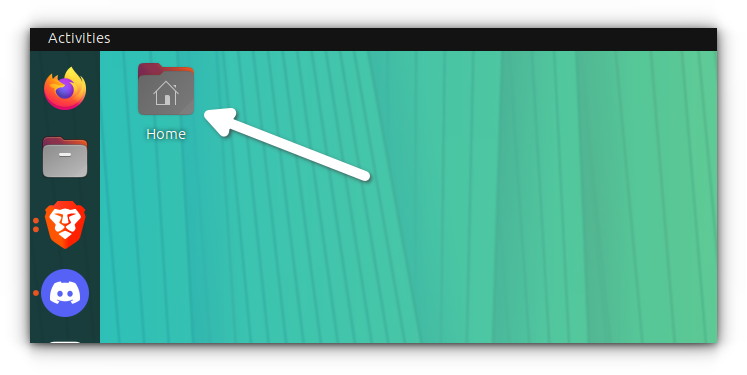

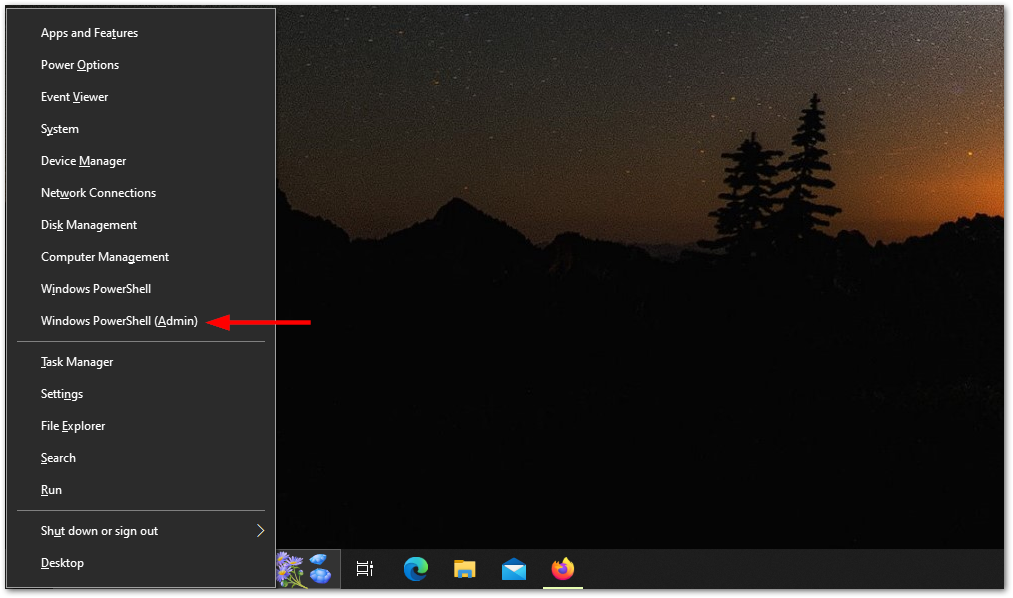

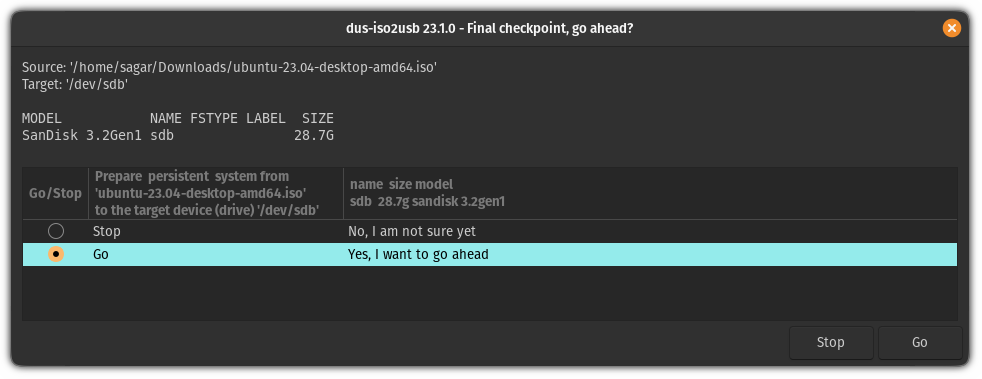

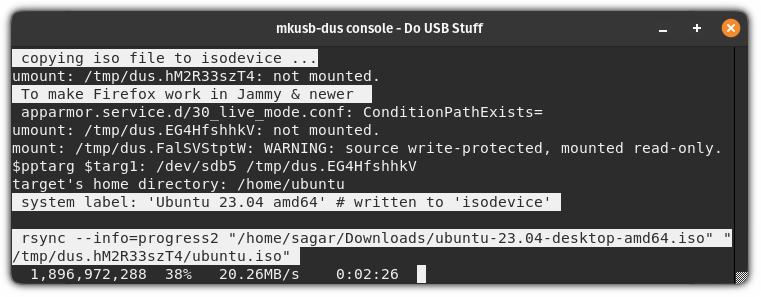

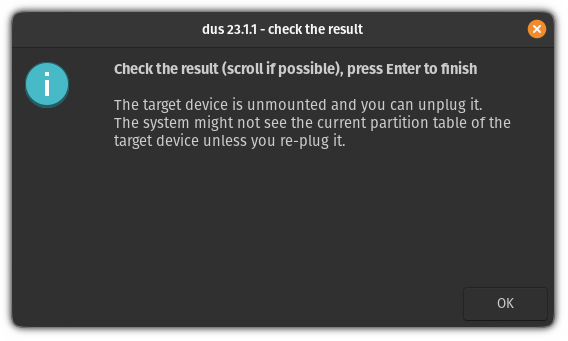

### 在虚拟机中访问 GRUB 引导加载程序(快速方式)

如果你偶尔想访问 GRUB,这应该是最好的方法,因为它不需要任何配置。

只需重新启动系统并按住 `shift` 键即可。

就是这样!

你将拥有没有任何时间限制的 GRUB 菜单:

很简单的方法。不是吗?

但它仅适用于特定的启动。那么如果你想在每次启动时都进入 GRUB 该怎么办呢? 请参考下面的方法。

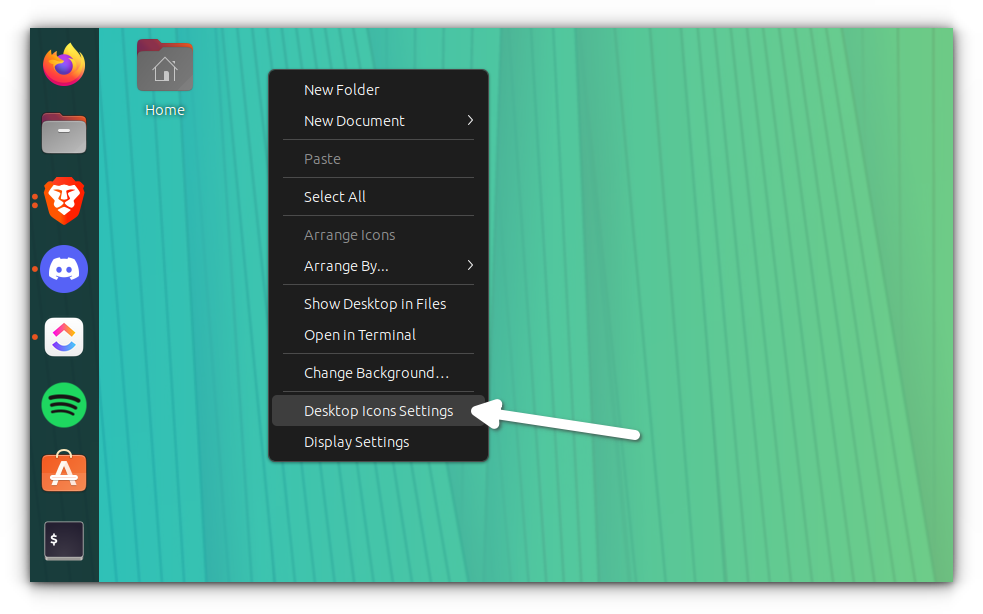

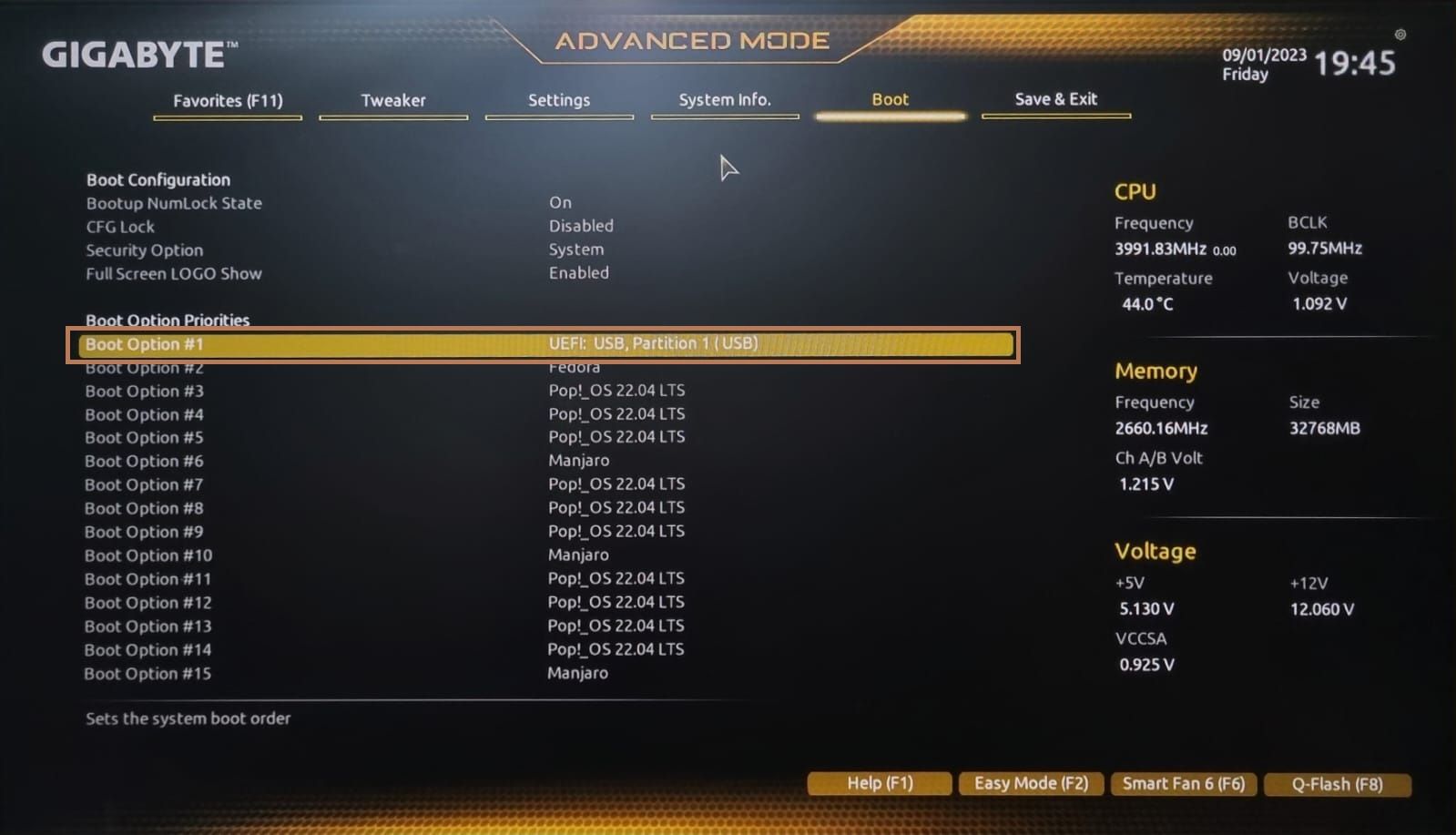

### 永久在虚拟机中启用 GRUB 菜单(如果你愿意)

>

> ? 此方法需要在命令行中更改 GRUB 配置文件。请确保你能够轻松地在终端中进行编辑。

>

>

>

如果你需要处理 GRUB 菜单来访问其他操作系统或经常更改 [从旧内核启动](https://itsfoss.com/boot-older-kernel-default/),那么此方法非常适合你。

要使 GRUB 在每次引导时都可访问,你必须在配置文件中进行更改。

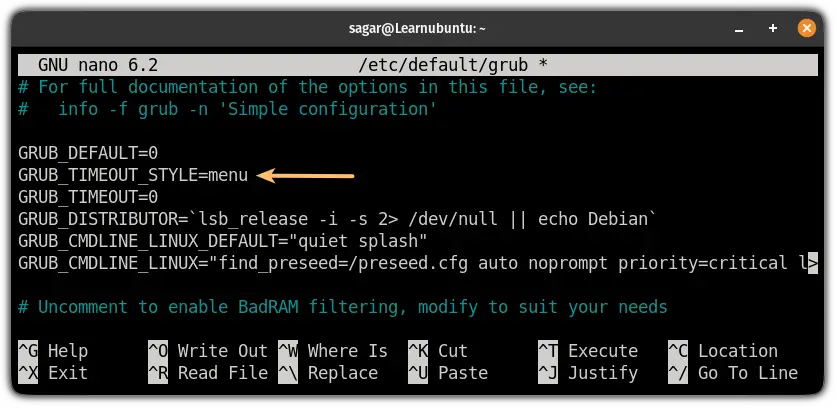

首先,使用以下命令打开 GRUB 配置文件:

```

sudo nano /etc/default/grub

```

在这里,将 `GRUB_TIMEOUT_STYLE=hidden` 更改为 `GRUB_TIMEOUT_STYLE=menu`:

接下来,在同一个配置文件中,指定你希望 GRUB 显示的秒数。

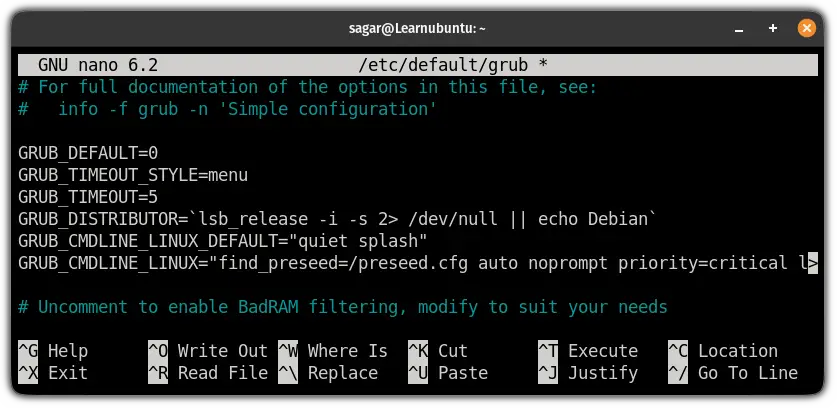

我建议 5 秒,因为它似乎在太长和太短之间取得了平衡(是的,非常相关):

```

GRUB_TIMEOUT=5

```

最后,你可以 [保存更改并退出 nano](https://linuxhandbook.com/nano-save-exit/) 编辑器。

要激活对配置文件所做的更改,请使用以下命令更新 GRUB:

```

sudo update-grub

```

就是这样。重启系统,GRUB 应该会显示 5 秒钟。

### 将 GRUB 主题化如何?

大多数 Linux 发行版都会使用 GRUB 引导加载程序,因为它的配置非常简单,而且能完成工作。

但在默认情况下,除了黑色背景和纯文本外,它没什么样式。因此,我们制作了一份指南,教你如何让它看起来更漂亮:

>

> **[定制 GRUB 以获得更好的体验](https://itsfoss.com/customize-grub-linux/)**

>

>

>

希望本指南对你有所帮助,如果你有任何疑问,请在评论中告诉我。

*(题图:MJ/f75daf37-20c2-4bae-8a68-dc6a82ad0d61)*

---

via: <https://itsfoss.com/access-grub-virtual-machine/>

作者:[Sagar Sharma](https://itsfoss.com/author/sagar/) 选题:[lkxed](https://github.com/lkxed/) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

Most modern VMs are configured to skip the [GRUB bootloader](https://itsfoss.com/what-is-grub/) for a seamless experience.

However, you might need to access the GRUB menu at times. For example, if you want to switch back to the older kernel or get into recovery mode for [resetting the password](https://itsfoss.com/how-to-hack-ubuntu-password/).

Reboot your VM and keep the Shift key pressed when it is booting up again. This should give you the GRUB menu.

In this quick article, I will be showing you two ways to access the GRUB menu in Linux running in a virtual machine:

- A temporary solution (when you have to access GRUB once or twice)

- A permanent solution (will show GRUB at every boot)

As most of the users are not going to interact with the grub on a daily basis, I will start with a temporary solution in which you can access the grub without any tweaks.

I have used Ubuntu in the tutorial here but the steps should be valid for other Linux distributions as well.

## Access the GRUB bootloader in VM (Quick way)

If you want to access the GRUB occasionally, this is supposed to be the best way as it does not require any configuration.

Just reboot your system and keep the `shift`

key pressed.

That's it!

You will have your grub menu without any time limit:

Pretty simple way. Isn't it?

But it will work for that specific boot only. So what if you want to have the grub on every boot? Refer to the given method.

## Enable Grub menu in virtual machines permanently (if you want to)

This method requires changing Grub config file in the command line. Please ensure that you are comfortable doing the edits in the terminal.

If you have to deal with the grub menu to access the other operating systems or change[ boot from the older kernels](https://itsfoss.com/boot-older-kernel-default/) often, this method is just for you.

To make the grub accessible at every boot, you must make changes in the configuration file.

First, open the grub config file using the following command:

`sudo nano /etc/default/grub`

Here, change the `GRUB_TIMEOUT_STYLE=hidden`

to the `GRUB_TIMEOUT_STYLE=menu`

:

Next, in the same config file, specify for how many seconds you want the grub to be displayed.

I would recommend 5 seconds as it seems to balance between not too long and short (yep, quite relatable):

`GRUB_TIMEOUT=5`

And finally, you can [save the changes and exit from the nano](https://linuxhandbook.com/nano-save-exit/) text editor.

To activate the changes you made to the config file, update the grub using the following command:

`sudo update-grub`

That's it. Reboot your system and the grub should be there for 5 seconds.

## How about theming GRUB?

You will get the grub bootloader in most of the Linux distros as it is quite simple to configure and gets the job done.

But by default, it's nothing apart from the black background and plain text. So we made a guide on how you can make it look dope:

[Customize Grub to Get a Better Experience With LinuxCouple of Grub configuration tweaks to get better experience with multi-boot Linux system using Grub Customizer GUI tool.](https://itsfoss.com/customize-grub-linux/)

I hope you will find this guide helpful and if you have any queries, let me know in the comments. |

16,058 | Fedora Linux 的家族(二):定制版 | https://fedoramagazine.org/fedora-linux-editions-part-2-spins/ | 2023-08-02T23:35:31 | [

"Fedora"

] | https://linux.cn/article-16058-1.html |

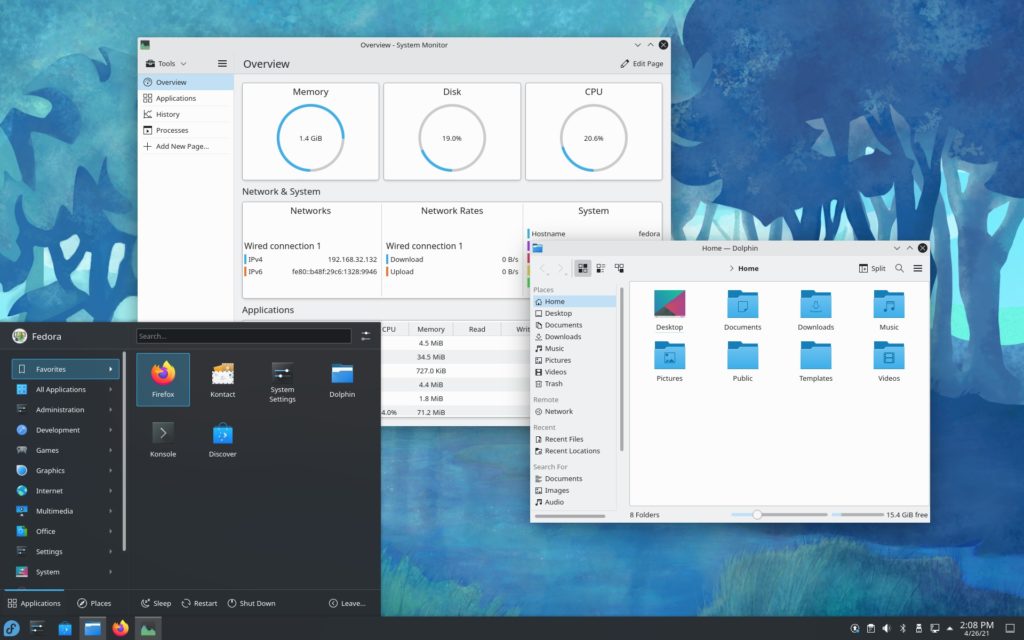

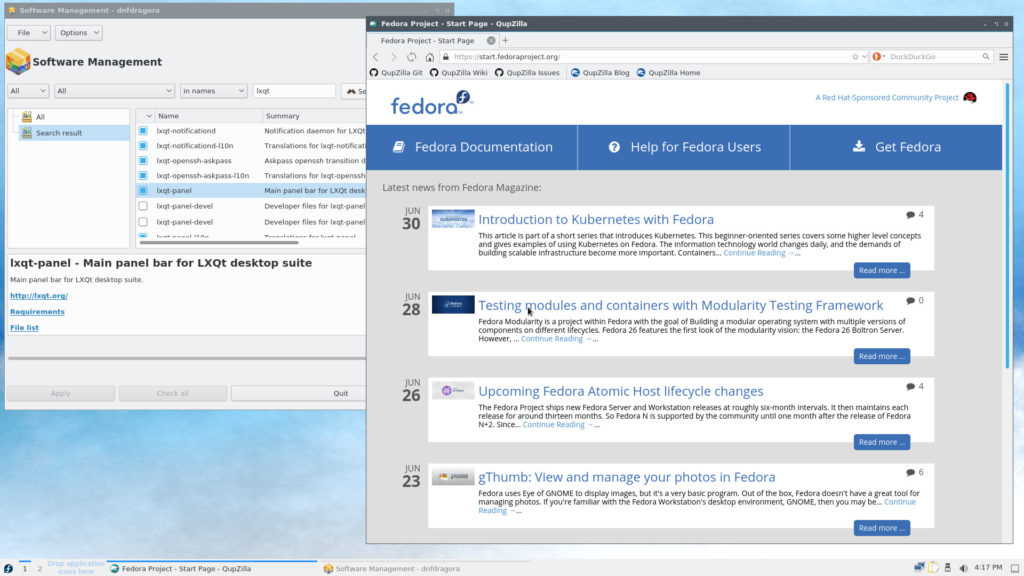

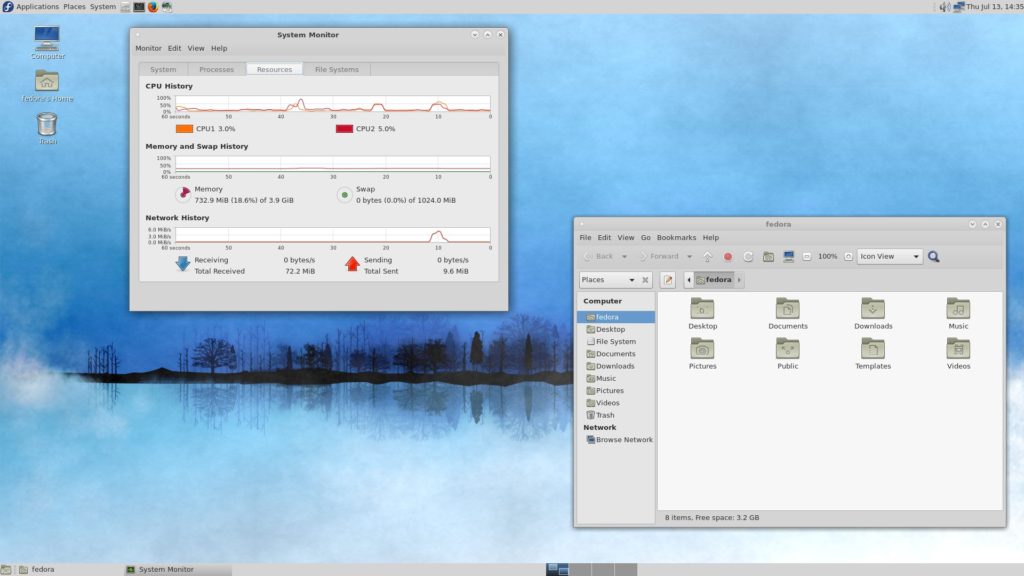

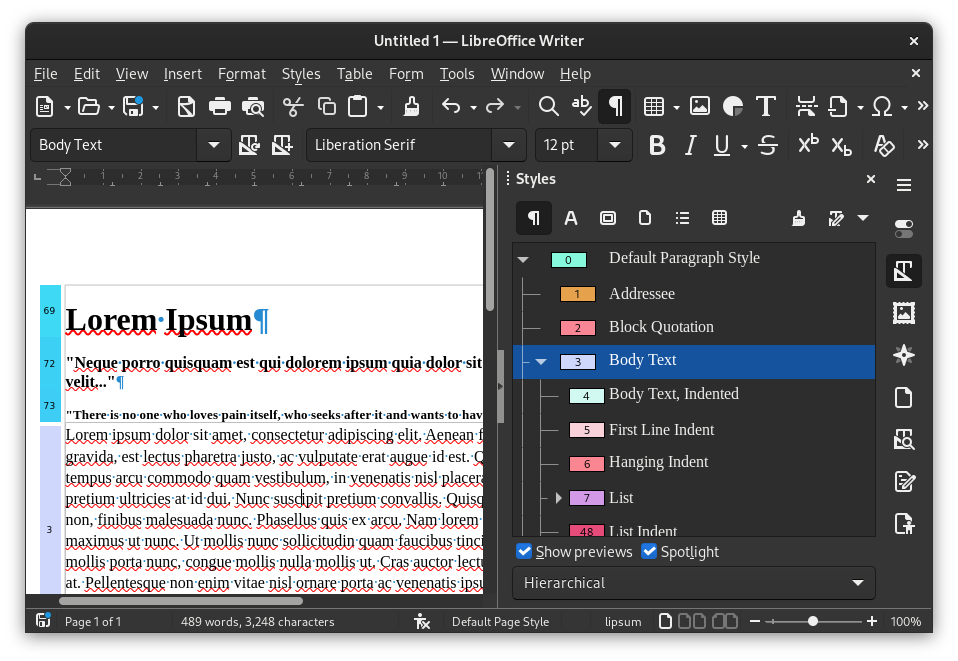

使用 Linux 的一个好处是可以选择多种不同的桌面环境。Fedora Linux 官方的 Workstation 版本默认使用 GNOME 作为桌面环境,但是你可以通过 Fedora <ruby> 定制版 <rt> Spin </rt></ruby> 选择另一个桌面环境作为默认环境。本文将更详细地介绍 Fedora Linux 定制版。你可以在我之前的文章《[Fedora Linux 的各种版本](/article-15003-1.html)》中找到所有 Fedora Linux 变体的概述。

### KDE Plasma 桌面

这个 Fedora Linux 定制版使用 KDE Plasma 作为默认的桌面环境。KDE Plasma 是一个优雅的桌面环境,非常易于定制。因此,你可以根据自己的喜好自由地定制桌面的外观。你可以定制你喜欢的主题,安装所需的小部件,更换图标、字体,根据个人喜好定制面板,并从社区安装各种扩展功能。

Fedora Linux KDE Plasma 桌面预装了许多常用的应用程序。你可以使用 Firefox、Kontact、Telepathy、KTorrent 和 KGet 上网。LibreOffice、Okular、Dolphin 和 Ark 可满足你的办公需求。而针对多媒体需求,该版本提供了 Elisa、Dragon Player、K3B 和 GwenView 等多个应用程序。

更多信息请参考以下链接:

>

> **[Fedora Linux KDE Plasma 桌面](https://spins.fedoraproject.org/en/kde/)**

>

>

>

### XFCE 桌面

这个版本非常适合那些希望在外观定制和性能之间取得平衡的用户。XFCE 本身被设计为快速轻巧,但仍具有吸引人的外观。这个桌面环境逐渐受到老旧设备用户的欢迎。

Fedora Linux XFCE 安装了多种适合日常需求的应用程序,包括 Firefox、Pidgin、Gnumeric、AbiWord、Ristretto、Parole 等。Fedora Linux XFCE 还提供了一个系统设置菜单,让你更轻松地配置 Fedora Linux。

更多信息请访问此链接:

>

> **[Fedora Linux XFCE 桌面](https://spins.fedoraproject.org/en/xfce/)**

>

>

>

### LXQT 桌面

这个版本带有一个轻量级的 Qt 桌面环境,专注于现代经典的桌面体验,而不会降低系统性能。这个 Fedora Linux 版本包含基于 Qt5 工具包的应用程序,并采用 Breeze 主题。你可以通过内置的应用程序如 QupZilla、QTerminal、FeatherPad、qpdfview、Dragon Player 等来进行各种日常活动。

更多信息请访问此链接:

>

> **[Fedora Linux LXQT 桌面](https://spins.fedoraproject.org/en/lxqt/)**

>

>

>

### MATE-Compiz 桌面

Fedora Linux MATE Compiz 桌面是 MATE 和 Compiz Fusion 的组合。MATE 桌面环境使这个 Fedora Linux 版本能够通过优先考虑生产力和性能来发挥最佳效果。同时,Compiz Fusion 使用 Emerald 和 GTK+ 主题为系统提供了美观的 3D 外观。这个 Fedora Linux 版本还配备了各种流行的应用程序,如 Firefox、LibreOffice、Parole、FileZilla 等。

更多信息请访问此链接:

>

> **[Fedora Linux MATE Compiz 桌面](https://spins.fedoraproject.org/en/mate-compiz/)**

>

>

>

### Cinnamon 桌面

由于其用户友好的界面,Fedora Linux Cinnamon 桌面非常适合那些可能对 Linux 操作系统不太熟悉的用户。你可以轻松理解如何使用这个 Fedora Linux 版本。这个定制版内置了各种准备好供你日常使用的应用程序,如 Firefox、Pidgin、GNOME Terminal、LibreOffice、Thunderbird、Shotwell 等。你可以使用 Cinnamon 设置应用来配置你的操作系统。

更多信息请访问此链接:

>

> **[Fedora Linux Cinnamon 桌面](https://spins.fedoraproject.org/en/cinnamon/)**

>

>

>

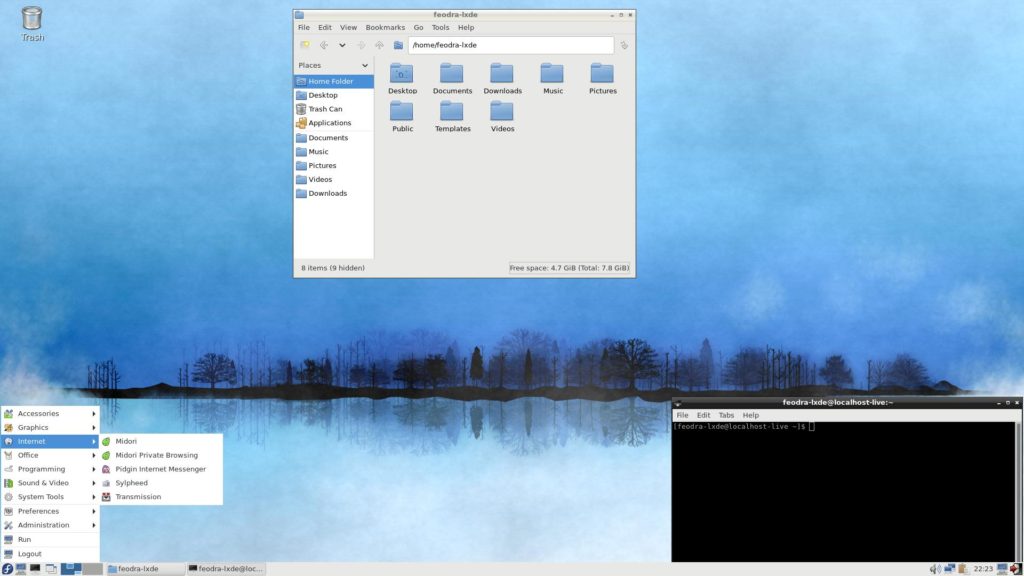

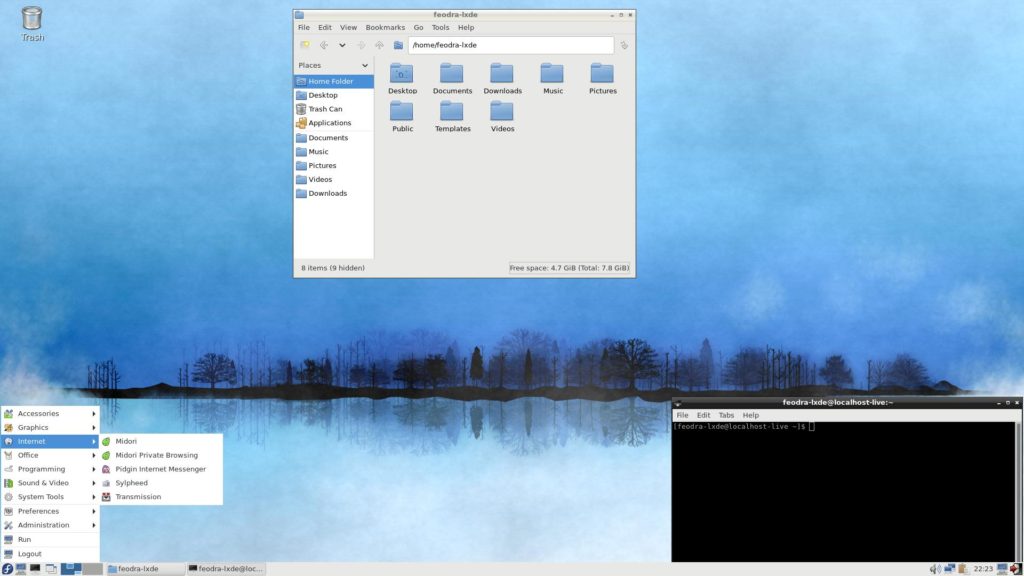

### LXDE 桌面

Fedora Linux LXDE 桌面拥有一个快速运行的桌面环境,但设计旨在保持低资源使用。这个定制版专为低配置硬件设计,如上网本、移动设备和旧电脑。Fedora Linux LXDE 配备了轻量级和流行的应用程序,如 Midori、AbiWord、Osmo、Sylpheed 等。

更多信息请访问此链接:

>

> **[Fedora Linux LXDE 桌面](https://spins.fedoraproject.org/en/lxde/)**

>

>

>

### SoaS 桌面

SoaS 是 “Sugar on a Stick” 的缩写。Fedora Linux Sugar 桌面是一个面向儿童的学习平台,因此它具有非常简单的界面,易于儿童理解。这里的 “stick” 是指一个随身驱动器或存储“棒”。这意味着这个操作系统体积紧凑,可以完全安装在一个随身 U 盘上。学童可以将他们的操作系统携带在随身 U 盘上,这样他们可以在家、学校、图书馆和其他地方轻松使用。Fedora Linux SoaS 拥有各种有趣的儿童学习应用程序,如 Browse、Get Books、Read、Turtle Blocks、Pippy、Paint、Write、Labyrinth、Physic 和 FotoToon。

更多信息请访问此链接:

>

> **[Fedora Linux Sugar 桌面](https://spins.fedoraproject.org/en/soas/)**

>

>

>

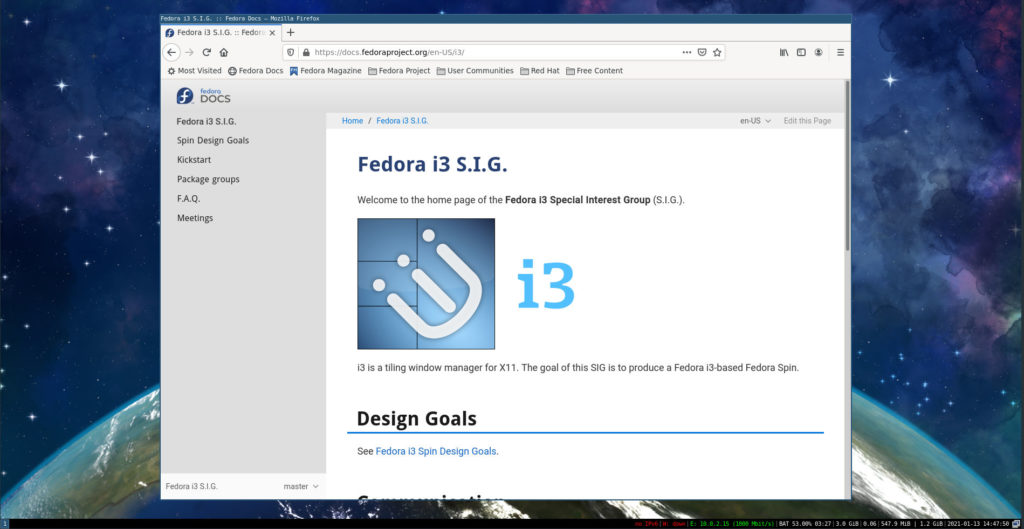

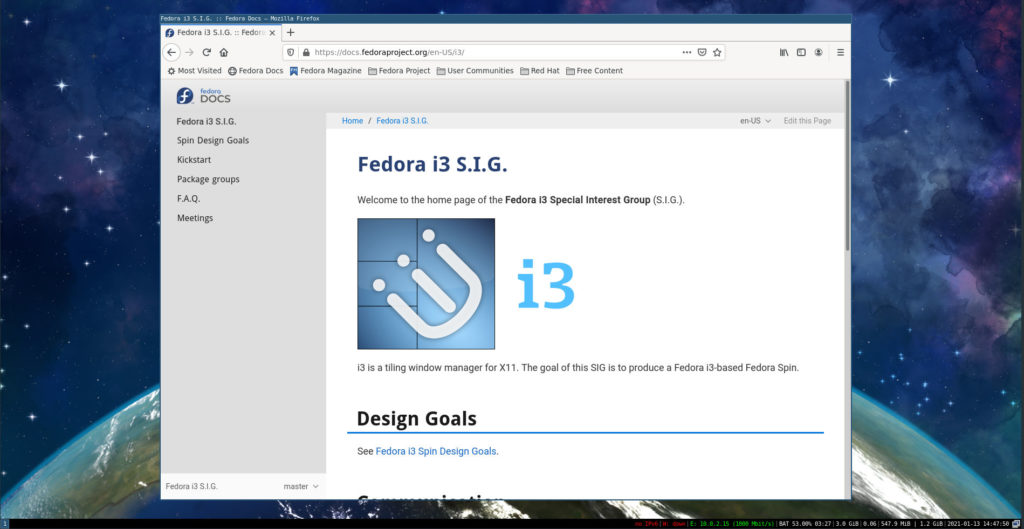

### i3 Tiling WM

Fedora Linux 的 i3 Tiling WM 定制版与其他不太相同。这个 Fedora Linux 定制版不使用桌面环境,而只使用窗口管理器。所使用的窗口管理器是 i3,它是 Linux 用户中非常受欢迎的平铺式窗口管理器。Fedora i3 定制版适用于那些更注重使用键盘进行交互而非鼠标或触摸板的用户。这个 Fedora Linux 定制版配备了各种应用程序,如 Firefox、NM Applet、brightlight、azote、htop、mousepad 和 Thunar。

更多信息请访问此链接:

>

> **[Fedora Linux i3 Tiling WM](https://spins.fedoraproject.org/en/i3/)**

>

>

>

### 结论

Fedora Linux 通过 Fedora Linux 定制版提供了大量的桌面环境选择。你只需选择一个 Fedora 定制版,即可享受你选择的桌面环境以及其内置的即用应用程序。你可以在 <https://spins.fedoraproject.org/> 找到关于 Fedora 定制版的完整信息。

*(题图:MJ/6e7ea0c7-ccbe-4664-af60-61345a7c6617)*

---

via: <https://fedoramagazine.org/fedora-linux-editions-part-2-spins/>

作者:[Arman Arisman](https://fedoramagazine.org/author/armanwu/) 选题:[lkxed](https://github.com/lkxed) 译者:ChatGPT 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | One of the nice things about using Linux is the wide choice of desktop environments. Fedora Linux official Worksation edition comes with GNOME as default desktop environment, but you can choose another desktop environment as default via Fedora Spins. This article will go into a little more detail about the Fedora Linux Spins. You can find an overview of all the Fedora Linux variants in my previous article [Introduce the different Fedora Linux editions](https://fedoramagazine.org/introduce-the-different-fedora-linux-editions/).

## KDE Plasma Desktop

This Fedora Linux comes with KDE Plasma as the default desktop environment. KDE Plasma is an elegant desktop environment that is very easy to customize. Therefore, you can freely and easily change the appearance of your desktop as you wish. You can customize your favorite themes, install the widgets you want, change icons, change fonts, customize panels according to your preferences, and install various extensions from the community.

Fedora Linux KDE Plasma Desktop is installed with a variety of ready-to-use applications. You’re ready to go online with Firefox, Kontact, Telepathy, KTorrent, and KGet. LibreOffice, Okular, Dolphic, and Ark are ready to use for your office needs. Your multimedia needs will be met with several applications such as Elisa, Dragon Player, K3B, and GwenView.

More information is available at this link: [https://spins.fedoraproject.org/en/kde/](https://spins.fedoraproject.org/en/kde/)

## XFCE Desktop

This version is perfect for those who want a balance between ease of customizing appearance and performance. XFCE itself is made to be fast and light, but still has an attractive appearance. This desktop environment is becoming popular for those with older devices.

Fedora Linux XFCE is installed with various applications that suit your daily needs. These applications are Firefox, Pidgin, Gnumeric, AbiWord, Ristretto, Parole, etc. Fedora Linux XFCE also already has a System Settings menu to make it easier for you to configure your Fedora Linux.

More information is available at this link: [https://spins.fedoraproject.org/en/xfce/](https://spins.fedoraproject.org/en/xfce/)

## LXQT Desktop

This spin comes with a lightweight Qt desktop environment, and focuses on modern classic desktops without slowing down the system. This version of Fedora Linux includes applications based on the Qt5 toolkit and is Breeze themed. You will be ready to carry out various daily activities with built-in applications, such as QupZilla, QTerminal, FeatherPad, qpdfview, Dragon Player, etc.

More information is available at this link: [https://spins.fedoraproject.org/en/lxqt/](https://spins.fedoraproject.org/en/lxqt/)

## MATE-Compiz Desktop

Fedora Linux MATE Compiz Desktop is a combination of MATE and Compiz Fusion. MATE desktop allows this version of Fedora Linux to work optimally by prioritizing productivity and performance. At the same time Compiz Fusion provides a beautiful 3D look with Emerald and GTK + themes. This Fedora Linux is also equipped with various popular applications, such as Firefox, LibreOffice, Parole, FileZilla, etc.

More information is available at this link: [https://spins.fedoraproject.org/en/mate-compiz/](https://spins.fedoraproject.org/en/mate-compiz/)

## Cinnamon Desktop

Because of its user-friendly interface, Fedora Linux Cinnamon Desktop is perfect for those who may be new to the Linux operating system. You can easily understand how to use this version of Fedora Linux. This spin has built-in applications that are ready to use for your daily needs, such as Firefox, Pidgin, GNOME Terminal, LibreOffice, Thunderbird, Shotwell, etc. You can use Cinnamon Settings to configure your operating system.

More information is available at this link: [https://spins.fedoraproject.org/en/cinnamon/](https://spins.fedoraproject.org/en/cinnamon/)

## LXDE Desktop

Fedora Linux LXDE Desktop has a desktop environment that performs fast but is designed to keep resource usage low. This spin is designed for low-spec hardware, such as netbooks, mobile devices, and older computers. Fedora Linux LXDE has lightweight and popular applications, such as Midori, AbiWord, Osmo, Sylpheed, etc.

More information is available at this link: [https://spins.fedoraproject.org/en/lxde/](https://spins.fedoraproject.org/en/lxde/)

## SoaS Desktop

SoaS stands for Sugar on a Stick. Fedora Linux Sugar Desktop is a learning platform for children, so it has a very simple interface that is easy for children to understand. The word “stick” in this context refers to a thumb drive or memory “stick”. This means this OS has a compact size and can be completely installed on a thumb drive. Schoolchildren can carry their OS on a thumb drive, so they can use it easily at home, school, library, and elsewhere. Fedora Linux SoaS has a variety of interesting learning applications for children, such as Browse, Get Books, Read, Turtle Blocks, Pippy, Paint, Write, Labyrinth, Physic, and FotoToon.

More information is available at this link: [https://spins.fedoraproject.org/en/soas/](https://spins.fedoraproject.org/en/soas/)

## i3 Tiling WM

The i3 Tiling WM spin of Fedora Linux is a bit different from the others. This Fedora Linux spin does not use a desktop environment, but only uses a window manager. The window manager used is i3, which is a very popular tiling window manager among Linux users. Fedora i3 Spin is intended for those who focus on interacting using a keyboard rather than pointing devices, such as a mouse or touchpad. This spin of Fedora Linux is equipped with various applications, such as Firefox, NM Applet, brightlight, azote, htop, mousepad, and Thunar.

More information is available at this link: [https://spins.fedoraproject.org/en/i3/](https://spins.fedoraproject.org/en/i3/)

## Conclusion

Fedora Linux provides a large selection of desktop environments through Fedora Linux Spins. You can simply choose one of the Fedora Spins, and immediately enjoy Fedora Linux with the desktop environment of your choice along with its ready-to-use built-in applications. You can find complete information about Fedora Spins at [https://spins.fedoraproject.org/](https://spins.fedoraproject.org/).

## Darvond

I like Spins. They don’t confuse the user (especially the end user) into thinking that they’re disparate editions, but rather different starting points.

No thanks to Ubuntu and the many ___ntu editions, has that been a fine mess to explain over the years.## james

Great article, covers all the usual wms.

Will you cover fedora labs? I just downloaded comp-neuro and installed it in a vm and it had absolutely no installed applications, other than a base installation of fedora 36. No neuron, no brian, nothing… very disappointed

## james

it was my mistake, the packages were present after all.

## Cliff

The spins are grab and install then do. I had a major hard ware failure. Was back up running in just under 2 hours. Thanks to the spins.

## Hal Kristjan Stefansson

Thx for all the years together!! <3

I’ve used KDE for years now, and it’s awesome, but I’ll add I do miss the CUBE

love&light

Kristjan

## Vilius

Hi,

Does MATLAB install in the same way across all of the mentioned spins?

Can I have desktop shortcuts/icons on the original Fedora, i.e. GNOME?

Thanks a lot

## tsyang

Do you mean Octave, the freeware version of Matlab?

You can install it just like other package.

Open a terminal, then use this command

$ sudo dnf install octave

## robin

yes it does. But there is a carvet with fedora using a newer version of freetype and cairo. you have to make matlab default to system libraries for these and also for libstdc++ to be able to use your gpu.

## Richard Miller

What screenreader is used?

## Marcus

What’s the difference between “Fedora Spins” and “Fedora Labs”?

## Richard England

I refer you to the first article in Arman’s series:

https://fedoramagazine.org/introduce-the-different-fedora-linux-editions/

## Muhammed Yasin Özsaraç

Very good article. Thanks Arman !

## jtad

Don’t forget swaywm – along with i3 the best tiled wm for wayland. On Fedora, the version is almost the most up-to-date, and the repositories also contain up-to-date versions of the wayland utility like grim, fuzzel, and terminals like foot

## Sam

Thank you |

16,059 | Linux 中退出码的含义 | https://itsfoss.com/linux-exit-codes/ | 2023-08-03T15:50:00 | [

"退出码"

] | https://linux.cn/article-16059-1.html |

>

> 揭开 Linux 中退出码的神秘面纱。了解什么是退出码,以及为什么和如何使用它们。

>

>

>

退出码(退出状态)可以告诉我们最后一次执行的命令的状态。在命令结束以后,我们可以知道命令是成功完成的还是以错误结束的。

**其基本思想是,程序返回退出代码 `0` 时表示执行成功,没有问题。代码 `1` 或 `0` 以外的任何代码都被视为不成功。**

退出码除了 0 和 1 外还有很多值,我将在本文介绍它们。

### Linux Shell 中的各种退出码

我们来快速了解一下 Linux Shell 中的主要退出码:

| 退出码 | 解释 |

| --- | --- |

| `0` | 命令成功执行 |

| `1` | 通用错误代码 |

| `2` | 命令(或参数)使用不当 |

| `126` | 权限被拒绝(或)无法执行 |

| `127` | 未找到命令,或 `PATH` 错误 |

| `128+n` | 命令被信号从外部终止,或遇到致命错误 |

| `130` | 通过 `Ctrl+C` 或 `SIGINT` 终止(*终止代码 2 或键盘中断*) |

| `143` | 通过 `SIGTERM` 终止(*默认终止*) |

| `255/*` | 退出码超过了 0-255 的范围,因此重新计算(LCTT 译注:超过 255 后,用退出码对 256 取模) |

>

> ? `130`(`SIGINT` 或 `^C`)和 `143`(`SIGTERM`)等终止信号是非常典型的,它们属于 `128+n` 信号,其中 `n` 代表终止码。

>

>

>

在简单了解了退出码之后,我们来看看它们的用法。

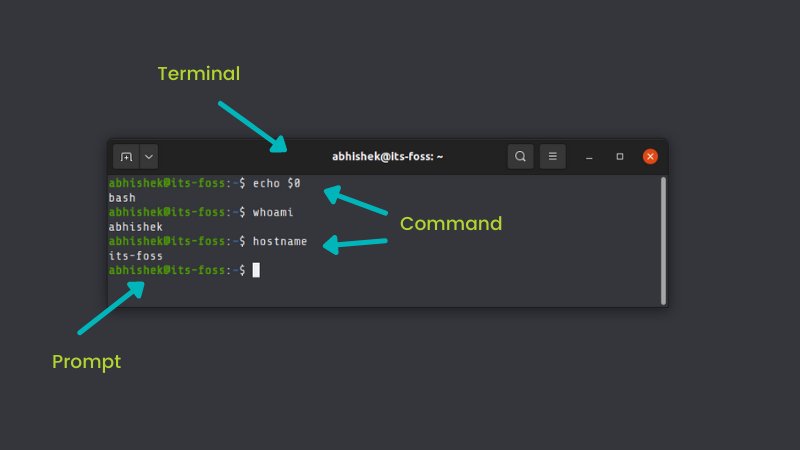

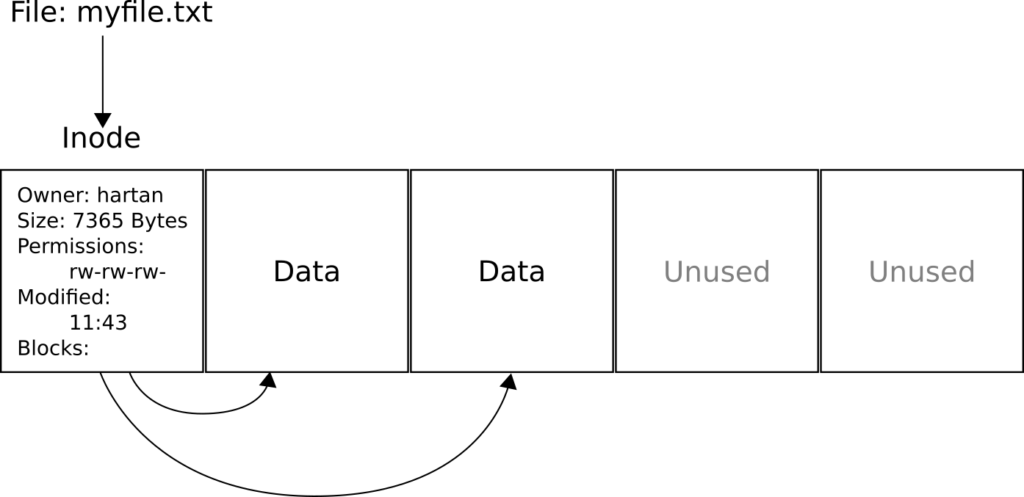

### 获取退出码

前一个命令执行的退出码存储在 [特殊变量](https://linuxhandbook.com:443/bash-special-variables/) `$?` 中。你可以通过运行以下命令来获取:

```

echo $?

```

我们在所有演示中都将使用它来获取退出代码。

请注意,`exit` 命令支持以带着前一条命令相同的退出码退出。

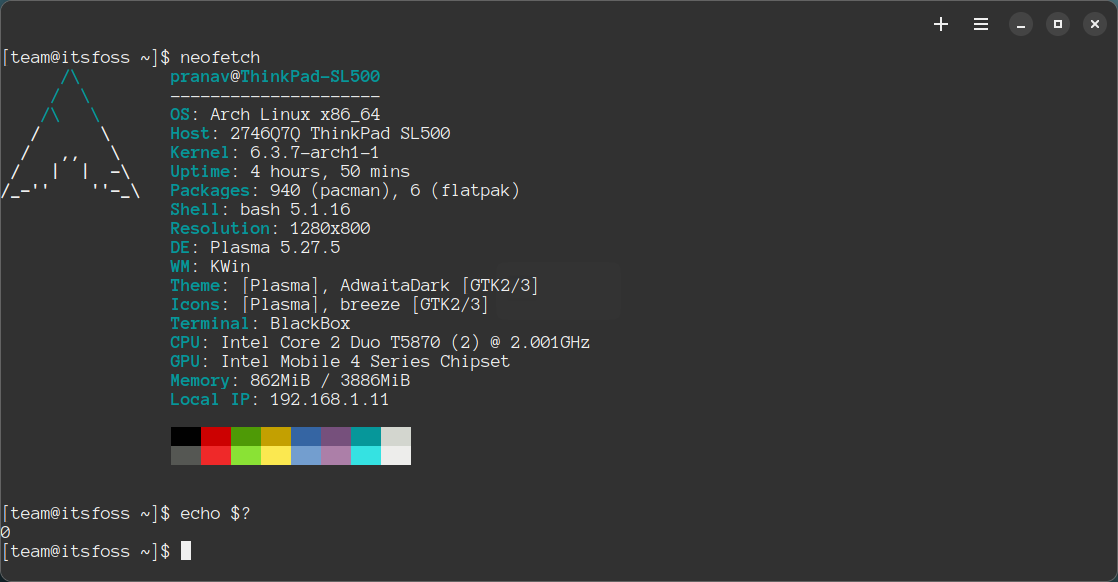

### 退出码 0

退出码 `0` 表示命令执行无误,这是完成命令的理想状态。

例如,我们运行这样一条基本命令

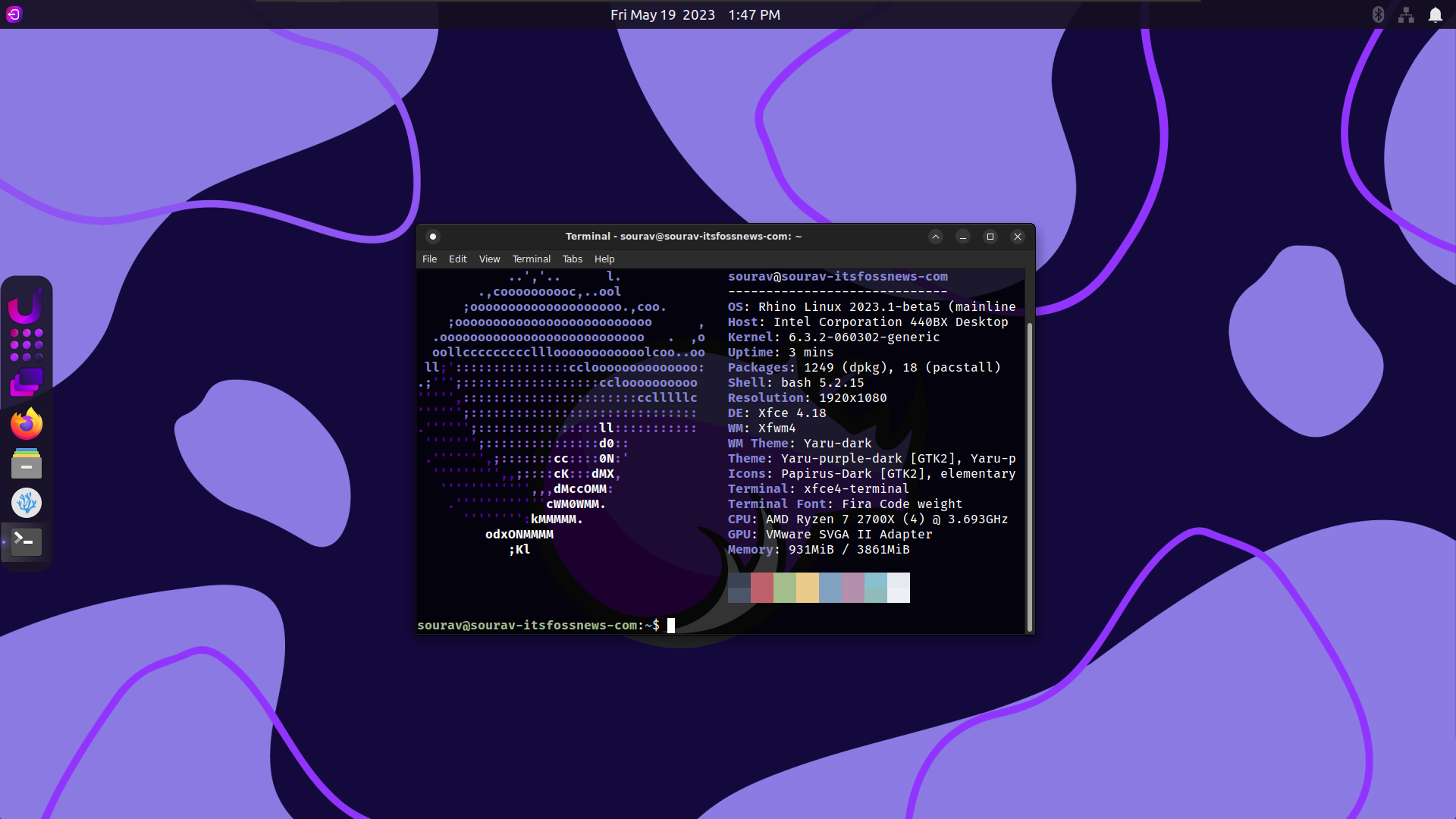

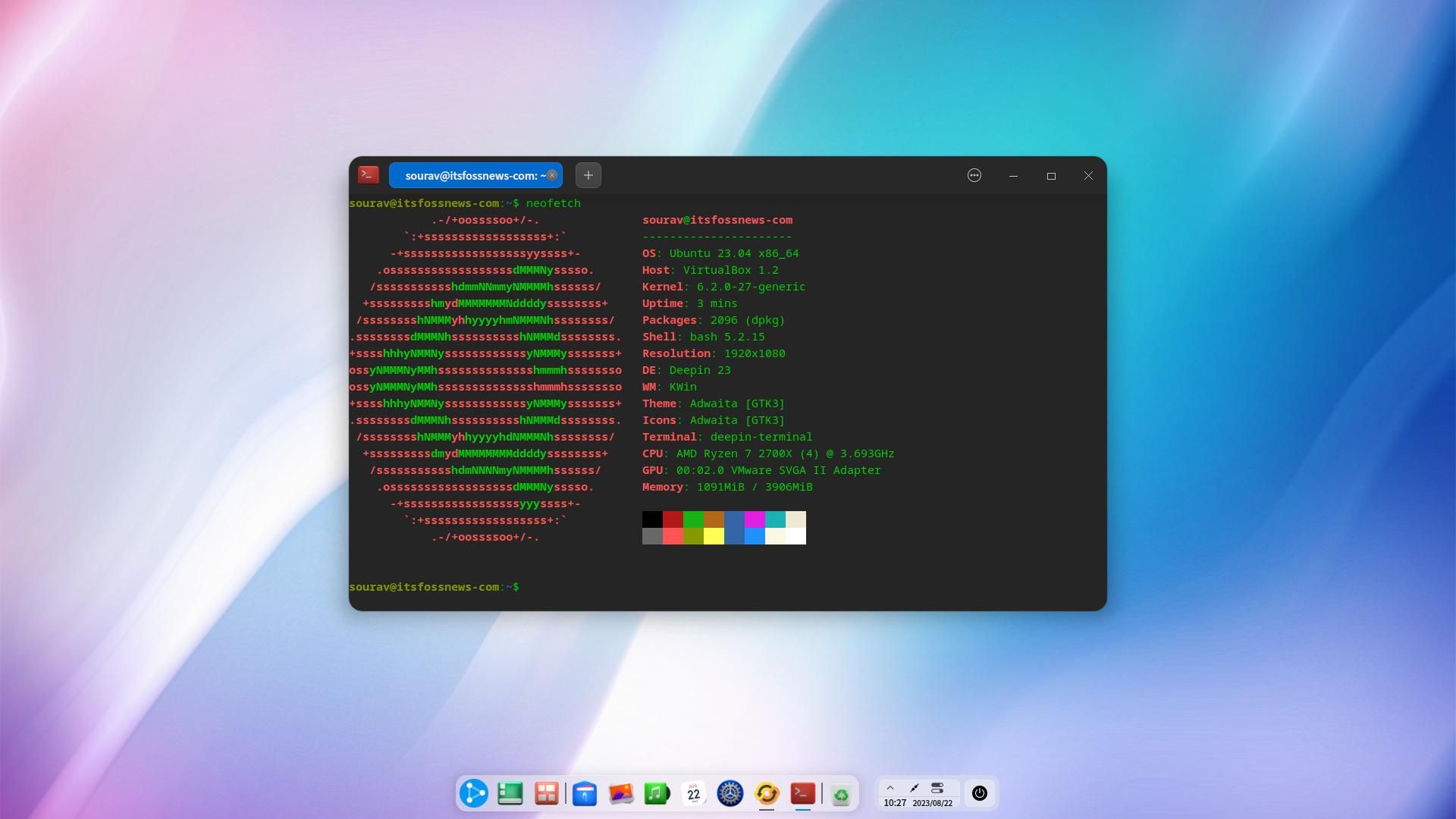

```

neofetch

echo $?

```

这个退出码 `0` 表示特定命令已成功执行,仅此而已。让我们再演示几个例子。

你可以尝试 [终止一个进程](https://itsfoss.com/how-to-find-the-process-id-of-a-program-and-kill-it-quick-tip/);它也会返回代码 `0`。

```

pkill lxappearance

```

查看文件内容也会返回退出码 0,这**仅**意味着 `cat` 命令执行成功。

### 退出码 1

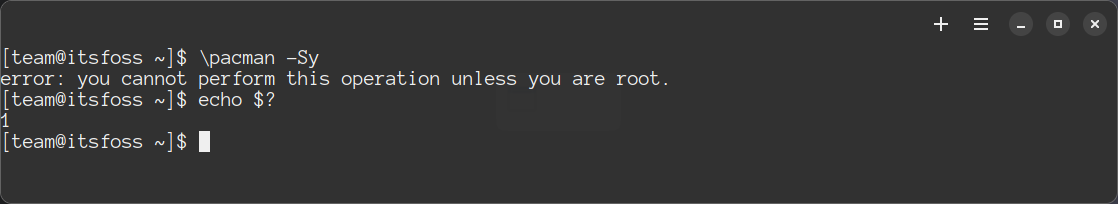

退出码 `1` 也很常见。它通常表示命令以一般错误结束。

例如,在没有 sudo 权限的情况下使用 [软件包管理器](https://itsfoss.com/package-manager/),就会返回代码 `1`。在 Arch Linux 中,如果我运行下面的命令:

```

pacman -Sy

```

它会返回 `1`, 表示上一条命令运行出错。

>

> ? 如果你在基于 Ubuntu 的发行版中尝试这样做(不使用 `sudo` 执行 `apt update`),运行后会得到错误码 `100`,表示你是在没有权限的情况下运行 `apt`。`100` 不是标准错误码,而是 `apt` 特有的错误码。

>

>

>

虽然这是一般的理解,但我们也可以将其解释为 “不被允许的操作”。

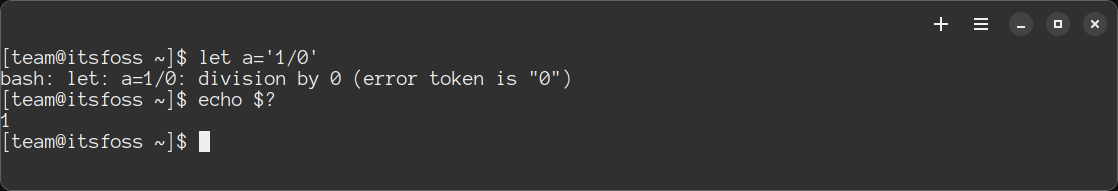

除以 `0` 等操作也会返回错误码 `1`。

### 退出码 2

这个退出码出现在当执行的命令有语法错误时。滥用命令参数也会导致此错误。

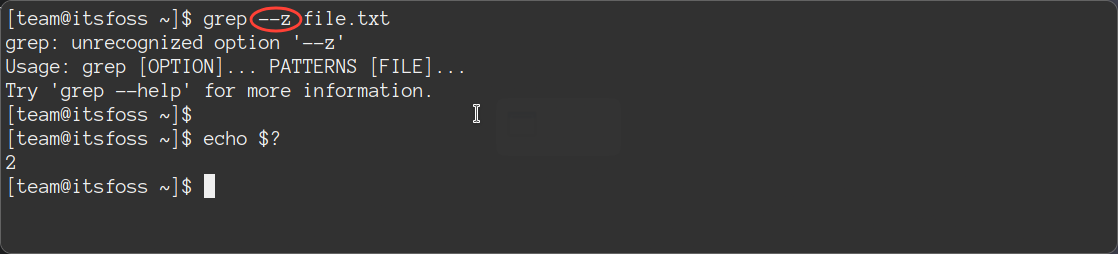

一般来说,它表示由于使用不当,命令无法执行。

例如,我在一个本应只有一个连字符的选项上添加了两个连字符,那么此时会出现退出码 2。

```

grep --z file.txt

```

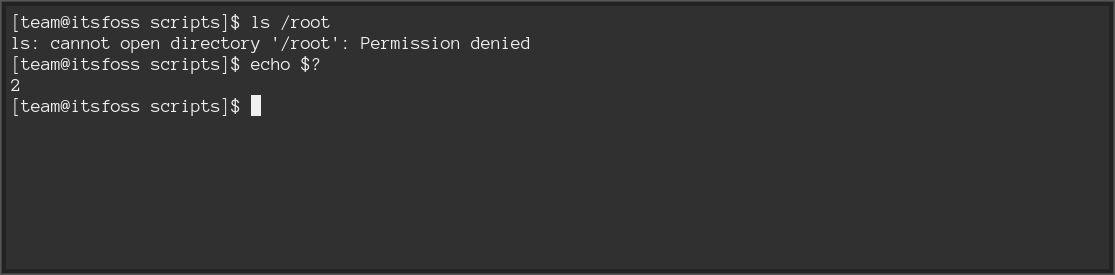

当权限被拒绝时,比如访问 `/root` 文件夹,就会出现错误码 `2`。

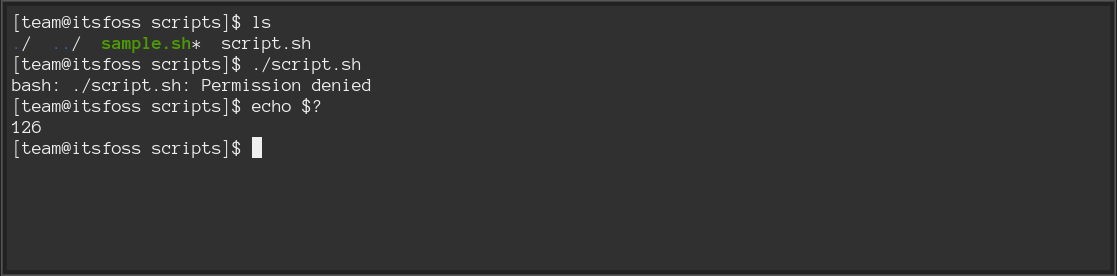

### 退出码 126

126 是一个特殊的退出码,它用于表示命令或脚本因权限错误而未被执行。

当你尝试执行没有执行权限的 Shell 脚本时,就会出现这个错误。

请注意,该退出码只出现在没有足够权限的脚本或命令的“*执行*”中,这与一般的**权限被拒绝**错误不同。

因此,不要把它与你之前看到的退出码为 `2` 的示例混淆。在那个示例中,运行的是 `ls` 命令,权限问题出自它试图执行的目录。而本例中权限问题来自脚本本身。

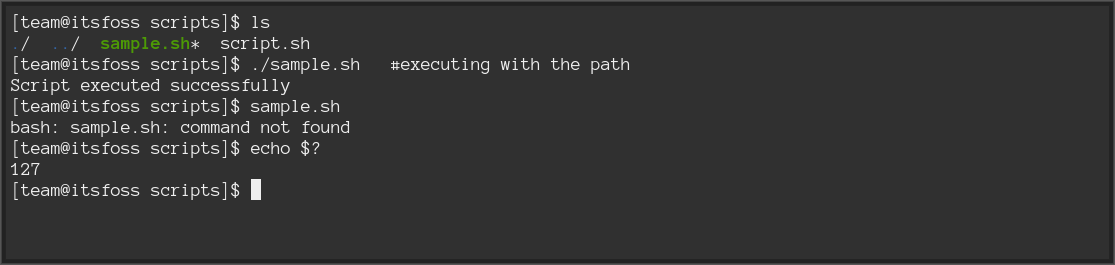

### 退出码 127

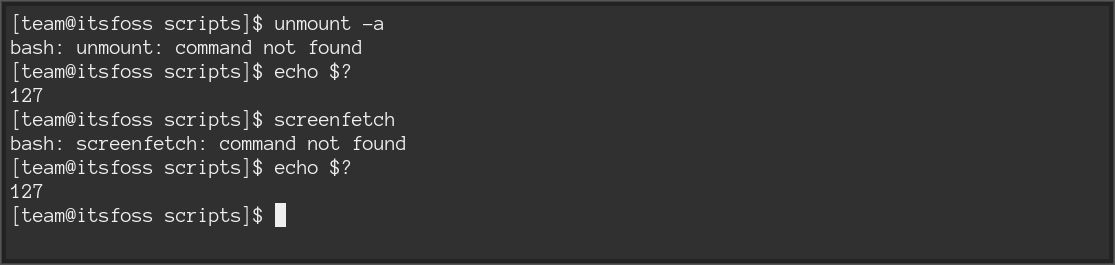

这是另一个常见的退出码。退出码 `127` 指的是“[未找到命令](https://itsfoss.com/bash-command-not-found/)”。它通常发生在执行的命令有错别字或所需的可执行文件不在 `$PATH` 变量中时。

例如,当我尝试执行一个不带路径的脚本时,经常会看到这个错误。

当你想运行的可执行文件不在 `$PATH` 变量中时,也会出现退出码 `127`。你可以通过 [在 PATH 变量中添加命令的目录](https://itsfoss.com/add-directory-to-path-linux/) 来纠正这种情况。

当你输入不存在的命令时,也会得到这样的退出码。

### 退出码 128+n 系列

当应用程序或命令因致命错误而终止或执行失败时,将产生 128 系列退出码(`128+n`),其中 `n` 为信号编号。

`n` 包括所有类型的终止代码,如 `SIGTERM`、`SIGKILL` 等。

#### 退出码 130 或 SIGINT

在通过终止信号 `2` 或按下 `Ctrl+C` 中断进程时,会发出 `SIGINT`(键盘中断信号)。

因为终止信号是 `2`,所以我们得到的退出码是 `130`(128+2)。下面的视频演示了 `lxappearance` 的中断信号。

#### 退出码 137 或 SIGKILL

`SIGKILL`(立即终止信号)表示终止信号 `9`。这是终止应用程序时最不应该使用的方法。

因为终止信号为 `9`,因此我们得到的退出代码为 `137`(128+9)。

#### 退出码 143 或 SIGTERM

`SIGTERM` 是进程在未指定参数的情况下被杀死时的默认行为。

`SIGTERM` 的终止信号为 `15`,因此该信号的退出码为 `143`(128+15)。

还有一些你以前可能不知道的终止信号,它们也有自己类似的退出码。你可以在这里查看它们:

>

> ? 请注意,如果进程在启动它的同一会话中终止,这些信号可能不会出现。如果要重现这些信号,请从不同的 shell 终止。

>

>

> 就个人而言,信号 `128` 是无法重现的。

>

>

>

### 当退出码超过了 255 会怎样?

最新版本的 Bash 甚至保留了超过 255 的原始退出码的值,但一般来说,如果代码超过 255,就会被重新计算。

也就是说,代码 `256` 会变成 `0`,`257` 会变成 `1`,`383` 会变成 `127`,以此类推。为确保更好的兼容性,请将退出码保持在 `0` 至 `255` 之间。

### 结语

希望你对 Linux Shell 中的退出码有所了解。在排查各种问题时,使用它们会非常方便。

如果你要在 Shell 脚本中使用这些代码,请确保你了解每个代码的含义,以便更容易地排除故障。

这就是本文的全部内容。如有遗漏,请在评论区告诉我。

*(题图:MJ/719ff711-1b9f-4aa9-a82e-980704acbdd8)*

---

via: <https://itsfoss.com/linux-exit-codes/>

作者:[Pranav Krishna](https://itsfoss.com/author/pranav/) 选题:[lkxed](https://github.com/lkxed/) 译者:[lxbwolf](https://github.com/lxbwolf) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

An exit code or exit status tells us about the status of the last executed command. Whether the command was completed successfully or ended with an error. This is obtained after the command terminates.

**The basic ideology is that programs return the exit code 0 to indicate that it executed successfully without issues. Code 1 or anything other than 0 is considered unsuccessful. **

There are many more exit codes other than 0 and 1, which I'll cover in this article.

## Various exit codes in Linux shell

Let us take a quick look at the prominent exit codes in the Linux shell:

Exit code | Meaning of the code |

---|---|

`0` |

Command executed with no errors |

`1` |

Code for generic errors |

`2` |

Incorrect command (or argument) usage |

`126` |

Permission denied (or) unable to execute |

`127` |

Command not found, or PATH error |

`128+n` |

Command terminated externally by passing signals, or it encountered a fatal error |

`130` |

Termination by Ctrl+C or SIGINT (termination code 2 or keyboard interrupt) |

`143` |

Termination by SIGTERM (default termination) |

`255/*` |

Exit code exceeded the range 0-255, hence wrapped up |

The termination signals like `130`

(SIGINT or `^C`

) and `143`

(SIGTERM) are prominent, which are just `128+n`

signals with `n`

standing for the termination code.

Now that you are briefly familiar with the exit codes let's see about their usage.

## Retrieving the exit code

The exit code of the previously executed command is stored in the [special variable](https://linuxhandbook.com/bash-special-variables/) `$?`

. You can retrieve the exit status by running:

`echo $?`

This will be used in all our demonstrations to retrieve the exit code.

Note that the *exit* command supports carrying the same exit code of the previous command executed.

## Exit code 0

Exit code `0`

means that the command is executed without errors. This is ideally the best case for the completion of commands.

For example, let us run a basic command like this

```

neofetch

echo $?

```

This exit code `0`

means that the particular command was executed successfully, nothing more or less. Let us demonstrate some more examples.

You may try [killing a process](https://itsfoss.com/how-to-find-the-process-id-of-a-program-and-kill-it-quick-tip/); it will also return the code `0`

.

`pkill lxappearance`

Viewing a file's contents will also return an exit code 0, which implies **only** that the 'cat' command executed successfully.

## Exit code 1

Exit code `1`

is also a common one. It generally means the command terminated with a generic error.

For example, using the [package manager](https://itsfoss.com/package-manager/) without sudo permissions results in code 1. In Arch Linux, if I try this:

`pacman -Sy `

It will give me exist code as 1 meaning the last command resulted in error.

If you try this in Ubuntu-based distros (`apt update`

without sudo), you get 100 as an error code for running 'apt' without permissions. This is not a standardized error code, but one specific to apt.

While this is a general understanding, we can also interpret this as "operation impermissible".

Operations like dividing by zero also result in code 1.

## Exit code 2

This exit code is given out when the command executed has a syntax error. Misusing the arguments of commands also results in this error.

It generally suggests that the command could not execute due to incorrect usage.

For example, I added two hyphens to an option that's supposed to have one hyphen. Code 2 was given out.

`grep --z file.txt`

When permission is denied, like accessing the /root folder, you get error code 2.

## Exit code 126

126 is a peculiar exit code since it is used to indicate a command or script was not executed due to a permission error.

This error can be found when you try executing a shell script without giving execution permissions.

Note that this exit code appears only for the '*execution*' of scripts/commands without sufficient permissions, which is different from a generic Permission Denied error.

So, on't confuse it with the previous example you saw with exit code 2. There, ls command ran and the permission issue came with the directory it was trying to execute. Here, the permission issues came from the script itself.

## Exit code 127

This is another common one. Exit code 127 refers to "[command not found](https://itsfoss.com/bash-command-not-found/)". It usually occurs when there's a typo in the command executed or the required executable is not in the $PATH variable.

For example, I often see this error when I try executing a script without its path.

Or when the executable file you're trying to run, is not listed in the `$PATH`

variable. You can rectify this by [adding the parent directory to the PATH variable](https://itsfoss.com/add-directory-to-path-linux/).

[How to Add a Directory to PATH in LinuxLearn all the essential steps about adding a directory to the PATH in Linux and making those changes permanently.](https://itsfoss.com/add-directory-to-path-linux/)

You'll also get this exit code when you type commands that do not exist.

*is not a command, and*

*Unmount**is not installed*

*Screenfetch*## Exit code series 128+n

When an application or command is terminated or its execution fails due to a fatal error, the adjacent code to 128 is produced (128+n), where n is the signal number.

This includes all types of termination codes, like `SIGTERM`

, `SIGKILL`

, etc that apply to the value 'n' here.

### Code 130 or SIGINT

`SIGINT`

or **Sig**nal for Keyboard ** Int**errupt is induced by interrupting the process by termination signal 2, or by Ctrl+C.

Since the termination signal is 2, we get a code 130 (128+2). Here's a video demonstrating the interrupt signal for `lxappearance`

.

SIGINT(2) termination or Keyboard Interrupt (^C) that gives code 130

### Code 137 or SIGKILL

The `SIGKILL`

termination **sig**nal that **kill**s the process instantly has a termination signal 9. This is the last method one should use while terminating an application.

The exit code thrown is 137 since the termination signal is 9 (128+9).

SIGKILL(9) termination that gives code 137

### Code 143 or SIGTERM

`SIGTERM`

or **Sig**nal to **Term**inate is the default behavior when a process is killed without specifying arguments.

The termination code for SIGTERM is 15, hence this signal gets an exit code of 143 (128+15).

SIGTERM(15) termination that gives code 143

There are other termination signals that you may not have known before; they too have their own exit codes similar to these. You can check them out here:

[How to use SIGINT and other Termination Signals in LinuxTerminating executing process is more than just kill -9. Here are some of the prominent termination signals and their usage.](https://linuxhandbook.com/termination-signals/)

Note that these signals may not appear if terminated from the same session from which the process was started. If you're reproducing these, terminate from a different shell.

On a personal note, signal 128 was impossible to reproduce.

## What if the code exceeds 255?

Recent versions of Bash retain the original exit code value even beyond 255, but generally, if the code exceeds 255, then it is wrapped up.

That is, code 256 becomes '0', 257 becomes '1', 383 becomes '127', and so on and so forth. To ensure better compatibility, keep the exit codes between 0 and 255.

## Wrapping up

I hope you learned something about the exit codes in the Linux shell. Using them can come in handy for troubleshooting various issues.

If you are using these codes in a shell script, make sure you understand the meaning of each code in order to make it easier for troubleshooting.

In case you need a reference, check out the Bash series here:

[Bash Basics #1: Create and Run Your First Bash Shell ScriptStart learning bash scripting with this new series. Create and run your first bash shell script in the first chapter.](https://itsfoss.com/create-bash-script/)

That's all about the article. Feel free to let me know in the comments section if I have missed anything. |

16,061 | 你的旧电脑是 “过时” 了,还是使用 Linux 的机会? | https://opensource.com/article/22/10/obsolete-computer-linux-opportunity | 2023-08-04T09:37:44 | [

"旧电脑"

] | /article-16061-1.html |

>

> 很多时候,老旧的电脑往往被打上 “过时” 的标签。Linux 改变了这一现状。翻新旧电脑,让它重新为需要它的人所用。

>

>

>

你可能经常听到有人说某个电脑、平板电脑或智能手机 “过时了”。当你听到这样的说法时,花一分钟问问自己:“这个人说的是个人观点还是事实?”

很多时候他们的陈述是主观观点。让我解释一下为什么。

当有人说一台电脑 “过时” 时,他们往往是从自己的角度出发的。因此,如果你是一名专业技术人员,一台使用了五年的电脑可能确实已经过时。但对于一个逃离战争或饥荒的难民家庭来说,这台五年前的电脑过时了吗?可能不会。对你来说过时的电脑,对别人来说可能是梦寐以求的电脑。

### 我是如何用 Linux 翻新旧电脑的

我在这方面有些经验。在过去的 25 年里,我一直把老旧电脑带给那些没有电脑的人。我的一名二年级学生,由祖母抚养长大,五年前从斯坦福大学毕业了。另一位学生,我在 2007 年给他送去了一台布满灰尘的 Windows XP 台式机,去年她从耶鲁大学毕业。这两名学生都利用捐赠的电脑实现了自我发展。后一位学生在中学毕业前,打字速度就超过了每分钟 50 个单词。我把捐赠电脑送到她家时,她还在读三年级,当时她的家庭还负担不起网络服务。因此,她有效地利用时间学习触摸打字技巧。我在这个 [YouTube 视频](https://www.youtube.com/watch?v=Ao_vOijz14U) 中记录了她的故事。

我再分享一件连我自己都难以相信的轶事。几年前,我在 eBay 上花 20 美元买了一台戴尔笔记本电脑。这台笔记本电脑是 2002 年的顶级笔记本电脑。我在上面安装了 Linux Mint,并添加了一个 USB WiFi 适配器,这台笔记本电脑就这样重生了。我把这个故事记录在 YouTube 视频中,名为 “[我的 20 美元 eBay 笔记本电脑](https://www.youtube.com/watch?v=UZiN6nm-PUU)”。

在视频中,你可以看到这台笔记本电脑正在上网。它的速度并不快,但比我们在 20 世纪 90 年代末使用的拨号上网电脑要快得多。我会将其描述为功能正常。有人可以用这台 2002 年的笔记本电脑撰写博士论文。论文读起来就像用昨天发布的电脑写的一样好。这台笔记本电脑应该摆放在公共场所,让人们近距离看到 2002 年的电脑仍然可以使用。眼见为实。这难道不是真理吗?

那么 2008 年、2009 年和 2010 年那些著名的 “上网本” 怎么样?它们肯定已经过时了吧?没那么快了吧!如果你在它们身上安装 32 位 Linux,它们就可以使用最新版本的 Chromium 网页浏览器上网冲浪,而 Chromium 浏览器仍然支持 32 位操作系统(不过谷歌 Chrome 浏览器不再支持 32 位操作系统)。使用这些上网本的学生可以观看<ruby> 可汗学院 <rt> Khan Academy </rt></ruby>的视频,使用<ruby> 谷歌文档 <rt> Google Docs </rt></ruby>提高写作能力。将这些上网本连接到更大的液晶屏幕上,学生就可以使用 [LibreOffice Draw](https://opensource.com/tags/libreoffice) 或 [Inkscape](https://opensource.com/downloads/inkscape-cheat-sheet) 这两个我最喜欢的开源图形程序来提高技能。如果你感兴趣,我有一个 [使用 Linux 重振上网本的视频](https://www.youtube.com/watch?v=GBYEclpvyGQ)。上网本也非常适合邮寄到海外,比如利比里亚的学校、海地的医院、索马里的食品分发点,或者其他任何捐赠技术可以发挥巨大作用的地方。

你知道翻新的上网本在哪里会受到欢迎吗?在那些向乌克兰难民敞开心扉和家园的社区。他们在尽自己的一份力量,我们也应该尽自己的一份力量。

### 开源让老旧电脑重获新生

许多技术专业人士生活在特权的泡沫中。当他们宣称某项技术 “过时” 时,可能并没有意识到他们把这种观点当作事实所造成的伤害。不了解开源如何让旧电脑重新焕发生机的人,正在宣判这些电脑的死刑。面对这种情况,我不会袖手旁观。你也不应该袖手旁观。

对于宣称电脑过时的人,一个简单的回答是:“有时旧电脑可以重获新生。我听说开源就是一种方法。”

如果你很了解对方,不妨分享本文列出的一些 YouTube 视频链接。如果有机会,请花点时间去见见那些无法获得所需技术的个人或家庭。这次会面将以你意想不到的方式丰富你的生活。

*(题图:MJ/cfd05206-dae4-4b14-a50c-38f2da342c95)*

---

via: <https://opensource.com/article/22/10/obsolete-computer-linux-opportunity>

作者:[Phil Shapiro](https://opensource.com/users/pshapiro) 选题:[lkxed](https://github.com/lkxed) 译者:[wcjjdlhws](https://github.com/wcjjdlhws) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

16,062 | 2023 年最佳 Linux 视频编辑软件 | https://www.debugpoint.com/linux-video-editors/ | 2023-08-04T10:35:00 | [

"视频编辑"

] | /article-16062-1.html |

>

> 我们列出了最佳的 Linux 视频编辑器选项。请看一下。

>

>

>

视频编辑软件的确可能很昂贵,尤其是像 Adobe Premiere Pro 这样更高级的选项。但好消息是,有很多自由开源的视频编辑器可供选择。这些替代方案提供了出色的功能,而不需要付费。在这里,我们编制了一个由十个这样的免费 Linux 视频编辑器组成的列表,它们可能非常适合你的特定需求:

### 2023 年最佳 Linux 视频编辑器

#### 1、Blender

Blender 是这个列表中功能最强大的视频编辑器之一。值得注意的是,它是自由开源软件,同时也是一个 3D 建模工具。使用 Blender,你可以制作令人印象深刻的电影、动画电影、模拟以及进行动作追踪。

其多功能性使其成为许多用户的首选,包括工作室、个人艺术家、专业人士、爱好者、科学家、学生、视觉效果专家、动画师、游戏艺术家、改装者等等。

庞大的全球社区不断支持和丰富这个强大的编辑器,有众多贡献者不断增强其功能和能力。然而,由于它提供了一整套工具,新手可能需要一些时间来有效利用其全部潜能。但一旦掌握了它的功能,你将会对它能释放出无限创意而感到惊讶。

它的功能列表十分庞大,但以下是一些值得注意的功能:

* 建模

* 雕刻

* 动画与绑定

* <ruby> 油笔 <rt> Grease Pencil </rt></ruby>

* 渲染

* 模拟

* 视频编辑

* 脚本编写

* 视觉效果

* 用户界面

* 工作流程

>

> **[下载 Blender](https://www.blender.org/download/)**

>

>

>

#### 2、Lightworks

体验 [Lightworks](https://www.lwks.com/),一款多功能的<ruby> 免费增值 <rt> freemium </rt></ruby>的视频编辑器,可帮助你编辑社交媒体视频、4K 质量甚至完整的电影。非凡的是,这款非线性视频编辑器已在好莱坞电影编辑领域打下了自己的烙印,证明了其专业能力。

请记住,尽管免费版提供了丰富的工具和功能,但某些专业功能可能仅在付费版本中提供。

因此,无论你是渴望成为社交媒体影响者、专业电影制片人,还是喜欢制作高质量视频的人,Lightworks 可能成为你在视频编辑世界释放创造力的通行证。

以下是其功能的快速概述:

* 简单直观的用户界面

* 访问出色的免版税音频和视频内容

* 轻松进行时间轴编辑和修剪

* 用于 4K 的低分辨率代理工作流程

* 实时即用的音频和视频特效

* 导出适用于 YouTube/Vimeo、SD/HD 的视频,高达 4K

* 广泛的文件格式支持,包括可变帧率媒体

* 使用高达 32 位 GPU 精度和直方图工具专业地调整序列

>

> **[下载 Lightworks](https://lwks.com/)**

>

>

>

#### 3、Shotcut

介绍一下 [Shotcut](https://shotcut.org/),这是一款出色的自由开源的视频编辑器,能够在各种平台上无缝运行。Shotcut 具备许多功能,为用户带来了很多便利。其中一个突出的特点是其对各种格式的出色支持,让你可以轻松处理各种媒体文件。它的独特之处在于本地时间轴编辑,意味着你可以直接编辑文件,而无需进行耗时的导入操作。

Shotcut 的功能远不止于此;它还支持 Blackmagic Design,为你提供专业的输入和预览监控。无论你是内容创作者还是电影制片人,能够使用 4K 分辨率进行工作是 Shotcut 提供的巨大优势。你可以轻松使用该编辑器捕捉音频、视频甚至是摄像头画面。

Shotcut 的显著功能包括:

* 支持 4K 分辨率

* 音频、视频和摄像头捕捉

* 广泛的文件格式支持

* 4K 分辨率支持

* 插件

* 音频和视频滤镜

>

> **[下载 Shotcut](https://shotcut.org/download/)**

>

>

>

#### 4、Avidemux

对于初学者或仅作为业余爱好者的视频编辑者来说,Avidemux 是一个完美的选择。这款自由开源的视频编辑器专为简单的任务设计,如剪辑、应用滤镜和编码。它拥有用户友好的界面,为刚开始学习编辑的人提供流畅且易于操作的体验。

Avidemux 使用简单,并且支持广泛的文件格式,确保与各种媒体类型兼容。如果你有一些重复的任务或特定的工作流程,Avidemux 的项目和作业队列功能可以帮助你自动化编辑过程。

此外,Avidemux 还提供强大的脚本功能,适用于喜欢深入技术细节的用户,可以根据个人喜好自定义和微调编辑任务。

然而,需要注意的是,Avidemux 可能缺少一些其他专业级视频编辑器中的高级功能和复杂性。然而,对于初学者、学习者和业余爱好者来说,Avidemux 是一个直观且免费的解决方案,非常适合开始视频编辑之旅,为你的创作努力铺平道路。

>

> **[下载 Avidemux](https://avidemux.sourceforge.net/download.html)**

>

>

>

#### 5、HitFilm Express

寻找一个免费且出色的视频编辑器?试试 [HitFilm Express](https://fxhome.com/hitfilm-express),这是一款顶级的免费编辑软件。顾名思义,“express” 版本在功能上毫不逊色,非常适合初学者、YouTube 创作者和有抱负的电影制作者。有一支专业的开发团队支持,你可以期待无缝而精细的编辑体验。

HitFilm Express 集成了大量功能,让你释放创造力,制作出令人印象深刻的视频。无论你是刚开始视频编辑之旅还是希望提升你的 YouTube 内容,该软件提供了工具和灵活性,让你的想象变为现实。

然而,需要注意的是,目前 HitFilm Express 仅与 Windows 和 Mac 系统兼容。目前还没有 Linux 版本可用。开发团队根据用户群体做出了这个决定,因为在目前情况下,支持 Linux 可能在经济上不可行。(LCTT 译注:这个是怎么混进来的!)

>

> **[下载 HitFilm Express](https://fxhome.com/hitfilm-express)**

>

>

>

#### 6、DaVinci Resolve

DaVinci Resolve 是专业视频编辑的代表,具备精湛处理 8K 编辑的令人印象深刻能力。这款强大的软件跨越多个平台,使得 Linux、Mac 和 Windows 用户都能使用。然而,需要了解的是,DaVinci Resolve 是专有的商业软件。

凭借其全面的功能和出色的性能,DaVinci Resolve 是视频编辑爱好者和行业专业人士的强大选择。该软件提供了附加付费功能的工作室版本,包括许多插件和附加组件,以增强你的编辑能力。

如果你不想花费一分钱就能入门,也不必担心 - DaVinci Resolve 还提供免费版本。对于许多普通用户来说,免费版本已经完全足够满足他们的视频编辑需求。即使是免费版本,凭借其强大的工具和功能,也提供了丰富的选项,可以创建令人惊艳的视频,展现创意的想象力。

以下是一些主要功能的简介:

* 双时间轴

* 源磁带

* 专用修剪界面

* 智能编辑模式

* 快速回顾

* 变换、变速和稳定

* 快速导出

* 媒体导入

* 便携编辑

* 自定义时间轴设置

* 调整剪辑

* 人脸识别

* 速度加速变速

* 图像稳定

* 关键帧曲线编辑器

* 磁带式音频刮动

* 更快、更智能的编码

>

> **[下载 DaVinci Resolve](https://www.blackmagicdesign.com/in/products/davinciresolve)**

>

>

>

#### 7、OpenShot

如果你正在寻找一个简单强大、具有用户友好界面的免费视频编辑软件,那么 [OpenShot](https://www.openshot.org/) 是你的不二选择。这款出色的软件以简洁为设计理念,确保即使是初次接触视频编辑的人也能轻松上手。OpenShot 的直观设计提供了低学习曲线,适用于各个层次的用户。

最棒的是,OpenShot 适用于 Windows、Linux 和 Mac 等各种操作系统。因此,无论你喜欢哪个平台,都可以享受到 OpenShot 视频编辑能力带来的便利和强大功能。

因此,如果你正在寻找一个将用户友好功能与强大编辑能力相结合的免费视频编辑软件,OpenShot 应该是你的首选。拥抱其简洁性,投入编辑过程,轻松看到你的视频项目焕发生机。

>

> **[下载 OpenShot 视频编辑器](https://www.debugpoint.com)**

>

>

>

#### 8、KDenlive

认识一下 [KDenlive](https://kdenlive.org/),这是一款经过 15 年开发的经验丰富的视频编辑器。作为一款自由开源的应用程序,KDenlive 是社区合作和创新力量的典范。它基于 QT 框架构建,在 FFMpeg、frei0r、movie、ladspa 和 sox 等一流框架的支持下,确保了无缝可靠的编辑体验。

KDenlive 面向广泛的用户群体,非常适合想要尝试视频编辑的普通用户。它的独特之处在于考虑周到地包含了高级功能,实现了功能和易用性的完美平衡。你将找到丰富的工具,可以增强你的视频项目,而无需过多学习复杂的技术知识。

使用 KDenlive,你可以释放创造力,而无需被复杂的过程所困扰。这款编辑器使你能够制作引人入胜的视频,而无需掌握过多的技术知识。

以下是一些其功能的概述:

* 多轨视频编辑

* 使用任何音频/视频格式

* 可配置的界面和快捷键

* 使用带有 2D 标题的标题工具

* 多种效果和转场

* 音频和视频范围检测

* 代理编辑

* 自动备份

* 可在界面直接下载在线资源

* 时间轴预览

* 可关键帧化的效果

* 主题界面

>

> **[下载 KDenlive](https://kdenlive.org/en/download/)**

>

>

>

#### 9、Flowblade

“快速、精确、稳定” - 这正是最能概括 [Flowblade](https://jliljebl.github.io/flowblade/) 的标语,这是一款非线性视频编辑器,旨在以最高效的方式满足目标用户的需求。通过提供一种无缝快速的编辑体验,保证每个细节的精确性,并为你的创意努力提供稳定的平台,这款编辑器兑现了它的承诺。

Flowblade 在功能方面毫不保留。它配备了一套全面的工具,以促进你的编辑过程。从简化工作流程的编辑工具到平滑排序的时间轴功能,该编辑器应有尽有。

为了进一步提高你的编辑效率,Flowblade 包含了一个范围记录,方便你管理剪辑并精确选择所需的片段。此外,它支持代理编辑,这是一个有价值的功能,可在处理高分辨率媒体时优化性能。

以下是一些其功能的概述:

* 编辑工具

* 时间轴功能

* 合成器

* 滤镜

* 范围记录

* 代理编辑

* 批量渲染队列

* G'mic 特效工具

* 音频混音器

* 媒体链接处理

* 标题制作

* 其它功能

* 渲染

* 支持 MLT 视频和音频编解码器

该软件只适用于 Linux 系统,不适用于 Windows 或 Mac。

>

> **[下载 Flowblade](https://jliljebl.github.io/flowblade/download.html)**

>

>

>

#### 10、Olive

介绍一下 [Olive](https://www.olivevideoeditor.org/),这是一款非常出色的免费非线性视频编辑器,旨在成为高端专业视频编辑软件的全功能替代品。目前 Olive 处于活跃开发阶段,处于 ALPHA 阶段。尽管处于早期阶段,这个编辑器已经引起了广泛关注,并且已经在使用 Olive 进行视频创作的用户中表现出色。

凭借其雄心勃勃的目标和不断壮大的社区,Olive 展示出了成为视频编辑领域强大竞争对手的潜力。已经有用户开始使用这个新兴编辑器制作视频,他们的经验展示了它所具备的能力。

你可以通过下面的链接在 Windows、Linux 和 Mac 上下载。

>

> **[下载 Olive](https://www.olivevideoeditor.org/download.php)**

>

>

>

### 总结

在这个汇编中,我们重点介绍了 Linux 上的十大专业视频编辑器,为不同的编辑需求提供了多样化的选择。某些编辑器在熟练使用时甚至可以媲美甚至取代高价专业软件。

例如,Blender、KDenlive 和 DaVinci Resolve 是创建专业级视频甚至完整电影的出色选择。每个编辑器都具有独特的优势和功能,使它们成为有抱负的电影制作者和内容创作者的可靠工具。

现在轮到你了!你对这个 Linux 视频编辑器列表有何看法?你还有其他喜欢推荐的编辑器吗?请在评论框中告诉我。

*图片来源:各应用程序。*

*(题图:MJ/9b567ebc-e5ed-4cdc-a023-30bbdfa564e8)*

---

via: <https://www.debugpoint.com/linux-video-editors/>

作者:[Arindam](https://www.debugpoint.com/author/admin1/) 选题:[lkxed](https://github.com/lkxed/) 译者:ChatGPT 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | ('Connection aborted.', RemoteDisconnected('Remote end closed connection without response')) | null |

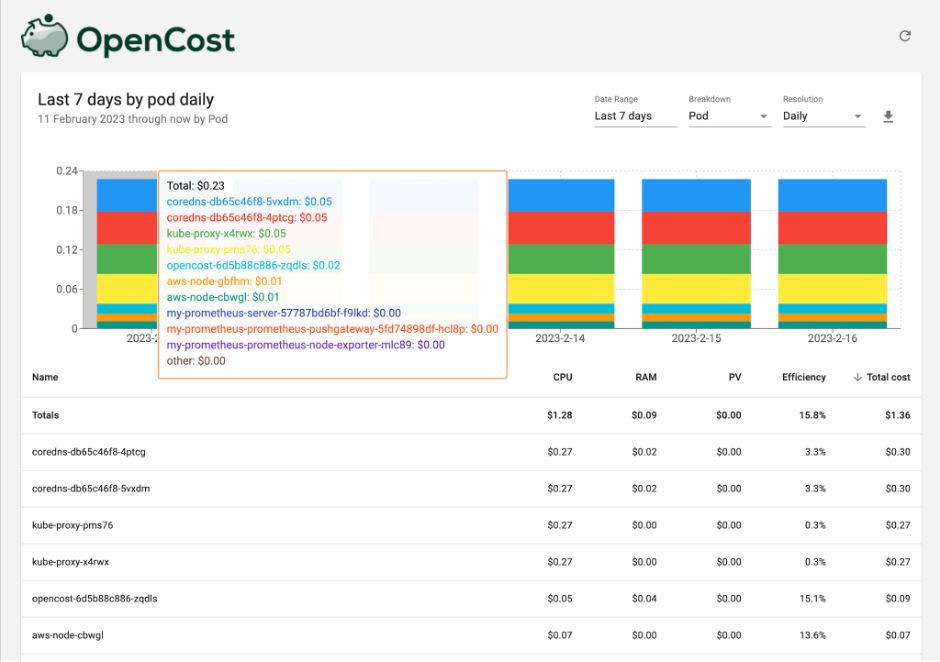

16,064 | 分步指南:安装和访问 Kubernetes 仪表板 | https://www.linuxtechi.com/how-to-install-kubernetes-dashboard/ | 2023-08-05T13:25:00 | [

"Kubernetes"

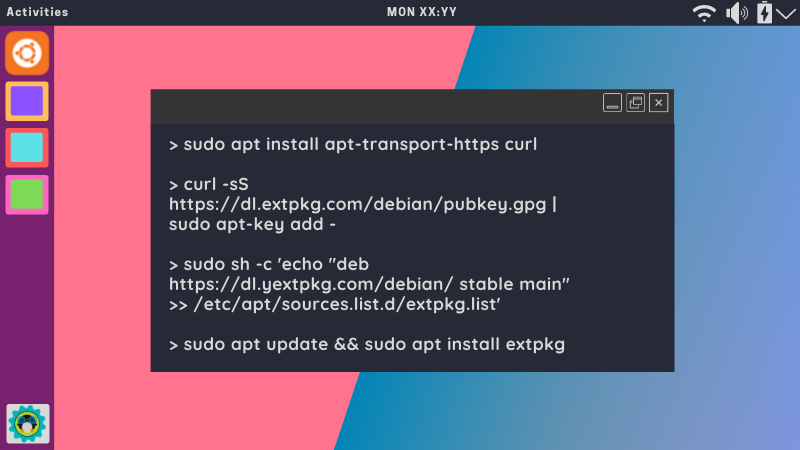

] | https://linux.cn/article-16064-1.html |

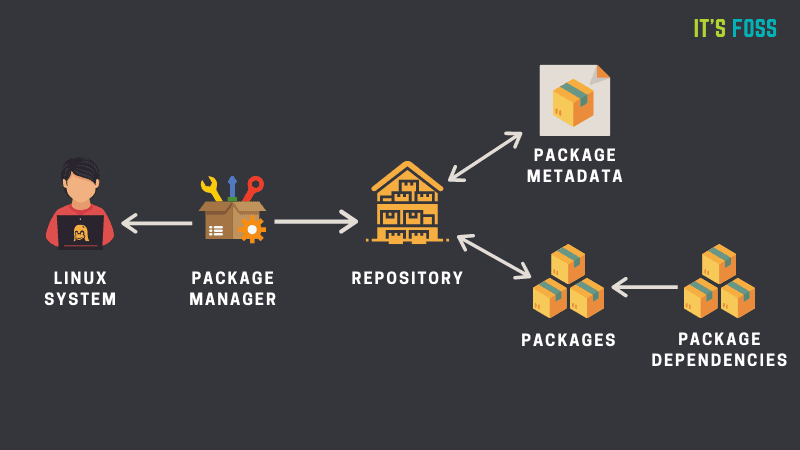

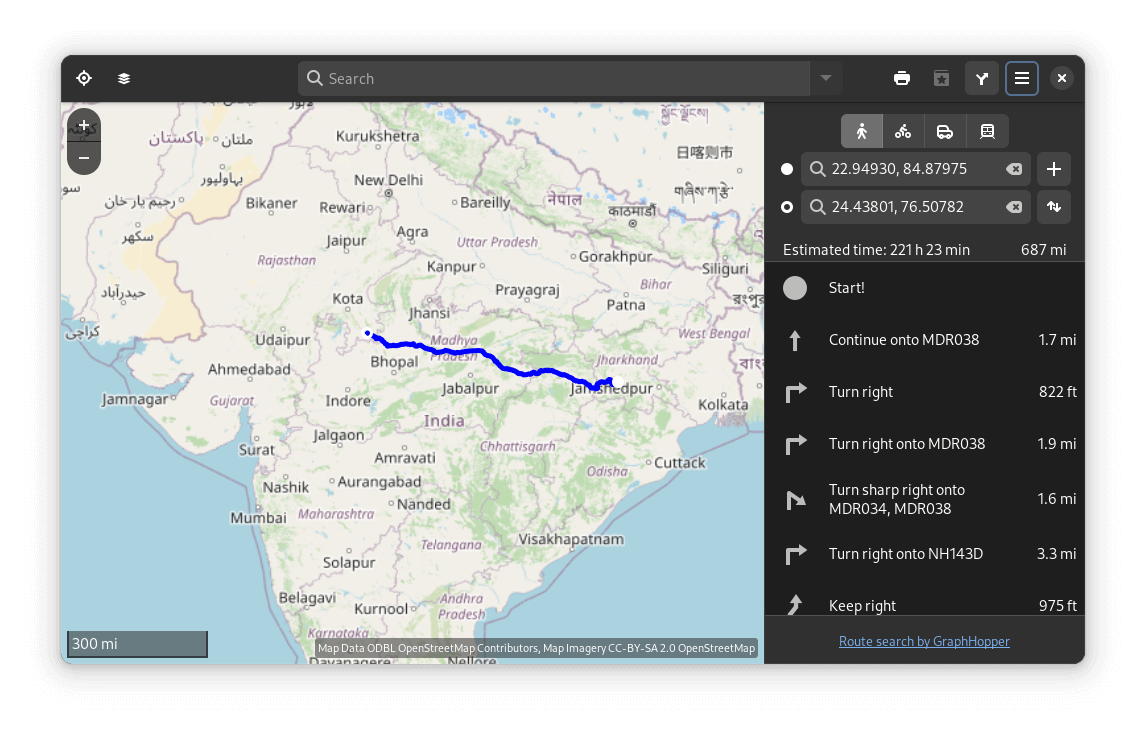

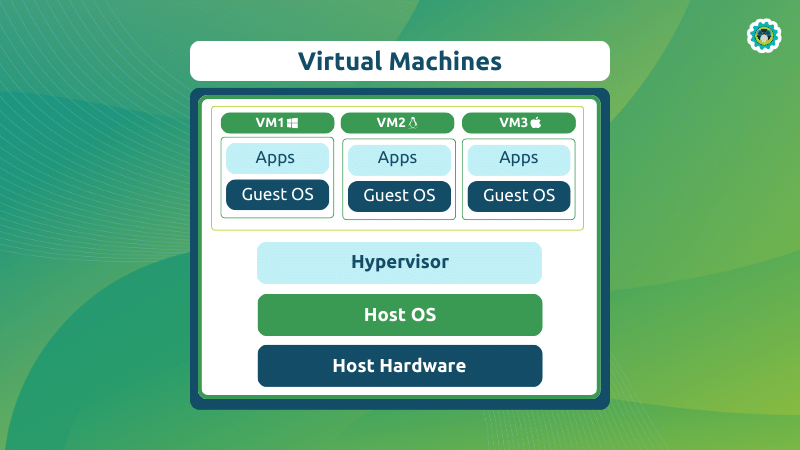

Kubernetes 是一个开源容器编排平台,已成为大规模管理容器化应用的首选解决方案。虽然 Kubernetes 提供了强大的命令行工具来管理集群,但有时可视化界面可以使监控和管理部署变得更加容易。Kubernetes 仪表板是一个基于 Web 的用户界面,可让你可视化 Kubernetes 集群并与之交互。

在这篇博文中,我们将逐步引导你完成安装和访问 Kubernetes Dashboard 的过程,使你能够简化 Kubernetes 管理任务。

先决条件:

在安装 Kubernetes Dashboard 之前,请确保你有一个正在运行的 Kubernetes 集群并具有必要的管理访问权限。

### 安装 Kubernetes 仪表板

为集群安装 Kubernetes 仪表板的简单方法是通过 Helm Chart。Kubernetes 仪表板现在依赖于 cert-manager 和 nginx-ingress-controller。幸运的是,可以使用 Helm Chart 自动安装这些依赖项。但是,如果你已经安装了这些组件,则可以在安装 Chart 时通过设置标志 `–set=nginx.enabled=false` 和 `–set=cert-manager.enabled=false` 来禁用它们的安装。

事不宜迟,让我们进入安装步骤。

#### 1)安装 Helm

使用终端或命令提示符访问集群的主节点。如果没有安装,请安装 helm。运行以下命令。

```

$ curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

$ chmod 700 get_helm.sh

$ ./get_helm.sh

```

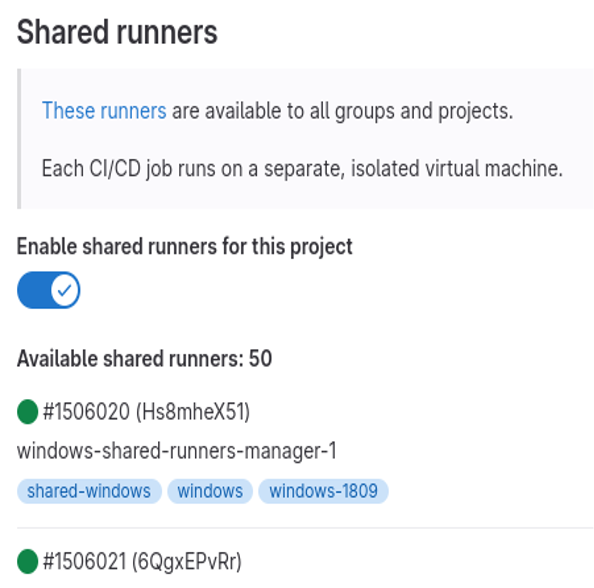

#### 2)添加 Kubernetes 仪表板 Helm 仓库

运行以下 `helm` 命令来添加仪表板仓库:

```

$ helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

$ helm repo list

```

#### 3)安装 Kubernetes 仪表板

要使用 `helm` 安装 Kubernetes 仪表板,请运行以下命令:

```

$ helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

```

上面的输出确认仪表板已部署在 `Kubernetes-dashboard` 命名空间中。因此,要访问仪表板,请运行:

```

$ kubectl -n kubernetes-dashboard port-forward svc/kubernetes-dashboard-nginx-controller 8443:443

```

现在,打开运行上述命令的系统的 Web 浏览器,输入以下 URL:

```

https://localhost:8443

```