id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

11,771 | 我的树莓派项目回顾 | https://opensource.com/article/20/1/raspberry-pi-best | 2020-01-11T09:18:12 | [

"树莓派"

] | https://linux.cn/article-11771-1.html |

>

> 看看我在好玩、能学习、有用处的树莓派上做了些什么。

>

>

>

无论是从历史上,还是从理论上讲,当时钟走到一个十年份第一年的 1 月 1 日的午夜 0 点时,就开始了一个十年或一个世纪或一个千年纪元。例如,20 世纪始于 1901 年 1 月 1 日,而不是 1900 年 1 月 1 日。原因很简单:现代日历中没有 0 年,因此这些时间段始于 1 年(使用公历)。但这不是我们在口语上和文化上指代时间段的方式。例如,当我们提到 80 年代的音乐或电影时,我们所说的是 1980 年至 1989 年。

我可以说,最近过去的 21 世纪 10 年代是云计算、社交媒体、移动技术和物联网(IoT)的十年,这其中就包括[树莓派](https://www.raspberrypi.org/)。最近,《时代》杂志将树莓派称为[过去十年中 10 个最佳小玩意](https://time.com/5745302/best-gadgets-of-the-2010s-decade/?utm_source=reddit.com)之一。我非常同意这点。

树莓派最初的于 2012 年推出,我过了几年才使用上了它。不过从那以后,我在家中做了许多有趣的教育项目,还在 Opensource.com 中记录了一些。

### 圣诞灯三部曲

三年来,我写了三篇探讨如何使用树莓派和开源项目 LightShowPi 控制圣诞灯的文章。第一篇文章《[用树莓派创建你自己的音乐灯光秀](https://opensource.com/life/15/2/music-light-show-with-raspberry-pi)》,非常基础地介绍了电子开发介绍和灯光秀的结合。第二篇文章,《[使用树莓派 SSH 进入你的圣诞树](https://opensource.com/life/15/12/ssh-your-christmas-tree-raspberry-pi)》,稍微深入地介绍了通过远程管理和电子按钮控制灯光的方法。三部曲的最后一章《[用树莓派设置假期心情](https://opensource.com/article/18/12/lightshowpi-raspberry-pi)》,回顾了上一年 LightShowPi 项目中引入的更改。

### DIY 项目

多年来,我已经将树莓派变成了几种有用的设备。有一次,我将树莓派变成了 [Pi MusicBox 音乐播放设备](https://opensource.com/life/15/3/pi-musicbox-guide),它可以让你在 Web 界面中导入你喜欢的音乐流并在房间中播放。

将树莓派做成[移动视频录制设备](https://opensource.com/life/15/9/turning-raspberry-pi-portable-streaming-camera)是另一个 DIY 项目。它需要一些额外的硬件,例如触摸屏、树莓派摄像头和电池组,但是它工作的很好。这个设备的最大缺点之一是当时树莓派的可用内存很小。我认为如果我将它重新配置在具有 4GB 内存的树莓派 4 上,那么这款便携式摄像机的功能可能会更好。这可能是一个会在 2020 年重新打造的项目。

我做的另一个小项目[客厅的数字时钟](https://opensource.com/article/17/7/raspberry-pi-clock)使用了 Adafruit PiTFT 小屏幕。尽管这个项目很简单,但它可能是我使用时间最长的项目。那个时钟在我的电视机上呆了一年多,一天 24 小时不间断运行,直到屏幕烧坏为止。

### 圆周率日系列

最后但并非最不重要的一点是,在 2019 年的圆周率日(3 月 14 日)之前,我在 [14 内发布了 14 篇文章](https://opensource.com/article/19/3/happy-pi-day)。这是迄今为止我完成过的最具挑战性的写作项目,但是它使我能够涵盖许多不同的主题,并希望使读者对树莓派的丰富功能有更多的了解。

### 走向未来

我不认识树莓派基金会中的任何人,因此我不了解它的任何路线图和未来计划。我可以(但我不会)推测品牌及其设备的未来,它们让世界各地这么多不同社会地位的人扩展他们对计算机科学、电子和开源开发的知识。我希望基金会的管理层能够忠于其愿景和使命,并继续为世界各地的人们提供价格合理的技术。

21 世纪 10 年代过去了,那是多么甜蜜的十年。对我来说,它闻起来就像树莓派馅饼。

---

via: <https://opensource.com/article/20/1/raspberry-pi-best>

作者:[Anderson Silva](https://opensource.com/users/ansilva) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Historically and theoretically speaking, a decade, a century, or a millennium starts when the clock turns midnight on January 1 of the year one of its decimal order. For example, the 20th century started on January 1, 1901, not on January 1, 1900. The reason for this is simple: there is no year 0 in our modern calendar, so these periods of time start on year 1 (using the Gregorian calendar). But that's not how we refer to time periods colloquially and culturally; for example, when we mention '80s music or movies, we're talking about the period from 1980 to 1989.

The recently passed 2010s, I could argue, was the decade of the cloud, social media, mobile technology, and the Internet of Things (IoT), which includes the [Raspberry Pi](https://www.raspberrypi.org/). Recently, *Time* magazine called the Raspberry Pi one of the [10 best gadgets of the past decade](https://time.com/5745302/best-gadgets-of-the-2010s-decade/?utm_source=reddit.com). And I very much agree.

The original Raspberry Pi launched in 2012, and it took me a couple of years to jump on the bandwagon and ride on the Pi-train. But since then, I have built many fun educational projects at home and even documented a few of them for Opensource.com.

## The Christmas light trilogy

I wrote three articles over three years that explored controlling Christmas lights with the Raspberry Pi and the open source project LightShowPi. The first article, [ Create your own musical light show with Raspberry Pi](https://opensource.com/life/15/2/music-light-show-with-raspberry-pi), was a mix between a very basic introduction to electronics development and building a Christmas light show. The second article,

[, went a little deeper into controlling the lights with things such as remote management and electronic buttons. The last chapter of the trilogy,](https://opensource.com/life/15/12/ssh-your-christmas-tree-raspberry-pi)

*SSH into your Christmas tree with Raspberry Pi*[, reviewed changes introduced in the LightShowPi project in the prior year.](https://opensource.com/article/18/12/lightshowpi-raspberry-pi)

*Set the holiday mood with your Raspberry Pi*## DIY projects

Over the years, I've turned my Raspberry Pi into several useful devices. One time, I turned the Pi into a [music-playing device with the Pi MusicBox](https://opensource.com/life/15/3/pi-musicbox-guide), which allows you to plug your favorite music streaming services into a common web interface and play your favorite tunes around the house.

Making the Raspberry Pi into a [mobile video recording device](https://opensource.com/life/15/9/turning-raspberry-pi-portable-streaming-camera) was another DIY project I put together. It required a few extra pieces of hardware, like a touchscreen, a Pi Camera, and a battery pack, but it worked. One of the biggest drawbacks of this setup was the small amount of memory available on the Pi at the time. I think the capabilities of this little portable camera could be a lot better if I re-did it on a Raspberry Pi 4 with 4GB of RAM. This may be a project to revisit in 2020.

Another small project I built, a [digital clock for my living room](https://opensource.com/article/17/7/raspberry-pi-clock), used the small Adafruit PiTFT screen. As simple as this project was, it probably was the one that I used the longest. That clock stood by my TV set for over a year, running 24 hours a day… until the day the screen burned out.

## The Pi Day series

Last but not least, in 2019, I contributed [14 articles in 14 days](https://opensource.com/article/19/3/happy-pi-day) leading up to Pi Day (March 14). This was by far the most challenging writing project I've ever done, but it allowed me to cover many different topics and hopefully expose readers to even more ideas about the versatility of the Raspberry Pi.

## To infinity and beyond

I don't know anyone in the Raspberry Pi Foundation, so I don't have any special access to its roadmaps and future plans. I could (but I won't) speculate on what the future holds for the brand and its devices that have allowed so many people of different social statuses around the world to expand their knowledge about computer science, electronics, and open source development. All I hope is for the foundation's management to stay true to its vision and mission and continue making affordable technology for people everywhere.

The 2010s are over, and what a sweet decade it was. To me, it smelled like pie, raspberry pie.

## 2 Comments |

11,773 | 如何借助 Django 来编写一个 Python Web API | https://opensource.com/article/19/11/python-web-api-django | 2020-01-11T14:25:00 | [

"Python",

"Django"

] | https://linux.cn/article-11773-1.html |

>

> Django 是 Python API 开发中最流行的框架之一,在这个教程中,我们来学习如何使用它。

>

>

>

[Django](https://www.djangoproject.com/) 所有 Web 框架中最全面的,也是最受欢迎的一个。自 2005 年以来,其流行度大幅上升。

Django 是由 Django 软件基金会维护,并且获得了社区的大力支持,在全球拥有超过 11,600 名成员。在 Stack Overflow 上,约有 191,000 个带 Django 标签的问题。Spotify、YouTube 和 Instagram 等都使用 Django 来构建应用程序和数据管理。

本文演示了一个简单的 API,通过它可以使用 HTTP 协议的 GET 方法来从服务器获取数据。

### 构建一个项目

首先,为你的 Django 应用程序创建一个目录结构,你可以在系统的任何位置创建:

```

$ mkdir myproject

$ cd myproject

```

然后,在项目目录中创建一个虚拟环境来隔离本地包依赖关系:

```

$ python3 -m venv env

$ source env/bin/activate

```

在 Windows 上,使用命令 `env\Scripts\activate` 来激活虚拟环境。

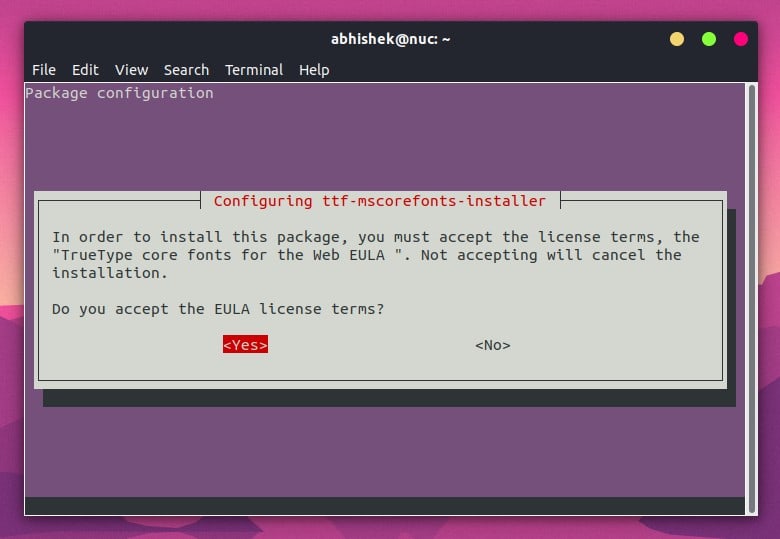

### 安装 Django 和 Django REST framework

然后,安装 Django 和 Django REST 模块:

```

$ pip3 install django

$ pip3 install djangorestframework

```

### 实例化一个新的 Django 项目

现在你的应用程序已经有了一个工作环境,你必须实例化一个新的 Django 项目。与 [Flask](https://opensource.com/article/19/11/python-web-api-flask) 这样微框架不同的是,Django 有专门的命令来创建(注意第一条命令后的 `.` 字符)。

```

$ django-admin startproject tutorial .

$ cd tutorial

$ django-admin startapp quickstart

```

Django 使用数据库来管理后端,所以你应该在开始开发之前同步数据库,数据库可以通过 `manage.py` 脚本管理,它是在你运行 `django-admin` 命令时创建的。因为你现在在 `tutorial` 目录,所以使用 `../` 符号来运行脚本,它位于上一层目录:

```

$ python3 ../manage.py makemigrations

No changes detected

$ python3 ../manage.py migrate

Operations to perform:

Apply all migrations: admin, auth, contenttypes, sessions

Running migrations:

Applying contenttypes.0001_initial... OK

Applying auth.0001_initial... OK

Applying admin.0001_initial... OK

Applying admin.0002_logentry_remove_auto_add... OK

Applying admin.0003_logentry_add_action_flag_choices... OK

Applying contenttypes.0002_remove_content_type_name... OK

Applying auth.0002_alter_permission_name_max_length... OK

Applying auth.0003_alter_user_email_max_length... OK

Applying auth.0004_alter_user_username_opts... OK

Applying auth.0005_alter_user_last_login_null... OK

Applying auth.0006_require_contenttypes_0002... OK

Applying auth.0007_alter_validators_add_error_messages... OK

Applying auth.0008_alter_user_username_max_length... OK

Applying auth.0009_alter_user_last_name_max_length... OK

Applying auth.0010_alter_group_name_max_length... OK

Applying auth.0011_update_proxy_permissions... OK

Applying sessions.0001_initial... OK

```

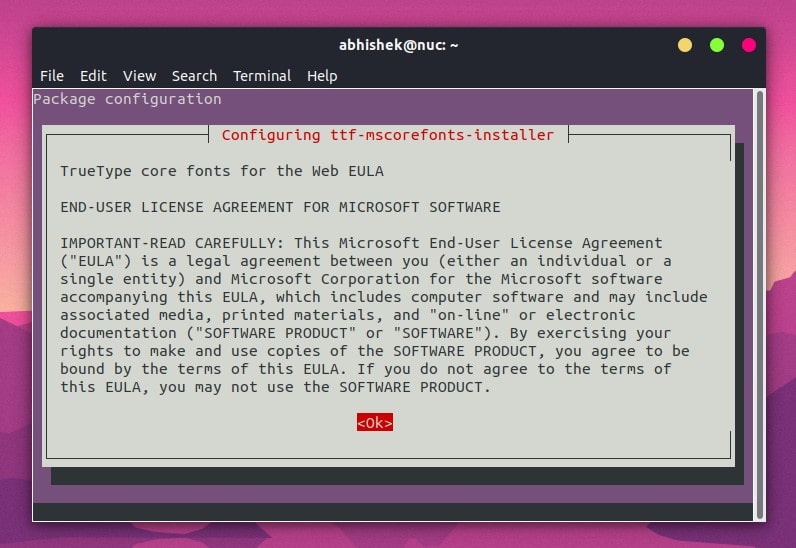

### 在 Django 中创建用户

创建一个名为 `admin`,示例密码为 `password123` 的初始用户:

```

$ python3 ../manage.py createsuperuser \

--email [email protected] \

--username admin

```

在提示时创建密码。

### 在 Django 中实现序列化和视图

为了使 Django 能够将信息传递给 HTTP GET 请求,必须将信息对象转化为有效的响应数据。Django 为此实现了“序列化类” `serializers`。

在你的项目中,创建一个名为 `quickstart/serializers.py` 的新模块,使用它来定义一些序列化器,模块将用于数据展示:

```

from django.contrib.auth.models import User, Group

from rest_framework import serializers

class UserSerializer(serializers.HyperlinkedModelSerializer):

class Meta:

model = User

fields = ['url', 'username', 'email', 'groups']

class GroupSerializer(serializers.HyperlinkedModelSerializer):

class Meta:

model = Group

fields = ['url', 'name']

```

Django 中的[视图](https://docs.djangoproject.com/en/2.2/topics/http/views/)是一个接受 Web 请求并返回 Web 响应的函数。响应可以是 HTML、HTTP 重定向、HTTP 错误、JSON 或 XML 文档、图像或 TAR 文件,或者可以是从 Internet 获得的任何其他内容。要创建视图,打开 `quickstart/views.py` 并输入以下代码。该文件已经存在,并且其中包含一些示例文本,保留这些文本并将以下代码添加到文件中:

```

from django.contrib.auth.models import User, Group

from rest_framework import viewsets

from tutorial.quickstart.serializers import UserSerializer, GroupSerializer

class UserViewSet(viewsets.ModelViewSet):

"""

API 允许查看或编辑用户

"""

queryset = User.objects.all().order_by('-date_joined')

serializer_class = UserSerializer

class GroupViewSet(viewsets.ModelViewSet):

"""

API 允许查看或编辑组

"""

queryset = Group.objects.all()

serializer_class = GroupSerializer

```

### 使用 Django 生成 URL

现在,你可以生成 URL 以便人们可以访问你刚起步的 API。在文本编辑器中打开 `urls.py` 并将默认示例代码替换为以下代码:

```

from django.urls import include, path

from rest_framework import routers

from tutorial.quickstart import views

router = routers.DefaultRouter()

router.register(r'users', views.UserViewSet)

router.register(r'groups', views.GroupViewSet)

# 使用自动路由 URL

# 还有登录 URL

urlpatterns = [

path('', include(router.urls)),

path('api-auth/', include('rest_framework.urls', namespace='rest_framework'))

]

```

### 调整你的 Django 项目设置

这个示例项目的设置模块存储在 `tutorial/settings.py` 中,因此在文本编辑器中将其打开,然后在 `INSTALLED_APPS` 列表的末尾添加 `rest_framework`:

```

INSTALLED_APPS = [

...

'rest_framework',

]

```

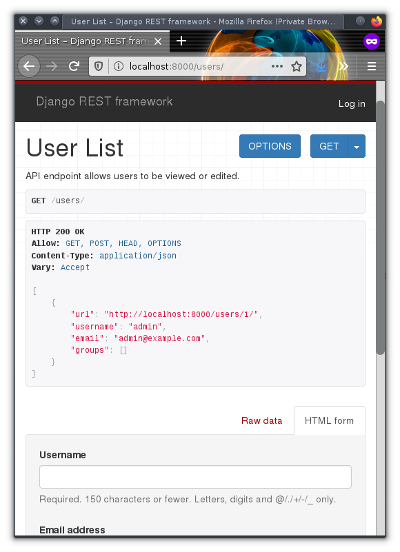

### 测试 Django API

现在,你可以测试构建的 API。首先,从命令行启动内置服务器:

```

$ python3 manage.py runserver

```

你可以通过使用 `curl` 导航至 URL `http://localhost:8000/users` 来访问 API:

```

$ curl --get http://localhost:8000/users/?format=json

[{"url":"http://localhost:8000/users/1/?format=json","username":"admin","email":"[email protected]","groups":[]}]

```

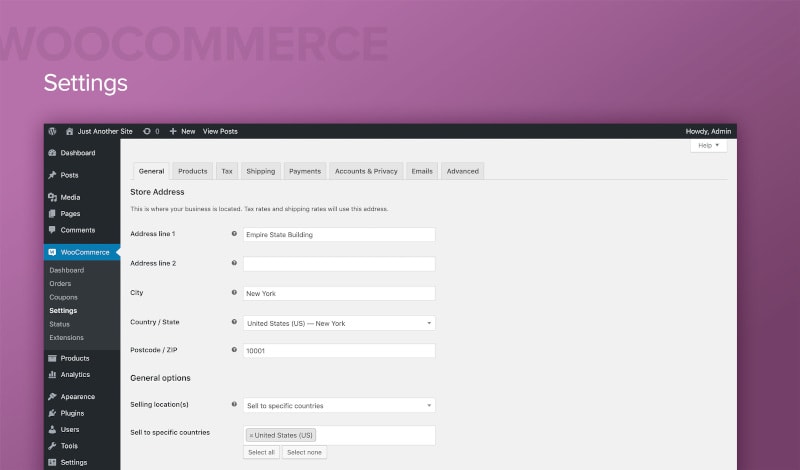

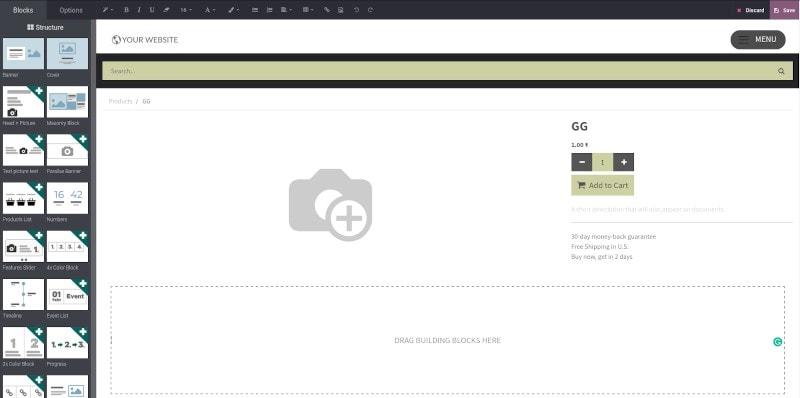

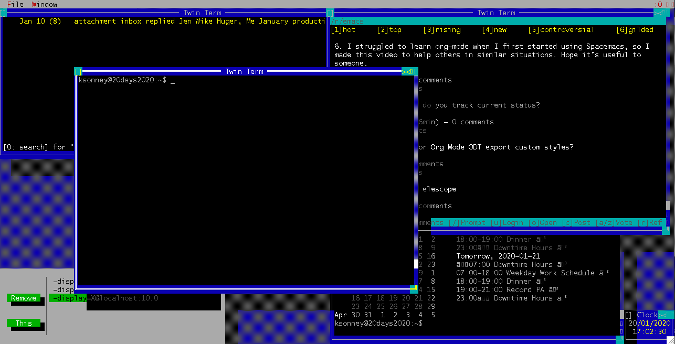

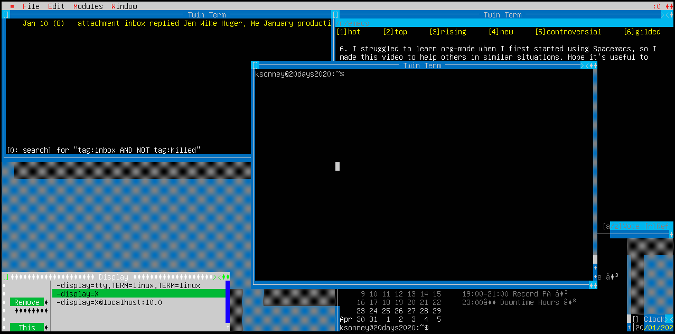

使用 Firefox 或你选择的[开源浏览器](https://opensource.com/article/19/7/open-source-browsers):

有关使用 Django 和 Python 的 RESTful API 的更多深入知识,参考出色的 [Django 文档](https://docs.djangoproject.com/en/2.2)。

### 为什么要使用 Djago?

Django 的主要优点:

1. Django 社区的规模正在不断扩大,因此即使你做一个复杂项目,也会有大量的指导资源。

2. 默认包含模板、路由、表单、身份验证和管理工具等功能,你不必寻找外部工具,也不必担心第三方工具会引入兼容性问题。

3. 用户、循环和条件的简单结构使你可以专注于编写代码。

4. 这是一个成熟且经过优化的框架,它非常快速且可靠。

Django 的主要缺点:

1. Django 很复杂!从开发人员视角的角度来看,它可能比简单的框架更难学。

2. Django 有一个很大的生态系统。一旦你熟悉它,这会很棒,但是当你深入学习时,它可能会令人感到无所适从。

对你的应用程序或 API 来说,Django 是绝佳选择。下载并熟悉它,开始开发一个迷人的项目!

---

via: <https://opensource.com/article/19/11/python-web-api-django>

作者:[Rachel Waston](https://opensource.com/users/rachelwaston) 选题:[lujun9972](https://github.com/lujun9972) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | [Django](https://www.djangoproject.com/) is the comprehensive web framework by which all other frameworks are measured. One of the most popular names in Python API development, Django has surged in popularity since its start in 2005.

Django is maintained by the Django Software Foundation and has experienced great community support, with over 11,600 members worldwide. On Stack Overflow, Django has around 191,000 tagged questions. Websites like Spotify, YouTube, and Instagram rely on Django for application and data management.

This article demonstrates a simple API to fetch data from a server using the GET method of the HTTP protocol.

## Set up a project

First, create a structure for your Django application; you can do this at any location on your system:

```

$ mkdir myproject

$ cd myproject

```

Then, create a virtual environment to isolate package dependencies locally within the project directory:

```

$ python3 -m venv env

$ source env/bin/activate

```

On Windows, use the command **env\Scripts\activate** to activate your Python virtual environment.

## Install Django and the Django REST framework

Next, install the Python modules for Django and Django REST:

```

$ pip3 install django

$ pip3 install djangorestframework

```

## Instantiate a new Django project

Now that you have a work environment for your app, you must instantiate a new Django project. Unlike a minimal framework like [Flask](https://opensource.com/article/19/11/python-web-api-flask), Django includes dedicated commands for this process (note the trailing **.** character in the first command):

```

$ django-admin startproject tutorial .

$ cd tutorial

$ django-admin startapp quickstart

```

Django uses a database as its backend, so you should sync your database before beginning development. The database can be managed with the **manage.py** script that was created when you ran the **django-admin** command. Because you're currently in the **tutorial** directory, use the **../** notation to run the script, located one directory up:

```

$ python3 ../manage.py makemigrations

No changes detected

$ python4 ../manage.py migrate

Operations to perform:

Apply all migrations: admin, auth, contenttypes, sessions

Running migrations:

Applying contenttypes.0001_initial... OK

Applying auth.0001_initial... OK

Applying admin.0001_initial... OK

Applying admin.0002_logentry_remove_auto_add... OK

Applying admin.0003_logentry_add_action_flag_choices... OK

Applying contenttypes.0002_remove_content_type_name... OK

Applying auth.0002_alter_permission_name_max_length... OK

Applying auth.0003_alter_user_email_max_length... OK

Applying auth.0004_alter_user_username_opts... OK

Applying auth.0005_alter_user_last_login_null... OK

Applying auth.0006_require_contenttypes_0002... OK

Applying auth.0007_alter_validators_add_error_messages... OK

Applying auth.0008_alter_user_username_max_length... OK

Applying auth.0009_alter_user_last_name_max_length... OK

Applying auth.0010_alter_group_name_max_length... OK

Applying auth.0011_update_proxy_permissions... OK

Applying sessions.0001_initial... OK

```

## Create users in Django

Create an initial user named **admin** with the example password of **password123**:

```

$ python3 ../manage.py createsuperuser \

--email [email protected] \

--username admin

```

Create a password when you're prompted.

## Implement serializers and views in Django

For Django to be able to pass information over to an HTTP GET request, the information object must be translated into valid response data. Django implements **serializers** for this.

In your project, define some serializers by creating a new module named **quickstart/serializers.py**, which you'll use for data representations:

```

from django.contrib.auth.models import User, Group

from rest_framework import serializers

class UserSerializer(serializers.HyperlinkedModelSerializer):

class Meta:

model = User

fields = ['url', 'username', 'email', 'groups']

class GroupSerializer(serializers.HyperlinkedModelSerializer):

class Meta:

model = Group

fields = ['url', 'name']

```

A [view](https://docs.djangoproject.com/en/2.2/topics/http/views/) in Django is a function that takes a web request and returns a web response. The response can be HTML, or an HTTP redirect, or an HTTP error, a JSON or XML document, an image or TAR file, or anything else you can get over the internet. To create a view, open **quickstart/views.py** and enter the following code. This file already exists and has some boilerplate text in it, so keep that and append this text to the file:

```

from django.contrib.auth.models import User, Group

from rest_framework import viewsets

from tutorial.quickstart.serializers import UserSerializer, GroupSerializer

class UserViewSet(viewsets.ModelViewSet):

"""

API endpoint allows users to be viewed or edited.

"""

queryset = User.objects.all().order_by('-date_joined')

serializer_class = UserSerializer

class GroupViewSet(viewsets.ModelViewSet):

"""

API endpoint allows groups to be viewed or edited.

"""

queryset = Group.objects.all()

serializer_class = GroupSerializer

```

## Generate URLs with Django

Now you can generate URLs so people can access your fledgling API. Open **urls.py** in a text editor and replace the default sample code with this code:

```

from django.urls import include, path

from rest_framework import routers

from tutorial.quickstart import views

router = routers.DefaultRouter()

router.register(r'users', views.UserViewSet)

router.register(r'groups', views.GroupViewSet)

# Use automatic URL routing

# Can also include login URLs for the browsable API

urlpatterns = [

path('', include(router.urls)),

path('api-auth/', include('rest_framework.urls', namespace='rest_framework'))

]

```

## Adjust your Django project settings

The settings module for this example project is stored in **tutorial/settings.py**, so open that in a text editor and add **rest_framework** to the end of the **INSTALLED_APPS** list:

```

INSTALLED_APPS = [

...

'rest_framework',

]

```

## Test your Django API

You're now ready to test the API you've built. First, start up the built-in server from the command line:

`$ python3 manage.py runserver`

You can access your API by navigating to the URL ** http://localhost:8000/users** using

**curl**:

```

$ curl --get http://localhost:8000/users/?format=json

[{"url":"http://localhost:8000/users/1/?format=json","username":"admin","email":"[email protected]","groups":[]}]

```

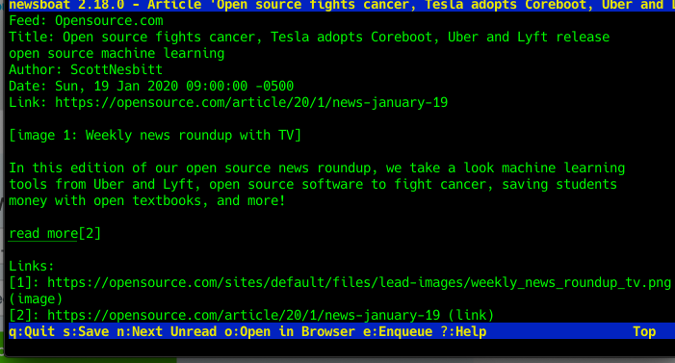

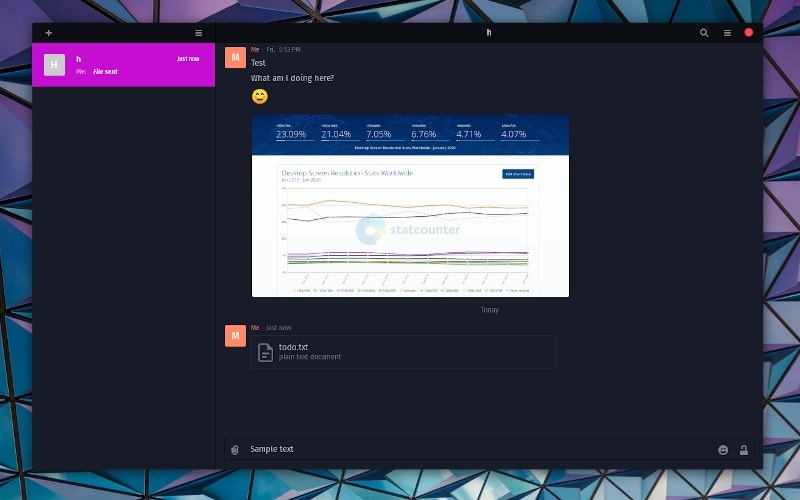

Or use Firefox or the [open source web browser](https://opensource.com/article/19/7/open-source-browsers) of your choice:

For more in-depth knowledge about RESTful APIs using Django and Python, see the excellent [Django documentation](https://docs.djangoproject.com/en/2.2).

## Why should I use Django?

The major benefits of Django:

- The size of the Django community is ever-growing, so you have lots of resources for guidance, even on a complicated project.

- Features like templating, routing, forms, authentication, and management tools are included by default. You don't have to hunt for external tools or worry about third-party tools introducing compatibility issues.

- Simple constructs for users, loops, and conditions allow you to focus on writing code.

- It's a mature and optimized framework that is extremely fast and reliable.

The major drawbacks of Django are:

- Django is complex! From a developer's point of view, Django can be trickier to learn than a simpler framework.

- There's a big ecosystem around Django. This is great once you're comfortable with Django, but it can be overwhelming when you're still learning.

Django is a great option for your application or API. Download it, get familiar with it, and start developing an amazing project!

## 1 Comment |

11,774 | Sweet Home 3D 开放源码室内设计 | https://opensource.com/article/19/10/interior-design-sweet-home-3d | 2020-01-12T09:28:12 | [

"室内设计"

] | /article-11774-1.html |

>

> 在你去真实世界购物前,在虚拟世界中尝试家具布局、配色方案等等。

>

>

>

这里有关于如何装饰房间的三大流派:

1. 购买一堆家具,并把它们塞进房间。

2. 仔细测量每件家具,计算房间的理论容量,然后把它们全部塞进房间,忽略你在床上放置一个书架的事实。

3. 使用一台计算机进行预先可视化。

之前,我还实践了鲜为人知的第四个方式:不要家具。然而,自从我成为一个远程工作者,我发现家庭办公需要一些便利的设施,像一张桌子和一张椅子,一个用于存放参考书和技术手册的书架等等。因此,我一直在制定一个使用实际的家具来迁移我的生活和工作空间的计划,在该*计划*上强调由实木制作,而不是牛奶箱子(或胶水和锯末板之类的东西)。我最不想做的一件事:从旧货市场淘到得宝贝带回家时,发现其进不了门,或者比另一件家具大很多。

是时候做专业人士该做的事了,是时候做视觉预览了。

### 开放源码室内设计

[Sweet Home 3D](http://www.sweethome3d.com/) 是一个开源的(GPLv2)室内设计应用程序,可以帮助你绘制你的住宅平面图,然后定义、重新调整大小以及安排家具。你可以使用精确的尺寸来完成这些,精确到一厘米以下,而不使用任何数学运算,仅使用简单的拖拽操作就行。当你完成后,你可以以 3D 方式查看结果。在 Sweet Home 3D 中规划你家的室内设计,就和在 Word 程序中创建基本的表格一样简单。

### 安装

Sweet Home 3D 是一个 [Java](https://opensource.com/resources/java) 应用程序,因此它是平台通用的。它运行在任何可以运行 Java 的操作系统上,包括 Linux、Windows、MacOS 和 BSD 。不用理会你的操作系统,你可以从网站[下载](http://www.sweethome3d.com/download.jsp)该应用程序。

* 在 Linux 上,[untar](https://opensource.com/article/17/7/how-unzip-targz-file) 存档文件。在 `SweetHome3D` 文件上右键单击,并选择**属性**。在**权限**选项卡中,授予文件可执行权限。

* 在 MacOS 和 Windows 上,展开存档文件并启动应用程序。当系统提示时,你必需授予它权限来在你的系统上运行。

在 Linux 上,你也可以像一个 Snap 软件包一样安装 Sweet Home 3D ,前提是你已经安装并启用 **snapd**。

### 成功的测量

首先:打开你的卷尺。为充分利用 Sweet Home 3D,你必须知道你所计划的生活空间的实际尺寸。你可能需要测量精度到毫米或 1/16 英寸;你可以自己把握对偏差幅度的容忍度。但是你必需获得基本的尺寸,包括测量墙壁和门窗。

用你最好的判断力来判断常识。例如,当测量门时,包括门框;虽然从技术上讲它不是*门*本身的一部分,但它可能是你不想用家具遮挡的一部分墙壁空间。

### 创建一间房间

当你第一次启动 Sweet Home 3D 时,它会以其默认查看模式来打开一个空白的画布,蓝图视图在顶部面板中,3D 渲染在底部面板中。在我的 [Slackware](http://www.slackware.com/) 桌面计算机上,它可以很好地工作,不过我的桌面计算机也是我的视频编辑和游戏计算机,所以它有一个极好的 3D 渲染显卡。在我的笔记本计算机上,这种视图模式是非常慢的。为了最好的性能(尤其是在一台计没有 3D 渲染的专用计算机上),转到窗口顶部的 **3D 视图** 菜单,并选择 **虚拟访问** 。这个视图模式基于虚拟访客的位置从地面视图渲染你的工作。这意味着你可以控制渲染的内容和时机。

不管你计算机是否强力,切换到这个视图的有意义的,因为地表以上的 3D 渲染不比蓝图平面图向你提供更多有用的详细信息。在你更改视图模式后,你可以开始设计。

第一步是定义你家的墙壁。使用**创建墙壁**工具完成,可以在顶部工具栏的**手形**图标右侧找到。绘制墙壁很简单:单击你想要墙壁开始的位置,单击以锚定位置,不断单击锚定,直到你的房间完成。

在你闭合墙壁后,按 `Esc` 来退出工具。

#### 定义一间房间

Sweet Home 3D 在你如何创建墙壁的问题上是灵活的。你可以先绘制你房子的外部边界,然后再细分内部,或者你可以绘制每个房间作为结成一体的“容器”,最终形成你房子所占的空间量。这种灵活性是能做到的,因为在现实生活中和在 Sweet Home 3D 中,墙壁并不总是用来定义一间房间。为定义一间房间,使用在顶部工具栏的**创建墙壁**按钮右侧的**创建房间**按钮。

如果房间的地板空间是通过四面墙所定义,你需要做的全部的定义是像一间房间一样在四面墙壁内双击来圈占地方。Sweet Home 3D 将定义该空间为一间房间,并根据你的喜好,以英尺或米为单位向你提供房间的面积。

对于不规则的房间,你必需使用每次单击来手动定义房间的每个墙角。根据房间形状的复杂性,你可能不得不进行试验来发现你是否需要从你的原点来顺时针或逆时针工作,以避免奇怪的莫比斯条形地板。不过,一般来说,定义一间房间的地板空间是简单的。

在你给定房间一层地板后,你可以更改到**箭头**工具,并在房间上双击来给予它一个名称。你也可以设置地板、墙壁、天花板和踢脚线的颜色及纹理。

默认情况下,这些都不会在蓝图视图中渲染。为启用在你蓝图面板中的房间渲染,转到**文件**菜单并选择**首选项**。在**首选项**面板中,设置**平面图中房间渲染**为**地板颜色或纹理**。

### 门和窗

在你完成基本的地板平面图后,你可以长期地切换到**箭头**工具。

你可以在 Sweet Home 3D 的左栏中的**门和窗**类别下找到门和窗。你有很多选择,所以选择最接近你家的东西。

为放置一扇门或窗到你的平面图中,在你的蓝图平面图中的合适的墙壁上拖拽门或窗。要调整它的位置和大小,请双击门或窗。

### 添加家具

随着基本平面图完成,这部分工作感觉像是结束了!从这点继续,你可以摆弄家具布置以及其它装饰。

你可以在左栏中找到家具,按预期的方式来组织每个房间。你可以拖拽任何项目到你的蓝图平面图中,当你的鼠标悬停在项目的区域上时,使用可视化工具控制方向和大小。在任何项目上双击双击来调整它的颜色和成品表面。

### 查看和导出

为了看看你未来的家将会看起来是什么样子,在你的蓝图视图中拖拽“人”图标到一个房间中。

你可以在现实和空间感受之间找到自己的平衡,你的想象力是你唯一的限制。你可以从 Sweet Home 3D [下载页面](http://www.sweethome3d.com/download.jsp)获取附加的有用的资源来添加到你的家中。你甚至可以使用**库编辑器**应用程序创建你自己的家具和纹理,它可以从该项目的网站下载。

Sweet Home 3D 可以导出你的蓝图平面图为 SVG 格式,以便在 [Inkscape](http://inkscape.org) 中使用,并且它可以导出你的 3D 模型为 OBJ 格式,以便在 [Blender](http://blender.org) 中使用。为导出你的蓝图,转到**平面图**菜单,并选择**导出为 SVG 格式**。为导出一个 3D 模型,转到 **3D 视图** 菜单并选择**导出为 OBJ 格式**。

你也可以拍摄你家的"快照,以便于不打开 Sweet Home 3D 而回顾你的想法。为创建一个快照,转到 **3D 视图**菜单并选择**创建照片**。快照是按照蓝图视图中的人的图标的角度展现的,因此按照需要调整,然后在**创建照片**窗口中单击**创建**按钮。如果你对快照满意,单击**保存**。

### 甜蜜的家

在 Sweet Home 3D 中有更多的特色。你可以添加一片天空和一片草坪,为你的照片定位光线,设置天花板高度,给你房子添加另一楼层等等。不管你是打算租一套公寓,还是买一套房子,或是(尚)不存在的房子,Sweet Home 3D 是一款简单迷人的应用程序,当你匆忙购买家具时,它可以帮助你快乐地做出更好的购买选择。因此,你终于可以停止在厨房的柜台上吃早餐以及蹲在地上工作了。

---

via: <https://opensource.com/article/19/10/interior-design-sweet-home-3d>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[robsean](https://github.com/robsean) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

11,776 | 这些简单的技巧使 VLC 更加出色 | https://itsfoss.com/simple-vlc-tips/ | 2020-01-13T09:06:00 | [

"VLC"

] | https://linux.cn/article-11776-1.html |

如果 [VLC](https://www.videolan.org/) 不是最好的播放器,那它也是[最好的开源视频播放器](https://itsfoss.com/video-players-linux/)之一。大多数人不知道的是,它不仅仅是视频播放器。

你可以进行许多复杂的任务,如直播视频、捕捉设备等。只需打开菜单,你就可以看到它有多少选项。

我们有一个详细的教程,讨论一些[专业的 VLC 技巧](https://itsfoss.com/vlc-pro-tricks-linux/),但这些对于普通用户太复杂。

这就是为什么我要写另一篇文章的原因,来向你展示一些可以在 VLC 中使用的简单技巧。

### 使用这些简单技巧让 VLC 做更多事

让我们看看除了播放视频文件之外,你还可以使用 VLC 做什么。

#### 1、使用 VLC 观看 YouTube 视频

如果你不想在 [YouTube](https://www.youtube.com/) 上观看令人讨厌的广告,或者只想体验没有打扰地观看 YouTube 视频,你可以使用 VLC。

是的,在 VLC 上流式传输 YouTube 视频是非常容易的。

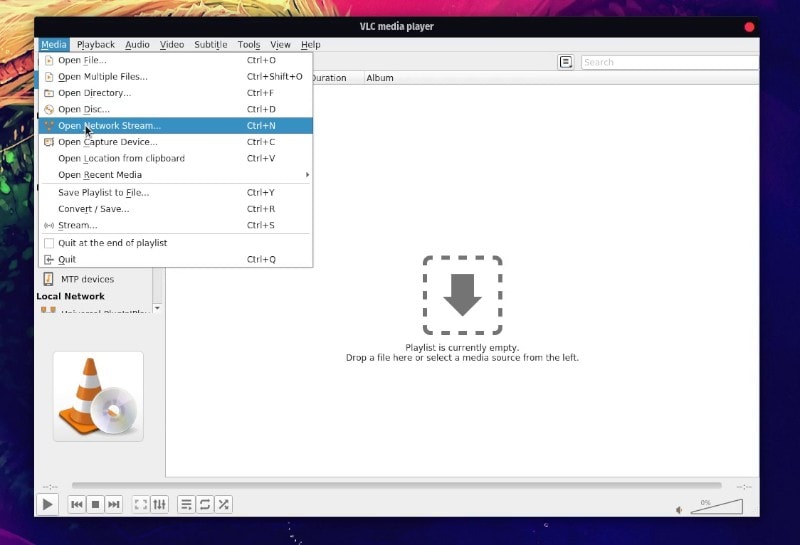

只需启动 VLC 播放器,前往媒体设置,然后单击 ”Open Network Stream“ 或使用快捷方式 `CTRL + N`。

接下来,你只需要粘贴要观看的视频的 URL。有一些选项可以调整,但通常你无需担心这些。如果你好奇,你可以点击 ”Advanced options“ 来探索。

你还可以通过这种方式向 YouTube 视频添加字幕。然而,[一个更简单的带字幕观看 Youtube 视频的办法是使用 Penguin 字幕播放器](https://itsfoss.com/penguin-subtitle-player/)。

#### 2、将视频转换为不同格式

你可以[在 Linux 命令行使用 ffmpeg 转换视频](https://itsfoss.com/ffmpeg/)。你还可以使用图形工具,如 [HandBrake 转换视频格式](https://itsfoss.com/handbrake/)。

但是,如果你不想用一个单独的应用来转码视频,你可以使用 VLC 播放器来完成该工作。

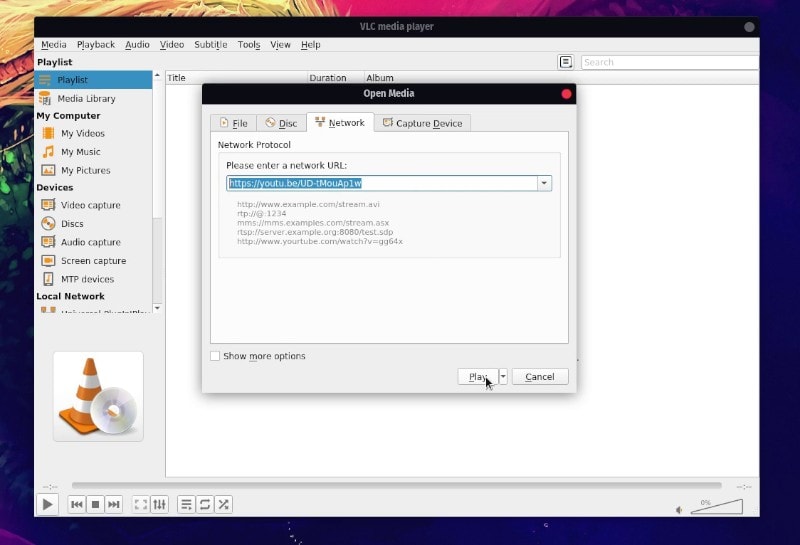

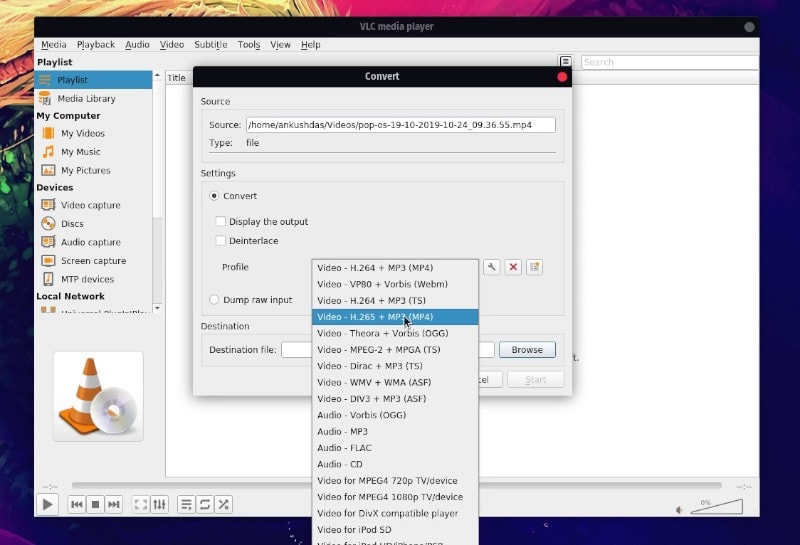

为此,只需点击 VLC 上的媒体选项,然后单击 “Convert/Save”,或者在 VLC 播放器处于活动状态时按下快捷键 `CTRL + R`。接下来,你需要从计算机/硬盘或者 URL 导入你想保存/转换的的视频。

不管是什么来源,只需选择文件后点击 “Convert/Save” 按钮。你现在会看到另外一个窗口可以更改 “Profile” 设置。点击并选择你想转换的格式(并保存)。

你还可以在转换之前通过在屏幕底部设置目标文件夹来更改转换文件的存储路径。

#### 3、从源录制音频/视频

你是否想在 VLC 播放器中录制正在播放的音频/视频?

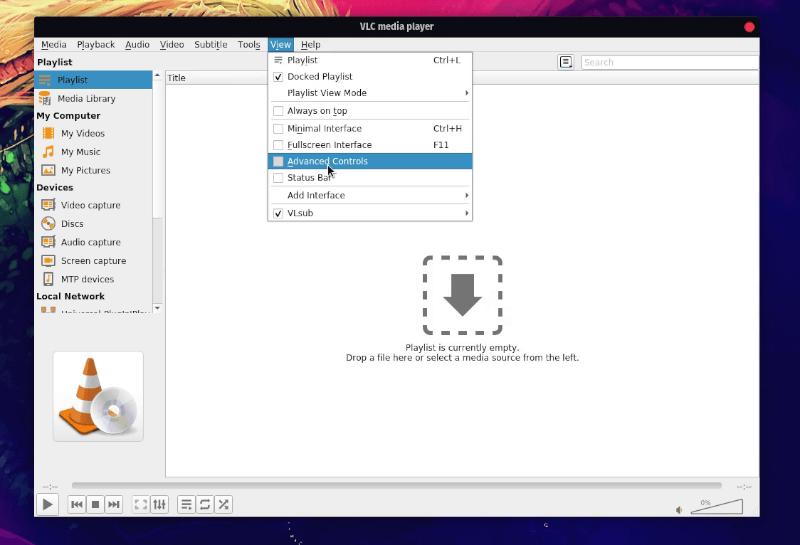

如果是的话,有一个简单的解决方案。只需通过 “View”,然后点击 “Advanced Controls”。

完成后,你会看到一个新按钮(包括 VLC 播放器中的红色录制按钮)。

#### 4、自动下载字幕

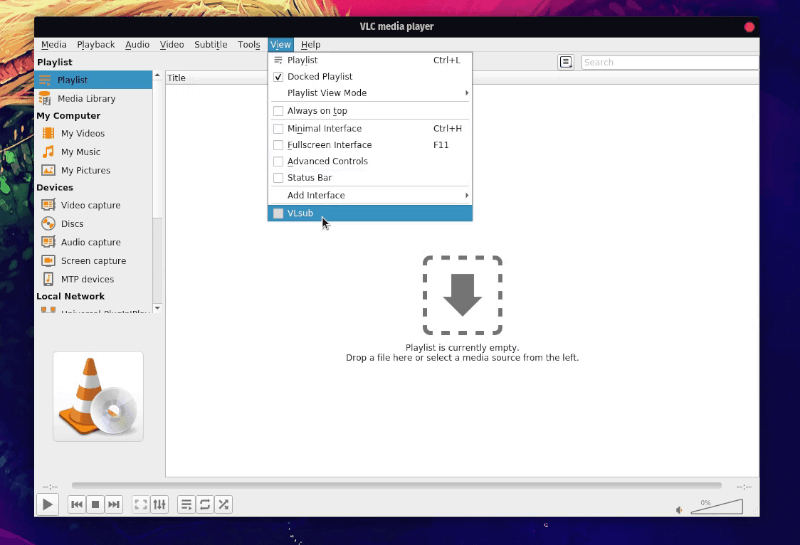

是的,你可以[使用 VLC 自动下载字幕](https://itsfoss.com/download-subtitles-automatically-vlc-media-player-ubuntu/)。你甚至不必在单独的网站上查找字幕。你只需点击 “View”->“VLSub”。

默认情况下,它是禁用的,因此当你单击该选项时,它会被激活,并允许你搜索/下载想要的字幕。

[VLC 还能让你使用简单的键盘快捷键同步字幕](https://itsfoss.com/how-to-synchronize-subtitles-with-movie-quick-tip/)

#### 5、截图

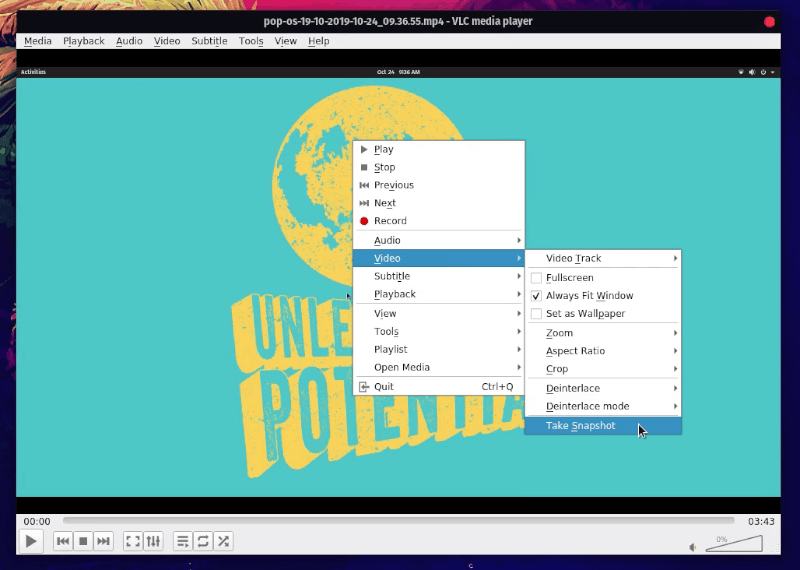

你可以在观看视频时使用 VLC 获取一些视频的截图/图像。你只需在视频播放/暂停时右击播放器,你会看到一组选项,点击 “Video”->“Take Snapshot”。

如果安装了旧版本,你可能在右键时看到截图选项。

#### 额外技巧:给视频添加音频/视频效果

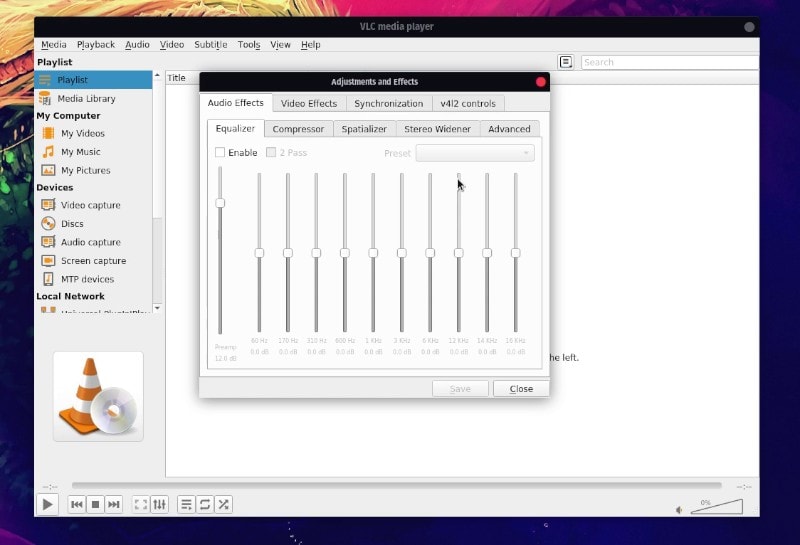

在菜单中,进入 “Tools” 选项。单击 “Effects and Filters”,或者在 VLC 播放器窗口中按 `CTRL + E` 打开选项。

好了,你可以观察你给视频添加的音频和视频效果了。你也许无法实时看到效果,因此你需要调整并保存来看发生了什么。

我建议在修改视频之前保存一份原始视频备份。

#### 你最喜欢的 VLC 技巧是什么?

我分享了一些我最喜欢的 VLC 技巧。你知道什么你经常使用的很酷的 VLC 技巧吗?为什么不和我们分享呢?我可以把它添加到列表中。

---

via: <https://itsfoss.com/simple-vlc-tips/>

作者:[Ankush Das](https://itsfoss.com/author/ankush/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

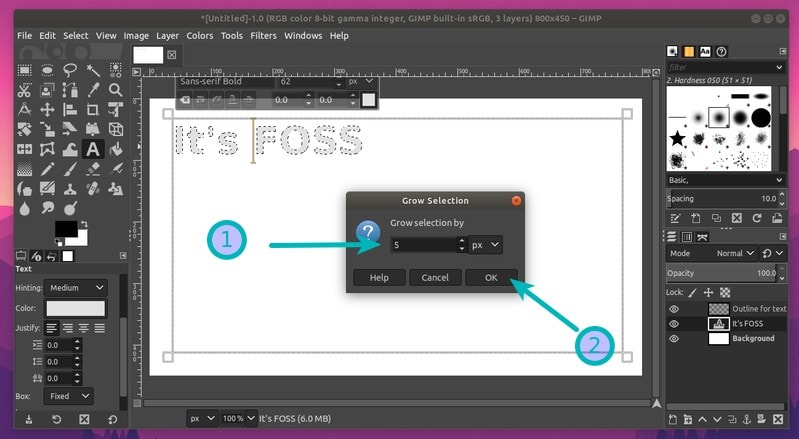

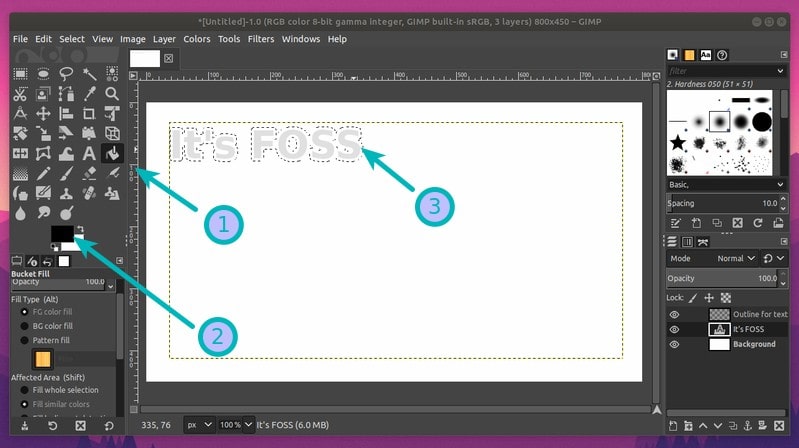

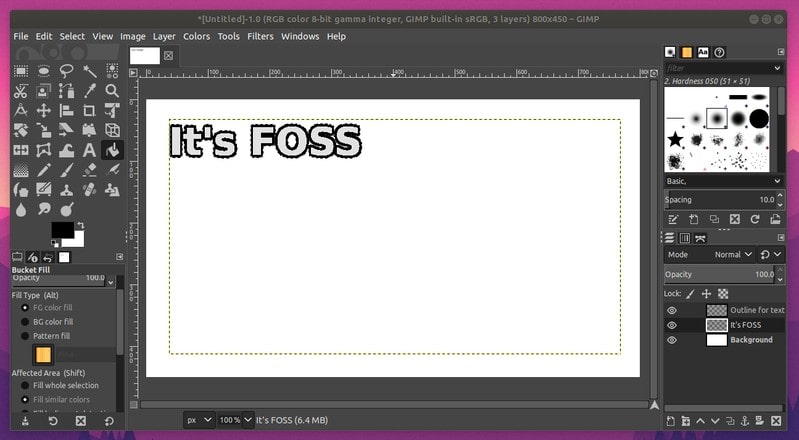

| 200 | OK | [VLC](https://www.videolan.org/) is one of the [best open source video players](https://itsfoss.com/video-players-linux/), if not the best. What most people don’t know about it is that it is a lot more than just a video player.

You can do a lot of complex tasks like broadcasting live videos, capturing devices etc. Just open its menu and you’ll see how many options it has.

It’s FOSS has a detailed tutorial discussing some of the [pro VLC tricks](https://itsfoss.com/vlc-pro-tricks-linux/) but those are way too complicated for normal users.

This is why I am writing another article to show you some of the simple tips that you can use with VLC.

## Do more with VLC with these simple tips

Let’s see what can you do with VLC other than just playing a video file.

### 1. Watch YouTube videos with VLC

If you do not want to watch the annoying advertisements on [YouTube](https://www.youtube.com/) or simply want a distraction-free experience for watching a YouTube video, you can use VLC.

Yes, it is very easy to stream a YouTube video on VLC.

Simply launch the VLC player, head to the Media settings and click on “**Open Network Stream**” or **CTRL + N** as a shortcut to that.

Next, you just have to paste the URL of the video that you want to watch. There are some options to tweak – usually, you should not bother using them. But, if you are curious you can click on the “**Advanced options**” to explore.

You can also add subtitles to the YouTube videos this way. However, an easier way to [watch YouTube or any online video with subtitles is using Penguin subtitle player](https://itsfoss.com/penguin-subtitle-player/).

### 2. Convert videos to different formats

You can [use ffmpeg to convert videos in Linux command line](https://itsfoss.com/ffmpeg/). You can also use a graphical tool like [HandBrake to convert video formats](https://itsfoss.com/handbrake/).

But if you do not want a separate app to transcode videos, you can use VLC media player to get the job done.

To do that, just head on to the Media option on VLC and then click on “**Convert/Save**” or press CTRL + R as a shortcut to get there while you have VLC media player active.

Next, you will need to either import the video from your computer/disk or paste the URL of the video that you want to save/convert.

Whatever your input source is – just hit the “**Convert/Save**” button after selecting the file.

Now, you will find another window that gives you the option to change the “**Profile**” from the settings. Click on it and choose a format that you’d like the video to be converted to (and saved).

You can also change the storage path for the converted file by setting the destination folder at the bottom of the screen before converting it.

### 3. Record Audio/Video From Source

Do you want to record the audio/video you’re playing on VLC Media Player?

If yes, there’s an easy solution to that. Simply navigate your way through **View->click on “Advanced Controls”**.

Once you do that, you should observe new buttons (including a red record button in your VLC player).

### 4. Download subtitles automatically

Yes, you can [automatically download subtitles with VLC](https://itsfoss.com/download-subtitles-automatically-vlc-media-player-ubuntu/). You do not even have to look for it on a separate website. You just have to navigate your way to **View->VLSub**.

By default, it is deactivated, so when you click on the option it gets activated and lets you search/download the subtitles you wanted.

[VLC also lets you synchronize the subtitles](https://itsfoss.com/how-to-synchronize-subtitles-with-movie-quick-tip/) with simple keyboard shortcuts.

### 5. Take A Snapshot

With VLC, you can get some screenshots/images of the video while watching it.

You just need to right-click on the player while the video is playing/paused, you will notice a bunch of options now, navigate through **Video->Take Snapshot**.

If you have an old version installed, you might observe the snapshot option right after performing a right-click.

### Bonus Tip: Add Audio/Video Effects to a video

From the menu, go to the “**Tools**” option. Now, click on “**Effects and Filters**” or simply press **CTRL + E **from the VLC player window to open up the option.

Here, you can observe audio effects and video effects that you can add to your video. You may not be able to see all the changes in real-time, so you will have to tweak it and save it in order to see what happens.

I’ll suggest keeping a backup of the original video before you modify the video.

### What’s your favorite VLC tip?

I shared some of my favourite VLC tips. Do you know some cool tip that you use regularly with VLC? Why not share it with us? I may add it to the list here. |

11,778 | Go 并发编程中的经验教训 | https://opensource.com/article/19/12/go-common-pitfalls | 2020-01-13T15:12:00 | [

"Go",

"并发"

] | https://linux.cn/article-11778-1.html |

>

> 通过学习如何定位并发处理的陷阱来避免未来处理这些问题时的困境。

>

>

>

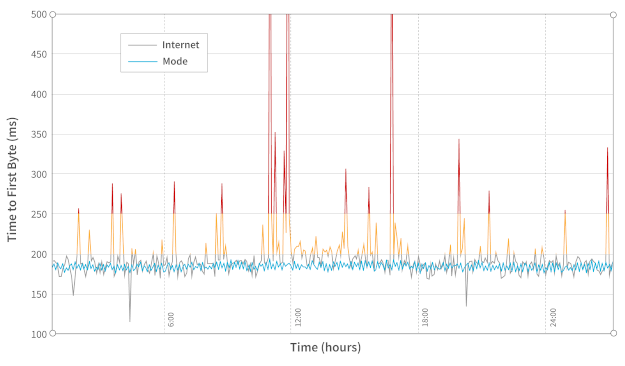

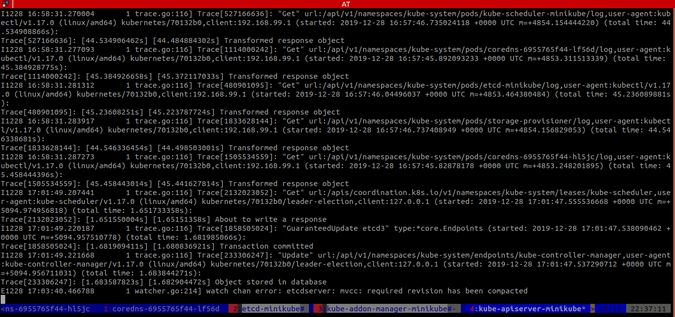

在复杂的分布式系统进行任务处理时,你通常会需要进行并发的操作。在 [Mode.net](http://mode.net) 公司,我们每天都要和实时、快速和灵活的软件打交道。而没有一个高度并发的系统,就不可能构建一个毫秒级的动态地路由数据包的全球专用网络。这个动态路由是基于网络状态的,尽管这个过程需要考虑众多因素,但我们的重点是链路指标。在我们的环境中,链路指标可以是任何跟网络链接的状态和当前属性(如链接延迟)有关的任何内容。

### 并发探测链接监控

我们的动态路由算法 [H.A.L.O.](https://people.ece.cornell.edu/atang/pub/15/HALO_ToN.pdf)(<ruby> 逐跳自适应链路状态最佳路由 <rt> Hop-by-Hop Adaptive Link-State Optimal Routing </rt></ruby>)部分依赖于链路指标来计算路由表。这些指标由位于每个 PoP(<ruby> 存活节点 <rt> Point of Presence </rt></ruby>)上的独立组件收集。PoP 是表示我们的网络中单个路由实体的机器,通过链路连接并分布在我们的网络拓扑中的各个位置。某个组件使用网络数据包探测周围的机器,周围的机器回复数据包给前者。从接收到的探测包中可以获得链路延迟。由于每个 PoP 都有不止一个临近节点,所以这种探测任务实质上是并发的:我们需要实时测量每个临近连接点的延迟。我们不能串行地处理;为了计算这个指标,必须尽快处理每个探测。

### 序列号和重置:一个重新排列场景

我们的探测组件互相发送和接收数据包,并依靠序列号进行数据包处理。这旨在避免处理重复的包或顺序被打乱的包。我们的第一个实现依靠特殊的序列号 0 来重置序列号。这个数字仅在组件初始化时使用。主要的问题是我们考虑了递增的序列号总是从 0 开始。在该组件重启后,包的顺序可能会重新排列,某个包的序列号可能会轻易地被替换成重置之前使用过的值。这意味着,后继的包都会被忽略掉,直到排到重置之前用到的序列值。

### UDP 握手和有限状态机

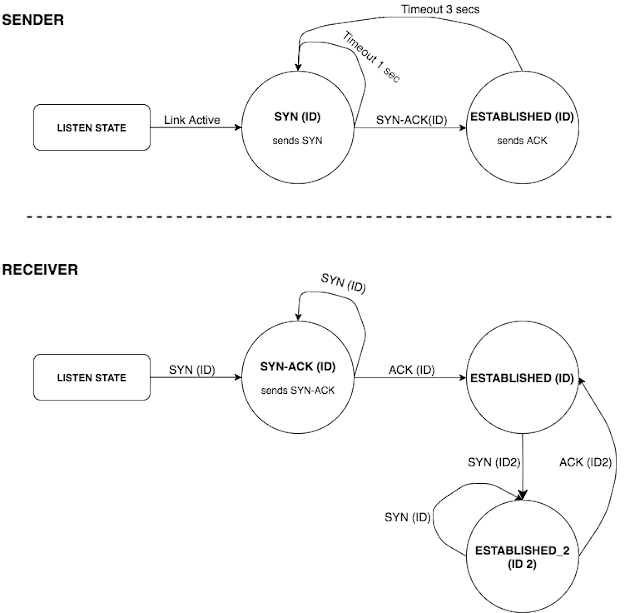

这里的问题是该组件重启前后的序列号是否一致。有几种方法可以解决这个问题,经过讨论,我们选择了实现一个带有清晰状态定义的三步握手协议。这个握手过程在初始化时通过链接建立会话。这样可以确保节点通过同一个会话进行通信且使用了适当的序列号。

为了正确实现这个过程,我们必须定义一个有清晰状态和过渡的有限状态机。这样我们就可以正确管理握手过程中的所有极端情况。

会话 ID 由握手的初始化程序生成。一个完整的交换顺序如下:

1. 发送者发送一个 `SYN(ID)` 数据包。

2. 接收者存储接收到的 `ID` 并发送一个 `SYN-ACK(ID)`。

3. 发送者接收到 `SYN-ACK(ID)` 并发送一个 `ACK(ID)`。它还发送一个从序列号 0 开始的数据包。

4. 接收者检查最后接收到的 `ID`,如果 ID 匹配,则接受 `ACK(ID)`。它还开始接受序列号为 0 的数据包。

### 处理状态超时

基本上,每种状态下你都需要处理最多三种类型的事件:链接事件、数据包事件和超时事件。这些事件会并发地出现,因此你必须正确处理并发。

* 链接事件包括网络连接或网络断开的变化,相应的初始化一个链接会话或断开一个已建立的会话。

* 数据包事件是控制数据包(`SYN`/`SYN-ACK`/`ACK`)或只是探测响应。

* 超时事件在当前会话状态的预定超时时间到期后触发。

这里面临的最主要的问题是如何处理并发的超时到期和其他事件。这里很容易陷入死锁和资源竞争的陷阱。

### 第一种方法

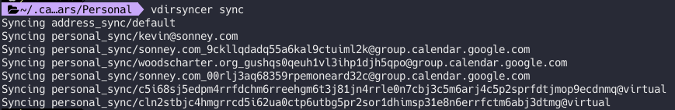

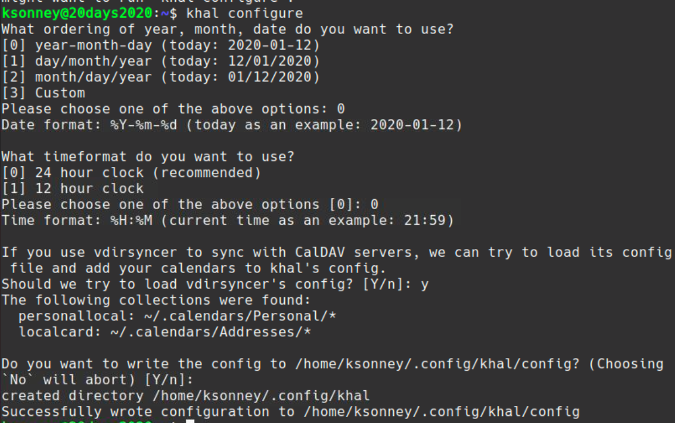

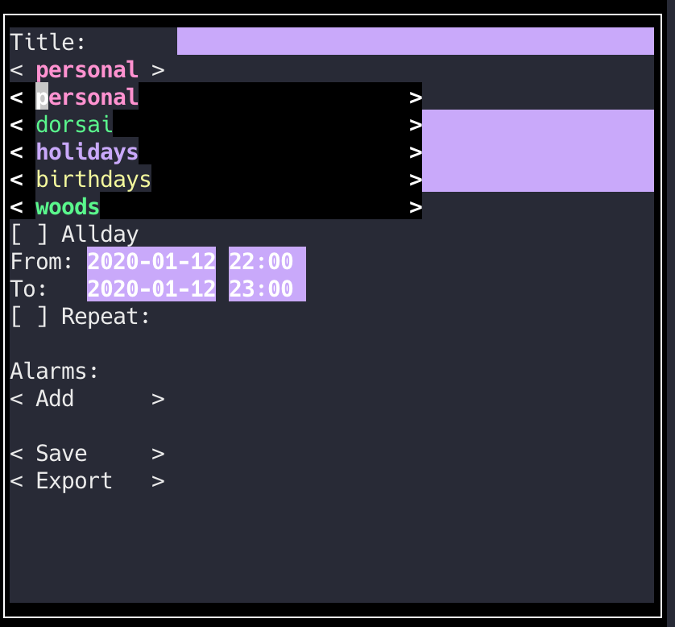

本项目使用的语言是 [Golang](https://golang.org/)。它确实提供了原生的同步机制,如自带的通道和锁,并且能够使用轻量级线程来进行并发处理。

*gopher 们聚众狂欢*

首先,你可以设计两个分别表示我们的会话和超时处理程序的结构体。

```

type Session struct {

State SessionState

Id SessionId

RemoteIp string

}

type TimeoutHandler struct {

callback func(Session)

session Session

duration int

timer *timer.Timer

}

```

`Session` 标识连接会话,内有表示会话 ID、临近的连接点的 IP 和当前会话状态的字段。

`TimeoutHandler` 包含回调函数、对应的会话、持续时间和指向调度计时器的指针。

每一个临近连接点的会话都包含一个保存调度 `TimeoutHandler` 的全局映射。

```

SessionTimeout map[Session]*TimeoutHandler

```

下面方法注册和取消超时:

```

// schedules the timeout callback function.

func (timeout* TimeoutHandler) Register() {

timeout.timer = time.AfterFunc(time.Duration(timeout.duration) * time.Second, func() {

timeout.callback(timeout.session)

})

}

func (timeout* TimeoutHandler) Cancel() {

if timeout.timer == nil {

return

}

timeout.timer.Stop()

}

```

你可以使用类似下面的方法来创建和存储超时:

```

func CreateTimeoutHandler(callback func(Session), session Session, duration int) *TimeoutHandler {

if sessionTimeout[session] == nil {

sessionTimeout[session] := new(TimeoutHandler)

}

timeout = sessionTimeout[session]

timeout.session = session

timeout.callback = callback

timeout.duration = duration

return timeout

}

```

超时处理程序创建后,会在经过了设置的 `duration` 时间(秒)后执行回调函数。然而,有些事件会使你重新调度一个超时处理程序(与 `SYN` 状态时的处理一样,每 3 秒一次)。

为此,你可以让回调函数重新调度一次超时:

```

func synCallback(session Session) {

sendSynPacket(session)

// reschedules the same callback.

newTimeout := NewTimeoutHandler(synCallback, session, SYN_TIMEOUT_DURATION)

newTimeout.Register()

sessionTimeout[state] = newTimeout

}

```

这次回调在新的超时处理程序中重新调度自己,并更新全局映射 `sessionTimeout`。

### 数据竞争和引用

你的解决方案已经有了。可以通过检查计时器到期后超时回调是否执行来进行一个简单的测试。为此,注册一个超时,休眠 `duration` 秒,然后检查是否执行了回调的处理。执行这个测试后,最好取消预定的超时时间(因为它会重新调度),这样才不会在下次测试时产生副作用。

令人惊讶的是,这个简单的测试发现了这个解决方案中的一个问题。使用 `cancel` 方法来取消超时并没有正确处理。以下顺序的事件会导致数据资源竞争:

1. 你有一个已调度的超时处理程序。

2. 线程 1:

1. 你接收到一个控制数据包,现在你要取消已注册的超时并切换到下一个会话状态(如发送 `SYN` 后接收到一个 `SYN-ACK`)

2. 你调用了 `timeout.Cancel()`,这个函数调用了 `timer.Stop()`。(请注意,Golang 计时器的停止不会终止一个已过期的计时器。)

3. 线程 2:

1. 在取消调用之前,计时器已过期,回调即将执行。

2. 执行回调,它调度一次新的超时并更新全局映射。

4. 线程 1:

1. 切换到新的会话状态并注册新的超时,更新全局映射。

两个线程并发地更新超时映射。最终结果是你无法取消注册的超时,然后你也会丢失对线程 2 重新调度的超时的引用。这导致处理程序在一段时间内持续执行和重新调度,出现非预期行为。

### 锁也解决不了问题

使用锁也不能完全解决问题。如果你在处理所有事件和执行回调之前加锁,它仍然不能阻止一个过期的回调运行:

```

func (timeout* TimeoutHandler) Register() {

timeout.timer = time.AfterFunc(time.Duration(timeout.duration) * time._Second_, func() {

stateLock.Lock()

defer stateLock.Unlock()

timeout.callback(timeout.session)

})

}

```

现在的区别就是全局映射的更新是同步的,但是这还是不能阻止在你调用 `timeout.Cancel()` 后回调的执行 —— 这种情况出现在调度计时器过期了但是还没有拿到锁的时候。你还是会丢失一个已注册的超时的引用。

### 使用取消通道

你可以使用取消通道,而不必依赖不能阻止到期的计时器执行的 golang 函数 `timer.Stop()`。

这是一个略有不同的方法。现在你可以不用再通过回调进行递归地重新调度;而是注册一个死循环,这个循环接收到取消信号或超时事件时终止。

新的 `Register()` 产生一个新的 go 线程,这个线程在超时后执行你的回调,并在前一个超时执行后调度新的超时。返回给调用方一个取消通道,用来控制循环的终止。

```

func (timeout *TimeoutHandler) Register() chan struct{} {

cancelChan := make(chan struct{})

go func () {

select {

case _ = <- cancelChan:

return

case _ = <- time.AfterFunc(time.Duration(timeout.duration) * time.Second):

func () {

stateLock.Lock()

defer stateLock.Unlock()

timeout.callback(timeout.session)

} ()

}

} ()

return cancelChan

}

func (timeout* TimeoutHandler) Cancel() {

if timeout.cancelChan == nil {

return

}

timeout.cancelChan <- struct{}{}

}

```

这个方法给你注册的所有超时提供了取消通道。一个取消调用向通道发送一个空结构体并触发取消操作。然而,这并不能解决前面的问题;可能在你通过通道取消之前以及超时线程拿到锁之前,超时时间就已经到了。

这里的解决方案是,在拿到锁**之后**,检查一下超时范围内的取消通道。

```

case _ = <- time.AfterFunc(time.Duration(timeout.duration) * time.Second):

func () {

stateLock.Lock()

defer stateLock.Unlock()

select {

case _ = <- handler.cancelChan:

return

default:

timeout.callback(timeout.session)

}

} ()

}

```

最终,这可以确保在拿到锁之后执行回调,不会触发取消操作。

### 小心死锁

这个解决方案看起来有效;但是还是有个隐患:[死锁](https://en.wikipedia.org/wiki/Deadlock)。

请阅读上面的代码,试着自己找到它。考虑下描述的所有函数的并发调用。

这里的问题在取消通道本身。我们创建的是无缓冲通道,即发送的是阻塞调用。当你在一个超时处理程序中调用取消函数时,只有在该处理程序被取消后才能继续处理。问题出现在,当你有多个调用请求到同一个取消通道时,这时一个取消请求只被处理一次。当多个事件同时取消同一个超时处理程序时,如连接断开或控制包事件,很容易出现这种情况。这会导致死锁,可能会使应用程序停机。

*有人在听吗?*

(已获得 Trevor Forrey 授权。)

这里的解决方案是创建通道时指定缓存大小至少为 1,这样向通道发送数据就不会阻塞,也显式地使发送变成非阻塞的,避免了并发调用。这样可以确保取消操作只发送一次,并且不会阻塞后续的取消调用。

```

func (timeout* TimeoutHandler) Cancel() {

if timeout.cancelChan == nil {

return

}

select {

case timeout.cancelChan <- struct{}{}:

default:

// can’t send on the channel, someone has already requested the cancellation.

}

}

```

### 总结

在实践中你学到了并发操作时出现的常见错误。由于其不确定性,即使进行大量的测试,也不容易发现这些问题。下面是我们在最初的实现中遇到的三个主要问题:

#### 在非同步的情况下更新共享数据

这似乎是个很明显的问题,但如果并发更新发生在不同的位置,就很难发现。结果就是数据竞争,由于一个更新会覆盖另一个,因此对同一数据的多次更新中会有某些更新丢失。在我们的案例中,我们是在同时更新同一个共享映射里的调度超时引用。(有趣的是,如果 Go 检测到在同一个映射对象上的并发读写,会抛出致命错误 — 你可以尝试下运行 Go 的[数据竞争检测器](https://golang.org/doc/articles/race_detector.html))。这最终会导致丢失超时引用,且无法取消给定的超时。当有必要时,永远不要忘记使用锁。

*不要忘记同步 gopher 们的工作*

#### 缺少条件检查

在不能仅依赖锁的独占性的情况下,就需要进行条件检查。我们遇到的场景稍微有点不一样,但是核心思想跟[条件变量](https://en.wikipedia.org/wiki/Monitor_%28synchronization%29#Condition_variables)是一样的。假设有个一个生产者和多个消费者使用一个共享队列的经典场景,生产者可以将一个元素添加到队列并唤醒所有消费者。这个唤醒调用意味着队列中的数据是可访问的,并且由于队列是共享的,消费者必须通过锁来进行同步访问。每个消费者都可能拿到锁;然而,你仍然需要检查队列中是否有元素。因为在你拿到锁的瞬间并不知道队列的状态,所以还是需要进行条件检查。

在我们的例子中,超时处理程序收到了计时器到期时发出的“唤醒”调用,但是它仍需要检查是否已向其发送了取消信号,然后才能继续执行回调。

*如果你要唤醒多个 gopher,可能就需要进行条件检查*

#### 死锁

当一个线程被卡住,无限期地等待一个唤醒信号,但是这个信号永远不会到达时,就会发生这种情况。死锁可以通过让你的整个程序停机来彻底杀死你的应用。

在我们的案例中,这种情况的发生是由于多次发送请求到一个非缓冲且阻塞的通道。这意味着向通道发送数据只有在从这个通道接收完数据后才能返回。我们的超时线程循环迅速从取消通道接收信号;然而,在接收到第一个信号后,它将跳出循环,并且再也不会从这个通道读取数据。其他的调用会一直被卡住。为避免这种情况,你需要仔细检查代码,谨慎处理阻塞调用,并确保不会发生线程饥饿。我们例子中的解决方法是使取消调用成为非阻塞调用 — 我们不需要阻塞调用。

---

via: <https://opensource.com/article/19/12/go-common-pitfalls>

作者:[Eduardo Ferreira](https://opensource.com/users/edufgf) 选题:[lujun9972](https://github.com/lujun9972) 译者:[lxbwolf](https://github.com/lxbwolf) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | When you are working with complex distributed systems, you will likely come across the need for concurrent processing. At [Mode.net](http://mode.net), we deal daily with real-time, fast and resilient software. Building a global private network that dynamically routes packets at the millisecond scale wouldn’t be possible without a highly concurrent system. This dynamic routing is based on the state of the network and, while there are many parameters to consider here, our focus is on link [metrics](https://en.wikipedia.org/wiki/Metrics_%28networking%29). In our context, link metrics can be anything related to the status or current properties of a network link (e.g.: link latency).

## Concurrent probing for link metrics

[H.A.L.O.](https://people.ece.cornell.edu/atang/pub/15/HALO_ToN.pdf) (Hop-by-Hop Adaptive Link-State Optimal Routing), our dynamic routing algorithm relies partially on link metrics to compute its routing table. Those metrics are collected by an independent component that sits on each [PoP](https://en.wikipedia.org/wiki/Point_of_presence) (Point of Presence). PoPs are machines that represent a single routing entity in our networks, connected by links and spread around multiple locations shaping our network. This component probes neighboring machines using network packets, and those neighbors will bounce back the initial probe. Link latency values can be derived from the received probes. Because each PoP has more than one neighbor, the nature of such a task is intrinsically concurrent: we need to measure latency for each neighboring link in real-time. We can’t afford sequential processing; each probe must be processed as soon as possible in order to compute this metric.

## Sequence numbers and resets: A reordering situation

Our probing component exchanges packets and relies on sequence numbers for packet processing. This aims to avoid processing of packet duplication or out-of-order packets. Our first implementation relied on a special sequence number 0 to reset sequence numbers. Such a number was only used during initialization of a component. The main problem was that we were considering an increasing sequence number value that always started at 0. After the component restarts, packet reordering could happen, and a packet could easily replace the sequence number with the value that was being used before the reset. This meant that the following packets would be ignored until it reaches the sequence number that was in use just before the reset.

## UDP handshake and finite state machine

The problem here was proper agreement of a sequence number after a component restarts. There are a few ways to handle this and, after discussing our options, we chose to implement a 3-way handshake protocol with a clear definition of states. This handshake establishes sessions over links during initialization. This guarantees that nodes are communicating over the same session and using the appropriate sequence number for it.

To properly implement this, we have to define a finite state machine with clear states and transitions. This allows us to properly manage all corner cases for the handshake formation.

Session IDs are generated by the handshake initiator. A full exchange sequence is as follows:

- The sender sends out a

**SYN (ID)** - The receiver stores the received

**ID**and sends a**SYN-ACK (ID)**. - The sender receives the

**SYN-ACK (ID)**and sends out an**ACK (ID)**. - The receiver checks the last received

**ID****ACK (ID)**

## Handling state timeouts

Basically, at each state, you need to handle, at most, three types of events: link events, packet events, and timeout events. And those events show up concurrently, so here you have to handle concurrency properly.

- Link events are either link up or link down updates. This can either initiate a link session or break an existing session.

- Packet events are control packets

**(SYN/SYN-ACK/ACK)**or just probe responses. - Timeout events are the ones triggered after a scheduled timeout expires for the current session state.

The main challenge here is how to handle concurrent timeout expiration and other events. And this is where one can easily fall into the traps of deadlocks and race conditions.

## A first approach

The language used for this project is [Golang](https://golang.org/). It does provide native synchronization mechanisms such as native channels and locks and is able to spin lightweight threads for concurrent processing.

Gophers hacking together

by Ashley McNamara, CC BY-NC-SA 4.0

You can start first by designing a structure that represents our **Session **and **Timeout Handlers**.

```

type Session struct {

State SessionState

Id SessionId

RemoteIp string

}

type TimeoutHandler struct {

callback func(Session)

session Session

duration int

timer *timer.Timer

}

```

**Session** identifies the connection session, with the session ID, neighboring link IP, and the current session state.

**TimeoutHandler** holds the callback function, the session for which it should run, the duration, and a pointer to the scheduled timer.

There is a global map that will store, per neighboring link session, the scheduled timeout handler.

`SessionTimeout map[Session]*TimeoutHandler`

Registering and canceling a timeout is achieved by the following methods:

```

// schedules the timeout callback function.

func (timeout* TimeoutHandler) Register() {

timeout.timer = time.AfterFunc(time.Duration(timeout.duration) * time.Second, func() {

timeout.callback(timeout.session)

})

}

func (timeout* TimeoutHandler) Cancel() {

if timeout.timer == nil {

return

}

timeout.timer.Stop()

}

```

For the timeouts creation and storage, you can use a method like the following:

```

func CreateTimeoutHandler(callback func(Session), session Session, duration int) *TimeoutHandler {

if sessionTimeout[session] == nil {

sessionTimeout[session] := new(TimeoutHandler)

}

timeout = sessionTimeout[session]

timeout.session = session

timeout.callback = callback

timeout.duration = duration

return timeout

}

```

Once the timeout handler is created and registered, it runs the callback after *duration* seconds have elapsed. However, some events will require you to reschedule a timeout handler (as it happens at **SYN** state — every 3 seconds).

For that, you can have the callback rescheduling a new timeout:

```

func synCallback(session Session) {

sendSynPacket(session)

// reschedules the same callback.

newTimeout := NewTimeoutHandler(synCallback, session, SYN_TIMEOUT_DURATION)

newTimeout.Register()

sessionTimeout[state] = newTimeout

}

```

This callback reschedules itself in a new timeout handler and updates the global **sessionTimeout** map.

**Data race and references**

Your solution is ready. One simple test is to check that a timeout callback is executed after the timer has expired. To do this, register a timeout, sleep for its duration, and then check whether the callback actions were done. After the test is executed, it is a good idea to cancel the scheduled timeout (as it reschedules), so it won’t have side effects between tests.

Surprisingly, this simple test found a bug in the solution. Canceling timeouts using the cancel method was just not doing its job. The following order of events would cause a data race condition:

- You have one scheduled timeout handler.

- Thread 1:

a) You receive a control packet, and you now want to cancel the registered timeout and move on to the next session state. (e.g. received a**SYN-ACK****SYN**).

b) You call**timeout.Cancel()**, which calls a**timer.Stop()**. (Note that a Golang timer stop doesn’t prevent an already expired timer from running.) - Thread 2:

a) Right before that cancel call, the timer has expired, and the callback was about to execute.

b) The callback is executed, it schedules a new timeout and updates the global map. - Thread 1:

a) Transitions to a new session state and registers a new timeout, updating the global map.

Both threads were updating the timeout map concurrently. The end result is that you failed to cancel the registered timeout, and then you also lost the reference to the rescheduled timeout done by thread 2. This results in a handler that keeps executing and rescheduling for a while, doing unwanted behavior.

## When locking is not enough

Using locks also doesn’t fix the issue completely. If you add locks before processing any event and before executing a callback, it still doesn’t prevent an expired callback to run:

```

func (timeout* TimeoutHandler) Register() {

timeout.timer = time.AfterFunc(time.Duration(timeout.duration) * time._Second_, func() {

stateLock.Lock()

defer stateLock.Unlock()

timeout.callback(timeout.session)

})

}

```

The difference now is that the updates in the global map are synchronized, but this doesn’t prevent the callback from running after you call the **timeout.Cancel() **— This is the case if the scheduled timer expired but didn’t grab the lock yet. You should again lose reference to one of the registered timeouts.

## Using cancellation channels

Instead of relying on golang’s **timer.Stop()**, which doesn’t prevent an expired timer to execute, you can use cancellation channels.

It is a slightly different approach. Now you won’t do a recursive re-scheduling through callbacks; instead, you register an infinite loop that waits for cancellation signals or timeout events.

The new **Register() **spawns a new go thread that runs your callback after a timeout and schedules a new timeout after the previous one has been executed. A cancellation channel is returned to the caller to control when the loop should stop.

```

func (timeout *TimeoutHandler) Register() chan struct{} {

cancelChan := make(chan struct{})

go func () {

select {

case _ = <- cancelChan:

return

case _ = <- time.AfterFunc(time.Duration(timeout.duration) * time.Second):

func () {

stateLock.Lock()

defer stateLock.Unlock()

timeout.callback(timeout.session)

} ()

}

} ()

return cancelChan

}

func (timeout* TimeoutHandler) Cancel() {

if timeout.cancelChan == nil {

return

}

timeout.cancelChan <- struct{}{}

}

```

This approach gives you a cancellation channel for each timeout you register. A cancel call sends an empty struct to the channel and triggers the cancellation. However, this doesn’t resolve the previous issue; the timeout can expire right before you call cancel over the channel, and before the lock is grabbed by the timeout thread.

The solution here is to check the cancellation channel inside the timeout scope __after__ you grab the lock.

```

case _ = <- time.AfterFunc(time.Duration(timeout.duration) * time.Second):

func () {

stateLock.Lock()

defer stateLock.Unlock()

select {

case _ = <- handler.cancelChan:

return

default:

timeout.callback(timeout.session)

}

} ()

}

```

Finally, this guarantees that the callback is only executed after you grab the lock and no cancellation was triggered.

## Beware of deadlocks

This solution seems to work; however, there is one hidden pitfall here: [deadlocks](https://en.wikipedia.org/wiki/Deadlock).

Please read the code above again and try to find it yourself. Think of concurrent calls to any of the methods described.

The last problem here is with the cancellation channel itself. We made it an unbuffered channel, which means that sending is a blocking call. Once you call cancel in a timeout handler, you only proceed once that handler is canceled. The problem here is when you have multiple calls to the same cancelation channel, where a cancel request is only consumed once. And this can easily happen if concurrent events were to cancel the same timeout handler, like a link down or control packet event. This results in a deadlock situation, possibly bringing the application to a halt.

Is anyone listening?

By Trevor Forrey. Used with permission.

The solution here is to at least make the channel buffered by one, so sends are not always blocking, and also explicitly make the send non-blocking in case of concurrent calls. This guarantees the cancellation is sent once and won’t block the subsequent cancel calls.

```

func (timeout* TimeoutHandler) Cancel() {

if timeout.cancelChan == nil {

return

}

select {

case timeout.cancelChan <- struct{}{}:

default:

// can’t send on the channel, someone has already requested the cancellation.

}

}

```

## Conclusion

You learned in practice how common mistakes can show up while working with concurrent code. Due to their non-deterministic nature, those issues can go easily undetected, even with extensive testing. Here are the three main problems we encountered in the initial implementation.

### Updating shared data without synchronization

This seems like an obvious one, but it’s actually hard to spot if your concurrent updates happen in different locations. The result is data race, where multiple updates to the same data can cause update loss, due to one update overriding another. In our case, we were updating the scheduled timeout reference on the same shared map. (Interestingly, if Go detects a concurrent read/write on the same Map object, it throws a fatal error —you can try to run Go’s[ data race detector](https://golang.org/doc/articles/race_detector.html)). This eventually results in losing a timeout reference and making it impossible to cancel that given timeout. Always remember to use locks when they are needed.

don’t forget to synchronize gophers’ work

### Missing condition checks

Condition checks are needed in situations where you can’t rely only on the lock exclusivity. Our situation is a bit different, but the core idea is the same as [condition variables](https://en.wikipedia.org/wiki/Monitor_%28synchronization%29#Condition_variables). Imagine a classic situation where you have one producer and multiple consumers working with a shared queue. A producer can add one item to the queue and wake up all consumers. The wake-up call means that some data is available at the queue, and because the queue is shared, access must be synchronized through a lock. Every consumer has a chance to grab the lock; however, you still need to check if there are items in the queue. A condition check is needed because you don’t know the queue status by the time you grab the lock.

In our example, the timeout handler got a ‘wake up’ call from a timer expiration, but it still needed to check if a cancel signal was sent to it before it could proceed with the callback execution.

Condition checks might be needed if you wake up multiple gophers

### Deadlocks

This happens when one thread is stuck, waiting indefinitely for a signal to wake up, but this signal will never arrive. Those can completely kill your application by halting your entire program execution.

In our case, this happened due to multiple send calls to a non-buffered and blocking channel. This meant that the send call would only return after a receive is done on the same channel. Our timeout thread loop was promptly receiving signals on the cancellation channel; however, after the first signal is received, it would break off the loop and never read from that channel again. The remaining callers are stuck forever. To avoid this situation, you need to carefully think through your code, handle blocking calls with care, and guarantee that thread starvation doesn’t happen. The fix in our example was to make the cancellation calls non-blocking—we didn’t need a blocking call for our needs.

## Comments are closed. |

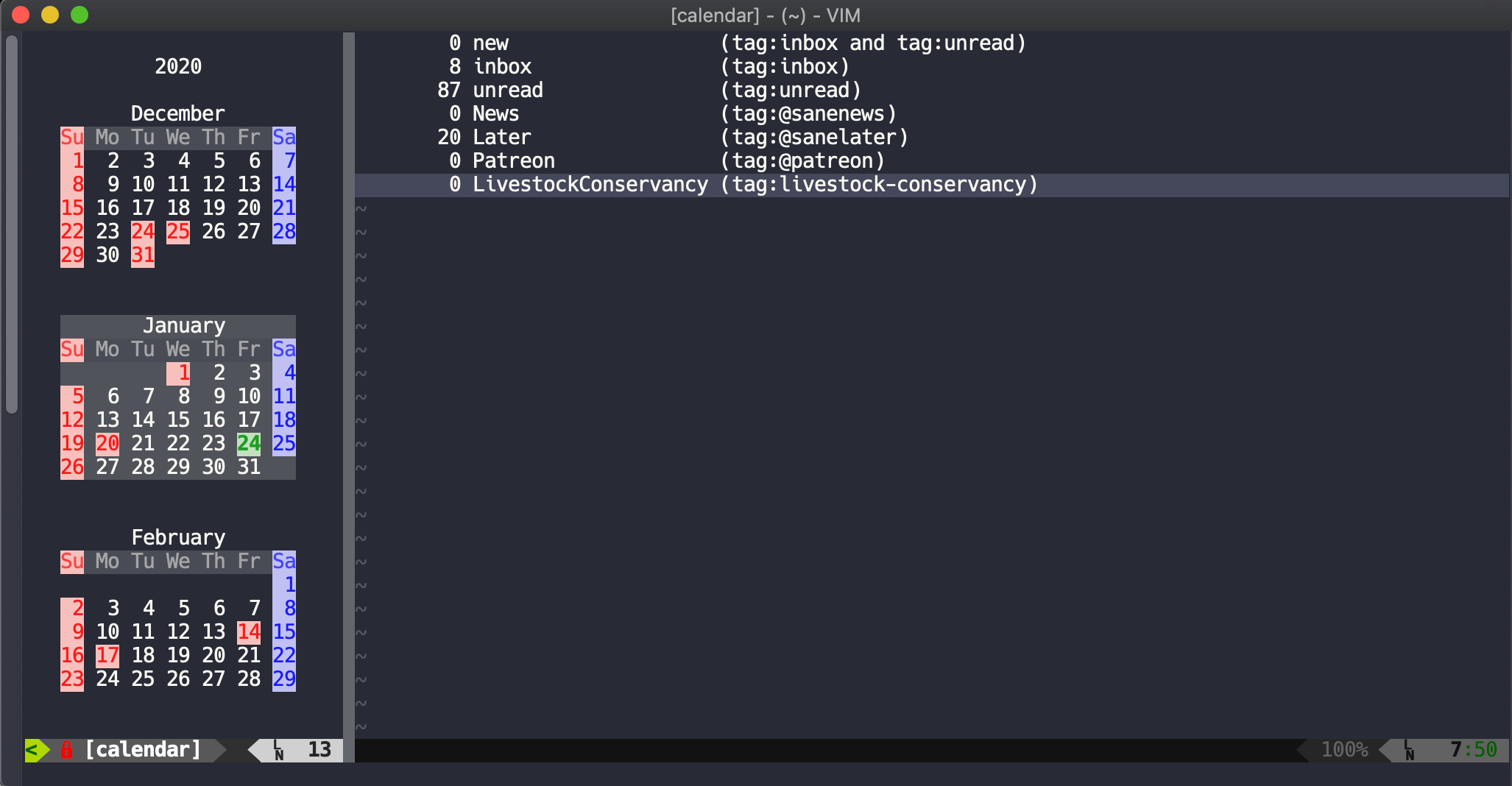

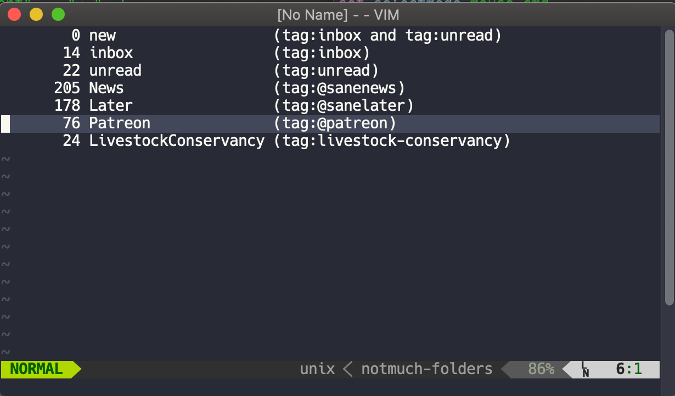

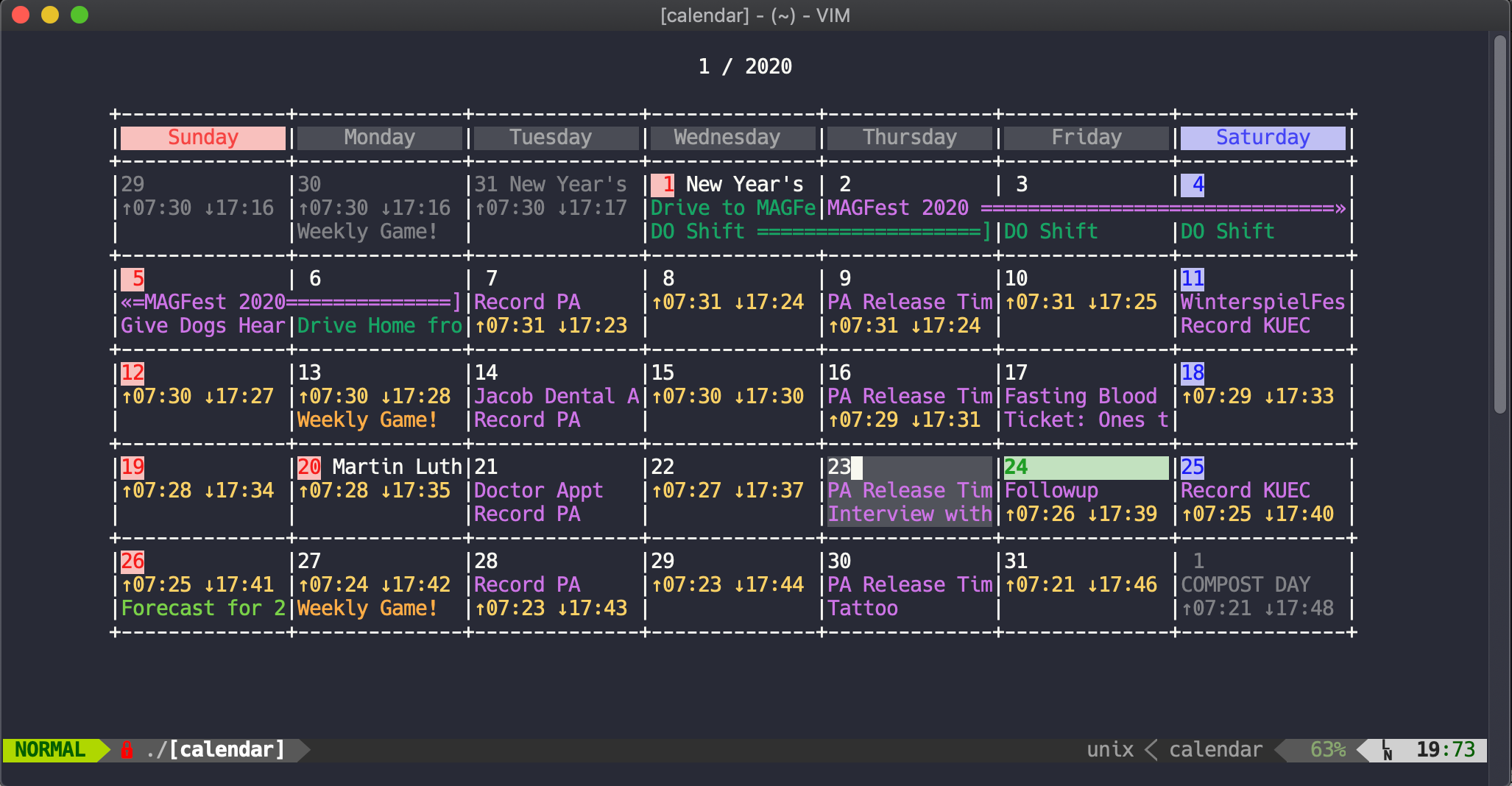

11,779 | PaperWM:GNOME 下的平铺窗口管理 | https://jvns.ca/blog/2020/01/05/paperwm/ | 2020-01-13T21:29:00 | [

"窗口管理器"

] | https://linux.cn/article-11779-1.html |

当我开始在个人计算机上使用 Linux 时,首先令我兴奋的就是轻巧的窗口管理器,这主要是因为当时我的笔记本电脑只有有 32MB 的内存,其它的都运行不了了。

接着我开始接触 [xmonad](https://xmonad.org/) 之类的平铺窗口管理器!我可以用键盘管理窗口了!它们是如此之快!我可以通过编写 Haskell 程序来配置 xmonad!我可以用各种有趣的方式自定义所有内容(例如使用 [dmenu](https://wiki.archlinux.org/index.php/Dmenu) 作为启动器)!这些年来,我用过 3、4 个不同的平铺窗口管理器,它们都很有趣。

大约 6 年前,我觉得配置平铺窗口管理器对我来说不再是一件有趣的事情,因此转而使用 Ubuntu 桌面环境 Gnome。(现在,我的笔记本电脑中的内存增加了 500 倍,这要快得多 :) )

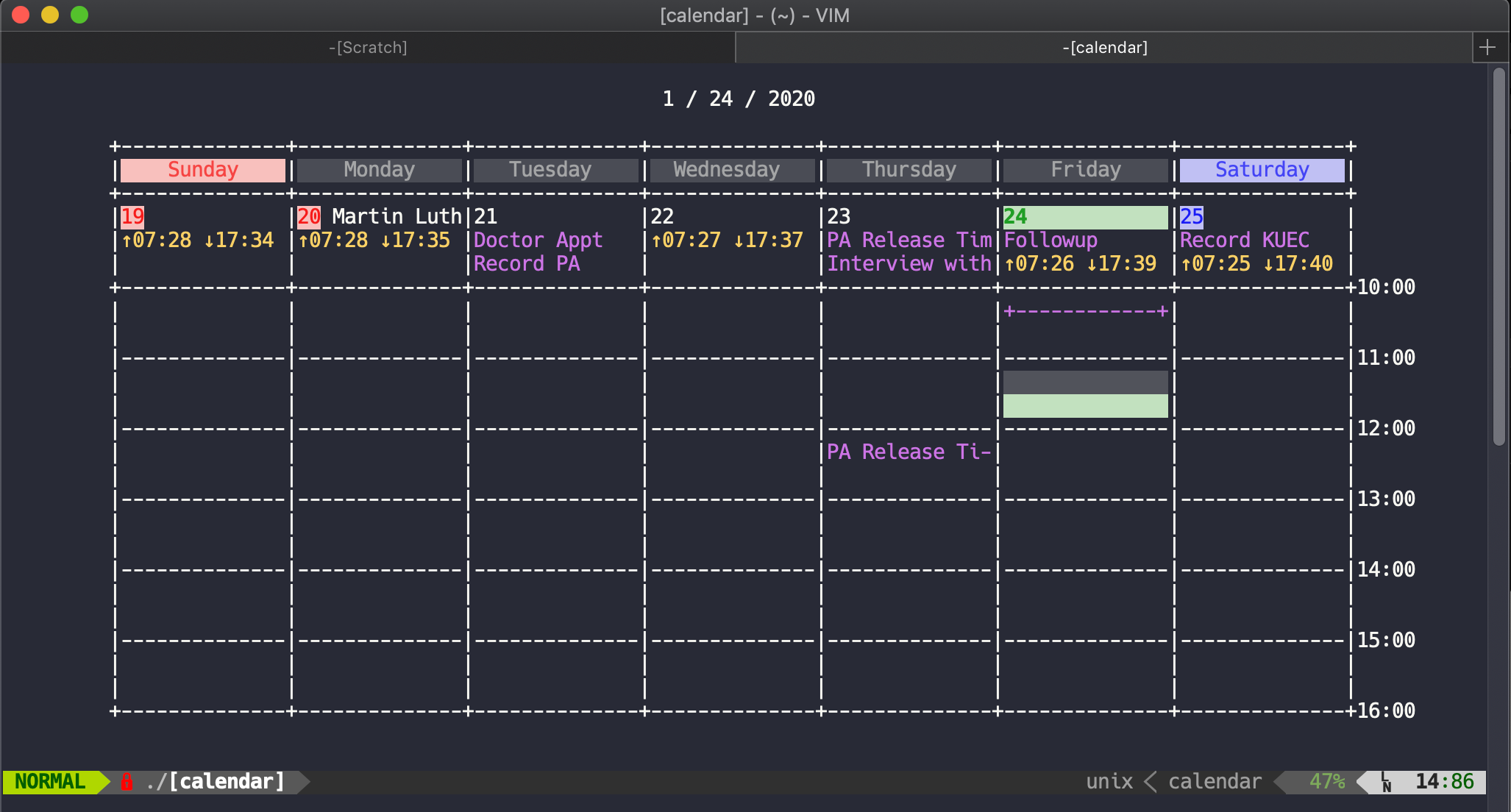

我使用 Gnome 已有很长时间了,但是我仍然有点想念平铺窗口管理器。六个月前,一个朋友告诉我有关 [PaperWM](https://github.com/paperwm/PaperWM) 的消息,它使你可以在 Gnome 中平铺窗口!我立即安装了它,并从那时起我一直在使用它。

### PaperWM:Gnome 下的平铺窗口管理

[PaperWM](https://github.com/paperwm/PaperWM) 的基本思想是:你想继续使用 Gnome(因为在 Gnome 中各种任务都能完成),但是你也希望使用平铺窗口管理器。

它是一个 Gnome 扩展程序(而不是一个独立的窗口管理器),并且使用 Javascript。

### “Paper” 表示你的所有窗户都在一行中

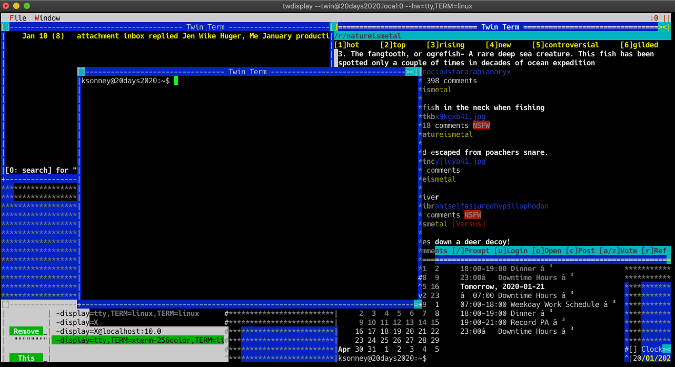

PaperWM 的主要想法是将所有窗口排成一行,这实际上与传统的平铺窗口管理器大不相同,在传统的平铺窗口管理器中,你可以按任意方式平铺窗口。这是我写这篇博客时在几个窗口之间切换/调整大小的 gif 图像(有一个浏览器和两个终端窗口):

PaperWM 的 Github README 链接了此视频:<http://10gui.com/video/>,它描述为一个类似的”线性窗口管理器“。

我以前从未听说过这种组织窗口的方式,但是我喜欢它的简单性。如果要查找特定的窗口,只需向左/向右移动,直到找到它。

### 我在 PaperWM 中所做的一切

还有很多其他功能,但这是我使用的功能:

* 在窗口之间左右移动(`Super + ,`、`Super + .`)

* 按顺序向左/向右移动窗口(`Super+Shift+,`、`Super+Shift+.`)

* 全屏显示窗口(`Super + f`)

* 缩小窗口(`Super + r`)

### 我喜欢不需要配置的工具

我在笔记本上使用 PaperWM 已经 6 个月了,我真的很喜欢它!即使它是可配置(通过编写 Javascript 配置文件),我也非常欣赏它,它自带我想要的功能,我无需研究如何去配置。

[fish shell](https://jvns.ca/blog/2017/04/23/the-fish-shell-is-awesome/) 是另一个类似的令人愉悦的工具,我基本上没有配置 fish(除了设置环境变量等),我真的很喜欢它的默认功能。

---

via: <https://jvns.ca/blog/2020/01/05/paperwm/>

作者:[Julia Evans](https://jvns.ca/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | null |

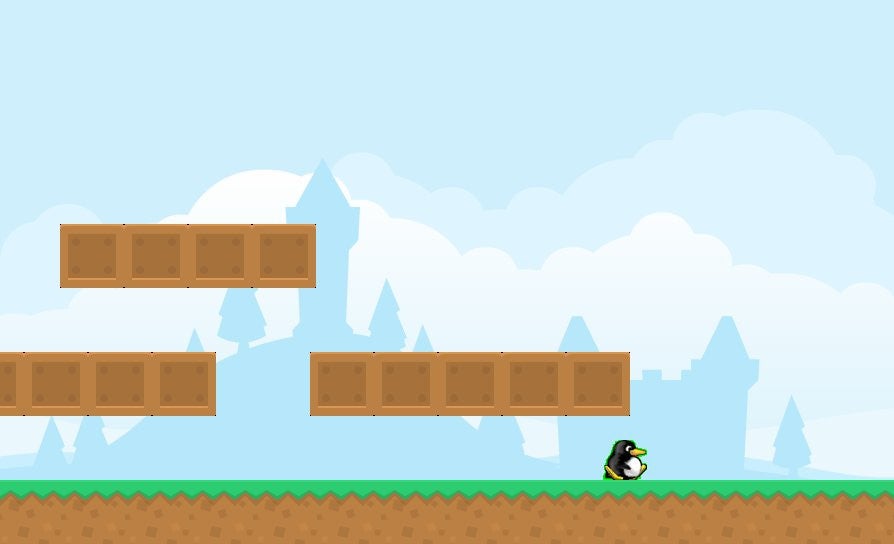

11,780 | 在你的 Python 游戏中模拟引力 | https://opensource.com/article/19/11/simulate-gravity-python | 2020-01-13T22:39:00 | [

"Pygame",

"引力"

] | https://linux.cn/article-11780-1.html |

>

> 学习如何使用 Python 的 Pygame 模块编程电脑游戏,并开始操作引力。

>

>

>

真实的世界充满了运动和生活。物理学使得真实的生活如此忙碌和动态。物理学是物质在空间中运动的方式。既然一个电脑游戏世界没有物质,它也就没有物理学规律,使用游戏程序员不得不*模拟*物理学。

从大多数电脑游戏来说,这里基本上仅有两个方面的物理学是重要的:引力和碰撞。

当你[添加一个敌人](/article-10883-1.html)到你的游戏中时,你实现了一些碰撞检测,但是这篇文章要添加更多的东西,因为引力需要碰撞检测。想想为什么引力可能涉及碰撞。如果你不能想到任何原因,不要担心 —— 它会随着你开发示例代码工作而且显然。

在真实世界中的引力是有质量的物体来相互吸引的倾向性。物体(质量)越大,它施加越大的引力作用。在电脑游戏物理学中,你不必创建质量足够大的物体来证明引力的正确;你可以在电脑游戏世界本身中仅编程一个物体落向假设的最大的对象的倾向。

### 添加一个引力函数

记住你的玩家已经有了一个决定动作的属性。使用这个属性来将玩家精灵拉向屏幕底部。

在 Pygame 中,较高的数字更接近屏幕的底部边缘。

在真实的世界中,引力影响一切。然而,在平台游戏中,引力是有选择性的 —— 如果你添加引力到你的整个游戏世界,你的所有平台都将掉到地上。反之,你可以仅添加引力到你的玩家和敌人精灵中。

首先,在你的 `Player` 类中添加一个 `gravity` 函数:

```

def gravity(self):

self.movey += 3.2 # 玩家掉落的多快

```

这是一个简单的函数。首先,不管你的玩家是否想运动,你设置你的玩家垂直运动。也就是说,你已经编程你的玩家总是在下降。这基本上就是引力。

为使引力函数生效,你必须在你的主循环中调用它。这样,当每一个处理循环时,Python 都应用下落运动到你的玩家。

在这代码中,添加第一行到你的循环中:

```

player.gravity() # 检查引力

player.update()

```

启动你的游戏来看看会发生什么。要注意,因为它发生的很快:你是玩家从天空上下落,马上掉出了你的游戏屏幕。

你的引力模拟是工作的,但是,也许太好了。

作为一次试验,尝试更改你玩家下落的速度。

### 给引力添加一个地板

你的游戏没有办法发现你的角色掉落出世界的问题。在一些游戏中,如果一个玩家掉落出世界,该精灵被删除,并在某个新的位置重生。在另一些游戏中,玩家会丢失分数或一条生命。当一个玩家掉落出世界时,不管你想发生什么,你必须能够侦测出玩家何时消失在屏幕外。

在 Python 中,要检查一个条件,你可以使用一个 `if` 语句。

你必需查看你玩家**是否**正在掉落,以及你的玩家掉落的程度。如果你的玩家掉落到屏幕的底部,那么你可以做*一些事情*。简化一下,设置玩家精灵的位置为底部边缘上方 20 像素。

使你的 `gravity` 函数看起来像这样:

```

def gravity(self):

self.movey += 3.2 # 玩家掉落的多快

if self.rect.y > worldy and self.movey >= 0:

self.movey = 0

self.rect.y = worldy-ty

```

然后,启动你的游戏。你的精灵仍然下落,但是它停在屏幕的底部。不过,你也许不能*看到*你在地面层之上的精灵。一个简单的解决方法是,在精灵碰撞游戏世界的底部后,通过添加另一个 `-ty` 到它的新 Y 位置,从而使你的精灵弹跳到更高处:

```

def gravity(self):

self.movey += 3.2 # 玩家掉落的多快

if self.rect.y > worldy and self.movey >= 0:

self.movey = 0

self.rect.y = worldy-ty-ty

```

现在你的玩家在屏幕底部弹跳,恰好在你地面精灵上面。

你的玩家真正需要的是反抗引力的方法。引力问题是,你不能反抗它,除非你有一些东西来推开引力作用。因此,在接下来的文章中,你将添加地面和平台碰撞以及跳跃能力。在这期间,尝试应用引力到敌人精灵。

到目前为止,这里是全部的代码:

```

#!/usr/bin/env python3

# draw a world

# add a player and player control

# add player movement

# add enemy and basic collision

# add platform

# add gravity

# GNU All-Permissive License

# Copying and distribution of this file, with or without modification,

# are permitted in any medium without royalty provided the copyright

# notice and this notice are preserved. This file is offered as-is,

# without any warranty.

import pygame

import sys

import os

'''

Objects

'''

class Platform(pygame.sprite.Sprite):

# x location, y location, img width, img height, img file

def __init__(self,xloc,yloc,imgw,imgh,img):

pygame.sprite.Sprite.__init__(self)

self.image = pygame.image.load(os.path.join('images',img)).convert()

self.image.convert_alpha()

self.rect = self.image.get_rect()

self.rect.y = yloc

self.rect.x = xloc

class Player(pygame.sprite.Sprite):

'''

Spawn a player

'''

def __init__(self):

pygame.sprite.Sprite.__init__(self)

self.movex = 0

self.movey = 0

self.frame = 0

self.health = 10

self.score = 1

self.images = []

for i in range(1,9):

img = pygame.image.load(os.path.join('images','hero' + str(i) + '.png')).convert()

img.convert_alpha()

img.set_colorkey(ALPHA)

self.images.append(img)

self.image = self.images[0]

self.rect = self.image.get_rect()

def gravity(self):

self.movey += 3.2 # how fast player falls

if self.rect.y > worldy and self.movey >= 0:

self.movey = 0

self.rect.y = worldy-ty-ty

def control(self,x,y):

'''

control player movement

'''

self.movex += x

self.movey += y

def update(self):

'''

Update sprite position

'''

self.rect.x = self.rect.x + self.movex

self.rect.y = self.rect.y + self.movey

# moving left

if self.movex < 0:

self.frame += 1

if self.frame > ani*3:

self.frame = 0

self.image = self.images[self.frame//ani]

# moving right

if self.movex > 0:

self.frame += 1

if self.frame > ani*3:

self.frame = 0

self.image = self.images[(self.frame//ani)+4]

# collisions

enemy_hit_list = pygame.sprite.spritecollide(self, enemy_list, False)

for enemy in enemy_hit_list:

self.health -= 1

print(self.health)

ground_hit_list = pygame.sprite.spritecollide(self, ground_list, False)

for g in ground_hit_list:

self.health -= 1

print(self.health)

class Enemy(pygame.sprite.Sprite):

'''

Spawn an enemy

'''

def __init__(self,x,y,img):

pygame.sprite.Sprite.__init__(self)

self.image = pygame.image.load(os.path.join('images',img))

#self.image.convert_alpha()

#self.image.set_colorkey(ALPHA)

self.rect = self.image.get_rect()

self.rect.x = x

self.rect.y = y

self.counter = 0

def move(self):

'''

enemy movement

'''

distance = 80

speed = 8

if self.counter >= 0 and self.counter <= distance:

self.rect.x += speed

elif self.counter >= distance and self.counter <= distance*2:

self.rect.x -= speed

else:

self.counter = 0

self.counter += 1

class Level():

def bad(lvl,eloc):

if lvl == 1:

enemy = Enemy(eloc[0],eloc[1],'yeti.png') # spawn enemy

enemy_list = pygame.sprite.Group() # create enemy group

enemy_list.add(enemy) # add enemy to group

if lvl == 2:

print("Level " + str(lvl) )

return enemy_list

def loot(lvl,lloc):

print(lvl)

def ground(lvl,gloc,tx,ty):

ground_list = pygame.sprite.Group()

i=0

if lvl == 1:

while i < len(gloc):

ground = Platform(gloc[i],worldy-ty,tx,ty,'ground.png')

ground_list.add(ground)

i=i+1

if lvl == 2:

print("Level " + str(lvl) )

return ground_list

def platform(lvl,tx,ty):

plat_list = pygame.sprite.Group()

ploc = []

i=0

if lvl == 1:

ploc.append((0,worldy-ty-128,3))

ploc.append((300,worldy-ty-256,3))

ploc.append((500,worldy-ty-128,4))

while i < len(ploc):

j=0

while j <= ploc[i][2]:

plat = Platform((ploc[i][0]+(j*tx)),ploc[i][1],tx,ty,'ground.png')

plat_list.add(plat)

j=j+1

print('run' + str(i) + str(ploc[i]))

i=i+1

if lvl == 2:

print("Level " + str(lvl) )

return plat_list

'''

Setup

'''

worldx = 960

worldy = 720

fps = 40 # frame rate

ani = 4 # animation cycles

clock = pygame.time.Clock()

pygame.init()

main = True

BLUE = (25,25,200)

BLACK = (23,23,23 )

WHITE = (254,254,254)

ALPHA = (0,255,0)

world = pygame.display.set_mode([worldx,worldy])

backdrop = pygame.image.load(os.path.join('images','stage.png')).convert()

backdropbox = world.get_rect()

player = Player() # spawn player

player.rect.x = 0

player.rect.y = 0

player_list = pygame.sprite.Group()

player_list.add(player)

steps = 10 # how fast to move

eloc = []

eloc = [200,20]

gloc = []

#gloc = [0,630,64,630,128,630,192,630,256,630,320,630,384,630]

tx = 64 #tile size

ty = 64 #tile size

i=0

while i <= (worldx/tx)+tx:

gloc.append(i*tx)

i=i+1

enemy_list = Level.bad( 1, eloc )

ground_list = Level.ground( 1,gloc,tx,ty )

plat_list = Level.platform( 1,tx,ty )

'''

Main loop

'''

while main == True:

for event in pygame.event.get():

if event.type == pygame.QUIT:

pygame.quit(); sys.exit()

main = False

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_LEFT or event.key == ord('a'):

print("LEFT")

player.control(-steps,0)

if event.key == pygame.K_RIGHT or event.key == ord('d'):

print("RIGHT")

player.control(steps,0)

if event.key == pygame.K_UP or event.key == ord('w'):

print('jump')

if event.type == pygame.KEYUP:

if event.key == pygame.K_LEFT or event.key == ord('a'):

player.control(steps,0)

if event.key == pygame.K_RIGHT or event.key == ord('d'):

player.control(-steps,0)

if event.key == pygame.K_UP or event.key == ord('w'):

print('jump')

if event.key == ord('q'):

pygame.quit()

sys.exit()

main = False

world.blit(backdrop, backdropbox)

player.gravity() # check gravity

player.update()

player_list.draw(world)

enemy_list.draw(world)

ground_list.draw(world)

plat_list.draw(world)

for e in enemy_list:

e.move()

pygame.display.flip()

clock.tick(fps)

```

---

这是仍在进行中的关于使用 [Pygame](https://www.pygame.org) 模块来在 [Python 3](https://www.python.org/) 在创建电脑游戏的第七部分。先前的文章是:

* [通过构建一个简单的掷骰子游戏去学习怎么用 Python 编程](/article-9071-1.html)

* [使用 Python 和 Pygame 模块构建一个游戏框架](/article-10850-1.html)

* [如何在你的 Python 游戏中添加一个玩家](/article-10858-1.html)

* [用 Pygame 使你的游戏角色移动起来](/article-10874-1.html)

* [如何向你的 Python 游戏中添加一个敌人](/article-10883-1.html)

* [在 Pygame 游戏中放置平台](/article-10902-1.html)

---

via: <https://opensource.com/article/19/11/simulate-gravity-python>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[robsean](https://github.com/robsean) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | The real world is full of movement and life. The thing that makes the real world so busy and dynamic is physics. Physics is the way matter moves through space. Since a video game world has no matter, it also has no physics, so game programmers have to *simulate* physics.

In terms of most video games, there are basically only two aspects of physics that are important: gravity and collision.

You implemented some collision detection when you [added an enemy](https://opensource.com/article/18/5/pygame-enemy) to your game, but this article adds more because gravity requires collision detection. Think about why gravity might involve collisions. If you can't think of any reasons, don't worry—it'll become apparent as you work through the sample code.

Gravity in the real world is the tendency for objects with mass to be drawn toward one another. The larger the object, the more gravitational influence it exerts. In video game physics, you don't have to create objects with mass great enough to justify a gravitational pull; you can just program a tendency for objects to fall toward the presumed largest object in the video game world: the world itself.

## Adding a gravity function

Remember that your player already has a property to determine motion. Use this property to pull the player sprite toward the bottom of the screen.

In Pygame, higher numbers are closer to the bottom edge of the screen.

In the real world, gravity affects everything. In platformers, however, gravity is selective—if you add gravity to your entire game world, all of your platforms would fall to the ground. Instead, you add gravity just to your player and enemy sprites.

First, add a **gravity** function in your **Player** class:

```

def gravity(self):

self.movey += 3.2 # how fast player falls

```

This is a simple function. First, you set your player in vertical motion, whether your player wants to be in motion or not. In other words, you have programmed your player to always be falling. That's basically gravity.

For the gravity function to have an effect, you must call it in your main loop. This way, Python applies the falling motion to your player once every clock tick.

In this code, add the first line to your loop:

```

player.gravity() # check gravity

player.update()

```

Launch your game to see what happens. Look sharp, because it happens fast: your player falls out of the sky, right off your game screen.

Your gravity simulation is working, but maybe too well.

As an experiment, try changing the rate at which your player falls.

## Adding a floor to gravity

The problem with your character falling off the world is that there's no way for your game to detect it. In some games, if a player falls off the world, the sprite is deleted and respawned somewhere new. In other games, the player loses points or a life. Whatever you want to happen when a player falls off the world, you have to be able to detect when the player disappears offscreen.

In Python, to check for a condition, you can use an **if** statement.

You must check to see **if** your player is falling and how far your player has fallen. If your player falls so far that it reaches the bottom of the screen, then you can do *something*. To keep things simple, set the position of the player sprite to 20 pixels above the bottom edge.

Make your **gravity** function look like this:

```

def gravity(self):

self.movey += 3.2 # how fast player falls

if self.rect.y > worldy and self.movey >= 0:

self.movey = 0

self.rect.y = worldy-ty

```

Then launch your game. Your sprite still falls, but it stops at the bottom of the screen. You may not be able to *see* your sprite behind the ground layer, though. An easy fix is to make your player sprite bounce higher by adding another **-ty** to its new Y position after it hits the bottom of the game world:

```

def gravity(self):

self.movey += 3.2 # how fast player falls

if self.rect.y > worldy and self.movey >= 0:

self.movey = 0

self.rect.y = worldy-ty-ty

```

Now your player bounces at the bottom of the screen, just behind your ground sprites.

What your player really needs is a way to fight gravity. The problem with gravity is, you can't fight it unless you have something to push off of. So, in the next article, you'll add ground and platform collision and the ability to jump. In the meantime, try applying gravity to the enemy sprite.

Here's all the code so far:

```

#!/usr/bin/env python3

# by Seth Kenlon

# GPLv3

# This program is free software: you can redistribute it and/or

# modify it under the terms of the GNU General Public License as

# published by the Free Software Foundation, either version 3 of the

# License, or (at your option) any later version.

#

# This program is distributed in the hope that it will be useful, but

# WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

# General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <http://www.gnu.org/licenses/>.

import pygame

import sys

import os

'''

Variables

'''

worldx = 960

worldy = 720

fps = 40

ani = 4

world = pygame.display.set_mode([worldx, worldy])

BLUE = (25, 25, 200)

BLACK = (23, 23, 23)

WHITE = (254, 254, 254)

ALPHA = (0, 255, 0)

'''

Objects

'''

# x location, y location, img width, img height, img file

class Platform(pygame.sprite.Sprite):

def __init__(self, xloc, yloc, imgw, imgh, img):

pygame.sprite.Sprite.__init__(self)

self.image = pygame.image.load(os.path.join('images', img)).convert()

self.image.convert_alpha()

self.image.set_colorkey(ALPHA)

self.rect = self.image.get_rect()

self.rect.y = yloc

self.rect.x = xloc

class Player(pygame.sprite.Sprite):

"""

Spawn a player

"""

def __init__(self):

pygame.sprite.Sprite.__init__(self)

self.movex = 0

self.movey = 0

self.frame = 0

self.health = 10

self.images = []

for i in range(1, 5):

img = pygame.image.load(os.path.join('images', 'hero' + str(i) + '.png')).convert()

img.convert_alpha()

img.set_colorkey(ALPHA)

self.images.append(img)

self.image = self.images[0]

self.rect = self.image.get_rect()

def gravity(self):

self.movey += 3.2

if self.rect.y > worldy and self.movey >= 0:

self.movey = 0

self.rect.y = worldy-ty-ty

def control(self, x, y):

"""

control player movement

"""

self.movex += x

self.movey += y

def update(self):

"""

Update sprite position

"""

self.rect.x = self.rect.x + self.movex

self.rect.y = self.rect.y + self.movey

# moving left

if self.movex < 0:

self.frame += 1

if self.frame > 3 * ani:

self.frame = 0

self.image = pygame.transform.flip(self.images[self.frame // ani], True, False)

# moving right

if self.movex > 0:

self.frame += 1

if self.frame > 3 * ani:

self.frame = 0

self.image = self.images[self.frame // ani]

hit_list = pygame.sprite.spritecollide(self, enemy_list, False)

for enemy in hit_list:

self.health -= 1

print(self.health)

class Enemy(pygame.sprite.Sprite):

"""