id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

11,456 | 7 个实用的操作 Bash 历史记录的快捷方式 | https://opensource.com/article/19/10/bash-history-shortcuts | 2019-10-14T11:16:27 | [

"Bash",

"历史记录"

] | https://linux.cn/article-11456-1.html |

>

> 这些必不可少的 Bash 快捷键可在命令行上节省时间。

>

>

>

大多数介绍 Bash 历史记录的指南都详尽地列出了全部可用的快捷方式。这样做的问题是,你会对每个快捷方式都浅尝辄止,然后在尝试了那么多的快捷方式后就搞得目不暇接。而在开始工作时它们就全被丢在脑后,只记住了刚开始使用 Bash 时学到的 [!! 技巧](https://opensource.com/article/18/5/bash-tricks)。这些技巧大多数从未进入记忆当中。

本文概述了我每天实际使用的快捷方式。它基于我的书《[Bash 学习,艰难之旅](https://leanpub.com/learnbashthehardway)》中的某些内容(你可以阅读其中的[样章](https://leanpub.com/learnbashthehardway/read_sample)以了解更多信息)。

当人们看到我使用这些快捷方式时,他们经常问我:“你做了什么!?” 学习它们只需付出很少的精力或智力,但是要真正的学习它们,我建议每周用一天学一个,然后下次再继续学习一个。值得花时间让它们落在你的指尖下,因为从长远来看,节省的时间将很重要。

### 1、最后一个参数:`!$`

如果你仅想从本文中学习一种快捷方式,那就是这个。它会将最后一个命令的最后一个参数替换到你的命令行中。

看看这种情况:

```

$ mv /path/to/wrongfile /some/other/place

mv: cannot stat '/path/to/wrongfile': No such file or directory

```

啊哈,我在命令中写了错误的文件名 “wrongfile”,我应该用正确的文件名 “rightfile” 代替。

你可以重新键入上一个命令,并用 “rightfile” 完全替换 “wrongfile”。但是,你也可以键入:

```

$ mv /path/to/rightfile !$

mv /path/to/rightfile /some/other/place

```

这个命令也可以奏效。

在 Bash 中还有其他方法可以通过快捷方式实现相同的目的,但是重用上一个命令的最后一个参数的这种技巧是我最常使用的。

### 2、第 n 个参数:`!:2`

是不是干过像这样的事情:

```

$ tar -cvf afolder afolder.tar

tar: failed to open

```

像许多其他人一样,我也经常搞错 `tar`(和 `ln`)的参数顺序。

当你搞混了参数,你可以这样:

```

$ !:0 !:1 !:3 !:2

tar -cvf afolder.tar afolder

```

这样就不会出丑了。

上一个命令的各个参数的索引是从零开始的,并且可以用 `!:` 之后跟上该索引数字代表各个参数。

显然,你也可以使用它来重用上一个命令中的特定参数,而不是所有参数。

### 3、全部参数:`!:1-$`

假设我运行了类似这样的命令:

```

$ grep '(ping|pong)' afile

```

参数是正确的。然而,我想在文件中匹配 “ping” 或 “pong”,但我使用的是 `grep` 而不是 `egrep`。

我开始输入 `egrep`,但是我不想重新输入其他参数。因此,我可以使用 `!:1-$` 快捷方式来调取上一个命令的所有参数,从第二个(记住它们的索引从零开始,因此是 `1`)到最后一个(由 `$` 表示)。

```

$ egrep !:1-$

egrep '(ping|pong)' afile

ping

```

你不用必须用 `1-$` 选择全部参数;你也可以选择一个子集,例如 `1-2` 或 `3-9` (如果上一个命令中有那么多参数的话)。

### 4、倒数第 n 行的最后一个参数:`!-2:$`

当我输错之后马上就知道该如何更正我的命令时,上面的快捷键非常有用,但是我经常在原来的命令之后运行别的命令,这意味着上一个命令不再是我所要引用的命令。

例如,还是用之前的 `mv` 例子,如果我通过 `ls` 检查文件夹的内容来纠正我的错误:

```

$ mv /path/to/wrongfile /some/other/place

mv: cannot stat '/path/to/wrongfile': No such file or directory

$ ls /path/to/

rightfile

```

我就不能再使用 `!$` 快捷方式了。

在这些情况下,我可以在 `!` 之后插入 `-n`:(其中 `n` 是要在历史记录中回溯的命令条数),以从较旧的命令取得最后的参数:

```

$ mv /path/to/rightfile !-2:$

mv /path/to/rightfile /some/other/place

```

同样,一旦你学会了它,你可能会惊讶于你需要使用它的频率。

### 5、进入文件夹:`!$:h`

从表面上看,这个看起来不太有用,但我每天要用它几十次。

想象一下,我运行的命令如下所示:

```

$ tar -cvf system.tar /etc/system

tar: /etc/system: Cannot stat: No such file or directory

tar: Error exit delayed from previous errors.

```

我可能要做的第一件事是转到 `/etc` 文件夹,查看其中的内容并找出我做错了什么。

我可以通过以下方法来做到这一点:

```

$ cd !$:h

cd /etc

```

这是说:“获取上一个命令的最后一个参数(`/etc/system`),并删除其最后的文件名部分,仅保留 `/ etc`。”

### 6、当前行:`!#:1`

多年以来,在我最终找到并学会之前,我有时候想知道是否可以在当前行引用一个参数。我多希望我能早早学会这个快捷方式。我经常常使用它制作备份文件:

```

$ cp /path/to/some/file !#:1.bak

cp /path/to/some/file /path/to/some/file.bak

```

但当我学会之后,它很快就被下面的快捷方式替代了……

### 7、搜索并替换:`!!:gs`

这将搜索所引用的命令,并将前两个 `/` 之间的字符替换为后两个 `/` 之间的字符。

假设我想告诉别人我的 `s` 键不起作用,而是输出了 `f`:

```

$ echo my f key doef not work

my f key doef not work

```

然后我意识到这里出现的 `f` 键都是错的。要将所有 `f` 替换为 `s`,我可以输入:

```

$ !!:gs/f /s /

echo my s key does not work

my s key does not work

```

它不只对单个字符起作用。我也可以替换单词或句子:

```

$ !!:gs/does/did/

echo my s key did not work

my s key did not work

```

### 测试一下

为了向你展示如何组合这些快捷方式,你知道这些命令片段将输出什么吗?

```

$ ping !#:0:gs/i/o

$ vi /tmp/!:0.txt

$ ls !$:h

$ cd !-2:$:h

$ touch !$!-3:$ !! !$.txt

$ cat !:1-$

```

### 总结

对于日常的命令行用户,Bash 可以作为快捷方式的优雅来源。虽然有成千上万的技巧要学习,但这些是我经常使用的最喜欢的技巧。

如果你想更深入地了解 Bash 可以教给你的全部知识,请买本我的书,《[Bash 学习,艰难之旅](https://leanpub.com/learnbashthehardway)》,或查看我的在线课程《[精通 Bash shell](https://www.educative.io/courses/master-the-bash-shell)》。

---

本文最初发布在 Ian 的博客 [Zwischenzugs.com](https://zwischenzugs.com/2019/08/25/seven-god-like-bash-history-shortcuts-you-will-actually-use/) 上,并经允许重复发布。

---

via: <https://opensource.com/article/19/10/bash-history-shortcuts>

作者:[Ian Miell](https://opensource.com/users/ianmiell) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Most guides to Bash history shortcuts exhaustively list every single one available. The problem with that is I would use a shortcut once, then glaze over as I tried out all the possibilities. Then I'd move onto my working day and completely forget them, retaining only the well-known [ !! trick](https://opensource.com/article/18/5/bash-tricks) I learned when I first started using Bash.

So most of them were never committed to memory.

This article outlines the shortcuts I *actually use* every day. It is based on some of the contents of my book, [ Learn Bash the hard way](https://leanpub.com/learnbashthehardway); (you can read a

[preview](https://leanpub.com/learnbashthehardway/read_sample)of it to learn more).

When people see me use these shortcuts, they often ask me, "What did you do there!?" There's minimal effort or intelligence required, but to really learn them, I recommend using one each day for a week, then moving to the next one. It's worth taking your time to get them under your fingers, as the time you save will be significant in the long run.

## 1. The "last argument" one: !$

If you only take one shortcut from this article, make it this one. It substitutes in the last argument of the last command into your line.

Consider this scenario:

```

$ mv /path/to/wrongfile /some/other/place

mv: cannot stat '/path/to/wrongfile': No such file or directory

```

Ach, I put the **wrongfile** filename in my command. I should have put **rightfile** instead.

You might decide to retype the last command and replace wrongfile with rightfile completely. Instead, you can type:

```

$ mv /path/to/rightfile !$

mv /path/to/rightfile /some/other/place

```

and the command will work.

There are other ways to achieve the same thing in Bash with shortcuts, but this trick of reusing the last argument of the last command is one I use the most.

## 2. The "*n*th argument" one: !:2

Ever done anything like this?

```

$ tar -cvf afolder afolder.tar

tar: failed to open

```

Like many others, I get the arguments to **tar** (and **ln**) wrong more often than I would like to admit.

When you mix up arguments like that, you can run:

```

$ !:0 !:1 !:3 !:2

tar -cvf afolder.tar afolder

```

and your reputation will be saved.

The last command's items are zero-indexed and can be substituted in with the number after the **!:**.

Obviously, you can also use this to reuse specific arguments from the last command rather than all of them.

## 3. The "all the arguments" one: !:1-$

Imagine I run a command like:

`$ grep '(ping|pong)' afile`

The arguments are correct; however, I want to match **ping** or **pong** in a file, but I used **grep** rather than **egrep**.

I start typing **egrep**, but I don't want to retype the other arguments. So I can use the **!:1$** shortcut to ask for all the arguments to the previous command from the second one (remember they’re zero-indexed) to the last one (represented by the **$** sign).

```

$ egrep !:1-$

egrep '(ping|pong)' afile

ping

```

You don't need to pick **1-$**; you can pick a subset like **1-2** or **3-9** (if you had that many arguments in the previous command).

## 4. The "last but *n*" one: !-2:$

The shortcuts above are great when I know immediately how to correct my last command, but often I run commands *after* the original one, which means that the last command is no longer the one I want to reference.

For example, using the **mv** example from before, if I follow up my mistake with an **ls** check of the folder's contents:

```

$ mv /path/to/wrongfile /some/other/place

mv: cannot stat '/path/to/wrongfile': No such file or directory

$ ls /path/to/

rightfile

```

I can no longer use the **!$** shortcut.

In these cases, I can insert a **- n:** (where

*is the number of commands to go back in the history) after the*

**n****!**to grab the last argument from an older command:

```

$ mv /path/to/rightfile !-2:$

mv /path/to/rightfile /some/other/place

```

Again, once you learn it, you may be surprised at how often you need it.

## 5. The "get me the folder" one: !$:h

This one looks less promising on the face of it, but I use it dozens of times daily.

Imagine I run a command like this:

```

$ tar -cvf system.tar /etc/system

tar: /etc/system: Cannot stat: No such file or directory

tar: Error exit delayed from previous errors.

```

The first thing I might want to do is go to the **/etc** folder to see what's in there and work out what I've done wrong.

I can do this at a stroke with:

```

$ cd !$:h

cd /etc

```

This one says: "Get the last argument to the last command (**/etc/system**) and take off its last filename component, leaving only the **/etc**."

## 6. The "the current line" one: !#:1

For years, I occasionally wondered if I could reference an argument on the current line before finally looking it up and learning it. I wish I'd done so a long time ago. I most commonly use it to make backup files:

```

$ cp /path/to/some/file !#:1.bak

cp /path/to/some/file /path/to/some/file.bak

```

but once under the fingers, it can be a very quick alternative to …

## 7. The "search and replace" one: !!:gs

This one searches across the referenced command and replaces what's in the first two **/** characters with what's in the second two.

Say I want to tell the world that my **s** key does not work and outputs **f** instead:

```

$ echo my f key doef not work

my f key doef not work

```

Then I realize that I was just hitting the **f** key by accident. To replace all the **f**s with **s**es, I can type:

```

$ !!:gs/f /s /

echo my s key does not work

my s key does not work

```

It doesn't work only on single characters; I can replace words or sentences, too:

```

$ !!:gs/does/did/

echo my s key did not work

my s key did not work

```

## Test them out

Just to show you how these shortcuts can be combined, can you work out what these toenail clippings will output?

```

$ ping !#:0:gs/i/o

$ vi /tmp/!:0.txt

$ ls !$:h

$ cd !-2:h

$ touch !$!-3:$ !! !$.txt

$ cat !:1-$

```

**Conclusion**

Bash can be an elegant source of shortcuts for the day-to-day command-line user. While there are thousands of tips and tricks to learn, these are my favorites that I frequently put to use.

If you want to dive even deeper into all that Bash can teach you, pick up my book, [ Learn Bash the hard way](https://leanpub.com/learnbashthehardway) or check out my online course,

[Master the Bash shell](https://www.educative.io/courses/master-the-bash-shell).

*This article was originally posted on Ian's blog, Zwischenzugs.com, and is reused with permission.*

## 12 Comments |

11,458 | 如何在 Centos 8 / RHEL 8 上安装和配置 VNC 服务器 | https://www.linuxtechi.com/install-configure-vnc-server-centos8-rhel8/ | 2019-10-14T12:02:00 | [

"VNC"

] | https://linux.cn/article-11458-1.html |

VNC(<ruby> 虚拟网络计算 <rt> Virtual Network Computing </rt></ruby>)服务器是基于 GUI 的桌面共享平台,它可让你访问远程桌面计算机。在 Centos 8 和 RHEL 8 系统中,默认未安装 VNC 服务器,它需要手动安装。在本文中,我们将通过简单的分步指南,介绍如何在 Centos 8 / RHEL 8 上安装 VNC 服务器。

### 在 Centos 8 / RHEL 8 上安装 VNC 服务器的先决要求

要在你的系统中安装 VNC 服务器,请确保你的系统满足以下要求:

* CentOS 8 / RHEL 8

* GNOME 桌面环境

* root 用户权限

* DNF / YUM 软件包仓库

### 在 Centos 8 / RHEL 8 上安装 VNC 服务器的分步指导

#### 步骤 1)安装 GNOME 桌面环境

在 CentOS 8 / RHEL 8 中安装 VNC 服务器之前,请确保已安装了桌面环境(DE)。如果已经安装了 GNOME 桌面或安装了 GUI 支持,那么可以跳过此步骤。

在 CentOS 8 / RHEL 8 中,GNOME 是默认的桌面环境。如果你的系统中没有它,请使用以下命令进行安装:

```

[root@linuxtechi ~]# dnf groupinstall "workstation"

或者

[root@linuxtechi ~]# dnf groupinstall "Server with GUI

```

成功安装上面的包后,请运行以下命令启用图形模式:

```

[root@linuxtechi ~]# systemctl set-default graphical

```

现在重启系统,进入 GNOME 登录页面(LCTT 译注:你可以通过切换运行态来进入图形界面)。

```

[root@linuxtechi ~]# reboot

```

重启后,请取消注释 `/etc/gdm/custom.conf` 中的 `WaylandEnable=false`,以使通过 vnc 进行的远程桌面会话请求由 GNOME 桌面的 xorg 处理,来代替 Wayland 显示管理器。

注意: Wayland 是 GNOME 中的默认显示管理器 (GDM),并且未配置用于处理 X.org 等远程渲染的 API。

#### 步骤 2)安装 VNC 服务器(tigervnc-server)

接下来,我们将安装 VNC 服务器,有很多 VNC 服务器可以选择,出于安装目的,我们将安装 `TigerVNC 服务器`。它是最受欢迎的 VNC 服务器之一,并且高性能还独立于平台,它使用户可以轻松地与远程计算机进行交互。

现在,使用以下命令安装 TigerVNC 服务器:

```

[root@linuxtechi ~]# dnf install tigervnc-server tigervnc-server-module -y

```

#### 步骤 3)为本地用户设置 VNC 密码

假设我们希望用户 `pkumar` 使用 VNC 进行远程桌面会话,然后切换到该用户并使用 `vncpasswd` 命令设置其密码,

```

[root@linuxtechi ~]# su - pkumar

[root@linuxtechi ~]$ vncpasswd

Password:

Verify:

Would you like to enter a view-only password (y/n)? n

A view-only password is not used

[root@linuxtechi ~]$

[root@linuxtechi ~]$ exit

logout

[root@linuxtechi ~]#

```

#### 步骤 4)设置 VNC 服务器配置文件

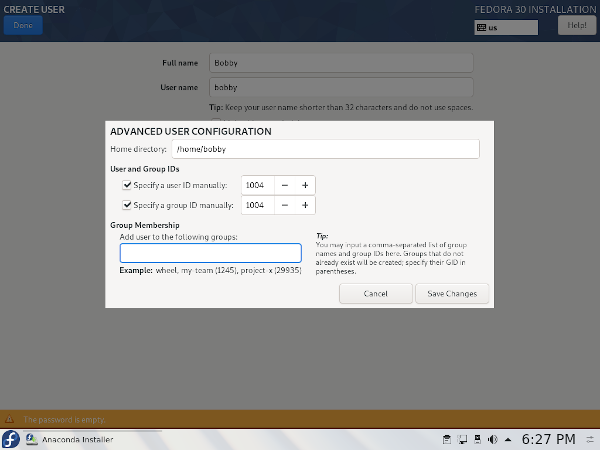

下一步是配置 VNC 服务器配置文件。创建含以下内容的 `/etc/systemd/system/[email protected]`,以便为上面的本地用户 `pkumar` 启动 tigervnc-server 的服务。

```

[root@linuxtechi ~]# vim /etc/systemd/system/[email protected]

[Unit]

Description=Remote Desktop VNC Service

After=syslog.target network.target

[Service]

Type=forking

WorkingDirectory=/home/pkumar

User=pkumar

Group=pkumar

ExecStartPre=/bin/sh -c '/usr/bin/vncserver -kill %i > /dev/null 2>&1 || :'

ExecStart=/usr/bin/vncserver -autokill %i

ExecStop=/usr/bin/vncserver -kill %i

[Install]

WantedBy=multi-user.target

```

保存并退出文件,

注意:替换上面文件中的用户名为你自己的。

默认情况下,VNC 服务器在 tcp 端口 5900+n 上监听,其中 n 是显示端口号,如果显示端口号为 “1”,那么 VNC 服务器将在 TCP 端口 5901 上监听其请求。

#### 步骤 5)启动 VNC 服务并允许防火墙中的端口

我将显示端口号设置为 1,因此请使用以下命令在显示端口号 “1” 上启动并启用 vnc 服务,

```

[root@linuxtechi ~]# systemctl daemon-reload

[root@linuxtechi ~]# systemctl start vncserver@:1.service

[root@linuxtechi ~]# systemctl enable vncserver@:1.service

Created symlink /etc/systemd/system/multi-user.target.wants/vncserver@:1.service → /etc/systemd/system/[email protected].

[root@linuxtechi ~]#

```

使用下面的 `netstat` 或 `ss` 命令来验证 VNC 服务器是否开始监听 5901 上的请求,

```

[root@linuxtechi ~]# netstat -tunlp | grep 5901

tcp 0 0 0.0.0.0:5901 0.0.0.0:* LISTEN 8169/Xvnc

tcp6 0 0 :::5901 :::* LISTEN 8169/Xvnc

[root@linuxtechi ~]# ss -tunlp | grep -i 5901

tcp LISTEN 0 5 0.0.0.0:5901 0.0.0.0:* users:(("Xvnc",pid=8169,fd=6))

tcp LISTEN 0 5 [::]:5901 [::]:* users:(("Xvnc",pid=8169,fd=7))

[root@linuxtechi ~]#

```

使用下面的 `systemctl` 命令验证 VNC 服务器的状态,

```

[root@linuxtechi ~]# systemctl status vncserver@:1.service

```

上面命令的输出确认在 tcp 端口 5901 上成功启动了 VNC。使用以下命令在系统防火墙中允许 VNC 服务器端口 “5901”,

```

[root@linuxtechi ~]# firewall-cmd --permanent --add-port=5901/tcp

success

[root@linuxtechi ~]# firewall-cmd --reload

success

[root@linuxtechi ~]#

```

#### 步骤 6)连接到远程桌面会话

现在,我们已经准备就绪,可以查看远程桌面连接是否正常工作。要访问远程桌面,请在 Windows / Linux 工作站中启动 VNC Viewer,然后输入 VNC 服务器的 IP 地址和端口号,然后按回车。

接下来,它将询问你的 VNC 密码。输入你先前为本地用户创建的密码,然后单击 “OK” 继续。

现在你可以看到远程桌面,

就是这样,你已经在 Centos 8 / RHEL 8 中成功安装了 VNC 服务器。

### 总结

希望这篇在 Centos 8 / RHEL 8 上安装 VNC 服务器的分步指南为你提供了轻松设置 VNC 服务器并访问远程桌面的所有信息。请在下面的评论栏中提供你的意见和建议。下篇文章再见。谢谢再见!!!

---

via: <https://www.linuxtechi.com/install-configure-vnc-server-centos8-rhel8/>

作者:[Pradeep Kumar](https://www.linuxtechi.com/author/pradeep/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | A **VNC** (Virtual Network Computing) Server is a GUI based desktop sharing platform that allows you to access remote desktop machines. In **Centos 8** and **RHEL 8** systems, VNC servers are not installed by default and need to be installed manually. In this article, we’ll look at how to install VNC Server on CentOS 8 / RHEL 8 systems with a simple step-by-step installation guide.

#### Prerequisites to Install VNC Server on Centos 8 / RHEL 8

To install VNC Server in your system, make sure you have the following requirements readily available on your system:

- CentOS 8 / RHEL 8

- GNOME Desktop Environment

- Root access

- DNF / YUM Package repositories

#### Step by Step Guide to Install VNC Server on Centos 8 / RHEL 8

#### Step 1) Install GNOME Desktop environment

Before installing VNC Server in your CentOS 8 / RHEL 8, make sure you have a desktop Environment (DE) installed. In case GNOME desktop is already installed or you have installed your server with gui option then you can skip this step.

In CentOS 8 / RHEL 8, GNOME is the default desktop environment. if you don’t have it in your system, install it using the following command:

[root@linuxtechi ~]# dnf groupinstall "workstation" Or [root@linuxtechi ~]# dnf groupinstall "Server with GUI"

Once the above packages are installed successfully then run the following command to enable the graphical mode

[root@linuxtechi ~]# systemctl set-default graphical

Now reboot the system so that we get GNOME login screen.

[root@linuxtechi ~]# reboot

Once the system is rebooted successfully uncomment the line “**WaylandEnable=false**” from the file “**/etc/gdm/custom.conf**” so that remote desktop session request via vnc is handled by xorg of GNOME desktop in place of wayland display manager.

**Note:** Wayland is the default display manager (GDM) in GNOME and it not is configured to handled remote rendering API like X.org

VNC server will not work properly if SElinux is enabled on your system, as of now work around is to disable it using following commands,

[root@linuxtechi ~]# setenforce 0 [root@linuxtechi ~]# sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

#### Step 2) Install VNC Server (tigervnc-server)

Next we’ll install the VNC Server, there are lot of VNC Servers available, and for installation purposes, we’ll be installing **TigerVNC Server**. It is one of the most popular VNC Server and a high-performance and platform-independent VNC that allows users to interact with remote machines easily.

Now install TigerVNC Server using the following command:

[root@linuxtechi ~]# dnf install tigervnc-server tigervnc-server-module -y

#### Step 3) Set VNC Password for Local User

Let’s assume we want ‘pkumar’ user to use VNC for remote desktop session, then switch to the user and set its password using vncpasswd command,

[root@linuxtechi ~]# su - pkumar [pkumar@linuxtechi ~]$ vncpasswd Password: Verify: Would you like to enter a view-only password (y/n)? n A view-only password is not used [pkumar@linuxtechi ~]$ [pkumar@linuxtechi ~]$ exit logout [root@linuxtechi ~]#

#### Step 4) Setup VNC Server Configuration File

Next step is to configure VNC Server Configuration file. Create a file “**/etc/systemd/system/ [email protected]**” with the following content so that tigervnc-server’s service started for above local user “pkumar”.

[root@linuxtechi ~]# vim /etc/systemd/system/[[email protected]][Unit] Description=Remote desktop service (VNC) After=syslog.target network.target [Service] Type=forking ExecStartPre=/bin/sh -c '/usr/bin/vncserver -kill %i > /dev/null 2>&1 || :' ExecStart=/sbin/runuser -l pkumar -c "/usr/bin/vncserver %i -geometry 1280x1024" PIDFile=/home/pkumar/.vnc/%H%i.pid ExecStop=/bin/sh -c '/usr/bin/vncserver -kill %i > /dev/null 2>&1 || :' [Install] WantedBy=multi-user.target

Save and exit the file,

**Note: **Replace the user name in above file which suits to your setup.

By default, VNC server listen on tcp port 5900+n, where n is the display number, if the display number is “1” then VNC server will listen its request on TCP port 5901.

#### Step 5) Start VNC Service and allow port in firewall

I am using display number as 1, so use the following commands to start and enable vnc service on display number “1”,

[root@linuxtechi ~]# systemctl daemon-reload [root@linuxtechi ~]# systemctl start vncserver@:1.service [root@linuxtechi ~]# systemctl enable vncserver@:1.service Created symlink /etc/systemd/system/multi-user.target.wants/vncserver@:1.service → /etc/systemd/system/[[email protected]]. [root@linuxtechi ~]#

Use below **netstat** or **ss** command to verify whether VNC server start listening its request on 5901,

[root@linuxtechi ~]# netstat -tunlp | grep 5901 tcp 0 0 0.0.0.0:5901 0.0.0.0:* LISTEN 8169/Xvnc tcp6 0 0 :::5901 :::* LISTEN 8169/Xvnc [root@linuxtechi ~]# ss -tunlp | grep -i 5901 tcp LISTEN 0 5 0.0.0.0:5901 0.0.0.0:* users:(("Xvnc",pid=8169,fd=6)) tcp LISTEN 0 5 [::]:5901 [::]:* users:(("Xvnc",pid=8169,fd=7)) [root@linuxtechi ~]#

Use below systemctl command to verify the status of VNC server,

[root@linuxtechi ~]# systemctl status vncserver@:1.service

Above command’s output confirms that VNC is started successfully on port tcp port 5901. Use the following command allow VNC Server port “5901” in os firewall,

[root@linuxtechi ~]# firewall-cmd --permanent --add-port=5901/tcp success [root@linuxtechi ~]# firewall-cmd --reload success [root@linuxtechi ~]#

#### Step 6) Connect to Remote Desktop Session

Now we are all set to see if the remote desktop connection is working. To access the remote desktop, Start the VNC Viewer from your Windows / Linux workstation and enter your **VNC server IP Address** and **Port Number** and then hit enter

Next, it will ask for your VNC password. Enter the password that you have created earlier for your local user and click OK to continue

Now you can see the remote desktop,

That’s it, you’ve successfully installed VNC Server in Centos 8 / RHEL 8.

**Conclusion**

Hope the step-by-step guide to install VNC server on Centos 8 / RHEL 8 has provided you with all the information to easily setup VNC Server and access remote desktops. Please provide your comments and suggestion in the feedback section below. See you in the next article…Until then a big THANK YOU and BYE for now!!!

Read Also : [How to Configure Rsyslog Server in CentOS 8 / RHEL 8](https://www.linuxtechi.com/configure-rsyslog-server-centos-8-rhel-8/)

Bill SanfordAfter you install the BaseOS of RHEL 8 and add the repo files, yum update -y

After you install the workstation or server: yum install gnome* -y

Setting Graphical Login as Default – As root: systemctl set-default graphical.target and reboot.

To enable and use tigervnc, in the following steps:

yum install tigervnc-server -y

semodule -i /usr/share/selinux/packages/vncsession.pp

restorecon /usr/sbin/vncsession /usr/libexec/vncsession-start

If you already have VNC installed, you need to remove “$HOME/.vnc folder” if it exists and recreate again with vncpasswd. This is needed to have “.vnc” folder with correct SELinux context.

Alternatively you can use: restorecon -RFv $HOME/.vnc

vim /etc/tigervnc/vncserver-config-defaults and add “session=gnome”

You can also set other options here. See manual page of Xvnc.

NOTE: You can also have configuration which is specific for a given user, to do that just modify “$HOME/.vnc/config”. You can copy content of “/etc/tigervnc/vncserver-config-defaults” there and do your own modifications.

vim /etc/tigervnc/vncserver.users

Added “:2=test” If you want to add more users, just realize you need to start each instance separately.

NOTE: Spaces are a no-no

NOTE: Example is: :2= and there should be examples in the file for existing users

systemctl start vncserver@:2.service

Connect to the VNCServer

vncviewer or :2

Example: vncviewer 10.10.10.1:2

BillThat last bit about connecting should be

vncviewer or :2

Example: vncviewer 10.10.10.1:2

RomanFollowed your tutorial, but systemctl could not start the service.

Error message was ‘geometry 1280×1024 is invalid’

RobinI have the same situation.

ChrisThe x in the example service file isn’t a proper x when you copy and paste the text. Replace the × with an x and it will then give you a new error with SELinux because the first instruction on disabling SELinux doesn’t actually disable it. This tutorial is rife with FAILS.

Pradeep KumarThanks Chris, I have fixed the letter x as you mentioned in comments. I hope it helps our readers.

LuisAnyone display Black Screen?

Chanme too. did you solve it?

Chan KimI followed it, but when I type ‘netstat -tunlp | grep 5901’ there is no entry shown.

actually the vnc server doesn’t respond. What’s wrong?

ArashGUYS! I found the black screen symptom here!

Make sure the content doesn’t change while copying and pasting which happens for encoding issues or other problems.

That happened to me and the text of geometry 1280×1024 changed to 1280 ^1024. Fix it and reload the daemon and start VNC server again!

CarlI can not start the VNC server which complains geometry 1280×1024.

[root@m2-mapreng-vm167100 ~]# systemctl start vncserver@:1.service

Job for vncserver@:1.service failed because the control process exited with error code.

See “systemctl status vncserver@:1.service” and “journalctl -xe” for details.

Oct 28 09:59:46 m2-mapreng-vm167100 runuser[127322]: vncserver: geometry 1280×1024 is invalid

If I change the ‘x’ between the two number to lower case ‘x’ the VNC server started OK.

[root@m2-mapreng-vm167100 ~]# systemctl status vncserver@:1.service

● vncserver@:1.service – Remote desktop service (VNC)

Loaded: loaded (/etc/systemd/system/[email protected]; enabled; vendor preset: disabled)

Active: active (running) since Wed 2020-10-28 10:20:23 PDT; 42s ago

Main PID: 150965 (Xvnc)

Tasks: 0 (limit: 152821)

Memory: 320.0K

CGroup: /system.slice/system-vncserver.slice/vncserver@:1.service

‣ 150965 /usr/bin/Xvnc :1 -auth /root/.Xauthority -desktop m2-mapreng-vm167100:1 (root) -fp catalogue:/etc/X11/fontpath.d -geometry 1280×1024 -pn -rfbauth /root/.vnc/passwd -rfbport 5901 -rfbwait 300>

Oct 28 10:20:20 m2-mapreng-vm167100 systemd[1]: Starting Remote desktop service (VNC)…

Oct 28 10:20:23 m2-mapreng-vm167100 systemd[1]: Started Remote desktop service (VNC).

However, when connect with VNC viewer, after enter password etc, I got black screen.

Mike Landismy \ got excised… the post should have read…

I’m still experimenting so take this with a grain of salt, but I’ve found that you need to start the daemon with…

“systemctl start vncserver_\@:\.service” to match the name of its config file in /etc/systemd/system/, e.g. /etc/systemd/system/vncserver_\@:\.service

the default resolution of port 5900 (n=0) is 16x1024x768

per: ‘https://wiki.centos.org/HowTos/VNC-Server’

There will also be a /etc/systemd/system/[email protected] config file that is not specific to a user or port.

if you want to change the display geometry (e.g. to 1280×1024), you can do something like…

VNCSERVERARGS[]=”-geometry 1280×1024 -localhost”‘

Lokesh KHi,

How can I authenticate using unixpwd (PAM Authentication), We no need to manage two passwords for user.

System Password and VNC passwd have to be same and if we change the system password vnc should also update.

Please help

Regards,

Lokesh |

11,460 | Google Analytics 的一些用法介绍 | https://opensourceforu.com/2019/10/all-that-you-can-do-with-google-analytics-and-more/ | 2019-10-14T17:49:51 | [

"跟踪"

] | https://linux.cn/article-11460-1.html |

Google Analytics (GA)这个最流行的用户活动追踪工具我们或多或少都听说过甚至使用过,但它的用途并不仅仅限于对页面访问的追踪。作为一个既实用又流行的工具,它已经受到了广泛的欢迎,因此我们将要在下文中介绍如何在各种 Angular 和 React 单页应用中使用 Google Analytics。

这篇文章源自这样一个问题:如何对单页应用中的页面访问进行跟踪?

通常来说,有很多的方法可以解决这个问题,在这里我们只讨论其中的一种方法。下面我会使用 Angular 来写出对应的实现,如果你使用的是 React,相关的用法和概念也不会有太大的差别。接下来就开始吧。

### 准备好应用程序

首先需要有一个<ruby> 追踪 ID <rt> tracking ID </rt></ruby>。在开始编写业务代码之前,要先准备好一个追踪 ID,通过这个唯一的标识,Google Analytics 才能识别出某个点击或者某个页面访问是来自于哪一个应用。

按照以下的步骤:

1. 访问 <https://analytics.google.com>;

2. 提交指定信息以完成注册,并确保可用于 Web 应用,因为我们要开发的正是一个 Web 应用;

3. 同意相关的条款,生成一个追踪 ID;

4. 保存好追踪 ID。

追踪 ID 拿到了,就可以开始编写业务代码了。

### 添加 analytics.js 脚本

Google 已经帮我们做好了接入之前需要做的所有事情,接下来就是我们的工作了。不过我们要做的也很简单,只需要把下面这段脚本添加到应用的 `index.html` 里,就可以了:

```

<script>

(function(i,s,o,g,r,a,m){i['GoogleAnalyticsObject']=r;i[r]=i[r]||function(){

(i[r].q=i[r].q||[]).push(arguments)},i[r].l=1*new Date();a=s.createElement(o),

m=s.getElementsByTagName(o)[0];a.async=1;a.src=g;m.parentNode.insertBefore(a,m)

})(window,document,'script','https://www.google-analytics.com/analytics.js','ga');

</script>

```

现在我们来看一下 Google Analytics 是如何在应用程序中初始化的。

### 创建追踪器

首先创建一个应用程序的追踪器。在 `app.component.ts` 中执行以下两个步骤:

1. 声明一个名为 `ga`,类型为 `any` 的全局变量(在 Typescript 中需要制定变量类型);

2. 将下面一行代码加入到 `ngInInit()` 中。

```

ga('create', <你的追踪 ID>, 'auto');

```

这样就已经成功地在应用程序中初始化了一个 Google Analytics 的追踪器了。由于追踪器的初始化是在 `OnInit()` 函数中执行的,因此每当应用程序启动,追踪器就会启动。

### 在单页应用中记录页面访问情况

我们需要实现的是记录<ruby> 访问路由 <rt> route-visits </rt></ruby>。

如何记录用户在一个应用中不同部分的访问,这是一个难点。从功能上来看,单页应用中的路由对应了传统多页面应用中各个页面之间的跳转,因此我们需要记录访问路由。要做到这一点尽管不算简单,但仍然是可以实现的。在 `app.component.ts` 的 `ngOnInit()` 函数中添加以下的代码片段:

```

import { Router, NavigationEnd } from '@angular/router';

...

constructor(public router: Router) {}

...

this.router.events.subscribe(

event => {

if (event instanceof NavigationEnd) {

ga('set', 'page', event.urlAfterRedirects);

ga('send', { hitType: 'pageview', hitCallback: () => { this.pageViewSent = true; }});

}

}

);

```

神奇的是,只需要这么几行代码,就实现了 Angular 应用中记录页面访问情况的功能。

这段代码实际上做了以下几件事情:

1. 从 Angular Router 中导入了 `Router`、`NavigationEnd`;

2. 通过构造函数中将 `Router` 添加到组件中;

3. 然后订阅 `router` 事件,也就是由 Angular Router 发出的所有事件;

4. 只要产生了一个 `NavigationEnd` 事件实例,就将路由和目标作为一个页面访问进行记录。

这样,只要使用到了页面路由,就会向 Google Analytics 发送一条页面访问记录,在 Google Analytics 的在线控制台中可以看到这些记录。

类似地,我们可以用相同的方式来记录除了页面访问之外的活动,例如某个界面的查看次数或者时长等等。只要像上面的代码那样使用 `hitCallBack()` 就可以在有需要收集的数据的时候让应用程序作出反应,这里我们做的事情仅仅是把一个变量的值设为 `true`,但实际上 `hitCallBack()` 中可以执行任何代码。

### 追踪用户交互活动

除了页面访问记录之外,Google Analytics 还经常被用于追踪用户的交互活动,例如某个按钮的点击情况。“提交按钮被用户点击了多少次?”,“产品手册会被经常查阅吗?”这些都是 Web 应用程序的产品评审会议上的常见问题。这一节我们将会介绍如何实现这些数据的统计。

#### 按钮点击

设想这样一种场景,需要统计到应用程序中某个按钮或链接被点击的次数,这是一个和注册之类的关键动作关系最密切的数据指标。下面我们来举一个例子:

假设应用程序中有一个“感兴趣”按钮,用于显示即将推出的活动,你希望通过统计这个按钮被点击的次数来推测有多少用户对此感兴趣。那么我们可以使用以下的代码来实现这个功能:

```

...

params = {

eventCategory:

'Button'

,

eventAction:

'Click'

,

eventLabel:

'Show interest'

,

eventValue:

1

};

showInterest() {

ga('send', 'event', this.params);

}

...

```

现在看下这段代码实际上做了什么。正如前面说到,当我们向 Google Analytics 发送数据的时候,Google Analytics 就会记录下来。因此我们可以向 `send()` 方法传递不同的参数,以区分不同的事件,例如两个不同按钮的点击记录。

1、首先我们定义了一个 `params` 对象,这个对象包含了以下几个字段:

1. `eventCategory` – 交互发生的对象,这里对应的是按钮(button)

2. `eventAction` – 发生的交互的类型,这里对应的是点击(click)

3. `eventLabel` – 交互动作的标识,这里对应的是这个按钮的内容,也就是“感兴趣”

4. `eventValue` – 与每个发生的事件实例相关联的值

由于这个例子中是要统计点击了“感兴趣”按钮的用户数,因此我们把 `eventValue` 的值定为 1。

2、对象构造完成之后,下一步就是将 `params` 对象作为请求负载发送到 Google Analytics,而这一步是通过事件绑定将 `showInterest()` 绑定在按钮上实现的。这也是使用 Google Analytics 追踪中最常用的发送事件方法。

至此,Google Analytics 就可以通过记录按钮的点击次数来统计感兴趣的用户数了。

#### 追踪社交活动

Google Analytics 还可以通过应用程序追踪用户在社交媒体上的互动。其中一种场景就是在应用中放置类似 Facebook 的点赞按钮,下面我们来看看如何使用 Google Analytics 来追踪这一行为。

```

...

fbLikeParams = {

socialNetwork:

'Facebook',

socialAction:

'Like',

socialTarget:

'https://facebook.com/mypage'

};

...

fbLike() {

ga('send', 'social', this.fbLikeParams);

}

```

如果你觉得这段代码似曾相识,那是因为它确实跟上面统计“感兴趣”按钮点击次数的代码非常相似。下面我们继续看其中每一步的内容:

1、构造发送的数据负载,其中包括以下字段:

1. `socialNetwork` – 交互发生的社交媒体,例如 Facebook、Twitter 等等

2. `socialAction` – 发生的交互类型,例如点赞、发表推文、分享等等

3. `socialTarget` – 交互的目标 URL,一般是社交媒体账号的主页

2、下一步是增加一个函数来发送整个交互记录。和统计按钮点击数量时相比,这里使用 `send()` 的方式有所不同。另外,我们还需要把这个函数绑定到已有的点赞按钮上。

在追踪用户交互方面,Google Analytics 还可以做更多的事情,其中最重要的一种是针对异常的追踪,这让我们可以通过 Google Analytics 来追踪应用程序中出现的错误和异常。在本文中我们就不赘述这一点了,但我们鼓励读者自行探索。

### 用户识别

#### 隐私是一项权利,而不是奢侈品

Google Analytics 除了可以记录很多用户的操作和交互活动之外,这一节还将介绍一个不太常见的功能,就是可以控制是否对用户的身份进行追踪。

#### Cookies

Google Analytics 追踪用户活动的方式是基于 Cookies 的,因此我们可以自定义 Cookies 的名称以及一些其它的内容,请看下面这段代码:

```

trackingID =

'UA-139883813-1'

;

cookieParams = {

cookieName: 'myGACookie',

cookieDomain: window.location.hostname,

cookieExpires: 604800

};

...

ngOnInit() {

ga('create', this.trackingID, this.cookieParams);

...

}

```

在上面这段代码中,我们设置了 Google Analytics Cookies 的名称、域以及过期时间,这就让我们能够将不同网站或 Web 应用的 Cookies 区分开来。因此我们需要为我们自己的应用程序的 Google Analytics 追踪器的 Cookies 设置一个自定义的标识1,而不是一个自动生成的随机标识。

#### IP 匿名

在某些场景下,我们可能不需要知道应用程序的流量来自哪里。例如对于一个按钮点击的追踪器,我们只需要关心按钮的点击量,而不需要关心点击者的地理位置。在这种场景下,Google Analytics 允许我们只追踪用户的操作行为,而不获取到用户的 IP 地址。

```

ipParams = {

anonymizeIp: true

};

...

ngOnInit() {

...

ga('set', this.ipParams);

...

}

```

在上面这段代码中,我们将 Google Analytics 追踪器的 `abibymizeIp` 参数设置为 `true`。这样用户的 IP 地址就不会被 Google Analytics 所追踪,这可以让用户知道自己的隐私正在被保护。

#### 不被跟踪

还有些时候用户可能不希望自己的行为受到追踪,而 Google Analytics 也允许这样的需求。因此也存在让用户不被追踪的选项。

```

...

optOut() {

window['ga-disable-UA-139883813-1'] = true;

}

...

```

`optOut()` 是一个自定义函数,它可以禁用页面中的 Google Analytics 追踪,我们可以使用按钮或复选框上得事件绑定来使用这一个功能,这样用户就可以选择是否会被 Google Analytics 追踪。

在本文中,我们讨论了 Google Analytics 集成到单页应用时的难点,并探索出了一种相关的解决方法。我们还了解到了如何在单页应用中追踪页面访问和用户交互,例如按钮点击、社交媒体活动等。

最后,我们还了解到 Google Analytics 为用户提供了保护隐私的功能,尤其是用户的一些隐私数据并不需要参与到统计当中的时候。而用户也可以选择完全不受到 Google Analytics 的追踪。除此以外,Google Analytics 还可以做到很多其它的事情,这就需要我们继续不断探索了。

---

via: <https://opensourceforu.com/2019/10/all-that-you-can-do-with-google-analytics-and-more/>

作者:[Ashwin Sathian](https://opensourceforu.com/author/ashwin-sathian/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[HankChow](https://github.com/HankChow) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

11,461 | 区块链 2.0:Hyperledger Fabric 介绍(十) | https://www.ostechnix.com/blockchain-2-0-introduction-to-hyperledger-fabric/ | 2019-10-15T08:41:42 | [

"Fabric"

] | https://linux.cn/article-11461-1.html |

### Hyperledger Fabric

[Hyperledger 项目](https://www.ostechnix.com/blockchain-2-0-an-introduction-to-hyperledger-project-hlp/) 是一个伞形组织,包括许多正在开发的不同模块和系统。在这些子项目中,最受欢迎的是 “Hyperledger Fabric”。这篇博文将探讨一旦区块链系统开始大量使用到主流,将使 Fabric 在不久的将来成为几乎不可或缺的功能。最后,我们还将快速了解开发人员和爱好者们需要了解的有关 Hyperledger Fabric 技术的知识。

### 起源

按照 Hyperledger 项目的常规方式,Fabric 由其核心成员之一 IBM “捐赠”给该组织,而 IBM 以前是该组织的主要开发者。由 IBM 共享的这个技术平台在 Hyperledger 项目中进行了联合开发,来自 100 多个成员公司和机构为之做出了贡献。

目前,Fabric 正处于 LTS 版本的 v1.4,该版本已经发展很长一段时间,并且被视为企业管理业务数据的解决方案。Hyperledger 项目的核心愿景也必然会渗透到 Fabric 中。Hyperledger Fabric 系统继承了所有企业级的可扩展功能,这些功能已深深地刻入到 Hyperledger 组织旗下所有的项目当中。

### Hyperledger Fabric 的亮点

Hyperledger Fabric 提供了多种功能和标准,这些功能和标准围绕着支持快速开发和模块化体系结构的使命而构建。此外,与竞争对手(主要是瑞波和[以太坊](https://www.ostechnix.com/blockchain-2-0-what-is-ethereum/))相比,Fabric 明确用于封闭和[许可区块链](https://www.ostechnix.com/blockchain-2-0-public-vs-private-blockchain-comparison/)。它们的核心目标是开发一套工具,这些工具将帮助区块链开发人员创建定制的解决方案,而不是创建独立的生态系统或产品。

Hyperledger Fabric 的一些亮点如下:

#### 许可区块链系统

这是一个 Hyperledger Fabric 与其他平台(如以太坊和瑞波)差异很大的地方。默认情况下,Fabric 是一种旨在实现私有许可的区块链的工具。此类区块链不能被所有人访问,并且其中致力于达成共识或验证交易的节点将由中央机构进行选择。这对于某些应用(例如银行和保险)可能很重要,在这些应用中,交易必须由中央机构而不是参与者来验证。

#### 机密和受控的信息流

Fabric 内置了权限系统,该权限系统将视情况限制特定组或某些个人中的信息流。与公有区块链不同,在公有区块链中,任何运行节点的人都可以对存储在区块链中的数据进行复制和选择性访问,而 Fabric 系统的管理员可以选择谁能访问共享的信息,以及访问的方式。与现有竞争产品相比,它还有以更好的安全性标准对存储的数据进行加密的子系统。

#### 即插即用架构

Hyperledger Fabric 具有即插即用类型的体系结构。可以选择实施系统的各个组件,而开发人员看不到用处的系统组件可能会被废弃。Fabric 采取高度模块化和可定制的方式进行开发,而不是一种与其竞争对手采用的“一种方法适应所有需求”的方式。对于希望快速构建精益系统的公司和公司而言,这尤其有吸引力。这与 Fabric 和其它 Hyperledger 组件的互操作性相结合,意味着开发人员和设计人员现在可以使用各种标准化工具,而不必从其他来源提取代码并随后进行集成。它还提供了一种相当可靠的方式来构建健壮的模块化系统。

#### 智能合约和链码

运行在区块链上的分布式应用程序称为[智能合约](https://www.ostechnix.com/blockchain-2-0-explaining-smart-contracts-and-its-types/)。虽然智能合约这个术语或多或少与以太坊平台相关联,但<ruby> 链码 <rt> chaincode </rt></ruby>是 Hyperledger 阵营中为其赋予的名称。链码应用程序除了拥有 DApp 中有的所有优点之外,使 Hyperledger 与众不同的是,该应用程序的代码可以用多种高级编程语言编写。它本身支持 [Go](https://www.ostechnix.com/install-go-language-linux/) 和 JavaScript,并且在与适当的编译器模块集成后还支持许多其它编程语言。尽管这一事实在此时可能并不代表什么,但这意味着,如果可以将现有人才用于正在进行的涉及区块链的项目,从长远来看,这有可能为公司节省数十亿美元的人员培训和管理费用。开发人员可以使用自己喜欢的语言进行编码,从而在 Hyperledger Fabric 上开始构建应用程序,而无需学习或培训平台特定的语言和语法。这提供了 Hyperledger Fabric 当前竞争对手无法提供的灵活性。

### 总结

* Hyperledger Fabric 是一个后端驱动程序平台,是一个主要针对需要区块链或其它分布式账本技术的集成项目。因此,除了次要的脚本功能外,它不提供任何面向用户的服务。(认可以为它更像是一种脚本语言。)

* Hyperledger Fabric 支持针对特定用例构建侧链。如果开发人员希望将一组用户或参与者隔离到应用程序的特定部分或功能,则可以通过侧链来实现。侧链是衍生自主要父代的区块链,但在其初始块之后形成不同的链。产生新链的块将不受新链进一步变化的影响,即使将新信息添加到原始链中,新链也将保持不变。此功能将有助于扩展正在开发的平台,并引入用户特定的和案例特定的处理功能。

* 前面的功能还意味着并非所有用户都会像通常对公有链所期望的那样拥有区块链中所有数据的“精确”副本。参与节点将具有仅与之相关的数据副本。例如,假设有一个类似于印度的 PayTM 的应用程序,该应用程序具有钱包功能以及电子商务功能。但是,并非所有的钱包用户都使用 PayTM 在线购物。在这种情况下,只有活跃的购物者将在 PayTM 电子商务网站上拥有相应的交易链,而钱包用户将仅拥有存储钱包交易的链的副本。这种灵活的数据存储和检索体系结构在扩展时非常重要,因为大量的单链区块链已经显示出会增加处理交易的前置时间。这样可以保持链的精简和分类。

我们将在以后的文章中详细介绍 Hyperledger Project 下的其他模块。

---

via: <https://www.ostechnix.com/blockchain-2-0-introduction-to-hyperledger-fabric/>

作者:[sk](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

11,462 | 在 Linux 上以树状查看文件和进程 | https://www.networkworld.com/article/3444589/viewing-files-and-processes-as-trees-on-linux.html | 2019-10-15T09:32:22 | [

"树状"

] | https://linux.cn/article-11462-1.html |

>

> 介绍三个 Linux 命令:ps、pstree 和 tree 以类似树的格式查看文件和进程。

>

>

>

[Linux](https://www.networkworld.com/article/3215226/what-is-linux-uses-featres-products-operating-systems.html) 提供了一些方便的命令,用于以树状分支形式查看文件和进程,从而易于查看它们之间的关系。在本文中,我们将介绍 `ps`、`pstree` 和 `tree` 命令以及它们提供的一些选项,这些选项可帮助你将注意力集中在要查看的内容上。

### ps

我们用来列出进程的 `ps` 命令有一些有趣的选项,但是很多人从来没有利用过。虽然常用的 `ps -ef` 提供了正在运行的进程的完整列表,但是 `ps -ejH` 命令增加了一个不错的效果。它缩进了相关的进程以使这些进程之间的关系在视觉上更加清晰——就像这个片段:

```

$ ps -ejH

PID PGID SID TTY TIME CMD

...

1396 1396 1396 ? 00:00:00 sshd

28281 28281 28281 ? 00:00:00 sshd

28409 28281 28281 ? 00:00:00 sshd

28410 28410 28410 pts/0 00:00:00 bash

30968 30968 28410 pts/0 00:00:00 ps

```

可以看到,正在运行的 `ps` 进程是在 `bash` 中运行的,而 `bash` 是在 ssh 会话中运行的。

`-exjf` 选项字符串提供了类似的视图,但是带有一些其它细节和符号以突出显示进程的层次结构性质:

```

$ ps -exjf

PPID PID PGID SID TTY TPGID STAT UID TIME COMMAND

...

1 1396 1396 1396 ? -1 Ss 0 0:00 /usr/sbin/sshd -D

1396 28281 28281 28281 ? -1 Ss 0 0:00 \_ sshd: shs [priv]

28281 28409 28281 28281 ? -1 S 1000 0:00 \_ sshd: shs@pts/0

28409 28410 28410 28410 pts/0 31028 Ss 1000 0:00 \_ -bash

28410 31028 31028 28410 pts/0 31028 R+ 1000 0:00 \_ ps axjf

```

命令中使用的这些选项表示:

```

-e 选择所有进程

-j 使用工作格式

-f 提供完整格式列表

-H 分层显示进程(如,树状格式)

-x 取消“必须与 tty 相关联”的限制

```

同时,该命令也有一个 `--forest` 选项提供了类似的视图。

```

$ ps -ef --forest

UID PID PPID C STIME TTY TIME CMD

...

root 1396 1 0 Oct08 ? 00:00:00 /usr/sbin/sshd -D

root 28281 1396 0 12:55 ? 00:00:00 \_ sshd: shs [priv]

shs 28409 28281 0 12:56 ? 00:00:00 \_ sshd: shs@pts/0

shs 28410 28409 0 12:56 pts/0 00:00:00 \_ -bash

shs 32351 28410 0 14:39 pts/0 00:00:00 \_ ps -ef --forest

```

注意,这些示例只是这些命令如何使用的示例。你可以选择最适合你的进程视图的任何选项组合。

### pstree

使用 `pstree` 命令可以获得类似的进程视图。尽管 `pstree` 具备了许多选项,但是该命令本身就提供了非常有用的显示。注意,许多父子进程关系显示在单行而不是后续行上。

```

$ pstree

...

├─sshd───sshd───sshd───bash───pstree

├─systemd─┬─(sd-pam)

│ ├─at-spi-bus-laun─┬─dbus-daemon

│ │ └─3*[{at-spi-bus-laun}]

│ ├─at-spi2-registr───2*[{at-spi2-registr}]

│ ├─dbus-daemon

│ ├─ibus-portal───2*[{ibus-portal}]

│ ├─pulseaudio───2*[{pulseaudio}]

│ └─xdg-permission-───2*[{xdg-permission-}]

```

通过 `-n` 选项,`pstree` 以数值(按进程 ID)顺序显示进程:

```

$ pstree -n

systemd─┬─systemd-journal

├─systemd-udevd

├─systemd-timesyn───{systemd-timesyn}

├─systemd-resolve

├─systemd-logind

├─dbus-daemon

├─atopacctd

├─irqbalance───{irqbalance}

├─accounts-daemon───2*[{accounts-daemon}]

├─acpid

├─rsyslogd───3*[{rsyslogd}]

├─freshclam

├─udisksd───4*[{udisksd}]

├─networkd-dispat

├─ModemManager───2*[{ModemManager}]

├─snapd───10*[{snapd}]

├─avahi-daemon───avahi-daemon

├─NetworkManager───2*[{NetworkManager}]

├─wpa_supplicant

├─cron

├─atd

├─polkitd───2*[{polkitd}]

├─colord───2*[{colord}]

├─unattended-upgr───{unattended-upgr}

├─sshd───sshd───sshd───bash───pstree

```

使用 `pstree` 时可以考虑的一些选项包括 `-a`(包括命令行参数)和 `-g`(包括进程组)。

以下是一些简单的示例(片段)。

命令 `pstree -a` 的输出内容:

```

└─wpa_supplicant -u -s -O /run/wpa_supplicant

```

命令 `pstree -g` 的输出内容:

```

├─sshd(1396)───sshd(28281)───sshd(28281)───bash(28410)───pstree(1115)

```

### tree

虽然 `tree` 命令听起来与 `pstree` 非常相似,但这是用于查看文件而非进程的命令。它提供了一个漂亮的树状目录和文件视图。

如果你使用 `tree` 命令查看 `/proc` 目录,你显示的开头部分将类似于这个:

```

$ tree /proc

/proc

├── 1

│ ├── attr

│ │ ├── apparmor

│ │ │ ├── current

│ │ │ ├── exec

│ │ │ └── prev

│ │ ├── current

│ │ ├── display

│ │ ├── exec

│ │ ├── fscreate

│ │ ├── keycreate

│ │ ├── prev

│ │ ├── smack

│ │ │ └── current

│ │ └── sockcreate

│ ├── autogroup

│ ├── auxv

│ ├── cgroup

│ ├── clear_refs

│ ├── cmdline

...

```

如果以 root 权限运行这条命令(`sudo tree /proc`),你将会看到更多详细信息,因为 `/proc` 目录的许多内容对于普通用户而言是无法访问的。

命令 `tree -d` 将会限制仅显示目录。

```

$ tree -d /proc

/proc

├── 1

│ ├── attr

│ │ ├── apparmor

│ │ └── smack

│ ├── fd [error opening dir]

│ ├── fdinfo [error opening dir]

│ ├── map_files [error opening dir]

│ ├── net

│ │ ├── dev_snmp6

│ │ ├── netfilter

│ │ └── stat

│ ├── ns [error opening dir]

│ └── task

│ └── 1

│ ├── attr

│ │ ├── apparmor

│ │ └── smack

...

```

使用 `-f` 选项,`tree` 命令会显示完整的路径。

```

$ tree -f /proc

/proc

├── /proc/1

│ ├── /proc/1/attr

│ │ ├── /proc/1/attr/apparmor

│ │ │ ├── /proc/1/attr/apparmor/current

│ │ │ ├── /proc/1/attr/apparmor/exec

│ │ │ └── /proc/1/attr/apparmor/prev

│ │ ├── /proc/1/attr/current

│ │ ├── /proc/1/attr/display

│ │ ├── /proc/1/attr/exec

│ │ ├── /proc/1/attr/fscreate

│ │ ├── /proc/1/attr/keycreate

│ │ ├── /proc/1/attr/prev

│ │ ├── /proc/1/attr/smack

│ │ │ └── /proc/1/attr/smack/current

│ │ └── /proc/1/attr/sockcreate

...

```

分层显示通常可以使进程和文件之间的关系更容易理解。可用选项的数量很多,而你总可以找到一些视图,帮助你查看所需的内容。

---

via: <https://www.networkworld.com/article/3444589/viewing-files-and-processes-as-trees-on-linux.html>

作者:[Sandra Henry-Stocker](https://www.networkworld.com/author/Sandra-Henry_Stocker/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[laingke](https://github.com/laingke) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

11,464 | Linux 内核测试的生命周期 | https://opensource.com/article/19/8/linux-kernel-testing | 2019-10-16T10:19:43 | [

"内核"

] | /article-11464-1.html |

>

> 内核持续集成(CKI)项目旨在防止错误进入 Linux 内核。

>

>

>

在 [Linux 内核的持续集成测试](https://opensource.com/article/19/6/continuous-kernel-integration-linux) 一文中,我介绍了 <ruby> <a href="https://cki-project.org/"> 内核持续集成 </a> <rt> Continuous Kernel Integration </rt></ruby>(CKI)项目及其使命:改变内核开发人员和维护人员的工作方式。本文深入探讨了该项目的某些技术方面,以及这所有的部分是如何组合在一起的。

### 从一次更改开始

内核中每一项令人兴奋的功能、改进和错误都始于开发人员提出的更改。这些更改出现在各个内核存储库的大量邮件列表中。一些存储库关注内核中的某些子系统,例如存储或网络,而其它存储库关注内核的更多方面。 当开发人员向内核提出更改或补丁集时,或者维护者在存储库本身中进行更改时,CKI 项目就会付诸行动。

CKI 项目维护的触发器用于监视这些补丁集并采取措施。诸如 [Patchwork](https://github.com/getpatchwork/patchwork) 之类的软件项目通过将多个补丁贡献整合为单个补丁系列,使此过程变得更加容易。补丁系列作为一个整体历经 CKI 系统,并可以针对该系列发布单个报告。

其他触发器可以监视存储库中的更改。当内核维护人员合并补丁集、还原补丁或创建新标签时,就会触发。测试这些关键的更改可确保开发人员始终具有坚实的基线,可以用作编写新补丁的基础。

所有这些更改都会进入 GitLab CI 管道,并历经多个阶段和多个系统。

### 准备构建

首先要准备好要编译的源代码。这需要克隆存储库、打上开发人员建议的补丁集,并生成内核配置文件。这些配置文件具有成千上万个用于打开或关闭功能的选项,并且配置文件在不同的系统体系结构之间差异非常大。 例如,一个相当标准的 x86\_64 系统在其配置文件中可能有很多可用选项,但是 s390x 系统(IBM zSeries 大型机)的选项可能要少得多。在该大型机上,某些选项可能有意义,但在消费类笔记本电脑上没有任何作用。

内核进一步转换为源代码工件。该工件包含整个存储库(已打上补丁)以及编译所需的所有内核配置文件。 上游内核会打包成压缩包,而 Red Hat 的内核会生成下一步所用的源代码 RPM 包。

### 成堆的编译

编译内核会将源代码转换为计算机可以启动和使用的代码。配置文件描述了要构建的内容,内核中的脚本描述了如何构建它,系统上的工具(例如 GCC 和 glibc)完成构建。此过程需要一段时间才能完成,但是 CKI 项目需要针对四种体系结构快速完成:aarch64(64 位 ARM)、ppc64le(POWER)、s390x(IBM zSeries)和 x86\_64。重要的是,我们必须快速编译内核,以便使工作任务不会积压,而开发人员可以及时收到反馈。

添加更多的 CPU 可以大大提高速度,但是每个系统都有其局限性。CKI 项目在 OpenShift 的部署环境中的容器内编译内核;尽管 OpenShift 可以实现高伸缩性,但在部署环境中的可用 CPU 仍然是数量有限的。CKI 团队分配了 20 个虚拟 CPU 来编译每个内核。涉及到四个体系结构,这就涨到了 80 个 CPU!

另一个速度的提高来自 [ccache](https://ccache.dev/) 工具。内核开发进展迅速,但是即使在多个发布版本之间,内核的大部分仍保持不变。ccache 工具进行编译期间会在磁盘上缓存已构建的对象(整个内核的一小部分)。稍后再进行另一个内核编译时,ccache 会查找以前看到的内核的未更改部分。ccache 会从磁盘中提取缓存的对象并重新使用它。这样可以加快编译速度并降低总体 CPU 使用率。现在,耗时 20 分钟编译的内核在不到几分钟的时间内就完成了。

### 测试时间

内核进入最后一步:在真实硬件上进行测试。每个内核都使用 Beaker 在其原生体系结构上启动,并且开始无数次的测试以发现问题。一些测试会寻找简单的问题,例如容器问题或启动时的错误消息。其他测试则深入到各种内核子系统中,以查找系统调用、内存分配和线程中的回归问题。

大型测试框架,例如 [Linux Test Project](https://linux-test-project.github.io)(LTP),包含了大量测试,这些测试在内核中寻找麻烦的回归问题。其中一些回归问题可能会回滚关键的安全修复程序,并且进行测试以确保这些改进仍保留在内核中。

测试完成后,关键的一步仍然是:报告。内核开发人员和维护人员需要一份简明的报告,准确地告诉他们哪些有效、哪些无效以及如何获取更多信息。每个 CKI 报告都包含所用源代码、编译参数和测试输出的详细信息。该信息可帮助开发人员知道从哪里开始寻找解决问题的方法。此外,它还可以帮助维护人员在漏洞进入内核存储库之前知道何时需要保留补丁集以进行其他查看。

### 总结

CKI 项目团队通过向内核开发人员和维护人员提供及时、自动的反馈,努力防止错误进入 Linux 内核。这项工作通过发现导致内核错误、安全性问题和性能问题等易于找到的问题,使他们的工作更加轻松。

---

via: <https://opensource.com/article/19/8/linux-kernel-testing>

作者:[Major Hayden](https://opensource.com/users/mhaydenhttps://opensource.com/users/mhaydenhttps://opensource.com/users/marcobravohttps://opensource.com/users/mhayden) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

11,467 | 在 Linux 上安装 VMware 工具 | https://itsfoss.com/install-vmware-tools-linux | 2019-10-16T12:06:00 | [

"VMware"

] | https://linux.cn/article-11467-1.html |

>

> VMware 工具通过允许你共享剪贴板和文件夹以及其他东西来提升你的虚拟机体验。了解如何在 Ubuntu 和其它 Linux 发行版上安装 VMware 工具。

>

>

>

在先前的教程中,你学习了[在 Ubuntu 上安装 VMware 工作站](https://itsfoss.com/install-vmware-player-ubuntu-1310/)。你还可以通过安装 VMware 工具进一步提升你的虚拟机功能。

如果你已经在 VMware 上安装了一个访客机系统,你必须要注意 [VMware 工具](https://kb.vmware.com/s/article/340)的要求 —— 尽管并不完全清楚到底有什么要求。

在本文中,我们将要强调 VMware 工具的重要性、所提供的特性,以及在 Ubuntu 和其它 Linux 发行版上安装 VMware 工具的方法。

### VMware 工具:概览及特性

出于显而易见的理由,虚拟机(你的访客机系统)并不能做到与宿主机上的表现完全一致。在其性能和操作上会有特定的限制。那就是为什么引入 VMware 工具的原因。

VMware 工具以一种高效的形式在提升了其性能的同时,也可以帮助管理访客机系统。

#### VMware 工具到底负责什么?

你大致知道它可以做什么,但让我们探讨一下细节:

* 同步访客机系统与宿主机系统间的时间以简化操作

* 提供从宿主机系统向访客机系统传递消息的能力。比如说,你可以复制文字到剪贴板,并将它轻松粘贴到你的访客机系统

* 在访客机系统上启用声音

* 提升访客机视频分辨率

* 修正错误的网络速度数据

* 减少不合适的色深

在访客机系统上安装了 VMware 工具会给它带来显著改变,但是它到底包含了什么特性才解锁或提升这些功能的呢?让我们来看看……

#### VMware 工具:核心特性细节

如果你不想知道它包含什么来启用这些功能的话,你可以跳过这部分。但是为了好奇的读者,让我们简短地讨论它一下:

**VMware 设备驱动:** 它具体取决于操作系统。大多数主流操作系统都默认包含了设备驱动,因此你不必另外安装它。这主要涉及到内存控制驱动、鼠标驱动、音频驱动、网卡驱动、VGA 驱动以及其它。

**VMware 用户进程:** 这是这里真正有意思的地方。通过它你获得了在访客机和宿主机间复制粘贴和拖拽的能力。基本上,你可以从宿主机复制粘贴文本到虚拟机,反之亦然。

你同样也可以拖拽文件。此外,在你未安装 SVGA 驱动时它会启用鼠标指针的释放/锁定。

**VMware 工具生命周期管理:** 嗯,我们会在下面看看如何安装 VMware 工具,但是这个特性帮你在虚拟机中轻松安装/升级 VMware 工具。

**共享文件夹**:除了这些。VMware 工具同样允许你在访客机与宿主机系统间共享文件夹。

当然,它的效果同样取决于访客机系统。例如在 Windows 上你通过 Unity 模式运行虚拟机上的程序并从宿主机系统上操作它。

### 如何在 Ubuntu 和其它 Linux 发行版上安装 VMware 工具

**注意:** 对于 Linux 操作系统,你应该已经安装好了“Open VM 工具”,大多数情况下免除了额外安装 VMware 工具的需要。

大部分时候,当你安装了访客机系统时,如果操作系统支持 [Easy Install](https://docs.vmware.com/en/VMware-Workstation-Player-for-Linux/15.0/com.vmware.player.linux.using.doc/GUID-3F6B9D0E-6CFC-4627-B80B-9A68A5960F60.html) 的话你会收到软件更新或弹窗告诉你要安装 VMware 工具。

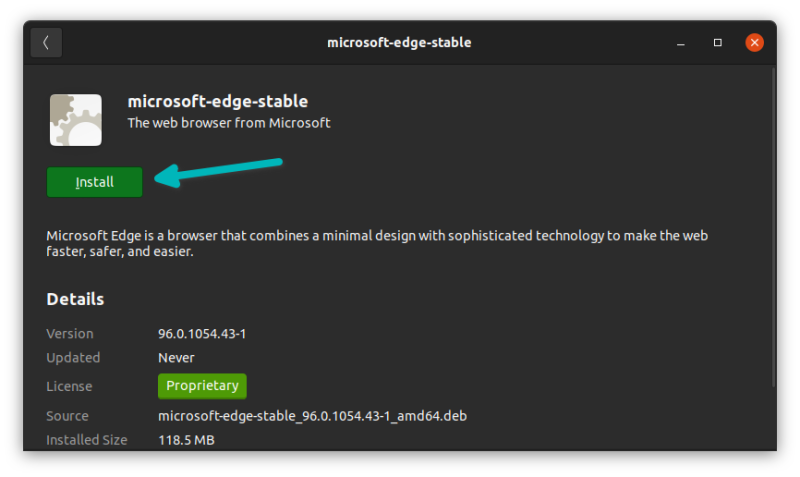

Windows 和 Ubuntu 都支持 Easy Install。因此如果你使用 Windows 作为你的宿主机或尝试在 Ubuntu 上安装 VMware 工具,你应该会看到一个和弹窗消息差不多的选项来轻松安装 VMware 工具。这是它应该看起来的样子:

这是搞定它最简便的办法。因此当你配置虚拟机时确保你有一个通畅的网络连接。

如果你没收到任何弹窗或者选项来轻松安装 VMware 工具。你需要手动安装它。以下是如何去做:

1. 运行 VMware Workstation Player。

2. 从菜单导航至 “Virtual Machine -> Install VMware tools”。如果你已经安装了它并想修复安装,你会看到 “Re-install VMware tools” 这一选项出现。

3. 一旦你点击了,你就会看到一个虚拟 CD/DVD 挂载在访客机系统上。

4. 打开该 CD/DVD,并复制粘贴那个 tar.gz 文件到任何你选择的区域并解压,这里我们选择“桌面”作为解压目的地。

5. 在解压后,运行终端并通过输入以下命令导航至里面的文件夹:

```

cd Desktop/VMwareTools-10.3.2-9925305/vmware-tools-distrib

```

你需要检查文件夹与路径名,这取决于版本与解压目的地,名字可能会改变。

用你的存储位置(如“下载”)替换“桌面”,如果你安装的也是 10.3.2 版本,其它的保持一样即可。

6. 现在仅需输入以下命令开始安装:

```

sudo ./vmware-install.pl -d

```

你会被询问密码以获得安装权限,输入密码然后应当一切都搞定了。

到此为止了,你搞定了。这系列步骤应当适用于几乎大部分基于 Ubuntu 的访客机系统。如果你想要在 Ubuntu 服务器上或其它系统安装 VMware 工具,步骤应该类似。

### 总结

在 Ubuntu Linux 上安装 VMware 工具应该挺简单。除了简单办法,我们也详述了手动安装的方法。如果你仍需帮助或者对安装有任何建议,在评论区评论让我们知道。

---

via: <https://itsfoss.com/install-vmware-tools-linux>

作者:[Ankush Das](https://itsfoss.com/author/ankush/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[tomjlw](https://github.com/tomjlw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

11,468 | 变异测试是测试驱动开发(TDD)的演变 | https://opensource.com/article/19/8/mutation-testing-evolution-tdd | 2019-10-17T09:44:35 | [

"TDD",

"DevOps"

] | /article-11468-1.html |

>

> 测试驱动开发技术是根据大自然的运作规律创建的,变异测试自然成为 DevOps 演变的下一步。

>

>

>

在 “[故障是无懈可击的开发运维中的一个特点](https://opensource.com/article/19/7/failure-feature-blameless-devops)”,我讨论了故障在通过征求反馈来交付优质产品的过程中所起到的重要作用。敏捷 DevOps 团队就是用故障来指导他们并推动开发进程的。<ruby> <a href="https://en.wikipedia.org/wiki/Test-driven_development"> 测试驱动开发 </a> <rt> Test-driven development </rt></ruby>(TDD)是任何敏捷 DevOps 团队评估产品交付的[必要条件](https://www.merriam-webster.com/dictionary/conditio%20sine%20qua%20non)。以故障为中心的 TDD 方法仅在与可量化的测试配合使用时才有效。

TDD 方法仿照大自然是如何运作的以及自然界在进化博弈中是如何产生赢家和输家为模型而建立的。

### 自然选择

1859 年,<ruby> <a href="https://en.wikipedia.org/wiki/Charles_Darwin"> 查尔斯·达尔文 </a> <rt> Charles Darwin </rt></ruby>在他的《<ruby> <a href="https://en.wikipedia.org/wiki/On_the_Origin_of_Species"> 物种起源 </a> <rt> On the Origin of Species </rt></ruby>》一书中提出了进化论学说。达尔文的论点是,自然变异是由生物个体的自发突变和环境压力共同造成的。环境压力淘汰了适应性较差的生物体,而有利于其他适应性强的生物的发展。每个生物体的染色体都会发生变异,而这些自发的变异会携带给下一代(后代)。然后在自然选择下测试新出现的变异性 —— 当下存在的环境压力是由变异性的环境条件所导致的。

这张简图说明了调整适应环境条件的过程。

*图1. 不同的环境压力导致自然选择下的不同结果。图片截图来源于[理查德·道金斯的一个视频](https://www.youtube.com/watch?v=MgK5Rf7qFaU)。*

该图显示了一群生活在自己栖息地的鱼。栖息地各不相同(海底或河床底部的砾石颜色有深有浅),每条鱼长的也各不相同(鱼身图案和颜色也有深有浅)。

这张图还显示了两种情况(即环境压力的两种变化):

1. 捕食者在场

2. 捕食者不在场

在第一种情况下,在砾石颜色衬托下容易凸显出来的鱼被捕食者捕获的风险更高。当砾石颜色较深时,浅色鱼的数量会更少一些。反之亦然,当砾石颜色较浅时,深色鱼的数量会更少。

在第二种情况下,鱼完全放松下来进行交配。在没有捕食者和没有交配仪式的情况下,可以预料到相反的结果:在砾石背景下显眼的鱼会有更大的机会被选来交配并将其特性传递给后代。

### 选择标准

变异性在进行选择时,绝不是任意的、反复无常的、异想天开的或随机的。选择过程中的决定性因素通常是可以度量的。该决定性因素通常称为测试或目标。

一个简单的数学例子可以说明这一决策过程。(在该示例中,这种选择不是由自然选择决定的,而是由人为选择决定。)假设有人要求你构建一个小函数,该函数将接受一个正数,然后计算该数的平方根。你将怎么做?

敏捷 DevOps 团队的方法是快速验证失败。谦虚一点,先承认自己并不真的知道如何开发该函数。这时,你所知道的就是如何描述你想做的事情。从技术上讲,你已准备好进行单元测试。

“<ruby> 单元测试 <rt> unit test </rt></ruby>”描述了你的具体期望结果是什么。它可以简单地表述为“给定数字 16,我希望平方根函数返回数字 4”。你可能知道 16 的平方根是 4。但是,你不知道一些较大数字(例如 533)的平方根。

但至少,你已经制定了选择标准,即你的测试或你的期望值。

### 进行故障测试

[.NET Core](https://dotnet.microsoft.com/) 平台可以演示该测试。.NET 通常使用 xUnit.net 作为单元测试框架。(要跟随进行这个代码示例,请安装 .NET Core 和 xUnit.net。)

打开命令行并创建一个文件夹,在该文件夹实现平方根解决方案。例如,输入:

```

mkdir square_root

```

再输入:

```

cd square_root

```

为单元测试创建一个单独的文件夹:

```

mkdir unit_tests

```

进入 `unit_tests` 文件夹下(`cd unit_tests`),初始化 xUnit 框架:

```

dotnet new xunit

```

现在,转到 `square_root` 下, 创建 `app` 文件夹:

```

mkdir app

cd app

```

如果有必要的话,为你的代码创建一个脚手架:

```

dotnet new classlib

```

现在打开你最喜欢的编辑器开始编码!

在你的代码编辑器中,导航到 `unit_tests` 文件夹,打开 `UnitTest1.cs`。

将 `UnitTest1.cs` 中自动生成的代码替换为:

```

using System;

using Xunit;

using app;

namespace unit_tests{

public class UnitTest1{

Calculator calculator = new Calculator();

[Fact]

public void GivenPositiveNumberCalculateSquareRoot(){

var expected = 4;

var actual = calculator.CalculateSquareRoot(16);

Assert.Equal(expected, actual);

}

}

}

```

该单元测试描述了变量的**期望值**应该为 4。下一行描述了**实际值**。建议通过将输入值发送到称为`calculator` 的组件来计算**实际值**。对该组件的描述是通过接收数值来处理`CalculateSquareRoot` 信息。该组件尚未开发。但这并不重要,我们在此只是描述期望值。

最后,描述了触发消息发送时发生的情况。此时,判断**期望值**是否等于**实际值**。如果是,则测试通过,目标达成。如果**期望值**不等于**实际值**,则测试失败。

接下来,要实现称为 `calculator` 的组件,在 `app` 文件夹中创建一个新文件,并将其命名为`Calculator.cs`。要实现计算平方根的函数,请在此新文件中添加以下代码:

```

namespace app {

public class Calculator {

public double CalculateSquareRoot(double number) {

double bestGuess = number;

return bestGuess;

}

}

}

```

在测试之前,你需要通知单元测试如何找到该新组件(`Calculator`)。导航至 `unit_tests` 文件夹,打开 `unit_tests.csproj` 文件。在 `<ItemGroup>` 代码块中添加以下代码:

```

<ProjectReference Include="../app/app.csproj" />

```

保存 `unit_test.csproj` 文件。现在,你可以运行第一个测试了。

切换到命令行,进入 `unit_tests` 文件夹。运行以下命令:

```

dotnet test

```

运行单元测试,会输出以下内容:

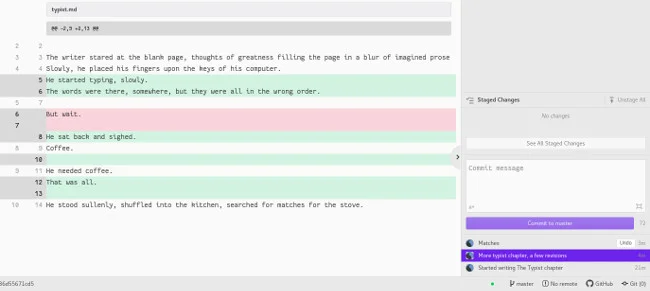

*图2. 单元测试失败后 xUnit 的输出结果*

正如你所看到的,单元测试失败了。期望将数字 16 发送到 `calculator` 组件后会输出数字 4,但是输出(`Actual`)的是 16。

恭喜你!创建了第一个故障。单元测试为你提供了强有力的反馈机制,敦促你修复故障。

### 修复故障

要修复故障,你必须要改进 `bestGuess`。当下,`bestGuess` 仅获取函数接收的数字并返回。这不够好。

但是,如何找到一种计算平方根值的方法呢? 我有一个主意 —— 看一下大自然母亲是如何解决问题的。

### 效仿大自然的迭代

在第一次(也是唯一的)尝试中要得出正确值是非常难的(几乎不可能)。你必须允许自己进行多次尝试猜测,以增加解决问题的机会。允许多次尝试的一种方法是进行迭代。

要迭代,就要将 `bestGuess` 值存储在 `previousGuess` 变量中,转换 `bestGuess` 的值,然后比较两个值之间的差。如果差为 0,则说明问题已解决。否则,继续迭代。

这是生成任何正数的平方根的函数体:

```

double bestGuess = number;

double previousGuess;

do {

previousGuess = bestGuess;

bestGuess = (previousGuess + (number/previousGuess))/2;

} while((bestGuess - previousGuess) != 0);

return bestGuess;

```

该循环(迭代)将 `bestGuess` 值集中到设想的解决方案。现在,你精心设计的单元测试通过了!

*图 3. 单元测试通过了。*

### 迭代解决了问题

正如大自然母亲解决问题的方法,在本练习中,迭代解决了问题。增量方法与逐步改进相结合是获得满意解决方案的有效方法。该示例中的决定性因素是具有可衡量的目标和测试。一旦有了这些,就可以继续迭代直到达到目标。

### 关键点!

好的,这是一个有趣的试验,但是更有趣的发现来自于使用这种新创建的解决方案。到目前为止,`bestGuess` 从开始一直把函数接收到的数字作为输入参数。如果更改 `bestGuess` 的初始值会怎样?

为了测试这一点,你可以测试几种情况。 首先,在迭代多次尝试计算 25 的平方根时,要逐步细化观察结果:

*图 4. 通过迭代来计算 25 的平方根。*

以 25 作为 `bestGuess` 的初始值,该函数需要八次迭代才能计算出 25 的平方根。但是,如果在设计 `bestGuess` 初始值上犯下荒谬的错误,那将怎么办? 尝试第二次,那 100 万可能是 25 的平方根吗? 在这种明显错误的情况下会发生什么?你写的函数是否能够处理这种低级错误。

直接来吧。回到测试中来,这次以一百万开始:

*图 5. 在计算 25 的平方根时,运用逐步求精法,以 100 万作为 bestGuess 的初始值。*

哇! 以一个荒谬的数字开始,迭代次数仅增加了两倍(从八次迭代到 23 次)。增长幅度没有你直觉中预期的那么大。

### 故事的寓意

啊哈! 当你意识到,迭代不仅能够保证解决问题,而且与你的解决方案的初始猜测值是好是坏也没有关系。 不论你最初理解得多么不正确,迭代过程以及可衡量的测试/目标,都可以使你走上正确的道路并得到解决方案。

图 4 和 5 显示了陡峭而戏剧性的燃尽图。一个非常错误的开始,迭代很快就产生了一个绝对正确的解决方案。

简而言之,这种神奇的方法就是敏捷 DevOps 的本质。

### 回到一些更深层次的观察

敏捷 DevOps 的实践源于人们对所生活的世界的认知。我们生活的世界存在不确定性、不完整性以及充满太多的困惑。从科学/哲学的角度来看,这些特征得到了<ruby> <a href="https://en.wikipedia.org/wiki/Uncertainty_principle"> 海森堡的不确定性原理 </a> <rt> Heisenberg’s Uncertainty Principle </rt></ruby>(涵盖不确定性部分),<ruby> <a href="https://en.wikipedia.org/wiki/Tractatus_Logico-Philosophicus"> 维特根斯坦的逻辑论哲学 </a> <rt> Wittgenstein’s Tractatus Logico-Philosophicus </rt></ruby>(歧义性部分),<ruby> <a href="https://en.wikipedia.org/wiki/G%C3%B6del%27s_incompleteness_theorems"> 哥德尔的不完全性定理 </a> <rt> Gödel’s incompleteness theorems </rt></ruby>(不完全性方面)以及<ruby> <a href="https://en.wikipedia.org/wiki/Second_law_of_thermodynamics"> 热力学第二定律 </a> <rt> Second Law of Thermodynamics </rt></ruby>(无情的熵引起的混乱)的充分证明和支持。

简而言之,无论你多么努力,在尝试解决任何问题时都无法获得完整的信息。因此,放下傲慢的姿态,采取更为谦虚的方法来解决问题对我们会更有帮助。谦卑会给为你带来巨大的回报,这个回报不仅是你期望的一个解决方案,还会有它的副产品。

### 总结

大自然在不停地运作,这是一个持续不断的过程。大自然没有总体规划。一切都是对先前发生的事情的回应。 反馈循环是非常紧密的,明显的进步/倒退都是逐步实现的。大自然中随处可见,任何事物的都在以一种或多种形式逐步完善。

敏捷 DevOps 是工程模型逐渐成熟的一个非常有趣的结果。DevOps 基于这样的认识,即你所拥有的信息总是不完整的,因此你最好谨慎进行。获得可衡量的测试(例如,假设、可测量的期望结果),进行简单的尝试,大多数情况下可能失败,然后收集反馈,修复故障并继续测试。除了同意每个步骤都必须要有可衡量的假设/测试之外,没有其他方法。

在本系列的下一篇文章中,我将仔细研究变异测试是如何提供及时反馈来推动实现结果的。

---

via: <https://opensource.com/article/19/8/mutation-testing-evolution-tdd>

作者:[Alex Bunardzic](https://opensource.com/users/alex-bunardzic) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Morisun029](https://github.com/Morisun029) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

11,469 | 命令行技巧:使用 find 和 xargs 查找和处理文件 | https://fedoramagazine.org/command-line-quick-tips-locate-and-process-files-with-find-and-xargs/ | 2019-10-17T13:00:00 | [

"find",

"xargs"

] | https://linux.cn/article-11469-1.html |

`find` 是日常工具箱中功能强大、灵活的命令行程序之一。它如它名字所暗示的:查找符合你指定条件的文件和目录。借助 `-exec` 或 `-delete` 之类的参数,你可以让它对找到的文件进行操作。

在[命令行提示](https://fedoramagazine.org/?s=command+line+quick+tips)系列的这一期中,你将会看到 `find` 命令的介绍,并学习如何使用内置命令或使用 `xargs` 命令处理文件。

### 查找文件

`find` 至少要加上查找的路径。例如,此命令将查找(并打印)系统上的每个文件:

```

find /

```

由于一切皆文件,因此你会看到大量的输出。这可能无法帮助你找到所需的内容。你可以更改路径参数缩小范围,但这实际上并没有比使用 `ls` 命令更好。因此,你需要考虑要查找的内容。

也许你想在家目录中查找所有 JPEG 文件。 `-name` 参数允许你将结果限制为与给定模式匹配的文件。

```

find ~ -name '*jpg'

```

但是等等!如果其中一些扩展名是大写怎么办? `-iname` 类似于 `-name`,但不区分大小写:

```

find ~ -iname '*jpg'

```

很好!但是 8.3 命名方案出自 1985 年。某些图片的扩展名可能是 .jpeg。幸运的是,我们可以将模式使用“或”(`-o`)进行组合。括号需要转义,以便使 `find` 命令而不是 shell 程序尝试解释它们。

```

find ~ \( -iname 'jpeg' -o -iname 'jpg' \)

```

更进一步。如果你有一些以 `jpg` 结尾的目录怎么办?(我不懂你为什么将目录命名为 `bucketofjpg` 而不是 `pictures`?)我们可以加上 `-type` 参数来仅查找文件:

```

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type f

```

或者,也许你想找到那些名字奇怪的目录,以便之后可以重命名它们:

```

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type d

```

最近你拍摄了很多照片,因此使用 `-mtime`(修改时间)将范围缩小到最近一周修改过的文件。 `-7` 表示 7 天或更短时间内修改的所有文件。

```

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type f -mtime -7

```

### 使用 xargs 进行操作

`xargs` 命令从标准输入流中获取参数,并基于它们执行命令。继续使用上一节中的示例,假设你要将上周修改过的家目录中的所有 JPEG 文件复制到 U 盘,以便插到电子相册上。假设你已经将 U 盘挂载到 `/media/photo_display`。

```

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type f -mtime -7 -print0 | xargs -0 cp -t /media/photo_display

```

这里的 `find` 命令与以前的版本略有不同。`-print0` 命令让输出有一些更改:它不使用换行符,而是添加了一个 `null` 字符。`xargs` 的 `-0`(零)选项可调整解析以达到预期效果。这很重要,不然对包含空格、引号或其他特殊字符的文件名执行操作可能无法按预期进行。对文件采取任何操作时,都应使用这些选项。

`cp` 命令的 `-t` 参数很重要,因为 `cp` 通常要求目的地址在最后。你可以不使用 `xargs` 而使用 `find` 的 `-exec` 执行此操作,但是 `xargs` 的方式会更快,尤其是对于大量文件,因为它会单次调用 `cp`。

### 了解更多

这篇文章仅仅是 `find` 可以做的事情的表面。 `find` 支持基于权限、所有者、访问时间等的测试。它甚至可以将搜索路径中的文件与其他文件进行比较。将测试与布尔逻辑相结合,可以为你提供惊人的灵活性,以精确地找到你要查找的文件。使用内置命令或管道传递给 `xargs`,你可以快速处理大量文件。

---

via: <https://fedoramagazine.org/command-line-quick-tips-locate-and-process-files-with-find-and-xargs/>

作者:[Ben Cotton](https://fedoramagazine.org/author/bcotton/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | **find** is one of the more powerful and flexible command-line programs in the daily toolbox. It does what the name suggests: it finds files and directories that match the conditions you specify. And with arguments like **-exec** or **-delete**, you can have find take action on what it… finds.

In this installment of the [Command Line Quick Tips](https://fedoramagazine.org/?s=command+line+quick+tips) series, you’ll get an introduction to the **find** command and learn how to use it to process files with built-in commands or the **xargs** command.

## Finding files

At a minimum, **find** takes a path to find things in. For example, this command will find (and print) every file on the system:

find /

And since everything is a file, you will get a lot of output to sort through. This probably doesn’t help you locate what you’re looking for. You can change the path argument to narrow things down a bit, but it’s still not really any more helpful than using the **ls** command. So you need to think about what you’re trying to locate.

Perhaps you want to find all the JPEG files in your home directory. The **-name** argument allows you to restrict your results to files that match the given pattern.

find ~ -name '*jpg'

But wait! What if some of them have an uppercase extension? **-iname** is like **-name**, but it is case-insensitive:

find ~ -iname '*jpg'

Great! But the 8.3 name scheme is so 1985. Some of the pictures might have a .jpeg extension. Fortunately, we can combine patterns with an “or,” represented by **-o**. The parentheses are escaped so that the shell doesn’t try to interpret them instead of the **find** command.

find ~ \( -iname '*jpeg' -o -iname '*jpg' \)

We’re getting closer. But what if you have some directories that end in jpg? (Why you named a directory **bucketofjpg** instead of **pictures** is beyond me.) We can modify our command with the **-type** argument to look only for files:

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type f

Or maybe you’d like to find those oddly named directories so you can rename them later:

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type d

It turns out you’ve been taking a lot of pictures lately, so narrow this down to files that have changed in the last week with **-mtime** (modification time). The **-7** means all files modified in 7 days or fewer.

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type f -mtime -7

## Taking action with xargs

The **xargs** command takes arguments from the standard input stream and executes a command based on them. Sticking with the example in the previous section, let’s say you want to copy all of the JPEG files in your home directory that have been modified in the last week to a thumb drive that you’ll attach to a digital photo display. Assume you already have the thumb drive mounted as */media/photo_display*.

find ~ \( -iname '*jpeg' -o -iname '*jpg' \) -type f -mtime -7 -print0 | xargs -0 cp -t /media/photo_display

The **find** command is slightly modified from the previous version. The **-print0** command makes a subtle change on how the output is written: instead of using a newline, it adds a null character. The** -0** (zero) option to **xargs** adjusts the parsing to expect this. This is important because otherwise actions on file names that contain spaces, quotes, or other special characters may not work as expected. You should use these options whenever you’re taking action on files.

The **-t** argument to **cp** is important because **cp** normally expects the destination to come last. You can do this without **xargs** using **find**‘s **-exec** command, but the **xargs** method will be faster, especially with a large number of files, because it will run as a single invocation of **cp**.

## Find out more

This post only scratches the surface of what **find** can do. **find** supports testing based on permissions, ownership, access time, and much more. It can even compare the files in the search path to other files. Combining tests with Boolean logic can give you incredible flexibility to find exactly the files you’re looking for. With build in commands or piping to **xargs**, you can quickly process a large set of files.

*Portions of this article were previously published on Opensource.com.*

*Photo by*

*Warren Wong**on*.

## Seirdy

For a faster alternative, try fd 0

It’s listed as “fd-find” in Fedora’s repos.

## pamsu

I think it would be good if articles like these had their own subsection.

## Stevko

GNU find has two versions of exec. One of them ends with ; and one with +. The second one is what you may want instead of xargs.

Also, since Unix (and Linux) decided to have almost no restriction on filenames, it means that filenames can contain newlines and this will break. Use -print0 in find and -0 in xargs.

## Peder

Yes, ” time find . ( -iname ‘

jpg’ -o -iname ‘jpeg’ ) -type f -mtime -7 -exec cp -t Pics -a {} +” works just as well as the xargs version.It also handles apostrophes and newlines, is less to type but just as fast and doesn’t make you have to remember any -print0 | -0 flags.

## Peder

The forum ate my backslashes!

The -exec command should look like this, I hope : time find . \( -iname ‘

jpg’ -o -iname ‘jpeg’ \) -type f -mtime -7 -exec cp -t Pics {} \+With a backslash before each parenthesis and one before the +

## Elliott

From the POSIX standard for xargs:

“… if xargs is used to bundle the output of commands like find dir -print or ls into commands to be executed, unexpected results are likely if any filenames contain , , or quoting characters. This can be solved by using find to call a script that converts each file found into a quoted string that is then piped to xargs, but in most cases it is preferable just to have find do the argument aggregation itself by using -exec with a ‘+’ terminator instead of ‘;’.”

Also, the xargs -0 option is not in the POSIX standard so it may not be available, depending on the environment.

## Pedro

Simply amazing 🙂

## kilgoretrout

One major limiting factor with xargs is that it will refuse to process files with a single quote like “Dec. ’97” or “Jerry’s Kids”. If it encounters such a file, it will spit out an “unmatched single quote” error message and stop in its tracks. I found this out the hard way and started using exec with the find command instead of xargs.

## Ben Cotton

The -0 (zero) option will correct this. From the manual page:

I’ll update the example to include this since, as you said, it is a lesson that can be learned painfully.

## ernesto

kudos for demystifying two daunting but important commands to all shell new commers.

there are so many things you can do with ‘find’, perhaps another article show casing these features?

i do a agree with another poster, all these command line articles require a section of their own for easier access/browsing in the future 😀

## Robin

Interesting, I was looking for a way to convert all my .xls files to .ods few days ago and that will work just fine associated with LibreOffice CLI.

## chris

I much more prefer to use something of the form:

ll *.jpg| gawk ‘{system(“command_line_execution.sh “$9)}’

its simpler for me I just never tried to use xargs or find as you have to escape everthing differently

## Göran Uddeborg

Gawkor shell scripts could be used in place ofxargs, but you can’t replace all the testing features offindwithls. Try to find all JPEG files on any level below the current directory, and I think you will have problems. 🙂 When done, try to find only the new ones! 🙂## Chris

That would be ls -Rl | grep “.jpg” ….

Or you could use

ls -Rl | gawk ‘if ($9 ~ /.jpg/) {system(“bash_command.sh “$1)}’

Either way I’m just not to hip to find and the exec option. I use find all the time, and you could actually use find then pipe into gawk’s system function.

## Göran Uddeborg

ls -Rlwould not give you the full path to the file. You would have to use a longergawkscript which also records the path when the pattern fromlslists it, and combine the two when you find a match. All of whichfindwould give you for free.## Chris

As far as finding new ones, I guess I’m lost on what you mean by new ones? Do you mean past a certain date? Again I don’t have any problems with find other than the exec option. It has wierd escaping, and from what I have used of it only allows for one argument. For instance what if you wanted to mv all of those jpgs but change the name and have the name some derivative of the first name. It gets complicated and gawk system allows you to use piped in input with as many Fields as you want and put them wherever you want in the cli execution.

## Göran Uddeborg

With finding new ones, I did indeed mean files modified recently. That was an example used in the article.

As for renaming in more complicated ways, I might also use a script, possibly combined with the various

printoptions offind. But I would still call it directly via -exec and/orxargs, rather than going viasystemingawk.## chris

I think you fail to see the point of my original comment, and are trying to sell me (or someone else) on find. I use find and understand how to use it, I just feel that having a fundamental understanding of a few things and you can accomplish most tasks anyways you would like.

for instance, you can change ownership permissions either,

ie: chmod 777 exec.sh

or: chmod o+rwx,g+rwx,u+rwx exec.sh

two ways to skin a cat and for some one way is easier than the other or prefered.

## Göran Uddeborg

I was trying to show how your examples could not achieve much of what

finddoes. When it could, it made things more complicated, in my opinion.But maybe I don’t get your point. Of course, everyone should do things the way he or she prefers.

## Paul W. Frields

This thread helps to illustrate there are many ways for doing things, and they will achieve some common and some divergent goals sometimes. It would be preferable to move on since the thread value is diminishing. Thanks! — ed.

## Göran Uddeborg

The globbing stars were lost in the first example with disjunction (-o).

## Paul W. Frields

Thanks, fixed! |

11,470 | 新手教程:如何在 Linux 下解压 Zip 文件 | https://itsfoss.com/unzip-linux/ | 2019-10-17T13:05:00 | [

"unzip",

"zip"

] | https://linux.cn/article-11470-1.html |

>

> 本文将会向你展示如何在 Ubuntu 和其他 Linux 发行版本上解压文件。终端和图形界面的方法都会讨论。

>

>

>

[Zip](https://en.wikipedia.org/wiki/Zip_(file_format)) 是一种创建压缩存档文件的最普通、最流行的方法。它也是一种古老的文件归档文件格式,这种格式创建于 1989 年。由于它的广泛使用,你会经常遇见 zip 文件。

在更早的一份教程里,我介绍了[如何在 Linux 上用 zip 压缩一个文件夹](https://itsfoss.com/linux-zip-folder/)。在这篇面向初学者的快速教程中,我会介绍如何在 Linux 上解压文件。

先决条件:检查你是否安装了 `unzip`。

为了解压 zip 归档文件,你必须在你的系统上安装了 unzip 软件包。大多数现代的的 Linux 发行版本提供了解压 zip 文件的支持,但是对这些 zip 文件进行校验以避免以后出现损坏总是没有坏处的。

在基于 [Unbutu](https://ubuntu.com/) 和 [Debian](https://www.debian.org/) 的发行版上,你能够使用下面的命令来安装 `unzip`。如果你已经安装了,你会被告知已经被安装。

```

sudo apt install unzip

```

一旦你能够确认你的系统中安装了 `unzip`,你就可以通过 `unzip` 来解压 zip 归档文件。

你也能够使用命令行或者图形工具来达到目的,我会向你展示两种方法:

### 使用命令行解压文件

在 Linux 下使用 `unzip` 命令是非常简单的。在你放 zip 文件的目录,用下面的命令:

```

unzip zipped_file.zip

```

你可以给 zip 文件提供解压路径而不是解压到当前所在路径。你会在终端输出中看到提取的文件:

```

unzip metallic-container.zip -d my_zip

Archive: metallic-container.zip

inflating: my_zip/625993-PNZP34-678.jpg

inflating: my_zip/License free.txt

inflating: my_zip/License premium.txt

```

上面的命令有一个小问题。它会提取 zip 文件中所有的内容到现在的文件夹。你会在当前文件夹下留下一堆没有组织的文件,这不是一件很好的事情。

#### 解压到文件夹下

在 Linux 命令行下,对于把文件解压到一个文件夹下是一个好的做法。这种方式下,所有的提取文件都会被存储到你所指定的文件夹下。如果文件夹不存在,会创建该文件夹。

```

unzip zipped_file.zip -d unzipped_directory

```

现在 `zipped_file.zip` 中所有的内容都会被提取到 `unzipped_directory` 中。

由于我们在讨论好的做法,这里有另一个注意点,我们可以查看压缩文件中的内容而不用实际解压。

#### 查看压缩文件中的内容而不解压压缩文件

```

unzip -l zipped_file.zip

```

下面是该命令的输出:

```

unzip -l metallic-container.zip

Archive: metallic-container.zip

Length Date Time Name

--------- ---------- ----- ----

6576010 2019-03-07 10:30 625993-PNZP34-678.jpg

1462 2019-03-07 13:39 License free.txt

1116 2019-03-07 13:39 License premium.txt

--------- -------

6578588 3 files

```

在 Linux 下,还有些 `unzip` 的其它用法,但我想你现在已经对在 Linux 下使用解压文件有了足够的了解。

### 使用图形界面来解压文件

如果你使用桌面版 Linux,那你就不必总是使用终端。在图形化的界面下,我们又要如何解压文件呢? 我使用的是 [GNOME 桌面](https://gnome.org/),不过其它桌面版 Linux 发行版也大致相同。

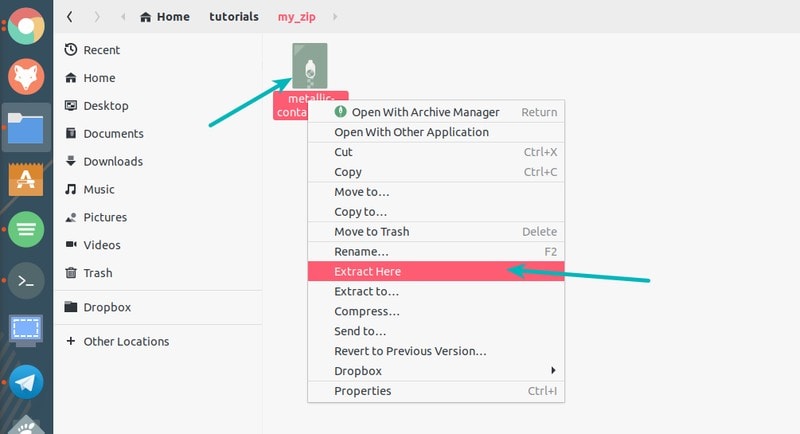

打开文件管理器,然后跳转到 zip 文件所在的文件夹下。在文件上点击鼠标右键,你会在弹出的窗口中看到 “提取到这里”,选择它。

与 `unzip` 命令不同,这个提取选项会创建一个和压缩文件名相同的文件夹(LCTT 译注:文件夹没有 `.zip` 扩展名),并且把压缩文件中的所有内容存储到创建的文件夹下。相对于 `unzip` 命令的默认行为是将压缩文件提取到当前所在的文件下,图形界面的解压对于我来说是一件非常好的事情。

这里还有一个选项“提取到……”,你可以选择特定的文件夹来存储提取的文件。

你现在知道如何在 Linux 解压文件了。你也许还对学习[在 Linux 下使用 7zip](https://itsfoss.com/use-7zip-ubuntu-linux/) 感兴趣?

---

via: <https://itsfoss.com/unzip-linux/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[octopus](https://github.com/singledo) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

[Zip](https://en.wikipedia.org/wiki/Zip_(file_format)) is one of the most common and most popular ways to create compressed archive files. It is also one of the older archive file formats that were created in 1989. Since it is widely used, you’ll regularly come across a zip file.

In an earlier tutorial, I showed [how to zip a folder in Linux](https://itsfoss.com/linux-zip-folder/). In this quick tutorial for beginners, I’ll show you how to unzip files in Linux.

**Prerequisite: Verify if you have unzip installed**

In order to unzip a zip archive file, you must have the unzip package installed in your system. Most modern Linux distributions come with unzip support but there is no harm in verifying it to avoid bad surprises later.

In a terminal, use the following command:

`unzip --version`

If it gives you some details, you have unzip installed already. If you see an ‘unzip command not found’ error, you have to install.

In [Ubuntu](https://ubuntu.com/) and [Debian](https://www.debian.org/) based distributions, you can use the command below to install unzip.

`sudo apt install unzip`

Once you have made sure that your system has unzip support, it’s time to unzip a zip file in Linux.

You can use both the command line and GUI for this purpose and I’ll show you both methods.

- Unzip files in Linux terminal

- Unzip files in Ubuntu via GUI

## Unzip files in Linux command line

Using unzip command in Linux is absolutely simple. In the directory, where you have the zip file, use this command:

`unzip zipped_file.zip`

You can also provide the path to the zip file instead of going to the directory. You’ll see extracted files in the output:

```

unzip metallic-container.zip -d my_zip

Archive: metallic-container.zip

inflating: my_zip/625993-PNZP34-678.jpg

inflating: my_zip/License free.txt

inflating: my_zip/License premium.txt

```

There is a slight problem with the above command. It will extract all the contents of the zip file in the current directory. That’s not a pretty thing to do because you’ll have a handful of files leaving the current directory unorganized.

### Unzip to a specific directory

A good practice is to unzip to directory in Linux command line. This way, all the extracted files are stored in the directory you specified. If the directory doesn’t exist, it will create one.

`unzip zipped_file.zip -d unzipped_directory`

Now all the contents of the zipped_file.zip will be extracted to unzipped_directory.