id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

10,848 | TLP:一个可以延长 Linux 笔记本电池寿命的高级电源管理工具 | https://www.2daygeek.com/tlp-increase-optimize-linux-laptop-battery-life/ | 2019-05-13T09:44:00 | [

"电池",

"笔记本",

"TPL"

] | https://linux.cn/article-10848-1.html |

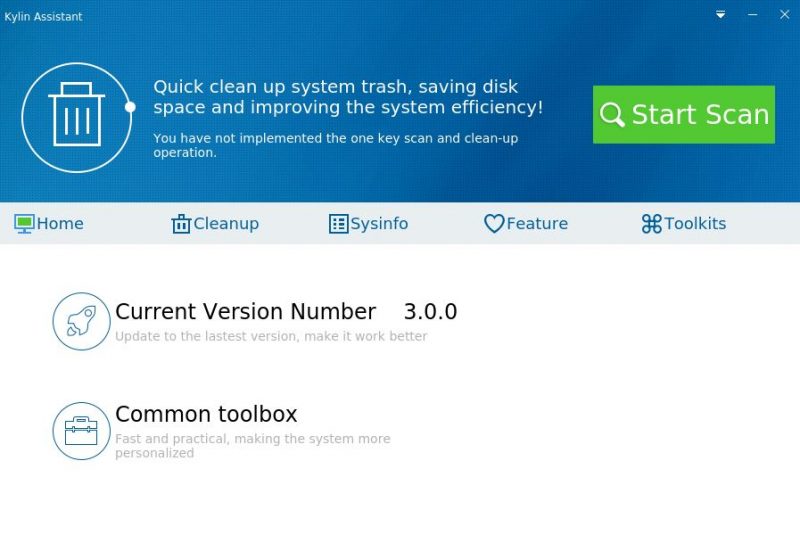

笔记本电池是针对 Windows 操作系统进行了高度优化的,当我在笔记本电脑中使用 Windows 操作系统时,我已经意识到这一点,但对于 Linux 来说却不一样。

多年来,Linux 在电池优化方面取得了很大进步,但我们仍然需要做一些必要的事情来改善 Linux 中笔记本电脑的电池寿命。

当我考虑延长电池寿命时,我没有多少选择,但我觉得 TLP 对我来说是一个更好的解决方案,所以我会继续使用它。

在本教程中,我们将详细讨论 TLP 以延长电池寿命。

我们之前在我们的网站上写过三篇关于 Linux [笔记本电池节电工具](https://www.2daygeek.com/check-laptop-battery-status-and-charging-state-in-linux-terminal/) 的文章:[PowerTOP](https://www.2daygeek.com/powertop-monitors-laptop-battery-usage-linux/) 和 [电池充电状态](https://www.2daygeek.com/monitor-laptop-battery-charging-state-linux/)。

### TLP

[TLP](https://linrunner.de/en/tlp/docs/tlp-linux-advanced-power-management.html) 是一款自由开源的高级电源管理工具,可在不进行任何配置更改的情况下延长电池寿命。

由于它的默认配置已针对电池寿命进行了优化,因此你可能只需要安装,然后就忘记它吧。

此外,它可以高度定制化,以满足你的特定要求。TLP 是一个具有自动后台任务的纯命令行工具。它不包含GUI。

TLP 适用于各种品牌的笔记本电脑。设置电池充电阈值仅适用于 IBM/Lenovo ThinkPad。

所有 TLP 设置都存储在 `/etc/default/tlp` 中。其默认配置提供了开箱即用的优化的节能设置。

以下 TLP 设置可用于自定义,如果需要,你可以相应地进行必要的更改。

### TLP 功能

* 内核笔记本电脑模式和脏缓冲区超时

* 处理器频率调整,包括 “turbo boost”/“turbo core”

* 限制最大/最小的 P 状态以控制 CPU 的功耗

* HWP 能源性能提示

* 用于多核/超线程的功率感知进程调度程序

* 处理器性能与节能策略(`x86_energy_perf_policy`)

* 硬盘高级电源管理级别(APM)和降速超时(按磁盘)

* AHCI 链路电源管理(ALPM)与设备黑名单

* PCIe 活动状态电源管理(PCIe ASPM)

* PCI(e) 总线设备的运行时电源管理

* Radeon 图形电源管理(KMS 和 DPM)

* Wifi 省电模式

* 关闭驱动器托架中的光盘驱动器

* 音频省电模式

* I/O 调度程序(按磁盘)

* USB 自动暂停,支持设备黑名单/白名单(输入设备自动排除)

* 在系统启动和关闭时启用或禁用集成的 wifi、蓝牙或 wwan 设备

* 在系统启动时恢复无线电设备状态(从之前的关机时的状态)

* 无线电设备向导:在网络连接/断开和停靠/取消停靠时切换无线电

* 禁用 LAN 唤醒

* 挂起/休眠后恢复集成的 WWAN 和蓝牙状态

* 英特尔处理器的动态电源降低 —— 需要内核和 PHC-Patch 支持

* 电池充电阈值 —— 仅限 ThinkPad

* 重新校准电池 —— 仅限 ThinkPad

### 如何在 Linux 上安装 TLP

TLP 包在大多数发行版官方存储库中都可用,因此,使用发行版的 [包管理器](https://www.2daygeek.com/category/package-management/) 来安装它。

对于 Fedora 系统,使用 [DNF 命令](https://www.2daygeek.com/dnf-command-examples-manage-packages-fedora-system/) 安装 TLP。

```

$ sudo dnf install tlp tlp-rdw

```

ThinkPad 需要一些附加软件包。

```

$ sudo dnf install https://download1.rpmfusion.org/free/fedora/rpmfusion-free-release-$(rpm -E %fedora).noarch.rpm

$ sudo dnf install http://repo.linrunner.de/fedora/tlp/repos/releases/tlp-release.fc$(rpm -E %fedora).noarch.rpm

$ sudo dnf install akmod-tp_smapi akmod-acpi_call kernel-devel

```

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

```

$ sudo dnf install smartmontools

```

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令](https://www.2daygeek.com/apt-get-apt-cache-command-examples-manage-packages-debian-ubuntu-systems/) 或 [APT 命令](https://www.2daygeek.com/apt-command-examples-manage-packages-debian-ubuntu-systems/) 安装 TLP。

```

$ sudo apt install tlp tlp-rdw

```

ThinkPad 需要一些附加软件包。

```

$ sudo apt-get install tp-smapi-dkms acpi-call-dkms

```

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

```

$ sudo apt-get install smartmontools

```

当基于 Ubuntu 的系统的官方软件包过时时,请使用以下 PPA 存储库,该存储库提供最新版本。运行以下命令以使用 PPA 安装 TLP。

```

$ sudo add-apt-repository ppa:linrunner/tlp

$ sudo apt-get update

$ sudo apt-get install tlp

```

对于基于 Arch Linux 的系统,使用 [Pacman 命令](https://www.2daygeek.com/pacman-command-examples-manage-packages-arch-linux-system/) 安装 TLP。

```

$ sudo pacman -S tlp tlp-rdw

```

ThinkPad 需要一些附加软件包。

```

$ pacman -S tp_smapi acpi_call

```

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

```

$ sudo pacman -S smartmontools

```

对于基于 Arch Linux 的系统,在启动时启用 TLP 和 TLP-Sleep 服务。

```

$ sudo systemctl enable tlp.service

$ sudo systemctl enable tlp-sleep.service

```

对于基于 Arch Linux 的系统,你还应该屏蔽以下服务以避免冲突,并确保 TLP 的无线电设备切换选项的正确操作。

```

$ sudo systemctl mask systemd-rfkill.service

$ sudo systemctl mask systemd-rfkill.socket

```

对于 RHEL/CentOS 系统,使用 [YUM 命令](https://www.2daygeek.com/yum-command-examples-manage-packages-rhel-centos-systems/) 安装 TLP。

```

$ sudo yum install tlp tlp-rdw

```

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

```

$ sudo yum install smartmontools

```

对于 openSUSE Leap 系统,使用 [Zypper 命令](https://www.2daygeek.com/zypper-command-examples-manage-packages-opensuse-system/) 安装 TLP。

```

$ sudo zypper install TLP

```

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

```

$ sudo zypper install smartmontools

```

成功安装 TLP 后,使用以下命令启动服务。

```

$ systemctl start tlp.service

```

### 使用方法

#### 显示电池信息

```

$ sudo tlp-stat -b

或

$ sudo tlp-stat --battery

```

```

--- TLP 1.1 --------------------------------------------

+++ Battery Status

/sys/class/power_supply/BAT0/manufacturer = SMP

/sys/class/power_supply/BAT0/model_name = L14M4P23

/sys/class/power_supply/BAT0/cycle_count = (not supported)

/sys/class/power_supply/BAT0/energy_full_design = 60000 [mWh]

/sys/class/power_supply/BAT0/energy_full = 48850 [mWh]

/sys/class/power_supply/BAT0/energy_now = 48850 [mWh]

/sys/class/power_supply/BAT0/power_now = 0 [mW]

/sys/class/power_supply/BAT0/status = Full

Charge = 100.0 [%]

Capacity = 81.4 [%]

```

#### 显示磁盘信息

```

$ sudo tlp-stat -d

或

$ sudo tlp-stat --disk

```

```

--- TLP 1.1 --------------------------------------------

+++ Storage Devices

/dev/sda:

Model = WDC WD10SPCX-24HWST1

Firmware = 02.01A02

APM Level = 128

Status = active/idle

Scheduler = mq-deadline

Runtime PM: control = on, autosuspend_delay = (not available)

SMART info:

4 Start_Stop_Count = 18787

5 Reallocated_Sector_Ct = 0

9 Power_On_Hours = 606 [h]

12 Power_Cycle_Count = 1792

193 Load_Cycle_Count = 25775

194 Temperature_Celsius = 31 [°C]

+++ AHCI Link Power Management (ALPM)

/sys/class/scsi_host/host0/link_power_management_policy = med_power_with_dipm

/sys/class/scsi_host/host1/link_power_management_policy = med_power_with_dipm

/sys/class/scsi_host/host2/link_power_management_policy = med_power_with_dipm

/sys/class/scsi_host/host3/link_power_management_policy = med_power_with_dipm

+++ AHCI Host Controller Runtime Power Management

/sys/bus/pci/devices/0000:00:17.0/ata1/power/control = on

/sys/bus/pci/devices/0000:00:17.0/ata2/power/control = on

/sys/bus/pci/devices/0000:00:17.0/ata3/power/control = on

/sys/bus/pci/devices/0000:00:17.0/ata4/power/control = on

```

#### 显示 PCI 设备信息

```

$ sudo tlp-stat -e

或

$ sudo tlp-stat --pcie

```

```

$ sudo tlp-stat -e

or

$ sudo tlp-stat --pcie

--- TLP 1.1 --------------------------------------------

+++ Runtime Power Management

Device blacklist = (not configured)

Driver blacklist = amdgpu nouveau nvidia radeon pcieport

/sys/bus/pci/devices/0000:00:00.0/power/control = auto (0x060000, Host bridge, skl_uncore)

/sys/bus/pci/devices/0000:00:01.0/power/control = auto (0x060400, PCI bridge, pcieport)

/sys/bus/pci/devices/0000:00:02.0/power/control = auto (0x030000, VGA compatible controller, i915)

/sys/bus/pci/devices/0000:00:14.0/power/control = auto (0x0c0330, USB controller, xhci_hcd)

......

```

#### 显示图形卡信息

```

$ sudo tlp-stat -g

或

$ sudo tlp-stat --graphics

```

```

--- TLP 1.1 --------------------------------------------

+++ Intel Graphics

/sys/module/i915/parameters/enable_dc = -1 (use per-chip default)

/sys/module/i915/parameters/enable_fbc = 1 (enabled)

/sys/module/i915/parameters/enable_psr = 0 (disabled)

/sys/module/i915/parameters/modeset = -1 (use per-chip default)

```

#### 显示处理器信息

```

$ sudo tlp-stat -p

或

$ sudo tlp-stat --processor

```

```

--- TLP 1.1 --------------------------------------------

+++ Processor

CPU model = Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz

/sys/devices/system/cpu/cpu0/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors = performance powersave

/sys/devices/system/cpu/cpu0/cpufreq/scaling_min_freq = 800000 [kHz]

/sys/devices/system/cpu/cpu0/cpufreq/scaling_max_freq = 3500000 [kHz]

/sys/devices/system/cpu/cpu0/cpufreq/energy_performance_preference = balance_power

/sys/devices/system/cpu/cpu0/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

......

/sys/devices/system/cpu/intel_pstate/min_perf_pct = 22 [%]

/sys/devices/system/cpu/intel_pstate/max_perf_pct = 100 [%]

/sys/devices/system/cpu/intel_pstate/no_turbo = 0

/sys/devices/system/cpu/intel_pstate/turbo_pct = 33 [%]

/sys/devices/system/cpu/intel_pstate/num_pstates = 28

x86_energy_perf_policy: program not installed.

/sys/module/workqueue/parameters/power_efficient = Y

/proc/sys/kernel/nmi_watchdog = 0

+++ Undervolting

PHC kernel not available.

```

#### 显示系统数据信息

```

$ sudo tlp-stat -s

或

$ sudo tlp-stat --system

```

```

--- TLP 1.1 --------------------------------------------

+++ System Info

System = LENOVO Lenovo ideapad Y700-15ISK 80NV

BIOS = CDCN35WW

Release = "Manjaro Linux"

Kernel = 4.19.6-1-MANJARO #1 SMP PREEMPT Sat Dec 1 12:21:26 UTC 2018 x86_64

/proc/cmdline = BOOT_IMAGE=/boot/vmlinuz-4.19-x86_64 root=UUID=69d9dd18-36be-4631-9ebb-78f05fe3217f rw quiet resume=UUID=a2092b92-af29-4760-8e68-7a201922573b

Init system = systemd

Boot mode = BIOS (CSM, Legacy)

+++ TLP Status

State = enabled

Last run = 11:04:00 IST, 596 sec(s) ago

Mode = battery

Power source = battery

```

#### 显示温度和风扇速度信息

```

$ sudo tlp-stat -t

或

$ sudo tlp-stat --temp

```

```

--- TLP 1.1 --------------------------------------------

+++ Temperatures

CPU temp = 36 [°C]

Fan speed = (not available)

```

#### 显示 USB 设备数据信息

```

$ sudo tlp-stat -u

或

$ sudo tlp-stat --usb

```

```

--- TLP 1.1 --------------------------------------------

+++ USB

Autosuspend = disabled

Device whitelist = (not configured)

Device blacklist = (not configured)

Bluetooth blacklist = disabled

Phone blacklist = disabled

WWAN blacklist = enabled

Bus 002 Device 001 ID 1d6b:0003 control = auto, autosuspend_delay_ms = 0 -- Linux Foundation 3.0 root hub (hub)

Bus 001 Device 003 ID 174f:14e8 control = auto, autosuspend_delay_ms = 2000 -- Syntek (uvcvideo)

......

```

#### 显示警告信息

```

$ sudo tlp-stat -w

或

$ sudo tlp-stat --warn

```

```

--- TLP 1.1 --------------------------------------------

No warnings detected.

```

#### 状态报告及配置和所有活动的设置

```

$ sudo tlp-stat

```

```

--- TLP 1.1 --------------------------------------------

+++ Configured Settings: /etc/default/tlp

TLP_ENABLE=1

TLP_DEFAULT_MODE=AC

TLP_PERSISTENT_DEFAULT=0

DISK_IDLE_SECS_ON_AC=0

DISK_IDLE_SECS_ON_BAT=2

MAX_LOST_WORK_SECS_ON_AC=15

MAX_LOST_WORK_SECS_ON_BAT=60

......

+++ System Info

System = LENOVO Lenovo ideapad Y700-15ISK 80NV

BIOS = CDCN35WW

Release = "Manjaro Linux"

Kernel = 4.19.6-1-MANJARO #1 SMP PREEMPT Sat Dec 1 12:21:26 UTC 2018 x86_64

/proc/cmdline = BOOT_IMAGE=/boot/vmlinuz-4.19-x86_64 root=UUID=69d9dd18-36be-4631-9ebb-78f05fe3217f rw quiet resume=UUID=a2092b92-af29-4760-8e68-7a201922573b

Init system = systemd

Boot mode = BIOS (CSM, Legacy)

+++ TLP Status

State = enabled

Last run = 11:04:00 IST, 684 sec(s) ago

Mode = battery

Power source = battery

+++ Processor

CPU model = Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz

/sys/devices/system/cpu/cpu0/cpufreq/scaling_driver = intel_pstate

/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor = powersave

/sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors = performance powersave

......

/sys/devices/system/cpu/intel_pstate/min_perf_pct = 22 [%]

/sys/devices/system/cpu/intel_pstate/max_perf_pct = 100 [%]

/sys/devices/system/cpu/intel_pstate/no_turbo = 0

/sys/devices/system/cpu/intel_pstate/turbo_pct = 33 [%]

/sys/devices/system/cpu/intel_pstate/num_pstates = 28

x86_energy_perf_policy: program not installed.

/sys/module/workqueue/parameters/power_efficient = Y

/proc/sys/kernel/nmi_watchdog = 0

+++ Undervolting

PHC kernel not available.

+++ Temperatures

CPU temp = 42 [°C]

Fan speed = (not available)

+++ File System

/proc/sys/vm/laptop_mode = 2

/proc/sys/vm/dirty_writeback_centisecs = 6000

/proc/sys/vm/dirty_expire_centisecs = 6000

/proc/sys/vm/dirty_ratio = 20

/proc/sys/vm/dirty_background_ratio = 10

+++ Storage Devices

/dev/sda:

Model = WDC WD10SPCX-24HWST1

Firmware = 02.01A02

APM Level = 128

Status = active/idle

Scheduler = mq-deadline

Runtime PM: control = on, autosuspend_delay = (not available)

SMART info:

4 Start_Stop_Count = 18787

5 Reallocated_Sector_Ct = 0

9 Power_On_Hours = 606 [h]

12 Power_Cycle_Count = 1792

193 Load_Cycle_Count = 25777

194 Temperature_Celsius = 31 [°C]

+++ AHCI Link Power Management (ALPM)

/sys/class/scsi_host/host0/link_power_management_policy = med_power_with_dipm

/sys/class/scsi_host/host1/link_power_management_policy = med_power_with_dipm

/sys/class/scsi_host/host2/link_power_management_policy = med_power_with_dipm

/sys/class/scsi_host/host3/link_power_management_policy = med_power_with_dipm

+++ AHCI Host Controller Runtime Power Management

/sys/bus/pci/devices/0000:00:17.0/ata1/power/control = on

/sys/bus/pci/devices/0000:00:17.0/ata2/power/control = on

/sys/bus/pci/devices/0000:00:17.0/ata3/power/control = on

/sys/bus/pci/devices/0000:00:17.0/ata4/power/control = on

+++ PCIe Active State Power Management

/sys/module/pcie_aspm/parameters/policy = powersave

+++ Intel Graphics

/sys/module/i915/parameters/enable_dc = -1 (use per-chip default)

/sys/module/i915/parameters/enable_fbc = 1 (enabled)

/sys/module/i915/parameters/enable_psr = 0 (disabled)

/sys/module/i915/parameters/modeset = -1 (use per-chip default)

+++ Wireless

bluetooth = on

wifi = on

wwan = none (no device)

hci0(btusb) : bluetooth, not connected

wlp8s0(iwlwifi) : wifi, connected, power management = on

+++ Audio

/sys/module/snd_hda_intel/parameters/power_save = 1

/sys/module/snd_hda_intel/parameters/power_save_controller = Y

+++ Runtime Power Management

Device blacklist = (not configured)

Driver blacklist = amdgpu nouveau nvidia radeon pcieport

/sys/bus/pci/devices/0000:00:00.0/power/control = auto (0x060000, Host bridge, skl_uncore)

/sys/bus/pci/devices/0000:00:01.0/power/control = auto (0x060400, PCI bridge, pcieport)

/sys/bus/pci/devices/0000:00:02.0/power/control = auto (0x030000, VGA compatible controller, i915)

......

+++ USB

Autosuspend = disabled

Device whitelist = (not configured)

Device blacklist = (not configured)

Bluetooth blacklist = disabled

Phone blacklist = disabled

WWAN blacklist = enabled

Bus 002 Device 001 ID 1d6b:0003 control = auto, autosuspend_delay_ms = 0 -- Linux Foundation 3.0 root hub (hub)

Bus 001 Device 003 ID 174f:14e8 control = auto, autosuspend_delay_ms = 2000 -- Syntek (uvcvideo)

Bus 001 Device 002 ID 17ef:6053 control = on, autosuspend_delay_ms = 2000 -- Lenovo (usbhid)

Bus 001 Device 004 ID 8087:0a2b control = auto, autosuspend_delay_ms = 2000 -- Intel Corp. (btusb)

Bus 001 Device 001 ID 1d6b:0002 control = auto, autosuspend_delay_ms = 0 -- Linux Foundation 2.0 root hub (hub)

+++ Battery Status

/sys/class/power_supply/BAT0/manufacturer = SMP

/sys/class/power_supply/BAT0/model_name = L14M4P23

/sys/class/power_supply/BAT0/cycle_count = (not supported)

/sys/class/power_supply/BAT0/energy_full_design = 60000 [mWh]

/sys/class/power_supply/BAT0/energy_full = 51690 [mWh]

/sys/class/power_supply/BAT0/energy_now = 50140 [mWh]

/sys/class/power_supply/BAT0/power_now = 12185 [mW]

/sys/class/power_supply/BAT0/status = Discharging

Charge = 97.0 [%]

Capacity = 86.2 [%]

```

---

via: <https://www.2daygeek.com/tlp-increase-optimize-linux-laptop-battery-life/>

作者:[Magesh Maruthamuthu](https://www.2daygeek.com/author/magesh/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 404 | Not Found | null |

10,850 | 使用 Python 和 Pygame 模块构建一个游戏框架 | https://opensource.com/article/17/12/game-framework-python | 2019-05-13T21:51:36 | [

"Python",

"Pygame"

] | https://linux.cn/article-10850-1.html |

>

> 这系列的第一篇通过创建一个简单的骰子游戏来探究 Python。现在是来从零制作你自己的游戏的时间。

>

>

>

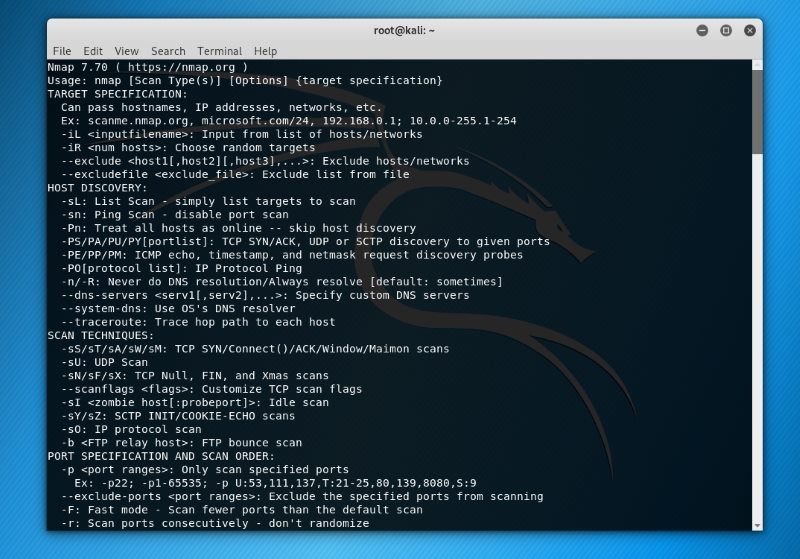

在我的[这系列的第一篇文章](/article-9071-1.html) 中, 我已经讲解如何使用 Python 创建一个简单的、基于文本的骰子游戏。这次,我将展示如何使用 Python 模块 Pygame 来创建一个图形化游戏。它将需要几篇文章才能来得到一个确实做成一些东西的游戏,但是到这系列的结尾,你将更好地理解如何查找和学习新的 Python 模块和如何从其基础上构建一个应用程序。

在开始前,你必须安装 [Pygame](http://www.pygame.org/wiki/about)。

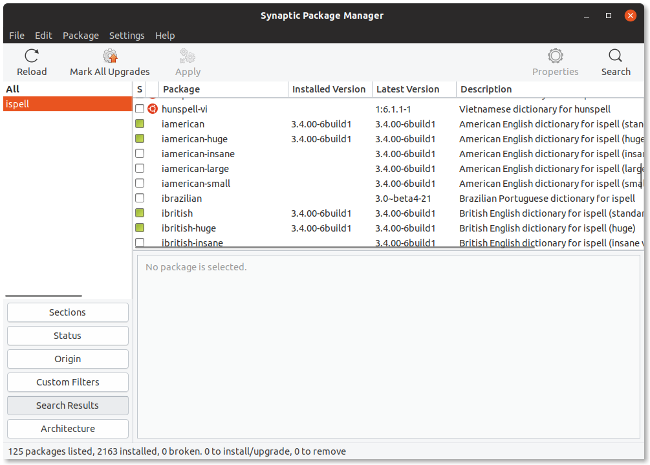

### 安装新的 Python 模块

有几种方法来安装 Python 模块,但是最通用的两个是:

* 从你的发行版的软件存储库

* 使用 Python 的软件包管理器 `pip`

两个方法都工作的很好,并且每一个都有它自己的一套优势。如果你是在 Linux 或 BSD 上开发,可以利用你的发行版的软件存储库来自动和及时地更新。

然而,使用 Python 的内置软件包管理器可以给予你控制更新模块时间的能力。而且,它不是特定于操作系统的,这意味着,即使当你不是在你常用的开发机器上时,你也可以使用它。`pip` 的其它的优势是允许本地安装模块,如果你没有正在使用的计算机的管理权限,这是有用的。

### 使用 pip

如果 Python 和 Python3 都安装在你的系统上,你想使用的命令很可能是 `pip3`,它用来区分 Python 2.x 的 `pip` 的命令。如果你不确定,先尝试 `pip3`。

`pip` 命令有些像大多数 Linux 软件包管理器一样工作。你可以使用 `search` 搜索 Python 模块,然后使用 `install` 安装它们。如果你没有你正在使用的计算机的管理权限来安装软件,你可以使用 `--user` 选项来仅仅安装模块到你的家目录。

```

$ pip3 search pygame

[...]

Pygame (1.9.3) - Python Game Development

sge-pygame (1.5) - A 2-D game engine for Python

pygame_camera (0.1.1) - A Camera lib for PyGame

pygame_cffi (0.2.1) - A cffi-based SDL wrapper that copies the pygame API.

[...]

$ pip3 install Pygame --user

```

Pygame 是一个 Python 模块,这意味着它仅仅是一套可以使用在你的 Python 程序中的库。换句话说,它不是一个像 [IDLE](https://en.wikipedia.org/wiki/IDLE) 或 [Ninja-IDE](http://ninja-ide.org/) 一样可以让你启动的程序。

### Pygame 新手入门

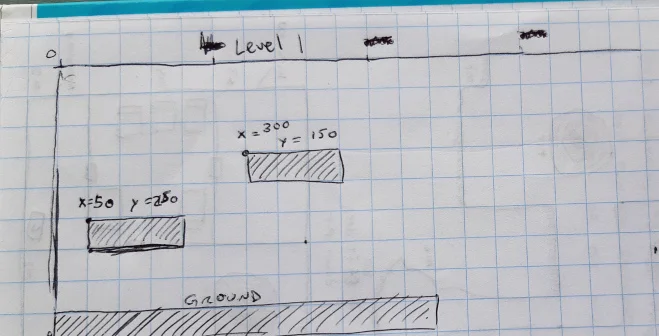

一个电子游戏需要一个背景设定:故事发生的地点。在 Python 中,有两种不同的方法来创建你的故事背景:

* 设置一种背景颜色

* 设置一张背景图片

你的背景仅是一张图片或一种颜色。你的电子游戏人物不能与在背景中的东西相互作用,因此,不要在后面放置一些太重要的东西。它仅仅是设置装饰。

### 设置你的 Pygame 脚本

要开始一个新的 Pygame 工程,先在计算机上创建一个文件夹。游戏的全部文件被放在这个目录中。在你的工程文件夹内部保持所需要的所有的文件来运行游戏是极其重要的。

一个 Python 脚本以文件类型、你的姓名,和你想使用的许可证开始。使用一个开放源码许可证,以便你的朋友可以改善你的游戏并与你一起分享他们的更改:

```

#!/usr/bin/env python3

# by Seth Kenlon

## GPLv3

# This program is free software: you can redistribute it and/or

# modify it under the terms of the GNU General Public License as

# published by the Free Software Foundation, either version 3 of the

# License, or (at your option) any later version.

#

# This program is distributed in the hope that it will be useful, but

# WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

# General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <http://www.gnu.org/licenses/>.

```

然后,你告诉 Python 你想使用的模块。一些模块是常见的 Python 库,当然,你想包括一个你刚刚安装的 Pygame 模块。

```

import pygame # 加载 pygame 关键字

import sys # 让 python 使用你的文件系统

import os # 帮助 python 识别你的操作系统

```

由于你将用这个脚本文件做很多工作,在文件中分成段落是有帮助的,以便你知道在哪里放代码。你可以使用块注释来做这些,这些注释仅在看你的源文件代码时是可见的。在你的代码中创建三个块。

```

'''

Objects

'''

# 在这里放置 Python 类和函数

'''

Setup

'''

# 在这里放置一次性的运行代码

'''

Main Loop

'''

# 在这里放置游戏的循环代码指令

```

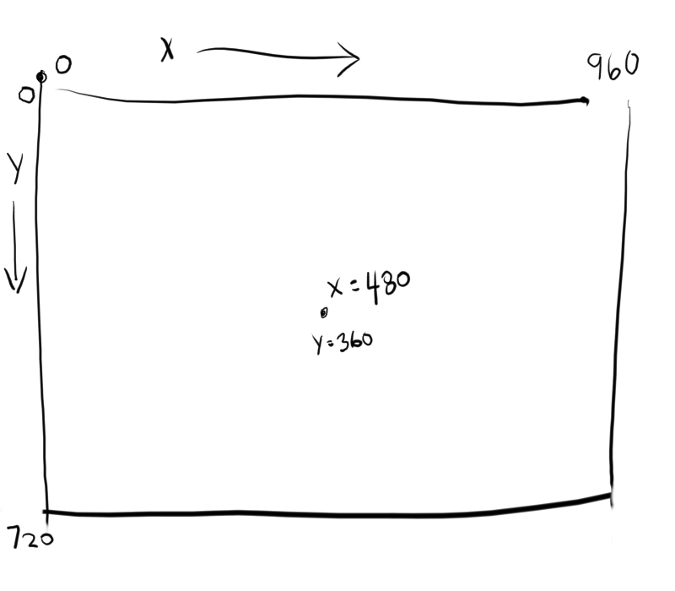

接下来,为你的游戏设置窗口大小。注意,不是每一个人都有大计算机屏幕,所以,最好使用一个适合大多数人的计算机的屏幕大小。

这里有一个方法来切换全屏模式,很多现代电子游戏都会这样做,但是,由于你刚刚开始,简单起见仅设置一个大小即可。

```

'''

Setup

'''

worldx = 960

worldy = 720

```

在脚本中使用 Pygame 引擎前,你需要一些基本的设置。你必须设置帧频,启动它的内部时钟,然后开始 (`init`)Pygame 。

```

fps = 40 # 帧频

ani = 4 # 动画循环

clock = pygame.time.Clock()

pygame.init()

```

现在你可以设置你的背景。

### 设置背景

在你继续前,打开一个图形应用程序,为你的游戏世界创建一个背景。在你的工程目录中的 `images` 文件夹内部保存它为 `stage.png` 。

这里有一些你可以使用的自由图形应用程序。

* [Krita](http://krita.org) 是一个专业级绘图素材模拟器,它可以被用于创建漂亮的图片。如果你对创建电子游戏艺术作品非常感兴趣,你甚至可以购买一系列的[游戏艺术作品教程](https://gumroad.com/l/krita-game-art-tutorial-1)。

* [Pinta](https://pinta-project.com/pintaproject/pinta/releases) 是一个基本的,易于学习的绘图应用程序。

* [Inkscape](http://inkscape.org) 是一个矢量图形应用程序。使用它来绘制形状、线、样条曲线和贝塞尔曲线。

你的图像不必很复杂,你可以以后回去更改它。一旦有了它,在你文件的 Setup 部分添加这些代码:

```

world = pygame.display.set_mode([worldx,worldy])

backdrop = pygame.image.load(os.path.join('images','stage.png').convert())

backdropbox = world.get_rect()

```

如果你仅仅用一种颜色来填充你的游戏的背景,你需要做的就是:

```

world = pygame.display.set_mode([worldx,worldy])

```

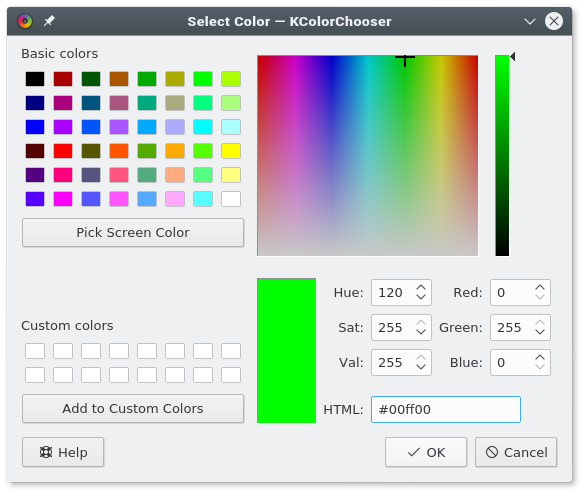

你也必须定义颜色以使用。在你的 Setup 部分,使用红、绿、蓝 (RGB) 的值来创建一些颜色的定义。

```

'''

Setup

'''

BLUE = (25,25,200)

BLACK = (23,23,23 )

WHITE = (254,254,254)

```

至此,你理论上可以启动你的游戏了。问题是,它可能仅持续了一毫秒。

为证明这一点,保存你的文件为 `your-name_game.py`(用你真实的名称替换 `your-name`)。然后启动你的游戏。

如果你正在使用 IDLE,通过选择来自 “Run” 菜单的 “Run Module” 来运行你的游戏。

如果你正在使用 Ninja,在左侧按钮条中单击 “Run file” 按钮。

你也可以直接从一个 Unix 终端或一个 Windows 命令提示符中运行一个 Python 脚本。

```

$ python3 ./your-name_game.py

```

如果你正在使用 Windows,使用这命令:

```

py.exe your-name_game.py

```

启动它,不过不要期望很多,因为你的游戏现在仅仅持续几毫秒。你可以在下一部分中修复它。

### 循环

除非另有说明,一个 Python 脚本运行一次并仅一次。近来计算机的运行速度是非常快的,所以你的 Python 脚本运行时间会少于 1 秒钟。

为强制你的游戏来处于足够长的打开和活跃状态来让人看到它(更不要说玩它),使用一个 `while` 循环。为使你的游戏保存打开,你可以设置一个变量为一些值,然后告诉一个 `while` 循环只要变量保持未更改则一直保存循环。

这经常被称为一个“主循环”,你可以使用术语 `main` 作为你的变量。在你的 Setup 部分的任意位置添加代码:

```

main = True

```

在主循环期间,使用 Pygame 关键字来检查键盘上的按键是否已经被按下或释放。添加这些代码到你的主循环部分:

```

'''

Main loop

'''

while main == True:

for event in pygame.event.get():

if event.type == pygame.QUIT:

pygame.quit(); sys.exit()

main = False

if event.type == pygame.KEYDOWN:

if event.key == ord('q'):

pygame.quit()

sys.exit()

main = False

```

也是在你的循环中,刷新你世界的背景。

如果你使用一个图片作为背景:

```

world.blit(backdrop, backdropbox)

```

如果你使用一种颜色作为背景:

```

world.fill(BLUE)

```

最后,告诉 Pygame 来重新刷新屏幕上的所有内容,并推进游戏的内部时钟。

```

pygame.display.flip()

clock.tick(fps)

```

保存你的文件,再次运行它来查看你曾经创建的最无趣的游戏。

退出游戏,在你的键盘上按 `q` 键。

在这系列的 [下一篇文章](https://opensource.com/article/17/12/program-game-python-part-3-spawning-player) 中,我将向你演示,如何加强你当前空空如也的游戏世界,所以,继续学习并创建一些将要使用的图形!

---

via: <https://opensource.com/article/17/12/game-framework-python>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[robsean](https://github.com/robsean) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

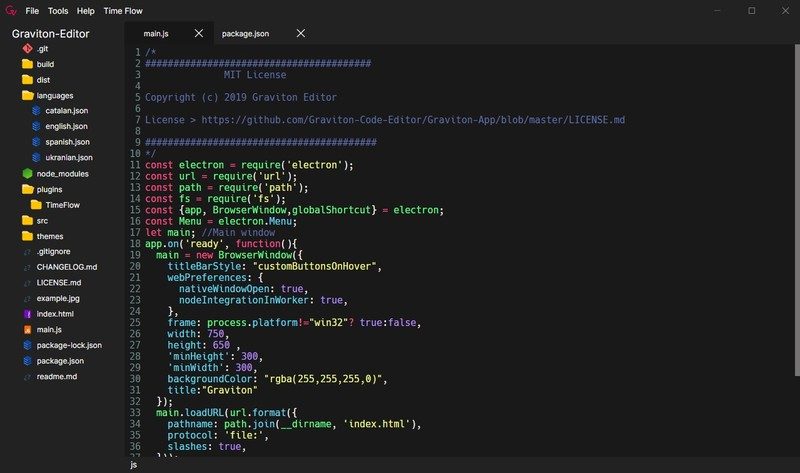

| 200 | OK | In my [first article in this series](https://opensource.com/article/17/10/python-101), I explained how to use Python to create a simple, text-based dice game. You also used the Turtle module to generate some simple graphics. In this article, you start using a module called Pygame to create a game with graphics.

The Turtle module is included with Python by default. Anyone who's got Python installed also has Turtle. The same is not true for an advanced module like Pygame. Because it's a specialized code library, you must install Pygame yourself. Modern Python development uses the concept of virtual *environments*, which provides your Python code its own space to run in, and also helps manage which code libraries your application uses. This ensures that when you pass your Python application to another user to play, you know exactly what they need to install to make it work.

You can manage your Python virtual environment manually, or you can let your IDE help you. For now, you can let PyCharm do all the work. If you're not using PyCharm, [read László Kiss Kollár's article about managing Python packages](https://opensource.com/article/19/4/managing-python-packages).

## Getting started with Pygame

Pygame is a library, or *Python module*. It's a collection of common code that prevents you from having to reinvent the wheel with every new game you write. You've already used the Turtle module, and you can imagine how complex that could have been if you'd had to write the code to create a pen before drawing with it. Pygame offers similar advantages, but for video games.

A video game needs a setting, a world in which it takes place. In Pygame, there are two different ways to create your setting:

- Set a background color

- Set a background image

Either way, your background is only an image or a color. Your video game characters can't interact with things in the background, so don't put anything too important back there. It's just set dressing.

## Setting up your first Pygame script

To start a new Python project, you would normally create a new folder on your computer and place all your game files go into this directory. It's vitally important that you keep all the files needed to run your game inside of your project folder.

PyCharm (or whatever IDE you use) can do this for you.

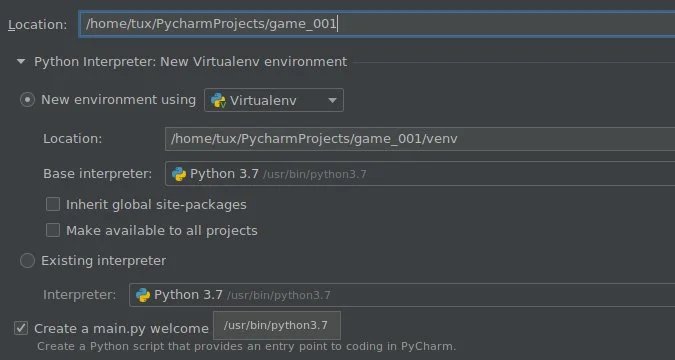

To create a new project in PyCharm, navigate to the **File** menu and select **New Project**. In the window that appears, enter a name for your project (such as **game_001**.) Notice that this project is saved into a special **PycharmProjects** folder in your home directory. This ensures that all the files your game needs stays in one place.

When creating a new project, let PyCharm create a new environment using Virtualenv, and accept all defaults. Enable the option to create a **main.py** file (and to set up some important defaults.)

opensource.com

After you've clicked the **Create** button, a new project appears in your PyCharm window. The project consists of a virtual environment (the **venv** directory listed in the left column) and a demo file called **main.py**.

Delete all the contents of **main.py** so you can enter your own custom code. A Python script starts with the file type, your name, and the license you want to use. Use an open source license so your friends can improve your game and share their changes with you:

```

#!/usr/bin/env python3

# by Seth Kenlon

## GPLv3

# This program is free software: you can redistribute it and/or

# modify it under the terms of the GNU General Public License as

# published by the Free Software Foundation, either version 3 of the

# License, or (at your option) any later version.

#

# This program is distributed in the hope that it will be useful, but

# WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

# General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <http://www.gnu.org/licenses/>.

```

Then tell Python what modules you want to use. In this code sample, you don't have to type the # character or anything after it on each line. The # character in Python represents a *comment*, notes in code left for yourself and other coders.

```

import pygame # load pygame keywords

import sys # let python use your file system

import os # help python identify your OS

```

Notice that PyCharm doesn't understand what Pygame is, and underlines it to mark it as an error. This is because Pygame, unlike sys and os, isn't included with Python by default. You need to include Pygame in your project directory before you can use it successfully in code. To include it, hover your mouse over the word **pygame** until you see a notification popup explaining the error.

Click the **Install package pygame** link in the alert box, and wait for PyCharm to install Pygame into your virtual environment.

Once it's installed, the error disappears.

Without an IDE like PyCharm, you can install Pygame into your virtual environment manually using the **pip **command.

## Code sections

Because you'll be working a lot with this script file, it helps to make sections within the file so you know where to put stuff. You do this with block comments, which are comments that are visible only when looking at your source code. Create four blocks in your code. These are all comments that Python ignores, but they're good placeholders for you as you follow along with these lessons. I still use placeholders when I code, because it helps me organize and plan.

```

'''

Variables

'''

# put variables here

'''

Objects

'''

# put Python classes and functions here

'''

Setup

'''

# put run-once code here

'''

Main Loop

'''

# put game loop here

```

Next, set the window size for your game. Keep in mind that not everyone has a big computer screen, so it's best to use a screen size that fits on "most" people's computers.

There is a way to toggle full-screen mode, the way many modern video games do, but since you're just starting out, keep it simple and just set one size.

```

'''

Variables

'''

worldx = 960

worldy = 720

```

The Pygame engine requires some basic setup before you can use it in a script. You must set the frame rate, start its internal clock, and start (using the keyword `init`

, for *initialize*) Pygame.

Add these variables:

```

fps = 40 # frame rate

ani = 4 # animation cycles

```

Add instructions to start Pygame's internal clock in the Setup section:

```

'''

Setup

'''

clock = pygame.time.Clock()

pygame.init()

```

Now you can set your background.

## Setting the background

Before you continue, open a graphics application and create a background for your game world. Save it as `stage.png`

inside a folder called `images`

in your project directory. If you need a starting point, you can download a set of [Creative Commons](https://opensource.com/article/20/1/what-creative-commons) backgrounds from [kenny.nl](https://kenney.nl/assets/background-elements-redux).

There are several free graphic applications you can use to create, resize, or modify graphics for your games.

[Pinta](https://pinta-project.com/pintaproject/pinta/releases)is a basic, easy to learn paint application.[Krita](http://krita.org)is a professional-level paint materials emulator that can be used to create beautiful images. If you're very interested in creating art for video games, you can even purchase a series of online[game art tutorials](https://gumroad.com/l/krita-game-art-tutorial-1).[Inkscape](http://inkscape.org)is a vector graphics application. Use it to draw with shapes, lines, splines, and Bézier curves.

Your graphic doesn't have to be complex, and you can always go back and change it later. Once you have a background, add this code in the setup section of your file:

```

world = pygame.display.set_mode([worldx,worldy])

backdrop = pygame.image.load(os.path.join('images','stage.png'))

backdropbox = world.get_rect()

```

If you're just going to fill the background of your game world with a color, all you need is:

`world = pygame.display.set_mode([worldx, worldy])`

You also must define a color to use. In your setup section, create some color definitions using values for red, green, and blue (RGB).

```

'''

Variables

'''

BLUE = (25, 25, 200)

BLACK = (23, 23, 23)

WHITE = (254, 254, 254)

```

## Look out for errors

PyCharm is strict, and that's pretty typical for programming. Syntax is paramount! As you enter your code into PyCharm, you see warnings and errors. The warnings are yellow and the errors are red.

PyCharm can be over-zealous sometimes, though, so it's usually safe to ignore warnings. You may be violating the "Python style", but these are subtle conventions that you'll learn in time. Your code will still run as expected.

Errors, on the other hand, prevent your code (and sometimes PyCharm) from doing what you expect. For instance, PyCharm is very insistent that there's a newline character at the end of the last line of code, so you **must** press **Enter** or **Return** on your keyboard at the end of your code. If you don't, PyCharm quietly refuses to run your code.

## Running the game

At this point, you could theoretically start your game. The problem is, it would only last for a millisecond.

To prove this, save and then run your game.

If you are using IDLE, run your game by selecting `Run Module`

from the Run menu.

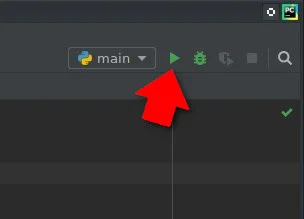

If you are using PyCharm, click the `Run file`

button in the top right toolbar.

opensource.com

You can also run a Python script straight from a Unix terminal or a Windows command prompt, as long as you're in your virtual environment.

However you launch it, don't expect much, because your game only lasts a few milliseconds right now. You can fix that in the next section.

## Looping

Unless told otherwise, a Python script runs once and only once. Computers are very fast these days, so your Python script runs in less than a second.

To force your game to stay open and active long enough for someone to see it (let alone play it), use a `while`

loop. To make your game remain open, you can set a variable to some value, then tell a `while`

loop to keep looping for as long as the variable remains unchanged.

This is often called a "main loop," and you can use the term `main`

as your variable. Add this anywhere in your Variables section:

`main = True`

During the main loop, use Pygame keywords to detect if keys on the keyboard have been pressed or released. Add this to your main loop section:

```

'''

Main loop

'''

while main:

for event in pygame.event.get():

if event.type == pygame.QUIT:

pygame.quit()

try:

sys.exit()

finally:

main = False

if event.type == pygame.KEYDOWN:

if event.key == ord('q'):

pygame.quit()

try:

sys.exit()

finally:

main = False

```

Be sure to press **Enter** or **Return** after the final line of your code so there's an empty line at the bottom of your file.

Also in your main loop, refresh your world's background.

If you are using an image for the background:

`world.blit(backdrop, backdropbox)`

If you are using a color for the background:

`world.fill(BLUE)`

Finally, tell Pygame to refresh everything on the screen and advance the game's internal clock.

```

pygame.display.flip()

clock.tick(fps)

```

Save your file, and run it again to see the most boring game ever created.

To quit the game, press `q`

on your keyboard.

## Freeze your Python environment

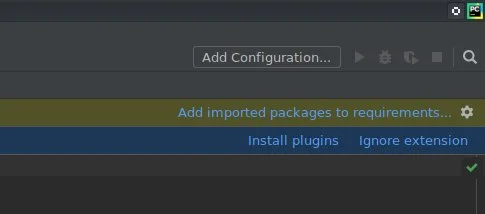

PyCharm is managing your code libraries, but your users aren't going to run your game from PyCharm. Just as you save your code file, you also need to preserve your virtual environment.

Go to the **Tools** menu and select **Sync Python Requirements**. This saves your library dependencies to a special file called **requirements.txt**. The first time you sync your requirements, PyCharm prompts you to install a plugin and to add dependencies. Click to accept these offers.

opensource.com

A **requirements.txt** is generated for you, and placed into your project directory.

## Code

Here's what your code should look like so far:

```

#!/usr/bin/env python3

# by Seth Kenlon

# GPLv3

# This program is free software: you can redistribute it and/or

# modify it under the terms of the GNU General Public License as

# published by the Free Software Foundation, either version 3 of the

# License, or (at your option) any later version.

#

# This program is distributed in the hope that it will be useful, but

# WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

# General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <http://www.gnu.org/licenses/>.

import pygame

import sys

import os

'''

Variables

'''

worldx = 960

worldy = 720

fps = 40 # frame rate

ani = 4 # animation cycles

main = True

BLUE = (25, 25, 200)

BLACK = (23, 23, 23)

WHITE = (254, 254, 254)

'''

Objects

'''

# put Python classes and functions here

'''

Setup

'''

clock = pygame.time.Clock()

pygame.init()

world = pygame.display.set_mode([worldx, worldy])

backdrop = pygame.image.load(os.path.join('images', 'stage.png'))

backdropbox = world.get_rect()

'''

Main Loop

'''

while main:

for event in pygame.event.get():

if event.type == pygame.QUIT:

pygame.quit()

try:

sys.exit()

finally:

main = False

if event.type == pygame.KEYDOWN:

if event.key == ord('q'):

pygame.quit()

try:

sys.exit()

finally:

main = False

world.blit(backdrop, backdropbox)

pygame.display.flip()

clock.tick(fps)

```

## What to do next

In the [next article](https://opensource.com/article/17/12/program-game-python-part-3-spawning-player) of this series, I'll show you how to add to your currently empty game world, so start creating or finding some graphics to use!

## 6 Comments |

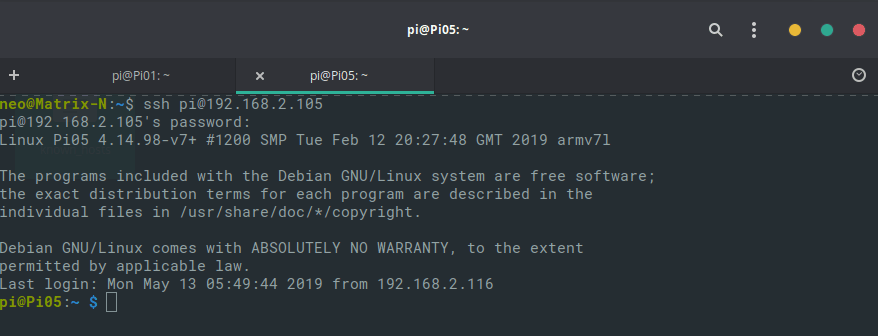

10,851 | 如何在 Linux 中创建 SSH 别名 | https://www.ostechnix.com/how-to-create-ssh-alias-in-linux/ | 2019-05-13T22:29:35 | [

"SSH",

"别名"

] | https://linux.cn/article-10851-1.html |

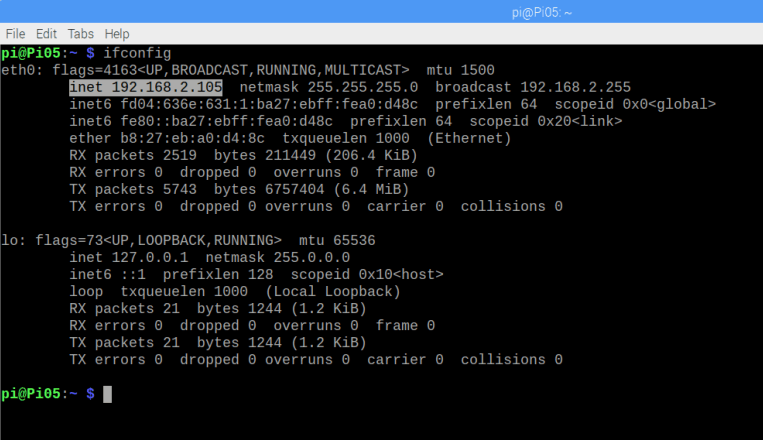

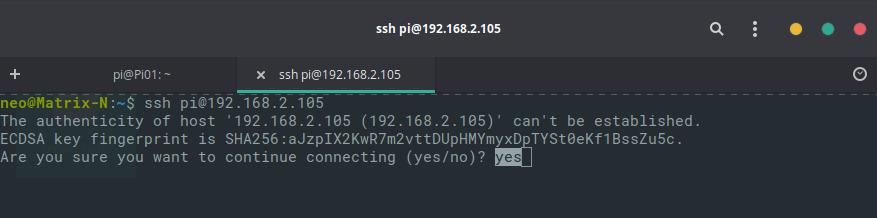

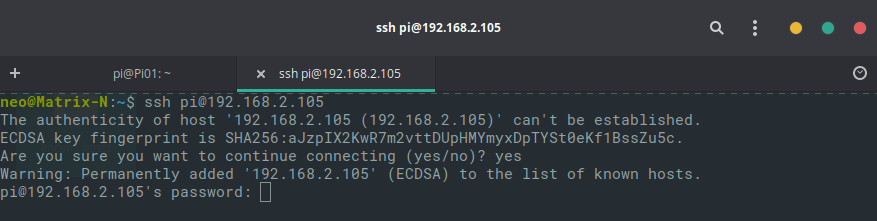

如果你经常通过 SSH 访问许多不同的远程系统,这个技巧将为你节省一些时间。你可以通过 SSH 为频繁访问的系统创建 SSH 别名,这样你就不必记住所有不同的用户名、主机名、SSH 端口号和 IP 地址等。此外,它避免了在 SSH 到 Linux 服务器时重复输入相同的用户名、主机名、IP 地址、端口号。

### 在 Linux 中创建 SSH 别名

在我知道这个技巧之前,我通常使用以下任意一种方式通过 SSH 连接到远程系统。

使用 IP 地址:

```

$ ssh 192.168.225.22

```

或使用端口号、用户名和 IP 地址:

```

$ ssh -p 22 [email protected]

```

或使用端口号、用户名和主机名:

```

$ ssh -p 22 [email protected]

```

这里

* `22` 是端口号,

* `sk` 是远程系统的用户名,

* `192.168.225.22` 是我远程系统的 IP,

* `server.example.com` 是远程系统的主机名。

我相信大多数 Linux 新手和(或一些)管理员都会以这种方式通过 SSH 连接到远程系统。但是,如果你通过 SSH 连接到多个不同的系统,记住所有主机名或 IP 地址,还有用户名是困难的,除非你将它们写在纸上或者将其保存在文本文件中。别担心!这可以通过为 SSH 连接创建别名(或快捷方式)轻松解决。

我们可以用两种方法为 SSH 命令创建别名。

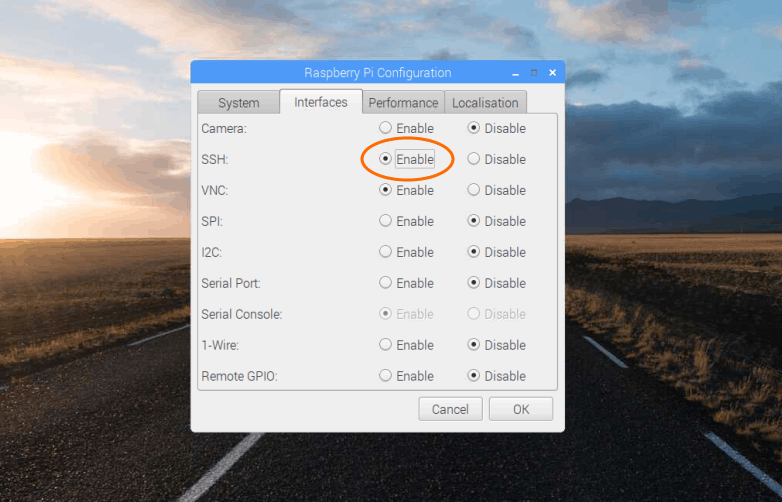

#### 方法 1 – 使用 SSH 配置文件

这是我创建别名的首选方法。

我们可以使用 SSH 默认配置文件来创建 SSH 别名。为此,编辑 `~/.ssh/config` 文件(如果此文件不存在,只需创建一个):

```

$ vi ~/.ssh/config

```

添加所有远程主机的详细信息,如下所示:

```

Host webserver

HostName 192.168.225.22

User sk

Host dns

HostName server.example.com

User root

Host dhcp

HostName 192.168.225.25

User ostechnix

Port 2233

```

*使用 SSH 配置文件在 Linux 中创建 SSH 别名*

将 `Host`、`Hostname`、`User` 和 `Port` 配置的值替换为你自己的值。添加所有远程主机的详细信息后,保存并退出该文件。

现在你可以使用以下命令通过 SSH 进入系统:

```

$ ssh webserver

$ ssh dns

$ ssh dhcp

```

就是这么简单!

看看下面的截图。

*使用 SSH 别名访问远程系统*

看到了吗?我只使用别名(例如 `webserver`)来访问 IP 地址为 `192.168.225.22` 的远程系统。

请注意,这只使用于当前用户。如果要为所有用户(系统范围内)提供别名,请在 `/etc/ssh/ssh_config` 文件中添加以上行。

你还可以在 SSH 配置文件中添加许多其他内容。例如,如果你[已配置基于 SSH 密钥的身份验证](https://www.ostechnix.com/configure-ssh-key-based-authentication-linux/),说明 SSH 密钥文件的位置,如下所示:

```

Host ubuntu

HostName 192.168.225.50

User senthil

IdentityFIle ~/.ssh/id_rsa_remotesystem

```

确保已使用你自己的值替换主机名、用户名和 SSH 密钥文件路径。

现在使用以下命令连接到远程服务器:

```

$ ssh ubuntu

```

这样,你可以添加希望通过 SSH 访问的任意多台远程主机,并使用别名快速访问它们。

#### 方法 2 – 使用 Bash 别名

这是创建 SSH 别名的一种应急变通的方法,可以加快通信的速度。你可以使用 [alias 命令](https://www.ostechnix.com/the-alias-and-unalias-commands-explained-with-examples/)使这项任务更容易。

打开 `~/.bashrc` 或者 `~/.bash_profile` 文件:

```

alias webserver='ssh [email protected]'

alias dns='ssh [email protected]'

alias dhcp='ssh [email protected] -p 2233'

alias ubuntu='ssh [email protected] -i ~/.ssh/id_rsa_remotesystem'

```

再次确保你已使用自己的值替换主机、主机名、端口号和 IP 地址。保存文件并退出。

然后,使用命令应用更改:

```

$ source ~/.bashrc

```

或者

```

$ source ~/.bash_profile

```

在此方法中,你甚至不需要使用 `ssh 别名` 命令。相反,只需使用别名,如下所示。

```

$ webserver

$ dns

$ dhcp

$ ubuntu

```

这两种方法非常简单,但对于经常通过 SSH 连接到多个不同系统的人来说非常有用,而且非常方便。使用适合你的上述任何一种方法,通过 SSH 快速访问远程 Linux 系统。

建议阅读:

* [允许或拒绝 SSH 访问 Linux 中的特定用户或组](https://www.ostechnix.com/allow-deny-ssh-access-particular-user-group-linux/)

* [如何在 Linux 上 SSH 到特定目录](https://www.ostechnix.com/how-to-ssh-into-a-particular-directory-on-linux/)

* [如何在 Linux 中断开 SSH 会话](https://www.ostechnix.com/how-to-stop-ssh-session-from-disconnecting-in-linux/)

* [4 种方式在退出 SSH 会话后保持命令运行](https://www.ostechnix.com/4-ways-keep-command-running-log-ssh-session/)

* [SSLH – 共享相同端口的 HTTPS 和 SSH](https://www.ostechnix.com/sslh-share-port-https-ssh/)

目前这就是全部了,希望它对你有帮助。更多好东西要来了,敬请关注!

干杯!

---

via: <https://www.ostechnix.com/how-to-create-ssh-alias-in-linux/>

作者:[sk](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

10,852 | 使用 Python 进行社交媒体情感分析入门 | https://opensource.com/article/19/4/social-media-sentiment-analysis-python | 2019-05-14T00:30:14 | [

"情感",

"NLP"

] | https://linux.cn/article-10852-1.html |

>

> 学习自然语言处理的基础知识并探索两个有用的 Python 包。

>

>

>

自然语言处理(NLP)是机器学习的一种,它解决了口语或书面语言和计算机辅助分析这些语言之间的相关性。日常生活中我们经历了无数的 NLP 创新,从写作帮助和建议到实时语音翻译,还有口译。

本文研究了 NLP 的一个特定领域:情感分析。重点是确定输入语言的积极、消极或中性性质。本部分将解释 NLP 和情感分析的背景,并探讨两个开源的 Python 包。[第 2 部分](https://opensource.com/article/19/4/social-media-sentiment-analysis-python-part-2)将演示如何开始构建自己的可扩展情感分析服务。

在学习情感分析时,对 NLP 有一个大体了解是有帮助的。本文不会深入研究数学本质。相反,我们的目标是阐明 NLP 中的关键概念,这些概念对于将这些方法实际结合到你的解决方案中至关重要。

### 自然语言和文本数据

合理的起点是从定义开始:“什么是自然语言?”它是我们人类相互交流的方式,沟通的主要方式是口语和文字。我们可以更进一步,只关注文本交流。毕竟,生活在 Siri、Alexa 等无处不在的时代,我们知道语音是一组与文本无关的计算。

### 数据前景和挑战

我们只考虑使用文本数据,我们可以对语言和文本做什么呢?首先是语言,特别是英语,除了规则还有很多例外,含义的多样性和语境差异,这些都可能使人类口译员感到困惑,更不用说计算机翻译了。在小学,我们学习文章和标点符号,通过讲母语,我们获得了寻找直觉上表示唯一意义的词的能力。比如,出现诸如 “a”、“the” 和 “or” 之类的文章,它们在 NLP 中被称为*停止词*,因为传统上 NLP 算法是在一个序列中找到这些词时意味着搜索停止。

由于我们的目标是自动将文本分类为情感类,因此我们需要一种以计算方式处理文本数据的方法。因此,我们必须考虑如何向机器表示文本数据。众所周知,利用和解释语言的规则很复杂,输入文本的大小和结构可能会有很大差异。我们需要将文本数据转换为数字数据,这是机器和数学的首选方式。这种转变属于*特征提取*的范畴。

在提取输入文本数据的数字表示形式后,一个改进可能是:给定一个文本输入体,为上面列出的文章确定一组向量统计数据,并根据这些数据对文档进行分类。例如,过多的副词可能会使撰稿人感到愤怒,或者过度使用停止词可能有助于识别带有内容填充的学期论文。诚然,这可能与我们情感分析的目标没有太大关系。

### 词袋

当你评估一个文本陈述是积极还是消极的时候,你使用哪些上下文来评估它的极性?(例如,文本中是否具有积极的、消极的或中性的情感)一种方式是隐含形容词:被称为 “disgusting”(恶心) 的东西被认为是消极的,但如果同样的东西被称为 “beautiful”(漂亮),你会认为它是积极的。从定义上讲,俗语给人一种熟悉感,通常是积极的,而脏话可能是敌意的表现。文本数据也可以包括表情符号,它带有固定的情感。

理解单个单词的极性影响为文本的<ruby> <a href="https://en.wikipedia.org/wiki/Bag-of-words_model"> 词袋 </a> <rt> bag-of-words </rt></ruby>(BoW)模型提供了基础。它分析一组单词或词汇表,并提取关于这些单词在输入文本中是否存在的度量。词汇表是通过处理已知极性的文本形成称为*标记的训练数据*。从这组标记数据中提取特征,然后分析特征之间的关系,并将标记与数据关联起来。

“词袋”这个名称说明了它的用途:即不考虑空间位置或上下文的的单个词。词汇表通常是由训练集中出现的所有单词构建的,训练后往往会被修剪。如果在训练之前没有清理停止词,那么停止词会因为其高频率和低语境而被移除。很少使用的单词也可以删除,因为缺乏为一般输入实例提供的信息。

但是,重要的是要注意,你可以(并且应该)进一步考虑单词在单个训练数据实例之外的情形,这称为<ruby> <a href="https://en.wikipedia.org/wiki/Tf%E2%80%93idf#Term_frequency"> 词频 </a> <rt> term frequency </rt></ruby>(TF)。你还应该考虑输入数据在所有训练实例中的单词计数,通常,出现在所有文档中的低频词更重要,这被称为<ruby> <a href="https://en.wikipedia.org/wiki/Tf%E2%80%93idf#Inverse_document_frequency"> 逆文本频率指数 </a> <rt> inverse document frequency </rt></ruby>(IDF)。这些指标一定会在本主题系列的其他文章和软件包中提及,因此了解它们会有所帮助。

词袋在许多文档分类应用程序中很有用。然而,在情感分析中,当缺乏情境意识的问题被利用时,事情就可以解决。考虑以下句子:

* 我们不喜欢这场战争。

* 我讨厌下雨天,好事是今天是晴天。

* 这不是生死攸关的问题。

这些短语的情感对于人类口译员来说是有难度的,而且通过严格关注单个词汇的实例,对于机器翻译来说也是困难的。

在 NLP 中也可以使用称为 “n-grams” 的单词分组。一个二元组考虑两个相邻单词组成的组而不是(或除了)单个词袋。这应该可以缓解诸如上述“不喜欢”之类的情况,但由于缺乏语境意思,它仍然是个问题。此外,在上面的第二句中,下半句的情感语境可以被理解为否定前半部分。因此,这种方法中也会丢失上下文线索的空间局部性。从实用角度来看,使问题复杂化的是从给定输入文本中提取的特征的稀疏性。对于一个完整的大型词汇表,每个单词都有一个计数,可以将其视为一个整数向量。大多数文档的向量中都有大量的零计数向量,这给操作增加了不必要的空间和时间复杂度。虽然已经提出了许多用于降低这种复杂性的简便方法,但它仍然是一个问题。

### 词嵌入

<ruby> 词嵌入 <rt> Word embedding </rt></ruby>是一种分布式表示,它允许具有相似含义的单词具有相似的表示。这是基于使用实值向量来与它们周围相关联。重点在于使用单词的方式,而不仅仅是它们的存在与否。此外,词嵌入的一个巨大实用优势是它们关注于密集向量。通过摆脱具有相应数量的零值向量元素的单词计数模型,词嵌入在时间和存储方面提供了一个更有效的计算范例。

以下是两个优秀的词嵌入方法。

#### Word2vec

第一个是 [Word2vec](https://en.wikipedia.org/wiki/Word2vec),它是由 Google 开发的。随着你对 NLP 和情绪分析研究的深入,你可能会看到这种嵌入方法。它要么使用一个<ruby> 连续的词袋 <rt> continuous bag of words </rt></ruby>(CBOW),要么使用一个连续 skip-gram 模型。在 CBOW 中,一个单词的上下文是在训练中根据围绕它的单词来学习的。连续 skip-gram 学习倾向于围绕给定的单词学习单词。虽然这可能超出了你需要解决的问题,但是如果你曾经面对必须生成自己的词嵌入情况,那么 Word2vec 的作者就提倡使用 CBOW 方法来提高速度并评估频繁的单词,而 skip-gram 方法更适合嵌入稀有单词更重要的嵌入。

#### GloVe

第二个是<ruby> <a href="https://en.wikipedia.org/wiki/GloVe_(machine_learning)"> 用于词表示的全局向量 </a> <rt> Global Vectors for Word Representation </rt></ruby>(GloVe),它是斯坦福大学开发的。它是 Word2vec 方法的扩展,试图通过将经典的全局文本统计特征提取获得的信息与 Word2vec 确定的本地上下文信息相结合。实际上,在一些应用程序中,GloVe 性能优于 Word2vec,而在另一些应用程序中则不如 Word2vec。最终,用于词嵌入的目标数据集将决定哪种方法最优。因此,最好了解它们的存在性和高级机制,因为你很可能会遇到它们。

#### 创建和使用词嵌入

最后,知道如何获得词嵌入是有用的。在第 2 部分中,你将看到我们通过利用社区中其他人的实质性工作,站到了巨人的肩膀上。这是获取词嵌入的一种方法:即使用现有的经过训练和验证的模型。实际上,有无数的模型适用于英语和其他语言,一定会有一种模型可以满足你的应用程序,让你开箱即用!

如果没有的话,就开发工作而言,另一个极端是培训你自己的独立模型,而不考虑你的应用程序。实质上,你将获得大量标记的训练数据,并可能使用上述方法之一来训练模型。即使这样,你仍然只是在理解你输入文本数据。然后,你需要为你应用程序开发一个特定的模型(例如,分析软件版本控制消息中的情感价值),这反过来又需要自己的时间和精力。

你还可以对针对你的应用程序的数据训练一个词嵌入,虽然这可以减少时间和精力,但这个词嵌入将是特定于应用程序的,这将会降低它的可重用性。

### 可用的工具选项

考虑到所需的大量时间和计算能力,你可能想知道如何才能找到解决问题的方法。的确,开发可靠模型的复杂性可能令人望而生畏。但是,有一个好消息:已经有许多经过验证的模型、工具和软件库可以为我们提供所需的大部分内容。我们将重点关注 [Python](https://www.python.org/),因为它为这些应用程序提供了大量方便的工具。

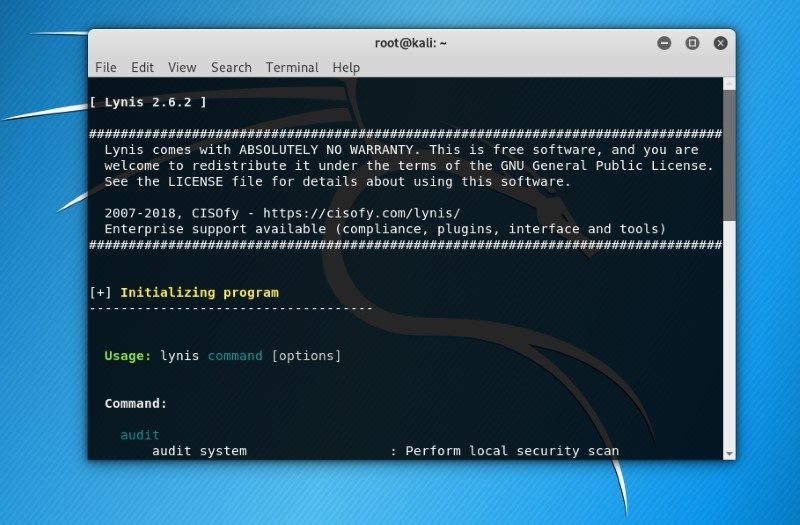

#### SpaCy

[SpaCy](https://pypi.org/project/spacy/) 提供了许多用于解析输入文本数据和提取特征的语言模型。它经过了高度优化,并被誉为同类中最快的库。最棒的是,它是开源的!SpaCy 会执行标识化、词性分类和依赖项注释。它包含了用于执行此功能的词嵌入模型,还有用于为超过 46 种语言的其他特征提取操作。在本系列的第二篇文章中,你将看到它如何用于文本分析和特征提取。

#### vaderSentiment

[vaderSentiment](https://pypi.org/project/vaderSentiment/) 包提供了积极、消极和中性情绪的衡量标准。正如 [原论文](http://comp.social.gatech.edu/papers/icwsm14.vader.hutto.pdf) 的标题(《VADER:一个基于规则的社交媒体文本情感分析模型》)所示,这些模型是专门为社交媒体文本数据开发和调整的。VADER 接受了一组完整的人类标记过的数据的训练,包括常见的表情符号、UTF-8 编码的表情符号以及口语术语和缩写(例如 meh、lol、sux)。

对于给定的输入文本数据,vaderSentiment 返回一个极性分数百分比的三元组。它还提供了一个单个的评分标准,称为 *vaderSentiment 复合指标*。这是一个在 `[-1, 1]` 范围内的实值,其中对于分值大于 `0.05` 的情绪被认为是积极的,对于分值小于 `-0.05` 的被认为是消极的,否则为中性。

在[第 2 部分](https://opensource.com/article/19/4/social-media-sentiment-analysis-python-part-2)中,你将学习如何使用这些工具为你的设计添加情感分析功能。

---

via: <https://opensource.com/article/19/4/social-media-sentiment-analysis-python>

作者:[Michael McCune](https://opensource.com/users/elmiko/users/jschlessman) 选题:[lujun9972](https://github.com/lujun9972) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Natural language processing (NLP) is a type of machine learning that addresses the correlation between spoken/written languages and computer-aided analysis of those languages. We experience numerous innovations from NLP in our daily lives, from writing assistance and suggestions to real-time speech translation and interpretation.

This article examines one specific area of NLP: sentiment analysis, with an emphasis on determining the positive, negative, or neutral nature of the input language. This part will explain the background behind NLP and sentiment analysis and explore two open source Python packages. [Part 2](https://opensource.com/article/19/4/social-media-sentiment-analysis-python-part-2) will demonstrate how to begin building your own scalable sentiment analysis services.

When learning sentiment analysis, it is helpful to have an understanding of NLP in general. This article won't dig into the mathematical guts, rather our goal is to clarify key concepts in NLP that are crucial to incorporating these methods into your solutions in practical ways.

## Natural language and text data

A reasonable place to begin is defining: "What is natural language?" It is the means by which we, as humans, communicate with one another. The primary modalities for communication are verbal and text. We can take this a step further and focus solely on text communication; after all, living in an age of pervasive Siri, Alexa, etc., we know speech is a group of computations away from text.

## Data landscape and challenges

Limiting ourselves to textual data, what can we say about language and text? First, language, particularly English, is fraught with exceptions to rules, plurality of meanings, and contextual differences that can confuse even a human interpreter, let alone a computational one. In elementary school, we learn articles of speech and punctuation, and from speaking our native language, we acquire intuition about which words have less significance when searching for meaning. Examples of the latter would be articles of speech such as "a," "the," and "or," which in NLP are referred to as *stop words*, since traditionally an NLP algorithm's search for meaning stops when reaching one of these words in a sequence.

Since our goal is to automate the classification of text as belonging to a sentiment class, we need a way to work with text data in a computational fashion. Therefore, we must consider how to represent text data to a machine. As we know, the rules for utilizing and interpreting language are complicated, and the size and structure of input text can vary greatly. We'll need to transform the text data into numeric data, the form of choice for machines and math. This transformation falls under the area of *feature extraction*.

Upon extracting numeric representations of input text data, one refinement might be, given an input body of text, to determine a set of quantitative statistics for the articles of speech listed above and perhaps classify documents based on them. For example, a glut of adverbs might make a copywriter bristle, or excessive use of stop words might be helpful in identifying term papers with content padding. Admittedly, this may not have much bearing on our goal of sentiment analysis.

## Bag of words

When you assess a text statement as positive or negative, what are some contextual clues you use to assess its polarity (i.e., whether the text has positive, negative, or neutral sentiment)? One way is connotative adjectives: something called "disgusting" is viewed as negative, but if the same thing were called "beautiful," you would judge it as positive. Colloquialisms, by definition, give a sense of familiarity and often positivity, whereas curse words could be a sign of hostility. Text data can also include emojis, which carry inherent sentiments.

Understanding the polarity influence of individual words provides a basis for the [ bag-of-words](https://en.wikipedia.org/wiki/Bag-of-words_model) (BoW) model of text. It considers a set of words or vocabulary and extracts measures about the presence of those words in the input text. The vocabulary is formed by considering text where the polarity is known, referred to as

*labeled training data*. Features are extracted from this set of labeled data, then the relationships between the features are analyzed and labels are associated with the data.

The name "bag of words" illustrates what it utilizes: namely, individual words without consideration of spatial locality or context. A vocabulary typically is built from all words appearing in the training set, which tends to be pruned afterward. Stop words, if not cleaned prior to training, are removed due to their high frequency and low contextual utility. Rarely used words can also be removed, given the lack of information they provide for general input cases.

It is important to note, however, that you can (and should) go further and consider the appearance of words beyond their use in an individual instance of training data, or what is called [ term frequency](https://en.wikipedia.org/wiki/Tf%E2%80%93idf#Term_frequency) (TF). You should also consider the counts of a word through all instances of input data; typically the infrequency of words among all documents is notable, which is called the

[(IDF). These metrics are bound to be mentioned in other articles and software packages on this subject, so having an awareness of them can only help.](https://en.wikipedia.org/wiki/Tf%E2%80%93idf#Inverse_document_frequency)

*inverse document frequency*BoW is useful in a number of document classification applications; however, in the case of sentiment analysis, things can be gamed when the lack of contextual awareness is leveraged. Consider the following sentences:

- We are not enjoying this war.

- I loathe rainy days, good thing today is sunny.

- This is not a matter of life and death.

The sentiment of these phrases is questionable for human interpreters, and by strictly focusing on instances of individual vocabulary words, it's difficult for a machine interpreter as well.

Groupings of words, called *n-grams*, can also be considered in NLP. A bigram considers groups of two adjacent words instead of (or in addition to) the single BoW. This should alleviate situations such as "not enjoying" above, but it will remain open to gaming due to its loss of contextual awareness. Furthermore, in the second sentence above, the sentiment context of the second half of the sentence could be perceived as negating the first half. Thus, spatial locality of contextual clues also can be lost in this approach. Complicating matters from a pragmatic perspective is the sparsity of features extracted from a given input text. For a thorough and large vocabulary, a count is maintained for each word, which can be considered an integer vector. Most documents will have a large number of zero counts in their vectors, which adds unnecessary space and time complexity to operations. While a number of clever approaches have been proposed for reducing this complexity, it remains an issue.

## Word embeddings

Word embeddings are a distributed representation that allows words with a similar meaning to have a similar representation. This is based on using a real-valued vector to represent words in connection with the company they keep, as it were. The focus is on the manner that words are used, as opposed to simply their existence. In addition, a huge pragmatic benefit of word embeddings is their focus on dense vectors; by moving away from a word-counting model with commensurate amounts of zero-valued vector elements, word embeddings provide a more efficient computational paradigm with respect to both time and storage.

Following are two prominent word embedding approaches.

### Word2vec

The first of these word embeddings, [Word2vec](https://en.wikipedia.org/wiki/Word2vec), was developed at Google. You'll probably see this embedding method mentioned as you go deeper in your study of NLP and sentiment analysis. It utilizes either a *continuous bag of words* (CBOW) or a *continuous skip-gram* model. In CBOW, a word's context is learned during training based on the words surrounding it. Continuous skip-gram learns the words that tend to surround a given word. Although this is more than what you'll probably need to tackle, if you're ever faced with having to generate your own word embeddings, the author of Word2vec advocates the CBOW method for speed and assessment of frequent words, while the skip-gram approach is better suited for embeddings where rare words are more important.

### GloVe

The second word embedding, [ Global Vectors for Word Representation](https://en.wikipedia.org/wiki/GloVe_(machine_learning)) (GloVe), was developed at Stanford. It's an extension to the Word2vec method that attempts to combine the information gained through classical global text statistical feature extraction with the local contextual information determined by Word2vec. In practice, GloVe has outperformed Word2vec for some applications, while falling short of Word2vec's performance in others. Ultimately, the targeted dataset for your word embedding will dictate which method is optimal; as such, it's good to know the existence and high-level mechanics of each, as you'll likely come across them.

### Creating and using word embeddings

Finally, it's useful to know how to obtain word embeddings; in part 2, you'll see that we are standing on the shoulders of giants, as it were, by leveraging the substantial work of others in the community. This is one method of acquiring a word embedding: namely, using an existing trained and proven model. Indeed, myriad models exist for English and other languages, and it's possible that one does what your application needs out of the box!

If not, the opposite end of the spectrum in terms of development effort is training your own standalone model without consideration of your application. In essence, you would acquire substantial amounts of labeled training data and likely use one of the approaches above to train a model. Even then, you are still only at the point of acquiring understanding of your input-text data; you then need to develop a model specific for your application (e.g., analyzing sentiment valence in software version-control messages) which, in turn, requires its own time and effort.

You also could train a word embedding on data specific to your application; while this could reduce time and effort, the word embedding would be application-specific, which would reduce reusability.

## Available tooling options

You may wonder how you'll ever get to a point of having a solution for your problem, given the intensive time and computing power needed. Indeed, the complexities of developing solid models can be daunting; however, there is good news: there are already many proven models, tools, and software libraries available that may provide much of what you need. We will focus on [Python](https://www.python.org/), which conveniently has a plethora of tooling in place for these applications.

### SpaCy

[SpaCy](https://pypi.org/project/spacy/) provides a number of language models for parsing input text data and extracting features. It is highly optimized and touted as the fastest library of its kind. Best of all, it's open source! SpaCy performs tokenization, parts-of-speech classification, and dependency annotation. It contains word embedding models for performing this and other feature extraction operations for over 46 languages. You will see how it can be used for text analysis and feature extraction in the second article in this series.

### vaderSentiment

The [vaderSentiment](https://pypi.org/project/vaderSentiment/) package provides a measure of positive, negative, and neutral sentiment. As the [original paper](http://comp.social.gatech.edu/papers/icwsm14.vader.hutto.pdf)'s title ("VADER: A Parsimonious Rule-based Model for Sentiment Analysis of Social Media Text") indicates, the models were developed and tuned specifically for social media text data. VADER was trained on a thorough set of human-labeled data, which included common emoticons, UTF-8 encoded emojis, and colloquial terms and abbreviations (e.g., meh, lol, sux).

For given input text data, vaderSentiment returns a 3-tuple of polarity score percentages. It also provides a single scoring measure, referred to as *vaderSentiment's compound metric*. This is a real-valued measurement within the range **[-1, 1]** wherein sentiment is considered positive for values greater than **0.05**, negative for values less than **-0.05**, and neutral otherwise.

In [part 2](https://opensource.com/article/19/4/social-media-sentiment-analysis-python-part-2), you will learn how to use these tools to add sentiment analysis capabilities to your designs.

## Comments are closed. |

10,854 | 将 Fedora 29 升级到 Fedora 30 | https://fedoramagazine.org/upgrading-fedora-29-to-fedora-30/ | 2019-05-14T12:51:00 | [

"Fedora"

] | https://linux.cn/article-10854-1.html |

Fedora 30 [已经发布了](https://fedoramagazine.org/announcing-fedora-30/)。你可能希望将系统升级到最新版本的 Fedora。Fedora 工作站版本有图形化升级的方法。另外,Fedora 也提供了一个命令行方法,用于将 Fedora 29 升级到 Fedora 30。

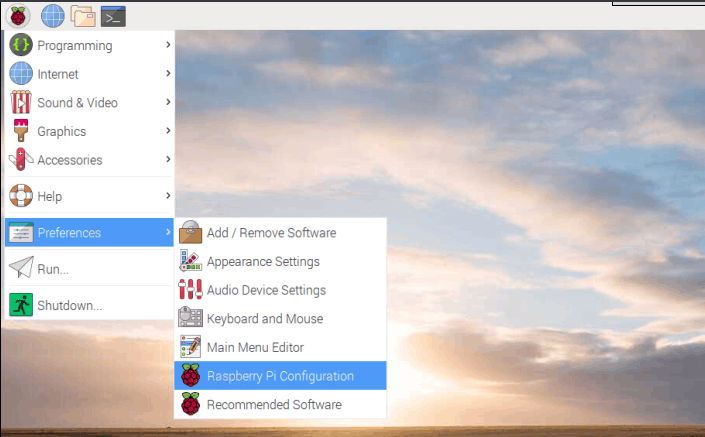

### 将 Fedora 29 工作站版本升级到 Fedora 30

在发布不久后,桌面会显示一条通知告诉你可以升级。你可以单击通知启动 “GNOME 软件” 应用。或者你可以从 GNOME Shell 中选择“软件”。

在 “GNOME 软件” 中选择*更新*选项卡,你会看到一个页面通知你可以更新 Fedora 30。

如果你在屏幕上看不到任何内容,请尝试点击左上角的重新加载按钮。发布后,所有系统都可能需要一段时间才能看到可用的升级。

选择“下载”获取升级包。你可以继续做其他的事直到下载完成。然后使用 “GNOME 软件” 重启系统并应用升级。升级需要时间,因此你可以喝杯咖啡,稍后再回来。

### 使用命令行

如果你过去升级过 Fedora 版本,你可能熟悉 `dnf upgrade` 插件。这是从 Fedora 29 升级到 Fedora 30 的推荐和支持的方式。使用这个插件将使你的 Fedora 30 升级简单易行。

#### 1、更新软件并备份系统

在你执行任何操作之前,你需要确保在开始升级之前拥有 Fedora 29 的最新软件。要更新软件,请使用 “GNOME 软件” 或在终端中输入以下命令。

```

sudo dnf upgrade --refresh

```

此外,请确保在继续之前备份系统。关于备份的帮助,请参阅 Fedora Magazine 上的[备份系列](https://fedoramagazine.org/taking-smart-backups-duplicity/)。

#### 2、安装 DNF 插件

接下来,打开终端并输入以下命令来安装插件:

```

sudo dnf install dnf-plugin-system-upgrade

```

#### 3、使用 DNF 开始更新

现在你的系统是最新的,完成了备份,并且已安装 DNF 插件,你可以在终端中使用以下命令开始升级:

```

sudo dnf system-upgrade download --releasever=30

```

此命令将开始在本地下载所有升级文件以准备升级。如果你因为没有更新包、错误的依赖,或过时的包在升级时遇到问题,请在输入上面的命令时添加 `-- allowerasing` 标志。这将允许 DNF 删除可能阻止系统升级的软件包。

#### 4、重启并升级

当前面的命令完成下载所有升级文件后,你的系统就可以重启了。要将系统引导至升级过程,请在终端中输入以下命令:

```

sudo dnf system-upgrade reboot

```

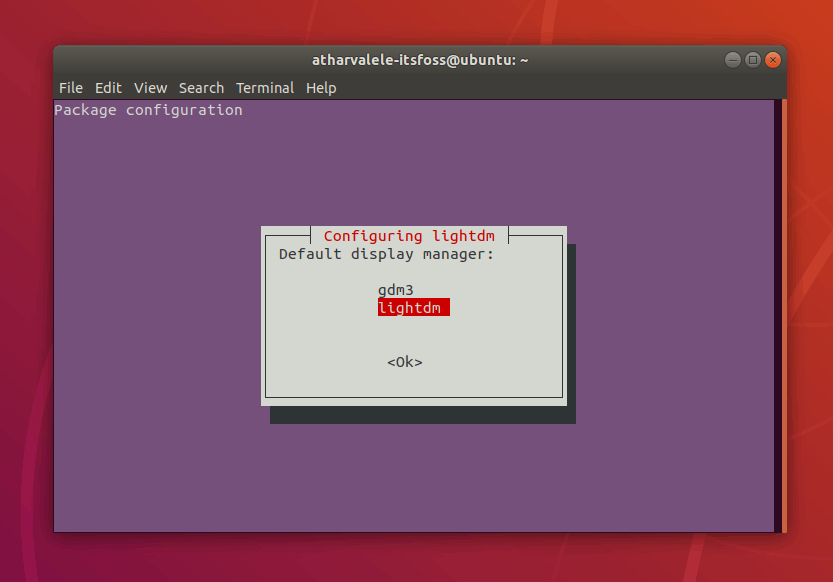

此后你的系统将重启。在许多版本之前,`fedup` 工具将在内核选择/引导页面上创建一个新选项。使用 `dnf-plugin-system-upgrade` 包,你的系统将使用当前 Fedora 29 安装的内核重启。这个是正常的。在内核选择页面后不久,系统开始升级过程。

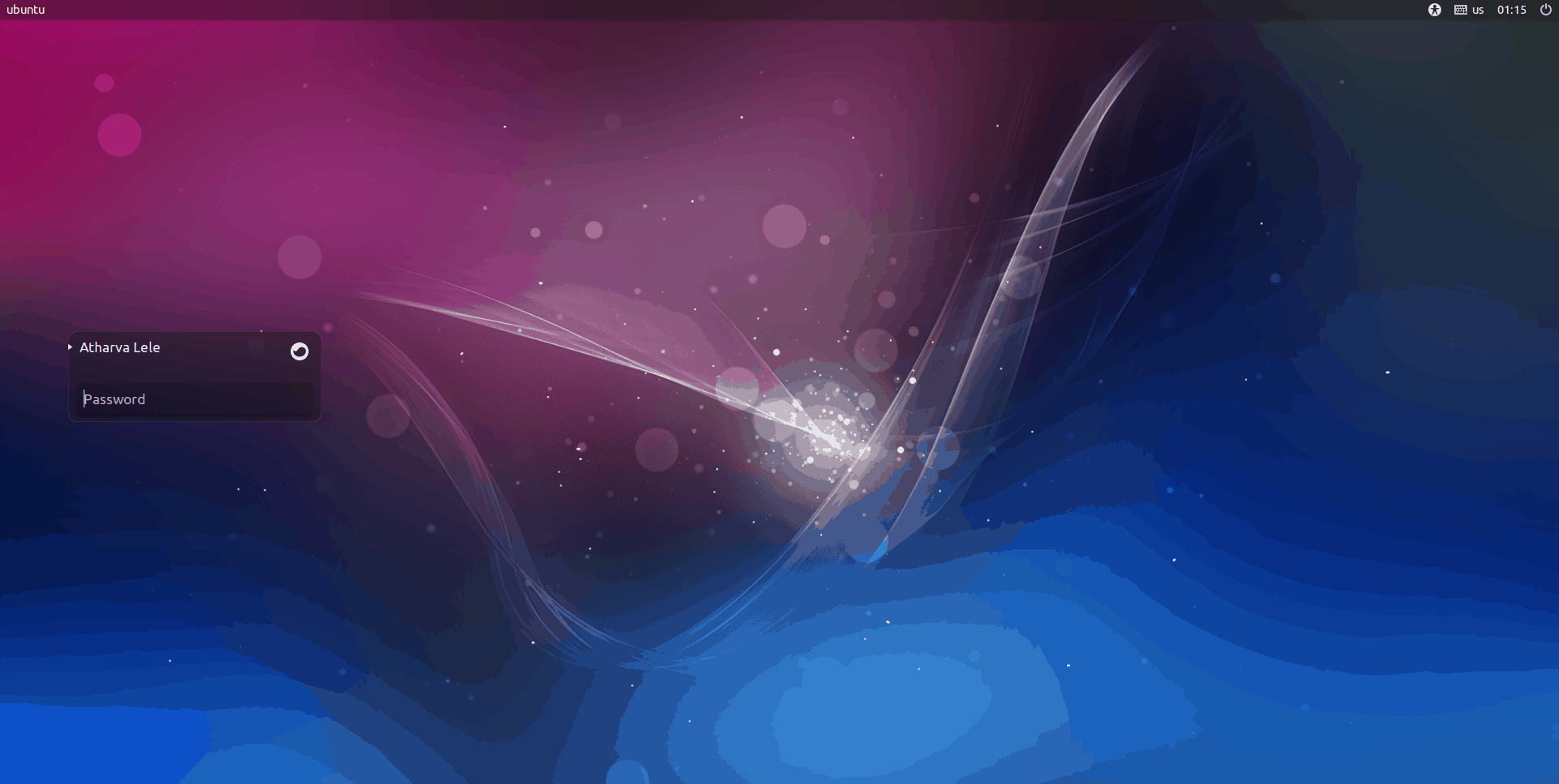

现在可以休息一下了!完成后你的系统将重启,你就可以登录新升级的 Fedora 30 了。

### 解决升级问题

升级系统时偶尔可能会出现意外问题。如果你遇到任何问题,请访问 [DNF 系统升级的维基页面](https://fedoraproject.org/wiki/DNF_system_upgrade#Resolving_post-upgrade_issues),以获取有关出现问题时的故障排除的更多信息。

如果你在升级时遇到问题并在系统上安装了第三方仓库,那么可能需要在升级时禁用这些仓库。有关 Fedora 对未提供仓库的支持,请与仓库的提供商联系。

---

via: <https://fedoramagazine.org/upgrading-fedora-29-to-fedora-30/>

作者:[Ryan Lerch](https://fedoramagazine.org/author/ryanlerch/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Fedora 30 i[s available now](https://fedoramagazine.org/announcing-fedora-30/). You’ll likely want to upgrade your system to the latest version of Fedora. Fedora Workstation has a graphical upgrade method. Alternatively, Fedora offers a command-line method for upgrading Fedora 29 to Fedora 30.

## Upgrading Fedora 29 Workstation to Fedora 30

Soon after release time, a notification appears to tell you an upgrade is available. You can click the notification to launch the **GNOME Software** app. Or you can choose Software from GNOME Shell.

Choose the *Updates* tab in GNOME Software and you should see a screen informing you that Fedora 30 is Now Available.

If you don’t see anything on this screen, try using the reload button at the top left. It may take some time after release for all systems to be able to see an upgrade available.

Choose *Download* to fetch the upgrade packages. You can continue working until you reach a stopping point, and the download is complete. Then use GNOME Software to restart your system and apply the upgrade. Upgrading takes time, so you may want to grab a coffee and come back to the system later.

## Using the command line

If you’ve upgraded from past Fedora releases, you are likely familiar with the *dnf upgrade* plugin. This method is the recommended and supported way to upgrade from Fedora 29 to Fedora 30. Using this plugin will make your upgrade to Fedora 30 simple and easy.

#### 1. Update software and back up your system

Before you do anything, you will want to make sure you have the latest software for Fedora 29 before beginning the upgrade process. To update your software, use *GNOME Software* or enter the following command in a terminal.

sudo dnf upgrade --refresh

Additionally, make sure you back up your system before proceeding. For help with taking a backup, see [the backup series](https://fedoramagazine.org/taking-smart-backups-duplicity/) on the Fedora Magazine.

#### 2. Install the DNF plugin

Next, open a terminal and type the following command to install the plugin:

sudo dnf install dnf-plugin-system-upgrade

#### 3. Start the update with DNF

Now that your system is up-to-date, backed up, and you have the DNF plugin installed, you can begin the upgrade by using the following command in a terminal:

sudo dnf system-upgrade download --releasever=30

This command will begin downloading all of the upgrades for your machine locally to prepare for the upgrade. If you have issues when upgrading because of packages without updates, broken dependencies, or retired packages, add the *‐‐allowerasing* flag when typing the above command. This will allow DNF to remove packages that may be blocking your system upgrade.

#### 4. Reboot and upgrade

Once the previous command finishes downloading all of the upgrades, your system will be ready for rebooting. To boot your system into the upgrade process, type the following command in a terminal:

sudo dnf system-upgrade reboot

Your system will restart after this. Many releases ago, the *fedup* tool would create a new option on the kernel selection / boot screen. With the *dnf-plugin-system-upgrade* package, your system reboots into the current kernel installed for Fedora 29; this is normal. Shortly after the kernel selection screen, your system begins the upgrade process.

Now might be a good time for a coffee break! Once it finishes, your system will restart and you’ll be able to log in to your newly upgraded Fedora 30 system.

## Resolving upgrade problems

On occasion, there may be unexpected issues when you upgrade your system. If you experience any issues, please visit the [DNF system upgrade wiki page](https://fedoraproject.org/wiki/DNF_system_upgrade#Resolving_post-upgrade_issues) for more information on troubleshooting in the event of a problem.

If you are having issues upgrading and have third-party repositories installed on your system, you may need to disable these repositories while you are upgrading. For support with repositories not provided by Fedora, please contact the providers of the repositories.

## Punch- to

Better to backup your data and install Fedora 30, reinstall your apps and data. It takes a very long time to update.

## Stephen Snow

I have used the update method since Fedora 19 with no issue ever. The dnf upgrade plugin is even better than

was, again upgrades of the released Fedora have never left me with a broken system. I think the update is on par with the length of time to backup your data, and do a bare metal install, overwriting everything. However, I haven’t timed both to get a definitive answer. I can imagine the upgrade would need to do more dependency checking, which of course you would want, to ensure your system started up as you had it when beginning the upgrade.

## Gary Traverson

How long is “very” – My upgrade appears to be stuck around step 81 of 5757 (xemacs-filesystem)

## Michael Williamson

It took me about 15 minutes to download the updates, then I rebooted for the upgrade before lunch and had F30 when I got back.

## nathan

Upgrade to me about 10-15 minutes on my Thinkpad X380 Yoga. No problems at all.

## Leslie Satenstein

Hi Robin,.

Do you have an idea why the plugin is not integrated automatically into dnf? The plugin is actually dormant until invoked. It being integrated into dnf in the current version or the new version would make upgrades actions easier for users.

The following is off topic, but I see the upgrade responsibility being assigned to Anaconda. I envision the user launching Anaconda and clicking a checkbox to indicate a new installation or upgrade. It seems to me that it being within Anaconda fits in with Fedora upgrade management.

By the way, Fedora 30 is GREAT!!

## Erick

How do you see it?? its faster??

## simon

Because you need to install the command mediawriter and install the version, use media wrighted to pase a custom image on a flash drive. no need to make it bootable, mediawrighter does that for you.

## Leslie Satenstein

Fedora 3o includes a new user interface Deepin”.

I have been using it since it was made available. It is very good. I like it as much as I like GNOME. It is an up and coming winner interface.

## Robert

Step 1 has a typo that states “Before you do anything, you will want to make sure you have the latest software for Fedora 39 before beginning the upgrade process.” I’m sure the author meant 29.

## Markus

had to recover my grub config as described in the ‘common F30 bugs’ document. It’s a i686 VM running in virtualbox with legacy boot.

First install was F27. So I guess all i686 fedora installations might not boot after ‘dnf system-upgrade reboot’

## Dave Hugh

Thanks for the post. I upgraded both my personal laptop and a DIY wireless router from 29 to 30 without issue.

(PS In “1” did you mean 29 as opposed to 39?)

## Parveen

Yes.

## Alan Olsen

If you have downloaded extensions from extensions.gnome.org, you will want to disable them and then update them after the upgrade. Some of them cause the gnome-shell to crash, which prevents you from logging in.

The extensions are in the users home directory in .local/share/gnome-shell/extensions

## John Smith

That is a useful tip.

Two points about this:

1) It would be nice if the most common Gnome extensions would be integrated better. For example, before upgrading to F30, I used a nice-and-easy audio extension which allowed me to quickly change from headphones to monitor speaker. After upgrading, it disappeared, too bad! Changing speakers/headphones is in my opinion too “clumsy” with the default settings (too many clicks, takes too long).

2) It would be nice if Gnome shell does not hang/crash when an extension misbehaves. Sometimes, switching between Xorg and Wayland (login screen option) fixes such problems. That should be improved: There should be a clear feedback message for the user what the problem is and the crashing extension should be switched off automatically. After all, I assume most Gnome users will use extensions and are so all at risk.

## Brian Vaughan

I wish I’d seen this tip before I spent several hours trying to figure out why gnome-shell kept core-dumping.

## km

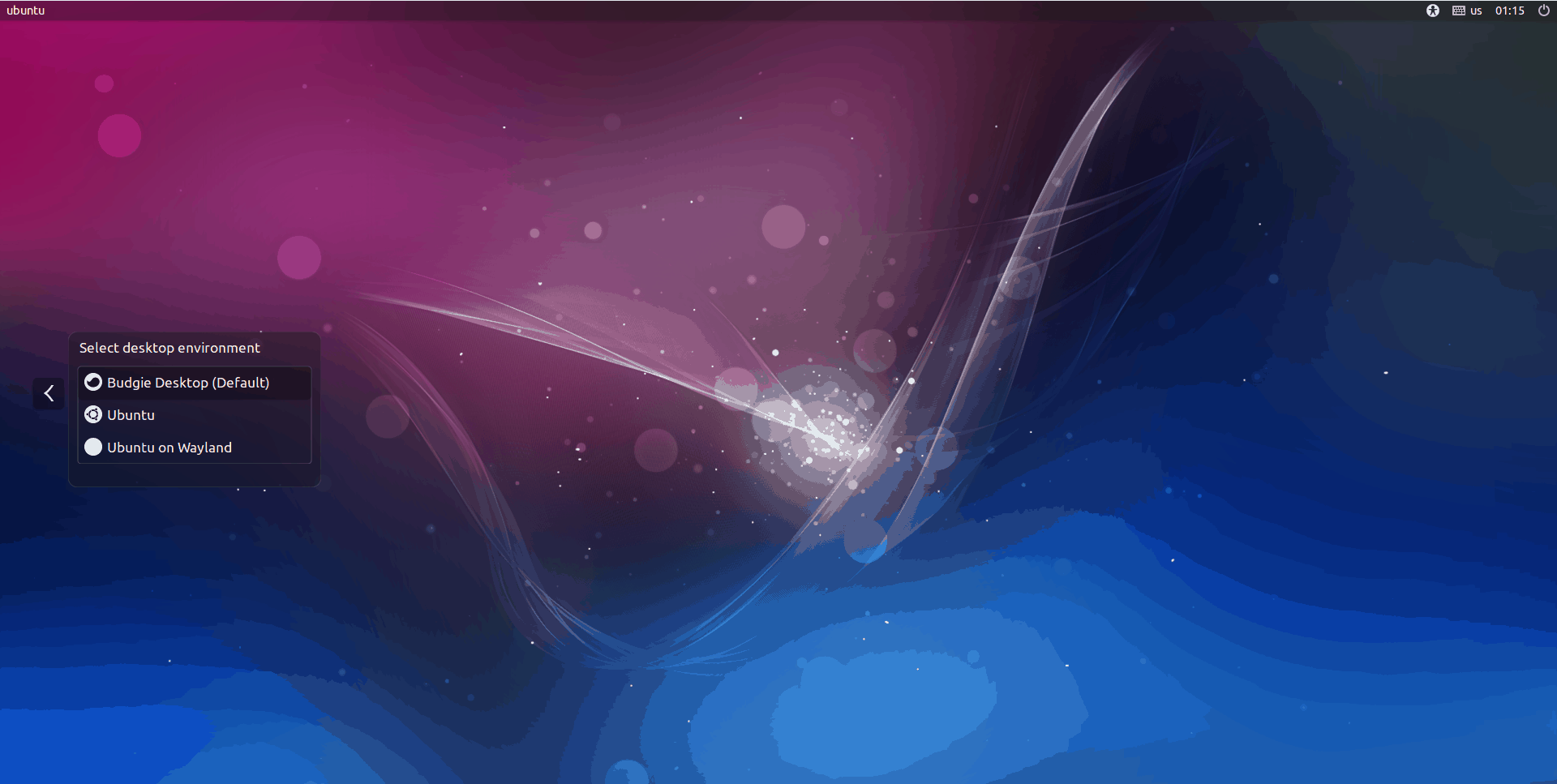

If do not disable Gnome extensions and have problems getting the desktop to appear, consider using another desktop such as KDE, Mate Cinammon. Cfter logging in change to the terminal interface using ctl+alt+F2, then

sudo dnf group install “KDE Plasma Workspaces”

then reboot and choose an alternate desktop when logging in.

More information for older Fedora versions is at:

https://computingforgeeks.com/install-kde-plasma-environment-on-fedora-29-fedora-28/

https://fedoramag.wpengine.com/installing-another-desktop/

## Greg

I could not login to Gnome Shell after upgrade to F30 too. I logged in via ssh (you can also via vt) and run:

$ gsettings set org.gnome.shell disable-user-extensions true

Then I was able to login to Gnome.

It would be nice if Gnome/Fedora would ignore and auto-disable crashing and misbehaving extensions.

## Gayland Gump

Greg, I much appreciate your sharing this. I’d used the remove the .config directory with root to solve what I believe was the same problem. Consequently, considerable loss of my prior configuration was effected. Since I had saved my original .config directory, I was able to restore it in place of the newly created .config directory, apply your fix and recovered my prior setup. I concur with your wish to the developers. Thanks again.

## David Nowak

The GNOME interface hangs upon bootup on my Raspberry Pi 3b/3b+. I am not a programmer, but would it not be better to develop a separate video interface module (container, I think they call them) which when added to the upgraded server code create an image could be a repeatable bootable from release to release?

## Boris

Source code pro regular 10? lol why?

## Pawan Kumar

After upgrading to fedora 30, my broadcom wifi stopped working.

I have reinstalled the broadcom driver from rpm fusion, still it is not working.

## Stephen Snow

You should definitely check out if this has been answered here https://ask.fedoraproject.org/

Since that is the place to start for issues with using fedora.

## David

Ever miss the old days when you needed ndiswrapper to get Broadcom wifi to work? Or alternate kernel drivers? You haven’t lived until you’ve done that.

## Peter Braet

Same here, no more wifi BCM43142, and I can’t find it anymore on the old “Ask Fedora” either. Reinstalled everything, doesn’t work. Where is “Howto install BCM 43142” gone?

## Peter Braet

OK, got it back by reinstalling akmods akmod-wl and broadcom-wl, but had to do ‘$ sudo systemctl enable NetworkManager’ and ‘sudo systemctl reboot’ The connection is restored, but the WiFi icon is still missing.

## John Smith

I have same problem, my Broadcom BCM4360 worked fine in previous Fedoras, but stopped in F30. Hope to find tips in the magazine how to fix this.

## Peter Braet

Will Fedora please explain howto build (f30-candidate, wpa_supplicant-2.8-1.fc30.bz1703745rebuild.src.rpm)? That is the solution according to “Ask Fedora”, something from Koji, I don’t even know what that is. Please Fedora, explain howto do this and make broadcom work, because the solutions I tried only worked temporally.

## Jonathon Poppleton

You can download the binary rpm and right click to install it in gnome. You don’t need to build the source rpm.

RPM:

https://kojipkgs.fedoraproject.org//packages/wpa_supplicant/2.8/1.fc30/x86_64/wpa_supplicant-2.8-1.fc30.x86_64.rpm

To install using the terminal:

[jonathon@here ~]$ cd Downloads

[jonathon@here ~]$ wget https://kojipkgs.fedoraproject.org//packages/wpa_supplicant/2.8/1.fc30/x86_64/wpa_supplicant-2.8-1.fc30.x86_64.rpm

–2019-05-09 22:09:49– https://kojipkgs.fedoraproject.org//packages/wpa_supplicant/2.8/1.fc30/x86_64/wpa_supplicant-2.8-1.fc30.x86_64.rpm

Resolving kojipkgs.fedoraproject.org (kojipkgs.fedoraproject.org)… 209.132.181.15, 209.132.181.16

Connecting to kojipkgs.fedoraproject.org (kojipkgs.fedoraproject.org)|209.132.181.15|:443… connected.

HTTP request sent, awaiting response… 200 OK

Length: 1982324 (1.9M) [application/x-rpm]

Saving to: ‘wpa_supplicant-2.8-1.fc30.x86_64.rpm’

wpa_supplicant-2.8- 100%[===================>] 1.89M 1.33MB/s in 1.4s

2019-05-09 22:09:52 (1.33 MB/s) – ‘wpa_supplicant-2.8-1.fc30.x86_64.rpm’ saved [1982324/1982324]

[jonathon@here Downloads]$ su -c “dnf install wpa_supplicant-2.8-1.fc30.x86_64.rpm”

Password:

Last metadata expiration check: 0:20:47 ago on Thu 09 May 2019 21:50:54 AEST.

Dependencies resolved.

Package Architecture Version Repository Size

Upgrading:

wpa_supplicant x86_64 1:2.8-1.fc30 @commandline 1.9 M

Transaction Summary

Upgrade 1 Package

Total size: 1.9 M

Is this ok [y/N]:

If you want to build the source rpm let me know.

## Jonathon Poppleton

This is how i used wpa supplicant in the past (no guarantees it will work since i am using a pc now):

Obtain the name of your wifi interface:

ip link

Take note of wifi interface:

eg wlo1

Stop network manager:

su -c “systemctl stop NetworkManager”

Set SSID and password:

su -c “wpa_passphrase MYROUTER > /etc/wpa_supplicant/wpa_supplicant.conf

mypassphrase”

Enable wpa_supplicant:

su -c “wpa_supplicant -B -iwlo1 -c/etc/wpa_supplicant/wpa_supplicant.conf”

Obtain ip address:

su -c “dhcpcd wlo1”

Check ip address:

ping -c 3 http://www.google.com

## Erich Küster