id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

8,773 | 响应式编程与响应式系统 | https://www.oreilly.com/ideas/reactive-programming-vs-reactive-systems | 2017-08-12T18:30:00 | [

"响应式"

] | https://linux.cn/article-8773-1.html |

>

> 在恒久的迷惑与过多期待的海洋中,登上一组简单响应式设计原则的小岛。

>

>

>

>

> 下载 Konrad Malawski 的免费电子书[《为什么选择响应式?企业应用中的基本原则》](http://www.oreilly.com/programming/free/why-reactive.csp?intcmp=il-webops-free-product-na_new_site_reactive_programming_vs_reactive_systems_text_cta),深入了解更多响应式技术的知识与好处。

>

>

>

自从 2013 年一起合作写了[《响应式宣言》](http://www.reactivemanifesto.org/)之后,我们看着响应式从一种几乎无人知晓的软件构建技术——当时只有少数几个公司的边缘项目使用了这一技术——最后成为<ruby> 中间件领域 <rt> middleware field </rt></ruby>大佬们全平台战略中的一部分。本文旨在定义和澄清响应式各个方面的概念,方法是比较在*响应式编程*风格下和把*响应式系统*视作一个紧密整体的设计方法下编写代码的不同之处。

### 响应式是一组设计原则

响应式技术目前成功的标志之一是“<ruby> 响应式 <rt> reactive </rt></ruby>”成为了一个热词,并且跟一些不同的事物与人联系在了一起——常常伴随着像“<ruby> 流 <rt> streaming </rt></ruby>”、“<ruby> 轻量级 <rt> lightweight </rt></ruby>”和“<ruby> 实时 <rt> real-time </rt></ruby>”这样的词。

举个例子:当我们看到一支运动队时(像棒球队或者篮球队),我们一般会把他们看成一个个单独个体的组合,但是当他们之间碰撞不出火花,无法像一个团队一样高效地协作时,他们就会输给一个“更差劲”的队伍。从这篇文章的角度来看,响应式是一组设计原则,一种关于系统架构与设计的思考方式,一种关于在一个分布式环境下,当<ruby> 实现技术 <rp> ( </rp> <rt> implementation techniques </rt> <rp> ) </rp></ruby>、工具和设计模式都只是一个更大系统的一部分时如何设计的思考方式。

这个例子展示了不经考虑地将一堆软件拼揍在一起——尽管单独来看,这些软件都很优秀——和响应式系统之间的不同。在一个响应式系统中,正是*不同<ruby> 组件 <rp> ( </rp> <rt> parts </rt> <rp> ) </rp></ruby>间的相互作用*让响应式系统如此不同,它使得不同组件能够独立地运作,同时又一致协作从而达到最终想要的结果。

*一个响应式系统* 是一种<ruby> 架构风格 <rp> ( </rp> <rt> architectural style </rt> <rp> ) </rp></ruby>,它允许许多独立的应用结合在一起成为一个单元,共同响应它们所处的环境,同时保留着对单元内其它应用的“感知”——这能够表现为它能够做到<ruby> 放大/缩小规模 <rp> ( </rp> <rt> scale up/down </rt> <rp> ) </rp></ruby>,负载平衡,甚至能够主动地执行这些步骤。

以响应式的风格(或者说,通过响应式编程)写一个软件是可能的;然而,那也不过是拼图中的一块罢了。虽然在上面的提到的各个方面似乎都足以称其为“响应式的”,但仅就其它们自身而言,还不足以让一个*系统*成为响应式的。

当人们在软件开发与设计的语境下谈论“响应式”时,他们的意思通常是以下三者之一:

* 响应式系统(架构与设计)

* 响应式编程(基于声明的事件的)

* 函数响应式编程(FRP)

我们将调查这些做法与技术的意思,特别是前两个。更明确地说,我们会在使用它们的时候讨论它们,例如它们是怎么联系在一起的,从它们身上又能到什么样的好处——特别是在为多核、云或移动架构搭建系统的情境下。

让我们先来说一说函数响应式编程吧,以及我们在本文后面不再讨论它的原因。

### 函数响应式编程(FRP)

<ruby> 函数响应式编程 <rt> Functional reactive programming </rt></ruby>,通常被称作 *FRP*,是最常被误解的。FRP 在二十年前就被 Conal Elliott [精确地定义过了](http://conal.net/papers/icfp97/)了。但是最近这个术语却被错误地<sup> 脚注1</sup> 用来描述一些像 Elm、Bacon.js 的技术以及其它技术中的响应式插件(RxJava、Rx.NET、 RxJS)。许多的<ruby> 库 <rp> ( </rp> <rt> libraries </rt> <rp> ) </rp></ruby>声称他们支持 FRP,事实上他们说的并非*响应式编程*,因此我们不会再进一步讨论它们。

### 响应式编程

<ruby> 响应式编程 <rt> Reactive programming </rt></ruby>,不要把它跟*函数响应式编程*混淆了,它是异步编程下的一个子集,也是一种范式,在这种范式下,由新信息的<ruby> 有效性 <rp> ( </rp> <rt> availability </rt> <rp> ) </rp></ruby>推动逻辑的前进,而不是让<ruby> 一条执行线程 <rp> ( </rp> <rt> a thread-of-execution </rt> <rp> ) </rp></ruby>去推动<ruby> 控制流 <rp> ( </rp> <rt> control flow </rt> <rp> ) </rp></ruby>。

它能够把问题分解为多个独立的步骤,这些独立的步骤可以以异步且<ruby> 非阻塞 <rp> ( </rp> <rt> non-blocking </rt> <rp> ) </rp></ruby>的方式被执行,最后再组合在一起产生一条<ruby> 工作流 <rp> ( </rp> <rt> workflow </rt> <rp> ) </rp></ruby>——它的输入和输出可能是<ruby> 非绑定的 <rp> ( </rp> <rt> unbounded </rt> <rp> ) </rp></ruby>。

<ruby> <a href="http://www.reactivemanifesto.org/glossary#Asynchronous"> “异步地” </a> <rp> ( </rp> <rt> Asynchronous </rt> <rp> ) </rp></ruby>被牛津词典定义为“不在同一时刻存在或发生”,在我们的语境下,它意味着一条消息或者一个事件可发生在任何时刻,也有可能是在未来。这在响应式编程中是非常重要的一项技术,因为响应式编程允许[<ruby> 非阻塞式 <rp> ( </rp> <rt> non-blocking </rt> <rp> ) </rp></ruby>]的执行方式——执行线程在竞争一块共享资源时不会因为<ruby> 阻塞 <rp> ( </rp> <rt> blocking </rt> <rp> ) </rp></ruby>而陷入等待(为了防止执行线程在当前的工作完成之前执行任何其它操作),而是在共享资源被占用的期间转而去做其它工作。<ruby> 阿姆达尔定律 <rp> ( </rp> <rt> Amdahl's Law </rt> <rp> ) </rp></ruby> <sup> 脚注2</sup> 告诉我们,竞争是<ruby> 可伸缩性 <rp> ( </rp> <rt> scalability </rt> <rp> ) </rp></ruby>最大的敌人,所以一个响应式系统应当在极少数的情况下才不得不做阻塞工作。

响应式编程一般是<ruby> 事件驱动 <rp> ( </rp> <rt> event-driven </rt> <rp> ) </rp></ruby>,相比之下,响应式系统则是<ruby> 消息驱动 <rp> ( </rp> <rt> message-driven </rt> <rp> ) </rp></ruby>的——事件驱动与消息驱动之间的差别会在文章后面阐明。

响应式编程库的应用程序接口(API)一般是以下二者之一:

* <ruby> 基于回调的 <rp> ( </rp> <rt> Callback-based </rt> <rp> ) </rp></ruby>—匿名的<ruby> 间接作用 <rp> ( </rp> <rt> side-effecting </rt> <rp> ) </rp></ruby>回调函数被绑定在<ruby> 事件源 <rp> ( </rp> <rt> event sources </rt> <rp> ) </rp></ruby>上,当事件被放入<ruby> 数据流 <rp> ( </rp> <rt> dataflow chain </rt> <rp> ) </rp></ruby>中时,回调函数被调用。

* <ruby> 声明式的 <rp> ( </rp> <rt> Declarative </rt> <rp> ) </rp></ruby>——通过函数的组合,通常是使用一些固定的函数,像 *map*、 *filter*、 *fold* 等等。

大部分的库会混合这两种风格,一般还带有<ruby> 基于流 <rp> ( </rp> <rt> stream-based </rt> <rp> ) </rp></ruby>的<ruby> 操作符 <rp> ( </rp> <rt> operators </rt> <rp> ) </rp></ruby>,像 windowing、 counts、 triggers。

说响应式编程跟<ruby> <a href="https://en.wikipedia.org/wiki/Dataflow_programming"> 数据流编程 </a> <rp> ( </rp> <rt> dataflow programming </rt> <rp> ) </rp></ruby>有关是很合理的,因为它强调的是*数据流*而不是*控制流*。

举几个为这种编程技术提供支持的的编程抽象概念:

* [Futures/Promises](https://en.wikipedia.org/wiki/Futures_and_promises)——一个值的容器,具有<ruby> 读共享/写独占 <rp> ( </rp> <rt> many-read/single-write </rt> <rp> ) </rp></ruby>的语义,即使变量尚不可用也能够添加异步的值转换操作。

* <ruby> 流 <rp> ( </rp> <rt> streams </rt> <rp> ) </rp></ruby> - [响应式流](http://reactive-streams.org/)——无限制的数据处理流,支持异步,非阻塞式,支持多个源与目的的<ruby> 反压转换管道 <rp> ( </rp> <rt> back-pressured transformation pipelines </rt> <rp> ) </rp></ruby>。

* [数据流变量](https://en.wikipedia.org/wiki/Oz_(programming_language)#Dataflow_variables_and_declarative_concurrency)——依赖于输入、<ruby> 过程 <rp> ( </rp> <rt> procedures </rt> <rp> ) </rp></ruby>或者其它单元的<ruby> 单赋值变量 <rp> ( </rp> <rt> single assignment variables </rt> <rp> ) </rp></ruby>(存储单元),它能够自动更新值的改变。其中一个应用例子是表格软件——一个单元的值的改变会像涟漪一样荡开,影响到所有依赖于它的函数,顺流而下地使它们产生新的值。

在 JVM 中,支持响应式编程的流行库有 Akka Streams、Ratpack、Reactor、RxJava 和 Vert.x 等等。这些库实现了响应式编程的规范,成为 JVM 上响应式编程库之间的<ruby> 互通标准 <rp> ( </rp> <rt> standard for interoperability </rt> <rp> ) </rp></ruby>,并且根据它自身的叙述是“……一个为如何处理非阻塞式反压异步流提供标准的倡议”。

响应式编程的基本好处是:提高多核和多 CPU 硬件的计算资源利用率;根据阿姆达尔定律以及引申的 <ruby> Günther 的通用可伸缩性定律 <rp> ( </rp> <rt> Günther’s Universal Scalability Law </rt> <rp> ) </rp></ruby> <sup> 脚注3</sup> ,通过减少<ruby> 序列化点 <rp> ( </rp> <rt> serialization points </rt> <rp> ) </rp></ruby>来提高性能。

另一个好处是开发者生产效率,传统的编程范式都尽力想提供一个简单直接的可持续的方法来处理异步非阻塞式计算和 I/O。在响应式编程中,因活动(active)组件之间通常不需要明确的协作,从而也就解决了其中大部分的挑战。

响应式编程真正的发光点在于组件的创建跟工作流的组合。为了在异步执行上取得最大的优势,把<ruby> <a href="http://www.reactivemanifesto.org/glossary#Back-Pressure"> 反压 </a> <rp> ( </rp> <rt> back-pressure </rt> <rp> ) </rp></ruby>加进来是很重要,这样能避免过度使用,或者确切地说,避免无限度的消耗资源。

尽管如此,响应式编程在搭建现代软件上仍然非常有用,为了在更高层次上<ruby> 理解 <rp> ( </rp> <rt> reason about </rt> <rp> ) </rp></ruby>一个系统,那么必须要使用到另一个工具:<ruby> 响应式架构 <rt> reactive architecture </rt></ruby>——设计响应式系统的方法。此外,要记住编程范式有很多,而响应式编程仅仅只是其中一个,所以如同其它工具一样,响应式编程并不是万金油,它不意图适用于任何情况。

### 事件驱动 vs. 消息驱动

如上面提到的,响应式编程——专注于短时间的数据流链条上的计算——因此倾向于*事件驱动*,而响应式系统——关注于通过分布式系统的通信和协作所得到的弹性和韧性——则是[*消息驱动的*](http://www.reactivemanifesto.org/glossary#Message-Driven) <sup> 脚注4</sup>(或者称之为 <ruby> 消息式 <rp> ( </rp> <rt> messaging </rt> <rp> ) </rp></ruby> 的)。

一个拥有<ruby> 长期存活的可寻址 <rp> ( </rp> <rt> long-lived addressable </rt> <rp> ) </rp></ruby>组件的消息驱动系统跟一个事件驱动的数据流驱动模型的不同在于,消息具有固定的导向,而事件则没有。消息会有明确的(一个)去向,而事件则只是一段等着被<ruby> 观察 <rp> ( </rp> <rt> observe </rt> <rp> ) </rp></ruby>的信息。另外,<ruby> 消息式 <rp> ( </rp> <rt> messaging </rt> <rp> ) </rp></ruby>更适用于异步,因为消息的发送与接收和发送者和接收者是分离的。

响应式宣言中的术语表定义了两者之间[概念上的不同](http://www.reactivemanifesto.org/glossary#Message-Driven):

>

> 一条消息就是一则被送往一个明确目的地的数据。一个事件则是达到某个给定状态的组件发出的一个信号。在一个消息驱动系统中,可寻址到的接收者等待消息的到来然后响应它,否则保持休眠状态。在一个事件驱动系统中,通知的监听者被绑定到消息源上,这样当消息被发出时它就会被调用。这意味着一个事件驱动系统专注于可寻址的事件源而消息驱动系统专注于可寻址的接收者。

>

>

>

分布式系统需要通过消息在网络上传输进行交流,以实现其沟通基础,与之相反,事件的发出则是本地的。在底层通过发送包裹着事件的消息来搭建跨网络的事件驱动系统的做法很常见。这样能够维持在分布式环境下事件驱动编程模型的相对简易性,并且在某些特殊的和合理的范围内的使用案例上工作得很好。

然而,这是有利有弊的:在编程模型的抽象性和简易性上得一分,在控制上就减一分。消息强迫我们去拥抱分布式系统的真实性和一致性——像<ruby> 局部错误 <rp> ( </rp> <rt> partial failures </rt> <rp> ) </rp></ruby>,<ruby> 错误侦测 <rp> ( </rp> <rt> failure detection </rt> <rp> ) </rp></ruby>,<ruby> 丢弃/复制/重排序 <rp> ( </rp> <rt> dropped/duplicated/reordered </rt> <rp> ) </rp></ruby>消息,最后还有一致性,管理多个并发真实性等等——然后直面它们,去处理它们,而不是像过去无数次一样,藏在一个蹩脚的抽象面罩后——假装网络并不存在(例如EJB、 [RPC](https://christophermeiklejohn.com/pl/2016/04/12/rpc.html)、 [CORBA](https://queue.acm.org/detail.cfm?id=1142044) 和 [XA](https://cs.brown.edu/courses/cs227/archives/2012/papers/weaker/cidr07p15.pdf))。

这些在语义学和适用性上的不同在应用设计中有着深刻的含义,包括分布式系统的<ruby> 复杂性 <rp> ( </rp> <rt> complexity </rt> <rp> ) </rp></ruby>中的 <ruby> 弹性 <rp> ( </rp> <rt> resilience </rt> <rp> ) </rp></ruby>、 <ruby> 韧性 <rp> ( </rp> <rt> elasticity </rt> <rp> ) </rp></ruby>、<ruby> 移动性 <rp> ( </rp> <rt> mobility </rt> <rp> ) </rp></ruby>、<ruby> 位置透明性 <rp> ( </rp> <rt> location transparency </rt> <rp> ) </rp></ruby>和 <ruby> 管理 <rp> ( </rp> <rt> management </rt> <rp> ) </rp></ruby>,这些在文章后面再进行介绍。

在一个响应式系统中,特别是使用了响应式编程技术的,这样的系统中就即有事件也有消息——一个是用于沟通的强大工具(消息),而另一个则呈现现实(事件)。

### 响应式系统和架构

*响应式系统* —— 如同在《响应式宣言》中定义的那样——是一组用于搭建现代系统——已充分准备好满足如今应用程序所面对的不断增长的需求的现代系统——的架构设计原则。

响应式系统的原则决对不是什么新东西,它可以被追溯到 70 和 80 年代 Jim Gray 和 Pat Helland 在<ruby> <a href="http://www.hpl.hp.com/techreports/tandem/TR-86.2.pdf"> 串级系统 </a> <rp> ( </rp> <rt> Tandem System </rt> <rp> ) </rp></ruby>上和 Joe aomstrong 和 Robert Virding 在 [Erland](http://erlang.org/download/armstrong_thesis_2003.pdf) 上做出的重大工作。然而,这些人在当时都超越了时代,只有到了最近 5 - 10 年,技术行业才被不得不反思当前企业系统最好的开发实践活动并且学习如何将来之不易的响应式原则应用到今天这个多核、云计算和物联网的世界中。

响应式系统的基石是<ruby> 消息传递 <rp> ( </rp> <rt> message-passing </rt> <rp> ) </rp></ruby>,消息传递为两个组件之间创建一条暂时的边界,使得它们能够在 *时间* 上分离——实现并发性——和 <ruby> 空间 <rp> ( </rp> <rt> space </rt> <rp> ) </rp></ruby> ——实现<ruby> 分布式 <rp> ( </rp> <rt> distribution </rt> <rp> ) </rp></ruby>与<ruby> 移动性 <rp> ( </rp> <rt> mobility </rt> <rp> ) </rp></ruby>。这种分离是两个组件完全<ruby> <a href="http://www.reactivemanifesto.org/glossary#Isolation"> 隔离 </a> <rp> ( </rp> <rt> isolation </rt> <rp> ) </rp></ruby>以及实现<ruby> 弹性 <rp> ( </rp> <rt> resilience </rt> <rp> ) </rp></ruby>和<ruby> 韧性 <rp> ( </rp> <rt> elasticity </rt> <rp> ) </rp></ruby>基础的必需条件。

### 从程序到系统

这个世界的连通性正在变得越来越高。我们不再构建 *程序* ——为单个操作子来计算某些东西的端到端逻辑——而更多地在构建 *系统* 了。

系统从定义上来说是复杂的——每一部分都包含多个组件,每个组件的自身或其子组件也可以是一个系统——这意味着软件要正常工作已经越来越依赖于其它软件。

我们今天构建的系统会在多个计算机上操作,小型的或大型的,或少或多,相近的或远隔半个地球的。同时,由于人们的生活正变得越来越依赖于系统顺畅运行的有效性,用户的期望也变得越得越来越难以满足。

为了实现用户——和企业——能够依赖的系统,这些系统必须是 <ruby> 灵敏的 <rp> ( </rp> <rt> responsive </rt> <rp> ) </rp></ruby> ,这样无论是某个东西提供了一个正确的响应,还是当需要一个响应时响应无法使用,都不会有影响。为了达到这一点,我们必须保证在错误( *弹性* )和欠载( *韧性* )下,系统仍然能够保持灵敏性。为了实现这一点,我们把系统设计为 *消息驱动的* ,我们称其为 *响应式系统* 。

### 响应式系统的弹性

弹性是与 *错误下* 的<ruby> 灵敏性 <rp> ( </rp> <rt> responsiveness </rt> <rp> ) </rp></ruby>有关的,它是系统内在的功能特性,是需要被设计的东西,而不是能够被动的加入系统中的东西。弹性是大于容错性的——弹性无关于<ruby> 故障退化 <rp> ( </rp> <rt> graceful degradation </rt> <rp> ) </rp></ruby>——虽然故障退化对于系统来说是很有用的一种特性——与弹性相关的是与从错误中完全恢复达到 *自愈* 的能力。这就需要组件的隔离以及组件对错误的包容,以免错误散播到其相邻组件中去——否则,通常会导致灾难性的连锁故障。

因此构建一个弹性的、<ruby> 自愈 <rp> ( </rp> <rt> self-healing </rt> <rp> ) </rp></ruby>系统的关键是允许错误被:容纳、具体化为消息,发送给其他(担当<ruby> 监管者 <rp> ( </rp> <rt> supervisors </rt> <rp> ) </rp></ruby>)的组件,从而在错误组件之外修复出一个安全环境。在这,消息驱动是其促成因素:远离高度耦合的、脆弱的深层嵌套的同步调用链,大家长期要么学会忍受其煎熬或直接忽略。解决的想法是将调用链中的错误管理分离,将客户端从处理服务端错误的责任中解放出来。

### 响应式系统的韧性

<ruby> <a href="http://www.reactivemanifesto.org/glossary#Elasticity"> 韧性 </a> <rp> ( </rp> <rt> Elasticity </rt> <rp> ) </rp></ruby>是关于 *欠载下的<ruby> 灵敏性 <rp> ( </rp> <rt> responsiveness </rt> <rp> ) </rp></ruby>* 的——意味着一个系统的吞吐量在资源增加或减少时能够自动地相应<ruby> 增加或减少 <rp> ( </rp> <rt> scales up or down </rt> <rp> ) </rp></ruby>(同样能够<ruby> 向内或外扩展 <rp> ( </rp> <rt> scales in or out </rt> <rp> ) </rp></ruby>)以满足不同的需求。这是利用云计算承诺的特性所必需的因素:使系统利用资源更加有效,成本效益更佳,对环境友好以及实现按次付费。

系统必须能够在不重写甚至不重新设置的情况下,适应性地——即无需介入自动伸缩——响应状态及行为,沟通负载均衡,<ruby> 故障转移 <rp> ( </rp> <rt> failover </rt> <rp> ) </rp></ruby>,以及升级。实现这些的就是 <ruby> 位置透明性 <rp> ( </rp> <rt> location transparency </rt> <rp> ) </rp></ruby>:使用同一个方法,同样的编程抽象,同样的语义,在所有向度中<ruby> 伸缩 <rp> ( </rp> <rt> scaling </rt> <rp> ) </rp></ruby>系统的能力——从 CPU 核心到数据中心。

如同《响应式宣言》所述:

>

> 一个极大地简化问题的关键洞见在于意识到我们都在使用分布式计算。无论我们的操作系统是运行在一个单一结点上(拥有多个独立的 CPU,并通过 QPI 链接进行交流),还是在一个<ruby> 节点集群 <rp> ( </rp> <rt> cluster of nodes </rt> <rp> ) </rp></ruby>(独立的机器,通过网络进行交流)上。拥抱这个事实意味着在垂直方向上多核的伸缩与在水平方面上集群的伸缩并无概念上的差异。在空间上的解耦 [...],是通过异步消息传送以及运行时实例与其引用解耦从而实现的,这就是我们所说的位置透明性。

>

>

>

因此,不论接收者在哪里,我们都以同样的方式与它交流。唯一能够在语义上等同实现的方式是消息传送。

### 响应式系统的生产效率

既然大多数的系统生来即是复杂的,那么其中一个最重要的点即是保证一个系统架构在开发和维护组件时,最小程度地减低生产效率,同时将操作的 <ruby> 偶发复杂性 <rp> ( </rp> <rt> accidental complexity </rt> <rp> ) </rp></ruby>降到最低。

这一点很重要,因为在一个系统的生命周期中——如果系统的设计不正确——系统的维护会变得越来越困难,理解、定位和解决问题所需要花费时间和精力会不断地上涨。

响应式系统是我们所知的最具 *生产效率* 的系统架构(在多核、云及移动架构的背景下):

* 错误的隔离为组件与组件之间裹上[舱壁](http://skife.org/architecture/fault-tolerance/2009/12/31/bulkheads.html)(LCTT 译注:当船遭到损坏进水时,舱壁能够防止水从损坏的船舱流入其他船舱),防止引发连锁错误,从而限制住错误的波及范围以及严重性。

* 监管者的层级制度提供了多个等级的防护,搭配以自我修复能力,避免了许多曾经在侦查(inverstigate)时引发的操作<ruby> 代价 <rp> ( </rp> <rt> cost </rt> <rp> ) </rp></ruby>——大量的<ruby> 瞬时故障 <rp> ( </rp> <rt> transient failures </rt> <rp> ) </rp></ruby>。

* 消息传送和位置透明性允许组件被卸载下线、代替或<ruby> 重新布线 <rp> ( </rp> <rt> rerouted </rt> <rp> ) </rp></ruby>同时不影响终端用户的使用体验,并降低中断的代价、它们的相对紧迫性以及诊断和修正所需的资源。

* 复制减少了数据丢失的风险,减轻了数据<ruby> 检索 <rp> ( </rp> <rt> retrieval </rt> <rp> ) </rp></ruby>和存储的有效性错误的影响。

* 韧性允许在使用率波动时保存资源,允许在负载很低时,最小化操作开销,并且允许在负载增加时,最小化<ruby> 运行中断 <rp> ( </rp> <rt> outgae </rt> <rp> ) </rp></ruby>或<ruby> 紧急投入 <rp> ( </rp> <rt> urgent investment </rt> <rp> ) </rp></ruby>伸缩性的风险。

因此,响应式系统使<ruby> 生成系统 <rp> ( </rp> <rt> creation systems </rt> <rp> ) </rp></ruby>很好的应对错误、随时间变化的负载——同时还能保持低运营成本。

### 响应式编程与响应式系统的关联

响应式编程是一种管理<ruby> 内部逻辑 <rp> ( </rp> <rt> internal logic </rt> <rp> ) </rp></ruby>和<ruby> 数据流转换 <rp> ( </rp> <rt> dataflow transformation </rt> <rp> ) </rp></ruby>的好技术,在本地的组件中,做为一种优化代码清晰度、性能以及资源利用率的方法。响应式系统,是一组架构上的原则,旨在强调分布式信息交流并为我们提供一种处理分布式系统弹性与韧性的工具。

只使用响应式编程常遇到的一个问题,是一个事件驱动的基于回调的或声明式的程序中两个计算阶段的<ruby> 高度耦合 <rp> ( </rp> <rt> tight coupling </rt> <rp> ) </rp></ruby>,使得 *弹性* 难以实现,因此时它的转换链通常存活时间短,并且它的各个阶段——回调函数或<ruby> 组合子 <rp> ( </rp> <rt> combinator </rt> <rp> ) </rp></ruby>——是匿名的,也就是不可寻址的。

这意味着,它通常在内部处理成功与错误的状态而不会向外界发送相应的信号。这种寻址能力的缺失导致单个<ruby> 阶段 <rp> ( </rp> <rt> stages </rt> <rp> ) </rp></ruby>很难恢复,因为它通常并不清楚异常应该,甚至不清楚异常可以,发送到何处去。

另一个与响应式系统方法的不同之处在于单纯的响应式编程允许 *时间* 上的<ruby> 解耦 <rp> ( </rp> <rt> decoupling </rt> <rp> ) </rp></ruby>,但不允许 *空间* 上的(除非是如上面所述的,在底层通过网络传送消息来<ruby> 分发 <rp> ( </rp> <rt> distribute </rt> <rp> ) </rp></ruby>数据流)。正如叙述的,在时间上的解耦使 *并发性* 成为可能,但是是空间上的解耦使 <ruby> 分布 <rp> ( </rp> <rt> distribution </rt> <rp> ) </rp></ruby>和<ruby> 移动性 <rp> ( </rp> <rt> mobility </rt> <rp> ) </rp></ruby> (使得不仅仅静态拓扑可用,还包括了动态拓扑)成为可能的——而这些正是 *韧性* 所必需的要素。

位置透明性的缺失使得很难以韧性方式对一个基于适应性响应式编程技术的程序进行向外扩展,因为这样就需要分附加工具,例如<ruby> 消息总线 <rp> ( </rp> <rt> message bus </rt> <rp> ) </rp></ruby>,<ruby> 数据网格 <rp> ( </rp> <rt> data grid </rt> <rp> ) </rp></ruby>或者在顶层的<ruby> 定制网络协议 <rp> ( </rp> <rt> bespoke network protocol </rt> <rp> ) </rp></ruby>。而这点正是响应式系统的消息驱动编程的闪光的地方,因为它是一个包含了其编程模型和所有伸缩向度语义的交流抽象概念,因此降低了复杂性与认知超载。

对于基于回调的编程,常会被提及的一个问题是写这样的程序或许相对来说会比较简单,但最终会引发一些真正的后果。

例如,对于基于匿名回调的系统,当你想理解它们,维护它们或最重要的是在<ruby> 生产供应中断 <rp> ( </rp> <rt> production outages </rt> <rp> ) </rp></ruby>或错误行为发生时,你想知道到底发生了什么、发生在哪以及为什么发生,但此时它们只提供极少的内部信息。

为响应式系统设计的库与平台(例如 [Akka](http://akka.io/) 项目和 [Erlang](https://www.erlang.org/) 平台)学到了这一点,它们依赖于那些更容易理解的长期存活的可寻址组件。当错误发生时,根据导致错误的消息可以找到唯一的组件。当可寻址的概念存在组件模型的核心中时,<ruby> 监控方案 <rp> ( </rp> <rt> monitoring solution </rt> <rp> ) </rp></ruby>就有了一个 *有意义* 的方式来呈现它收集的数据——利用<ruby> 传播 <rp> ( </rp> <rt> propagated </rt> <rp> ) </rp></ruby>的身份标识。

一个好的编程范式的选择,一个选择实现像可寻址能力和错误管理这些东西的范式,已经被证明在生产中是无价的,因它在设计中承认了现实并非一帆风顺,*接受并拥抱错误的出现* 而不是毫无希望地去尝试避免错误。

总而言之,响应式编程是一个非常有用的实现技术,可以用在响应式架构当中。但是记住这只能帮助管理一部分:异步且非阻塞执行下的数据流管理——通常只在单个结点或服务中。当有多个结点时,就需要开始认真地考虑像<ruby> 数据一致性 <rp> ( </rp> <rt> data consistency </rt> <rp> ) </rp></ruby>、<ruby> 跨结点沟通 <rp> ( </rp> <rt> cross-node communication </rt> <rp> ) </rp></ruby>、<ruby> 协调 <rp> ( </rp> <rt> coordination </rt> <rp> ) </rp></ruby>、<ruby> 版本控制 <rp> ( </rp> <rt> versioning </rt> <rp> ) </rp></ruby>、<ruby> 编制 <rp> ( </rp> <rt> orchestration </rt> <rp> ) </rp></ruby>、<ruby> 错误管理 <rp> ( </rp> <rt> failure management </rt> <rp> ) </rp></ruby>、<ruby> 关注与责任 <rp> ( </rp> <rt> concerns and responsibilities </rt> <rp> ) </rp></ruby>分离等等的东西——也即是:系统架构。

因此,要最大化响应式编程的价值,就把它作为构建响应式系统的工具来使用。构建一个响应式系统需要的不仅是在一个已存在的遗留下来的<ruby> 软件栈 <rp> ( </rp> <rt> software stack </rt> <rp> ) </rp></ruby>上抽象掉特定的操作系统资源和少量的异步 API 和<ruby> <a href="http://martinfowler.com/bliki/CircuitBreaker.html"> 断路器 </a> <rp> ( </rp> <rt> circuit breakers </rt> <rp> ) </rp></ruby>。此时应该拥抱你在创建一个包含多个服务的分布式系统这一事实——这意味着所有东西都要共同合作,提供一致性与灵敏的体验,而不仅仅是如预期工作,但同时还要在发生错误和不可预料的负载下正常工作。

### 总结

企业和中间件供应商在目睹了应用响应式所带来的企业利润增长后,同样开始拥抱响应式。在本文中,我们把响应式系统做为企业最终目标进行描述——假设了多核、云和移动架构的背景——而响应式编程则从中担任重要工具的角色。

响应式编程在内部逻辑及数据流转换的组件层次上为开发者提高了生产率——通过性能与资源的有效利用实现。而响应式系统在构建 <ruby> 原生云 <rp> ( </rp> <rt> cloud native </rt> <rp> ) </rp></ruby> 和其它大型分布式系统的系统层次上为架构师及 DevOps 从业者提高了生产率——通过弹性与韧性。我们建议在响应式系统设计原则中结合响应式编程技术。

### 脚注

>

> 1. 参考 Conal Elliott,FRP 的发明者,见[这个演示](https://begriffs.com/posts/2015-07-22-essence-of-frp.html)。

> 2. [Amdahl 定律](https://en.wikipedia.org/wiki/Amdahl%2527s_law)揭示了系统理论上的加速会被一系列的子部件限制,这意味着系统在新的资源加入后会出现<ruby> 收益递减 <rp> ( </rp> <rt> diminishing returns </rt> <rp> ) </rp></ruby>。

> 3. Neil Günter 的<ruby> <a href="http://www.perfdynamics.com/Manifesto/USLscalability.html"> 通用可伸缩性定律 </a> <rp> ( </rp> <rt> Universal Scalability Law </rt> <rp> ) </rp></ruby>是理解并发与分布式系统的竞争与协作的重要工具,它揭示了当新资源加入到系统中时,保持一致性的开销会导致不好的结果。

> 4. 消息可以是同步的(要求发送者和接受者同时存在),也可以是异步的(允许他们在时间上解耦)。其语义上的区别超出本文的讨论范围。

>

>

>

---

via: <https://www.oreilly.com/ideas/reactive-programming-vs-reactive-systems>

作者:[Jonas Bonér](https://www.oreilly.com/people/e0b57-jonas-boner), [Viktor Klang](https://www.oreilly.com/people/f96106d4-4ce6-41d9-9d2b-d24590598fcd) 译者:[XLCYun](https://github.com/XLCYun) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

8,774 | 一篇缺失的 TypeScript 介绍 | https://toddmotto.com/typescript-the-missing-introduction | 2017-08-13T13:24:07 | [

"JavaScript",

"TypeScript"

] | https://linux.cn/article-8774-1.html |

**下文是 James Henry([@MrJamesHenry](https://twitter.com/MrJamesHenry))所提交的内容。我是 ESLint 核心团队的一员,也是 TypeScript 布道师。我正在和 Todd 在 [UltimateAngular](https://ultimateangular.com/courses) 平台上合作发布 Angular 和 TypeScript 的精品课程。**

>

> 本文的主旨是为了介绍我们是如何看待 TypeScript 的以及它在加强 JavaScript 开发中所起的作用。

>

>

> 我们也将尽可能地给出那些类型和编译方面的那些时髦词汇的准确定义。

>

>

>

TypeScript 强大之处远远不止这些,本篇文章无法涵盖,想要了解更多请阅读[官方文档](http://www.typescriptlang.org/docs),或者学习 [UltimateAngular 上的 TypeScript 课程](https://ultimateangular.com/courses#typescript) ,从初学者成为一位 TypeScript 高手。

### 背景

TypeScript 是个出乎意料强大的工具,而且它真的很容易掌握。

然而,TypeScript 可能比 JavaScript 要更为复杂一些,因为 TypeScript 可能向我们同时引入了一系列以前没有考虑过的 JavaScript 程序相关的技术概念。

每当我们谈论到类型、编译器等这些概念的时候,你会发现很快会变的不知所云起来。

这篇文章就是一篇为了解答你需要知道的许许多多不知所云的概念,来帮助你 TypeScript 快速入门的教程,可以让你轻松自如的应对这些概念。

### 关键知识的掌握

在 Web 浏览器中运行我们的代码这件事或许使我们对它是如何工作的产生一些误解,“它不用经过编译,是吗?”,“我敢肯定这里面是没有类型的...”

更有意思的是,上述的说法既是正确的也是不正确的,这取决于上下文环境和我们是如何定义这些概念的。

首先,我们要作的是明确这些。

#### JavaScript 是解释型语言还是编译型语言?

传统意义上,程序员经常将自己的程序编译之后运行出结果就认为这种语言是编译型语言。

>

> 从初学者的角度来说,编译的过程就是将我们自己编辑好的高级语言程序转换成机器实际运行的格式。

>

>

>

就像 Go 语言,可以使用 `go build` 的命令行工具编译 .go 的文件,将其编译成代码的低级形式,它可以直接执行、运行。

```

# We manually compile our .go file into something we can run

# using the command line tool "go build"

go build ultimate-angular.go

# ...then we execute it!

./ultimate-angular

```

作为一个 JavaScript 程序员(这一刻,请先忽略我们对新一代构建工具和模块加载程序的热爱),我们在日常的 JavaScript 开发中并没有编译的这一基本步骤,

我们写一些 JavaScript 代码,把它放在浏览器的 `<script>` 标签中,它就能运行了(或者在服务端环境运行,比如:node.js)。

**好吧,因此 JavaScript 没有进行过编译,那它一定是解释型语言了,是吗?**

实际上,我们能够确定的一点是,JavaScript 不是我们自己编译的,现在让我们简单的回顾一个简单的解释型语言的例子,再来谈 JavaScript 的编译问题。

>

> 解释型计算机语言的执行的过程就像人们看书一样,从上到下、一行一行的阅读。

>

>

>

我们所熟知的解释型语言的典型例子是 bash 脚本。我们终端中的 bash 解释器逐行读取我们的命令并且执行它。

现在我们回到 JavaScript 是解释执行还是编译执行的讨论中,我们要将逐行读取和逐行执行程序分开理解(对“解释型”的简单理解),不要混在一起。

以此代码为例:

```

hello();

function hello(){

console.log("Hello")

}

```

这是真正意义上 JavaScript 输出 Hello 单词的程序代码,但是,在 `hello()` 在我们定义它之前就已经使用了这个函数,这是简单的逐行执行办不到的,因为 `hello()` 在第一行没有任何意义的,直到我们在第二行声明了它。

像这样在 JavaScript 是存在的,因为我们的代码实际上在执行之前就被所谓的“JavaScript 引擎”或者是“特定的编译环境”编译过,这个编译的过程取决于具体的实现(比如,使用 V8 引擎的 node.js 和 Chome 就和使用 SpiderMonkey 的 FireFox 就有所不同)。

在这里,我们不会在进一步的讲解编译型执行和解释型执行微妙之处(这里的定义已经很好了)。

>

> 请务必记住,我们编写的 JavaScript 代码已经不是我们的用户实际执行的代码了,即使是我们简单地将其放在 HTML 中的 `<script>` ,也是不一样的。

>

>

>

#### 运行时间 VS 编译时间

现在我们已经正确理解了编译和运行是两个不同的阶段,那“<ruby> 运行阶段 <rt> Run Time </rt></ruby>”和“<ruby> 编译阶段 <rt> Compile Time </rt></ruby>”理解起来也就容易多了。

编译阶段,就是我们在我们的编辑器或者 IDE 当中的代码转换成其它格式的代码的阶段。

运行阶段,就是我们程序实际执行的阶段,例如:上面的 `hello()` 函数就执行在“运行阶段”。

#### TypeScript 编译器

现在我们了解了程序的生命周期中的关键阶段,接下来我们可以介绍 TypeScript 编译器了。

TypeScript 编译器是帮助我们编写代码的关键。比如,我们不需将 JavaScript 代码包含到 `<script>` 标签当中,只需要通过 TypeScript 编译器传递它,就可以在运行程序之前得到改进程序的建议。

>

> 我们可以将这个新的步骤作为我们自己的个人“编译阶段”,这将在我们的程序抵达 JavaScript 主引擎之前,确保我们的程序是以我们预期的方式编写的。

>

>

>

它与上面 Go 语言的实例类似,但是 TypeScript 编译器只是基于我们编写程序的方式提供提示信息,并不会将其转换成低级的可执行文件,它只会生成纯 JavaScript 代码。

```

# One option for passing our source .ts file through the TypeScript

# compiler is to use the command line tool "tsc"

tsc ultimate-angular.ts

# ...this will produce a .js file of the same name

# i.e. ultimate-angular.js

```

在[官方文档](http://www.typescriptlang.org/docs)中,有许多关于将 TypeScript 编译器以各种方式融入到你的现有工作流程中的文章。这些已经超出本文范围。

#### 动态类型与静态类型

就像对比编译程序与解释程序一样,动态类型与静态类型的对比在现有的资料中也是极其模棱两可的。

让我们先回顾一下我们在 JavaScript 中对于类型的理解。

我们的代码如下:

```

var name = 'James';

var sum = 1 + 2;

```

我们如何给别人描述这段代码?

“我们声明了一个变量 `name`,它被分配了一个 “James” 的**字符串**,然后我们又申请了一个变量 `sum`,它被分配了一个**数字** 1 和**数字** 2 的求和的数值结果。”

即使在这样一个简单的程序中,我们也使用了两个 JavaScript 的基本类型:`String` 和 `Number`。

就像上面我们讲编译一样,我们不会陷入编程语言类型的学术细节当中,关键是要理解在 JavaScript 中类型表示的是什么,并扩展到 TypeScript 的类型的理解上。

从每夜拜读的最新 ECMAScript 规范中我们可以学到(LOL, JK - “wat’s an ECMA?”),它大量引用了 JavaScript 的类型及其用法。

直接引自官方规范:

>

> ECMAScript 语言的类型取决于使用 ECMAScript 语言的 ECMAScript 程序员所直接操作的值。

>

>

> ECMAScript 语言的类型有 Undefined、Null、Boolean、String、Symbol、Number 和 Object。

>

>

>

我们可以看到,JavaScript 语言有 7 种正式类型,其中我们在我们现在程序中使用了 6 种(Symbol 首次在 ES2015 中引入,也就是 ES6)。

现在我们来深入一点看上面的 JavaScript 代码中的 “name 和 sum”。

我们可以把我们当前被分配了字符串“James”的变量 `name` 重新赋值为我们的第二个变量 sum 的当前值,目前是数字 3。

```

var name = 'James';

var sum = 1 + 2;

name = sum;

```

该 `name` 变量开始“存有”一个字符串,但现在它“存有”一个数字。这凸显了 JavaScript 中变量和类型的基本特性:

“James” 值一直是字符串类型,而 `name` 变量可以分配任何类型的值。和 `sum` 赋值的情况相同,1 是一个数字类型,`sum` 变量可以分配任何可能的值。

>

> 在 JavaScript 中,值是具有类型的,而变量是可以随时保存任何类型的值。

>

>

>

这也恰好是一个“动态类型语言”的定义。

相比之下,我们可以将“静态类型语言”视为我们可以(也必须)将类型信息与特定变量相关联的语言:

```

var name: string = ‘James’;

```

在这段代码中,我们能够更好地显式声明我们对变量 `name` 的意图,我们希望它总是用作一个字符串。

你猜怎么着?我们刚刚看到我们的第一个 TypeScript 程序。

当我们<ruby> 反思reflect</ruby>我们自己的代码(非编程方面的双关语“反射”)时,我们可以得出的结论,即使我们使用动态语言(如 JavaScript),在几乎所有的情况下,当我们初次定义变量和函数参数时,我们应该有非常明确的使用意图。如果这些变量和参数被重新赋值为与我们原先赋值不同类型的值,那么有可能某些东西并不是我们预期的那样工作的。

>

> 作为 JavaScript 开发者,TypeScript 的静态类型注释给我们的一个巨大的帮助,它能够清楚地表达我们对变量的意图。

>

>

> 这种改进不仅有益于 TypeScript 编译器,还可以让我们的同事和将来的自己明白我们的代码。代码是用来读的。

>

>

>

### TypeScript 在我们的 JavaScript 工作流程中的作用

我们已经开始看到“为什么经常说 TypeScript 只是 JavaScript + 静态类型”的说法了。`: string` 对于我们的 `name` 变量就是我们所谓的“类型注释”,在编译时被使用(换句话说,当我们让代码通过 TypeScript 编译器时),以确保其余的代码符合我们原来的意图。

我们再来看看我们的程序,并添加显式注释,这次是我们的 `sum` 变量:

```

var name: string = 'James';

var sum: number = 1 + 2;

name = sum;

```

如果我们使用 TypeScript 编译器编译这个代码,我们现在就会收到一个在 `name = sum` 这行的错误: `Type 'number' is not assignable to type 'string'`,我们的这种“偷渡”被警告,我们执行的代码可能有问题。

>

> 重要的是,如果我们想要继续执行,我们可以选择忽略 TypeScript 编译器的错误,因为它只是在将 JavaScript 代码发送给我们的用户之前给我们反馈的工具。

>

>

>

TypeScript 编译器为我们输出的最终 JavaScript 代码将与上述原始源代码完全相同:

```

var name = 'James';

var sum = 1 + 2;

name = sum;

```

类型注释全部为我们自动删除了,现在我们可以运行我们的代码了。

>

> 注意:在此示例中,即使我们没有提供显式类型注释的 `: string` 和 `: number` ,TypeScript 编译器也可以为我们提供完全相同的错误 。

>

>

> TypeScript 通常能够从我们使用它的方式推断变量的类型!

>

>

>

#### 我们的源文件是我们的文档,TypeScript 是我们的拼写检查

对于 TypeScript 与我们的源代码的关系来说,一个很好的类比,就是拼写检查与我们在 Microsoft Word 中写的文档的关系。

这两个例子有三个关键的共同点:

1. **它能告诉我们写的东西的客观的、直接的错误:**

* *拼写检查*:“我们已经写了字典中不存在的字”

* *TypeScript*:“我们引用了一个符号(例如一个变量),它没有在我们的程序中声明”

2. **它可以提醒我们写的可能是错误的:**

* *拼写检查*:“该工具无法完全推断特定语句的含义,并建议重写”

* *TypeScript*:“该工具不能完全推断特定变量的类型,并警告不要这样使用它”

3. **我们的来源可以用于其原始目的,无论工具是否存在错误:**

* *拼写检查*:“即使您的文档有很多拼写错误,您仍然可以打印出来,并把它当成文档使用”

* *TypeScript*:“即使您的源代码具有 TypeScript 错误,它仍然会生成您可以执行的 JavaScript 代码”

### TypeScript 是一种可以启用其它工具的工具

TypeScript 编译器由几个不同的部分或阶段组成。我们将通过查看这些部分之一 The Parser(语法分析程序)来结束这篇文章,除了 TypeScript 已经为我们做的以外,它为我们提供了在其上构建其它开发工具的机会。

编译过程的“解析器步骤”的结果是所谓的抽象语法树,简称为 AST。

#### 什么是抽象语法树(AST)?

我们以普通文本形式编写我们的程序,因为这是我们人类与计算机交互的最好方式,让它们能够做我们想要的东西。我们并不是很擅长于手工编写复杂的数据结构!

然而,不管在哪种情况下,普通文本在编译器里面实际上是一个非常棘手的事情。它可能包含程序运作不必要的东西,例如空格,或者可能存在有歧义的部分。

因此,我们希望将我们的程序转换成数据结构,将数据结构全部映射为我们所使用的所谓“标记”,并将其插入到我们的程序中。

这个数据结构正是 AST!

AST 可以通过多种不同的方式表示,我使用 JSON 来看一看。

我们从这个极其简单的基本源代码来看:

```

var a = 1;

```

TypeScript 编译器的 Parser(语法分析程序)阶段的(简化后的)输出将是以下 AST:

```

{

"pos": 0,

"end": 10,

"kind": 256,

"text": "var a = 1;",

"statements": [

{

"pos": 0,

"end": 10,

"kind": 200,

"declarationList": {

"pos": 0,

"end": 9,

"kind": 219,

"declarations": [

{

"pos": 3,

"end": 9,

"kind": 218,

"name": {

"pos": 3,

"end": 5,

"text": "a"

},

"initializer": {

"pos": 7,

"end": 9,

"kind": 8,

"text": "1"

}

}

]

}

}

]

}

```

我们的 AST 中的对象称为节点。

#### 示例:在 VS Code 中重命名符号

在内部,TypeScript 编译器将使用 Parser 生成的 AST 来提供一些非常重要的事情,例如,发生在编译程序时的类型检查。

但它不止于此!

>

> 我们可以使用 AST 在 TypeScript 之上开发自己的工具,如代码美化工具、代码格式化工具和分析工具。

>

>

>

建立在这个 AST 代码之上的工具的一个很好的例子是:<ruby> 语言服务器 <rt> Language Server </rt></ruby>。

深入了解语言服务器的工作原理超出了本文的范围,但是当我们编写程序时,它能为我们提供一个绝对重量级别功能,就是“重命名符号”。

假设我们有以下源代码:

```

// The name of the author is James

var first_name = 'James';

console.log(first_name);

```

经过代码审查和对完美的适当追求,我们决定应该改换我们的变量命名惯例;使用驼峰式命名方式,而不是我们当前正在使用这种蛇式命名。

在我们的代码编辑器中,我们一直以来可以选择多个相同的文本,并使用多个光标来一次更改它们。

当我们把程序也视作文本这样继续操作时,我们已经陷入了一个典型的陷阱中。

那个注释中我们不想修改的“name”单词,在我们的手动匹配中却被误选中了。我们可以看到在现实世界的应用程序中这样更改代码是有多危险。

正如我们在上面学到的那样,像 TypeScript 这样的东西在幕后生成一个 AST 的时候,与我们的程序不再像普通文本那样可以交互,每个标记在 AST 中都有自己的位置,而且它有很清晰的映射关系。

当我们右键单击我们的 `first_name` 变量时,我们可以在 VS Code 中直接“重命名符号”(TypeScript 语言服务器插件也可用于其他编辑器)。

非常好!现在我们的 `first_name` 变量是唯一需要改变的东西,如果需要的话,这个改变甚至会发生在我们项目中的多个文件中(与导出和导入的值一样)!

### 总结

哦,我们在这篇文章中已经讲了很多的内容。

我们把有关学术方面的规避开,围绕编译器和类型还有很多专业术语给出了通俗的定义。

我们对比了编译语言与解释语言、运行阶段与编译阶段、动态类型与静态类型,以及抽象语法树(AST)如何为我们的程序构建工具提供了更为优化的方法。

重要的是,我们提供了 TypeScript 作为我们 JavaScript 开发工具的一种思路,以及如何在其上构建更棒的工具,比如说作为重构代码的一种方式的重命名符号。

快来 UltimateAngular 平台上学习从初学者到 TypeScript 高手的课程吧,开启你的学习之旅!

---

via: <https://toddmotto.com/typescript-the-missing-introduction>

作者:James Henry 译者:[MonkeyDEcho](https://github.com/MonkeyDEcho) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

8,775 | 如何在 CentOS 上安装 Apache Hadoop | https://www.unixmen.com.hijacked/setup-apache-hadoop-centos/ | 2017-08-13T21:05:34 | [

"Hadoop"

] | /article-8775-1.html |

Apache Hadoop 软件库是一个框架,它允许使用简单的编程模型在计算机集群上对大型数据集进行分布式处理。Apache™ Hadoop® 是可靠、可扩展、分布式计算的开源软件。

该项目包括以下模块:

* Hadoop Common:支持其他 Hadoop 模块的常用工具。

* Hadoop 分布式文件系统 (HDFS™):分布式文件系统,可提供对应用程序数据的高吞吐量访问支持。

* Hadoop YARN:作业调度和集群资源管理框架。

* Hadoop MapReduce:一个基于 YARN 的大型数据集并行处理系统。

本文将帮助你逐步在 CentOS 上安装 hadoop 并配置单节点 hadoop 集群。

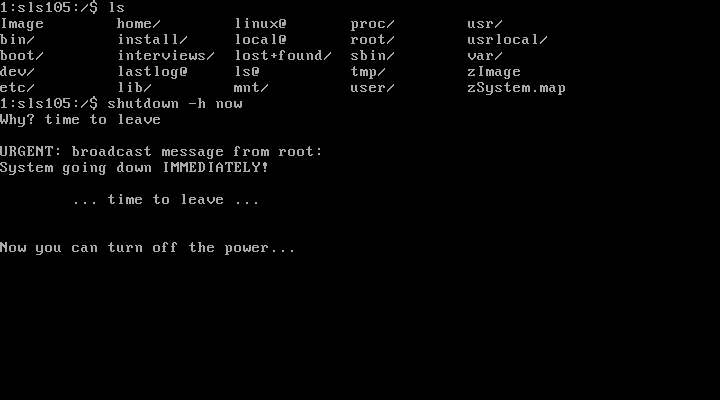

### 安装 Java

在安装 hadoop 之前,请确保你的系统上安装了 Java。使用此命令检查已安装 Java 的版本。

```

java -version

java version "1.7.0_75"

Java(TM) SE Runtime Environment (build 1.7.0_75-b13)

Java HotSpot(TM) 64-Bit Server VM (build 24.75-b04, mixed mode)

```

要安装或更新 Java,请参考下面逐步的说明。

第一步是从 [Oracle 官方网站](http://www.oracle.com/technetwork/java/javase/downloads/jdk7-downloads-1880260.html)下载最新版本的 java。

```

cd /opt/

wget --no-cookies --no-check-certificate --header "Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept-securebackup-cookie" "http://download.oracle.com/otn-pub/java/jdk/7u79-b15/jdk-7u79-linux-x64.tar.gz"

tar xzf jdk-7u79-linux-x64.tar.gz

```

需要设置使用更新版本的 Java 作为替代。使用以下命令来执行此操作。

```

cd /opt/jdk1.7.0_79/

alternatives --install /usr/bin/java java /opt/jdk1.7.0_79/bin/java 2

alternatives --config java

```

```

There are 3 programs which provide 'java'.

Selection Command

-----------------------------------------------

* 1 /opt/jdk1.7.0_60/bin/java

+ 2 /opt/jdk1.7.0_72/bin/java

3 /opt/jdk1.7.0_79/bin/java

Enter to keep the current selection[+], or type selection number: 3 [Press Enter]

```

现在你可能还需要使用 `alternatives` 命令设置 `javac` 和 `jar` 命令路径。

```

alternatives --install /usr/bin/jar jar /opt/jdk1.7.0_79/bin/jar 2

alternatives --install /usr/bin/javac javac /opt/jdk1.7.0_79/bin/javac 2

alternatives --set jar /opt/jdk1.7.0_79/bin/jar

alternatives --set javac /opt/jdk1.7.0_79/bin/javac

```

下一步是配置环境变量。使用以下命令正确设置这些变量。

设置 `JAVA_HOME` 变量:

```

export JAVA_HOME=/opt/jdk1.7.0_79

```

设置 `JRE_HOME` 变量:

```

export JRE_HOME=/opt/jdk1.7.0_79/jre

```

设置 `PATH` 变量:

```

export PATH=$PATH:/opt/jdk1.7.0_79/bin:/opt/jdk1.7.0_79/jre/bin

```

### 安装 Apache Hadoop

设置好 java 环境后。开始安装 Apache Hadoop。

第一步是创建用于 hadoop 安装的系统用户帐户。

```

useradd hadoop

passwd hadoop

```

现在你需要配置用户 `hadoop` 的 ssh 密钥。使用以下命令启用无需密码的 ssh 登录。

```

su - hadoop

ssh-keygen -t rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

exit

```

现在从官方网站 [hadoop.apache.org](https://hadoop.apache.org/) 下载 hadoop 最新的可用版本。

```

cd ~

wget http://apache.claz.org/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz

tar xzf hadoop-2.6.0.tar.gz

mv hadoop-2.6.0 hadoop

```

下一步是设置 hadoop 使用的环境变量。

编辑 `~/.bashrc`,并在文件末尾添加以下这些值。

```

export HADOOP_HOME=/home/hadoop/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

```

在当前运行环境中应用更改。

```

source ~/.bashrc

```

编辑 `$HADOOP_HOME/etc/hadoop/hadoop-env.sh` 并设置 `JAVA_HOME` 环境变量。

```

export JAVA_HOME=/opt/jdk1.7.0_79/

```

现在,先从配置基本的 hadoop 单节点集群开始。

首先编辑 hadoop 配置文件并进行以下更改。

```

cd /home/hadoop/hadoop/etc/hadoop

```

让我们编辑 `core-site.xml`。

```

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

```

接着编辑 `hdfs-site.xml`:

```

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/datanode</value>

</property>

</configuration>

```

并编辑 `mapred-site.xml`:

```

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

```

最后编辑 `yarn-site.xml`:

```

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

```

现在使用以下命令格式化 namenode:

```

hdfs namenode -format

```

要启动所有 hadoop 服务,请使用以下命令:

```

cd /home/hadoop/hadoop/sbin/

start-dfs.sh

start-yarn.sh

```

要检查所有服务是否正常启动,请使用 `jps` 命令:

```

jps

```

你应该看到这样的输出。

```

26049 SecondaryNameNode

25929 DataNode

26399 Jps

26129 JobTracker

26249 TaskTracker

25807 NameNode

```

现在,你可以在浏览器中访问 Hadoop 服务:http://your-ip-address:8088/ 。

[](http://www.unixmen.com/wp-content/uploads/2015/06/hadoop.png)

谢谢阅读!!!

---

via: [https://www.unixmen.com/setup-apache-hadoop-centos/](https://www.unixmen.com.hijacked/setup-apache-hadoop-centos/)

作者:[anismaj](https://www.unixmen.com/author/anis/) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='www.unixmen.com.hijacked', port=443): Max retries exceeded with url: /setup-apache-hadoop-centos/ (Caused by NameResolutionError("<urllib3.connection.HTTPSConnection object at 0x7b83275821d0>: Failed to resolve 'www.unixmen.com.hijacked' ([Errno -2] Name or service not known)")) | null |

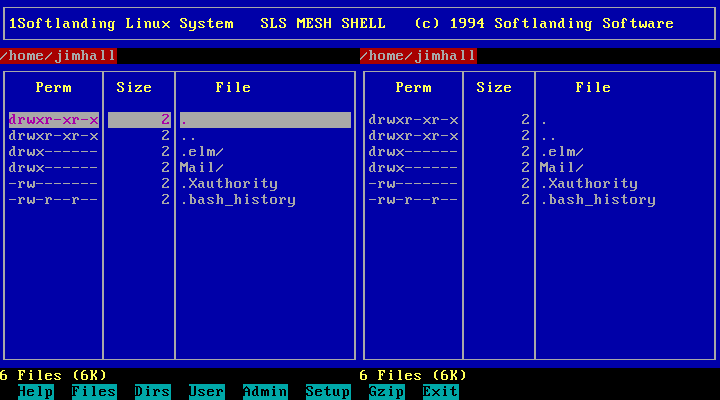

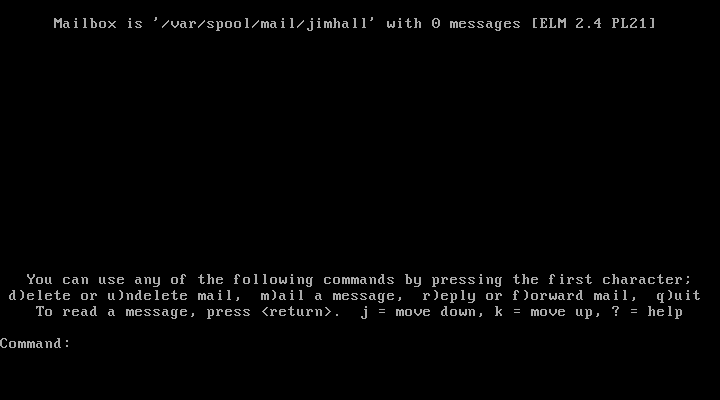

8,776 | 4 个 Linux 桌面上的轻量级图像浏览器 | https://opensource.com/article/17/7/4-lightweight-image-viewers-linux-desktop | 2017-08-14T11:48:00 | [

"图像"

] | https://linux.cn/article-8776-1.html |

>

> 当你需要的不仅仅是一个基本的图像浏览器,而是一个完整的图像编辑器,请查看这些程序。

>

>

>

像大多数人一样,你计算机上可能有些照片和其他图像。而且,像大多数人一样,你可能想要经常查看那些图像和照片。

而启动一个 [GIMP](https://www.gimp.org/) 或者 [Pinta](https://pinta-project.com/pintaproject/pinta/) 这样的图片编辑器对于简单的浏览图片来说太笨重了。

另一方面,大多数 Linux 桌面环境中包含的基本图像查看器可能不足以满足你的需要。如果你想要一些更多的功能,但仍然希望它是轻量级的,那么看看这四个 Linux 桌面中的图像查看器,如果还不能满足你的需要,还有额外的选择。

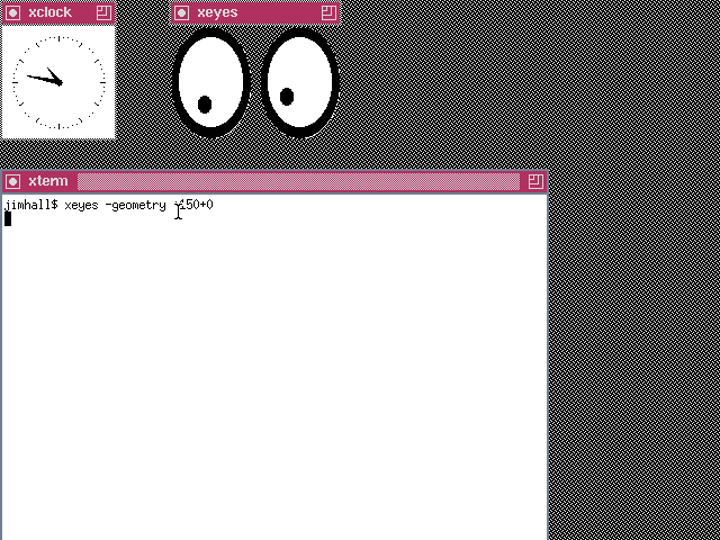

### Feh

[Feh](https://feh.finalrewind.org/) 是我以前在老旧计算机上最喜欢的软件。它简单、朴实、用起来很好。

你可以从命令行启动 Feh:只将其指向图像或者包含图像的文件夹之后就行了。Feh 会快速加载,你可以通过鼠标点击或使用键盘上的向左和向右箭头键滚动图像。不能更简单了。

Feh 可能很轻量级,但它提供了一些选项。例如,你可以控制 Feh 的窗口是否具有边框,设置要查看的图像的最小和最大尺寸,并告诉 Feh 你想要从文件夹中的哪个图像开始浏览。

*Feh 的使用*

### Ristretto

如果你将 Xfce 作为桌面环境,那么你会熟悉 [Ristretto](https://docs.xfce.org/apps/ristretto/start)。它很小、简单、并且非常有用。

怎么简单?你打开包含图像的文件夹,单击左侧的缩略图之一,然后单击窗口顶部的导航键浏览图像。Ristretto 甚至有幻灯片功能。

Ristretto 也可以做更多的事情。你可以使用它来保存你正在浏览的图像的副本,将该图像设置为桌面壁纸,甚至在另一个应用程序中打开它,例如,当你需要修改一下的时候。

*在 Ristretto 中浏览照片*

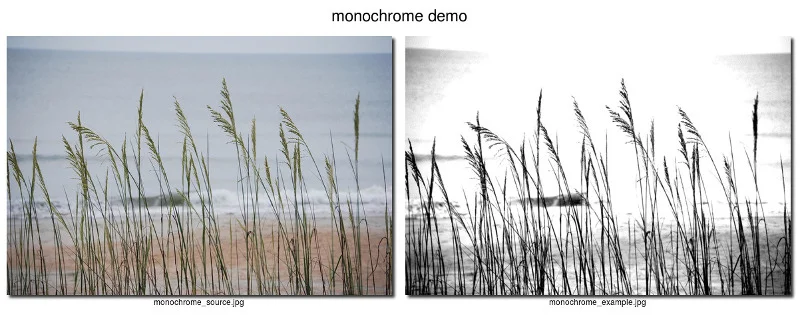

### Mirage

表面上,[Mirage](http://mirageiv.sourceforge.net/)有点平常,没什么特色,但它做着和其他优秀图片浏览器一样的事:打开图像,将它们缩放到窗口的宽度,并且可以使用键盘滚动浏览图像。它甚至可以使用幻灯片。

不过,Mirage 将让需要更多功能的人感到惊喜。除了其核心功能,Mirage 还可以调整图像大小和裁剪图像、截取屏幕截图、重命名图像,甚至生成文件夹中图像的 150 像素宽的缩略图。

如果这还不够,Mirage 还可以显示 [SVG 文件](https://en.wikipedia.org/wiki/Scalable_Vector_Graphics)。你甚至可以从[命令行](http://mirageiv.sourceforge.net/docs-advanced.html#cli)中运行。

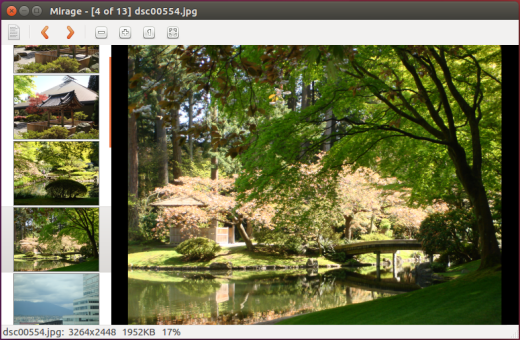

*使用 Mirage*

### Nomacs

[Nomacs](http://nomacs.org/) 显然是本文中最重量级的图像浏览器。它所呈现的那么多功能让人忽视了它的速度。它快捷而易用。

Nomacs 不仅仅可以显示图像。你还可以查看和编辑图像的[元数据](https://iptc.org/standards/photo-metadata/photo-metadata/),向图像添加注释,并进行一些基本的编辑,包括裁剪、调整大小、并将图像转换为灰度。Nomacs 甚至可以截图。

一个有趣的功能是你可以在桌面上运行程序的两个实例,并在这些实例之间同步图像。当需要比较两个图像时,[Nomacs 文档](http://nomacs.org/synchronization/)中推荐这样做。你甚至可以通过局域网同步图像。我没有尝试通过网络进行同步,如果你做过可以分享下你的经验。

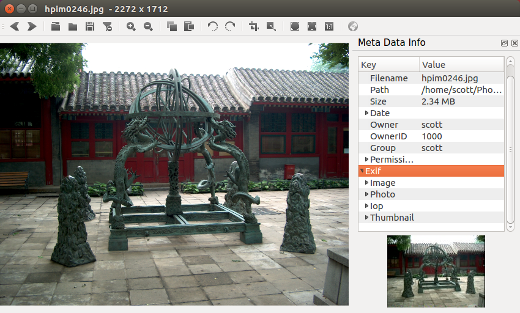

*Nomacs 中的照片及其元数据*

### 其他一些值得一看的浏览器

如果这四个图像浏览器不符合你的需求,这里还有其他一些你可能感兴趣的。

**[Viewnior](http://siyanpanayotov.com/project/viewnior/)** 自称是 “GNU/Linux 中的快速简单的图像查看器”,它很适合这个用途。它的界面干净整洁,Viewnior 甚至可以进行一些基本的图像处理。

如果你喜欢在命令行中使用,那么 **display** 可能是你需要的浏览器。 **[ImageMagick](https://www.imagemagick.org/script/display.php)** 和 **[GraphicsMagick](http://www.graphicsmagick.org/display.html)** 这两个图像处理软件包都有一个名为 display 的应用程序,这两个版本都有查看图像的基本和高级选项。

**[Geeqie](http://geeqie.org/)** 是更轻和更快的图像浏览器之一。但是,不要让它的简单误导你。它包含的功能有元数据编辑功能和其它浏览器所缺乏的查看相机 RAW 图像格式的功能。

**[Shotwell](https://wiki.gnome.org/Apps/Shotwell)** 是 GNOME 桌面的照片管理器。然而它不仅仅能浏览图像,而且 Shotwell 非常快速,并且非常适合显示照片和其他图形。

*在 Linux 桌面中你有最喜欢的一款轻量级图片浏览器么?请在评论区随意分享你的喜欢的浏览器。*

---

作者简介:

我是一名长期使用自由/开源软件的用户,并因为乐趣和收获写各种东西。我不会很严肃。你可以在这些网站上找到我:Twitter、Mastodon、GitHub。

via: <https://opensource.com/article/17/7/4-lightweight-image-viewers-linux-desktop>

作者:[Scott Nesbitt](https://opensource.com/users/scottnesbitt) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Like most people, you probably have more than a few photos and other images on your computer. And, like most people, you probably like to take a peek at those images and photos every so often.

Firing up an editor like [GIMP](https://www.gimp.org/) or [Pinta](https://pinta-project.com/pintaproject/pinta/) is overkill for simply viewing images.

On the other hand, the basic image viewer included with most Linux desktop environments might not be enough for your needs. If you want something with a few more features, but still want it to be lightweight, then take a closer look at these four image viewers for the Linux desktop, plus a handful of bonus options if they don't meet your needs.

## Feh

[Feh](https://feh.finalrewind.org/) is an old favorite from the days when I computed on older, slower hardware. It's simple, unadorned, and does what it's designed to do very well.

You drive Feh from the command line: just point it at an image or a folder containing images and away you go. Feh loads quickly, and you can scroll through a set of images with a mouse click or by using the left and right arrow keys on your keyboard. What could be simpler?

Feh might be light, but it offers some options. You can, for example, control whether Feh's window has a border, set the minimum and maximum sizes of the images you want to view, and tell Feh at which image in a folder you want to start viewing.

opensource.com

## Ristretto

If you've used Xfce as a desktop environment, you'll be familiar with [Ristretto](https://docs.xfce.org/apps/ristretto/start). It's small, simple, and very useful.

How simple? You open a folder containing images, click on one of the thumbnails on the left, and move through the images by clicking the navigation keys at the top of the window. Ristretto even has a slideshow feature.

Ristretto can do a bit more, too. You can use it to save a copy of an image you're viewing, set that image as your desktop wallpaper, and even open it in another application, for example, if you need to touch it up.

opensource.com

## Mirage

On the surface, [Mirage](http://mirageiv.sourceforge.net/) is kind of plain and nondescript. It does the same things just about every decent image viewer does: opens image files, scales them to the width of the window, and lets you scroll through a collection of images using your keyboard. It even runs slideshows.

Still, Mirage will surprise anyone who needs a little more from their image viewer. In addition to its core features, Mirage lets you resize and crop images, take screenshots, rename an image file, and even generate 150-pixel-wide thumbnails of the images in a folder.

If that wasn't enough, Mirage can display [SVG files](https://en.wikipedia.org/wiki/Scalable_Vector_Graphics). You can even drive it [from the command line](http://mirageiv.sourceforge.net/docs-advanced.html#cli).

opensource.com

## Nomacs

[Nomacs](http://nomacs.org/) is easily the heaviest of the image viewers described in this article. Its perceived bulk belies Nomacs' speed. It's quick and easy to use.

Nomacs does more than display images. You can also view and edit an image's [metadata](https://iptc.org/standards/photo-metadata/photo-metadata/), add notes to an image, and do some basic editing—including cropping, resizing, and converting the image to grayscale. Nomacs can even take screenshots.

One interesting feature is that you can run two instances of the application on your desktop and synchronize an image across those instances. The [Nomacs documentation](http://nomacs.org/synchronization/) recommends this when you need to compare two images. You can even synchronize an image across a local area network. I haven't tried synchronizing across a network, but please share your experiences if you have.

opensource.com

## A few other viewers worth looking at

If these four image viewers don't suit your needs, here are some others that might interest you.

** Viewnior** bills itself as a "fast and simple image viewer for GNU/Linux," and it fits that bill nicely. Its interface is clean and uncluttered, and Viewnior can even do some basic image manipulation.

If the command line is more your thing, then **display** might be the viewer for you. Both the ** ImageMagick** and

**image manipulation packages have an application named display, and both versions have basic and advanced options for viewing images.**

[GraphicsMagick](http://www.graphicsmagick.org/display.html)** Geeqie** is one of the lighter and faster image viewers out there. Don't let its simplicity fool you, though. It packs features, like metadata editing and viewing camera RAW image formats, that other viewers lack.

** Shotwell** is the photo manager for the GNOME desktop. While it does more than just view images, Shotwell is quite speedy and does a great job of displaying photos and other graphics.

*Do you have a favorite lightweight image viewer for the Linux desktop? Feel free to share your preferences by leaving a comment.*

## 7 Comments |

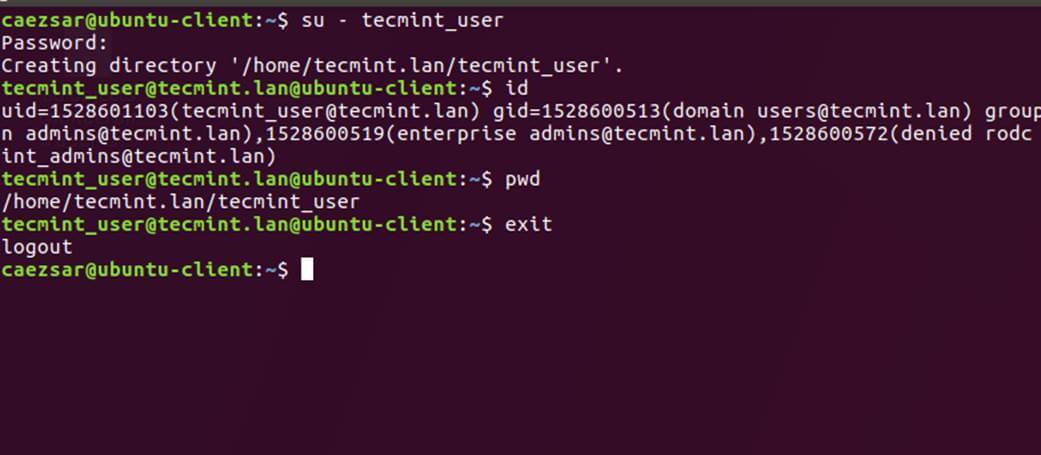

8,777 | Samba 系列(十四):在命令行中将 CentOS 7 与 Samba4 AD 集成 | https://www.tecmint.com/integrate-centos-7-to-samba4-active-directory/ | 2017-08-14T17:58:03 | [

"Samba"

] | https://linux.cn/article-8777-1.html |

本指南将向你介绍如何使用 Authconfig 在命令行中将无图形界面的 CentOS 7 服务器集成到 [Samba4 AD 域控制器](/article-8065-1.html)中。

这类设置提供了由 Samba 持有的单一集中式帐户数据库,允许 AD 用户通过网络基础设施对 CentOS 服务器进行身份验证。

#### 要求

1. [在 Ubuntu 上使用 Samba4 创建 AD 基础架构](/article-8065-1.html)

2. [CentOS 7.3 安装指南](/article-8048-1.html)

### 步骤 1:为 Samba4 AD DC 配置 CentOS

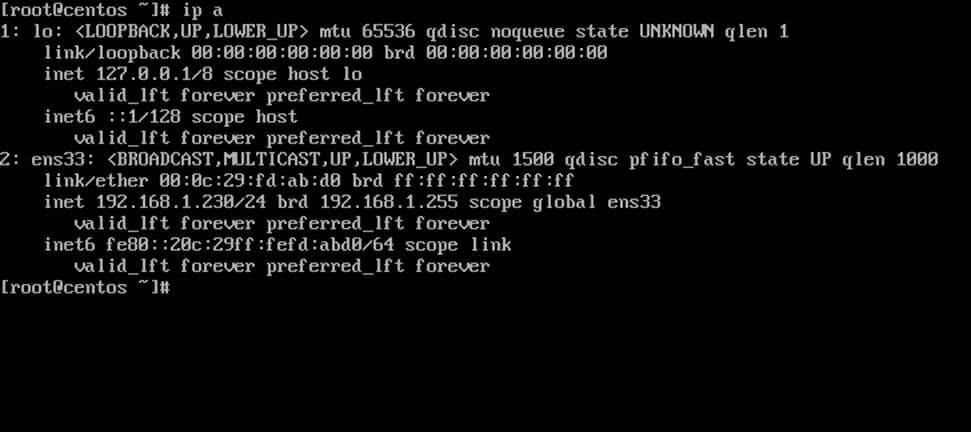

1、 在开始将 CentOS 7 服务器加入 Samba4 DC 之前,你需要确保网络接口被正确配置为通过 DNS 服务查询域。

运行 `ip address` 命令列出你机器网络接口,选择要编辑的特定网卡,通过针对接口名称运行 `nmtui-edit` 命令(如本例中的 ens33),如下所示。

```

# ip address

# nmtui-edit ens33

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/List-Network-Interfaces.jpg)

*列出网络接口*

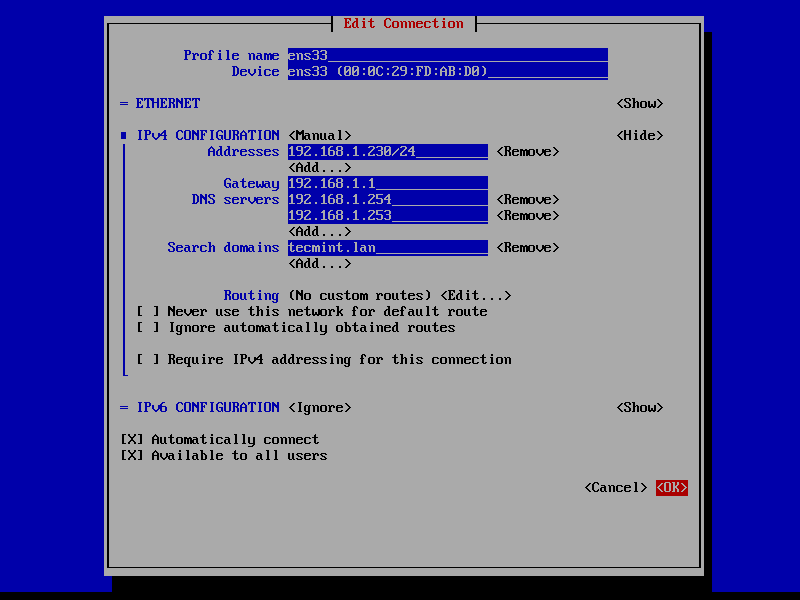

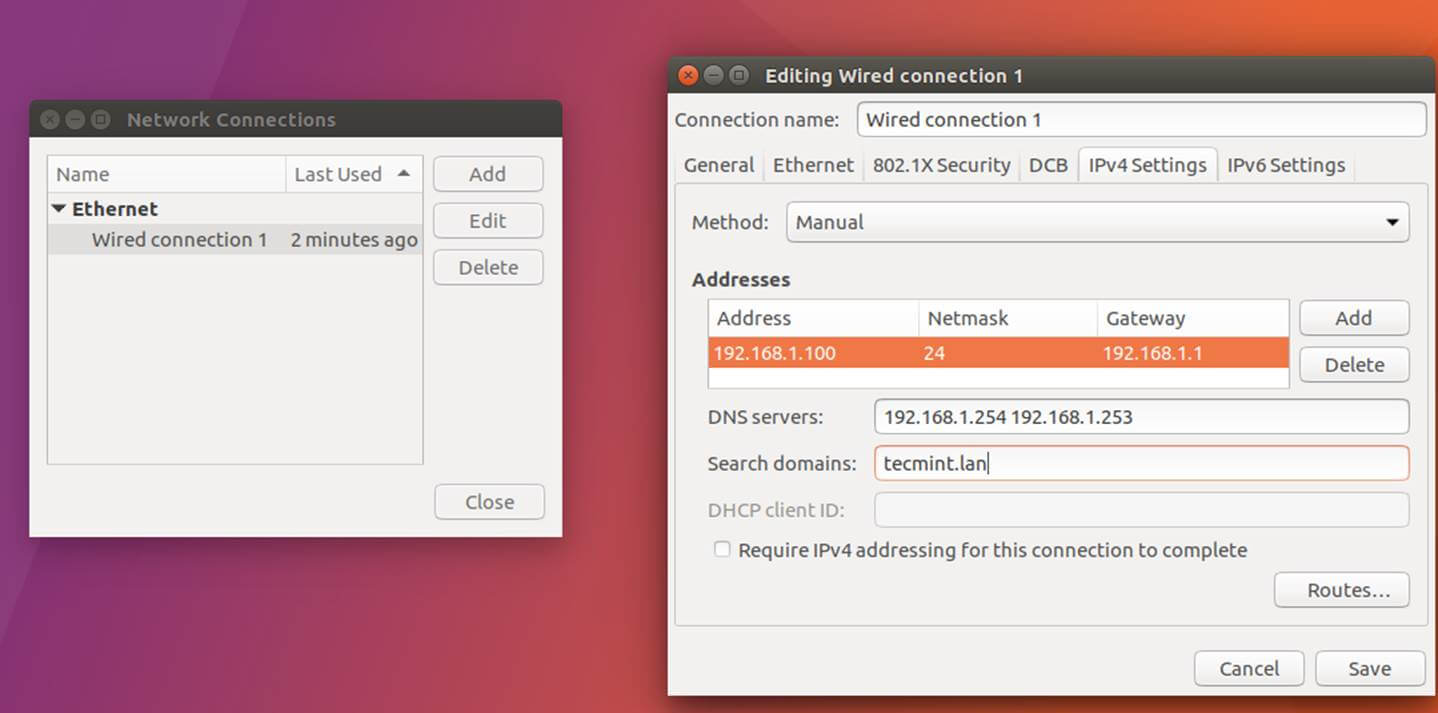

2、 打开网络接口进行编辑后,添加最适合 LAN 的静态 IPv4 配置,并确保为 DNS 服务器设置 Samba AD 域控制器 IP 地址。

另外,在搜索域中追加你的域的名称,并使用 [TAB] 键跳到确定按钮来应用更改。

当你仅对域 dns 记录使用短名称时, 已提交的搜索域保证域对应项会自动追加到 dns 解析 (FQDN) 中。

[](https://www.tecmint.com/wp-content/uploads/2017/07/Configure-Network-Interface.png)

*配置网络接口*

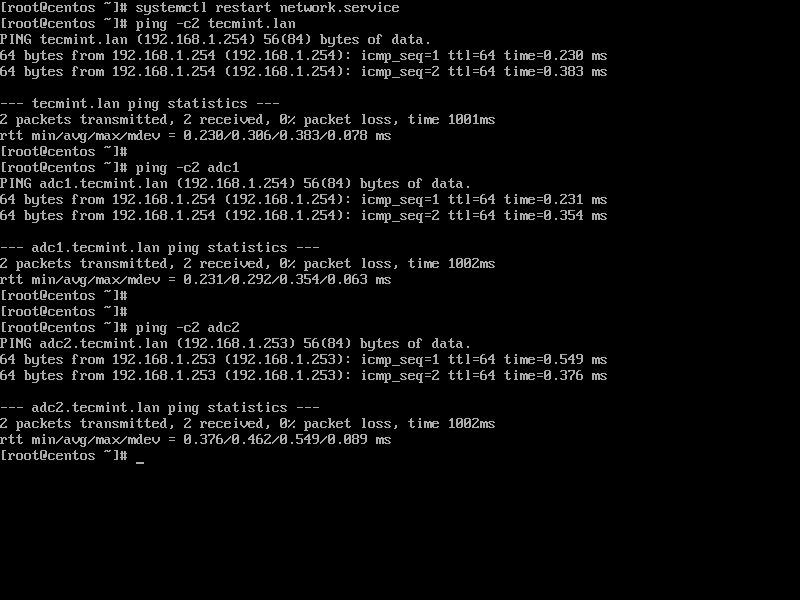

3、最后,重启网络守护进程以应用更改,并通过对域名和域控制器 ping 来测试 DNS 解析是否正确配置,如下所示。

```

# systemctl restart network.service

# ping -c2 tecmint.lan

# ping -c2 adc1

# ping -c2 adc2

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/Verify-DNS-Resolution-on-Domain.png)

*验证域上的 DNS 解析*

4、 另外,使用下面的命令配置你的计算机主机名并重启机器应用更改。

```

# hostnamectl set-hostname your_hostname

# init 6

```

使用以下命令验证主机名是否正确配置。

```

# cat /etc/hostname

# hostname

```

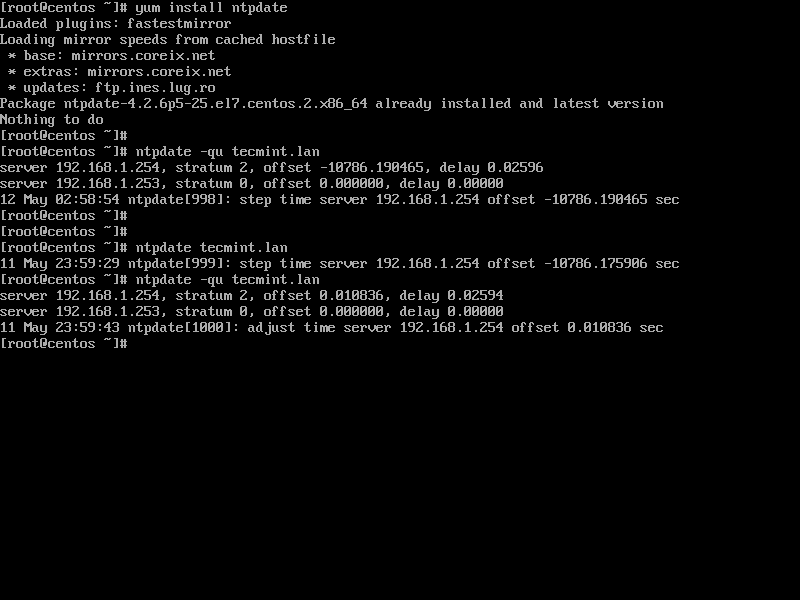

5、 最后,使用 root 权限运行以下命令,与 Samba4 AD DC 同步本地时间。

```

# yum install ntpdate

# ntpdate domain.tld

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/Sync-Time-with-Samba4-AD-DC.png)

*与 Samba4 AD DC 同步时间*

### 步骤 2:将 CentOS 7 服务器加入到 Samba4 AD DC

6、 要将 CentOS 7 服务器加入到 Samba4 AD 中,请先用具有 root 权限的帐户在计算机上安装以下软件包。

```

# yum install authconfig samba-winbind samba-client samba-winbind-clients

```

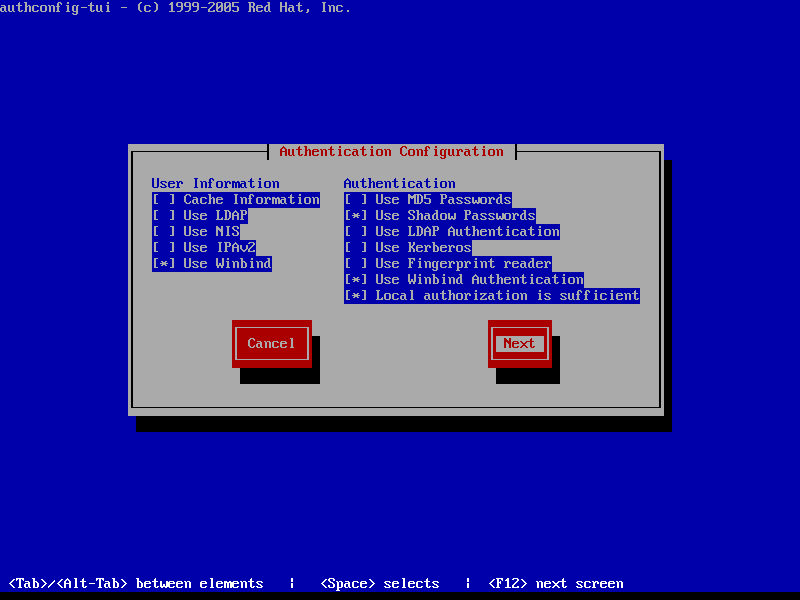

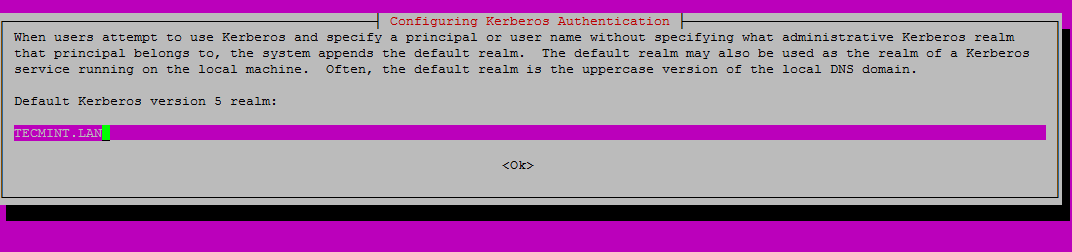

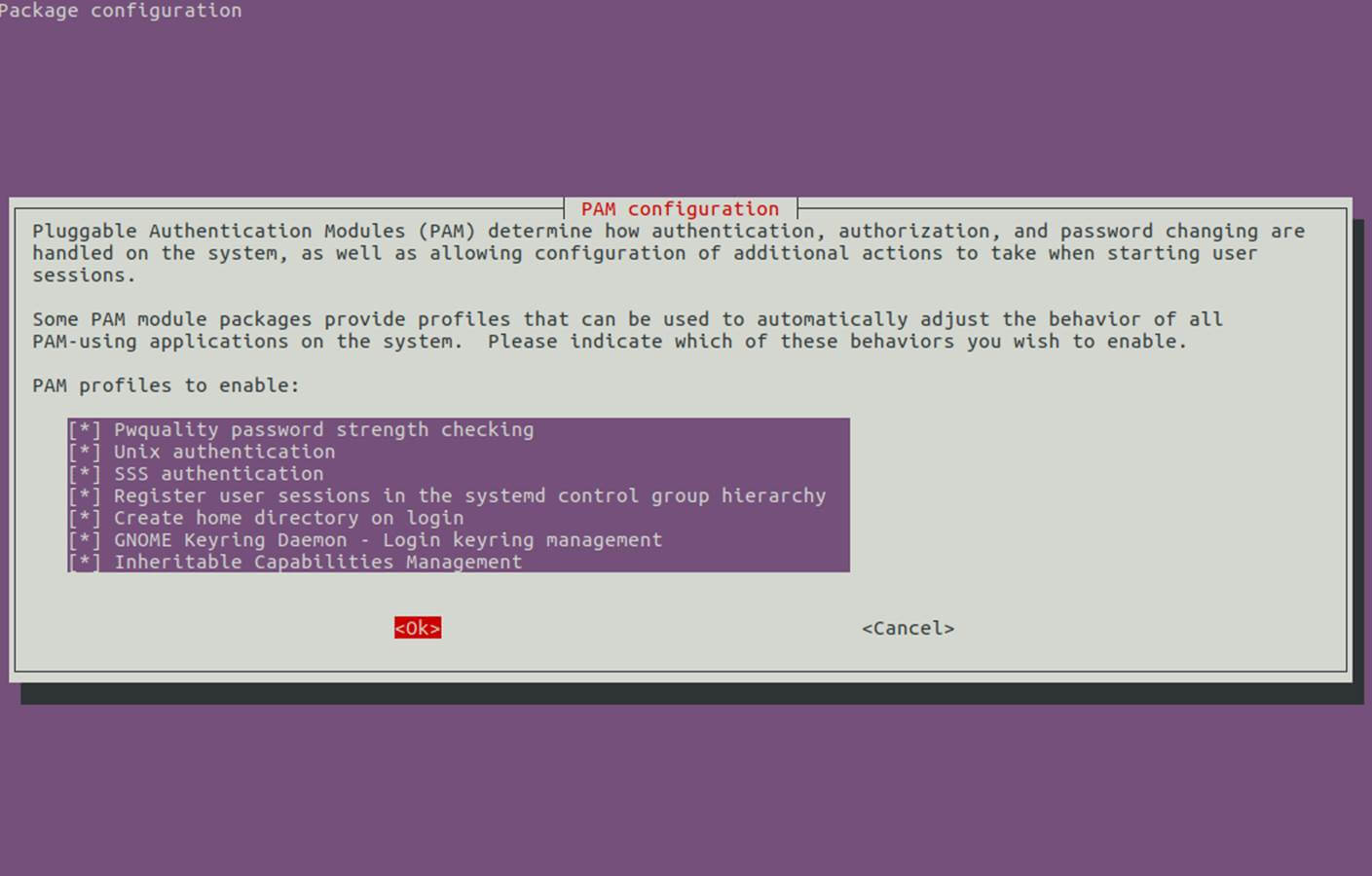

7、 为了将 CentOS 7 服务器与域控制器集成,可以使用 root 权限运行 `authconfig-tui`,并使用下面的配置。

```

# authconfig-tui

```

首屏选择:

* 在 User Information 中:

+ Use Winbind

* 在 Authentication 中使用[空格键]选择:

+ Use Shadow Password

+ Use Winbind Authentication

+ Local authorization is sufficient

[](https://www.tecmint.com/wp-content/uploads/2017/07/Authentication-Configuration.png)

*验证配置*

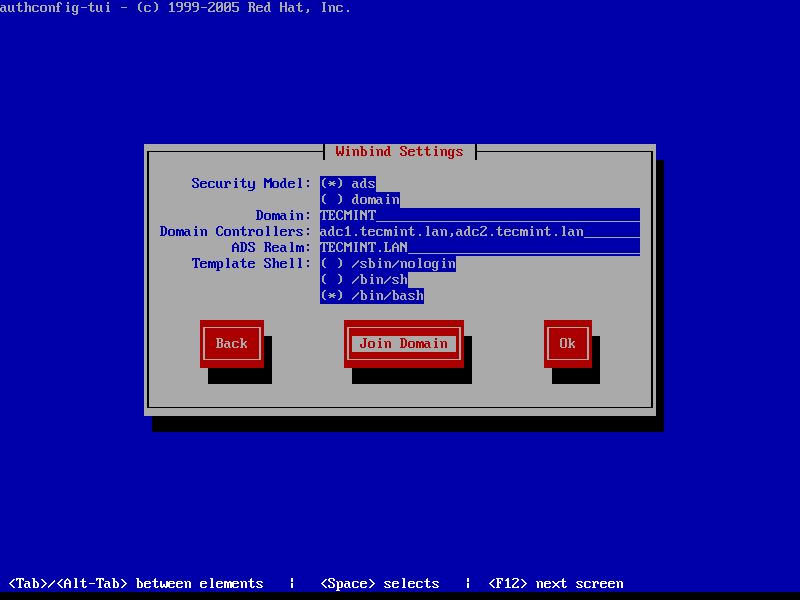

8、 点击 Next 进入 Winbind 设置界面并配置如下:

* Security Model: ads

* Domain = YOUR\_DOMAIN (use upper case)

* Domain Controllers = domain machines FQDN (comma separated if more than one)

* ADS Realm = YOUR\_DOMAIN.TLD

* Template Shell = /bin/bash

[](https://www.tecmint.com/wp-content/uploads/2017/07/Winbind-Settings.png)

*Winbind 设置*

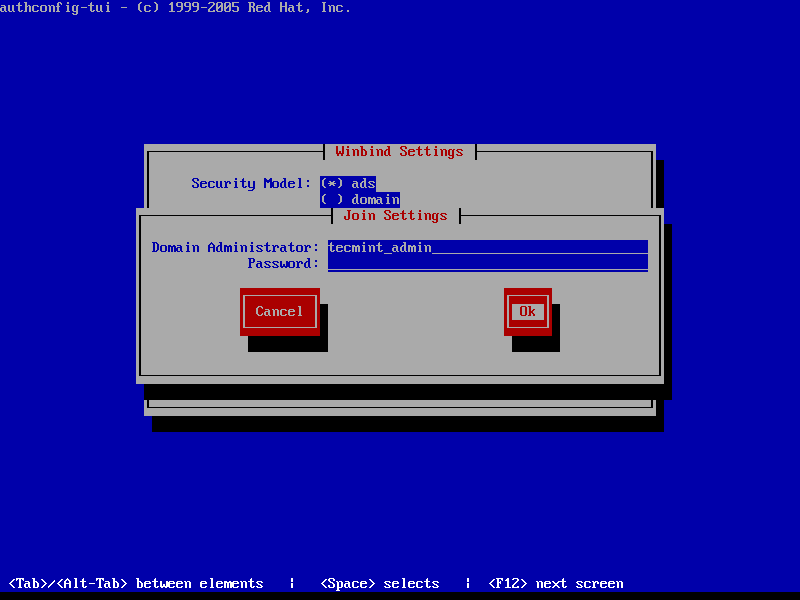

9、 要加入域,使用 [tab] 键跳到 “Join Domain” 按钮,然后按[回车]键加入域。

在下一个页面,添加具有提升权限的 Samba4 AD 帐户的凭据,以将计算机帐户加入 AD,然后单击 “OK” 应用设置并关闭提示。

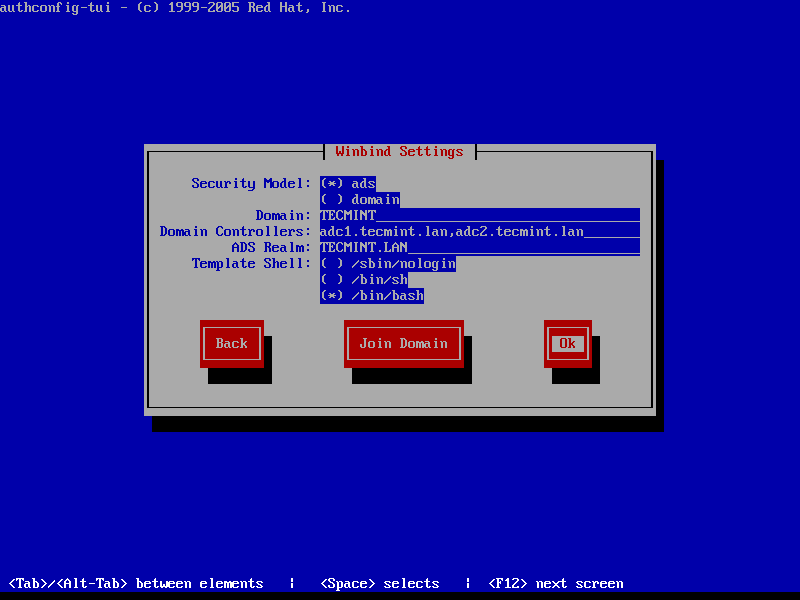

请注意,当你输入用户密码时,凭据将不会显示在屏幕中。在下面再次点击 OK,完成 CentOS 7 的域集成。

[](https://www.tecmint.com/wp-content/uploads/2017/07/Join-Domain-to-Samba4-AD-DC.png)

*加入域到 Samba4 AD DC*

[](https://www.tecmint.com/wp-content/uploads/2017/07/Confirm-Winbind-Settings.png)

*确认 Winbind 设置*

要强制将机器添加到特定的 Samba AD OU 中,请使用 hostname 命令获取计算机的完整名称,并使用机器名称在该 OU 中创建一个新的计算机对象。

将新对象添加到 Samba4 AD 中的最佳方法是已经集成到[安装了 RSAT 工具](/article-8097-1.html)的域的 Windows 机器上使用 ADUC 工具。

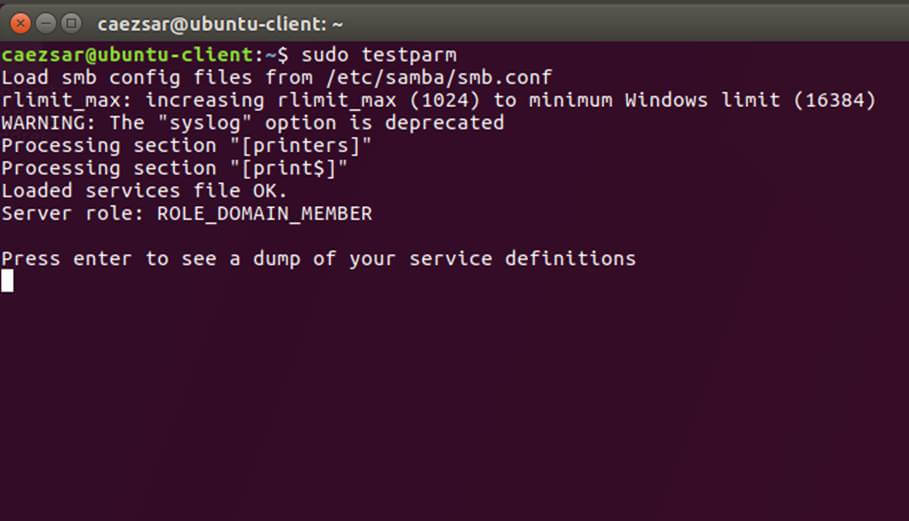

重要:加入域的另一种方法是使用 `authconfig` 命令行,它可以对集成过程进行广泛的控制。

但是,这种方法很容易因为其众多参数造成错误,如下所示。该命令必须输入一条长命令行。

```

# authconfig --enablewinbind --enablewinbindauth --smbsecurity ads --smbworkgroup=YOUR_DOMAIN --smbrealm YOUR_DOMAIN.TLD --smbservers=adc1.yourdomain.tld --krb5realm=YOUR_DOMAIN.TLD --enablewinbindoffline --enablewinbindkrb5 --winbindtemplateshell=/bin/bash--winbindjoin=domain_admin_user --update --enablelocauthorize --savebackup=/backups

```

10、 机器加入域后,通过使用以下命令验证 winbind 服务是否正常运行。

```

# systemctl status winbind.service

```

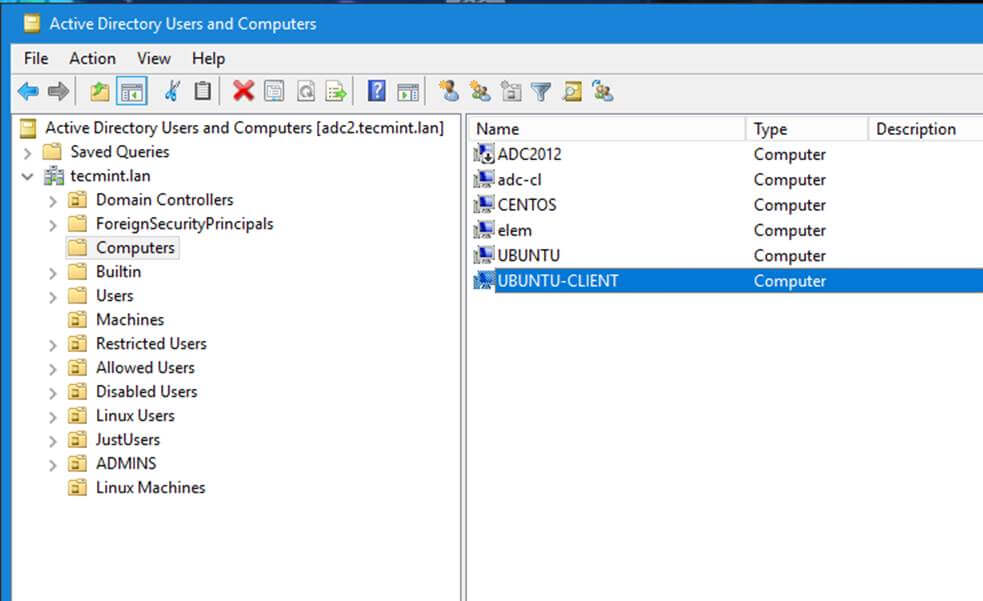

11、 接着检查是否在 Samba4 AD 中成功创建了 CentOS 机器对象。从安装了 RSAT 工具的 Windows 机器使用 AD 用户和计算机工具,并进入到你的域计算机容器。一个名为 CentOS 7 Server 的新 AD 计算机帐户对象应该在右边的列表中。

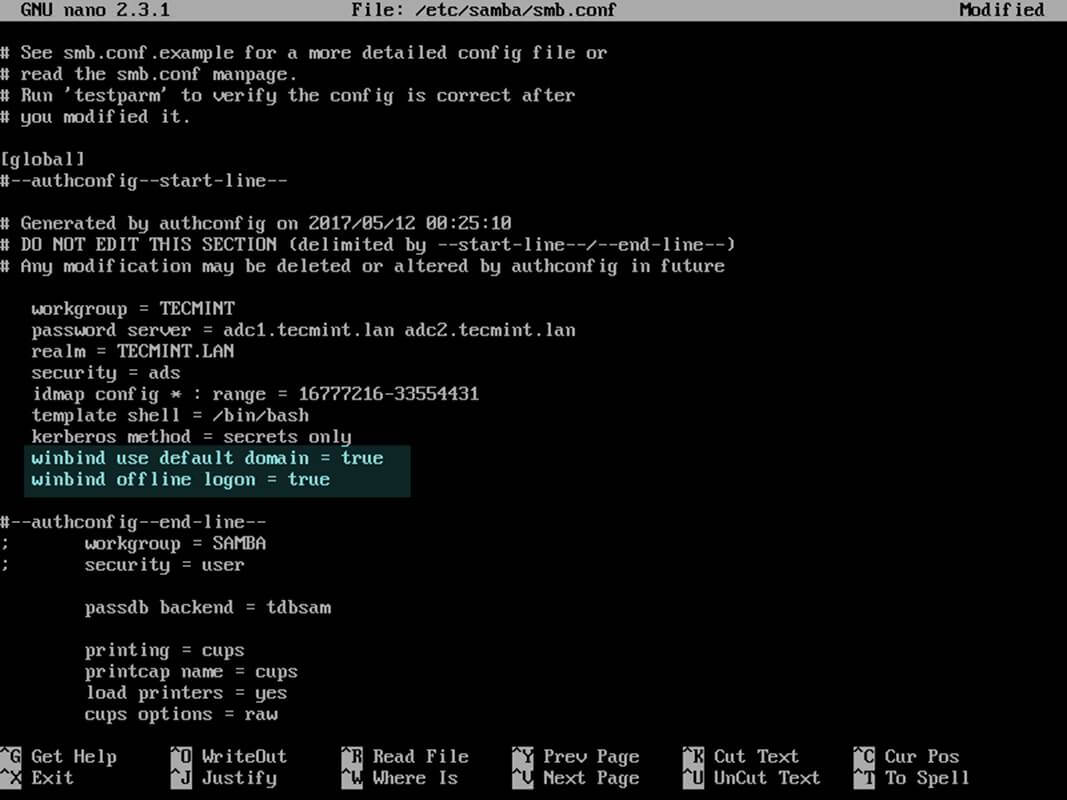

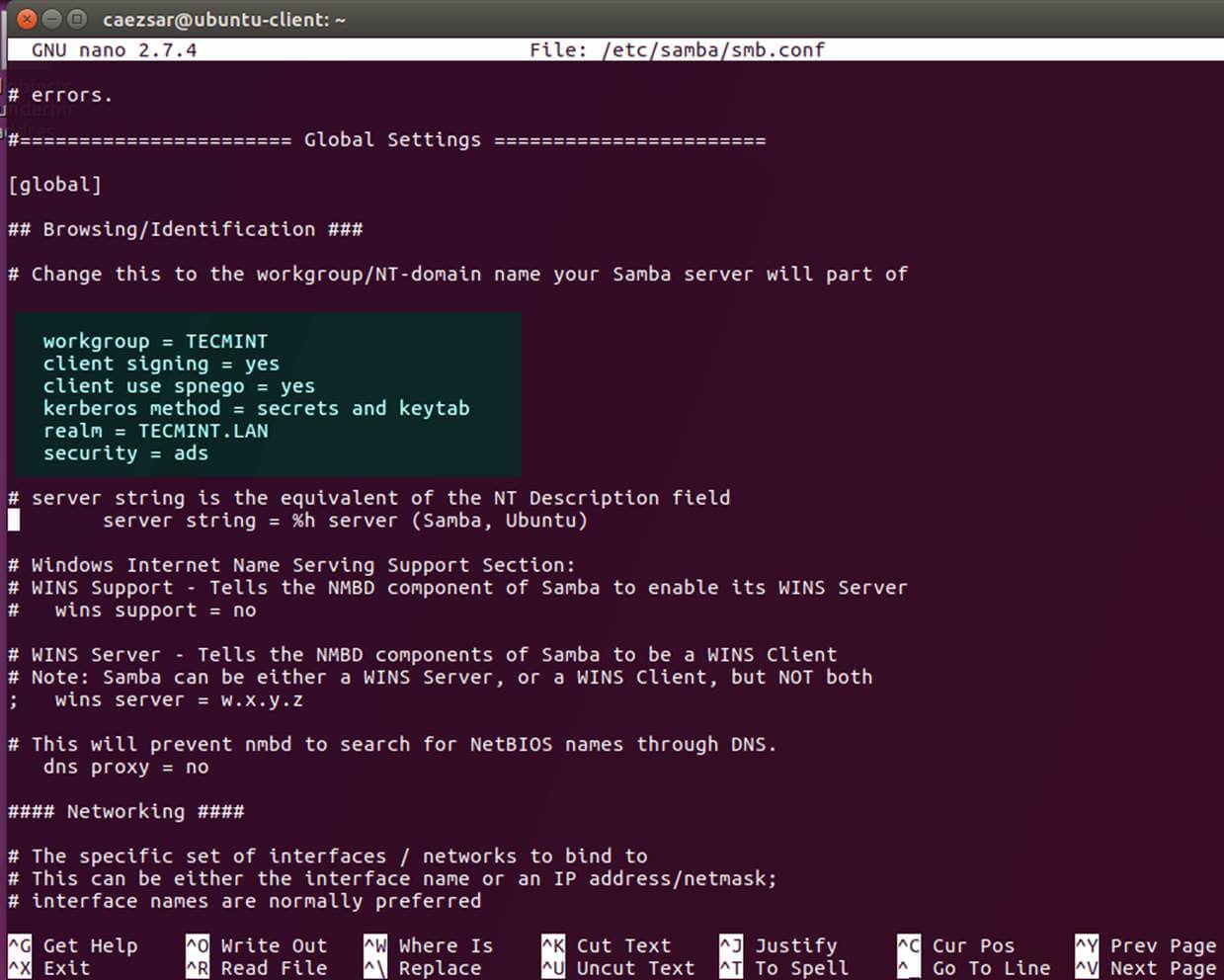

12、 最后,使用文本编辑器打开 samba 主配置文件(`/etc/samba/smb.conf`)来调整配置,并在 `[global]` 配置块的末尾附加以下行,如下所示:

```

winbind use default domain = true

winbind offline logon = true

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/Configure-Samba.jpg)

*配置 Samba*

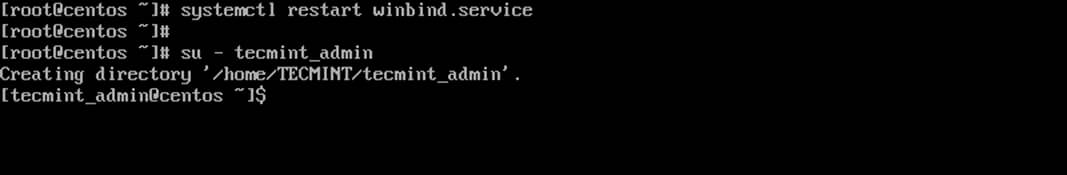

13、 为了在 AD 帐户首次登录时在机器上创建本地家目录,请运行以下命令:

```

# authconfig --enablemkhomedir --update

```

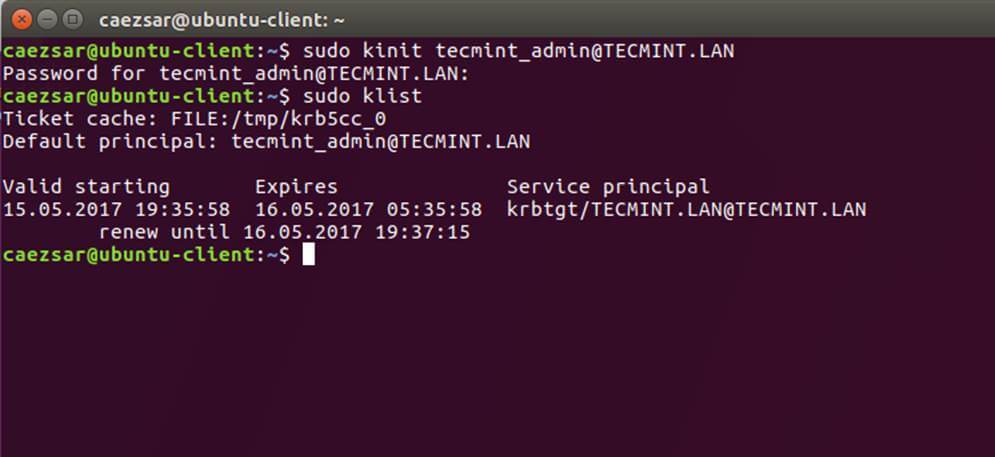

14、 最后,重启 Samba 守护进程使更改生效,并使用一个 AD 账户登陆验证域加入。AD 帐户的家目录应该会自动创建。

```

# systemctl restart winbind

# su - domain_account

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/Verify-Domain-Joining.jpg)

*验证域加入*

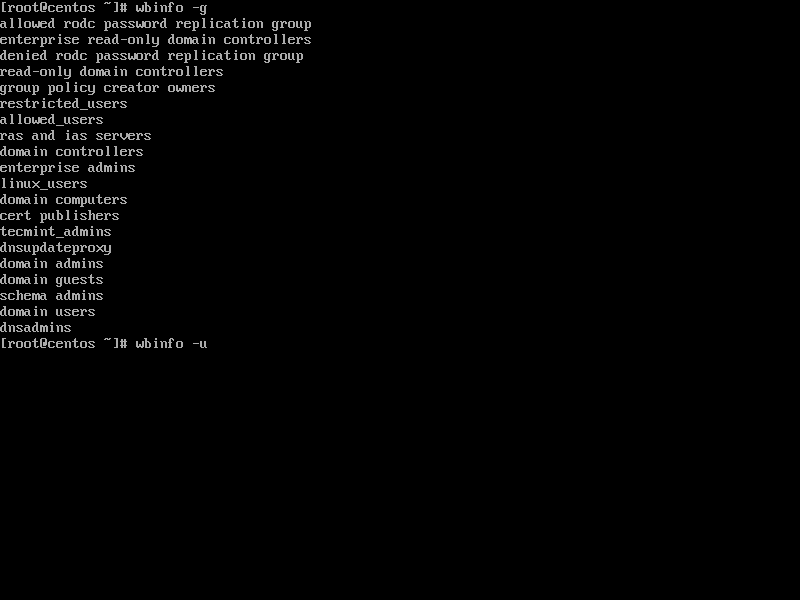

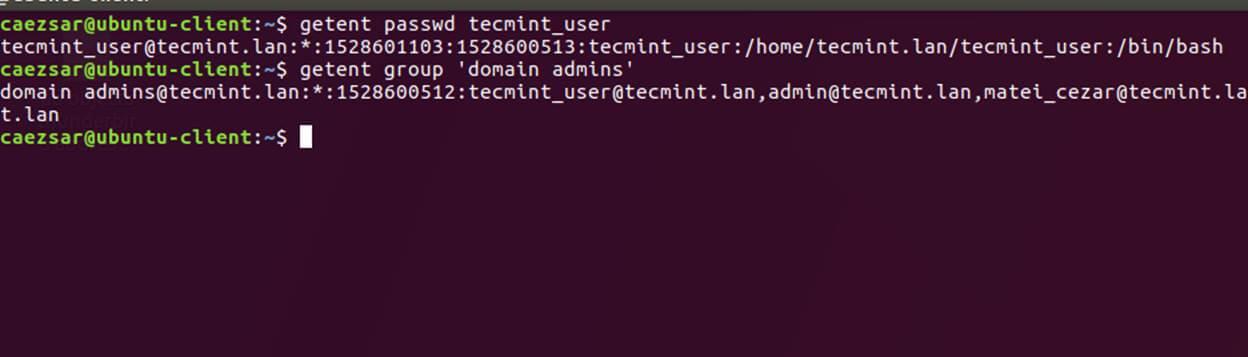

15、 通过以下命令之一列出域用户或域组。

```

# wbinfo -u

# wbinfo -g

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/List-Domain-Users-and-Groups.png)

*列出域用户和组*

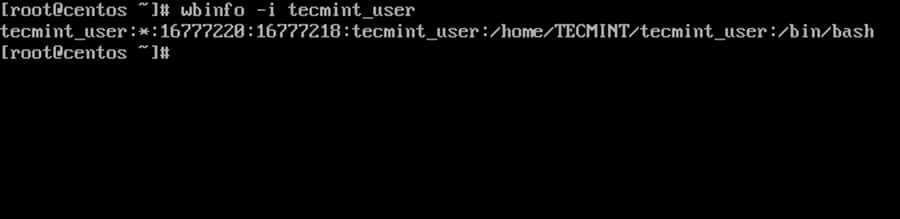

16、 要获取有关域用户的信息,请运行以下命令。

```

# wbinfo -i domain_user

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/List-Domain-User-Info.jpg)

*列出域用户信息*

17、 要显示域摘要信息,请使用以下命令。

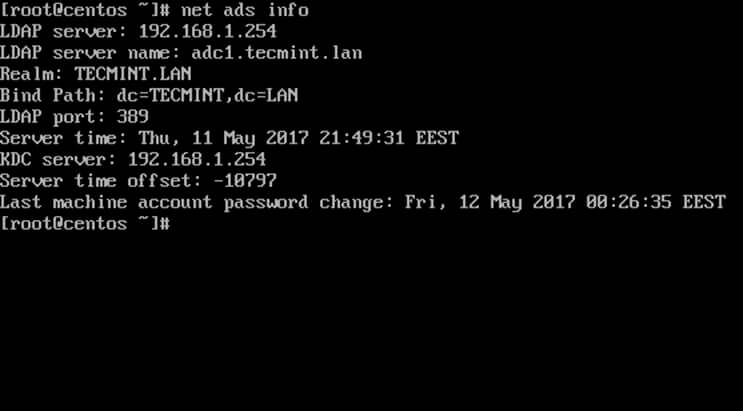

```

# net ads info

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/List-Domain-Summary.jpg)

*列出域摘要*

### 步骤 3:使用 Samba4 AD DC 帐号登录CentOS

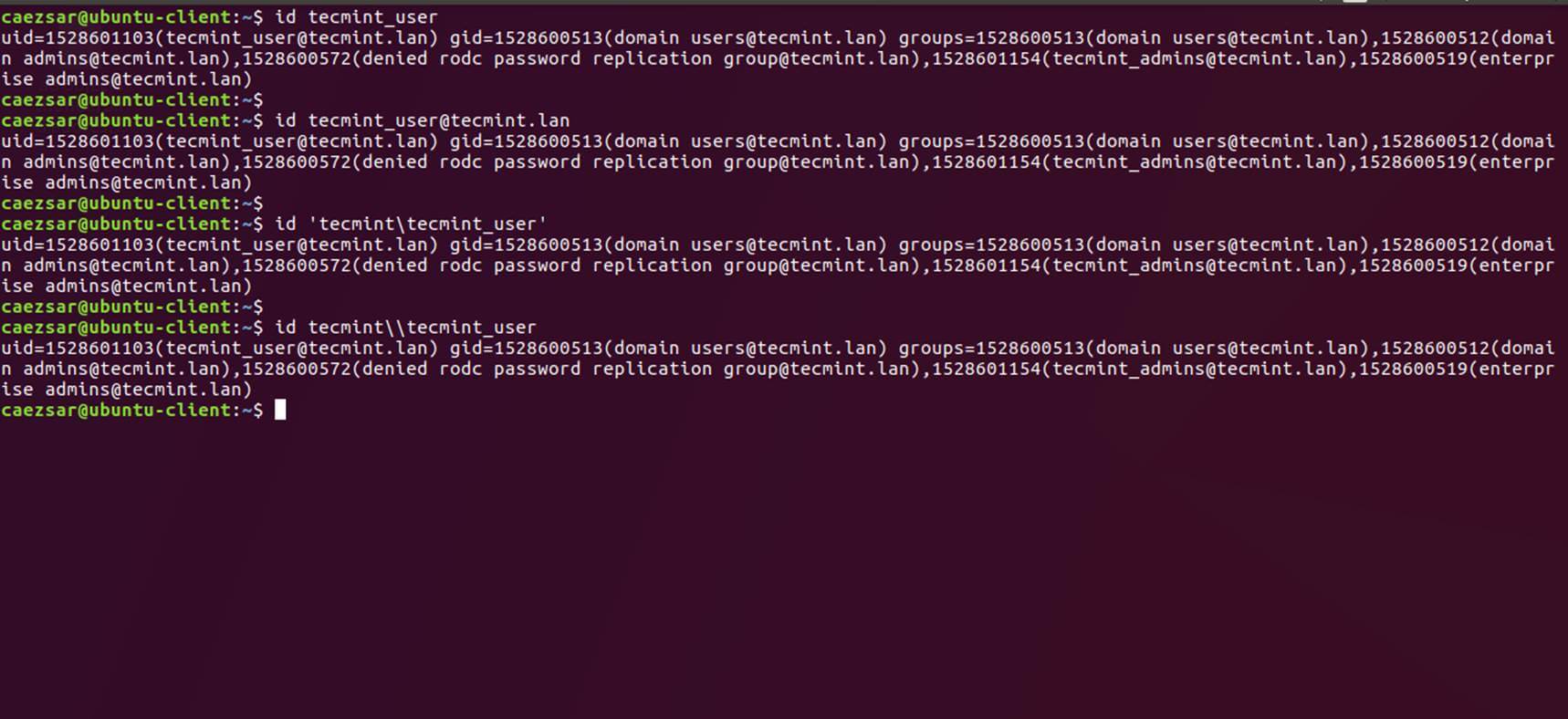

18、 要在 CentOS 中与域用户进行身份验证,请使用以下命令语法之一。

```

# su - ‘domain\domain_user’

# su - domain\\domain_user

```

或者在 samba 配置文件中设置了 `winbind use default domain = true` 参数的情况下,使用下面的语法。

```

# su - domain_user

# su - [email protected]

```

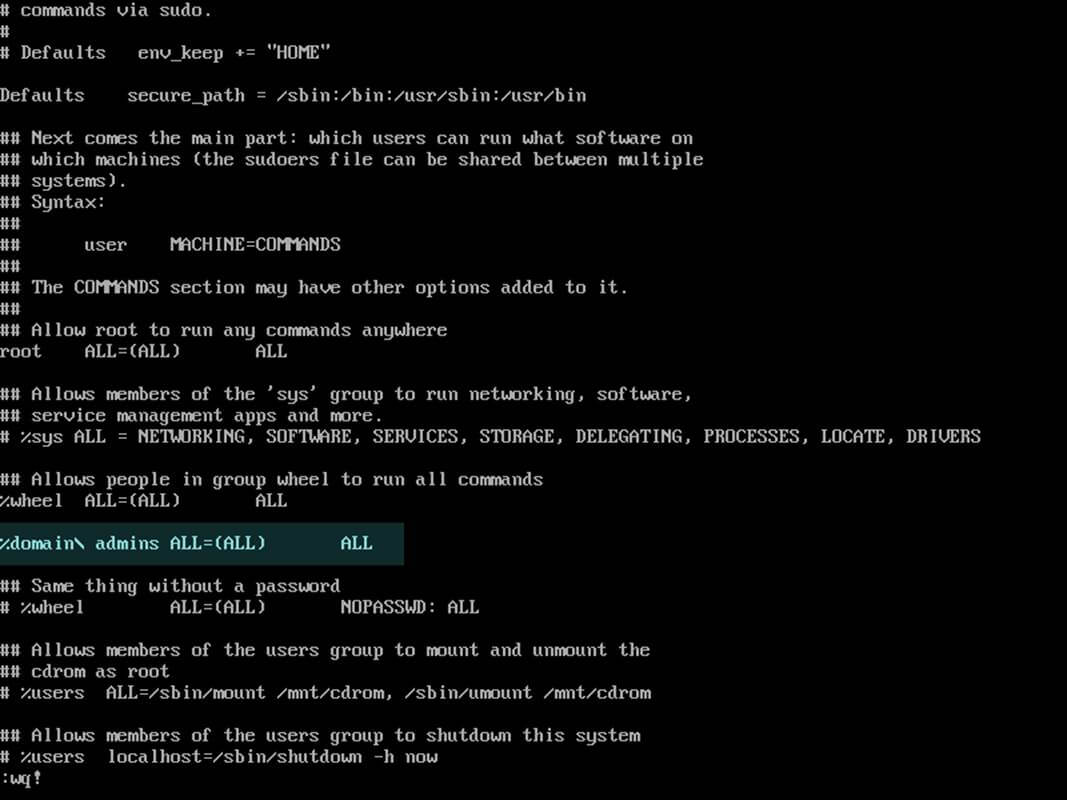

19、 要为域用户或组添加 root 权限,请使用 `visudocommand` 编辑 `sudoers` 文件,并添加以下截图所示的行。

```

YOUR_DOMAIN\\domain_username ALL=(ALL:ALL) ALL #For domain users

%YOUR_DOMAIN\\your_domain\ group ALL=(ALL:ALL) ALL #For domain groups

```

或者在 samba 配置文件中设置了 `winbind use default domain = true` 参数的情况下,使用下面的语法。

```

domain_username ALL=(ALL:ALL) ALL #For domain users

%your_domain\ group ALL=(ALL:ALL) ALL #For domain groups

```

[](https://www.tecmint.com/wp-content/uploads/2017/07/Grant-Root-Privileges-on-Domain-Users.jpg)

*授予域用户 root 权限*

20、 针对 Samba4 AD DC 的以下一系列命令也可用于故障排除:

```

# wbinfo -p #Ping domain

# wbinfo -n domain_account #Get the SID of a domain account

# wbinfo -t #Check trust relationship

```

21、 要离开该域, 请使用具有提升权限的域帐户对你的域名运行以下命令。从 AD 中删除计算机帐户后, 重启计算机以在集成进程之前还原更改。

```

# net ads leave -w DOMAIN -U domain_admin

# init 6

```

就是这样了!尽管此过程主要集中在将 CentOS 7 服务器加入到 Samba4 AD DC 中,但这里描述的相同步骤也适用于将 CentOS 服务器集成到 Microsoft Windows Server 2012 AD 中。

---

作者简介:

Matei Cezar - 我是一个电脑上瘾的家伙,开源和基于 linux 的系统软件的粉丝,在 Linux 发行版桌面、服务器和 bash 脚本方面拥有大约 4 年的经验。

---

via: <https://www.tecmint.com/integrate-centos-7-to-samba4-active-directory/>

作者:[Matei Cezar](https://www.tecmint.com/author/cezarmatei/) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | This guide will show you how you can integrate a **CentOS 7** Server with no Graphical User Interface to [Samba4 Active Directory Domain Controller](https://www.tecmint.com/install-samba4-active-directory-ubuntu/) from command line using Authconfig software.

This type of setup provides a single centralized account database held by Samba and allows the AD users to authenticate to CentOS server across the network infrastructure.

#### Requirements

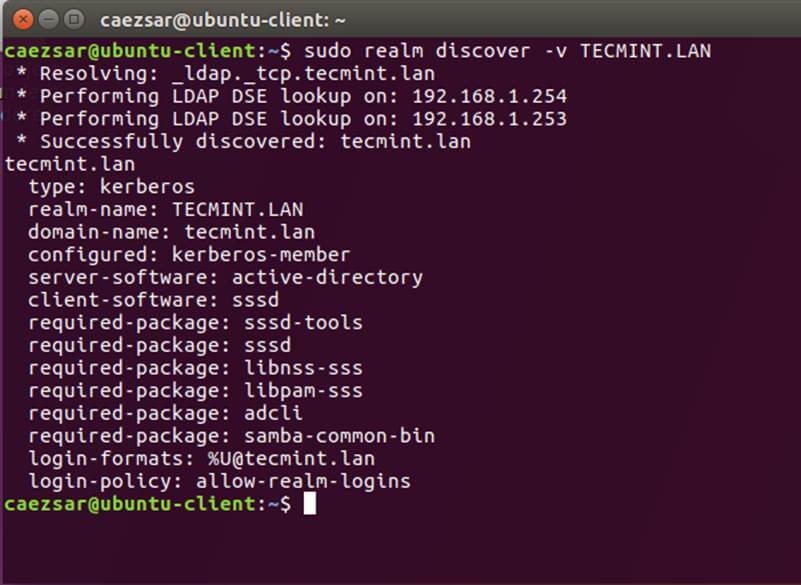

### Step 1: Configure CentOS for Samba4 AD DC

**1.** Before starting to join **CentOS 7** Server into a **Samba4 DC** you need to assure that the network interface is properly configured to query domain via DNS service.

Run [ip address](https://www.tecmint.com/ip-command-examples/) command to list your machine network interfaces and choose the specific NIC to edit by issuing **nmtui-edit** command against the interface name, such as **ens33** in this example, as illustrated below.

# ip address # nmtui-edit ens33

**2.** Once the network interface is opened for editing, add the static IPv4 configurations best suited for your LAN and make sure you setup Samba AD Domain Controllers IP addresses for the DNS servers.

Also, append the name of your domain in search domains filed and navigate to **OK** button using **[TAB]** key to apply changes.

The search domains filed assures that the domain counterpart is automatically appended by DNS resolution (FQDN) when you use only a short name for a domain DNS record.

**3.** Finally, restart the network daemon to apply changes and test if DNS resolution is properly configured by issuing series of **ping** commands against the domain name and domain controllers short names as shown below.

# systemctl restart network.service # ping -c2 tecmint.lan # ping -c2 adc1 # ping -c2 adc2

**4.** Also, configure your machine hostname and reboot the machine to properly apply the settings by issuing the following commands.

# hostnamectl set-hostname your_hostname # init 6

Verify if hostname was correctly applied with the below commands.

# cat /etc/hostname # hostname

**5.** Finally, sync local time with Samba4 AD DC by issuing the below commands with root privileges.

# yum install ntpdate # ntpdate domain.tld

### Step 2: Join CentOS 7 Server to Samba4 AD DC

**6.** To join CentOS 7 server to Samba4 Active Directory, first install the following packages on your machine from an account with root privileges.

# yum install authconfig samba-winbind samba-client samba-winbind-clients

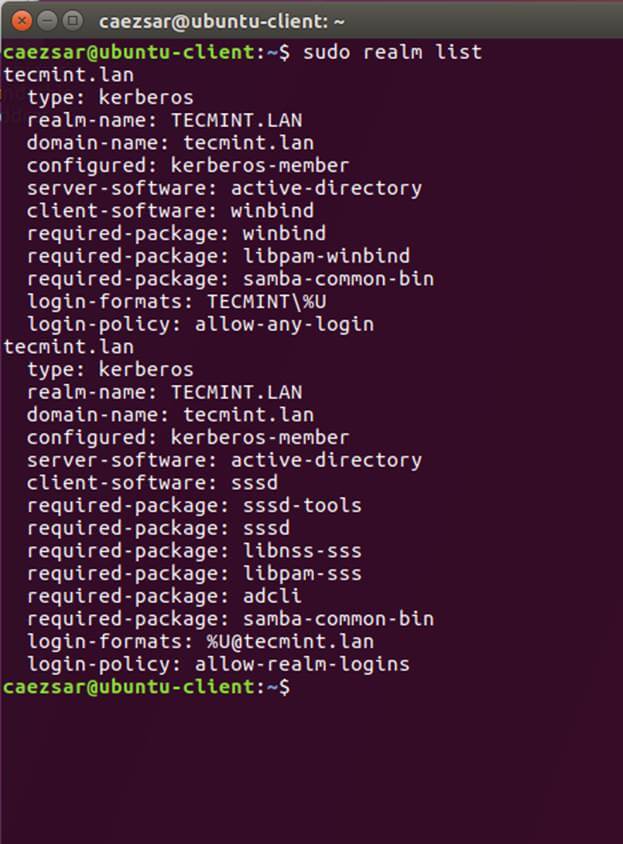

**7.** In order to integrate CentOS 7 server to a domain controller run **authconfig-tui** graphical utility with root privileges and use the below configurations as described below.

# authconfig-tui

At the first prompt screen choose:

- On

**User Information**:- Use Winbind

- On

**Authentication**tab select by pressing**[Space]**key:- Use

**Shadow Password** - Use

**Winbind Authentication** - Local authorization is sufficient

- Use

**8.** Hit **Next** to continue to the Winbind Settings screen and configure as illustrated below:

- Security Model:

**ads** - Domain =

**YOUR_DOMAIN**(use upper case) - Domain Controllers =

**domain machines FQDN**(comma separated if more than one) - ADS Realm =

**YOUR_DOMAIN.TLD** - Template Shell =

**/bin/bash**

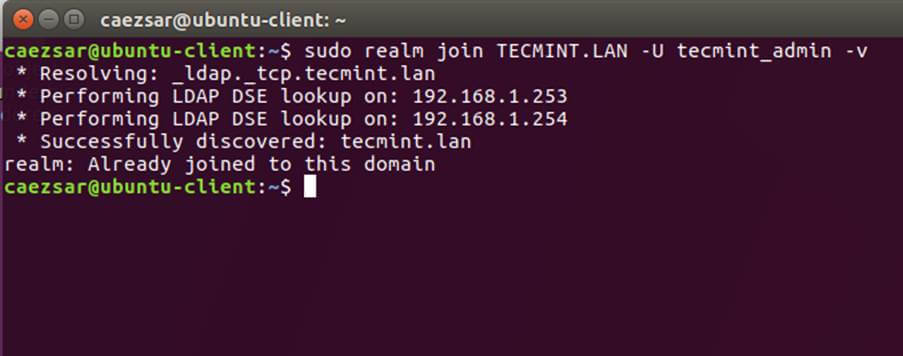

**9.** To perform domain joining navigate to **Join Domain** button using **[tab]** key and hit **[Enter]** key to join domain.

At the next screen prompt, add the credentials for a **Samba4 AD** account with elevated privileges to perform the machine account joining into AD and hit **OK** to apply settings and close the prompt.

Be aware that when you type the user password, the credentials won’t be shown in the password screen. On the remaining screen hit **OK** again to finish domain integration for CentOS 7 machine.

To force adding a machine into a specific **Samba AD Organizational Unit**, get your machine exact name using hostname command and create a new Computer object in that OU with the name of your machine.

The best way to add a new object into a Samba4 AD is by using **ADUC** tool from a Windows machine integrated into the domain with [RSAT tools installed](https://www.tecmint.com/manage-samba4-ad-from-windows-via-rsat/) on it.

**Important**: An alternate method of joining a domain is by using **authconfig** command line which offers extensive control over the integration process.

However, this method is prone to errors do to its numerous parameters as illustrated on the below command excerpt. The command must be typed into a single long line.

# authconfig --enablewinbind --enablewinbindauth --smbsecurity ads --smbworkgroup=YOUR_DOMAIN --smbrealm YOUR_DOMAIN.TLD --smbservers=adc1.yourdomain.tld --krb5realm=YOUR_DOMAIN.TLD --enablewinbindoffline --enablewinbindkrb5 --winbindtemplateshell=/bin/bash--winbindjoin=domain_admin_user --update --enablelocauthorize --savebackup=/backups

**10.** After the machine has been joined to domain, verify if winbind service is up and running by issuing the below command.

# systemctl status winbind.service

**11.** Then, check if CentOS machine object has been successfully created in Samba4 AD. Use AD Users and Computers tool from a Windows machine with RSAT tools installed and navigate to your domain Computers container. A new AD computer account object with name of your CentOS 7 server should be listed in the right plane.

**12.** Finally, tweak the configuration by opening samba main configuration file (**/etc/samba/smb.conf**) with a text editor and append the below lines at the end of the **[global]** configuration block as illustrated below:

winbind use default domain = true winbind offline logon = true

**13.** In order to create local homes on the machine for AD accounts at their first logon run the below command.

# authconfig --enablemkhomedir --update

**14.** Finally, restart Samba daemon to reflect changes and verify domain joining by performing a logon on the server with an AD account. The home directory for the AD account should be automatically created.

# systemctl restart winbind # su - domain_account

**15.** List the domain users or domain groups by issuing one of the following commands.

# wbinfo -u # wbinfo -g

**16.** To get info about a domain user run the below command.

# wbinfo -i domain_user

**17.** To display summary domain info issue the following command.

# net ads info

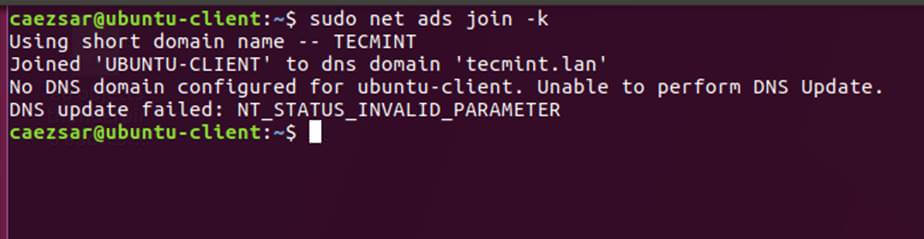

### Step 3: Login to CentOS with a Samba4 AD DC Account

**18.** To authenticate with a domain user in CentOS, use one of the following command line syntaxes.

# su - ‘domain\domain_user’ # su - domain\\domain_user

Or use the below syntax in case winbind use default domain = true parameter is set to samba configuration file.

# su - domain_user # su -[[email protected]]

**19.** In order to add root privileges for a domain user or group, edit **sudoers** file using **visudo** command and add the following lines as illustrated on the below screenshot.

YOUR_DOMAIN\\domain_username ALL=(ALL:ALL) ALL #For domain users %YOUR_DOMAIN\\your_domain\ group ALL=(ALL:ALL) ALL #For domain groups

Or use the below excerpt in case winbind use default domain = true parameter is set to samba configuration file.

domain_username ALL=(ALL:ALL) ALL #For domain users %your_domain\ group ALL=(ALL:ALL) ALL #For domain groups

**20.** The following series of commands against a Samba4 AD DC can also be useful for troubleshooting purposes:

# wbinfo -p #Ping domain # wbinfo -n domain_account #Get the SID of a domain account # wbinfo -t #Check trust relationship

**21.** To leave the domain run the following command against your domain name using a domain account with elevated privileges. After the machine account has been removed from the AD, reboot the machine to revert changes before the integration process.

# net ads leave -w DOMAIN -U domain_admin # init 6

That’s all! Although this procedure is mainly focused on joining a CentOS 7 server to a Samba4 AD DC, the same steps described here are also valid for integrating a CentOS server into a Microsoft Windows Server 2012 Active Directory.

Please compare this way using authconfig and winbind to the RHEL recommendation to use realm join and sssd – in other words, when is this better or worse?

Reply |

8,778 | OCI 发布容器运行时和镜像格式规范 V1.0 | https://blog.docker.com/2017/07/oci-release-of-v1-0-runtime-and-image-format-specifications/ | 2017-08-15T08:30:00 | [

"OCI",

"Docker"

] | https://linux.cn/article-8778-1.html |

7 月 19 日是<ruby> 开放容器计划 <rt> Open Container Initiative </rt></ruby>(OCI)的一个重要里程碑,OCI 发布了容器运行时和镜像规范的 1.0 版本,而 Docker 在这过去两年中一直充当着推动和引领的核心角色。我们的目标是为社区、客户以及更广泛的容器行业提供底层的标准。要了解这一里程碑的意义,我们先来看看 Docker 在开发容器技术行业标准方面的成长和发展历史。

### Docker 将运行时和镜像捐赠给 OCI 的历史回顾

Docker 的镜像格式和容器运行时在 2013 年作为开源项目发布后,迅速成为事实上的标准。我们认识到将其转交给中立管理机构管理,以加强创新和防止行业碎片化的重要性。我们与广泛的容器技术人员和行业领导者合作,成立了<ruby> 开放容器项目 <rt> Open Container Project </rt></ruby>来制定了一套容器标准,并在 Linux 基金会的支持下,于 2015 年 6 月在 <ruby> Docker 大会 <rp> ( </rp> <rt> DockerCon </rt> <rp> ) </rp></ruby>上推出。最终在那个夏天演变成为<ruby> 开放容器计划 <rt> Open Container Initiative </rt></ruby> (OCI)。

Docker 贡献了 runc ,这是从 Docker 员工 [Michael Crosby](https://github.com/crosbymichael) 的 libcontainer 项目中发展而来的容器运行时参考实现。 runc 是描述容器生命周期和运行时行为的运行时规范的基础。runc 被用在数千万个节点的生产环境中,这比任何其它代码库都要大一个数量级。runc 已经成为运行时规范的参考实现,并且随着项目的进展而不断发展。

在运行时规范制定工作开始近一年后,我们组建了一个新的工作组来制定镜像格式的规范。 Docker 将 Docker V2 镜像格式捐赠给 OCI 作为镜像规范的基础。通过这次捐赠,OCI 定义了构成容器镜像的数据结构(原始镜像)。定义容器镜像格式是一个至关重要的步骤,但它需要一个像 Docker 这样的平台通过定义和提供构建、管理和发布镜像的工具来实现它的价值。 例如,Dockerfile 等内容并不包括在 OCI 规范中。

### 开放容器标准化之旅

这个规范已经持续开发了两年。随着代码的重构,更小型的项目已经从 runc 参考实现中脱颖而出,并支持即将发布的认证测试工具。

有关 Docker 参与塑造 OCI 的详细信息,请参阅上面的时间轴,其中包括:创建 runc ,和社区一起更新、迭代运行时规范,创建 containerd 以便于将 runc 集成到 Docker 1.11 中,将 Docker V2 镜像格式贡献给 OCI 作为镜像格式规范的基础,并在 [containerd](https://containerd.io/) 中实现该规范,使得该核心容器运行时同时涵盖了运行时和镜像格式标准,最后将 containerd 捐赠给了<ruby> 云计算基金会 <rt> Cloud Native Computing Foundation </rt></ruby>(CNCF),并于本月发布了更新的 1.0 alpha 版本。

维护者 [Michael Crosby](https://github.com/crosbymichael) 和 [Stephen Day](https://github.com/stevvooe) 引导了这些规范的发展,并且为 v1.0 版本的实现提供了极大的帮助,另外 Alexander Morozov,Josh Hawn,Derek McGown 和 Aaron Lehmann 也贡献了代码,以及 Stephen Walli 参加了认证工作组。

Docker 仍然致力于推动容器标准化进程,在每个人都认可的层面建立起坚实的基础,使整个容器行业能够在依旧十分差异化的层面上进行创新。

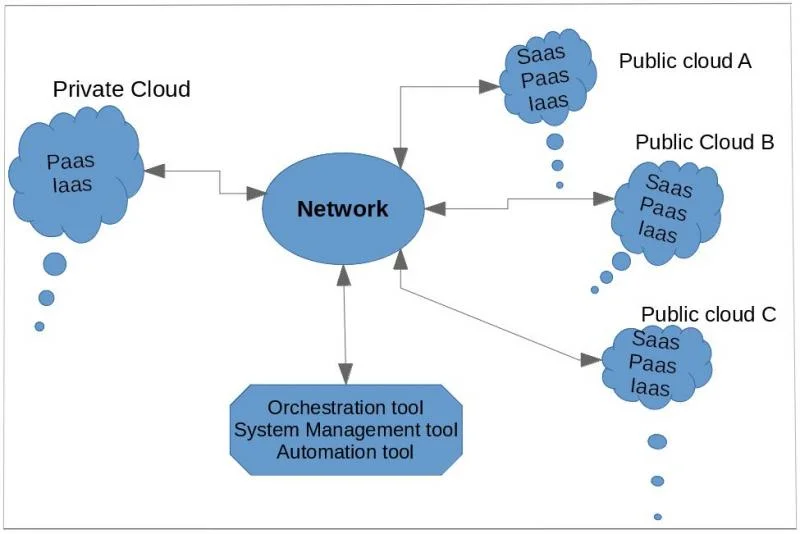

### 开放标准只是一小块拼图

Docker 是一个完整的平台,用于创建、管理、保护和编排容器以及镜像。该项目的愿景始终是致力于成为支持开源组件的行业规范的基石,或着是容器解决方案的校准铅锤。Docker 平台正位于此层之上 -- 为客户提供从开发到生产的安全的容器管理解决方案。

OCI 运行时和镜像规范成为一个可靠的标准基础,允许和鼓励多样化的容器解决方案,同时它们不限制产品创新或遏制主要开发者。打一个比方,TCP/IP、HTTP 和 HTML 成为过去 25 年来建立万维网的可靠标准,其他公司可以继续通过这些标准的新工具、技术和浏览器进行创新。 OCI 规范也为容器解决方案提供了类似的规范基础。

开源项目也在为产品开发提供组件方面发挥着作用。containerd 项目就使用了 OCI 的 runc 参考实现,它负责镜像的传输和存储,容器运行和监控,以及支持存储和网络附件的等底层功能。containerd 项目已经被 Docker 捐赠给了 CNCF ,与其他重要项目一起支持云计算解决方案。

Docker 使用了 containerd 和其它自己的核心开源基础设施组件,如 LinuxKit,InfraKit 和 Notary 等项目来构建和保护 Docker 社区版容器解决方案。正在寻找一个能提供容器管理、安全性、编排、网络和更多功能的完整容器平台的用户和组织可以了解下 Docker Enterprise Edition 。

>

> 这张图强调了 OCI 规范提供了一个由容器运行时实现的标准层:containerd 和 runc。 要组装一个完整的像 Docker 这样具有完整容器生命周期和工作流程的容器平台,需要和许多其他的组件集成在一起:管理基础架构的 InfraKit,提供操作系统的 LinuxKit,交付编排的 SwarmKit,确保安全性的 Notary。

>

>

>

### OCI 下一步该干什么

随着运行时和镜像规范的发布,我们应该庆祝开发者的努力。开放容器计划的下一个关键工作是提供认证计划,以验证实现者的产品和项目确实符合运行时和镜像规范。[认证工作组](https://github.com/opencontainers/certification) 已经组织了一个程序,结合了<ruby> 开发套件 <rp> ( </rp> <rt> developing suite </rt> <rp> ) </rp></ruby>的[运行时](https://github.com/opencontainers/runtime-tools)和[镜像](https://github.com/opencontainers/image-tools)规范测试工具将展示产品应该如何参照标准进行实现。

同时,当前规范的开发者们正在考虑下一个最重要的容器技术领域。云计算基金会的通用容器网络接口开发工作已经正在进行中,支持镜像签署和分发的工作正也在 OCI 的考虑之中。

除了 OCI 及其成员,Docker 仍然致力于推进容器技术的标准化。 OCI 的使命是为用户和公司提供在开发者工具、镜像分发、容器编排、安全、监控和管理等方面进行创新的基准。Docker 将继续引领创新,不仅提供提高生产力和效率的工具,而且还通过授权用户,合作伙伴和客户进行创新。

**在 Docker 学习更过关于 OCI 和开源的信息:**

* 阅读 [OCI 规范的误区](/article-8763-1.html)

* 访问 [开放容器计划的网站](https://www.opencontainers.org/join)

* 访问 [Moby 项目网站](http://mobyproject.org/)

* 参加 [DockerCon Europe 2017](https://europe-2017.dockercon.com/)

* 参加 [Moby Summit LA](https://www.eventbrite.com/e/moby-summit-los-angeles-tickets-35930560273)

(题图:vox-cdn.com)

---

作者简介:

Patrick Chanezon 是 Docker Inc. 技术人员。他的工作是帮助构建 Docker 。一个程序员和讲故事的人 (storyller),他在 Netscape 和 Sun 工作了10年的时间,又在Google,VMware 和微软工作了10年。他的主要职业兴趣是为这些奇特的双边市场“平台”建立和推动网络效应。他曾在门户网站,广告,电商,社交,Web,分布式应用和云平台上工作过。有关更多信息,请访问 linkedin.com/in/chanezon 和他的推特 @chanezon。

---

via: <https://blog.docker.com/2017/07/oci-release-of-v1-0-runtime-and-image-format-specifications/>

作者:[Patrick Chanezon](https://blog.docker.com/author/chanezon/) 译者:[rieonke](https://github.com/rieonke) 校对:[校对者ID](https://github.com/%E6%A0%A1%E5%AF%B9%E8%80%85ID)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

8,780 | 学习用 Python 编程时要避免的 3 个错误 | https://opensource.com/article/17/6/3-things-i-did-wrong-learning-python | 2017-08-16T08:58:00 | [

"Python"

] | https://linux.cn/article-8780-1.html |

>

> 这些错误会造成很麻烦的问题,需要数小时才能解决。

>

>

>

当你做错事时,承认错误并不是一件容易的事,但是犯错是任何学习过程中的一部分,无论是学习走路,还是学习一种新的编程语言都是这样,比如学习 Python。

为了让初学 Python 的程序员避免犯同样的错误,以下列出了我学习 Python 时犯的三种错误。这些错误要么是我长期以来经常犯的,要么是造成了需要几个小时解决的麻烦。

年轻的程序员们可要注意了,这些错误是会浪费一下午的!

### 1、 可变数据类型作为函数定义中的默认参数

这似乎是对的?你写了一个小函数,比如,搜索当前页面上的链接,并可选将其附加到另一个提供的列表中。

```

def search_for_links(page, add_to=[]):

new_links = page.search_for_links()

add_to.extend(new_links)

return add_to

```

从表面看,这像是十分正常的 Python 代码,事实上它也是,而且是可以运行的。但是,这里有个问题。如果我们给 `add_to` 参数提供了一个列表,它将按照我们预期的那样工作。但是,如果我们让它使用默认值,就会出现一些神奇的事情。

试试下面的代码:

```

def fn(var1, var2=[]):

var2.append(var1)

print var2

fn(3)

fn(4)

fn(5)

```

可能你认为我们将看到:

```

[3]

[4]

[5]

```

但实际上,我们看到的却是:

```

[3]

[3, 4]

[3, 4, 5]

```

为什么呢?如你所见,每次都使用的是同一个列表,输出为什么会是这样?在 Python 中,当我们编写这样的函数时,这个列表被实例化为函数定义的一部分。当函数运行时,它并不是每次都被实例化。这意味着,这个函数会一直使用完全一样的列表对象,除非我们提供一个新的对象:

```

fn(3, [4])

```

```

[4, 3]

```

答案正如我们所想的那样。要想得到这种结果,正确的方法是:

```

def fn(var1, var2=None):

if not var2:

var2 = []

var2.append(var1)

```

或是在第一个例子中:

```

def search_for_links(page, add_to=None):

if not add_to:

add_to = []

new_links = page.search_for_links()

add_to.extend(new_links)

return add_to

```

这将在模块加载的时候移走实例化的内容,以便每次运行函数时都会发生列表实例化。请注意,对于不可变数据类型,比如[**元组**](https://docs.python.org/2/library/functions.html?highlight=tuple#tuple)、[**字符串**](https://docs.python.org/2/library/string.html)、[**整型**](https://docs.python.org/2/library/functions.html#int),是不需要考虑这种情况的。这意味着,像下面这样的代码是非常可行的:

```

def func(message="my message"):

print message

```

### 2、 可变数据类型作为类变量

这和上面提到的最后一个错误很相像。思考以下代码:

```

class URLCatcher(object):

urls = []

def add_url(self, url):

self.urls.append(url)

```

这段代码看起来非常正常。我们有一个储存 URL 的对象。当我们调用 add\_url 方法时,它会添加一个给定的 URL 到存储中。看起来非常正确吧?让我们看看实际是怎样的:

```

a = URLCatcher()

a.add_url('http://www.google.com')

b = URLCatcher()

b.add_url('http://www.bbc.co.hk')

```

b.urls:

```

['http://www.google.com', 'http://www.bbc.co.uk']

```

a.urls:

```

['http://www.google.com', 'http://www.bbc.co.uk']

```

等等,怎么回事?!我们想的不是这样啊。我们实例化了两个单独的对象 `a` 和 `b`。把一个 URL 给了 `a`,另一个给了 `b`。这两个对象怎么会都有这两个 URL 呢?

这和第一个错例是同样的问题。创建类定义时,URL 列表将被实例化。该类所有的实例使用相同的列表。在有些时候这种情况是有用的,但大多数时候你并不想这样做。你希望每个对象有一个单独的储存。为此,我们修改代码为:

```

class URLCatcher(object):

def __init__(self):

self.urls = []

def add_url(self, url):

self.urls.append(url)

```

现在,当创建对象时,URL 列表被实例化。当我们实例化两个单独的对象时,它们将分别使用两个单独的列表。

### 3、 可变的分配错误

这个问题困扰了我一段时间。让我们做出一些改变,并使用另一种可变数据类型 - [**字典**](https://docs.python.org/2/library/stdtypes.html?highlight=dict#dict)。

```

a = {'1': "one", '2': 'two'}

```

现在,假设我们想把这个字典用在别的地方,且保持它的初始数据完整。

```

b = a

b['3'] = 'three'

```

简单吧?

现在,让我们看看原来那个我们不想改变的字典 `a`:

```

{'1': "one", '2': 'two', '3': 'three'}

```

哇等一下,我们再看看 **b**?

```

{'1': "one", '2': 'two', '3': 'three'}

```

等等,什么?有点乱……让我们回想一下,看看其它不可变类型在这种情况下会发生什么,例如一个**元组**:

```

c = (2, 3)

d = c

d = (4, 5)

```

现在 `c` 是 `(2, 3)`,而 `d` 是 `(4, 5)`。

这个函数结果如我们所料。那么,在之前的例子中到底发生了什么?当使用可变类型时,其行为有点像 **C** 语言的一个指针。在上面的代码中,我们令 `b = a`,我们真正表达的意思是:`b` 成为 `a` 的一个引用。它们都指向 Python 内存中的同一个对象。听起来有些熟悉?那是因为这个问题与先前的相似。其实,这篇文章应该被称为「可变引发的麻烦」。

列表也会发生同样的事吗?是的。那么我们如何解决呢?这必须非常小心。如果我们真的需要复制一个列表进行处理,我们可以这样做:

```

b = a[:]

```

这将遍历并复制列表中的每个对象的引用,并且把它放在一个新的列表中。但是要注意:如果列表中的每个对象都是可变的,我们将再次获得它们的引用,而不是完整的副本。

假设在一张纸上列清单。在原来的例子中相当于,A 某和 B 某正在看着同一张纸。如果有个人修改了这个清单,两个人都将看到相同的变化。当我们复制引用时,每个人现在有了他们自己的清单。但是,我们假设这个清单包括寻找食物的地方。如果“冰箱”是列表中的第一个,即使它被复制,两个列表中的条目也都指向同一个冰箱。所以,如果冰箱被 A 修改,吃掉了里面的大蛋糕,B 也将看到这个蛋糕的消失。这里没有简单的方法解决它。只要你记住它,并编写代码的时候,使用不会造成这个问题的方式。

字典以相同的方式工作,并且你可以通过以下方式创建一个昂贵副本:

```

b = a.copy()

```

再次说明,这只会创建一个新的字典,指向原来存在的相同的条目。因此,如果我们有两个相同的列表,并且我们修改字典 `a` 的一个键指向的可变对象,那么在字典 b 中也将看到这些变化。

可变数据类型的麻烦也是它们强大的地方。以上都不是实际中的问题;它们是一些要注意防止出现的问题。在第三个项目中使用昂贵复制操作作为解决方案在 99% 的时候是没有必要的。你的程序或许应该被改改,所以在第一个例子中,这些副本甚至是不需要的。

*编程快乐!在评论中可以随时提问。*

---

作者简介:

Pete Savage - Peter 是一位充满激情的开源爱好者,在过去十年里一直在推广和使用开源产品。他从 Ubuntu 社区开始,在许多不同的领域自愿参与音频制作领域的研究工作。在职业经历方面,他起初作为公司的系统管理员,大部分时间在管理和建立数据中心,之后在 Red Hat 担任 CloudForms 产品的主要测试工程师。

---

via: <https://opensource.com/article/17/6/3-things-i-did-wrong-learning-python>

作者:[Pete Savage](https://opensource.com/users/psav) 译者:[polebug](https://github.com/polebug) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | It's never easy to admit when you do things wrong, but making errors is part of any learning process, from learning to walk to learning a new programming language, such as Python.

Here's a list of three things I got wrong when I was learning Python, presented so that newer Python programmers can avoid making the same mistakes. These are errors that either I got away with for a long time or that that created big problems that took hours to solve.

Take heed young coders, some of these mistakes are afternoon wasters!

## 1. Mutable data types as default arguments in function definitions

It makes sense right? You have a little function that, let's say, searches for links on a current page and optionally appends it to another supplied list.

```

``````

def search_for_links(page, add_to=[]):

new_links = page.search_for_links()

add_to.extend(new_links)

return add_to

```

On the face of it, this looks like perfectly normal Python, and indeed it is. It works. But there are issues with it. If we supply a list for the **add_to** parameter, it works as expected. If, however, we let it use the default, something interesting happens.

Try the following code:

```

``````

def fn(var1, var2=[]):

var2.append(var1)

print var2

fn(3)

fn(4)

fn(5)

```

You may expect that we would see:

**[3]

[4]

[5]**

But we actually see this:

**[3]

[3, 4]

[3, 4, 5]**

Why? Well, you see, the same list is used each time. In Python, when we write the function like this, the list is instantiated as part of the function's definition. It is not instantiated each time the function is run. This means that the function keeps using the exact same list object again and again, unless of course we supply another one:

```

````fn(3, [4])`

**[4, 3]**

Just as expected. The correct way to achieve the desired result is:

```

``````

def fn(var1, var2=None):

if not var2:

var2 = []

var2.append(var1)

```

Or, in our first example:

```

``````

def search_for_links(page, add_to=None):

if not add_to:

add_to = []

new_links = page.search_for_links()

add_to.extend(new_links)

return add_to

```

This moves the instantiation from module load time so that it happens every time the function runs. Note that for immutable data types, like [ tuples](https://docs.python.org/2/library/functions.html?highlight=tuple#tuple),

[, or](https://docs.python.org/2/library/string.html)

**strings**[, this is not necessary. That means it is perfectly fine to do something like:](https://docs.python.org/2/library/functions.html#int)

**ints**```

``````

def func(message="my message"):

print message

```

## 2. Mutable data types as class variables

Hot on the heels of the last error is one that is very similar. Consider the following:

```

``````

class URLCatcher(object):

urls = []

def add_url(self, url):

self.urls.append(url)

```

This code looks perfectly normal. We have an object with a storage of URLs. When we call the **add_url** method, it adds a given URL to the store. Perfect right? Let's see it in action:

```

``````

a = URLCatcher()

a.add_url('http://www.google.')

b = URLCatcher()

b.add_url('http://www.bbc.co.')

```

**b.urls

[' http://www.google.com', 'http://www.bbc.co.uk']**

**a.urls

[' http://www.google.com', 'http://www.bbc.co.uk']**

Wait, what?! We didn't expect that. We instantiated two separate objects, **a** and **b**. **A** was given one URL and **b** the other. How is it that both objects have both URLs?

Turns out it's kinda the same problem as in the first example. The URLs list is instantiated when the class definition is created. All instances of that class use the same list. Now, there are some cases where this is advantageous, but the majority of the time you don't want to do this. You want each object to have a separate store. To do that, we would modify the code like:

```

``````

class URLCatcher(object):

def __init__(self):

self.urls = []

def add_url(self, url):

self.urls.append(url)

```

Now the URLs list is instantiated when the object is created. When we instantiate two separate objects, they will be using two separate lists.

## 3. Mutable assignment errors

This one confused me for a while. Let's change gears a little and use another mutable datatype, the [ dict](https://docs.python.org/2/library/stdtypes.html?highlight=dict#dict).

```

``````

a = {'1': "one", '2': 'two'}

```

Now let's assume we want to take that **dict** and use it someplace else, leaving the original intact.

```

``````

b = a

b['3'] = 'three'

```

Simple eh?

Now let's look at our original dict, **a**, the one we didn't want to modify:

```

````{'1': "one", '2': 'two', '3': 'three'}`

Whoa, hold on a minute. What does **b** look like then?

```

````{'1': "one", '2': 'two', '3': 'three'}`

Wait what? But… let's step back and see what happens with our other immutable types, a **tuple** for instance:

```

``````

c = (2, 3)

d = c

d = (4, 5)

```

Now **c** is:

**(2, 3)**

While **d** is:

**(4, 5)**

That functions as expected. So what happened in our example? When using mutable types, we get something that behaves a little more like a pointer from C. When we said **b = a** in the code above, what we really meant was: **b** is now also a reference to **a**. They both point to the same object in Python's memory. Sound familiar? That's because it's similar to the previous problems. In fact, this post should really have been called, "The Trouble with Mutables."

Does the same thing happen with lists? Yes. So how do we get around it? Well, we have to be very careful. If we really need to copy a list for processing, we can do so like:

```

````b = a[:]`

This will go through and copy a reference to each item in the list and place it in a new list. But be warned: If any objects in the list are mutable, we will again get references to those, rather than complete copies.

Imagine having a list on a piece of paper. In the original example, Person A and Person B are looking at the same piece of paper. If someone changes that list, both people will see the same changes. When we copy the references, each person now has their own list. But let's suppose that this list contains places to search for food. If "fridge" is first on the list, even when it is copied, both entries in both lists point to the same fridge. So if the fridge is modified by Person A, by say eating a large gateaux, Person B will also see that the gateaux is missing. There is no easy way around this. It is just something that you need to remember and code in a way that will not cause an issue.

Dicts function in the same way, and you can create this expensive copy by doing:

```

````b = a.copy()`

Again, this will only create a new dictionary pointing to the same entries that were present in the original. Thus, if we have two lists that are identical and we modify a mutable object that is pointed to by a key from dict 'a', the dict object present in dict 'b' will also see those changes.

The trouble with mutable data types is that they are powerful. None of the above are real problems; they are things to keep in mind to prevent issues. The expensive copy operations presented as solutions in the third item are unnecessary 99% of the time. Your program can and probably should be modified so that those copies are not even required in the first place.

*Happy coding! And feel free to ask questions in the comments.*

## 7 Comments |

8,782 | Linux 包管理基础:apt、yum、dnf 和 pkg | https://www.digitalocean.com/community/tutorials/package-management-basics-apt-yum-dnf-pkg | 2017-08-16T11:45:00 | [

"apt",

"包管理",

"yum"

] | https://linux.cn/article-8782-1.html |

### 介绍

大多数现代的类 Unix 操作系统都提供了一种中心化的机制用来搜索和安装软件。软件通常都是存放在存储库中,并通过包的形式进行分发。处理包的工作被称为包管理。包提供了操作系统的基本组件,以及共享的库、应用程序、服务和文档。

包管理系统除了安装软件外,它还提供了工具来更新已经安装的包。包存储库有助于确保你的系统中使用的代码是经过审查的,并且软件的安装版本已经得到了开发人员和包维护人员的认可。

在配置服务器或开发环境时,我们最好了解下包在官方存储库之外的情况。某个发行版的稳定版本中的包有可能已经过时了,尤其是那些新的或者快速迭代的软件。然而,包管理无论对于系统管理员还是开发人员来说都是至关重要的技能,而已打包的软件对于主流 Linux 发行版来说也是一笔巨大的财富。

本指南旨在快速地介绍下在多种 Linux 发行版中查找、安装和升级软件包的基础知识,并帮助您将这些内容在多个系统之间进行交叉对比。

### 包管理系统:简要概述

大多数包系统都是围绕包文件的集合构建的。包文件通常是一个存档文件,它包含已编译的二进制文件和软件的其他资源,以及安装脚本。包文件同时也包含有价值的元数据,包括它们的依赖项,以及安装和运行它们所需的其他包的列表。

虽然这些包管理系统的功能和优点大致相同,但打包格式和工具却因平台而异:

| 操作系统 | 格式 | 工具 |

| --- | --- | --- |

| Debian | `.deb` | `apt`, `apt-cache`, `apt-get`, `dpkg` |

| Ubuntu | `.deb` | `apt`, `apt-cache`, `apt-get`, `dpkg` |

| CentOS | `.rpm` | `yum` |

| Fedora | `.rpm` | `dnf` |

| FreeBSD | Ports, `.txz` | `make`, `pkg` |

Debian 及其衍生版,如 Ubuntu、Linux Mint 和 Raspbian,它们的包格式是 `.deb`。APT 这款先进的包管理工具提供了大多数常见的操作命令:搜索存储库、安装软件包及其依赖项,并管理升级。在本地系统中,我们还可以使用 `dpkg` 程序来安装单个的 `deb` 文件,APT 命令作为底层 `dpkg` 的前端,有时也会直接调用它。

最近发布的 debian 衍生版大多数都包含了 `apt` 命令,它提供了一个简洁统一的接口,可用于通常由 `apt-get` 和 `apt-cache` 命令处理的常见操作。这个命令是可选的,但使用它可以简化一些任务。

CentOS、Fedora 和其它 Red Hat 家族成员使用 RPM 文件。在 CentOS 中,通过 `yum` 来与单独的包文件和存储库进行交互。

在最近的 Fedora 版本中,`yum` 已经被 `dnf` 取代,`dnf` 是它的一个现代化的分支,它保留了大部分 `yum` 的接口。

FreeBSD 的二进制包系统由 `pkg` 命令管理。FreeBSD 还提供了 `Ports` 集合,这是一个存在于本地的目录结构和工具,它允许用户获取源码后使用 Makefile 直接从源码编译和安装包。

### 更新包列表

大多数系统在本地都会有一个和远程存储库对应的包数据库,在安装或升级包之前最好更新一下这个数据库。另外,`yum` 和 `dnf` 在执行一些操作之前也会自动检查更新。当然你可以在任何时候对系统进行更新。

| 系统 | 命令 |

| --- | --- |

| Debian / Ubuntu | `sudo apt-get update` |

| | `sudo apt update` |

| CentOS | `yum check-update` |

| Fedora | `dnf check-update` |

| FreeBSD Packages | `sudo pkg update` |

| FreeBSD Ports | `sudo portsnap fetch update` |

### 更新已安装的包

在没有包系统的情况下,想确保机器上所有已安装的软件都保持在最新的状态是一个很艰巨的任务。你将不得不跟踪数百个不同包的上游更改和安全警报。虽然包管理器并不能解决升级软件时遇到的所有问题,但它确实使你能够使用一些命令来维护大多数系统组件。