hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

c00ec213a3138c563e3b85d52c36243e4b8dabb2 | 8,060 | md | Markdown | _posts/SIST/2018-11-07-oracle-정리-04.md | younggeun0/younggeun0.github.io | 29926870832211f3e489e4c608349650a2e5482a | [

"MIT"

] | null | null | null | _posts/SIST/2018-11-07-oracle-정리-04.md | younggeun0/younggeun0.github.io | 29926870832211f3e489e4c608349650a2e5482a | [

"MIT"

] | null | null | null | _posts/SIST/2018-11-07-oracle-정리-04.md | younggeun0/younggeun0.github.io | 29926870832211f3e489e4c608349650a2e5482a | [

"MIT"

] | 1 | 2022-03-10T00:16:36.000Z | 2022-03-10T00:16:36.000Z | ---

layout: post

title: 오라클 DBMS 정리 04

tags: [오라클DBMS]

excerpt: "ORACLE DBMS 정리 - FUNCTIONS"

date: 2018-11-07

feature: https://github.com/younggeun0/younggeun0.github.io/blob/master/_posts/img/oracle/oracleImageFeature.jpg?raw=true

comments: true

---

## 오라클 DBMS 정리 04 - FUNCTIONS

---

### 함수

* 오라클은 함수들 연습용으로 가상테이블 **DUAL**을 제공함

- **pseudo table**

- 입력된 데이터로 컬럼을 생성하여 조회하는 일을함

- 사용자가 DUAL 테이블을 생성하면 가상 테이블을 사용할 수 없음

+ DROP TABLE DUAL; 로 동명 테이블 제거하면 다시 바로 사용 가능

---

### 문자열 함수

~~~sql

-- 문자열의 길이를 숫자로 반환해주는 함수 LENGTH(문자열)

LENGTH('ABCD')

-- 4

-- 영어 문자열을 대문자로 바꾸는 함수 UPPER(문자열)

UPPER('AbcD')

-- ABCD

-- 영어 문자열을 소문자로 바꾸는 함수 LOWER(문자열)

LOWER('AbcD')

-- abcd

-- 영어 문자열의 첫 글자만 대문자로 바꾸고 나머지는 소문자로 변환하는 함수 INITCAP(문자열)

INITCAP('name')

-- Name

-- 문자열 중간에 띄어쓰기가 존재하면 띄어쓰기 다음 나오는 문자의 첫 글짜는 대문자로 바꿈

INITCAP('abcd ef ghi')

-- Abcd Ef Ghi

-- 찾는 특정 문자열의 인덱스를 반환해주는 함수 INSTR(문자열, 찾을문자열)

-- 오라클은 인덱스가 1번부터 시작

INSTR('AbcDef', 'D')

-- 4

-- 찾는 문자열이 없으면 0이 반환됨(JAVA같은 언어에서 찾는 데이터가 없을 땐 -1이 반환)

-- 문자열 자르기 SUBSTR(문자열, 시작인덱스, 자를글자수)

-- 부모 문자열(Superstring)에서 자식 문자열(Substring)을 잘라내는 것

SUBSTR('ABCDEF', 2, 3)

-- BCD

-- 자를글자수를 입력 안하면 시작인덱스부터 끝까지 자름

SUBSTR('ABCDEF', 3)

-- CDEF

-- 문자열 공백 제거 세가지(앞(좌), 뒤(우), 양뒤 공백 제거)

-- 사이 공백은 REPLACE함수를 통해 없앨 수 있음!

-- 앞뒤 공백을 제거하는 TRIM(문자열)

TRIM(' ABCDE ')

-- ABCDE

-- 앞 공백을 제거하는 LTRIM(문자열)

LTRIM(' ABCDE ')

-- ABCDE

-- 뒤 공백을 제거하는 RTRIM(문자열)

RTRIM(' ABCDE ')

-- ABCDE

-- 문자열 결합해주는 함수 CONCAT(문자열, 붙일문자열)

-- 붙임연산자(||)와 동일한 기능

-- 많이 결합할 경우 복잡해지므로 ||를 더 많이 쓴다

CONCAT('ABC', 'DEF')

-- ABCDEF

-- 문자열 채우기는 왼쪽 채우기 LPAD와 오른쪽 채우기 RPAD가 있음

-- 이 함수에서 한글은 2byte로 계산함

-- LPAD(문자열, 총자릿수, 채울문자열), RPAD(문자열, 총자릿수, 채울문자열)

LPAD('ABCDE', 10, '#')

-- #####ABCDE

RPAD('ABCDE', 10, '$')

-- ABCDE$$$$$

-- 규격화된 문자열을 만들 때 자주 사용

~~~

---

### 수학 함수

~~~sql

-- sin, cos, tan 구해주는 함수 SIN(값) COS(값) TAN(값), 잘 안쓰임

-- 절대값을 반환하는 함수 ABS(값)

ABS(-5)

-- 5

-- 반올림을 해주는 함수 ROUND(값, 반올림할자리수)

-- '.'을 기준으로 ROUND(555.555) 소수부는 +, 정수부는 -

-- 소수부는 반올림했을 때 볼 자리수

ROUND(5.555, 1)

-- 5.6

ROUND(5.555, 2)

-- 5.56

-- 정수부는 그 자리에서 반올림

ROUND(555, -1)

-- 560

-- 0이 아니면 무조건 올리는 함수 CEIL(값)

CEIL(10.1)

-- 11

-- 내림 함수 FLOOR(값)

FLOOR(10.8)

-- 10

-- 지정한 위치의 수를 자르는 절삭 함수 TRUNC(값, 절삭할자리수)

---소수부는 반올림했을 때 볼 자리수를 뜻하고, 정수부는 반올림할 그 자리를 뜻함

-- 소수부는 그 다음자리를 자름

-- 정수부는 그 자리를 자름

TRUNC(555.555, 2)

-- 555.55

-- 원단위 절삭

TRUNC(555.555, -1)

-- 550

-- 소수 이하 절삭

TRUNC(555.555, 0)

-- 555

~~~

---

### NULL 변환 함수

- NULL은 값이 존재하지 않는 상태(0이 아님)

- **NULL은 레코드를 추가할 때(INSERT) 해당 컬럼을 명시하지 않으면 삽입됨**

+ CHAR/VARCHAR2 : 컬럼을 명시하지 않거나 값이 ''인 경우

+ NUMBER/DATE : 컬럼을 명시하지 않은 경우

- **NVL(값, NULL이었을 때 보여줄 값)**

+ 컬럼 값이 NULL일때 보여줄 값으로 컬럼 데이터형과 동일한 타입값을 넣어야 함

~~~sql

NVL(age, 0)

-- 잘못된 데이터형을 삽입한 예

NVL(age, '없음')

-- 오라클은 자동형변환을 해주기 때문에 문자열이 숫자면 괜찮다

NVL(age, '0')

~~~

---

### 변환함수

- **TO_CHAR - 문자가 아닌 값(NUMBER, DATE)들을 문자로 바꿔줌**

- **숫자 변환 pattern**

+ 지정한 자리에 ,나 .을 출력

+ 0 - 데이터가 없으면 0을 채워서 출력

+ 9 - 데이터가 있는 것 까지만 출력

- **날짜 변환 pattern**

+ 날짜를 원하는 형식으로 변환해서 보겠다는 의미

+ yyyy/ yy - 년 / mm/mon/month - 월 / dd - 일

+ am - 오전, 오후 / hh - 12시간 / hh24 - 24시간

+ mi - 분

+ ss - 초

+ day - 요일(ex) 목요일 / dy - 요일(ex) 목

+ q - 분기(1~4)

+ 주의! 패턴이 길면 에러가 발생!

* 에러 발생 시 나눠서 붙임 연산자로 연결

- TO_DATE - 날짜가 아닌 값들을 날짜로 바꿔줌

+ 날짜가 아닌 문자열을 날짜로 변환

~~~sql

-- TO_CHAR(숫자, pattern)

TO_CHAR(2018, '0,000,000')

-- 0,002,018

TO_CHAR(2018, '9,999,999')

-- 2,018

SELECT TO_CHAR(2018.1025, '999999.999')

FROM DUAL;

-- 2018.103

-- 사원테이블에서 사원번호, 사원명, 입사일, 연봉을 조회

-- 단, 연봉은 데이터가 있는 것까지만 ,를 넣어 출력

SELECT empno, ename, hiredate, TO_CHAR(sal, '999,999') sal

FROM emp;

-- 에러!, TO_CHAR의 반환값은 문자열! 문자열은 사칙연산을 할 수 없음

SELECT TO_CHAR(sal, '9,999')+100

FROM emp;

-- 이거는 가능

-- SELECT TO_CHAR(sal+100, '9,999')

-- TO_CHAR(날짜, pattern)

TO_CHAR(SYSDATE, 'y')

-- 8

TO_CHAR(SYSDATE, 'yy')

-- 18

TO_CHAR(SYSDATE, 'yyyy')

-- 2018

TO_CHAR(SYSDATE, 'yyyyy')

-- 20188

-- 필요한 형태로 패턴을 만들어 사용한다

-- SYSDATE로 입력한게 아니면 시간정보는 들어가지 않는다

SELECT TO_CHAR(SYSDATE, 'yyyy-mm-dd am hh(hh24):mi:ss day dy q')

FROM DUAL;

SELECT SYSDATE

FROM DUAL;

-- 툴마다 SYSDATE형태가 다르게 나옴, 같게 만들고 싶으면 TO_CHAR를 사용한다

-- perttern이 특수문자가 아닌 문자열 사용할때에는 "로 묶는다

SELECT TO_CHAR(SYSDATE, 'yyyy"년" mm"월" dd"일"')

FROM DUAL;

-- perttern을 너무 길게 사용하면 Error 발생!

SELECT TO_CHAR(SYSDATE, 'yyyy " 년 " mm " 월 " dd " 일 " hh24 " 시 " mi " 분 " ss " 초 "')

FROM DUAL;

-- 이럴경우 잘라서 ||나 CONCAT으로 붙이는 방법을 사용

SELECT TO_CHAR(SYSDATE, 'yyyy " 년 " mm " 월 " dd " 일 "')

|| TO_CHAR(' hh24 " 시 " mi " 분 " ss " 초 "')

FROM DUAL;

~~~

~~~sql

TO_DATE(문자열, pattern)

-- pattern은 TO_CHAR에서 쓰던 pattern과 동일

-- 단, 문자열이 날짜형식의 문자열일 때만 변환됨

TO_DATE('2018-10-25','yyyy-mm-dd')

-- 2018-10-25

-- 문자형 데이터가 날짜형 데이터로 변환됨

-- 현재 날짜가 아닌 날짜를 추가할 땐, 날짜 형식의 문자열을 추가하면 됨

INSERT INTO class4_info(num, name, input_date)

VALUES(8, '양세찬', '2018-10-21');

-- SYSDATE로 데이터를 넣지 않았기 때문에 시간정보는 없다

INSERT INTO class4_info(num, name, input_date)

VALUES(9, '양세형', TO_DATE('2018-10-22', 'yyyy-mm-dd'));

-- 굳이 TO_DATE를 안써도 년월일 구별해서 잘 들어간다

-- TO_CHAR는 날짜나 숫자를 입력받아야함.(함수의 값(인자)은 데이터형을 구분한다.)

-- 아래 '2018-10-25'는 문자열, 문자열로 함수의 인자로 들어갔을 경우 에러 발생

SELECT TO_CHAR('2018-10-25', 'mm')

FROM DUAL;

-- 함수의 매개변수로 이용할 땐 TO_DATE가 필요!

SELECT TO_CHAR(TO_DATE('2018-10-25', 'yyyy-mm-dd'), 'mm')

FROM DUAL;

~~~

---

### 조건(비교) 함수

* DECODE

- PL/SQL에서는 사용할 수 없음

- 비교값, 출력값이 쌍을 이룸, 여러개 들어갈 수 있음

- **컬럼명이 비교값과 같으면 쌍을 이루는 출력값 반환**

+ **비교값과 일치하는 값이 없으면 맨 마지막 값 반환**

- 비교하여 실행될 코드가 짧거나 간단한 경우에 사용!

~~~sql

DECODE(컬럼명, 비교값, 출력값, 비교값, 출력값, ..., 비교값이없을때출력할값)

-- deptno가 10, 20, 30이 있을 때

-- deptno가 10이면 SI로, 20이면 SM으로 30이면 QA로 그 외는 Solution을 반환하는 DECODE 함수

DECODE(deptno, 10, 'SI',

20, 'SM',

30, 'QA', 'Solution')

-- 사원테이블에서 사원번호, 사원명, 연봉, 부서명을 조회

-- 단, 부서명은 아래의 부서번호에 해당하는 부서명으로 출력

-- 10-개발부, 20-유지보수부, 30-품질보증부, 그 외는 탁구부로 출력

SELECT empno, ename, sal,

DECODE(deptno, 10, '개발부',

20, '유지보수부', 30, '품질보증부',

'탁구부') dname

FROM emp;

~~~

* CASE

- PL/SQL에서 사용 가능

- SELECT 조회 컬럼에 사용

- 컬럼의 값을 비교하여 코드를 수행할 때 사용

- **비교하여 실행될 코드가 길거나 복잡한 경우 사용**

~~~sql

SELECT CASE 컬럼명

WHEN 비교값 THEN 실행코드

WHEN 비교값 THEN 실행코드

WHEN ...

ELSE 비교값이없을때실행코드

END alias

-- DECODE랑 다르게 콤마 안씀! 조심할 것

-- DECODE 예제와 같은 문제를 CASE로 조회

SELECT empno, ename, sal, deptno,

CASE deptno WHEN 10 THEN '개발부'

WHEN 20 THEN '유지보수부'

WHEN 30 THEN '품질보증부'

ELSE '탁구부'

END dname

FROM emp;

~~~

---

### 집계 함수

* 컬럼을 묶어서 보여줄 때 사용하는 함수

- 한 행이 조회됨

* **여러행이 조회되는 컬럼과 같이 사용되면 에러 발생**

* **WHERE절에서는 집계함수를 사용할 수 없다**

* 집계함수는 GROUP BY와 같이 사용하면 그룹별 집계를 조회한다

* GROUP BY와 함께 사용하면 그룹별 집계 가능

- COUNT(), SUM(), AVG(), MAX(), MIN() 등 존재

~~~sql

SELECT COUNT(empno), ename

FROM emp;

-- Error!, COUNT는 한 행으로 조회되고 ename은 14개가 조회됨

-- COUNT(empno) 컬럼값으로 뭘 넣어야 할 지 몰라서 에러발생

-- COUNT는 레코드의 수를 세는 함수

-- NULL인 레코드는 COUNT에 포함되지 않는다!

COUNT(컬럼명)

-- NULL이 아닌 모든 레코드의 수를 셀 때

COUNT(*)

-- 컬럼 값의 합을 구하는 함수 SUM

SUM(컬럼명)

-- 컬럼 값의 평균을 구하는 함수 AVG

AVG(컬럼명)

-- 컬럼 값 중 가장 큰 수를 찾는 반환하는 MAX

MAX(컬럼명)

-- 컬럼 값 중 가장 작은 수를 찾는 반환하는 MIN

MIN(컬럼명)

-- 최고연봉액, 최저연봉액, 최고연봉액과 최저연봉액의 차이

SELECT MAX(sal), MIN(sal), MAX(sal)-MIN(sal)

FROM emp;

-- **WHERE절에서는 집계함수를 사용할 수 없다

-- 서브쿼리를 사용해서 처리해야 함

-- 사원테이블에서 평균연봉보다 많이 받는 수령하는 사원의

-- 사원번호, 사원명, 연봉, 입사일을 조회

SELECT empno, ename, sal, hiredate

FROM emp

WHERE sal > AVG(sal); -- 에러!

-- 집계함수는 GROUP BY와 같이 사용하면 그룹별 집계를 조회한다

-- 부서번호, 부서별인원수, 부서별 연봉의 합, 부서별 연봉평균, 부서별 최고연봉액

SELECT deptno, COUNT(empno), SUM(sal), TRUNC(AVG(sal),2), MAX(sal), MIN(sal)

FROM emp

GROUP BY deptno;

~~~

---

### 날짜 함수

* **ADD_MONTHS**

- 날짜에 월 더하기

* **MONTHS_BETWEEN**

- 날짜간 개월수 차이

~~~sql

ADD_MONTHS(날짜, 더할개월수)

MONTHS_BETWEEN(큰날짜, 작은날짜)

~~~

~~~sql

-- 날짜에 +를 사용하면 일을 더함

SELECT SYSDATE+5

FROM DUAL;

-- 현재로부터 5개월 후

SELECT ADD_MONTHS(SYSDATE,5)

FROM DUAL;

-- 현재(2018-10-25)부터 2019-05-25까지의 개월수 차이를 구한다

SELECT MONTHS_BETWEEN('2019-05-25', SYSDATE)

FROM DUAL;

~~~

---

[숙제풀이](https://github.com/younggeun0/SSangYoung/blob/master/dev/query/date181025/homework.sql) | 19.563107 | 121 | 0.61464 | kor_Hang | 1.000009 |

c0123f07af3a9bd68b268eb79469579272055111 | 1,993 | md | Markdown | README.md | theiskaa/highlightable | 2a5a89e3e844fa9124e1c75c4526b0d647176991 | [

"MIT"

] | 7 | 2021-08-17T19:19:25.000Z | 2022-03-26T20:31:55.000Z | README.md | theiskaa/highlightable | 2a5a89e3e844fa9124e1c75c4526b0d647176991 | [

"MIT"

] | 6 | 2021-10-18T05:42:08.000Z | 2022-03-21T22:30:05.000Z | README.md | theiskaa/highlightable | 2a5a89e3e844fa9124e1c75c4526b0d647176991 | [

"MIT"

] | null | null | null | <p align="center">

<br>

<img width="500" src="https://user-images.githubusercontent.com/59066341/129483451-4196e1bb-f094-4b3c-aefc-41d77aff8117.png" alt="Package Logo">

<br>

<br>

<a href="https://pub.dev/packages/field_suggestion">

<img src="https://img.shields.io/badge/Special%20Made%20for-FieldSuggestion-blue" alt="License: MIT"/>

</a>

<a href="https://github.com/theiskaa/highlightable-text/blob/main/LICENSE">

<img src="https://img.shields.io/badge/License-MIT-red.svg" alt="License: MIT"/>

</a>

<a href="https://github.com/theiskaa/highlightable-text/blob/main/CONTRIBUTING.md">

<img src="https://img.shields.io/badge/Contributions-Welcome-brightgreen" alt="CONTRIBUTING"/>

</a>

</p>

# Overview & Usage

First, `actualText` property and `highlightableWord` property are required.

You can customize `actualText` by providing `defaultStyle`. Also you can customize highlighted text style by `highlightStyle` property.

`highlightableWord` poperty could be string array or just string with spaces.

You can enable word detection to focus on concrete matcher word. See "Custom usage" part for example.

### Very basic usage

```dart

HighlightText(

'Hello World',

highlightableWord: 'hello',

),

```

<img width="200" alt="s1" src="https://user-images.githubusercontent.com/59066341/129080679-bfb97d11-93c5-4258-b271-0e0918e3bc22.png">

### Custom usage

```dart

HighlightText(

"Hello, Flutter!",

highlightable: "Flu, He",

caseSensitive: true, // Turn on case-sensitive.

detectWords: true, // Turn on only full word hightlighting.

defaultStyle: TextStyle(

fontSize: 25,

color: Colors.black,

fontWeight: FontWeight.bold,

),

highlightStyle: TextStyle(

fontSize: 25,

letterSpacing: 2.5,

color: Colors.white,

backgroundColor: Colors.blue,

fontWeight: FontWeight.bold,

),

),

```

<img width="220" alt="stwo" src="https://user-images.githubusercontent.com/59066341/129483513-c379f0d6-d5ba-43e1-a2d7-0722aeb5dafa.png">

| 32.672131 | 145 | 0.724034 | eng_Latn | 0.289276 |

c0134cd244b03bfcadf875f179dce9c9e5742c58 | 10,455 | md | Markdown | _posts/2019-12-24-hottest-programming-languages-to-learn-in-2020.md | vamsikollipara/vamsikollipara.github.io | 64e65a20762820ae10a192137b2565e15345bc18 | [

"MIT"

] | null | null | null | _posts/2019-12-24-hottest-programming-languages-to-learn-in-2020.md | vamsikollipara/vamsikollipara.github.io | 64e65a20762820ae10a192137b2565e15345bc18 | [

"MIT"

] | null | null | null | _posts/2019-12-24-hottest-programming-languages-to-learn-in-2020.md | vamsikollipara/vamsikollipara.github.io | 64e65a20762820ae10a192137b2565e15345bc18 | [

"MIT"

] | null | null | null | ---

layout: post

title: "Hottest Programming Languages in 2020"

author: vk

categories: [Programming, Learning]

tags: [Java, Javascript, Python, Rust, Kotlin, Go, Dart, Typescript]

image: assets/images/post1/pro-lang.png

featured: true

---

The begin of 21st century saw a rise in demand of skills in programming. Computer sciences/ engineering was the most preferred subject of graduation among students. But this time around by the end of 2nd decade software engineers are not the only one who own this skill set. Every graduate irrespective of their background is expected to have knowledge in at least one of the most used programming language by the companies recruiting them. The competitive world has made it a point that schools have started curriculum with new subjects such as C and python.

Now there is always a fresh set of people who irrespective of their backgrounds are coming forward to learn programming languages. Choosing the right one for their job and career can be daunting with around 700 programming languages to choose from (https://en.wikipedia.org/wiki/List_of_programming_languages).

Just to make it easier we have come up with a comprehensive list of the most in demand programming languages in 2020. Our analysis is considering data from leading open source platforms such as GitHub and Stack Overflow.

## Front Runners

Let us have a look at the top programming languages based on their user base.

Yes, JavaScript is continuing its supremacy as top programming language 2019. But 2020 will see a new champion. You guessed it right that will be python. In terms of Community question asked per month Python took over Java in 2019 and it is after JavaScript for Numero Uno spot. Growing popularity in NodeJS also not helping JavaScript either because typescript is taking its share in UI development.

#### Python

Python is an interpreted, high-level, general-purpose programming language. It created by Guido van Rossum and first released in 1991, Python's design philosophy emphasizes code readability with its notable use of significant whitespace.

since it is a general-purpose programming language, you can use the it for developing both desktop and web applications. Also, you can use Python for developing complex scientific and numeric applications. It is designed with features to facilitate data analysis and visualization.

Latest boom in Data science clearly reflects in the growth in popularity of Python programming language as it is the most preferred language for Data Analytics, Machine Learning, Data Visualization and Deep Learning. No Wonder Pandas is the top used tags among the python packages in stack overflow followed by Django, Matplotlib another package for data science and Flask.

Python is used at major tech companies for some of their major products. Some of the notable names include

+ Google

+ Microsoft

+ Amazon

+ Netflix

+ Facebook

2020 is expected to see some major growth in the fields of Data science. If you are planning to be ready for a tasty challenge in 2020 train yourself in python to solve many daily life problems.

#### JavaScript

JavaScript, The programming language of Internet.

JavaScript, often abbreviated as JS, is a high-level, just-in-time compiled, object-oriented programming language

Alongside HTML and CSS, JavaScript is one of the core technologies of the World Wide Web. JavaScript enables interactive web pages and is an essential part of web applications. Most websites use it, and major web browsers have a dedicated JavaScript engine to execute it.

JavaScript is developed by Brendon Eich at Netscape. Later it became a standard in web development. Now it is maintained by ECMAScript an opensource community driven organization.

Birth of Node.js in 2009 age JavaScript a big boom in server-side development.

Although there are similarities between JavaScript and Java, including language name, syntax, and respective standard libraries, the two languages are distinct and differ greatly in design.

It is no exaggeration that JavaScript is still the widely used programming language based on its sheer user base. There are over 1.6 billion web sites in the world, and JavaScript is used on 95% of them, that is a staggering 1.52 billion web sites with JavaScript. JavaScript in its various forms sees its usage in Web Development, Server-Side Scripting, IoT etc.

2020 will not be any different for JavaScript but the increase in typescript (a superscript of JavaScript) will see a little decline its usage in web development. If you are thinking to venture yourself into web development, JavaScript is a must.

#### Java

Java is the Big Brother of programming languages when it comes to it popularity and wide userbase. Since its inception in 1995 (24 years old, hence the Big Brother) it has been one of the most sought-after skill set in software industry. Java is one of the widely taught programming languages in the grad schools, that makes it quite popular in dev circles right from the young age.

Java is a general-purpose programming language that is class-based, object-oriented, and designed to have as few implementation dependencies as possible.

Java still dominates various lists like TIOBE, Octaverse etc in popular programming languages. Java is not going to go anywhere. Given its wide range of usage in applications it worth learning (in case you haven’t).

John Cook has an interesting post about his predictions for programming languages. As per that java is going to outlive many new coming languages like Go, C#, python. Given it is already 24 years old is quite a long run.

## People Champion

These programming languages are garnering a good response from the programming community. Adding these skillsets in a developer’s arsenal will be handy in 2020.

#### RUST

Rust has been the "most loved programming language" in the Stack Overflow Developer Survey every year since 2016. When 83.5% of the world’s biggest developer forum like it, there must be something good going on in here.

Rust is a programming language developed by Mozilla. Rust is a multi-paradigm system programming language focused on safety, especially safe concurrency. Rust is syntactically like C++ but is designed to provide better memory safety while maintaining high performance.

*“Rust is one of the fastest growing languages on GitHub” – Octaverse report.*

#### Kotlin

Behold!! the JAVA alternative is here. It is said to conquer major share in mobile application development space.

Kotlin is a cross-platform, statically typed, general-purpose programming language with type inference. Kotlin is designed to interoperate fully with Java, and the JVM version of its standard library depends on the Java Class Library, but type inference allows its syntax to be more concise. Kotlin mainly targets the JVM, but also compiles to JavaScript or native code (via LLVM). Language development costs are borne by JetBrains, while the Kotlin Foundation protects the Kotlin trademark.

From Android studio v3.0 Kotlin is included in as an option to select along with standard java compiler. The Android Kotlin compiler lets the user choose between targeting Java 6 or Java 8 compatible bytecode.

Its ability to create both android (Kotlin/JVM) and iOS (Kotlin/Native) applications makes it a hot skillset to master in 2020.

#### Dart

The fastest growing programming language in GitHub (It is observed to grow by 500+% in YoY usage). Flutter is the one of GitHub’s most popular open source projects and Dart is the language used to write Flutter apps.

Dart is a cross functional programming language originally build for Flutter by Google. It can be used to build mobile, desktop, backend and web applications. Are you are doing cross platform apps in 2020? Dart is worth a shot.

#### Typescript

Typescript is an object oriented strictly typed superset on JavaScript from Microsoft. Yes, it transpiles into JavaScript. That is the reason many may oppose it to be a programming language but, Typescript is seeing a steady growth its userbase thanks to modern web UI frameworks like Angular.

You can agree or deny but the fact is Typescript is eating JavaScript market. UI development in 2020 can see more such contribution to Typescript.

Growth in TypeScript and drop in JavaScript is not a coincidence as both operate more in UI development space.

#### Go

Go is not only loved by people but also one of the most wanted programming languages in 2019 (Stack overflow Developer survey 2019). It is mostly used for building largescale and complex software.

Go, also known as Golang is a statically typed, compiled programming language designed at Google by Robert Griesemer, Rob Pike, and Ken Thompson. Go is syntactically like C that boasts the user friendliness of dynamically typed, interpreted languages like Python, but with memory safety, garbage collection, structural typing, and CSP-style concurrency.

There are two major implementations:

+ Google's self-hosting compiler toolchain targeting multiple operating systems, mobile devices, and WebAssembly.

+ gccgo, a GCC frontend.

A third party transpiler, GopherJS, compiles Go to JavaScript for front-end web development.

#### References:

Stack overflow

+ *[`Stack Overflow Survey`](https://insights.stackoverflow.com/survey/2019#most-loved-dreaded-and-wanted)*

+ *[`Stack overflow Trends`](https://insights.stackoverflow.com/trends?tags=go%2Ckotlin%2Ctypescript%2Crust%2Cdart)*

GitHub

+ *[`GitHut 2.0`](https://madnight.github.io/githut/#/pull_requests/2019/3)*

+ *[`The State of the Octoverse`](https://octoverse.github.com/)*

Others

+ *[`Kotlin Lang`](https://play.kotlinlang.org/hands-on/Targeting%20iOS%20and%20Android%20with%20Kotlin%20Multiplatform/01_Introduction)*

+ *[`John cook about programming languages life expectancy`](https://www.johndcook.com/blog/2017/08/19/programming-language-life-expectancy/)* | 81.046512 | 559 | 0.798757 | eng_Latn | 0.998698 |

c0143c74fbc090010988eb8b1f1c5c57b2e67395 | 5,045 | md | Markdown | _posts/2019-10-10-designing-in-the-open.md | scotentSD/scotentSD.github.io | 92f3a11f4667e94241e7fbfec785c8789d9b1a78 | [

"MIT"

] | null | null | null | _posts/2019-10-10-designing-in-the-open.md | scotentSD/scotentSD.github.io | 92f3a11f4667e94241e7fbfec785c8789d9b1a78 | [

"MIT"

] | null | null | null | _posts/2019-10-10-designing-in-the-open.md | scotentSD/scotentSD.github.io | 92f3a11f4667e94241e7fbfec785c8789d9b1a78 | [

"MIT"

] | 2 | 2019-12-10T09:44:48.000Z | 2020-03-27T16:18:59.000Z | ---

layout: post

title: Designing in the open

author: David

---

The [Scottish Enterprise service design team](https://scotentsd.github.io/) is committed to designing in the open

<!--more-->

## What does design in the open mean?

According to Brad Frost, [designing in the open](https://bradfrost.com/blog/post/designing-in-the-open/) means

>sharing your work and/or process publicly as you undertake a design project

It's not about making your code open source (although it could include that). It's about:

- Sharing bits of things as you build them

- Sharing versions of things as they eveolve and change

- Sharing techniques and resources

- Sharing our experiences and stories

For us, designing in the open means sharing all the documents and artefacts and prototypes an anything else we generate publicly by default.

Unless there is a good reason not to - for example, if it contains personal or potentially sensitive information - we make it as widely available as we can as soon as possible. Often, that means our work is available while we are still working on it.

## Why design in the open?

Well, we're a public body, so why shouldn't the ouputs of our work be available to the people who fund it?

But the process brings many benefits:

- Faster (potentially instantaneous) feedback

- Feedback from a wider group

- Generating buy-in from the community and stakeholders

- Demonstrating our commitment (and work in progress)

- Communicating all day, every day

- Reduce the cost of communication within the team, even if some work remotely

But mostly, it's just about being open, honest, and transparent. And we reduce our communication overhead because we don't need to tell people about what we've done if it is right in front of them any time they want it.

## Some history

Towards te end of 2008, both @numbat70 (Martin Kerr) and @david-obrien (writing) worked for Scottish Enterprise's web team.

We were asked by our director (this was like our boss's boss's boss's boss) if we could completely redesign, rewrite and rebuild scottish-enterprise.com by the 1st of April.

So, no pressure then. 3 months to completely tear down and rebuild a 5,000 page website.

But we did it. We had no time to contract anyone else to do it, so we did it ourselves.

We did a lot of things we would call agile now, though neither one of us was particularly aware of the term in 2008.

We put together a small, multi-disciplinary team. Mostly content writers; I did front end design and some development. @numbat70 did most of the back end dev.

We used [Gerry McGovern](https://twitter.com/gerrymcgovern)'s [top tasks](http://www.customercarewords.com/services/customer-top-task-identification/) methodology to identify user needs.

Most importantly, we all worked in the same small room. We talked, every day, about our work. We helped each other out. We didn't wait for anyone else to catch up. We wrote and coded and designed and built together, but independently.

No dependencies.

In the end, we got there. We redesigned, rewrote, rebuilt and shipped on 1 April 2009. We got a 10,000 page site down to <500 pages by foucusing on user needs.

The results? Ambiguous. Analytics data was still mostly based on server logs in those days. Google Analytics was still a twinkle in my old mate Sergey's eye.

Stakeholders hated it. And everyone complained about "their" content going missing (even though "their" content had not, so far as we could tell, been read by anyone in over 6 months.)

### Then on Friday night we went to the Arches

That's just for SEO.

In truth, we started to blog at http://digital.scotentblog.co.uk/. It's a WordPress blog, dead simple, far away from our internal processes and controls. It just made things easier.

Important people (cf. boss's boss's boss's boss) actually read it. Which, er, surprised us.

Astonished is probably a better word.

Which is all good, but wasn't making a difference.

### Then we moved to the junkyard

AKA our old Paisley office. A beautiful early 20th century building with lovely art deco tiling in the wally close and the shonkiest interior ever.

We had the first floor. Half of it was off limits because, well, there were holes and stuff. And if you opened a window it might fall out onto the street and decapitate a passing buddy.

But it was great. Apart from the trains.

We were properly agile.

We had small teams and no communications overhead.

We had proper, actual, for real kanban boards that we actually stood in front of and talked about our work. Every day.

There was loads of wall-space, and plenty of whiteboards

It felt easy, and it felt natural. It felt good.

We plastered that place with post-its and ideas. Some of them came to fruition, most didn't. Some of those that came to fruition worked, most didn't.

We tried stuff, we discarded the stuff that wasn't working, we improved the stuff that was.

And we got results.

| 47.149533 | 250 | 0.772052 | eng_Latn | 0.999652 |

c014594a7b8937db0094abf4c55baccfe86e4d2a | 1,917 | md | Markdown | CSP_Solver-master/README.md | magdaleenaaaa/TP-Sudoku | 55b2be19ab894c55346bd152ac902418e6af5b2a | [

"MIT"

] | 1 | 2021-01-04T19:07:11.000Z | 2021-01-04T19:07:11.000Z | CSP_Solver-master/README.md | magdaleenaaaa/TP-Sudoku | 55b2be19ab894c55346bd152ac902418e6af5b2a | [

"MIT"

] | null | null | null | CSP_Solver-master/README.md | magdaleenaaaa/TP-Sudoku | 55b2be19ab894c55346bd152ac902418e6af5b2a | [

"MIT"

] | 16 | 2020-01-14T14:09:42.000Z | 2020-02-04T11:57:26.000Z | CSP Solver

Bonjour à tous,

Pour exécuter le programme, vous devez avoir installé python 3.4 ou version ultérieure, les fichiers search.py, original.py et utils.py ont été extraits du code original d'AIMA (https://github.com/aimacode/aima-python) par Peter Norvig comme solveur de base pour csp général.

- original.py, utils.py et search.py ne sont pas nécessaires pour exécuter main.py ou test.py, ceux-ci ont été ajoutés juste pour montrer ses performances sur docs / original_results.txt

- La classe sudokuCSP crée les objets nécessaires pour le solveur csp général comme voisin, variables, domaine pour un jeu sudoku comme problème de satisfaction de contrainte

- Le fichier csp.py contient du code de original.py (fichier csp.py du code AIMA) légèrement modifié, il n'utilise pas de fonctions rares d'autres fichiers (comme ultis.py), les méthodes qui ont été modifiées sont étiquetées avec le commentaire "@modifié"

- Le fichier csp.py contient des algorithmes pour résoudre csp, comme le retour en arrière avec les 3 méthodes différentes pour l'inférence, mrv et quelques autres méthodes.

- La classe dans gui.py contient du code pour créer une interface utilisateur graphique pour le jeu, permettant à l'utilisateur de choisir un niveau (il y a 6 tableaux différents) et une méthode infernet pour le retour en arrière

- Le fichier test.py contient du code pour exécuter des tests pour chaque algorithme d'inférence sur une carte sudoku (il est possible de choisir quelle carte)

- Le fichier Main.py contient une méthode principale pour exécuter le programme gui

Deux fichiers ont été ajoutés montrant la différence entre le fichier csp.py d'origine du code AIMA et celui-ci modifié. (vérifiez modified_results.txt et original_results.txt)

N'hésitez pas à lire les documentations pour plus d'informations ou à en envoyer un message à Danush, Axel, Célia ou moi-même Laura.

Bonne lecture à tous

| 71 | 275 | 0.798122 | fra_Latn | 0.995407 |

c01470dbe1687dfa0737107d79afb5eda64b07a7 | 813 | md | Markdown | docs/api/@remirror/core-extensions/core-extensions.historyextensionoptions.getdispatch.md | jankeromnes/remirror | 95306cee4c76ee9fd7271a0ab6069f0a0a6803d9 | [

"MIT"

] | 1 | 2021-05-22T06:22:01.000Z | 2021-05-22T06:22:01.000Z | docs/api/@remirror/core-extensions/core-extensions.historyextensionoptions.getdispatch.md | jankeromnes/remirror | 95306cee4c76ee9fd7271a0ab6069f0a0a6803d9 | [

"MIT"

] | null | null | null | docs/api/@remirror/core-extensions/core-extensions.historyextensionoptions.getdispatch.md | jankeromnes/remirror | 95306cee4c76ee9fd7271a0ab6069f0a0a6803d9 | [

"MIT"

] | null | null | null | <!-- Do not edit this file. It is automatically generated by API Documenter. -->

[Home](./index.md) > [@remirror/core-extensions](./core-extensions.md) > [HistoryExtensionOptions](./core-extensions.historyextensionoptions.md) > [getDispatch](./core-extensions.historyextensionoptions.getdispatch.md)

## HistoryExtensionOptions.getDispatch() method

Provide a custom dispatch getter function for embedded editors

<b>Signature:</b>

```typescript

getDispatch?(): DispatchFunction;

```

<b>Returns:</b>

`DispatchFunction`

## Remarks

This is only needed when the extension is part of a child editor, e.g. `ImageCaptionEditor`<!-- -->. By passing in the `getDispatch` method history actions can be dispatched into the parent editor allowing them to propagate into the child editor.

| 36.954545 | 247 | 0.740467 | eng_Latn | 0.868851 |

c0155bd6702341b6d48e1c3190f85d42faa3276e | 352 | md | Markdown | vendor/tw-city-selector-master/docs/zipcode.md | mypetertw/ianphp-framework | c4265b3aeb68021b2816f5ab08eac3ec73d70bc9 | [

"MIT"

] | null | null | null | vendor/tw-city-selector-master/docs/zipcode.md | mypetertw/ianphp-framework | c4265b3aeb68021b2816f5ab08eac3ec73d70bc9 | [

"MIT"

] | null | null | null | vendor/tw-city-selector-master/docs/zipcode.md | mypetertw/ianphp-framework | c4265b3aeb68021b2816f5ab08eac3ec73d70bc9 | [

"MIT"

] | null | null | null | # 郵遞區號表

<dl id="zipcode-list" class="zipcode-list">

<template v-for="(county, i) in counties">

<dt>{{ county }}</dt>

<dd>

<span v-for="(district, n) in districts[i][0]">

{{ district }}

{{ districts[i][1][n] }}

</span>

</dd>

</template>

</dl>

<script>

new Vue({

el: '#zipcode-list',

data: window.data

});

</script>

| 16.761905 | 48 | 0.534091 | eng_Latn | 0.362141 |

c015b4bae1552dbcad08d8d73b16177a58f46eac | 919 | md | Markdown | README.md | MHHukiewitz/bonfida-api-wrapper-python3 | c4db0c5ea1e294e0bb43bafa63c96c4d474b168a | [

"MIT"

] | 5 | 2021-03-08T07:21:02.000Z | 2021-12-12T01:09:39.000Z | README.md | MHHukiewitz/bonfida-api-wrapper-python3 | c4db0c5ea1e294e0bb43bafa63c96c4d474b168a | [

"MIT"

] | 2 | 2021-04-22T12:55:39.000Z | 2021-04-30T09:56:49.000Z | README.md | MHHukiewitz/bonfida-api-wrapper-python3 | c4db0c5ea1e294e0bb43bafa63c96c4d474b168a | [

"MIT"

] | 2 | 2021-04-27T15:39:17.000Z | 2021-04-30T22:24:08.000Z | # Bonfida-Trader

## Warning

This is an UNOFFICIAL wrapper for Bonfida [HTTP API](https://docs.bonfida.com/) written in Python 3.7

The library can be used to fetch market data or create third-party clients

USE THIS WRAPPER AT YOUR OWN RISK, I WILL NOT CORRESPOND TO ANY LOSES

## Features

- Implementation of all [public](#) endpoints

## Donate

If useful, buy me a coffee?

- ETH: 0xA9D89A5CAf6480496ACC8F4096fE254F24329ef0 (brendanc.eth)

- SOL: AQBqATwRqbU8odBL3RCFovzLbHR13MuoF2v53QpmjEV3

## Installation

$ git clone https://github.com/LeeChunHao2000/bonfida-api-wrapper-python3

- This wrapper requires [requests](https://github.com/psf/requests)

## Quickstart

This is an introduction on how to get started with Bonfida client. First, make sure the Bonfida library is installed.

And then:

from Bonfida.client import Client

client = Client('', '')

### Version Logs

#### 2021-03-07

- Birth! | 23.564103 | 117 | 0.746464 | eng_Latn | 0.719986 |

c0160f8a3359376269038840d89fb9961a5cb747 | 887 | md | Markdown | docs/csharp/misc/cs1057.md | AlejandraHM/docs.es-es | 5f5b056e12f9a0bcccbbbef5e183657d898b9324 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/csharp/misc/cs1057.md | AlejandraHM/docs.es-es | 5f5b056e12f9a0bcccbbbef5e183657d898b9324 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/csharp/misc/cs1057.md | AlejandraHM/docs.es-es | 5f5b056e12f9a0bcccbbbef5e183657d898b9324 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: Error del compilador CS1057

ms.date: 07/20/2015

f1_keywords:

- CS1057

helpviewer_keywords:

- CS1057

ms.assetid: 6f247cfd-6d26-43b8-98d9-0a6d7c115cad

ms.openlocfilehash: 3617d83b81894476dc7635b962d8c70462dc573f

ms.sourcegitcommit: 3d5d33f384eeba41b2dff79d096f47ccc8d8f03d

ms.translationtype: HT

ms.contentlocale: es-ES

ms.lasthandoff: 05/04/2018

ms.locfileid: "33304014"

---

# <a name="compiler-error-cs1057"></a>Error del compilador CS1057

'member': las clases estáticas no pueden contener miembros protegidos

Este error se genera al declarar a un miembro protegido dentro de una clase estática.

## <a name="example"></a>Ejemplo

El ejemplo siguiente genera el error CS1057:

```csharp

// CS1057.cs

using System;

static class Class1

{

protected static int x; // CS1057

public static void Main()

{

}

}

```

| 24.638889 | 88 | 0.720406 | spa_Latn | 0.663011 |

c0164d7a3154a66bd9113c36a453e5a6875fc171 | 38 | md | Markdown | README.md | Jonle/jonleServer | 12cf3a9ccffde8a29eb1ca3e9d8c038d165b08f9 | [

"Unlicense"

] | null | null | null | README.md | Jonle/jonleServer | 12cf3a9ccffde8a29eb1ca3e9d8c038d165b08f9 | [

"Unlicense"

] | null | null | null | README.md | Jonle/jonleServer | 12cf3a9ccffde8a29eb1ca3e9d8c038d165b08f9 | [

"Unlicense"

] | null | null | null | # jonleServer

my bolg sever - Node.js

| 12.666667 | 23 | 0.736842 | tur_Latn | 0.249483 |

c0167e849b8a8d78d7887e19b03e7795f1c064c2 | 30 | md | Markdown | 0x00-python-hello_world/README.md | Trice254/alx-higher_level_programming | b49b7adaf2c3faa290b3652ad703914f8013c67c | [

"MIT"

] | null | null | null | 0x00-python-hello_world/README.md | Trice254/alx-higher_level_programming | b49b7adaf2c3faa290b3652ad703914f8013c67c | [

"MIT"

] | null | null | null | 0x00-python-hello_world/README.md | Trice254/alx-higher_level_programming | b49b7adaf2c3faa290b3652ad703914f8013c67c | [

"MIT"

] | null | null | null | ## 0x00. Python - Hello, World | 30 | 30 | 0.666667 | eng_Latn | 0.364362 |

c017b8f84fc21a0c86343031fa47df06ec7e06eb | 2,425 | md | Markdown | examples/cool_app/README.md | romychs/gotk3 | 116e09e25f5e2b197e29e6ebbb06d889101bd2a7 | [

"0BSD"

] | 32 | 2018-03-14T15:08:34.000Z | 2020-12-22T19:25:18.000Z | examples/cool_app/README.md | romychs/gotk3 | 116e09e25f5e2b197e29e6ebbb06d889101bd2a7 | [

"0BSD"

] | 1 | 2018-03-25T16:25:15.000Z | 2018-04-04T13:05:19.000Z | examples/cool_app/README.md | romychs/gotk3 | 116e09e25f5e2b197e29e6ebbb06d889101bd2a7 | [

"0BSD"

] | 4 | 2018-03-25T16:21:13.000Z | 2021-03-28T08:27:18.000Z | Feature-rich GTK+3 app demo written in go

=========================================

Aim of this application is to interconnect GLIB/GTK+ components and widgets together

with use of helpful code patterns, meet the real needs of developers.

It's obligatory to have GTK+ 3.12 and high installed, otherwise app will not compile

(GtkPopover, GtkHeaderBar require more recent GTK+3 installation).

Features of this demonstration:

1) All actions in application implemented via GLIB's GAction component working as entry point

for any activity in application. Advanced use of GAction utilize

"[action with states and parameters](https://developer.gnome.org/GAction/)"

with seamless integration of such actions to UI menus.

2) New widgets such as GtkHeaderBar, GtkPopover and others are used.

3) Good example of preference dialog with save/restore functionality.

4) Helpful code pattern are present: dialogs and message boxes demo

working with save/restore settings (via GSettings),

fullscreen mode on/off, actions with states or stateless and others.

Screenshots

-----------

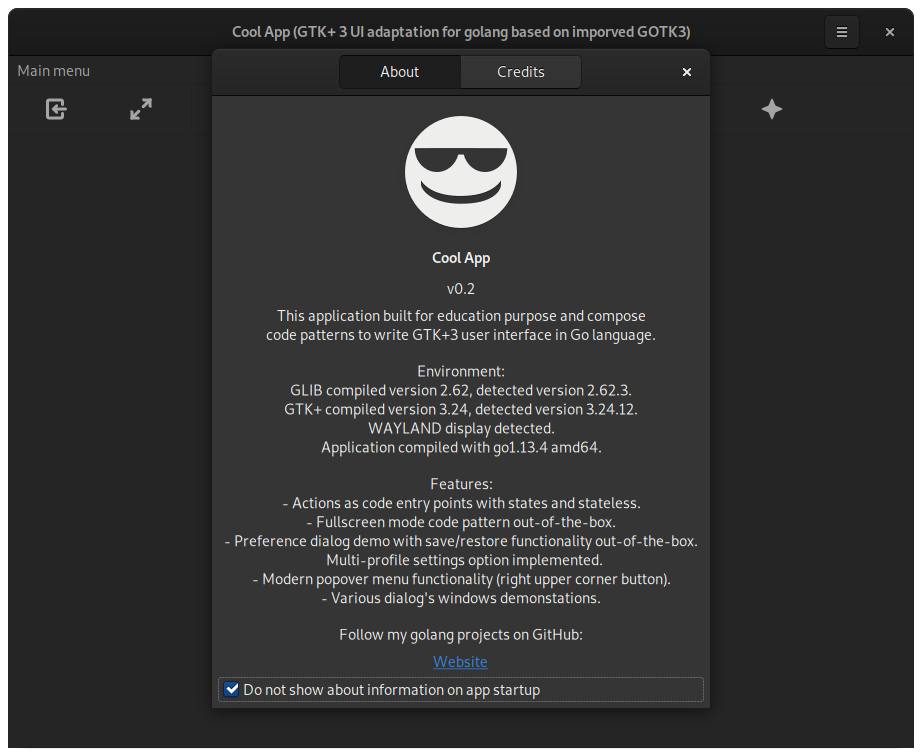

Main form with about dialog:

Main form and modern popover menu:

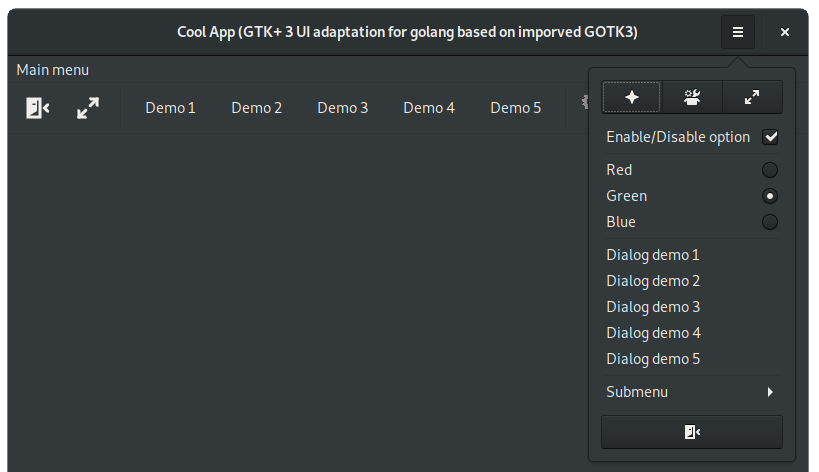

Main form and classic main menu:

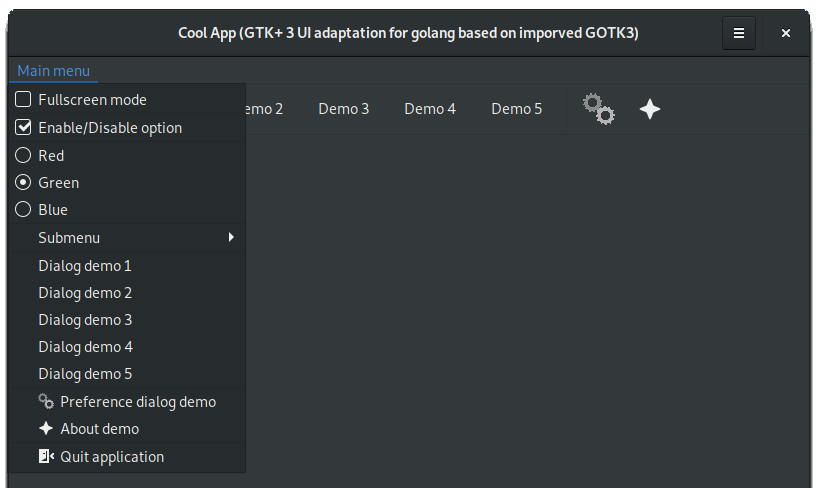

One of few dialogs demo:

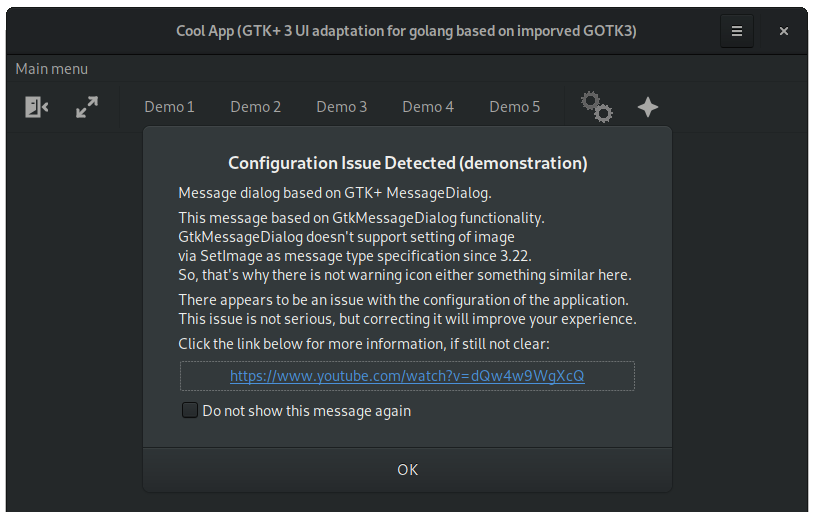

Preference dialog with save/restore settings functionality:

Installation

------------

Almost no action needed, the main requirement is to have the GOTK3 library preliminary installed.

Still, to make a "preference dialog" function properly scripts `gs_install_schema.sh`/`gs_uninstall_schema.sh`

must be used, to install/compile [GLIB setting's schema](https://developer.gnome.org/GSettings/).

To run application, type in console `go run ./cool_app.go`.

Additional recommendations

-------------------------

- Use GNOME application `gtk3-icon-browser` to find themed icons available at your linux desktop installation.

Contact

-------

Please use [Github issue tracker](https://github.com/d2r2/gotk3/issues)

for filing bugs or feature requests.

| 41.810345 | 110 | 0.769072 | eng_Latn | 0.935475 |

c01a3973e369661d4a9d81458996edf296076fdd | 298 | md | Markdown | 2017/todo_2017.md | wiix/ccjava-overview | 42e525317417416f54801e585fdf1f6c42209fa2 | [

"Apache-2.0"

] | null | null | null | 2017/todo_2017.md | wiix/ccjava-overview | 42e525317417416f54801e585fdf1f6c42209fa2 | [

"Apache-2.0"

] | null | null | null | 2017/todo_2017.md | wiix/ccjava-overview | 42e525317417416f54801e585fdf1f6c42209fa2 | [

"Apache-2.0"

] | null | null | null | # 2017年期望完成的目标

### Todos

- 掌握基于证书的用户管理、及安全传输。

- 基于javafx(或wpf)的多线程执行器:GUI程序;实时显示执行动态;可管理线程。

- 基于“用户组”概念的可扩展权限框架:注解、可处理数据权限、权限传播管理。

- 掌握oauth2.0,并有一个实际应用。

- 使用netty实现一个有中心多节点网络服务,并实现多人分组聊天示例应用。

- 掌握一种机器学习API。

- 掌握一种人脸检测API。

- 完成一个工单系统。

- 所有的前端使用restful api + bootstrap + ngularjs[/vuejs]

| 21.285714 | 52 | 0.738255 | yue_Hant | 0.577437 |

c01aa049091f2f72cf280c694e8bfe22680e4f7e | 95 | md | Markdown | general-todos.md | hboutemy/incubator-wayang | a91bd3615432cd8f3bf44bd2f4b99b5d8df3cdba | [

"Apache-2.0"

] | null | null | null | general-todos.md | hboutemy/incubator-wayang | a91bd3615432cd8f3bf44bd2f4b99b5d8df3cdba | [

"Apache-2.0"

] | null | null | null | general-todos.md | hboutemy/incubator-wayang | a91bd3615432cd8f3bf44bd2f4b99b5d8df3cdba | [

"Apache-2.0"

] | null | null | null | # Here are all the To-Do's that are general

## TODO: Explain the structure of the scala folder | 31.666667 | 50 | 0.747368 | eng_Latn | 0.999537 |

c01b08c939c5612ddda0bbad260e42c33092dd25 | 22,090 | md | Markdown | config.md | jmhbnz/humacs | 063873150d1977203a34d9ca4a743040e02c1c75 | [

"BSD-2-Clause"

] | 10 | 2020-07-29T20:30:16.000Z | 2022-03-08T00:43:47.000Z | config.md | jmhbnz/humacs | 063873150d1977203a34d9ca4a743040e02c1c75 | [

"BSD-2-Clause"

] | 39 | 2020-07-29T01:35:46.000Z | 2021-08-29T23:59:13.000Z | config.md | jmhbnz/humacs | 063873150d1977203a34d9ca4a743040e02c1c75 | [

"BSD-2-Clause"

] | 7 | 2020-07-29T01:34:15.000Z | 2022-02-22T13:40:32.000Z | - [Humac Human Deets](#org19ea609)

- [Ergonomics](#orgfdecd2e)

- [Better Local Leaders](#org6ec54d3)

- [Use mouse scroll](#org6337bb1)

- [lispy vim](#org73d8410)

- [Consistency](#orgcbe28e3)

- [consistent paths](#org00d844c)

- [Appearance](#org7f80cdc)

- [Fonts](#org1556a9a)

- [Theme](#org018a0eb)

- [Indent](#orga40c35d)

- [LSP Behaviour](#orge6cf9b5)

- [Languages](#org1fc6b65)

- [Web](#org06cc1eb)

- [Go](#orgdd10467)

- [Vue](#org864ca0c)

- [Org](#org0586042)

- [Show properties when cycling through subtrees](#org8c25a2b)

- [ASCII colours on shell results](#orgb9be192)

- [Literate!](#org1d5d058)

- [SQL](#orgb686a95)

- [Go](#org09c422e)

- [Pairing](#org4bfcb1e)

- [Exporting](#orgd168ca6)

- [Sane Org Defaults](#org4911799)

- [Support Big Query](#org08572fb)

- [Snippets](#org07738c1)

- [org-mode](#org96ae34f)

- [Blog Property](#orgece52b0)

- [Dashboard](#org76a3931)

- [Banners](#org6ea8080)

- [user configs](#org15bf9e3)

- [init.el](#org501945e)

- [Patch for when using emacs 28+](#org9dd9a75)

- [Doom! block](#org73b79ac)

- [packages.el](#org6a2581d)

- [ii-packages](#org13860bd)

- [upstream](#orga8e224e)

[](spacemacs-config/banners/img/kubemacs.png)

<a id="org19ea609"></a>

# Humac Human Deets

On a sharing.io cluster, we should have these two env vars set…so we can personalize to the person who started the instance. Otherwise, they’re just a friend.

```elisp

(setq user-full-name (if (getenv "GIT_AUTHOR_NAME")

(getenv "GIT_AUTHOR_NAME")

"ii friend")

user-mail-address (if (getenv "GIT_COMMIT_EMAIL")

(getenv "GIT_COMMIT_EMAIL")

"ii*ii.ii"))

```

<a id="orgfdecd2e"></a>

# Ergonomics

<a id="org6ec54d3"></a>

## Better Local Leaders

I got used to using comma as the localleader key, from spacemacs, so i keep it.

```elisp

(setq doom-localleader-key ",")

```

<a id="org6337bb1"></a>

## Use mouse scroll

```elisp

(defun scroll-up-5-lines ()

"Scroll up 5 lines"

(interactive)

(scroll-up 5))

(defun scroll-down-5-lines ()

"Scroll down 5 lines"

(interactive)

(scroll-down 5))

(global-set-key (kbd "<mouse-4>") 'scroll-down-5-lines)

(global-set-key (kbd "<mouse-5>") 'scroll-up-5-lines)

```

<a id="org73d8410"></a>

## lispy vim

This sets up keybindings for manipuulating parenthesis with slurp and barf when in normal or visual mode.

```elisp

(map!

:map smartparens-mode-map

:nv ">" #'sp-forward-slurp-sexp

:nv "<" #'sp-forward-barf-sexp

:nv "}" #'sp-backward-barf-sexp

:nv "{" #'sp-backward-slurp-sexp)

```

<a id="orgcbe28e3"></a>

# Consistency

<a id="org00d844c"></a>

## consistent paths

If you are using a mac, you can have problem with running source blocks or some language support as the shell PATH isn’t found in emacs. [exec-path-from-shell](https://github.com/purcell/exec-path-from-shell) is a solution for this.

```elisp

(when (memq window-system '(mac ns x)) (exec-path-from-shell-initialize))

```

<a id="org7f80cdc"></a>

# Appearance

<a id="org1556a9a"></a>

## Fonts

;; Doom exposes five (optional) variables for controlling fonts in Doom. Here ;; are the three important ones:

```elisp

;(setq doom-font (font-spec :family "Source Code Pro" :size 10)

; ;; )(font-spec :family "Source Code Pro" :size 8 :weight 'semi-light)

; doom-serif-font (font-spec :family "Source Code Pro" :size 10)

; doom-variable-pitch-font (font-spec :family "Source Code Pro" :size 10)

; doom-unicode-font (font-spec :family "Input Mono Narrow" :size 12)

; doom-big-font (font-spec :family "Source Code Pro" :size 10))

```

<a id="org018a0eb"></a>

## Theme

```elisp

(setq doom-theme 'doom-gruvbox)

```

<a id="orga40c35d"></a>

## Indent

```elisp

(setq standard-indent 2)

```

<a id="orge6cf9b5"></a>

## LSP Behaviour

This brings over the lsp behaviour of spacemacs, so working with code feels consistent across emacs..

```elisp

(use-package! lsp-ui

:config

(setq lsp-navigation 'both)

(setq lsp-ui-doc-enable t)

(setq lsp-ui-doc-position 'top)

(setq lsp-ui-doc-alignment 'frame)

(setq lsp-ui-doc-use-childframe t)

(setq lsp-ui-doc-use-webkit t)

(setq lsp-ui-doc-delay 0.2)

(setq lsp-ui-doc-include-signature nil)

(setq lsp-ui-sideline-show-symbol t)

(setq lsp-ui-remap-xref-keybindings t)

(setq lsp-ui-sideline-enable t)

(setq lsp-prefer-flymake nil)

(setq lsp-print-io t))

```

<a id="org1fc6b65"></a>

# Languages

<a id="org06cc1eb"></a>

## Web

auto-closing tags works different if you are in a terminal or gui. We want consistent behaviour when editing any sort of web doc. I also like it to create a closing tag when i’ve starteed my opening tag, which is auto-close-style 2

```elisp

(setq web-mode-enable-auto-closing t)

(setq-hook! web-mode web-mode-auto-close-style 2)

```

<a id="orgdd10467"></a>

## Go

Go is enabled, with LSP support in our [init.el](init.el). To get it working properly, though, you want to ensure you have all the go dependencies installed on your computer and your GOPATH set. It’s recommended you read the doom docs on golang, following all links to ensure your dependencies are up to date. [Go Docs](file:///Users/hh/humacs/doom-emacs/modules/lang/go/README.md)

I’ve had inconsistencies with having the GOPATH set on humacs boxes, so if we are in a humacs pod, explicitly set the GOPATH

```elisp

(when (and (getenv "HUMACS_PROFILE") (not (getenv "GOPATH")))

(setenv "GOPATH" (concat (getenv "HOME") "/go")))

```

<a id="org864ca0c"></a>

## Vue

Tried out vue-mode, but it was causing more problems than benefits and doesn’t seem to do much beyond what web-mode plus vue-lsp support would do. So, following [Gene Hack’s Blog Post](https://genehack.blog/2020/08/web-mode-eglot-vetur-vuejs-=-happy/), we’ll create our own mode, that just inherits all of web-mode and adds lsp. This requires for [vls](https://npmjs.com/vls) to be installed.

```elisp

(define-derived-mode ii-vue-mode web-mode "iiVue"

"A major mode derived from web-mode, for editing .vue files with LSP support.")

(add-to-list 'auto-mode-alist '("\\.vue\\'" . ii-vue-mode))

(add-hook 'ii-vue-mode-hook #'lsp!)

```

<a id="org0586042"></a>

# Org

Various settings specific to org-mode to satisfy our preferences

<a id="org8c25a2b"></a>

## Show properties when cycling through subtrees

This is an adjustment to the default hook, which hides drawers by default

```elisp

(setq org-cycle-hook

' (org-cycle-hide-archived-subtrees

org-cycle-show-empty-lines

org-optimize-window-after-visibility-change))

```

<a id="orgb9be192"></a>

## ASCII colours on shell results

```elisp

(defun ek/babel-ansi ()

(when-let ((beg (org-babel-where-is-src-block-result nil nil)))

(save-excursion

(goto-char beg)

(when (looking-at org-babel-result-regexp)

(let ((end (org-babel-result-end))

(ansi-color-context-region nil))

(ansi-color-apply-on-region beg end))))))

(add-hook 'org-babel-after-execute-hook 'ek/babel-ansi)

```

<a id="org1d5d058"></a>

# Literate!

<a id="orgb686a95"></a>

## SQL

```elisp

(setq org-babel-default-header-args:sql-mode

'((:results . "replace code")

(:product . "postgres")

(:wrap . "SRC example")))

```

<a id="org09c422e"></a>

## Go

```elisp

(setq org-babel-default-header-args:go

'((:results . "replace code")

(:wrap . "SRC example")))

```

<a id="org4bfcb1e"></a>

## Pairing

```elisp

(use-package! graphviz-dot-mode)

(use-package! sql)

(use-package! ii-utils)

(use-package! ii-pair)

(after! ii-pair

(osc52-set-cut-function)

)

;;(use-package! iterm)

;;(use-package! ob-tmate)

```

<a id="orgd168ca6"></a>

## Exporting

```elisp

(require 'ox-gfm)

```

<a id="org4911799"></a>

## Sane Org Defaults

In addition to the org defaults, we wanna make sure our exports include results, but that we dont’ try to run all our tamte commands again.

```elisp

(setq org-babel-default-header-args

'((:session . "none")

(:results . "replace code")

(:comments . "org")

(:exports . "both")

(:eval . "never-export")

(:tangle . "no")))

(setq org-babel-default-header-args:shell

'((:results . "output code verbatim replace")

(:wrap . "example")))

```

<a id="org08572fb"></a>

## Support Big Query

```elisp

(defun ii-sql-comint-bq (product options &optional buf-name)

"Create a bq shell in a comint buffer."

;; We may have 'options' like database later

;; but for the most part, ensure bq command works externally first

(sql-comint product options buf-name)

)

(defun ii-sql-bq (&optional buffer)

"Run bq by Google as an inferior process."

(interactive "P")

(sql-product-interactive 'bq buffer)

)

(after! sql

(sql-add-product 'bq "Google Big Query"

:free-software nil

;; :font-lock 'bqm-font-lock-keywords ; possibly later?

;; :syntax-alist 'bqm-mode-syntax-table ; invalid

:prompt-regexp "^[[:alnum:]-]+> "

;; I don't think we have a continuation prompt

;; but org-babel-execute:sql-mode requires it

;; otherwise re-search-forward errors on nil

;; when it requires a string

:prompt-cont-regexp "3a83b8c2z93c89889a4c98r2z34"

;; :prompt-length 9 ; can't precalculate this

:sqli-program "bq"

:sqli-login nil ; probably just need to preauth

:sqli-options '("shell" "--quiet" "--format" "pretty")

:sqli-comint-func 'ii-sql-comint-bq

)

)

```

<a id="org07738c1"></a>

# Snippets

These are helpful text expanders made with yasnippet

<a id="org96ae34f"></a>

## org-mode

<a id="orgece52b0"></a>

### Blog Property

Creates a property drawer with all the necessary info for our blog.

```snippet

# -*- snippet -*-

# name: blog

# key: <blog

# --

** ${1:Enter Title}

:PROPERTIES:

:EXPORT_FILE_NAME: ${1:$(downcase(replace-regexp-in-string " " "-" yas-text))}

:EXPORT_DATE: `(format-time-string "%Y-%m-%d")`

:EXPORT_HUGO_MENU: :menu "main"

:EXPORT_HUGO_CUSTOM_FRONT_MATTER: :summary "${2:No Summary Provided}"

:END:

${3:"Enter Tags"$(unless yas-modified-p (progn (counsel-org-tag)(kill-whole-line)))}

```

<a id="org76a3931"></a>

# Dashboard

<a id="org6ea8080"></a>

## Banners

```elisp

(setq

;; user-banners-dir

;; doom-dashboard-banner-file "img/kubemacs.png"

doom-dashboard-banner-dir (concat humacs-spacemacs-directory (convert-standard-filename "/banners/"))

doom-dashboard-banner-file "img/kubemacs.png"

fancy-splash-image (concat doom-dashboard-banner-dir doom-dashboard-banner-file)

)

```

<a id="org15bf9e3"></a>

# user configs

Place your user config in

```elisp

(defun pair-or-user-name ()

"Getenv SHARINGIO_PAIR_NAME if exists, else USER"

(if (getenv "SHARINGIO_PAIR_USER")

(getenv "SHARINGIO_PAIR_USER")

(getenv "USER")))

(setq humacs-doom-user-config (expand-file-name (concat humacs-directory "doom-config/users/" (pair-or-user-name) ".org")))

(if (file-exists-p humacs-doom-user-config)

(progn

(org-babel-load-file humacs-doom-user-config)

)

)

;; once all personal vars are set, reload the theme

(doom/reload-theme)

;; for some reason this isn't loading

;; and doesn't exist an config.org time

;; (doom-dashboard/open) ;; our default screen

```

<a id="org501945e"></a>

# init.el

:header-args:emacs-lisp+ :tangle init.el :header-args:elisp+ :results silent :tangle init.el

<a id="org9dd9a75"></a>

## Patch for when using emacs 28+

```elisp

;; patch to [email protected]

;; https://www.reddit.com/r/emacs/comments/kqd9wi/changes_in_emacshead2828050_break_many_packages/

(defmacro define-obsolete-function-alias ( obsolete-name current-name

&optional when docstring)

"Set OBSOLETE-NAME's function definition to CURRENT-NAME and mark it obsolete.

\(define-obsolete-function-alias \\='old-fun \\='new-fun \"22.1\" \"old-fun's doc.\")

is equivalent to the following two lines of code:

\(defalias \\='old-fun \\='new-fun \"old-fun's doc.\")

\(make-obsolete \\='old-fun \\='new-fun \"22.1\")

WHEN should be a string indicating when the function was first

made obsolete, for example a date or a release number.

See the docstrings of `defalias' and `make-obsolete' for more details."

(declare (doc-string 4)

(advertised-calling-convention

;; New code should always provide the `when' argument

(obsolete-name current-name when &optional docstring) "23.1"))

`(progn

(defalias ,obsolete-name ,current-name ,docstring)

(make-obsolete ,obsolete-name ,current-name ,when)))

```

<a id="org73b79ac"></a>

## Doom! block

```elisp

(doom! :input

;;chinese

;;japanese

:os

(tty +osc)

:completion

company ; the ultimate code completion backend

helm ; the *other* search engine for love and life

;;ido ; the other *other* search engine...

;;ivy ; a search engine for love and life

:ui

deft ; notational velocity for Emacs

doom ; what makes DOOM look the way it does

doom-dashboard ; a nifty splash screen for Emacs

doom-quit ; DOOM quit-message prompts when you quit Emacs

; fill-column ; a `fill-column' indicator

hl-todo ; highlight TODO/FIXME/NOTE/DEPRECATED/HACK/REVIEW

;;hydra

;;indent-guides ; highlighted indent columns

;minimap ; show a map of the code on the side

modeline ; snazzy, Atom-inspired modeline, plus API

;;nav-flash ; blink cursor line after big motions

;;neotree ; a project drawer, like NERDTree for vim

ophints ; highlight the region an operation acts on

(popup +defaults) ; tame sudden yet inevitable temporary windows

;; pretty-code ; ligatures or substitute text with pretty symbols

;;tabs ; a tab bar for Emacs

treemacs ; a project drawer, like neotree but cooler

unicode ; extended unicode support for various languages

window-select ; visually switch windows

vc-gutter ; vcs diff in the fringe

vi-tilde-fringe ; fringe tildes to mark beyond EOB

workspaces ; tab emulation, persistence & separate workspaces

zen ; distraction-free coding or writing

:editor

(evil +everywhere) ; come to the dark side, we have cookies

file-templates ; auto-snippets for empty files

fold ; (nigh) universal code folding

(format +onsave) ; automated prettiness

;;god ; run Emacs commands without modifier keys

;;lispy ; vim for lisp, for people who don't like vim

multiple-cursors ; editing in many places at once

;;objed ; text object editing for the innocent

;;parinfer ; turn lisp into python, sort of

;;rotate-text ; cycle region at point between text candidates

snippets ; my elves. They type so I don't have to

word-wrap ; soft wrapping with language-aware indent

:emacs

dired ; making dired pretty [functional]

electric ; smarter, keyword-based electric-indent

ibuffer ; interactive buffer management

(undo +tree) ; persistent, smarter undo for your inevitable mistakes

vc ; version-control and Emacs, sitting in a tree

:term

eshell ; the elisp shell that works everywhere

;;shell ; simple shell REPL for Emacs

;;term ; basic terminal emulator for Emacs

;vterm ; the best terminal emulation in Emacs

:checkers

syntax ; tasing you for every semicolon you forget

;;spell ; tasing you for misspelling mispelling

;;grammar ; tasing grammar mistake every you make

:tools

;;ansible

debugger ; FIXME stepping through code, to help you add bugs

direnv

docker

editorconfig ; let someone else argue about tabs vs spaces

ein ; tame Jupyter notebooks with emacs

(eval +overlay) ; run code, run (also, repls)

;;gist ; interacting with github gists

lookup ; navigate your code and its documentation

(lsp +peek)

macos ; MacOS-specific commands

magit ; a git porcelain for Emacs

make ; run make tasks from Emacs

pass ; password manager for nerds

;; pdf ; pdf enhancements

;;prodigy ; FIXME managing external services & code builders

rgb ; creating color strings

;;taskrunner ; taskrunner for all your projects

terraform ; infrastructure as code

tmux ; an API for interacting with tmux

;;upload ; map local to remote projects via ssh/ftp

:lang

;;agda ; types of types of types of types...

;;cc ; C/C++/Obj-C madness

clojure ; java with a lisp

;;common-lisp ; if you've seen one lisp, you've seen them all

;;coq ; proofs-as-programs

;;crystal ; ruby at the speed of c

;;csharp ; unity, .NET, and mono shenanigans

;;data ; config/data formats

;;(dart +flutter) ; paint ui and not much else

;;elixir ; erlang done right

;;elm ; care for a cup of TEA?

emacs-lisp ; drown in parentheses

;;erlang ; an elegant language for a more civilized age

;;ess ; emacs speaks statistics

;;faust ; dsp, but you get to keep your soul

;;fsharp ; ML stands for Microsoft's Language

;;fstar ; (dependent) types and (monadic) effects and Z3

;;gdscript ; the language you waited for

(go +lsp) ; the hipster dialect

;;(haskell +dante) ; a language that's lazier than I am

;;hy ; readability of scheme w/ speed of python

;;idris ;

json ; At least it ain't XML

;;(java +meghanada) ; the poster child for carpal tunnel syndrome

javascript ; all(hope(abandon(ye(who(enter(here))))))

;;julia ; a better, faster MATLAB

;;kotlin ; a better, slicker Java(Script)

latex ; writing papers in Emacs has never been so fun

;;lean

;;factor

;;ledger ; an accounting system in Emacs

lua ; one-based indices? one-based indices

markdown ; writing docs for people to ignore

;;nim ; python + lisp at the speed of c

;;nix ; I hereby declare "nix geht mehr!"

;;ocaml ; an objective camel

(org +present +pomodoro +pandoc +hugo); ; organize your plain life in plain text

;;php ; perl's insecure younger brother

;;plantuml ; diagrams for confusing people more

;;purescript ; javascript, but functional

python ; beautiful is better than ugly

;;qt ; the 'cutest' gui framework ever

racket ; a DSL for DSLs

;;raku ; the artist formerly known as perl6

;;rest ; Emacs as a REST client

;;rst ; ReST in peace

(ruby +rails) ; 1.step {|i| p "Ruby is #{i.even? ? 'love' : 'life'}"}

;;rust ; Fe2O3.unwrap().unwrap().unwrap().unwrap()

;;scala ; java, but good

;;scheme ; a fully conniving family of lisps

sh ; she sells {ba,z,fi}sh shells on the C xor

;;sml

;;solidity ; do you need a blockchain? No.

;;swift ; who asked for emoji variables?

;;terra ; Earth and Moon in alignment for performance.

web ; the tubes

yaml ; JSON, but readable

:email

;;(mu4e +gmail)

;;notmuch

;;(wanderlust +gmail)

:app

calendar

irc ; how neckbeards socialize

(rss +org) ; emacs as an RSS reader

;;twitter ; twitter client https://twitter.com/vnought

:config

;; literate ; don't use literate when manually tangling

(default +bindings +smartparens))

```

<a id="org6a2581d"></a>

# packages.el

:header-args:emacs-lisp+ :tangle packages.el :header-args:elisp+ :results silent :tangle packages.el

<a id="org13860bd"></a>

## ii-packages

```elisp

(package! ii-utils :recipe

(:host github

:branch "master"

:repo "ii/ii-utils"

:files ("*.el")))

(package! ii-pair :recipe

(:host github

:branch "main"

:repo "humacs/ii-pair"

:files ("*.el")))

```

<a id="orga8e224e"></a>

## upstream

```elisp

(package! sql)

(package! ob-sql-mode)

(package! ob-tmux)

(package! ox-gfm) ; org dispatch github flavoured markdown

(package! kubernetes)

(package! kubernetes-evil)

(package! exec-path-from-shell)

(package! tomatinho)

(package! graphviz-dot-mode)

(package! feature-mode)

(package! almost-mono-themes)

(package! graphviz-dot-mode)

```

| 32.485294 | 410 | 0.606428 | eng_Latn | 0.786726 |

c01b4c23398e0f7280f9c3826c408ba2b073b5b4 | 1,985 | md | Markdown | readme.md | XenotriX1337/TRPL-Interpreter | 976962090b2c2d0ade9d02567b83bd643e4ab9d2 | [

"MIT"

] | null | null | null | readme.md | XenotriX1337/TRPL-Interpreter | 976962090b2c2d0ade9d02567b83bd643e4ab9d2 | [

"MIT"

] | null | null | null | readme.md | XenotriX1337/TRPL-Interpreter | 976962090b2c2d0ade9d02567b83bd643e4ab9d2 | [

"MIT"

] | null | null | null | # The Reactive Programming Language

> This is the interpreter for TRPL

> The specification for the language itself can be found [here](https://github.com/XenotriX1337/TRPL/blob/draft/specification.md)

The Reactive Programming Language or TRPL is a language developed as part of a school project.

What makes this language different from others, is that when assigning a term to a variable, the term is not evaluated before being assigned but instead the variable stores the term itself.

So, for example if you have this code:

``` js

var x = 5

var y = x + 2

x = 10

print y

```

most languages would output `7` whereas in TRPL this produces the output `12`.

Now, you might be wondering if this can be useful or not and to be honest I have no clue.

So, you shouldn't think of TRPL as the main programming language for your next project but as an experiment to try to think differently.

## Getting Started

To use the interpreter, you will need to build it yourself.

### Linux

1. Clone this repository

2. Install **GNU/Bison** and **flex**

3. Create a `build` directory

4. Inside your `build` directory run `cmake ..`

5. Once the cmake configuartion is done, compile with `make`

### Windows

I have not yet tried to compile this on windows but since there is no platform specific code, the cmake file should work.

> If you are a windows user and built this project, I would appreciat it a lot if you could help me writing this guide.

## Contributing

All contributions and suggestions are welcome.

For suggestions and bugs, please file an issue.

For direct contributions, please file a pull request.

> If you'd like to contribute to the language itself, I invite you to do so on the [Specification](https://github.com/xenotrix1337/TRPL) repository.

## Author

Tibo Clausen

[email protected]

## License

This project is licensed under the MIT License. For more information see the [LICENSE](https://github.com/XenotriX1337/TRPL-Interpreter/blob/master/LICENSE) file.

| 32.016129 | 189 | 0.763224 | eng_Latn | 0.999081 |

c01b76fd151916ccb150abe31e7c26ad6f9e606f | 15,668 | md | Markdown | Teaching - 9ho - Daedam9 - Full Article/Daedam9 Translated by J_Lee_77777.md | TranslatingTathagata/Translations | 52dadbc55b9f8bf42a70110a1812944c0a062f14 | [

"MIT-advertising"

] | 2 | 2018-11-20T09:15:24.000Z | 2019-09-26T10:35:02.000Z | Teaching - 9ho - Daedam9 - Full Article/Daedam9 Translated by J_Lee_77777.md | TranslatingTathagata/Translations | 52dadbc55b9f8bf42a70110a1812944c0a062f14 | [

"MIT-advertising"

] | 3 | 2019-03-05T03:38:51.000Z | 2019-03-29T04:20:06.000Z | Teaching - 9ho - Daedam9 - Full Article/Daedam9 Translated by J_Lee_77777.md | TranslatingTathagata/Translations | 52dadbc55b9f8bf42a70110a1812944c0a062f14 | [

"MIT-advertising"

] | 1 | 2019-03-05T03:34:52.000Z | 2019-03-05T03:34:52.000Z | Sam Han Lee, Chairman, Natural Sciences Studies

VS

Ki Whan Kim, Moon Woo Lee, Hwoi Sim Jeong

Recently, both in the country and overseas, there have been noticeable emergence of the application of Chi *Tai-Chi* Meditation, in many popular training methods used to cleanse the mind, address social issues, and bring about social discourse?

Today, the word vitality is used quite often within the human society, and to achieve vitality, we see many people practicing Tai-Chi or meditation.

Chi is not visible to human eyes, and as a result, there are many questions surrounding it as it is difficult to understand even if one is to learn about it from a well versed person.

Despite that, many individuals do not realize how risky their actions maybe when following others’ advices without verifying its truths.

First, let’s find out what Chi refers to.

Chi refers to energy and heightened feeling of ambience without seeing. Chi can be further divided into ‘vitality’ and ‘lethargy’ as two forms of heightened feeling of ambience or energy.

Vitality is the live energy, the energy that keeps oneself while lethargy defines the pure energy, the energy that does not keep oneself.

Additionally, vitality can be further categorized into two forms. The first form refers to the vitality that is contained in the plants and animals foods, which are absorbed by various physical organs as they are eaten and digested.

This type of vitality transforms into energy that is necessary for the body when one moves from one location to another as needed, and such ingested vitality becomes the source of the energy in performing life activities for the person.

As such, there do not appear any notable side-effects when the energy contained in some substance with a form is ingested by a new subject and converted into a source of energy for that subject, through the absorption of the original energy by other substances of the new subject.

However, the other kind of vitality, which is accepted through many training methods and also the subject of the question, does not refer to the energy that is absorbed by physical organs, rather they refer to the energy that travel throughout the body and become the source of diseases and other issues.

The vitality that is ingested by eating objects, such as grains, plants, Ascidians, Fish, Meat, gets immediately absorbed as its energy enters the body.

However, if people take in the vitality that are in the air, rather than through ingestion of objects, then the energy that enters your body will cause many diseases and problems.

The reason is that such vitality is a live energy, which keeps itself. Upon entering your body, instead of getting absorbed, this energy travels throughout the body, preventing normal activities by causing breakages, and at times, it overtakes sense of awareness by attaching itself to the conscious system.

Therefore, by applying many of the trending methods, such as xx Tai-Chi, xx Meditation, Brain Respiration, xx Moral Technique, xx Self Cultivation in order to achieve vitality, not only does it lead one to danger, but can also lead to grave results by killing off one’s spirit as consciousness maybe lost.

Some people claim that they were healed of diseases after absorbing Chi. But this is a very dangerous thing. Although on rare occasions, it is possible to heal diseases that may not be possible to heal by using modern medicine, if someone feels comfort by absorbing Chi while fighting illness, the reality is that he is feeling the comfort because he has lost his awareness, which is the case of making his spirit even miserable.

Lethargy refers to the pure energy that does not hold oneself.

Lethargy does not contain much nutrients or energy. When we drink water, the energy contained in water enters our body. This is lethargy.

As this type of Chi is very small, it does not provide much help during the activities of people or cells. That’s how small the energy is.

Although both vitality and lethargy are in the air, majority of energy that people absorb during normal breathing is lethargy. This lethargy never damages people’s health. No matter how much is absorbed, lethargy does not cause harm.

However, the vitality that is in the air holds oneself, it resists from easily entering into human body. At the same time, if people absorb vitality without fearing it, great harm will come to the human world.

Through media, you are most likely familiar with the issue that is associated with a form of Tai-Chi, Falun Gong. The practitioners are said to be inhaling the vitality in the air as they perform exercise like poses.

People do not usually perform health exercises when recommended. Also, people do not easily follow instructions if they were asked to unite while doing health exercises.

Furthermore, when asked to propagate and provide health exercises available because they are good things, people do not do so. Finally, no one will protest against the government should the government prevent people from performing health exercises. No one will consider health exercises as a subject of protest.

However, Falun Gong, a form of Tai-Chi, exercised its influence and pressed the Chinese government to legalize it. As a result, the Chinese government had undertaken an investigation into Falun Gong and ordered the protesting organization to dismantle.

Why is such event occurring? Like Korea, great many changes are taking place within the Chinese society. What are being referred to as these societal changes?

It refers to this vitality exercise or the act of absorbing spirits into people’s bodies.

It is easy to see that there are exercises that connect spirits and people everywhere in the world. If spirit gets to control people, anything, including mob action can be made possible. Many things that are beyond our common sense can occur.

There are countless organizations that are active, fronting Chi in our society as well.

Recently, they said that one can improve academic performances through brain respiration training. This is hard to understand through common sense.

A certain student, who was in the middle of his class, has improved his academic performance to the top level of his class, vying for being the 1st or 2nd in the class, after starting brain respiration training. Very dangerously, those people, who lack the preconceived notion of reality, can easily be drawn into such claims.

How did that student come to excel in academics? When people run high temperatures, the brain activities become hindered. In such conditions, even little things cause one to become overly sensitive and deteriorate the ability to memorize.

In other words, this is a phenomenon that occurs as blood does not easily flow to the brain due to some disabilities in the blood vessels, either inside the chest or head.

This phenomenon occurs in the back of the head: The rear brain weakens and causes anemia in the brain, thus inducing a rise in the temperature in the area. This in turn causes brain activity to get impeded, leading to deterioration in the ability to accept reality, hence resulting in a drop in the academic performance.

In such situation, by resolving the problems that impedes the student’s heart activity or improve blood flow to the head by removing problems that cause poor blood circulation, the student will recover normal brain activities. From that point, depending on the student’s efforts, his grades will eventually improve.