hexsha

stringlengths 40

40

| size

int64 5

1.04M

| ext

stringclasses 6

values | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 3

344

| max_stars_repo_name

stringlengths 5

125

| max_stars_repo_head_hexsha

stringlengths 40

78

| max_stars_repo_licenses

sequencelengths 1

11

| max_stars_count

int64 1

368k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 3

344

| max_issues_repo_name

stringlengths 5

125

| max_issues_repo_head_hexsha

stringlengths 40

78

| max_issues_repo_licenses

sequencelengths 1

11

| max_issues_count

int64 1

116k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 3

344

| max_forks_repo_name

stringlengths 5

125

| max_forks_repo_head_hexsha

stringlengths 40

78

| max_forks_repo_licenses

sequencelengths 1

11

| max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | content

stringlengths 5

1.04M

| avg_line_length

float64 1.14

851k

| max_line_length

int64 1

1.03M

| alphanum_fraction

float64 0

1

| lid

stringclasses 191

values | lid_prob

float64 0.01

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

bdfb42c931f271f1ee53c5327a4184116d64978a | 97 | md | Markdown | README.md | Sarh/algorithms | 29fa8bc0a275684eb46cf5b0747483ffa7fcedbc | [

"Unlicense"

] | null | null | null | README.md | Sarh/algorithms | 29fa8bc0a275684eb46cf5b0747483ffa7fcedbc | [

"Unlicense"

] | 2 | 2016-04-27T22:10:36.000Z | 2016-04-27T22:11:16.000Z | README.md | Sarh/algorithms | 29fa8bc0a275684eb46cf5b0747483ffa7fcedbc | [

"Unlicense"

] | null | null | null | # algorithms

implements the algorithms discussed in my Design & Analysis of Algorithms class

| 32.333333 | 83 | 0.824742 | eng_Latn | 0.956547 |

bdfb966d10ace933af194e0c50b43d5f955a6703 | 2,876 | md | Markdown | CTFwriteups/YearList/2021.md | Angmar2722/Angmar2722.github.io | 6d79cfeeb6681b32d62caba167e14c2f4eeb2569 | [

"MIT"

] | null | null | null | CTFwriteups/YearList/2021.md | Angmar2722/Angmar2722.github.io | 6d79cfeeb6681b32d62caba167e14c2f4eeb2569 | [

"MIT"

] | null | null | null | CTFwriteups/YearList/2021.md | Angmar2722/Angmar2722.github.io | 6d79cfeeb6681b32d62caba167e14c2f4eeb2569 | [

"MIT"

] | 9 | 2021-06-30T07:57:08.000Z | 2022-01-03T09:22:35.000Z | ---

layout: page

title: 2021 Writeups

---

<hr/>

Below are the writeups for CTFs participated in 2021 (my first year playing CTFs):

<br/>

| CTF Writeup | Weight | Team | Rank | Points |

| ------------- | --- | --- | ------ | -----: |

|[idek CTF 2021](https://angmar2722.github.io/CTFwriteups/2021/idek2021/) | 24.52 | Social Engineering Experts | 13/235 | 15.840 |

|[Buckeye CTF 2021](https://angmar2722.github.io/CTFwriteups/2021/buckeye2021/) | 24.74 | Social Engineering Experts | 7/505 | 19.386 |

|[Perfect Blue 2021](https://angmar2722.github.io/CTFwriteups/2021/pbctf2021/) | 24.90 | Social Engineering Experts | 32/210 | 5.552 |

|[CSAW Quals 2021](https://angmar2722.github.io/CTFwriteups/2021/csaw2021/) | 23.53 | Social Engineering Experts | 23/1216 | 15.276 |

|[Yauza CTF 2021](https://angmar2722.github.io/CTFwriteups/2021/yauza2021/) | 21.84 | Social Engineering Experts | 9/227 | 14.452 |

|[Fword CTF 2021](https://angmar2722.github.io/CTFwriteups/2021/fword2021/) | 24.42 | Isengard | 55/428 | 3.407 |

|[InCTF 2021](https://angmar2722.github.io/CTFwriteups/2021/inctf2021/) | 70.41 | Social Engineering Experts | 22/604 | 19.143 |

|[UIUCTF 2021](https://angmar2722.github.io/CTFwriteups/2021/uiuctf2021/) | 23.76 | Social Engineering Experts | 18/658 | 8.222 |

|[Google CTF 2021](https://angmar2722.github.io/CTFwriteups/2021/google2021/) | 99.22 | Isengard | 80/379 | 8.435 |

|[Redpwn CTF 2021](https://angmar2722.github.io/CTFwriteups/2021/redpwn2021/) | 32.61 | Isengard | 41/1418 | 9.532 |

|[HSCTF 8 2021](https://angmar2722.github.io/CTFwriteups/2021/hsctf2021/) | 24.50 | Isengard | 57/1165 | 15.190 |

|[Zh3r0 CTF 2021](https://angmar2722.github.io/CTFwriteups/2021/zh3r02021/) | 21.85 | Isengard | 48/509 | 2.893 |

|[ångstrom 2021](https://angmar2722.github.io/CTFwriteups/2021/actf2021/) | 46.09 | Isengard | 278/1245 | 6.340 |

|[UMassCTF 2021](https://angmar2722.github.io/CTFwriteups/2021/umass2021/) | 23.50 | Dog 1.2 | 46/660 | 8.474 |

|[Whitehacks 2021](https://angmar2722.github.io/CTFwriteups/2021/wh2021/) | N/A | N/A | 35/130 | N/A |

<br/>

Due to a lack of time or due to playing another CTF which was occuring at the same time, I couldn't really spend much time at all for some CTFs. Below is the link for some solve scripts for random cryptography challenges that I solved during the duration of such CTFs :

- <a href="https://angmar2722.github.io/CTFwriteups/2021/randomCTFs2021/">2021 Random Crypto Solves in CTFs</a>

**Note :** Since I mainly specialise in cryptography related challenges, a list of writeups for all crypto related challenges that I have solved during the duration of a CTF in 2021 can be found <a href="https://github.com/Angmar2722/Angmar2722.github.io/blob/master/CTFwriteups/CryptoWriteupsList/crypto2021.md" target="_blank">here</a>.

| 75.684211 | 338 | 0.718011 | eng_Latn | 0.409527 |

bdfbdd8d3a80afe04a3b71e340aea92f5daa0fef | 2,690 | md | Markdown | sdk-api-src/content/objidl/nf-objidl-imallocspy-postheapminimize.md | amorilio/sdk-api | 54ef418912715bd7df39c2561fbc3d1dcef37d7e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | sdk-api-src/content/objidl/nf-objidl-imallocspy-postheapminimize.md | amorilio/sdk-api | 54ef418912715bd7df39c2561fbc3d1dcef37d7e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | sdk-api-src/content/objidl/nf-objidl-imallocspy-postheapminimize.md | amorilio/sdk-api | 54ef418912715bd7df39c2561fbc3d1dcef37d7e | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

UID: NF:objidl.IMallocSpy.PostHeapMinimize

title: IMallocSpy::PostHeapMinimize (objidl.h)

description: Performs operations required after calling IMalloc::HeapMinimize.

helpviewer_keywords: ["IMallocSpy interface [COM]","PostHeapMinimize method","IMallocSpy.PostHeapMinimize","IMallocSpy::PostHeapMinimize","PostHeapMinimize","PostHeapMinimize method [COM]","PostHeapMinimize method [COM]","IMallocSpy interface","_com_imallocspy_postheapminimize","com.imallocspy_postheapminimize","objidl/IMallocSpy::PostHeapMinimize"]

old-location: com\imallocspy_postheapminimize.htm

tech.root: com

ms.assetid: 9d51c34e-6ed1-493d-8999-e67c4a60f6b6

ms.date: 12/05/2018

ms.keywords: IMallocSpy interface [COM],PostHeapMinimize method, IMallocSpy.PostHeapMinimize, IMallocSpy::PostHeapMinimize, PostHeapMinimize, PostHeapMinimize method [COM], PostHeapMinimize method [COM],IMallocSpy interface, _com_imallocspy_postheapminimize, com.imallocspy_postheapminimize, objidl/IMallocSpy::PostHeapMinimize

req.header: objidl.h

req.include-header:

req.target-type: Windows

req.target-min-winverclnt: Windows 2000 Professional [desktop apps only]

req.target-min-winversvr: Windows 2000 Server [desktop apps only]

req.kmdf-ver:

req.umdf-ver:

req.ddi-compliance:

req.unicode-ansi:

req.idl: ObjIdl.idl

req.max-support:

req.namespace:

req.assembly:

req.type-library:

req.lib:

req.dll:

req.irql:

targetos: Windows

req.typenames:

req.redist:

ms.custom: 19H1

f1_keywords:

- IMallocSpy::PostHeapMinimize

- objidl/IMallocSpy::PostHeapMinimize

dev_langs:

- c++

topic_type:

- APIRef

- kbSyntax

api_type:

- COM

api_location:

- ObjIdl.h

api_name:

- IMallocSpy.PostHeapMinimize

---

# IMallocSpy::PostHeapMinimize

## -description

Performs operations required after calling <a href="/windows/desktop/api/objidl/nf-objidl-imalloc-heapminimize">IMalloc::HeapMinimize</a>.

## -remarks

When a spy object implementing <a href="/windows/desktop/api/objidl/nn-objidl-imallocspy">IMallocSpy</a> is registered using the <a href="/windows/desktop/api/objbase/nf-objbase-coregistermallocspy">CoRegisterMallocSpy</a> function, COM calls this method immediately after any call to <a href="/windows/desktop/api/objidl/nf-objidl-imalloc-free">IMalloc::Free</a>. This method is included for completeness and consistency; it is not anticipated that developers will implement significant functionality in this method.

## -see-also

<a href="/windows/desktop/api/objidl/nf-objidl-imalloc-heapminimize">IMalloc::HeapMinimize</a>

<a href="/windows/desktop/api/objidl/nn-objidl-imallocspy">IMallocSpy</a>

<a href="/windows/desktop/api/objidl/nf-objidl-imallocspy-preheapminimize">IMallocSpy::PreHeapMinimize</a>

| 37.361111 | 517 | 0.798885 | eng_Latn | 0.351219 |

bdfd3b8a9f687f3e939578a2488e011d1c2c48a7 | 3,440 | md | Markdown | _posts/2019-11-14-dev-00000051-R-SVM.md | moreGet/moreGet.github.io | 281876948fe14ee3710b0c36f0a7edd6f0e9af12 | [

"MIT"

] | null | null | null | _posts/2019-11-14-dev-00000051-R-SVM.md | moreGet/moreGet.github.io | 281876948fe14ee3710b0c36f0a7edd6f0e9af12 | [

"MIT"

] | null | null | null | _posts/2019-11-14-dev-00000051-R-SVM.md | moreGet/moreGet.github.io | 281876948fe14ee3710b0c36f0a7edd6f0e9af12 | [

"MIT"

] | 1 | 2019-08-31T08:02:36.000Z | 2019-08-31T08:02:36.000Z | ---

date: 2019-11-14 22:00:00

layout: post

title: R-Studio SVM(Support Vector Machine) 을 이용한 머신러닝

subtitle: kernlab 패키지의 ksvm 사용하기

description: "Current RStudio : == Desktop 1.2.5001(64bit)"

image: ../assets/img/postsimg/R_main_00000003.png

optimized_image: ../assets/img/postsimg/R_op_00000003.png

category: coding

tags:

- R-Studio

author: thiagorossener

# image:760*400

# optimized_image:380*200

---

## 파일 소스

우클릭 -> 다른이름으로 링크저장 이용해 주세요<br>

<a href="../assets/sources/S20191114.zip" class="btn btn-lg btn-outline">

S20191114.zip

</a>

<br>

<br>

<br>

## 메인 사용 함수

> install.packeage("kernlab")

> library(kernlab)

> ksvm 서포트 벡터 머신 알고리즘을 수행하려면 kernlab 패키지의 ksvm() 함수를 사용<br>

## 소스 코드

```r

library(kernlab)

data <- read.csv('bmi.csv')

head(data)

str(data)

summary(data)

dim(data)

colnames(data)

idx <- sample(1:nrow(data), 0.9 * nrow(data))

training <- data[idx, ]

testing <- data[-idx, ]

# kernel 커널 함수를 명시한다.

model <- ksvm(label ~ ., data = training, kernel = 'vanilladot')

pred <- predict(model, testing)

table(pred, testing$label)

aggrement <- pred == testing$label

prop.table(table(aggrement))

model_rbf <- ksvm(label ~ ., data = training, kernel = 'rbfdot')

pred_rbf <- predict(model_rbf, testing)

table(pred_rbf, testing$label)

aggrement_rbf <- pred_rbf == testing$label

prop.table(table(aggrement_rbf))

########################################################

library(kernlab)

str(iris)

unique(iris$Species)

idx <- sample(1:nrow(iris), 0.6 * nrow(iris))

training <- iris[idx, ]

testing <- iris[-idx, ]

model <- ksvm(Species ~ ., data = training, kernel = 'vanilladot')

pred <- predict(model, testing)

prop.table(table(pred == testing$Species))

#######################################################

library(kernlab)

data <- read.csv('zoo_data.csv')

testing <- read.csv('zoo_testing.csv')

head(data)

str(data)

summary(data)

dim(data)

colnames(data)

model <- ksvm(type ~ ., data = data, kernel = 'rbfdot')

pred <- round(predict(model, testing), 0)

table(pred, testing$type)

aggrement <- pred == testing$type

prop.table(table(aggrement))

#########################################################

library(kernlab)

data <- read.csv('letterdata.csv')

head(data)

str(data)

summary(data)

dim(data)

colnames(data)

idx <- sample(1:nrow(data), 0.7 * nrow(data))

training <- data[idx, ]

testing <- data[-idx, ]

model <- ksvm(letter ~ ., data = training, kernel = 'rbfdot')

pred <- predict(model, testing)

table(pred, testing$letter)

prop.table(table(pred == testing$letter))

##############################################################

library(kernlab)

data <- read.csv('mushrooms.csv')

head(data)

str(data)

summary(data)

dim(data)

colnames(data)

data <- data[, -17]

idx <- sample(1:nrow(data), 0.7 * nrow(data))

training <- data[idx, ]

testing <- data[-idx, ]

model <- ksvm(type ~ ., data = training, kernel = 'rbfdot')

pred <- predict(model, testing)

table(pred, testing$type)

prop.table(table(pred == testing$type))

##################################################################

library(kernlab)

data <- read.csv('../../R Basic Source/31.KNN/likelyhood.csv')

head(data)

str(data)

summary(data)

dim(data)

colnames(data)

idx <- sample(1:nrow(data), 0.1 * nrow(data))

training <- data[idx, ]

testing <- data[-idx, ]

model <- ksvm(likelyhood ~ ., data = training, kernel = 'rbfdot')

pred <- predict(model, testing)

table(pred, testing$likelyhood)

prop.table(table(pred == testing$likelyhood))

``` | 20.848485 | 73 | 0.628488 | kor_Hang | 0.191521 |

bdfd8f70ee594b35c0347134ec711e1b4bc23053 | 1,418 | md | Markdown | README.md | onewheelskyward/lita-google-images | 70c29ef2f6daf105608c9a724be2e4d65e93313d | [

"MIT"

] | null | null | null | README.md | onewheelskyward/lita-google-images | 70c29ef2f6daf105608c9a724be2e4d65e93313d | [

"MIT"

] | null | null | null | README.md | onewheelskyward/lita-google-images | 70c29ef2f6daf105608c9a724be2e4d65e93313d | [

"MIT"

] | null | null | null | # lita-google-images

[](https://travis-ci.org/jimmycuadra/lita-google-images)

[](https://codeclimate.com/github/jimmycuadra/lita-google-images)

[](https://coveralls.io/r/jimmycuadra/lita-google-images)

**lita-google-images** is a handler for [Lita](https://github.com/jimmycuadra/lita) that searches Google Images for images matching users' queries, and replies with links to them.

## Installation

Add lita-google-images to your Lita instance's Gemfile:

``` ruby

gem "lita-google-images"

```

## Configuration

### Optional attributes

* `safe_search` (String, Symbol) - The safe search setting to use when querying for images. Possible values are `:active`, `:moderate`, and `:off`. Default: `:active`.

### Example

```

Lita.configure do |config|

config.handlers.google_images.safe_search = :off

end

```

## Usage

The following are all equivalent ways of asking Lita to search for an image of "carl the pug":

```

Lita: image carl the pug

Lita: image me carl the pug

Lita: img carl the pug

Lita: img me carl the pug

```

The second form is for those coming from [Hubot](http://hubot.github.com/).

## License

[MIT](http://opensource.org/licenses/MIT)

| 30.170213 | 179 | 0.741185 | eng_Latn | 0.409813 |

bdfe2abcf8b5016c9e5b1b743b3b9c69a80bf3e3 | 5,049 | md | Markdown | rancher/Networking/Networking-with-Flannel.md | kspnec/ContainerLabs | aee420cccc76949bd8478af7f95094fe43f4662f | [

"Apache-2.0"

] | 6 | 2021-11-11T02:56:58.000Z | 2022-02-02T07:16:54.000Z | rancher/Networking/Networking-with-Flannel.md | sangam14/CloudNativeLab | df26ee9d41e12df945cb4314fffc07d8896c6411 | [

"Apache-2.0"

] | null | null | null | rancher/Networking/Networking-with-Flannel.md | sangam14/CloudNativeLab | df26ee9d41e12df945cb4314fffc07d8896c6411 | [

"Apache-2.0"

] | null | null | null | ---

layout: default

title: Networking with Flannel

parent: Rancher Networking

nav_order: 10

---

# Networking with Flannel

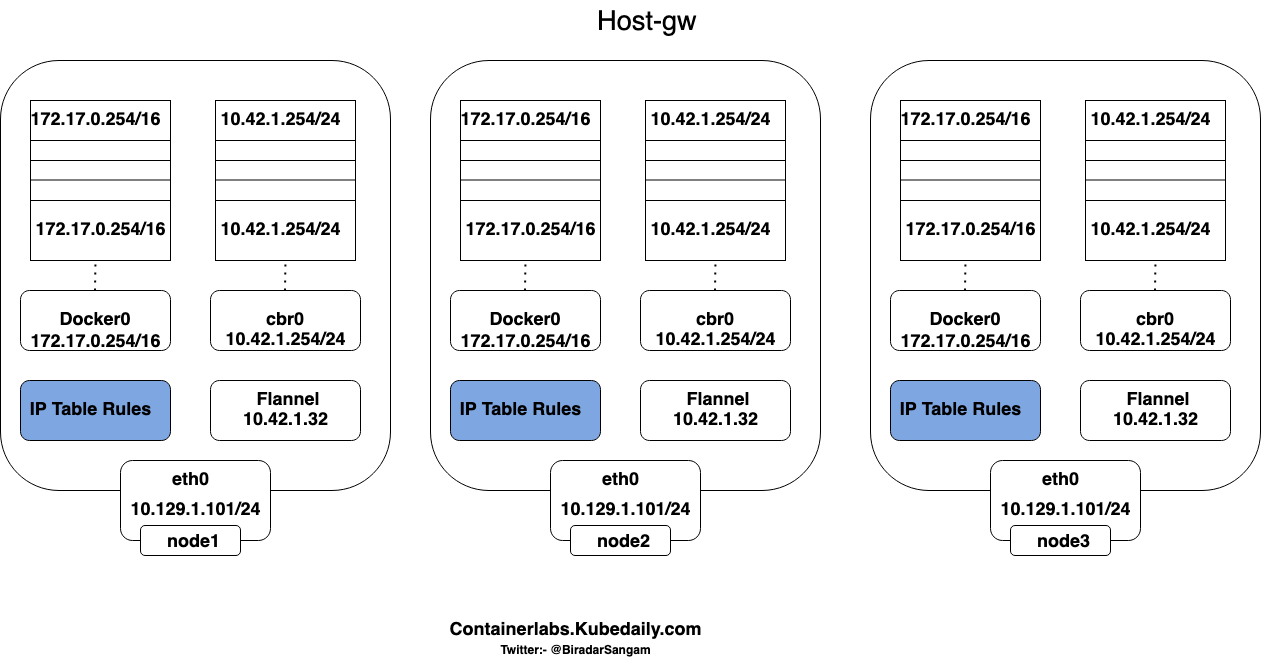

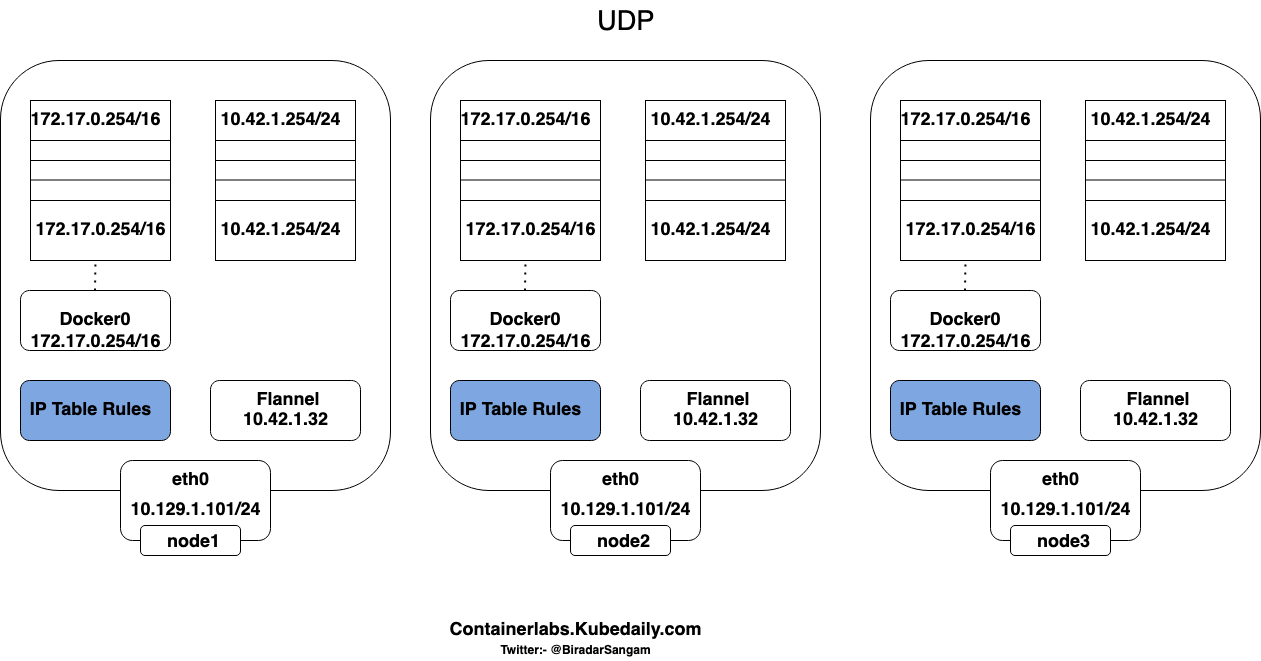

- Flannel is one of the most straightforward network providers for Kubernetes. It operates at Layer 3 and offloads the actual packet forwarding to a backend such

as VxLAN or IPSec. It assigns a large network to all hosts in the cluster and then assigns a portion of that network to each host. Routing between containers on

a host happens via the usual channels, and Flannel handles routing between hosts using one of its available options.

- Flannel uses etcd to store the map of what network is assigned to which host. The target can be an external deployment of etcd or the one that Kubernetes itself uses.

- Flannel does not provide an implementation of the NetworkPolicy resource.

# Running Flannel With Kubenetes

- Flannel Pods roll out as a DaemonSet, with one Pod assigned to each host. To deploy it within Kubernetes, use the `kube-flannel.yaml` manifest from the Flannel repository on Github.

```

kubectl apply -f https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel.yml

```

- Once Flannel is running, it is not possible to change the network address space or the backend communication format without cluster downtime.

```

kubectl get pods --all-namespacesNAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-66bff467f8-7lfpd 1/1 Running 0 8m28s

kube-system coredns-66bff467f8-mx4tq 1/1 Running 0 8m28s

kube-system etcd-master 1/1 Running 0 8m37s

kube-system katacoda-cloud-provider-58f89f7d9-lcghg 1/1 Running 5 8m28s

kube-system kube-apiserver-master 1/1 Running 0 8m37s

kube-system kube-controller-manager-master 1/1 Running 0 8m37skube-system kube-flannel-ds-amd64-brdvt 1/1 Running 1 8m20s

kube-system kube-flannel-ds-amd64-ldt8g 1/1 Running 0 8m28skube-system kube-keepalived-vip-tr9nf 1/1 Running 0 8m10s

kube-system kube-proxy-7gk5t 1/1 Running 0 8m28s

kube-system kube-proxy-dqn7c 1/1 Running 0 8m20s

kube-system kube-scheduler-master 1/1 Running 0 8m37s

```

| Network Type | Backend | Key features |

|-------------- |--------- |------------------------------------------------------------------------------------ |

| Overlay | VxLAN | - Fast, but with no interhost encryption<br>- Suitable for private/secure networks |

| Overlay | IPSec | - Encrypts traffic between hosts<br>- Suitable when traffic traverses the Internet |

| Non Overlay | Host-gw | - Good performance<br>- Cloud agnostic |

| Non Overlay | AWS VPC | - Good performance<br>- Limited to Amazon’s cloud |

# Flannel Backends

- VxLAN

- VxLAN is the simplest of the officially supported backends for Flannel. Encapsulation happens within the kernel, so there is no additional overhead caused by moving data between the kernel and user space

- The VxLAN backend creates a Flannel interface on every host. When a container on one node wishes to send traffic to a different node, the packet goes from the container to the bridge interface in the host’s network namespace. From there the bridge forwards it to the Flannel interface because the kernel route table designates that this interface is the target for the non-local portion of the overlay network. The Flannel network driver wraps the packet in a UDP packet and sends it to the target host.

- Once it arrives at its destination, the process flows in reverse, with the Flannel driver on the destination host unwrapping the packet, sending it to the bridge interface, and from there the packet finds its way into the overlay network and to the destination Pod.

- Host-gw

- The Host-gw backend provides better performance than VxLAN but requires Layer 2 connectivity between hosts. It operates by creating IP routes to subnets via remote machine addresses

- Unlike VxLAN, no Flannel interface is created when using this backend. Instead, each node sends traffic directly to the destination node where the remote network is located.

- This backend may require additional network configuration if used in a cloud provider where inter-host communication uses virtual switches.

- UDP

- The UDP backend is insecure and should only be used for debugging or if the kernel does not support VxLAN.

| 65.571429 | 508 | 0.667657 | eng_Latn | 0.992687 |

bdfe95bf45099f07bdcb03a7ad7f49f730f240ad | 965 | md | Markdown | catalog/owari-to-hajimari-no-miles/en-US_owari-to-hajimari-no-miles.md | htron-dev/baka-db | cb6e907a5c53113275da271631698cd3b35c9589 | [

"MIT"

] | 3 | 2021-08-12T20:02:29.000Z | 2021-09-05T05:03:32.000Z | catalog/owari-to-hajimari-no-miles/en-US_owari-to-hajimari-no-miles.md | zzhenryquezz/baka-db | da8f54a87191a53a7fca54b0775b3c00f99d2531 | [

"MIT"

] | 8 | 2021-07-20T00:44:48.000Z | 2021-09-22T18:44:04.000Z | catalog/owari-to-hajimari-no-miles/en-US_owari-to-hajimari-no-miles.md | zzhenryquezz/baka-db | da8f54a87191a53a7fca54b0775b3c00f99d2531 | [

"MIT"

] | 2 | 2021-07-19T01:38:25.000Z | 2021-07-29T08:10:29.000Z | # Owari to Hajimari no Miles

- **type**: manga

- **chapters**: 12

- **original-name**: 終わりと始まりのマイルス

- **start-date**: 2006-11-06

- **end-date**: 2006-11-06

## Tags

- comedy

- fantasy

- slice-of-life

## Authors

- Kitoh

- Mohiro (Story & Art)

## Sinopse

Set in a world where every object has its own spirit, the story follows Miles, a young woman who has the rare ability to communicate with those spirits. Working as a spirit medium, Miles helps people find the objects that are compatible to them. Assisting her is a mysterious man named Gikaku who's literally not from this world. Dubbed as the "god of destruction" Gikaku wields a terrible power which, as it seems, only Miles can contain. But for how long?

(Source: Kotonoha)

This series is on hiatus.

## Links

- [My Anime list](https://myanimelist.net/manga/13313/Owari_to_Hajimari_no_Miles)

| 29.242424 | 457 | 0.717098 | eng_Latn | 0.988148 |

bdfed87c6a844e8f3ca1cedbfe07e7adcf820233 | 796 | md | Markdown | docs/odbc/microsoft/scalar-function-limitations.md | sql-aus-hh/sql-docs.de-de | edfac31211cedb5d13440802f131a1e48934748a | [

"CC-BY-4.0",

"MIT"

] | 1 | 2022-02-25T18:10:29.000Z | 2022-02-25T18:10:29.000Z | docs/odbc/microsoft/scalar-function-limitations.md | sql-aus-hh/sql-docs.de-de | edfac31211cedb5d13440802f131a1e48934748a | [

"CC-BY-4.0",

"MIT"

] | null | null | null | docs/odbc/microsoft/scalar-function-limitations.md | sql-aus-hh/sql-docs.de-de | edfac31211cedb5d13440802f131a1e48934748a | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: Einschränkungen von Skalarfunktionen | Microsoft-Dokumentation

ms.custom: ''

ms.date: 01/19/2017

ms.prod: sql

ms.prod_service: connectivity

ms.reviewer: ''

ms.technology: connectivity

ms.topic: conceptual

helpviewer_keywords:

- ODBC desktop database drivers [ODBC]

- desktop database drivers [ODBC]

ms.assetid: 023d94b9-3ed6-46d3-9a66-f2872f505bbb

author: MightyPen

ms.author: genemi

manager: craigg

ms.openlocfilehash: 6bfb6ff3ba39400278db23931b4c9420e506c973

ms.sourcegitcommit: 61381ef939415fe019285def9450d7583df1fed0

ms.translationtype: MT

ms.contentlocale: de-DE

ms.lasthandoff: 10/01/2018

ms.locfileid: "47687088"

---

# <a name="scalar-function-limitations"></a>Einschränkungen für Skalarfunktionen

Skalare Funktionen werden nur mithilfe der kanonische ODBC-Format unterstützt.

| 30.615385 | 80 | 0.819095 | deu_Latn | 0.286505 |

da009f049b24d2a99b65b38e31b884b97fc45bb8 | 15,415 | md | Markdown | articles/backup/restore-afs-cli.md | sonquer/azure-docs.pl-pl | d8159cf8e870e807bd64e58188d281461b291ea8 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/backup/restore-afs-cli.md | sonquer/azure-docs.pl-pl | d8159cf8e870e807bd64e58188d281461b291ea8 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | articles/backup/restore-afs-cli.md | sonquer/azure-docs.pl-pl | d8159cf8e870e807bd64e58188d281461b291ea8 | [

"CC-BY-4.0",

"MIT"

] | null | null | null | ---

title: Przywracanie udziałów plików platformy Azure za pomocą interfejsu wiersza polecenia platformy Azure

description: Dowiedz się, jak za pomocą interfejsu wiersza polecenia platformy Azure przywrócić kopie zapasowe udziałów plików platformy Azure w magazynie Recovery Services

ms.topic: conceptual

ms.date: 01/16/2020

ms.openlocfilehash: 63b2be2fe24c1274ed1581b7b849de578c978842

ms.sourcegitcommit: fa6fe765e08aa2e015f2f8dbc2445664d63cc591

ms.translationtype: MT

ms.contentlocale: pl-PL

ms.lasthandoff: 02/01/2020

ms.locfileid: "76931049"

---

# <a name="restore-azure-file-shares-with-the-azure-cli"></a>Przywracanie udziałów plików platformy Azure za pomocą interfejsu wiersza polecenia platformy Azure

Interfejs wiersza polecenia platformy Azure zapewnia obsługę systemu Azure w programie. To doskonałe narzędzie do tworzenia niestandardowych automatyzacji do korzystania z zasobów platformy Azure. W tym artykule wyjaśniono, jak przywrócić cały udział plików lub określone pliki z punktu przywracania utworzonego przez [Azure Backup](https://docs.microsoft.com/azure/backup/backup-overview) przy użyciu interfejsu wiersza polecenia platformy Azure. Te kroki można również wykonać przy użyciu programu [Azure PowerShell](https://docs.microsoft.com/azure/backup/backup-azure-afs-automation) lub w witrynie [Azure Portal](backup-afs.md).

Na końcu tego artykułu dowiesz się, jak wykonywać następujące operacje za pomocą interfejsu wiersza polecenia platformy Azure:

* Wyświetl punkty przywracania dla kopii zapasowej udziału plików platformy Azure.

* Przywróć pełny udział plików platformy Azure.

* Przywróć pojedyncze pliki lub foldery.

[!INCLUDE [cloud-shell-try-it.md](../../includes/cloud-shell-try-it.md)]

Aby zainstalować interfejs wiersza polecenia lokalnie i korzystać z niego, należy korzystać z interfejsu wiersza polecenia platformy Azure w wersji 2.0.18 lub nowszej. Aby znaleźć wersję interfejsu wiersza polecenia, uruchom polecenie `az --version`. Jeśli konieczna będzie instalacja lub uaktualnienie interfejsu, zobacz [Instalowanie interfejsu wiersza polecenia platformy Azure](https://docs.microsoft.com/cli/azure/install-azure-cli?view=azure-cli-latest).

## <a name="prerequisites"></a>Wymagania wstępne

W tym artykule przyjęto założenie, że masz już udział plików platformy Azure, którego kopia zapasowa została utworzona przez Azure Backup. Jeśli go nie masz, zobacz Tworzenie kopii zapasowych [udziałów plików platformy Azure przy użyciu interfejsu wiersza polecenia](backup-afs-cli.md) w celu skonfigurowania usługi Backup dla udziału plików. W tym artykule opisano użycie następujących zasobów:

| Udział plików | Konto magazynu | Region | Szczegóły |

| ----------- | --------------- | ------ | ------------------------------------------------------------ |

| *migracji pamięci* | *afsaccount* | EastUS | Oryginalne źródło kopii zapasowej przy użyciu Azure Backup |

| *azurefiles1* | *afaccount1* | EastUS | Źródło docelowe używane do odzyskiwania lokalizacji alternatywnej |

Możesz użyć podobnej struktury dla udziałów plików, aby wypróbować różne typy przywracania wyjaśnione w tym artykule.

## <a name="fetch-recovery-points-for-the-azure-file-share"></a>Pobierz punkty odzyskiwania dla udziału plików platformy Azure

Użyj polecenia [AZ Backup recoverypoint list](https://docs.microsoft.com/cli/azure/backup/recoverypoint?view=azure-cli-latest#az-backup-recoverypoint-list) , aby wyświetlić listę wszystkich punktów odzyskiwania kopii zapasowej udziału plików.

Poniższy przykład pobiera listę punktów odzyskiwania dla udziału plików *migracji pamięci* na koncie magazynu *afsaccount* .

```azurecli-interactive

az backup recoverypoint list --vault-name azurefilesvault --resource-group azurefiles --container-name "StorageContainer;Storage;AzureFiles;afsaccount” --backup-management-type azurestorage --item-name “AzureFileShare;azurefiles” --workload-type azurefileshare --out table

```

Możesz również uruchomić poprzednie polecenie cmdlet przy użyciu przyjaznej nazwy kontenera i elementu, podając następujące dwa dodatkowe parametry:

* **--Backup-Management-Type**: *azurestorage*

* **--Typ obciążenia**: *azurefileshare*

```azurecli-interactive

az backup recoverypoint list --vault-name azurefilesvault --resource-group azurefiles --container-name afsaccount --backup-management-type azurestorage --item-name azurefiles --workload-type azurefileshare --out table

```

Zestaw wyników to lista punktów odzyskiwania wraz ze szczegółami dotyczącymi czasu i spójności poszczególnych punktów przywracania.

```output

Name Time Consistency

------------------ ------------------------- --------------------

932887541532871865 2020-01-05T07:08:23+00:00 FileSystemConsistent

932885927361238054 2020-01-05T07:08:10+00:00 FileSystemConsistent

932879614553967772 2020-01-04T21:33:04+00:00 FileSystemConsistent

```

Atrybut **name** w danych wyjściowych odpowiada nazwie punktu odzyskiwania, który może być używany jako wartość parametru **--RP-Name** w operacji odzyskiwania.

## <a name="full-share-recovery-by-using-the-azure-cli"></a>Odzyskiwanie pełnego udziału przy użyciu interfejsu wiersza polecenia platformy Azure

Można użyć tej opcji przywracania, aby przywrócić pełny udział plików w lokalizacji oryginalnej lub alternatywnej.

Zdefiniuj następujące parametry, aby wykonać operacje przywracania:

* **--Container-Name**: nazwa konta magazynu, które hostuje kopię zapasową oryginalnego udziału plików. Aby pobrać nazwę lub przyjazną nazwę kontenera, użyj polecenia [AZ Backup Container list](https://docs.microsoft.com/cli/azure/backup/container?view=azure-cli-latest#az-backup-container-list) .

* **--Item-Name**: Nazwa kopii zapasowej oryginalnego pliku, który ma być używany dla operacji przywracania. Aby pobrać nazwę lub przyjazną nazwę elementu kopii zapasowej, użyj polecenia [AZ Backup Item list](https://docs.microsoft.com/cli/azure/backup/item?view=azure-cli-latest#az-backup-item-list) .

### <a name="restore-a-full-share-to-the-original-location"></a>Przywróć pełny udział do oryginalnej lokalizacji

W przypadku przywracania do oryginalnej lokalizacji nie trzeba określać parametrów związanych z elementem docelowym. Należy podać tylko **konflikt** .

W poniższym przykładzie zastosowano polecenie [AZ Backup Restore Restore-azurefileshare](https://docs.microsoft.com/cli/azure/backup/restore?view=azure-cli-latest#az-backup-restore-restore-azurefileshare) z trybem przywracania ustawionym na *originallocation* , aby przywrócić udział plików *migracji pamięci* w oryginalnej lokalizacji. Używany jest punkt odzyskiwania 932883129628959823, który uzyskano w obszarze [pobieranie punktów odzyskiwania dla udziału plików platformy Azure](#fetch-recovery-points-for-the-azure-file-share):

```azurecli-interactive

az backup restore restore-azurefileshare --vault-name azurefilesvault --resource-group azurefiles --rp-name 932887541532871865 --container-name "StorageContainer;Storage;AzureFiles;afsaccount” --item-name “AzureFileShare;azurefiles” --restore-mode originallocation --resolve-conflict overwrite --out table

```

```output

Name ResourceGroup

------------------------------------ ---------------

6a27cc23-9283-4310-9c27-dcfb81b7b4bb azurefiles

```

Atrybut **name** w danych wyjściowych odpowiada nazwie zadania, które jest tworzone przez usługę kopii zapasowej dla operacji przywracania. Aby śledzić stan zadania, użyj polecenia [AZ Backup Job show](https://docs.microsoft.com/cli/azure/backup/job?view=azure-cli-latest#az-backup-job-show) cmdlet.

### <a name="restore-a-full-share-to-an-alternate-location"></a>Przywróć pełny udział w lokalizacji alternatywnej

Możesz użyć tej opcji, aby przywrócić udział plików w alternatywnej lokalizacji i zachować oryginalny udział plików jako. Określ następujące parametry odzyskiwania lokalizacji alternatywnej:

* **--Target-Storage-account**: konto magazynu, do którego zostanie przywrócona zawartość kopii zapasowej. Docelowe konto magazynu musi znajdować się w tej samej lokalizacji co magazyn.

* **--docelowy udział plików**: udział plików w docelowym koncie magazynu, do którego zostanie przywrócona zawartość kopii zapasowej.

* **--Target-folder**: folder w udziale plików, do którego przywracane są dane. Jeśli kopia zapasowa ma zostać przywrócona do folderu głównego, nadaj wartości folderu docelowego jako pusty ciąg.

* **--Rozwiąż konflikt**: instrukcje w przypadku konfliktu z przywróconymi danymi. Akceptuje **zastępowanie** lub **pomijanie**.

Poniższy przykład używa [AZ Backup Restore Restore-azurefileshare](https://docs.microsoft.com/cli/azure/backup/restore?view=azure-cli-latest#az-backup-restore-restore-azurefileshare) z trybem przywracania jako *alternatelocation* , aby przywrócić udział plików *migracji pamięci* na koncie magazynu *afsaccount* do udziału plików *azurefiles1* na koncie magazynu *afaccount1* .

```azurecli-interactive

az backup restore restore-azurefileshare --vault-name azurefilesvault --resource-group azurefiles --rp-name 932883129628959823 --container-name "StorageContainer;Storage;AzureFiles;afsaccount” --item-name “AzureFileShare;azurefiles” --restore-mode alternatelocation --target-storage-account afaccount1 --target-file-share azurefiles1 --target-folder restoredata --resolve-conflict overwrite --out table

```

```output

Name ResourceGroup

------------------------------------ ---------------

babeb61c-d73d-4b91-9830-b8bfa83c349a azurefiles

```

Atrybut **name** w danych wyjściowych odpowiada nazwie zadania, które jest tworzone przez usługę kopii zapasowej dla operacji przywracania. Aby śledzić stan zadania, użyj polecenia [AZ Backup Job show](https://docs.microsoft.com/cli/azure/backup/job?view=azure-cli-latest#az-backup-job-show) cmdlet.

## <a name="item-level-recovery"></a>Odzyskiwanie na poziomie elementu

Ta opcja przywracania służy do przywracania pojedynczych plików lub folderów w lokalizacji oryginalnej lub alternatywnej.

Zdefiniuj następujące parametry, aby wykonać operacje przywracania:

* **--Container-Name**: nazwa konta magazynu, które hostuje kopię zapasową oryginalnego udziału plików. Aby pobrać nazwę lub przyjazną nazwę kontenera, użyj polecenia [AZ Backup Container list](https://docs.microsoft.com/cli/azure/backup/container?view=azure-cli-latest#az-backup-container-list) .

* **--Item-Name**: Nazwa kopii zapasowej oryginalnego pliku, który ma być używany dla operacji przywracania. Aby pobrać nazwę lub przyjazną nazwę elementu kopii zapasowej, użyj polecenia [AZ Backup Item list](https://docs.microsoft.com/cli/azure/backup/item?view=azure-cli-latest#az-backup-item-list) .

Określ następujące parametry dla elementów, które mają zostać odzyskane:

* **Sourcefilepath**: ścieżka bezwzględna pliku, która ma zostać przywrócona w udziale plików jako ciąg. Ta ścieżka jest tą samą ścieżką, która jest używana w pliku [AZ Storage File Download](https://docs.microsoft.com/cli/azure/storage/file?view=azure-cli-latest#az-storage-file-download) lub [AZ Storage File show](https://docs.microsoft.com/cli/azure/storage/file?view=azure-cli-latest#az-storage-file-show) interfejsu wiersza polecenia.

* **SourceFileType**: Wybierz, czy wybrano katalog lub plik. Akceptuje **katalog** lub **plik**.

* **ResolveConflict**: instrukcja, jeśli wystąpił konflikt z przywróconymi danymi. Akceptuje **zastępowanie** lub **pomijanie**.

### <a name="restore-individual-files-or-folders-to-the-original-location"></a>Przywróć pojedyncze pliki lub foldery do oryginalnej lokalizacji

Użyj polecenia [AZ Backup Restore Restore-migracji pamięci](https://docs.microsoft.com/cli/azure/backup/restore?view=azure-cli-latest#az-backup-restore-restore-azurefiles) z trybem przywracania ustawionym na *originallocation* w celu przywrócenia określonych plików lub folderów do ich oryginalnej lokalizacji.

Poniższy przykład przywraca plik *RestoreTest. txt* w jego pierwotnej lokalizacji: udział plików *migracji pamięci* .

```azurecli-interactive

az backup restore restore-azurefiles --vault-name azurefilesvault --resource-group azurefiles --rp-name 932881556234035474 --container-name "StorageContainer;Storage;AzureFiles;afsaccount” --item-name “AzureFileShare;azurefiles” --restore-mode originallocation --source-file-type file --source-file-path "Restore/RestoreTest.txt" --resolve-conflict overwrite --out table

```

```output

Name ResourceGroup

------------------------------------ ---------------

df4d9024-0dcb-4edc-bf8c-0a3d18a25319 azurefiles

```

Atrybut **name** w danych wyjściowych odpowiada nazwie zadania, które jest tworzone przez usługę kopii zapasowej dla operacji przywracania. Aby śledzić stan zadania, użyj polecenia [AZ Backup Job show](https://docs.microsoft.com/cli/azure/backup/job?view=azure-cli-latest#az-backup-job-show) cmdlet.

### <a name="restore-individual-files-or-folders-to-an-alternate-location"></a>Przywracanie pojedynczych plików lub folderów do lokalizacji alternatywnej

Aby przywrócić określone pliki lub foldery do innej lokalizacji, użyj polecenia [AZ Backup Restore Restore-migracji pamięci](https://docs.microsoft.com/cli/azure/backup/restore?view=azure-cli-latest#az-backup-restore-restore-azurefiles) cmdlet z trybem przywracania ustawionym na *alternatelocation* i określ następujące parametry powiązane z elementem docelowym:

* **--Target-Storage-account**: konto magazynu, do którego zostanie przywrócona zawartość kopii zapasowej. Docelowe konto magazynu musi znajdować się w tej samej lokalizacji co magazyn.

* **--docelowy udział plików**: udział plików w docelowym koncie magazynu, do którego zostanie przywrócona zawartość kopii zapasowej.

* **--Target-folder**: folder w udziale plików, do którego przywracane są dane. Jeśli kopia zapasowa ma zostać przywrócona do folderu głównego, nadaj jej wartość jako pusty ciąg.

Poniższy przykład przywraca plik *RestoreTest. txt* znajdujący się pierwotnie w udziale plików *migracji pamięci* do lokalizacji alternatywnej: folder *restoredata* w udziale plików *azurefiles1* hostowanym na koncie magazynu *afaccount1* .

```azurecli-interactive

az backup restore restore-azurefiles --vault-name azurefilesvault --resource-group azurefiles --rp-name 932881556234035474 --container-name "StorageContainer;Storage;AzureFiles;afsaccount” --item-name “AzureFileShare;azurefiles” --restore-mode alternatelocation --target-storage-account afaccount1 --target-file-share azurefiles1 --target-folder restoredata --resolve-conflict overwrite --source-file-type file --source-file-path "Restore/RestoreTest.txt" --out table

```

```output

Name ResourceGroup

------------------------------------ ---------------

df4d9024-0dcb-4edc-bf8c-0a3d18a25319 azurefiles

```

Atrybut **name** w danych wyjściowych odpowiada nazwie zadania, które jest tworzone przez usługę kopii zapasowej dla operacji przywracania. Aby śledzić stan zadania, użyj polecenia [AZ Backup Job show](https://docs.microsoft.com/cli/azure/backup/job?view=azure-cli-latest#az-backup-job-show) cmdlet.

## <a name="next-steps"></a>Następne kroki

Dowiedz się [, jak zarządzać kopiami zapasowymi udziałów plików platformy Azure za pomocą interfejsu wiersza polecenia platformy Azure](manage-afs-backup-cli.md).

| 87.090395 | 633 | 0.775997 | pol_Latn | 0.998775 |

da01132329df974bfd8e97dfc5c34c383f74c681 | 2,071 | md | Markdown | maths/squares/calculating_squares_of_ends_at_4.md | hygull/research | ad65ba6ac4cdef97aa1ece2769cff1bfd6027209 | [

"MIT"

] | null | null | null | maths/squares/calculating_squares_of_ends_at_4.md | hygull/research | ad65ba6ac4cdef97aa1ece2769cff1bfd6027209 | [

"MIT"

] | null | null | null | maths/squares/calculating_squares_of_ends_at_4.md | hygull/research | ad65ba6ac4cdef97aa1ece2769cff1bfd6027209 | [

"MIT"

] | null | null | null | # Calculating square of numbers with unit digit 4

### Parameters

N : Number whose square is to be calculated

u : unit digit of number(N)

t : number formed after excluding the unit digit

### Formula

```

R = (N * (t + 1)) - (3 + t * 6)

R = (N * t) + N - 3 - (t * 6)

R = t * (N - 6) + (N - 3)

R = t * (N - 6) + (N - 6) + 3

R = (N - 6) * (t + 1) + 3

```

```

Square(N) = Concat(R, 6)

```

# Base of the derivation

```python

>>> for number in range(4, 95, 10):

... print number, "^2 = ", number**2

...

4 ^2 = 16

14 ^2 = 196

24 ^2 = 576

34 ^2 = 1156

44 ^2 = 1936

54 ^2 = 2916

64 ^2 = 4096

74 ^2 = 5476

84 ^2 = 7056

94 ^2 = 8836

>>>

>>> 4 * 1 - 3

1

>>> 14 * 2 - 9

19

>>> 24 * 3 - 15

57

>>> 34 * 4 - 21

115

>>>

```

Or (Using formula **(N - 6) * (t + 1) + 3)**)

```python

>>> for number in range(4, 95, 10):

... print number, "^2 = ", number**2

...

4 ^2 = 16

14 ^2 = 196

24 ^2 = 576

34 ^2 = 1156

44 ^2 = 1936

54 ^2 = 2916

64 ^2 = 4096

74 ^2 = 5476

84 ^2 = 7056

94 ^2 = 8836

>>>

>>> (4 - 6) * (0 + 1) + 3

1

>>> (14 - 6) * (1 + 1) + 3

19

>>> (24 - 6) * (2 + 1) + 3

57

>>> (34 - 6) * (3 + 1) + 3

115

>>> (44 - 6) * (4 + 1) + 3

193

>>> (54 - 6) * (5 + 1) + 3

291

>>> (64 - 6) * (6 + 1) + 3

409

>>> (74 - 6) * (7 + 1) + 3

547

>>> (84 - 6) * (8 + 1) + 3

705

>>> (94 - 6) * (9 + 1) + 3

883

>>> (104 - 6) * (10 + 1) + 3

1081

>>> (994 - 6) * (99 + 1) + 3

98803

>>>

>>> # Test

...

>>> 994**2

988036

>>> 34**2

1156

>>>

```

# Example

Caculate the square of 229?

```

164 x 164

=============

656

984x

164xx

=============

26896

```

Let's calculate the square of 229 using formula, (N - 6) * (t + 1) + 3

```

Here

N = 164

t = 16

So,

R = (N - 6) * (t + 1) + 3

R = (N - 6) * (t + 1) + 3

R = (164 - 6) * (16 + 1) + 3

R = 158 * 17 + 3

Finally

158 x 17

============

1106

158x

============

2686

R = 2686 + 3

R = 2689

Square(164) = Concat(R, 6)

Square(164) = Concat(2689, 6)

Square(164) = 26896

``` | 13.899329 | 70 | 0.404635 | yue_Hant | 0.395705 |

da01d84cc4b1a2464351d5263cbb32fb736ecda5 | 833 | md | Markdown | README.md | nyteshade/c-linkedlist | de677415d84fe84b885e23d05cfe1b86ba0794d6 | [

"MIT"

] | null | null | null | README.md | nyteshade/c-linkedlist | de677415d84fe84b885e23d05cfe1b86ba0794d6 | [

"MIT"

] | null | null | null | README.md | nyteshade/c-linkedlist | de677415d84fe84b885e23d05cfe1b86ba0794d6 | [

"MIT"

] | null | null | null | # c-linkedlist

Playing with LinkedLists in C

## Overview

Recently playing with older C compilers, I wanted a LinkedList that offered some of the niceties of modern arrays found in more recent languages. I identified the most used key and value types I encounter in C which were a string (`char*`), a pointer (`void *`), an integer (`long`) and a decimal (`double`). I plan to add many more functions for handling things like searching, sorting and the like but this basis allows me to get started.

## Tested On (Machines I own)

- [X] Apple MacBook Pro 15" 2019 (Intel)

- [ ] Apple MacBook Pro 14" 2022 (Apple Silicon)

- [ ] Apple PowerBook G3 Pismo (500Mhz)

- [ ] Commodore Amiga 500/600/1000/2000

- [ ] Commodore Amiga 1200/3000/4000

## Modern APIs Supported

- [X] ForEach

- [X] Filter

- [X] Map

- [X] Reduce

### More to Come

| 36.217391 | 440 | 0.719088 | eng_Latn | 0.988655 |

da03a828cd6f1216141bee18332c72cf82e4775b | 777 | md | Markdown | README.md | nobody48sheldor/fractales | 35eedb6477715c365afd58db46180f8daccaab8e | [

"MIT"

] | null | null | null | README.md | nobody48sheldor/fractales | 35eedb6477715c365afd58db46180f8daccaab8e | [

"MIT"

] | 1 | 2022-02-02T12:29:01.000Z | 2022-02-02T12:33:09.000Z | README.md | nobody48sheldor/fractales | 35eedb6477715c365afd58db46180f8daccaab8e | [

"MIT"

] | null | null | null | # Fractales

Mandelbrot's set and Julia's sets.

## description

### languages :

- Python

- C++

### dependencies :

- Python :

- matplotlib

- numpy

- cmath (for the version written all in python)

- C++ :

- complex

### versions

- [C++] + [Python]

- computation in C++ for speed

- plot in python with matplotlib

- full [Python]

- all in Python,easy to understand, but very slow

## how to install and run ?

``` git clone https://github.com/nobody48sheldor/fractales ```

``` cd fractales/ ```

- [C++] + [Python]

``` sh compile.sh ``` (needs g++ compiler)

- full [Python]

``` cd full_python/ ```

``` python3 mandelbrot.py ``` or ``` python3 julia_set.py ```

## contribute

you can copy the code update/modify it and do a pull request.

| 18.5 | 62 | 0.643501 | eng_Latn | 0.926941 |

da042f8b38d65797a4aae6389f1c2fa856bfd0b3 | 2,941 | md | Markdown | _docs/Magento-V2-Connector/Uploading-Shipments-to-Magento-V2.md | chrishotchkiss/test | 3c83a28798bde3f8222c028815ef9fcae7a95490 | [

"MIT"

] | null | null | null | _docs/Magento-V2-Connector/Uploading-Shipments-to-Magento-V2.md | chrishotchkiss/test | 3c83a28798bde3f8222c028815ef9fcae7a95490 | [

"MIT"

] | null | null | null | _docs/Magento-V2-Connector/Uploading-Shipments-to-Magento-V2.md | chrishotchkiss/test | 3c83a28798bde3f8222c028815ef9fcae7a95490 | [

"MIT"

] | null | null | null | ---

slug: uploading-shipments-to-magento-v2

redirect_from: "/article/974-uploading-shipments-to-magento"

title: Uploading Shipments to Magento V2

---

This task will create new shipments for existing orders in Magento. See below for a sample input file.

The `<entity_id>` element is used to specify the ID of the order to create the shipment for. If no ID is specified, the task will use the `<external_id>` element in conjunction with Zynk's truth table to look up the ID of an order that has been previously processed by Zynk. If no external ID is provided, the `<increment_id>` element will be used to look up the order ID based on the increment ID of the order.

You can optionally provide a collection of `<item>` elements to ship specific items on the order. If no items are provided, the task will ship all remaining items on the order.

## Settings

### Connection

_Required_

The Magento V2 connection to use. See the [Connecting to Magento V2](connecting-to-magento-v2) article if you require more information on how to create/manage connections.

## Fail File

_Required_

The XML file to save failed shipment uploads to. The data will be written in the same format as the input file.

## Input File

_Required_

The XML file containing the shipments to upload in Magento.

## Success File

_Required_

The XML file to save successful shipment uploads to. The data will be written in the same format as the input file.

## Prevent Reprocessing

_Required_

Set to true to prevent the same record being processed more than once by the task. This setting will only work where an `<external_id>` element is provided in the XML.

## Store View Code

_Required_

The magento store view code to perform the API calls against. Default value of 'all'.

### Zynk Settings

See [Common Task Settings](common-task-settings).

## Examples

A sample input file is shown below. This will create or update the customer with the email address `[email protected]`.

```xml

<?xml version="1.0" encoding="utf-8"?>

<ArrayOfShipment xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema">

<Shipment>

<external_id>9814</external_id>

<order>

<!-- At least one of the following must be provided to identify the order to ship -->

<entity_id>4</entity_id>

<external_id>1</external_id>

<increment_id>000000004</increment_id>

</order>

<!-- If no items collection is provided, all items on the order will be shipped -->

<items>

<item>

<sku>WSH12-32-Purple</sku>

<qty>1</qty>

</item>

</items>

<tracks>

<track>

<track_number>abcd-1234</track_number>

<title>DHL Next Day Shipping</title>

<carrier_code>DHL</carrier_code>

</track>

</tracks>

<comment>

<comment>Leave by the door</comment>

<is_visible_on_front>1</is_visible_on_front>

</comment>

</Shipment>

</ArrayOfShipment>

```

| 39.743243 | 411 | 0.723903 | eng_Latn | 0.990768 |

da04f0ea0411e1cc53d5ac1ee268bf75fedbbb56 | 1,419 | md | Markdown | 2020/10/14/2020-10-14 18:25.md | zhzhzhy/WeiBoHot_history | 32ce4800e63f26384abb17d43e308452c537c902 | [

"MIT"

] | 3 | 2020-07-14T14:54:15.000Z | 2020-08-21T06:48:24.000Z | 2020/10/14/2020-10-14 18:25.md | zhzhzhy/WeiBoHot_history | 32ce4800e63f26384abb17d43e308452c537c902 | [

"MIT"

] | null | null | null | 2020/10/14/2020-10-14 18:25.md | zhzhzhy/WeiBoHot_history | 32ce4800e63f26384abb17d43e308452c537c902 | [

"MIT"

] | null | null | null | 2020年10月14日18时数据

Status: 200

1.因嫉妒心删掉同学专升本志愿被抓

微博热度:4437075

2.南医大女生被杀案凶手被判死刑

微博热度:2635936

3.现在就试试

微博热度:2482412

4.谭松韵经纪人道歉

微博热度:2419704

5.马丽韩国分丽

微博热度:2245112

6.瞄准真刺激

微博热度:2048717

7.李毅被停职一周

微博热度:1667479

8.大连理工通报一研究生实验室身亡

微博热度:1553053

9.昆山

微博热度:1272929

10.莲花山

微博热度:1159409

11.朱一龙站姐

微博热度:1051450

12.杜蕾斯文案

微博热度:835376

13.何炅刘涛主持金鹰节

微博热度:720121

14.被迫还贷退休老师喊话学生还钱

微博热度:558903

15.丹麦将扑杀至少250万只水貂

微博热度:449552

16.iPhone12将支持北斗导航定位系统

微博热度:449073

17.学生当上老师和昔日老师坐对桌

微博热度:422845

18.原来好评比差评更气人

微博热度:422789

19.宋茜

微博热度:416864

20.医护人员用喇叭喊话居民做核酸检测

微博热度:412708

21.大连理工

微博热度:409561

22.易烊千玺眼神

微博热度:404690

23.姐妹相亲失败现场

微博热度:402461

24.陪女朋友吃螺蛳粉的男孩

微博热度:398804

25.我国首次对碰瓷作出明确界定

微博热度:389441

26.北京发布青岛方向进京管控措施

微博热度:382867

27.陈伟霆刘诗诗同框

微博热度:374541

28.杨洋生图

微博热度:365115

29.iPhone12值不值得买

微博热度:318345

30.韩国违规不戴口罩将被罚款近600元

微博热度:297250

31.这辈子就是上辈子你说的下辈子

微博热度:294353

32.韩红 我尊重流量明星

微博热度:285631

33.虞书欣 脉搏犹如40岁男子壮如牛

微博热度:270295

34.没想到长颈鹿这样吃草

微博热度:265632

35.深圳经济特区40周年

微博热度:261116

36.长城宽带

微博热度:260967

37.王嘉尔黄色发带造型

微博热度:260210

38.伸懒腰是要付出代价的

微博热度:258848

39.天才小熊猫

微博热度:247030

40.江南百景图

微博热度:244843

41.明星回复粉丝能有多搞笑

微博热度:237591

42.崔雪莉吧悼念雪莉

微博热度:225527

43.金鹰女神投票结果

微博热度:201176

44.美军机连续三个月对中国密集抵近侦察

微博热度:199531

45.诸葛亮武陵仙君优化

微博热度:188818

46.北京对入境进京人员实行3次核酸检测

微博热度:186416

47.打针众生相

微博热度:184267

48.初秋辣妹穿搭

微博热度:183613

49.从青岛进京须持7日内核酸检测阴性证明

微博热度:172904

50.郑爽蜜糖胶片风大片

微博热度:163693

| 6.955882 | 22 | 0.78365 | yue_Hant | 0.36972 |

da0a85426903a005c9dd3c51f0ca8ebe5cd52411 | 61 | md | Markdown | docs/zh-hans/components/comp_PSS/comp_PSSelectrical/BasicPassiveComp/index.md | CloudPSS/docs | 8bb06e23d55d1f6e1acd3dbe9638ad9c7e8f317c | [

"MIT"

] | 1 | 2021-07-30T14:25:55.000Z | 2021-07-30T14:25:55.000Z | docs/zh-hans/components/comp_PSS/comp_PSSelectrical/BasicPassiveComp/index.md | CloudPSS/docs | 8bb06e23d55d1f6e1acd3dbe9638ad9c7e8f317c | [

"MIT"

] | null | null | null | docs/zh-hans/components/comp_PSS/comp_PSSelectrical/BasicPassiveComp/index.md | CloudPSS/docs | 8bb06e23d55d1f6e1acd3dbe9638ad9c7e8f317c | [

"MIT"

] | 1 | 2021-11-03T00:31:55.000Z | 2021-11-03T00:31:55.000Z | ---

title: 基本无源元件

order: 5000

redirect to: ./GND/index.md

--- | 12.2 | 27 | 0.655738 | eng_Latn | 0.38761 |

da0bcadf78de1da6c20f9738c89fd78734de8fa8 | 4,425 | md | Markdown | docs/custom-system-keyboard.zh-Hans.md | aonon/mongol-library | 2487318a9932eb401cf687dea841461e3562cd98 | [

"MIT"

] | 57 | 2017-03-08T09:02:38.000Z | 2021-09-15T07:24:53.000Z | docs/custom-system-keyboard.zh-Hans.md | aonon/mongol-library | 2487318a9932eb401cf687dea841461e3562cd98 | [

"MIT"

] | 6 | 2017-07-11T10:54:01.000Z | 2019-07-25T18:42:00.000Z | docs/custom-system-keyboard.zh-Hans.md | aonon/mongol-library | 2487318a9932eb401cf687dea841461e3562cd98 | [

"MIT"

] | 18 | 2017-02-27T09:48:56.000Z | 2022-01-24T23:49:01.000Z | # 如何创建自定义系统输入法

本教成以Andoid Studio 3.1和monogl-library 1.1.0测试的 (mongol-library不能低于1.1.0版)

## 1、 新的项目

新建一个项目,把它命名为`Jianpan`

## 2、 导入mongol-library

在build.gradle(Module: app)文件里的dependencies中加蒙文控件库

```java

implementation 'net.studymongolian:mongol-library:1.3.1'

```

## 3、 自定义键盘布局

创建一个java文件,这是你的键盘试图类,要继承`net.studymongolian.mongollibrary.Keyboard`类。最简单的方法是复制已存在的键盘然后改成你想要的布局。参考下面的键盘试图:

- [KeyboardAeiou](https://github.com/suragch/mongol-library/blob/master/mongol-library/src/main/java/net/studymongolian/mongollibrary/KeyboardAeiou.java)

- [KeyboardQwerty](https://github.com/suragch/mongol-library/blob/master/mongol-library/src/main/java/net/studymongolian/mongollibrary/KeyboardQwerty.java)

- [KeyboardLatin](https://github.com/suragch/mongol-library/blob/master/mongol-library/src/main/java/net/studymongolian/mongollibrary/KeyboardLatin.java)

- [KeyboardCyrillic](https://github.com/suragch/mongol-library/blob/master/mongol-library/src/main/java/net/studymongolian/mongollibrary/KeyboardCyrillic.java)

- [CustomKeyboard](https://github.com/suragch/mongol-library/blob/master/demo-app/src/main/java/net/studymongolian/mongollibrarydemo/CustomKeyboard.java)

- [CustomKeyboardTwo](https://github.com/suragch/mongol-library/blob/master/demo-app/src/main/java/net/studymongolian/mongollibrarydemo/CustomKeyboardTwo.java)

命名为`WodeJianpan.java`

## 4、 键盘样式

在`res/layout`里新建xml文件,把它命名为`jianpan_yangshi.xml`。内容如下:

```xml

<?xml version="1.0" encoding="utf-8"?>

<net.studymongolian.mongollibrary.ImeContainer xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:background="#dbdbdb">

<com.example.jianpan.WodeJianpan

android:layout_width="match_parent"

android:layout_height="match_parent"

app:keyBorderColor="#000000"

app:keyBorderRadius="3dp"

app:keyBorderWidth="1px"

app:keyColor="#ffffff"

app:keyPressedColor="#b3b3b3"

app:keySpacing="3dp"

app:popupHighlightColor="#dbdbdb"

app:popupTextColor="#fe9a52"

app:primaryTextColor="#000000"

app:primaryTextSize="30sp"

app:secondaryTextColor="#b3b3b3" />

</net.studymongolian.mongollibrary.ImeContainer>

```

**备注**

- 把`com.example.Jianpan.WodeJianpan`改成你的项目和类名。

- 如果你想切换键盘,可以多加几个键盘视图类。

## 5、 InputMethodService类

创建一个java文件,把它命名为`WodeInputMethodService.java`,要继承`InputMethodService`,还要实现`ImeContainer.OnSystemImeListener`接口。内容如下:

```java

public class WodeInputMethodService extends InputMethodService implements ImeContainer.OnSystemImeListener {

@Override

public View onCreateInputView() {

LayoutInflater inflater = getLayoutInflater();

ImeContainer jianpan = (ImeContainer) inflater.inflate(R.layout.jianpan_yangshi, null, false);

jianpan.showSystemKeyboardsOption("ᠰᠢᠰᠲ᠋ᠧᠮ"); // 长按键盘键可以切换到别的系统输入法

jianpan.setOnSystemImeListener(this);

return jianpan;

}

// ImeContainer.OnSystemImeListener的方法

@Override

public InputConnection getInputConnection() {

return getCurrentInputConnection();

}

@Override

public void onChooseNewSystemKeyboard() {

InputMethodManager im = (InputMethodManager) getSystemService(INPUT_METHOD_SERVICE);

if (im == null) return;

im.showInputMethodPicker();

}

}

```

**备注**

- 如果你不想用`ImeContainer`或者你想自己控制键盘的输入法,从`onCreateInputView()`也可以返回一个`Keyboard`视图,这样的话要你的`InputMethodService`要实现`Keyboard.OnKeyboardListener`接口,就是要实现的方法较多一点。

## 6、 输入法子类型

在`res/xml/`里创建一个xml文件,把它命名为`method.xml`。内容如下:

```java

<?xml version="1.0" encoding="utf-8"?>

<input-method

xmlns:android="http://schemas.android.com/apk/res/android">

<subtype

android:imeSubtypeMode="keyboard"/>

</input-method>

```

# 7、 申明输入法

在`AndroidManifest.xml`里要申明你的输入法:

```java

</application>

...

<service

android:name=".WodeInputMethodService"

android:label="自定义输入法"

android:permission="android.permission.BIND_INPUT_METHOD">

<intent-filter>

<action android:name="android.view.InputMethod"/>

</intent-filter>

<meta-data

android:name="android.view.im"

android:resource="@xml/method"/>

</service>

</application>

```

你的键盘的基本功能已经完成了,但是`MainActivity`里可以加一些设置项目或帮助。

# 8、 激活输入法

在系统设置里用户都要激活你的输入法。

| 30.944056 | 159 | 0.734463 | yue_Hant | 0.174816 |

da0bd02e110a40029507ca63b2a2b34ba286cb40 | 642 | md | Markdown | NEWS.md | kullrich/MSA2dist | cdb453af04e4577cc08d9df82bb1583484e07b1f | [

"MIT"

] | 1 | 2021-12-23T00:14:16.000Z | 2021-12-23T00:14:16.000Z | NEWS.md | kullrich/MSA2dist | cdb453af04e4577cc08d9df82bb1583484e07b1f | [

"MIT"

] | null | null | null | NEWS.md | kullrich/MSA2dist | cdb453af04e4577cc08d9df82bb1583484e07b1f | [

"MIT"

] | null | null | null | # Changes in version 0.99.3 (2022-03-18)

## Major changes

## Minor improvements and bug fixes

* Fix RcppThread::LdFlags warning

# Changes in version 0.99.2 (2022-01-28)

## Major changes

## Minor improvements and bug fixes

* Added RcppThread::ProgressBar

# Changes in version 0.99.1 (2022-01-27)

## Major changes

* Changed version number into 0.99.2

## Minor improvements and bug fixes

* Changed URL links in DESCRIPTION

# Changes in version 0.99.0 (2021-12-22)

## Major changes

* Changed version number into 0.99.1

* Changed name from distSTRING into MSA2dist

* Submitted to Bioconductor

## Minor improvements and bug fixes

| 16.894737 | 44 | 0.73053 | eng_Latn | 0.974803 |

da0bda38f9062677f3556034724d9728fa671c8a | 218 | md | Markdown | _watches/M20191022_071511_TLP_2.md | Meteoros-Floripa/meteoros.floripa.br | 7d296fb8d630a4e5fec9ab1a3fb6050420fc0dad | [

"MIT"

] | 5 | 2020-01-22T17:44:06.000Z | 2020-01-26T17:57:58.000Z | _watches/M20191022_071511_TLP_2.md | Meteoros-Floripa/site | 764cf471d85a6b498873610e4f3b30efd1fd9fae | [

"MIT"

] | null | null | null | _watches/M20191022_071511_TLP_2.md | Meteoros-Floripa/site | 764cf471d85a6b498873610e4f3b30efd1fd9fae | [

"MIT"

] | 2 | 2020-05-19T17:06:27.000Z | 2020-09-04T00:00:43.000Z | ---

layout: watch

title: TLP2 - 22/10/2019 - M20191022_071511_TLP_2T.jpg

date: 2019-10-22 07:15:11

permalink: /2019/10/22/watch/M20191022_071511_TLP_2

capture: TLP2/2019/201910/20191021/M20191022_071511_TLP_2T.jpg

---

| 27.25 | 62 | 0.784404 | eng_Latn | 0.032539 |

da0cc25a9d0e52de0f616108d7761256098a6d07 | 4,282 | md | Markdown | README.md | thobbs/genartlib | 032f685b326ef56235e1dc1198cacf02264d3c39 | [

"MIT"

] | 159 | 2016-06-06T11:00:49.000Z | 2022-03-29T18:40:00.000Z | README.md | thobbs/genartlib | 032f685b326ef56235e1dc1198cacf02264d3c39 | [

"MIT"

] | 6 | 2017-11-01T16:12:30.000Z | 2021-06-28T20:48:06.000Z | README.md | thobbs/genartlib | 032f685b326ef56235e1dc1198cacf02264d3c39 | [

"MIT"

] | 14 | 2017-11-01T15:17:09.000Z | 2022-03-08T22:33:43.000Z | # genartlib

[](https://clojars.org/genartlib)

[](https://cljdoc.org/d/genartlib/genartlib/CURRENT)

<img src="dev-resources/ectogenesis-small.jpg" alt="Ectogenesis" title="Ectogenesis" align="right" width="250"/>

A Clojure library with simple utilities for creating generative artwork.

This library is built around [Quil](https://github.com/quil/quil), a Clojure wrapper around the [Processing](https://processing.org) framework. However, most of the functions are just mathematical utilies that could be used idependently.

To see and read about my artwork, visit [tylerxhobbs.com](https://tylerxhobbs.com) or follow me on [Instagram](https://instagram.com/tylerxhobbs) or [Twitter](https://twitter.com/tylerxhobbs).

## Usage

To install, add this to your dependencies in `project.clj`:

```clojure

[genartlib "0.1.22"]

```

## Contents

View the [API Docs](https://cljdoc.org/d/genartlib/genartlib/CURRENT).

The genartlib library has the following tools:

### Project Template

Under project-template/, you'll find the basic setup that I use for every new generative art project. This is geared towards creating static images.

I also wrote a bit about [my development setup and how I use it](https://tylerxhobbs.com/essays/2015/using-quil-for-artwork).

### Algebra

The following algebra-ish functions are defined:

* `avg` - average

* `interpolate` / `interpolate-multi` - linear interpolation

* `rescale` - map from one range to another

* `line-intersection` - find the intersection of two lines

* `lines-intersection-point` - another way to find line intersections

* `slope` / `point-slope` - get the slope of a line

* `y-intercept` - get the y intercept point of a line

* `angle` / `point-angle` - get the angle between two points in radians

* `angular-coords` - calculate the offset location from a base point with angle and magnitude

* `point-dist` - distance between two points

### Geometry

* `polygon-contains-point?` - a fast test for checking if a point falls inside a polygon

* `rotate-polygon` - rotates around the average center of the poly

* `shrink-polygon` - shrink by a ratio

### Curves

* `chaikin-curve` - a curve-smoothing algorithm

* `chaikin-curve-retain-ends` - a variation that preserves the original end points

* `split-curve-by-step` - break up a curve into chunks with the given length

* `split-curve-into-parts` - break up a curve into chunks with equal length, given a number of parts to have

* `interpolate-curve` - find a point that is a given percentage along the length of a curve

* `line-simplification` - an implementation of the Ramer-Douglas-Peucker line simplification algorithm

### Random

* `gauss` - sample a gaussian probability distribution

* `abs-gauss` - basically gauss + abs

* `triangular` - sample a triangular probability distribution

* `pareto-sampler` / `pareto-sample` - sample a pareto probability distribution

* `random-point-in-circle` - uniform sampling of points within a circle

* `odds` - returns true or false with the given probability

* `choice` - pick from a list of items with uniform probability

* `weighted-choice` - pick from a list of items, each with an assigned probability

* `repeatable-shuffle` - a version of shuffle that uses Processing's Random, in order to ensure repeatability with the same seed

### Plotter

* `sort-curves-for-plotting` - sorts a seq of curves in order to minimize plotter travel distance

### Utils

* `w` and `h` - shorthand for expressing a length or position in terms of percentage of the image width or height - good for using a pseudo-vector approach to creating images

* `set-color-mode` - set the color mode to HSV with ranges H [0, 360], S [0.0, 100.0], V [0.0, 100.0], alpha [0.0, 1.0]

* `in?` / `not-in?` - test if a seq contains an item

* `between?` - is a value inside an inclusive range?

* `enumerate` - turns a seq of items into a seq like ([0 item-0] [1 item-1] [2 item-2] ...)

* `zip` - combine two or more seqs into tuples

* `snap-to` - snap a value to a given window size, kind of like configurable rounding

* `vec-remove` - remove an item from a vector

## License

Copyright © Tyler Hobbs

Distributed under the MIT License.

| 44.14433 | 237 | 0.741709 | eng_Latn | 0.976494 |

da0d83eed10f21aa5beb9b9f287553c1da47a6e1 | 3,009 | md | Markdown | README.md | World-of-Cryptopups/useEOSHyperion | d197873866e298876e905f4fd7927aaa7f5eef31 | [

"MIT"

] | null | null | null | README.md | World-of-Cryptopups/useEOSHyperion | d197873866e298876e905f4fd7927aaa7f5eef31 | [

"MIT"

] | null | null | null | README.md | World-of-Cryptopups/useEOSHyperion | d197873866e298876e905f4fd7927aaa7f5eef31 | [

"MIT"

] | null | null | null | # useEOSHypersion

React hooks for [EOS Hyperion State API](https://github.com/eosrio/hyperion-history-api) data fetching.

This can be used with any supported EOSIO-based blockchains.

All of the hooks wraps around `useSWR` from the [swr](https://swr.vercel.app) library.

## Install

```sh

npm install @cryptopuppie/useeoshyperion --save

```

## Usage

Using the hooks is simple and made to be similar to `useSWR` for easier use.

```jsx

import { useGetActions } from '@cryptopuppie/useeoshyperion'

export default function App() {

const { data } = useGetActions(

{ account: 'fckedupmyacc', limit: 5 },

'https://testnet.waxsweden.org'

)

return (

<div>

<h3>Actions</h3>

<ul>

{data?.actions.map((i) => (

<li key={i.trx_id}>

{i.trx_id} - {new Date(i.timestamp).toLocaleString()}

</li>

))}

</ul>

</div>

)

}

```

- **With a Provider**

If you do not want to set the api everytime in each hook, you can use a provider.

```tsx

// Component.tsx

import { useGetActions } from '@cryptopuppie/useeoshyperion'

export default function Component() {

const { data } = useGetActions({ account: 'fckedupmyacc', limit: 5 })

return (

<div>

<h3>Actions</h3>

<ul>

{data?.actions.map((i) => (

<li key={i.trx_id}>

{i.trx_id} - {new Date(i.timestamp).toLocaleString()}

</li>

))}

</ul>

</div>

)

}

// App.tsx

import { UseHyperionProvider } from '@cryptopuppie/useeoshyperion'

import Component from './Component.tsx'

export default function App() {

return (

<UseHyperionProvider endpoint="https://testnet.waxsweden.org">

<Component />

</UseHyperionProvider>

)

}

```

- **Error handling**

All of the hooks export error objects, `error` and `hasFailed`.

If `hasFailed` is true, the `data` object is null and `error` otherwise.

```jsx

import { useGetActions } from '@cryptopuppie/useeoshyperion'

export default function App() {

const { data, hasFailed, error } = useGetActions(

{ account: 'fckedupmyacc', limit: 5 },

'https://testnet.waxsweden.org'

)

if (hasFailed) {

return <p>{error.message}</p>

}

return (

<div>

<h3>Actions</h3>

<ul>

{data?.actions.map((i) => (

<li key={i.trx_id}>

{i.trx_id} - {new Date(i.timestamp).toLocaleString()}

</li>

))}

</ul>

</div>

)

}

```

### Hooks

All of the main primary endpoints are implemented.

- Health

- `useGetHealth`

- History

- `useGetABISnapshot`

- `useGetActions`

- `useGetCreatedAccounts`

- `useGetCreator`

- `useGetDeltas`

- `useGetSchedule`

- `useGetTransaction`

- State

- `useGetAccount`

- `useGetKeyAccounts`

- `useGetLinks`

- `useGetProposals`

- `useGetTokens`

- `useGetVoters`

- Stats

- `useGetMissedBlocks`

##

**2022 | World of Cryptopups**

| 21.190141 | 103 | 0.592888 | eng_Latn | 0.444482 |

da0e07131470a4269acf2cd7c2ae27b5a8bd6c30 | 14,259 | md | Markdown | Readme.md | schwamster/docker-tutorial | d97b5539a7c1e86eee94f58b32dc003c07be9854 | [

"MIT"

] | 13 | 2017-04-16T11:52:38.000Z | 2019-01-09T19:57:36.000Z | Readme.md | schwamster/docker-tutorial | d97b5539a7c1e86eee94f58b32dc003c07be9854 | [

"MIT"

] | null | null | null | Readme.md | schwamster/docker-tutorial | d97b5539a7c1e86eee94f58b32dc003c07be9854 | [

"MIT"

] | 6 | 2017-04-12T14:40:03.000Z | 2020-07-31T10:10:38.000Z | # Docker Tutorial with asp.net core

This is related to [this post on devt.to](https://dev.to/schwamster/docker-tutorial-with-for-aspnet-core)

In this tutorial, you will learn how to build and run your first asp.net core docker image. We start of with a very short general docker introduction.

After that we choose the "right" images. We will first create a docker container that is responsible for building our source files. For that we copy our source files to the build container. When the build is done we will copy the published project back to the host system and create a runtime image. After that we explore the handy additon "multi-stage" build to simplify the build.

Your will need to install [dotnet core](https://www.microsoft.com/net/core) and [docker](https://docs.docker.com/engine/installation/) on your machine before your begin this tutorial.

If you are running behind a proxy some of the commands might not work, so be sure to check out the [Proxy-Section](#proxy) below.

## The Dockerfile

If you already have basic knowledge of Docker skip this introduction and go straight to [Choose an image](#choose_image).

You can run one of the many images that exist ready for usage on [hub.docker.com](https://hub.docker.com). You can for example

run a command on an instance of Debian a popular Linux Distro with the following command:

```powershell

docker run debian echo "Welcome to Docker"

```

This might take a while the first time, since docker has to pull the image. A second run should start the command in a fraction of a second.

Instead of running a "throw away"-container you can also use an container interactively like so:

```powershell

docker run -it debian /bin/bash

```

Check out the docker run reference to find out more: [docker run](https://docs.docker.com/engine/reference/run/)

You can exit the container by typing "exit" and hitting enter.

But you can not only run other peoples images, you can also create your own images. For that you will need to create a *Dockerfile*. The *Dockerfile* describes an image and all its dependencies in steps.

We can start with a simple Dockerfile that extends our hello world example.

Create a new folder called cowsay and add a file called Dockerfile. Add the following content to the file:

```dockerfile

FROM debian

RUN apt-get update && apt-get install -y cowsay

ENTRYPOINT ["/usr/games/cowsay"]

```

In this dockerfile we are doing the follwing:

1. defining what base image we want to use => debian

2. running a command in the image that updates the packagemanager and installs an app called cowsay

3. defining what app to run when the image is run

For a full reference of the available instructions in Dockerfile go here [Dockerfile](https://docs.docker.com/engine/reference/builder/)

Now let's build the image with the build command from the created folder:

```powershell

docker build -t cowsay .

```

If this hangs and you are running behind a proxy check [this](#proxy) out.

Now that we have build our image we can run it:

```powershell

docker run cowsay "Welcome to Docker"

```

## Choose an image<a name="choose_image"></a>

Go to [hub.docker.com](https://hub.docker.com) and search for aspnetcore

You will find many different choices. If there are no very special reasons i would opt for official images or images uploaded by the involved companies. Two images are interesting:

There are two different images provided by microsoft. One of them only contains the runtime and the other contains the SDK as well - see the following descriptions

### ASP.NET Core Docker Image

This repository contains images for running **published** ASP.NET Core applications. These images use the

microsoft/dotnet image as its base.

### ASP.NET Core Build Docker Image

This repository contains images that are used to **compile/publish** ASP.NET Core applications inside the container. This is different to compiling an ASP.NET Core application and then adding the compiled output to an image, which is what you would do when using the microsoft/aspnetcore image. These Dockerfiles use the microsoft/dotnet image as its base.

## Create a asp.net core project

create a folder called docker-tutorial and navigate to it, then execute the following command:

```powershell

dotnet new webapi

```

## First Build

Let's start easy and compile the app on our computer and then add the output to the runtime image.

Run the following commands in the root of your project:

```powershell

dotnet restore

dotnet publish -o ./publish

```

You should now have a publish folder, that contains your compiled application.

Now create a new Dockerfile in the root of the application

```dockerfile

FROM microsoft/aspnetcore:2.0

WORKDIR /app

COPY ./publish .

ENTRYPOINT ["dotnet", "docker-tutorial.dll"]

```

This Dockerimage will copy the contents of the publish folder in the root of your project into the app folder on the image.

Build the image:

```powershell

docker build -t docker-tutorial .

```

You can find out more about the build command [here](https://docs.docker.com/engine/reference/commandline/build/)

Test the image:

```powershell

docker run -p 8181:80 docker-tutorial

```

Now you can navigate to the hosted application: http://localhost:8181/api/values

You should get a response like this:

```json

["value1","value2"]

```

Your docker engine might not be reachable through localhost. If so change to the correct url. If you

are using the docker toolbox with docker-machine you can get the ip with the following command:

```powershell

docker-machine ip default

```

## Compiling within the aspnetcore-build image

It is recommended to compile your project within the docker image, since this will produce a more reliable build pipeline. The build on the development machine will work the same way as the build in the build server.

So let's create another Dockerfile called Dockerfile.build

```dockerfile

FROM microsoft/aspnetcore-build:2.0

WORKDIR /app

COPY *.csproj .

RUN dotnet restore

COPY . .

RUN dotnet publish --output /out/ --configuration Release

```

The new instruction we use here is *COPY*. This copies files from our host into the image.

Also note what happens when you rebuild the image. If you don't change anything nothing will be done. If you change something in the code the publish instruction will be executed but not *dotnet restore*. Only if you change some dependency will the *dotnet restore* instruction be executed.

For a more detailed description of this "layered" build process check [this](https://docs.docker.com/engine/userguide/storagedriver/imagesandcontainers/) out.

Before we build the "build-image" we need to add one more file to avoid that dotnet commands on our host (dotnet restore/build/publish) interfere with the build context. See [this](https://codefresh.io/blog/not-ignore-dockerignore/) for more information. Add a file called .dockerignore with the following content to the root of the project:

```txt

bin

obj

publish

```

Now let's build the image. Note we have to explicitly specify what Dockerfile we want to use:

```powershell

docker build -f Dockerfile.build -t docker-tutorial-build .

```

We have now build the app in the image. We could now run the container from that image but run the follwoing command first:

```powershell

docker image ls | sls docker-tutorial

```