id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

350,998,164 |

flutter

|

Color codes in error messages are probably escaped when using the iOS simulator

|

I'm adding ANSI color codes such as `\u001B[31;1m` (bright red) to error messages.

They render fine when using the Android emulator.

But with the iOS simulator, they seem to get escaped and appear as below:

`**\^[[31;1m**Material widget ancestor required by TextField widgets not found.**<…>**`

|

platform-ios,tool,customer: crowd,has reproducible steps,P3,found in release: 3.0,found in release: 3.1,team-ios,triaged-ios

|

medium

|

Critical

|

351,004,315 |

flutter

|

Binding a Flutter Canvas / PictureRecorder to a Texture?

|

I'm looking into rendering Flutter canvas graphics onto an Android surface from another plugin.

Currently I have the following:

Android flutter plugin that obtains an Android Surface.

- option 1: flutter app renders `Canvas`, save with `PictureRecorder`, copy RGBA bytes to plugin, draw to `Surface`

- option 2: flutter app sends messages to plugin to draw on an Android `Canvas`, draw to `Surface`

option 2 is significantly faster - but requires mirroring `Canvas` methods in the UI with plugin methods,

e.g.

```dart

// Draw UI

canvas

.. drawPaint(paint)

.. drawLine(pt1, pt2, paint);

// Render same thing to plugin

plugin

..drawPaint(paint);

..drawLine(pt1, ot2, paint);

```

In our case - we currently only need a subset of Canvas methods, so this isn't too burdensome for now.

Ideally, however, this could look something like this:

```dart

// Draw UI

canvas

.. drawPaint(paint)

.. drawLine(pt1, pt2, paint);

// Render same thing to plugin

canvas = new Canvas(new PictureRecorder(plugin.textureId))

..drawPaint(paint);

..drawLine(pt1, pt2, paint);

```

Is there a way to let a `PictureRecorder` record canvas operations to a texture?

Thanks!

|

c: new feature,engine,P2,team-engine,triaged-engine

|

low

|

Minor

|

351,010,357 |

go

|

cmd/cover: misleading coverage indicators for channel operations in 'select' statements

|

### What version of Go are you using (`go version`)?

go version go1.10.3 windows/amd64

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

set GOHOSTARCH=amd64

set GOHOSTOS=windows

### What did you do?

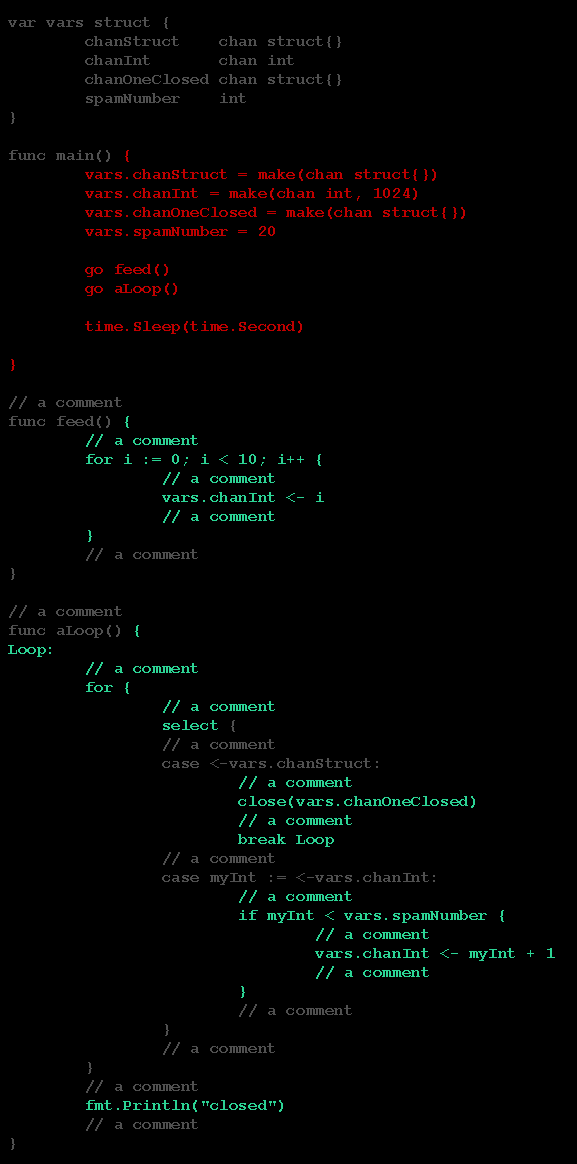

main.go:

```go

package main

import (

"fmt"

"time"

)

var vars struct {

chanStruct chan struct{}

chanInt chan int

chanOneClosed chan struct{}

spamNumber int

}

func main() {

vars.chanStruct = make(chan struct{})

vars.chanInt = make(chan int, 1024)

vars.chanOneClosed = make(chan struct{})

vars.spamNumber = 20

go feed()

go aLoop()

time.Sleep(time.Second)

close(vars.chanStruct)

select {

case <-vars.chanOneClosed:

}

time.Sleep(time.Second)

}

// a comment

func feed() {

// a comment

for i := 0; i < 10; i++ {

// a comment

vars.chanInt <- i

// a comment

}

// a comment

}

// a comment

func aLoop() {

Loop:

// a comment

for {

// a comment

select {

// a comment

case <-vars.chanStruct:

// a comment

close(vars.chanOneClosed)

// a comment

break Loop

// a comment

case myInt := <-vars.chanInt:

// a comment

if myInt < vars.spamNumber {

// a comment

vars.chanInt <- myInt + 1

// a comment

}

// a comment

}

// a comment

}

// a comment

fmt.Println("closed")

// a comment

}

```

main_test.go:

```go

package main

import (

"testing"

"time"

)

func TestALoop(t *testing.T) {

vars.chanStruct = make(chan struct{})

vars.chanInt = make(chan int, 1024)

vars.chanOneClosed = make(chan struct{})

vars.spamNumber = 20

go feed()

go aLoop()

time.Sleep(time.Second)

close(vars.chanStruct)

select {

case <-vars.chanOneClosed:

}

time.Sleep(time.Second)

}

```

Then run:

```

go test -coverprofile=coverage.out -v test

go tool cover -html=coverage.out

```

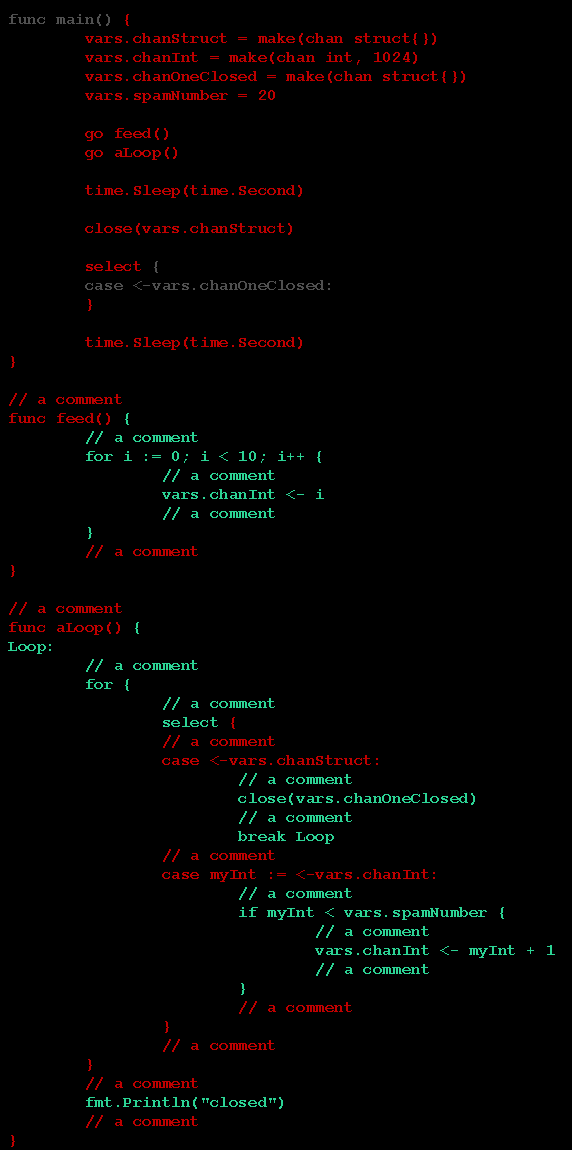

### What did you expect to see?

Coverage report with green and black, with only red in main, similar to this:

Note, remove the follow code from main to get coverage report to be "correct"

```go

close(vars.chanStruct)

select {

case <-vars.chanOneClosed:

}

time.Sleep(time.Second)

```

### What did you see instead?

Coverage report with red marks in comments, function, and case:

### Note:

This is similar to https://github.com/golang/go/issues/22545 but that one is only comments.

|

NeedsInvestigation,compiler/runtime

|

low

|

Minor

|

351,012,435 |

TypeScript

|

Cannot use type side of a namespace in JsDoc after `declare global ...` workaround for UMD globals

|

I hit this while writing an electron app using `checkJs` where you can both `require` code in (thus making your file a module), and there may also be script tags loading code in the HTML for the app. For example, by main page has the below as it is using D3. Thus the 'd3' object is available globally.

```html

<script src="node_modules/d3/dist/d3.js"></script>

<script src="./app.js"></script>

```

Trying to use the global D3 in my `app.js` however results in the error `'d3' refers to a UMD global, but the current file is a module...`, so I've added the common workaround below to avoid this via a .d.ts file.

```ts

import {default as _d3} from 'd3';

declare global {

// Make the global d3 from directly including the bundle via a script tag available as a global

const d3: typeof _d3;

}

```

When the above .d.ts code is present (and only when), JSDoc gives an error on trying to use types from the namespace, i.e. the below code

```js

/** @type {d3.DefaultArcObject} */

var x;

```

Results in the error `Namespace '"./@types/d3/index".d3' has no exported member 'DefaultArcObject'.` Yet the below TypeScript continues to work fine:

```ts

var x: d3.DefaultArcObject;

```

The below also continues to work fine in JavaScript, but is kind of ugly and a pain to have to repeat (especially if you need to use a lot of type arguments)

```js

/** @type {import('d3').DefaultArcObject} */

var x;

```

Personally I'd rather not have to do the .d.ts workaround at all and just be able to use the `d3` global in my modules (see the highly controversial #10178). That not being the case, JsDoc should be able to access the types still with the workaround in place.

|

Bug,Domain: JSDoc

|

low

|

Critical

|

351,018,556 |

rust

|

Rust should embed /DEFAULTLIB linker directives in staticlibs for pc-windows-msvc

|

This would significantly improve the user experience as they wouldn't have to call `--print native-static-libs` to figure out what libraries they need to link to and then tell their build system to link them. Instead the linker would just know to automatically link to those libraries.

Examples of issues that wouldn't exist with this:

https://github.com/rust-lang/rust/issues/52892

|

A-linkage,A-LLVM,T-compiler,O-windows-msvc

|

low

|

Minor

|

351,029,708 |

TypeScript

|

quick fix for merge duplicate import or export declaration

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Please read the FAQ first, especially the "Common Feature Requests" section.

-->

## Search Terms

quickfix, import, export

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

## Suggestion

provide a quickfix for merge two(or more) import or export declaration

<!-- A summary of what you'd like to see added or changed -->

## Use Cases

<!--

What do you want to use this for?

What shortcomings exist with current approaches?

-->

it's useful for resolve git conflict

## Examples

```ts

// ====

import { a, b, c } from 'mod'

// ====

import { b, d, e } from 'mod'

// ====

```

keep each other for git conflict:

```ts

import { a, b, c } from 'mod'

import { b, d, e } from 'mod'

```

<!-- Show how this would be used and what the behavior would be -->

after merge:

```ts

import { a, b, c, d, e } from 'mod'

```

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript / JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. new expression-level syntax)

|

Suggestion,In Discussion,Domain: Refactorings,Experience Enhancement

|

low

|

Critical

|

351,040,720 |

pytorch

|

[Caffe2] Error C2375 when building DLL.

|

## Issue description

VS complains about `C2375` due to missing dllimport/dllexport.

```

caffe2\core\operator_schema.cc(403): error C2375: 'caffe2::operator <<': redefinition; different linkage

caffe2\core\db.cc(15): error C2375: 'caffe2::db::Caffe2DBRegistry': redefinition; different linkage

caffe2\core\blob_serialization.cc(323): error C2375: 'caffe2::BlobSerializerRegistry': redefinition; different linkage

caffe2\core\net.cc(22): error C2375: 'caffe2::NetRegistry': redefinition; different linkage

```

## System Info

- PyTorch or Caffe2: C2

- How you installed PyTorch (conda, pip, source): src

- Build command you used (if compiling from source): cmake

- OS: Win10

- PyTorch version: master

- VS version (if compiling from source): 2017

- CMake version: 3.12

|

caffe2

|

low

|

Critical

|

351,045,644 |

react

|

Umbrella: Chopping Block

|

I wanted to create a list of things whose existence makes React bigger and more complicated than necessary. This makes them more likely to need to be deprecated and actually removed in a future version. No clue of when this will happen and what the recommended upgrade path will be so don't take this issue as advice that you should move away from them until there's clear upgrade advice. You might make it worse by doing so.

(This has some overlap with https://github.com/facebook/react/issues/9475 but those seem more longer term.)

- [ ] __Unsafe Life Cycles without UNSAFE prefix__ - We'll keep the ones prefixed UNSAFE indefinitely but the original ones will likely be deprecated and removed.

- [ ] __Legacy context__ - `.contextTypes`, `.childContextTypes`, `getChildContext` - The old context is full of edge cases for when it is accidentally supposed to work and the way it is designed requires all React code to become slower just to support this feature.

- [ ] __String refs__ - This requires current owner to be exposed at runtime. While it is likely that some form of owner will remain, this particular semantics is likely not what we want out of it. So rather than having two owners, we should just remove this feature. It also requires an extra field on every ReactElement which is otherwise not needed.

- [ ] __Module pattern components__ - This is a little used feature that lets you return a class instance from a regular function without extending `React.Component`. This is not that useful. In practice the ecosystem has moved around ES class like usage, and other language compiling to JS tries to comply with that model as well. The existence of this feature means that we don't know that something is a functional component by just testing if it's a function that is not extending `React.Component`. Instead we have to do some extra feature testing for every functional component there is. It also prevents us from passing the ref as the second argument by default for all functional components without using `forwardRef` since that wouldn't be valid for class components.

- [ ] __Uncontrolled onInput__ - This is described in #9657. Because we support uncontrolled polyfilling of this event, we have to do pretty invasive operations to the DOM like attaching setters. This is all in support of imperative usage of the DOM which should be out-of-scope for React.

- [ ] __setState in componentDidCatch__ - Currently we support error recovery in `componentDidCatch` but once we support `getDerivedStateFromCatch` we might want to consider deprecating the old mechanism which automatically first commits null. The semantics of this are a bit weird and requires complicated code that we likely get wrong sometimes.

- [ ] __Context Object As Consumer__ - Right now it is possible to use the Context object as a Consumer render prop. That's an artifact of reusing the same object allocation but not documented. We'll want to deprecate that and make it the Provider instead.

- [ ] __No GC of not unmounted roots__ - This likely won't come with a warning. We'll just do it. It's not a breaking behavior other than memory usage. You have to call `unmountComponentAtNode` or that component won't be cleaned up. Almost always it is not cleaned up anyway since if you have at least one subscription that still holds onto it. Arguably this is not even a breaking change. #13293

- [ ] __unstable_renderSubtreeIntoContainer__ - This is replaced by Portals. It is already problematic since it can't be used in life-cycles but it also add lots of special case code to transfer the context. Since legacy context itself likely will be deprecated, this serves no purposes.

- [x] __ReactDOM.render with hydration__ - This has already been deprecated. This requires extra code and requires us to generate an extra attribute in the HTML to auto-select hydration. People should be using ReactDOM.hydrate instead. We just need to remove the old behavior and the attribute in ReactDOMServer.

- [ ] __Return value of `ReactDOM.render()`__ - We can't synchronously return an instance when inside a lifecycle/callback/effect, or in concurrent mode. Should use a ref instead.

- [ ] __All of `ReactDOM.render()`__ - Switch everyone over to `createRoot`, with an option to make `createRoot` sync.

|

Type: Umbrella,React Core Team

|

medium

|

Critical

|

351,053,410 |

vscode

|

Method separator

|

Issue Type: <b>Feature Request</b>

Please add method separator in class and shortcut for go back to previous Edit/last edit location ... Thanks

VS Code version: Code 1.26.0 (4e9361845dc28659923a300945f84731393e210d, 2018-08-13T16:20:44.170Z)

OS version: Darwin x64 17.7.0

<!-- generated by issue reporter -->

|

feature-request,editor-rendering

|

high

|

Critical

|

351,054,155 |

pytorch

|

[BUG]: unstable happend in saving model.

|

## Issue description

When I save my model, I met the errors.

My environment:

system: debian 8

python: python3

pytorch: 0.4.1

## Code

```python

def save_model(self, epoch):

# for example: save_path = '~/Documents/my_model_1.pth'.

save_path = self.model_prefix + '_' + str(epoch) + '.pth'

# note that my net: self.net is trained on GPU.

torch.save(self.net.state_dict(), save_path)

self.logging.info('Saved model in {}'.format(save_path))

```

## Error

```

Traceback (most recent call last):

File "train_network.py", line 64, in <module>

main()

File "train_network.py", line 60, in main

netutil.save_model(epoch)

File "/opt/tiger/reid/attribute_net/network.py", line 169, in save_model

torch.save(self.net.state_dict(), save_path)

File "/usr/local/lib/python3.6/site-packages/torch/serialization.py", line 209, in save

return _with_file_like(f, "wb", lambda f: _save(obj, f, pickle_module, pickle_protocol))

File "/usr/local/lib/python3.6/site-packages/torch/serialization.py", line 134, in _with_file_like

return body(f)

File "/usr/local/lib/python3.6/site-packages/torch/serialization.py", line 209, in <lambda>

return _with_file_like(f, "wb", lambda f: _save(obj, f, pickle_module, pickle_protocol))

File "/usr/local/lib/python3.6/site-packages/torch/serialization.py", line 288, in _save

serialized_storages[key]._write_file(f, _should_read_directly(f))

RuntimeError: Unknown error -1

```

|

module: serialization,triaged

|

low

|

Critical

|

351,134,598 |

go

|

x/mobile: manual declaration of uses-sdk in AndroidManifest.xml not supported

|

We need to set the minSdkVersion and targetSdkVersion in the AndroidManifest.xml file. But it won't build, gomobile will exit with err:

```

manual declaration of uses-sdk in AndroidManifest.xml not supported

```

minSdkVersion and targetSdkVersion is a commonly used in Android. If not set, app market like Google Play will refused to accept the app.

|

mobile

|

low

|

Major

|

351,137,812 |

three.js

|

GLTFExporter: Normal Texture handedness

|

GLTF Loader: Fix for handedness in Normal Texture:

https://github.com/mrdoob/three.js/pull/11825

https://github.com/KhronosGroup/glTF/issues/952

https://github.com/mrdoob/three.js/blob/master/examples/js/loaders/GLTFLoader.js#L2204

material.normalScale.y = - material.normalScale.y;

Given that the loader needs to adjust this, should the exporter be adjusting this as well?

Please do correct me if I'm wrong, but the current code does not seem to account for this.

if ( material.normalMap ) {

gltfMaterial.normalTexture = {

index: processTexture( material.normalMap )

};

if ( material.normalScale.x !== - 1 ) {

if ( material.normalScale.x !== material.normalScale.y ) {

console.warn( 'THREE.GLTFExporter: Normal scale components are different, ignoring Y and exporting X.' );

}

gltfMaterial.normalTexture.scale = material.normalScale.x;

}

}

##### Three.js version

- [x ] Dev

- [ ] r95

- [ ] ...

##### Browser

- [x] All of them

- [ ] Chrome

- [ ] Firefox

- [ ] Internet Explorer

##### OS

- [x] All of them

- [ ] Windows

- [ ] macOS

- [ ] Linux

- [ ] Android

- [ ] iOS

##### Hardware Requirements (graphics card, VR Device, ...)

|

Needs Investigation

|

low

|

Major

|

351,139,545 |

opencv

|

Run the official face-detection sample and find the problem

|

<!--

If you have a question rather than reporting a bug please go to http://answers.opencv.org where you get much faster responses.

If you need further assistance please read [How To Contribute](https://github.com/opencv/opencv/wiki/How_to_contribute).

Please:

* Read the documentation to test with the latest developer build.

* Check if other person has already created the same issue to avoid duplicates. You can comment on it if there already is an issue.

* Try to be as detailed as possible in your report.

* Report only one problem per created issue.

This is a template helping you to create an issue which can be processed as quickly as possible. This is the bug reporting section for the OpenCV library.

-->

##### System information (version)

<!-- Example

- OpenCV => 3.4

- Operating System / Platform => Android

- Compiler => cmake

-->

- OpenCV => 3.4

- Operating System / Platform => Android

- Compiler => cmake

##### Detailed description

<!-- your description -->

Run the official face-detection sample and find that the value detected by the face is incorrect. It is clear that the current screen has no face, but the detected rectangular frame is still displayed.

##### Steps to reproduce

code segment

mJavaDetector.detectMultiScale(mGray, faces, 1.1, 2, 2, // TODO: objdetect.CV_HAAR_SCALE_IMAGE

new Size(mAbsoluteFaceSize, mAbsoluteFaceSize), new Size());

Rect[] facesArray = faces.toArray();

for (int i = 0; i < facesArray.length; i++)

Imgproc.rectangle(mRgba, facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3);

<!-- to add code example fence it with triple backticks and optional file extension

```.cpp

// C++ code example

```

or attach as .txt or .zip file

-->

|

incomplete

|

low

|

Critical

|

351,166,166 |

opencv

|

Unable to open video stream

|

<!--

If you have a question rather than reporting a bug please go to http://answers.opencv.org where you get much faster responses.

If you need further assistance please read [How To Contribute](https://github.com/opencv/opencv/wiki/How_to_contribute).

Please:

* Read the documentation to test with the latest developer build.

* Check if other person has already created the same issue to avoid duplicates. You can comment on it if there already is an issue.

* Try to be as detailed as possible in your report.

* Report only one problem per created issue.

This is a template helping you to create an issue which can be processed as quickly as possible. This is the bug reporting section for the OpenCV library.

-->

##### System information (version)

<!-- Example

-->

- OpenCV => 3.4.1

- Operating System / Platform => Ubuntu 16.04 arm64

- Compiler => gcc

##### Detailed description

Not able to open video stream using function cvCaptureFromCAM() in C language.

Gets following error:

```

[ WARN:0] cvCreateFileCaptureWithPreference: backend FFMPEG doesn't support legacy API anymore.

NvMMLiteOpen : Block : BlockType = 261

TVMR: NvMMLiteTVMRDecBlockOpen: 7907: NvMMLiteBlockOpen

NvMMLiteBlockCreate : Block : BlockType = 261

TVMR: cbBeginSequence: 1223: BeginSequence 1280x720, bVPR = 0

TVMR: LowCorner Frequency = 100000

TVMR: cbBeginSequence: 1622: DecodeBuffers = 5, pnvsi->eCodec = 4, codec = 0

TVMR: cbBeginSequence: 1693: Display Resolution : (1280x720)

TVMR: cbBeginSequence: 1694: Display Aspect Ratio : (1280x720)

TVMR: cbBeginSequence: 1762: ColorFormat : 5

TVMR: cbBeginSequence:1776 ColorSpace = NvColorSpace_YCbCr601

TVMR: cbBeginSequence: 1904: SurfaceLayout = 3

TVMR: cbBeginSequence: 2005: NumOfSurfaces = 12, InteraceStream = 0, InterlaceEnabled = 0, bSecure = 0, MVC = 0 Semiplanar = 1, bReinit = 1, BitDepthForSurface = 8 LumaBitDepth = 8, ChromaBitDepth = 8, ChromaFormat = 5

TVMR: cbBeginSequence: 2007: BeginSequence ColorPrimaries = 2, TransferCharacteristics = 2, MatrixCoefficients = 2

Allocating new output: 1280x720 (x 12), ThumbnailMode = 0

OPENMAX: HandleNewStreamFormat: 3464: Send OMX_EventPortSettingsChanged : nFrameWidth = 1280, nFrameHeight = 720

GStreamer-CRITICAL **: gst_query_set_position: assertion 'format == g_value_get_enum (gst_structure_id_get_value (s, GST_QUARK (FORMAT)))' failed

[ WARN:0] cvCreateFileCaptureWithPreference: backend GSTREAMER doesn't support legacy API anymore.

TVMR: TVMRFrameStatusReporting: 6369: Closing TVMR Frame Status Thread -------------

TVMR: TVMRVPRFloorSizeSettingThread: 6179: Closing TVMRVPRFloorSizeSettingThread -------------

TVMR: TVMRFrameDelivery: 6219: Closing TVMR Frame Delivery Thread -------------

TVMR: NvMMLiteTVMRDecBlockClose: 8105: Done

Failed to query video capabilities: Inappropriate ioctl for device

libv4l2: error getting capabilities: Inappropriate ioctl for device

VIDEOIO ERROR: V4L: device test.mp4: Unable to query number of channels

Couldn't connect to webcam.

: Bad file descriptor

Opened the streamAborted (core dumped)

```

|

wontfix,category: videoio

|

low

|

Critical

|

351,182,490 |

kubernetes

|

Kubelet doesn't support dynamic CPU offlining/onlining

|

<!-- This form is for bug reports and feature requests ONLY!

If you're looking for help check [Stack Overflow](https://stackoverflow.com/questions/tagged/kubernetes) and the [troubleshooting guide](https://kubernetes.io/docs/tasks/debug-application-cluster/troubleshooting/).

If the matter is security related, please disclose it privately via https://kubernetes.io/security/.

-->

**Is this a BUG REPORT or FEATURE REQUEST?**:

/kind bug

**What happened**:

Kubelet doesn't follow CPU hotplug events. For example, if I offline a CPU, guaranteed pods might still be assigned there even though there is no real capacity available. Also, the CPU manager static policy might try to assign containers to non-existent CPUs. This would lead to containers not starting, because the CRI cpuset assignment would fail.

**How to reproduce it (as minimally and precisely as possible)**:

On the master node check CPU capacity:

$ kubectl get node worker-node -o json | jq '.status | .capacity | .cpu'

"4"

On the worker node offline a CPU:

# echo 0 > /sys/devices/system/cpu/cpu2/online

On the master node check CPU capacity again:

$ kubectl get node worker-node -o json | jq '.status | .capacity | .cpu'

"4"

**What you expected to happen**:

The cpu capacity should have gone to 3.

**Anything else we need to know?**:

The correct way to fix this would be to listen to udev hotplug events from a netlink socket. When an event telling that a CPU has been added or removed is received, kubelet should do a few things:

1. Inform API server about the new capacity in NodeStatus message.

2. Inform CPU manager that a new topology must be loaded. The containers must be reassigned to CPUs, because either the default pool grew or some container lost a CPU on which it was assigned to.

CPU hotplug functionality is needed for some pretty special use cases. One of them is the possibility to (dynamically) disable SMT support by offlining a sibling core. I know that this bug is not something that a regular user will meet on daily basis, but since kubernetes manages CPU usage and CPU allocations, this is something that it should take into account.

I would be happy to take a look at fixing this bug if there is some sort of consensus that such a patch might be accepted in kubernetes.

**Environment**:

- Kubernetes version (use `kubectl version`):

Client Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.1",

GitCommit:"b1b29978270dc22fecc592ac55d903350454310a", GitTreeState:"clean", BuildDate:"2018-07-17T18:53:20Z", GoVersion:"go1.10.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.1",

GitCommit:"b1b29978270dc22fecc592ac55d903350454310a", GitTreeState:"clean", BuildDate:"2018-07-17T18:43:26Z", GoVersion:"go1.10.3", Compiler:"gc", Platform:"linux/amd64"}

- Cloud provider or hardware configuration: Local VMs

- OS (e.g. from /etc/os-release):

NAME=Fedora

VERSION="28 (Workstation Edition)"

- Kernel (e.g. `uname -a`):

4.17.12-200.fc28.x86_64

|

sig/node,kind/feature,needs-triage

|

medium

|

Critical

|

351,185,785 |

go

|

x/tools/present: fix rendering on mobile

|

Trying to follow a presentation on a mobile device is currently very difficult. Some of the issues include:

* Scrolling left or right sometimes doesn't move one slide; rather, it scrolls through the visible horizontal slides

* The screens tend to be tall, meaning that the text is too small by default.

* Trying to zoom in our out easily breaks the UI; one can end up with slides that are cut off.

Below is a screenshot I took after zooming in and out on a presentation of mine.

<img src="https://user-images.githubusercontent.com/3576549/44208641-de165680-a158-11e8-9a73-ce07db61b9f1.png" width="300">

My HTML/JS skills are limited, so I don't know if this would be a major rework for mobile, or just some tweaking to have it behave better on small/tall screens.

|

help wanted,NeedsFix,Tools

|

medium

|

Major

|

351,216,770 |

vue-element-admin

|

Is there any ways to set up a new small admin with another backend framwrok

|

So I use laravel node and nuxt js with node. I want to use this admin as an independent library to fit into other projects. How can I easily separate the components, all styles and use in any other project?

|

enhancement :star:

|

low

|

Minor

|

351,237,949 |

go

|

encoding/json, encoding/xml: update documentation to use embedded fields instead of anonymous fields.

|

This is a documentation issue.

The [json.Marshal documentation](https://tip.golang.org/pkg/encoding/json/#Marshal) refers to "anonymous struct fields," which the spec has been calling "embedded" struct fields since f8b4123613a2cb0c453726033a03a1968205ccae. This is confusing to readers who want to know how embedded struct fields are marshaled and aren't aware of the historic terminology.

Searching the codebase for "anonymous struct" suggests that the encoding/xml godoc has the same issue.

|

Documentation,help wanted,NeedsFix

|

low

|

Minor

|

351,239,760 |

pytorch

|

[Caffe2] Unable to use MPI rendezvous in Caffe2

|

## Issue description

Unable to use MPI rendezvous in Caffe2.

I understand that this information may not be sufficient for helping me out. Hence, I request you to ask to perform whatever steps that are required to get more information about the situation.

I am grateful for your help.

## Code example

Details:

For reproducibility, I am using a container made using the following the Dockerfile:

```

FROM nvidia/cuda:8.0-cudnn7-devel-ubuntu16.04

LABEL maintainer="[email protected]"

# caffe2 install with gpu support

RUN apt-get update && apt-get install -y --no-install-recommends \

build-essential \

cmake \

git \

libgflags-dev \

libgoogle-glog-dev \

libgtest-dev \

libiomp-dev \

libleveldb-dev \

liblmdb-dev \

libopencv-dev \

libprotobuf-dev \

libsnappy-dev \

protobuf-compiler \

python-dev \

python-numpy \

python-pip \

python-pydot \

python-setuptools \

python-scipy \

wget \

&& rm -rf /var/lib/apt/lists/*

RUN wget -q http://www.mpich.org/static/downloads/3.1.4/mpich-3.1.4.tar.gz \

&& tar xf mpich-3.1.4.tar.gz \

&& cd mpich-3.1.4 \

&& ./configure --disable-fortran --enable-fast=all,O3 --prefix=/usr \

&& make -j$(nproc) \

&& make install \

&& ldconfig \

&& cd .. \

&& rm -rf mpich-3.1.4 \

&& rm mpich-3.1.4.tar.gz

RUN pip install --no-cache-dir --upgrade pip==9.0.3 setuptools wheel

RUN pip install --no-cache-dir \

flask \

future \

graphviz \

hypothesis \

jupyter \

matplotlib \

numpy \

protobuf \

pydot \

python-nvd3 \

pyyaml \

requests \

scikit-image \

scipy \

setuptools \

six \

tornado

########## INSTALLATION STEPS ###################

RUN git clone --branch master --recursive https://github.com/pytorch/pytorch.git

RUN cd pytorch && mkdir build && cd build \

&& cmake .. \

-DCUDA_ARCH_NAME=Manual \

-DCUDA_ARCH_BIN="35 52 60 61" \

-DCUDA_ARCH_PTX="61" \

-DUSE_NNPACK=OFF \

-DUSE_ROCKSDB=OFF \

&& make -j"$(nproc)" install \

&& ldconfig \

&& make clean \

&& cd .. \

&& rm -rf build

ENV PYTHONPATH /usr/local

```

The command:

```

srun -N 4 -n 4 -C gpu \

shifter run --mpi load/library/caffe2_container_diff \

python resnet50_trainer.py \

--train_data=$SCRATCH/caffe2_notebooks/tutorial_data/resnet_trainer/imagenet_cars_boats_train \

--test_data=$SCRATCH/caffe2_notebooks/tutorial_data/resnet_trainer/imagenet_cars_boats_val \

--db_type=lmdb \

--num_shards=4 \

--num_gpu=1 \

--num_labels=2 \

--batch_size=2 \

--epoch_size=150 \

--num_epochs=2 \

--distributed_transport ibverbs \

--distributed_interface mlx5_0

```

The output/error:

```

srun: job 9059937 queued and waiting for resources

srun: job 9059937 has been allocated resources

E0816 14:14:20.081552 7042 init_intrinsics_check.cc:43] CPU feature avx is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.081637 7042 init_intrinsics_check.cc:43] CPU feature avx2 is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.081642 7042 init_intrinsics_check.cc:43] CPU feature fma is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.083420 6442 init_intrinsics_check.cc:43] CPU feature avx is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.083504 6442 init_intrinsics_check.cc:43] CPU feature avx2 is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.083509 6442 init_intrinsics_check.cc:43] CPU feature fma is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

INFO:resnet50_trainer:Running on GPUs: [0]

INFO:resnet50_trainer:Using epoch size: 144

INFO:resnet50_trainer:Running on GPUs: [0]

INFO:resnet50_trainer:Using epoch size: 144

E0816 14:14:20.087043 5987 init_intrinsics_check.cc:43] CPU feature avx is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.087126 5987 init_intrinsics_check.cc:43] CPU feature avx2 is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.087131 5987 init_intrinsics_check.cc:43] CPU feature fma is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

INFO:resnet50_trainer:Running on GPUs: [0]

INFO:resnet50_trainer:Using epoch size: 144

INFO:data_parallel_model:Parallelizing model for devices: [0]

INFO:data_parallel_model:Create input and model training operators

INFO:data_parallel_model:Model for GPU : 0

INFO:data_parallel_model:Parallelizing model for devices: [0]

INFO:data_parallel_model:Create input and model training operators

INFO:data_parallel_model:Model for GPU : 0

E0816 14:14:20.102372 11086 init_intrinsics_check.cc:43] CPU feature avx is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.102452 11086 init_intrinsics_check.cc:43] CPU feature avx2 is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

E0816 14:14:20.102457 11086 init_intrinsics_check.cc:43] CPU feature fma is present on your machine, but the Caffe2 binary is not compiled with it. It means you may not get the full speed of your CPU.

INFO:data_parallel_model:Parallelizing model for devices: [0]

INFO:data_parallel_model:Create input and model training operators

INFO:data_parallel_model:Model for GPU : 0

INFO:resnet50_trainer:Running on GPUs: [0]

INFO:resnet50_trainer:Using epoch size: 144

INFO:data_parallel_model:Parallelizing model for devices: [0]

INFO:data_parallel_model:Create input and model training operators

INFO:data_parallel_model:Model for GPU : 0

INFO:data_parallel_model:Adding gradient operators

INFO:data_parallel_model:Adding gradient operators

INFO:data_parallel_model:Adding gradient operators

INFO:data_parallel_model:Adding gradient operators

INFO:data_parallel_model:Add gradient all-reduces for SyncSGD

WARNING:data_parallel_model:Distributed broadcast of computed params is not implemented yet

INFO:data_parallel_model:Add gradient all-reduces for SyncSGD

INFO:data_parallel_model:Add gradient all-reduces for SyncSGD

WARNING:data_parallel_model:Distributed broadcast of computed params is not implemented yet

WARNING:data_parallel_model:Distributed broadcast of computed params is not implemented yet

INFO:data_parallel_model:Add gradient all-reduces for SyncSGD

WARNING:data_parallel_model:Distributed broadcast of computed params is not implemented yet

INFO:data_parallel_model:Post-iteration operators for updating params

INFO:data_parallel_model:Calling optimizer builder function

INFO:data_parallel_model:Post-iteration operators for updating params

INFO:data_parallel_model:Post-iteration operators for updating params

INFO:data_parallel_model:Calling optimizer builder function

INFO:data_parallel_model:Calling optimizer builder function

INFO:data_parallel_model:Post-iteration operators for updating params

INFO:data_parallel_model:Calling optimizer builder function

INFO:data_parallel_model:Add initial parameter sync

INFO:data_parallel_model:Add initial parameter sync

INFO:data_parallel_model:Add initial parameter sync

INFO:data_parallel_model:Add initial parameter sync

INFO:data_parallel_model:Creating barrier net

INFO:data_parallel_model:Creating barrier net

INFO:data_parallel_model:Creating barrier net

*** Aborted at 1534428860 (unix time) try "date -d @1534428860" if you are using GNU date ***

INFO:data_parallel_model:Creating barrier net

*** Aborted at 1534428860 (unix time) try "date -d @1534428860" if you are using GNU date ***

*** Aborted at 1534428860 (unix time) try "date -d @1534428860" if you are using GNU date ***

PC: @ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

PC: @ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

PC: @ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

*** SIGSEGV (@0x8) received by PID 5987 (TID 0x2aaaaaae5480) from PID 8; stack trace: ***

@ 0x2aaaaace4390 (unknown)

@ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

*** SIGSEGV (@0x8) received by PID 7042 (TID 0x2aaaaaae5480) from PID 8; stack trace: ***

@ 0x2aaaaace4390 (unknown)

@ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

*** Aborted at 1534428860 (unix time) try "date -d @1534428860" if you are using GNU date ***

*** SIGSEGV (@0x8) received by PID 6442 (TID 0x2aaaaaae5480) from PID 8; stack trace: ***

@ 0x2aaaaace4390 (unknown)

@ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

@ 0x2aaab0af78d3 std::_Function_handler<>::_M_invoke()

PC: @ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

@ 0x2aaab0af78d3 std::_Function_handler<>::_M_invoke()

@ 0x2aaab09e8094 caffe2::InferBlobShapesAndTypes()

@ 0x2aaab09e9659 caffe2::InferBlobShapesAndTypesFromMap()

@ 0x2aaab0af78d3 std::_Function_handler<>::_M_invoke()

@ 0x2aaab09e8094 caffe2::InferBlobShapesAndTypes()

@ 0x2aaab032588e _ZZN8pybind1112cpp_function10initializeIZN6caffe26python16addGlobalMethodsERNS_6moduleEEUlRKSt6vectorINS_5bytesESaIS7_EESt3mapINSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEES6_IlSaIlEESt4lessISI_ESaISt4pairIKSI_SK_EEEE36_S7_JSB_SR_EJNS_4nameENS_5scopeENS_7siblingEEEEvOT_PFT0_DpT1_EDpRKT2_ENUlRNS_6detail13function_callEE1_4_FUNES19_

@ 0x2aaab09e9659 caffe2::InferBlobShapesAndTypesFromMap()

@ 0x2aaab035273e pybind11::cpp_function::dispatcher()

@ 0x4bc3fa PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c16e7 PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x2aaab032588e _ZZN8pybind1112cpp_function10initializeIZN6caffe26python16addGlobalMethodsERNS_6moduleEEUlRKSt6vectorINS_5bytesESaIS7_EESt3mapINSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEES6_IlSaIlEESt4lessISI_ESaISt4pairIKSI_SK_EEEE36_S7_JSB_SR_EJNS_4nameENS_5scopeENS_7siblingEEEEvOT_PFT0_DpT1_EDpRKT2_ENUlRNS_6detail13function_callEE1_4_FUNES19_

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x2aaab09e8094 caffe2::InferBlobShapesAndTypes()

@ 0x4eb30f (unknown)

@ 0x4e5422 PyRun_FileExFlags

@ 0x4e3cd6 PyRun_SimpleFileExFlags

@ 0x493ae2 Py_Main

@ 0x2aaaaaf10830 __libc_start_main

@ 0x4933e9 _start

@ 0x2aaab09e9659 caffe2::InferBlobShapesAndTypesFromMap()

*** SIGSEGV (@0x8) received by PID 11086 (TID 0x2aaaaaae5480) from PID 8; stack trace: ***

@ 0x2aaab035273e pybind11::cpp_function::dispatcher()

@ 0x0 (unknown)

@ 0x4bc3fa PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c16e7 PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x2aaab032588e _ZZN8pybind1112cpp_function10initializeIZN6caffe26python16addGlobalMethodsERNS_6moduleEEUlRKSt6vectorINS_5bytesESaIS7_EESt3mapINSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEES6_IlSaIlEESt4lessISI_ESaISt4pairIKSI_SK_EEEE36_S7_JSB_SR_EJNS_4nameENS_5scopeENS_7siblingEEEEvOT_PFT0_DpT1_EDpRKT2_ENUlRNS_6detail13function_callEE1_4_FUNES19_

@ 0x2aaaaace4390 (unknown)

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4eb30f (unknown)

@ 0x4e5422 PyRun_FileExFlags

@ 0x4e3cd6 PyRun_SimpleFileExFlags

@ 0x493ae2 Py_Main

@ 0x2aaaaaf10830 __libc_start_main

@ 0x4933e9 _start

@ 0x2aaab0afb108 caffe2::ConvPoolOpBase<>::TensorInferenceForConv()

@ 0x0 (unknown)

@ 0x2aaab035273e pybind11::cpp_function::dispatcher()

@ 0x4bc3fa PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c16e7 PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4eb30f (unknown)

@ 0x4e5422 PyRun_FileExFlags

@ 0x4e3cd6 PyRun_SimpleFileExFlags

@ 0x493ae2 Py_Main

@ 0x2aaaaaf10830 __libc_start_main

@ 0x4933e9 _start

@ 0x0 (unknown)

@ 0x2aaab0af78d3 std::_Function_handler<>::_M_invoke()

@ 0x2aaab09e8094 caffe2::InferBlobShapesAndTypes()

@ 0x2aaab09e9659 caffe2::InferBlobShapesAndTypesFromMap()

@ 0x2aaab032588e _ZZN8pybind1112cpp_function10initializeIZN6caffe26python16addGlobalMethodsERNS_6moduleEEUlRKSt6vectorINS_5bytesESaIS7_EESt3mapINSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEES6_IlSaIlEESt4lessISI_ESaISt4pairIKSI_SK_EEEE36_S7_JSB_SR_EJNS_4nameENS_5scopeENS_7siblingEEEEvOT_PFT0_DpT1_EDpRKT2_ENUlRNS_6detail13function_callEE1_4_FUNES19_

@ 0x2aaab035273e pybind11::cpp_function::dispatcher()

@ 0x4bc3fa PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c16e7 PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4c1e6f PyEval_EvalFrameEx

@ 0x4b9ab6 PyEval_EvalCodeEx

@ 0x4eb30f (unknown)

@ 0x4e5422 PyRun_FileExFlags

@ 0x4e3cd6 PyRun_SimpleFileExFlags

@ 0x493ae2 Py_Main

@ 0x2aaaaaf10830 __libc_start_main

@ 0x4933e9 _start

@ 0x0 (unknown)

srun: error: nid06499: task 2: Segmentation fault

srun: Terminating job step 9059937.0

srun: error: nid06497: task 0: Segmentation fault

srun: error: nid06498: task 1: Segmentation fault

srun: error: nid06500: task 3: Segmentation fault

```

## System Info

- Caffe2:

- How you installed Caffe2 (conda, pip, source): Modified Dockerfile mentioned above

- CUDA/cuDNN version: 8.0/7.0

- GPU models and configuration: Cray XC40/XC50 supercomputer, uses SLURM!

|

caffe2

|

low

|

Critical

|

351,266,005 |

go

|

cmd/compile: split up SSA rewrite passes

|

For Go 1.12 I'd like to experiment with splitting up the rewrite rules for generic optimization, lowering and lowered optimization into more phases. This is a tracking issue for that experimentation. Ideas and feedback welcomed.

As we have added more and more optimizations the rules files have become increasingly large. This introduces a few problems. Firstly 'noopt' builds actually do a lot of optimizations, some of which are quite complex and may make programs much harder to debug. Secondly the ways that rules interact is becoming less clear. For example, adding a new set of rules might introduce 'dead ends' into the optimization passes, because, say, the author hasn't take into account special cases such as indexed versions of memory operations. Thirdly there are some rules which will only ever fire once. For example, the rules to explode small struct accesses('SSAable'). This is inefficient since they need to be re-checked every time the optimization pass is re-run (the optimization passes tend to run over and over again until a steady state is reached).

Here is a summary of the existing passes that use code generated from the rewrite rules (I've ignored the 32-bit passes for now):

* `opt`: first round of generic optimizations [mandatory][iterative]

* `decompose builtins`: split up compound operations, such as complex number operations, into individual operations [mandatory][single pass]

* `late opt`: repeat the generic optimizations after CSE etc. have run [mandatory][iterative]

* `lower`: generate and optimize machine specific operations [mandatory][iterative]

As a rough guide I see the phases looking something like this (will most likely change quite a lot):

Generic phases:

* `optimize initial`: generic optimizations targeting compound types (store-load forwarding etc.) and constant propagation [optional][iterative]

* `decompose compound types`: split up SSAable operations and generate Move and Zero operations etc. for non-SSAable operations [mandatory][single pass]

* `decompose builtins`: (might merge into the previous pass) [mandatory][single pass]

* `optimize main`: generic optimizations before the main optimization passes (CSE, BCE, etc.) [optional][iterative]

* `optimize final`: repeat the generic optimizations after the main optimization passes [optional][iterative]

Architecture specific phases (not all architectures will need all of these):

* `lower`: minimal rules needed to produce executable code [mandatory][iterative]

* `lowered optimize initial`: optimizations that can be applied in one pass (not sure if this will be needed) [optional][single pass]

* `lowered optimize main`: optimizations that need to be executed iteratively [optional][iterative]

* `lowered optimize final:` low priority optimizations that can be applied in one pass (or a small number of passes) such as indexed memory accesses and merging loads into instructions [optional][single pass (maybe iterative)]

I'm hoping the benefits of being able to reduce the number of rules and perhaps more efficient I-cache usage will make up for the increased number of passes. I'll need to experiment to see.

Most likely some rules will need to be duplicated in multiple passes (particularly the generic optimization passes). This will probably involve splitting the rules into more files and then re-combining them in individual passes (for example, constant propagation rules could get their own file and then be called from both the initial and main generic optimization passes).

There are some TODOs along these lines in the compiler source, but I couldn't find any existing issues, so apologies if this is a dup of another issue.

|

compiler/runtime

|

low

|

Critical

|

351,304,898 |

TypeScript

|

Prototype assignment of constructor nested inside a class confuses type resolution

|

From chrome-devtools-frontend, in ui/ListWidget.js:

```js

var UI = {}

UI.ListWidget = class { };

UI.ListWidget.Delegate = function() {};

UI.ListWidget.Delegate.prototype = {

renderItem() {},

};

/** @type {UI.ListWidget} */

var l = new UI.ListWidget();

```

**Expected behavior:**

The type of `l` is the same as `new UI.ListWidget()` — `UI.ListWidget`.

**Actual behavior:**

It's `{ renderItem() {} } & { renderItem() {} }` -- the structural type of UI.ListWidget.Delegate, duplicated. This also causes an assignability error from `new UI.ListWidget()`.

Note that this has been broken for some time; it's not a 3.1 regression.

|

Bug

|

low

|

Critical

|

351,417,363 |

vscode

|

Allow extensions to be installed for all users

|

I'm the instructor a college Python programming course. I'd love to adopt VS Code for this course, but the fact that extensions are installed on a per-user basis is a major problem. For education and enterprise markets, there needs to be support for pre-installing extensions for all users.

It's important that we be able to provide a ready-to-use environment to students on login. I have 50 students in my class. I can't afford to lose class time to making students install the Python extension each time they use a new computer or to help troubleshooting students when they have problems.

I've put together a very hackish workaround where I've created a batch file that installs the plugin and then launches VS Code. I've added shortcuts to this batch file, but it's messy and I fear brittle.

Please add a flag to code --install-extension that allows users to specify the extension is to be installed for all users.

FWIW, @rhyspaterson mentioned needing this same functionality in a comment on Issue #27972.

|

feature-request,extensions

|

high

|

Critical

|

351,418,074 |

pytorch

|

Bilinear interpolation behavior inconsistent with TF, CoreML and Caffe

|

## Issue description

Trying to compare and transfer models between Caffe, TF and Pytorch found difference in output of bilinear interpolations between all. Caffe is using depthwise transposed convolutions instead of straightforward resize, so it's easy to reimplement both in TF and Pytorch.

However, there is difference between output for TF and Pytorch with `align_corners=False`, which is default for both.

## Code example

```Python

img = cv2.resize(cv2.imread('./lenna.png')[:, :, ::-1], (256, 256))

img = img.reshape(1, 256, 256, 3).astype('float32') / 255.

img = tf.convert_to_tensor(img)

output_size = [512, 512]

output = tf.image.resize_bilinear(img, output_size, align_corners=True)

with tf.Session() as sess:

values = sess.run([output])

out_tf = values[0].astype('float32')[0]

img = img.reshape(1, 256, 256, 3).transpose(0, 3, 1, 2).astype('float32') / 255.

out_pt = nn.functional.interpolate(torch.from_numpy(nimg),

scale_factor=2,

mode='bilinear',

align_corners=True)

out_pt = out_pt.data.numpy().transpose(0, 2, 3, 1)[0]

print(np.max(np.abs(out_pt - out_tf)))

# output 5.6624413e-06

```

But

```Python

img = cv2.resize(cv2.imread('./lenna.png')[:, :, ::-1], (256, 256))

img = img.reshape(1, 256, 256, 3).astype('float32') / 255.

img = tf.convert_to_tensor(img)

output_size = [512, 512]

output = tf.image.resize_bilinear(img, output_size, align_corners=False)

with tf.Session() as sess:

values = sess.run([output])

out_tf = values[0].astype('float32')[0]

img = img.reshape(1, 256, 256, 3).transpose(0, 3, 1, 2).astype('float32') / 255.

out_pt = nn.functional.interpolate(torch.from_numpy(nimg),

scale_factor=2,

mode='bilinear',

align_corners=False)

out_pt = out_pt.data.numpy().transpose(0, 2, 3, 1)[0]

print(np.max(np.abs(out_pt - out_tf)))

# output 0.22745097

```

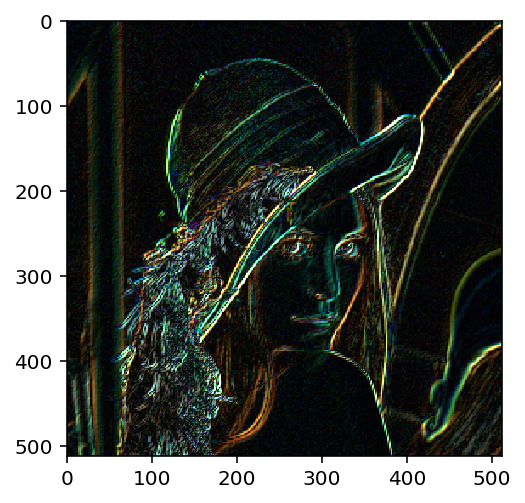

Output diff * 10:

Output of CoreML is consistent with TF, so it seems that there is a bug with implementation of bilinear interpolation with `align_corners=False` in Pytorch.

Diff is reproducible both on cpu and cuda with cudnn 7.1, cuda 9.1.

|

triaged,module: interpolation

|

low

|

Critical

|

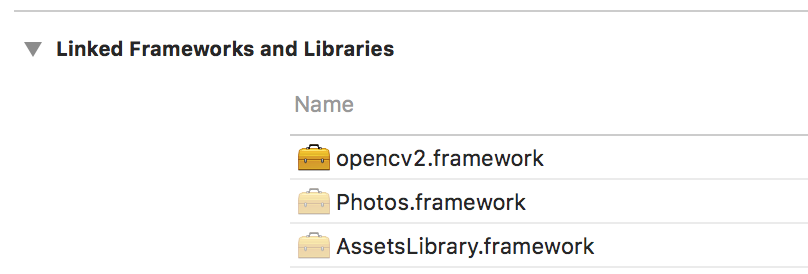

351,445,586 |

opencv

|

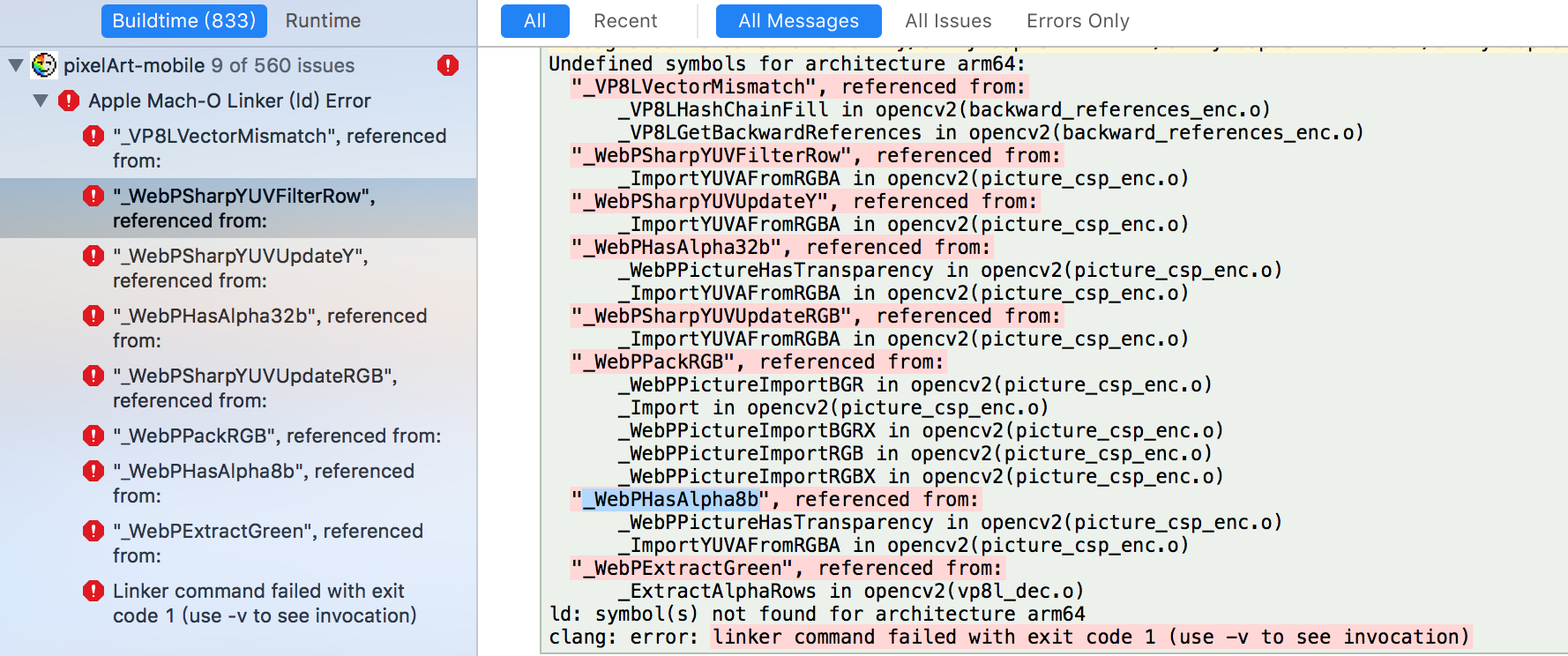

app link error with iOS framework

|

##### System information (version)

- OpenCV => 3.4.2

- Operating System / Platform => Mac OSX 10.13.6 (17G65)

- Compiler => XCode Version 9.4.1 (9F2000)

##### Detailed description

|

category: build/install,platform: ios/osx,needs investigation

|

low

|

Critical

|

351,544,821 |

pytorch

|

Request for better memory management

|

This issue is mainly about how to recover from an `out of memory` exception, previously posted in [forum](https://discuss.pytorch.org/t/whats-the-best-way-to-handle-exception-cuda-runtime-error-2-out-of-memory/11891).

Till now, it's not always possible to recover from OOM exception whether during train or inference. This will pose huge risk when you use pytorch in large-scale training or deployment.

Please pay attention to this problem, I really like pytorch anyway.

|

feature,module: memory usage,triaged

|

low

|

Critical

|

351,565,975 |

rust

|

Unrelated error report for trait bound checking

|

Given the following code:

```rust

use nalgebra::{Dynamic, MatrixMN};

use std::fmt::Debug;

trait CheckedType : Copy + Clone + Debug + Ord {}

struct Foo<T: CheckedType> {

matrix: MatrixMN<T, Dynamic, Dynamic>,

}

```

The compilers reports:

```

error[E0310]: the parameter type `T` may not live long enough

--> src/main.rs:9:5

|

8 | struct Foo<T: CheckedType> {

| -- help: consider adding an explicit lifetime bound `T: 'static`...

9 | matrix: MatrixMN<T, Dynamic, Dynamic>,

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

note: ...so that the type `T` will meet its required lifetime bounds

--> src/main.rs:9:5

|

9 | matrix: MatrixMN<T, Dynamic, Dynamic>,

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

```

Checking the definition of `nalgebra::MatrixMN`, it seems the trait bound needs to include `Scalar`, so if I add `nalgebra::Scalar` to the bound of `CheckedType`, then the code passes compile.

The error report for the original code seems to be completely unrelated to the actual fix. I suspect this is a compiler bug.

|

C-enhancement,A-diagnostics,T-compiler

|

low

|

Critical

|

351,598,174 |

go

|

x/text: gotext with french numbers above 1,000,000

|

Please answer these questions before submitting your issue. Thanks!

### What version of Go are you using (`go version`)?

go version go1.10.3 darwin/amd64

### Does this issue reproduce with the latest release?

YES

### What operating system and processor architecture are you using (`go env`)?

GOARCH="amd64"

GOBIN="/Users/christophe/go/bin"

GOCACHE="/Users/christophe/Library/Caches/go-build"

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GOOS="darwin"

GOPATH="/Users/christophe/go"

GORACE=""

GOROOT="/usr/local/Cellar/go/1.10.3/libexec"

GOTMPDIR=""

GOTOOLDIR="/usr/local/Cellar/go/1.10.3/libexec/pkg/tool/darwin_amd64"

GCCGO="gccgo"

CC="clang"

CXX="clang++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/85/01ftf7594x1_01_ps0ypv76h0000gn/T/go-build291898772=/tmp/go-build -gno-record-gcc-switches -fno-common"

### What did you do?

In french, when expressing quantities, you have to distinguish between less than 1,000,000 and more. For example, one have to say:

* For numbers from 2 up to and including 999'999, we have to say/write:

> Il y a 999'999 habitants.

> Il y a 999'999 chiens.

* And for numbers from 1'000'000 and up, we have to say/write:

> Il y a 1'000'000 **d**'habitants.

> Il y a 1'000'000 **de** chiens.

With the following program:

```

package main

//go:generate gotext -srclang=en update -out=catalog/catalog.go -lang=fr,en

import (

"fmt"

_ "github.com/clamb/arcaciel/aws/scrapbook/catalog"

"golang.org/x/text/language"

"golang.org/x/text/message"

)

func main() {

tr := message.NewPrinter(language.French)

num := 999999

tr.Printf("There are %d dog(s)", num)

fmt.Println()

num = 1000000

tr.Printf("There are %d dog(s)", num)

fmt.Println()

num = 1000001

tr.Printf("There are %d dog(s)", num)

fmt.Println()

}

```

I tried using `many` (not sure how exactly about how to write this, but this is not the point):

```

{

"id": "There are {Num} dog(s)",

"message": "There are {Num} dog(s)",

"translation": {

"select": {

"feature": "plural",

"arg": "Num",

"cases": {

"=0": "Il n'y a pas de chien",

"one": "Il y a {Num} chien",

"many": "Il y a {Num} de chiens",

"other": "Il y a {Num} de chiens"

}

}

},

"placeholders": [{

"id": "Num",

"string": "%[1]d",

"type": "int",

"underlyingType": "int",

"argNum": 1,

"expr": "num"

}]

},

```

However `many` is not supported for language `fr`.

```

gotext: generation failed: error: plural: form "many" not supported for language "fr"

test.go:3: running "gotext": exit status 1

```

Then I tried using `"<1000000"`:

```

{

"id": "There are {Num} dog(s)",

"message": "There are {Num} dog(s)",

"translation": {

"select": {

"feature": "plural",

"arg": "Num",

"cases": {

"=0": "Il n'y a pas de chien",

"one": "Il y a {Num} chien",

"<1000000": "Il y a {Num} chiens",

"other": "Il y a {Num} de chiens"

}

}

},

"placeholders": [{

"id": "Num",

"string": "%[1]d",

"type": "int",

"underlyingType": "int",

"argNum": 1,

"expr": "num"

}]

},

```

As the number `1,000,000` is read as an `int16`, `gotext` raises an error:

```

gotext: generation failed: error: plural: invalid number in selector "<1000000": strconv.ParseUint: parsing "1000000": value out of range

test.go:3: running "gotext": exit status 1

```

### What did you expect to see?

```

Il y a 999 999 chiens

Il y a 1 000 000 de chiens

Il y a 1 000 001 de chiens

```

### What did you see instead?

```

gotext: generation failed: error: plural: invalid number in selector "<1000000": strconv.ParseUint: parsing "1000000": value out of range

test.go:3: running "gotext": exit status 1

```

|

NeedsInvestigation

|

low

|

Critical

|

351,662,938 |

react

|

onChange doesn't fire if input re-renders due to a setState() in a non-React capture phase listener

|

Extracting from https://github.com/facebook/react/issues/12643.

This issue has always been in React. I can reproduce it up to React 0.11. However **it's probably extremely rare in practice and isn't worth fixing**. I'm just filing this for posterity.

Here is a minimal example.

```js

class App extends React.Component {

state = {value: ''}

handleChange = (e) => {

this.setState({

value: e.target.value

});

}

componentDidMount() {

document.addEventListener(

"input",

() => {

// COMMENT OUT THIS LINE TO FIX:

this.setState({});

},

true

);

}

render() {

return (

<div>

<input

value={this.state.value}

onChange={this.handleChange}

/>

</div>

);

}

}

ReactDOM.render(<App />, document.getElementById("container"));

```

Typing doesn't work — unless I comment out that `setState` call in the capture phase listener.

Say the input is empty and we're typing `a`.

What happens here is that `setState({})` in the capture phase non-React listener runs first. When re-rendering due to that first empty `setState({})`, input props still contain the old value (`""`) while the DOM node's value is new (`"a"`). They're not equal, so we'll set the DOM node value to `""` (according to the props) and remember `""` as the current value.

<img width="549" alt="screen shot 2018-08-17 at 1 08 42 am" src="https://user-images.githubusercontent.com/810438/44241204-4b0e0880-a1ba-11e8-847d-bf9ca43eb954.png">

Then, `ChangeEventPlugin` tries to decide whether to emit a change event. It asks the tracker whether the value has changed. The tracker compares the presumably "new" `node.value` (it's `""` — we've just set it earlier!) with the `lastValue` it has stored (also `""` — and also just updated). No changes!

<img width="505" alt="screen shot 2018-08-17 at 1 10 59 am" src="https://user-images.githubusercontent.com/810438/44241293-e0110180-a1ba-11e8-9c5a-b0d808f745cd.png">

Our `"a"` update is lost. We never get the change event, and never actually get a chance to set the correct state.

|

Type: Bug,Component: DOM,React Core Team

|

medium

|

Major

|

351,678,753 |

electron

|

Split session 'Media' permission to 'Camera' and 'Microphone'

|

**Is your feature request related to a problem? Please describe.**

In the actual Electron Session permission request handler, the 'media' permission englobes both microphone and camera.

**Describe the solution you'd like**

I suggest that it should be splited into microphone and camera, in order to work like Chrome, Firefox and other major browsers.

It will also bring a more detailed option for a specific set of applications, that only require access to either microphone or the webcam of the user.

|

enhancement :sparkles:

|

low

|

Minor

|

351,679,975 |

rust

|

Query system cycle errors should be extendable with notes

|

Relevant PR: https://github.com/rust-lang/rust/pull/53316

Relevant Issue: #52985

The [query system](https://rust-lang-nursery.github.io/rustc-guide/query.html) automatically detects and emits a cycle error if a cycle occurs when dependency nodes are added to the query DAG. This error is extensible with a custom main message defined as below to help human readability,

https://github.com/rust-lang/rust/blob/a385095f9a6d4d068102b6c72fbdc86ac2667e51/src/librustc/ty/query/config.rs#L93

but is otherwise closed for modification outside of the query::plumbing module:

https://github.com/rust-lang/rust/blob/b2397437530eecef72a1524a7e0a4b42034fa360/src/librustc/ty/query/plumbing.rs#L248

It would be nice to have a mechanism in addition that allows custom notes and suggestions to be added to these errors to help illustrate why a cycle occurred, not just where. It may be possible to expose the `DiagnosticBuilder` or provide wrappers for methods like `span_suggestion()` and `span_note()`

|

C-enhancement,A-diagnostics,T-compiler

|

low

|

Critical

|

351,698,237 |

go

|

x/net/idna: Display returns invalid label for r4---sn-a5uuxaxjvh-gpm6.googlevideo.com.

|

### What version of Go are you using (`go version`)?

```

go version go1.10.3 linux/amd64 via docker golang:1.10.3-alpine

(also go version go1.10.3 darwin/amd64)

```

### Does this issue reproduce with the latest release?

```

Yes

```

### What operating system and processor architecture are you using (`go env`)?

```

GOARCH="amd64"

GOBIN=""

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH="/go"

GORACE=""

GOROOT="/usr/local/go"

GOTMPDIR=""

GOTOOLDIR="/usr/local/go/pkg/tool/linux_amd64"

GCCGO="gccgo"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build623899479=/tmp/go-build -gno-record-gcc-switches"

```

### What did you do?

Transforming domain name to human-readable form with "golang.org/x/net/idna".

```golang

package main

import (

"fmt"

"golang.org/x/net/idna"

)

func main() {

_, e := idna.ToUnicode("r4---sn-a5uuxaxjvh-gpm6.googlevideo.com")

fmt.Println(e)

_, e = idna.Display.ToUnicode("r4---sn-a5uuxaxjvh-gpm6.googlevideo.com")

fmt.Println(e)

}

```

### What did you expect to see?

I expect to see no error.

```

<nil>

<nil>

```

### What did you see instead?

But `idna.Display` does not agree to accept the host name as a valid one.

```

<nil>

idna: invalid label "r4---sn-a5uuxaxjvh-gpm6"

```

`.ToASCII` has the same property.

|

NeedsInvestigation

|

low

|

Critical

|

351,733,588 |

vscode

|

[npm] hover should show relevant latest version

|

In a `package.json`, when you hover the version for a dependency it will show the "[Latest version](https://github.com/Microsoft/vscode/blob/9a03a86c0a54a24c355bd950ddad91a0e74de6dd/extensions/npm/src/features/packageJSONContribution.ts#L303)". I think it would be useful to (also) show the _relevant_ latest version, which might differ if using a version prefix like `^` or `~`.

|

feature-request,json

|

low

|

Minor

|

351,769,355 |

flutter

|

Expose the tri-state checkbox "indeterminate" state in the semantics data

|

Right now we treat "indeterminate" and "unchecked" as the same. This works for Android and iOS since their accessibility APIs don't support tristate checkboxes, but hopefully Fuchsia's API will support tristate checkboxes so we should expose the data there at least.

|

framework,a: accessibility,platform-fuchsia,c: proposal,P2,team-framework,triaged-framework

|

low

|

Minor

|

351,771,464 |

awesome-mac

|

Recategorize Art/Protoyping/Modeling Software

|

It should really be better organized, and the categories we have now are insufficient.

Maybe add a `3D Modeling` category?

Also, maybe rename `Screenshot Tools` to `Screencapturing Software`?

- [x] I have checked for other similar issues

- [x] I have explained why this change is important

|

help wanted,organization

|

low

|

Minor

|

351,783,002 |

flutter

|

Box shadow doesn't have flexible features.

|

I love flutter. I have been using it for a year. I'm working on a client project. And the demand is We need Box-shadow for only 3 sides. How can I achieve this? If it would have been like `BoxShadow.only` as in the case of `EdgeInsted.only`, that would be much better. So that as a Developer, I can't say "No" to my Client. And that's what Flutter is For. I don't know where to suggest this. So I'm reporting here.

|

c: new feature,framework,P2,team-framework,triaged-framework

|

low

|

Major

|

351,789,591 |

flutter

|

[web] Drawer menu button is not reachable by screen readers

|

framework,f: material design,a: accessibility,platform-web,has reproducible steps,P2,found in release: 3.3,found in release: 3.6,team-web,triaged-web

|

low

|

Minor

|

|

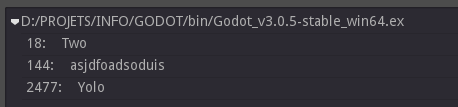

351,797,063 |

godot

|

How to Scroll via ScrollContainer in android or touch screen devices

|

Godot version: 3.1.dev

commit 15ee6b7

OS/device including version: MacOSX High Sierra, Android

Issue description:How to scroll via ScrollContainer. It doesnt scroll in android or touch screen or via dragging

Steps to reproduce:

1. Add Scroll Container in the scene

2. Add VBoxContainer as child of scrollContainer

3. Add Panel with min. size with a sprite inside

Note: Scrolling via mouse wheel works or dragging the scrollBar but if its in android It should be able to scroll via dragging the content right?

Thanks for the response in advance

|

bug,platform:android,platform:macos,confirmed,usability,topic:input,topic:gui

|

medium

|

Critical

|

351,804,670 |

TypeScript

|

Add an option to force source maps to use absolute paths?

|

Previous related issue: https://github.com/Microsoft/TypeScript/issues/23180

It seems VS2017 is incompatible with relative paths, when debugging a UWP app now tries to load source maps from the app URI and the host does not support it.

```

'WWAHost.exe' (Script): Loaded 'Script Code (MSAppHost/3.0)'.

SourceMap ms-appx://6b826582-e42b-45b9-b4e6-dd210285d94b/js/require-config.js.map read failed: The URI prefix is not recognized..

```

Hard-coding the full path in `tsconfig.json` is not a good idea here, I hope we have an option to behave as 2.9.2 so that we can get the auto-resolved full path.

Edit: My tsconfig.json that works in TS2.9 but not in TS3.0:

```json

{

"compilerOptions": {

"strict": true,

"noEmitOnError": true,

"removeComments": false,

"sourceMap": true,

"target": "es2017",

"outDir": "js/",

"mapRoot": "js/",

"sourceRoot": "sources/",

"emitBOM": true,

"module": "amd",

"moduleResolution": "node"

},

"exclude": [

"node_modules",

"wwwroot"

]

}

```

My failed trial to fix this: (removed `mapRoot` and changed `sourceRoot`)

```json

{

"compilerOptions": {

"strict": true,

"noEmitOnError": true,

"removeComments": false,

"sourceMap": true,

"target": "es2017",

"outDir": "js/",

"sourceRoot": "../sources/",

"emitBOM": true,

"module": "amd",

"moduleResolution": "node"

},

"exclude": [

"node_modules",

"wwwroot"

]

}

```

|

Suggestion,In Discussion,Add a Flag

|

low

|

Critical

|

351,818,447 |

TypeScript

|

Proposal: `export as namespace` for UMD module output

|

## Current problem

TypeScript supports UMD output but does not support exporting as a global namespace.

## Syntax

_NamespaceExportDeclaration:_

`export` `as` `namespace` _IdentifierPath_

## Behavior

```ts

export var x = 0;

export function y() {}

export default {};

export as namespace My.Custom.Namespace;

// emits:

(function (global, factory) {

if (typeof module === "object" && typeof module.exports === "object") {

var v = factory(require, exports);

if (v !== undefined) module.exports = v;

}

else if (typeof define === "function" && define.amd) {

define(["require", "exports"], factory);

}

else {

global.My = global.My || {};

global.My.Custom = global.My.Custom || {};

global.My.Custom.Namespace = global.My.Custom.Namespace || {};

var exports = global.My.Custom.Namespace;

factory(global.require, exports);

}

})(this, function (require, exports) {

"use strict";

exports.__esModule = true;

exports.x = 0;

function y() { }

exports.y = y;

exports["default"] = {};

});

```

## Note

* This proposal basically follows Babel behavior.

* Importing any module without a module loader will throw in this proposal. A further extension may use global namespaces as Babel does.

* Babel overwrites on the existing namespace whereas this proposal extends the existing one, as TS `namespace` does.

* Rollup has a special behavior where `export default X` works like CommonJS `module.exports = X` whereas this proposal does not.

Prior arts: [Babel exactGlobals](https://babeljs.io/docs/en/babel-plugin-transform-es2015-modules-umd/#more-flexible-semantics-with-exactglobals-true), [Webpack multi part library](https://github.com/webpack/webpack/tree/v4.16.5/examples/multi-part-library), [Rollup `output.name` option with namespace support](https://rollupjs.org/guide/en#core-functionality)

## See also

#8436

#10907

#20990

|

Suggestion,In Discussion

|

low

|

Major

|

351,828,283 |

godot

|

Allow SkeletonIK to ignore target's rotation

|

**Godot version:**

`master` / c93888ae

**OS/device including version:**

Manjaro Linux 17.1

**Issue description:**

Currently, `SkeletonIK` will try to match the bone hierarchy to a given target's transform which is not always desired.

For example, IK can be used to make a character stretch its hand towards an item when picking it up. And in this case, the arm could be twisted in an unnatural manner, if the character is positioned in a 'wrong' direction from the item.

As an item should be picked up from any direction, it would be much easier if the IK system allowed matching a bone chain to its target's position, without affecting rotation of its constiuent parts.

(For those who need such a feature now: you can change `SkeletonIK.Target` in each frame, so that it matches rotation with the assigned target.)

|

enhancement,topic:core

|

low

|

Minor

|

351,833,060 |

flutter

|

FlutterDriver locator and fluent-style improvements

|

To be more like Selenium-WebDriver, FlutterDriver would be better if it had a mechanism to find lists of matches for a given locator.

```java

// WebDriver's Java API:

Element element = webDriver.findElement(locator); // singular match

List<WebElement> elements = webDriver.findElement(locator); // multiple matches, returned as list

String secondElemText = elements.get(1).getText();

```

FlutterDriver only has mechanisms to target single matches to locators, right now.

To be honest, FlutterDriver's API feels more like Selenium-RC from 2004 (I co-created that) rather than the superior Selenium2 API (WebDriver) that came a few years later.

|

a: tests,c: new feature,framework,t: flutter driver,customer: crowd,P2,team-framework,triaged-framework

|

low

|

Major

|

351,835,598 |

go

|

crypto/tls: fix pseudo-constant mitigation for lucky 13

|

As detailed in the paper "Pseudo Constant Time Implementations of TLS

Are Only Pseudo Secure"

https://eprint.iacr.org/2018/747

|

NeedsInvestigation

|

low

|

Major

|

351,885,929 |

youtube-dl

|