id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

326,864,065 | pytorch | [Caffe2] convert ONNX to caffe2 | I used this program to generate pb model.

> convert-caffe2-to-onnx trainednet.onnx --output predict_net.pb

My Error

```

WARNING:root:This caffe2 python run does not have GPU support. Will run in CPU only mode.

WARNING:root:Debug message: No module named caffe2_pybind11_state_gpu

Traceback (most recent call last):

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/bin/convert-caffe2-to-onnx", line 11, in <module>

sys.exit(caffe2_to_onnx())

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/click/core.py", line 722, in __call__

return self.main(*args, **kwargs)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/click/core.py", line 697, in main

rv = self.invoke(ctx)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/click/core.py", line 895, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/click/core.py", line 535, in invoke

return callback(*args, **kwargs)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/onnx_caffe2/bin/conversion.py", line 42, in caffe2_to_onnx

c2_net_proto.ParseFromString(caffe2_net.read())

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/google/protobuf/message.py", line 185, in ParseFromString

self.MergeFromString(serialized)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/google/protobuf/internal/python_message.py", line 1083, in MergeFromString

if self._InternalParse(serialized, 0, length) != length:

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/google/protobuf/internal/python_message.py", line 1120, in InternalParse

pos = field_decoder(buffer, new_pos, end, self, field_dict)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/google/protobuf/internal/decoder.py", line 612, in DecodeRepeatedField

if value.add()._InternalParse(buffer, pos, new_pos) != new_pos:

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/google/protobuf/internal/python_message.py", line 1109, in InternalParse

new_pos = local_SkipField(buffer, new_pos, end, tag_bytes)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/google/protobuf/internal/decoder.py", line 850, in SkipField

return WIRETYPE_TO_SKIPPER[wire_type](buffer, pos, end)

File "/Users/rafalpilarczyk/anaconda3/envs/caffe2/lib/python2.7/site-packages/google/protobuf/internal/decoder.py", line 820, in _RaiseInvalidWireType

raise _DecodeError('Tag had invalid wire type.')

google.protobuf.message.DecodeError: Tag had invalid wire type.

```

I have similar issues with ONNX-MXNet and tensorflow. I assume that my ONNX model is not compatible with current onnx_caffe2 module? I exported this model using Matlab ONNX exporter. https://www.mathworks.com/matlabcentral/fileexchange/67296-neural-network-toolbox-converter-for-onnx-model-format

I work on MacOS with conda enviroment, python 2.7.15. I tried this also on virtual machine with P4000, Cuda 9.0 and 9.1, but results were the same.

| caffe2 | low | Critical |

326,882,364 | pytorch | [feature request] batch_first of RNN hidden weight for Multi GPU training | As we know, the input and output tensor support ```batch_first``` for RNN training, but as for hidden state, the tensor shape is forced to be ```(num_layers * num_directions, batch, hidden_size)```, even we set ```batch_first = True```. So there is a problem when we do multi GPU training by using ```torch.nn.DataParallel```.

For example, if we initialize a hidden state with shape ```(num_layers * num_directions, batch, hidden_size)``` and set ```hidden = init_hidden(batch_size)``` like [word_language_model](https://github.com/pytorch/examples/blob/f9820471d615d848c14661b2d582417ca3aee8a3/word_language_model/main.py#L150),then if we set ```hidden = init_hidden(batch_size)``` and use parallel training, the hidden size will be ```(num_layers * num_directions / num_gpu, batch, hidden_size)``` while the correct shape is ```(num_layers * num_directions, batch / num_gpu, hidden_size)```. So I was wondering if PyTorch Teams prepare to support ```batch_first``` for RNN hidden state.

Another possible way to avoid this problem is to use the following codes:

```

def init_hidden(self, batch_size):

weight = next(self.parameters())

# The hidden weight format is not consisten with PyTorch's LSTM

# impelementation, so we will transpose it

hidden = weight.new_zeros(batch_size, self.num_layers * 2,

self.hidden_size, requires_grad=False)

hidden = [hidden, hidden]

return hidden

def forward(self, inputs, hidden, length):

hidden[0] = hidden[0].permute(1, 0, 2).contiguous()

hidden[1] = hidden[1].permute(1, 0, 2).contiguous()

inputs_pack = pack_padded_sequence(inputs, length, batch_first=True)

# self.blstm.flatten_parameters()

output, hidden = self.blstm(inputs_pack, hidden)

......

hidden = list(hidden)

hidden[0] = hidden[0].permute(1, 0, 2).contiguous()

hidden[1] = hidden[1].permute(1, 0, 2).contiguous()

return output, hidden

```

But this implementation is very ugly. Also, this implementation faces another problem like the following warning log:

```

/mnt/workspace/pytorch/deep_clustering/model/blstm_upit.py:65: UserWarning: RNN module weights are not part of single contiguous chunk of memory. This means they need to be compacted at every call, possibly greatly increasing memory usage. To compact weights again call flatten_parameters().

```

If we add ```self.blstm.flatten_parameters()``` before ```output, hidden = self.blstm(inputs_pack, hidden)```, we will face another problem like #7092

cc @albanD @mruberry | module: nn,triaged,enhancement,module: data parallel | low | Major |

327,013,320 | rust | Defaulted unit types no longer error out (regression?) | This currently compiles (on stable and nightly). Till 1.25, it would trigger a [lint](https://github.com/rust-lang/rust/issues/39216) because it has inference default to `()` instead of throwing an error.

```rust

fn main() {}

struct Err;

fn load<T: Default>() -> Result<T, Err> {

Ok(T::default())

}

fn foo() -> Result<(), Err> {

let val = load()?; // defaults to ()

Ok(())

}

```

([playpen](https://play.rust-lang.org/?gist=6f7cf9dafb9d1651b659c1f029413fc5&version=nightly&mode=debug))

That lint indicates that it would become a hard error in the future, but it's not erroring. It seems like a bunch of this was changed when we [stabilized `!`](https://github.com/rust-lang/rust/issues/48950).

That issue says

> Type inference will now default unconstrained type variables to `!` instead of `()`. The [`resolve_trait_on_defaulted_unit`](https://github.com/rust-lang/rust/issues/39216) lint has been retired. An example of where this comes up is if you have something like:

Though this doesn't really _make sense_, this looks like a safe way to produce nevers, which should, in short, never happen. It seems like this is related to https://github.com/rust-lang/rust/issues/40801 -- but that was closed as it seems to be a more drastic change.

Also, if you print `val`, it's clear that the compiler thought it was a unit type. This seems like one of those cases where attempting to observe the situation changes it.

We _should_ have a hard error here, this looks like a footgun, and as @SimonSapin mentioned has broken some unsafe code already. We had an upgrade lint for the hard error and we'll need to reintroduce it for a cycle or two since we've had a release without it. AFAICT this is a less drastic change than #40801, and we seem to have _intended_ for this to be a hard error before so it probably is minor enough that it's fine.

cc @nikomatsakis @eddyb

h/t @spacekookie for finding this | T-compiler,regression-from-stable-to-stable,A-inference,C-bug | low | Critical |

327,031,030 | pytorch | [caffe2] build from source, cannot find my cudnn7.1 | I am building pytorch from source. My cudnn version is 7.1, however, it seems that caffe2 cannot succeed to use this verion of cudnn 7.1, but found a low version 5 instead.

errors like below:

```

[ 87%] Building CXX object caffe2/CMakeFiles/caffe2_gpu.dir/__/aten/src/ATen/native/cuda/CUDAReduceOps.cpp.o

[ 87%] Building CXX object caffe2/CMakeFiles/caffe2_gpu.dir/__/aten/src/ATen/CUDACharStorage.cpp.o

[ 87%] Building CXX object caffe2/CMakeFiles/caffe2_gpu.dir/__/aten/src/ATen/CUDAByteStorage.cpp.o

[ 87%] Building CXX object caffe2/CMakeFiles/caffe2_gpu.dir/__/aten/src/ATen/CUDAByteTensor.cpp.o

In file included from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/Exceptions.h:3:0,

from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/Descriptors.h:3,

from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/native/cudnn/BatchNorm.cpp:31:

/home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/cudnn-wrapper.h:10:2: error: #error "CuDNN version not supported"

#error "CuDNN version not supported"

^

In file included from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/native/cudnn/RNN.cpp:58:0:

/home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/cudnn-wrapper.h:10:2: error: #error "CuDNN version not supported"

#error "CuDNN version not supported"

^

In file included from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/Exceptions.h:3:0,

from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/Descriptors.h:3,

from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/native/cudnn/GridSampler.cpp:27:

/home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/cudnn-wrapper.h:10:2: error: #error "CuDNN version not supported"

#error "CuDNN version not supported"

^

In file included from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cuda/detail/CUDAHooks.cpp:11:0:

/home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/cudnn-wrapper.h:10:2: error: #error "CuDNN version not supported"

#error "CuDNN version not supported"

```

and

```

n file included from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/Exceptions.h:3:0,

from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/Descriptors.h:3,

from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/native/cudnn/BatchNorm.cpp:31:

/home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/cudnn-wrapper.h:9:198: note: #pragma message: CuDNN v5 found, but need

at least CuDNN v6. You can get the latest version of CuDNN from https://developer.nvidia.com/cudnn or disable CuDNN with NO_CUDNN=1

#pragma message ("CuDNN v" STRING(CUDNN_MAJOR) " found, but need at least CuDNN v6. You can get the latest version of CuDNN from https

://developer.nvidia.com/cudnn or disable CuDNN with NO_CUDNN=1")

^

In file included from /home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/native/cudnn/AffineGridGenerator.cpp:28:0:

/home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/cudnn-wrapper.h:9:198: note: #pragma message: CuDNN v5 found, but need

at least CuDNN v6. You can get the latest version of CuDNN from https://developer.nvidia.com/cudnn or disable CuDNN with NO_CUDNN=1

at least CuDNN v6. You can get the latest version of CuDNN from https://developer.nvidia.com/cudnn or disable CuDNN with NO_CUDNN=1")

^

/home/hxw/Ananaconda_Pytorch_Related/pytorch/aten/src/ATen/cudnn/cudnn-wrapper.h:9:198: note: #pragma message: CuDNN v5 found, but need

at least CuDNN v6. You can get the latest version of CuDNN from https://developer.nvidia.com/cudnn or disable CuDNN with NO_CUDNN=1

#pragma message ("CuDNN v" STRING(CUDNN_MAJOR) " found, but need at least CuDNN v6. You can get the latest version of CuDNN from https

://developer.nvidia.com/cudnn or disable CuDNN with NO_CUDNN=1")

```

does anyone know how to solve this problem. | caffe2 | low | Critical |

327,032,058 | three.js | Add shadow map support for RectAreaLights (brainstorming, R&D) | ##### Description of the problem

It would be very useful for realism to support shadows on RectAreaLights.

I am unsure of the best technique to use here as I have not researched it yet beyond some quick Google searches. I am not yet sure what is the accepted best practice in the industry?

Two simple techniques I can think of:

- one could place a PointLightShadowMap at the center of a rect area light and it would sort of work.

- less accurate, one could place a SpotLightShadowMap with a fairly high FOV (upwards of 120 deg, but less than 180 deg as that would cause it to fail) at the center of the rect area light and point it in the light direction.

(I believe with the spot light shadow map you may be able to get better results for large area lights if you moved the shadow map behind the area light surface so that the front near clip plane in the shadow map frustum was roughly the side of the area light as it passed through the area light plane. I believe I read this in some paper once, but I can remember the source of it.) | Enhancement | high | Critical |

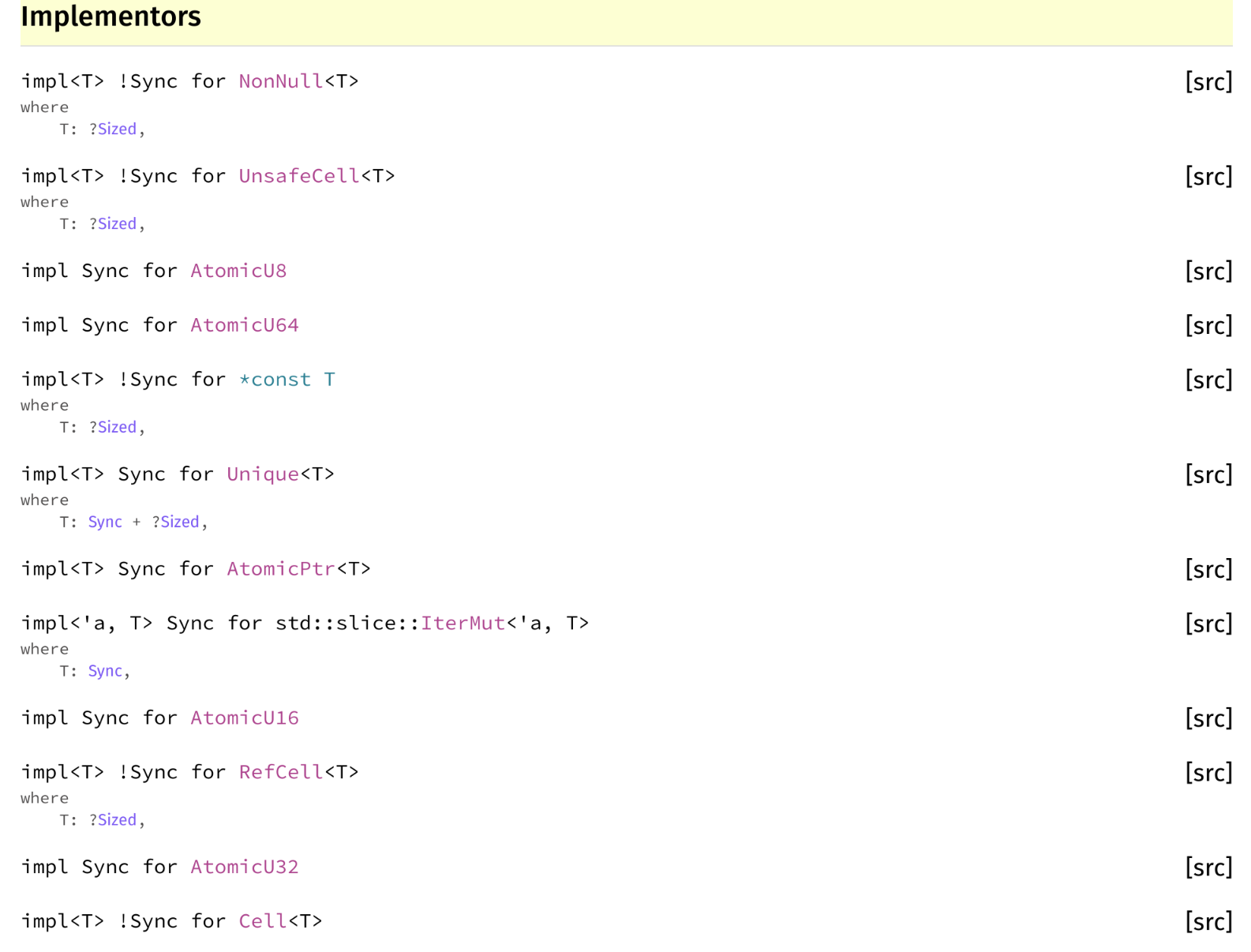

327,048,239 | rust | [rustdoc] Implementors section of Sync (and other similar traits) should separate implementors and !implementors |

The `!Sync` implementations and the `Sync` implementations really ought to be separated apart, otherwise it makes it harder to skim.

https://doc.rust-lang.org/stable/std/marker/trait.Sync.html#implementors | T-rustdoc,C-enhancement,E-mentor | low | Major |

327,059,337 | opencv | Suggestion for the CUDA stream module | Hi, I'd like to suggest something related to the CUDA stream module.

It seems that `cv::cuda::Stream` class encapsulates a feature related to CUDA memory allocation using `StackAllocator` class and `MemoryPool` class.

This feature is described in detail at [this documentation for `BufferPool`](https://docs.opencv.org/master/d5/d08/classcv_1_1cuda_1_1BufferPool.html). In short, it seems that this feature is designed to bypass CUDA memory allocation API calls to speed up performance as follows:

- When a `Stream` class is constructed, some amount of CUDA memory is pre-allocated and assigned to the `Stream` instance.

- If an OpenCV algorithm is run on that stream, the algorithm internally uses the `BufferPool` class to get buffer memory (if needed) from the pre-allocted area, rather than calling the CUDA memory allocation API.

- This reduces overhead.

Since [there](https://github.com/opencv/opencv/blob/master/modules/cudaimgproc/src/histogram.cpp#L311) [are](https://github.com/opencv/opencv/blob/master/modules/cudaobjdetect/src/cascadeclassifier.cpp#L536) [many](https://github.com/opencv/opencv/blob/master/modules/cudaobjdetect/src/hog.cpp#L494) [cases](https://github.com/opencv/opencv/blob/master/modules/cudaarithm/src/cuda/integral.cu#L93) where CUDA algorithms need some amount of GPU memory (sometimes as small as [a](https://github.com/opencv/opencv/blob/master/modules/cudaarithm/src/cuda/norm.cu#L86) [few](https://github.com/opencv/opencv/blob/master/modules/cudaarithm/src/cuda/normalize.cu#L145) [bytes](https://github.com/opencv/opencv/blob/master/modules/cudaarithm/src/cuda/minmax.cu#L77)) for internal buffer, it seems reasonable to take this memory pre-allocation approach. I suppose that's the original reason behind this design, and this feature was enabled by default before https://github.com/opencv/opencv/pull/10751. It is now disabled by default by the mentioned PR, while users can still turn it on by `cv::cuda::setBufferPoolUsage(true);`.

However, in my opinion, _this feature should not be used since enabling it may lead to some problems_.

The problems are:

1. The current design does not work well with device resetting function. The allocated CUDA memory is deallocated when the CUDA context is reset with `cv::cuda::resetDevice()`, but this is not recognized by the `Stream` module, leading to failures. The following code snippets fail to run due to this.

- https://github.com/nglee/opencv_test/blob/e6e7aab4202d285965d62dd79ff66e410fecc85c/cuda_stream_master/cuda_stream_master.cpp#L11-L28

- https://github.com/nglee/opencv_test/blob/e6e7aab4202d285965d62dd79ff66e410fecc85c/cuda_stream_master/cuda_stream_master.cpp#L30-L48

2. It is error-prone since users would have to consider the deallocation order. The following code will show different images for seemingly unchanged variable.

- https://github.com/nglee/opencv_test/blob/e6e7aab4202d285965d62dd79ff66e410fecc85c/cuda_stream_master/cuda_stream_master.cpp#L50-L77

3. The `Stream` module becomes not safe to multi-threaded application.

- The thread-non-safety of `Stream` is already mentioned in the documentation: https://docs.opencv.org/master/d9/df3/classcv_1_1cuda_1_1Stream.html

- It would be tempting for users to use the default stream for multi-threaded application, but running the following code snippet displays thread-non-safety when using the default stream. Some thread prints different result.

https://github.com/nglee/opencv_test/blob/e6e7aab4202d285965d62dd79ff66e410fecc85c/cuda_stream_master/cuda_stream_master.cpp#L81-L107

All code snippets mentioned above run without error when memory pre-allocation mechanism is disabled by replacing `setBufferPoolUsage(true);` to `setBufferPoolUsage(false);` (or just by deleting the `setBufferPoolUsage(true);` line).

So I'm suggesting that the memory pre-allocation feature of `Stream` module should be blocked. But to achieve this, the `StackAllocator`, `MemoryPool`, and `BufferPool` class should be blocked or removed. Also, existing CUDA memory allocation using `BufferPool` should be replaced by ordinary `GpuMat` allocation using the `DefaultAllocator`.

_Since all these changes seem too radical_, another option I can think of is that we might stay the way as it is right now (disable the feature by default) and warn the users who would like to enable this feature by rewriting the documentation of `setBufferPoolUsage` function. | category: gpu/cuda (contrib),RFC | low | Critical |

327,063,120 | rust | Borrowing an immutable reference of a mutable reference through a function call in a loop is not accepted | This code appears to be sound because `event` should either leave the loop or be thrown away before the next iteration:

```rust

#![feature(nll)]

fn next<'buf>(buffer: &'buf mut String) -> &'buf str {

loop {

let event = parse(buffer);

if true {

return event;

}

}

}

fn parse<'buf>(_buffer: &'buf mut String) -> &'buf str {

unimplemented!()

}

fn main() {}

```

The current (1.28.0-nightly 2018-05-25 990d8aa743b1dda3cc0f) implementation still marks this as an error:

```

error[E0499]: cannot borrow `*buffer` as mutable more than once at a time

--> src/main.rs:5:27

|

5 | let event = parse(buffer);

| ^^^^^^ mutable borrow starts here in previous iteration of loop

|

note: borrowed value must be valid for the lifetime 'buf as defined on the function body at 3:1...

--> src/main.rs:3:1

|

3 | fn next<'buf>(buffer: &'buf mut String) -> &'buf str {

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

```

@nikomatsakis said:

> this should throw an error (for now) but this error should eventually go away. This is precisely a case where "Location sensitivity" is needed, but we removed that feature in the name of performance — once polonius support lands, though, this code would be accepted. That may or may not be before the edition. | C-enhancement,T-lang,A-NLL,NLL-polonius | low | Critical |

327,108,944 | pytorch | Deprecate torch.Tensor | This:

```python

>>> torch.Tensor(torch.tensor(0.5))

RuntimeError: slice() cannot be applied to a 0-dim tensor.

```

should work and be a no-op I guess?

I'm running pytorch CPU, built from source at fece8787d98c99177505c9357850171457397b61. | triaged,module: deprecation,module: tensor creation | low | Critical |

327,150,371 | pytorch | TracedModules don't support parameter sharing between modules | I have a multihead module with 2 heads that share parameters during training. I want to use the JIT compiler to increase the performance only during the inference when only 1 head is used. When I create a jit compiled module, JIT code looks at all of the parameters, even those that will never be used in the 2nd head and raises the exception above. Instead of the exception, please create a warning here:

File "anaconda2/lib/python2.7/site-packages/torch/jit/__init__.py", line 643, in check_unique

raise ValueError("TracedModules don't support parameter sharing between modules")

| oncall: jit | low | Critical |

327,157,973 | go | cmd/objdump: x86 disassembler does not recognize PDEPQ | ### What version of Go are you using (`go version`)?

```

go version go1.10.2 darwin/amd64

```

### Does this issue reproduce with the latest release?

Yes, confirmed on 1.9.2 and 1.10.2. Not tested on master.

### What operating system and processor architecture are you using (`go env`)?

```

GOARCH="amd64"

GOBIN=""

GOCACHE="/Users/michaelmcloughlin/Library/Caches/go-build"

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GOOS="darwin"

GOPATH="/Users/michaelmcloughlin/gocode"

GORACE=""

GOROOT="/usr/local/Cellar/go/1.10.2/libexec"

GOTMPDIR=""

GOTOOLDIR="/usr/local/Cellar/go/1.10.2/libexec/pkg/tool/darwin_amd64"

GCCGO="gccgo"

CC="clang"

CXX="clang++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/p5/84p384bs42v7pbgfx0db9gq80000gn/T/go-build505202882=/tmp/go-build -gno-record-gcc-switches -fno-common"

```

### What did you do?

Compiled package with assembly code using `PDEPQ`. Viewed resulting assembly with `go tool objdump`.

Gist https://gist.github.com/mmcloughlin/b5bf1bcc7f31222ff2bc510f2777cd79 is a minimal example.

### What did you expect to see?

Expect `go tool objdump` to show the `PDEPQ` instruction.

### What did you see instead?

Output from `objdump.sh` script is as follows. The `objdump` tool does not recognize `PDEPQ`, and instead parses it as a sequence of instructions. Note that Apple's LLVM objdump correctly identifies the instruction.

```

+ go version

go version go1.10.2 darwin/amd64

+ go test -c

+ go tool objdump -s bmi.PDep bmi.test

TEXT github.com/mmcloughlin/bmi.PDep(SB) /Users/michaelmcloughlin/gocode/src/github.com/mmcloughlin/bmi/pdep.s

pdep.s:7 0x10e7860 4c8b442408 MOVQ 0x8(SP), R8

pdep.s:8 0x10e7865 4c8b4c2410 MOVQ 0x10(SP), R9

pdep.s:10 0x10e786a c442b3f5 CMC

pdep.s:10 0x10e786e d04c8954 RORB $0x1, 0x54(CX)(CX*4)

pdep.s:12 0x10e7872 2418 ANDL $0x18, AL

pdep.s:13 0x10e7874 c3 RET

:-1 0x10e7875 cc INT $0x3

:-1 0x10e7876 cc INT $0x3

:-1 0x10e7877 cc INT $0x3

:-1 0x10e7878 cc INT $0x3

:-1 0x10e7879 cc INT $0x3

:-1 0x10e787a cc INT $0x3

:-1 0x10e787b cc INT $0x3

:-1 0x10e787c cc INT $0x3

:-1 0x10e787d cc INT $0x3

:-1 0x10e787e cc INT $0x3

:-1 0x10e787f cc INT $0x3

+ objdump -disassemble-all bmi.test

+ grep -A 4 bmi.PDep:

github.com/mmcloughlin/bmi.PDep:

10e7860: 4c 8b 44 24 08 movq 8(%rsp), %r8

10e7865: 4c 8b 4c 24 10 movq 16(%rsp), %r9

10e786a: c4 42 b3 f5 d0 pdepq %r8, %r9, %r10

10e786f: 4c 89 54 24 18 movq %r10, 24(%rsp)

```

| help wanted,NeedsFix,compiler/runtime | low | Critical |

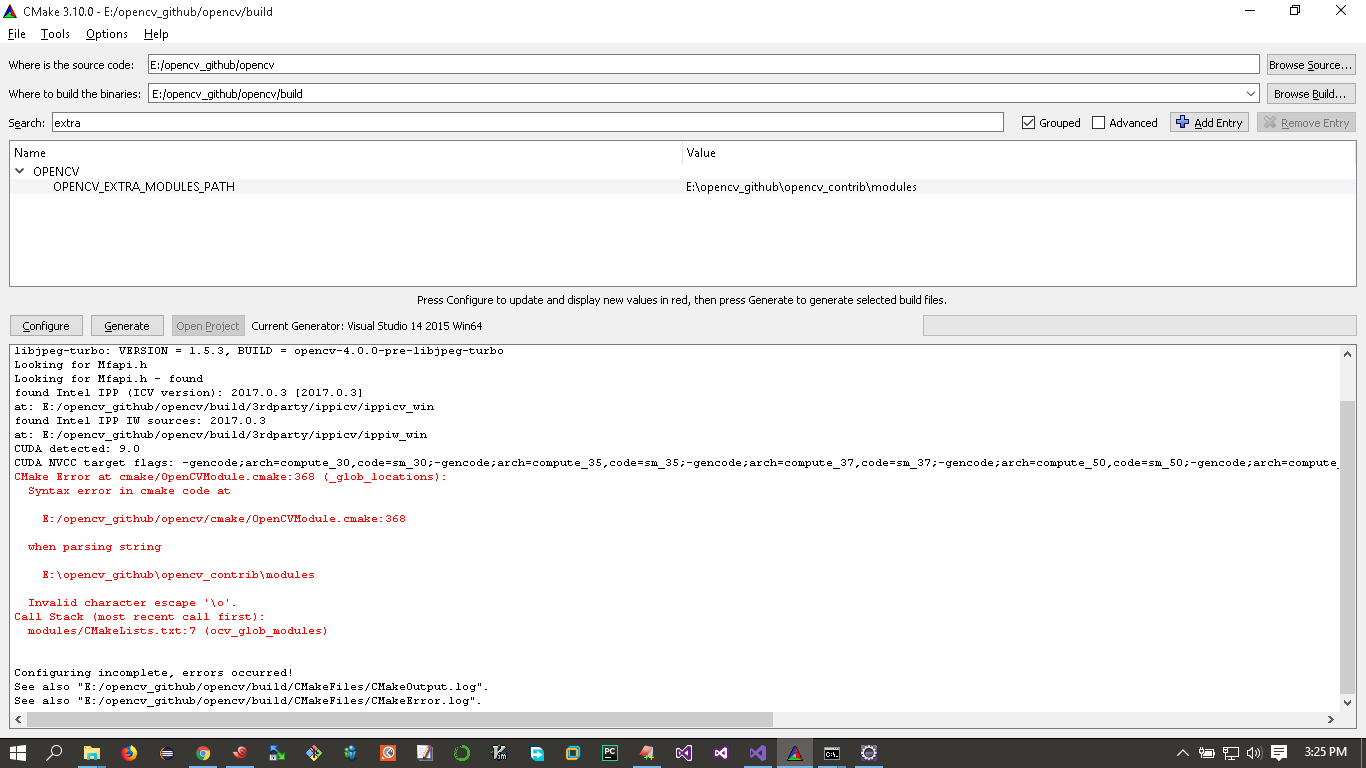

327,226,910 | pytorch | [Caffe2] Operators of Detectron module not registered/compiled when built on windows | ## Issue description

I am using Caffe2+Detectron in Windows. After successfully building Caffe2 (with CUDA, cuDNN, OpenCV), COCOAPI and Detectron modules, I ran the `tools/train_net.py` script in Detectron, trying to train Faster R-CNN on Pascal VOC. But the following errors appeared, reporting a Detectron operator `AffineChannel` not registered. With different configurations, similar errors for other Detectron operators happen.

```

...

File "D:/repo/github/Detectron_facebookresearch\detectron\utils\train.py", line 53, in train_model

model, weights_file, start_iter, checkpoints, output_dir = create_model()

File "D:/repo/github/Detectron_facebookresearch\detectron\utils\train.py", line 132, in create_model

model = model_builder.create(cfg.MODEL.TYPE, train=True)

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\model_builder.py", line 124, in create

return get_func(model_type_func)(model)

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\model_builder.py", line 89, in generalized_rcnn

freeze_conv_body=cfg.TRAIN.FREEZE_CONV_BODY

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\model_builder.py", line 229, in build_generic_detection_model

optim.build_data_parallel_model(model, _single_gpu_build_func)

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\optimizer.py", line 40, in build_data_parallel_model

all_loss_gradients = _build_forward_graph(model, single_gpu_build_func)

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\optimizer.py", line 63, in _build_forward_graph

all_loss_gradients.update(single_gpu_build_func(model))

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\model_builder.py", line 169, in _single_gpu_build_func

blob_conv, dim_conv, spatial_scale_conv = add_conv_body_func(model)

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\ResNet.py", line 36, in add_ResNet50_conv4_body

return add_ResNet_convX_body(model, (3, 4, 6))

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\ResNet.py", line 98, in add_ResNet_convX_body

p, dim_in = globals()[cfg.RESNETS.STEM_FUNC](model, 'data')

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\ResNet.py", line 252, in basic_bn_stem

p = model.AffineChannel(p, 'res_conv1_bn', dim=dim, inplace=True)

File "D:/repo/github/Detectron_facebookresearch\detectron\modeling\detector.py", line 103, in AffineChannel

return self.net.AffineChannel([blob_in, scale, bias], blob_in)

File "D:/repo/github/pytorch/build\caffe2\python\core.py", line 2067, in __getattr__

",".join(workspace.C.nearby_opnames(op_type)) + ']'

AttributeError: Method AffineChannel is not a registered operator. Did you mean: []

```

I have modified `import_detectron_ops()` in `detectron/utils/c2.py` to use my `caffe2_detectron_ops_gpu.dll` path.

I have added the following path with `sys.path.insert(0, path)` in the training script.

- pytorch build directory

- detectron root directory

- COCOAPI PythonAPI directory

I have added the following path to my `PATH` variable.

- cuDNN bin directory

- pytorch build bin directory (`pytorch/build/bin/Release`), which contains `caffe2_detectron_ops_gpu.dll`

- OpenCV bin directory

The `import` commands seem to have all been successful. So I guess the environment setting should be OK.

I used the dumpbin tool to examine my `caffe2_detectron_ops_gpu.dll`, which only has a size of ~5.5MB.

With the `EXPORTS` option, the results are as follows:

```

Microsoft (R) COFF/PE Dumper Version 14.00.24215.1

Copyright (C) Microsoft Corporation. All rights reserved.

Dump of file D:\repo\github\pytorch\build\bin\Release\caffe2_detectron_ops_gpu.dll

File Type: DLL

Section contains the following exports for caffe2_detectron_ops_gpu.dll

00000000 characteristics

5B0CBE13 time date stamp Tue May 29 10:42:27 2018

0.00 version

1 ordinal base

1 number of functions

1 number of names

ordinal hint RVA name

1 0 003393E8 NvOptimusEnablementCuda

Summary

13000 .data

1000 .gfids

1000 .nvFatBi

20C000 .nv_fatb

23000 .pdata

E6000 .rdata

5000 .reloc

1000 .rsrc

252000 .text

1000 .tls

```

With the `SYMBOLS` option, the results are as follows:

```

Microsoft (R) COFF/PE Dumper Version 14.00.24215.1

Copyright (C) Microsoft Corporation. All rights reserved.

Dump of file D:\repo\github\pytorch\build\bin\Release\caffe2_detectron_ops_gpu.dll

File Type: DLL

Summary

13000 .data

1000 .gfids

1000 .nvFatBi

20C000 .nv_fatb

23000 .pdata

E6000 .rdata

5000 .reloc

1000 .rsrc

252000 .text

1000 .tls

```

Does this mean the Detectron operators are actually not compiled? If so, what could possibly be the reason and how can I make them compile?

## Code example

1. Build Caffe2 with CUDA, cuDNN, OpenCV.

2. Build COCOAPI modules.

3. Build Detectron modules.

4. Add all sorts of paths properly (as described above).

5. Run `tools/train_net.py` in Detectron with proper arguments.

## System Info

- PyTorch or Caffe2: **Caffe2**

- How you installed PyTorch (conda, pip, source): **source**

- Build command you used (if compiling from source): **scripts/build_windows.bat**

- OS: **Windows 10 Home Edition**

- PyTorch version: (Latest clone)

- Python version: **Python 2.7.12 :: Anaconda custom (64-bit)**

- CUDA/cuDNN version: **8.0/cudnn-8.0-windows10-x64-v7**

- GPU models and configuration: (GTX 1050)

- GCC version (if compiling from source):

- CMake version: **cmake-3.7.2-win64-x64**

- Versions of any other relevant libraries: **Visual Studio 2015, OpenCV 3.2.0-vc14**

| caffe2 | low | Critical |

327,247,193 | vue | Transition using js hooks always run the initial render | ### Version

2.5.16

### Reproduction link

[https://jsfiddle.net/p1dthw6z/](https://jsfiddle.net/p1dthw6z/)

### Steps to reproduce

In the demo link, toggle checkbox 'odd'.

### What is expected?

When rows appeared, both inner element transition using CSS (fade) and transition using hooks (slide) should not do initial render.

### What is actually happening?

Transition using CSS do not run the initial render, as expected.

But the transition using hooks run the initial render, as if I have used `<transition appear>`, which I didn't.

---

I don't know if it is the intended behavior. If it is, I would like to know how to avoid the initial render in the hooked transition.

<!-- generated by vue-issues. DO NOT REMOVE --> | transition | medium | Minor |

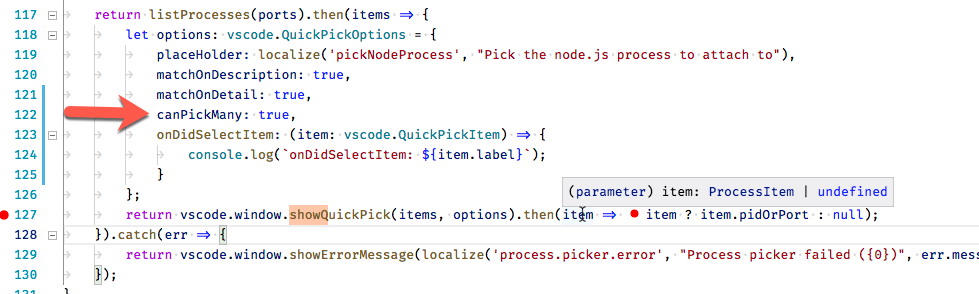

327,252,852 | vscode | Cannot exclude root folders while searching | Testing #50498

- In a MR workspace, try to exclude root folder using **/folder1/** and search. Results are still shown in folder1.

This happens irrespective of setting `search.enableSearchProviders" `

| help wanted,feature-request,search | medium | Major |

327,353,740 | opencv | RTSP streams freeze at version 3.1+ but not at 3.0 | <!--

If you have a question rather than reporting a bug please go to http://answers.opencv.org where you get much faster responses.

If you need further assistance please read [How To Contribute](https://github.com/opencv/opencv/wiki/How_to_contribute).

This is a template helping you to create an issue which can be processed as quickly as possible. This is the bug reporting section for the OpenCV library.

-->

##### System information (version)

<!-- Example

- OpenCV => 3.1

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2015

-->

- OpenCV => 3.1+ including 3.4.1

- Operating System / Platform => Windows .Net C# x86

- Compiler => Visual Studio 2017

##### Detailed description

Using an RTSP camera such as "AimCam", the stream freezes after 10 seconds. It does not freeze when using EMGU 3.0. It does not freeze when run through VLC utility either

##### Steps to reproduce

<!-- to add code example fence it with triple backticks and optional file extension

```.cpp

// cpp code

```

or attach as .txt or .zip file

-->

```.cs

_capture = new VideoCapture("rtsp://192.168.1.254/12345678.mov");

_capture.ImageGrabbed += processFrame;

_capture.Start();

…

// processFrame

_capture.Retrieve(_frame);

_frameBmp?.Dispose();

_frameBmp = _frame.Bitmap;

``` | priority: low,category: videoio,incomplete | low | Critical |

327,380,459 | rust | when suggesting to remove a crate, we leave a blank line | As of https://github.com/rust-lang/rust/pull/51015, the rustfix result for:

```rust

#![warn(unused_crates)]

extern crate foo;

fn main() { }

```

is

```rust

#![warn(unused_crates)]

fn main() { }

```

but not

```rust

#![warn(unused_crates)]

fn main() { }

```

| C-enhancement,A-diagnostics,T-compiler,E-help-wanted,WG-epoch | low | Minor |

327,382,289 | rust | unused macros fails some obvious cases due to prelude | This example does not warn, even in Rust 2018 edition:

```rust

// compile-flags: --edition 2018

extern crate foo;

fn main() { foo::bar(); }

```

The reason is that we resolve `foo` against the extern crate, when in fact it could *also* be resolved by the extern prelude fallback. But that's tricky! Something like this could also happen:

```rust

// compile-flags: --edition 2018

mod bar { mod foo { } }

use bar::*; // `foo` here is shadowed by `foo` below

extern crate foo;

fn main() { foo::bar(); }

```

In which case, removing `foo` would result in importing the module, since (I believe) that would shadow the implicit prelude imports.

Therefore: we can do better here, but with caution -- for example, we could suggest removing an extern crate in a scenario like this, but only if there are no glob imports in the current module. Not sure it's worth it though. | C-enhancement,T-compiler,WG-epoch | low | Minor |

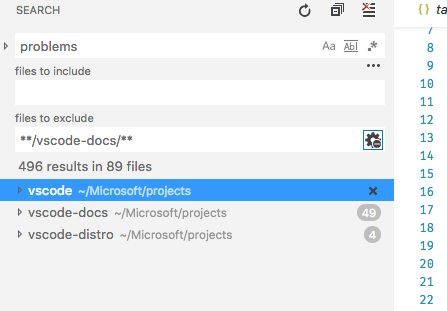

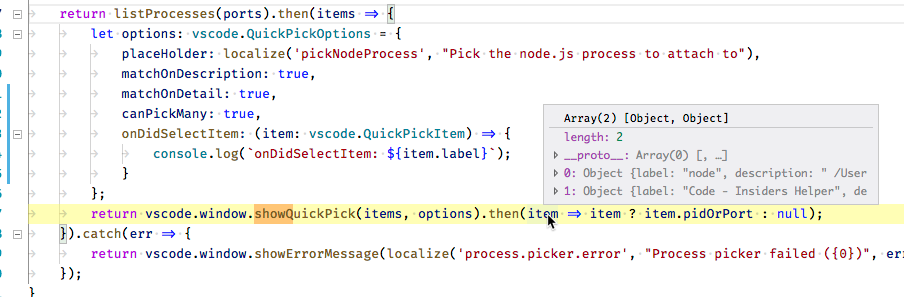

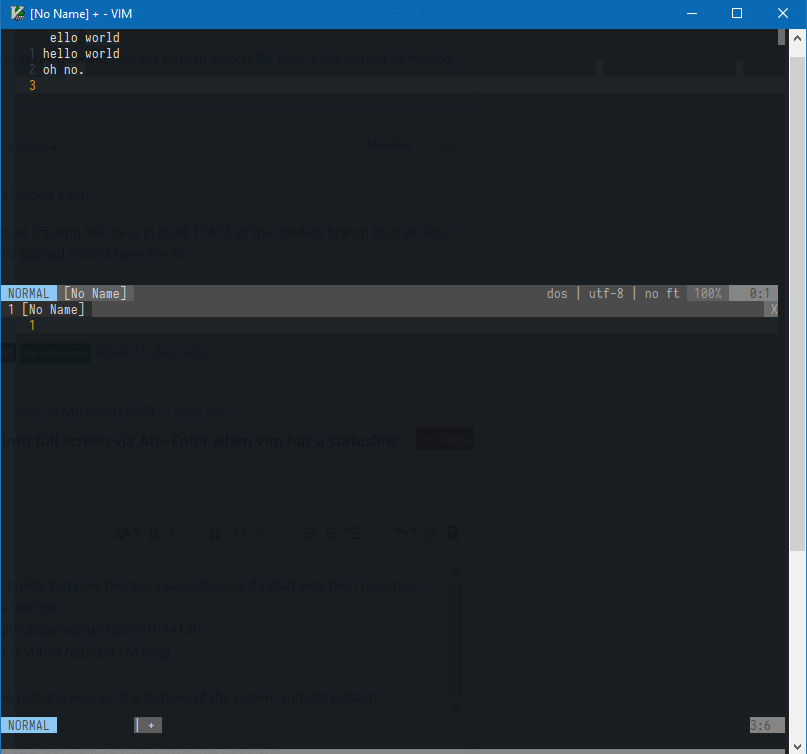

327,396,102 | vscode | QuickPick API is no longer type safe | Testing #50574:

I've added `canPickMany: true` to my `vscode.QuickPickOptions` and now I'm receiving an array through a parameter that does not include the array type:

Debugger hover shows:

but Intellisense does not offer the array type:

| api,debt | low | Critical |

327,396,229 | rust | libcore: add defaults for empty iterators | I'm looking for a way to create empty iterators.

I have a few places in my application where I keep an iterator around as part of a data structure, and will advance that from time to time. After a few rounds, I will reset the iterator to start from the beginning again. (The iterator is supposed to point into the data structure, so [I got some problems](https://users.rust-lang.org/t/struct-containing-reference-to-own-field/1894/2) with implementing that design even though the data is never dropped, but let's ignore that here).

To initialise my structure, I want to create an empty iterator, i.e. one that has no elements left and will only yield `None`.

```rs

pub struct X;

pub struct Example {

iterator: Once<X>

}

impl Example {

pub fn reset(&mut self) {

self.iterator = once(X);

}

}

let mut e = Example {

iterator: ??? // how to create a consumed Once?

};

e.iterator.next(); // should yield None

e.reset();

e.iterator.next(); // will yield Option(X)

e.iterator.next(); // will yield None

```

In this particular case, I can use `option::IntoIter` directly instead of `Once` and write

```rs

pub struct Example {

iterator: option::IntoIter<X>

}

impl Example {

pub fn reset(&mut self) {

self.iterator = Some(X).into_iter();

}

}

let mut e = Example {

iterator: None.into_iter()

};

```

but that doesn't express my intent as well as using `Once`. Also, given that in my actual code the iterator type is generic, I would need an additional method `get_init` next to `get_reset` in my trait. I'd rather have my trait say that the iterator type needs to implement `Default`, and the default value should be an instance of the iterator that doesn't yield anything.

My suggestion: **Have all builtin iterators implement `Default`**.

For example,

```rs

impl<A> Default for Item<A> {

#[inline]

fn default() -> Item<A> { Item { opt: None } }

}

impl<A> Default for Iter<A> {

fn default() -> Iter<A> { Iter { inner: default() } }

}

impl<A> Default for IterMut<A> {

fn default() -> IterMut<A> { IterMut { inner: default() } }

}

impl<A> Default for IntoIter<A> {

fn default() -> IntoIter<A> { IntoIter { inner: default() } }

}

impl<T> Default for Once<T> {

fn default() -> Once<T> { Once { inner: default() } }

}

// (not sure whether all of these could simply be derived)

```

Does this need to go through the RFC process? Should I simply create a pull request? | T-libs-api,C-feature-request,A-iterators | low | Major |

327,402,320 | angular | Formbuilder group from value object with array | ## I'm submitting a...

<!-- Check one of the following options with "x" -->

<pre><code>

[ ] Regression (a behavior that used to work and stopped working in a new release)

[ x] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Performance issue

[ ] Feature request

[ ] Documentation issue or request

[ ] Support request => Please do not submit support request here, instead see https://github.com/angular/angular/blob/master/CONTRIBUTING.md#question

[ ] Other... Please describe:

</code></pre>

## Current behavior

<pre><code>

this.myObject = {myobject: [{id: 'test1'}, {id: 'test2'}]};

this.myFormGroup = this.fb.group(this.myObject);

</code></pre>

RESULT

<pre><code>

myFormGroup.value : { "myobject": { "id": "test1" } }

</code></pre>

## Expected behavior

<pre><code>

myFormGroup.value : {myobject: [{id: 'test1'}, {id: 'test2'}]}

</code></pre>

## Minimal reproduction of the problem with instructions

https://stackblitz.com/edit/angular-fromgroup-with-array

## What is the motivation / use case for changing the behavior?

It seems to be a bug, FormGroup build FormControl tree without considering if it's an array or object

It'is ok with a FormArray

## Environment

<pre><code>

Angular version: 5.0.0

Angular version: 6.0.2

| type: bug/fix,effort3: weeks,freq1: low,area: forms,state: confirmed,design complexity: major,P4 | medium | Critical |

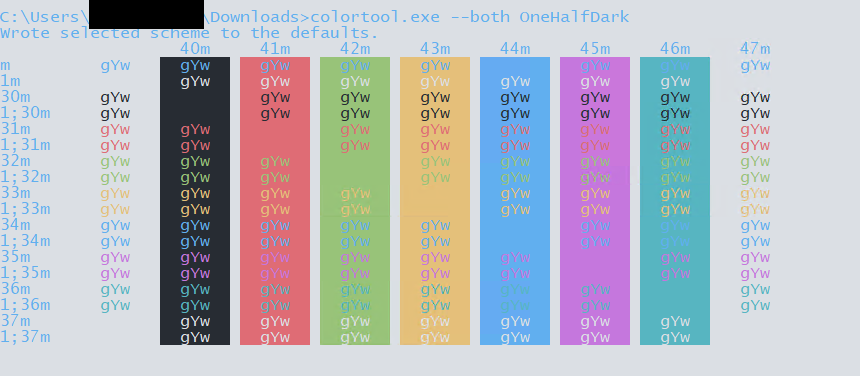

327,487,364 | terminal | colortool.exe does not exit after executing | This bug-tracker is monitored by Windows Console development team and other technical types. **We like detail!**

If you have a feature request, please post to [the UserVoice](https://wpdev.uservoice.com/forums/266908).

> **Important: When reporting BSODs or security issues, DO NOT attach memory dumps, logs, or traces to Github issues**. Instead, send dumps/traces to [email protected], referencing this GitHub issue.

Please use this form and describe your issue, concisely but precisely, with as much detail as possible

* Your Windows build number: (Type `ver` at a Windows Command Prompt)

Microsoft Windows [Version 10.0.17134.48]

* What you're doing and what's happening: (Copy & paste specific commands and their output, or include screen shots)

I'm trying to use the color tool to change the color scheme. Whenever I execute it within cmd.exe, it does not exit. Also the effects (even with `--both`) don't seem to persist when I close the hung window and open a new one.

* What's wrong / what should be happening instead:

1. Color tool does not exit and freezes cmd

To repro:

```

cd Downloads

colortool.exe --both OneHalfDark

```

Please see attached screenshot:

| Product-Colortool,Help Wanted,Area-Interaction,Issue-Bug | low | Critical |

327,500,183 | opencv | Cuda 9.1 NVCUVID not working with opencv test program but works with nvidia test source code. | ##### System information (version)

- OpenCV => 3.4 (checkout from git)

- Operating System / Platform => Linux Ubuntu 16.04 64 Bit

- Compiler => gcc (Ubuntu 5.4.0-6ubuntu1~16.04.9) 5.4.0 20160609

##### Detailed description

When using cuda9.1, opencv's own test program does not work:

./example_gpu_video_reader plush1_720p_10s.m2v

It creates with a segfault.

cuda-sample test program works (it includes source code):

cuda-9.1/samples/3_Imaging/cudaDecodeGL/cudaDecodeGL

So, I conclude from this that the cuda drivers are installed correctly and that they work.

There must be something wrong with the opencv software, maybe using the cuda API wrongly.

Both the above test programs are supposed to utilize

libnvcuvid.so.390.30

<!-- your description -->

##### Steps to reproduce

./example_gpu_video_reader plush1_720p_10s.m2v

.... -> segfault.

The "plush1_720p_10s.m2v" is part of cuda-samples, supplied by Nvidia.

This used to work with cuda8.

The cuda supplied source code that tests the NVCUVID api works fine.

| priority: low,category: build/install,category: gpu/cuda (contrib) | low | Major |

327,522,428 | go | cmd/compile: confusing internal error when importing different packages with same name from different paths | This is a follow-up on #25568: It is possible to get an internal compiler error when invoking the compiler with plausible but incorrect `-I` arguments leading to selection of different but identically named packages ("io" in this case). To reproduce:

1) `cd $GOROOT/test`

2) `go tool compile fixedbugs/bug345.dir/io.go`

2) `go tool compile -I . fixedbugs/bug345.dir/main.go`

=>

```

fixedbugs/bug345.dir/main.go:10:2: internal compiler error: conflicting package heights 4 and 0 for path "io"

```

The issue here is an incorrect argument for `-I`. The following invocation:

`go tool compile -I $HOME/test/fixedbugs/bug345.dir fixedbugs/bug345.dir/main.go`

works as expected.

The internal error is confusing. We should be able to provide a better error message.

| NeedsFix,compiler/runtime | low | Critical |

327,532,825 | flutter | Instrument code to give visual cue to developers when their native code is taking too long | Following a discussion with peers I noticed that a pain point and source of misunderstanding around Method Channels running on main native thread.

Following a suggestion from @dnfield, it would be nice if method channels had a way to know if they're taking too long and freezing the UI. That would benefit people coming from different backgrounds that are not aware that method channels use the native UI thread and that's very bad for long operations.

I think the ideal case would have the method channel time the elapsed time in case the response happens in the main thread, or something along these lines. Feedback could flash the screen or something clearer / more obvious like a banner.

My low-hanging-fruit approach would be for debug builds to hook into Strict Mode api and set up some defaults for immediate results

```dart

StrictMode.setThreadPolicy(new StrictMode.ThreadPolicy.Builder()

.detectDiskReads()

.detectDiskWrites()

.detectNetwork()

.penaltyLog()

// .penaltyDialog()

.penaltyFlashScreen()

.build());

```

https://developer.android.com/reference/android/os/StrictMode

| engine,c: performance,c: proposal,P3,team-engine,triaged-engine | low | Critical |

327,542,135 | rust | Command's Debug impl has incorrect shell escaping | On Unix, the Debug impl for Command prints the command using quotes around each argument, e.g.

"ls" "-la" "\"foo \""

The use of spaces as a delimiter suggests that the output is suitable to be passed to a shell. While it's debatable whether users should be depending on any specific debug representation, in practice, at least rustc itself uses it for user-facing output (when passing `-Z print-link-args`).

There are two problems with this:

1. It's insecure! The quoting is performed, via the `Debug` impl for `CStr`, by `ascii::escape_default`, whose escaping rules are

> chosen with a bias toward producing literals that are legal in a variety of languages, including C++11 and similar C-family languages.

However, this does not include escaping the characters $, \`, and !, which have a special meaning within double quotes in Unix shell syntax. So, for example:

```rust

let mut cmd = Command::new("foo");

cmd.arg("`echo 123`");

println!("{:?}", cmd);

```

prints

```

"foo" "`echo 123`"

```

but if you run that in a shell, it won't produce the same behavior as the original command.

2. It's noisy. In a long command line like those produced by `-Z print-link-args`, most arguments don't contain any characters that need to be quoted or escaped, and the output is long enough without unnecessary quotes.

Cargo uses the `shell-escape` crate for this purpose; perhaps that code can be copied into libstd.

| C-bug,T-libs,A-fmt | low | Critical |

327,569,088 | pytorch | Better error message in DataChannelTCP::_receive | ## Issue description

The following code will produce an error from the TCP distributed backend:

```python

import os

import torch

import torch.distributed as dist

import sys

os.environ['MASTER_ADDR'] = '127.0.0.1'

os.environ['MASTER_PORT'] = '29500'

dist.init_process_group('tcp', rank=int(sys.argv[1]), world_size=2)

if dist.get_rank() == 0:

t = torch.arange(9)

dist.send(tensor=t, dst=1)

else:

t = torch.zeros(9)

dist.recv(tensor=t, src=0)

```

Error:

```

Traceback (most recent call last):

File "/Users/shendrickson/pytorch_dist.py", line 19, in <module>

dist.recv(tensor=t, src=0)

File "/Users/shendrickson/anaconda2/envs/torchdev/lib/python3.6/site-packages/torch/distributed/__init__.py", line 230, in recv

return torch._C._dist_recv(tensor, src)

RuntimeError: Tensor sizes do not match

```

This is a bit misleading, since the sizes of the tensors are the same but the types are different. I think it would be better to give a message along the lines of:

```

RuntimeError: Expected to receive 72 bytes, but got 36 bytes instead. Are tensors of same size and type?

```

I'd be happy to submit a PR for this if others agree that the original message should be changed. | triaged,module: backend | low | Critical |

327,577,134 | pytorch | [Caffe2]How to convert Caffe's mean file to Caffe2's ? | I have tried to use Caffe's mean file directly in Caffe2, but it complains the mean file doesn't contain qtensor fields.

Is there any way to translate Caffe's mean file to Caffe2's?

Thansk. | caffe2 | low | Minor |

327,587,604 | pytorch | how to use Softmax when do segmentation | I want implement FCN by caffe2 to do semantic segmentation, but I do not konw how to implement Softmax as FCN in caffe version.

Could you help me, Thanks!

the code of FCN's softmax using caffe is as follows:

```

layer {

name: "upscore"

type: "Deconvolution"

bottom: "score_fr"

top: "upscore"

param {

lr_mult: 0

}

convolution_param {

num_output: 21

bias_term: false

kernel_size: 64

stride: 32

}

}

layer {

name: "score"

type: "Crop"

bottom: "upscore"

bottom: "data"

top: "score"

crop_param {

axis: 2

offset: 19

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "score"

bottom: "label"

top: "loss"

loss_param {

ignore_label: 255

normalize: false

}

}

```

| caffe2 | low | Minor |

327,631,847 | rust | assert_eq!(a,b) fails to compile for slices while assert!(a == b) works fine | Example ([playground](https://play.rust-lang.org/?gist=1515ab97f5153b600fe3542b50aba925&version=stable&mode=debug)):

```rust

pub fn foo(xs: &[u32], k: u32) -> (&[u32], u32) { (xs, 0) }

fn main() {

assert!(foo(&[], 10) == (&[], 0)); // OK

assert_eq!(foo(&[], 10), (&[], 0)); // FAILS

}

````

| C-enhancement,T-libs-api | low | Critical |

327,674,010 | opencv | VideoCapture can't read some png files | ##### System information (version)

- OpenCV => 4.0.0-dev (todays)

- Operating System / Platform => Windows 64 Bit

- Compiler => mingw64

opencv_ffmpeg400_64.dll has 2018-03-01 timestamp

##### Detailed description

the dnn samples make heavy use of loading images through the videocapture, but trying with

http://answers.opencv.org/upfiles/15276026856434307.png or the humble

http://answers.opencv.org/m/opencv/media/images/logo.png?v=6 it fails to open.

<strike>renaming an older opencv_ffmpeg341_64.dll to opencv_ffmpeg400_64 made it work nicely, so there seems to be some regression here.</strike>

##### Steps to reproduce:

VideoCapture cap("logo.png");

cout << cap.isOpened() << endl;

| category: videoio,incomplete | low | Minor |

327,676,172 | gin | Question: How to write request log and error log in a separate manner. | I could write everything into a file with #805 post.

Related to this, how could it be possible to write error into a separate log?

--

I assume to use gin.DefaultErrorWriter, but it seems that log.SetOuput is only possible with a single file.

Current output (everything into a single file)

```

[GIN] 2018/05/30 - 19:21:17 | 404 | 114.656µs | ::1 | GET /comment/view/99999

Error #01: th size of input parameter is not 40

[GIN] 2018/05/30 - 19:21:20 | 200 | 1.82468ms | ::1 | GET /comment/view/00001

```

Current code

```

logfile, err := os.OpenFile(c.GinLogPath, os.O_RDWR|os.O_CREATE|os.O_APPEND, 0666)

if err != nil {

log.Fatalln("Failed to create request log file:", err)

}

errlogfile, err := os.OpenFile(c.GinErrorLogPath, os.O_RDWR|os.O_CREATE|os.O_APPEND, 0666)

if err != nil {

log.Fatalln("Failed to create request log file:", err)

}

// set request logging

gin.DefaultWriter = io.MultiWriter(logfile)

gin.DefaultErrorWriter = io.MultiWriter(errlogfile)

log.SetFlags(log.Ldate | log.Ltime | log.Lshortfile)

log.SetOutput(gin.DefaultWriter)

``` | question | low | Critical |

327,713,679 | create-react-app | Add React version into eslint-config-react-app | Hello everyone,

### Is this a bug report?

No

### Situation

I'm working on a project that use React (**[email protected]**). I use **react-scripts** (1.0.11) to start and build my project.

According to my React version, I use some deprecated function:

- **componentWillMount** (https://reactjs.org/docs/react-component.html#unsafe_componentwillmount)

- **componentWillReceiveProps** (https://reactjs.org/docs/react-component.html#unsafe_componentwillreceiveprops)

These functions are deprecated in 16.3.0 of React.

### Problems

Now, when I start or build my project, I get several warnings inside console and browser for this deprecated function.

### Explanation

I try to understand why I got this message for the React 15.6.2 which are not concern by this warning. In fact, the package **[email protected]** set the ESLint configuration for the actions of **react-scripts**.

The ESLint's plugin **eslint-plugin-react** have a rule: "_react/no-deprecated_" that created the warnings. This rule match on deprecated code, according to the configured React version number given in the ESLint config file. But, in this case, **eslint-config-react-app** does not define React version. So, as explain here: https://github.com/yannickcr/eslint-plugin-react#configuration, the default value is the latest React stable release.

That explain why I got warnings aiming React 16.3 in my project which use React 15.6.2.

### Solution

I suggest as solution to define React version in the configuration file of **eslint-config-react-app**.

I resolve my problem on adding this:

```

settings: {

react: {

version: require('react').version

}

},

```

inside the file index.js of **eslint-config-react-app**.

This solution could have some problems, especially, the package **react** is now mandatory (we could add a verification if package **react** exist before).

I know, that I could also "_eject_" my project and edit the config files, but I think this modification should be helpful for other users.

### Discussion

In first time, I enjoy to know if my solution is correct on the content and the style. In second time, if people agree with me, I will create a pull request to integrate this modification inside the project.

Thanks for the reading. I hope that my post is clear enough.

| issue: proposal | low | Critical |

327,723,946 | vue | Make vue available to other libraries without having to import it | Title needs work, idk what to call this.

### What problem does this feature solve?

Writing a component library with typescript requires importing Vue so you can use `Vue.extend(...)` to get typings. This causes problems when webpack decides to load a different instance of vue.

See https://github.com/vuetifyjs/vuetify/issues/4068

### What does the proposed API look like?

[Local registration](https://vuejs.org/v2/guide/components-registration.html#Local-Registration) to accept a function that **synchronously** returns a component, calling it with the parent vue instance.

The library can then do:

```ts

export default function MyComponent (Vue: VueConstructor) {

return Vue.extend({ ... })

}

```

And be used like:

```js

import MyComponent from 'some-library'

export default {

components: { MyComponent }

}

```

Of course that would then cause other problems, particularly where we use methods directly from other components. Maybe something that adds types like `Vue.extend()` but doesn't have any runtime behaviour would be better instead?

```ts

// When used, this will behave the same as a bare options object, instead of being an entire vue instance

export default Vue.component({

...

})

```

<!-- generated by vue-issues. DO NOT REMOVE --> | discussion | medium | Major |

327,745,880 | flutter | ListTile needs Material Design guidance: title overflow, textScaleFactor != 1.0 | We've updated the Flutter ListTile (like list items) widget to match: https://material.io/design/components/lists.html#specs

There are still some loose ends that the spec doesn't cover:

- Overflow: what happens when the title or subtitle wraps?

- Text scale factor: how should the layout scale when the text scale factor != 1.0?

There were also some issues with centering and the implicit case analysis in the specs. We need to confirm that the following rules are correct:

- If a leading widget is specified then the leading edge of the titles is 56.0, unless the leading widget's intrinsic width is > 40. In that case title's begin at leading_width + 16

- The leading and trailing widgets are always centered within the list tile. | framework,f: material design,P2,team-design,triaged-design | low | Minor |

327,798,737 | rust | Problem with type inference resolution | A problem was found with the type inference resoultion when the env_logger crate was imported. The issue was reported on [reddit](https://old.reddit.com/r/rust/comments/8n83ph/compile_issue_when_adding_a_dependency) and errors on the latest stable and nightly.

```rust

// Uncomment this and it breaks...

extern crate env_logger;

fn main() {

let string = String::new();

let str = <&str>::from(&string);

println!("{:?}", str);

}

``` | A-trait-system,T-compiler,A-inference,T-types | low | Critical |

327,802,686 | flutter | Text does not conform to DefaultTextStyle which is beyond Card | <!-- Thank you for using Flutter!

If you are looking for support, please check out our documentation

or consider asking a question on Stack Overflow:

* https://flutter.io/

* https://docs.flutter.io/

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

If you have found a bug or if our documentation doesn't have an answer

to what you're looking for, then fill our the template below. Please read

our guide to filing a bug first: https://flutter.io/bug-reports/

-->

## Steps to Reproduce

<!--

Please tell us exactly how to reproduce the problem you are running into.

Please attach a small application (ideally just one main.dart file) that

reproduces the problem. You could use https://gist.github.com/ for this.

If the problem is with your application's rendering, then please attach

a screenshot and explain what the problem is.

-->

I'm using DefaultTextStyle to set default TextStyle to all Text inside a Card. Widget tree:

- DefaultTextStyle -> Card -> Text : not work

- Card -> DefaultTextStyle -> Text : work

```dart

import 'package:flutter/material.dart';

void main() => runApp(MyApp());

class MyApp extends StatelessWidget {

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Demo',

theme: ThemeData(

primarySwatch: Colors.blue,

),

home: FooPage(),

);

}

}

class FooPage extends StatefulWidget {

@override

State<StatefulWidget> createState() => FooPageState();

}

class FooPageState extends State<FooPage> {

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(title: new Text("Demo")),

body: Column(

children: <Widget>[

DefaultTextStyle(

style: TextStyle(fontSize: 30.0, color: Colors.blue),

child: Card(

child: Text('DefaultTextStyle outside Card does not work'),

),

),

Card(

child: DefaultTextStyle(

style: TextStyle(fontSize: 30.0, color: Colors.blue),

child: Text('DefaultTextStyle inside Card works'),

),

),

],

),

);

}

}

```

## Logs

<!--

Run your application with `flutter run --verbose` and attach all the

log output below between the lines with the backticks. If there is an

exception, please see if the error message includes enough information

to explain how to solve the issue.

-->

<!--

Run `flutter analyze` and attach any output of that command below.

If there are any analysis errors, try resolving them before filing this issue.

-->

<!-- Finally, paste the output of running `flutter doctor -v` here. -->

```

[✓] Flutter (Channel dev, v0.5.0, on Mac OS X 10.13.4 17E202, locale en-US)

• Flutter version 0.5.0 at /Users/rain/flutter

• Framework revision a863817c04 (4 days ago), 2018-05-26 12:29:21 -0700

• Engine revision 2b1f3dbe25

• Dart version 2.0.0-dev.58.0.flutter-97b6c2e09d

[✓] Android toolchain - develop for Android devices (Android SDK 27.0.3)

• Android SDK at /Users/rain/Library/Android/sdk

• Android NDK at /Users/rain/Library/Android/sdk/ndk-bundle

• Platform android-27, build-tools 27.0.3

• Java binary at: /Applications/Android Studio.app/Contents/jre/jdk/Contents/Home/bin/java

• Java version OpenJDK Runtime Environment (build 1.8.0_152-release-1024-b01)

• All Android licenses accepted.

[✓] iOS toolchain - develop for iOS devices (Xcode 9.3.1)

• Xcode at /Applications/Xcode.app/Contents/Developer

• Xcode 9.3.1, Build version 9E501

• ios-deploy 1.9.2

• CocoaPods version 1.5.2

[✓] Android Studio (version 3.1)

• Android Studio at /Applications/Android Studio.app/Contents

• Flutter plugin version 24.2.1

• Dart plugin version 173.4700

• Java version OpenJDK Runtime Environment (build 1.8.0_152-release-1024-b01)

[✓] VS Code (version 1.23.1)

• VS Code at /Applications/Visual Studio Code.app/Contents

• Dart Code extension version 2.12.1

```

| framework,f: material design,a: typography,has reproducible steps,P2,found in release: 2.5,found in release: 2.6,team-design,triaged-design | low | Critical |

327,873,076 | vscode | Support customisable alias for commands in command palette | - VSCode Version: 1.23.0

- OS Version: Linux

----

**Is there a way to define an alias for a command so that the command can be called under multiple different names (original name and the alias)?**

This is useful in different cases:

- If you switch from a different editor that uses a command palette (like emacs, ... ) you might have learned the names of commands over many years. Relearning takes more time than making an alias once. This would also allow to use two editors in parallel without interference. Before I came to VSCode I used emacs. I regularly want to call `diff-buffer-with-file` and it takes me some time to figure out that in VSCode it's called `Compare active file with saved`.

- You could define abbreviations that are quicker to type than selecting regular command names with the fuzzy search, e.g. `tt`. This is quite popular in emacs, see e.g. http://ergoemacs.org/emacs/emacs_alias.html

- if you regularly hit keys in the wrong order an alias might be useful for command you regularly use.

I think I'm not the only one who likes such a feature: When I used emacs I checked quite a few popular user configurations that users keep on github. A lot of these contain aliases by using the command `(defalias ...)` - including popular/influential ones like [spacemacs](https://github.com/syl20bnr/spacemacs/), [abo-abo's oremacs](https://github.com/abo-abo/oremacs), the [config of the emacs maintainer](https://github.com/jwiegley/dot-emacs) ...

This has been asked on [Reddit](https://www.reddit.com/r/vscode/comments/8bj63w/can_i_set_an_alias_for_the_command_palette/) and [stackoverflow](https://stackoverflow.com/questions/50143258/add-alias-for-commands-in-command-palette) without any useful answer.

| feature-request,quick-open | medium | Major |

327,888,684 | vscode | Support folding ranges inside a line | Hey, I have read through the folding-related issues (like #3422, the linked ones and some other ones related to the `FoldingRangeProvider` API specifically), but I don't seem to have come across a conversation about inline folding ranges.

Has this been discussed - or is it okay to start a discussion on the topic now?

I am interested in these, because I'd like to improve readability of MarkDown documents (using an extension) by collapsing MarkDown link targets (the URLs - which can be quite long) and instead linkifying the text range (using a `DocumentLinkProvider`).

Researching the `FoldingRange` API though, I can see it only has start and end lines, not `Position`s. Is this an immutable deliberate design decision or something open to alternative with enough support behind it?

I think other possible use cases could be folding of ternary expression branches, one-liner `if` statements and stuff like that. (But I'm mostly interested in my use-case described above.) | feature-request,editor-folding | high | Critical |

327,892,047 | rust | rustdoc: accept a "test runner" argument to wrap around doctest executables | Unit tests are currently built and run in separate steps: First you call `rustc --test` to build up a test runner executable, then you run the executable it outputs to actually execute the tests. This provides a great advantage: The unit tests can be built for a different platform than the host. Cargo takes advantage of this by allowing you to hand it a "test runner" command that it will run the tests in.

Rustdoc, on the other hand, collects, compiles, and runs the tests in one fell swoop. Specifically, it compiles the doctest as part of "running" the test, so compile errors in doctests are reported as test errors rather than as compile errors prior to test execution. Moreover, it runs the resulting executable directly:

https://github.com/rust-lang/rust/blob/fddb46eda35be880945348e1ec40260af9306d74/src/librustdoc/test.rs#L338-L354

This can be a problem when you're trying to run doctests for a platform that's fairly far removed from your host platform - e.g. for a different processor architecture. It would be ideal if rustdoc could accept some wrapper command so that people could run their doctests in e.g. qemu rather than on the host environment. | T-rustdoc,C-enhancement,A-doctests | low | Critical |

327,901,269 | flutter | Improve error message when a qualified import should be relative | I had a PR validation fail because I used a fully qualified import where I should have used a relative import. The error message in the out was as follows:

```bash

dart ./dev/bots/test.dart

SHARD=analyze

⏩ RUNNING: cd examples/hello_world; ../../bin/flutter inject-plugins

Unhandled exception:

NoSuchMethodError: The getter 'iterator' was called on null.

Receiver: null

Tried calling: iterator

#0 Object.noSuchMethod (dart:core-patch/dart:core/object_patch.dart:46)

#1 _deepSearch (file:///tmp/flutter%20sdk/dev/bots/test.dart:520:17)

#2 _deepSearch (file:///tmp/flutter%20sdk/dev/bots/test.dart:525:28)

#3 _verifyNoBadImportsInFlutter (file:///tmp/flutter%20sdk/dev/bots/test.dart:454:31)

<asynchronous suspension>

#4 _analyzeRepo (file:///tmp/flutter%20sdk/dev/bots/test.dart:100:9)

<asynchronous suspension>

#5 main (file:///tmp/flutter%20sdk/dev/bots/test.dart:56:26)

<asynchronous suspension>

#6 _startIsolate.<anonymous closure> (dart:isolate-patch/dart:isolate/isolate_patch.dart:277)

#7 _RawReceivePortImpl._handleMessage (dart:isolate-patch/dart:isolate/isolate_patch.dart:165)

```

We should have a better error message that points in the direction of the problem.

The specific import was in:

`circle_avatar.dart`

I initially imported material colors with full qualification:

`import 'package:flutter/src/material/colors.dart';`

The correct import was a relative one:

`import 'colors.dart'` | a: tests,team,framework,a: error message,P2,team-framework,triaged-framework | low | Critical |

327,938,554 | TypeScript | Add a Mutable type (opposite of Readonly) to lib.d.ts | <!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Please read the FAQ first, especially the "Common Feature Requests" section.

-->

## Search Terms

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

mutable, mutable type

## Suggestion

lib.d.ts contains a `Readonly` type, but not a `Mutable` type. This type would be the opposite of `Readonly`, defined as:

```typescript

type Mutable<T> = {

-readonly[P in keyof T]: T[P]

};

```

## Use Cases

<!--

What do you want to use this for?

What shortcomings exist with current approaches?

-->

This is useful as a lightweight builder for a type with readonly properties (especially useful when some of the properties are optional and conditional logic is needed before assignment).

## Examples

<!-- Show how this would be used and what the behavior would be -->

```typescript

type Mutable<T> = {-readonly[P in keyof T]: T[P]};

interface Foobar {

readonly a: number;

readonly b: number;

readonly x?: string;

}

function newFoobar(baz: string): Foobar {

const foobar: Mutable<Foobar> = {a: 1, b: 2};

if (shouldHaveAnX(baz)) {

foobar.x = 'someValue';

}

return foobar;

}

```

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript / JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. new expression-level syntax)

| Suggestion,Awaiting More Feedback,Fix Available | high | Critical |

327,967,867 | go | runtime/pprof: possible sampling error with large memprofile rate | Split out from #25096, where @hyangah wrote:

> With the default memprofile sampling rate, however, the results look different. The results are different from the results with -memprofilerate=1, too. The order in the top15 list varies a lot. This seems to me errors from sampling-based estimation, not the bug the original report suggested.

This is an issue to investigate that. | NeedsInvestigation,compiler/runtime | low | Critical |

327,978,466 | go | x/image/vector: rasterizer shifts alpha mask and is slow when target is offset and small relative image size | Please answer these questions before submitting your issue. Thanks!

### What version of Go are you using (`go version`)?

1.10.2

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

linux/amd64

### What did you do?

In the golang.org/x/image/vector.go there is this function:

```func (z *Rasterizer) Draw(dst draw.Image, r image.Rectangle, src image.Image, sp image.Point)```

r is the target rectangle for rendering the image. When r is a smaller rectangle than the bounds of the destination image dst, and offset into the middle of dst, two problems emerge:

First, the alpha mask, which is the whole point of the Draw command shifts with the target rectangle rather than staying fixed to the upper left corner of the image. I believe this should not be the default behavior. It is not consistent with other rasterizers, such as the free type rasterizer. If the mask shifts with the target and you are rendering a path using, say, Bezier curves, and want to draw that path onto an image, you must either determine before hand the exact boundaries of the path and shift the coordinates accordingly, because as it is, a target drawn somewhere in the middle of an image will always draw the mask relative to the upper left corner of the target rectangle. Having to determine the boundaries before hand requires flattening the curves and either storing the resulting line segments or repeating the process when actually rendering the alpha mask. It is much simpler to keep the alpha mask fixed to the upper left corner of the image boundary rather than move with the target rectangle.

Second, no matter how big the target rectangle is relative to the entire image, the "accumulate step" traverses the entire image, which is inefficient when the target is a small fraction of the image.

I have created a modified version of the rasterizer here: [https://github.com/srwiley/image](https://github.com/srwiley/image)

In the vector_test.go file are modified versions of the TestBasicPathDstAlpha and TestBasicPathDstRGBA test functions. These are changed to test for fixing the alpha mask to the image and not shifting with the target. They will fail with the current version of vector.go, but not the forked version.

There are also a series of benchmarks showing the improved performance when the target is small relative the destination image. This is what the benchmark output looks like:

```

BenchmarkDrawPathBounds1000-16 30 44412739 ns/op

BenchmarkDrawImageBounds1000-16 20 53485352 ns/op

BenchmarkDrawPathBounds100-16 3000 458989 ns/op

BenchmarkDrawImageBounds100-16 20 53309779 ns/op

BenchmarkDrawPathBounds10-16 200000 6054 ns/op

BenchmarkDrawImageBounds10-16 20 55934650 ns/op

BenchmarkDrawPathBounds2-16 1000000 1021 ns/op

BenchmarkDrawImageBounds2-16 20 54077408 ns/op

```

BenchmarkDrawPathBoundsX draw only the path boundary for a path of a hexagon of the indicated radius X in a 2200x2200 sized image. BenchmarkDrawImageBoundsX draws the entire image, which is what the current vector.go file does. So, as the size of the path gets smaller the speed difference increases.

### What did you expect to see?

Significantly greater speed when the target is small compared to the image size and the alpha mask fixed to the upper left of the destination image.

### What did you see instead?

Inefficient speed for small target sizes relative the image size, and the alpha mask fixed relative the target rectangle upper left corner and not the destination image bounds.

| Performance,NeedsInvestigation | low | Major |

328,000,762 | puppeteer | Support ServiceWorkers | We need an API to connect and manage ServiceWorkers.

At the very least, we need to:

- have a way to shut down service worker (requested at https://github.com/GoogleChrome/puppeteer/issues/1396)

- have a way to see service worker traffic (requested at https://github.com/GoogleChrome/puppeteer/issues/2617)

- support code coverage for service workers (requested at https://github.com/GoogleChrome/puppeteer/issues/2092)

| feature,chromium | low | Major |

328,051,788 | rust | Tracking issue for the OOM hook (`alloc_error_hook`) | PR #50880 added an API to override the std OOM handler, similarly to the panic hook. This was discussed previously in issue #49668, after PR #50144 moved OOM handling out of the `Alloc`/`GlobalAlloc` traits. The API is somewhat similar to what existed before PR #42727 removed it without an explanation. This issue tracks the stabilization of this API.

Defined in the `std::alloc` module:

<del>

```rust

pub fn set_oom_hook(hook: fn(Layout) -> !);

pub fn take_oom_hook() -> fn(Layout) -> !;

```

</del>

```rust

pub fn set_alloc_error_hook(hook: fn(Layout));

pub fn take_alloc_error_hook() -> fn(Layout);

```

CC @rust-lang/libs, @SimonSapin

## Unresolved questions

- [x] ~~Name of the functions. The API before #42727 used `_handler`, I made it `_hook` in #50880 because that's the terminology used for the panic hook (OTOH, the panic hook returns, contrary to the OOM hook).~~ #51264

- [ ] Should this move to its own module, or stay in `std::alloc`?

- [x] Interaction with unwinding. `alloc::alloc::oom` is marked `#[rustc_allocator_nounwind]`, so theoretically, the hook shouldn't panic (except when panic=abort). Yet if the hook does panic, unwinding seems to happen properly except it doesn't.

- [ ] Should we have this, or https://github.com/rust-lang/rust/issues/51540, or both?

- [ ] https://github.com/rust-lang/unsafe-code-guidelines/issues/506 | A-allocators,T-libs-api,B-unstable,C-tracking-issue,Libs-Tracked | medium | Critical |

328,053,026 | flutter | [proposal] make flutter compatible with Appium Tests | Hi,

we are writing a new flutter app and want it to be acceptance-tested by a bunch of Appium Tests.

As first we want to test on an iPhone, so we are not sure if the same problem also exsits for Android.

The flutter app cannot be tested because the PageSource always returns the one of the LaunchImage. Although the screenshot in Appium Desktop shows the correct view of the app. We verified it with other flutter apps from the AppStore, they have the same behavior. Means whatever view is open on the app, we always see the same page source.

The page source looks like

```xml

<?xml version="1.0" encoding="UTF-8"?>

<XCUIElementTypeApplication type="XCUIElementTypeApplication" name="test_app" label="test_app" enabled="true" visible="true" x="0" y="0" width="375" height="667">

<XCUIElementTypeWindow type="XCUIElementTypeWindow" enabled="true" visible="true" x="0" y="0" width="375" height="667">

<XCUIElementTypeOther type="XCUIElementTypeOther" enabled="true" visible="true" x="0" y="0" width="375" height="667"/>

</XCUIElementTypeWindow>

<XCUIElementTypeWindow type="XCUIElementTypeWindow" enabled="true" visible="false" x="0" y="0" width="375" height="667">

<XCUIElementTypeOther type="XCUIElementTypeOther" enabled="true" visible="false" x="0" y="0" width="375" height="667">

<XCUIElementTypeOther type="XCUIElementTypeOther" enabled="true" visible="false" x="0" y="667" width="375" height="216"/>

</XCUIElementTypeOther>

</XCUIElementTypeWindow>

<XCUIElementTypeWindow type="XCUIElementTypeWindow" enabled="true" visible="true" x="0" y="0" width="375" height="667">