id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

324,212,268 |

TypeScript

|

In JS, prototype-assignment methods can't find method-local type parameters

|

```js

/**

* @constructor

* @template K, V

*/

var Multimap = function() {

/** @type {!Map.<K, !Set.<!V>>} */

this._map = new Map();

};

/**

* @param {!S} o <------ error here, cannot find name 'S'

* @template S

*/

Multimap.prototype.lowerBound = function(o) {

};

```

**Expected behavior:**

No error, and `o: S`

**Actual behavior:**

Error on o's param tag: "cannot find name 'S'".

It looks like type resolution on prototype-assignment methods only looks at the class to find type parameters, meaning that method-local type parameters are missed. You can refer to `K` in the above example:

```js

/**

* @param {!K} object

* @template S

*/

Multimap.prototype.lowerBound = function(o) {

return 0;

};

```

And you can refer to `S` on a standalone function:

```js

/**

* @param {!S} object

* @template S

*/

var lowerBound = function(o) {

return 0;

};

```

|

Bug,Domain: JSDoc,Domain: JavaScript

|

low

|

Critical

|

324,280,512 |

go

|

x/build/cmd/gitmirror: lock conversations on GitHub commits

|

Occasionally a GitHub user will post a comment on a commit. Frequently these are spam which leads to nuisance emails generated if you watch the repo. Very occasionally these are requests for help, which currently require a human to reply, reminding the OP to raise an issue. This also generates nuisance emails.

I propose that the bot should blanket lock discussion on all commits in this repo.

|

Builders,Proposal,Proposal-Accepted,NeedsFix,FeatureRequest

|

low

|

Major

|

324,301,876 |

electron

|

Feature Request: Add query methods for Visual/Layout ZoomLevelLimits

|

**Is your feature request related to a problem? Please describe.**

The related PR - Microsoft/vscode#49858 (Issue: Microsoft/vscode#48357) - The UI setting of the zoom level was unbounded, although the actual zoom level has clear limits (`+9` <-> `-8`).

We use the `webFrame` module of `electron` the get the zoom level, but it seems to be unbounded, at least not to reasonable values.

I've looked at the `.d.ts` declaration and at the `web-frame.js` and the `c++` header which defines `WebFrame`, and I can only see a method to `Set` the layout and visual zoom level limits, but not retrieve them.

**Describe the solution you'd like**

I want to be able to logically bind the UI settings the values that `electron` itself bounds, not hard coded values that might be right for one screen/resolution/etc.

Currently in the PR listed above, the bounds are hard-coded, and I enforce them myself, logically, because `webFrame.setZoomLevel` and `webFrame.getZoomLevel` do not match the visual zoom level bounds.

I would want to have methods in [webFrame](https://github.com/electron/electron/blob/4fcd178c368e67e543ee10ee84a0947342412a4c/atom/renderer/api/atom_api_web_frame.h), probably called:

```cpp

v8::Local<v8::Value> GetVisualZoomLevelLimits() const;

v8::Local<v8::Value> GetLayoutZoomLevelLimits() const;

```

Which would be exported to the `js` `webFrame` module, which would in-turn allow me to query for the electron bounds value instead of hard coded ones, specifically [here](https://github.com/Microsoft/vscode/pull/49858/files#diff-c93a0e42070e15efe5973a7aa2f71f53), since currently, `webFrame.getZoomLevel`/`webFrame.setZoomLevel` are not limiting values to the visual zoom level limits.

**Describe alternatives you've considered**

Currently, the PR is not in yet in production, but the workaround was to get the `min`/`max` zoom level limits via trial and error, and hard code those limits.

Thanks a lot for reading, perhaps tho I've just missed the methods which allow to do this in my research. But if not, that could help a lot.

If it's not too complicated, I'd love to PR it as well.

|

enhancement :sparkles:

|

low

|

Critical

|

324,316,303 |

pytorch

|

Caffe2 network exported from ONNX does not initialize the model inputs

|

## Issue description

When exporting an ONNX model to Caffe2 with the [conversion tool](pytorch/pytorch/blob/master/caffe2/python/onnx/bin/conversion.py), the resulting init net does not initialize the model inputs. When running this network in Caffe2, you therefore get the error `Encountered a non-existing input blob: <name of input blob>`, unless you explicitly create the input blob before running the network.

I would like the network to create the blob automatically, so that I don't have to specify the name and size of the input blob manually (this information should be part of the exported network imho).

This problem was already fixed in onnx/onnx-caffe2#48, but it was later changed again by https://github.com/onnx/onnx-caffe2/commit/ff82dca5551fce84d781bb23719fbe2913b30327. I'm not sure which behavior is actually intended.

This is probably related to #6505, but I'm not sure whether it's the same problem.

## System Info

- PyTorch or Caffe2: Caffe2

- How you installed Caffe2: conda

- OS: Ubuntu 16.04

- Caffe2 version: 0.8.dev

- Versions of any other relevant libraries: onnx 1.1.1 from pip

|

caffe2

|

low

|

Critical

|

324,328,682 |

vscode

|

Git - Support Co-Authored-By

|

Issue Type: <b>Feature Request</b>

Github introduced a convention where the commit message contains a list of co-authors, for use when pairing.

Example:

```

commit 032db38255275dd7372f575e3d06947c878ef4c6

Author: Tommy Brunn <[email protected]>

Date: Thu May 17 10:21:16 2018 +0200

Encode int64

Co-Authored-By: Tulio Ornelas <[email protected]>

```

Both Github and Atom then displays both authors whenever they are showing author information. See http://blog.atom.io/2018/04/18/atom-1-26.html#github-package-improvements-1 for an example of what it looks like in Atom.

VS Code version: Code 1.23.1 (d0182c3417d225529c6d5ad24b7572815d0de9ac, 2018-05-10T16:03:31.083Z)

OS version: Darwin x64 16.7.0

<!-- generated by issue reporter -->

|

help wanted,feature-request,git

|

medium

|

Major

|

324,357,767 |

kubernetes

|

StatefulSet with long name can not create pods

|

**Is this a BUG REPORT or FEATURE REQUEST?**:

/kind bug

**What happened**:

Creating a StatefulSet with a name containing 57 characters resulted could not start any pods as kubernetes added the label "controller-revision-hash" to the pod which apparently contains the StatefulSet name and a hash appended.

The label is not truncated to 63 characters, therefore the creation of the pod fails with the error message

`statefulset-controller create Pod long-redacted-statefulset-name-xxxxxxxxxxxxxxxxxxxxxxxxx-0 in StatefulSet long-redacted-statefulset-name-xxxxxxxxxxxxxxxxxxxxxxxxx failed error: Pod "long-redacted-statefulset-name-xxxxxxxxxxxxxxxxxxxxxxxxx-0" is invalid: metadata.labels: Invalid value: "long-redacted-statefulset-name-xxxxxxxxxxxxxxxxxxxxxxxxx-58d5fbb889": must be no more than 63 characters

`

**What you expected to happen**:

The label should be truncated or StatefulSets should enforce shorter names.

**How to reproduce it (as minimally and precisely as possible)**:

Create a StatefulSet with a name longer than 57 characters:

```apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: sset

name: long-redacted-statefulset-name-xxxxxxxxxxxxxxxxxxxxxxxxx

spec:

serviceName: ""

replicas: 1

selector:

matchLabels:

app: sset

template:

metadata:

labels:

app: sset

spec:

containers:

- image: alpine

name: sset-container

command:

- sleep

- "300"

```

**Anything else we need to know?**:

**Environment**:

- Kubernetes version (use `kubectl version`): Server Version: "v1.10.2"

- Cloud provider or hardware configuration: baremetal

- OS (e.g. from /etc/os-release): CentOS-7.5.1804

- Kernel (e.g. `uname -a`): 3.10.0-862.2.3.el7.x86_64

- Install tools: kubeadm

|

kind/bug,sig/apps,lifecycle/frozen,needs-triage

|

medium

|

Critical

|

324,369,541 |

godot

|

Ragdoll / Physical Bones issue

|

As described in #11973

I've attached an example project below:

[Ragdoll Test.zip](https://github.com/godotengine/godot/files/2016597/Ragdoll.Test.zip)

Example contains 1 scene with 2 skeletons.

First example - a simple worm skeleton that contorts wildly.

Second Example - Robot from 3D platformer, by default I've set the physics simulation to just his arms. They don't stop moving, as if he's very excited about something.

If you hit Enter (ui_accept) the rest of his bones are activated, and he crumples to the floor in quite an unnatural way, not like in the example posted by Andrea (https://godotengine.org/article/godot-ragdoll-system)

|

bug,confirmed,topic:physics,topic:3d

|

medium

|

Major

|

324,395,107 |

three.js

|

Improved Alpha Testing via Alpha Distributions

|

Improved alpha testing in mipmapped texture hierarchies via alpha distributions, rather than averaging.

http://www.cemyuksel.com/research/alphadistribution/

http://www.cemyuksel.com/research/alphadistribution/alpha_distribution.pdf

|

Enhancement

|

low

|

Minor

|

324,506,615 |

rust

|

Confusing error message when using Self in where clause bounds errornously

|

The source `trait SelfReferential<T> where T: Self {}` gives the error

```

error[E0411]: expected trait, found self type `Self`

--> src/main.rs:13:35

|

13 | trait SelfReferential<T> where T: Self {}

| ^^^^ `Self` is only available in traits and impls

```

This is confusing because the `Self` is in a trait. It just doesn't seem to be valid to use it as the trait bounds of a type in a where clause.

|

C-enhancement,A-diagnostics,A-trait-system,T-compiler,D-papercut

|

low

|

Critical

|

324,506,658 |

rust

|

Calling `borrow_mut` on a `Box`ed trait object and passing the result to a function can cause a spurious compile error

|

This code does not compile:

```rust

use std::borrow::BorrowMut;

pub trait Trait {}

pub struct Struct {}

impl Trait for Struct {}

fn func(_: &mut Trait) {}

fn main() {

let mut foo: Box<Trait> = Box::new(Struct{});

func(foo.borrow_mut());

}

```

However, if you use `as` to be clear on what you expect `borrow_mut()` to return:

```rust

func(foo.borrow_mut() as &mut Trait);

```

It will then compile.

This does not happen when the boxed type is a struct, only a trait. Simple assignments don't seem to trigger it either. (**Edit:** Forgot to add that this happens both on current stable and current nightly.)

Here is the output when compiling on the Playground:

```

Compiling playground v0.0.1 (file:///playground)

error[E0597]: `foo` does not live long enough

--> src/main.rs:11:10

|

11 | func(foo.borrow_mut());

| ^^^ borrowed value does not live long enough

12 | }

| - `foo` dropped here while still borrowed

|

= note: values in a scope are dropped in the opposite order they are created

warning: variable does not need to be mutable

--> src/main.rs:10:9

|

10 | let mut foo: Box<Trait> = Box::new(Struct{});

| ----^^^

| |

| help: remove this `mut`

|

= note: #[warn(unused_mut)] on by default

error: aborting due to previous error

For more information about this error, try `rustc --explain E0597`.

error: Could not compile `playground`.

To learn more, run the command again with --verbose.

```

|

A-lifetimes,T-compiler,C-bug,T-types,A-trait-objects

|

low

|

Critical

|

324,522,844 |

pytorch

|

Only one thread is used on macOS (super slow on CPU)

|

## Issue description

Computations on my macbook's CPU are extremely slow.

`torch.get_num_threads()` always says 1, and `torch.set_num_threads(n)` has no effect.

I also tried building from source using the workaround described [here](https://github.com/pytorch/pytorch/issues/6328#issuecomment-383290539) in order to enable libomp support, but nothing changed.

## System Info

PyTorch version: 0.5.0a0+cf0c585

Is debug build: No

CUDA used to build PyTorch: None

OS: Mac OSX 10.13.4

GCC version: Could not collect

CMake version: version 3.11.0

Python version: 3.6

Is CUDA available: No

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

Versions of relevant libraries:

[pip3] numpy (1.14.3)

[pip3] torch (0.5.0a0+cf0c585)

[pip3] torchvision (0.2.1)

[conda] Could not collect

|

triaged,module: macos,module: multithreading

|

medium

|

Critical

|

324,552,759 |

angular

|

Calling formGroup.updateValueAndValidity() does not update child controls that have `onUpdate` set to `'submit'`

|

<!--

PLEASE HELP US PROCESS GITHUB ISSUES FASTER BY PROVIDING THE FOLLOWING INFORMATION.

ISSUES MISSING IMPORTANT INFORMATION MAY BE CLOSED WITHOUT INVESTIGATION.

-->

## I'm submitting a...

<!-- Check one of the following options with "x" -->

<pre><code>

[ ] Regression (a behavior that used to work and stopped working in a new release)

[x] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Performance issue

[x] Feature request

[ ] Documentation issue or request

[ ] Support request => Please do not submit support request here, instead see https://github.com/angular/angular/blob/master/CONTRIBUTING.md#question

[ ] Other... Please describe:

</code></pre>

## Current behavior

Calling formGroup.updateValueAndValidity() does not update child control and therefore form.value with data pending in child controls if child controls onUpdate is set to 'submit' .

## Expected behavior

The **formGroup.updateValueAndValidity()** should raise child validation and update values. What would also be acceptable is **formhooks** having a 'manual' value that if a control's **onUpdate** is set to this, **formGroup.updateValueAndValidity()** would update it.

## Minimal reproduction of the problem with instructions

the demo has instructions

https://stackblitz.com/edit/angular-rx-validation-form-bug-demo

## What is the motivation / use case for changing the behavior?

calling **formGroup.updateValueAndValidity()** should mean that regardless of what **onUpdate** is, the child controls should be validated and updated.

## Environment

<pre><code>

Angular version: 5.2.0

Browser:

- [x ] Chrome (desktop) version XX

- [ ] Chrome (Android) version XX

- [ ] Chrome (iOS) version XX

- [ ] Firefox version XX

- [ ] Safari (desktop) version XX

- [ ] Safari (iOS) version XX

- [ ] IE version XX

- [ ] Edge version XX

</code></pre>

|

type: bug/fix,freq1: low,area: forms,state: needs more investigation,P4

|

low

|

Critical

|

324,591,315 |

vscode

|

Support syntax highlighting with tree-sitter

|

Please consider supporting [tree-sitter](https://github.com/tree-sitter/tree-sitter) grammars in addition to TextMate grammars. TextMate grammars are incredibly difficult to author and maintain and impossible to get right. The over 500 (!) issues reported against https://github.com/Microsoft/TypeScript-TmLanguage are a living proof of this.

This presentation explains the motivation and goals for tree-sitter: https://www.youtube.com/watch?v=a1rC79DHpmY

tree-sitter already ships with Atom and is also used on github.com.

|

feature-request,languages-basic,tokenization

|

high

|

Critical

|

324,600,855 |

go

|

x/build/maintner: growing files are not cleaned up

|

When the full file is downloaded, the corresponding growing file is not removed, so over time growing files accumulate. For example this is my cache directory at the moment:

```

[...]

0033.7b5b6f92e2eecdbfcfb06d7ed7a4f95f66092b2bb3693f41407bb546.mutlog

0034.435067e2842b0a7363a09a259e5aa1a2006787d95fae3a14a33b79b2.mutlog

0034.growing.mutlog

0035.f59248bfa6248f1cd081601c2d0331c97ecfd7807c3a4805d8fb65a8.mutlog

0035.growing.mutlog

0036.growing.mutlog

```

/cc @bradfitz

|

help wanted,Builders,NeedsFix

|

low

|

Minor

|

324,602,741 |

TypeScript

|

[SALSA] @callback's @param tags should not require names

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!-- Please try to reproduce the issue with `typescript@next`. It may have already been fixed. -->

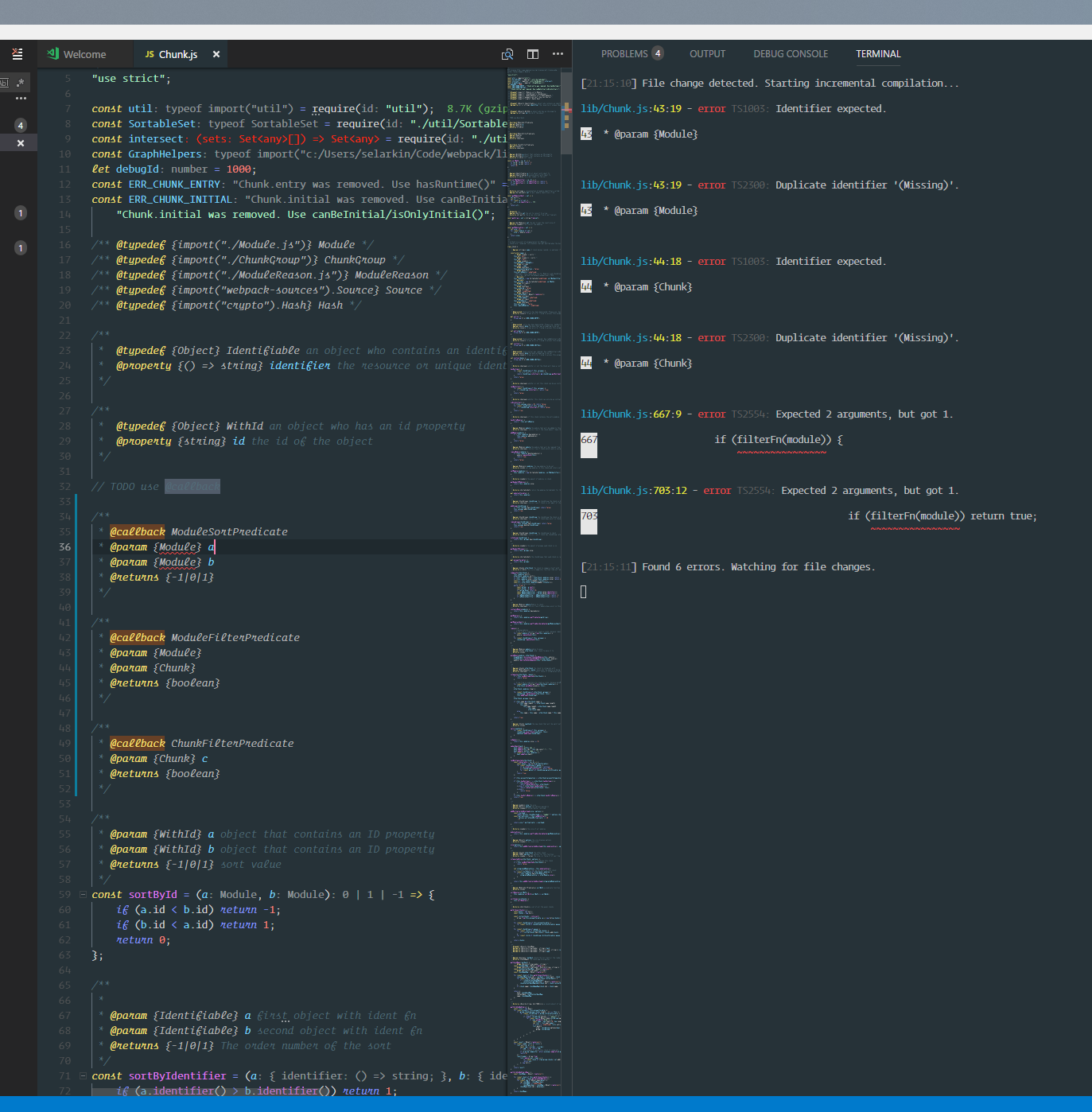

**TypeScript Version:** 2.9.0-dev.20180518

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:**

`@callback` `@params` duplicate identifier error

**Code**

```js

/**

* @callback ModuleSortPredicate

* @param {Module} a

* @param {Module} b

* @returns {-1|0|1}

*/

/**

* @callback ModuleFilterPredicate

* @param {Module}

* @param {Chunk}

* @returns {boolean}

*/

/**

* @callback ChunkFilterPredicate

* @param {Chunk} c

* @returns {boolean}

*/

```

**Expected behavior:**

No errors, or syntax highlighting errors.

**Actual behavior:**

```

lib/Chunk.js:44:18 - error TS1003: Identifier expected.

44 * @param {Chunk}

lib/Chunk.js:44:18 - error TS2300: Duplicate identifier '(Missing)'.

44 * @param {Chunk}

```

**Playground Link:** <!-- A link to a TypeScript Playground "Share" link which demonstrates this behavior -->

**Related Issues:** <!-- Did you find other bugs that looked similar? -->

Here is an image of it in action. I'm not sure if it is interaction with other things at the same time. I'm happy to pull information for you @sandersn. If you goto webpack/webpack#feature/type-compiler-compilation-save branch you can go to those lines of the code and test it as well.

|

Suggestion,Awaiting More Feedback,Domain: JavaScript

|

low

|

Critical

|

324,615,175 |

opencv

|

I hope 2 features could be added into opencv 4.0.

|

1. Can set OpenCL device by thread in program. Like cuda::setDevice().

2. Adapt more functions for CUDA or OpenCL device, e.g. findHomography, findContours...

|

feature,category: ocl,RFC

|

low

|

Minor

|

324,621,600 |

angular

|

[Feature] Allow to clear Service Worker cache

|

<!--

PLEASE HELP US PROCESS GITHUB ISSUES FASTER BY PROVIDING THE FOLLOWING INFORMATION.

ISSUES MISSING IMPORTANT INFORMATION MAY BE CLOSED WITHOUT INVESTIGATION.

-->

## I'm submitting a...

<!-- Check one of the following options with "x" -->

<pre><code>

[ ] Regression (a behavior that used to work and stopped working in a new release)

[ ] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Performance issue

[X] Feature request

[ ] Documentation issue or request

[ ] Support request => Please do not submit support request here, instead see https://github.com/angular/angular/blob/master/CONTRIBUTING.md#question

[ ] Other... Please describe:

</code></pre>

## Current behavior

As a developer, I clear the cookies, data and relevant user information from the browser when the user logs out.

For privacy and security reasons and to avoid keeping relevant data in the browser's cache, I delete all the relevant data of the user from the cookies and localStorage.

However I cannot do the same from the service worker cache.

## Expected behavior

I'd like to be able to clear the Service Worker cache whenever a user logs out, to make sure no other user has access to the cache in any way.

This could be added as a function in the Service Worker provider.

## What is the motivation / use case for changing the behavior?

A problem is that if a user [bob] does log in with his/her user account after a logout from another user [alice], the cache of the Service Worker might answer with data from alice's requests, having a privacy problem here... The only difference between the requests is the access Token, and is set in the header, which is not taken in account when caching in the service worker. Thus a properly made request while being offline, would be able to answer the data from another user.

Another problem is that a user could access the cache from the browser web tools and check the contents of the cache, even after the user has logged out.

## Environment

<pre><code>

Angular version: 6.0.2

<!-- Check whether this is still an issue in the most recent Angular version -->

Browser:

- All

|

feature,area: service-worker,feature: under consideration

|

medium

|

Critical

|

324,635,104 |

TypeScript

|

Wrong createElementNS() type definitions

|

`Document.createElementNS()` allows to create a few nonexistent SVG elements. In particular, this function has signatures that allow creating `componentTransferFunction`, `textContent` and `textPositioning`, but these correspond to SVG interfaces which do not have any real element associated.

This can be easily verified by running:

```ts

document.createElementNS('http://www.w3.org/2000/svg', 'textContent') instanceof SVGTextElement; // false

document.createElementNS('http://www.w3.org/2000/svg', 'text') instanceof SVGTextElement; // true

```

(Note that [SVGTextElement](https://developer.mozilla.org/en-US/docs/Web/API/SVGTextElement) implements [SVGTextContentElement](https://developer.mozilla.org/en-US/docs/Web/API/SVGTextContentElement).)

|

Bug,Help Wanted,Domain: lib.d.ts

|

low

|

Major

|

324,643,787 |

rust

|

Bogus note with duplicate function names in --test / --bench mode and elsewhere.

|

This file:

```

#[test]

pub fn test() { }

#[test]

pub fn test() { }

```

When run with `rustc --test`.

Produces this error:

```

error[E0428]: the name `test` is defined multiple times

--> t.rs:5:1

|

2 | pub fn test() { }

| ------------- previous definition of the value `test` here

...

5 | pub fn test() { }

| ^^^^^^^^^^^^^ `test` redefined here

|

= note: `test` must be defined only once in the value namespace of this module

error[E0252]: the name `test` is defined multiple times

|

= note: `test` must be defined only once in the value namespace of this module

help: You can use `as` to change the binding name of the import

|

1 | as other_test#[test]

| ^^^^^^^^^^^^^

error: aborting due to 2 previous errors

Some errors occurred: E0252, E0428.

For more information about an error, try `rustc --explain E0252`.

```

The note is producing bogus syntax, possibly from the reexport magic that makes testing work.

Tested on

```

rustc 1.26.0 (a77568041 2018-05-07)

rustc 1.27.0-beta.5 (84b5a46f8 2018-05-15)

rustc 1.27.0-nightly (2f2a11dfc 2018-05-16)

```

|

A-diagnostics,T-compiler,C-bug

|

low

|

Critical

|

324,662,294 |

TypeScript

|

Suggestion: Allow interfaces to "implement" (vs extend) other interfaces

|

## Search Terms

interface, implements

## Suggestion

Allow declaring that an interface "implements" another interface or interfaces, which means the compiler checks conformance, but unlike the "extends" clause, no members are inherited:

```

interface Foo {

foo(): void;

}

interface Bar implements Foo {

foo(): void; // must be present to satisfy type-checker

bar(): void;

}

```

## Use Cases

It is very common for one interface to be an "extension" of another, but the "extends" keyword is not a universal way to make this fact explicit in code. Because of structural typing, the fact that one interface is assignable to another is true with or without "extends," so you might say the "extends" keyword serves primarily to inherit members, and secondarily to document and enforce the relationship between the two types. Inheriting members comes with a readability trade-off and is not always desirable, so it would be useful to be able to document and enforce the relationship between two interfaces without inheriting members.

Consider code such as:

```

import { GestureHandler } from './GestureHandler'

import { DropTarget } from './DropTarget'

export interface DragAndDropHandler extends GestureHandler {

updateDropTarget(dropTarget: DropTaget): void;

}

function createDragAndDropHandler(/*...*/): DragAndDropHandler {

//...

}

```

While this code is not bad, it is notable that DragAndDropHandler omits some of its members simply because it has a relationship with GestureHandler. What are those members? What if I would like to declare them explicitly, just as I would if GestureHandler didn't exist, or if DragAndDropHandler were a class that implemented GestureHandler? I could write them in, but the compiler won't check that I have included all of them. I could omit `extends GestureHandler`, but then the type-checking will happen where DragAndDropHandler is used as a GestureHandler, not where it is defined.

What I really want to do is be explicit about — _and have the compiler check_ — that I am specifying all members of this interface, and also that it conforms to GestureHandler.

I would like to be able to write:

```

export interface DragAndDropHandler implements GestureHandler {

updateDropTarget(dropTarget: DropTaget): void;

move(gestureInfo: GestureInfo): void

finish(gestureInfo: GestureInfo, success: boolean): void

}

```

## Examples

See above

## Checklist

My suggestion meets these guidelines:

* [X] This wouldn't be a breaking change in existing TypeScript / JavaScript code

* [X] This wouldn't change the runtime behavior of existing JavaScript code

* [X] This could be implemented without emitting different JS based on the types of the expressions

* [X] This isn't a runtime feature (e.g. new expression-level syntax)

|

Suggestion,Awaiting More Feedback

|

high

|

Critical

|

324,663,304 |

neovim

|

extended registers: associate more info with a register

|

This might be a bit of a stretch, but would you consider making available certain information about the buffer the contents of a register were copied from, so that it may be retrieved at the time the buffer contents are pasted?

Use case: copy text from buffer a and paste it into new buffer b, and since there is no ft in buffer b, deduce ft from that of buffer a and apply it to buffer b?

I can understand if it's just not happening :)

Cheers!

|

enhancement

|

low

|

Minor

|

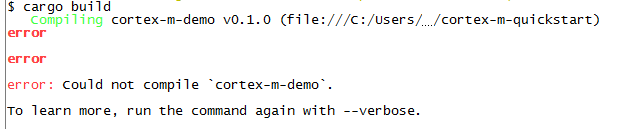

324,663,503 |

rust

|

Highlighting still assumes a dark background

|

Like #7737.

I'm using Git's MINGW64 with a white background, and the error message is not visible at all. Using the verbose flag didn't show any further information but that `rustc` failed with exit code 101. It wasn't until I tried to copy the terminal output into a bug report that I realised there actually was some text.

```

Compiling cortex-m-demo v0.1.0 (file:///C:/Users/…/cortex-m-quickstart)

error: language item required, but not found: `panic_fmt`

error: aborting due to previous error

error: Could not compile `cortex-m-demo`.

To learn more, run the command again with --verbose.

```

|

O-windows,A-diagnostics,T-compiler,C-bug,D-papercut

|

low

|

Critical

|

324,669,130 |

flutter

|

flutter doctor issue with symbolic links ?

|

my /opt is linked to /data/opt and flutter doctor see 2 installations, which are the same path, and one is failing

my emulators shows up in flutter and not in android studio (which has plugin)

```

flutter doctor -v

[✓] Flutter (Channel beta, v0.3.2, on Linux, locale en_CA.UTF-8)

• Flutter version 0.3.2 at /data/opt/flutter

• Framework revision 44b7e7d3f4 (il y a 4 semaines), 2018-04-20 01:02:44 -0700

• Engine revision 09d05a3891

• Dart version 2.0.0-dev.48.0.flutter-fe606f890b

[✓] Android toolchain - develop for Android devices (Android SDK 27.0.3)

• Android SDK at /data/opt/android-sdk

• Android NDK at /data/opt/android-sdk/ndk-bundle

• Platform android-27, build-tools 27.0.3

• ANDROID_HOME = /data/opt/android-sdk

• Java binary at: /data/opt/android-studio/jre/bin/java

• Java version OpenJDK Runtime Environment (build 1.8.0_152-release-1024-b01)

• All Android licenses accepted.

[✓] Android Studio (version 3.1)

• Android Studio at /data/opt/android-studio

• Flutter plugin version 24.2.1

• Dart plugin version 173.4700

• Java version OpenJDK Runtime Environment (build 1.8.0_152-release-1024-b01)

[✓] Android Studio

• Android Studio at /opt/android-studio

✗ Flutter plugin not installed; this adds Flutter specific functionality.

✗ Dart plugin not installed; this adds Dart specific functionality.

• Java version OpenJDK Runtime Environment (build 1.8.0_152-release-1024-b01)

[✓] Connected devices (2 available)

• Android SDK built for x86 • emulator-5556 • android-x86 • Android 8.1.0 (API 27) (emulator)

• Android SDK built for x86 • emulator-5554 • android-x86 • Android 8.1.0 (API 27) (emulator)

```

|

tool,t: flutter doctor,P3,team-tool,triaged-tool

|

low

|

Minor

|

324,699,040 |

TypeScript

|

Generics; ReturnType<Foo> != ReturnType<typeof foo>

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!-- Please try to reproduce the issue with `typescript@next`. It may have already been fixed. -->

**TypeScript Version:** Version 2.9.0-dev.20180516

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** generic function returntype

I couldn't think of a good title for this,

**Code**

```ts

type Delegate<T> = () => T;

function executeGeneric<

X,

DelegateT extends Delegate<X>

>(delegate: DelegateT): ReturnType<DelegateT> {

//Type 'X' is not assignable to type 'ReturnType<DelegateT>'.

const x: ReturnType<typeof delegate> = delegate();

//Type 'X' is not assignable to type 'ReturnType<DelegateT>'.

const y: ReturnType<DelegateT> = delegate();

//Type 'X' is not assignable to type 'ReturnType<DelegateT>'.

return delegate();

}

```

**Expected behavior:**

Return successfully.

Intuitively, to me,

1. `delegate` is of type`DelegateT`.

1. `ReturnType<DelegateT>` and `ReturnType<typeof delegate>` should be the same

However,

**Actual behavior:**

`Type 'X' is not assignable to type 'ReturnType<DelegateT>'.`

**Playground Link:** [Here](http://www.typescriptlang.org/play/#src=type%20Delegate%3CT%3E%20%3D%20()%20%3D%3E%20T%3B%0D%0A%0D%0Afunction%20executeGeneric%3C%0D%0A%20%20%20%20X%2C%0D%0A%20%20%20%20DelegateT%20extends%20Delegate%3CX%3E%20%0D%0A%3E(delegate%3A%20DelegateT)%3A%20ReturnType%3CDelegateT%3E%20%7B%0D%0A%20%20%20%20%2F%2FType%20'X'%20is%20not%20assignable%20to%20type%20'ReturnType%3CDelegateT%3E'.%0D%0A%20%20%20%20const%20x%3A%20ReturnType%3Ctypeof%20delegate%3E%20%3D%20delegate()%3B%0D%0A%20%20%20%20%2F%2FType%20'X'%20is%20not%20assignable%20to%20type%20'ReturnType%3CDelegateT%3E'.%0D%0A%20%20%20%20const%20y%3A%20ReturnType%3CDelegateT%3E%20%3D%20delegate()%3B%0D%0A%20%20%20%20%2F%2FType%20'X'%20is%20not%20assignable%20to%20type%20'ReturnType%3CDelegateT%3E'.%0D%0A%20%20%20%20return%20delegate()%3B%0D%0A%7D)

**Related Issues:** <!-- Did you find other bugs that looked similar? -->

|

Suggestion,Awaiting More Feedback

|

low

|

Critical

|

324,722,858 |

nvm

|

Add a new command nvm local which creates a .nvmrc in the cwd

|

This is a simple feature request - add a new command nvm local <version> which creates a new .nvmrc file with the version provided.

|

feature requests

|

low

|

Minor

|

324,743,038 |

go

|

time: Sleep requires ~7 syscalls

|

### What version of Go are you using (`go version`)?

`go version go1.10.1 linux/amd64`

### Does this issue reproduce with the latest release?

Yes (`1.10.2`).

### What operating system and processor architecture are you using (`go env`)?

```

GOARCH="amd64"

GOBIN=""

GOCACHE="/home/bas/.cache/go-build"

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH="/home/bas/go"

GORACE=""

GOROOT="/usr/lib/go-1.10"

GOTMPDIR=""

GOTOOLDIR="/usr/lib/go-1.10/pkg/tool/linux_amd64"

GCCGO="gccgo"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build723289083=/tmp/go-build -gno-record-gcc-switches"

```

### What did you do?

The following Go program calls `time.Sleep` the number of times given as a commandline argument.

```go

package main

import (

"os"

"strconv"

"time"

)

var max int

func main() {

max, _ = strconv.Atoi(os.Args[1])

n := 0

for {

time.Sleep(time.Second / 100)

n += 1

if n >= max {

return

}

}

}

```

If track the number of sys calls using `strace -f -c`, we find

```

bas@fourier2:~/gosleeptest$ strace -c -f ./gosleeptest 1

strace: Process 3115 attached

strace: Process 3114 attached

strace: Process 3116 attached

strace: Process 3117 attached

% time seconds usecs/call calls errors syscall

------ ----------- ----------- --------- --------- ----------------

0.00 0.000000 0 8 mmap

0.00 0.000000 0 1 munmap

0.00 0.000000 0 114 rt_sigaction

0.00 0.000000 0 14 rt_sigprocmask

0.00 0.000000 0 4 clone

0.00 0.000000 0 1 execve

0.00 0.000000 0 10 sigaltstack

0.00 0.000000 0 5 arch_prctl

0.00 0.000000 0 9 gettid

0.00 0.000000 0 8 1 futex

0.00 0.000000 0 1 sched_getaffinity

0.00 0.000000 0 1 readlinkat

0.00 0.000000 0 22 pselect6

------ ----------- ----------- --------- --------- ----------------

100.00 0.000000 198 1 total

bas@fourier2:~/gosleeptest$ strace -c -f ./gosleeptest 10

strace: Process 3919 attached

strace: Process 3918 attached

strace: Process 3917 attached

strace: Process 3927 attached

% time seconds usecs/call calls errors syscall

------ ----------- ----------- --------- --------- ----------------

0.00 0.000000 0 8 mmap

0.00 0.000000 0 1 munmap

0.00 0.000000 0 114 rt_sigaction

0.00 0.000000 0 14 rt_sigprocmask

0.00 0.000000 0 2 sched_yield

0.00 0.000000 0 4 clone

0.00 0.000000 0 1 execve

0.00 0.000000 0 10 sigaltstack

0.00 0.000000 0 5 arch_prctl

0.00 0.000000 0 9 gettid

0.00 0.000000 0 74 12 futex

0.00 0.000000 0 1 sched_getaffinity

0.00 0.000000 0 1 readlinkat

0.00 0.000000 0 69 pselect6

------ ----------- ----------- --------- --------- ----------------

100.00 0.000000 313 12 total

bas@fourier2:~/gosleeptest$ strace -c -f ./gosleeptest 100

strace: Process 4491 attached

strace: Process 4490 attached

strace: Process 4489 attached

strace: Process 4532 attached

% time seconds usecs/call calls errors syscall

------ ----------- ----------- --------- --------- ----------------

89.01 0.043330 82 530 104 futex

9.76 0.004751 21 228 pselect6

0.27 0.000131 1 114 rt_sigaction

0.23 0.000114 23 5 arch_prctl

0.19 0.000091 9 10 sigaltstack

0.18 0.000086 10 9 gettid

0.13 0.000061 4 14 rt_sigprocmask

0.07 0.000035 4 8 mmap

0.07 0.000033 33 1 readlinkat

0.03 0.000017 4 4 clone

0.03 0.000017 17 1 execve

0.02 0.000009 9 1 munmap

0.01 0.000003 3 1 sched_getaffinity

------ ----------- ----------- --------- --------- ----------------

100.00 0.048678 926 104 total

```

### What did you expect to see?

A single `time.Sleep` should use approximately one syscall. (Python's `time.sleep` does only use one syscall, for instance.)

### What did you see instead?

Approximately seven sys calls per `time.Sleep`. As a consequence, the go process also uses quite a bit of CPU time per `time.Sleep`: 500us (compared to 13us for Python's `time.sleep`).

### Notes

I encountered this issue while debugging unexpectedly high idle CPU usage by `wireguard-go`.

|

Performance,NeedsInvestigation

|

medium

|

Critical

|

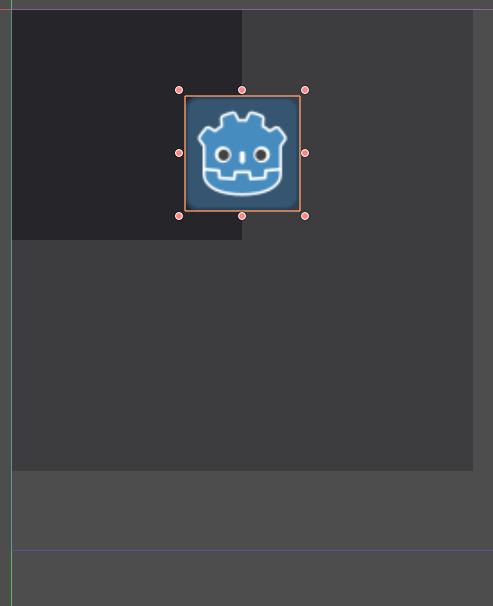

324,744,503 |

godot

|

Scaled Controls don't align properly

|

**Godot version:** 3.0

**OS/device including version:**

**Issue description:**

Margins ignore the scale of the control.

The top-left corner is set as if the node was not scaled.

Scale: 1x1, Anchor: center right

Scale: 2x2, Anchor: center right (the transparent panel is also scaled 2x2, but the textureRect didn't align to it's center right)

What was expected: (with Anchor: center left)

**Steps to reproduce:**

Add a Control to a parent Control.

Change the child's scale.

Change the child's anchor from the Layout menu (to a layout different from top left).

**Minimal reproduction project:**

[align-scaled-issue.zip](https://github.com/godotengine/godot/files/2020719/align-scaled-issue.zip)

|

bug,confirmed,topic:gui

|

medium

|

Major

|

324,750,503 |

neovim

|

Numeric prefixes not always rehighlighted when arabicshape is off

|

- `nvim --version`: #8421, 60dae5a9efe6c8b928ebc278ac4aa9d87bafa660

- Vim (version: ) behaves differently? no such feature in Vim

### Steps to reproduce

```

# nvim -u NONE -i NONE --cmd 'set noarabicshape' --cmd 'syntax on'

i<C-r>=0x1F<BS>

```

### Actual behaviour

`0x` is highlighted the same before and after typing `1F`, but gets rehighlighted as numeric prefix once `<BS>` is typed.

### Expected behaviour

`0x` is highlighted as error before typing `1` and as numeric prefix after that event.

|

bug,vimscript,syntax

|

low

|

Critical

|

324,806,676 |

youtube-dl

|

Support Xiami MV (Taobao video player)

|

Hi,

I'm using linux YouTube-dl to download a video from xiami.com;

the command is:

```shell

youtube-dl -v 'https://www.xiami.com/mv/K6YmI0'

```

And I got the following output:

```shell

[debug] System config: []

[debug] User config: []

[debug] Custom config: []

[debug] Command-line args: [u'-v', u'https://www.xiami.com/mv/K6YmI0']

[debug] Encodings: locale UTF-8, fs UTF-8, out UTF-8, pref UTF-8

[debug] youtube-dl version 2017.12.23

[debug] Python version 2.7.12 - Linux-4.4.0-119-generic-x86_64-with-Ubuntu-16.04-xenial

[debug] exe versions: avconv 2.8.14-0ubuntu0.16.04.1, avprobe 2.8.14-0ubuntu0.16.04.1, ffmpeg 2.8.14-0ubuntu0.16.04.1, ffprobe 2.8.14-0ubuntu0.16.04.1

[debug] Proxy map: {}

[generic] K6YmI0: Requesting header

WARNING: Falling back on generic information extractor.

[generic] K6YmI0: Downloading webpage

[generic] K6YmI0: Extracting information

ERROR: Unsupported URL: https://www.xiami.com/mv/K6YmI0

Traceback (most recent call last):

File "/usr/local/bin/youtube-dl/youtube_dl/extractor/generic.py", line 2163, in _real_extract

doc = compat_etree_fromstring(webpage.encode('utf-8'))

File "/usr/local/bin/youtube-dl/youtube_dl/compat.py", line 2539, in compat_etree_fromstring

doc = _XML(text, parser=etree.XMLParser(target=_TreeBuilder(element_factory=_element_factory)))

File "/usr/local/bin/youtube-dl/youtube_dl/compat.py", line 2528, in _XML

parser.feed(text)

File "/usr/lib/python2.7/xml/etree/ElementTree.py", line 1653, in feed

self._raiseerror(v)

File "/usr/lib/python2.7/xml/etree/ElementTree.py", line 1517, in _raiseerror

raise err

ParseError: syntax error: line 4, column 0

Traceback (most recent call last):

File "/usr/local/bin/youtube-dl/youtube_dl/YoutubeDL.py", line 784, in extract_info

ie_result = ie.extract(url)

File "/usr/local/bin/youtube-dl/youtube_dl/extractor/common.py", line 438, in extract

ie_result = self._real_extract(url)

File "/usr/local/bin/youtube-dl/youtube_dl/extractor/generic.py", line 3063, in _real_extract

raise UnsupportedError(url)

UnsupportedError: Unsupported URL: https://www.xiami.com/mv/K6YmI0

```

Seems like YouTube-dl don't support it.

FIX IT plz, thx

|

site-support-request

|

low

|

Critical

|

324,817,108 |

youtube-dl

|

Support Better Homes and Gardens (BHG)

|

## Please follow the guide below

- You will be asked some questions and requested to provide some information, please read them **carefully** and answer honestly

- Put an `x` into all the boxes [ ] relevant to your *issue* (like this: `[x]`)

- Use the *Preview* tab to see what your issue will actually look like

---

### Make sure you are using the *latest* version: run `youtube-dl --version` and ensure your version is *2018.05.18*. If it's not, read [this FAQ entry](https://github.com/rg3/youtube-dl/blob/master/README.md#how-do-i-update-youtube-dl) and update. Issues with outdated version will be rejected.

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2018.05.18**

### Before submitting an *issue* make sure you have:

- [x] At least skimmed through the [README](https://github.com/rg3/youtube-dl/blob/master/README.md), **most notably** the [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

- [x] Checked that provided video/audio/playlist URLs (if any) are alive and playable in a browser

### What is the purpose of your *issue*?

- [ ] Bug report (encountered problems with youtube-dl)

- [x] Site support request (request for adding support for a new site)

- [ ] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

---

### If the purpose of this *issue* is a *site support request* please provide all kinds of example URLs support for which should be included (replace following example URLs by **yours**):

- Single video: https://www.bhg.com.au/koala-hospital?category=TV

- Single video: https://www.bhg.com.au/magnolia-manor?category=TV

Note that **youtube-dl does not support sites dedicated to [copyright infringement](https://github.com/rg3/youtube-dl#can-you-add-support-for-this-anime-video-site-or-site-which-shows-current-movies-for-free)**. In order for site support request to be accepted all provided example URLs should not violate any copyrights.

---

### Description of your *issue*, suggested solution and other information

Please add support for https://www.bhg.com.au/ site. Thank you

|

site-support-request

|

low

|

Critical

|

324,817,672 |

pytorch

|

[Caffe2][Caffe] Caffe to Caffe2 ParseError

|

`google.protobuf.text_format.ParseError: 78:5 : Message type "caffe.PoolingParameter" has no field named "torch_pooling".

`

So I am trying to convert from Caffe to Caffe 2 with custom pooling parameter using floor and ceil.

implemented it like this in `pooling_layer.cpp`

```

template <typename Dtype>

void PoolingLayer<Dtype>::Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

//unrelated code

switch (this->layer_param_.pooling_param().torch_pooling()) {

/// implementation

}

}

```

Found out on [caffe_translator.py](https://github.com/caffe2/caffe2/blob/master/caffe2/python/caffe_translator.py) line 521, that facebook has basically the same thing.

```

# In the Facebook port of Caffe, a torch_pooling field was added to

# map the pooling computation of Torch. Essentially, it uses

# floor((height + 2 * padding - kernel) / stride) + 1

# instead of

# ceil((height + 2 * padding - kernel) / stride) + 1

# which is Caffe's version.

# Torch pooling is actually the same as Caffe2 pooling, so we don't

# need to do anything.

```

I dont exactly understand how to register it so the parser will understand it.

I tried, in `caffe.proto`:

```

message LayerParameter {

optional PoolingParameter torch_pooling = 149;

```

Is there any documentation how facebook implemented its torch_pooling? I didn't find any information on the internet.

If the question above is unansverable, can you help me to register that pooling parameter to be parsed succesfully?

|

caffe2

|

low

|

Critical

|

324,865,207 |

gin

|

how do i get response body in after router middleware?

|

rt

```

r := gin.Default()

r.GET("/test",handler,middleware())

func middleware() gin.HandlerFunc{

// get response body in here;

...

}

```

|

question

|

low

|

Major

|

324,909,575 |

rust

|

Higher ranked trivial bounds are not checked

|

As can be seen in the example:

```rust

trait Trait {}

// Checked

fn foo() where i32 : Trait {}

// Not checked.

fn bar() where for<'a> fn(&'a ()) : Trait {}

```

In light of #2056 this will become just a lint, but still we should lint in both cases rather than just the first. This would be fixed by having well-formedness not ignore higher-ranked predicates. See #50815 for an attempted fix which resulted in too many regressions, probably for other bugs in handling higher-ranked stuff. We can try revisiting this after more chalkification.

|

C-enhancement,A-trait-system,T-compiler,T-types,S-types-tracked,A-higher-ranked

|

low

|

Critical

|

324,961,942 |

electron

|

Electron defaults to en-US and MM/dd/YY date formats regardless of system settings

|

* Electron Version: 2.0.0, 4.2.5

* Operating System (Platform and Version): Windows 10

* Last known working Electron version: Unknown

**Expected Behavior**

Electron should default to the OS's current culture/locale and date format settings.

**Actual behavior**

Electron defaults to en-US and the MM/dd/YY format for dates. More specifically:

- Entering `navigator.language` should return whatever my OS is set to.

- Entering `new Date().toLocaleString()` should return a date string in the format set by my OS.

**To Reproduce**

1. If in the US, change your OS language/region to something else (like United Kingdom or Sweden).

2. Install and run the Electron Quick Start sample application.

3. Open the developer tools and enter `navigator.language` in the console window.

4. In the console window, enter `new Date().toLocaleString()`.

|

platform/windows,bug :beetle:,blocked/upstream ❌,2-0-x,4-2-x,5-0-x,6-1-x,7-1-x,10-x-y,stale-exempt

|

high

|

Critical

|

324,978,186 |

pytorch

|

Inserting a tensor into a python dict causes strange behavior

|

Reported from https://discuss.pytorch.org/t/why-tensor-is-hashable/18225

```

T = torch.randn(5,5)

c = hash(T) # i.e. c = 140676925984200

dic = dict()

dic[T] = 100

dic[c]

RuntimeError: bool value of Tensor with more than one value is ambiguous.

```

I'm not sure if we support using Tensors as keys in python dicts; but Tensor does have a __hash__ method.

cc @albanD @mruberry

|

todo,module: nn,triaged

|

medium

|

Critical

|

325,023,266 |

pytorch

|

Conv3D can be optimized for cases when kernel is spatial (probably)

|

While using 3D CNNs we may need to use kernels with temporal dimension 1 (i.e. 1xHxW kernel), example 3D Resnet.

It would be similar to using a 2D Conv on reshaped input. What I have observed is that if i reshape, then do a 2D conv and then reshape back I get quicker results than 3D conv (which is maybe not very surprising).

[Here](https://discuss.pytorch.org/t/conv3d-can-be-optimized-for-case-when-no-temporal-stride-done/18184) is link to form post I created referencing this issue.

While measuring speed I used `torch.cuda.synchronize` so I hope my calculations were correct.

[Here](https://www.cse.iitb.ac.in/~namanjain/test2DConv.py) is the script I used for method using 2D Convolutions and [here](https://www.cse.iitb.ac.in/~namanjain/test3DConv.py)

is the one that used plane 3D convs.

I might have miscalculated the times but my observations showed scope of improvement which I believe would be useful..

What do you guys think???

cc @VitalyFedyunin @ngimel

|

module: performance,module: convolution,triaged

|

low

|

Minor

|

325,047,510 |

flutter

|

Let’s Rewrite Buildroot

|

The current buildroot was forked from Chromium many years ago. Due to different project priorities, a lot of features were tacked on in an entirely ad-hoc fashion. Changing project priorities and the lack of a clear owner has left a lot of unused cruft in the source tree.

For instance, there are definitions for platforms that don’t exist (fnl), or, have no users in the engine source tree (cros). Many toolchain definitions reference toolchains that don’t exist at all (GCC toolchains and many of the toolchains for architectures that we don’t target). There are also tools and templates for features that we no longer use (like preparing APKs in the source tree). Many unused python and shell utilities reference Chromium infrastructure.

Many of the features we want to add support for are not fully implemented and the existing definitions cause confusion. This includes adding support for sanitizers or supporting custom toolchains and sysroots. This technical debt is currently limiting development velocity and developer productivity.

The Flutter engine is a relatively simple project that would be better served with a simpler buildroot. I propose we write one from scratch.

|

team,engine,P2,team-engine,triaged-engine

|

low

|

Major

|

325,047,950 |

TypeScript

|

Support @param tag on function type

|

## Examples

```ts

/**

* @param a A doc

* @param b B doc

*/

type F = (a: number, b: number) => number;

```

Hover over `a` or `b` -- I would expect to see parameter documentation.

It would also be nice to get it at `a` in `const f: F = (a, b) => a + b;`.

|

Suggestion,In Discussion,Domain: JSDoc,Domain: Signature Help,Domain: Quick Info

|

low

|

Minor

|

325,064,131 |

go

|

net/http: investigate and fix uncaught allocations and regressions

|

I just made a fix for https://golang.org/issue/25383 with https://go-review.googlesource.com/c/go/+/113996 and that CL just puts a bandaid on the issue which was a regression that was manually noticed by @Quasilyte.

The real issue as raised by @bradfitz is that there have been a bunch of allocations and regressions that have crept into net/http code over the past couple of months/years, that are more concerning.

This issue is to track the mentioned the problem raised. I am currently too swamped to comprehensively do investigative and performance work but if anyone would like to take on this issue or would like to work on this for Go1.11 or perhaps during Go1.12 or so, I would be very happy to help out whether pairing or as a "bounty", please feel free to reach out to me.

|

Performance,NeedsInvestigation

|

low

|

Major

|

325,075,565 |

go

|

x/tools: write release tool to run your package's callers' tests

|

In https://github.com/golang/go/issues/24301#issuecomment-390788506 I proposed in a comment:

> We've been discussing some sort of `go release` command that both makes releases/tagging easy, but also checks API compatibility (like the Go-internal `go tool api` checker I wrote for Go releases). It might also be able to query `godoc.org` and find callers of your package and run their tests against your new version too at pre-release time, before any tag is pushed. etc.

And later in that issue, I said:

> With all the cloud providers starting to offer pay-by-the-second containers-as-a-service, I see no reason we couldn't provide this as an open source tool that anybody can run and pay the $0.57 or $1.34 they need to to run a bazillion tests over a bunch of hosts for a few minutes.

@SamWhited and @mattfarina had objections (https://github.com/golang/go/issues/24301#issuecomment-390792056, https://github.com/golang/go/issues/24301#issuecomment-390794717) about the inclusivity of such a tool, so I'm opening this bug so we don't cause too much noise in the other issue.

Such a tool would involve:

* querying a service (such as godoc.org or anything implementing the "Go workspace abstraction") to find your callers

* running the tests (or a fraction thereof) either

* locally, possibly overnight

* on a cloud provider of your choice (AWS Fargate, Azure Container Service, Digital Ocean, GCP, etc)

... and telling you if they pass before your change, but fail after your change, so you can release a new version of your package with confidence.

In local mode, it'd use your local GOOS/GOARCH. On cloud, Linux containers are cheapest, but it could also spin up Windows VMs like we do with Go. (Each Go commit gets a fresh new Windows VM that boots in under a minute and runs a few tests and then the VM is destroyed).

None of this costs much, and the assumption is that this would be used by people (optionally) who are willing to pay for the extra assurance, and/or those whose time needed to fix regressions later is worth more than the cloud costs.

And maybe some cloud CI/CD company(s) could sponsor such builders.

|

NeedsFix

|

medium

|

Critical

|

325,087,482 |

pytorch

|

[Caffe2] Align Element-Wise Ops Broadcasting to Numpy

|

- [x] Add

- [x] Div

- [x] Mul

- [ ] Pow

- [x] Sub

- [ ] And

- [ ] Or

- [ ] Xor

- [x] Equal

- [x] Greater

- [x] Less

- [ ] Gemm

- [ ] PRelu

|

caffe2

|

low

|

Minor

|

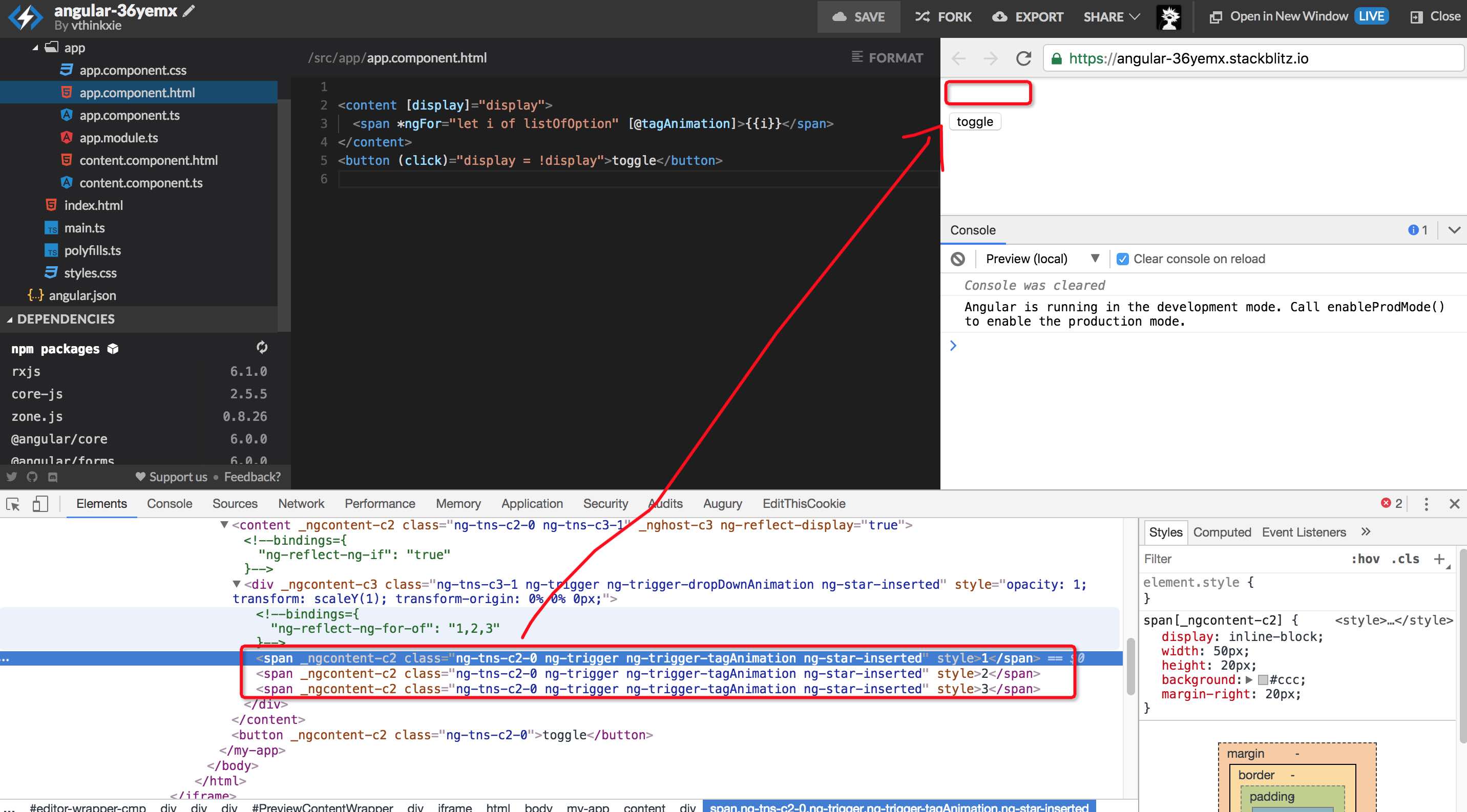

325,131,988 |

angular

|

bug happened when animations work with ng-content

|

<!--

PLEASE HELP US PROCESS GITHUB ISSUES FASTER BY PROVIDING THE FOLLOWING INFORMATION.

ISSUES MISSING IMPORTANT INFORMATION MAY BE CLOSED WITHOUT INVESTIGATION.

-->

## I'm submitting a...

<!-- Check one of the following options with "x" -->

<pre><code>

[ ] Regression (a behavior that used to work and stopped working in a new release)

[x] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Performance issue

[ ] Feature request

[ ] Documentation issue or request

[ ] Support request => Please do not submit support request here, instead see https://github.com/angular/angular/blob/master/CONTRIBUTING.md#question

[ ] Other... Please describe:

</code></pre>

## Current behavior

<!-- Describe how the issue manifests. -->

When there is an animation in the parent div of ng-content, the animation in ng-content will break and go wrong.

## Expected behavior

<!-- Describe what the desired behavior would be. -->

animation works fine.

## Minimal reproduction of the problem with instructions

<!--

For bug reports please provide the *STEPS TO REPRODUCE* and if possible a *MINIMAL DEMO* of the problem via

https://stackblitz.com or similar (you can use this template as a starting point: https://stackblitz.com/fork/angular-gitter).

-->

https://stackblitz.com/edit/angular-36yemx?file=src%2Fapp%2Fcontent.component.html

click toggle button twice, you can see all `span` are gone, which expected to display again with animations

## What is the motivation / use case for changing the behavior?

<!-- Describe the motivation or the concrete use case. -->

## Environment

<pre><code>

Angular version: latest

<!-- Check whether this is still an issue in the most recent Angular version -->

Browser:

- [x] Chrome (desktop) version latest

- [ ] Chrome (Android) version XX

- [ ] Chrome (iOS) version XX

- [ ] Firefox version XX

- [ ] Safari (desktop) version XX

- [ ] Safari (iOS) version XX

- [ ] IE version XX

- [ ] Edge version XX

For Tooling issues:

- Node version: XX <!-- run `node --version` -->

- Platform: <!-- Mac, Linux, Windows -->

Others:

<!-- Anything else relevant? Operating system version, IDE, package manager, HTTP server, ... -->

</code></pre>

remove animation in the `content component` or do it without `ng-content`, every thing will be ok

|

type: bug/fix,area: animations,freq2: medium,P3

|

medium

|

Critical

|

325,134,333 |

flutter

|

Circular Progress Indicator CPU Spike

|

My Flutter application heats up my MacBook Pro Late 2015 15" whenever I use a Circular Progress Indicator on the Android Emulator (Running Nougat)

## Steps to Reproduce

<!--

Please tell us exactly how to reproduce the problem you are running into.

Please attach a small application (ideally just one main.dart file) that

reproduces the problem. You could use https://gist.github.com/ for this.

If the problem is with your application's rendering, then please attach

a screenshot and explain what the problem is.

-->

1. Use CircleProgressIndicator Widget

|

framework,f: material design,c: performance,has reproducible steps,P2,found in release: 3.3,found in release: 3.7,team-design,triaged-design

|

low

|

Major

|

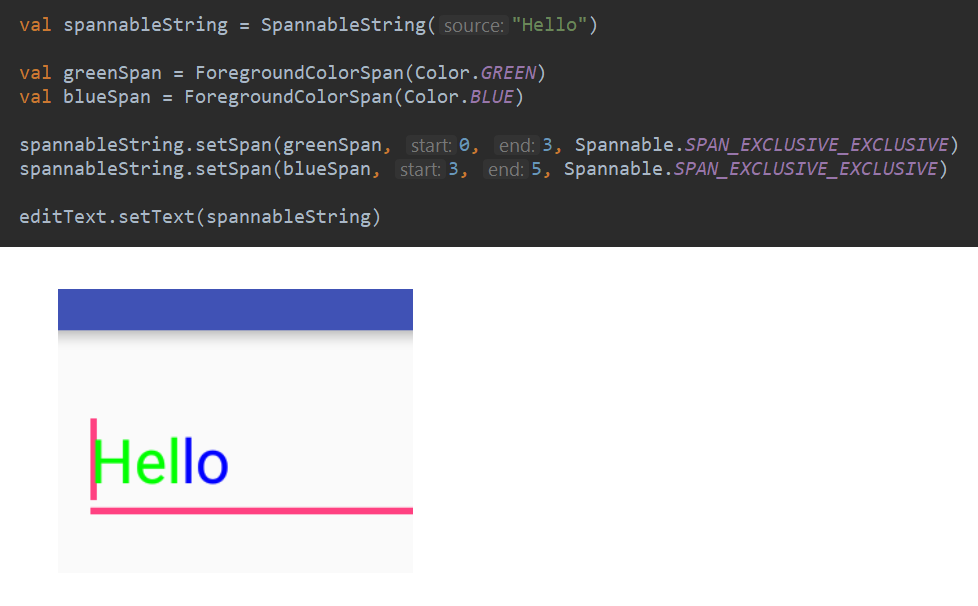

325,341,009 |

flutter

|

Support TextSpan in TextFields

|

Android's `EditText` and `TextView` both support rich text spans.

But in Flutter, only `RichText` widget support rich text spans.

`TextField` should support rich text spans natively using `TextSpan`.

Android example of using text span in edit text component:

|

a: text input,c: new feature,framework,P3,team-framework,triaged-framework

|

medium

|

Major

|

325,348,066 |

youtube-dl

|

vlive extractor recognition error

|

## Please follow the guide below

- You will be asked some questions and requested to provide some information, please read them **carefully** and answer honestly

- Put an `x` into all the boxes [ ] relevant to your *issue* (like this: `[x]`)

- Use the *Preview* tab to see what your issue will actually look like

---

### Make sure you are using the *latest* version: run `youtube-dl --version` and ensure your version is *2018.05.18*. If it's not, read [this FAQ entry](https://github.com/rg3/youtube-dl/blob/master/README.md#how-do-i-update-youtube-dl) and update. Issues with outdated version will be rejected.

- [ x ] I've **verified** and **I assure** that I'm running youtube-dl **2018.05.18**

### Before submitting an *issue* make sure you have:

- [x ] At least skimmed through the [README](https://github.com/rg3/youtube-dl/blob/master/README.md), **most notably** the [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [ ] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

- [ x ] Checked that provided video/audio/playlist URLs (if any) are alive and playable in a browser

### What is the purpose of your *issue*?

- [ x ] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [ ] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

---

### The following sections concretize particular purposed issues, you can erase any section (the contents between triple ---) not applicable to your *issue*

---

### If the purpose of this *issue* is a *bug report*, *site support request* or you are not completely sure provide the full verbose output as follows:

Add the `-v` flag to **your command line** you run youtube-dl with (`youtube-dl -v <your command line>`), copy the **whole** output and insert it here. It should look similar to one below (replace it with **your** log inserted between triple ```):

```

C:\Users\nichi_000\Desktop\youtube_dl>youtube-dl.exe --config-location C:\Users\nichi_000\AppData\Roaming\youtube-dl\vlive.txt --cookies C:\Users\nichi_000\Desktop\youtube_dl\Cookies\vlive_cookies.txt -v https://www.vlive.tv/video/vplus/9427

[debug] System config: []

[debug] User config: []

[debug] Custom config: ['--prefer-ffmpeg', '--no-mark-watched', '-f', 'bestvideo[ext=mp4]+bestaudio[ext=m4a]/best[ext=mp4]/best', '--merge-output-format', 'mp4', '--ffmpeg-location', 'C:\\video\\ffmpeg.exe', '-o', 'C:\\Vlive\\%(uploader)s - %(title)s.%(ext)s', '--no-check-certificate', '--abort-on-unavailable-fragment', '--write-sub', '--sub-lang', 'en', '--write-auto-sub', '--convert-subs', 'srt', '--write-thumbnail', '--add-metadata']

[debug] Command-line args: ['--config-location', 'C:\\Users\\nichi_000\\AppData\\Roaming\\youtube-dl\\vlive.txt', '--cookies', 'C:\\Users\\nichi_000\\Desktop\\youtube_dl\\Cookies\\vlive_cookies.txt', '-v', 'https://www.vlive.tv/video/vplus/9427']

[debug] Encodings: locale cp1250, fs mbcs, out cp852, pref cp1250

[debug] youtube-dl version 2018.05.18

[debug] Python version 3.4.4 (CPython) - Windows-10-10.0.16299

[debug] exe versions: ffmpeg N-65426-gd6af706, ffprobe N-65426-gd6af706

[debug] Proxy map: {}

[generic] 9427: Requesting header

WARNING: Falling back on generic information extractor.

[generic] 9427: Downloading webpage

[generic] 9427: Extracting information

ERROR: Unsupported URL: https://www.vlive.tv/video/vplus/9427

Traceback (most recent call last):

File "C:\Users\dst\AppData\Roaming\Build archive\youtube-dl\rg3\tmpco7jv88i\build\youtube_dl\YoutubeDL.py", line 792, in extract_info

File "C:\Users\dst\AppData\Roaming\Build archive\youtube-dl\rg3\tmpco7jv88i\build\youtube_dl\extractor\common.py", line 503, in extract

File "C:\Users\dst\AppData\Roaming\Build archive\youtube-dl\rg3\tmpco7jv88i\build\youtube_dl\extractor\generic.py", line 3201, in _real_extract

youtube_dl.utils.UnsupportedError: Unsupported URL: https://www.vlive.tv/video/vplus/9427

---

### Description of your *issue*, suggested solution and other information

Explanation of your *issue* in arbitrary form goes here. Please make sure the [description is worded well enough to be understood](https://github.com/rg3/youtube-dl#is-the-description-of-the-issue-itself-sufficient). Provide as much context and examples as possible.

If work on your *issue* requires account credentials please provide them or explain how one can obtain them.

|

account-needed

|

low

|

Critical

|

325,378,167 |

vscode

|

Intellisense tooltip with filter category like Visual Studio

|

It would be awesome if in the moment to bring the intellisense of properties or classes on Typescript and C# or other language we can filter this like the little icons on the bottom of the tooltip as visual studio,

or control + shift + p + @: but for the intellisense.

|

feature-request,suggest

|

high

|

Critical

|

325,391,243 |

TypeScript

|

Add `SharedWorker` to the library

|

Details in https://developer.mozilla.org/en-US/docs/Web/API/SharedWorker

This should work

```ts

var myWorker = new SharedWorker('worker.js');

```

|

Bug,Help Wanted,Domain: lib.d.ts

|

low

|

Major

|

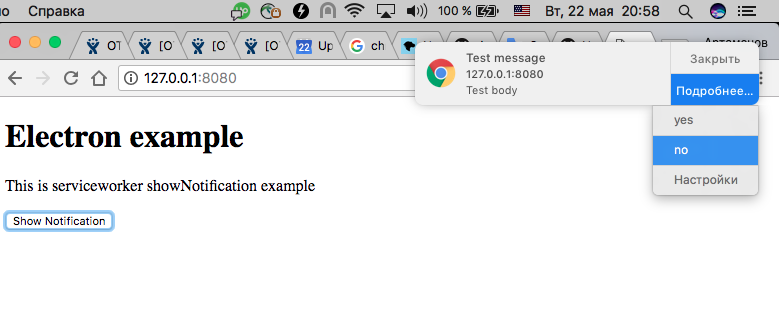

325,403,852 |

electron

|

serviceWorkerRegistration.showNotification does not work

|

* Electron Version: 1.8.x - 2.x

* Operating System (Platform and Version): Win, Linux, MacOSX

* Last known working Electron version: no

**Expected Behavior**

notification should be shown

**Actual behavior**

notification not shown

**To Reproduce**

here example: https://github.com/dregenor/showNotification.git

1) clone,

2) npm install,

3) npm start

click "Show Notification"

```sh

$ git clone https://github.com/dregenor/showNotification.git -b master

$ npm install

$ npm start

```

in browser all works fine.

example:

1) open src folder and start simpleHttp server

```sh

python -m SimpleHTTPServer 8080

```

2) open in Google Chrome http://127.0.0.1:8080

3) press "Show Notification" button

result:

|

enhancement :sparkles:,platform/all,2-0-x,5-0-x,6-0-x,6-1-x,9-x-y,11-x-y

|

medium

|

Major

|

325,474,335 |

pytorch

|

[feature request] Add Local Contrast Normalization

|

## Issue description

As mentioned in this paper :- http://yann.lecun.com/exdb/publis/pdf/jarrett-iccv-09.pdf

I noticed that Local Response Norm is present. This is will be a good addition too.

I have an implementation ready and can create a PR soon, if approved.

cc @albanD @mruberry

|

module: nn,triaged,enhancement

|

low

|

Minor

|

325,508,978 |

opencv

|

JVM crash while reading from camera

|

##### System information (version)

- OpenCV => 3.4.1

- Operating System / Platform => OpenSUSE Leap

- Compiler => gcc 4.8.5

##### Detailed description

```

uvcvideo: Failed to query (GET_CUR) UVC control 4 on unit 1: -110 (exp. 4).

uvcvideo: Failed to query (GET_CUR) UVC control 3 on unit 1: -110 (exp. 1).

libv4l2: error setting pixformat: Input/output error

VIDEOIO ERROR: libv4l unable to ioctl S_FMT

uvcvideo: Failed to set UVC probe control : -32 (exp. 26).

VIDIOC_G_FMT: Bad file descriptor

ERROR: V4L: Unable to determine size of incoming image

*** Error in `/usr/lib64/jvm/jre/bin/java': double free or corruption (fasttop): 0x00007f93200a40e0 ***

======= Backtrace: =========

/lib64/libc.so.6(+0x740ef)[0x7f937f4bc0ef]

/lib64/libc.so.6(+0x79646)[0x7f937f4c1646]

/lib64/libc.so.6(+0x7a393)[0x7f937f4c2393]

/usr/lib64/libopencv_videoio.so.3.4(+0x173c2)[0x7f93243993c2]

/usr/lib64/libopencv_videoio.so.3.4(+0x175e4)[0x7f93243995e4]

/usr/lib64/libopencv_videoio.so.3.4(cvReleaseCapture+0x1f)[0x7f93243891df]

/usr/lib64/libopencv_videoio.so.3.4(_ZNK2cv14DefaultDeleterI9CvCaptureEclEPS1_+0x15)[0x7f9324389205]

/usr/lib64/libopencv_videoio.so.3.4(+0x7229)[0x7f9324389229]

/usr/lib64/libopencv_videoio.so.3.4(_ZN2cv12VideoCapture7releaseEv+0x86)[0x7f9324388e36]

/usr/share/OpenCV/java/libopencv_java341.so(Java_org_opencv_videoio_VideoCapture_release_10+0x15)[0x7f932533a7c5]

[0x7f9369017e47]

```

This doesn't happen consistently (maybe one out of 50 attempts to open the camera), but it always includes the uvcvideo errors (from the kernel) and v4l errors.

When this happens the calls to `open` and `isOpen` on `VideoCamera` both return true, but I can detect that there's a problem by setting the resolution, reading back the resolution and seeing that it didn't take. If at that point I don't call `release` i can avoid this particular crash, but then the JVM still crashes a few seconds later in some random place.

I saw the same issue with OpenCV 3.1.

|

bug,category: videoio(camera)

|

low

|

Critical

|

325,529,323 |

pytorch

|

[Caffe2] Fail to build after upgrading to cuda 9.2

|

## Issue description

I am baffled by this actually (probably because I don't understand cmake very well). After I upgraded to cuda-9.2, wiped out the whole build directory, and reran the build flow, Caffe2 cannot be run:

`WARNING:root:Debug message: libcurand.so.9.1: cannot open shared object file: No such file or directory`

While 'ldd libcaffe2_gpu.so' gives:

` libcurand.so.9.2 => /usr/local/cuda-9.2/targets/x86_64-linux/lib/libcurand.so.9.2 (0x00007f7b4965c000)`

## Code example

## System Info

- PyTorch or Caffe2: Caffe2

- How you installed PyTorch (conda, pip, source): Source build

- Build command you used (if compiling from source): `mkdir build && cd mkdir && cmake $(python3 ../scripts/get_python_cmake_flags.py) -DUSE_NATIVE_ARCH=ON -DUSE_CUDA=ON .. && make && sudo make install`

- OS: Ubuntu 1604

- PyTorch version:

- Python version: 3.5.2

- CUDA/cuDNN version: 9.2

- GPU models and configuration: GTX 1070

- GCC version (if compiling from source): gcc (Ubuntu 5.4.0-6ubuntu1~16.04.9) 5.4.0 20160609

- CMake version: 3.5.1

- Versions of any other relevant libraries:

|

caffe2

|

low

|

Critical

|

325,557,821 |

TypeScript

|

Assume arity of tuples when declared as literal

|

## Search Terms

tuples, length, arity

## Suggestion

Now that #17765 is out I'm curious about if we could change the arity of tuples declared as literals. This was proposed as part of #16896 but I thought it might be better to pull this part out to have a discussion about this part of that proposal.

With fixed length tuples TypeScript allows you to convert `[number, number]` to `number[]` but not the other way around (which is great).

```ts

const foo = [1, 2];

const bar = [1, 2] as [number, number];

const foo2: [number, number] = foo;

const bar2: number[] = bar;

```

If you declare a constant such as `foo` above it would be nice if it would be nice if it would have the length as part of its type, that is, if `foo` was assumed to be of type `[number, number]` not `number[]`.

This would have potential issues with mutable arrays allowing you to call `push` and `pop` although this isn't different to present and was discussed a bit in #6229.

```ts

const foo = [1, 2] as [number, number]; // After this proposal TypeScript would infer the type as `[number, number]` not `number[]`.

foo.push(3); // foo is of type `[number, number]` even though it now has 3 elements.

foo.splice(2); // And now it has 2 elements again.

```

## Use Cases

When using a function such as `fromPairs` from lodash it requires that the type is a list of tuples. A simplified version is

```ts

function fromPairs<T>(values: [PropertyKey, T][]): { [key: string]: T } {

return values.reduce((acc, [key, value]) => ({ ...acc, [key]: value }), {});

}

```

If I do

```ts

const foo = fromPairs(Object.entries({ a: 1, b: 2 }));

```

it works because the type passed into `fromPairs` is `[string, number][]`, but if I try to say map the values to double their value I get a compile error:

```ts

const bar = fromPairs(Object.entries({ a: 1, b: 2 }).map(([key, value]) => [key, value * 2]));

```

as the parameter is of type `(string | number)[][]`

This can be fixed by going

```ts

const bar = fromPairs(Object.entries({ a: 1, b: 2 }).map(([key, value]) => [key, value * 2] as [string, number]));

```

but this is cumbersome.

## Checklist

My suggestion meets these guidelines:

* [ ] This wouldn't be a breaking change in existing TypeScript / JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. new expression-level syntax)

|

Suggestion,Revisit

|

medium

|

Critical

|

325,590,018 |

pytorch

|

[feature request] Simple and Efficient way to get gradients of each element of a sum

|

For some application, I need to get gradients for each elements of a sum.

I am aware that this issue has already been raised previously, in various forms ([here](https://discuss.pytorch.org/t/gradient-w-r-t-each-sample/1433/2), [here](https://discuss.pytorch.org/t/efficient-per-example-gradient-computations/17204), [here](https://discuss.pytorch.org/t/quickly-get-individual-gradients-not-sum-of-gradients-of-all-network-outputs/8405) and possibly related to [here](https://github.com/pytorch/pytorch/issues/1407))

and has also been raised for other autodifferentiation libraries (some examples for TensorFlow: [here](https://github.com/tensorflow/tensorflow/issues/675), long discussion [here](https://github.com/tensorflow/tensorflow/issues/4897))

While the feature does exists in that there is a way to get the desired output,

I have not found an efficient way of computing it after investing a significant amount of time.

I am bringing it up again, with some numbers showing the inefficiency of the existing solutions I have been able to find.

It is possible that the running time I observed is not an issue with the existing solutions but only with my implementation of them,