id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

269,302,686 | TypeScript | `SVGElement.className` should be read-only | When trying to assign a value to it, the browser yells: `TypeError: Cannot assign to read only property 'className' of object '#<SVGAElement>'`.

Furthermore, the type of the `className` property is set as `any`, but it should be `SVGAnimatedString`. | Bug,Help Wanted,Domain: lib.d.ts | low | Critical |

269,306,626 | rust | Use systems page size instead of a hard-coded constant for File I/O? | Hi, I have a question regarding the following constant that is used throughout the `BufReader`:

https://github.com/rust-lang/rust/blob/6ccfe68076abc78392ab9e1d81b5c1a2123af657/src/libstd/sys_common/io.rs#L10

Shouldn't this variable rather be the page size for the system (determined at runtime) instead of 8KB hard-coded (for better IO performance)? This avoids that the system accidentally splits memory between pages that should really be contiguous on one page. For example, if my page size was 16KB, the system could accidentally allocate the memory in an unfortunate way so that the 8KB from the `BufReader` are now split across two pages. If it used 16KB pages, the system could map it 1:1 to a memory page. Why is the `BufReader` size hard-coded to 8KB? | I-slow,C-enhancement,T-libs,A-io | low | Major |

269,332,389 | go | cmd/compile: reordering struct field accesses can alter performance | ### What version of Go are you using (`go version`)?

go version devel +47c868dc1c Sat Oct 28 11:53:49 2017 +0000 linux/amd64

### Does this issue reproduce with the latest release?

Yes.

### What operating system and processor architecture are you using (`go env`)?

```

GOARCH="amd64"

GOBIN=""

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH="/home/mvdan/go/land:/home/mvdan/go"

GORACE=""

GOROOT="/home/mvdan/tip"

GOTOOLDIR="/home/mvdan/tip/pkg/tool/linux_amd64"

GCCGO="gccgo"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build268906853=/tmp/go-build -gno-record-gcc-switches"

```

### What did you do?

https://play.golang.org/p/d5g7tuaxHW

go test -bench=.

### What did you expect to see?

Both of them performing equally.

### What did you see instead?

With 6 runs of each on an idle machine:

```

name time/op

Separate-4 2.48ns ± 1%

Contiguous-4 2.15ns ± 2%

```

This is a minifiedin version of a performance issue I had in a very hot function. In particular, the function is the ASCII fast path of a rune advance method.

Here's the assembly for the two funcs:

```

"".(*T).separate STEXT nosplit size=26 args=0x10 locals=0x0

0x0000 00000 (f_test.go:9) TEXT "".(*T).separate(SB), NOSPLIT, $0-16

0x0000 00000 (f_test.go:9) MOVQ "".t+8(SP), AX

0x0005 00005 (f_test.go:10) INCQ 8(AX)

0x0009 00009 (f_test.go:11) MOVQ $0, (AX)

0x0010 00016 (f_test.go:12) MOVQ 8(AX), AX

0x0014 00020 (f_test.go:12) MOVQ AX, "".~r0+16(SP)

0x0019 00025 (f_test.go:12) RET

"".(*T).contiguous STEXT nosplit size=29 args=0x10 locals=0x0

0x0000 00000 (f_test.go:15) TEXT "".(*T).contiguous(SB), NOSPLIT, $0-16

0x0000 00000 (f_test.go:15) MOVQ "".t+8(SP), AX

0x0005 00005 (f_test.go:16) MOVQ $0, (AX)

0x000c 00012 (f_test.go:17) MOVQ 8(AX), CX

0x0010 00016 (f_test.go:17) INCQ CX

0x0013 00019 (f_test.go:17) MOVQ CX, 8(AX)

0x0017 00023 (f_test.go:18) MOVQ CX, "".~r0+16(SP)

0x001c 00028 (f_test.go:18) RET

```

Funnily enough, the faster one results in an extra instruction. How that makes sense is beyond me. Perhaps it's because the first one accesses `8(AX)` three times, and the second only twice?

My understanding of the compiler and assembly are limited, so any pointers welcome.

/cc @randall77 @philhofer | Performance,NeedsInvestigation,compiler/runtime | low | Critical |

269,346,921 | go | proposal: encoding/json: add omitnil option | Note: This proposal already has as a [patch] from 2015 by @bakineggs, but it appears to have fallen between the cracks.

I have the following case:

```go

type Join struct {

ChannelId string `json:"channel_id"`

Accounts []Ident `json:"accounts,omitempty"`

History []TextEntry `json:"history,omitempty"`

}

```

This struct is used for message passing and the slices are only relevant (and set to non-`nil`) in some cases. However, since `encoding/json` does not differentiate between a `nil` slice and an empty slice, there will be legitimate cases where a field is excluded when it's not expected to (e.g., the `History` slice is set, but empty).

I reiterate the proposal by Dan in his patch referred above to support an `omitnil` option which allows this differentiation for slices and maps.

*Note for hypothetical Go 2.0:* This is already how `omitempty` works for pointers to Go's basic types (e.g., `(*int)(nil)` is omitted while pointer to `0` is not). For Go 2.0 the behavior of `omitempty` could change to omit both `nil` and `0` when specified, and then only `nil` would be omitted when `omitnil` is specified.

[patch]: https://go-review.googlesource.com/c/go/+/10686 | Proposal,Proposal-Hold | high | Critical |

269,396,714 | rust | Consider more fine grained grouping for built-in lints | Copying the comment from https://github.com/rust-lang/rust/pull/45424#issuecomment-338345084:

I've also audited the remaining ungrouped lints.

In principle new lint groups can be created for them, but it looks like fine-grained lint grouping didn't find its use even in clippy, so I didn't do anything.

**Unused++.**

These can also be quite reasonably added into the `unused` group, but less obviously than those I added into it in this PR.

```

STABLE_FEATURES

RENAMED_AND_REMOVED_LINTS

UNKNOWN_LINTS

UNUSED_COMPARISONS

```

**Bad style++.**

Probably can be added into the `bad_style` group, but it currently consists only of casing-related lints.

```

NON_SHORTHAND_FIELD_PATTERNS

WHILE_TRUE

```

**Future compatibility++.**

Errors that are reported as lints for some reasons unknown to me.

See the question in https://github.com/rust-lang/rust/pull/45424#discussion_r146084996 as well.

```

CONST_ERR // ?

UNKNOWN_CRATE_TYPES // Deny-by-default

NO_MANGLE_CONST_ITEMS // Deny-by-default

NO_MANGLE_GENERIC_ITEMS

```

**Restrictions.**

Something generally reasonable that can be prohibited if necessary.

```

BOX_POINTERS // Allow-by-default

UNSAFE_CODE // Allow-by-default

UNSTABLE_FEATURES // Allow-by-default

MISSING_DOCS // Allow-by-default

MISSING_COPY_IMPLEMENTATIONS // Allow-by-default

MISSING_DEBUG_IMPLEMENTATIONS // Allow-by-default

```

**Pedantic.**

Something not bad enough to always report/fix.

```

UNUSED_RESULTS // Allow-by-default

UNUSED_IMPORT_BRACES // Allow-by-default

UNUSED_QUALIFICATIONS // Allow-by-default

TRIVIAL_CASTS // Allow-by-default

TRIVIAL_NUMERIC_CASTS // Allow-by-default

VARIANT_SIZE_DIFFERENCES // Allow-by-default

UNIONS_WITH_DROP_FIELDS

```

**Obvious mistakes.**

Prevent foot shooting, some can become hard errors in principle.

```

OVERFLOWING_LITERALS

EXCEEDING_BITSHIFTS // Deny-by-default

UNCONDITIONAL_RECURSION

MUTABLE_TRANSMUTES // Deny-by-default

IMPROPER_CTYPES

PLUGIN_AS_LIBRARY

PRIVATE_NO_MANGLE_FNS

PRIVATE_NO_MANGLE_STATICS

```

**General purpose lints.**

```

WARNINGS

DEPRECATED

``` | C-enhancement,A-lints,T-lang | low | Critical |

269,404,494 | go | lib/time: update tzdata before release | The timezone database in lib/time should be updated shortly before the 1.10 release (to whatever tzdata release is current then). There was https://golang.org/cl/74230 attempting to do this just now, but it was too early. @ALTree suggested to open an issue about this, so we don't forget.

The latest available version is shown at https://www.iana.org/time-zones. | NeedsFix,release-blocker,recurring | high | Critical |

269,420,235 | pytorch | Data sampling seems to be more complicated than necessary | I love Pytorch for its flexibility and debugging friendly environment, and like Andrej Karpathy said after using Pytorch, "I have more energy. My skin is clearer. My eye sight has improved."

However, I am finding sampling from datasets a bit more convoluted that it needs to be. I was hoping for a way to efficiently extract samples (using multiprocessing) from the dataset by providing a batch index list as input, e.g.

```python

batch_indices = [5,4,2]

loader.get_indices(batch_indices)

```

where `loader`is a `DataLoader`object or a `torch.data.Dataset` object. In other words, I am looking for a simple, yet flexible sampling interface.

Currently, if I want to sample using a non-uniform distribution, first I have to define a sampler class for the `loader`, then within the class I have to define a generator that returns indices from a pre-defined list. Later, whenever the sampling distribution changes I have to re-create the sampler object that takes input values which are used to compute the new sampling distribution. I didn't find an easier way yet.

As a result, defining the data loader would be something like,

```python

loader = data.DataLoader(train_set, sampler=sampler(train_set), num_workers=2)`

```

for the initialization step; and

```python

loader.sampler = sampler(train_set, some_values)

```

between epochs.

This seems to give me certain restrictions (please correct me if I am wrong),

1. dynamic sampling is not well supported with this approach - consider the case where the sampling distribution or the batch size changes after every iteration (not epoch); and

2. this makes it necessary to have an epoch-based outer loop. Once I tried to avoid having epochs by using `itertools.cycle` on a `DataLoader` with `RandomSampler`, but it gave me a bad memory leak.

Therefore, is it an issue if we have the API allow for a sampling procedure that looks like the code below ? My goal is to have a flexible data sampling interface while harnessing the multiprocessing power of `DataLoader`.

```python

for i in range(n_iters):

# 1. Sample according to `probs`

indices = np.random.choice(n, batch_size, p=probs)

batch = loader.get_indices(indices)

# 2. Optimization step

opt.zero_grad()

loss = model.compute_loss(batch)

loss.backward()

opt.step()

# 3. Change probs values according to some criterion

probs = get_newProbs(probs, loss)

```

If the `DataLoader` **api** can't be changed, what if we add three extra functions to `torch.data.Dataset` ?

1. `.get_indices(batch_indices, collate_fn)` - to extract a batch from indices using a collate function;

2. `.spawn_workers(num_workers)` - to initialize workers for sampling with multiprocessing; and

3. `.terminate_workers()` - to terminate the worker threads.

What do you think ?

I would be happy to submit a pull request for this feature!

cc @SsnL | module: dataloader,triaged | low | Critical |

269,446,431 | TypeScript | __metadata should register function that returns type instead of literal type | Imagine case with circular dependencies

```

class Car {

@Field owner: Person // !!! Error: Person is not defined.

}

class Person {

@Field car: Car;

}

// car has owner, owner has car

```

Typescript metadata would be emitted here like

eg.

`__metadata('design:type', Person)`.

As `Person` is injected for the first time before `Person` class is initialized, it will result with `ReferenceError` saying `Person is not defined`.

If it'd emit metadata like:

```__metadata('design:type', () => Person)```

it'd be fine.

Later on, when using Reflect.metadata, it would also need to call meta function instead of just returning the type.

If you think it's good idea, do you have any suggestions about starting point for PR that would implement this change?

| Suggestion,Revisit,Domain: Decorators | low | Critical |

269,497,493 | rust | link_dead_code flag breaks sodiumoxide build | When trying to disable dead code elimination project fails to build.

To be clear, this works:

`cargo test --no-run`

this doesn't:

`RUSTFLAGS=-Clink_dead_code cargo test --no-run`

```

Build log:

error: linking with `cc` failed: exit code: 1

|

= note: "cc" "-m64" "-L" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper0.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper1.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper10.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper11.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper12.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper13.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper14.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper15.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper2.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper3.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper4.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper5.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper6.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper7.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper8.rust-cgu.o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.libwhisper9.rust-cgu.o" "-o" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libwhisper-3838df784f3557e7.crate.allocator.rust-cgu.o" "-nodefaultlibs" "-L" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps" "-L" "/usr/local/Cellar/libsodium/1.0.15/lib" "-L" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libchrono-a2ac93e1e7f3829f.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libnum-c49ae6ecf79aff3b.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libnum_iter-529703f02f959052.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libnum_integer-ef7f59b4e3fd69a0.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libnom-d63d0bb4b90726c4.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libmemchr-90d85a68cc7f0681.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libtime-9ed328ba0dc074f6.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libnum_traits-5f9924077010f966.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libsodiumoxide-18211dc8e7914ec4.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libserde-9cbcd9e9b1f85d31.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/liblibsodium_sys-0b032eb7c19f6f3e.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libbytes-d7c1bd52839d451a.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libiovec-c288cddee47c6551.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/liblibc-1d475d610e8905d5.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libbyteorder-330a9355803e29d8.rlib" "/Users/andoriyu/Dev/Heaven/libwhisper-rs/target/debug/deps/libquick_error-8a3cabb77e931a5b.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libtest-191b92e1a25a742e.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libterm-16adb5ef965afad6.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libgetopts-f78c669374ceb40f.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libstd-a812896ed8dd253f.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libpanic_unwind-f80668a71535d14a.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/liballoc_jemalloc-e7385b9dc1f6352a.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libunwind-fa5ca42c4beb9fd9.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/liballoc_system-39205359e68fcafd.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/liblibc-6d1727ccc0bf3375.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/liballoc-59037b68a5b9d10d.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libstd_unicode-db482e95dfaeb4c7.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/librand-6dde5ed2dcdc460f.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libcore-ef96fd3d49f3c876.rlib" "/Users/andoriyu/.rustup/toolchains/nightly-x86_64-apple-darwin/lib/rustlib/x86_64-apple-darwin/lib/libcompiler_builtins-213aeb9cef9ff383.rlib" "-l" "sodium" "-l" "System" "-l" "resolv" "-l" "pthread" "-l" "c" "-l" "m"

= note: Undefined symbols for architecture x86_64:

"_crypto_stream_aes128ctr", referenced from:

sodiumoxide::crypto::stream::aes128ctr::stream::h096683557c24d55a in libsodiumoxide-18211dc8e7914ec4.rlib(sodiumoxide-18211dc8e7914ec4.sodiumoxide1.rust-cgu.o)

"_crypto_stream_aes128ctr_xor", referenced from:

sodiumoxide::crypto::stream::aes128ctr::stream_xor::h446c7cf4f1728666 in libsodiumoxide-18211dc8e7914ec4.rlib(sodiumoxide-18211dc8e7914ec4.sodiumoxide1.rust-cgu.o)

sodiumoxide::crypto::stream::aes128ctr::stream_xor_inplace::h15e74395e3a300a9 in libsodiumoxide-18211dc8e7914ec4.rlib(sodiumoxide-18211dc8e7914ec4.sodiumoxide1.rust-cgu.o)

ld: symbol(s) not found for architecture x86_64

clang: error: linker command failed with exit code 1 (use -v to see invocation)

```

Pretty odd...

## Meta

`rustc --version --verbose`:

```

rustc 1.23.0-nightly (269cf5026 2017-10-28)

binary: rustc

commit-hash: 269cf5026cdac6ff47f886a948e99101316d7091

commit-date: 2017-10-28

host: x86_64-apple-darwin

release: 1.23.0-nightly

LLVM version: 4.0

```

`cargo --version --verbose`:

```

cargo 0.24.0-nightly (e5562ddb0 2017-10-26)

release: 0.24.0

commit-hash: e5562ddb061b8eb5a0e754d702f164a1d42d0a21

commit-date: 2017-10-26

```

Backtrace:

| A-linkage,I-crash,P-medium,T-compiler,C-bug,link-dead-code | low | Critical |

269,565,373 | pytorch | type of torch.bernoulli and torch.multinomial inconsistent | type of `torch.bernoulli` and `torch.multinomial` inconsistent:

- `torch.bernoulli` => torch.FloatTensor

- `torch.multinomial` => torch.LongTensor

I think the types should be self-consistent, ie both FloatTensor, or both LongTensor.

cc @vincentqb @fritzo @neerajprad @alicanb @vishwakftw | module: distributions,triaged | low | Minor |

269,623,019 | TypeScript | @ts-ignore for the block scope and imports | currently @ts-ignore only mutes the errors from the line immediately below it

would be great to have the same for

1. the whole next block

2. also for all imports

### use case:

refactoring: commenting out a piece of code to see what would break without it, yet avoiding to deal with the errors in the file where commented code is which can be many | Suggestion,Awaiting More Feedback,VS Code Tracked | high | Critical |

269,671,588 | opencv | Annotation squares have an offset to the cursor in `opencv_annotation` | ##### System information (version)

- OpenCV => 3.3.0

- Operating System / Platform => macOS High Sierra (10.13)

- Compiler => Installed with homebrew

##### Detailed description

When annotating images using `opencv_annotation` the annotation program start the annotation markings (the red/green squares) with a offset from cursor in the y-axis depending on the y-position of the cursor (proportional it seems). The offset is not dependent on where the annotation/marking started, only the current position of the cursor.

At the bottom (y~0, assuming [0,0] is in the bottom left corner) the marking starts with a negative offset from the cursor, and then the offset gradually increases when the y-position of the cursor increases. It seems the offset is 0 around one third to the top (y-position to the cursor is 1/3 of the height of the image).

##### Steps to reproduce (example)

If I start an annotation on the bottom of an image, the marking appears below the cursor:

<img width="400" alt="bottom" src="https://user-images.githubusercontent.com/6630430/32182217-74de28f6-bd96-11e7-8008-b22fb351a954.png">

About one third up the image, the marking is where it should be:

<img width="577" alt="one-third" src="https://user-images.githubusercontent.com/6630430/32182261-845b8562-bd96-11e7-8bf1-a3fac2dd7772.png">

When I reach the top, the marking appears above the cursor:

<img width="523" alt="top" src="https://user-images.githubusercontent.com/6630430/32182700-9d475280-bd97-11e7-8e05-3901ab362d90.png">

This makes it hard to annotate objects near the top and the bottom:

<img width="600" alt="hard annotation" src="https://user-images.githubusercontent.com/6630430/32182733-ad783552-bd97-11e7-8452-5cb092dc5068.png">

| bug,priority: low,category: highgui-gui,platform: ios/osx,category: apps | low | Minor |

269,673,629 | puppeteer | Support extensions execution contexts | The [documentation](https://github.com/GoogleChrome/puppeteer/blob/master/docs/api.md#overview) show that Frames can be associated with extensions but there's no documentation on how to register an extension & run a script under one of the extensions environments (option page/background page/page action/ect...).

It would be awesome to run automated tests for web-extensions developpers. | feature,chromium | medium | Critical |

269,687,481 | opencv | The output of cv::convertPointsFromHomogeneous does not match the documentation for points at infinity. | <!--

If you have a question rather than reporting a bug please go to http://answers.opencv.org where you get much faster responses.

If you need further assistance please read [How To Contribute](https://github.com/opencv/opencv/wiki/How_to_contribute).

This is a template helping you to create an issue which can be processed as quickly as possible. This is the bug reporting section for the OpenCV library.

-->

##### System information (version)

<!-- Example

- OpenCV => 3.1

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2015

-->

- OpenCV => :master:

##### Detailed description

The documentation <https://github.com/opencv/opencv/blob/master/modules/calib3d/include/opencv2/calib3d.hpp#L1290>

says the output should be (0,0,...,0) for points at infinity.

But the implementation just discards the last component.

Please refer to

<https://github.com/opencv/opencv/blob/master/modules/calib3d/src/fundam.cpp#L875>

```.cpp

float scale = sptr[i][3] != 0 ? 1.f/sptr[i][3] : 1.f;

dptr[i] = Point2f(sptr[i].x*scale, sptr[i].y*scale);

```

<!-- your description -->

##### Steps to reproduce

```.cpp

#include <iostream>

#include <opencv2/core.hpp>

#include <opencv2/calib3d.hpp>

int main()

{

std::vector<cv::Point3f> x1;

x1.push_back({2,3,0});

cv::Mat x2;

cv::convertPointsFromHomogeneous(x1, x2);

std::cout << x2 << std::endl;

return 0;

}

```

Output:

```

[2, 3]

```

instead of the expected

```

[0, 0]

```

<!-- to add code example fence it with triple backticks and optional file extension

```.cpp

// C++ code example

```

or attach as .txt or .zip file

--> | category: documentation,category: calib3d | low | Critical |

269,731,667 | angular | Animation state is not reapplied | <!--

PLEASE HELP US PROCESS GITHUB ISSUES FASTER BY PROVIDING THE FOLLOWING INFORMATION.

ISSUES MISSING IMPORTANT INFORMATION MAY BE CLOSED WITHOUT INVESTIGATION.

-->

## I'm submitting a...

<!-- Check one of the following options with "x" -->

<pre><code>

[ ] Regression (a behavior that used to work and stopped working in a new release)

[x] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Feature request

[ ] Documentation issue or request

[ ] Support request => Please do not submit support request here, instead see https://github.com/angular/angular/blob/master/CONTRIBUTING.md#question

</code></pre>

## Current behavior

<!-- Describe how the issue manifests. -->

Even when disabled, styles of animation are preserved. This is not bad, however when animation state changes and and we enable animation, styles are not updated.

## Expected behavior

<!-- Describe what the desired behavior would be. -->

Styles should update when animation becomes active again.

## Minimal reproduction of the problem with instructions

<!--

For bug reports please provide the *STEPS TO REPRODUCE* and if possible a *MINIMAL DEMO* of the problem via

https://plnkr.co or similar (you can use this template as a starting point: http://plnkr.co/edit/tpl:AvJOMERrnz94ekVua0u5).

-->

https://stackblitz.com/edit/angular-ds3u1p

sidenav switches between mobile and mini variant depending on window width(xs - mobile, gt-xs -mini)

1) Go to "mini" variant(`collapse` state is active),

2) Go to mobile variant(`collapse` state is still active)

3) Open sidenav and close it(`collapse` is no longer active)

4) Go to "mini" variant(`collapse` is not active <- problem)

## What is the motivation / use case for changing the behavior?

<!-- Describe the motivation or the concrete use case. -->

inconsistency

## Environment

<pre><code>

Angular version: 4.4.6

<!-- Check whether this is still an issue in the most recent Angular version -->

Browser:

- [x] Chrome (desktop) version 59

- [ ] Chrome (Android) version XX

- [ ] Chrome (iOS) version XX

- [ ] Firefox version XX

- [ ] Safari (desktop) version XX

- [ ] Safari (iOS) version XX

- [ ] IE version XX

- [ ] Edge version XX

For Tooling issues:

- Node version: XX <!-- run `node --version` -->

- Platform: Linux(Ubuntu 16.04) <!-- Mac, Linux, Windows -->

Others:

<!-- Anything else relevant? Operating system version, IDE, package manager, HTTP server, ... -->

</code></pre>

| type: bug/fix,area: animations,freq2: medium,state: needs more investigation,P3 | low | Critical |

269,745,508 | TypeScript | hook into `tsc --watch` using stdout -- the unix way | This is a **feature request**.

I would like to launch `tsc -w` and then read from stdout to know when tsc -w has started and completed a build. Like so:

```js

const cp = require('child_process');

const k = cp.spawn('bash');

setImmediate(function(){

k.stdin.end(`\n tsc --watch --project x \n`);

});

let stdout = '';

k.stdout.on('data', function(d){

stdout += String(d);

if(/foobar/.test(stdout)){

makeMyDay();

}

});

```

Right now, `tsc --watch` outputs stdout that is mostly just human readable, and doesn't provide very useful information:

```

1:33:44 PM - File change detected. Starting incremental compilation...

../suman-types/dts/it.d.ts(6,18): error TS2430: Interface 'ITestDataObj' incorrectly extends interface 'ITestOrHookBase'.

Property 'cb' is optional in type 'ITestDataObj' but required in type 'ITestOrHookBase'.

../suman-types/dts/test-suite.d.ts(13,23): error TS2503: Cannot find namespace 'Chai'.

1:33:44 PM - Compilation complete. Watching for file changes.

```

**Proposal:**

`tsc --watch` should have a new flag, that tells `tsc --watch` to output machine readable data to stdout, instead of human readable data. For example:

`tsc --watch --machine-stdio`

Using this new flag, no existing users will be affected.

Ideally, output JSON to stdout, something like this upon a file change:

```js

const data = {source: '@tscwatch', event: 'file_change', srcfilePath: filePath, willTranspile: true/false, destinationFilePath: null / destinationFilePath};

console.log(JSON.stringify(data);

```

then after transpilation finishes, write something like this to stdout:

```js

const data = {source: '@tscwatch', event: 'compilation_complete', errors: null / errors:[]};

console.log(JSON.stringify(data));

```

if it's behind a flag (`--machine-stdio`) then it won't affect any current users, so should be safe.

I need a truly unique field value like '@tscwatch' so that I know that the JSON is coming from a certain process. In general, it's possible to be parsing more than one JSON stream from a process, so having a unique field in each JSON object makes this better.

| Suggestion,Awaiting More Feedback | low | Critical |

269,770,729 | create-react-app | Parse build output into a rich format we can display properly | The build overlay still looks less rich than the runtime one:

<img width="813" alt="screen shot 2017-10-30 at 22 07 12" src="https://user-images.githubusercontent.com/810438/32198125-b434c83e-bdbe-11e7-8cc3-8390b197376b.png">

It would be nice to actually parse this (if it matches known Babel and ESLint formats) and display a richer version with:

* Error message and file information in a runtime stack frame-like view

* Highlighted line that causes the issue

There would need to be tests verifying we don't regress if message format updates. | tag: enhancement | low | Critical |

269,794,239 | TypeScript | Implicit any quick fix should infer from JSX component usage | ```ts

function Foo(props) {

return <div>

{props.x}, {props.y}, {props.z}

</div>

}

let a = <Foo x={100} y="hello" z={true} />;

```

Expected:

```ts

function Foo(props: { x: number, y: string, z: boolean}) {

return <div>

{props.x}, {props.y}, {props.z}

</div>

}

let a = <Foo x={100} y="hello" z={true} />;

```

Actual:

```ts

function Foo(props: { x: React.ReactNode; y: React.ReactNode; z: React.ReactNode; }) {

return <div>

{props.x}, {props.y}, {props.z}

</div>

}

let a = <Foo x={100} y="hello" z={true} />;

```

| Bug,Domain: Quick Fixes | low | Minor |

269,801,548 | go | go/importer: fix and enable TestFor for gccgo | The go command and gccgo don't get along at the moment. Once they do, importer.TestFor needs to be enabled for gccgo and checked. It may just work. | NeedsFix | low | Minor |

269,933,043 | pytorch | High CPU use by clock_gettime syscall | I noticed, while training a network via https://github.com/abhiskk/fast-neural-style, that CPU use is consistently high in kernel time that is not IO wait. I suspected this was caused by excessive syscalls or context switches.

My platform is Ubuntu 16.04.2, kernel 4.10, CUDA 8, cudnn 6 with a 1080 Ti

To reproduce, I run `python3 neural_style/neural_style.py train --dataset /path/to/COCO2014 --vgg-model-dir vgg --batch-size 4 --save-model-dir saved-models --cuda 1`

`htop` will show high kernel time use (in detailed mode, so it isn't confused with IO waits)

`strace -f -p <PID>` shows that the calls are for `CLOCK_MONOTONIC_RAW`, which is indeed unsupported in vDSO and falls back to a syscall (see http://elixir.free-electrons.com/linux/v4.10.17/source/arch/x86/entry/vdso/vclock_gettime.c#L245). `argdist` for low overhead tracing shows ~2.5m calls per second (`sudo ./argdist -p <PID> -C 'p::sys_clock_gettime(clockid_t clk_id, struct timespec *tp):int'`)

I started manually running parts of the code in the interpreter while sampling the amount of calls happening via `argdist` on the python interpreter, and the culprits are `.cuda()` and `.cpu()` calls on tensors, which trigger many calls to `clock_gettime()` each (amounts are inconsistent and range between hundreds and tens of thousands). I haven't gone down further, but it seems to me that if it's at all possible to replace `CLOCK_MONOTONIC_RAW` with `CLOCK_MONOTONIC`, it will give plenty of performance for such a minor change. Not sure if it's pytorch or CUDA that actually contains said code, though.

cc @ngimel @VitalyFedyunin | module: performance,module: cuda,triaged | low | Major |

270,010,833 | pytorch | CUDA topk is slow for some input sizes | Hi,

I was able to reproduce a configuration in which I have what I believe is a GPU synchronization issue :

```python

import torch

from torch.autograd import Variable

def accuracy_2d(output, target, topk=(1,)):

"""

Computes the precision@k for the specified values of k

Considers output is : NxCxHxW and target is : NxHxW

"""

maxk = max(topk)

total_nelem = target.size(0) * target.size(1) * target.size(2)

_, pred = output.topk(maxk, 1, True, True)

correct = target.unsqueeze(1).expand(pred.size())

correct = pred.eq(correct)

res = []

for k in topk:

correct_k = correct[:, :k].contiguous().view(-1).float().sum(0)

res.append(correct_k.mul_(100.0 / total_nelem))

return res

class AverageMeter(object):

"""Computes and stores the average and current value"""

def __init__(self):

self.reset()

def reset(self):

self.val = 0

self.avg = 0

self.sum = 0

self.count = 0

def update(self, val, n=1):

self.val = val

self.sum += val * n

self.count += n

self.avg = self.sum / self.count

top1 = AverageMeter()

top3 = AverageMeter()

target = torch.LongTensor(16, 172, 172).cuda()

target_var = Variable(target)

pred = Variable(torch.FloatTensor(16, 135, 172, 172).cuda())

prec1, prec3 = accuracy_2d(pred.data, target, topk=(1, 3))

top1.update(prec1[0], 16)

top3.update(prec3[0], 16)

```

I noticed that removing the last two lines of code does not produce the expected behaviour, I suggest this is where the synchronization happens. Also, I think this is related to how `.topk` handles large kernels (cf @soumith).

cc @ngimel @VitalyFedyunin | module: performance,module: cuda,triaged,module: sorting and selection | low | Major |

270,120,147 | react | Treat value={null} as empty string | Per @gaearon's request, I'm opening up a new issue based on https://github.com/facebook/react/issues/5013#issuecomment-340898727.

Currently, if you create an input like `<input value={null} onChange={this.handleChange} />`, the null value is a flag for React to treat this as an uncontrolled input, and a console warning is generated. However, this is often a valid condition. For example, when creating a new object (initialized w/ default values from the server then passed to the component as props) in a form that requires address, Address Line 2 is often optional. As such, passing null as value to this controlled component is a very reasonable thing to do.

One can do a workaround, i.e. `<input value={foo || ''} onChange={this.handleChange} />`, but this is an error-prone approach and quite awkward.

Per issue referenced above, the React team has planned on treating null as an empty string, but that hasn't yet occurred. I'd like to propose tackling this problem in the near future.

Please let me know if I can help further. | Component: DOM,Type: Discussion | medium | Critical |

270,137,440 | go | encoding/json: JSON tags don't handle empty properties, non-standard characters | #### What did you do?

https://play.golang.org/p/RRB1VFNufW

Trying to unmarshal with tags like this:

```

type Data struct {

Foo string `json:"Foo"`

Empty string `json:""`

Quote string "json:\"\\\""

Smiley string "json:\"\U0001F610\""

}

```

#### What did you expect to see?

```

{"Foo": "bla", "": "nothing", "\"": "quux", "😐": ":-|"}

{"Foo":"bla","":"nothing","\"":"quux","😐":":-|"}

```

#### What did you see instead?

```

{"Foo": "bla", "": "nothing", "\"": "quux", "😐": ":-|"}

{"Foo":"bla","Empty":"","Quote":"","Smiley":""}

```

#### System details

```

go version go1.9.2 darwin/amd64

GOARCH="amd64"

GOBIN=""

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GOOS="darwin"

GOPATH="/Users/schani/go"

GORACE=""

GOROOT="/usr/local/go"

GOTOOLDIR="/usr/local/go/pkg/tool/darwin_amd64"

GCCGO="gccgo"

CC="clang"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/1h/7ghts1ys53xdk8y1czrjzdmw0000gn/T/go-build770874315=/tmp/go-build -gno-record-gcc-switches -fno-common"

CXX="clang++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOROOT/bin/go version: go version go1.9.2 darwin/amd64

GOROOT/bin/go tool compile -V: compile version go1.9.2

uname -v: Darwin Kernel Version 16.7.0: Thu Jun 15 17:36:27 PDT 2017; root:xnu-3789.70.16~2/RELEASE_X86_64

ProductName: Mac OS X

ProductVersion: 10.12.6

BuildVersion: 16G29

lldb --version: lldb-900.0.50.1

Swift-4.0

gdb --version: GNU gdb (GDB) 7.12.1

```

| NeedsDecision | medium | Critical |

270,148,445 | flutter | flutter_driver finders should allow accessing descendants, first, and last | The [flutter_driver finders](https://docs.flutter.io/flutter/flutter_driver/CommonFinders-class.html) are very limited making it difficult to find a single instance of any repeated widget. If the finders supported descendant, first, and last (as they do in [flutter_test](https://docs.flutter.io/flutter/flutter_test/CommonFinders-class.html)) this would become much easier.

As is, the only way to find one of a set of repeated elements is to assign a predictable unique key to the first element in the list. This often requires some hackery - we usually don't generate lists based on indices - happens in a callback that takes some renderable data type. | c: new feature,framework,t: flutter driver,P3,team-framework,triaged-framework | medium | Critical |

270,156,954 | TypeScript | TS auto import should support configuring whether a star or a qualified import is used. | _From @dbaeumer on October 31, 2017 11:29_

Testing: #37177

- vscode source code opened in VS Code

- open a file that doesn't import `'vs/base/common/types'`

- type isStringArray

- select the entry from code complete list

- the following import is inserted:

```ts

import { isStringArray } from 'vs/base/common/types';

```

However what I want in this case is

```ts

import * as Types from 'vs/base/common/types';

```

and the code should become `Types.isStringArray`

Would be cool if I can control this via a setting.

_Copied from original issue: Microsoft/vscode#37258_ | Suggestion,Awaiting More Feedback,VS Code Tracked | medium | Critical |

270,161,625 | go | archive/zip: FileHeader.Extra API is problematic | The `FileHeader.Extra` field is used by the `Writer` to write the "extra" field for the local file header and the central-directory file header. This is problematic because the Go implementation assumes that the extra bytes used in the two headers are the same. While is this is often the case, it is not always true.

See http://mdfs.net/Docs/Comp/Archiving/Zip/ExtraField and you will notice that it frequently describes a "Local-header version" and a "Central-header version", where the formats sometimes differ.

The `Reader` does not have this problem because it entirely ignores the local headers.

I haven't thought much about what the right action is moving forward, whether to deprecate this field or add new API. I just want to file this issue, so I remember to address it later. | NeedsFix | low | Minor |

270,221,205 | TypeScript | Fix setTimeout/setInterval/setImmediate functions | Fix too lax typings.

**TypeScript Version:** master

**Expected behavior:**

```ts

declare function setTimeout(handler: (...args: any[]) => void, timeout?: number, ...args: any[]): number;

```

**Actual behavior:**

```ts

declare function setTimeout(handler: (...args: any[]) => void, timeout: number): number;

declare function setTimeout(handler: any, timeout?: any, ...args: any[]): number;

``` | Bug,Help Wanted,Domain: lib.d.ts | low | Major |

270,237,733 | kubernetes | Support disk io requests and limits | <!-- This form is for bug reports and feature requests ONLY!

If you're looking for help check [Stack Overflow](https://stackoverflow.com/questions/tagged/kubernetes) and the [troubleshooting guide](https://kubernetes.io/docs/tasks/debug-application-cluster/troubleshooting/).

-->

**Is this a BUG REPORT or FEATURE REQUEST?**:

/kind feature

Kubernetes should support disk io requests and limits.

/cc @kubernetes/sig-node-feature-requests | sig/node,kind/feature,lifecycle/frozen,needs-triage | medium | Critical |

270,250,759 | TypeScript | Error "'this' implicitly has type 'any'" when used .bind() | ```sh

› tsc --version

Version 2.7.0-dev.20171020

```

**Code**

tsconfig.json:

```json

{

"compilerOptions": {

"allowJs": true,

"checkJs": true,

"noEmit": true,

"strict": true

},

"files": [

"index.d.ts",

"index.js"

]

}

```

index.js:

```js

/** @type {MyObj} */

const o = {

foo: function() {

(function() {

console.log(this); // <- Unexpected error here.

}.bind(this))();

}

};

```

index.d.ts:

```ts

interface MyObj {

foo(this: { a: number }): void;

}

```

**How it looks in the editor:**

Context of `foo()` is defined:

But context passed to the nested function is lost:

**Expected behavior:**

There should not be error, because `this` explicitly specified by `.bind()`.

**Actual behavior:**

```sh

› tsc

index.js(5,25): error TS2683: 'this' implicitly has type 'any' because it does not have a type annotation.

```

| Suggestion,Awaiting More Feedback,Domain: JavaScript | medium | Critical |

270,308,907 | rust | Lint for undesirable, implicit copies | As part of https://github.com/rust-lang/rust/issues/44619, one topic that keeps coming up is that we have to find some way to mitigate the risk of large, implicit copies. Indeed, this risk exists today even without any changes to the language:

```rust

let x = [0; 1024 * 10];

let y = x; // maybe you meant to copy 10K words, but maybe you didn't.

```

In addition to performance hazards, implicit copies can have surprising semantics. For example, there are several iterator types that *would* implement `Copy`, but we were afraid that people would be surprised. Another, clearer example is a type like `Cell<i32>`, which could certainly be copy, but for this interaction:

```rust

let x = Cell::new(22);

let y = x;

x.set(23);

println!("{}", y.get()); // prints 22

```

For a time before 1.0, we briefly considered introducing a new `Pod` trait that acted like `Copy` (memcpy is safe) but without the implicit copy semantics. At the time, @eddyb argued persuasively against this, basically saying (and rightly so) that this is more of a linting concern than anything else -- the implicit copies in the example above, after all, don't lead to any sort of unsoundness, they just may not be the semantics you expected.

Since then, a number of use cases have arisen where having some kind of warning against implicit, unexpected copies would be useful:

- Iterators implementing `Copy`

- Copy/clone closures (closures that are copy can be surprising just as iterators can be)

- `Cell`, ~~`RefCell`, and other types with interior mutability implementing `Copy`~~

- Behavior of `#[derive(PartiallEq)]` and friends with packed structs

- A coercion from `&T` to `T` (i.e., it'd be nice to be able to do `foo(x)` where `foo: fn(u32)` and `x: &u32`)

I will writeup a more specific proposal in the thread below.

| A-lints,T-lang,C-tracking-issue,S-tracking-design-concerns | medium | Major |

270,362,664 | rust | assertion failed: !are_upstream_rust_objects_already_included(sess) when building rustc_private with monolithic lto | EDIT: repo for reproducing: https://github.com/matthiaskrgr/rustc_crashtest_lto , run cargo build --release

````

rustc --version #rustc 1.23.0-nightly (8b22e70b2 2017-10-31)

git clone https://github.com/rust-lang-nursery/rustfmt

cd rustfmt

git checkout 0af8825eb104e6c7b9444693d583b5fa0bd55ceb

echo "

[profile.release]

opt-level = 3

lto = true

" >> Cargo.toml

RUST_BACKTRACE=full cargo build --release --verbose

````

crashes rustc:

````

Fresh quote v0.3.15

Fresh utf8-ranges v1.0.0

Fresh num-traits v0.1.40

Fresh unicode-xid v0.0.4

Fresh getopts v0.2.15

Fresh serde v1.0.16

Fresh itoa v0.3.4

Fresh void v1.0.2

Fresh dtoa v0.4.2

Fresh diff v0.1.10

Fresh term v0.4.6

Fresh regex-syntax v0.4.1

Fresh unicode-segmentation v1.2.0

Fresh log v0.3.8

Fresh lazy_static v0.2.9

Fresh libc v0.2.32

Fresh synom v0.11.3

Fresh toml v0.4.5

Fresh unreachable v1.0.0

Fresh serde_json v1.0.4

Fresh strings v0.1.0

Fresh memchr v1.0.2

Fresh syn v0.11.11

Fresh thread_local v0.3.4

Fresh aho-corasick v0.6.3

Fresh serde_derive_internals v0.16.0

Fresh regex v0.2.2

Fresh serde_derive v1.0.16

Fresh env_logger v0.4.3

Compiling rustfmt-nightly v0.2.13 (file:///home/matthias/vcs/github/rustfmt)

Running `rustc --crate-name rustfmt src/bin/rustfmt.rs --crate-type bin --emit=dep-info,link -C opt-level=3 -C lto --cfg 'feature="cargo-fmt"' --cfg 'feature="default"' --cfg 'feature="rustfmt-format-diff"' -C metadata=8286bf522b4875a9 -C extra-filename=-8286bf522b4875a9 --out-dir /home/matthias/vcs/github/rustfmt/target/release/deps -L dependency=/home/matthias/vcs/github/rustfmt/target/release/deps --extern serde_derive=/home/matthias/vcs/github/rustfmt/target/release/deps/libserde_derive-2b4ee28cf16ac2a4.so --extern term=/home/matthias/vcs/github/rustfmt/target/release/deps/libterm-752362bbc8237001.rlib --extern serde=/home/matthias/vcs/github/rustfmt/target/release/deps/libserde-45127027bf81d438.rlib --extern log=/home/matthias/vcs/github/rustfmt/target/release/deps/liblog-d09fa7f67c1f577c.rlib --extern diff=/home/matthias/vcs/github/rustfmt/target/release/deps/libdiff-6cc97c0e6df9495d.rlib --extern getopts=/home/matthias/vcs/github/rustfmt/target/release/deps/libgetopts-8ff6434fa2a5d019.rlib --extern unicode_segmentation=/home/matthias/vcs/github/rustfmt/target/release/deps/libunicode_segmentation-6bb2cdd83d97a0ec.rlib --extern serde_json=/home/matthias/vcs/github/rustfmt/target/release/deps/libserde_json-53e4f5d05eed2957.rlib --extern strings=/home/matthias/vcs/github/rustfmt/target/release/deps/libstrings-04c4ec84130f6565.rlib --extern regex=/home/matthias/vcs/github/rustfmt/target/release/deps/libregex-48d942f70d747749.rlib --extern toml=/home/matthias/vcs/github/rustfmt/target/release/deps/libtoml-65d6559cb921e7a7.rlib --extern env_logger=/home/matthias/vcs/github/rustfmt/target/release/deps/libenv_logger-10d3b6fcb2fa4ecb.rlib --extern libc=/home/matthias/vcs/github/rustfmt/target/release/deps/liblibc-2029413d0fb43b31.rlib --extern rustfmt_nightly=/home/matthias/vcs/github/rustfmt/target/release/deps/librustfmt_nightly-13335655e8960ac0.rlib -C target-cpu=native`

error: internal compiler error: unexpected panic

note: the compiler unexpectedly panicked. this is a bug.

note: we would appreciate a bug report: https://github.com/rust-lang/rust/blob/master/CONTRIBUTING.md#bug-reports

note: rustc 1.23.0-nightly (8b22e70b2 2017-10-31) running on x86_64-unknown-linux-gnu

note: run with `RUST_BACKTRACE=1` for a backtrace

thread 'rustc' panicked at 'assertion failed: !sess.lto()', /checkout/src/librustc_trans/back/link.rs:1287:8

stack backtrace:

0: 0x7fc18198a153 - std::sys::imp::backtrace::tracing::imp::unwind_backtrace::hf409d569470ae30b

at /checkout/src/libstd/sys/unix/backtrace/tracing/gcc_s.rs:49

1: 0x7fc1819847f0 - std::sys_common::backtrace::_print::h9f8ff77762968e1c

at /checkout/src/libstd/sys_common/backtrace.rs:69

2: 0x7fc181997473 - std::panicking::default_hook::{{closure}}::h233cc40af697cbfb

at /checkout/src/libstd/sys_common/backtrace.rs:58

at /checkout/src/libstd/panicking.rs:381

3: 0x7fc18199717d - std::panicking::default_hook::hefff18022ca24d92

at /checkout/src/libstd/panicking.rs:391

4: 0x7fc181997907 - std::panicking::rust_panic_with_hook::hd94a4492e4561dca

at /checkout/src/libstd/panicking.rs:577

5: 0x7fc17f79a6c1 - std::panicking::begin_panic::h1d4a7052e8a95c5a

6: 0x7fc17f75d24d - _ZN11rustc_trans4back4link13link_natively17hfbc8890611f67b24E.llvm.C0978D50

7: 0x7fc17f75879d - rustc_trans::back::link::link_binary::ha0632ae2f8eab4a2

8: 0x7fc17f770135 - <rustc_trans::LlvmTransCrate as rustc_trans_utils::trans_crate::TransCrate>::link_binary::hef3d77a5e1caaee2

9: 0x7fc181d5d03b - rustc_driver::driver::compile_input::h6d65afe4a82d280a

10: 0x7fc181da41a0 - rustc_driver::run_compiler::h6a01af2106f7c680

11: 0x7fc181d330f2 - _ZN3std10sys_common9backtrace28__rust_begin_short_backtrace17h5665586c1dd72980E.llvm.B78FDE68

12: 0x7fc1819e0a0e - __rust_maybe_catch_panic

at /checkout/src/libpanic_unwind/lib.rs:99

13: 0x7fc181d4e7a2 - _ZN50_$LT$F$u20$as$u20$alloc..boxed..FnBox$LT$A$GT$$GT$8call_box17hda2c4e140d408872E.llvm.B78FDE68

14: 0x7fc18199634b - std::sys::imp::thread::Thread::new::thread_start::h024eb26cf106639b

at /checkout/src/liballoc/boxed.rs:772

at /checkout/src/libstd/sys_common/thread.rs:24

at /checkout/src/libstd/sys/unix/thread.rs:90

15: 0x7fc17bd51739 - start_thread

16: 0x7fc18165ce7e - clone

17: 0x0 - <unknown>

error: Could not compile `rustfmt-nightly`.

Caused by:

process didn't exit successfully: `rustc --crate-name rustfmt src/bin/rustfmt.rs --crate-type bin --emit=dep-info,link -C opt-level=3 -C lto --cfg feature="cargo-fmt" --cfg feature="default" --cfg feature="rustfmt-format-diff" -C metadata=8286bf522b4875a9 -C extra-filename=-8286bf522b4875a9 --out-dir /home/matthias/vcs/github/rustfmt/target/release/deps -L dependency=/home/matthias/vcs/github/rustfmt/target/release/deps --extern serde_derive=/home/matthias/vcs/github/rustfmt/target/release/deps/libserde_derive-2b4ee28cf16ac2a4.so --extern term=/home/matthias/vcs/github/rustfmt/target/release/deps/libterm-752362bbc8237001.rlib --extern serde=/home/matthias/vcs/github/rustfmt/target/release/deps/libserde-45127027bf81d438.rlib --extern log=/home/matthias/vcs/github/rustfmt/target/release/deps/liblog-d09fa7f67c1f577c.rlib --extern diff=/home/matthias/vcs/github/rustfmt/target/release/deps/libdiff-6cc97c0e6df9495d.rlib --extern getopts=/home/matthias/vcs/github/rustfmt/target/release/deps/libgetopts-8ff6434fa2a5d019.rlib --extern unicode_segmentation=/home/matthias/vcs/github/rustfmt/target/release/deps/libunicode_segmentation-6bb2cdd83d97a0ec.rlib --extern serde_json=/home/matthias/vcs/github/rustfmt/target/release/deps/libserde_json-53e4f5d05eed2957.rlib --extern strings=/home/matthias/vcs/github/rustfmt/target/release/deps/libstrings-04c4ec84130f6565.rlib --extern regex=/home/matthias/vcs/github/rustfmt/target/release/deps/libregex-48d942f70d747749.rlib --extern toml=/home/matthias/vcs/github/rustfmt/target/release/deps/libtoml-65d6559cb921e7a7.rlib --extern env_logger=/home/matthias/vcs/github/rustfmt/target/release/deps/libenv_logger-10d3b6fcb2fa4ecb.rlib --extern libc=/home/matthias/vcs/github/rustfmt/target/release/deps/liblibc-2029413d0fb43b31.rlib --extern rustfmt_nightly=/home/matthias/vcs/github/rustfmt/target/release/deps/librustfmt_nightly-13335655e8960ac0.rlib -C target-cpu=native` (exit code: 101)

````

| I-ICE,E-needs-test,T-compiler,C-bug | low | Critical |

270,446,108 | go | proposal: encoding/json: preserve unknown fields | Yesterday I've implemented https://github.com/golang/go/issues/15314, which allows to optionally fail JSON decoding if an object has a key which cannot be mapped to a field in the destination struct.

In the discussion of that proposal, a few people floated the idea of having a mechanism to collect such keys/values instead of silently ignoring them or failing to parse.

The main use case I can think of is allowing for JSON to be decoded into structs, modified, and serialized back while preserving unknown keys (modulo the order in which they appeared, and potentially "duplicate" keys that are dropped due to uppercase/lowercase collisions, etc.). This behavior is supported by many languages / libraries and other serialization systems such as protocol buffers.

I propose to add this type to the JSON package:

```go

type UnknownFields map[string]interface{}

```

Users of the JSON package can then embed this type in structs for which they'd like to use the feature:

```go

type Data struct {

json.UnknownFields

FirstField int

SecondField string

}

```

On decoding, any object key/value which cannot be mapped to a field in the destination struct would be decoded and stored in UnknownFields. On encoding, any key present UnknownFields would be added to the serialized object.

I can think of a couple edge cases which are tricky, and I propose to resolve them as follows:

##### Nested structs

It's possible for nested structs to also declare UnknownFields. In such cases any UnknownFields in nested structs should be ignored, both when decoding and encoding. Pros: it is consistent with how we already flatten fields, and it's the only way to ensure decoding is unambiguous. Cons: keys that somehow were set to UnknownFields in a child struct would be ignored on encoding.

##### Key collisions

When encoding it's possible that a key in UnknownFields would collide with another field on the struct. In such cases the key in UnknownFields should be ignored. Pros: it is consistent with the behavior in absence of UnknownFields, seems generally less error prone, it cannot happen in a plain decode/edit/encode cycle, it's unambiguous. Cons: it can possibly lead to silently dropping some values.

PS: I'm happy to do the implementation should the proposal or some variation of it be approved. | Proposal,Proposal-Hold | medium | Critical |

270,462,099 | go | image: support LJPEG | Please consider adding support for Lossless JPEG (SOF3, aka LJPEG). This format is used in medical imaging (DICOM) and RAW files of digital cameras (DNG). An argument for this to be in standard library is that most of the required parts are already in place (JPEG parser, Huffman coding), whereas an external library would need to replicate those private methods. | help wanted,NeedsFix | low | Major |

270,470,531 | kubernetes | Create guidelines, documentation and tooling about apiextension PKI | * how do I deploy extensions securely?

* how do I implement rotation?

* can I use the kubernetes certificates API?

* is there any way to improve automation of this? | priority/important-soon,kind/documentation,sig/api-machinery,sig/auth,lifecycle/frozen | low | Major |

270,473,557 | youtube-dl | YT-DL dont downloading from new page of IPRIMA TV | youtube-dl -u ******@seznam.cz --verbose http://play.iprima.cz/filmy/bitva-o-sevastopol

[debug] System config: []

[debug] User config: []

[debug] Custom config: []

[debug] Command-line args: [u'-u', u'PRIVATE', u'--verbose', u'-F', u'http://play.iprima.cz/filmy/bitva-o-sevastopol']

Type account password and press [Return]:

[debug] Encodings: locale UTF-8, fs UTF-8, out UTF-8, pref UTF-8

[debug] youtube-dl version 2017.10.29

[debug] Python version 2.7.13 - Linux-4.9.0-4-amd64-x86_64-with-debian-9.2

[debug] exe versions: avconv 3.2.8-1, avprobe 3.2.8-1, ffmpeg 3.2.8-1, ffprobe 3.2.8-1

[debug] Proxy map: {}

[IPrima] bitva-o-sevastopol: Downloading webpage

[IPrima] p396592: Downloading player

ERROR: No video formats found; please report this issue on https://yt-dl.org/bug . Make sure you are using the latest version; type youtube-dl -U to update. Be sure to call youtube-dl with the --verbose flag and include its complete output.

Traceback (most recent call last):

File "/usr/local/bin/youtube-dl/youtube_dl/YoutubeDL.py", line 784, in extract_info

ie_result = ie.extract(url)

File "/usr/local/bin/youtube-dl/youtube_dl/extractor/common.py", line 434, in extract

ie_result = self._real_extract(url)

File "/usr/local/bin/youtube-dl/youtube_dl/extractor/iprima.py", line 93, in _real_extract

self._sort_formats(formats)

File "/usr/local/bin/youtube-dl/youtube_dl/extractor/common.py", line 1072, in _sort_formats

raise ExtractorError('No video formats found')

ExtractorError: No video formats found; please report this issue on https://yt-dl.org/bug . Make sure you are using the latest version; type youtube-dl -U to update. Be sure to call youtube-dl with the --verbose flag and include its complete output.

| geo-restricted,account-needed | low | Critical |

270,492,153 | go | net/http: Transport: add a ConnectionManager interface to separate the connection management from http.Transport | `http.Transport` gives us a real solid HTTP client functionality and a faithful protocol implementation. The connection pooling/management is also bundled into `http.Transport`. `http.Transport` connection management takes a stance on a few areas. For example,

- it does not limit the number of *active* connections

- it reuses available connections in a LIFO manner

There are real needs and use cases where we need a different behavior there. We may want to limit the number of active connections. We may want to have a different connection pooling policy (e.g. FIFO). But today it is not possible if you use `http.Transport`. The only option is to implement the HTTP client, but we like the protocol implementation that exists in `http.Transport`.

There are several issues filed because of the inability to override or modify the connection management behavior of `http.Transport`:

- #14984

- #6785

- #17775

- #17776

among others.

It would be great if the connection management aspect of `http.Transport` is separated from the protocol aspect of `http.Transport` and becomes pluggable (e.g. a `ConnectionManager` interface). Then we could choose to provide a different connection management implementation and mix it with the protocol support of `http.Transport`.

The `http.Transport` API would add this new optional field:

```go

type Transport struct {

...

// ConnMgr provides the connection management behavior

// if nil, a default connection manager is used (yet to be named)

ConnMgr ConnectionManager

}

```

The connection manager should have a fairly simple API while encapsulating the complex behavior in the implementation. An incomplete API might look like:

```go

type ConnectionManager interface {

Get() (net.Conn, error)

Put(conn net.Conn)

}

```

It would be a pretty straightforward pool-like API. The only wrinkle might be that it should allow for the possibility that `Get` may be blocking (with a timeout) for certain implementations that want to allow timed waits for obtaining a connection from the connection manager.

It'd be great if the current "connection manager" is available publicly so some implementations can start with the base implementation and configure/customize it or override some methods as needed. | NeedsInvestigation,FeatureRequest | low | Critical |

270,525,618 | kubernetes | All etcd3 watches close after 10m or so | It appears that we are not properly handling the watch expired error by continuing the watch from the most recent RV seen on a watch. When compaction occurs watches appear to terminate (the RV we started the watch on no longer exists). We should be able to restart the watch at the last RV we received on the watch at the storage level and continue.

```

E0607 17:45:11.447234 367 watcher.go:188] watch chan error: etcdserver: mvcc: required revision has been compacted

``` | kind/bug,priority/backlog,sig/scalability,sig/api-machinery,lifecycle/frozen | medium | Critical |

270,537,122 | flutter | DefaultTextStyle docs are lacking | https://api.flutter.dev/flutter/widgets/DefaultTextStyle-class.html

Doesn't say much. Topics it might explain are things like "when would you use one of these" or "what widgets set one of these for you", etc.

My understanding is MaterialApp sets a crazy default text style, might be useful to note that here (and how you're expected to interact with that default). | framework,d: api docs,a: typography,has reproducible steps,P2,found in release: 3.3,found in release: 3.7,team-framework,triaged-framework | low | Minor |

270,542,429 | rust | private_in_public lint triggered for pub associated type computed using non-pub trait | I was surprised to get a private_in_public deprecation warning for using a private trait to _compute_ an associated type that's itself pub.

De-macro'd, shortened example of what I was doing:

```rust

#![feature(try_from)]

trait Bar {

type Inner;

}

pub struct B16(u16);

pub struct B32(u32);

impl Bar for B16 {

type Inner = u16;

}

impl Bar for B32 {

type Inner = u32;

}

use std::convert::{TryFrom, TryInto};

impl TryFrom<B32> for B16 {

type Error = <<B16 as Bar>::Inner as TryFrom<<B32 as Bar>::Inner>>::Error;

// ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

// warning: private type `<B16 as Bar>::Inner` in public interface (error E0446)

// (Despite the fact that that actual type is core::num::TryFromIntError)

fn try_from(x: B32) -> Result<Self, Self::Error> {

Ok(B16(x.0.try_into()?))

}

}

fn main() {}

```

Repro: https://play.rust-lang.org/?gist=1f84c630e07ddd54d2bf208aa85ed8bb&version=nightly

I don't understand how that type is part of the public interface, since I can't get to it using TryFrom.

(Do close if this is known and covered by things like https://github.com/rust-lang/rfcs/pull/1671#issuecomment-268422405, but I couldn't find any issues talking about this part at least.) | C-enhancement,A-visibility,T-compiler | low | Critical |

270,656,049 | flutter | Make it less confusing to use Cupertino with text under MaterialApp | Avoid user confusing wrt the default error text style from MaterialApp when using Cupertino widgets. | framework,f: material design,f: cupertino,a: quality,a: typography,P2,team-design,triaged-design | low | Critical |

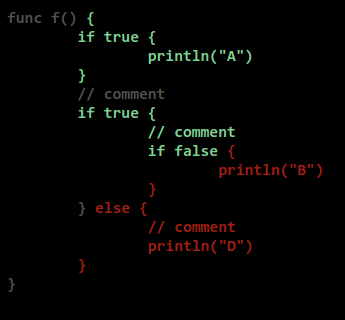

270,731,203 | go | cmd/cover: inconsistent treatment of comments | Run coverage on the following program using these commands:

```

$ cd $GOROOT/src/test

$ cat test.go

package test

func f() {

if true {

println("A")

}

// comment

if true {

// comment

if false {

println("B")

}

} else {

// comment

println("D")

}

}

$ cat test_test.go

package test

import "testing"

func Test(t *testing.T) { f() }

$ go test -coverprofile=c.out test

ok test 0.015s coverage: 66.7% of statements

$ command go tool cover -html=c.out

```

This is the rendered HTML output:

Observe that the first comment is grey, not green, even though it was covered. Is this a simple bookkeeping error, or is there a design reason why we shouldn't consider the entire span from `func f() {` up to `if false` as covered, and render it green?

(This is Google internal issue 68650370.) | help wanted,NeedsInvestigation,compiler/runtime | low | Critical |

270,788,506 | rust | Literate doctests | This might be RFC-worthy.

What if you could tie several sequential doctests together so that they operate as a single code block, but you can still write narrative comments in between in a literal style? This could cut down on `ignore`/`no_run` code blocks as well as hidden lines at the same time.

### Example

Here is an example of using mutable variables. First, let's make some bindings.

```rust

let a = 1;

let mut b = 2;

```

`a` can't be modified, but `b` can!

```rust,cont

// a += 1; // this would be an error!

b += 1;

```

Clicking the run button on either code block would open a playpen with all the code. Syntax up for debate.

cc @QuietMisdreavus | T-rustdoc,C-feature-request,A-doctests | low | Critical |

270,818,914 | go | cmd/vet: flag atomic.Value usages with interface types | Consider the following:

```go

var v atomic.Value

var err error

err = &http.ProtocolError{}

v.Store(err)

err = io.EOF

v.Store(err)

```

The intention to have a atomic value store for errors. However, running this code panics:

```

panic: sync/atomic: store of inconsistently typed value into Value

```

This is because `atomic.Value` requires that the underlying *concrete* type be the same (which is a reasonable expectation for its implementation). When going through the `atomic.Value.Store` method call, the fact that both these are of the `error` interface is lost.

Perhaps we should add a vet check that flags usages of `atomic.Value` where the argument passed in is an interface type?

Vet criterions:

* frequency: not sure, I haven't done an analysis through all Go corpus.

* correctness: this is almost always wrong. Any "correct" usages should type assert to the concrete value first.

* accuracy: if the type information available can conclusively show an argument is an interface type, then very accurate.

\cc @robpike

\cc @dominikh for `staticcheck` | NeedsDecision,Analysis | low | Critical |

270,820,725 | nvm | `lts/*` should point to latest installed line, not latest available line | - Operating system and version:

Ubuntu 16.04.3 LTS

- `nvm debug` output:

<details>

<!-- do not delete the following blank line -->

```sh

nvm --version: v0.33.6

$SHELL: /bin/bash

$HOME: /home/build

$NVM_DIR: '$HOME/.nvm'

$PREFIX: ''

$NPM_CONFIG_PREFIX: ''

$NVM_NODEJS_ORG_MIRROR: ''

$NVM_IOJS_ORG_MIRROR: ''

shell version: 'GNU bash, version 4.3.48(1)-release (x86_64-pc-linux-gnu)'

uname -a: 'Linux 4.4.0-98-generic #121-Ubuntu SMP Tue Oct 10 14:24:03 UTC 2017 x86_64 x86_64 x86_64 GNU/Linux'

OS version: Ubuntu 16.04.3 LTS

sed: -e expression #1, char 9: Unmatched ) or \)

curl: , curl 7.47.0 (x86_64-pc-linux-gnu) libcurl/7.47.0 GnuTLS/3.4.10 zlib/1.2.8 libidn/1.32 librtmp/2.3

wget: /usr/bin/wget, GNU Wget 1.17.1 built on linux-gnu.

git: /usr/bin/git, git version 2.7.4

grep: /bin/grep (grep --color=auto), grep (GNU grep) 2.25

awk: /usr/bin/awk, GNU Awk 4.1.3, API: 1.1 (GNU MPFR 3.1.4, GNU MP 6.1.0)

sed: /bin/sed, sed (GNU sed) 4.2.2

cut: /usr/bin/cut, cut (GNU coreutils) 8.25

basename: /usr/bin/basename, basename (GNU coreutils) 8.25

rm: /bin/rm, rm (GNU coreutils) 8.25

sed: -e expression #1, char 9: Unmatched ) or \)

mkdir: , mkdir (GNU coreutils) 8.25

xargs: /usr/bin/xargs, xargs (GNU findutils) 4.7.0-git

nvm current: none

which node:

which iojs:

which npm:

npm config get prefix: The program 'npm' is currently not installed. You can install it by typing:

sudo apt install npm

npm root -g: The program 'npm' is currently not installed. You can install it by typing:

sudo apt install npm

```

</details>

- `nvm ls` output:

<details>

<!-- do not delete the following blank line -->

```sh

v4.8.3

v5.3.0

v6.10.3

v6.11.0

v6.11.1

v6.11.2

v6.11.3

v6.11.4

v6.11.5

default -> lts/* (-> N/A)

node -> stable (-> v6.11.5) (default)

stable -> 6.11 (-> v6.11.5) (default)

iojs -> N/A (default)

lts/* -> lts/carbon (-> N/A)

lts/argon -> v4.8.5 (-> N/A)

lts/boron -> v6.11.5

lts/carbon -> v8.9.0 (-> N/A)

```

</details>

- How did you install `nvm`? (e.g. install script in readme, homebrew):

install script in readme

- What steps did you perform?

Opened a new terminal session (e.g. SSH client or new tmux tab).

- What happened?

Error message:

````

N/A: version "N/A -> N/A" is not yet installed.

You need to run "nvm install N/A" to install it before using it.

````

- What did you expect to happen?

No error message.

- Is there anything in any of your profile files (`.bashrc`, `.bash_profile`, `.zshrc`, etc) that modifies the `PATH`?

No. | feature requests,bugs | medium | Critical |

270,822,351 | angular | Compiling with Closure Compiler requires unnecessary extern | <!--

PLEASE HELP US PROCESS GITHUB ISSUES FASTER BY PROVIDING THE FOLLOWING INFORMATION.

ISSUES MISSING IMPORTANT INFORMATION MAY BE CLOSED WITHOUT INVESTIGATION.

-->

## I'm submitting a...

<!-- Check one of the following options with "x" -->

<pre><code>

[ ] Regression (a behavior that used to work and stopped working in a new release)

[ ] Bug report <!-- Please search GitHub for a similar issue or PR before submitting -->

[ ] Feature request

[ ] Documentation issue or request

[ ] Support request => Please do not submit support request here, instead see https://github.com/angular/angular/blob/master/CONTRIBUTING.md#question

</code></pre>

## Current behavior

<!-- Describe how the issue manifests. -->

When compiling an AOT compiler bundle with Closure Compiler, an error prevents the bundle from being compiled.

[14:46:34] WARN node_modules/@angular/platform-browser/esm2015/platform-browser.js:1542:

Originally at:

node_modules/packages/platform-browser/src/dom/util.js:38: ERROR - variable COMPILED is undeclared

1 error(s), 0 warning(s)

As a workaround, I made COMPILED an extern, but this variable is not needed in my application. I believe this had something to do with this change:

https://github.com/angular/angular/commit/db74f44a97b545488c4e05bf4210dfb733fe8d6f

## Expected behavior

<!-- Describe what the desired behavior would be. -->

COMPILED should not be required by an application to compile AOT compiled code with Closure Compiler.

## Minimal reproduction of the problem with instructions

Follow the instructions in the README.md of this repo:

https://github.com/steveblue/angular5-closure-extern-bug

## What is the motivation / use case for changing the behavior?

<!-- Describe the motivation or the concrete use case. -->

Angular should not require a dev to include unnecessary externs just to build with Closure Compiler.

## Environment

<pre><code>

Angular version: 5.0.0

<!-- Check whether this is still an issue in the most recent Angular version -->

Browser:

- [ ] Chrome (desktop) version XX

- [ ] Chrome (Android) version XX

- [ ] Chrome (iOS) version XX

- [ ] Firefox version XX

- [ ] Safari (desktop) version XX

- [ ] Safari (iOS) version XX

- [ ] IE version XX

- [ ] Edge version XX

For Tooling issues:

- Node version: 6.11.0

- Platform: MacOS 11.12.6

</code></pre> | freq1: low,area: compiler,type: use-case,P3 | low | Critical |

270,828,042 | opencv | cv2.so link two versions: libopencv_imgcodecs.so,libopencv_imgproc.so,libopencv_core.so | - OpenCV => 3.3

- Operating System / Platform => ubuntu 16.04 64 Bit

- Compiler => gcc version 5.4.0 20160609

I cleaned opencv-3.1 in ubuntu 16.04,then build 3.3,when

`ldd /usr/lib/python2.7/dist-packages/cv2.so|grep 3.1`

it gives:

```

/lib64/ld-linux-x86-64.so.2 (0x0000557321d2a000)

libopencv_imgcodecs.so.3.1 => not found

libopencv_imgproc.so.3.1 => not found

libopencv_core.so.3.1 => not found

```

as you can see that cv2.so(from opencv 3.3) **linked three not existed libs** from opencv-3.1

when

`ldd /usr/lib/python2.7/dist-packages/cv2.so|grep libopencv_core`

it gives

```

libopencv_core.so.3.3 => /usr/lib/x86_64-linux-gnu/libopencv_core.so.3.3..

libopencv_core.so.3.1 => not found

```

obviously,**two versions of libopencv_core got linked into cv2.so**,can anybody tell me:

how do this happened?

how to solve this problem?

my steps:

```

cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=$(python -c

"import sys; print(sys.prefix)") -D PYTHON_EXECUTABLE=$(which python) -D

OPENCV_EXTRA_MODULES_PATH=/home/user/opencv_contrib/modules -D WITH_QT=ON -D

WITH_OPENGL=ON -D WITH_IPP=ON -D WITH_OPENNI2=ON -D WITH_V4L=ON -D

WITH_FFMPEG=ON -D WITH_GSTREAMER=OFF -D WITH_OPENMP=ON -D WITH_VTK=ON -D

BUILD_opencv_java=OFF -D BUILD_opencv_python3=OFF -D WITH_CUDA=ON -D

ENABLE_FAST_MATH=1 -D WITH_NVCUVID=ON -D CUDA_FAST_MATH=ON -D

BUILD_opencv_cnn_3dobj=ON -D FORCE_VTK=ON -D WITH_TBB=ON -D WITH_CUBLAS=ON -

D CUDA_NVCC_FLAGS="-D_FORCE_INLINES" -D WITH_GDAL=ON -D WITH_XINE=ON ..

```

`make -j 48`

`make install -j 48` | category: build/install,incomplete | low | Minor |

270,846,133 | go | runtime: windows-amd64-race builder fails with errno=1455 | I noticed some failures on windows-amd64-race builder:

https://build.golang.org/log/51b118b069de539851ff1cc07769de863bf47797

```

--- FAIL: TestRace (2.21s)

race_test.go:71: failed to parse test output:

# command-line-arguments_test

runtime: VirtualAlloc of 1048576 bytes failed with errno=1455

fatal error: out of memory

...

FAIL

FAIL runtime/race 40.936s

```

and

https://build.golang.org/log/f73793faa51acd4b45e9ef88a18e462a4913448c

```

==2820==ERROR: ThreadSanitizer failed to allocate 0x000000400000 (4194304) bytes at 0x040177c00000 (error code: 1455)

runtime: newstack sp=0x529fdf0 stack=[0xc042200000, 0xc042202000]

morebuf={pc:0x4083a4 sp:0x529fe00 lr:0x0}

sched={pc:0x47bec7 sp:0x529fdf8 lr:0x0 ctxt:0x0}

runtime: gp=0xc0421ec300, gp->status=0x2

runtime: split stack overflow: 0x529fdf0 < 0xc042200000

fatal error: runtime: split stack overflow

...

FAIL runtime/trace 14.859s

...

--- FAIL: TestMutexMisuse (0.28s)

mutex_test.go:173: Mutex.Unlock: did not find failure with message about unlocked lock: fork/exec C:\Users\gopher\AppData\Local\Temp\go-build022577002\b075\sync.test.exe: The paging file is too small for this operation to complete.

mutex_test.go:173: Mutex.Unlock2: did not find failure with message about unlocked lock: fork/exec C:\Users\gopher\AppData\Local\Temp\go-build022577002\b075\sync.test.exe: The paging file is too small for this operation to complete.

mutex_test.go:173: RWMutex.Unlock: did not find failure with message about unlocked lock: fork/exec C:\Users\gopher\AppData\Local\Temp\go-build022577002\b075\sync.test.exe: The paging file is too small for this operation to complete.