id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

656,784,787 |

pytorch

|

[jit] support for generators and `yield`

|

Background on what generators are: https://wiki.python.org/moin/Generators

The use cases for TorchScript are

1. some classes want to use generates to implement `__iter__` and such, especially in Torchtext

2. the PyTorch optimizers make use of generators

cc @suo @gmagogsfm

|

oncall: jit,months,TSUsability,TSRootCause:UnsupportedConstructs

|

low

|

Minor

|

656,785,699 |

pytorch

|

[jit] support class polymorphism

|

Today, classes cannot inherit from other classes. This is a surprising limitation for people who want to use inheritance for code re-use, or are scripting already-existing codebases that use inheritance. Also: the PyTorch optimizers are polymorphic.

cc @suo @gmagogsfm

|

oncall: jit,months,TSRootCause:DynamicBehaviors,TSUsability,TSRootCause:UnsupportedConstructs

|

low

|

Major

|

656,787,164 |

pytorch

|

[jit] support `rpc_remote` and `rpc_sync`

|

We registered the `rpc_async` in TorchScript, but not `rpc_remote` and `rpc_sync`. We should register these two as well, as they are exactly the same semantics with `rpc_async`. This is a mid-pri request from PyPER

cc @suo @gmagogsfm

|

oncall: jit,days

|

low

|

Minor

|

656,787,950 |

pytorch

|

[jit] Support NamedTuple in tracing

|

We should be able to take `NamedTuples` as input and output of traced modules. As a starting point, can look at https://github.com/pytorch/pytorch/pull/29751 for a rough implementation

cc @suo @gmagogsfm

|

oncall: jit,module: bootcamp,days

|

low

|

Minor

|

656,811,631 |

TypeScript

|

No error using imports/exports with --module=none and --target=es2015+

|

<!-- 🚨 STOP 🚨 STOP 🚨 STOP 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!--

Please try to reproduce the issue with the latest published version. It may have already been fixed.

For npm: `typescript@next`

This is also the 'Nightly' version in the playground: http://www.typescriptlang.org/play/?ts=Nightly

-->

**TypeScript Version:** 4.0.0-dev.20200713

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** module none target

**Code**

```ts

// @module: none

// @target: es2015

export class Foo {}

```

**Expected behavior:**

`TS1148 Cannot use imports, exports, or module augmentations when '--module' is 'none'` and emits `export class Foo {}`

**Actual behavior:**

No error, and emits as CommonJS.

**Playground Link:** https://www.typescriptlang.org/play/?module=0#code/KYDwDg9gTgLgBAYwDYEMDOa4DEITgbwF8AoIA

**Related issues:** #39597

|

Bug,Breaking Change,Rescheduled

|

low

|

Critical

|

656,908,826 |

pytorch

|

Add a done() API to torch.futures.Future and ProcessGroup::Work

|

Discussion in https://discuss.pytorch.org/t/how-to-check-if-irecv-got-a-message/55725 asks for an API to check the completion of a `ProcessGroup::Work` from `isend` / `irecv`. A temporary solution would be implementing the `isCompleted` API properly, which is already exposed to Python.

https://github.com/pytorch/pytorch/blob/e2c4c2f102af3bf81b1a7a7e4d2165cebda4995b/torch/lib/c10d/ProcessGroup.hpp#L43

https://github.com/pytorch/pytorch/blob/e2c4c2f102af3bf81b1a7a7e4d2165cebda4995b/torch/csrc/distributed/c10d/init.cpp#L660-L674

In the long run, as we are going to replace `ProcessGroup::Work` with `torch.futures.Future`, we should also add a [`done`](https://docs.python.org/3/library/asyncio-future.html#asyncio.Future.done) API to the Future type.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @xush6528 @osalpekar @jiayisuse @agolynski

|

oncall: distributed,triaged

|

low

|

Minor

|

656,920,012 |

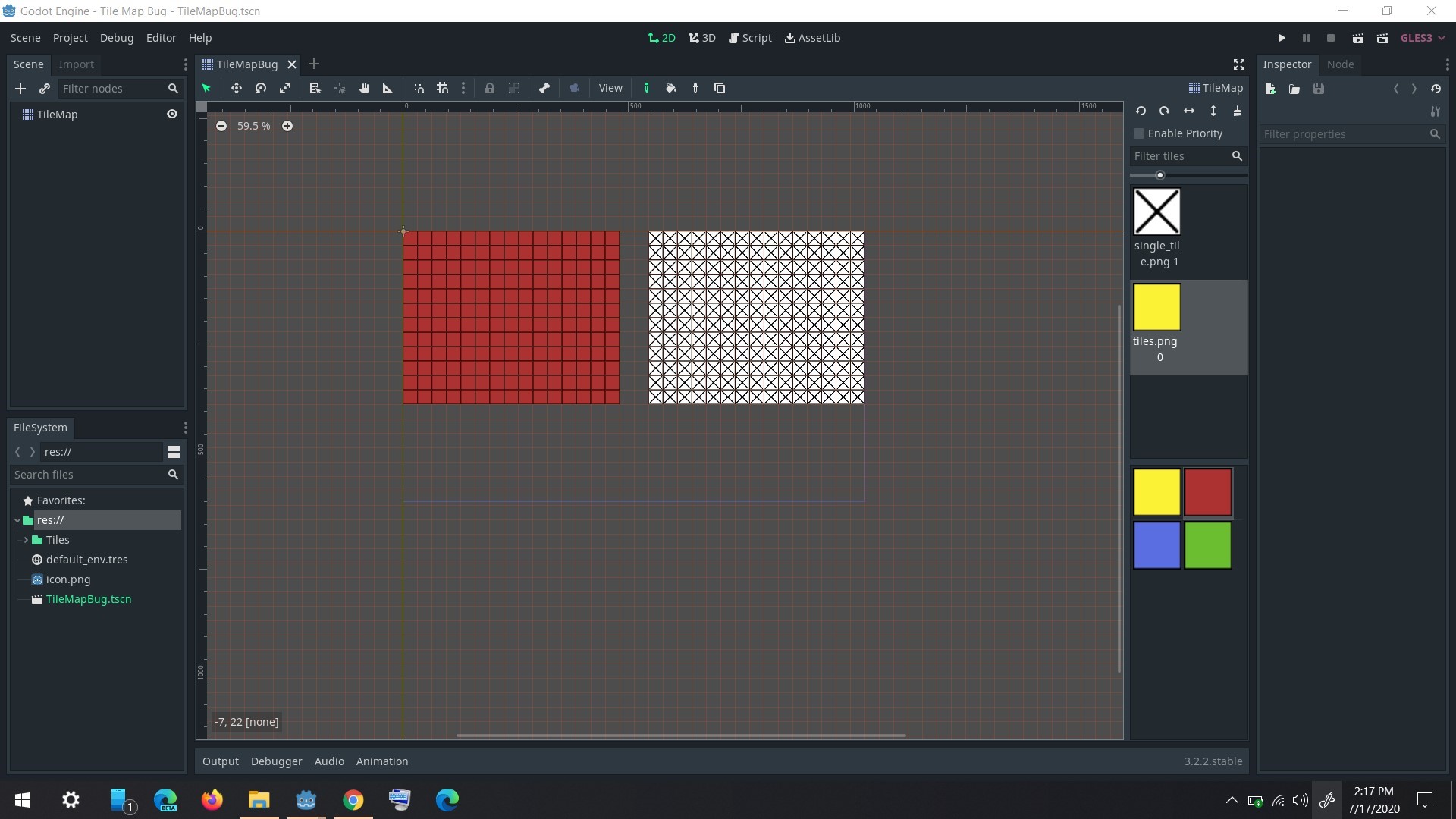

godot

|

Crash when overriding _set (?)

|

<!-- Please search existing issues for potential duplicates before filing yours:

https://github.com/godotengine/godot/issues?q=is%3Aissue

-->

**Godot version:**

v3.2.2.stable.official / Steam

<!-- Specify commit hash if using non-official build. -->

**OS/device including version:**

Windows 10 / 2004

<!-- Specify GPU model, drivers, and the backend (GLES2, GLES3, Vulkan) if graphics-related. -->

**Issue description:**

Godot crashs, it is almost reproduceable everytime. It crashs when executing the example project.

I override `_set(property, value)`, which may lead to that crash.

Error message:

```

ERROR: get: FATAL: Index p_index = 7 is out of bounds (size() = 7).

At: ./core/cowdata.h:152

```

<!-- What happened, and what was expected. -->

**Steps to reproduce:**

Execute the main scene.

**Minimal reproduction project:**

[gd_crash.zip](https://github.com/godotengine/godot/files/4921701/gd_crash.zip)

<!-- A small Godot project which reproduces the issue. Drag and drop a zip archive to upload it. -->

Another example replace the `_read():` function with this

```gdscript

func _ready():

#self.my_dict["_"] = MyType.new("1", 1)

self._non_existent

```

Will lead to a crash too.

|

bug,topic:gdscript,topic:editor,confirmed,crash

|

low

|

Critical

|

656,958,986 |

pytorch

|

[JIT][to-backend] `selective_to_backend` infra

|

Now that #41146 has landed, we need to create and make available some utility functions to help users write code that selectively lowers some modules in a module hierarchy. The main consideration here is that the JIT type of the lowered module and all of its ancestors needs to be updated after lowering to a backend.

cc @suo @gmagogsfm

|

oncall: jit

|

low

|

Minor

|

656,971,413 |

PowerToys

|

Touchscreen Gesture Customization

|

I feel with how many touchscreen devices out there we would benefit from customizable touch gestures. There are many different touch gestures that could be implemented.

- swipe up from the bottom to go to desktop (similar to iPhone/Android)

- swipe from the sides to switch desktops/applications

- swipe to display multi-view of currently open apps

- swipe and hold to quick split screen apps

|

Idea-New PowerToy

|

low

|

Major

|

656,991,642 |

rust

|

Documentation for stdout does not mention flushing

|

From https://github.com/rust-dc/fish-manpage-completions/pull/96#discussion_r453728093.

The documentation for [`std::io::stdout()`](https://doc.rust-lang.org/stable/std/io/fn.stdout.html) does not mention "flush" anywhere, neither does `Stdout` or `StdoutLock`. I think it would be helpful for the documentation to indicate the behaviour, i.e. if it should be flushed manually or it's flushed once it goes out of scope.

The same would apply for `stderr`. I'm not sure if the behaviour of #23205 has changed since, or whether not documenting flushing was an oversight or intended to allow breakage in that regard.

|

C-enhancement,T-libs-api,A-docs

|

low

|

Minor

|

657,004,263 |

youtube-dl

|

--datebefore now-1day doesn't download videos which are 24 hours old or older

|

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE

######################################################################

-->

## Checklist

<!--

Carefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:

- Look through the README (http://yt-dl.org/readme) and FAQ (http://yt-dl.org/faq) for similar questions

- Search the bugtracker for similar questions: http://yt-dl.org/search-issues

- Finally, put x into all relevant boxes (like this [x])

-->

- [x] I'm asking a question

- [ ] I've looked through the README and FAQ for similar questions

- [x] I've searched the bugtracker for similar questions including closed ones

## Question

<!--

Ask your question in an arbitrary form. Please make sure it's worded well enough to be understood, see https://github.com/ytdl-org/youtube-dl#is-the-description-of-the-issue-itself-sufficient.

-->

WRITE QUESTION HERE

I used --datebefore now-1day when downloading all videos on this channel: https://www.youtube.com/channel/UCfwE_ODI1YTbdjkzuSi1Nag/videos

However instead of downloading videos which were created 24 hours ago or older, it downloaded videos which were created 4 hours ago or older. I have to use this instead which does indeed download videos created 2 days ago or older:

--datebefore now-1day

|

question

|

low

|

Critical

|

657,079,895 |

opencv

|

openCV DNN:: cv::ocl::Queue segfault 'scalar deleting destructor' [OpenCL]

|

##### System information (version) #################

- OpenCV => 4.3

- Operating System / Platform => Windows 10, 64 Bit

- Compiler => Visual Studio 2019

##### Detailed description #################

When running a SSD-MobileNet v2 converted from Tensorflow model (pb) using the DNN module. It gives out a result and throws a segfault in the destructor.

` objectDetectionNet.setInput(blob);

cv::Mat output = objectDetectionNet.forward();`

Throws a segfault error.

I could see a [INFO] message that OpenCL is initialized. Hardware is Intel HD graphics.

########### ############

PID 10620 received SIGSEGV for address: 0x718ffb65

OS-Version: 10.0.18362 () 0x100-0x1

00007FFF719385B6 (ntdll): (filename not available): RtlIsGenericTableEmpty

00007FFF7192A056 (ntdll): (filename not available): RtlRaiseException

00007FFF7195FE3E (ntdll): (filename not available): KiUserExceptionDispatcher

00007FFF718FFB65 (ntdll): (filename not available): RtlFreeHeap

00007FFF34B3078C (igdrcl64): (filename not available): clGetCLObjectInfoINTEL

00007FFF34B1E4BE (igdrcl64): (filename not available): clGetCLObjectInfoINTEL

00007FFF3491B634 (igdrcl64): (filename not available): (function-name not available)

00007FFF349197D9 (igdrcl64): (filename not available): (function-name not available)

00007FFF34919284 (igdrcl64): (filename not available): (function-name not available)

00007FFF34903D02 (igdrcl64): (filename not available): (function-name not available)

00007FFF34927876 (igdrcl64): (filename not available): (function-name not available)

D:\cosmos\libs\opencv-4.3.0\modules\core\src\opencl\runtime\autogenerated\opencl_core_impl.hpp (391): OPENCL_FN_clFinish_switch_fn

D:\cosmos\libs\opencv-4.3.0\modules\core\src\ocl.cpp (2656): cv::ocl::Queue::Impl::~Impl

00007FFF0DD90E0C (SiviVision): (filename not available): cv::ocl::Queue::Impl::`scalar deleting destructor'

D:\cosmos\libs\opencv-4.3.0\modules\core\src\ocl.cpp (2689): cv::ocl::Queue::Impl::release

D:\cosmos\libs\opencv-4.3.0\modules\core\src\ocl.cpp (2728): cv::ocl::Queue::~Queue

00007FFF0DB9E5EF (SiviVision): (filename not available): cv::CoreTLSData::~CoreTLSData

00007FFF0DB9F81C (SiviVision): (filename not available): cv::CoreTLSData::`scalar deleting destructor'

D:\cosmos\libs\opencv-4.3.0\modules\core\include\opencv2\core\utils\tls.hpp (80): cv::TLSData<cv::CoreTLSData>::deleteDataInstance

D:\cosmos\libs\opencv-4.3.0\modules\core\src\system.cpp (1543): cv::details::TlsStorage::releaseThread

D:\cosmos\libs\opencv-4.3.0\modules\core\src\system.cpp (1719): cv::details::opencv_fls_destructor

00007FFF719340C9 (ntdll): (filename not available): RtlFlsFree

00007FFF6F2DAA7B (KERNELBASE): (filename not available): FlsFree

D:\cosmos\libs\opencv-4.3.0\modules\core\src\system.cpp (1442): cv::details::TlsAbstraction::~TlsAbstraction

00007FFF106CC3F1 (SiviVision): (filename not available): `cv::details::getTlsAbstraction_'::`2'::`dynamic atexit destructor for 'g_tls''

minkernel\crts\ucrt\src\appcrt\startup\onexit.cpp (206): <lambda_d121dba8a4adeaf3a9819e48611155df>::operator()

vccrt\vcruntime\inc\internal_shared.h (204): __crt_seh_guarded_call<int>::operator()<<lambda_6a47f4c8fd0152770a780fc1d70204eb>,<lambda_d121dba8a4adeaf3a9819e48611155df> &,<lambda_6aaa2265f5b6a89667e7d7630012e97a> >

minkernel\crts\ucrt\inc\corecrt_internal.h (975): __acrt_lock_and_call<<lambda_d121dba8a4adeaf3a9819e48611155df> >

minkernel\crts\ucrt\src\appcrt\startup\onexit.cpp (231): _execute_onexit_table

minkernel\crts\ucrt\src\appcrt\startup\exit.cpp (226): <lambda_6e4b09c48022b2350581041d5f6b0c4c>::operator()

vccrt\vcruntime\inc\internal_shared.h (224): __crt_seh_guarded_call<void>::operator()<<lambda_d80eeec6fff315bfe5c115232f3240e3>,<lambda_6e4b09c48022b2350581041d5f6b0c4c> &,<lambda_2358e3775559c9db80273638284d5e45> >

minkernel\crts\ucrt\inc\corecrt_internal.h (975): __acrt_lock_and_call<<lambda_6e4b09c48022b2350581041d5f6b0c4c> >

minkernel\crts\ucrt\src\appcrt\startup\exit.cpp (259): common_exit

minkernel\crts\ucrt\src\appcrt\startup\exit.cpp (314): _cexit

d:\A01\_work\6\s\src\vctools\crt\vcstartup\src\utility\utility.cpp (407): __scrt_dllmain_uninitialize_c

d:\A01\_work\6\s\src\vctools\crt\vcstartup\src\startup\dll_dllmain.cpp (182): dllmain_crt_process_detach

d:\A01\_work\6\s\src\vctools\crt\vcstartup\src\startup\dll_dllmain.cpp (220): dllmain_crt_dispatch

d:\A01\_work\6\s\src\vctools\crt\vcstartup\src\startup\dll_dllmain.cpp (293): dllmain_dispatch

d:\A01\_work\6\s\src\vctools\crt\vcstartup\src\startup\dll_dllmain.cpp (335): _DllMainCRTStartup

00007FFF718E5021 (ntdll): (filename not available): RtlActivateActivationContextUnsafeFast

00007FFF7192AA82 (ntdll): (filename not available): LdrShutdownProcess

00007FFF7192A92D (ntdll): (filename not available): RtlExitUserProcess

00007FFF6FE1CD8A (KERNEL32): (filename not available): ExitProcess

00007FF702271B49 (node): (filename not available): v8::internal::SetupIsolateDelegate::SetupHeap

00007FF702271AFF (node): (filename not available): v8::internal::SetupIsolateDelegate::SetupHeap

00007FF702245DBF (node): (filename not available): v8::internal::SetupIsolateDelegate::SetupHeap

00007FFF6FE17BD4 (KERNEL32): (filename not available): BaseThreadInitThunk

00007FFF7192CE51 (ntdll): (filename not available): RtlUserThreadStart

|

bug,category: core,category: ocl,platform: win32

|

low

|

Critical

|

657,109,591 |

vscode

|

User settings for language configurations

|

<!-- ⚠️⚠️ Do Not Delete This! feature_request_template ⚠️⚠️ -->

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Describe the feature you'd like. -->

Please add functionality to: set all Autoclosing, Surrounding, Indentation settings and enable Intendation Folding (if possible, on top of language specific one); so they could not be overwritten by Language configs.

When multiple languages are used, the kaleidoscope switching of these settings are disturbing and/or loading the memory for no use. And editing the config files for each language is inconsistent and long.

|

feature-request,languages-basic

|

low

|

Major

|

657,172,495 |

create-react-app

|

Creat react app script not working in vscode

|

PS E:\jitsi\MERN> create-react-app MERN

create-react-app : File C:\Users\marsec developer\AppData\Roaming\npm\create-react-app.ps1 cannot be loaded because running scripts is disabled

on this system. For more information, see about_Execution_Policies at https:/go.microsoft.com/fwlink/?LinkID=135170.

At line:1 char:1

+ create-react-app MERN

+ CategoryInfo : SecurityError: (:) [], PSSecurityException

+ FullyQualifiedErrorId : UnauthorizedAccess

PS E:\jitsi\MERN> create-react-app mern

create-react-app : File C:\Users\marsec developer\AppData\Roaming\npm\create-react-app.ps1 cannot be loaded because running scripts is disabled

on this system. For more information, see about_Execution_Policies at https:/go.microsoft.com/fwlink/?LinkID=135170.

At line:1 char:1

+ create-react-app mern

+ ~~~~~~~~~~~~~~~~

+ CategoryInfo : SecurityError: (:) [], PSSecurityException

+ FullyQualifiedErrorId : UnauthorizedAccess

PS E:\jitsi\MERN> create-react-app

create-react-app : File C:\Users\marsec developer\AppData\Roaming\npm\create-react-app.ps1 cannot be loaded because running scripts is disabled

on this system. For more information, see about_Execution_Policies at https:/go.microsoft.com/fwlink/?LinkID=135170.

At line:1 char:1

+ create-react-app

+ ~~~~~~~~~~~~~~~~

+ CategoryInfo : SecurityError: (:) [], PSSecurityException

+ FullyQualifiedErrorId : UnauthorizedAccess

If you're looking for general information on using React, the React docs have a list of resources: https://reactjs.org/community/support.html

If you've discovered a bug or would like to propose a change please use one of the other issue templates.

Thanks!

|

stale,needs triage

|

low

|

Critical

|

657,203,549 |

godot

|

Cryptic GDScript Error Message for A Beginner Programming Mistake - "Unexpected token"

|

**Godot version:** v3.2.2 stable

**OS/device including version:** Windows 7

**Issue description:**

This 1-line beginner program throws a cryptic error message:

> print("Hello World")

Corresponding error message:

> "Unexpected token: Built-In Func:"

And it's elaborated on in the Errors tab as:

> "get_token_identifier: Condition "tk_rb[ofs].type != TK_IDENTIFIER" is true. Returned: StringName()"

**Expected Behavior**: GDScript is advertised to new users for its similarity to Python. So it's bad when trivial code doesn't work for incomprehensible reasons - nobody should have to check documentation or have to google to debug a "Hello World" program.

**How to Fix it**: GDScript doesn't like the code above because you aren't allowed to do much of anything but define variables or functions at the base level of a script. So either make the reason for this specific error message crystal clear, or make the "Unexpected token" error message in general more clear.

**Elaboration**: Comprehensible error messages are very important for programming. Personally, I tried Godot Engine and GDScript for the reasons mentioned above, then got this bizarre error even when following basic, community-approved programming tutorials. For instance, I followed this tutorial by GDQuest (https://www.youtube.com/watch?v=UcdwP1Q2UlU ), and copying the code on most of the slides in that video causes the error mentioned in this issue, unless you code inside a function like _ready. Naturally, an incomprehensible error message in that situation is *really bad*.

|

enhancement,topic:gdscript,usability

|

low

|

Critical

|

657,207,623 |

flutter

|

CupertinoTextSelectionToolbar doesn't vertically centre align text in Chinese language

|

1. Setup localizations settings

pubspec.yaml

```

flutter_localizations:

sdk: flutter

```

MyApp

```

class MyApp extends StatelessWidget {

// This widget is the root of your application.

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Flutter Demo',

theme: ThemeData(

primarySwatch: Colors.blue,

visualDensity: VisualDensity.adaptivePlatformDensity,

),

localizationsDelegates: [

GlobalCupertinoLocalizations.delegate,

DefaultCupertinoLocalizations.delegate,

GlobalMaterialLocalizations.delegate,

GlobalWidgetsLocalizations.delegate,

],

supportedLocales: [

const Locale('zh', 'CH'),

const Locale('en', 'US'),

],

locale: Locale('zh', 'CH'),

home: MyHomePage(title: 'Flutter Demo Home Page'),

);

}

}

```

2. Put a TextField to page.

```

Padding(

padding: EdgeInsets.fromLTRB(20, 0, 20, 0),

child: TextField(),

),

```

3. Double tap TextField will show CupertinoTextSelectionToolbar in Chinese, it will not display center.

<img width="406" alt="WX20200715-172840@2x" src="https://user-images.githubusercontent.com/11239033/87528825-a7d50f00-c6c0-11ea-8de4-12fbf416ef22.png">

when I change the language to en, it will display correct.

```

locale: Locale('en', 'US'),

// locale: Locale('zh', 'CH'),

```

<img width="442" alt="WX20200715-173057@2x" src="https://user-images.githubusercontent.com/11239033/87529029-f682a900-c6c0-11ea-995a-1f736a1ee052.png">

flutter doctor -v

```

[✓] Flutter (Channel stable, v1.17.5, on Mac OS X 10.15.5 19F101, locale en)

• Flutter version 1.17.5 at /Users/wangyu/Yu/flutter

• Framework revision 8af6b2f038 (2 weeks ago), 2020-06-30 12:53:55 -0700

• Engine revision ee76268252

• Dart version 2.8.4

[!] Android toolchain - develop for Android devices (Android SDK version 29.0.3)

• Android SDK at /Users/wangyu/Library/Android/sdk

• Platform android-29, build-tools 29.0.3

• Java binary at: /Applications/Android

Studio.app/Contents/jre/jdk/Contents/Home/bin/java

• Java version OpenJDK Runtime Environment (build

1.8.0_242-release-1644-b3-6222593)

✗ Android license status unknown.

Try re-installing or updating your Android SDK Manager.

See https://developer.android.com/studio/#downloads or visit visit

https://flutter.dev/docs/get-started/install/macos#android-setup for

detailed instructions.

[✓] Xcode - develop for iOS and macOS (Xcode 11.5)

• Xcode at /Applications/Xcode.app/Contents/Developer

• Xcode 11.5, Build version 11E608c

• CocoaPods version 1.9.1

[!] Android Studio (version 4.0)

• Android Studio at /Applications/Android Studio.app/Contents

✗ Flutter plugin not installed; this adds Flutter specific functionality.

✗ Dart plugin not installed; this adds Dart specific functionality.

• Java version OpenJDK Runtime Environment (build

1.8.0_242-release-1644-b3-6222593)

[✓] IntelliJ IDEA Ultimate Edition (version 2019.2.2)

• IntelliJ at /Applications/IntelliJ IDEA.app

• Flutter plugin version 31.3.3

• Dart plugin version 182.5124

[✓] VS Code (version 1.47.0)

• VS Code at /Applications/Visual Studio Code.app/Contents

• Flutter extension version 3.12.2

[✓] Connected device (1 available)

• iPhone 11 Pro Max • B3A87334-6C79-4ADD-A38E-BF76EC71D171 • ios •

com.apple.CoreSimulator.SimRuntime.iOS-13-5 (simulator)

! Doctor found issues in 2 categories.

```

|

a: text input,framework,a: internationalization,f: cupertino,has reproducible steps,P2,found in release: 3.3,found in release: 3.7,team-design,triaged-design

|

low

|

Major

|

657,211,837 |

realworld

|

Bearer Authentication

|

In the API spec `swagger.json`, the authentication scheme is defined as:

```

"Token": {

"description": "For accessing the protected API resources, you must have received a a valid JWT token after registering or logging in. This JWT token must then be used for all protected resources by passing it in via the 'Authorization' header.\n\nA JWT token is generated by the API by either registering via /users or logging in via /users/login.\n\nThe following format must be in the 'Authorization' header :\n\n Token: xxxxxx.yyyyyyy.zzzzzz\n \n",

"type": "apiKey",

"name": "Authorization",

"in": "header"

}

```

Shouldn't it be of `"type": "http"`, `"scheme": "bearer"`, `"bearerFormat": "JWT"`?

|

help wanted,good first issue,Status: Approved,v2 changelog

|

low

|

Minor

|

657,257,334 |

go

|

runtime: reducing preemption in suspendG when G is running large nosplit functions

|

<!--

Please answer these questions before submitting your issue. Thanks!

For questions please use one of our forums: https://github.com/golang/go/wiki/Questions

-->

### What version of Go are you using (`go version`)?

<pre>

$ go version

tip version, on arm64

</pre>

### Does this issue reproduce with the latest release?

Yes.

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

</pre></details>

GO111MODULE=""

GOARCH="arm64"

GOBIN=""

GOCACHE="/home/xiaji01/.cache/go-build"

GOENV="/home/xiaji01/.config/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="arm64"

GOHOSTOS="linux"

GOINSECURE=""

GOMODCACHE="/home/xiaji01/.go/pkg/mod"

GONOPROXY=""

GONOSUMDB=""

GOOS="linux"

GOPATH="/home/xiaji01/.go"

GOPRIVATE=""

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/home/xiaji01/src/go.gc"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/home/xiaji01/src/go.gc/pkg/tool/linux_arm64"

GCCGO="gccgo"

AR="ar"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD="/home/xiaji01/src/go.gc/src/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build944660995=/tmp/go-build -gno-record-gcc-switches"

### What did you do?

<!--

If possible, provide a recipe for reproducing the error.

A complete runnable program is good.

A link on play.golang.org is best.

-->

live-lock problems are spotted on arm64 in a couple of micro benchmarks from text/tabwriter (there should be more similar cases).

If a goroutine is running 'nosplit' functions which are time consuming, like

bulkBarrierPreWriteSrcOnly

memmove

called in growslice when the slice is a large one, and its background worker tries to suspend it to do stack scanning, the G under preemption may hardly make progress, especially on arm64 machines which seem to experience poor signal handling performance.

pprof data of BenchmarkTable/1x100000/new on an arm64 machine:

```

(pprof) top

Showing nodes accounting for 12.46s, 95.70% of 13.02s total

Dropped 118 nodes (cum <= 0.07s)

Showing top 10 nodes out of 40

flat flat% sum% cum cum%

5.41s 41.55% 41.55% 5.41s 41.55% runtime.futex

3.22s 24.73% 66.28% 3.59s 27.57% runtime.nanotime (inline)

0.99s 7.60% 73.89% 0.99s 7.60% runtime.tgkill

0.84s 6.45% 80.34% 0.84s 6.45% runtime.osyield

0.77s 5.91% 86.25% 0.77s 5.91% runtime.epollwait

0.37s 2.84% 89.09% 0.37s 2.84% runtime.nanotime1

0.33s 2.53% 91.63% 6.38s 49.00% runtime.suspendG

0.30s 2.30% 93.93% 0.30s 2.30% runtime.getpid

0.14s 1.08% 95.01% 0.14s 1.08% runtime.procyield

0.09s 0.69% 95.70% 1.38s 10.60% runtime.preemptM (inline)

```

I'm thinking to introduce a flag into G to indicate whether it's running a time-consuming nosplit function and let suspendG yield to avoid the live-lock, the flag is set manually for known functions, I tried it for bulkBarrierPreWriteSrcOnly and memmove in runtime.growslice and runtime.makeslicecopy when the slice is longer than a threshold (select 4K for now), the text/tabwriter package witnessed significant improvement on arm64:

arm64-1 perf:

```

name old time/op new time/op delta

Table/1x10/new-224 6.59µs ± 8% 6.67µs ± 7% ~ (p=0.841 n=5+5)

Table/1x10/reuse-224 1.94µs ± 1% 1.94µs ± 1% ~ (p=0.802 n=5+5)

Table/1x1000/new-224 494µs ±13% 381µs ± 3% -22.85% (p=0.008 n=5+5)

Table/1x1000/reuse-224 185µs ± 0% 185µs ± 0% -0.37% (p=0.016 n=5+4)

Table/1x100000/new-224 2.73s ±73% 0.04s ± 2% -98.46% (p=0.008 n=5+5)

Table/1x100000/reuse-224 4.25s ±76% 0.02s ± 1% -99.55% (p=0.008 n=5+5)

Table/10x10/new-224 20.7µs ± 6% 20.1µs ± 5% ~ (p=0.421 n=5+5)

Table/10x10/reuse-224 8.89µs ± 0% 8.91µs ± 0% +0.21% (p=0.032 n=5+5)

Table/10x1000/new-224 1.70ms ± 7% 1.59ms ± 6% ~ (p=0.151 n=5+5)

Table/10x1000/reuse-224 908µs ± 0% 902µs ± 0% -0.59% (p=0.032 n=5+5)

Table/10x100000/new-224 2.34s ±62% 0.14s ± 2% -94.21% (p=0.008 n=5+5)

Table/10x100000/reuse-224 911ms ±72% 103ms ± 2% -88.66% (p=0.008 n=5+5)

Table/100x10/new-224 143µs ±15% 138µs ± 5% ~ (p=0.841 n=5+5)

Table/100x10/reuse-224 78.1µs ± 0% 78.1µs ± 0% ~ (p=0.841 n=5+5)

Table/100x1000/new-224 13.1ms ± 6% 11.9ms ± 5% -9.16% (p=0.008 n=5+5)

Table/100x1000/reuse-224 8.13ms ± 0% 8.15ms ± 1% ~ (p=1.000 n=5+5)

Table/100x100000/new-224 1.29s ±17% 1.31s ±10% ~ (p=0.310 n=5+5)

Table/100x100000/reuse-224 1.23s ± 2% 1.26s ± 5% ~ (p=0.286 n=4+5)

```

arm64-2 perf:

```

name old time/op new time/op delta

Table/1x10/new-64 4.33µs ± 1% 5.40µs ± 4% +24.92% (p=0.004 n=6+5)

Table/1x10/reuse-64 1.77µs ± 0% 1.77µs ± 0% -0.36% (p=0.024 n=6+6)

Table/1x1000/new-64 318µs ±10% 379µs ±10% +19.04% (p=0.008 n=5+5)

Table/1x1000/reuse-64 172µs ± 0% 171µs ± 0% ~ (p=0.537 n=6+5)

Table/1x100000/new-64 4.26s ±88% 0.05s ± 4% -98.77% (p=0.002 n=6+6)

Table/1x100000/reuse-64 4.79s ±73% 0.02s ± 2% -99.61% (p=0.004 n=6+5)

Table/10x10/new-64 14.6µs ± 3% 16.4µs ± 9% +12.51% (p=0.004 n=5+6)

Table/10x10/reuse-64 8.76µs ± 0% 8.79µs ± 0% ~ (p=0.329 n=5+6)

Table/10x1000/new-64 1.18ms ± 3% 1.32ms ± 4% +12.12% (p=0.002 n=6+6)

Table/10x1000/reuse-64 890µs ± 0% 897µs ± 0% +0.84% (p=0.002 n=6+6)

Table/10x100000/new-64 1.43s ±36% 0.16s ± 3% -88.56% (p=0.004 n=5+6)

Table/10x100000/reuse-64 375ms ±55% 138ms ±11% -63.31% (p=0.004 n=5+6)

Table/100x10/new-64 103µs ± 0% 105µs ± 4% ~ (p=0.429 n=5+6)

Table/100x10/reuse-64 79.6µs ± 0% 80.0µs ± 1% ~ (p=0.329 n=5+6)

Table/100x1000/new-64 10.2ms ± 3% 10.5ms ± 1% ~ (p=0.052 n=6+5)

Table/100x1000/reuse-64 8.75ms ± 0% 9.23ms ± 6% ~ (p=0.126 n=5+6)

Table/100x100000/new-64 1.42s ± 6% 1.46s ±11% ~ (p=0.394 n=6+6)

Table/100x100000/reuse-64 1.40s ± 0% 1.45s ± 6% ~ (p=0.690 n=5+5)

Pyramid/10-64 15.3µs ±45% 14.5µs ±20% ~ (p=0.662 n=6+5)

Pyramid/100-64 1.16ms ±13% 0.88ms ±11% -23.90% (p=0.002 n=6+6)

Pyramid/1000-64 71.6ms ± 8% 77.8ms ±12% ~ (p=0.056 n=5+5)

Ragged/10-64 13.2µs ±17% 14.0µs ± 2% ~ (p=0.792 n=6+5)

Ragged/100-64 104µs ± 3% 123µs ± 2% +18.21% (p=0.008 n=5+5)

Ragged/1000-64 1.12ms ±12% 1.35ms ± 9% +20.55% (p=0.002 n=6+6)

Code-64 3.51µs ± 1% 3.80µs ± 3% +8.46% (p=0.004 n=5+6)

```

x86 perf:

```

name old time/op new time/op delta

Table/1x10/new-32 5.36µs ± 2% 5.58µs ± 3% +4.03% (p=0.004 n=6+6)

Table/1x10/reuse-32 1.50µs ± 8% 1.48µs ± 0% ~ (p=0.433 n=6+5)

Table/1x1000/new-32 318µs ± 2% 355µs ± 2% +11.43% (p=0.004 n=5+6)

Table/1x1000/reuse-32 127µs ± 0% 134µs ± 1% +5.36% (p=0.004 n=6+5)

Table/1x100000/new-32 49.9ms ± 3% 48.1ms ± 3% -3.57% (p=0.026 n=6+6)

Table/1x100000/reuse-32 13.8ms ± 1% 15.2ms ± 1% +9.87% (p=0.002 n=6+6)

Table/10x10/new-32 17.4µs ± 3% 17.6µs ± 2% ~ (p=0.310 n=6+6)

Table/10x10/reuse-32 7.20µs ± 1% 7.21µs ± 0% ~ (p=0.429 n=5+6)

Table/10x1000/new-32 1.38ms ± 1% 1.43ms ± 2% +4.16% (p=0.002 n=6+6)

Table/10x1000/reuse-32 687µs ± 1% 693µs ± 0% ~ (p=0.052 n=6+5)

Table/10x100000/new-32 131ms ± 2% 133ms ± 5% ~ (p=0.699 n=6+6)

Table/10x100000/reuse-32 89.2ms ± 2% 90.3ms ± 2% ~ (p=0.177 n=5+6)

Table/100x10/new-32 122µs ± 1% 122µs ± 1% ~ (p=0.632 n=6+5)

Table/100x10/reuse-32 62.3µs ± 0% 62.4µs ± 0% ~ (p=0.429 n=5+6)

Table/100x1000/new-32 12.2ms ± 4% 12.0ms ± 3% ~ (p=0.180 n=6+6)

Table/100x1000/reuse-32 6.29ms ± 0% 6.32ms ± 0% +0.54% (p=0.015 n=6+6)

Table/100x100000/new-32 1.01s ± 2% 1.00s ± 4% ~ (p=0.429 n=6+5)

Table/100x100000/reuse-32 972ms ±10% 962ms ±18% ~ (p=1.000 n=6+6)

Pyramid/10-32 14.3µs ± 4% 14.8µs ± 6% ~ (p=0.240 n=6+6)

Pyramid/100-32 833µs ± 2% 841µs ± 2% ~ (p=0.394 n=6+6)

Pyramid/1000-32 53.5ms ± 1% 56.2ms ± 3% +4.98% (p=0.004 n=5+6)

Ragged/10-32 15.1µs ± 0% 14.8µs ± 1% -1.75% (p=0.008 n=5+5)

Ragged/100-32 127µs ± 4% 130µs ± 2% ~ (p=0.180 n=6+6)

Ragged/1000-32 1.27ms ± 5% 1.33ms ± 3% +3.96% (p=0.026 n=6+6)

Code-32 3.75µs ± 1% 3.81µs ± 2% +1.67% (p=0.048 n=5+6)

```

The potential overhead is acquiring the current G in the two slice functions, which seem to be tiny.

I'm working on benchmarking more packages and evaluate its impact to x86 platform.

Any comment is highly appreciated.

### What did you expect to see?

Better performance.

### What did you see instead?

|

Performance,NeedsInvestigation,compiler/runtime

|

low

|

Critical

|

657,293,506 |

electron

|

WebAuthn FIDO/FIDO2 Support

|

<!-- As an open source project with a dedicated but small maintainer team, it can sometimes take a long time for issues to be addressed so please be patient and we will get back to you as soon as we can.

-->

### Preflight Checklist

<!-- Please ensure you've completed the following steps by replacing [ ] with [x]-->

* [x] I have read the [Contributing Guidelines](https://github.com/electron/electron/blob/master/CONTRIBUTING.md) for this project.

* [x] I agree to follow the [Code of Conduct](https://github.com/electron/electron/blob/master/CODE_OF_CONDUCT.md) that this project adheres to.

* [x] I have searched the issue tracker for an issue that matches the one I want to file, without success.

### Issue Details

* **Electron Version:**

* <!-- (output of `node_modules/.bin/electron --version`) e.g. 4.0.3 --> 8.4.0, 9, 10, (added 07.04.2021) 11, 12

* **Operating System:**

* <!-- (Platform and Version) e.g. macOS 10.13.6 / Windows 10 (1803) / Ubuntu 18.04 x64 --> macOS 10.15.5

* **Last Known Working Electron version:**

* <!-- (if applicable) e.g. 3.1.0 --> -

It is not clear how to make [WebAuthn](https://w3c.github.io/webauthn/) works in Electron app if page is local not from webserver.

### Expected Behavior

<!-- A clear and concise description of what you expected to happen. -->

WebAuthn works if page loaded from `standard` and `secure` scheme: https://www.electronjs.org/docs/api/protocol#protocolregisterschemesasprivilegedcustomschemes

### Actual Behavior

<!-- A clear and concise description of what actually happened. -->

I've faced with the following error:

```

Uncaught (in promise) DOMException: Public-key credentials are only available to HTTPS origin or HTTP origins that fall under 'localhost'. See https://crbug.com/824383

```

### To Reproduce

<!--

Your best chance of getting this bug looked at quickly is to provide an example.

-->

https://gist.github.com/mahnunchik/165a117564ebc632a3723d2666f5024c

<!--

For bugs that can be encapsulated in a small experiment, you can use Electron Fiddle (https://github.com/electron/fiddle) to publish your example to a GitHub Gist and link it your bug report.

-->

<!--

If Fiddle is insufficient to produce an example, please provide an example REPOSITORY that can be cloned and run. You can fork electron-quick-start (https://github.com/electron/electron-quick-start) and include a link to the branch with your changes.

-->

<!--

If you provide a URL, please list the commands required to clone/setup/run your repo e.g.

```sh

$ git clone $YOUR_URL -b $BRANCH

$ npm install

$ npm start || electron .

```

-->

### Additional Information

<!-- Add any other context about the problem here. -->

Related issues:

* [WebAuthn Support #15404](https://github.com/electron/electron/issues/15404)

* [protocol.intercept{Any}Protocol handler ability to call original handler #15434](https://github.com/electron/electron/issues/15434)

|

enhancement :sparkles:

|

high

|

Critical

|

657,489,534 |

pytorch

|

Implement backend fallback for Tracer

|

Now that @ljk53 has extracted tracing functionality into a separate dispatch key, we should now be able to write a single generic fallback for tracing. This is in two parts:

1. Write the generic fallback. This will give us automatic support for tracing custom ops

2. Remove all code generated tracing for anything that is subsumed by the fallback

cc @ezyang @bhosmer @smessmer @ljk53

|

module: internals,triaged

|

low

|

Minor

|

657,534,592 |

pytorch

|

GLOO infiniband with PyTorch

|

## 🚀 Feature

## Motivation

* From https://discuss.pytorch.org/t/gloo-and-infiniband/89303. Some GPUs like RTX2080ti GPUs do not support GPUDirect/RDMA anyway.

* With GPUs w/ GPUDirect/RDMA, in some scenarios we still would like to do CPU RDMA to avoid DtoH memory copy which causes certain synchronicity and breaks the pipelining.

For these use cases, GLOO infiniband could help achieve lower latency and higher bandwidth, and remove host/device synchronicity.

## Pitch

GLOO has an ibverbs transport in place https://github.com/facebookincubator/gloo/tree/master/gloo/transport/ibverbs. However it was not tested or used with PyTorch. We would like to test and integrate with PyTorch c10d library.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @xush6528 @osalpekar @jiayisuse @agolynski

|

oncall: distributed,feature,triaged

|

low

|

Minor

|

657,547,107 |

pytorch

|

LSTMs leak memory in CPU PyTorch 1.5.1, 1.6, and 1.7 on Linux

|

## 🐛 Bug

On Linux, when using the CPU (whether a CPU device in a CUDA-capable PyTorch, or using a CPU-only PyTorch distribution), merely _instantiating_ modules with an LSTM in them claims memory that is never released until the process is killed. _Training_ using one of these modules consumes more and more memory during training, in what seems to be a nondeterministic way. I have reproduced this in PyTorch 1.5.1, 1.6.0, and 1.7.0 on Linux.

Oddly, the _MacOS_ CPU-only PyTorch distribution _does not_ leak in this way.

I was not able to resolve the leak in the below allocation test (tested with the 1.6 nightly) using any combination of `MKL_DISABLE_FAST_MM=1` or `OMP_NUM_THREADS=4`, so I believe this leak is distinct from other issues I came across trying to troubleshoot it.

## To Reproduce

Steps to reproduce the behavior:

1. On Linux, using any of PyTorch `1.5.1+cpu`, `1.6.0.dev20200625+cpu`, or `1.7.0.dev20200715+cpu`, run the below allocation_test.py script

1. Using `htop`, find the PID output near the top of the script. You should see that the resident set (`RES`) for this process in memory is near 1GB (on my machine, 946M).

1. Once it finishes the deallocation step, note that a very small amount of memory has been recovered, but most of it has not been.

1. Repeat these steps on MacOS and notice that the memory is almost entirely recovered (on my machine, a little under 100MB is left resident, and increasing the size of the test doesn't increase this much on MacOS).

```{python}

# allocation_test.py

import os

import torch

import torch.nn as nn

import gc

from time import sleep

print(os.getpid())

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

throwaway = torch.ones((1,1)).to(device) # load CUDA context

class Encoder(nn.Module):

def __init__(self, input_dim, hidden_dim, n_layers, dropout_perc):

super().__init__()

self.hidden_dim, self.n_layers = (hidden_dim, n_layers)

self.rnn = nn.LSTM(input_dim,hidden_dim,n_layers,dropout=dropout_perc)

def forward(self,x):

outputs, (hidden, cell) = self.rnn(x)

return hidden, cell

print('allocating memory')

pile=[]

for i in range(1500):

pile.append(Encoder(102,64,4,0.5).to(device))

print('waiting two seconds')

sleep(2)

print('hypothetically de-allocating memory')

del pile

gc.collect()

if torch.cuda.is_available():

torch.cuda.empty_cache()

print('waiting forever')

while True:

sleep(1)

```

## Expected behavior

The MacOS behavior (near-immediate full recovery of all memory allocated by the LSTM module) is expected on Linux as well.

## Environment

Collecting environment information...

PyTorch version: 1.5.1+cpu

Is debug build: No

CUDA used to build PyTorch: Could not collect

OS: Ubuntu 18.04.4 LTS

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

CMake version: version 3.10.2

Python version: 3.8

Is CUDA available: No

CUDA runtime version: Could not collect

GPU models and configuration:

GPU 0: GeForce GTX 1080 Ti

GPU 1: GeForce GTX 1080 Ti

Nvidia driver version: 440.100

cuDNN version: /usr/local/cuda-9.2/targets/x86_64-linux/lib/libcudnn.so.7.2.1

Versions of relevant libraries:

[pip3] numpy==1.19.0

[pip3] torch==1.5.1+cpu

[conda] Could not collect

## Additional context

There is some other diagnostic information and discussion here:

https://discuss.pytorch.org/t/lstm-on-cpu-wont-release-memory-when-all-refs-deleted/89026

cc @ezyang @gchanan @zou3519 @VitalyFedyunin

|

high priority,module: rnn,module: cpu,module: memory usage,triaged

|

medium

|

Critical

|

657,582,430 |

rust

|

Emit noundef LLVM attribute

|

LLVM 11 introduces a new `noundef` attribute, with the following semantics:

> This attribute applies to parameters and return values. If the value representation contains any undefined or poison bits, the behavior is undefined. Note that this does not refer to padding introduced by the type’s storage representation.

In LLVM 11 itself it doesn't do anything yet, but this will become important in the future to reduce the impact of `freeze` instructions.

We need to figure out for which parameters / return values we can emit this attribute. We generally can't do so if any bits are unspecified, e.g. due to padding. More problematic for Rust is https://github.com/rust-lang/unsafe-code-guidelines/issues/71, i.e. the question of whether integers are allowed to contain uninitialized bits without going through something like MaybeUninit.

If we go with aggressive emission of noundef, we probably need to ~~punish~~ safe-guard `mem::uninitialized()` users with liberal application of `freeze`.

cc @RalfJung

|

A-LLVM,T-compiler

|

low

|

Major

|

657,592,151 |

pytorch

|

Make torch.iinfo/torch.finfo torchscriptable

|

It would be helpful for torch.iinfo, torch.finfo, and other dtype attributes like is_floating_point to be torch scriptable.

## Motivation

Information like `torch.iinfo(dtype).max` (and `dtype.is_floating_point`) can be helpful in image transformations, but can't be used in transformations that need to be scriptable.

E.g.:

https://github.com/pytorch/vision/blob/master/torchvision/transforms/functional.py#L164

## Alternatives

for dtype.is_floating_point we can workaround by using torch.empty(0, dtype=dtype).is_floating_point(), but I'm not aware of a scriptable alternative for getting other dtype info like `max`.

## Additional context

This came up as part of this PR:

https://github.com/pytorch/vision/pull/2459

cc @ezyang @gchanan @zou3519 @suo @gmagogsfm

|

triage review,oncall: jit,days

|

low

|

Minor

|

657,599,927 |

rust

|

Should include crate name in error message for E0391 (and perhaps others)

|

While doing a bootstrap build of the compiler with some other build artifacts lying around, I saw the following error message (a small snippet from whole output):

```

[RUSTC-TIMING] cfg_if test:false 0.035

Compiling lock_api v0.3.4

[RUSTC-TIMING] scopeguard test:false 0.068

Compiling crossbeam-utils v0.6.5

Compiling tracing-core v0.1.10

Compiling log_settings v0.1.2

error[E0391]: cycle detected when running analysis passes on this crate

|

= note: ...which again requires running analysis passes on this crate, completing the cycle

error: aborting due to previous error

For more information about this error, try `rustc --explain E0391`.

error[E0391]: cycle detected when running analysis passes on this crate

|

= note: ...which again requires running analysis passes on this crate, completing the cycle

[RUSTC-TIMING] lazy_static test:false 0.079

error: aborting due to previous error

```

It is sub-optimal to say "on this crate" in a diagnostic like this.

Multiple crates can be compiled in parallel, and thus it can be ambiguous which crate a diagnostic like that is associated with.

In general it would be better to extract a crate name, if possible, and include that in the message, rather than using the simpler-but-potentially-ambiguous "on this crate."

|

A-diagnostics,T-compiler,C-bug

|

low

|

Critical

|

657,617,744 |

godot

|

[4.0] External changes to shader overwritten if builtin editor has ever shown that shader

|

**Godot version:**

4.0.dev.calinou.3ed5ff244

**OS/device including version:**

Windows 10.0.18362.900, NVIDIA / Vulkan

**Issue description:**

When a shader is changed from an external program, it is updated as expected. However, if the shader is opened in the text editor (double clicking the shader resource, or selecting it in a material), the original contents of the shader are displayed.

If the text editor is hidden/closed, the in-memory shader is replaced with the original version, even though the on-disk contents contain the external shader, and this state persists until the project is closed and later reopened. (When the project is closed or saved, the shader on disk is overwritten with an old copy.)

So basically, the behavior here depends on whether the shader code editor at the bottom has ever been viewed for a given shader (such as by expanding a ShaderMaterial) or not, within a given godot editor session.

**Steps to reproduce:**

(If using the attached project, open BUG.tscn and skip steps 1-2)

1. Create sprite with a simple shader, and save it to file:

```

shader_type canvas_item;

render_mode unshaded;

void fragment() {

COLOR = vec4(1.0,1.0,0.0,1.0);

}

```

2. Quit to Project List and reopen (optional).

3. Open the shader in VS Code or another external text editor.

4. Open the scene, and observe the object is yellow.

5. In the external editor, modify the color to `vec4(0.0,1.0,0.0,1.0);` (green)

6. Alt-tab back to Godot, observe that the object is now green.

7. Double-click the shader, or expand the ShaderMaterial section of the inspector.

8. Observe that the code editor shows the new code: `vec4(0.0,1.0,0.0,1.0)` (green).

9. In the external editor, modify the color to `vec4(1.0,0.0,1.0,1.0);` (pink)

10. Alt-tab back to Godot, observe that the object is now pink.

11. Observe that the code editor still shows the previous code: `vec4(0.0,1.0,0.0,1.0)` (green).

12. select the root Node2d in the scene, such that the code editor closes.

13. The object color has reverted to green.

14. Quit to Project List and reopen. (Alternatively hit ctrl-S to save all resources).

15. The object in the scene is still green.

16. Observe that the shader on disk is overwritten with the old version previous visible in the code editor: both the code editor and the external editor show `vec4(0.0,1.0,0.0,1.0);` (green)

---

17. This process can now be repeated indefinitely: undo and save the old shader in the external editor, and observe that it is reflected.

18. Hit ctrl-s in godot editor and the shader is reverted both in scene and on disk.

etc.

19. Quit to Project List and reopen.

20. Now, never open the shader in the text editor (do not select the shader or expand the ShaderMaterial).

21. Change the shader externally and save.

22. Open godot and observe that the shader is updated.

23. Hit ctrl-s in godot. The shader on disk is *not* reverted, and nothing changes in the scene. This is the same result we observed in steps 5-8 earlier.

**Minimal reproduction project:**

[ExternalEditTest.zip](https://github.com/godotengine/godot/files/4927636/ExternalEditTest.zip)

|

bug,topic:editor,topic:shaders

|

low

|

Critical

|

657,643,862 |

opencv

|

Moves for basic types have copy semantics

|

PR 11899 manually implemented moves for types like Point and Rect. std::move() leaves source POD values unchanged, so all this extra code has no apparent effect.

A better idiom would be e.g. `width(std::exchange(r.width, 0))`.

|

category: build/install,RFC

|

low

|

Minor

|

657,676,538 |

pytorch

|

Helping test example code blocks in the docs

|

## 📚 Documentation

<!-- A clear and concise description of what content in https://pytorch.org/docs is an issue. If this has to do with the general https://pytorch.org website, please file an issue at https://github.com/pytorch/pytorch.github.io/issues/new/choose instead. If this has to do with https://pytorch.org/tutorials, please file an issue at https://github.com/pytorch/tutorials/issues/new -->

Hello, I noticed this older issue https://github.com/pytorch/pytorch/issues/6662 is still open and looked through this PR https://github.com/pytorch/pytorch/pull/24435 about adding `doctest` to jit. If it would be helpful I can work on other parts of the docs to convert code blocks to use `doctest`. Currently it seems there are over 400 code blocks in the docs using the format `Example::` that are not being tested, e.g. a bunch are in this [file](https://github.com/pytorch/pytorch/blob/master/torch/_torch_docs.py).

cc @jlin27 @mruberry @VitalyFedyunin

|

module: docs,feature,module: tests,triaged

|

low

|

Major

|

657,734,703 |

vscode

|

Allow tooltips/hovering on symbols in outline view and breadcrumbs

|

<!-- ⚠️⚠️ Do Not Delete This! feature_request_template ⚠️⚠️ -->

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Describe the feature you'd like. -->

Currently, the information which breadcrumbs and the outline view can provide is limited to two strings: the title and the "detail" (breadcrumbs are not even able to display the detail string). For the underlying complexity of the information being presented, this seems quite limiting.

In order to optionally provide more information about a symbol (and without cluttering the UI), it would be really nice if if symbols could at least provide a tooltip, through an extremely simple `DocumentSymbol.tooltip: string` property (basically identical to the underlying tooltip property of `TreeItem`).

If this feature were to be taken all the way, hovering over symbols in the breadcrumbs or outline view could display information about the symbol from the document's registered HoverProvider for the symbol's selectionRange. This could be enabled/disabled on a symbol-by-symbol basis (something along the lines of `DocumentSymbol.triggerhoverprovider: boolean`). This would make the breadcrumbs and outline view significantly more useful for understanding the structure of a document without fully diving into the code.

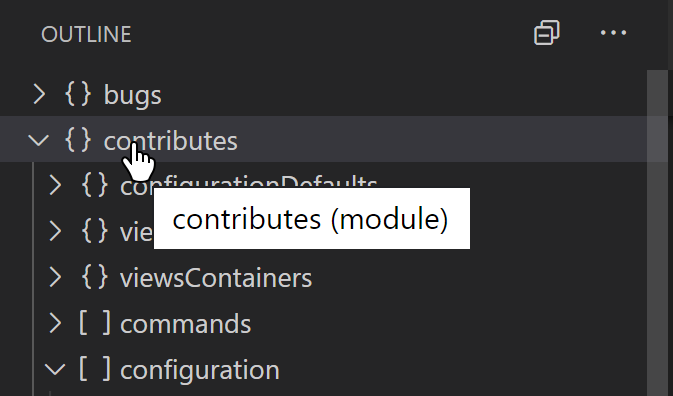

### Bad behaviour

*unhelpful, repetitive, ugly: i already know the name of the symbol, it's just there*

### Good behaviour

*helpful, useful, and pretty: the only way I could know what this part of the document does is by clicking the symbol and hovering over it in the editor*

DocumentSymbols help explain the structure of the code - why limit this explanation to the name, especially when there's more information about them so readily available?

|

feature-request,outline,breadcrumbs

|

low

|

Minor

|

657,738,751 |

pytorch

|

nn.MultiheadAttention causes gradients to become NaN under some use cases

|

## 🐛 Bug

Using key_padding_mask and attn_mask with nn.MultiheadAttention causes gradients to become NaN under some use cases.

## To Reproduce

Steps to reproduce the behavior:

Backwards pass through nn.MultiheadAttention layer where the forward pass used:

1. attn_mask limiting context in both directions (e.g. bucketed attention)

2. key_padding_mask where there is padding for at least one sequence (and there is also at least one valid entry for every sequence, as expected)

3. The dimensions that were masked are not used to calculate the loss

4. The loss is a real number (not NaN)

```python

import torch

torch.manual_seed(0)

'''Create attention layer'''

attn = torch.nn.MultiheadAttention(embed_dim=1, num_heads=1)

'''Create dummy input'''

x = torch.rand(3, 2, 1)

'''Padding mask, second sequence can only see first embedding'''

key_padding_mask = torch.as_tensor([[False, False, False], [False, True, True]], dtype=torch.bool)

'''Attention mask, bucketing attention to current and previous time steps'''

attn_mask = torch.as_tensor([[0., float('-inf'), float('-inf')], [0., 0., float('-inf')], [float('-inf'), 0., 0.]])

'''Generate attention embedding'''

output, scores = attn(x, x, x, key_padding_mask=key_padding_mask, attn_mask=attn_mask)

print("scores")

print(scores)

'''Create a dummy loss, only use the first embedding which is defined for all sequences'''

loss = output[0, :].sum()

print("loss")

print(loss)

'''Backwards pass and gradients'''

loss.backward()

print("grads")

for n, p in attn.named_parameters():

print(n, p.grad)

> scores

> tensor([[[1.0000, 0.0000, 0.0000],

> [0.4468, 0.5532, 0.0000],

> [0.0000, 0.5379, 0.4621]],

> [[1.0000, 0.0000, 0.0000],

> [1.0000, 0.0000, 0.0000],

> [ nan, nan, nan]]], grad_fn=<DivBackward0>)

> loss

> tensor(0.0040, grad_fn=<SumBackward0>)

> grads

> in_proj_weight tensor([[nan],

> [nan],

> [nan]])

> in_proj_bias tensor([nan, nan, nan])

> out_proj.weight tensor([[nan]])

> out_proj.bias tensor([2.])

```

## Expected behavior

Gradients should not be NaN

## Environment

PyTorch version: 1.5.1

Is debug build: No

CUDA used to build PyTorch: None

OS: Ubuntu 18.04.4 LTS

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

CMake version: version 3.10.2

Python version: 3.7

Is CUDA available: No

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

Versions of relevant libraries:

[pip3] numpy==1.18.5

[pip3] torch==1.5.1

[conda] blas 1.0 mkl

[conda] cpuonly 1.0 0 pytorch

[conda] mkl 2020.1 217

[conda] mkl-service 2.3.0 py37he904b0f_0

[conda] mkl_fft 1.1.0 py37h23d657b_0

[conda] mkl_random 1.1.1 py37h0573a6f_0

[conda] numpy 1.18.5 py37ha1c710e_0

[conda] numpy-base 1.18.5 py37hde5b4d6_0

[conda] pytorch 1.5.1 py3.7_cpu_0 [cpuonly] pytorch

Also fails when using GPU.

cc @ezyang @gchanan @zou3519 @bdhirsh @jbschlosser @albanD @mruberry @zhangguanheng66

|

high priority,module: nn,triaged,module: NaNs and Infs

|

high

|

Critical

|

657,753,167 |

pytorch

|

Tensor.new_tensor is not supported

|

This comes up as a common error in Parity Bench. (scripting)

Here is the error message:

```

Tried to access nonexistent attribute or method 'new_tensor' of type 'Tensor (inferred)'.:

File ""/tmp/paritybench1zfkgifq/pbqmuageme.py"", line 15

:param x: Long tensor of size ``(batch_size, num_fields)``

""""""

x = x + x.new_tensor(self.offsets).unsqueeze(0)

~~~~~~~~~~~~ <--- HERE

xs = [self.embeddings[i](x) for i in range(self.num_fields)]

ix = list()

```

[Concrete example](https://github.com/jansel/pytorch-jit-paritybench/blob/570400b612332ecaec0cf850cdc70a6d4ddc374b/generated/test_rixwew_pytorch_fm.py#L126)

cc @suo @gmagogsfm

|

oncall: jit,days,TSUsability,TSRootCause:BetterEngineering

|

low

|

Critical

|

657,754,260 |

pytorch

|

Python object None check not supported

|

This comes up as a common error in Parity Bench.

It is common to check for the None-ness of an object to determine next steps of calculation in a dynamic model. But this is not supported well in JIT today.

Error message:

```

compile,97,RuntimeError: Could not cast value of type __torch__.torch.nn.modules.instancenorm.InstanceNorm2d to bool:,"RuntimeError:

Could not cast value of type __torch__.torch.nn.modules.instancenorm.InstanceNorm2d to bool:

File ""/tmp/paritybench819sw5wj/pbzmu661cu.py"", line 41

def forward(self, x):

x = self.conv(self.pad(x))

if self.norm:

~~~~~~~~~ <--- HERE

x = self.norm(x)

if self.activation:

```

Concrete [example](https://github.com/jansel/pytorch-jit-paritybench/blob/570400b612332ecaec0cf850cdc70a6d4ddc374b/generated/test_junyanz_VON.py#L685)

cc @suo @gmagogsfm

|

oncall: jit,days,TSUsability,TSRootCause:UnsupportedConstructs

|

low

|

Critical

|

657,768,330 |

rust

|

LLVM unrolls loops fully, leading to non-linear compilation time

|

<!--

Thank you for filing a bug report! 🐛 Please provide a short summary of the bug,

along with any information you feel relevant to replicating the bug.

-->

I tried this code:

```rust

#[derive(Copy, Clone)]

pub enum Foo {

A,

B(u8),

}

pub fn foo() -> Box<[[[Foo; 50]; 50]; 50]> {

Box::new([[[Foo::A; 50]; 50]; 50])

}

```

I expected to see this happen:

```sh

cargo build --release

```

(Above command eventually should terminate)

Instead, this happened: Compiler doesn't terminate.

### Meta

<!--

If you're using the stable version of the compiler, you should also check if the

bug also exists in the beta or nightly versions.

-->

`rustc --version --verbose`:

```

rustc 1.44.1 (c7087fe00 2020-06-17)

rustc 1.46.0-nightly (346aec9b0 2020-07-11)

```

A crate with the repro can be found here: https://github.com/io12/llvm-rustc-bug-repro.

It seems like this is an LLVM bug.

|

A-LLVM,P-medium,T-compiler,C-bug,I-hang,ICEBreaker-LLVM

|

medium

|

Critical

|

657,830,774 |

godot

|

Input Map action doesn't support two directions of same joy axis

|

**Godot version:**

3.2.2

**OS/device including version:**

Windows 10

**Issue description:**

If you set both directions of the same joy axis of the same device to one action, `Input.is_action_pressed` only is true when the first joy axis direction is pressed

**Steps to reproduce:**

1. Add a new action `test` to the Input Map

2. Add Joy Axis `Device 0, Axis 0 - (Left Stick Left)` to `test`

3. Add Joy Axis `Device 0, Axis 0 + (Left Stick Right)` to `test`

4. On `_process` check for `Input.is_action_pressed("test")`

**Minimal reproduction project:**

[InputMapBug.zip](https://github.com/godotengine/godot/files/4929246/InputMapBug.zip)

|

topic:input

|

low

|

Critical

|

657,862,693 |

godot

|

[Mono] 3.2.1 don't work virtual method

|

<!-- Please search existing issues for potential duplicates before filing yours:

https://github.com/godotengine/godot/issues?q=is%3Aissue

-->

**Godot version:**

<!-- Specify commit hash if using non-official build. -->

3.2.1

**OS/device including version:**

<!-- Specify GPU model, drivers, and the backend (GLES2, GLES3, Vulkan) if graphics-related. -->

Windows 10

**Issue description:**

<!-- What happened, and what was expected. -->

The problem was when compiling Godot with the Zylann voxel module. The most interesting thing is that Godot does not want to compile virtual methods and mark them as such. Because of what I cannot carry out full-fledged inheritance and create my own stream for generation.

Issue: https://github.com/Zylann/godot_voxel/issues/81

**Steps to reproduce:**

Compile godot with this module and create you successor class from VoxelStream

|

topic:dotnet

|

low

|

Minor

|

657,897,862 |

pytorch

|

c++ indexing vs python

|

## 🐛 Bug

i am working on translating pytorch code to c++ environment.

at the pytorch code there is support to take and provide tensors of indexes along different dimensions, which change the output tensor shape,

this feature is not supported at the c++ framework

Steps to reproduce the behavior:

at pytorch:

1. tensor = torch.arange(25*4*96*170).reshape(25,4,96,170)

2. a = torch.arange(25)

3. x=a

4. y=a

5. output = tensor[a, :, y, x]

output shape is: [25,4]

thought maybe to use the torch::index function, but it fails when number of dimensions is larger than 3.

how do i produce the same behavior at the c++ framework?

- PyTorch Version (e.g., 1.0): torch==1.3.0

- OS (e.g., Linux): Linux

- Python version: Python 3.6.8

cc @yf225 @glaringlee

|

module: cpp,triaged

|

low

|

Critical

|

657,959,278 |

next.js

|

Inconsistent css import order between Prod and Dev for Material-UI

|

# Bug report

## Describe the bug

When using Material-UI components, the import order the related css within output html is not static. This outputs inconsistent styling due to inconsistent overrides.

Affected by three different situations

1. Whether we are using destructuring to import the components or direct imports

```tsx

import Button from '@material-ui/core/Button'

import Typography from '@material-ui/core/Typography'

```

have different results than

```tsx

import { Button, Typography } from '@material-ui/core'

```

2. The order of direct imports

```tsx

import Button from '@material-ui/core/Button'

import Typography from '@material-ui/core/Typography'

```

have different results to

```tsx

import Typography from '@material-ui/core/Typography'

import Button from '@material-ui/core/Button'

```

3. Prod vs dev

`yarn dev` have different results to `yarn build && yarn start`

## To Reproduce

Steps to reproduce the behavior, please provide code snippets or a repository:

1. Clone https://github.com/karpkarp/mui-next-repo

2. Run in Dev vs Prod to see difference

3. Change component import in `/src/SideNav.component.tsx` and run in Prod to see difference

change

```tsx

import { Button, Typography } from '@material-ui/core'

```

to

``` tsx

import Button from '@material-ui/core/Button'

import Typography from '@material-ui/core/Typography'

```

4. Change import order and run in Prod to see difference

```tsx

import Button from '@material-ui/core/Button'

import Typography from '@material-ui/core/Typography'

```

to

```tsx

import Typography from '@material-ui/core/Typography'

import Button from '@material-ui/core/Button'

```

## Expected behavior

There an expectation for a static order of css load order on the HTML. The lack of it makes development very tedious and unpredictable in styling overrides.

## Screenshots

Using dev

Using prod

## System information

- OS: MacOS

- Browser: Chrome

- Version of Next.js: 9.4.4

- Version of Node.js: v10.16.0

|

bug,Webpack

|

low

|

Critical

|

657,963,834 |

pytorch

|

torch.nn.functional.grid_sample()is doing bilinear interpolation when the input is 5D, i think the mode should add 'trilinear'

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

## To Reproduce

Steps to reproduce the behavior:

1.

1.

1.

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

Please copy and paste the output from our

[environment collection script](https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py)

(or fill out the checklist below manually).

You can get the script and run it with:

```

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

```

- PyTorch Version (e.g., 1.0):

- OS (e.g., Linux):

- How you installed PyTorch (`conda`, `pip`, source):

- Build command you used (if compiling from source):

- Python version:

- CUDA/cuDNN version:

- GPU models and configuration:

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

cc @ezyang @gchanan @zou3519 @jlin27 @albanD @mruberry

|

module: nn,triaged

|

low

|

Critical

|

657,996,513 |

flutter

|

Implement PrimaryScrollController inside CupertinoTabScaffold

|

iOS native widgets have to feature that CupertinoTabScaffold doesn't support yet.

- Scroll to the top when the status bar is tapped. (This is only possible yet with material Scaffold)

- Scroll to the top when the active tab inside the CupertinoTabBar is tapped again.

I would like to propose to add a `PrimaryScrollController` that handle both cases. The implementation would be very similar to the one in Scaffold [here](https://github.com/flutter/flutter/blob/9c4a5ef1ed2bc88960fbf3b04d7bafd1c630414b/packages/flutter/lib/src/material/scaffold.dart#L2117).

I would be able to do a PR if you are interested in implement this.

<img height="400" src="https://user-images.githubusercontent.com/19904063/87650810-891f5880-c752-11ea-91ef-f57016af9047.gif" />

|

c: new feature,framework,a: fidelity,f: scrolling,f: cupertino,c: proposal,P3,team-design,triaged-design

|

low

|

Minor

|

658,055,781 |

tensorflow

|

tf.keras cannot weight classes when using multiple outputs

|

This post is a mirror of https://github.com/keras-team/keras/issues/11735, showing the need to handle class weight for multiple outputs.

Version 2.2.0 used.

------

This is a minimal source code, by @GalAvineri, to reproduce the issue (please comment/uncomment the class weight line):

````python3

from tensorflow.python.keras.models import Model

from tensorflow.python.keras.layers import Input, Dense

from tensorflow.python.data import Dataset

import tensorflow as tf

import numpy as np

def preprocess_sample(features, labels):

label1, label2 = labels

label1 = tf.one_hot(label1, 2)

label2 = tf.one_hot(label2, 3)

return features, (label1, label2)

batch_size = 32

num_samples = 1000

num_features = 10

features = np.random.rand(num_samples, num_features)

labels1 = np.random.randint(2, size=num_samples)

labels2 = np.random.randint(3, size=num_samples)

train = Dataset.from_tensor_slices((features, (labels1, labels2))).map(preprocess_sample).batch(batch_size).repeat()

# Model

inputs = Input(shape=(num_features, ))

output1 = Dense(2, activation='softmax', name='output1')(inputs)

output2 = Dense(3, activation='softmax', name='output2')(inputs)

model = Model(inputs, [output1, output2])

model.compile(loss='categorical_crossentropy', optimizer='adam')

class_weights = {'output1': {0: 1, 1: 10}, 'output2': {0: 5, 1: 1, 2: 10}}

model.fit(train, epochs=10, steps_per_epoch=num_samples // batch_size,

# class_weight=class_weights

)

````

Uncommenting yields this error:

```

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-38-d137ff6fb3f9> in <module>

33 class_weights = {'output1': {0: 1, 1: 10}, 'output2': {0: 5, 1: 1, 2: 10}}

34 model.fit(train, epochs=10, steps_per_epoch=num_samples // batch_size,

---> 35 class_weight=class_weights

36 )

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py in _method_wrapper(self, *args, **kwargs)

64 def _method_wrapper(self, *args, **kwargs):

65 if not self._in_multi_worker_mode(): # pylint: disable=protected-access

---> 66 return method(self, *args, **kwargs)

67

68 # Running inside `run_distribute_coordinator` already.

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)

813 workers=workers,

814 use_multiprocessing=use_multiprocessing,

--> 815 model=self)

816

817 # Container that configures and calls `tf.keras.Callback`s.

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/data_adapter.py in __init__(self, x, y, sample_weight, batch_size, steps_per_epoch, initial_epoch, epochs, shuffle, class_weight, max_queue_size, workers, use_multiprocessing, model)

1115 dataset = self._adapter.get_dataset()

1116 if class_weight:

-> 1117 dataset = dataset.map(_make_class_weight_map_fn(class_weight))

1118 self._inferred_steps = self._infer_steps(steps_per_epoch, dataset)

1119 self._dataset = strategy.experimental_distribute_dataset(dataset)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/data_adapter.py in _make_class_weight_map_fn(class_weight)

1233 "Expected `class_weight` to be a dict with keys from 0 to one less "

1234 "than the number of classes, found {}").format(class_weight)

-> 1235 raise ValueError(error_msg)

1236

1237 class_weight_tensor = ops.convert_to_tensor_v2(

ValueError: Expected `class_weight` to be a dict with keys from 0 to one less than the number of classes, found {'output1': {0: 1, 1: 10}, 'output2': {0: 5, 1: 1, 2: 10}}

````

|

stat:awaiting tensorflower,type:bug,comp:keras,TF 2.5

|

high

|

Critical

|

658,076,441 |

TypeScript

|

Cast Method of a class to a certain type

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker.

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ, especially the "Common Feature Requests" section: https://github.com/Microsoft/TypeScript/wiki/FAQ

-->

## Search Terms

casting

type

interface

method

function

parameters

inference

class

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

## Suggestion

<!-- A summary of what you'd like to see added or changed -->

Currently, it is impossible to infer the parameters and the return types of a method of a class using a type

The goal is to be able to declare a method without repeating the param types and the return type. I used typescript `Type` for that.

But there is currently no way to do this without changing the compiled javascript code.

type IBar = (x:number, y: number)=>number;

I tried

type IBar = (x:number, y: number)=>number;

class Foo {

sum:IBar=(x,y)=>{

return x+y;

}

}

BUT unfortunately, this changes the javascript code compiled into:

class Foo {

constructor() {

this.sum = (x, y) => {

return x + y;

};

}

}

I am looking for something that compiles into:

class Foo {

sum(x, y) {

return x + y;

}

}

Another option currently is to declare an interface and implement it on the class like so:

```

interface IBar { sum(x: number, y: number): number; }

class Foo implements IBar {

public sum(x: number, y: number): number {

return x + y;

}

}

```

HOWEVER, this approach would bnd the method name to only `sum` . What if I want to use the same method type with a different name like `divide` or `multiply`

## Use Cases

<!--

What do you want to use this for?

What shortcomings exist with current approaches?

-->

It will be used like this

class Foo {

sum(x,y){

return x+y;

} as IBar

}

## Examples

class Foo {

sum(x,y){

return x+y;

} as IBar

}

or

class Foo {

divide(x,y){

return x+y;

} as IBar

}

or

class Foo {

multiply(x,y){

return x+y;

} as IBar

}

<!-- Show how this would be used and what the behavior would be -->

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

|

Suggestion,Awaiting More Feedback

|

low

|

Critical

|

658,078,322 |

flutter

|

Support custom ADB sockets while debugging

|

Hi,

I am working with a vscode devcontainer that contains the flutter installation.

My environment is Windows 10 with WSL2 and Docker (WSL2-backend). The devcontainer runs on this WSL2-backend-Docker instance.

First I created this issue https://github.com/Dart-Code/Dart-Code/issues/2640 but it turned out that it looks like a problem with flutter itself.

Due to the fact that it is currently not possible to attach USB devices directly in WSL2 we need ADB (in the devcontainer) to communicate with the ADB on Windows.