id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

617,453,878 |

TypeScript

|

Much better comment preservation

|

some comments are removed (even even removeComments to false)

**TypeScript Version:** Version 3.9.1-rc

**Search Terms:**

Much better comment preservation

**Code**

```ts

// my comment 1

import { something } from './somefile.js';

// my comment 2

declare let test1: something;

let test = new something( {} );

// also

let item = [

// my comment 3

{ a: 0 },

{ a: 0 },

{ a: 0 },

// my comment 4

];

```

```js

**Expected behavior:**

// my comment 1

import { something } from './somefile.js';

// my comment 2

let test = new something( {} );

// also

let item = [

// my comment 3

{ a: 0 },

{ a: 0 },

{ a: 0 },

// my comment 4

];

```

**Actual behavior:**

```js

// my comment 1

import { something }from '/lib/backend/./somefile.js';

let test = new something({});

// also

let item = [

// my comment 3

{ a: 0 },

{ a: 0 },

{ a: 0 },

];

```

**Related Issues:**

sound like previous bugs report but not.

#1665

#16727

|

Needs Investigation

|

low

|

Critical

|

617,471,220 |

go

|

cmd/compile: generated DWARF triggers objdump warning for large uint64 const

|

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14.2 linux/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes.

### What did you do?

The Go compiler is emitting DWARF information for certain constants that is causing warnings from 'objdump --dwarf', details below. This is a comparatively minor problem (since as far as I can tell the debugging experience is not impacted) but the warning makes it a pain to read objdump output while looking for other problems (as a Go developer, not a Go user).

Code:

```

package main

import "fmt"

const neg64 uint64 = 1 << 63

func main() {

fmt.Println(neg64)

}

```

Symptoms:

```

$ go build neg64.go

$ objdump --dwarf=info neg64 > /dev/null

objdump: Error: LEB value too large

objdump: Error: LEB value too large

objdump: Error: LEB value too large

objdump: Error: LEB value too large

objdump: Error: LEB value too large

objdump: Error: LEB value too large

```

### What did you expect to see?

clean objdump output with no warnings

### What did you see instead?

LEB value too large warnings.

DWARF output excerpt:

```

<1><79f2b>: Abbrev Number: 9 (DW_TAG_constant)

<79f2c> DW_AT_name : main.neg64

<79f37> DW_AT_type : <0x3bf51>

<79f3b> DW_AT_const_value :objdump: Error: LEB value too large

-9223372036854775808

```

where the type in question is listed as

```

<1><3bf51>: Abbrev Number: 27 (DW_TAG_base_type)

<3bf52> DW_AT_name : uint64

<3bf59> DW_AT_encoding : 7 (unsigned)

<3bf5a> DW_AT_byte_size : 8

<3bf5b> Unknown AT value: 2900: 11

<3bf5c> Unknown AT value: 2904: 0xdec0

```

I think what's happening here is that the abbrev entry looks like this:

```

DW_TAG_constant [no children]

DW_AT_name DW_FORM_string

DW_AT_type DW_FORM_ref_addr

DW_AT_const_value DW_FORM_sdata

DW_AT value: 0 DW_FORM value: 0

```

Note the DW_FORM_sdata payload -- this is a signed value. I am assuming that objdump is interpreting this combination as something along the lines of "take this signed value and put it into an 8-byte signed container" (at which point it decides it can't fit).

A possible workaround would be to use a different abbrev with FORM_udata instead.

It's also arguable that we should instead file a binutils bug and ask them to change the objdump behavior -- you can make case that the Go DWARF is ok and it should be getting the "signedness" from the destination type and not the form (just depends on how you interpret things).

|

NeedsInvestigation,compiler/runtime

|

low

|

Critical

|

617,475,820 |

rust

|

clippy is not installed to destination dir if 'tools' config variable not explicitly defined.

|

Found whilst performing a extended tools release bootstrap build on aarch64:

Unless 'tools' is explicitly specified in config.toml with an extended build config, clippy is not installed, it is explicitly skipped as it does not appear in builder.config.tools (src/install.rs, Line: Line: 158, Line: 222)

Clippy is actually built for stage 2, but not installed by install.py.

Of the default extended tools built when extended-tools are enabled, only clippy seems to behave like this.

If this is intended behaviour, then a note in the config.toml indicating this would be helpful :-)

This occurs using the default channel (dev) in config.toml, constructed only using ./configure --enable-full-tools --prefix=$RUST_TOOLCHAIN_DIR --sysconfdir=$RUST_TOOLCHAIN_DIR on aarch64 native host.

<!-- TRIAGEBOT_START -->

<!-- TRIAGEBOT_ASSIGN_START -->

<!-- TRIAGEBOT_ASSIGN_DATA_START$${"user":null}$$TRIAGEBOT_ASSIGN_DATA_END -->

<!-- TRIAGEBOT_ASSIGN_END -->

<!-- TRIAGEBOT_END -->

|

T-bootstrap

|

low

|

Major

|

617,531,113 |

flutter

|

GeneratedPluginRegistrant.java still no re-generated using FlutterEngine still using PluginRegistry

|

I have a real project that already published on Play Store and App Store, but when I want to use [file_picker](https://pub.dev/packages/file_picker) it's giving me an [error](https://github.com/miguelpruivo/flutter_file_picker/issues/225).

As mentioned, the solution it's to `upgrading pre 1.12 Android projects` and I follow this [instruction](https://github.com/flutter/flutter/wiki/Upgrading-pre-1.12-Android-projects).

I also already add

```

<meta-data

android:name="flutterEmbedding"

android:value="2" />

```

Already update splash screen behavior and etc. Also on MainActivity.kt use this code.

```

package com.rifafauzi.fluttertestcamera

import androidx.annotation.NonNull;

import io.flutter.embedding.android.FlutterActivity

import io.flutter.embedding.engine.FlutterEngine

import io.flutter.plugins.GeneratedPluginRegistrant

class MainActivity: FlutterActivity() {

override fun configureFlutterEngine(@NonNull flutterEngine: FlutterEngine) {

GeneratedPluginRegistrant.registerWith(flutterEngine);

// My logic native side here

}

}

```

But after I upgrading that, I get the error too:

```

Type mismatch: inferred type is FlutterEngine but PluginRegistry! was expected

```

That because of my `GeneratedPluginRegistrant` not re-generate so still using `PluginRegistry` not using `FlutterEngine` like in `pre 1.12 Android projects` or if we create `new project` will generating `GeneratedPluginRegistrant` with `FlutterEngine` not with `PluginRegistry`.

So my question is, how to re-generate `GeneratedPluginRegistrant` class so I can use new classic with `FlutterEngine`?

Fyi, I already run `flutter clean`, `clean project`, `rebuild project`, and also `Invalidate Cache and Restart` but still not re-generate.

|

platform-android,engine,a: quality,P2,team-android,triaged-android

|

low

|

Critical

|

617,553,927 |

go

|

runtime/race: potential false positive from race detector

|

I've gone over this so many times, thinking I must've missed something, but I do believe I have found a case where the race detector returns a false positive (ie data race where there really isn't a data race). It seems to be something that happens when writing to a channel in a `select-case` statement directly.

The unit tests trigger the race detector, even though I'm ensuring all calls accessing the channel have been made using a callback and a waitgroup.

I have the channels in a map, which I access through a mutex. The data race vanishes the moment I explicitly remove the type that holds the channel from this map. The only way I am able to do is because the mutex is released, so once again: I'm certain everything behaves correctly. Code below

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14.2 linux/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GO111MODULE=""

GOARCH="amd64"

GOBIN=""

GOCACHE="/home/XXXX/.cache/go-build"

GOENV="/home/XXXX/.config/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOINSECURE=""

GONOPROXY=""

GONOSUMDB=""

GOOS="linux"

GOPATH="/home/XXXX/go"

GOPRIVATE=""

GOPROXY="direct"

GOROOT="/usr/lib/golang"

GOSUMDB="off"

GOTMPDIR=""

GOTOOLDIR="/usr/lib/golang/pkg/tool/linux_amd64"

GCCGO="gccgo"

AR="ar"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD="/home/XXXX/projects/datarace/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build729449265=/tmp/go-build -gno-record-gcc-switches"

</pre></details>

### What did you do?

I'm writing a simple message event bus where the broker pushes data onto a channel of its subscribers/consumers. If the channel buffer is full, I don't want the broker to block, so I'm using routines, and a `select` statement to skip writes to a channel with a full buffer. To make life easier WRT testing, I'm mocking a subscriber interface, and I'm exposing the channels through functions (similar to `context.Context.Done()` and the like).

My tests all pass, and everything behaves as expected. However, running the same tests with the race detector, I'm getting what I believe to be a false positive. I have a test where I send data to a subscriber that isn't consuming the messages. The channel buffer is full, and I want to ensure that the broker doesn't block. To make sure I've tried to send all data, I'm using a waitgroup to check if the subscriber has indeed been accessed N number of times (where N is the number of events I'm sending). Once the waitgroup is done, I validate what data is on the channel, make sure it's empty, and then close it. The statement where I close the channel is marked as a data race.

If I do the exact same thing, but remove the subscriber from the broker, the data race magically is no more. Here's the code to reproduce the issue:

broker.go

```go

package broker

import (

"context"

"log"

"sync"

)

//go:generate go run github.com/golang/mock/mockgen -destination mocks/sub_mock.go -package mocks my.pkg/race/broker Sub

type Sub interface {

C() chan<- interface{}

Done() <-chan struct{}

}

type Broker struct {

mu sync.Mutex

ctx context.Context

subs map[int]Sub

keys []int

}

func New(ctx context.Context) *Broker {

return &Broker{

ctx: ctx,

subs: map[int]Sub{},

keys: []int{},

}

}

func (b *Broker) Send(v interface{}) {

b.mu.Lock()

go func() {

rm := make([]int, 0, len(b.subs))

defer func() {

if len(rm) > 0 {

b.unsub(rm...)

}

b.mu.Unlock()

}()

for k, s := range b.subs {

select {

case <-b.ctx.Done():

return

case <-s.Done():

rm = append(rm, k)

case s.C() <- v:

continue

default:

log.Printf("Skipped sub %d", k)

}

}

}()

}

func (b *Broker) Subscribe(s Sub) int {

b.mu.Lock()

k := b.key()

b.subs[k] = s

b.mu.Unlock()

return k

}

func (b *Broker) Unsubscribe(k int) {

b.mu.Lock()

b.unsub(k)

b.mu.Unlock()

}

func (b *Broker) key() int {

if len(b.keys) > 0 {

k := b.keys[0]

b.keys = b.keys[1:]

return k

}

return len(b.subs) + 1

}

func (b *Broker) unsub(keys ...int) {

for _, k := range keys {

if _, ok := b.subs[k]; !ok {

return

}

delete(b.subs, k)

b.keys = append(b.keys, k)

}

}

```

broker_test.go

```go

package broker_test

import (

"context"

"sync"

"testing"

"my.pkg/race/broker"

"my.pkg/race/broker/mocks"

"github.com/golang/mock/gomock"

"github.com/tj/assert"

)

type tstBroker struct {

*broker.Broker

cfunc context.CancelFunc

ctx context.Context

ctrl *gomock.Controller

}

func getBroker(t *testing.T) *tstBroker {

ctx, cfunc := context.WithCancel(context.Background())

ctrl := gomock.NewController(t)

return &tstBroker{

Broker: broker.New(ctx),

cfunc: cfunc,

ctx: ctx,

ctrl: ctrl,

}

}

func TestRace(t *testing.T) {

broker := getBroker(t)

defer broker.Finish()

sub := mocks.NewMockSub(broker.ctrl)

cCh, dCh := make(chan interface{}, 1), make(chan struct{})

vals := []interface{}{1, 2, 3}

wg := sync.WaitGroup{}

wg.Add(len(vals))

sub.EXPECT().Done().Times(len(vals)).Return(dCh)

sub.EXPECT().C().Times(len(vals)).Return(cCh).Do(func() {

wg.Done()

})

k := broker.Subscribe(sub)

assert.NotZero(t, k)

for _, v := range vals {

broker.Send(v)

}

wg.Wait()

// I've tried to send all 3 values, channels should be safe to close now

close(dCh)

// channel had buffer of 1, so first value should be present

assert.Equal(t, vals[0], <-cCh)

// other values should be skipped due to default select

assert.Equal(t, 0, len(cCh))

close(cCh)

}

func TestNoRace(t *testing.T) {

broker := getBroker(t)

defer broker.Finish()

sub := mocks.NewMockSub(broker.ctrl)

cCh, dCh := make(chan interface{}, 1), make(chan struct{})

vals := []interface{}{1, 2, 3}

wg := sync.WaitGroup{}

wg.Add(len(vals))

sub.EXPECT().Done().Times(len(vals)).Return(dCh)

sub.EXPECT().C().Times(len(vals)).Return(cCh).Do(func() {

wg.Done()

})

k := broker.Subscribe(sub)

assert.NotZero(t, k)

for _, v := range vals {

broker.Send(v)

}

wg.Wait()

// I've tried to send all 3 values, channels should be safe to close now

close(dCh)

// channel had buffer of 1, so first value should be present

assert.Equal(t, vals[0], <-cCh)

// other values should be skipped due to default select

assert.Equal(t, 0, len(cCh))

// add this line, and data race magically vanishes

broker.Unsubscribe(k)

close(cCh)

}

func (b *tstBroker) Finish() {

b.cfunc()

b.ctrl.Finish()

}

```

See the data race by running: `go test -v -race ./broker/... -run TestRace`

### What did you expect to see?

I expect to see log output showing that the subscriber was skipped twice (output I do indeed see), and *no* data race

### What did you see instead?

I still saw the code behaved as expected, but I do see a data race reported:

```

go test -v -race ./broker/... -run TestRace

=== RUN TestRace

2020/05/13 16:24:06 Skipped sub 1

2020/05/13 16:24:06 Skipped sub 1

==================

WARNING: DATA RACE

Write at 0x00c00011a4f0 by goroutine 7:

runtime.closechan()

/usr/lib/golang/src/runtime/chan.go:335 +0x0

my.pkg/race/broker_test.TestRace()

/home/XXXX/projects/race/broker/stuff_test.go:56 +0x7c8

testing.tRunner()

/usr/lib/golang/src/testing/testing.go:991 +0x1eb

Previous read at 0x00c00011a4f0 by goroutine 10:

runtime.chansend()

/usr/lib/golang/src/runtime/chan.go:142 +0x0

my.pkg/race/broker.(*Broker).Send.func1()

/home/XXXX/projects/race/broker/stuff.go:46 +0x369

Goroutine 7 (running) created at:

testing.(*T).Run()

/usr/lib/golang/src/testing/testing.go:1042 +0x660

testing.runTests.func1()

/usr/lib/golang/src/testing/testing.go:1284 +0xa6

testing.tRunner()

/usr/lib/golang/src/testing/testing.go:991 +0x1eb

testing.runTests()

/usr/lib/golang/src/testing/testing.go:1282 +0x527

testing.(*M).Run()

/usr/lib/golang/src/testing/testing.go:1199 +0x2ff

main.main()

_testmain.go:48 +0x223

Goroutine 10 (finished) created at:

my.pkg/race/broker.(*Broker).Send()

/home/XXXX/projects/race/broker/stuff.go:32 +0x70

my.pkg/race/broker_test.TestRace()

/home/XXXX/projects/race/broker/stuff_test.go:47 +0x664

testing.tRunner()

/usr/lib/golang/src/testing/testing.go:991 +0x1eb

==================

TestRace: testing.go:906: race detected during execution of test

--- FAIL: TestRace (0.00s)

: testing.go:906: race detected during execution of test

FAIL

FAIL my.pkg/race/broker 0.009s

? my.pkg/race/broker/mocks [no test files]

```

Though I'm not certain, my guess is that the expression `s.C() <- v`, because it's a case expression, is what trips the race detector up here. The channel buffer is full, so any writes would be blocking if I'd put the channel write in the `default` case. As it stands, the write cannot possibly be executed, so instead my code logs the fact that a subscriber is being skipped, the routine ends (defer func unlocks the mutex), and the mock callback decrements the waitgroup. Once the waitgroup is empty, all calls to my mock subscriber have been made, and the channel can be safely closed.

It seems, however, that I need to add the additional call, removing the mock from the broker to _"reset"_ the race detector state. I'll try and have a look at the source, maybe something jumps out.

|

RaceDetector,NeedsInvestigation,compiler/runtime

|

low

|

Critical

|

617,564,169 |

go

|

cmd/link: include per-package aggregate static temp symbols?

|

I've been working on a Go [binary size analysis tool](https://github.com/bradfitz/shotizam) that aims to attribute ~each byte of the binary back to a function or package.

One thing it's not great at doing at the moment in the general case is summing the size of static temp (..stmp_NNN) because those symbols are removed by default (except in external linking mode).

Worse, with Macho-O binaries not having sizes on symbols, I can't even accurately count the sizes of symbols that do exist because the stmp values are in the DATA, but lacking symbols, so I end up calculating the wrong size of existing symbols:

e.g. this `float64info` should actually be the same size as the `float32info`, but a bunch of stmp_NNN are omitted between `float64info` and `hash..inittask`:

```

sym "debug/dwarf._Class_index" (at 19138544), size=464

sym "strconv.float32info" (at 19139008), size=32

sym "strconv.float64info" (at 19139040), size=1664

sym "hash..inittask" (at 19140704), size=32

sym "internal/bytealg..inittask" (at 19140736), size=32

sym "internal/reflectlite..inittask" (at 19140768), size=32

sym "internal/singleflight..inittask" (at 19140800), size=32

```

Looking at a binary with the `stmp` symbols, I get the accurate size for `float64info`:

```

sym "unicode..stmp_539" (at 1005d4260), size=32

sym "unicode..stmp_553" (at 1005d4280), size=32

sym "unicode..stmp_558" (at 1005d42a0), size=32

sym "crypto..stmp_2" (at 1005d42c0), size=32

sym "crypto/tls..stmp_205" (at 1005d42e0), size=32

sym "strconv.float32info" (at 1005d4300), size=32

sym "strconv.float64info" (at 1005d4320), size=32

sym "unicode..stmp_11" (at 1005d4340), size=32

sym "unicode..stmp_113" (at 1005d4360), size=32

sym "unicode..stmp_121" (at 1005d4380), size=32

sym "unicode..stmp_147" (at 1005d43a0), size=32

sym "unicode..stmp_150" (at 1005d43c0), size=32

```

So, my request: can we aggregate all the `stmp_NNN` symbols together per-package and emit one symbol per section per Go package, like:

```

unicode..stmp_pkg

```

Then I can both calculate the sum stmp sizes per package (e.g. unicode is 68KB, crypto/tls is 12KB), and I can also accurately calculate the size of other symbols (Mach-o symbols without a size)

This would make binaries a tiny bit bigger (but bounded by number of packages at least) but would permit more analysis at making them much smaller, IMO. (Or output it to a separate file.)

It would also require sorting the stmp values all together in the binary. They're currently scattered around:

```

sym "vendor/golang.org/x/net/route.rtmVersion" (at 100615964), size=2

sym "runtime..stmp_40" (at 100615966), size=2

sym "context.goroutines" (at 100615968), size=4

sym "runtime..stmp_41" (at 10061596c), size=4

sym "runtime.argc" (at 100615970), size=4

sym "runtime.crashing" (at 100615974), size=4

```

(Note a `context` symbol between `runtime.stmp_40` and `stmp_41` )

What I'd like to see is something like:

```

sym "vendor/golang.org/x/net/route.rtmVersion" (at 100615964), size=2

sym "context.goroutines" (at 100615966), size=4

sym "runtime..stmp_pkg" (at 100615970), size=6

sym "runtime.argc" (at 100615970), size=4

sym "runtime.crashing" (at 100615974), size=4

```

Thoughts? Alternatives?

/cc @ianlancetaylor (who schooled me on some of this), @cherrymui, @thanm, @randall77, @josharian, @jeremyfaller

|

NeedsInvestigation,compiler/runtime

|

low

|

Critical

|

617,591,257 |

go

|

x/build: reduce ambiguity in long test of main Go repo

|

Taking into account the build infrastructure at [build.golang.org](https://build.golang.org), local development, and issues such as #26473, #34707, I understand we currently have the goal of having good support for two well-known testing configurations (for a given GOOS/GOARCH pair) for the main Go repository:

- Short, as implemented by `all.bash`

- target of 3-4 minutes, acceptable to skip slow tests as implemented by `go test -short`

- Long, as implemented by `-longtest` post-submit builders (e.g., `linux-amd64-longtest`)

- no goal of skipping tests for performance reasons

While investigating #29252, I found that there is some ambiguity in what it means to test the main Go repo in long mode. It's not easy to say "in order to run a long test, do this" and have a predictable outcome. We currently say "run `all.bash` and `go test std cmd`" in some places, but there's room for improvement.

We want to ensure long tests are passing for Go releases. To support that goal, I think it will helpful to reduce ambiguity in what it means to run a long test on the Go repo.

This is a high level tracking issue for improvements in this area, and for any discussion that may need to happen.

/cc @andybons @cagedmantis @toothrot @golang/osp-team @bradfitz @rsc

|

Builders,NeedsInvestigation

|

low

|

Major

|

617,654,600 |

go

|

github: consider downscoping "GitHub Editors" access to Triage role

|

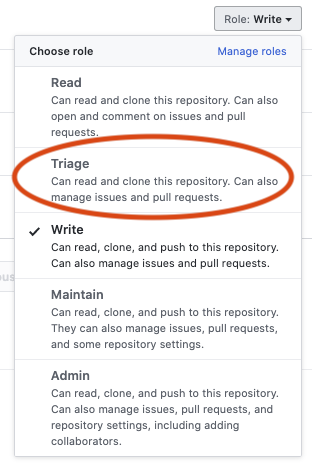

The Go project offers access to a set of people to edit metadata on GitHub issues, in order to help with [gardening](https://golang.org/wiki/Gardening). This access is documented at https://golang.org/wiki/GithubAccess#editors.

Back when the "go-approvers" group was created, GitHub did not offer granular access levels, it was either Read (no access to triage), Write (access to triage and push), Admin. We used Write since it was the most fitting option.

The GitHub repository at https://github.com/golang/go is a mirror of the canonical repository at https://go.googlesource.com/go where code review happens, and any changes to it are automatically overwritten by [`gitmirror`](https://pkg.go.dev/golang.org/x/build/cmd/gitmirror). However, accidents happen occasionally, and people may unintentionally create new branches (e.g., see https://groups.google.com/d/msg/golang-dev/EqqZf5kTRqI/9BEDmjHwBwAJ).

By now, GitHub seems to offer more granularity in access controls, including a "Triage" role:

It's documented in more details at https://help.github.com/en/github/setting-up-and-managing-organizations-and-teams/repository-permission-levels-for-an-organization.

We should investigate and confirm whether it's safe to downscope the go-approvers team to Triage access without causing unintended inconvenience to people who rely on it, and if so, apply the change.

/cc @golang/osp-team @katiehockman @FiloSottile

|

Security,Builders,NeedsInvestigation,Community

|

low

|

Major

|

617,656,164 |

pytorch

|

No MKL Compatible Conda installation for PyTorch 1.5

|

## 🚀 Feature

Right now having the conda nomkl package installed is a comfortable way to ensure that numpy is linking to openblas instead of Intel mkl, which is (in my experience) slower for AMD processors. If the no mkl package is installed, the conda pytorch installation resolves to an older pytorch version.

I.e. the command (in an otherwise empty conda environment)

`conda install pytorch torchvision cudatoolkit=10.2 -c pytorch`

installs WITHOUT the nomkl package

`pytorch pytorch/linux-64::pytorch-1.5.0-py3.7_cuda10.2.89_cudnn7.6.5_0`

and WITH the nomkl package

`pytorch pytorch/linux-64::pytorch-1.0.0-py3.7_cuda9.0.176_cudnn7.4.1_1`

It would be great to have a conda compatible installation with nomkl, as AMD processors have gotten good in terms of # of fast cores and value. So if it would not require to much effort it would be great to have. And also a bit of documentation on how to ensure linking to openblas instead of mkl would be great as AMD processor have become more widespread in recent years.

## Alternatives

To get pytorch 1.5 with nomkl, one can simply install pytorch 1.5 via pip.

cc @ezyang @seemethere

|

module: binaries,triaged,module: mkl

|

medium

|

Major

|

617,670,965 |

flutter

|

Allow copying engine artifacts before starting native build tool

|

Currently, for all of the desktop builds, we trigger the native build (Xcode, make, etc.) and that eventually calls into the backend script that copies engine artifacts like the library and the C++ client wrappers.

I'm in the process of converting Linux (and maybe Windows) to CMake, and with CMake the best option is to have the build files live next to the things they are building; in the case of then engine artifacts it would not only make the build structure cleaner and more modular, but it would mean that we could retain long-term control over those files. For instance, the client wrapper targets could be updated if the set of client wrapper files changed, without breaking existing consumers.

However, the CMake step is the very first step in the build, so we can't call it without already having all the `CMakeLists.txt` files in place. In order for them to live with the artifacts, we'd need to be able to trigger the artifact copying earlier, from, e.g., build_linux.dart, just before doing CMake generation.

/cc @jonahwilliams

|

tool,platform-windows,platform-linux,a: desktop,a: build,P3,team-tool,triaged-tool

|

low

|

Major

|

617,677,740 |

pytorch

|

[RFC] Add tar-based IterableDataset implementation to PyTorch

|

Problem

=======

As datasets become larger and larger, storing training samples as individual files becomes impractical and inefficient. This can be addressed using sequential storage formats and sharding (see "Alternatives Considered" for other implementations). PyTorch lacks such a common storage format right now.

Proposal

========

[WebDataset](http://github.com/tmbdev/webdataset) provides an implementation of `IterableDataset` based on sharded tar archives. This format provides efficient I/O for very large datasets, makes migration from file-based I/O easy, and works well locally, with cloud storage, and web servers. It also provides a simple, standard format in which large datasets can be distributed easily and used directly without unpacking.

The implementation is small (1000-1800 LOC) and has no external dependencies. The proposal is to incorporate the `webdataset.Dataset` class into the PyTorch base distribution. The source repository is here:

http://github.com/tmbdev/webdataset

My suggestion would be to incorporate the library with minimal changes into its own subpackage. I can perform the integration and generate a PR once there is general agreement.

More Background

===============

For a general introduction to how we handle large scale training with WebDataset, see [this YouTube playlist](https://www.youtube.com/playlist?list=PL0dsKxFNMcX4XcB0w1Wm-pvSfQu-eWM26)

The WebDataset library (github.com/tmbdev/webdataset) provides an implementation of `IterableDataset` that uses POSIX tar archives as its native storage format. The format itself is based on a simple convention:

- datasets are POSIX tar archives

- each training sample consists of adjacent files with the same basename

- shards are numbered consecutively

For example, ImageNet is stored in 147 separate 1 Gbyte shards with names `imagenet-train-0000.tar` to `imagenet-train-0147.tar`; the contents of the first shard are:

```

-r--r--r-- bigdata/bigdata 3 2020-05-08 21:23 n03991062_24866.cls

-r--r--r-- bigdata/bigdata 108611 2020-05-08 21:23 n03991062_24866.jpg

-r--r--r-- bigdata/bigdata 3 2020-05-08 21:23 n07749582_9506.cls

-r--r--r-- bigdata/bigdata 129044 2020-05-08 21:23 n07749582_9506.jpg

-r--r--r-- bigdata/bigdata 3 2020-05-08 21:23 n03425413_23604.cls

-r--r--r-- bigdata/bigdata 106255 2020-05-08 21:23 n03425413_23604.jpg

-r--r--r-- bigdata/bigdata 3 2020-05-08 21:23 n02795169_27274.cls

```

Datasets in WebDataset format can be used directly after downloading without unpacking; they can also be mounted as a file system. Content in WebDataset format can used any file-based compression scheme. In addition, the tar file itself can also be compressed and WebDataset will transparently decompress it.

WebDatsets can be used directly from local disk, from web servers (hence the name), from cloud storage, and from object stores, just by changing a URL.

WebDataset readers/writers are easy to implement (we have Python, Golang, and C++ implementations).

WebDataset performs shuffling both at the shard level and at the sample level. Splitting of data across multiple workers is performed at the shard level using a user-provided `shard_selection` function that defaults to a function that splits based on `get_worker_info`. (WebDataset can be combined with the `tensorcom` library to offload decompression/data augmentation and provide RDMA and direct-to-GPU loading; see below.)

We are storing and processing petabytes of training data as tar archives and are using the format for unsupervised learning from video, OCR and scene text recognition, and large scale object recognition experiments. The same code and I/O pipelines work efficiently on the desktop, on a local cluster, or in the cloud. [Our benchmarks](https://arxiv.org/abs/2001.01858) show scalability and the ability to take advantage of the full I/O bandwidth of each disk drive across very large training jobs and storage clusters.

Code Sample

===========

This shows how to use WebDataset with ImageNet.

```Python

import webdataset as wds

improt ...

shardurl = "/imagenet/imagenet-train-{0000..0147}.tar"

normalize = transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

preproc = transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

normalize,

])

dataset = (

wds.Dataset(shardurl)

.shuffle(1000)

.decode("pil")

.rename(image="jpg;png", data="json")

.map_dict(image=preproc)

.to_tuple("image", "data")

)

loader = torch.utils.data.DataLoader(dataset, batch_size=64, num_workers=8)

for inputs, targets in loader:

...

```

WebDataset uses a fluent API for configuration that internally builds up a processing pipeline. Without any added processing stages, WebDataset just iterates through each training sample as a dictionary:

```Python

# load from a web server using a separate client process

shardurl = "pipe:curl -s http://server/imagenet/imagenet-train-{0000..0147}.tar"

dataset = wds.Dataset(shardurl)

for sample in dataset:

# sample["jpg"] contains the raw image data

# sample["cls"] contains the class

...

```

Alternatives Considered

=======================

Keeping WebDataset as as Separate Library

-----------------------------------------

WebDataset is perfectly usable as a separate library, so why not keep it that way?

- Users of deep learning libraries expect an efficient data format that avoids the "many small file" problem; Tensorflow provides TFRecord/tf.Example Making WebDataset part of PyTorch itself provides a straightforward solution for most users and reduces dependencies.

- Providing a format reader in the standard library encourages dataset standardization, tool building, and adoption of the same format by other libraries.

- Having a realistic implementation of `IterableDataset` in PyTorch provides a common reference against which to address issues and provide test cases in the `DataLoader` implementation and its use of `IterableDataset`

Many of the larger datasets distributed in `torchvision` could be distributed easily in WebDataset format. This would allow users to either train directly against web-hosted data, or to train on the datasets immediately after downloading without unpacking. The way data is arranged in WebDataset also allows users to download just a few shards for testing code locally, and then use the entire dataset when running on a cluster. Furthermore, the way `webdataset.Dataset` works in most cases, no special code is needed in order to read them; many training jobs can be retargeted to different datasets simply by using a different URL for the dataset.

TFRecord+protobuf, Parquet

----------------------------

These formats are suitable for large scale data processing for machine learning and deep learning applications and some datasets exist in this format and more will continue to be generated for the Tensorflow ecosystem. However, they are not good candidates for incorporating into PyTorch as core feature because:

- TFRecord+protobuf (tf.Example) and Parquet have significant external library dependencies; in contrast, WebDataset is pure Python, using the built-in libraries for tar decoding.

- Protobuf and Parquet represent serialized data structures, while WebDataset uses file-based representations. File based representations make creation of WebDatasets easy, result in bit-identical representations of data in WebDatasets, and allow existing file-based codecs and I/O pipelines to be reused easily.

- Except for their native frameworks, there is not much third party support for these formats; in contrast, POSIX tar archive libraries exist in all major programming languages and are easily processed, either by unpacking shards or using tools like `tarp`.

Note that WebDataset supports usage scenarios similar to TFRecord+protobuf, since serialized data structures can be incorporated as files, and WebDataset will decode them automatically. For example, OpenImages multi-instance data is simply stored in a `.json` file accompanying each `.jpg` file:

```

$ curl -s http://storage.googleapis.com/nvdata-openimages/openimages-train-000554.tar | tar tf -

0b946d1d5201c06c.jpg

0b946d1d5201c06c.json

05c9d72ff9e64010.jpg

05c9d72ff9e64010.json

...

$

```

zip instead of tar

--------------------

The zip format is another archival format. Unlike tar format, which is just a sequence of records, zip format stores a file index at the very end of the file, making it unsuitable for streaming. Tar files can be made random access (and, in fact, can be mounted as file systems), but they use a separate index file to support that functionality.

LMDB, HDF5, Databases

---------------------

These formats are not suitable for streaming and require the entire dataset to fit onto local disk. In addition, while they nominally solve the "many small file problems", they don't solve the problem that indexing into the dataset still results in expensive seek operations.

Local File System Caches

------------------------

An approach for extending file-system based I/O pipelines to large distributed storage systems is to use some form of "pre-caching" or "staging" on a local NVMe drive. Generally, there is little advantage to this. For large datasets, it does not increase throughput. Input pipelines still need to be modified to schedule the pre-caching. And generally, this requires volume plugins or virtual file system support. A similar effect can be achieved with WebDataset by simply unpacking shards to the local file system when direct file access is required.

Related Software

================

[AIStore](http://github.com/NVIDIA/AIStore) is an open source object store capable of full bandwidth disk-to-GPU data delivery (meaning that if you have 1000 rotational drives with 200 MB/s read speed, AIStore actually delivers an aggregate bandwidth of 200 GB/s to the GPUs). AIStore is fully compatible with WebDataset as a client, and in addition understands the WebDataset format, permitting it to perform shuffling, sorting, ETL, and some map-reduce operations directly in the storage system.

[tarp](http://github.com/tmbdev/tarp) is a small command line program for splitting, merging, shuffling, and processing tar archives and WebDataset datasets.

[tensorcom](http://github.com/NVLabs/tensorcom) is a library supporting distributed data augmentation and RDMA to GPU.

[webdataset-examples](http://github.com/tmbdev/webdataset-examples) contains an example (and soon more examples) of how to use WebDataset in practice.

[Bigdata 2019 Paper with Benchmarks](https://arxiv.org/abs/2001.01858)

cc @SsnL

|

feature,module: dataloader,triaged

|

high

|

Critical

|

617,683,936 |

angular

|

Angular Module instance never get destroyed

|

No matter what we do lazy loading or eager loading Angular Module instance never gets destroyed.

When we route across components or use *ngIF Components get destroyed and recreated but its not the same case when we navigate from one module to another.

While lazy loading every module gets loaded dynamically. Also, the instance get created but that instance never gets destroyed.

To check the same I put the breakpoint in Module constructor.

Anybody know the reason for such behavior. I feel it bad because all services registered on those modules get remain forever then too.

I felt like it a bug than a feature request.

|

type: bug/fix,feature,area: router,core: NgModule,router: lazy loading,needs: discussion,feature: under consideration

|

high

|

Critical

|

617,722,117 |

flutter

|

[webview_flutter] Error while trying to play a Google Drive video preview link

|

I have an app that needs to play Google Drive videos preview, using webview_flutter plugin, the thumbnail appears but when I tap play I get the errors below:

**Code:**

```dart

String url = 'https://drive.google.com/file/d/1O8WF2MsdyoKpQZE2973IFPRpqwKUjm_q/preview';

WebView(

onWebViewCreated: (WebViewController controller){

webViewController = controller;

},

initialUrl: url,

javascriptMode: JavascriptMode.unrestricted,

initialMediaPlaybackPolicy: AutoMediaPlaybackPolicy.always_allow,

),

```

**Errors:**

```

"The deviceorientation events are blocked by feature policy. See https://github.com/WICG/feature-policy/blo

b/master/features.md#sensor-features", source: https://youtube.googleapis.com/s/player/64dddad9/player_ias.vflset/pt_BR/base.js (263)

```

**The second time I tap play button:**

```

I/chromium(29212): [INFO:CONSOLE(1472)] "Uncaught (in promise) Error: Untrusted URL: https://youtube.googleapis.com/videoplayback?expire=158836583

1&ei=x1GsXtyWNuzPj-8Px_eH2Aw&ip=2804:431:c7da:c52b:854e:e83e:e7c5:eb3e&cp=QVNNWkRfVFhRQlhOOk5mQ0FhT0J5Y0k2T3ZDdjJLa0UzQVRiaHNoQlVHeXpjV3BtYW9YT2Rk

YUM&id=eb79141269cc6ad3&itag=18&source=webdrive&requiressl=yes&mh=F-&mm=32&mn=sn-bg0eznll&ms=su&mv=m&mvi=4&pl=47&ttl=transient&susc=dr&driveid=1O8

WF2MsdyoKpQZE2973IFPRpqwKUjm_q&app=explorer&mime=video/mp4&dur=2.043&lmt=1551969798109476&mt=1588351334&sparams=expire,ei,ip,cp,id,itag,source,req

uiressl,ttl,susc,driveid,app,mime,dur,lmt&sig=AOq0QJ8wRAIgEzxYGpS8RI0CRVPdZrMxdDGfkYfCezdOkiJ7iUcl5XMCIHiDsmbGel8tWT6XIU8dWdfjLJWdOlI_WHNtDNwYszU9

&lsparams=mh,mm,mn,ms,mv,mvi,pl&lsig=AG3C_xAwRAIgTq3W38roufwBwSPXe4fxB25kANk3s42N5x2oBvVWonoCIDaYJVrPpmNzcoU6q4bqogHP6W-Mw4p_5CRrwh59kZM4&cpn=bCev

241Hx8eXmwyo&c=WEB_EMBEDDED_PLAYER&cver=20200429", source: https://youtube.googleapis.com/s/player/64dddad9/player_ias.vflset/pt_BR/base.js (1472)

```

Does anyone can explain me this errors please? Through browser the link is working.

<details>

<summary>flutter doctor -v</summary>

```bash

**flutter doctor -v**

[√] Flutter (Channel master, 1.18.0-12.0.pre, on Microsoft Windows [versão 10.0.18363.778], locale pt-BR)

• Flutter version 1.18.0-12.0.pre at C:\src\flutter

• Framework revision c2b7342ca4 (7 days ago), 2020-05-06 23:16:03 +0800

• Engine revision 33d2367950

• Dart version 2.9.0 (build 2.9.0-5.0.dev 9c94f08410)

[√] Android toolchain - develop for Android devices (Android SDK version 29.0.3)

• Android SDK at C:\Users\Dih\AppData\Local\Android\sdk

• Platform android-29, build-tools 29.0.3

• Java binary at: C:\Program Files\Android\Android Studio\jre\bin\java

• Java version OpenJDK Runtime Environment (build 1.8.0_212-release-1586-b04)

• All Android licenses accepted.

[√] Chrome - develop for the web

• Chrome at C:\Program Files (x86)\Google\Chrome\Application\chrome.exe

[√] Android Studio (version 3.6)

• Android Studio at C:\Program Files\Android\Android Studio

• Flutter plugin version 45.1.1

• Dart plugin version 192.7761

• Java version OpenJDK Runtime Environment (build 1.8.0_212-release-1586-b04)

[√] Connected device (3 available)

• LG K430 • LGK4305DKZJJKN • android-arm • Android 6.0 (API 23)

• Web Server • web-server • web-javascript • Flutter Tools

• Chrome • chrome • web-javascript • Google Chrome 81.0.4044.138

• No issues found!

```

</details>

|

platform-android,a: video,p: video_player,package,has reproducible steps,P3,found in release: 2.2,found in release: 2.3,team-android,triaged-android

|

low

|

Critical

|

617,739,531 |

kubernetes

|

Add a `Plan` kind

|

**What would you like to be added**:

A way (through a `Plan` kind?) to save a list of changes to be applied later, with

the guarantee to not cause any additional conflicts/resource addition/resource deletion.

The workflow would look like:

- Generate a `Plan` with `kubectl diff --recursive --server-side -oyaml -f manifests.yaml > plan.yaml`

- This would be even more useful if/when `kubectl diff` supports `--prune` and `--selector` flags (that behave like their kubectl apply counterparts).

- Apply it with `kubectl apply --server-side -f plan.yaml`

**Why is this needed**:

Reviewing a change before applying it (`kubectl apply --dry-run` or `kubectl diff`) currently does not give confidence that the real change made to the cluster will match what was expected.

For example, if I want to update an already deployed resource:

- I run `kubectl diff` and validate that the output corresponds to what I expect

- Meanwhile, the resource is deleted in the cluster (I am not aware of that)

- I run `kubectl apply` expecting to update a field, but I actually create a resource

The same can lead to unexpected resource deletion (with `--prune` flag) or unexpected update of fields not owned by my workflow (with `--force-conflicts`).

This is especially true in a GitOps workflow where the diff is automatically printed in the PR to help the reviewer and the apply can take place several hours later.

Having a structured "plan" is also useful:

- For automated policy enforcement, e.g. using OPA

- To make `kubectl apply --recursive --prune` safer

/kind feature

/sig api-machinery

/wg api-expression

|

sig/api-machinery,kind/feature,lifecycle/frozen,wg/api-expression

|

low

|

Major

|

617,752,294 |

create-react-app

|

Allow full URL with hostname/port for PUBLIC_URL in development

|

### Is your proposal related to a problem?

Recently there was a change so that PUBLIC_URL is no longer ignored in development mode. This is a great change, however it seems limited in that PUBLIC_URL in development can only be a path

while environments outside of development can be full URLs with hostname/port, etc.

### Describe the solution you'd like

<!--

Provide a clear and concise description of what you want to happen.

-->

Please allow for PUBLIC_URL to specify a URL in development, not just a path.

### Describe alternatives you've considered

<!--

Let us know about other solutions you've tried or researched.

-->

Only other solution we have to work with is change our entire server-side setup (quite involved) or utilize patch-package (ew)

|

issue: proposal,needs triage

|

medium

|

Major

|

617,759,692 |

flutter

|

"resizeToAvoidBottomInset property" Documentation should have a video to demonstrate how it changes the view

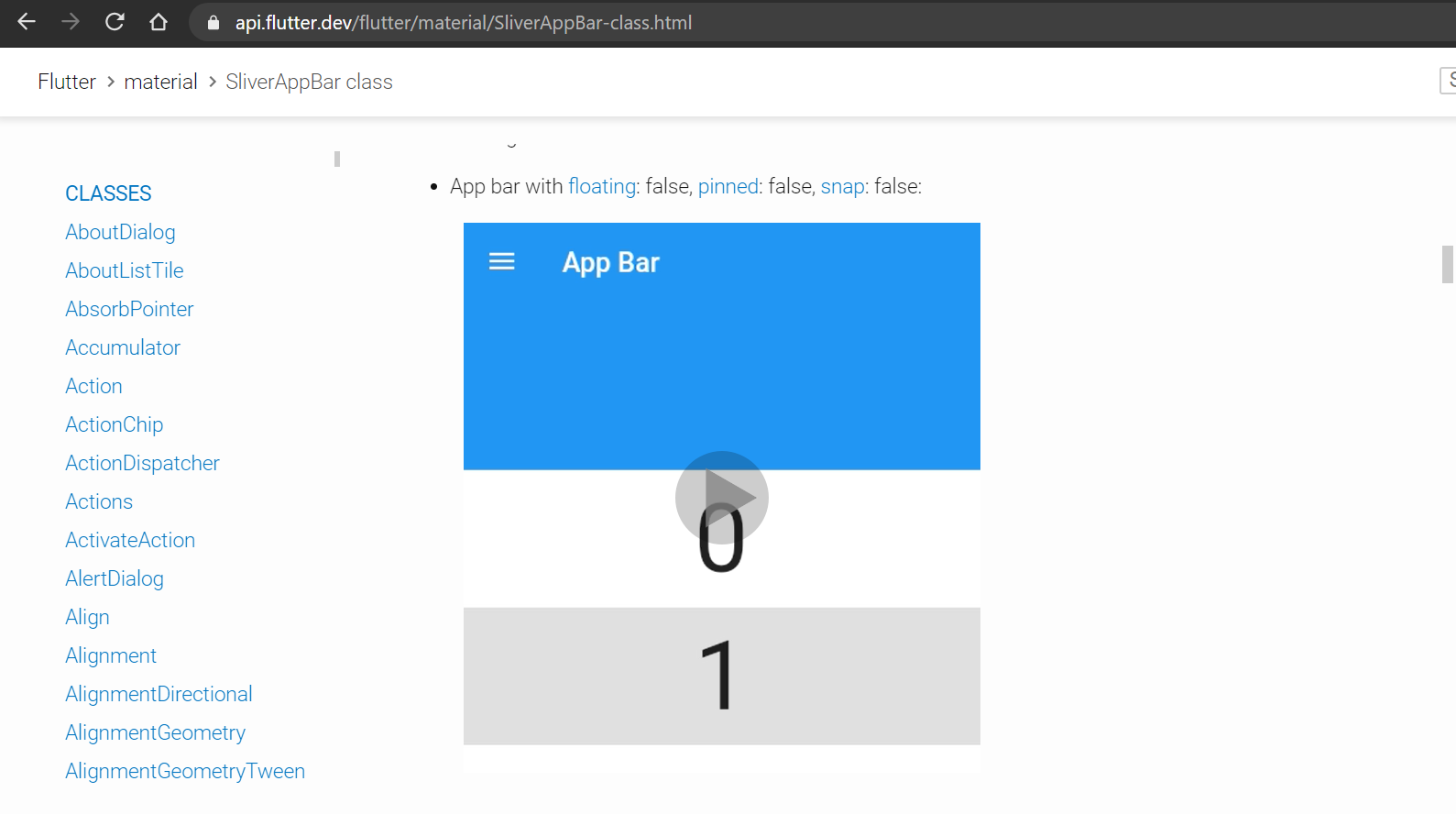

|

In the [documentation page](https://api.flutter.dev/flutter/material/Scaffold/resizeToAvoidBottomInset.html), there must be a video to show how changing the boolean value of "resizeToAvoidBottomInset" changes the view in the actual app.

For example, such a demonstration must be added ⬇

https://flutter.github.io/assets-for-api-docs/assets/material/app_bar.mp4

|

framework,f: material design,d: api docs,d: examples,c: proposal,team-design,triaged-design

|

low

|

Minor

|

617,771,285 |

pytorch

|

[JIT] torch.tensor needs a Tensor overload

|

## 🐛 Bug

`torch.tensor` in python eager can accept a Tensor, which it then copies into a new tensor. Because JIT does not have a tensor overload, an implicit conversion to float gets inserted which will fail at runtime.

```

import torch

import torch.nn as nn

from typing import List

def foo(x):

return torch.tensor(x)

input = torch.rand([2, 2])

foo(input)

# fine

torch.jit.script(foo)(input)

```

> ... <--- HERERuntimeError: Cannot input a tensor of dimension other than 0 as a scalar argument

cc @suo

|

oncall: jit,module: bootcamp,triaged,small

|

low

|

Critical

|

617,776,344 |

flutter

|

Tool crashes when launching adb when getcwd fails with EACCES.

|

Sometimes when launched from an IDE, the tool can crash when the tool process does not have read/list permissions from / up to the cwd.

```

FileSystemException: FileSystemException: Getting current working directory failed, path = '' (OS Error: Operation not permitted, errno = 1)

| at _Directory.current | (directory_impl.dart:49)

| at Directory.current | (directory.dart:161)

| at LocalFileSystem.currentDirectory | (local_file_system.dart:44)

| at getExecutablePath | (common.dart:59)

| at _getExecutable | (local_process_manager.dart:125)

| at LocalProcessManager.run | (local_process_manager.dart:68)

| at _DefaultProcessUtils.run | (process.dart:313)

| at AndroidDevices.pollingGetDevices | (android_device_discovery.dart:54)

| at PollingDeviceDiscovery._initTimer.<anonymous closure> | (device.dart:295)

| at _rootRun | (zone.dart:1180)

| at _CustomZone.run | (zone.dart:1077)

| at _CustomZone.runGuarded | (zone.dart:979)

| at _CustomZone.bindCallbackGuarded.<anonymous closure> | (zone.dart:1019)

| at _rootRun | (zone.dart:1184)

| at _CustomZone.run | (zone.dart:1077)

| at _CustomZone.bindCallback.<anonymous closure> | (zone.dart:1003)

| at Timer._createTimer.<anonymous closure> | (timer_patch.dart:23)

| at _Timer._runTimers | (timer_impl.dart:398)

| at _Timer._handleMessage | (timer_impl.dart:429)

| at _RawReceivePortImpl._handleMessage | (isolate_patch.dart:168)

```

Supposing that `getAdbPath` ([here](https://github.com/flutter/flutter/blob/master/packages/flutter_tools/lib/src/android/android_device_discovery.dart#L48)) is giving an absolute path, the problem seems to be in `package:process`, which tries to grab the cwd even though the path might be absolute ([here](https://github.com/google/process.dart/blob/master/lib/src/interface/common.dart#L59)). `package:process` should probably skip the cwd relative search if grabbing the cwd fails.

|

c: crash,tool,P2,team-tool,triaged-tool

|

low

|

Critical

|

617,791,934 |

vscode

|

No images in jsdoc hover or notebooks in remote/web

|

- Add an image in markdown syntax to a jsdoc comment

- In a local vscode window, hover the identifier with the command

- The image is rendered

- Do the same in a remote window

- The image is broken

Same issue with notebooks. @mjbvz Is it possible to hook into the same system that webviews use, or is that only tied to webviews?

|

bug,typescript,javascript,web,notebook-workbench-integration

|

low

|

Critical

|

617,805,498 |

opencv

|

OpenCV 4.3.0 RELEASE build error in traits.hpp

|

##### System information (version)

- OpenCV => 4.3.0

- Operating System / Platform => Windows 10 64 Bit v 1909

- Compiler => Visual Studio 2019 community

- CUDA => v10.2.89

- GSTREAMER => v1.16.2

- NINJA => v1.10.0

- CMAKE => v3.17.2

- NVIDIA RTX2070 with Q-Max design

Please refer to the attached build config "BuildCONFIG.txt"

[BuildCONFIG.txt](https://github.com/opencv/opencv/files/4625103/BuildCONFIG.txt)

Please refer to the build file (NinjaRELEASECuDNNGStreamerNonFree.txt) here:

[NinjaRELEASECuDNNGStreamerNonFree.txt](https://github.com/opencv/opencv/files/4625106/NinjaRELEASECuDNNGStreamerNonFree.txt)

##### Detailed description

When i build "RELEASE" version, I see the following error in building gpu_mat.cu

And the error is:

**gpu_mat.cu**

C:/Nitin/OpenCVCode/opencv4_3_0/modules/core/include\opencv2/core/traits.hpp(374): **error C2993: 'T': illegal type for non-type template parameter '__formal'**

C:/Nitin/OpenCVCode/opencv4_3_0/modules/core/include\opencv2/core/traits.hpp(374): note: see reference to class template instantiation 'cv::traits::internal::CheckMember_fmt<T>' being compiled

C:/Nitin/OpenCVCode/opencv4_3_0/modules/core/include\opencv2/core/traits.hpp(374): **error C2065: '__T0': undeclared identifier**

C:/Nitin/OpenCVCode/opencv4_3_0/modules/core/include\opencv2/core/traits.hpp(374): **error C2923: 'std::_Select<__formal>::_Apply': '__T0' is not a valid template type argument for parameter '<unnamed-symbol>'**

C:/Nitin/OpenCVCode/opencv4_3_0/modules/core/include\opencv2/core/traits.hpp(374): e**rror C2062: type 'unknown-type' unexpected

CMake Error at cuda_compile_1_generated_gpu_mat.cu.obj.Release.cmake:280 (message):

Error generating file**

C:/Nitin/OpenCVCode/opencv4_3_0/build/modules/world/CMakeFiles/cuda_compile_1.dir/__/core/src/cuda/./cuda_compile_1_generated_gpu_mat.cu.obj

Whereas, DEBUG build builds just fine !

|

category: build/install,category: gpu/cuda (contrib),platform: win32

|

low

|

Critical

|

617,809,065 |

electron

|

I can still interact with disabled windows after a call to setEnabled(false)

|

### Preflight Checklist

* [x] I have read the [Contributing Guidelines](https://github.com/electron/electron/blob/master/CONTRIBUTING.md) for this project.

* [x] I agree to follow the [Code of Conduct](https://github.com/electron/electron/blob/master/CODE_OF_CONDUCT.md) that this project adheres to.

* [x] I have searched the issue tracker for an issue that matches the one I want to file, without success.

### Issue Details

* **Electron Version:** 8.2.0

* **Operating System:** Windows 10

### Expected Behavior

When I call `win.setEnabled(false)`, I expect that I won't be able to interact with the window.

### Actual Behavior

I can still interact if I Alt+Tab to it.

### To Reproduce

main.js:

```

const { app, BrowserWindow, ipcMain } = require('electron')

async function createWindow() {

const mainWindow = new BrowserWindow({ webPreferences: { nodeIntegration: true } });

await mainWindow.loadFile("./index.html");

mainWindow.webContents.openDevTools({ mode: "detach" });

ipcMain.on("disableWindows", (e, arg) => {

BrowserWindow.getAllWindows().forEach((win) => {

if (win.id != mainWindow.id) {

win.setEnabled(false);

}

});

});

}

app.on('ready', createWindow)

```

index.html:

```

<body>

<button id="b">Cliek me</button>

<script src="./renderer.js">

</script>

</body>

```

renderer.js:

```

const { ipcRenderer } = require("electron");

var b = document.getElementById("b");

b.onclick = () => {

ipcRenderer.send("disableWindows");

}

```

Launch the app. In the devtools, open a window to google. Make sure focus is in the search field.

Then click the button in the main window to disable the window. Alt+tab back to the disabled window and type something. notice that that still works and I can search for results.

Gif:

|

platform/windows,bug :beetle:,8-x-y,10-x-y

|

medium

|

Major

|

617,857,226 |

godot

|

Scrollcontainer which doesn't show the last element when supposed to spawn outside of Scrollbox

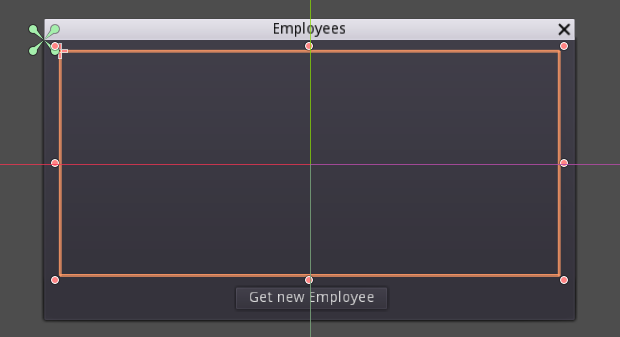

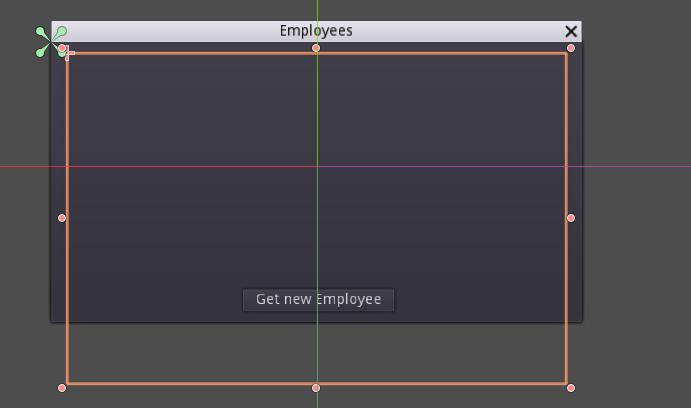

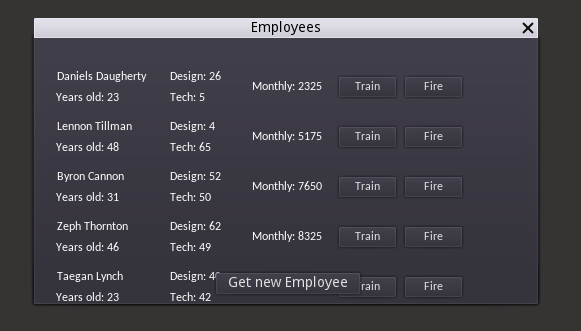

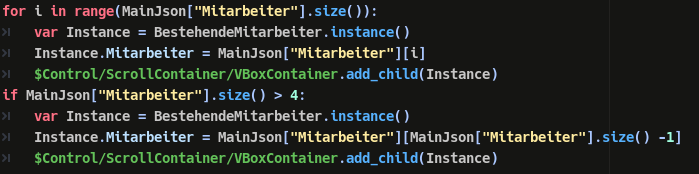

|

**Godot version:** v3.2.stable.official and v3.2.1.stable.official

**OS/device including version:** Windows 64bit

**Issue description:** If your Scrollcontainer is too small, then the last element of an array wont load into the "scrolling" of the Container.

Like you see in this picture I do have 5 elements in my array but as you'll see in the next screenshot the 5th element isn't loaded / properly spawned.

What I did find out is that the fifth element **is** loading but it doesn't spawn it into the scrolling of the container. It spawns outside of the box so you'll never see it (That's what I saw. It could also be another problem with maybe the sizing of the Scrollcontainer or even a bug in the Vertical- and HorizontalBox).

So I only found out one solution to this problem that is to manually spawn the last element of the array you are using.

**Steps to reproduce:**

- make an array (a good number is 10)

- make a little scene you want to instance into the scrollingcontainer and then try to run a for loop to instance all elements

**Minimal reproduction project:**

[Scrollingcontainer Bug.zip](https://github.com/godotengine/godot/files/4625485/Scrollingcontainer.Bug.zip)

In this project you should see a difference between the size of the array and how many actually spawned. (The instances are numbered, It goes from 0 to 7 with an array of 9 elements)

|

bug,confirmed,topic:gui

|

low

|

Critical

|

617,866,941 |

go

|

x/tools/gopls: use RelatedInformation for diagnostics on interface implementation errors

|

See the example in https://play.golang.org/p/2TrSYmBsdLU.

In this case, both the compiler and go/types report error messages on L17 where the error occurs. However, in cases like these, the user is most likely interested in fixing their mistake on L9 by changing the return type of `Hello`. We should add this position in the `RelatedInformation` field of diagnostics.

|

FeatureRequest,gopls,Tools

|

low

|

Critical

|

617,877,489 |

terminal

|

Include a profile that will connect to Visual Studio Codespaces

|

# Description of the new feature/enhancement

When using VSCode with [Visual Studio Codespaces ](https://online.visualstudio.com/), the integrated terminal is "connected" to the codespace and all commands execute in the Codespace environment.

I would like a profile in Terminal that connects to a Codespace. I would expect it to function similar to the Azure Cloud Shell profile.

|

Issue-Feature,Help Wanted,Area-Extensibility,Product-Terminal

|

low

|

Minor

|

617,883,309 |

go

|

net/http: Transport doesn't discard connections which received a 408 Request Timeout

|

<!--

Please answer these questions before submitting your issue. Thanks!

For questions please use one of our forums: https://github.com/golang/go/wiki/Questions

-->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14.2 linux/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes.

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GO111MODULE=""

GOARCH="amd64"

GOBIN=""

GOCACHE="/root/.cache/go-build"

GOENV="/root/.config/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOINSECURE=""

GONOPROXY=""

GONOSUMDB=""

GOOS="linux"

GOPATH="/go"

GOPRIVATE=""

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/usr/local/go"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/usr/local/go/pkg/tool/linux_amd64"

GCCGO="gccgo"

AR="ar"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD=""

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build338312077=/tmp/go-build -gno-record-gcc-switches"

</pre></details>

### What did you do?

I'm reading net/http for implementing HTTP client in Go. I want to use connections pool functionality of it but not sure how to deal with the stale connections.

`*http.Transport` says it retries the request if a connection got closed by server `while it was trying to read the response`.

https://github.com/golang/go/blob/a88c26eb286098b4c8f322f5076e933556fce5ac/src/net/http/transport.go#L640-L645

Since #32310, the connection is discarded also when `408 Request Timeout` is sent on it.

https://github.com/golang/go/blob/a88c26eb286098b4c8f322f5076e933556fce5ac/src/net/http/transport.go#L2127-L2135

But if a connection receives `408` *as a response to the request*, no retry is held and that connection doesn't get discarded. So the client of `*http.Transport` sees `408` response repeatedly until that connection actually gets closed from the server.

Two open questions:

- Is it the client(of `*http.Transport`)'s responsibility to retry the request when it sees `408`?

- And why `*http.Transport` doesn't discard connections which got `408`?

You can reproduce this situation with the server which responses `408` for requests but doesn't close the connection immediately:

```go

package main

import (

"context"

"fmt"

"io"

"io/ioutil"

"net/http"

"net/http/httptest"

"net/http/httptrace"

"runtime"

"time"

)

func main() {

fmt.Println(runtime.Version())

server := httptest.NewServer(http.HandlerFunc(func(rw http.ResponseWriter, req *http.Request) {

http.Error(rw, "", http.StatusRequestTimeout)

}))

defer server.Close()

req, err := http.NewRequestWithContext(

httptrace.WithClientTrace(context.Background(), &httptrace.ClientTrace{

GotConn: func(info httptrace.GotConnInfo) {

fmt.Printf("WasIdle: %v\n", info.WasIdle)

},

}),

"GET", server.URL, nil,

)

if err != nil {

panic(err)

}

for i := 0; i < 3; i++ {

fmt.Printf("===== %d =====\n", i)

res, err := http.DefaultTransport.RoundTrip(req)

if err != nil {

panic(err)

}

fmt.Println(res)

io.Copy(ioutil.Discard, res.Body)

res.Body.Close()

time.Sleep(time.Second)

}

}

```

Then the client always sees `408` through the cached connection.

```console

go1.14.2

===== 0 =====

WasIdle: false

&{408 Request Timeout 408 HTTP/1.1 1 1 map[Content-Length:[1] Content-Type:[text/plain; charset=utf-8] Date:[Thu, 14 May 2020 02:00:53 GMT] X-Content-Type-Options:[nosniff]] 0xc000190080 1 [] false false map[] 0xc000142000 <nil>}

===== 1 =====

WasIdle: true

&{408 Request Timeout 408 HTTP/1.1 1 1 map[Content-Length:[1] Content-Type:[text/plain; charset=utf-8] Date:[Thu, 14 May 2020 02:00:54 GMT] X-Content-Type-Options:[nosniff]] 0xc000092240 1 [] false false map[] 0xc000142000 <nil>}

===== 2 =====

WasIdle: true

&{408 Request Timeout 408 HTTP/1.1 1 1 map[Content-Length:[1] Content-Type:[text/plain; charset=utf-8] Date:[Thu, 14 May 2020 02:00:55 GMT] X-Content-Type-Options:[nosniff]] 0xc00021e080 1 [] false false map[] 0xc000142000 <nil>}

```

### What did you expect to see?

When `*http.Transport` get 408 as a response, it should discard the connection and retry with another.

### What did you see instead?

When `*http.Transport` get 408 as a response, it doesn't discard the connection nor retry.

|

NeedsInvestigation

|

low

|

Critical

|

617,889,112 |

PowerToys

|

Nightly releases

|

Not really a feature request, more like a helpful suggestion I could do.

I have a server and maybe if you guys think I should setup a release system?

Such as: Master, Stable, Nightly builds?

Up to you! Please react with reactions if you think this is a good idea, -3 reactions and i'll close this.

(I'm not sure how I would do this but, it's up to the person running this!).

More information on how I would run this:

1) It would run jenkins.

2) Links could be in readme.md

3) I'll give the operator of this repository full access of the Jenkins website.

Thank's all!

|

Area-Setup/Install

|

low

|

Major

|

617,906,801 |

flutter

|

type 'MaterialPageRoute<dynamic>' is not a subtype of type 'Route<String?>?' of ' in type cast'

|

### Hi, I found an uncertain problem!

#### Problem description:

> I expect to use `Navigator.of(context).pop<String>` to return a value of a certain type, but the result is always a value of dynamic type.

#### Code:

```dart

// First screen

Navigator.of(context).pushNamed<String>('login').then((String result){

print('login.result runtimeType: ${result.runtimeType}');

});

// Second screen

Navigator.of(context).pop<String>('success');

```

#### A type error will occur in the above code:

```bash

E/flutter (16614): [ERROR:flutter/lib/ui/ui_dart_state.cc(157)] Unhandled Exception: type 'MaterialPageRoute<dynamic>' is not a subtype of type 'Route<String>' in type cast

E/flutter (16614): #0 NavigatorState._routeNamed (package:flutter/src/widgets/navigator.dart:3138:55)

E/flutter (16614): #1 NavigatorState.pushNamed (package:flutter/src/widgets/navigator.dart:3190:20)

E/flutter (16614): #2 _Transfer.build.<anonymous closure> (package:panda_exchange/views/FirstScreen.dart:157:55)

E/flutter (16614): #3 GestureRecognizer.invokeCallback (package:flutter/src/gestures/recognizer.dart:182:24)

E/flutter (16614): #4 TapGestureRecognizer.handleTapUp (package:flutter/src/gestures/tap.dart:504:11)

E/flutter (16614): #5 BaseTapGestureRecognizer._checkUp (package:flutter/src/gestures/tap.dart:282:5)

E/flutter (16614): #6 BaseTapGestureRecognizer.acceptGesture (package:flutter/src/gestures/tap.dart:254:7)

E/flutter (16614): #7 GestureArenaManager.sweep (package:flutter/src/gestures/arena.dart:156:27)

E/flutter (16614): #8 GestureBinding.handleEvent (package:flutter/src/gestures/binding.dart:222:20)

E/flutter (16614): #9 GestureBinding.dispatchEvent (package:flutter/src/gestures/binding.dart:198:22)

E/flutter (16614): #10 GestureBinding._handlePointerEvent (package:flutter/src/gestures/binding.dart:156:7)

E/flutter (16614): #11 GestureBinding._flushPointerEventQueue (package:flutter/src/gestures/binding.dart:102:7)

E/flutter (16614): #12 GestureBinding._handlePointerDataPacket (package:flutter/src/gestures/binding.dart:86:7)

E/flutter (16614): #13 _rootRunUnary (dart:async/zone.dart:1196:13)

E/flutter (16614): #14 _CustomZone.runUnary (dart:async/zone.dart:1085:19)

E/flutter (16614): #15 _CustomZone.runUnaryGuarded (dart:async/zone.dart:987:7)

E/flutter (16614): #16 _invoke1 (dart:ui/hooks.dart:275:10)

E/flutter (16614): #17 _dispatchPointerDataPacket (dart:ui/hooks.dart:184:5)

E/flutter (16614):

```

|

framework,f: material design,f: routes,has reproducible steps,P2,found in release: 3.3,found in release: 3.7,team-design,triaged-design

|

low

|

Critical

|

617,907,544 |

ant-design

|

How could I format the week num in WeekPicker

|

- [x] I have searched the [issues](https://github.com/ant-design/ant-design/issues) of this repository and believe that this is not a duplicate.

### What problem does this feature solve?

Now the week num in WeekPicker is the number of year , but for some one that is not clear, they want the number of month.

现在WeekPicker的组件里,周数指的是一年当中的第几周,但对一些客户来说,这个不够明确,他们比较希望的表达是某个月的第几周。

### What does the proposed API look like?

I hope there is a method to format the week number, just like this.

```

<WeekPicker

weekNumberFormatter = {(beginDate, endDate) => ''}

/>

```

<!-- generated by ant-design-issue-helper. DO NOT REMOVE -->

|

Inactive

|

low

|

Minor

|

617,930,185 |

vscode

|

Tab key when indenting with spaces does not insert correct number of spaces

|

<!-- ⚠️⚠️ Do Not Delete This! bug_report_template ⚠️⚠️ -->

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Also please test using the latest insiders build to make sure your issue has not already been fixed: https://code.visualstudio.com/insiders/ -->

<!-- Use Help > Report Issue to prefill these. -->

- VSCode Version: 1.45.0

- OS Version: macOS Catalina 10.15.4

This is a re-filing of #80129 which was closed erroneously. This is NOT caused by an extension and reproduces when editing even a plain text document when extensions are all disabled.

Related to #2798.

If the insertion point is *anywhere* inside the indentation at the start of the line, and I press <kbd>Tab</kbd>, the indentation should be increased to the next full tab stop (multiple of the tab width).

I'll use the period `.` character instead of spaces in indentation so that it is easier to see what I'm talking about.

If I have a line of code indented by 4 spaces like this:

```python

....do_something()

```

If I place the insertion point on column 2 and press tab, I get _7_ spaces. That's really irritating, it means I have to be hyper precise placing my cursor when I want to indent a line, even when there's a very wide click target I should be able to hit anywhere.

```python

.......do_something()

```

**Xcode** does this properly, it increases the indentation to 8 spaces and moves the cursor to the end of the indentation. **TextMate** behaves the same as Xcode.

<!-- Launch with `code --disable-extensions` to check. -->

Does this issue occur when all extensions are disabled?: Yes

|

feature-request,editor-commands

|

low

|

Critical

|

617,946,963 |

pytorch

|

Named Tensor and Indexing

|

First of all, thanks for all the great work on PyTorch! It's been a pleasure to use, and to watch it continue to improve. I was also excited about Named Tensor, for all the reasons described in the initial proposal: there's painful gotchas in unnamed dimensions, and opportunity to write clearer, safer code with named dimensions.

However, when we tried to use named tensors, we ran into some trouble, mostly around indexing. This post will be part feature request, and part general observation / comment.

### Indexing Not Supported - Feature Request

We make use of indexing, primarily for embedding lookups, which is not supported with named tensors (as of 1.5):

```

named_tensor = torch.tensor([100, 101, 102], names=('foo',))

named_tensor[[0,2]] # -> RuntimeError: index is not yet supported with named tensors.

```

Similarly for `index_select`. The runtime error recommends removing the names and recreating them. Since this is rather cumbersome, it would be great if named tensor could support indexing more naturally.

### Named Dimension vs Labeled Index

One slightly unexpected observation in our exploration of named tensor is that we realized we actually had more cases where a labeled index would help with legibility and safety, than for named dimensions. Of course, both together would be better still. As a reference, consider the popular pandas dataframe API. For a concrete example, imagine we have a grocery model with a fruit embedding tensor represented as a pandas dataframe:

```

df = pd.DataFrame({'apple':[0,0],'pear':[1,1],'orange':[2,2]})

```

We can also make things even more explicit if we wish, analogous to named tensor, by assigning names to the index/columns (the pandas analogy breaks down slightly because dataframes are inherently 2-dimensional and don't fully generalize to tensors, so I'll stick with a 2 dimensional example here for clarity). But either way, we can index into this dataframe with labels rather than numeric indices:

```

df[['apple','orange']]

```

This is handy because it lets us standardize a label vocabulary, and avoids exposing the internal implementation detail of an arbitrary numeric index. When working with pytorch I find myself rolling my own indexing solutions, which would be simple enough in this toy example but can get quite messy as things become more complicated. Besides the potential complexity and error-prone nature of bespoke index tracking, there's also the drawback of missing out on a tighter integration of indexing with pytorch, which could be helpful for inspecting model objects.

Overall, it would simplify our model interface substantially if pytorch could provide built-in support for a labled index, possibly augmenting tensors in a similar fashion to named tensor. Of course this is way beyond the scope of named tensor itself, but perhaps this can help motivate future exploration, or if there is existing work in this direction I'd love to hear about it!

cc @zou3519

|

triaged,module: named tensor

|

low

|

Critical

|

618,063,771 |

TypeScript

|

templated function with multiple signatures does not have options merged for autocomplete.

|

```ts

function regular(options: {abc: 'hello'});

function regular(options: {abc: 'world'});

function regular(options: any) {

}

regular({abc: '' });

function templated<K extends string>(options: {abc: 'hello'});

function templated<K extends string>(options: {abc: 'world'});

function templated(options: any) {

}

templated({abc: ''});

```

With the `regular` function, asking for autocomplete inside the quotes for `abc` gives `"hello"` and `"world"`. With the `templated` function, only `"hello"` is given. Type checking itself works fine.

|

Bug,Domain: Completion Lists

|

low

|

Minor

|

618,079,361 |

youtube-dl

|

ondamedia.cl

|

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE

######################################################################

-->

## Checklist

<!--

Carefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:

- First of, make sure you are using the latest version of youtube-dl. Run `youtube-dl --version` and ensure your version is 2020.05.08. If it's not, see https://yt-dl.org/update on how to update. Issues with outdated version will be REJECTED.

- Make sure that all provided video/audio/playlist URLs (if any) are alive and playable in a browser.

- Make sure that site you are requesting is not dedicated to copyright infringement, see https://yt-dl.org/copyright-infringement. youtube-dl does not support such sites. In order for site support request to be accepted all provided example URLs should not violate any copyrights.

- Search the bugtracker for similar site support requests: http://yt-dl.org/search-issues. DO NOT post duplicates.

- Finally, put x into all relevant boxes (like this [x])

-->

- [x] I'm reporting a new site support request

- [x] I've verified that I'm running youtube-dl version **2020.05.08**

- [x] I've checked that all provided URLs are alive and playable in a browser

- [x] I've checked that none of provided URLs violate any copyrights

- [x] I've searched the bugtracker for similar site support requests including closed ones

## Example URLs

<!--

Provide all kinds of example URLs support for which should be included. Replace following example URLs by yours.

-->

- Single video: https://ondamedia.cl/#/player/velodromo

- Single video: https://ondamedia.cl/#/player/hoy-y-no-manana

- Playlist: https://ondamedia.cl/#/result?query=ondasinfronteras

## Description

<!--

Provide any additional information.

If work on your issue requires account credentials please provide them or explain how one can obtain them.

-->

Collection of Chilean movies, documentaries and similar items hosted by the Chilean Ministry of Culture

|

site-support-request

|

low

|

Critical

|

618,126,175 |

pytorch

|

[Feature] Option to have zeros/ones/full output tensor with zero strides

|

## 🚀 Feature

An option to return a tensor with all strides set to 0 for `torch.zeros`, `torch.ones`, `torch.Tensor.new_ones`, `torch.Tensor.new_zeros`, etc. for smaller memory usage and better performance.

## Motivation

For now, an alternative way of doing this is by calling two methods, e.g.:

`x.new_full((1,), value).expand_as(x)`

But it is a pretty unfriendly approach, therefore, I have mostly seen a simple `torch.full_like(x, value)` being used instead, which can unnecessarily allocate a huge chunk of the memory.

## Pitch

Possible implementations: