problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_49673

|

rasdani/github-patches

|

git_diff

|

kserve__kserve-2835

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

No matches for kind \"HorizontalPodAutoscaler\" in version \"autoscaling/v2beta2\

/kind bug

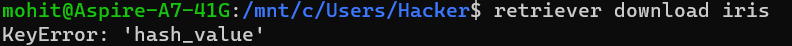

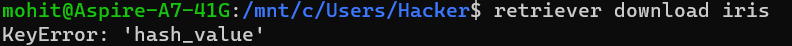

**What steps did you take and what happened:**

Deploy kserve in raw mode on kubernetes 1.26 where autoscaling/v2beta2 is no longer available

**What did you expect to happen:**

Kserve should support v2 of the api

</issue>

<code>

[start of hack/python-sdk/update_release_version_helper.py]

1 #!/usr/bin/env python3

2

3 # Copyright 2023 The KServe Authors.

4 #

5 # Licensed under the Apache License, Version 2.0 (the "License");

6 # you may not use this file except in compliance with the License.

7 # You may obtain a copy of the License at

8 #

9 # http://www.apache.org/licenses/LICENSE-2.0

10 #

11 # Unless required by applicable law or agreed to in writing, software

12 # distributed under the License is distributed on an "AS IS" BASIS,

13 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

14 # See the License for the specific language governing permissions and

15 # limitations under the License.

16

17 import tomlkit

18 import argparse

19

20 parser = argparse.ArgumentParser(description="Update release version in python toml files")

21 parser.add_argument("version", type=str, help="release version")

22 args, _ = parser.parse_known_args()

23

24 toml_files = [

25 "python/kserve/pyproject.toml",

26 "python/aiffairness/pyproject.toml",

27 "python/aixexplainer/pyproject.toml",

28 "python/alibiexplainer/pyproject.toml",

29 "python/artexplainer/pyproject.toml",

30 "python/custom_model/pyproject.toml",

31 "python/custom_transformer/pyproject.toml",

32 "python/lgbserver/pyproject.toml",

33 "python/paddleserver/pyproject.toml",

34 "python/pmmlserver/pyproject.toml",

35 "python/sklearnserver/pyproject.toml",

36 "python/xgbserver/pyproject.toml",

37 ]

38

39 for toml_file in toml_files:

40 with open(toml_file, "r") as file:

41 toml_config = tomlkit.load(file)

42 toml_config['tool']['poetry']['version'] = args.version

43

44 with open(toml_file, "w") as file:

45 tomlkit.dump(toml_config, file)

46

[end of hack/python-sdk/update_release_version_helper.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/hack/python-sdk/update_release_version_helper.py b/hack/python-sdk/update_release_version_helper.py

--- a/hack/python-sdk/update_release_version_helper.py

+++ b/hack/python-sdk/update_release_version_helper.py

@@ -24,7 +24,6 @@

toml_files = [

"python/kserve/pyproject.toml",

"python/aiffairness/pyproject.toml",

- "python/aixexplainer/pyproject.toml",

"python/alibiexplainer/pyproject.toml",

"python/artexplainer/pyproject.toml",

"python/custom_model/pyproject.toml",

|

{"golden_diff": "diff --git a/hack/python-sdk/update_release_version_helper.py b/hack/python-sdk/update_release_version_helper.py\n--- a/hack/python-sdk/update_release_version_helper.py\n+++ b/hack/python-sdk/update_release_version_helper.py\n@@ -24,7 +24,6 @@\n toml_files = [\n \"python/kserve/pyproject.toml\",\n \"python/aiffairness/pyproject.toml\",\n- \"python/aixexplainer/pyproject.toml\",\n \"python/alibiexplainer/pyproject.toml\",\n \"python/artexplainer/pyproject.toml\",\n \"python/custom_model/pyproject.toml\",\n", "issue": "No matches for kind \\\"HorizontalPodAutoscaler\\\" in version \\\"autoscaling/v2beta2\\\n/kind bug\r\n\r\n**What steps did you take and what happened:**\r\nDeploy kserve in raw mode on kubernetes 1.26 where autoscaling/v2beta2 is no longer available\r\n\r\n\r\n**What did you expect to happen:**\r\nKserve should support v2 of the api\r\n\n", "before_files": [{"content": "#!/usr/bin/env python3\n\n# Copyright 2023 The KServe Authors.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport tomlkit\nimport argparse\n\nparser = argparse.ArgumentParser(description=\"Update release version in python toml files\")\nparser.add_argument(\"version\", type=str, help=\"release version\")\nargs, _ = parser.parse_known_args()\n\ntoml_files = [\n \"python/kserve/pyproject.toml\",\n \"python/aiffairness/pyproject.toml\",\n \"python/aixexplainer/pyproject.toml\",\n \"python/alibiexplainer/pyproject.toml\",\n \"python/artexplainer/pyproject.toml\",\n \"python/custom_model/pyproject.toml\",\n \"python/custom_transformer/pyproject.toml\",\n \"python/lgbserver/pyproject.toml\",\n \"python/paddleserver/pyproject.toml\",\n \"python/pmmlserver/pyproject.toml\",\n \"python/sklearnserver/pyproject.toml\",\n \"python/xgbserver/pyproject.toml\",\n]\n\nfor toml_file in toml_files:\n with open(toml_file, \"r\") as file:\n toml_config = tomlkit.load(file)\n toml_config['tool']['poetry']['version'] = args.version\n\n with open(toml_file, \"w\") as file:\n tomlkit.dump(toml_config, file)\n", "path": "hack/python-sdk/update_release_version_helper.py"}]}

| 1,115 | 133 |

gh_patches_debug_30014

|

rasdani/github-patches

|

git_diff

|

DDMAL__CantusDB-1183

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

`created_by` and `last_updated_by` fields should be read only on source admin change page

They should be readonly fields instead. I don't recall seeing them at the top of the page like they are now. Maybe previously they were hidden and now they are visible.

</issue>

<code>

[start of django/cantusdb_project/main_app/admin.py]

1 from django.contrib import admin

2 from main_app.models import *

3 from main_app.forms import (

4 AdminCenturyForm,

5 AdminChantForm,

6 AdminFeastForm,

7 AdminGenreForm,

8 AdminNotationForm,

9 AdminOfficeForm,

10 AdminProvenanceForm,

11 AdminRismSiglumForm,

12 AdminSegmentForm,

13 AdminSequenceForm,

14 AdminSourceForm,

15 )

16

17 # these fields should not be editable by all classes

18 EXCLUDE = (

19 "created_by",

20 "last_updated_by",

21 "json_info",

22 )

23

24

25 class BaseModelAdmin(admin.ModelAdmin):

26 exclude = EXCLUDE

27

28 # if an object is created in the admin interface, assign the user to the created_by field

29 # else if an object is updated in the admin interface, assign the user to the last_updated_by field

30 def save_model(self, request, obj, form, change):

31 if change:

32 obj.last_updated_by = request.user

33 else:

34 obj.created_by = request.user

35 super().save_model(request, obj, form, change)

36

37

38 class CenturyAdmin(BaseModelAdmin):

39 search_fields = ("name",)

40 form = AdminCenturyForm

41

42

43 class ChantAdmin(BaseModelAdmin):

44 @admin.display(description="Source Siglum")

45 def get_source_siglum(self, obj):

46 if obj.source:

47 return obj.source.siglum

48

49 list_display = (

50 "incipit",

51 "get_source_siglum",

52 "genre",

53 )

54 search_fields = (

55 "title",

56 "incipit",

57 "cantus_id",

58 "id",

59 )

60

61 readonly_fields = (

62 "date_created",

63 "date_updated",

64 )

65

66 list_filter = (

67 "genre",

68 "office",

69 )

70 exclude = EXCLUDE + (

71 "col1",

72 "col2",

73 "col3",

74 "next_chant",

75 "s_sequence",

76 "is_last_chant_in_feast",

77 "visible_status",

78 "date",

79 "volpiano_notes",

80 "volpiano_intervals",

81 "title",

82 "differentiae_database",

83 )

84 form = AdminChantForm

85 raw_id_fields = (

86 "source",

87 "feast",

88 )

89 ordering = ("source__siglum",)

90

91

92 class DifferentiaAdmin(BaseModelAdmin):

93 search_fields = (

94 "differentia_id",

95 "id",

96 )

97

98

99 class FeastAdmin(BaseModelAdmin):

100 search_fields = (

101 "name",

102 "feast_code",

103 )

104 list_display = (

105 "name",

106 "month",

107 "day",

108 "feast_code",

109 )

110 form = AdminFeastForm

111

112

113 class GenreAdmin(BaseModelAdmin):

114 search_fields = ("name",)

115 form = AdminGenreForm

116

117

118 class NotationAdmin(BaseModelAdmin):

119 search_fields = ("name",)

120 form = AdminNotationForm

121

122

123 class OfficeAdmin(BaseModelAdmin):

124 search_fields = ("name",)

125 form = AdminOfficeForm

126

127

128 class ProvenanceAdmin(BaseModelAdmin):

129 search_fields = ("name",)

130 form = AdminProvenanceForm

131

132

133 class RismSiglumAdmin(BaseModelAdmin):

134 search_fields = ("name",)

135 form = AdminRismSiglumForm

136

137

138 class SegmentAdmin(BaseModelAdmin):

139 search_fields = ("name",)

140 form = AdminSegmentForm

141

142

143 class SequenceAdmin(BaseModelAdmin):

144 @admin.display(description="Source Siglum")

145 def get_source_siglum(self, obj):

146 if obj.source:

147 return obj.source.siglum

148

149 search_fields = (

150 "title",

151 "incipit",

152 "cantus_id",

153 "id",

154 )

155 exclude = EXCLUDE + (

156 "c_sequence",

157 "next_chant",

158 "is_last_chant_in_feast",

159 "visible_status",

160 )

161 list_display = ("incipit", "get_source_siglum", "genre")

162 list_filter = (

163 "genre",

164 "office",

165 )

166 raw_id_fields = (

167 "source",

168 "feast",

169 )

170 ordering = ("source__siglum",)

171 form = AdminSequenceForm

172

173

174 class SourceAdmin(BaseModelAdmin):

175 exclude = ("source_status",)

176

177 # These search fields are also available on the user-source inline relationship in the user admin page

178 search_fields = (

179 "siglum",

180 "title",

181 "id",

182 )

183 readonly_fields = (

184 "number_of_chants",

185 "number_of_melodies",

186 "date_created",

187 "date_updated",

188 )

189 # from the Django docs:

190 # Adding a ManyToManyField to this list will instead use a nifty unobtrusive JavaScript “filter” interface

191 # that allows searching within the options. The unselected and selected options appear in two boxes side by side.

192 filter_horizontal = (

193 "century",

194 "notation",

195 "current_editors",

196 "inventoried_by",

197 "full_text_entered_by",

198 "melodies_entered_by",

199 "proofreaders",

200 "other_editors",

201 )

202

203 list_display = (

204 "title",

205 "siglum",

206 "id",

207 )

208

209 list_filter = (

210 "full_source",

211 "segment",

212 "source_status",

213 "published",

214 "century",

215 )

216

217 ordering = ("siglum",)

218

219 form = AdminSourceForm

220

221

222 admin.site.register(Century, CenturyAdmin)

223 admin.site.register(Chant, ChantAdmin)

224 admin.site.register(Differentia, DifferentiaAdmin)

225 admin.site.register(Feast, FeastAdmin)

226 admin.site.register(Genre, GenreAdmin)

227 admin.site.register(Notation, NotationAdmin)

228 admin.site.register(Office, OfficeAdmin)

229 admin.site.register(Provenance, ProvenanceAdmin)

230 admin.site.register(RismSiglum, RismSiglumAdmin)

231 admin.site.register(Segment, SegmentAdmin)

232 admin.site.register(Sequence, SequenceAdmin)

233 admin.site.register(Source, SourceAdmin)

234

[end of django/cantusdb_project/main_app/admin.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/django/cantusdb_project/main_app/admin.py b/django/cantusdb_project/main_app/admin.py

--- a/django/cantusdb_project/main_app/admin.py

+++ b/django/cantusdb_project/main_app/admin.py

@@ -16,14 +16,17 @@

# these fields should not be editable by all classes

EXCLUDE = (

- "created_by",

- "last_updated_by",

"json_info",

)

+READ_ONLY = (

+ "created_by",

+ "last_updated_by",

+)

class BaseModelAdmin(admin.ModelAdmin):

exclude = EXCLUDE

+ readonly_fields = READ_ONLY

# if an object is created in the admin interface, assign the user to the created_by field

# else if an object is updated in the admin interface, assign the user to the last_updated_by field

@@ -58,7 +61,7 @@

"id",

)

- readonly_fields = (

+ readonly_fields = READ_ONLY + (

"date_created",

"date_updated",

)

@@ -172,7 +175,7 @@

class SourceAdmin(BaseModelAdmin):

- exclude = ("source_status",)

+ exclude = EXCLUDE + ("source_status",)

# These search fields are also available on the user-source inline relationship in the user admin page

search_fields = (

@@ -180,7 +183,7 @@

"title",

"id",

)

- readonly_fields = (

+ readonly_fields = READ_ONLY + (

"number_of_chants",

"number_of_melodies",

"date_created",

|

{"golden_diff": "diff --git a/django/cantusdb_project/main_app/admin.py b/django/cantusdb_project/main_app/admin.py\n--- a/django/cantusdb_project/main_app/admin.py\n+++ b/django/cantusdb_project/main_app/admin.py\n@@ -16,14 +16,17 @@\n \n # these fields should not be editable by all classes\n EXCLUDE = (\n- \"created_by\",\n- \"last_updated_by\",\n \"json_info\",\n )\n \n+READ_ONLY = (\n+ \"created_by\",\n+ \"last_updated_by\",\n+)\n \n class BaseModelAdmin(admin.ModelAdmin):\n exclude = EXCLUDE\n+ readonly_fields = READ_ONLY\n \n # if an object is created in the admin interface, assign the user to the created_by field\n # else if an object is updated in the admin interface, assign the user to the last_updated_by field\n@@ -58,7 +61,7 @@\n \"id\",\n )\n \n- readonly_fields = (\n+ readonly_fields = READ_ONLY + (\n \"date_created\",\n \"date_updated\",\n )\n@@ -172,7 +175,7 @@\n \n \n class SourceAdmin(BaseModelAdmin):\n- exclude = (\"source_status\",)\n+ exclude = EXCLUDE + (\"source_status\",)\n \n # These search fields are also available on the user-source inline relationship in the user admin page\n search_fields = (\n@@ -180,7 +183,7 @@\n \"title\",\n \"id\",\n )\n- readonly_fields = (\n+ readonly_fields = READ_ONLY + (\n \"number_of_chants\",\n \"number_of_melodies\",\n \"date_created\",\n", "issue": "`created_by` and `last_updated_by` fields should be read only on source admin change page\nThey should be readonly fields instead. I don't recall seeing them at the top of the page like they are now. Maybe previously they were hidden and now they are visible.\n", "before_files": [{"content": "from django.contrib import admin\nfrom main_app.models import *\nfrom main_app.forms import (\n AdminCenturyForm,\n AdminChantForm,\n AdminFeastForm,\n AdminGenreForm,\n AdminNotationForm,\n AdminOfficeForm,\n AdminProvenanceForm,\n AdminRismSiglumForm,\n AdminSegmentForm,\n AdminSequenceForm,\n AdminSourceForm,\n)\n\n# these fields should not be editable by all classes\nEXCLUDE = (\n \"created_by\",\n \"last_updated_by\",\n \"json_info\",\n)\n\n\nclass BaseModelAdmin(admin.ModelAdmin):\n exclude = EXCLUDE\n\n # if an object is created in the admin interface, assign the user to the created_by field\n # else if an object is updated in the admin interface, assign the user to the last_updated_by field\n def save_model(self, request, obj, form, change):\n if change:\n obj.last_updated_by = request.user\n else:\n obj.created_by = request.user\n super().save_model(request, obj, form, change)\n\n\nclass CenturyAdmin(BaseModelAdmin):\n search_fields = (\"name\",)\n form = AdminCenturyForm\n\n\nclass ChantAdmin(BaseModelAdmin):\n @admin.display(description=\"Source Siglum\")\n def get_source_siglum(self, obj):\n if obj.source:\n return obj.source.siglum\n\n list_display = (\n \"incipit\",\n \"get_source_siglum\",\n \"genre\",\n )\n search_fields = (\n \"title\",\n \"incipit\",\n \"cantus_id\",\n \"id\",\n )\n\n readonly_fields = (\n \"date_created\",\n \"date_updated\",\n )\n\n list_filter = (\n \"genre\",\n \"office\",\n )\n exclude = EXCLUDE + (\n \"col1\",\n \"col2\",\n \"col3\",\n \"next_chant\",\n \"s_sequence\",\n \"is_last_chant_in_feast\",\n \"visible_status\",\n \"date\",\n \"volpiano_notes\",\n \"volpiano_intervals\",\n \"title\",\n \"differentiae_database\",\n )\n form = AdminChantForm\n raw_id_fields = (\n \"source\",\n \"feast\",\n )\n ordering = (\"source__siglum\",)\n\n\nclass DifferentiaAdmin(BaseModelAdmin):\n search_fields = (\n \"differentia_id\",\n \"id\",\n )\n\n\nclass FeastAdmin(BaseModelAdmin):\n search_fields = (\n \"name\",\n \"feast_code\",\n )\n list_display = (\n \"name\",\n \"month\",\n \"day\",\n \"feast_code\",\n )\n form = AdminFeastForm\n\n\nclass GenreAdmin(BaseModelAdmin):\n search_fields = (\"name\",)\n form = AdminGenreForm\n\n\nclass NotationAdmin(BaseModelAdmin):\n search_fields = (\"name\",)\n form = AdminNotationForm\n\n\nclass OfficeAdmin(BaseModelAdmin):\n search_fields = (\"name\",)\n form = AdminOfficeForm\n\n\nclass ProvenanceAdmin(BaseModelAdmin):\n search_fields = (\"name\",)\n form = AdminProvenanceForm\n\n\nclass RismSiglumAdmin(BaseModelAdmin):\n search_fields = (\"name\",)\n form = AdminRismSiglumForm\n\n\nclass SegmentAdmin(BaseModelAdmin):\n search_fields = (\"name\",)\n form = AdminSegmentForm\n\n\nclass SequenceAdmin(BaseModelAdmin):\n @admin.display(description=\"Source Siglum\")\n def get_source_siglum(self, obj):\n if obj.source:\n return obj.source.siglum\n\n search_fields = (\n \"title\",\n \"incipit\",\n \"cantus_id\",\n \"id\",\n )\n exclude = EXCLUDE + (\n \"c_sequence\",\n \"next_chant\",\n \"is_last_chant_in_feast\",\n \"visible_status\",\n )\n list_display = (\"incipit\", \"get_source_siglum\", \"genre\")\n list_filter = (\n \"genre\",\n \"office\",\n )\n raw_id_fields = (\n \"source\",\n \"feast\",\n )\n ordering = (\"source__siglum\",)\n form = AdminSequenceForm\n\n\nclass SourceAdmin(BaseModelAdmin):\n exclude = (\"source_status\",)\n\n # These search fields are also available on the user-source inline relationship in the user admin page\n search_fields = (\n \"siglum\",\n \"title\",\n \"id\",\n )\n readonly_fields = (\n \"number_of_chants\",\n \"number_of_melodies\",\n \"date_created\",\n \"date_updated\",\n )\n # from the Django docs:\n # Adding a ManyToManyField to this list will instead use a nifty unobtrusive JavaScript \u201cfilter\u201d interface\n # that allows searching within the options. The unselected and selected options appear in two boxes side by side.\n filter_horizontal = (\n \"century\",\n \"notation\",\n \"current_editors\",\n \"inventoried_by\",\n \"full_text_entered_by\",\n \"melodies_entered_by\",\n \"proofreaders\",\n \"other_editors\",\n )\n\n list_display = (\n \"title\",\n \"siglum\",\n \"id\",\n )\n\n list_filter = (\n \"full_source\",\n \"segment\",\n \"source_status\",\n \"published\",\n \"century\",\n )\n\n ordering = (\"siglum\",)\n\n form = AdminSourceForm\n\n\nadmin.site.register(Century, CenturyAdmin)\nadmin.site.register(Chant, ChantAdmin)\nadmin.site.register(Differentia, DifferentiaAdmin)\nadmin.site.register(Feast, FeastAdmin)\nadmin.site.register(Genre, GenreAdmin)\nadmin.site.register(Notation, NotationAdmin)\nadmin.site.register(Office, OfficeAdmin)\nadmin.site.register(Provenance, ProvenanceAdmin)\nadmin.site.register(RismSiglum, RismSiglumAdmin)\nadmin.site.register(Segment, SegmentAdmin)\nadmin.site.register(Sequence, SequenceAdmin)\nadmin.site.register(Source, SourceAdmin)\n", "path": "django/cantusdb_project/main_app/admin.py"}]}

| 2,512 | 365 |

gh_patches_debug_10660

|

rasdani/github-patches

|

git_diff

|

mozilla__pontoon-2816

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Don't submit pretranslated strings with warnings or errors

If possible, it would be great to have 2 different behaviors:

* Strings with warning should be suggested, instead of submitted, because they might be OK (i.e. the warning could be ignored), or at least it might be still useful to post-edit them instead of starting from scratch.

* String with errors shouldn't be even suggested.

If not possible, I guess storing them both as suggestions would work, since the localizer wouldn't be able to confirm a string with errors (but it will be confusing).

The important piece is not storing them in VCS, because it will create a chain of errors, especially in parts that we don't control (the final product).

</issue>

<code>

[start of pontoon/pretranslation/tasks.py]

1 import logging

2

3 from django.db.models import Q, CharField, Value as V

4 from django.db.models.functions import Concat

5 from django.conf import settings

6 from pontoon.base.models import (

7 Project,

8 Entity,

9 TranslatedResource,

10 Translation,

11 )

12 from pontoon.actionlog.models import ActionLog

13 from pontoon.pretranslation.pretranslate import (

14 get_pretranslations,

15 update_changed_instances,

16 )

17 from pontoon.base.tasks import PontoonTask

18 from pontoon.sync.core import serial_task

19 from pontoon.checks.utils import bulk_run_checks

20

21

22 log = logging.getLogger(__name__)

23

24

25 @serial_task(settings.SYNC_TASK_TIMEOUT, base=PontoonTask, lock_key="project={0}")

26 def pretranslate(self, project_pk, locales=None, entities=None):

27 """

28 Identifies strings without any translations and any suggestions.

29 Engages TheAlgorithm (bug 1552796) to gather pretranslations.

30 Stores pretranslations as suggestions (approved=False) to DB.

31

32 :arg project_pk: the pk of the project to be pretranslated

33 :arg Queryset locales: the locales for the project to be pretranslated

34 :arg Queryset entites: the entities for the project to be pretranslated

35

36 :returns: None

37 """

38 project = Project.objects.get(pk=project_pk)

39

40 if not project.pretranslation_enabled:

41 log.info(f"Pretranslation not enabled for project {project.name}")

42 return

43

44 if locales:

45 locales = project.locales.filter(pk__in=locales)

46 else:

47 locales = project.locales

48

49 locales = locales.filter(

50 project_locale__project=project,

51 project_locale__pretranslation_enabled=True,

52 project_locale__readonly=False,

53 )

54

55 if not locales:

56 log.info(

57 f"Pretranslation not enabled for any locale within project {project.name}"

58 )

59 return

60

61 log.info(f"Fetching pretranslations for project {project.name} started")

62

63 if not entities:

64 entities = Entity.objects.filter(

65 resource__project=project,

66 obsolete=False,

67 )

68

69 entities = entities.prefetch_related("resource")

70

71 # get available TranslatedResource pairs

72 tr_pairs = (

73 TranslatedResource.objects.filter(

74 resource__project=project,

75 locale__in=locales,

76 )

77 .annotate(

78 locale_resource=Concat(

79 "locale_id", V("-"), "resource_id", output_field=CharField()

80 )

81 )

82 .values_list("locale_resource", flat=True)

83 .distinct()

84 )

85

86 # Fetch all distinct locale-entity pairs for which translation exists

87 translated_entities = (

88 Translation.objects.filter(

89 locale__in=locales,

90 entity__in=entities,

91 )

92 .annotate(

93 locale_entity=Concat(

94 "locale_id", V("-"), "entity_id", output_field=CharField()

95 )

96 )

97 .values_list("locale_entity", flat=True)

98 .distinct()

99 )

100

101 translated_entities = list(translated_entities)

102

103 translations = []

104

105 # To keep track of changed TranslatedResources and their latest_translation

106 tr_dict = {}

107

108 tr_filter = []

109 index = -1

110

111 for locale in locales:

112 log.info(f"Fetching pretranslations for locale {locale.code} started")

113 for entity in entities:

114 locale_entity = f"{locale.id}-{entity.id}"

115 locale_resource = f"{locale.id}-{entity.resource.id}"

116 if locale_entity in translated_entities or locale_resource not in tr_pairs:

117 continue

118

119 pretranslations = get_pretranslations(entity, locale)

120

121 if not pretranslations:

122 continue

123

124 for string, plural_form, user in pretranslations:

125 t = Translation(

126 entity=entity,

127 locale=locale,

128 string=string,

129 user=user,

130 approved=False,

131 pretranslated=True,

132 active=True,

133 plural_form=plural_form,

134 )

135

136 index += 1

137 translations.append(t)

138

139 if locale_resource not in tr_dict:

140 tr_dict[locale_resource] = index

141

142 # Add query for fetching respective TranslatedResource.

143 tr_filter.append(

144 Q(locale__id=locale.id) & Q(resource__id=entity.resource.id)

145 )

146

147 # Update the latest translation index

148 tr_dict[locale_resource] = index

149

150 log.info(f"Fetching pretranslations for locale {locale.code} done")

151

152 if len(translations) == 0:

153 return

154

155 translations = Translation.objects.bulk_create(translations)

156

157 # Log creating actions

158 actions_to_log = [

159 ActionLog(

160 action_type=ActionLog.ActionType.TRANSLATION_CREATED,

161 performed_by=t.user,

162 translation=t,

163 )

164 for t in translations

165 ]

166

167 ActionLog.objects.bulk_create(actions_to_log)

168

169 # Run checks on all translations

170 translation_pks = {translation.pk for translation in translations}

171 bulk_run_checks(Translation.objects.for_checks().filter(pk__in=translation_pks))

172

173 # Mark translations as changed

174 changed_translations = Translation.objects.filter(pk__in=translation_pks)

175 changed_translations.bulk_mark_changed()

176

177 # Update latest activity and stats for changed instances.

178 update_changed_instances(tr_filter, tr_dict, translations)

179

180 log.info(f"Fetching pretranslations for project {project.name} done")

181

[end of pontoon/pretranslation/tasks.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pontoon/pretranslation/tasks.py b/pontoon/pretranslation/tasks.py

--- a/pontoon/pretranslation/tasks.py

+++ b/pontoon/pretranslation/tasks.py

@@ -171,7 +171,12 @@

bulk_run_checks(Translation.objects.for_checks().filter(pk__in=translation_pks))

# Mark translations as changed

- changed_translations = Translation.objects.filter(pk__in=translation_pks)

+ changed_translations = Translation.objects.filter(

+ pk__in=translation_pks,

+ # Do not sync translations with errors and warnings

+ errors__isnull=True,

+ warnings__isnull=True,

+ )

changed_translations.bulk_mark_changed()

# Update latest activity and stats for changed instances.

|

{"golden_diff": "diff --git a/pontoon/pretranslation/tasks.py b/pontoon/pretranslation/tasks.py\n--- a/pontoon/pretranslation/tasks.py\n+++ b/pontoon/pretranslation/tasks.py\n@@ -171,7 +171,12 @@\n bulk_run_checks(Translation.objects.for_checks().filter(pk__in=translation_pks))\n \n # Mark translations as changed\n- changed_translations = Translation.objects.filter(pk__in=translation_pks)\n+ changed_translations = Translation.objects.filter(\n+ pk__in=translation_pks,\n+ # Do not sync translations with errors and warnings\n+ errors__isnull=True,\n+ warnings__isnull=True,\n+ )\n changed_translations.bulk_mark_changed()\n \n # Update latest activity and stats for changed instances.\n", "issue": "Don't submit pretranslated strings with warnings or errors\nIf possible, it would be great to have 2 different behaviors:\r\n* Strings with warning should be suggested, instead of submitted, because they might be OK (i.e. the warning could be ignored), or at least it might be still useful to post-edit them instead of starting from scratch.\r\n* String with errors shouldn't be even suggested.\r\n\r\nIf not possible, I guess storing them both as suggestions would work, since the localizer wouldn't be able to confirm a string with errors (but it will be confusing).\r\n\r\nThe important piece is not storing them in VCS, because it will create a chain of errors, especially in parts that we don't control (the final product).\n", "before_files": [{"content": "import logging\n\nfrom django.db.models import Q, CharField, Value as V\nfrom django.db.models.functions import Concat\nfrom django.conf import settings\nfrom pontoon.base.models import (\n Project,\n Entity,\n TranslatedResource,\n Translation,\n)\nfrom pontoon.actionlog.models import ActionLog\nfrom pontoon.pretranslation.pretranslate import (\n get_pretranslations,\n update_changed_instances,\n)\nfrom pontoon.base.tasks import PontoonTask\nfrom pontoon.sync.core import serial_task\nfrom pontoon.checks.utils import bulk_run_checks\n\n\nlog = logging.getLogger(__name__)\n\n\n@serial_task(settings.SYNC_TASK_TIMEOUT, base=PontoonTask, lock_key=\"project={0}\")\ndef pretranslate(self, project_pk, locales=None, entities=None):\n \"\"\"\n Identifies strings without any translations and any suggestions.\n Engages TheAlgorithm (bug 1552796) to gather pretranslations.\n Stores pretranslations as suggestions (approved=False) to DB.\n\n :arg project_pk: the pk of the project to be pretranslated\n :arg Queryset locales: the locales for the project to be pretranslated\n :arg Queryset entites: the entities for the project to be pretranslated\n\n :returns: None\n \"\"\"\n project = Project.objects.get(pk=project_pk)\n\n if not project.pretranslation_enabled:\n log.info(f\"Pretranslation not enabled for project {project.name}\")\n return\n\n if locales:\n locales = project.locales.filter(pk__in=locales)\n else:\n locales = project.locales\n\n locales = locales.filter(\n project_locale__project=project,\n project_locale__pretranslation_enabled=True,\n project_locale__readonly=False,\n )\n\n if not locales:\n log.info(\n f\"Pretranslation not enabled for any locale within project {project.name}\"\n )\n return\n\n log.info(f\"Fetching pretranslations for project {project.name} started\")\n\n if not entities:\n entities = Entity.objects.filter(\n resource__project=project,\n obsolete=False,\n )\n\n entities = entities.prefetch_related(\"resource\")\n\n # get available TranslatedResource pairs\n tr_pairs = (\n TranslatedResource.objects.filter(\n resource__project=project,\n locale__in=locales,\n )\n .annotate(\n locale_resource=Concat(\n \"locale_id\", V(\"-\"), \"resource_id\", output_field=CharField()\n )\n )\n .values_list(\"locale_resource\", flat=True)\n .distinct()\n )\n\n # Fetch all distinct locale-entity pairs for which translation exists\n translated_entities = (\n Translation.objects.filter(\n locale__in=locales,\n entity__in=entities,\n )\n .annotate(\n locale_entity=Concat(\n \"locale_id\", V(\"-\"), \"entity_id\", output_field=CharField()\n )\n )\n .values_list(\"locale_entity\", flat=True)\n .distinct()\n )\n\n translated_entities = list(translated_entities)\n\n translations = []\n\n # To keep track of changed TranslatedResources and their latest_translation\n tr_dict = {}\n\n tr_filter = []\n index = -1\n\n for locale in locales:\n log.info(f\"Fetching pretranslations for locale {locale.code} started\")\n for entity in entities:\n locale_entity = f\"{locale.id}-{entity.id}\"\n locale_resource = f\"{locale.id}-{entity.resource.id}\"\n if locale_entity in translated_entities or locale_resource not in tr_pairs:\n continue\n\n pretranslations = get_pretranslations(entity, locale)\n\n if not pretranslations:\n continue\n\n for string, plural_form, user in pretranslations:\n t = Translation(\n entity=entity,\n locale=locale,\n string=string,\n user=user,\n approved=False,\n pretranslated=True,\n active=True,\n plural_form=plural_form,\n )\n\n index += 1\n translations.append(t)\n\n if locale_resource not in tr_dict:\n tr_dict[locale_resource] = index\n\n # Add query for fetching respective TranslatedResource.\n tr_filter.append(\n Q(locale__id=locale.id) & Q(resource__id=entity.resource.id)\n )\n\n # Update the latest translation index\n tr_dict[locale_resource] = index\n\n log.info(f\"Fetching pretranslations for locale {locale.code} done\")\n\n if len(translations) == 0:\n return\n\n translations = Translation.objects.bulk_create(translations)\n\n # Log creating actions\n actions_to_log = [\n ActionLog(\n action_type=ActionLog.ActionType.TRANSLATION_CREATED,\n performed_by=t.user,\n translation=t,\n )\n for t in translations\n ]\n\n ActionLog.objects.bulk_create(actions_to_log)\n\n # Run checks on all translations\n translation_pks = {translation.pk for translation in translations}\n bulk_run_checks(Translation.objects.for_checks().filter(pk__in=translation_pks))\n\n # Mark translations as changed\n changed_translations = Translation.objects.filter(pk__in=translation_pks)\n changed_translations.bulk_mark_changed()\n\n # Update latest activity and stats for changed instances.\n update_changed_instances(tr_filter, tr_dict, translations)\n\n log.info(f\"Fetching pretranslations for project {project.name} done\")\n", "path": "pontoon/pretranslation/tasks.py"}]}

| 2,257 | 170 |

gh_patches_debug_20429

|

rasdani/github-patches

|

git_diff

|

liqd__a4-meinberlin-2905

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

only module can be removed from published project

**URL:** https://meinberlin-dev.liqd.net/dashboard/projects/lorem-ipsum/basic/

**user:** initiator

**expected behaviour:** when a project is published, at least one modle should always be added to the project, the initiator shouldn't be able to remove the last one

**behaviour:** last added module can be removed, resulting ind strange project detail view ("Die Beteiligung ist aktuell nicht möglich. Sie startet am None. ") with an empty project without any participation.

**important screensize:**

**device & browser:**

**Comment/Question:** @CarolingerSeilchenspringer I am not sure we decided it should be like that (or can you just not delete the last module and only delete it after it has been removed?), but I think this needs to be fixed. Do you agree?

Screenshot?

</issue>

<code>

[start of meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py]

1 import json

2 from urllib.parse import unquote

3

4 from django import template

5

6 register = template.Library()

7

8

9 @register.simple_tag(takes_context=True)

10 def closed_accordeons(context, project_id):

11 request = context['request']

12 cookie = request.COOKIES.get('dashboard_projects_closed_accordeons', '[]')

13 ids = json.loads(unquote(cookie))

14 if project_id in ids:

15 ids.append(-1)

16 return ids

17

18

19 @register.filter

20 def is_publishable(project, project_progress):

21 """Check if project can be published.

22

23 Required project details need to be filled in and at least one module

24 has to be published (added to the project).

25 """

26 return (project_progress['project_is_complete']

27 and project.published_modules.count() >= 1)

28

29

30 @register.filter

31 def has_unpublishable_modules(project):

32 """Check if modules can be removed from project.

33

34 Modules can be removed if the project is not yet published and there is

35 another module published for (added to) the project.

36 """

37 return (project.is_draft

38 and project.published_modules.count() <= 1)

39

40

41 @register.filter

42 def project_nav_is_active(dashboard_menu_project):

43 """Check if the view is in the project dashboard nav."""

44 for item in dashboard_menu_project:

45 if item['is_active']:

46 return True

47 return False

48

49

50 @register.filter

51 def module_nav_is_active(dashboard_menu_modules):

52 """Check if the view is in the project dashboard nav."""

53 for module_menu in dashboard_menu_modules:

54 for item in module_menu['menu']:

55 if item['is_active']:

56 return True

57 return False

58

59

60 @register.filter

61 def has_publishable_module(dashboard_menu_modules):

62 for module_menu in dashboard_menu_modules:

63 if module_menu['is_complete']:

64 return True

65 return False

66

[end of meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py]

[start of meinberlin/apps/dashboard/views.py]

1 import json

2 from urllib import parse

3

4 from django.apps import apps

5 from django.contrib import messages

6 from django.contrib.messages.views import SuccessMessageMixin

7 from django.http import HttpResponseRedirect

8 from django.urls import resolve

9 from django.urls import reverse

10 from django.utils.translation import ugettext_lazy as _

11 from django.views import generic

12 from django.views.generic.detail import SingleObjectMixin

13

14 from adhocracy4.dashboard import mixins

15 from adhocracy4.dashboard import signals

16 from adhocracy4.dashboard import views as a4dashboard_views

17 from adhocracy4.dashboard.blueprints import get_blueprints

18 from adhocracy4.modules import models as module_models

19 from adhocracy4.phases import models as phase_models

20 from adhocracy4.projects import models as project_models

21 from adhocracy4.projects.mixins import ProjectMixin

22 from meinberlin.apps.dashboard.forms import DashboardProjectCreateForm

23

24

25 class ModuleBlueprintListView(ProjectMixin,

26 mixins.DashboardBaseMixin,

27 mixins.BlueprintMixin,

28 generic.DetailView):

29 template_name = 'meinberlin_dashboard/module_blueprint_list_dashboard.html'

30 permission_required = 'a4projects.change_project'

31 model = project_models.Project

32 slug_url_kwarg = 'project_slug'

33 menu_item = 'project'

34

35 @property

36 def blueprints(self):

37 return get_blueprints()

38

39 def get_permission_object(self):

40 return self.project

41

42

43 class ModuleCreateView(ProjectMixin,

44 mixins.DashboardBaseMixin,

45 mixins.BlueprintMixin,

46 SingleObjectMixin,

47 generic.View):

48 permission_required = 'a4projects.change_project'

49 model = project_models.Project

50 slug_url_kwarg = 'project_slug'

51 success_message = _('The module was created')

52

53 def post(self, request, *args, **kwargs):

54 project = self.get_object()

55 weight = 1

56 if project.modules:

57 weight = max(

58 project.modules.values_list('weight', flat=True)

59 ) + 1

60 module = module_models.Module(

61 name=self.blueprint.title,

62 weight=weight,

63 project=project,

64 is_draft=True,

65 )

66 module.save()

67 signals.module_created.send(sender=None,

68 module=module,

69 user=self.request.user)

70

71 self._create_module_settings(module)

72 self._create_phases(module, self.blueprint.content)

73 messages.success(self.request, self.success_message)

74

75 cookie = request.COOKIES.get('dashboard_projects_closed_accordeons',

76 '[]')

77 ids = json.loads(parse.unquote(cookie))

78 if self.project.id not in ids:

79 ids.append(self.project.id)

80

81 cookie = parse.quote(json.dumps(ids))

82

83 response = HttpResponseRedirect(self.get_next(module))

84 response.set_cookie('dashboard_projects_closed_accordeons', cookie)

85 return response

86

87 def _create_module_settings(self, module):

88 if self.blueprint.settings_model:

89 settings_model = apps.get_model(*self.blueprint.settings_model)

90 module_settings = settings_model(module=module)

91 module_settings.save()

92

93 def _create_phases(self, module, blueprint_phases):

94 for index, phase_content in enumerate(blueprint_phases):

95 phase = phase_models.Phase(

96 type=phase_content.identifier,

97 name=phase_content.name,

98 description=phase_content.description,

99 weight=index,

100 module=module,

101 )

102 phase.save()

103

104 def get_next(self, module):

105 return reverse('a4dashboard:dashboard-module_basic-edit', kwargs={

106 'module_slug': module.slug

107 })

108

109 def get_permission_object(self):

110 return self.project

111

112

113 class ModulePublishView(SingleObjectMixin,

114 generic.View):

115 permission_required = 'a4projects.change_project'

116 model = module_models.Module

117 slug_url_kwarg = 'module_slug'

118

119 def get_permission_object(self):

120 return self.get_object().project

121

122 def post(self, request, *args, **kwargs):

123 action = request.POST.get('action', None)

124 if action == 'publish':

125 self.publish_module()

126 elif action == 'unpublish':

127 self.unpublish_module()

128 else:

129 messages.warning(self.request, _('Invalid action'))

130

131 return HttpResponseRedirect(self.get_next())

132

133 def get_next(self):

134 if 'referrer' in self.request.POST:

135 return self.request.POST['referrer']

136 elif 'HTTP_REFERER' in self.request.META:

137 return self.request.META['HTTP_REFERER']

138

139 return reverse('a4dashboard:project-edit', kwargs={

140 'project_slug': self.project.slug

141 })

142

143 def publish_module(self):

144 module = self.get_object()

145 if not module.is_draft:

146 messages.info(self.request, _('Module is already added'))

147 return

148

149 module.is_draft = False

150 module.save()

151

152 signals.module_published.send(sender=None,

153 module=module,

154 user=self.request.user)

155

156 messages.success(self.request,

157 _('The module is displayed in the project.'))

158

159 def unpublish_module(self):

160 module = self.get_object()

161 if module.is_draft:

162 messages.info(self.request, _('Module is already removed'))

163 return

164

165 module.is_draft = True

166 module.save()

167

168 signals.module_unpublished.send(sender=None,

169 module=module,

170 user=self.request.user)

171

172 messages.success(self.request,

173 _('The module is no longer displayed in the project.'

174 ))

175

176

177 class ModuleDeleteView(generic.DeleteView):

178 permission_required = 'a4projects.change_project'

179 model = module_models.Module

180 success_message = _('The module has been deleted')

181

182 def delete(self, request, *args, **kwargs):

183 messages.success(self.request, self.success_message)

184 return super().delete(request, *args, **kwargs)

185

186 def get_permission_object(self):

187 return self.get_object().project

188

189 def get_success_url(self):

190 referrer = self.request.POST.get('referrer', None) \

191 or self.request.META.get('HTTP_REFERER', None)

192 if referrer:

193 view, args, kwargs = resolve(referrer)

194 if 'module_slug' not in kwargs \

195 or not kwargs['module_slug'] == self.get_object().slug:

196 return referrer

197

198 return reverse('a4dashboard:project-edit', kwargs={

199 'project_slug': self.get_object().project.slug

200 })

201

202

203 class DashboardProjectListView(a4dashboard_views.ProjectListView):

204 def get_queryset(self):

205 return super().get_queryset().filter(

206 projectcontainer=None,

207 externalproject=None

208 )

209

210

211 class ProjectCreateView(mixins.DashboardBaseMixin,

212 SuccessMessageMixin,

213 generic.CreateView):

214 model = project_models.Project

215 slug_url_kwarg = 'project_slug'

216 form_class = DashboardProjectCreateForm

217 template_name = 'a4dashboard/project_create_form.html'

218 permission_required = 'a4projects.add_project'

219 menu_item = 'project'

220 success_message = _('Project was created.')

221

222 def get_permission_object(self):

223 return self.organisation

224

225 def get_form_kwargs(self):

226 kwargs = super().get_form_kwargs()

227 kwargs['organisation'] = self.organisation

228 kwargs['creator'] = self.request.user

229 return kwargs

230

231 def get_success_url(self):

232 return reverse('a4dashboard:project-edit',

233 kwargs={'project_slug': self.object.slug})

234

235 def form_valid(self, form):

236 response = super().form_valid(form)

237 signals.project_created.send(sender=None,

238 project=self.object,

239 user=self.request.user)

240

241 return response

242

[end of meinberlin/apps/dashboard/views.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py b/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py

--- a/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py

+++ b/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py

@@ -35,7 +35,7 @@

another module published for (added to) the project.

"""

return (project.is_draft

- and project.published_modules.count() <= 1)

+ and project.published_modules.count() > 1)

@register.filter

diff --git a/meinberlin/apps/dashboard/views.py b/meinberlin/apps/dashboard/views.py

--- a/meinberlin/apps/dashboard/views.py

+++ b/meinberlin/apps/dashboard/views.py

@@ -161,6 +161,16 @@

if module.is_draft:

messages.info(self.request, _('Module is already removed'))

return

+ if not module.project.is_draft:

+ messages.error(self.request,

+ _('Module cannot be removed '

+ 'from a published project.'))

+ return

+ if module.project.published_modules.count() == 1:

+ messages.error(self.request,

+ _('Module cannot be removed. '

+ 'It is the only module added to the project.'))

+ return

module.is_draft = True

module.save()

|

{"golden_diff": "diff --git a/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py b/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py\n--- a/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py\n+++ b/meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py\n@@ -35,7 +35,7 @@\n another module published for (added to) the project.\n \"\"\"\n return (project.is_draft\n- and project.published_modules.count() <= 1)\n+ and project.published_modules.count() > 1)\n \n \n @register.filter\ndiff --git a/meinberlin/apps/dashboard/views.py b/meinberlin/apps/dashboard/views.py\n--- a/meinberlin/apps/dashboard/views.py\n+++ b/meinberlin/apps/dashboard/views.py\n@@ -161,6 +161,16 @@\n if module.is_draft:\n messages.info(self.request, _('Module is already removed'))\n return\n+ if not module.project.is_draft:\n+ messages.error(self.request,\n+ _('Module cannot be removed '\n+ 'from a published project.'))\n+ return\n+ if module.project.published_modules.count() == 1:\n+ messages.error(self.request,\n+ _('Module cannot be removed. '\n+ 'It is the only module added to the project.'))\n+ return\n \n module.is_draft = True\n module.save()\n", "issue": "only module can be removed from published project\n**URL:** https://meinberlin-dev.liqd.net/dashboard/projects/lorem-ipsum/basic/\r\n**user:** initiator\r\n**expected behaviour:** when a project is published, at least one modle should always be added to the project, the initiator shouldn't be able to remove the last one \r\n**behaviour:** last added module can be removed, resulting ind strange project detail view (\"Die Beteiligung ist aktuell nicht m\u00f6glich. Sie startet am None. \") with an empty project without any participation.\r\n**important screensize:**\r\n**device & browser:** \r\n**Comment/Question:** @CarolingerSeilchenspringer I am not sure we decided it should be like that (or can you just not delete the last module and only delete it after it has been removed?), but I think this needs to be fixed. Do you agree?\r\n\r\nScreenshot?\r\n\n", "before_files": [{"content": "import json\nfrom urllib.parse import unquote\n\nfrom django import template\n\nregister = template.Library()\n\n\[email protected]_tag(takes_context=True)\ndef closed_accordeons(context, project_id):\n request = context['request']\n cookie = request.COOKIES.get('dashboard_projects_closed_accordeons', '[]')\n ids = json.loads(unquote(cookie))\n if project_id in ids:\n ids.append(-1)\n return ids\n\n\[email protected]\ndef is_publishable(project, project_progress):\n \"\"\"Check if project can be published.\n\n Required project details need to be filled in and at least one module\n has to be published (added to the project).\n \"\"\"\n return (project_progress['project_is_complete']\n and project.published_modules.count() >= 1)\n\n\[email protected]\ndef has_unpublishable_modules(project):\n \"\"\"Check if modules can be removed from project.\n\n Modules can be removed if the project is not yet published and there is\n another module published for (added to) the project.\n \"\"\"\n return (project.is_draft\n and project.published_modules.count() <= 1)\n\n\[email protected]\ndef project_nav_is_active(dashboard_menu_project):\n \"\"\"Check if the view is in the project dashboard nav.\"\"\"\n for item in dashboard_menu_project:\n if item['is_active']:\n return True\n return False\n\n\[email protected]\ndef module_nav_is_active(dashboard_menu_modules):\n \"\"\"Check if the view is in the project dashboard nav.\"\"\"\n for module_menu in dashboard_menu_modules:\n for item in module_menu['menu']:\n if item['is_active']:\n return True\n return False\n\n\[email protected]\ndef has_publishable_module(dashboard_menu_modules):\n for module_menu in dashboard_menu_modules:\n if module_menu['is_complete']:\n return True\n return False\n", "path": "meinberlin/apps/dashboard/templatetags/meinberlin_dashboard_tags.py"}, {"content": "import json\nfrom urllib import parse\n\nfrom django.apps import apps\nfrom django.contrib import messages\nfrom django.contrib.messages.views import SuccessMessageMixin\nfrom django.http import HttpResponseRedirect\nfrom django.urls import resolve\nfrom django.urls import reverse\nfrom django.utils.translation import ugettext_lazy as _\nfrom django.views import generic\nfrom django.views.generic.detail import SingleObjectMixin\n\nfrom adhocracy4.dashboard import mixins\nfrom adhocracy4.dashboard import signals\nfrom adhocracy4.dashboard import views as a4dashboard_views\nfrom adhocracy4.dashboard.blueprints import get_blueprints\nfrom adhocracy4.modules import models as module_models\nfrom adhocracy4.phases import models as phase_models\nfrom adhocracy4.projects import models as project_models\nfrom adhocracy4.projects.mixins import ProjectMixin\nfrom meinberlin.apps.dashboard.forms import DashboardProjectCreateForm\n\n\nclass ModuleBlueprintListView(ProjectMixin,\n mixins.DashboardBaseMixin,\n mixins.BlueprintMixin,\n generic.DetailView):\n template_name = 'meinberlin_dashboard/module_blueprint_list_dashboard.html'\n permission_required = 'a4projects.change_project'\n model = project_models.Project\n slug_url_kwarg = 'project_slug'\n menu_item = 'project'\n\n @property\n def blueprints(self):\n return get_blueprints()\n\n def get_permission_object(self):\n return self.project\n\n\nclass ModuleCreateView(ProjectMixin,\n mixins.DashboardBaseMixin,\n mixins.BlueprintMixin,\n SingleObjectMixin,\n generic.View):\n permission_required = 'a4projects.change_project'\n model = project_models.Project\n slug_url_kwarg = 'project_slug'\n success_message = _('The module was created')\n\n def post(self, request, *args, **kwargs):\n project = self.get_object()\n weight = 1\n if project.modules:\n weight = max(\n project.modules.values_list('weight', flat=True)\n ) + 1\n module = module_models.Module(\n name=self.blueprint.title,\n weight=weight,\n project=project,\n is_draft=True,\n )\n module.save()\n signals.module_created.send(sender=None,\n module=module,\n user=self.request.user)\n\n self._create_module_settings(module)\n self._create_phases(module, self.blueprint.content)\n messages.success(self.request, self.success_message)\n\n cookie = request.COOKIES.get('dashboard_projects_closed_accordeons',\n '[]')\n ids = json.loads(parse.unquote(cookie))\n if self.project.id not in ids:\n ids.append(self.project.id)\n\n cookie = parse.quote(json.dumps(ids))\n\n response = HttpResponseRedirect(self.get_next(module))\n response.set_cookie('dashboard_projects_closed_accordeons', cookie)\n return response\n\n def _create_module_settings(self, module):\n if self.blueprint.settings_model:\n settings_model = apps.get_model(*self.blueprint.settings_model)\n module_settings = settings_model(module=module)\n module_settings.save()\n\n def _create_phases(self, module, blueprint_phases):\n for index, phase_content in enumerate(blueprint_phases):\n phase = phase_models.Phase(\n type=phase_content.identifier,\n name=phase_content.name,\n description=phase_content.description,\n weight=index,\n module=module,\n )\n phase.save()\n\n def get_next(self, module):\n return reverse('a4dashboard:dashboard-module_basic-edit', kwargs={\n 'module_slug': module.slug\n })\n\n def get_permission_object(self):\n return self.project\n\n\nclass ModulePublishView(SingleObjectMixin,\n generic.View):\n permission_required = 'a4projects.change_project'\n model = module_models.Module\n slug_url_kwarg = 'module_slug'\n\n def get_permission_object(self):\n return self.get_object().project\n\n def post(self, request, *args, **kwargs):\n action = request.POST.get('action', None)\n if action == 'publish':\n self.publish_module()\n elif action == 'unpublish':\n self.unpublish_module()\n else:\n messages.warning(self.request, _('Invalid action'))\n\n return HttpResponseRedirect(self.get_next())\n\n def get_next(self):\n if 'referrer' in self.request.POST:\n return self.request.POST['referrer']\n elif 'HTTP_REFERER' in self.request.META:\n return self.request.META['HTTP_REFERER']\n\n return reverse('a4dashboard:project-edit', kwargs={\n 'project_slug': self.project.slug\n })\n\n def publish_module(self):\n module = self.get_object()\n if not module.is_draft:\n messages.info(self.request, _('Module is already added'))\n return\n\n module.is_draft = False\n module.save()\n\n signals.module_published.send(sender=None,\n module=module,\n user=self.request.user)\n\n messages.success(self.request,\n _('The module is displayed in the project.'))\n\n def unpublish_module(self):\n module = self.get_object()\n if module.is_draft:\n messages.info(self.request, _('Module is already removed'))\n return\n\n module.is_draft = True\n module.save()\n\n signals.module_unpublished.send(sender=None,\n module=module,\n user=self.request.user)\n\n messages.success(self.request,\n _('The module is no longer displayed in the project.'\n ))\n\n\nclass ModuleDeleteView(generic.DeleteView):\n permission_required = 'a4projects.change_project'\n model = module_models.Module\n success_message = _('The module has been deleted')\n\n def delete(self, request, *args, **kwargs):\n messages.success(self.request, self.success_message)\n return super().delete(request, *args, **kwargs)\n\n def get_permission_object(self):\n return self.get_object().project\n\n def get_success_url(self):\n referrer = self.request.POST.get('referrer', None) \\\n or self.request.META.get('HTTP_REFERER', None)\n if referrer:\n view, args, kwargs = resolve(referrer)\n if 'module_slug' not in kwargs \\\n or not kwargs['module_slug'] == self.get_object().slug:\n return referrer\n\n return reverse('a4dashboard:project-edit', kwargs={\n 'project_slug': self.get_object().project.slug\n })\n\n\nclass DashboardProjectListView(a4dashboard_views.ProjectListView):\n def get_queryset(self):\n return super().get_queryset().filter(\n projectcontainer=None,\n externalproject=None\n )\n\n\nclass ProjectCreateView(mixins.DashboardBaseMixin,\n SuccessMessageMixin,\n generic.CreateView):\n model = project_models.Project\n slug_url_kwarg = 'project_slug'\n form_class = DashboardProjectCreateForm\n template_name = 'a4dashboard/project_create_form.html'\n permission_required = 'a4projects.add_project'\n menu_item = 'project'\n success_message = _('Project was created.')\n\n def get_permission_object(self):\n return self.organisation\n\n def get_form_kwargs(self):\n kwargs = super().get_form_kwargs()\n kwargs['organisation'] = self.organisation\n kwargs['creator'] = self.request.user\n return kwargs\n\n def get_success_url(self):\n return reverse('a4dashboard:project-edit',\n kwargs={'project_slug': self.object.slug})\n\n def form_valid(self, form):\n response = super().form_valid(form)\n signals.project_created.send(sender=None,\n project=self.object,\n user=self.request.user)\n\n return response\n", "path": "meinberlin/apps/dashboard/views.py"}]}

| 3,499 | 321 |

gh_patches_debug_24899

|

rasdani/github-patches

|

git_diff

|

sktime__sktime-2279

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG] odd failure in `fh._coerce_to_period` that occurs only on windows, in context of hierarchical forecast

When making hierarchical forecasts on default output of `_make_hierarchical`, `fh.coerce_to_period` fails, on a windows run:

https://github.com/alan-turing-institute/sktime/runs/5503723513

This is quite strange, and I cannot reproduce the behaviour locally on my windows machine (python 3.8 or 3.9).

It looks like `freq` is not correctly preserved in subsetting from a hierarchical multi-index ... but only under specific conditions on a windows system???

Expected behaviour: the test does not fail, just like on linux/max and as on my local system.

</issue>

<code>

[start of sktime/datatypes/_vectorize.py]

1 # -*- coding: utf-8 -*-

2 # copyright: sktime developers, BSD-3-Clause License (see LICENSE file)

3 """Wrapper for easy vectorization/iteration of time series data.

4

5 Contains VectorizedDF class.

6 """

7

8 import pandas as pd

9

10 from sktime.datatypes._check import check_is_scitype, mtype

11 from sktime.datatypes._convert import convert_to

12

13

14 class VectorizedDF:

15 """Wrapper for easy vectorization/iteration over instances.

16

17 VectorizedDF is an iterable that returns pandas.DataFrame

18 in sktime Series or Panel format.

19 Elements are all Series or Panels in X, these are iterated over.

20

21 Parameters

22 ----------

23 X : object in sktime compatible Panel or Hierarchical format

24 the data container to vectorize over

25 y : placeholder argument, not used currently

26 is_scitype : str ("Panel", "Hierarchical") or None, default = "Panel"

27 scitype of X, if known; if None, will be inferred

28 provide to constructor if known to avoid superfluous checks

29 Caution: will not conduct checks if provided, assumes checks done

30 iterate_as : str ("Series", "Panel")

31 scitype of the iteration

32 for instance, if X is Panel and iterate_as is "Series"

33 then the class will iterate over individual Series in X

34 or, if X is Hierarchical and iterate_as is "Panel"

35 then the class will iterate over individual Panels in X

36 (Panel = flat/non-hierarchical collection of Series)

37

38 Methods

39 -------

40 self[i] or self.__getitem__(i)

41 Returns i-th Series/Panel (depending on iterate_as) in X

42 as pandas.DataFrame with Index or MultiIndex (in sktime pandas format)

43 len(self) or self.__len__

44 returns number of Series/Panel in X

45 get_iter_indices()

46 Returns pandas.(Multi)Index that are iterated over

47 reconstruct(self, df_list, convert_back=False)

48 Takes iterable df_list and returns as an object of is_scitype.

49 Used to obtain original format after applying operations to self iterated

50 """

51

52 def __init__(self, X, y=None, iterate_as="Series", is_scitype="Panel"):

53

54 self.X = X

55

56 if is_scitype is None:

57 possible_scitypes = ["Panel", "Hierarchical"]

58 _, _, metadata = check_is_scitype(

59 X, scitype=possible_scitypes, return_metadata=True

60 )

61 is_scitype = metadata["scitype"]

62 X_orig_mtype = metadata["mtype"]

63 else:

64 X_orig_mtype = None

65

66 if is_scitype is not None and is_scitype not in ["Hierarchical", "Panel"]:

67 raise ValueError(

68 'is_scitype must be None, "Hierarchical" or "Panel", ',

69 f"found {is_scitype}",

70 )

71 self.iterate_as = iterate_as

72

73 self.is_scitype = is_scitype

74 self.X_orig_mtype = X_orig_mtype

75

76 if iterate_as not in ["Series", "Panel"]:

77 raise ValueError(

78 f'iterate_as must be "Series" or "Panel", found {iterate_as}'

79 )

80 self.iterate_as = iterate_as

81

82 if iterate_as == "Panel" and is_scitype == "Panel":

83 raise ValueError(

84 'If is_scitype is "Panel", then iterate_as must be "Series"'

85 )

86

87 self.converter_store = dict()

88

89 self.X_multiindex = self._init_conversion(X)

90 self.iter_indices = self._init_iter_indices()

91

92 def _init_conversion(self, X):

93 """Convert X to a pandas multiindex format."""

94 is_scitype = self.is_scitype

95

96 if is_scitype == "Panel":

97 return convert_to(

98 X,

99 to_type="pd-multiindex",

100 as_scitype="Panel",

101 store=self.converter_store,

102 )

103 elif is_scitype == "Hierarchical":

104 return convert_to(

105 X,

106 to_type="pd_multiindex_hier",

107 as_scitype="Hierarchical",

108 store=self.converter_store,

109 )

110 else:

111 raise RuntimeError(

112 f"unexpected value found for attribute self.is_scitype: {is_scitype}"

113 'must be "Panel" or "Hierarchical"'

114 )

115

116 def _init_iter_indices(self):

117 """Initialize indices that are iterated over in vectorization."""

118 iterate_as = self.iterate_as

119 X = self.X_multiindex

120

121 if iterate_as == "Series":

122 ix = X.index.droplevel(-1).unique()

123 return ix

124 elif iterate_as == "Panel":

125 ix = X.index.droplevel([-1, -2]).unique()

126 return ix

127 else:

128 raise RuntimeError(

129 f"unexpected value found for attribute self.iterate_as: {iterate_as}"

130 'must be "Series" or "Panel"'

131 )

132

133 def get_iter_indices(self):

134 """Get indices that are iterated over in vectorization.

135

136 Returns

137 -------

138 pandas.Index or pandas.MultiIndex

139 index with unique indices that are iterated over

140 use to reconstruct data frame after iteration

141 """

142 return self.iter_indices

143

144 def __len__(self):

145 """Return number of indices to iterate over."""

146 return len(self.get_iter_indices())

147

148 def __getitem__(self, i: int):

149 """Return the i-th element iterated over in vectorization."""

150 X = self.X_multiindex

151 ind = self.get_iter_indices()[i]

152 item = X.loc[ind]

153 # pd-multiindex type (Panel case) expects these index names:

154 if self.iterate_as == "Panel":

155 item.index.set_names(["instances", "timepoints"], inplace=True)

156 return item

157

158 def as_list(self):

159 """Shorthand to retrieve self (iterator) as list."""

160 return list(self)

161

162 def reconstruct(self, df_list, convert_back=False, overwrite_index=True):

163 """Reconstruct original format from iterable of vectorization instances.

164

165 Parameters

166 ----------

167 df_list : iterable of objects of same type as __getitem__ returns.

168 can be self, but will in general be another object to be useful.

169 Example: [some_operation(df) for df in self] that leaves types the same

170 convert_back : bool, default = False

171 whether to convert output back to mtype of X in __init__

172 if False, the return will be a pandas.DataFrame with Index or multiIndex

173 if True, the return is converted to the mtype of X in __init__

174 overwrite_index : bool, default = True

175 if True, the resulting return will have index overwritten by that of X

176 only if applies, i.e., overwrite is possible and X had an index

177 if False, no index overwrite will happen

178

179 Returns

180 -------

181 X_reconstructed_orig_format : row-concatenation of df-list,

182 with keys and additional level from self.get_iter_indices

183 if convert_back=False, always a pd.DataFrame in a sktime MultiIndex format

184 (pd-multiindex mtype for Panel, or pd_multiindex_hier for Hierarchical)

185 if convert_back=True, will have same format and mtype as X input to __init__

186 """

187 ix = self.get_iter_indices()

188 X_mi_reconstructed = pd.concat(df_list, keys=ix)

189

190 X_mi_index = X_mi_reconstructed.index

191 X_orig_index = self.X_multiindex.index

192 if overwrite_index and len(X_mi_index.names) == len(X_orig_index.names):

193 X_mi_reconstructed.index.set_names(X_orig_index.names)

194

195 if not convert_back:

196 return X_mi_reconstructed

197 else:

198 X_orig_mtype = self.X_orig_mtype

199 is_scitype = self.is_scitype

200 if X_orig_mtype is None:

201 X_orig_mtype = mtype(self.X, as_scitype=self.is_scitype)

202

203 X_reconstructed_orig_format = convert_to(

204 X_mi_reconstructed,

205 to_type=X_orig_mtype,

206 as_scitype=is_scitype,

207 store=self.converter_store,

208 )

209

210 return X_reconstructed_orig_format

211

[end of sktime/datatypes/_vectorize.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/sktime/datatypes/_vectorize.py b/sktime/datatypes/_vectorize.py

--- a/sktime/datatypes/_vectorize.py

+++ b/sktime/datatypes/_vectorize.py

@@ -4,7 +4,6 @@

Contains VectorizedDF class.

"""

-

import pandas as pd

from sktime.datatypes._check import check_is_scitype, mtype

@@ -150,6 +149,7 @@

X = self.X_multiindex

ind = self.get_iter_indices()[i]

item = X.loc[ind]

+ item = _enforce_index_freq(item)

# pd-multiindex type (Panel case) expects these index names:

if self.iterate_as == "Panel":

item.index.set_names(["instances", "timepoints"], inplace=True)

@@ -208,3 +208,20 @@

)

return X_reconstructed_orig_format

+

+

+def _enforce_index_freq(item: pd.Series) -> pd.Series:

+ """Enforce the frequency of a Series index using pd.infer_freq.

+

+ Parameters

+ ----------

+ item : pd.Series

+ Returns

+ -------

+ pd.Series

+ Pandas series with the inferred frequency. If the frequency cannot be inferred

+ it will stay None

+ """

+ if hasattr(item.index, "freq") and item.index.freq is None:

+ item.index.freq = pd.infer_freq(item.index)

+ return item

|