problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

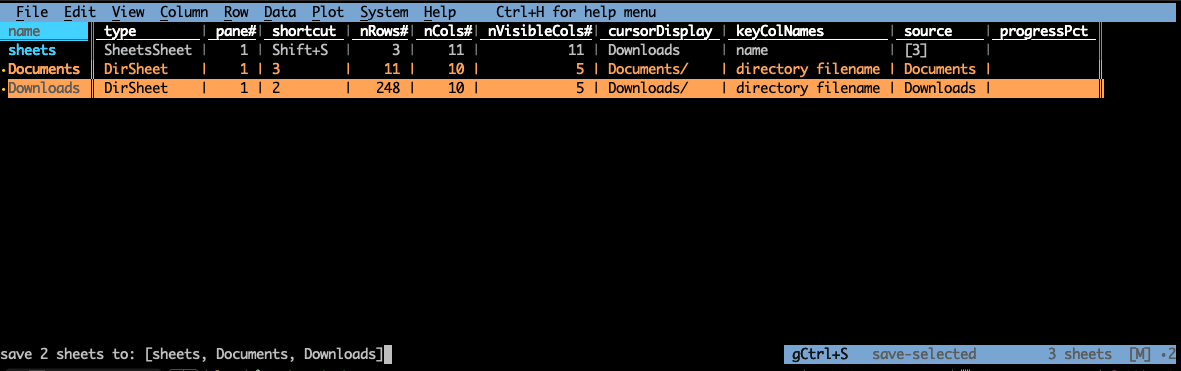

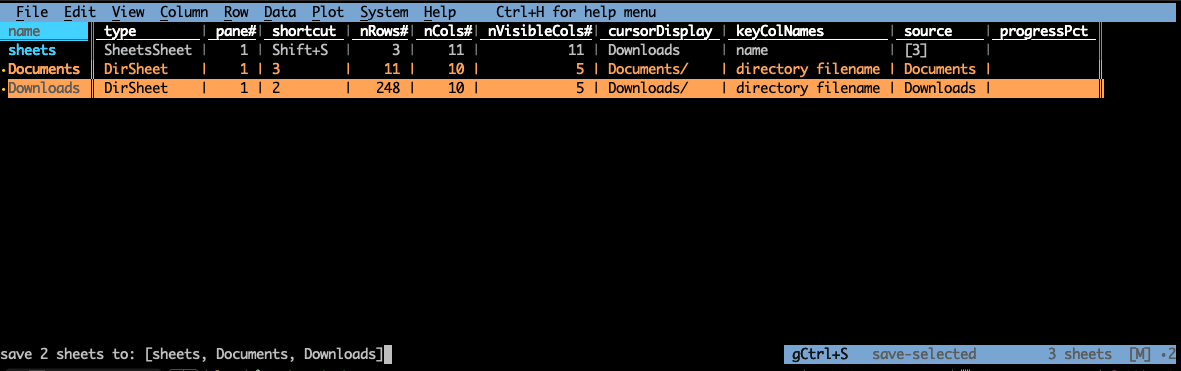

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_27046

|

rasdani/github-patches

|

git_diff

|

CTFd__CTFd-410

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Move plugin focused functions to the plugins folder

`override_template`

`register_plugin_script`

`register_plugin_stylesheet`

These should move to the plugins directory.

</issue>

<code>

[start of CTFd/plugins/__init__.py]

1 import glob

2 import importlib

3 import os

4

5 from flask.helpers import safe_join

6 from flask import send_file, send_from_directory, abort

7 from CTFd.utils import admins_only as admins_only_wrapper

8

9

10 def register_plugin_assets_directory(app, base_path, admins_only=False):

11 """

12 Registers a directory to serve assets

13

14 :param app: A CTFd application

15 :param string base_path: The path to the directory

16 :param boolean admins_only: Whether or not the assets served out of the directory should be accessible to the public

17 :return:

18 """

19 base_path = base_path.strip('/')

20

21 def assets_handler(path):

22 return send_from_directory(base_path, path)

23

24 if admins_only:

25 asset_handler = admins_only_wrapper(assets_handler)

26

27 rule = '/' + base_path + '/<path:path>'

28 app.add_url_rule(rule=rule, endpoint=base_path, view_func=assets_handler)

29

30

31 def register_plugin_asset(app, asset_path, admins_only=False):

32 """

33 Registers an file path to be served by CTFd

34

35 :param app: A CTFd application

36 :param string asset_path: The path to the asset file

37 :param boolean admins_only: Whether or not this file should be accessible to the public

38 :return:

39 """

40 asset_path = asset_path.strip('/')

41

42 def asset_handler():

43 return send_file(asset_path)

44

45 if admins_only:

46 asset_handler = admins_only_wrapper(asset_handler)

47 rule = '/' + asset_path

48 app.add_url_rule(rule=rule, endpoint=asset_path, view_func=asset_handler)

49

50

51 def init_plugins(app):

52 """

53 Searches for the load function in modules in the CTFd/plugins folder. This function is called with the current CTFd

54 app as a parameter. This allows CTFd plugins to modify CTFd's behavior.

55

56 :param app: A CTFd application

57 :return:

58 """

59 modules = glob.glob(os.path.dirname(__file__) + "/*")

60 blacklist = {'__pycache__'}

61 for module in modules:

62 module_name = os.path.basename(module)

63 if os.path.isdir(module) and module_name not in blacklist:

64 module = '.' + module_name

65 module = importlib.import_module(module, package='CTFd.plugins')

66 module.load(app)

67 print(" * Loaded module, %s" % module)

68

[end of CTFd/plugins/__init__.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/CTFd/plugins/__init__.py b/CTFd/plugins/__init__.py

--- a/CTFd/plugins/__init__.py

+++ b/CTFd/plugins/__init__.py

@@ -3,8 +3,13 @@

import os

from flask.helpers import safe_join

-from flask import send_file, send_from_directory, abort

-from CTFd.utils import admins_only as admins_only_wrapper

+from flask import current_app as app, send_file, send_from_directory, abort

+from CTFd.utils import (

+ admins_only as admins_only_wrapper,

+ override_template as utils_override_template,

+ register_plugin_script as utils_register_plugin_script,

+ register_plugin_stylesheet as utils_register_plugin_stylesheet

+)

def register_plugin_assets_directory(app, base_path, admins_only=False):

@@ -48,6 +53,29 @@

app.add_url_rule(rule=rule, endpoint=asset_path, view_func=asset_handler)

+def override_template(*args, **kwargs):

+ """

+ Overrides a template with the provided html content.

+

+ e.g. override_template('scoreboard.html', '<h1>scores</h1>')

+ """

+ utils_override_template(*args, **kwargs)

+

+

+def register_plugin_script(*args, **kwargs):

+ """

+ Adds a given script to the base.html template which all pages inherit from

+ """

+ utils_register_plugin_script(*args, **kwargs)

+

+

+def register_plugin_stylesheet(*args, **kwargs):

+ """

+ Adds a given stylesheet to the base.html template which all pages inherit from.

+ """

+ utils_register_plugin_stylesheet(*args, **kwargs)

+

+

def init_plugins(app):

"""

Searches for the load function in modules in the CTFd/plugins folder. This function is called with the current CTFd

|

{"golden_diff": "diff --git a/CTFd/plugins/__init__.py b/CTFd/plugins/__init__.py\n--- a/CTFd/plugins/__init__.py\n+++ b/CTFd/plugins/__init__.py\n@@ -3,8 +3,13 @@\n import os\n \n from flask.helpers import safe_join\n-from flask import send_file, send_from_directory, abort\n-from CTFd.utils import admins_only as admins_only_wrapper\n+from flask import current_app as app, send_file, send_from_directory, abort\n+from CTFd.utils import (\n+ admins_only as admins_only_wrapper,\n+ override_template as utils_override_template,\n+ register_plugin_script as utils_register_plugin_script,\n+ register_plugin_stylesheet as utils_register_plugin_stylesheet\n+)\n \n \n def register_plugin_assets_directory(app, base_path, admins_only=False):\n@@ -48,6 +53,29 @@\n app.add_url_rule(rule=rule, endpoint=asset_path, view_func=asset_handler)\n \n \n+def override_template(*args, **kwargs):\n+ \"\"\"\n+ Overrides a template with the provided html content.\n+\n+ e.g. override_template('scoreboard.html', '<h1>scores</h1>')\n+ \"\"\"\n+ utils_override_template(*args, **kwargs)\n+\n+\n+def register_plugin_script(*args, **kwargs):\n+ \"\"\"\n+ Adds a given script to the base.html template which all pages inherit from\n+ \"\"\"\n+ utils_register_plugin_script(*args, **kwargs)\n+\n+\n+def register_plugin_stylesheet(*args, **kwargs):\n+ \"\"\"\n+ Adds a given stylesheet to the base.html template which all pages inherit from.\n+ \"\"\"\n+ utils_register_plugin_stylesheet(*args, **kwargs)\n+\n+\n def init_plugins(app):\n \"\"\"\n Searches for the load function in modules in the CTFd/plugins folder. This function is called with the current CTFd\n", "issue": "Move plugin focused functions to the plugins folder\n`override_template`\r\n`register_plugin_script`\r\n`register_plugin_stylesheet`\r\n\r\nThese should move to the plugins directory.\n", "before_files": [{"content": "import glob\nimport importlib\nimport os\n\nfrom flask.helpers import safe_join\nfrom flask import send_file, send_from_directory, abort\nfrom CTFd.utils import admins_only as admins_only_wrapper\n\n\ndef register_plugin_assets_directory(app, base_path, admins_only=False):\n \"\"\"\n Registers a directory to serve assets\n\n :param app: A CTFd application\n :param string base_path: The path to the directory\n :param boolean admins_only: Whether or not the assets served out of the directory should be accessible to the public\n :return:\n \"\"\"\n base_path = base_path.strip('/')\n\n def assets_handler(path):\n return send_from_directory(base_path, path)\n\n if admins_only:\n asset_handler = admins_only_wrapper(assets_handler)\n\n rule = '/' + base_path + '/<path:path>'\n app.add_url_rule(rule=rule, endpoint=base_path, view_func=assets_handler)\n\n\ndef register_plugin_asset(app, asset_path, admins_only=False):\n \"\"\"\n Registers an file path to be served by CTFd\n\n :param app: A CTFd application\n :param string asset_path: The path to the asset file\n :param boolean admins_only: Whether or not this file should be accessible to the public\n :return:\n \"\"\"\n asset_path = asset_path.strip('/')\n\n def asset_handler():\n return send_file(asset_path)\n\n if admins_only:\n asset_handler = admins_only_wrapper(asset_handler)\n rule = '/' + asset_path\n app.add_url_rule(rule=rule, endpoint=asset_path, view_func=asset_handler)\n\n\ndef init_plugins(app):\n \"\"\"\n Searches for the load function in modules in the CTFd/plugins folder. This function is called with the current CTFd\n app as a parameter. This allows CTFd plugins to modify CTFd's behavior.\n\n :param app: A CTFd application\n :return:\n \"\"\"\n modules = glob.glob(os.path.dirname(__file__) + \"/*\")\n blacklist = {'__pycache__'}\n for module in modules:\n module_name = os.path.basename(module)\n if os.path.isdir(module) and module_name not in blacklist:\n module = '.' + module_name\n module = importlib.import_module(module, package='CTFd.plugins')\n module.load(app)\n print(\" * Loaded module, %s\" % module)\n", "path": "CTFd/plugins/__init__.py"}]}

| 1,214 | 399 |

gh_patches_debug_33168

|

rasdani/github-patches

|

git_diff

|

tensorflow__addons-2265

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Drop GELU for 0.13 release

https://www.tensorflow.org/api_docs/python/tf/keras/activations/gelu will be available in TF2.4. Deprecation warning is already set for our upcming 0.12 release

</issue>

<code>

[start of tensorflow_addons/activations/gelu.py]

1 # Copyright 2019 The TensorFlow Authors. All Rights Reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 # ==============================================================================

15

16 import tensorflow as tf

17 import math

18 import warnings

19

20 from tensorflow_addons.utils import types

21

22

23 @tf.keras.utils.register_keras_serializable(package="Addons")

24 def gelu(x: types.TensorLike, approximate: bool = True) -> tf.Tensor:

25 r"""Gaussian Error Linear Unit.

26

27 Computes gaussian error linear:

28

29 $$

30 \mathrm{gelu}(x) = x \Phi(x),

31 $$

32

33 where

34

35 $$

36 \Phi(x) = \frac{1}{2} \left[ 1 + \mathrm{erf}(\frac{x}{\sqrt{2}}) \right]$

37 $$

38

39 when `approximate` is `False`; or

40

41 $$

42 \Phi(x) = \frac{x}{2} \left[ 1 + \tanh(\sqrt{\frac{2}{\pi}} \cdot (x + 0.044715 \cdot x^3)) \right]

43 $$

44

45 when `approximate` is `True`.

46

47 See [Gaussian Error Linear Units (GELUs)](https://arxiv.org/abs/1606.08415)

48 and [BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding](https://arxiv.org/abs/1810.04805).

49

50 Usage:

51

52 >>> tfa.options.TF_ADDONS_PY_OPS = True

53 >>> x = tf.constant([-1.0, 0.0, 1.0])

54 >>> tfa.activations.gelu(x, approximate=False)

55 <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.15865529, 0. , 0.8413447 ], dtype=float32)>

56 >>> tfa.activations.gelu(x, approximate=True)

57 <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.158808, 0. , 0.841192], dtype=float32)>

58

59 Args:

60 x: A `Tensor`. Must be one of the following types:

61 `float16`, `float32`, `float64`.

62 approximate: bool, whether to enable approximation.

63 Returns:

64 A `Tensor`. Has the same type as `x`.

65 """

66 warnings.warn(

67 "gelu activation has been migrated to core TensorFlow, "

68 "and will be deprecated in Addons 0.13.",

69 DeprecationWarning,

70 )

71

72 x = tf.convert_to_tensor(x)

73

74 return _gelu_py(x, approximate)

75

76

77 def _gelu_py(x: types.TensorLike, approximate: bool = True) -> tf.Tensor:

78 x = tf.convert_to_tensor(x)

79 if approximate:

80 pi = tf.cast(math.pi, x.dtype)

81 coeff = tf.cast(0.044715, x.dtype)

82 return 0.5 * x * (1.0 + tf.tanh(tf.sqrt(2.0 / pi) * (x + coeff * tf.pow(x, 3))))

83 else:

84 return 0.5 * x * (1.0 + tf.math.erf(x / tf.cast(tf.sqrt(2.0), x.dtype)))

85

[end of tensorflow_addons/activations/gelu.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/tensorflow_addons/activations/gelu.py b/tensorflow_addons/activations/gelu.py

--- a/tensorflow_addons/activations/gelu.py

+++ b/tensorflow_addons/activations/gelu.py

@@ -18,6 +18,7 @@

import warnings

from tensorflow_addons.utils import types

+from distutils.version import LooseVersion

@tf.keras.utils.register_keras_serializable(package="Addons")

@@ -47,6 +48,9 @@

See [Gaussian Error Linear Units (GELUs)](https://arxiv.org/abs/1606.08415)

and [BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding](https://arxiv.org/abs/1810.04805).

+ Note that `approximate` will default to `False` from TensorFlow version 2.4 onwards.

+ Consider using `tf.nn.gelu` instead.

+

Usage:

>>> tfa.options.TF_ADDONS_PY_OPS = True

@@ -54,7 +58,7 @@

>>> tfa.activations.gelu(x, approximate=False)

<tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.15865529, 0. , 0.8413447 ], dtype=float32)>

>>> tfa.activations.gelu(x, approximate=True)

- <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.158808, 0. , 0.841192], dtype=float32)>

+ <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.15880796, 0. , 0.841192 ], dtype=float32)>

Args:

x: A `Tensor`. Must be one of the following types:

@@ -71,7 +75,15 @@

x = tf.convert_to_tensor(x)

- return _gelu_py(x, approximate)

+ if LooseVersion(tf.__version__) >= "2.4":

+ gelu_op = tf.nn.gelu

+ warnings.warn(

+ "Default value of `approximate` is changed from `True` to `False`"

+ )

+ else:

+ gelu_op = _gelu_py

+

+ return gelu_op(x, approximate)

def _gelu_py(x: types.TensorLike, approximate: bool = True) -> tf.Tensor:

|

{"golden_diff": "diff --git a/tensorflow_addons/activations/gelu.py b/tensorflow_addons/activations/gelu.py\n--- a/tensorflow_addons/activations/gelu.py\n+++ b/tensorflow_addons/activations/gelu.py\n@@ -18,6 +18,7 @@\n import warnings\n \n from tensorflow_addons.utils import types\n+from distutils.version import LooseVersion\n \n \n @tf.keras.utils.register_keras_serializable(package=\"Addons\")\n@@ -47,6 +48,9 @@\n See [Gaussian Error Linear Units (GELUs)](https://arxiv.org/abs/1606.08415)\n and [BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding](https://arxiv.org/abs/1810.04805).\n \n+ Note that `approximate` will default to `False` from TensorFlow version 2.4 onwards.\n+ Consider using `tf.nn.gelu` instead.\n+\n Usage:\n \n >>> tfa.options.TF_ADDONS_PY_OPS = True\n@@ -54,7 +58,7 @@\n >>> tfa.activations.gelu(x, approximate=False)\n <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.15865529, 0. , 0.8413447 ], dtype=float32)>\n >>> tfa.activations.gelu(x, approximate=True)\n- <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.158808, 0. , 0.841192], dtype=float32)>\n+ <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.15880796, 0. , 0.841192 ], dtype=float32)>\n \n Args:\n x: A `Tensor`. Must be one of the following types:\n@@ -71,7 +75,15 @@\n \n x = tf.convert_to_tensor(x)\n \n- return _gelu_py(x, approximate)\n+ if LooseVersion(tf.__version__) >= \"2.4\":\n+ gelu_op = tf.nn.gelu\n+ warnings.warn(\n+ \"Default value of `approximate` is changed from `True` to `False`\"\n+ )\n+ else:\n+ gelu_op = _gelu_py\n+\n+ return gelu_op(x, approximate)\n \n \n def _gelu_py(x: types.TensorLike, approximate: bool = True) -> tf.Tensor:\n", "issue": "Drop GELU for 0.13 release\nhttps://www.tensorflow.org/api_docs/python/tf/keras/activations/gelu will be available in TF2.4. Deprecation warning is already set for our upcming 0.12 release\n", "before_files": [{"content": "# Copyright 2019 The TensorFlow Authors. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n# ==============================================================================\n\nimport tensorflow as tf\nimport math\nimport warnings\n\nfrom tensorflow_addons.utils import types\n\n\[email protected]_keras_serializable(package=\"Addons\")\ndef gelu(x: types.TensorLike, approximate: bool = True) -> tf.Tensor:\n r\"\"\"Gaussian Error Linear Unit.\n\n Computes gaussian error linear:\n\n $$\n \\mathrm{gelu}(x) = x \\Phi(x),\n $$\n\n where\n\n $$\n \\Phi(x) = \\frac{1}{2} \\left[ 1 + \\mathrm{erf}(\\frac{x}{\\sqrt{2}}) \\right]$\n $$\n\n when `approximate` is `False`; or\n\n $$\n \\Phi(x) = \\frac{x}{2} \\left[ 1 + \\tanh(\\sqrt{\\frac{2}{\\pi}} \\cdot (x + 0.044715 \\cdot x^3)) \\right]\n $$\n\n when `approximate` is `True`.\n\n See [Gaussian Error Linear Units (GELUs)](https://arxiv.org/abs/1606.08415)\n and [BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding](https://arxiv.org/abs/1810.04805).\n\n Usage:\n\n >>> tfa.options.TF_ADDONS_PY_OPS = True\n >>> x = tf.constant([-1.0, 0.0, 1.0])\n >>> tfa.activations.gelu(x, approximate=False)\n <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.15865529, 0. , 0.8413447 ], dtype=float32)>\n >>> tfa.activations.gelu(x, approximate=True)\n <tf.Tensor: shape=(3,), dtype=float32, numpy=array([-0.158808, 0. , 0.841192], dtype=float32)>\n\n Args:\n x: A `Tensor`. Must be one of the following types:\n `float16`, `float32`, `float64`.\n approximate: bool, whether to enable approximation.\n Returns:\n A `Tensor`. Has the same type as `x`.\n \"\"\"\n warnings.warn(\n \"gelu activation has been migrated to core TensorFlow, \"\n \"and will be deprecated in Addons 0.13.\",\n DeprecationWarning,\n )\n\n x = tf.convert_to_tensor(x)\n\n return _gelu_py(x, approximate)\n\n\ndef _gelu_py(x: types.TensorLike, approximate: bool = True) -> tf.Tensor:\n x = tf.convert_to_tensor(x)\n if approximate:\n pi = tf.cast(math.pi, x.dtype)\n coeff = tf.cast(0.044715, x.dtype)\n return 0.5 * x * (1.0 + tf.tanh(tf.sqrt(2.0 / pi) * (x + coeff * tf.pow(x, 3))))\n else:\n return 0.5 * x * (1.0 + tf.math.erf(x / tf.cast(tf.sqrt(2.0), x.dtype)))\n", "path": "tensorflow_addons/activations/gelu.py"}]}

| 1,628 | 586 |

gh_patches_debug_5095

|

rasdani/github-patches

|

git_diff

|

kivy__kivy-3104

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Issues with popup on py3

When running the following code in py3 on windows I get the following error:

``` py

from kivy.uix.widget import Widget

from kivy.uix.popup import Popup

w1 = Widget()

w2 = Widget()

p1 = Popup(content=w1)

p2 = Popup(content=w2)

```

```

Traceback (most recent call last):

File "playground8.py", line 7, in <module>

p2 = Popup(content=w2)

File "C:\Users\Matthew Einhorn\Desktop\Kivy-1.8.0-py3.3-win32\kivy\kivy\uix\modalview.py", line 152, in __init__

super(ModalView, self).__init__(**kwargs)

File "C:\Users\Matthew Einhorn\Desktop\Kivy-1.8.0-py3.3-win32\kivy\kivy\uix\anchorlayout.py", line 68, in __init__

super(AnchorLayout, self).__init__(**kwargs)

File "C:\Users\Matthew Einhorn\Desktop\Kivy-1.8.0-py3.3-win32\kivy\kivy\uix\layout.py", line 66, in __init__

super(Layout, self).__init__(**kwargs)

File "C:\Users\Matthew Einhorn\Desktop\Kivy-1.8.0-py3.3-win32\kivy\kivy\uix\widget.py", line 261, in __init__

super(Widget, self).__init__(**kwargs)

File "kivy\_event.pyx", line 271, in kivy._event.EventDispatcher.__init__ (kivy\_event.c:4933)

File "kivy\properties.pyx", line 397, in kivy.properties.Property.__set__ (kivy\properties.c:4680)

File "kivy\properties.pyx", line 429, in kivy.properties.Property.set (kivy\properties.c:5203)

File "kivy\properties.pyx", line 480, in kivy.properties.Property.dispatch (kivy\properties.c:5779)

File "kivy\_event.pyx", line 1168, in kivy._event.EventObservers.dispatch (kivy\_event.c:12154)

File "kivy\_event.pyx", line 1074, in kivy._event.EventObservers._dispatch (kivy\_event.c:11451)

File "C:\Users\Matthew Einhorn\Desktop\Kivy-1.8.0-py3.3-win32\kivy\kivy\uix\popup.py", line 188, in on_content

if not hasattr(value, 'popup'):

File "kivy\properties.pyx", line 402, in kivy.properties.Property.__get__ (kivy\properties.c:4776)

File "kivy\properties.pyx", line 435, in kivy.properties.Property.get (kivy\properties.c:5416)

KeyError: 'popup'

```

The reason is because of https://github.com/kivy/kivy/blob/master/kivy/uix/popup.py#L188. Both Widgets are created first. Then upon creation of first Popup its `on_content` is executed and a property in that widget as well in Widget class is created. However, it's only initialized for w1, w2 `__storage` has not been initialized for w2. So when hasattr is called on widget 2 and in python 3 it causes obj.__storage['popup']` to be executed from get, because storage has not been initialized for 'popup' in this widget it crashes.

The question is, why does the Popup code do this `create_property` stuff?

</issue>

<code>

[start of kivy/uix/popup.py]

1 '''

2 Popup

3 =====

4

5 .. versionadded:: 1.0.7

6

7 .. image:: images/popup.jpg

8 :align: right

9

10 The :class:`Popup` widget is used to create modal popups. By default, the popup

11 will cover the whole "parent" window. When you are creating a popup, you

12 must at least set a :attr:`Popup.title` and :attr:`Popup.content`.

13

14 Remember that the default size of a Widget is size_hint=(1, 1). If you don't

15 want your popup to be fullscreen, either use size hints with values less than 1

16 (for instance size_hint=(.8, .8)) or deactivate the size_hint and use

17 fixed size attributes.

18

19

20 .. versionchanged:: 1.4.0

21 The :class:`Popup` class now inherits from

22 :class:`~kivy.uix.modalview.ModalView`. The :class:`Popup` offers a default

23 layout with a title and a separation bar.

24

25 Examples

26 --------

27

28 Example of a simple 400x400 Hello world popup::

29

30 popup = Popup(title='Test popup',

31 content=Label(text='Hello world'),

32 size_hint=(None, None), size=(400, 400))

33

34 By default, any click outside the popup will dismiss/close it. If you don't

35 want that, you can set

36 :attr:`~kivy.uix.modalview.ModalView.auto_dismiss` to False::

37

38 popup = Popup(title='Test popup', content=Label(text='Hello world'),

39 auto_dismiss=False)

40 popup.open()

41

42 To manually dismiss/close the popup, use

43 :attr:`~kivy.uix.modalview.ModalView.dismiss`::

44

45 popup.dismiss()

46

47 Both :meth:`~kivy.uix.modalview.ModalView.open` and

48 :meth:`~kivy.uix.modalview.ModalView.dismiss` are bindable. That means you

49 can directly bind the function to an action, e.g. to a button's on_press::

50

51 # create content and add to the popup

52 content = Button(text='Close me!')

53 popup = Popup(content=content, auto_dismiss=False)

54

55 # bind the on_press event of the button to the dismiss function

56 content.bind(on_press=popup.dismiss)

57

58 # open the popup

59 popup.open()

60

61

62 Popup Events

63 ------------

64

65 There are two events available: `on_open` which is raised when the popup is

66 opening, and `on_dismiss` which is raised when the popup is closed.

67 For `on_dismiss`, you can prevent the

68 popup from closing by explictly returning True from your callback::

69

70 def my_callback(instance):

71 print('Popup', instance, 'is being dismissed but is prevented!')

72 return True

73 popup = Popup(content=Label(text='Hello world'))

74 popup.bind(on_dismiss=my_callback)

75 popup.open()

76

77 '''

78

79 __all__ = ('Popup', 'PopupException')

80

81 from kivy.uix.modalview import ModalView

82 from kivy.properties import (StringProperty, ObjectProperty, OptionProperty,

83 NumericProperty, ListProperty)

84

85

86 class PopupException(Exception):

87 '''Popup exception, fired when multiple content widgets are added to the

88 popup.

89

90 .. versionadded:: 1.4.0

91 '''

92

93

94 class Popup(ModalView):

95 '''Popup class. See module documentation for more information.

96

97 :Events:

98 `on_open`:

99 Fired when the Popup is opened.

100 `on_dismiss`:

101 Fired when the Popup is closed. If the callback returns True, the

102 dismiss will be canceled.

103 '''

104

105 title = StringProperty('No title')

106 '''String that represents the title of the popup.

107

108 :attr:`title` is a :class:`~kivy.properties.StringProperty` and defaults to

109 'No title'.

110 '''

111

112 title_size = NumericProperty('14sp')

113 '''Represents the font size of the popup title.

114

115 .. versionadded:: 1.6.0

116

117 :attr:`title_size` is a :class:`~kivy.properties.NumericProperty` and

118 defaults to '14sp'.

119 '''

120

121 title_align = OptionProperty('left',

122 options=['left', 'center', 'right', 'justify'])

123 '''Horizontal alignment of the title.

124

125 .. versionadded:: 1.9.0

126

127 :attr:`title_align` is a :class:`~kivy.properties.OptionProperty` and

128 defaults to 'left'. Available options are left, middle, right and justify.

129 '''

130

131 title_font = StringProperty('DroidSans')

132 '''Font used to render the title text.

133

134 .. versionadded:: 1.9.0

135

136 :attr:`title_font` is a :class:`~kivy.properties.StringProperty` and

137 defaults to 'DroidSans'.

138 '''

139

140 content = ObjectProperty(None)

141 '''Content of the popup that is displayed just under the title.

142

143 :attr:`content` is an :class:`~kivy.properties.ObjectProperty` and defaults

144 to None.

145 '''

146

147 title_color = ListProperty([1, 1, 1, 1])

148 '''Color used by the Title.

149

150 .. versionadded:: 1.8.0

151

152 :attr:`title_color` is a :class:`~kivy.properties.ListProperty` and

153 defaults to [1, 1, 1, 1].

154 '''

155

156 separator_color = ListProperty([47 / 255., 167 / 255., 212 / 255., 1.])

157 '''Color used by the separator between title and content.

158

159 .. versionadded:: 1.1.0

160

161 :attr:`separator_color` is a :class:`~kivy.properties.ListProperty` and

162 defaults to [47 / 255., 167 / 255., 212 / 255., 1.]

163 '''

164

165 separator_height = NumericProperty('2dp')

166 '''Height of the separator.

167

168 .. versionadded:: 1.1.0

169

170 :attr:`separator_height` is a :class:`~kivy.properties.NumericProperty` and

171 defaults to 2dp.

172 '''

173

174 # Internal properties used for graphical representation.

175

176 _container = ObjectProperty(None)

177

178 def add_widget(self, widget):

179 if self._container:

180 if self.content:

181 raise PopupException(

182 'Popup can have only one widget as content')

183 self.content = widget

184 else:

185 super(Popup, self).add_widget(widget)

186

187 def on_content(self, instance, value):

188 if not hasattr(value, 'popup'):

189 value.create_property('popup')

190 value.popup = self

191 if self._container:

192 self._container.clear_widgets()

193 self._container.add_widget(value)

194

195 def on__container(self, instance, value):

196 if value is None or self.content is None:

197 return

198 self._container.clear_widgets()

199 self._container.add_widget(self.content)

200

201 def on_touch_down(self, touch):

202 if self.disabled and self.collide_point(*touch.pos):

203 return True

204 return super(Popup, self).on_touch_down(touch)

205

206

207 if __name__ == '__main__':

208 from kivy.base import runTouchApp

209 from kivy.uix.button import Button

210 from kivy.uix.label import Label

211 from kivy.uix.gridlayout import GridLayout

212 from kivy.core.window import Window

213

214 # add popup

215 content = GridLayout(cols=1)

216 content_cancel = Button(text='Cancel', size_hint_y=None, height=40)

217 content.add_widget(Label(text='This is a hello world'))

218 content.add_widget(content_cancel)

219 popup = Popup(title='Test popup',

220 size_hint=(None, None), size=(256, 256),

221 content=content, disabled=True)

222 content_cancel.bind(on_release=popup.dismiss)

223

224 layout = GridLayout(cols=3)

225 for x in range(9):

226 btn = Button(text=str(x))

227 btn.bind(on_release=popup.open)

228 layout.add_widget(btn)

229

230 Window.add_widget(layout)

231

232 popup.open()

233

234 runTouchApp()

235

[end of kivy/uix/popup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/kivy/uix/popup.py b/kivy/uix/popup.py

--- a/kivy/uix/popup.py

+++ b/kivy/uix/popup.py

@@ -185,9 +185,6 @@

super(Popup, self).add_widget(widget)

def on_content(self, instance, value):

- if not hasattr(value, 'popup'):

- value.create_property('popup')

- value.popup = self

if self._container:

self._container.clear_widgets()

self._container.add_widget(value)

|

{"golden_diff": "diff --git a/kivy/uix/popup.py b/kivy/uix/popup.py\n--- a/kivy/uix/popup.py\n+++ b/kivy/uix/popup.py\n@@ -185,9 +185,6 @@\n super(Popup, self).add_widget(widget)\n \n def on_content(self, instance, value):\n- if not hasattr(value, 'popup'):\n- value.create_property('popup')\n- value.popup = self\n if self._container:\n self._container.clear_widgets()\n self._container.add_widget(value)\n", "issue": "Issues with popup on py3\nWhen running the following code in py3 on windows I get the following error:\n\n``` py\nfrom kivy.uix.widget import Widget\nfrom kivy.uix.popup import Popup\n\nw1 = Widget()\nw2 = Widget()\np1 = Popup(content=w1)\np2 = Popup(content=w2)\n```\n\n```\n Traceback (most recent call last):\n File \"playground8.py\", line 7, in <module>\n p2 = Popup(content=w2)\n File \"C:\\Users\\Matthew Einhorn\\Desktop\\Kivy-1.8.0-py3.3-win32\\kivy\\kivy\\uix\\modalview.py\", line 152, in __init__\n super(ModalView, self).__init__(**kwargs)\n File \"C:\\Users\\Matthew Einhorn\\Desktop\\Kivy-1.8.0-py3.3-win32\\kivy\\kivy\\uix\\anchorlayout.py\", line 68, in __init__\n super(AnchorLayout, self).__init__(**kwargs)\n File \"C:\\Users\\Matthew Einhorn\\Desktop\\Kivy-1.8.0-py3.3-win32\\kivy\\kivy\\uix\\layout.py\", line 66, in __init__\n super(Layout, self).__init__(**kwargs)\n File \"C:\\Users\\Matthew Einhorn\\Desktop\\Kivy-1.8.0-py3.3-win32\\kivy\\kivy\\uix\\widget.py\", line 261, in __init__\n super(Widget, self).__init__(**kwargs)\n File \"kivy\\_event.pyx\", line 271, in kivy._event.EventDispatcher.__init__ (kivy\\_event.c:4933)\n File \"kivy\\properties.pyx\", line 397, in kivy.properties.Property.__set__ (kivy\\properties.c:4680)\n File \"kivy\\properties.pyx\", line 429, in kivy.properties.Property.set (kivy\\properties.c:5203)\n File \"kivy\\properties.pyx\", line 480, in kivy.properties.Property.dispatch (kivy\\properties.c:5779)\n File \"kivy\\_event.pyx\", line 1168, in kivy._event.EventObservers.dispatch (kivy\\_event.c:12154)\n File \"kivy\\_event.pyx\", line 1074, in kivy._event.EventObservers._dispatch (kivy\\_event.c:11451)\n File \"C:\\Users\\Matthew Einhorn\\Desktop\\Kivy-1.8.0-py3.3-win32\\kivy\\kivy\\uix\\popup.py\", line 188, in on_content\n if not hasattr(value, 'popup'):\n File \"kivy\\properties.pyx\", line 402, in kivy.properties.Property.__get__ (kivy\\properties.c:4776)\n File \"kivy\\properties.pyx\", line 435, in kivy.properties.Property.get (kivy\\properties.c:5416)\n KeyError: 'popup'\n```\n\nThe reason is because of https://github.com/kivy/kivy/blob/master/kivy/uix/popup.py#L188. Both Widgets are created first. Then upon creation of first Popup its `on_content` is executed and a property in that widget as well in Widget class is created. However, it's only initialized for w1, w2 `__storage` has not been initialized for w2. So when hasattr is called on widget 2 and in python 3 it causes obj.__storage['popup']` to be executed from get, because storage has not been initialized for 'popup' in this widget it crashes.\n\nThe question is, why does the Popup code do this `create_property` stuff?\n\n", "before_files": [{"content": "'''\nPopup\n=====\n\n.. versionadded:: 1.0.7\n\n.. image:: images/popup.jpg\n :align: right\n\nThe :class:`Popup` widget is used to create modal popups. By default, the popup\nwill cover the whole \"parent\" window. When you are creating a popup, you\nmust at least set a :attr:`Popup.title` and :attr:`Popup.content`.\n\nRemember that the default size of a Widget is size_hint=(1, 1). If you don't\nwant your popup to be fullscreen, either use size hints with values less than 1\n(for instance size_hint=(.8, .8)) or deactivate the size_hint and use\nfixed size attributes.\n\n\n.. versionchanged:: 1.4.0\n The :class:`Popup` class now inherits from\n :class:`~kivy.uix.modalview.ModalView`. The :class:`Popup` offers a default\n layout with a title and a separation bar.\n\nExamples\n--------\n\nExample of a simple 400x400 Hello world popup::\n\n popup = Popup(title='Test popup',\n content=Label(text='Hello world'),\n size_hint=(None, None), size=(400, 400))\n\nBy default, any click outside the popup will dismiss/close it. If you don't\nwant that, you can set\n:attr:`~kivy.uix.modalview.ModalView.auto_dismiss` to False::\n\n popup = Popup(title='Test popup', content=Label(text='Hello world'),\n auto_dismiss=False)\n popup.open()\n\nTo manually dismiss/close the popup, use\n:attr:`~kivy.uix.modalview.ModalView.dismiss`::\n\n popup.dismiss()\n\nBoth :meth:`~kivy.uix.modalview.ModalView.open` and\n:meth:`~kivy.uix.modalview.ModalView.dismiss` are bindable. That means you\ncan directly bind the function to an action, e.g. to a button's on_press::\n\n # create content and add to the popup\n content = Button(text='Close me!')\n popup = Popup(content=content, auto_dismiss=False)\n\n # bind the on_press event of the button to the dismiss function\n content.bind(on_press=popup.dismiss)\n\n # open the popup\n popup.open()\n\n\nPopup Events\n------------\n\nThere are two events available: `on_open` which is raised when the popup is\nopening, and `on_dismiss` which is raised when the popup is closed.\nFor `on_dismiss`, you can prevent the\npopup from closing by explictly returning True from your callback::\n\n def my_callback(instance):\n print('Popup', instance, 'is being dismissed but is prevented!')\n return True\n popup = Popup(content=Label(text='Hello world'))\n popup.bind(on_dismiss=my_callback)\n popup.open()\n\n'''\n\n__all__ = ('Popup', 'PopupException')\n\nfrom kivy.uix.modalview import ModalView\nfrom kivy.properties import (StringProperty, ObjectProperty, OptionProperty,\n NumericProperty, ListProperty)\n\n\nclass PopupException(Exception):\n '''Popup exception, fired when multiple content widgets are added to the\n popup.\n\n .. versionadded:: 1.4.0\n '''\n\n\nclass Popup(ModalView):\n '''Popup class. See module documentation for more information.\n\n :Events:\n `on_open`:\n Fired when the Popup is opened.\n `on_dismiss`:\n Fired when the Popup is closed. If the callback returns True, the\n dismiss will be canceled.\n '''\n\n title = StringProperty('No title')\n '''String that represents the title of the popup.\n\n :attr:`title` is a :class:`~kivy.properties.StringProperty` and defaults to\n 'No title'.\n '''\n\n title_size = NumericProperty('14sp')\n '''Represents the font size of the popup title.\n\n .. versionadded:: 1.6.0\n\n :attr:`title_size` is a :class:`~kivy.properties.NumericProperty` and\n defaults to '14sp'.\n '''\n\n title_align = OptionProperty('left',\n options=['left', 'center', 'right', 'justify'])\n '''Horizontal alignment of the title.\n\n .. versionadded:: 1.9.0\n\n :attr:`title_align` is a :class:`~kivy.properties.OptionProperty` and\n defaults to 'left'. Available options are left, middle, right and justify.\n '''\n\n title_font = StringProperty('DroidSans')\n '''Font used to render the title text.\n\n .. versionadded:: 1.9.0\n\n :attr:`title_font` is a :class:`~kivy.properties.StringProperty` and\n defaults to 'DroidSans'.\n '''\n\n content = ObjectProperty(None)\n '''Content of the popup that is displayed just under the title.\n\n :attr:`content` is an :class:`~kivy.properties.ObjectProperty` and defaults\n to None.\n '''\n\n title_color = ListProperty([1, 1, 1, 1])\n '''Color used by the Title.\n\n .. versionadded:: 1.8.0\n\n :attr:`title_color` is a :class:`~kivy.properties.ListProperty` and\n defaults to [1, 1, 1, 1].\n '''\n\n separator_color = ListProperty([47 / 255., 167 / 255., 212 / 255., 1.])\n '''Color used by the separator between title and content.\n\n .. versionadded:: 1.1.0\n\n :attr:`separator_color` is a :class:`~kivy.properties.ListProperty` and\n defaults to [47 / 255., 167 / 255., 212 / 255., 1.]\n '''\n\n separator_height = NumericProperty('2dp')\n '''Height of the separator.\n\n .. versionadded:: 1.1.0\n\n :attr:`separator_height` is a :class:`~kivy.properties.NumericProperty` and\n defaults to 2dp.\n '''\n\n # Internal properties used for graphical representation.\n\n _container = ObjectProperty(None)\n\n def add_widget(self, widget):\n if self._container:\n if self.content:\n raise PopupException(\n 'Popup can have only one widget as content')\n self.content = widget\n else:\n super(Popup, self).add_widget(widget)\n\n def on_content(self, instance, value):\n if not hasattr(value, 'popup'):\n value.create_property('popup')\n value.popup = self\n if self._container:\n self._container.clear_widgets()\n self._container.add_widget(value)\n\n def on__container(self, instance, value):\n if value is None or self.content is None:\n return\n self._container.clear_widgets()\n self._container.add_widget(self.content)\n\n def on_touch_down(self, touch):\n if self.disabled and self.collide_point(*touch.pos):\n return True\n return super(Popup, self).on_touch_down(touch)\n\n\nif __name__ == '__main__':\n from kivy.base import runTouchApp\n from kivy.uix.button import Button\n from kivy.uix.label import Label\n from kivy.uix.gridlayout import GridLayout\n from kivy.core.window import Window\n\n # add popup\n content = GridLayout(cols=1)\n content_cancel = Button(text='Cancel', size_hint_y=None, height=40)\n content.add_widget(Label(text='This is a hello world'))\n content.add_widget(content_cancel)\n popup = Popup(title='Test popup',\n size_hint=(None, None), size=(256, 256),\n content=content, disabled=True)\n content_cancel.bind(on_release=popup.dismiss)\n\n layout = GridLayout(cols=3)\n for x in range(9):\n btn = Button(text=str(x))\n btn.bind(on_release=popup.open)\n layout.add_widget(btn)\n\n Window.add_widget(layout)\n\n popup.open()\n\n runTouchApp()\n", "path": "kivy/uix/popup.py"}]}

| 3,785 | 122 |

gh_patches_debug_60845

|

rasdani/github-patches

|

git_diff

|

uclapi__uclapi-226

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

timetable/data/departments endpoint returns 500 error

The timetable/data/departments endpoint is currently returning a 500 error on any request.

I know it's not a documented endpoint, but it would be nice if it worked :)

It looks to me like the problem is line 85 below - `rate_limiting_data` is being passed as an argument to `append`.

https://github.com/uclapi/uclapi/blob/cfd6753ae3d979bbe53573dad68babc2de19e04d/backend/uclapi/timetable/views.py#L82-L85

Removing that and replacing with this:

```python

depts["departments"].append({

"department_id": dept.deptid,

"name": dept.name})

```

should fix it, though I don't have the whole API setup installed, so I can't be sure.

</issue>

<code>

[start of backend/uclapi/timetable/views.py]

1 from django.conf import settings

2

3 from rest_framework.decorators import api_view

4

5 from common.helpers import PrettyJsonResponse as JsonResponse

6

7 from .models import Lock, Course, Depts, ModuleA, ModuleB

8

9 from .app_helpers import get_student_timetable, get_custom_timetable

10

11 from common.decorators import uclapi_protected_endpoint

12

13 _SETID = settings.ROOMBOOKINGS_SETID

14

15

16 @api_view(["GET"])

17 @uclapi_protected_endpoint(personal_data=True, required_scopes=['timetable'])

18 def get_personal_timetable(request, *args, **kwargs):

19 token = kwargs['token']

20 user = token.user

21 try:

22 date_filter = request.GET["date_filter"]

23 timetable = get_student_timetable(user.employee_id, date_filter)

24 except KeyError:

25 timetable = get_student_timetable(user.employee_id)

26

27 response = {

28 "ok": True,

29 "timetable": timetable

30 }

31 return JsonResponse(response, rate_limiting_data=kwargs)

32

33

34 @api_view(["GET"])

35 @uclapi_protected_endpoint()

36 def get_modules_timetable(request, *args, **kwargs):

37 module_ids = request.GET.get("modules")

38 if module_ids is None:

39 return JsonResponse({

40 "ok": False,

41 "error": "No module IDs provided."

42 }, rate_limiting_data=kwargs)

43

44 try:

45 modules = module_ids.split(',')

46 except ValueError:

47 return JsonResponse({

48 "ok": False,

49 "error": "Invalid module IDs provided."

50 }, rate_limiting_data=kwargs)

51

52 try:

53 date_filter = request.GET["date_filter"]

54 custom_timetable = get_custom_timetable(modules, date_filter)

55 except KeyError:

56 custom_timetable = get_custom_timetable(modules)

57

58 if custom_timetable:

59 response_json = {

60 "ok": True,

61 "timetable": custom_timetable

62 }

63 return JsonResponse(response_json, rate_limiting_data=kwargs)

64 else:

65 response_json = {

66 "ok": False,

67 "error": "One or more invalid Module IDs supplied."

68 }

69 response = JsonResponse(response_json, rate_limiting_data=kwargs)

70 response.status_code = 400

71 return response

72

73

74 @api_view(["GET"])

75 @uclapi_protected_endpoint()

76 def get_departments(request, *args, **kwargs):

77 """

78 Returns all departments at UCL

79 """

80 depts = {"ok": True, "departments": []}

81 for dept in Depts.objects.all():

82 depts["departments"].append({

83 "department_id": dept.deptid,

84 "name": dept.name

85 }, rate_limiting_data=kwargs)

86 return JsonResponse(depts, rate_limiting_data=kwargs)

87

88

89 @api_view(["GET"])

90 @uclapi_protected_endpoint()

91 def get_department_courses(request, *args, **kwargs):

92 """

93 Returns all the courses in UCL with relevant ID

94 """

95 try:

96 department_id = request.GET["department"]

97 except KeyError:

98 response = JsonResponse({

99 "ok": False,

100 "error": "Supply a Department ID using the department parameter."

101 }, rate_limiting_data=kwargs)

102 response.status_code = 400

103 return response

104

105 courses = {"ok": True, "courses": []}

106 for course in Course.objects.filter(owner=department_id, setid=_SETID):

107 courses["courses"].append({

108 "course_name": course.name,

109 "course_id": course.courseid,

110 "years": course.numyears

111 })

112 return JsonResponse(courses, rate_limiting_data=kwargs)

113

114

115 @api_view(["GET"])

116 @uclapi_protected_endpoint()

117 def get_department_modules(request, *args, **kwargs):

118 """

119 Returns all modules taught by a particular department.

120 """

121 try:

122 department_id = request.GET["department"]

123 except KeyError:

124 response = JsonResponse({

125 "ok": False,

126 "error": "Supply a Department ID using the department parameter."

127 }, rate_limiting_data=kwargs)

128 response.status_code = 400

129 return response

130

131 modules = {"ok": True, "modules": []}

132 lock = Lock.objects.all()[0]

133 m = ModuleA if lock.a else ModuleB

134 for module in m.objects.filter(owner=department_id, setid=_SETID):

135 modules["modules"].append({

136 "module_id": module.moduleid,

137 "name": module.name,

138 "module_code": module.linkcode,

139 "class_size": module.csize

140 })

141

142 return JsonResponse(modules, rate_limiting_data=kwargs)

143

[end of backend/uclapi/timetable/views.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/backend/uclapi/timetable/views.py b/backend/uclapi/timetable/views.py

--- a/backend/uclapi/timetable/views.py

+++ b/backend/uclapi/timetable/views.py

@@ -82,7 +82,7 @@

depts["departments"].append({

"department_id": dept.deptid,

"name": dept.name

- }, rate_limiting_data=kwargs)

+ })

return JsonResponse(depts, rate_limiting_data=kwargs)

|

{"golden_diff": "diff --git a/backend/uclapi/timetable/views.py b/backend/uclapi/timetable/views.py\n--- a/backend/uclapi/timetable/views.py\n+++ b/backend/uclapi/timetable/views.py\n@@ -82,7 +82,7 @@\n depts[\"departments\"].append({\n \"department_id\": dept.deptid,\n \"name\": dept.name\n- }, rate_limiting_data=kwargs)\n+ })\n return JsonResponse(depts, rate_limiting_data=kwargs)\n", "issue": "timetable/data/departments endpoint returns 500 error\nThe timetable/data/departments endpoint is currently returning a 500 error on any request.\r\n\r\nI know it's not a documented endpoint, but it would be nice if it worked :)\r\n\r\nIt looks to me like the problem is line 85 below - `rate_limiting_data` is being passed as an argument to `append`. \r\n\r\nhttps://github.com/uclapi/uclapi/blob/cfd6753ae3d979bbe53573dad68babc2de19e04d/backend/uclapi/timetable/views.py#L82-L85\r\n\r\nRemoving that and replacing with this:\r\n```python\r\ndepts[\"departments\"].append({ \r\n\"department_id\": dept.deptid, \r\n\"name\": dept.name}) \r\n```\r\nshould fix it, though I don't have the whole API setup installed, so I can't be sure.\n", "before_files": [{"content": "from django.conf import settings\n\nfrom rest_framework.decorators import api_view\n\nfrom common.helpers import PrettyJsonResponse as JsonResponse\n\nfrom .models import Lock, Course, Depts, ModuleA, ModuleB\n\nfrom .app_helpers import get_student_timetable, get_custom_timetable\n\nfrom common.decorators import uclapi_protected_endpoint\n\n_SETID = settings.ROOMBOOKINGS_SETID\n\n\n@api_view([\"GET\"])\n@uclapi_protected_endpoint(personal_data=True, required_scopes=['timetable'])\ndef get_personal_timetable(request, *args, **kwargs):\n token = kwargs['token']\n user = token.user\n try:\n date_filter = request.GET[\"date_filter\"]\n timetable = get_student_timetable(user.employee_id, date_filter)\n except KeyError:\n timetable = get_student_timetable(user.employee_id)\n\n response = {\n \"ok\": True,\n \"timetable\": timetable\n }\n return JsonResponse(response, rate_limiting_data=kwargs)\n\n\n@api_view([\"GET\"])\n@uclapi_protected_endpoint()\ndef get_modules_timetable(request, *args, **kwargs):\n module_ids = request.GET.get(\"modules\")\n if module_ids is None:\n return JsonResponse({\n \"ok\": False,\n \"error\": \"No module IDs provided.\"\n }, rate_limiting_data=kwargs)\n\n try:\n modules = module_ids.split(',')\n except ValueError:\n return JsonResponse({\n \"ok\": False,\n \"error\": \"Invalid module IDs provided.\"\n }, rate_limiting_data=kwargs)\n\n try:\n date_filter = request.GET[\"date_filter\"]\n custom_timetable = get_custom_timetable(modules, date_filter)\n except KeyError:\n custom_timetable = get_custom_timetable(modules)\n\n if custom_timetable:\n response_json = {\n \"ok\": True,\n \"timetable\": custom_timetable\n }\n return JsonResponse(response_json, rate_limiting_data=kwargs)\n else:\n response_json = {\n \"ok\": False,\n \"error\": \"One or more invalid Module IDs supplied.\"\n }\n response = JsonResponse(response_json, rate_limiting_data=kwargs)\n response.status_code = 400\n return response\n\n\n@api_view([\"GET\"])\n@uclapi_protected_endpoint()\ndef get_departments(request, *args, **kwargs):\n \"\"\"\n Returns all departments at UCL\n \"\"\"\n depts = {\"ok\": True, \"departments\": []}\n for dept in Depts.objects.all():\n depts[\"departments\"].append({\n \"department_id\": dept.deptid,\n \"name\": dept.name\n }, rate_limiting_data=kwargs)\n return JsonResponse(depts, rate_limiting_data=kwargs)\n\n\n@api_view([\"GET\"])\n@uclapi_protected_endpoint()\ndef get_department_courses(request, *args, **kwargs):\n \"\"\"\n Returns all the courses in UCL with relevant ID\n \"\"\"\n try:\n department_id = request.GET[\"department\"]\n except KeyError:\n response = JsonResponse({\n \"ok\": False,\n \"error\": \"Supply a Department ID using the department parameter.\"\n }, rate_limiting_data=kwargs)\n response.status_code = 400\n return response\n\n courses = {\"ok\": True, \"courses\": []}\n for course in Course.objects.filter(owner=department_id, setid=_SETID):\n courses[\"courses\"].append({\n \"course_name\": course.name,\n \"course_id\": course.courseid,\n \"years\": course.numyears\n })\n return JsonResponse(courses, rate_limiting_data=kwargs)\n\n\n@api_view([\"GET\"])\n@uclapi_protected_endpoint()\ndef get_department_modules(request, *args, **kwargs):\n \"\"\"\n Returns all modules taught by a particular department.\n \"\"\"\n try:\n department_id = request.GET[\"department\"]\n except KeyError:\n response = JsonResponse({\n \"ok\": False,\n \"error\": \"Supply a Department ID using the department parameter.\"\n }, rate_limiting_data=kwargs)\n response.status_code = 400\n return response\n\n modules = {\"ok\": True, \"modules\": []}\n lock = Lock.objects.all()[0]\n m = ModuleA if lock.a else ModuleB\n for module in m.objects.filter(owner=department_id, setid=_SETID):\n modules[\"modules\"].append({\n \"module_id\": module.moduleid,\n \"name\": module.name,\n \"module_code\": module.linkcode,\n \"class_size\": module.csize\n })\n\n return JsonResponse(modules, rate_limiting_data=kwargs)\n", "path": "backend/uclapi/timetable/views.py"}]}

| 2,053 | 111 |

gh_patches_debug_21817

|

rasdani/github-patches

|

git_diff

|

pantsbuild__pants-18258

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

`shfmt` assumes downloaded executable will be named `shfmt_{version}_{platform}`, and breaks if it isn't

**Describe the bug**

To reduce network transfer & flakiness during CI, we've pre-cached all the "external" tools used by Pants in our executor container. As part of this I've overridden the `url_template` for each tool to use a `file://` URL. The URL-paths I ended up using in the image were "simplified" from the defaults - for example, I have:

```toml

[shfmt]

url_template = "file:///opt/pants-tools/shfmt/{version}/shfmt"

```

When CI runs with this config, it fails with:

```

Error launching process: Os { code: 2, kind: NotFound, message: "No such file or directory" }

```

I `ssh`'d into one of the executors that hit this failure, and looked inside the failing sandbox. There I saw:

1. The `shfmt` binary _was_ in the sandbox, and runnable

2. According to `__run.sh`, Pants was trying to invoke `./shfmt_v3.2.4_linux_amd64` instead of plain `./shfmt`

I believe this is happening because the `shfmt` subsystem defines `generate_exe` to hard-code the same naming pattern as is used in the default `url_pattern`: https://github.com/pantsbuild/pants/blob/ac9e27b142b14f079089522c1175a9e380291100/src/python/pants/backend/shell/lint/shfmt/subsystem.py#L56-L58

I think things would operate as expected if we deleted that `generate_exe` override, since the `shfmt` download is the executable itself.

**Pants version**

2.15.0rc4

**OS**

Observed on Linux

**Additional info**

https://app.toolchain.com/organizations/color/repos/color/builds/pants_run_2023_02_15_12_48_26_897_660d20c55cc041fbb63374c79a4402b0/

</issue>

<code>

[start of src/python/pants/backend/shell/lint/shfmt/subsystem.py]

1 # Copyright 2021 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 from __future__ import annotations

5

6 import os.path

7 from typing import Iterable

8

9 from pants.core.util_rules.config_files import ConfigFilesRequest

10 from pants.core.util_rules.external_tool import TemplatedExternalTool

11 from pants.engine.platform import Platform

12 from pants.option.option_types import ArgsListOption, BoolOption, SkipOption

13 from pants.util.strutil import softwrap

14

15

16 class Shfmt(TemplatedExternalTool):

17 options_scope = "shfmt"

18 name = "shfmt"

19 help = "An autoformatter for shell scripts (https://github.com/mvdan/sh)."

20

21 default_version = "v3.6.0"

22 default_known_versions = [

23 "v3.2.4|macos_arm64 |e70fc42e69debe3e400347d4f918630cdf4bf2537277d672bbc43490387508ec|2998546",

24 "v3.2.4|macos_x86_64|43a0461a1b54070ddc04fbbf1b78f7861ee39a65a61f5466d15a39c4aba4f917|2980208",

25 "v3.2.4|linux_arm64 |6474d9cc08a1c9fe2ef4be7a004951998e3067d46cf55a011ddd5ff7bfab3de6|2752512",

26 "v3.2.4|linux_x86_64|3f5a47f8fec27fae3e06d611559a2063f5d27e4b9501171dde9959b8c60a3538|2797568",

27 "v3.6.0|macos_arm64 |633f242246ee0a866c5f5df25cbf61b6af0d5e143555aca32950059cf13d91e0|3065202",

28 "v3.6.0|macos_x86_64|b8c9c025b498e2816b62f0b717f6032e9ab49e725a45b8205f52f66318f17185|3047552",

29 "v3.6.0|linux_arm64 |fb1cf0af3dbe9aac7d98e38e3c7426765208ecfe23cb2da51037bb234776fd70|2818048",

30 "v3.6.0|linux_x86_64|5741a02a641de7e56b8da170e71a97e58050d66a3cf485fb268d6a5a8bb74afb|2850816",

31 ]

32

33 default_url_template = (

34 "https://github.com/mvdan/sh/releases/download/{version}/shfmt_{version}_{platform}"

35 )

36 default_url_platform_mapping = {

37 "macos_arm64": "darwin_arm64",

38 "macos_x86_64": "darwin_amd64",

39 "linux_arm64": "linux_arm64",

40 "linux_x86_64": "linux_amd64",

41 }

42

43 skip = SkipOption("fmt", "lint")

44 args = ArgsListOption(example="-i 2")

45 config_discovery = BoolOption(

46 default=True,

47 advanced=True,

48 help=softwrap(

49 """

50 If true, Pants will include all relevant `.editorconfig` files during runs.

51 See https://editorconfig.org.

52 """

53 ),

54 )

55

56 def generate_exe(self, plat: Platform) -> str:

57 plat_str = self.default_url_platform_mapping[plat.value]

58 return f"./shfmt_{self.version}_{plat_str}"

59

60 def config_request(self, dirs: Iterable[str]) -> ConfigFilesRequest:

61 # Refer to https://editorconfig.org/#file-location for how config files are discovered.

62 candidates = (os.path.join(d, ".editorconfig") for d in ("", *dirs))

63 return ConfigFilesRequest(

64 discovery=self.config_discovery,

65 check_content={fp: b"[*.sh]" for fp in candidates},

66 )

67

[end of src/python/pants/backend/shell/lint/shfmt/subsystem.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/python/pants/backend/shell/lint/shfmt/subsystem.py b/src/python/pants/backend/shell/lint/shfmt/subsystem.py

--- a/src/python/pants/backend/shell/lint/shfmt/subsystem.py

+++ b/src/python/pants/backend/shell/lint/shfmt/subsystem.py

@@ -8,7 +8,6 @@

from pants.core.util_rules.config_files import ConfigFilesRequest

from pants.core.util_rules.external_tool import TemplatedExternalTool

-from pants.engine.platform import Platform

from pants.option.option_types import ArgsListOption, BoolOption, SkipOption

from pants.util.strutil import softwrap

@@ -53,10 +52,6 @@

),

)

- def generate_exe(self, plat: Platform) -> str:

- plat_str = self.default_url_platform_mapping[plat.value]

- return f"./shfmt_{self.version}_{plat_str}"

-

def config_request(self, dirs: Iterable[str]) -> ConfigFilesRequest:

# Refer to https://editorconfig.org/#file-location for how config files are discovered.

candidates = (os.path.join(d, ".editorconfig") for d in ("", *dirs))

|

{"golden_diff": "diff --git a/src/python/pants/backend/shell/lint/shfmt/subsystem.py b/src/python/pants/backend/shell/lint/shfmt/subsystem.py\n--- a/src/python/pants/backend/shell/lint/shfmt/subsystem.py\n+++ b/src/python/pants/backend/shell/lint/shfmt/subsystem.py\n@@ -8,7 +8,6 @@\n \n from pants.core.util_rules.config_files import ConfigFilesRequest\n from pants.core.util_rules.external_tool import TemplatedExternalTool\n-from pants.engine.platform import Platform\n from pants.option.option_types import ArgsListOption, BoolOption, SkipOption\n from pants.util.strutil import softwrap\n \n@@ -53,10 +52,6 @@\n ),\n )\n \n- def generate_exe(self, plat: Platform) -> str:\n- plat_str = self.default_url_platform_mapping[plat.value]\n- return f\"./shfmt_{self.version}_{plat_str}\"\n-\n def config_request(self, dirs: Iterable[str]) -> ConfigFilesRequest:\n # Refer to https://editorconfig.org/#file-location for how config files are discovered.\n candidates = (os.path.join(d, \".editorconfig\") for d in (\"\", *dirs))\n", "issue": "`shfmt` assumes downloaded executable will be named `shfmt_{version}_{platform}`, and breaks if it isn't\n**Describe the bug**\r\n\r\nTo reduce network transfer & flakiness during CI, we've pre-cached all the \"external\" tools used by Pants in our executor container. As part of this I've overridden the `url_template` for each tool to use a `file://` URL. The URL-paths I ended up using in the image were \"simplified\" from the defaults - for example, I have:\r\n```toml\r\n[shfmt]\r\nurl_template = \"file:///opt/pants-tools/shfmt/{version}/shfmt\"\r\n```\r\n\r\nWhen CI runs with this config, it fails with:\r\n```\r\nError launching process: Os { code: 2, kind: NotFound, message: \"No such file or directory\" }\r\n```\r\n\r\nI `ssh`'d into one of the executors that hit this failure, and looked inside the failing sandbox. There I saw:\r\n1. The `shfmt` binary _was_ in the sandbox, and runnable\r\n2. According to `__run.sh`, Pants was trying to invoke `./shfmt_v3.2.4_linux_amd64` instead of plain `./shfmt`\r\n\r\nI believe this is happening because the `shfmt` subsystem defines `generate_exe` to hard-code the same naming pattern as is used in the default `url_pattern`: https://github.com/pantsbuild/pants/blob/ac9e27b142b14f079089522c1175a9e380291100/src/python/pants/backend/shell/lint/shfmt/subsystem.py#L56-L58\r\n\r\nI think things would operate as expected if we deleted that `generate_exe` override, since the `shfmt` download is the executable itself.\r\n\r\n**Pants version**\r\n\r\n2.15.0rc4\r\n\r\n**OS**\r\n\r\nObserved on Linux\r\n\r\n**Additional info**\r\n\r\nhttps://app.toolchain.com/organizations/color/repos/color/builds/pants_run_2023_02_15_12_48_26_897_660d20c55cc041fbb63374c79a4402b0/\r\n\n", "before_files": [{"content": "# Copyright 2021 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\nfrom __future__ import annotations\n\nimport os.path\nfrom typing import Iterable\n\nfrom pants.core.util_rules.config_files import ConfigFilesRequest\nfrom pants.core.util_rules.external_tool import TemplatedExternalTool\nfrom pants.engine.platform import Platform\nfrom pants.option.option_types import ArgsListOption, BoolOption, SkipOption\nfrom pants.util.strutil import softwrap\n\n\nclass Shfmt(TemplatedExternalTool):\n options_scope = \"shfmt\"\n name = \"shfmt\"\n help = \"An autoformatter for shell scripts (https://github.com/mvdan/sh).\"\n\n default_version = \"v3.6.0\"\n default_known_versions = [\n \"v3.2.4|macos_arm64 |e70fc42e69debe3e400347d4f918630cdf4bf2537277d672bbc43490387508ec|2998546\",\n \"v3.2.4|macos_x86_64|43a0461a1b54070ddc04fbbf1b78f7861ee39a65a61f5466d15a39c4aba4f917|2980208\",\n \"v3.2.4|linux_arm64 |6474d9cc08a1c9fe2ef4be7a004951998e3067d46cf55a011ddd5ff7bfab3de6|2752512\",\n \"v3.2.4|linux_x86_64|3f5a47f8fec27fae3e06d611559a2063f5d27e4b9501171dde9959b8c60a3538|2797568\",\n \"v3.6.0|macos_arm64 |633f242246ee0a866c5f5df25cbf61b6af0d5e143555aca32950059cf13d91e0|3065202\",\n \"v3.6.0|macos_x86_64|b8c9c025b498e2816b62f0b717f6032e9ab49e725a45b8205f52f66318f17185|3047552\",\n \"v3.6.0|linux_arm64 |fb1cf0af3dbe9aac7d98e38e3c7426765208ecfe23cb2da51037bb234776fd70|2818048\",\n \"v3.6.0|linux_x86_64|5741a02a641de7e56b8da170e71a97e58050d66a3cf485fb268d6a5a8bb74afb|2850816\",\n ]\n\n default_url_template = (\n \"https://github.com/mvdan/sh/releases/download/{version}/shfmt_{version}_{platform}\"\n )\n default_url_platform_mapping = {\n \"macos_arm64\": \"darwin_arm64\",\n \"macos_x86_64\": \"darwin_amd64\",\n \"linux_arm64\": \"linux_arm64\",\n \"linux_x86_64\": \"linux_amd64\",\n }\n\n skip = SkipOption(\"fmt\", \"lint\")\n args = ArgsListOption(example=\"-i 2\")\n config_discovery = BoolOption(\n default=True,\n advanced=True,\n help=softwrap(\n \"\"\"\n If true, Pants will include all relevant `.editorconfig` files during runs.\n See https://editorconfig.org.\n \"\"\"\n ),\n )\n\n def generate_exe(self, plat: Platform) -> str:\n plat_str = self.default_url_platform_mapping[plat.value]\n return f\"./shfmt_{self.version}_{plat_str}\"\n\n def config_request(self, dirs: Iterable[str]) -> ConfigFilesRequest:\n # Refer to https://editorconfig.org/#file-location for how config files are discovered.\n candidates = (os.path.join(d, \".editorconfig\") for d in (\"\", *dirs))\n return ConfigFilesRequest(\n discovery=self.config_discovery,\n check_content={fp: b\"[*.sh]\" for fp in candidates},\n )\n", "path": "src/python/pants/backend/shell/lint/shfmt/subsystem.py"}]}

| 2,317 | 253 |

gh_patches_debug_3045

|

rasdani/github-patches

|

git_diff

|

ethereum__web3.py-1095

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Dissallow python 3.5.1

### What was wrong?

It looks like `typing.NewType` may not be available in python 3.5.1

https://github.com/ethereum/web3.py/issues/1091

### How can it be fixed?

Check what version `NewType` was added and restrict our python versions as declared in `setup.py` to be `>=` that version

</issue>

<code>

[start of setup.py]

1 #!/usr/bin/env python

2 # -*- coding: utf-8 -*-

3 from setuptools import (

4 find_packages,

5 setup,

6 )

7

8 extras_require = {

9 'tester': [

10 "eth-tester[py-evm]==0.1.0-beta.32",

11 "py-geth>=2.0.1,<3.0.0",

12 ],

13 'testrpc': ["eth-testrpc>=1.3.3,<2.0.0"],

14 'linter': [

15 "flake8==3.4.1",

16 "isort>=4.2.15,<5",

17 ],

18 'docs': [

19 "mock",

20 "sphinx-better-theme>=0.1.4",

21 "click>=5.1",

22 "configparser==3.5.0",

23 "contextlib2>=0.5.4",

24 #"eth-testrpc>=0.8.0",

25 #"ethereum-tester-client>=1.1.0",

26 "ethtoken",

27 "py-geth>=1.4.0",

28 "py-solc>=0.4.0",

29 "pytest>=2.7.2",

30 "sphinx",

31 "sphinx_rtd_theme>=0.1.9",

32 "toposort>=1.4",

33 "urllib3",

34 "web3>=2.1.0",

35 "wheel"

36 ],

37 'dev': [

38 "bumpversion",

39 "flaky>=3.3.0",

40 "hypothesis>=3.31.2",

41 "pytest>=3.5.0,<4",

42 "pytest-mock==1.*",

43 "pytest-pythonpath>=0.3",

44 "pytest-watch==4.*",

45 "pytest-xdist==1.*",

46 "setuptools>=36.2.0",

47 "tox>=1.8.0",

48 "tqdm",

49 "when-changed"

50 ]

51 }

52

53 extras_require['dev'] = (

54 extras_require['tester'] +

55 extras_require['linter'] +

56 extras_require['docs'] +

57 extras_require['dev']

58 )

59

60 setup(

61 name='web3',

62 # *IMPORTANT*: Don't manually change the version here. Use the 'bumpversion' utility.

63 version='4.7.1',

64 description="""Web3.py""",

65 long_description_markdown_filename='README.md',

66 author='Piper Merriam',

67 author_email='[email protected]',

68 url='https://github.com/ethereum/web3.py',

69 include_package_data=True,

70 install_requires=[

71 "toolz>=0.9.0,<1.0.0;implementation_name=='pypy'",

72 "cytoolz>=0.9.0,<1.0.0;implementation_name=='cpython'",

73 "eth-abi>=1.2.0,<2.0.0",

74 "eth-account>=0.2.1,<0.4.0",

75 "eth-utils>=1.0.1,<2.0.0",

76 "hexbytes>=0.1.0,<1.0.0",

77 "lru-dict>=1.1.6,<2.0.0",

78 "eth-hash[pycryptodome]>=0.2.0,<1.0.0",

79 "requests>=2.16.0,<3.0.0",

80 "websockets>=6.0.0,<7.0.0",

81 "pypiwin32>=223;platform_system=='Windows'",

82 ],

83 setup_requires=['setuptools-markdown'],

84 python_requires='>=3.5, <4',

85 extras_require=extras_require,

86 py_modules=['web3', 'ens'],

87 license="MIT",

88 zip_safe=False,

89 keywords='ethereum',

90 packages=find_packages(exclude=["tests", "tests.*"]),

91 classifiers=[

92 'Development Status :: 5 - Production/Stable',

93 'Intended Audience :: Developers',

94 'License :: OSI Approved :: MIT License',

95 'Natural Language :: English',

96 'Programming Language :: Python :: 3',

97 'Programming Language :: Python :: 3.5',

98 'Programming Language :: Python :: 3.6',

99 ],

100 )

101

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -81,7 +81,7 @@

"pypiwin32>=223;platform_system=='Windows'",

],

setup_requires=['setuptools-markdown'],

- python_requires='>=3.5, <4',

+ python_requires='>=3.5.2, <4',

extras_require=extras_require,

py_modules=['web3', 'ens'],

license="MIT",

|