problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_3600

|

rasdani/github-patches

|

git_diff

|

interlegis__sapl-1349

|

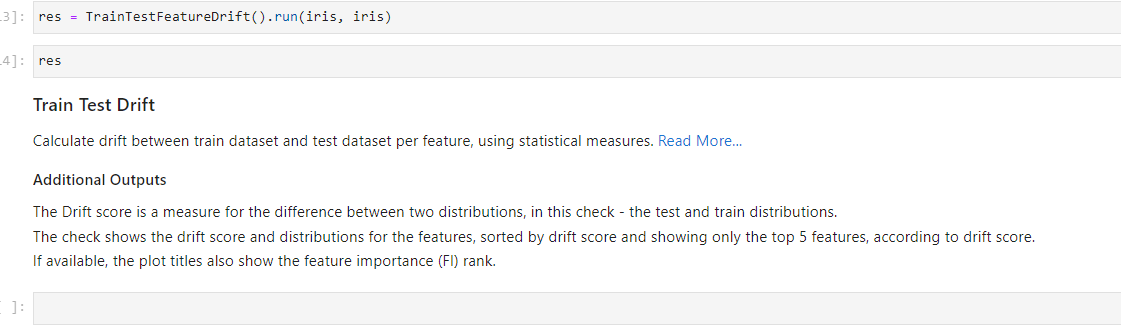

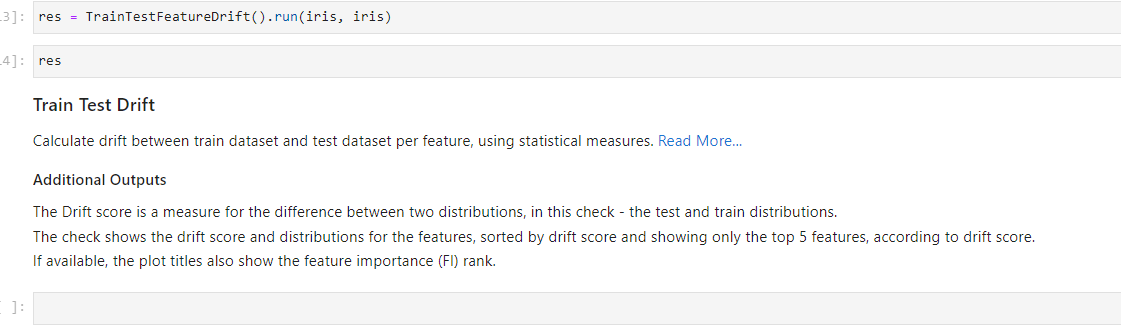

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Falta label de processo nos detalhes da matéria

O número do processo está perdido em meio aos detalhes da matéria. Falta o label processo

</issue>

<code>

[start of sapl/crispy_layout_mixin.py]

1 from math import ceil

2

3 from crispy_forms.bootstrap import FormActions

4 from crispy_forms.helper import FormHelper

5 from crispy_forms.layout import HTML, Div, Fieldset, Layout, Submit

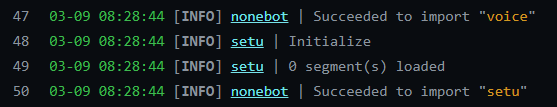

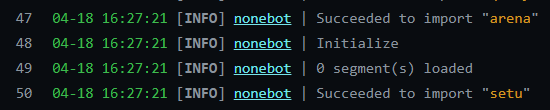

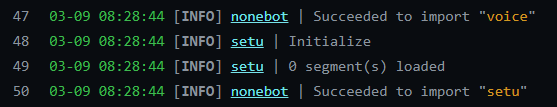

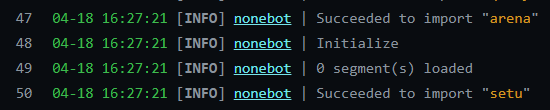

6 from django import template

7 from django.core.urlresolvers import reverse

8 from django.utils import formats

9 from django.utils.translation import ugettext as _

10 import rtyaml

11

12

13 def heads_and_tails(list_of_lists):

14 for alist in list_of_lists:

15 yield alist[0], alist[1:]

16

17

18 def to_column(name_span):

19 fieldname, span = name_span

20 return Div(fieldname, css_class='col-md-%d' % span)

21

22

23 def to_row(names_spans):

24 return Div(*map(to_column, names_spans), css_class='row-fluid')

25

26

27 def to_fieldsets(fields):

28 for field in fields:

29 if isinstance(field, list):

30 legend, row_specs = field[0], field[1:]

31 rows = [to_row(name_span_list) for name_span_list in row_specs]

32 yield Fieldset(legend, *rows)

33 else:

34 yield field

35

36

37 def form_actions(more=[], save_label=_('Salvar')):

38 return FormActions(

39 Submit('salvar', save_label, css_class='pull-right'), *more)

40

41

42 class SaplFormLayout(Layout):

43

44 def __init__(self, *fields, cancel_label=_('Cancelar'),

45 save_label=_('Salvar'), actions=None):

46

47 buttons = actions

48 if not buttons:

49 buttons = form_actions(save_label=save_label, more=[

50 HTML('<a href="{{ view.cancel_url }}"'

51 ' class="btn btn-inverse">%s</a>' % cancel_label)

52 if cancel_label else None])

53

54 _fields = list(to_fieldsets(fields))

55 if buttons:

56 _fields += [to_row([(buttons, 12)])]

57 super(SaplFormLayout, self).__init__(*_fields)

58

59

60 def get_field_display(obj, fieldname):

61 field = ''

62 try:

63 field = obj._meta.get_field(fieldname)

64 except Exception as e:

65 """ nos casos que o fieldname não é um field_model,

66 ele pode ser um aggregate, annotate, um property, um manager,

67 ou mesmo uma método no model.

68 """

69 value = getattr(obj, fieldname)

70 verbose_name = ''

71

72 else:

73 verbose_name = str(field.verbose_name)\

74 if hasattr(field, 'verbose_name') else ''

75

76 if hasattr(field, 'choices') and field.choices:

77 value = getattr(obj, 'get_%s_display' % fieldname)()

78 else:

79 value = getattr(obj, fieldname)

80

81 str_type_from_value = str(type(value))

82 str_type_from_field = str(type(field))

83

84 if value is None:

85 display = ''

86 elif 'date' in str_type_from_value:

87 display = formats.date_format(value, "SHORT_DATE_FORMAT")

88 elif 'bool' in str_type_from_value:

89 display = _('Sim') if value else _('Não')

90 elif 'ImageFieldFile' in str(type(value)):

91 if value:

92 display = '<img src="{}" />'.format(value.url)

93 else:

94 display = ''

95 elif 'FieldFile' in str_type_from_value:

96 if value:

97 display = '<a href="{}">{}</a>'.format(

98 value.url,

99 value.name.split('/')[-1:][0])

100 else:

101 display = ''

102 elif 'ManyRelatedManager' in str_type_from_value\

103 or 'RelatedManager' in str_type_from_value\

104 or 'GenericRelatedObjectManager' in str_type_from_value:

105 display = '<ul>'

106 for v in value.all():

107 display += '<li>%s</li>' % str(v)

108 display += '</ul>'

109 if not verbose_name:

110 if hasattr(field, 'related_model'):

111 verbose_name = str(

112 field.related_model._meta.verbose_name_plural)

113 elif hasattr(field, 'model'):

114 verbose_name = str(field.model._meta.verbose_name_plural)

115 elif 'GenericForeignKey' in str_type_from_field:

116 display = '<a href="{}">{}</a>'.format(

117 reverse(

118 '%s:%s_detail' % (

119 value._meta.app_config.name, obj.content_type.model),

120 args=(value.id,)),

121 value)

122 else:

123 display = str(value)

124 return verbose_name, display

125

126

127 class CrispyLayoutFormMixin:

128

129 @property

130 def layout_key(self):

131 if hasattr(super(CrispyLayoutFormMixin, self), 'layout_key'):

132 return super(CrispyLayoutFormMixin, self).layout_key

133 else:

134 return self.model.__name__

135

136 @property

137 def layout_key_set(self):

138 if hasattr(super(CrispyLayoutFormMixin, self), 'layout_key_set'):

139 return super(CrispyLayoutFormMixin, self).layout_key_set

140 else:

141 obj = self.crud if hasattr(self, 'crud') else self

142 return getattr(obj.model,

143 obj.model_set).field.model.__name__

144

145 def get_layout(self):

146 yaml_layout = '%s/layouts.yaml' % self.model._meta.app_config.label

147 return read_layout_from_yaml(yaml_layout, self.layout_key)

148

149 def get_layout_set(self):

150 obj = self.crud if hasattr(self, 'crud') else self

151 yaml_layout = '%s/layouts.yaml' % getattr(

152 obj.model, obj.model_set).field.model._meta.app_config.label

153 return read_layout_from_yaml(yaml_layout, self.layout_key_set)

154

155 @property

156 def fields(self):

157 if hasattr(self, 'form_class') and self.form_class:

158 return None

159 else:

160 '''Returns all fields in the layout'''

161 return [fieldname for legend_rows in self.get_layout()

162 for row in legend_rows[1:]

163 for fieldname, span in row]

164

165 def get_form(self, form_class=None):

166 try:

167 form = super(CrispyLayoutFormMixin, self).get_form(form_class)

168 except AttributeError:

169 # simply return None if there is no get_form on super

170 pass

171 else:

172 if self.layout_key:

173 form.helper = FormHelper()

174 form.helper.layout = SaplFormLayout(*self.get_layout())

175 return form

176

177 @property

178 def list_field_names(self):

179 '''The list of field names to display on table

180

181 This base implementation returns the field names

182 in the first fieldset of the layout.

183 '''

184 obj = self.crud if hasattr(self, 'crud') else self

185 if hasattr(obj, 'list_field_names') and obj.list_field_names:

186 return obj.list_field_names

187 rows = self.get_layout()[0][1:]

188 return [fieldname for row in rows for fieldname, __ in row]

189

190 @property

191 def list_field_names_set(self):

192 '''The list of field names to display on table

193

194 This base implementation returns the field names

195 in the first fieldset of the layout.

196 '''

197 rows = self.get_layout_set()[0][1:]

198 return [fieldname for row in rows for fieldname, __ in row]

199

200 def get_column(self, fieldname, span):

201 obj = self.get_object()

202 verbose_name, text = get_field_display(obj, fieldname)

203 return {

204 'id': fieldname,

205 'span': span,

206 'verbose_name': verbose_name,

207 'text': text,

208 }

209

210 @property

211 def layout_display(self):

212

213 return [

214 {'legend': legend,

215 'rows': [[self.get_column(fieldname, span)

216 for fieldname, span in row]

217 for row in rows]

218 } for legend, rows in heads_and_tails(self.get_layout())]

219

220

221 def read_yaml_from_file(yaml_layout):

222 # TODO cache this at application level

223 t = template.loader.get_template(yaml_layout)

224 # aqui é importante converter para str pois, dependendo do ambiente,

225 # o rtyaml pode usar yaml.CSafeLoader, que exige str ou stream

226 rendered = str(t.render())

227 return rtyaml.load(rendered)

228

229

230 def read_layout_from_yaml(yaml_layout, key):

231 # TODO cache this at application level

232 yaml = read_yaml_from_file(yaml_layout)

233 base = yaml[key]

234

235 def line_to_namespans(line):

236 split = [cell.split(':') for cell in line.split()]

237 namespans = [[s[0], int(s[1]) if len(s) > 1 else 0] for s in split]

238 remaining = 12 - sum(s for n, s in namespans)

239 nondefined = [ns for ns in namespans if not ns[1]]

240 while nondefined:

241 span = ceil(remaining / len(nondefined))

242 namespan = nondefined.pop(0)

243 namespan[1] = span

244 remaining = remaining - span

245 return list(map(tuple, namespans))

246

247 return [[legend] + [line_to_namespans(l) for l in lines]

248 for legend, lines in base.items()]

249

[end of sapl/crispy_layout_mixin.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/sapl/crispy_layout_mixin.py b/sapl/crispy_layout_mixin.py

--- a/sapl/crispy_layout_mixin.py

+++ b/sapl/crispy_layout_mixin.py

@@ -67,7 +67,10 @@

ou mesmo uma método no model.

"""

value = getattr(obj, fieldname)

- verbose_name = ''

+ try:

+ verbose_name = value.model._meta.verbose_name

+ except AttributeError:

+ verbose_name = ''

else:

verbose_name = str(field.verbose_name)\

|

{"golden_diff": "diff --git a/sapl/crispy_layout_mixin.py b/sapl/crispy_layout_mixin.py\n--- a/sapl/crispy_layout_mixin.py\n+++ b/sapl/crispy_layout_mixin.py\n@@ -67,7 +67,10 @@\n ou mesmo uma m\u00e9todo no model.\n \"\"\"\n value = getattr(obj, fieldname)\n- verbose_name = ''\n+ try:\n+ verbose_name = value.model._meta.verbose_name\n+ except AttributeError:\n+ verbose_name = ''\n \n else:\n verbose_name = str(field.verbose_name)\\\n", "issue": "Falta label de processo nos detalhes da mat\u00e9ria\nO n\u00famero do processo est\u00e1 perdido em meio aos detalhes da mat\u00e9ria. Falta o label processo \r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\n", "before_files": [{"content": "from math import ceil\n\nfrom crispy_forms.bootstrap import FormActions\nfrom crispy_forms.helper import FormHelper\nfrom crispy_forms.layout import HTML, Div, Fieldset, Layout, Submit\nfrom django import template\nfrom django.core.urlresolvers import reverse\nfrom django.utils import formats\nfrom django.utils.translation import ugettext as _\nimport rtyaml\n\n\ndef heads_and_tails(list_of_lists):\n for alist in list_of_lists:\n yield alist[0], alist[1:]\n\n\ndef to_column(name_span):\n fieldname, span = name_span\n return Div(fieldname, css_class='col-md-%d' % span)\n\n\ndef to_row(names_spans):\n return Div(*map(to_column, names_spans), css_class='row-fluid')\n\n\ndef to_fieldsets(fields):\n for field in fields:\n if isinstance(field, list):\n legend, row_specs = field[0], field[1:]\n rows = [to_row(name_span_list) for name_span_list in row_specs]\n yield Fieldset(legend, *rows)\n else:\n yield field\n\n\ndef form_actions(more=[], save_label=_('Salvar')):\n return FormActions(\n Submit('salvar', save_label, css_class='pull-right'), *more)\n\n\nclass SaplFormLayout(Layout):\n\n def __init__(self, *fields, cancel_label=_('Cancelar'),\n save_label=_('Salvar'), actions=None):\n\n buttons = actions\n if not buttons:\n buttons = form_actions(save_label=save_label, more=[\n HTML('<a href=\"{{ view.cancel_url }}\"'\n ' class=\"btn btn-inverse\">%s</a>' % cancel_label)\n if cancel_label else None])\n\n _fields = list(to_fieldsets(fields))\n if buttons:\n _fields += [to_row([(buttons, 12)])]\n super(SaplFormLayout, self).__init__(*_fields)\n\n\ndef get_field_display(obj, fieldname):\n field = ''\n try:\n field = obj._meta.get_field(fieldname)\n except Exception as e:\n \"\"\" nos casos que o fieldname n\u00e3o \u00e9 um field_model,\n ele pode ser um aggregate, annotate, um property, um manager,\n ou mesmo uma m\u00e9todo no model.\n \"\"\"\n value = getattr(obj, fieldname)\n verbose_name = ''\n\n else:\n verbose_name = str(field.verbose_name)\\\n if hasattr(field, 'verbose_name') else ''\n\n if hasattr(field, 'choices') and field.choices:\n value = getattr(obj, 'get_%s_display' % fieldname)()\n else:\n value = getattr(obj, fieldname)\n\n str_type_from_value = str(type(value))\n str_type_from_field = str(type(field))\n\n if value is None:\n display = ''\n elif 'date' in str_type_from_value:\n display = formats.date_format(value, \"SHORT_DATE_FORMAT\")\n elif 'bool' in str_type_from_value:\n display = _('Sim') if value else _('N\u00e3o')\n elif 'ImageFieldFile' in str(type(value)):\n if value:\n display = '<img src=\"{}\" />'.format(value.url)\n else:\n display = ''\n elif 'FieldFile' in str_type_from_value:\n if value:\n display = '<a href=\"{}\">{}</a>'.format(\n value.url,\n value.name.split('/')[-1:][0])\n else:\n display = ''\n elif 'ManyRelatedManager' in str_type_from_value\\\n or 'RelatedManager' in str_type_from_value\\\n or 'GenericRelatedObjectManager' in str_type_from_value:\n display = '<ul>'\n for v in value.all():\n display += '<li>%s</li>' % str(v)\n display += '</ul>'\n if not verbose_name:\n if hasattr(field, 'related_model'):\n verbose_name = str(\n field.related_model._meta.verbose_name_plural)\n elif hasattr(field, 'model'):\n verbose_name = str(field.model._meta.verbose_name_plural)\n elif 'GenericForeignKey' in str_type_from_field:\n display = '<a href=\"{}\">{}</a>'.format(\n reverse(\n '%s:%s_detail' % (\n value._meta.app_config.name, obj.content_type.model),\n args=(value.id,)),\n value)\n else:\n display = str(value)\n return verbose_name, display\n\n\nclass CrispyLayoutFormMixin:\n\n @property\n def layout_key(self):\n if hasattr(super(CrispyLayoutFormMixin, self), 'layout_key'):\n return super(CrispyLayoutFormMixin, self).layout_key\n else:\n return self.model.__name__\n\n @property\n def layout_key_set(self):\n if hasattr(super(CrispyLayoutFormMixin, self), 'layout_key_set'):\n return super(CrispyLayoutFormMixin, self).layout_key_set\n else:\n obj = self.crud if hasattr(self, 'crud') else self\n return getattr(obj.model,\n obj.model_set).field.model.__name__\n\n def get_layout(self):\n yaml_layout = '%s/layouts.yaml' % self.model._meta.app_config.label\n return read_layout_from_yaml(yaml_layout, self.layout_key)\n\n def get_layout_set(self):\n obj = self.crud if hasattr(self, 'crud') else self\n yaml_layout = '%s/layouts.yaml' % getattr(\n obj.model, obj.model_set).field.model._meta.app_config.label\n return read_layout_from_yaml(yaml_layout, self.layout_key_set)\n\n @property\n def fields(self):\n if hasattr(self, 'form_class') and self.form_class:\n return None\n else:\n '''Returns all fields in the layout'''\n return [fieldname for legend_rows in self.get_layout()\n for row in legend_rows[1:]\n for fieldname, span in row]\n\n def get_form(self, form_class=None):\n try:\n form = super(CrispyLayoutFormMixin, self).get_form(form_class)\n except AttributeError:\n # simply return None if there is no get_form on super\n pass\n else:\n if self.layout_key:\n form.helper = FormHelper()\n form.helper.layout = SaplFormLayout(*self.get_layout())\n return form\n\n @property\n def list_field_names(self):\n '''The list of field names to display on table\n\n This base implementation returns the field names\n in the first fieldset of the layout.\n '''\n obj = self.crud if hasattr(self, 'crud') else self\n if hasattr(obj, 'list_field_names') and obj.list_field_names:\n return obj.list_field_names\n rows = self.get_layout()[0][1:]\n return [fieldname for row in rows for fieldname, __ in row]\n\n @property\n def list_field_names_set(self):\n '''The list of field names to display on table\n\n This base implementation returns the field names\n in the first fieldset of the layout.\n '''\n rows = self.get_layout_set()[0][1:]\n return [fieldname for row in rows for fieldname, __ in row]\n\n def get_column(self, fieldname, span):\n obj = self.get_object()\n verbose_name, text = get_field_display(obj, fieldname)\n return {\n 'id': fieldname,\n 'span': span,\n 'verbose_name': verbose_name,\n 'text': text,\n }\n\n @property\n def layout_display(self):\n\n return [\n {'legend': legend,\n 'rows': [[self.get_column(fieldname, span)\n for fieldname, span in row]\n for row in rows]\n } for legend, rows in heads_and_tails(self.get_layout())]\n\n\ndef read_yaml_from_file(yaml_layout):\n # TODO cache this at application level\n t = template.loader.get_template(yaml_layout)\n # aqui \u00e9 importante converter para str pois, dependendo do ambiente,\n # o rtyaml pode usar yaml.CSafeLoader, que exige str ou stream\n rendered = str(t.render())\n return rtyaml.load(rendered)\n\n\ndef read_layout_from_yaml(yaml_layout, key):\n # TODO cache this at application level\n yaml = read_yaml_from_file(yaml_layout)\n base = yaml[key]\n\n def line_to_namespans(line):\n split = [cell.split(':') for cell in line.split()]\n namespans = [[s[0], int(s[1]) if len(s) > 1 else 0] for s in split]\n remaining = 12 - sum(s for n, s in namespans)\n nondefined = [ns for ns in namespans if not ns[1]]\n while nondefined:\n span = ceil(remaining / len(nondefined))\n namespan = nondefined.pop(0)\n namespan[1] = span\n remaining = remaining - span\n return list(map(tuple, namespans))\n\n return [[legend] + [line_to_namespans(l) for l in lines]\n for legend, lines in base.items()]\n", "path": "sapl/crispy_layout_mixin.py"}]}

| 3,346 | 126 |

gh_patches_debug_14404

|

rasdani/github-patches

|

git_diff

|

napari__napari-1147

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Toggle current layer visibility w/keyboard shortcut

## 🚀 Feature

<!-- A clear and concise description of the feature proposal -->

I'd like to introduce a new keyboard shortcut to toggle visibility of current layer

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->

When working with a few layers, I often find myself wanting to toggle the visibility of a particular layer to look clearly at one beneath. This has been coming up most recently when painting labels.

## Pitch

<!-- A clear and concise description of what you want to happen. -->

I'd like to propose a new keyboard shortcut to toggle the visibility of the current layer.

This part is up for debate, but if this is very useful for people, it could be a single key, like `H`, or a combo like `Ctrl+H`, or some other key(s) entirely.

From looking at the other key bindings, I assume this would be straightforward to do, unless there is some `Qt` issue I'm not familiar with.

## Alternatives

<!-- A clear and concise description of any alternative solutions or features you've considered, if any. -->

At the moment, I use the mouse to manually toggle the visibility.

</issue>

<code>

[start of napari/components/layerlist.py]

1 from ..layers import Layer

2 from ..utils.naming import inc_name_count

3 from ..utils.list import ListModel

4

5

6 def _add(event):

7 """When a layer is added, set its name."""

8 layers = event.source

9 layer = event.item

10 layer.name = layers._coerce_name(layer.name, layer)

11 layer.events.name.connect(lambda e: layers._update_name(e))

12 layers.unselect_all(ignore=layer)

13

14

15 class LayerList(ListModel):

16 """List-like layer collection with built-in reordering and callback hooks.

17

18 Parameters

19 ----------

20 iterable : iterable

21 Iterable of napari.layer.Layer

22

23 Attributes

24 ----------

25 events : vispy.util.event.EmitterGroup

26 Event hooks:

27 * added(item, index): whenever an item is added

28 * removed(item): whenever an item is removed

29 * reordered(): whenever the list is reordered

30 """

31

32 def __init__(self, iterable=()):

33 super().__init__(

34 basetype=Layer,

35 iterable=iterable,

36 lookup={str: lambda q, e: q == e.name},

37 )

38

39 self.events.added.connect(_add)

40

41 def __newlike__(self, iterable):

42 return ListModel(self._basetype, iterable, self._lookup)

43

44 def _coerce_name(self, name, layer=None):

45 """Coerce a name into a unique equivalent.

46

47 Parameters

48 ----------

49 name : str

50 Original name.

51 layer : napari.layers.Layer, optional

52 Layer for which name is generated.

53

54 Returns

55 -------

56 new_name : str

57 Coerced, unique name.

58 """

59 for l in self:

60 if l is layer:

61 continue

62 if l.name == name:

63 name = inc_name_count(name)

64

65 return name

66

67 def _update_name(self, event):

68 """Coerce name of the layer in `event.layer`."""

69 layer = event.source

70 layer.name = self._coerce_name(layer.name, layer)

71

72 def move_selected(self, index, insert):

73 """Reorder list by moving the item at index and inserting it

74 at the insert index. If additional items are selected these will

75 get inserted at the insert index too. This allows for rearranging

76 the list based on dragging and dropping a selection of items, where

77 index is the index of the primary item being dragged, and insert is

78 the index of the drop location, and the selection indicates if

79 multiple items are being dragged. If the moved layer is not selected

80 select it.

81

82 Parameters

83 ----------

84 index : int

85 Index of primary item to be moved

86 insert : int

87 Index that item(s) will be inserted at

88 """

89 total = len(self)

90 indices = list(range(total))

91 if not self[index].selected:

92 self.unselect_all()

93 self[index].selected = True

94 selected = [i for i in range(total) if self[i].selected]

95

96 # remove all indices to be moved

97 for i in selected:

98 indices.remove(i)

99 # adjust offset based on selected indices to move

100 offset = sum([i < insert and i != index for i in selected])

101 # insert indices to be moved at correct start

102 for insert_idx, elem_idx in enumerate(selected, start=insert - offset):

103 indices.insert(insert_idx, elem_idx)

104 # reorder list

105 self[:] = self[tuple(indices)]

106

107 def unselect_all(self, ignore=None):

108 """Unselects all layers expect any specified in ignore.

109

110 Parameters

111 ----------

112 ignore : Layer | None

113 Layer that should not be unselected if specified.

114 """

115 for layer in self:

116 if layer.selected and layer != ignore:

117 layer.selected = False

118

119 def select_all(self):

120 """Selects all layers."""

121 for layer in self:

122 if not layer.selected:

123 layer.selected = True

124

125 def remove_selected(self):

126 """Removes selected items from list."""

127 to_delete = []

128 for i in range(len(self)):

129 if self[i].selected:

130 to_delete.append(i)

131 to_delete.reverse()

132 for i in to_delete:

133 self.pop(i)

134 if len(to_delete) > 0:

135 first_to_delete = to_delete[-1]

136 if first_to_delete == 0 and len(self) > 0:

137 self[0].selected = True

138 elif first_to_delete > 0:

139 self[first_to_delete - 1].selected = True

140

141 def select_next(self, shift=False):

142 """Selects next item from list.

143 """

144 selected = []

145 for i in range(len(self)):

146 if self[i].selected:

147 selected.append(i)

148 if len(selected) > 0:

149 if selected[-1] == len(self) - 1:

150 if shift is False:

151 self.unselect_all(ignore=self[selected[-1]])

152 elif selected[-1] < len(self) - 1:

153 if shift is False:

154 self.unselect_all(ignore=self[selected[-1] + 1])

155 self[selected[-1] + 1].selected = True

156 elif len(self) > 0:

157 self[-1].selected = True

158

159 def select_previous(self, shift=False):

160 """Selects previous item from list.

161 """

162 selected = []

163 for i in range(len(self)):

164 if self[i].selected:

165 selected.append(i)

166 if len(selected) > 0:

167 if selected[0] == 0:

168 if shift is False:

169 self.unselect_all(ignore=self[0])

170 elif selected[0] > 0:

171 if shift is False:

172 self.unselect_all(ignore=self[selected[0] - 1])

173 self[selected[0] - 1].selected = True

174 elif len(self) > 0:

175 self[0].selected = True

176

[end of napari/components/layerlist.py]

[start of napari/_viewer_key_bindings.py]

1 import numpy as np

2 from .viewer import Viewer

3

4

5 @Viewer.bind_key('Control-F')

6 def toggle_fullscreen(viewer):

7 """Toggle fullscreen mode."""

8 if viewer.window._qt_window.isFullScreen():

9 viewer.window._qt_window.showNormal()

10 else:

11 viewer.window._qt_window.showFullScreen()

12

13

14 @Viewer.bind_key('Control-Y')

15 def toggle_ndisplay(viewer):

16 """Toggle ndisplay."""

17 if viewer.dims.ndisplay == 3:

18 viewer.dims.ndisplay = 2

19 else:

20 viewer.dims.ndisplay = 3

21

22

23 @Viewer.bind_key('Left')

24 def increment_dims_left(viewer):

25 """Increment dimensions slider to the left."""

26 axis = viewer.window.qt_viewer.dims.last_used

27 if axis is not None:

28 cur_point = viewer.dims.point[axis]

29 axis_range = viewer.dims.range[axis]

30 new_point = np.clip(

31 cur_point - axis_range[2],

32 axis_range[0],

33 axis_range[1] - axis_range[2],

34 )

35 viewer.dims.set_point(axis, new_point)

36

37

38 @Viewer.bind_key('Right')

39 def increment_dims_right(viewer):

40 """Increment dimensions slider to the right."""

41 axis = viewer.window.qt_viewer.dims.last_used

42 if axis is not None:

43 cur_point = viewer.dims.point[axis]

44 axis_range = viewer.dims.range[axis]

45 new_point = np.clip(

46 cur_point + axis_range[2],

47 axis_range[0],

48 axis_range[1] - axis_range[2],

49 )

50 viewer.dims.set_point(axis, new_point)

51

52

53 @Viewer.bind_key('Control-E')

54 def roll_axes(viewer):

55 """Change order of the visible axes, e.g. [0, 1, 2] -> [2, 0, 1]."""

56 viewer.dims._roll()

57

58

59 @Viewer.bind_key('Control-T')

60 def transpose_axes(viewer):

61 """Transpose order of the last two visible axes, e.g. [0, 1] -> [1, 0]."""

62 viewer.dims._transpose()

63

64

65 @Viewer.bind_key('Alt-Up')

66 def focus_axes_up(viewer):

67 """Move focus of dimensions slider up."""

68 viewer.window.qt_viewer.dims.focus_up()

69

70

71 @Viewer.bind_key('Alt-Down')

72 def focus_axes_down(viewer):

73 """Move focus of dimensions slider down."""

74 viewer.window.qt_viewer.dims.focus_down()

75

76

77 @Viewer.bind_key('Control-Backspace')

78 def remove_selected(viewer):

79 """Remove selected layers."""

80 viewer.layers.remove_selected()

81

82

83 @Viewer.bind_key('Control-A')

84 def select_all(viewer):

85 """Selected all layers."""

86 viewer.layers.select_all()

87

88

89 @Viewer.bind_key('Control-Shift-Backspace')

90 def remove_all_layers(viewer):

91 """Remove all layers."""

92 viewer.layers.select_all()

93 viewer.layers.remove_selected()

94

95

96 @Viewer.bind_key('Up')

97 def select_layer_above(viewer):

98 """Select layer above."""

99 viewer.layers.select_next()

100

101

102 @Viewer.bind_key('Down')

103 def select_layer_below(viewer):

104 """Select layer below."""

105 viewer.layers.select_previous()

106

107

108 @Viewer.bind_key('Shift-Up')

109 def also_select_layer_above(viewer):

110 """Also select layer above."""

111 viewer.layers.select_next(shift=True)

112

113

114 @Viewer.bind_key('Shift-Down')

115 def also_select_layer_below(viewer):

116 """Also select layer below."""

117 viewer.layers.select_previous(shift=True)

118

119

120 @Viewer.bind_key('Control-R')

121 def reset_view(viewer):

122 """Reset view to original state."""

123 viewer.reset_view()

124

125

126 @Viewer.bind_key('Control-G')

127 def toggle_grid(viewer):

128 """Toggle grid mode."""

129 if np.all(viewer.grid_size == (1, 1)):

130 viewer.grid_view()

131 else:

132 viewer.stack_view()

133

134

135 @Viewer.bind_key('Control-Alt-P')

136 def play(viewer):

137 """Toggle animation on the first axis"""

138 if viewer.window.qt_viewer.dims.is_playing:

139 viewer.window.qt_viewer.dims.stop()

140 else:

141 axis = viewer.window.qt_viewer.dims.last_used or 0

142 viewer.window.qt_viewer.dims.play(axis)

143

[end of napari/_viewer_key_bindings.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/napari/_viewer_key_bindings.py b/napari/_viewer_key_bindings.py

--- a/napari/_viewer_key_bindings.py

+++ b/napari/_viewer_key_bindings.py

@@ -140,3 +140,9 @@

else:

axis = viewer.window.qt_viewer.dims.last_used or 0

viewer.window.qt_viewer.dims.play(axis)

+

+

[email protected]_key('V')

+def toggle_selected_visibility(viewer):

+ """Toggle visibility of selected layers"""

+ viewer.layers.toggle_selected_visibility()

diff --git a/napari/components/layerlist.py b/napari/components/layerlist.py

--- a/napari/components/layerlist.py

+++ b/napari/components/layerlist.py

@@ -173,3 +173,9 @@

self[selected[0] - 1].selected = True

elif len(self) > 0:

self[0].selected = True

+

+ def toggle_selected_visibility(self):

+ """Toggle visibility of selected layers"""

+ for layer in self:

+ if layer.selected:

+ layer.visible = not layer.visible

|

{"golden_diff": "diff --git a/napari/_viewer_key_bindings.py b/napari/_viewer_key_bindings.py\n--- a/napari/_viewer_key_bindings.py\n+++ b/napari/_viewer_key_bindings.py\n@@ -140,3 +140,9 @@\n else:\n axis = viewer.window.qt_viewer.dims.last_used or 0\n viewer.window.qt_viewer.dims.play(axis)\n+\n+\[email protected]_key('V')\n+def toggle_selected_visibility(viewer):\n+ \"\"\"Toggle visibility of selected layers\"\"\"\n+ viewer.layers.toggle_selected_visibility()\ndiff --git a/napari/components/layerlist.py b/napari/components/layerlist.py\n--- a/napari/components/layerlist.py\n+++ b/napari/components/layerlist.py\n@@ -173,3 +173,9 @@\n self[selected[0] - 1].selected = True\n elif len(self) > 0:\n self[0].selected = True\n+\n+ def toggle_selected_visibility(self):\n+ \"\"\"Toggle visibility of selected layers\"\"\"\n+ for layer in self:\n+ if layer.selected:\n+ layer.visible = not layer.visible\n", "issue": "Toggle current layer visibility w/keyboard shortcut\n## \ud83d\ude80 Feature\r\n<!-- A clear and concise description of the feature proposal -->\r\nI'd like to introduce a new keyboard shortcut to toggle visibility of current layer\r\n\r\n## Motivation\r\n\r\n<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->\r\nWhen working with a few layers, I often find myself wanting to toggle the visibility of a particular layer to look clearly at one beneath. This has been coming up most recently when painting labels.\r\n\r\n## Pitch\r\n\r\n<!-- A clear and concise description of what you want to happen. -->\r\nI'd like to propose a new keyboard shortcut to toggle the visibility of the current layer. \r\n\r\nThis part is up for debate, but if this is very useful for people, it could be a single key, like `H`, or a combo like `Ctrl+H`, or some other key(s) entirely. \r\n\r\nFrom looking at the other key bindings, I assume this would be straightforward to do, unless there is some `Qt` issue I'm not familiar with.\r\n\r\n## Alternatives\r\n\r\n<!-- A clear and concise description of any alternative solutions or features you've considered, if any. -->\r\nAt the moment, I use the mouse to manually toggle the visibility.\n", "before_files": [{"content": "from ..layers import Layer\nfrom ..utils.naming import inc_name_count\nfrom ..utils.list import ListModel\n\n\ndef _add(event):\n \"\"\"When a layer is added, set its name.\"\"\"\n layers = event.source\n layer = event.item\n layer.name = layers._coerce_name(layer.name, layer)\n layer.events.name.connect(lambda e: layers._update_name(e))\n layers.unselect_all(ignore=layer)\n\n\nclass LayerList(ListModel):\n \"\"\"List-like layer collection with built-in reordering and callback hooks.\n\n Parameters\n ----------\n iterable : iterable\n Iterable of napari.layer.Layer\n\n Attributes\n ----------\n events : vispy.util.event.EmitterGroup\n Event hooks:\n * added(item, index): whenever an item is added\n * removed(item): whenever an item is removed\n * reordered(): whenever the list is reordered\n \"\"\"\n\n def __init__(self, iterable=()):\n super().__init__(\n basetype=Layer,\n iterable=iterable,\n lookup={str: lambda q, e: q == e.name},\n )\n\n self.events.added.connect(_add)\n\n def __newlike__(self, iterable):\n return ListModel(self._basetype, iterable, self._lookup)\n\n def _coerce_name(self, name, layer=None):\n \"\"\"Coerce a name into a unique equivalent.\n\n Parameters\n ----------\n name : str\n Original name.\n layer : napari.layers.Layer, optional\n Layer for which name is generated.\n\n Returns\n -------\n new_name : str\n Coerced, unique name.\n \"\"\"\n for l in self:\n if l is layer:\n continue\n if l.name == name:\n name = inc_name_count(name)\n\n return name\n\n def _update_name(self, event):\n \"\"\"Coerce name of the layer in `event.layer`.\"\"\"\n layer = event.source\n layer.name = self._coerce_name(layer.name, layer)\n\n def move_selected(self, index, insert):\n \"\"\"Reorder list by moving the item at index and inserting it\n at the insert index. If additional items are selected these will\n get inserted at the insert index too. This allows for rearranging\n the list based on dragging and dropping a selection of items, where\n index is the index of the primary item being dragged, and insert is\n the index of the drop location, and the selection indicates if\n multiple items are being dragged. If the moved layer is not selected\n select it.\n\n Parameters\n ----------\n index : int\n Index of primary item to be moved\n insert : int\n Index that item(s) will be inserted at\n \"\"\"\n total = len(self)\n indices = list(range(total))\n if not self[index].selected:\n self.unselect_all()\n self[index].selected = True\n selected = [i for i in range(total) if self[i].selected]\n\n # remove all indices to be moved\n for i in selected:\n indices.remove(i)\n # adjust offset based on selected indices to move\n offset = sum([i < insert and i != index for i in selected])\n # insert indices to be moved at correct start\n for insert_idx, elem_idx in enumerate(selected, start=insert - offset):\n indices.insert(insert_idx, elem_idx)\n # reorder list\n self[:] = self[tuple(indices)]\n\n def unselect_all(self, ignore=None):\n \"\"\"Unselects all layers expect any specified in ignore.\n\n Parameters\n ----------\n ignore : Layer | None\n Layer that should not be unselected if specified.\n \"\"\"\n for layer in self:\n if layer.selected and layer != ignore:\n layer.selected = False\n\n def select_all(self):\n \"\"\"Selects all layers.\"\"\"\n for layer in self:\n if not layer.selected:\n layer.selected = True\n\n def remove_selected(self):\n \"\"\"Removes selected items from list.\"\"\"\n to_delete = []\n for i in range(len(self)):\n if self[i].selected:\n to_delete.append(i)\n to_delete.reverse()\n for i in to_delete:\n self.pop(i)\n if len(to_delete) > 0:\n first_to_delete = to_delete[-1]\n if first_to_delete == 0 and len(self) > 0:\n self[0].selected = True\n elif first_to_delete > 0:\n self[first_to_delete - 1].selected = True\n\n def select_next(self, shift=False):\n \"\"\"Selects next item from list.\n \"\"\"\n selected = []\n for i in range(len(self)):\n if self[i].selected:\n selected.append(i)\n if len(selected) > 0:\n if selected[-1] == len(self) - 1:\n if shift is False:\n self.unselect_all(ignore=self[selected[-1]])\n elif selected[-1] < len(self) - 1:\n if shift is False:\n self.unselect_all(ignore=self[selected[-1] + 1])\n self[selected[-1] + 1].selected = True\n elif len(self) > 0:\n self[-1].selected = True\n\n def select_previous(self, shift=False):\n \"\"\"Selects previous item from list.\n \"\"\"\n selected = []\n for i in range(len(self)):\n if self[i].selected:\n selected.append(i)\n if len(selected) > 0:\n if selected[0] == 0:\n if shift is False:\n self.unselect_all(ignore=self[0])\n elif selected[0] > 0:\n if shift is False:\n self.unselect_all(ignore=self[selected[0] - 1])\n self[selected[0] - 1].selected = True\n elif len(self) > 0:\n self[0].selected = True\n", "path": "napari/components/layerlist.py"}, {"content": "import numpy as np\nfrom .viewer import Viewer\n\n\[email protected]_key('Control-F')\ndef toggle_fullscreen(viewer):\n \"\"\"Toggle fullscreen mode.\"\"\"\n if viewer.window._qt_window.isFullScreen():\n viewer.window._qt_window.showNormal()\n else:\n viewer.window._qt_window.showFullScreen()\n\n\[email protected]_key('Control-Y')\ndef toggle_ndisplay(viewer):\n \"\"\"Toggle ndisplay.\"\"\"\n if viewer.dims.ndisplay == 3:\n viewer.dims.ndisplay = 2\n else:\n viewer.dims.ndisplay = 3\n\n\[email protected]_key('Left')\ndef increment_dims_left(viewer):\n \"\"\"Increment dimensions slider to the left.\"\"\"\n axis = viewer.window.qt_viewer.dims.last_used\n if axis is not None:\n cur_point = viewer.dims.point[axis]\n axis_range = viewer.dims.range[axis]\n new_point = np.clip(\n cur_point - axis_range[2],\n axis_range[0],\n axis_range[1] - axis_range[2],\n )\n viewer.dims.set_point(axis, new_point)\n\n\[email protected]_key('Right')\ndef increment_dims_right(viewer):\n \"\"\"Increment dimensions slider to the right.\"\"\"\n axis = viewer.window.qt_viewer.dims.last_used\n if axis is not None:\n cur_point = viewer.dims.point[axis]\n axis_range = viewer.dims.range[axis]\n new_point = np.clip(\n cur_point + axis_range[2],\n axis_range[0],\n axis_range[1] - axis_range[2],\n )\n viewer.dims.set_point(axis, new_point)\n\n\[email protected]_key('Control-E')\ndef roll_axes(viewer):\n \"\"\"Change order of the visible axes, e.g. [0, 1, 2] -> [2, 0, 1].\"\"\"\n viewer.dims._roll()\n\n\[email protected]_key('Control-T')\ndef transpose_axes(viewer):\n \"\"\"Transpose order of the last two visible axes, e.g. [0, 1] -> [1, 0].\"\"\"\n viewer.dims._transpose()\n\n\[email protected]_key('Alt-Up')\ndef focus_axes_up(viewer):\n \"\"\"Move focus of dimensions slider up.\"\"\"\n viewer.window.qt_viewer.dims.focus_up()\n\n\[email protected]_key('Alt-Down')\ndef focus_axes_down(viewer):\n \"\"\"Move focus of dimensions slider down.\"\"\"\n viewer.window.qt_viewer.dims.focus_down()\n\n\[email protected]_key('Control-Backspace')\ndef remove_selected(viewer):\n \"\"\"Remove selected layers.\"\"\"\n viewer.layers.remove_selected()\n\n\[email protected]_key('Control-A')\ndef select_all(viewer):\n \"\"\"Selected all layers.\"\"\"\n viewer.layers.select_all()\n\n\[email protected]_key('Control-Shift-Backspace')\ndef remove_all_layers(viewer):\n \"\"\"Remove all layers.\"\"\"\n viewer.layers.select_all()\n viewer.layers.remove_selected()\n\n\[email protected]_key('Up')\ndef select_layer_above(viewer):\n \"\"\"Select layer above.\"\"\"\n viewer.layers.select_next()\n\n\[email protected]_key('Down')\ndef select_layer_below(viewer):\n \"\"\"Select layer below.\"\"\"\n viewer.layers.select_previous()\n\n\[email protected]_key('Shift-Up')\ndef also_select_layer_above(viewer):\n \"\"\"Also select layer above.\"\"\"\n viewer.layers.select_next(shift=True)\n\n\[email protected]_key('Shift-Down')\ndef also_select_layer_below(viewer):\n \"\"\"Also select layer below.\"\"\"\n viewer.layers.select_previous(shift=True)\n\n\[email protected]_key('Control-R')\ndef reset_view(viewer):\n \"\"\"Reset view to original state.\"\"\"\n viewer.reset_view()\n\n\[email protected]_key('Control-G')\ndef toggle_grid(viewer):\n \"\"\"Toggle grid mode.\"\"\"\n if np.all(viewer.grid_size == (1, 1)):\n viewer.grid_view()\n else:\n viewer.stack_view()\n\n\[email protected]_key('Control-Alt-P')\ndef play(viewer):\n \"\"\"Toggle animation on the first axis\"\"\"\n if viewer.window.qt_viewer.dims.is_playing:\n viewer.window.qt_viewer.dims.stop()\n else:\n axis = viewer.window.qt_viewer.dims.last_used or 0\n viewer.window.qt_viewer.dims.play(axis)\n", "path": "napari/_viewer_key_bindings.py"}]}

| 3,738 | 251 |

gh_patches_debug_100

|

rasdani/github-patches

|

git_diff

|

jazzband__pip-tools-555

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

pip-sync uninstalls pkg-resources, breaks psycopg2

`pip-sync` uninstalls `pkg-resources`, which in turn breaks installation of many other packages. `pkg-resources` is a new "system" package that was recently extracted from `setuptools` (since version 31, I believe). I think it must be handled similarly to `setuptools`.

##### Steps to replicate

On a fully updated Ubuntu 16.04 LTS:

```console

semenov@dev2:~/tmp$ rm -rf ~/.cache/pip

semenov@dev2:~/tmp$ virtualenv --python=$(which python3) test

Already using interpreter /usr/bin/python3

Using base prefix '/usr'

New python executable in /home/semenov/tmp/test/bin/python3

Also creating executable in /home/semenov/tmp/test/bin/python

Installing setuptools, pkg_resources, pip, wheel...done.

semenov@dev2:~/tmp$ cd test

semenov@dev2:~/tmp/test$ . bin/activate

(test) semenov@dev2:~/tmp/test$ pip install pip-tools

Collecting pip-tools

Downloading pip_tools-1.8.0-py2.py3-none-any.whl

Collecting six (from pip-tools)

Downloading six-1.10.0-py2.py3-none-any.whl

Collecting first (from pip-tools)

Downloading first-2.0.1-py2.py3-none-any.whl

Collecting click>=6 (from pip-tools)

Downloading click-6.6-py2.py3-none-any.whl (71kB)

100% |████████████████████████████████| 71kB 559kB/s

Installing collected packages: six, first, click, pip-tools

Successfully installed click-6.6 first-2.0.1 pip-tools-1.8.0 six-1.10.0

(test) semenov@dev2:~/tmp/test$ echo psycopg2 > requirements.in

(test) semenov@dev2:~/tmp/test$ pip-compile

#

# This file is autogenerated by pip-compile

# To update, run:

#

# pip-compile --output-file requirements.txt requirements.in

#

psycopg2==2.6.2

(test) semenov@dev2:~/tmp/test$ pip-sync

Uninstalling pkg-resources-0.0.0:

Successfully uninstalled pkg-resources-0.0.0

Collecting psycopg2==2.6.2

Downloading psycopg2-2.6.2.tar.gz (376kB)

100% |████████████████████████████████| 378kB 2.4MB/s

Could not import setuptools which is required to install from a source distribution.

Traceback (most recent call last):

File "/home/semenov/tmp/test/lib/python3.5/site-packages/pip/req/req_install.py", line 387, in setup_py

import setuptools # noqa

File "/home/semenov/tmp/test/lib/python3.5/site-packages/setuptools/__init__.py", line 10, in <module>

from setuptools.extern.six.moves import filter, filterfalse, map

File "/home/semenov/tmp/test/lib/python3.5/site-packages/setuptools/extern/__init__.py", line 1, in <module>

from pkg_resources.extern import VendorImporter

ImportError: No module named 'pkg_resources.extern'

Traceback (most recent call last):

File "/home/semenov/tmp/test/bin/pip-sync", line 11, in <module>

sys.exit(cli())

File "/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py", line 716, in __call__

return self.main(*args, **kwargs)

File "/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py", line 696, in main

rv = self.invoke(ctx)

File "/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py", line 889, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py", line 534, in invoke

return callback(*args, **kwargs)

File "/home/semenov/tmp/test/lib/python3.5/site-packages/piptools/scripts/sync.py", line 72, in cli

install_flags=install_flags))

File "/home/semenov/tmp/test/lib/python3.5/site-packages/piptools/sync.py", line 157, in sync

check_call([pip, 'install'] + pip_flags + install_flags + sorted(to_install))

File "/usr/lib/python3.5/subprocess.py", line 581, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['pip', 'install', 'psycopg2==2.6.2']' returned non-zero exit status 1

```

##### Expected result

`pip-sync` keeps `pkg-resources` in place, `psycopg2` installs normally.

##### Actual result

`pip-sync` uninstalls `pkg-resources`, then `psycopg2` installation fails with: `ImportError: No module named 'pkg_resources.extern'`

</issue>

<code>

[start of piptools/sync.py]

1 import collections

2 import os

3 import sys

4 from subprocess import check_call

5

6 from . import click

7 from .exceptions import IncompatibleRequirements, UnsupportedConstraint

8 from .utils import flat_map, format_requirement, key_from_req

9

10 PACKAGES_TO_IGNORE = [

11 'pip',

12 'pip-tools',

13 'pip-review',

14 'setuptools',

15 'wheel',

16 ]

17

18

19 def dependency_tree(installed_keys, root_key):

20 """

21 Calculate the dependency tree for the package `root_key` and return

22 a collection of all its dependencies. Uses a DFS traversal algorithm.

23

24 `installed_keys` should be a {key: requirement} mapping, e.g.

25 {'django': from_line('django==1.8')}

26 `root_key` should be the key to return the dependency tree for.

27 """

28 dependencies = set()

29 queue = collections.deque()

30

31 if root_key in installed_keys:

32 dep = installed_keys[root_key]

33 queue.append(dep)

34

35 while queue:

36 v = queue.popleft()

37 key = key_from_req(v)

38 if key in dependencies:

39 continue

40

41 dependencies.add(key)

42

43 for dep_specifier in v.requires():

44 dep_name = key_from_req(dep_specifier)

45 if dep_name in installed_keys:

46 dep = installed_keys[dep_name]

47

48 if dep_specifier.specifier.contains(dep.version):

49 queue.append(dep)

50

51 return dependencies

52

53

54 def get_dists_to_ignore(installed):

55 """

56 Returns a collection of package names to ignore when performing pip-sync,

57 based on the currently installed environment. For example, when pip-tools

58 is installed in the local environment, it should be ignored, including all

59 of its dependencies (e.g. click). When pip-tools is not installed

60 locally, click should also be installed/uninstalled depending on the given

61 requirements.

62 """

63 installed_keys = {key_from_req(r): r for r in installed}

64 return list(flat_map(lambda req: dependency_tree(installed_keys, req), PACKAGES_TO_IGNORE))

65

66

67 def merge(requirements, ignore_conflicts):

68 by_key = {}

69

70 for ireq in requirements:

71 if ireq.link is not None and not ireq.editable:

72 msg = ('pip-compile does not support URLs as packages, unless they are editable. '

73 'Perhaps add -e option?')

74 raise UnsupportedConstraint(msg, ireq)

75

76 key = ireq.link or key_from_req(ireq.req)

77

78 if not ignore_conflicts:

79 existing_ireq = by_key.get(key)

80 if existing_ireq:

81 # NOTE: We check equality here since we can assume that the

82 # requirements are all pinned

83 if ireq.specifier != existing_ireq.specifier:

84 raise IncompatibleRequirements(ireq, existing_ireq)

85

86 # TODO: Always pick the largest specifier in case of a conflict

87 by_key[key] = ireq

88

89 return by_key.values()

90

91

92 def diff(compiled_requirements, installed_dists):

93 """

94 Calculate which packages should be installed or uninstalled, given a set

95 of compiled requirements and a list of currently installed modules.

96 """

97 requirements_lut = {r.link or key_from_req(r.req): r for r in compiled_requirements}

98

99 satisfied = set() # holds keys

100 to_install = set() # holds InstallRequirement objects

101 to_uninstall = set() # holds keys

102

103 pkgs_to_ignore = get_dists_to_ignore(installed_dists)

104 for dist in installed_dists:

105 key = key_from_req(dist)

106 if key not in requirements_lut:

107 to_uninstall.add(key)

108 elif requirements_lut[key].specifier.contains(dist.version):

109 satisfied.add(key)

110

111 for key, requirement in requirements_lut.items():

112 if key not in satisfied:

113 to_install.add(requirement)

114

115 # Make sure to not uninstall any packages that should be ignored

116 to_uninstall -= set(pkgs_to_ignore)

117

118 return (to_install, to_uninstall)

119

120

121 def sync(to_install, to_uninstall, verbose=False, dry_run=False, pip_flags=None, install_flags=None):

122 """

123 Install and uninstalls the given sets of modules.

124 """

125 if not to_uninstall and not to_install:

126 click.echo("Everything up-to-date")

127

128 if pip_flags is None:

129 pip_flags = []

130

131 if not verbose:

132 pip_flags += ['-q']

133

134 if os.environ.get('VIRTUAL_ENV'):

135 # find pip via PATH

136 pip = 'pip'

137 else:

138 # find pip in same directory as pip-sync entry-point script

139 pip = os.path.join(os.path.dirname(os.path.abspath(sys.argv[0])), 'pip')

140

141 if to_uninstall:

142 if dry_run:

143 click.echo("Would uninstall:")

144 for pkg in to_uninstall:

145 click.echo(" {}".format(pkg))

146 else:

147 check_call([pip, 'uninstall', '-y'] + pip_flags + sorted(to_uninstall))

148

149 if to_install:

150 if install_flags is None:

151 install_flags = []

152 if dry_run:

153 click.echo("Would install:")

154 for ireq in to_install:

155 click.echo(" {}".format(format_requirement(ireq)))

156 else:

157 package_args = []

158 for ireq in sorted(to_install):

159 if ireq.editable:

160 package_args.extend(['-e', str(ireq.link or ireq.req)])

161 else:

162 package_args.append(str(ireq.req))

163 check_call([pip, 'install'] + pip_flags + install_flags + package_args)

164 return 0

165

[end of piptools/sync.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/piptools/sync.py b/piptools/sync.py

--- a/piptools/sync.py

+++ b/piptools/sync.py

@@ -11,6 +11,7 @@

'pip',

'pip-tools',

'pip-review',

+ 'pkg-resources',

'setuptools',

'wheel',

]

|

{"golden_diff": "diff --git a/piptools/sync.py b/piptools/sync.py\n--- a/piptools/sync.py\n+++ b/piptools/sync.py\n@@ -11,6 +11,7 @@\n 'pip',\n 'pip-tools',\n 'pip-review',\n+ 'pkg-resources',\n 'setuptools',\n 'wheel',\n ]\n", "issue": "pip-sync uninstalls pkg-resources, breaks psycopg2\n`pip-sync` uninstalls `pkg-resources`, which in turn breaks installation of many other packages. `pkg-resources` is a new \"system\" package that was recently extracted from `setuptools` (since version 31, I believe). I think it must be handled similarly to `setuptools`.\r\n\r\n##### Steps to replicate\r\n\r\nOn a fully updated Ubuntu 16.04 LTS:\r\n\r\n```console\r\nsemenov@dev2:~/tmp$ rm -rf ~/.cache/pip\r\nsemenov@dev2:~/tmp$ virtualenv --python=$(which python3) test\r\nAlready using interpreter /usr/bin/python3\r\nUsing base prefix '/usr'\r\nNew python executable in /home/semenov/tmp/test/bin/python3\r\nAlso creating executable in /home/semenov/tmp/test/bin/python\r\nInstalling setuptools, pkg_resources, pip, wheel...done.\r\nsemenov@dev2:~/tmp$ cd test\r\nsemenov@dev2:~/tmp/test$ . bin/activate\r\n(test) semenov@dev2:~/tmp/test$ pip install pip-tools\r\nCollecting pip-tools\r\n Downloading pip_tools-1.8.0-py2.py3-none-any.whl\r\nCollecting six (from pip-tools)\r\n Downloading six-1.10.0-py2.py3-none-any.whl\r\nCollecting first (from pip-tools)\r\n Downloading first-2.0.1-py2.py3-none-any.whl\r\nCollecting click>=6 (from pip-tools)\r\n Downloading click-6.6-py2.py3-none-any.whl (71kB)\r\n 100% |\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588| 71kB 559kB/s\r\nInstalling collected packages: six, first, click, pip-tools\r\nSuccessfully installed click-6.6 first-2.0.1 pip-tools-1.8.0 six-1.10.0\r\n(test) semenov@dev2:~/tmp/test$ echo psycopg2 > requirements.in\r\n(test) semenov@dev2:~/tmp/test$ pip-compile\r\n#\r\n# This file is autogenerated by pip-compile\r\n# To update, run:\r\n#\r\n# pip-compile --output-file requirements.txt requirements.in\r\n#\r\npsycopg2==2.6.2\r\n(test) semenov@dev2:~/tmp/test$ pip-sync\r\nUninstalling pkg-resources-0.0.0:\r\n Successfully uninstalled pkg-resources-0.0.0\r\nCollecting psycopg2==2.6.2\r\n Downloading psycopg2-2.6.2.tar.gz (376kB)\r\n 100% |\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2588| 378kB 2.4MB/s\r\nCould not import setuptools which is required to install from a source distribution.\r\nTraceback (most recent call last):\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/pip/req/req_install.py\", line 387, in setup_py\r\n import setuptools # noqa\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/setuptools/__init__.py\", line 10, in <module>\r\n from setuptools.extern.six.moves import filter, filterfalse, map\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/setuptools/extern/__init__.py\", line 1, in <module>\r\n from pkg_resources.extern import VendorImporter\r\nImportError: No module named 'pkg_resources.extern'\r\n\r\nTraceback (most recent call last):\r\n File \"/home/semenov/tmp/test/bin/pip-sync\", line 11, in <module>\r\n sys.exit(cli())\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py\", line 716, in __call__\r\n return self.main(*args, **kwargs)\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py\", line 696, in main\r\n rv = self.invoke(ctx)\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py\", line 889, in invoke\r\n return ctx.invoke(self.callback, **ctx.params)\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/click/core.py\", line 534, in invoke\r\n return callback(*args, **kwargs)\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/piptools/scripts/sync.py\", line 72, in cli\r\n install_flags=install_flags))\r\n File \"/home/semenov/tmp/test/lib/python3.5/site-packages/piptools/sync.py\", line 157, in sync\r\n check_call([pip, 'install'] + pip_flags + install_flags + sorted(to_install))\r\n File \"/usr/lib/python3.5/subprocess.py\", line 581, in check_call\r\n raise CalledProcessError(retcode, cmd)\r\nsubprocess.CalledProcessError: Command '['pip', 'install', 'psycopg2==2.6.2']' returned non-zero exit status 1\r\n```\r\n\r\n##### Expected result\r\n\r\n`pip-sync` keeps `pkg-resources` in place, `psycopg2` installs normally.\r\n\r\n##### Actual result\r\n\r\n`pip-sync` uninstalls `pkg-resources`, then `psycopg2` installation fails with: `ImportError: No module named 'pkg_resources.extern'`\n", "before_files": [{"content": "import collections\nimport os\nimport sys\nfrom subprocess import check_call\n\nfrom . import click\nfrom .exceptions import IncompatibleRequirements, UnsupportedConstraint\nfrom .utils import flat_map, format_requirement, key_from_req\n\nPACKAGES_TO_IGNORE = [\n 'pip',\n 'pip-tools',\n 'pip-review',\n 'setuptools',\n 'wheel',\n]\n\n\ndef dependency_tree(installed_keys, root_key):\n \"\"\"\n Calculate the dependency tree for the package `root_key` and return\n a collection of all its dependencies. Uses a DFS traversal algorithm.\n\n `installed_keys` should be a {key: requirement} mapping, e.g.\n {'django': from_line('django==1.8')}\n `root_key` should be the key to return the dependency tree for.\n \"\"\"\n dependencies = set()\n queue = collections.deque()\n\n if root_key in installed_keys:\n dep = installed_keys[root_key]\n queue.append(dep)\n\n while queue:\n v = queue.popleft()\n key = key_from_req(v)\n if key in dependencies:\n continue\n\n dependencies.add(key)\n\n for dep_specifier in v.requires():\n dep_name = key_from_req(dep_specifier)\n if dep_name in installed_keys:\n dep = installed_keys[dep_name]\n\n if dep_specifier.specifier.contains(dep.version):\n queue.append(dep)\n\n return dependencies\n\n\ndef get_dists_to_ignore(installed):\n \"\"\"\n Returns a collection of package names to ignore when performing pip-sync,\n based on the currently installed environment. For example, when pip-tools\n is installed in the local environment, it should be ignored, including all\n of its dependencies (e.g. click). When pip-tools is not installed\n locally, click should also be installed/uninstalled depending on the given\n requirements.\n \"\"\"\n installed_keys = {key_from_req(r): r for r in installed}\n return list(flat_map(lambda req: dependency_tree(installed_keys, req), PACKAGES_TO_IGNORE))\n\n\ndef merge(requirements, ignore_conflicts):\n by_key = {}\n\n for ireq in requirements:\n if ireq.link is not None and not ireq.editable:\n msg = ('pip-compile does not support URLs as packages, unless they are editable. '\n 'Perhaps add -e option?')\n raise UnsupportedConstraint(msg, ireq)\n\n key = ireq.link or key_from_req(ireq.req)\n\n if not ignore_conflicts:\n existing_ireq = by_key.get(key)\n if existing_ireq:\n # NOTE: We check equality here since we can assume that the\n # requirements are all pinned\n if ireq.specifier != existing_ireq.specifier:\n raise IncompatibleRequirements(ireq, existing_ireq)\n\n # TODO: Always pick the largest specifier in case of a conflict\n by_key[key] = ireq\n\n return by_key.values()\n\n\ndef diff(compiled_requirements, installed_dists):\n \"\"\"\n Calculate which packages should be installed or uninstalled, given a set\n of compiled requirements and a list of currently installed modules.\n \"\"\"\n requirements_lut = {r.link or key_from_req(r.req): r for r in compiled_requirements}\n\n satisfied = set() # holds keys\n to_install = set() # holds InstallRequirement objects\n to_uninstall = set() # holds keys\n\n pkgs_to_ignore = get_dists_to_ignore(installed_dists)\n for dist in installed_dists:\n key = key_from_req(dist)\n if key not in requirements_lut:\n to_uninstall.add(key)\n elif requirements_lut[key].specifier.contains(dist.version):\n satisfied.add(key)\n\n for key, requirement in requirements_lut.items():\n if key not in satisfied:\n to_install.add(requirement)\n\n # Make sure to not uninstall any packages that should be ignored\n to_uninstall -= set(pkgs_to_ignore)\n\n return (to_install, to_uninstall)\n\n\ndef sync(to_install, to_uninstall, verbose=False, dry_run=False, pip_flags=None, install_flags=None):\n \"\"\"\n Install and uninstalls the given sets of modules.\n \"\"\"\n if not to_uninstall and not to_install:\n click.echo(\"Everything up-to-date\")\n\n if pip_flags is None:\n pip_flags = []\n\n if not verbose:\n pip_flags += ['-q']\n\n if os.environ.get('VIRTUAL_ENV'):\n # find pip via PATH\n pip = 'pip'\n else:\n # find pip in same directory as pip-sync entry-point script\n pip = os.path.join(os.path.dirname(os.path.abspath(sys.argv[0])), 'pip')\n\n if to_uninstall:\n if dry_run:\n click.echo(\"Would uninstall:\")\n for pkg in to_uninstall:\n click.echo(\" {}\".format(pkg))\n else:\n check_call([pip, 'uninstall', '-y'] + pip_flags + sorted(to_uninstall))\n\n if to_install:\n if install_flags is None:\n install_flags = []\n if dry_run:\n click.echo(\"Would install:\")\n for ireq in to_install:\n click.echo(\" {}\".format(format_requirement(ireq)))\n else:\n package_args = []\n for ireq in sorted(to_install):\n if ireq.editable:\n package_args.extend(['-e', str(ireq.link or ireq.req)])\n else:\n package_args.append(str(ireq.req))\n check_call([pip, 'install'] + pip_flags + install_flags + package_args)\n return 0\n", "path": "piptools/sync.py"}]}

| 3,310 | 78 |

gh_patches_debug_3781

|

rasdani/github-patches

|

git_diff

|

beeware__toga-2356

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

On GTK, `App.current_window` returns the last active window even when all windows are hidden

### Describe the bug

On Cocoa and WinForms, it returns `None` in this situation, which I think makes more sense.

### Environment

- Software versions:

- Toga: main (536a60f)

</issue>

<code>

[start of gtk/src/toga_gtk/app.py]

1 import asyncio

2 import signal

3 import sys

4 from pathlib import Path

5

6 import gbulb

7

8 import toga

9 from toga import App as toga_App

10 from toga.command import Command, Separator

11

12 from .keys import gtk_accel

13 from .libs import TOGA_DEFAULT_STYLES, Gdk, Gio, GLib, Gtk

14 from .window import Window

15

16

17 class MainWindow(Window):

18 def create(self):

19 self.native = Gtk.ApplicationWindow()

20 self.native.set_role("MainWindow")

21 icon_impl = toga_App.app.icon._impl

22 self.native.set_icon(icon_impl.native_72)

23

24 def gtk_delete_event(self, *args):

25 # Return value of the GTK on_close handler indicates

26 # whether the event has been fully handled. Returning

27 # False indicates the event handling is *not* complete,

28 # so further event processing (including actually

29 # closing the window) should be performed; so

30 # "should_exit == True" must be converted to a return

31 # value of False.

32 self.interface.app.on_exit()

33 return True

34

35

36 class App:

37 """

38 Todo:

39 * Creation of Menus is not working.

40 * Disabling of menu items is not working.

41 * App Icon is not showing up

42 """

43

44 def __init__(self, interface):

45 self.interface = interface

46 self.interface._impl = self

47

48 gbulb.install(gtk=True)

49 self.loop = asyncio.new_event_loop()

50

51 self.create()

52

53 def create(self):

54 # Stimulate the build of the app

55 self.native = Gtk.Application(

56 application_id=self.interface.app_id,

57 flags=Gio.ApplicationFlags.FLAGS_NONE,

58 )

59 self.native_about_dialog = None

60

61 # Connect the GTK signal that will cause app startup to occur

62 self.native.connect("startup", self.gtk_startup)

63 self.native.connect("activate", self.gtk_activate)

64

65 self.actions = None

66

67 def gtk_startup(self, data=None):

68 # Set up the default commands for the interface.

69 self.interface.commands.add(

70 Command(

71 self._menu_about,

72 "About " + self.interface.formal_name,

73 group=toga.Group.HELP,

74 ),

75 Command(None, "Preferences", group=toga.Group.APP),

76 # Quit should always be the last item, in a section on its own

77 Command(

78 self._menu_quit,

79 "Quit " + self.interface.formal_name,

80 shortcut=toga.Key.MOD_1 + "q",

81 group=toga.Group.APP,

82 section=sys.maxsize,

83 ),

84 )

85 self._create_app_commands()

86

87 self.interface._startup()

88

89 # Create the lookup table of menu items,

90 # then force the creation of the menus.

91 self.create_menus()

92

93 # Now that we have menus, make the app take responsibility for

94 # showing the menubar.

95 # This is required because of inconsistencies in how the Gnome

96 # shell operates on different windowing environments;

97 # see #872 for details.

98 settings = Gtk.Settings.get_default()

99 settings.set_property("gtk-shell-shows-menubar", False)

100

101 # Set any custom styles

102 css_provider = Gtk.CssProvider()

103 css_provider.load_from_data(TOGA_DEFAULT_STYLES)

104

105 context = Gtk.StyleContext()

106 context.add_provider_for_screen(

107 Gdk.Screen.get_default(), css_provider, Gtk.STYLE_PROVIDER_PRIORITY_USER

108 )

109

110 def _create_app_commands(self):

111 # No extra menus

112 pass

113

114 def gtk_activate(self, data=None):

115 pass

116

117 def _menu_about(self, command, **kwargs):

118 self.interface.about()

119

120 def _menu_quit(self, command, **kwargs):

121 self.interface.on_exit()

122

123 def create_menus(self):

124 # Only create the menu if the menu item index has been created.

125 self._menu_items = {}

126 self._menu_groups = {}

127

128 # Create the menu for the top level menubar.

129 menubar = Gio.Menu()

130 section = None

131 for cmd in self.interface.commands:

132 if isinstance(cmd, Separator):

133 section = None

134 else:

135 submenu, created = self._submenu(cmd.group, menubar)

136 if created:

137 section = None

138

139 if section is None:

140 section = Gio.Menu()

141 submenu.append_section(None, section)

142

143 cmd_id = "command-%s" % id(cmd)

144 action = Gio.SimpleAction.new(cmd_id, None)

145 action.connect("activate", cmd._impl.gtk_activate)

146

147 cmd._impl.native.append(action)

148 cmd._impl.set_enabled(cmd.enabled)

149 self._menu_items[action] = cmd

150 self.native.add_action(action)

151

152 item = Gio.MenuItem.new(cmd.text, "app." + cmd_id)

153 if cmd.shortcut:

154 item.set_attribute_value(

155 "accel", GLib.Variant("s", gtk_accel(cmd.shortcut))

156 )

157

158 section.append_item(item)

159

160 # Set the menu for the app.

161 self.native.set_menubar(menubar)

162

163 def _submenu(self, group, menubar):

164 try:

165 return self._menu_groups[group], False

166 except KeyError:

167 if group is None:

168 submenu = menubar

169 else:

170 parent_menu, _ = self._submenu(group.parent, menubar)

171 submenu = Gio.Menu()

172 self._menu_groups[group] = submenu

173

174 text = group.text

175 if text == "*":

176 text = self.interface.formal_name

177 parent_menu.append_submenu(text, submenu)

178

179 # Install the item in the group cache.

180 self._menu_groups[group] = submenu

181

182 return submenu, True

183

184 def main_loop(self):

185 # Modify signal handlers to make sure Ctrl-C is caught and handled.

186 signal.signal(signal.SIGINT, signal.SIG_DFL)

187

188 self.loop.run_forever(application=self.native)

189

190 def set_main_window(self, window):

191 pass

192

193 def show_about_dialog(self):

194 self.native_about_dialog = Gtk.AboutDialog()

195 self.native_about_dialog.set_modal(True)

196

197 icon_impl = toga_App.app.icon._impl

198 self.native_about_dialog.set_logo(icon_impl.native_72)

199

200 self.native_about_dialog.set_program_name(self.interface.formal_name)

201 if self.interface.version is not None:

202 self.native_about_dialog.set_version(self.interface.version)

203 if self.interface.author is not None:

204 self.native_about_dialog.set_authors([self.interface.author])

205 if self.interface.description is not None:

206 self.native_about_dialog.set_comments(self.interface.description)

207 if self.interface.home_page is not None:

208 self.native_about_dialog.set_website(self.interface.home_page)

209

210 self.native_about_dialog.show()

211 self.native_about_dialog.connect("close", self._close_about)

212

213 def _close_about(self, dialog):

214 self.native_about_dialog.destroy()

215 self.native_about_dialog = None

216

217 def beep(self):

218 Gdk.beep()

219

220 # We can't call this under test conditions, because it would kill the test harness

221 def exit(self): # pragma: no cover

222 self.native.quit()

223

224 def get_current_window(self):

225 return self.native.get_active_window()._impl

226

227 def set_current_window(self, window):

228 window._impl.native.present()

229

230 def enter_full_screen(self, windows):

231 for window in windows:

232 window._impl.set_full_screen(True)

233

234 def exit_full_screen(self, windows):

235 for window in windows:

236 window._impl.set_full_screen(False)

237

238 def show_cursor(self):

239 self.interface.factory.not_implemented("App.show_cursor()")

240

241 def hide_cursor(self):

242 self.interface.factory.not_implemented("App.hide_cursor()")

243

244

245 class DocumentApp(App): # pragma: no cover

246 def _create_app_commands(self):

247 self.interface.commands.add(

248 toga.Command(

249 self.open_file,

250 text="Open...",

251 shortcut=toga.Key.MOD_1 + "o",

252 group=toga.Group.FILE,

253 section=0,

254 ),

255 )

256

257 def gtk_startup(self, data=None):

258 super().gtk_startup(data=data)

259

260 try:

261 # Look for a filename specified on the command line

262 self.interface._open(Path(sys.argv[1]))

263 except IndexError:

264 # Nothing on the command line; open a file dialog instead.

265 # Create a temporary window so we have context for the dialog

266 m = toga.Window()

267 m.open_file_dialog(

268 self.interface.formal_name,

269 file_types=self.interface.document_types.keys(),

270 on_result=lambda dialog, path: self.interface._open(path)

271 if path

272 else self.exit(),

273 )

274

275 def open_file(self, widget, **kwargs):

276 # Create a temporary window so we have context for the dialog

277 m = toga.Window()

278 m.open_file_dialog(

279 self.interface.formal_name,

280 file_types=self.interface.document_types.keys(),

281 on_result=lambda dialog, path: self.interface._open(path) if path else None,

282 )

283

[end of gtk/src/toga_gtk/app.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0: