problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_21757

|

rasdani/github-patches

|

git_diff

|

zestedesavoir__zds-site-3209

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Les derniers sujets suivis cette semaine ne le sont pas forcément.

A l'heure ou je poste cette issue, on est lundi et dans mes sujets suivis j'ai des sujets suivis de :

- aujourd'hui : normal

- hier (dimanche) : normal

- cette semaine : il y'a un problème de vocable ici. Car "cette semaine" à commencée en fait aujourd'hui. Le code lui veut plutôt parler des "7 derniers jours".

Donc j'ignore la bonne façon de faire ici ? renommer le "cette semaine" ou modifier le comportement pour n'avoir que ce qui s'est passé cette semaine ?

Mais dans tout les cas l'affichage ne correspond pas à la réalité. Au vu du code, le problème est aussi présent pour "Ce mois" qui devrait plutôt s'appeler "Les 30 derniers jours" pour être cohérent avec la réalité.

</issue>

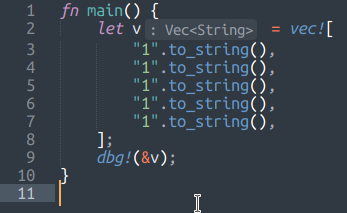

<code>

[start of zds/utils/templatetags/interventions.py]

1 # coding: utf-8

2

3 from datetime import datetime, timedelta

4 import time

5

6 from django import template

7 from django.db.models import F

8

9 from zds.forum.models import TopicFollowed, never_read as never_read_topic, Post, TopicRead

10 from zds.mp.models import PrivateTopic

11

12 from zds.utils.models import Alert

13 from zds.tutorialv2.models.models_database import ContentRead, ContentReaction

14

15 register = template.Library()

16

17

18 @register.filter('is_read')

19 def is_read(topic):

20 if never_read_topic(topic):

21 return False

22 else:

23 return True

24

25

26 @register.filter('humane_delta')

27 def humane_delta(value):

28 # mapping between label day and key

29 const = {1: "Aujourd'hui", 2: "Hier", 3: "Cette semaine", 4: "Ce mois-ci", 5: "Cette année"}

30

31 return const[value]

32

33

34 @register.filter('followed_topics')

35 def followed_topics(user):

36 topicsfollowed = TopicFollowed.objects.select_related("topic").filter(user=user)\

37 .order_by('-topic__last_message__pubdate')[:10]

38 # This period is a map for link a moment (Today, yesterday, this week, this month, etc.) with

39 # the number of days for which we can say we're still in the period

40 # for exemple, the tuple (2, 1) means for the period "2" corresponding to "Yesterday" according

41 # to humane_delta, means if your pubdate hasn't exceeded one day, we are always at "Yesterday"

42 # Number is use for index for sort map easily

43 periods = ((1, 0), (2, 1), (3, 7), (4, 30), (5, 360))

44 topics = {}

45 for tfollowed in topicsfollowed:

46 for period in periods:

47 if tfollowed.topic.last_message.pubdate.date() >= (datetime.now() - timedelta(days=int(period[1]),

48 hours=0,

49 minutes=0,

50 seconds=0)).date():

51 if period[0] in topics:

52 topics[period[0]].append(tfollowed.topic)

53 else:

54 topics[period[0]] = [tfollowed.topic]

55 break

56 return topics

57

58

59 def comp(dated_element1, dated_element2):

60 version1 = int(time.mktime(dated_element1['pubdate'].timetuple()))

61 version2 = int(time.mktime(dated_element2['pubdate'].timetuple()))

62 if version1 > version2:

63 return -1

64 elif version1 < version2:

65 return 1

66 else:

67 return 0

68

69

70 @register.filter('interventions_topics')

71 def interventions_topics(user):

72 topicsfollowed = TopicFollowed.objects.filter(user=user).values("topic").distinct().all()

73

74 topics_never_read = TopicRead.objects\

75 .filter(user=user)\

76 .filter(topic__in=topicsfollowed)\

77 .select_related("topic")\

78 .exclude(post=F('topic__last_message')).all()

79

80 content_followed_pk = ContentReaction.objects\

81 .filter(author=user, related_content__public_version__isnull=False)\

82 .values_list('related_content__pk', flat=True)

83

84 content_to_read = ContentRead.objects\

85 .select_related('note')\

86 .select_related('note__author')\

87 .select_related('content')\

88 .select_related('note__related_content__public_version')\

89 .filter(user=user)\

90 .exclude(note__pk=F('content__last_note__pk')).all()

91

92 posts_unread = []

93

94 for top in topics_never_read:

95 content = top.topic.first_unread_post()

96 if content is None:

97 content = top.topic.last_message

98 posts_unread.append({'pubdate': content.pubdate,

99 'author': content.author,

100 'title': top.topic.title,

101 'url': content.get_absolute_url()})

102

103 for content_read in content_to_read:

104 content = content_read.content

105 if content.pk not in content_followed_pk and user not in content.authors.all():

106 continue

107 reaction = content.first_unread_note()

108 if reaction is None:

109 reaction = content.first_note()

110 if reaction is None:

111 continue

112 posts_unread.append({'pubdate': reaction.pubdate,

113 'author': reaction.author,

114 'title': content.title,

115 'url': reaction.get_absolute_url()})

116

117 posts_unread.sort(cmp=comp)

118

119 return posts_unread

120

121

122 @register.filter('interventions_privatetopics')

123 def interventions_privatetopics(user):

124

125 # Raw query because ORM doesn't seems to allow this kind of "left outer join" clauses.

126 # Parameters = list with 3x the same ID because SQLite backend doesn't allow map parameters.

127 privatetopics_unread = PrivateTopic.objects.raw(

128 '''

129 select distinct t.*

130 from mp_privatetopic t

131 left outer join mp_privatetopic_participants p on p.privatetopic_id = t.id

132 left outer join mp_privatetopicread r on r.user_id = %s and r.privatepost_id = t.last_message_id

133 where (t.author_id = %s or p.user_id = %s)

134 and r.id is null

135 order by t.pubdate desc''',

136 [user.id, user.id, user.id])

137

138 # "total" re-do the query, but there is no other way to get the length as __len__ is not available on raw queries.

139 topics = list(privatetopics_unread)

140 return {'unread': topics, 'total': len(topics)}

141

142

143 @register.filter(name='alerts_list')

144 def alerts_list(user):

145 total = []

146 alerts = Alert.objects.select_related('author', 'comment').all().order_by('-pubdate')[:10]

147 nb_alerts = Alert.objects.count()

148 for alert in alerts:

149 if alert.scope == Alert.FORUM:

150 post = Post.objects.select_related('topic').get(pk=alert.comment.pk)

151 total.append({'title': post.topic.title,

152 'url': post.get_absolute_url(),

153 'pubdate': alert.pubdate,

154 'author': alert.author,

155 'text': alert.text})

156

157 elif alert.scope == Alert.CONTENT:

158 note = ContentReaction.objects.select_related('related_content').get(pk=alert.comment.pk)

159 total.append({'title': note.related_content.title,

160 'url': note.get_absolute_url(),

161 'pubdate': alert.pubdate,

162 'author': alert.author,

163 'text': alert.text})

164

165 return {'alerts': total, 'nb_alerts': nb_alerts}

166

[end of zds/utils/templatetags/interventions.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/zds/utils/templatetags/interventions.py b/zds/utils/templatetags/interventions.py

--- a/zds/utils/templatetags/interventions.py

+++ b/zds/utils/templatetags/interventions.py

@@ -5,10 +5,10 @@

from django import template

from django.db.models import F

+from django.utils.translation import ugettext_lazy as _

from zds.forum.models import TopicFollowed, never_read as never_read_topic, Post, TopicRead

from zds.mp.models import PrivateTopic

-

from zds.utils.models import Alert

from zds.tutorialv2.models.models_database import ContentRead, ContentReaction

@@ -25,8 +25,19 @@

@register.filter('humane_delta')

def humane_delta(value):

- # mapping between label day and key

- const = {1: "Aujourd'hui", 2: "Hier", 3: "Cette semaine", 4: "Ce mois-ci", 5: "Cette année"}

+ """

+ Mapping between label day and key

+

+ :param int value:

+ :return: string

+ """

+ const = {

+ 1: _("Aujourd'hui"),

+ 2: _("Hier"),

+ 3: _("Les 7 derniers jours"),

+ 4: _("Les 30 derniers jours"),

+ 5: _("Plus ancien")

+ }

return const[value]

|

{"golden_diff": "diff --git a/zds/utils/templatetags/interventions.py b/zds/utils/templatetags/interventions.py\n--- a/zds/utils/templatetags/interventions.py\n+++ b/zds/utils/templatetags/interventions.py\n@@ -5,10 +5,10 @@\n \n from django import template\n from django.db.models import F\n+from django.utils.translation import ugettext_lazy as _\n \n from zds.forum.models import TopicFollowed, never_read as never_read_topic, Post, TopicRead\n from zds.mp.models import PrivateTopic\n-\n from zds.utils.models import Alert\n from zds.tutorialv2.models.models_database import ContentRead, ContentReaction\n \n@@ -25,8 +25,19 @@\n \n @register.filter('humane_delta')\n def humane_delta(value):\n- # mapping between label day and key\n- const = {1: \"Aujourd'hui\", 2: \"Hier\", 3: \"Cette semaine\", 4: \"Ce mois-ci\", 5: \"Cette ann\u00e9e\"}\n+ \"\"\"\n+ Mapping between label day and key\n+\n+ :param int value:\n+ :return: string\n+ \"\"\"\n+ const = {\n+ 1: _(\"Aujourd'hui\"),\n+ 2: _(\"Hier\"),\n+ 3: _(\"Les 7 derniers jours\"),\n+ 4: _(\"Les 30 derniers jours\"),\n+ 5: _(\"Plus ancien\")\n+ }\n \n return const[value]\n", "issue": "Les derniers sujets suivis cette semaine ne le sont pas forc\u00e9ment.\nA l'heure ou je poste cette issue, on est lundi et dans mes sujets suivis j'ai des sujets suivis de : \n- aujourd'hui : normal\n- hier (dimanche) : normal\n- cette semaine : il y'a un probl\u00e8me de vocable ici. Car \"cette semaine\" \u00e0 commenc\u00e9e en fait aujourd'hui. Le code lui veut plut\u00f4t parler des \"7 derniers jours\".\n\nDonc j'ignore la bonne fa\u00e7on de faire ici ? renommer le \"cette semaine\" ou modifier le comportement pour n'avoir que ce qui s'est pass\u00e9 cette semaine ?\n\nMais dans tout les cas l'affichage ne correspond pas \u00e0 la r\u00e9alit\u00e9. Au vu du code, le probl\u00e8me est aussi pr\u00e9sent pour \"Ce mois\" qui devrait plut\u00f4t s'appeler \"Les 30 derniers jours\" pour \u00eatre coh\u00e9rent avec la r\u00e9alit\u00e9.\n\n", "before_files": [{"content": "# coding: utf-8\n\nfrom datetime import datetime, timedelta\nimport time\n\nfrom django import template\nfrom django.db.models import F\n\nfrom zds.forum.models import TopicFollowed, never_read as never_read_topic, Post, TopicRead\nfrom zds.mp.models import PrivateTopic\n\nfrom zds.utils.models import Alert\nfrom zds.tutorialv2.models.models_database import ContentRead, ContentReaction\n\nregister = template.Library()\n\n\[email protected]('is_read')\ndef is_read(topic):\n if never_read_topic(topic):\n return False\n else:\n return True\n\n\[email protected]('humane_delta')\ndef humane_delta(value):\n # mapping between label day and key\n const = {1: \"Aujourd'hui\", 2: \"Hier\", 3: \"Cette semaine\", 4: \"Ce mois-ci\", 5: \"Cette ann\u00e9e\"}\n\n return const[value]\n\n\[email protected]('followed_topics')\ndef followed_topics(user):\n topicsfollowed = TopicFollowed.objects.select_related(\"topic\").filter(user=user)\\\n .order_by('-topic__last_message__pubdate')[:10]\n # This period is a map for link a moment (Today, yesterday, this week, this month, etc.) with\n # the number of days for which we can say we're still in the period\n # for exemple, the tuple (2, 1) means for the period \"2\" corresponding to \"Yesterday\" according\n # to humane_delta, means if your pubdate hasn't exceeded one day, we are always at \"Yesterday\"\n # Number is use for index for sort map easily\n periods = ((1, 0), (2, 1), (3, 7), (4, 30), (5, 360))\n topics = {}\n for tfollowed in topicsfollowed:\n for period in periods:\n if tfollowed.topic.last_message.pubdate.date() >= (datetime.now() - timedelta(days=int(period[1]),\n hours=0,\n minutes=0,\n seconds=0)).date():\n if period[0] in topics:\n topics[period[0]].append(tfollowed.topic)\n else:\n topics[period[0]] = [tfollowed.topic]\n break\n return topics\n\n\ndef comp(dated_element1, dated_element2):\n version1 = int(time.mktime(dated_element1['pubdate'].timetuple()))\n version2 = int(time.mktime(dated_element2['pubdate'].timetuple()))\n if version1 > version2:\n return -1\n elif version1 < version2:\n return 1\n else:\n return 0\n\n\[email protected]('interventions_topics')\ndef interventions_topics(user):\n topicsfollowed = TopicFollowed.objects.filter(user=user).values(\"topic\").distinct().all()\n\n topics_never_read = TopicRead.objects\\\n .filter(user=user)\\\n .filter(topic__in=topicsfollowed)\\\n .select_related(\"topic\")\\\n .exclude(post=F('topic__last_message')).all()\n\n content_followed_pk = ContentReaction.objects\\\n .filter(author=user, related_content__public_version__isnull=False)\\\n .values_list('related_content__pk', flat=True)\n\n content_to_read = ContentRead.objects\\\n .select_related('note')\\\n .select_related('note__author')\\\n .select_related('content')\\\n .select_related('note__related_content__public_version')\\\n .filter(user=user)\\\n .exclude(note__pk=F('content__last_note__pk')).all()\n\n posts_unread = []\n\n for top in topics_never_read:\n content = top.topic.first_unread_post()\n if content is None:\n content = top.topic.last_message\n posts_unread.append({'pubdate': content.pubdate,\n 'author': content.author,\n 'title': top.topic.title,\n 'url': content.get_absolute_url()})\n\n for content_read in content_to_read:\n content = content_read.content\n if content.pk not in content_followed_pk and user not in content.authors.all():\n continue\n reaction = content.first_unread_note()\n if reaction is None:\n reaction = content.first_note()\n if reaction is None:\n continue\n posts_unread.append({'pubdate': reaction.pubdate,\n 'author': reaction.author,\n 'title': content.title,\n 'url': reaction.get_absolute_url()})\n\n posts_unread.sort(cmp=comp)\n\n return posts_unread\n\n\[email protected]('interventions_privatetopics')\ndef interventions_privatetopics(user):\n\n # Raw query because ORM doesn't seems to allow this kind of \"left outer join\" clauses.\n # Parameters = list with 3x the same ID because SQLite backend doesn't allow map parameters.\n privatetopics_unread = PrivateTopic.objects.raw(\n '''\n select distinct t.*\n from mp_privatetopic t\n left outer join mp_privatetopic_participants p on p.privatetopic_id = t.id\n left outer join mp_privatetopicread r on r.user_id = %s and r.privatepost_id = t.last_message_id\n where (t.author_id = %s or p.user_id = %s)\n and r.id is null\n order by t.pubdate desc''',\n [user.id, user.id, user.id])\n\n # \"total\" re-do the query, but there is no other way to get the length as __len__ is not available on raw queries.\n topics = list(privatetopics_unread)\n return {'unread': topics, 'total': len(topics)}\n\n\[email protected](name='alerts_list')\ndef alerts_list(user):\n total = []\n alerts = Alert.objects.select_related('author', 'comment').all().order_by('-pubdate')[:10]\n nb_alerts = Alert.objects.count()\n for alert in alerts:\n if alert.scope == Alert.FORUM:\n post = Post.objects.select_related('topic').get(pk=alert.comment.pk)\n total.append({'title': post.topic.title,\n 'url': post.get_absolute_url(),\n 'pubdate': alert.pubdate,\n 'author': alert.author,\n 'text': alert.text})\n\n elif alert.scope == Alert.CONTENT:\n note = ContentReaction.objects.select_related('related_content').get(pk=alert.comment.pk)\n total.append({'title': note.related_content.title,\n 'url': note.get_absolute_url(),\n 'pubdate': alert.pubdate,\n 'author': alert.author,\n 'text': alert.text})\n\n return {'alerts': total, 'nb_alerts': nb_alerts}\n", "path": "zds/utils/templatetags/interventions.py"}]}

| 2,591 | 333 |

gh_patches_debug_16231

|

rasdani/github-patches

|

git_diff

|

ytdl-org__youtube-dl-28074

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

urplay

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE

######################################################################

-->

## Checklist

<!--

Carefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:

- First of, make sure you are using the latest version of youtube-dl. Run `youtube-dl --version` and ensure your version is 2021.02.04.1. If it's not, see https://yt-dl.org/update on how to update. Issues with outdated version will be REJECTED.

- Make sure that all provided video/audio/playlist URLs (if any) are alive and playable in a browser.

- Make sure that all URLs and arguments with special characters are properly quoted or escaped as explained in http://yt-dl.org/escape.

- Search the bugtracker for similar issues: http://yt-dl.org/search-issues. DO NOT post duplicates.

- Finally, put x into all relevant boxes (like this [x])

-->

- [x] I'm reporting a broken site support

- [x] I've verified that I'm running youtube-dl version **2021.02.04.1**

- [x] I've checked that all provided URLs are alive and playable in a browser

- [x] I've checked that all URLs and arguments with special characters are properly quoted or escaped

- [x] I've searched the bugtracker for similar issues including closed ones

## Verbose log

<!--

Provide the complete verbose output of youtube-dl that clearly demonstrates the problem.

Add the `-v` flag to your command line you run youtube-dl with (`youtube-dl -v <your command line>`), copy the WHOLE output and insert it below. It should look similar to this:

[debug] System config: []

[debug] User config: []

[debug] Command-line args: [u'-v', u'http://www.youtube.com/watch?v=BaW_jenozKcj']

[debug] Encodings: locale cp1251, fs mbcs, out cp866, pref cp1251

[debug] youtube-dl version 2021.02.04.1

[debug] Python version 2.7.11 - Windows-2003Server-5.2.3790-SP2

[debug] exe versions: ffmpeg N-75573-g1d0487f, ffprobe N-75573-g1d0487f, rtmpdump 2.4

[debug] Proxy map: {}

<more lines>

-->

```

[debug] System config: []

[debug] User config: []

[debug] Custom config: []

[debug] Command-line args: ['--proxy', 'https://127.0.0.1:10809', '-J', 'https://urplay.se/program/220659-seniorsurfarskolan-social-pa-distans?autostart=true', '-v']

[debug] Encodings: locale cp936, fs mbcs, out cp936, pref cp936

[debug] youtube-dl version 2021.02.04.1

[debug] Python version 3.4.4 (CPython) - Windows-7-6.1.7601-SP1

[debug] exe versions: none

[debug] Proxy map: {'http': 'https://127.0.0.1:10809', 'https': 'https://127.0.0.1:10809'}

ERROR: Unable to download webpage: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:600)> (caused by URLError(SSLError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:600)'),))

File "C:\Users\dst\AppData\Roaming\Build archive\youtube-dl\ytdl-org\tmpgi7ngq0n\build\youtube_dl\extractor\common.py", line 632, in _request_webpage

File "C:\Users\dst\AppData\Roaming\Build archive\youtube-dl\ytdl-org\tmpgi7ngq0n\build\youtube_dl\YoutubeDL.py", line 2275, in urlopen

File "C:\Python\Python34\lib\urllib\request.py", line 464, in open

File "C:\Python\Python34\lib\urllib\request.py", line 482, in _open

File "C:\Python\Python34\lib\urllib\request.py", line 442, in _call_chain

File "C:\Users\dst\AppData\Roaming\Build archive\youtube-dl\ytdl-org\tmpgi7ngq0n\build\youtube_dl\utils.py", line 2736, in https_open

File "C:\Python\Python34\lib\urllib\request.py", line 1185, in do_open

```

## Description

<!--

Provide an explanation of your issue in an arbitrary form. Provide any additional information, suggested solution and as much context and examples as possible.

If work on your issue requires account credentials please provide them or explain how one can obtain them.

-->

This problem is exsactly same as #26815, but I saw that issue is closed, now the problem is still there, hope you guys can fix it!

</issue>

<code>

[start of youtube_dl/extractor/urplay.py]

1 # coding: utf-8

2 from __future__ import unicode_literals

3

4 from .common import InfoExtractor

5 from ..utils import (

6 dict_get,

7 int_or_none,

8 unified_timestamp,

9 )

10

11

12 class URPlayIE(InfoExtractor):

13 _VALID_URL = r'https?://(?:www\.)?ur(?:play|skola)\.se/(?:program|Produkter)/(?P<id>[0-9]+)'

14 _TESTS = [{

15 'url': 'https://urplay.se/program/203704-ur-samtiden-livet-universum-och-rymdens-markliga-musik-om-vetenskap-kritiskt-tankande-och-motstand',

16 'md5': 'ff5b0c89928f8083c74bbd5099c9292d',

17 'info_dict': {

18 'id': '203704',

19 'ext': 'mp4',

20 'title': 'UR Samtiden - Livet, universum och rymdens märkliga musik : Om vetenskap, kritiskt tänkande och motstånd',

21 'description': 'md5:5344508a52aa78c1ced6c1b8b9e44e9a',

22 'timestamp': 1513292400,

23 'upload_date': '20171214',

24 },

25 }, {

26 'url': 'https://urskola.se/Produkter/190031-Tripp-Trapp-Trad-Sovkudde',

27 'info_dict': {

28 'id': '190031',

29 'ext': 'mp4',

30 'title': 'Tripp, Trapp, Träd : Sovkudde',

31 'description': 'md5:b86bffdae04a7e9379d1d7e5947df1d1',

32 'timestamp': 1440086400,

33 'upload_date': '20150820',

34 },

35 }, {

36 'url': 'http://urskola.se/Produkter/155794-Smasagor-meankieli-Grodan-i-vida-varlden',

37 'only_matching': True,

38 }]

39

40 def _real_extract(self, url):

41 video_id = self._match_id(url)

42 url = url.replace('skola.se/Produkter', 'play.se/program')

43 webpage = self._download_webpage(url, video_id)

44 urplayer_data = self._parse_json(self._html_search_regex(

45 r'data-react-class="components/Player/Player"[^>]+data-react-props="({.+?})"',

46 webpage, 'urplayer data'), video_id)['currentProduct']

47 episode = urplayer_data['title']

48 raw_streaming_info = urplayer_data['streamingInfo']['raw']

49 host = self._download_json(

50 'http://streaming-loadbalancer.ur.se/loadbalancer.json',

51 video_id)['redirect']

52

53 formats = []

54 for k, v in raw_streaming_info.items():

55 if not (k in ('sd', 'hd') and isinstance(v, dict)):

56 continue

57 file_http = v.get('location')

58 if file_http:

59 formats.extend(self._extract_wowza_formats(

60 'http://%s/%splaylist.m3u8' % (host, file_http),

61 video_id, skip_protocols=['f4m', 'rtmp', 'rtsp']))

62 self._sort_formats(formats)

63

64 image = urplayer_data.get('image') or {}

65 thumbnails = []

66 for k, v in image.items():

67 t = {

68 'id': k,

69 'url': v,

70 }

71 wh = k.split('x')

72 if len(wh) == 2:

73 t.update({

74 'width': int_or_none(wh[0]),

75 'height': int_or_none(wh[1]),

76 })

77 thumbnails.append(t)

78

79 series = urplayer_data.get('series') or {}

80 series_title = dict_get(series, ('seriesTitle', 'title')) or dict_get(urplayer_data, ('seriesTitle', 'mainTitle'))

81

82 return {

83 'id': video_id,

84 'title': '%s : %s' % (series_title, episode) if series_title else episode,

85 'description': urplayer_data.get('description'),

86 'thumbnails': thumbnails,

87 'timestamp': unified_timestamp(urplayer_data.get('publishedAt')),

88 'series': series_title,

89 'formats': formats,

90 'duration': int_or_none(urplayer_data.get('duration')),

91 'categories': urplayer_data.get('categories'),

92 'tags': urplayer_data.get('keywords'),

93 'season': series.get('label'),

94 'episode': episode,

95 'episode_number': int_or_none(urplayer_data.get('episodeNumber')),

96 }

97

[end of youtube_dl/extractor/urplay.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/youtube_dl/extractor/urplay.py b/youtube_dl/extractor/urplay.py

--- a/youtube_dl/extractor/urplay.py

+++ b/youtube_dl/extractor/urplay.py

@@ -42,8 +42,8 @@

url = url.replace('skola.se/Produkter', 'play.se/program')

webpage = self._download_webpage(url, video_id)

urplayer_data = self._parse_json(self._html_search_regex(

- r'data-react-class="components/Player/Player"[^>]+data-react-props="({.+?})"',

- webpage, 'urplayer data'), video_id)['currentProduct']

+ r'data-react-class="routes/Product/components/ProgramContainer/ProgramContainer"[^>]+data-react-props="({.+?})"',

+ webpage, 'urplayer data'), video_id)['accessibleEpisodes'][0]

episode = urplayer_data['title']

raw_streaming_info = urplayer_data['streamingInfo']['raw']

host = self._download_json(

|

{"golden_diff": "diff --git a/youtube_dl/extractor/urplay.py b/youtube_dl/extractor/urplay.py\n--- a/youtube_dl/extractor/urplay.py\n+++ b/youtube_dl/extractor/urplay.py\n@@ -42,8 +42,8 @@\n url = url.replace('skola.se/Produkter', 'play.se/program')\n webpage = self._download_webpage(url, video_id)\n urplayer_data = self._parse_json(self._html_search_regex(\n- r'data-react-class=\"components/Player/Player\"[^>]+data-react-props=\"({.+?})\"',\n- webpage, 'urplayer data'), video_id)['currentProduct']\n+ r'data-react-class=\"routes/Product/components/ProgramContainer/ProgramContainer\"[^>]+data-react-props=\"({.+?})\"',\n+ webpage, 'urplayer data'), video_id)['accessibleEpisodes'][0]\n episode = urplayer_data['title']\n raw_streaming_info = urplayer_data['streamingInfo']['raw']\n host = self._download_json(\n", "issue": "urplay\n<!--\r\n\r\n######################################################################\r\n WARNING!\r\n IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE\r\n######################################################################\r\n\r\n-->\r\n\r\n\r\n## Checklist\r\n\r\n<!--\r\nCarefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:\r\n- First of, make sure you are using the latest version of youtube-dl. Run `youtube-dl --version` and ensure your version is 2021.02.04.1. If it's not, see https://yt-dl.org/update on how to update. Issues with outdated version will be REJECTED.\r\n- Make sure that all provided video/audio/playlist URLs (if any) are alive and playable in a browser.\r\n- Make sure that all URLs and arguments with special characters are properly quoted or escaped as explained in http://yt-dl.org/escape.\r\n- Search the bugtracker for similar issues: http://yt-dl.org/search-issues. DO NOT post duplicates.\r\n- Finally, put x into all relevant boxes (like this [x])\r\n-->\r\n\r\n- [x] I'm reporting a broken site support\r\n- [x] I've verified that I'm running youtube-dl version **2021.02.04.1**\r\n- [x] I've checked that all provided URLs are alive and playable in a browser\r\n- [x] I've checked that all URLs and arguments with special characters are properly quoted or escaped\r\n- [x] I've searched the bugtracker for similar issues including closed ones\r\n\r\n\r\n## Verbose log\r\n\r\n<!--\r\nProvide the complete verbose output of youtube-dl that clearly demonstrates the problem.\r\nAdd the `-v` flag to your command line you run youtube-dl with (`youtube-dl -v <your command line>`), copy the WHOLE output and insert it below. It should look similar to this:\r\n [debug] System config: []\r\n [debug] User config: []\r\n [debug] Command-line args: [u'-v', u'http://www.youtube.com/watch?v=BaW_jenozKcj']\r\n [debug] Encodings: locale cp1251, fs mbcs, out cp866, pref cp1251\r\n [debug] youtube-dl version 2021.02.04.1\r\n [debug] Python version 2.7.11 - Windows-2003Server-5.2.3790-SP2\r\n [debug] exe versions: ffmpeg N-75573-g1d0487f, ffprobe N-75573-g1d0487f, rtmpdump 2.4\r\n [debug] Proxy map: {}\r\n <more lines>\r\n-->\r\n\r\n```\r\n[debug] System config: []\r\n[debug] User config: []\r\n[debug] Custom config: []\r\n[debug] Command-line args: ['--proxy', 'https://127.0.0.1:10809', '-J', 'https://urplay.se/program/220659-seniorsurfarskolan-social-pa-distans?autostart=true', '-v']\r\n[debug] Encodings: locale cp936, fs mbcs, out cp936, pref cp936\r\n[debug] youtube-dl version 2021.02.04.1\r\n[debug] Python version 3.4.4 (CPython) - Windows-7-6.1.7601-SP1\r\n[debug] exe versions: none\r\n[debug] Proxy map: {'http': 'https://127.0.0.1:10809', 'https': 'https://127.0.0.1:10809'}\r\nERROR: Unable to download webpage: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:600)> (caused by URLError(SSLError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:600)'),))\r\n File \"C:\\Users\\dst\\AppData\\Roaming\\Build archive\\youtube-dl\\ytdl-org\\tmpgi7ngq0n\\build\\youtube_dl\\extractor\\common.py\", line 632, in _request_webpage\r\n File \"C:\\Users\\dst\\AppData\\Roaming\\Build archive\\youtube-dl\\ytdl-org\\tmpgi7ngq0n\\build\\youtube_dl\\YoutubeDL.py\", line 2275, in urlopen\r\n File \"C:\\Python\\Python34\\lib\\urllib\\request.py\", line 464, in open\r\n File \"C:\\Python\\Python34\\lib\\urllib\\request.py\", line 482, in _open\r\n File \"C:\\Python\\Python34\\lib\\urllib\\request.py\", line 442, in _call_chain\r\n File \"C:\\Users\\dst\\AppData\\Roaming\\Build archive\\youtube-dl\\ytdl-org\\tmpgi7ngq0n\\build\\youtube_dl\\utils.py\", line 2736, in https_open\r\n File \"C:\\Python\\Python34\\lib\\urllib\\request.py\", line 1185, in do_open\r\n```\r\n\r\n\r\n## Description\r\n\r\n<!--\r\nProvide an explanation of your issue in an arbitrary form. Provide any additional information, suggested solution and as much context and examples as possible.\r\nIf work on your issue requires account credentials please provide them or explain how one can obtain them.\r\n-->\r\n\r\n This problem is exsactly same as #26815, but I saw that issue is closed, now the problem is still there, hope you guys can fix it!\r\n\n", "before_files": [{"content": "# coding: utf-8\nfrom __future__ import unicode_literals\n\nfrom .common import InfoExtractor\nfrom ..utils import (\n dict_get,\n int_or_none,\n unified_timestamp,\n)\n\n\nclass URPlayIE(InfoExtractor):\n _VALID_URL = r'https?://(?:www\\.)?ur(?:play|skola)\\.se/(?:program|Produkter)/(?P<id>[0-9]+)'\n _TESTS = [{\n 'url': 'https://urplay.se/program/203704-ur-samtiden-livet-universum-och-rymdens-markliga-musik-om-vetenskap-kritiskt-tankande-och-motstand',\n 'md5': 'ff5b0c89928f8083c74bbd5099c9292d',\n 'info_dict': {\n 'id': '203704',\n 'ext': 'mp4',\n 'title': 'UR Samtiden - Livet, universum och rymdens m\u00e4rkliga musik : Om vetenskap, kritiskt t\u00e4nkande och motst\u00e5nd',\n 'description': 'md5:5344508a52aa78c1ced6c1b8b9e44e9a',\n 'timestamp': 1513292400,\n 'upload_date': '20171214',\n },\n }, {\n 'url': 'https://urskola.se/Produkter/190031-Tripp-Trapp-Trad-Sovkudde',\n 'info_dict': {\n 'id': '190031',\n 'ext': 'mp4',\n 'title': 'Tripp, Trapp, Tr\u00e4d : Sovkudde',\n 'description': 'md5:b86bffdae04a7e9379d1d7e5947df1d1',\n 'timestamp': 1440086400,\n 'upload_date': '20150820',\n },\n }, {\n 'url': 'http://urskola.se/Produkter/155794-Smasagor-meankieli-Grodan-i-vida-varlden',\n 'only_matching': True,\n }]\n\n def _real_extract(self, url):\n video_id = self._match_id(url)\n url = url.replace('skola.se/Produkter', 'play.se/program')\n webpage = self._download_webpage(url, video_id)\n urplayer_data = self._parse_json(self._html_search_regex(\n r'data-react-class=\"components/Player/Player\"[^>]+data-react-props=\"({.+?})\"',\n webpage, 'urplayer data'), video_id)['currentProduct']\n episode = urplayer_data['title']\n raw_streaming_info = urplayer_data['streamingInfo']['raw']\n host = self._download_json(\n 'http://streaming-loadbalancer.ur.se/loadbalancer.json',\n video_id)['redirect']\n\n formats = []\n for k, v in raw_streaming_info.items():\n if not (k in ('sd', 'hd') and isinstance(v, dict)):\n continue\n file_http = v.get('location')\n if file_http:\n formats.extend(self._extract_wowza_formats(\n 'http://%s/%splaylist.m3u8' % (host, file_http),\n video_id, skip_protocols=['f4m', 'rtmp', 'rtsp']))\n self._sort_formats(formats)\n\n image = urplayer_data.get('image') or {}\n thumbnails = []\n for k, v in image.items():\n t = {\n 'id': k,\n 'url': v,\n }\n wh = k.split('x')\n if len(wh) == 2:\n t.update({\n 'width': int_or_none(wh[0]),\n 'height': int_or_none(wh[1]),\n })\n thumbnails.append(t)\n\n series = urplayer_data.get('series') or {}\n series_title = dict_get(series, ('seriesTitle', 'title')) or dict_get(urplayer_data, ('seriesTitle', 'mainTitle'))\n\n return {\n 'id': video_id,\n 'title': '%s : %s' % (series_title, episode) if series_title else episode,\n 'description': urplayer_data.get('description'),\n 'thumbnails': thumbnails,\n 'timestamp': unified_timestamp(urplayer_data.get('publishedAt')),\n 'series': series_title,\n 'formats': formats,\n 'duration': int_or_none(urplayer_data.get('duration')),\n 'categories': urplayer_data.get('categories'),\n 'tags': urplayer_data.get('keywords'),\n 'season': series.get('label'),\n 'episode': episode,\n 'episode_number': int_or_none(urplayer_data.get('episodeNumber')),\n }\n", "path": "youtube_dl/extractor/urplay.py"}]}

| 3,070 | 236 |

gh_patches_debug_42286

|

rasdani/github-patches

|

git_diff

|

ethereum__web3.py-785

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Better error message on failed encoding

* Version: 4.1.0

### What was wrong?

A field of type `HexBytes` slipped through the pythonic middleware and the web3 provider tried to encode it with json. The only error message was:

> TypeError: Object of type 'HexBytes' is not JSON serializable

### How can it be fixed?

Catch type errors in:

```

~/web3/providers/base.py in encode_rpc_request(self, method, params)

65 "method": method,

---> 66 "params": params or [],

67 "id": next(self.request_counter),

68 }))

69

```

In case of a `TypeError`, inspect `params` and raise another `TypeError` saying which parameter index was failing to json encode, and (if applicable) which key in the `dict` at that index.

</issue>

<code>

[start of web3/utils/encoding.py]

1 # String encodings and numeric representations

2 import re

3

4 from eth_utils import (

5 add_0x_prefix,

6 big_endian_to_int,

7 decode_hex,

8 encode_hex,

9 int_to_big_endian,

10 is_boolean,

11 is_bytes,

12 is_hex,

13 is_integer,

14 remove_0x_prefix,

15 to_hex,

16 )

17

18 from web3.utils.abi import (

19 is_address_type,

20 is_array_type,

21 is_bool_type,

22 is_bytes_type,

23 is_int_type,

24 is_string_type,

25 is_uint_type,

26 size_of_type,

27 sub_type_of_array_type,

28 )

29 from web3.utils.toolz import (

30 curry,

31 )

32 from web3.utils.validation import (

33 assert_one_val,

34 validate_abi_type,

35 validate_abi_value,

36 )

37

38

39 def hex_encode_abi_type(abi_type, value, force_size=None):

40 """

41 Encodes value into a hex string in format of abi_type

42 """

43 validate_abi_type(abi_type)

44 validate_abi_value(abi_type, value)

45

46 data_size = force_size or size_of_type(abi_type)

47 if is_array_type(abi_type):

48 sub_type = sub_type_of_array_type(abi_type)

49 return "".join([remove_0x_prefix(hex_encode_abi_type(sub_type, v, 256)) for v in value])

50 elif is_bool_type(abi_type):

51 return to_hex_with_size(value, data_size)

52 elif is_uint_type(abi_type):

53 return to_hex_with_size(value, data_size)

54 elif is_int_type(abi_type):

55 return to_hex_twos_compliment(value, data_size)

56 elif is_address_type(abi_type):

57 return pad_hex(value, data_size)

58 elif is_bytes_type(abi_type):

59 if is_bytes(value):

60 return encode_hex(value)

61 else:

62 return value

63 elif is_string_type(abi_type):

64 return to_hex(text=value)

65 else:

66 raise ValueError(

67 "Unsupported ABI type: {0}".format(abi_type)

68 )

69

70

71 def to_hex_twos_compliment(value, bit_size):

72 """

73 Converts integer value to twos compliment hex representation with given bit_size

74 """

75 if value >= 0:

76 return to_hex_with_size(value, bit_size)

77

78 value = (1 << bit_size) + value

79 hex_value = hex(value)

80 hex_value = hex_value.rstrip("L")

81 return hex_value

82

83

84 def to_hex_with_size(value, bit_size):

85 """

86 Converts a value to hex with given bit_size:

87 """

88 return pad_hex(to_hex(value), bit_size)

89

90

91 def pad_hex(value, bit_size):

92 """

93 Pads a hex string up to the given bit_size

94 """

95 value = remove_0x_prefix(value)

96 return add_0x_prefix(value.zfill(int(bit_size / 4)))

97

98

99 def trim_hex(hexstr):

100 if hexstr.startswith('0x0'):

101 hexstr = re.sub('^0x0+', '0x', hexstr)

102 if hexstr == '0x':

103 hexstr = '0x0'

104 return hexstr

105

106

107 def to_int(value=None, hexstr=None, text=None):

108 """

109 Converts value to it's integer representation.

110

111 Values are converted this way:

112

113 * value:

114 * bytes: big-endian integer

115 * bool: True => 1, False => 0

116 * hexstr: interpret hex as integer

117 * text: interpret as string of digits, like '12' => 12

118 """

119 assert_one_val(value, hexstr=hexstr, text=text)

120

121 if hexstr is not None:

122 return int(hexstr, 16)

123 elif text is not None:

124 return int(text)

125 elif isinstance(value, bytes):

126 return big_endian_to_int(value)

127 elif isinstance(value, str):

128 raise TypeError("Pass in strings with keyword hexstr or text")

129 else:

130 return int(value)

131

132

133 @curry

134 def pad_bytes(fill_with, num_bytes, unpadded):

135 return unpadded.rjust(num_bytes, fill_with)

136

137

138 zpad_bytes = pad_bytes(b'\0')

139

140

141 def to_bytes(primitive=None, hexstr=None, text=None):

142 assert_one_val(primitive, hexstr=hexstr, text=text)

143

144 if is_boolean(primitive):

145 return b'\x01' if primitive else b'\x00'

146 elif isinstance(primitive, bytes):

147 return primitive

148 elif is_integer(primitive):

149 return to_bytes(hexstr=to_hex(primitive))

150 elif hexstr is not None:

151 if len(hexstr) % 2:

152 hexstr = '0x0' + remove_0x_prefix(hexstr)

153 return decode_hex(hexstr)

154 elif text is not None:

155 return text.encode('utf-8')

156 raise TypeError("expected an int in first arg, or keyword of hexstr or text")

157

158

159 def to_text(primitive=None, hexstr=None, text=None):

160 assert_one_val(primitive, hexstr=hexstr, text=text)

161

162 if hexstr is not None:

163 return to_bytes(hexstr=hexstr).decode('utf-8')

164 elif text is not None:

165 return text

166 elif isinstance(primitive, str):

167 return to_text(hexstr=primitive)

168 elif isinstance(primitive, bytes):

169 return primitive.decode('utf-8')

170 elif is_integer(primitive):

171 byte_encoding = int_to_big_endian(primitive)

172 return to_text(byte_encoding)

173 raise TypeError("Expected an int, bytes or hexstr.")

174

175

176 @curry

177 def text_if_str(to_type, text_or_primitive):

178 '''

179 Convert to a type, assuming that strings can be only unicode text (not a hexstr)

180

181 @param to_type is a function that takes the arguments (primitive, hexstr=hexstr, text=text),

182 eg~ to_bytes, to_text, to_hex, to_int, etc

183 @param hexstr_or_primitive in bytes, str, or int.

184 '''

185 if isinstance(text_or_primitive, str):

186 (primitive, text) = (None, text_or_primitive)

187 else:

188 (primitive, text) = (text_or_primitive, None)

189 return to_type(primitive, text=text)

190

191

192 @curry

193 def hexstr_if_str(to_type, hexstr_or_primitive):

194 '''

195 Convert to a type, assuming that strings can be only hexstr (not unicode text)

196

197 @param to_type is a function that takes the arguments (primitive, hexstr=hexstr, text=text),

198 eg~ to_bytes, to_text, to_hex, to_int, etc

199 @param text_or_primitive in bytes, str, or int.

200 '''

201 if isinstance(hexstr_or_primitive, str):

202 (primitive, hexstr) = (None, hexstr_or_primitive)

203 if remove_0x_prefix(hexstr) and not is_hex(hexstr):

204 raise ValueError(

205 "when sending a str, it must be a hex string. Got: {0!r}".format(

206 hexstr_or_primitive,

207 )

208 )

209 else:

210 (primitive, hexstr) = (hexstr_or_primitive, None)

211 return to_type(primitive, hexstr=hexstr)

212

[end of web3/utils/encoding.py]

[start of web3/providers/__init__.py]

1 from .base import ( # noqa: F401

2 BaseProvider,

3 )

4 from .rpc import HTTPProvider # noqa: F401

5 from .ipc import IPCProvider # noqa: F401

6 from .websocket import WebsocketProvider # noqa: F401

7 from .auto import AutoProvider # noqa: F401

8

[end of web3/providers/__init__.py]

[start of web3/providers/base.py]

1 import itertools

2 import json

3

4 from eth_utils import (

5 to_bytes,

6 to_text,

7 )

8

9 from web3.middleware import (

10 combine_middlewares,

11 )

12

13

14 class BaseProvider:

15 _middlewares = ()

16 _request_func_cache = (None, None) # a tuple of (all_middlewares, request_func)

17

18 @property

19 def middlewares(self):

20 return self._middlewares

21

22 @middlewares.setter

23 def middlewares(self, values):

24 self._middlewares = tuple(values)

25

26 def request_func(self, web3, outer_middlewares):

27 '''

28 @param outer_middlewares is an iterable of middlewares, ordered by first to execute

29 @returns a function that calls all the middleware and eventually self.make_request()

30 '''

31 all_middlewares = tuple(outer_middlewares) + tuple(self.middlewares)

32

33 cache_key = self._request_func_cache[0]

34 if cache_key is None or cache_key != all_middlewares:

35 self._request_func_cache = (

36 all_middlewares,

37 self._generate_request_func(web3, all_middlewares)

38 )

39 return self._request_func_cache[-1]

40

41 def _generate_request_func(self, web3, middlewares):

42 return combine_middlewares(

43 middlewares=middlewares,

44 web3=web3,

45 provider_request_fn=self.make_request,

46 )

47

48 def make_request(self, method, params):

49 raise NotImplementedError("Providers must implement this method")

50

51 def isConnected(self):

52 raise NotImplementedError("Providers must implement this method")

53

54

55 class JSONBaseProvider(BaseProvider):

56 def __init__(self):

57 self.request_counter = itertools.count()

58

59 def decode_rpc_response(self, response):

60 return json.loads(to_text(response))

61

62 def encode_rpc_request(self, method, params):

63 return to_bytes(text=json.dumps({

64 "jsonrpc": "2.0",

65 "method": method,

66 "params": params or [],

67 "id": next(self.request_counter),

68 }))

69

70 def isConnected(self):

71 try:

72 response = self.make_request('web3_clientVersion', [])

73 except IOError:

74 return False

75 else:

76 assert response['jsonrpc'] == '2.0'

77 assert 'error' not in response

78 return True

79 assert False

80

[end of web3/providers/base.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/web3/providers/__init__.py b/web3/providers/__init__.py

--- a/web3/providers/__init__.py

+++ b/web3/providers/__init__.py

@@ -1,6 +1,8 @@

from .base import ( # noqa: F401

BaseProvider,

+ JSONBaseProvider,

)

+

from .rpc import HTTPProvider # noqa: F401

from .ipc import IPCProvider # noqa: F401

from .websocket import WebsocketProvider # noqa: F401

diff --git a/web3/providers/base.py b/web3/providers/base.py

--- a/web3/providers/base.py

+++ b/web3/providers/base.py

@@ -1,5 +1,4 @@

import itertools

-import json

from eth_utils import (

to_bytes,

@@ -9,6 +8,9 @@

from web3.middleware import (

combine_middlewares,

)

+from web3.utils.encoding import (

+ FriendlyJsonSerde,

+)

class BaseProvider:

@@ -57,15 +59,18 @@

self.request_counter = itertools.count()

def decode_rpc_response(self, response):

- return json.loads(to_text(response))

+ text_response = to_text(response)

+ return FriendlyJsonSerde().json_decode(text_response)

def encode_rpc_request(self, method, params):

- return to_bytes(text=json.dumps({

+ rpc_dict = {

"jsonrpc": "2.0",

"method": method,

"params": params or [],

"id": next(self.request_counter),

- }))

+ }

+ encoded = FriendlyJsonSerde().json_encode(rpc_dict)

+ return to_bytes(text=encoded)

def isConnected(self):

try:

diff --git a/web3/utils/encoding.py b/web3/utils/encoding.py

--- a/web3/utils/encoding.py

+++ b/web3/utils/encoding.py

@@ -1,4 +1,5 @@

# String encodings and numeric representations

+import json

import re

from eth_utils import (

@@ -11,6 +12,7 @@

is_bytes,

is_hex,

is_integer,

+ is_list_like,

remove_0x_prefix,

to_hex,

)

@@ -209,3 +211,54 @@

else:

(primitive, hexstr) = (hexstr_or_primitive, None)

return to_type(primitive, hexstr=hexstr)

+

+

+class FriendlyJsonSerde:

+ '''

+ Friendly JSON serializer & deserializer

+

+ When encoding or decoding fails, this class collects

+ information on which fields failed, to show more

+ helpful information in the raised error messages.

+ '''

+ def _json_mapping_errors(self, mapping):

+ for key, val in mapping.items():

+ try:

+ self._friendly_json_encode(val)

+ except TypeError as exc:

+ yield "%r: because (%s)" % (key, exc)

+

+ def _json_list_errors(self, iterable):

+ for index, element in enumerate(iterable):

+ try:

+ self._friendly_json_encode(element)

+ except TypeError as exc:

+ yield "%d: because (%s)" % (index, exc)

+

+ def _friendly_json_encode(self, obj):

+ try:

+ encoded = json.dumps(obj)

+ return encoded

+ except TypeError as full_exception:

+ if hasattr(obj, 'items'):

+ item_errors = '; '.join(self._json_mapping_errors(obj))

+ raise TypeError("dict had unencodable value at keys: {{{}}}".format(item_errors))

+ elif is_list_like(obj):

+ element_errors = '; '.join(self._json_list_errors(obj))

+ raise TypeError("list had unencodable value at index: [{}]".format(element_errors))

+ else:

+ raise full_exception

+

+ def json_decode(self, json_str):

+ try:

+ decoded = json.loads(json_str)

+ return decoded

+ except json.decoder.JSONDecodeError as exc:

+ err_msg = 'Could not decode {} because of {}.'.format(repr(json_str), exc)

+ raise ValueError(err_msg)

+

+ def json_encode(self, obj):

+ try:

+ return self._friendly_json_encode(obj)

+ except TypeError as exc:

+ raise TypeError("Could not encode to JSON: {}".format(exc))

|

{"golden_diff": "diff --git a/web3/providers/__init__.py b/web3/providers/__init__.py\n--- a/web3/providers/__init__.py\n+++ b/web3/providers/__init__.py\n@@ -1,6 +1,8 @@\n from .base import ( # noqa: F401\n BaseProvider,\n+ JSONBaseProvider,\n )\n+\n from .rpc import HTTPProvider # noqa: F401\n from .ipc import IPCProvider # noqa: F401\n from .websocket import WebsocketProvider # noqa: F401\ndiff --git a/web3/providers/base.py b/web3/providers/base.py\n--- a/web3/providers/base.py\n+++ b/web3/providers/base.py\n@@ -1,5 +1,4 @@\n import itertools\n-import json\n \n from eth_utils import (\n to_bytes,\n@@ -9,6 +8,9 @@\n from web3.middleware import (\n combine_middlewares,\n )\n+from web3.utils.encoding import (\n+ FriendlyJsonSerde,\n+)\n \n \n class BaseProvider:\n@@ -57,15 +59,18 @@\n self.request_counter = itertools.count()\n \n def decode_rpc_response(self, response):\n- return json.loads(to_text(response))\n+ text_response = to_text(response)\n+ return FriendlyJsonSerde().json_decode(text_response)\n \n def encode_rpc_request(self, method, params):\n- return to_bytes(text=json.dumps({\n+ rpc_dict = {\n \"jsonrpc\": \"2.0\",\n \"method\": method,\n \"params\": params or [],\n \"id\": next(self.request_counter),\n- }))\n+ }\n+ encoded = FriendlyJsonSerde().json_encode(rpc_dict)\n+ return to_bytes(text=encoded)\n \n def isConnected(self):\n try:\ndiff --git a/web3/utils/encoding.py b/web3/utils/encoding.py\n--- a/web3/utils/encoding.py\n+++ b/web3/utils/encoding.py\n@@ -1,4 +1,5 @@\n # String encodings and numeric representations\n+import json\n import re\n \n from eth_utils import (\n@@ -11,6 +12,7 @@\n is_bytes,\n is_hex,\n is_integer,\n+ is_list_like,\n remove_0x_prefix,\n to_hex,\n )\n@@ -209,3 +211,54 @@\n else:\n (primitive, hexstr) = (hexstr_or_primitive, None)\n return to_type(primitive, hexstr=hexstr)\n+\n+\n+class FriendlyJsonSerde:\n+ '''\n+ Friendly JSON serializer & deserializer\n+\n+ When encoding or decoding fails, this class collects\n+ information on which fields failed, to show more\n+ helpful information in the raised error messages.\n+ '''\n+ def _json_mapping_errors(self, mapping):\n+ for key, val in mapping.items():\n+ try:\n+ self._friendly_json_encode(val)\n+ except TypeError as exc:\n+ yield \"%r: because (%s)\" % (key, exc)\n+\n+ def _json_list_errors(self, iterable):\n+ for index, element in enumerate(iterable):\n+ try:\n+ self._friendly_json_encode(element)\n+ except TypeError as exc:\n+ yield \"%d: because (%s)\" % (index, exc)\n+\n+ def _friendly_json_encode(self, obj):\n+ try:\n+ encoded = json.dumps(obj)\n+ return encoded\n+ except TypeError as full_exception:\n+ if hasattr(obj, 'items'):\n+ item_errors = '; '.join(self._json_mapping_errors(obj))\n+ raise TypeError(\"dict had unencodable value at keys: {{{}}}\".format(item_errors))\n+ elif is_list_like(obj):\n+ element_errors = '; '.join(self._json_list_errors(obj))\n+ raise TypeError(\"list had unencodable value at index: [{}]\".format(element_errors))\n+ else:\n+ raise full_exception\n+\n+ def json_decode(self, json_str):\n+ try:\n+ decoded = json.loads(json_str)\n+ return decoded\n+ except json.decoder.JSONDecodeError as exc:\n+ err_msg = 'Could not decode {} because of {}.'.format(repr(json_str), exc)\n+ raise ValueError(err_msg)\n+\n+ def json_encode(self, obj):\n+ try:\n+ return self._friendly_json_encode(obj)\n+ except TypeError as exc:\n+ raise TypeError(\"Could not encode to JSON: {}\".format(exc))\n", "issue": "Better error message on failed encoding\n* Version: 4.1.0\r\n\r\n### What was wrong?\r\n\r\nA field of type `HexBytes` slipped through the pythonic middleware and the web3 provider tried to encode it with json. The only error message was:\r\n\r\n> TypeError: Object of type 'HexBytes' is not JSON serializable\r\n\r\n\r\n### How can it be fixed?\r\n\r\nCatch type errors in:\r\n\r\n```\r\n~/web3/providers/base.py in encode_rpc_request(self, method, params)\r\n 65 \"method\": method,\r\n---> 66 \"params\": params or [],\r\n 67 \"id\": next(self.request_counter),\r\n 68 }))\r\n 69\r\n```\r\nIn case of a `TypeError`, inspect `params` and raise another `TypeError` saying which parameter index was failing to json encode, and (if applicable) which key in the `dict` at that index.\n", "before_files": [{"content": "# String encodings and numeric representations\nimport re\n\nfrom eth_utils import (\n add_0x_prefix,\n big_endian_to_int,\n decode_hex,\n encode_hex,\n int_to_big_endian,\n is_boolean,\n is_bytes,\n is_hex,\n is_integer,\n remove_0x_prefix,\n to_hex,\n)\n\nfrom web3.utils.abi import (\n is_address_type,\n is_array_type,\n is_bool_type,\n is_bytes_type,\n is_int_type,\n is_string_type,\n is_uint_type,\n size_of_type,\n sub_type_of_array_type,\n)\nfrom web3.utils.toolz import (\n curry,\n)\nfrom web3.utils.validation import (\n assert_one_val,\n validate_abi_type,\n validate_abi_value,\n)\n\n\ndef hex_encode_abi_type(abi_type, value, force_size=None):\n \"\"\"\n Encodes value into a hex string in format of abi_type\n \"\"\"\n validate_abi_type(abi_type)\n validate_abi_value(abi_type, value)\n\n data_size = force_size or size_of_type(abi_type)\n if is_array_type(abi_type):\n sub_type = sub_type_of_array_type(abi_type)\n return \"\".join([remove_0x_prefix(hex_encode_abi_type(sub_type, v, 256)) for v in value])\n elif is_bool_type(abi_type):\n return to_hex_with_size(value, data_size)\n elif is_uint_type(abi_type):\n return to_hex_with_size(value, data_size)\n elif is_int_type(abi_type):\n return to_hex_twos_compliment(value, data_size)\n elif is_address_type(abi_type):\n return pad_hex(value, data_size)\n elif is_bytes_type(abi_type):\n if is_bytes(value):\n return encode_hex(value)\n else:\n return value\n elif is_string_type(abi_type):\n return to_hex(text=value)\n else:\n raise ValueError(\n \"Unsupported ABI type: {0}\".format(abi_type)\n )\n\n\ndef to_hex_twos_compliment(value, bit_size):\n \"\"\"\n Converts integer value to twos compliment hex representation with given bit_size\n \"\"\"\n if value >= 0:\n return to_hex_with_size(value, bit_size)\n\n value = (1 << bit_size) + value\n hex_value = hex(value)\n hex_value = hex_value.rstrip(\"L\")\n return hex_value\n\n\ndef to_hex_with_size(value, bit_size):\n \"\"\"\n Converts a value to hex with given bit_size:\n \"\"\"\n return pad_hex(to_hex(value), bit_size)\n\n\ndef pad_hex(value, bit_size):\n \"\"\"\n Pads a hex string up to the given bit_size\n \"\"\"\n value = remove_0x_prefix(value)\n return add_0x_prefix(value.zfill(int(bit_size / 4)))\n\n\ndef trim_hex(hexstr):\n if hexstr.startswith('0x0'):\n hexstr = re.sub('^0x0+', '0x', hexstr)\n if hexstr == '0x':\n hexstr = '0x0'\n return hexstr\n\n\ndef to_int(value=None, hexstr=None, text=None):\n \"\"\"\n Converts value to it's integer representation.\n\n Values are converted this way:\n\n * value:\n * bytes: big-endian integer\n * bool: True => 1, False => 0\n * hexstr: interpret hex as integer\n * text: interpret as string of digits, like '12' => 12\n \"\"\"\n assert_one_val(value, hexstr=hexstr, text=text)\n\n if hexstr is not None:\n return int(hexstr, 16)\n elif text is not None:\n return int(text)\n elif isinstance(value, bytes):\n return big_endian_to_int(value)\n elif isinstance(value, str):\n raise TypeError(\"Pass in strings with keyword hexstr or text\")\n else:\n return int(value)\n\n\n@curry\ndef pad_bytes(fill_with, num_bytes, unpadded):\n return unpadded.rjust(num_bytes, fill_with)\n\n\nzpad_bytes = pad_bytes(b'\\0')\n\n\ndef to_bytes(primitive=None, hexstr=None, text=None):\n assert_one_val(primitive, hexstr=hexstr, text=text)\n\n if is_boolean(primitive):\n return b'\\x01' if primitive else b'\\x00'\n elif isinstance(primitive, bytes):\n return primitive\n elif is_integer(primitive):\n return to_bytes(hexstr=to_hex(primitive))\n elif hexstr is not None:\n if len(hexstr) % 2:\n hexstr = '0x0' + remove_0x_prefix(hexstr)\n return decode_hex(hexstr)\n elif text is not None:\n return text.encode('utf-8')\n raise TypeError(\"expected an int in first arg, or keyword of hexstr or text\")\n\n\ndef to_text(primitive=None, hexstr=None, text=None):\n assert_one_val(primitive, hexstr=hexstr, text=text)\n\n if hexstr is not None:\n return to_bytes(hexstr=hexstr).decode('utf-8')\n elif text is not None:\n return text\n elif isinstance(primitive, str):\n return to_text(hexstr=primitive)\n elif isinstance(primitive, bytes):\n return primitive.decode('utf-8')\n elif is_integer(primitive):\n byte_encoding = int_to_big_endian(primitive)\n return to_text(byte_encoding)\n raise TypeError(\"Expected an int, bytes or hexstr.\")\n\n\n@curry\ndef text_if_str(to_type, text_or_primitive):\n '''\n Convert to a type, assuming that strings can be only unicode text (not a hexstr)\n\n @param to_type is a function that takes the arguments (primitive, hexstr=hexstr, text=text),\n eg~ to_bytes, to_text, to_hex, to_int, etc\n @param hexstr_or_primitive in bytes, str, or int.\n '''\n if isinstance(text_or_primitive, str):\n (primitive, text) = (None, text_or_primitive)\n else:\n (primitive, text) = (text_or_primitive, None)\n return to_type(primitive, text=text)\n\n\n@curry\ndef hexstr_if_str(to_type, hexstr_or_primitive):\n '''\n Convert to a type, assuming that strings can be only hexstr (not unicode text)\n\n @param to_type is a function that takes the arguments (primitive, hexstr=hexstr, text=text),\n eg~ to_bytes, to_text, to_hex, to_int, etc\n @param text_or_primitive in bytes, str, or int.\n '''\n if isinstance(hexstr_or_primitive, str):\n (primitive, hexstr) = (None, hexstr_or_primitive)\n if remove_0x_prefix(hexstr) and not is_hex(hexstr):\n raise ValueError(\n \"when sending a str, it must be a hex string. Got: {0!r}\".format(\n hexstr_or_primitive,\n )\n )\n else:\n (primitive, hexstr) = (hexstr_or_primitive, None)\n return to_type(primitive, hexstr=hexstr)\n", "path": "web3/utils/encoding.py"}, {"content": "from .base import ( # noqa: F401\n BaseProvider,\n)\nfrom .rpc import HTTPProvider # noqa: F401\nfrom .ipc import IPCProvider # noqa: F401\nfrom .websocket import WebsocketProvider # noqa: F401\nfrom .auto import AutoProvider # noqa: F401\n", "path": "web3/providers/__init__.py"}, {"content": "import itertools\nimport json\n\nfrom eth_utils import (\n to_bytes,\n to_text,\n)\n\nfrom web3.middleware import (\n combine_middlewares,\n)\n\n\nclass BaseProvider:\n _middlewares = ()\n _request_func_cache = (None, None) # a tuple of (all_middlewares, request_func)\n\n @property\n def middlewares(self):\n return self._middlewares\n\n @middlewares.setter\n def middlewares(self, values):\n self._middlewares = tuple(values)\n\n def request_func(self, web3, outer_middlewares):\n '''\n @param outer_middlewares is an iterable of middlewares, ordered by first to execute\n @returns a function that calls all the middleware and eventually self.make_request()\n '''\n all_middlewares = tuple(outer_middlewares) + tuple(self.middlewares)\n\n cache_key = self._request_func_cache[0]\n if cache_key is None or cache_key != all_middlewares:\n self._request_func_cache = (\n all_middlewares,\n self._generate_request_func(web3, all_middlewares)\n )\n return self._request_func_cache[-1]\n\n def _generate_request_func(self, web3, middlewares):\n return combine_middlewares(\n middlewares=middlewares,\n web3=web3,\n provider_request_fn=self.make_request,\n )\n\n def make_request(self, method, params):\n raise NotImplementedError(\"Providers must implement this method\")\n\n def isConnected(self):\n raise NotImplementedError(\"Providers must implement this method\")\n\n\nclass JSONBaseProvider(BaseProvider):\n def __init__(self):\n self.request_counter = itertools.count()\n\n def decode_rpc_response(self, response):\n return json.loads(to_text(response))\n\n def encode_rpc_request(self, method, params):\n return to_bytes(text=json.dumps({\n \"jsonrpc\": \"2.0\",\n \"method\": method,\n \"params\": params or [],\n \"id\": next(self.request_counter),\n }))\n\n def isConnected(self):\n try:\n response = self.make_request('web3_clientVersion', [])\n except IOError:\n return False\n else:\n assert response['jsonrpc'] == '2.0'\n assert 'error' not in response\n return True\n assert False\n", "path": "web3/providers/base.py"}]}

| 3,584 | 965 |

gh_patches_debug_36645

|

rasdani/github-patches

|

git_diff

|

scikit-hep__awkward-2029

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Checking isinstance of a Protocol is slow

### Version of Awkward Array

2.0.2

### Description and code to reproduce

In a profiling run of a coffea processor with awkward2 (eager mode) I found that 20% of the time was spent in the following line:

https://github.com/scikit-hep/awkward/blob/7e6f504c3cb0310cdbe0be7b5d662722ee73aaa7/src/awkward/contents/content.py#L94

This instance check would normally be very fast but I suspect because the type is a `@runtime_checkable` protocol, it is doing more work.

https://github.com/scikit-hep/awkward/blob/7e6f504c3cb0310cdbe0be7b5d662722ee73aaa7/src/awkward/_backends.py#L42-L45

Perhaps there is a way to have it first check the class `__mro__` and then fall back to the protocol?

If this time is removed from the profile, the remaining time is in line with what I get running the same processor in awkward 1.

</issue>

<code>

[start of src/awkward/_backends.py]

1 from __future__ import annotations

2

3 from abc import abstractmethod

4

5 import awkward_cpp

6

7 import awkward as ak

8 from awkward._nplikes import (

9 Cupy,

10 CupyKernel,

11 Jax,

12 JaxKernel,

13 Numpy,

14 NumpyKernel,

15 NumpyLike,

16 NumpyMetadata,

17 Singleton,

18 nplike_of,

19 )

20 from awkward._typetracer import NoKernel, TypeTracer

21 from awkward.typing import (

22 Any,

23 Callable,

24 Final,

25 Protocol,

26 Self,

27 Tuple,

28 TypeAlias,

29 TypeVar,

30 Unpack,

31 runtime_checkable,

32 )

33

34 np = NumpyMetadata.instance()

35

36

37 T = TypeVar("T", covariant=True)

38 KernelKeyType: TypeAlias = Tuple[str, Unpack[Tuple[np.dtype, ...]]]

39 KernelType: TypeAlias = Callable[..., None]

40

41

42 @runtime_checkable

43 class Backend(Protocol[T]):

44 name: str

45

46 @property

47 @abstractmethod

48 def nplike(self) -> NumpyLike:

49 raise ak._errors.wrap_error(NotImplementedError)

50

51 @property

52 @abstractmethod

53 def index_nplike(self) -> NumpyLike:

54 raise ak._errors.wrap_error(NotImplementedError)

55

56 @classmethod

57 @abstractmethod

58 def instance(cls) -> Self:

59 raise ak._errors.wrap_error(NotImplementedError)

60

61 def __getitem__(self, key: KernelKeyType) -> KernelType:

62 raise ak._errors.wrap_error(NotImplementedError)

63

64

65 class NumpyBackend(Singleton, Backend[Any]):

66 name: Final[str] = "cpu"

67

68 _numpy: Numpy

69

70 @property

71 def nplike(self) -> Numpy:

72 return self._numpy

73

74 @property

75 def index_nplike(self) -> Numpy:

76 return self._numpy

77

78 def __init__(self):

79 self._numpy = Numpy.instance()

80

81 def __getitem__(self, index: KernelKeyType) -> NumpyKernel:

82 return NumpyKernel(awkward_cpp.cpu_kernels.kernel[index], index)

83

84

85 class CupyBackend(Singleton, Backend[Any]):

86 name: Final[str] = "cuda"

87

88 _cupy: Cupy

89

90 @property

91 def nplike(self) -> Cupy:

92 return self._cupy

93

94 @property

95 def index_nplike(self) -> Cupy:

96 return self._cupy

97

98 def __init__(self):

99 self._cupy = Cupy.instance()

100

101 def __getitem__(self, index: KernelKeyType) -> CupyKernel | NumpyKernel:

102 from awkward._connect import cuda

103

104 cupy = cuda.import_cupy("Awkward Arrays with CUDA")

105 _cuda_kernels = cuda.initialize_cuda_kernels(cupy)

106 func = _cuda_kernels[index]

107 if func is not None:

108 return CupyKernel(func, index)

109 else:

110 raise ak._errors.wrap_error(

111 AssertionError(f"CuPyKernel not found: {index!r}")

112 )

113

114

115 class JaxBackend(Singleton, Backend[Any]):

116 name: Final[str] = "jax"

117

118 _jax: Jax

119 _numpy: Numpy

120

121 @property

122 def nplike(self) -> Jax:

123 return self._jax

124

125 @property

126 def index_nplike(self) -> Numpy:

127 return self._numpy

128

129 def __init__(self):

130 self._jax = Jax.instance()

131 self._numpy = Numpy.instance()

132

133 def __getitem__(self, index: KernelKeyType) -> JaxKernel:

134 # JAX uses Awkward's C++ kernels for index-only operations

135 return JaxKernel(awkward_cpp.cpu_kernels.kernel[index], index)

136

137

138 class TypeTracerBackend(Singleton, Backend[Any]):

139 name: Final[str] = "typetracer"

140

141 _typetracer: TypeTracer

142

143 @property

144 def nplike(self) -> TypeTracer:

145 return self._typetracer

146

147 @property

148 def index_nplike(self) -> TypeTracer:

149 return self._typetracer

150

151 def __init__(self):

152 self._typetracer = TypeTracer.instance()

153

154 def __getitem__(self, index: KernelKeyType) -> NoKernel:

155 return NoKernel(index)

156

157

158 def _backend_for_nplike(nplike: ak._nplikes.NumpyLike) -> Backend:

159 # Currently there exists a one-to-one relationship between the nplike

160 # and the backend. In future, this might need refactoring

161 if isinstance(nplike, Numpy):

162 return NumpyBackend.instance()

163 elif isinstance(nplike, Cupy):

164 return CupyBackend.instance()

165 elif isinstance(nplike, Jax):

166 return JaxBackend.instance()

167 elif isinstance(nplike, TypeTracer):

168 return TypeTracerBackend.instance()

169 else:

170 raise ak._errors.wrap_error(ValueError("unrecognised nplike", nplike))

171

172

173 _UNSET = object()

174 D = TypeVar("D")

175

176

177 def backend_of(*objects, default: D = _UNSET) -> Backend | D:

178 """

179 Args:

180 objects: objects for which to find a suitable backend

181 default: value to return if no backend is found.

182

183 Return the most suitable backend for the given objects (e.g. arrays, layouts). If no

184 suitable backend is found, return the `default` value, or raise a `ValueError` if

185 no default is given.

186 """

187 nplike = nplike_of(*objects, default=None)

188 if nplike is not None:

189 return _backend_for_nplike(nplike)

190 elif default is _UNSET:

191 raise ak._errors.wrap_error(ValueError("could not find backend for", objects))

192 else:

193 return default

194

195

196 _backends: Final[dict[str, type[Backend]]] = {

197 b.name: b for b in (NumpyBackend, CupyBackend, JaxBackend, TypeTracerBackend)

198 }

199

200

201 def regularize_backend(backend: str | Backend) -> Backend:

202 if isinstance(backend, Backend):

203 return backend

204 elif backend in _backends:

205 return _backends[backend].instance()

206 else:

207 raise ak._errors.wrap_error(ValueError(f"No such backend {backend!r} exists."))

208

[end of src/awkward/_backends.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/awkward/_backends.py b/src/awkward/_backends.py

--- a/src/awkward/_backends.py

+++ b/src/awkward/_backends.py

@@ -1,6 +1,6 @@

from __future__ import annotations

-from abc import abstractmethod

+from abc import ABC, abstractmethod

import awkward_cpp

@@ -18,18 +18,7 @@

nplike_of,

)

from awkward._typetracer import NoKernel, TypeTracer

-from awkward.typing import (

- Any,

- Callable,

- Final,

- Protocol,

- Self,

- Tuple,

- TypeAlias,

- TypeVar,

- Unpack,

- runtime_checkable,

-)

+from awkward.typing import Callable, Final, Tuple, TypeAlias, TypeVar, Unpack

np = NumpyMetadata.instance()

@@ -39,8 +28,7 @@

KernelType: TypeAlias = Callable[..., None]

-@runtime_checkable

-class Backend(Protocol[T]):

+class Backend(Singleton, ABC):

name: str

@property

@@ -53,16 +41,11 @@

def index_nplike(self) -> NumpyLike:

raise ak._errors.wrap_error(NotImplementedError)

- @classmethod

- @abstractmethod

- def instance(cls) -> Self:

- raise ak._errors.wrap_error(NotImplementedError)

-

def __getitem__(self, key: KernelKeyType) -> KernelType:

raise ak._errors.wrap_error(NotImplementedError)

-class NumpyBackend(Singleton, Backend[Any]):

+class NumpyBackend(Backend):

name: Final[str] = "cpu"

_numpy: Numpy

@@ -82,7 +65,7 @@

return NumpyKernel(awkward_cpp.cpu_kernels.kernel[index], index)

-class CupyBackend(Singleton, Backend[Any]):

+class CupyBackend(Backend):

name: Final[str] = "cuda"

_cupy: Cupy

@@ -112,7 +95,7 @@

)

-class JaxBackend(Singleton, Backend[Any]):

+class JaxBackend(Backend):

name: Final[str] = "jax"

_jax: Jax

@@ -135,7 +118,7 @@

return JaxKernel(awkward_cpp.cpu_kernels.kernel[index], index)

-class TypeTracerBackend(Singleton, Backend[Any]):

+class TypeTracerBackend(Backend):

name: Final[str] = "typetracer"

_typetracer: TypeTracer

|