problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_54968 | rasdani/github-patches | git_diff | fedora-infra__bodhi-4148 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Crash in automatic update handler when submitting work_on_bugs_task

From bodhi-consumer logs:

```

2020-10-25 11:17:14,460 INFO [fedora_messaging.twisted.protocol][MainThread] Consuming message from topic org.fedoraproject.prod.buildsys.tag (message id c2d97737-444f-49b4-b4ca-1efb3a05e941)

2020-10-25 11:17:14,463 INFO [bodhi][PoolThread-twisted.internet.reactor-1] Received message from fedora-messaging with topic: org.fedoraproject.prod.buildsys.tag

2020-10-25 11:17:14,463 INFO [bodhi][PoolThread-twisted.internet.reactor-1] ginac-1.7.9-5.fc34 tagged into f34-updates-candidate

2020-10-25 11:17:14,469 INFO [bodhi][PoolThread-twisted.internet.reactor-1] Build was not submitted, skipping

2020-10-25 11:17:14,838 INFO [bodhi.server][PoolThread-twisted.internet.reactor-1] Sending mail to [email protected]: [Fedora Update] [comment] ginac-1.7.9-5.fc34

2020-10-25 11:17:15,016 ERROR [bodhi][PoolThread-twisted.internet.reactor-1] Instance <Update at 0x7fa3740f5910> is not bound to a Session; attribute refresh operation cannot proceed (Background on this error at: http://sqlalche.me/e/13/bhk3): Unable to handle message in Automatic Update handler: Id: c2d97737-444f-49b4-b4ca-1efb3a05e941

Topic: org.fedoraproject.prod.buildsys.tag

Headers: {

"fedora_messaging_schema": "base.message",

"fedora_messaging_severity": 20,

"sent-at": "2020-10-25T11:17:14+00:00"

}

Body: {

"build_id": 1634116,

"instance": "primary",

"name": "ginac",

"owner": "---",

"release": "5.fc34",

"tag": "f34-updates-candidate",

"tag_id": 27040,

"user": "---",

"version": "1.7.9"

}

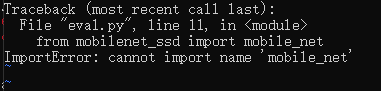

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/bodhi/server/consumers/__init__.py", line 79, in __call__

handler_info.handler(msg)

File "/usr/local/lib/python3.8/site-packages/bodhi/server/consumers/automatic_updates.py", line 197, in __call__

alias = update.alias

File "/usr/lib64/python3.8/site-packages/sqlalchemy/orm/attributes.py", line 287, in __get__

return self.impl.get(instance_state(instance), dict_)

File "/usr/lib64/python3.8/site-packages/sqlalchemy/orm/attributes.py", line 718, in get

value = state._load_expired(state, passive)

File "/usr/lib64/python3.8/site-packages/sqlalchemy/orm/state.py", line 652, in _load_expired

self.manager.deferred_scalar_loader(self, toload)

File "/usr/lib64/python3.8/site-packages/sqlalchemy/orm/loading.py", line 944, in load_scalar_attributes

raise orm_exc.DetachedInstanceError(

sqlalchemy.orm.exc.DetachedInstanceError: Instance <Update at 0x7fa3740f5910> is not bound to a Session; attribute refresh operation cannot proceed (Background on this error at: http://sqlalche.me/e/13/bhk3 )

2020-10-25 11:17:15,053 WARNI [fedora_messaging.twisted.protocol][MainThread] Returning message id c2d97737-444f-49b4-b4ca-1efb3a05e941 to the queue

```

</issue>

<code>

[start of bodhi/server/consumers/automatic_updates.py]

1 # Copyright © 2019 Red Hat, Inc. and others.

2 #

3 # This file is part of Bodhi.

4 #

5 # This program is free software; you can redistribute it and/or

6 # modify it under the terms of the GNU General Public License

7 # as published by the Free Software Foundation; either version 2

8 # of the License, or (at your option) any later version.

9 #

10 # This program is distributed in the hope that it will be useful,

11 # but WITHOUT ANY WARRANTY; without even the implied warranty of

12 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

13 # GNU General Public License for more details.

14 #

15 # You should have received a copy of the GNU General Public License along with

16 # this program; if not, write to the Free Software Foundation, Inc., 51

17 # Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.

18 """

19 The Bodhi handler that creates updates automatically from tagged builds.

20

21 This module is responsible for the process of creating updates when builds are

22 tagged with certain tags.

23 """

24

25 import logging

26 import re

27

28 import fedora_messaging

29

30 from bodhi.server import buildsys

31 from bodhi.server.config import config

32 from bodhi.server.models import (

33 Bug, Build, ContentType, Package, Release, Update, UpdateStatus, UpdateType, User)

34 from bodhi.server.tasks import work_on_bugs_task

35 from bodhi.server.util import transactional_session_maker

36

37 log = logging.getLogger('bodhi')

38

39

40 class AutomaticUpdateHandler:

41 """

42 The Bodhi Automatic Update Handler.

43

44 A consumer that listens for messages about tagged builds and creates

45 updates from them.

46 """

47

48 def __init__(self, db_factory: transactional_session_maker = None):

49 """

50 Initialize the Automatic Update Handler.

51

52 Args:

53 db_factory: If given, used as the db_factory for this handler. If

54 None (the default), a new TransactionalSessionMaker is created and

55 used.

56 """

57 if not db_factory:

58 self.db_factory = transactional_session_maker()

59 else:

60 self.db_factory = db_factory

61

62 def __call__(self, message: fedora_messaging.api.Message) -> None:

63 """Create updates from appropriately tagged builds.

64

65 Args:

66 message: The message we are processing.

67 """

68 body = message.body

69

70 missing = []

71 for mandatory in ('tag', 'build_id', 'name', 'version', 'release'):

72 if mandatory not in body:

73 missing.append(mandatory)

74 if missing:

75 log.debug(f"Received incomplete tag message. Missing: {', '.join(missing)}")

76 return

77

78 btag = body['tag']

79 bnvr = '{name}-{version}-{release}'.format(**body)

80

81 koji = buildsys.get_session()

82

83 kbuildinfo = koji.getBuild(bnvr)

84 if not kbuildinfo:

85 log.debug(f"Can't find Koji build for {bnvr}.")

86 return

87

88 if 'nvr' not in kbuildinfo:

89 log.debug(f"Koji build info for {bnvr} doesn't contain 'nvr'.")

90 return

91

92 if 'owner_name' not in kbuildinfo:

93 log.debug(f"Koji build info for {bnvr} doesn't contain 'owner_name'.")

94 return

95

96 if kbuildinfo['owner_name'] in config.get('automatic_updates_blacklist'):

97 log.debug(f"{bnvr} owned by {kbuildinfo['owner_name']} who is listed in "

98 "automatic_updates_blacklist, skipping.")

99 return

100

101 # some APIs want the Koji build info, some others want the same

102 # wrapped in a larger (request?) structure

103 rbuildinfo = {

104 'info': kbuildinfo,

105 'nvr': kbuildinfo['nvr'].rsplit('-', 2),

106 }

107

108 with self.db_factory() as dbsession:

109 rel = dbsession.query(Release).filter_by(create_automatic_updates=True,

110 candidate_tag=btag).first()

111 if not rel:

112 log.debug(f"Ignoring build being tagged into {btag!r}, no release configured for "

113 "automatic updates for it found.")

114 return

115

116 bcls = ContentType.infer_content_class(Build, kbuildinfo)

117 build = bcls.get(bnvr)

118 if build and build.update:

119 log.info(f"Build, active update for {bnvr} exists already, skipping.")

120 return

121

122 if not build:

123 log.debug(f"Build for {bnvr} doesn't exist yet, creating.")

124

125 # Package.get_or_create() infers content type already

126 log.debug("Getting/creating related package object.")

127 pkg = Package.get_or_create(dbsession, rbuildinfo)

128

129 log.debug("Creating build object, adding it to the DB.")

130 build = bcls(nvr=bnvr, package=pkg, release=rel)

131 dbsession.add(build)

132

133 owner_name = kbuildinfo['owner_name']

134 user = User.get(owner_name)

135 if not user:

136 log.debug(f"Creating bodhi user for '{owner_name}'.")

137 # Leave email, groups blank, these will be filled

138 # in or updated when they log into Bodhi next time, see

139 # bodhi.server.security:remember_me().

140 user = User(name=owner_name)

141 dbsession.add(user)

142

143 log.debug(f"Creating new update for {bnvr}.")

144 changelog = build.get_changelog(lastupdate=True)

145 closing_bugs = []

146 if changelog:

147 log.debug("Adding changelog to update notes.")

148 notes = f"""Automatic update for {bnvr}.

149

150 ##### **Changelog**

151

152 ```

153 {changelog}

154 ```"""

155

156 for b in re.finditer(config.get('bz_regex'), changelog, re.IGNORECASE):

157 idx = int(b.group(1))

158 log.debug(f'Adding bug #{idx} to the update.')

159 bug = Bug.get(idx)

160 if bug is None:

161 bug = Bug(bug_id=idx)

162 dbsession.add(bug)

163 dbsession.flush()

164 if bug not in closing_bugs:

165 closing_bugs.append(bug)

166 else:

167 notes = f"Automatic update for {bnvr}."

168 update = Update(

169 release=rel,

170 builds=[build],

171 bugs=closing_bugs,

172 notes=notes,

173 type=UpdateType.unspecified,

174 stable_karma=3,

175 unstable_karma=-3,

176 autokarma=False,

177 user=user,

178 status=UpdateStatus.pending,

179 )

180

181 # Comment on the update that it was automatically created.

182 update.comment(

183 dbsession,

184 str("This update was automatically created"),

185 author="bodhi",

186 )

187

188 update.add_tag(update.release.pending_signing_tag)

189

190 log.debug("Adding new update to the database.")

191 dbsession.add(update)

192

193 log.debug("Flushing changes to the database.")

194 dbsession.flush()

195

196 # Obsolete older updates which may be stuck in testing due to failed gating

197 try:

198 update.obsolete_older_updates(dbsession)

199 except Exception as e:

200 log.error(f'Problem obsoleting older updates: {e}')

201

202 # This must be run after dbsession is closed so changes are committed to db

203 alias = update.alias

204 work_on_bugs_task.delay(alias, closing_bugs)

205

[end of bodhi/server/consumers/automatic_updates.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/bodhi/server/consumers/automatic_updates.py b/bodhi/server/consumers/automatic_updates.py

--- a/bodhi/server/consumers/automatic_updates.py

+++ b/bodhi/server/consumers/automatic_updates.py

@@ -199,6 +199,7 @@

except Exception as e:

log.error(f'Problem obsoleting older updates: {e}')

+ alias = update.alias

+

# This must be run after dbsession is closed so changes are committed to db

- alias = update.alias

work_on_bugs_task.delay(alias, closing_bugs)

| {"golden_diff": "diff --git a/bodhi/server/consumers/automatic_updates.py b/bodhi/server/consumers/automatic_updates.py\n--- a/bodhi/server/consumers/automatic_updates.py\n+++ b/bodhi/server/consumers/automatic_updates.py\n@@ -199,6 +199,7 @@\n except Exception as e:\n log.error(f'Problem obsoleting older updates: {e}')\n \n+ alias = update.alias\n+\n # This must be run after dbsession is closed so changes are committed to db\n- alias = update.alias\n work_on_bugs_task.delay(alias, closing_bugs)\n", "issue": "Crash in automatic update handler when submitting work_on_bugs_task\nFrom bodhi-consumer logs:\r\n```\r\n2020-10-25 11:17:14,460 INFO [fedora_messaging.twisted.protocol][MainThread] Consuming message from topic org.fedoraproject.prod.buildsys.tag (message id c2d97737-444f-49b4-b4ca-1efb3a05e941)\r\n2020-10-25 11:17:14,463 INFO [bodhi][PoolThread-twisted.internet.reactor-1] Received message from fedora-messaging with topic: org.fedoraproject.prod.buildsys.tag\r\n2020-10-25 11:17:14,463 INFO [bodhi][PoolThread-twisted.internet.reactor-1] ginac-1.7.9-5.fc34 tagged into f34-updates-candidate\r\n2020-10-25 11:17:14,469 INFO [bodhi][PoolThread-twisted.internet.reactor-1] Build was not submitted, skipping\r\n2020-10-25 11:17:14,838 INFO [bodhi.server][PoolThread-twisted.internet.reactor-1] Sending mail to [email protected]: [Fedora Update] [comment] ginac-1.7.9-5.fc34\r\n2020-10-25 11:17:15,016 ERROR [bodhi][PoolThread-twisted.internet.reactor-1] Instance <Update at 0x7fa3740f5910> is not bound to a Session; attribute refresh operation cannot proceed (Background on this error at: http://sqlalche.me/e/13/bhk3): Unable to handle message in Automatic Update handler: Id: c2d97737-444f-49b4-b4ca-1efb3a05e941\r\nTopic: org.fedoraproject.prod.buildsys.tag\r\nHeaders: {\r\n \"fedora_messaging_schema\": \"base.message\",\r\n \"fedora_messaging_severity\": 20,\r\n \"sent-at\": \"2020-10-25T11:17:14+00:00\"\r\n}\r\nBody: {\r\n \"build_id\": 1634116,\r\n \"instance\": \"primary\",\r\n \"name\": \"ginac\",\r\n \"owner\": \"---\",\r\n \"release\": \"5.fc34\",\r\n \"tag\": \"f34-updates-candidate\",\r\n \"tag_id\": 27040,\r\n \"user\": \"---\",\r\n \"version\": \"1.7.9\"\r\n}\r\nTraceback (most recent call last):\r\n File \"/usr/local/lib/python3.8/site-packages/bodhi/server/consumers/__init__.py\", line 79, in __call__\r\n handler_info.handler(msg)\r\n File \"/usr/local/lib/python3.8/site-packages/bodhi/server/consumers/automatic_updates.py\", line 197, in __call__\r\n alias = update.alias\r\n File \"/usr/lib64/python3.8/site-packages/sqlalchemy/orm/attributes.py\", line 287, in __get__\r\n return self.impl.get(instance_state(instance), dict_)\r\n File \"/usr/lib64/python3.8/site-packages/sqlalchemy/orm/attributes.py\", line 718, in get\r\n value = state._load_expired(state, passive)\r\n File \"/usr/lib64/python3.8/site-packages/sqlalchemy/orm/state.py\", line 652, in _load_expired\r\n self.manager.deferred_scalar_loader(self, toload)\r\n File \"/usr/lib64/python3.8/site-packages/sqlalchemy/orm/loading.py\", line 944, in load_scalar_attributes\r\n raise orm_exc.DetachedInstanceError(\r\nsqlalchemy.orm.exc.DetachedInstanceError: Instance <Update at 0x7fa3740f5910> is not bound to a Session; attribute refresh operation cannot proceed (Background on this error at: http://sqlalche.me/e/13/bhk3 )\r\n2020-10-25 11:17:15,053 WARNI [fedora_messaging.twisted.protocol][MainThread] Returning message id c2d97737-444f-49b4-b4ca-1efb3a05e941 to the queue\r\n```\n", "before_files": [{"content": "# Copyright \u00a9 2019 Red Hat, Inc. and others.\n#\n# This file is part of Bodhi.\n#\n# This program is free software; you can redistribute it and/or\n# modify it under the terms of the GNU General Public License\n# as published by the Free Software Foundation; either version 2\n# of the License, or (at your option) any later version.\n#\n# This program is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License along with\n# this program; if not, write to the Free Software Foundation, Inc., 51\n# Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.\n\"\"\"\nThe Bodhi handler that creates updates automatically from tagged builds.\n\nThis module is responsible for the process of creating updates when builds are\ntagged with certain tags.\n\"\"\"\n\nimport logging\nimport re\n\nimport fedora_messaging\n\nfrom bodhi.server import buildsys\nfrom bodhi.server.config import config\nfrom bodhi.server.models import (\n Bug, Build, ContentType, Package, Release, Update, UpdateStatus, UpdateType, User)\nfrom bodhi.server.tasks import work_on_bugs_task\nfrom bodhi.server.util import transactional_session_maker\n\nlog = logging.getLogger('bodhi')\n\n\nclass AutomaticUpdateHandler:\n \"\"\"\n The Bodhi Automatic Update Handler.\n\n A consumer that listens for messages about tagged builds and creates\n updates from them.\n \"\"\"\n\n def __init__(self, db_factory: transactional_session_maker = None):\n \"\"\"\n Initialize the Automatic Update Handler.\n\n Args:\n db_factory: If given, used as the db_factory for this handler. If\n None (the default), a new TransactionalSessionMaker is created and\n used.\n \"\"\"\n if not db_factory:\n self.db_factory = transactional_session_maker()\n else:\n self.db_factory = db_factory\n\n def __call__(self, message: fedora_messaging.api.Message) -> None:\n \"\"\"Create updates from appropriately tagged builds.\n\n Args:\n message: The message we are processing.\n \"\"\"\n body = message.body\n\n missing = []\n for mandatory in ('tag', 'build_id', 'name', 'version', 'release'):\n if mandatory not in body:\n missing.append(mandatory)\n if missing:\n log.debug(f\"Received incomplete tag message. Missing: {', '.join(missing)}\")\n return\n\n btag = body['tag']\n bnvr = '{name}-{version}-{release}'.format(**body)\n\n koji = buildsys.get_session()\n\n kbuildinfo = koji.getBuild(bnvr)\n if not kbuildinfo:\n log.debug(f\"Can't find Koji build for {bnvr}.\")\n return\n\n if 'nvr' not in kbuildinfo:\n log.debug(f\"Koji build info for {bnvr} doesn't contain 'nvr'.\")\n return\n\n if 'owner_name' not in kbuildinfo:\n log.debug(f\"Koji build info for {bnvr} doesn't contain 'owner_name'.\")\n return\n\n if kbuildinfo['owner_name'] in config.get('automatic_updates_blacklist'):\n log.debug(f\"{bnvr} owned by {kbuildinfo['owner_name']} who is listed in \"\n \"automatic_updates_blacklist, skipping.\")\n return\n\n # some APIs want the Koji build info, some others want the same\n # wrapped in a larger (request?) structure\n rbuildinfo = {\n 'info': kbuildinfo,\n 'nvr': kbuildinfo['nvr'].rsplit('-', 2),\n }\n\n with self.db_factory() as dbsession:\n rel = dbsession.query(Release).filter_by(create_automatic_updates=True,\n candidate_tag=btag).first()\n if not rel:\n log.debug(f\"Ignoring build being tagged into {btag!r}, no release configured for \"\n \"automatic updates for it found.\")\n return\n\n bcls = ContentType.infer_content_class(Build, kbuildinfo)\n build = bcls.get(bnvr)\n if build and build.update:\n log.info(f\"Build, active update for {bnvr} exists already, skipping.\")\n return\n\n if not build:\n log.debug(f\"Build for {bnvr} doesn't exist yet, creating.\")\n\n # Package.get_or_create() infers content type already\n log.debug(\"Getting/creating related package object.\")\n pkg = Package.get_or_create(dbsession, rbuildinfo)\n\n log.debug(\"Creating build object, adding it to the DB.\")\n build = bcls(nvr=bnvr, package=pkg, release=rel)\n dbsession.add(build)\n\n owner_name = kbuildinfo['owner_name']\n user = User.get(owner_name)\n if not user:\n log.debug(f\"Creating bodhi user for '{owner_name}'.\")\n # Leave email, groups blank, these will be filled\n # in or updated when they log into Bodhi next time, see\n # bodhi.server.security:remember_me().\n user = User(name=owner_name)\n dbsession.add(user)\n\n log.debug(f\"Creating new update for {bnvr}.\")\n changelog = build.get_changelog(lastupdate=True)\n closing_bugs = []\n if changelog:\n log.debug(\"Adding changelog to update notes.\")\n notes = f\"\"\"Automatic update for {bnvr}.\n\n##### **Changelog**\n\n```\n{changelog}\n```\"\"\"\n\n for b in re.finditer(config.get('bz_regex'), changelog, re.IGNORECASE):\n idx = int(b.group(1))\n log.debug(f'Adding bug #{idx} to the update.')\n bug = Bug.get(idx)\n if bug is None:\n bug = Bug(bug_id=idx)\n dbsession.add(bug)\n dbsession.flush()\n if bug not in closing_bugs:\n closing_bugs.append(bug)\n else:\n notes = f\"Automatic update for {bnvr}.\"\n update = Update(\n release=rel,\n builds=[build],\n bugs=closing_bugs,\n notes=notes,\n type=UpdateType.unspecified,\n stable_karma=3,\n unstable_karma=-3,\n autokarma=False,\n user=user,\n status=UpdateStatus.pending,\n )\n\n # Comment on the update that it was automatically created.\n update.comment(\n dbsession,\n str(\"This update was automatically created\"),\n author=\"bodhi\",\n )\n\n update.add_tag(update.release.pending_signing_tag)\n\n log.debug(\"Adding new update to the database.\")\n dbsession.add(update)\n\n log.debug(\"Flushing changes to the database.\")\n dbsession.flush()\n\n # Obsolete older updates which may be stuck in testing due to failed gating\n try:\n update.obsolete_older_updates(dbsession)\n except Exception as e:\n log.error(f'Problem obsoleting older updates: {e}')\n\n # This must be run after dbsession is closed so changes are committed to db\n alias = update.alias\n work_on_bugs_task.delay(alias, closing_bugs)\n", "path": "bodhi/server/consumers/automatic_updates.py"}]} | 3,695 | 138 |

gh_patches_debug_33977 | rasdani/github-patches | git_diff | interlegis__sapl-3282 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Link de Matéria Legislative em Audiência

## Comportamento Atual

<!--- Se está descrevendo um bug, conte-nos o que acontece em vez do comportamento esperado. -->

<!--- Se está sugerindo uma mudança/melhoria, explique a diferença com o comportamento atual. -->

Quando clica-se no link da Matéria Legislativa, é redirecionado para outra Matéria Legislativa e não a com o título no link

## Possível Solução

<!--- Não é obrigatório, mas sugira uma possível correção/razão para o bug -->

<!--- ou ideias de como implementar a adição/mudança. -->

Número das audiências estão repetidos. Talvez isso tá causando o bug.

</issue>

<code>

[start of sapl/audiencia/views.py]

1 import sapl

2

3 from django.http import HttpResponse

4 from django.urls import reverse

5 from django.views.decorators.clickjacking import xframe_options_exempt

6 from django.views.generic import UpdateView

7 from sapl.crud.base import RP_DETAIL, RP_LIST, Crud, MasterDetailCrud

8

9 from .forms import AudienciaForm, AnexoAudienciaPublicaForm

10 from .models import AudienciaPublica, AnexoAudienciaPublica

11

12

13 def index(request):

14 return HttpResponse("Audiência Pública")

15

16

17 class AudienciaCrud(Crud):

18 model = AudienciaPublica

19 public = [RP_LIST, RP_DETAIL, ]

20

21 class BaseMixin(Crud.BaseMixin):

22 list_field_names = ['numero', 'nome', 'tipo', 'materia',

23 'data']

24 ordering = '-data', 'nome', 'numero', 'tipo'

25

26 class ListView(Crud.ListView):

27 paginate_by = 10

28

29 def get_context_data(self, **kwargs):

30 context = super().get_context_data(**kwargs)

31

32 audiencia_materia = {}

33 for o in context['object_list']:

34 # indexado pelo numero da audiencia

35 audiencia_materia[str(o.numero)] = o.materia

36

37 for row in context['rows']:

38 coluna_materia = row[3] # se mudar a ordem de listagem mudar aqui

39 if coluna_materia[0]:

40 materia = audiencia_materia[row[0][0]]

41 if materia:

42 url_materia = reverse('sapl.materia:materialegislativa_detail',

43 kwargs={'pk': materia.id})

44 else:

45 url_materia = None

46 row[3] = (coluna_materia[0], url_materia)

47 return context

48

49 class CreateView(Crud.CreateView):

50 form_class = AudienciaForm

51

52 def form_valid(self, form):

53 return super(Crud.CreateView, self).form_valid(form)

54

55 class UpdateView(Crud.UpdateView):

56 form_class = AudienciaForm

57

58 def get_initial(self):

59 initial = super(UpdateView, self).get_initial()

60 if self.object.materia:

61 initial['tipo_materia'] = self.object.materia.tipo.id

62 initial['numero_materia'] = self.object.materia.numero

63 initial['ano_materia'] = self.object.materia.ano

64 return initial

65

66 class DeleteView(Crud.DeleteView):

67 pass

68

69 class DetailView(Crud.DetailView):

70

71 layout_key = 'AudienciaPublicaDetail'

72

73 @xframe_options_exempt

74 def get(self, request, *args, **kwargs):

75 return super().get(request, *args, **kwargs)

76

77

78 class AudienciaPublicaMixin:

79

80 def has_permission(self):

81 app_config = sapl.base.models.AppConfig.objects.last()

82 if app_config and app_config.documentos_administrativos == 'O':

83 return True

84

85 return super().has_permission()

86

87

88 class AnexoAudienciaPublicaCrud(MasterDetailCrud):

89 model = AnexoAudienciaPublica

90 parent_field = 'audiencia'

91 help_topic = 'numeracao_docsacess'

92 public = [RP_LIST, RP_DETAIL, ]

93

94 class BaseMixin(MasterDetailCrud.BaseMixin):

95 list_field_names = ['assunto']

96

97 class CreateView(MasterDetailCrud.CreateView):

98 form_class = AnexoAudienciaPublicaForm

99 layout_key = None

100

101 class UpdateView(MasterDetailCrud.UpdateView):

102 form_class = AnexoAudienciaPublicaForm

103

104 class ListView(AudienciaPublicaMixin, MasterDetailCrud.ListView):

105

106 def get_queryset(self):

107 qs = super(MasterDetailCrud.ListView, self).get_queryset()

108 kwargs = {self.crud.parent_field: self.kwargs['pk']}

109 return qs.filter(**kwargs).order_by('-data', '-id')

110

111 class DetailView(AudienciaPublicaMixin, MasterDetailCrud.DetailView):

112 pass

113

[end of sapl/audiencia/views.py]

[start of sapl/audiencia/forms.py]

1 import logging

2

3 from django import forms

4 from django.core.exceptions import ObjectDoesNotExist, ValidationError

5 from django.db import transaction

6 from django.utils.translation import ugettext_lazy as _

7

8 from crispy_forms.layout import Button, Column, Fieldset, HTML, Layout

9

10 from sapl.audiencia.models import AudienciaPublica, TipoAudienciaPublica, AnexoAudienciaPublica

11 from sapl.crispy_layout_mixin import form_actions, SaplFormHelper, SaplFormLayout, to_row

12 from sapl.materia.models import MateriaLegislativa, TipoMateriaLegislativa

13 from sapl.parlamentares.models import Parlamentar

14 from sapl.utils import timezone, FileFieldCheckMixin, validar_arquivo

15

16 class AudienciaForm(FileFieldCheckMixin, forms.ModelForm):

17 logger = logging.getLogger(__name__)

18 data_atual = timezone.now()

19

20 tipo = forms.ModelChoiceField(

21 required=True,

22 label=_('Tipo de Audiência Pública'),

23 queryset=TipoAudienciaPublica.objects.all().order_by('nome'))

24

25 tipo_materia = forms.ModelChoiceField(

26 label=_('Tipo Matéria'),

27 required=False,

28 queryset=TipoMateriaLegislativa.objects.all(),

29 empty_label=_('Selecione'))

30

31 numero_materia = forms.CharField(

32 label=_('Número Matéria'),

33 required=False)

34

35 ano_materia = forms.CharField(

36 label=_('Ano Matéria'),

37 required=False)

38

39 materia = forms.ModelChoiceField(

40 required=False,

41 widget=forms.HiddenInput(),

42 queryset=MateriaLegislativa.objects.all())

43

44 parlamentar_autor = forms.ModelChoiceField(

45 label=_("Parlamentar Autor"),

46 required=False,

47 queryset=Parlamentar.objects.all())

48

49 requerimento = forms.ModelChoiceField(

50 label=_("Requerimento"),

51 required=False,

52 queryset=MateriaLegislativa.objects.select_related("tipo").filter(tipo__descricao="Requerimento"))

53

54 class Meta:

55 model = AudienciaPublica

56 fields = ['tipo', 'numero', 'nome',

57 'tema', 'data', 'hora_inicio', 'hora_fim',

58 'observacao', 'audiencia_cancelada', 'parlamentar_autor', 'requerimento', 'url_audio',

59 'url_video', 'upload_pauta', 'upload_ata',

60 'upload_anexo', 'tipo_materia', 'numero_materia',

61 'ano_materia', 'materia']

62

63 def __init__(self, **kwargs):

64 super(AudienciaForm, self).__init__(**kwargs)

65

66 tipos = []

67

68 if not self.fields['tipo'].queryset:

69 tipos.append(TipoAudienciaPublica.objects.create(nome='Audiência Pública', tipo='A'))

70 tipos.append(TipoAudienciaPublica.objects.create(nome='Plebiscito', tipo='P'))

71 tipos.append(TipoAudienciaPublica.objects.create(nome='Referendo', tipo='R'))

72 tipos.append(TipoAudienciaPublica.objects.create(nome='Iniciativa Popular', tipo='I'))

73

74 for t in tipos:

75 t.save()

76

77 def clean(self):

78 cleaned_data = super(AudienciaForm, self).clean()

79 if not self.is_valid():

80 return cleaned_data

81

82 materia = cleaned_data['numero_materia']

83 ano_materia = cleaned_data['ano_materia']

84 tipo_materia = cleaned_data['tipo_materia']

85 parlamentar_autor = cleaned_data["parlamentar_autor"]

86 requerimento = cleaned_data["requerimento"]

87

88 if materia and ano_materia and tipo_materia:

89 try:

90 self.logger.debug("Tentando obter MateriaLegislativa %s nº %s/%s." % (tipo_materia, materia, ano_materia))

91 materia = MateriaLegislativa.objects.get(

92 numero=materia,

93 ano=ano_materia,

94 tipo=tipo_materia)

95 except ObjectDoesNotExist:

96 msg = _('A matéria %s nº %s/%s não existe no cadastro'

97 ' de matérias legislativas.' % (tipo_materia, materia, ano_materia))

98 self.logger.warning(

99 'A MateriaLegislativa %s nº %s/%s não existe no cadastro'

100 ' de matérias legislativas.' % (tipo_materia, materia, ano_materia)

101 )

102 raise ValidationError(msg)

103 else:

104 self.logger.info("MateriaLegislativa %s nº %s/%s obtida com sucesso." % (tipo_materia, materia, ano_materia))

105 cleaned_data['materia'] = materia

106

107 else:

108 campos = [materia, tipo_materia, ano_materia]

109 if campos.count(None) + campos.count('') < len(campos):

110 msg = _('Preencha todos os campos relacionados à Matéria Legislativa')

111 self.logger.warning(

112 'Algum campo relacionado à MatériaLegislativa %s nº %s/%s \

113 não foi preenchido.' % (tipo_materia, materia, ano_materia)

114 )

115 raise ValidationError(msg)

116

117 if not cleaned_data['numero']:

118

119 ultima_audiencia = AudienciaPublica.objects.all().order_by('numero').last()

120 if ultima_audiencia:

121 cleaned_data['numero'] = ultima_audiencia.numero + 1

122 else:

123 cleaned_data['numero'] = 1

124

125 if self.cleaned_data['hora_inicio'] and self.cleaned_data['hora_fim']:

126 if self.cleaned_data['hora_fim'] < self.cleaned_data['hora_inicio']:

127 msg = _('A hora de fim ({}) não pode ser anterior a hora de início({})'

128 .format(self.cleaned_data['hora_fim'], self.cleaned_data['hora_inicio']))

129 self.logger.warning(

130 'Hora de fim anterior à hora de início.'

131 )

132 raise ValidationError(msg)

133

134 # requerimento é optativo

135 if parlamentar_autor and requerimento:

136 if parlamentar_autor.autor.first() not in requerimento.autores.all():

137 raise ValidationError("Parlamentar Autor selecionado não faz"

138 " parte da autoria do Requerimento "

139 "selecionado.")

140 elif parlamentar_autor:

141 raise ValidationError("Para informar um autor deve-se informar um requerimento.")

142 elif requerimento:

143 raise ValidationError("Para informar um requerimento deve-se informar um autor.")

144

145

146 upload_pauta = self.cleaned_data.get('upload_pauta', False)

147 upload_ata = self.cleaned_data.get('upload_ata', False)

148 upload_anexo = self.cleaned_data.get('upload_anexo', False)

149

150 if upload_pauta:

151 validar_arquivo(upload_pauta, "Pauta da Audiência Pública")

152

153 if upload_ata:

154 validar_arquivo(upload_ata, "Ata da Audiência Pública")

155

156 if upload_anexo:

157 validar_arquivo(upload_anexo, "Anexo da Audiência Pública")

158

159 return cleaned_data

160

161

162 class AnexoAudienciaPublicaForm(forms.ModelForm):

163

164 class Meta:

165 model = AnexoAudienciaPublica

166 fields = ['arquivo',

167 'assunto']

168

169 def __init__(self, *args, **kwargs):

170

171 row1 = to_row(

172 [('arquivo', 4)])

173

174 row2 = to_row(

175 [('assunto', 12)])

176

177 self.helper = SaplFormHelper()

178 self.helper.layout = SaplFormLayout(

179 Fieldset(_('Identificação Básica'),

180 row1, row2))

181 super(AnexoAudienciaPublicaForm, self).__init__(

182 *args, **kwargs)

183

184 def clean(self):

185 super(AnexoAudienciaPublicaForm, self).clean()

186

187 if not self.is_valid():

188 return self.cleaned_data

189

190 arquivo = self.cleaned_data.get('arquivo', False)

191

192 if arquivo:

193 validar_arquivo(arquivo, "Arquivo")

194

195 return self.cleaned_data

196

[end of sapl/audiencia/forms.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/sapl/audiencia/forms.py b/sapl/audiencia/forms.py

--- a/sapl/audiencia/forms.py

+++ b/sapl/audiencia/forms.py

@@ -115,12 +115,14 @@

raise ValidationError(msg)

if not cleaned_data['numero']:

-

ultima_audiencia = AudienciaPublica.objects.all().order_by('numero').last()

if ultima_audiencia:

cleaned_data['numero'] = ultima_audiencia.numero + 1

else:

cleaned_data['numero'] = 1

+ else:

+ if AudienciaPublica.objects.filter(numero=cleaned_data['numero']).exclude(pk=self.instance.pk).exists():

+ raise ValidationError(f"Já existe uma audiência com a numeração {cleaned_data['numero']}.")

if self.cleaned_data['hora_inicio'] and self.cleaned_data['hora_fim']:

if self.cleaned_data['hora_fim'] < self.cleaned_data['hora_inicio']:

diff --git a/sapl/audiencia/views.py b/sapl/audiencia/views.py

--- a/sapl/audiencia/views.py

+++ b/sapl/audiencia/views.py

@@ -29,15 +29,13 @@

def get_context_data(self, **kwargs):

context = super().get_context_data(**kwargs)

- audiencia_materia = {}

- for o in context['object_list']:

- # indexado pelo numero da audiencia

- audiencia_materia[str(o.numero)] = o.materia

+ audiencia_materia = {str(a.id): a.materia for a in context['object_list']}

for row in context['rows']:

- coluna_materia = row[3] # se mudar a ordem de listagem mudar aqui

+ coluna_materia = row[3] # se mudar a ordem de listagem mudar aqui

if coluna_materia[0]:

- materia = audiencia_materia[row[0][0]]

+ audiencia_id = row[0][1].split('/')[-1]

+ materia = audiencia_materia[audiencia_id]

if materia:

url_materia = reverse('sapl.materia:materialegislativa_detail',

kwargs={'pk': materia.id})

| {"golden_diff": "diff --git a/sapl/audiencia/forms.py b/sapl/audiencia/forms.py\n--- a/sapl/audiencia/forms.py\n+++ b/sapl/audiencia/forms.py\n@@ -115,12 +115,14 @@\n raise ValidationError(msg)\n \n if not cleaned_data['numero']:\n-\n ultima_audiencia = AudienciaPublica.objects.all().order_by('numero').last()\n if ultima_audiencia:\n cleaned_data['numero'] = ultima_audiencia.numero + 1\n else:\n cleaned_data['numero'] = 1\n+ else:\n+ if AudienciaPublica.objects.filter(numero=cleaned_data['numero']).exclude(pk=self.instance.pk).exists():\n+ raise ValidationError(f\"J\u00e1 existe uma audi\u00eancia com a numera\u00e7\u00e3o {cleaned_data['numero']}.\")\n \n if self.cleaned_data['hora_inicio'] and self.cleaned_data['hora_fim']:\n if self.cleaned_data['hora_fim'] < self.cleaned_data['hora_inicio']:\ndiff --git a/sapl/audiencia/views.py b/sapl/audiencia/views.py\n--- a/sapl/audiencia/views.py\n+++ b/sapl/audiencia/views.py\n@@ -29,15 +29,13 @@\n def get_context_data(self, **kwargs):\n context = super().get_context_data(**kwargs)\n \n- audiencia_materia = {}\n- for o in context['object_list']:\n- # indexado pelo numero da audiencia\n- audiencia_materia[str(o.numero)] = o.materia\n+ audiencia_materia = {str(a.id): a.materia for a in context['object_list']}\n \n for row in context['rows']:\n- coluna_materia = row[3] # se mudar a ordem de listagem mudar aqui\n+ coluna_materia = row[3] # se mudar a ordem de listagem mudar aqui\n if coluna_materia[0]:\n- materia = audiencia_materia[row[0][0]]\n+ audiencia_id = row[0][1].split('/')[-1]\n+ materia = audiencia_materia[audiencia_id]\n if materia:\n url_materia = reverse('sapl.materia:materialegislativa_detail',\n kwargs={'pk': materia.id})\n", "issue": "Link de Mat\u00e9ria Legislative em Audi\u00eancia\n## Comportamento Atual\r\n<!--- Se est\u00e1 descrevendo um bug, conte-nos o que acontece em vez do comportamento esperado. -->\r\n<!--- Se est\u00e1 sugerindo uma mudan\u00e7a/melhoria, explique a diferen\u00e7a com o comportamento atual. -->\r\nQuando clica-se no link da Mat\u00e9ria Legislativa, \u00e9 redirecionado para outra Mat\u00e9ria Legislativa e n\u00e3o a com o t\u00edtulo no link\r\n\r\n## Poss\u00edvel Solu\u00e7\u00e3o\r\n<!--- N\u00e3o \u00e9 obrigat\u00f3rio, mas sugira uma poss\u00edvel corre\u00e7\u00e3o/raz\u00e3o para o bug -->\r\n<!--- ou ideias de como implementar a adi\u00e7\u00e3o/mudan\u00e7a. -->\r\nN\u00famero das audi\u00eancias est\u00e3o repetidos. Talvez isso t\u00e1 causando o bug.\n", "before_files": [{"content": "import sapl\n\nfrom django.http import HttpResponse\nfrom django.urls import reverse\nfrom django.views.decorators.clickjacking import xframe_options_exempt\nfrom django.views.generic import UpdateView\nfrom sapl.crud.base import RP_DETAIL, RP_LIST, Crud, MasterDetailCrud\n\nfrom .forms import AudienciaForm, AnexoAudienciaPublicaForm\nfrom .models import AudienciaPublica, AnexoAudienciaPublica\n\n\ndef index(request):\n return HttpResponse(\"Audi\u00eancia P\u00fablica\")\n\n\nclass AudienciaCrud(Crud):\n model = AudienciaPublica\n public = [RP_LIST, RP_DETAIL, ]\n\n class BaseMixin(Crud.BaseMixin):\n list_field_names = ['numero', 'nome', 'tipo', 'materia',\n 'data'] \n ordering = '-data', 'nome', 'numero', 'tipo'\n\n class ListView(Crud.ListView):\n paginate_by = 10\n\n def get_context_data(self, **kwargs):\n context = super().get_context_data(**kwargs)\n\n audiencia_materia = {}\n for o in context['object_list']:\n # indexado pelo numero da audiencia\n audiencia_materia[str(o.numero)] = o.materia\n\n for row in context['rows']:\n coluna_materia = row[3] # se mudar a ordem de listagem mudar aqui\n if coluna_materia[0]:\n materia = audiencia_materia[row[0][0]]\n if materia:\n url_materia = reverse('sapl.materia:materialegislativa_detail',\n kwargs={'pk': materia.id})\n else:\n url_materia = None\n row[3] = (coluna_materia[0], url_materia)\n return context\n\n class CreateView(Crud.CreateView):\n form_class = AudienciaForm\n\n def form_valid(self, form):\n return super(Crud.CreateView, self).form_valid(form)\n\n class UpdateView(Crud.UpdateView):\n form_class = AudienciaForm\n\n def get_initial(self):\n initial = super(UpdateView, self).get_initial()\n if self.object.materia:\n initial['tipo_materia'] = self.object.materia.tipo.id\n initial['numero_materia'] = self.object.materia.numero\n initial['ano_materia'] = self.object.materia.ano\n return initial\n \n class DeleteView(Crud.DeleteView):\n pass\n\n class DetailView(Crud.DetailView):\n\n layout_key = 'AudienciaPublicaDetail'\n\n @xframe_options_exempt\n def get(self, request, *args, **kwargs):\n return super().get(request, *args, **kwargs)\n\n\nclass AudienciaPublicaMixin:\n\n def has_permission(self):\n app_config = sapl.base.models.AppConfig.objects.last()\n if app_config and app_config.documentos_administrativos == 'O':\n return True\n\n return super().has_permission()\n\n\nclass AnexoAudienciaPublicaCrud(MasterDetailCrud):\n model = AnexoAudienciaPublica\n parent_field = 'audiencia'\n help_topic = 'numeracao_docsacess'\n public = [RP_LIST, RP_DETAIL, ]\n\n class BaseMixin(MasterDetailCrud.BaseMixin):\n list_field_names = ['assunto']\n\n class CreateView(MasterDetailCrud.CreateView):\n form_class = AnexoAudienciaPublicaForm\n layout_key = None\n\n class UpdateView(MasterDetailCrud.UpdateView):\n form_class = AnexoAudienciaPublicaForm\n\n class ListView(AudienciaPublicaMixin, MasterDetailCrud.ListView):\n\n def get_queryset(self):\n qs = super(MasterDetailCrud.ListView, self).get_queryset()\n kwargs = {self.crud.parent_field: self.kwargs['pk']}\n return qs.filter(**kwargs).order_by('-data', '-id')\n\n class DetailView(AudienciaPublicaMixin, MasterDetailCrud.DetailView):\n pass\n", "path": "sapl/audiencia/views.py"}, {"content": "import logging\n\nfrom django import forms\nfrom django.core.exceptions import ObjectDoesNotExist, ValidationError\nfrom django.db import transaction\nfrom django.utils.translation import ugettext_lazy as _\n\nfrom crispy_forms.layout import Button, Column, Fieldset, HTML, Layout\n\nfrom sapl.audiencia.models import AudienciaPublica, TipoAudienciaPublica, AnexoAudienciaPublica\nfrom sapl.crispy_layout_mixin import form_actions, SaplFormHelper, SaplFormLayout, to_row\nfrom sapl.materia.models import MateriaLegislativa, TipoMateriaLegislativa\nfrom sapl.parlamentares.models import Parlamentar\nfrom sapl.utils import timezone, FileFieldCheckMixin, validar_arquivo\n\nclass AudienciaForm(FileFieldCheckMixin, forms.ModelForm):\n logger = logging.getLogger(__name__)\n data_atual = timezone.now()\n\n tipo = forms.ModelChoiceField(\n required=True,\n label=_('Tipo de Audi\u00eancia P\u00fablica'),\n queryset=TipoAudienciaPublica.objects.all().order_by('nome'))\n\n tipo_materia = forms.ModelChoiceField(\n label=_('Tipo Mat\u00e9ria'),\n required=False,\n queryset=TipoMateriaLegislativa.objects.all(),\n empty_label=_('Selecione'))\n\n numero_materia = forms.CharField(\n label=_('N\u00famero Mat\u00e9ria'),\n required=False)\n\n ano_materia = forms.CharField(\n label=_('Ano Mat\u00e9ria'),\n required=False)\n\n materia = forms.ModelChoiceField(\n required=False,\n widget=forms.HiddenInput(),\n queryset=MateriaLegislativa.objects.all())\n\n parlamentar_autor = forms.ModelChoiceField(\n label=_(\"Parlamentar Autor\"),\n required=False,\n queryset=Parlamentar.objects.all())\n\n requerimento = forms.ModelChoiceField(\n label=_(\"Requerimento\"),\n required=False,\n queryset=MateriaLegislativa.objects.select_related(\"tipo\").filter(tipo__descricao=\"Requerimento\"))\n\n class Meta:\n model = AudienciaPublica\n fields = ['tipo', 'numero', 'nome',\n 'tema', 'data', 'hora_inicio', 'hora_fim',\n 'observacao', 'audiencia_cancelada', 'parlamentar_autor', 'requerimento', 'url_audio',\n 'url_video', 'upload_pauta', 'upload_ata',\n 'upload_anexo', 'tipo_materia', 'numero_materia',\n 'ano_materia', 'materia']\n\n def __init__(self, **kwargs):\n super(AudienciaForm, self).__init__(**kwargs)\n\n tipos = []\n\n if not self.fields['tipo'].queryset:\n tipos.append(TipoAudienciaPublica.objects.create(nome='Audi\u00eancia P\u00fablica', tipo='A'))\n tipos.append(TipoAudienciaPublica.objects.create(nome='Plebiscito', tipo='P'))\n tipos.append(TipoAudienciaPublica.objects.create(nome='Referendo', tipo='R'))\n tipos.append(TipoAudienciaPublica.objects.create(nome='Iniciativa Popular', tipo='I'))\n\n for t in tipos:\n t.save()\n\n def clean(self):\n cleaned_data = super(AudienciaForm, self).clean()\n if not self.is_valid():\n return cleaned_data\n\n materia = cleaned_data['numero_materia']\n ano_materia = cleaned_data['ano_materia']\n tipo_materia = cleaned_data['tipo_materia']\n parlamentar_autor = cleaned_data[\"parlamentar_autor\"]\n requerimento = cleaned_data[\"requerimento\"]\n\n if materia and ano_materia and tipo_materia:\n try:\n self.logger.debug(\"Tentando obter MateriaLegislativa %s n\u00ba %s/%s.\" % (tipo_materia, materia, ano_materia))\n materia = MateriaLegislativa.objects.get(\n numero=materia,\n ano=ano_materia,\n tipo=tipo_materia)\n except ObjectDoesNotExist:\n msg = _('A mat\u00e9ria %s n\u00ba %s/%s n\u00e3o existe no cadastro'\n ' de mat\u00e9rias legislativas.' % (tipo_materia, materia, ano_materia))\n self.logger.warning(\n 'A MateriaLegislativa %s n\u00ba %s/%s n\u00e3o existe no cadastro'\n ' de mat\u00e9rias legislativas.' % (tipo_materia, materia, ano_materia)\n )\n raise ValidationError(msg)\n else:\n self.logger.info(\"MateriaLegislativa %s n\u00ba %s/%s obtida com sucesso.\" % (tipo_materia, materia, ano_materia))\n cleaned_data['materia'] = materia\n\n else:\n campos = [materia, tipo_materia, ano_materia]\n if campos.count(None) + campos.count('') < len(campos):\n msg = _('Preencha todos os campos relacionados \u00e0 Mat\u00e9ria Legislativa')\n self.logger.warning(\n 'Algum campo relacionado \u00e0 Mat\u00e9riaLegislativa %s n\u00ba %s/%s \\\n n\u00e3o foi preenchido.' % (tipo_materia, materia, ano_materia)\n )\n raise ValidationError(msg)\n\n if not cleaned_data['numero']:\n\n ultima_audiencia = AudienciaPublica.objects.all().order_by('numero').last()\n if ultima_audiencia:\n cleaned_data['numero'] = ultima_audiencia.numero + 1\n else:\n cleaned_data['numero'] = 1\n\n if self.cleaned_data['hora_inicio'] and self.cleaned_data['hora_fim']:\n if self.cleaned_data['hora_fim'] < self.cleaned_data['hora_inicio']:\n msg = _('A hora de fim ({}) n\u00e3o pode ser anterior a hora de in\u00edcio({})'\n .format(self.cleaned_data['hora_fim'], self.cleaned_data['hora_inicio']))\n self.logger.warning(\n 'Hora de fim anterior \u00e0 hora de in\u00edcio.'\n )\n raise ValidationError(msg)\n\n # requerimento \u00e9 optativo\n if parlamentar_autor and requerimento:\n if parlamentar_autor.autor.first() not in requerimento.autores.all():\n raise ValidationError(\"Parlamentar Autor selecionado n\u00e3o faz\"\n \" parte da autoria do Requerimento \"\n \"selecionado.\")\n elif parlamentar_autor:\n raise ValidationError(\"Para informar um autor deve-se informar um requerimento.\")\n elif requerimento:\n raise ValidationError(\"Para informar um requerimento deve-se informar um autor.\")\n\n\n upload_pauta = self.cleaned_data.get('upload_pauta', False)\n upload_ata = self.cleaned_data.get('upload_ata', False)\n upload_anexo = self.cleaned_data.get('upload_anexo', False)\n\n if upload_pauta:\n validar_arquivo(upload_pauta, \"Pauta da Audi\u00eancia P\u00fablica\")\n\n if upload_ata:\n validar_arquivo(upload_ata, \"Ata da Audi\u00eancia P\u00fablica\")\n\n if upload_anexo:\n validar_arquivo(upload_anexo, \"Anexo da Audi\u00eancia P\u00fablica\")\n\n return cleaned_data\n\n\nclass AnexoAudienciaPublicaForm(forms.ModelForm):\n\n class Meta:\n model = AnexoAudienciaPublica\n fields = ['arquivo',\n 'assunto']\n\n def __init__(self, *args, **kwargs):\n\n row1 = to_row(\n [('arquivo', 4)])\n\n row2 = to_row(\n [('assunto', 12)])\n\n self.helper = SaplFormHelper()\n self.helper.layout = SaplFormLayout(\n Fieldset(_('Identifica\u00e7\u00e3o B\u00e1sica'),\n row1, row2))\n super(AnexoAudienciaPublicaForm, self).__init__(\n *args, **kwargs)\n\n def clean(self):\n super(AnexoAudienciaPublicaForm, self).clean()\n\n if not self.is_valid():\n return self.cleaned_data\n\n arquivo = self.cleaned_data.get('arquivo', False)\n\n if arquivo:\n validar_arquivo(arquivo, \"Arquivo\")\n\n return self.cleaned_data\n", "path": "sapl/audiencia/forms.py"}]} | 4,060 | 507 |

gh_patches_debug_5135 | rasdani/github-patches | git_diff | mitmproxy__mitmproxy-4845 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

WebSocket view jumps to top on new message

The WebSocket view keeps jumping to the top every time a new message arrives. This makes it basically impossible to work with while the connection is open. I hold down arrow -> it scrolls a bit -> message arrives -> I'm back at the top

_Originally posted by @Prinzhorn in https://github.com/mitmproxy/mitmproxy/issues/4486#issuecomment-796578909_

</issue>

<code>

[start of mitmproxy/tools/console/searchable.py]

1 import urwid

2

3 from mitmproxy.tools.console import signals

4

5

6 class Highlight(urwid.AttrMap):

7

8 def __init__(self, t):

9 urwid.AttrMap.__init__(

10 self,

11 urwid.Text(t.text),

12 "focusfield",

13 )

14 self.backup = t

15

16

17 class Searchable(urwid.ListBox):

18

19 def __init__(self, contents):

20 self.walker = urwid.SimpleFocusListWalker(contents)

21 urwid.ListBox.__init__(self, self.walker)

22 self.search_offset = 0

23 self.current_highlight = None

24 self.search_term = None

25 self.last_search = None

26

27 def keypress(self, size, key):

28 if key == "/":

29 signals.status_prompt.send(

30 prompt = "Search for",

31 text = "",

32 callback = self.set_search

33 )

34 elif key == "n":

35 self.find_next(False)

36 elif key == "N":

37 self.find_next(True)

38 elif key == "m_start":

39 self.set_focus(0)

40 self.walker._modified()

41 elif key == "m_end":

42 self.set_focus(len(self.walker) - 1)

43 self.walker._modified()

44 else:

45 return super().keypress(size, key)

46

47 def set_search(self, text):

48 self.last_search = text

49 self.search_term = text or None

50 self.find_next(False)

51

52 def set_highlight(self, offset):

53 if self.current_highlight is not None:

54 old = self.body[self.current_highlight]

55 self.body[self.current_highlight] = old.backup

56 if offset is None:

57 self.current_highlight = None

58 else:

59 self.body[offset] = Highlight(self.body[offset])

60 self.current_highlight = offset

61

62 def get_text(self, w):

63 if isinstance(w, urwid.Text):

64 return w.text

65 elif isinstance(w, Highlight):

66 return w.backup.text

67 else:

68 return None

69

70 def find_next(self, backwards):

71 if not self.search_term:

72 if self.last_search:

73 self.search_term = self.last_search

74 else:

75 self.set_highlight(None)

76 return

77 # Start search at focus + 1

78 if backwards:

79 rng = range(len(self.body) - 1, -1, -1)

80 else:

81 rng = range(1, len(self.body) + 1)

82 for i in rng:

83 off = (self.focus_position + i) % len(self.body)

84 w = self.body[off]

85 txt = self.get_text(w)

86 if txt and self.search_term in txt:

87 self.set_highlight(off)

88 self.set_focus(off, coming_from="above")

89 self.body._modified()

90 return

91 else:

92 self.set_highlight(None)

93 signals.status_message.send(message="Search not found.", expire=1)

94

[end of mitmproxy/tools/console/searchable.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/mitmproxy/tools/console/searchable.py b/mitmproxy/tools/console/searchable.py

--- a/mitmproxy/tools/console/searchable.py

+++ b/mitmproxy/tools/console/searchable.py

@@ -19,6 +19,7 @@

def __init__(self, contents):

self.walker = urwid.SimpleFocusListWalker(contents)

urwid.ListBox.__init__(self, self.walker)

+ self.set_focus(len(self.walker) - 1)

self.search_offset = 0

self.current_highlight = None

self.search_term = None

| {"golden_diff": "diff --git a/mitmproxy/tools/console/searchable.py b/mitmproxy/tools/console/searchable.py\n--- a/mitmproxy/tools/console/searchable.py\n+++ b/mitmproxy/tools/console/searchable.py\n@@ -19,6 +19,7 @@\n def __init__(self, contents):\n self.walker = urwid.SimpleFocusListWalker(contents)\n urwid.ListBox.__init__(self, self.walker)\n+ self.set_focus(len(self.walker) - 1)\n self.search_offset = 0\n self.current_highlight = None\n self.search_term = None\n", "issue": "WebSocket view jumps to top on new message\nThe WebSocket view keeps jumping to the top every time a new message arrives. This makes it basically impossible to work with while the connection is open. I hold down arrow -> it scrolls a bit -> message arrives -> I'm back at the top\r\n\r\n_Originally posted by @Prinzhorn in https://github.com/mitmproxy/mitmproxy/issues/4486#issuecomment-796578909_\n", "before_files": [{"content": "import urwid\n\nfrom mitmproxy.tools.console import signals\n\n\nclass Highlight(urwid.AttrMap):\n\n def __init__(self, t):\n urwid.AttrMap.__init__(\n self,\n urwid.Text(t.text),\n \"focusfield\",\n )\n self.backup = t\n\n\nclass Searchable(urwid.ListBox):\n\n def __init__(self, contents):\n self.walker = urwid.SimpleFocusListWalker(contents)\n urwid.ListBox.__init__(self, self.walker)\n self.search_offset = 0\n self.current_highlight = None\n self.search_term = None\n self.last_search = None\n\n def keypress(self, size, key):\n if key == \"/\":\n signals.status_prompt.send(\n prompt = \"Search for\",\n text = \"\",\n callback = self.set_search\n )\n elif key == \"n\":\n self.find_next(False)\n elif key == \"N\":\n self.find_next(True)\n elif key == \"m_start\":\n self.set_focus(0)\n self.walker._modified()\n elif key == \"m_end\":\n self.set_focus(len(self.walker) - 1)\n self.walker._modified()\n else:\n return super().keypress(size, key)\n\n def set_search(self, text):\n self.last_search = text\n self.search_term = text or None\n self.find_next(False)\n\n def set_highlight(self, offset):\n if self.current_highlight is not None:\n old = self.body[self.current_highlight]\n self.body[self.current_highlight] = old.backup\n if offset is None:\n self.current_highlight = None\n else:\n self.body[offset] = Highlight(self.body[offset])\n self.current_highlight = offset\n\n def get_text(self, w):\n if isinstance(w, urwid.Text):\n return w.text\n elif isinstance(w, Highlight):\n return w.backup.text\n else:\n return None\n\n def find_next(self, backwards):\n if not self.search_term:\n if self.last_search:\n self.search_term = self.last_search\n else:\n self.set_highlight(None)\n return\n # Start search at focus + 1\n if backwards:\n rng = range(len(self.body) - 1, -1, -1)\n else:\n rng = range(1, len(self.body) + 1)\n for i in rng:\n off = (self.focus_position + i) % len(self.body)\n w = self.body[off]\n txt = self.get_text(w)\n if txt and self.search_term in txt:\n self.set_highlight(off)\n self.set_focus(off, coming_from=\"above\")\n self.body._modified()\n return\n else:\n self.set_highlight(None)\n signals.status_message.send(message=\"Search not found.\", expire=1)\n", "path": "mitmproxy/tools/console/searchable.py"}]} | 1,425 | 127 |

gh_patches_debug_24462 | rasdani/github-patches | git_diff | projectmesa__mesa-1343 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

UX: Split UserSettableParameter into various classes

What if, instead of

```python

number_option = UserSettableParameter('number', 'My Number', value=123)

boolean_option = UserSettableParameter('checkbox', 'My Boolean', value=True)

slider_option = UserSettableParameter('slider', 'My Slider', value=123, min_value=10, max_value=200, step=0.1)

```

we do

```python

number_option = Number("My Number", 123)

boolean_option = Checkbox("My boolean", True)

slider_option = Slider("My slider", 123, min=10, max=200, step=0.1)

```

In addition to looking simpler, more importantly, this allows users to define their own UserParam class.

</issue>

<code>

[start of mesa/visualization/UserParam.py]

1 NUMBER = "number"

2 CHECKBOX = "checkbox"

3 CHOICE = "choice"

4 SLIDER = "slider"

5 STATIC_TEXT = "static_text"

6

7

8 class UserSettableParameter:

9 """A class for providing options to a visualization for a given parameter.

10

11 UserSettableParameter can be used instead of keyword arguments when specifying model parameters in an

12 instance of a `ModularServer` so that the parameter can be adjusted in the UI without restarting the server.

13

14 Validation of correctly-specified params happens on startup of a `ModularServer`. Each param is handled

15 individually in the UI and sends callback events to the server when an option is updated. That option is then

16 re-validated, in the `value.setter` property method to ensure input is correct from the UI to `reset_model`

17 callback.

18

19 Parameter types include:

20 - 'number' - a simple numerical input

21 - 'checkbox' - boolean checkbox

22 - 'choice' - String-based dropdown input, for selecting choices within a model

23 - 'slider' - A number-based slider input with settable increment

24 - 'static_text' - A non-input textbox for displaying model info.

25

26 Examples:

27

28 # Simple number input

29 number_option = UserSettableParameter('number', 'My Number', value=123)

30

31 # Checkbox input

32 boolean_option = UserSettableParameter('checkbox', 'My Boolean', value=True)

33

34 # Choice input

35 choice_option = UserSettableParameter('choice', 'My Choice', value='Default choice',

36 choices=['Default Choice', 'Alternate Choice'])

37

38 # Slider input

39 slider_option = UserSettableParameter('slider', 'My Slider', value=123, min_value=10, max_value=200, step=0.1)

40

41 # Static text

42 static_text = UserSettableParameter('static_text', value="This is a descriptive textbox")

43 """

44

45 NUMBER = NUMBER

46 CHECKBOX = CHECKBOX

47 CHOICE = CHOICE

48 SLIDER = SLIDER

49 STATIC_TEXT = STATIC_TEXT

50

51 TYPES = (NUMBER, CHECKBOX, CHOICE, SLIDER, STATIC_TEXT)

52

53 _ERROR_MESSAGE = "Missing or malformed inputs for '{}' Option '{}'"

54

55 def __init__(

56 self,

57 param_type=None,

58 name="",

59 value=None,

60 min_value=None,

61 max_value=None,

62 step=1,

63 choices=None,

64 description=None,

65 ):

66 if choices is None:

67 choices = list()

68 if param_type not in self.TYPES:

69 raise ValueError(f"{param_type} is not a valid Option type")

70 self.param_type = param_type

71 self.name = name

72 self._value = value

73 self.min_value = min_value

74 self.max_value = max_value

75 self.step = step

76 self.choices = choices

77 self.description = description

78

79 # Validate option types to make sure values are supplied properly

80 msg = self._ERROR_MESSAGE.format(self.param_type, name)

81 valid = True

82

83 if self.param_type == self.NUMBER:

84 valid = not (self.value is None)

85

86 elif self.param_type == self.SLIDER:

87 valid = not (

88 self.value is None or self.min_value is None or self.max_value is None

89 )

90

91 elif self.param_type == self.CHOICE:

92 valid = not (self.value is None or len(self.choices) == 0)

93

94 elif self.param_type == self.CHECKBOX:

95 valid = isinstance(self.value, bool)

96

97 elif self.param_type == self.STATIC_TEXT:

98 valid = isinstance(self.value, str)

99

100 if not valid:

101 raise ValueError(msg)

102

103 @property

104 def value(self):

105 return self._value

106

107 @value.setter

108 def value(self, value):

109 self._value = value

110 if self.param_type == self.SLIDER:

111 if self._value < self.min_value:

112 self._value = self.min_value

113 elif self._value > self.max_value:

114 self._value = self.max_value

115 elif self.param_type == self.CHOICE:

116 if self._value not in self.choices:

117 print(

118 "Selected choice value not in available choices, selected first choice from 'choices' list"

119 )

120 self._value = self.choices[0]

121

122 @property

123 def json(self):

124 result = self.__dict__.copy()

125 result["value"] = result.pop(

126 "_value"

127 ) # Return _value as value, value is the same

128 return result

129

130

131 class UserParam:

132 _ERROR_MESSAGE = "Missing or malformed inputs for '{}' Option '{}'"

133

134 @property

135 def json(self):

136 result = self.__dict__.copy()

137 result["value"] = result.pop(

138 "_value"

139 ) # Return _value as value, value is the same

140 return result

141

142 def maybe_raise_error(self, valid):

143 if not valid:

144 msg = self._ERROR_MESSAGE.format(self.param_type, self.name)

145 raise ValueError(msg)

146

147 @property

148 def value(self):

149 return self._value

150

151 @value.setter

152 def value(self, value):

153 self._value = value

154

155

156 class Slider(UserParam):

157 """

158 A number-based slider input with settable increment.

159

160 Example:

161

162 slider_option = Slider("My Slider", value=123, min_value=10, max_value=200, step=0.1)

163 """

164

165 def __init__(

166 self,

167 name="",

168 value=None,

169 min_value=None,

170 max_value=None,

171 step=1,

172 description=None,

173 ):

174 self.param_type = SLIDER

175 self.name = name

176 self._value = value

177 self.min_value = min_value

178 self.max_value = max_value

179 self.step = step

180 self.description = description

181

182 # Validate option type to make sure values are supplied properly

183 valid = not (

184 self.value is None or self.min_value is None or self.max_value is None

185 )

186 self.maybe_raise_error(valid)

187

188 @property

189 def value(self):

190 return self._value

191

192 @value.setter

193 def value(self, value):

194 self._value = value

195 if self._value < self.min_value:

196 self._value = self.min_value

197 elif self._value > self.max_value:

198 self._value = self.max_value

199

200

201 class Checkbox(UserParam):

202 """

203 Boolean checkbox.

204

205 Example:

206

207 boolean_option = Checkbox('My Boolean', True)

208 """

209

210 def __init__(self, name="", value=None, description=None):

211 self.param_type = CHECKBOX

212 self.name = name

213 self._value = value

214 self.description = description

215

216 # Validate option type to make sure values are supplied properly

217 valid = isinstance(self.value, bool)

218 self.maybe_raise_error(valid)

219

220

221 class Choice(UserParam):

222 """

223 String-based dropdown input, for selecting choices within a model

224

225 Example:

226 choice_option = Choice(

227 'My Choice',

228 value='Default choice',

229 choices=['Default Choice', 'Alternate Choice']

230 )

231 """

232

233 def __init__(self, name="", value=None, choices=None, description=None):

234 self.param_type = CHOICE

235 self.name = name

236 self._value = value

237 self.choices = choices

238 self.description = description

239

240 # Validate option type to make sure values are supplied properly

241 valid = not (self.value is None or len(self.choices) == 0)

242 self.maybe_raise_error(valid)

243

244 @property

245 def value(self):

246 return self._value

247

248 @value.setter

249 def value(self, value):

250 self._value = value

251 if self._value not in self.choices:

252 print(

253 "Selected choice value not in available choices, selected first choice from 'choices' list"

254 )

255 self._value = self.choices[0]

256

257

258 class StaticText(UserParam):

259 """

260 A non-input textbox for displaying model info.

261

262 Example:

263 static_text = StaticText("This is a descriptive textbox")

264 """

265

266 def __init__(self, value=None):

267 self.param_type = STATIC_TEXT

268 self._value = value

269 valid = isinstance(self.value, str)

270 self.maybe_raise_error(valid)

271

[end of mesa/visualization/UserParam.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/mesa/visualization/UserParam.py b/mesa/visualization/UserParam.py

--- a/mesa/visualization/UserParam.py

+++ b/mesa/visualization/UserParam.py

@@ -1,3 +1,7 @@

+from numbers import Number

+from warnings import warn

+

+

NUMBER = "number"

CHECKBOX = "checkbox"

CHOICE = "choice"

@@ -63,6 +67,13 @@

choices=None,

description=None,

):

+

+ warn(

+ "UserSettableParameter is deprecated in favor of UserParam objects "

+ "such as Slider, Checkbox, Choice, StaticText, NumberInput. "

+ "See the examples folder for how to use them. "

+ "UserSettableParameter will be removed in the next major release."

+ )

if choices is None:

choices = list()

if param_type not in self.TYPES:

@@ -268,3 +279,19 @@

self._value = value

valid = isinstance(self.value, str)

self.maybe_raise_error(valid)

+

+

+class NumberInput(UserParam):

+ """

+ a simple numerical input

+

+ Example:

+ number_option = NumberInput("My Number", value=123)

+ """

+

+ def __init__(self, name="", value=None, description=None):

+ self.param_type = NUMBER

+ self.name = name

+ self._value = value

+ valid = isinstance(self.value, Number)

+ self.maybe_raise_error(valid)