problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

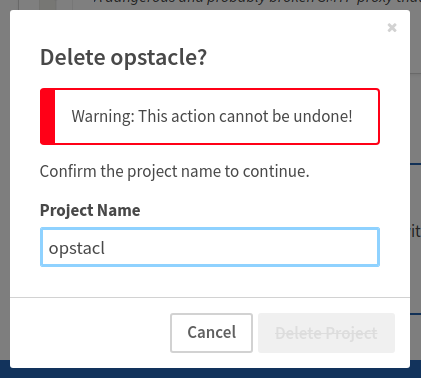

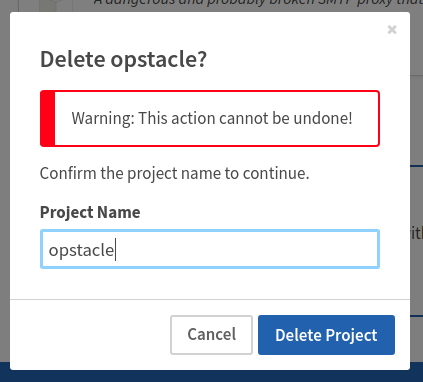

gh_patches_debug_38039 | rasdani/github-patches | git_diff | ansible__ansible-43947 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Serverless Module - Support Verbose Mode

<!---

Verify first that your issue/request is not already reported on GitHub.

Also test if the latest release, and master branch are affected too.

-->

##### ISSUE TYPE

<!--- Pick one below and delete the rest: -->

- Feature Idea

##### COMPONENT NAME

<!--- Name of the module/plugin/task/feature -->

<!--- Please do not include extra details here, e.g. "vyos_command" not "the network module vyos_command" -->

- serverless.py

##### ANSIBLE VERSION

<!--- Paste verbatim output from "ansible --version" between quotes below -->

```

ansible 2.4.0.0

```

##### CONFIGURATION

<!---

If using Ansible 2.4 or above, paste the results of "ansible-config dump --only-changed"

Otherwise, mention any settings you have changed/added/removed in ansible.cfg

(or using the ANSIBLE_* environment variables).

-->

```

DEFAULT_HOST_LIST(env: ANSIBLE_INVENTORY) = [u'/.../inventory/dev']

```

##### OS / ENVIRONMENT

<!---

Mention the OS you are running Ansible from, and the OS you are

managing, or say "N/A" for anything that is not platform-specific.

Also mention the specific version of what you are trying to control,

e.g. if this is a network bug the version of firmware on the network device.

-->

- N/A

##### SUMMARY

<!--- Explain the problem briefly -->

When using Ansible to deploy Serverless projects it would be very helpful to be able to turn on verbose mode (`-v`)

**Reference:** https://serverless.com/framework/docs/providers/aws/cli-reference/deploy/

##### STEPS TO REPRODUCE

<!---

For bugs, show exactly how to reproduce the problem, using a minimal test-case.

For new features, show how the feature would be used.

-->

<!--- Paste example playbooks or commands between quotes below -->

Add `verbose="true"` to `serverless` command and/or piggyback on Ansible verbose mode

```yaml

- name: configure | Run Serverless

serverless: stage="{{ env }}" region="{{ ec2_region }}" service_path="{{ serverless_service_path }}" verbose="true"

```

<!--- You can also paste gist.github.com links for larger files -->

##### EXPECTED RESULTS

<!--- What did you expect to happen when running the steps above? -->

You would see Serverless verbose logging in the Ansible log

```

Serverless: Packaging service...

Serverless: Creating Stack...

Serverless: Checking Stack create progress...

CloudFormation - CREATE_IN_PROGRESS - AWS::CloudFormation::Stack - ***

CloudFormation - CREATE_IN_PROGRESS - AWS::S3::Bucket - ServerlessDeploymentBucket

(removed additional printout)

Serverless: Operation failed!

```

##### ACTUAL RESULTS

<!--- What actually happened? If possible run with extra verbosity (-vvvv) -->

<!--- Paste verbatim command output between quotes below -->

```

Serverless: Packaging service...

Serverless: Creating Stack...

Serverless: Checking Stack create progress...

....

Serverless: Operation failed!

```

**Note:** The `....` seen above is Serverless not listing all AWS commands because it's not in verbose mode

</issue>

<code>

[start of lib/ansible/modules/cloud/misc/serverless.py]

1 #!/usr/bin/python

2 # -*- coding: utf-8 -*-

3

4 # (c) 2016, Ryan Scott Brown <[email protected]>

5 # GNU General Public License v3.0+ (see COPYING or https://www.gnu.org/licenses/gpl-3.0.txt)

6

7 from __future__ import absolute_import, division, print_function

8 __metaclass__ = type

9

10

11 ANSIBLE_METADATA = {'metadata_version': '1.1',

12 'status': ['preview'],

13 'supported_by': 'community'}

14

15

16 DOCUMENTATION = '''

17 ---

18 module: serverless

19 short_description: Manages a Serverless Framework project

20 description:

21 - Provides support for managing Serverless Framework (https://serverless.com/) project deployments and stacks.

22 version_added: "2.3"

23 options:

24 state:

25 choices: ['present', 'absent']

26 description:

27 - Goal state of given stage/project

28 required: false

29 default: present

30 serverless_bin_path:

31 description:

32 - The path of a serverless framework binary relative to the 'service_path' eg. node_module/.bin/serverless

33 required: false

34 version_added: "2.4"

35 service_path:

36 description:

37 - The path to the root of the Serverless Service to be operated on.

38 required: true

39 stage:

40 description:

41 - The name of the serverless framework project stage to deploy to. This uses the serverless framework default "dev".

42 required: false

43 functions:

44 description:

45 - A list of specific functions to deploy. If this is not provided, all functions in the service will be deployed.

46 required: false

47 default: []

48 region:

49 description:

50 - AWS region to deploy the service to

51 required: false

52 default: us-east-1

53 deploy:

54 description:

55 - Whether or not to deploy artifacts after building them. When this option is `false` all the functions will be built, but no stack update will be

56 run to send them out. This is mostly useful for generating artifacts to be stored/deployed elsewhere.

57 required: false

58 default: true

59 notes:

60 - Currently, the `serverless` command must be in the path of the node executing the task. In the future this may be a flag.

61 requirements: [ "serverless", "yaml" ]

62 author: "Ryan Scott Brown @ryansb"

63 '''

64

65 EXAMPLES = """

66 # Basic deploy of a service

67 - serverless:

68 service_path: '{{ project_dir }}'

69 state: present

70

71 # Deploy specific functions

72 - serverless:

73 service_path: '{{ project_dir }}'

74 functions:

75 - my_func_one

76 - my_func_two

77

78 # deploy a project, then pull its resource list back into Ansible

79 - serverless:

80 stage: dev

81 region: us-east-1

82 service_path: '{{ project_dir }}'

83 register: sls

84 # The cloudformation stack is always named the same as the full service, so the

85 # cloudformation_facts module can get a full list of the stack resources, as

86 # well as stack events and outputs

87 - cloudformation_facts:

88 region: us-east-1

89 stack_name: '{{ sls.service_name }}'

90 stack_resources: true

91

92 # Deploy a project but use a locally installed serverless binary instead of the global serverless binary

93 - serverless:

94 stage: dev

95 region: us-east-1

96 service_path: '{{ project_dir }}'

97 serverless_bin_path: node_modules/.bin/serverless

98 """

99

100 RETURN = """

101 service_name:

102 type: string

103 description: The service name specified in the serverless.yml that was just deployed.

104 returned: always

105 sample: my-fancy-service-dev

106 state:

107 type: string

108 description: Whether the stack for the serverless project is present/absent.

109 returned: always

110 command:

111 type: string

112 description: Full `serverless` command run by this module, in case you want to re-run the command outside the module.

113 returned: always

114 sample: serverless deploy --stage production

115 """

116

117 import os

118 import traceback

119

120 try:

121 import yaml

122 HAS_YAML = True

123 except ImportError:

124 HAS_YAML = False

125

126 from ansible.module_utils.basic import AnsibleModule

127

128

129 def read_serverless_config(module):

130 path = module.params.get('service_path')

131

132 try:

133 with open(os.path.join(path, 'serverless.yml')) as sls_config:

134 config = yaml.safe_load(sls_config.read())

135 return config

136 except IOError as e:

137 module.fail_json(msg="Could not open serverless.yml in {}. err: {}".format(path, str(e)), exception=traceback.format_exc())

138

139 module.fail_json(msg="Failed to open serverless config at {}".format(

140 os.path.join(path, 'serverless.yml')))

141

142

143 def get_service_name(module, stage):

144 config = read_serverless_config(module)

145 if config.get('service') is None:

146 module.fail_json(msg="Could not read `service` key from serverless.yml file")

147

148 if stage:

149 return "{}-{}".format(config['service'], stage)

150

151 return "{}-{}".format(config['service'], config.get('stage', 'dev'))

152

153

154 def main():

155 module = AnsibleModule(

156 argument_spec=dict(

157 service_path=dict(required=True, type='path'),

158 state=dict(default='present', choices=['present', 'absent'], required=False),

159 functions=dict(type='list', required=False),

160 region=dict(default='', required=False),

161 stage=dict(default='', required=False),

162 deploy=dict(default=True, type='bool', required=False),

163 serverless_bin_path=dict(required=False, type='path')

164 ),

165 )

166

167 if not HAS_YAML:

168 module.fail_json(msg='yaml is required for this module')

169

170 service_path = module.params.get('service_path')

171 state = module.params.get('state')

172 functions = module.params.get('functions')

173 region = module.params.get('region')

174 stage = module.params.get('stage')

175 deploy = module.params.get('deploy', True)

176 serverless_bin_path = module.params.get('serverless_bin_path')

177

178 if serverless_bin_path is not None:

179 command = serverless_bin_path + " "

180 else:

181 command = "serverless "

182

183 if state == 'present':

184 command += 'deploy '

185 elif state == 'absent':

186 command += 'remove '

187 else:

188 module.fail_json(msg="State must either be 'present' or 'absent'. Received: {}".format(state))

189

190 if not deploy and state == 'present':

191 command += '--noDeploy '

192 if region:

193 command += '--region {} '.format(region)

194 if stage:

195 command += '--stage {} '.format(stage)

196

197 rc, out, err = module.run_command(command, cwd=service_path)

198 if rc != 0:

199 if state == 'absent' and "-{}' does not exist".format(stage) in out:

200 module.exit_json(changed=False, state='absent', command=command,

201 out=out, service_name=get_service_name(module, stage))

202

203 module.fail_json(msg="Failure when executing Serverless command. Exited {}.\nstdout: {}\nstderr: {}".format(rc, out, err))

204

205 # gather some facts about the deployment

206 module.exit_json(changed=True, state='present', out=out, command=command,

207 service_name=get_service_name(module, stage))

208

209

210 if __name__ == '__main__':

211 main()

212

[end of lib/ansible/modules/cloud/misc/serverless.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/lib/ansible/modules/cloud/misc/serverless.py b/lib/ansible/modules/cloud/misc/serverless.py

--- a/lib/ansible/modules/cloud/misc/serverless.py

+++ b/lib/ansible/modules/cloud/misc/serverless.py

@@ -56,6 +56,18 @@

run to send them out. This is mostly useful for generating artifacts to be stored/deployed elsewhere.

required: false

default: true

+ force:

+ description:

+ - Whether or not to force full deployment, equivalent to serverless `--force` option.

+ required: false

+ default: false

+ version_added: "2.7"

+ verbose:

+ description:

+ - Shows all stack events during deployment, and display any Stack Output.

+ required: false

+ default: false

+ version_added: "2.7"

notes:

- Currently, the `serverless` command must be in the path of the node executing the task. In the future this may be a flag.

requirements: [ "serverless", "yaml" ]

@@ -160,7 +172,9 @@

region=dict(default='', required=False),

stage=dict(default='', required=False),

deploy=dict(default=True, type='bool', required=False),

- serverless_bin_path=dict(required=False, type='path')

+ serverless_bin_path=dict(required=False, type='path'),

+ force=dict(default=False, required=False),

+ verbose=dict(default=False, required=False)

),

)

@@ -173,6 +187,8 @@

region = module.params.get('region')

stage = module.params.get('stage')

deploy = module.params.get('deploy', True)

+ force = module.params.get('force', False)

+ verbose = module.params.get('verbose', False)

serverless_bin_path = module.params.get('serverless_bin_path')

if serverless_bin_path is not None:

@@ -187,12 +203,18 @@

else:

module.fail_json(msg="State must either be 'present' or 'absent'. Received: {}".format(state))

- if not deploy and state == 'present':

- command += '--noDeploy '

+ if state == 'present':

+ if not deploy:

+ command += '--noDeploy '

+ elif force:

+ command += '--force '

+

if region:

command += '--region {} '.format(region)

if stage:

command += '--stage {} '.format(stage)

+ if verbose:

+ command += '--verbose '

rc, out, err = module.run_command(command, cwd=service_path)

if rc != 0:

| {"golden_diff": "diff --git a/lib/ansible/modules/cloud/misc/serverless.py b/lib/ansible/modules/cloud/misc/serverless.py\n--- a/lib/ansible/modules/cloud/misc/serverless.py\n+++ b/lib/ansible/modules/cloud/misc/serverless.py\n@@ -56,6 +56,18 @@\n run to send them out. This is mostly useful for generating artifacts to be stored/deployed elsewhere.\n required: false\n default: true\n+ force:\n+ description:\n+ - Whether or not to force full deployment, equivalent to serverless `--force` option.\n+ required: false\n+ default: false\n+ version_added: \"2.7\"\n+ verbose:\n+ description:\n+ - Shows all stack events during deployment, and display any Stack Output.\n+ required: false\n+ default: false\n+ version_added: \"2.7\"\n notes:\n - Currently, the `serverless` command must be in the path of the node executing the task. In the future this may be a flag.\n requirements: [ \"serverless\", \"yaml\" ]\n@@ -160,7 +172,9 @@\n region=dict(default='', required=False),\n stage=dict(default='', required=False),\n deploy=dict(default=True, type='bool', required=False),\n- serverless_bin_path=dict(required=False, type='path')\n+ serverless_bin_path=dict(required=False, type='path'),\n+ force=dict(default=False, required=False),\n+ verbose=dict(default=False, required=False)\n ),\n )\n \n@@ -173,6 +187,8 @@\n region = module.params.get('region')\n stage = module.params.get('stage')\n deploy = module.params.get('deploy', True)\n+ force = module.params.get('force', False)\n+ verbose = module.params.get('verbose', False)\n serverless_bin_path = module.params.get('serverless_bin_path')\n \n if serverless_bin_path is not None:\n@@ -187,12 +203,18 @@\n else:\n module.fail_json(msg=\"State must either be 'present' or 'absent'. Received: {}\".format(state))\n \n- if not deploy and state == 'present':\n- command += '--noDeploy '\n+ if state == 'present':\n+ if not deploy:\n+ command += '--noDeploy '\n+ elif force:\n+ command += '--force '\n+\n if region:\n command += '--region {} '.format(region)\n if stage:\n command += '--stage {} '.format(stage)\n+ if verbose:\n+ command += '--verbose '\n \n rc, out, err = module.run_command(command, cwd=service_path)\n if rc != 0:\n", "issue": "Serverless Module - Support Verbose Mode\n<!---\r\nVerify first that your issue/request is not already reported on GitHub.\r\nAlso test if the latest release, and master branch are affected too.\r\n-->\r\n\r\n##### ISSUE TYPE\r\n<!--- Pick one below and delete the rest: -->\r\n - Feature Idea\r\n\r\n##### COMPONENT NAME\r\n<!--- Name of the module/plugin/task/feature -->\r\n<!--- Please do not include extra details here, e.g. \"vyos_command\" not \"the network module vyos_command\" -->\r\n- serverless.py\r\n\r\n##### ANSIBLE VERSION\r\n<!--- Paste verbatim output from \"ansible --version\" between quotes below -->\r\n```\r\nansible 2.4.0.0\r\n```\r\n\r\n##### CONFIGURATION\r\n<!---\r\nIf using Ansible 2.4 or above, paste the results of \"ansible-config dump --only-changed\"\r\n\r\nOtherwise, mention any settings you have changed/added/removed in ansible.cfg\r\n(or using the ANSIBLE_* environment variables).\r\n-->\r\n```\r\nDEFAULT_HOST_LIST(env: ANSIBLE_INVENTORY) = [u'/.../inventory/dev']\r\n```\r\n\r\n##### OS / ENVIRONMENT\r\n<!---\r\nMention the OS you are running Ansible from, and the OS you are\r\nmanaging, or say \"N/A\" for anything that is not platform-specific.\r\nAlso mention the specific version of what you are trying to control,\r\ne.g. if this is a network bug the version of firmware on the network device.\r\n-->\r\n- N/A\r\n\r\n##### SUMMARY\r\n<!--- Explain the problem briefly -->\r\n\r\nWhen using Ansible to deploy Serverless projects it would be very helpful to be able to turn on verbose mode (`-v`)\r\n\r\n**Reference:** https://serverless.com/framework/docs/providers/aws/cli-reference/deploy/\r\n\r\n##### STEPS TO REPRODUCE\r\n<!---\r\nFor bugs, show exactly how to reproduce the problem, using a minimal test-case.\r\nFor new features, show how the feature would be used.\r\n-->\r\n\r\n<!--- Paste example playbooks or commands between quotes below -->\r\nAdd `verbose=\"true\"` to `serverless` command and/or piggyback on Ansible verbose mode\r\n```yaml\r\n- name: configure | Run Serverless\r\n serverless: stage=\"{{ env }}\" region=\"{{ ec2_region }}\" service_path=\"{{ serverless_service_path }}\" verbose=\"true\"\r\n```\r\n\r\n<!--- You can also paste gist.github.com links for larger files -->\r\n\r\n##### EXPECTED RESULTS\r\n<!--- What did you expect to happen when running the steps above? -->\r\nYou would see Serverless verbose logging in the Ansible log\r\n```\r\nServerless: Packaging service...\r\nServerless: Creating Stack...\r\nServerless: Checking Stack create progress...\r\nCloudFormation - CREATE_IN_PROGRESS - AWS::CloudFormation::Stack - ***\r\nCloudFormation - CREATE_IN_PROGRESS - AWS::S3::Bucket - ServerlessDeploymentBucket\r\n(removed additional printout)\r\nServerless: Operation failed!\r\n```\r\n##### ACTUAL RESULTS\r\n<!--- What actually happened? If possible run with extra verbosity (-vvvv) -->\r\n\r\n<!--- Paste verbatim command output between quotes below -->\r\n```\r\nServerless: Packaging service...\r\nServerless: Creating Stack...\r\nServerless: Checking Stack create progress...\r\n....\r\nServerless: Operation failed!\r\n```\r\n**Note:** The `....` seen above is Serverless not listing all AWS commands because it's not in verbose mode\n", "before_files": [{"content": "#!/usr/bin/python\n# -*- coding: utf-8 -*-\n\n# (c) 2016, Ryan Scott Brown <[email protected]>\n# GNU General Public License v3.0+ (see COPYING or https://www.gnu.org/licenses/gpl-3.0.txt)\n\nfrom __future__ import absolute_import, division, print_function\n__metaclass__ = type\n\n\nANSIBLE_METADATA = {'metadata_version': '1.1',\n 'status': ['preview'],\n 'supported_by': 'community'}\n\n\nDOCUMENTATION = '''\n---\nmodule: serverless\nshort_description: Manages a Serverless Framework project\ndescription:\n - Provides support for managing Serverless Framework (https://serverless.com/) project deployments and stacks.\nversion_added: \"2.3\"\noptions:\n state:\n choices: ['present', 'absent']\n description:\n - Goal state of given stage/project\n required: false\n default: present\n serverless_bin_path:\n description:\n - The path of a serverless framework binary relative to the 'service_path' eg. node_module/.bin/serverless\n required: false\n version_added: \"2.4\"\n service_path:\n description:\n - The path to the root of the Serverless Service to be operated on.\n required: true\n stage:\n description:\n - The name of the serverless framework project stage to deploy to. This uses the serverless framework default \"dev\".\n required: false\n functions:\n description:\n - A list of specific functions to deploy. If this is not provided, all functions in the service will be deployed.\n required: false\n default: []\n region:\n description:\n - AWS region to deploy the service to\n required: false\n default: us-east-1\n deploy:\n description:\n - Whether or not to deploy artifacts after building them. When this option is `false` all the functions will be built, but no stack update will be\n run to send them out. This is mostly useful for generating artifacts to be stored/deployed elsewhere.\n required: false\n default: true\nnotes:\n - Currently, the `serverless` command must be in the path of the node executing the task. In the future this may be a flag.\nrequirements: [ \"serverless\", \"yaml\" ]\nauthor: \"Ryan Scott Brown @ryansb\"\n'''\n\nEXAMPLES = \"\"\"\n# Basic deploy of a service\n- serverless:\n service_path: '{{ project_dir }}'\n state: present\n\n# Deploy specific functions\n- serverless:\n service_path: '{{ project_dir }}'\n functions:\n - my_func_one\n - my_func_two\n\n# deploy a project, then pull its resource list back into Ansible\n- serverless:\n stage: dev\n region: us-east-1\n service_path: '{{ project_dir }}'\n register: sls\n# The cloudformation stack is always named the same as the full service, so the\n# cloudformation_facts module can get a full list of the stack resources, as\n# well as stack events and outputs\n- cloudformation_facts:\n region: us-east-1\n stack_name: '{{ sls.service_name }}'\n stack_resources: true\n\n# Deploy a project but use a locally installed serverless binary instead of the global serverless binary\n- serverless:\n stage: dev\n region: us-east-1\n service_path: '{{ project_dir }}'\n serverless_bin_path: node_modules/.bin/serverless\n\"\"\"\n\nRETURN = \"\"\"\nservice_name:\n type: string\n description: The service name specified in the serverless.yml that was just deployed.\n returned: always\n sample: my-fancy-service-dev\nstate:\n type: string\n description: Whether the stack for the serverless project is present/absent.\n returned: always\ncommand:\n type: string\n description: Full `serverless` command run by this module, in case you want to re-run the command outside the module.\n returned: always\n sample: serverless deploy --stage production\n\"\"\"\n\nimport os\nimport traceback\n\ntry:\n import yaml\n HAS_YAML = True\nexcept ImportError:\n HAS_YAML = False\n\nfrom ansible.module_utils.basic import AnsibleModule\n\n\ndef read_serverless_config(module):\n path = module.params.get('service_path')\n\n try:\n with open(os.path.join(path, 'serverless.yml')) as sls_config:\n config = yaml.safe_load(sls_config.read())\n return config\n except IOError as e:\n module.fail_json(msg=\"Could not open serverless.yml in {}. err: {}\".format(path, str(e)), exception=traceback.format_exc())\n\n module.fail_json(msg=\"Failed to open serverless config at {}\".format(\n os.path.join(path, 'serverless.yml')))\n\n\ndef get_service_name(module, stage):\n config = read_serverless_config(module)\n if config.get('service') is None:\n module.fail_json(msg=\"Could not read `service` key from serverless.yml file\")\n\n if stage:\n return \"{}-{}\".format(config['service'], stage)\n\n return \"{}-{}\".format(config['service'], config.get('stage', 'dev'))\n\n\ndef main():\n module = AnsibleModule(\n argument_spec=dict(\n service_path=dict(required=True, type='path'),\n state=dict(default='present', choices=['present', 'absent'], required=False),\n functions=dict(type='list', required=False),\n region=dict(default='', required=False),\n stage=dict(default='', required=False),\n deploy=dict(default=True, type='bool', required=False),\n serverless_bin_path=dict(required=False, type='path')\n ),\n )\n\n if not HAS_YAML:\n module.fail_json(msg='yaml is required for this module')\n\n service_path = module.params.get('service_path')\n state = module.params.get('state')\n functions = module.params.get('functions')\n region = module.params.get('region')\n stage = module.params.get('stage')\n deploy = module.params.get('deploy', True)\n serverless_bin_path = module.params.get('serverless_bin_path')\n\n if serverless_bin_path is not None:\n command = serverless_bin_path + \" \"\n else:\n command = \"serverless \"\n\n if state == 'present':\n command += 'deploy '\n elif state == 'absent':\n command += 'remove '\n else:\n module.fail_json(msg=\"State must either be 'present' or 'absent'. Received: {}\".format(state))\n\n if not deploy and state == 'present':\n command += '--noDeploy '\n if region:\n command += '--region {} '.format(region)\n if stage:\n command += '--stage {} '.format(stage)\n\n rc, out, err = module.run_command(command, cwd=service_path)\n if rc != 0:\n if state == 'absent' and \"-{}' does not exist\".format(stage) in out:\n module.exit_json(changed=False, state='absent', command=command,\n out=out, service_name=get_service_name(module, stage))\n\n module.fail_json(msg=\"Failure when executing Serverless command. Exited {}.\\nstdout: {}\\nstderr: {}\".format(rc, out, err))\n\n # gather some facts about the deployment\n module.exit_json(changed=True, state='present', out=out, command=command,\n service_name=get_service_name(module, stage))\n\n\nif __name__ == '__main__':\n main()\n", "path": "lib/ansible/modules/cloud/misc/serverless.py"}]} | 3,376 | 586 |

gh_patches_debug_31936 | rasdani/github-patches | git_diff | WordPress__openverse-api-210 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[Feature] Add new Authority Type

## Problem

<!-- Describe a problem solved by this feature; or delete the section entirely. -->

We currently lack an authority type for curated image galleries: places like https://stocksnap.io where content is manually curated by the platform, but it isn't a site with social, user-uploaded content, or isn't a formal GLAM institution.

## Description

<!-- Describe the feature and how it solves the problem. -->

Our current authorities:

https://github.com/WordPress/openverse-api/blob/9d0d724651f18cc9f96931e01bea92b8032bd6a0/ingestion_server/ingestion_server/authority.py#L32-L36

Should be modified to:

```diff

boost = {

- AuthorityTypes.CURATED: 90,

+ AuthorityTypes.CURATED: 87.5,

+ AuthorityTypes.CULTURAL_INSTITUTIONS: 90,

AuthorityTypes.SOCIAL_MEDIA: 80,

AuthorityTypes.DEFAULT: 85

}

```

We'll also need to re-classify the existing providers classified as `CURATED` to `CULTURAL_INSTITUTIONS` and add a line for StockSnap here (we might also want to sort these alphabetically):

https://github.com/WordPress/openverse-api/blob/9d0d724651f18cc9f96931e01bea92b8032bd6a0/ingestion_server/ingestion_server/authority.py#L37-L53

## Alternatives

<!-- Describe any alternative solutions or features you have considered. How is this feature better? -->

## Additional context

<!-- Add any other context about the feature here; or delete the section entirely. -->

## Implementation

<!-- Replace the [ ] with [x] to check the box. -->

- [ ] 🙋 I would be interested in implementing this feature.

</issue>

<code>

[start of ingestion_server/ingestion_server/authority.py]

1 from enum import Enum, auto

2

3

4 """

5 Authority is a ranking from 0 to 100 (with 0 being least authoritative)

6 indicating the pedigree of an image. Some examples of things that could impact

7 authority:

8 - The reputation of the website that posted an image

9 - The popularity of the uploader on a social media site in terms of number of

10 followers

11 - Whether the uploader has uploaded images that have previously been flagged for

12 copyright infringement.

13 - etc

14

15 The authority can be set from the catalog layer through the meta_data field

16 or through the ingestion layer. As of now, we are only factoring in the

17 reputation of the website as a static hand-picked list based on experience

18 and search result quality, with the intention to add more sophisticated and

19 tailored measures of authority later on.

20

21 Also note that this is just one factor in rankings, and the magnitude of the

22 boost can be adjusted at search-time.

23 """

24

25

26 class AuthorityTypes(Enum):

27 CURATED = auto()

28 SOCIAL_MEDIA = auto()

29 DEFAULT = auto()

30

31

32 # We want to boost curated collections where each image has been vetted for

33 # cultural significance.

34 boost = {

35 AuthorityTypes.CURATED: 90,

36 AuthorityTypes.SOCIAL_MEDIA: 80,

37 AuthorityTypes.DEFAULT: 85,

38 }

39 authority_types = {

40 "flickr": AuthorityTypes.SOCIAL_MEDIA,

41 "behance": AuthorityTypes.SOCIAL_MEDIA,

42 "thingiverse": AuthorityTypes.SOCIAL_MEDIA,

43 "sketchfab": AuthorityTypes.SOCIAL_MEDIA,

44 "deviantart": AuthorityTypes.SOCIAL_MEDIA,

45 "thorvaldsensmuseum": AuthorityTypes.CURATED,

46 "svgsilh": AuthorityTypes.CURATED,

47 "smithsonian": AuthorityTypes.CURATED,

48 "rijksmuseum": AuthorityTypes.CURATED,

49 "museumsvictoria": AuthorityTypes.CURATED,

50 "met": AuthorityTypes.CURATED,

51 "mccordsmuseum": AuthorityTypes.CURATED,

52 "digitaltmuseum": AuthorityTypes.CURATED,

53 "clevelandmuseum": AuthorityTypes.CURATED,

54 "brooklynmuseum": AuthorityTypes.CURATED,

55 }

56

57

58 def get_authority_boost(source):

59 authority_boost = None

60 if source in authority_types:

61 authority_type = authority_types[source]

62 if authority_type in boost:

63 authority_boost = boost[authority_type]

64 else:

65 authority_boost = boost[AuthorityTypes.DEFAULT]

66 return authority_boost

67

[end of ingestion_server/ingestion_server/authority.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/ingestion_server/ingestion_server/authority.py b/ingestion_server/ingestion_server/authority.py

--- a/ingestion_server/ingestion_server/authority.py

+++ b/ingestion_server/ingestion_server/authority.py

@@ -25,6 +25,7 @@

class AuthorityTypes(Enum):

CURATED = auto()

+ CULTURAL_INSTITUTION = auto()

SOCIAL_MEDIA = auto()

DEFAULT = auto()

@@ -32,26 +33,29 @@

# We want to boost curated collections where each image has been vetted for

# cultural significance.

boost = {

- AuthorityTypes.CURATED: 90,

- AuthorityTypes.SOCIAL_MEDIA: 80,

- AuthorityTypes.DEFAULT: 85,

+ AuthorityTypes.CURATED: 85,

+ AuthorityTypes.CULTURAL_INSTITUTION: 90,

+ AuthorityTypes.SOCIAL_MEDIA: 75,

+ AuthorityTypes.DEFAULT: 80,

}

+

authority_types = {

"flickr": AuthorityTypes.SOCIAL_MEDIA,

"behance": AuthorityTypes.SOCIAL_MEDIA,

"thingiverse": AuthorityTypes.SOCIAL_MEDIA,

"sketchfab": AuthorityTypes.SOCIAL_MEDIA,

"deviantart": AuthorityTypes.SOCIAL_MEDIA,

- "thorvaldsensmuseum": AuthorityTypes.CURATED,

- "svgsilh": AuthorityTypes.CURATED,

- "smithsonian": AuthorityTypes.CURATED,

- "rijksmuseum": AuthorityTypes.CURATED,

- "museumsvictoria": AuthorityTypes.CURATED,

- "met": AuthorityTypes.CURATED,

- "mccordsmuseum": AuthorityTypes.CURATED,

- "digitaltmuseum": AuthorityTypes.CURATED,

- "clevelandmuseum": AuthorityTypes.CURATED,

- "brooklynmuseum": AuthorityTypes.CURATED,

+ "thorvaldsensmuseum": AuthorityTypes.CULTURAL_INSTITUTION,

+ "svgsilh": AuthorityTypes.CULTURAL_INSTITUTION,

+ "smithsonian": AuthorityTypes.CULTURAL_INSTITUTION,

+ "rijksmuseum": AuthorityTypes.CULTURAL_INSTITUTION,

+ "museumsvictoria": AuthorityTypes.CULTURAL_INSTITUTION,

+ "met": AuthorityTypes.CULTURAL_INSTITUTION,

+ "mccordsmuseum": AuthorityTypes.CULTURAL_INSTITUTION,

+ "digitaltmuseum": AuthorityTypes.CULTURAL_INSTITUTION,

+ "clevelandmuseum": AuthorityTypes.CULTURAL_INSTITUTION,

+ "brooklynmuseum": AuthorityTypes.CULTURAL_INSTITUTION,

+ "stocksnap": AuthorityTypes.CURATED,

}

| {"golden_diff": "diff --git a/ingestion_server/ingestion_server/authority.py b/ingestion_server/ingestion_server/authority.py\n--- a/ingestion_server/ingestion_server/authority.py\n+++ b/ingestion_server/ingestion_server/authority.py\n@@ -25,6 +25,7 @@\n \n class AuthorityTypes(Enum):\n CURATED = auto()\n+ CULTURAL_INSTITUTION = auto()\n SOCIAL_MEDIA = auto()\n DEFAULT = auto()\n \n@@ -32,26 +33,29 @@\n # We want to boost curated collections where each image has been vetted for\n # cultural significance.\n boost = {\n- AuthorityTypes.CURATED: 90,\n- AuthorityTypes.SOCIAL_MEDIA: 80,\n- AuthorityTypes.DEFAULT: 85,\n+ AuthorityTypes.CURATED: 85,\n+ AuthorityTypes.CULTURAL_INSTITUTION: 90,\n+ AuthorityTypes.SOCIAL_MEDIA: 75,\n+ AuthorityTypes.DEFAULT: 80,\n }\n+\n authority_types = {\n \"flickr\": AuthorityTypes.SOCIAL_MEDIA,\n \"behance\": AuthorityTypes.SOCIAL_MEDIA,\n \"thingiverse\": AuthorityTypes.SOCIAL_MEDIA,\n \"sketchfab\": AuthorityTypes.SOCIAL_MEDIA,\n \"deviantart\": AuthorityTypes.SOCIAL_MEDIA,\n- \"thorvaldsensmuseum\": AuthorityTypes.CURATED,\n- \"svgsilh\": AuthorityTypes.CURATED,\n- \"smithsonian\": AuthorityTypes.CURATED,\n- \"rijksmuseum\": AuthorityTypes.CURATED,\n- \"museumsvictoria\": AuthorityTypes.CURATED,\n- \"met\": AuthorityTypes.CURATED,\n- \"mccordsmuseum\": AuthorityTypes.CURATED,\n- \"digitaltmuseum\": AuthorityTypes.CURATED,\n- \"clevelandmuseum\": AuthorityTypes.CURATED,\n- \"brooklynmuseum\": AuthorityTypes.CURATED,\n+ \"thorvaldsensmuseum\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"svgsilh\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"smithsonian\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"rijksmuseum\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"museumsvictoria\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"met\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"mccordsmuseum\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"digitaltmuseum\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"clevelandmuseum\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"brooklynmuseum\": AuthorityTypes.CULTURAL_INSTITUTION,\n+ \"stocksnap\": AuthorityTypes.CURATED,\n }\n", "issue": "[Feature] Add new Authority Type\n## Problem\r\n<!-- Describe a problem solved by this feature; or delete the section entirely. -->\r\n\r\nWe currently lack an authority type for curated image galleries: places like https://stocksnap.io where content is manually curated by the platform, but it isn't a site with social, user-uploaded content, or isn't a formal GLAM institution.\r\n\r\n## Description\r\n<!-- Describe the feature and how it solves the problem. -->\r\n\r\nOur current authorities:\r\n\r\nhttps://github.com/WordPress/openverse-api/blob/9d0d724651f18cc9f96931e01bea92b8032bd6a0/ingestion_server/ingestion_server/authority.py#L32-L36\r\n\r\nShould be modified to:\r\n\r\n\r\n```diff\r\nboost = {\r\n- AuthorityTypes.CURATED: 90,\r\n+ AuthorityTypes.CURATED: 87.5,\r\n+ AuthorityTypes.CULTURAL_INSTITUTIONS: 90,\r\n AuthorityTypes.SOCIAL_MEDIA: 80,\r\n AuthorityTypes.DEFAULT: 85\r\n}\r\n```\r\n\r\nWe'll also need to re-classify the existing providers classified as `CURATED` to `CULTURAL_INSTITUTIONS` and add a line for StockSnap here (we might also want to sort these alphabetically):\r\n\r\nhttps://github.com/WordPress/openverse-api/blob/9d0d724651f18cc9f96931e01bea92b8032bd6a0/ingestion_server/ingestion_server/authority.py#L37-L53\r\n\r\n\r\n\r\n## Alternatives\r\n<!-- Describe any alternative solutions or features you have considered. How is this feature better? -->\r\n\r\n## Additional context\r\n<!-- Add any other context about the feature here; or delete the section entirely. -->\r\n\r\n## Implementation\r\n<!-- Replace the [ ] with [x] to check the box. -->\r\n- [ ] \ud83d\ude4b I would be interested in implementing this feature.\r\n\n", "before_files": [{"content": "from enum import Enum, auto\n\n\n\"\"\"\nAuthority is a ranking from 0 to 100 (with 0 being least authoritative)\nindicating the pedigree of an image. Some examples of things that could impact\nauthority:\n- The reputation of the website that posted an image\n- The popularity of the uploader on a social media site in terms of number of\nfollowers\n- Whether the uploader has uploaded images that have previously been flagged for\ncopyright infringement.\n- etc\n\nThe authority can be set from the catalog layer through the meta_data field\nor through the ingestion layer. As of now, we are only factoring in the\nreputation of the website as a static hand-picked list based on experience\nand search result quality, with the intention to add more sophisticated and\ntailored measures of authority later on.\n\nAlso note that this is just one factor in rankings, and the magnitude of the\nboost can be adjusted at search-time.\n\"\"\"\n\n\nclass AuthorityTypes(Enum):\n CURATED = auto()\n SOCIAL_MEDIA = auto()\n DEFAULT = auto()\n\n\n# We want to boost curated collections where each image has been vetted for\n# cultural significance.\nboost = {\n AuthorityTypes.CURATED: 90,\n AuthorityTypes.SOCIAL_MEDIA: 80,\n AuthorityTypes.DEFAULT: 85,\n}\nauthority_types = {\n \"flickr\": AuthorityTypes.SOCIAL_MEDIA,\n \"behance\": AuthorityTypes.SOCIAL_MEDIA,\n \"thingiverse\": AuthorityTypes.SOCIAL_MEDIA,\n \"sketchfab\": AuthorityTypes.SOCIAL_MEDIA,\n \"deviantart\": AuthorityTypes.SOCIAL_MEDIA,\n \"thorvaldsensmuseum\": AuthorityTypes.CURATED,\n \"svgsilh\": AuthorityTypes.CURATED,\n \"smithsonian\": AuthorityTypes.CURATED,\n \"rijksmuseum\": AuthorityTypes.CURATED,\n \"museumsvictoria\": AuthorityTypes.CURATED,\n \"met\": AuthorityTypes.CURATED,\n \"mccordsmuseum\": AuthorityTypes.CURATED,\n \"digitaltmuseum\": AuthorityTypes.CURATED,\n \"clevelandmuseum\": AuthorityTypes.CURATED,\n \"brooklynmuseum\": AuthorityTypes.CURATED,\n}\n\n\ndef get_authority_boost(source):\n authority_boost = None\n if source in authority_types:\n authority_type = authority_types[source]\n if authority_type in boost:\n authority_boost = boost[authority_type]\n else:\n authority_boost = boost[AuthorityTypes.DEFAULT]\n return authority_boost\n", "path": "ingestion_server/ingestion_server/authority.py"}]} | 1,636 | 614 |

gh_patches_debug_1353 | rasdani/github-patches | git_diff | microsoft__Qcodes-87 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

PR #70 breaks parameter .get and .set functionality

I cannot debug the issue properly because all the objects are `multiprocessing` objects. A minimal example showing the issue:

``` python

%matplotlib nbagg

import matplotlib.pyplot as plt

import time

import numpy as np

import qcodes as qc

from toymodel import AModel, MockGates, MockSource, MockMeter, AverageGetter, AverageAndRaw

# now create this "experiment"

model = AModel()

gates = MockGates('gates', model=model)

c0, c1, c2 = gates.chan0, gates.chan1, gates.chan2

print('fine so far...')

print('error...')

c2.get()

print('no effect?')

c2.set(0.5)

```

</issue>

<code>

[start of docs/examples/toymodel.py]

1 # code for example notebook

2

3 import math

4

5 from qcodes import MockInstrument, MockModel, Parameter, Loop, DataArray

6 from qcodes.utils.validators import Numbers

7

8

9 class AModel(MockModel):

10 def __init__(self):

11 self._gates = [0.0, 0.0, 0.0]

12 self._excitation = 0.1

13 super().__init__()

14

15 def _output(self):

16 # my super exciting model!

17 # make a nice pattern that looks sort of double-dotty

18 # with the first two gates controlling the two dots,

19 # and the third looking like Vsd

20 delta_i = 10

21 delta_j = 10

22 di = (self._gates[0] + delta_i / 2) % delta_i - delta_i / 2

23 dj = (self._gates[1] + delta_j / 2) % delta_j - delta_j / 2

24 vsd = math.sqrt(self._gates[2]**2 + self._excitation**2)

25 dij = math.sqrt(di**2 + dj**2) - vsd

26 g = (vsd**2 + 1) * (1 / (dij**2 + 1) +

27 0.1 * (math.atan(-dij) + math.pi / 2))

28 return g

29

30 def fmt(self, value):

31 return '{:.3f}'.format(value)

32

33 def gates_set(self, parameter, value):

34 if parameter[0] == 'c':

35 self._gates[int(parameter[1:])] = float(value)

36 elif parameter == 'rst' and value is None:

37 self._gates = [0.0, 0.0, 0.0]

38 else:

39 raise ValueError

40

41 def gates_get(self, parameter):

42 if parameter[0] == 'c':

43 return self.fmt(self.gates[int(parameter[1:])])

44 else:

45 raise ValueError

46

47 def source_set(self, parameter, value):

48 if parameter == 'ampl':

49 self._excitation = float(value)

50 else:

51 raise ValueError

52

53 def source_get(self, parameter):

54 if parameter == 'ampl':

55 return self.fmt(self._excitation)

56 else:

57 raise ValueError

58

59 def meter_get(self, parameter):

60 if parameter == 'ampl':

61 return self.fmt(self._output() * self._excitation)

62 else:

63 raise ValueError

64

65

66 # make our mock instruments

67 # real instruments would subclass IPInstrument or VisaInstrument

68 # or just the base Instrument instead of MockInstrument,

69 # and be instantiated with an address rather than a model

70 class MockGates(MockInstrument):

71 def __init__(self, name, model=None, **kwargs):

72 super().__init__(name, model=model, **kwargs)

73

74 for i in range(3):

75 cmdbase = 'c{}'.format(i)

76 self.add_parameter('chan{}'.format(i),

77 label='Gate Channel {} (mV)'.format(i),

78 get_cmd=cmdbase + '?',

79 set_cmd=cmdbase + ':{:.4f}',

80 get_parser=float,

81 vals=Numbers(-100, 100))

82

83 self.add_function('reset', call_cmd='rst')

84

85

86 class MockSource(MockInstrument):

87 def __init__(self, name, model=None, **kwargs):

88 super().__init__(name, model=model, **kwargs)

89

90 # this parameter uses built-in sweeping to change slowly

91 self.add_parameter('amplitude',

92 label='Source Amplitude (\u03bcV)',

93 get_cmd='ampl?',

94 set_cmd='ampl:{:.4f}',

95 get_parser=float,

96 vals=Numbers(0, 10),

97 sweep_step=0.1,

98 sweep_delay=0.05)

99

100

101 class MockMeter(MockInstrument):

102 def __init__(self, name, model=None, **kwargs):

103 super().__init__(name, model=model, **kwargs)

104

105 self.add_parameter('amplitude',

106 label='Current (nA)',

107 get_cmd='ampl?',

108 get_parser=float)

109

110

111 class AverageGetter(Parameter):

112 def __init__(self, measured_param, sweep_values, delay):

113 super().__init__(name='avg_' + measured_param.name)

114 self.measured_param = measured_param

115 self.sweep_values = sweep_values

116 self.delay = delay

117 if hasattr(measured_param, 'label'):

118 self.label = 'Average: ' + measured_param.label

119

120 def get(self):

121 loop = Loop(self.sweep_values, self.delay).each(self.measured_param)

122 data = loop.run_temp()

123 return data.arrays[self.measured_param.name].mean()

124

125

126 class AverageAndRaw(Parameter):

127 def __init__(self, measured_param, sweep_values, delay):

128 name = measured_param.name

129 super().__init__(names=(name, 'avg_' + name))

130 self.measured_param = measured_param

131 self.sweep_values = sweep_values

132 self.delay = delay

133 self.sizes = (len(sweep_values), None)

134 set_array = DataArray(parameter=sweep_values.parameter,

135 preset_data=sweep_values)

136 self.setpoints = (set_array, None)

137 if hasattr(measured_param, 'label'):

138 self.labels = (measured_param.label,

139 'Average: ' + measured_param.label)

140

141 def get(self):

142 loop = Loop(self.sweep_values, self.delay).each(self.measured_param)

143 data = loop.run_temp()

144 array = data.arrays[self.measured_param.name]

145 return (array, array.mean())

146

[end of docs/examples/toymodel.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/docs/examples/toymodel.py b/docs/examples/toymodel.py

--- a/docs/examples/toymodel.py

+++ b/docs/examples/toymodel.py

@@ -40,7 +40,7 @@

def gates_get(self, parameter):

if parameter[0] == 'c':

- return self.fmt(self.gates[int(parameter[1:])])

+ return self.fmt(self._gates[int(parameter[1:])])

else:

raise ValueError

| {"golden_diff": "diff --git a/docs/examples/toymodel.py b/docs/examples/toymodel.py\n--- a/docs/examples/toymodel.py\n+++ b/docs/examples/toymodel.py\n@@ -40,7 +40,7 @@\n \n def gates_get(self, parameter):\n if parameter[0] == 'c':\n- return self.fmt(self.gates[int(parameter[1:])])\n+ return self.fmt(self._gates[int(parameter[1:])])\n else:\n raise ValueError\n", "issue": "PR #70 breaks parameter .get and .set functionality\nI cannot debug the issue properly because all the objects are `multiprocessing` objects. A minimal example showing the issue:\n\n``` python\n%matplotlib nbagg\nimport matplotlib.pyplot as plt\nimport time\nimport numpy as np\nimport qcodes as qc\n\nfrom toymodel import AModel, MockGates, MockSource, MockMeter, AverageGetter, AverageAndRaw\n\n# now create this \"experiment\"\nmodel = AModel()\ngates = MockGates('gates', model=model)\n\nc0, c1, c2 = gates.chan0, gates.chan1, gates.chan2\nprint('fine so far...')\n\nprint('error...')\nc2.get()\nprint('no effect?')\nc2.set(0.5)\n\n```\n\n", "before_files": [{"content": "# code for example notebook\n\nimport math\n\nfrom qcodes import MockInstrument, MockModel, Parameter, Loop, DataArray\nfrom qcodes.utils.validators import Numbers\n\n\nclass AModel(MockModel):\n def __init__(self):\n self._gates = [0.0, 0.0, 0.0]\n self._excitation = 0.1\n super().__init__()\n\n def _output(self):\n # my super exciting model!\n # make a nice pattern that looks sort of double-dotty\n # with the first two gates controlling the two dots,\n # and the third looking like Vsd\n delta_i = 10\n delta_j = 10\n di = (self._gates[0] + delta_i / 2) % delta_i - delta_i / 2\n dj = (self._gates[1] + delta_j / 2) % delta_j - delta_j / 2\n vsd = math.sqrt(self._gates[2]**2 + self._excitation**2)\n dij = math.sqrt(di**2 + dj**2) - vsd\n g = (vsd**2 + 1) * (1 / (dij**2 + 1) +\n 0.1 * (math.atan(-dij) + math.pi / 2))\n return g\n\n def fmt(self, value):\n return '{:.3f}'.format(value)\n\n def gates_set(self, parameter, value):\n if parameter[0] == 'c':\n self._gates[int(parameter[1:])] = float(value)\n elif parameter == 'rst' and value is None:\n self._gates = [0.0, 0.0, 0.0]\n else:\n raise ValueError\n\n def gates_get(self, parameter):\n if parameter[0] == 'c':\n return self.fmt(self.gates[int(parameter[1:])])\n else:\n raise ValueError\n\n def source_set(self, parameter, value):\n if parameter == 'ampl':\n self._excitation = float(value)\n else:\n raise ValueError\n\n def source_get(self, parameter):\n if parameter == 'ampl':\n return self.fmt(self._excitation)\n else:\n raise ValueError\n\n def meter_get(self, parameter):\n if parameter == 'ampl':\n return self.fmt(self._output() * self._excitation)\n else:\n raise ValueError\n\n\n# make our mock instruments\n# real instruments would subclass IPInstrument or VisaInstrument\n# or just the base Instrument instead of MockInstrument,\n# and be instantiated with an address rather than a model\nclass MockGates(MockInstrument):\n def __init__(self, name, model=None, **kwargs):\n super().__init__(name, model=model, **kwargs)\n\n for i in range(3):\n cmdbase = 'c{}'.format(i)\n self.add_parameter('chan{}'.format(i),\n label='Gate Channel {} (mV)'.format(i),\n get_cmd=cmdbase + '?',\n set_cmd=cmdbase + ':{:.4f}',\n get_parser=float,\n vals=Numbers(-100, 100))\n\n self.add_function('reset', call_cmd='rst')\n\n\nclass MockSource(MockInstrument):\n def __init__(self, name, model=None, **kwargs):\n super().__init__(name, model=model, **kwargs)\n\n # this parameter uses built-in sweeping to change slowly\n self.add_parameter('amplitude',\n label='Source Amplitude (\\u03bcV)',\n get_cmd='ampl?',\n set_cmd='ampl:{:.4f}',\n get_parser=float,\n vals=Numbers(0, 10),\n sweep_step=0.1,\n sweep_delay=0.05)\n\n\nclass MockMeter(MockInstrument):\n def __init__(self, name, model=None, **kwargs):\n super().__init__(name, model=model, **kwargs)\n\n self.add_parameter('amplitude',\n label='Current (nA)',\n get_cmd='ampl?',\n get_parser=float)\n\n\nclass AverageGetter(Parameter):\n def __init__(self, measured_param, sweep_values, delay):\n super().__init__(name='avg_' + measured_param.name)\n self.measured_param = measured_param\n self.sweep_values = sweep_values\n self.delay = delay\n if hasattr(measured_param, 'label'):\n self.label = 'Average: ' + measured_param.label\n\n def get(self):\n loop = Loop(self.sweep_values, self.delay).each(self.measured_param)\n data = loop.run_temp()\n return data.arrays[self.measured_param.name].mean()\n\n\nclass AverageAndRaw(Parameter):\n def __init__(self, measured_param, sweep_values, delay):\n name = measured_param.name\n super().__init__(names=(name, 'avg_' + name))\n self.measured_param = measured_param\n self.sweep_values = sweep_values\n self.delay = delay\n self.sizes = (len(sweep_values), None)\n set_array = DataArray(parameter=sweep_values.parameter,\n preset_data=sweep_values)\n self.setpoints = (set_array, None)\n if hasattr(measured_param, 'label'):\n self.labels = (measured_param.label,\n 'Average: ' + measured_param.label)\n\n def get(self):\n loop = Loop(self.sweep_values, self.delay).each(self.measured_param)\n data = loop.run_temp()\n array = data.arrays[self.measured_param.name]\n return (array, array.mean())\n", "path": "docs/examples/toymodel.py"}]} | 2,262 | 105 |

gh_patches_debug_38636 | rasdani/github-patches | git_diff | e-valuation__EvaP-1105 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Release Sisyphus data only after successful post

When a user enters answers on the student vote page and then logs out in another window before submitting the form, Sisyphus releases the form data on the form submit, because a 302 redirect to the login page is not an error case.

The data should be kept in browser storage until the vote was successfully counted.

Release Sisyphus data only after successful post

When a user enters answers on the student vote page and then logs out in another window before submitting the form, Sisyphus releases the form data on the form submit, because a 302 redirect to the login page is not an error case.

The data should be kept in browser storage until the vote was successfully counted.

</issue>

<code>

[start of evap/student/views.py]

1 from collections import OrderedDict

2

3 from django.contrib import messages

4 from django.core.exceptions import PermissionDenied, SuspiciousOperation

5 from django.db import transaction

6 from django.shortcuts import get_object_or_404, redirect, render

7 from django.utils.translation import ugettext as _

8

9 from evap.evaluation.auth import participant_required

10 from evap.evaluation.models import Course, Semester

11 from evap.evaluation.tools import STUDENT_STATES_ORDERED

12

13 from evap.student.forms import QuestionsForm

14 from evap.student.tools import question_id

15

16

17 @participant_required

18 def index(request):

19 # retrieve all courses, where the user is a participant and that are not new

20 courses = list(set(Course.objects.filter(participants=request.user).exclude(state="new")))

21 voted_courses = list(set(Course.objects.filter(voters=request.user)))

22 due_courses = list(set(Course.objects.filter(participants=request.user, state='in_evaluation').exclude(voters=request.user)))

23

24 sorter = lambda course: (list(STUDENT_STATES_ORDERED.keys()).index(course.student_state), course.vote_end_date, course.name)

25 courses.sort(key=sorter)

26

27 semesters = Semester.objects.all()

28 semester_list = [dict(semester_name=semester.name, id=semester.id, is_active_semester=semester.is_active_semester,

29 courses=[course for course in courses if course.semester_id == semester.id]) for semester in semesters]

30

31 template_data = dict(

32 semester_list=semester_list,

33 voted_courses=voted_courses,

34 due_courses=due_courses,

35 can_download_grades=request.user.can_download_grades,

36 )

37 return render(request, "student_index.html", template_data)

38

39

40 def vote_preview(request, course, for_rendering_in_modal=False):

41 """

42 Renders a preview of the voting page for the given course.

43 Not used by the student app itself, but by staff and contributor.

44 """

45 form_groups = helper_create_voting_form_groups(request, course.contributions.all())

46 course_form_group = form_groups.pop(course.general_contribution)

47 contributor_form_groups = list((contribution.contributor, contribution.label, form_group, False) for contribution, form_group in form_groups.items())

48

49 template_data = dict(

50 errors_exist=False,

51 course_form_group=course_form_group,

52 contributor_form_groups=contributor_form_groups,

53 course=course,

54 preview=True,

55 for_rendering_in_modal=for_rendering_in_modal)

56 return render(request, "student_vote.html", template_data)

57

58

59 @participant_required

60 def vote(request, course_id):

61 # retrieve course and make sure that the user is allowed to vote

62 course = get_object_or_404(Course, id=course_id)

63 if not course.can_user_vote(request.user):

64 raise PermissionDenied

65

66

67 # prevent a user from voting on themselves.

68 contributions_to_vote_on = course.contributions.exclude(contributor=request.user).all()

69 form_groups = helper_create_voting_form_groups(request, contributions_to_vote_on)

70

71 if not all(all(form.is_valid() for form in form_group) for form_group in form_groups.values()):

72 errors_exist = any(helper_has_errors(form_group) for form_group in form_groups.values())

73

74 course_form_group = form_groups.pop(course.general_contribution)

75

76 contributor_form_groups = list((contribution.contributor, contribution.label, form_group, helper_has_errors(form_group)) for contribution, form_group in form_groups.items())

77

78 template_data = dict(

79 errors_exist=errors_exist,

80 course_form_group=course_form_group,

81 contributor_form_groups=contributor_form_groups,

82 course=course,

83 participants_warning=course.num_participants <= 5,

84 preview=False,

85 vote_end_datetime=course.vote_end_datetime,

86 hours_left_for_evaluation=course.time_left_for_evaluation.seconds//3600,

87 minutes_left_for_evaluation=(course.time_left_for_evaluation.seconds//60)%60,

88 evaluation_ends_soon=course.evaluation_ends_soon())

89 return render(request, "student_vote.html", template_data)

90

91 # all forms are valid, begin vote operation

92 with transaction.atomic():

93 # add user to course.voters

94 # not using course.voters.add(request.user) since it fails silently when done twice.

95 # manually inserting like this gives us the 'created' return value and ensures at the database level that nobody votes twice.

96 __, created = course.voters.through.objects.get_or_create(userprofile_id=request.user.pk, course_id=course.pk)

97 if not created: # vote already got recorded, bail out

98 raise SuspiciousOperation("A second vote has been received shortly after the first one.")

99

100 for contribution, form_group in form_groups.items():

101 for questionnaire_form in form_group:

102 questionnaire = questionnaire_form.questionnaire

103 for question in questionnaire.question_set.all():

104 identifier = question_id(contribution, questionnaire, question)

105 value = questionnaire_form.cleaned_data.get(identifier)

106

107 if question.is_text_question:

108 if value:

109 question.answer_class.objects.create(

110 contribution=contribution,

111 question=question,

112 answer=value)

113 elif question.is_heading_question:

114 pass # ignore these

115 else:

116 if value != 6:

117 answer_counter, __ = question.answer_class.objects.get_or_create(contribution=contribution, question=question, answer=value)

118 answer_counter.add_vote()

119 answer_counter.save()

120

121 course.course_evaluated.send(sender=Course, request=request, semester=course.semester)

122

123 messages.success(request, _("Your vote was recorded."))

124 return redirect('student:index')

125

126

127 def helper_create_voting_form_groups(request, contributions):

128 form_groups = OrderedDict()

129 for contribution in contributions:

130 questionnaires = contribution.questionnaires.all()

131 if not questionnaires.exists():

132 continue

133 form_groups[contribution] = [QuestionsForm(request.POST or None, contribution=contribution, questionnaire=questionnaire) for questionnaire in questionnaires]

134 return form_groups

135

136

137 def helper_has_errors(form_group):

138 return any(form.errors for form in form_group)

139

[end of evap/student/views.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/evap/student/views.py b/evap/student/views.py

--- a/evap/student/views.py

+++ b/evap/student/views.py

@@ -3,7 +3,9 @@

from django.contrib import messages

from django.core.exceptions import PermissionDenied, SuspiciousOperation

from django.db import transaction

+from django.http import HttpResponse

from django.shortcuts import get_object_or_404, redirect, render

+from django.urls import reverse

from django.utils.translation import ugettext as _

from evap.evaluation.auth import participant_required

@@ -13,6 +15,7 @@

from evap.student.forms import QuestionsForm

from evap.student.tools import question_id

+SUCCESS_MAGIC_STRING = 'vote submitted successfully'

@participant_required

def index(request):

@@ -58,12 +61,11 @@

@participant_required

def vote(request, course_id):

- # retrieve course and make sure that the user is allowed to vote

+

course = get_object_or_404(Course, id=course_id)

if not course.can_user_vote(request.user):

raise PermissionDenied

-

# prevent a user from voting on themselves.

contributions_to_vote_on = course.contributions.exclude(contributor=request.user).all()

form_groups = helper_create_voting_form_groups(request, contributions_to_vote_on)

@@ -85,6 +87,8 @@

vote_end_datetime=course.vote_end_datetime,

hours_left_for_evaluation=course.time_left_for_evaluation.seconds//3600,

minutes_left_for_evaluation=(course.time_left_for_evaluation.seconds//60)%60,

+ success_magic_string=SUCCESS_MAGIC_STRING,

+ success_redirect_url=reverse('student:index'),

evaluation_ends_soon=course.evaluation_ends_soon())

return render(request, "student_vote.html", template_data)

@@ -121,7 +125,7 @@

course.course_evaluated.send(sender=Course, request=request, semester=course.semester)

messages.success(request, _("Your vote was recorded."))

- return redirect('student:index')

+ return HttpResponse(SUCCESS_MAGIC_STRING)

def helper_create_voting_form_groups(request, contributions):

| {"golden_diff": "diff --git a/evap/student/views.py b/evap/student/views.py\n--- a/evap/student/views.py\n+++ b/evap/student/views.py\n@@ -3,7 +3,9 @@\n from django.contrib import messages\n from django.core.exceptions import PermissionDenied, SuspiciousOperation\n from django.db import transaction\n+from django.http import HttpResponse\n from django.shortcuts import get_object_or_404, redirect, render\n+from django.urls import reverse\n from django.utils.translation import ugettext as _\n \n from evap.evaluation.auth import participant_required\n@@ -13,6 +15,7 @@\n from evap.student.forms import QuestionsForm\n from evap.student.tools import question_id\n \n+SUCCESS_MAGIC_STRING = 'vote submitted successfully'\n \n @participant_required\n def index(request):\n@@ -58,12 +61,11 @@\n \n @participant_required\n def vote(request, course_id):\n- # retrieve course and make sure that the user is allowed to vote\n+\n course = get_object_or_404(Course, id=course_id)\n if not course.can_user_vote(request.user):\n raise PermissionDenied\n \n- \n # prevent a user from voting on themselves.\n contributions_to_vote_on = course.contributions.exclude(contributor=request.user).all()\n form_groups = helper_create_voting_form_groups(request, contributions_to_vote_on)\n@@ -85,6 +87,8 @@\n vote_end_datetime=course.vote_end_datetime,\n hours_left_for_evaluation=course.time_left_for_evaluation.seconds//3600,\n minutes_left_for_evaluation=(course.time_left_for_evaluation.seconds//60)%60,\n+ success_magic_string=SUCCESS_MAGIC_STRING,\n+ success_redirect_url=reverse('student:index'),\n evaluation_ends_soon=course.evaluation_ends_soon())\n return render(request, \"student_vote.html\", template_data)\n \n@@ -121,7 +125,7 @@\n course.course_evaluated.send(sender=Course, request=request, semester=course.semester)\n \n messages.success(request, _(\"Your vote was recorded.\"))\n- return redirect('student:index')\n+ return HttpResponse(SUCCESS_MAGIC_STRING)\n \n \n def helper_create_voting_form_groups(request, contributions):\n", "issue": "Release Sisyphus data only after successful post\nWhen a user enters answers on the student vote page and then logs out in another window before submitting the form, Sisyphus releases the form data on the form submit, because a 302 redirect to the login page is not an error case.\r\nThe data should be kept in browser storage until the vote was successfully counted.\nRelease Sisyphus data only after successful post\nWhen a user enters answers on the student vote page and then logs out in another window before submitting the form, Sisyphus releases the form data on the form submit, because a 302 redirect to the login page is not an error case.\r\nThe data should be kept in browser storage until the vote was successfully counted.\n", "before_files": [{"content": "from collections import OrderedDict\n\nfrom django.contrib import messages\nfrom django.core.exceptions import PermissionDenied, SuspiciousOperation\nfrom django.db import transaction\nfrom django.shortcuts import get_object_or_404, redirect, render\nfrom django.utils.translation import ugettext as _\n\nfrom evap.evaluation.auth import participant_required\nfrom evap.evaluation.models import Course, Semester\nfrom evap.evaluation.tools import STUDENT_STATES_ORDERED\n\nfrom evap.student.forms import QuestionsForm\nfrom evap.student.tools import question_id\n\n\n@participant_required\ndef index(request):\n # retrieve all courses, where the user is a participant and that are not new\n courses = list(set(Course.objects.filter(participants=request.user).exclude(state=\"new\")))\n voted_courses = list(set(Course.objects.filter(voters=request.user)))\n due_courses = list(set(Course.objects.filter(participants=request.user, state='in_evaluation').exclude(voters=request.user)))\n\n sorter = lambda course: (list(STUDENT_STATES_ORDERED.keys()).index(course.student_state), course.vote_end_date, course.name)\n courses.sort(key=sorter)\n\n semesters = Semester.objects.all()\n semester_list = [dict(semester_name=semester.name, id=semester.id, is_active_semester=semester.is_active_semester,\n courses=[course for course in courses if course.semester_id == semester.id]) for semester in semesters]\n\n template_data = dict(\n semester_list=semester_list,\n voted_courses=voted_courses,\n due_courses=due_courses,\n can_download_grades=request.user.can_download_grades,\n )\n return render(request, \"student_index.html\", template_data)\n\n\ndef vote_preview(request, course, for_rendering_in_modal=False):\n \"\"\"\n Renders a preview of the voting page for the given course.\n Not used by the student app itself, but by staff and contributor.\n \"\"\"\n form_groups = helper_create_voting_form_groups(request, course.contributions.all())\n course_form_group = form_groups.pop(course.general_contribution)\n contributor_form_groups = list((contribution.contributor, contribution.label, form_group, False) for contribution, form_group in form_groups.items())\n\n template_data = dict(\n errors_exist=False,\n course_form_group=course_form_group,\n contributor_form_groups=contributor_form_groups,\n course=course,\n preview=True,\n for_rendering_in_modal=for_rendering_in_modal)\n return render(request, \"student_vote.html\", template_data)\n\n\n@participant_required\ndef vote(request, course_id):\n # retrieve course and make sure that the user is allowed to vote\n course = get_object_or_404(Course, id=course_id)\n if not course.can_user_vote(request.user):\n raise PermissionDenied\n\n \n # prevent a user from voting on themselves.\n contributions_to_vote_on = course.contributions.exclude(contributor=request.user).all()\n form_groups = helper_create_voting_form_groups(request, contributions_to_vote_on)\n\n if not all(all(form.is_valid() for form in form_group) for form_group in form_groups.values()):\n errors_exist = any(helper_has_errors(form_group) for form_group in form_groups.values())\n\n course_form_group = form_groups.pop(course.general_contribution)\n\n contributor_form_groups = list((contribution.contributor, contribution.label, form_group, helper_has_errors(form_group)) for contribution, form_group in form_groups.items())\n\n template_data = dict(\n errors_exist=errors_exist,\n course_form_group=course_form_group,\n contributor_form_groups=contributor_form_groups,\n course=course,\n participants_warning=course.num_participants <= 5,\n preview=False,\n vote_end_datetime=course.vote_end_datetime,\n hours_left_for_evaluation=course.time_left_for_evaluation.seconds//3600,\n minutes_left_for_evaluation=(course.time_left_for_evaluation.seconds//60)%60,\n evaluation_ends_soon=course.evaluation_ends_soon())\n return render(request, \"student_vote.html\", template_data)\n\n # all forms are valid, begin vote operation\n with transaction.atomic():\n # add user to course.voters\n # not using course.voters.add(request.user) since it fails silently when done twice.\n # manually inserting like this gives us the 'created' return value and ensures at the database level that nobody votes twice.\n __, created = course.voters.through.objects.get_or_create(userprofile_id=request.user.pk, course_id=course.pk)\n if not created: # vote already got recorded, bail out\n raise SuspiciousOperation(\"A second vote has been received shortly after the first one.\")\n\n for contribution, form_group in form_groups.items():\n for questionnaire_form in form_group:\n questionnaire = questionnaire_form.questionnaire\n for question in questionnaire.question_set.all():\n identifier = question_id(contribution, questionnaire, question)\n value = questionnaire_form.cleaned_data.get(identifier)\n\n if question.is_text_question:\n if value:\n question.answer_class.objects.create(\n contribution=contribution,\n question=question,\n answer=value)\n elif question.is_heading_question:\n pass # ignore these\n else:\n if value != 6:\n answer_counter, __ = question.answer_class.objects.get_or_create(contribution=contribution, question=question, answer=value)\n answer_counter.add_vote()\n answer_counter.save()\n\n course.course_evaluated.send(sender=Course, request=request, semester=course.semester)\n\n messages.success(request, _(\"Your vote was recorded.\"))\n return redirect('student:index')\n\n\ndef helper_create_voting_form_groups(request, contributions):\n form_groups = OrderedDict()\n for contribution in contributions:\n questionnaires = contribution.questionnaires.all()\n if not questionnaires.exists():\n continue\n form_groups[contribution] = [QuestionsForm(request.POST or None, contribution=contribution, questionnaire=questionnaire) for questionnaire in questionnaires]\n return form_groups\n\n\ndef helper_has_errors(form_group):\n return any(form.errors for form in form_group)\n", "path": "evap/student/views.py"}]} | 2,276 | 474 |

gh_patches_debug_38096 | rasdani/github-patches | git_diff | Qiskit__qiskit-7447 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

dag_drawer should check the existence of filename extension

### Information

- **Qiskit Terra version**: 0.10.0.dev0+831d942

- **Python version**: 3.7

- **Operating system**: Mac

### What is the current behavior?

If a filename without extension is passed to the function `dag_drawer`, this [line](https://github.com/Qiskit/qiskit-terra/blob/d090eca91dc1afdb68f563885c4ccf13b31de20e/qiskit/visualization/dag_visualization.py#L91) reports two errors:

```

nxpd.pydot.InvocationException: Program terminated with status: 1. stderr follows: Format: "XXXXX" not recognized. Use one of: ......

During handling of the above exception, another exception occurred:

qiskit.visualization.exceptions.VisualizationError: 'dag_drawer requires GraphViz installed in the system. Check https://www.graphviz.org/download/ for details on how to install GraphViz in your system.'

```

This is confusing because the second error thrown by Qiskit is not the cause of the problem.

### Steps to reproduce the problem

Try `dag_drawer(dag, filename='abc')`

### What is the expected behavior?

Make the error catching better.

### Suggested solutions

We could either catch this error by reading and filtering the error message, or we could check the existence of the filename's extension, and provide a default one.

</issue>

<code>

[start of qiskit/exceptions.py]

1 # This code is part of Qiskit.

2 #

3 # (C) Copyright IBM 2017, 2020.

4 #

5 # This code is licensed under the Apache License, Version 2.0. You may

6 # obtain a copy of this license in the LICENSE.txt file in the root directory

7 # of this source tree or at http://www.apache.org/licenses/LICENSE-2.0.

8 #

9 # Any modifications or derivative works of this code must retain this

10 # copyright notice, and modified files need to carry a notice indicating

11 # that they have been altered from the originals.

12

13 """Exceptions for errors raised by Qiskit."""

14

15 from typing import Optional

16 import warnings

17

18

19 class QiskitError(Exception):

20 """Base class for errors raised by Qiskit."""

21

22 def __init__(self, *message):

23 """Set the error message."""

24 super().__init__(" ".join(message))

25 self.message = " ".join(message)

26

27 def __str__(self):

28 """Return the message."""

29 return repr(self.message)

30

31

32 class QiskitIndexError(QiskitError, IndexError):

33 """Raised when a sequence subscript is out of range."""

34

35 def __init__(self, *args):

36 """Set the error message."""

37 warnings.warn(

38 "QiskitIndexError class is being deprecated and it is going to be remove in the future",

39 DeprecationWarning,

40 stacklevel=2,

41 )

42 super().__init__(*args)

43

44