problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_56973 | rasdani/github-patches | git_diff | bookwyrm-social__bookwyrm-3126 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Saved lists pagination is broken

**Describe the bug**

Trying to move through pages of saved lists is broken. Instead, one moves back to all lists.

**To Reproduce**

Steps to reproduce the behavior:

1. Save enough lists to have at least two pages

2. Go to [`Lists -> Saved Lists`](https://bookwyrm.social/list/saved)

3. Click on `Next`

4. Wonder why the lists shown are not the ones you saved

5. Realize you're back on `All Lists`

**Expected behavior**

One should be able to paginate through saved lists

**Instance**

[bookwyrm.social](https://bookwyrm.social/)

**Additional comments**

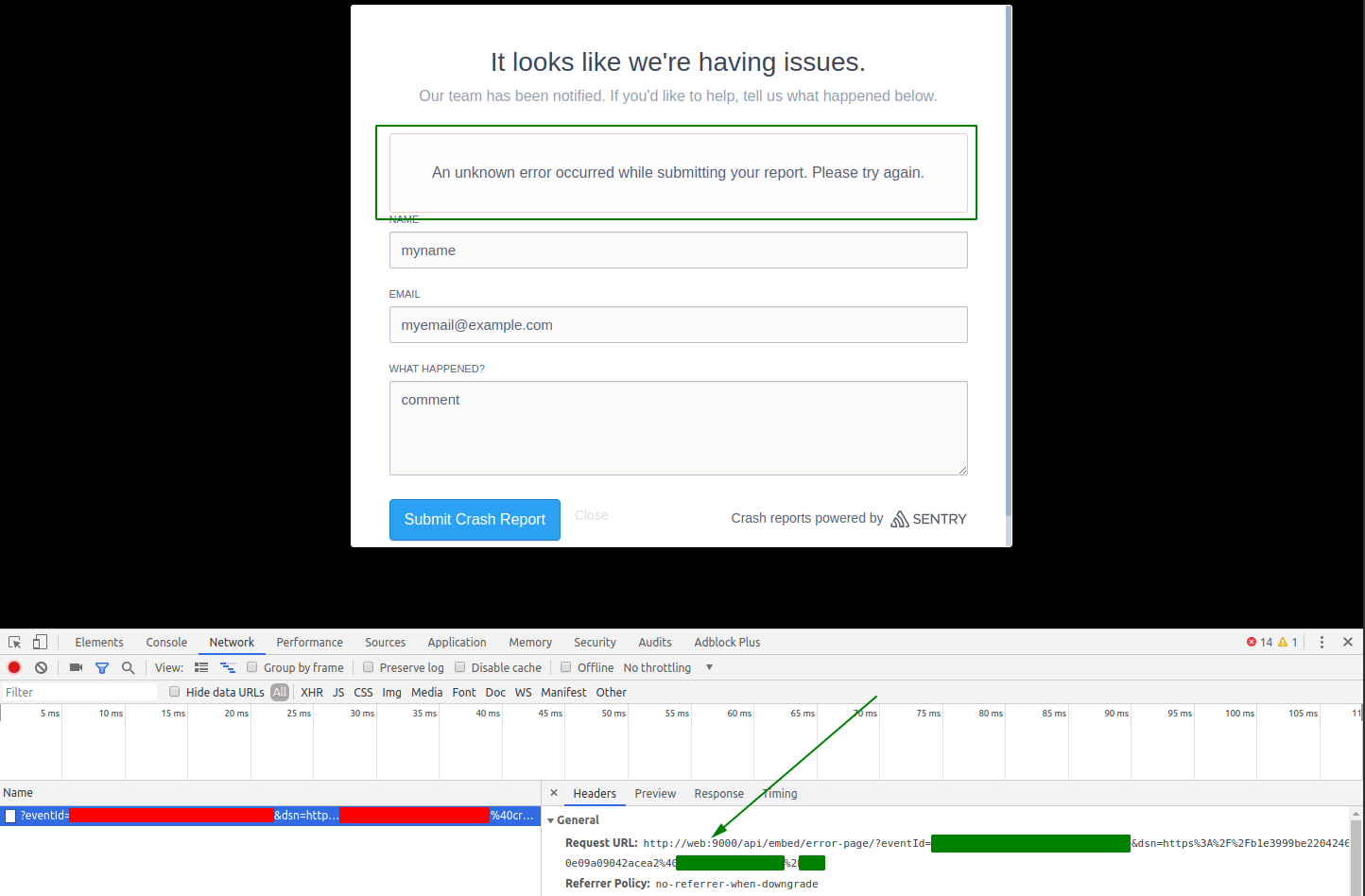

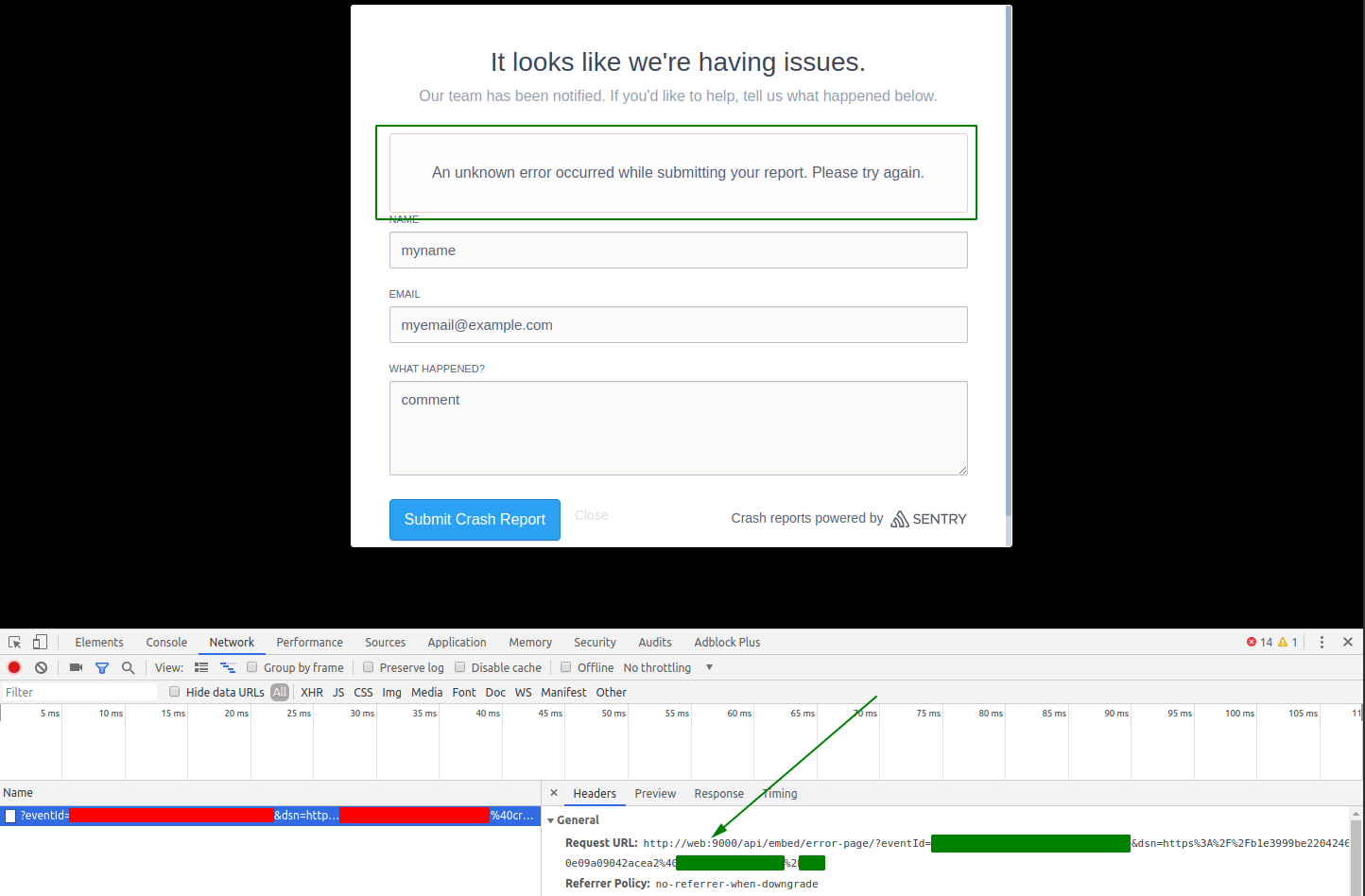

I'm trying to dig through the code a bit, but I don't have time to setup dev environment and populate local dbs and whatever needs to be done. It might just be that `path` needs to be changed to `/list/saved` [here](https://github.com/bookwyrm-social/bookwyrm/blob/6667178703b93d0d1874f1fd547e97c12a2ec144/bookwyrm/views/list/lists.py#L62)? But idk, this isn't a stack I'm very intimate with 🤷

</issue>

<code>

[start of bookwyrm/views/list/lists.py]

1 """ book list views"""

2 from django.contrib.auth.decorators import login_required

3 from django.core.paginator import Paginator

4 from django.shortcuts import redirect

5 from django.template.response import TemplateResponse

6 from django.utils.decorators import method_decorator

7 from django.views import View

8

9 from bookwyrm import forms, models

10 from bookwyrm.lists_stream import ListsStream

11 from bookwyrm.views.helpers import get_user_from_username

12

13

14 # pylint: disable=no-self-use

15 class Lists(View):

16 """book list page"""

17

18 def get(self, request):

19 """display a book list"""

20 if request.user.is_authenticated:

21 lists = ListsStream().get_list_stream(request.user)

22 else:

23 lists = models.List.objects.filter(privacy="public")

24 paginated = Paginator(lists, 12)

25 data = {

26 "lists": paginated.get_page(request.GET.get("page")),

27 "list_form": forms.ListForm(),

28 "path": "/list",

29 }

30 return TemplateResponse(request, "lists/lists.html", data)

31

32 @method_decorator(login_required, name="dispatch")

33 # pylint: disable=unused-argument

34 def post(self, request):

35 """create a book_list"""

36 form = forms.ListForm(request.POST)

37 if not form.is_valid():

38 return redirect("lists")

39 book_list = form.save(request, commit=False)

40

41 # list should not have a group if it is not group curated

42 if not book_list.curation == "group":

43 book_list.group = None

44 book_list.save()

45

46 return redirect(book_list.local_path)

47

48

49 @method_decorator(login_required, name="dispatch")

50 class SavedLists(View):

51 """saved book list page"""

52

53 def get(self, request):

54 """display book lists"""

55 # hide lists with no approved books

56 lists = request.user.saved_lists.order_by("-updated_date")

57

58 paginated = Paginator(lists, 12)

59 data = {

60 "lists": paginated.get_page(request.GET.get("page")),

61 "list_form": forms.ListForm(),

62 "path": "/list",

63 }

64 return TemplateResponse(request, "lists/lists.html", data)

65

66

67 @method_decorator(login_required, name="dispatch")

68 class UserLists(View):

69 """a user's book list page"""

70

71 def get(self, request, username):

72 """display a book list"""

73 user = get_user_from_username(request.user, username)

74 lists = models.List.privacy_filter(request.user).filter(user=user)

75 paginated = Paginator(lists, 12)

76

77 data = {

78 "user": user,

79 "is_self": request.user.id == user.id,

80 "lists": paginated.get_page(request.GET.get("page")),

81 "list_form": forms.ListForm(),

82 "path": user.local_path + "/lists",

83 }

84 return TemplateResponse(request, "user/lists.html", data)

85

[end of bookwyrm/views/list/lists.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/bookwyrm/views/list/lists.py b/bookwyrm/views/list/lists.py

--- a/bookwyrm/views/list/lists.py

+++ b/bookwyrm/views/list/lists.py

@@ -59,7 +59,7 @@

data = {

"lists": paginated.get_page(request.GET.get("page")),

"list_form": forms.ListForm(),

- "path": "/list",

+ "path": "/list/saved",

}

return TemplateResponse(request, "lists/lists.html", data)

| {"golden_diff": "diff --git a/bookwyrm/views/list/lists.py b/bookwyrm/views/list/lists.py\n--- a/bookwyrm/views/list/lists.py\n+++ b/bookwyrm/views/list/lists.py\n@@ -59,7 +59,7 @@\n data = {\n \"lists\": paginated.get_page(request.GET.get(\"page\")),\n \"list_form\": forms.ListForm(),\n- \"path\": \"/list\",\n+ \"path\": \"/list/saved\",\n }\n return TemplateResponse(request, \"lists/lists.html\", data)\n", "issue": "Saved lists pagination is broken\n**Describe the bug**\r\nTrying to move through pages of saved lists is broken. Instead, one moves back to all lists.\r\n\r\n**To Reproduce**\r\nSteps to reproduce the behavior:\r\n1. Save enough lists to have at least two pages\r\n2. Go to [`Lists -> Saved Lists`](https://bookwyrm.social/list/saved)\r\n3. Click on `Next`\r\n4. Wonder why the lists shown are not the ones you saved\r\n5. Realize you're back on `All Lists`\r\n\r\n**Expected behavior**\r\nOne should be able to paginate through saved lists\r\n\r\n**Instance**\r\n[bookwyrm.social](https://bookwyrm.social/)\r\n\r\n**Additional comments**\r\nI'm trying to dig through the code a bit, but I don't have time to setup dev environment and populate local dbs and whatever needs to be done. It might just be that `path` needs to be changed to `/list/saved` [here](https://github.com/bookwyrm-social/bookwyrm/blob/6667178703b93d0d1874f1fd547e97c12a2ec144/bookwyrm/views/list/lists.py#L62)? But idk, this isn't a stack I'm very intimate with \ud83e\udd37\r\n\n", "before_files": [{"content": "\"\"\" book list views\"\"\"\nfrom django.contrib.auth.decorators import login_required\nfrom django.core.paginator import Paginator\nfrom django.shortcuts import redirect\nfrom django.template.response import TemplateResponse\nfrom django.utils.decorators import method_decorator\nfrom django.views import View\n\nfrom bookwyrm import forms, models\nfrom bookwyrm.lists_stream import ListsStream\nfrom bookwyrm.views.helpers import get_user_from_username\n\n\n# pylint: disable=no-self-use\nclass Lists(View):\n \"\"\"book list page\"\"\"\n\n def get(self, request):\n \"\"\"display a book list\"\"\"\n if request.user.is_authenticated:\n lists = ListsStream().get_list_stream(request.user)\n else:\n lists = models.List.objects.filter(privacy=\"public\")\n paginated = Paginator(lists, 12)\n data = {\n \"lists\": paginated.get_page(request.GET.get(\"page\")),\n \"list_form\": forms.ListForm(),\n \"path\": \"/list\",\n }\n return TemplateResponse(request, \"lists/lists.html\", data)\n\n @method_decorator(login_required, name=\"dispatch\")\n # pylint: disable=unused-argument\n def post(self, request):\n \"\"\"create a book_list\"\"\"\n form = forms.ListForm(request.POST)\n if not form.is_valid():\n return redirect(\"lists\")\n book_list = form.save(request, commit=False)\n\n # list should not have a group if it is not group curated\n if not book_list.curation == \"group\":\n book_list.group = None\n book_list.save()\n\n return redirect(book_list.local_path)\n\n\n@method_decorator(login_required, name=\"dispatch\")\nclass SavedLists(View):\n \"\"\"saved book list page\"\"\"\n\n def get(self, request):\n \"\"\"display book lists\"\"\"\n # hide lists with no approved books\n lists = request.user.saved_lists.order_by(\"-updated_date\")\n\n paginated = Paginator(lists, 12)\n data = {\n \"lists\": paginated.get_page(request.GET.get(\"page\")),\n \"list_form\": forms.ListForm(),\n \"path\": \"/list\",\n }\n return TemplateResponse(request, \"lists/lists.html\", data)\n\n\n@method_decorator(login_required, name=\"dispatch\")\nclass UserLists(View):\n \"\"\"a user's book list page\"\"\"\n\n def get(self, request, username):\n \"\"\"display a book list\"\"\"\n user = get_user_from_username(request.user, username)\n lists = models.List.privacy_filter(request.user).filter(user=user)\n paginated = Paginator(lists, 12)\n\n data = {\n \"user\": user,\n \"is_self\": request.user.id == user.id,\n \"lists\": paginated.get_page(request.GET.get(\"page\")),\n \"list_form\": forms.ListForm(),\n \"path\": user.local_path + \"/lists\",\n }\n return TemplateResponse(request, \"user/lists.html\", data)\n", "path": "bookwyrm/views/list/lists.py"}]} | 1,582 | 112 |

gh_patches_debug_1469 | rasdani/github-patches | git_diff | microsoft__DeepSpeed-5577 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG] fp_quantizer is not correctly built when non-jit installation

**Describe the bug**

fp_quantizer is not correctly built when non-jit installation.

**To Reproduce**

Steps to reproduce the behavior:

```

DS_BUILD_FP_QUANTIZER=1 pip install deepspeed

```

install will succeed but

```

from deepspeed.ops.fp_quantizer import FP_Quantize

FP_Quantize()

```

will raise `ImportError: dynamic module does not define module export function (PyInit_fp_quantizer_op)`

**Expected behavior**

Renaming csrc/fp_quantizer/quantize.cu may solve the issue.

This restriction seems to be cause of the bug.

> Note that setuptools cannot handle files with the same name but different extensions

https://pytorch.org/tutorials/advanced/cpp_extension.html

</issue>

<code>

[start of op_builder/fp_quantizer.py]

1 # Copyright (c) Microsoft Corporation.

2 # SPDX-License-Identifier: Apache-2.0

3

4 # DeepSpeed Team

5

6 from .builder import CUDAOpBuilder, installed_cuda_version

7

8

9 class FPQuantizerBuilder(CUDAOpBuilder):

10 BUILD_VAR = "DS_BUILD_FP_QUANTIZER"

11 NAME = "fp_quantizer"

12

13 def __init__(self, name=None):

14 name = self.NAME if name is None else name

15 super().__init__(name=name)

16

17 def absolute_name(self):

18 return f'deepspeed.ops.fp_quantizer.{self.NAME}_op'

19

20 def is_compatible(self, verbose=True):

21 try:

22 import torch

23 except ImportError:

24 self.warning("Please install torch if trying to pre-compile inference kernels")

25 return False

26

27 cuda_okay = True

28 if not self.is_rocm_pytorch() and torch.cuda.is_available(): #ignore-cuda

29 sys_cuda_major, _ = installed_cuda_version()

30 torch_cuda_major = int(torch.version.cuda.split('.')[0])

31 cuda_capability = torch.cuda.get_device_properties(0).major #ignore-cuda

32 if cuda_capability < 8:

33 self.warning("NVIDIA Inference is only supported on Ampere and newer architectures")

34 cuda_okay = False

35 if cuda_capability >= 8:

36 if torch_cuda_major < 11 or sys_cuda_major < 11:

37 self.warning("On Ampere and higher architectures please use CUDA 11+")

38 cuda_okay = False

39 return super().is_compatible(verbose) and cuda_okay

40

41 def filter_ccs(self, ccs):

42 ccs_retained = []

43 ccs_pruned = []

44 for cc in ccs:

45 if int(cc[0]) >= 8:

46 ccs_retained.append(cc)

47 else:

48 ccs_pruned.append(cc)

49 if len(ccs_pruned) > 0:

50 self.warning(f"Filtered compute capabilities {ccs_pruned}")

51 return ccs_retained

52

53 def sources(self):

54 return [

55 "csrc/fp_quantizer/quantize.cu",

56 "csrc/fp_quantizer/quantize.cpp",

57 ]

58

59 def extra_ldflags(self):

60 return ['-lcurand']

61

62 def include_paths(self):

63 return ['csrc/fp_quantizer/includes', 'csrc/includes']

64

[end of op_builder/fp_quantizer.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/op_builder/fp_quantizer.py b/op_builder/fp_quantizer.py

--- a/op_builder/fp_quantizer.py

+++ b/op_builder/fp_quantizer.py

@@ -52,8 +52,8 @@

def sources(self):

return [

- "csrc/fp_quantizer/quantize.cu",

- "csrc/fp_quantizer/quantize.cpp",

+ "csrc/fp_quantizer/fp_quantize.cu",

+ "csrc/fp_quantizer/fp_quantize.cpp",

]

def extra_ldflags(self):

| {"golden_diff": "diff --git a/op_builder/fp_quantizer.py b/op_builder/fp_quantizer.py\n--- a/op_builder/fp_quantizer.py\n+++ b/op_builder/fp_quantizer.py\n@@ -52,8 +52,8 @@\n \n def sources(self):\n return [\n- \"csrc/fp_quantizer/quantize.cu\",\n- \"csrc/fp_quantizer/quantize.cpp\",\n+ \"csrc/fp_quantizer/fp_quantize.cu\",\n+ \"csrc/fp_quantizer/fp_quantize.cpp\",\n ]\n \n def extra_ldflags(self):\n", "issue": "[BUG] fp_quantizer is not correctly built when non-jit installation\n**Describe the bug**\r\nfp_quantizer is not correctly built when non-jit installation.\r\n\r\n**To Reproduce**\r\nSteps to reproduce the behavior:\r\n```\r\nDS_BUILD_FP_QUANTIZER=1 pip install deepspeed\r\n```\r\ninstall will succeed but\r\n```\r\nfrom deepspeed.ops.fp_quantizer import FP_Quantize\r\nFP_Quantize()\r\n```\r\nwill raise `ImportError: dynamic module does not define module export function (PyInit_fp_quantizer_op)`\r\n\r\n**Expected behavior**\r\n\r\nRenaming csrc/fp_quantizer/quantize.cu may solve the issue.\r\nThis restriction seems to be cause of the bug.\r\n> Note that setuptools cannot handle files with the same name but different extensions\r\nhttps://pytorch.org/tutorials/advanced/cpp_extension.html\r\n\n", "before_files": [{"content": "# Copyright (c) Microsoft Corporation.\n# SPDX-License-Identifier: Apache-2.0\n\n# DeepSpeed Team\n\nfrom .builder import CUDAOpBuilder, installed_cuda_version\n\n\nclass FPQuantizerBuilder(CUDAOpBuilder):\n BUILD_VAR = \"DS_BUILD_FP_QUANTIZER\"\n NAME = \"fp_quantizer\"\n\n def __init__(self, name=None):\n name = self.NAME if name is None else name\n super().__init__(name=name)\n\n def absolute_name(self):\n return f'deepspeed.ops.fp_quantizer.{self.NAME}_op'\n\n def is_compatible(self, verbose=True):\n try:\n import torch\n except ImportError:\n self.warning(\"Please install torch if trying to pre-compile inference kernels\")\n return False\n\n cuda_okay = True\n if not self.is_rocm_pytorch() and torch.cuda.is_available(): #ignore-cuda\n sys_cuda_major, _ = installed_cuda_version()\n torch_cuda_major = int(torch.version.cuda.split('.')[0])\n cuda_capability = torch.cuda.get_device_properties(0).major #ignore-cuda\n if cuda_capability < 8:\n self.warning(\"NVIDIA Inference is only supported on Ampere and newer architectures\")\n cuda_okay = False\n if cuda_capability >= 8:\n if torch_cuda_major < 11 or sys_cuda_major < 11:\n self.warning(\"On Ampere and higher architectures please use CUDA 11+\")\n cuda_okay = False\n return super().is_compatible(verbose) and cuda_okay\n\n def filter_ccs(self, ccs):\n ccs_retained = []\n ccs_pruned = []\n for cc in ccs:\n if int(cc[0]) >= 8:\n ccs_retained.append(cc)\n else:\n ccs_pruned.append(cc)\n if len(ccs_pruned) > 0:\n self.warning(f\"Filtered compute capabilities {ccs_pruned}\")\n return ccs_retained\n\n def sources(self):\n return [\n \"csrc/fp_quantizer/quantize.cu\",\n \"csrc/fp_quantizer/quantize.cpp\",\n ]\n\n def extra_ldflags(self):\n return ['-lcurand']\n\n def include_paths(self):\n return ['csrc/fp_quantizer/includes', 'csrc/includes']\n", "path": "op_builder/fp_quantizer.py"}]} | 1,341 | 131 |

gh_patches_debug_16543 | rasdani/github-patches | git_diff | web2py__web2py-1496 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

IS_EMPTY_OR validator returns incorrect "empty" value

When setting default validators, the https://github.com/web2py/web2py/commit/bdbc053285b67fd3ee02f2ea862b30ca495f33e2 commit mistakenly sets the `null` attribute of the `IS_EMPTY_OR` validator to `''` for _all_ field types rather than just the string based field types.

[This line](https://github.com/web2py/web2py/blob/1877f497309e71918aa78e1a1288cbe3cb5392ec/gluon/dal.py#L81):

```

requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field in ('string', 'text', 'password') else None)

```

should instead be:

```

requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field.type in ('string', 'text', 'password') else None)

```

Notice, `field.type` rather than `field`.

</issue>

<code>

[start of gluon/dal.py]

1 #!/usr/bin/env python

2 # -*- coding: utf-8 -*-

3

4 """

5 | This file is part of the web2py Web Framework

6 | Copyrighted by Massimo Di Pierro <[email protected]>

7 | License: LGPLv3 (http://www.gnu.org/licenses/lgpl.html)

8

9 Takes care of adapting pyDAL to web2py's needs

10 -----------------------------------------------

11 """

12

13 from pydal import DAL as DAL

14 from pydal import Field

15 from pydal.objects import Row, Rows, Table, Query, Set, Expression

16 from pydal import SQLCustomType, geoPoint, geoLine, geoPolygon

17

18 def _default_validators(db, field):

19 """

20 Field type validation, using web2py's validators mechanism.

21

22 makes sure the content of a field is in line with the declared

23 fieldtype

24 """

25 from gluon import validators

26 field_type, field_length = field.type, field.length

27 requires = []

28

29 if field_type in (('string', 'text', 'password')):

30 requires.append(validators.IS_LENGTH(field_length))

31 elif field_type == 'json':

32 requires.append(validators.IS_EMPTY_OR(validators.IS_JSON()))

33 elif field_type == 'double' or field_type == 'float':

34 requires.append(validators.IS_FLOAT_IN_RANGE(-1e100, 1e100))

35 elif field_type == 'integer':

36 requires.append(validators.IS_INT_IN_RANGE(-2**31, 2**31))

37 elif field_type == 'bigint':

38 requires.append(validators.IS_INT_IN_RANGE(-2**63, 2**63))

39 elif field_type.startswith('decimal'):

40 requires.append(validators.IS_DECIMAL_IN_RANGE(-10**10, 10**10))

41 elif field_type == 'date':

42 requires.append(validators.IS_DATE())

43 elif field_type == 'time':

44 requires.append(validators.IS_TIME())

45 elif field_type == 'datetime':

46 requires.append(validators.IS_DATETIME())

47 elif db and field_type.startswith('reference') and \

48 field_type.find('.') < 0 and \

49 field_type[10:] in db.tables:

50 referenced = db[field_type[10:]]

51 if hasattr(referenced, '_format') and referenced._format:

52 requires = validators.IS_IN_DB(db, referenced._id,

53 referenced._format)

54 if field.unique:

55 requires._and = validators.IS_NOT_IN_DB(db, field)

56 if field.tablename == field_type[10:]:

57 return validators.IS_EMPTY_OR(requires)

58 return requires

59 elif db and field_type.startswith('list:reference') and \

60 field_type.find('.') < 0 and \

61 field_type[15:] in db.tables:

62 referenced = db[field_type[15:]]

63 if hasattr(referenced, '_format') and referenced._format:

64 requires = validators.IS_IN_DB(db, referenced._id,

65 referenced._format, multiple=True)

66 else:

67 requires = validators.IS_IN_DB(db, referenced._id,

68 multiple=True)

69 if field.unique:

70 requires._and = validators.IS_NOT_IN_DB(db, field)

71 if not field.notnull:

72 requires = validators.IS_EMPTY_OR(requires)

73 return requires

74 # does not get here for reference and list:reference

75 if field.unique:

76 requires.insert(0, validators.IS_NOT_IN_DB(db, field))

77 excluded_fields = ['string', 'upload', 'text', 'password', 'boolean']

78 if (field.notnull or field.unique) and not field_type in excluded_fields:

79 requires.insert(0, validators.IS_NOT_EMPTY())

80 elif not field.notnull and not field.unique and requires:

81 requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field in ('string', 'text', 'password') else None)

82 return requires

83

84 from gluon.serializers import custom_json, xml

85 from gluon.utils import web2py_uuid

86 from gluon import sqlhtml

87

88

89 DAL.serializers = {'json': custom_json, 'xml': xml}

90 DAL.validators_method = _default_validators

91 DAL.uuid = lambda x: web2py_uuid()

92 DAL.representers = {

93 'rows_render': sqlhtml.represent,

94 'rows_xml': sqlhtml.SQLTABLE

95 }

96 DAL.Field = Field

97 DAL.Table = Table

98

99 #: add web2py contrib drivers to pyDAL

100 from pydal.drivers import DRIVERS

101 if not DRIVERS.get('pymysql'):

102 try:

103 from .contrib import pymysql

104 DRIVERS['pymysql'] = pymysql

105 except:

106 pass

107 if not DRIVERS.get('pyodbc'):

108 try:

109 from .contrib import pypyodbc as pyodbc

110 DRIVERS['pyodbc'] = pyodbc

111 except:

112 pass

113 if not DRIVERS.get('pg8000'):

114 try:

115 from .contrib import pg8000

116 DRIVERS['pg8000'] = pg8000

117 except:

118 pass

119

[end of gluon/dal.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/gluon/dal.py b/gluon/dal.py

--- a/gluon/dal.py

+++ b/gluon/dal.py

@@ -75,10 +75,10 @@

if field.unique:

requires.insert(0, validators.IS_NOT_IN_DB(db, field))

excluded_fields = ['string', 'upload', 'text', 'password', 'boolean']

- if (field.notnull or field.unique) and not field_type in excluded_fields:

+ if (field.notnull or field.unique) and field_type not in excluded_fields:

requires.insert(0, validators.IS_NOT_EMPTY())

elif not field.notnull and not field.unique and requires:

- requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field in ('string', 'text', 'password') else None)

+ requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field.type in ('string', 'text', 'password') else None)

return requires

from gluon.serializers import custom_json, xml

| {"golden_diff": "diff --git a/gluon/dal.py b/gluon/dal.py\n--- a/gluon/dal.py\n+++ b/gluon/dal.py\n@@ -75,10 +75,10 @@\n if field.unique:\n requires.insert(0, validators.IS_NOT_IN_DB(db, field))\n excluded_fields = ['string', 'upload', 'text', 'password', 'boolean']\n- if (field.notnull or field.unique) and not field_type in excluded_fields:\n+ if (field.notnull or field.unique) and field_type not in excluded_fields:\n requires.insert(0, validators.IS_NOT_EMPTY())\n elif not field.notnull and not field.unique and requires:\n- requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field in ('string', 'text', 'password') else None)\n+ requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field.type in ('string', 'text', 'password') else None)\n return requires\n \n from gluon.serializers import custom_json, xml\n", "issue": "IS_EMPTY_OR validator returns incorrect \"empty\" value\nWhen setting default validators, the https://github.com/web2py/web2py/commit/bdbc053285b67fd3ee02f2ea862b30ca495f33e2 commit mistakenly sets the `null` attribute of the `IS_EMPTY_OR` validator to `''` for _all_ field types rather than just the string based field types.\n\n[This line](https://github.com/web2py/web2py/blob/1877f497309e71918aa78e1a1288cbe3cb5392ec/gluon/dal.py#L81):\n\n```\n requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field in ('string', 'text', 'password') else None)\n```\n\nshould instead be:\n\n```\n requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field.type in ('string', 'text', 'password') else None)\n```\n\nNotice, `field.type` rather than `field`.\n\n", "before_files": [{"content": "#!/usr/bin/env python\n# -*- coding: utf-8 -*-\n\n\"\"\"\n| This file is part of the web2py Web Framework\n| Copyrighted by Massimo Di Pierro <[email protected]>\n| License: LGPLv3 (http://www.gnu.org/licenses/lgpl.html)\n\nTakes care of adapting pyDAL to web2py's needs\n-----------------------------------------------\n\"\"\"\n\nfrom pydal import DAL as DAL\nfrom pydal import Field\nfrom pydal.objects import Row, Rows, Table, Query, Set, Expression\nfrom pydal import SQLCustomType, geoPoint, geoLine, geoPolygon\n\ndef _default_validators(db, field):\n \"\"\"\n Field type validation, using web2py's validators mechanism.\n\n makes sure the content of a field is in line with the declared\n fieldtype\n \"\"\"\n from gluon import validators\n field_type, field_length = field.type, field.length\n requires = []\n\n if field_type in (('string', 'text', 'password')):\n requires.append(validators.IS_LENGTH(field_length))\n elif field_type == 'json':\n requires.append(validators.IS_EMPTY_OR(validators.IS_JSON()))\n elif field_type == 'double' or field_type == 'float':\n requires.append(validators.IS_FLOAT_IN_RANGE(-1e100, 1e100))\n elif field_type == 'integer':\n requires.append(validators.IS_INT_IN_RANGE(-2**31, 2**31))\n elif field_type == 'bigint':\n requires.append(validators.IS_INT_IN_RANGE(-2**63, 2**63))\n elif field_type.startswith('decimal'):\n requires.append(validators.IS_DECIMAL_IN_RANGE(-10**10, 10**10))\n elif field_type == 'date':\n requires.append(validators.IS_DATE())\n elif field_type == 'time':\n requires.append(validators.IS_TIME())\n elif field_type == 'datetime':\n requires.append(validators.IS_DATETIME())\n elif db and field_type.startswith('reference') and \\\n field_type.find('.') < 0 and \\\n field_type[10:] in db.tables:\n referenced = db[field_type[10:]]\n if hasattr(referenced, '_format') and referenced._format:\n requires = validators.IS_IN_DB(db, referenced._id,\n referenced._format)\n if field.unique:\n requires._and = validators.IS_NOT_IN_DB(db, field)\n if field.tablename == field_type[10:]:\n return validators.IS_EMPTY_OR(requires)\n return requires\n elif db and field_type.startswith('list:reference') and \\\n field_type.find('.') < 0 and \\\n field_type[15:] in db.tables:\n referenced = db[field_type[15:]]\n if hasattr(referenced, '_format') and referenced._format:\n requires = validators.IS_IN_DB(db, referenced._id,\n referenced._format, multiple=True)\n else:\n requires = validators.IS_IN_DB(db, referenced._id,\n multiple=True)\n if field.unique:\n requires._and = validators.IS_NOT_IN_DB(db, field)\n if not field.notnull:\n requires = validators.IS_EMPTY_OR(requires)\n return requires\n # does not get here for reference and list:reference\n if field.unique:\n requires.insert(0, validators.IS_NOT_IN_DB(db, field))\n excluded_fields = ['string', 'upload', 'text', 'password', 'boolean']\n if (field.notnull or field.unique) and not field_type in excluded_fields:\n requires.insert(0, validators.IS_NOT_EMPTY())\n elif not field.notnull and not field.unique and requires:\n requires[0] = validators.IS_EMPTY_OR(requires[0], null='' if field in ('string', 'text', 'password') else None)\n return requires\n\nfrom gluon.serializers import custom_json, xml\nfrom gluon.utils import web2py_uuid\nfrom gluon import sqlhtml\n\n\nDAL.serializers = {'json': custom_json, 'xml': xml}\nDAL.validators_method = _default_validators\nDAL.uuid = lambda x: web2py_uuid()\nDAL.representers = {\n 'rows_render': sqlhtml.represent,\n 'rows_xml': sqlhtml.SQLTABLE\n}\nDAL.Field = Field\nDAL.Table = Table\n\n#: add web2py contrib drivers to pyDAL\nfrom pydal.drivers import DRIVERS\nif not DRIVERS.get('pymysql'):\n try:\n from .contrib import pymysql\n DRIVERS['pymysql'] = pymysql\n except:\n pass\nif not DRIVERS.get('pyodbc'):\n try:\n from .contrib import pypyodbc as pyodbc\n DRIVERS['pyodbc'] = pyodbc\n except:\n pass\nif not DRIVERS.get('pg8000'):\n try:\n from .contrib import pg8000\n DRIVERS['pg8000'] = pg8000\n except:\n pass\n", "path": "gluon/dal.py"}]} | 2,107 | 233 |

gh_patches_debug_4775 | rasdani/github-patches | git_diff | nilearn__nilearn-1949 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

line_width param in view_connectome has no effect

The parameter `line_width` in `html_connectome.view_connectome()` is not associated with any functionality.

Should we remove it or is there a reason for it to be there?

</issue>

<code>

[start of nilearn/plotting/html_connectome.py]

1 import functools

2 import json

3 import warnings

4

5 import numpy as np

6 from scipy import sparse

7

8 from nilearn._utils import replace_parameters

9 from .. import datasets

10 from . import cm

11

12 from .js_plotting_utils import (add_js_lib, HTMLDocument, mesh_to_plotly,

13 encode, colorscale, get_html_template,

14 to_color_strings)

15

16

17 class ConnectomeView(HTMLDocument):

18 pass

19

20

21 def _prepare_line(edges, nodes):

22 path_edges = np.zeros(len(edges) * 3, dtype=int)

23 path_edges[::3] = edges

24 path_edges[1::3] = edges

25 path_nodes = np.zeros(len(nodes) * 3, dtype=int)

26 path_nodes[::3] = nodes[:, 0]

27 path_nodes[1::3] = nodes[:, 1]

28 return path_edges, path_nodes

29

30

31 def _get_connectome(adjacency_matrix, coords, threshold=None,

32 marker_size=None, cmap=cm.cold_hot, symmetric_cmap=True):

33 connectome = {}

34 coords = np.asarray(coords, dtype='<f4')

35 adjacency_matrix = adjacency_matrix.copy()

36 colors = colorscale(

37 cmap, adjacency_matrix.ravel(), threshold=threshold,

38 symmetric_cmap=symmetric_cmap)

39 connectome['colorscale'] = colors['colors']

40 connectome['cmin'] = float(colors['vmin'])

41 connectome['cmax'] = float(colors['vmax'])

42 if threshold is not None:

43 adjacency_matrix[

44 np.abs(adjacency_matrix) <= colors['abs_threshold']] = 0

45 s = sparse.coo_matrix(adjacency_matrix)

46 nodes = np.asarray([s.row, s.col], dtype=int).T

47 edges = np.arange(len(nodes))

48 path_edges, path_nodes = _prepare_line(edges, nodes)

49 connectome["_con_w"] = encode(np.asarray(s.data, dtype='<f4')[path_edges])

50 c = coords[path_nodes]

51 if np.ndim(marker_size) > 0:

52 marker_size = np.asarray(marker_size)

53 marker_size = marker_size[path_nodes]

54 x, y, z = c.T

55 for coord, cname in [(x, "x"), (y, "y"), (z, "z")]:

56 connectome["_con_{}".format(cname)] = encode(

57 np.asarray(coord, dtype='<f4'))

58 connectome["markers_only"] = False

59 if hasattr(marker_size, 'tolist'):

60 marker_size = marker_size.tolist()

61 connectome['marker_size'] = marker_size

62 return connectome

63

64

65 def _get_markers(coords, colors):

66 connectome = {}

67 coords = np.asarray(coords, dtype='<f4')

68 x, y, z = coords.T

69 for coord, cname in [(x, "x"), (y, "y"), (z, "z")]:

70 connectome["_con_{}".format(cname)] = encode(

71 np.asarray(coord, dtype='<f4'))

72 connectome["marker_color"] = to_color_strings(colors)

73 connectome["markers_only"] = True

74 return connectome

75

76

77 def _make_connectome_html(connectome_info, embed_js=True):

78 plot_info = {"connectome": connectome_info}

79 mesh = datasets.fetch_surf_fsaverage()

80 for hemi in ['pial_left', 'pial_right']:

81 plot_info[hemi] = mesh_to_plotly(mesh[hemi])

82 as_json = json.dumps(plot_info)

83 as_html = get_html_template(

84 'connectome_plot_template.html').safe_substitute(

85 {'INSERT_CONNECTOME_JSON_HERE': as_json})

86 as_html = add_js_lib(as_html, embed_js=embed_js)

87 return ConnectomeView(as_html)

88

89

90 def _replacement_params_view_connectome():

91 """ Returns a dict containing deprecated & replacement parameters

92 as key-value pair for view_connectome().

93 Avoids cluttering the global namespace.

94 """

95 return {

96 'coords': 'node_coords',

97 'threshold': 'edge_threshold',

98 'cmap': 'edge_cmap',

99 'marker_size': 'node_size',

100 }

101

102 @replace_parameters(replacement_params=_replacement_params_view_connectome(),

103 end_version='0.6.0',

104 lib_name='Nilearn',

105 )

106 def view_connectome(adjacency_matrix, node_coords, edge_threshold=None,

107 edge_cmap=cm.bwr, symmetric_cmap=True,

108 linewidth=6., node_size=3.,

109 ):

110 """

111 Insert a 3d plot of a connectome into an HTML page.

112

113 Parameters

114 ----------

115 adjacency_matrix : ndarray, shape=(n_nodes, n_nodes)

116 the weights of the edges.

117

118 node_coords : ndarray, shape=(n_nodes, 3)

119 the coordinates of the nodes in MNI space.

120

121 edge_threshold : str, number or None, optional (default=None)

122 If None, no thresholding.

123 If it is a number only connections of amplitude greater

124 than threshold will be shown.

125 If it is a string it must finish with a percent sign,

126 e.g. "25.3%", and only connections of amplitude above the

127 given percentile will be shown.

128

129 edge_cmap : str or matplotlib colormap, optional

130

131 symmetric_cmap : bool, optional (default=True)

132 Make colormap symmetric (ranging from -vmax to vmax).

133

134 linewidth : float, optional (default=6.)

135 Width of the lines that show connections.

136

137 node_size : float, optional (default=3.)

138 Size of the markers showing the seeds in pixels.

139

140 Returns

141 -------

142 ConnectomeView : plot of the connectome.

143 It can be saved as an html page or rendered (transparently) by the

144 Jupyter notebook. Useful methods are :

145

146 - 'resize' to resize the plot displayed in a Jupyter notebook

147 - 'save_as_html' to save the plot to a file

148 - 'open_in_browser' to save the plot and open it in a web browser.

149

150 See Also

151 --------

152 nilearn.plotting.plot_connectome:

153 projected views of a connectome in a glass brain.

154

155 nilearn.plotting.view_markers:

156 interactive plot of colored markers

157

158 nilearn.plotting.view_surf, nilearn.plotting.view_img_on_surf:

159 interactive view of statistical maps or surface atlases on the cortical

160 surface.

161

162 """

163 connectome_info = _get_connectome(

164 adjacency_matrix, node_coords, threshold=edge_threshold, cmap=edge_cmap,

165 symmetric_cmap=symmetric_cmap, marker_size=node_size)

166 return _make_connectome_html(connectome_info)

167

168

169 def _replacement_params_view_markers():

170 """ Returns a dict containing deprecated & replacement parameters

171 as key-value pair for view_markers().

172 Avoids cluttering the global namespace.

173 """

174 return {'coords': 'marker_coords',

175 'colors': 'marker_color',

176 }

177

178

179 @replace_parameters(replacement_params=_replacement_params_view_markers(),

180 end_version='0.6.0',

181 lib_name='Nilearn',

182 )

183 def view_markers(marker_coords, marker_color=None, marker_size=5.):

184 """

185 Insert a 3d plot of markers in a brain into an HTML page.

186

187 Parameters

188 ----------

189 marker_coords : ndarray, shape=(n_nodes, 3)

190 the coordinates of the nodes in MNI space.

191

192 marker_color : ndarray, shape=(n_nodes,)

193 colors of the markers: list of strings, hex rgb or rgba strings, rgb

194 triplets, or rgba triplets (i.e. formats accepted by matplotlib, see

195 https://matplotlib.org/users/colors.html#specifying-colors)

196

197 marker_size : float or array-like, optional (default=3.)

198 Size of the markers showing the seeds in pixels.

199

200 Returns

201 -------

202 ConnectomeView : plot of the markers.

203 It can be saved as an html page or rendered (transparently) by the

204 Jupyter notebook. Useful methods are :

205

206 - 'resize' to resize the plot displayed in a Jupyter notebook

207 - 'save_as_html' to save the plot to a file

208 - 'open_in_browser' to save the plot and open it in a web browser.

209

210 See Also

211 --------

212 nilearn.plotting.plot_connectome:

213 projected views of a connectome in a glass brain.

214

215 nilearn.plotting.view_connectome:

216 interactive plot of a connectome.

217

218 nilearn.plotting.view_surf, nilearn.plotting.view_img_on_surf:

219 interactive view of statistical maps or surface atlases on the cortical

220 surface.

221

222 """

223 if marker_color is None:

224 marker_color = ['red' for i in range(len(marker_coords))]

225 connectome_info = _get_markers(marker_coords, marker_color)

226 if hasattr(marker_size, 'tolist'):

227 marker_size = marker_size.tolist()

228 connectome_info["marker_size"] = marker_size

229 return _make_connectome_html(connectome_info)

230

[end of nilearn/plotting/html_connectome.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/nilearn/plotting/html_connectome.py b/nilearn/plotting/html_connectome.py

--- a/nilearn/plotting/html_connectome.py

+++ b/nilearn/plotting/html_connectome.py

@@ -159,6 +159,7 @@

connectome_info = _get_connectome(

adjacency_matrix, node_coords, threshold=edge_threshold, cmap=edge_cmap,

symmetric_cmap=symmetric_cmap, marker_size=node_size)

+ connectome_info['line_width'] = linewidth

return _make_connectome_html(connectome_info)

| {"golden_diff": "diff --git a/nilearn/plotting/html_connectome.py b/nilearn/plotting/html_connectome.py\n--- a/nilearn/plotting/html_connectome.py\n+++ b/nilearn/plotting/html_connectome.py\n@@ -159,6 +159,7 @@\n connectome_info = _get_connectome(\n adjacency_matrix, node_coords, threshold=edge_threshold, cmap=edge_cmap,\n symmetric_cmap=symmetric_cmap, marker_size=node_size)\n+ connectome_info['line_width'] = linewidth\n return _make_connectome_html(connectome_info)\n", "issue": "line_width param in view_connectome has no effect\nThe parameter `line_width` in `html_connectome.view_connectome()` is not associated with any functionality.\r\nShould we remove it or is there a reason for it to be there?\n", "before_files": [{"content": "import functools\nimport json\nimport warnings\n\nimport numpy as np\nfrom scipy import sparse\n\nfrom nilearn._utils import replace_parameters\nfrom .. import datasets\nfrom . import cm\n\nfrom .js_plotting_utils import (add_js_lib, HTMLDocument, mesh_to_plotly,\n encode, colorscale, get_html_template,\n to_color_strings)\n\n\nclass ConnectomeView(HTMLDocument):\n pass\n\n\ndef _prepare_line(edges, nodes):\n path_edges = np.zeros(len(edges) * 3, dtype=int)\n path_edges[::3] = edges\n path_edges[1::3] = edges\n path_nodes = np.zeros(len(nodes) * 3, dtype=int)\n path_nodes[::3] = nodes[:, 0]\n path_nodes[1::3] = nodes[:, 1]\n return path_edges, path_nodes\n\n\ndef _get_connectome(adjacency_matrix, coords, threshold=None,\n marker_size=None, cmap=cm.cold_hot, symmetric_cmap=True):\n connectome = {}\n coords = np.asarray(coords, dtype='<f4')\n adjacency_matrix = adjacency_matrix.copy()\n colors = colorscale(\n cmap, adjacency_matrix.ravel(), threshold=threshold,\n symmetric_cmap=symmetric_cmap)\n connectome['colorscale'] = colors['colors']\n connectome['cmin'] = float(colors['vmin'])\n connectome['cmax'] = float(colors['vmax'])\n if threshold is not None:\n adjacency_matrix[\n np.abs(adjacency_matrix) <= colors['abs_threshold']] = 0\n s = sparse.coo_matrix(adjacency_matrix)\n nodes = np.asarray([s.row, s.col], dtype=int).T\n edges = np.arange(len(nodes))\n path_edges, path_nodes = _prepare_line(edges, nodes)\n connectome[\"_con_w\"] = encode(np.asarray(s.data, dtype='<f4')[path_edges])\n c = coords[path_nodes]\n if np.ndim(marker_size) > 0:\n marker_size = np.asarray(marker_size)\n marker_size = marker_size[path_nodes]\n x, y, z = c.T\n for coord, cname in [(x, \"x\"), (y, \"y\"), (z, \"z\")]:\n connectome[\"_con_{}\".format(cname)] = encode(\n np.asarray(coord, dtype='<f4'))\n connectome[\"markers_only\"] = False\n if hasattr(marker_size, 'tolist'):\n marker_size = marker_size.tolist()\n connectome['marker_size'] = marker_size\n return connectome\n\n\ndef _get_markers(coords, colors):\n connectome = {}\n coords = np.asarray(coords, dtype='<f4')\n x, y, z = coords.T\n for coord, cname in [(x, \"x\"), (y, \"y\"), (z, \"z\")]:\n connectome[\"_con_{}\".format(cname)] = encode(\n np.asarray(coord, dtype='<f4'))\n connectome[\"marker_color\"] = to_color_strings(colors)\n connectome[\"markers_only\"] = True\n return connectome\n\n\ndef _make_connectome_html(connectome_info, embed_js=True):\n plot_info = {\"connectome\": connectome_info}\n mesh = datasets.fetch_surf_fsaverage()\n for hemi in ['pial_left', 'pial_right']:\n plot_info[hemi] = mesh_to_plotly(mesh[hemi])\n as_json = json.dumps(plot_info)\n as_html = get_html_template(\n 'connectome_plot_template.html').safe_substitute(\n {'INSERT_CONNECTOME_JSON_HERE': as_json})\n as_html = add_js_lib(as_html, embed_js=embed_js)\n return ConnectomeView(as_html)\n\n\ndef _replacement_params_view_connectome():\n \"\"\" Returns a dict containing deprecated & replacement parameters\n as key-value pair for view_connectome().\n Avoids cluttering the global namespace.\n \"\"\"\n return {\n 'coords': 'node_coords',\n 'threshold': 'edge_threshold',\n 'cmap': 'edge_cmap',\n 'marker_size': 'node_size',\n }\n\n@replace_parameters(replacement_params=_replacement_params_view_connectome(),\n end_version='0.6.0',\n lib_name='Nilearn',\n )\ndef view_connectome(adjacency_matrix, node_coords, edge_threshold=None,\n edge_cmap=cm.bwr, symmetric_cmap=True,\n linewidth=6., node_size=3.,\n ):\n \"\"\"\n Insert a 3d plot of a connectome into an HTML page.\n\n Parameters\n ----------\n adjacency_matrix : ndarray, shape=(n_nodes, n_nodes)\n the weights of the edges.\n\n node_coords : ndarray, shape=(n_nodes, 3)\n the coordinates of the nodes in MNI space.\n\n edge_threshold : str, number or None, optional (default=None)\n If None, no thresholding.\n If it is a number only connections of amplitude greater\n than threshold will be shown.\n If it is a string it must finish with a percent sign,\n e.g. \"25.3%\", and only connections of amplitude above the\n given percentile will be shown.\n\n edge_cmap : str or matplotlib colormap, optional\n\n symmetric_cmap : bool, optional (default=True)\n Make colormap symmetric (ranging from -vmax to vmax).\n\n linewidth : float, optional (default=6.)\n Width of the lines that show connections.\n\n node_size : float, optional (default=3.)\n Size of the markers showing the seeds in pixels.\n\n Returns\n -------\n ConnectomeView : plot of the connectome.\n It can be saved as an html page or rendered (transparently) by the\n Jupyter notebook. Useful methods are :\n\n - 'resize' to resize the plot displayed in a Jupyter notebook\n - 'save_as_html' to save the plot to a file\n - 'open_in_browser' to save the plot and open it in a web browser.\n\n See Also\n --------\n nilearn.plotting.plot_connectome:\n projected views of a connectome in a glass brain.\n\n nilearn.plotting.view_markers:\n interactive plot of colored markers\n\n nilearn.plotting.view_surf, nilearn.plotting.view_img_on_surf:\n interactive view of statistical maps or surface atlases on the cortical\n surface.\n\n \"\"\"\n connectome_info = _get_connectome(\n adjacency_matrix, node_coords, threshold=edge_threshold, cmap=edge_cmap,\n symmetric_cmap=symmetric_cmap, marker_size=node_size)\n return _make_connectome_html(connectome_info)\n\n\ndef _replacement_params_view_markers():\n \"\"\" Returns a dict containing deprecated & replacement parameters\n as key-value pair for view_markers().\n Avoids cluttering the global namespace.\n \"\"\"\n return {'coords': 'marker_coords',\n 'colors': 'marker_color',\n }\n\n\n@replace_parameters(replacement_params=_replacement_params_view_markers(),\n end_version='0.6.0',\n lib_name='Nilearn',\n )\ndef view_markers(marker_coords, marker_color=None, marker_size=5.):\n \"\"\"\n Insert a 3d plot of markers in a brain into an HTML page.\n\n Parameters\n ----------\n marker_coords : ndarray, shape=(n_nodes, 3)\n the coordinates of the nodes in MNI space.\n\n marker_color : ndarray, shape=(n_nodes,)\n colors of the markers: list of strings, hex rgb or rgba strings, rgb\n triplets, or rgba triplets (i.e. formats accepted by matplotlib, see\n https://matplotlib.org/users/colors.html#specifying-colors)\n\n marker_size : float or array-like, optional (default=3.)\n Size of the markers showing the seeds in pixels.\n\n Returns\n -------\n ConnectomeView : plot of the markers.\n It can be saved as an html page or rendered (transparently) by the\n Jupyter notebook. Useful methods are :\n\n - 'resize' to resize the plot displayed in a Jupyter notebook\n - 'save_as_html' to save the plot to a file\n - 'open_in_browser' to save the plot and open it in a web browser.\n\n See Also\n --------\n nilearn.plotting.plot_connectome:\n projected views of a connectome in a glass brain.\n\n nilearn.plotting.view_connectome:\n interactive plot of a connectome.\n\n nilearn.plotting.view_surf, nilearn.plotting.view_img_on_surf:\n interactive view of statistical maps or surface atlases on the cortical\n surface.\n\n \"\"\"\n if marker_color is None:\n marker_color = ['red' for i in range(len(marker_coords))]\n connectome_info = _get_markers(marker_coords, marker_color)\n if hasattr(marker_size, 'tolist'):\n marker_size = marker_size.tolist()\n connectome_info[\"marker_size\"] = marker_size\n return _make_connectome_html(connectome_info)\n", "path": "nilearn/plotting/html_connectome.py"}]} | 3,104 | 129 |

gh_patches_debug_7174 | rasdani/github-patches | git_diff | cowrie__cowrie-1054 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Bug in csirtg plugin

@wesyoung Not sure when this bug started, but just looked today at my honeypots and saw this happening all over the place in the logs.

```

2018-02-11T16:53:14-0500 [twisted.internet.defer#critical] Unhandled error in Deferred:

2018-02-11T16:53:14-0500 [twisted.internet.defer#critical]

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/tcp.py", line 289, in connectionLost

protocol.connectionLost(reason)

File "/usr/local/lib/python2.7/dist-packages/twisted/web/client.py", line 223, in connectionLost

self.factory._disconnectedDeferred.callback(None)

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 459, in callback

self._startRunCallbacks(result)

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 567, in _startRunCallbacks

self._runCallbacks()

--- <exception caught here> ---

File "/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py", line 653, in _runCallbacks

current.result = callback(current.result, *args, **kw)

File "/home/cowrie/cowrie/cowrie/commands/wget.py", line 241, in error

url=self.url)

File "/home/cowrie/cowrie/cowrie/shell/protocol.py", line 80, in logDispatch

pt.factory.logDispatch(*msg, **args)

File "/home/cowrie/cowrie/cowrie/telnet/transport.py", line 43, in logDispatch

output.logDispatch(*msg, **args)

File "/home/cowrie/cowrie/cowrie/core/output.py", line 117, in logDispatch

self.emit(ev)

File "/home/cowrie/cowrie/cowrie/core/output.py", line 206, in emit

self.write(ev)

File "/home/cowrie/cowrie/cowrie/output/csirtg.py", line 43, in write

system = e['system']

exceptions.KeyError: 'system'

```

</issue>

<code>

[start of src/cowrie/output/csirtg.py]

1 from __future__ import absolute_import, division

2

3 import os

4 from datetime import datetime

5

6 from csirtgsdk.client import Client

7 from csirtgsdk.indicator import Indicator

8

9 from twisted.python import log

10

11 import cowrie.core.output

12 from cowrie.core.config import CONFIG

13

14 USERNAME = os.environ.get('CSIRTG_USER')

15 FEED = os.environ.get('CSIRTG_FEED')

16 TOKEN = os.environ.get('CSIRG_TOKEN')

17 DESCRIPTION = os.environ.get('CSIRTG_DESCRIPTION', 'random scanning activity')

18

19

20 class Output(cowrie.core.output.Output):

21 def __init__(self):

22 self.user = CONFIG.get('output_csirtg', 'username') or USERNAME

23 self.feed = CONFIG.get('output_csirtg', 'feed') or FEED

24 self.token = CONFIG.get('output_csirtg', 'token') or TOKEN

25 try:

26 self.description = CONFIG.get('output_csirtg', 'description')

27 except Exception:

28 self.description = DESCRIPTION

29 self.context = {}

30 self.client = Client(token=self.token)

31 cowrie.core.output.Output.__init__(self)

32

33 def start(self, ):

34 pass

35

36 def stop(self):

37 pass

38

39 def write(self, e):

40 peerIP = e['src_ip']

41 ts = e['timestamp']

42 system = e['system']

43

44 if system not in ['cowrie.ssh.factory.CowrieSSHFactory', 'cowrie.telnet.transport.HoneyPotTelnetFactory']:

45 return

46

47 today = str(datetime.now().date())

48

49 if not self.context.get(today):

50 self.context = {}

51 self.context[today] = set()

52

53 key = ','.join([peerIP, system])

54

55 if key in self.context[today]:

56 return

57

58 self.context[today].add(key)

59

60 tags = 'scanner,ssh'

61 port = 22

62 if e['system'] == 'cowrie.telnet.transport.HoneyPotTelnetFactory':

63 tags = 'scanner,telnet'

64 port = 23

65

66 i = {

67 'user': self.user,

68 'feed': self.feed,

69 'indicator': peerIP,

70 'portlist': port,

71 'protocol': 'tcp',

72 'tags': tags,

73 'firsttime': ts,

74 'lasttime': ts,

75 'description': self.description

76 }

77

78 ret = Indicator(self.client, i).submit()

79 log.msg('logged to csirtg %s ' % ret['location'])

80

[end of src/cowrie/output/csirtg.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/src/cowrie/output/csirtg.py b/src/cowrie/output/csirtg.py

--- a/src/cowrie/output/csirtg.py

+++ b/src/cowrie/output/csirtg.py

@@ -39,9 +39,10 @@

def write(self, e):

peerIP = e['src_ip']

ts = e['timestamp']

- system = e['system']

+ system = e.get('system', None)

- if system not in ['cowrie.ssh.factory.CowrieSSHFactory', 'cowrie.telnet.transport.HoneyPotTelnetFactory']:

+ if system not in ['cowrie.ssh.factory.CowrieSSHFactory',

+ 'cowrie.telnet.transport.HoneyPotTelnetFactory']:

return

today = str(datetime.now().date())

| {"golden_diff": "diff --git a/src/cowrie/output/csirtg.py b/src/cowrie/output/csirtg.py\n--- a/src/cowrie/output/csirtg.py\n+++ b/src/cowrie/output/csirtg.py\n@@ -39,9 +39,10 @@\n def write(self, e):\n peerIP = e['src_ip']\n ts = e['timestamp']\n- system = e['system']\n+ system = e.get('system', None)\n \n- if system not in ['cowrie.ssh.factory.CowrieSSHFactory', 'cowrie.telnet.transport.HoneyPotTelnetFactory']:\n+ if system not in ['cowrie.ssh.factory.CowrieSSHFactory',\n+ 'cowrie.telnet.transport.HoneyPotTelnetFactory']:\n return\n \n today = str(datetime.now().date())\n", "issue": "Bug in csirtg plugin\n@wesyoung Not sure when this bug started, but just looked today at my honeypots and saw this happening all over the place in the logs.\r\n\r\n```\r\n2018-02-11T16:53:14-0500 [twisted.internet.defer#critical] Unhandled error in Deferred:\r\n2018-02-11T16:53:14-0500 [twisted.internet.defer#critical]\r\n\tTraceback (most recent call last):\r\n\t File \"/usr/local/lib/python2.7/dist-packages/twisted/internet/tcp.py\", line 289, in connectionLost\r\n\t protocol.connectionLost(reason)\r\n\t File \"/usr/local/lib/python2.7/dist-packages/twisted/web/client.py\", line 223, in connectionLost\r\n\t self.factory._disconnectedDeferred.callback(None)\r\n\t File \"/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py\", line 459, in callback\r\n\t self._startRunCallbacks(result)\r\n\t File \"/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py\", line 567, in _startRunCallbacks\r\n\t self._runCallbacks()\r\n\t--- <exception caught here> ---\r\n\t File \"/usr/local/lib/python2.7/dist-packages/twisted/internet/defer.py\", line 653, in _runCallbacks\r\n\t current.result = callback(current.result, *args, **kw)\r\n\t File \"/home/cowrie/cowrie/cowrie/commands/wget.py\", line 241, in error\r\n\t url=self.url)\r\n\t File \"/home/cowrie/cowrie/cowrie/shell/protocol.py\", line 80, in logDispatch\r\n\t pt.factory.logDispatch(*msg, **args)\r\n\t File \"/home/cowrie/cowrie/cowrie/telnet/transport.py\", line 43, in logDispatch\r\n\t output.logDispatch(*msg, **args)\r\n\t File \"/home/cowrie/cowrie/cowrie/core/output.py\", line 117, in logDispatch\r\n\t self.emit(ev)\r\n\t File \"/home/cowrie/cowrie/cowrie/core/output.py\", line 206, in emit\r\n\t self.write(ev)\r\n\t File \"/home/cowrie/cowrie/cowrie/output/csirtg.py\", line 43, in write\r\n\t system = e['system']\r\n\texceptions.KeyError: 'system'\r\n```\n", "before_files": [{"content": "from __future__ import absolute_import, division\n\nimport os\nfrom datetime import datetime\n\nfrom csirtgsdk.client import Client\nfrom csirtgsdk.indicator import Indicator\n\nfrom twisted.python import log\n\nimport cowrie.core.output\nfrom cowrie.core.config import CONFIG\n\nUSERNAME = os.environ.get('CSIRTG_USER')\nFEED = os.environ.get('CSIRTG_FEED')\nTOKEN = os.environ.get('CSIRG_TOKEN')\nDESCRIPTION = os.environ.get('CSIRTG_DESCRIPTION', 'random scanning activity')\n\n\nclass Output(cowrie.core.output.Output):\n def __init__(self):\n self.user = CONFIG.get('output_csirtg', 'username') or USERNAME\n self.feed = CONFIG.get('output_csirtg', 'feed') or FEED\n self.token = CONFIG.get('output_csirtg', 'token') or TOKEN\n try:\n self.description = CONFIG.get('output_csirtg', 'description')\n except Exception:\n self.description = DESCRIPTION\n self.context = {}\n self.client = Client(token=self.token)\n cowrie.core.output.Output.__init__(self)\n\n def start(self, ):\n pass\n\n def stop(self):\n pass\n\n def write(self, e):\n peerIP = e['src_ip']\n ts = e['timestamp']\n system = e['system']\n\n if system not in ['cowrie.ssh.factory.CowrieSSHFactory', 'cowrie.telnet.transport.HoneyPotTelnetFactory']:\n return\n\n today = str(datetime.now().date())\n\n if not self.context.get(today):\n self.context = {}\n self.context[today] = set()\n\n key = ','.join([peerIP, system])\n\n if key in self.context[today]:\n return\n\n self.context[today].add(key)\n\n tags = 'scanner,ssh'\n port = 22\n if e['system'] == 'cowrie.telnet.transport.HoneyPotTelnetFactory':\n tags = 'scanner,telnet'\n port = 23\n\n i = {\n 'user': self.user,\n 'feed': self.feed,\n 'indicator': peerIP,\n 'portlist': port,\n 'protocol': 'tcp',\n 'tags': tags,\n 'firsttime': ts,\n 'lasttime': ts,\n 'description': self.description\n }\n\n ret = Indicator(self.client, i).submit()\n log.msg('logged to csirtg %s ' % ret['location'])\n", "path": "src/cowrie/output/csirtg.py"}]} | 1,779 | 180 |

gh_patches_debug_5664 | rasdani/github-patches | git_diff | cisagov__manage.get.gov-278 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

DomainApplication list on logged in user homepage

Outcome: A list (limited to one now) of domain applications on the homepage of a logged in user.

A/C: As a user I want to see my in progress applications for a domain. As a user I want to be able to select an application that is `In Progress` and continue my progress. As a user I want to be able to view submitted applications.

</issue>

<code>

[start of src/registrar/views/index.py]

1 from django.shortcuts import render

2

3

4 def index(request):

5 """This page is available to anyone without logging in."""

6 return render(request, "home.html")

7

[end of src/registrar/views/index.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/src/registrar/views/index.py b/src/registrar/views/index.py

--- a/src/registrar/views/index.py

+++ b/src/registrar/views/index.py

@@ -1,6 +1,12 @@

from django.shortcuts import render

+from registrar.models import DomainApplication

+

def index(request):

"""This page is available to anyone without logging in."""

- return render(request, "home.html")

+ context = {}

+ if request.user.is_authenticated:

+ applications = DomainApplication.objects.filter(creator=request.user)

+ context["domain_applications"] = applications

+ return render(request, "home.html", context)

| {"golden_diff": "diff --git a/src/registrar/views/index.py b/src/registrar/views/index.py\n--- a/src/registrar/views/index.py\n+++ b/src/registrar/views/index.py\n@@ -1,6 +1,12 @@\n from django.shortcuts import render\n \n+from registrar.models import DomainApplication\n+\n \n def index(request):\n \"\"\"This page is available to anyone without logging in.\"\"\"\n- return render(request, \"home.html\")\n+ context = {}\n+ if request.user.is_authenticated:\n+ applications = DomainApplication.objects.filter(creator=request.user)\n+ context[\"domain_applications\"] = applications\n+ return render(request, \"home.html\", context)\n", "issue": "DomainApplication list on logged in user homepage\nOutcome: A list (limited to one now) of domain applications on the homepage of a logged in user. \n\nA/C: As a user I want to see my in progress applications for a domain. As a user I want to be able to select an application that is `In Progress` and continue my progress. As a user I want to be able to view submitted applications. \n", "before_files": [{"content": "from django.shortcuts import render\n\n\ndef index(request):\n \"\"\"This page is available to anyone without logging in.\"\"\"\n return render(request, \"home.html\")\n", "path": "src/registrar/views/index.py"}]} | 664 | 138 |

gh_patches_debug_57620 | rasdani/github-patches | git_diff | SigmaHQ__sigma-2144 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

sigmac - invalid value in STATE filter

In specification it states that there are three statuses:

* stable

* test

* experimental

https://github.com/SigmaHQ/sigma/wiki/Specification#status-optional

Problem arises when using sigmac with filter option and trying to filter out status **test**, because in filter options it states that status is one of these values: experimental, testing, stable.

Specification says **test**, but filter looks for status **testing**.

```

--filter FILTER, -f FILTER

Define comma-separated filters that must match (AND-

linked) to rule to be processed. Valid filters:

level<=x, level>=x, level=x, status=y, logsource=z,

tag=t. x is one of: low, medium, high, critical. y is

one of: experimental, testing, stable. z is a word

appearing in an arbitrary log source attribute. t is a

tag that must appear in the rules tag list, case-

insensitive matching. Multiple log source

specifications are AND linked.

```

sigma-master\tools\sigma\filter.py

`line 27: STATES = ["experimental", "testing", "stable"] `

</issue>

<code>

[start of tools/sigma/filter.py]

1 # Sigma parser

2 # Copyright 2016-2018 Thomas Patzke, Florian Roth

3

4 # This program is free software: you can redistribute it and/or modify

5 # it under the terms of the GNU Lesser General Public License as published by

6 # the Free Software Foundation, either version 3 of the License, or

7 # (at your option) any later version.

8

9 # This program is distributed in the hope that it will be useful,

10 # but WITHOUT ANY WARRANTY; without even the implied warranty of

11 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

12 # GNU Lesser General Public License for more details.

13

14 # You should have received a copy of the GNU Lesser General Public License

15 # along with this program. If not, see <http://www.gnu.org/licenses/>.

16

17 # Rule Filtering

18 import datetime

19 class SigmaRuleFilter:

20 """Filter for Sigma rules with conditions"""

21 LEVELS = {

22 "low" : 0,

23 "medium" : 1,

24 "high" : 2,

25 "critical" : 3

26 }

27 STATES = ["experimental", "testing", "stable"]

28

29 def __init__(self, expr):

30 self.minlevel = None

31 self.maxlevel = None

32 self.status = None

33 self.tlp = None

34 self.target = None

35 self.logsources = list()

36 self.notlogsources = list()

37 self.tags = list()

38 self.nottags = list()

39 self.inlastday = None

40 self.condition = list()

41 self.notcondition = list()

42

43 for cond in [c.replace(" ", "") for c in expr.split(",")]:

44 if cond.startswith("level<="):

45 try:

46 level = cond[cond.index("=") + 1:]

47 self.maxlevel = self.LEVELS[level]

48 except KeyError as e:

49 raise SigmaRuleFilterParseException("Unknown level '%s' in condition '%s'" % (level, cond)) from e

50 elif cond.startswith("level>="):

51 try:

52 level = cond[cond.index("=") + 1:]

53 self.minlevel = self.LEVELS[level]

54 except KeyError as e:

55 raise SigmaRuleFilterParseException("Unknown level '%s' in condition '%s'" % (level, cond)) from e

56 elif cond.startswith("level="):

57 try:

58 level = cond[cond.index("=") + 1:]

59 self.minlevel = self.LEVELS[level]

60 self.maxlevel = self.minlevel

61 except KeyError as e:

62 raise SigmaRuleFilterParseException("Unknown level '%s' in condition '%s'" % (level, cond)) from e

63 elif cond.startswith("status="):

64 self.status = cond[cond.index("=") + 1:]

65 if self.status not in self.STATES:

66 raise SigmaRuleFilterParseException("Unknown status '%s' in condition '%s'" % (self.status, cond))

67 elif cond.startswith("tlp="):

68 self.tlp = cond[cond.index("=") + 1:].upper() #tlp is always uppercase

69 elif cond.startswith("target="):

70 self.target = cond[cond.index("=") + 1:].lower() # lower to make caseinsensitive

71 elif cond.startswith("logsource="):

72 self.logsources.append(cond[cond.index("=") + 1:])

73 elif cond.startswith("logsource!="):

74 self.notlogsources.append(cond[cond.index("=") + 1:])

75 elif cond.startswith("tag="):

76 self.tags.append(cond[cond.index("=") + 1:].lower())

77 elif cond.startswith("tag!="):

78 self.nottags.append(cond[cond.index("=") + 1:].lower())

79 elif cond.startswith("condition="):

80 self.condition.append(cond[cond.index("=") + 1:].lower())

81 elif cond.startswith("condition!="):

82 self.notcondition.append(cond[cond.index("=") + 1:].lower())

83 elif cond.startswith("inlastday="):

84 nbday = cond[cond.index("=") + 1:]

85 try:

86 self.inlastday = int(nbday)

87 except ValueError as e:

88 raise SigmaRuleFilterParseException("Unknown number '%s' in condition '%s'" % (nbday, cond)) from e

89 else:

90 raise SigmaRuleFilterParseException("Unknown condition '%s'" % cond)

91

92 def match(self, yamldoc):

93 """Match filter conditions against rule"""

94 # Levels

95 if self.minlevel is not None or self.maxlevel is not None:

96 try:

97 level = self.LEVELS[yamldoc['level']]

98 except KeyError: # missing or invalid level

99 return False # User wants level restriction, but it's not possible here

100

101 # Minimum level

102 if self.minlevel is not None:

103 if level < self.minlevel:

104 return False

105 # Maximum level

106 if self.maxlevel is not None:

107 if level > self.maxlevel:

108 return False

109

110 # Status

111 if self.status is not None:

112 try:

113 status = yamldoc['status']

114 except KeyError: # missing status

115 return False # User wants status restriction, but it's not possible here

116 if status != self.status:

117 return False

118

119 # Tlp

120 if self.tlp is not None:

121 try:

122 tlp = yamldoc['tlp']

123 except KeyError: # missing tlp

124 tlp = "WHITE" # tlp is WHITE by default

125 if tlp != self.tlp:

126 return False

127

128 #Target

129 if self.target:

130 try:

131 targets = [ target.lower() for target in yamldoc['target']]

132 except (KeyError, AttributeError): # no target set

133 return False

134 if self.target not in targets:

135 return False

136

137 # Log Sources

138 if self.logsources:

139 try:

140 logsources = { value for key, value in yamldoc['logsource'].items() }

141 except (KeyError, AttributeError): # no log source set

142 return False # User wants status restriction, but it's not possible here

143

144 for logsrc in self.logsources:

145 if logsrc not in logsources:

146 return False

147

148 # NOT Log Sources

149 if self.notlogsources:

150 try:

151 notlogsources = { value for key, value in yamldoc['logsource'].items() }

152 except (KeyError, AttributeError): # no log source set

153 return False # User wants status restriction, but it's not possible here

154

155 for logsrc in self.notlogsources:

156 if logsrc in notlogsources:

157 return False

158

159 # Tags

160 if self.tags:

161 try:

162 tags = [ tag.lower() for tag in yamldoc['tags']]

163 except (KeyError, AttributeError): # no tags set

164 return False

165

166 for tag in self.tags:

167 if tag not in tags:

168 return False

169 # NOT Tags

170 if self.nottags:

171 try:

172 nottags = [ tag.lower() for tag in yamldoc['tags']]

173 except (KeyError, AttributeError): # no tags set

174 return False

175

176 for tag in self.nottags:

177 if tag in nottags:

178 return False

179

180 # date in the last N days

181 if self.inlastday:

182 try:

183 date_str = yamldoc['date']

184 except KeyError: # missing date

185 return False # User wants date time restriction, but it's not possible here

186

187 try:

188 modified_str = yamldoc['modified']

189 except KeyError: # no update

190 modified_str = None

191 if modified_str:

192 date_str = modified_str

193

194 date_object = datetime.datetime.strptime(date_str, '%Y/%m/%d')

195 today_objet = datetime.datetime.now()

196 delta = today_objet - date_object

197 if delta.days > self.inlastday:

198 return False

199

200 if self.condition:

201 try:

202 conditions = yamldoc['detection']['condition']

203 if isinstance(conditions,list): # sone time conditions are list even with only 1 line

204 s_condition = ' '.join(conditions)

205 else:

206 s_condition = conditions

207 except KeyError: # missing condition

208 return False # User wants condition restriction, but it's not possible here

209 for val in self.condition:

210 if not val in s_condition:

211 return False

212

213 if self.notcondition:

214 try:

215 conditions = yamldoc['detection']['condition']

216 if isinstance(conditions,list): # sone time conditions are list even with only 1 line

217 s_condition = ' '.join(conditions)

218 else:

219 s_condition = conditions

220 except KeyError: # missing condition

221 return False # User wants condition restriction, but it's not possible here

222 for val in self.notcondition:

223 if val in s_condition:

224 return False

225

226 # all tests passed

227 return True

228

229 class SigmaRuleFilterParseException(Exception):

230 pass

231

[end of tools/sigma/filter.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []