problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_52175

|

rasdani/github-patches

|

git_diff

|

microsoft__ptvsd-167

|

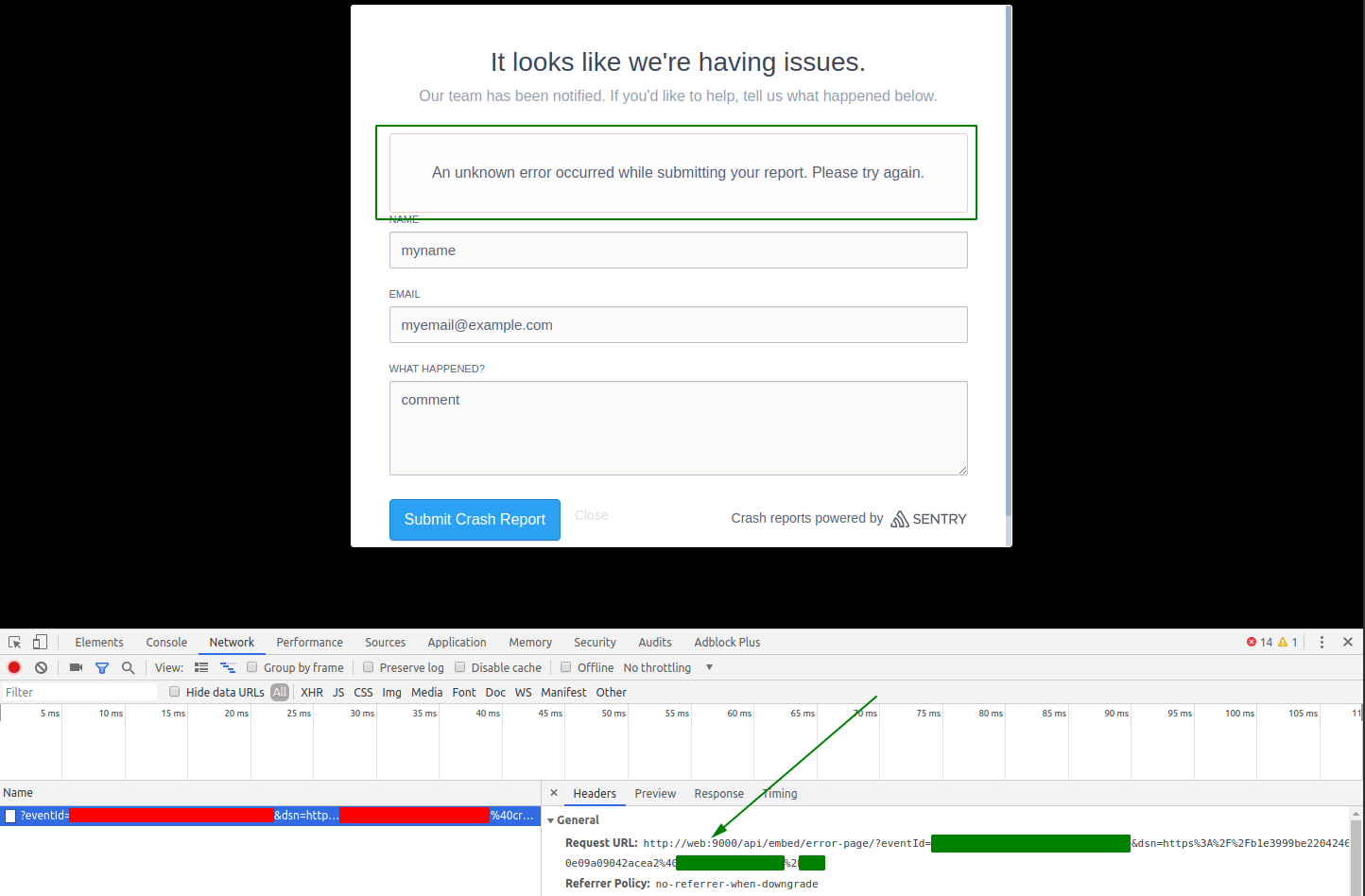

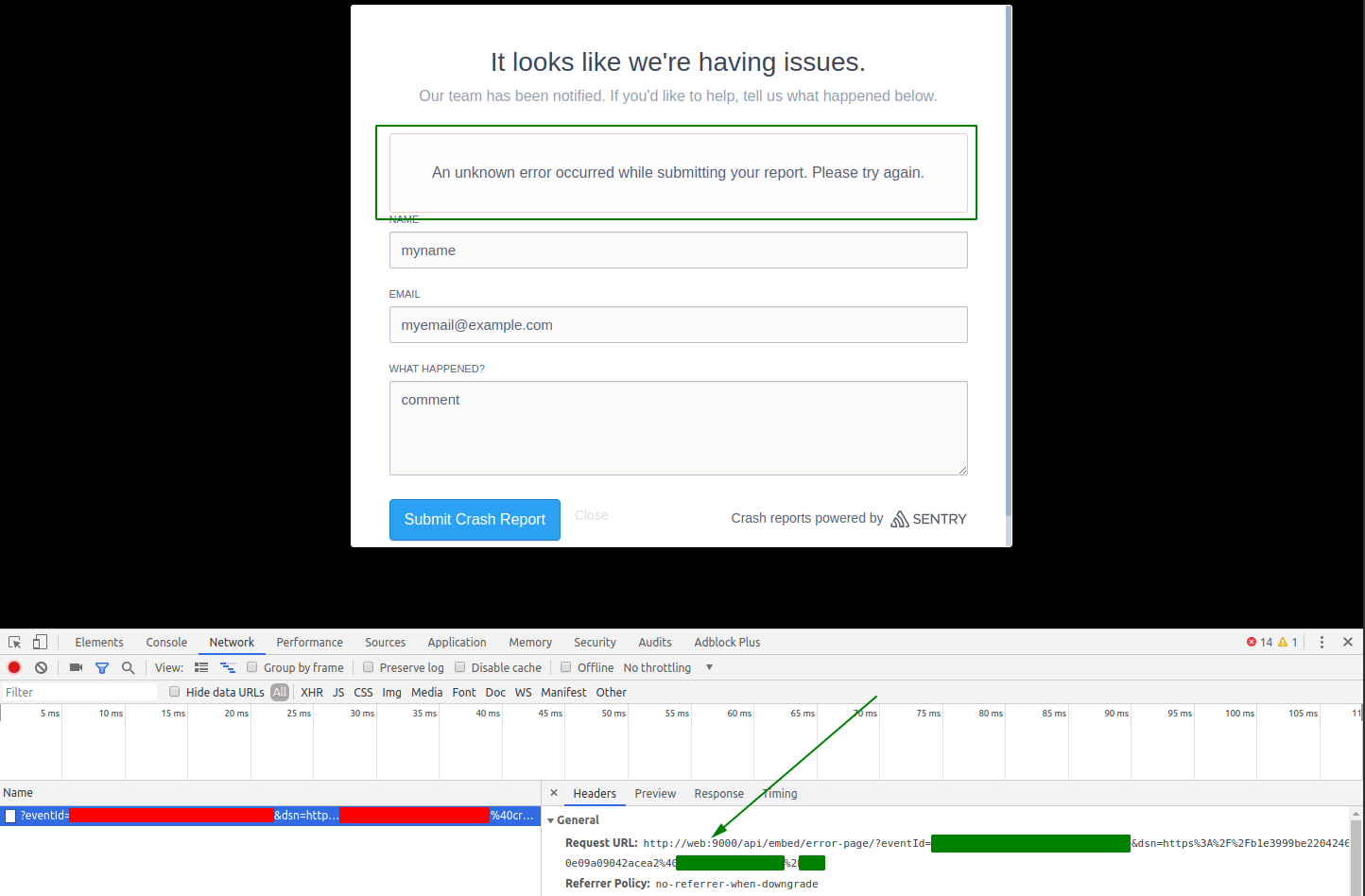

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Error reading integer

From VS (might not be a ptvsd bug, not sure at this point):

Create new python application

Add new item, python unit test

Set the unit test as startup file

F5

Result:

```

---------------------------

Microsoft Visual Studio

---------------------------

Error reading integer. Unexpected token: Boolean. Path 'exitCode'.

---------------------------

OK

---------------------------

```

</issue>

<code>

[start of ptvsd/debugger.py]

1 # Copyright (c) Microsoft Corporation. All rights reserved.

2 # Licensed under the MIT License. See LICENSE in the project root

3 # for license information.

4

5 import sys

6

7

8 __author__ = "Microsoft Corporation <[email protected]>"

9 __version__ = "4.0.0a1"

10

11 DONT_DEBUG = []

12

13

14 def debug(filename, port_num, debug_id, debug_options, run_as):

15 # TODO: docstring

16

17 # import the wrapper first, so that it gets a chance

18 # to detour pydevd socket functionality.

19 import ptvsd.wrapper

20 import pydevd

21

22 args = [

23 '--port', str(port_num),

24 '--client', '127.0.0.1',

25 ]

26 if run_as == 'module':

27 args.append('--module')

28 args.extend(('--file', filename + ":"))

29 else:

30 args.extend(('--file', filename))

31 sys.argv[1:0] = args

32 try:

33 pydevd.main()

34 except SystemExit as ex:

35 ptvsd.wrapper.ptvsd_sys_exit_code = ex.code

36 raise

37

[end of ptvsd/debugger.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/ptvsd/debugger.py b/ptvsd/debugger.py

--- a/ptvsd/debugger.py

+++ b/ptvsd/debugger.py

@@ -32,5 +32,5 @@

try:

pydevd.main()

except SystemExit as ex:

- ptvsd.wrapper.ptvsd_sys_exit_code = ex.code

+ ptvsd.wrapper.ptvsd_sys_exit_code = int(ex.code)

raise

|

{"golden_diff": "diff --git a/ptvsd/debugger.py b/ptvsd/debugger.py\n--- a/ptvsd/debugger.py\n+++ b/ptvsd/debugger.py\n@@ -32,5 +32,5 @@\n try:\n pydevd.main()\n except SystemExit as ex:\n- ptvsd.wrapper.ptvsd_sys_exit_code = ex.code\n+ ptvsd.wrapper.ptvsd_sys_exit_code = int(ex.code)\n raise\n", "issue": "Error reading integer\nFrom VS (might not be a ptvsd bug, not sure at this point):\r\nCreate new python application\r\nAdd new item, python unit test\r\nSet the unit test as startup file\r\nF5\r\n\r\nResult:\r\n```\r\n---------------------------\r\nMicrosoft Visual Studio\r\n---------------------------\r\nError reading integer. Unexpected token: Boolean. Path 'exitCode'.\r\n---------------------------\r\nOK \r\n---------------------------\r\n```\n", "before_files": [{"content": "# Copyright (c) Microsoft Corporation. All rights reserved.\n# Licensed under the MIT License. See LICENSE in the project root\n# for license information.\n\nimport sys\n\n\n__author__ = \"Microsoft Corporation <[email protected]>\"\n__version__ = \"4.0.0a1\"\n\nDONT_DEBUG = []\n\n\ndef debug(filename, port_num, debug_id, debug_options, run_as):\n # TODO: docstring\n\n # import the wrapper first, so that it gets a chance\n # to detour pydevd socket functionality.\n import ptvsd.wrapper\n import pydevd\n\n args = [\n '--port', str(port_num),\n '--client', '127.0.0.1',\n ]\n if run_as == 'module':\n args.append('--module')\n args.extend(('--file', filename + \":\"))\n else:\n args.extend(('--file', filename))\n sys.argv[1:0] = args\n try:\n pydevd.main()\n except SystemExit as ex:\n ptvsd.wrapper.ptvsd_sys_exit_code = ex.code\n raise\n", "path": "ptvsd/debugger.py"}]}

| 931 | 104 |

gh_patches_debug_57163

|

rasdani/github-patches

|

git_diff

|

Bitmessage__PyBitmessage-2004

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Adding Protocol specification to docs (WIP)

I am slowly formatting Protocol Specification doc. I see some typos and mistakes in the wiki, which I also hope to fix.

[A quick preview](https://pybitmessage-test.readthedocs.io/en/doc/protocol.html)

</issue>

<code>

[start of docs/conf.py]

1 # -*- coding: utf-8 -*-

2 """

3 Configuration file for the Sphinx documentation builder.

4

5 For a full list of options see the documentation:

6 http://www.sphinx-doc.org/en/master/config

7 """

8

9 import os

10 import sys

11

12 sys.path.insert(0, os.path.abspath('../src'))

13

14 from importlib import import_module

15

16 import version # noqa:E402

17

18

19 # -- Project information -----------------------------------------------------

20

21 project = u'PyBitmessage'

22 copyright = u'2019, The Bitmessage Team' # pylint: disable=redefined-builtin

23 author = u'The Bitmessage Team'

24

25 # The short X.Y version

26 version = unicode(version.softwareVersion)

27

28 # The full version, including alpha/beta/rc tags

29 release = version

30

31 # -- General configuration ---------------------------------------------------

32

33 # If your documentation needs a minimal Sphinx version, state it here.

34 #

35 # needs_sphinx = '1.0'

36

37 # Add any Sphinx extension module names here, as strings. They can be

38 # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

39 # ones.

40 extensions = [

41 'sphinx.ext.autodoc',

42 'sphinx.ext.coverage', # FIXME: unused

43 'sphinx.ext.imgmath', # legacy unused

44 'sphinx.ext.intersphinx',

45 'sphinx.ext.linkcode',

46 'sphinx.ext.napoleon',

47 'sphinx.ext.todo',

48 'sphinxcontrib.apidoc',

49 'm2r',

50 ]

51

52 default_role = 'obj'

53

54 # Add any paths that contain templates here, relative to this directory.

55 templates_path = ['_templates']

56

57 # The suffix(es) of source filenames.

58 # You can specify multiple suffix as a list of string:

59 #

60 source_suffix = ['.rst', '.md']

61

62 # The master toctree document.

63 master_doc = 'index'

64

65 # The language for content autogenerated by Sphinx. Refer to documentation

66 # for a list of supported languages.

67 #

68 # This is also used if you do content translation via gettext catalogs.

69 # Usually you set "language" from the command line for these cases.

70 # language = None

71

72 # List of patterns, relative to source directory, that match files and

73 # directories to ignore when looking for source files.

74 # This pattern also affects html_static_path and html_extra_path .

75 exclude_patterns = ['_build']

76

77 # The name of the Pygments (syntax highlighting) style to use.

78 pygments_style = 'sphinx'

79

80 # Don't prepend every class or function name with full module path

81 add_module_names = False

82

83 # A list of ignored prefixes for module index sorting.

84 modindex_common_prefix = ['pybitmessage.']

85

86

87 # -- Options for HTML output -------------------------------------------------

88

89 # The theme to use for HTML and HTML Help pages. See the documentation for

90 # a list of builtin themes.

91 #

92 html_theme = 'sphinx_rtd_theme'

93

94 # Theme options are theme-specific and customize the look and feel of a theme

95 # further. For a list of options available for each theme, see the

96 # documentation.

97 #

98 # html_theme_options = {}

99

100 # Add any paths that contain custom static files (such as style sheets) here,

101 # relative to this directory. They are copied after the builtin static files,

102 # so a file named "default.css" will overwrite the builtin "default.css".

103 html_static_path = ['_static']

104

105 html_css_files = [

106 'custom.css',

107 ]

108

109 # Custom sidebar templates, must be a dictionary that maps document names

110 # to template names.

111 #

112 # The default sidebars (for documents that don't match any pattern) are

113 # defined by theme itself. Builtin themes are using these templates by

114 # default: ``['localtoc.html', 'relations.html', 'sourcelink.html',

115 # 'searchbox.html']``.

116 #

117 # html_sidebars = {}

118

119 html_show_sourcelink = False

120

121 # -- Options for HTMLHelp output ---------------------------------------------

122

123 # Output file base name for HTML help builder.

124 htmlhelp_basename = 'PyBitmessagedoc'

125

126

127 # -- Options for LaTeX output ------------------------------------------------

128

129 latex_elements = {

130 # The paper size ('letterpaper' or 'a4paper').

131 #

132 # 'papersize': 'letterpaper',

133

134 # The font size ('10pt', '11pt' or '12pt').

135 #

136 # 'pointsize': '10pt',

137

138 # Additional stuff for the LaTeX preamble.

139 #

140 # 'preamble': '',

141

142 # Latex figure (float) alignment

143 #

144 # 'figure_align': 'htbp',

145 }

146

147 # Grouping the document tree into LaTeX files. List of tuples

148 # (source start file, target name, title,

149 # author, documentclass [howto, manual, or own class]).

150 latex_documents = [

151 (master_doc, 'PyBitmessage.tex', u'PyBitmessage Documentation',

152 u'The Bitmessage Team', 'manual'),

153 ]

154

155

156 # -- Options for manual page output ------------------------------------------

157

158 # One entry per manual page. List of tuples

159 # (source start file, name, description, authors, manual section).

160 man_pages = [

161 (master_doc, 'pybitmessage', u'PyBitmessage Documentation',

162 [author], 1)

163 ]

164

165

166 # -- Options for Texinfo output ----------------------------------------------

167

168 # Grouping the document tree into Texinfo files. List of tuples

169 # (source start file, target name, title, author,

170 # dir menu entry, description, category)

171 texinfo_documents = [

172 (master_doc, 'PyBitmessage', u'PyBitmessage Documentation',

173 author, 'PyBitmessage', 'One line description of project.',

174 'Miscellaneous'),

175 ]

176

177

178 # -- Options for Epub output -------------------------------------------------

179

180 # Bibliographic Dublin Core info.

181 epub_title = project

182 epub_author = author

183 epub_publisher = author

184 epub_copyright = copyright

185

186 # The unique identifier of the text. This can be a ISBN number

187 # or the project homepage.

188 #

189 # epub_identifier = ''

190

191 # A unique identification for the text.

192 #

193 # epub_uid = ''

194

195 # A list of files that should not be packed into the epub file.

196 epub_exclude_files = ['search.html']

197

198

199 # -- Extension configuration -------------------------------------------------

200

201 autodoc_mock_imports = [

202 'debug',

203 'pybitmessage.bitmessagekivy',

204 'pybitmessage.bitmessageqt.foldertree',

205 'pybitmessage.helper_startup',

206 'pybitmessage.mock',

207 'pybitmessage.network.httpd',

208 'pybitmessage.network.https',

209 'ctypes',

210 'dialog',

211 'gi',

212 'kivy',

213 'logging',

214 'msgpack',

215 'numpy',

216 'pkg_resources',

217 'pycanberra',

218 'pyopencl',

219 'PyQt4',

220 'PyQt5',

221 'qrcode',

222 'stem',

223 'xdg',

224 ]

225 autodoc_member_order = 'bysource'

226

227 # Apidoc settings

228 apidoc_module_dir = '../pybitmessage'

229 apidoc_output_dir = 'autodoc'

230 apidoc_excluded_paths = [

231 'bitmessagekivy', 'build_osx.py',

232 'bitmessageqt/addressvalidator.py', 'bitmessageqt/foldertree.py',

233 'bitmessageqt/migrationwizard.py', 'bitmessageqt/newaddresswizard.py',

234 'helper_startup.py',

235 'kivymd', 'mock', 'main.py', 'navigationdrawer', 'network/http*',

236 'src', 'tests', 'version.py'

237 ]

238 apidoc_module_first = True

239 apidoc_separate_modules = True

240 apidoc_toc_file = False

241 apidoc_extra_args = ['-a']

242

243 # Napoleon settings

244 napoleon_google_docstring = True

245

246

247 # linkcode function

248 def linkcode_resolve(domain, info):

249 """This generates source URL's for sphinx.ext.linkcode"""

250 if domain != 'py' or not info['module']:

251 return

252 try:

253 home = os.path.abspath(import_module('pybitmessage').__path__[0])

254 mod = import_module(info['module']).__file__

255 except ImportError:

256 return

257 repo = 'https://github.com/Bitmessage/PyBitmessage/blob/v0.6/src%s'

258 path = mod.replace(home, '')

259 if path != mod:

260 # put the link only for top level definitions

261 if len(info['fullname'].split('.')) > 1:

262 return

263 if path.endswith('.pyc'):

264 path = path[:-1]

265 return repo % path

266

267

268 # -- Options for intersphinx extension ---------------------------------------

269

270 # Example configuration for intersphinx: refer to the Python standard library.

271 intersphinx_mapping = {'https://docs.python.org/2.7/': None}

272

273 # -- Options for todo extension ----------------------------------------------

274

275 # If true, `todo` and `todoList` produce output, else they produce nothing.

276 todo_include_todos = True

277

[end of docs/conf.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/docs/conf.py b/docs/conf.py

--- a/docs/conf.py

+++ b/docs/conf.py

@@ -19,7 +19,7 @@

# -- Project information -----------------------------------------------------

project = u'PyBitmessage'

-copyright = u'2019, The Bitmessage Team' # pylint: disable=redefined-builtin

+copyright = u'2019-2022, The Bitmessage Team' # pylint: disable=redefined-builtin

author = u'The Bitmessage Team'

# The short X.Y version

|

{"golden_diff": "diff --git a/docs/conf.py b/docs/conf.py\n--- a/docs/conf.py\n+++ b/docs/conf.py\n@@ -19,7 +19,7 @@\n # -- Project information -----------------------------------------------------\n \n project = u'PyBitmessage'\n-copyright = u'2019, The Bitmessage Team' # pylint: disable=redefined-builtin\n+copyright = u'2019-2022, The Bitmessage Team' # pylint: disable=redefined-builtin\n author = u'The Bitmessage Team'\n \n # The short X.Y version\n", "issue": "Adding Protocol specification to docs (WIP)\nI am slowly formatting Protocol Specification doc. I see some typos and mistakes in the wiki, which I also hope to fix.\r\n\r\n[A quick preview](https://pybitmessage-test.readthedocs.io/en/doc/protocol.html)\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\"\"\"\nConfiguration file for the Sphinx documentation builder.\n\nFor a full list of options see the documentation:\nhttp://www.sphinx-doc.org/en/master/config\n\"\"\"\n\nimport os\nimport sys\n\nsys.path.insert(0, os.path.abspath('../src'))\n\nfrom importlib import import_module\n\nimport version # noqa:E402\n\n\n# -- Project information -----------------------------------------------------\n\nproject = u'PyBitmessage'\ncopyright = u'2019, The Bitmessage Team' # pylint: disable=redefined-builtin\nauthor = u'The Bitmessage Team'\n\n# The short X.Y version\nversion = unicode(version.softwareVersion)\n\n# The full version, including alpha/beta/rc tags\nrelease = version\n\n# -- General configuration ---------------------------------------------------\n\n# If your documentation needs a minimal Sphinx version, state it here.\n#\n# needs_sphinx = '1.0'\n\n# Add any Sphinx extension module names here, as strings. They can be\n# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom\n# ones.\nextensions = [\n 'sphinx.ext.autodoc',\n 'sphinx.ext.coverage', # FIXME: unused\n 'sphinx.ext.imgmath', # legacy unused\n 'sphinx.ext.intersphinx',\n 'sphinx.ext.linkcode',\n 'sphinx.ext.napoleon',\n 'sphinx.ext.todo',\n 'sphinxcontrib.apidoc',\n 'm2r',\n]\n\ndefault_role = 'obj'\n\n# Add any paths that contain templates here, relative to this directory.\ntemplates_path = ['_templates']\n\n# The suffix(es) of source filenames.\n# You can specify multiple suffix as a list of string:\n#\nsource_suffix = ['.rst', '.md']\n\n# The master toctree document.\nmaster_doc = 'index'\n\n# The language for content autogenerated by Sphinx. Refer to documentation\n# for a list of supported languages.\n#\n# This is also used if you do content translation via gettext catalogs.\n# Usually you set \"language\" from the command line for these cases.\n# language = None\n\n# List of patterns, relative to source directory, that match files and\n# directories to ignore when looking for source files.\n# This pattern also affects html_static_path and html_extra_path .\nexclude_patterns = ['_build']\n\n# The name of the Pygments (syntax highlighting) style to use.\npygments_style = 'sphinx'\n\n# Don't prepend every class or function name with full module path\nadd_module_names = False\n\n# A list of ignored prefixes for module index sorting.\nmodindex_common_prefix = ['pybitmessage.']\n\n\n# -- Options for HTML output -------------------------------------------------\n\n# The theme to use for HTML and HTML Help pages. See the documentation for\n# a list of builtin themes.\n#\nhtml_theme = 'sphinx_rtd_theme'\n\n# Theme options are theme-specific and customize the look and feel of a theme\n# further. For a list of options available for each theme, see the\n# documentation.\n#\n# html_theme_options = {}\n\n# Add any paths that contain custom static files (such as style sheets) here,\n# relative to this directory. They are copied after the builtin static files,\n# so a file named \"default.css\" will overwrite the builtin \"default.css\".\nhtml_static_path = ['_static']\n\nhtml_css_files = [\n 'custom.css',\n]\n\n# Custom sidebar templates, must be a dictionary that maps document names\n# to template names.\n#\n# The default sidebars (for documents that don't match any pattern) are\n# defined by theme itself. Builtin themes are using these templates by\n# default: ``['localtoc.html', 'relations.html', 'sourcelink.html',\n# 'searchbox.html']``.\n#\n# html_sidebars = {}\n\nhtml_show_sourcelink = False\n\n# -- Options for HTMLHelp output ---------------------------------------------\n\n# Output file base name for HTML help builder.\nhtmlhelp_basename = 'PyBitmessagedoc'\n\n\n# -- Options for LaTeX output ------------------------------------------------\n\nlatex_elements = {\n # The paper size ('letterpaper' or 'a4paper').\n #\n # 'papersize': 'letterpaper',\n\n # The font size ('10pt', '11pt' or '12pt').\n #\n # 'pointsize': '10pt',\n\n # Additional stuff for the LaTeX preamble.\n #\n # 'preamble': '',\n\n # Latex figure (float) alignment\n #\n # 'figure_align': 'htbp',\n}\n\n# Grouping the document tree into LaTeX files. List of tuples\n# (source start file, target name, title,\n# author, documentclass [howto, manual, or own class]).\nlatex_documents = [\n (master_doc, 'PyBitmessage.tex', u'PyBitmessage Documentation',\n u'The Bitmessage Team', 'manual'),\n]\n\n\n# -- Options for manual page output ------------------------------------------\n\n# One entry per manual page. List of tuples\n# (source start file, name, description, authors, manual section).\nman_pages = [\n (master_doc, 'pybitmessage', u'PyBitmessage Documentation',\n [author], 1)\n]\n\n\n# -- Options for Texinfo output ----------------------------------------------\n\n# Grouping the document tree into Texinfo files. List of tuples\n# (source start file, target name, title, author,\n# dir menu entry, description, category)\ntexinfo_documents = [\n (master_doc, 'PyBitmessage', u'PyBitmessage Documentation',\n author, 'PyBitmessage', 'One line description of project.',\n 'Miscellaneous'),\n]\n\n\n# -- Options for Epub output -------------------------------------------------\n\n# Bibliographic Dublin Core info.\nepub_title = project\nepub_author = author\nepub_publisher = author\nepub_copyright = copyright\n\n# The unique identifier of the text. This can be a ISBN number\n# or the project homepage.\n#\n# epub_identifier = ''\n\n# A unique identification for the text.\n#\n# epub_uid = ''\n\n# A list of files that should not be packed into the epub file.\nepub_exclude_files = ['search.html']\n\n\n# -- Extension configuration -------------------------------------------------\n\nautodoc_mock_imports = [\n 'debug',\n 'pybitmessage.bitmessagekivy',\n 'pybitmessage.bitmessageqt.foldertree',\n 'pybitmessage.helper_startup',\n 'pybitmessage.mock',\n 'pybitmessage.network.httpd',\n 'pybitmessage.network.https',\n 'ctypes',\n 'dialog',\n 'gi',\n 'kivy',\n 'logging',\n 'msgpack',\n 'numpy',\n 'pkg_resources',\n 'pycanberra',\n 'pyopencl',\n 'PyQt4',\n 'PyQt5',\n 'qrcode',\n 'stem',\n 'xdg',\n]\nautodoc_member_order = 'bysource'\n\n# Apidoc settings\napidoc_module_dir = '../pybitmessage'\napidoc_output_dir = 'autodoc'\napidoc_excluded_paths = [\n 'bitmessagekivy', 'build_osx.py',\n 'bitmessageqt/addressvalidator.py', 'bitmessageqt/foldertree.py',\n 'bitmessageqt/migrationwizard.py', 'bitmessageqt/newaddresswizard.py',\n 'helper_startup.py',\n 'kivymd', 'mock', 'main.py', 'navigationdrawer', 'network/http*',\n 'src', 'tests', 'version.py'\n]\napidoc_module_first = True\napidoc_separate_modules = True\napidoc_toc_file = False\napidoc_extra_args = ['-a']\n\n# Napoleon settings\nnapoleon_google_docstring = True\n\n\n# linkcode function\ndef linkcode_resolve(domain, info):\n \"\"\"This generates source URL's for sphinx.ext.linkcode\"\"\"\n if domain != 'py' or not info['module']:\n return\n try:\n home = os.path.abspath(import_module('pybitmessage').__path__[0])\n mod = import_module(info['module']).__file__\n except ImportError:\n return\n repo = 'https://github.com/Bitmessage/PyBitmessage/blob/v0.6/src%s'\n path = mod.replace(home, '')\n if path != mod:\n # put the link only for top level definitions\n if len(info['fullname'].split('.')) > 1:\n return\n if path.endswith('.pyc'):\n path = path[:-1]\n return repo % path\n\n\n# -- Options for intersphinx extension ---------------------------------------\n\n# Example configuration for intersphinx: refer to the Python standard library.\nintersphinx_mapping = {'https://docs.python.org/2.7/': None}\n\n# -- Options for todo extension ----------------------------------------------\n\n# If true, `todo` and `todoList` produce output, else they produce nothing.\ntodo_include_todos = True\n", "path": "docs/conf.py"}]}

| 3,222 | 123 |

gh_patches_debug_3811

|

rasdani/github-patches

|

git_diff

|

openmc-dev__openmc-926

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Unable to run examples/python/pincell

Hi,

After generating the xml files and trying to `openmc` I get the following error:

```

Reading tallies XML file...

ERROR: Two or more meshes use the same unique ID: 1

```

</issue>

<code>

[start of examples/python/pincell/build-xml.py]

1 import openmc

2

3 ###############################################################################

4 # Simulation Input File Parameters

5 ###############################################################################

6

7 # OpenMC simulation parameters

8 batches = 100

9 inactive = 10

10 particles = 1000

11

12

13 ###############################################################################

14 # Exporting to OpenMC materials.xml file

15 ###############################################################################

16

17

18 # Instantiate some Materials and register the appropriate Nuclides

19 uo2 = openmc.Material(material_id=1, name='UO2 fuel at 2.4% wt enrichment')

20 uo2.set_density('g/cm3', 10.29769)

21 uo2.add_element('U', 1., enrichment=2.4)

22 uo2.add_element('O', 2.)

23

24 helium = openmc.Material(material_id=2, name='Helium for gap')

25 helium.set_density('g/cm3', 0.001598)

26 helium.add_element('He', 2.4044e-4)

27

28 zircaloy = openmc.Material(material_id=3, name='Zircaloy 4')

29 zircaloy.set_density('g/cm3', 6.55)

30 zircaloy.add_element('Sn', 0.014 , 'wo')

31 zircaloy.add_element('Fe', 0.00165, 'wo')

32 zircaloy.add_element('Cr', 0.001 , 'wo')

33 zircaloy.add_element('Zr', 0.98335, 'wo')

34

35 borated_water = openmc.Material(material_id=4, name='Borated water')

36 borated_water.set_density('g/cm3', 0.740582)

37 borated_water.add_element('B', 4.0e-5)

38 borated_water.add_element('H', 5.0e-2)

39 borated_water.add_element('O', 2.4e-2)

40 borated_water.add_s_alpha_beta('c_H_in_H2O')

41

42 # Instantiate a Materials collection and export to XML

43 materials_file = openmc.Materials([uo2, helium, zircaloy, borated_water])

44 materials_file.export_to_xml()

45

46

47 ###############################################################################

48 # Exporting to OpenMC geometry.xml file

49 ###############################################################################

50

51 # Instantiate ZCylinder surfaces

52 fuel_or = openmc.ZCylinder(surface_id=1, x0=0, y0=0, R=0.39218, name='Fuel OR')

53 clad_ir = openmc.ZCylinder(surface_id=2, x0=0, y0=0, R=0.40005, name='Clad IR')

54 clad_or = openmc.ZCylinder(surface_id=3, x0=0, y0=0, R=0.45720, name='Clad OR')

55 left = openmc.XPlane(surface_id=4, x0=-0.62992, name='left')

56 right = openmc.XPlane(surface_id=5, x0=0.62992, name='right')

57 bottom = openmc.YPlane(surface_id=6, y0=-0.62992, name='bottom')

58 top = openmc.YPlane(surface_id=7, y0=0.62992, name='top')

59

60 left.boundary_type = 'reflective'

61 right.boundary_type = 'reflective'

62 top.boundary_type = 'reflective'

63 bottom.boundary_type = 'reflective'

64

65 # Instantiate Cells

66 fuel = openmc.Cell(cell_id=1, name='cell 1')

67 gap = openmc.Cell(cell_id=2, name='cell 2')

68 clad = openmc.Cell(cell_id=3, name='cell 3')

69 water = openmc.Cell(cell_id=4, name='cell 4')

70

71 # Use surface half-spaces to define regions

72 fuel.region = -fuel_or

73 gap.region = +fuel_or & -clad_ir

74 clad.region = +clad_ir & -clad_or

75 water.region = +clad_or & +left & -right & +bottom & -top

76

77 # Register Materials with Cells

78 fuel.fill = uo2

79 gap.fill = helium

80 clad.fill = zircaloy

81 water.fill = borated_water

82

83 # Instantiate Universe

84 root = openmc.Universe(universe_id=0, name='root universe')

85

86 # Register Cells with Universe

87 root.add_cells([fuel, gap, clad, water])

88

89 # Instantiate a Geometry, register the root Universe, and export to XML

90 geometry = openmc.Geometry(root)

91 geometry.export_to_xml()

92

93

94 ###############################################################################

95 # Exporting to OpenMC settings.xml file

96 ###############################################################################

97

98 # Instantiate a Settings object, set all runtime parameters, and export to XML

99 settings_file = openmc.Settings()

100 settings_file.batches = batches

101 settings_file.inactive = inactive

102 settings_file.particles = particles

103

104 # Create an initial uniform spatial source distribution over fissionable zones

105 bounds = [-0.62992, -0.62992, -1, 0.62992, 0.62992, 1]

106 uniform_dist = openmc.stats.Box(bounds[:3], bounds[3:], only_fissionable=True)

107 settings_file.source = openmc.source.Source(space=uniform_dist)

108

109 entropy_mesh = openmc.Mesh()

110 entropy_mesh.lower_left = [-0.39218, -0.39218, -1.e50]

111 entropy_mesh.upper_right = [0.39218, 0.39218, 1.e50]

112 entropy_mesh.dimension = [10, 10, 1]

113 settings_file.entropy_mesh = entropy_mesh

114 settings_file.export_to_xml()

115

116

117 ###############################################################################

118 # Exporting to OpenMC tallies.xml file

119 ###############################################################################

120

121 # Instantiate a tally mesh

122 mesh = openmc.Mesh(mesh_id=1)

123 mesh.type = 'regular'

124 mesh.dimension = [100, 100, 1]

125 mesh.lower_left = [-0.62992, -0.62992, -1.e50]

126 mesh.upper_right = [0.62992, 0.62992, 1.e50]

127

128 # Instantiate some tally Filters

129 energy_filter = openmc.EnergyFilter([0., 4., 20.e6])

130 mesh_filter = openmc.MeshFilter(mesh)

131

132 # Instantiate the Tally

133 tally = openmc.Tally(tally_id=1, name='tally 1')

134 tally.filters = [energy_filter, mesh_filter]

135 tally.scores = ['flux', 'fission', 'nu-fission']

136

137 # Instantiate a Tallies collection and export to XML

138 tallies_file = openmc.Tallies([tally])

139 tallies_file.export_to_xml()

140

[end of examples/python/pincell/build-xml.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/examples/python/pincell/build-xml.py b/examples/python/pincell/build-xml.py

--- a/examples/python/pincell/build-xml.py

+++ b/examples/python/pincell/build-xml.py

@@ -119,7 +119,7 @@

###############################################################################

# Instantiate a tally mesh

-mesh = openmc.Mesh(mesh_id=1)

+mesh = openmc.Mesh()

mesh.type = 'regular'

mesh.dimension = [100, 100, 1]

mesh.lower_left = [-0.62992, -0.62992, -1.e50]

|

{"golden_diff": "diff --git a/examples/python/pincell/build-xml.py b/examples/python/pincell/build-xml.py\n--- a/examples/python/pincell/build-xml.py\n+++ b/examples/python/pincell/build-xml.py\n@@ -119,7 +119,7 @@\n ###############################################################################\n \n # Instantiate a tally mesh\n-mesh = openmc.Mesh(mesh_id=1)\n+mesh = openmc.Mesh()\n mesh.type = 'regular'\n mesh.dimension = [100, 100, 1]\n mesh.lower_left = [-0.62992, -0.62992, -1.e50]\n", "issue": "Unable to run examples/python/pincell\nHi,\r\n\r\nAfter generating the xml files and trying to `openmc` I get the following error:\r\n```\r\nReading tallies XML file...\r\nERROR: Two or more meshes use the same unique ID: 1\r\n```\n", "before_files": [{"content": "import openmc\n\n###############################################################################\n# Simulation Input File Parameters\n###############################################################################\n\n# OpenMC simulation parameters\nbatches = 100\ninactive = 10\nparticles = 1000\n\n\n###############################################################################\n# Exporting to OpenMC materials.xml file\n###############################################################################\n\n\n# Instantiate some Materials and register the appropriate Nuclides\nuo2 = openmc.Material(material_id=1, name='UO2 fuel at 2.4% wt enrichment')\nuo2.set_density('g/cm3', 10.29769)\nuo2.add_element('U', 1., enrichment=2.4)\nuo2.add_element('O', 2.)\n\nhelium = openmc.Material(material_id=2, name='Helium for gap')\nhelium.set_density('g/cm3', 0.001598)\nhelium.add_element('He', 2.4044e-4)\n\nzircaloy = openmc.Material(material_id=3, name='Zircaloy 4')\nzircaloy.set_density('g/cm3', 6.55)\nzircaloy.add_element('Sn', 0.014 , 'wo')\nzircaloy.add_element('Fe', 0.00165, 'wo')\nzircaloy.add_element('Cr', 0.001 , 'wo')\nzircaloy.add_element('Zr', 0.98335, 'wo')\n\nborated_water = openmc.Material(material_id=4, name='Borated water')\nborated_water.set_density('g/cm3', 0.740582)\nborated_water.add_element('B', 4.0e-5)\nborated_water.add_element('H', 5.0e-2)\nborated_water.add_element('O', 2.4e-2)\nborated_water.add_s_alpha_beta('c_H_in_H2O')\n\n# Instantiate a Materials collection and export to XML\nmaterials_file = openmc.Materials([uo2, helium, zircaloy, borated_water])\nmaterials_file.export_to_xml()\n\n\n###############################################################################\n# Exporting to OpenMC geometry.xml file\n###############################################################################\n\n# Instantiate ZCylinder surfaces\nfuel_or = openmc.ZCylinder(surface_id=1, x0=0, y0=0, R=0.39218, name='Fuel OR')\nclad_ir = openmc.ZCylinder(surface_id=2, x0=0, y0=0, R=0.40005, name='Clad IR')\nclad_or = openmc.ZCylinder(surface_id=3, x0=0, y0=0, R=0.45720, name='Clad OR')\nleft = openmc.XPlane(surface_id=4, x0=-0.62992, name='left')\nright = openmc.XPlane(surface_id=5, x0=0.62992, name='right')\nbottom = openmc.YPlane(surface_id=6, y0=-0.62992, name='bottom')\ntop = openmc.YPlane(surface_id=7, y0=0.62992, name='top')\n\nleft.boundary_type = 'reflective'\nright.boundary_type = 'reflective'\ntop.boundary_type = 'reflective'\nbottom.boundary_type = 'reflective'\n\n# Instantiate Cells\nfuel = openmc.Cell(cell_id=1, name='cell 1')\ngap = openmc.Cell(cell_id=2, name='cell 2')\nclad = openmc.Cell(cell_id=3, name='cell 3')\nwater = openmc.Cell(cell_id=4, name='cell 4')\n\n# Use surface half-spaces to define regions\nfuel.region = -fuel_or\ngap.region = +fuel_or & -clad_ir\nclad.region = +clad_ir & -clad_or\nwater.region = +clad_or & +left & -right & +bottom & -top\n\n# Register Materials with Cells\nfuel.fill = uo2\ngap.fill = helium\nclad.fill = zircaloy\nwater.fill = borated_water\n\n# Instantiate Universe\nroot = openmc.Universe(universe_id=0, name='root universe')\n\n# Register Cells with Universe\nroot.add_cells([fuel, gap, clad, water])\n\n# Instantiate a Geometry, register the root Universe, and export to XML\ngeometry = openmc.Geometry(root)\ngeometry.export_to_xml()\n\n\n###############################################################################\n# Exporting to OpenMC settings.xml file\n###############################################################################\n\n# Instantiate a Settings object, set all runtime parameters, and export to XML\nsettings_file = openmc.Settings()\nsettings_file.batches = batches\nsettings_file.inactive = inactive\nsettings_file.particles = particles\n\n# Create an initial uniform spatial source distribution over fissionable zones\nbounds = [-0.62992, -0.62992, -1, 0.62992, 0.62992, 1]\nuniform_dist = openmc.stats.Box(bounds[:3], bounds[3:], only_fissionable=True)\nsettings_file.source = openmc.source.Source(space=uniform_dist)\n\nentropy_mesh = openmc.Mesh()\nentropy_mesh.lower_left = [-0.39218, -0.39218, -1.e50]\nentropy_mesh.upper_right = [0.39218, 0.39218, 1.e50]\nentropy_mesh.dimension = [10, 10, 1]\nsettings_file.entropy_mesh = entropy_mesh\nsettings_file.export_to_xml()\n\n\n###############################################################################\n# Exporting to OpenMC tallies.xml file\n###############################################################################\n\n# Instantiate a tally mesh\nmesh = openmc.Mesh(mesh_id=1)\nmesh.type = 'regular'\nmesh.dimension = [100, 100, 1]\nmesh.lower_left = [-0.62992, -0.62992, -1.e50]\nmesh.upper_right = [0.62992, 0.62992, 1.e50]\n\n# Instantiate some tally Filters\nenergy_filter = openmc.EnergyFilter([0., 4., 20.e6])\nmesh_filter = openmc.MeshFilter(mesh)\n\n# Instantiate the Tally\ntally = openmc.Tally(tally_id=1, name='tally 1')\ntally.filters = [energy_filter, mesh_filter]\ntally.scores = ['flux', 'fission', 'nu-fission']\n\n# Instantiate a Tallies collection and export to XML\ntallies_file = openmc.Tallies([tally])\ntallies_file.export_to_xml()\n", "path": "examples/python/pincell/build-xml.py"}]}

| 2,378 | 139 |

gh_patches_debug_7240

|

rasdani/github-patches

|

git_diff

|

ansible__awx-14489

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Tower Settings of type on/off idempotency not working

### Please confirm the following

- [X] I agree to follow this project's [code of conduct](https://docs.ansible.com/ansible/latest/community/code_of_conduct.html).

- [X] I have checked the [current issues](https://github.com/ansible/awx/issues) for duplicates.

- [X] I understand that AWX is open source software provided for free and that I might not receive a timely response.

- [X] I am **NOT** reporting a (potential) security vulnerability. (These should be emailed to `[email protected]` instead.)

### Bug Summary

When trying to set the setting named AWX_MOUNT_ISOLATED_PATHS_ON_K8S to true, no matter what I put in the playbook it is always marked as "changed: true", even if the setting is already true..

Sample Task:

```

- name: SET AWX EXPOSE HOST PATHS

awx.awx.settings:

name: "AWX_MOUNT_ISOLATED_PATHS_ON_K8S"

value: true

```

When I change the playbook value and register the results of the task to review the results I get this:

| Value In Playbook | Resulting Debug|

| ------- | ------- |

| true | ok: [awxlab] => {<br> "this_setting": {<br> "changed": true,<br> "failed": false,<br> "new_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": "True"<br> },<br> "old_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": true<br> },<br> "value": true<br> }<br>}|

| True | ok: [awxlab] => {<br> "this_setting": {<br> "changed": true,<br> "failed": false,<br> "new_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": "True"<br> },<br> "old_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": true<br> },<br> "value": true<br> }<br>}|

| "true" | ok: [awxlab] => {<br> "this_setting": {<br> "changed": true,<br> "failed": false,<br> "new_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": "true"<br> },<br> "old_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": true<br> },<br> "value": true<br> }<br>}|

| "True" | ok: [awxlab] => {<br> "this_setting": {<br> "changed": true,<br> "failed": false,<br> "new_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": "True"<br> },<br> "old_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": true<br> },<br> "value": true<br> }<br>}

| yes | ok: [awxlab] => {<br> "this_setting": {<br> "changed": true,<br> "failed": false,<br> "new_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": "True"<br> },<br> "old_values": {<br> "AWX_MOUNT_ISOLATED_PATHS_ON_K8S": true<br> },<br> "value": true<br> }<br>}

The documentation says this:

```> AWX.AWX.SETTINGS ([...]collections/ansible_collections/awx/awx/plugins/modules/settings.py)

Modify Automation Platform Controller settings. See https://www.ansible.com/tower for an overview.

OPTIONS (= is mandatory):

- value

Value to be modified for given setting.

**If given a non-string type, will make best effort to cast it to type API expects.**

For better control over types, use the `settings' param instead.

default: null

type: str

```

This leads me to believe that the logic used to sanitize the input might be doing a little extra or the conversion of 'settings' parameters to/from name/value parameters.

The documentation does show an example of how to use both the settings parameter and the name/value parameter. So, this may be lower priority but I wanted to get this somewhere that could be search by others who may be running into this issue.

### AWX version

23.1.0

### Select the relevant components

- [ ] UI

- [ ] UI (tech preview)

- [ ] API

- [ ] Docs

- [X] Collection

- [ ] CLI

- [ ] Other

### Installation method

kubernetes

### Modifications

no

### Ansible version

2.15.4

### Operating system

CentOS Stream release 9

### Web browser

Firefox

### Steps to reproduce

Use a playbook that modifies a boolean setting.

Use the awx.awx.setting module.

Use the name/value parameters instead of the settings paremeter.

Sample:

```

- name: SET AWX EXPOSE HOST PATHS

awx.awx.settings:

name: "AWX_MOUNT_ISOLATED_PATHS_ON_K8S"

value: true

register: this_setting

- name: Debug this setting

debug: var=this_setting

```

### Expected results

When the setting is already true, idempotency keeps the playbook from trying to update the setting again.

### Actual results

Regardless if the setting is true or not, the playbook always updates the setting.

### Additional information

_No response_

</issue>

<code>

[start of awx_collection/plugins/modules/settings.py]

1 #!/usr/bin/python

2 # coding: utf-8 -*-

3

4 # (c) 2018, Nikhil Jain <[email protected]>

5 # GNU General Public License v3.0+ (see COPYING or https://www.gnu.org/licenses/gpl-3.0.txt)

6

7 from __future__ import absolute_import, division, print_function

8

9 __metaclass__ = type

10

11

12 ANSIBLE_METADATA = {'metadata_version': '1.1', 'status': ['preview'], 'supported_by': 'community'}

13

14

15 DOCUMENTATION = '''

16 ---

17 module: settings

18 author: "Nikhil Jain (@jainnikhil30)"

19 short_description: Modify Automation Platform Controller settings.

20 description:

21 - Modify Automation Platform Controller settings. See

22 U(https://www.ansible.com/tower) for an overview.

23 options:

24 name:

25 description:

26 - Name of setting to modify

27 type: str

28 value:

29 description:

30 - Value to be modified for given setting.

31 - If given a non-string type, will make best effort to cast it to type API expects.

32 - For better control over types, use the C(settings) param instead.

33 type: str

34 settings:

35 description:

36 - A data structure to be sent into the settings endpoint

37 type: dict

38 requirements:

39 - pyyaml

40 extends_documentation_fragment: awx.awx.auth

41 '''

42

43 EXAMPLES = '''

44 - name: Set the value of AWX_ISOLATION_BASE_PATH

45 settings:

46 name: AWX_ISOLATION_BASE_PATH

47 value: "/tmp"

48 register: testing_settings

49

50 - name: Set the value of AWX_ISOLATION_SHOW_PATHS

51 settings:

52 name: "AWX_ISOLATION_SHOW_PATHS"

53 value: "'/var/lib/awx/projects/', '/tmp'"

54 register: testing_settings

55

56 - name: Set the LDAP Auth Bind Password

57 settings:

58 name: "AUTH_LDAP_BIND_PASSWORD"

59 value: "Password"

60 no_log: true

61

62 - name: Set all the LDAP Auth Bind Params

63 settings:

64 settings:

65 AUTH_LDAP_BIND_PASSWORD: "password"

66 AUTH_LDAP_USER_ATTR_MAP:

67 email: "mail"

68 first_name: "givenName"

69 last_name: "surname"

70 '''

71

72 from ..module_utils.controller_api import ControllerAPIModule

73

74 try:

75 import yaml

76

77 HAS_YAML = True

78 except ImportError:

79 HAS_YAML = False

80

81

82 def coerce_type(module, value):

83 # If our value is already None we can just return directly

84 if value is None:

85 return value

86

87 yaml_ish = bool((value.startswith('{') and value.endswith('}')) or (value.startswith('[') and value.endswith(']')))

88 if yaml_ish:

89 if not HAS_YAML:

90 module.fail_json(msg="yaml is not installed, try 'pip install pyyaml'")

91 return yaml.safe_load(value)

92 elif value.lower in ('true', 'false', 't', 'f'):

93 return {'t': True, 'f': False}[value[0].lower()]

94 try:

95 return int(value)

96 except ValueError:

97 pass

98 return value

99

100

101 def main():

102 # Any additional arguments that are not fields of the item can be added here

103 argument_spec = dict(

104 name=dict(),

105 value=dict(),

106 settings=dict(type='dict'),

107 )

108

109 # Create a module for ourselves

110 module = ControllerAPIModule(

111 argument_spec=argument_spec,

112 required_one_of=[['name', 'settings']],

113 mutually_exclusive=[['name', 'settings']],

114 required_if=[['name', 'present', ['value']]],

115 )

116

117 # Extract our parameters

118 name = module.params.get('name')

119 value = module.params.get('value')

120 new_settings = module.params.get('settings')

121

122 # If we were given a name/value pair we will just make settings out of that and proceed normally

123 if new_settings is None:

124 new_value = coerce_type(module, value)

125

126 new_settings = {name: new_value}

127

128 # Load the existing settings

129 existing_settings = module.get_endpoint('settings/all')['json']

130

131 # Begin a json response

132 json_output = {'changed': False, 'old_values': {}, 'new_values': {}}

133

134 # Check any of the settings to see if anything needs to be updated

135 needs_update = False

136 for a_setting in new_settings:

137 if a_setting not in existing_settings or existing_settings[a_setting] != new_settings[a_setting]:

138 # At least one thing is different so we need to patch

139 needs_update = True

140 json_output['old_values'][a_setting] = existing_settings[a_setting]

141 json_output['new_values'][a_setting] = new_settings[a_setting]

142

143 if module._diff:

144 json_output['diff'] = {'before': json_output['old_values'], 'after': json_output['new_values']}

145

146 # If nothing needs an update we can simply exit with the response (as not changed)

147 if not needs_update:

148 module.exit_json(**json_output)

149

150 if module.check_mode and module._diff:

151 json_output['changed'] = True

152 module.exit_json(**json_output)

153

154 # Make the call to update the settings

155 response = module.patch_endpoint('settings/all', **{'data': new_settings})

156

157 if response['status_code'] == 200:

158 # Set the changed response to True

159 json_output['changed'] = True

160

161 # To deal with the old style values we need to return 'value' in the response

162 new_values = {}

163 for a_setting in new_settings:

164 new_values[a_setting] = response['json'][a_setting]

165

166 # If we were using a name we will just add a value of a string, otherwise we will return an array in values

167 if name is not None:

168 json_output['value'] = new_values[name]

169 else:

170 json_output['values'] = new_values

171

172 module.exit_json(**json_output)

173 elif 'json' in response and '__all__' in response['json']:

174 module.fail_json(msg=response['json']['__all__'])

175 else:

176 module.fail_json(**{'msg': "Unable to update settings, see response", 'response': response})

177

178

179 if __name__ == '__main__':

180 main()

181

[end of awx_collection/plugins/modules/settings.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/awx_collection/plugins/modules/settings.py b/awx_collection/plugins/modules/settings.py

--- a/awx_collection/plugins/modules/settings.py

+++ b/awx_collection/plugins/modules/settings.py

@@ -89,7 +89,7 @@

if not HAS_YAML:

module.fail_json(msg="yaml is not installed, try 'pip install pyyaml'")

return yaml.safe_load(value)

- elif value.lower in ('true', 'false', 't', 'f'):

+ elif value.lower() in ('true', 'false', 't', 'f'):

return {'t': True, 'f': False}[value[0].lower()]

try:

return int(value)

|

{"golden_diff": "diff --git a/awx_collection/plugins/modules/settings.py b/awx_collection/plugins/modules/settings.py\n--- a/awx_collection/plugins/modules/settings.py\n+++ b/awx_collection/plugins/modules/settings.py\n@@ -89,7 +89,7 @@\n if not HAS_YAML:\n module.fail_json(msg=\"yaml is not installed, try 'pip install pyyaml'\")\n return yaml.safe_load(value)\n- elif value.lower in ('true', 'false', 't', 'f'):\n+ elif value.lower() in ('true', 'false', 't', 'f'):\n return {'t': True, 'f': False}[value[0].lower()]\n try:\n return int(value)\n", "issue": "Tower Settings of type on/off idempotency not working\n### Please confirm the following\n\n- [X] I agree to follow this project's [code of conduct](https://docs.ansible.com/ansible/latest/community/code_of_conduct.html).\n- [X] I have checked the [current issues](https://github.com/ansible/awx/issues) for duplicates.\n- [X] I understand that AWX is open source software provided for free and that I might not receive a timely response.\n- [X] I am **NOT** reporting a (potential) security vulnerability. (These should be emailed to `[email protected]` instead.)\n\n### Bug Summary\n\nWhen trying to set the setting named AWX_MOUNT_ISOLATED_PATHS_ON_K8S to true, no matter what I put in the playbook it is always marked as \"changed: true\", even if the setting is already true..\r\n\r\nSample Task:\r\n```\r\n - name: SET AWX EXPOSE HOST PATHS\r\n awx.awx.settings:\r\n name: \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\"\r\n value: true\r\n\r\n```\r\n\r\nWhen I change the playbook value and register the results of the task to review the results I get this:\r\n\r\n| Value In Playbook | Resulting Debug|\r\n| ------- | ------- |\r\n| true | ok: [awxlab] => {<br> \"this_setting\": {<br> \"changed\": true,<br> \"failed\": false,<br> \"new_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": \"True\"<br> },<br> \"old_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": true<br> },<br> \"value\": true<br> }<br>}|\r\n| True | ok: [awxlab] => {<br> \"this_setting\": {<br> \"changed\": true,<br> \"failed\": false,<br> \"new_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": \"True\"<br> },<br> \"old_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": true<br> },<br> \"value\": true<br> }<br>}|\r\n| \"true\" | ok: [awxlab] => {<br> \"this_setting\": {<br> \"changed\": true,<br> \"failed\": false,<br> \"new_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": \"true\"<br> },<br> \"old_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": true<br> },<br> \"value\": true<br> }<br>}|\r\n| \"True\" | ok: [awxlab] => {<br> \"this_setting\": {<br> \"changed\": true,<br> \"failed\": false,<br> \"new_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": \"True\"<br> },<br> \"old_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": true<br> },<br> \"value\": true<br> }<br>}\r\n| yes | ok: [awxlab] => {<br> \"this_setting\": {<br> \"changed\": true,<br> \"failed\": false,<br> \"new_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": \"True\"<br> },<br> \"old_values\": {<br> \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\": true<br> },<br> \"value\": true<br> }<br>}\r\n\r\n\r\nThe documentation says this:\r\n```> AWX.AWX.SETTINGS ([...]collections/ansible_collections/awx/awx/plugins/modules/settings.py)\r\n\r\n Modify Automation Platform Controller settings. See https://www.ansible.com/tower for an overview.\r\n\r\nOPTIONS (= is mandatory):\r\n- value\r\n Value to be modified for given setting.\r\n **If given a non-string type, will make best effort to cast it to type API expects.**\r\n For better control over types, use the `settings' param instead.\r\n default: null\r\n type: str\r\n```\r\n\r\nThis leads me to believe that the logic used to sanitize the input might be doing a little extra or the conversion of 'settings' parameters to/from name/value parameters.\r\n\r\nThe documentation does show an example of how to use both the settings parameter and the name/value parameter. So, this may be lower priority but I wanted to get this somewhere that could be search by others who may be running into this issue.\n\n### AWX version\n\n23.1.0\n\n### Select the relevant components\n\n- [ ] UI\n- [ ] UI (tech preview)\n- [ ] API\n- [ ] Docs\n- [X] Collection\n- [ ] CLI\n- [ ] Other\n\n### Installation method\n\nkubernetes\n\n### Modifications\n\nno\n\n### Ansible version\n\n2.15.4\n\n### Operating system\n\nCentOS Stream release 9\n\n### Web browser\n\nFirefox\n\n### Steps to reproduce\n\nUse a playbook that modifies a boolean setting.\r\nUse the awx.awx.setting module.\r\nUse the name/value parameters instead of the settings paremeter.\r\nSample:\r\n```\r\n - name: SET AWX EXPOSE HOST PATHS\r\n awx.awx.settings:\r\n name: \"AWX_MOUNT_ISOLATED_PATHS_ON_K8S\"\r\n value: true\r\n register: this_setting\r\n \r\n - name: Debug this setting\r\n debug: var=this_setting\r\n```\n\n### Expected results\n\nWhen the setting is already true, idempotency keeps the playbook from trying to update the setting again.\n\n### Actual results\n\nRegardless if the setting is true or not, the playbook always updates the setting.\n\n### Additional information\n\n_No response_\n", "before_files": [{"content": "#!/usr/bin/python\n# coding: utf-8 -*-\n\n# (c) 2018, Nikhil Jain <[email protected]>\n# GNU General Public License v3.0+ (see COPYING or https://www.gnu.org/licenses/gpl-3.0.txt)\n\nfrom __future__ import absolute_import, division, print_function\n\n__metaclass__ = type\n\n\nANSIBLE_METADATA = {'metadata_version': '1.1', 'status': ['preview'], 'supported_by': 'community'}\n\n\nDOCUMENTATION = '''\n---\nmodule: settings\nauthor: \"Nikhil Jain (@jainnikhil30)\"\nshort_description: Modify Automation Platform Controller settings.\ndescription:\n - Modify Automation Platform Controller settings. See\n U(https://www.ansible.com/tower) for an overview.\noptions:\n name:\n description:\n - Name of setting to modify\n type: str\n value:\n description:\n - Value to be modified for given setting.\n - If given a non-string type, will make best effort to cast it to type API expects.\n - For better control over types, use the C(settings) param instead.\n type: str\n settings:\n description:\n - A data structure to be sent into the settings endpoint\n type: dict\nrequirements:\n - pyyaml\nextends_documentation_fragment: awx.awx.auth\n'''\n\nEXAMPLES = '''\n- name: Set the value of AWX_ISOLATION_BASE_PATH\n settings:\n name: AWX_ISOLATION_BASE_PATH\n value: \"/tmp\"\n register: testing_settings\n\n- name: Set the value of AWX_ISOLATION_SHOW_PATHS\n settings:\n name: \"AWX_ISOLATION_SHOW_PATHS\"\n value: \"'/var/lib/awx/projects/', '/tmp'\"\n register: testing_settings\n\n- name: Set the LDAP Auth Bind Password\n settings:\n name: \"AUTH_LDAP_BIND_PASSWORD\"\n value: \"Password\"\n no_log: true\n\n- name: Set all the LDAP Auth Bind Params\n settings:\n settings:\n AUTH_LDAP_BIND_PASSWORD: \"password\"\n AUTH_LDAP_USER_ATTR_MAP:\n email: \"mail\"\n first_name: \"givenName\"\n last_name: \"surname\"\n'''\n\nfrom ..module_utils.controller_api import ControllerAPIModule\n\ntry:\n import yaml\n\n HAS_YAML = True\nexcept ImportError:\n HAS_YAML = False\n\n\ndef coerce_type(module, value):\n # If our value is already None we can just return directly\n if value is None:\n return value\n\n yaml_ish = bool((value.startswith('{') and value.endswith('}')) or (value.startswith('[') and value.endswith(']')))\n if yaml_ish:\n if not HAS_YAML:\n module.fail_json(msg=\"yaml is not installed, try 'pip install pyyaml'\")\n return yaml.safe_load(value)\n elif value.lower in ('true', 'false', 't', 'f'):\n return {'t': True, 'f': False}[value[0].lower()]\n try:\n return int(value)\n except ValueError:\n pass\n return value\n\n\ndef main():\n # Any additional arguments that are not fields of the item can be added here\n argument_spec = dict(\n name=dict(),\n value=dict(),\n settings=dict(type='dict'),\n )\n\n # Create a module for ourselves\n module = ControllerAPIModule(\n argument_spec=argument_spec,\n required_one_of=[['name', 'settings']],\n mutually_exclusive=[['name', 'settings']],\n required_if=[['name', 'present', ['value']]],\n )\n\n # Extract our parameters\n name = module.params.get('name')\n value = module.params.get('value')\n new_settings = module.params.get('settings')\n\n # If we were given a name/value pair we will just make settings out of that and proceed normally\n if new_settings is None:\n new_value = coerce_type(module, value)\n\n new_settings = {name: new_value}\n\n # Load the existing settings\n existing_settings = module.get_endpoint('settings/all')['json']\n\n # Begin a json response\n json_output = {'changed': False, 'old_values': {}, 'new_values': {}}\n\n # Check any of the settings to see if anything needs to be updated\n needs_update = False\n for a_setting in new_settings:\n if a_setting not in existing_settings or existing_settings[a_setting] != new_settings[a_setting]:\n # At least one thing is different so we need to patch\n needs_update = True\n json_output['old_values'][a_setting] = existing_settings[a_setting]\n json_output['new_values'][a_setting] = new_settings[a_setting]\n\n if module._diff:\n json_output['diff'] = {'before': json_output['old_values'], 'after': json_output['new_values']}\n\n # If nothing needs an update we can simply exit with the response (as not changed)\n if not needs_update:\n module.exit_json(**json_output)\n\n if module.check_mode and module._diff:\n json_output['changed'] = True\n module.exit_json(**json_output)\n\n # Make the call to update the settings\n response = module.patch_endpoint('settings/all', **{'data': new_settings})\n\n if response['status_code'] == 200:\n # Set the changed response to True\n json_output['changed'] = True\n\n # To deal with the old style values we need to return 'value' in the response\n new_values = {}\n for a_setting in new_settings:\n new_values[a_setting] = response['json'][a_setting]\n\n # If we were using a name we will just add a value of a string, otherwise we will return an array in values\n if name is not None:\n json_output['value'] = new_values[name]\n else:\n json_output['values'] = new_values\n\n module.exit_json(**json_output)\n elif 'json' in response and '__all__' in response['json']:\n module.fail_json(msg=response['json']['__all__'])\n else:\n module.fail_json(**{'msg': \"Unable to update settings, see response\", 'response': response})\n\n\nif __name__ == '__main__':\n main()\n", "path": "awx_collection/plugins/modules/settings.py"}]}

| 3,673 | 151 |

gh_patches_debug_29809

|

rasdani/github-patches

|

git_diff

|

DataBiosphere__toil-3691

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add type hints to humanize.py

Add type hints to `src/toil/lib/humanize.py` so it can be checked under mypy during linting.

Refers to #3568.

┆Issue is synchronized with this [Jira Task](https://ucsc-cgl.atlassian.net/browse/TOIL-946)

┆Issue Number: TOIL-946

</issue>

<code>

[start of src/toil/lib/humanize.py]

1 # Used by cactus; now a wrapper and not used in Toil.

2 # TODO: Remove from cactus and then remove from Toil.

3 # See https://github.com/DataBiosphere/toil/pull/3529#discussion_r611735988

4

5 # http://code.activestate.com/recipes/578019-bytes-to-human-human-to-bytes-converter/

6 import logging

7 from typing import Optional, SupportsInt

8 from toil.lib.conversions import bytes2human as b2h, human2bytes as h2b

9

10 """

11 Bytes-to-human / human-to-bytes converter.

12 Based on: http://goo.gl/kTQMs

13 Working with Python 2.x and 3.x.

14

15 Author: Giampaolo Rodola' <g.rodola [AT] gmail [DOT] com>

16 License: MIT

17 """

18

19 logger = logging.getLogger(__name__)

20

21

22 def bytes2human(n: SupportsInt, fmt: Optional[str] = None, symbols: Optional[str] = None) -> str:

23 """

24 Convert n bytes into a human readable string based on format.

25 symbols can be either "customary", "customary_ext", "iec" or "iec_ext",

26 see: http://goo.gl/kTQMs

27 """

28 logger.warning('Deprecated toil method. Please use "toil.lib.conversions.bytes2human()" instead."')

29 return b2h(n)

30

31

32 def human2bytes(s):

33 """

34 Attempts to guess the string format based on default symbols

35 set and return the corresponding bytes as an integer.

36

37 When unable to recognize the format ValueError is raised.

38 """

39 logger.warning('Deprecated toil method. Please use "toil.lib.conversions.human2bytes()" instead."')

40 return h2b(s)

41

[end of src/toil/lib/humanize.py]

[start of contrib/admin/mypy-with-ignore.py]

1 #!/usr/bin/env python3

2 """

3 Runs mypy and ignores files that do not yet have passing type hints.

4

5 Does not type check test files (any path including "src/toil/test").

6 """

7 import os

8 import subprocess

9 import sys

10

11 os.environ['MYPYPATH'] = 'contrib/typeshed'

12 pkg_root = os.path.abspath(os.path.join(os.path.dirname(__file__), '..', '..')) # noqa

13 sys.path.insert(0, pkg_root) # noqa

14

15 from src.toil.lib.resources import glob # type: ignore

16

17

18 def main():

19 all_files_to_check = []

20 for d in ['dashboard', 'docker', 'docs', 'src']:

21 all_files_to_check += glob(glob_pattern='*.py', directoryname=os.path.join(pkg_root, d))

22

23 # TODO: Remove these paths as typing is added and mypy conflicts are addressed

24 ignore_paths = [os.path.abspath(f) for f in [

25 'docker/Dockerfile.py',

26 'docs/conf.py',

27 'docs/vendor/sphinxcontrib/fulltoc.py',

28 'docs/vendor/sphinxcontrib/__init__.py',

29 'src/toil/job.py',

30 'src/toil/leader.py',

31 'src/toil/common.py',

32 'src/toil/worker.py',

33 'src/toil/toilState.py',

34 'src/toil/__init__.py',

35 'src/toil/resource.py',

36 'src/toil/deferred.py',

37 'src/toil/version.py',

38 'src/toil/wdl/utils.py',

39 'src/toil/wdl/wdl_types.py',

40 'src/toil/wdl/wdl_synthesis.py',

41 'src/toil/wdl/wdl_analysis.py',

42 'src/toil/wdl/wdl_functions.py',

43 'src/toil/wdl/toilwdl.py',

44 'src/toil/wdl/versions/draft2.py',

45 'src/toil/wdl/versions/v1.py',

46 'src/toil/wdl/versions/dev.py',

47 'src/toil/provisioners/clusterScaler.py',

48 'src/toil/provisioners/abstractProvisioner.py',

49 'src/toil/provisioners/gceProvisioner.py',

50 'src/toil/provisioners/__init__.py',

51 'src/toil/provisioners/node.py',

52 'src/toil/provisioners/aws/boto2Context.py',

53 'src/toil/provisioners/aws/awsProvisioner.py',

54 'src/toil/provisioners/aws/__init__.py',

55 'src/toil/batchSystems/slurm.py',

56 'src/toil/batchSystems/gridengine.py',

57 'src/toil/batchSystems/singleMachine.py',

58 'src/toil/batchSystems/abstractBatchSystem.py',

59 'src/toil/batchSystems/parasol.py',

60 'src/toil/batchSystems/kubernetes.py',

61 'src/toil/batchSystems/torque.py',

62 'src/toil/batchSystems/options.py',

63 'src/toil/batchSystems/registry.py',

64 'src/toil/batchSystems/lsf.py',

65 'src/toil/batchSystems/__init__.py',

66 'src/toil/batchSystems/abstractGridEngineBatchSystem.py',

67 'src/toil/batchSystems/lsfHelper.py',

68 'src/toil/batchSystems/htcondor.py',

69 'src/toil/batchSystems/mesos/batchSystem.py',

70 'src/toil/batchSystems/mesos/executor.py',

71 'src/toil/batchSystems/mesos/conftest.py',

72 'src/toil/batchSystems/mesos/__init__.py',

73 'src/toil/batchSystems/mesos/test/__init__.py',

74 'src/toil/cwl/conftest.py',

75 'src/toil/cwl/__init__.py',

76 'src/toil/cwl/cwltoil.py',

77 'src/toil/fileStores/cachingFileStore.py',

78 'src/toil/fileStores/abstractFileStore.py',

79 'src/toil/fileStores/nonCachingFileStore.py',

80 'src/toil/fileStores/__init__.py',

81 'src/toil/jobStores/utils.py',

82 'src/toil/jobStores/conftest.py',

83 'src/toil/jobStores/fileJobStore.py',

84 'src/toil/jobStores/__init__.py',

85 'src/toil/jobStores/googleJobStore.py',

86 'src/toil/jobStores/aws/utils.py',

87 'src/toil/jobStores/aws/jobStore.py',

88 'src/toil/jobStores/aws/__init__.py',

89 'src/toil/utils/__init__.py',

90 'src/toil/lib/memoize.py',

91 'src/toil/lib/throttle.py',

92 'src/toil/lib/humanize.py',

93 'src/toil/lib/iterables.py',

94 'src/toil/lib/bioio.py',

95 'src/toil/lib/ec2.py',

96 'src/toil/lib/expando.py',

97 'src/toil/lib/exceptions.py',

98 'src/toil/lib/__init__.py',

99 'src/toil/lib/generatedEC2Lists.py',

100 'src/toil/lib/retry.py',

101 'src/toil/lib/objects.py',

102 'src/toil/lib/io.py',

103 'src/toil/lib/docker.py',

104 'src/toil/lib/encryption/_dummy.py',

105 'src/toil/lib/encryption/conftest.py',

106 'src/toil/lib/encryption/__init__.py',

107 'src/toil/lib/aws/utils.py',

108 'src/toil/lib/aws/__init__.py'

109 ]]

110

111 filtered_files_to_check = []

112 for file_path in all_files_to_check:

113 if file_path not in ignore_paths and 'src/toil/test' not in file_path:

114 filtered_files_to_check.append(file_path)

115 # follow-imports type checks pypi projects we don't control, so we skip it; why is this their default?

116 args = ['mypy', '--follow-imports=skip'] + filtered_files_to_check

117 p = subprocess.run(args=args, stdout=subprocess.PIPE)

118 result = p.stdout.decode()

119 print(result)

120 if 'Success: no issues found' not in result:

121 exit(1)

122

123

124 if __name__ == '__main__':

125 main()

126

[end of contrib/admin/mypy-with-ignore.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/contrib/admin/mypy-with-ignore.py b/contrib/admin/mypy-with-ignore.py

--- a/contrib/admin/mypy-with-ignore.py

+++ b/contrib/admin/mypy-with-ignore.py

@@ -89,7 +89,6 @@

'src/toil/utils/__init__.py',

'src/toil/lib/memoize.py',

'src/toil/lib/throttle.py',

- 'src/toil/lib/humanize.py',

'src/toil/lib/iterables.py',

'src/toil/lib/bioio.py',

'src/toil/lib/ec2.py',

diff --git a/src/toil/lib/humanize.py b/src/toil/lib/humanize.py

--- a/src/toil/lib/humanize.py

+++ b/src/toil/lib/humanize.py

@@ -4,7 +4,7 @@

# http://code.activestate.com/recipes/578019-bytes-to-human-human-to-bytes-converter/

import logging

-from typing import Optional, SupportsInt

+from typing import SupportsInt

from toil.lib.conversions import bytes2human as b2h, human2bytes as h2b

"""

@@ -19,17 +19,15 @@

logger = logging.getLogger(__name__)

-def bytes2human(n: SupportsInt, fmt: Optional[str] = None, symbols: Optional[str] = None) -> str:

+def bytes2human(n: SupportsInt) -> str:

"""

- Convert n bytes into a human readable string based on format.

- symbols can be either "customary", "customary_ext", "iec" or "iec_ext",

- see: http://goo.gl/kTQMs

+ Convert n bytes into a human readable string.

"""

logger.warning('Deprecated toil method. Please use "toil.lib.conversions.bytes2human()" instead."')

return b2h(n)

-def human2bytes(s):

+def human2bytes(s: str) -> int:

"""

Attempts to guess the string format based on default symbols

set and return the corresponding bytes as an integer.

|