problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_50209

|

rasdani/github-patches

|

git_diff

|

pex-tool__pex-2286

|

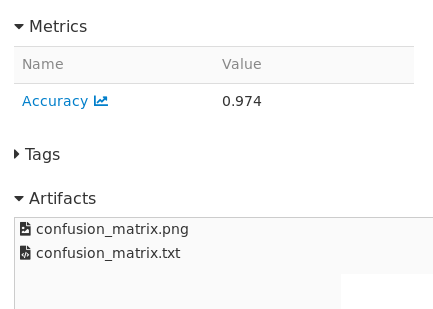

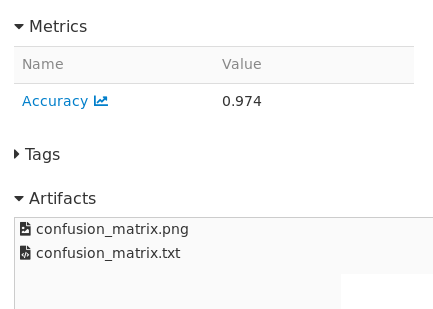

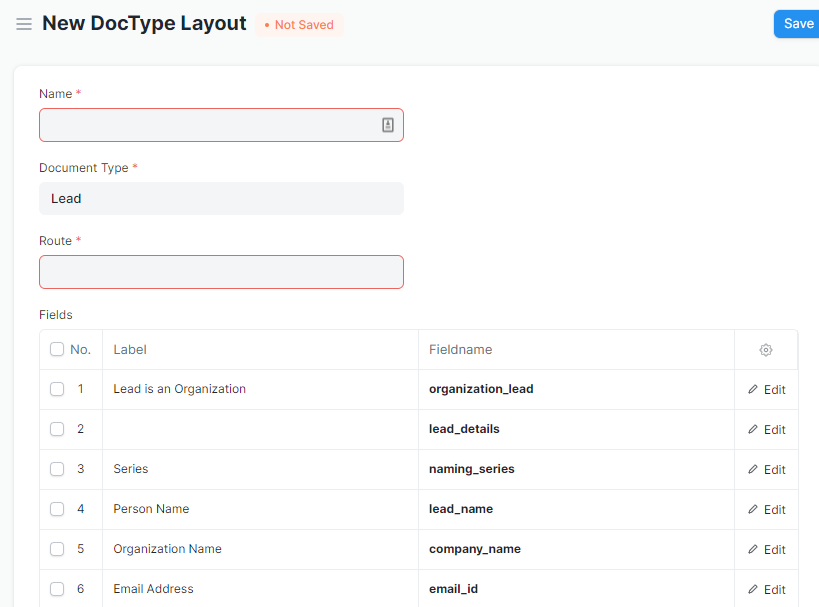

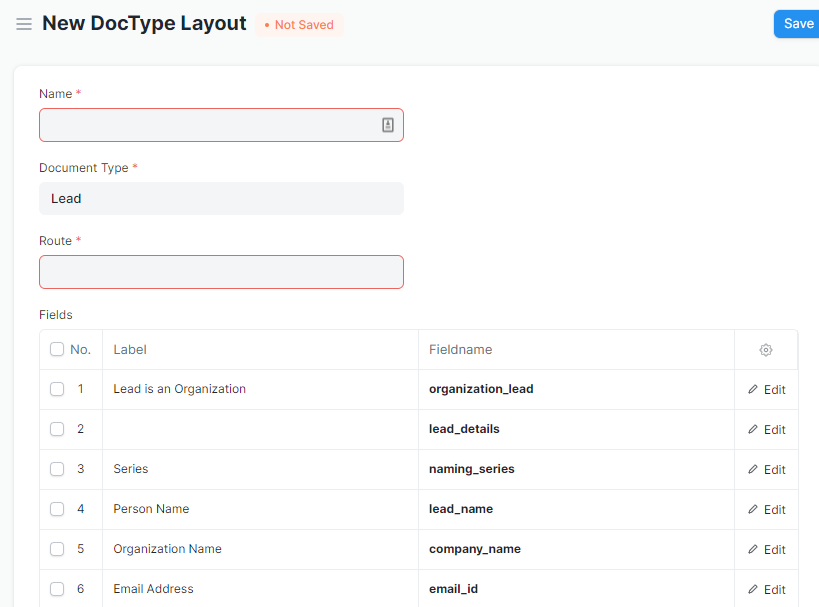

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

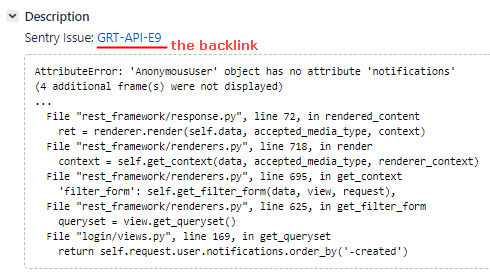

<issue>

`venv create` no longer includes `--sources-directory` contents when all files are nested

It seems like there was a regression from 2.1.148 -> 2.1.149 with the behaviour of `venv create` with a `--pex-repository` that was created with `--sources-directory`: those sources aren't included in the final venv.

Reproducer:

```shell

cd $(mktemp -d)

# create our dummy file

mkdir -p source_files/foo

touch source_files/foo/bar.py # NB.1

# touch source_files/qux.py # NB.2

for version in v2.1.148 v2.1.149; do

curl -s -L https://github.com/pantsbuild/pex/releases/download/$version/pex > pex-$version

chmod +x pex-$version

# NB.3

./pex-$version --output-file=repository-$version.pex --sources-directory=source_files

# NB.4

PEX_SCRIPT=pex3 ./pex-$version venv create --dest-dir=dest-$version --pex-repository=repository-$version.pex --layout=flat

# what was included?

tree dest-$version

done

```

Running that shows that the contents of the `dest-...` directory depends on the version, without the `bar.py` file when using v2.1.149, but should be the same:

```

dest-v2.1.148

└── foo

└── bar.py

1 directory, 1 file

dest-v2.1.149

0 directories, 0 files

```

Ablative studies:

- uncommenting `NB.2` line (to have two files) passes ✅ (both versions have both `foo/bar.py` and `qux.py`)

- _replacing_ the `NB.1` with `NB.2` (to just `qux.py` at the top level) passes ✅

- _always_ using v2.1.148 on line `NB.3` (create the pex) and v2.1.149 on line `NB.4` (create the venv) passes ✅

- v2.1.149 for `NB.3` and v2.1.148 for `NB.4` fails ❌

- I think third-party dependencies work okay, but haven't confirmed in this reduced setting

- This reproduces without `--layout`, but the output is simpler with `--layout=flat`

(First observed in https://github.com/pantsbuild/pants/pull/20149.)

</issue>

<code>

[start of pex/util.py]

1 # Copyright 2014 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 from __future__ import absolute_import

5

6 import contextlib

7 import hashlib

8 import importlib

9 import os

10 import shutil

11 import tempfile

12 from hashlib import sha1

13 from site import makepath # type: ignore[attr-defined]

14

15 from pex import hashing

16 from pex.common import is_pyc_dir, is_pyc_file, safe_mkdir, safe_mkdtemp

17 from pex.compatibility import ( # type: ignore[attr-defined] # `exec_function` is defined dynamically

18 PY2,

19 exec_function,

20 )

21 from pex.orderedset import OrderedSet

22 from pex.typing import TYPE_CHECKING

23

24 if TYPE_CHECKING:

25 from typing import IO, Any, Callable, Iterator, Optional, Text

26

27 from pex.hashing import Hasher

28

29

30 class DistributionHelper(object):

31 # TODO(#584: This appears unused, but clients might still use it. We cannot remove until we

32 # have a deprecation policy.

33 @classmethod

34 def access_zipped_assets(cls, static_module_name, static_path, dir_location=None):

35 # type: (str, str, Optional[str]) -> str

36 """Create a copy of static resource files as we can't serve them from within the pex file.

37

38 :param static_module_name: Module name containing module to cache in a tempdir

39 :param static_path: Module name, for example 'serverset'

40 :param dir_location: create a new temporary directory inside, or None to have one created

41 :returns temp_dir: Temporary directory with the zipped assets inside

42 """

43 if dir_location is None:

44 temp_dir = safe_mkdtemp()

45 else:

46 temp_dir = dir_location

47

48 module = importlib.import_module(static_module_name)

49 # N.B.: This handles namespace packages new and old.

50 paths = OrderedSet(os.path.realpath(d) for d in getattr(module, "__path__", []))

51 if module.__file__:

52 # And this handles old-style __init__.py packages.

53 paths.add(os.path.realpath(module.__file__))

54

55 safe_mkdir(temp_dir)

56 for path in paths:

57 resource_dir = os.path.realpath(os.path.join(path, static_path))

58 if os.path.isdir(resource_dir):

59 for root, dirs, files in os.walk(resource_dir):

60 for d in dirs:

61 safe_mkdir(

62 os.path.join(

63 temp_dir, os.path.relpath(os.path.join(root, d), resource_dir)

64 )

65 )

66 for f in files:

67 src = os.path.join(root, f)

68 shutil.copy(src, os.path.join(temp_dir, os.path.relpath(src, resource_dir)))

69 return temp_dir

70

71

72 class CacheHelper(object):

73 @classmethod

74 def hash(cls, path, digest=None, hasher=sha1):

75 # type: (Text, Optional[Hasher], Callable[[], Hasher]) -> str

76 """Return the digest of a single file in a memory-efficient manner."""

77 if digest is None:

78 digest = hasher()

79 hashing.file_hash(path, digest)

80 return digest.hexdigest()

81

82 @classmethod

83 def pex_code_hash(cls, directory):

84 # type: (str) -> str

85 """Return a reproducible hash of the contents of a loose PEX; excluding all `.pyc` files."""

86 digest = hashlib.sha1()

87 hashing.dir_hash(

88 directory=directory,

89 digest=digest,

90 dir_filter=is_pyc_dir,

91 file_filter=lambda file_path: not is_pyc_file(file_path)

92 and not file_path.startswith("."),

93 )

94 return digest.hexdigest()

95

96 @classmethod

97 def dir_hash(cls, directory, digest=None, hasher=sha1):

98 # type: (str, Optional[Hasher], Callable[[], Hasher]) -> str

99 """Return a reproducible hash of the contents of a directory; excluding all `.pyc` files."""

100 if digest is None:

101 digest = hasher()

102 hashing.dir_hash(

103 directory=directory,

104 digest=digest,

105 dir_filter=lambda d: not is_pyc_dir(d),

106 file_filter=lambda f: not is_pyc_file(f),

107 )

108 return digest.hexdigest()

109

110 @classmethod

111 def zip_hash(

112 cls,

113 zip_path, # type: str

114 relpath=None, # type: Optional[str]

115 ):

116 # type: (...) -> str

117 """Return a reproducible hash of the contents of a zip; excluding all `.pyc` files."""

118 digest = hashlib.sha1()

119 hashing.zip_hash(

120 zip_path=zip_path,

121 digest=digest,

122 relpath=relpath,

123 dir_filter=lambda d: not is_pyc_dir(d),

124 file_filter=lambda f: not is_pyc_file(f),

125 )

126 return digest.hexdigest()

127

128

129 @contextlib.contextmanager

130 def named_temporary_file(**kwargs):

131 # type: (**Any) -> Iterator[IO]

132 """Due to a bug in python (https://bugs.python.org/issue14243), we need this to be able to use

133 the temporary file without deleting it."""

134 assert "delete" not in kwargs

135 kwargs["delete"] = False

136 fp = tempfile.NamedTemporaryFile(**kwargs)

137 try:

138 with fp:

139 yield fp

140 finally:

141 os.remove(fp.name)

142

[end of pex/util.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pex/util.py b/pex/util.py

--- a/pex/util.py

+++ b/pex/util.py

@@ -87,7 +87,7 @@

hashing.dir_hash(

directory=directory,

digest=digest,

- dir_filter=is_pyc_dir,

+ dir_filter=lambda d: not is_pyc_dir(d),

file_filter=lambda file_path: not is_pyc_file(file_path)

and not file_path.startswith("."),

)

|

{"golden_diff": "diff --git a/pex/util.py b/pex/util.py\n--- a/pex/util.py\n+++ b/pex/util.py\n@@ -87,7 +87,7 @@\n hashing.dir_hash(\n directory=directory,\n digest=digest,\n- dir_filter=is_pyc_dir,\n+ dir_filter=lambda d: not is_pyc_dir(d),\n file_filter=lambda file_path: not is_pyc_file(file_path)\n and not file_path.startswith(\".\"),\n )\n", "issue": "`venv create` no longer includes `--sources-directory` contents when all files are nested\nIt seems like there was a regression from 2.1.148 -> 2.1.149 with the behaviour of `venv create` with a `--pex-repository` that was created with `--sources-directory`: those sources aren't included in the final venv.\r\n\r\nReproducer:\r\n\r\n```shell\r\ncd $(mktemp -d)\r\n\r\n# create our dummy file\r\nmkdir -p source_files/foo\r\ntouch source_files/foo/bar.py # NB.1\r\n# touch source_files/qux.py # NB.2\r\n\r\nfor version in v2.1.148 v2.1.149; do\r\n curl -s -L https://github.com/pantsbuild/pex/releases/download/$version/pex > pex-$version\r\n chmod +x pex-$version\r\n\r\n # NB.3\r\n ./pex-$version --output-file=repository-$version.pex --sources-directory=source_files\r\n\r\n # NB.4\r\n PEX_SCRIPT=pex3 ./pex-$version venv create --dest-dir=dest-$version --pex-repository=repository-$version.pex --layout=flat\r\n\r\n # what was included?\r\n tree dest-$version\r\ndone\r\n```\r\n\r\nRunning that shows that the contents of the `dest-...` directory depends on the version, without the `bar.py` file when using v2.1.149, but should be the same:\r\n\r\n```\r\ndest-v2.1.148\r\n\u2514\u2500\u2500 foo\r\n \u2514\u2500\u2500 bar.py\r\n\r\n1 directory, 1 file\r\ndest-v2.1.149\r\n\r\n0 directories, 0 files\r\n```\r\n\r\nAblative studies:\r\n\r\n- uncommenting `NB.2` line (to have two files) passes \u2705 (both versions have both `foo/bar.py` and `qux.py`)\r\n- _replacing_ the `NB.1` with `NB.2` (to just `qux.py` at the top level) passes \u2705 \r\n- _always_ using v2.1.148 on line `NB.3` (create the pex) and v2.1.149 on line `NB.4` (create the venv) passes \u2705 \r\n- v2.1.149 for `NB.3` and v2.1.148 for `NB.4` fails \u274c \r\n- I think third-party dependencies work okay, but haven't confirmed in this reduced setting\r\n- This reproduces without `--layout`, but the output is simpler with `--layout=flat`\r\n\r\n(First observed in https://github.com/pantsbuild/pants/pull/20149.)\n", "before_files": [{"content": "# Copyright 2014 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\nfrom __future__ import absolute_import\n\nimport contextlib\nimport hashlib\nimport importlib\nimport os\nimport shutil\nimport tempfile\nfrom hashlib import sha1\nfrom site import makepath # type: ignore[attr-defined]\n\nfrom pex import hashing\nfrom pex.common import is_pyc_dir, is_pyc_file, safe_mkdir, safe_mkdtemp\nfrom pex.compatibility import ( # type: ignore[attr-defined] # `exec_function` is defined dynamically\n PY2,\n exec_function,\n)\nfrom pex.orderedset import OrderedSet\nfrom pex.typing import TYPE_CHECKING\n\nif TYPE_CHECKING:\n from typing import IO, Any, Callable, Iterator, Optional, Text\n\n from pex.hashing import Hasher\n\n\nclass DistributionHelper(object):\n # TODO(#584: This appears unused, but clients might still use it. We cannot remove until we\n # have a deprecation policy.\n @classmethod\n def access_zipped_assets(cls, static_module_name, static_path, dir_location=None):\n # type: (str, str, Optional[str]) -> str\n \"\"\"Create a copy of static resource files as we can't serve them from within the pex file.\n\n :param static_module_name: Module name containing module to cache in a tempdir\n :param static_path: Module name, for example 'serverset'\n :param dir_location: create a new temporary directory inside, or None to have one created\n :returns temp_dir: Temporary directory with the zipped assets inside\n \"\"\"\n if dir_location is None:\n temp_dir = safe_mkdtemp()\n else:\n temp_dir = dir_location\n\n module = importlib.import_module(static_module_name)\n # N.B.: This handles namespace packages new and old.\n paths = OrderedSet(os.path.realpath(d) for d in getattr(module, \"__path__\", []))\n if module.__file__:\n # And this handles old-style __init__.py packages.\n paths.add(os.path.realpath(module.__file__))\n\n safe_mkdir(temp_dir)\n for path in paths:\n resource_dir = os.path.realpath(os.path.join(path, static_path))\n if os.path.isdir(resource_dir):\n for root, dirs, files in os.walk(resource_dir):\n for d in dirs:\n safe_mkdir(\n os.path.join(\n temp_dir, os.path.relpath(os.path.join(root, d), resource_dir)\n )\n )\n for f in files:\n src = os.path.join(root, f)\n shutil.copy(src, os.path.join(temp_dir, os.path.relpath(src, resource_dir)))\n return temp_dir\n\n\nclass CacheHelper(object):\n @classmethod\n def hash(cls, path, digest=None, hasher=sha1):\n # type: (Text, Optional[Hasher], Callable[[], Hasher]) -> str\n \"\"\"Return the digest of a single file in a memory-efficient manner.\"\"\"\n if digest is None:\n digest = hasher()\n hashing.file_hash(path, digest)\n return digest.hexdigest()\n\n @classmethod\n def pex_code_hash(cls, directory):\n # type: (str) -> str\n \"\"\"Return a reproducible hash of the contents of a loose PEX; excluding all `.pyc` files.\"\"\"\n digest = hashlib.sha1()\n hashing.dir_hash(\n directory=directory,\n digest=digest,\n dir_filter=is_pyc_dir,\n file_filter=lambda file_path: not is_pyc_file(file_path)\n and not file_path.startswith(\".\"),\n )\n return digest.hexdigest()\n\n @classmethod\n def dir_hash(cls, directory, digest=None, hasher=sha1):\n # type: (str, Optional[Hasher], Callable[[], Hasher]) -> str\n \"\"\"Return a reproducible hash of the contents of a directory; excluding all `.pyc` files.\"\"\"\n if digest is None:\n digest = hasher()\n hashing.dir_hash(\n directory=directory,\n digest=digest,\n dir_filter=lambda d: not is_pyc_dir(d),\n file_filter=lambda f: not is_pyc_file(f),\n )\n return digest.hexdigest()\n\n @classmethod\n def zip_hash(\n cls,\n zip_path, # type: str\n relpath=None, # type: Optional[str]\n ):\n # type: (...) -> str\n \"\"\"Return a reproducible hash of the contents of a zip; excluding all `.pyc` files.\"\"\"\n digest = hashlib.sha1()\n hashing.zip_hash(\n zip_path=zip_path,\n digest=digest,\n relpath=relpath,\n dir_filter=lambda d: not is_pyc_dir(d),\n file_filter=lambda f: not is_pyc_file(f),\n )\n return digest.hexdigest()\n\n\[email protected]\ndef named_temporary_file(**kwargs):\n # type: (**Any) -> Iterator[IO]\n \"\"\"Due to a bug in python (https://bugs.python.org/issue14243), we need this to be able to use\n the temporary file without deleting it.\"\"\"\n assert \"delete\" not in kwargs\n kwargs[\"delete\"] = False\n fp = tempfile.NamedTemporaryFile(**kwargs)\n try:\n with fp:\n yield fp\n finally:\n os.remove(fp.name)\n", "path": "pex/util.py"}]}

| 2,612 | 103 |

gh_patches_debug_28189

|

rasdani/github-patches

|

git_diff

|

netbox-community__netbox-4718

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

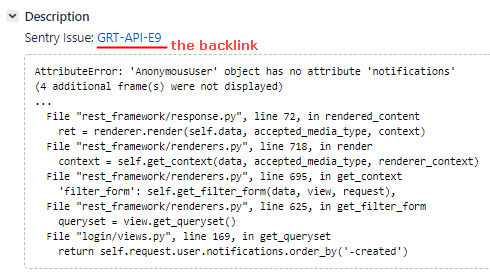

<issue>

API Definitions for available-ips and available-prefixes are incorrect

<!--

NOTE: IF YOUR ISSUE DOES NOT FOLLOW THIS TEMPLATE, IT WILL BE CLOSED.

Please indicate the nature of the change by placing an X in one of the

boxes below.

-->

### Change Type

[ ] Addition

[x] Correction

[ ] Deprecation

[ ] Cleanup (formatting, typos, etc.)

### Area

[ ] Installation instructions

[ ] Configuration parameters

[ ] Functionality/features

[x] REST API

[ ] Administration/development

[ ] Other

<!-- Describe the proposed change(s). -->

### Proposed Changes

I'm writing a ipam project with go-netbox(https://github.com/kobayashi/go-netbox for v2.8), which generate the client from swagger. And I'm using the prefix/available-ips api to create unused ip address. I notice that the api documents in http://netbox/api/docs/ has difference with actually code:

* The request model should be IPAddress rather than WritablePrefix

_ref: https://github.com/netbox-community/netbox/blob/v2.8.3/netbox/ipam/api/views.py#L211_

* The return code should be 201 rather than 200

_ref: https://github.com/netbox-community/netbox/blob/v2.8.3/netbox/ipam/api/views.py#L218_

These mismatches make go-netbox client unable to work correctly. I would appreciate if this can be fixed.

</issue>

<code>

[start of netbox/ipam/api/views.py]

1 from django.conf import settings

2 from django.db.models import Count

3 from django.shortcuts import get_object_or_404

4 from django_pglocks import advisory_lock

5 from drf_yasg.utils import swagger_auto_schema

6 from rest_framework import status

7 from rest_framework.decorators import action

8 from rest_framework.exceptions import PermissionDenied

9 from rest_framework.response import Response

10

11 from extras.api.views import CustomFieldModelViewSet

12 from ipam import filters

13 from ipam.models import Aggregate, IPAddress, Prefix, RIR, Role, Service, VLAN, VLANGroup, VRF

14 from utilities.api import ModelViewSet

15 from utilities.constants import ADVISORY_LOCK_KEYS

16 from utilities.utils import get_subquery

17 from . import serializers

18

19

20 #

21 # VRFs

22 #

23

24 class VRFViewSet(CustomFieldModelViewSet):

25 queryset = VRF.objects.prefetch_related('tenant').prefetch_related('tags').annotate(

26 ipaddress_count=get_subquery(IPAddress, 'vrf'),

27 prefix_count=get_subquery(Prefix, 'vrf')

28 )

29 serializer_class = serializers.VRFSerializer

30 filterset_class = filters.VRFFilterSet

31

32

33 #

34 # RIRs

35 #

36

37 class RIRViewSet(ModelViewSet):

38 queryset = RIR.objects.annotate(

39 aggregate_count=Count('aggregates')

40 )

41 serializer_class = serializers.RIRSerializer

42 filterset_class = filters.RIRFilterSet

43

44

45 #

46 # Aggregates

47 #

48

49 class AggregateViewSet(CustomFieldModelViewSet):

50 queryset = Aggregate.objects.prefetch_related('rir').prefetch_related('tags')

51 serializer_class = serializers.AggregateSerializer

52 filterset_class = filters.AggregateFilterSet

53

54

55 #

56 # Roles

57 #

58

59 class RoleViewSet(ModelViewSet):

60 queryset = Role.objects.annotate(

61 prefix_count=get_subquery(Prefix, 'role'),

62 vlan_count=get_subquery(VLAN, 'role')

63 )

64 serializer_class = serializers.RoleSerializer

65 filterset_class = filters.RoleFilterSet

66

67

68 #

69 # Prefixes

70 #

71

72 class PrefixViewSet(CustomFieldModelViewSet):

73 queryset = Prefix.objects.prefetch_related('site', 'vrf__tenant', 'tenant', 'vlan', 'role', 'tags')

74 serializer_class = serializers.PrefixSerializer

75 filterset_class = filters.PrefixFilterSet

76

77 @swagger_auto_schema(

78 methods=['get', 'post'],

79 responses={

80 200: serializers.AvailablePrefixSerializer(many=True),

81 }

82 )

83 @action(detail=True, url_path='available-prefixes', methods=['get', 'post'])

84 @advisory_lock(ADVISORY_LOCK_KEYS['available-prefixes'])

85 def available_prefixes(self, request, pk=None):

86 """

87 A convenience method for returning available child prefixes within a parent.

88

89 The advisory lock decorator uses a PostgreSQL advisory lock to prevent this API from being

90 invoked in parallel, which results in a race condition where multiple insertions can occur.

91 """

92 prefix = get_object_or_404(Prefix, pk=pk)

93 available_prefixes = prefix.get_available_prefixes()

94

95 if request.method == 'POST':

96

97 # Permissions check

98 if not request.user.has_perm('ipam.add_prefix'):

99 raise PermissionDenied()

100

101 # Validate Requested Prefixes' length

102 serializer = serializers.PrefixLengthSerializer(

103 data=request.data if isinstance(request.data, list) else [request.data],

104 many=True,

105 context={

106 'request': request,

107 'prefix': prefix,

108 }

109 )

110 if not serializer.is_valid():

111 return Response(

112 serializer.errors,

113 status=status.HTTP_400_BAD_REQUEST

114 )

115

116 requested_prefixes = serializer.validated_data

117 # Allocate prefixes to the requested objects based on availability within the parent

118 for i, requested_prefix in enumerate(requested_prefixes):

119

120 # Find the first available prefix equal to or larger than the requested size

121 for available_prefix in available_prefixes.iter_cidrs():

122 if requested_prefix['prefix_length'] >= available_prefix.prefixlen:

123 allocated_prefix = '{}/{}'.format(available_prefix.network, requested_prefix['prefix_length'])

124 requested_prefix['prefix'] = allocated_prefix

125 requested_prefix['vrf'] = prefix.vrf.pk if prefix.vrf else None

126 break

127 else:

128 return Response(

129 {

130 "detail": "Insufficient space is available to accommodate the requested prefix size(s)"

131 },

132 status=status.HTTP_204_NO_CONTENT

133 )

134

135 # Remove the allocated prefix from the list of available prefixes

136 available_prefixes.remove(allocated_prefix)

137

138 # Initialize the serializer with a list or a single object depending on what was requested

139 context = {'request': request}

140 if isinstance(request.data, list):

141 serializer = serializers.PrefixSerializer(data=requested_prefixes, many=True, context=context)

142 else:

143 serializer = serializers.PrefixSerializer(data=requested_prefixes[0], context=context)

144

145 # Create the new Prefix(es)

146 if serializer.is_valid():

147 serializer.save()

148 return Response(serializer.data, status=status.HTTP_201_CREATED)

149

150 return Response(serializer.errors, status=status.HTTP_400_BAD_REQUEST)

151

152 else:

153

154 serializer = serializers.AvailablePrefixSerializer(available_prefixes.iter_cidrs(), many=True, context={

155 'request': request,

156 'vrf': prefix.vrf,

157 })

158

159 return Response(serializer.data)

160

161 @swagger_auto_schema(

162 methods=['get', 'post'],

163 responses={

164 200: serializers.AvailableIPSerializer(many=True),

165 }

166 )

167 @action(detail=True, url_path='available-ips', methods=['get', 'post'])

168 @advisory_lock(ADVISORY_LOCK_KEYS['available-ips'])

169 def available_ips(self, request, pk=None):

170 """

171 A convenience method for returning available IP addresses within a prefix. By default, the number of IPs

172 returned will be equivalent to PAGINATE_COUNT. An arbitrary limit (up to MAX_PAGE_SIZE, if set) may be passed,

173 however results will not be paginated.

174

175 The advisory lock decorator uses a PostgreSQL advisory lock to prevent this API from being

176 invoked in parallel, which results in a race condition where multiple insertions can occur.

177 """

178 prefix = get_object_or_404(Prefix, pk=pk)

179

180 # Create the next available IP within the prefix

181 if request.method == 'POST':

182

183 # Permissions check

184 if not request.user.has_perm('ipam.add_ipaddress'):

185 raise PermissionDenied()

186

187 # Normalize to a list of objects

188 requested_ips = request.data if isinstance(request.data, list) else [request.data]

189

190 # Determine if the requested number of IPs is available

191 available_ips = prefix.get_available_ips()

192 if available_ips.size < len(requested_ips):

193 return Response(

194 {

195 "detail": "An insufficient number of IP addresses are available within the prefix {} ({} "

196 "requested, {} available)".format(prefix, len(requested_ips), len(available_ips))

197 },

198 status=status.HTTP_204_NO_CONTENT

199 )

200

201 # Assign addresses from the list of available IPs and copy VRF assignment from the parent prefix

202 available_ips = iter(available_ips)

203 prefix_length = prefix.prefix.prefixlen

204 for requested_ip in requested_ips:

205 requested_ip['address'] = '{}/{}'.format(next(available_ips), prefix_length)

206 requested_ip['vrf'] = prefix.vrf.pk if prefix.vrf else None

207

208 # Initialize the serializer with a list or a single object depending on what was requested

209 context = {'request': request}

210 if isinstance(request.data, list):

211 serializer = serializers.IPAddressSerializer(data=requested_ips, many=True, context=context)

212 else:

213 serializer = serializers.IPAddressSerializer(data=requested_ips[0], context=context)

214

215 # Create the new IP address(es)

216 if serializer.is_valid():

217 serializer.save()

218 return Response(serializer.data, status=status.HTTP_201_CREATED)

219

220 return Response(serializer.errors, status=status.HTTP_400_BAD_REQUEST)

221

222 # Determine the maximum number of IPs to return

223 else:

224 try:

225 limit = int(request.query_params.get('limit', settings.PAGINATE_COUNT))

226 except ValueError:

227 limit = settings.PAGINATE_COUNT

228 if settings.MAX_PAGE_SIZE:

229 limit = min(limit, settings.MAX_PAGE_SIZE)

230

231 # Calculate available IPs within the prefix

232 ip_list = []

233 for index, ip in enumerate(prefix.get_available_ips(), start=1):

234 ip_list.append(ip)

235 if index == limit:

236 break

237 serializer = serializers.AvailableIPSerializer(ip_list, many=True, context={

238 'request': request,

239 'prefix': prefix.prefix,

240 'vrf': prefix.vrf,

241 })

242

243 return Response(serializer.data)

244

245

246 #

247 # IP addresses

248 #

249

250 class IPAddressViewSet(CustomFieldModelViewSet):

251 queryset = IPAddress.objects.prefetch_related(

252 'vrf__tenant', 'tenant', 'nat_inside', 'interface__device__device_type', 'interface__virtual_machine',

253 'nat_outside', 'tags',

254 )

255 serializer_class = serializers.IPAddressSerializer

256 filterset_class = filters.IPAddressFilterSet

257

258

259 #

260 # VLAN groups

261 #

262

263 class VLANGroupViewSet(ModelViewSet):

264 queryset = VLANGroup.objects.prefetch_related('site').annotate(

265 vlan_count=Count('vlans')

266 )

267 serializer_class = serializers.VLANGroupSerializer

268 filterset_class = filters.VLANGroupFilterSet

269

270

271 #

272 # VLANs

273 #

274

275 class VLANViewSet(CustomFieldModelViewSet):

276 queryset = VLAN.objects.prefetch_related(

277 'site', 'group', 'tenant', 'role', 'tags'

278 ).annotate(

279 prefix_count=get_subquery(Prefix, 'vlan')

280 )

281 serializer_class = serializers.VLANSerializer

282 filterset_class = filters.VLANFilterSet

283

284

285 #

286 # Services

287 #

288

289 class ServiceViewSet(ModelViewSet):

290 queryset = Service.objects.prefetch_related('device').prefetch_related('tags')

291 serializer_class = serializers.ServiceSerializer

292 filterset_class = filters.ServiceFilterSet

293

[end of netbox/ipam/api/views.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/netbox/ipam/api/views.py b/netbox/ipam/api/views.py

--- a/netbox/ipam/api/views.py

+++ b/netbox/ipam/api/views.py

@@ -74,12 +74,8 @@

serializer_class = serializers.PrefixSerializer

filterset_class = filters.PrefixFilterSet

- @swagger_auto_schema(

- methods=['get', 'post'],

- responses={

- 200: serializers.AvailablePrefixSerializer(many=True),

- }

- )

+ @swagger_auto_schema(method='get', responses={200: serializers.AvailablePrefixSerializer(many=True)})

+ @swagger_auto_schema(method='post', responses={201: serializers.AvailablePrefixSerializer(many=True)})

@action(detail=True, url_path='available-prefixes', methods=['get', 'post'])

@advisory_lock(ADVISORY_LOCK_KEYS['available-prefixes'])

def available_prefixes(self, request, pk=None):

@@ -158,12 +154,9 @@

return Response(serializer.data)

- @swagger_auto_schema(

- methods=['get', 'post'],

- responses={

- 200: serializers.AvailableIPSerializer(many=True),

- }

- )

+ @swagger_auto_schema(method='get', responses={200: serializers.AvailableIPSerializer(many=True)})

+ @swagger_auto_schema(method='post', responses={201: serializers.AvailableIPSerializer(many=True)},

+ request_body=serializers.AvailableIPSerializer(many=False))

@action(detail=True, url_path='available-ips', methods=['get', 'post'])

@advisory_lock(ADVISORY_LOCK_KEYS['available-ips'])

def available_ips(self, request, pk=None):

|

{"golden_diff": "diff --git a/netbox/ipam/api/views.py b/netbox/ipam/api/views.py\n--- a/netbox/ipam/api/views.py\n+++ b/netbox/ipam/api/views.py\n@@ -74,12 +74,8 @@\n serializer_class = serializers.PrefixSerializer\n filterset_class = filters.PrefixFilterSet\n \n- @swagger_auto_schema(\n- methods=['get', 'post'],\n- responses={\n- 200: serializers.AvailablePrefixSerializer(many=True),\n- }\n- )\n+ @swagger_auto_schema(method='get', responses={200: serializers.AvailablePrefixSerializer(many=True)})\n+ @swagger_auto_schema(method='post', responses={201: serializers.AvailablePrefixSerializer(many=True)})\n @action(detail=True, url_path='available-prefixes', methods=['get', 'post'])\n @advisory_lock(ADVISORY_LOCK_KEYS['available-prefixes'])\n def available_prefixes(self, request, pk=None):\n@@ -158,12 +154,9 @@\n \n return Response(serializer.data)\n \n- @swagger_auto_schema(\n- methods=['get', 'post'],\n- responses={\n- 200: serializers.AvailableIPSerializer(many=True),\n- }\n- )\n+ @swagger_auto_schema(method='get', responses={200: serializers.AvailableIPSerializer(many=True)})\n+ @swagger_auto_schema(method='post', responses={201: serializers.AvailableIPSerializer(many=True)},\n+ request_body=serializers.AvailableIPSerializer(many=False))\n @action(detail=True, url_path='available-ips', methods=['get', 'post'])\n @advisory_lock(ADVISORY_LOCK_KEYS['available-ips'])\n def available_ips(self, request, pk=None):\n", "issue": "API Definitions for available-ips and available-prefixes are incorrect\n<!--\r\n NOTE: IF YOUR ISSUE DOES NOT FOLLOW THIS TEMPLATE, IT WILL BE CLOSED.\r\n\r\n Please indicate the nature of the change by placing an X in one of the\r\n boxes below.\r\n-->\r\n### Change Type\r\n[ ] Addition\r\n[x] Correction\r\n[ ] Deprecation\r\n[ ] Cleanup (formatting, typos, etc.)\r\n\r\n### Area\r\n[ ] Installation instructions\r\n[ ] Configuration parameters\r\n[ ] Functionality/features\r\n[x] REST API\r\n[ ] Administration/development\r\n[ ] Other\r\n\r\n<!-- Describe the proposed change(s). -->\r\n### Proposed Changes\r\nI'm writing a ipam project with go-netbox(https://github.com/kobayashi/go-netbox for v2.8), which generate the client from swagger. And I'm using the prefix/available-ips api to create unused ip address. I notice that the api documents in http://netbox/api/docs/ has difference with actually code:\r\n* The request model should be IPAddress rather than WritablePrefix\r\n _ref: https://github.com/netbox-community/netbox/blob/v2.8.3/netbox/ipam/api/views.py#L211_\r\n* The return code should be 201 rather than 200\r\n _ref: https://github.com/netbox-community/netbox/blob/v2.8.3/netbox/ipam/api/views.py#L218_\r\n\r\nThese mismatches make go-netbox client unable to work correctly. I would appreciate if this can be fixed.\n", "before_files": [{"content": "from django.conf import settings\nfrom django.db.models import Count\nfrom django.shortcuts import get_object_or_404\nfrom django_pglocks import advisory_lock\nfrom drf_yasg.utils import swagger_auto_schema\nfrom rest_framework import status\nfrom rest_framework.decorators import action\nfrom rest_framework.exceptions import PermissionDenied\nfrom rest_framework.response import Response\n\nfrom extras.api.views import CustomFieldModelViewSet\nfrom ipam import filters\nfrom ipam.models import Aggregate, IPAddress, Prefix, RIR, Role, Service, VLAN, VLANGroup, VRF\nfrom utilities.api import ModelViewSet\nfrom utilities.constants import ADVISORY_LOCK_KEYS\nfrom utilities.utils import get_subquery\nfrom . import serializers\n\n\n#\n# VRFs\n#\n\nclass VRFViewSet(CustomFieldModelViewSet):\n queryset = VRF.objects.prefetch_related('tenant').prefetch_related('tags').annotate(\n ipaddress_count=get_subquery(IPAddress, 'vrf'),\n prefix_count=get_subquery(Prefix, 'vrf')\n )\n serializer_class = serializers.VRFSerializer\n filterset_class = filters.VRFFilterSet\n\n\n#\n# RIRs\n#\n\nclass RIRViewSet(ModelViewSet):\n queryset = RIR.objects.annotate(\n aggregate_count=Count('aggregates')\n )\n serializer_class = serializers.RIRSerializer\n filterset_class = filters.RIRFilterSet\n\n\n#\n# Aggregates\n#\n\nclass AggregateViewSet(CustomFieldModelViewSet):\n queryset = Aggregate.objects.prefetch_related('rir').prefetch_related('tags')\n serializer_class = serializers.AggregateSerializer\n filterset_class = filters.AggregateFilterSet\n\n\n#\n# Roles\n#\n\nclass RoleViewSet(ModelViewSet):\n queryset = Role.objects.annotate(\n prefix_count=get_subquery(Prefix, 'role'),\n vlan_count=get_subquery(VLAN, 'role')\n )\n serializer_class = serializers.RoleSerializer\n filterset_class = filters.RoleFilterSet\n\n\n#\n# Prefixes\n#\n\nclass PrefixViewSet(CustomFieldModelViewSet):\n queryset = Prefix.objects.prefetch_related('site', 'vrf__tenant', 'tenant', 'vlan', 'role', 'tags')\n serializer_class = serializers.PrefixSerializer\n filterset_class = filters.PrefixFilterSet\n\n @swagger_auto_schema(\n methods=['get', 'post'],\n responses={\n 200: serializers.AvailablePrefixSerializer(many=True),\n }\n )\n @action(detail=True, url_path='available-prefixes', methods=['get', 'post'])\n @advisory_lock(ADVISORY_LOCK_KEYS['available-prefixes'])\n def available_prefixes(self, request, pk=None):\n \"\"\"\n A convenience method for returning available child prefixes within a parent.\n\n The advisory lock decorator uses a PostgreSQL advisory lock to prevent this API from being\n invoked in parallel, which results in a race condition where multiple insertions can occur.\n \"\"\"\n prefix = get_object_or_404(Prefix, pk=pk)\n available_prefixes = prefix.get_available_prefixes()\n\n if request.method == 'POST':\n\n # Permissions check\n if not request.user.has_perm('ipam.add_prefix'):\n raise PermissionDenied()\n\n # Validate Requested Prefixes' length\n serializer = serializers.PrefixLengthSerializer(\n data=request.data if isinstance(request.data, list) else [request.data],\n many=True,\n context={\n 'request': request,\n 'prefix': prefix,\n }\n )\n if not serializer.is_valid():\n return Response(\n serializer.errors,\n status=status.HTTP_400_BAD_REQUEST\n )\n\n requested_prefixes = serializer.validated_data\n # Allocate prefixes to the requested objects based on availability within the parent\n for i, requested_prefix in enumerate(requested_prefixes):\n\n # Find the first available prefix equal to or larger than the requested size\n for available_prefix in available_prefixes.iter_cidrs():\n if requested_prefix['prefix_length'] >= available_prefix.prefixlen:\n allocated_prefix = '{}/{}'.format(available_prefix.network, requested_prefix['prefix_length'])\n requested_prefix['prefix'] = allocated_prefix\n requested_prefix['vrf'] = prefix.vrf.pk if prefix.vrf else None\n break\n else:\n return Response(\n {\n \"detail\": \"Insufficient space is available to accommodate the requested prefix size(s)\"\n },\n status=status.HTTP_204_NO_CONTENT\n )\n\n # Remove the allocated prefix from the list of available prefixes\n available_prefixes.remove(allocated_prefix)\n\n # Initialize the serializer with a list or a single object depending on what was requested\n context = {'request': request}\n if isinstance(request.data, list):\n serializer = serializers.PrefixSerializer(data=requested_prefixes, many=True, context=context)\n else:\n serializer = serializers.PrefixSerializer(data=requested_prefixes[0], context=context)\n\n # Create the new Prefix(es)\n if serializer.is_valid():\n serializer.save()\n return Response(serializer.data, status=status.HTTP_201_CREATED)\n\n return Response(serializer.errors, status=status.HTTP_400_BAD_REQUEST)\n\n else:\n\n serializer = serializers.AvailablePrefixSerializer(available_prefixes.iter_cidrs(), many=True, context={\n 'request': request,\n 'vrf': prefix.vrf,\n })\n\n return Response(serializer.data)\n\n @swagger_auto_schema(\n methods=['get', 'post'],\n responses={\n 200: serializers.AvailableIPSerializer(many=True),\n }\n )\n @action(detail=True, url_path='available-ips', methods=['get', 'post'])\n @advisory_lock(ADVISORY_LOCK_KEYS['available-ips'])\n def available_ips(self, request, pk=None):\n \"\"\"\n A convenience method for returning available IP addresses within a prefix. By default, the number of IPs\n returned will be equivalent to PAGINATE_COUNT. An arbitrary limit (up to MAX_PAGE_SIZE, if set) may be passed,\n however results will not be paginated.\n\n The advisory lock decorator uses a PostgreSQL advisory lock to prevent this API from being\n invoked in parallel, which results in a race condition where multiple insertions can occur.\n \"\"\"\n prefix = get_object_or_404(Prefix, pk=pk)\n\n # Create the next available IP within the prefix\n if request.method == 'POST':\n\n # Permissions check\n if not request.user.has_perm('ipam.add_ipaddress'):\n raise PermissionDenied()\n\n # Normalize to a list of objects\n requested_ips = request.data if isinstance(request.data, list) else [request.data]\n\n # Determine if the requested number of IPs is available\n available_ips = prefix.get_available_ips()\n if available_ips.size < len(requested_ips):\n return Response(\n {\n \"detail\": \"An insufficient number of IP addresses are available within the prefix {} ({} \"\n \"requested, {} available)\".format(prefix, len(requested_ips), len(available_ips))\n },\n status=status.HTTP_204_NO_CONTENT\n )\n\n # Assign addresses from the list of available IPs and copy VRF assignment from the parent prefix\n available_ips = iter(available_ips)\n prefix_length = prefix.prefix.prefixlen\n for requested_ip in requested_ips:\n requested_ip['address'] = '{}/{}'.format(next(available_ips), prefix_length)\n requested_ip['vrf'] = prefix.vrf.pk if prefix.vrf else None\n\n # Initialize the serializer with a list or a single object depending on what was requested\n context = {'request': request}\n if isinstance(request.data, list):\n serializer = serializers.IPAddressSerializer(data=requested_ips, many=True, context=context)\n else:\n serializer = serializers.IPAddressSerializer(data=requested_ips[0], context=context)\n\n # Create the new IP address(es)\n if serializer.is_valid():\n serializer.save()\n return Response(serializer.data, status=status.HTTP_201_CREATED)\n\n return Response(serializer.errors, status=status.HTTP_400_BAD_REQUEST)\n\n # Determine the maximum number of IPs to return\n else:\n try:\n limit = int(request.query_params.get('limit', settings.PAGINATE_COUNT))\n except ValueError:\n limit = settings.PAGINATE_COUNT\n if settings.MAX_PAGE_SIZE:\n limit = min(limit, settings.MAX_PAGE_SIZE)\n\n # Calculate available IPs within the prefix\n ip_list = []\n for index, ip in enumerate(prefix.get_available_ips(), start=1):\n ip_list.append(ip)\n if index == limit:\n break\n serializer = serializers.AvailableIPSerializer(ip_list, many=True, context={\n 'request': request,\n 'prefix': prefix.prefix,\n 'vrf': prefix.vrf,\n })\n\n return Response(serializer.data)\n\n\n#\n# IP addresses\n#\n\nclass IPAddressViewSet(CustomFieldModelViewSet):\n queryset = IPAddress.objects.prefetch_related(\n 'vrf__tenant', 'tenant', 'nat_inside', 'interface__device__device_type', 'interface__virtual_machine',\n 'nat_outside', 'tags',\n )\n serializer_class = serializers.IPAddressSerializer\n filterset_class = filters.IPAddressFilterSet\n\n\n#\n# VLAN groups\n#\n\nclass VLANGroupViewSet(ModelViewSet):\n queryset = VLANGroup.objects.prefetch_related('site').annotate(\n vlan_count=Count('vlans')\n )\n serializer_class = serializers.VLANGroupSerializer\n filterset_class = filters.VLANGroupFilterSet\n\n\n#\n# VLANs\n#\n\nclass VLANViewSet(CustomFieldModelViewSet):\n queryset = VLAN.objects.prefetch_related(\n 'site', 'group', 'tenant', 'role', 'tags'\n ).annotate(\n prefix_count=get_subquery(Prefix, 'vlan')\n )\n serializer_class = serializers.VLANSerializer\n filterset_class = filters.VLANFilterSet\n\n\n#\n# Services\n#\n\nclass ServiceViewSet(ModelViewSet):\n queryset = Service.objects.prefetch_related('device').prefetch_related('tags')\n serializer_class = serializers.ServiceSerializer\n filterset_class = filters.ServiceFilterSet\n", "path": "netbox/ipam/api/views.py"}]}

| 3,790 | 392 |

gh_patches_debug_40882

|

rasdani/github-patches

|

git_diff

|

pytorch__vision-3193

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Clean up and Document the io.image enhancements

PR #3024 introduced a few enhancements on the `io.image` methods used for reading JPEG and PNG images. The code changes were merged but there are a few nice-to-have improvements missing.

More specifically:

- [x] Provide better error messages for [JPEG](https://github.com/pytorch/vision/pull/3024#discussion_r527066925) and [PNG](https://github.com/pytorch/vision/pull/3024#discussion_r527093866) when a specific `ImageReadMode` value is not supported.

- [x] Ensure that `image_read_mode.h` has a new line at the [end of the file](https://github.com/pytorch/vision/pull/3024#discussion_r526710809).

- [x] [Replace](https://github.com/pytorch/vision/pull/3024#discussion_r527065253) the old `#define` with the newer `constexpr` expression at `image_read_mode.h`.

- [x] [Move the documentation](https://github.com/pytorch/vision/pull/3024#discussion_r527095264) of `ImageReadMode` values from the methods to the enum.

**Note:** `constexpr` might cause problems on Windows. If that's the case, try using const instead.

</issue>

<code>

[start of torchvision/io/image.py]

1 import torch

2

3 import os

4 import os.path as osp

5 import importlib.machinery

6

7 from enum import Enum

8

9 _HAS_IMAGE_OPT = False

10

11 try:

12 lib_dir = osp.abspath(osp.join(osp.dirname(__file__), ".."))

13

14 loader_details = (

15 importlib.machinery.ExtensionFileLoader,

16 importlib.machinery.EXTENSION_SUFFIXES

17 )

18

19 extfinder = importlib.machinery.FileFinder(lib_dir, loader_details) # type: ignore[arg-type]

20 ext_specs = extfinder.find_spec("image")

21

22 if os.name == 'nt':

23 # Load the image extension using LoadLibraryExW

24 import ctypes

25 import sys

26

27 kernel32 = ctypes.WinDLL('kernel32.dll', use_last_error=True)

28 with_load_library_flags = hasattr(kernel32, 'AddDllDirectory')

29 prev_error_mode = kernel32.SetErrorMode(0x0001)

30

31 kernel32.LoadLibraryW.restype = ctypes.c_void_p

32 if with_load_library_flags:

33 kernel32.LoadLibraryExW.restype = ctypes.c_void_p

34

35 if ext_specs is not None:

36 res = kernel32.LoadLibraryExW(ext_specs.origin, None, 0x00001100)

37 if res is None:

38 err = ctypes.WinError(ctypes.get_last_error())

39 err.strerror += (f' Error loading "{ext_specs.origin}" or any or '

40 'its dependencies.')

41 raise err

42

43 kernel32.SetErrorMode(prev_error_mode)

44

45 if ext_specs is not None:

46 torch.ops.load_library(ext_specs.origin)

47 _HAS_IMAGE_OPT = True

48 except (ImportError, OSError):

49 pass

50

51

52 class ImageReadMode(Enum):

53 UNCHANGED = 0

54 GRAY = 1

55 GRAY_ALPHA = 2

56 RGB = 3

57 RGB_ALPHA = 4

58

59

60 def read_file(path: str) -> torch.Tensor:

61 """

62 Reads and outputs the bytes contents of a file as a uint8 Tensor

63 with one dimension.

64

65 Arguments:

66 path (str): the path to the file to be read

67

68 Returns:

69 data (Tensor)

70 """

71 data = torch.ops.image.read_file(path)

72 return data

73

74

75 def write_file(filename: str, data: torch.Tensor) -> None:

76 """

77 Writes the contents of a uint8 tensor with one dimension to a

78 file.

79

80 Arguments:

81 filename (str): the path to the file to be written

82 data (Tensor): the contents to be written to the output file

83 """

84 torch.ops.image.write_file(filename, data)

85

86

87 def decode_png(input: torch.Tensor, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:

88 """

89 Decodes a PNG image into a 3 dimensional RGB Tensor.

90 Optionally converts the image to the desired format.

91 The values of the output tensor are uint8 between 0 and 255.

92

93 Arguments:

94 input (Tensor[1]): a one dimensional uint8 tensor containing

95 the raw bytes of the PNG image.

96 mode (ImageReadMode): the read mode used for optionally

97 converting the image. Use `ImageReadMode.UNCHANGED` for loading

98 the image as-is, `ImageReadMode.GRAY` for converting to grayscale,

99 `ImageReadMode.GRAY_ALPHA` for grayscale with transparency,

100 `ImageReadMode.RGB` for RGB and `ImageReadMode.RGB_ALPHA` for

101 RGB with transparency. Default: `ImageReadMode.UNCHANGED`

102

103 Returns:

104 output (Tensor[image_channels, image_height, image_width])

105 """

106 output = torch.ops.image.decode_png(input, mode.value)

107 return output

108

109

110 def encode_png(input: torch.Tensor, compression_level: int = 6) -> torch.Tensor:

111 """

112 Takes an input tensor in CHW layout and returns a buffer with the contents

113 of its corresponding PNG file.

114

115 Parameters

116 ----------

117 input: Tensor[channels, image_height, image_width]

118 int8 image tensor of `c` channels, where `c` must 3 or 1.

119 compression_level: int

120 Compression factor for the resulting file, it must be a number

121 between 0 and 9. Default: 6

122

123 Returns

124 -------

125 output: Tensor[1]

126 A one dimensional int8 tensor that contains the raw bytes of the

127 PNG file.

128 """

129 output = torch.ops.image.encode_png(input, compression_level)

130 return output

131

132

133 def write_png(input: torch.Tensor, filename: str, compression_level: int = 6):

134 """

135 Takes an input tensor in CHW layout (or HW in the case of grayscale images)

136 and saves it in a PNG file.

137

138 Parameters

139 ----------

140 input: Tensor[channels, image_height, image_width]

141 int8 image tensor of `c` channels, where `c` must be 1 or 3.

142 filename: str

143 Path to save the image.

144 compression_level: int

145 Compression factor for the resulting file, it must be a number

146 between 0 and 9. Default: 6

147 """

148 output = encode_png(input, compression_level)

149 write_file(filename, output)

150

151

152 def decode_jpeg(input: torch.Tensor, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:

153 """

154 Decodes a JPEG image into a 3 dimensional RGB Tensor.

155 Optionally converts the image to the desired format.

156 The values of the output tensor are uint8 between 0 and 255.

157

158 Arguments:

159 input (Tensor[1]): a one dimensional uint8 tensor containing

160 the raw bytes of the JPEG image.

161 mode (ImageReadMode): the read mode used for optionally

162 converting the image. Use `ImageReadMode.UNCHANGED` for loading

163 the image as-is, `ImageReadMode.GRAY` for converting to grayscale

164 and `ImageReadMode.RGB` for RGB. Default: `ImageReadMode.UNCHANGED`

165

166 Returns:

167 output (Tensor[image_channels, image_height, image_width])

168 """

169 output = torch.ops.image.decode_jpeg(input, mode.value)

170 return output

171

172

173 def encode_jpeg(input: torch.Tensor, quality: int = 75) -> torch.Tensor:

174 """

175 Takes an input tensor in CHW layout and returns a buffer with the contents

176 of its corresponding JPEG file.

177

178 Parameters

179 ----------

180 input: Tensor[channels, image_height, image_width])

181 int8 image tensor of `c` channels, where `c` must be 1 or 3.

182 quality: int

183 Quality of the resulting JPEG file, it must be a number between

184 1 and 100. Default: 75

185

186 Returns

187 -------

188 output: Tensor[1]

189 A one dimensional int8 tensor that contains the raw bytes of the

190 JPEG file.

191 """

192 if quality < 1 or quality > 100:

193 raise ValueError('Image quality should be a positive number '

194 'between 1 and 100')

195

196 output = torch.ops.image.encode_jpeg(input, quality)

197 return output

198

199

200 def write_jpeg(input: torch.Tensor, filename: str, quality: int = 75):

201 """

202 Takes an input tensor in CHW layout and saves it in a JPEG file.

203

204 Parameters

205 ----------

206 input: Tensor[channels, image_height, image_width]

207 int8 image tensor of `c` channels, where `c` must be 1 or 3.

208 filename: str

209 Path to save the image.

210 quality: int

211 Quality of the resulting JPEG file, it must be a number

212 between 1 and 100. Default: 75

213 """

214 output = encode_jpeg(input, quality)

215 write_file(filename, output)

216

217

218 def decode_image(input: torch.Tensor, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:

219 """

220 Detects whether an image is a JPEG or PNG and performs the appropriate

221 operation to decode the image into a 3 dimensional RGB Tensor.

222

223 Optionally converts the image to the desired format.

224 The values of the output tensor are uint8 between 0 and 255.

225

226 Parameters

227 ----------

228 input: Tensor

229 a one dimensional uint8 tensor containing the raw bytes of the

230 PNG or JPEG image.

231 mode: ImageReadMode

232 the read mode used for optionally converting the image. JPEG

233 and PNG images have different permitted values. The default

234 value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.

235 See `decode_jpeg()` and `decode_png()` for more information.

236 Default: `ImageReadMode.UNCHANGED`

237

238 Returns

239 -------

240 output: Tensor[image_channels, image_height, image_width]

241 """

242 output = torch.ops.image.decode_image(input, mode.value)

243 return output

244

245

246 def read_image(path: str, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:

247 """

248 Reads a JPEG or PNG image into a 3 dimensional RGB Tensor.

249 Optionally converts the image to the desired format.

250 The values of the output tensor are uint8 between 0 and 255.

251

252 Parameters

253 ----------

254 path: str

255 path of the JPEG or PNG image.

256 mode: ImageReadMode

257 the read mode used for optionally converting the image. JPEG

258 and PNG images have different permitted values. The default

259 value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.

260 See `decode_jpeg()` and `decode_png()` for more information.

261 Default: `ImageReadMode.UNCHANGED`

262

263 Returns

264 -------

265 output: Tensor[image_channels, image_height, image_width]

266 """

267 data = read_file(path)

268 return decode_image(data, mode)

269

[end of torchvision/io/image.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/torchvision/io/image.py b/torchvision/io/image.py

--- a/torchvision/io/image.py

+++ b/torchvision/io/image.py

@@ -50,6 +50,15 @@

class ImageReadMode(Enum):

+ """

+ Support for various modes while reading images.

+

+ Use `ImageReadMode.UNCHANGED` for loading the image as-is,

+ `ImageReadMode.GRAY` for converting to grayscale,

+ `ImageReadMode.GRAY_ALPHA` for grayscale with transparency,

+ `ImageReadMode.RGB` for RGB and `ImageReadMode.RGB_ALPHA` for

+ RGB with transparency.

+ """

UNCHANGED = 0

GRAY = 1

GRAY_ALPHA = 2

@@ -94,11 +103,9 @@

input (Tensor[1]): a one dimensional uint8 tensor containing

the raw bytes of the PNG image.

mode (ImageReadMode): the read mode used for optionally

- converting the image. Use `ImageReadMode.UNCHANGED` for loading

- the image as-is, `ImageReadMode.GRAY` for converting to grayscale,

- `ImageReadMode.GRAY_ALPHA` for grayscale with transparency,

- `ImageReadMode.RGB` for RGB and `ImageReadMode.RGB_ALPHA` for

- RGB with transparency. Default: `ImageReadMode.UNCHANGED`

+ converting the image. Default: `ImageReadMode.UNCHANGED`.

+ See `ImageReadMode` class for more information on various

+ available modes.

Returns:

output (Tensor[image_channels, image_height, image_width])

@@ -159,9 +166,9 @@

input (Tensor[1]): a one dimensional uint8 tensor containing

the raw bytes of the JPEG image.

mode (ImageReadMode): the read mode used for optionally

- converting the image. Use `ImageReadMode.UNCHANGED` for loading

- the image as-is, `ImageReadMode.GRAY` for converting to grayscale

- and `ImageReadMode.RGB` for RGB. Default: `ImageReadMode.UNCHANGED`

+ converting the image. Default: `ImageReadMode.UNCHANGED`.

+ See `ImageReadMode` class for more information on various

+ available modes.

Returns:

output (Tensor[image_channels, image_height, image_width])

@@ -229,11 +236,10 @@

a one dimensional uint8 tensor containing the raw bytes of the

PNG or JPEG image.

mode: ImageReadMode

- the read mode used for optionally converting the image. JPEG

- and PNG images have different permitted values. The default

- value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.

- See `decode_jpeg()` and `decode_png()` for more information.

- Default: `ImageReadMode.UNCHANGED`

+ the read mode used for optionally converting the image.

+ Default: `ImageReadMode.UNCHANGED`.

+ See `ImageReadMode` class for more information on various

+ available modes.

Returns

-------

@@ -254,11 +260,10 @@

path: str

path of the JPEG or PNG image.

mode: ImageReadMode

- the read mode used for optionally converting the image. JPEG

- and PNG images have different permitted values. The default

- value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.

- See `decode_jpeg()` and `decode_png()` for more information.

- Default: `ImageReadMode.UNCHANGED`

+ the read mode used for optionally converting the image.

+ Default: `ImageReadMode.UNCHANGED`.

+ See `ImageReadMode` class for more information on various

+ available modes.

Returns

-------

|

{"golden_diff": "diff --git a/torchvision/io/image.py b/torchvision/io/image.py\n--- a/torchvision/io/image.py\n+++ b/torchvision/io/image.py\n@@ -50,6 +50,15 @@\n \n \n class ImageReadMode(Enum):\n+ \"\"\"\n+ Support for various modes while reading images.\n+\n+ Use `ImageReadMode.UNCHANGED` for loading the image as-is,\n+ `ImageReadMode.GRAY` for converting to grayscale,\n+ `ImageReadMode.GRAY_ALPHA` for grayscale with transparency,\n+ `ImageReadMode.RGB` for RGB and `ImageReadMode.RGB_ALPHA` for\n+ RGB with transparency.\n+ \"\"\"\n UNCHANGED = 0\n GRAY = 1\n GRAY_ALPHA = 2\n@@ -94,11 +103,9 @@\n input (Tensor[1]): a one dimensional uint8 tensor containing\n the raw bytes of the PNG image.\n mode (ImageReadMode): the read mode used for optionally\n- converting the image. Use `ImageReadMode.UNCHANGED` for loading\n- the image as-is, `ImageReadMode.GRAY` for converting to grayscale,\n- `ImageReadMode.GRAY_ALPHA` for grayscale with transparency,\n- `ImageReadMode.RGB` for RGB and `ImageReadMode.RGB_ALPHA` for\n- RGB with transparency. Default: `ImageReadMode.UNCHANGED`\n+ converting the image. Default: `ImageReadMode.UNCHANGED`.\n+ See `ImageReadMode` class for more information on various\n+ available modes.\n \n Returns:\n output (Tensor[image_channels, image_height, image_width])\n@@ -159,9 +166,9 @@\n input (Tensor[1]): a one dimensional uint8 tensor containing\n the raw bytes of the JPEG image.\n mode (ImageReadMode): the read mode used for optionally\n- converting the image. Use `ImageReadMode.UNCHANGED` for loading\n- the image as-is, `ImageReadMode.GRAY` for converting to grayscale\n- and `ImageReadMode.RGB` for RGB. Default: `ImageReadMode.UNCHANGED`\n+ converting the image. Default: `ImageReadMode.UNCHANGED`.\n+ See `ImageReadMode` class for more information on various\n+ available modes.\n \n Returns:\n output (Tensor[image_channels, image_height, image_width])\n@@ -229,11 +236,10 @@\n a one dimensional uint8 tensor containing the raw bytes of the\n PNG or JPEG image.\n mode: ImageReadMode\n- the read mode used for optionally converting the image. JPEG\n- and PNG images have different permitted values. The default\n- value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.\n- See `decode_jpeg()` and `decode_png()` for more information.\n- Default: `ImageReadMode.UNCHANGED`\n+ the read mode used for optionally converting the image.\n+ Default: `ImageReadMode.UNCHANGED`.\n+ See `ImageReadMode` class for more information on various\n+ available modes.\n \n Returns\n -------\n@@ -254,11 +260,10 @@\n path: str\n path of the JPEG or PNG image.\n mode: ImageReadMode\n- the read mode used for optionally converting the image. JPEG\n- and PNG images have different permitted values. The default\n- value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.\n- See `decode_jpeg()` and `decode_png()` for more information.\n- Default: `ImageReadMode.UNCHANGED`\n+ the read mode used for optionally converting the image.\n+ Default: `ImageReadMode.UNCHANGED`.\n+ See `ImageReadMode` class for more information on various\n+ available modes.\n \n Returns\n -------\n", "issue": "Clean up and Document the io.image enhancements\nPR #3024 introduced a few enhancements on the `io.image` methods used for reading JPEG and PNG images. The code changes were merged but there are a few nice-to-have improvements missing.\r\n\r\nMore specifically:\r\n- [x] Provide better error messages for [JPEG](https://github.com/pytorch/vision/pull/3024#discussion_r527066925) and [PNG](https://github.com/pytorch/vision/pull/3024#discussion_r527093866) when a specific `ImageReadMode` value is not supported.\r\n- [x] Ensure that `image_read_mode.h` has a new line at the [end of the file](https://github.com/pytorch/vision/pull/3024#discussion_r526710809).\r\n- [x] [Replace](https://github.com/pytorch/vision/pull/3024#discussion_r527065253) the old `#define` with the newer `constexpr` expression at `image_read_mode.h`.\r\n- [x] [Move the documentation](https://github.com/pytorch/vision/pull/3024#discussion_r527095264) of `ImageReadMode` values from the methods to the enum.\r\n\r\n**Note:** `constexpr` might cause problems on Windows. If that's the case, try using const instead.\n", "before_files": [{"content": "import torch\n\nimport os\nimport os.path as osp\nimport importlib.machinery\n\nfrom enum import Enum\n\n_HAS_IMAGE_OPT = False\n\ntry:\n lib_dir = osp.abspath(osp.join(osp.dirname(__file__), \"..\"))\n\n loader_details = (\n importlib.machinery.ExtensionFileLoader,\n importlib.machinery.EXTENSION_SUFFIXES\n )\n\n extfinder = importlib.machinery.FileFinder(lib_dir, loader_details) # type: ignore[arg-type]\n ext_specs = extfinder.find_spec(\"image\")\n\n if os.name == 'nt':\n # Load the image extension using LoadLibraryExW\n import ctypes\n import sys\n\n kernel32 = ctypes.WinDLL('kernel32.dll', use_last_error=True)\n with_load_library_flags = hasattr(kernel32, 'AddDllDirectory')\n prev_error_mode = kernel32.SetErrorMode(0x0001)\n\n kernel32.LoadLibraryW.restype = ctypes.c_void_p\n if with_load_library_flags:\n kernel32.LoadLibraryExW.restype = ctypes.c_void_p\n\n if ext_specs is not None:\n res = kernel32.LoadLibraryExW(ext_specs.origin, None, 0x00001100)\n if res is None:\n err = ctypes.WinError(ctypes.get_last_error())\n err.strerror += (f' Error loading \"{ext_specs.origin}\" or any or '\n 'its dependencies.')\n raise err\n\n kernel32.SetErrorMode(prev_error_mode)\n\n if ext_specs is not None:\n torch.ops.load_library(ext_specs.origin)\n _HAS_IMAGE_OPT = True\nexcept (ImportError, OSError):\n pass\n\n\nclass ImageReadMode(Enum):\n UNCHANGED = 0\n GRAY = 1\n GRAY_ALPHA = 2\n RGB = 3\n RGB_ALPHA = 4\n\n\ndef read_file(path: str) -> torch.Tensor:\n \"\"\"\n Reads and outputs the bytes contents of a file as a uint8 Tensor\n with one dimension.\n\n Arguments:\n path (str): the path to the file to be read\n\n Returns:\n data (Tensor)\n \"\"\"\n data = torch.ops.image.read_file(path)\n return data\n\n\ndef write_file(filename: str, data: torch.Tensor) -> None:\n \"\"\"\n Writes the contents of a uint8 tensor with one dimension to a\n file.\n\n Arguments:\n filename (str): the path to the file to be written\n data (Tensor): the contents to be written to the output file\n \"\"\"\n torch.ops.image.write_file(filename, data)\n\n\ndef decode_png(input: torch.Tensor, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:\n \"\"\"\n Decodes a PNG image into a 3 dimensional RGB Tensor.\n Optionally converts the image to the desired format.\n The values of the output tensor are uint8 between 0 and 255.\n\n Arguments:\n input (Tensor[1]): a one dimensional uint8 tensor containing\n the raw bytes of the PNG image.\n mode (ImageReadMode): the read mode used for optionally\n converting the image. Use `ImageReadMode.UNCHANGED` for loading\n the image as-is, `ImageReadMode.GRAY` for converting to grayscale,\n `ImageReadMode.GRAY_ALPHA` for grayscale with transparency,\n `ImageReadMode.RGB` for RGB and `ImageReadMode.RGB_ALPHA` for\n RGB with transparency. Default: `ImageReadMode.UNCHANGED`\n\n Returns:\n output (Tensor[image_channels, image_height, image_width])\n \"\"\"\n output = torch.ops.image.decode_png(input, mode.value)\n return output\n\n\ndef encode_png(input: torch.Tensor, compression_level: int = 6) -> torch.Tensor:\n \"\"\"\n Takes an input tensor in CHW layout and returns a buffer with the contents\n of its corresponding PNG file.\n\n Parameters\n ----------\n input: Tensor[channels, image_height, image_width]\n int8 image tensor of `c` channels, where `c` must 3 or 1.\n compression_level: int\n Compression factor for the resulting file, it must be a number\n between 0 and 9. Default: 6\n\n Returns\n -------\n output: Tensor[1]\n A one dimensional int8 tensor that contains the raw bytes of the\n PNG file.\n \"\"\"\n output = torch.ops.image.encode_png(input, compression_level)\n return output\n\n\ndef write_png(input: torch.Tensor, filename: str, compression_level: int = 6):\n \"\"\"\n Takes an input tensor in CHW layout (or HW in the case of grayscale images)\n and saves it in a PNG file.\n\n Parameters\n ----------\n input: Tensor[channels, image_height, image_width]\n int8 image tensor of `c` channels, where `c` must be 1 or 3.\n filename: str\n Path to save the image.\n compression_level: int\n Compression factor for the resulting file, it must be a number\n between 0 and 9. Default: 6\n \"\"\"\n output = encode_png(input, compression_level)\n write_file(filename, output)\n\n\ndef decode_jpeg(input: torch.Tensor, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:\n \"\"\"\n Decodes a JPEG image into a 3 dimensional RGB Tensor.\n Optionally converts the image to the desired format.\n The values of the output tensor are uint8 between 0 and 255.\n\n Arguments:\n input (Tensor[1]): a one dimensional uint8 tensor containing\n the raw bytes of the JPEG image.\n mode (ImageReadMode): the read mode used for optionally\n converting the image. Use `ImageReadMode.UNCHANGED` for loading\n the image as-is, `ImageReadMode.GRAY` for converting to grayscale\n and `ImageReadMode.RGB` for RGB. Default: `ImageReadMode.UNCHANGED`\n\n Returns:\n output (Tensor[image_channels, image_height, image_width])\n \"\"\"\n output = torch.ops.image.decode_jpeg(input, mode.value)\n return output\n\n\ndef encode_jpeg(input: torch.Tensor, quality: int = 75) -> torch.Tensor:\n \"\"\"\n Takes an input tensor in CHW layout and returns a buffer with the contents\n of its corresponding JPEG file.\n\n Parameters\n ----------\n input: Tensor[channels, image_height, image_width])\n int8 image tensor of `c` channels, where `c` must be 1 or 3.\n quality: int\n Quality of the resulting JPEG file, it must be a number between\n 1 and 100. Default: 75\n\n Returns\n -------\n output: Tensor[1]\n A one dimensional int8 tensor that contains the raw bytes of the\n JPEG file.\n \"\"\"\n if quality < 1 or quality > 100:\n raise ValueError('Image quality should be a positive number '\n 'between 1 and 100')\n\n output = torch.ops.image.encode_jpeg(input, quality)\n return output\n\n\ndef write_jpeg(input: torch.Tensor, filename: str, quality: int = 75):\n \"\"\"\n Takes an input tensor in CHW layout and saves it in a JPEG file.\n\n Parameters\n ----------\n input: Tensor[channels, image_height, image_width]\n int8 image tensor of `c` channels, where `c` must be 1 or 3.\n filename: str\n Path to save the image.\n quality: int\n Quality of the resulting JPEG file, it must be a number\n between 1 and 100. Default: 75\n \"\"\"\n output = encode_jpeg(input, quality)\n write_file(filename, output)\n\n\ndef decode_image(input: torch.Tensor, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:\n \"\"\"\n Detects whether an image is a JPEG or PNG and performs the appropriate\n operation to decode the image into a 3 dimensional RGB Tensor.\n\n Optionally converts the image to the desired format.\n The values of the output tensor are uint8 between 0 and 255.\n\n Parameters\n ----------\n input: Tensor\n a one dimensional uint8 tensor containing the raw bytes of the\n PNG or JPEG image.\n mode: ImageReadMode\n the read mode used for optionally converting the image. JPEG\n and PNG images have different permitted values. The default\n value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.\n See `decode_jpeg()` and `decode_png()` for more information.\n Default: `ImageReadMode.UNCHANGED`\n\n Returns\n -------\n output: Tensor[image_channels, image_height, image_width]\n \"\"\"\n output = torch.ops.image.decode_image(input, mode.value)\n return output\n\n\ndef read_image(path: str, mode: ImageReadMode = ImageReadMode.UNCHANGED) -> torch.Tensor:\n \"\"\"\n Reads a JPEG or PNG image into a 3 dimensional RGB Tensor.\n Optionally converts the image to the desired format.\n The values of the output tensor are uint8 between 0 and 255.\n\n Parameters\n ----------\n path: str\n path of the JPEG or PNG image.\n mode: ImageReadMode\n the read mode used for optionally converting the image. JPEG\n and PNG images have different permitted values. The default\n value is `ImageReadMode.UNCHANGED` and it keeps the image as-is.\n See `decode_jpeg()` and `decode_png()` for more information.\n Default: `ImageReadMode.UNCHANGED`\n\n Returns\n -------\n output: Tensor[image_channels, image_height, image_width]\n \"\"\"\n data = read_file(path)\n return decode_image(data, mode)\n", "path": "torchvision/io/image.py"}]}

| 3,707 | 858 |

gh_patches_debug_6597

|

rasdani/github-patches

|

git_diff

|

mlflow__mlflow-2196

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Cannot preview artifacts in UI when uploaded to remote machine via FTP

### System information

- **Have I written custom code (as opposed to using a stock example script provided in MLflow)**:

No

- **OS Platform and Distribution (e.g., Linux Ubuntu 16.04)**:

Linux Ubuntu 18.04

- **MLflow installed from (source or binary)**:

installed via from pypi via pip

- **MLflow version (run ``mlflow --version``)**:

0.9.1

- **Python version**:

3.6.7

- **npm version (if running the dev UI):

- **Exact command to reproduce**:

Server runs with:

```

mlflow server --backend-store-uri ./mlruns --default-artifact-root ./artifacts -h 0.0.0.0 -p 5020

```

within a folder holding a `mlruns` and a `artifacts` folder

### Describe the problem

I am running the server on a remote machine.

On the local machine which uploads via the tracking API the

tracking uri is set to: `http://path.to.server:port`

and artifact uri to: `ftp://anonymous:@path.to.server/artifacts`

uploading the tracking information and artifacts work fine and everything shows in the ui:

However when I try to click one of the artifacts I always get the message that something went wrong:

Previewing the artifacts work when I store them locally on my machine but as soon as I transfer them via FTP and then try to preview them from the remote machine I get the error shown above.

### Source code / logs

-

</issue>

<code>

[start of mlflow/store/artifact/ftp_artifact_repo.py]

1 import os

2 import ftplib

3 from ftplib import FTP

4 from contextlib import contextmanager

5

6 import posixpath

7 from six.moves import urllib

8

9 from mlflow.entities.file_info import FileInfo

10 from mlflow.store.artifact.artifact_repo import ArtifactRepository

11 from mlflow.utils.file_utils import relative_path_to_artifact_path

12 from mlflow.exceptions import MlflowException

13

14

15 class FTPArtifactRepository(ArtifactRepository):

16 """Stores artifacts as files in a remote directory, via ftp."""

17

18 def __init__(self, artifact_uri):

19 self.uri = artifact_uri

20 parsed = urllib.parse.urlparse(artifact_uri)

21 self.config = {

22 'host': parsed.hostname,

23 'port': 21 if parsed.port is None else parsed.port,

24 'username': parsed.username,

25 'password': parsed.password

26 }

27 self.path = parsed.path

28

29 if self.config['host'] is None:

30 self.config['host'] = 'localhost'

31

32 super(FTPArtifactRepository, self).__init__(artifact_uri)

33

34 @contextmanager

35 def get_ftp_client(self):

36 ftp = FTP()

37 ftp.connect(self.config['host'], self.config['port'])

38 ftp.login(self.config['username'], self.config['password'])

39 yield ftp

40 ftp.close()

41

42 @staticmethod

43 def _is_dir(ftp, full_file_path):

44 try:

45 ftp.cwd(full_file_path)

46 return True

47 except ftplib.error_perm:

48 return False

49

50 @staticmethod

51 def _mkdir(ftp, artifact_dir):

52 try:

53 if not FTPArtifactRepository._is_dir(ftp, artifact_dir):

54 ftp.mkd(artifact_dir)

55 except ftplib.error_perm:

56 head, _ = posixpath.split(artifact_dir)

57 FTPArtifactRepository._mkdir(ftp, head)

58 FTPArtifactRepository._mkdir(ftp, artifact_dir)

59

60 @staticmethod

61 def _size(ftp, full_file_path):

62 ftp.voidcmd('TYPE I')

63 size = ftp.size(full_file_path)

64 ftp.voidcmd('TYPE A')

65 return size

66

67 def log_artifact(self, local_file, artifact_path=None):

68 with self.get_ftp_client() as ftp:

69 artifact_dir = posixpath.join(self.path, artifact_path) \

70 if artifact_path else self.path

71 self._mkdir(ftp, artifact_dir)

72 with open(local_file, 'rb') as f:

73 ftp.cwd(artifact_dir)

74 ftp.storbinary('STOR ' + os.path.basename(local_file), f)

75

76 def log_artifacts(self, local_dir, artifact_path=None):

77 dest_path = posixpath.join(self.path, artifact_path) \

78 if artifact_path else self.path

79

80 dest_path = posixpath.join(

81 dest_path, os.path.split(local_dir)[1])

82 dest_path_re = os.path.split(local_dir)[1]

83 if artifact_path:

84 dest_path_re = posixpath.join(

85 artifact_path, os.path.split(local_dir)[1])

86

87 local_dir = os.path.abspath(local_dir)

88 for (root, _, filenames) in os.walk(local_dir):

89 upload_path = dest_path

90 if root != local_dir:

91 rel_path = os.path.relpath(root, local_dir)

92 rel_path = relative_path_to_artifact_path(rel_path)

93 upload_path = posixpath.join(dest_path_re, rel_path)

94 if not filenames:

95 with self.get_ftp_client() as ftp: