problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_4842

|

rasdani/github-patches

|

git_diff

|

plone__Products.CMFPlone-3972

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

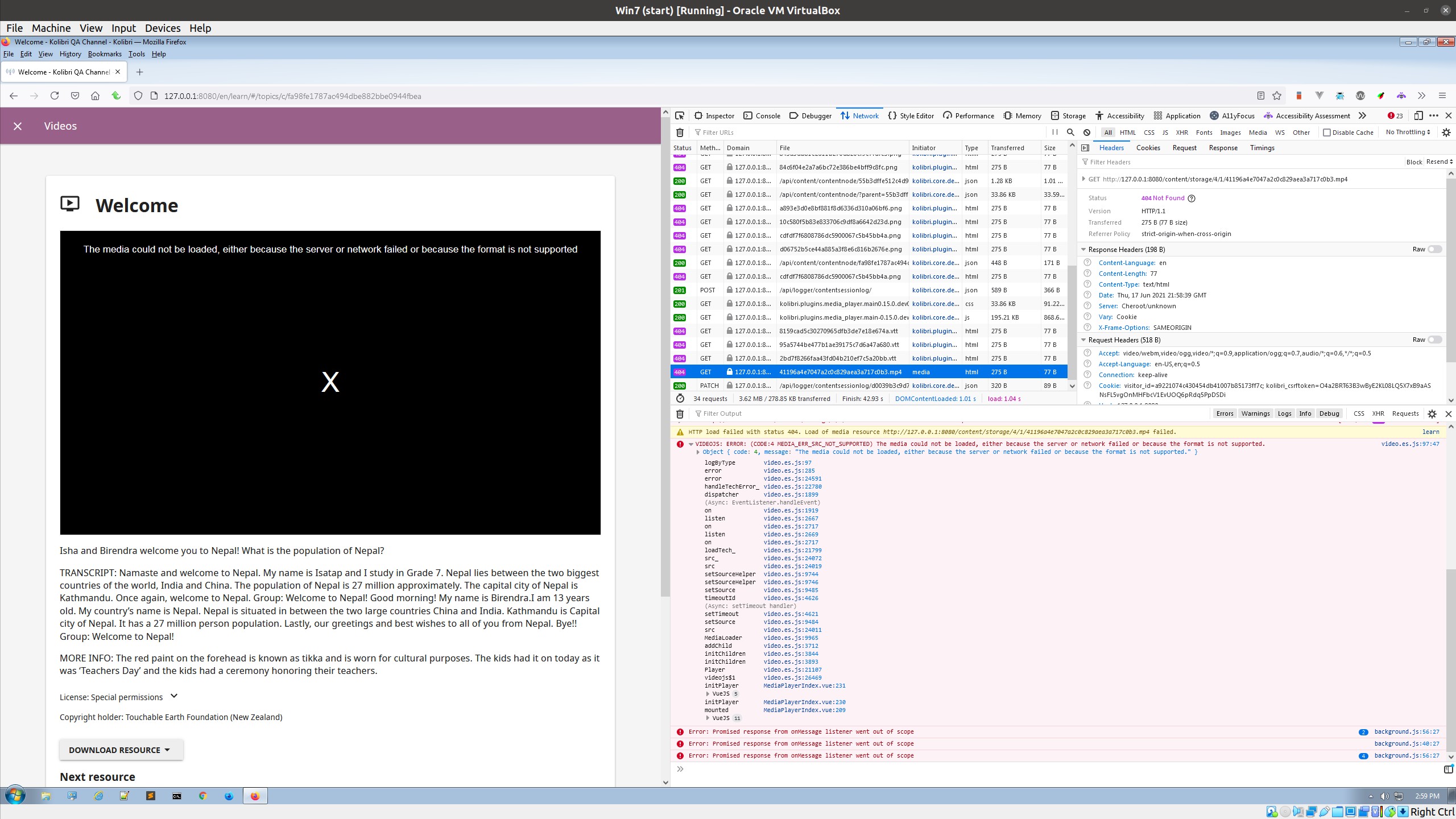

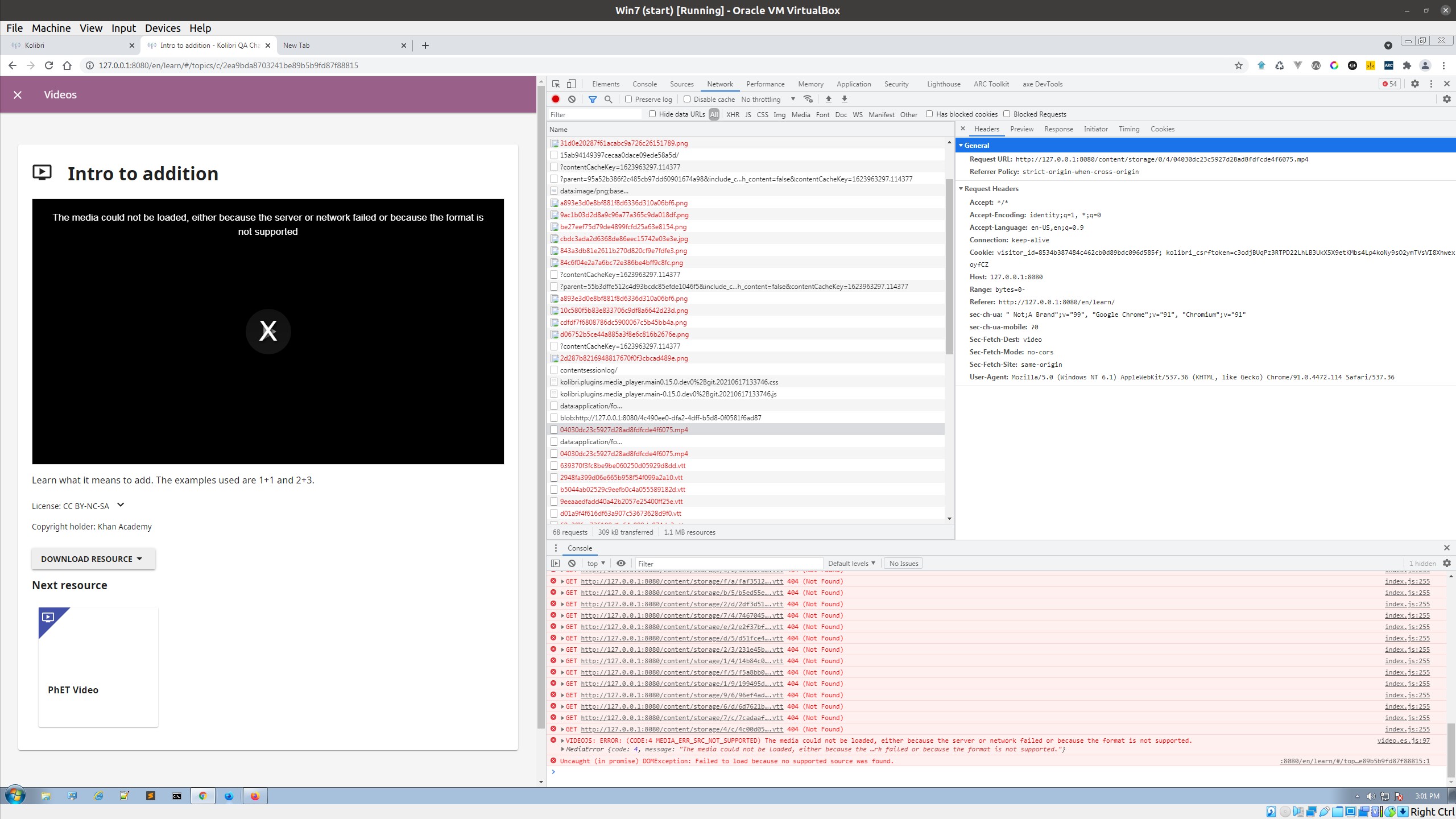

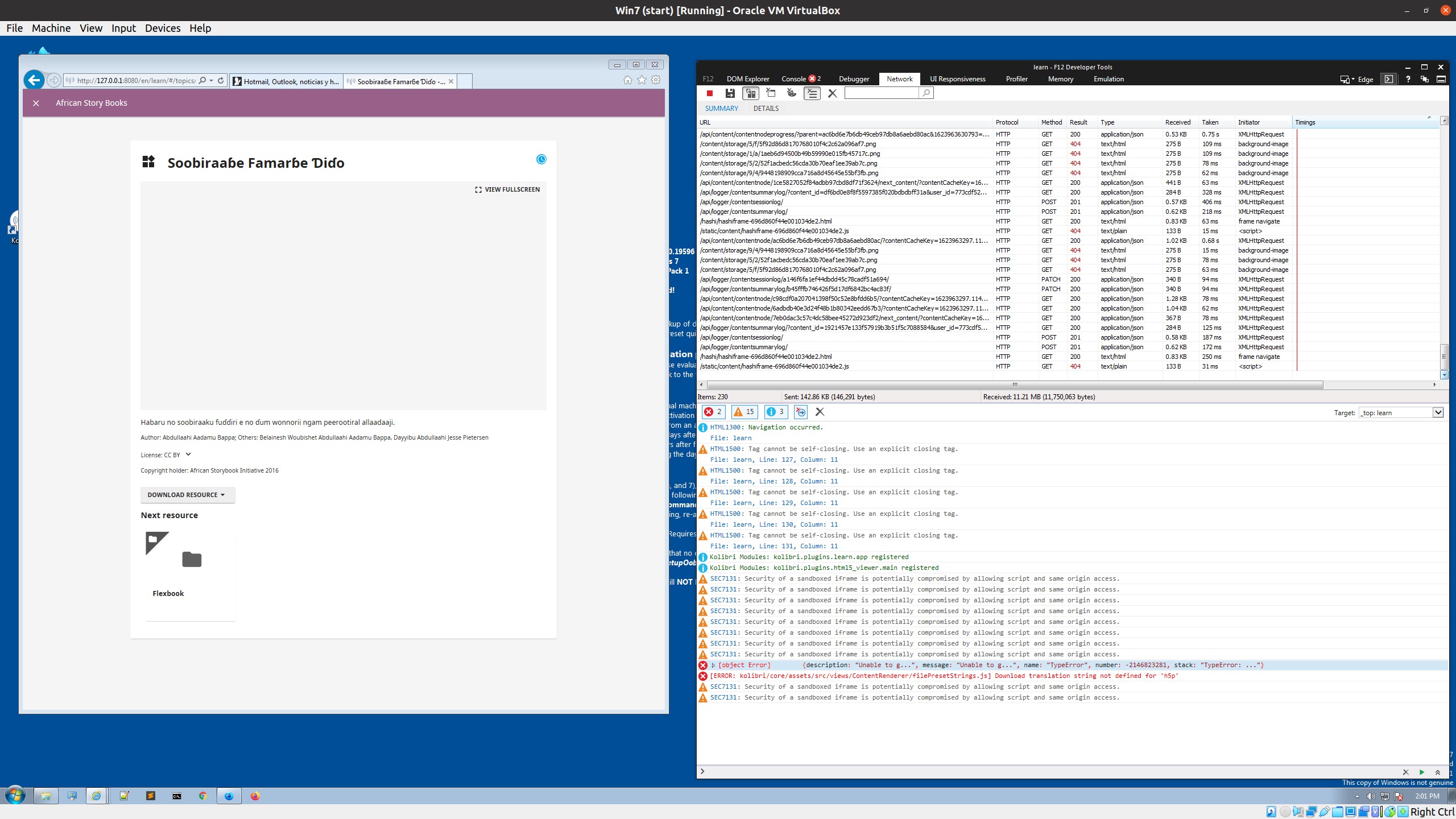

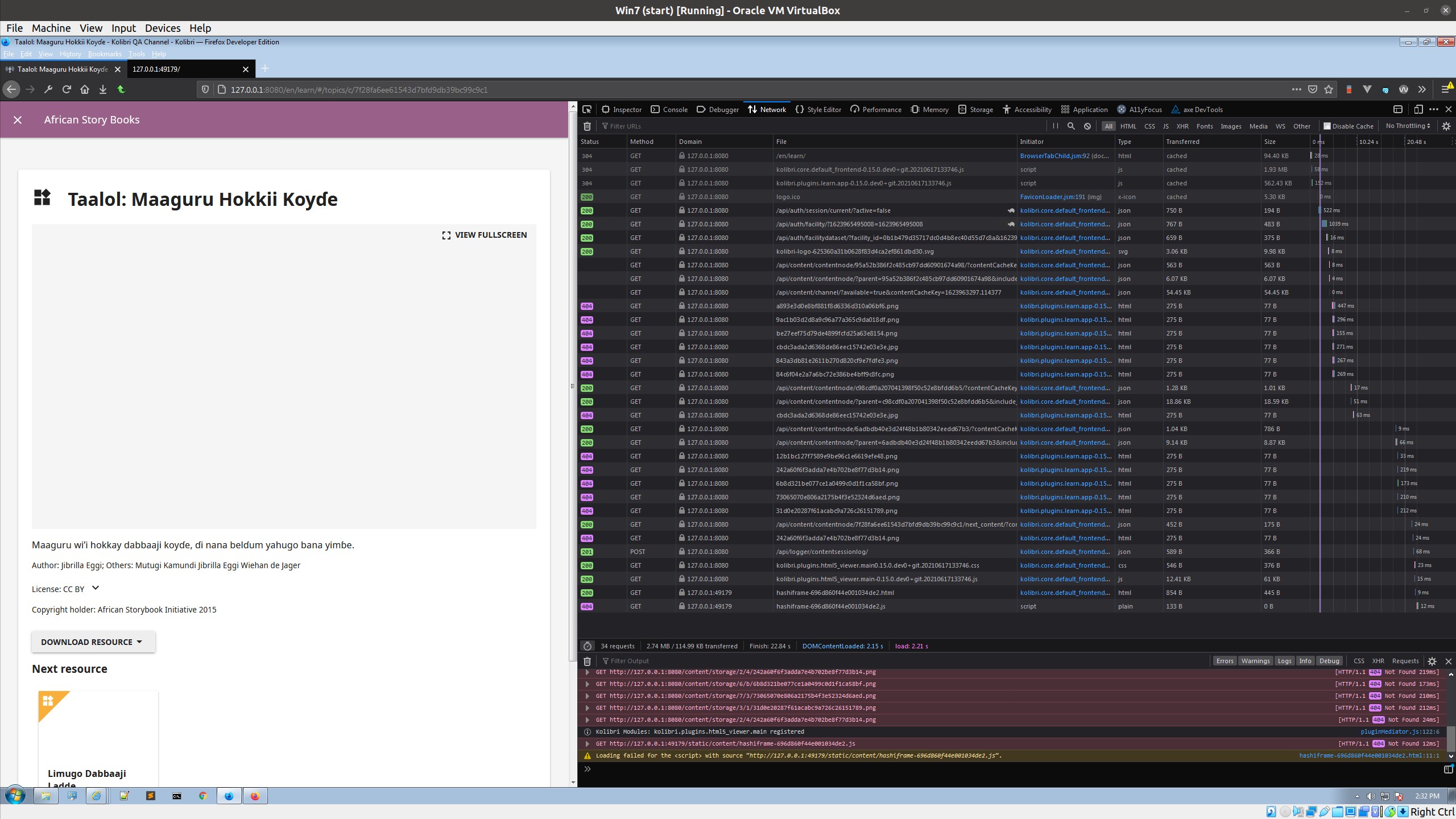

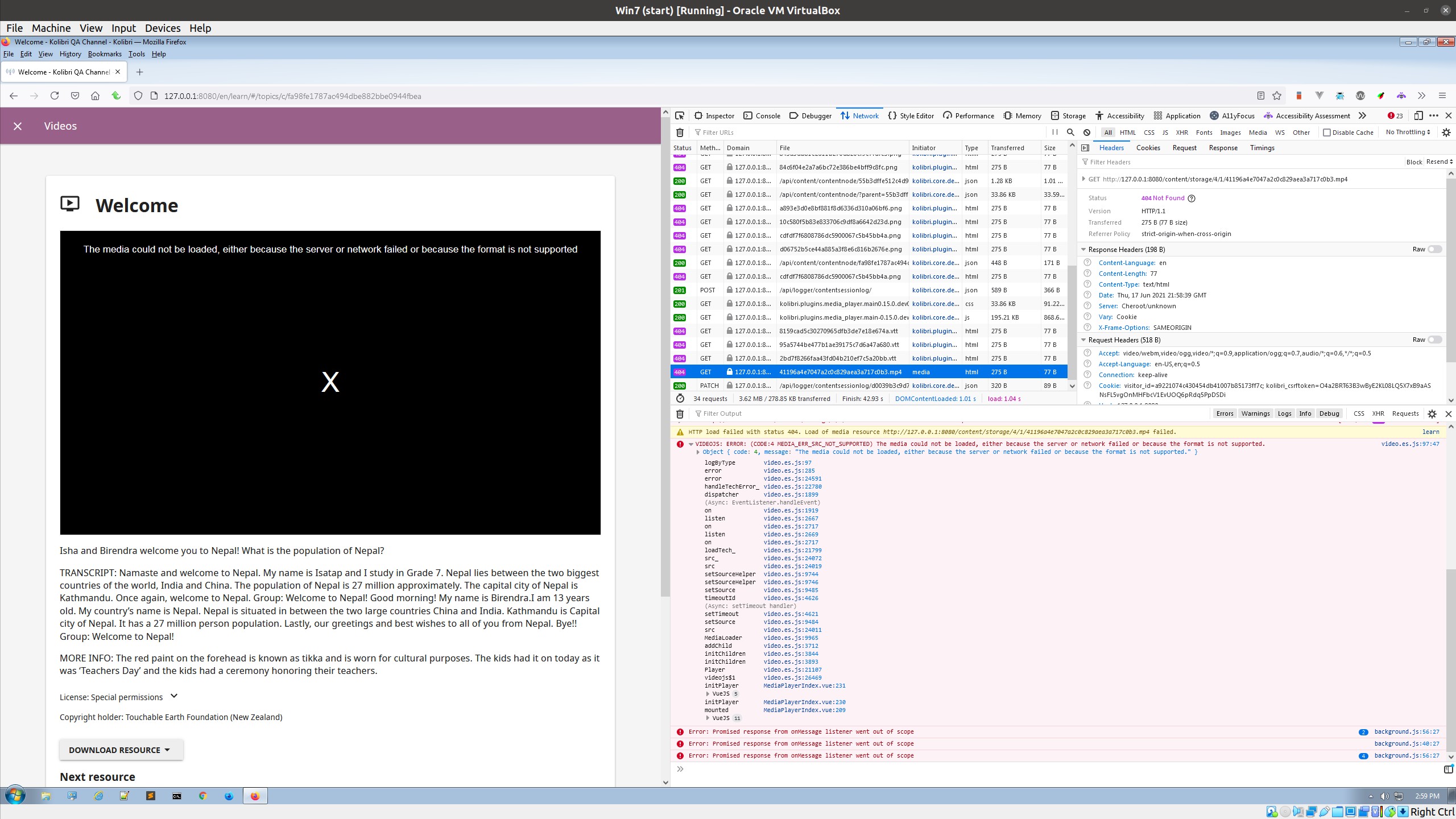

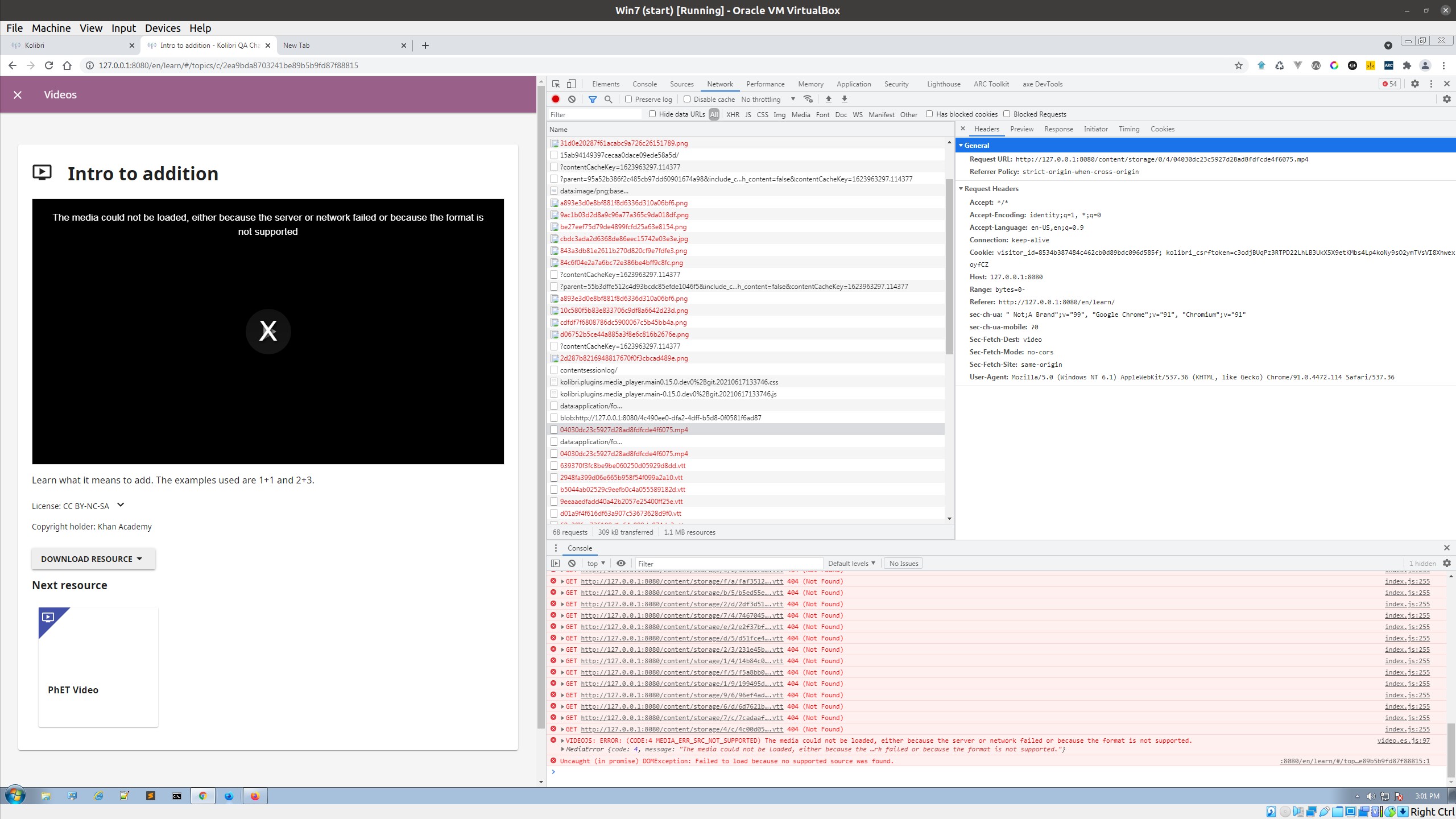

<issue>

TypeError thrown when during groups lookup

The offending code is [here](https://github.com/plone/Products.CMFPlone/blob/308aa4d03ee6c0ce9d8119ce4c37955153f0bc6f/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py#L66). The traceback looks like this:

```

Traceback (innermost last):

Module ZPublisher.WSGIPublisher, line 176, in transaction_pubevents

Module ZPublisher.WSGIPublisher, line 385, in publish_module

Module ZPublisher.WSGIPublisher, line 280, in publish

Module ZPublisher.mapply, line 85, in mapply

Module ZPublisher.WSGIPublisher, line 63, in call_object

Module Products.CMFPlone.controlpanel.browser.usergroups_usermembership, line 57, in __call__

Module Products.CMFPlone.controlpanel.browser.usergroups_usermembership, line 54, in update

Module Products.CMFPlone.controlpanel.browser.usergroups_usermembership, line 63, in getGroups

TypeError: '<' not supported between instances of 'bool' and 'str'

```

The issue is that when there's a `None` value in the `groupResults` (which is anticipated in the sort code) the lambda returns `False` which fails to compare against the group title/name strings under Python 3. The list comprehension that defines `groupResults` should probably just filter out `None` values to avoid this issue. I'm not entirely sure what circumstances result in a `None` group value, but I am seeing it occur in a real world use case.

</issue>

<code>

[start of Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py]

1 from plone.base import PloneMessageFactory as _

2 from Products.CMFCore.utils import getToolByName

3 from Products.CMFPlone.controlpanel.browser.usergroups import (

4 UsersGroupsControlPanelView,

5 )

6 from Products.CMFPlone.utils import normalizeString

7 from zExceptions import Forbidden

8

9

10 class UserMembershipControlPanel(UsersGroupsControlPanelView):

11 def update(self):

12 self.userid = getattr(self.request, "userid")

13 self.gtool = getToolByName(self, "portal_groups")

14 self.mtool = getToolByName(self, "portal_membership")

15 self.member = self.mtool.getMemberById(self.userid)

16

17 form = self.request.form

18

19 self.searchResults = []

20 self.searchString = ""

21 self.newSearch = False

22

23 if form.get("form.submitted", False):

24 delete = form.get("delete", [])

25 if delete:

26 for groupname in delete:

27 self.gtool.removePrincipalFromGroup(

28 self.userid, groupname, self.request

29 )

30 self.context.plone_utils.addPortalMessage(_("Changes made."))

31

32 add = form.get("add", [])

33 if add:

34 for groupname in add:

35 group = self.gtool.getGroupById(groupname)

36 if "Manager" in group.getRoles() and not self.is_zope_manager:

37 raise Forbidden

38

39 self.gtool.addPrincipalToGroup(self.userid, groupname, self.request)

40 self.context.plone_utils.addPortalMessage(_("Changes made."))

41

42 search = form.get("form.button.Search", None) is not None

43 findAll = (

44 form.get("form.button.FindAll", None) is not None and not self.many_groups

45 )

46 self.searchString = not findAll and form.get("searchstring", "") or ""

47

48 if findAll or not self.many_groups or self.searchString != "":

49 self.searchResults = self.getPotentialGroups(self.searchString)

50

51 if search or findAll:

52 self.newSearch = True

53

54 self.groups = self.getGroups()

55

56 def __call__(self):

57 self.update()

58 return self.index()

59

60 def getGroups(self):

61 groupResults = [

62 self.gtool.getGroupById(m)

63 for m in self.gtool.getGroupsForPrincipal(self.member)

64 ]

65 groupResults.sort(

66 key=lambda x: x is not None and normalizeString(x.getGroupTitleOrName())

67 )

68 return [i for i in groupResults if i]

69

70 def getPotentialGroups(self, searchString):

71 ignoredGroups = [x.id for x in self.getGroups() if x is not None]

72 return self.membershipSearch(

73 searchString, searchUsers=False, ignore=ignoredGroups

74 )

75

[end of Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py b/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py

--- a/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py

+++ b/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py

@@ -63,7 +63,7 @@

for m in self.gtool.getGroupsForPrincipal(self.member)

]

groupResults.sort(

- key=lambda x: x is not None and normalizeString(x.getGroupTitleOrName())

+ key=lambda x: normalizeString(x.getGroupTitleOrName()) if x else ''

)

return [i for i in groupResults if i]

|

{"golden_diff": "diff --git a/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py b/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py\n--- a/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py\n+++ b/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py\n@@ -63,7 +63,7 @@\n for m in self.gtool.getGroupsForPrincipal(self.member)\n ]\n groupResults.sort(\n- key=lambda x: x is not None and normalizeString(x.getGroupTitleOrName())\n+ key=lambda x: normalizeString(x.getGroupTitleOrName()) if x else ''\n )\n return [i for i in groupResults if i]\n", "issue": "TypeError thrown when during groups lookup\nThe offending code is [here](https://github.com/plone/Products.CMFPlone/blob/308aa4d03ee6c0ce9d8119ce4c37955153f0bc6f/Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py#L66). The traceback looks like this:\r\n```\r\nTraceback (innermost last):\r\n Module ZPublisher.WSGIPublisher, line 176, in transaction_pubevents\r\n Module ZPublisher.WSGIPublisher, line 385, in publish_module\r\n Module ZPublisher.WSGIPublisher, line 280, in publish\r\n Module ZPublisher.mapply, line 85, in mapply\r\n Module ZPublisher.WSGIPublisher, line 63, in call_object\r\n Module Products.CMFPlone.controlpanel.browser.usergroups_usermembership, line 57, in __call__\r\n Module Products.CMFPlone.controlpanel.browser.usergroups_usermembership, line 54, in update\r\n Module Products.CMFPlone.controlpanel.browser.usergroups_usermembership, line 63, in getGroups\r\nTypeError: '<' not supported between instances of 'bool' and 'str'\r\n```\r\n\r\nThe issue is that when there's a `None` value in the `groupResults` (which is anticipated in the sort code) the lambda returns `False` which fails to compare against the group title/name strings under Python 3. The list comprehension that defines `groupResults` should probably just filter out `None` values to avoid this issue. I'm not entirely sure what circumstances result in a `None` group value, but I am seeing it occur in a real world use case.\n", "before_files": [{"content": "from plone.base import PloneMessageFactory as _\nfrom Products.CMFCore.utils import getToolByName\nfrom Products.CMFPlone.controlpanel.browser.usergroups import (\n UsersGroupsControlPanelView,\n)\nfrom Products.CMFPlone.utils import normalizeString\nfrom zExceptions import Forbidden\n\n\nclass UserMembershipControlPanel(UsersGroupsControlPanelView):\n def update(self):\n self.userid = getattr(self.request, \"userid\")\n self.gtool = getToolByName(self, \"portal_groups\")\n self.mtool = getToolByName(self, \"portal_membership\")\n self.member = self.mtool.getMemberById(self.userid)\n\n form = self.request.form\n\n self.searchResults = []\n self.searchString = \"\"\n self.newSearch = False\n\n if form.get(\"form.submitted\", False):\n delete = form.get(\"delete\", [])\n if delete:\n for groupname in delete:\n self.gtool.removePrincipalFromGroup(\n self.userid, groupname, self.request\n )\n self.context.plone_utils.addPortalMessage(_(\"Changes made.\"))\n\n add = form.get(\"add\", [])\n if add:\n for groupname in add:\n group = self.gtool.getGroupById(groupname)\n if \"Manager\" in group.getRoles() and not self.is_zope_manager:\n raise Forbidden\n\n self.gtool.addPrincipalToGroup(self.userid, groupname, self.request)\n self.context.plone_utils.addPortalMessage(_(\"Changes made.\"))\n\n search = form.get(\"form.button.Search\", None) is not None\n findAll = (\n form.get(\"form.button.FindAll\", None) is not None and not self.many_groups\n )\n self.searchString = not findAll and form.get(\"searchstring\", \"\") or \"\"\n\n if findAll or not self.many_groups or self.searchString != \"\":\n self.searchResults = self.getPotentialGroups(self.searchString)\n\n if search or findAll:\n self.newSearch = True\n\n self.groups = self.getGroups()\n\n def __call__(self):\n self.update()\n return self.index()\n\n def getGroups(self):\n groupResults = [\n self.gtool.getGroupById(m)\n for m in self.gtool.getGroupsForPrincipal(self.member)\n ]\n groupResults.sort(\n key=lambda x: x is not None and normalizeString(x.getGroupTitleOrName())\n )\n return [i for i in groupResults if i]\n\n def getPotentialGroups(self, searchString):\n ignoredGroups = [x.id for x in self.getGroups() if x is not None]\n return self.membershipSearch(\n searchString, searchUsers=False, ignore=ignoredGroups\n )\n", "path": "Products/CMFPlone/controlpanel/browser/usergroups_usermembership.py"}]}

| 1,641 | 158 |

gh_patches_debug_19305

|

rasdani/github-patches

|

git_diff

|

fail2ban__fail2ban-940

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

WARNING 'ignoreregex' not defined

Hello there

I'm seeing this error each time I restart fail2ban:

```

WARNING 'ignoreregex' not defined in 'Definition'. Using default one: ''

```

No idea to which one filter this is referring to. Any ideas?

Here more information. Thanks!

```

$ fail2ban-client -d -v

INFO Using socket file /var/run/fail2ban/fail2ban.sock

WARNING 'ignoreregex' not defined in 'Definition'. Using default one: ''

['set', 'loglevel', 3]

['set', 'logtarget', '/var/log/fail2ban.log']

...

```

```

$ fail2ban-client -V

Fail2Ban v0.8.11

```

</issue>

<code>

[start of setup.py]

1 #!/usr/bin/python

2 # emacs: -*- mode: python; py-indent-offset: 4; indent-tabs-mode: t -*-

3 # vi: set ft=python sts=4 ts=4 sw=4 noet :

4

5 # This file is part of Fail2Ban.

6 #

7 # Fail2Ban is free software; you can redistribute it and/or modify

8 # it under the terms of the GNU General Public License as published by

9 # the Free Software Foundation; either version 2 of the License, or

10 # (at your option) any later version.

11 #

12 # Fail2Ban is distributed in the hope that it will be useful,

13 # but WITHOUT ANY WARRANTY; without even the implied warranty of

14 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

15 # GNU General Public License for more details.

16 #

17 # You should have received a copy of the GNU General Public License

18 # along with Fail2Ban; if not, write to the Free Software

19 # Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.

20

21 __author__ = "Cyril Jaquier, Steven Hiscocks, Yaroslav Halchenko"

22 __copyright__ = "Copyright (c) 2004 Cyril Jaquier, 2008-2013 Fail2Ban Contributors"

23 __license__ = "GPL"

24

25 try:

26 import setuptools

27 from setuptools import setup

28 except ImportError:

29 setuptools = None

30 from distutils.core import setup

31

32 try:

33 # python 3.x

34 from distutils.command.build_py import build_py_2to3 as build_py

35 from distutils.command.build_scripts \

36 import build_scripts_2to3 as build_scripts

37 except ImportError:

38 # python 2.x

39 from distutils.command.build_py import build_py

40 from distutils.command.build_scripts import build_scripts

41 import os

42 from os.path import isfile, join, isdir

43 import sys, warnings

44 from glob import glob

45

46 if setuptools and "test" in sys.argv:

47 import logging

48 logSys = logging.getLogger("fail2ban")

49 hdlr = logging.StreamHandler(sys.stdout)

50 fmt = logging.Formatter("%(asctime)-15s %(message)s")

51 hdlr.setFormatter(fmt)

52 logSys.addHandler(hdlr)

53 if set(["-q", "--quiet"]) & set(sys.argv):

54 logSys.setLevel(logging.CRITICAL)

55 warnings.simplefilter("ignore")

56 sys.warnoptions.append("ignore")

57 elif set(["-v", "--verbose"]) & set(sys.argv):

58 logSys.setLevel(logging.DEBUG)

59 else:

60 logSys.setLevel(logging.INFO)

61 elif "test" in sys.argv:

62 print("python distribute required to execute fail2ban tests")

63 print("")

64

65 longdesc = '''

66 Fail2Ban scans log files like /var/log/pwdfail or

67 /var/log/apache/error_log and bans IP that makes

68 too many password failures. It updates firewall rules

69 to reject the IP address or executes user defined

70 commands.'''

71

72 if setuptools:

73 setup_extra = {

74 'test_suite': "fail2ban.tests.utils.gatherTests",

75 'use_2to3': True,

76 }

77 else:

78 setup_extra = {}

79

80 # Get version number, avoiding importing fail2ban.

81 # This is due to tests not functioning for python3 as 2to3 takes place later

82 exec(open(join("fail2ban", "version.py")).read())

83

84 setup(

85 name = "fail2ban",

86 version = version,

87 description = "Ban IPs that make too many password failures",

88 long_description = longdesc,

89 author = "Cyril Jaquier & Fail2Ban Contributors",

90 author_email = "[email protected]",

91 url = "http://www.fail2ban.org",

92 license = "GPL",

93 platforms = "Posix",

94 cmdclass = {'build_py': build_py, 'build_scripts': build_scripts},

95 scripts = [

96 'bin/fail2ban-client',

97 'bin/fail2ban-server',

98 'bin/fail2ban-regex',

99 'bin/fail2ban-testcases',

100 ],

101 packages = [

102 'fail2ban',

103 'fail2ban.client',

104 'fail2ban.server',

105 'fail2ban.tests',

106 'fail2ban.tests.action_d',

107 ],

108 package_data = {

109 'fail2ban.tests':

110 [ join(w[0], f).replace("fail2ban/tests/", "", 1)

111 for w in os.walk('fail2ban/tests/files')

112 for f in w[2]] +

113 [ join(w[0], f).replace("fail2ban/tests/", "", 1)

114 for w in os.walk('fail2ban/tests/config')

115 for f in w[2]] +

116 [ join(w[0], f).replace("fail2ban/tests/", "", 1)

117 for w in os.walk('fail2ban/tests/action_d')

118 for f in w[2]]

119 },

120 data_files = [

121 ('/etc/fail2ban',

122 glob("config/*.conf")

123 ),

124 ('/etc/fail2ban/filter.d',

125 glob("config/filter.d/*.conf")

126 ),

127 ('/etc/fail2ban/action.d',

128 glob("config/action.d/*.conf") +

129 glob("config/action.d/*.py")

130 ),

131 ('/etc/fail2ban/fail2ban.d',

132 ''

133 ),

134 ('/etc/fail2ban/jail.d',

135 ''

136 ),

137 ('/var/lib/fail2ban',

138 ''

139 ),

140 ('/usr/share/doc/fail2ban',

141 ['README.md', 'README.Solaris', 'DEVELOP', 'FILTERS',

142 'doc/run-rootless.txt']

143 )

144 ],

145 **setup_extra

146 )

147

148 # Do some checks after installation

149 # Search for obsolete files.

150 obsoleteFiles = []

151 elements = {

152 "/etc/":

153 [

154 "fail2ban.conf"

155 ],

156 "/usr/bin/":

157 [

158 "fail2ban.py"

159 ],

160 "/usr/lib/fail2ban/":

161 [

162 "version.py",

163 "protocol.py"

164 ]

165 }

166

167 for directory in elements:

168 for f in elements[directory]:

169 path = join(directory, f)

170 if isfile(path):

171 obsoleteFiles.append(path)

172

173 if obsoleteFiles:

174 print("")

175 print("Obsolete files from previous Fail2Ban versions were found on "

176 "your system.")

177 print("Please delete them:")

178 print("")

179 for f in obsoleteFiles:

180 print("\t" + f)

181 print("")

182

183 if isdir("/usr/lib/fail2ban"):

184 print("")

185 print("Fail2ban is not installed under /usr/lib anymore. The new "

186 "location is under /usr/share. Please remove the directory "

187 "/usr/lib/fail2ban and everything under this directory.")

188 print("")

189

190 # Update config file

191 if sys.argv[1] == "install":

192 print("")

193 print("Please do not forget to update your configuration files.")

194 print("They are in /etc/fail2ban/.")

195 print("")

196

[end of setup.py]

[start of fail2ban/client/filterreader.py]

1 # emacs: -*- mode: python; py-indent-offset: 4; indent-tabs-mode: t -*-

2 # vi: set ft=python sts=4 ts=4 sw=4 noet :

3

4 # This file is part of Fail2Ban.

5 #

6 # Fail2Ban is free software; you can redistribute it and/or modify

7 # it under the terms of the GNU General Public License as published by

8 # the Free Software Foundation; either version 2 of the License, or

9 # (at your option) any later version.

10 #

11 # Fail2Ban is distributed in the hope that it will be useful,

12 # but WITHOUT ANY WARRANTY; without even the implied warranty of

13 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

14 # GNU General Public License for more details.

15 #

16 # You should have received a copy of the GNU General Public License

17 # along with Fail2Ban; if not, write to the Free Software

18 # Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.

19

20 # Author: Cyril Jaquier

21 #

22

23 __author__ = "Cyril Jaquier"

24 __copyright__ = "Copyright (c) 2004 Cyril Jaquier"

25 __license__ = "GPL"

26

27 import os, shlex

28

29 from .configreader import DefinitionInitConfigReader

30 from ..server.action import CommandAction

31 from ..helpers import getLogger

32

33 # Gets the instance of the logger.

34 logSys = getLogger(__name__)

35

36 class FilterReader(DefinitionInitConfigReader):

37

38 _configOpts = [

39 ["string", "ignoreregex", None],

40 ["string", "failregex", ""],

41 ]

42

43 def setFile(self, fileName):

44 self.__file = fileName

45 DefinitionInitConfigReader.setFile(self, os.path.join("filter.d", fileName))

46

47 def getFile(self):

48 return self.__file

49

50 def getCombined(self):

51 combinedopts = dict(list(self._opts.items()) + list(self._initOpts.items()))

52 if not len(combinedopts):

53 return {};

54 opts = CommandAction.substituteRecursiveTags(combinedopts)

55 if not opts:

56 raise ValueError('recursive tag definitions unable to be resolved')

57 return opts;

58

59 def convert(self):

60 stream = list()

61 opts = self.getCombined()

62 if not len(opts):

63 return stream;

64 for opt, value in opts.iteritems():

65 if opt == "failregex":

66 for regex in value.split('\n'):

67 # Do not send a command if the rule is empty.

68 if regex != '':

69 stream.append(["set", self._jailName, "addfailregex", regex])

70 elif opt == "ignoreregex":

71 for regex in value.split('\n'):

72 # Do not send a command if the rule is empty.

73 if regex != '':

74 stream.append(["set", self._jailName, "addignoreregex", regex])

75 if self._initOpts:

76 if 'maxlines' in self._initOpts:

77 # We warn when multiline regex is used without maxlines > 1

78 # therefore keep sure we set this option first.

79 stream.insert(0, ["set", self._jailName, "maxlines", self._initOpts["maxlines"]])

80 if 'datepattern' in self._initOpts:

81 stream.append(["set", self._jailName, "datepattern", self._initOpts["datepattern"]])

82 # Do not send a command if the match is empty.

83 if self._initOpts.get("journalmatch", '') != '':

84 for match in self._initOpts["journalmatch"].split("\n"):

85 stream.append(

86 ["set", self._jailName, "addjournalmatch"] +

87 shlex.split(match))

88 return stream

89

90

[end of fail2ban/client/filterreader.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/fail2ban/client/filterreader.py b/fail2ban/client/filterreader.py

--- a/fail2ban/client/filterreader.py

+++ b/fail2ban/client/filterreader.py

@@ -71,7 +71,7 @@

for regex in value.split('\n'):

# Do not send a command if the rule is empty.

if regex != '':

- stream.append(["set", self._jailName, "addignoreregex", regex])

+ stream.append(["set", self._jailName, "addignoreregex", regex])

if self._initOpts:

if 'maxlines' in self._initOpts:

# We warn when multiline regex is used without maxlines > 1

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -124,6 +124,9 @@

('/etc/fail2ban/filter.d',

glob("config/filter.d/*.conf")

),

+ ('/etc/fail2ban/filter.d/ignorecommands',

+ glob("config/filter.d/ignorecommands/*")

+ ),

('/etc/fail2ban/action.d',

glob("config/action.d/*.conf") +

glob("config/action.d/*.py")

|

{"golden_diff": "diff --git a/fail2ban/client/filterreader.py b/fail2ban/client/filterreader.py\n--- a/fail2ban/client/filterreader.py\n+++ b/fail2ban/client/filterreader.py\n@@ -71,7 +71,7 @@\n \t\t\t\tfor regex in value.split('\\n'):\n \t\t\t\t\t# Do not send a command if the rule is empty.\n \t\t\t\t\tif regex != '':\n-\t\t\t\t\t\tstream.append([\"set\", self._jailName, \"addignoreregex\", regex])\t\t\n+\t\t\t\t\t\tstream.append([\"set\", self._jailName, \"addignoreregex\", regex])\n \t\tif self._initOpts:\n \t\t\tif 'maxlines' in self._initOpts:\n \t\t\t\t# We warn when multiline regex is used without maxlines > 1\ndiff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -124,6 +124,9 @@\n \t\t('/etc/fail2ban/filter.d',\n \t\t\tglob(\"config/filter.d/*.conf\")\n \t\t),\n+\t\t('/etc/fail2ban/filter.d/ignorecommands',\n+\t\t\tglob(\"config/filter.d/ignorecommands/*\")\n+\t\t),\n \t\t('/etc/fail2ban/action.d',\n \t\t\tglob(\"config/action.d/*.conf\") +\n \t\t\tglob(\"config/action.d/*.py\")\n", "issue": "WARNING 'ignoreregex' not defined\nHello there\n\nI'm seeing this error each time I restart fail2ban:\n\n```\nWARNING 'ignoreregex' not defined in 'Definition'. Using default one: ''\n```\n\nNo idea to which one filter this is referring to. Any ideas?\n\nHere more information. Thanks!\n\n```\n$ fail2ban-client -d -v\nINFO Using socket file /var/run/fail2ban/fail2ban.sock\nWARNING 'ignoreregex' not defined in 'Definition'. Using default one: ''\n['set', 'loglevel', 3]\n['set', 'logtarget', '/var/log/fail2ban.log']\n...\n```\n\n```\n$ fail2ban-client -V\nFail2Ban v0.8.11\n```\n\n", "before_files": [{"content": "#!/usr/bin/python\n# emacs: -*- mode: python; py-indent-offset: 4; indent-tabs-mode: t -*-\n# vi: set ft=python sts=4 ts=4 sw=4 noet :\n\n# This file is part of Fail2Ban.\n#\n# Fail2Ban is free software; you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation; either version 2 of the License, or\n# (at your option) any later version.\n#\n# Fail2Ban is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with Fail2Ban; if not, write to the Free Software\n# Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.\n\n__author__ = \"Cyril Jaquier, Steven Hiscocks, Yaroslav Halchenko\"\n__copyright__ = \"Copyright (c) 2004 Cyril Jaquier, 2008-2013 Fail2Ban Contributors\"\n__license__ = \"GPL\"\n\ntry:\n\timport setuptools\n\tfrom setuptools import setup\nexcept ImportError:\n\tsetuptools = None\n\tfrom distutils.core import setup\n\ntry:\n\t# python 3.x\n\tfrom distutils.command.build_py import build_py_2to3 as build_py\n\tfrom distutils.command.build_scripts \\\n\t\timport build_scripts_2to3 as build_scripts\nexcept ImportError:\n\t# python 2.x\n\tfrom distutils.command.build_py import build_py\n\tfrom distutils.command.build_scripts import build_scripts\nimport os\nfrom os.path import isfile, join, isdir\nimport sys, warnings\nfrom glob import glob\n\nif setuptools and \"test\" in sys.argv:\n\timport logging\n\tlogSys = logging.getLogger(\"fail2ban\")\n\thdlr = logging.StreamHandler(sys.stdout)\n\tfmt = logging.Formatter(\"%(asctime)-15s %(message)s\")\n\thdlr.setFormatter(fmt)\n\tlogSys.addHandler(hdlr)\n\tif set([\"-q\", \"--quiet\"]) & set(sys.argv):\n\t\tlogSys.setLevel(logging.CRITICAL)\n\t\twarnings.simplefilter(\"ignore\")\n\t\tsys.warnoptions.append(\"ignore\")\n\telif set([\"-v\", \"--verbose\"]) & set(sys.argv):\n\t\tlogSys.setLevel(logging.DEBUG)\n\telse:\n\t\tlogSys.setLevel(logging.INFO)\nelif \"test\" in sys.argv:\n\tprint(\"python distribute required to execute fail2ban tests\")\n\tprint(\"\")\n\nlongdesc = '''\nFail2Ban scans log files like /var/log/pwdfail or\n/var/log/apache/error_log and bans IP that makes\ntoo many password failures. It updates firewall rules\nto reject the IP address or executes user defined\ncommands.'''\n\nif setuptools:\n\tsetup_extra = {\n\t\t'test_suite': \"fail2ban.tests.utils.gatherTests\",\n\t\t'use_2to3': True,\n\t}\nelse:\n\tsetup_extra = {}\n\n# Get version number, avoiding importing fail2ban.\n# This is due to tests not functioning for python3 as 2to3 takes place later\nexec(open(join(\"fail2ban\", \"version.py\")).read())\n\nsetup(\n\tname = \"fail2ban\",\n\tversion = version,\n\tdescription = \"Ban IPs that make too many password failures\",\n\tlong_description = longdesc,\n\tauthor = \"Cyril Jaquier & Fail2Ban Contributors\",\n\tauthor_email = \"[email protected]\",\n\turl = \"http://www.fail2ban.org\",\n\tlicense = \"GPL\",\n\tplatforms = \"Posix\",\n\tcmdclass = {'build_py': build_py, 'build_scripts': build_scripts},\n\tscripts = [\n\t\t'bin/fail2ban-client',\n\t\t'bin/fail2ban-server',\n\t\t'bin/fail2ban-regex',\n\t\t'bin/fail2ban-testcases',\n\t],\n\tpackages = [\n\t\t'fail2ban',\n\t\t'fail2ban.client',\n\t\t'fail2ban.server',\n\t\t'fail2ban.tests',\n\t\t'fail2ban.tests.action_d',\n\t],\n\tpackage_data = {\n\t\t'fail2ban.tests':\n\t\t\t[ join(w[0], f).replace(\"fail2ban/tests/\", \"\", 1)\n\t\t\t\tfor w in os.walk('fail2ban/tests/files')\n\t\t\t\tfor f in w[2]] +\n\t\t\t[ join(w[0], f).replace(\"fail2ban/tests/\", \"\", 1)\n\t\t\t\tfor w in os.walk('fail2ban/tests/config')\n\t\t\t\tfor f in w[2]] +\n\t\t\t[ join(w[0], f).replace(\"fail2ban/tests/\", \"\", 1)\n\t\t\t\tfor w in os.walk('fail2ban/tests/action_d')\n\t\t\t\tfor f in w[2]]\n\t},\n\tdata_files = [\n\t\t('/etc/fail2ban',\n\t\t\tglob(\"config/*.conf\")\n\t\t),\n\t\t('/etc/fail2ban/filter.d',\n\t\t\tglob(\"config/filter.d/*.conf\")\n\t\t),\n\t\t('/etc/fail2ban/action.d',\n\t\t\tglob(\"config/action.d/*.conf\") +\n\t\t\tglob(\"config/action.d/*.py\")\n\t\t),\n\t\t('/etc/fail2ban/fail2ban.d',\n\t\t\t''\n\t\t),\n\t\t('/etc/fail2ban/jail.d',\n\t\t\t''\n\t\t),\n\t\t('/var/lib/fail2ban',\n\t\t\t''\n\t\t),\n\t\t('/usr/share/doc/fail2ban',\n\t\t\t['README.md', 'README.Solaris', 'DEVELOP', 'FILTERS',\n\t\t\t 'doc/run-rootless.txt']\n\t\t)\n\t],\n\t**setup_extra\n)\n\n# Do some checks after installation\n# Search for obsolete files.\nobsoleteFiles = []\nelements = {\n\t\"/etc/\":\n\t\t[\n\t\t\t\"fail2ban.conf\"\n\t\t],\n\t\"/usr/bin/\":\n\t\t[\n\t\t\t\"fail2ban.py\"\n\t\t],\n\t\"/usr/lib/fail2ban/\":\n\t\t[\n\t\t\t\"version.py\",\n\t\t\t\"protocol.py\"\n\t\t]\n}\n\nfor directory in elements:\n\tfor f in elements[directory]:\n\t\tpath = join(directory, f)\n\t\tif isfile(path):\n\t\t\tobsoleteFiles.append(path)\n\nif obsoleteFiles:\n\tprint(\"\")\n\tprint(\"Obsolete files from previous Fail2Ban versions were found on \"\n\t\t \"your system.\")\n\tprint(\"Please delete them:\")\n\tprint(\"\")\n\tfor f in obsoleteFiles:\n\t\tprint(\"\\t\" + f)\n\tprint(\"\")\n\nif isdir(\"/usr/lib/fail2ban\"):\n\tprint(\"\")\n\tprint(\"Fail2ban is not installed under /usr/lib anymore. The new \"\n\t\t \"location is under /usr/share. Please remove the directory \"\n\t\t \"/usr/lib/fail2ban and everything under this directory.\")\n\tprint(\"\")\n\n# Update config file\nif sys.argv[1] == \"install\":\n\tprint(\"\")\n\tprint(\"Please do not forget to update your configuration files.\")\n\tprint(\"They are in /etc/fail2ban/.\")\n\tprint(\"\")\n", "path": "setup.py"}, {"content": "# emacs: -*- mode: python; py-indent-offset: 4; indent-tabs-mode: t -*-\n# vi: set ft=python sts=4 ts=4 sw=4 noet :\n\n# This file is part of Fail2Ban.\n#\n# Fail2Ban is free software; you can redistribute it and/or modify\n# it under the terms of the GNU General Public License as published by\n# the Free Software Foundation; either version 2 of the License, or\n# (at your option) any later version.\n#\n# Fail2Ban is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with Fail2Ban; if not, write to the Free Software\n# Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.\n\n# Author: Cyril Jaquier\n# \n\n__author__ = \"Cyril Jaquier\"\n__copyright__ = \"Copyright (c) 2004 Cyril Jaquier\"\n__license__ = \"GPL\"\n\nimport os, shlex\n\nfrom .configreader import DefinitionInitConfigReader\nfrom ..server.action import CommandAction\nfrom ..helpers import getLogger\n\n# Gets the instance of the logger.\nlogSys = getLogger(__name__)\n\nclass FilterReader(DefinitionInitConfigReader):\n\n\t_configOpts = [\n\t\t[\"string\", \"ignoreregex\", None],\n\t\t[\"string\", \"failregex\", \"\"],\n\t]\n\n\tdef setFile(self, fileName):\n\t\tself.__file = fileName\n\t\tDefinitionInitConfigReader.setFile(self, os.path.join(\"filter.d\", fileName))\n\t\n\tdef getFile(self):\n\t\treturn self.__file\n\n\tdef getCombined(self):\n\t\tcombinedopts = dict(list(self._opts.items()) + list(self._initOpts.items()))\n\t\tif not len(combinedopts):\n\t\t\treturn {};\n\t\topts = CommandAction.substituteRecursiveTags(combinedopts)\n\t\tif not opts:\n\t\t\traise ValueError('recursive tag definitions unable to be resolved')\n\t\treturn opts;\n\t\n\tdef convert(self):\n\t\tstream = list()\n\t\topts = self.getCombined()\n\t\tif not len(opts):\n\t\t\treturn stream;\n\t\tfor opt, value in opts.iteritems():\n\t\t\tif opt == \"failregex\":\n\t\t\t\tfor regex in value.split('\\n'):\n\t\t\t\t\t# Do not send a command if the rule is empty.\n\t\t\t\t\tif regex != '':\n\t\t\t\t\t\tstream.append([\"set\", self._jailName, \"addfailregex\", regex])\n\t\t\telif opt == \"ignoreregex\":\n\t\t\t\tfor regex in value.split('\\n'):\n\t\t\t\t\t# Do not send a command if the rule is empty.\n\t\t\t\t\tif regex != '':\n\t\t\t\t\t\tstream.append([\"set\", self._jailName, \"addignoreregex\", regex])\t\t\n\t\tif self._initOpts:\n\t\t\tif 'maxlines' in self._initOpts:\n\t\t\t\t# We warn when multiline regex is used without maxlines > 1\n\t\t\t\t# therefore keep sure we set this option first.\n\t\t\t\tstream.insert(0, [\"set\", self._jailName, \"maxlines\", self._initOpts[\"maxlines\"]])\n\t\t\tif 'datepattern' in self._initOpts:\n\t\t\t\tstream.append([\"set\", self._jailName, \"datepattern\", self._initOpts[\"datepattern\"]])\n\t\t\t# Do not send a command if the match is empty.\n\t\t\tif self._initOpts.get(\"journalmatch\", '') != '':\n\t\t\t\tfor match in self._initOpts[\"journalmatch\"].split(\"\\n\"):\n\t\t\t\t\tstream.append(\n\t\t\t\t\t\t[\"set\", self._jailName, \"addjournalmatch\"] +\n shlex.split(match))\n\t\treturn stream\n\t\t\n", "path": "fail2ban/client/filterreader.py"}]}

| 3,782 | 287 |

gh_patches_debug_29390

|

rasdani/github-patches

|

git_diff

|

paperless-ngx__paperless-ngx-6302

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG ]At new splitting: "QuerySet' object has no attribute 'extend'" - Workflow adding a custom field on adding new document leads to the error message

### Description

When trying out the new splitting functionality I get the error message:

'QuerySet' object has no attribute 'extend'

A Workflow for adding a custom field on adding new document leads to the error message.

Anybody with the same issue?

### Steps to reproduce

1. Go to Documents

2. Edit Document

3. Split

4. Split Pages 1 and 2

5. Error message

### Webserver logs

```bash

[2024-04-06 11:18:26,873] [DEBUG] [paperless.tasks] Executing plugin WorkflowTriggerPlugin

[2024-04-06 11:18:26,905] [INFO] [paperless.matching] Document matched WorkflowTrigger 3 from Workflow: Benutzerdefinierte Felder hinzufügen

[2024-04-06 11:18:26,910] [ERROR] [paperless.tasks] WorkflowTriggerPlugin failed: 'QuerySet' object has no attribute 'extend'

Traceback (most recent call last):

File "/usr/src/paperless/src/documents/tasks.py", line 144, in consume_file

msg = plugin.run()

^^^^^^^^^^^^

File "/usr/src/paperless/src/documents/consumer.py", line 223, in run

self.metadata.update(overrides)

File "/usr/src/paperless/src/documents/data_models.py", line 64, in update

self.view_users.extend(other.view_users)

^^^^^^^^^^^^^^^^^^^^^^

AttributeError: 'QuerySet' object has no attribute 'extend'

```

### Browser logs

_No response_

### Paperless-ngx version

2.7.0

### Host OS

Docker on Synology NAS - DSM 7.2

### Installation method

Docker - official image

### Browser

Firefox

### Configuration changes

_No response_

### Other

_No response_

### Please confirm the following

- [X] I believe this issue is a bug that affects all users of Paperless-ngx, not something specific to my installation.

- [X] I have already searched for relevant existing issues and discussions before opening this report.

- [X] I have updated the title field above with a concise description.

</issue>

<code>

[start of src/documents/data_models.py]

1 import dataclasses

2 import datetime

3 from enum import IntEnum

4 from pathlib import Path

5 from typing import Optional

6

7 import magic

8 from guardian.shortcuts import get_groups_with_perms

9 from guardian.shortcuts import get_users_with_perms

10

11

12 @dataclasses.dataclass

13 class DocumentMetadataOverrides:

14 """

15 Manages overrides for document fields which normally would

16 be set from content or matching. All fields default to None,

17 meaning no override is happening

18 """

19

20 filename: Optional[str] = None

21 title: Optional[str] = None

22 correspondent_id: Optional[int] = None

23 document_type_id: Optional[int] = None

24 tag_ids: Optional[list[int]] = None

25 storage_path_id: Optional[int] = None

26 created: Optional[datetime.datetime] = None

27 asn: Optional[int] = None

28 owner_id: Optional[int] = None

29 view_users: Optional[list[int]] = None

30 view_groups: Optional[list[int]] = None

31 change_users: Optional[list[int]] = None

32 change_groups: Optional[list[int]] = None

33 custom_field_ids: Optional[list[int]] = None

34

35 def update(self, other: "DocumentMetadataOverrides") -> "DocumentMetadataOverrides":

36 """

37 Merges two DocumentMetadataOverrides objects such that object B's overrides

38 are applied to object A or merged if multiple are accepted.

39

40 The update is an in-place modification of self

41 """

42 # only if empty

43 if other.title is not None:

44 self.title = other.title

45 if other.correspondent_id is not None:

46 self.correspondent_id = other.correspondent_id

47 if other.document_type_id is not None:

48 self.document_type_id = other.document_type_id

49 if other.storage_path_id is not None:

50 self.storage_path_id = other.storage_path_id

51 if other.owner_id is not None:

52 self.owner_id = other.owner_id

53

54 # merge

55 if self.tag_ids is None:

56 self.tag_ids = other.tag_ids

57 elif other.tag_ids is not None:

58 self.tag_ids.extend(other.tag_ids)

59 self.tag_ids = list(set(self.tag_ids))

60

61 if self.view_users is None:

62 self.view_users = other.view_users

63 elif other.view_users is not None:

64 self.view_users.extend(other.view_users)

65 self.view_users = list(set(self.view_users))

66

67 if self.view_groups is None:

68 self.view_groups = other.view_groups

69 elif other.view_groups is not None:

70 self.view_groups.extend(other.view_groups)

71 self.view_groups = list(set(self.view_groups))

72

73 if self.change_users is None:

74 self.change_users = other.change_users

75 elif other.change_users is not None:

76 self.change_users.extend(other.change_users)

77 self.change_users = list(set(self.change_users))

78

79 if self.change_groups is None:

80 self.change_groups = other.change_groups

81 elif other.change_groups is not None:

82 self.change_groups.extend(other.change_groups)

83 self.change_groups = list(set(self.change_groups))

84

85 if self.custom_field_ids is None:

86 self.custom_field_ids = other.custom_field_ids

87 elif other.custom_field_ids is not None:

88 self.custom_field_ids.extend(other.custom_field_ids)

89 self.custom_field_ids = list(set(self.custom_field_ids))

90

91 return self

92

93 @staticmethod

94 def from_document(doc) -> "DocumentMetadataOverrides":

95 """

96 Fills in the overrides from a document object

97 """

98 overrides = DocumentMetadataOverrides()

99 overrides.title = doc.title

100 overrides.correspondent_id = doc.correspondent.id if doc.correspondent else None

101 overrides.document_type_id = doc.document_type.id if doc.document_type else None

102 overrides.storage_path_id = doc.storage_path.id if doc.storage_path else None

103 overrides.owner_id = doc.owner.id if doc.owner else None

104 overrides.tag_ids = list(doc.tags.values_list("id", flat=True))

105

106 overrides.view_users = get_users_with_perms(

107 doc,

108 only_with_perms_in=["view_document"],

109 ).values_list("id", flat=True)

110 overrides.change_users = get_users_with_perms(

111 doc,

112 only_with_perms_in=["change_document"],

113 ).values_list("id", flat=True)

114 overrides.custom_field_ids = list(

115 doc.custom_fields.values_list("id", flat=True),

116 )

117

118 groups_with_perms = get_groups_with_perms(

119 doc,

120 attach_perms=True,

121 )

122 overrides.view_groups = [

123 group.id for group, perms in groups_with_perms if "view_document" in perms

124 ]

125 overrides.change_groups = [

126 group.id for group, perms in groups_with_perms if "change_document" in perms

127 ]

128

129 return overrides

130

131

132 class DocumentSource(IntEnum):

133 """

134 The source of an incoming document. May have other uses in the future

135 """

136

137 ConsumeFolder = 1

138 ApiUpload = 2

139 MailFetch = 3

140

141

142 @dataclasses.dataclass

143 class ConsumableDocument:

144 """

145 Encapsulates an incoming document, either from consume folder, API upload

146 or mail fetching and certain useful operations on it.

147 """

148

149 source: DocumentSource

150 original_file: Path

151 mailrule_id: Optional[int] = None

152 mime_type: str = dataclasses.field(init=False, default=None)

153

154 def __post_init__(self):

155 """

156 After a dataclass is initialized, this is called to finalize some data

157 1. Make sure the original path is an absolute, fully qualified path

158 2. Get the mime type of the file

159 """

160 # Always fully qualify the path first thing

161 # Just in case, convert to a path if it's a str

162 self.original_file = Path(self.original_file).resolve()

163

164 # Get the file type once at init

165 # Note this function isn't called when the object is unpickled

166 self.mime_type = magic.from_file(self.original_file, mime=True)

167

[end of src/documents/data_models.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/documents/data_models.py b/src/documents/data_models.py

--- a/src/documents/data_models.py

+++ b/src/documents/data_models.py

@@ -103,14 +103,18 @@

overrides.owner_id = doc.owner.id if doc.owner else None

overrides.tag_ids = list(doc.tags.values_list("id", flat=True))

- overrides.view_users = get_users_with_perms(

- doc,

- only_with_perms_in=["view_document"],

- ).values_list("id", flat=True)

- overrides.change_users = get_users_with_perms(

- doc,

- only_with_perms_in=["change_document"],

- ).values_list("id", flat=True)

+ overrides.view_users = list(

+ get_users_with_perms(

+ doc,

+ only_with_perms_in=["view_document"],

+ ).values_list("id", flat=True),

+ )

+ overrides.change_users = list(

+ get_users_with_perms(

+ doc,

+ only_with_perms_in=["change_document"],

+ ).values_list("id", flat=True),

+ )

overrides.custom_field_ids = list(

doc.custom_fields.values_list("id", flat=True),

)

@@ -120,10 +124,14 @@

attach_perms=True,

)

overrides.view_groups = [

- group.id for group, perms in groups_with_perms if "view_document" in perms

+ group.id

+ for group in groups_with_perms

+ if "view_document" in groups_with_perms[group]

]

overrides.change_groups = [

- group.id for group, perms in groups_with_perms if "change_document" in perms

+ group.id

+ for group in groups_with_perms

+ if "change_document" in groups_with_perms[group]

]

return overrides

|

{"golden_diff": "diff --git a/src/documents/data_models.py b/src/documents/data_models.py\n--- a/src/documents/data_models.py\n+++ b/src/documents/data_models.py\n@@ -103,14 +103,18 @@\n overrides.owner_id = doc.owner.id if doc.owner else None\n overrides.tag_ids = list(doc.tags.values_list(\"id\", flat=True))\n \n- overrides.view_users = get_users_with_perms(\n- doc,\n- only_with_perms_in=[\"view_document\"],\n- ).values_list(\"id\", flat=True)\n- overrides.change_users = get_users_with_perms(\n- doc,\n- only_with_perms_in=[\"change_document\"],\n- ).values_list(\"id\", flat=True)\n+ overrides.view_users = list(\n+ get_users_with_perms(\n+ doc,\n+ only_with_perms_in=[\"view_document\"],\n+ ).values_list(\"id\", flat=True),\n+ )\n+ overrides.change_users = list(\n+ get_users_with_perms(\n+ doc,\n+ only_with_perms_in=[\"change_document\"],\n+ ).values_list(\"id\", flat=True),\n+ )\n overrides.custom_field_ids = list(\n doc.custom_fields.values_list(\"id\", flat=True),\n )\n@@ -120,10 +124,14 @@\n attach_perms=True,\n )\n overrides.view_groups = [\n- group.id for group, perms in groups_with_perms if \"view_document\" in perms\n+ group.id\n+ for group in groups_with_perms\n+ if \"view_document\" in groups_with_perms[group]\n ]\n overrides.change_groups = [\n- group.id for group, perms in groups_with_perms if \"change_document\" in perms\n+ group.id\n+ for group in groups_with_perms\n+ if \"change_document\" in groups_with_perms[group]\n ]\n \n return overrides\n", "issue": "[BUG ]At new splitting: \"QuerySet' object has no attribute 'extend'\" - Workflow adding a custom field on adding new document leads to the error message\n### Description\n\nWhen trying out the new splitting functionality I get the error message: \r\n\r\n 'QuerySet' object has no attribute 'extend'\r\n\r\nA Workflow for adding a custom field on adding new document leads to the error message.\r\n\r\nAnybody with the same issue?\r\n\n\n### Steps to reproduce\n\n1. Go to Documents\r\n2. Edit Document\r\n3. Split\r\n4. Split Pages 1 and 2\r\n5. Error message\n\n### Webserver logs\n\n```bash\n[2024-04-06 11:18:26,873] [DEBUG] [paperless.tasks] Executing plugin WorkflowTriggerPlugin\r\n\r\n[2024-04-06 11:18:26,905] [INFO] [paperless.matching] Document matched WorkflowTrigger 3 from Workflow: Benutzerdefinierte Felder hinzuf\u00fcgen\r\n\r\n[2024-04-06 11:18:26,910] [ERROR] [paperless.tasks] WorkflowTriggerPlugin failed: 'QuerySet' object has no attribute 'extend'\r\n\r\nTraceback (most recent call last):\r\n\r\n File \"/usr/src/paperless/src/documents/tasks.py\", line 144, in consume_file\r\n\r\n msg = plugin.run()\r\n\r\n ^^^^^^^^^^^^\r\n\r\n File \"/usr/src/paperless/src/documents/consumer.py\", line 223, in run\r\n\r\n self.metadata.update(overrides)\r\n\r\n File \"/usr/src/paperless/src/documents/data_models.py\", line 64, in update\r\n\r\n self.view_users.extend(other.view_users)\r\n\r\n ^^^^^^^^^^^^^^^^^^^^^^\r\n\r\nAttributeError: 'QuerySet' object has no attribute 'extend'\n```\n\n\n### Browser logs\n\n_No response_\n\n### Paperless-ngx version\n\n2.7.0\n\n### Host OS\n\nDocker on Synology NAS - DSM 7.2\n\n### Installation method\n\nDocker - official image\n\n### Browser\n\nFirefox\n\n### Configuration changes\n\n_No response_\n\n### Other\n\n_No response_\n\n### Please confirm the following\n\n- [X] I believe this issue is a bug that affects all users of Paperless-ngx, not something specific to my installation.\n- [X] I have already searched for relevant existing issues and discussions before opening this report.\n- [X] I have updated the title field above with a concise description.\n", "before_files": [{"content": "import dataclasses\nimport datetime\nfrom enum import IntEnum\nfrom pathlib import Path\nfrom typing import Optional\n\nimport magic\nfrom guardian.shortcuts import get_groups_with_perms\nfrom guardian.shortcuts import get_users_with_perms\n\n\[email protected]\nclass DocumentMetadataOverrides:\n \"\"\"\n Manages overrides for document fields which normally would\n be set from content or matching. All fields default to None,\n meaning no override is happening\n \"\"\"\n\n filename: Optional[str] = None\n title: Optional[str] = None\n correspondent_id: Optional[int] = None\n document_type_id: Optional[int] = None\n tag_ids: Optional[list[int]] = None\n storage_path_id: Optional[int] = None\n created: Optional[datetime.datetime] = None\n asn: Optional[int] = None\n owner_id: Optional[int] = None\n view_users: Optional[list[int]] = None\n view_groups: Optional[list[int]] = None\n change_users: Optional[list[int]] = None\n change_groups: Optional[list[int]] = None\n custom_field_ids: Optional[list[int]] = None\n\n def update(self, other: \"DocumentMetadataOverrides\") -> \"DocumentMetadataOverrides\":\n \"\"\"\n Merges two DocumentMetadataOverrides objects such that object B's overrides\n are applied to object A or merged if multiple are accepted.\n\n The update is an in-place modification of self\n \"\"\"\n # only if empty\n if other.title is not None:\n self.title = other.title\n if other.correspondent_id is not None:\n self.correspondent_id = other.correspondent_id\n if other.document_type_id is not None:\n self.document_type_id = other.document_type_id\n if other.storage_path_id is not None:\n self.storage_path_id = other.storage_path_id\n if other.owner_id is not None:\n self.owner_id = other.owner_id\n\n # merge\n if self.tag_ids is None:\n self.tag_ids = other.tag_ids\n elif other.tag_ids is not None:\n self.tag_ids.extend(other.tag_ids)\n self.tag_ids = list(set(self.tag_ids))\n\n if self.view_users is None:\n self.view_users = other.view_users\n elif other.view_users is not None:\n self.view_users.extend(other.view_users)\n self.view_users = list(set(self.view_users))\n\n if self.view_groups is None:\n self.view_groups = other.view_groups\n elif other.view_groups is not None:\n self.view_groups.extend(other.view_groups)\n self.view_groups = list(set(self.view_groups))\n\n if self.change_users is None:\n self.change_users = other.change_users\n elif other.change_users is not None:\n self.change_users.extend(other.change_users)\n self.change_users = list(set(self.change_users))\n\n if self.change_groups is None:\n self.change_groups = other.change_groups\n elif other.change_groups is not None:\n self.change_groups.extend(other.change_groups)\n self.change_groups = list(set(self.change_groups))\n\n if self.custom_field_ids is None:\n self.custom_field_ids = other.custom_field_ids\n elif other.custom_field_ids is not None:\n self.custom_field_ids.extend(other.custom_field_ids)\n self.custom_field_ids = list(set(self.custom_field_ids))\n\n return self\n\n @staticmethod\n def from_document(doc) -> \"DocumentMetadataOverrides\":\n \"\"\"\n Fills in the overrides from a document object\n \"\"\"\n overrides = DocumentMetadataOverrides()\n overrides.title = doc.title\n overrides.correspondent_id = doc.correspondent.id if doc.correspondent else None\n overrides.document_type_id = doc.document_type.id if doc.document_type else None\n overrides.storage_path_id = doc.storage_path.id if doc.storage_path else None\n overrides.owner_id = doc.owner.id if doc.owner else None\n overrides.tag_ids = list(doc.tags.values_list(\"id\", flat=True))\n\n overrides.view_users = get_users_with_perms(\n doc,\n only_with_perms_in=[\"view_document\"],\n ).values_list(\"id\", flat=True)\n overrides.change_users = get_users_with_perms(\n doc,\n only_with_perms_in=[\"change_document\"],\n ).values_list(\"id\", flat=True)\n overrides.custom_field_ids = list(\n doc.custom_fields.values_list(\"id\", flat=True),\n )\n\n groups_with_perms = get_groups_with_perms(\n doc,\n attach_perms=True,\n )\n overrides.view_groups = [\n group.id for group, perms in groups_with_perms if \"view_document\" in perms\n ]\n overrides.change_groups = [\n group.id for group, perms in groups_with_perms if \"change_document\" in perms\n ]\n\n return overrides\n\n\nclass DocumentSource(IntEnum):\n \"\"\"\n The source of an incoming document. May have other uses in the future\n \"\"\"\n\n ConsumeFolder = 1\n ApiUpload = 2\n MailFetch = 3\n\n\[email protected]\nclass ConsumableDocument:\n \"\"\"\n Encapsulates an incoming document, either from consume folder, API upload\n or mail fetching and certain useful operations on it.\n \"\"\"\n\n source: DocumentSource\n original_file: Path\n mailrule_id: Optional[int] = None\n mime_type: str = dataclasses.field(init=False, default=None)\n\n def __post_init__(self):\n \"\"\"\n After a dataclass is initialized, this is called to finalize some data\n 1. Make sure the original path is an absolute, fully qualified path\n 2. Get the mime type of the file\n \"\"\"\n # Always fully qualify the path first thing\n # Just in case, convert to a path if it's a str\n self.original_file = Path(self.original_file).resolve()\n\n # Get the file type once at init\n # Note this function isn't called when the object is unpickled\n self.mime_type = magic.from_file(self.original_file, mime=True)\n", "path": "src/documents/data_models.py"}]}

| 2,749 | 397 |

gh_patches_debug_649

|

rasdani/github-patches

|

git_diff

|

pex-tool__pex-1997

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Release 2.1.116

On the docket:

+ [x] The --resolve-local-platforms option does not work with --complete-platforms #1899

</issue>

<code>

[start of pex/version.py]

1 # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 __version__ = "2.1.115"

5

[end of pex/version.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pex/version.py b/pex/version.py

--- a/pex/version.py

+++ b/pex/version.py

@@ -1,4 +1,4 @@

# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

# Licensed under the Apache License, Version 2.0 (see LICENSE).

-__version__ = "2.1.115"

+__version__ = "2.1.116"

|

{"golden_diff": "diff --git a/pex/version.py b/pex/version.py\n--- a/pex/version.py\n+++ b/pex/version.py\n@@ -1,4 +1,4 @@\n # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n # Licensed under the Apache License, Version 2.0 (see LICENSE).\n \n-__version__ = \"2.1.115\"\n+__version__ = \"2.1.116\"\n", "issue": "Release 2.1.116\nOn the docket:\r\n+ [x] The --resolve-local-platforms option does not work with --complete-platforms #1899\n", "before_files": [{"content": "# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\n__version__ = \"2.1.115\"\n", "path": "pex/version.py"}]}

| 623 | 98 |

gh_patches_debug_14330

|

rasdani/github-patches

|

git_diff

|

pyca__cryptography-5825

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Version Scheme Change

We've talked on and off for quite a few years about our versioning scheme for `cryptography`, but #5771 made it very clear that despite our [versioning documentation](https://cryptography.io/en/latest/api-stability.html#versioning) some users still assume it's [SemVer](https://semver.org) and are caught off guard by changes. I propose that we **switch to a [CalVer](https://calver.org) scheme** for the thirty fifth feature release (which we currently would call 3.5). This has the advantage of being unambiguously not semantic versioning but encoding some vaguely useful information (the year of release) in it.

### Alternate Choices

**Stay the course**

We've successfully used our versioning scheme for a bit over 7 years now and the probability of another monumental shift like this is low. There is, however, a constant (but low) background radiation of people who are tripped up by making assumptions about our versioning so I would argue against this choice.

**SemVer**

Switch to using an actual semantic versioning scheme. Without re-litigating years of conversations, I don't believe either @alex or myself are interested in this option. (See https://github.com/pyca/cryptography/issues/5801#issuecomment-776067787 for a bit of elaboration on our position)

**Firefox/Chrome Versioning**

(From @alex)

"Its merits are that it's technically semver compatible, it's fairly predictable, and it communicates at least a little info on the relative age of the project.

Its demerits are that it probably won't solve the real problem here, which was that folks were surprised a major change happened at all."

</issue>

<code>

[start of src/cryptography/__about__.py]

1 # This file is dual licensed under the terms of the Apache License, Version

2 # 2.0, and the BSD License. See the LICENSE file in the root of this repository

3 # for complete details.

4

5

6 __all__ = [

7 "__title__",

8 "__summary__",

9 "__uri__",

10 "__version__",

11 "__author__",

12 "__email__",

13 "__license__",

14 "__copyright__",

15 ]

16

17 __title__ = "cryptography"

18 __summary__ = (

19 "cryptography is a package which provides cryptographic recipes"

20 " and primitives to Python developers."

21 )

22 __uri__ = "https://github.com/pyca/cryptography"

23

24 __version__ = "3.5.dev1"

25

26 __author__ = "The Python Cryptographic Authority and individual contributors"

27 __email__ = "[email protected]"

28

29 __license__ = "BSD or Apache License, Version 2.0"

30 __copyright__ = "Copyright 2013-2021 {}".format(__author__)

31

[end of src/cryptography/__about__.py]

[start of vectors/cryptography_vectors/__about__.py]

1 # This file is dual licensed under the terms of the Apache License, Version

2 # 2.0, and the BSD License. See the LICENSE file in the root of this repository

3 # for complete details.

4

5 __all__ = [

6 "__title__",

7 "__summary__",

8 "__uri__",

9 "__version__",

10 "__author__",

11 "__email__",

12 "__license__",

13 "__copyright__",

14 ]

15

16 __title__ = "cryptography_vectors"

17 __summary__ = "Test vectors for the cryptography package."

18

19 __uri__ = "https://github.com/pyca/cryptography"

20

21 __version__ = "3.5.dev1"

22

23 __author__ = "The Python Cryptographic Authority and individual contributors"

24 __email__ = "[email protected]"

25

26 __license__ = "BSD or Apache License, Version 2.0"

27 __copyright__ = "Copyright 2013-2021 %s" % __author__

28

[end of vectors/cryptography_vectors/__about__.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/cryptography/__about__.py b/src/cryptography/__about__.py

--- a/src/cryptography/__about__.py

+++ b/src/cryptography/__about__.py

@@ -21,7 +21,7 @@

)

__uri__ = "https://github.com/pyca/cryptography"

-__version__ = "3.5.dev1"

+__version__ = "35.0.0.dev1"

__author__ = "The Python Cryptographic Authority and individual contributors"

__email__ = "[email protected]"

diff --git a/vectors/cryptography_vectors/__about__.py b/vectors/cryptography_vectors/__about__.py

--- a/vectors/cryptography_vectors/__about__.py

+++ b/vectors/cryptography_vectors/__about__.py

@@ -18,7 +18,7 @@

__uri__ = "https://github.com/pyca/cryptography"

-__version__ = "3.5.dev1"

+__version__ = "35.0.0.dev1"

__author__ = "The Python Cryptographic Authority and individual contributors"

__email__ = "[email protected]"

|

{"golden_diff": "diff --git a/src/cryptography/__about__.py b/src/cryptography/__about__.py\n--- a/src/cryptography/__about__.py\n+++ b/src/cryptography/__about__.py\n@@ -21,7 +21,7 @@\n )\n __uri__ = \"https://github.com/pyca/cryptography\"\n \n-__version__ = \"3.5.dev1\"\n+__version__ = \"35.0.0.dev1\"\n \n __author__ = \"The Python Cryptographic Authority and individual contributors\"\n __email__ = \"[email protected]\"\ndiff --git a/vectors/cryptography_vectors/__about__.py b/vectors/cryptography_vectors/__about__.py\n--- a/vectors/cryptography_vectors/__about__.py\n+++ b/vectors/cryptography_vectors/__about__.py\n@@ -18,7 +18,7 @@\n \n __uri__ = \"https://github.com/pyca/cryptography\"\n \n-__version__ = \"3.5.dev1\"\n+__version__ = \"35.0.0.dev1\"\n \n __author__ = \"The Python Cryptographic Authority and individual contributors\"\n __email__ = \"[email protected]\"\n", "issue": "Version Scheme Change\nWe've talked on and off for quite a few years about our versioning scheme for `cryptography`, but #5771 made it very clear that despite our [versioning documentation](https://cryptography.io/en/latest/api-stability.html#versioning) some users still assume it's [SemVer](https://semver.org) and are caught off guard by changes. I propose that we **switch to a [CalVer](https://calver.org) scheme** for the thirty fifth feature release (which we currently would call 3.5). This has the advantage of being unambiguously not semantic versioning but encoding some vaguely useful information (the year of release) in it.\r\n\r\n### Alternate Choices\r\n**Stay the course**\r\nWe've successfully used our versioning scheme for a bit over 7 years now and the probability of another monumental shift like this is low. There is, however, a constant (but low) background radiation of people who are tripped up by making assumptions about our versioning so I would argue against this choice.\r\n\r\n**SemVer**\r\nSwitch to using an actual semantic versioning scheme. Without re-litigating years of conversations, I don't believe either @alex or myself are interested in this option. (See https://github.com/pyca/cryptography/issues/5801#issuecomment-776067787 for a bit of elaboration on our position)\r\n\r\n**Firefox/Chrome Versioning**\r\n(From @alex)\r\n\"Its merits are that it's technically semver compatible, it's fairly predictable, and it communicates at least a little info on the relative age of the project.\r\n\r\nIts demerits are that it probably won't solve the real problem here, which was that folks were surprised a major change happened at all.\"\n", "before_files": [{"content": "# This file is dual licensed under the terms of the Apache License, Version\n# 2.0, and the BSD License. See the LICENSE file in the root of this repository\n# for complete details.\n\n\n__all__ = [\n \"__title__\",\n \"__summary__\",\n \"__uri__\",\n \"__version__\",\n \"__author__\",\n \"__email__\",\n \"__license__\",\n \"__copyright__\",\n]\n\n__title__ = \"cryptography\"\n__summary__ = (\n \"cryptography is a package which provides cryptographic recipes\"\n \" and primitives to Python developers.\"\n)\n__uri__ = \"https://github.com/pyca/cryptography\"\n\n__version__ = \"3.5.dev1\"\n\n__author__ = \"The Python Cryptographic Authority and individual contributors\"\n__email__ = \"[email protected]\"\n\n__license__ = \"BSD or Apache License, Version 2.0\"\n__copyright__ = \"Copyright 2013-2021 {}\".format(__author__)\n", "path": "src/cryptography/__about__.py"}, {"content": "# This file is dual licensed under the terms of the Apache License, Version\n# 2.0, and the BSD License. See the LICENSE file in the root of this repository\n# for complete details.\n\n__all__ = [\n \"__title__\",\n \"__summary__\",\n \"__uri__\",\n \"__version__\",\n \"__author__\",\n \"__email__\",\n \"__license__\",\n \"__copyright__\",\n]\n\n__title__ = \"cryptography_vectors\"\n__summary__ = \"Test vectors for the cryptography package.\"\n\n__uri__ = \"https://github.com/pyca/cryptography\"\n\n__version__ = \"3.5.dev1\"\n\n__author__ = \"The Python Cryptographic Authority and individual contributors\"\n__email__ = \"[email protected]\"\n\n__license__ = \"BSD or Apache License, Version 2.0\"\n__copyright__ = \"Copyright 2013-2021 %s\" % __author__\n", "path": "vectors/cryptography_vectors/__about__.py"}]}

| 1,457 | 252 |

gh_patches_debug_4829

|

rasdani/github-patches

|

git_diff

|

archlinux__archinstall-2178

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Reset in locales menu causes crash

Using reset in the _Locales_ menu will cause a crash.

```

Traceback (most recent call last):

File "/home/scripttest/archinstall/.venv/bin/archinstall", line 8, in <module>

sys.exit(run_as_a_module())

^^^^^^^^^^^^^^^^^

File "/home/scripttest/archinstall/archinstall/__init__.py", line 291, in run_as_a_module

importlib.import_module(mod_name)

File "/usr/lib/python3.11/importlib/__init__.py", line 126, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "<frozen importlib._bootstrap>", line 1204, in _gcd_import

File "<frozen importlib._bootstrap>", line 1176, in _find_and_load

File "<frozen importlib._bootstrap>", line 1147, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 690, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 940, in exec_module

File "<frozen importlib._bootstrap>", line 241, in _call_with_frames_removed

File "/home/scripttest/archinstall/archinstall/scripts/guided.py", line 234, in <module>

ask_user_questions()

File "/home/scripttest/archinstall/archinstall/scripts/guided.py", line 99, in ask_user_questions

global_menu.run()

File "/home/scripttest/archinstall/archinstall/lib/menu/abstract_menu.py", line 348, in run

if not self._process_selection(value):

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/scripttest/archinstall/archinstall/lib/menu/abstract_menu.py", line 365, in _process_selection

return self.exec_option(config_name, selector)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/scripttest/archinstall/archinstall/lib/menu/abstract_menu.py", line 386, in exec_option

result = selector.func(presel_val)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/scripttest/archinstall/archinstall/lib/global_menu.py", line 53, in <lambda>

lambda preset: self._locale_selection(preset),

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/scripttest/archinstall/archinstall/lib/global_menu.py", line 246, in _locale_selection

locale_config = LocaleMenu(data_store, preset).run()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/scripttest/archinstall/archinstall/lib/locale/locale_menu.py", line 84, in run

self._data_store['keyboard-layout'],

~~~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

KeyError: 'keyboard-layout'

```

</issue>

<code>

[start of archinstall/lib/locale/locale_menu.py]

1 from dataclasses import dataclass

2 from typing import Dict, Any, TYPE_CHECKING, Optional

3

4 from .utils import list_keyboard_languages, list_locales, set_kb_layout

5 from ..menu import Selector, AbstractSubMenu, MenuSelectionType, Menu

6

7 if TYPE_CHECKING:

8 _: Any

9

10

11 @dataclass

12 class LocaleConfiguration:

13 kb_layout: str

14 sys_lang: str

15 sys_enc: str

16

17 @staticmethod

18 def default() -> 'LocaleConfiguration':

19 return LocaleConfiguration('us', 'en_US', 'UTF-8')

20

21 def json(self) -> Dict[str, str]:

22 return {

23 'kb_layout': self.kb_layout,

24 'sys_lang': self.sys_lang,

25 'sys_enc': self.sys_enc

26 }

27

28 @classmethod

29 def _load_config(cls, config: 'LocaleConfiguration', args: Dict[str, Any]) -> 'LocaleConfiguration':

30 if 'sys_lang' in args:

31 config.sys_lang = args['sys_lang']

32 if 'sys_enc' in args:

33 config.sys_enc = args['sys_enc']

34 if 'kb_layout' in args:

35 config.kb_layout = args['kb_layout']

36

37 return config

38

39 @classmethod

40 def parse_arg(cls, args: Dict[str, Any]) -> 'LocaleConfiguration':

41 default = cls.default()

42

43 if 'locale_config' in args:

44 default = cls._load_config(default, args['locale_config'])

45 else:

46 default = cls._load_config(default, args)

47

48 return default

49

50

51 class LocaleMenu(AbstractSubMenu):

52 def __init__(

53 self,

54 data_store: Dict[str, Any],

55 locale_conf: LocaleConfiguration

56 ):

57 self._preset = locale_conf

58 super().__init__(data_store=data_store)

59

60 def setup_selection_menu_options(self):

61 self._menu_options['keyboard-layout'] = \

62 Selector(

63 _('Keyboard layout'),

64 lambda preset: self._select_kb_layout(preset),

65 default=self._preset.kb_layout,

66 enabled=True)

67 self._menu_options['sys-language'] = \

68 Selector(

69 _('Locale language'),

70 lambda preset: select_locale_lang(preset),

71 default=self._preset.sys_lang,

72 enabled=True)

73 self._menu_options['sys-encoding'] = \

74 Selector(

75 _('Locale encoding'),

76 lambda preset: select_locale_enc(preset),

77 default=self._preset.sys_enc,

78 enabled=True)

79

80 def run(self, allow_reset: bool = True) -> LocaleConfiguration:

81 super().run(allow_reset=allow_reset)

82

83 return LocaleConfiguration(

84 self._data_store['keyboard-layout'],

85 self._data_store['sys-language'],

86 self._data_store['sys-encoding']

87 )

88

89 def _select_kb_layout(self, preset: Optional[str]) -> Optional[str]:

90 kb_lang = select_kb_layout(preset)

91 if kb_lang:

92 set_kb_layout(kb_lang)

93 return kb_lang

94

95

96 def select_locale_lang(preset: Optional[str] = None) -> Optional[str]:

97 locales = list_locales()

98 locale_lang = set([locale.split()[0] for locale in locales])

99

100 choice = Menu(

101 _('Choose which locale language to use'),

102 list(locale_lang),

103 sort=True,

104 preset_values=preset

105 ).run()

106

107 match choice.type_:

108 case MenuSelectionType.Selection: return choice.single_value

109 case MenuSelectionType.Skip: return preset

110

111 return None

112

113

114 def select_locale_enc(preset: Optional[str] = None) -> Optional[str]:

115 locales = list_locales()

116 locale_enc = set([locale.split()[1] for locale in locales])

117

118 choice = Menu(

119 _('Choose which locale encoding to use'),

120 list(locale_enc),

121 sort=True,

122 preset_values=preset

123 ).run()

124

125 match choice.type_:

126 case MenuSelectionType.Selection: return choice.single_value

127 case MenuSelectionType.Skip: return preset

128

129 return None

130

131

132 def select_kb_layout(preset: Optional[str] = None) -> Optional[str]:

133 """

134 Asks the user to select a language

135 Usually this is combined with :ref:`archinstall.list_keyboard_languages`.

136

137 :return: The language/dictionary key of the selected language

138 :rtype: str

139 """

140 kb_lang = list_keyboard_languages()

141 # sort alphabetically and then by length