problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_3412

|

rasdani/github-patches

|

git_diff

|

dynaconf__dynaconf-767

|

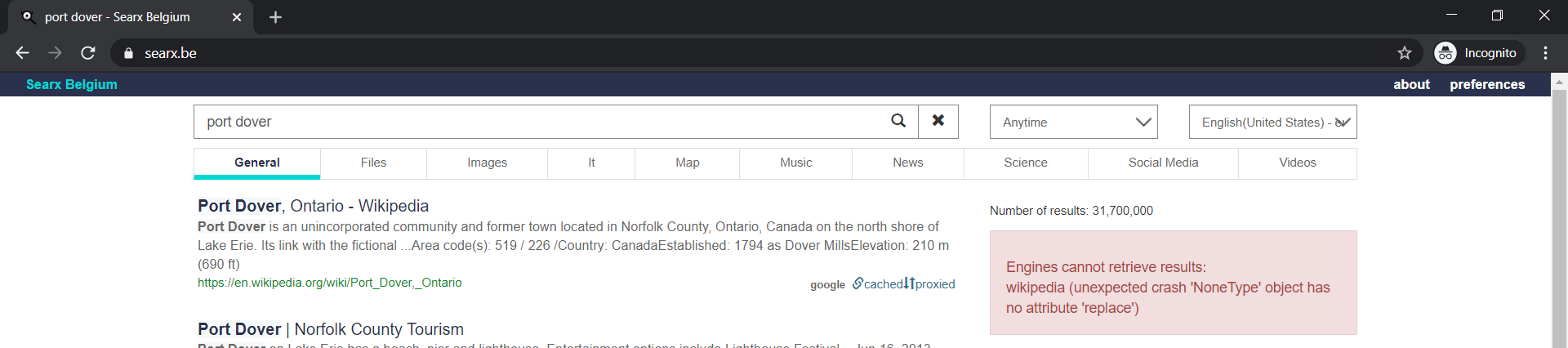

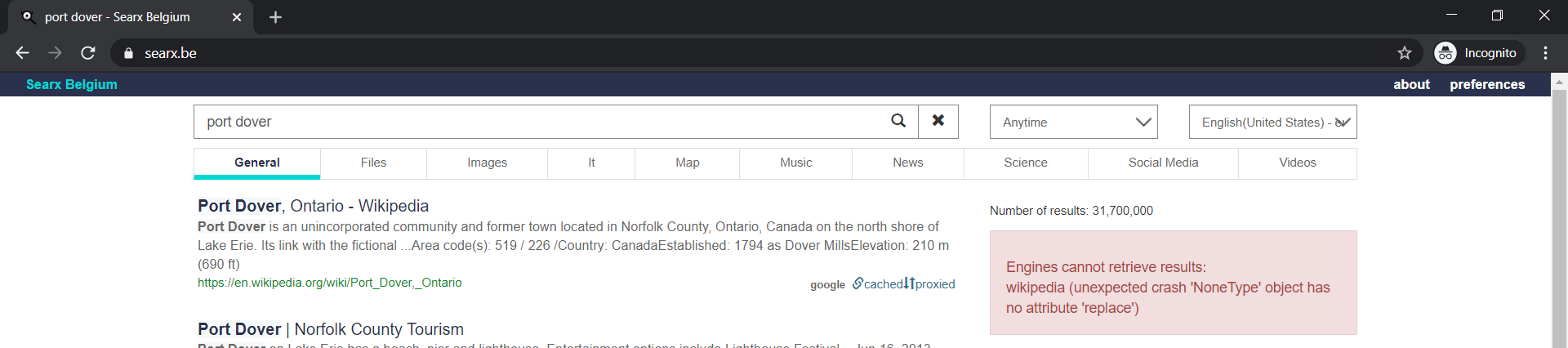

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[bug] filter_strategy config not working

**Describe the bug**

It seems that the `filter_strategy` config (which by the way is misspelled int the [docs](https://github.com/rochacbruno/dynaconf/blob/master/docs/configuration.md)) is not taken into account when used in the `Dynaconf` constructor.

**To Reproduce**

Steps to reproduce the behavior:

1. Having the following folder structure

Just a plain python script.

2. Having the following config files:

No config file, just using env variables

4. Having the following app code:

<details>

<summary> Code </summary>

**/test/test.py**

```python

import dynaconf

class CustomFilter:

def __call__(self, data):

print("this is never called")

return {

k: v

for k, v in data.items()

if k.startswith("PREFIX")

}

if __name__ == "__main__":

dc = dynaconf.Dynaconf(

envvar_prefix=False,

filter_strategy=CustomFilter(),

)

print(dc.as_dict())

```

</details>

5. Executing under the following environment

<details>

<summary> Execution </summary>

```bash

PREFIX_VAR="HELLO" OTHER_VAR="WORLD" python test.py

```

</details>

**Expected behavior**

`CustomFilter` should be called ("this is never called" should be displayed) and only the `PREFIX_VAR` should be in dict, not `OTHER_VAR`

**Environment (please complete the following information):**

- OS: Linux version 5.10.60.1-microsoft-standard-WSL2

- Dynaconf Version 3.1.9

- Framework: None

**Context**

I was looking for a way to filter out empty environment variables.

</issue>

<code>

[start of dynaconf/loaders/env_loader.py]

1 from __future__ import annotations

2

3 from os import environ

4

5 from dynaconf.utils import missing

6 from dynaconf.utils import upperfy

7 from dynaconf.utils.parse_conf import parse_conf_data

8 from dynaconf.vendor.dotenv import cli as dotenv_cli

9

10

11 IDENTIFIER = "env"

12

13

14 def load(obj, env=None, silent=True, key=None):

15 """Loads envvars with prefixes:

16

17 `DYNACONF_` (default global) or `$(ENVVAR_PREFIX_FOR_DYNACONF)_`

18 """

19 global_prefix = obj.get("ENVVAR_PREFIX_FOR_DYNACONF")

20 if global_prefix is False or global_prefix.upper() != "DYNACONF":

21 load_from_env(obj, "DYNACONF", key, silent, IDENTIFIER + "_global")

22

23 # Load the global env if exists and overwrite everything

24 load_from_env(obj, global_prefix, key, silent, IDENTIFIER + "_global")

25

26

27 def load_from_env(

28 obj,

29 prefix=False,

30 key=None,

31 silent=False,

32 identifier=IDENTIFIER,

33 env=False, # backwards compatibility bc renamed param

34 ):

35 if prefix is False and env is not False:

36 prefix = env

37

38 env_ = ""

39 if prefix is not False:

40 if not isinstance(prefix, str):

41 raise TypeError("`prefix/env` must be str or False")

42

43 prefix = prefix.upper()

44 env_ = f"{prefix}_"

45

46 # Load a single environment variable explicitly.

47 if key:

48 key = upperfy(key)

49 value = environ.get(f"{env_}{key}")

50 if value:

51 try: # obj is a Settings

52 obj.set(key, value, loader_identifier=identifier, tomlfy=True)

53 except AttributeError: # obj is a dict

54 obj[key] = parse_conf_data(

55 value, tomlfy=True, box_settings=obj

56 )

57

58 # Load environment variables in bulk (when matching).

59 else:

60 # Only known variables should be loaded from environment?

61 ignore_unknown = obj.get("IGNORE_UNKNOWN_ENVVARS_FOR_DYNACONF")

62

63 trim_len = len(env_)

64 data = {

65 key[trim_len:]: parse_conf_data(

66 data, tomlfy=True, box_settings=obj

67 )

68 for key, data in environ.items()

69 if key.startswith(env_)

70 and not (

71 # Ignore environment variables that haven't been

72 # pre-defined in settings space.

73 ignore_unknown

74 and obj.get(key[trim_len:], default=missing) is missing

75 )

76 }

77 # Update the settings space based on gathered data from environment.

78 if data:

79 obj.update(data, loader_identifier=identifier)

80

81

82 def write(settings_path, settings_data, **kwargs):

83 """Write data to .env file"""

84 for key, value in settings_data.items():

85 quote_mode = (

86 isinstance(value, str)

87 and (value.startswith("'") or value.startswith('"'))

88 ) or isinstance(value, (list, dict))

89 dotenv_cli.set_key(

90 str(settings_path),

91 key,

92 str(value),

93 quote_mode="always" if quote_mode else "none",

94 )

95

[end of dynaconf/loaders/env_loader.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/dynaconf/loaders/env_loader.py b/dynaconf/loaders/env_loader.py

--- a/dynaconf/loaders/env_loader.py

+++ b/dynaconf/loaders/env_loader.py

@@ -76,6 +76,9 @@

}

# Update the settings space based on gathered data from environment.

if data:

+ filter_strategy = obj.get("FILTER_STRATEGY")

+ if filter_strategy:

+ data = filter_strategy(data)

obj.update(data, loader_identifier=identifier)

|

{"golden_diff": "diff --git a/dynaconf/loaders/env_loader.py b/dynaconf/loaders/env_loader.py\n--- a/dynaconf/loaders/env_loader.py\n+++ b/dynaconf/loaders/env_loader.py\n@@ -76,6 +76,9 @@\n }\n # Update the settings space based on gathered data from environment.\n if data:\n+ filter_strategy = obj.get(\"FILTER_STRATEGY\")\n+ if filter_strategy:\n+ data = filter_strategy(data)\n obj.update(data, loader_identifier=identifier)\n", "issue": "[bug] filter_strategy config not working\n**Describe the bug**\r\nIt seems that the `filter_strategy` config (which by the way is misspelled int the [docs](https://github.com/rochacbruno/dynaconf/blob/master/docs/configuration.md)) is not taken into account when used in the `Dynaconf` constructor.\r\n\r\n**To Reproduce**\r\nSteps to reproduce the behavior:\r\n\r\n1. Having the following folder structure\r\nJust a plain python script.\r\n\r\n2. Having the following config files:\r\nNo config file, just using env variables\r\n\r\n4. Having the following app code:\r\n\r\n<details>\r\n<summary> Code </summary>\r\n\r\n**/test/test.py**\r\n```python\r\nimport dynaconf\r\n\r\nclass CustomFilter:\r\n def __call__(self, data):\r\n print(\"this is never called\")\r\n return {\r\n k: v\r\n for k, v in data.items()\r\n if k.startswith(\"PREFIX\")\r\n }\r\n\r\n\r\nif __name__ == \"__main__\":\r\n dc = dynaconf.Dynaconf(\r\n envvar_prefix=False,\r\n filter_strategy=CustomFilter(),\r\n )\r\n print(dc.as_dict())\r\n```\r\n\r\n</details>\r\n\r\n5. Executing under the following environment\r\n\r\n<details>\r\n<summary> Execution </summary>\r\n\r\n```bash\r\nPREFIX_VAR=\"HELLO\" OTHER_VAR=\"WORLD\" python test.py\r\n```\r\n\r\n</details>\r\n\r\n**Expected behavior**\r\n`CustomFilter` should be called (\"this is never called\" should be displayed) and only the `PREFIX_VAR` should be in dict, not `OTHER_VAR`\r\n\r\n**Environment (please complete the following information):**\r\n - OS: Linux version 5.10.60.1-microsoft-standard-WSL2 \r\n - Dynaconf Version 3.1.9\r\n - Framework: None\r\n\r\n**Context**\r\n\r\nI was looking for a way to filter out empty environment variables.\r\n\n", "before_files": [{"content": "from __future__ import annotations\n\nfrom os import environ\n\nfrom dynaconf.utils import missing\nfrom dynaconf.utils import upperfy\nfrom dynaconf.utils.parse_conf import parse_conf_data\nfrom dynaconf.vendor.dotenv import cli as dotenv_cli\n\n\nIDENTIFIER = \"env\"\n\n\ndef load(obj, env=None, silent=True, key=None):\n \"\"\"Loads envvars with prefixes:\n\n `DYNACONF_` (default global) or `$(ENVVAR_PREFIX_FOR_DYNACONF)_`\n \"\"\"\n global_prefix = obj.get(\"ENVVAR_PREFIX_FOR_DYNACONF\")\n if global_prefix is False or global_prefix.upper() != \"DYNACONF\":\n load_from_env(obj, \"DYNACONF\", key, silent, IDENTIFIER + \"_global\")\n\n # Load the global env if exists and overwrite everything\n load_from_env(obj, global_prefix, key, silent, IDENTIFIER + \"_global\")\n\n\ndef load_from_env(\n obj,\n prefix=False,\n key=None,\n silent=False,\n identifier=IDENTIFIER,\n env=False, # backwards compatibility bc renamed param\n):\n if prefix is False and env is not False:\n prefix = env\n\n env_ = \"\"\n if prefix is not False:\n if not isinstance(prefix, str):\n raise TypeError(\"`prefix/env` must be str or False\")\n\n prefix = prefix.upper()\n env_ = f\"{prefix}_\"\n\n # Load a single environment variable explicitly.\n if key:\n key = upperfy(key)\n value = environ.get(f\"{env_}{key}\")\n if value:\n try: # obj is a Settings\n obj.set(key, value, loader_identifier=identifier, tomlfy=True)\n except AttributeError: # obj is a dict\n obj[key] = parse_conf_data(\n value, tomlfy=True, box_settings=obj\n )\n\n # Load environment variables in bulk (when matching).\n else:\n # Only known variables should be loaded from environment?\n ignore_unknown = obj.get(\"IGNORE_UNKNOWN_ENVVARS_FOR_DYNACONF\")\n\n trim_len = len(env_)\n data = {\n key[trim_len:]: parse_conf_data(\n data, tomlfy=True, box_settings=obj\n )\n for key, data in environ.items()\n if key.startswith(env_)\n and not (\n # Ignore environment variables that haven't been\n # pre-defined in settings space.\n ignore_unknown\n and obj.get(key[trim_len:], default=missing) is missing\n )\n }\n # Update the settings space based on gathered data from environment.\n if data:\n obj.update(data, loader_identifier=identifier)\n\n\ndef write(settings_path, settings_data, **kwargs):\n \"\"\"Write data to .env file\"\"\"\n for key, value in settings_data.items():\n quote_mode = (\n isinstance(value, str)\n and (value.startswith(\"'\") or value.startswith('\"'))\n ) or isinstance(value, (list, dict))\n dotenv_cli.set_key(\n str(settings_path),\n key,\n str(value),\n quote_mode=\"always\" if quote_mode else \"none\",\n )\n", "path": "dynaconf/loaders/env_loader.py"}]}

| 1,793 | 111 |

gh_patches_debug_37526

|

rasdani/github-patches

|

git_diff

|

freqtrade__freqtrade-8273

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

stoploss_from_open has some ambiguous with leverage

<!--

Have you searched for similar issues before posting it?

If you have discovered a bug in the bot, please [search the issue tracker](https://github.com/freqtrade/freqtrade/issues?q=is%3Aissue).

If it hasn't been reported, please create a new issue.

Please do not use bug reports to request new features.

-->

## Describe your environment

* Operating system: ____ Linux

* Python Version: _____ (`python -V`) 3.10

* CCXT version: _____ (`pip freeze | grep ccxt`) 1.95

* Freqtrade Version: ____ (`freqtrade -V` or `docker compose run --rm freqtrade -V` for Freqtrade running in docker)

Note: All issues other than enhancement requests will be closed without further comment if the above template is deleted or not filled out.

## Describe the problem:

In the doc explanation, profit and stoploss is calculated with leverage.

And this function `stoploss_from_open` (in freqtrade/strategy/strategy_helper.py) may wrong when enter short with leverage. Because it's profit_rate may large than 1 , and but open_relative_stop still less than 1, and stoploss be always negative, and function return 0.0. But in fact, it has positive profit.

*Explain the problem you have encountered*

### Steps to reproduce:

1. _____

2. _____

3. _____

### Observed Results:

* What happened?

stoploss_from_open may have ambiguous when used leverage

* What did you expect to happen?

In function dive leverage, or argument explicitly explanates without leverage

### Relevant code exceptions or logs

``` Python

def stoploss_from_open(

open_relative_stop: float,

current_profit: float,

is_short: bool = False

) -> float:

"""

Given the current profit, and a desired stop loss value relative to the open price,

return a stop loss value that is relative to the current price, and which can be

returned from `custom_stoploss`.

The requested stop can be positive for a stop above the open price, or negative for

a stop below the open price. The return value is always >= 0.

Returns 0 if the resulting stop price would be above/below (longs/shorts) the current price

:param open_relative_stop: Desired stop loss percentage relative to open price

:param current_profit: The current profit percentage

:param is_short: When true, perform the calculation for short instead of long

:return: Stop loss value relative to current price

"""

# formula is undefined for current_profit -1 (longs) or 1 (shorts), return maximum value

if (current_profit == -1 and not is_short) or (is_short and current_profit == 1):

return 1

if is_short is True:

stoploss = -1 + ((1 - open_relative_stop) / (1 - current_profit))

else:

stoploss = 1 - ((1 + open_relative_stop) / (1 + current_profit))

# negative stoploss values indicate the requested stop price is higher/lower

# (long/short) than the current price

return max(stoploss, 0.0)

```

Note: Please copy/paste text of the messages, no screenshots of logs please.

```

// paste your log here

```

</issue>

<code>

[start of freqtrade/strategy/strategy_helper.py]

1 from typing import Optional

2

3 import pandas as pd

4

5 from freqtrade.exchange import timeframe_to_minutes

6

7

8 def merge_informative_pair(dataframe: pd.DataFrame, informative: pd.DataFrame,

9 timeframe: str, timeframe_inf: str, ffill: bool = True,

10 append_timeframe: bool = True,

11 date_column: str = 'date',

12 suffix: Optional[str] = None) -> pd.DataFrame:

13 """

14 Correctly merge informative samples to the original dataframe, avoiding lookahead bias.

15

16 Since dates are candle open dates, merging a 15m candle that starts at 15:00, and a

17 1h candle that starts at 15:00 will result in all candles to know the close at 16:00

18 which they should not know.

19

20 Moves the date of the informative pair by 1 time interval forward.

21 This way, the 14:00 1h candle is merged to 15:00 15m candle, since the 14:00 1h candle is the

22 last candle that's closed at 15:00, 15:15, 15:30 or 15:45.

23

24 Assuming inf_tf = '1d' - then the resulting columns will be:

25 date_1d, open_1d, high_1d, low_1d, close_1d, rsi_1d

26

27 :param dataframe: Original dataframe

28 :param informative: Informative pair, most likely loaded via dp.get_pair_dataframe

29 :param timeframe: Timeframe of the original pair sample.

30 :param timeframe_inf: Timeframe of the informative pair sample.

31 :param ffill: Forwardfill missing values - optional but usually required

32 :param append_timeframe: Rename columns by appending timeframe.

33 :param date_column: A custom date column name.

34 :param suffix: A string suffix to add at the end of the informative columns. If specified,

35 append_timeframe must be false.

36 :return: Merged dataframe

37 :raise: ValueError if the secondary timeframe is shorter than the dataframe timeframe

38 """

39

40 minutes_inf = timeframe_to_minutes(timeframe_inf)

41 minutes = timeframe_to_minutes(timeframe)

42 if minutes == minutes_inf:

43 # No need to forwardshift if the timeframes are identical

44 informative['date_merge'] = informative[date_column]

45 elif minutes < minutes_inf:

46 # Subtract "small" timeframe so merging is not delayed by 1 small candle

47 # Detailed explanation in https://github.com/freqtrade/freqtrade/issues/4073

48 informative['date_merge'] = (

49 informative[date_column] + pd.to_timedelta(minutes_inf, 'm') -

50 pd.to_timedelta(minutes, 'm')

51 )

52 else:

53 raise ValueError("Tried to merge a faster timeframe to a slower timeframe."

54 "This would create new rows, and can throw off your regular indicators.")

55

56 # Rename columns to be unique

57 date_merge = 'date_merge'

58 if suffix and append_timeframe:

59 raise ValueError("You can not specify `append_timeframe` as True and a `suffix`.")

60 elif append_timeframe:

61 date_merge = f'date_merge_{timeframe_inf}'

62 informative.columns = [f"{col}_{timeframe_inf}" for col in informative.columns]

63

64 elif suffix:

65 date_merge = f'date_merge_{suffix}'

66 informative.columns = [f"{col}_{suffix}" for col in informative.columns]

67

68 # Combine the 2 dataframes

69 # all indicators on the informative sample MUST be calculated before this point

70 if ffill:

71 # https://pandas.pydata.org/docs/user_guide/merging.html#timeseries-friendly-merging

72 # merge_ordered - ffill method is 2.5x faster than seperate ffill()

73 dataframe = pd.merge_ordered(dataframe, informative, fill_method="ffill", left_on='date',

74 right_on=date_merge, how='left')

75 else:

76 dataframe = pd.merge(dataframe, informative, left_on='date',

77 right_on=date_merge, how='left')

78 dataframe = dataframe.drop(date_merge, axis=1)

79

80 # if ffill:

81 # dataframe = dataframe.ffill()

82

83 return dataframe

84

85

86 def stoploss_from_open(

87 open_relative_stop: float,

88 current_profit: float,

89 is_short: bool = False

90 ) -> float:

91 """

92

93 Given the current profit, and a desired stop loss value relative to the open price,

94 return a stop loss value that is relative to the current price, and which can be

95 returned from `custom_stoploss`.

96

97 The requested stop can be positive for a stop above the open price, or negative for

98 a stop below the open price. The return value is always >= 0.

99

100 Returns 0 if the resulting stop price would be above/below (longs/shorts) the current price

101

102 :param open_relative_stop: Desired stop loss percentage relative to open price

103 :param current_profit: The current profit percentage

104 :param is_short: When true, perform the calculation for short instead of long

105 :return: Stop loss value relative to current price

106 """

107

108 # formula is undefined for current_profit -1 (longs) or 1 (shorts), return maximum value

109 if (current_profit == -1 and not is_short) or (is_short and current_profit == 1):

110 return 1

111

112 if is_short is True:

113 stoploss = -1 + ((1 - open_relative_stop) / (1 - current_profit))

114 else:

115 stoploss = 1 - ((1 + open_relative_stop) / (1 + current_profit))

116

117 # negative stoploss values indicate the requested stop price is higher/lower

118 # (long/short) than the current price

119 return max(stoploss, 0.0)

120

121

122 def stoploss_from_absolute(stop_rate: float, current_rate: float, is_short: bool = False) -> float:

123 """

124 Given current price and desired stop price, return a stop loss value that is relative to current

125 price.

126

127 The requested stop can be positive for a stop above the open price, or negative for

128 a stop below the open price. The return value is always >= 0.

129

130 Returns 0 if the resulting stop price would be above the current price.

131

132 :param stop_rate: Stop loss price.

133 :param current_rate: Current asset price.

134 :param is_short: When true, perform the calculation for short instead of long

135 :return: Positive stop loss value relative to current price

136 """

137

138 # formula is undefined for current_rate 0, return maximum value

139 if current_rate == 0:

140 return 1

141

142 stoploss = 1 - (stop_rate / current_rate)

143 if is_short:

144 stoploss = -stoploss

145

146 # negative stoploss values indicate the requested stop price is higher/lower

147 # (long/short) than the current price

148 # shorts can yield stoploss values higher than 1, so limit that as well

149 return max(min(stoploss, 1.0), 0.0)

150

[end of freqtrade/strategy/strategy_helper.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/freqtrade/strategy/strategy_helper.py b/freqtrade/strategy/strategy_helper.py

--- a/freqtrade/strategy/strategy_helper.py

+++ b/freqtrade/strategy/strategy_helper.py

@@ -86,37 +86,41 @@

def stoploss_from_open(

open_relative_stop: float,

current_profit: float,

- is_short: bool = False

+ is_short: bool = False,

+ leverage: float = 1.0

) -> float:

"""

-

- Given the current profit, and a desired stop loss value relative to the open price,

+ Given the current profit, and a desired stop loss value relative to the trade entry price,

return a stop loss value that is relative to the current price, and which can be

returned from `custom_stoploss`.

The requested stop can be positive for a stop above the open price, or negative for

a stop below the open price. The return value is always >= 0.

+ `open_relative_stop` will be considered as adjusted for leverage if leverage is provided..

Returns 0 if the resulting stop price would be above/below (longs/shorts) the current price

- :param open_relative_stop: Desired stop loss percentage relative to open price

+ :param open_relative_stop: Desired stop loss percentage, relative to the open price,

+ adjusted for leverage

:param current_profit: The current profit percentage

:param is_short: When true, perform the calculation for short instead of long

+ :param leverage: Leverage to use for the calculation

:return: Stop loss value relative to current price

"""

# formula is undefined for current_profit -1 (longs) or 1 (shorts), return maximum value

- if (current_profit == -1 and not is_short) or (is_short and current_profit == 1):

+ _current_profit = current_profit / leverage

+ if (_current_profit == -1 and not is_short) or (is_short and _current_profit == 1):

return 1

if is_short is True:

- stoploss = -1 + ((1 - open_relative_stop) / (1 - current_profit))

+ stoploss = -1 + ((1 - open_relative_stop / leverage) / (1 - _current_profit))

else:

- stoploss = 1 - ((1 + open_relative_stop) / (1 + current_profit))

+ stoploss = 1 - ((1 + open_relative_stop / leverage) / (1 + _current_profit))

# negative stoploss values indicate the requested stop price is higher/lower

# (long/short) than the current price

- return max(stoploss, 0.0)

+ return max(stoploss * leverage, 0.0)

def stoploss_from_absolute(stop_rate: float, current_rate: float, is_short: bool = False) -> float:

|

{"golden_diff": "diff --git a/freqtrade/strategy/strategy_helper.py b/freqtrade/strategy/strategy_helper.py\n--- a/freqtrade/strategy/strategy_helper.py\n+++ b/freqtrade/strategy/strategy_helper.py\n@@ -86,37 +86,41 @@\n def stoploss_from_open(\n open_relative_stop: float,\n current_profit: float,\n- is_short: bool = False\n+ is_short: bool = False,\n+ leverage: float = 1.0\n ) -> float:\n \"\"\"\n-\n- Given the current profit, and a desired stop loss value relative to the open price,\n+ Given the current profit, and a desired stop loss value relative to the trade entry price,\n return a stop loss value that is relative to the current price, and which can be\n returned from `custom_stoploss`.\n \n The requested stop can be positive for a stop above the open price, or negative for\n a stop below the open price. The return value is always >= 0.\n+ `open_relative_stop` will be considered as adjusted for leverage if leverage is provided..\n \n Returns 0 if the resulting stop price would be above/below (longs/shorts) the current price\n \n- :param open_relative_stop: Desired stop loss percentage relative to open price\n+ :param open_relative_stop: Desired stop loss percentage, relative to the open price,\n+ adjusted for leverage\n :param current_profit: The current profit percentage\n :param is_short: When true, perform the calculation for short instead of long\n+ :param leverage: Leverage to use for the calculation\n :return: Stop loss value relative to current price\n \"\"\"\n \n # formula is undefined for current_profit -1 (longs) or 1 (shorts), return maximum value\n- if (current_profit == -1 and not is_short) or (is_short and current_profit == 1):\n+ _current_profit = current_profit / leverage\n+ if (_current_profit == -1 and not is_short) or (is_short and _current_profit == 1):\n return 1\n \n if is_short is True:\n- stoploss = -1 + ((1 - open_relative_stop) / (1 - current_profit))\n+ stoploss = -1 + ((1 - open_relative_stop / leverage) / (1 - _current_profit))\n else:\n- stoploss = 1 - ((1 + open_relative_stop) / (1 + current_profit))\n+ stoploss = 1 - ((1 + open_relative_stop / leverage) / (1 + _current_profit))\n \n # negative stoploss values indicate the requested stop price is higher/lower\n # (long/short) than the current price\n- return max(stoploss, 0.0)\n+ return max(stoploss * leverage, 0.0)\n \n \n def stoploss_from_absolute(stop_rate: float, current_rate: float, is_short: bool = False) -> float:\n", "issue": "stoploss_from_open has some ambiguous with leverage\n<!-- \r\nHave you searched for similar issues before posting it?\r\n\r\nIf you have discovered a bug in the bot, please [search the issue tracker](https://github.com/freqtrade/freqtrade/issues?q=is%3Aissue). \r\nIf it hasn't been reported, please create a new issue.\r\n\r\nPlease do not use bug reports to request new features.\r\n-->\r\n\r\n## Describe your environment\r\n\r\n * Operating system: ____ Linux\r\n * Python Version: _____ (`python -V`) 3.10\r\n * CCXT version: _____ (`pip freeze | grep ccxt`) 1.95\r\n * Freqtrade Version: ____ (`freqtrade -V` or `docker compose run --rm freqtrade -V` for Freqtrade running in docker)\r\n \r\nNote: All issues other than enhancement requests will be closed without further comment if the above template is deleted or not filled out.\r\n\r\n## Describe the problem:\r\n\r\nIn the doc explanation, profit and stoploss is calculated with leverage.\r\n\r\nAnd this function `stoploss_from_open` (in freqtrade/strategy/strategy_helper.py) may wrong when enter short with leverage. Because it's profit_rate may large than 1 , and but open_relative_stop still less than 1, and stoploss be always negative, and function return 0.0. But in fact, it has positive profit.\r\n\r\n*Explain the problem you have encountered*\r\n\r\n### Steps to reproduce:\r\n\r\n 1. _____\r\n 2. _____\r\n 3. _____\r\n \r\n### Observed Results:\r\n\r\n * What happened? \r\n stoploss_from_open may have ambiguous when used leverage\r\n * What did you expect to happen?\r\n In function dive leverage, or argument explicitly explanates without leverage\r\n### Relevant code exceptions or logs\r\n``` Python\r\n def stoploss_from_open(\r\n open_relative_stop: float,\r\n current_profit: float,\r\n is_short: bool = False\r\n ) -> float:\r\n \"\"\"\r\n Given the current profit, and a desired stop loss value relative to the open price,\r\n return a stop loss value that is relative to the current price, and which can be\r\n returned from `custom_stoploss`.\r\n \r\n The requested stop can be positive for a stop above the open price, or negative for\r\n a stop below the open price. The return value is always >= 0.\r\n \r\n Returns 0 if the resulting stop price would be above/below (longs/shorts) the current price\r\n \r\n :param open_relative_stop: Desired stop loss percentage relative to open price\r\n :param current_profit: The current profit percentage\r\n :param is_short: When true, perform the calculation for short instead of long\r\n :return: Stop loss value relative to current price\r\n \"\"\"\r\n \r\n # formula is undefined for current_profit -1 (longs) or 1 (shorts), return maximum value\r\n if (current_profit == -1 and not is_short) or (is_short and current_profit == 1):\r\n return 1\r\n \r\n if is_short is True:\r\n stoploss = -1 + ((1 - open_relative_stop) / (1 - current_profit))\r\n else:\r\n stoploss = 1 - ((1 + open_relative_stop) / (1 + current_profit))\r\n \r\n # negative stoploss values indicate the requested stop price is higher/lower\r\n # (long/short) than the current price\r\n return max(stoploss, 0.0)\r\n```\r\nNote: Please copy/paste text of the messages, no screenshots of logs please.\r\n\r\n ```\r\n // paste your log here\r\n ```\r\n\n", "before_files": [{"content": "from typing import Optional\n\nimport pandas as pd\n\nfrom freqtrade.exchange import timeframe_to_minutes\n\n\ndef merge_informative_pair(dataframe: pd.DataFrame, informative: pd.DataFrame,\n timeframe: str, timeframe_inf: str, ffill: bool = True,\n append_timeframe: bool = True,\n date_column: str = 'date',\n suffix: Optional[str] = None) -> pd.DataFrame:\n \"\"\"\n Correctly merge informative samples to the original dataframe, avoiding lookahead bias.\n\n Since dates are candle open dates, merging a 15m candle that starts at 15:00, and a\n 1h candle that starts at 15:00 will result in all candles to know the close at 16:00\n which they should not know.\n\n Moves the date of the informative pair by 1 time interval forward.\n This way, the 14:00 1h candle is merged to 15:00 15m candle, since the 14:00 1h candle is the\n last candle that's closed at 15:00, 15:15, 15:30 or 15:45.\n\n Assuming inf_tf = '1d' - then the resulting columns will be:\n date_1d, open_1d, high_1d, low_1d, close_1d, rsi_1d\n\n :param dataframe: Original dataframe\n :param informative: Informative pair, most likely loaded via dp.get_pair_dataframe\n :param timeframe: Timeframe of the original pair sample.\n :param timeframe_inf: Timeframe of the informative pair sample.\n :param ffill: Forwardfill missing values - optional but usually required\n :param append_timeframe: Rename columns by appending timeframe.\n :param date_column: A custom date column name.\n :param suffix: A string suffix to add at the end of the informative columns. If specified,\n append_timeframe must be false.\n :return: Merged dataframe\n :raise: ValueError if the secondary timeframe is shorter than the dataframe timeframe\n \"\"\"\n\n minutes_inf = timeframe_to_minutes(timeframe_inf)\n minutes = timeframe_to_minutes(timeframe)\n if minutes == minutes_inf:\n # No need to forwardshift if the timeframes are identical\n informative['date_merge'] = informative[date_column]\n elif minutes < minutes_inf:\n # Subtract \"small\" timeframe so merging is not delayed by 1 small candle\n # Detailed explanation in https://github.com/freqtrade/freqtrade/issues/4073\n informative['date_merge'] = (\n informative[date_column] + pd.to_timedelta(minutes_inf, 'm') -\n pd.to_timedelta(minutes, 'm')\n )\n else:\n raise ValueError(\"Tried to merge a faster timeframe to a slower timeframe.\"\n \"This would create new rows, and can throw off your regular indicators.\")\n\n # Rename columns to be unique\n date_merge = 'date_merge'\n if suffix and append_timeframe:\n raise ValueError(\"You can not specify `append_timeframe` as True and a `suffix`.\")\n elif append_timeframe:\n date_merge = f'date_merge_{timeframe_inf}'\n informative.columns = [f\"{col}_{timeframe_inf}\" for col in informative.columns]\n\n elif suffix:\n date_merge = f'date_merge_{suffix}'\n informative.columns = [f\"{col}_{suffix}\" for col in informative.columns]\n\n # Combine the 2 dataframes\n # all indicators on the informative sample MUST be calculated before this point\n if ffill:\n # https://pandas.pydata.org/docs/user_guide/merging.html#timeseries-friendly-merging\n # merge_ordered - ffill method is 2.5x faster than seperate ffill()\n dataframe = pd.merge_ordered(dataframe, informative, fill_method=\"ffill\", left_on='date',\n right_on=date_merge, how='left')\n else:\n dataframe = pd.merge(dataframe, informative, left_on='date',\n right_on=date_merge, how='left')\n dataframe = dataframe.drop(date_merge, axis=1)\n\n # if ffill:\n # dataframe = dataframe.ffill()\n\n return dataframe\n\n\ndef stoploss_from_open(\n open_relative_stop: float,\n current_profit: float,\n is_short: bool = False\n) -> float:\n \"\"\"\n\n Given the current profit, and a desired stop loss value relative to the open price,\n return a stop loss value that is relative to the current price, and which can be\n returned from `custom_stoploss`.\n\n The requested stop can be positive for a stop above the open price, or negative for\n a stop below the open price. The return value is always >= 0.\n\n Returns 0 if the resulting stop price would be above/below (longs/shorts) the current price\n\n :param open_relative_stop: Desired stop loss percentage relative to open price\n :param current_profit: The current profit percentage\n :param is_short: When true, perform the calculation for short instead of long\n :return: Stop loss value relative to current price\n \"\"\"\n\n # formula is undefined for current_profit -1 (longs) or 1 (shorts), return maximum value\n if (current_profit == -1 and not is_short) or (is_short and current_profit == 1):\n return 1\n\n if is_short is True:\n stoploss = -1 + ((1 - open_relative_stop) / (1 - current_profit))\n else:\n stoploss = 1 - ((1 + open_relative_stop) / (1 + current_profit))\n\n # negative stoploss values indicate the requested stop price is higher/lower\n # (long/short) than the current price\n return max(stoploss, 0.0)\n\n\ndef stoploss_from_absolute(stop_rate: float, current_rate: float, is_short: bool = False) -> float:\n \"\"\"\n Given current price and desired stop price, return a stop loss value that is relative to current\n price.\n\n The requested stop can be positive for a stop above the open price, or negative for\n a stop below the open price. The return value is always >= 0.\n\n Returns 0 if the resulting stop price would be above the current price.\n\n :param stop_rate: Stop loss price.\n :param current_rate: Current asset price.\n :param is_short: When true, perform the calculation for short instead of long\n :return: Positive stop loss value relative to current price\n \"\"\"\n\n # formula is undefined for current_rate 0, return maximum value\n if current_rate == 0:\n return 1\n\n stoploss = 1 - (stop_rate / current_rate)\n if is_short:\n stoploss = -stoploss\n\n # negative stoploss values indicate the requested stop price is higher/lower\n # (long/short) than the current price\n # shorts can yield stoploss values higher than 1, so limit that as well\n return max(min(stoploss, 1.0), 0.0)\n", "path": "freqtrade/strategy/strategy_helper.py"}]}

| 3,213 | 642 |

gh_patches_debug_16832

|

rasdani/github-patches

|

git_diff

|

pantsbuild__pants-20984

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

`stevedore_namespace` documentation shows `str`'s doc string

**Describe the bug**

The `stevedore_namespace` BUILD file symbol has a doc-string, but it isn't shown in `pants help-all`. It instead shows what looks like the doc string for `str`.

https://github.com/pantsbuild/pants/blob/ec86d19cd954cd49a9562880a7c0dbc45632778c/src/python/pants/backend/python/framework/stevedore/target_types.py#L13-L30

To reproduce, enable the stevedore backend and look at `help` or `help-all`:

```shell

PANTS_VERSION=2.22.0.dev3 pants --backend-packages=pants.backend.experimental.python.framework.stevedore help stevedore_namespace

```

```

`stevedore_namespace` BUILD file symbol

---------------------------------------

str(object='') -> str

str(bytes_or_buffer[, encoding[, errors]]) -> str

Create a new string object from the given object. If encoding or

errors is specified, then the object must expose a data buffer

that will be decoded using the given encoding and error handler.

Otherwise, returns the result of object.__str__() (if defined)

or repr(object).

encoding defaults to sys.getdefaultencoding().

errors defaults to 'strict'.

```

```shell

PANTS_VERSION=2.22.0.dev3 pants --backend-packages=pants.backend.experimental.python.framework.stevedore help-all | \

jq .name_to_build_file_info.stevedore_namespace

```

```json

{

"documentation": "str(object='') -> str\nstr(bytes_or_buffer[, encoding[, errors]]) -> str\n\nCreate a new string object from the given object. If encoding or\nerrors is specified, then the object must expose a data buffer\nthat will be decoded using the given encoding and error handler.\nOtherwise, returns the result of object.__str__() (if defined)\nor repr(object).\nencoding defaults to sys.getdefaultencoding().\nerrors defaults to 'strict'.",

"is_target": false,

"name": "stevedore_namespace",

"signature": null

}

```

**Pants version**

Seems to be visible in 2.16 through to the currently latest.

**OS**

both

**Additional info**

- Will appear in online docs too after https://github.com/pantsbuild/pantsbuild.org/pull/216

- Relevant issues:

- https://github.com/pantsbuild/pants/discussions/18117

- https://github.com/pantsbuild/pants/issues/14832

</issue>

<code>

[start of src/python/pants/backend/python/framework/stevedore/target_types.py]

1 # Copyright 2023 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 from __future__ import annotations

5

6 from dataclasses import dataclass

7

8 from pants.backend.python.target_types import PythonDistribution

9 from pants.engine.target import StringSequenceField, Targets

10 from pants.util.strutil import help_text

11

12

13 class StevedoreNamespace(str):

14 f"""Tag a namespace in entry_points as a stevedore namespace.

15

16 This is required for the entry_point to be visible to dep inference

17 based on the `stevedore_namespaces` field.

18

19 For example:

20 {PythonDistribution.alias}(

21 ...

22 entry_points={{

23 stevedore_namespace("a.b.c"): {{

24 "plugin_name": "some.entry:point",

25 }},

26 }},

27 )

28 """

29

30 alias = "stevedore_namespace"

31

32

33 # This is a lot like a SpecialCasedDependencies field, but it doesn't list targets directly.

34 class StevedoreNamespacesField(StringSequenceField):

35 alias = "stevedore_namespaces"

36 help = help_text(

37 f"""

38 List the stevedore namespaces required by this target.

39

40 Code for all `entry_points` on `{PythonDistribution.alias}` targets with

41 these namespaces will be added as dependencies so that they are

42 available on PYTHONPATH during tests. Note that this is only a subset

43 of the `{PythonDistribution.alias}`s dependencies, so the `entry_points`

44 only need to be defined on one `{PythonDistribution.alias}` even if the

45 test only needs some of the `entry_points` namespaces on it.

46

47 Plus, an `entry_points.txt` file will be generated in the sandbox so that

48 each of the `{PythonDistribution.alias}`s appear to be "installed". The

49 `entry_points.txt` file will only include the namespaces requested on this

50 field. Without this, stevedore would not be able to look up plugins in

51 the setuptools `entry_points` metadata.

52

53 NOTE: Each `{PythonDistribution.alias}` must opt-in to being included in

54 this repo-wide inference by tagging the namespaces with

55 `{StevedoreNamespace.alias}("my.stevedore.extension")`.

56

57 The stevedore namespace format (`my.stevedore.extension`) is similar

58 to a Python namespace.

59 """

60 )

61

62

63 class AllStevedoreExtensionTargets(Targets):

64 pass

65

66

67 @dataclass(frozen=True)

68 class StevedoreNamespacesProviderTargetsRequest:

69 stevedore_namespaces: StevedoreNamespacesField

70

71

72 class StevedoreExtensionTargets(Targets):

73 pass

74

[end of src/python/pants/backend/python/framework/stevedore/target_types.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/python/pants/backend/python/framework/stevedore/target_types.py b/src/python/pants/backend/python/framework/stevedore/target_types.py

--- a/src/python/pants/backend/python/framework/stevedore/target_types.py

+++ b/src/python/pants/backend/python/framework/stevedore/target_types.py

@@ -11,20 +11,22 @@

class StevedoreNamespace(str):

- f"""Tag a namespace in entry_points as a stevedore namespace.

+ """Tag a namespace in entry_points as a stevedore namespace.

This is required for the entry_point to be visible to dep inference

based on the `stevedore_namespaces` field.

For example:

- {PythonDistribution.alias}(

- ...

- entry_points={{

- stevedore_namespace("a.b.c"): {{

- "plugin_name": "some.entry:point",

- }},

- }},

- )

+ ```python

+ python_distribution(

+ ...

+ entry_points={

+ stevedore_namespace("a.b.c"): {

+ "plugin_name": "some.entry:point",

+ },

+ },

+ )

+ ```

"""

alias = "stevedore_namespace"

|

{"golden_diff": "diff --git a/src/python/pants/backend/python/framework/stevedore/target_types.py b/src/python/pants/backend/python/framework/stevedore/target_types.py\n--- a/src/python/pants/backend/python/framework/stevedore/target_types.py\n+++ b/src/python/pants/backend/python/framework/stevedore/target_types.py\n@@ -11,20 +11,22 @@\n \n \n class StevedoreNamespace(str):\n- f\"\"\"Tag a namespace in entry_points as a stevedore namespace.\n+ \"\"\"Tag a namespace in entry_points as a stevedore namespace.\n \n This is required for the entry_point to be visible to dep inference\n based on the `stevedore_namespaces` field.\n \n For example:\n- {PythonDistribution.alias}(\n- ...\n- entry_points={{\n- stevedore_namespace(\"a.b.c\"): {{\n- \"plugin_name\": \"some.entry:point\",\n- }},\n- }},\n- )\n+ ```python\n+ python_distribution(\n+ ...\n+ entry_points={\n+ stevedore_namespace(\"a.b.c\"): {\n+ \"plugin_name\": \"some.entry:point\",\n+ },\n+ },\n+ )\n+ ```\n \"\"\"\n \n alias = \"stevedore_namespace\"\n", "issue": "`stevedore_namespace` documentation shows `str`'s doc string\n**Describe the bug**\r\n\r\nThe `stevedore_namespace` BUILD file symbol has a doc-string, but it isn't shown in `pants help-all`. It instead shows what looks like the doc string for `str`.\r\n\r\nhttps://github.com/pantsbuild/pants/blob/ec86d19cd954cd49a9562880a7c0dbc45632778c/src/python/pants/backend/python/framework/stevedore/target_types.py#L13-L30\r\n\r\nTo reproduce, enable the stevedore backend and look at `help` or `help-all`:\r\n\r\n```shell\r\nPANTS_VERSION=2.22.0.dev3 pants --backend-packages=pants.backend.experimental.python.framework.stevedore help stevedore_namespace\r\n```\r\n```\r\n`stevedore_namespace` BUILD file symbol\r\n---------------------------------------\r\n\r\nstr(object='') -> str\r\nstr(bytes_or_buffer[, encoding[, errors]]) -> str\r\n\r\nCreate a new string object from the given object. If encoding or\r\nerrors is specified, then the object must expose a data buffer\r\nthat will be decoded using the given encoding and error handler.\r\nOtherwise, returns the result of object.__str__() (if defined)\r\nor repr(object).\r\nencoding defaults to sys.getdefaultencoding().\r\nerrors defaults to 'strict'.\r\n```\r\n\r\n```shell\r\nPANTS_VERSION=2.22.0.dev3 pants --backend-packages=pants.backend.experimental.python.framework.stevedore help-all | \\\r\n jq .name_to_build_file_info.stevedore_namespace\r\n```\r\n```json\r\n{\r\n \"documentation\": \"str(object='') -> str\\nstr(bytes_or_buffer[, encoding[, errors]]) -> str\\n\\nCreate a new string object from the given object. If encoding or\\nerrors is specified, then the object must expose a data buffer\\nthat will be decoded using the given encoding and error handler.\\nOtherwise, returns the result of object.__str__() (if defined)\\nor repr(object).\\nencoding defaults to sys.getdefaultencoding().\\nerrors defaults to 'strict'.\",\r\n \"is_target\": false,\r\n \"name\": \"stevedore_namespace\",\r\n \"signature\": null\r\n}\r\n```\r\n\r\n**Pants version**\r\nSeems to be visible in 2.16 through to the currently latest.\r\n\r\n**OS**\r\nboth\r\n\r\n**Additional info**\r\n\r\n- Will appear in online docs too after https://github.com/pantsbuild/pantsbuild.org/pull/216\r\n- Relevant issues:\r\n - https://github.com/pantsbuild/pants/discussions/18117\r\n - https://github.com/pantsbuild/pants/issues/14832\n", "before_files": [{"content": "# Copyright 2023 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\nfrom __future__ import annotations\n\nfrom dataclasses import dataclass\n\nfrom pants.backend.python.target_types import PythonDistribution\nfrom pants.engine.target import StringSequenceField, Targets\nfrom pants.util.strutil import help_text\n\n\nclass StevedoreNamespace(str):\n f\"\"\"Tag a namespace in entry_points as a stevedore namespace.\n\n This is required for the entry_point to be visible to dep inference\n based on the `stevedore_namespaces` field.\n\n For example:\n {PythonDistribution.alias}(\n ...\n entry_points={{\n stevedore_namespace(\"a.b.c\"): {{\n \"plugin_name\": \"some.entry:point\",\n }},\n }},\n )\n \"\"\"\n\n alias = \"stevedore_namespace\"\n\n\n# This is a lot like a SpecialCasedDependencies field, but it doesn't list targets directly.\nclass StevedoreNamespacesField(StringSequenceField):\n alias = \"stevedore_namespaces\"\n help = help_text(\n f\"\"\"\n List the stevedore namespaces required by this target.\n\n Code for all `entry_points` on `{PythonDistribution.alias}` targets with\n these namespaces will be added as dependencies so that they are\n available on PYTHONPATH during tests. Note that this is only a subset\n of the `{PythonDistribution.alias}`s dependencies, so the `entry_points`\n only need to be defined on one `{PythonDistribution.alias}` even if the\n test only needs some of the `entry_points` namespaces on it.\n\n Plus, an `entry_points.txt` file will be generated in the sandbox so that\n each of the `{PythonDistribution.alias}`s appear to be \"installed\". The\n `entry_points.txt` file will only include the namespaces requested on this\n field. Without this, stevedore would not be able to look up plugins in\n the setuptools `entry_points` metadata.\n\n NOTE: Each `{PythonDistribution.alias}` must opt-in to being included in\n this repo-wide inference by tagging the namespaces with\n `{StevedoreNamespace.alias}(\"my.stevedore.extension\")`.\n\n The stevedore namespace format (`my.stevedore.extension`) is similar\n to a Python namespace.\n \"\"\"\n )\n\n\nclass AllStevedoreExtensionTargets(Targets):\n pass\n\n\n@dataclass(frozen=True)\nclass StevedoreNamespacesProviderTargetsRequest:\n stevedore_namespaces: StevedoreNamespacesField\n\n\nclass StevedoreExtensionTargets(Targets):\n pass\n", "path": "src/python/pants/backend/python/framework/stevedore/target_types.py"}]}

| 1,837 | 272 |

gh_patches_debug_37847

|

rasdani/github-patches

|

git_diff

|

kubeflow__pipelines-3912

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

mount_pvc failing when pipeline includes ResourceOps

### What steps did you take:

Created a persistent volume claim using a `VolumeOp`:

```python

vop = dsl.VolumeOp(

name="shared-data",

resource_name="shared-data",

size="100G",

storage_class="nfs",

).set_display_name("Prepare volume")

```

Attempted to mount the PVC to every operation in a pipeline using:

```python

dsl.get_pipeline_conf().add_op_transformer(

mount_pvc(pvc_name=vop.volume.persistent_volume_claim.claim_name, volume_mount_path=MOUNT_PATH)

)

```

### What happened:

During compilation, the `add_op_transformer` attempts to invoke the `add_volume_mount` method on the `VolumeOp`, producing an error:

```

AttributeError: 'VolumeOp' object has no attribute 'add_volume_mount'

```

### What did you expect to happen:

The documentation states that transfomers take and return a `ContainerOp`:

https://kubeflow-pipelines.readthedocs.io/en/latest/source/kfp.dsl.html#kfp.dsl.PipelineConf.add_op_transformer

Given that, I would expect them to be bypassed for `VolumeOp` (or other `ResourceOp` operations), and hence this compilation should succeed.

### Environment:

<!-- Please fill in those that seem relevant. -->

KFP version: Build commit: 9c16e12

KFP SDK version: <!-- Please attach the output of this shell command: $pip list | grep kfp -->

kfp 0.5.1

kfp-server-api 0.3.0

### Anything else you would like to add:

[Miscellaneous information that will assist in solving the issue.]

/kind bug

<!-- Please include labels by uncommenting them to help us better triage issues, choose from the following -->

<!--

// /area frontend

// /area backend

// /area sdk

// /area testing

// /area engprod

-->

</issue>

<code>

[start of sdk/python/kfp/dsl/_pipeline.py]

1 # Copyright 2018-2019 Google LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15

16 from . import _container_op

17 from . import _resource_op

18 from . import _ops_group

19 from ._component_bridge import _create_container_op_from_component_and_arguments

20 from ..components import _components

21 from ..components._naming import _make_name_unique_by_adding_index

22 import sys

23

24

25 # This handler is called whenever the @pipeline decorator is applied.

26 # It can be used by command-line DSL compiler to inject code that runs for every pipeline definition.

27 _pipeline_decorator_handler = None

28

29

30 def pipeline(name : str = None, description : str = None):

31 """Decorator of pipeline functions.

32

33 Usage:

34 ```python

35 @pipeline(

36 name='my awesome pipeline',

37 description='Is it really awesome?'

38 )

39 def my_pipeline(a: PipelineParam, b: PipelineParam):

40 ...

41 ```

42 """

43 def _pipeline(func):

44 if name:

45 func._component_human_name = name

46 if description:

47 func._component_description = description

48

49 if _pipeline_decorator_handler:

50 return _pipeline_decorator_handler(func) or func

51 else:

52 return func

53

54 return _pipeline

55

56 class PipelineConf():

57 """PipelineConf contains pipeline level settings

58 """

59 def __init__(self):

60 self.image_pull_secrets = []

61 self.timeout = 0

62 self.ttl_seconds_after_finished = -1

63 self.op_transformers = []

64 self.default_pod_node_selector = {}

65 self.image_pull_policy = None

66 self.parallelism = None

67

68 def set_image_pull_secrets(self, image_pull_secrets):

69 """Configures the pipeline level imagepullsecret

70

71 Args:

72 image_pull_secrets: a list of Kubernetes V1LocalObjectReference

73 For detailed description, check Kubernetes V1LocalObjectReference definition

74 https://github.com/kubernetes-client/python/blob/master/kubernetes/docs/V1LocalObjectReference.md

75 """

76 self.image_pull_secrets = image_pull_secrets

77 return self

78

79 def set_timeout(self, seconds: int):

80 """Configures the pipeline level timeout

81

82 Args:

83 seconds: number of seconds for timeout

84 """

85 self.timeout = seconds

86 return self

87

88 def set_parallelism(self, max_num_pods: int):

89 """Configures the max number of total parallel pods that can execute at the same time in a workflow.

90

91 Args:

92 max_num_pods (int): max number of total parallel pods.

93 """

94 self.parallelism = max_num_pods

95 return self

96

97 def set_ttl_seconds_after_finished(self, seconds: int):

98 """Configures the ttl after the pipeline has finished.

99

100 Args:

101 seconds: number of seconds for the workflow to be garbage collected after it is finished.

102 """

103 self.ttl_seconds_after_finished = seconds

104 return self

105

106 def set_default_pod_node_selector(self, label_name: str, value: str):

107 """Add a constraint for nodeSelector for a pipeline. Each constraint is a key-value pair label. For the

108 container to be eligible to run on a node, the node must have each of the constraints appeared

109 as labels.

110

111 Args:

112 label_name: The name of the constraint label.

113 value: The value of the constraint label.

114 """

115 self.default_pod_node_selector[label_name] = value

116 return self

117

118

119 def set_image_pull_policy(self, policy: str):

120 """Configures the default image pull policy

121

122 Args:

123 policy: the pull policy, has to be one of: Always, Never, IfNotPresent.

124 For more info: https://github.com/kubernetes-client/python/blob/10a7f95435c0b94a6d949ba98375f8cc85a70e5a/kubernetes/docs/V1Container.md

125 """

126 self.image_pull_policy = policy

127 return self

128

129 def add_op_transformer(self, transformer):

130 """Configures the op_transformers which will be applied to all ops in the pipeline.

131

132 Args:

133 transformer: a function that takes a ContainOp as input and returns a ContainerOp

134 """

135 self.op_transformers.append(transformer)

136

137

138 def get_pipeline_conf():

139 """Configure the pipeline level setting to the current pipeline

140 Note: call the function inside the user defined pipeline function.

141 """

142 return Pipeline.get_default_pipeline().conf

143

144 #TODO: Pipeline is in fact an opsgroup, refactor the code.

145 class Pipeline():

146 """A pipeline contains a list of operators.

147

148 This class is not supposed to be used by pipeline authors since pipeline authors can use

149 pipeline functions (decorated with @pipeline) to reference their pipelines. This class

150 is useful for implementing a compiler. For example, the compiler can use the following

151 to get the pipeline object and its ops:

152

153 ```python

154 with Pipeline() as p:

155 pipeline_func(*args_list)

156

157 traverse(p.ops)

158 ```

159 """

160

161 # _default_pipeline is set when it (usually a compiler) runs "with Pipeline()"

162 _default_pipeline = None

163

164 @staticmethod

165 def get_default_pipeline():

166 """Get default pipeline. """

167 return Pipeline._default_pipeline

168

169 @staticmethod

170 def add_pipeline(name, description, func):

171 """Add a pipeline function with the specified name and description."""

172 # Applying the @pipeline decorator to the pipeline function

173 func = pipeline(name=name, description=description)(func)

174

175 def __init__(self, name: str):

176 """Create a new instance of Pipeline.

177

178 Args:

179 name: the name of the pipeline. Once deployed, the name will show up in Pipeline System UI.

180 """

181 self.name = name

182 self.ops = {}

183 # Add the root group.

184 self.groups = [_ops_group.OpsGroup('pipeline', name=name)]

185 self.group_id = 0

186 self.conf = PipelineConf()

187 self._metadata = None

188

189 def __enter__(self):

190 if Pipeline._default_pipeline:

191 raise Exception('Nested pipelines are not allowed.')

192

193 Pipeline._default_pipeline = self

194 self._old_container_task_constructor = _components._container_task_constructor

195 _components._container_task_constructor = _create_container_op_from_component_and_arguments

196

197 def register_op_and_generate_id(op):

198 return self.add_op(op, op.is_exit_handler)

199

200 self._old__register_op_handler = _container_op._register_op_handler

201 _container_op._register_op_handler = register_op_and_generate_id

202 return self

203

204 def __exit__(self, *args):

205 Pipeline._default_pipeline = None

206 _container_op._register_op_handler = self._old__register_op_handler

207 _components._container_task_constructor = self._old_container_task_constructor

208

209 def add_op(self, op: _container_op.BaseOp, define_only: bool):

210 """Add a new operator.

211

212 Args:

213 op: An operator of ContainerOp, ResourceOp or their inherited types.

214

215 Returns

216 op_name: a unique op name.

217 """

218 #If there is an existing op with this name then generate a new name.

219 op_name = _make_name_unique_by_adding_index(op.human_name, list(self.ops.keys()), ' ')

220

221 self.ops[op_name] = op

222 if not define_only:

223 self.groups[-1].ops.append(op)

224

225 return op_name

226

227 def push_ops_group(self, group: _ops_group.OpsGroup):

228 """Push an OpsGroup into the stack.

229

230 Args:

231 group: An OpsGroup. Typically it is one of ExitHandler, Branch, and Loop.

232 """

233 self.groups[-1].groups.append(group)

234 self.groups.append(group)

235

236 def pop_ops_group(self):

237 """Remove the current OpsGroup from the stack."""

238 del self.groups[-1]

239

240 def remove_op_from_groups(self, op):

241 for group in self.groups:

242 group.remove_op_recursive(op)

243

244 def get_next_group_id(self):

245 """Get next id for a new group. """

246

247 self.group_id += 1

248 return self.group_id

249

250 def _set_metadata(self, metadata):

251 '''_set_metadata passes the containerop the metadata information

252 Args:

253 metadata (ComponentMeta): component metadata

254 '''

255 self._metadata = metadata

256

[end of sdk/python/kfp/dsl/_pipeline.py]

[start of sdk/python/kfp/onprem.py]

1

2 def mount_pvc(pvc_name='pipeline-claim', volume_name='pipeline', volume_mount_path='/mnt/pipeline'):

3 """

4 Modifier function to apply to a Container Op to simplify volume, volume mount addition and

5 enable better reuse of volumes, volume claims across container ops.

6 Usage:

7 train = train_op(...)

8 train.apply(mount_pvc('claim-name', 'pipeline', '/mnt/pipeline'))

9 """

10 def _mount_pvc(task):

11 from kubernetes import client as k8s_client

12 local_pvc = k8s_client.V1PersistentVolumeClaimVolumeSource(claim_name=pvc_name)

13 return (

14 task

15 .add_volume(

16 k8s_client.V1Volume(name=volume_name, persistent_volume_claim=local_pvc)

17 )

18 .add_volume_mount(

19 k8s_client.V1VolumeMount(mount_path=volume_mount_path, name=volume_name)

20 )

21 )

22 return _mount_pvc

23

[end of sdk/python/kfp/onprem.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/sdk/python/kfp/dsl/_pipeline.py b/sdk/python/kfp/dsl/_pipeline.py

--- a/sdk/python/kfp/dsl/_pipeline.py

+++ b/sdk/python/kfp/dsl/_pipeline.py

@@ -102,9 +102,9 @@

"""

self.ttl_seconds_after_finished = seconds

return self

-

- def set_default_pod_node_selector(self, label_name: str, value: str):

- """Add a constraint for nodeSelector for a pipeline. Each constraint is a key-value pair label. For the

+

+ def set_default_pod_node_selector(self, label_name: str, value: str):

+ """Add a constraint for nodeSelector for a pipeline. Each constraint is a key-value pair label. For the

container to be eligible to run on a node, the node must have each of the constraints appeared

as labels.

@@ -114,7 +114,7 @@

"""

self.default_pod_node_selector[label_name] = value

return self

-

+

def set_image_pull_policy(self, policy: str):

"""Configures the default image pull policy

@@ -128,9 +128,10 @@

def add_op_transformer(self, transformer):

"""Configures the op_transformers which will be applied to all ops in the pipeline.

+ The ops can be ResourceOp, VolumenOp, or ContainerOp.

Args:

- transformer: a function that takes a ContainOp as input and returns a ContainerOp

+ transformer: a function that takes a kfp Op as input and returns a kfp Op

"""

self.op_transformers.append(transformer)

diff --git a/sdk/python/kfp/onprem.py b/sdk/python/kfp/onprem.py

--- a/sdk/python/kfp/onprem.py

+++ b/sdk/python/kfp/onprem.py

@@ -1,7 +1,7 @@

def mount_pvc(pvc_name='pipeline-claim', volume_name='pipeline', volume_mount_path='/mnt/pipeline'):

"""

- Modifier function to apply to a Container Op to simplify volume, volume mount addition and

+ Modifier function to apply to a Container Op to simplify volume, volume mount addition and

enable better reuse of volumes, volume claims across container ops.

Usage:

train = train_op(...)

@@ -9,6 +9,10 @@

"""

def _mount_pvc(task):

from kubernetes import client as k8s_client

+ # there can be other ops in a pipeline (e.g. ResourceOp, VolumeOp)

+ # refer to #3906

+ if not hasattr(task, "add_volume") or not hasattr(task, "add_volume_mount"):

+ return task

local_pvc = k8s_client.V1PersistentVolumeClaimVolumeSource(claim_name=pvc_name)

return (

task

|