problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_21667

|

rasdani/github-patches

|

git_diff

|

fedora-infra__bodhi-2005

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

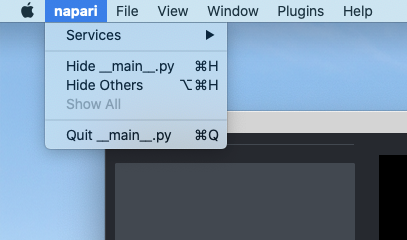

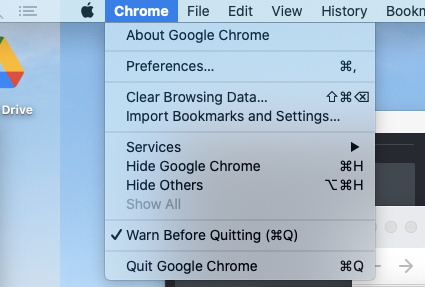

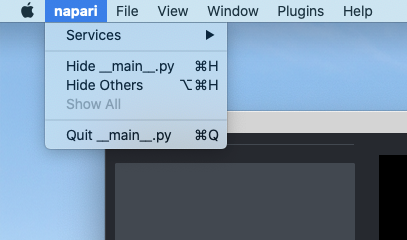

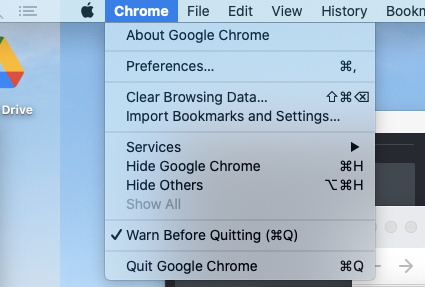

<issue>

bodhi-dequqe-stable dies if any update in the queue is no longer eligible to go stable

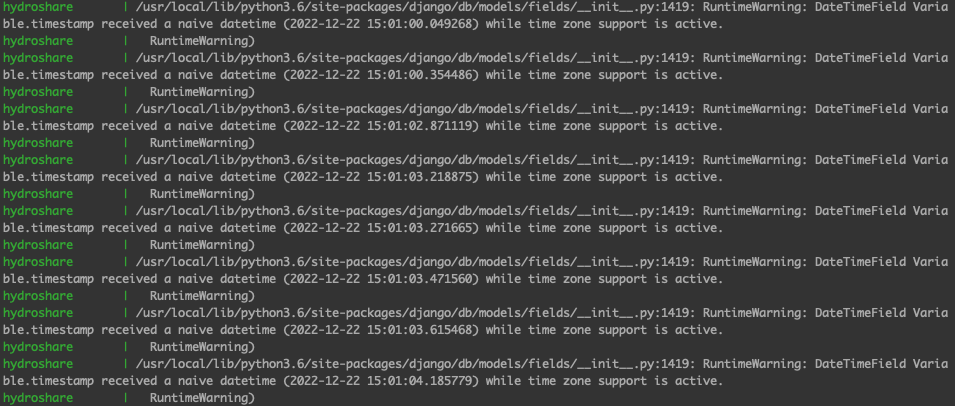

QuLogic from Freenode reported today that batched updates didn't go stable at 03:00 UTC like they should have. I confirmed that the cron job ran, but I didn't see any notes about its output. I then ran the command by hand and received this output:

```

[bowlofeggs@bodhi-backend01 ~][PROD]$ sudo -u apache /usr/bin/bodhi-dequeue-stable

No handlers could be found for logger "bodhi.server"

This update has not yet met the minimum testing requirements defined in the <a href="https://fedoraproject.org/wiki/Package_update_acceptance_criteria">Package Update Acceptance Criteria</a>

```

The [```dequeue_stable()```](https://github.com/fedora-infra/bodhi/blob/3.0.0/bodhi/server/scripts/dequeue_stable.py#L28-L46) function runs a large transaction with only a single try/except. It seems that some update in the queue no longer meets testing requirements (probably due to receiving a -1 karma after going to batched) and is raising an Exception when the tool attempts to mark it for stable. Since there is only one try/except handler, this causes the whole transaction to be rolled back.

It should be easy to fix this - we just need a try/except around each update.

Thanks to QuLogic from Freenode for reporting this issue to me.

</issue>

<code>

[start of bodhi/server/scripts/dequeue_stable.py]

1 # -*- coding: utf-8 -*-

2 # Copyright © 2017 Caleigh Runge-Hottman

3 #

4 # This file is part of Bodhi.

5 #

6 # This program is free software; you can redistribute it and/or

7 # modify it under the terms of the GNU General Public License

8 # as published by the Free Software Foundation; either version 2

9 # of the License, or (at your option) any later version.

10 #

11 # This program is distributed in the hope that it will be useful,

12 # but WITHOUT ANY WARRANTY; without even the implied warranty of

13 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

14 # GNU General Public License for more details.

15 #

16 # You should have received a copy of the GNU General Public License

17 # along with this program; if not, write to the Free Software

18 # Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.

19 """This script is responsible for moving all updates with a batched request to a stable request."""

20

21 import sys

22

23 import click

24

25 from bodhi.server import buildsys, config, models, Session, initialize_db

26

27

28 @click.command()

29 @click.version_option(message='%(version)s')

30 def dequeue_stable():

31 """Convert all batched requests to stable requests."""

32 initialize_db(config.config)

33 buildsys.setup_buildsystem(config.config)

34 db = Session()

35

36 try:

37 batched = db.query(models.Update).filter_by(request=models.UpdateRequest.batched).all()

38 for update in batched:

39 update.set_request(db, models.UpdateRequest.stable, u'bodhi')

40 db.commit()

41

42 except Exception as e:

43 print(str(e))

44 db.rollback()

45 Session.remove()

46 sys.exit(1)

47

[end of bodhi/server/scripts/dequeue_stable.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/bodhi/server/scripts/dequeue_stable.py b/bodhi/server/scripts/dequeue_stable.py

--- a/bodhi/server/scripts/dequeue_stable.py

+++ b/bodhi/server/scripts/dequeue_stable.py

@@ -1,5 +1,5 @@

# -*- coding: utf-8 -*-

-# Copyright © 2017 Caleigh Runge-Hottman

+# Copyright © 2017 Caleigh Runge-Hottman and Red Hat, Inc.

#

# This file is part of Bodhi.

#

@@ -36,11 +36,17 @@

try:

batched = db.query(models.Update).filter_by(request=models.UpdateRequest.batched).all()

for update in batched:

- update.set_request(db, models.UpdateRequest.stable, u'bodhi')

- db.commit()

-

+ try:

+ update.set_request(db, models.UpdateRequest.stable, u'bodhi')

+ db.commit()

+ except Exception as e:

+ print('Unable to stabilize {}: {}'.format(update.alias, str(e)))

+ db.rollback()

+ msg = u"Bodhi is unable to request this update for stabilization: {}"

+ update.comment(db, msg.format(str(e)), author=u'bodhi')

+ db.commit()

except Exception as e:

print(str(e))

- db.rollback()

- Session.remove()

sys.exit(1)

+ finally:

+ Session.remove()

|

{"golden_diff": "diff --git a/bodhi/server/scripts/dequeue_stable.py b/bodhi/server/scripts/dequeue_stable.py\n--- a/bodhi/server/scripts/dequeue_stable.py\n+++ b/bodhi/server/scripts/dequeue_stable.py\n@@ -1,5 +1,5 @@\n # -*- coding: utf-8 -*-\n-# Copyright \u00a9 2017 Caleigh Runge-Hottman\n+# Copyright \u00a9 2017 Caleigh Runge-Hottman and Red Hat, Inc.\n #\n # This file is part of Bodhi.\n #\n@@ -36,11 +36,17 @@\n try:\n batched = db.query(models.Update).filter_by(request=models.UpdateRequest.batched).all()\n for update in batched:\n- update.set_request(db, models.UpdateRequest.stable, u'bodhi')\n- db.commit()\n-\n+ try:\n+ update.set_request(db, models.UpdateRequest.stable, u'bodhi')\n+ db.commit()\n+ except Exception as e:\n+ print('Unable to stabilize {}: {}'.format(update.alias, str(e)))\n+ db.rollback()\n+ msg = u\"Bodhi is unable to request this update for stabilization: {}\"\n+ update.comment(db, msg.format(str(e)), author=u'bodhi')\n+ db.commit()\n except Exception as e:\n print(str(e))\n- db.rollback()\n- Session.remove()\n sys.exit(1)\n+ finally:\n+ Session.remove()\n", "issue": "bodhi-dequqe-stable dies if any update in the queue is no longer eligible to go stable\nQuLogic from Freenode reported today that batched updates didn't go stable at 03:00 UTC like they should have. I confirmed that the cron job ran, but I didn't see any notes about its output. I then ran the command by hand and received this output:\r\n\r\n```\r\n[bowlofeggs@bodhi-backend01 ~][PROD]$ sudo -u apache /usr/bin/bodhi-dequeue-stable\r\nNo handlers could be found for logger \"bodhi.server\"\r\nThis update has not yet met the minimum testing requirements defined in the <a href=\"https://fedoraproject.org/wiki/Package_update_acceptance_criteria\">Package Update Acceptance Criteria</a>\r\n```\r\n\r\nThe [```dequeue_stable()```](https://github.com/fedora-infra/bodhi/blob/3.0.0/bodhi/server/scripts/dequeue_stable.py#L28-L46) function runs a large transaction with only a single try/except. It seems that some update in the queue no longer meets testing requirements (probably due to receiving a -1 karma after going to batched) and is raising an Exception when the tool attempts to mark it for stable. Since there is only one try/except handler, this causes the whole transaction to be rolled back.\r\n\r\nIt should be easy to fix this - we just need a try/except around each update.\r\n\r\nThanks to QuLogic from Freenode for reporting this issue to me.\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n# Copyright \u00a9 2017 Caleigh Runge-Hottman\n#\n# This file is part of Bodhi.\n#\n# This program is free software; you can redistribute it and/or\n# modify it under the terms of the GNU General Public License\n# as published by the Free Software Foundation; either version 2\n# of the License, or (at your option) any later version.\n#\n# This program is distributed in the hope that it will be useful,\n# but WITHOUT ANY WARRANTY; without even the implied warranty of\n# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the\n# GNU General Public License for more details.\n#\n# You should have received a copy of the GNU General Public License\n# along with this program; if not, write to the Free Software\n# Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.\n\"\"\"This script is responsible for moving all updates with a batched request to a stable request.\"\"\"\n\nimport sys\n\nimport click\n\nfrom bodhi.server import buildsys, config, models, Session, initialize_db\n\n\[email protected]()\[email protected]_option(message='%(version)s')\ndef dequeue_stable():\n \"\"\"Convert all batched requests to stable requests.\"\"\"\n initialize_db(config.config)\n buildsys.setup_buildsystem(config.config)\n db = Session()\n\n try:\n batched = db.query(models.Update).filter_by(request=models.UpdateRequest.batched).all()\n for update in batched:\n update.set_request(db, models.UpdateRequest.stable, u'bodhi')\n db.commit()\n\n except Exception as e:\n print(str(e))\n db.rollback()\n Session.remove()\n sys.exit(1)\n", "path": "bodhi/server/scripts/dequeue_stable.py"}]}

| 1,336 | 319 |

gh_patches_debug_38003

|

rasdani/github-patches

|

git_diff

|

napari__napari-2436

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

mac thinks that napari is named "__main__.py" in bundled app

## 🐛 Bug

My mac seems to think that napari is named "__main__.py" in bundled app

## To Reproduce

Steps to reproduce the behavior:

1. Launch napari bundled app on mac

2. Click "napari" on menu bar

3. See the menu items "Hide __main__.py" and "Close __main__.py"

## Expected behavior

menu items include "Hide napari" and "Close napari", similar to Chrome...

<!-- A clear and concise description of what you expected to happen. -->

## Environment

napari: 0.4.6

Platform: macOS-10.15.7-x86_64-i386-64bit

System: MacOS 10.15.7

Python: 3.8.7 (default, Jan 2 2021, 04:16:43) [Clang 11.0.0 (clang-1100.0.33.17)]

Qt: 5.15.2

PySide2: 5.15.2

NumPy: 1.19.3

SciPy: 1.6.1

Dask: 2021.03.0

VisPy: 0.6.6

OpenGL:

- GL version: 2.1 ATI-3.10.19

- MAX_TEXTURE_SIZE: 16384

Screens:

- screen 1: resolution 1792x1120, scale 2.0

- screen 2: resolution 2560x1440, scale 1.0

Plugins:

- affinder: 0.1.0

- aicsimageio: 0.2.0

- aicsimageio_delayed: 0.2.0

- brainreg: 0.2.3

- brainreg_standard: 0.2.3

- cellfinder: 0.2.1

- console: 0.0.3

- napari-hdf5-labels-io: 0.2.dev1

- ndtiffs: 0.1.1

- svg: 0.1.4

</issue>

<code>

[start of bundle.py]

1 import configparser

2 import os

3 import re

4 import shutil

5 import subprocess

6 import sys

7 import time

8 from contextlib import contextmanager

9

10 import tomlkit

11

12 APP = 'napari'

13

14 # EXTRA_REQS will be added to the bundle, in addition to those specified in

15 # setup.cfg. To add additional packages to the bundle, or to override any of

16 # the packages listed here or in `setup.cfg, use the `--add` command line

17 # argument with a series of "pip install" style strings when running this file.

18 # For example, the following will ADD ome-zarr, and CHANGE the version of

19 # PySide2:

20 # python bundle.py --add 'PySide2==5.15.0' 'ome-zarr'

21

22 EXTRA_REQS = [

23 "pip",

24 "PySide2==5.15.2",

25 "scikit-image",

26 "zarr",

27 "pims",

28 "numpy==1.19.3",

29 ]

30

31 WINDOWS = os.name == 'nt'

32 MACOS = sys.platform == 'darwin'

33 LINUX = sys.platform.startswith("linux")

34 HERE = os.path.abspath(os.path.dirname(__file__))

35 PYPROJECT_TOML = os.path.join(HERE, 'pyproject.toml')

36 SETUP_CFG = os.path.join(HERE, 'setup.cfg')

37

38 if WINDOWS:

39 BUILD_DIR = os.path.join(HERE, 'windows')

40 elif LINUX:

41 BUILD_DIR = os.path.join(HERE, 'linux')

42 elif MACOS:

43 BUILD_DIR = os.path.join(HERE, 'macOS')

44 APP_DIR = os.path.join(BUILD_DIR, APP, f'{APP}.app')

45

46

47 with open(os.path.join(HERE, "napari", "_version.py")) as f:

48 match = re.search(r'version\s?=\s?\'([^\']+)', f.read())

49 if match:

50 VERSION = match.groups()[0].split('+')[0]

51

52

53 @contextmanager

54 def patched_toml():

55 parser = configparser.ConfigParser()

56 parser.read(SETUP_CFG)

57 requirements = parser.get("options", "install_requires").splitlines()

58 requirements = [r.split('#')[0].strip() for r in requirements if r]

59

60 with open(PYPROJECT_TOML) as f:

61 original_toml = f.read()

62

63 toml = tomlkit.parse(original_toml)

64

65 # parse command line arguments

66 if '--add' in sys.argv:

67 for item in sys.argv[sys.argv.index('--add') + 1 :]:

68 if item.startswith('-'):

69 break

70 EXTRA_REQS.append(item)

71

72 for item in EXTRA_REQS:

73 _base = re.split('<|>|=', item, maxsplit=1)[0]

74 for r in requirements:

75 if r.startswith(_base):

76 requirements.remove(r)

77 break

78 if _base.lower().startswith('pyqt5'):

79 try:

80 i = next(x for x in requirements if x.startswith('PySide'))

81 requirements.remove(i)

82 except StopIteration:

83 pass

84

85 requirements += EXTRA_REQS

86

87 toml['tool']['briefcase']['app'][APP]['requires'] = requirements

88 toml['tool']['briefcase']['version'] = VERSION

89

90 print("patching pyroject.toml to version: ", VERSION)

91 print(

92 "patching pyroject.toml requirements to : \n",

93 "\n".join(toml['tool']['briefcase']['app'][APP]['requires']),

94 )

95 with open(PYPROJECT_TOML, 'w') as f:

96 f.write(tomlkit.dumps(toml))

97

98 try:

99 yield

100 finally:

101 with open(PYPROJECT_TOML, 'w') as f:

102 f.write(original_toml)

103

104

105 def patch_dmgbuild():

106 if not MACOS:

107 return

108 from dmgbuild import core

109

110 # will not be required after dmgbuild > v1.3.3

111 # see https://github.com/al45tair/dmgbuild/pull/18

112 with open(core.__file__) as f:

113 src = f.read()

114 with open(core.__file__, 'w') as f:

115 f.write(

116 src.replace(

117 "shutil.rmtree(os.path.join(mount_point, '.Trashes'), True)",

118 "shutil.rmtree(os.path.join(mount_point, '.Trashes'), True);time.sleep(30)",

119 )

120 )

121 print("patched dmgbuild.core")

122

123

124 def add_site_packages_to_path():

125 # on mac, make sure the site-packages folder exists even before the user

126 # has pip installed, so it is in sys.path on the first run

127 # (otherwise, newly installed plugins will not be detected until restart)

128 if MACOS:

129 pkgs_dir = os.path.join(

130 APP_DIR,

131 'Contents',

132 'Resources',

133 'Support',

134 'lib',

135 f'python{sys.version_info.major}.{sys.version_info.minor}',

136 'site-packages',

137 )

138 os.makedirs(pkgs_dir)

139 print("created site-packages at", pkgs_dir)

140

141 # on windows, briefcase uses a _pth file to determine the sys.path at

142 # runtime. https://docs.python.org/3/using/windows.html#finding-modules

143 # We update that file with the eventual location of pip site-packages

144 elif WINDOWS:

145 py = "".join(map(str, sys.version_info[:2]))

146 python_dir = os.path.join(BUILD_DIR, APP, 'src', 'python')

147 pth = os.path.join(python_dir, f'python{py}._pth')

148 with open(pth, "a") as f:

149 # Append 'hello' at the end of file

150 f.write(".\\\\Lib\\\\site-packages\n")

151 print("added bundled site-packages to", pth)

152

153 pkgs_dir = os.path.join(python_dir, 'Lib', 'site-packages')

154 os.makedirs(pkgs_dir)

155 print("created site-packages at", pkgs_dir)

156 with open(os.path.join(pkgs_dir, 'readme.txt'), 'w') as f:

157 f.write("this is where plugin packages will go")

158

159

160 def patch_wxs():

161 # must run after briefcase create

162 fname = os.path.join(BUILD_DIR, APP, f'{APP}.wxs')

163

164 if os.path.exists(fname):

165 with open(fname) as f:

166 source = f.read()

167 with open(fname, 'w') as f:

168 f.write(source.replace('pythonw.exe', 'python.exe'))

169 print("patched pythonw.exe -> python.exe")

170

171

172 def make_zip():

173 import glob

174 import zipfile

175

176 if WINDOWS:

177 ext, OS = '*.msi', 'Windows'

178 elif LINUX:

179 ext, OS = '*.AppImage', 'Linux'

180 elif MACOS:

181 ext, OS = '*.dmg', 'macOS'

182 artifact = glob.glob(os.path.join(BUILD_DIR, ext))[0]

183 dest = f'napari-{VERSION}-{OS}.zip'

184

185 with zipfile.ZipFile(dest, 'w', zipfile.ZIP_DEFLATED) as zf:

186 zf.write(artifact, arcname=os.path.basename(artifact))

187 print("created zipfile: ", dest)

188 return dest

189

190

191 def clean():

192 shutil.rmtree(BUILD_DIR, ignore_errors=True)

193

194

195 def bundle():

196 clean()

197

198 if MACOS:

199 patch_dmgbuild()

200

201 # smoke test, and build resources

202 subprocess.check_call([sys.executable, '-m', APP, '--info'])

203

204 with patched_toml():

205 # create

206 cmd = ['briefcase', 'create'] + (['--no-docker'] if LINUX else [])

207 subprocess.check_call(cmd)

208

209 time.sleep(0.5)

210

211 add_site_packages_to_path()

212

213 if WINDOWS:

214 patch_wxs()

215

216 # build

217 cmd = ['briefcase', 'build'] + (['--no-docker'] if LINUX else [])

218 subprocess.check_call(cmd)

219

220 # package

221 cmd = ['briefcase', 'package']

222 cmd += ['--no-sign'] if MACOS else (['--no-docker'] if LINUX else [])

223 subprocess.check_call(cmd)

224

225 # compress

226 dest = make_zip()

227 clean()

228

229 return dest

230

231

232 if __name__ == "__main__":

233 if '--clean' in sys.argv:

234 clean()

235 sys.exit()

236 if '--version' in sys.argv:

237 print(VERSION)

238 sys.exit()

239 print('created', bundle())

240

[end of bundle.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/bundle.py b/bundle.py

--- a/bundle.py

+++ b/bundle.py

@@ -87,9 +87,9 @@

toml['tool']['briefcase']['app'][APP]['requires'] = requirements

toml['tool']['briefcase']['version'] = VERSION

- print("patching pyroject.toml to version: ", VERSION)

+ print("patching pyproject.toml to version: ", VERSION)

print(

- "patching pyroject.toml requirements to : \n",

+ "patching pyproject.toml requirements to : \n",

"\n".join(toml['tool']['briefcase']['app'][APP]['requires']),

)

with open(PYPROJECT_TOML, 'w') as f:

@@ -107,15 +107,14 @@

return

from dmgbuild import core

- # will not be required after dmgbuild > v1.3.3

- # see https://github.com/al45tair/dmgbuild/pull/18

with open(core.__file__) as f:

src = f.read()

with open(core.__file__, 'w') as f:

f.write(

src.replace(

"shutil.rmtree(os.path.join(mount_point, '.Trashes'), True)",

- "shutil.rmtree(os.path.join(mount_point, '.Trashes'), True);time.sleep(30)",

+ "shutil.rmtree(os.path.join(mount_point, '.Trashes'), True)"

+ ";time.sleep(30)",

)

)

print("patched dmgbuild.core")

@@ -201,6 +200,7 @@

# smoke test, and build resources

subprocess.check_call([sys.executable, '-m', APP, '--info'])

+ # the briefcase calls need to happen while the pyproject toml is patched

with patched_toml():

# create

cmd = ['briefcase', 'create'] + (['--no-docker'] if LINUX else [])

@@ -213,20 +213,20 @@

if WINDOWS:

patch_wxs()

- # build

- cmd = ['briefcase', 'build'] + (['--no-docker'] if LINUX else [])

- subprocess.check_call(cmd)

+ # build

+ cmd = ['briefcase', 'build'] + (['--no-docker'] if LINUX else [])

+ subprocess.check_call(cmd)

- # package

- cmd = ['briefcase', 'package']

- cmd += ['--no-sign'] if MACOS else (['--no-docker'] if LINUX else [])

- subprocess.check_call(cmd)

+ # package

+ cmd = ['briefcase', 'package']

+ cmd += ['--no-sign'] if MACOS else (['--no-docker'] if LINUX else [])

+ subprocess.check_call(cmd)

- # compress

- dest = make_zip()

- clean()

+ # compress

+ dest = make_zip()

+ clean()

- return dest

+ return dest

if __name__ == "__main__":

|

{"golden_diff": "diff --git a/bundle.py b/bundle.py\n--- a/bundle.py\n+++ b/bundle.py\n@@ -87,9 +87,9 @@\n toml['tool']['briefcase']['app'][APP]['requires'] = requirements\n toml['tool']['briefcase']['version'] = VERSION\n \n- print(\"patching pyroject.toml to version: \", VERSION)\n+ print(\"patching pyproject.toml to version: \", VERSION)\n print(\n- \"patching pyroject.toml requirements to : \\n\",\n+ \"patching pyproject.toml requirements to : \\n\",\n \"\\n\".join(toml['tool']['briefcase']['app'][APP]['requires']),\n )\n with open(PYPROJECT_TOML, 'w') as f:\n@@ -107,15 +107,14 @@\n return\n from dmgbuild import core\n \n- # will not be required after dmgbuild > v1.3.3\n- # see https://github.com/al45tair/dmgbuild/pull/18\n with open(core.__file__) as f:\n src = f.read()\n with open(core.__file__, 'w') as f:\n f.write(\n src.replace(\n \"shutil.rmtree(os.path.join(mount_point, '.Trashes'), True)\",\n- \"shutil.rmtree(os.path.join(mount_point, '.Trashes'), True);time.sleep(30)\",\n+ \"shutil.rmtree(os.path.join(mount_point, '.Trashes'), True)\"\n+ \";time.sleep(30)\",\n )\n )\n print(\"patched dmgbuild.core\")\n@@ -201,6 +200,7 @@\n # smoke test, and build resources\n subprocess.check_call([sys.executable, '-m', APP, '--info'])\n \n+ # the briefcase calls need to happen while the pyproject toml is patched\n with patched_toml():\n # create\n cmd = ['briefcase', 'create'] + (['--no-docker'] if LINUX else [])\n@@ -213,20 +213,20 @@\n if WINDOWS:\n patch_wxs()\n \n- # build\n- cmd = ['briefcase', 'build'] + (['--no-docker'] if LINUX else [])\n- subprocess.check_call(cmd)\n+ # build\n+ cmd = ['briefcase', 'build'] + (['--no-docker'] if LINUX else [])\n+ subprocess.check_call(cmd)\n \n- # package\n- cmd = ['briefcase', 'package']\n- cmd += ['--no-sign'] if MACOS else (['--no-docker'] if LINUX else [])\n- subprocess.check_call(cmd)\n+ # package\n+ cmd = ['briefcase', 'package']\n+ cmd += ['--no-sign'] if MACOS else (['--no-docker'] if LINUX else [])\n+ subprocess.check_call(cmd)\n \n- # compress\n- dest = make_zip()\n- clean()\n+ # compress\n+ dest = make_zip()\n+ clean()\n \n- return dest\n+ return dest\n \n \n if __name__ == \"__main__\":\n", "issue": "mac thinks that napari is named \"__main__.py\" in bundled app\n## \ud83d\udc1b Bug\r\n\r\nMy mac seems to think that napari is named \"__main__.py\" in bundled app\r\n\r\n## To Reproduce\r\n\r\nSteps to reproduce the behavior:\r\n\r\n1. Launch napari bundled app on mac\r\n2. Click \"napari\" on menu bar\r\n3. See the menu items \"Hide __main__.py\" and \"Close __main__.py\"\r\n\r\n\r\n\r\n## Expected behavior\r\n\r\nmenu items include \"Hide napari\" and \"Close napari\", similar to Chrome...\r\n\r\n<!-- A clear and concise description of what you expected to happen. -->\r\n\r\n\r\n## Environment\r\n\r\nnapari: 0.4.6\r\nPlatform: macOS-10.15.7-x86_64-i386-64bit\r\nSystem: MacOS 10.15.7\r\nPython: 3.8.7 (default, Jan 2 2021, 04:16:43) [Clang 11.0.0 (clang-1100.0.33.17)]\r\nQt: 5.15.2\r\nPySide2: 5.15.2\r\nNumPy: 1.19.3\r\nSciPy: 1.6.1\r\nDask: 2021.03.0\r\nVisPy: 0.6.6\r\n\r\nOpenGL:\r\n- GL version: 2.1 ATI-3.10.19\r\n- MAX_TEXTURE_SIZE: 16384\r\n\r\nScreens:\r\n- screen 1: resolution 1792x1120, scale 2.0\r\n- screen 2: resolution 2560x1440, scale 1.0\r\n\r\nPlugins:\r\n- affinder: 0.1.0\r\n- aicsimageio: 0.2.0\r\n- aicsimageio_delayed: 0.2.0\r\n- brainreg: 0.2.3\r\n- brainreg_standard: 0.2.3\r\n- cellfinder: 0.2.1\r\n- console: 0.0.3\r\n- napari-hdf5-labels-io: 0.2.dev1\r\n- ndtiffs: 0.1.1\r\n- svg: 0.1.4\r\n\n", "before_files": [{"content": "import configparser\nimport os\nimport re\nimport shutil\nimport subprocess\nimport sys\nimport time\nfrom contextlib import contextmanager\n\nimport tomlkit\n\nAPP = 'napari'\n\n# EXTRA_REQS will be added to the bundle, in addition to those specified in\n# setup.cfg. To add additional packages to the bundle, or to override any of\n# the packages listed here or in `setup.cfg, use the `--add` command line\n# argument with a series of \"pip install\" style strings when running this file.\n# For example, the following will ADD ome-zarr, and CHANGE the version of\n# PySide2:\n# python bundle.py --add 'PySide2==5.15.0' 'ome-zarr'\n\nEXTRA_REQS = [\n \"pip\",\n \"PySide2==5.15.2\",\n \"scikit-image\",\n \"zarr\",\n \"pims\",\n \"numpy==1.19.3\",\n]\n\nWINDOWS = os.name == 'nt'\nMACOS = sys.platform == 'darwin'\nLINUX = sys.platform.startswith(\"linux\")\nHERE = os.path.abspath(os.path.dirname(__file__))\nPYPROJECT_TOML = os.path.join(HERE, 'pyproject.toml')\nSETUP_CFG = os.path.join(HERE, 'setup.cfg')\n\nif WINDOWS:\n BUILD_DIR = os.path.join(HERE, 'windows')\nelif LINUX:\n BUILD_DIR = os.path.join(HERE, 'linux')\nelif MACOS:\n BUILD_DIR = os.path.join(HERE, 'macOS')\n APP_DIR = os.path.join(BUILD_DIR, APP, f'{APP}.app')\n\n\nwith open(os.path.join(HERE, \"napari\", \"_version.py\")) as f:\n match = re.search(r'version\\s?=\\s?\\'([^\\']+)', f.read())\n if match:\n VERSION = match.groups()[0].split('+')[0]\n\n\n@contextmanager\ndef patched_toml():\n parser = configparser.ConfigParser()\n parser.read(SETUP_CFG)\n requirements = parser.get(\"options\", \"install_requires\").splitlines()\n requirements = [r.split('#')[0].strip() for r in requirements if r]\n\n with open(PYPROJECT_TOML) as f:\n original_toml = f.read()\n\n toml = tomlkit.parse(original_toml)\n\n # parse command line arguments\n if '--add' in sys.argv:\n for item in sys.argv[sys.argv.index('--add') + 1 :]:\n if item.startswith('-'):\n break\n EXTRA_REQS.append(item)\n\n for item in EXTRA_REQS:\n _base = re.split('<|>|=', item, maxsplit=1)[0]\n for r in requirements:\n if r.startswith(_base):\n requirements.remove(r)\n break\n if _base.lower().startswith('pyqt5'):\n try:\n i = next(x for x in requirements if x.startswith('PySide'))\n requirements.remove(i)\n except StopIteration:\n pass\n\n requirements += EXTRA_REQS\n\n toml['tool']['briefcase']['app'][APP]['requires'] = requirements\n toml['tool']['briefcase']['version'] = VERSION\n\n print(\"patching pyroject.toml to version: \", VERSION)\n print(\n \"patching pyroject.toml requirements to : \\n\",\n \"\\n\".join(toml['tool']['briefcase']['app'][APP]['requires']),\n )\n with open(PYPROJECT_TOML, 'w') as f:\n f.write(tomlkit.dumps(toml))\n\n try:\n yield\n finally:\n with open(PYPROJECT_TOML, 'w') as f:\n f.write(original_toml)\n\n\ndef patch_dmgbuild():\n if not MACOS:\n return\n from dmgbuild import core\n\n # will not be required after dmgbuild > v1.3.3\n # see https://github.com/al45tair/dmgbuild/pull/18\n with open(core.__file__) as f:\n src = f.read()\n with open(core.__file__, 'w') as f:\n f.write(\n src.replace(\n \"shutil.rmtree(os.path.join(mount_point, '.Trashes'), True)\",\n \"shutil.rmtree(os.path.join(mount_point, '.Trashes'), True);time.sleep(30)\",\n )\n )\n print(\"patched dmgbuild.core\")\n\n\ndef add_site_packages_to_path():\n # on mac, make sure the site-packages folder exists even before the user\n # has pip installed, so it is in sys.path on the first run\n # (otherwise, newly installed plugins will not be detected until restart)\n if MACOS:\n pkgs_dir = os.path.join(\n APP_DIR,\n 'Contents',\n 'Resources',\n 'Support',\n 'lib',\n f'python{sys.version_info.major}.{sys.version_info.minor}',\n 'site-packages',\n )\n os.makedirs(pkgs_dir)\n print(\"created site-packages at\", pkgs_dir)\n\n # on windows, briefcase uses a _pth file to determine the sys.path at\n # runtime. https://docs.python.org/3/using/windows.html#finding-modules\n # We update that file with the eventual location of pip site-packages\n elif WINDOWS:\n py = \"\".join(map(str, sys.version_info[:2]))\n python_dir = os.path.join(BUILD_DIR, APP, 'src', 'python')\n pth = os.path.join(python_dir, f'python{py}._pth')\n with open(pth, \"a\") as f:\n # Append 'hello' at the end of file\n f.write(\".\\\\\\\\Lib\\\\\\\\site-packages\\n\")\n print(\"added bundled site-packages to\", pth)\n\n pkgs_dir = os.path.join(python_dir, 'Lib', 'site-packages')\n os.makedirs(pkgs_dir)\n print(\"created site-packages at\", pkgs_dir)\n with open(os.path.join(pkgs_dir, 'readme.txt'), 'w') as f:\n f.write(\"this is where plugin packages will go\")\n\n\ndef patch_wxs():\n # must run after briefcase create\n fname = os.path.join(BUILD_DIR, APP, f'{APP}.wxs')\n\n if os.path.exists(fname):\n with open(fname) as f:\n source = f.read()\n with open(fname, 'w') as f:\n f.write(source.replace('pythonw.exe', 'python.exe'))\n print(\"patched pythonw.exe -> python.exe\")\n\n\ndef make_zip():\n import glob\n import zipfile\n\n if WINDOWS:\n ext, OS = '*.msi', 'Windows'\n elif LINUX:\n ext, OS = '*.AppImage', 'Linux'\n elif MACOS:\n ext, OS = '*.dmg', 'macOS'\n artifact = glob.glob(os.path.join(BUILD_DIR, ext))[0]\n dest = f'napari-{VERSION}-{OS}.zip'\n\n with zipfile.ZipFile(dest, 'w', zipfile.ZIP_DEFLATED) as zf:\n zf.write(artifact, arcname=os.path.basename(artifact))\n print(\"created zipfile: \", dest)\n return dest\n\n\ndef clean():\n shutil.rmtree(BUILD_DIR, ignore_errors=True)\n\n\ndef bundle():\n clean()\n\n if MACOS:\n patch_dmgbuild()\n\n # smoke test, and build resources\n subprocess.check_call([sys.executable, '-m', APP, '--info'])\n\n with patched_toml():\n # create\n cmd = ['briefcase', 'create'] + (['--no-docker'] if LINUX else [])\n subprocess.check_call(cmd)\n\n time.sleep(0.5)\n\n add_site_packages_to_path()\n\n if WINDOWS:\n patch_wxs()\n\n # build\n cmd = ['briefcase', 'build'] + (['--no-docker'] if LINUX else [])\n subprocess.check_call(cmd)\n\n # package\n cmd = ['briefcase', 'package']\n cmd += ['--no-sign'] if MACOS else (['--no-docker'] if LINUX else [])\n subprocess.check_call(cmd)\n\n # compress\n dest = make_zip()\n clean()\n\n return dest\n\n\nif __name__ == \"__main__\":\n if '--clean' in sys.argv:\n clean()\n sys.exit()\n if '--version' in sys.argv:\n print(VERSION)\n sys.exit()\n print('created', bundle())\n", "path": "bundle.py"}]}

| 3,625 | 697 |

gh_patches_debug_34895

|

rasdani/github-patches

|

git_diff

|

rasterio__rasterio-509

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add test coverage for Cython modules

Right now, we are not getting coverage of Cython modules from our test suite. This would be good to do, especially if it is [pretty straightforward](http://blog.behnel.de/posts/coverage-analysis-for-cython-modules.html).

I'll look into this for #496

</issue>

<code>

[start of setup.py]

1 #!/usr/bin/env python

2

3 # Two environmental variables influence this script.

4 #

5 # GDAL_CONFIG: the path to a gdal-config program that points to GDAL headers,

6 # libraries, and data files.

7 #

8 # PACKAGE_DATA: if defined, GDAL and PROJ4 data files will be copied into the

9 # source or binary distribution. This is essential when creating self-contained

10 # binary wheels.

11

12 import logging

13 import os

14 import pprint

15 import shutil

16 import subprocess

17 import sys

18

19 from setuptools import setup

20 from setuptools.extension import Extension

21

22 logging.basicConfig()

23 log = logging.getLogger()

24

25 # python -W all setup.py ...

26 if 'all' in sys.warnoptions:

27 log.level = logging.DEBUG

28

29 def check_output(cmd):

30 # since subprocess.check_output doesn't exist in 2.6

31 # we wrap it here.

32 try:

33 out = subprocess.check_output(cmd)

34 return out.decode('utf')

35 except AttributeError:

36 # For some reasone check_output doesn't exist

37 # So fall back on Popen

38 p = subprocess.Popen(cmd, stdout=subprocess.PIPE)

39 out, err = p.communicate()

40 return out

41

42 def copy_data_tree(datadir, destdir):

43 try:

44 shutil.rmtree(destdir)

45 except OSError:

46 pass

47 shutil.copytree(datadir, destdir)

48

49 # Parse the version from the rasterio module.

50 with open('rasterio/__init__.py') as f:

51 for line in f:

52 if line.find("__version__") >= 0:

53 version = line.split("=")[1].strip()

54 version = version.strip('"')

55 version = version.strip("'")

56 continue

57

58 with open('VERSION.txt', 'w') as f:

59 f.write(version)

60

61 # Use Cython if available.

62 try:

63 from Cython.Build import cythonize

64 except ImportError:

65 cythonize = None

66

67 # By default we'll try to get options via gdal-config. On systems without,

68 # options will need to be set in setup.cfg or on the setup command line.

69 include_dirs = []

70 library_dirs = []

71 libraries = []

72 extra_link_args = []

73 gdal_output = [None]*3

74

75 try:

76 import numpy

77 include_dirs.append(numpy.get_include())

78 except ImportError:

79 log.critical("Numpy and its headers are required to run setup(). Exiting.")

80 sys.exit(1)

81

82 try:

83 gdal_config = os.environ.get('GDAL_CONFIG', 'gdal-config')

84 for i, flag in enumerate(("--cflags", "--libs", "--datadir")):

85 gdal_output[i] = check_output([gdal_config, flag]).strip()

86

87 for item in gdal_output[0].split():

88 if item.startswith("-I"):

89 include_dirs.extend(item[2:].split(":"))

90 for item in gdal_output[1].split():

91 if item.startswith("-L"):

92 library_dirs.extend(item[2:].split(":"))

93 elif item.startswith("-l"):

94 libraries.append(item[2:])

95 else:

96 # e.g. -framework GDAL

97 extra_link_args.append(item)

98

99 except Exception as e:

100 if os.name == "nt":

101 log.info(("Building on Windows requires extra options to setup.py to locate needed GDAL files.\n"

102 "More information is available in the README."))

103 else:

104 log.warning("Failed to get options via gdal-config: %s", str(e))

105

106

107 # Conditionally copy the GDAL data. To be used in conjunction with

108 # the bdist_wheel command to make self-contained binary wheels.

109 if os.environ.get('PACKAGE_DATA'):

110 destdir = 'rasterio/gdal_data'

111 if gdal_output[2]:

112 log.info("Copying gdal data from %s" % gdal_output[2])

113 copy_data_tree(gdal_output[2], destdir)

114 else:

115 # check to see if GDAL_DATA is defined

116 gdal_data = os.environ.get('GDAL_DATA', None)

117 if gdal_data:

118 log.info("Copying gdal_data from %s" % gdal_data)

119 copy_data_tree(gdal_data, destdir)

120

121 # Conditionally copy PROJ.4 data.

122 projdatadir = os.environ.get('PROJ_LIB', '/usr/local/share/proj')

123 if os.path.exists(projdatadir):

124 log.info("Copying proj_data from %s" % projdatadir)

125 copy_data_tree(projdatadir, 'rasterio/proj_data')

126

127 ext_options = dict(

128 include_dirs=include_dirs,

129 library_dirs=library_dirs,

130 libraries=libraries,

131 extra_link_args=extra_link_args)

132

133 if not os.name == "nt":

134 # These options fail on Windows if using Visual Studio

135 ext_options['extra_compile_args'] = ['-Wno-unused-parameter',

136 '-Wno-unused-function']

137

138 log.debug('ext_options:\n%s', pprint.pformat(ext_options))

139

140 # When building from a repo, Cython is required.

141 if os.path.exists("MANIFEST.in") and "clean" not in sys.argv:

142 log.info("MANIFEST.in found, presume a repo, cythonizing...")

143 if not cythonize:

144 log.critical(

145 "Cython.Build.cythonize not found. "

146 "Cython is required to build from a repo.")

147 sys.exit(1)

148 ext_modules = cythonize([

149 Extension(

150 'rasterio._base', ['rasterio/_base.pyx'], **ext_options),

151 Extension(

152 'rasterio._io', ['rasterio/_io.pyx'], **ext_options),

153 Extension(

154 'rasterio._copy', ['rasterio/_copy.pyx'], **ext_options),

155 Extension(

156 'rasterio._features', ['rasterio/_features.pyx'], **ext_options),

157 Extension(

158 'rasterio._drivers', ['rasterio/_drivers.pyx'], **ext_options),

159 Extension(

160 'rasterio._warp', ['rasterio/_warp.pyx'], **ext_options),

161 Extension(

162 'rasterio._fill', ['rasterio/_fill.pyx', 'rasterio/rasterfill.cpp'], **ext_options),

163 Extension(

164 'rasterio._err', ['rasterio/_err.pyx'], **ext_options),

165 Extension(

166 'rasterio._example', ['rasterio/_example.pyx'], **ext_options),

167 ], quiet=True)

168

169 # If there's no manifest template, as in an sdist, we just specify .c files.

170 else:

171 ext_modules = [

172 Extension(

173 'rasterio._base', ['rasterio/_base.c'], **ext_options),

174 Extension(

175 'rasterio._io', ['rasterio/_io.c'], **ext_options),

176 Extension(

177 'rasterio._copy', ['rasterio/_copy.c'], **ext_options),

178 Extension(

179 'rasterio._features', ['rasterio/_features.c'], **ext_options),

180 Extension(

181 'rasterio._drivers', ['rasterio/_drivers.c'], **ext_options),

182 Extension(

183 'rasterio._warp', ['rasterio/_warp.cpp'], **ext_options),

184 Extension(

185 'rasterio._fill', ['rasterio/_fill.cpp', 'rasterio/rasterfill.cpp'], **ext_options),

186 Extension(

187 'rasterio._err', ['rasterio/_err.c'], **ext_options),

188 Extension(

189 'rasterio._example', ['rasterio/_example.c'], **ext_options),

190 ]

191

192 with open('README.rst') as f:

193 readme = f.read()

194

195 # Runtime requirements.

196 inst_reqs = ['affine', 'cligj', 'numpy', 'snuggs', 'click-plugins']

197

198 if sys.version_info < (3, 4):

199 inst_reqs.append('enum34')

200

201 setup_args = dict(

202 name='rasterio',

203 version=version,

204 description="Fast and direct raster I/O for use with Numpy and SciPy",

205 long_description=readme,

206 classifiers=[

207 'Development Status :: 4 - Beta',

208 'Intended Audience :: Developers',

209 'Intended Audience :: Information Technology',

210 'Intended Audience :: Science/Research',

211 'License :: OSI Approved :: BSD License',

212 'Programming Language :: C',

213 'Programming Language :: Python :: 2.6',

214 'Programming Language :: Python :: 2.7',

215 'Programming Language :: Python :: 3.3',

216 'Programming Language :: Python :: 3.4',

217 'Topic :: Multimedia :: Graphics :: Graphics Conversion',

218 'Topic :: Scientific/Engineering :: GIS'],

219 keywords='raster gdal',

220 author='Sean Gillies',

221 author_email='[email protected]',

222 url='https://github.com/mapbox/rasterio',

223 license='BSD',

224 package_dir={'': '.'},

225 packages=['rasterio', 'rasterio.rio', 'rasterio.tools'],

226 entry_points='''

227 [console_scripts]

228 rio=rasterio.rio.main:main_group

229

230 [rasterio.rio_commands]

231 bounds=rasterio.rio.features:bounds

232 calc=rasterio.rio.calc:calc

233 clip=rasterio.rio.convert:clip

234 convert=rasterio.rio.convert:convert

235 edit-info=rasterio.rio.info:edit

236 env=rasterio.rio.info:env

237 info=rasterio.rio.info:info

238 insp=rasterio.rio.info:insp

239 mask=rasterio.rio.features:mask

240 merge=rasterio.rio.merge:merge

241 overview=rasterio.rio.overview:overview

242 rasterize=rasterio.rio.features:rasterize

243 sample=rasterio.rio.sample:sample

244 shapes=rasterio.rio.features:shapes

245 stack=rasterio.rio.bands:stack

246 warp=rasterio.rio.warp:warp

247 transform=rasterio.rio.info:transform

248 ''',

249 include_package_data=True,

250 ext_modules=ext_modules,

251 zip_safe=False,

252 install_requires=inst_reqs,

253 extras_require={

254 'ipython': ['ipython>=2.0']})

255

256 if os.environ.get('PACKAGE_DATA'):

257 setup_args['package_data'] = {'rasterio': ['gdal_data/*', 'proj_data/*']}

258

259 setup(**setup_args)

260

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -19,12 +19,10 @@

from setuptools import setup

from setuptools.extension import Extension

+

logging.basicConfig()

log = logging.getLogger()

-# python -W all setup.py ...

-if 'all' in sys.warnoptions:

- log.level = logging.DEBUG

def check_output(cmd):

# since subprocess.check_output doesn't exist in 2.6

@@ -39,6 +37,7 @@

out, err = p.communicate()

return out

+

def copy_data_tree(datadir, destdir):

try:

shutil.rmtree(destdir)

@@ -46,6 +45,11 @@

pass

shutil.copytree(datadir, destdir)

+

+# python -W all setup.py ...

+if 'all' in sys.warnoptions:

+ log.level = logging.DEBUG

+

# Parse the version from the rasterio module.

with open('rasterio/__init__.py') as f:

for line in f:

@@ -135,6 +139,13 @@

ext_options['extra_compile_args'] = ['-Wno-unused-parameter',

'-Wno-unused-function']

+cythonize_options = {}

+if os.environ.get('CYTHON_COVERAGE'):

+ cythonize_options['compiler_directives'] = {'linetrace': True}

+ cythonize_options['annotate'] = True

+ ext_options['define_macros'] = [('CYTHON_TRACE', '1'),

+ ('CYTHON_TRACE_NOGIL', '1')]

+

log.debug('ext_options:\n%s', pprint.pformat(ext_options))

# When building from a repo, Cython is required.

@@ -164,7 +175,7 @@

'rasterio._err', ['rasterio/_err.pyx'], **ext_options),

Extension(

'rasterio._example', ['rasterio/_example.pyx'], **ext_options),

- ], quiet=True)

+ ], quiet=True, **cythonize_options)

# If there's no manifest template, as in an sdist, we just specify .c files.

else:

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -19,12 +19,10 @@\n from setuptools import setup\n from setuptools.extension import Extension\n \n+\n logging.basicConfig()\n log = logging.getLogger()\n \n-# python -W all setup.py ...\n-if 'all' in sys.warnoptions:\n- log.level = logging.DEBUG\n \n def check_output(cmd):\n # since subprocess.check_output doesn't exist in 2.6\n@@ -39,6 +37,7 @@\n out, err = p.communicate()\n return out\n \n+\n def copy_data_tree(datadir, destdir):\n try:\n shutil.rmtree(destdir)\n@@ -46,6 +45,11 @@\n pass\n shutil.copytree(datadir, destdir)\n \n+\n+# python -W all setup.py ...\n+if 'all' in sys.warnoptions:\n+ log.level = logging.DEBUG\n+\n # Parse the version from the rasterio module.\n with open('rasterio/__init__.py') as f:\n for line in f:\n@@ -135,6 +139,13 @@\n ext_options['extra_compile_args'] = ['-Wno-unused-parameter',\n '-Wno-unused-function']\n \n+cythonize_options = {}\n+if os.environ.get('CYTHON_COVERAGE'):\n+ cythonize_options['compiler_directives'] = {'linetrace': True}\n+ cythonize_options['annotate'] = True\n+ ext_options['define_macros'] = [('CYTHON_TRACE', '1'),\n+ ('CYTHON_TRACE_NOGIL', '1')]\n+\n log.debug('ext_options:\\n%s', pprint.pformat(ext_options))\n \n # When building from a repo, Cython is required.\n@@ -164,7 +175,7 @@\n 'rasterio._err', ['rasterio/_err.pyx'], **ext_options),\n Extension(\n 'rasterio._example', ['rasterio/_example.pyx'], **ext_options),\n- ], quiet=True)\n+ ], quiet=True, **cythonize_options)\n \n # If there's no manifest template, as in an sdist, we just specify .c files.\n else:\n", "issue": "Add test coverage for Cython modules\nRight now, we are not getting coverage of Cython modules from our test suite. This would be good to do, especially if it is [pretty straightforward](http://blog.behnel.de/posts/coverage-analysis-for-cython-modules.html).\n\nI'll look into this for #496\n\n", "before_files": [{"content": "#!/usr/bin/env python\n\n# Two environmental variables influence this script.\n#\n# GDAL_CONFIG: the path to a gdal-config program that points to GDAL headers,\n# libraries, and data files.\n#\n# PACKAGE_DATA: if defined, GDAL and PROJ4 data files will be copied into the\n# source or binary distribution. This is essential when creating self-contained\n# binary wheels.\n\nimport logging\nimport os\nimport pprint\nimport shutil\nimport subprocess\nimport sys\n\nfrom setuptools import setup\nfrom setuptools.extension import Extension\n\nlogging.basicConfig()\nlog = logging.getLogger()\n\n# python -W all setup.py ...\nif 'all' in sys.warnoptions:\n log.level = logging.DEBUG\n\ndef check_output(cmd):\n # since subprocess.check_output doesn't exist in 2.6\n # we wrap it here.\n try:\n out = subprocess.check_output(cmd)\n return out.decode('utf')\n except AttributeError:\n # For some reasone check_output doesn't exist\n # So fall back on Popen\n p = subprocess.Popen(cmd, stdout=subprocess.PIPE)\n out, err = p.communicate()\n return out\n\ndef copy_data_tree(datadir, destdir):\n try:\n shutil.rmtree(destdir)\n except OSError:\n pass\n shutil.copytree(datadir, destdir)\n\n# Parse the version from the rasterio module.\nwith open('rasterio/__init__.py') as f:\n for line in f:\n if line.find(\"__version__\") >= 0:\n version = line.split(\"=\")[1].strip()\n version = version.strip('\"')\n version = version.strip(\"'\")\n continue\n\nwith open('VERSION.txt', 'w') as f:\n f.write(version)\n\n# Use Cython if available.\ntry:\n from Cython.Build import cythonize\nexcept ImportError:\n cythonize = None\n\n# By default we'll try to get options via gdal-config. On systems without,\n# options will need to be set in setup.cfg or on the setup command line.\ninclude_dirs = []\nlibrary_dirs = []\nlibraries = []\nextra_link_args = []\ngdal_output = [None]*3\n\ntry:\n import numpy\n include_dirs.append(numpy.get_include())\nexcept ImportError:\n log.critical(\"Numpy and its headers are required to run setup(). Exiting.\")\n sys.exit(1)\n\ntry:\n gdal_config = os.environ.get('GDAL_CONFIG', 'gdal-config')\n for i, flag in enumerate((\"--cflags\", \"--libs\", \"--datadir\")):\n gdal_output[i] = check_output([gdal_config, flag]).strip()\n\n for item in gdal_output[0].split():\n if item.startswith(\"-I\"):\n include_dirs.extend(item[2:].split(\":\"))\n for item in gdal_output[1].split():\n if item.startswith(\"-L\"):\n library_dirs.extend(item[2:].split(\":\"))\n elif item.startswith(\"-l\"):\n libraries.append(item[2:])\n else:\n # e.g. -framework GDAL\n extra_link_args.append(item)\n\nexcept Exception as e:\n if os.name == \"nt\":\n log.info((\"Building on Windows requires extra options to setup.py to locate needed GDAL files.\\n\"\n \"More information is available in the README.\"))\n else:\n log.warning(\"Failed to get options via gdal-config: %s\", str(e))\n\n\n# Conditionally copy the GDAL data. To be used in conjunction with\n# the bdist_wheel command to make self-contained binary wheels.\nif os.environ.get('PACKAGE_DATA'):\n destdir = 'rasterio/gdal_data'\n if gdal_output[2]:\n log.info(\"Copying gdal data from %s\" % gdal_output[2])\n copy_data_tree(gdal_output[2], destdir)\n else:\n # check to see if GDAL_DATA is defined\n gdal_data = os.environ.get('GDAL_DATA', None)\n if gdal_data:\n log.info(\"Copying gdal_data from %s\" % gdal_data)\n copy_data_tree(gdal_data, destdir)\n\n # Conditionally copy PROJ.4 data.\n projdatadir = os.environ.get('PROJ_LIB', '/usr/local/share/proj')\n if os.path.exists(projdatadir):\n log.info(\"Copying proj_data from %s\" % projdatadir)\n copy_data_tree(projdatadir, 'rasterio/proj_data')\n\next_options = dict(\n include_dirs=include_dirs,\n library_dirs=library_dirs,\n libraries=libraries,\n extra_link_args=extra_link_args)\n\nif not os.name == \"nt\":\n # These options fail on Windows if using Visual Studio\n ext_options['extra_compile_args'] = ['-Wno-unused-parameter',\n '-Wno-unused-function']\n\nlog.debug('ext_options:\\n%s', pprint.pformat(ext_options))\n\n# When building from a repo, Cython is required.\nif os.path.exists(\"MANIFEST.in\") and \"clean\" not in sys.argv:\n log.info(\"MANIFEST.in found, presume a repo, cythonizing...\")\n if not cythonize:\n log.critical(\n \"Cython.Build.cythonize not found. \"\n \"Cython is required to build from a repo.\")\n sys.exit(1)\n ext_modules = cythonize([\n Extension(\n 'rasterio._base', ['rasterio/_base.pyx'], **ext_options),\n Extension(\n 'rasterio._io', ['rasterio/_io.pyx'], **ext_options),\n Extension(\n 'rasterio._copy', ['rasterio/_copy.pyx'], **ext_options),\n Extension(\n 'rasterio._features', ['rasterio/_features.pyx'], **ext_options),\n Extension(\n 'rasterio._drivers', ['rasterio/_drivers.pyx'], **ext_options),\n Extension(\n 'rasterio._warp', ['rasterio/_warp.pyx'], **ext_options),\n Extension(\n 'rasterio._fill', ['rasterio/_fill.pyx', 'rasterio/rasterfill.cpp'], **ext_options),\n Extension(\n 'rasterio._err', ['rasterio/_err.pyx'], **ext_options),\n Extension(\n 'rasterio._example', ['rasterio/_example.pyx'], **ext_options),\n ], quiet=True)\n\n# If there's no manifest template, as in an sdist, we just specify .c files.\nelse:\n ext_modules = [\n Extension(\n 'rasterio._base', ['rasterio/_base.c'], **ext_options),\n Extension(\n 'rasterio._io', ['rasterio/_io.c'], **ext_options),\n Extension(\n 'rasterio._copy', ['rasterio/_copy.c'], **ext_options),\n Extension(\n 'rasterio._features', ['rasterio/_features.c'], **ext_options),\n Extension(\n 'rasterio._drivers', ['rasterio/_drivers.c'], **ext_options),\n Extension(\n 'rasterio._warp', ['rasterio/_warp.cpp'], **ext_options),\n Extension(\n 'rasterio._fill', ['rasterio/_fill.cpp', 'rasterio/rasterfill.cpp'], **ext_options),\n Extension(\n 'rasterio._err', ['rasterio/_err.c'], **ext_options),\n Extension(\n 'rasterio._example', ['rasterio/_example.c'], **ext_options),\n ]\n\nwith open('README.rst') as f:\n readme = f.read()\n\n# Runtime requirements.\ninst_reqs = ['affine', 'cligj', 'numpy', 'snuggs', 'click-plugins']\n\nif sys.version_info < (3, 4):\n inst_reqs.append('enum34')\n\nsetup_args = dict(\n name='rasterio',\n version=version,\n description=\"Fast and direct raster I/O for use with Numpy and SciPy\",\n long_description=readme,\n classifiers=[\n 'Development Status :: 4 - Beta',\n 'Intended Audience :: Developers',\n 'Intended Audience :: Information Technology',\n 'Intended Audience :: Science/Research',\n 'License :: OSI Approved :: BSD License',\n 'Programming Language :: C',\n 'Programming Language :: Python :: 2.6',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3.3',\n 'Programming Language :: Python :: 3.4',\n 'Topic :: Multimedia :: Graphics :: Graphics Conversion',\n 'Topic :: Scientific/Engineering :: GIS'],\n keywords='raster gdal',\n author='Sean Gillies',\n author_email='[email protected]',\n url='https://github.com/mapbox/rasterio',\n license='BSD',\n package_dir={'': '.'},\n packages=['rasterio', 'rasterio.rio', 'rasterio.tools'],\n entry_points='''\n [console_scripts]\n rio=rasterio.rio.main:main_group\n\n [rasterio.rio_commands]\n bounds=rasterio.rio.features:bounds\n calc=rasterio.rio.calc:calc\n clip=rasterio.rio.convert:clip\n convert=rasterio.rio.convert:convert\n edit-info=rasterio.rio.info:edit\n env=rasterio.rio.info:env\n info=rasterio.rio.info:info\n insp=rasterio.rio.info:insp\n mask=rasterio.rio.features:mask\n merge=rasterio.rio.merge:merge\n overview=rasterio.rio.overview:overview\n rasterize=rasterio.rio.features:rasterize\n sample=rasterio.rio.sample:sample\n shapes=rasterio.rio.features:shapes\n stack=rasterio.rio.bands:stack\n warp=rasterio.rio.warp:warp\n transform=rasterio.rio.info:transform\n ''',\n include_package_data=True,\n ext_modules=ext_modules,\n zip_safe=False,\n install_requires=inst_reqs,\n extras_require={\n 'ipython': ['ipython>=2.0']})\n\nif os.environ.get('PACKAGE_DATA'):\n setup_args['package_data'] = {'rasterio': ['gdal_data/*', 'proj_data/*']}\n\nsetup(**setup_args)\n", "path": "setup.py"}]}

| 3,522 | 481 |

gh_patches_debug_33793

|

rasdani/github-patches

|

git_diff

|

Project-MONAI__MONAI-7664

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

SABlock parameters when using more heads

**Describe the bug**

The number of parameters in the SABlock should be increased when increasing the number of heads (_num_heads_). However, this is not the case and limits comparability to famous scaling like ViT-S or ViT-B.

**To Reproduce**

Steps to reproduce the behavior:

```

from monai.networks.nets import ViT

def count_trainable_parameters(model: nn.Module) -> int:

return sum(p.numel() for p in model.parameters() if p.requires_grad)

# Create ViT models with different numbers of heads

vit_b = ViT(1, 224, 16, num_heads=12)

vit_s = ViT(1, 224, 16, num_heads=6)

print("ViT with 12 heads parameters:", count_trainable_parameters(vit_b))

print("ViT with 6 heads parameters:", count_trainable_parameters(vit_s))

>>> ViT with 12 heads parameters: 90282240

>>> ViT with 6 heads parameters: 90282240

```

**Expected behavior**

The number of trainable parameters should be increased with increasing number of heads.

**Environment**

```

================================

Printing MONAI config...

================================

MONAI version: 0.8.1rc4+1384.g139182ea

Numpy version: 1.26.4

Pytorch version: 2.2.2+cpu

MONAI flags: HAS_EXT = False, USE_COMPILED = False, USE_META_DICT = False

MONAI rev id: 139182ea52725aa3c9214dc18082b9837e32f9a2

MONAI __file__: C:\Users\<username>\MONAI\monai\__init__.py

Optional dependencies:

Pytorch Ignite version: 0.4.11

ITK version: 5.3.0

Nibabel version: 5.2.1

scikit-image version: 0.23.1

scipy version: 1.13.0

Pillow version: 10.3.0

Tensorboard version: 2.16.2

gdown version: 4.7.3

TorchVision version: 0.17.2+cpu

tqdm version: 4.66.2

lmdb version: 1.4.1

psutil version: 5.9.8

pandas version: 2.2.2

einops version: 0.7.0

transformers version: 4.39.3

mlflow version: 2.12.1

pynrrd version: 1.0.0

clearml version: 1.15.1

For details about installing the optional dependencies, please visit:

https://docs.monai.io/en/latest/installation.html#installing-the-recommended-dependencies

================================

Printing system config...

================================

System: Windows

Win32 version: ('10', '10.0.22621', 'SP0', 'Multiprocessor Free')

Win32 edition: Professional

Platform: Windows-10-10.0.22621-SP0

Processor: Intel64 Family 6 Model 142 Stepping 12, GenuineIntel

Machine: AMD64

Python version: 3.11.8

Process name: python.exe

Command: ['python', '-c', 'import monai; monai.config.print_debug_info()']

Open files: [popenfile(path='C:\\Windows\\System32\\de-DE\\KernelBase.dll.mui', fd=-1), popenfile(path='C:\\Windows\\System32\\de-DE\\kernel32.dll.mui', fd=-1), popenfile(path='C:\\Windows\\System32\\de-DE\\tzres.dll.mui', fd=-1)]

Num physical CPUs: 4

Num logical CPUs: 8

Num usable CPUs: 8

CPU usage (%): [3.9, 0.2, 3.7, 0.9, 3.9, 3.9, 2.8, 32.2]

CPU freq. (MHz): 1803

Load avg. in last 1, 5, 15 mins (%): [0.0, 0.0, 0.0]

Disk usage (%): 83.1

Avg. sensor temp. (Celsius): UNKNOWN for given OS

Total physical memory (GB): 15.8

Available memory (GB): 5.5

Used memory (GB): 10.2

================================

Printing GPU config...

================================

Num GPUs: 0

Has CUDA: False

cuDNN enabled: False

NVIDIA_TF32_OVERRIDE: None

TORCH_ALLOW_TF32_CUBLAS_OVERRIDE: None

```

</issue>

<code>

[start of monai/networks/blocks/selfattention.py]

1 # Copyright (c) MONAI Consortium

2 # Licensed under the Apache License, Version 2.0 (the "License");

3 # you may not use this file except in compliance with the License.

4 # You may obtain a copy of the License at

5 # http://www.apache.org/licenses/LICENSE-2.0

6 # Unless required by applicable law or agreed to in writing, software

7 # distributed under the License is distributed on an "AS IS" BASIS,

8 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

9 # See the License for the specific language governing permissions and

10 # limitations under the License.

11

12 from __future__ import annotations

13

14 import torch

15 import torch.nn as nn

16

17 from monai.utils import optional_import

18

19 Rearrange, _ = optional_import("einops.layers.torch", name="Rearrange")

20

21

22 class SABlock(nn.Module):

23 """

24 A self-attention block, based on: "Dosovitskiy et al.,

25 An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale <https://arxiv.org/abs/2010.11929>"

26 """

27

28 def __init__(

29 self,

30 hidden_size: int,

31 num_heads: int,

32 dropout_rate: float = 0.0,

33 qkv_bias: bool = False,

34 save_attn: bool = False,

35 ) -> None:

36 """

37 Args:

38 hidden_size (int): dimension of hidden layer.

39 num_heads (int): number of attention heads.

40 dropout_rate (float, optional): fraction of the input units to drop. Defaults to 0.0.

41 qkv_bias (bool, optional): bias term for the qkv linear layer. Defaults to False.

42 save_attn (bool, optional): to make accessible the attention matrix. Defaults to False.

43

44 """

45

46 super().__init__()

47

48 if not (0 <= dropout_rate <= 1):

49 raise ValueError("dropout_rate should be between 0 and 1.")

50

51 if hidden_size % num_heads != 0:

52 raise ValueError("hidden size should be divisible by num_heads.")

53

54 self.num_heads = num_heads

55 self.out_proj = nn.Linear(hidden_size, hidden_size)

56 self.qkv = nn.Linear(hidden_size, hidden_size * 3, bias=qkv_bias)

57 self.input_rearrange = Rearrange("b h (qkv l d) -> qkv b l h d", qkv=3, l=num_heads)

58 self.out_rearrange = Rearrange("b h l d -> b l (h d)")

59 self.drop_output = nn.Dropout(dropout_rate)

60 self.drop_weights = nn.Dropout(dropout_rate)

61 self.head_dim = hidden_size // num_heads

62 self.scale = self.head_dim**-0.5

63 self.save_attn = save_attn

64 self.att_mat = torch.Tensor()

65

66 def forward(self, x):

67 output = self.input_rearrange(self.qkv(x))

68 q, k, v = output[0], output[1], output[2]

69 att_mat = (torch.einsum("blxd,blyd->blxy", q, k) * self.scale).softmax(dim=-1)

70 if self.save_attn:

71 # no gradients and new tensor;

72 # https://pytorch.org/docs/stable/generated/torch.Tensor.detach.html

73 self.att_mat = att_mat.detach()

74

75 att_mat = self.drop_weights(att_mat)

76 x = torch.einsum("bhxy,bhyd->bhxd", att_mat, v)

77 x = self.out_rearrange(x)

78 x = self.out_proj(x)

79 x = self.drop_output(x)

80 return x

81

[end of monai/networks/blocks/selfattention.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/monai/networks/blocks/selfattention.py b/monai/networks/blocks/selfattention.py

--- a/monai/networks/blocks/selfattention.py

+++ b/monai/networks/blocks/selfattention.py

@@ -32,6 +32,7 @@

dropout_rate: float = 0.0,

qkv_bias: bool = False,

save_attn: bool = False,

+ dim_head: int | None = None,

) -> None:

"""

Args:

@@ -40,6 +41,7 @@

dropout_rate (float, optional): fraction of the input units to drop. Defaults to 0.0.

qkv_bias (bool, optional): bias term for the qkv linear layer. Defaults to False.

save_attn (bool, optional): to make accessible the attention matrix. Defaults to False.

+ dim_head (int, optional): dimension of each head. Defaults to hidden_size // num_heads.

"""

@@ -52,14 +54,16 @@

raise ValueError("hidden size should be divisible by num_heads.")

self.num_heads = num_heads

- self.out_proj = nn.Linear(hidden_size, hidden_size)

- self.qkv = nn.Linear(hidden_size, hidden_size * 3, bias=qkv_bias)

+ self.dim_head = hidden_size // num_heads if dim_head is None else dim_head

+ self.inner_dim = self.dim_head * num_heads

+

+ self.out_proj = nn.Linear(self.inner_dim, hidden_size)

+ self.qkv = nn.Linear(hidden_size, self.inner_dim * 3, bias=qkv_bias)

self.input_rearrange = Rearrange("b h (qkv l d) -> qkv b l h d", qkv=3, l=num_heads)

self.out_rearrange = Rearrange("b h l d -> b l (h d)")

self.drop_output = nn.Dropout(dropout_rate)

self.drop_weights = nn.Dropout(dropout_rate)

- self.head_dim = hidden_size // num_heads

- self.scale = self.head_dim**-0.5

+ self.scale = self.dim_head**-0.5

self.save_attn = save_attn

self.att_mat = torch.Tensor()

|

{"golden_diff": "diff --git a/monai/networks/blocks/selfattention.py b/monai/networks/blocks/selfattention.py\n--- a/monai/networks/blocks/selfattention.py\n+++ b/monai/networks/blocks/selfattention.py\n@@ -32,6 +32,7 @@\n dropout_rate: float = 0.0,\n qkv_bias: bool = False,\n save_attn: bool = False,\n+ dim_head: int | None = None,\n ) -> None:\n \"\"\"\n Args:\n@@ -40,6 +41,7 @@\n dropout_rate (float, optional): fraction of the input units to drop. Defaults to 0.0.\n qkv_bias (bool, optional): bias term for the qkv linear layer. Defaults to False.\n save_attn (bool, optional): to make accessible the attention matrix. Defaults to False.\n+ dim_head (int, optional): dimension of each head. Defaults to hidden_size // num_heads.\n \n \"\"\"\n \n@@ -52,14 +54,16 @@\n raise ValueError(\"hidden size should be divisible by num_heads.\")\n \n self.num_heads = num_heads\n- self.out_proj = nn.Linear(hidden_size, hidden_size)\n- self.qkv = nn.Linear(hidden_size, hidden_size * 3, bias=qkv_bias)\n+ self.dim_head = hidden_size // num_heads if dim_head is None else dim_head\n+ self.inner_dim = self.dim_head * num_heads\n+\n+ self.out_proj = nn.Linear(self.inner_dim, hidden_size)\n+ self.qkv = nn.Linear(hidden_size, self.inner_dim * 3, bias=qkv_bias)\n self.input_rearrange = Rearrange(\"b h (qkv l d) -> qkv b l h d\", qkv=3, l=num_heads)\n self.out_rearrange = Rearrange(\"b h l d -> b l (h d)\")\n self.drop_output = nn.Dropout(dropout_rate)\n self.drop_weights = nn.Dropout(dropout_rate)\n- self.head_dim = hidden_size // num_heads\n- self.scale = self.head_dim**-0.5\n+ self.scale = self.dim_head**-0.5\n self.save_attn = save_attn\n self.att_mat = torch.Tensor()\n", "issue": "SABlock parameters when using more heads\n**Describe the bug**\r\nThe number of parameters in the SABlock should be increased when increasing the number of heads (_num_heads_). However, this is not the case and limits comparability to famous scaling like ViT-S or ViT-B.\r\n\r\n**To Reproduce**\r\nSteps to reproduce the behavior:\r\n```\r\nfrom monai.networks.nets import ViT\r\n\r\ndef count_trainable_parameters(model: nn.Module) -> int:\r\n return sum(p.numel() for p in model.parameters() if p.requires_grad)\r\n\r\n# Create ViT models with different numbers of heads\r\nvit_b = ViT(1, 224, 16, num_heads=12)\r\nvit_s = ViT(1, 224, 16, num_heads=6)\r\n\r\nprint(\"ViT with 12 heads parameters:\", count_trainable_parameters(vit_b))\r\nprint(\"ViT with 6 heads parameters:\", count_trainable_parameters(vit_s))\r\n\r\n>>> ViT with 12 heads parameters: 90282240\r\n>>> ViT with 6 heads parameters: 90282240\r\n```\r\n\r\n**Expected behavior**\r\nThe number of trainable parameters should be increased with increasing number of heads.\r\n\r\n**Environment**\r\n```\r\n================================\r\nPrinting MONAI config...\r\n================================\r\nMONAI version: 0.8.1rc4+1384.g139182ea\r\nNumpy version: 1.26.4\r\nPytorch version: 2.2.2+cpu\r\nMONAI flags: HAS_EXT = False, USE_COMPILED = False, USE_META_DICT = False\r\nMONAI rev id: 139182ea52725aa3c9214dc18082b9837e32f9a2\r\nMONAI __file__: C:\\Users\\<username>\\MONAI\\monai\\__init__.py\r\n\r\nOptional dependencies:\r\nPytorch Ignite version: 0.4.11\r\nITK version: 5.3.0\r\nNibabel version: 5.2.1\r\nscikit-image version: 0.23.1\r\nscipy version: 1.13.0\r\nPillow version: 10.3.0\r\nTensorboard version: 2.16.2\r\ngdown version: 4.7.3\r\nTorchVision version: 0.17.2+cpu\r\ntqdm version: 4.66.2\r\nlmdb version: 1.4.1\r\npsutil version: 5.9.8\r\npandas version: 2.2.2\r\neinops version: 0.7.0\r\ntransformers version: 4.39.3\r\nmlflow version: 2.12.1\r\npynrrd version: 1.0.0\r\nclearml version: 1.15.1\r\n\r\nFor details about installing the optional dependencies, please visit:\r\n https://docs.monai.io/en/latest/installation.html#installing-the-recommended-dependencies\r\n\r\n\r\n================================\r\nPrinting system config...\r\n================================\r\nSystem: Windows\r\nWin32 version: ('10', '10.0.22621', 'SP0', 'Multiprocessor Free')\r\nWin32 edition: Professional\r\nPlatform: Windows-10-10.0.22621-SP0\r\nProcessor: Intel64 Family 6 Model 142 Stepping 12, GenuineIntel\r\nMachine: AMD64\r\nPython version: 3.11.8\r\nProcess name: python.exe\r\nCommand: ['python', '-c', 'import monai; monai.config.print_debug_info()']\r\nOpen files: [popenfile(path='C:\\\\Windows\\\\System32\\\\de-DE\\\\KernelBase.dll.mui', fd=-1), popenfile(path='C:\\\\Windows\\\\System32\\\\de-DE\\\\kernel32.dll.mui', fd=-1), popenfile(path='C:\\\\Windows\\\\System32\\\\de-DE\\\\tzres.dll.mui', fd=-1)]\r\nNum physical CPUs: 4\r\nNum logical CPUs: 8\r\nNum usable CPUs: 8\r\nCPU usage (%): [3.9, 0.2, 3.7, 0.9, 3.9, 3.9, 2.8, 32.2]\r\nCPU freq. (MHz): 1803\r\nLoad avg. in last 1, 5, 15 mins (%): [0.0, 0.0, 0.0]\r\nDisk usage (%): 83.1\r\nAvg. sensor temp. (Celsius): UNKNOWN for given OS\r\nTotal physical memory (GB): 15.8\r\nAvailable memory (GB): 5.5\r\nUsed memory (GB): 10.2\r\n\r\n================================\r\nPrinting GPU config...\r\n================================\r\nNum GPUs: 0\r\nHas CUDA: False\r\ncuDNN enabled: False\r\nNVIDIA_TF32_OVERRIDE: None\r\nTORCH_ALLOW_TF32_CUBLAS_OVERRIDE: None\r\n```\n", "before_files": [{"content": "# Copyright (c) MONAI Consortium\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n# http://www.apache.org/licenses/LICENSE-2.0\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom __future__ import annotations\n\nimport torch\nimport torch.nn as nn\n\nfrom monai.utils import optional_import\n\nRearrange, _ = optional_import(\"einops.layers.torch\", name=\"Rearrange\")\n\n\nclass SABlock(nn.Module):\n \"\"\"\n A self-attention block, based on: \"Dosovitskiy et al.,\n An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale <https://arxiv.org/abs/2010.11929>\"\n \"\"\"\n\n def __init__(\n self,\n hidden_size: int,\n num_heads: int,\n dropout_rate: float = 0.0,\n qkv_bias: bool = False,\n save_attn: bool = False,\n ) -> None:\n \"\"\"\n Args:\n hidden_size (int): dimension of hidden layer.\n num_heads (int): number of attention heads.\n dropout_rate (float, optional): fraction of the input units to drop. Defaults to 0.0.\n qkv_bias (bool, optional): bias term for the qkv linear layer. Defaults to False.\n save_attn (bool, optional): to make accessible the attention matrix. Defaults to False.\n\n \"\"\"\n\n super().__init__()\n\n if not (0 <= dropout_rate <= 1):\n raise ValueError(\"dropout_rate should be between 0 and 1.\")\n\n if hidden_size % num_heads != 0:\n raise ValueError(\"hidden size should be divisible by num_heads.\")\n\n self.num_heads = num_heads\n self.out_proj = nn.Linear(hidden_size, hidden_size)\n self.qkv = nn.Linear(hidden_size, hidden_size * 3, bias=qkv_bias)\n self.input_rearrange = Rearrange(\"b h (qkv l d) -> qkv b l h d\", qkv=3, l=num_heads)\n self.out_rearrange = Rearrange(\"b h l d -> b l (h d)\")\n self.drop_output = nn.Dropout(dropout_rate)\n self.drop_weights = nn.Dropout(dropout_rate)\n self.head_dim = hidden_size // num_heads\n self.scale = self.head_dim**-0.5\n self.save_attn = save_attn\n self.att_mat = torch.Tensor()\n\n def forward(self, x):\n output = self.input_rearrange(self.qkv(x))\n q, k, v = output[0], output[1], output[2]\n att_mat = (torch.einsum(\"blxd,blyd->blxy\", q, k) * self.scale).softmax(dim=-1)\n if self.save_attn:\n # no gradients and new tensor;\n # https://pytorch.org/docs/stable/generated/torch.Tensor.detach.html\n self.att_mat = att_mat.detach()\n\n att_mat = self.drop_weights(att_mat)\n x = torch.einsum(\"bhxy,bhyd->bhxd\", att_mat, v)\n x = self.out_rearrange(x)\n x = self.out_proj(x)\n x = self.drop_output(x)\n return x\n", "path": "monai/networks/blocks/selfattention.py"}]}

| 2,603 | 497 |

gh_patches_debug_4008

|

rasdani/github-patches

|

git_diff

|

zestedesavoir__zds-site-2705

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Derniers sujets sur la Home : la date sur mobile n'est pas optimisée

Sur mobile on à en général pas beaucoup de place. Et il faudrait éviter d'afficher la date literralle pour optimiser la place. Cf screen (paysage).

</issue>

<code>

[start of zds/featured/forms.py]

1 # coding: utf-8

2 from crispy_forms.bootstrap import StrictButton

3 from crispy_forms.helper import FormHelper

4 from crispy_forms.layout import Layout, Field, ButtonHolder

5 from django import forms

6 from django.core.urlresolvers import reverse

7 from django.utils.translation import ugettext_lazy as _

8

9 from zds.featured.models import FeaturedResource, FeaturedMessage

10

11

12 class FeaturedResourceForm(forms.ModelForm):

13 class Meta:

14 model = FeaturedResource

15

16 fields = ['title', 'type', 'authors', 'image_url', 'url']

17

18 title = forms.CharField(

19 label=_(u'Titre'),

20 max_length=FeaturedResource._meta.get_field('title').max_length,

21 widget=forms.TextInput(

22 attrs={

23 'required': 'required',

24 }

25 )

26 )

27

28 type = forms.CharField(

29 label=_(u'Type'),

30 max_length=FeaturedResource._meta.get_field('type').max_length,

31 widget=forms.TextInput(

32 attrs={

33 'placeholder': _(u'ex: Un projet, un article, un tutoriel...'),

34 'required': 'required',

35 }

36 )

37 )

38

39 authors = forms.CharField(

40 label=_('Auteurs'),

41 widget=forms.TextInput(

42 attrs={

43 'placeholder': _(u'Les auteurs doivent être séparés par une virgule.'),

44 'required': 'required',

45 'data-autocomplete': '{ "type": "multiple" }'

46 }

47 )

48 )

49

50 image_url = forms.CharField(

51 label='Image URL',

52 max_length=FeaturedResource._meta.get_field('image_url').max_length,

53 widget=forms.TextInput(