problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_29361

|

rasdani/github-patches

|

git_diff

|

gratipay__gratipay.com-1735

|

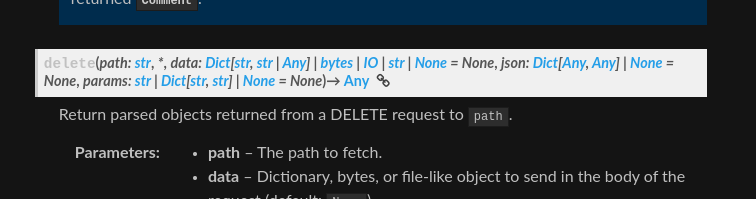

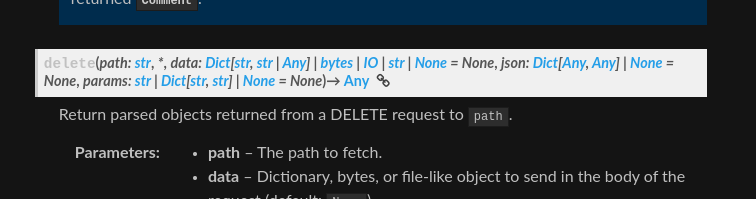

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

per-user charts are scrunched

After #1711 the per-user charts are scrunched:

cc: @dominic @seanlinsley

fake_data paydays should include transfers

We have paydays with `make data`, but we don't have any transfers. We need transfers to see the user charts in dev (cf. #1717).

Charts are too wide in mobile browsers

<img width="33%" src="https://f.cloud.github.com/assets/688886/1649646/413a1054-5a18-11e3-8acb-2d1d5da19d72.png"> <img width="33%" src="https://f.cloud.github.com/assets/688886/1649647/413b9956-5a18-11e3-87c7-582ef4525553.png"> <img width="33%" src="https://f.cloud.github.com/assets/688886/1649655/efdc9cbc-5a18-11e3-81a3-9fab62fd0be7.png">

</issue>

<code>

[start of gittip/utils/fake_data.py]

1 from faker import Factory

2 from gittip import wireup, MAX_TIP, MIN_TIP

3 from gittip.models.participant import Participant

4

5 import decimal

6 import random

7 import string

8 import datetime

9

10

11 faker = Factory.create()

12

13 platforms = ['github', 'twitter', 'bitbucket']

14

15

16 def _fake_thing(db, tablename, **kw):

17 column_names = []

18 column_value_placeholders = []

19 column_values = []

20

21 for k,v in kw.items():

22 column_names.append(k)

23 column_value_placeholders.append("%s")

24 column_values.append(v)

25

26 column_names = ", ".join(column_names)

27 column_value_placeholders = ", ".join(column_value_placeholders)

28

29 db.run( "INSERT INTO {} ({}) VALUES ({})"

30 .format(tablename, column_names, column_value_placeholders)

31 , column_values

32 )

33 return kw

34

35

36 def fake_text_id(size=6, chars=string.ascii_lowercase + string.digits):

37 """Create a random text id.

38 """

39 return ''.join(random.choice(chars) for x in range(size))

40

41

42 def fake_balance(max_amount=100):

43 """Return a random amount between 0 and max_amount.

44 """

45 return random.random() * max_amount

46

47

48 def fake_int_id(nmax=2 ** 31 -1):

49 """Create a random int id.

50 """

51 return random.randint(0, nmax)

52

53

54 def fake_participant(db, number="singular", is_admin=False, anonymous=False):

55 """Create a fake User.

56 """

57 username = faker.firstName() + fake_text_id(3)

58 d = _fake_thing( db

59 , "participants"

60 , id=fake_int_id()

61 , username=username

62 , username_lower=username.lower()

63 , statement=faker.sentence()

64 , ctime=faker.dateTimeThisYear()

65 , is_admin=is_admin

66 , balance=fake_balance()

67 , anonymous=anonymous

68 , goal=fake_balance()

69 , balanced_account_uri=faker.uri()

70 , last_ach_result=''

71 , is_suspicious=False

72 , last_bill_result='' # Needed to not be suspicious

73 , claimed_time=faker.dateTimeThisYear()

74 , number=number

75 )

76 #Call participant constructor to perform other DB initialization

77 return Participant.from_username(username)

78

79

80

81 def fake_tip_amount():

82 amount = ((decimal.Decimal(random.random()) * (MAX_TIP - MIN_TIP))

83 + MIN_TIP)

84

85 decimal_amount = decimal.Decimal(amount).quantize(decimal.Decimal('.01'))

86

87 return decimal_amount

88

89

90 def fake_tip(db, tipper, tippee):

91 """Create a fake tip.

92 """

93 return _fake_thing( db

94 , "tips"

95 , id=fake_int_id()

96 , ctime=faker.dateTimeThisYear()

97 , mtime=faker.dateTimeThisMonth()

98 , tipper=tipper.username

99 , tippee=tippee.username

100 , amount=fake_tip_amount()

101 )

102

103

104 def fake_elsewhere(db, participant, platform=None):

105 """Create a fake elsewhere.

106 """

107 if platform is None:

108 platform = random.choice(platforms)

109

110 info_templates = {

111 "github": {

112 "name": participant.username,

113 "html_url": "https://github.com/" + participant.username,

114 "type": "User",

115 "login": participant.username

116 },

117 "twitter": {

118 "name": participant.username,

119 "html_url": "https://twitter.com/" + participant.username,

120 "screen_name": participant.username

121 },

122 "bitbucket": {

123 "display_name": participant.username,

124 "username": participant.username,

125 "is_team": "False",

126 "html_url": "https://bitbucket.org/" + participant.username,

127 }

128 }

129

130 _fake_thing( db

131 , "elsewhere"

132 , id=fake_int_id()

133 , platform=platform

134 , user_id=fake_text_id()

135 , is_locked=False

136 , participant=participant.username

137 , user_info=info_templates[platform]

138 )

139

140 def fake_transfer(db, tipper, tippee):

141 return _fake_thing( db

142 , "transfers"

143 , id=fake_int_id()

144 , timestamp=faker.dateTimeThisYear()

145 , tipper=tipper.username

146 , tippee=tippee.username

147 , amount=fake_tip_amount()

148 )

149

150

151 def populate_db(db, num_participants=300, num_tips=200, num_teams=5, num_transfers=300):

152 """Populate DB with fake data.

153 """

154 #Make the participants

155 participants = []

156 for i in xrange(num_participants):

157 participants.append(fake_participant(db))

158

159 #Make the "Elsewhere's"

160 for p in participants:

161 #All participants get between 1 and 3 elsewheres

162 num_elsewheres = random.randint(1, 3)

163 for platform_name in platforms[:num_elsewheres]:

164 fake_elsewhere(db, p, platform_name)

165

166 #Make teams

167 for i in xrange(num_teams):

168 t = fake_participant(db, number="plural")

169 #Add 1 to 3 members to the team

170 members = random.sample(participants, random.randint(1, 3))

171 for p in members:

172 t.add_member(p)

173

174 #Make the tips

175 tips = []

176 for i in xrange(num_tips):

177 tipper, tippee = random.sample(participants, 2)

178 tips.append(fake_tip(db, tipper, tippee))

179

180

181 #Make the transfers

182 transfers = []

183 for i in xrange(num_tips):

184 tipper, tippee = random.sample(participants, 2)

185 transfers.append(fake_transfer(db, tipper, tippee))

186

187

188 #Make some paydays

189 #First determine the boundaries - min and max date

190 min_date = min(min(x['ctime'] for x in tips), \

191 min(x['timestamp'] for x in transfers))

192 max_date = max(max(x['ctime'] for x in tips), \

193 max(x['timestamp'] for x in transfers))

194 #iterate through min_date, max_date one week at a time

195 date = min_date

196 while date < max_date:

197 end_date = date + datetime.timedelta(days=7)

198 week_tips = filter(lambda x: date <= x['ctime'] <= end_date, tips)

199 week_transfers = filter(lambda x: date <= x['timestamp'] <= end_date, transfers)

200 week_participants = filter(lambda x: x.ctime.replace(tzinfo=None) <= end_date, participants)

201 actives=set()

202 tippers=set()

203 for xfers in week_tips, week_transfers:

204 actives.update(x['tipper'] for x in xfers)

205 actives.update(x['tippee'] for x in xfers)

206 tippers.update(x['tipper'] for x in xfers)

207 payday = {

208 'id': fake_int_id(),

209 'ts_start': date,

210 'ts_end': end_date,

211 'ntips': len(week_tips),

212 'ntransfers': len(week_transfers),

213 'nparticipants': len(week_participants),

214 'ntippers': len(tippers),

215 'nactive': len(actives),

216 'transfer_volume': sum(x['amount'] for x in week_transfers)

217 }

218 #Make ach_volume and charge_volume between 0 and 10% of transfer volume

219 def rand_part():

220 return decimal.Decimal(random.random()* 0.1)

221 payday['ach_volume'] = -1 * payday['transfer_volume'] * rand_part()

222 payday['charge_volume'] = payday['transfer_volume'] * rand_part()

223 _fake_thing(db, "paydays", **payday)

224 date = end_date

225

226

227

228 def main():

229 populate_db(wireup.db())

230

231 if __name__ == '__main__':

232 main()

233

[end of gittip/utils/fake_data.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/gittip/utils/fake_data.py b/gittip/utils/fake_data.py

--- a/gittip/utils/fake_data.py

+++ b/gittip/utils/fake_data.py

@@ -51,6 +51,12 @@

return random.randint(0, nmax)

+def fake_sentence(start=1, stop=100):

+ """Create a sentence of random length.

+ """

+ return faker.sentence(random.randrange(start,stop))

+

+

def fake_participant(db, number="singular", is_admin=False, anonymous=False):

"""Create a fake User.

"""

@@ -60,7 +66,7 @@

, id=fake_int_id()

, username=username

, username_lower=username.lower()

- , statement=faker.sentence()

+ , statement=fake_sentence()

, ctime=faker.dateTimeThisYear()

, is_admin=is_admin

, balance=fake_balance()

@@ -148,7 +154,7 @@

)

-def populate_db(db, num_participants=300, num_tips=200, num_teams=5, num_transfers=300):

+def populate_db(db, num_participants=100, num_tips=200, num_teams=5, num_transfers=5000):

"""Populate DB with fake data.

"""

#Make the participants

@@ -180,7 +186,7 @@

#Make the transfers

transfers = []

- for i in xrange(num_tips):

+ for i in xrange(num_transfers):

tipper, tippee = random.sample(participants, 2)

transfers.append(fake_transfer(db, tipper, tippee))

|

{"golden_diff": "diff --git a/gittip/utils/fake_data.py b/gittip/utils/fake_data.py\n--- a/gittip/utils/fake_data.py\n+++ b/gittip/utils/fake_data.py\n@@ -51,6 +51,12 @@\n return random.randint(0, nmax)\n \n \n+def fake_sentence(start=1, stop=100):\n+ \"\"\"Create a sentence of random length.\n+ \"\"\"\n+ return faker.sentence(random.randrange(start,stop))\n+\n+\n def fake_participant(db, number=\"singular\", is_admin=False, anonymous=False):\n \"\"\"Create a fake User.\n \"\"\"\n@@ -60,7 +66,7 @@\n , id=fake_int_id()\n , username=username\n , username_lower=username.lower()\n- , statement=faker.sentence()\n+ , statement=fake_sentence()\n , ctime=faker.dateTimeThisYear()\n , is_admin=is_admin\n , balance=fake_balance()\n@@ -148,7 +154,7 @@\n )\n \n \n-def populate_db(db, num_participants=300, num_tips=200, num_teams=5, num_transfers=300):\n+def populate_db(db, num_participants=100, num_tips=200, num_teams=5, num_transfers=5000):\n \"\"\"Populate DB with fake data.\n \"\"\"\n #Make the participants\n@@ -180,7 +186,7 @@\n \n #Make the transfers\n transfers = []\n- for i in xrange(num_tips):\n+ for i in xrange(num_transfers):\n tipper, tippee = random.sample(participants, 2)\n transfers.append(fake_transfer(db, tipper, tippee))\n", "issue": "per-user charts are scrunched\nAfter #1711 the per-user charts are scrunched:\n\n\n\ncc: @dominic @seanlinsley \n\nfake_data paydays should include transfers\nWe have paydays with `make data`, but we don't have any transfers. We need transfers to see the user charts in dev (cf. #1717).\n\nCharts are too wide in mobile browsers\n<img width=\"33%\" src=\"https://f.cloud.github.com/assets/688886/1649646/413a1054-5a18-11e3-8acb-2d1d5da19d72.png\"> <img width=\"33%\" src=\"https://f.cloud.github.com/assets/688886/1649647/413b9956-5a18-11e3-87c7-582ef4525553.png\"> <img width=\"33%\" src=\"https://f.cloud.github.com/assets/688886/1649655/efdc9cbc-5a18-11e3-81a3-9fab62fd0be7.png\">\n\n", "before_files": [{"content": "from faker import Factory\nfrom gittip import wireup, MAX_TIP, MIN_TIP\nfrom gittip.models.participant import Participant\n\nimport decimal\nimport random\nimport string\nimport datetime\n\n\nfaker = Factory.create()\n\nplatforms = ['github', 'twitter', 'bitbucket']\n\n\ndef _fake_thing(db, tablename, **kw):\n column_names = []\n column_value_placeholders = []\n column_values = []\n\n for k,v in kw.items():\n column_names.append(k)\n column_value_placeholders.append(\"%s\")\n column_values.append(v)\n\n column_names = \", \".join(column_names)\n column_value_placeholders = \", \".join(column_value_placeholders)\n\n db.run( \"INSERT INTO {} ({}) VALUES ({})\"\n .format(tablename, column_names, column_value_placeholders)\n , column_values\n )\n return kw\n\n\ndef fake_text_id(size=6, chars=string.ascii_lowercase + string.digits):\n \"\"\"Create a random text id.\n \"\"\"\n return ''.join(random.choice(chars) for x in range(size))\n\n\ndef fake_balance(max_amount=100):\n \"\"\"Return a random amount between 0 and max_amount.\n \"\"\"\n return random.random() * max_amount\n\n\ndef fake_int_id(nmax=2 ** 31 -1):\n \"\"\"Create a random int id.\n \"\"\"\n return random.randint(0, nmax)\n\n\ndef fake_participant(db, number=\"singular\", is_admin=False, anonymous=False):\n \"\"\"Create a fake User.\n \"\"\"\n username = faker.firstName() + fake_text_id(3)\n d = _fake_thing( db\n , \"participants\"\n , id=fake_int_id()\n , username=username\n , username_lower=username.lower()\n , statement=faker.sentence()\n , ctime=faker.dateTimeThisYear()\n , is_admin=is_admin\n , balance=fake_balance()\n , anonymous=anonymous\n , goal=fake_balance()\n , balanced_account_uri=faker.uri()\n , last_ach_result=''\n , is_suspicious=False\n , last_bill_result='' # Needed to not be suspicious\n , claimed_time=faker.dateTimeThisYear()\n , number=number\n )\n #Call participant constructor to perform other DB initialization\n return Participant.from_username(username)\n\n\n\ndef fake_tip_amount():\n amount = ((decimal.Decimal(random.random()) * (MAX_TIP - MIN_TIP))\n + MIN_TIP)\n\n decimal_amount = decimal.Decimal(amount).quantize(decimal.Decimal('.01'))\n\n return decimal_amount\n\n\ndef fake_tip(db, tipper, tippee):\n \"\"\"Create a fake tip.\n \"\"\"\n return _fake_thing( db\n , \"tips\"\n , id=fake_int_id()\n , ctime=faker.dateTimeThisYear()\n , mtime=faker.dateTimeThisMonth()\n , tipper=tipper.username\n , tippee=tippee.username\n , amount=fake_tip_amount()\n )\n\n\ndef fake_elsewhere(db, participant, platform=None):\n \"\"\"Create a fake elsewhere.\n \"\"\"\n if platform is None:\n platform = random.choice(platforms)\n\n info_templates = {\n \"github\": {\n \"name\": participant.username,\n \"html_url\": \"https://github.com/\" + participant.username,\n \"type\": \"User\",\n \"login\": participant.username\n },\n \"twitter\": {\n \"name\": participant.username,\n \"html_url\": \"https://twitter.com/\" + participant.username,\n \"screen_name\": participant.username\n },\n \"bitbucket\": {\n \"display_name\": participant.username,\n \"username\": participant.username,\n \"is_team\": \"False\",\n \"html_url\": \"https://bitbucket.org/\" + participant.username,\n }\n }\n\n _fake_thing( db\n , \"elsewhere\"\n , id=fake_int_id()\n , platform=platform\n , user_id=fake_text_id()\n , is_locked=False\n , participant=participant.username\n , user_info=info_templates[platform]\n )\n\ndef fake_transfer(db, tipper, tippee):\n return _fake_thing( db\n , \"transfers\"\n , id=fake_int_id()\n , timestamp=faker.dateTimeThisYear()\n , tipper=tipper.username\n , tippee=tippee.username\n , amount=fake_tip_amount()\n )\n\n\ndef populate_db(db, num_participants=300, num_tips=200, num_teams=5, num_transfers=300):\n \"\"\"Populate DB with fake data.\n \"\"\"\n #Make the participants\n participants = []\n for i in xrange(num_participants):\n participants.append(fake_participant(db))\n\n #Make the \"Elsewhere's\"\n for p in participants:\n #All participants get between 1 and 3 elsewheres\n num_elsewheres = random.randint(1, 3)\n for platform_name in platforms[:num_elsewheres]:\n fake_elsewhere(db, p, platform_name)\n\n #Make teams\n for i in xrange(num_teams):\n t = fake_participant(db, number=\"plural\")\n #Add 1 to 3 members to the team\n members = random.sample(participants, random.randint(1, 3))\n for p in members:\n t.add_member(p)\n\n #Make the tips\n tips = []\n for i in xrange(num_tips):\n tipper, tippee = random.sample(participants, 2)\n tips.append(fake_tip(db, tipper, tippee))\n\n\n #Make the transfers\n transfers = []\n for i in xrange(num_tips):\n tipper, tippee = random.sample(participants, 2)\n transfers.append(fake_transfer(db, tipper, tippee))\n\n\n #Make some paydays\n #First determine the boundaries - min and max date\n min_date = min(min(x['ctime'] for x in tips), \\\n min(x['timestamp'] for x in transfers))\n max_date = max(max(x['ctime'] for x in tips), \\\n max(x['timestamp'] for x in transfers))\n #iterate through min_date, max_date one week at a time\n date = min_date\n while date < max_date:\n end_date = date + datetime.timedelta(days=7)\n week_tips = filter(lambda x: date <= x['ctime'] <= end_date, tips)\n week_transfers = filter(lambda x: date <= x['timestamp'] <= end_date, transfers)\n week_participants = filter(lambda x: x.ctime.replace(tzinfo=None) <= end_date, participants)\n actives=set()\n tippers=set()\n for xfers in week_tips, week_transfers:\n actives.update(x['tipper'] for x in xfers)\n actives.update(x['tippee'] for x in xfers)\n tippers.update(x['tipper'] for x in xfers)\n payday = {\n 'id': fake_int_id(),\n 'ts_start': date,\n 'ts_end': end_date,\n 'ntips': len(week_tips),\n 'ntransfers': len(week_transfers),\n 'nparticipants': len(week_participants),\n 'ntippers': len(tippers),\n 'nactive': len(actives),\n 'transfer_volume': sum(x['amount'] for x in week_transfers)\n }\n #Make ach_volume and charge_volume between 0 and 10% of transfer volume\n def rand_part():\n return decimal.Decimal(random.random()* 0.1)\n payday['ach_volume'] = -1 * payday['transfer_volume'] * rand_part()\n payday['charge_volume'] = payday['transfer_volume'] * rand_part()\n _fake_thing(db, \"paydays\", **payday)\n date = end_date\n\n\n\ndef main():\n populate_db(wireup.db())\n\nif __name__ == '__main__':\n main()\n", "path": "gittip/utils/fake_data.py"}]}

| 3,227 | 385 |

gh_patches_debug_63480

|

rasdani/github-patches

|

git_diff

|

ansible-collections__community.vmware-1686

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

vmware_migrate_vmk: tests fail with 7.0.3

<!--- Verify first that your issue is not already reported on GitHub -->

<!--- Also test if the latest release and devel branch are affected too -->

<!--- Complete *all* sections as described, this form is processed automatically -->

##### SUMMARY

I get this failure:

```

TASK [vmware_migrate_vmk : Create a new vmkernel] ********************************************************************************************************************************************************************************************************************************************************************************************************************************************

fatal: [testhost]: FAILED! => {"changed": false, "msg": "Failed to add vmk as IP address or Subnet Mask in the IP configuration are invalid or PortGroup does not exist : A specified parameter was not correct: Vim.Host.VirtualNic.Specification.Ip"}

```##### EXPECTED RESULTS

<!--- Describe what you expected to happen when running the steps above -->

##### ACTUAL RESULTS

<!--- Describe what actually happened. If possible run with extra verbosity (-vvvv) -->

<!--- Paste verbatim command output between quotes -->

```paste below

```

</issue>

<code>

[start of plugins/modules/vmware_migrate_vmk.py]

1 #!/usr/bin/python

2 # -*- coding: utf-8 -*-

3

4 # Copyright: (c) 2015, Joseph Callen <jcallen () csc.com>

5 # GNU General Public License v3.0+ (see LICENSES/GPL-3.0-or-later.txt or https://www.gnu.org/licenses/gpl-3.0.txt)

6 # SPDX-License-Identifier: GPL-3.0-or-later

7

8 from __future__ import absolute_import, division, print_function

9 __metaclass__ = type

10

11

12 DOCUMENTATION = r'''

13 ---

14 module: vmware_migrate_vmk

15 short_description: Migrate a VMK interface from VSS to VDS

16 description:

17 - Migrate a VMK interface from VSS to VDS

18 author:

19 - Joseph Callen (@jcpowermac)

20 - Russell Teague (@mtnbikenc)

21 options:

22 esxi_hostname:

23 description:

24 - ESXi hostname to be managed

25 required: true

26 type: str

27 device:

28 description:

29 - VMK interface name

30 required: true

31 type: str

32 current_switch_name:

33 description:

34 - Switch VMK interface is currently on

35 required: true

36 type: str

37 current_portgroup_name:

38 description:

39 - Portgroup name VMK interface is currently on

40 required: true

41 type: str

42 migrate_switch_name:

43 description:

44 - Switch name to migrate VMK interface to

45 required: true

46 type: str

47 migrate_portgroup_name:

48 description:

49 - Portgroup name to migrate VMK interface to

50 required: true

51 type: str

52 migrate_vlan_id:

53 version_added: '2.4.0'

54 description:

55 - VLAN to use for the VMK interface when migrating from VDS to VSS

56 - Will be ignored when migrating from VSS to VDS

57 type: int

58 extends_documentation_fragment:

59 - community.vmware.vmware.documentation

60

61 '''

62

63 EXAMPLES = r'''

64 - name: Migrate Management vmk

65 community.vmware.vmware_migrate_vmk:

66 hostname: "{{ vcenter_hostname }}"

67 username: "{{ vcenter_username }}"

68 password: "{{ vcenter_password }}"

69 esxi_hostname: "{{ esxi_hostname }}"

70 device: vmk1

71 current_switch_name: temp_vswitch

72 current_portgroup_name: esx-mgmt

73 migrate_switch_name: dvSwitch

74 migrate_portgroup_name: Management

75 delegate_to: localhost

76 '''

77 try:

78 from pyVmomi import vim, vmodl

79 HAS_PYVMOMI = True

80 except ImportError:

81 HAS_PYVMOMI = False

82

83 from ansible.module_utils.basic import AnsibleModule

84 from ansible_collections.community.vmware.plugins.module_utils.vmware import (

85 vmware_argument_spec, find_dvs_by_name, find_hostsystem_by_name,

86 connect_to_api, find_dvspg_by_name)

87

88

89 class VMwareMigrateVmk(object):

90

91 def __init__(self, module):

92 self.module = module

93 self.host_system = None

94 self.migrate_switch_name = self.module.params['migrate_switch_name']

95 self.migrate_portgroup_name = self.module.params['migrate_portgroup_name']

96 self.migrate_vlan_id = self.module.params['migrate_vlan_id']

97 self.device = self.module.params['device']

98 self.esxi_hostname = self.module.params['esxi_hostname']

99 self.current_portgroup_name = self.module.params['current_portgroup_name']

100 self.current_switch_name = self.module.params['current_switch_name']

101 self.content = connect_to_api(module)

102

103 def process_state(self):

104 try:

105 vmk_migration_states = {

106 'migrate_vss_vds': self.state_migrate_vss_vds,

107 'migrate_vds_vss': self.state_migrate_vds_vss,

108 'migrated': self.state_exit_unchanged

109 }

110

111 vmk_migration_states[self.check_vmk_current_state()]()

112

113 except vmodl.RuntimeFault as runtime_fault:

114 self.module.fail_json(msg=runtime_fault.msg)

115 except vmodl.MethodFault as method_fault:

116 self.module.fail_json(msg=method_fault.msg)

117 except Exception as e:

118 self.module.fail_json(msg=str(e))

119

120 def state_exit_unchanged(self):

121 self.module.exit_json(changed=False)

122

123 def create_host_vnic_config_vds_vss(self):

124 host_vnic_config = vim.host.VirtualNic.Config()

125 host_vnic_config.spec = vim.host.VirtualNic.Specification()

126 host_vnic_config.changeOperation = "edit"

127 host_vnic_config.device = self.device

128 host_vnic_config.spec.portgroup = self.migrate_portgroup_name

129 return host_vnic_config

130

131 def create_port_group_config_vds_vss(self):

132 port_group_config = vim.host.PortGroup.Config()

133 port_group_config.spec = vim.host.PortGroup.Specification()

134 port_group_config.changeOperation = "add"

135 port_group_config.spec.name = self.migrate_portgroup_name

136 port_group_config.spec.vlanId = self.migrate_vlan_id if self.migrate_vlan_id is not None else 0

137 port_group_config.spec.vswitchName = self.migrate_switch_name

138 port_group_config.spec.policy = vim.host.NetworkPolicy()

139 return port_group_config

140

141 def state_migrate_vds_vss(self):

142 host_network_system = self.host_system.configManager.networkSystem

143 config = vim.host.NetworkConfig()

144 config.portgroup = [self.create_port_group_config_vds_vss()]

145 host_network_system.UpdateNetworkConfig(config, "modify")

146 config = vim.host.NetworkConfig()

147 config.vnic = [self.create_host_vnic_config_vds_vss()]

148 host_network_system.UpdateNetworkConfig(config, "modify")

149 self.module.exit_json(changed=True)

150

151 def create_host_vnic_config(self, dv_switch_uuid, portgroup_key):

152 host_vnic_config = vim.host.VirtualNic.Config()

153 host_vnic_config.spec = vim.host.VirtualNic.Specification()

154

155 host_vnic_config.changeOperation = "edit"

156 host_vnic_config.device = self.device

157 host_vnic_config.portgroup = ""

158 host_vnic_config.spec.distributedVirtualPort = vim.dvs.PortConnection()

159 host_vnic_config.spec.distributedVirtualPort.switchUuid = dv_switch_uuid

160 host_vnic_config.spec.distributedVirtualPort.portgroupKey = portgroup_key

161

162 return host_vnic_config

163

164 def create_port_group_config(self):

165 port_group_config = vim.host.PortGroup.Config()

166 port_group_config.spec = vim.host.PortGroup.Specification()

167

168 port_group_config.changeOperation = "remove"

169 port_group_config.spec.name = self.current_portgroup_name

170 port_group_config.spec.vlanId = -1

171 port_group_config.spec.vswitchName = self.current_switch_name

172 port_group_config.spec.policy = vim.host.NetworkPolicy()

173

174 return port_group_config

175

176 def state_migrate_vss_vds(self):

177 host_network_system = self.host_system.configManager.networkSystem

178

179 dv_switch = find_dvs_by_name(self.content, self.migrate_switch_name)

180 pg = find_dvspg_by_name(dv_switch, self.migrate_portgroup_name)

181

182 config = vim.host.NetworkConfig()

183 config.portgroup = [self.create_port_group_config()]

184 config.vnic = [self.create_host_vnic_config(dv_switch.uuid, pg.key)]

185 host_network_system.UpdateNetworkConfig(config, "modify")

186 self.module.exit_json(changed=True)

187

188 def check_vmk_current_state(self):

189 self.host_system = find_hostsystem_by_name(self.content, self.esxi_hostname)

190

191 for vnic in self.host_system.configManager.networkSystem.networkInfo.vnic:

192 if vnic.device == self.device:

193 if vnic.spec.distributedVirtualPort is None:

194 std_vswitches = [vswitch.name for vswitch in self.host_system.configManager.networkSystem.networkInfo.vswitch]

195 if self.current_switch_name not in std_vswitches:

196 return "migrated"

197 if vnic.portgroup == self.current_portgroup_name:

198 return "migrate_vss_vds"

199 else:

200 dvs = find_dvs_by_name(self.content, self.current_switch_name)

201 if dvs is None:

202 return "migrated"

203 if vnic.spec.distributedVirtualPort.switchUuid == dvs.uuid:

204 return "migrate_vds_vss"

205

206

207 def main():

208

209 argument_spec = vmware_argument_spec()

210 argument_spec.update(dict(esxi_hostname=dict(required=True, type='str'),

211 device=dict(required=True, type='str'),

212 current_switch_name=dict(required=True, type='str'),

213 current_portgroup_name=dict(required=True, type='str'),

214 migrate_switch_name=dict(required=True, type='str'),

215 migrate_portgroup_name=dict(required=True, type='str'),

216 migrate_vlan_id=dict(required=False, type='int')))

217

218 module = AnsibleModule(argument_spec=argument_spec, supports_check_mode=False)

219

220 if not HAS_PYVMOMI:

221 module.fail_json(msg='pyvmomi required for this module')

222

223 vmware_migrate_vmk = VMwareMigrateVmk(module)

224 vmware_migrate_vmk.process_state()

225

226

227 if __name__ == '__main__':

228 main()

229

[end of plugins/modules/vmware_migrate_vmk.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/plugins/modules/vmware_migrate_vmk.py b/plugins/modules/vmware_migrate_vmk.py

--- a/plugins/modules/vmware_migrate_vmk.py

+++ b/plugins/modules/vmware_migrate_vmk.py

@@ -203,6 +203,8 @@

if vnic.spec.distributedVirtualPort.switchUuid == dvs.uuid:

return "migrate_vds_vss"

+ self.module.fail_json(msg='Unable to find the specified device %s.' % self.device)

+

def main():

|

{"golden_diff": "diff --git a/plugins/modules/vmware_migrate_vmk.py b/plugins/modules/vmware_migrate_vmk.py\n--- a/plugins/modules/vmware_migrate_vmk.py\n+++ b/plugins/modules/vmware_migrate_vmk.py\n@@ -203,6 +203,8 @@\n if vnic.spec.distributedVirtualPort.switchUuid == dvs.uuid:\n return \"migrate_vds_vss\"\n \n+ self.module.fail_json(msg='Unable to find the specified device %s.' % self.device)\n+\n \n def main():\n", "issue": "vmware_migrate_vmk: tests fail with 7.0.3\n<!--- Verify first that your issue is not already reported on GitHub -->\r\n<!--- Also test if the latest release and devel branch are affected too -->\r\n<!--- Complete *all* sections as described, this form is processed automatically -->\r\n\r\n##### SUMMARY\r\n\r\nI get this failure:\r\n\r\n```\r\nTASK [vmware_migrate_vmk : Create a new vmkernel] ********************************************************************************************************************************************************************************************************************************************************************************************************************************************\r\nfatal: [testhost]: FAILED! => {\"changed\": false, \"msg\": \"Failed to add vmk as IP address or Subnet Mask in the IP configuration are invalid or PortGroup does not exist : A specified parameter was not correct: Vim.Host.VirtualNic.Specification.Ip\"}\r\n```##### EXPECTED RESULTS\r\n<!--- Describe what you expected to happen when running the steps above -->\r\n\r\n\r\n##### ACTUAL RESULTS\r\n<!--- Describe what actually happened. If possible run with extra verbosity (-vvvv) -->\r\n\r\n<!--- Paste verbatim command output between quotes -->\r\n```paste below\r\n\r\n```\r\n\n", "before_files": [{"content": "#!/usr/bin/python\n# -*- coding: utf-8 -*-\n\n# Copyright: (c) 2015, Joseph Callen <jcallen () csc.com>\n# GNU General Public License v3.0+ (see LICENSES/GPL-3.0-or-later.txt or https://www.gnu.org/licenses/gpl-3.0.txt)\n# SPDX-License-Identifier: GPL-3.0-or-later\n\nfrom __future__ import absolute_import, division, print_function\n__metaclass__ = type\n\n\nDOCUMENTATION = r'''\n---\nmodule: vmware_migrate_vmk\nshort_description: Migrate a VMK interface from VSS to VDS\ndescription:\n - Migrate a VMK interface from VSS to VDS\nauthor:\n- Joseph Callen (@jcpowermac)\n- Russell Teague (@mtnbikenc)\noptions:\n esxi_hostname:\n description:\n - ESXi hostname to be managed\n required: true\n type: str\n device:\n description:\n - VMK interface name\n required: true\n type: str\n current_switch_name:\n description:\n - Switch VMK interface is currently on\n required: true\n type: str\n current_portgroup_name:\n description:\n - Portgroup name VMK interface is currently on\n required: true\n type: str\n migrate_switch_name:\n description:\n - Switch name to migrate VMK interface to\n required: true\n type: str\n migrate_portgroup_name:\n description:\n - Portgroup name to migrate VMK interface to\n required: true\n type: str\n migrate_vlan_id:\n version_added: '2.4.0'\n description:\n - VLAN to use for the VMK interface when migrating from VDS to VSS\n - Will be ignored when migrating from VSS to VDS\n type: int\nextends_documentation_fragment:\n- community.vmware.vmware.documentation\n\n'''\n\nEXAMPLES = r'''\n- name: Migrate Management vmk\n community.vmware.vmware_migrate_vmk:\n hostname: \"{{ vcenter_hostname }}\"\n username: \"{{ vcenter_username }}\"\n password: \"{{ vcenter_password }}\"\n esxi_hostname: \"{{ esxi_hostname }}\"\n device: vmk1\n current_switch_name: temp_vswitch\n current_portgroup_name: esx-mgmt\n migrate_switch_name: dvSwitch\n migrate_portgroup_name: Management\n delegate_to: localhost\n'''\ntry:\n from pyVmomi import vim, vmodl\n HAS_PYVMOMI = True\nexcept ImportError:\n HAS_PYVMOMI = False\n\nfrom ansible.module_utils.basic import AnsibleModule\nfrom ansible_collections.community.vmware.plugins.module_utils.vmware import (\n vmware_argument_spec, find_dvs_by_name, find_hostsystem_by_name,\n connect_to_api, find_dvspg_by_name)\n\n\nclass VMwareMigrateVmk(object):\n\n def __init__(self, module):\n self.module = module\n self.host_system = None\n self.migrate_switch_name = self.module.params['migrate_switch_name']\n self.migrate_portgroup_name = self.module.params['migrate_portgroup_name']\n self.migrate_vlan_id = self.module.params['migrate_vlan_id']\n self.device = self.module.params['device']\n self.esxi_hostname = self.module.params['esxi_hostname']\n self.current_portgroup_name = self.module.params['current_portgroup_name']\n self.current_switch_name = self.module.params['current_switch_name']\n self.content = connect_to_api(module)\n\n def process_state(self):\n try:\n vmk_migration_states = {\n 'migrate_vss_vds': self.state_migrate_vss_vds,\n 'migrate_vds_vss': self.state_migrate_vds_vss,\n 'migrated': self.state_exit_unchanged\n }\n\n vmk_migration_states[self.check_vmk_current_state()]()\n\n except vmodl.RuntimeFault as runtime_fault:\n self.module.fail_json(msg=runtime_fault.msg)\n except vmodl.MethodFault as method_fault:\n self.module.fail_json(msg=method_fault.msg)\n except Exception as e:\n self.module.fail_json(msg=str(e))\n\n def state_exit_unchanged(self):\n self.module.exit_json(changed=False)\n\n def create_host_vnic_config_vds_vss(self):\n host_vnic_config = vim.host.VirtualNic.Config()\n host_vnic_config.spec = vim.host.VirtualNic.Specification()\n host_vnic_config.changeOperation = \"edit\"\n host_vnic_config.device = self.device\n host_vnic_config.spec.portgroup = self.migrate_portgroup_name\n return host_vnic_config\n\n def create_port_group_config_vds_vss(self):\n port_group_config = vim.host.PortGroup.Config()\n port_group_config.spec = vim.host.PortGroup.Specification()\n port_group_config.changeOperation = \"add\"\n port_group_config.spec.name = self.migrate_portgroup_name\n port_group_config.spec.vlanId = self.migrate_vlan_id if self.migrate_vlan_id is not None else 0\n port_group_config.spec.vswitchName = self.migrate_switch_name\n port_group_config.spec.policy = vim.host.NetworkPolicy()\n return port_group_config\n\n def state_migrate_vds_vss(self):\n host_network_system = self.host_system.configManager.networkSystem\n config = vim.host.NetworkConfig()\n config.portgroup = [self.create_port_group_config_vds_vss()]\n host_network_system.UpdateNetworkConfig(config, \"modify\")\n config = vim.host.NetworkConfig()\n config.vnic = [self.create_host_vnic_config_vds_vss()]\n host_network_system.UpdateNetworkConfig(config, \"modify\")\n self.module.exit_json(changed=True)\n\n def create_host_vnic_config(self, dv_switch_uuid, portgroup_key):\n host_vnic_config = vim.host.VirtualNic.Config()\n host_vnic_config.spec = vim.host.VirtualNic.Specification()\n\n host_vnic_config.changeOperation = \"edit\"\n host_vnic_config.device = self.device\n host_vnic_config.portgroup = \"\"\n host_vnic_config.spec.distributedVirtualPort = vim.dvs.PortConnection()\n host_vnic_config.spec.distributedVirtualPort.switchUuid = dv_switch_uuid\n host_vnic_config.spec.distributedVirtualPort.portgroupKey = portgroup_key\n\n return host_vnic_config\n\n def create_port_group_config(self):\n port_group_config = vim.host.PortGroup.Config()\n port_group_config.spec = vim.host.PortGroup.Specification()\n\n port_group_config.changeOperation = \"remove\"\n port_group_config.spec.name = self.current_portgroup_name\n port_group_config.spec.vlanId = -1\n port_group_config.spec.vswitchName = self.current_switch_name\n port_group_config.spec.policy = vim.host.NetworkPolicy()\n\n return port_group_config\n\n def state_migrate_vss_vds(self):\n host_network_system = self.host_system.configManager.networkSystem\n\n dv_switch = find_dvs_by_name(self.content, self.migrate_switch_name)\n pg = find_dvspg_by_name(dv_switch, self.migrate_portgroup_name)\n\n config = vim.host.NetworkConfig()\n config.portgroup = [self.create_port_group_config()]\n config.vnic = [self.create_host_vnic_config(dv_switch.uuid, pg.key)]\n host_network_system.UpdateNetworkConfig(config, \"modify\")\n self.module.exit_json(changed=True)\n\n def check_vmk_current_state(self):\n self.host_system = find_hostsystem_by_name(self.content, self.esxi_hostname)\n\n for vnic in self.host_system.configManager.networkSystem.networkInfo.vnic:\n if vnic.device == self.device:\n if vnic.spec.distributedVirtualPort is None:\n std_vswitches = [vswitch.name for vswitch in self.host_system.configManager.networkSystem.networkInfo.vswitch]\n if self.current_switch_name not in std_vswitches:\n return \"migrated\"\n if vnic.portgroup == self.current_portgroup_name:\n return \"migrate_vss_vds\"\n else:\n dvs = find_dvs_by_name(self.content, self.current_switch_name)\n if dvs is None:\n return \"migrated\"\n if vnic.spec.distributedVirtualPort.switchUuid == dvs.uuid:\n return \"migrate_vds_vss\"\n\n\ndef main():\n\n argument_spec = vmware_argument_spec()\n argument_spec.update(dict(esxi_hostname=dict(required=True, type='str'),\n device=dict(required=True, type='str'),\n current_switch_name=dict(required=True, type='str'),\n current_portgroup_name=dict(required=True, type='str'),\n migrate_switch_name=dict(required=True, type='str'),\n migrate_portgroup_name=dict(required=True, type='str'),\n migrate_vlan_id=dict(required=False, type='int')))\n\n module = AnsibleModule(argument_spec=argument_spec, supports_check_mode=False)\n\n if not HAS_PYVMOMI:\n module.fail_json(msg='pyvmomi required for this module')\n\n vmware_migrate_vmk = VMwareMigrateVmk(module)\n vmware_migrate_vmk.process_state()\n\n\nif __name__ == '__main__':\n main()\n", "path": "plugins/modules/vmware_migrate_vmk.py"}]}

| 3,323 | 119 |

gh_patches_debug_9427

|

rasdani/github-patches

|

git_diff

|

lra__mackup-1244

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Does Mackup still sync SSH keys by default?

Today I was using Mackup and I noticed this in the help documentation:

> By default, Mackup syncs all application data (including private keys!) via Dropbox, but may be configured to exclude applications or use a different backend with a .mackup.cfg file.

I really like Mackup—it saves a ton of time when setting up a new computer. However, the idea of automatically syncing SSH keys by default really scares me. A few years ago I accidentally exposed an SSH key and someone used it to charge a few thousand dollars to AWS for my company. I'd really like to avoid doing anything like this again in the future.

In reading through #512 and #109, it looks like this behavior was turned off. However, the help documentation doesn't seem to indicate that. So which one is correct? I feel strongly that synching private keys by default is not obvious behavior, and it has the potential to have some serious consequences.

Also, will Mackup sync other types of sensitive information in the future? What scares me most about this is not necessarily what Mackup is syncing today, but what it might add in the future that I don't notice.

Thanks!

</issue>

<code>

[start of mackup/main.py]

1 """Mackup.

2

3 Keep your application settings in sync.

4 Copyright (C) 2013-2015 Laurent Raufaste <http://glop.org/>

5

6 Usage:

7 mackup list

8 mackup [options] backup

9 mackup [options] restore

10 mackup [options] uninstall

11 mackup (-h | --help)

12 mackup --version

13

14 Options:

15 -h --help Show this screen.

16 -f --force Force every question asked to be answered with "Yes".

17 -n --dry-run Show steps without executing.

18 -v --verbose Show additional details.

19 --version Show version.

20

21 Modes of action:

22 1. list: display a list of all supported applications.

23 2. backup: sync your conf files to your synced storage, use this the 1st time

24 you use Mackup. (Note that by default this will sync private keys used by

25 GnuPG.)

26 3. restore: link the conf files already in your synced storage on your system,

27 use it on any new system you use.

28 4. uninstall: reset everything as it was before using Mackup.

29

30 By default, Mackup syncs all application data (including some private keys!)

31 via Dropbox, but may be configured to exclude applications or use a different

32 backend with a .mackup.cfg file.

33

34 See https://github.com/lra/mackup/tree/master/doc for more information.

35

36 """

37 from docopt import docopt

38 from .appsdb import ApplicationsDatabase

39 from .application import ApplicationProfile

40 from .constants import MACKUP_APP_NAME, VERSION

41 from .mackup import Mackup

42 from . import utils

43

44

45 class ColorFormatCodes:

46 BLUE = '\033[34m'

47 BOLD = '\033[1m'

48 NORMAL = '\033[0m'

49

50

51 def header(str):

52 return ColorFormatCodes.BLUE + str + ColorFormatCodes.NORMAL

53

54

55 def bold(str):

56 return ColorFormatCodes.BOLD + str + ColorFormatCodes.NORMAL

57

58

59 def main():

60 """Main function."""

61 # Get the command line arg

62 args = docopt(__doc__, version="Mackup {}".format(VERSION))

63

64 mckp = Mackup()

65 app_db = ApplicationsDatabase()

66

67 def printAppHeader(app_name):

68 if verbose:

69 print(("\n{0} {1} {0}").format(header("---"), bold(app_name)))

70

71 # If we want to answer mackup with "yes" for each question

72 if args['--force']:

73 utils.FORCE_YES = True

74

75 dry_run = args['--dry-run']

76

77 verbose = args['--verbose']

78

79 if args['backup']:

80 # Check the env where the command is being run

81 mckp.check_for_usable_backup_env()

82

83 # Backup each application

84 for app_name in sorted(mckp.get_apps_to_backup()):

85 app = ApplicationProfile(mckp,

86 app_db.get_files(app_name),

87 dry_run,

88 verbose)

89 printAppHeader(app_name)

90 app.backup()

91

92 elif args['restore']:

93 # Check the env where the command is being run

94 mckp.check_for_usable_restore_env()

95

96 # Restore the Mackup config before any other config, as we might need

97 # it to know about custom settings

98 mackup_app = ApplicationProfile(mckp,

99 app_db.get_files(MACKUP_APP_NAME),

100 dry_run,

101 verbose)

102 printAppHeader(MACKUP_APP_NAME)

103 mackup_app.restore()

104

105 # Initialize again the apps db, as the Mackup config might have changed

106 # it

107 mckp = Mackup()

108 app_db = ApplicationsDatabase()

109

110 # Restore the rest of the app configs, using the restored Mackup config

111 app_names = mckp.get_apps_to_backup()

112 # Mackup has already been done

113 app_names.discard(MACKUP_APP_NAME)

114

115 for app_name in sorted(app_names):

116 app = ApplicationProfile(mckp,

117 app_db.get_files(app_name),

118 dry_run,

119 verbose)

120 printAppHeader(app_name)

121 app.restore()

122

123 elif args['uninstall']:

124 # Check the env where the command is being run

125 mckp.check_for_usable_restore_env()

126

127 if dry_run or (

128 utils.confirm("You are going to uninstall Mackup.\n"

129 "Every configuration file, setting and dotfile"

130 " managed by Mackup will be unlinked and moved back"

131 " to their original place, in your home folder.\n"

132 "Are you sure ?")):

133

134 # Uninstall the apps except Mackup, which we'll uninstall last, to

135 # keep the settings as long as possible

136 app_names = mckp.get_apps_to_backup()

137 app_names.discard(MACKUP_APP_NAME)

138

139 for app_name in sorted(app_names):

140 app = ApplicationProfile(mckp,

141 app_db.get_files(app_name),

142 dry_run,

143 verbose)

144 printAppHeader(app_name)

145 app.uninstall()

146

147 # Restore the Mackup config before any other config, as we might

148 # need it to know about custom settings

149 mackup_app = ApplicationProfile(mckp,

150 app_db.get_files(MACKUP_APP_NAME),

151 dry_run,

152 verbose)

153 mackup_app.uninstall()

154

155 # Delete the Mackup folder in Dropbox

156 # Don't delete this as there might be other Macs that aren't

157 # uninstalled yet

158 # delete(mckp.mackup_folder)

159

160 print("\n"

161 "All your files have been put back into place. You can now"

162 " safely uninstall Mackup.\n"

163 "\n"

164 "Thanks for using Mackup !")

165

166 elif args['list']:

167 # Display the list of supported applications

168 mckp.check_for_usable_environment()

169 output = "Supported applications:\n"

170 for app_name in sorted(app_db.get_app_names()):

171 output += " - {}\n".format(app_name)

172 output += "\n"

173 output += ("{} applications supported in Mackup v{}"

174 .format(len(app_db.get_app_names()), VERSION))

175 print(output)

176

177 # Delete the tmp folder

178 mckp.clean_temp_folder()

179

[end of mackup/main.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/mackup/main.py b/mackup/main.py

--- a/mackup/main.py

+++ b/mackup/main.py

@@ -27,8 +27,8 @@

use it on any new system you use.

4. uninstall: reset everything as it was before using Mackup.

-By default, Mackup syncs all application data (including some private keys!)

-via Dropbox, but may be configured to exclude applications or use a different

+By default, Mackup syncs all application data (except for private keys) via

+Dropbox, but may be configured to exclude applications or use a different

backend with a .mackup.cfg file.

See https://github.com/lra/mackup/tree/master/doc for more information.

|

{"golden_diff": "diff --git a/mackup/main.py b/mackup/main.py\n--- a/mackup/main.py\n+++ b/mackup/main.py\n@@ -27,8 +27,8 @@\n use it on any new system you use.\n 4. uninstall: reset everything as it was before using Mackup.\n \n-By default, Mackup syncs all application data (including some private keys!)\n-via Dropbox, but may be configured to exclude applications or use a different\n+By default, Mackup syncs all application data (except for private keys) via\n+Dropbox, but may be configured to exclude applications or use a different\n backend with a .mackup.cfg file.\n \n See https://github.com/lra/mackup/tree/master/doc for more information.\n", "issue": "Does Mackup still sync SSH keys by default?\nToday I was using Mackup and I noticed this in the help documentation:\r\n\r\n> By default, Mackup syncs all application data (including private keys!) via Dropbox, but may be configured to exclude applications or use a different backend with a .mackup.cfg file.\r\n\r\nI really like Mackup\u2014it saves a ton of time when setting up a new computer. However, the idea of automatically syncing SSH keys by default really scares me. A few years ago I accidentally exposed an SSH key and someone used it to charge a few thousand dollars to AWS for my company. I'd really like to avoid doing anything like this again in the future.\r\n\r\nIn reading through #512 and #109, it looks like this behavior was turned off. However, the help documentation doesn't seem to indicate that. So which one is correct? I feel strongly that synching private keys by default is not obvious behavior, and it has the potential to have some serious consequences.\r\n\r\nAlso, will Mackup sync other types of sensitive information in the future? What scares me most about this is not necessarily what Mackup is syncing today, but what it might add in the future that I don't notice.\r\n\r\nThanks!\n", "before_files": [{"content": "\"\"\"Mackup.\n\nKeep your application settings in sync.\nCopyright (C) 2013-2015 Laurent Raufaste <http://glop.org/>\n\nUsage:\n mackup list\n mackup [options] backup\n mackup [options] restore\n mackup [options] uninstall\n mackup (-h | --help)\n mackup --version\n\nOptions:\n -h --help Show this screen.\n -f --force Force every question asked to be answered with \"Yes\".\n -n --dry-run Show steps without executing.\n -v --verbose Show additional details.\n --version Show version.\n\nModes of action:\n 1. list: display a list of all supported applications.\n 2. backup: sync your conf files to your synced storage, use this the 1st time\n you use Mackup. (Note that by default this will sync private keys used by\n GnuPG.)\n 3. restore: link the conf files already in your synced storage on your system,\n use it on any new system you use.\n 4. uninstall: reset everything as it was before using Mackup.\n\nBy default, Mackup syncs all application data (including some private keys!)\nvia Dropbox, but may be configured to exclude applications or use a different\nbackend with a .mackup.cfg file.\n\nSee https://github.com/lra/mackup/tree/master/doc for more information.\n\n\"\"\"\nfrom docopt import docopt\nfrom .appsdb import ApplicationsDatabase\nfrom .application import ApplicationProfile\nfrom .constants import MACKUP_APP_NAME, VERSION\nfrom .mackup import Mackup\nfrom . import utils\n\n\nclass ColorFormatCodes:\n BLUE = '\\033[34m'\n BOLD = '\\033[1m'\n NORMAL = '\\033[0m'\n\n\ndef header(str):\n return ColorFormatCodes.BLUE + str + ColorFormatCodes.NORMAL\n\n\ndef bold(str):\n return ColorFormatCodes.BOLD + str + ColorFormatCodes.NORMAL\n\n\ndef main():\n \"\"\"Main function.\"\"\"\n # Get the command line arg\n args = docopt(__doc__, version=\"Mackup {}\".format(VERSION))\n\n mckp = Mackup()\n app_db = ApplicationsDatabase()\n\n def printAppHeader(app_name):\n if verbose:\n print((\"\\n{0} {1} {0}\").format(header(\"---\"), bold(app_name)))\n\n # If we want to answer mackup with \"yes\" for each question\n if args['--force']:\n utils.FORCE_YES = True\n\n dry_run = args['--dry-run']\n\n verbose = args['--verbose']\n\n if args['backup']:\n # Check the env where the command is being run\n mckp.check_for_usable_backup_env()\n\n # Backup each application\n for app_name in sorted(mckp.get_apps_to_backup()):\n app = ApplicationProfile(mckp,\n app_db.get_files(app_name),\n dry_run,\n verbose)\n printAppHeader(app_name)\n app.backup()\n\n elif args['restore']:\n # Check the env where the command is being run\n mckp.check_for_usable_restore_env()\n\n # Restore the Mackup config before any other config, as we might need\n # it to know about custom settings\n mackup_app = ApplicationProfile(mckp,\n app_db.get_files(MACKUP_APP_NAME),\n dry_run,\n verbose)\n printAppHeader(MACKUP_APP_NAME)\n mackup_app.restore()\n\n # Initialize again the apps db, as the Mackup config might have changed\n # it\n mckp = Mackup()\n app_db = ApplicationsDatabase()\n\n # Restore the rest of the app configs, using the restored Mackup config\n app_names = mckp.get_apps_to_backup()\n # Mackup has already been done\n app_names.discard(MACKUP_APP_NAME)\n\n for app_name in sorted(app_names):\n app = ApplicationProfile(mckp,\n app_db.get_files(app_name),\n dry_run,\n verbose)\n printAppHeader(app_name)\n app.restore()\n\n elif args['uninstall']:\n # Check the env where the command is being run\n mckp.check_for_usable_restore_env()\n\n if dry_run or (\n utils.confirm(\"You are going to uninstall Mackup.\\n\"\n \"Every configuration file, setting and dotfile\"\n \" managed by Mackup will be unlinked and moved back\"\n \" to their original place, in your home folder.\\n\"\n \"Are you sure ?\")):\n\n # Uninstall the apps except Mackup, which we'll uninstall last, to\n # keep the settings as long as possible\n app_names = mckp.get_apps_to_backup()\n app_names.discard(MACKUP_APP_NAME)\n\n for app_name in sorted(app_names):\n app = ApplicationProfile(mckp,\n app_db.get_files(app_name),\n dry_run,\n verbose)\n printAppHeader(app_name)\n app.uninstall()\n\n # Restore the Mackup config before any other config, as we might\n # need it to know about custom settings\n mackup_app = ApplicationProfile(mckp,\n app_db.get_files(MACKUP_APP_NAME),\n dry_run,\n verbose)\n mackup_app.uninstall()\n\n # Delete the Mackup folder in Dropbox\n # Don't delete this as there might be other Macs that aren't\n # uninstalled yet\n # delete(mckp.mackup_folder)\n\n print(\"\\n\"\n \"All your files have been put back into place. You can now\"\n \" safely uninstall Mackup.\\n\"\n \"\\n\"\n \"Thanks for using Mackup !\")\n\n elif args['list']:\n # Display the list of supported applications\n mckp.check_for_usable_environment()\n output = \"Supported applications:\\n\"\n for app_name in sorted(app_db.get_app_names()):\n output += \" - {}\\n\".format(app_name)\n output += \"\\n\"\n output += (\"{} applications supported in Mackup v{}\"\n .format(len(app_db.get_app_names()), VERSION))\n print(output)\n\n # Delete the tmp folder\n mckp.clean_temp_folder()\n", "path": "mackup/main.py"}]}

| 2,595 | 167 |

gh_patches_debug_16469

|

rasdani/github-patches

|

git_diff

|

google-deepmind__optax-465

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Better tests for utils.

Optax tests did not catch a problem with one of the type annotations in #367. This is due to `utils` not having good test coverage.

I'm marking this as "good first issue". Any tests for `utils` would be very welcome! No need to write tests for all of them at once, PRs with only a single test at a time are very welcome.

</issue>

<code>

[start of optax/_src/utils.py]

1 # Copyright 2019 DeepMind Technologies Limited. All Rights Reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 # ==============================================================================

15 """Utility functions for testing."""

16

17 from typing import Optional, Tuple, Sequence

18

19 import chex

20 import jax

21 import jax.numpy as jnp

22 import jax.scipy.stats.norm as multivariate_normal

23

24 from optax._src import linear_algebra

25 from optax._src import numerics

26

27

28 def tile_second_to_last_dim(a: chex.Array) -> chex.Array:

29 ones = jnp.ones_like(a)

30 a = jnp.expand_dims(a, axis=-1)

31 return jnp.expand_dims(ones, axis=-2) * a

32

33

34 def canonicalize_dtype(

35 dtype: Optional[chex.ArrayDType]) -> Optional[chex.ArrayDType]:

36 """Canonicalise a dtype, skip if None."""

37 if dtype is not None:

38 return jax.dtypes.canonicalize_dtype(dtype)

39 return dtype

40

41

42 def cast_tree(tree: chex.ArrayTree,

43 dtype: Optional[chex.ArrayDType]) -> chex.ArrayTree:

44 """Cast tree to given dtype, skip if None."""

45 if dtype is not None:

46 return jax.tree_util.tree_map(lambda t: t.astype(dtype), tree)

47 else:

48 return tree

49

50

51 def set_diags(a: chex.Array, new_diags: chex.Array) -> chex.Array:

52 """Set the diagonals of every DxD matrix in an input of shape NxDxD.

53

54 Args:

55 a: rank 3, tensor NxDxD.

56 new_diags: NxD matrix, the new diagonals of each DxD matrix.

57

58 Returns:

59 NxDxD tensor, with the same contents as `a` but with the diagonal

60 changed to `new_diags`.

61 """

62 n, d, d1 = a.shape

63 assert d == d1

64

65 indices1 = jnp.repeat(jnp.arange(n), d)

66 indices2 = jnp.tile(jnp.arange(d), n)

67 indices3 = indices2

68

69 # Use numpy array setting

70 a = a.at[indices1, indices2, indices3].set(new_diags.flatten())

71 return a

72

73

74 class MultiNormalDiagFromLogScale():

75 """MultiNormalDiag which directly exposes its input parameters."""

76

77 def __init__(self, loc: chex.Array, log_scale: chex.Array):

78 self._log_scale = log_scale

79 self._scale = jnp.exp(log_scale)

80 self._mean = loc

81 self._param_shape = jax.lax.broadcast_shapes(

82 self._mean.shape, self._scale.shape)

83

84 def sample(self, shape: Sequence[int],

85 seed: chex.PRNGKey) -> chex.Array:

86 sample_shape = tuple(shape) + self._param_shape

87 return jax.random.normal(

88 seed, shape=sample_shape) * self._scale + self._mean

89

90 def log_prob(self, x: chex.Array) -> chex.Array:

91 log_prob = multivariate_normal.logpdf(x, loc=self._mean, scale=self._scale)

92 # Sum over parameter axes.

93 sum_axis = [-(i + 1) for i in range(len(self._param_shape))]

94 return jnp.sum(log_prob, axis=sum_axis)

95

96 @property

97 def log_scale(self) -> chex.Array:

98 return self._log_scale

99

100 @property

101 def params(self) -> Sequence[chex.Array]:

102 return [self._mean, self._log_scale]

103

104

105 def multi_normal(loc: chex.Array,

106 log_scale: chex.Array) -> MultiNormalDiagFromLogScale:

107 return MultiNormalDiagFromLogScale(loc=loc, log_scale=log_scale)

108

109

110 @jax.custom_vjp

111 def _scale_gradient(inputs: chex.ArrayTree, scale: float) -> chex.ArrayTree:

112 """Internal gradient scaling implementation."""

113 del scale # Only used for the backward pass defined in _scale_gradient_bwd.

114 return inputs

115

116

117 def _scale_gradient_fwd(inputs: chex.ArrayTree,

118 scale: float) -> Tuple[chex.ArrayTree, float]:

119 return _scale_gradient(inputs, scale), scale

120

121

122 def _scale_gradient_bwd(scale: float,

123 g: chex.ArrayTree) -> Tuple[chex.ArrayTree, None]:

124 return (jax.tree_util.tree_map(lambda g_: g_ * scale, g), None)

125

126

127 _scale_gradient.defvjp(_scale_gradient_fwd, _scale_gradient_bwd)

128

129

130 def scale_gradient(inputs: chex.ArrayTree, scale: float) -> chex.ArrayTree:

131 """Scales gradients for the backwards pass.

132

133 Args:

134 inputs: A nested array.

135 scale: The scale factor for the gradient on the backwards pass.

136

137 Returns:

138 An array of the same structure as `inputs`, with scaled backward gradient.

139 """

140 # Special case scales of 1. and 0. for more efficiency.

141 if scale == 1.:

142 return inputs

143 elif scale == 0.:

144 return jax.lax.stop_gradient(inputs)

145 else:

146 return _scale_gradient(inputs, scale)

147

148

149 # TODO(b/183800387): remove legacy aliases.

150 safe_norm = numerics.safe_norm

151 safe_int32_increment = numerics.safe_int32_increment

152 global_norm = linear_algebra.global_norm

153

[end of optax/_src/utils.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/optax/_src/utils.py b/optax/_src/utils.py

--- a/optax/_src/utils.py

+++ b/optax/_src/utils.py

@@ -59,8 +59,22 @@

NxDxD tensor, with the same contents as `a` but with the diagonal

changed to `new_diags`.

"""

+ a_dim, new_diags_dim = len(a.shape), len(new_diags.shape)

+ if a_dim != 3:

+ raise ValueError(f'Expected `a` to be a 3D tensor, got {a_dim}D instead')

+ if new_diags_dim != 2:

+ raise ValueError(

+ f'Expected `new_diags` to be a 2D array, got {new_diags_dim}D instead')

n, d, d1 = a.shape

- assert d == d1

+ n_diags, d_diags = new_diags.shape

+ if d != d1:

+ raise ValueError(

+ f'Shape mismatch: expected `a.shape` to be {(n, d, d)}, '

+ f'got {(n, d, d1)} instead')

+ if d_diags != d or n_diags != n:

+ raise ValueError(

+ f'Shape mismatch: expected `new_diags.shape` to be {(n, d)}, '

+ f'got {(n_diags, d_diags)} instead')

indices1 = jnp.repeat(jnp.arange(n), d)

indices2 = jnp.tile(jnp.arange(d), n)

|

{"golden_diff": "diff --git a/optax/_src/utils.py b/optax/_src/utils.py\n--- a/optax/_src/utils.py\n+++ b/optax/_src/utils.py\n@@ -59,8 +59,22 @@\n NxDxD tensor, with the same contents as `a` but with the diagonal\n changed to `new_diags`.\n \"\"\"\n+ a_dim, new_diags_dim = len(a.shape), len(new_diags.shape)\n+ if a_dim != 3:\n+ raise ValueError(f'Expected `a` to be a 3D tensor, got {a_dim}D instead')\n+ if new_diags_dim != 2:\n+ raise ValueError(\n+ f'Expected `new_diags` to be a 2D array, got {new_diags_dim}D instead')\n n, d, d1 = a.shape\n- assert d == d1\n+ n_diags, d_diags = new_diags.shape\n+ if d != d1:\n+ raise ValueError(\n+ f'Shape mismatch: expected `a.shape` to be {(n, d, d)}, '\n+ f'got {(n, d, d1)} instead')\n+ if d_diags != d or n_diags != n:\n+ raise ValueError(\n+ f'Shape mismatch: expected `new_diags.shape` to be {(n, d)}, '\n+ f'got {(n_diags, d_diags)} instead')\n \n indices1 = jnp.repeat(jnp.arange(n), d)\n indices2 = jnp.tile(jnp.arange(d), n)\n", "issue": "Better tests for utils.\nOptax tests did not catch a problem with one of the type annotations in #367. This is due to `utils` not having good test coverage. \r\n\r\nI'm marking this as \"good first issue\". Any tests for `utils` would be very welcome! No need to write tests for all of them at once, PRs with only a single test at a time are very welcome.\n", "before_files": [{"content": "# Copyright 2019 DeepMind Technologies Limited. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n# ==============================================================================\n\"\"\"Utility functions for testing.\"\"\"\n\nfrom typing import Optional, Tuple, Sequence\n\nimport chex\nimport jax\nimport jax.numpy as jnp\nimport jax.scipy.stats.norm as multivariate_normal\n\nfrom optax._src import linear_algebra\nfrom optax._src import numerics\n\n\ndef tile_second_to_last_dim(a: chex.Array) -> chex.Array:\n ones = jnp.ones_like(a)\n a = jnp.expand_dims(a, axis=-1)\n return jnp.expand_dims(ones, axis=-2) * a\n\n\ndef canonicalize_dtype(\n dtype: Optional[chex.ArrayDType]) -> Optional[chex.ArrayDType]:\n \"\"\"Canonicalise a dtype, skip if None.\"\"\"\n if dtype is not None:\n return jax.dtypes.canonicalize_dtype(dtype)\n return dtype\n\n\ndef cast_tree(tree: chex.ArrayTree,\n dtype: Optional[chex.ArrayDType]) -> chex.ArrayTree:\n \"\"\"Cast tree to given dtype, skip if None.\"\"\"\n if dtype is not None:\n return jax.tree_util.tree_map(lambda t: t.astype(dtype), tree)\n else:\n return tree\n\n\ndef set_diags(a: chex.Array, new_diags: chex.Array) -> chex.Array:\n \"\"\"Set the diagonals of every DxD matrix in an input of shape NxDxD.\n\n Args:\n a: rank 3, tensor NxDxD.\n new_diags: NxD matrix, the new diagonals of each DxD matrix.\n\n Returns:\n NxDxD tensor, with the same contents as `a` but with the diagonal\n changed to `new_diags`.\n \"\"\"\n n, d, d1 = a.shape\n assert d == d1\n\n indices1 = jnp.repeat(jnp.arange(n), d)\n indices2 = jnp.tile(jnp.arange(d), n)\n indices3 = indices2\n\n # Use numpy array setting\n a = a.at[indices1, indices2, indices3].set(new_diags.flatten())\n return a\n\n\nclass MultiNormalDiagFromLogScale():\n \"\"\"MultiNormalDiag which directly exposes its input parameters.\"\"\"\n\n def __init__(self, loc: chex.Array, log_scale: chex.Array):\n self._log_scale = log_scale\n self._scale = jnp.exp(log_scale)\n self._mean = loc\n self._param_shape = jax.lax.broadcast_shapes(\n self._mean.shape, self._scale.shape)\n\n def sample(self, shape: Sequence[int],\n seed: chex.PRNGKey) -> chex.Array:\n sample_shape = tuple(shape) + self._param_shape\n return jax.random.normal(\n seed, shape=sample_shape) * self._scale + self._mean\n\n def log_prob(self, x: chex.Array) -> chex.Array:\n log_prob = multivariate_normal.logpdf(x, loc=self._mean, scale=self._scale)\n # Sum over parameter axes.\n sum_axis = [-(i + 1) for i in range(len(self._param_shape))]\n return jnp.sum(log_prob, axis=sum_axis)\n\n @property\n def log_scale(self) -> chex.Array:\n return self._log_scale\n\n @property\n def params(self) -> Sequence[chex.Array]:\n return [self._mean, self._log_scale]\n\n\ndef multi_normal(loc: chex.Array,\n log_scale: chex.Array) -> MultiNormalDiagFromLogScale:\n return MultiNormalDiagFromLogScale(loc=loc, log_scale=log_scale)\n\n\[email protected]_vjp\ndef _scale_gradient(inputs: chex.ArrayTree, scale: float) -> chex.ArrayTree:\n \"\"\"Internal gradient scaling implementation.\"\"\"\n del scale # Only used for the backward pass defined in _scale_gradient_bwd.\n return inputs\n\n\ndef _scale_gradient_fwd(inputs: chex.ArrayTree,\n scale: float) -> Tuple[chex.ArrayTree, float]:\n return _scale_gradient(inputs, scale), scale\n\n\ndef _scale_gradient_bwd(scale: float,\n g: chex.ArrayTree) -> Tuple[chex.ArrayTree, None]:\n return (jax.tree_util.tree_map(lambda g_: g_ * scale, g), None)\n\n\n_scale_gradient.defvjp(_scale_gradient_fwd, _scale_gradient_bwd)\n\n\ndef scale_gradient(inputs: chex.ArrayTree, scale: float) -> chex.ArrayTree:\n \"\"\"Scales gradients for the backwards pass.\n\n Args:\n inputs: A nested array.\n scale: The scale factor for the gradient on the backwards pass.\n\n Returns:\n An array of the same structure as `inputs`, with scaled backward gradient.\n \"\"\"\n # Special case scales of 1. and 0. for more efficiency.\n if scale == 1.:\n return inputs\n elif scale == 0.:\n return jax.lax.stop_gradient(inputs)\n else:\n return _scale_gradient(inputs, scale)\n\n\n# TODO(b/183800387): remove legacy aliases.\nsafe_norm = numerics.safe_norm\nsafe_int32_increment = numerics.safe_int32_increment\nglobal_norm = linear_algebra.global_norm\n", "path": "optax/_src/utils.py"}]}

| 2,255 | 348 |

gh_patches_debug_8272

|

rasdani/github-patches

|

git_diff

|

Parsl__parsl-463

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Improve datafuture representation

Here is an example: `<DataFuture at 0x7f305c0b1da0 state=finished returned /home/annawoodard/pnpfit/results/best-fit-cuB.root_file>`

I do not think we should append `_file` to the end of the filepath, it makes it confusing what the actual filepath is.

</issue>

<code>

[start of parsl/app/futures.py]

1 """This module implements DataFutures.

2

3 We have two basic types of futures:

4 1. DataFutures which represent data objects

5 2. AppFutures which represent the futures on App/Leaf tasks.

6 """

7 import os

8 import logging

9 from concurrent.futures import Future

10

11 from parsl.dataflow.futures import AppFuture

12 from parsl.app.errors import *

13 from parsl.data_provider.files import File

14

15 logger = logging.getLogger(__name__)

16

17 # Possible future states (for internal use by the futures package).

18 PENDING = 'PENDING'

19 RUNNING = 'RUNNING'

20 # The future was cancelled by the user...

21 CANCELLED = 'CANCELLED'

22 # ...and _Waiter.add_cancelled() was called by a worker.

23 CANCELLED_AND_NOTIFIED = 'CANCELLED_AND_NOTIFIED'

24 FINISHED = 'FINISHED'

25

26 _STATE_TO_DESCRIPTION_MAP = {

27 PENDING: "pending",

28 RUNNING: "running",

29 CANCELLED: "cancelled",

30 CANCELLED_AND_NOTIFIED: "cancelled",

31 FINISHED: "finished"

32 }

33

34

35 class DataFuture(Future):

36 """A datafuture points at an AppFuture.

37

38 We are simply wrapping a AppFuture, and adding the specific case where, if

39 the future is resolved i.e file exists, then the DataFuture is assumed to be

40 resolved.

41 """

42

43 def parent_callback(self, parent_fu):

44 """Callback from executor future to update the parent.

45

46 Args:

47 - parent_fu (Future): Future returned by the executor along with callback

48

49 Returns:

50 - None

51

52 Updates the super() with the result() or exception()

53 """

54 if parent_fu.done() is True:

55 e = parent_fu._exception

56 if e:

57 super().set_exception(e)

58 else:

59 super().set_result(parent_fu.result())

60 return

61

62 def __init__(self, fut, file_obj, parent=None, tid=None):

63 """Construct the DataFuture object.

64

65 If the file_obj is a string convert to a File.

66

67 Args:

68 - fut (AppFuture) : AppFuture that this DataFuture will track

69 - file_obj (string/File obj) : Something representing file(s)

70

71 Kwargs:

72 - parent ()

73 - tid (task_id) : Task id that this DataFuture tracks

74 """

75 super().__init__()

76 self._tid = tid

77 if isinstance(file_obj, str) and not isinstance(file_obj, File):

78 self.file_obj = File(file_obj)

79 else:

80 self.file_obj = file_obj

81 self.parent = parent

82 self._exception = None

83

84 if fut is None:

85 logger.debug("Setting result to filepath since no future was passed")

86 self.set_result = self.file_obj

87

88 else:

89 if isinstance(fut, Future):

90 self.parent = fut

91 self.parent.add_done_callback(self.parent_callback)

92 else:

93 raise NotFutureError("DataFuture can be created only with a FunctionFuture on None")