problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_4028

|

rasdani/github-patches

|

git_diff

|

diofant__diofant-852

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Project logo

There are few places to put images:

- [x] Top left corner of https://diofant.readthedocs.io/ (see e.g. https://sphinx-rtd-theme.readthedocs.io/)

- [x] favicon.ico.

- [x] logo for pdf logs

- [x] Organization profile on the Github (at least 200x200px)

- [x] ~~repository’s social media preview (640×320px - 1280×640px for best display)~~

--- END ISSUE ---

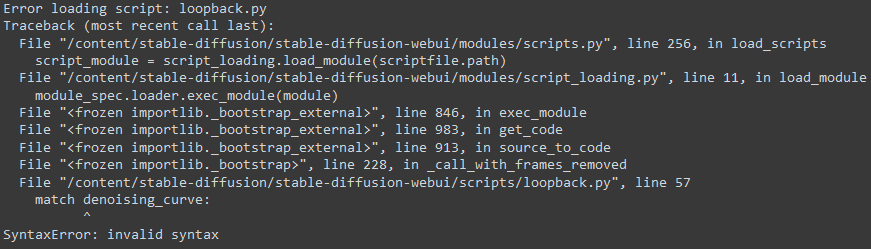

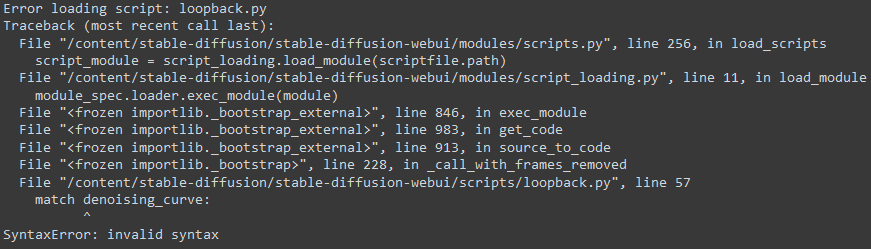

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `docs/conf.py`

Content:

```

1 #

2 # Diofant documentation build configuration file.

3 #

4 # This file is execfile()d with the current directory set to its

5 # containing dir.

6 #

7 # The contents of this file are pickled, so don't put values in the

8 # namespace that aren't pickleable (module imports are okay, they're

9 # removed automatically).

10 #

11

12 import warnings

13

14 import diofant

15

16

17 # Turns numpydoc's section warnings to exceptions, see numpy/numpydoc#58.

18 warnings.simplefilter('error', UserWarning)

19

20 # Add any Sphinx extension module names here, as strings.

21 extensions = ['sphinx.ext.autodoc', 'sphinx.ext.viewcode', 'sphinx.ext.mathjax',

22 'sphinx.ext.graphviz', 'sphinx.ext.intersphinx',

23 'sphinx.ext.extlinks', 'sphinx.ext.napoleon',

24 'sphinxcontrib.bibtex']

25

26 napoleon_google_docstring = False

27 napoleon_use_param = False

28 napoleon_use_rtype = False

29

30 # Sphinx will warn about all references where the target cannot be found.

31 nitpicky = True

32

33 # Glob-style patterns that should be excluded when looking for sources.

34 exclude_patterns = ['README.rst']

35

36 # The document name of the "master" document, that is, the document

37 # that contains the root toctree directive.

38 master_doc = 'index'

39

40 # Project information.

41 project = 'Diofant'

42 copyright = '2006-2018 SymPy Development Team, 2013-2019 Sergey B Kirpichev'

43 version = diofant.__version__

44 release = version

45

46 # The name of default reST role, that is, for text marked up `like this`.

47 default_role = 'math'

48

49 # The theme to use for HTML and HTML Help pages.

50 html_theme = 'sphinx_rtd_theme'

51

52 # The LaTeX engine to build the docs.

53 latex_engine = 'xelatex'

54

55 # If True, the PDF build from the LaTeX files created by Sphinx will use xindy

56 # rather than makeindex.

57 latex_use_xindy = False

58

59 # This value determines how to group the document tree into LaTeX source

60 # files. It must be a list of tuples (startdocname, targetname, title,

61 # author, documentclass, toctree_only),

62 latex_documents = [('index', 'diofant.tex', 'Diofant Documentation',

63 'Diofant Development Team', 'manual', True)]

64

65 # A dictionary that contains LaTeX snippets that override predefined.

66 latex_elements = {

67 'preamble': r'''

68 \setmainfont{DejaVu Serif}

69 \setsansfont{DejaVu Sans}

70 \setmonofont{DejaVu Sans Mono}

71 % redefine \LaTeX to be usable in math mode

72 \expandafter\def\expandafter\LaTeX\expandafter{\expandafter\text\expandafter{\LaTeX}}

73 '''

74 }

75

76 # Add page references after internal references.

77 latex_show_pagerefs = True

78

79 # The output format for Graphviz when building HTML files.

80 graphviz_output_format = 'svg'

81

82 # Contains mapping the locations and names of other projects that

83 # should be linked to in this documentation.

84 intersphinx_mapping = {

85 'python3': ('https://docs.python.org/3/', None),

86 'numpy': ('https://docs.scipy.org/doc/numpy', None),

87 'scipy': ('https://docs.scipy.org/doc/scipy/reference', None),

88 }

89

90 # Dictionary of external sites, mapping unique short alias names to a

91 # base URL and a prefix.

92 extlinks = {

93 'issue': ('https://github.com/diofant/diofant/issues/%s', '#'),

94 'pull': ('https://github.com/diofant/diofant/pull/%s', '#'),

95 'commit': ('https://github.com/diofant/diofant/commit/%s', ''),

96 'sympyissue': ('https://github.com/sympy/sympy/issues/%s', 'sympy/sympy#'),

97 'sympypull': ('https://github.com/sympy/sympy/pull/%s', 'sympy/sympy#'),

98 }

99

100 # The number of times the linkcheck builder will attempt to check a URL

101 # before declaring it broken.

102 linkcheck_retries = 3

103

104 # A list of regular expressions that match URIs that should not be checked.

105 linkcheck_ignore = [r'https://primes.utm.edu/notes/gaps.html',

106 r'https://primes.utm.edu/glossary/xpage/BertrandsPostulate.html',

107 r'https://primes.utm.edu/prove/prove2_3.html',

108 r'https://primes.utm.edu/glossary/xpage/Pseudoprime.html']

109

110 # This value controls if docstring for classes or methods, if not explicitly

111 # set, is inherited form parents.

112 autodoc_inherit_docstrings = False

113

114 # A list of paths that contain custom static files. Relative paths are taken as

115 # relative to the configuration directory. They are copied to the output’s

116 # _static directory.

117 html_static_path = ['_static']

118

119 # Should we show "Created using Sphinx" in the HTML footer?

120 html_show_sphinx = False

121

122 # Theme-specific options.

123 html_theme_options = {

124 'logo_only': True,

125 'display_version': False,

126 }

127

128 mathjax_config = {

129 'CommonHTML': {'linebreaks': {'automatic': True}},

130 'HTML-CSS': {'linebreaks': {'automatic': True}},

131 'SVG': {'linebreaks': {'automatic': True}},

132 }

133

134

135 # https://docs.readthedocs.io/en/latest/guides/adding-custom-css.html

136 def setup(app):

137 app.add_stylesheet('custom.css')

138

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/docs/conf.py b/docs/conf.py

--- a/docs/conf.py

+++ b/docs/conf.py

@@ -119,6 +119,11 @@

# Should we show "Created using Sphinx" in the HTML footer?

html_show_sphinx = False

+# Paths to the logo and favicon.ico, relative to the conf.py's directory.

+html_logo = '_static/logo.svg'

+html_favicon = '_static/favicon.ico'

+latex_logo = '_static/logo.png'

+

# Theme-specific options.

html_theme_options = {

'logo_only': True,

|

{"golden_diff": "diff --git a/docs/conf.py b/docs/conf.py\n--- a/docs/conf.py\n+++ b/docs/conf.py\n@@ -119,6 +119,11 @@\n # Should we show \"Created using Sphinx\" in the HTML footer?\n html_show_sphinx = False\n \n+# Paths to the logo and favicon.ico, relative to the conf.py's directory.\n+html_logo = '_static/logo.svg'\n+html_favicon = '_static/favicon.ico'\n+latex_logo = '_static/logo.png'\n+\n # Theme-specific options.\n html_theme_options = {\n 'logo_only': True,\n", "issue": "Project logo\nThere are few places to put images:\r\n- [x] Top left corner of https://diofant.readthedocs.io/ (see e.g. https://sphinx-rtd-theme.readthedocs.io/)\r\n- [x] favicon.ico.\r\n- [x] logo for pdf logs\r\n- [x] Organization profile on the Github (at least 200x200px)\r\n- [x] ~~repository\u2019s social media preview (640\u00d7320px - 1280\u00d7640px for best display)~~\r\n\n", "before_files": [{"content": "#\n# Diofant documentation build configuration file.\n#\n# This file is execfile()d with the current directory set to its\n# containing dir.\n#\n# The contents of this file are pickled, so don't put values in the\n# namespace that aren't pickleable (module imports are okay, they're\n# removed automatically).\n#\n\nimport warnings\n\nimport diofant\n\n\n# Turns numpydoc's section warnings to exceptions, see numpy/numpydoc#58.\nwarnings.simplefilter('error', UserWarning)\n\n# Add any Sphinx extension module names here, as strings.\nextensions = ['sphinx.ext.autodoc', 'sphinx.ext.viewcode', 'sphinx.ext.mathjax',\n 'sphinx.ext.graphviz', 'sphinx.ext.intersphinx',\n 'sphinx.ext.extlinks', 'sphinx.ext.napoleon',\n 'sphinxcontrib.bibtex']\n\nnapoleon_google_docstring = False\nnapoleon_use_param = False\nnapoleon_use_rtype = False\n\n# Sphinx will warn about all references where the target cannot be found.\nnitpicky = True\n\n# Glob-style patterns that should be excluded when looking for sources.\nexclude_patterns = ['README.rst']\n\n# The document name of the \"master\" document, that is, the document\n# that contains the root toctree directive.\nmaster_doc = 'index'\n\n# Project information.\nproject = 'Diofant'\ncopyright = '2006-2018 SymPy Development Team, 2013-2019 Sergey B Kirpichev'\nversion = diofant.__version__\nrelease = version\n\n# The name of default reST role, that is, for text marked up `like this`.\ndefault_role = 'math'\n\n# The theme to use for HTML and HTML Help pages.\nhtml_theme = 'sphinx_rtd_theme'\n\n# The LaTeX engine to build the docs.\nlatex_engine = 'xelatex'\n\n# If True, the PDF build from the LaTeX files created by Sphinx will use xindy\n# rather than makeindex.\nlatex_use_xindy = False\n\n# This value determines how to group the document tree into LaTeX source\n# files. It must be a list of tuples (startdocname, targetname, title,\n# author, documentclass, toctree_only),\nlatex_documents = [('index', 'diofant.tex', 'Diofant Documentation',\n 'Diofant Development Team', 'manual', True)]\n\n# A dictionary that contains LaTeX snippets that override predefined.\nlatex_elements = {\n 'preamble': r'''\n\\setmainfont{DejaVu Serif}\n\\setsansfont{DejaVu Sans}\n\\setmonofont{DejaVu Sans Mono}\n% redefine \\LaTeX to be usable in math mode\n\\expandafter\\def\\expandafter\\LaTeX\\expandafter{\\expandafter\\text\\expandafter{\\LaTeX}}\n'''\n}\n\n# Add page references after internal references.\nlatex_show_pagerefs = True\n\n# The output format for Graphviz when building HTML files.\ngraphviz_output_format = 'svg'\n\n# Contains mapping the locations and names of other projects that\n# should be linked to in this documentation.\nintersphinx_mapping = {\n 'python3': ('https://docs.python.org/3/', None),\n 'numpy': ('https://docs.scipy.org/doc/numpy', None),\n 'scipy': ('https://docs.scipy.org/doc/scipy/reference', None),\n}\n\n# Dictionary of external sites, mapping unique short alias names to a\n# base URL and a prefix.\nextlinks = {\n 'issue': ('https://github.com/diofant/diofant/issues/%s', '#'),\n 'pull': ('https://github.com/diofant/diofant/pull/%s', '#'),\n 'commit': ('https://github.com/diofant/diofant/commit/%s', ''),\n 'sympyissue': ('https://github.com/sympy/sympy/issues/%s', 'sympy/sympy#'),\n 'sympypull': ('https://github.com/sympy/sympy/pull/%s', 'sympy/sympy#'),\n}\n\n# The number of times the linkcheck builder will attempt to check a URL\n# before declaring it broken.\nlinkcheck_retries = 3\n\n# A list of regular expressions that match URIs that should not be checked.\nlinkcheck_ignore = [r'https://primes.utm.edu/notes/gaps.html',\n r'https://primes.utm.edu/glossary/xpage/BertrandsPostulate.html',\n r'https://primes.utm.edu/prove/prove2_3.html',\n r'https://primes.utm.edu/glossary/xpage/Pseudoprime.html']\n\n# This value controls if docstring for classes or methods, if not explicitly\n# set, is inherited form parents.\nautodoc_inherit_docstrings = False\n\n# A list of paths that contain custom static files. Relative paths are taken as\n# relative to the configuration directory. They are copied to the output\u2019s\n# _static directory.\nhtml_static_path = ['_static']\n\n# Should we show \"Created using Sphinx\" in the HTML footer?\nhtml_show_sphinx = False\n\n# Theme-specific options.\nhtml_theme_options = {\n 'logo_only': True,\n 'display_version': False,\n}\n\nmathjax_config = {\n 'CommonHTML': {'linebreaks': {'automatic': True}},\n 'HTML-CSS': {'linebreaks': {'automatic': True}},\n 'SVG': {'linebreaks': {'automatic': True}},\n}\n\n\n# https://docs.readthedocs.io/en/latest/guides/adding-custom-css.html\ndef setup(app):\n app.add_stylesheet('custom.css')\n", "path": "docs/conf.py"}], "after_files": [{"content": "#\n# Diofant documentation build configuration file.\n#\n# This file is execfile()d with the current directory set to its\n# containing dir.\n#\n# The contents of this file are pickled, so don't put values in the\n# namespace that aren't pickleable (module imports are okay, they're\n# removed automatically).\n#\n\nimport warnings\n\nimport diofant\n\n\n# Turns numpydoc's section warnings to exceptions, see numpy/numpydoc#58.\nwarnings.simplefilter('error', UserWarning)\n\n# Add any Sphinx extension module names here, as strings.\nextensions = ['sphinx.ext.autodoc', 'sphinx.ext.viewcode', 'sphinx.ext.mathjax',\n 'sphinx.ext.graphviz', 'sphinx.ext.intersphinx',\n 'sphinx.ext.extlinks', 'sphinx.ext.napoleon',\n 'sphinxcontrib.bibtex']\n\nnapoleon_google_docstring = False\nnapoleon_use_param = False\nnapoleon_use_rtype = False\n\n# Sphinx will warn about all references where the target cannot be found.\nnitpicky = True\n\n# Glob-style patterns that should be excluded when looking for sources.\nexclude_patterns = ['README.rst']\n\n# The document name of the \"master\" document, that is, the document\n# that contains the root toctree directive.\nmaster_doc = 'index'\n\n# Project information.\nproject = 'Diofant'\ncopyright = '2006-2018 SymPy Development Team, 2013-2019 Sergey B Kirpichev'\nversion = diofant.__version__\nrelease = version\n\n# The name of default reST role, that is, for text marked up `like this`.\ndefault_role = 'math'\n\n# The theme to use for HTML and HTML Help pages.\nhtml_theme = 'sphinx_rtd_theme'\n\n# The LaTeX engine to build the docs.\nlatex_engine = 'xelatex'\n\n# If True, the PDF build from the LaTeX files created by Sphinx will use xindy\n# rather than makeindex.\nlatex_use_xindy = False\n\n# This value determines how to group the document tree into LaTeX source\n# files. It must be a list of tuples (startdocname, targetname, title,\n# author, documentclass, toctree_only),\nlatex_documents = [('index', 'diofant.tex', 'Diofant Documentation',\n 'Diofant Development Team', 'manual', True)]\n\n# A dictionary that contains LaTeX snippets that override predefined.\nlatex_elements = {\n 'preamble': r'''\n\\setmainfont{DejaVu Serif}\n\\setsansfont{DejaVu Sans}\n\\setmonofont{DejaVu Sans Mono}\n% redefine \\LaTeX to be usable in math mode\n\\expandafter\\def\\expandafter\\LaTeX\\expandafter{\\expandafter\\text\\expandafter{\\LaTeX}}\n'''\n}\n\n# Add page references after internal references.\nlatex_show_pagerefs = True\n\n# The output format for Graphviz when building HTML files.\ngraphviz_output_format = 'svg'\n\n# Contains mapping the locations and names of other projects that\n# should be linked to in this documentation.\nintersphinx_mapping = {\n 'python3': ('https://docs.python.org/3/', None),\n 'numpy': ('https://docs.scipy.org/doc/numpy', None),\n 'scipy': ('https://docs.scipy.org/doc/scipy/reference', None),\n}\n\n# Dictionary of external sites, mapping unique short alias names to a\n# base URL and a prefix.\nextlinks = {\n 'issue': ('https://github.com/diofant/diofant/issues/%s', '#'),\n 'pull': ('https://github.com/diofant/diofant/pull/%s', '#'),\n 'commit': ('https://github.com/diofant/diofant/commit/%s', ''),\n 'sympyissue': ('https://github.com/sympy/sympy/issues/%s', 'sympy/sympy#'),\n 'sympypull': ('https://github.com/sympy/sympy/pull/%s', 'sympy/sympy#'),\n}\n\n# The number of times the linkcheck builder will attempt to check a URL\n# before declaring it broken.\nlinkcheck_retries = 3\n\n# A list of regular expressions that match URIs that should not be checked.\nlinkcheck_ignore = [r'https://primes.utm.edu/notes/gaps.html',\n r'https://primes.utm.edu/glossary/xpage/BertrandsPostulate.html',\n r'https://primes.utm.edu/prove/prove2_3.html',\n r'https://primes.utm.edu/glossary/xpage/Pseudoprime.html']\n\n# This value controls if docstring for classes or methods, if not explicitly\n# set, is inherited form parents.\nautodoc_inherit_docstrings = False\n\n# A list of paths that contain custom static files. Relative paths are taken as\n# relative to the configuration directory. They are copied to the output\u2019s\n# _static directory.\nhtml_static_path = ['_static']\n\n# Should we show \"Created using Sphinx\" in the HTML footer?\nhtml_show_sphinx = False\n\n# Paths to the logo and favicon.ico, relative to the conf.py's directory.\nhtml_logo = '_static/logo.svg'\nhtml_favicon = '_static/favicon.ico'\nlatex_logo = '_static/logo.png'\n\n# Theme-specific options.\nhtml_theme_options = {\n 'logo_only': True,\n 'display_version': False,\n}\n\nmathjax_config = {\n 'CommonHTML': {'linebreaks': {'automatic': True}},\n 'HTML-CSS': {'linebreaks': {'automatic': True}},\n 'SVG': {'linebreaks': {'automatic': True}},\n}\n\n\n# https://docs.readthedocs.io/en/latest/guides/adding-custom-css.html\ndef setup(app):\n app.add_stylesheet('custom.css')\n", "path": "docs/conf.py"}]}

| 1,927 | 123 |

gh_patches_debug_16223

|

rasdani/github-patches

|

git_diff

|

microsoft__botbuilder-python-1930

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Bump msrest to the 0.6.19 or higher

Is your feature request related to a problem? Please describe.

Old version of msrest is used in botframework components -> https://github.com/microsoft/botbuilder-python/search?q=msrest%3D%3D0.6.10 . This blocks us to use latest versions of the service bus client or event using the new language studio python libraries.

With msrest=0.6.10, we're blocked to using 0.50 service bus package and other packages like event grid.

Describe the solution you'd like

EDITED: Upgrade msrest to the at least 0.6.19 or higher.

Describe alternatives you've considered

No alternatives.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `libraries/botframework-connector/setup.py`

Content:

```

1 # Copyright (c) Microsoft Corporation. All rights reserved.

2 # Licensed under the MIT License.

3

4 import os

5 from setuptools import setup

6

7 NAME = "botframework-connector"

8 VERSION = os.environ["packageVersion"] if "packageVersion" in os.environ else "4.15.0"

9 REQUIRES = [

10 "msrest==0.6.10",

11 "requests>=2.23.0,<2.26",

12 "PyJWT>=1.5.3,<2.0.0",

13 "botbuilder-schema==4.15.0",

14 "msal==1.6.0",

15 ]

16

17 root = os.path.abspath(os.path.dirname(__file__))

18

19 with open(os.path.join(root, "README.rst"), encoding="utf-8") as f:

20 long_description = f.read()

21

22 setup(

23 name=NAME,

24 version=VERSION,

25 description="Microsoft Bot Framework Bot Builder SDK for Python.",

26 author="Microsoft",

27 url="https://www.github.com/Microsoft/botbuilder-python",

28 keywords=["BotFrameworkConnector", "bots", "ai", "botframework", "botbuilder"],

29 install_requires=REQUIRES,

30 packages=[

31 "botframework.connector",

32 "botframework.connector.auth",

33 "botframework.connector.async_mixin",

34 "botframework.connector.operations",

35 "botframework.connector.models",

36 "botframework.connector.aio",

37 "botframework.connector.aio.operations_async",

38 "botframework.connector.skills",

39 "botframework.connector.teams",

40 "botframework.connector.teams.operations",

41 "botframework.connector.token_api",

42 "botframework.connector.token_api.aio",

43 "botframework.connector.token_api.aio.operations_async",

44 "botframework.connector.token_api.models",

45 "botframework.connector.token_api.operations",

46 ],

47 include_package_data=True,

48 long_description=long_description,

49 long_description_content_type="text/x-rst",

50 license="MIT",

51 classifiers=[

52 "Programming Language :: Python :: 3.7",

53 "Intended Audience :: Developers",

54 "License :: OSI Approved :: MIT License",

55 "Operating System :: OS Independent",

56 "Development Status :: 5 - Production/Stable",

57 "Topic :: Scientific/Engineering :: Artificial Intelligence",

58 ],

59 )

60

```

Path: `libraries/botbuilder-schema/setup.py`

Content:

```

1 # Copyright (c) Microsoft Corporation. All rights reserved.

2 # Licensed under the MIT License.

3

4 import os

5 from setuptools import setup

6

7 NAME = "botbuilder-schema"

8 VERSION = os.environ["packageVersion"] if "packageVersion" in os.environ else "4.15.0"

9 REQUIRES = ["msrest==0.6.10"]

10

11 root = os.path.abspath(os.path.dirname(__file__))

12

13 with open(os.path.join(root, "README.rst"), encoding="utf-8") as f:

14 long_description = f.read()

15

16 setup(

17 name=NAME,

18 version=VERSION,

19 description="BotBuilder Schema",

20 author="Microsoft",

21 url="https://github.com/Microsoft/botbuilder-python",

22 keywords=["BotBuilderSchema", "bots", "ai", "botframework", "botbuilder"],

23 long_description=long_description,

24 long_description_content_type="text/x-rst",

25 license="MIT",

26 install_requires=REQUIRES,

27 packages=["botbuilder.schema", "botbuilder.schema.teams",],

28 include_package_data=True,

29 classifiers=[

30 "Programming Language :: Python :: 3.7",

31 "Intended Audience :: Developers",

32 "License :: OSI Approved :: MIT License",

33 "Operating System :: OS Independent",

34 "Development Status :: 5 - Production/Stable",

35 "Topic :: Scientific/Engineering :: Artificial Intelligence",

36 ],

37 )

38

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/libraries/botbuilder-schema/setup.py b/libraries/botbuilder-schema/setup.py

--- a/libraries/botbuilder-schema/setup.py

+++ b/libraries/botbuilder-schema/setup.py

@@ -6,7 +6,7 @@

NAME = "botbuilder-schema"

VERSION = os.environ["packageVersion"] if "packageVersion" in os.environ else "4.15.0"

-REQUIRES = ["msrest==0.6.10"]

+REQUIRES = ["msrest==0.6.19"]

root = os.path.abspath(os.path.dirname(__file__))

diff --git a/libraries/botframework-connector/setup.py b/libraries/botframework-connector/setup.py

--- a/libraries/botframework-connector/setup.py

+++ b/libraries/botframework-connector/setup.py

@@ -7,7 +7,7 @@

NAME = "botframework-connector"

VERSION = os.environ["packageVersion"] if "packageVersion" in os.environ else "4.15.0"

REQUIRES = [

- "msrest==0.6.10",

+ "msrest==0.6.19",

"requests>=2.23.0,<2.26",

"PyJWT>=1.5.3,<2.0.0",

"botbuilder-schema==4.15.0",

|

{"golden_diff": "diff --git a/libraries/botbuilder-schema/setup.py b/libraries/botbuilder-schema/setup.py\n--- a/libraries/botbuilder-schema/setup.py\n+++ b/libraries/botbuilder-schema/setup.py\n@@ -6,7 +6,7 @@\n \r\n NAME = \"botbuilder-schema\"\r\n VERSION = os.environ[\"packageVersion\"] if \"packageVersion\" in os.environ else \"4.15.0\"\r\n-REQUIRES = [\"msrest==0.6.10\"]\r\n+REQUIRES = [\"msrest==0.6.19\"]\r\n \r\n root = os.path.abspath(os.path.dirname(__file__))\r\n \r\ndiff --git a/libraries/botframework-connector/setup.py b/libraries/botframework-connector/setup.py\n--- a/libraries/botframework-connector/setup.py\n+++ b/libraries/botframework-connector/setup.py\n@@ -7,7 +7,7 @@\n NAME = \"botframework-connector\"\n VERSION = os.environ[\"packageVersion\"] if \"packageVersion\" in os.environ else \"4.15.0\"\n REQUIRES = [\n- \"msrest==0.6.10\",\n+ \"msrest==0.6.19\",\n \"requests>=2.23.0,<2.26\",\n \"PyJWT>=1.5.3,<2.0.0\",\n \"botbuilder-schema==4.15.0\",\n", "issue": "Bump msrest to the 0.6.19 or higher\nIs your feature request related to a problem? Please describe.\r\nOld version of msrest is used in botframework components -> https://github.com/microsoft/botbuilder-python/search?q=msrest%3D%3D0.6.10 . This blocks us to use latest versions of the service bus client or event using the new language studio python libraries.\r\n\r\nWith msrest=0.6.10, we're blocked to using 0.50 service bus package and other packages like event grid.\r\n\r\nDescribe the solution you'd like\r\nEDITED: Upgrade msrest to the at least 0.6.19 or higher.\r\n\r\nDescribe alternatives you've considered\r\nNo alternatives.\r\n\n", "before_files": [{"content": "# Copyright (c) Microsoft Corporation. All rights reserved.\n# Licensed under the MIT License.\n\nimport os\nfrom setuptools import setup\n\nNAME = \"botframework-connector\"\nVERSION = os.environ[\"packageVersion\"] if \"packageVersion\" in os.environ else \"4.15.0\"\nREQUIRES = [\n \"msrest==0.6.10\",\n \"requests>=2.23.0,<2.26\",\n \"PyJWT>=1.5.3,<2.0.0\",\n \"botbuilder-schema==4.15.0\",\n \"msal==1.6.0\",\n]\n\nroot = os.path.abspath(os.path.dirname(__file__))\n\nwith open(os.path.join(root, \"README.rst\"), encoding=\"utf-8\") as f:\n long_description = f.read()\n\nsetup(\n name=NAME,\n version=VERSION,\n description=\"Microsoft Bot Framework Bot Builder SDK for Python.\",\n author=\"Microsoft\",\n url=\"https://www.github.com/Microsoft/botbuilder-python\",\n keywords=[\"BotFrameworkConnector\", \"bots\", \"ai\", \"botframework\", \"botbuilder\"],\n install_requires=REQUIRES,\n packages=[\n \"botframework.connector\",\n \"botframework.connector.auth\",\n \"botframework.connector.async_mixin\",\n \"botframework.connector.operations\",\n \"botframework.connector.models\",\n \"botframework.connector.aio\",\n \"botframework.connector.aio.operations_async\",\n \"botframework.connector.skills\",\n \"botframework.connector.teams\",\n \"botframework.connector.teams.operations\",\n \"botframework.connector.token_api\",\n \"botframework.connector.token_api.aio\",\n \"botframework.connector.token_api.aio.operations_async\",\n \"botframework.connector.token_api.models\",\n \"botframework.connector.token_api.operations\",\n ],\n include_package_data=True,\n long_description=long_description,\n long_description_content_type=\"text/x-rst\",\n license=\"MIT\",\n classifiers=[\n \"Programming Language :: Python :: 3.7\",\n \"Intended Audience :: Developers\",\n \"License :: OSI Approved :: MIT License\",\n \"Operating System :: OS Independent\",\n \"Development Status :: 5 - Production/Stable\",\n \"Topic :: Scientific/Engineering :: Artificial Intelligence\",\n ],\n)\n", "path": "libraries/botframework-connector/setup.py"}, {"content": "# Copyright (c) Microsoft Corporation. All rights reserved.\r\n# Licensed under the MIT License.\r\n\r\nimport os\r\nfrom setuptools import setup\r\n\r\nNAME = \"botbuilder-schema\"\r\nVERSION = os.environ[\"packageVersion\"] if \"packageVersion\" in os.environ else \"4.15.0\"\r\nREQUIRES = [\"msrest==0.6.10\"]\r\n\r\nroot = os.path.abspath(os.path.dirname(__file__))\r\n\r\nwith open(os.path.join(root, \"README.rst\"), encoding=\"utf-8\") as f:\r\n long_description = f.read()\r\n\r\nsetup(\r\n name=NAME,\r\n version=VERSION,\r\n description=\"BotBuilder Schema\",\r\n author=\"Microsoft\",\r\n url=\"https://github.com/Microsoft/botbuilder-python\",\r\n keywords=[\"BotBuilderSchema\", \"bots\", \"ai\", \"botframework\", \"botbuilder\"],\r\n long_description=long_description,\r\n long_description_content_type=\"text/x-rst\",\r\n license=\"MIT\",\r\n install_requires=REQUIRES,\r\n packages=[\"botbuilder.schema\", \"botbuilder.schema.teams\",],\r\n include_package_data=True,\r\n classifiers=[\r\n \"Programming Language :: Python :: 3.7\",\r\n \"Intended Audience :: Developers\",\r\n \"License :: OSI Approved :: MIT License\",\r\n \"Operating System :: OS Independent\",\r\n \"Development Status :: 5 - Production/Stable\",\r\n \"Topic :: Scientific/Engineering :: Artificial Intelligence\",\r\n ],\r\n)\r\n", "path": "libraries/botbuilder-schema/setup.py"}], "after_files": [{"content": "# Copyright (c) Microsoft Corporation. All rights reserved.\n# Licensed under the MIT License.\n\nimport os\nfrom setuptools import setup\n\nNAME = \"botframework-connector\"\nVERSION = os.environ[\"packageVersion\"] if \"packageVersion\" in os.environ else \"4.15.0\"\nREQUIRES = [\n \"msrest==0.6.19\",\n \"requests>=2.23.0,<2.26\",\n \"PyJWT>=1.5.3,<2.0.0\",\n \"botbuilder-schema==4.15.0\",\n \"msal==1.6.0\",\n]\n\nroot = os.path.abspath(os.path.dirname(__file__))\n\nwith open(os.path.join(root, \"README.rst\"), encoding=\"utf-8\") as f:\n long_description = f.read()\n\nsetup(\n name=NAME,\n version=VERSION,\n description=\"Microsoft Bot Framework Bot Builder SDK for Python.\",\n author=\"Microsoft\",\n url=\"https://www.github.com/Microsoft/botbuilder-python\",\n keywords=[\"BotFrameworkConnector\", \"bots\", \"ai\", \"botframework\", \"botbuilder\"],\n install_requires=REQUIRES,\n packages=[\n \"botframework.connector\",\n \"botframework.connector.auth\",\n \"botframework.connector.async_mixin\",\n \"botframework.connector.operations\",\n \"botframework.connector.models\",\n \"botframework.connector.aio\",\n \"botframework.connector.aio.operations_async\",\n \"botframework.connector.skills\",\n \"botframework.connector.teams\",\n \"botframework.connector.teams.operations\",\n \"botframework.connector.token_api\",\n \"botframework.connector.token_api.aio\",\n \"botframework.connector.token_api.aio.operations_async\",\n \"botframework.connector.token_api.models\",\n \"botframework.connector.token_api.operations\",\n ],\n include_package_data=True,\n long_description=long_description,\n long_description_content_type=\"text/x-rst\",\n license=\"MIT\",\n classifiers=[\n \"Programming Language :: Python :: 3.7\",\n \"Intended Audience :: Developers\",\n \"License :: OSI Approved :: MIT License\",\n \"Operating System :: OS Independent\",\n \"Development Status :: 5 - Production/Stable\",\n \"Topic :: Scientific/Engineering :: Artificial Intelligence\",\n ],\n)\n", "path": "libraries/botframework-connector/setup.py"}, {"content": "# Copyright (c) Microsoft Corporation. All rights reserved.\r\n# Licensed under the MIT License.\r\n\r\nimport os\r\nfrom setuptools import setup\r\n\r\nNAME = \"botbuilder-schema\"\r\nVERSION = os.environ[\"packageVersion\"] if \"packageVersion\" in os.environ else \"4.15.0\"\r\nREQUIRES = [\"msrest==0.6.19\"]\r\n\r\nroot = os.path.abspath(os.path.dirname(__file__))\r\n\r\nwith open(os.path.join(root, \"README.rst\"), encoding=\"utf-8\") as f:\r\n long_description = f.read()\r\n\r\nsetup(\r\n name=NAME,\r\n version=VERSION,\r\n description=\"BotBuilder Schema\",\r\n author=\"Microsoft\",\r\n url=\"https://github.com/Microsoft/botbuilder-python\",\r\n keywords=[\"BotBuilderSchema\", \"bots\", \"ai\", \"botframework\", \"botbuilder\"],\r\n long_description=long_description,\r\n long_description_content_type=\"text/x-rst\",\r\n license=\"MIT\",\r\n install_requires=REQUIRES,\r\n packages=[\"botbuilder.schema\", \"botbuilder.schema.teams\",],\r\n include_package_data=True,\r\n classifiers=[\r\n \"Programming Language :: Python :: 3.7\",\r\n \"Intended Audience :: Developers\",\r\n \"License :: OSI Approved :: MIT License\",\r\n \"Operating System :: OS Independent\",\r\n \"Development Status :: 5 - Production/Stable\",\r\n \"Topic :: Scientific/Engineering :: Artificial Intelligence\",\r\n ],\r\n)\r\n", "path": "libraries/botbuilder-schema/setup.py"}]}

| 1,390 | 298 |

gh_patches_debug_11520

|

rasdani/github-patches

|

git_diff

|

gratipay__gratipay.com-2999

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Font problem in production

> Font from origin 'https://assets.gratipay.com' has been blocked from loading by Cross-Origin Resource Sharing policy: No 'Access-Control-Allow-Origin' header is present on the requested resource. Origin 'https://gratipay.com' is therefore not allowed access.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `gratipay/utils/cache_static.py`

Content:

```

1 """

2 Handles caching of static resources.

3 """

4 from base64 import b64encode

5 from hashlib import md5

6

7 from aspen import Response

8

9

10 ETAGS = {}

11

12

13 def asset_etag(path):

14 if path.endswith('.spt'):

15 return ''

16 if path in ETAGS:

17 h = ETAGS[path]

18 else:

19 with open(path) as f:

20 h = ETAGS[path] = b64encode(md5(f.read()).digest(), '-_').replace('=', '~')

21 return h

22

23

24 # algorithm functions

25

26 def get_etag_for_file(dispatch_result):

27 return {'etag': asset_etag(dispatch_result.match)}

28

29

30 def try_to_serve_304(website, dispatch_result, request, etag):

31 """Try to serve a 304 for static resources.

32 """

33 if not etag:

34 # This is a request for a dynamic resource.

35 return

36

37 qs_etag = request.line.uri.querystring.get('etag')

38 if qs_etag and qs_etag != etag:

39 # Don't serve one version of a file as if it were another.

40 raise Response(410)

41

42 headers_etag = request.headers.get('If-None-Match')

43 if not headers_etag:

44 # This client doesn't want a 304.

45 return

46

47 if headers_etag != etag:

48 # Cache miss, the client sent an old or invalid etag.

49 return

50

51 # Huzzah!

52 # =======

53 # We can serve a 304! :D

54

55 raise Response(304)

56

57

58 def add_caching_to_response(website, response, request=None, etag=None):

59 """Set caching headers for static resources.

60 """

61 if etag is None:

62 return

63 assert request is not None # sanity check

64

65 if response.code not in (200, 304):

66 return

67

68 # https://developers.google.com/speed/docs/best-practices/caching

69 response.headers['Vary'] = 'accept-encoding'

70 response.headers['Etag'] = etag

71

72 if request.line.uri.querystring.get('etag'):

73 # We can cache "indefinitely" when the querystring contains the etag.

74 response.headers['Cache-Control'] = 'public, max-age=31536000'

75 else:

76 # Otherwise we cache for 5 seconds

77 response.headers['Cache-Control'] = 'public, max-age=5'

78

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/gratipay/utils/cache_static.py b/gratipay/utils/cache_static.py

--- a/gratipay/utils/cache_static.py

+++ b/gratipay/utils/cache_static.py

@@ -68,6 +68,9 @@

# https://developers.google.com/speed/docs/best-practices/caching

response.headers['Vary'] = 'accept-encoding'

response.headers['Etag'] = etag

+ # Set CORS header for https://assets.gratipay.com (see issue #2970)

+ if 'Access-Control-Allow-Origin' not in response.headers:

+ response.headers['Access-Control-Allow-Origin'] = 'https://gratipay.com'

if request.line.uri.querystring.get('etag'):

# We can cache "indefinitely" when the querystring contains the etag.

|

{"golden_diff": "diff --git a/gratipay/utils/cache_static.py b/gratipay/utils/cache_static.py\n--- a/gratipay/utils/cache_static.py\n+++ b/gratipay/utils/cache_static.py\n@@ -68,6 +68,9 @@\n # https://developers.google.com/speed/docs/best-practices/caching\n response.headers['Vary'] = 'accept-encoding'\n response.headers['Etag'] = etag\n+ # Set CORS header for https://assets.gratipay.com (see issue #2970)\n+ if 'Access-Control-Allow-Origin' not in response.headers:\n+ response.headers['Access-Control-Allow-Origin'] = 'https://gratipay.com'\n \n if request.line.uri.querystring.get('etag'):\n # We can cache \"indefinitely\" when the querystring contains the etag.\n", "issue": "Font problem in production\n> Font from origin 'https://assets.gratipay.com' has been blocked from loading by Cross-Origin Resource Sharing policy: No 'Access-Control-Allow-Origin' header is present on the requested resource. Origin 'https://gratipay.com' is therefore not allowed access. \n\n", "before_files": [{"content": "\"\"\"\nHandles caching of static resources.\n\"\"\"\nfrom base64 import b64encode\nfrom hashlib import md5\n\nfrom aspen import Response\n\n\nETAGS = {}\n\n\ndef asset_etag(path):\n if path.endswith('.spt'):\n return ''\n if path in ETAGS:\n h = ETAGS[path]\n else:\n with open(path) as f:\n h = ETAGS[path] = b64encode(md5(f.read()).digest(), '-_').replace('=', '~')\n return h\n\n\n# algorithm functions\n\ndef get_etag_for_file(dispatch_result):\n return {'etag': asset_etag(dispatch_result.match)}\n\n\ndef try_to_serve_304(website, dispatch_result, request, etag):\n \"\"\"Try to serve a 304 for static resources.\n \"\"\"\n if not etag:\n # This is a request for a dynamic resource.\n return\n\n qs_etag = request.line.uri.querystring.get('etag')\n if qs_etag and qs_etag != etag:\n # Don't serve one version of a file as if it were another.\n raise Response(410)\n\n headers_etag = request.headers.get('If-None-Match')\n if not headers_etag:\n # This client doesn't want a 304.\n return\n\n if headers_etag != etag:\n # Cache miss, the client sent an old or invalid etag.\n return\n\n # Huzzah!\n # =======\n # We can serve a 304! :D\n\n raise Response(304)\n\n\ndef add_caching_to_response(website, response, request=None, etag=None):\n \"\"\"Set caching headers for static resources.\n \"\"\"\n if etag is None:\n return\n assert request is not None # sanity check\n\n if response.code not in (200, 304):\n return\n\n # https://developers.google.com/speed/docs/best-practices/caching\n response.headers['Vary'] = 'accept-encoding'\n response.headers['Etag'] = etag\n\n if request.line.uri.querystring.get('etag'):\n # We can cache \"indefinitely\" when the querystring contains the etag.\n response.headers['Cache-Control'] = 'public, max-age=31536000'\n else:\n # Otherwise we cache for 5 seconds\n response.headers['Cache-Control'] = 'public, max-age=5'\n", "path": "gratipay/utils/cache_static.py"}], "after_files": [{"content": "\"\"\"\nHandles caching of static resources.\n\"\"\"\nfrom base64 import b64encode\nfrom hashlib import md5\n\nfrom aspen import Response\n\n\nETAGS = {}\n\n\ndef asset_etag(path):\n if path.endswith('.spt'):\n return ''\n if path in ETAGS:\n h = ETAGS[path]\n else:\n with open(path) as f:\n h = ETAGS[path] = b64encode(md5(f.read()).digest(), '-_').replace('=', '~')\n return h\n\n\n# algorithm functions\n\ndef get_etag_for_file(dispatch_result):\n return {'etag': asset_etag(dispatch_result.match)}\n\n\ndef try_to_serve_304(website, dispatch_result, request, etag):\n \"\"\"Try to serve a 304 for static resources.\n \"\"\"\n if not etag:\n # This is a request for a dynamic resource.\n return\n\n qs_etag = request.line.uri.querystring.get('etag')\n if qs_etag and qs_etag != etag:\n # Don't serve one version of a file as if it were another.\n raise Response(410)\n\n headers_etag = request.headers.get('If-None-Match')\n if not headers_etag:\n # This client doesn't want a 304.\n return\n\n if headers_etag != etag:\n # Cache miss, the client sent an old or invalid etag.\n return\n\n # Huzzah!\n # =======\n # We can serve a 304! :D\n\n raise Response(304)\n\n\ndef add_caching_to_response(website, response, request=None, etag=None):\n \"\"\"Set caching headers for static resources.\n \"\"\"\n if etag is None:\n return\n assert request is not None # sanity check\n\n if response.code not in (200, 304):\n return\n\n # https://developers.google.com/speed/docs/best-practices/caching\n response.headers['Vary'] = 'accept-encoding'\n response.headers['Etag'] = etag\n # Set CORS header for https://assets.gratipay.com (see issue #2970)\n if 'Access-Control-Allow-Origin' not in response.headers:\n response.headers['Access-Control-Allow-Origin'] = 'https://gratipay.com'\n\n if request.line.uri.querystring.get('etag'):\n # We can cache \"indefinitely\" when the querystring contains the etag.\n response.headers['Cache-Control'] = 'public, max-age=31536000'\n else:\n # Otherwise we cache for 5 seconds\n response.headers['Cache-Control'] = 'public, max-age=5'\n", "path": "gratipay/utils/cache_static.py"}]}

| 1,021 | 180 |

gh_patches_debug_2632

|

rasdani/github-patches

|

git_diff

|

hpcaitech__ColossalAI-5433

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[tensor] fix some unittests

[tensor] fix some unittests

[tensor] fix some unittests

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `extensions/inference/inference_ops_cuda.py`

Content:

```

1 from ..cuda_extension import _CudaExtension

2 from ..utils import get_cuda_cc_flag

3

4

5 class InferenceOpsCudaExtension(_CudaExtension):

6 def __init__(self):

7 super().__init__(name="inference_ops_cuda")

8

9 def sources_files(self):

10 ret = [

11 self.csrc_abs_path(fname)

12 for fname in [

13 "cuda/colossal_inference_C_frontend.cpp",

14 "cuda/decode_kv_cache_memcpy_kernel.cu",

15 ]

16 ]

17 return ret

18

19 def include_dirs(self):

20 ret = [self.get_cuda_home_include()]

21 return ret

22

23 def cxx_flags(self):

24 version_dependent_macros = ["-DVERSION_GE_1_1", "-DVERSION_GE_1_3", "-DVERSION_GE_1_5"]

25 return ["-O3"] + version_dependent_macros

26

27 def nvcc_flags(self):

28 extra_cuda_flags = ["-lineinfo"]

29 extra_cuda_flags.extend(get_cuda_cc_flag())

30 return ["-O3", "--use_fast_math"] + extra_cuda_flags

31

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/extensions/inference/inference_ops_cuda.py b/extensions/inference/inference_ops_cuda.py

--- a/extensions/inference/inference_ops_cuda.py

+++ b/extensions/inference/inference_ops_cuda.py

@@ -12,6 +12,7 @@

for fname in [

"cuda/colossal_inference_C_frontend.cpp",

"cuda/decode_kv_cache_memcpy_kernel.cu",

+ "cuda/activation_kernel.cu",

]

]

return ret

|

{"golden_diff": "diff --git a/extensions/inference/inference_ops_cuda.py b/extensions/inference/inference_ops_cuda.py\n--- a/extensions/inference/inference_ops_cuda.py\n+++ b/extensions/inference/inference_ops_cuda.py\n@@ -12,6 +12,7 @@\n for fname in [\n \"cuda/colossal_inference_C_frontend.cpp\",\n \"cuda/decode_kv_cache_memcpy_kernel.cu\",\n+ \"cuda/activation_kernel.cu\",\n ]\n ]\n return ret\n", "issue": "[tensor] fix some unittests\n\n[tensor] fix some unittests\n\n[tensor] fix some unittests\n\n", "before_files": [{"content": "from ..cuda_extension import _CudaExtension\nfrom ..utils import get_cuda_cc_flag\n\n\nclass InferenceOpsCudaExtension(_CudaExtension):\n def __init__(self):\n super().__init__(name=\"inference_ops_cuda\")\n\n def sources_files(self):\n ret = [\n self.csrc_abs_path(fname)\n for fname in [\n \"cuda/colossal_inference_C_frontend.cpp\",\n \"cuda/decode_kv_cache_memcpy_kernel.cu\",\n ]\n ]\n return ret\n\n def include_dirs(self):\n ret = [self.get_cuda_home_include()]\n return ret\n\n def cxx_flags(self):\n version_dependent_macros = [\"-DVERSION_GE_1_1\", \"-DVERSION_GE_1_3\", \"-DVERSION_GE_1_5\"]\n return [\"-O3\"] + version_dependent_macros\n\n def nvcc_flags(self):\n extra_cuda_flags = [\"-lineinfo\"]\n extra_cuda_flags.extend(get_cuda_cc_flag())\n return [\"-O3\", \"--use_fast_math\"] + extra_cuda_flags\n", "path": "extensions/inference/inference_ops_cuda.py"}], "after_files": [{"content": "from ..cuda_extension import _CudaExtension\nfrom ..utils import get_cuda_cc_flag\n\n\nclass InferenceOpsCudaExtension(_CudaExtension):\n def __init__(self):\n super().__init__(name=\"inference_ops_cuda\")\n\n def sources_files(self):\n ret = [\n self.csrc_abs_path(fname)\n for fname in [\n \"cuda/colossal_inference_C_frontend.cpp\",\n \"cuda/decode_kv_cache_memcpy_kernel.cu\",\n \"cuda/activation_kernel.cu\",\n ]\n ]\n return ret\n\n def include_dirs(self):\n ret = [self.get_cuda_home_include()]\n return ret\n\n def cxx_flags(self):\n version_dependent_macros = [\"-DVERSION_GE_1_1\", \"-DVERSION_GE_1_3\", \"-DVERSION_GE_1_5\"]\n return [\"-O3\"] + version_dependent_macros\n\n def nvcc_flags(self):\n extra_cuda_flags = [\"-lineinfo\"]\n extra_cuda_flags.extend(get_cuda_cc_flag())\n return [\"-O3\", \"--use_fast_math\"] + extra_cuda_flags\n", "path": "extensions/inference/inference_ops_cuda.py"}]}

| 568 | 103 |

gh_patches_debug_153

|

rasdani/github-patches

|

git_diff

|

bookwyrm-social__bookwyrm-1018

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Ratings don't federate

**Describe the bug**

I do follow someone on bookwyrm.social from bookwyrm.social and wyrms.de. I have seen on b.s that they rated some books without reviewing them, but those ratings do not appear on w.d. All other posts federate properly (I think).

**Expeceted behaviour**

The rating should show up on connected instances and ideally also be used on those to calculate the average rating of the book.

Here is one example that's not visible from w.d: https://bookwyrm.social/user/tastytea/reviewrating/21469

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `bookwyrm/activitypub/note.py`

Content:

```

1 """ note serializer and children thereof """

2 from dataclasses import dataclass, field

3 from typing import Dict, List

4 from django.apps import apps

5

6 from .base_activity import ActivityObject, Link

7 from .image import Document

8

9

10 @dataclass(init=False)

11 class Tombstone(ActivityObject):

12 """the placeholder for a deleted status"""

13

14 type: str = "Tombstone"

15

16 def to_model(self, *args, **kwargs): # pylint: disable=unused-argument

17 """this should never really get serialized, just searched for"""

18 model = apps.get_model("bookwyrm.Status")

19 return model.find_existing_by_remote_id(self.id)

20

21

22 @dataclass(init=False)

23 class Note(ActivityObject):

24 """Note activity"""

25

26 published: str

27 attributedTo: str

28 content: str = ""

29 to: List[str] = field(default_factory=lambda: [])

30 cc: List[str] = field(default_factory=lambda: [])

31 replies: Dict = field(default_factory=lambda: {})

32 inReplyTo: str = ""

33 summary: str = ""

34 tag: List[Link] = field(default_factory=lambda: [])

35 attachment: List[Document] = field(default_factory=lambda: [])

36 sensitive: bool = False

37 type: str = "Note"

38

39

40 @dataclass(init=False)

41 class Article(Note):

42 """what's an article except a note with more fields"""

43

44 name: str

45 type: str = "Article"

46

47

48 @dataclass(init=False)

49 class GeneratedNote(Note):

50 """just a re-typed note"""

51

52 type: str = "GeneratedNote"

53

54

55 @dataclass(init=False)

56 class Comment(Note):

57 """like a note but with a book"""

58

59 inReplyToBook: str

60 type: str = "Comment"

61

62

63 @dataclass(init=False)

64 class Quotation(Comment):

65 """a quote and commentary on a book"""

66

67 quote: str

68 type: str = "Quotation"

69

70

71 @dataclass(init=False)

72 class Review(Comment):

73 """a full book review"""

74

75 name: str = None

76 rating: int = None

77 type: str = "Review"

78

79

80 @dataclass(init=False)

81 class Rating(Comment):

82 """just a star rating"""

83

84 rating: int

85 content: str = None

86 type: str = "Rating"

87

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/bookwyrm/activitypub/note.py b/bookwyrm/activitypub/note.py

--- a/bookwyrm/activitypub/note.py

+++ b/bookwyrm/activitypub/note.py

@@ -83,4 +83,5 @@

rating: int

content: str = None

+ name: str = None # not used, but the model inherits from Review

type: str = "Rating"

|

{"golden_diff": "diff --git a/bookwyrm/activitypub/note.py b/bookwyrm/activitypub/note.py\n--- a/bookwyrm/activitypub/note.py\n+++ b/bookwyrm/activitypub/note.py\n@@ -83,4 +83,5 @@\n \n rating: int\n content: str = None\n+ name: str = None # not used, but the model inherits from Review\n type: str = \"Rating\"\n", "issue": "Ratings don't federate\n**Describe the bug**\r\nI do follow someone on bookwyrm.social from bookwyrm.social and wyrms.de. I have seen on b.s that they rated some books without reviewing them, but those ratings do not appear on w.d. All other posts federate properly (I think).\r\n\r\n**Expeceted behaviour**\r\nThe rating should show up on connected instances and ideally also be used on those to calculate the average rating of the book.\r\n\r\nHere is one example that's not visible from w.d: https://bookwyrm.social/user/tastytea/reviewrating/21469\n", "before_files": [{"content": "\"\"\" note serializer and children thereof \"\"\"\nfrom dataclasses import dataclass, field\nfrom typing import Dict, List\nfrom django.apps import apps\n\nfrom .base_activity import ActivityObject, Link\nfrom .image import Document\n\n\n@dataclass(init=False)\nclass Tombstone(ActivityObject):\n \"\"\"the placeholder for a deleted status\"\"\"\n\n type: str = \"Tombstone\"\n\n def to_model(self, *args, **kwargs): # pylint: disable=unused-argument\n \"\"\"this should never really get serialized, just searched for\"\"\"\n model = apps.get_model(\"bookwyrm.Status\")\n return model.find_existing_by_remote_id(self.id)\n\n\n@dataclass(init=False)\nclass Note(ActivityObject):\n \"\"\"Note activity\"\"\"\n\n published: str\n attributedTo: str\n content: str = \"\"\n to: List[str] = field(default_factory=lambda: [])\n cc: List[str] = field(default_factory=lambda: [])\n replies: Dict = field(default_factory=lambda: {})\n inReplyTo: str = \"\"\n summary: str = \"\"\n tag: List[Link] = field(default_factory=lambda: [])\n attachment: List[Document] = field(default_factory=lambda: [])\n sensitive: bool = False\n type: str = \"Note\"\n\n\n@dataclass(init=False)\nclass Article(Note):\n \"\"\"what's an article except a note with more fields\"\"\"\n\n name: str\n type: str = \"Article\"\n\n\n@dataclass(init=False)\nclass GeneratedNote(Note):\n \"\"\"just a re-typed note\"\"\"\n\n type: str = \"GeneratedNote\"\n\n\n@dataclass(init=False)\nclass Comment(Note):\n \"\"\"like a note but with a book\"\"\"\n\n inReplyToBook: str\n type: str = \"Comment\"\n\n\n@dataclass(init=False)\nclass Quotation(Comment):\n \"\"\"a quote and commentary on a book\"\"\"\n\n quote: str\n type: str = \"Quotation\"\n\n\n@dataclass(init=False)\nclass Review(Comment):\n \"\"\"a full book review\"\"\"\n\n name: str = None\n rating: int = None\n type: str = \"Review\"\n\n\n@dataclass(init=False)\nclass Rating(Comment):\n \"\"\"just a star rating\"\"\"\n\n rating: int\n content: str = None\n type: str = \"Rating\"\n", "path": "bookwyrm/activitypub/note.py"}], "after_files": [{"content": "\"\"\" note serializer and children thereof \"\"\"\nfrom dataclasses import dataclass, field\nfrom typing import Dict, List\nfrom django.apps import apps\n\nfrom .base_activity import ActivityObject, Link\nfrom .image import Document\n\n\n@dataclass(init=False)\nclass Tombstone(ActivityObject):\n \"\"\"the placeholder for a deleted status\"\"\"\n\n type: str = \"Tombstone\"\n\n def to_model(self, *args, **kwargs): # pylint: disable=unused-argument\n \"\"\"this should never really get serialized, just searched for\"\"\"\n model = apps.get_model(\"bookwyrm.Status\")\n return model.find_existing_by_remote_id(self.id)\n\n\n@dataclass(init=False)\nclass Note(ActivityObject):\n \"\"\"Note activity\"\"\"\n\n published: str\n attributedTo: str\n content: str = \"\"\n to: List[str] = field(default_factory=lambda: [])\n cc: List[str] = field(default_factory=lambda: [])\n replies: Dict = field(default_factory=lambda: {})\n inReplyTo: str = \"\"\n summary: str = \"\"\n tag: List[Link] = field(default_factory=lambda: [])\n attachment: List[Document] = field(default_factory=lambda: [])\n sensitive: bool = False\n type: str = \"Note\"\n\n\n@dataclass(init=False)\nclass Article(Note):\n \"\"\"what's an article except a note with more fields\"\"\"\n\n name: str\n type: str = \"Article\"\n\n\n@dataclass(init=False)\nclass GeneratedNote(Note):\n \"\"\"just a re-typed note\"\"\"\n\n type: str = \"GeneratedNote\"\n\n\n@dataclass(init=False)\nclass Comment(Note):\n \"\"\"like a note but with a book\"\"\"\n\n inReplyToBook: str\n type: str = \"Comment\"\n\n\n@dataclass(init=False)\nclass Quotation(Comment):\n \"\"\"a quote and commentary on a book\"\"\"\n\n quote: str\n type: str = \"Quotation\"\n\n\n@dataclass(init=False)\nclass Review(Comment):\n \"\"\"a full book review\"\"\"\n\n name: str = None\n rating: int = None\n type: str = \"Review\"\n\n\n@dataclass(init=False)\nclass Rating(Comment):\n \"\"\"just a star rating\"\"\"\n\n rating: int\n content: str = None\n name: str = None # not used, but the model inherits from Review\n type: str = \"Rating\"\n", "path": "bookwyrm/activitypub/note.py"}]}

| 1,059 | 96 |

gh_patches_debug_591

|

rasdani/github-patches

|

git_diff

|

pex-tool__pex-1140

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Release 2.1.23

On the docket:

+ [x] Upgrade Pex to Pip 20.3.1. #1133

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pex/version.py`

Content:

```

1 # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3

4 __version__ = "2.1.22"

5

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pex/version.py b/pex/version.py

--- a/pex/version.py

+++ b/pex/version.py

@@ -1,4 +1,4 @@

# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).

# Licensed under the Apache License, Version 2.0 (see LICENSE).

-__version__ = "2.1.22"

+__version__ = "2.1.23"

|

{"golden_diff": "diff --git a/pex/version.py b/pex/version.py\n--- a/pex/version.py\n+++ b/pex/version.py\n@@ -1,4 +1,4 @@\n # Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n # Licensed under the Apache License, Version 2.0 (see LICENSE).\n \n-__version__ = \"2.1.22\"\n+__version__ = \"2.1.23\"\n", "issue": "Release 2.1.23\nOn the docket:\r\n+ [x] Upgrade Pex to Pip 20.3.1. #1133\r\n\n", "before_files": [{"content": "# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\n__version__ = \"2.1.22\"\n", "path": "pex/version.py"}], "after_files": [{"content": "# Copyright 2015 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\n\n__version__ = \"2.1.23\"\n", "path": "pex/version.py"}]}

| 345 | 96 |

gh_patches_debug_13712

|

rasdani/github-patches

|

git_diff

|

chainer__chainer-1312

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

`split_axis` doesn't support empty sections

This code causes a TypeError.

`functions.split_axis(x, [], 0)`

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `chainer/functions/array/split_axis.py`

Content:

```

1 import collections

2

3 import six

4

5 import chainer

6 from chainer import cuda

7 from chainer import function

8 from chainer.utils import type_check

9

10

11 class SplitAxis(function.Function):

12

13 """Function that splits multiple arrays along the specified axis."""

14

15 def __init__(self, indices_or_sections, axis):

16 if not isinstance(indices_or_sections, (int, collections.Iterable)):

17 raise TypeError('indices_or_sections must be integer or 1-D array')

18 self.indices_or_sections = indices_or_sections

19 self.axis = axis

20

21 def check_type_forward(self, in_types):

22 type_check.expect(in_types.size() == 1)

23 type_check.expect(in_types[0].ndim > self.axis)

24

25 if isinstance(self.indices_or_sections, collections.Iterable):

26 max_index = type_check.Variable(

27 self.indices_or_sections[-1], 'max_index')

28 type_check.expect(in_types[0].shape[self.axis] > max_index)

29 else:

30 sections = type_check.Variable(

31 self.indices_or_sections, 'sections')

32 type_check.expect(in_types[0].shape[self.axis] % sections == 0)

33

34 def forward(self, x):

35 if isinstance(self.indices_or_sections, collections.Iterable):

36 cdimx = x[0].shape[self.axis]

37 ind = list(self.indices_or_sections)

38 ind.append(cdimx)

39 prev_i = 0

40 for i in ind:

41 cdimy = max(0, min(i, cdimx) - prev_i)

42 if cdimy == 0:

43 raise ValueError('Not support if shape contains 0')

44 prev_i = i

45 xp = cuda.get_array_module(*x)

46 return tuple(xp.split(x[0], self.indices_or_sections, self.axis))

47

48 def backward(self, x, gys):

49 xp = cuda.get_array_module(*x)

50 if any(gy is None for gy in gys):

51 gx = xp.zeros_like(x[0])

52 gxs = xp.split(gx, self.indices_or_sections, self.axis)

53 for gxi, gy in six.moves.zip(gxs, gys):

54 if gy is None:

55 continue

56 gxi[:] = gy

57 return gx,

58 else:

59 return xp.concatenate(gys, axis=self.axis),

60

61

62 def split_axis(x, indices_or_sections, axis, force_tuple=False):

63 """Splits given variables along an axis.

64

65 Args:

66 x (tuple of Variables): Variables to be split.

67 indices_or_sections (int or 1-D array): If this argument is an integer,

68 N, the array will be divided into N equal arrays along axis.

69 If it is a 1-D array of sorted integers, it

70 indicates the positions where the array is split.

71 axis (int): Axis that the input array is split along.

72 force_tuple (bool): If ``True``, this method returns a tuple even when

73 the number of outputs is one.

74

75 Returns:

76 tuple or Variable: Tuple of :class:`~chainer.Variable` objects

77 if the number of outputs is more than 1 or

78 :class:`~chainer.Variable` otherwise.

79 When ``force_tuple`` is ``True``, returned value is always a tuple

80 regardless of the number of outputs.

81

82 .. note::

83 This function raises :class:`ValueError` if at least

84 one of the outputs is split to zero-size

85 (i.e. ``axis``-th value of its shape is zero).

86

87 """

88 res = SplitAxis(indices_or_sections, axis)(x)

89 if force_tuple and isinstance(res, chainer.Variable):

90 res = (res,)

91 return res

92

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/chainer/functions/array/split_axis.py b/chainer/functions/array/split_axis.py

--- a/chainer/functions/array/split_axis.py

+++ b/chainer/functions/array/split_axis.py

@@ -23,9 +23,10 @@

type_check.expect(in_types[0].ndim > self.axis)

if isinstance(self.indices_or_sections, collections.Iterable):

- max_index = type_check.Variable(

- self.indices_or_sections[-1], 'max_index')

- type_check.expect(in_types[0].shape[self.axis] > max_index)

+ if len(self.indices_or_sections) > 0:

+ max_index = type_check.Variable(

+ self.indices_or_sections[-1], 'max_index')

+ type_check.expect(in_types[0].shape[self.axis] > max_index)

else:

sections = type_check.Variable(

self.indices_or_sections, 'sections')

|

{"golden_diff": "diff --git a/chainer/functions/array/split_axis.py b/chainer/functions/array/split_axis.py\n--- a/chainer/functions/array/split_axis.py\n+++ b/chainer/functions/array/split_axis.py\n@@ -23,9 +23,10 @@\n type_check.expect(in_types[0].ndim > self.axis)\n \n if isinstance(self.indices_or_sections, collections.Iterable):\n- max_index = type_check.Variable(\n- self.indices_or_sections[-1], 'max_index')\n- type_check.expect(in_types[0].shape[self.axis] > max_index)\n+ if len(self.indices_or_sections) > 0:\n+ max_index = type_check.Variable(\n+ self.indices_or_sections[-1], 'max_index')\n+ type_check.expect(in_types[0].shape[self.axis] > max_index)\n else:\n sections = type_check.Variable(\n self.indices_or_sections, 'sections')\n", "issue": "`split_axis` doesn't support empty sections\nThis code causes a TypeError.\n`functions.split_axis(x, [], 0)`\n\n", "before_files": [{"content": "import collections\n\nimport six\n\nimport chainer\nfrom chainer import cuda\nfrom chainer import function\nfrom chainer.utils import type_check\n\n\nclass SplitAxis(function.Function):\n\n \"\"\"Function that splits multiple arrays along the specified axis.\"\"\"\n\n def __init__(self, indices_or_sections, axis):\n if not isinstance(indices_or_sections, (int, collections.Iterable)):\n raise TypeError('indices_or_sections must be integer or 1-D array')\n self.indices_or_sections = indices_or_sections\n self.axis = axis\n\n def check_type_forward(self, in_types):\n type_check.expect(in_types.size() == 1)\n type_check.expect(in_types[0].ndim > self.axis)\n\n if isinstance(self.indices_or_sections, collections.Iterable):\n max_index = type_check.Variable(\n self.indices_or_sections[-1], 'max_index')\n type_check.expect(in_types[0].shape[self.axis] > max_index)\n else:\n sections = type_check.Variable(\n self.indices_or_sections, 'sections')\n type_check.expect(in_types[0].shape[self.axis] % sections == 0)\n\n def forward(self, x):\n if isinstance(self.indices_or_sections, collections.Iterable):\n cdimx = x[0].shape[self.axis]\n ind = list(self.indices_or_sections)\n ind.append(cdimx)\n prev_i = 0\n for i in ind:\n cdimy = max(0, min(i, cdimx) - prev_i)\n if cdimy == 0:\n raise ValueError('Not support if shape contains 0')\n prev_i = i\n xp = cuda.get_array_module(*x)\n return tuple(xp.split(x[0], self.indices_or_sections, self.axis))\n\n def backward(self, x, gys):\n xp = cuda.get_array_module(*x)\n if any(gy is None for gy in gys):\n gx = xp.zeros_like(x[0])\n gxs = xp.split(gx, self.indices_or_sections, self.axis)\n for gxi, gy in six.moves.zip(gxs, gys):\n if gy is None:\n continue\n gxi[:] = gy\n return gx,\n else:\n return xp.concatenate(gys, axis=self.axis),\n\n\ndef split_axis(x, indices_or_sections, axis, force_tuple=False):\n \"\"\"Splits given variables along an axis.\n\n Args:\n x (tuple of Variables): Variables to be split.\n indices_or_sections (int or 1-D array): If this argument is an integer,\n N, the array will be divided into N equal arrays along axis.\n If it is a 1-D array of sorted integers, it\n indicates the positions where the array is split.\n axis (int): Axis that the input array is split along.\n force_tuple (bool): If ``True``, this method returns a tuple even when\n the number of outputs is one.\n\n Returns:\n tuple or Variable: Tuple of :class:`~chainer.Variable` objects\n if the number of outputs is more than 1 or\n :class:`~chainer.Variable` otherwise.\n When ``force_tuple`` is ``True``, returned value is always a tuple\n regardless of the number of outputs.\n\n .. note::\n This function raises :class:`ValueError` if at least\n one of the outputs is split to zero-size\n (i.e. ``axis``-th value of its shape is zero).\n\n \"\"\"\n res = SplitAxis(indices_or_sections, axis)(x)\n if force_tuple and isinstance(res, chainer.Variable):\n res = (res,)\n return res\n", "path": "chainer/functions/array/split_axis.py"}], "after_files": [{"content": "import collections\n\nimport six\n\nimport chainer\nfrom chainer import cuda\nfrom chainer import function\nfrom chainer.utils import type_check\n\n\nclass SplitAxis(function.Function):\n\n \"\"\"Function that splits multiple arrays along the specified axis.\"\"\"\n\n def __init__(self, indices_or_sections, axis):\n if not isinstance(indices_or_sections, (int, collections.Iterable)):\n raise TypeError('indices_or_sections must be integer or 1-D array')\n self.indices_or_sections = indices_or_sections\n self.axis = axis\n\n def check_type_forward(self, in_types):\n type_check.expect(in_types.size() == 1)\n type_check.expect(in_types[0].ndim > self.axis)\n\n if isinstance(self.indices_or_sections, collections.Iterable):\n if len(self.indices_or_sections) > 0:\n max_index = type_check.Variable(\n self.indices_or_sections[-1], 'max_index')\n type_check.expect(in_types[0].shape[self.axis] > max_index)\n else:\n sections = type_check.Variable(\n self.indices_or_sections, 'sections')\n type_check.expect(in_types[0].shape[self.axis] % sections == 0)\n\n def forward(self, x):\n if isinstance(self.indices_or_sections, collections.Iterable):\n cdimx = x[0].shape[self.axis]\n ind = list(self.indices_or_sections)\n ind.append(cdimx)\n prev_i = 0\n for i in ind:\n cdimy = max(0, min(i, cdimx) - prev_i)\n if cdimy == 0:\n raise ValueError('Not support if shape contains 0')\n prev_i = i\n xp = cuda.get_array_module(*x)\n return tuple(xp.split(x[0], self.indices_or_sections, self.axis))\n\n def backward(self, x, gys):\n xp = cuda.get_array_module(*x)\n if any(gy is None for gy in gys):\n gx = xp.zeros_like(x[0])\n gxs = xp.split(gx, self.indices_or_sections, self.axis)\n for gxi, gy in six.moves.zip(gxs, gys):\n if gy is None:\n continue\n gxi[:] = gy\n return gx,\n else:\n return xp.concatenate(gys, axis=self.axis),\n\n\ndef split_axis(x, indices_or_sections, axis, force_tuple=False):\n \"\"\"Splits given variables along an axis.\n\n Args:\n x (tuple of Variables): Variables to be split.\n indices_or_sections (int or 1-D array): If this argument is an integer,\n N, the array will be divided into N equal arrays along axis.\n If it is a 1-D array of sorted integers, it\n indicates the positions where the array is split.\n axis (int): Axis that the input array is split along.\n force_tuple (bool): If ``True``, this method returns a tuple even when\n the number of outputs is one.\n\n Returns:\n tuple or Variable: Tuple of :class:`~chainer.Variable` objects\n if the number of outputs is more than 1 or\n :class:`~chainer.Variable` otherwise.\n When ``force_tuple`` is ``True``, returned value is always a tuple\n regardless of the number of outputs.\n\n .. note::\n This function raises :class:`ValueError` if at least\n one of the outputs is split to zero-size\n (i.e. ``axis``-th value of its shape is zero).\n\n \"\"\"\n res = SplitAxis(indices_or_sections, axis)(x)\n if force_tuple and isinstance(res, chainer.Variable):\n res = (res,)\n return res\n", "path": "chainer/functions/array/split_axis.py"}]}

| 1,247 | 197 |

gh_patches_debug_26510

|

rasdani/github-patches

|

git_diff

|

mitmproxy__mitmproxy-2325

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

HEAD method not allowed to access mitm.it

##### Steps to reproduce the problem:

1. Using mitmdump in transparent mode, with on-boarding port on 6969 (did not test on 80)

/mitmdump -T --host --insecure --onboarding-port 6969

2. On iPad + Chrome + Transparent mode, access to http://mitm.it:6969

3. An HEAD /cert/pem is sent, receive an "405 Method Not Allowed"

Any idea of any workaround?

##### Any other comments? What have you tried so far?

Exact packet:

Hypertext Transfer Protocol

HEAD /cert/pem HTTP/1.1\r\n

Host: mitm.it:6969\r\n

Connection: keep-alive\r\n

User-Agent: Mozilla/5.0 (iPad; CPU OS 9_2 like Mac OS X) AppleWebKit/601.1 (KHTML, like Gecko) CriOS/55.0.2883.79 Mobile/13C75 Safari/601.1.46\r\n

Accept-Encoding: gzip, deflate, sdch\r\n

Accept-Language: fr-FR,fr;q=0.8,en-US;q=0.6,en;q=0.4\r\n

\r\n

Answer:

Hypertext Transfer Protocol

HTTP/1.1 405 Method Not Allowed\r\n

Server: TornadoServer/4.4.2\r\n

Content-Length: 87\r\n

Date: Tue, 09 May 2017 13:35:38 GMT\r\n

Content-Type: text/html; charset=UTF-8\r\n

\r\n

##### System information

mitmdump --version

Mitmproxy version: 2.0.1 (release version) Precompiled Binary

Python version: 3.5.2

Platform: Darwin-16.5.0-x86_64-i386-64bit

SSL version: OpenSSL 1.0.2j 26 Sep 2016

Mac version: 10.12.4 ('', '', '') x86_64

HEAD method not allowed to access mitm.it

##### Steps to reproduce the problem:

1. Using mitmdump in transparent mode, with on-boarding port on 6969 (did not test on 80)

/mitmdump -T --host --insecure --onboarding-port 6969

2. On iPad + Chrome + Transparent mode, access to http://mitm.it:6969

3. An HEAD /cert/pem is sent, receive an "405 Method Not Allowed"

Any idea of any workaround?

##### Any other comments? What have you tried so far?

Exact packet:

Hypertext Transfer Protocol

HEAD /cert/pem HTTP/1.1\r\n

Host: mitm.it:6969\r\n

Connection: keep-alive\r\n

User-Agent: Mozilla/5.0 (iPad; CPU OS 9_2 like Mac OS X) AppleWebKit/601.1 (KHTML, like Gecko) CriOS/55.0.2883.79 Mobile/13C75 Safari/601.1.46\r\n

Accept-Encoding: gzip, deflate, sdch\r\n

Accept-Language: fr-FR,fr;q=0.8,en-US;q=0.6,en;q=0.4\r\n

\r\n

Answer:

Hypertext Transfer Protocol

HTTP/1.1 405 Method Not Allowed\r\n

Server: TornadoServer/4.4.2\r\n

Content-Length: 87\r\n

Date: Tue, 09 May 2017 13:35:38 GMT\r\n

Content-Type: text/html; charset=UTF-8\r\n

\r\n

##### System information

mitmdump --version

Mitmproxy version: 2.0.1 (release version) Precompiled Binary

Python version: 3.5.2

Platform: Darwin-16.5.0-x86_64-i386-64bit

SSL version: OpenSSL 1.0.2j 26 Sep 2016

Mac version: 10.12.4 ('', '', '') x86_64

HEAD method not allowed to access mitm.it

##### Steps to reproduce the problem:

1. Using mitmdump in transparent mode, with on-boarding port on 6969 (did not test on 80)

/mitmdump -T --host --insecure --onboarding-port 6969

2. On iPad + Chrome + Transparent mode, access to http://mitm.it:6969

3. An HEAD /cert/pem is sent, receive an "405 Method Not Allowed"

Any idea of any workaround?

##### Any other comments? What have you tried so far?

Exact packet:

Hypertext Transfer Protocol

HEAD /cert/pem HTTP/1.1\r\n

Host: mitm.it:6969\r\n

Connection: keep-alive\r\n

User-Agent: Mozilla/5.0 (iPad; CPU OS 9_2 like Mac OS X) AppleWebKit/601.1 (KHTML, like Gecko) CriOS/55.0.2883.79 Mobile/13C75 Safari/601.1.46\r\n

Accept-Encoding: gzip, deflate, sdch\r\n

Accept-Language: fr-FR,fr;q=0.8,en-US;q=0.6,en;q=0.4\r\n

\r\n

Answer:

Hypertext Transfer Protocol

HTTP/1.1 405 Method Not Allowed\r\n

Server: TornadoServer/4.4.2\r\n

Content-Length: 87\r\n

Date: Tue, 09 May 2017 13:35:38 GMT\r\n

Content-Type: text/html; charset=UTF-8\r\n

\r\n

##### System information

mitmdump --version

Mitmproxy version: 2.0.1 (release version) Precompiled Binary

Python version: 3.5.2

Platform: Darwin-16.5.0-x86_64-i386-64bit

SSL version: OpenSSL 1.0.2j 26 Sep 2016

Mac version: 10.12.4 ('', '', '') x86_64

--- END ISSUE ---