problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

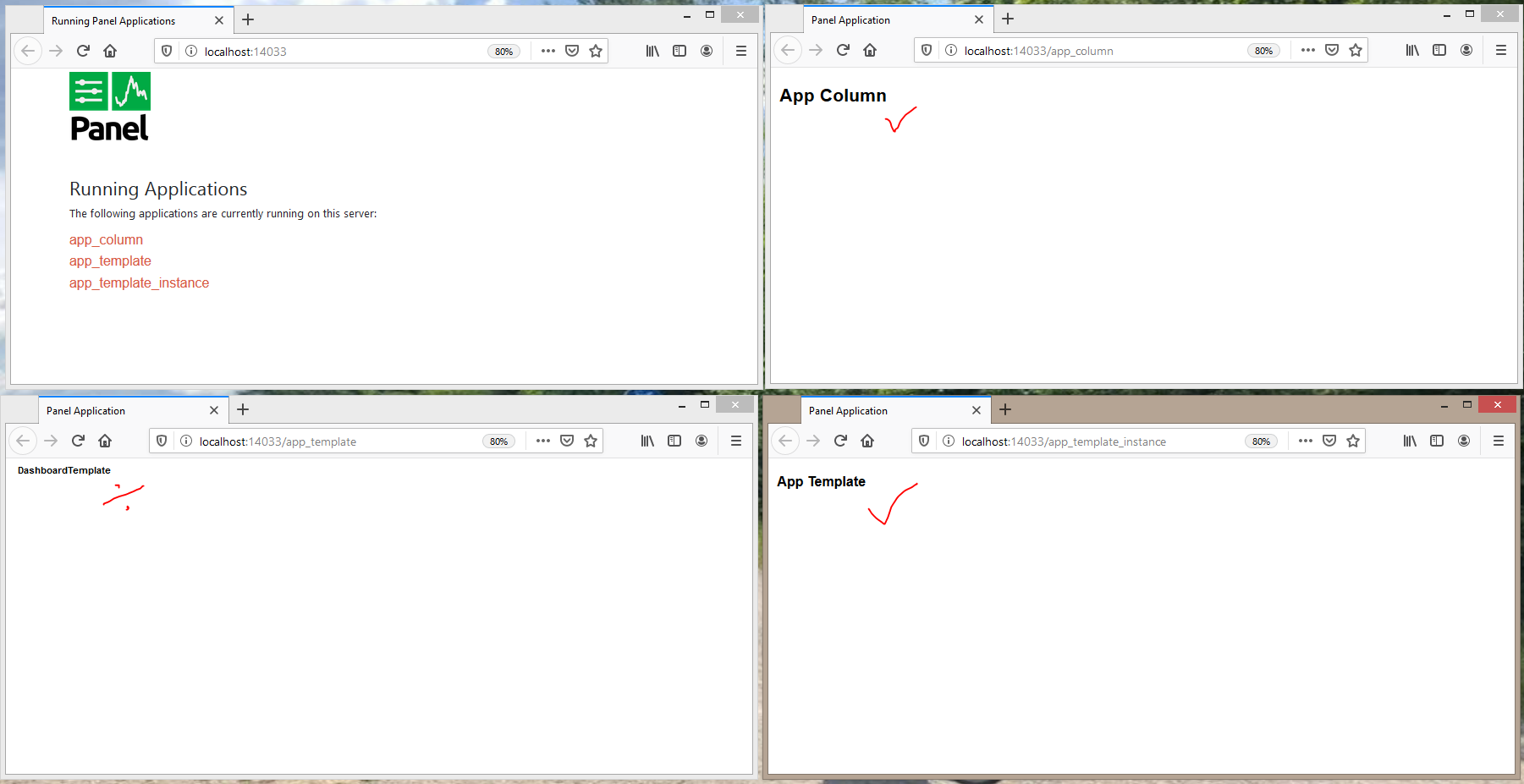

gh_patches_debug_2627

|

rasdani/github-patches

|

git_diff

|

streamlink__streamlink-2171

|

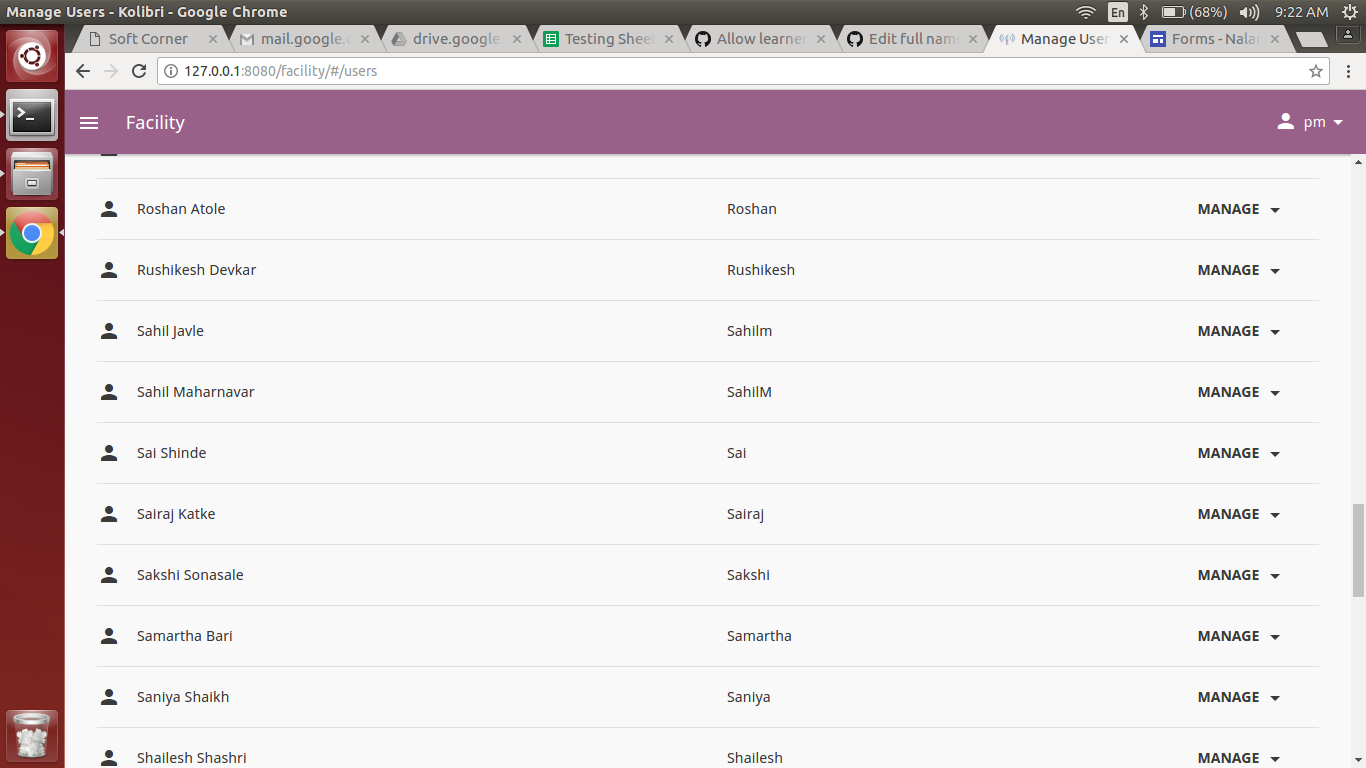

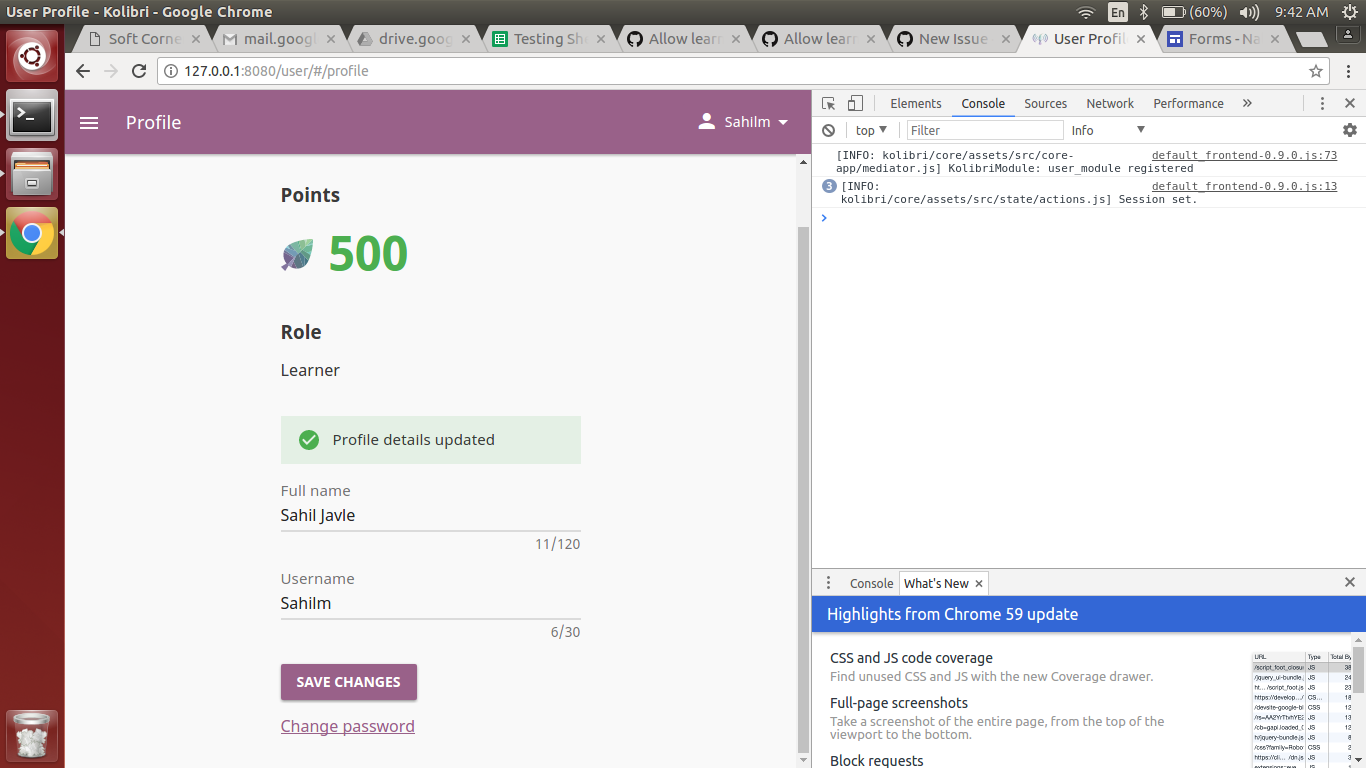

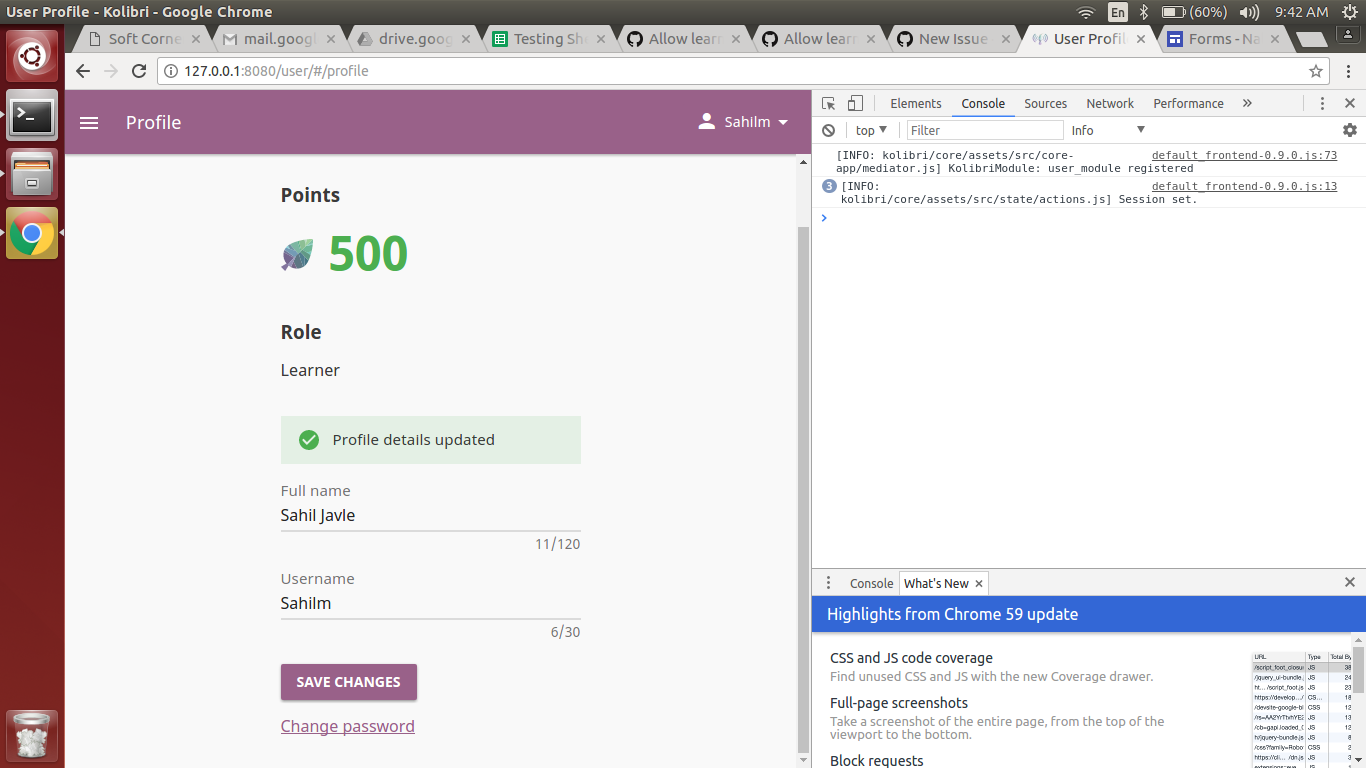

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

INE Plugin

## Plugin Issue

<!-- Replace [ ] with [x] in order to check the box -->

- [X] This is a plugin issue and I have read the contribution guidelines.

### Description

The INE plugin doesn't appear to work on any videos I try.

### Reproduction steps / Explicit stream URLs to test

Try do download a video

### Log output

<!--

TEXT LOG OUTPUT IS REQUIRED for a plugin issue!

Use the `--loglevel debug` parameter and avoid using parameters which suppress log output.

https://streamlink.github.io/cli.html#cmdoption-l

Make sure to **remove usernames and passwords**

You can copy the output to https://gist.github.com/ or paste it below.

-->

```

streamlink https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/ --http-cookie laravel_session=<Removed> --loglevel debug

[cli][debug] OS: macOS 10.14.1

[cli][debug] Python: 2.7.10

[cli][debug] Streamlink: 0.14.2

[cli][debug] Requests(2.19.1), Socks(1.6.7), Websocket(0.54.0)

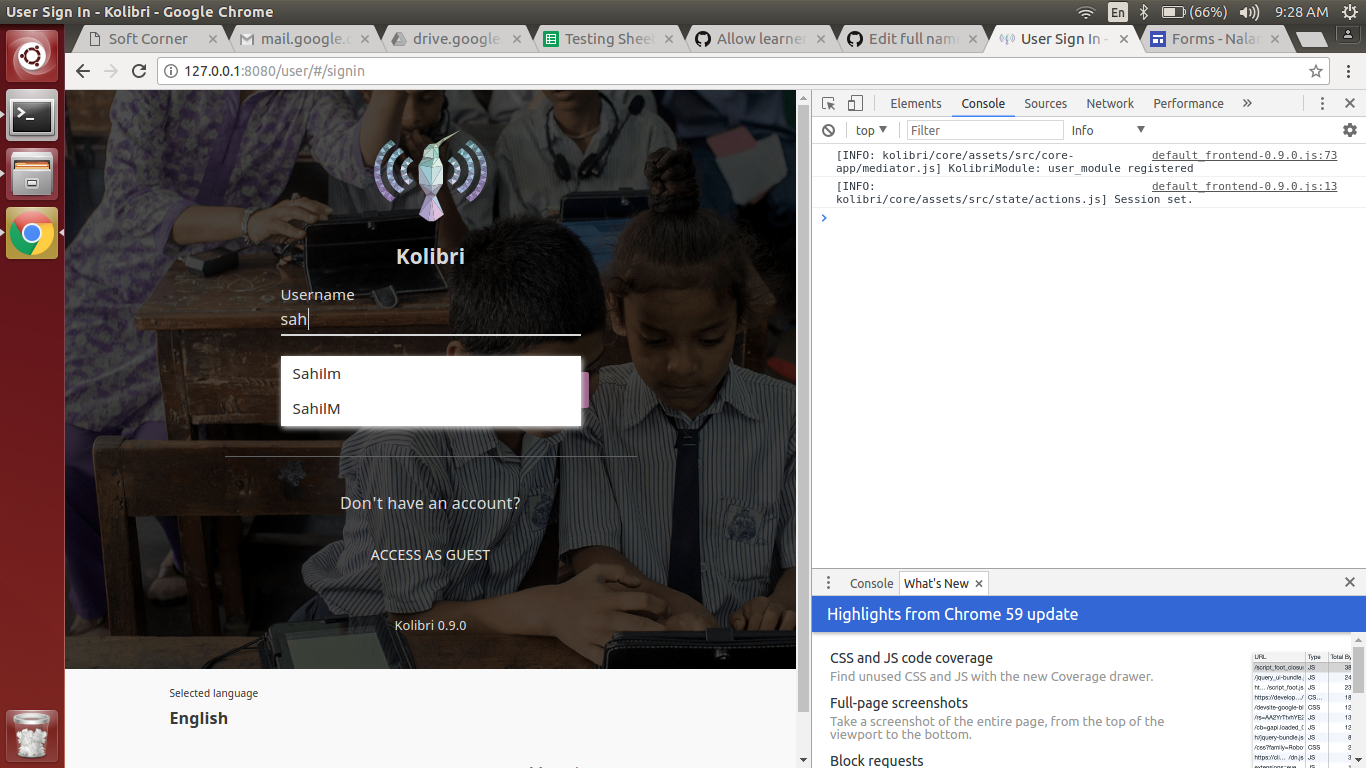

[cli][info] Found matching plugin ine for URL https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/

[plugin.ine][debug] Found video ID: 419cdc1a-a4a8-4eba-b8b3-5dda324daa94

[plugin.ine][debug] Loading player JS: https://content.jwplatform.com/players/yyYIR4k9-p4NBeNN0.js?exp=1543579899&sig=5e0058876669be2e2aafc7e52d067b78

error: Unable to validate result: <_sre.SRE_Match object at 0x106564dc8> does not equal None or Unable to validate key 'playlist': Type of u'//content.jwplatform.com/v2/media/yyYIR4k9?token=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJyZWNvbW1lbmRhdGlvbnNfcGxheWxpc3RfaWQiOiJ5cHQwdDR4aCIsInJlc291cmNlIjoiL3YyL21lZGlhL3l5WUlSNGs5IiwiZXhwIjoxNTQzNTc5OTIwfQ.pHEgoDYzc219-S_slfWRhyEoCsyCZt74BiL8RNs5IJ8' should be 'str' but is 'unicode'

```

### Additional comments, screenshots, etc.

[Love Streamlink? Please consider supporting our collective. Thanks!](https://opencollective.com/streamlink/donate)

INE Plugin

## Plugin Issue

<!-- Replace [ ] with [x] in order to check the box -->

- [X] This is a plugin issue and I have read the contribution guidelines.

### Description

The INE plugin doesn't appear to work on any videos I try.

### Reproduction steps / Explicit stream URLs to test

Try do download a video

### Log output

<!--

TEXT LOG OUTPUT IS REQUIRED for a plugin issue!

Use the `--loglevel debug` parameter and avoid using parameters which suppress log output.

https://streamlink.github.io/cli.html#cmdoption-l

Make sure to **remove usernames and passwords**

You can copy the output to https://gist.github.com/ or paste it below.

-->

```

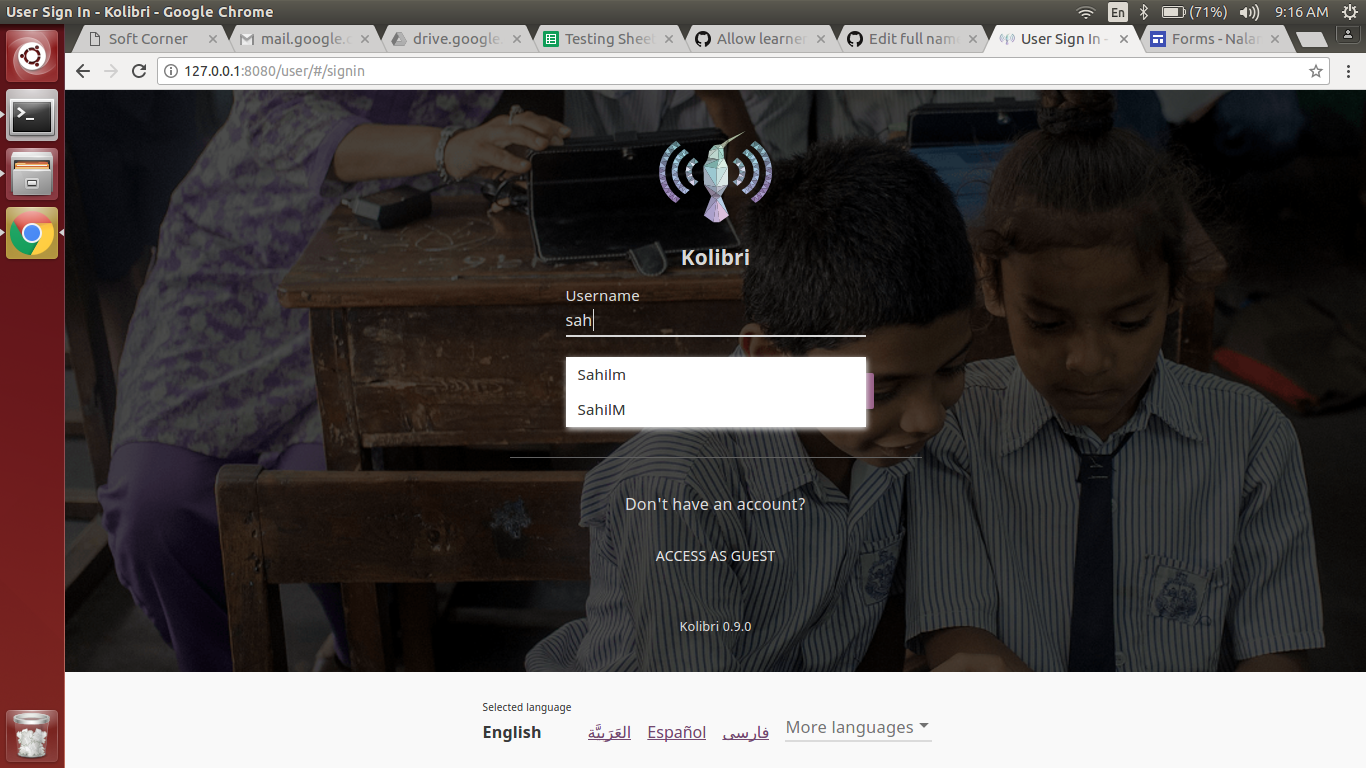

streamlink https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/ --http-cookie laravel_session=<Removed> --loglevel debug

[cli][debug] OS: macOS 10.14.1

[cli][debug] Python: 2.7.10

[cli][debug] Streamlink: 0.14.2

[cli][debug] Requests(2.19.1), Socks(1.6.7), Websocket(0.54.0)

[cli][info] Found matching plugin ine for URL https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/

[plugin.ine][debug] Found video ID: 419cdc1a-a4a8-4eba-b8b3-5dda324daa94

[plugin.ine][debug] Loading player JS: https://content.jwplatform.com/players/yyYIR4k9-p4NBeNN0.js?exp=1543579899&sig=5e0058876669be2e2aafc7e52d067b78

error: Unable to validate result: <_sre.SRE_Match object at 0x106564dc8> does not equal None or Unable to validate key 'playlist': Type of u'//content.jwplatform.com/v2/media/yyYIR4k9?token=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJyZWNvbW1lbmRhdGlvbnNfcGxheWxpc3RfaWQiOiJ5cHQwdDR4aCIsInJlc291cmNlIjoiL3YyL21lZGlhL3l5WUlSNGs5IiwiZXhwIjoxNTQzNTc5OTIwfQ.pHEgoDYzc219-S_slfWRhyEoCsyCZt74BiL8RNs5IJ8' should be 'str' but is 'unicode'

```

### Additional comments, screenshots, etc.

[Love Streamlink? Please consider supporting our collective. Thanks!](https://opencollective.com/streamlink/donate)

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/streamlink/plugins/ine.py`

Content:

```

1 from __future__ import print_function

2

3 import json

4 import re

5

6 from streamlink.plugin import Plugin

7 from streamlink.plugin.api import validate

8 from streamlink.stream import HLSStream, HTTPStream

9 from streamlink.utils import update_scheme

10

11

12 class INE(Plugin):

13 url_re = re.compile(r"""https://streaming.ine.com/play\#?/

14 ([0-9a-f]{8}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{12})/?

15 (.*?)""", re.VERBOSE)

16 play_url = "https://streaming.ine.com/play/{vid}/watch"

17 js_re = re.compile(r'''script type="text/javascript" src="(https://content.jwplatform.com/players/.*?)"''')

18 jwplayer_re = re.compile(r'''jwConfig\s*=\s*(\{.*\});''', re.DOTALL)

19 setup_schema = validate.Schema(

20 validate.transform(jwplayer_re.search),

21 validate.any(

22 None,

23 validate.all(

24 validate.get(1),

25 validate.transform(json.loads),

26 {"playlist": str},

27 validate.get("playlist")

28 )

29 )

30 )

31

32 @classmethod

33 def can_handle_url(cls, url):

34 return cls.url_re.match(url) is not None

35

36 def _get_streams(self):

37 vid = self.url_re.match(self.url).group(1)

38 self.logger.debug("Found video ID: {0}", vid)

39

40 page = self.session.http.get(self.play_url.format(vid=vid))

41 js_url_m = self.js_re.search(page.text)

42 if js_url_m:

43 js_url = js_url_m.group(1)

44 self.logger.debug("Loading player JS: {0}", js_url)

45

46 res = self.session.http.get(js_url)

47 metadata_url = update_scheme(self.url, self.setup_schema.validate(res.text))

48 data = self.session.http.json(self.session.http.get(metadata_url))

49

50 for source in data["playlist"][0]["sources"]:

51 if source["type"] == "application/vnd.apple.mpegurl":

52 for s in HLSStream.parse_variant_playlist(self.session, source["file"]).items():

53 yield s

54 elif source["type"] == "video/mp4":

55 yield "{0}p".format(source["height"]), HTTPStream(self.session, source["file"])

56

57

58 __plugin__ = INE

59

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/streamlink/plugins/ine.py b/src/streamlink/plugins/ine.py

--- a/src/streamlink/plugins/ine.py

+++ b/src/streamlink/plugins/ine.py

@@ -23,7 +23,7 @@

validate.all(

validate.get(1),

validate.transform(json.loads),

- {"playlist": str},

+ {"playlist": validate.text},

validate.get("playlist")

)

)

|

{"golden_diff": "diff --git a/src/streamlink/plugins/ine.py b/src/streamlink/plugins/ine.py\n--- a/src/streamlink/plugins/ine.py\n+++ b/src/streamlink/plugins/ine.py\n@@ -23,7 +23,7 @@\n validate.all(\n validate.get(1),\n validate.transform(json.loads),\n- {\"playlist\": str},\n+ {\"playlist\": validate.text},\n validate.get(\"playlist\")\n )\n )\n", "issue": "INE Plugin\n## Plugin Issue\r\n\r\n<!-- Replace [ ] with [x] in order to check the box -->\r\n- [X] This is a plugin issue and I have read the contribution guidelines.\r\n\r\n\r\n### Description\r\n\r\nThe INE plugin doesn't appear to work on any videos I try.\r\n\r\n\r\n### Reproduction steps / Explicit stream URLs to test\r\n\r\nTry do download a video\r\n\r\n### Log output\r\n\r\n<!--\r\nTEXT LOG OUTPUT IS REQUIRED for a plugin issue!\r\nUse the `--loglevel debug` parameter and avoid using parameters which suppress log output.\r\nhttps://streamlink.github.io/cli.html#cmdoption-l\r\n\r\nMake sure to **remove usernames and passwords**\r\nYou can copy the output to https://gist.github.com/ or paste it below.\r\n-->\r\n\r\n```\r\nstreamlink https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/ --http-cookie laravel_session=<Removed> --loglevel debug\r\n[cli][debug] OS: macOS 10.14.1\r\n[cli][debug] Python: 2.7.10\r\n[cli][debug] Streamlink: 0.14.2\r\n[cli][debug] Requests(2.19.1), Socks(1.6.7), Websocket(0.54.0)\r\n[cli][info] Found matching plugin ine for URL https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/\r\n[plugin.ine][debug] Found video ID: 419cdc1a-a4a8-4eba-b8b3-5dda324daa94\r\n[plugin.ine][debug] Loading player JS: https://content.jwplatform.com/players/yyYIR4k9-p4NBeNN0.js?exp=1543579899&sig=5e0058876669be2e2aafc7e52d067b78\r\nerror: Unable to validate result: <_sre.SRE_Match object at 0x106564dc8> does not equal None or Unable to validate key 'playlist': Type of u'//content.jwplatform.com/v2/media/yyYIR4k9?token=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJyZWNvbW1lbmRhdGlvbnNfcGxheWxpc3RfaWQiOiJ5cHQwdDR4aCIsInJlc291cmNlIjoiL3YyL21lZGlhL3l5WUlSNGs5IiwiZXhwIjoxNTQzNTc5OTIwfQ.pHEgoDYzc219-S_slfWRhyEoCsyCZt74BiL8RNs5IJ8' should be 'str' but is 'unicode'\r\n```\r\n\r\n\r\n### Additional comments, screenshots, etc.\r\n\r\n\r\n\r\n[Love Streamlink? Please consider supporting our collective. Thanks!](https://opencollective.com/streamlink/donate)\r\n\nINE Plugin\n## Plugin Issue\r\n\r\n<!-- Replace [ ] with [x] in order to check the box -->\r\n- [X] This is a plugin issue and I have read the contribution guidelines.\r\n\r\n\r\n### Description\r\n\r\nThe INE plugin doesn't appear to work on any videos I try.\r\n\r\n\r\n### Reproduction steps / Explicit stream URLs to test\r\n\r\nTry do download a video\r\n\r\n### Log output\r\n\r\n<!--\r\nTEXT LOG OUTPUT IS REQUIRED for a plugin issue!\r\nUse the `--loglevel debug` parameter and avoid using parameters which suppress log output.\r\nhttps://streamlink.github.io/cli.html#cmdoption-l\r\n\r\nMake sure to **remove usernames and passwords**\r\nYou can copy the output to https://gist.github.com/ or paste it below.\r\n-->\r\n\r\n```\r\nstreamlink https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/ --http-cookie laravel_session=<Removed> --loglevel debug\r\n[cli][debug] OS: macOS 10.14.1\r\n[cli][debug] Python: 2.7.10\r\n[cli][debug] Streamlink: 0.14.2\r\n[cli][debug] Requests(2.19.1), Socks(1.6.7), Websocket(0.54.0)\r\n[cli][info] Found matching plugin ine for URL https://streaming.ine.com/play/419cdc1a-a4a8-4eba-b8b3-5dda324daa94/day-1-part-1#/\r\n[plugin.ine][debug] Found video ID: 419cdc1a-a4a8-4eba-b8b3-5dda324daa94\r\n[plugin.ine][debug] Loading player JS: https://content.jwplatform.com/players/yyYIR4k9-p4NBeNN0.js?exp=1543579899&sig=5e0058876669be2e2aafc7e52d067b78\r\nerror: Unable to validate result: <_sre.SRE_Match object at 0x106564dc8> does not equal None or Unable to validate key 'playlist': Type of u'//content.jwplatform.com/v2/media/yyYIR4k9?token=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJyZWNvbW1lbmRhdGlvbnNfcGxheWxpc3RfaWQiOiJ5cHQwdDR4aCIsInJlc291cmNlIjoiL3YyL21lZGlhL3l5WUlSNGs5IiwiZXhwIjoxNTQzNTc5OTIwfQ.pHEgoDYzc219-S_slfWRhyEoCsyCZt74BiL8RNs5IJ8' should be 'str' but is 'unicode'\r\n```\r\n\r\n\r\n### Additional comments, screenshots, etc.\r\n\r\n\r\n\r\n[Love Streamlink? Please consider supporting our collective. Thanks!](https://opencollective.com/streamlink/donate)\r\n\n", "before_files": [{"content": "from __future__ import print_function\n\nimport json\nimport re\n\nfrom streamlink.plugin import Plugin\nfrom streamlink.plugin.api import validate\nfrom streamlink.stream import HLSStream, HTTPStream\nfrom streamlink.utils import update_scheme\n\n\nclass INE(Plugin):\n url_re = re.compile(r\"\"\"https://streaming.ine.com/play\\#?/\n ([0-9a-f]{8}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{12})/?\n (.*?)\"\"\", re.VERBOSE)\n play_url = \"https://streaming.ine.com/play/{vid}/watch\"\n js_re = re.compile(r'''script type=\"text/javascript\" src=\"(https://content.jwplatform.com/players/.*?)\"''')\n jwplayer_re = re.compile(r'''jwConfig\\s*=\\s*(\\{.*\\});''', re.DOTALL)\n setup_schema = validate.Schema(\n validate.transform(jwplayer_re.search),\n validate.any(\n None,\n validate.all(\n validate.get(1),\n validate.transform(json.loads),\n {\"playlist\": str},\n validate.get(\"playlist\")\n )\n )\n )\n\n @classmethod\n def can_handle_url(cls, url):\n return cls.url_re.match(url) is not None\n\n def _get_streams(self):\n vid = self.url_re.match(self.url).group(1)\n self.logger.debug(\"Found video ID: {0}\", vid)\n\n page = self.session.http.get(self.play_url.format(vid=vid))\n js_url_m = self.js_re.search(page.text)\n if js_url_m:\n js_url = js_url_m.group(1)\n self.logger.debug(\"Loading player JS: {0}\", js_url)\n\n res = self.session.http.get(js_url)\n metadata_url = update_scheme(self.url, self.setup_schema.validate(res.text))\n data = self.session.http.json(self.session.http.get(metadata_url))\n\n for source in data[\"playlist\"][0][\"sources\"]:\n if source[\"type\"] == \"application/vnd.apple.mpegurl\":\n for s in HLSStream.parse_variant_playlist(self.session, source[\"file\"]).items():\n yield s\n elif source[\"type\"] == \"video/mp4\":\n yield \"{0}p\".format(source[\"height\"]), HTTPStream(self.session, source[\"file\"])\n\n\n__plugin__ = INE\n", "path": "src/streamlink/plugins/ine.py"}], "after_files": [{"content": "from __future__ import print_function\n\nimport json\nimport re\n\nfrom streamlink.plugin import Plugin\nfrom streamlink.plugin.api import validate\nfrom streamlink.stream import HLSStream, HTTPStream\nfrom streamlink.utils import update_scheme\n\n\nclass INE(Plugin):\n url_re = re.compile(r\"\"\"https://streaming.ine.com/play\\#?/\n ([0-9a-f]{8}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{4}-[0-9a-f]{12})/?\n (.*?)\"\"\", re.VERBOSE)\n play_url = \"https://streaming.ine.com/play/{vid}/watch\"\n js_re = re.compile(r'''script type=\"text/javascript\" src=\"(https://content.jwplatform.com/players/.*?)\"''')\n jwplayer_re = re.compile(r'''jwConfig\\s*=\\s*(\\{.*\\});''', re.DOTALL)\n setup_schema = validate.Schema(\n validate.transform(jwplayer_re.search),\n validate.any(\n None,\n validate.all(\n validate.get(1),\n validate.transform(json.loads),\n {\"playlist\": validate.text},\n validate.get(\"playlist\")\n )\n )\n )\n\n @classmethod\n def can_handle_url(cls, url):\n return cls.url_re.match(url) is not None\n\n def _get_streams(self):\n vid = self.url_re.match(self.url).group(1)\n self.logger.debug(\"Found video ID: {0}\", vid)\n\n page = self.session.http.get(self.play_url.format(vid=vid))\n js_url_m = self.js_re.search(page.text)\n if js_url_m:\n js_url = js_url_m.group(1)\n self.logger.debug(\"Loading player JS: {0}\", js_url)\n\n res = self.session.http.get(js_url)\n metadata_url = update_scheme(self.url, self.setup_schema.validate(res.text))\n data = self.session.http.json(self.session.http.get(metadata_url))\n\n for source in data[\"playlist\"][0][\"sources\"]:\n if source[\"type\"] == \"application/vnd.apple.mpegurl\":\n for s in HLSStream.parse_variant_playlist(self.session, source[\"file\"]).items():\n yield s\n elif source[\"type\"] == \"video/mp4\":\n yield \"{0}p\".format(source[\"height\"]), HTTPStream(self.session, source[\"file\"])\n\n\n__plugin__ = INE\n", "path": "src/streamlink/plugins/ine.py"}]}

| 2,359 | 93 |

gh_patches_debug_24965

|

rasdani/github-patches

|

git_diff

|

google__clusterfuzz-2567

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Investigate GitHub login

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/appengine/libs/csp.py`

Content:

```

1 # Copyright 2019 Google LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 """Helpers used to generate Content Security Policies for pages."""

15 import collections

16

17 from libs import auth

18

19

20 class CSPBuilder(object):

21 """Helper to build a Content Security Policy string."""

22

23 def __init__(self):

24 self.directives = collections.defaultdict(list)

25

26 def add(self, directive, source, quote=False):

27 """Add a source for a given directive."""

28 # Some values for sources are expected to be quoted. No escaping is done

29 # since these are specific literal values that don't require it.

30 if quote:

31 source = '\'{}\''.format(source)

32

33 assert source not in self.directives[directive], (

34 'Duplicate source "{source}" for directive "{directive}"'.format(

35 source=source, directive=directive))

36 self.directives[directive].append(source)

37

38 def add_sourceless(self, directive):

39 assert directive not in self.directives, (

40 'Sourceless directive "{directive}" already exists.'.format(

41 directive=directive))

42

43 self.directives[directive] = []

44

45 def remove(self, directive, source, quote=False):

46 """Remove a source for a given directive."""

47 if quote:

48 source = '\'{}\''.format(source)

49

50 assert source in self.directives[directive], (

51 'Removing nonexistent "{source}" for directive "{directive}"'.format(

52 source=source, directive=directive))

53 self.directives[directive].remove(source)

54

55 def __str__(self):

56 """Convert to a string to send with a Content-Security-Policy header."""

57 parts = []

58

59 # Sort directives for deterministic results.

60 for directive, sources in sorted(self.directives.items()):

61 # Each policy part has the form "directive source1 source2 ...;".

62 parts.append(' '.join([directive] + sources) + ';')

63

64 return ' '.join(parts)

65

66

67 def get_default_builder():

68 """Get a CSPBuilder object for the default policy.

69

70 Can be modified for specific pages if needed."""

71 builder = CSPBuilder()

72

73 # By default, disallow everything. Whitelist only features that are needed.

74 builder.add('default-src', 'none', quote=True)

75

76 # Allow various directives if sourced from self.

77 builder.add('font-src', 'self', quote=True)

78 builder.add('connect-src', 'self', quote=True)

79 builder.add('img-src', 'self', quote=True)

80 builder.add('manifest-src', 'self', quote=True)

81

82 # External scripts. Google analytics, charting libraries.

83 builder.add('script-src', 'www.google-analytics.com')

84 builder.add('script-src', 'www.gstatic.com')

85 builder.add('script-src', 'apis.google.com')

86

87 # Google Analytics also uses connect-src and img-src.

88 builder.add('connect-src', 'www.google-analytics.com')

89 builder.add('img-src', 'www.google-analytics.com')

90

91 # Firebase.

92 builder.add('img-src', 'www.gstatic.com')

93 builder.add('connect-src', 'securetoken.googleapis.com')

94 builder.add('connect-src', 'www.googleapis.com')

95 builder.add('frame-src', auth.auth_domain())

96

97 # External style. Used for fonts, charting libraries.

98 builder.add('style-src', 'fonts.googleapis.com')

99 builder.add('style-src', 'www.gstatic.com')

100

101 # External fonts.

102 builder.add('font-src', 'fonts.gstatic.com')

103

104 # Some upload forms require us to connect to the cloud storage API.

105 builder.add('connect-src', 'storage.googleapis.com')

106

107 # Mixed content is unexpected, but upgrade requests rather than block.

108 builder.add_sourceless('upgrade-insecure-requests')

109

110 # We don't expect object to be used, but it doesn't fall back to default-src.

111 builder.add('object-src', 'none', quote=True)

112

113 # We don't expect workers to be used, but they fall back to script-src.

114 builder.add('worker-src', 'none', quote=True)

115

116 # Add reporting so that violations don't break things silently.

117 builder.add('report-uri', '/report-csp-failure')

118

119 # TODO(mbarbella): Remove Google-specific cases by allowing configuration.

120

121 # Internal authentication.

122 builder.add('manifest-src', 'login.corp.google.com')

123

124 # TODO(mbarbella): Improve the policy by limiting the additions below.

125

126 # Because we use Polymer Bundler to create large files containing all of our

127 # scripts inline, our policy requires this (which weakens CSP significantly).

128 builder.add('script-src', 'unsafe-inline', quote=True)

129

130 # Some of the pages that read responses from json handlers require this.

131 builder.add('script-src', 'unsafe-eval', quote=True)

132

133 # Our Polymer Bundler usage also requires inline style.

134 builder.add('style-src', 'unsafe-inline', quote=True)

135

136 # Some fonts are loaded from data URIs.

137 builder.add('font-src', 'data:')

138

139 return builder

140

141

142 def get_default():

143 """Get the default Content Security Policy as a string."""

144 return str(get_default_builder())

145

```

Path: `src/appengine/libs/auth.py`

Content:

```

1 # Copyright 2019 Google LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 """Authentication helpers."""

15

16 import collections

17

18 from firebase_admin import auth

19 from google.cloud import ndb

20 from googleapiclient.discovery import build

21 import jwt

22 import requests

23

24 from clusterfuzz._internal.base import memoize

25 from clusterfuzz._internal.base import utils

26 from clusterfuzz._internal.config import local_config

27 from clusterfuzz._internal.datastore import data_types

28 from clusterfuzz._internal.metrics import logs

29 from clusterfuzz._internal.system import environment

30 from libs import request_cache

31

32 User = collections.namedtuple('User', ['email'])

33

34

35 class AuthError(Exception):

36 """Auth error."""

37

38

39 def auth_domain():

40 """Get the auth domain."""

41 domain = local_config.ProjectConfig().get('firebase.auth_domain')

42 if domain:

43 return domain

44

45 return utils.get_application_id() + '.firebaseapp.com'

46

47

48 def is_current_user_admin():

49 """Returns whether or not the current logged in user is an admin."""

50 if environment.is_local_development():

51 return True

52

53 user = get_current_user()

54 if not user:

55 return False

56

57 key = ndb.Key(data_types.Admin, user.email)

58 return bool(key.get())

59

60

61 @memoize.wrap(memoize.FifoInMemory(1))

62 def _project_number_from_id(project_id):

63 """Get the project number from project ID."""

64 resource_manager = build('cloudresourcemanager', 'v1')

65 result = resource_manager.projects().get(projectId=project_id).execute()

66 if 'projectNumber' not in result:

67 raise AuthError('Failed to get project number.')

68

69 return result['projectNumber']

70

71

72 @memoize.wrap(memoize.FifoInMemory(1))

73 def _get_iap_key(key_id):

74 """Retrieves a public key from the list published by Identity-Aware Proxy,

75 re-fetching the key file if necessary.

76 """

77 resp = requests.get('https://www.gstatic.com/iap/verify/public_key')

78 if resp.status_code != 200:

79 raise AuthError('Unable to fetch IAP keys: {} / {} / {}'.format(

80 resp.status_code, resp.headers, resp.text))

81

82 result = resp.json()

83 key = result.get(key_id)

84 if not key:

85 raise AuthError('Key {!r} not found'.format(key_id))

86

87 return key

88

89

90 def _validate_iap_jwt(iap_jwt):

91 """Validate JWT assertion."""

92 project_id = utils.get_application_id()

93 expected_audience = '/projects/{}/apps/{}'.format(

94 _project_number_from_id(project_id), project_id)

95

96 try:

97 key_id = jwt.get_unverified_header(iap_jwt).get('kid')

98 if not key_id:

99 raise AuthError('No key ID.')

100

101 key = _get_iap_key(key_id)

102 decoded_jwt = jwt.decode(

103 iap_jwt,

104 key,

105 algorithms=['ES256'],

106 issuer='https://cloud.google.com/iap',

107 audience=expected_audience)

108 return decoded_jwt['email']

109 except (jwt.exceptions.InvalidTokenError,

110 requests.exceptions.RequestException) as e:

111 raise AuthError('JWT assertion decode error: ' + str(e))

112

113

114 def get_iap_email(current_request):

115 """Get Cloud IAP email."""

116 jwt_assertion = current_request.headers.get('X-Goog-IAP-JWT-Assertion')

117 if not jwt_assertion:

118 return None

119

120 return _validate_iap_jwt(jwt_assertion)

121

122

123 def get_current_user():

124 """Get the current logged in user, or None."""

125 if environment.is_local_development():

126 return User('user@localhost')

127

128 current_request = request_cache.get_current_request()

129 if local_config.AuthConfig().get('enable_loas'):

130 loas_user = current_request.headers.get('X-AppEngine-LOAS-Peer-Username')

131 if loas_user:

132 return User(loas_user + '@google.com')

133

134 iap_email = get_iap_email(current_request)

135 if iap_email:

136 return User(iap_email)

137

138 cache_backing = request_cache.get_cache_backing()

139 oauth_email = getattr(cache_backing, '_oauth_email', None)

140 if oauth_email:

141 return User(oauth_email)

142

143 cached_email = getattr(cache_backing, '_cached_email', None)

144 if cached_email:

145 return User(cached_email)

146

147 session_cookie = get_session_cookie()

148 if not session_cookie:

149 return None

150

151 try:

152 decoded_claims = decode_claims(get_session_cookie())

153 except AuthError:

154 logs.log_warn('Invalid session cookie.')

155 return None

156

157 if not decoded_claims.get('email_verified'):

158 return None

159

160 email = decoded_claims.get('email')

161 if not email:

162 return None

163

164 # We cache the email for this request if we've validated the user to make

165 # subsequent get_current_user() calls fast.

166 setattr(cache_backing, '_cached_email', email)

167 return User(email)

168

169

170 def create_session_cookie(id_token, expires_in):

171 """Create a new session cookie."""

172 try:

173 return auth.create_session_cookie(id_token, expires_in=expires_in)

174 except auth.AuthError:

175 raise AuthError('Failed to create session cookie.')

176

177

178 def get_session_cookie():

179 """Get the current session cookie."""

180 return request_cache.get_current_request().cookies.get('session')

181

182

183 def revoke_session_cookie(session_cookie):

184 """Revoke a session cookie."""

185 decoded_claims = decode_claims(session_cookie)

186 auth.revoke_refresh_tokens(decoded_claims['sub'])

187

188

189 def decode_claims(session_cookie):

190 """Decode the claims for the current session cookie."""

191 try:

192 return auth.verify_session_cookie(session_cookie, check_revoked=True)

193 except (ValueError, auth.AuthError):

194 raise AuthError('Invalid session cookie.')

195

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/appengine/libs/auth.py b/src/appengine/libs/auth.py

--- a/src/appengine/libs/auth.py

+++ b/src/appengine/libs/auth.py

@@ -154,7 +154,21 @@

logs.log_warn('Invalid session cookie.')

return None

- if not decoded_claims.get('email_verified'):

+ allowed_firebase_providers = local_config.ProjectConfig().get(

+ 'firebase.auth_providers', ['google.com'])

+ firebase_info = decoded_claims.get('firebase', {})

+ sign_in_provider = firebase_info.get('sign_in_provider')

+

+ if sign_in_provider not in allowed_firebase_providers:

+ logs.log_error(f'Firebase provider {sign_in_provider} is not enabled.')

+ return None

+

+ # Per https://docs.github.com/en/authentication/

+ # keeping-your-account-and-data-secure/authorizing-oauth-apps

+ # GitHub requires emails to be verified before an OAuth app can be

+ # authorized, so we make an exception.

+ if (not decoded_claims.get('email_verified') and

+ sign_in_provider != 'github.com'):

return None

email = decoded_claims.get('email')

diff --git a/src/appengine/libs/csp.py b/src/appengine/libs/csp.py

--- a/src/appengine/libs/csp.py

+++ b/src/appengine/libs/csp.py

@@ -92,6 +92,7 @@

builder.add('img-src', 'www.gstatic.com')

builder.add('connect-src', 'securetoken.googleapis.com')

builder.add('connect-src', 'www.googleapis.com')

+ builder.add('connect-src', 'identitytoolkit.googleapis.com')

builder.add('frame-src', auth.auth_domain())

# External style. Used for fonts, charting libraries.

|

{"golden_diff": "diff --git a/src/appengine/libs/auth.py b/src/appengine/libs/auth.py\n--- a/src/appengine/libs/auth.py\n+++ b/src/appengine/libs/auth.py\n@@ -154,7 +154,21 @@\n logs.log_warn('Invalid session cookie.')\n return None\n \n- if not decoded_claims.get('email_verified'):\n+ allowed_firebase_providers = local_config.ProjectConfig().get(\n+ 'firebase.auth_providers', ['google.com'])\n+ firebase_info = decoded_claims.get('firebase', {})\n+ sign_in_provider = firebase_info.get('sign_in_provider')\n+\n+ if sign_in_provider not in allowed_firebase_providers:\n+ logs.log_error(f'Firebase provider {sign_in_provider} is not enabled.')\n+ return None\n+\n+ # Per https://docs.github.com/en/authentication/\n+ # keeping-your-account-and-data-secure/authorizing-oauth-apps\n+ # GitHub requires emails to be verified before an OAuth app can be\n+ # authorized, so we make an exception.\n+ if (not decoded_claims.get('email_verified') and\n+ sign_in_provider != 'github.com'):\n return None\n \n email = decoded_claims.get('email')\ndiff --git a/src/appengine/libs/csp.py b/src/appengine/libs/csp.py\n--- a/src/appengine/libs/csp.py\n+++ b/src/appengine/libs/csp.py\n@@ -92,6 +92,7 @@\n builder.add('img-src', 'www.gstatic.com')\n builder.add('connect-src', 'securetoken.googleapis.com')\n builder.add('connect-src', 'www.googleapis.com')\n+ builder.add('connect-src', 'identitytoolkit.googleapis.com')\n builder.add('frame-src', auth.auth_domain())\n \n # External style. Used for fonts, charting libraries.\n", "issue": "Investigate GitHub login\n\n", "before_files": [{"content": "# Copyright 2019 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"Helpers used to generate Content Security Policies for pages.\"\"\"\nimport collections\n\nfrom libs import auth\n\n\nclass CSPBuilder(object):\n \"\"\"Helper to build a Content Security Policy string.\"\"\"\n\n def __init__(self):\n self.directives = collections.defaultdict(list)\n\n def add(self, directive, source, quote=False):\n \"\"\"Add a source for a given directive.\"\"\"\n # Some values for sources are expected to be quoted. No escaping is done\n # since these are specific literal values that don't require it.\n if quote:\n source = '\\'{}\\''.format(source)\n\n assert source not in self.directives[directive], (\n 'Duplicate source \"{source}\" for directive \"{directive}\"'.format(\n source=source, directive=directive))\n self.directives[directive].append(source)\n\n def add_sourceless(self, directive):\n assert directive not in self.directives, (\n 'Sourceless directive \"{directive}\" already exists.'.format(\n directive=directive))\n\n self.directives[directive] = []\n\n def remove(self, directive, source, quote=False):\n \"\"\"Remove a source for a given directive.\"\"\"\n if quote:\n source = '\\'{}\\''.format(source)\n\n assert source in self.directives[directive], (\n 'Removing nonexistent \"{source}\" for directive \"{directive}\"'.format(\n source=source, directive=directive))\n self.directives[directive].remove(source)\n\n def __str__(self):\n \"\"\"Convert to a string to send with a Content-Security-Policy header.\"\"\"\n parts = []\n\n # Sort directives for deterministic results.\n for directive, sources in sorted(self.directives.items()):\n # Each policy part has the form \"directive source1 source2 ...;\".\n parts.append(' '.join([directive] + sources) + ';')\n\n return ' '.join(parts)\n\n\ndef get_default_builder():\n \"\"\"Get a CSPBuilder object for the default policy.\n\n Can be modified for specific pages if needed.\"\"\"\n builder = CSPBuilder()\n\n # By default, disallow everything. Whitelist only features that are needed.\n builder.add('default-src', 'none', quote=True)\n\n # Allow various directives if sourced from self.\n builder.add('font-src', 'self', quote=True)\n builder.add('connect-src', 'self', quote=True)\n builder.add('img-src', 'self', quote=True)\n builder.add('manifest-src', 'self', quote=True)\n\n # External scripts. Google analytics, charting libraries.\n builder.add('script-src', 'www.google-analytics.com')\n builder.add('script-src', 'www.gstatic.com')\n builder.add('script-src', 'apis.google.com')\n\n # Google Analytics also uses connect-src and img-src.\n builder.add('connect-src', 'www.google-analytics.com')\n builder.add('img-src', 'www.google-analytics.com')\n\n # Firebase.\n builder.add('img-src', 'www.gstatic.com')\n builder.add('connect-src', 'securetoken.googleapis.com')\n builder.add('connect-src', 'www.googleapis.com')\n builder.add('frame-src', auth.auth_domain())\n\n # External style. Used for fonts, charting libraries.\n builder.add('style-src', 'fonts.googleapis.com')\n builder.add('style-src', 'www.gstatic.com')\n\n # External fonts.\n builder.add('font-src', 'fonts.gstatic.com')\n\n # Some upload forms require us to connect to the cloud storage API.\n builder.add('connect-src', 'storage.googleapis.com')\n\n # Mixed content is unexpected, but upgrade requests rather than block.\n builder.add_sourceless('upgrade-insecure-requests')\n\n # We don't expect object to be used, but it doesn't fall back to default-src.\n builder.add('object-src', 'none', quote=True)\n\n # We don't expect workers to be used, but they fall back to script-src.\n builder.add('worker-src', 'none', quote=True)\n\n # Add reporting so that violations don't break things silently.\n builder.add('report-uri', '/report-csp-failure')\n\n # TODO(mbarbella): Remove Google-specific cases by allowing configuration.\n\n # Internal authentication.\n builder.add('manifest-src', 'login.corp.google.com')\n\n # TODO(mbarbella): Improve the policy by limiting the additions below.\n\n # Because we use Polymer Bundler to create large files containing all of our\n # scripts inline, our policy requires this (which weakens CSP significantly).\n builder.add('script-src', 'unsafe-inline', quote=True)\n\n # Some of the pages that read responses from json handlers require this.\n builder.add('script-src', 'unsafe-eval', quote=True)\n\n # Our Polymer Bundler usage also requires inline style.\n builder.add('style-src', 'unsafe-inline', quote=True)\n\n # Some fonts are loaded from data URIs.\n builder.add('font-src', 'data:')\n\n return builder\n\n\ndef get_default():\n \"\"\"Get the default Content Security Policy as a string.\"\"\"\n return str(get_default_builder())\n", "path": "src/appengine/libs/csp.py"}, {"content": "# Copyright 2019 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"Authentication helpers.\"\"\"\n\nimport collections\n\nfrom firebase_admin import auth\nfrom google.cloud import ndb\nfrom googleapiclient.discovery import build\nimport jwt\nimport requests\n\nfrom clusterfuzz._internal.base import memoize\nfrom clusterfuzz._internal.base import utils\nfrom clusterfuzz._internal.config import local_config\nfrom clusterfuzz._internal.datastore import data_types\nfrom clusterfuzz._internal.metrics import logs\nfrom clusterfuzz._internal.system import environment\nfrom libs import request_cache\n\nUser = collections.namedtuple('User', ['email'])\n\n\nclass AuthError(Exception):\n \"\"\"Auth error.\"\"\"\n\n\ndef auth_domain():\n \"\"\"Get the auth domain.\"\"\"\n domain = local_config.ProjectConfig().get('firebase.auth_domain')\n if domain:\n return domain\n\n return utils.get_application_id() + '.firebaseapp.com'\n\n\ndef is_current_user_admin():\n \"\"\"Returns whether or not the current logged in user is an admin.\"\"\"\n if environment.is_local_development():\n return True\n\n user = get_current_user()\n if not user:\n return False\n\n key = ndb.Key(data_types.Admin, user.email)\n return bool(key.get())\n\n\[email protected](memoize.FifoInMemory(1))\ndef _project_number_from_id(project_id):\n \"\"\"Get the project number from project ID.\"\"\"\n resource_manager = build('cloudresourcemanager', 'v1')\n result = resource_manager.projects().get(projectId=project_id).execute()\n if 'projectNumber' not in result:\n raise AuthError('Failed to get project number.')\n\n return result['projectNumber']\n\n\[email protected](memoize.FifoInMemory(1))\ndef _get_iap_key(key_id):\n \"\"\"Retrieves a public key from the list published by Identity-Aware Proxy,\n re-fetching the key file if necessary.\n \"\"\"\n resp = requests.get('https://www.gstatic.com/iap/verify/public_key')\n if resp.status_code != 200:\n raise AuthError('Unable to fetch IAP keys: {} / {} / {}'.format(\n resp.status_code, resp.headers, resp.text))\n\n result = resp.json()\n key = result.get(key_id)\n if not key:\n raise AuthError('Key {!r} not found'.format(key_id))\n\n return key\n\n\ndef _validate_iap_jwt(iap_jwt):\n \"\"\"Validate JWT assertion.\"\"\"\n project_id = utils.get_application_id()\n expected_audience = '/projects/{}/apps/{}'.format(\n _project_number_from_id(project_id), project_id)\n\n try:\n key_id = jwt.get_unverified_header(iap_jwt).get('kid')\n if not key_id:\n raise AuthError('No key ID.')\n\n key = _get_iap_key(key_id)\n decoded_jwt = jwt.decode(\n iap_jwt,\n key,\n algorithms=['ES256'],\n issuer='https://cloud.google.com/iap',\n audience=expected_audience)\n return decoded_jwt['email']\n except (jwt.exceptions.InvalidTokenError,\n requests.exceptions.RequestException) as e:\n raise AuthError('JWT assertion decode error: ' + str(e))\n\n\ndef get_iap_email(current_request):\n \"\"\"Get Cloud IAP email.\"\"\"\n jwt_assertion = current_request.headers.get('X-Goog-IAP-JWT-Assertion')\n if not jwt_assertion:\n return None\n\n return _validate_iap_jwt(jwt_assertion)\n\n\ndef get_current_user():\n \"\"\"Get the current logged in user, or None.\"\"\"\n if environment.is_local_development():\n return User('user@localhost')\n\n current_request = request_cache.get_current_request()\n if local_config.AuthConfig().get('enable_loas'):\n loas_user = current_request.headers.get('X-AppEngine-LOAS-Peer-Username')\n if loas_user:\n return User(loas_user + '@google.com')\n\n iap_email = get_iap_email(current_request)\n if iap_email:\n return User(iap_email)\n\n cache_backing = request_cache.get_cache_backing()\n oauth_email = getattr(cache_backing, '_oauth_email', None)\n if oauth_email:\n return User(oauth_email)\n\n cached_email = getattr(cache_backing, '_cached_email', None)\n if cached_email:\n return User(cached_email)\n\n session_cookie = get_session_cookie()\n if not session_cookie:\n return None\n\n try:\n decoded_claims = decode_claims(get_session_cookie())\n except AuthError:\n logs.log_warn('Invalid session cookie.')\n return None\n\n if not decoded_claims.get('email_verified'):\n return None\n\n email = decoded_claims.get('email')\n if not email:\n return None\n\n # We cache the email for this request if we've validated the user to make\n # subsequent get_current_user() calls fast.\n setattr(cache_backing, '_cached_email', email)\n return User(email)\n\n\ndef create_session_cookie(id_token, expires_in):\n \"\"\"Create a new session cookie.\"\"\"\n try:\n return auth.create_session_cookie(id_token, expires_in=expires_in)\n except auth.AuthError:\n raise AuthError('Failed to create session cookie.')\n\n\ndef get_session_cookie():\n \"\"\"Get the current session cookie.\"\"\"\n return request_cache.get_current_request().cookies.get('session')\n\n\ndef revoke_session_cookie(session_cookie):\n \"\"\"Revoke a session cookie.\"\"\"\n decoded_claims = decode_claims(session_cookie)\n auth.revoke_refresh_tokens(decoded_claims['sub'])\n\n\ndef decode_claims(session_cookie):\n \"\"\"Decode the claims for the current session cookie.\"\"\"\n try:\n return auth.verify_session_cookie(session_cookie, check_revoked=True)\n except (ValueError, auth.AuthError):\n raise AuthError('Invalid session cookie.')\n", "path": "src/appengine/libs/auth.py"}], "after_files": [{"content": "# Copyright 2019 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"Helpers used to generate Content Security Policies for pages.\"\"\"\nimport collections\n\nfrom libs import auth\n\n\nclass CSPBuilder(object):\n \"\"\"Helper to build a Content Security Policy string.\"\"\"\n\n def __init__(self):\n self.directives = collections.defaultdict(list)\n\n def add(self, directive, source, quote=False):\n \"\"\"Add a source for a given directive.\"\"\"\n # Some values for sources are expected to be quoted. No escaping is done\n # since these are specific literal values that don't require it.\n if quote:\n source = '\\'{}\\''.format(source)\n\n assert source not in self.directives[directive], (\n 'Duplicate source \"{source}\" for directive \"{directive}\"'.format(\n source=source, directive=directive))\n self.directives[directive].append(source)\n\n def add_sourceless(self, directive):\n assert directive not in self.directives, (\n 'Sourceless directive \"{directive}\" already exists.'.format(\n directive=directive))\n\n self.directives[directive] = []\n\n def remove(self, directive, source, quote=False):\n \"\"\"Remove a source for a given directive.\"\"\"\n if quote:\n source = '\\'{}\\''.format(source)\n\n assert source in self.directives[directive], (\n 'Removing nonexistent \"{source}\" for directive \"{directive}\"'.format(\n source=source, directive=directive))\n self.directives[directive].remove(source)\n\n def __str__(self):\n \"\"\"Convert to a string to send with a Content-Security-Policy header.\"\"\"\n parts = []\n\n # Sort directives for deterministic results.\n for directive, sources in sorted(self.directives.items()):\n # Each policy part has the form \"directive source1 source2 ...;\".\n parts.append(' '.join([directive] + sources) + ';')\n\n return ' '.join(parts)\n\n\ndef get_default_builder():\n \"\"\"Get a CSPBuilder object for the default policy.\n\n Can be modified for specific pages if needed.\"\"\"\n builder = CSPBuilder()\n\n # By default, disallow everything. Whitelist only features that are needed.\n builder.add('default-src', 'none', quote=True)\n\n # Allow various directives if sourced from self.\n builder.add('font-src', 'self', quote=True)\n builder.add('connect-src', 'self', quote=True)\n builder.add('img-src', 'self', quote=True)\n builder.add('manifest-src', 'self', quote=True)\n\n # External scripts. Google analytics, charting libraries.\n builder.add('script-src', 'www.google-analytics.com')\n builder.add('script-src', 'www.gstatic.com')\n builder.add('script-src', 'apis.google.com')\n\n # Google Analytics also uses connect-src and img-src.\n builder.add('connect-src', 'www.google-analytics.com')\n builder.add('img-src', 'www.google-analytics.com')\n\n # Firebase.\n builder.add('img-src', 'www.gstatic.com')\n builder.add('connect-src', 'securetoken.googleapis.com')\n builder.add('connect-src', 'www.googleapis.com')\n builder.add('connect-src', 'identitytoolkit.googleapis.com')\n builder.add('frame-src', auth.auth_domain())\n\n # External style. Used for fonts, charting libraries.\n builder.add('style-src', 'fonts.googleapis.com')\n builder.add('style-src', 'www.gstatic.com')\n\n # External fonts.\n builder.add('font-src', 'fonts.gstatic.com')\n\n # Some upload forms require us to connect to the cloud storage API.\n builder.add('connect-src', 'storage.googleapis.com')\n\n # Mixed content is unexpected, but upgrade requests rather than block.\n builder.add_sourceless('upgrade-insecure-requests')\n\n # We don't expect object to be used, but it doesn't fall back to default-src.\n builder.add('object-src', 'none', quote=True)\n\n # We don't expect workers to be used, but they fall back to script-src.\n builder.add('worker-src', 'none', quote=True)\n\n # Add reporting so that violations don't break things silently.\n builder.add('report-uri', '/report-csp-failure')\n\n # TODO(mbarbella): Remove Google-specific cases by allowing configuration.\n\n # Internal authentication.\n builder.add('manifest-src', 'login.corp.google.com')\n\n # TODO(mbarbella): Improve the policy by limiting the additions below.\n\n # Because we use Polymer Bundler to create large files containing all of our\n # scripts inline, our policy requires this (which weakens CSP significantly).\n builder.add('script-src', 'unsafe-inline', quote=True)\n\n # Some of the pages that read responses from json handlers require this.\n builder.add('script-src', 'unsafe-eval', quote=True)\n\n # Our Polymer Bundler usage also requires inline style.\n builder.add('style-src', 'unsafe-inline', quote=True)\n\n # Some fonts are loaded from data URIs.\n builder.add('font-src', 'data:')\n\n return builder\n\n\ndef get_default():\n \"\"\"Get the default Content Security Policy as a string.\"\"\"\n return str(get_default_builder())\n", "path": "src/appengine/libs/csp.py"}, {"content": "# Copyright 2019 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"Authentication helpers.\"\"\"\n\nimport collections\n\nfrom firebase_admin import auth\nfrom google.cloud import ndb\nfrom googleapiclient.discovery import build\nimport jwt\nimport requests\n\nfrom clusterfuzz._internal.base import memoize\nfrom clusterfuzz._internal.base import utils\nfrom clusterfuzz._internal.config import local_config\nfrom clusterfuzz._internal.datastore import data_types\nfrom clusterfuzz._internal.metrics import logs\nfrom clusterfuzz._internal.system import environment\nfrom libs import request_cache\n\nUser = collections.namedtuple('User', ['email'])\n\n\nclass AuthError(Exception):\n \"\"\"Auth error.\"\"\"\n\n\ndef auth_domain():\n \"\"\"Get the auth domain.\"\"\"\n domain = local_config.ProjectConfig().get('firebase.auth_domain')\n if domain:\n return domain\n\n return utils.get_application_id() + '.firebaseapp.com'\n\n\ndef is_current_user_admin():\n \"\"\"Returns whether or not the current logged in user is an admin.\"\"\"\n if environment.is_local_development():\n return True\n\n user = get_current_user()\n if not user:\n return False\n\n key = ndb.Key(data_types.Admin, user.email)\n return bool(key.get())\n\n\[email protected](memoize.FifoInMemory(1))\ndef _project_number_from_id(project_id):\n \"\"\"Get the project number from project ID.\"\"\"\n resource_manager = build('cloudresourcemanager', 'v1')\n result = resource_manager.projects().get(projectId=project_id).execute()\n if 'projectNumber' not in result:\n raise AuthError('Failed to get project number.')\n\n return result['projectNumber']\n\n\[email protected](memoize.FifoInMemory(1))\ndef _get_iap_key(key_id):\n \"\"\"Retrieves a public key from the list published by Identity-Aware Proxy,\n re-fetching the key file if necessary.\n \"\"\"\n resp = requests.get('https://www.gstatic.com/iap/verify/public_key')\n if resp.status_code != 200:\n raise AuthError('Unable to fetch IAP keys: {} / {} / {}'.format(\n resp.status_code, resp.headers, resp.text))\n\n result = resp.json()\n key = result.get(key_id)\n if not key:\n raise AuthError('Key {!r} not found'.format(key_id))\n\n return key\n\n\ndef _validate_iap_jwt(iap_jwt):\n \"\"\"Validate JWT assertion.\"\"\"\n project_id = utils.get_application_id()\n expected_audience = '/projects/{}/apps/{}'.format(\n _project_number_from_id(project_id), project_id)\n\n try:\n key_id = jwt.get_unverified_header(iap_jwt).get('kid')\n if not key_id:\n raise AuthError('No key ID.')\n\n key = _get_iap_key(key_id)\n decoded_jwt = jwt.decode(\n iap_jwt,\n key,\n algorithms=['ES256'],\n issuer='https://cloud.google.com/iap',\n audience=expected_audience)\n return decoded_jwt['email']\n except (jwt.exceptions.InvalidTokenError,\n requests.exceptions.RequestException) as e:\n raise AuthError('JWT assertion decode error: ' + str(e))\n\n\ndef get_iap_email(current_request):\n \"\"\"Get Cloud IAP email.\"\"\"\n jwt_assertion = current_request.headers.get('X-Goog-IAP-JWT-Assertion')\n if not jwt_assertion:\n return None\n\n return _validate_iap_jwt(jwt_assertion)\n\n\ndef get_current_user():\n \"\"\"Get the current logged in user, or None.\"\"\"\n if environment.is_local_development():\n return User('user@localhost')\n\n current_request = request_cache.get_current_request()\n if local_config.AuthConfig().get('enable_loas'):\n loas_user = current_request.headers.get('X-AppEngine-LOAS-Peer-Username')\n if loas_user:\n return User(loas_user + '@google.com')\n\n iap_email = get_iap_email(current_request)\n if iap_email:\n return User(iap_email)\n\n cache_backing = request_cache.get_cache_backing()\n oauth_email = getattr(cache_backing, '_oauth_email', None)\n if oauth_email:\n return User(oauth_email)\n\n cached_email = getattr(cache_backing, '_cached_email', None)\n if cached_email:\n return User(cached_email)\n\n session_cookie = get_session_cookie()\n if not session_cookie:\n return None\n\n try:\n decoded_claims = decode_claims(get_session_cookie())\n except AuthError:\n logs.log_warn('Invalid session cookie.')\n return None\n\n allowed_firebase_providers = local_config.ProjectConfig().get(\n 'firebase.auth_providers', ['google.com'])\n firebase_info = decoded_claims.get('firebase', {})\n sign_in_provider = firebase_info.get('sign_in_provider')\n\n if sign_in_provider not in allowed_firebase_providers:\n logs.log_error(f'Firebase provider {sign_in_provider} is not enabled.')\n return None\n\n # Per https://docs.github.com/en/authentication/\n # keeping-your-account-and-data-secure/authorizing-oauth-apps\n # GitHub requires emails to be verified before an OAuth app can be\n # authorized, so we make an exception.\n if (not decoded_claims.get('email_verified') and\n sign_in_provider != 'github.com'):\n return None\n\n email = decoded_claims.get('email')\n if not email:\n return None\n\n # We cache the email for this request if we've validated the user to make\n # subsequent get_current_user() calls fast.\n setattr(cache_backing, '_cached_email', email)\n return User(email)\n\n\ndef create_session_cookie(id_token, expires_in):\n \"\"\"Create a new session cookie.\"\"\"\n try:\n return auth.create_session_cookie(id_token, expires_in=expires_in)\n except auth.AuthError:\n raise AuthError('Failed to create session cookie.')\n\n\ndef get_session_cookie():\n \"\"\"Get the current session cookie.\"\"\"\n return request_cache.get_current_request().cookies.get('session')\n\n\ndef revoke_session_cookie(session_cookie):\n \"\"\"Revoke a session cookie.\"\"\"\n decoded_claims = decode_claims(session_cookie)\n auth.revoke_refresh_tokens(decoded_claims['sub'])\n\n\ndef decode_claims(session_cookie):\n \"\"\"Decode the claims for the current session cookie.\"\"\"\n try:\n return auth.verify_session_cookie(session_cookie, check_revoked=True)\n except (ValueError, auth.AuthError):\n raise AuthError('Invalid session cookie.')\n", "path": "src/appengine/libs/auth.py"}]}

| 3,687 | 395 |

gh_patches_debug_13091

|

rasdani/github-patches

|

git_diff

|

PrefectHQ__prefect-11999

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

no import statement for wait_for_flow_run

### First check

- [X] I added a descriptive title to this issue.

- [X] I used GitHub search to find a similar request and didn't find it 😇

### Describe the issue

There is no import statement for wait_for_flow_run so typing this code into pycharm shows wait_for_flow_run as an error. Searching the internets, the import statement used to be

_from prefect.tasks.prefect import wait_for_flow_run_

yeah, that doesn't work anymore.

### Describe the proposed change

put the correct import statement in the docs which is

_from prefect.flow_runs import wait_for_flow_run_

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `src/prefect/flow_runs.py`

Content:

```

1 from typing import Optional

2 from uuid import UUID

3

4 import anyio

5

6 from prefect.client.orchestration import PrefectClient

7 from prefect.client.schemas import FlowRun

8 from prefect.client.utilities import inject_client

9 from prefect.exceptions import FlowRunWaitTimeout

10 from prefect.logging import get_logger

11

12

13 @inject_client

14 async def wait_for_flow_run(

15 flow_run_id: UUID,

16 timeout: Optional[int] = 10800,

17 poll_interval: int = 5,

18 client: Optional[PrefectClient] = None,

19 log_states: bool = False,

20 ) -> FlowRun:

21 """

22 Waits for the prefect flow run to finish and returns the FlowRun

23

24 Args:

25 flow_run_id: The flow run ID for the flow run to wait for.

26 timeout: The wait timeout in seconds. Defaults to 10800 (3 hours).

27 poll_interval: The poll interval in seconds. Defaults to 5.

28

29 Returns:

30 FlowRun: The finished flow run.

31

32 Raises:

33 prefect.exceptions.FlowWaitTimeout: If flow run goes over the timeout.

34

35 Examples:

36 Create a flow run for a deployment and wait for it to finish:

37 ```python

38 import asyncio

39

40 from prefect import get_client

41

42 async def main():

43 async with get_client() as client:

44 flow_run = await client.create_flow_run_from_deployment(deployment_id="my-deployment-id")

45 flow_run = await wait_for_flow_run(flow_run_id=flow_run.id)

46 print(flow_run.state)

47

48 if __name__ == "__main__":

49 asyncio.run(main())

50

51 ```

52

53 Trigger multiple flow runs and wait for them to finish:

54 ```python

55 import asyncio

56

57 from prefect import get_client

58

59 async def main(num_runs: int):

60 async with get_client() as client:

61 flow_runs = [

62 await client.create_flow_run_from_deployment(deployment_id="my-deployment-id")

63 for _

64 in range(num_runs)

65 ]

66 coros = [wait_for_flow_run(flow_run_id=flow_run.id) for flow_run in flow_runs]

67 finished_flow_runs = await asyncio.gather(*coros)

68 print([flow_run.state for flow_run in finished_flow_runs])

69

70 if __name__ == "__main__":

71 asyncio.run(main(num_runs=10))

72

73 ```

74 """

75 assert client is not None, "Client injection failed"

76 logger = get_logger()

77 with anyio.move_on_after(timeout):

78 while True:

79 flow_run = await client.read_flow_run(flow_run_id)

80 flow_state = flow_run.state

81 if log_states:

82 logger.info(f"Flow run is in state {flow_run.state.name!r}")

83 if flow_state and flow_state.is_final():

84 return flow_run

85 await anyio.sleep(poll_interval)

86 raise FlowRunWaitTimeout(

87 f"Flow run with ID {flow_run_id} exceeded watch timeout of {timeout} seconds"

88 )

89

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/src/prefect/flow_runs.py b/src/prefect/flow_runs.py

--- a/src/prefect/flow_runs.py

+++ b/src/prefect/flow_runs.py

@@ -38,6 +38,7 @@

import asyncio

from prefect import get_client

+ from prefect.flow_runs import wait_for_flow_run

async def main():

async with get_client() as client:

@@ -55,6 +56,7 @@

import asyncio

from prefect import get_client

+ from prefect.flow_runs import wait_for_flow_run

async def main(num_runs: int):

async with get_client() as client:

|

{"golden_diff": "diff --git a/src/prefect/flow_runs.py b/src/prefect/flow_runs.py\n--- a/src/prefect/flow_runs.py\n+++ b/src/prefect/flow_runs.py\n@@ -38,6 +38,7 @@\n import asyncio\n \n from prefect import get_client\n+ from prefect.flow_runs import wait_for_flow_run\n \n async def main():\n async with get_client() as client:\n@@ -55,6 +56,7 @@\n import asyncio\n \n from prefect import get_client\n+ from prefect.flow_runs import wait_for_flow_run\n \n async def main(num_runs: int):\n async with get_client() as client:\n", "issue": "no import statement for wait_for_flow_run\n### First check\r\n\r\n- [X] I added a descriptive title to this issue.\r\n- [X] I used GitHub search to find a similar request and didn't find it \ud83d\ude07\r\n\r\n### Describe the issue\r\n\r\nThere is no import statement for wait_for_flow_run so typing this code into pycharm shows wait_for_flow_run as an error. Searching the internets, the import statement used to be\r\n\r\n_from prefect.tasks.prefect import wait_for_flow_run_\r\n\r\nyeah, that doesn't work anymore.\r\n\r\n### Describe the proposed change\r\n\r\nput the correct import statement in the docs which is \r\n\r\n_from prefect.flow_runs import wait_for_flow_run_\r\n\n", "before_files": [{"content": "from typing import Optional\nfrom uuid import UUID\n\nimport anyio\n\nfrom prefect.client.orchestration import PrefectClient\nfrom prefect.client.schemas import FlowRun\nfrom prefect.client.utilities import inject_client\nfrom prefect.exceptions import FlowRunWaitTimeout\nfrom prefect.logging import get_logger\n\n\n@inject_client\nasync def wait_for_flow_run(\n flow_run_id: UUID,\n timeout: Optional[int] = 10800,\n poll_interval: int = 5,\n client: Optional[PrefectClient] = None,\n log_states: bool = False,\n) -> FlowRun:\n \"\"\"\n Waits for the prefect flow run to finish and returns the FlowRun\n\n Args:\n flow_run_id: The flow run ID for the flow run to wait for.\n timeout: The wait timeout in seconds. Defaults to 10800 (3 hours).\n poll_interval: The poll interval in seconds. Defaults to 5.\n\n Returns:\n FlowRun: The finished flow run.\n\n Raises:\n prefect.exceptions.FlowWaitTimeout: If flow run goes over the timeout.\n\n Examples:\n Create a flow run for a deployment and wait for it to finish:\n ```python\n import asyncio\n\n from prefect import get_client\n\n async def main():\n async with get_client() as client:\n flow_run = await client.create_flow_run_from_deployment(deployment_id=\"my-deployment-id\")\n flow_run = await wait_for_flow_run(flow_run_id=flow_run.id)\n print(flow_run.state)\n\n if __name__ == \"__main__\":\n asyncio.run(main())\n\n ```\n\n Trigger multiple flow runs and wait for them to finish:\n ```python\n import asyncio\n\n from prefect import get_client\n\n async def main(num_runs: int):\n async with get_client() as client:\n flow_runs = [\n await client.create_flow_run_from_deployment(deployment_id=\"my-deployment-id\")\n for _\n in range(num_runs)\n ]\n coros = [wait_for_flow_run(flow_run_id=flow_run.id) for flow_run in flow_runs]\n finished_flow_runs = await asyncio.gather(*coros)\n print([flow_run.state for flow_run in finished_flow_runs])\n\n if __name__ == \"__main__\":\n asyncio.run(main(num_runs=10))\n\n ```\n \"\"\"\n assert client is not None, \"Client injection failed\"\n logger = get_logger()\n with anyio.move_on_after(timeout):\n while True:\n flow_run = await client.read_flow_run(flow_run_id)\n flow_state = flow_run.state\n if log_states:\n logger.info(f\"Flow run is in state {flow_run.state.name!r}\")\n if flow_state and flow_state.is_final():\n return flow_run\n await anyio.sleep(poll_interval)\n raise FlowRunWaitTimeout(\n f\"Flow run with ID {flow_run_id} exceeded watch timeout of {timeout} seconds\"\n )\n", "path": "src/prefect/flow_runs.py"}], "after_files": [{"content": "from typing import Optional\nfrom uuid import UUID\n\nimport anyio\n\nfrom prefect.client.orchestration import PrefectClient\nfrom prefect.client.schemas import FlowRun\nfrom prefect.client.utilities import inject_client\nfrom prefect.exceptions import FlowRunWaitTimeout\nfrom prefect.logging import get_logger\n\n\n@inject_client\nasync def wait_for_flow_run(\n flow_run_id: UUID,\n timeout: Optional[int] = 10800,\n poll_interval: int = 5,\n client: Optional[PrefectClient] = None,\n log_states: bool = False,\n) -> FlowRun:\n \"\"\"\n Waits for the prefect flow run to finish and returns the FlowRun\n\n Args:\n flow_run_id: The flow run ID for the flow run to wait for.\n timeout: The wait timeout in seconds. Defaults to 10800 (3 hours).\n poll_interval: The poll interval in seconds. Defaults to 5.\n\n Returns:\n FlowRun: The finished flow run.\n\n Raises:\n prefect.exceptions.FlowWaitTimeout: If flow run goes over the timeout.\n\n Examples:\n Create a flow run for a deployment and wait for it to finish:\n ```python\n import asyncio\n\n from prefect import get_client\n from prefect.flow_runs import wait_for_flow_run\n\n async def main():\n async with get_client() as client:\n flow_run = await client.create_flow_run_from_deployment(deployment_id=\"my-deployment-id\")\n flow_run = await wait_for_flow_run(flow_run_id=flow_run.id)\n print(flow_run.state)\n\n if __name__ == \"__main__\":\n asyncio.run(main())\n\n ```\n\n Trigger multiple flow runs and wait for them to finish:\n ```python\n import asyncio\n\n from prefect import get_client\n from prefect.flow_runs import wait_for_flow_run\n\n async def main(num_runs: int):\n async with get_client() as client:\n flow_runs = [\n await client.create_flow_run_from_deployment(deployment_id=\"my-deployment-id\")\n for _\n in range(num_runs)\n ]\n coros = [wait_for_flow_run(flow_run_id=flow_run.id) for flow_run in flow_runs]\n finished_flow_runs = await asyncio.gather(*coros)\n print([flow_run.state for flow_run in finished_flow_runs])\n\n if __name__ == \"__main__\":\n asyncio.run(main(num_runs=10))\n\n ```\n \"\"\"\n assert client is not None, \"Client injection failed\"\n logger = get_logger()\n with anyio.move_on_after(timeout):\n while True:\n flow_run = await client.read_flow_run(flow_run_id)\n flow_state = flow_run.state\n if log_states:\n logger.info(f\"Flow run is in state {flow_run.state.name!r}\")\n if flow_state and flow_state.is_final():\n return flow_run\n await anyio.sleep(poll_interval)\n raise FlowRunWaitTimeout(\n f\"Flow run with ID {flow_run_id} exceeded watch timeout of {timeout} seconds\"\n )\n", "path": "src/prefect/flow_runs.py"}]}

| 1,205 | 146 |

gh_patches_debug_3915

|

rasdani/github-patches

|

git_diff

|

fossasia__open-event-server-890

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Show return model of sponsor types list in Swagger spec

Currently no return model (or schema) is shown for the GET API to get sponsor types used in a Event

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `open_event/api/sponsors.py`

Content:

```

1 from flask.ext.restplus import Resource, Namespace

2

3 from open_event.models.sponsor import Sponsor as SponsorModel

4

5 from .helpers.helpers import get_paginated_list, requires_auth, get_object_in_event

6 from .helpers.utils import PAGINATED_MODEL, PaginatedResourceBase, ServiceDAO, \

7 PAGE_PARAMS, POST_RESPONSES, PUT_RESPONSES

8 from .helpers import custom_fields as fields

9

10 api = Namespace('sponsors', description='Sponsors', path='/')

11

12 SPONSOR = api.model('Sponsor', {

13 'id': fields.Integer(required=True),

14 'name': fields.String(),

15 'url': fields.Uri(),

16 'logo': fields.ImageUri(),

17 'description': fields.String(),

18 'level': fields.String(),

19 'sponsor_type': fields.String(),

20 })

21

22 SPONSOR_PAGINATED = api.clone('SponsorPaginated', PAGINATED_MODEL, {

23 'results': fields.List(fields.Nested(SPONSOR))

24 })

25

26 SPONSOR_POST = api.clone('SponsorPost', SPONSOR)

27 del SPONSOR_POST['id']

28

29

30 # Create DAO

31 class SponsorDAO(ServiceDAO):

32 def list_types(self, event_id):

33 sponsors = self.list(event_id)

34 return list(set(

35 sponsor.sponsor_type for sponsor in sponsors

36 if sponsor.sponsor_type))

37

38

39 DAO = SponsorDAO(SponsorModel, SPONSOR_POST)

40

41

42 @api.route('/events/<int:event_id>/sponsors/<int:sponsor_id>')

43 @api.response(404, 'Sponsor not found')

44 @api.response(400, 'Sponsor does not belong to event')

45 class Sponsor(Resource):

46 @api.doc('get_sponsor')

47 @api.marshal_with(SPONSOR)

48 def get(self, event_id, sponsor_id):

49 """Fetch a sponsor given its id"""

50 return DAO.get(event_id, sponsor_id)

51

52 @requires_auth

53 @api.doc('delete_sponsor')

54 @api.marshal_with(SPONSOR)

55 def delete(self, event_id, sponsor_id):

56 """Delete a sponsor given its id"""

57 return DAO.delete(event_id, sponsor_id)

58

59 @requires_auth

60 @api.doc('update_sponsor', responses=PUT_RESPONSES)

61 @api.marshal_with(SPONSOR)

62 @api.expect(SPONSOR_POST)

63 def put(self, event_id, sponsor_id):

64 """Update a sponsor given its id"""

65 return DAO.update(event_id, sponsor_id, self.api.payload)

66

67

68 @api.route('/events/<int:event_id>/sponsors')

69 class SponsorList(Resource):

70 @api.doc('list_sponsors')

71 @api.marshal_list_with(SPONSOR)

72 def get(self, event_id):

73 """List all sponsors"""

74 return DAO.list(event_id)

75

76 @requires_auth

77 @api.doc('create_sponsor', responses=POST_RESPONSES)

78 @api.marshal_with(SPONSOR)

79 @api.expect(SPONSOR_POST)

80 def post(self, event_id):

81 """Create a sponsor"""

82 return DAO.create(

83 event_id,

84 self.api.payload,

85 self.api.url_for(self, event_id=event_id)

86 )

87

88

89 @api.route('/events/<int:event_id>/sponsors/types')

90 class SponsorTypesList(Resource):

91 @api.doc('list_sponsor_types')

92 def get(self, event_id):

93 """List all sponsor types"""

94 return DAO.list_types(event_id)

95

96

97 @api.route('/events/<int:event_id>/sponsors/page')

98 class SponsorListPaginated(Resource, PaginatedResourceBase):

99 @api.doc('list_sponsors_paginated', params=PAGE_PARAMS)

100 @api.marshal_with(SPONSOR_PAGINATED)

101 def get(self, event_id):

102 """List sponsors in a paginated manner"""

103 return get_paginated_list(

104 SponsorModel,

105 self.api.url_for(self, event_id=event_id),

106 args=self.parser.parse_args(),

107 event_id=event_id

108 )

109

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/open_event/api/sponsors.py b/open_event/api/sponsors.py

--- a/open_event/api/sponsors.py

+++ b/open_event/api/sponsors.py

@@ -88,7 +88,7 @@

@api.route('/events/<int:event_id>/sponsors/types')

class SponsorTypesList(Resource):

- @api.doc('list_sponsor_types')

+ @api.doc('list_sponsor_types', model=[fields.String()])

def get(self, event_id):

"""List all sponsor types"""

return DAO.list_types(event_id)

|