problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_21404

|

rasdani/github-patches

|

git_diff

|

matrix-org__synapse-6578

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fatal 'Failed to upgrade database' error on startup

As of Synapse 1.7.0, when I start synapse with an old database version, I get this rather cryptic error.

--- END ISSUE ---

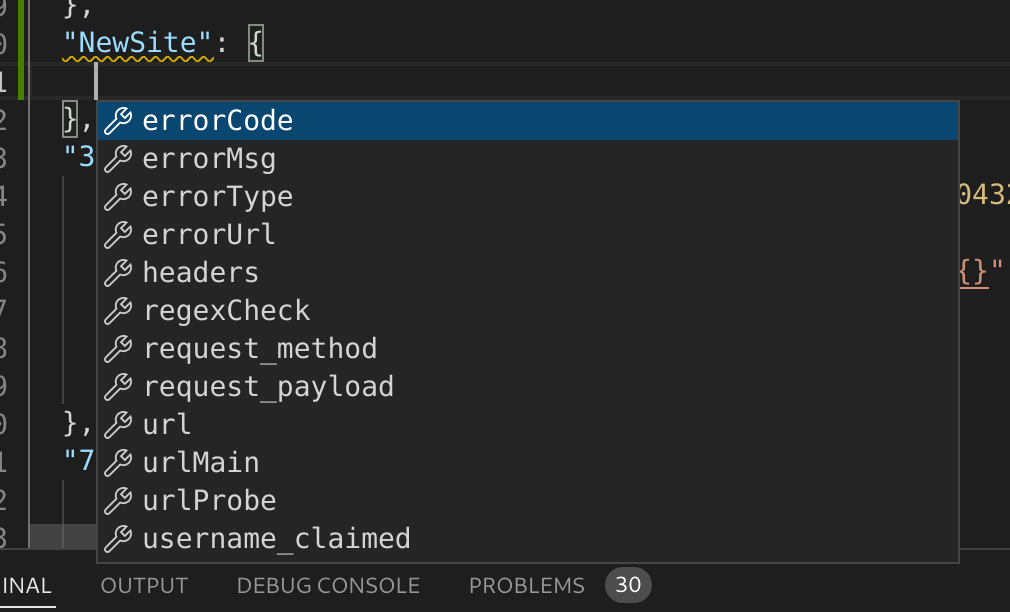

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `synapse/storage/engines/sqlite.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 # Copyright 2015, 2016 OpenMarket Ltd

3 #

4 # Licensed under the Apache License, Version 2.0 (the "License");

5 # you may not use this file except in compliance with the License.

6 # You may obtain a copy of the License at

7 #

8 # http://www.apache.org/licenses/LICENSE-2.0

9 #

10 # Unless required by applicable law or agreed to in writing, software

11 # distributed under the License is distributed on an "AS IS" BASIS,

12 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 # See the License for the specific language governing permissions and

14 # limitations under the License.

15

16 import struct

17 import threading

18

19 from synapse.storage.prepare_database import prepare_database

20

21

22 class Sqlite3Engine(object):

23 single_threaded = True

24

25 def __init__(self, database_module, database_config):

26 self.module = database_module

27

28 # The current max state_group, or None if we haven't looked

29 # in the DB yet.

30 self._current_state_group_id = None

31 self._current_state_group_id_lock = threading.Lock()

32

33 @property

34 def can_native_upsert(self):

35 """

36 Do we support native UPSERTs? This requires SQLite3 3.24+, plus some

37 more work we haven't done yet to tell what was inserted vs updated.

38 """

39 return self.module.sqlite_version_info >= (3, 24, 0)

40

41 @property

42 def supports_tuple_comparison(self):

43 """

44 Do we support comparing tuples, i.e. `(a, b) > (c, d)`? This requires

45 SQLite 3.15+.

46 """

47 return self.module.sqlite_version_info >= (3, 15, 0)

48

49 @property

50 def supports_using_any_list(self):

51 """Do we support using `a = ANY(?)` and passing a list

52 """

53 return False

54

55 def check_database(self, txn):

56 pass

57

58 def convert_param_style(self, sql):

59 return sql

60

61 def on_new_connection(self, db_conn):

62 prepare_database(db_conn, self, config=None)

63 db_conn.create_function("rank", 1, _rank)

64

65 def is_deadlock(self, error):

66 return False

67

68 def is_connection_closed(self, conn):

69 return False

70

71 def lock_table(self, txn, table):

72 return

73

74 def get_next_state_group_id(self, txn):

75 """Returns an int that can be used as a new state_group ID

76 """

77 # We do application locking here since if we're using sqlite then

78 # we are a single process synapse.

79 with self._current_state_group_id_lock:

80 if self._current_state_group_id is None:

81 txn.execute("SELECT COALESCE(max(id), 0) FROM state_groups")

82 self._current_state_group_id = txn.fetchone()[0]

83

84 self._current_state_group_id += 1

85 return self._current_state_group_id

86

87 @property

88 def server_version(self):

89 """Gets a string giving the server version. For example: '3.22.0'

90

91 Returns:

92 string

93 """

94 return "%i.%i.%i" % self.module.sqlite_version_info

95

96

97 # Following functions taken from: https://github.com/coleifer/peewee

98

99

100 def _parse_match_info(buf):

101 bufsize = len(buf)

102 return [struct.unpack("@I", buf[i : i + 4])[0] for i in range(0, bufsize, 4)]

103

104

105 def _rank(raw_match_info):

106 """Handle match_info called w/default args 'pcx' - based on the example rank

107 function http://sqlite.org/fts3.html#appendix_a

108 """

109 match_info = _parse_match_info(raw_match_info)

110 score = 0.0

111 p, c = match_info[:2]

112 for phrase_num in range(p):

113 phrase_info_idx = 2 + (phrase_num * c * 3)

114 for col_num in range(c):

115 col_idx = phrase_info_idx + (col_num * 3)

116 x1, x2 = match_info[col_idx : col_idx + 2]

117 if x1 > 0:

118 score += float(x1) / x2

119 return score

120

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/synapse/storage/engines/sqlite.py b/synapse/storage/engines/sqlite.py

--- a/synapse/storage/engines/sqlite.py

+++ b/synapse/storage/engines/sqlite.py

@@ -25,6 +25,9 @@

def __init__(self, database_module, database_config):

self.module = database_module

+ database = database_config.get("args", {}).get("database")

+ self._is_in_memory = database in (None, ":memory:",)

+

# The current max state_group, or None if we haven't looked

# in the DB yet.

self._current_state_group_id = None

@@ -59,7 +62,12 @@

return sql

def on_new_connection(self, db_conn):

- prepare_database(db_conn, self, config=None)

+ if self._is_in_memory:

+ # In memory databases need to be rebuilt each time. Ideally we'd

+ # reuse the same connection as we do when starting up, but that

+ # would involve using adbapi before we have started the reactor.

+ prepare_database(db_conn, self, config=None)

+

db_conn.create_function("rank", 1, _rank)

def is_deadlock(self, error):

|

{"golden_diff": "diff --git a/synapse/storage/engines/sqlite.py b/synapse/storage/engines/sqlite.py\n--- a/synapse/storage/engines/sqlite.py\n+++ b/synapse/storage/engines/sqlite.py\n@@ -25,6 +25,9 @@\n def __init__(self, database_module, database_config):\n self.module = database_module\n \n+ database = database_config.get(\"args\", {}).get(\"database\")\n+ self._is_in_memory = database in (None, \":memory:\",)\n+\n # The current max state_group, or None if we haven't looked\n # in the DB yet.\n self._current_state_group_id = None\n@@ -59,7 +62,12 @@\n return sql\n \n def on_new_connection(self, db_conn):\n- prepare_database(db_conn, self, config=None)\n+ if self._is_in_memory:\n+ # In memory databases need to be rebuilt each time. Ideally we'd\n+ # reuse the same connection as we do when starting up, but that\n+ # would involve using adbapi before we have started the reactor.\n+ prepare_database(db_conn, self, config=None)\n+\n db_conn.create_function(\"rank\", 1, _rank)\n \n def is_deadlock(self, error):\n", "issue": "Fatal 'Failed to upgrade database' error on startup\nAs of Synapse 1.7.0, when I start synapse with an old database version, I get this rather cryptic error.\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n# Copyright 2015, 2016 OpenMarket Ltd\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport struct\nimport threading\n\nfrom synapse.storage.prepare_database import prepare_database\n\n\nclass Sqlite3Engine(object):\n single_threaded = True\n\n def __init__(self, database_module, database_config):\n self.module = database_module\n\n # The current max state_group, or None if we haven't looked\n # in the DB yet.\n self._current_state_group_id = None\n self._current_state_group_id_lock = threading.Lock()\n\n @property\n def can_native_upsert(self):\n \"\"\"\n Do we support native UPSERTs? This requires SQLite3 3.24+, plus some\n more work we haven't done yet to tell what was inserted vs updated.\n \"\"\"\n return self.module.sqlite_version_info >= (3, 24, 0)\n\n @property\n def supports_tuple_comparison(self):\n \"\"\"\n Do we support comparing tuples, i.e. `(a, b) > (c, d)`? This requires\n SQLite 3.15+.\n \"\"\"\n return self.module.sqlite_version_info >= (3, 15, 0)\n\n @property\n def supports_using_any_list(self):\n \"\"\"Do we support using `a = ANY(?)` and passing a list\n \"\"\"\n return False\n\n def check_database(self, txn):\n pass\n\n def convert_param_style(self, sql):\n return sql\n\n def on_new_connection(self, db_conn):\n prepare_database(db_conn, self, config=None)\n db_conn.create_function(\"rank\", 1, _rank)\n\n def is_deadlock(self, error):\n return False\n\n def is_connection_closed(self, conn):\n return False\n\n def lock_table(self, txn, table):\n return\n\n def get_next_state_group_id(self, txn):\n \"\"\"Returns an int that can be used as a new state_group ID\n \"\"\"\n # We do application locking here since if we're using sqlite then\n # we are a single process synapse.\n with self._current_state_group_id_lock:\n if self._current_state_group_id is None:\n txn.execute(\"SELECT COALESCE(max(id), 0) FROM state_groups\")\n self._current_state_group_id = txn.fetchone()[0]\n\n self._current_state_group_id += 1\n return self._current_state_group_id\n\n @property\n def server_version(self):\n \"\"\"Gets a string giving the server version. For example: '3.22.0'\n\n Returns:\n string\n \"\"\"\n return \"%i.%i.%i\" % self.module.sqlite_version_info\n\n\n# Following functions taken from: https://github.com/coleifer/peewee\n\n\ndef _parse_match_info(buf):\n bufsize = len(buf)\n return [struct.unpack(\"@I\", buf[i : i + 4])[0] for i in range(0, bufsize, 4)]\n\n\ndef _rank(raw_match_info):\n \"\"\"Handle match_info called w/default args 'pcx' - based on the example rank\n function http://sqlite.org/fts3.html#appendix_a\n \"\"\"\n match_info = _parse_match_info(raw_match_info)\n score = 0.0\n p, c = match_info[:2]\n for phrase_num in range(p):\n phrase_info_idx = 2 + (phrase_num * c * 3)\n for col_num in range(c):\n col_idx = phrase_info_idx + (col_num * 3)\n x1, x2 = match_info[col_idx : col_idx + 2]\n if x1 > 0:\n score += float(x1) / x2\n return score\n", "path": "synapse/storage/engines/sqlite.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\n# Copyright 2015, 2016 OpenMarket Ltd\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport struct\nimport threading\n\nfrom synapse.storage.prepare_database import prepare_database\n\n\nclass Sqlite3Engine(object):\n single_threaded = True\n\n def __init__(self, database_module, database_config):\n self.module = database_module\n\n database = database_config.get(\"args\", {}).get(\"database\")\n self._is_in_memory = database in (None, \":memory:\",)\n\n # The current max state_group, or None if we haven't looked\n # in the DB yet.\n self._current_state_group_id = None\n self._current_state_group_id_lock = threading.Lock()\n\n @property\n def can_native_upsert(self):\n \"\"\"\n Do we support native UPSERTs? This requires SQLite3 3.24+, plus some\n more work we haven't done yet to tell what was inserted vs updated.\n \"\"\"\n return self.module.sqlite_version_info >= (3, 24, 0)\n\n @property\n def supports_tuple_comparison(self):\n \"\"\"\n Do we support comparing tuples, i.e. `(a, b) > (c, d)`? This requires\n SQLite 3.15+.\n \"\"\"\n return self.module.sqlite_version_info >= (3, 15, 0)\n\n @property\n def supports_using_any_list(self):\n \"\"\"Do we support using `a = ANY(?)` and passing a list\n \"\"\"\n return False\n\n def check_database(self, txn):\n pass\n\n def convert_param_style(self, sql):\n return sql\n\n def on_new_connection(self, db_conn):\n if self._is_in_memory:\n # In memory databases need to be rebuilt each time. Ideally we'd\n # reuse the same connection as we do when starting up, but that\n # would involve using adbapi before we have started the reactor.\n prepare_database(db_conn, self, config=None)\n\n db_conn.create_function(\"rank\", 1, _rank)\n\n def is_deadlock(self, error):\n return False\n\n def is_connection_closed(self, conn):\n return False\n\n def lock_table(self, txn, table):\n return\n\n def get_next_state_group_id(self, txn):\n \"\"\"Returns an int that can be used as a new state_group ID\n \"\"\"\n # We do application locking here since if we're using sqlite then\n # we are a single process synapse.\n with self._current_state_group_id_lock:\n if self._current_state_group_id is None:\n txn.execute(\"SELECT COALESCE(max(id), 0) FROM state_groups\")\n self._current_state_group_id = txn.fetchone()[0]\n\n self._current_state_group_id += 1\n return self._current_state_group_id\n\n @property\n def server_version(self):\n \"\"\"Gets a string giving the server version. For example: '3.22.0'\n\n Returns:\n string\n \"\"\"\n return \"%i.%i.%i\" % self.module.sqlite_version_info\n\n\n# Following functions taken from: https://github.com/coleifer/peewee\n\n\ndef _parse_match_info(buf):\n bufsize = len(buf)\n return [struct.unpack(\"@I\", buf[i : i + 4])[0] for i in range(0, bufsize, 4)]\n\n\ndef _rank(raw_match_info):\n \"\"\"Handle match_info called w/default args 'pcx' - based on the example rank\n function http://sqlite.org/fts3.html#appendix_a\n \"\"\"\n match_info = _parse_match_info(raw_match_info)\n score = 0.0\n p, c = match_info[:2]\n for phrase_num in range(p):\n phrase_info_idx = 2 + (phrase_num * c * 3)\n for col_num in range(c):\n col_idx = phrase_info_idx + (col_num * 3)\n x1, x2 = match_info[col_idx : col_idx + 2]\n if x1 > 0:\n score += float(x1) / x2\n return score\n", "path": "synapse/storage/engines/sqlite.py"}]}

| 1,499 | 284 |

gh_patches_debug_39466

|

rasdani/github-patches

|

git_diff

|

yt-dlp__yt-dlp-1877

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

RedTube DL don't work any more

### Checklist

- [X] I'm reporting a broken site

- [X] I've verified that I'm running yt-dlp version **2021.11.10.1**. ([update instructions](https://github.com/yt-dlp/yt-dlp#update))

- [X] I've checked that all provided URLs are alive and playable in a browser

- [X] I've checked that all URLs and arguments with special characters are [properly quoted or escaped](https://github.com/ytdl-org/youtube-dl#video-url-contains-an-ampersand-and-im-getting-some-strange-output-1-2839-or-v-is-not-recognized-as-an-internal-or-external-command)

- [X] I've searched the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=) for similar issues including closed ones. DO NOT post duplicates

- [X] I've read the [guidelines for opening an issue](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#opening-an-issue)

- [X] I've read about [sharing account credentials](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#are-you-willing-to-share-account-details-if-needed) and I'm willing to share it if required

### Region

_No response_

### Description

only downloaded a small file (893 btyes) which is obviously not the real video file...

sample url:

https://www.redtube.com/39016781

### Verbose log

```shell

Download Log for url: https://www.redtube.com/39016781

Waiting

[RedTube] 39016781: Downloading webpage

[RedTube] 39016781: Downloading m3u8 information

[info] 39016781: Downloading 1 format(s): 1

[download] Destination: C:\Users\Sony\xxx\Youtube-Dl\Cute Asian is fucked & creampied!-39016781.mp4

[download] 893.00B at 436.07KiB/s (00:02)

[download] 100% of 893.00B in 00:02

Download Worker Finished.

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `yt_dlp/extractor/redtube.py`

Content:

```

1 from __future__ import unicode_literals

2

3 import re

4

5 from .common import InfoExtractor

6 from ..utils import (

7 determine_ext,

8 ExtractorError,

9 int_or_none,

10 merge_dicts,

11 str_to_int,

12 unified_strdate,

13 url_or_none,

14 )

15

16

17 class RedTubeIE(InfoExtractor):

18 _VALID_URL = r'https?://(?:(?:\w+\.)?redtube\.com/|embed\.redtube\.com/\?.*?\bid=)(?P<id>[0-9]+)'

19 _TESTS = [{

20 'url': 'http://www.redtube.com/66418',

21 'md5': 'fc08071233725f26b8f014dba9590005',

22 'info_dict': {

23 'id': '66418',

24 'ext': 'mp4',

25 'title': 'Sucked on a toilet',

26 'upload_date': '20110811',

27 'duration': 596,

28 'view_count': int,

29 'age_limit': 18,

30 }

31 }, {

32 'url': 'http://embed.redtube.com/?bgcolor=000000&id=1443286',

33 'only_matching': True,

34 }, {

35 'url': 'http://it.redtube.com/66418',

36 'only_matching': True,

37 }]

38

39 @staticmethod

40 def _extract_urls(webpage):

41 return re.findall(

42 r'<iframe[^>]+?src=["\'](?P<url>(?:https?:)?//embed\.redtube\.com/\?.*?\bid=\d+)',

43 webpage)

44

45 def _real_extract(self, url):

46 video_id = self._match_id(url)

47 webpage = self._download_webpage(

48 'http://www.redtube.com/%s' % video_id, video_id)

49

50 ERRORS = (

51 (('video-deleted-info', '>This video has been removed'), 'has been removed'),

52 (('private_video_text', '>This video is private', '>Send a friend request to its owner to be able to view it'), 'is private'),

53 )

54

55 for patterns, message in ERRORS:

56 if any(p in webpage for p in patterns):

57 raise ExtractorError(

58 'Video %s %s' % (video_id, message), expected=True)

59

60 info = self._search_json_ld(webpage, video_id, default={})

61

62 if not info.get('title'):

63 info['title'] = self._html_search_regex(

64 (r'<h(\d)[^>]+class="(?:video_title_text|videoTitle|video_title)[^"]*">(?P<title>(?:(?!\1).)+)</h\1>',

65 r'(?:videoTitle|title)\s*:\s*(["\'])(?P<title>(?:(?!\1).)+)\1',),

66 webpage, 'title', group='title',

67 default=None) or self._og_search_title(webpage)

68

69 formats = []

70 sources = self._parse_json(

71 self._search_regex(

72 r'sources\s*:\s*({.+?})', webpage, 'source', default='{}'),

73 video_id, fatal=False)

74 if sources and isinstance(sources, dict):

75 for format_id, format_url in sources.items():

76 if format_url:

77 formats.append({

78 'url': format_url,

79 'format_id': format_id,

80 'height': int_or_none(format_id),

81 })

82 medias = self._parse_json(

83 self._search_regex(

84 r'mediaDefinition["\']?\s*:\s*(\[.+?}\s*\])', webpage,

85 'media definitions', default='{}'),

86 video_id, fatal=False)

87 if medias and isinstance(medias, list):

88 for media in medias:

89 format_url = url_or_none(media.get('videoUrl'))

90 if not format_url:

91 continue

92 if media.get('format') == 'hls' or determine_ext(format_url) == 'm3u8':

93 formats.extend(self._extract_m3u8_formats(

94 format_url, video_id, 'mp4',

95 entry_protocol='m3u8_native', m3u8_id='hls',

96 fatal=False))

97 continue

98 format_id = media.get('quality')

99 formats.append({

100 'url': format_url,

101 'ext': 'mp4',

102 'format_id': format_id,

103 'height': int_or_none(format_id),

104 })

105 if not formats:

106 video_url = self._html_search_regex(

107 r'<source src="(.+?)" type="video/mp4">', webpage, 'video URL')

108 formats.append({'url': video_url, 'ext': 'mp4'})

109 self._sort_formats(formats)

110

111 thumbnail = self._og_search_thumbnail(webpage)

112 upload_date = unified_strdate(self._search_regex(

113 r'<span[^>]+>(?:ADDED|Published on) ([^<]+)<',

114 webpage, 'upload date', default=None))

115 duration = int_or_none(self._og_search_property(

116 'video:duration', webpage, default=None) or self._search_regex(

117 r'videoDuration\s*:\s*(\d+)', webpage, 'duration', default=None))

118 view_count = str_to_int(self._search_regex(

119 (r'<div[^>]*>Views</div>\s*<div[^>]*>\s*([\d,.]+)',

120 r'<span[^>]*>VIEWS</span>\s*</td>\s*<td>\s*([\d,.]+)',

121 r'<span[^>]+\bclass=["\']video_view_count[^>]*>\s*([\d,.]+)'),

122 webpage, 'view count', default=None))

123

124 # No self-labeling, but they describe themselves as

125 # "Home of Videos Porno"

126 age_limit = 18

127

128 return merge_dicts(info, {

129 'id': video_id,

130 'ext': 'mp4',

131 'thumbnail': thumbnail,

132 'upload_date': upload_date,

133 'duration': duration,

134 'view_count': view_count,

135 'age_limit': age_limit,

136 'formats': formats,

137 })

138

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/yt_dlp/extractor/redtube.py b/yt_dlp/extractor/redtube.py

--- a/yt_dlp/extractor/redtube.py

+++ b/yt_dlp/extractor/redtube.py

@@ -17,17 +17,20 @@

class RedTubeIE(InfoExtractor):

_VALID_URL = r'https?://(?:(?:\w+\.)?redtube\.com/|embed\.redtube\.com/\?.*?\bid=)(?P<id>[0-9]+)'

_TESTS = [{

- 'url': 'http://www.redtube.com/66418',

- 'md5': 'fc08071233725f26b8f014dba9590005',

+ 'url': 'https://www.redtube.com/38864951',

+ 'md5': '4fba70cbca3aefd25767ab4b523c9878',

'info_dict': {

- 'id': '66418',

+ 'id': '38864951',

'ext': 'mp4',

- 'title': 'Sucked on a toilet',

- 'upload_date': '20110811',

- 'duration': 596,

+ 'title': 'Public Sex on the Balcony in Freezing Paris! Amateur Couple LeoLulu',

+ 'description': 'Watch video Public Sex on the Balcony in Freezing Paris! Amateur Couple LeoLulu on Redtube, home of free Blowjob porn videos and Blonde sex movies online. Video length: (10:46) - Uploaded by leolulu - Verified User - Starring Pornstar: Leolulu',

+ 'upload_date': '20210111',

+ 'timestamp': 1610343109,

+ 'duration': 646,

'view_count': int,

'age_limit': 18,

- }

+ 'thumbnail': r're:https://\wi-ph\.rdtcdn\.com/videos/.+/.+\.jpg',

+ },

}, {

'url': 'http://embed.redtube.com/?bgcolor=000000&id=1443286',

'only_matching': True,

@@ -84,15 +87,25 @@

r'mediaDefinition["\']?\s*:\s*(\[.+?}\s*\])', webpage,

'media definitions', default='{}'),

video_id, fatal=False)

- if medias and isinstance(medias, list):

- for media in medias:

+ for media in medias if isinstance(medias, list) else []:

+ format_url = url_or_none(media.get('videoUrl'))

+ if not format_url:

+ continue

+ format_id = media.get('format')

+ quality = media.get('quality')

+ if format_id == 'hls' or (format_id == 'mp4' and not quality):

+ more_media = self._download_json(format_url, video_id, fatal=False)

+ else:

+ more_media = [media]

+ for media in more_media if isinstance(more_media, list) else []:

format_url = url_or_none(media.get('videoUrl'))

if not format_url:

continue

- if media.get('format') == 'hls' or determine_ext(format_url) == 'm3u8':

+ format_id = media.get('format')

+ if format_id == 'hls' or determine_ext(format_url) == 'm3u8':

formats.extend(self._extract_m3u8_formats(

format_url, video_id, 'mp4',

- entry_protocol='m3u8_native', m3u8_id='hls',

+ entry_protocol='m3u8_native', m3u8_id=format_id or 'hls',

fatal=False))

continue

format_id = media.get('quality')

|

{"golden_diff": "diff --git a/yt_dlp/extractor/redtube.py b/yt_dlp/extractor/redtube.py\n--- a/yt_dlp/extractor/redtube.py\n+++ b/yt_dlp/extractor/redtube.py\n@@ -17,17 +17,20 @@\n class RedTubeIE(InfoExtractor):\n _VALID_URL = r'https?://(?:(?:\\w+\\.)?redtube\\.com/|embed\\.redtube\\.com/\\?.*?\\bid=)(?P<id>[0-9]+)'\n _TESTS = [{\n- 'url': 'http://www.redtube.com/66418',\n- 'md5': 'fc08071233725f26b8f014dba9590005',\n+ 'url': 'https://www.redtube.com/38864951',\n+ 'md5': '4fba70cbca3aefd25767ab4b523c9878',\n 'info_dict': {\n- 'id': '66418',\n+ 'id': '38864951',\n 'ext': 'mp4',\n- 'title': 'Sucked on a toilet',\n- 'upload_date': '20110811',\n- 'duration': 596,\n+ 'title': 'Public Sex on the Balcony in Freezing Paris! Amateur Couple LeoLulu',\n+ 'description': 'Watch video Public Sex on the Balcony in Freezing Paris! Amateur Couple LeoLulu on Redtube, home of free Blowjob porn videos and Blonde sex movies online. Video length: (10:46) - Uploaded by leolulu - Verified User - Starring Pornstar: Leolulu',\n+ 'upload_date': '20210111',\n+ 'timestamp': 1610343109,\n+ 'duration': 646,\n 'view_count': int,\n 'age_limit': 18,\n- }\n+ 'thumbnail': r're:https://\\wi-ph\\.rdtcdn\\.com/videos/.+/.+\\.jpg',\n+ },\n }, {\n 'url': 'http://embed.redtube.com/?bgcolor=000000&id=1443286',\n 'only_matching': True,\n@@ -84,15 +87,25 @@\n r'mediaDefinition[\"\\']?\\s*:\\s*(\\[.+?}\\s*\\])', webpage,\n 'media definitions', default='{}'),\n video_id, fatal=False)\n- if medias and isinstance(medias, list):\n- for media in medias:\n+ for media in medias if isinstance(medias, list) else []:\n+ format_url = url_or_none(media.get('videoUrl'))\n+ if not format_url:\n+ continue\n+ format_id = media.get('format')\n+ quality = media.get('quality')\n+ if format_id == 'hls' or (format_id == 'mp4' and not quality):\n+ more_media = self._download_json(format_url, video_id, fatal=False)\n+ else:\n+ more_media = [media]\n+ for media in more_media if isinstance(more_media, list) else []:\n format_url = url_or_none(media.get('videoUrl'))\n if not format_url:\n continue\n- if media.get('format') == 'hls' or determine_ext(format_url) == 'm3u8':\n+ format_id = media.get('format')\n+ if format_id == 'hls' or determine_ext(format_url) == 'm3u8':\n formats.extend(self._extract_m3u8_formats(\n format_url, video_id, 'mp4',\n- entry_protocol='m3u8_native', m3u8_id='hls',\n+ entry_protocol='m3u8_native', m3u8_id=format_id or 'hls',\n fatal=False))\n continue\n format_id = media.get('quality')\n", "issue": "RedTube DL don't work any more\n### Checklist\n\n- [X] I'm reporting a broken site\n- [X] I've verified that I'm running yt-dlp version **2021.11.10.1**. ([update instructions](https://github.com/yt-dlp/yt-dlp#update))\n- [X] I've checked that all provided URLs are alive and playable in a browser\n- [X] I've checked that all URLs and arguments with special characters are [properly quoted or escaped](https://github.com/ytdl-org/youtube-dl#video-url-contains-an-ampersand-and-im-getting-some-strange-output-1-2839-or-v-is-not-recognized-as-an-internal-or-external-command)\n- [X] I've searched the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=) for similar issues including closed ones. DO NOT post duplicates\n- [X] I've read the [guidelines for opening an issue](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#opening-an-issue)\n- [X] I've read about [sharing account credentials](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#are-you-willing-to-share-account-details-if-needed) and I'm willing to share it if required\n\n### Region\n\n_No response_\n\n### Description\n\nonly downloaded a small file (893 btyes) which is obviously not the real video file...\r\nsample url:\r\nhttps://www.redtube.com/39016781\n\n### Verbose log\n\n```shell\nDownload Log for url: https://www.redtube.com/39016781\r\n\r\nWaiting\r\n[RedTube] 39016781: Downloading webpage\r\n[RedTube] 39016781: Downloading m3u8 information\r\n[info] 39016781: Downloading 1 format(s): 1\r\n[download] Destination: C:\\Users\\Sony\\xxx\\Youtube-Dl\\Cute Asian is fucked & creampied!-39016781.mp4\r\n[download] 893.00B at 436.07KiB/s (00:02)\r\n[download] 100% of 893.00B in 00:02\r\nDownload Worker Finished.\n```\n\n", "before_files": [{"content": "from __future__ import unicode_literals\n\nimport re\n\nfrom .common import InfoExtractor\nfrom ..utils import (\n determine_ext,\n ExtractorError,\n int_or_none,\n merge_dicts,\n str_to_int,\n unified_strdate,\n url_or_none,\n)\n\n\nclass RedTubeIE(InfoExtractor):\n _VALID_URL = r'https?://(?:(?:\\w+\\.)?redtube\\.com/|embed\\.redtube\\.com/\\?.*?\\bid=)(?P<id>[0-9]+)'\n _TESTS = [{\n 'url': 'http://www.redtube.com/66418',\n 'md5': 'fc08071233725f26b8f014dba9590005',\n 'info_dict': {\n 'id': '66418',\n 'ext': 'mp4',\n 'title': 'Sucked on a toilet',\n 'upload_date': '20110811',\n 'duration': 596,\n 'view_count': int,\n 'age_limit': 18,\n }\n }, {\n 'url': 'http://embed.redtube.com/?bgcolor=000000&id=1443286',\n 'only_matching': True,\n }, {\n 'url': 'http://it.redtube.com/66418',\n 'only_matching': True,\n }]\n\n @staticmethod\n def _extract_urls(webpage):\n return re.findall(\n r'<iframe[^>]+?src=[\"\\'](?P<url>(?:https?:)?//embed\\.redtube\\.com/\\?.*?\\bid=\\d+)',\n webpage)\n\n def _real_extract(self, url):\n video_id = self._match_id(url)\n webpage = self._download_webpage(\n 'http://www.redtube.com/%s' % video_id, video_id)\n\n ERRORS = (\n (('video-deleted-info', '>This video has been removed'), 'has been removed'),\n (('private_video_text', '>This video is private', '>Send a friend request to its owner to be able to view it'), 'is private'),\n )\n\n for patterns, message in ERRORS:\n if any(p in webpage for p in patterns):\n raise ExtractorError(\n 'Video %s %s' % (video_id, message), expected=True)\n\n info = self._search_json_ld(webpage, video_id, default={})\n\n if not info.get('title'):\n info['title'] = self._html_search_regex(\n (r'<h(\\d)[^>]+class=\"(?:video_title_text|videoTitle|video_title)[^\"]*\">(?P<title>(?:(?!\\1).)+)</h\\1>',\n r'(?:videoTitle|title)\\s*:\\s*([\"\\'])(?P<title>(?:(?!\\1).)+)\\1',),\n webpage, 'title', group='title',\n default=None) or self._og_search_title(webpage)\n\n formats = []\n sources = self._parse_json(\n self._search_regex(\n r'sources\\s*:\\s*({.+?})', webpage, 'source', default='{}'),\n video_id, fatal=False)\n if sources and isinstance(sources, dict):\n for format_id, format_url in sources.items():\n if format_url:\n formats.append({\n 'url': format_url,\n 'format_id': format_id,\n 'height': int_or_none(format_id),\n })\n medias = self._parse_json(\n self._search_regex(\n r'mediaDefinition[\"\\']?\\s*:\\s*(\\[.+?}\\s*\\])', webpage,\n 'media definitions', default='{}'),\n video_id, fatal=False)\n if medias and isinstance(medias, list):\n for media in medias:\n format_url = url_or_none(media.get('videoUrl'))\n if not format_url:\n continue\n if media.get('format') == 'hls' or determine_ext(format_url) == 'm3u8':\n formats.extend(self._extract_m3u8_formats(\n format_url, video_id, 'mp4',\n entry_protocol='m3u8_native', m3u8_id='hls',\n fatal=False))\n continue\n format_id = media.get('quality')\n formats.append({\n 'url': format_url,\n 'ext': 'mp4',\n 'format_id': format_id,\n 'height': int_or_none(format_id),\n })\n if not formats:\n video_url = self._html_search_regex(\n r'<source src=\"(.+?)\" type=\"video/mp4\">', webpage, 'video URL')\n formats.append({'url': video_url, 'ext': 'mp4'})\n self._sort_formats(formats)\n\n thumbnail = self._og_search_thumbnail(webpage)\n upload_date = unified_strdate(self._search_regex(\n r'<span[^>]+>(?:ADDED|Published on) ([^<]+)<',\n webpage, 'upload date', default=None))\n duration = int_or_none(self._og_search_property(\n 'video:duration', webpage, default=None) or self._search_regex(\n r'videoDuration\\s*:\\s*(\\d+)', webpage, 'duration', default=None))\n view_count = str_to_int(self._search_regex(\n (r'<div[^>]*>Views</div>\\s*<div[^>]*>\\s*([\\d,.]+)',\n r'<span[^>]*>VIEWS</span>\\s*</td>\\s*<td>\\s*([\\d,.]+)',\n r'<span[^>]+\\bclass=[\"\\']video_view_count[^>]*>\\s*([\\d,.]+)'),\n webpage, 'view count', default=None))\n\n # No self-labeling, but they describe themselves as\n # \"Home of Videos Porno\"\n age_limit = 18\n\n return merge_dicts(info, {\n 'id': video_id,\n 'ext': 'mp4',\n 'thumbnail': thumbnail,\n 'upload_date': upload_date,\n 'duration': duration,\n 'view_count': view_count,\n 'age_limit': age_limit,\n 'formats': formats,\n })\n", "path": "yt_dlp/extractor/redtube.py"}], "after_files": [{"content": "from __future__ import unicode_literals\n\nimport re\n\nfrom .common import InfoExtractor\nfrom ..utils import (\n determine_ext,\n ExtractorError,\n int_or_none,\n merge_dicts,\n str_to_int,\n unified_strdate,\n url_or_none,\n)\n\n\nclass RedTubeIE(InfoExtractor):\n _VALID_URL = r'https?://(?:(?:\\w+\\.)?redtube\\.com/|embed\\.redtube\\.com/\\?.*?\\bid=)(?P<id>[0-9]+)'\n _TESTS = [{\n 'url': 'https://www.redtube.com/38864951',\n 'md5': '4fba70cbca3aefd25767ab4b523c9878',\n 'info_dict': {\n 'id': '38864951',\n 'ext': 'mp4',\n 'title': 'Public Sex on the Balcony in Freezing Paris! Amateur Couple LeoLulu',\n 'description': 'Watch video Public Sex on the Balcony in Freezing Paris! Amateur Couple LeoLulu on Redtube, home of free Blowjob porn videos and Blonde sex movies online. Video length: (10:46) - Uploaded by leolulu - Verified User - Starring Pornstar: Leolulu',\n 'upload_date': '20210111',\n 'timestamp': 1610343109,\n 'duration': 646,\n 'view_count': int,\n 'age_limit': 18,\n 'thumbnail': r're:https://\\wi-ph\\.rdtcdn\\.com/videos/.+/.+\\.jpg',\n },\n }, {\n 'url': 'http://embed.redtube.com/?bgcolor=000000&id=1443286',\n 'only_matching': True,\n }, {\n 'url': 'http://it.redtube.com/66418',\n 'only_matching': True,\n }]\n\n @staticmethod\n def _extract_urls(webpage):\n return re.findall(\n r'<iframe[^>]+?src=[\"\\'](?P<url>(?:https?:)?//embed\\.redtube\\.com/\\?.*?\\bid=\\d+)',\n webpage)\n\n def _real_extract(self, url):\n video_id = self._match_id(url)\n webpage = self._download_webpage(\n 'http://www.redtube.com/%s' % video_id, video_id)\n\n ERRORS = (\n (('video-deleted-info', '>This video has been removed'), 'has been removed'),\n (('private_video_text', '>This video is private', '>Send a friend request to its owner to be able to view it'), 'is private'),\n )\n\n for patterns, message in ERRORS:\n if any(p in webpage for p in patterns):\n raise ExtractorError(\n 'Video %s %s' % (video_id, message), expected=True)\n\n info = self._search_json_ld(webpage, video_id, default={})\n\n if not info.get('title'):\n info['title'] = self._html_search_regex(\n (r'<h(\\d)[^>]+class=\"(?:video_title_text|videoTitle|video_title)[^\"]*\">(?P<title>(?:(?!\\1).)+)</h\\1>',\n r'(?:videoTitle|title)\\s*:\\s*([\"\\'])(?P<title>(?:(?!\\1).)+)\\1',),\n webpage, 'title', group='title',\n default=None) or self._og_search_title(webpage)\n\n formats = []\n sources = self._parse_json(\n self._search_regex(\n r'sources\\s*:\\s*({.+?})', webpage, 'source', default='{}'),\n video_id, fatal=False)\n if sources and isinstance(sources, dict):\n for format_id, format_url in sources.items():\n if format_url:\n formats.append({\n 'url': format_url,\n 'format_id': format_id,\n 'height': int_or_none(format_id),\n })\n medias = self._parse_json(\n self._search_regex(\n r'mediaDefinition[\"\\']?\\s*:\\s*(\\[.+?}\\s*\\])', webpage,\n 'media definitions', default='{}'),\n video_id, fatal=False)\n for media in medias if isinstance(medias, list) else []:\n format_url = url_or_none(media.get('videoUrl'))\n if not format_url:\n continue\n format_id = media.get('format')\n quality = media.get('quality')\n if format_id == 'hls' or (format_id == 'mp4' and not quality):\n more_media = self._download_json(format_url, video_id, fatal=False)\n else:\n more_media = [media]\n for media in more_media if isinstance(more_media, list) else []:\n format_url = url_or_none(media.get('videoUrl'))\n if not format_url:\n continue\n format_id = media.get('format')\n if format_id == 'hls' or determine_ext(format_url) == 'm3u8':\n formats.extend(self._extract_m3u8_formats(\n format_url, video_id, 'mp4',\n entry_protocol='m3u8_native', m3u8_id=format_id or 'hls',\n fatal=False))\n continue\n format_id = media.get('quality')\n formats.append({\n 'url': format_url,\n 'ext': 'mp4',\n 'format_id': format_id,\n 'height': int_or_none(format_id),\n })\n if not formats:\n video_url = self._html_search_regex(\n r'<source src=\"(.+?)\" type=\"video/mp4\">', webpage, 'video URL')\n formats.append({'url': video_url, 'ext': 'mp4'})\n self._sort_formats(formats)\n\n thumbnail = self._og_search_thumbnail(webpage)\n upload_date = unified_strdate(self._search_regex(\n r'<span[^>]+>(?:ADDED|Published on) ([^<]+)<',\n webpage, 'upload date', default=None))\n duration = int_or_none(self._og_search_property(\n 'video:duration', webpage, default=None) or self._search_regex(\n r'videoDuration\\s*:\\s*(\\d+)', webpage, 'duration', default=None))\n view_count = str_to_int(self._search_regex(\n (r'<div[^>]*>Views</div>\\s*<div[^>]*>\\s*([\\d,.]+)',\n r'<span[^>]*>VIEWS</span>\\s*</td>\\s*<td>\\s*([\\d,.]+)',\n r'<span[^>]+\\bclass=[\"\\']video_view_count[^>]*>\\s*([\\d,.]+)'),\n webpage, 'view count', default=None))\n\n # No self-labeling, but they describe themselves as\n # \"Home of Videos Porno\"\n age_limit = 18\n\n return merge_dicts(info, {\n 'id': video_id,\n 'ext': 'mp4',\n 'thumbnail': thumbnail,\n 'upload_date': upload_date,\n 'duration': duration,\n 'view_count': view_count,\n 'age_limit': age_limit,\n 'formats': formats,\n })\n", "path": "yt_dlp/extractor/redtube.py"}]}

| 2,477 | 910 |

gh_patches_debug_19514

|

rasdani/github-patches

|

git_diff

|

pymedusa__Medusa-5684

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Growl not registering

2018-11-10 08:21:42 INFO CHECKVERSION :: [0c0a735] Checking for updates using GIT

**What you did: Input ip:port to register gowl

**What happened: Nothing!

**What you expected: Successful registration.

**Logs:**

2018-11-10 08:22:04 WARNING Thread_1 :: [0c0a735] GROWL: Unable to send growl to 192.168.1.4:23053 - u"error b'encode() takes exactly 1 argument (2 given)'"

2018-11-10 08:22:04 WARNING Thread_1 :: [0c0a735] GROWL: Unable to send growl to 192.168.1.4:23053 - u"error b'encode() takes exactly 1 argument (2 given)'"

Same IP:port work perfectly in rage/chill.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `medusa/notifiers/growl.py`

Content:

```

1 # coding=utf-8

2

3 from __future__ import print_function

4 from __future__ import unicode_literals

5

6 import logging

7 import socket

8 from builtins import object

9

10 import gntp.core

11

12 from medusa import app, common

13 from medusa.helper.exceptions import ex

14 from medusa.logger.adapters.style import BraceAdapter

15

16 log = BraceAdapter(logging.getLogger(__name__))

17 log.logger.addHandler(logging.NullHandler())

18

19

20 class Notifier(object):

21 def test_notify(self, host, password):

22 self._sendRegistration(host, password)

23 return self._sendGrowl('Test Growl', 'Testing Growl settings from Medusa', 'Test', host, password,

24 force=True)

25

26 def notify_snatch(self, title, message):

27 if app.GROWL_NOTIFY_ONSNATCH:

28 self._sendGrowl(title, message)

29

30 def notify_download(self, ep_obj):

31 if app.GROWL_NOTIFY_ONDOWNLOAD:

32 self._sendGrowl(common.notifyStrings[common.NOTIFY_DOWNLOAD], ep_obj.pretty_name_with_quality())

33

34 def notify_subtitle_download(self, ep_obj, lang):

35 if app.GROWL_NOTIFY_ONSUBTITLEDOWNLOAD:

36 self._sendGrowl(common.notifyStrings[common.NOTIFY_SUBTITLE_DOWNLOAD], ep_obj.pretty_name() + ': ' + lang)

37

38 def notify_git_update(self, new_version='??'):

39 update_text = common.notifyStrings[common.NOTIFY_GIT_UPDATE_TEXT]

40 title = common.notifyStrings[common.NOTIFY_GIT_UPDATE]

41 self._sendGrowl(title, update_text + new_version)

42

43 def notify_login(self, ipaddress=''):

44 update_text = common.notifyStrings[common.NOTIFY_LOGIN_TEXT]

45 title = common.notifyStrings[common.NOTIFY_LOGIN]

46 self._sendGrowl(title, update_text.format(ipaddress))

47

48 def _send_growl(self, options, message=None):

49

50 # Initialize Notification

51 notice = gntp.core.GNTPNotice(

52 app=options['app'],

53 name=options['name'],

54 title=options['title'],

55 password=options['password'],

56 )

57

58 # Optional

59 if options['sticky']:

60 notice.add_header('Notification-Sticky', options['sticky'])

61 if options['priority']:

62 notice.add_header('Notification-Priority', options['priority'])

63 if options['icon']:

64 notice.add_header('Notification-Icon', app.LOGO_URL)

65

66 if message:

67 notice.add_header('Notification-Text', message)

68

69 response = self._send(options['host'], options['port'], notice.encode('utf-8'), options['debug'])

70 return True if isinstance(response, gntp.core.GNTPOK) else False

71

72 @staticmethod

73 def _send(host, port, data, debug=False):

74 if debug:

75 print('<Sending>\n', data, '\n</Sending>')

76

77 s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

78 s.connect((host, port))

79 s.send(data)

80 response = gntp.core.parse_gntp(s.recv(1024))

81 s.close()

82

83 if debug:

84 print('<Received>\n', response, '\n</Received>')

85

86 return response

87

88 def _sendGrowl(self, title='Medusa Notification', message=None, name=None, host=None, password=None,

89 force=False):

90 if not app.USE_GROWL and not force:

91 return False

92

93 if name is None:

94 name = title

95

96 if host is None:

97 hostParts = app.GROWL_HOST.split(':')

98 else:

99 hostParts = host.split(':')

100

101 if len(hostParts) != 2 or hostParts[1] == '':

102 port = 23053

103 else:

104 port = int(hostParts[1])

105

106 growlHosts = [(hostParts[0], port)]

107

108 opts = {

109 'name': name,

110 'title': title,

111 'app': 'Medusa',

112 'sticky': None,

113 'priority': None,

114 'debug': False

115 }

116

117 if password is None:

118 opts['password'] = app.GROWL_PASSWORD

119 else:

120 opts['password'] = password

121

122 opts['icon'] = True

123

124 for pc in growlHosts:

125 opts['host'] = pc[0]

126 opts['port'] = pc[1]

127 log.debug(

128 u'GROWL: Sending growl to {host}:{port} - {msg!r}',

129 {'msg': message, 'host': opts['host'], 'port': opts['port']}

130 )

131 try:

132 if self._send_growl(opts, message):

133 return True

134 else:

135 if self._sendRegistration(host, password):

136 return self._send_growl(opts, message)

137 else:

138 return False

139 except Exception as error:

140 log.warning(

141 u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',

142 {'msg': ex(error), 'host': opts['host'], 'port': opts['port']}

143 )

144 return False

145

146 def _sendRegistration(self, host=None, password=None):

147 opts = {}

148

149 if host is None:

150 hostParts = app.GROWL_HOST.split(':')

151 else:

152 hostParts = host.split(':')

153

154 if len(hostParts) != 2 or hostParts[1] == '':

155 port = 23053

156 else:

157 port = int(hostParts[1])

158

159 opts['host'] = hostParts[0]

160 opts['port'] = port

161

162 if password is None:

163 opts['password'] = app.GROWL_PASSWORD

164 else:

165 opts['password'] = password

166

167 opts['app'] = 'Medusa'

168 opts['debug'] = False

169

170 # Send Registration

171 register = gntp.core.GNTPRegister()

172 register.add_header('Application-Name', opts['app'])

173 register.add_header('Application-Icon', app.LOGO_URL)

174

175 register.add_notification('Test', True)

176 register.add_notification(common.notifyStrings[common.NOTIFY_SNATCH], True)

177 register.add_notification(common.notifyStrings[common.NOTIFY_DOWNLOAD], True)

178 register.add_notification(common.notifyStrings[common.NOTIFY_GIT_UPDATE], True)

179

180 if opts['password']:

181 register.set_password(opts['password'])

182

183 try:

184 return self._send(opts['host'], opts['port'], register.encode('utf-8'), opts['debug'])

185 except Exception as error:

186 log.warning(

187 u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',

188 {'msg': ex(error), 'host': opts['host'], 'port': opts['port']}

189 )

190 return False

191

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/medusa/notifiers/growl.py b/medusa/notifiers/growl.py

--- a/medusa/notifiers/growl.py

+++ b/medusa/notifiers/growl.py

@@ -66,7 +66,7 @@

if message:

notice.add_header('Notification-Text', message)

- response = self._send(options['host'], options['port'], notice.encode('utf-8'), options['debug'])

+ response = self._send(options['host'], options['port'], notice.encode(), options['debug'])

return True if isinstance(response, gntp.core.GNTPOK) else False

@staticmethod

@@ -181,7 +181,7 @@

register.set_password(opts['password'])

try:

- return self._send(opts['host'], opts['port'], register.encode('utf-8'), opts['debug'])

+ return self._send(opts['host'], opts['port'], register.encode(), opts['debug'])

except Exception as error:

log.warning(

u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',

|

{"golden_diff": "diff --git a/medusa/notifiers/growl.py b/medusa/notifiers/growl.py\n--- a/medusa/notifiers/growl.py\n+++ b/medusa/notifiers/growl.py\n@@ -66,7 +66,7 @@\n if message:\n notice.add_header('Notification-Text', message)\n \n- response = self._send(options['host'], options['port'], notice.encode('utf-8'), options['debug'])\n+ response = self._send(options['host'], options['port'], notice.encode(), options['debug'])\n return True if isinstance(response, gntp.core.GNTPOK) else False\n \n @staticmethod\n@@ -181,7 +181,7 @@\n register.set_password(opts['password'])\n \n try:\n- return self._send(opts['host'], opts['port'], register.encode('utf-8'), opts['debug'])\n+ return self._send(opts['host'], opts['port'], register.encode(), opts['debug'])\n except Exception as error:\n log.warning(\n u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',\n", "issue": "Growl not registering\n2018-11-10 08:21:42 INFO CHECKVERSION :: [0c0a735] Checking for updates using GIT\r\n\r\n**What you did: Input ip:port to register gowl\r\n**What happened: Nothing!\r\n**What you expected: Successful registration.\r\n\r\n**Logs:**\r\n2018-11-10 08:22:04 WARNING Thread_1 :: [0c0a735] GROWL: Unable to send growl to 192.168.1.4:23053 - u\"error b'encode() takes exactly 1 argument (2 given)'\"\r\n2018-11-10 08:22:04 WARNING Thread_1 :: [0c0a735] GROWL: Unable to send growl to 192.168.1.4:23053 - u\"error b'encode() takes exactly 1 argument (2 given)'\"\r\n\r\nSame IP:port work perfectly in rage/chill.\r\n\n", "before_files": [{"content": "# coding=utf-8\n\nfrom __future__ import print_function\nfrom __future__ import unicode_literals\n\nimport logging\nimport socket\nfrom builtins import object\n\nimport gntp.core\n\nfrom medusa import app, common\nfrom medusa.helper.exceptions import ex\nfrom medusa.logger.adapters.style import BraceAdapter\n\nlog = BraceAdapter(logging.getLogger(__name__))\nlog.logger.addHandler(logging.NullHandler())\n\n\nclass Notifier(object):\n def test_notify(self, host, password):\n self._sendRegistration(host, password)\n return self._sendGrowl('Test Growl', 'Testing Growl settings from Medusa', 'Test', host, password,\n force=True)\n\n def notify_snatch(self, title, message):\n if app.GROWL_NOTIFY_ONSNATCH:\n self._sendGrowl(title, message)\n\n def notify_download(self, ep_obj):\n if app.GROWL_NOTIFY_ONDOWNLOAD:\n self._sendGrowl(common.notifyStrings[common.NOTIFY_DOWNLOAD], ep_obj.pretty_name_with_quality())\n\n def notify_subtitle_download(self, ep_obj, lang):\n if app.GROWL_NOTIFY_ONSUBTITLEDOWNLOAD:\n self._sendGrowl(common.notifyStrings[common.NOTIFY_SUBTITLE_DOWNLOAD], ep_obj.pretty_name() + ': ' + lang)\n\n def notify_git_update(self, new_version='??'):\n update_text = common.notifyStrings[common.NOTIFY_GIT_UPDATE_TEXT]\n title = common.notifyStrings[common.NOTIFY_GIT_UPDATE]\n self._sendGrowl(title, update_text + new_version)\n\n def notify_login(self, ipaddress=''):\n update_text = common.notifyStrings[common.NOTIFY_LOGIN_TEXT]\n title = common.notifyStrings[common.NOTIFY_LOGIN]\n self._sendGrowl(title, update_text.format(ipaddress))\n\n def _send_growl(self, options, message=None):\n\n # Initialize Notification\n notice = gntp.core.GNTPNotice(\n app=options['app'],\n name=options['name'],\n title=options['title'],\n password=options['password'],\n )\n\n # Optional\n if options['sticky']:\n notice.add_header('Notification-Sticky', options['sticky'])\n if options['priority']:\n notice.add_header('Notification-Priority', options['priority'])\n if options['icon']:\n notice.add_header('Notification-Icon', app.LOGO_URL)\n\n if message:\n notice.add_header('Notification-Text', message)\n\n response = self._send(options['host'], options['port'], notice.encode('utf-8'), options['debug'])\n return True if isinstance(response, gntp.core.GNTPOK) else False\n\n @staticmethod\n def _send(host, port, data, debug=False):\n if debug:\n print('<Sending>\\n', data, '\\n</Sending>')\n\n s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)\n s.connect((host, port))\n s.send(data)\n response = gntp.core.parse_gntp(s.recv(1024))\n s.close()\n\n if debug:\n print('<Received>\\n', response, '\\n</Received>')\n\n return response\n\n def _sendGrowl(self, title='Medusa Notification', message=None, name=None, host=None, password=None,\n force=False):\n if not app.USE_GROWL and not force:\n return False\n\n if name is None:\n name = title\n\n if host is None:\n hostParts = app.GROWL_HOST.split(':')\n else:\n hostParts = host.split(':')\n\n if len(hostParts) != 2 or hostParts[1] == '':\n port = 23053\n else:\n port = int(hostParts[1])\n\n growlHosts = [(hostParts[0], port)]\n\n opts = {\n 'name': name,\n 'title': title,\n 'app': 'Medusa',\n 'sticky': None,\n 'priority': None,\n 'debug': False\n }\n\n if password is None:\n opts['password'] = app.GROWL_PASSWORD\n else:\n opts['password'] = password\n\n opts['icon'] = True\n\n for pc in growlHosts:\n opts['host'] = pc[0]\n opts['port'] = pc[1]\n log.debug(\n u'GROWL: Sending growl to {host}:{port} - {msg!r}',\n {'msg': message, 'host': opts['host'], 'port': opts['port']}\n )\n try:\n if self._send_growl(opts, message):\n return True\n else:\n if self._sendRegistration(host, password):\n return self._send_growl(opts, message)\n else:\n return False\n except Exception as error:\n log.warning(\n u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',\n {'msg': ex(error), 'host': opts['host'], 'port': opts['port']}\n )\n return False\n\n def _sendRegistration(self, host=None, password=None):\n opts = {}\n\n if host is None:\n hostParts = app.GROWL_HOST.split(':')\n else:\n hostParts = host.split(':')\n\n if len(hostParts) != 2 or hostParts[1] == '':\n port = 23053\n else:\n port = int(hostParts[1])\n\n opts['host'] = hostParts[0]\n opts['port'] = port\n\n if password is None:\n opts['password'] = app.GROWL_PASSWORD\n else:\n opts['password'] = password\n\n opts['app'] = 'Medusa'\n opts['debug'] = False\n\n # Send Registration\n register = gntp.core.GNTPRegister()\n register.add_header('Application-Name', opts['app'])\n register.add_header('Application-Icon', app.LOGO_URL)\n\n register.add_notification('Test', True)\n register.add_notification(common.notifyStrings[common.NOTIFY_SNATCH], True)\n register.add_notification(common.notifyStrings[common.NOTIFY_DOWNLOAD], True)\n register.add_notification(common.notifyStrings[common.NOTIFY_GIT_UPDATE], True)\n\n if opts['password']:\n register.set_password(opts['password'])\n\n try:\n return self._send(opts['host'], opts['port'], register.encode('utf-8'), opts['debug'])\n except Exception as error:\n log.warning(\n u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',\n {'msg': ex(error), 'host': opts['host'], 'port': opts['port']}\n )\n return False\n", "path": "medusa/notifiers/growl.py"}], "after_files": [{"content": "# coding=utf-8\n\nfrom __future__ import print_function\nfrom __future__ import unicode_literals\n\nimport logging\nimport socket\nfrom builtins import object\n\nimport gntp.core\n\nfrom medusa import app, common\nfrom medusa.helper.exceptions import ex\nfrom medusa.logger.adapters.style import BraceAdapter\n\nlog = BraceAdapter(logging.getLogger(__name__))\nlog.logger.addHandler(logging.NullHandler())\n\n\nclass Notifier(object):\n def test_notify(self, host, password):\n self._sendRegistration(host, password)\n return self._sendGrowl('Test Growl', 'Testing Growl settings from Medusa', 'Test', host, password,\n force=True)\n\n def notify_snatch(self, title, message):\n if app.GROWL_NOTIFY_ONSNATCH:\n self._sendGrowl(title, message)\n\n def notify_download(self, ep_obj):\n if app.GROWL_NOTIFY_ONDOWNLOAD:\n self._sendGrowl(common.notifyStrings[common.NOTIFY_DOWNLOAD], ep_obj.pretty_name_with_quality())\n\n def notify_subtitle_download(self, ep_obj, lang):\n if app.GROWL_NOTIFY_ONSUBTITLEDOWNLOAD:\n self._sendGrowl(common.notifyStrings[common.NOTIFY_SUBTITLE_DOWNLOAD], ep_obj.pretty_name() + ': ' + lang)\n\n def notify_git_update(self, new_version='??'):\n update_text = common.notifyStrings[common.NOTIFY_GIT_UPDATE_TEXT]\n title = common.notifyStrings[common.NOTIFY_GIT_UPDATE]\n self._sendGrowl(title, update_text + new_version)\n\n def notify_login(self, ipaddress=''):\n update_text = common.notifyStrings[common.NOTIFY_LOGIN_TEXT]\n title = common.notifyStrings[common.NOTIFY_LOGIN]\n self._sendGrowl(title, update_text.format(ipaddress))\n\n def _send_growl(self, options, message=None):\n\n # Initialize Notification\n notice = gntp.core.GNTPNotice(\n app=options['app'],\n name=options['name'],\n title=options['title'],\n password=options['password'],\n )\n\n # Optional\n if options['sticky']:\n notice.add_header('Notification-Sticky', options['sticky'])\n if options['priority']:\n notice.add_header('Notification-Priority', options['priority'])\n if options['icon']:\n notice.add_header('Notification-Icon', app.LOGO_URL)\n\n if message:\n notice.add_header('Notification-Text', message)\n\n response = self._send(options['host'], options['port'], notice.encode(), options['debug'])\n return True if isinstance(response, gntp.core.GNTPOK) else False\n\n @staticmethod\n def _send(host, port, data, debug=False):\n if debug:\n print('<Sending>\\n', data, '\\n</Sending>')\n\n s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)\n s.connect((host, port))\n s.send(data)\n response = gntp.core.parse_gntp(s.recv(1024))\n s.close()\n\n if debug:\n print('<Received>\\n', response, '\\n</Received>')\n\n return response\n\n def _sendGrowl(self, title='Medusa Notification', message=None, name=None, host=None, password=None,\n force=False):\n if not app.USE_GROWL and not force:\n return False\n\n if name is None:\n name = title\n\n if host is None:\n hostParts = app.GROWL_HOST.split(':')\n else:\n hostParts = host.split(':')\n\n if len(hostParts) != 2 or hostParts[1] == '':\n port = 23053\n else:\n port = int(hostParts[1])\n\n growlHosts = [(hostParts[0], port)]\n\n opts = {\n 'name': name,\n 'title': title,\n 'app': 'Medusa',\n 'sticky': None,\n 'priority': None,\n 'debug': False\n }\n\n if password is None:\n opts['password'] = app.GROWL_PASSWORD\n else:\n opts['password'] = password\n\n opts['icon'] = True\n\n for pc in growlHosts:\n opts['host'] = pc[0]\n opts['port'] = pc[1]\n log.debug(\n u'GROWL: Sending growl to {host}:{port} - {msg!r}',\n {'msg': message, 'host': opts['host'], 'port': opts['port']}\n )\n try:\n if self._send_growl(opts, message):\n return True\n else:\n if self._sendRegistration(host, password):\n return self._send_growl(opts, message)\n else:\n return False\n except Exception as error:\n log.warning(\n u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',\n {'msg': ex(error), 'host': opts['host'], 'port': opts['port']}\n )\n return False\n\n def _sendRegistration(self, host=None, password=None):\n opts = {}\n\n if host is None:\n hostParts = app.GROWL_HOST.split(':')\n else:\n hostParts = host.split(':')\n\n if len(hostParts) != 2 or hostParts[1] == '':\n port = 23053\n else:\n port = int(hostParts[1])\n\n opts['host'] = hostParts[0]\n opts['port'] = port\n\n if password is None:\n opts['password'] = app.GROWL_PASSWORD\n else:\n opts['password'] = password\n\n opts['app'] = 'Medusa'\n opts['debug'] = False\n\n # Send Registration\n register = gntp.core.GNTPRegister()\n register.add_header('Application-Name', opts['app'])\n register.add_header('Application-Icon', app.LOGO_URL)\n\n register.add_notification('Test', True)\n register.add_notification(common.notifyStrings[common.NOTIFY_SNATCH], True)\n register.add_notification(common.notifyStrings[common.NOTIFY_DOWNLOAD], True)\n register.add_notification(common.notifyStrings[common.NOTIFY_GIT_UPDATE], True)\n\n if opts['password']:\n register.set_password(opts['password'])\n\n try:\n return self._send(opts['host'], opts['port'], register.encode(), opts['debug'])\n except Exception as error:\n log.warning(\n u'GROWL: Unable to send growl to {host}:{port} - {msg!r}',\n {'msg': ex(error), 'host': opts['host'], 'port': opts['port']}\n )\n return False\n", "path": "medusa/notifiers/growl.py"}]}

| 2,428 | 252 |

gh_patches_debug_29624

|

rasdani/github-patches

|

git_diff

|

DataDog__dd-trace-py-826

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

nginx-opentracing + libdd_opentracing_plugin: SpanContextCorruptedException: failed to extract span context

I'm trying to set up an integration of nginx + nginx-opentracing module + DataDog tracer plugin + sample python app in order to get working multi-span traces in a manner when an app uses propagated context.

I'm getting the following error on every call:

```

ERROR:root:trace extract failed: failed to extract span context

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/opentracing_instrumentation/http_server.py", line 75, in before_request

format=Format.HTTP_HEADERS, carrier=carrier

File "/usr/local/lib/python2.7/dist-packages/ddtrace-0.20.4-py2.7.egg/ddtrace/opentracer/tracer.py", line 294, in extract

ot_span_ctx = propagator.extract(carrier)

File "/usr/local/lib/python2.7/dist-packages/ddtrace-0.20.4-py2.7.egg/ddtrace/opentracer/propagation/http.py", line 73, in extract

raise SpanContextCorruptedException('failed to extract span context')

SpanContextCorruptedException: failed to extract span context

```

Components used:

- nginx/1.15.7

- nginx-opentracing:

https://github.com/opentracing-contrib/nginx-opentracing/releases/tag/v0.8.0

- DataDog tracer plugin: https://github.com/DataDog/dd-opentracing-cpp/releases/download/v0.4.2/linux-amd64-libdd_opentracing_plugin.so.gz

nginx configuration:

```

# configuration file /etc/nginx/nginx.conf:

load_module modules/ngx_http_opentracing_module.so;

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log debug;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for" $request_id';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

opentracing_load_tracer /etc/nginx/linux-amd64-libdd_opentracing_plugin.so /etc/nginx/dd-config.json;

opentracing on;

opentracing_trace_locations off;

opentracing_tag http_user_agent $http_user_agent;

opentracing_tag http_uri $request_uri;

opentracing_tag http_request_id $request_id;

include /etc/nginx/conf.d/*.conf;

}

# configuration file /etc/nginx/conf.d/default.conf:

upstream u {

server 62.210.92.35:80;

keepalive 20;

zone u 128k;

}

upstream upload-app {

server 127.0.0.1:8080;

}

server {

listen 80 default_server;

server_name localhost;

opentracing_operation_name $uri;

location / {

opentracing_propagate_context;

proxy_set_header Host nginx.org;

proxy_set_header Connection "";

proxy_http_version 1.1;

proxy_pass http://u;

}

location /upload/ {

opentracing_propagate_context;

proxy_pass http://upload-app;

client_max_body_size 256m;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

```

DataDog tracer configuration (/etc/nginx/dd-config.json):

```

{

"service": "nginx",

"operation_name_override": "nginx.handle",

"agent_host": "localhost",

"agent_port": 8126

}

```

DataDog agent version, OS used:

```

# dpkg -s datadog-agent

Package: datadog-agent

Status: install ok installed

Priority: extra

Section: utils

Installed-Size: 390206

Maintainer: Datadog Packages <[email protected]>

Architecture: amd64

Version: 1:6.9.0-1

Description: Datadog Monitoring Agent

The Datadog Monitoring Agent is a lightweight process that monitors system

processes and services, and sends information back to your Datadog account.

.

This package installs and runs the advanced Agent daemon, which queues and

forwards metrics from your applications as well as system services.

.

See http://www.datadoghq.com/ for more information

License: Apache License Version 2.0

Vendor: Datadog <[email protected]>

Homepage: http://www.datadoghq.com

# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 18.04.1 LTS

Release: 18.04

Codename: bionic

```

App itself:

```

#!/usr/bin/env python

import logging

import hashlib

from flask import Flask

from flask import request

from werkzeug.debug import get_current_traceback

from opentracing_instrumentation import http_server

from opentracing_instrumentation import config

import opentracing

from ddtrace.opentracer import Tracer

from random import randint

application = Flask(__name__)

tracer = None

def init_dd_tracer(service_name='upload-app'):

print "INIT DATADOG TRACER"

return Tracer(service_name=service_name, config={})

@application.before_request

def before_request():

global tracer

request.stderr = request.environ['wsgi.errors'] if 'wsgi.errors' in request.environ else stderr

headers_summary = "HEADERS:\n\n" + "\n".join(["{0}: {1}".format(k, request.headers[k]) for k in sorted(request.headers.keys())]) + "\n"

request.stderr.write(headers_summary)

request.full_url = request.url

request.remote_ip = request.remote_addr

request.remote_port = request.environ['REMOTE_PORT']

request.caller_name = "n/a"

request.operation = request.method

if not tracer:

tracer = init_dd_tracer()

request.span = http_server.before_request(request=request, tracer=tracer)

@application.route("/", methods=['GET', 'POST'])

def default():

try:

environ_summary = "ENVIRON:\n\n" + "\n".join(["{0}: {1}".format(k, request.environ[k]) for k in sorted(request.environ.keys())]) + "\n"

args = "REQUEST ARGS: %s" % request.args

body = "REQUETS BODY: %s" % request.data

return "%s\n%s\n%s\n" % (args, body, environ_summary)

except Exception, e:

track = get_current_traceback(skip=1, show_hidden_frames=True, ignore_system_exceptions=False)

track.log()

abort(500)

@application.route("/upload/", methods=['GET', 'POST'])

@application.route("/upload-http/", methods=['GET', 'POST'])

def upload():

global tracer

with tracer.start_span('ProcessUpload', child_of=request.span) as span:

span.log_kv({'ProcessUpload': 'started'})

span.set_tag('payload-size', int(request.headers.get('Content-Length')) if 'Content-Length' in request.headers else 0)

if 'Content-Length' in request.headers and int(request.headers.get('Content-Length')):

body = request.stream.read()

for x in range(1, randint(2, 10)):

with tracer.start_span('SubPart%02d' % x, child_of=span) as subpart_span:

subpart_span.log_kv({'subpart_iteration': x, 'action': 'begin'})

m = hashlib.md5()

m.update(body)

response_body = "%d:%s\n" % (len(body), m.hexdigest())

subpart_span.log_kv({'subpart_iteration': x, 'action': 'end'})

request.stderr.write('ProcessUpload finished with %d iterations\n' % x)

else:

response_body = 'no data was uploaded'

try:

span.set_tag('iterations', x)

except NameError:

pass

span.log_kv({'ProcessUpload': 'finished'})

return response_body

@application.errorhandler(500)

def internal_error(error):

return "500 error"

if __name__ == "__main__":

application.debug = True

application.config['PROPAGATE_EXCEPTIONS'] = True

application.run(host='127.0.0.1', port=8080)

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `ddtrace/propagation/http.py`

Content:

```

1 import logging

2

3 from ..context import Context

4

5 from .utils import get_wsgi_header

6

7 log = logging.getLogger(__name__)

8

9 # HTTP headers one should set for distributed tracing.

10 # These are cross-language (eg: Python, Go and other implementations should honor these)

11 HTTP_HEADER_TRACE_ID = "x-datadog-trace-id"

12 HTTP_HEADER_PARENT_ID = "x-datadog-parent-id"

13 HTTP_HEADER_SAMPLING_PRIORITY = "x-datadog-sampling-priority"

14

15

16 # Note that due to WSGI spec we have to also check for uppercased and prefixed

17 # versions of these headers

18 POSSIBLE_HTTP_HEADER_TRACE_IDS = frozenset(

19 [HTTP_HEADER_TRACE_ID, get_wsgi_header(HTTP_HEADER_TRACE_ID)]

20 )

21 POSSIBLE_HTTP_HEADER_PARENT_IDS = frozenset(

22 [HTTP_HEADER_PARENT_ID, get_wsgi_header(HTTP_HEADER_PARENT_ID)]

23 )

24 POSSIBLE_HTTP_HEADER_SAMPLING_PRIORITIES = frozenset(

25 [HTTP_HEADER_SAMPLING_PRIORITY, get_wsgi_header(HTTP_HEADER_SAMPLING_PRIORITY)]

26 )

27

28

29 class HTTPPropagator(object):

30 """A HTTP Propagator using HTTP headers as carrier."""

31

32 def inject(self, span_context, headers):

33 """Inject Context attributes that have to be propagated as HTTP headers.

34

35 Here is an example using `requests`::

36

37 import requests

38 from ddtrace.propagation.http import HTTPPropagator

39

40 def parent_call():

41 with tracer.trace("parent_span") as span:

42 headers = {}

43 propagator = HTTPPropagator()

44 propagator.inject(span.context, headers)

45 url = "<some RPC endpoint>"

46 r = requests.get(url, headers=headers)

47

48 :param Context span_context: Span context to propagate.

49 :param dict headers: HTTP headers to extend with tracing attributes.

50 """

51 headers[HTTP_HEADER_TRACE_ID] = str(span_context.trace_id)

52 headers[HTTP_HEADER_PARENT_ID] = str(span_context.span_id)

53 sampling_priority = span_context.sampling_priority

54 # Propagate priority only if defined

55 if sampling_priority is not None:

56 headers[HTTP_HEADER_SAMPLING_PRIORITY] = str(span_context.sampling_priority)

57

58 @staticmethod

59 def extract_trace_id(headers):

60 trace_id = 0

61

62 for key in POSSIBLE_HTTP_HEADER_TRACE_IDS:

63 if key in headers:

64 trace_id = headers.get(key)

65

66 return int(trace_id)

67

68 @staticmethod

69 def extract_parent_span_id(headers):

70 parent_span_id = 0

71

72 for key in POSSIBLE_HTTP_HEADER_PARENT_IDS:

73 if key in headers:

74 parent_span_id = headers.get(key)

75

76 return int(parent_span_id)

77

78 @staticmethod

79 def extract_sampling_priority(headers):

80 sampling_priority = None

81

82 for key in POSSIBLE_HTTP_HEADER_SAMPLING_PRIORITIES:

83 if key in headers:

84 sampling_priority = headers.get(key)

85

86 return sampling_priority

87

88 def extract(self, headers):

89 """Extract a Context from HTTP headers into a new Context.

90

91 Here is an example from a web endpoint::

92

93 from ddtrace.propagation.http import HTTPPropagator

94

95 def my_controller(url, headers):

96 propagator = HTTPPropagator()

97 context = propagator.extract(headers)

98 tracer.context_provider.activate(context)

99

100 with tracer.trace("my_controller") as span:

101 span.set_meta('http.url', url)

102

103 :param dict headers: HTTP headers to extract tracing attributes.

104 :return: New `Context` with propagated attributes.

105 """

106 if not headers:

107 return Context()

108

109 try:

110 trace_id = HTTPPropagator.extract_trace_id(headers)

111 parent_span_id = HTTPPropagator.extract_parent_span_id(headers)

112 sampling_priority = HTTPPropagator.extract_sampling_priority(headers)

113

114 if sampling_priority is not None:

115 sampling_priority = int(sampling_priority)

116

117 return Context(

118 trace_id=trace_id,

119 span_id=parent_span_id,

120 sampling_priority=sampling_priority,

121 )

122 # If headers are invalid and cannot be parsed, return a new context and log the issue.

123 except Exception as error:

124 try:

125 log.debug(

126 "invalid x-datadog-* headers, trace-id: %s, parent-id: %s, priority: %s, error: %s",

127 headers.get(HTTP_HEADER_TRACE_ID, 0),

128 headers.get(HTTP_HEADER_PARENT_ID, 0),

129 headers.get(HTTP_HEADER_SAMPLING_PRIORITY),

130 error,

131 )

132 # We might fail on string formatting errors ; in that case only format the first error

133 except Exception:

134 log.debug(error)

135 return Context()

136

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/ddtrace/propagation/http.py b/ddtrace/propagation/http.py

--- a/ddtrace/propagation/http.py

+++ b/ddtrace/propagation/http.py

@@ -56,34 +56,35 @@

headers[HTTP_HEADER_SAMPLING_PRIORITY] = str(span_context.sampling_priority)

@staticmethod

- def extract_trace_id(headers):

- trace_id = 0

+ def extract_header_value(possible_header_names, headers, default=None):

+ for header, value in headers.items():

+ for header_name in possible_header_names:

+ if header.lower() == header_name.lower():

+ return value

- for key in POSSIBLE_HTTP_HEADER_TRACE_IDS:

- if key in headers:

- trace_id = headers.get(key)

+ return default

- return int(trace_id)

+ @staticmethod

+ def extract_trace_id(headers):

+ return int(

+ HTTPPropagator.extract_header_value(

+ POSSIBLE_HTTP_HEADER_TRACE_IDS, headers, default=0,

+ )

+ )

@staticmethod

def extract_parent_span_id(headers):

- parent_span_id = 0

-

- for key in POSSIBLE_HTTP_HEADER_PARENT_IDS:

- if key in headers:

- parent_span_id = headers.get(key)

-

- return int(parent_span_id)

+ return int(

+ HTTPPropagator.extract_header_value(

+ POSSIBLE_HTTP_HEADER_PARENT_IDS, headers, default=0,

+ )

+ )

@staticmethod

def extract_sampling_priority(headers):

- sampling_priority = None

-

- for key in POSSIBLE_HTTP_HEADER_SAMPLING_PRIORITIES:

- if key in headers:

- sampling_priority = headers.get(key)

-

- return sampling_priority

+ return HTTPPropagator.extract_header_value(

+ POSSIBLE_HTTP_HEADER_SAMPLING_PRIORITIES, headers,

+ )

def extract(self, headers):

"""Extract a Context from HTTP headers into a new Context.

|