problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

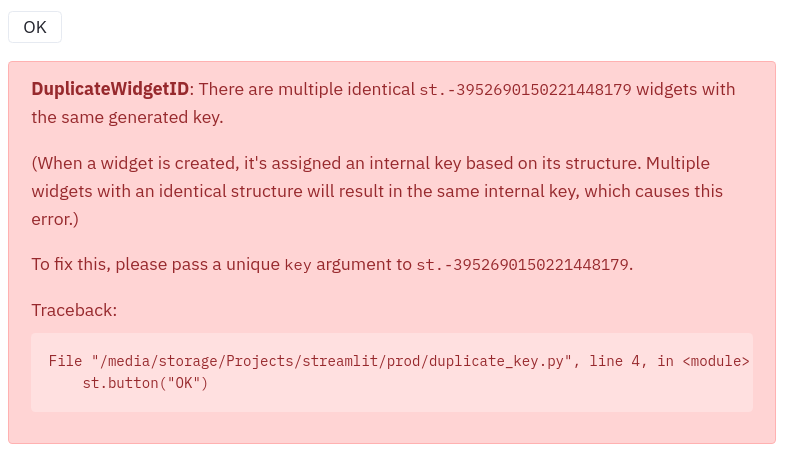

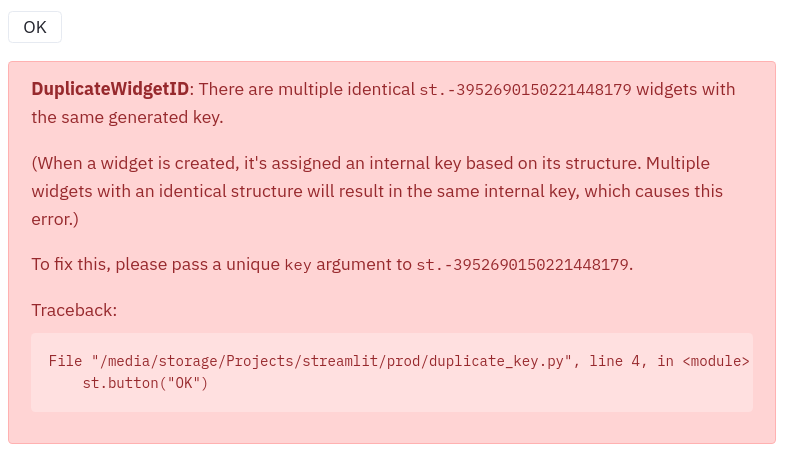

gh_patches_debug_14809 | rasdani/github-patches | git_diff | qtile__qtile-4065 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

All hooks in config will be subscribed and fired twice.

### The issue:

~~This is probably due to configuration testing syntax step.~~

```python

'startup_complete': [<function xstartup_complete at 0x7f2005fc49d0>,

<function xstartup_complete at 0x7f2005fc5510>]}

```

Code to reproduce is simple:

in config:

```python

@hook.subscribe.startup_complete

def xstartup_complete():

...

logger.warn(pprint.pformat(hook.subscriptions))

```

All hooks are actually being fired twice, not only startup but all for each event.

### Required:

- [X] I have searched past issues to see if this bug has already been reported.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `libqtile/confreader.py`

Content:

```

1 # Copyright (c) 2008, Aldo Cortesi <[email protected]>

2 # Copyright (c) 2011, Andrew Grigorev <[email protected]>

3 #

4 # All rights reserved.

5 #

6 # Permission is hereby granted, free of charge, to any person obtaining a copy

7 # of this software and associated documentation files (the "Software"), to deal

8 # in the Software without restriction, including without limitation the rights

9 # to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

10 # copies of the Software, and to permit persons to whom the Software is

11 # furnished to do so, subject to the following conditions:

12 #

13 # The above copyright notice and this permission notice shall be included in

14 # all copies or substantial portions of the Software.

15 #

16 # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

17 # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

18 # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

19 # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

20 # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

21 # OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

22 # SOFTWARE.

23

24 from __future__ import annotations

25

26 import importlib

27 import sys

28 from pathlib import Path

29 from typing import TYPE_CHECKING

30

31 from libqtile.backend.x11 import core

32

33 if TYPE_CHECKING:

34 from typing import Any

35

36 from typing_extensions import Literal

37

38 from libqtile.config import Group, Key, Mouse, Rule, Screen

39 from libqtile.layout.base import Layout

40

41

42 class ConfigError(Exception):

43 pass

44

45

46 config_pyi_header = """

47 from typing import Any

48 from typing_extensions import Literal

49 from libqtile.config import Group, Key, Mouse, Rule, Screen

50 from libqtile.layout.base import Layout

51

52 """

53

54

55 class Config:

56 # All configuration options

57 keys: list[Key]

58 mouse: list[Mouse]

59 groups: list[Group]

60 dgroups_key_binder: Any

61 dgroups_app_rules: list[Rule]

62 follow_mouse_focus: bool

63 focus_on_window_activation: Literal["focus", "smart", "urgent", "never"]

64 cursor_warp: bool

65 layouts: list[Layout]

66 floating_layout: Layout

67 screens: list[Screen]

68 auto_fullscreen: bool

69 widget_defaults: dict[str, Any]

70 extension_defaults: dict[str, Any]

71 bring_front_click: bool | Literal["floating_only"]

72 reconfigure_screens: bool

73 wmname: str

74 auto_minimize: bool

75 # Really we'd want to check this Any is libqtile.backend.wayland.ImportConfig, but

76 # doing so forces the import, creating a hard dependency for wlroots.

77 wl_input_rules: dict[str, Any] | None

78

79 def __init__(self, file_path=None, **settings):

80 """Create a Config() object from settings

81

82 Only attributes found in Config.__annotations__ will be added to object.

83 config attribute precedence is 1.) **settings 2.) self 3.) default_config

84 """

85 self.file_path = file_path

86 self.update(**settings)

87

88 def update(self, *, fake_screens=None, **settings):

89 from libqtile.resources import default_config

90

91 if fake_screens:

92 self.fake_screens = fake_screens

93

94 default = vars(default_config)

95 for key in self.__annotations__.keys():

96 try:

97 value = settings[key]

98 except KeyError:

99 value = getattr(self, key, default[key])

100 setattr(self, key, value)

101

102 def _reload_config_submodules(self, path: Path) -> None:

103 """Reloads python files from same folder as config file."""

104 folder = path.parent

105 for module in sys.modules.copy().values():

106

107 # Skip built-ins and anything with no filepath.

108 if hasattr(module, "__file__") and module.__file__ is not None:

109 subpath = Path(module.__file__)

110

111 # Check if the module is in the config folder or subfolder

112 # if so, reload it

113 if folder in subpath.parents:

114 importlib.reload(module)

115

116 def load(self):

117 if not self.file_path:

118 return

119

120 path = Path(self.file_path)

121 name = path.stem

122 sys.path.insert(0, path.parent.as_posix())

123

124 if name in sys.modules:

125 self._reload_config_submodules(path)

126 config = importlib.reload(sys.modules[name])

127 else:

128 config = importlib.import_module(name)

129

130 self.update(**vars(config))

131

132 def validate(self) -> None:

133 """

134 Validate the configuration against the core.

135 """

136 valid_keys = core.get_keys()

137 valid_mods = core.get_modifiers()

138 # we explicitly do not want to set self.keys and self.mouse above,

139 # because they are dynamically resolved from the default_config. so we

140 # need to ignore the errors here about missing attributes.

141 for k in self.keys:

142 if k.key.lower() not in valid_keys:

143 raise ConfigError("No such key: %s" % k.key)

144 for m in k.modifiers:

145 if m.lower() not in valid_mods:

146 raise ConfigError("No such modifier: %s" % m)

147 for ms in self.mouse:

148 for m in ms.modifiers:

149 if m.lower() not in valid_mods:

150 raise ConfigError("No such modifier: %s" % m)

151

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/libqtile/confreader.py b/libqtile/confreader.py

--- a/libqtile/confreader.py

+++ b/libqtile/confreader.py

@@ -108,6 +108,14 @@

if hasattr(module, "__file__") and module.__file__ is not None:

subpath = Path(module.__file__)

+ if subpath == path:

+ # do not reevaluate config itself here, we want only

+ # reload all submodules. Also we cant reevaluate config

+ # here, because it will cache all current modules before they

+ # are reloaded. Thus, config file should be reloaded after

+ # this routine.

+ continue

+

# Check if the module is in the config folder or subfolder

# if so, reload it

if folder in subpath.parents:

| {"golden_diff": "diff --git a/libqtile/confreader.py b/libqtile/confreader.py\n--- a/libqtile/confreader.py\n+++ b/libqtile/confreader.py\n@@ -108,6 +108,14 @@\n if hasattr(module, \"__file__\") and module.__file__ is not None:\n subpath = Path(module.__file__)\n \n+ if subpath == path:\n+ # do not reevaluate config itself here, we want only\n+ # reload all submodules. Also we cant reevaluate config\n+ # here, because it will cache all current modules before they\n+ # are reloaded. Thus, config file should be reloaded after\n+ # this routine.\n+ continue\n+\n # Check if the module is in the config folder or subfolder\n # if so, reload it\n if folder in subpath.parents:\n", "issue": "All hooks in config will be subscribed and fired twice.\n### The issue:\r\n\r\n~~This is probably due to configuration testing syntax step.~~\r\n\r\n```python\r\n'startup_complete': [<function xstartup_complete at 0x7f2005fc49d0>,\r\n <function xstartup_complete at 0x7f2005fc5510>]}\r\n```\r\n\r\nCode to reproduce is simple:\r\n\r\nin config:\r\n\r\n```python\r\[email protected]_complete\r\ndef xstartup_complete():\r\n ...\r\n\r\nlogger.warn(pprint.pformat(hook.subscriptions))\r\n```\r\n\r\nAll hooks are actually being fired twice, not only startup but all for each event.\r\n\r\n### Required:\r\n\r\n- [X] I have searched past issues to see if this bug has already been reported.\n", "before_files": [{"content": "# Copyright (c) 2008, Aldo Cortesi <[email protected]>\n# Copyright (c) 2011, Andrew Grigorev <[email protected]>\n#\n# All rights reserved.\n#\n# Permission is hereby granted, free of charge, to any person obtaining a copy\n# of this software and associated documentation files (the \"Software\"), to deal\n# in the Software without restriction, including without limitation the rights\n# to use, copy, modify, merge, publish, distribute, sublicense, and/or sell\n# copies of the Software, and to permit persons to whom the Software is\n# furnished to do so, subject to the following conditions:\n#\n# The above copyright notice and this permission notice shall be included in\n# all copies or substantial portions of the Software.\n#\n# THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR\n# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,\n# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE\n# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER\n# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,\n# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE\n# SOFTWARE.\n\nfrom __future__ import annotations\n\nimport importlib\nimport sys\nfrom pathlib import Path\nfrom typing import TYPE_CHECKING\n\nfrom libqtile.backend.x11 import core\n\nif TYPE_CHECKING:\n from typing import Any\n\n from typing_extensions import Literal\n\n from libqtile.config import Group, Key, Mouse, Rule, Screen\n from libqtile.layout.base import Layout\n\n\nclass ConfigError(Exception):\n pass\n\n\nconfig_pyi_header = \"\"\"\nfrom typing import Any\nfrom typing_extensions import Literal\nfrom libqtile.config import Group, Key, Mouse, Rule, Screen\nfrom libqtile.layout.base import Layout\n\n\"\"\"\n\n\nclass Config:\n # All configuration options\n keys: list[Key]\n mouse: list[Mouse]\n groups: list[Group]\n dgroups_key_binder: Any\n dgroups_app_rules: list[Rule]\n follow_mouse_focus: bool\n focus_on_window_activation: Literal[\"focus\", \"smart\", \"urgent\", \"never\"]\n cursor_warp: bool\n layouts: list[Layout]\n floating_layout: Layout\n screens: list[Screen]\n auto_fullscreen: bool\n widget_defaults: dict[str, Any]\n extension_defaults: dict[str, Any]\n bring_front_click: bool | Literal[\"floating_only\"]\n reconfigure_screens: bool\n wmname: str\n auto_minimize: bool\n # Really we'd want to check this Any is libqtile.backend.wayland.ImportConfig, but\n # doing so forces the import, creating a hard dependency for wlroots.\n wl_input_rules: dict[str, Any] | None\n\n def __init__(self, file_path=None, **settings):\n \"\"\"Create a Config() object from settings\n\n Only attributes found in Config.__annotations__ will be added to object.\n config attribute precedence is 1.) **settings 2.) self 3.) default_config\n \"\"\"\n self.file_path = file_path\n self.update(**settings)\n\n def update(self, *, fake_screens=None, **settings):\n from libqtile.resources import default_config\n\n if fake_screens:\n self.fake_screens = fake_screens\n\n default = vars(default_config)\n for key in self.__annotations__.keys():\n try:\n value = settings[key]\n except KeyError:\n value = getattr(self, key, default[key])\n setattr(self, key, value)\n\n def _reload_config_submodules(self, path: Path) -> None:\n \"\"\"Reloads python files from same folder as config file.\"\"\"\n folder = path.parent\n for module in sys.modules.copy().values():\n\n # Skip built-ins and anything with no filepath.\n if hasattr(module, \"__file__\") and module.__file__ is not None:\n subpath = Path(module.__file__)\n\n # Check if the module is in the config folder or subfolder\n # if so, reload it\n if folder in subpath.parents:\n importlib.reload(module)\n\n def load(self):\n if not self.file_path:\n return\n\n path = Path(self.file_path)\n name = path.stem\n sys.path.insert(0, path.parent.as_posix())\n\n if name in sys.modules:\n self._reload_config_submodules(path)\n config = importlib.reload(sys.modules[name])\n else:\n config = importlib.import_module(name)\n\n self.update(**vars(config))\n\n def validate(self) -> None:\n \"\"\"\n Validate the configuration against the core.\n \"\"\"\n valid_keys = core.get_keys()\n valid_mods = core.get_modifiers()\n # we explicitly do not want to set self.keys and self.mouse above,\n # because they are dynamically resolved from the default_config. so we\n # need to ignore the errors here about missing attributes.\n for k in self.keys:\n if k.key.lower() not in valid_keys:\n raise ConfigError(\"No such key: %s\" % k.key)\n for m in k.modifiers:\n if m.lower() not in valid_mods:\n raise ConfigError(\"No such modifier: %s\" % m)\n for ms in self.mouse:\n for m in ms.modifiers:\n if m.lower() not in valid_mods:\n raise ConfigError(\"No such modifier: %s\" % m)\n", "path": "libqtile/confreader.py"}], "after_files": [{"content": "# Copyright (c) 2008, Aldo Cortesi <[email protected]>\n# Copyright (c) 2011, Andrew Grigorev <[email protected]>\n#\n# All rights reserved.\n#\n# Permission is hereby granted, free of charge, to any person obtaining a copy\n# of this software and associated documentation files (the \"Software\"), to deal\n# in the Software without restriction, including without limitation the rights\n# to use, copy, modify, merge, publish, distribute, sublicense, and/or sell\n# copies of the Software, and to permit persons to whom the Software is\n# furnished to do so, subject to the following conditions:\n#\n# The above copyright notice and this permission notice shall be included in\n# all copies or substantial portions of the Software.\n#\n# THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR\n# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,\n# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE\n# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER\n# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,\n# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE\n# SOFTWARE.\n\nfrom __future__ import annotations\n\nimport importlib\nimport sys\nfrom pathlib import Path\nfrom typing import TYPE_CHECKING\n\nfrom libqtile.backend.x11 import core\n\nif TYPE_CHECKING:\n from typing import Any\n\n from typing_extensions import Literal\n\n from libqtile.config import Group, Key, Mouse, Rule, Screen\n from libqtile.layout.base import Layout\n\n\nclass ConfigError(Exception):\n pass\n\n\nconfig_pyi_header = \"\"\"\nfrom typing import Any\nfrom typing_extensions import Literal\nfrom libqtile.config import Group, Key, Mouse, Rule, Screen\nfrom libqtile.layout.base import Layout\n\n\"\"\"\n\n\nclass Config:\n # All configuration options\n keys: list[Key]\n mouse: list[Mouse]\n groups: list[Group]\n dgroups_key_binder: Any\n dgroups_app_rules: list[Rule]\n follow_mouse_focus: bool\n focus_on_window_activation: Literal[\"focus\", \"smart\", \"urgent\", \"never\"]\n cursor_warp: bool\n layouts: list[Layout]\n floating_layout: Layout\n screens: list[Screen]\n auto_fullscreen: bool\n widget_defaults: dict[str, Any]\n extension_defaults: dict[str, Any]\n bring_front_click: bool | Literal[\"floating_only\"]\n reconfigure_screens: bool\n wmname: str\n auto_minimize: bool\n # Really we'd want to check this Any is libqtile.backend.wayland.ImportConfig, but\n # doing so forces the import, creating a hard dependency for wlroots.\n wl_input_rules: dict[str, Any] | None\n\n def __init__(self, file_path=None, **settings):\n \"\"\"Create a Config() object from settings\n\n Only attributes found in Config.__annotations__ will be added to object.\n config attribute precedence is 1.) **settings 2.) self 3.) default_config\n \"\"\"\n self.file_path = file_path\n self.update(**settings)\n\n def update(self, *, fake_screens=None, **settings):\n from libqtile.resources import default_config\n\n if fake_screens:\n self.fake_screens = fake_screens\n\n default = vars(default_config)\n for key in self.__annotations__.keys():\n try:\n value = settings[key]\n except KeyError:\n value = getattr(self, key, default[key])\n setattr(self, key, value)\n\n def _reload_config_submodules(self, path: Path) -> None:\n \"\"\"Reloads python files from same folder as config file.\"\"\"\n folder = path.parent\n for module in sys.modules.copy().values():\n\n # Skip built-ins and anything with no filepath.\n if hasattr(module, \"__file__\") and module.__file__ is not None:\n subpath = Path(module.__file__)\n\n if subpath == path:\n # do not reevaluate config itself here, we want only\n # reload all submodules. Also we cant reevaluate config\n # here, because it will cache all current modules before they\n # are reloaded. Thus, config file should be reloaded after\n # this routine.\n continue\n\n # Check if the module is in the config folder or subfolder\n # if so, reload it\n if folder in subpath.parents:\n importlib.reload(module)\n\n def load(self):\n if not self.file_path:\n return\n\n path = Path(self.file_path)\n name = path.stem\n sys.path.insert(0, path.parent.as_posix())\n\n if name in sys.modules:\n self._reload_config_submodules(path)\n config = importlib.reload(sys.modules[name])\n else:\n config = importlib.import_module(name)\n\n self.update(**vars(config))\n\n def validate(self) -> None:\n \"\"\"\n Validate the configuration against the core.\n \"\"\"\n valid_keys = core.get_keys()\n valid_mods = core.get_modifiers()\n # we explicitly do not want to set self.keys and self.mouse above,\n # because they are dynamically resolved from the default_config. so we\n # need to ignore the errors here about missing attributes.\n for k in self.keys:\n if k.key.lower() not in valid_keys:\n raise ConfigError(\"No such key: %s\" % k.key)\n for m in k.modifiers:\n if m.lower() not in valid_mods:\n raise ConfigError(\"No such modifier: %s\" % m)\n for ms in self.mouse:\n for m in ms.modifiers:\n if m.lower() not in valid_mods:\n raise ConfigError(\"No such modifier: %s\" % m)\n", "path": "libqtile/confreader.py"}]} | 1,970 | 191 |

gh_patches_debug_13656 | rasdani/github-patches | git_diff | feast-dev__feast-2676 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

basicConfig is called at the module level

## Expected Behavior

```

import feast

logging.basicConfig(level=level, format=FORMAT)

logging.error("msg")

```

should print logging message according to `FORMAT`

## Current Behavior

It uses the format defined in `feast` at the module level.

## Steps to reproduce

Same as in "Expected Behavior"

### Specifications

- Version: 0.18.1

- Platform: Linux

- Subsystem: -

## Possible Solution

I see that `basicConfig` is called here: https://github.com/feast-dev/feast/blob/c9eda79c7b1169ef05a481a96f07960c014e88b9/sdk/python/feast/cli.py#L84 so it is possible that simply removing this call here is enough: https://github.com/feast-dev/feast/blob/0ca62970dd6bc33c00bd5d8b828752814d480588/sdk/python/feast/__init__.py#L30

If there are any other entry points that need to set up logging, they should call the function, but the call in `__init__.py` must be removed.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `sdk/python/feast/__init__.py`

Content:

```

1 import logging

2

3 from pkg_resources import DistributionNotFound, get_distribution

4

5 from feast.infra.offline_stores.bigquery_source import BigQuerySource

6 from feast.infra.offline_stores.file_source import FileSource

7 from feast.infra.offline_stores.redshift_source import RedshiftSource

8 from feast.infra.offline_stores.snowflake_source import SnowflakeSource

9

10 from .batch_feature_view import BatchFeatureView

11 from .data_source import (

12 KafkaSource,

13 KinesisSource,

14 PushSource,

15 RequestSource,

16 SourceType,

17 )

18 from .entity import Entity

19 from .feature import Feature

20 from .feature_service import FeatureService

21 from .feature_store import FeatureStore

22 from .feature_view import FeatureView

23 from .field import Field

24 from .on_demand_feature_view import OnDemandFeatureView

25 from .repo_config import RepoConfig

26 from .request_feature_view import RequestFeatureView

27 from .stream_feature_view import StreamFeatureView

28 from .value_type import ValueType

29

30 logging.basicConfig(

31 format="%(asctime)s %(levelname)s:%(message)s",

32 datefmt="%m/%d/%Y %I:%M:%S %p",

33 level=logging.INFO,

34 )

35

36 try:

37 __version__ = get_distribution(__name__).version

38 except DistributionNotFound:

39 # package is not installed

40 pass

41

42 __all__ = [

43 "BatchFeatureView",

44 "Entity",

45 "KafkaSource",

46 "KinesisSource",

47 "Feature",

48 "Field",

49 "FeatureService",

50 "FeatureStore",

51 "FeatureView",

52 "OnDemandFeatureView",

53 "RepoConfig",

54 "SourceType",

55 "StreamFeatureView",

56 "ValueType",

57 "BigQuerySource",

58 "FileSource",

59 "RedshiftSource",

60 "RequestFeatureView",

61 "SnowflakeSource",

62 "PushSource",

63 "RequestSource",

64 ]

65

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/sdk/python/feast/__init__.py b/sdk/python/feast/__init__.py

--- a/sdk/python/feast/__init__.py

+++ b/sdk/python/feast/__init__.py

@@ -1,5 +1,3 @@

-import logging

-

from pkg_resources import DistributionNotFound, get_distribution

from feast.infra.offline_stores.bigquery_source import BigQuerySource

@@ -27,12 +25,6 @@

from .stream_feature_view import StreamFeatureView

from .value_type import ValueType

-logging.basicConfig(

- format="%(asctime)s %(levelname)s:%(message)s",

- datefmt="%m/%d/%Y %I:%M:%S %p",

- level=logging.INFO,

-)

-

try:

__version__ = get_distribution(__name__).version

except DistributionNotFound:

| {"golden_diff": "diff --git a/sdk/python/feast/__init__.py b/sdk/python/feast/__init__.py\n--- a/sdk/python/feast/__init__.py\n+++ b/sdk/python/feast/__init__.py\n@@ -1,5 +1,3 @@\n-import logging\n-\n from pkg_resources import DistributionNotFound, get_distribution\n \n from feast.infra.offline_stores.bigquery_source import BigQuerySource\n@@ -27,12 +25,6 @@\n from .stream_feature_view import StreamFeatureView\n from .value_type import ValueType\n \n-logging.basicConfig(\n- format=\"%(asctime)s %(levelname)s:%(message)s\",\n- datefmt=\"%m/%d/%Y %I:%M:%S %p\",\n- level=logging.INFO,\n-)\n-\n try:\n __version__ = get_distribution(__name__).version\n except DistributionNotFound:\n", "issue": "basicConfig is called at the module level\n## Expected Behavior \r\n\r\n```\r\nimport feast\r\nlogging.basicConfig(level=level, format=FORMAT)\r\nlogging.error(\"msg\")\r\n```\r\n\r\nshould print logging message according to `FORMAT`\r\n\r\n## Current Behavior\r\n\r\nIt uses the format defined in `feast` at the module level.\r\n\r\n## Steps to reproduce\r\n\r\nSame as in \"Expected Behavior\"\r\n\r\n### Specifications\r\n\r\n- Version: 0.18.1\r\n- Platform: Linux\r\n- Subsystem: -\r\n\r\n## Possible Solution\r\n\r\nI see that `basicConfig` is called here: https://github.com/feast-dev/feast/blob/c9eda79c7b1169ef05a481a96f07960c014e88b9/sdk/python/feast/cli.py#L84 so it is possible that simply removing this call here is enough: https://github.com/feast-dev/feast/blob/0ca62970dd6bc33c00bd5d8b828752814d480588/sdk/python/feast/__init__.py#L30\r\n\r\nIf there are any other entry points that need to set up logging, they should call the function, but the call in `__init__.py` must be removed.\n", "before_files": [{"content": "import logging\n\nfrom pkg_resources import DistributionNotFound, get_distribution\n\nfrom feast.infra.offline_stores.bigquery_source import BigQuerySource\nfrom feast.infra.offline_stores.file_source import FileSource\nfrom feast.infra.offline_stores.redshift_source import RedshiftSource\nfrom feast.infra.offline_stores.snowflake_source import SnowflakeSource\n\nfrom .batch_feature_view import BatchFeatureView\nfrom .data_source import (\n KafkaSource,\n KinesisSource,\n PushSource,\n RequestSource,\n SourceType,\n)\nfrom .entity import Entity\nfrom .feature import Feature\nfrom .feature_service import FeatureService\nfrom .feature_store import FeatureStore\nfrom .feature_view import FeatureView\nfrom .field import Field\nfrom .on_demand_feature_view import OnDemandFeatureView\nfrom .repo_config import RepoConfig\nfrom .request_feature_view import RequestFeatureView\nfrom .stream_feature_view import StreamFeatureView\nfrom .value_type import ValueType\n\nlogging.basicConfig(\n format=\"%(asctime)s %(levelname)s:%(message)s\",\n datefmt=\"%m/%d/%Y %I:%M:%S %p\",\n level=logging.INFO,\n)\n\ntry:\n __version__ = get_distribution(__name__).version\nexcept DistributionNotFound:\n # package is not installed\n pass\n\n__all__ = [\n \"BatchFeatureView\",\n \"Entity\",\n \"KafkaSource\",\n \"KinesisSource\",\n \"Feature\",\n \"Field\",\n \"FeatureService\",\n \"FeatureStore\",\n \"FeatureView\",\n \"OnDemandFeatureView\",\n \"RepoConfig\",\n \"SourceType\",\n \"StreamFeatureView\",\n \"ValueType\",\n \"BigQuerySource\",\n \"FileSource\",\n \"RedshiftSource\",\n \"RequestFeatureView\",\n \"SnowflakeSource\",\n \"PushSource\",\n \"RequestSource\",\n]\n", "path": "sdk/python/feast/__init__.py"}], "after_files": [{"content": "from pkg_resources import DistributionNotFound, get_distribution\n\nfrom feast.infra.offline_stores.bigquery_source import BigQuerySource\nfrom feast.infra.offline_stores.file_source import FileSource\nfrom feast.infra.offline_stores.redshift_source import RedshiftSource\nfrom feast.infra.offline_stores.snowflake_source import SnowflakeSource\n\nfrom .batch_feature_view import BatchFeatureView\nfrom .data_source import (\n KafkaSource,\n KinesisSource,\n PushSource,\n RequestSource,\n SourceType,\n)\nfrom .entity import Entity\nfrom .feature import Feature\nfrom .feature_service import FeatureService\nfrom .feature_store import FeatureStore\nfrom .feature_view import FeatureView\nfrom .field import Field\nfrom .on_demand_feature_view import OnDemandFeatureView\nfrom .repo_config import RepoConfig\nfrom .request_feature_view import RequestFeatureView\nfrom .stream_feature_view import StreamFeatureView\nfrom .value_type import ValueType\n\ntry:\n __version__ = get_distribution(__name__).version\nexcept DistributionNotFound:\n # package is not installed\n pass\n\n__all__ = [\n \"BatchFeatureView\",\n \"Entity\",\n \"KafkaSource\",\n \"KinesisSource\",\n \"Feature\",\n \"Field\",\n \"FeatureService\",\n \"FeatureStore\",\n \"FeatureView\",\n \"OnDemandFeatureView\",\n \"RepoConfig\",\n \"SourceType\",\n \"StreamFeatureView\",\n \"ValueType\",\n \"BigQuerySource\",\n \"FileSource\",\n \"RedshiftSource\",\n \"RequestFeatureView\",\n \"SnowflakeSource\",\n \"PushSource\",\n \"RequestSource\",\n]\n", "path": "sdk/python/feast/__init__.py"}]} | 1,064 | 183 |

gh_patches_debug_1301 | rasdani/github-patches | git_diff | vega__altair-1844 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fix simple typo: packge -> package

There is a small typo in setup.py.

Should read package rather than packge.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup.py`

Content:

```

1 import io

2 import os

3 import re

4

5 try:

6 from setuptools import setup

7 except ImportError:

8 from distutils.core import setup

9

10 #==============================================================================

11 # Utilities

12 #==============================================================================

13

14 def read(path, encoding='utf-8'):

15 path = os.path.join(os.path.dirname(__file__), path)

16 with io.open(path, encoding=encoding) as fp:

17 return fp.read()

18

19

20 def get_install_requirements(path):

21 content = read(path)

22 return [

23 req

24 for req in content.split("\n")

25 if req != '' and not req.startswith('#')

26 ]

27

28

29 def version(path):

30 """Obtain the packge version from a python file e.g. pkg/__init__.py

31

32 See <https://packaging.python.org/en/latest/single_source_version.html>.

33 """

34 version_file = read(path)

35 version_match = re.search(r"""^__version__ = ['"]([^'"]*)['"]""",

36 version_file, re.M)

37 if version_match:

38 return version_match.group(1)

39 raise RuntimeError("Unable to find version string.")

40

41 HERE = os.path.abspath(os.path.dirname(__file__))

42

43 # From https://github.com/jupyterlab/jupyterlab/blob/master/setupbase.py, BSD licensed

44 def find_packages(top=HERE):

45 """

46 Find all of the packages.

47 """

48 packages = []

49 for d, dirs, _ in os.walk(top, followlinks=True):

50 if os.path.exists(os.path.join(d, '__init__.py')):

51 packages.append(os.path.relpath(d, top).replace(os.path.sep, '.'))

52 elif d != top:

53 # Do not look for packages in subfolders if current is not a package

54 dirs[:] = []

55 return packages

56

57 #==============================================================================

58 # Variables

59 #==============================================================================

60

61 DESCRIPTION = "Altair: A declarative statistical visualization library for Python."

62 LONG_DESCRIPTION = read("README.md")

63 LONG_DESCRIPTION_CONTENT_TYPE = 'text/markdown'

64 NAME = "altair"

65 PACKAGES = find_packages()

66 AUTHOR = "Brian E. Granger / Jake VanderPlas"

67 AUTHOR_EMAIL = "[email protected]"

68 URL = 'http://altair-viz.github.io'

69 DOWNLOAD_URL = 'http://github.com/altair-viz/altair/'

70 LICENSE = 'BSD 3-clause'

71 INSTALL_REQUIRES = get_install_requirements("requirements.txt")

72 PYTHON_REQUIRES = ">=3.5"

73 DEV_REQUIRES = get_install_requirements("requirements_dev.txt")

74 VERSION = version('altair/__init__.py')

75

76

77 setup(name=NAME,

78 version=VERSION,

79 description=DESCRIPTION,

80 long_description=LONG_DESCRIPTION,

81 long_description_content_type=LONG_DESCRIPTION_CONTENT_TYPE,

82 author=AUTHOR,

83 author_email=AUTHOR_EMAIL,

84 url=URL,

85 download_url=DOWNLOAD_URL,

86 license=LICENSE,

87 packages=PACKAGES,

88 include_package_data=True,

89 install_requires=INSTALL_REQUIRES,

90 python_requires=PYTHON_REQUIRES,

91 extras_require={

92 'dev': DEV_REQUIRES

93 },

94 classifiers=[

95 'Development Status :: 5 - Production/Stable',

96 'Environment :: Console',

97 'Intended Audience :: Science/Research',

98 'License :: OSI Approved :: BSD License',

99 'Natural Language :: English',

100 'Programming Language :: Python :: 3.5',

101 'Programming Language :: Python :: 3.6',

102 'Programming Language :: Python :: 3.7',

103 'Programming Language :: Python :: 3.8',

104 ],

105 )

106

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -27,7 +27,7 @@

def version(path):

- """Obtain the packge version from a python file e.g. pkg/__init__.py

+ """Obtain the package version from a python file e.g. pkg/__init__.py

See <https://packaging.python.org/en/latest/single_source_version.html>.

"""

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -27,7 +27,7 @@\n \n \n def version(path):\n- \"\"\"Obtain the packge version from a python file e.g. pkg/__init__.py\n+ \"\"\"Obtain the package version from a python file e.g. pkg/__init__.py\n \n See <https://packaging.python.org/en/latest/single_source_version.html>.\n \"\"\"\n", "issue": "Fix simple typo: packge -> package\nThere is a small typo in setup.py.\nShould read package rather than packge.\n\n\n", "before_files": [{"content": "import io\nimport os\nimport re\n\ntry:\n from setuptools import setup\nexcept ImportError:\n from distutils.core import setup\n\n#==============================================================================\n# Utilities\n#==============================================================================\n\ndef read(path, encoding='utf-8'):\n path = os.path.join(os.path.dirname(__file__), path)\n with io.open(path, encoding=encoding) as fp:\n return fp.read()\n\n\ndef get_install_requirements(path):\n content = read(path)\n return [\n req\n for req in content.split(\"\\n\")\n if req != '' and not req.startswith('#')\n ]\n\n\ndef version(path):\n \"\"\"Obtain the packge version from a python file e.g. pkg/__init__.py\n\n See <https://packaging.python.org/en/latest/single_source_version.html>.\n \"\"\"\n version_file = read(path)\n version_match = re.search(r\"\"\"^__version__ = ['\"]([^'\"]*)['\"]\"\"\",\n version_file, re.M)\n if version_match:\n return version_match.group(1)\n raise RuntimeError(\"Unable to find version string.\")\n\nHERE = os.path.abspath(os.path.dirname(__file__))\n\n# From https://github.com/jupyterlab/jupyterlab/blob/master/setupbase.py, BSD licensed\ndef find_packages(top=HERE):\n \"\"\"\n Find all of the packages.\n \"\"\"\n packages = []\n for d, dirs, _ in os.walk(top, followlinks=True):\n if os.path.exists(os.path.join(d, '__init__.py')):\n packages.append(os.path.relpath(d, top).replace(os.path.sep, '.'))\n elif d != top:\n # Do not look for packages in subfolders if current is not a package\n dirs[:] = []\n return packages\n\n#==============================================================================\n# Variables\n#==============================================================================\n\nDESCRIPTION = \"Altair: A declarative statistical visualization library for Python.\"\nLONG_DESCRIPTION = read(\"README.md\")\nLONG_DESCRIPTION_CONTENT_TYPE = 'text/markdown'\nNAME = \"altair\"\nPACKAGES = find_packages()\nAUTHOR = \"Brian E. Granger / Jake VanderPlas\"\nAUTHOR_EMAIL = \"[email protected]\"\nURL = 'http://altair-viz.github.io'\nDOWNLOAD_URL = 'http://github.com/altair-viz/altair/'\nLICENSE = 'BSD 3-clause'\nINSTALL_REQUIRES = get_install_requirements(\"requirements.txt\")\nPYTHON_REQUIRES = \">=3.5\"\nDEV_REQUIRES = get_install_requirements(\"requirements_dev.txt\")\nVERSION = version('altair/__init__.py')\n\n\nsetup(name=NAME,\n version=VERSION,\n description=DESCRIPTION,\n long_description=LONG_DESCRIPTION,\n long_description_content_type=LONG_DESCRIPTION_CONTENT_TYPE,\n author=AUTHOR,\n author_email=AUTHOR_EMAIL,\n url=URL,\n download_url=DOWNLOAD_URL,\n license=LICENSE,\n packages=PACKAGES,\n include_package_data=True,\n install_requires=INSTALL_REQUIRES,\n python_requires=PYTHON_REQUIRES,\n extras_require={\n 'dev': DEV_REQUIRES\n },\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n 'Environment :: Console',\n 'Intended Audience :: Science/Research',\n 'License :: OSI Approved :: BSD License',\n 'Natural Language :: English',\n 'Programming Language :: Python :: 3.5',\n 'Programming Language :: Python :: 3.6',\n 'Programming Language :: Python :: 3.7',\n 'Programming Language :: Python :: 3.8',\n ],\n )\n", "path": "setup.py"}], "after_files": [{"content": "import io\nimport os\nimport re\n\ntry:\n from setuptools import setup\nexcept ImportError:\n from distutils.core import setup\n\n#==============================================================================\n# Utilities\n#==============================================================================\n\ndef read(path, encoding='utf-8'):\n path = os.path.join(os.path.dirname(__file__), path)\n with io.open(path, encoding=encoding) as fp:\n return fp.read()\n\n\ndef get_install_requirements(path):\n content = read(path)\n return [\n req\n for req in content.split(\"\\n\")\n if req != '' and not req.startswith('#')\n ]\n\n\ndef version(path):\n \"\"\"Obtain the package version from a python file e.g. pkg/__init__.py\n\n See <https://packaging.python.org/en/latest/single_source_version.html>.\n \"\"\"\n version_file = read(path)\n version_match = re.search(r\"\"\"^__version__ = ['\"]([^'\"]*)['\"]\"\"\",\n version_file, re.M)\n if version_match:\n return version_match.group(1)\n raise RuntimeError(\"Unable to find version string.\")\n\nHERE = os.path.abspath(os.path.dirname(__file__))\n\n# From https://github.com/jupyterlab/jupyterlab/blob/master/setupbase.py, BSD licensed\ndef find_packages(top=HERE):\n \"\"\"\n Find all of the packages.\n \"\"\"\n packages = []\n for d, dirs, _ in os.walk(top, followlinks=True):\n if os.path.exists(os.path.join(d, '__init__.py')):\n packages.append(os.path.relpath(d, top).replace(os.path.sep, '.'))\n elif d != top:\n # Do not look for packages in subfolders if current is not a package\n dirs[:] = []\n return packages\n\n#==============================================================================\n# Variables\n#==============================================================================\n\nDESCRIPTION = \"Altair: A declarative statistical visualization library for Python.\"\nLONG_DESCRIPTION = read(\"README.md\")\nLONG_DESCRIPTION_CONTENT_TYPE = 'text/markdown'\nNAME = \"altair\"\nPACKAGES = find_packages()\nAUTHOR = \"Brian E. Granger / Jake VanderPlas\"\nAUTHOR_EMAIL = \"[email protected]\"\nURL = 'http://altair-viz.github.io'\nDOWNLOAD_URL = 'http://github.com/altair-viz/altair/'\nLICENSE = 'BSD 3-clause'\nINSTALL_REQUIRES = get_install_requirements(\"requirements.txt\")\nPYTHON_REQUIRES = \">=3.5\"\nDEV_REQUIRES = get_install_requirements(\"requirements_dev.txt\")\nVERSION = version('altair/__init__.py')\n\n\nsetup(name=NAME,\n version=VERSION,\n description=DESCRIPTION,\n long_description=LONG_DESCRIPTION,\n long_description_content_type=LONG_DESCRIPTION_CONTENT_TYPE,\n author=AUTHOR,\n author_email=AUTHOR_EMAIL,\n url=URL,\n download_url=DOWNLOAD_URL,\n license=LICENSE,\n packages=PACKAGES,\n include_package_data=True,\n install_requires=INSTALL_REQUIRES,\n python_requires=PYTHON_REQUIRES,\n extras_require={\n 'dev': DEV_REQUIRES\n },\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n 'Environment :: Console',\n 'Intended Audience :: Science/Research',\n 'License :: OSI Approved :: BSD License',\n 'Natural Language :: English',\n 'Programming Language :: Python :: 3.5',\n 'Programming Language :: Python :: 3.6',\n 'Programming Language :: Python :: 3.7',\n 'Programming Language :: Python :: 3.8',\n ],\n )\n", "path": "setup.py"}]} | 1,242 | 98 |

gh_patches_debug_22059 | rasdani/github-patches | git_diff | freedomofpress__securedrop-5559 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Update removed `platform.linux_distribution` funtion call in Python 3.8

## Description

We are using [platform.linux_distribution](https://github.com/freedomofpress/securedrop/blob/4c73102ca9151a86a08396de40163b48a5a21768/securedrop/source_app/api.py#L20) function in our metadata endpoint. But, this function was deprecated from Python3.5 and totally removed from Python 3.8.

## Solution

We can directly read the `/etc/lsb-release` and `/etc/os-release` file as required.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `securedrop/source_app/api.py`

Content:

```

1 import json

2 import platform

3

4 from flask import Blueprint, current_app, make_response

5

6 from source_app.utils import get_sourcev2_url, get_sourcev3_url

7

8 import version

9

10

11 def make_blueprint(config):

12 view = Blueprint('api', __name__)

13

14 @view.route('/metadata')

15 def metadata():

16 meta = {

17 'allow_document_uploads': current_app.instance_config.allow_document_uploads,

18 'gpg_fpr': config.JOURNALIST_KEY,

19 'sd_version': version.__version__,

20 'server_os': platform.linux_distribution()[1],

21 'supported_languages': config.SUPPORTED_LOCALES,

22 'v2_source_url': get_sourcev2_url(),

23 'v3_source_url': get_sourcev3_url()

24 }

25 resp = make_response(json.dumps(meta))

26 resp.headers['Content-Type'] = 'application/json'

27 return resp

28

29 return view

30

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/securedrop/source_app/api.py b/securedrop/source_app/api.py

--- a/securedrop/source_app/api.py

+++ b/securedrop/source_app/api.py

@@ -1,5 +1,4 @@

import json

-import platform

from flask import Blueprint, current_app, make_response

@@ -8,6 +7,10 @@

import version

+with open("/etc/lsb-release", "r") as f:

+ server_os = f.readlines()[1].split("=")[1].strip("\n")

+

+

def make_blueprint(config):

view = Blueprint('api', __name__)

@@ -17,7 +20,7 @@

'allow_document_uploads': current_app.instance_config.allow_document_uploads,

'gpg_fpr': config.JOURNALIST_KEY,

'sd_version': version.__version__,

- 'server_os': platform.linux_distribution()[1],

+ 'server_os': server_os,

'supported_languages': config.SUPPORTED_LOCALES,

'v2_source_url': get_sourcev2_url(),

'v3_source_url': get_sourcev3_url()

| {"golden_diff": "diff --git a/securedrop/source_app/api.py b/securedrop/source_app/api.py\n--- a/securedrop/source_app/api.py\n+++ b/securedrop/source_app/api.py\n@@ -1,5 +1,4 @@\n import json\n-import platform\n \n from flask import Blueprint, current_app, make_response\n \n@@ -8,6 +7,10 @@\n import version\n \n \n+with open(\"/etc/lsb-release\", \"r\") as f:\n+ server_os = f.readlines()[1].split(\"=\")[1].strip(\"\\n\")\n+\n+\n def make_blueprint(config):\n view = Blueprint('api', __name__)\n \n@@ -17,7 +20,7 @@\n 'allow_document_uploads': current_app.instance_config.allow_document_uploads,\n 'gpg_fpr': config.JOURNALIST_KEY,\n 'sd_version': version.__version__,\n- 'server_os': platform.linux_distribution()[1],\n+ 'server_os': server_os,\n 'supported_languages': config.SUPPORTED_LOCALES,\n 'v2_source_url': get_sourcev2_url(),\n 'v3_source_url': get_sourcev3_url()\n", "issue": "Update removed `platform.linux_distribution` funtion call in Python 3.8\n## Description\r\n\r\nWe are using [platform.linux_distribution](https://github.com/freedomofpress/securedrop/blob/4c73102ca9151a86a08396de40163b48a5a21768/securedrop/source_app/api.py#L20) function in our metadata endpoint. But, this function was deprecated from Python3.5 and totally removed from Python 3.8. \r\n\r\n## Solution\r\n\r\nWe can directly read the `/etc/lsb-release` and `/etc/os-release` file as required.\r\n\n", "before_files": [{"content": "import json\nimport platform\n\nfrom flask import Blueprint, current_app, make_response\n\nfrom source_app.utils import get_sourcev2_url, get_sourcev3_url\n\nimport version\n\n\ndef make_blueprint(config):\n view = Blueprint('api', __name__)\n\n @view.route('/metadata')\n def metadata():\n meta = {\n 'allow_document_uploads': current_app.instance_config.allow_document_uploads,\n 'gpg_fpr': config.JOURNALIST_KEY,\n 'sd_version': version.__version__,\n 'server_os': platform.linux_distribution()[1],\n 'supported_languages': config.SUPPORTED_LOCALES,\n 'v2_source_url': get_sourcev2_url(),\n 'v3_source_url': get_sourcev3_url()\n }\n resp = make_response(json.dumps(meta))\n resp.headers['Content-Type'] = 'application/json'\n return resp\n\n return view\n", "path": "securedrop/source_app/api.py"}], "after_files": [{"content": "import json\n\nfrom flask import Blueprint, current_app, make_response\n\nfrom source_app.utils import get_sourcev2_url, get_sourcev3_url\n\nimport version\n\n\nwith open(\"/etc/lsb-release\", \"r\") as f:\n server_os = f.readlines()[1].split(\"=\")[1].strip(\"\\n\")\n\n\ndef make_blueprint(config):\n view = Blueprint('api', __name__)\n\n @view.route('/metadata')\n def metadata():\n meta = {\n 'allow_document_uploads': current_app.instance_config.allow_document_uploads,\n 'gpg_fpr': config.JOURNALIST_KEY,\n 'sd_version': version.__version__,\n 'server_os': server_os,\n 'supported_languages': config.SUPPORTED_LOCALES,\n 'v2_source_url': get_sourcev2_url(),\n 'v3_source_url': get_sourcev3_url()\n }\n resp = make_response(json.dumps(meta))\n resp.headers['Content-Type'] = 'application/json'\n return resp\n\n return view\n", "path": "securedrop/source_app/api.py"}]} | 653 | 245 |

gh_patches_debug_18106 | rasdani/github-patches | git_diff | OpenNMT__OpenNMT-py-1151 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

torchaudio has to be optional

@bpopeters

The last change https://github.com/OpenNMT/OpenNMT-py/pull/1144/files

made torchaudio a requirement, not an optional one as it should be.

Can you fix it please ?

Thanks.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `onmt/inputters/audio_dataset.py`

Content:

```

1 # -*- coding: utf-8 -*-

2 import os

3 from tqdm import tqdm

4

5 import torch

6 import torchaudio

7 import librosa

8 import numpy as np

9

10 from onmt.inputters.dataset_base import DatasetBase

11

12

13 class AudioDataset(DatasetBase):

14 data_type = 'audio' # get rid of this class attribute asap

15

16 @staticmethod

17 def sort_key(ex):

18 """ Sort using duration time of the sound spectrogram. """

19 return ex.src.size(1)

20

21 @staticmethod

22 def extract_features(audio_path, sample_rate, truncate, window_size,

23 window_stride, window, normalize_audio):

24 # torchaudio loading options recently changed. It's probably

25 # straightforward to rewrite the audio handling to make use of

26 # up-to-date torchaudio, but in the meantime there is a legacy

27 # method which uses the old defaults

28 sound, sample_rate_ = torchaudio.legacy.load(audio_path)

29 if truncate and truncate > 0:

30 if sound.size(0) > truncate:

31 sound = sound[:truncate]

32

33 assert sample_rate_ == sample_rate, \

34 'Sample rate of %s != -sample_rate (%d vs %d)' \

35 % (audio_path, sample_rate_, sample_rate)

36

37 sound = sound.numpy()

38 if len(sound.shape) > 1:

39 if sound.shape[1] == 1:

40 sound = sound.squeeze()

41 else:

42 sound = sound.mean(axis=1) # average multiple channels

43

44 n_fft = int(sample_rate * window_size)

45 win_length = n_fft

46 hop_length = int(sample_rate * window_stride)

47 # STFT

48 d = librosa.stft(sound, n_fft=n_fft, hop_length=hop_length,

49 win_length=win_length, window=window)

50 spect, _ = librosa.magphase(d)

51 spect = np.log1p(spect)

52 spect = torch.FloatTensor(spect)

53 if normalize_audio:

54 mean = spect.mean()

55 std = spect.std()

56 spect.add_(-mean)

57 spect.div_(std)

58 return spect

59

60 @classmethod

61 def make_examples(

62 cls,

63 data,

64 src_dir,

65 side,

66 sample_rate,

67 window_size,

68 window_stride,

69 window,

70 normalize_audio,

71 truncate=None

72 ):

73 """

74 Args:

75 data: sequence of audio paths or path containing these sequences

76 src_dir (str): location of source audio files.

77 side (str): 'src' or 'tgt'.

78 sample_rate (int): sample_rate.

79 window_size (float) : window size for spectrogram in seconds.

80 window_stride (float): window stride for spectrogram in seconds.

81 window (str): window type for spectrogram generation.

82 normalize_audio (bool): subtract spectrogram by mean and divide

83 by std or not.

84 truncate (int): maximum audio length (0 or None for unlimited).

85

86 Yields:

87 a dictionary containing audio data for each line.

88 """

89 assert src_dir is not None and os.path.exists(src_dir),\

90 "src_dir must be a valid directory if data_type is audio"

91

92 if isinstance(data, str):

93 data = cls._read_file(data)

94

95 for i, line in enumerate(tqdm(data)):

96 audio_path = os.path.join(src_dir, line.strip())

97 if not os.path.exists(audio_path):

98 audio_path = line.strip()

99

100 assert os.path.exists(audio_path), \

101 'audio path %s not found' % (line.strip())

102

103 spect = AudioDataset.extract_features(

104 audio_path, sample_rate, truncate, window_size,

105 window_stride, window, normalize_audio

106 )

107

108 yield {side: spect, side + '_path': line.strip(),

109 side + '_lengths': spect.size(1), 'indices': i}

110

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/onmt/inputters/audio_dataset.py b/onmt/inputters/audio_dataset.py

--- a/onmt/inputters/audio_dataset.py

+++ b/onmt/inputters/audio_dataset.py

@@ -3,9 +3,6 @@

from tqdm import tqdm

import torch

-import torchaudio

-import librosa

-import numpy as np

from onmt.inputters.dataset_base import DatasetBase

@@ -21,6 +18,9 @@

@staticmethod

def extract_features(audio_path, sample_rate, truncate, window_size,

window_stride, window, normalize_audio):

+ import torchaudio

+ import librosa

+ import numpy as np

# torchaudio loading options recently changed. It's probably

# straightforward to rewrite the audio handling to make use of

# up-to-date torchaudio, but in the meantime there is a legacy

| {"golden_diff": "diff --git a/onmt/inputters/audio_dataset.py b/onmt/inputters/audio_dataset.py\n--- a/onmt/inputters/audio_dataset.py\n+++ b/onmt/inputters/audio_dataset.py\n@@ -3,9 +3,6 @@\n from tqdm import tqdm\n \n import torch\n-import torchaudio\n-import librosa\n-import numpy as np\n \n from onmt.inputters.dataset_base import DatasetBase\n \n@@ -21,6 +18,9 @@\n @staticmethod\n def extract_features(audio_path, sample_rate, truncate, window_size,\n window_stride, window, normalize_audio):\n+ import torchaudio\n+ import librosa\n+ import numpy as np\n # torchaudio loading options recently changed. It's probably\n # straightforward to rewrite the audio handling to make use of\n # up-to-date torchaudio, but in the meantime there is a legacy\n", "issue": "torchaudio has to be optional\n@bpopeters \r\nThe last change https://github.com/OpenNMT/OpenNMT-py/pull/1144/files\r\nmade torchaudio a requirement, not an optional one as it should be.\r\n\r\nCan you fix it please ?\r\nThanks.\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\nimport os\nfrom tqdm import tqdm\n\nimport torch\nimport torchaudio\nimport librosa\nimport numpy as np\n\nfrom onmt.inputters.dataset_base import DatasetBase\n\n\nclass AudioDataset(DatasetBase):\n data_type = 'audio' # get rid of this class attribute asap\n\n @staticmethod\n def sort_key(ex):\n \"\"\" Sort using duration time of the sound spectrogram. \"\"\"\n return ex.src.size(1)\n\n @staticmethod\n def extract_features(audio_path, sample_rate, truncate, window_size,\n window_stride, window, normalize_audio):\n # torchaudio loading options recently changed. It's probably\n # straightforward to rewrite the audio handling to make use of\n # up-to-date torchaudio, but in the meantime there is a legacy\n # method which uses the old defaults\n sound, sample_rate_ = torchaudio.legacy.load(audio_path)\n if truncate and truncate > 0:\n if sound.size(0) > truncate:\n sound = sound[:truncate]\n\n assert sample_rate_ == sample_rate, \\\n 'Sample rate of %s != -sample_rate (%d vs %d)' \\\n % (audio_path, sample_rate_, sample_rate)\n\n sound = sound.numpy()\n if len(sound.shape) > 1:\n if sound.shape[1] == 1:\n sound = sound.squeeze()\n else:\n sound = sound.mean(axis=1) # average multiple channels\n\n n_fft = int(sample_rate * window_size)\n win_length = n_fft\n hop_length = int(sample_rate * window_stride)\n # STFT\n d = librosa.stft(sound, n_fft=n_fft, hop_length=hop_length,\n win_length=win_length, window=window)\n spect, _ = librosa.magphase(d)\n spect = np.log1p(spect)\n spect = torch.FloatTensor(spect)\n if normalize_audio:\n mean = spect.mean()\n std = spect.std()\n spect.add_(-mean)\n spect.div_(std)\n return spect\n\n @classmethod\n def make_examples(\n cls,\n data,\n src_dir,\n side,\n sample_rate,\n window_size,\n window_stride,\n window,\n normalize_audio,\n truncate=None\n ):\n \"\"\"\n Args:\n data: sequence of audio paths or path containing these sequences\n src_dir (str): location of source audio files.\n side (str): 'src' or 'tgt'.\n sample_rate (int): sample_rate.\n window_size (float) : window size for spectrogram in seconds.\n window_stride (float): window stride for spectrogram in seconds.\n window (str): window type for spectrogram generation.\n normalize_audio (bool): subtract spectrogram by mean and divide\n by std or not.\n truncate (int): maximum audio length (0 or None for unlimited).\n\n Yields:\n a dictionary containing audio data for each line.\n \"\"\"\n assert src_dir is not None and os.path.exists(src_dir),\\\n \"src_dir must be a valid directory if data_type is audio\"\n\n if isinstance(data, str):\n data = cls._read_file(data)\n\n for i, line in enumerate(tqdm(data)):\n audio_path = os.path.join(src_dir, line.strip())\n if not os.path.exists(audio_path):\n audio_path = line.strip()\n\n assert os.path.exists(audio_path), \\\n 'audio path %s not found' % (line.strip())\n\n spect = AudioDataset.extract_features(\n audio_path, sample_rate, truncate, window_size,\n window_stride, window, normalize_audio\n )\n\n yield {side: spect, side + '_path': line.strip(),\n side + '_lengths': spect.size(1), 'indices': i}\n", "path": "onmt/inputters/audio_dataset.py"}], "after_files": [{"content": "# -*- coding: utf-8 -*-\nimport os\nfrom tqdm import tqdm\n\nimport torch\n\nfrom onmt.inputters.dataset_base import DatasetBase\n\n\nclass AudioDataset(DatasetBase):\n data_type = 'audio' # get rid of this class attribute asap\n\n @staticmethod\n def sort_key(ex):\n \"\"\" Sort using duration time of the sound spectrogram. \"\"\"\n return ex.src.size(1)\n\n @staticmethod\n def extract_features(audio_path, sample_rate, truncate, window_size,\n window_stride, window, normalize_audio):\n import torchaudio\n import librosa\n import numpy as np\n # torchaudio loading options recently changed. It's probably\n # straightforward to rewrite the audio handling to make use of\n # up-to-date torchaudio, but in the meantime there is a legacy\n # method which uses the old defaults\n sound, sample_rate_ = torchaudio.legacy.load(audio_path)\n if truncate and truncate > 0:\n if sound.size(0) > truncate:\n sound = sound[:truncate]\n\n assert sample_rate_ == sample_rate, \\\n 'Sample rate of %s != -sample_rate (%d vs %d)' \\\n % (audio_path, sample_rate_, sample_rate)\n\n sound = sound.numpy()\n if len(sound.shape) > 1:\n if sound.shape[1] == 1:\n sound = sound.squeeze()\n else:\n sound = sound.mean(axis=1) # average multiple channels\n\n n_fft = int(sample_rate * window_size)\n win_length = n_fft\n hop_length = int(sample_rate * window_stride)\n # STFT\n d = librosa.stft(sound, n_fft=n_fft, hop_length=hop_length,\n win_length=win_length, window=window)\n spect, _ = librosa.magphase(d)\n spect = np.log1p(spect)\n spect = torch.FloatTensor(spect)\n if normalize_audio:\n mean = spect.mean()\n std = spect.std()\n spect.add_(-mean)\n spect.div_(std)\n return spect\n\n @classmethod\n def make_examples(\n cls,\n data,\n src_dir,\n side,\n sample_rate,\n window_size,\n window_stride,\n window,\n normalize_audio,\n truncate=None\n ):\n \"\"\"\n Args:\n data: sequence of audio paths or path containing these sequences\n src_dir (str): location of source audio files.\n side (str): 'src' or 'tgt'.\n sample_rate (int): sample_rate.\n window_size (float) : window size for spectrogram in seconds.\n window_stride (float): window stride for spectrogram in seconds.\n window (str): window type for spectrogram generation.\n normalize_audio (bool): subtract spectrogram by mean and divide\n by std or not.\n truncate (int): maximum audio length (0 or None for unlimited).\n\n Yields:\n a dictionary containing audio data for each line.\n \"\"\"\n assert src_dir is not None and os.path.exists(src_dir),\\\n \"src_dir must be a valid directory if data_type is audio\"\n\n if isinstance(data, str):\n data = cls._read_file(data)\n\n for i, line in enumerate(tqdm(data)):\n audio_path = os.path.join(src_dir, line.strip())\n if not os.path.exists(audio_path):\n audio_path = line.strip()\n\n assert os.path.exists(audio_path), \\\n 'audio path %s not found' % (line.strip())\n\n spect = AudioDataset.extract_features(\n audio_path, sample_rate, truncate, window_size,\n window_stride, window, normalize_audio\n )\n\n yield {side: spect, side + '_path': line.strip(),\n side + '_lengths': spect.size(1), 'indices': i}\n", "path": "onmt/inputters/audio_dataset.py"}]} | 1,367 | 188 |

gh_patches_debug_8986 | rasdani/github-patches | git_diff | facebookresearch__Mephisto-323 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Path changes in cleanup scripts

In `mephisto/scripts/mturk/cleanup.py`: broken imports line 11-15 with the change from `core` and `providers` into `abstraction` - can also submit a PR if that's easier!

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mephisto/scripts/mturk/cleanup.py`

Content:

```

1 #!/usr/bin/env python3

2

3 # Copyright (c) Facebook, Inc. and its affiliates.

4 # This source code is licensed under the MIT license found in the

5 # LICENSE file in the root directory of this source tree.

6

7 """

8 Utility script that finds, expires, and disposes HITs that may not

9 have been taking down during a run that exited improperly.

10 """

11 from mephisto.providers.mturk.mturk_utils import (

12 get_outstanding_hits,

13 expire_and_dispose_hits,

14 )

15 from mephisto.core.local_database import LocalMephistoDB

16

17 db = LocalMephistoDB()

18

19 all_requesters = db.find_requesters(provider_type="mturk")

20 all_requesters += db.find_requesters(provider_type="mturk_sandbox")

21

22 print("You have the following requesters available for mturk and mturk sandbox:")

23 r_names = [r.requester_name for r in all_requesters]

24 print(sorted(r_names))

25

26 use_name = input("Enter the name of the requester to clear HITs from:\n>> ")

27 while use_name not in r_names:

28 use_name = input(

29 f"Sorry, {use_name} is not in the requester list. "

30 f"The following are valid: {r_names}\n"

31 f"Select one:\n>> "

32 )

33

34 requester = db.find_requesters(requester_name=use_name)[0]

35 client = requester._get_client(requester._requester_name)

36

37 outstanding_hit_types = get_outstanding_hits(client)

38 num_hit_types = len(outstanding_hit_types.keys())

39 sum_hits = sum([len(outstanding_hit_types[x]) for x in outstanding_hit_types.keys()])

40

41 all_hits = []

42 for hit_type in outstanding_hit_types.keys():

43 all_hits += outstanding_hit_types[hit_type]

44

45 broken_hits = [

46 h

47 for h in all_hits

48 if h["NumberOfAssignmentsCompleted"] == 0 and h["HITStatus"] != "Reviewable"

49 ]

50

51 print(

52 f"The requester {use_name} has {num_hit_types} outstanding HIT "

53 f"types, with {len(broken_hits)} suspected active or broken HITs.\n"

54 "This may include tasks that are still in-flight, but also "

55 "tasks that have already expired but have not been disposed of yet."

56 )

57

58 run_type = input("Would you like to cleanup by (t)itle, or just clean up (a)ll?\n>> ")

59 use_hits = None

60

61 while use_hits is None:

62 if run_type.lower().startswith("t"):

63 use_hits = []

64 for hit_type in outstanding_hit_types.keys():

65 cur_title = outstanding_hit_types[hit_type][0]["Title"]

66 print(f"HIT TITLE: {cur_title}")

67 print(f"HIT COUNT: {len(outstanding_hit_types[hit_type])}")

68 should_clear = input(

69 "Should we cleanup this hit type? (y)es for yes, anything else for no: "

70 "\n>> "

71 )

72 if should_clear.lower().startswith("y"):

73 use_hits += outstanding_hit_types[hit_type]

74 elif run_type.lower().startswith("a"):

75 use_hits = all_hits

76 else:

77 run_type = input("Options are (t)itle, or (a)ll:\n>> ")

78

79 print(f"Disposing {len(use_hits)} HITs.")

80 remaining_hits = expire_and_dispose_hits(client, use_hits)

81

82 if len(remaining_hits) == 0:

83 print("Disposed!")

84 else:

85 print(

86 f"After disposing, {len(remaining_hits)} could not be disposed.\n"

87 f"These may not have been reviewed yet, or are being actively worked on.\n"

88 "They have been expired though, so please try to dispose later."

89 "The first 20 dispose errors are added below:"

90 )

91 print([h["dispose_exception"] for h in remaining_hits[:20]])

92

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/mephisto/scripts/mturk/cleanup.py b/mephisto/scripts/mturk/cleanup.py

--- a/mephisto/scripts/mturk/cleanup.py

+++ b/mephisto/scripts/mturk/cleanup.py

@@ -8,11 +8,11 @@

Utility script that finds, expires, and disposes HITs that may not

have been taking down during a run that exited improperly.

"""

-from mephisto.providers.mturk.mturk_utils import (

+from mephisto.abstractions.providers.mturk.mturk_utils import (

get_outstanding_hits,

expire_and_dispose_hits,

)

-from mephisto.core.local_database import LocalMephistoDB

+from mephisto.abstractions.databases.local_database import LocalMephistoDB

db = LocalMephistoDB()

| {"golden_diff": "diff --git a/mephisto/scripts/mturk/cleanup.py b/mephisto/scripts/mturk/cleanup.py\n--- a/mephisto/scripts/mturk/cleanup.py\n+++ b/mephisto/scripts/mturk/cleanup.py\n@@ -8,11 +8,11 @@\n Utility script that finds, expires, and disposes HITs that may not\n have been taking down during a run that exited improperly.\n \"\"\"\n-from mephisto.providers.mturk.mturk_utils import (\n+from mephisto.abstractions.providers.mturk.mturk_utils import (\n get_outstanding_hits,\n expire_and_dispose_hits,\n )\n-from mephisto.core.local_database import LocalMephistoDB\n+from mephisto.abstractions.databases.local_database import LocalMephistoDB\n \n db = LocalMephistoDB()\n", "issue": "Path changes in cleanup scripts\nIn `mephisto/scripts/mturk/cleanup.py`: broken imports line 11-15 with the change from `core` and `providers` into `abstraction` - can also submit a PR if that's easier!\n", "before_files": [{"content": "#!/usr/bin/env python3\n\n# Copyright (c) Facebook, Inc. and its affiliates.\n# This source code is licensed under the MIT license found in the\n# LICENSE file in the root directory of this source tree.\n\n\"\"\"\nUtility script that finds, expires, and disposes HITs that may not\nhave been taking down during a run that exited improperly.\n\"\"\"\nfrom mephisto.providers.mturk.mturk_utils import (\n get_outstanding_hits,\n expire_and_dispose_hits,\n)\nfrom mephisto.core.local_database import LocalMephistoDB\n\ndb = LocalMephistoDB()\n\nall_requesters = db.find_requesters(provider_type=\"mturk\")\nall_requesters += db.find_requesters(provider_type=\"mturk_sandbox\")\n\nprint(\"You have the following requesters available for mturk and mturk sandbox:\")\nr_names = [r.requester_name for r in all_requesters]\nprint(sorted(r_names))\n\nuse_name = input(\"Enter the name of the requester to clear HITs from:\\n>> \")\nwhile use_name not in r_names:\n use_name = input(\n f\"Sorry, {use_name} is not in the requester list. \"\n f\"The following are valid: {r_names}\\n\"\n f\"Select one:\\n>> \"\n )\n\nrequester = db.find_requesters(requester_name=use_name)[0]\nclient = requester._get_client(requester._requester_name)\n\noutstanding_hit_types = get_outstanding_hits(client)\nnum_hit_types = len(outstanding_hit_types.keys())\nsum_hits = sum([len(outstanding_hit_types[x]) for x in outstanding_hit_types.keys()])\n\nall_hits = []\nfor hit_type in outstanding_hit_types.keys():\n all_hits += outstanding_hit_types[hit_type]\n\nbroken_hits = [\n h\n for h in all_hits\n if h[\"NumberOfAssignmentsCompleted\"] == 0 and h[\"HITStatus\"] != \"Reviewable\"\n]\n\nprint(\n f\"The requester {use_name} has {num_hit_types} outstanding HIT \"\n f\"types, with {len(broken_hits)} suspected active or broken HITs.\\n\"\n \"This may include tasks that are still in-flight, but also \"\n \"tasks that have already expired but have not been disposed of yet.\"\n)\n\nrun_type = input(\"Would you like to cleanup by (t)itle, or just clean up (a)ll?\\n>> \")\nuse_hits = None\n\nwhile use_hits is None:\n if run_type.lower().startswith(\"t\"):\n use_hits = []\n for hit_type in outstanding_hit_types.keys():\n cur_title = outstanding_hit_types[hit_type][0][\"Title\"]\n print(f\"HIT TITLE: {cur_title}\")\n print(f\"HIT COUNT: {len(outstanding_hit_types[hit_type])}\")\n should_clear = input(\n \"Should we cleanup this hit type? (y)es for yes, anything else for no: \"\n \"\\n>> \"\n )\n if should_clear.lower().startswith(\"y\"):\n use_hits += outstanding_hit_types[hit_type]\n elif run_type.lower().startswith(\"a\"):\n use_hits = all_hits\n else:\n run_type = input(\"Options are (t)itle, or (a)ll:\\n>> \")\n\nprint(f\"Disposing {len(use_hits)} HITs.\")\nremaining_hits = expire_and_dispose_hits(client, use_hits)\n\nif len(remaining_hits) == 0:\n print(\"Disposed!\")\nelse:\n print(\n f\"After disposing, {len(remaining_hits)} could not be disposed.\\n\"\n f\"These may not have been reviewed yet, or are being actively worked on.\\n\"\n \"They have been expired though, so please try to dispose later.\"\n \"The first 20 dispose errors are added below:\"\n )\n print([h[\"dispose_exception\"] for h in remaining_hits[:20]])\n", "path": "mephisto/scripts/mturk/cleanup.py"}], "after_files": [{"content": "#!/usr/bin/env python3\n\n# Copyright (c) Facebook, Inc. and its affiliates.\n# This source code is licensed under the MIT license found in the\n# LICENSE file in the root directory of this source tree.\n\n\"\"\"\nUtility script that finds, expires, and disposes HITs that may not\nhave been taking down during a run that exited improperly.\n\"\"\"\nfrom mephisto.abstractions.providers.mturk.mturk_utils import (\n get_outstanding_hits,\n expire_and_dispose_hits,\n)\nfrom mephisto.abstractions.databases.local_database import LocalMephistoDB\n\ndb = LocalMephistoDB()\n\nall_requesters = db.find_requesters(provider_type=\"mturk\")\nall_requesters += db.find_requesters(provider_type=\"mturk_sandbox\")\n\nprint(\"You have the following requesters available for mturk and mturk sandbox:\")\nr_names = [r.requester_name for r in all_requesters]\nprint(sorted(r_names))\n\nuse_name = input(\"Enter the name of the requester to clear HITs from:\\n>> \")\nwhile use_name not in r_names:\n use_name = input(\n f\"Sorry, {use_name} is not in the requester list. \"\n f\"The following are valid: {r_names}\\n\"\n f\"Select one:\\n>> \"\n )\n\nrequester = db.find_requesters(requester_name=use_name)[0]\nclient = requester._get_client(requester._requester_name)\n\noutstanding_hit_types = get_outstanding_hits(client)\nnum_hit_types = len(outstanding_hit_types.keys())\nsum_hits = sum([len(outstanding_hit_types[x]) for x in outstanding_hit_types.keys()])\n\nall_hits = []\nfor hit_type in outstanding_hit_types.keys():\n all_hits += outstanding_hit_types[hit_type]\n\nbroken_hits = [\n h\n for h in all_hits\n if h[\"NumberOfAssignmentsCompleted\"] == 0 and h[\"HITStatus\"] != \"Reviewable\"\n]\n\nprint(\n f\"The requester {use_name} has {num_hit_types} outstanding HIT \"\n f\"types, with {len(broken_hits)} suspected active or broken HITs.\\n\"\n \"This may include tasks that are still in-flight, but also \"\n \"tasks that have already expired but have not been disposed of yet.\"\n)\n\nrun_type = input(\"Would you like to cleanup by (t)itle, or just clean up (a)ll?\\n>> \")\nuse_hits = None\n\nwhile use_hits is None:\n if run_type.lower().startswith(\"t\"):\n use_hits = []\n for hit_type in outstanding_hit_types.keys():\n cur_title = outstanding_hit_types[hit_type][0][\"Title\"]\n print(f\"HIT TITLE: {cur_title}\")\n print(f\"HIT COUNT: {len(outstanding_hit_types[hit_type])}\")\n should_clear = input(\n \"Should we cleanup this hit type? (y)es for yes, anything else for no: \"\n \"\\n>> \"\n )\n if should_clear.lower().startswith(\"y\"):\n use_hits += outstanding_hit_types[hit_type]\n elif run_type.lower().startswith(\"a\"):\n use_hits = all_hits\n else:\n run_type = input(\"Options are (t)itle, or (a)ll:\\n>> \")\n\nprint(f\"Disposing {len(use_hits)} HITs.\")\nremaining_hits = expire_and_dispose_hits(client, use_hits)\n\nif len(remaining_hits) == 0:\n print(\"Disposed!\")\nelse:\n print(\n f\"After disposing, {len(remaining_hits)} could not be disposed.\\n\"\n f\"These may not have been reviewed yet, or are being actively worked on.\\n\"\n \"They have been expired though, so please try to dispose later.\"\n \"The first 20 dispose errors are added below:\"\n )\n print([h[\"dispose_exception\"] for h in remaining_hits[:20]])\n", "path": "mephisto/scripts/mturk/cleanup.py"}]} | 1,329 | 192 |

gh_patches_debug_6927 | rasdani/github-patches | git_diff | Cloud-CV__EvalAI-726 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Add fields in ChallengePhaseSerializer

Please add fields `max_submissions_per_day` and `max_submissions` in the `Challenge Phase Serializer`. It is needed for the issue #704 .

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `apps/challenges/serializers.py`

Content:

```

1 from rest_framework import serializers

2

3 from hosts.serializers import ChallengeHostTeamSerializer

4

5 from .models import (

6 Challenge,

7 ChallengePhase,

8 ChallengePhaseSplit,

9 DatasetSplit,)

10

11

12 class ChallengeSerializer(serializers.ModelSerializer):

13

14 is_active = serializers.ReadOnlyField()

15

16 def __init__(self, *args, **kwargs):

17 super(ChallengeSerializer, self).__init__(*args, **kwargs)

18 context = kwargs.get('context')

19 if context and context.get('request').method != 'GET':

20 challenge_host_team = context.get('challenge_host_team')

21 kwargs['data']['creator'] = challenge_host_team.pk

22 else:

23 self.fields['creator'] = ChallengeHostTeamSerializer()

24

25 class Meta:

26 model = Challenge

27 fields = ('id', 'title', 'description', 'terms_and_conditions',

28 'submission_guidelines', 'evaluation_details',

29 'image', 'start_date', 'end_date', 'creator',

30 'published', 'enable_forum', 'anonymous_leaderboard', 'is_active',)

31

32

33 class ChallengePhaseSerializer(serializers.ModelSerializer):

34

35 is_active = serializers.ReadOnlyField()

36

37 def __init__(self, *args, **kwargs):

38 super(ChallengePhaseSerializer, self).__init__(*args, **kwargs)

39 context = kwargs.get('context')

40 if context:

41 challenge = context.get('challenge')

42 kwargs['data']['challenge'] = challenge.pk

43

44 class Meta:

45 model = ChallengePhase