problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

10.2k

| golden_diff

stringlengths 151

4.94k

| verification_info

stringlengths 582

21k

| num_tokens

int64 271

2.05k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

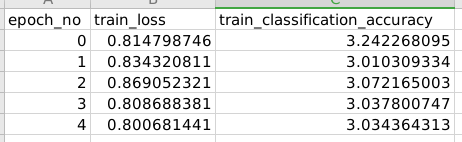

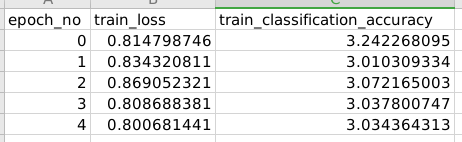

gh_patches_debug_9180 | rasdani/github-patches | git_diff | streamlit__streamlit-1722 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Top Half of Seaborn Chart Title Gets Cut Off

# Summary

When adding a title to a seaborn or perhaps MATPLOTLIB chart (have not tested with MATPLOTLIB yet) chart, the top half of the title is cut off or not displayed

```

# Steps to reproduce

import matplotlib.pyplot as plt

import seaborn as sns

import streamlit as st

mpg = sns.load_dataset("mpg")

option = st.sidebar.multiselect('Choose country of origin:', mpg.origin.unique()

sns.relplot(x="horsepower", y="mpg", hue="origin", size="weight", sizes=(40, 400), alpha=0.5, palette="muted", height=6, data=mpg.query("origin == @option"))

plt.title('MPG vs Weight by Country of Origin')

st.pyplot()

```

## Expected behavior:

seaborn chart title to be fully visible

## Actual behavior:

Top half of seaborn chart title is cut off

## Is this a regression?

That is, did this use to work the way you expected in the past? First time using streamlit, so not sure if worked in the past

yes? maybe?

# Debug info

- Streamlit version: 0.47.4

- Python version: 3.7

- Using Conda

- OS version: Windows 10

- Browser version: Chrome version 77

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `lib/streamlit/elements/pyplot.py`

Content:

```

1 # Copyright 2018-2020 Streamlit Inc.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """Streamlit support for Matplotlib PyPlot charts."""

16

17 import io

18

19 try:

20 import matplotlib # noqa: F401

21 import matplotlib.pyplot as plt

22

23 plt.ioff()

24 except ImportError:

25 raise ImportError("pyplot() command requires matplotlib")

26

27 import streamlit.elements.image_proto as image_proto

28

29 from streamlit.logger import get_logger

30

31 LOGGER = get_logger(__name__)

32

33

34 def marshall(coordinates, new_element_proto, fig=None, clear_figure=True, **kwargs):

35 """Construct a matplotlib.pyplot figure.

36

37 See DeltaGenerator.vega_lite_chart for docs.

38 """

39 # You can call .savefig() on a Figure object or directly on the pyplot

40 # module, in which case you're doing it to the latest Figure.

41 if not fig:

42 if clear_figure is None:

43 clear_figure = True

44 fig = plt

45

46 # Normally, dpi is set to 'figure', and the figure's dpi is set to 100.

47 # So here we pick double of that to make things look good in a high

48 # DPI display.

49 options = {"dpi": 200, "format": "png"}

50

51 # If some of the options are passed in from kwargs then replace

52 # the values in options with the ones from kwargs

53 options = {a: kwargs.get(a, b) for a, b in options.items()}

54 # Merge options back into kwargs.

55 kwargs.update(options)

56

57 image = io.BytesIO()

58 fig.savefig(image, **kwargs)

59 image_proto.marshall_images(

60 coordinates,

61 image,

62 None,

63 -2,

64 new_element_proto.imgs,

65 False,

66 channels="RGB",

67 format="PNG",

68 )

69

70 # Clear the figure after rendering it. This means that subsequent

71 # plt calls will be starting fresh.

72 if clear_figure:

73 fig.clf()

74

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/lib/streamlit/elements/pyplot.py b/lib/streamlit/elements/pyplot.py

--- a/lib/streamlit/elements/pyplot.py

+++ b/lib/streamlit/elements/pyplot.py

@@ -46,7 +46,7 @@

# Normally, dpi is set to 'figure', and the figure's dpi is set to 100.

# So here we pick double of that to make things look good in a high

# DPI display.

- options = {"dpi": 200, "format": "png"}

+ options = {"bbox_inches": "tight", "dpi": 200, "format": "png"}

# If some of the options are passed in from kwargs then replace

# the values in options with the ones from kwargs

| {"golden_diff": "diff --git a/lib/streamlit/elements/pyplot.py b/lib/streamlit/elements/pyplot.py\n--- a/lib/streamlit/elements/pyplot.py\n+++ b/lib/streamlit/elements/pyplot.py\n@@ -46,7 +46,7 @@\n # Normally, dpi is set to 'figure', and the figure's dpi is set to 100.\n # So here we pick double of that to make things look good in a high\n # DPI display.\n- options = {\"dpi\": 200, \"format\": \"png\"}\n+ options = {\"bbox_inches\": \"tight\", \"dpi\": 200, \"format\": \"png\"}\n \n # If some of the options are passed in from kwargs then replace\n # the values in options with the ones from kwargs\n", "issue": "Top Half of Seaborn Chart Title Gets Cut Off\n# Summary\r\nWhen adding a title to a seaborn or perhaps MATPLOTLIB chart (have not tested with MATPLOTLIB yet) chart, the top half of the title is cut off or not displayed\r\n\r\n```\r\n# Steps to reproduce\r\nimport matplotlib.pyplot as plt\r\nimport seaborn as sns\r\nimport streamlit as st\r\n\r\nmpg = sns.load_dataset(\"mpg\")\r\noption = st.sidebar.multiselect('Choose country of origin:', mpg.origin.unique()\r\nsns.relplot(x=\"horsepower\", y=\"mpg\", hue=\"origin\", size=\"weight\", sizes=(40, 400), alpha=0.5, palette=\"muted\", height=6, data=mpg.query(\"origin == @option\"))\r\nplt.title('MPG vs Weight by Country of Origin')\r\nst.pyplot()\r\n```\r\n\r\n## Expected behavior:\r\nseaborn chart title to be fully visible\r\n\r\n## Actual behavior:\r\nTop half of seaborn chart title is cut off\r\n\r\n## Is this a regression?\r\nThat is, did this use to work the way you expected in the past? First time using streamlit, so not sure if worked in the past\r\nyes? maybe?\r\n\r\n# Debug info\r\n- Streamlit version: 0.47.4\r\n- Python version: 3.7\r\n- Using Conda\r\n- OS version: Windows 10\r\n- Browser version: Chrome version 77\r\n\n", "before_files": [{"content": "# Copyright 2018-2020 Streamlit Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"Streamlit support for Matplotlib PyPlot charts.\"\"\"\n\nimport io\n\ntry:\n import matplotlib # noqa: F401\n import matplotlib.pyplot as plt\n\n plt.ioff()\nexcept ImportError:\n raise ImportError(\"pyplot() command requires matplotlib\")\n\nimport streamlit.elements.image_proto as image_proto\n\nfrom streamlit.logger import get_logger\n\nLOGGER = get_logger(__name__)\n\n\ndef marshall(coordinates, new_element_proto, fig=None, clear_figure=True, **kwargs):\n \"\"\"Construct a matplotlib.pyplot figure.\n\n See DeltaGenerator.vega_lite_chart for docs.\n \"\"\"\n # You can call .savefig() on a Figure object or directly on the pyplot\n # module, in which case you're doing it to the latest Figure.\n if not fig:\n if clear_figure is None:\n clear_figure = True\n fig = plt\n\n # Normally, dpi is set to 'figure', and the figure's dpi is set to 100.\n # So here we pick double of that to make things look good in a high\n # DPI display.\n options = {\"dpi\": 200, \"format\": \"png\"}\n\n # If some of the options are passed in from kwargs then replace\n # the values in options with the ones from kwargs\n options = {a: kwargs.get(a, b) for a, b in options.items()}\n # Merge options back into kwargs.\n kwargs.update(options)\n\n image = io.BytesIO()\n fig.savefig(image, **kwargs)\n image_proto.marshall_images(\n coordinates,\n image,\n None,\n -2,\n new_element_proto.imgs,\n False,\n channels=\"RGB\",\n format=\"PNG\",\n )\n\n # Clear the figure after rendering it. This means that subsequent\n # plt calls will be starting fresh.\n if clear_figure:\n fig.clf()\n", "path": "lib/streamlit/elements/pyplot.py"}], "after_files": [{"content": "# Copyright 2018-2020 Streamlit Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"Streamlit support for Matplotlib PyPlot charts.\"\"\"\n\nimport io\n\ntry:\n import matplotlib # noqa: F401\n import matplotlib.pyplot as plt\n\n plt.ioff()\nexcept ImportError:\n raise ImportError(\"pyplot() command requires matplotlib\")\n\nimport streamlit.elements.image_proto as image_proto\n\nfrom streamlit.logger import get_logger\n\nLOGGER = get_logger(__name__)\n\n\ndef marshall(coordinates, new_element_proto, fig=None, clear_figure=True, **kwargs):\n \"\"\"Construct a matplotlib.pyplot figure.\n\n See DeltaGenerator.vega_lite_chart for docs.\n \"\"\"\n # You can call .savefig() on a Figure object or directly on the pyplot\n # module, in which case you're doing it to the latest Figure.\n if not fig:\n if clear_figure is None:\n clear_figure = True\n fig = plt\n\n # Normally, dpi is set to 'figure', and the figure's dpi is set to 100.\n # So here we pick double of that to make things look good in a high\n # DPI display.\n options = {\"bbox_inches\": \"tight\", \"dpi\": 200, \"format\": \"png\"}\n\n # If some of the options are passed in from kwargs then replace\n # the values in options with the ones from kwargs\n options = {a: kwargs.get(a, b) for a, b in options.items()}\n # Merge options back into kwargs.\n kwargs.update(options)\n\n image = io.BytesIO()\n fig.savefig(image, **kwargs)\n image_proto.marshall_images(\n coordinates,\n image,\n None,\n -2,\n new_element_proto.imgs,\n False,\n channels=\"RGB\",\n format=\"PNG\",\n )\n\n # Clear the figure after rendering it. This means that subsequent\n # plt calls will be starting fresh.\n if clear_figure:\n fig.clf()\n", "path": "lib/streamlit/elements/pyplot.py"}]} | 1,246 | 174 |

gh_patches_debug_7479 | rasdani/github-patches | git_diff | ytdl-org__youtube-dl-4389 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

NBA URLs FAIL without INDEX.HTML

the NBA extractor does not work if URL does not explicitly end with index.html (which appears to be the default)

URL: http://www.nba.com/video/games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba/

C:>youtube-dl -v http://www.nba.com/video/games/hornets/2014/12/05/0021400276-n

yk-cha-play5.nba/

[debug] System config: []

[debug] User config: []

[debug] Command-line args: ['-v', 'http://www.nba.com/video/games/hornets/2014/1

2/05/0021400276-nyk-cha-play5.nba/']

[debug] Encodings: locale cp1252, fs mbcs, out cp850, pref cp1252

[debug] youtube-dl version 2014.12.06.1

[debug] Python version 2.7.8 - Windows-7-6.1.7601-SP1

[debug] exe versions: ffmpeg N-40824-

[debug] Proxy map: {}

[NBA] /games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba/: Downloading webpa

ge

[debug] Invoking downloader on u'http://ht-mobile.cdn.turner.com/nba/big/games/h

ornets/2014/12/05/0021400276-nyk-cha-play5.nba/_nba_1280x720.mp4'

ERROR: unable to download video data: HTTP Error 404: Not Found

Traceback (most recent call last):

File "youtube_dl\YoutubeDL.pyo", line 1091, in process_info

File "youtube_dl\YoutubeDL.pyo", line 1067, in dl

File "youtube_dl\downloader\common.pyo", line 294, in download

File "youtube_dl\downloader\http.pyo", line 66, in real_download

File "youtube_dl\YoutubeDL.pyo", line 1325, in urlopen

File "urllib2.pyo", line 410, in open

File "urllib2.pyo", line 523, in http_response

File "urllib2.pyo", line 448, in error

File "urllib2.pyo", line 382, in _call_chain

File "urllib2.pyo", line 531, in http_error_default

HTTPError: HTTP Error 404: Not Found

(same vid but with index.html)

URL: http://www.nba.com/video/games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba/index.html

C:>youtube-dl -v http://www.nba.com/video/games/hornets/2014/12/05/0021400276-n

yk-cha-play5.nba/index.html

[debug] System config: []

[debug] User config: []

[debug] Command-line args: ['-v', 'http://www.nba.com/video/games/hornets/2014/1

2/05/0021400276-nyk-cha-play5.nba/index.html']

[debug] Encodings: locale cp1252, fs mbcs, out cp850, pref cp1252

[debug] youtube-dl version 2014.12.06.1

[debug] Python version 2.7.8 - Windows-7-6.1.7601-SP1

[debug] exe versions: ffmpeg N-40824-

[debug] Proxy map: {}

[NBA] /games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba: Downloading webpag

e

[debug] Invoking downloader on u'http://ht-mobile.cdn.turner.com/nba/big/games/h

ornets/2014/12/05/0021400276-nyk-cha-play5.nba_nba_1280x720.mp4'

[download] Destination: Walker From Behind-0021400276-nyk-cha-play5.nba.mp4

[download] 100% of 5.76MiB in 00:04

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `youtube_dl/extractor/nba.py`

Content:

```

1 from __future__ import unicode_literals

2

3 import re

4

5 from .common import InfoExtractor

6 from ..utils import (

7 remove_end,

8 parse_duration,

9 )

10

11

12 class NBAIE(InfoExtractor):

13 _VALID_URL = r'https?://(?:watch\.|www\.)?nba\.com/(?:nba/)?video(?P<id>/[^?]*?)(?:/index\.html)?(?:\?.*)?$'

14 _TEST = {

15 'url': 'http://www.nba.com/video/games/nets/2012/12/04/0021200253-okc-bkn-recap.nba/index.html',

16 'md5': 'c0edcfc37607344e2ff8f13c378c88a4',

17 'info_dict': {

18 'id': '0021200253-okc-bkn-recap.nba',

19 'ext': 'mp4',

20 'title': 'Thunder vs. Nets',

21 'description': 'Kevin Durant scores 32 points and dishes out six assists as the Thunder beat the Nets in Brooklyn.',

22 'duration': 181,

23 },

24 }

25

26 def _real_extract(self, url):

27 mobj = re.match(self._VALID_URL, url)

28 video_id = mobj.group('id')

29

30 webpage = self._download_webpage(url, video_id)

31

32 video_url = 'http://ht-mobile.cdn.turner.com/nba/big' + video_id + '_nba_1280x720.mp4'

33

34 shortened_video_id = video_id.rpartition('/')[2]

35 title = remove_end(

36 self._og_search_title(webpage, default=shortened_video_id), ' : NBA.com')

37

38 description = self._og_search_description(webpage)

39 duration = parse_duration(

40 self._html_search_meta('duration', webpage, 'duration', fatal=False))

41

42 return {

43 'id': shortened_video_id,

44 'url': video_url,

45 'title': title,

46 'description': description,

47 'duration': duration,

48 }

49

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/youtube_dl/extractor/nba.py b/youtube_dl/extractor/nba.py

--- a/youtube_dl/extractor/nba.py

+++ b/youtube_dl/extractor/nba.py

@@ -10,7 +10,7 @@

class NBAIE(InfoExtractor):

- _VALID_URL = r'https?://(?:watch\.|www\.)?nba\.com/(?:nba/)?video(?P<id>/[^?]*?)(?:/index\.html)?(?:\?.*)?$'

+ _VALID_URL = r'https?://(?:watch\.|www\.)?nba\.com/(?:nba/)?video(?P<id>/[^?]*?)/?(?:/index\.html)?(?:\?.*)?$'

_TEST = {

'url': 'http://www.nba.com/video/games/nets/2012/12/04/0021200253-okc-bkn-recap.nba/index.html',

'md5': 'c0edcfc37607344e2ff8f13c378c88a4',

| {"golden_diff": "diff --git a/youtube_dl/extractor/nba.py b/youtube_dl/extractor/nba.py\n--- a/youtube_dl/extractor/nba.py\n+++ b/youtube_dl/extractor/nba.py\n@@ -10,7 +10,7 @@\n \n \n class NBAIE(InfoExtractor):\n- _VALID_URL = r'https?://(?:watch\\.|www\\.)?nba\\.com/(?:nba/)?video(?P<id>/[^?]*?)(?:/index\\.html)?(?:\\?.*)?$'\n+ _VALID_URL = r'https?://(?:watch\\.|www\\.)?nba\\.com/(?:nba/)?video(?P<id>/[^?]*?)/?(?:/index\\.html)?(?:\\?.*)?$'\n _TEST = {\n 'url': 'http://www.nba.com/video/games/nets/2012/12/04/0021200253-okc-bkn-recap.nba/index.html',\n 'md5': 'c0edcfc37607344e2ff8f13c378c88a4',\n", "issue": "NBA URLs FAIL without INDEX.HTML\nthe NBA extractor does not work if URL does not explicitly end with index.html (which appears to be the default)\n\nURL: http://www.nba.com/video/games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba/\n\nC:>youtube-dl -v http://www.nba.com/video/games/hornets/2014/12/05/0021400276-n\nyk-cha-play5.nba/\n[debug] System config: []\n[debug] User config: []\n[debug] Command-line args: ['-v', 'http://www.nba.com/video/games/hornets/2014/1\n2/05/0021400276-nyk-cha-play5.nba/']\n[debug] Encodings: locale cp1252, fs mbcs, out cp850, pref cp1252\n[debug] youtube-dl version 2014.12.06.1\n[debug] Python version 2.7.8 - Windows-7-6.1.7601-SP1\n[debug] exe versions: ffmpeg N-40824-\n[debug] Proxy map: {}\n[NBA] /games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba/: Downloading webpa\nge\n[debug] Invoking downloader on u'http://ht-mobile.cdn.turner.com/nba/big/games/h\nornets/2014/12/05/0021400276-nyk-cha-play5.nba/_nba_1280x720.mp4'\nERROR: unable to download video data: HTTP Error 404: Not Found\nTraceback (most recent call last):\n File \"youtube_dl\\YoutubeDL.pyo\", line 1091, in process_info\n File \"youtube_dl\\YoutubeDL.pyo\", line 1067, in dl\n File \"youtube_dl\\downloader\\common.pyo\", line 294, in download\n File \"youtube_dl\\downloader\\http.pyo\", line 66, in real_download\n File \"youtube_dl\\YoutubeDL.pyo\", line 1325, in urlopen\n File \"urllib2.pyo\", line 410, in open\n File \"urllib2.pyo\", line 523, in http_response\n File \"urllib2.pyo\", line 448, in error\n File \"urllib2.pyo\", line 382, in _call_chain\n File \"urllib2.pyo\", line 531, in http_error_default\nHTTPError: HTTP Error 404: Not Found\n\n(same vid but with index.html)\nURL: http://www.nba.com/video/games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba/index.html\n\nC:>youtube-dl -v http://www.nba.com/video/games/hornets/2014/12/05/0021400276-n\nyk-cha-play5.nba/index.html\n[debug] System config: []\n[debug] User config: []\n[debug] Command-line args: ['-v', 'http://www.nba.com/video/games/hornets/2014/1\n2/05/0021400276-nyk-cha-play5.nba/index.html']\n[debug] Encodings: locale cp1252, fs mbcs, out cp850, pref cp1252\n[debug] youtube-dl version 2014.12.06.1\n[debug] Python version 2.7.8 - Windows-7-6.1.7601-SP1\n[debug] exe versions: ffmpeg N-40824-\n[debug] Proxy map: {}\n[NBA] /games/hornets/2014/12/05/0021400276-nyk-cha-play5.nba: Downloading webpag\ne\n[debug] Invoking downloader on u'http://ht-mobile.cdn.turner.com/nba/big/games/h\nornets/2014/12/05/0021400276-nyk-cha-play5.nba_nba_1280x720.mp4'\n[download] Destination: Walker From Behind-0021400276-nyk-cha-play5.nba.mp4\n[download] 100% of 5.76MiB in 00:04\n\n", "before_files": [{"content": "from __future__ import unicode_literals\n\nimport re\n\nfrom .common import InfoExtractor\nfrom ..utils import (\n remove_end,\n parse_duration,\n)\n\n\nclass NBAIE(InfoExtractor):\n _VALID_URL = r'https?://(?:watch\\.|www\\.)?nba\\.com/(?:nba/)?video(?P<id>/[^?]*?)(?:/index\\.html)?(?:\\?.*)?$'\n _TEST = {\n 'url': 'http://www.nba.com/video/games/nets/2012/12/04/0021200253-okc-bkn-recap.nba/index.html',\n 'md5': 'c0edcfc37607344e2ff8f13c378c88a4',\n 'info_dict': {\n 'id': '0021200253-okc-bkn-recap.nba',\n 'ext': 'mp4',\n 'title': 'Thunder vs. Nets',\n 'description': 'Kevin Durant scores 32 points and dishes out six assists as the Thunder beat the Nets in Brooklyn.',\n 'duration': 181,\n },\n }\n\n def _real_extract(self, url):\n mobj = re.match(self._VALID_URL, url)\n video_id = mobj.group('id')\n\n webpage = self._download_webpage(url, video_id)\n\n video_url = 'http://ht-mobile.cdn.turner.com/nba/big' + video_id + '_nba_1280x720.mp4'\n\n shortened_video_id = video_id.rpartition('/')[2]\n title = remove_end(\n self._og_search_title(webpage, default=shortened_video_id), ' : NBA.com')\n\n description = self._og_search_description(webpage)\n duration = parse_duration(\n self._html_search_meta('duration', webpage, 'duration', fatal=False))\n\n return {\n 'id': shortened_video_id,\n 'url': video_url,\n 'title': title,\n 'description': description,\n 'duration': duration,\n }\n", "path": "youtube_dl/extractor/nba.py"}], "after_files": [{"content": "from __future__ import unicode_literals\n\nimport re\n\nfrom .common import InfoExtractor\nfrom ..utils import (\n remove_end,\n parse_duration,\n)\n\n\nclass NBAIE(InfoExtractor):\n _VALID_URL = r'https?://(?:watch\\.|www\\.)?nba\\.com/(?:nba/)?video(?P<id>/[^?]*?)/?(?:/index\\.html)?(?:\\?.*)?$'\n _TEST = {\n 'url': 'http://www.nba.com/video/games/nets/2012/12/04/0021200253-okc-bkn-recap.nba/index.html',\n 'md5': 'c0edcfc37607344e2ff8f13c378c88a4',\n 'info_dict': {\n 'id': '0021200253-okc-bkn-recap.nba',\n 'ext': 'mp4',\n 'title': 'Thunder vs. Nets',\n 'description': 'Kevin Durant scores 32 points and dishes out six assists as the Thunder beat the Nets in Brooklyn.',\n 'duration': 181,\n },\n }\n\n def _real_extract(self, url):\n mobj = re.match(self._VALID_URL, url)\n video_id = mobj.group('id')\n\n webpage = self._download_webpage(url, video_id)\n\n video_url = 'http://ht-mobile.cdn.turner.com/nba/big' + video_id + '_nba_1280x720.mp4'\n\n shortened_video_id = video_id.rpartition('/')[2]\n title = remove_end(\n self._og_search_title(webpage, default=shortened_video_id), ' : NBA.com')\n\n description = self._og_search_description(webpage)\n duration = parse_duration(\n self._html_search_meta('duration', webpage, 'duration', fatal=False))\n\n return {\n 'id': shortened_video_id,\n 'url': video_url,\n 'title': title,\n 'description': description,\n 'duration': duration,\n }\n", "path": "youtube_dl/extractor/nba.py"}]} | 1,928 | 260 |

gh_patches_debug_8060 | rasdani/github-patches | git_diff | mkdocs__mkdocs-1318 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Broken links/css in readthedocs 404 page

The [404.html](https://github.com/mkdocs/mkdocs/blob/master/mkdocs/themes/readthedocs/404.html) added in 0.17.0 seems to have broken links and css ([failing CI build](https://travis-ci.org/opensciencegrid/docs/builds/290469999?utm_source=github_status&utm_medium=notification)). The links in the generated `404.html` file all start with a `docs/...` prefix but when I inspect the `site` dir after a `mkdocs build`, there is no `docs` directory.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mkdocs/commands/serve.py`

Content:

```

1 from __future__ import unicode_literals

2

3 import logging

4 import shutil

5 import tempfile

6

7 from os.path import isfile, join

8 from mkdocs.commands.build import build

9 from mkdocs.config import load_config

10

11 log = logging.getLogger(__name__)

12

13

14 def _get_handler(site_dir, StaticFileHandler):

15

16 from tornado.template import Loader

17

18 class WebHandler(StaticFileHandler):

19

20 def write_error(self, status_code, **kwargs):

21

22 if status_code in (404, 500):

23 error_page = '{}.html'.format(status_code)

24 if isfile(join(site_dir, error_page)):

25 self.write(Loader(site_dir).load(error_page).generate())

26 else:

27 super(WebHandler, self).write_error(status_code, **kwargs)

28

29 return WebHandler

30

31

32 def _livereload(host, port, config, builder, site_dir):

33

34 # We are importing here for anyone that has issues with livereload. Even if

35 # this fails, the --no-livereload alternative should still work.

36 from livereload import Server

37 import livereload.handlers

38

39 class LiveReloadServer(Server):

40

41 def get_web_handlers(self, script):

42 handlers = super(LiveReloadServer, self).get_web_handlers(script)

43 # replace livereload handler

44 return [(handlers[0][0], _get_handler(site_dir, livereload.handlers.StaticFileHandler), handlers[0][2],)]

45

46 server = LiveReloadServer()

47

48 # Watch the documentation files, the config file and the theme files.

49 server.watch(config['docs_dir'], builder)

50 server.watch(config['config_file_path'], builder)

51

52 for d in config['theme'].dirs:

53 server.watch(d, builder)

54

55 # Run `serve` plugin events.

56 server = config['plugins'].run_event('serve', server, config=config)

57

58 server.serve(root=site_dir, host=host, port=port, restart_delay=0)

59

60

61 def _static_server(host, port, site_dir):

62

63 # Importing here to seperate the code paths from the --livereload

64 # alternative.

65 from tornado import ioloop

66 from tornado import web

67

68 application = web.Application([

69 (r"/(.*)", _get_handler(site_dir, web.StaticFileHandler), {

70 "path": site_dir,

71 "default_filename": "index.html"

72 }),

73 ])

74 application.listen(port=port, address=host)

75

76 log.info('Running at: http://%s:%s/', host, port)

77 log.info('Hold ctrl+c to quit.')

78 try:

79 ioloop.IOLoop.instance().start()

80 except KeyboardInterrupt:

81 log.info('Stopping server...')

82

83

84 def serve(config_file=None, dev_addr=None, strict=None, theme=None,

85 theme_dir=None, livereload='livereload'):

86 """

87 Start the MkDocs development server

88

89 By default it will serve the documentation on http://localhost:8000/ and

90 it will rebuild the documentation and refresh the page automatically

91 whenever a file is edited.

92 """

93

94 # Create a temporary build directory, and set some options to serve it

95 tempdir = tempfile.mkdtemp()

96

97 def builder():

98 log.info("Building documentation...")

99 config = load_config(

100 config_file=config_file,

101 dev_addr=dev_addr,

102 strict=strict,

103 theme=theme,

104 theme_dir=theme_dir

105 )

106 config['site_dir'] = tempdir

107 live_server = livereload in ['dirty', 'livereload']

108 dirty = livereload == 'dirty'

109 build(config, live_server=live_server, dirty=dirty)

110 return config

111

112 try:

113 # Perform the initial build

114 config = builder()

115

116 host, port = config['dev_addr']

117

118 if livereload in ['livereload', 'dirty']:

119 _livereload(host, port, config, builder, tempdir)

120 else:

121 _static_server(host, port, tempdir)

122 finally:

123 shutil.rmtree(tempdir)

124

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/mkdocs/commands/serve.py b/mkdocs/commands/serve.py

--- a/mkdocs/commands/serve.py

+++ b/mkdocs/commands/serve.py

@@ -103,7 +103,10 @@

theme=theme,

theme_dir=theme_dir

)

+ # Override a few config settings after validation

config['site_dir'] = tempdir

+ config['site_url'] = 'http://{0}/'.format(config['dev_addr'])

+

live_server = livereload in ['dirty', 'livereload']

dirty = livereload == 'dirty'

build(config, live_server=live_server, dirty=dirty)

| {"golden_diff": "diff --git a/mkdocs/commands/serve.py b/mkdocs/commands/serve.py\n--- a/mkdocs/commands/serve.py\n+++ b/mkdocs/commands/serve.py\n@@ -103,7 +103,10 @@\n theme=theme,\n theme_dir=theme_dir\n )\n+ # Override a few config settings after validation\n config['site_dir'] = tempdir\n+ config['site_url'] = 'http://{0}/'.format(config['dev_addr'])\n+\n live_server = livereload in ['dirty', 'livereload']\n dirty = livereload == 'dirty'\n build(config, live_server=live_server, dirty=dirty)\n", "issue": "Broken links/css in readthedocs 404 page \nThe [404.html](https://github.com/mkdocs/mkdocs/blob/master/mkdocs/themes/readthedocs/404.html) added in 0.17.0 seems to have broken links and css ([failing CI build](https://travis-ci.org/opensciencegrid/docs/builds/290469999?utm_source=github_status&utm_medium=notification)). The links in the generated `404.html` file all start with a `docs/...` prefix but when I inspect the `site` dir after a `mkdocs build`, there is no `docs` directory.\n", "before_files": [{"content": "from __future__ import unicode_literals\n\nimport logging\nimport shutil\nimport tempfile\n\nfrom os.path import isfile, join\nfrom mkdocs.commands.build import build\nfrom mkdocs.config import load_config\n\nlog = logging.getLogger(__name__)\n\n\ndef _get_handler(site_dir, StaticFileHandler):\n\n from tornado.template import Loader\n\n class WebHandler(StaticFileHandler):\n\n def write_error(self, status_code, **kwargs):\n\n if status_code in (404, 500):\n error_page = '{}.html'.format(status_code)\n if isfile(join(site_dir, error_page)):\n self.write(Loader(site_dir).load(error_page).generate())\n else:\n super(WebHandler, self).write_error(status_code, **kwargs)\n\n return WebHandler\n\n\ndef _livereload(host, port, config, builder, site_dir):\n\n # We are importing here for anyone that has issues with livereload. Even if\n # this fails, the --no-livereload alternative should still work.\n from livereload import Server\n import livereload.handlers\n\n class LiveReloadServer(Server):\n\n def get_web_handlers(self, script):\n handlers = super(LiveReloadServer, self).get_web_handlers(script)\n # replace livereload handler\n return [(handlers[0][0], _get_handler(site_dir, livereload.handlers.StaticFileHandler), handlers[0][2],)]\n\n server = LiveReloadServer()\n\n # Watch the documentation files, the config file and the theme files.\n server.watch(config['docs_dir'], builder)\n server.watch(config['config_file_path'], builder)\n\n for d in config['theme'].dirs:\n server.watch(d, builder)\n\n # Run `serve` plugin events.\n server = config['plugins'].run_event('serve', server, config=config)\n\n server.serve(root=site_dir, host=host, port=port, restart_delay=0)\n\n\ndef _static_server(host, port, site_dir):\n\n # Importing here to seperate the code paths from the --livereload\n # alternative.\n from tornado import ioloop\n from tornado import web\n\n application = web.Application([\n (r\"/(.*)\", _get_handler(site_dir, web.StaticFileHandler), {\n \"path\": site_dir,\n \"default_filename\": \"index.html\"\n }),\n ])\n application.listen(port=port, address=host)\n\n log.info('Running at: http://%s:%s/', host, port)\n log.info('Hold ctrl+c to quit.')\n try:\n ioloop.IOLoop.instance().start()\n except KeyboardInterrupt:\n log.info('Stopping server...')\n\n\ndef serve(config_file=None, dev_addr=None, strict=None, theme=None,\n theme_dir=None, livereload='livereload'):\n \"\"\"\n Start the MkDocs development server\n\n By default it will serve the documentation on http://localhost:8000/ and\n it will rebuild the documentation and refresh the page automatically\n whenever a file is edited.\n \"\"\"\n\n # Create a temporary build directory, and set some options to serve it\n tempdir = tempfile.mkdtemp()\n\n def builder():\n log.info(\"Building documentation...\")\n config = load_config(\n config_file=config_file,\n dev_addr=dev_addr,\n strict=strict,\n theme=theme,\n theme_dir=theme_dir\n )\n config['site_dir'] = tempdir\n live_server = livereload in ['dirty', 'livereload']\n dirty = livereload == 'dirty'\n build(config, live_server=live_server, dirty=dirty)\n return config\n\n try:\n # Perform the initial build\n config = builder()\n\n host, port = config['dev_addr']\n\n if livereload in ['livereload', 'dirty']:\n _livereload(host, port, config, builder, tempdir)\n else:\n _static_server(host, port, tempdir)\n finally:\n shutil.rmtree(tempdir)\n", "path": "mkdocs/commands/serve.py"}], "after_files": [{"content": "from __future__ import unicode_literals\n\nimport logging\nimport shutil\nimport tempfile\n\nfrom os.path import isfile, join\nfrom mkdocs.commands.build import build\nfrom mkdocs.config import load_config\n\nlog = logging.getLogger(__name__)\n\n\ndef _get_handler(site_dir, StaticFileHandler):\n\n from tornado.template import Loader\n\n class WebHandler(StaticFileHandler):\n\n def write_error(self, status_code, **kwargs):\n\n if status_code in (404, 500):\n error_page = '{}.html'.format(status_code)\n if isfile(join(site_dir, error_page)):\n self.write(Loader(site_dir).load(error_page).generate())\n else:\n super(WebHandler, self).write_error(status_code, **kwargs)\n\n return WebHandler\n\n\ndef _livereload(host, port, config, builder, site_dir):\n\n # We are importing here for anyone that has issues with livereload. Even if\n # this fails, the --no-livereload alternative should still work.\n from livereload import Server\n import livereload.handlers\n\n class LiveReloadServer(Server):\n\n def get_web_handlers(self, script):\n handlers = super(LiveReloadServer, self).get_web_handlers(script)\n # replace livereload handler\n return [(handlers[0][0], _get_handler(site_dir, livereload.handlers.StaticFileHandler), handlers[0][2],)]\n\n server = LiveReloadServer()\n\n # Watch the documentation files, the config file and the theme files.\n server.watch(config['docs_dir'], builder)\n server.watch(config['config_file_path'], builder)\n\n for d in config['theme'].dirs:\n server.watch(d, builder)\n\n # Run `serve` plugin events.\n server = config['plugins'].run_event('serve', server, config=config)\n\n server.serve(root=site_dir, host=host, port=port, restart_delay=0)\n\n\ndef _static_server(host, port, site_dir):\n\n # Importing here to seperate the code paths from the --livereload\n # alternative.\n from tornado import ioloop\n from tornado import web\n\n application = web.Application([\n (r\"/(.*)\", _get_handler(site_dir, web.StaticFileHandler), {\n \"path\": site_dir,\n \"default_filename\": \"index.html\"\n }),\n ])\n application.listen(port=port, address=host)\n\n log.info('Running at: http://%s:%s/', host, port)\n log.info('Hold ctrl+c to quit.')\n try:\n ioloop.IOLoop.instance().start()\n except KeyboardInterrupt:\n log.info('Stopping server...')\n\n\ndef serve(config_file=None, dev_addr=None, strict=None, theme=None,\n theme_dir=None, livereload='livereload'):\n \"\"\"\n Start the MkDocs development server\n\n By default it will serve the documentation on http://localhost:8000/ and\n it will rebuild the documentation and refresh the page automatically\n whenever a file is edited.\n \"\"\"\n\n # Create a temporary build directory, and set some options to serve it\n tempdir = tempfile.mkdtemp()\n\n def builder():\n log.info(\"Building documentation...\")\n config = load_config(\n config_file=config_file,\n dev_addr=dev_addr,\n strict=strict,\n theme=theme,\n theme_dir=theme_dir\n )\n # Override a few config settings after validation\n config['site_dir'] = tempdir\n config['site_url'] = 'http://{0}/'.format(config['dev_addr'])\n\n live_server = livereload in ['dirty', 'livereload']\n dirty = livereload == 'dirty'\n build(config, live_server=live_server, dirty=dirty)\n return config\n\n try:\n # Perform the initial build\n config = builder()\n\n host, port = config['dev_addr']\n\n if livereload in ['livereload', 'dirty']:\n _livereload(host, port, config, builder, tempdir)\n else:\n _static_server(host, port, tempdir)\n finally:\n shutil.rmtree(tempdir)\n", "path": "mkdocs/commands/serve.py"}]} | 1,544 | 153 |

gh_patches_debug_1527 | rasdani/github-patches | git_diff | hydroshare__hydroshare-2401 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Discover page: search box does NOT obey SOLR syntax

The helpful text that suggests that SOLR syntax works in the search box has been wrong for over a year. It now tokenizes terms and is not compatible with SOLR syntax.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `hs_core/discovery_form.py`

Content:

```

1 from haystack.forms import FacetedSearchForm

2 from haystack.query import SQ, SearchQuerySet

3 from crispy_forms.layout import *

4 from crispy_forms.bootstrap import *

5 from django import forms

6

7 class DiscoveryForm(FacetedSearchForm):

8 NElat = forms.CharField(widget = forms.HiddenInput(), required=False)

9 NElng = forms.CharField(widget = forms.HiddenInput(), required=False)

10 SWlat = forms.CharField(widget = forms.HiddenInput(), required=False)

11 SWlng = forms.CharField(widget = forms.HiddenInput(), required=False)

12 start_date = forms.DateField(label='From Date', required=False)

13 end_date = forms.DateField(label='To Date', required=False)

14

15 def search(self):

16 if not self.cleaned_data.get('q'):

17 sqs = self.searchqueryset.filter(discoverable=True).filter(is_replaced_by=False)

18 else:

19 # This corrects for an failed match of complete words, as documented in issue #2308.

20 # The text__startswith=cdata matches stemmed words in documents with an unstemmed cdata.

21 # The text=cdata matches stemmed words after stemming cdata as well.

22 # The stem of "Industrial", according to the aggressive default stemmer, is "industri".

23 # Thus "Industrial" does not match "Industrial" in the document according to

24 # startswith, but does match according to text=cdata.

25 cdata = self.cleaned_data.get('q')

26 sqs = self.searchqueryset.filter(SQ(text__startswith=cdata)|SQ(text=cdata))\

27 .filter(discoverable=True)\

28 .filter(is_replaced_by=False)

29

30 geo_sq = SQ()

31 if self.cleaned_data['NElng'] and self.cleaned_data['SWlng']:

32 if float(self.cleaned_data['NElng']) > float(self.cleaned_data['SWlng']):

33 geo_sq.add(SQ(coverage_east__lte=float(self.cleaned_data['NElng'])), SQ.AND)

34 geo_sq.add(SQ(coverage_east__gte=float(self.cleaned_data['SWlng'])), SQ.AND)

35 else:

36 geo_sq.add(SQ(coverage_east__gte=float(self.cleaned_data['SWlng'])), SQ.AND)

37 geo_sq.add(SQ(coverage_east__lte=float(180)), SQ.OR)

38 geo_sq.add(SQ(coverage_east__lte=float(self.cleaned_data['NElng'])), SQ.AND)

39 geo_sq.add(SQ(coverage_east__gte=float(-180)), SQ.AND)

40

41 if self.cleaned_data['NElat'] and self.cleaned_data['SWlat']:

42 geo_sq.add(SQ(coverage_north__lte=float(self.cleaned_data['NElat'])), SQ.AND)

43 geo_sq.add(SQ(coverage_north__gte=float(self.cleaned_data['SWlat'])), SQ.AND)

44

45 if geo_sq:

46 sqs = sqs.filter(geo_sq)

47

48

49 # Check to see if a start_date was chosen.

50 if self.cleaned_data['start_date']:

51 sqs = sqs.filter(coverage_start_date__gte=self.cleaned_data['start_date'])

52

53 # Check to see if an end_date was chosen.

54 if self.cleaned_data['end_date']:

55 sqs = sqs.filter(coverage_end_date__lte=self.cleaned_data['end_date'])

56

57 author_sq = SQ()

58 subjects_sq = SQ()

59 resource_sq = SQ()

60 public_sq = SQ()

61 owner_sq = SQ()

62 discoverable_sq = SQ()

63 published_sq = SQ()

64 variable_sq = SQ()

65 sample_medium_sq = SQ()

66 units_name_sq = SQ()

67 # We need to process each facet to ensure that the field name and the

68 # value are quoted correctly and separately:

69

70 for facet in self.selected_facets:

71 if ":" not in facet:

72 continue

73

74 field, value = facet.split(":", 1)

75

76 if value:

77 if "creators" in field:

78 author_sq.add(SQ(creators=sqs.query.clean(value)), SQ.OR)

79

80 elif "subjects" in field:

81 subjects_sq.add(SQ(subjects=sqs.query.clean(value)), SQ.OR)

82

83 elif "resource_type" in field:

84 resource_sq.add(SQ(resource_type=sqs.query.clean(value)), SQ.OR)

85

86 elif "public" in field:

87 public_sq.add(SQ(public=sqs.query.clean(value)), SQ.OR)

88

89 elif "owners_names" in field:

90 owner_sq.add(SQ(owners_names=sqs.query.clean(value)), SQ.OR)

91

92 elif "discoverable" in field:

93 discoverable_sq.add(SQ(discoverable=sqs.query.clean(value)), SQ.OR)

94

95 elif "published" in field:

96 published_sq.add(SQ(published=sqs.query.clean(value)), SQ.OR)

97

98 elif 'variable_names' in field:

99 variable_sq.add(SQ(variable_names=sqs.query.clean(value)), SQ.OR)

100

101 elif 'sample_mediums' in field:

102 sample_medium_sq.add(SQ(sample_mediums=sqs.query.clean(value)), SQ.OR)

103

104 elif 'units_names' in field:

105 units_name_sq.add(SQ(units_names=sqs.query.clean(value)), SQ.OR)

106

107 else:

108 continue

109

110 if author_sq:

111 sqs = sqs.filter(author_sq)

112 if subjects_sq:

113 sqs = sqs.filter(subjects_sq)

114 if resource_sq:

115 sqs = sqs.filter(resource_sq)

116 if public_sq:

117 sqs = sqs.filter(public_sq)

118 if owner_sq:

119 sqs = sqs.filter(owner_sq)

120 if discoverable_sq:

121 sqs = sqs.filter(discoverable_sq)

122 if published_sq:

123 sqs = sqs.filter(published_sq)

124 if variable_sq:

125 sqs = sqs.filter(variable_sq)

126 if sample_medium_sq:

127 sqs = sqs.filter(sample_medium_sq)

128 if units_name_sq:

129 sqs = sqs.filter(units_name_sq)

130

131 return sqs

132

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/hs_core/discovery_form.py b/hs_core/discovery_form.py

--- a/hs_core/discovery_form.py

+++ b/hs_core/discovery_form.py

@@ -1,7 +1,5 @@

from haystack.forms import FacetedSearchForm

-from haystack.query import SQ, SearchQuerySet

-from crispy_forms.layout import *

-from crispy_forms.bootstrap import *

+from haystack.query import SQ

from django import forms

class DiscoveryForm(FacetedSearchForm):

| {"golden_diff": "diff --git a/hs_core/discovery_form.py b/hs_core/discovery_form.py\n--- a/hs_core/discovery_form.py\n+++ b/hs_core/discovery_form.py\n@@ -1,7 +1,5 @@\n from haystack.forms import FacetedSearchForm\n-from haystack.query import SQ, SearchQuerySet\n-from crispy_forms.layout import *\n-from crispy_forms.bootstrap import *\n+from haystack.query import SQ\n from django import forms\n \n class DiscoveryForm(FacetedSearchForm):\n", "issue": "Discover page: search box does NOT obey SOLR syntax\nThe helpful text that suggests that SOLR syntax works in the search box has been wrong for over a year. It now tokenizes terms and is not compatible with SOLR syntax. \n", "before_files": [{"content": "from haystack.forms import FacetedSearchForm\nfrom haystack.query import SQ, SearchQuerySet\nfrom crispy_forms.layout import *\nfrom crispy_forms.bootstrap import *\nfrom django import forms\n\nclass DiscoveryForm(FacetedSearchForm):\n NElat = forms.CharField(widget = forms.HiddenInput(), required=False)\n NElng = forms.CharField(widget = forms.HiddenInput(), required=False)\n SWlat = forms.CharField(widget = forms.HiddenInput(), required=False)\n SWlng = forms.CharField(widget = forms.HiddenInput(), required=False)\n start_date = forms.DateField(label='From Date', required=False)\n end_date = forms.DateField(label='To Date', required=False)\n\n def search(self):\n if not self.cleaned_data.get('q'):\n sqs = self.searchqueryset.filter(discoverable=True).filter(is_replaced_by=False)\n else:\n # This corrects for an failed match of complete words, as documented in issue #2308.\n # The text__startswith=cdata matches stemmed words in documents with an unstemmed cdata.\n # The text=cdata matches stemmed words after stemming cdata as well.\n # The stem of \"Industrial\", according to the aggressive default stemmer, is \"industri\".\n # Thus \"Industrial\" does not match \"Industrial\" in the document according to\n # startswith, but does match according to text=cdata.\n cdata = self.cleaned_data.get('q')\n sqs = self.searchqueryset.filter(SQ(text__startswith=cdata)|SQ(text=cdata))\\\n .filter(discoverable=True)\\\n .filter(is_replaced_by=False)\n\n geo_sq = SQ()\n if self.cleaned_data['NElng'] and self.cleaned_data['SWlng']:\n if float(self.cleaned_data['NElng']) > float(self.cleaned_data['SWlng']):\n geo_sq.add(SQ(coverage_east__lte=float(self.cleaned_data['NElng'])), SQ.AND)\n geo_sq.add(SQ(coverage_east__gte=float(self.cleaned_data['SWlng'])), SQ.AND)\n else:\n geo_sq.add(SQ(coverage_east__gte=float(self.cleaned_data['SWlng'])), SQ.AND)\n geo_sq.add(SQ(coverage_east__lte=float(180)), SQ.OR)\n geo_sq.add(SQ(coverage_east__lte=float(self.cleaned_data['NElng'])), SQ.AND)\n geo_sq.add(SQ(coverage_east__gte=float(-180)), SQ.AND)\n\n if self.cleaned_data['NElat'] and self.cleaned_data['SWlat']:\n geo_sq.add(SQ(coverage_north__lte=float(self.cleaned_data['NElat'])), SQ.AND)\n geo_sq.add(SQ(coverage_north__gte=float(self.cleaned_data['SWlat'])), SQ.AND)\n\n if geo_sq:\n sqs = sqs.filter(geo_sq)\n\n\n # Check to see if a start_date was chosen.\n if self.cleaned_data['start_date']:\n sqs = sqs.filter(coverage_start_date__gte=self.cleaned_data['start_date'])\n\n # Check to see if an end_date was chosen.\n if self.cleaned_data['end_date']:\n sqs = sqs.filter(coverage_end_date__lte=self.cleaned_data['end_date'])\n\n author_sq = SQ()\n subjects_sq = SQ()\n resource_sq = SQ()\n public_sq = SQ()\n owner_sq = SQ()\n discoverable_sq = SQ()\n published_sq = SQ()\n variable_sq = SQ()\n sample_medium_sq = SQ()\n units_name_sq = SQ()\n # We need to process each facet to ensure that the field name and the\n # value are quoted correctly and separately:\n\n for facet in self.selected_facets:\n if \":\" not in facet:\n continue\n\n field, value = facet.split(\":\", 1)\n\n if value:\n if \"creators\" in field:\n author_sq.add(SQ(creators=sqs.query.clean(value)), SQ.OR)\n\n elif \"subjects\" in field:\n subjects_sq.add(SQ(subjects=sqs.query.clean(value)), SQ.OR)\n\n elif \"resource_type\" in field:\n resource_sq.add(SQ(resource_type=sqs.query.clean(value)), SQ.OR)\n\n elif \"public\" in field:\n public_sq.add(SQ(public=sqs.query.clean(value)), SQ.OR)\n\n elif \"owners_names\" in field:\n owner_sq.add(SQ(owners_names=sqs.query.clean(value)), SQ.OR)\n\n elif \"discoverable\" in field:\n discoverable_sq.add(SQ(discoverable=sqs.query.clean(value)), SQ.OR)\n\n elif \"published\" in field:\n published_sq.add(SQ(published=sqs.query.clean(value)), SQ.OR)\n\n elif 'variable_names' in field:\n variable_sq.add(SQ(variable_names=sqs.query.clean(value)), SQ.OR)\n\n elif 'sample_mediums' in field:\n sample_medium_sq.add(SQ(sample_mediums=sqs.query.clean(value)), SQ.OR)\n\n elif 'units_names' in field:\n units_name_sq.add(SQ(units_names=sqs.query.clean(value)), SQ.OR)\n\n else:\n continue\n\n if author_sq:\n sqs = sqs.filter(author_sq)\n if subjects_sq:\n sqs = sqs.filter(subjects_sq)\n if resource_sq:\n sqs = sqs.filter(resource_sq)\n if public_sq:\n sqs = sqs.filter(public_sq)\n if owner_sq:\n sqs = sqs.filter(owner_sq)\n if discoverable_sq:\n sqs = sqs.filter(discoverable_sq)\n if published_sq:\n sqs = sqs.filter(published_sq)\n if variable_sq:\n sqs = sqs.filter(variable_sq)\n if sample_medium_sq:\n sqs = sqs.filter(sample_medium_sq)\n if units_name_sq:\n sqs = sqs.filter(units_name_sq)\n\n return sqs\n", "path": "hs_core/discovery_form.py"}], "after_files": [{"content": "from haystack.forms import FacetedSearchForm\nfrom haystack.query import SQ\nfrom django import forms\n\nclass DiscoveryForm(FacetedSearchForm):\n NElat = forms.CharField(widget = forms.HiddenInput(), required=False)\n NElng = forms.CharField(widget = forms.HiddenInput(), required=False)\n SWlat = forms.CharField(widget = forms.HiddenInput(), required=False)\n SWlng = forms.CharField(widget = forms.HiddenInput(), required=False)\n start_date = forms.DateField(label='From Date', required=False)\n end_date = forms.DateField(label='To Date', required=False)\n\n def search(self):\n if not self.cleaned_data.get('q'):\n sqs = self.searchqueryset.filter(discoverable=True).filter(is_replaced_by=False)\n else:\n # This corrects for an failed match of complete words, as documented in issue #2308.\n # The text__startswith=cdata matches stemmed words in documents with an unstemmed cdata.\n # The text=cdata matches stemmed words after stemming cdata as well.\n # The stem of \"Industrial\", according to the aggressive default stemmer, is \"industri\".\n # Thus \"Industrial\" does not match \"Industrial\" in the document according to\n # startswith, but does match according to text=cdata.\n cdata = self.cleaned_data.get('q')\n sqs = self.searchqueryset.filter(SQ(text__startswith=cdata)|SQ(text=cdata))\\\n .filter(discoverable=True)\\\n .filter(is_replaced_by=False)\n\n geo_sq = SQ()\n if self.cleaned_data['NElng'] and self.cleaned_data['SWlng']:\n if float(self.cleaned_data['NElng']) > float(self.cleaned_data['SWlng']):\n geo_sq.add(SQ(coverage_east__lte=float(self.cleaned_data['NElng'])), SQ.AND)\n geo_sq.add(SQ(coverage_east__gte=float(self.cleaned_data['SWlng'])), SQ.AND)\n else:\n geo_sq.add(SQ(coverage_east__gte=float(self.cleaned_data['SWlng'])), SQ.AND)\n geo_sq.add(SQ(coverage_east__lte=float(180)), SQ.OR)\n geo_sq.add(SQ(coverage_east__lte=float(self.cleaned_data['NElng'])), SQ.AND)\n geo_sq.add(SQ(coverage_east__gte=float(-180)), SQ.AND)\n\n if self.cleaned_data['NElat'] and self.cleaned_data['SWlat']:\n geo_sq.add(SQ(coverage_north__lte=float(self.cleaned_data['NElat'])), SQ.AND)\n geo_sq.add(SQ(coverage_north__gte=float(self.cleaned_data['SWlat'])), SQ.AND)\n\n if geo_sq:\n sqs = sqs.filter(geo_sq)\n\n\n # Check to see if a start_date was chosen.\n if self.cleaned_data['start_date']:\n sqs = sqs.filter(coverage_start_date__gte=self.cleaned_data['start_date'])\n\n # Check to see if an end_date was chosen.\n if self.cleaned_data['end_date']:\n sqs = sqs.filter(coverage_end_date__lte=self.cleaned_data['end_date'])\n\n author_sq = SQ()\n subjects_sq = SQ()\n resource_sq = SQ()\n public_sq = SQ()\n owner_sq = SQ()\n discoverable_sq = SQ()\n published_sq = SQ()\n variable_sq = SQ()\n sample_medium_sq = SQ()\n units_name_sq = SQ()\n # We need to process each facet to ensure that the field name and the\n # value are quoted correctly and separately:\n\n for facet in self.selected_facets:\n if \":\" not in facet:\n continue\n\n field, value = facet.split(\":\", 1)\n\n if value:\n if \"creators\" in field:\n author_sq.add(SQ(creators=sqs.query.clean(value)), SQ.OR)\n\n elif \"subjects\" in field:\n subjects_sq.add(SQ(subjects=sqs.query.clean(value)), SQ.OR)\n\n elif \"resource_type\" in field:\n resource_sq.add(SQ(resource_type=sqs.query.clean(value)), SQ.OR)\n\n elif \"public\" in field:\n public_sq.add(SQ(public=sqs.query.clean(value)), SQ.OR)\n\n elif \"owners_names\" in field:\n owner_sq.add(SQ(owners_names=sqs.query.clean(value)), SQ.OR)\n\n elif \"discoverable\" in field:\n discoverable_sq.add(SQ(discoverable=sqs.query.clean(value)), SQ.OR)\n\n elif \"published\" in field:\n published_sq.add(SQ(published=sqs.query.clean(value)), SQ.OR)\n\n elif 'variable_names' in field:\n variable_sq.add(SQ(variable_names=sqs.query.clean(value)), SQ.OR)\n\n elif 'sample_mediums' in field:\n sample_medium_sq.add(SQ(sample_mediums=sqs.query.clean(value)), SQ.OR)\n\n elif 'units_names' in field:\n units_name_sq.add(SQ(units_names=sqs.query.clean(value)), SQ.OR)\n\n else:\n continue\n\n if author_sq:\n sqs = sqs.filter(author_sq)\n if subjects_sq:\n sqs = sqs.filter(subjects_sq)\n if resource_sq:\n sqs = sqs.filter(resource_sq)\n if public_sq:\n sqs = sqs.filter(public_sq)\n if owner_sq:\n sqs = sqs.filter(owner_sq)\n if discoverable_sq:\n sqs = sqs.filter(discoverable_sq)\n if published_sq:\n sqs = sqs.filter(published_sq)\n if variable_sq:\n sqs = sqs.filter(variable_sq)\n if sample_medium_sq:\n sqs = sqs.filter(sample_medium_sq)\n if units_name_sq:\n sqs = sqs.filter(units_name_sq)\n\n return sqs\n", "path": "hs_core/discovery_form.py"}]} | 1,870 | 102 |

gh_patches_debug_19832 | rasdani/github-patches | git_diff | GeotrekCE__Geotrek-admin-2461 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fixer la migration vers la 2.44.0

- [x] champs de traductions _xx créés avec NOT NULL et donc impossible à créer

- [x] géométries des sites à transformer en geometrycollection

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `geotrek/outdoor/migrations/0003_auto_20201214_1408.py`

Content:

```

1 # Generated by Django 3.1.4 on 2020-12-14 14:08

2

3 from django.conf import settings

4 import django.contrib.gis.db.models.fields

5 from django.db import migrations, models

6 import django.db.models.deletion

7

8

9 class Migration(migrations.Migration):

10

11 dependencies = [

12 ('outdoor', '0002_practice_sitepractice'),

13 ]

14

15 operations = [

16 migrations.AlterModelOptions(

17 name='site',

18 options={'ordering': ('name',), 'verbose_name': 'Outdoor site', 'verbose_name_plural': 'Outdoor sites'},

19 ),

20 migrations.AlterField(

21 model_name='site',

22 name='geom',

23 field=django.contrib.gis.db.models.fields.GeometryCollectionField(srid=settings.SRID, verbose_name='Location'),

24 ),

25 migrations.AlterField(

26 model_name='sitepractice',

27 name='site',

28 field=models.ForeignKey(on_delete=django.db.models.deletion.CASCADE, related_name='site_practices', to='outdoor.site', verbose_name='Outdoor site'),

29 ),

30 ]

31

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/geotrek/outdoor/migrations/0003_auto_20201214_1408.py b/geotrek/outdoor/migrations/0003_auto_20201214_1408.py

--- a/geotrek/outdoor/migrations/0003_auto_20201214_1408.py

+++ b/geotrek/outdoor/migrations/0003_auto_20201214_1408.py

@@ -17,10 +17,17 @@

name='site',

options={'ordering': ('name',), 'verbose_name': 'Outdoor site', 'verbose_name_plural': 'Outdoor sites'},

),

- migrations.AlterField(

- model_name='site',

- name='geom',

- field=django.contrib.gis.db.models.fields.GeometryCollectionField(srid=settings.SRID, verbose_name='Location'),

+ migrations.SeparateDatabaseAndState(

+ database_operations=[

+ migrations.RunSQL('ALTER TABLE "outdoor_site" ALTER COLUMN "geom" TYPE geometry(GeometryCollection,2154) USING ST_ForceCollection(geom);')

+ ],

+ state_operations=[

+ migrations.AlterField(

+ model_name='site',

+ name='geom',

+ field=django.contrib.gis.db.models.fields.GeometryCollectionField(srid=settings.SRID, verbose_name='Location'),

+ ),

+ ]

),

migrations.AlterField(

model_name='sitepractice',

| {"golden_diff": "diff --git a/geotrek/outdoor/migrations/0003_auto_20201214_1408.py b/geotrek/outdoor/migrations/0003_auto_20201214_1408.py\n--- a/geotrek/outdoor/migrations/0003_auto_20201214_1408.py\n+++ b/geotrek/outdoor/migrations/0003_auto_20201214_1408.py\n@@ -17,10 +17,17 @@\n name='site',\n options={'ordering': ('name',), 'verbose_name': 'Outdoor site', 'verbose_name_plural': 'Outdoor sites'},\n ),\n- migrations.AlterField(\n- model_name='site',\n- name='geom',\n- field=django.contrib.gis.db.models.fields.GeometryCollectionField(srid=settings.SRID, verbose_name='Location'),\n+ migrations.SeparateDatabaseAndState(\n+ database_operations=[\n+ migrations.RunSQL('ALTER TABLE \"outdoor_site\" ALTER COLUMN \"geom\" TYPE geometry(GeometryCollection,2154) USING ST_ForceCollection(geom);')\n+ ],\n+ state_operations=[\n+ migrations.AlterField(\n+ model_name='site',\n+ name='geom',\n+ field=django.contrib.gis.db.models.fields.GeometryCollectionField(srid=settings.SRID, verbose_name='Location'),\n+ ),\n+ ]\n ),\n migrations.AlterField(\n model_name='sitepractice',\n", "issue": "Fixer la migration vers la 2.44.0\n- [x] champs de traductions _xx cr\u00e9\u00e9s avec NOT NULL et donc impossible \u00e0 cr\u00e9er\r\n- [x] g\u00e9om\u00e9tries des sites \u00e0 transformer en geometrycollection\n", "before_files": [{"content": "# Generated by Django 3.1.4 on 2020-12-14 14:08\n\nfrom django.conf import settings\nimport django.contrib.gis.db.models.fields\nfrom django.db import migrations, models\nimport django.db.models.deletion\n\n\nclass Migration(migrations.Migration):\n\n dependencies = [\n ('outdoor', '0002_practice_sitepractice'),\n ]\n\n operations = [\n migrations.AlterModelOptions(\n name='site',\n options={'ordering': ('name',), 'verbose_name': 'Outdoor site', 'verbose_name_plural': 'Outdoor sites'},\n ),\n migrations.AlterField(\n model_name='site',\n name='geom',\n field=django.contrib.gis.db.models.fields.GeometryCollectionField(srid=settings.SRID, verbose_name='Location'),\n ),\n migrations.AlterField(\n model_name='sitepractice',\n name='site',\n field=models.ForeignKey(on_delete=django.db.models.deletion.CASCADE, related_name='site_practices', to='outdoor.site', verbose_name='Outdoor site'),\n ),\n ]\n", "path": "geotrek/outdoor/migrations/0003_auto_20201214_1408.py"}], "after_files": [{"content": "# Generated by Django 3.1.4 on 2020-12-14 14:08\n\nfrom django.conf import settings\nimport django.contrib.gis.db.models.fields\nfrom django.db import migrations, models\nimport django.db.models.deletion\n\n\nclass Migration(migrations.Migration):\n\n dependencies = [\n ('outdoor', '0002_practice_sitepractice'),\n ]\n\n operations = [\n migrations.AlterModelOptions(\n name='site',\n options={'ordering': ('name',), 'verbose_name': 'Outdoor site', 'verbose_name_plural': 'Outdoor sites'},\n ),\n migrations.SeparateDatabaseAndState(\n database_operations=[\n migrations.RunSQL('ALTER TABLE \"outdoor_site\" ALTER COLUMN \"geom\" TYPE geometry(GeometryCollection,2154) USING ST_ForceCollection(geom);')\n ],\n state_operations=[\n migrations.AlterField(\n model_name='site',\n name='geom',\n field=django.contrib.gis.db.models.fields.GeometryCollectionField(srid=settings.SRID, verbose_name='Location'),\n ),\n ]\n ),\n migrations.AlterField(\n model_name='sitepractice',\n name='site',\n field=models.ForeignKey(on_delete=django.db.models.deletion.CASCADE, related_name='site_practices', to='outdoor.site', verbose_name='Outdoor site'),\n ),\n ]\n", "path": "geotrek/outdoor/migrations/0003_auto_20201214_1408.py"}]} | 617 | 341 |

gh_patches_debug_15166 | rasdani/github-patches | git_diff | feast-dev__feast-4025 | We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Deprecate PostgreSQLRegistryStore

Right now we have 2 ways to use postgres as a registry backend. The first is with scalable `SqlRegistry` that uses `sqlalchemy`, another is an older option of using `PostgreSQLRegistryStore` which keeps the whole proto in a single table. Since we are [recommending](https://docs.feast.dev/tutorials/using-scalable-registry) the scalable registry anyway, we should deprecate `PostgreSQLRegistryStore` and remove it soon after. Or maybe remove it directly? It's under contribs as of now.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `sdk/python/feast/infra/registry/contrib/postgres/postgres_registry_store.py`

Content:

```

1 from typing import Optional

2

3 import psycopg2

4 from psycopg2 import sql

5

6 from feast.infra.registry.registry_store import RegistryStore

7 from feast.infra.utils.postgres.connection_utils import _get_conn

8 from feast.infra.utils.postgres.postgres_config import PostgreSQLConfig

9 from feast.protos.feast.core.Registry_pb2 import Registry as RegistryProto

10 from feast.repo_config import RegistryConfig

11

12

13 class PostgresRegistryConfig(RegistryConfig):

14 host: str

15 port: int

16 database: str

17 db_schema: str

18 user: str

19 password: str

20 sslmode: Optional[str]

21 sslkey_path: Optional[str]

22 sslcert_path: Optional[str]

23 sslrootcert_path: Optional[str]

24

25

26 class PostgreSQLRegistryStore(RegistryStore):

27 def __init__(self, config: PostgresRegistryConfig, registry_path: str):

28 self.db_config = PostgreSQLConfig(

29 host=config.host,

30 port=config.port,

31 database=config.database,

32 db_schema=config.db_schema,

33 user=config.user,

34 password=config.password,

35 sslmode=getattr(config, "sslmode", None),

36 sslkey_path=getattr(config, "sslkey_path", None),

37 sslcert_path=getattr(config, "sslcert_path", None),

38 sslrootcert_path=getattr(config, "sslrootcert_path", None),

39 )

40 self.table_name = config.path

41 self.cache_ttl_seconds = config.cache_ttl_seconds

42

43 def get_registry_proto(self) -> RegistryProto:

44 registry_proto = RegistryProto()

45 try:

46 with _get_conn(self.db_config) as conn, conn.cursor() as cur:

47 cur.execute(

48 sql.SQL(

49 """

50 SELECT registry

51 FROM {}

52 WHERE version = (SELECT max(version) FROM {})

53 """

54 ).format(

55 sql.Identifier(self.table_name),

56 sql.Identifier(self.table_name),

57 )

58 )

59 row = cur.fetchone()

60 if row:

61 registry_proto = registry_proto.FromString(row[0])

62 except psycopg2.errors.UndefinedTable:

63 pass

64 return registry_proto

65

66 def update_registry_proto(self, registry_proto: RegistryProto):

67 """

68 Overwrites the current registry proto with the proto passed in. This method

69 writes to the registry path.

70

71 Args:

72 registry_proto: the new RegistryProto

73 """

74 schema_name = self.db_config.db_schema or self.db_config.user

75 with _get_conn(self.db_config) as conn, conn.cursor() as cur:

76 cur.execute(

77 """

78 SELECT schema_name

79 FROM information_schema.schemata

80 WHERE schema_name = %s

81 """,

82 (schema_name,),

83 )

84 schema_exists = cur.fetchone()

85 if not schema_exists:

86 cur.execute(

87 sql.SQL("CREATE SCHEMA IF NOT EXISTS {} AUTHORIZATION {}").format(

88 sql.Identifier(schema_name),

89 sql.Identifier(self.db_config.user),

90 ),

91 )

92

93 cur.execute(

94 sql.SQL(

95 """

96 CREATE TABLE IF NOT EXISTS {} (

97 version BIGSERIAL PRIMARY KEY,

98 registry BYTEA NOT NULL

99 );

100 """

101 ).format(sql.Identifier(self.table_name)),

102 )

103 # Do we want to keep track of the history or just keep the latest?

104 cur.execute(

105 sql.SQL(

106 """

107 INSERT INTO {} (registry)

108 VALUES (%s);

109 """

110 ).format(sql.Identifier(self.table_name)),

111 [registry_proto.SerializeToString()],

112 )

113

114 def teardown(self):

115 with _get_conn(self.db_config) as conn, conn.cursor() as cur:

116 cur.execute(

117 sql.SQL(

118 """

119 DROP TABLE IF EXISTS {};

120 """

121 ).format(sql.Identifier(self.table_name))

122 )

123

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

| diff --git a/sdk/python/feast/infra/registry/contrib/postgres/postgres_registry_store.py b/sdk/python/feast/infra/registry/contrib/postgres/postgres_registry_store.py

--- a/sdk/python/feast/infra/registry/contrib/postgres/postgres_registry_store.py

+++ b/sdk/python/feast/infra/registry/contrib/postgres/postgres_registry_store.py

@@ -1,3 +1,4 @@

+import warnings

from typing import Optional

import psycopg2

@@ -37,6 +38,11 @@

sslcert_path=getattr(config, "sslcert_path", None),

sslrootcert_path=getattr(config, "sslrootcert_path", None),

)

+ warnings.warn(

+ "PostgreSQLRegistryStore is deprecated and will be removed in the future releases. Please use SqlRegistry instead.",

+ DeprecationWarning,

+ )

+

self.table_name = config.path

self.cache_ttl_seconds = config.cache_ttl_seconds